Introduction

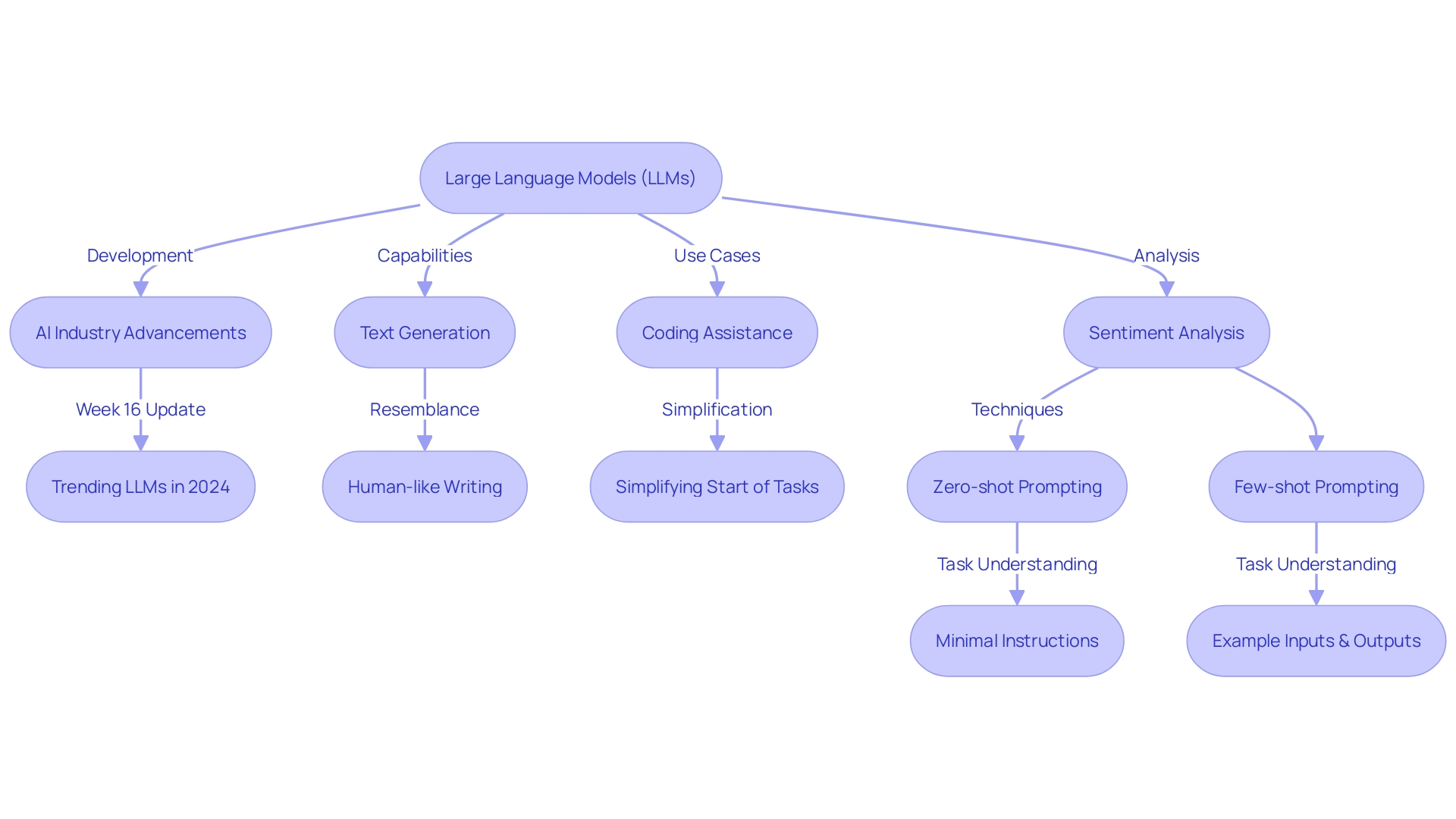

Large Language Models (LLMs) have revolutionized natural language processing and business problem-solving. These models, such as GPT-3.5 and GPT-4, possess intricate components that enable them to understand and generate human language accurately. The architecture of LLMs, built upon layers that process and produce language patterns, is a foundational element.

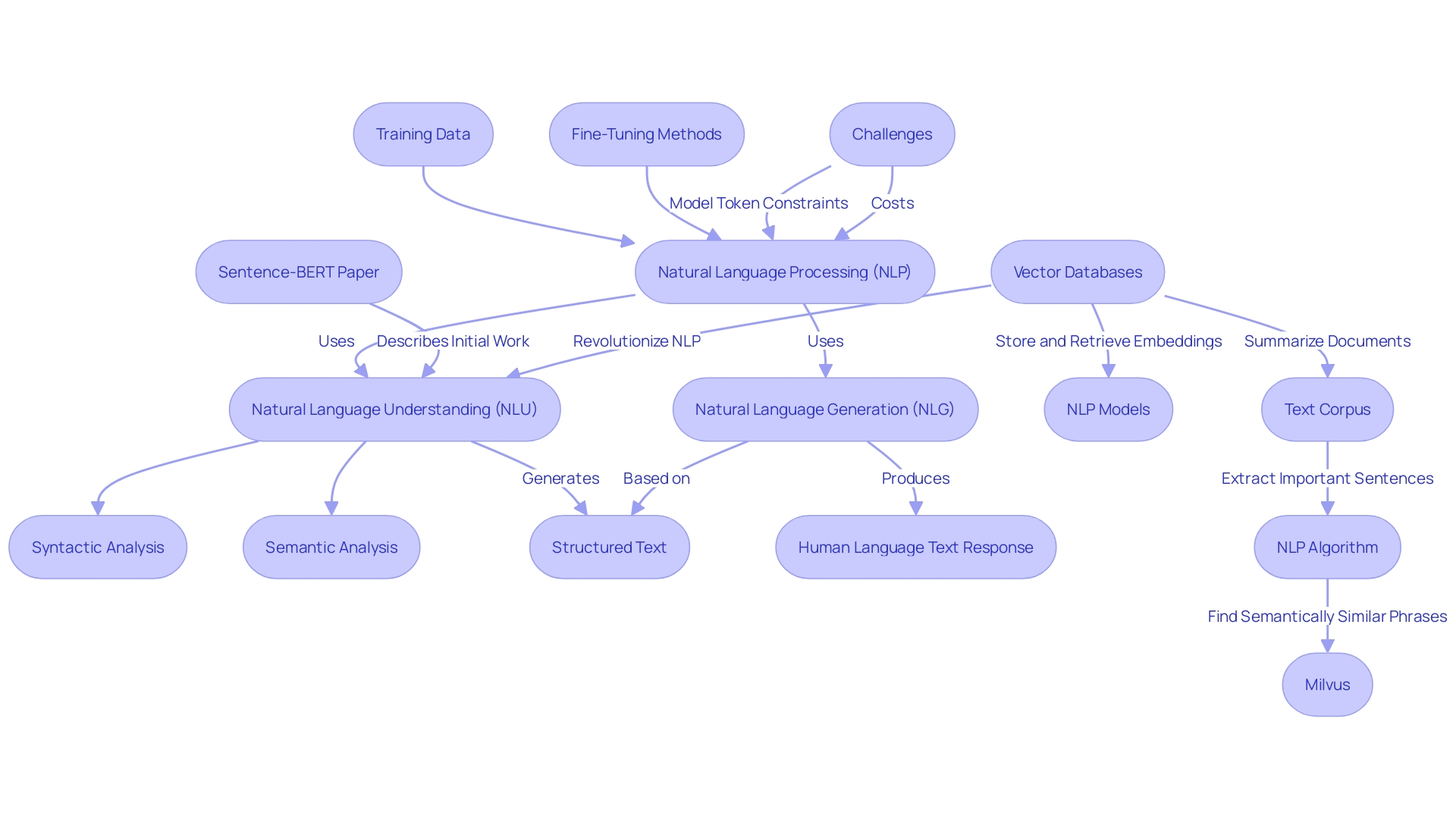

Training data and fine-tuning techniques, like prompt engineering and retrieval-augmented generation, further refine the models’ capabilities. However, challenges such as model token constraints and associated costs need to be addressed. By dissecting these components, we enhance our comprehension of LLMs and pave the way for more effective and safe applications in various domains.

In this article, we explore the key components, training, applications, architecture, transformers, embedding layers, attention mechanisms, fine-tuning and prompt-tuning techniques, evaluation, and ethical considerations of LLMs.

Key Components of LLMs

Large Linguistic Models (LLMs) like GPT-3.5 and GPT-4 are revolutionizing the field of natural language processing (NLP) and problem-solving in business. They are made up of complex elements that allow them to comprehend and produce human communication with impressive precision. The structure of these architectures is a fundamental component, constructed upon layers that analyze and generate language patterns. Training data is another crucial aspect, involving extensive amounts of diverse text to prepare the systems for a wide range of questions and prompts. Fine-tuning methods, including prompt design and retrieval-enhanced generation, additionally enhance the capabilities of the models, offering more relevant and precise responses to particular queries. These techniques, however, come with their own set of challenges, including limitations due to model token constraints and associated costs. By analyzing these components, we not only improve our understanding but also open up opportunities for more efficient and secure applications in various fields.

Training and Fine-Tuning LLMs

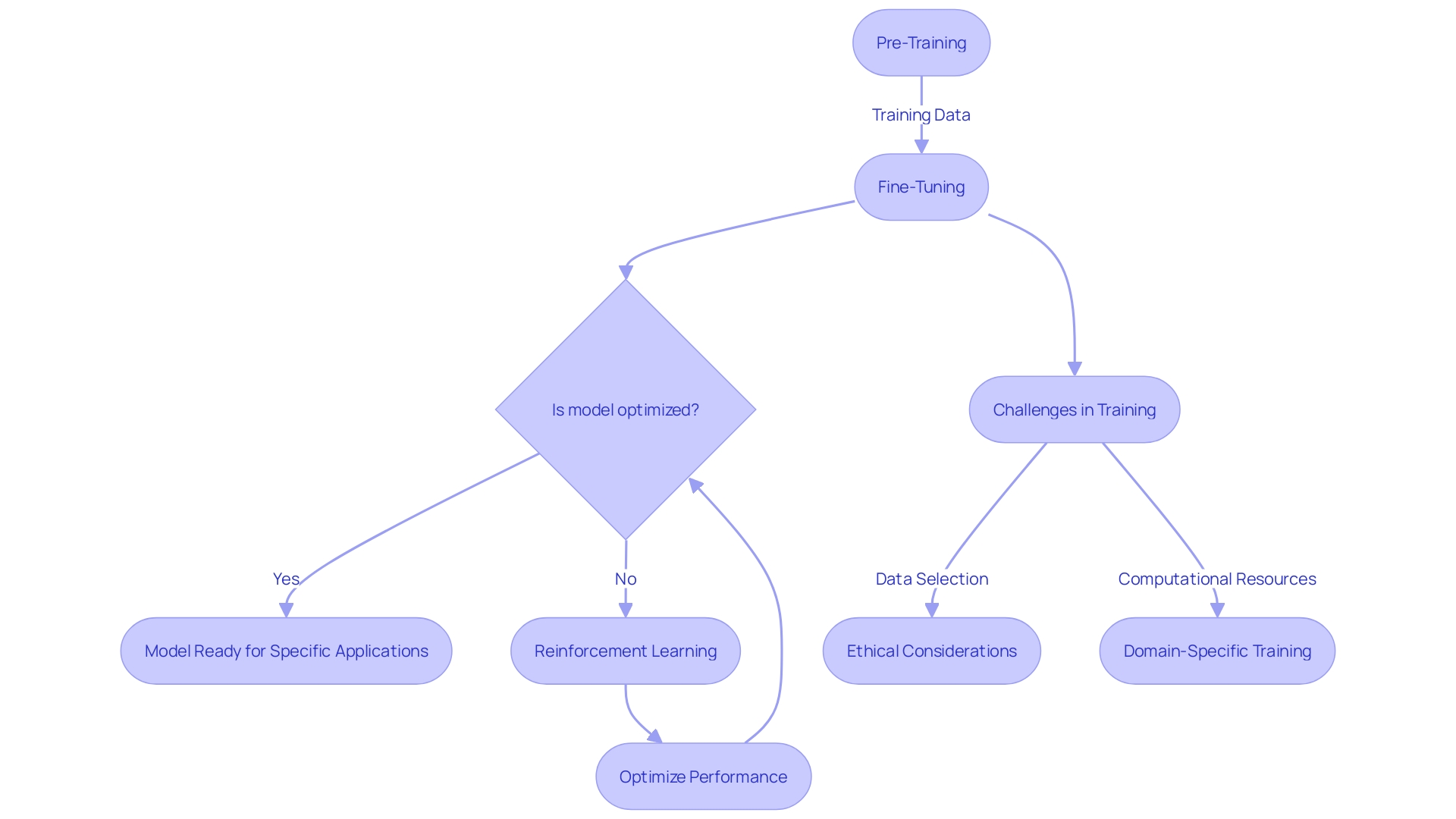

Creating extensive language models requires a careful training process that involves a combination of methods such as pre-training, fine-tuning, and reinforcement learning. The initial phase, pre-training, involves exposing the LLMs to vast amounts of text, enabling them to learn language patterns and structure. The subsequent fine-tuning phase adjusts these structures to perform specific tasks by training on a smaller, task-focused dataset. This process ensures the model’s performance is optimized for its intended application.

The education of advanced legal studies isn’t without its challenges. The selection of training data must be approached with caution, considering the guidelines that govern the use of LLMs, as well as the ethical implications of the data implemented. This is crucial to avoid emergent abilities that may lead to unpredictability in the behavior of the system. Moreover, the intricate character of these frameworks requires significant computational resources, raising concerns about privacy and the ecological consequences of their creation.

The development of language models is characterized by advancements like the Transformer architecture, which has transformed the domain by offering both contextual and positional comprehension to text. Meanwhile, case studies highlight the importance of domain-specific training, where models are tailored to comprehend and analyze lengthy, complex documents such as legal texts or regulatory frameworks.

In the dynamic landscape of AI research, the continuous open-sourcing of projects and collaborative efforts are propelling advancements. Research papers such as ‘Large Language Models for Education: A Survey’ demonstrate the wide range of uses of large language models across different sectors, from the educational sphere to legal matters. As research teams impact technology on a global scale, the incorporation of language and learning models into products and services is becoming increasingly commonplace, demonstrating their practical utility and relevance in today’s digital ecosystem.

Applications of LLMs

Big Language Models are changing the way we engage with technology, providing transformative solutions in various industries. These advanced AI systems excel in a variety of tasks, including understanding of human speech (NLU), generating human-like text (NLG), and facilitating dynamic interactions through chatbots and sophisticated machine translation services. Language models possess the ability to process and analyze large volumes of data, allowing them to comprehend natural language with impressive accuracy. This is not only driving advancements in areas such as smart education, where language learning models are becoming an integral component, but also shaping tools for cyber defenses, public health classification, and extraction tasks.

In the field of education, for instance, researchers such as Hanyi Xu are investigating the educational uses of language models, which are demonstrating to be effective supplementary tools integrating technologies such as deep learning and reinforcement learning. The smart utilization of advanced learning methods in education is becoming a priority worldwide, as they can customize learning, offer automated assessment, and provide intelligent tutoring systems.

In the field of cybersecurity, authors such as Mohammed Hassanin have provided comprehensive overviews on leveraging language models for cyber defenses, highlighting opportunities where these approaches can identify and counteract threats, improving the security landscape significantly.

Moreover, the implementation of language learning models in public health is demonstrated by the research conducted by Joshua Harris and his team, who assessed the effectiveness of these models in categorizing and retrieving public health data, showcasing the capacity of these models to assist healthcare practitioners in improving information management.

These applications are just the tip of the iceberg. According to industry experts, sounding boards are beneficial in enhancing our understanding of intricate subjects, acting as aids in brainstorming, and aiding in the effective expression of content. They remind us to begin with clear business objectives and metrics, selecting the appropriate LLM tool that aligns with business needs while taking into account aspects such as computational resources and cost-effectiveness.

Adopting a culture of ongoing experimentation and keeping up with the latest advancements is essential for fully leveraging the potential of language models. As research into the inner workings of AI models like Claude Sonnet reveals, our understanding of these systems is deepening, leading us towards more reliable and safe AI applications. With these insights, it’s evident that language models are not only academic curiosities but practical tools prepared to address real-world challenges.

Understanding the Architecture of LLMs

Exploring the complexities of Large Language Models (LLMs), one must recognize the crucial function their structure serves in processing and generating textual data. At the heart of these formidable AI constructs lies the transformer, a groundbreaking neural network structure that has revolutionized how machines understand text. Transformers are adept at handling sequences of data, making them ideal for the complex task of language modeling. With multiple layers of interconnected neurons, these systems are trained to recognize patterns in text, enabling them to predict subsequent words and generate coherent passages.

The efficiency of legal language models depends on their capacity to assess responses within context. Consider the example of Claude Sonnet, a state-of-the-art LLM that has been dissected to reveal the representation of millions of concepts within its digital neural web. This advancement in AI interpretability opens the door for more dependable and credible approaches. Just as Claude Sonnet’s inner workings have been unveiled, other LLMs are evaluated using a trusted scoring system, rating responses to ensure unbiased, logical, and high-quality outputs.

One of the recent advancements in LLM architecture is the introduction of Infini-attention, a method that extends a system’s ‘context window’—the span of data it can consider at any given time. ‘This innovation allows systems to maintain performance even when dealing with extensive text sequences, ensuring that no part of the conversation is lost or disregarded due to limitations in processing capacity.’.

Furthermore, advanced linguistic models are not just focused on generating text; they are concerned with comprehending and analyzing the extensive quantities of information that encapsulate human communication. For instance, Computational Mechanics, a theory developed to study prediction limits in complex systems, reveals that optimal prediction requires intricate structures within the model—structures that are often more complex than the data-generating process itself.

Basically, the structure of the machine learning models is a carefully coordinated arrangement of data processing tiers, each adding to the model’s capacity to understand, anticipate, and produce speech with a continuously growing degree of complexity. As the field develops, it is essential to stay updated on these advancements to fully utilize the complete potential of language models for a wide range of applications.

The Role of Transformers in LLMs

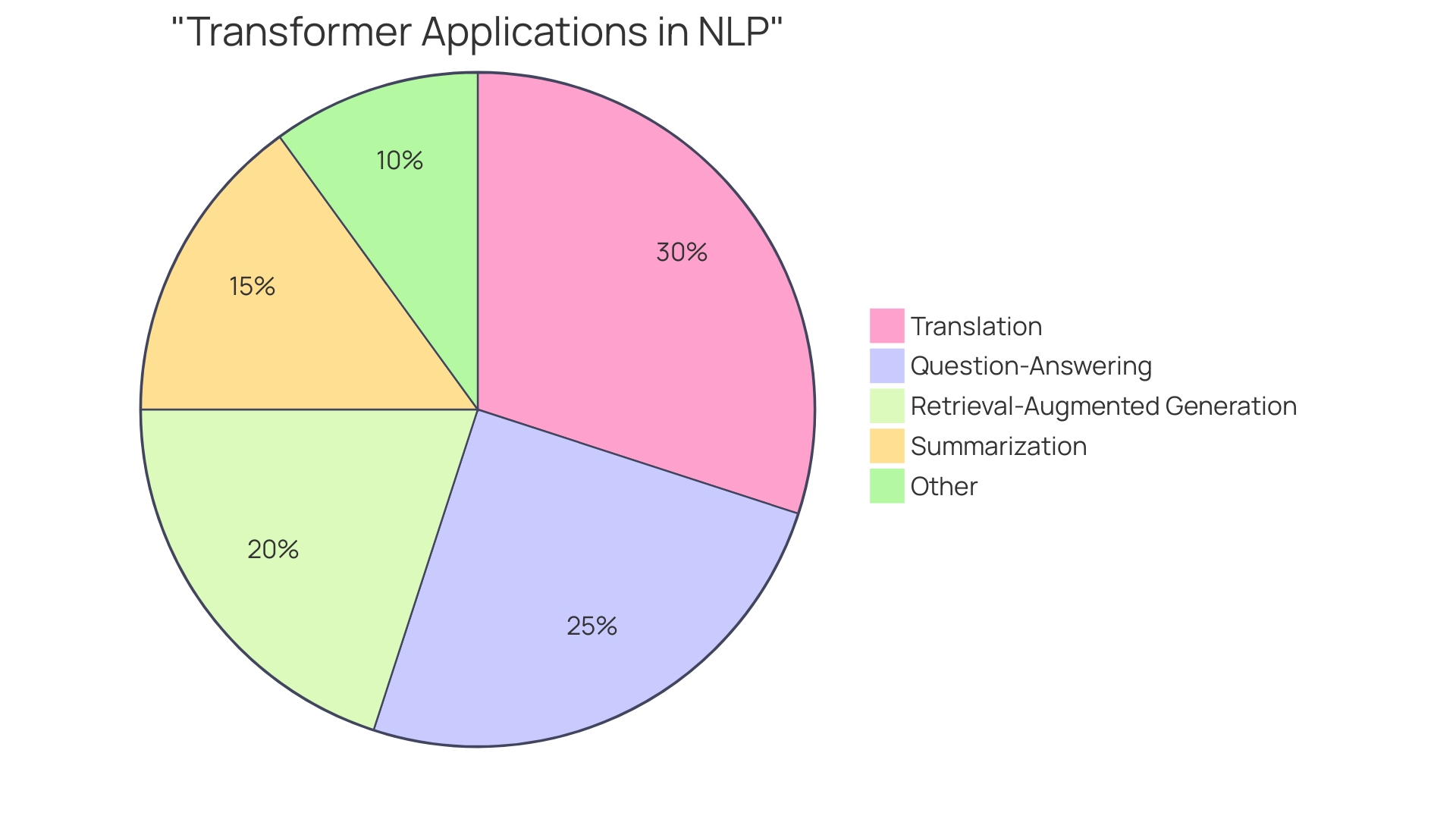

Transformers play a crucial role in enhancing the capabilities of natural language processing, acting as the foundation for Large Language Models. These mechanisms utilize transformers to process and learn from extensive datasets, interpreting and generating text resembling that produced by humans. Notably, transformers excel at understanding context, which is pivotal for tasks such as translation, summarization, and question-answering.

Recent advancements, like Infini-attention, have tackled constraints in the models’ context window—the range of text that can be taken into account at a given moment—by empowering LLMs such as Google’s to efficiently handle unlimited lengths of text without escalating memory or compute requirements. This innovation ensures that even extended interactions with entities, such as ChatGPT, remain coherent and contextually aware throughout.

In the domain of Retrieval-Augmented-Generation (RAG), transformers are further enhanced by integrating external knowledge bases, allowing responses to be both factual and nuanced. This approach not only grounds the system’s outputs in accurate information but also supplies domain-specific insights, showcasing the transformers’ adaptability.

The scalability of transformers is underpinned by their architecture, which provides meaning to individual tokens within large bodies of text. As mentioned in recent literature, transformers are essential for pre-trained language models, which can vary from models with 110 million parameters like Google’s Bert base to those with 340 billion parameters such as Google’s PaLM 2.

Despite their transformative impact, legal masters degrees and transformers are not without challenges. There are worries about the ethical consequences of AI, specifically concerning the utilization of artistic creations without permission for training. There is also the risk of perpetuating biases present in the training data, potentially leading to discriminatory language or content. Professionals have emphasized the possibility of information dangers, malevolent applications, and financial damages connected to LLMs.

Comprehending the two-fold characteristics of transformers—their impressive abilities and the obstacles they pose—is essential for the continuous advancement and conscientious utilization of language models. As the field evolves, so does the dialogue around the societal implications of these technologies, driving the need for continued research and thoughtful implementation.

Embedding Layers and Attention Mechanisms

Embedding layers and attention mechanisms are not just jargon in the realm of large language structures (LLMs); they are the very foundation that gives these structures their remarkable ability to comprehend and produce language. Embeddings serve as a translation mechanism, converting words into numerical vectors that encapsulate their meaning in a way that machines can process. Imagine a vast library where each book represents a word, and embeddings are librarians that organize these books so efficiently that relationships and context are preserved across the shelves of this high-dimensional space.

At the same time, attention mechanisms function as discerning readers who precisely know which books to pull from the shelves in order to comprehend a sentence or create a response. They determine the importance of various words in a sentence, enabling the system to concentrate on the most informative sections when making predictions or producing text. This powerful combination of embedding layers and attention mechanisms forms the foundation of models such as Gemini, which pushes boundaries with improved processing and enhanced linguistic comprehension, and Meta Llama 3, renowned for its performance in a wide range of tasks from healthcare classification to multilingual translation.

The advancement of embedding layers and attention mechanisms is continuously documented in academic circles, as seen in papers from arXiv Labs, where researchers explore and optimize these components for enhanced language understanding. For example, the RDR method, an innovation detailed in one of these papers, demonstrates the ongoing evolution of attention mechanisms that aim to recap, deliberate, and respond more effectively to complex linguistic data.

These technological advancements are not merely theoretical exercises but have real-world consequences in different domains, such as public health, where similar language learning models evaluated in Joshua Harris’s research aid in categorization and extraction tasks. The adaptability and efficiency of language models are exactly why they are at the forefront of AI advancements, underpinning applications that range from the everyday, such as search and recommendation systems, to the extraordinary, such as aiding legal professionals, as discussed in the paper ‘Better Call GPT’

Fine-Tuning and Prompt-Tuning Techniques

Customizing Large Language Models (LLMs) to perform exceptionally in specific domains and tasks involves advanced techniques like fine-tuning and prompt-tuning. The complexity of fine-tuning involves adjusting the parameters of the system to align with domain-specific data, which improves its proficiency in generating high-quality, relevant content. Prompt-tuning, on the other hand, focuses on crafting inputs that guide the model towards generating text that aligns with a desired outcome or style, essentially instructing the model on how to approach a task.

A noteworthy example of the power of fine-tuning is encapsulated in the paper titled ‘Bitune: Bidirectional Instruction-Tuning’ available on arXivLabs, which underscores the commitment to advancing research through collaboration and open science. This paper illustrates the meticulous process of refining LLMs for enhanced performance.

Prompt-tuning also has had its share of the spotlight, with innovative approaches like the ‘Chain Of Thought’ (Cot) prompting. This technique, which may begin with a simple addition such as ‘Let’s take this step by step,’ can significantly improve the model’s reasoning capabilities. It’s a testament to the subtle yet potent art of prompt engineering, which is rapidly becoming an indispensable skill for those working closely with specialized legal professionals.

Recent news from the field includes insights from experts like Hanna Hajishirzi, who shared the latest advancements in language models during a luncheon keynote. Her remarks emphasized the fluency of the structures and their expanding role in diverse applications, highlighting both their societal impact and economic implications.

As we keep investigating the boundaries of language models, it’s evident that the combined utilization of fine-tuning and prompt-tuning techniques is essential for unleashing the complete capability of these systems in specialized tasks. Through ongoing research and experimentation, the future of legal language models is poised to be as dynamic and exciting as the systems themselves.

Evaluating LLMs: NLU and NLG Tasks

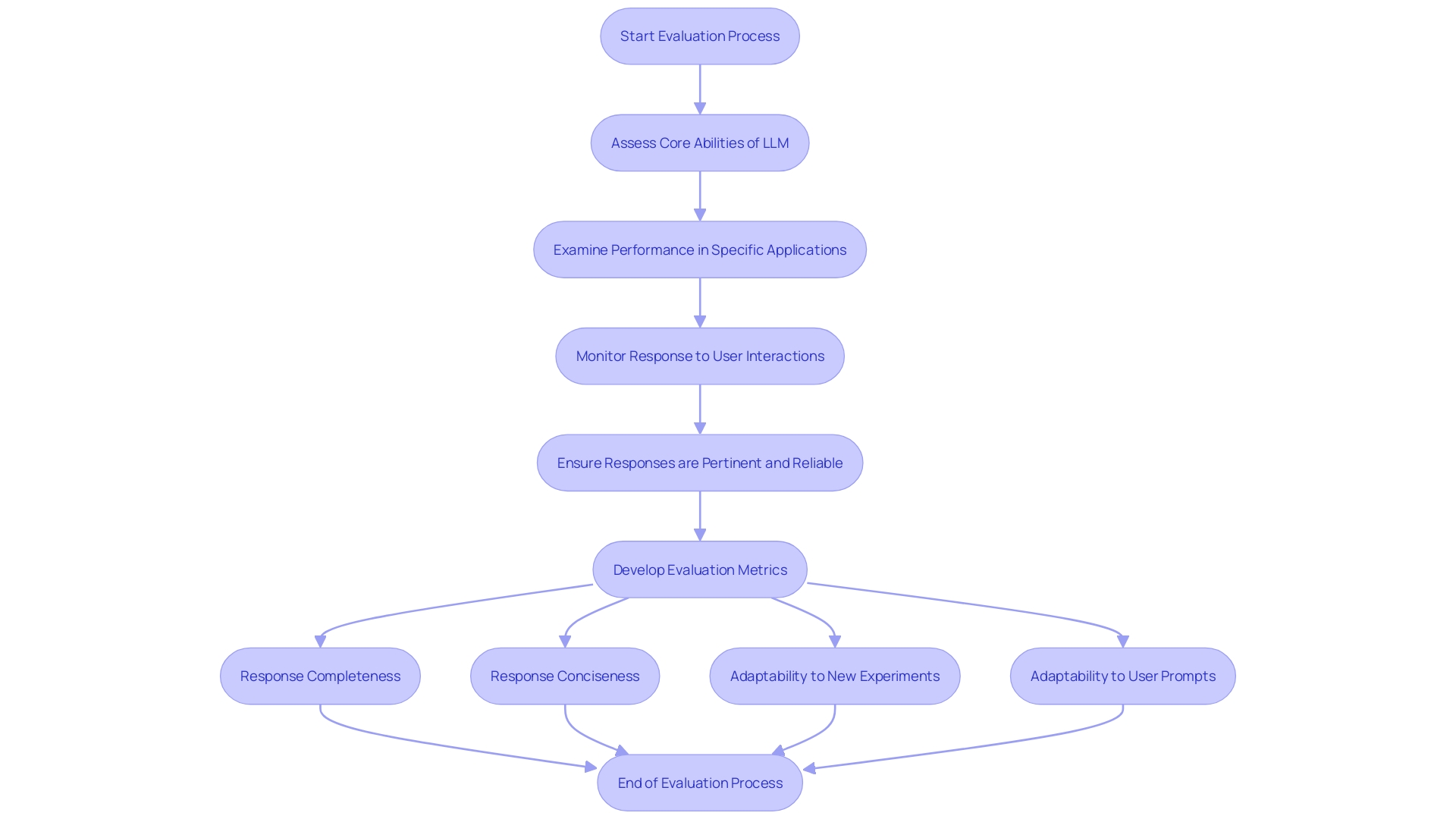

To leverage the complete capabilities of Large Language Models (LLMs), a thorough evaluation process is essential. This involves not only assessing the core abilities of the model itself but also examining its performance within specific applications and in response to user interactions. For instance, cutting-edge platforms such as Yoodli employ language models for speech analysis and feedback generation, emphasizing the significance of upholding top-notch, pertinent, and reliable language model responses to guarantee a favorable user experience. Similarly, monitoring AI systems, such as those applied in healthcare, is crucial for ensuring reliability, compliance, and patient safety. The evaluation criteria must include metrics that cover response completeness and conciseness, and the system’s ability to adapt to new experiments and user prompts.

A robust set of metrics is essential for scoring an LLM’s outputs, which act as a barometer for its effectiveness in understanding and generating natural language. These metrics should effectively identify any anomalies and enable the comparison of output quality across various LLM configurations. As the technology evolves and user expectations increase, it becomes even more critical to have these evaluation tools in place. For example, recent research has emphasized the convincing ability of language learning models, particularly when provided with customized information – a factor that can greatly impact their effectiveness. Therefore, ongoing monitoring and organized assessment are crucial for the responsible implementation of language models in any field, ensuring they stay efficient, secure, and in line with user requirements.

Challenges and Ethical Considerations in LLMs

Powerful language models have made a significant impact in the digital realm with their capacity to comprehend and produce text that resembles human language, influencing various industries ranging from online retail to customer support. However, with great power comes great responsibility, and the implementation of language models is not without its challenges and ethical considerations. One of the key issues is the potential for bias within these models, which can be a reflection of biased training data. This bias can have far-reaching consequences, influencing decisions and perpetuating stereotypes in sensitive applications.

As emphasized in a survey on the fairness of legal master’s degrees in e-commerce, bias is not just a theoretical concern but a practical challenge that needs to be addressed. The study highlights the significance of transparency throughout the entire process of legal information management, from the initial data gathering to the final utilization. To minimize the risks associated with bias in language models, recent studies suggest various strategies, such as knowledge editing, which involves adjusting the knowledge base of a language model to reduce biased outputs.

Moreover, the potential for malicious use of LLMs cannot be ignored. The flexibility of these options means they could be utilized to create deceptive information or even cyber threats. Addressing these challenges necessitates a comprehensive approach, encompassing strong data analysis techniques, diligent monitoring of algorithms, and ethical principles for utilization.

Insights from practical case studies, such as those presented by LaPlume on large-scale web-based data analysis, provide valuable lessons for handling large language models. These include data cleaning, analysis, and visualization techniques that are essential for ensuring the integrity of the models. Moreover, showcased research and innovations in the field from esteemed conferences such as EMNLP (Empirical Methods in Natural Language Processing) demonstrate a community committed to responsibly advancing language models.

With the right combination of research, ethics, and technical safeguards, the challenges posed by LLMs can be effectively managed, ensuring these powerful tools are used for the betterment of society while minimizing harm.

Conclusion

In conclusion, Large Language Models (LLMs) have revolutionized natural language processing and business problem-solving. These models possess intricate components that enable them to understand and generate human language accurately. The architecture of LLMs, built upon layers that process and produce language patterns, is a foundational element.

Training data and fine-tuning techniques, such as prompt engineering and retrieval-augmented generation, further refine the models’ capabilities.

By dissecting these components, we enhance our comprehension of LLMs and pave the way for more effective and safe applications. LLMs excel in tasks such as natural language understanding, generation, and facilitating dynamic interactions. Their wide range of applications includes areas like smart education, cybersecurity, and public health.

Embedding layers and attention mechanisms form the foundation of LLMs, allowing them to understand and generate language effectively. The fine-tuning and prompt-tuning techniques further customize LLMs for specific domains and tasks. Evaluating LLMs is crucial to ensure high-quality and relevant outputs, with metrics covering response completeness, conciseness, and adaptability.

However, challenges and ethical considerations, such as bias and malicious use, must be addressed. Transparency, bias mitigation strategies, and robust safeguards are necessary to responsibly deploy LLMs. Continuous experimentation and staying up-to-date with the latest developments are essential for leveraging the potential of LLMs effectively and responsibly.

In summary, LLMs are powerful tools that have practical applications across various domains. Understanding their components, training techniques, architecture, and evaluation processes is key to harnessing their full potential. By addressing challenges and ethical considerations, we can ensure the responsible deployment of LLMs for the benefit of society.