Introduction

In the rapidly evolving landscape of artificial intelligence, LLM-powered autonomous agents are emerging as a groundbreaking innovation. Leveraging large language models (LLMs), these agents can operate independently and execute complex tasks with remarkable efficiency. By understanding and generating human-like text, they seamlessly interact with users and integrate various specialized tools to address intricate queries.

This modular approach not only enhances their versatility but also optimizes their ability to automate workflows and provide insightful solutions. From autonomous driving software to sophisticated enterprise applications, the potential applications of these agents are vast and transformative. This article delves into the key components, integration techniques, and real-world applications of LLM-powered agents, offering a comprehensive guide to understanding their impact and future potential.

What Are LLM-Powered Autonomous Agents?

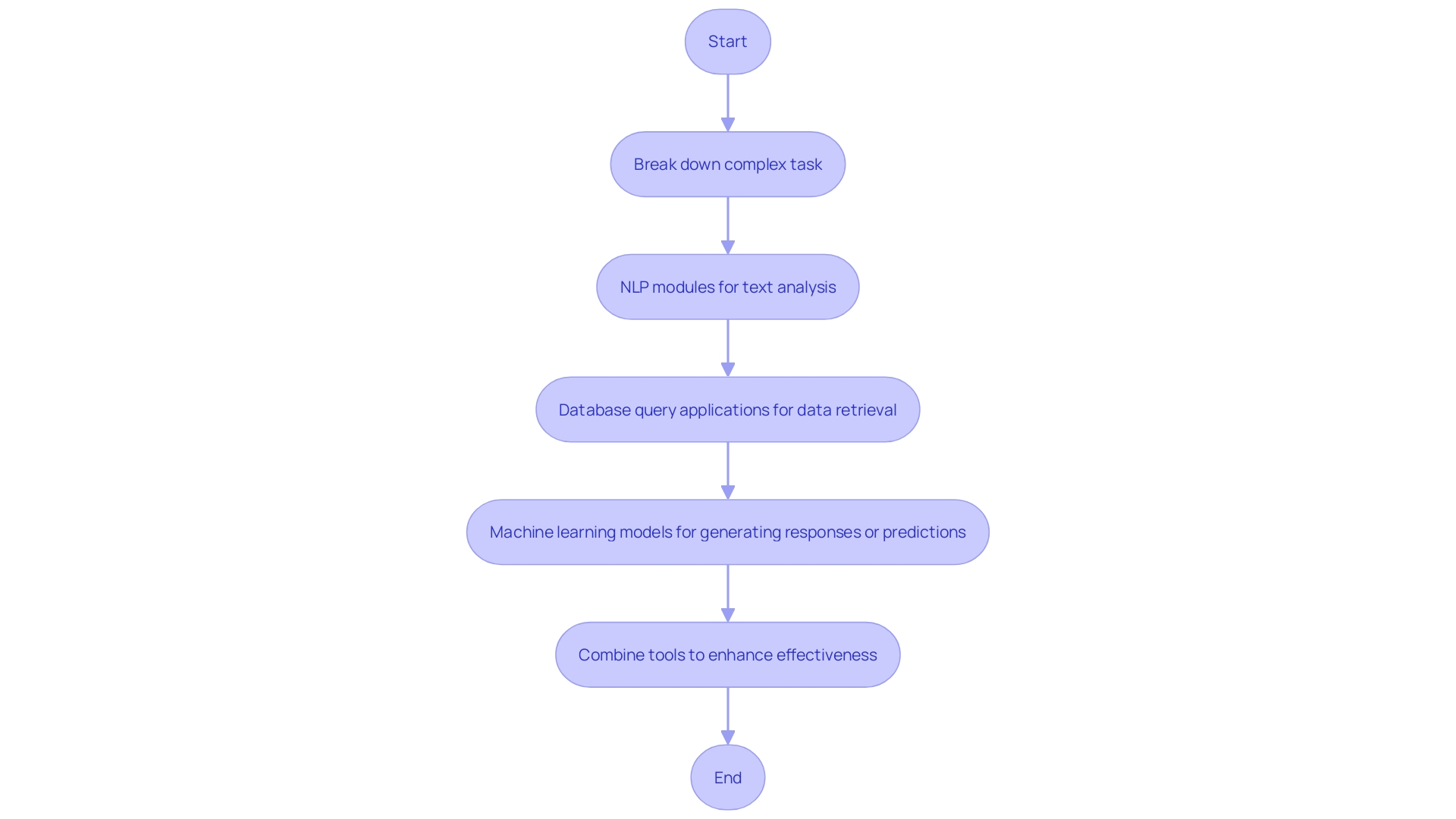

‘LLM-powered autonomous systems represent a significant advancement in AI technology, utilizing large language models (LLMs) to operate independently and execute intricate tasks.’. These entities are capable of understanding and generating human-like text, which allows them to interact fluidly with users and other systems. A key characteristic of these individuals is their capability to combine various tools—each intended for specific purposes—into their workflows. For instance, they can employ an NLP module to analyze text and extract relevant information, a database query application to retrieve specific data, and a machine learning model to generate text or make predictions based on input data.

These instruments function as the foundational elements that allow representatives to create thorough responses to user inquiries. By breaking down complex tasks into manageable steps and selecting the appropriate tools for each step, the individuals can efficiently address and resolve issues. This modular method not only improves the versatility of the entities but also their effectiveness in automating workflows, processing natural language inquiries, and delivering valuable insights.

A notable application of LLMs in autonomous systems is seen in companies like Ghost, which uses multi-modal large language models (MLLMs) to develop autonomous driving software. These models employ human-like reasoning to navigate intricate driving situations, demonstrating the potential of LLM-powered systems in various fields.

Key Components of LLM-Powered Agents

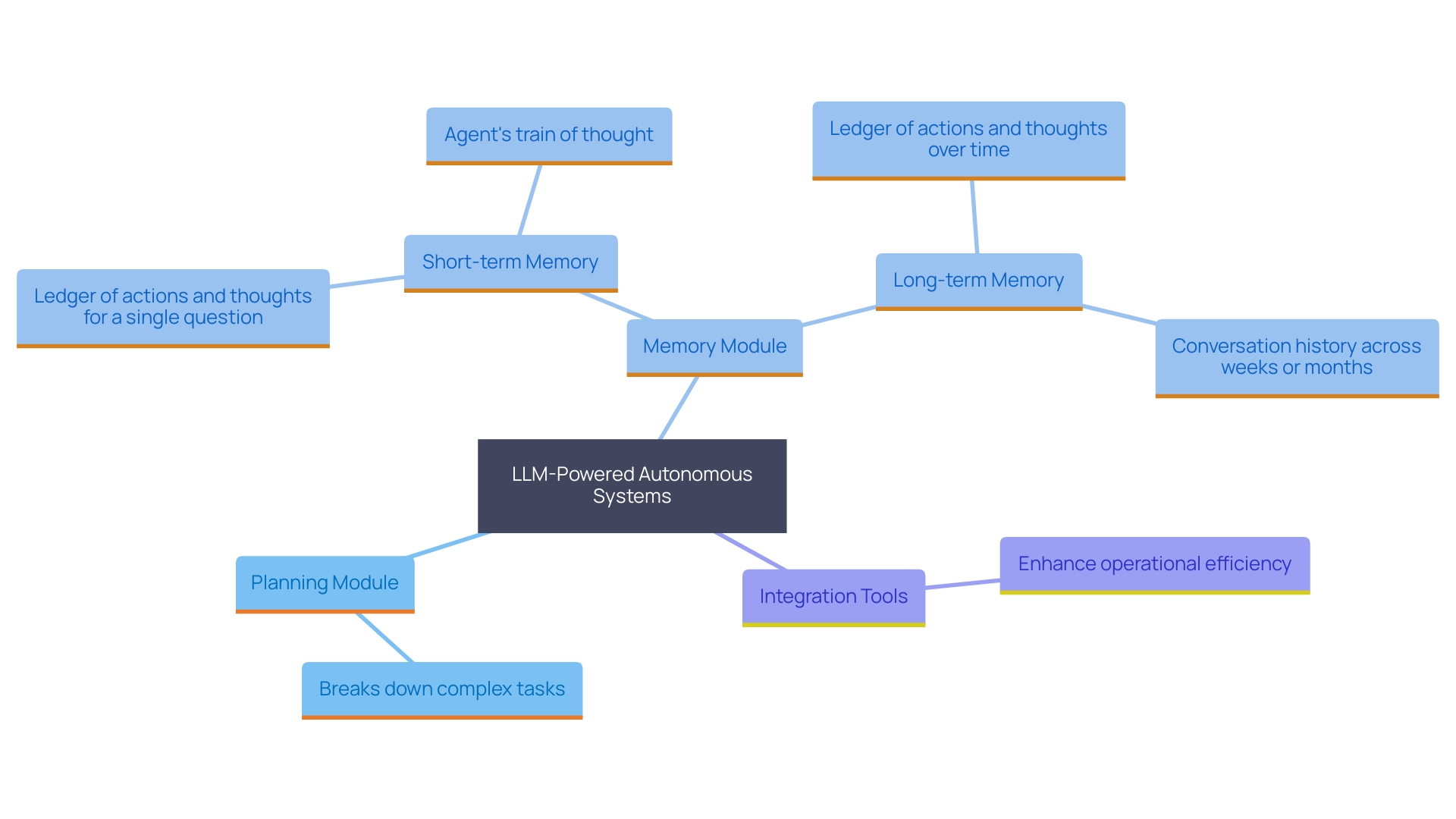

The functionality of LLM-powered autonomous systems hinges on several pivotal components working in unison to amplify their capabilities. Central to these entities is the planning module, which orchestrates the breakdown of complex questions into manageable tasks, ensuring that the entity can systematically address each part. Complementing this is the memory module, divided into short-term and long-term segments. Short-term memory captures the individual’s immediate actions and thoughts in response to a user’s query, essentially forming a ‘train of thought.’ Meanwhile, long-term memory records interactions and events over extended periods, building a rich history that the system can draw upon for more informed responses.

Integration tools further enhance these entities’ efficiency, enabling seamless operation in dynamic environments. The paper ‘On the Prospects of Incorporating Large Language Models in Automated Planning and Scheduling’ underscores the growing importance of these models in planning and scheduling, highlighting their potential when combined with traditional symbolic planners. This neuro-symbolic approach combines the generative capabilities of LLMs with the accuracy of traditional planning techniques, promising more efficient and scalable autonomous systems.

Planning Module

The planning module plays a pivotal role in managing activities and facilitating informed decision-making. It enables representatives to deconstruct intricate assignments into smaller, more manageable stages and formulate effective strategies for implementation. Some essential techniques within this module include:

-

Task Decomposition Techniques: This involves breaking down large, complex objectives into smaller, actionable tasks. This methodical process guarantees that each assignment can be managed effectively by one individual and can result in a unique outcome. ‘According to the paper ‘ADaPT: As-Needed Decomposition and Planning with Language Models’, activity decomposition is crucial for AI to efficiently manage and complete activities, thereby enhancing productivity and accuracy.’.

-

Reflection and Self-Improvement Techniques: These techniques enable agents to evaluate their performance continuously and adapt their strategies for improved outcomes. The Reflection Pattern article highlights how iterative self-assessment can significantly enhance the quality of AI outputs. The process involves the AI generating content, critiquing it, and refining it in cycles until a satisfactory level of quality is achieved.

In practical applications, such as those developed by the Korea Institute of Machinery and Materials (KIMM), AI technology based on Large Language Models (LLMs) is utilized to automate sequences of activities in manufacturing processes. This technology allows robots to understand user commands and autonomously generate and execute necessary actions, demonstrating the real-world impact of effective task planning and decomposition.

Memory Module

The memory module is crucial for retaining and retrieving information, enabling context-aware interactions. Key aspects include:

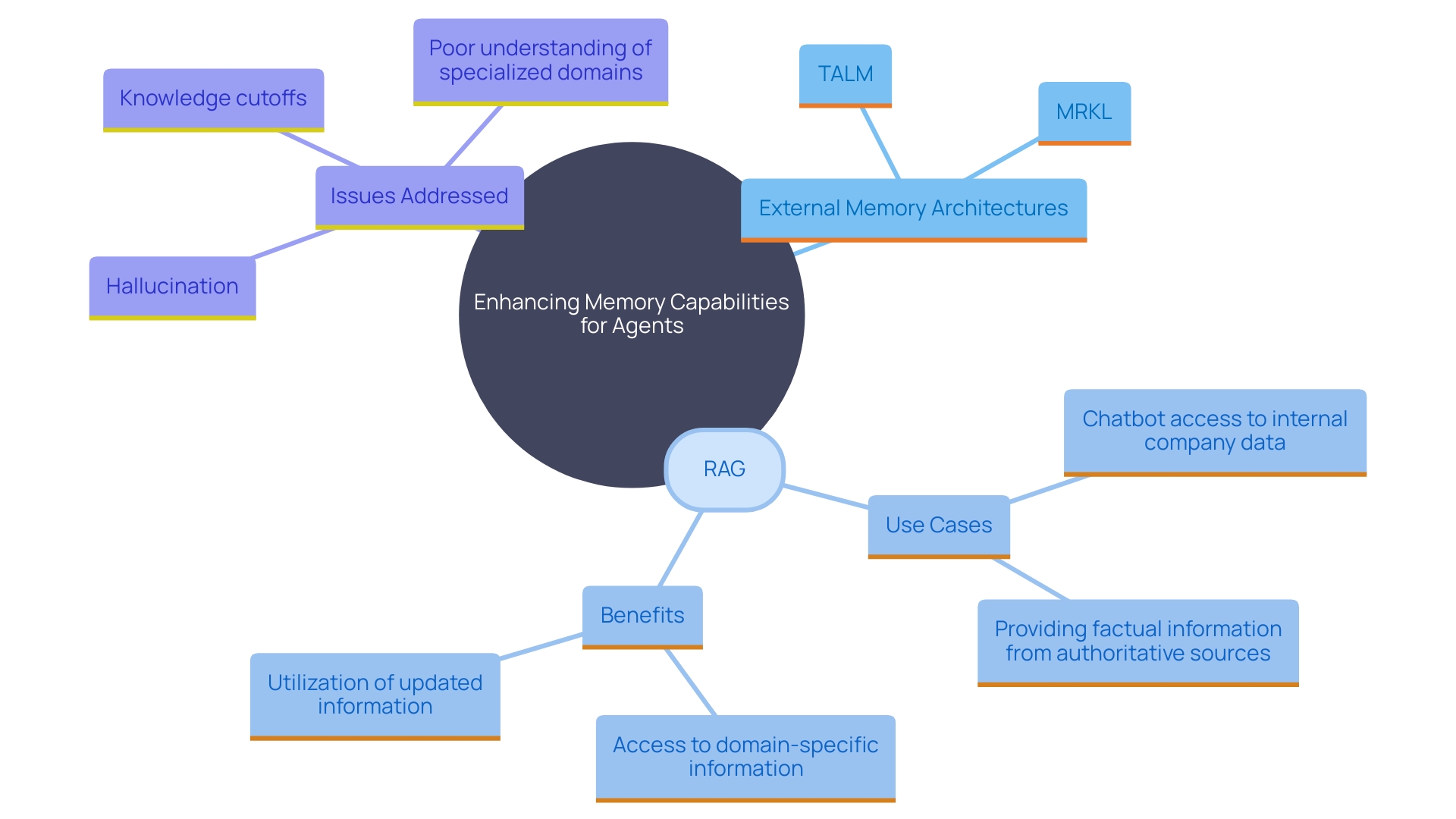

- External Memory Architectures (e.g., MRKL, TALM): These systems enhance the ability of agents to store and recall relevant information, much like how advanced reasoning techniques help in manually sifting through vast data warehouses to find and later recall specific data tables. This avoids the repetitive, brute-force approach often required when the data needs updating.

- Retrieval-Augmented Generation (RAG): This method merges the retrieval of past experiences with generative capabilities, resulting in more accurate responses. Think of RAG as a conversation between two experts—one with a vast memory (the generator) and one who fetches the most relevant documents (the retriever). Using similarity search techniques, the retriever scans through extensive data and selects the most pertinent information, which the generator then uses to craft more informed responses.

Tool Use and Integration

Incorporating advanced tools significantly expands the abilities of Large Language Model (LLM) systems. Key techniques and integrations include:

-

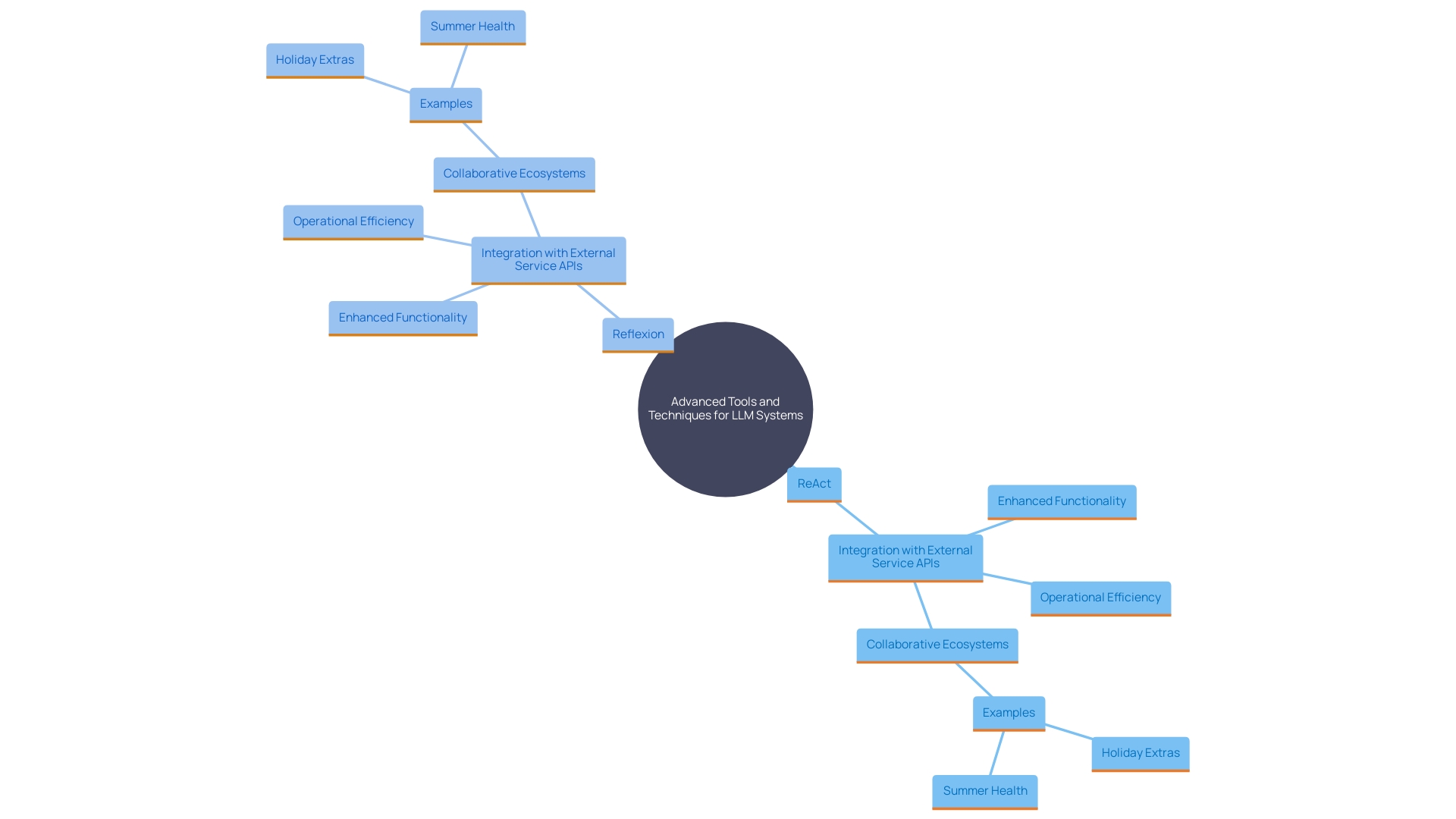

ReAct and Reflexion Techniques: These methods enable LLM agents to dynamically adapt to changing inputs, enhancing their ability to provide contextually relevant responses. By leveraging the React AI agent model, large language models can seamlessly combine reasoning and actionable steps. This method not only simplifies complex data analysis activities but also guarantees more precise and insightful results, especially when handling varied datasets.

-

External Service APIs and Integration: Connecting LLMs with third-party services and resources amplifies their functionality and performance. For example, organizations such as Holiday Extras have gained advantages from incorporating different resources to manage multilingual marketing activities and data-informed processes. Such integrations help streamline processes, reduce the burden of repetitive tasks, and allow employees to focus on strategic initiatives. Another example is Summer Health, where the use of integrated tools has alleviated the administrative load on medical professionals, enabling them to dedicate more time to patient care.

These integrations not only enhance the operational efficiency of LLM-powered systems but also foster a collaborative ecosystem by making advanced technologies accessible and user-friendly. The continuous open-sourcing of projects and engagement with the research community further drive innovation and practical applications across diverse domains.

Challenges and Limitations

Despite their potential, LLM-powered agents face several challenges that can impact their efficiency:

-

Limited Context Capacity: The ability to understand and respond to queries can be hindered by the constraints of context length. LLMs are stateless, meaning they do not store conversations, making context length a critical aspect of their functionality. This limitation can affect the quality and coherence of responses, particularly in complex or lengthy interactions.

-

Long-Term Planning and Adaptability: Agents may struggle with responsibilities requiring foresight and adjustments over extended periods. ‘While LLM-based autonomous systems can understand natural language and reason about tasks, their ability to handle long-term planning is still evolving.’. Memory modules, both short-term and long-term, play a crucial role in enabling these entities to keep track of interactions and enhance their adaptability over time.

-

Natural Language Interface Challenges: Interpreting complex queries can lead to misunderstandings and inaccuracies. The sophistication of natural language interfaces has improved significantly, yet challenges remain in accurately processing and responding to intricate or ambiguous questions. This is particularly evident in scenarios requiring detailed and nuanced understanding, where even minor inaccuracies can lead to significant misunderstandings.

Research has explored the capabilities, efficiency, and security of personal LLM systems, highlighting both their promise and the hurdles that must be overcome. For instance, while LLMs can assist with various tasks and offer substantial efficiency, they are also resource-intensive to run. Ensuring these representatives are secure and reliable in their interactions with users remains a critical area of focus.

Applications and Future Directions

The versatility of LLM-powered autonomous agents unlocks a myriad of applications across various domains:

- Enterprise Applications (e.g., Financial Analysis, E-commerce): These agents streamline operations and enhance decision-making processes. For instance, Amazon leverages LLM technology to improve customer service by enabling chatbots to understand and process natural language, thus providing quick and relevant responses. This integration not only boosts customer satisfaction but also improves operational efficiency.

- Behavioral Simulations and Economic Studies: Utilizing participants to model and analyze human behavior in economic contexts offers valuable insights. For example, representatives can be developed using distinct prototyping that considers behavioral elements, driven by strategies that stimulate knowledge generation based on specific use cases. This approach provides a differentiated and adaptable experiment across various applications and complexities.

- Advanced Business Automation: Automating repetitive tasks and enhancing operational efficiency is a key benefit. By utilizing representatives created from large language models, businesses can automate policy execution through a closed control loop for intent deployment. This includes actions such as monitoring, analysis, planning, and execution, thereby ensuring a seamless and efficient workflow.

These applications demonstrate the potential of LLM-powered agents to transform organizational strategies and operational processes, making them indispensable tools in the modern business landscape.

Conclusion

The exploration of LLM-powered autonomous agents reveals their transformative potential across various sectors. By leveraging advanced large language models, these agents can independently manage intricate tasks, engage in meaningful interactions, and integrate specialized tools to enhance their functionality. The modularity of their design allows for efficient problem-solving, making them invaluable assets in automating workflows and improving productivity.

Key components such as planning and memory modules play a crucial role in the agents’ ability to break down complex tasks and retain relevant information. This structured approach not only facilitates informed decision-making but also enhances the agents’ adaptability in dynamic environments. The integration of external tools further amplifies their capabilities, providing solutions that streamline operations and reduce the burden of repetitive tasks.

Despite the remarkable advancements, challenges remain, including context limitations and the complexities of natural language processing. Addressing these hurdles will be essential for maximizing the effectiveness of LLM-powered agents. The future of these technologies promises exciting possibilities, from enhancing enterprise applications to automating advanced business processes, ultimately reshaping how organizations operate and interact with their environments.

Embracing these innovations will empower businesses to unlock new levels of efficiency and insight, paving the way for a more automated and intelligent future.