Overview

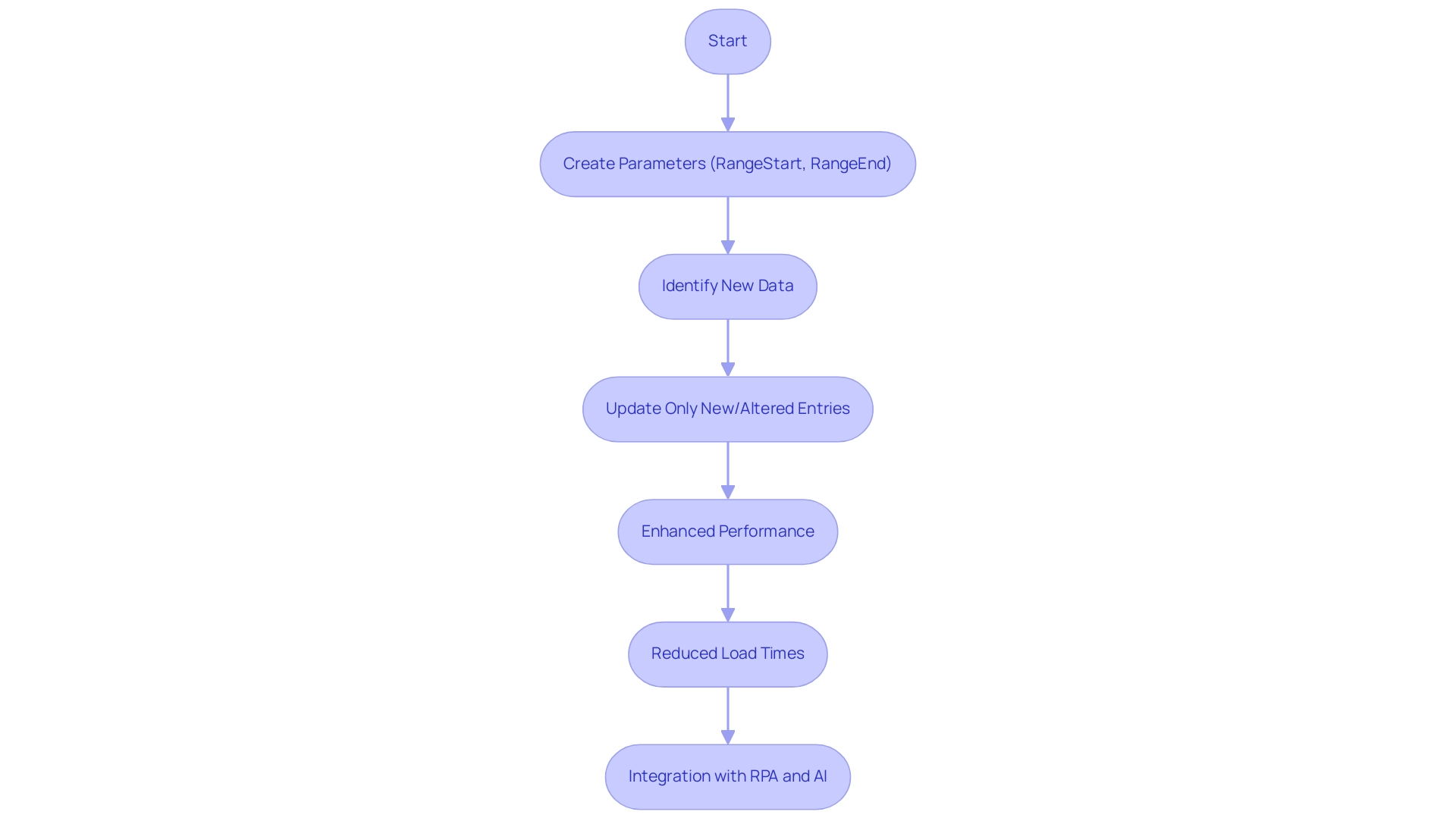

To configure Power Query to refresh only new data, users should establish parameters like RangeStart and RangeEnd to filter the dataset effectively, enabling incremental updates that enhance performance and reduce load times. The article outlines a step-by-step guide emphasizing the importance of these parameters and the integration of Robotic Process Automation (RPA) to automate and streamline the refresh process, ultimately improving operational efficiency and decision-making.

Introduction

In the fast-evolving landscape of data management, organizations are increasingly turning to incremental refresh in Power Query as a game-changing solution for optimizing their data workflows. This approach allows businesses to refresh only new or modified data, dramatically improving performance and reducing load times, especially when dealing with extensive datasets.

By harnessing the power of incremental refresh, organizations can not only streamline their data processes but also enhance their decision-making capabilities with timely access to relevant information. As the article explores, implementing this technique alongside Robotic Process Automation (RPA) can further elevate operational efficiency, enabling teams to focus on strategic initiatives rather than getting bogged down in repetitive tasks.

With practical guidance, troubleshooting tips, and best practices, this comprehensive guide equips organizations with the knowledge needed to leverage incremental refresh effectively and drive business growth.

Understanding Incremental Refresh in Power Query

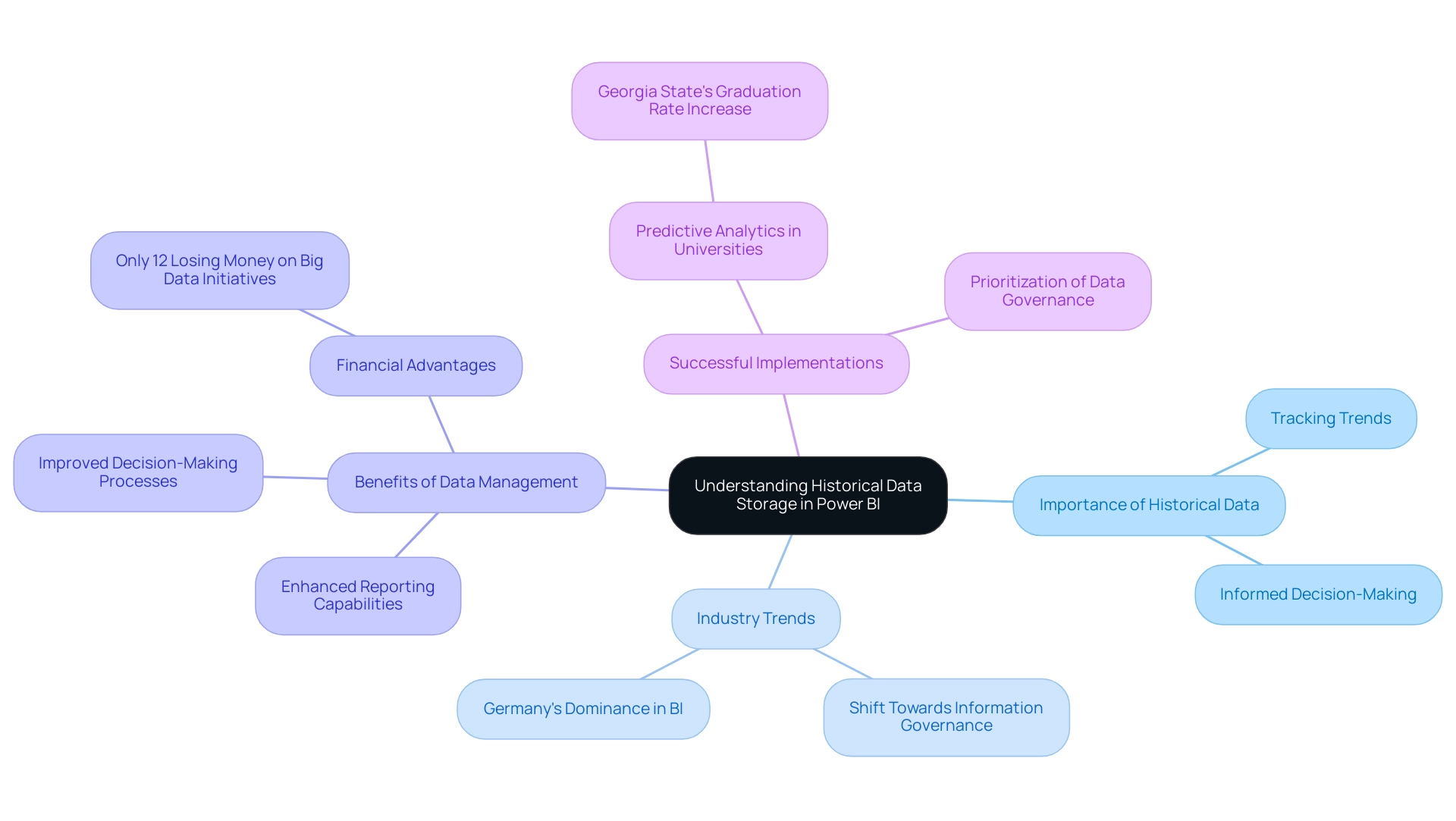

Incremental updating in Power Query transforms how organizations manage information updates by allowing users to perform a power query refresh only on new data or altered entries instead of updating the entire dataset. This is particularly advantageous for large datasets, as it significantly reduces load times and enhances overall performance. For instance, a case study titled ‘How to Set Up Incremental Refresh in Power BI’ illustrates the process of partitioning data tables, where the quality of each cell is contingent on the settings applied during setup.

This practical guidance illustrates the advantages of applying gradual updates in real-world situations. To harness the full potential of incremental updates, it’s crucial to understand its operational mechanics, which involve creating two key parameters: RangeStart and RangeEnd. These parameters determine the extent of historical information retained and facilitate the identification of new entries.

As pointed out by Marina Pigol, Content Manager at Alpha Serve,

Having fewer records to update decreases the overall memory and other database resources utilized in Power BI to finish the update.

This not only streamlines processing efficiency but also allows users to utilize a power query refresh only on new data to consistently access the most current information without unnecessary delays. Recent statistics reveal that organizations applying gradual updates have experienced up to a 70% decrease in update durations, highlighting its effectiveness for large datasets and demonstrating how RPA can reduce errors and free up team resources for more strategic tasks.

Furthermore, recent advancements enable automatic page refresh capabilities for reports utilizing models with incremental refresh, enhancing the immediacy of visibility. By integrating these strategies with Robotic Process Automation (RPA) and tailored AI solutions, organizations can optimize their workflows, drive greater operational efficiency, and leverage Business Intelligence to make informed decisions. Customized AI solutions can particularly aid in examining patterns and forecasting future trends, ultimately overcoming technology implementation challenges and promoting business growth.

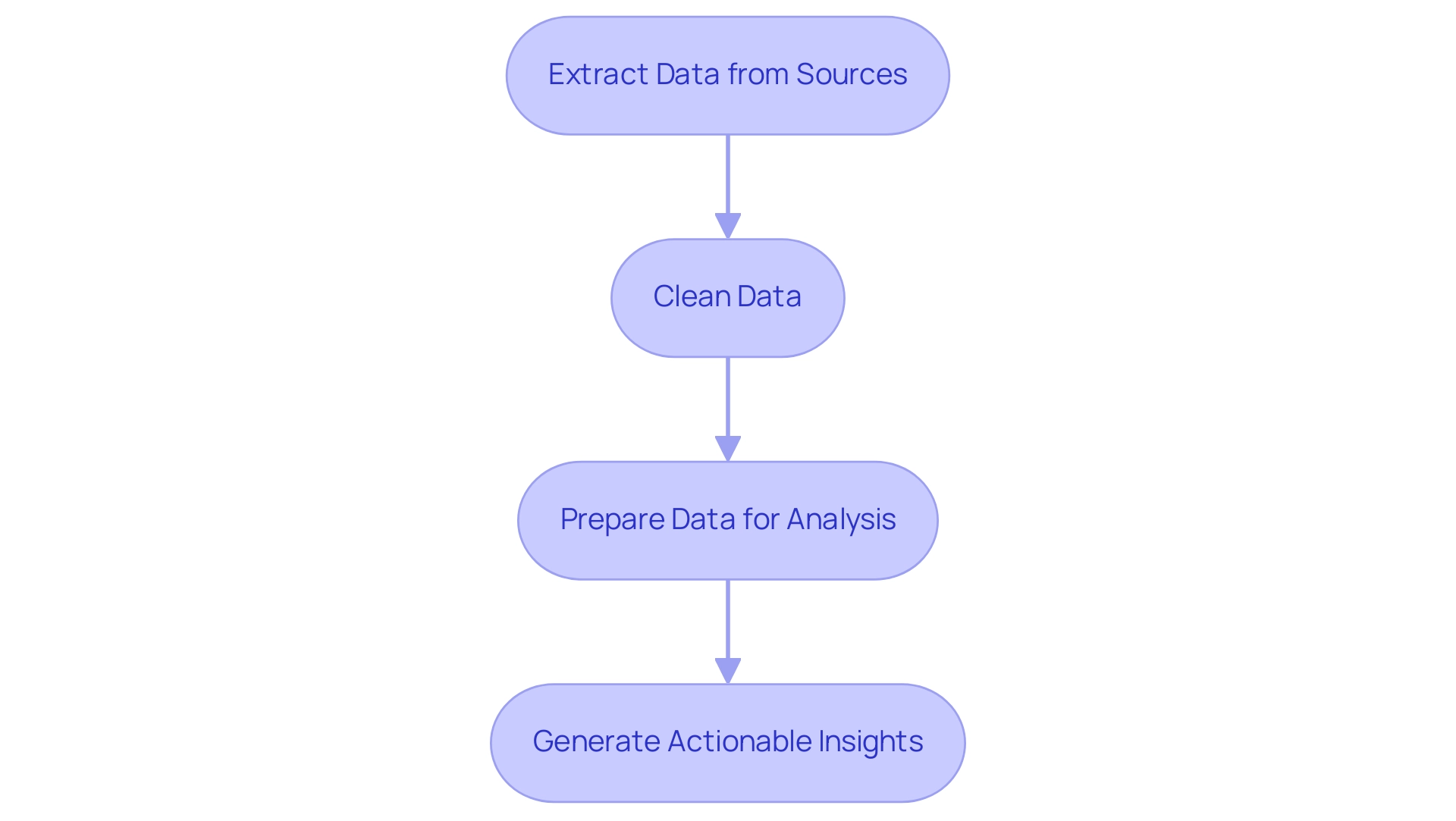

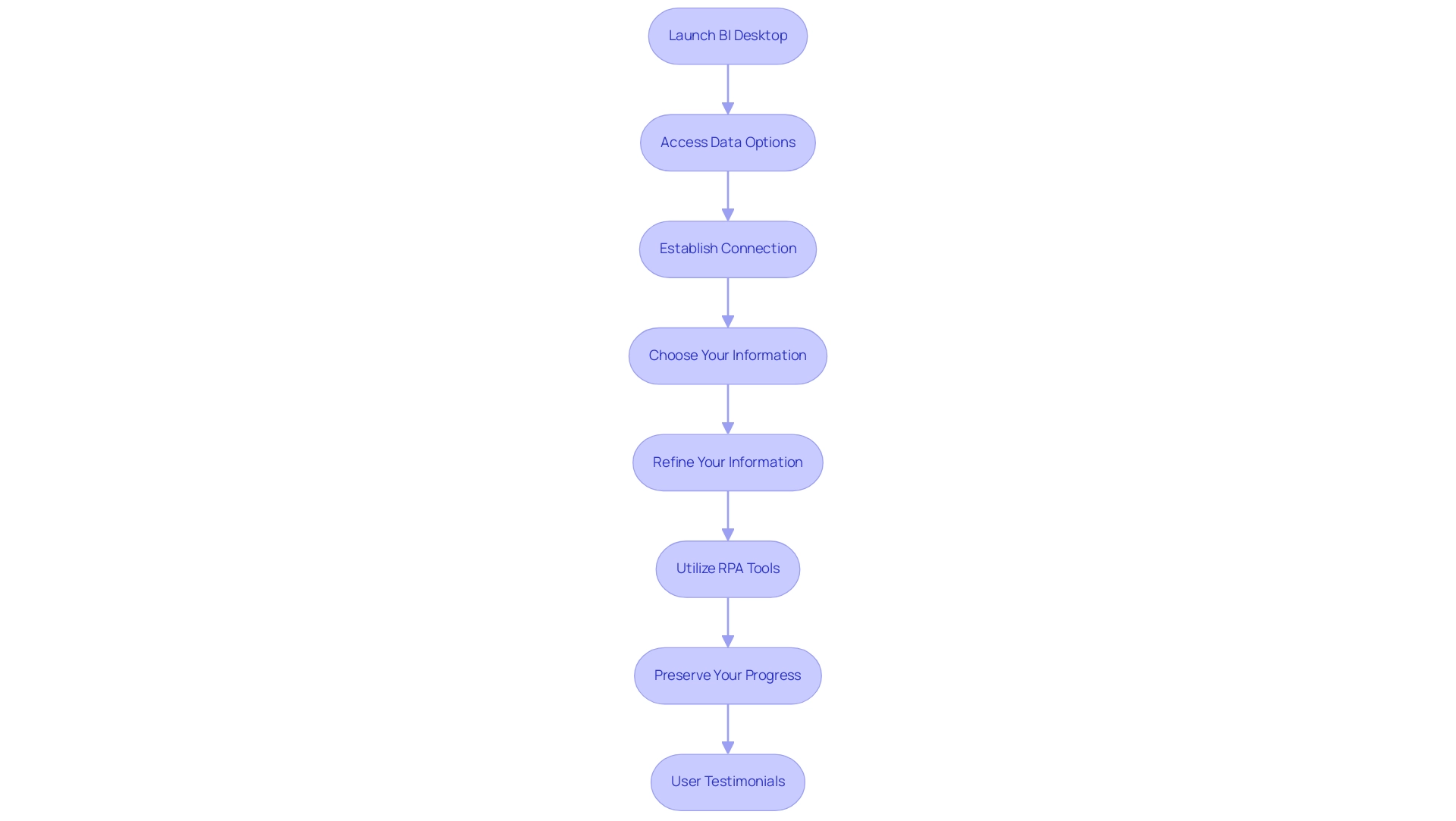

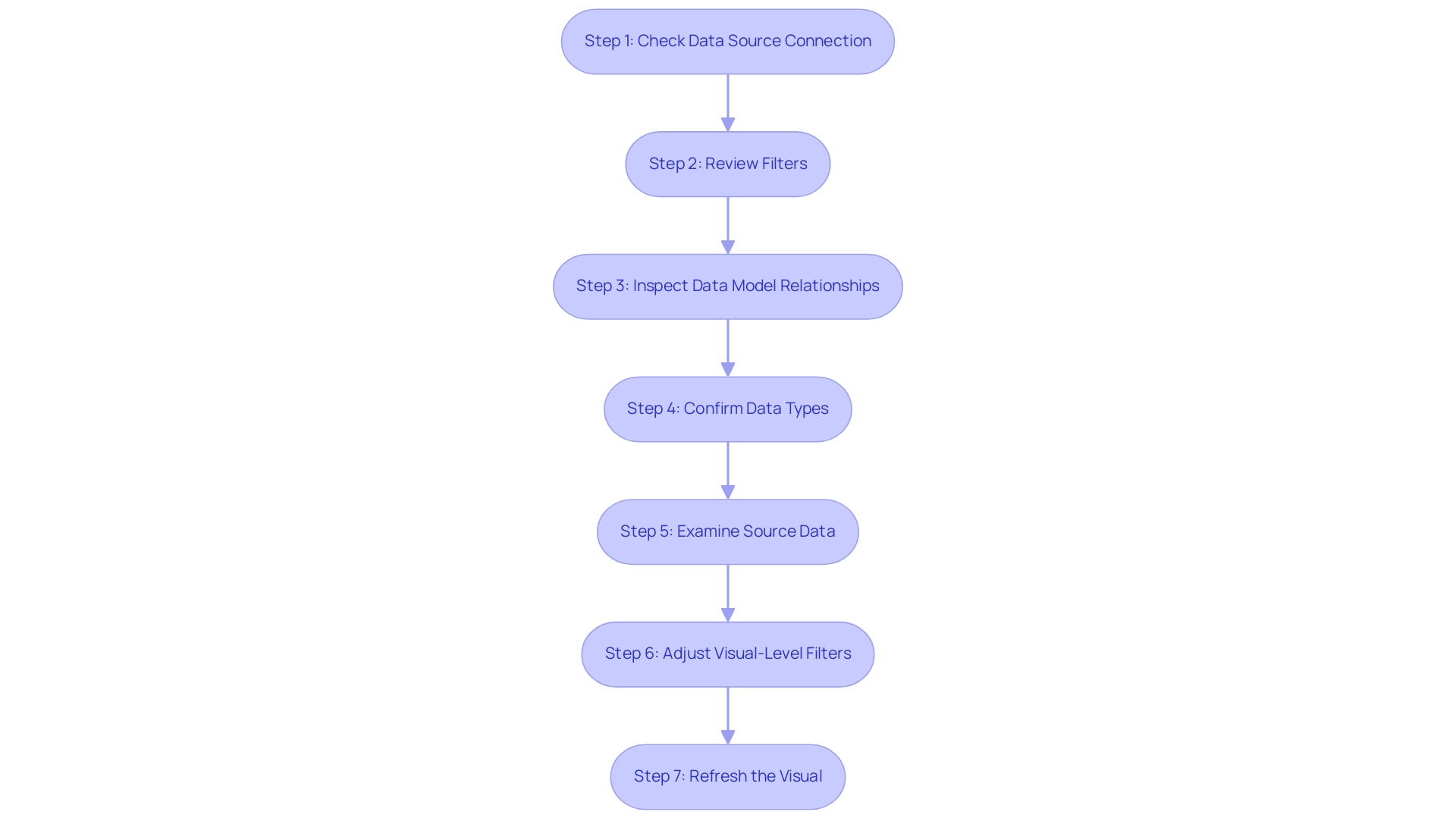

Step-by-Step Guide to Configuring Incremental Refresh

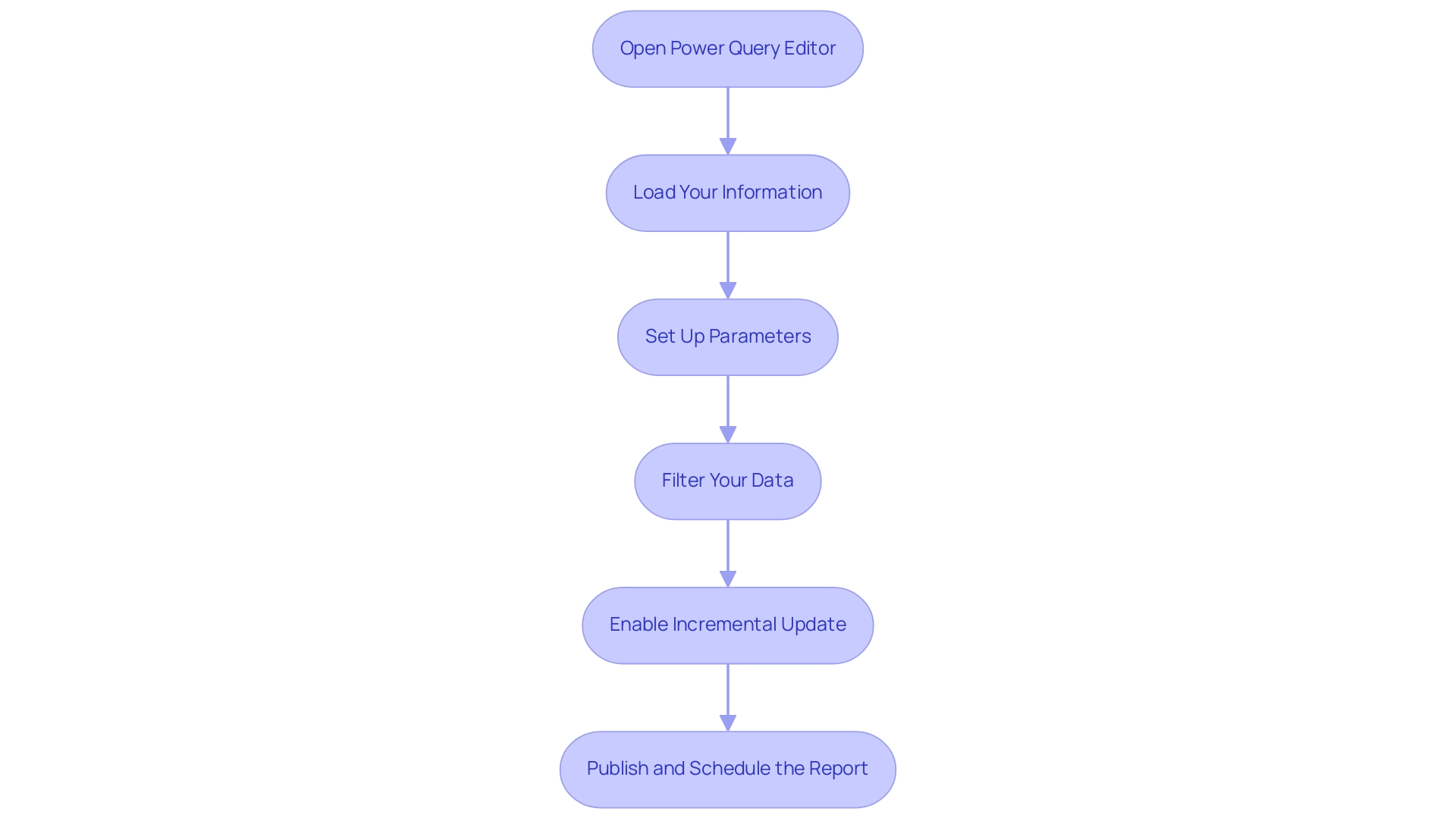

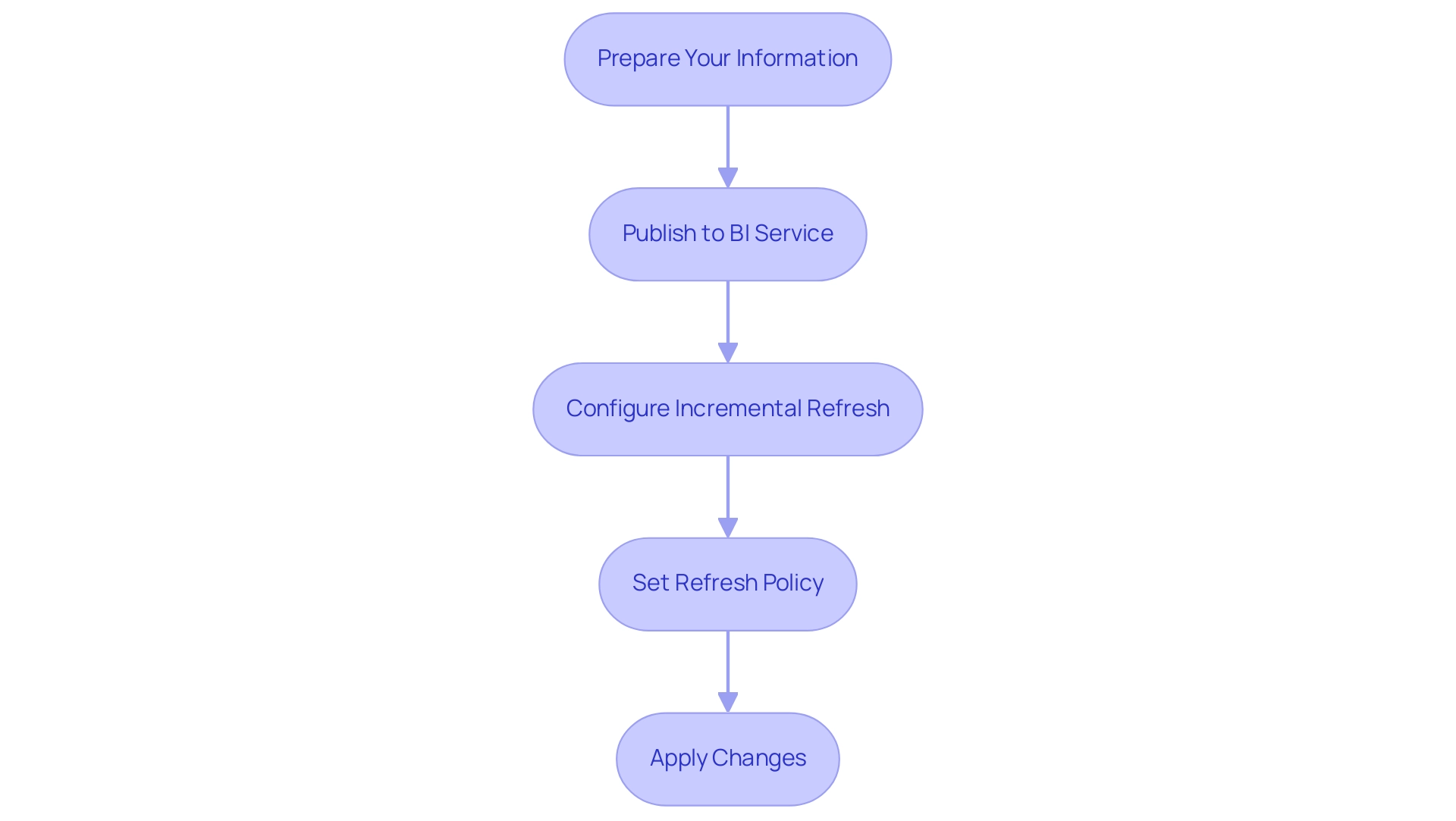

Setting up gradual updates in Power Query can greatly improve your information management strategy and align with wider operational efficiency objectives supported by Robotic Process Automation (RPA). Follow these steps to establish an efficient update process:

- Open Power Query Editor: Launch Power Query by navigating to the ‘Data’ tab in Excel or Power BI, then select ‘Get Data’ to access the Power Query Editor.

- Load Your Information: Import the desired source that you intend to configure for gradual refresh.

- Set Up Parameters: Establish two essential parameters:

- RangeStart: This parameter should denote the start date for the dataset you wish to load.

- RangeEnd: This parameter indicates the end date, guiding Power Query in identifying which records require refreshing.

- Filter Your Data: Utilize these parameters to filter your dataset. For example, set a filter to include records where the date falls between RangeStart and RangeEnd.

- Enable Incremental Update: Within the Power Query Editor, navigate to the ‘Manage Parameters’ section and activate the incremental update option by selecting the appropriate settings. This is vital because, as observed by analyst Manikanta Gudivaka, it minimizes the volume of information transferred and processed, enhancing performance and lowering resource usage. Additionally, it is important to note that subsequent refreshes load the dataset incrementally for the last 10 days, which enhances the efficiency of the process. By incorporating RPA into this step, you can automate the update scheduling, further decreasing manual effort and the potential for errors.

- Publish and Schedule the Report: After configuring your settings, publish your report to the Power BI Service or save your Excel workbook. It is crucial to arrange the update in Power BI Service to guarantee that your progressive update is active, which allows the Power Query to refresh only new data in subsequent updates. Utilizing RPA here can assist in automating the scheduling process, guaranteeing prompt updates without human involvement.

By adopting these measures, you can simplify your information update processes, incorporate RPA to automate manual tasks, and concentrate on the most pertinent details while enhancing performance. Moreover, utilizing query folding—where information transformation steps are pushed back to the source—reduces transfer and processing during gradual updates, emphasizing its significance in the setup. This method not only improves efficiency but also conforms to best practices for information management in Power BI, ensuring that your operations stay agile and responsive in a rapidly evolving AI landscape.

By utilizing RPA, you not only reduce errors but also free up your team to focus on strategic tasks that drive business growth.

Best Practices for Using Incremental Refresh

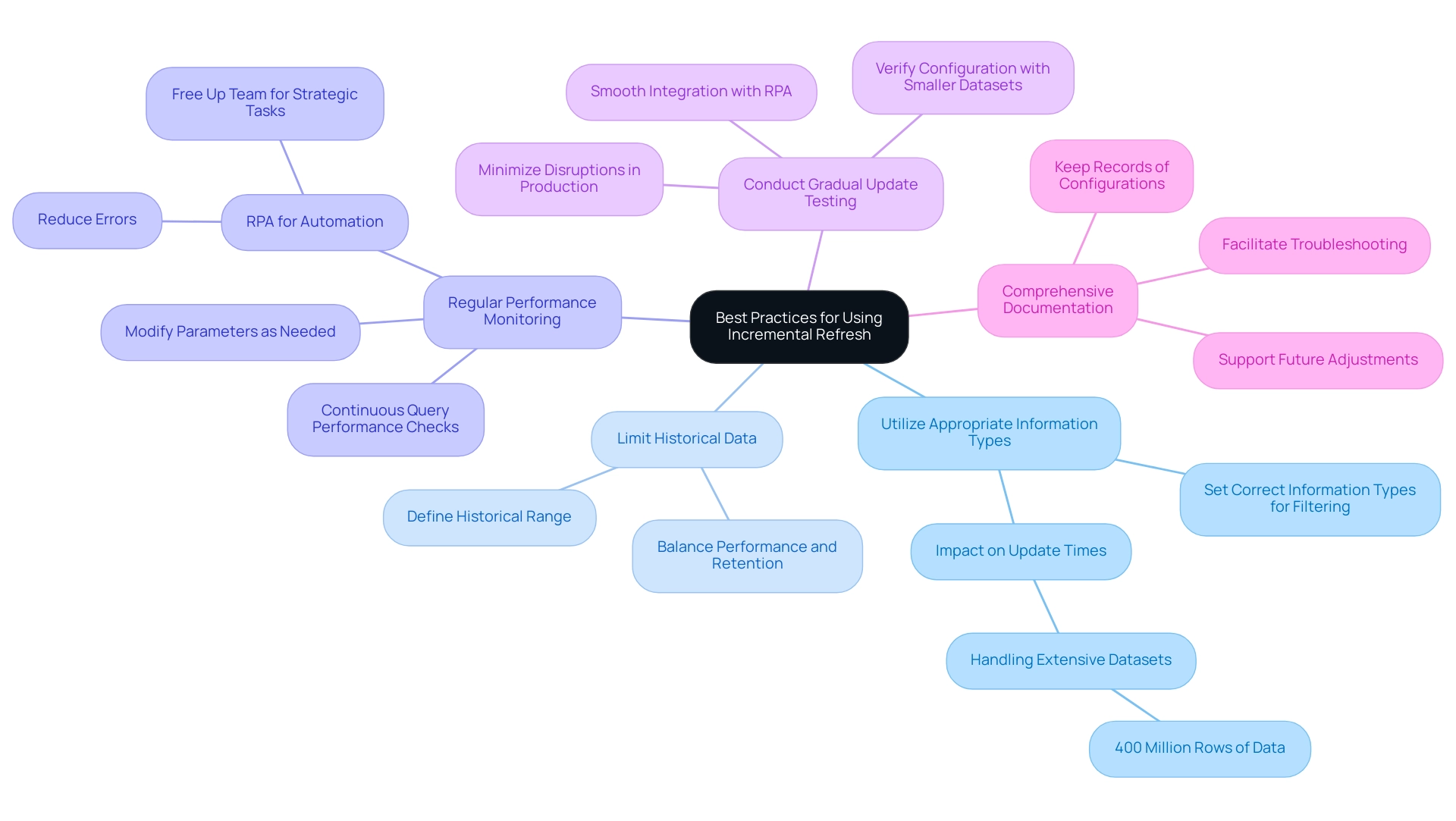

To maximize the benefits of incremental refresh in Power Query and enhance operational efficiency through automation, consider the following best practices:

-

Utilize Appropriate Information Types: Ensure that filtering fields, especially date fields, are accurately set to the correct information types. This step is essential for optimal filtering effectiveness, greatly affecting update times when handling extensive datasets, such as a source table containing 400 million rows of information for the past 2 years.

-

Limit Historical Data: Define a judicious historical range when configuring incremental refresh parameters. Balancing performance with information retention needs is essential, as excessive historical information can hinder overall performance.

-

Regular Performance Monitoring: Continuously monitor the performance of your queries. If you experience slowdowns, modify your parameters or enhance your information sources accordingly. This proactive approach ensures sustained efficiency in data retrieval and highlights how RPA can help automate monitoring processes, reducing the risk of errors and freeing up your team for more strategic tasks.

-

Conduct Gradual Update Testing: Before implementing gradual updates in a production environment, verify your configuration using a smaller dataset. This practice confirms that the setup functions as intended, minimizing disruptions when fully implemented and allows for smoother integration with RPA solutions.

-

Comprehensive Documentation: Keep thorough records of your gradual update configurations and any changes implemented. This resource is invaluable for troubleshooting and making future adjustments with confidence, facilitating RPA integration for streamlined updates.

By following these best practices, you can greatly improve your incremental update experience with Power Query refresh only new data, ensuring that your management processes remain both efficient and effective. As Soheil Bakhshi noted, “I hope you enjoyed reading this long blog and find it helpful,” emphasizing the importance of thorough guidance in information management.

Furthermore, utilizing the latest advancements, such as the Detect alterations feature, enables you to concentrate refresh efforts on specific partitions that have changed, further enhancing performance. It’s also important to note the limitations of using Incremental Refresh with REST API information sources, as highlighted in a case study discussing potential challenges in query folding, which is crucial for efficient information retrieval. This awareness will help you navigate the complexities of data management more effectively, particularly in a rapidly evolving AI landscape where manual, repetitive tasks can significantly slow down operations.

By implementing RPA, you can streamline these workflows, enhance accuracy, and ultimately drive better business outcomes.

Troubleshooting Common Issues with Incremental Refresh

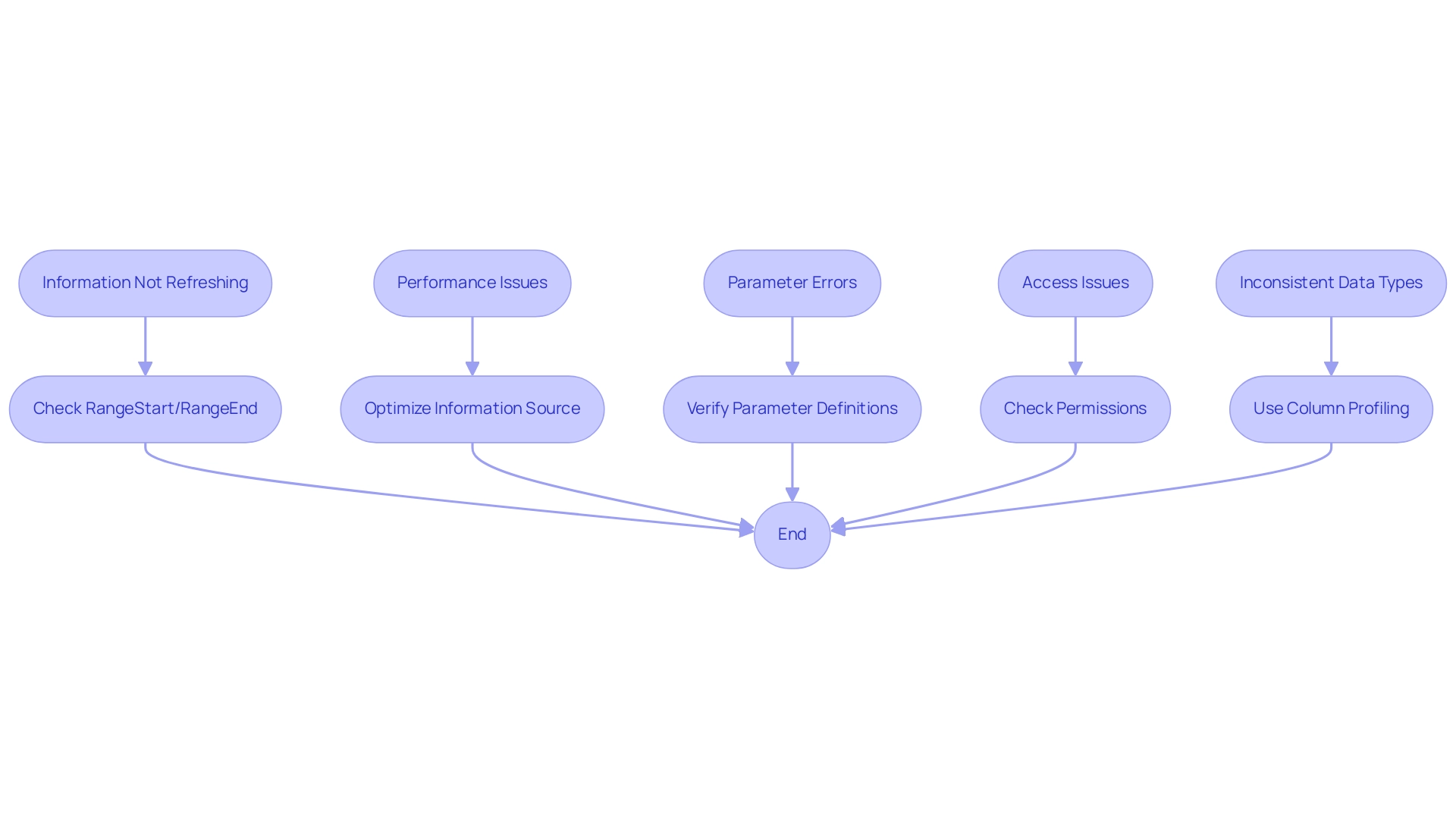

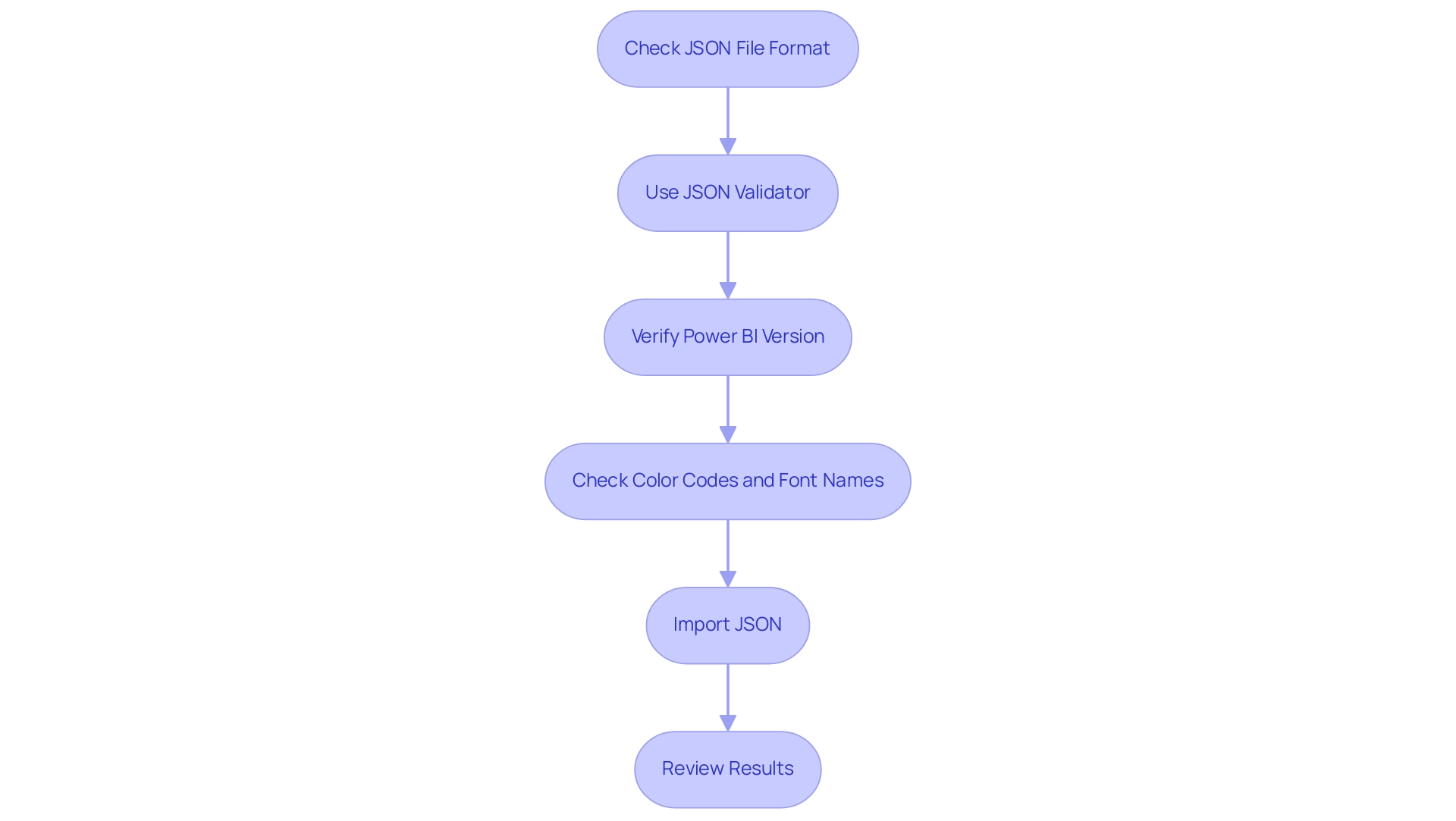

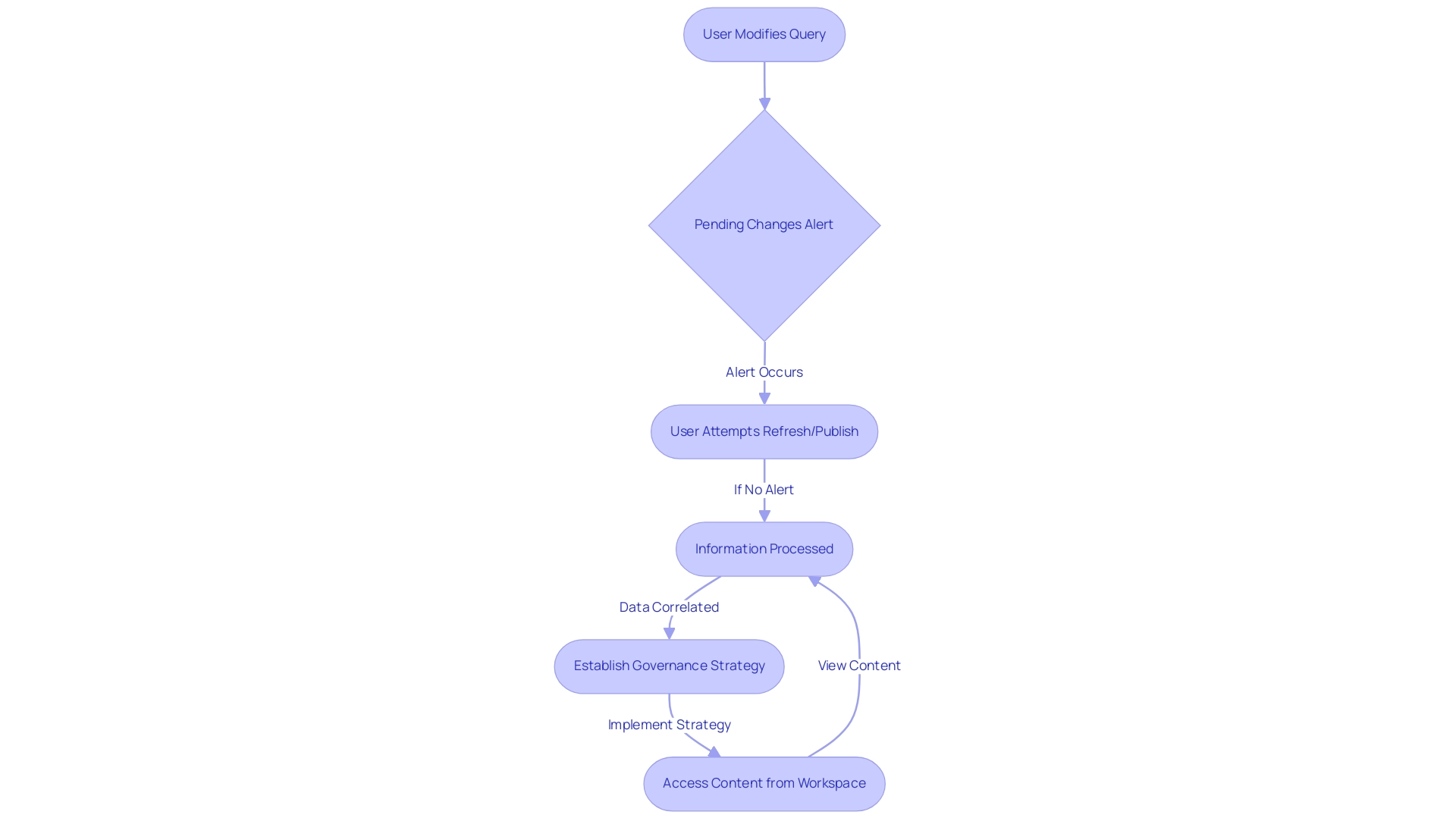

When setting up incremental updates in Power BI, users may encounter several common challenges that can hinder their efficiency. Here are some actionable troubleshooting tips to enhance your refresh experience while overcoming these obstacles:

-

Information Not Refreshing as Expected: If new information fails to appear post-refresh, it’s crucial to verify the RangeStart and RangeEnd parameters.

Misconfiguration here can easily lead to missing updates. Additionally, ensure that the information source itself is current and accessible, as outdated information can impede your ability to leverage insights effectively. -

Performance Issues: Slow update rates can be frustrating, especially in a data-rich environment where timely insights are vital.

Consider optimizing your information source or reducing the volume of historical records retained. This is especially vital for users handling large datasets, like the 25 million records that have caused some to encounter time-out errors during updates. By improving information sources, you can greatly enhance update performance and ensure your team can concentrate on more strategic, value-adding tasks. -

Parameter Errors: Pay close attention to how parameters are defined and referenced in your filters.

Even minor typos or misconfigurations can lead to substantial errors during updates, derailing your efforts and wasting valuable time. Implementing RPA solutions can help automate these checks, reducing the likelihood of human error and streamlining the process of managing parameters. -

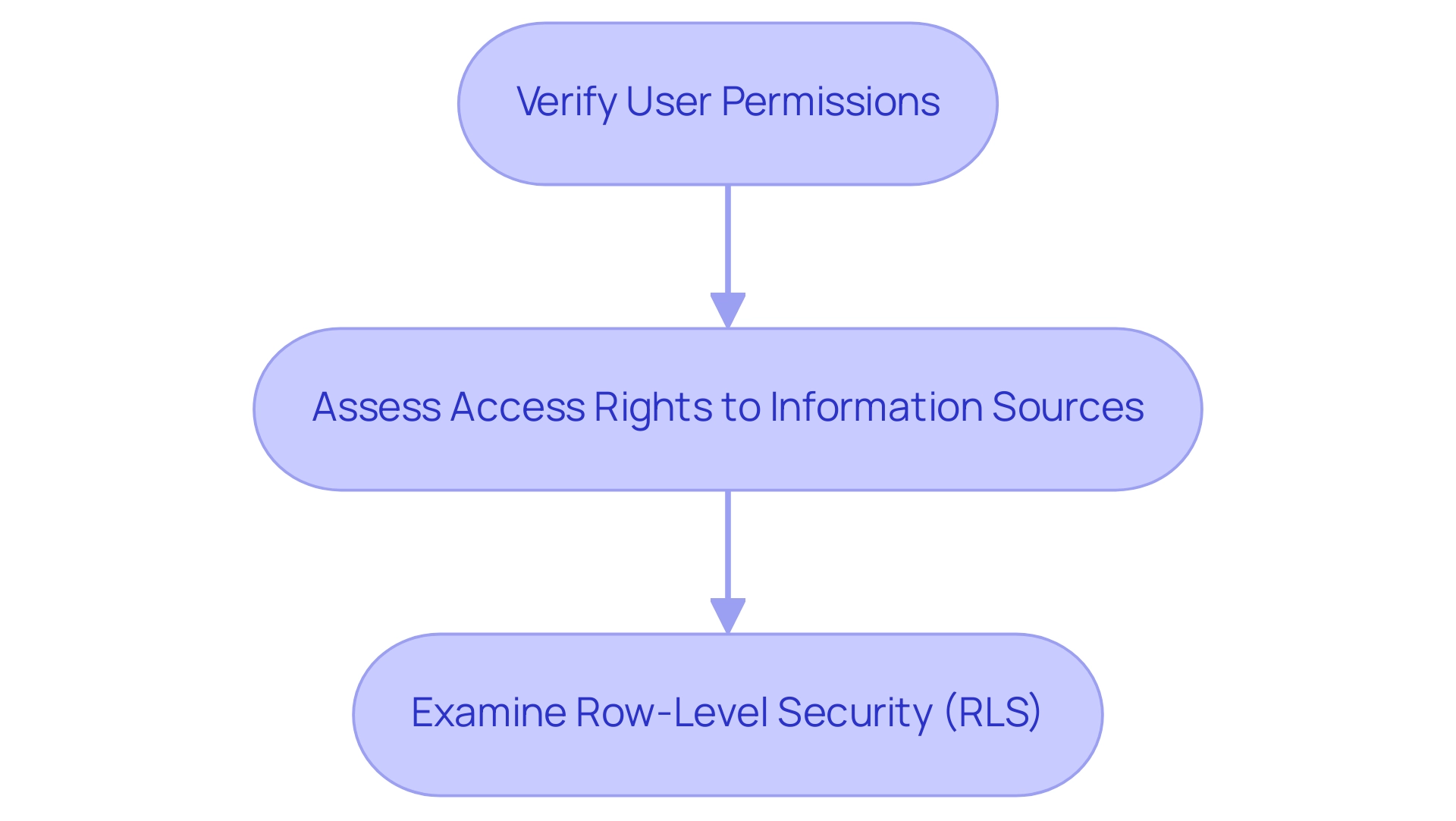

Access Issues: Permission problems can hinder information updates.

Ensure that your Power BI Service account has the necessary access rights to the data sources involved. Without appropriate permissions, update attempts may fail, highlighting the importance of streamlined access management in your operations. -

Inconsistent Data Types: Data type mismatches across your dataset can disrupt filtering and prevent the incremental update from executing properly.

As Selina Zhu from the Community Support Team advises,In my experience, the information shown consists of only the first 1000 rows, while errors may lurk in subsequent rows. Using ‘Column profiling based on the entire dataset‘ can help identify any discrepancies, particularly with date formats.

This method is crucial for handling inconsistent information types that could disrupt your update process.

Additionally, consider the proactive measure of enabling Large Model Storage Format for models exceeding 1 GB published to Premium capacities.

This can improve update performance and prevent size limit challenges, ensuring smoother update operations for larger models.

By proactively addressing these common issues and leveraging RPA to automate repetitive tasks, users can troubleshoot effectively, ensuring a smoother gradual update process.

This not only enhances operational efficiency but also empowers teams to extract meaningful insights from Power BI dashboards, driving data-driven decision-making that fosters business growth. Furthermore, integrating customized AI solutions can offer extra assistance in optimizing information handling and analysis, further alleviating the competitive disadvantage of struggling to extract insights.

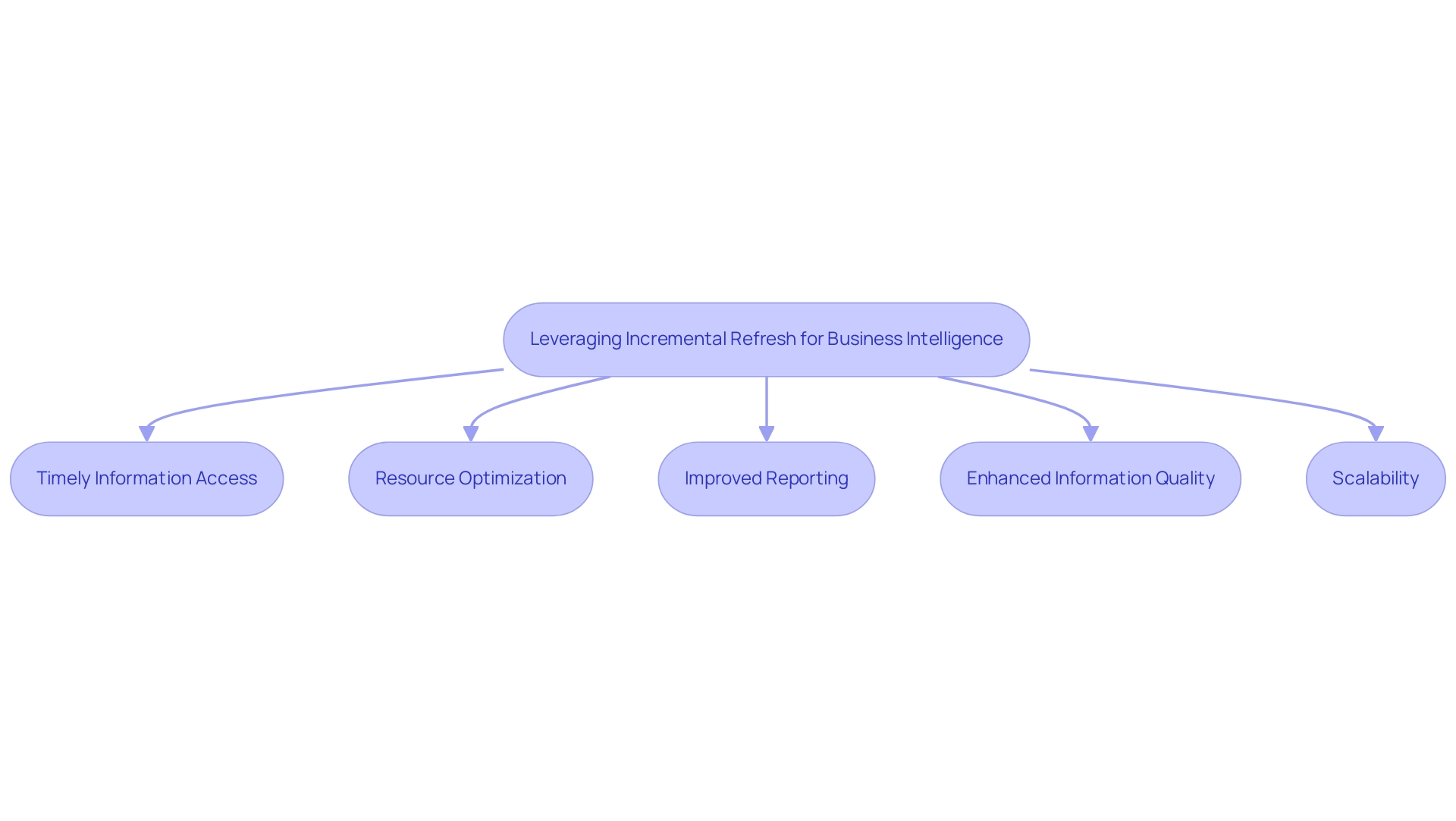

Leveraging Incremental Refresh for Business Intelligence

Applying gradual updates is an effective approach that can greatly enhance your organization’s business intelligence abilities, particularly when combined with Robotic Process Automation (RPA) to streamline manual workflows. Here’s how:

- Timely Information Access: Organizations can utilize power query to refresh only new data, providing decision-makers with the most current insights and fostering informed choices that drive success.

This is especially advantageous when utilizing large quantities of information obtained from ServiceNow, as incremental updates can conserve time and resources in the information update process. Moreover, RPA can automate these update tasks, ensuring timely updates without manual intervention, thereby reducing the potential for errors.

- Resource Optimization: Gradual updating reduces the pressure on information sources and processing resources.

This efficiency allows teams to concentrate on analysis and deriving insights rather than getting bogged down in data management tasks. Significantly, during each update operation, the model processes approximately 30,000 rows, highlighting the substantial resource savings achievable. Moreover, RPA can simplify the scheduling process, enhancing resource utilization even more.

Enabling the large semantic model storage format setting can also improve refresh performance for models exceeding 1 GB, ensuring that organizations can handle larger datasets effectively.

- Improved Reporting: The speed of refreshes associated with incremental updates enables quicker report generation, equipping stakeholders with timely insights to influence business strategies effectively.

By integrating RPA, organizations can automate report generation, ensuring that the most recent information is always available for decision-making, thus freeing up team members to focus on strategic initiatives.

- Enhanced Information Quality: By prioritizing new information, organizations can implement robust validation processes, ensuring that the details used for decision-making are both accurate and reliable.

RPA can aid in overseeing information quality during update processes, further boosting confidence in the information. This proactive approach helps mitigate the risks associated with manual information handling, which often leads to errors and inconsistencies.

- Scalability: As organizations grow, gradual updates enable scalable information management practices that can effortlessly adjust to rising quantities without compromising performance.

Continuous learning, as highlighted by The Knowledge Academy, is essential here; whether you are a beginner or looking to advance your Business Intelligence Reporting skills, their diverse courses have you covered. RPA facilitates scalability by automating repetitive tasks, enabling teams to concentrate on strategic initiatives.

By utilizing gradual update techniques alongside RPA, organizations can revolutionize their data management processes, allowing for a power query refresh only new data, which results in improved operational efficiency and better-informed business decisions.

For instance, after setting up an incremental update policy, successful publication of the model to the service ensures compliance with size limits, particularly for models anticipated to exceed 1 GB. This results in initial refresh operations that optimize performance and resource use, marking a significant step forward in business intelligence for 2024 and beyond.

Furthermore, integrating tailored AI solutions can further enhance RPA’s capabilities, ensuring that organizations stay ahead in the rapidly evolving AI landscape.

Conclusion

Implementing incremental refresh in Power Query undeniably transforms data management practices, allowing organizations to optimize performance and streamline workflows. By refreshing only the new or modified data, businesses can significantly reduce load times and resource consumption, which is particularly beneficial when handling large datasets. The incorporation of Robotic Process Automation (RPA) amplifies these advantages, automating repetitive tasks and providing teams with the freedom to focus on strategic initiatives that drive growth.

The step-by-step guide offered herein equips organizations with the necessary tools to configure incremental refresh effectively, ensuring timely access to the most relevant data. Adopting best practices, such as maintaining accurate data types and conducting regular performance monitoring, further enhances the efficiency of data refresh processes. Moreover, by proactively addressing common troubleshooting issues, teams can maintain smooth operations and foster a data-driven culture.

Ultimately, leveraging incremental refresh alongside RPA not only enhances operational efficiency but also empowers organizations to make informed decisions based on timely insights. As businesses continue to evolve in an increasingly data-centric world, adopting these innovative approaches will be crucial for maintaining a competitive edge. Embracing these strategies today positions organizations for success tomorrow, ensuring they can navigate the complexities of data management with agility and confidence.

Overview

To create and manage Power Query relationships effectively, one must understand the types of connections (one-to-one, one-to-many, and many-to-many) and follow a structured approach in the Query Editor to establish and maintain these relationships. The article provides a comprehensive guide that includes step-by-step instructions for creating relationships, troubleshooting common issues, and implementing best practices, emphasizing the importance of automation and documentation to enhance operational efficiency and data integrity.

Introduction

In the realm of data management, mastering relationships within Power Query is not just a technical necessity but a strategic advantage that can elevate operational efficiency. Understanding how different tables interact is fundamental to crafting a robust data model that can drive insightful business decisions. This article delves into the intricacies of establishing and managing these relationships, providing a comprehensive guide that encompasses everything from basic configurations to advanced troubleshooting techniques.

By embracing best practices and leveraging automation tools like Robotic Process Automation (RPA), organizations can streamline their data processes, mitigate common issues, and ultimately empower their teams to focus on strategic initiatives rather than repetitive tasks.

As the data landscape continues to evolve, equipping oneself with these skills will be crucial for harnessing the full potential of data analytics and fostering sustainable business growth.

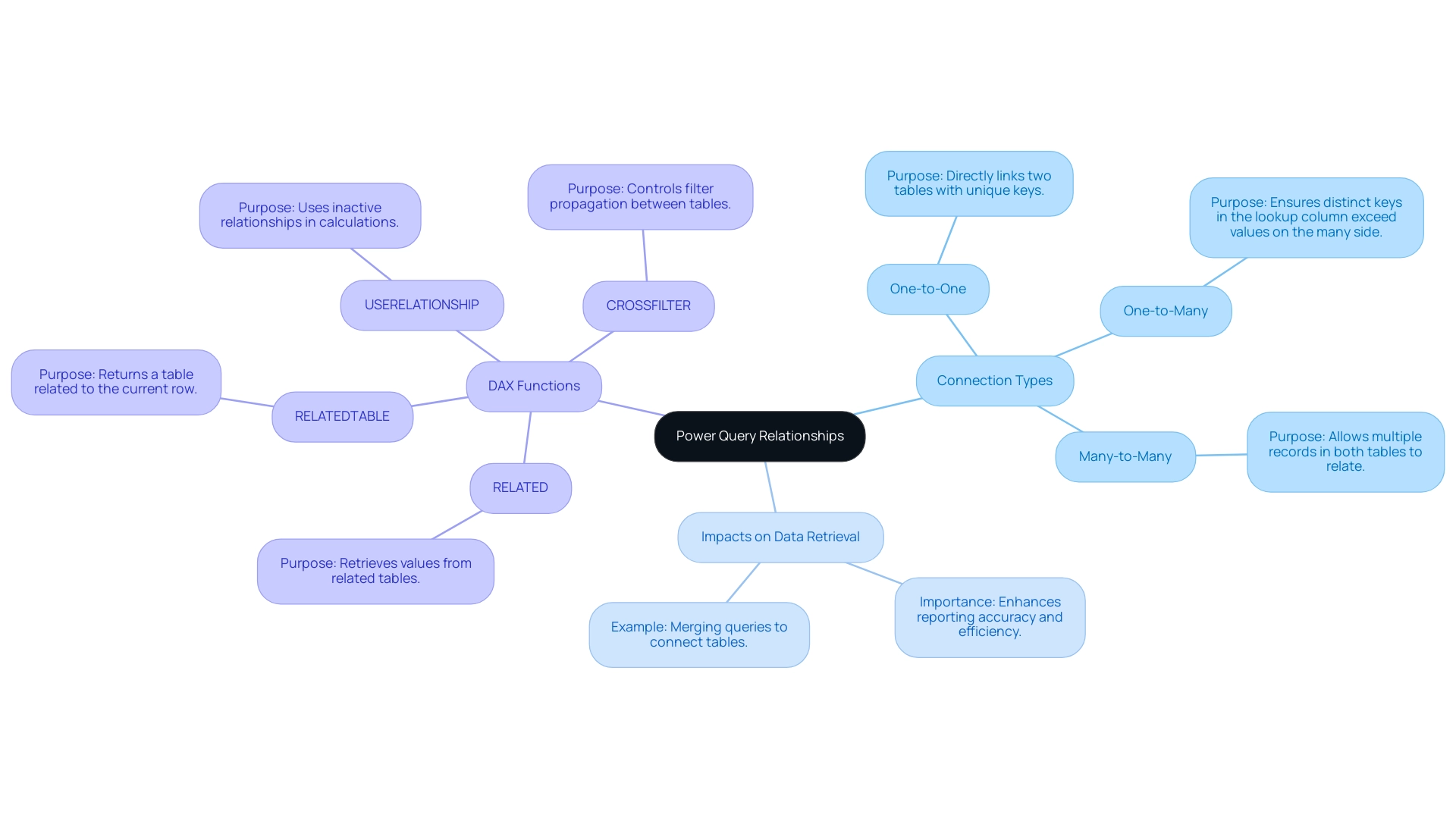

Understanding Relationships in Power Query: A Foundation for Data Management

In Power Query, grasping power query relationships is crucial for how information from various tables interacts, allowing a thorough approach to analysis that enhances operational efficiency. A strong understanding of power query relationships is essential for building a sturdy information model that efficiently utilizes Business Intelligence. There are three primary types of connections: one-to-one, one-to-many, and many-to-many.

Each connection type serves a distinct purpose and significantly impacts how information is retrieved and presented in reports. For example, a one-to-many association guarantees that the number of distinct keys in the lookup column surpasses the values on the many side, resulting in precise information retrieval. Jorge Pinho, a recognized Solution Sage, emphasizes practical application by stating,

It is possible!

In Query Editor, go to the Sales table and use ‘Merge Queries’ to connect to the Dimension table by the Location Key column. This will bring the columns from the Dimension Table to the Sales table. This capability exemplifies how effectively utilizing power query relationships can enhance your inquiries and address challenges such as time-consuming report creation and information inconsistencies.

Furthermore, integrating Robotic Process Automation (RPA) solutions can significantly alleviate task repetition fatigue and staffing shortages, enhancing overall operational efficiency. Moreover, various DAX functions aid in handling connections in BI, including:

- RELATED

- RELATEDTABLE

- USERELATIONSHIP

- CROSSFILTER

These functions help in managing power query relationships, assisting you in obtaining values and managing filter propagation. This tool offers three connection types:

- Import

- DirectQuery

- Live Connection

These are essential for enhancing access in relation to power query relationships.

As you become acquainted with these concepts, you will be well-prepared to create effective queries that harness the full potential of your information, driving informed decision-making and ultimately fostering business growth.

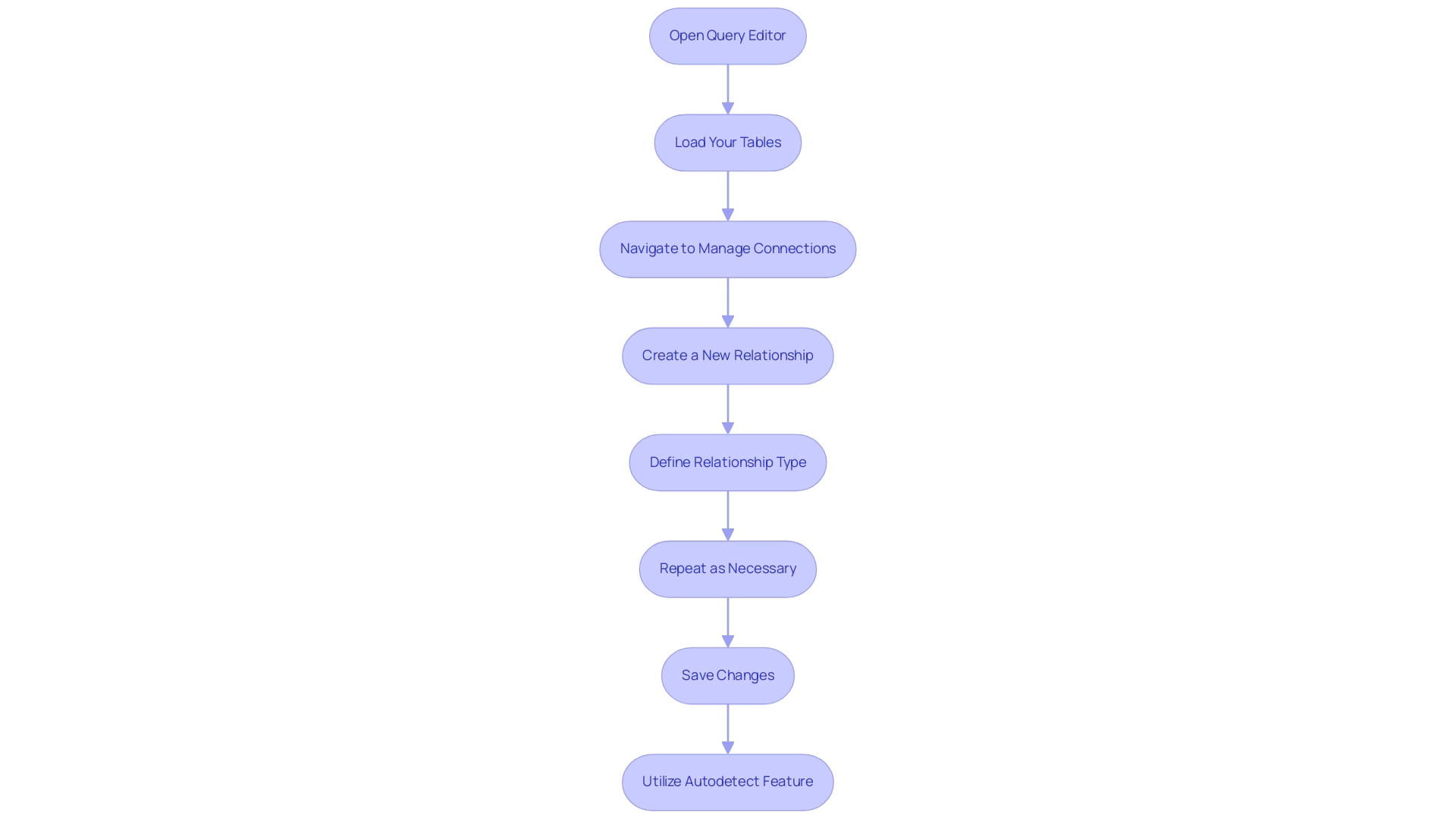

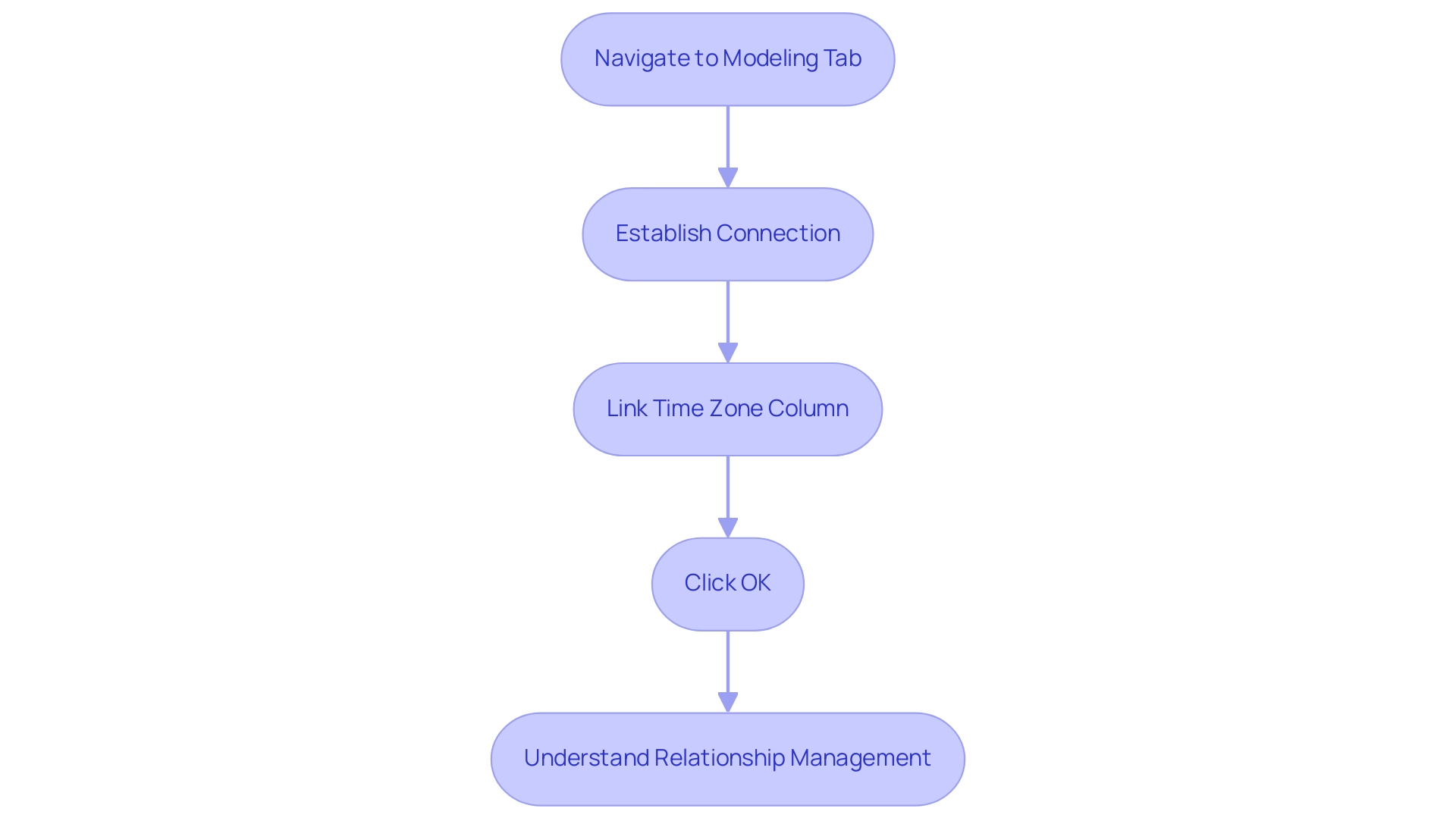

Step-by-Step Guide to Creating and Managing Power Query Relationships

-

Open Query Editor: Begin by launching Excel and navigating to the Data tab. Select ‘Get Data’ to open the Power Query Editor, a powerful tool for managing your information efficiently through power query relationships in a landscape where manual workflows can bog down operations. Automating this process with Robotic Process Automation (RPA) can significantly reduce the time spent on data preparation.

-

Load Your Tables: Import the tables that you plan to establish connections between. For instance, consider the ProjectTickets table, which contains connections such as Submitted By and opened By. This foundational step is crucial for effective modeling and aligns with the need to streamline workflows through automation, particularly in managing power query relationships, allowing your team to focus on strategic tasks instead of repetitive handling.

Navigate to the ‘Manage Connections’ Option: In the Power Query Editor, click on the ‘Home’ tab and select ‘Manage Connections’ to access the power query relationships management features, thereby enhancing your information’s integrity and usefulness. RPA can streamline the management of these connections, ensuring consistency and precision in your models.

Create a New Relationship: Click on ‘New’ to start establishing relationships. Choose the tables you want to link and select the columns that will function as primary and foreign keys. This step is crucial for ensuring precise connections using power query relationships that drive actionable insights. Automating this process can minimize errors and save valuable time.

- Define Relationship Type: Specify the type of relationship—either one-to-one or one-to-many—based on the nature of your information. Once specified, click ‘OK’ to execute the connection, ensuring that your power query relationships are strong and ready for analysis. RPA tools can help in automatically identifying and recommending connection types based on your information patterns.

Repeat as Necessary: Continue the process by incorporating further connections as needed for your information model, ensuring a comprehensive structure that can support advanced analytics. Automating this step can help maintain a consistent approach across your power query relationships.

Save Changes: After establishing all necessary connections, click ‘Close & Load’ to save your changes and return to Excel. RPA can also automate the saving and loading processes, ensuring that your information is always up-to-date without manual intervention.

Utilizing the Autodetect feature can streamline this process, especially when both columns share the same name, making relationship creation even more efficient. As emphasized in the case study titled ‘Building a Model in Power BI,’ understanding power query relationships between tables is crucial to avoiding mistakes and achieving effective modeling. The insights gained will empower you to leverage DAX expressions for intricate metrics, enhancing your overall analytical capabilities in conjunction with RPA.

However, be mindful of potential pitfalls, as noted by analyst Ricardo: ‘At first I did what you suggested, and it worked for creating the summary table, but broke the visualizations I already had in the report, because the fields they used were no longer present.’ This highlights the significance of careful management of power query relationships to maintain the integrity of your reports, thereby enhancing operational efficiency. In the context of the rapidly evolving AI landscape, leveraging RPA not only simplifies these processes but also helps navigate the complexities of information management, allowing you to focus on deriving insights that drive business growth.

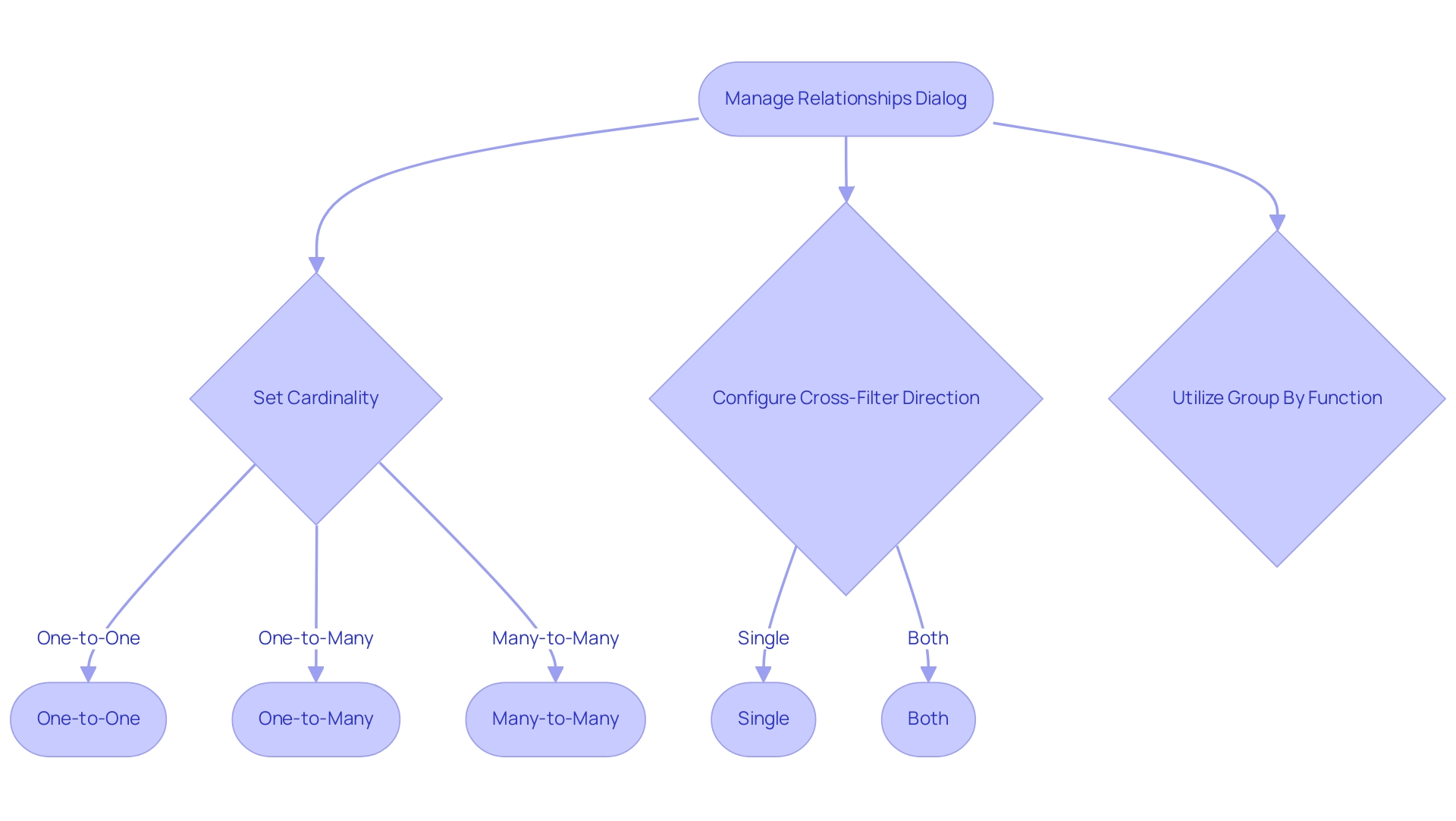

Configuring Advanced Relationship Properties in Power Query

To effectively configure advanced relationship properties in Power BI, begin by managing power query relationships in the ‘Manage Relationships’ dialog. This feature allows you to define cardinality settings—one-to-one, one-to-many, or many-to-many—based on your tables’ structure. Comprehending these cardinality settings is essential, particularly with 100 different categories included in your fact table, as they determine power query relationships and affect report outcomes.

Failing to understand these settings can leave your business at a competitive disadvantage when trying to extract meaningful insights from your information.

Moreover, the cross-filter direction setting in power query relationships significantly impacts how filters propagate across related tables. By selecting ‘Both’ for cross-filter direction in power query relationships, you enable filters to flow in both directions, which is particularly advantageous for complex models with interdependencies. Adjusting these settings can enhance your analysis accuracy and improve query performance.

For example, in a case study named ‘Configuring Connection Options,’ it was noted that BI Desktop automatically sets up connection options based on column information. This automatic configuration empowers users to adjust additional settings for their specific information needs, ensuring connections accurately reflect the material being analyzed. Moreover, employing the Group by function enables users to arrange and handle connections more efficiently, further improving BI’s analytical capabilities.

Rob Miles observes, ‘Begin to Code: Building apps and games in the Cloud,’ highlighting the significance of comprehending how information values connect in BI. In summary, by concentrating on cardinality, cross-filter direction, and utilizing features like Group by, you can enhance your power query relationships, which leads to improved accuracy and more insightful analytics. Moreover, combining strong BI with RPA solutions like EMMA RPA and Automate can enhance operational efficiency, facilitating informed decision-making that promotes business growth and innovation.

Consider exploring these RPA tools to enhance your information management processes and overcome the challenges of time-consuming report creation and inconsistencies.

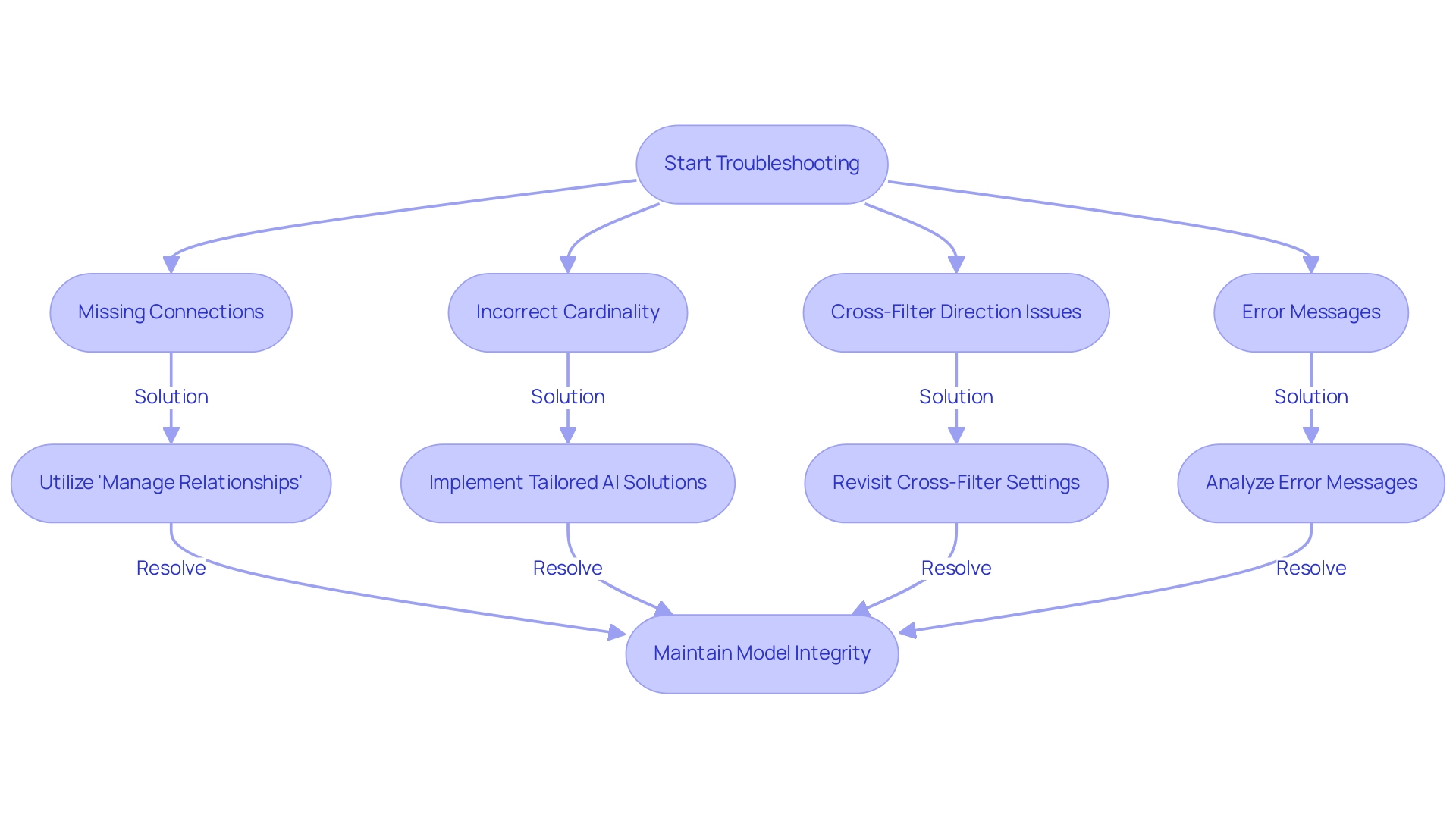

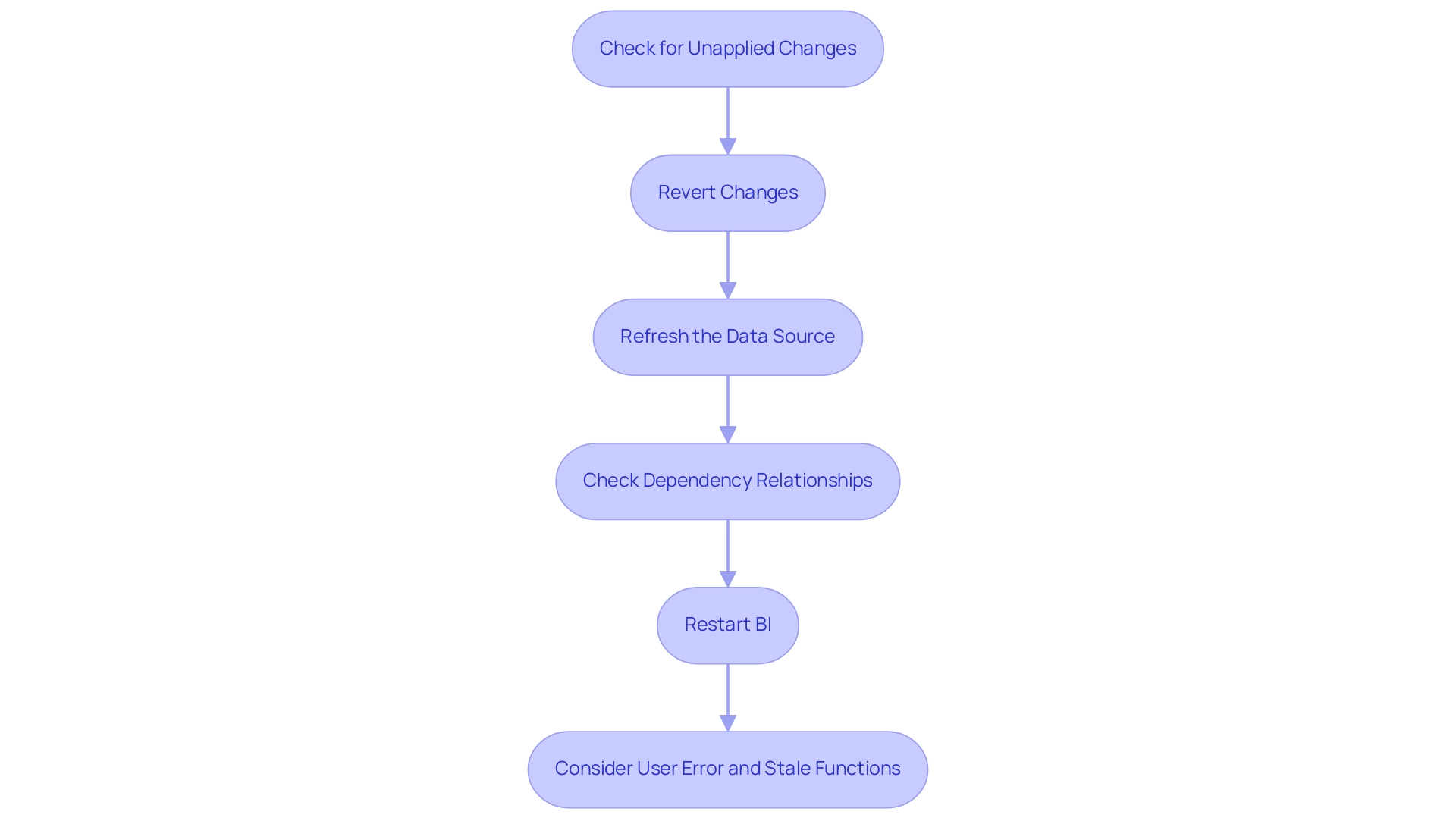

Troubleshooting Common Issues in Power Query Relationships

Navigating power query relationships can present several challenges, but effectively addressing these can enhance your information management capabilities and operational efficiency. Here are some typical problems you may face:

- Missing Connections: If your information is not showing as expected, ensure that connections are properly established. Utilize the ‘Manage Relationships’ feature to confirm that all necessary connections in your power query relationships are in place. This proactive step can be complemented by Robotic Process Automation (RPA) to automate routine checks, ensuring information integrity and saving valuable time through effective power query relationships.

- Incorrect Cardinality: The cardinality of a relationship dictates how information from one table relates to information from another. Ensuring the correct cardinality in power query relationships is vital; incorrect configurations in these relationships can lead to missing or duplicated information, compromising your analysis. Tailored AI solutions can assist in identifying patterns and anomalies in your information, helping you rectify these issues swiftly.

- Cross-Filter Direction Issues: Filters may not behave as expected if the cross-filter direction isn’t correctly configured. Revisiting these settings and making necessary adjustments can effectively resolve filtering problems, empowering you to make informed decisions based on precise information.

- Error Messages: Pay close attention to any error messages that arise during information loading. These messages often provide valuable insights into underlying issues that need addressing. Employing Business Intelligence tools can assist you in converting these insights into practical strategies, especially in addressing difficulties related to inadequate master information quality.

Furthermore, Query can face challenges with collections that feature diverse types, such as text, numbers, and nested tables within a single column, complicating relationship management and information integrity. Moreover, the lack of built-in password protection for query code presents a potential threat to security when handling power query relationships.

To improve collaboration and information management through power query relationships, consider strategies such as using OneDrive or SharePoint, adopting Power BI dataflows, and documenting changes, as emphasized in the case study titled ‘Solutions for Better Collaboration.’ These methods can centralize transformation processes and enhance version control, facilitating better teamwork and ensuring that your operational strategies are aligned with your business objectives.

By implementing these troubleshooting strategies and best practices, you can significantly maintain the integrity of your model through power query relationships. As noted by Paul D. Brown, a Community Champion, > Thanks for that very comprehensive explanation. It all makes sense of course.

This reflects the importance of understanding and applying best practices in your power query relationships to ensure optimal performance and drive innovation.

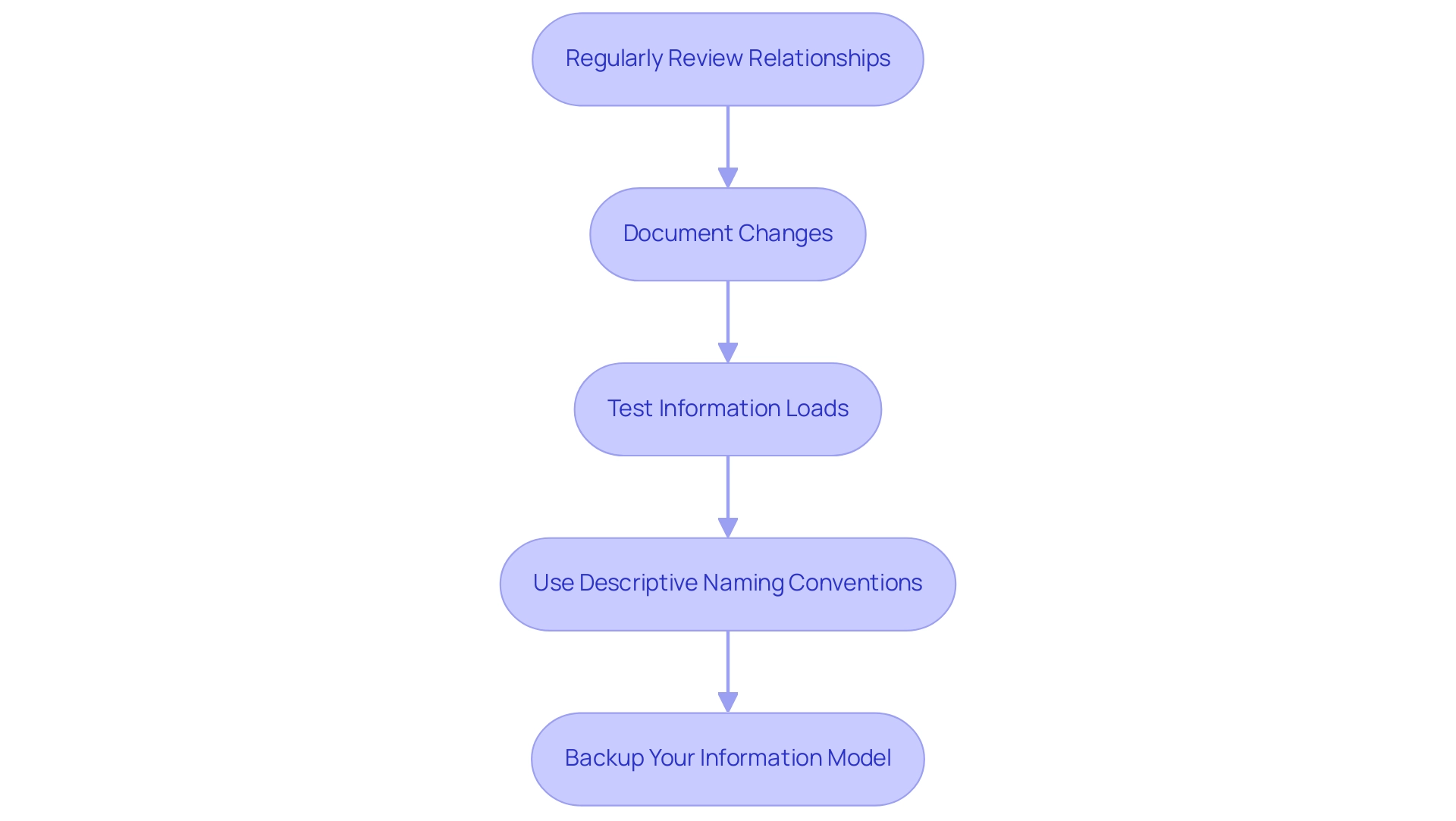

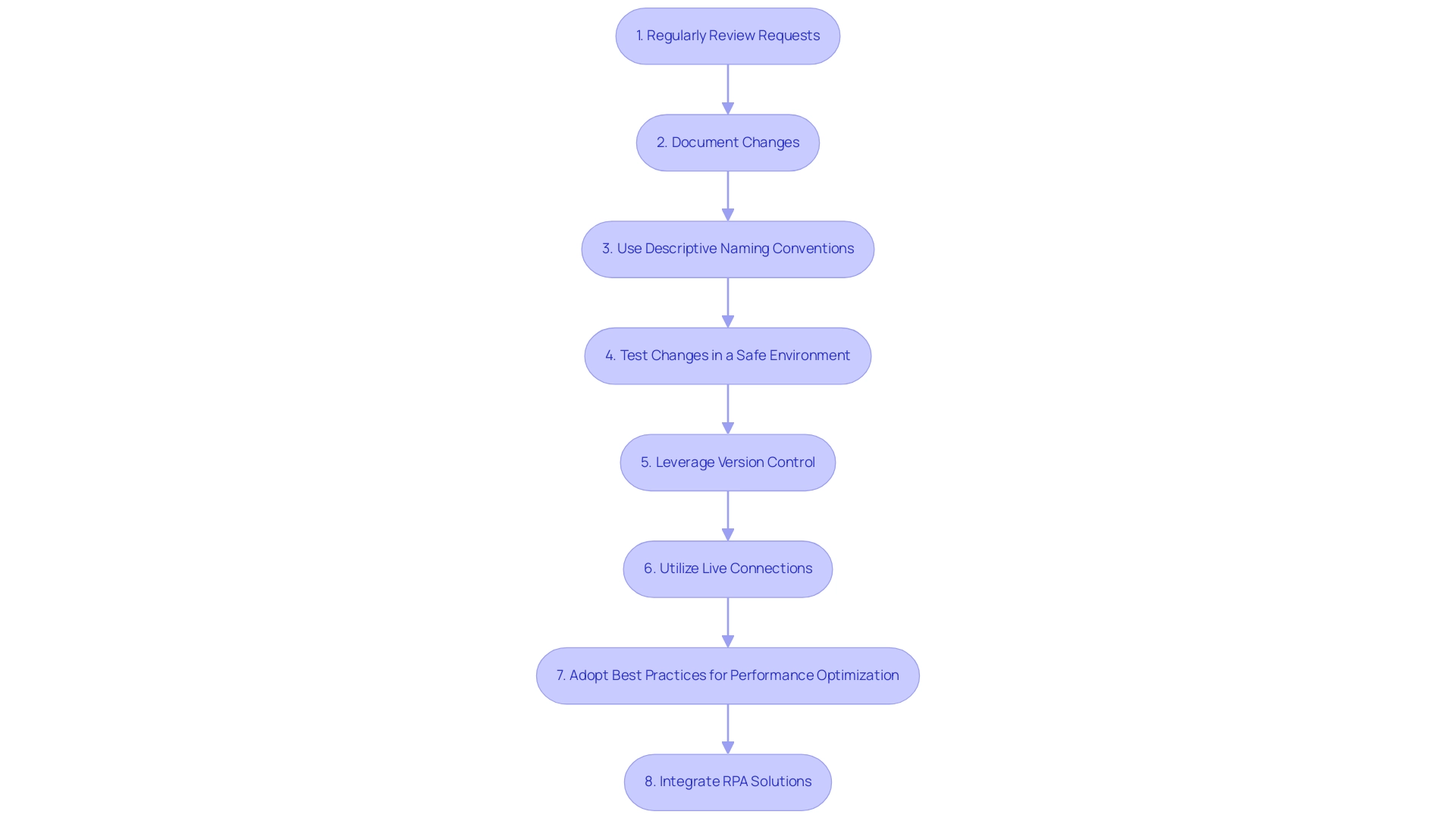

Best Practices for Maintaining Relationships During Data Source Changes

-

Regularly Review Relationships: Following any change to a source, it is crucial to revisit the ‘Manage Relationships’ dialog. This step ensures that all connections remain valid and functional, preventing potential disruptions in your analysis. By incorporating Robotic Process Automation (RPA), you can automate this review process, such as scheduling regular checks to confirm that connections are intact, boosting efficiency and allowing your team to focus on strategic tasks. As indicated in statistical practices, larger effect sizes necessitate smaller sample sizes to attain the same power, emphasizing the significance of preserving precise relationships.

-

Document Changes: Maintaining a thorough record of alterations made to information sources and relationships is crucial. This documentation enhances transparency and facilitates troubleshooting. Utilizing Business Intelligence tools can assist this process by offering insightful analytics on changes over time, as highlighted by the National Institutes of Health‘s emphasis on preserving information integrity and reproducibility. RPA can also be used to automate the documentation process, ensuring that all changes are recorded promptly and accurately.

-

Test Information Loads: After implementing changes, perform loads to identify any discrepancies or issues that may arise. This testing phase is essential for confirming that your updates have not compromised the integrity of the information. Employing RPA can automate this testing, ensuring consistent validation of your workflows. For instance, RPA can run predefined tests that check for consistency and accuracy after each update.

-

Use Descriptive Naming Conventions: Adopting clear and descriptive naming conventions for tables and relationships simplifies the identification of elements during updates, reducing the likelihood of errors. This practice supports a more organized information environment, further enhanced by tailored AI solutions that can suggest optimal naming practices based on your context, ensuring that names are intuitive and consistent.

-

Backup Your Information Model: Prior to executing significant changes, always create a backup of your information model. This precautionary measure safeguards against data loss, ensuring you can revert to a stable version if necessary. Implementing automated backup processes through RPA can enhance your operational efficiency, allowing backups to occur at scheduled intervals without manual intervention.

By adhering to these best practices, you can significantly enhance the integrity and effectiveness of your relationships in Power Query. This approach aligns with current trends in statistical tools, as highlighted in the case study ‘Trends in Statistical Tools and Analysis,’ where organizations increasingly rely on advanced statistical analysis and automation to improve operational efficiency.

Conclusion

Understanding and managing relationships in Power Query is a pivotal element in optimizing data management and enhancing operational efficiency. By mastering the types of relationships—one-to-one, one-to-many, and many-to-many—organizations can construct solid data models that facilitate insightful analysis and informed decision-making. Implementing best practices such as:

- Regular reviews

- Thorough documentation

- Utilizing automation tools like Robotic Process Automation (RPA)

can streamline these processes, allowing teams to focus on strategic initiatives rather than repetitive tasks.

Moreover, configuring advanced relationship properties and troubleshooting common issues is essential for maintaining data integrity and performance. By addressing challenges such as:

- Incorrect cardinality

- Cross-filter direction

businesses can ensure their data remains accurate and reliable. The integration of RPA not only alleviates the burden of manual oversight but also enhances the ability to adapt to changes in data sources seamlessly.

In a rapidly evolving data landscape, equipping teams with the knowledge and tools to effectively manage relationships within Power Query will empower them to harness the full potential of data analytics. Ultimately, this strategic focus on data relationships will drive sustainable business growth, ensuring that organizations remain competitive and capable of making data-driven decisions that foster innovation and operational excellence.

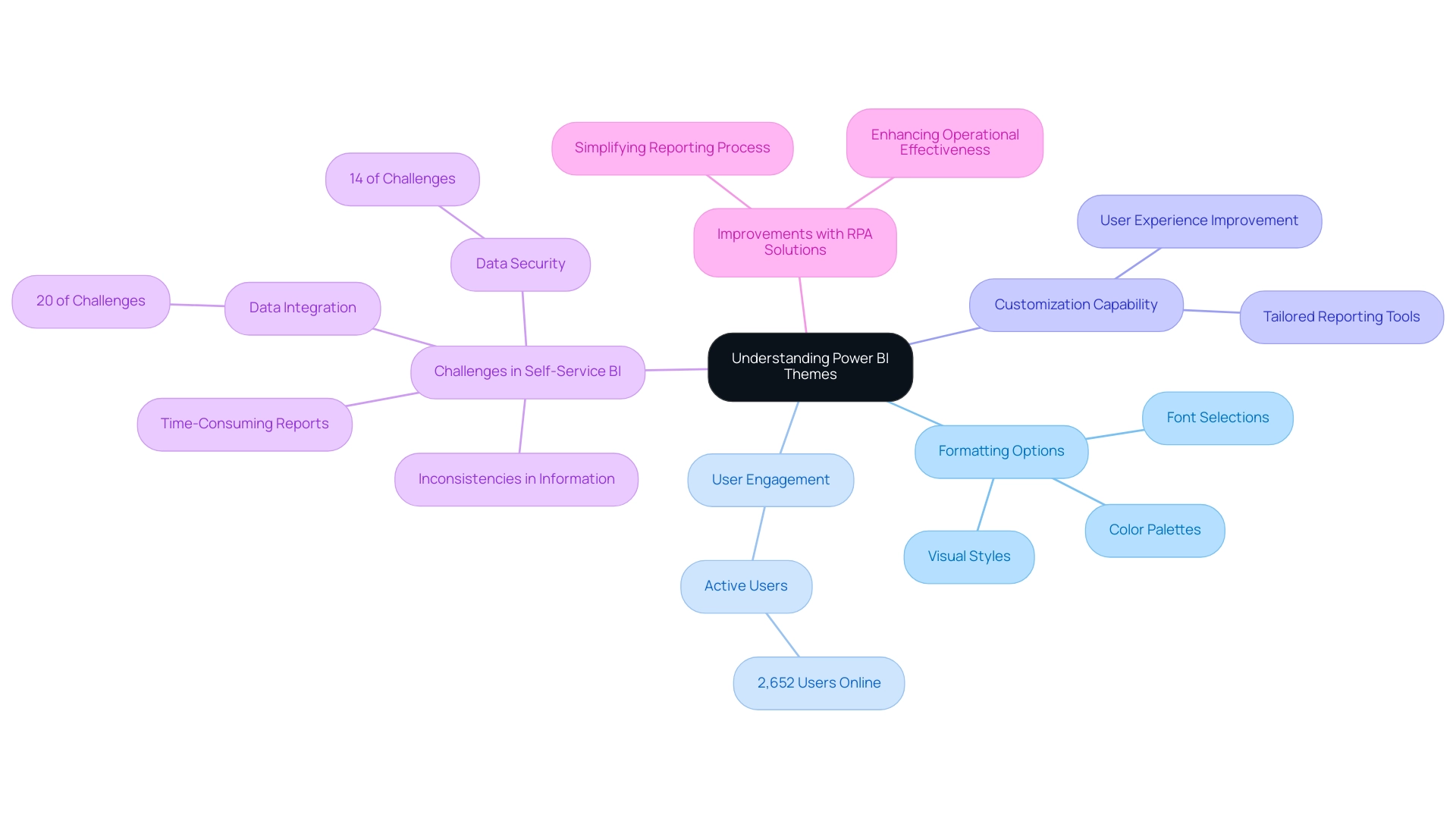

Overview:

Navigating the Power BI Visual Marketplace involves a straightforward process that allows users to enhance their data visualizations with custom visuals tailored for various reporting needs. The article outlines a step-by-step guide for accessing, installing, and customizing these visuals, emphasizing their role in transforming complex data into clear insights that facilitate effective decision-making and storytelling.

Introduction

In the realm of data visualization, the Power BI Visual Marketplace stands out as an invaluable resource for organizations seeking to elevate their reporting capabilities. This vibrant hub is filled with custom visuals that not only enhance the presentation of data but also tackle common operational challenges such as time-consuming report creation and data inconsistencies.

With offerings from both Microsoft and innovative third-party developers, users can access a diverse range of tools designed to transform complex datasets into clear, actionable insights. As the demand for personalized data solutions continues to rise, understanding how to navigate this marketplace is essential for unlocking the full potential of Power BI, ensuring that data storytelling resonates with stakeholders and drives informed decision-making.

Introduction to the Power BI Visual Marketplace

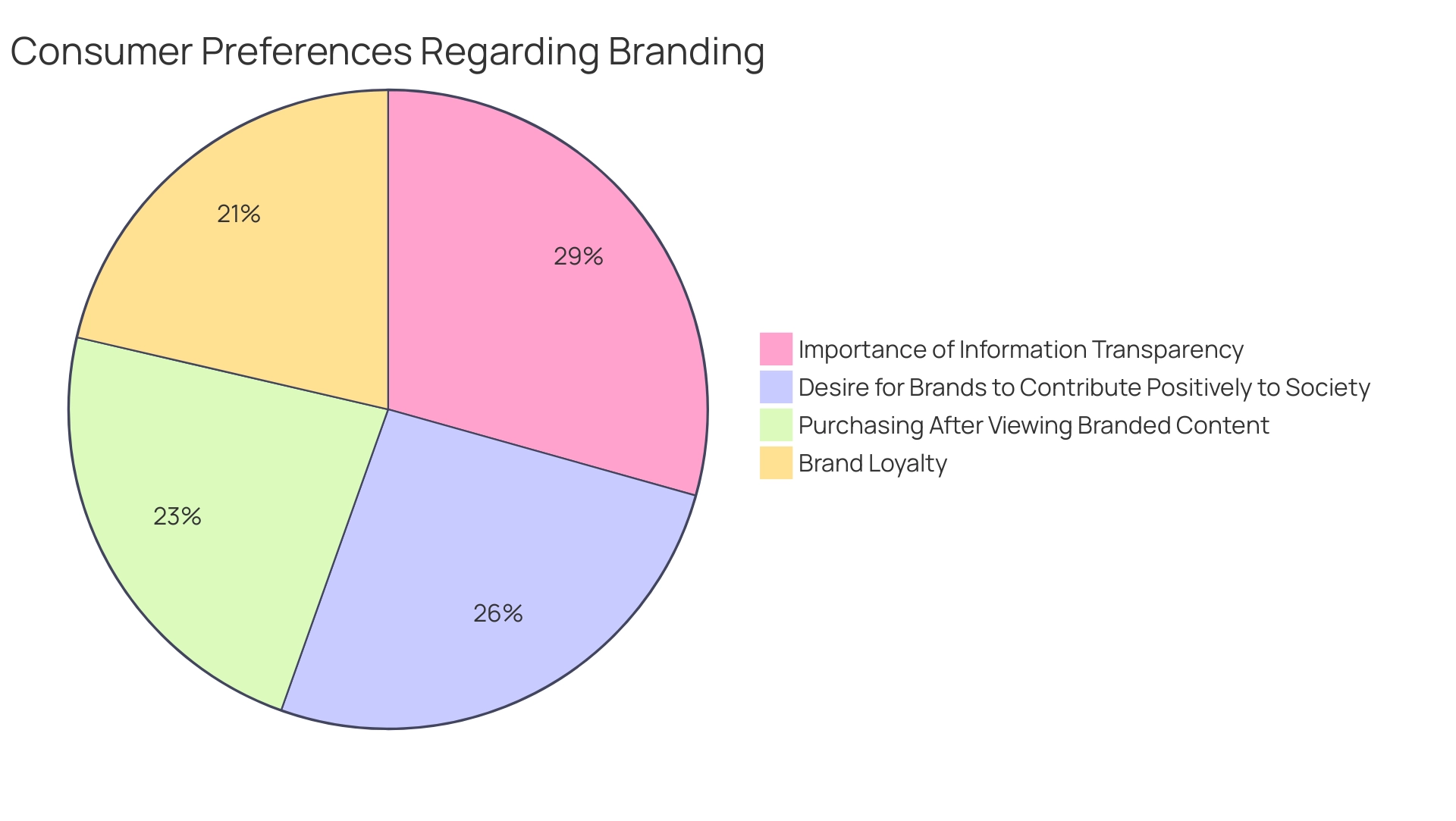

The Power BI Visual Marketplace serves as a dynamic hub for custom representations designed to enhance your information visualization capabilities. This extensive repository showcases a collection of images crafted by Microsoft, alongside innovative offerings from third-party developers, such as KPI by Powerviz, which boasts over 100 prebuilt KPI templates and 40 chart variations. Users can greatly enhance functionality beyond standard visualizations, tackling common challenges such as time-consuming documentation creation and inconsistencies in information.

Importantly, these visuals also offer actionable guidance, transforming complex information into clear insights that drive decision-making. Each visual is tailored to enhance specific aspects of information presentation, whether for interactive dashboards, comprehensive reports, or captivating presentations. By learning to navigate the Power BI Visual Marketplace efficiently, you can unlock the full potential of your Business Intelligence experience, ensuring that your storytelling through information resonates with your audience.

Enterprise Account Director Kevin Scott emphasizes this potential, stating, ‘In this step-by-step video, I showcase how one of my customers leveraged HG Insights’ Technology Intelligence to uncover a $200M opportunity in the Business Intelligence market.’ Recent trends indicate remarkable growth in custom visuals within the Power BI Visual Marketplace, reflecting a broader industry shift towards personalized and impactful visualization solutions. Additionally, the Finance & Insurance, Manufacturing, and Public Administration sectors collectively account for 62% of the overall market spending on BI software, highlighting the significant demand and opportunity for BI solutions.

Embracing these tools not only enhances your reporting capabilities but also fosters a data-driven culture that drives business growth.

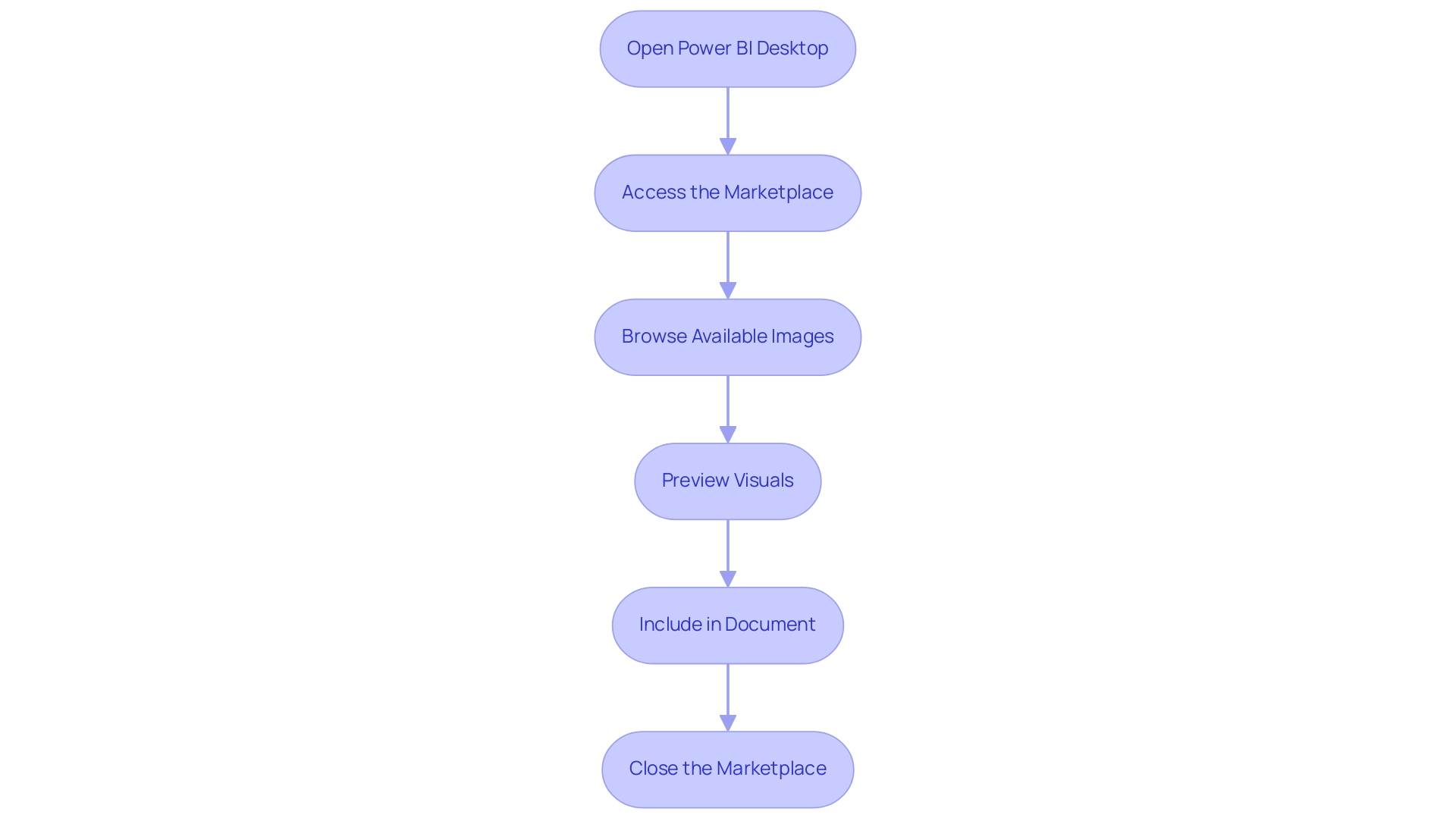

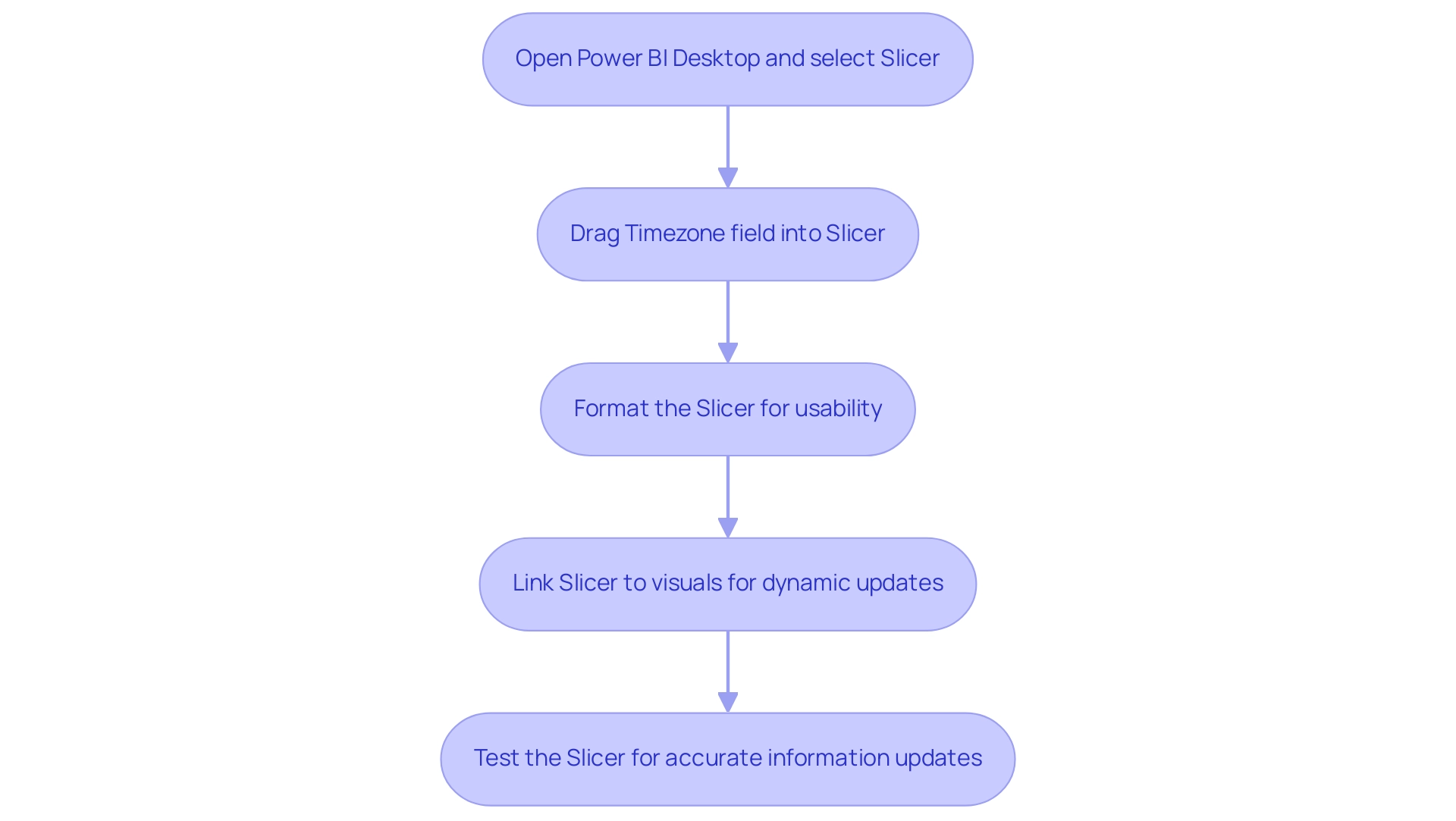

Step-by-Step Guide to Navigating the Marketplace

Navigating the power bi visual marketplace is a straightforward process that opens up a world of interactive information presentation and storytelling. By leveraging Business Intelligence tools, you can combat challenges such as time-consuming document creation and data inconsistencies, which often hinder operational efficiency. Furthermore, incorporating RPA solutions such as EMMA and Automation can further streamline these processes.

Follow these steps to enhance your reports:

- Open Power BI Desktop: Begin by launching the application on your computer, setting the stage for your data exploration.

- Access the Marketplace: In the ‘Visualizations’ pane on the right-hand side, click the three dots (ellipsis) at the bottom; this action will lead you straight into the marketplace, brimming with possibilities.

- Browse Available Images: Take your time to explore various categories or use the search bar to pinpoint specific images tailored to your objectives. The wealth of options available will empower you to find exactly what you need.

- Preview Visuals: Click on any visual that catches your interest to view detailed information, including screenshots, descriptions, and user ratings.

This insight will assist you in making informed choices about which images best fit your narrative.

Include in Document: Once you find a visual that aligns with your vision, just click ‘Add’ to effortlessly integrate it into your document, improving your storytelling abilities.

Close the Marketplace: After adding your selected visuals, close the marketplace to return to your report, prepared to present your information in a compelling manner.

By effectively utilizing the power bi visual marketplace, along with RPA solutions, you can transform raw information into impactful narratives that drive meaningful action and address common challenges in information management. Remember the insightful words of Darya Yatchenko, Lead Technical Writer:

Remember, data visualization is not just about presenting information, but also about telling a story and driving meaningful action.

Furthermore, consider the value proposition of acquiring BI Pro with Microsoft 365 applications for one low price per user, ensuring you have access to industry-leading security.

Should you need assistance, technical support for BI can be accessed through Microsoft Fabric, providing essential resources for your navigation. Embrace these tools to elevate your operational efficiency and lead your organization toward data-driven decision-making.

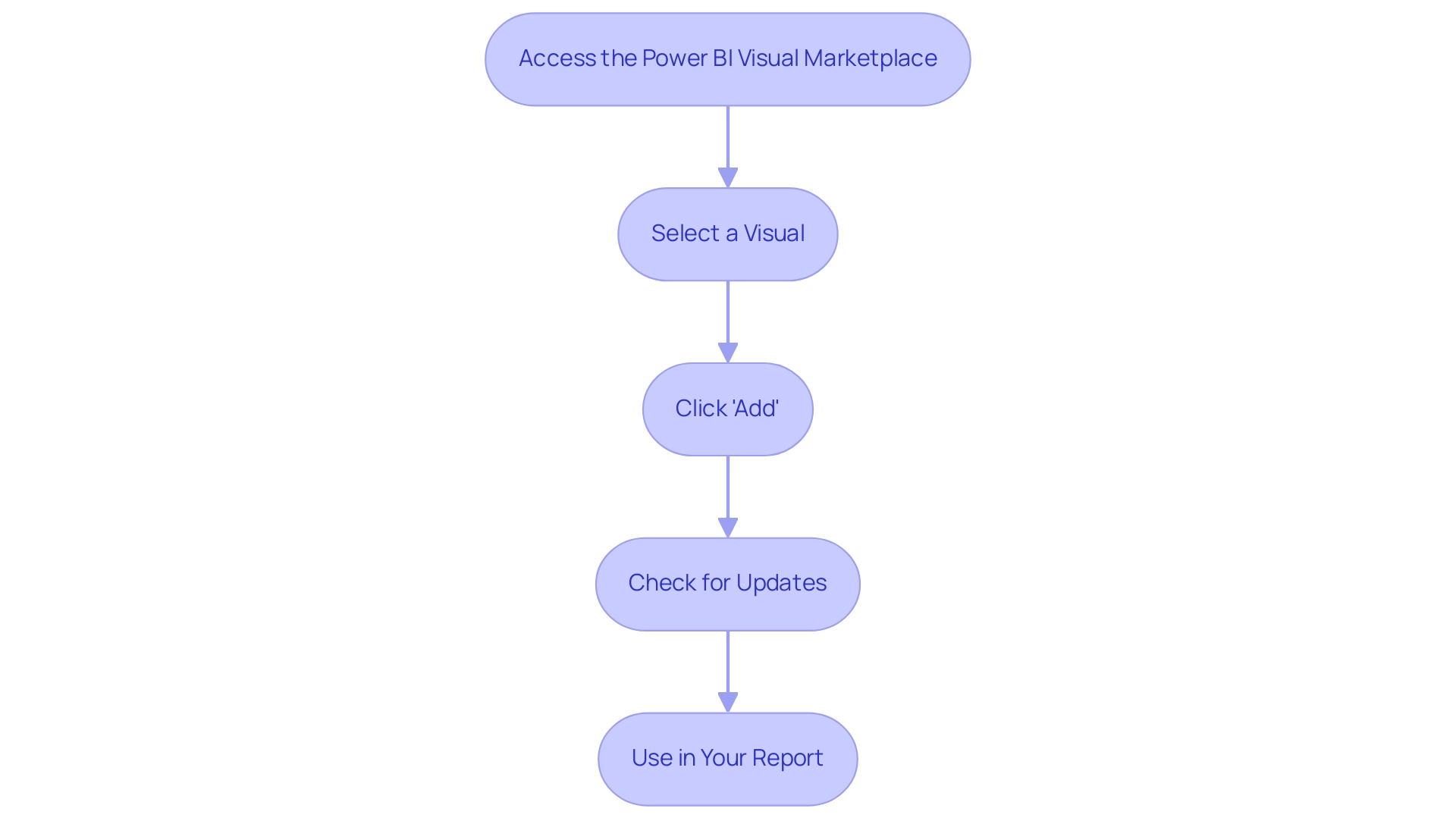

Installing Visuals from the Power BI Marketplace

Installing visuals from the Power BI Marketplace can be a straightforward process when following these essential steps:

- Access the Power BI visual marketplace: Begin by navigating to the Visualizations pane to open the Power BI visual marketplace, where a variety of powerful tools await your exploration.

- Select a Visual: Browse through the diverse selection and click on any visual that piques your interest to view its detailed features and functionalities.

- Click ‘Add’: Once you’ve reviewed the visual, simply click the ‘Add’ button to seamlessly integrate it into your Power BI workspace.

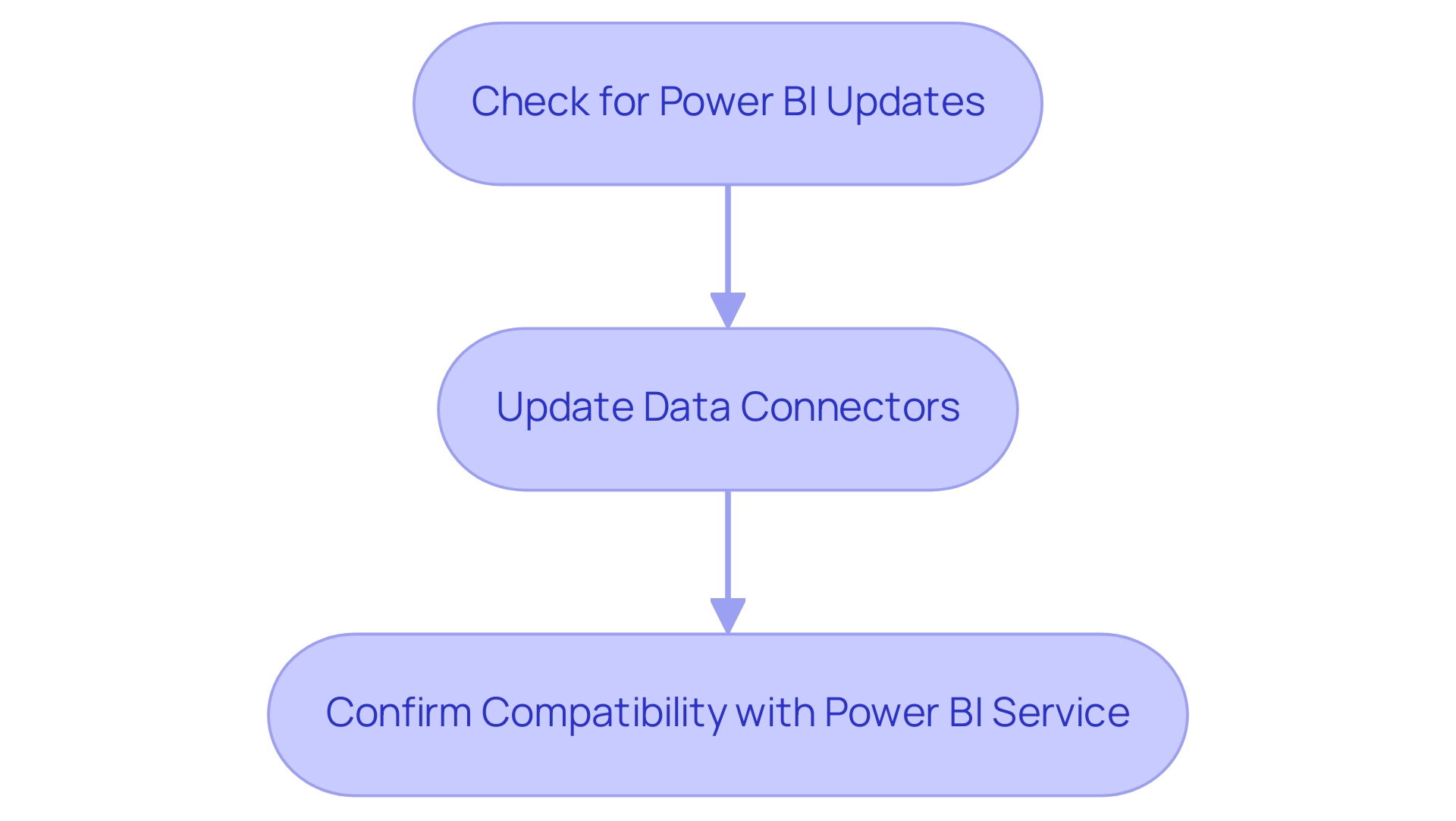

- Check for Updates: Keeping your graphics current is crucial; check for updates regularly through the ‘Manage graphics’ option in the Visualizations pane to ensure optimal performance and access to new features.

- Use in Your Report: After installation, your new visual will appear in the Visualizations pane, ready to enhance your reports and engage your audience.

By utilizing these images from the Power BI visual marketplace, organizations like the Red Cross have transformed their fundraising strategies and gained deeper insights into donor behavior. The Red Cross encountered difficulties in handling donor information and evaluating the success of their fundraising campaigns, but with the integration of BI, they enhanced campaign performance and support efficiency through improved donor insights.

Additionally, the ‘Drill Down Timeline PRO’ feature allows users to conduct detailed examinations of timelines with customizable interactions, showcasing the versatility of BI visuals. As Ryan Majidimehr emphasizes, “We hope that these exciting new capabilities enable you to create and migrate even more BI semantic models to Direct Lake mode…” This highlights the significant potential for real-time analytics and AI integration that can drive effective decision-making and storytelling in your presentations.

Additionally, our BI services provide a 3-Day BI Sprint, which enables quick development of professionally crafted documents, and the General Management App, which offers thorough management and intelligent evaluations. With tools like RPA optimizing your processes and customized AI solutions improving information quality, you can overcome implementation challenges and utilize Business Intelligence effectively for informed decision-making.

Customizing Your Power BI Visuals

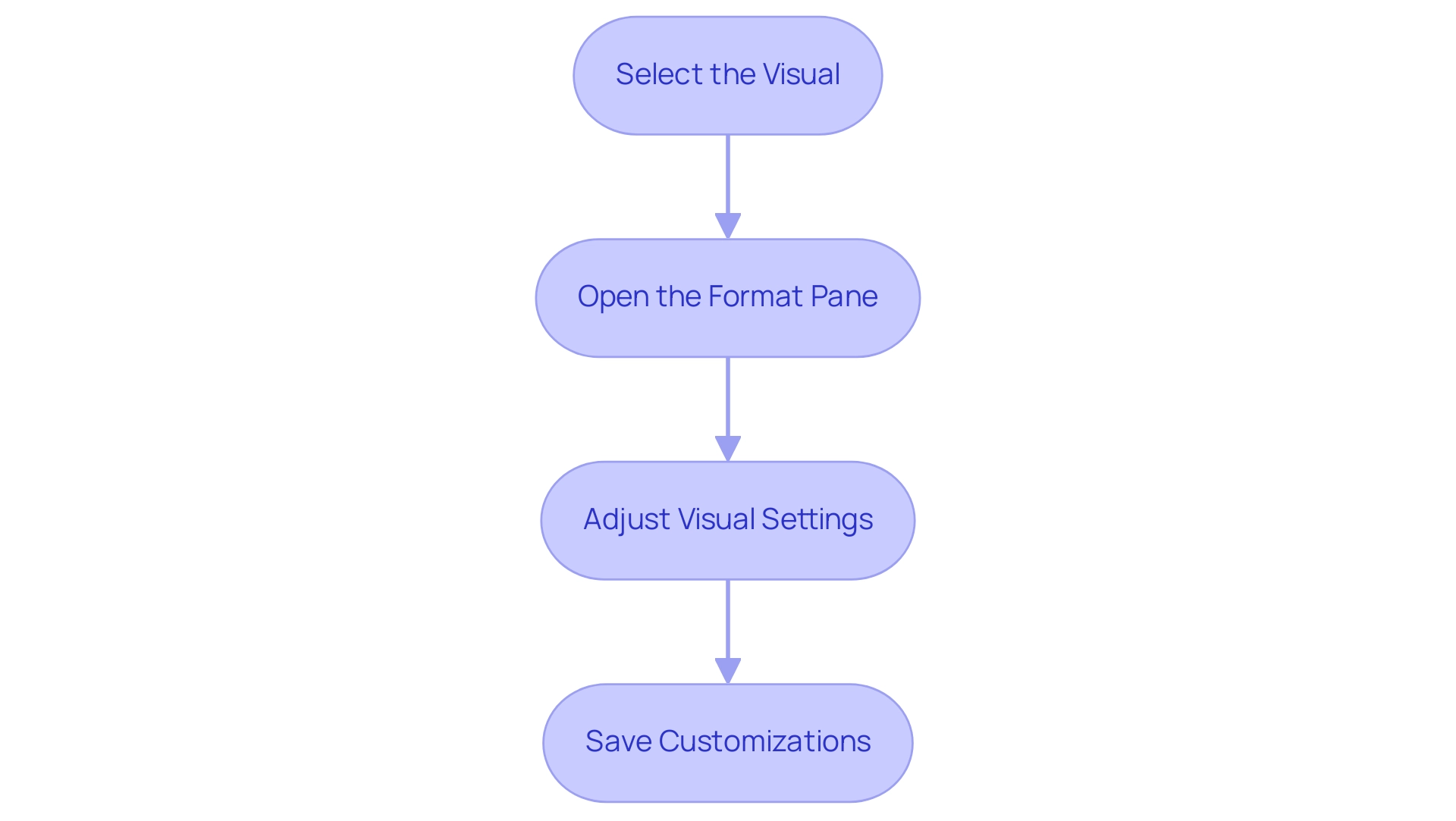

Customizing your Power BI visuals from the power bi visual marketplace is essential for creating impactful reports that drive actionable insights. With 2,501 users currently online, there’s a vibrant community engaged in improving their presentation skills. Here’s a streamlined approach to enhance your information presentations:

- Select the Visual: Start by clicking on the visual you want to customize within your document. This foundational step allows you to focus your modifications where they matter most.

- Open the Format Pane: Navigate to the Visualizations pane and click the paint roller icon. This action opens a range of formatting options, providing you with the tools needed to personalize your visual effectively.

- Adjust Visual Settings: Dive into the settings to modify aspects like colors, labels, titles, and data fields. This is your opportunity to tailor the visual to meet your specific reporting requirements. For example, the customization of visuals is facilitated in BI mobile applications for iOS and Android tablets, ensuring your documents are accessible on various devices. Don’t hesitate to experiment with various styles to discover which combinations yield the best results for your audience.

- Save Customizations: After making your desired changes, remember to save your document. This step is crucial to ensure that all customizations are retained and will be available for future presentations in the Power BI visual marketplace.

To illustrate the impact of customization, consider the case study of the Chiclet Slicer, which can be found in the Power BI visual marketplace. This tool acts as an interactive filter that enhances the visual appeal of documents. By using chiclet slicers, users can filter information with different colors, layouts, and images, effectively streamlining exploration and analysis. However, numerous organizations encounter obstacles like information inconsistencies across documents and a deficiency of actionable direction, which can diminish the effectiveness of their insights.

Utilizing our 3-Day BI Sprint can speed up your document creation process, while the General Management App offers thorough management and intelligent reviews to tackle these challenges. By adhering to these steps and employing these powerful tools, you can significantly improve the impact of your documents, making information storytelling more captivating and informative.

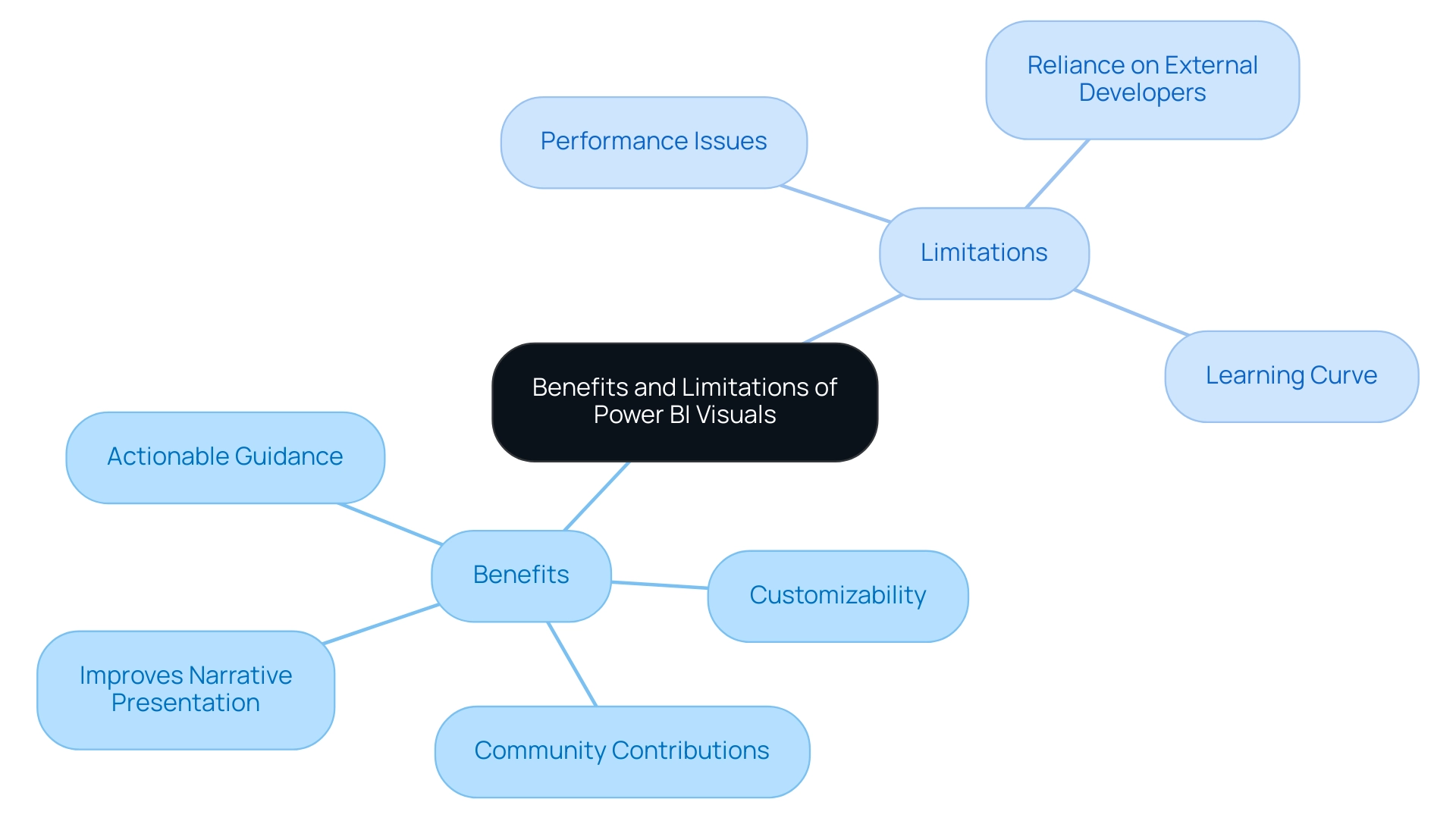

Benefits and Limitations of Power BI Visuals

Utilizing graphics from the power bi visual marketplace can greatly improve narrative presentation while tackling typical issues encountered in operational efficiency. Custom visuals can transform information into engaging narratives, making it easier for stakeholders to grasp key insights quickly—an essential need given the often time-consuming nature of report creation. Furthermore, the range of options accessible enables customized presentations that connect with varied audiences, addressing the problem of inconsistencies effectively.

Community contributions bring innovative solutions to the table, fostering a dynamic ecosystem that continuously evolves to meet users’ needs.

A case study titled Benefits of Custom Charts in Power BI highlights that while Power BI’s default charts are versatile, custom charts enhance storytelling, providing specialized visualizations that improve engagement and brand consistency. Importantly, these custom representations can also provide actionable guidance, assisting stakeholders in understanding the next steps based on the data presented. This addresses the frequent issue where documents are filled with numbers and graphs yet lack clear direction.

However, there are limitations to consider. Some custom graphics, particularly complex ones, may impact report performance, leading to slower load times and a less responsive user experience. Additionally, reliance on external developers poses risks, especially if support ceases or updates are not provided, potentially leaving organizations with outdated tools.

The adoption of new visuals may also require users to invest time in learning how to effectively use and customize them, which could temporarily hinder productivity.

Recent updates to BI in April 2024 have introduced new features that may address some of these limitations, providing advanced functionalities to enhance visualizations. Daniel, a BI Super User, shared insights on the limitations of using these offerings:

“Regarding both questions, unfortunately not. I’d love to see both also… Not ideal outcomes but might give you some ideas.”

This emphasizes the significance of tackling specific issues through updates and enhancements to the visual listings in the power bi visual marketplace, including governance strategies that can reduce inconsistencies.

In summary, while personalized graphics in Business Intelligence offer thrilling opportunities for improved information presentation and actionable insights, it is essential to navigate their limitations carefully to ensure optimal performance and user satisfaction, while also implementing governance strategies to maintain information integrity.

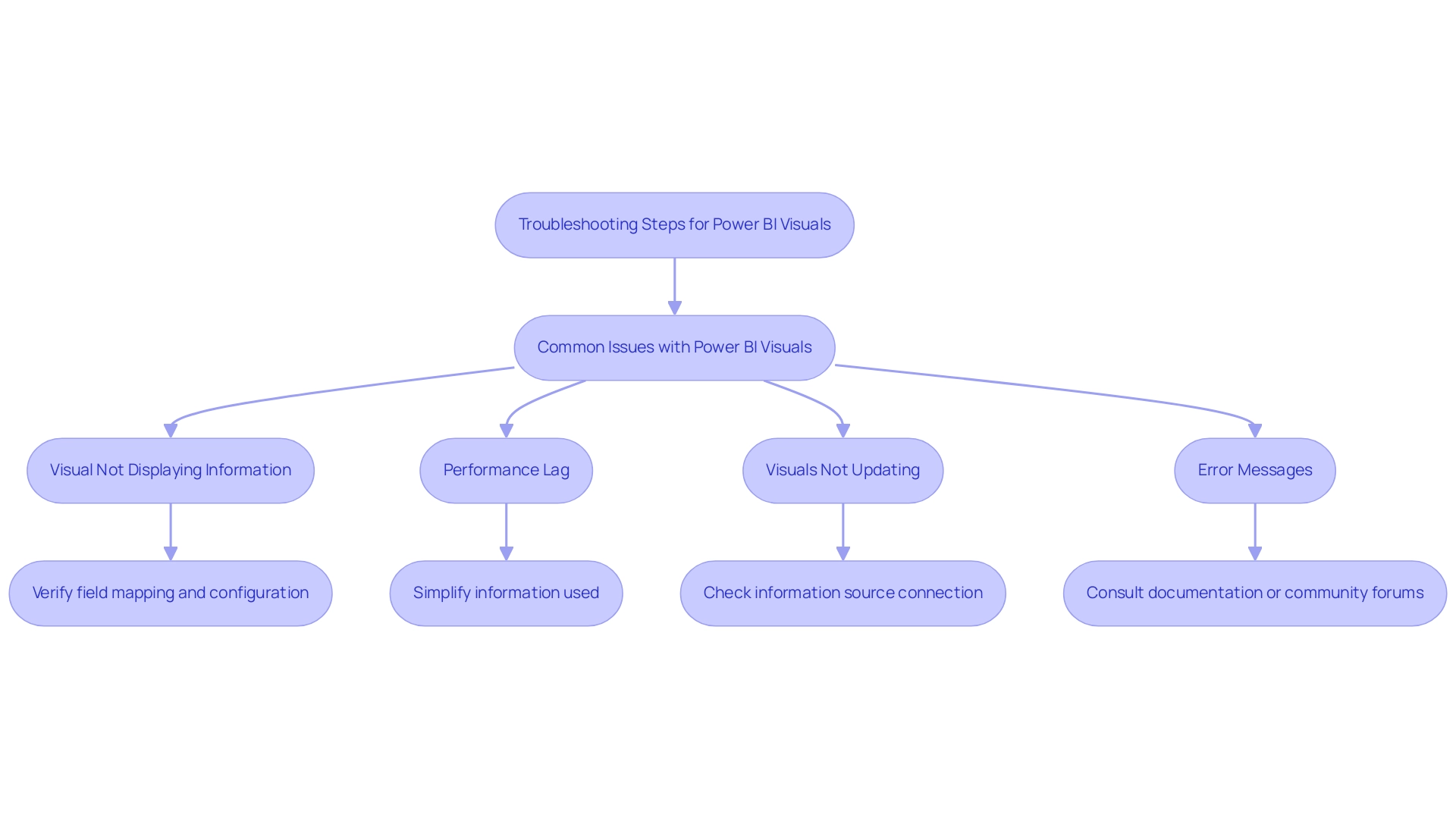

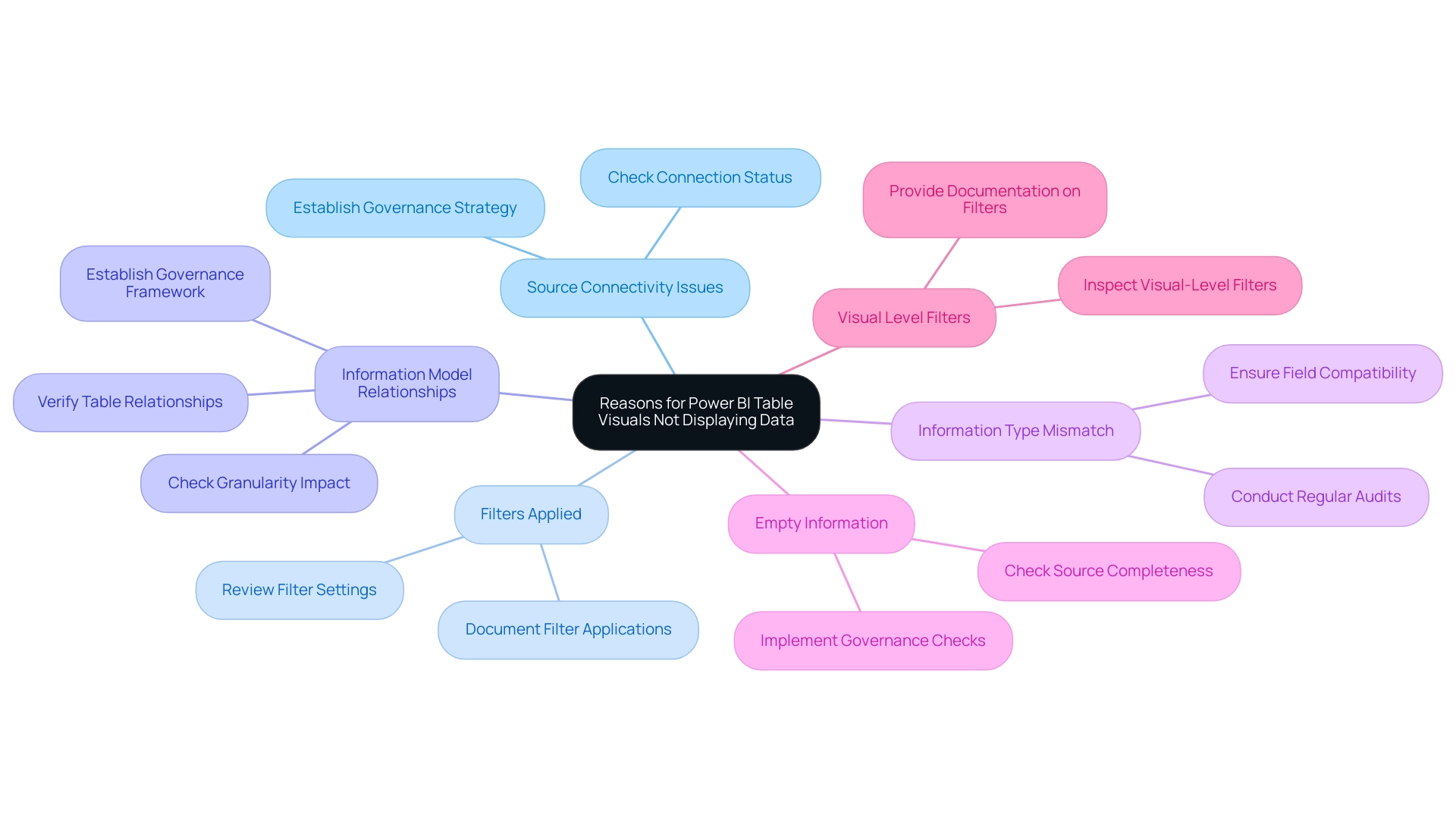

Troubleshooting Common Issues with Power BI Visuals

When utilizing Power BI visuals, you may encounter several common issues that can hinder your operational efficiency. Here’s how to effectively troubleshoot them, enhancing your data presentation and storytelling capabilities while considering the role of Robotic Process Automation (RPA) and tailored AI solutions in streamlining these processes:

-

Visual Not Displaying Information:

Start by verifying that the fields are accurately mapped within the visual settings. Ensure your information model is properly configured to avoid discrepancies. With over 15,000 related questions on StackOverflow about Power BI, it’s evident that many users face similar challenges. Implementing RPA can automate information preparation tasks, reducing the risk of mistakes in mapping. -

Performance Lag:

If you experience delays in visual loading, consider simplifying the information being utilized. Decreasing the quantity of points shown can significantly improve performance, making visuals more responsive. This is particularly important as Power BI is recognized for its user-friendliness compared to Tableau, impacting performance positively for less skilled users. Tailored AI solutions can also enhance queries, further improving loading times. -

Visuals Not Updating:

If your visuals fail to refresh, double-check that your information source is correctly connected. Remember to refresh your dataset within Power BI to reflect any changes. As Rico Zhou, a Community Support expert, advises, “To configure data refresh, you may need to install a gateway.” Automating this process through RPA can ensure that your visuals are always up-to-date without manual intervention. -

Error Messages:

Pay attention to any error messages that arise, as they often contain valuable insights into the underlying issues. For specific troubleshooting tips, consult the visual’s documentation or engage with community forums, where shared experiences can guide you through solutions. Leveraging AI can help in analyzing error patterns and suggesting potential fixes, enhancing your troubleshooting efficiency.

By addressing these common challenges and integrating RPA and AI solutions, you can not only optimize your experience with the Power BI visual marketplace but also enhance your organization’s ability to extract actionable insights, ultimately driving growth and innovation.

Conclusion

Unlocking the full potential of the Power BI Visual Marketplace can transform the way organizations approach data visualization and reporting. By leveraging custom visuals, users can enhance their storytelling capabilities, turning complex datasets into clear and actionable insights that resonate with stakeholders. The marketplace offers a diverse range of options, allowing for tailored presentations that address common challenges such as time-consuming report creation and data inconsistencies.

Navigating this vibrant hub is straightforward, empowering users to easily install, customize, and integrate visuals into their reports. With clear steps and practical guidance, organizations can overcome hurdles and streamline their data management processes. Moreover, the integration of Robotic Process Automation and tailored AI solutions can further enhance operational efficiency, ensuring that data remains accurate and up-to-date.

While the benefits of utilizing custom visuals are significant, it is essential to remain aware of potential limitations and challenges. By implementing effective governance strategies and troubleshooting common issues, organizations can maximize the performance of their Power BI experience. Ultimately, embracing the Power BI Visual Marketplace is not just about improving reporting capabilities; it is about fostering a data-driven culture that drives informed decision-making and fuels business growth. The time to elevate data storytelling is now—unlock the power of visualization and lead your organization toward a more insightful future.

Overview:

The article focuses on how to effectively use Power Query to compare two tables and return differences, emphasizing the importance of this process for data analysis and decision-making. It outlines a step-by-step guide that includes accessing data transformation, merging queries, and applying various techniques to identify discrepancies, thereby enhancing operational efficiency and ensuring information accuracy.

Introduction

In the realm of data analysis, the ability to compare tables effectively is not just a skill; it’s a necessity for organizations striving for accuracy and informed decision-making. Power Query emerges as a powerful ally in this endeavor, enabling users to seamlessly connect, combine, and transform data from diverse sources.

As businesses grapple with the complexities of maintaining data integrity amidst an ever-expanding array of datasets, the integration of Robotic Process Automation (RPA) becomes a game-changer, minimizing manual tasks and enhancing operational efficiency.

This guide will explore the intricacies of utilizing Power Query to:

- Identify discrepancies

- Streamline workflows

- Foster a culture of accuracy

Ultimately empowering organizations to transform raw data into actionable insights that drive innovation and growth.

Introduction to Comparing Tables with Power Query

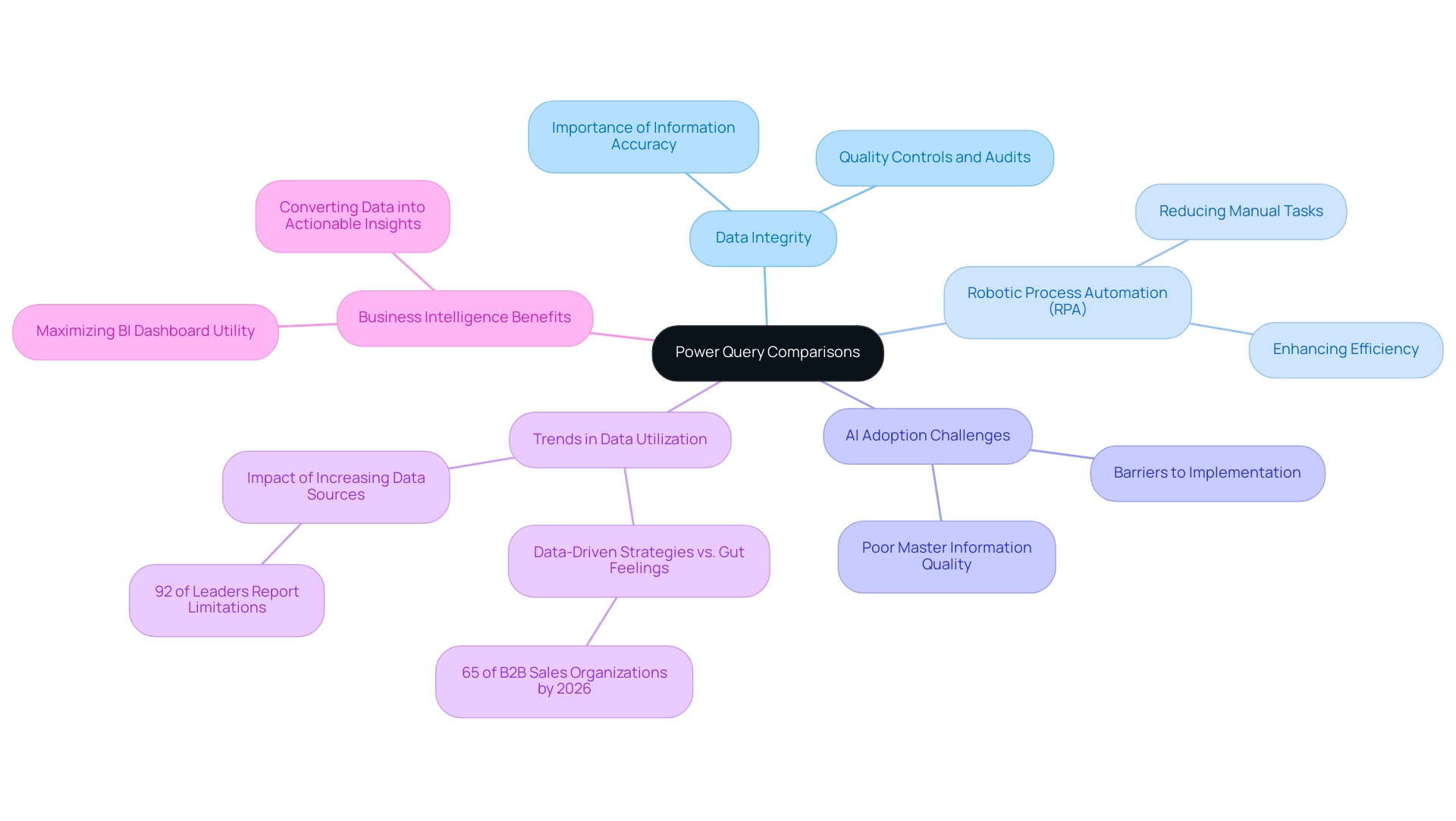

Power Query stands out as an invaluable asset within Microsoft Excel and Power BI, enabling users to connect, combine, and transform information from a multitude of sources effectively. The ability to power query compare two tables and return differences is essential in analyzing information, particularly for identifying discrepancies, changes, or updates between datasets. This process is crucial for safeguarding information accuracy and integrity, which directly influences effective decision-making and bolsters operational efficiency.

By incorporating Robotic Process Automation (RPA) into your workflows, you can reduce manual, repetitive tasks, thereby enhancing efficiency and enabling your team to focus on high-value analysis tasks. Supporting those involved in misconduct investigations is vital, as it emphasizes the importance of information integrity in maintaining trust and accountability within organizations. Moreover, ethical considerations in information reliability, such as responsible use and privacy protection, are critical to ensuring fair decision-making.

It is also essential to address challenges related to poor master information quality and barriers to AI adoption, as these factors can hinder effective implementation and utilization of information-driven strategies. As a testament to the increasing importance of evidence-based strategies, it is anticipated that by 2026, 65% of B2B sales organizations will surpass choices based on instinct with those grounded in information evaluation. Furthermore, a remarkable 92% of business leaders indicate that the growing number of information sources has obstructed their organizations’ advancement.

This guide will explore how to use Power Query to compare two tables and return differences effectively, enabling you to improve your analysis capabilities. For instance, a case study on maintaining information accuracy highlights the implementation of quality controls and regular audits, supporting ongoing employee training and real-time validation. Moreover, tackling the challenges of time-consuming report creation and the absence of actionable guidance from BI dashboards is essential for maximizing the benefits of Business Intelligence.

By promoting a culture of precision and dependability in your operations, you can manage the intricacies of information examination with assurance, utilizing customized AI solutions and Business Intelligence to convert unrefined information into actionable insights that propel growth and innovation.

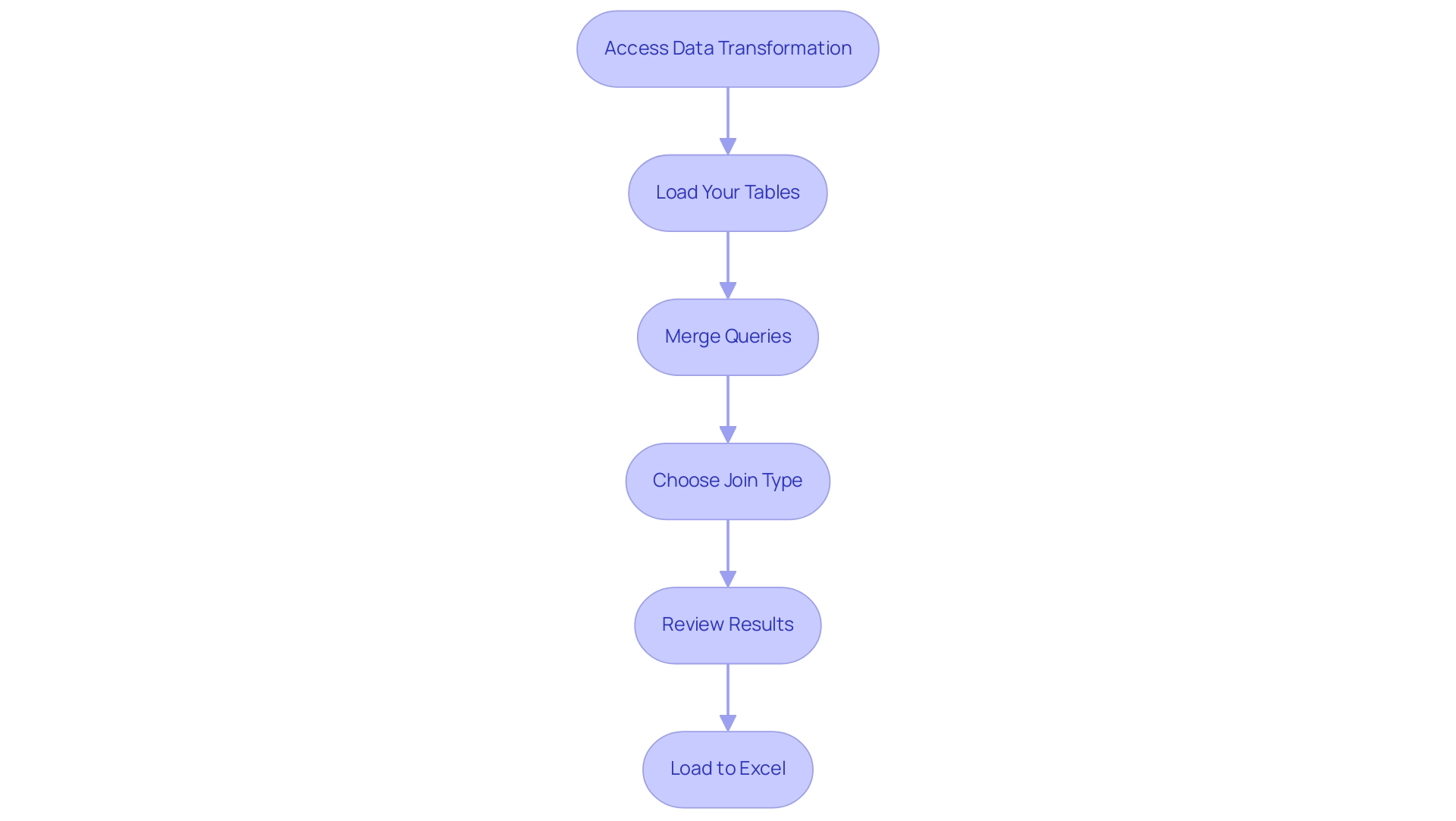

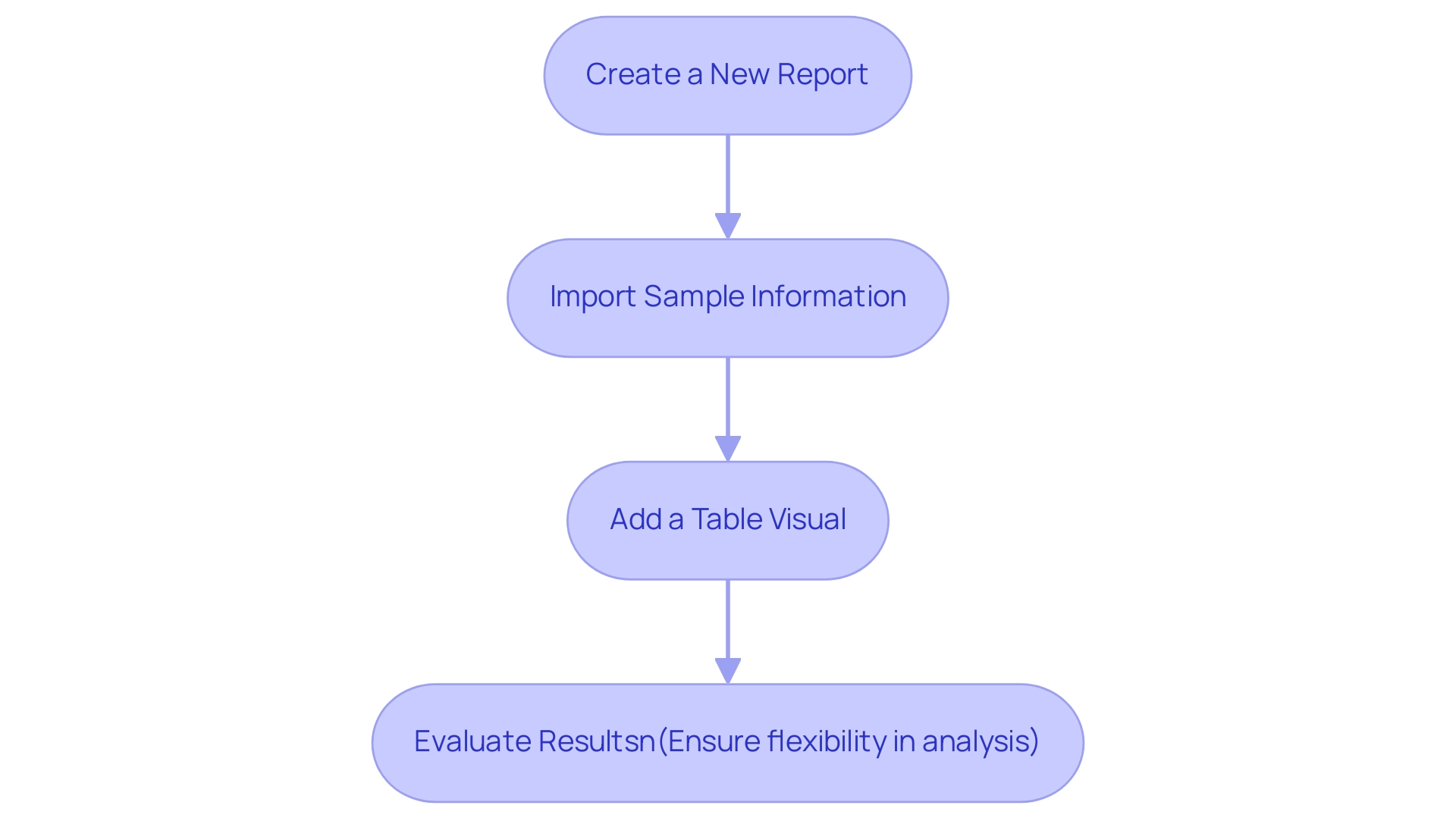

Step-by-Step Guide to Comparing Two Tables in Power Query

-

Access Data Transformation: Start by going to the ‘Data’ tab in Excel and choosing ‘Get Data’ to open Data Transformation. This powerful tool enables seamless manipulation and analysis, saving users valuable time in the process. By combining data transformation with Robotic Process Automation (RPA), you can further streamline your workflows, reducing manual tasks and improving operational efficiency. Manual, repetitive tasks can significantly slow down your operations, leading to wasted time and resources, which RPA can effectively mitigate.

-

Load Your Tables: Import the two tables you wish to power query compare two tables and return differences. The tool facilitates importing from multiple origins, including Excel files, databases, and external information sources. This flexibility not only streamlines your information preparation process but can also save HOURS when importing content from the web, especially when automated through RPA.

-

Merge Queries: In the Power Query interface, select one of your tables. Head to the ‘Home’ tab and click ‘Merge Queries.’ Here, you will select the second table and specify the matching columns to align your sets effectively. Leveraging RPA can automate this merging process, ensuring accuracy and speed, which is crucial in overcoming technology implementation challenges.

-

Choose Join Type: Opt for the join type that best satisfies your evaluation needs. For instance, selecting ‘Left Anti Join’ will allow you to identify records present in the first table but absent in the second, enhancing your data comparison capability. This method of analysis is crucial for extracting actionable insights, which is a key aspect of Business Intelligence, especially when you use power query to compare two tables and return differences.

-

Review Results: After merging, it’s crucial to review the results in the Power Query editor. Utilize filtering and sorting features in power query to compare two tables and return differences, gaining a clearer understanding of the discrepancies between your tables. This process is essential for identifying missing data and understanding data distribution, ultimately driving informed decision-making.

-

Load to Excel: Once your comparison meets your expectations, load the results back into Excel, choosing to either append them to an existing worksheet or create a new one. This feature, which permits defining the destination range, significantly improves your reporting and evaluation workflow. As mentioned by engineer and enthusiast Shruti M., utilizing the data transformation tool, you are saving a significant amount of time by executing many functions with just a few clicks!

By adhering to these steps and incorporating RPA and Business Intelligence principles, you can revolutionize your information evaluation process, save hours, and enable informed decision-making. In the context of the rapidly evolving AI landscape, these tailored solutions are essential for addressing specific business needs and challenges.

Methods for Identifying Differences Between Tables

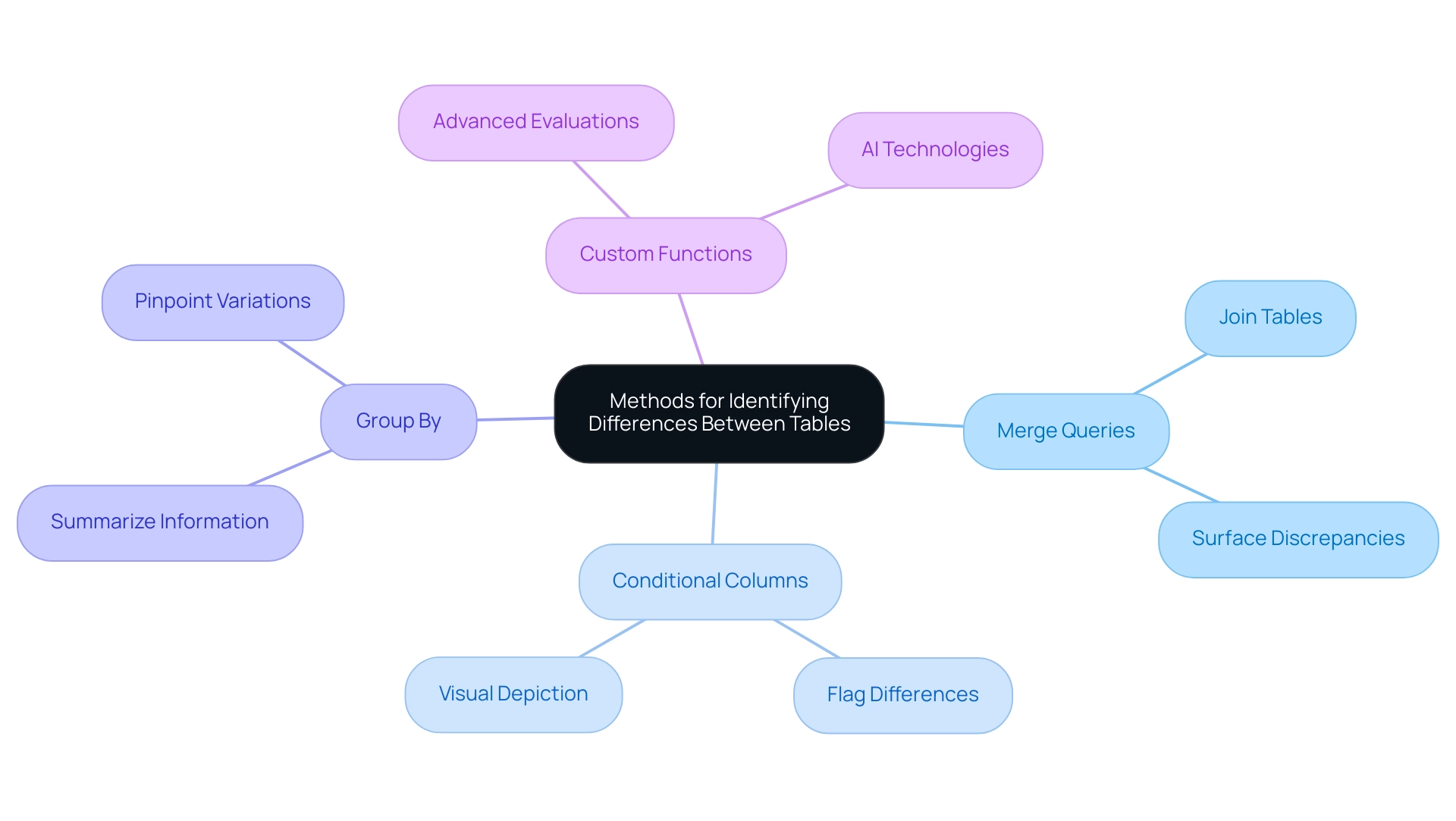

Power Query equips users with several powerful techniques for identifying discrepancies between tables, enabling efficient data analysis and accuracy in reporting:

-

Merge Queries: This feature allows you to join tables based on shared columns, effectively surfacing discrepancies in the merged output. This method streamlines the process to power query compare two tables and return differences, ensuring that any inconsistencies are readily apparent. As Amy Gilmour, Content Marketing Manager, notes, “Mobile measurement for app marketing can seem overwhelming with multiple platforms, channels, ad networks, campaigns, and now next-generation tools and solutions to manage.” This complexity is mirrored in the challenges of analysis, highlighting the need for robust tools like Power Query and our Power BI services, which enhance reporting and provide actionable insights.

-

Power Query Compare Two Tables and Return Differences: By utilizing this feature, you can create conditional columns to flag differences according to specific criteria. This visual depiction simplifies the identification of changes, which is essential when you want to power query compare two tables and return differences.

-

Group By: Utilizing the ‘Group By’ option allows you to summarize information and pinpoint variations in aggregated results, especially when you use power query to compare two tables and return differences. This method is especially beneficial for grasping trends and irregularities within your datasets, akin to how the recent case study ‘The Flow of Time: India Budget 2025’ examines the Union Budget introduced by Finance Minister Nirmala Sitharaman for FY 2025-26, concentrating on key areas and consequences that necessitate accurate evaluation, in line with the significance of business intelligence in fostering growth.

-

Custom Functions: For those more experienced with Power Query, writing custom M functions can assist in scenarios where you need to power query compare two tables and return differences for unique comparison challenges. This flexibility enables more advanced evaluations that meet particular operational requirements, further improved by utilizing AI technologies, including customized Small Language Models for effective information examination and increased privacy, along with GenAI Workshops for practical training.

These methods not only enhance your ability to analyze information effectively but also ensure accuracy in your findings, fostering a more robust decision-making process. In the context of the recent Union Budget statistics, precise information examination is crucial for understanding financial implications and making informed decisions, while automation through RPA can streamline manual workflows by reducing repetitive tasks and errors, thereby enhancing overall operational efficiency.

Troubleshooting Common Issues in Table Comparisons

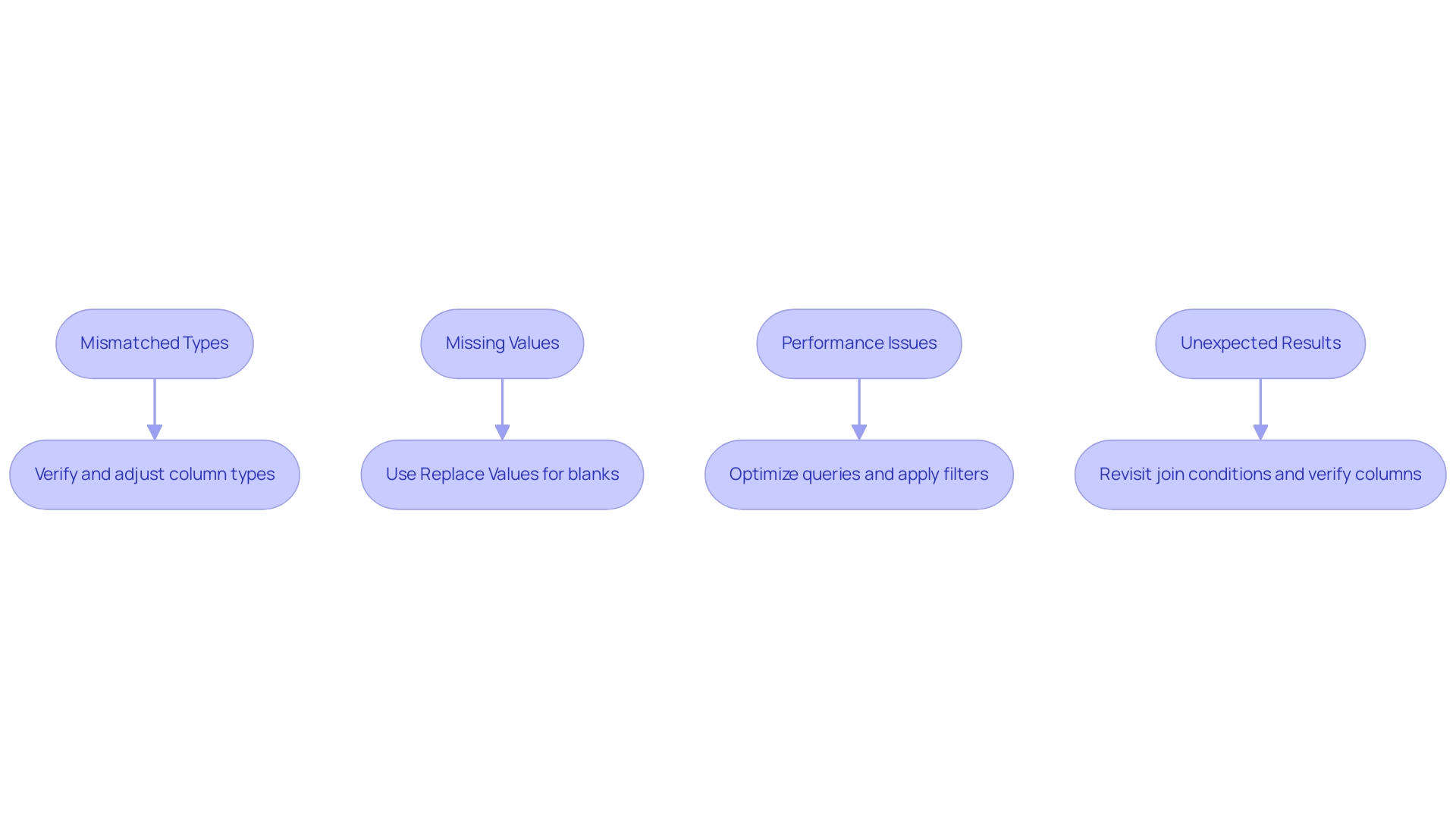

When utilizing Power Query to compare tables, several common challenges may arise that require attention:

-

Mismatched Types: One of the most frequent issues is encountering mismatched types between columns. According to recent statistics, nearly 30% of information analysis projects experience setbacks due to type mismatches. To ensure accurate comparisons when using Power Query to compare two tables and return differences, it’s essential to verify that the information types match. In Power Query, you can easily adjust types by selecting the relevant column and choosing the appropriate type from the ‘Transform’ tab. Additionally, Robotic Process Automation (RPA) can be employed to automate the correction of mismatched data types, significantly reducing the time spent on manual adjustments. This proactive step helps prevent errors that could distort evaluation outcomes, enhancing overall operational efficiency.

-

Missing Values: Missing values in one of the tables can significantly impact the comparison results. To address this, utilize the ‘Replace Values’ feature to manage blanks or null entries effectively. This ensures that your analysis reflects the complete dataset, minimizing discrepancies and aligning with the goal of informed decision-making through Business Intelligence. Moreover, customized AI solutions can help in forecasting and completing absent values based on historical information trends, while using Power Query to compare two tables and return differences, additionally improving information integrity.

-

Performance Issues: Handling extensive datasets can result in performance bottlenecks in the tool. As noted in recent news, out-of-memory errors can result from memory-intensive operations on large tables. To enhance efficiency and avoid these errors, consider applying filters to your information before loading it into Power Query, or optimize your queries to streamline processing times. These adjustments not only alleviate performance issues but also demonstrate the significance of utilizing RPA to automate manual workflows, thus enhancing overall information handling.

-

Unexpected Results: If Power Query compares two tables and returns differences that yield surprising outcomes, it’s vital to revisit your join conditions and verify that you have selected the correct columns for comparison. Ensuring that the join logic is sound is vital for achieving accurate and meaningful outcomes.

Incorporating these strategies not only improves the reliability of your comparisons but also supports the use of Power Query to compare two tables and return differences according to expert insights. As Mark Smallcombe observes, the application is intended to operate effortlessly within the Microsoft ecosystem, especially with Excel and Power BI. Moreover, a case study on instruments to improve productivity for financial analysts emphasizes how mastering data manipulation tools, along with SQL and Power Pivot, greatly enhances information handling abilities.

By utilizing these tools effectively alongside RPA and customized AI solutions, users can overcome common challenges in information evaluation and improve their operational efficiency.

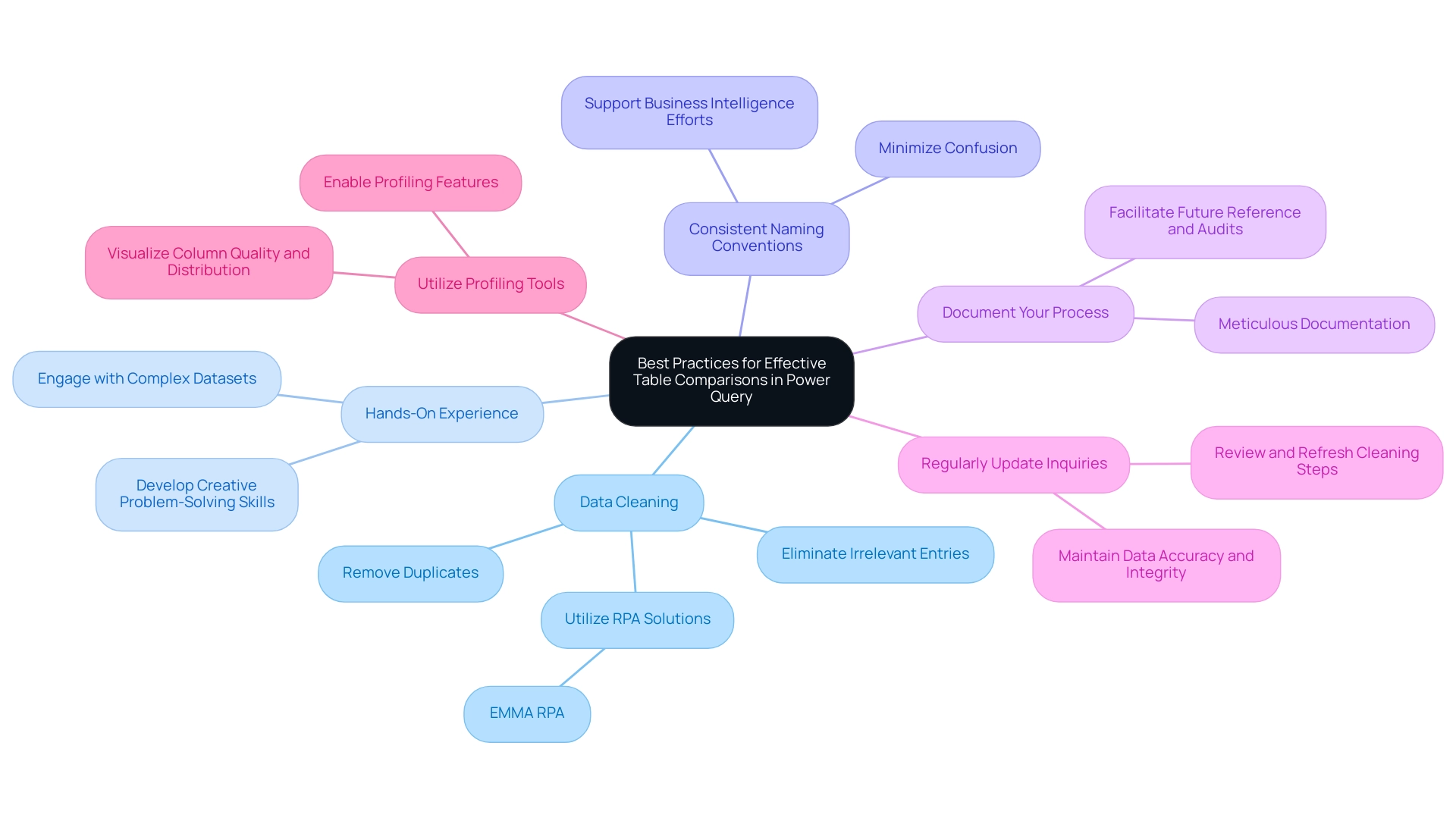

Best Practices for Effective Table Comparisons in Power Query

To achieve effective table comparisons in Power Query, it’s essential to adhere to several best practices that enhance the accuracy and reliability of your analysis:

-

Data Cleaning: Prior to any comparisons, ensure your information is thoroughly cleaned. This includes removing duplicates and irrelevant entries, which significantly enhances the accuracy of your results. As Neeraj Sharma wisely states,

Remember, clean information is the foundation of accurate and reliable insights.

Poor master information quality can impede your ability to derive meaningful insights, making this step critical. Consider utilizing RPA solutions like EMMA RPA to automate cleaning processes, ensuring efficiency and accuracy. -

Hands-On Experience: Mastering Power Query involves hands-on experience with messy datasets. Engaging with complex information challenges can develop your creative problem-solving skills, crucial for effective manipulation and comparison, and overcoming barriers to AI adoption. RPA tools can assist in managing these datasets more effectively.

-

Consistent Naming Conventions: Establish and maintain consistent naming conventions for columns across all tables. This practice minimizes confusion and ensures that comparisons are straightforward and reliable, supporting Business Intelligence efforts.

-

Document Your Process: Meticulously document every step you take and all transformations applied during your information manipulation. This documentation is advantageous for future reference and necessary for audits, offering clear insight into your processes, which is essential in tackling operational challenges.

-

Regularly Update Inquiries: Information sources can often change, so it is important to routinely review and refresh your information cleaning steps in the tool. This ensures that your comparisons remain precise and reflect the most current information, thereby maintaining accuracy and integrity, both of which are essential for driving growth and innovation. RPA solutions can simplify this updating process.

-

Utilize Profiling Tools: Before altering information in the tool, enabling profiling features can offer valuable insights into your dataset. These tools offer visualizations that help you understand column quality, distribution, and profile statistics, guiding your cleaning and comparison processes. This aligns with the goal of transforming raw data into actionable insights.

By implementing these best practices and leveraging RPA technologies, you can significantly improve your data comparison process to power query compare two tables and return differences, effectively utilize Business Intelligence, and gain more accurate insights from your analysis to foster operational efficiency and growth.

Conclusion

The ability to compare tables effectively using Power Query is a fundamental skill that empowers organizations to enhance data integrity and drive informed decision-making. By leveraging this powerful tool, users can seamlessly identify discrepancies, streamline workflows, and foster a culture of accuracy within their operations. The integration of Robotic Process Automation (RPA) further amplifies these benefits, minimizing manual tasks and allowing teams to focus on high-value analyses.

Throughout the guide, various methods for identifying differences between tables have been explored, including:

1. Merging queries

2. Utilizing conditional columns

3. Employing custom functions

These techniques not only simplify the process of data comparison but also ensure accuracy in reporting, which is critical for effective business intelligence. Additionally, troubleshooting common challenges such as mismatched data types and missing values is essential for maintaining the reliability of data analysis outcomes.

By adhering to best practices such as:

– Data cleaning

– Maintaining consistent naming conventions

– Regularly updating queries

organizations can significantly enhance their data comparison processes. Implementing these strategies, alongside tailored AI solutions and RPA, will not only improve operational efficiency but also transform raw data into actionable insights that fuel innovation and growth.

In a data-driven landscape, the ability to effectively compare and analyze datasets is no longer just an advantage; it is a necessity for organizations seeking to thrive. Embracing Power Query and its capabilities positions businesses to navigate complexities with confidence, ultimately leading to better decision-making and sustained success.

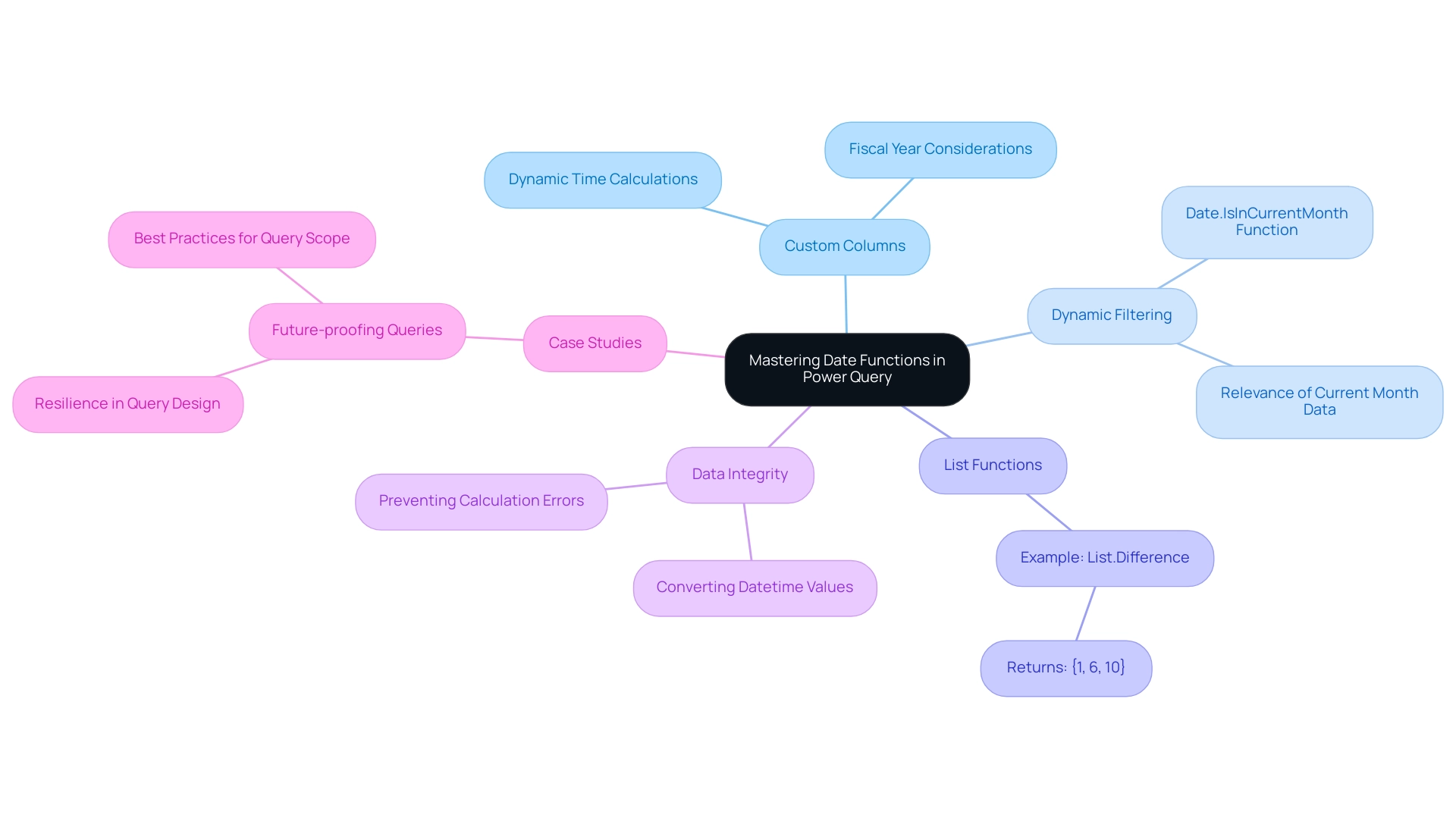

Overview:

The article focuses on mastering the Power Query date functions, which are essential tools for effectively managing and analyzing temporal data within business intelligence contexts. It supports this by detailing various functions like Date.From and Date.AddDays, illustrating their practical applications in transforming datasets for insightful analysis, thus enhancing operational efficiency and informed decision-making.

Introduction

In a world where data reigns supreme, the ability to transform raw information into actionable insights is crucial for businesses striving for operational excellence. Power Query emerges as a powerful ally in this endeavor, offering users the tools to seamlessly connect, clean, and prepare data for analysis without the need for extensive programming skills.

As organizations grapple with the complexities of data management amidst an ever-evolving technological landscape, understanding how to leverage Power Query becomes essential.

This article delves into the multifaceted capabilities of Power Query, particularly its date functions, and explores practical applications that enhance business intelligence. By mastering these techniques, users can navigate the intricacies of data analysis, enabling informed decision-making and strategic growth in a data-driven environment.

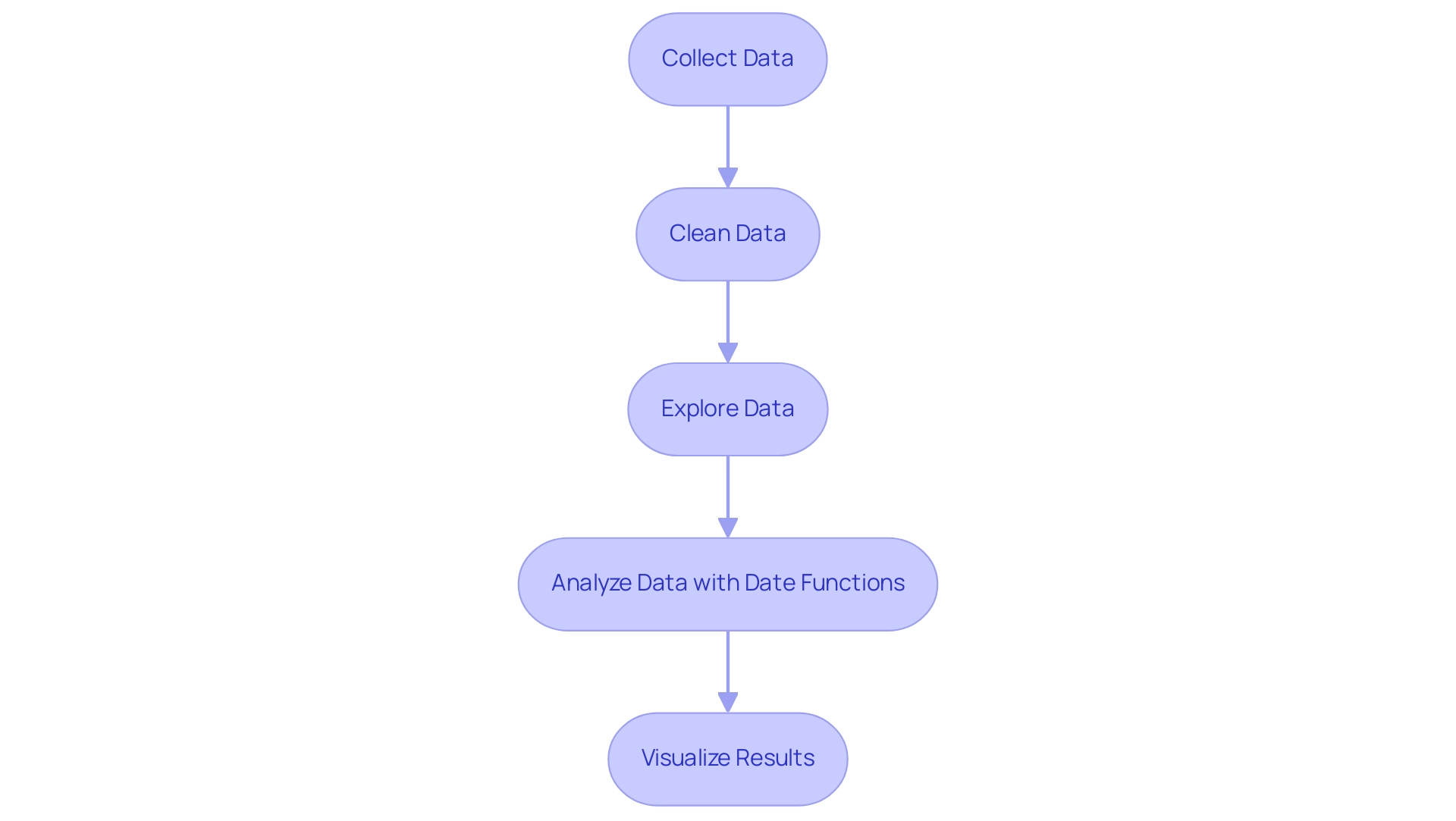

Introduction to Power Query: Unlocking Data Transformation

This tool stands out as a strong solution for information connection, transformation, and preparation, enabling users to effortlessly extract content from various sources, clean it, and ready it for analysis without extensive coding expertise. In the context of today’s overwhelming AI landscape, businesses often struggle to identify the right tools to extract meaningful insights from their data. The data transformation tool, along with BI services, offers a means to tackle these challenges efficiently.

For example, consider the monthly revenues recorded: 50000, 70000, 62000, 71000, 75000, 73000, 78000, 75000, 60000, 62000, 55000, and 50000. By employing data transformation tools, businesses can examine these figures to gain insights into financial performance and trends, which is essential in today’s information-abundant environment. The integration of BI services enhances this process, offering efficient reporting and actionable insights through features like:

- The 3-Day BI Sprint for rapid report creation

- The General Management App for comprehensive management

A strong understanding of the tool is crucial for anyone keen to improve their information processes and boost operational efficiency. As Salvatore Cagliari, a specialist in Data Analytics and Business Intelligence, highlights, ‘On the Business side, the tool is essential for converting information into actionable insights.’ In this segment, we will explore the foundational aspects of data transformation, focusing on its intuitive user interface and key features, while also referencing a case study on calculating total annual profit in BI.

This will illustrate how users can total profit columns from datasets generated in SQL Server to effectively analyze financial information. By mastering this tool, you will harness the ability to transform unrefined information into actionable insights, paving the way for informed decision-making and strategic planning, ultimately driving business growth.

Exploring Power Query Date Functions: A Comprehensive Guide

The power query date function offers an extensive array of time functions that are essential for managing temporal information effectively, supporting the broader objective of improving Business Intelligence (BI) and operational efficiency. Among the most significant power query date functions are Date.From, which converts a value into a temporal format, and Date.AddDays, which allows users to add a specific number of days to any given temporal point. These functions directly contribute to enhancing the analysis process by enabling quick adjustments to date-related information through the power query date function.

For instance, the Date.ToText function formats June 9, 2024, as a full day name, returning ‘Sunday’, illustrating its practical utility in generating insights quickly. Additional essential functions include Date.Year, Date.Month, and Date.Day, which facilitate the extraction of individual date components, utilizing the power query date function to empower analysts to tailor their datasets for precise analysis. As Rick de Groot of BI Gorilla points out, ‘BI Gorilla is a blog about DAX, Query and BI… to help you advance,’ emphasizing the importance of these tools in improving analysis capabilities.

Comprehending the power query date function not only assists in cleaning and filtering date-related information but also improves the overall handling of datasets to meet various analytical needs. This is particularly significant in tackling the challenges of lengthy report generation and inconsistencies frequently encountered when utilizing insights from BI dashboards. A practical illustration can be observed in the case study titled ‘Conclusion on Statistical Measures,’ which showcases the significance of fundamental statistical measures in analysis through the creation of a dataset in SQL Server and its subsequent examination in Business Intelligence.

By exploring these functions further, we will highlight their practical applications in streamlining information transformation workflows, ultimately leading to more insightful analysis and decision-making.

Practical Applications: Using Date Functions in Power Query

Exploring the practical applications of the Power Query date function reveals its potential to significantly transform data analysis, particularly in the context of navigating the overwhelming AI landscape. For example, consider a dataset containing sales transactions with a timestamp column. By utilizing Date.AddDays, you can create a new column that calculates the sales date plus 30 days, instrumental in forecasting future sales trends and aligning with your business goals.

This proactive approach not only enhances planning and stock management but also exemplifies how tailored AI solutions, such as predictive analytics tools, can cut through complexity to deliver targeted technologies for operational efficiency. Moreover, the power query date function, specifically the Date.Year function, allows you to filter records by specific years, streamlining the generation of year-over-year comparisons. Such comparisons are crucial for evaluating sales performance and recognizing growth patterns, highlighting the significance of Business Intelligence in transforming raw information into actionable insights. As Omid Motamedisedeh aptly states,

Anyone who wants to learn how to use functions in Power Query to transform and analyze information,

which underscores the value of mastering these techniques in the face of overwhelming options.

By mastering these techniques, you empower yourself to manipulate information effectively, leading to improved decision-making within your organization.