Overview

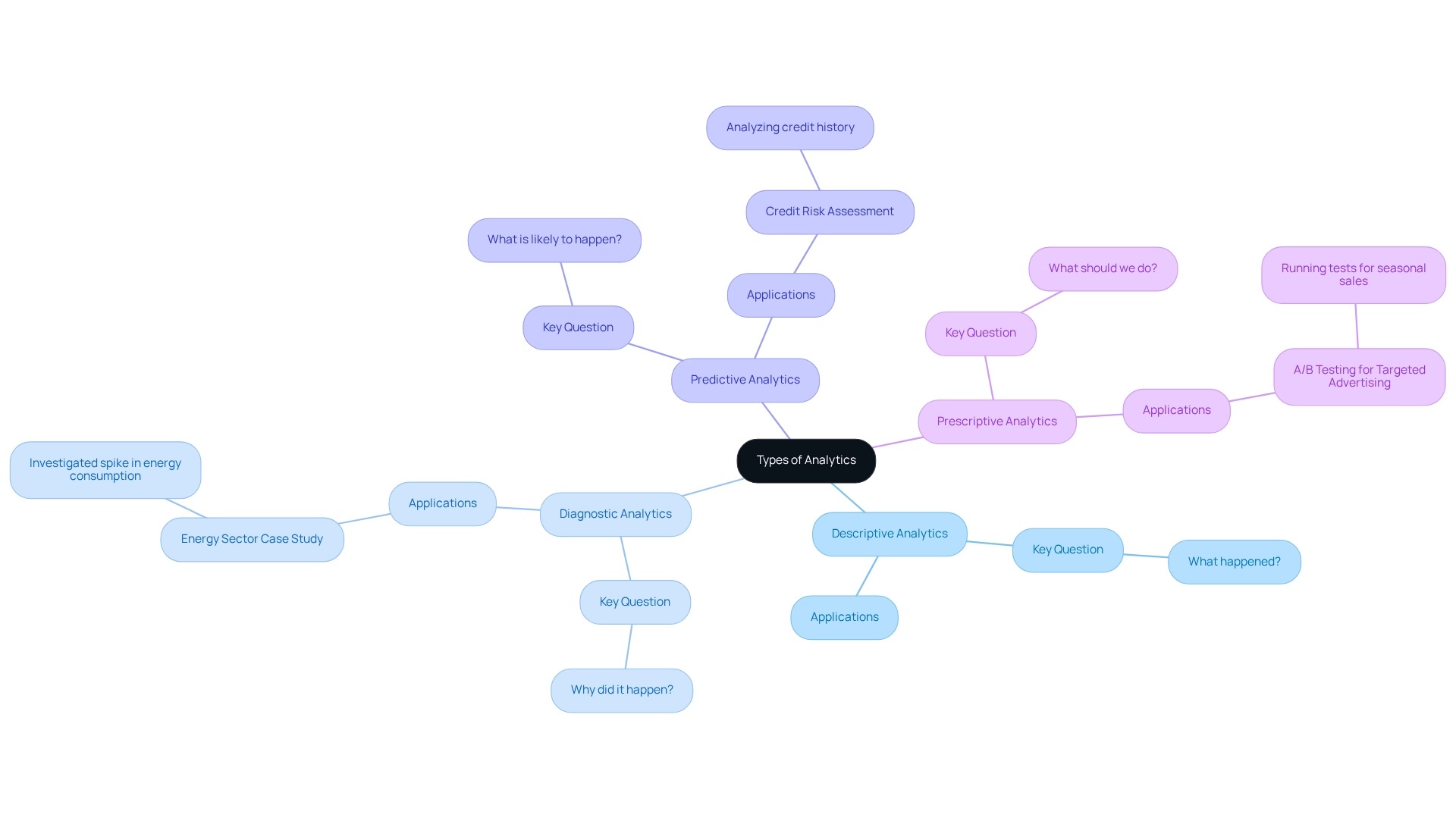

The three primary areas of analytics—descriptive, diagnostic, and predictive—play distinct roles in understanding and leveraging data for organizational decision-making.

- Descriptive analytics summarizes historical data, providing a foundation for insight.

- Diagnostic analytics delves into the reasons behind past outcomes, uncovering valuable lessons.

- Predictive analytics, on the other hand, forecasts future trends, allowing organizations to anticipate shifts in the market.

Together, these analytics enhance operational efficiency and inform strategic initiatives, ultimately driving better decision-making.

Consider how these analytics can be integrated into your current practices to elevate your organization’s performance.

Introduction

In a world increasingly driven by data, harnessing analytics stands as a cornerstone of operational success for organizations across all sectors. Understanding historical trends and predicting future outcomes, various forms of analytics—descriptive, diagnostic, predictive, and prescriptive—provide powerful tools that empower businesses to make informed decisions. As the landscape evolves, integrating these analytics types not only enhances efficiency but also fosters a culture of continuous improvement.

With the rise of AI and automation, organizations are now better equipped to navigate the complexities of vast data landscapes, driving innovation and strategic growth. This article delves into the multifaceted role of analytics in shaping operational efficiency and explores the trends that are redefining how businesses leverage data for competitive advantage.

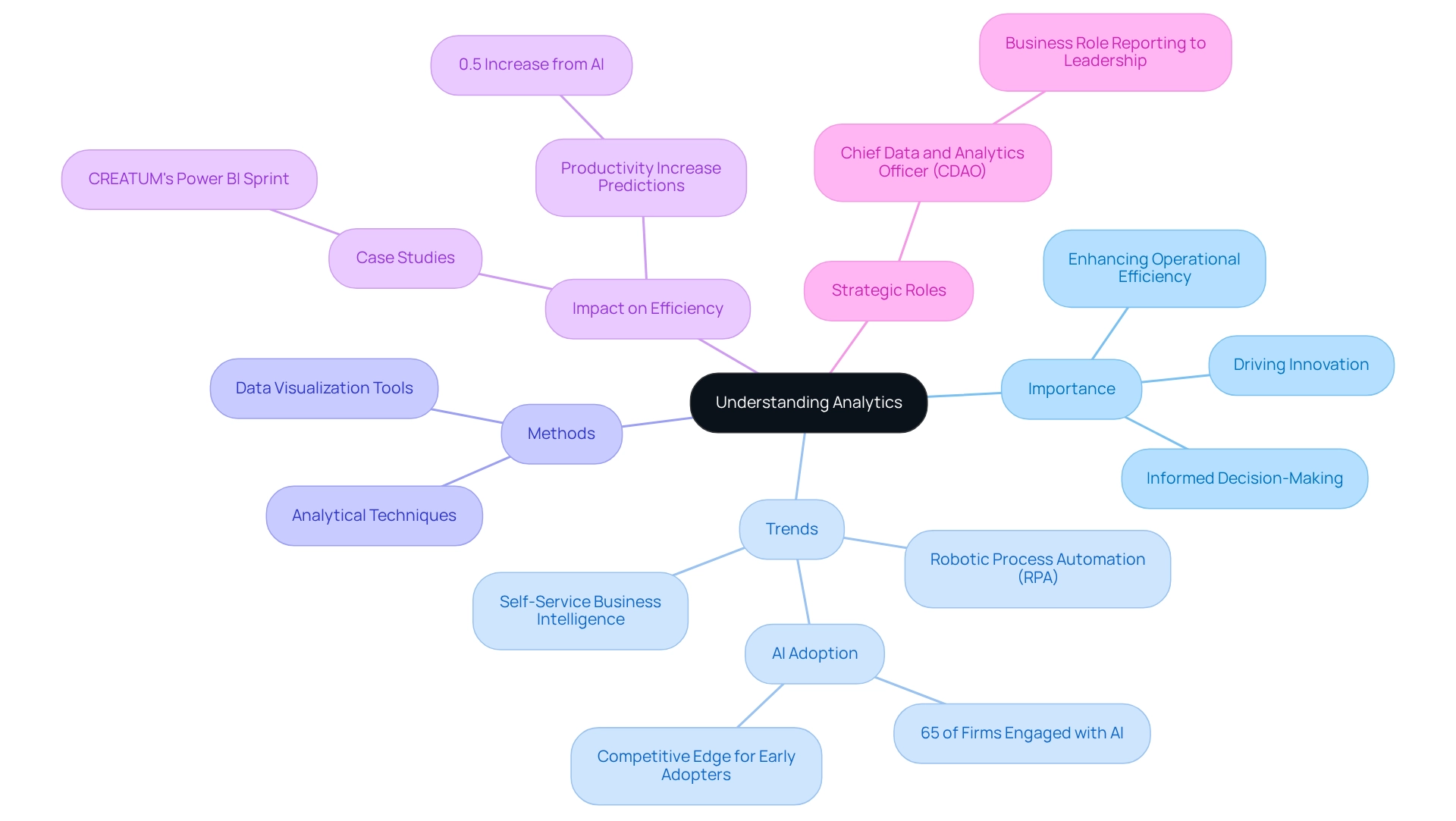

Understanding Analytics: An Overview

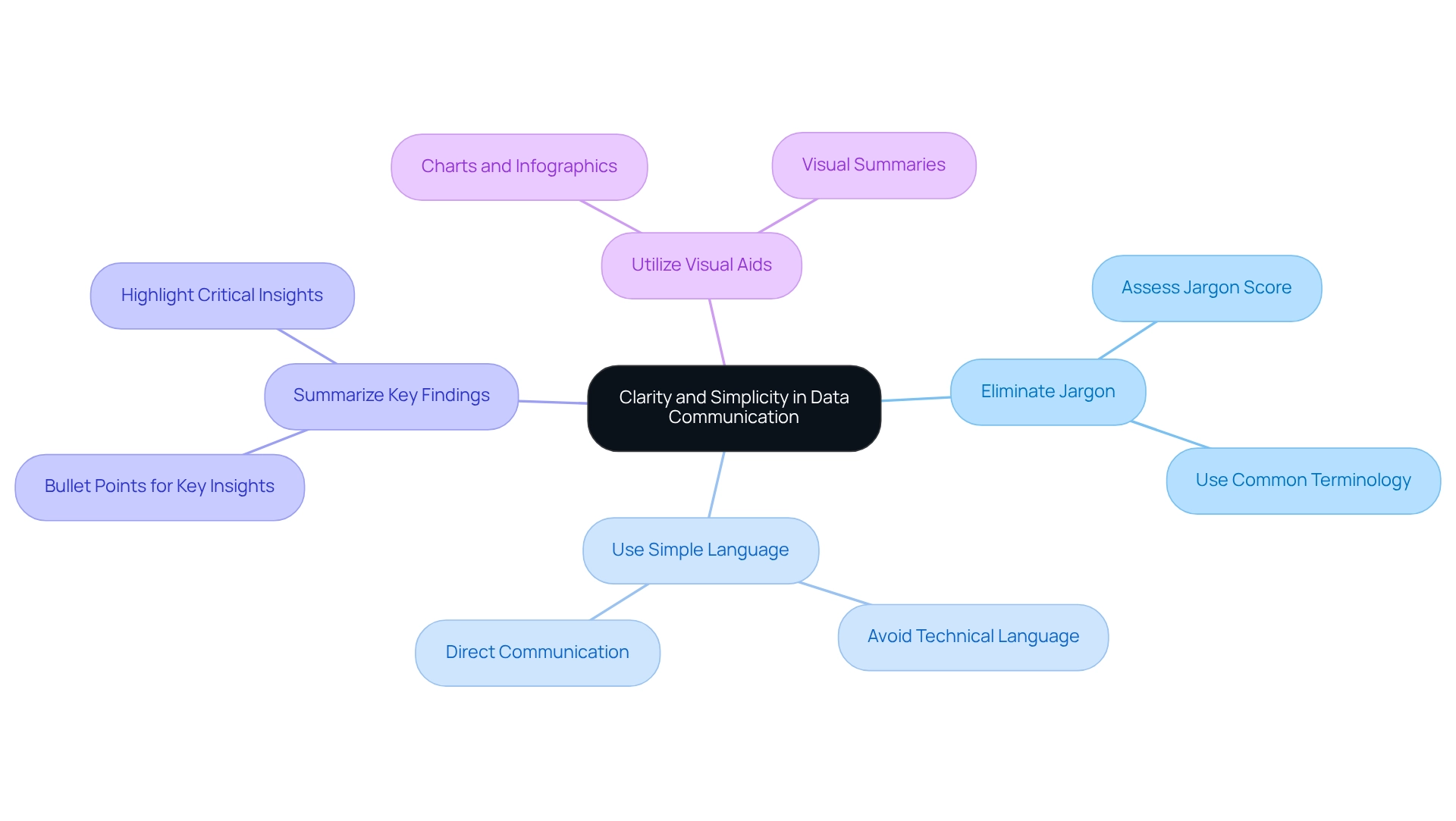

Analytics encompasses the systematic computational examination of information and statistics, serving as a crucial process for entities aiming to navigate the complexities of extensive information landscapes. By employing various analytical methods, organizations can identify patterns, trends, and connections within their data, facilitating informed decision-making. In 2025, the importance of data analysis in enhancing operational efficiency is paramount; it stands as a key driver of innovation and strategic goal achievement.

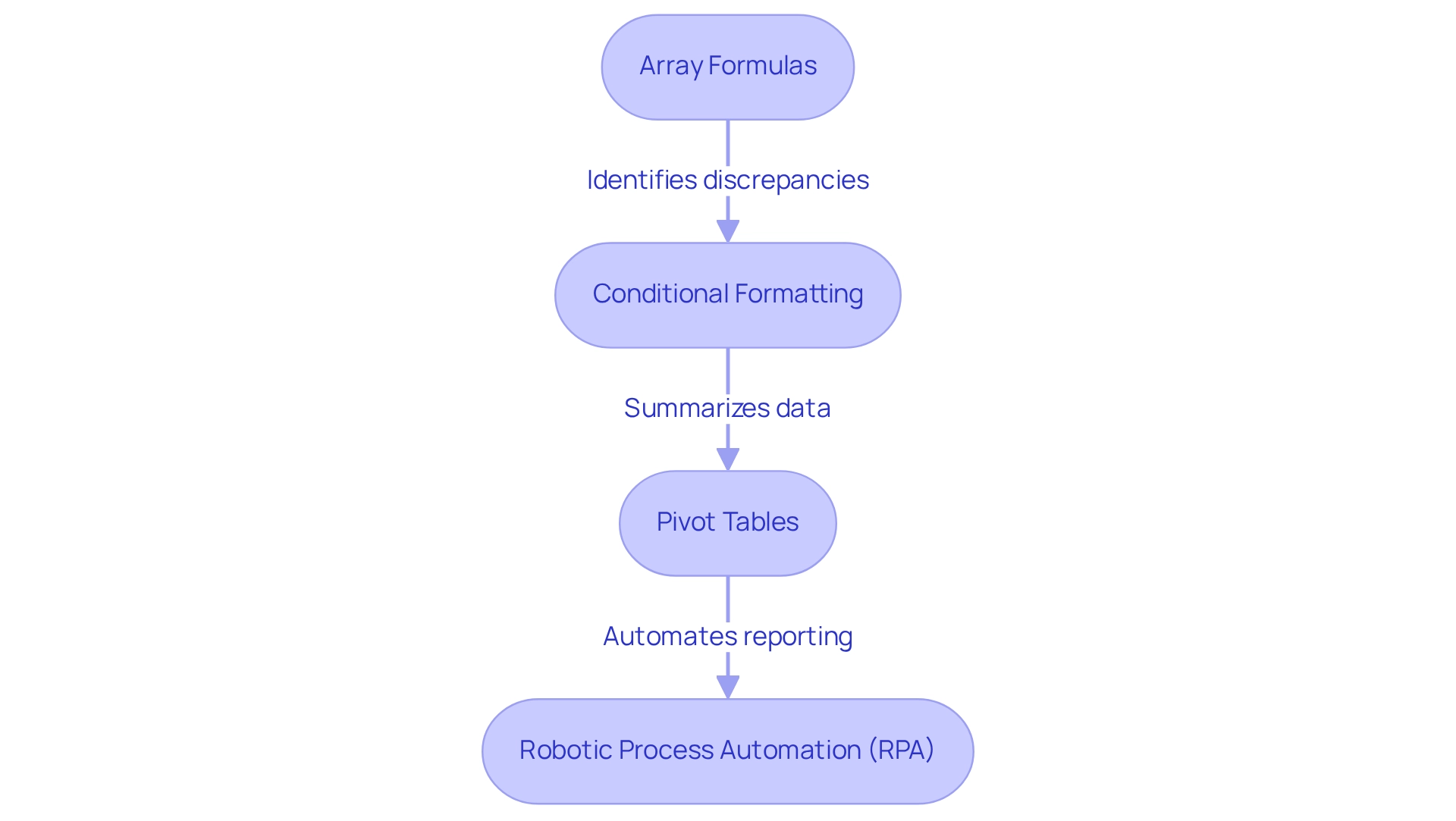

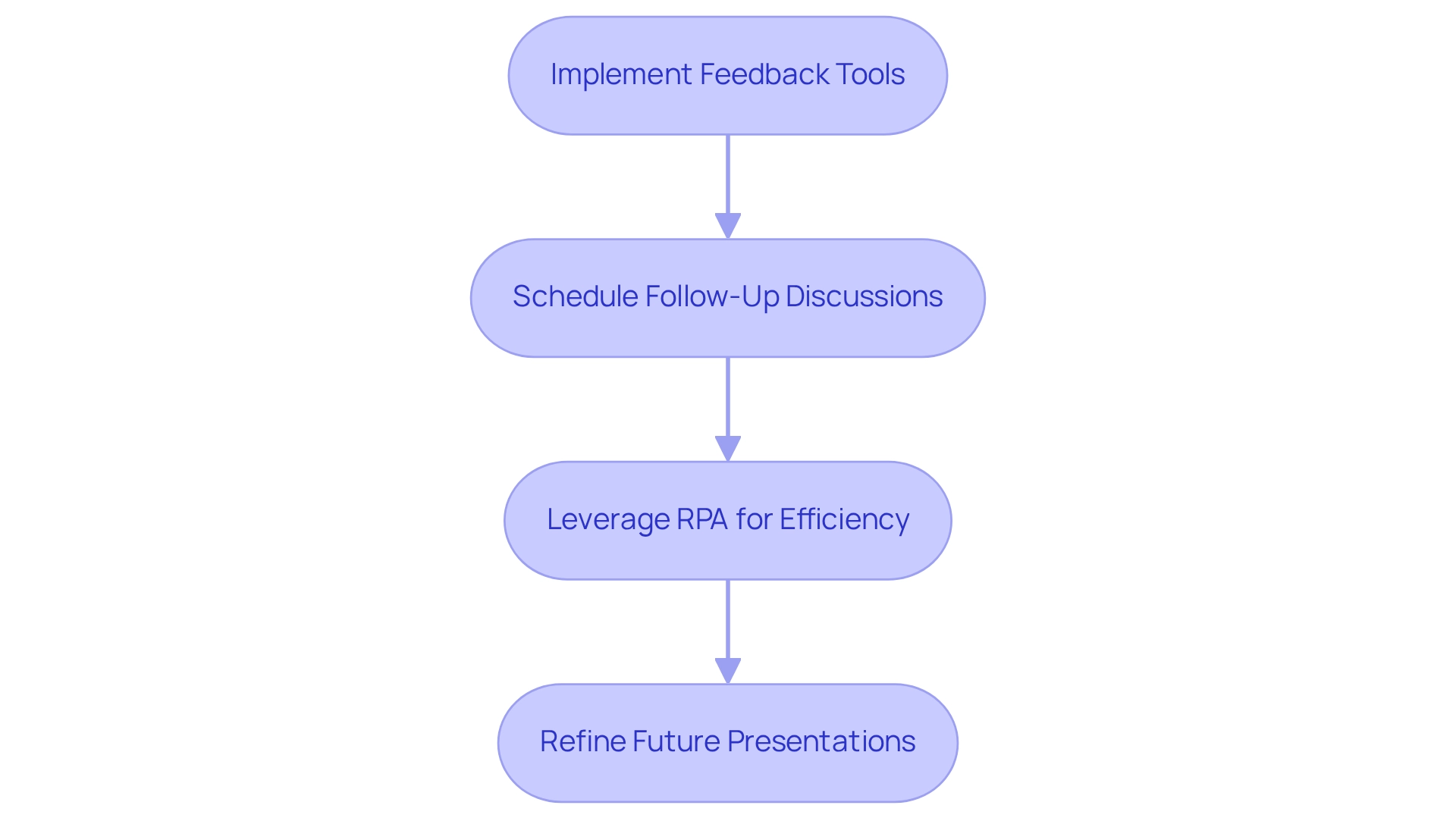

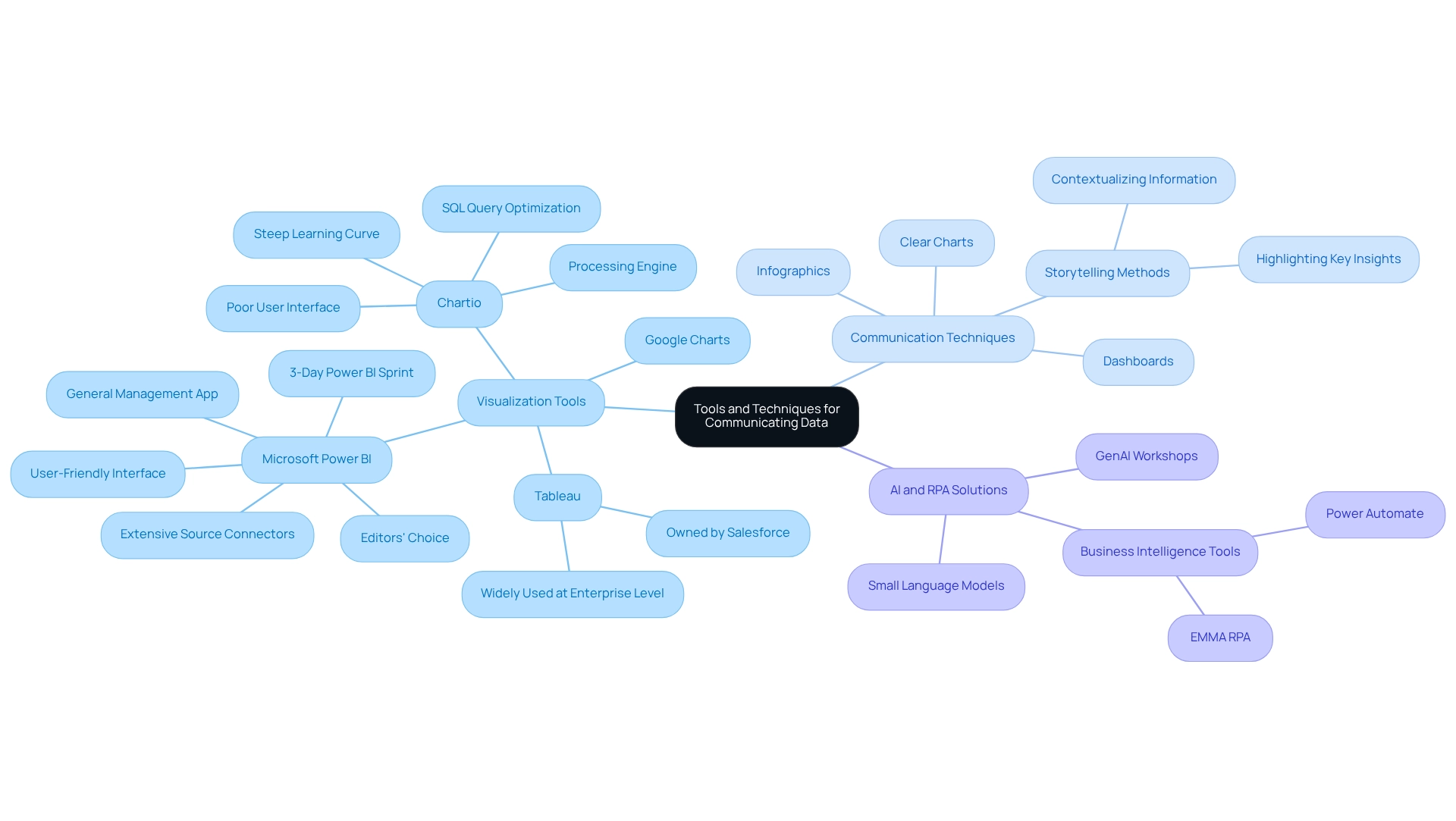

Current trends reveal a significant shift towards self-service business intelligence tools, which simplify performance tracking and empower organizations to swiftly monitor relevant performance indicators. This evolution allows teams to focus on strategic initiatives rather than being bogged down by manual data analysis. Moreover, the integration of Robotic Process Automation (RPA) can further enhance operational efficiency by automating manual workflows, thereby freeing valuable resources for more strategic tasks.

The impact of data analysis on operational efficiency is evident in numerous case studies. For instance, a notable 65% of firms are currently engaged with AI in data and analytics, with early adopters reporting a competitive edge. This trend underscores the necessity for businesses to adapt rapidly to the evolving data landscape to thrive in an increasingly data-driven environment.

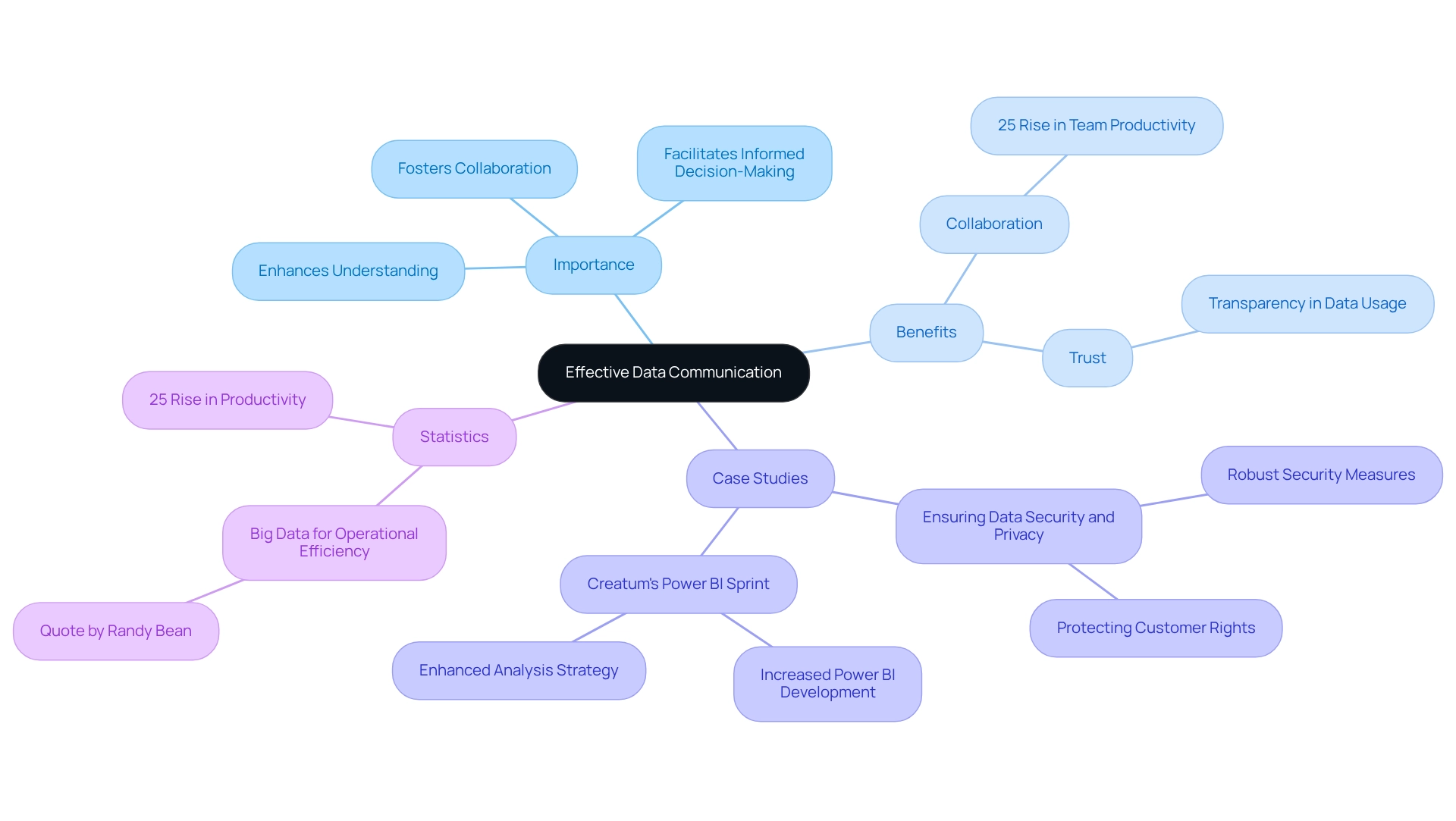

As MIT’s Daron Acemoglu predicts, AI may lead to a 0.5% increase in productivity over the next decade, further highlighting the importance of integrating AI into analytical strategies. Real-world examples illustrate how organizations are transforming raw data into actionable insights. For example, ‘CREATUM’s Power BI Sprint has significantly bolstered analytical capabilities for clients like PALFINGER, enabling them to generate professional reports and leverage insights effectively.

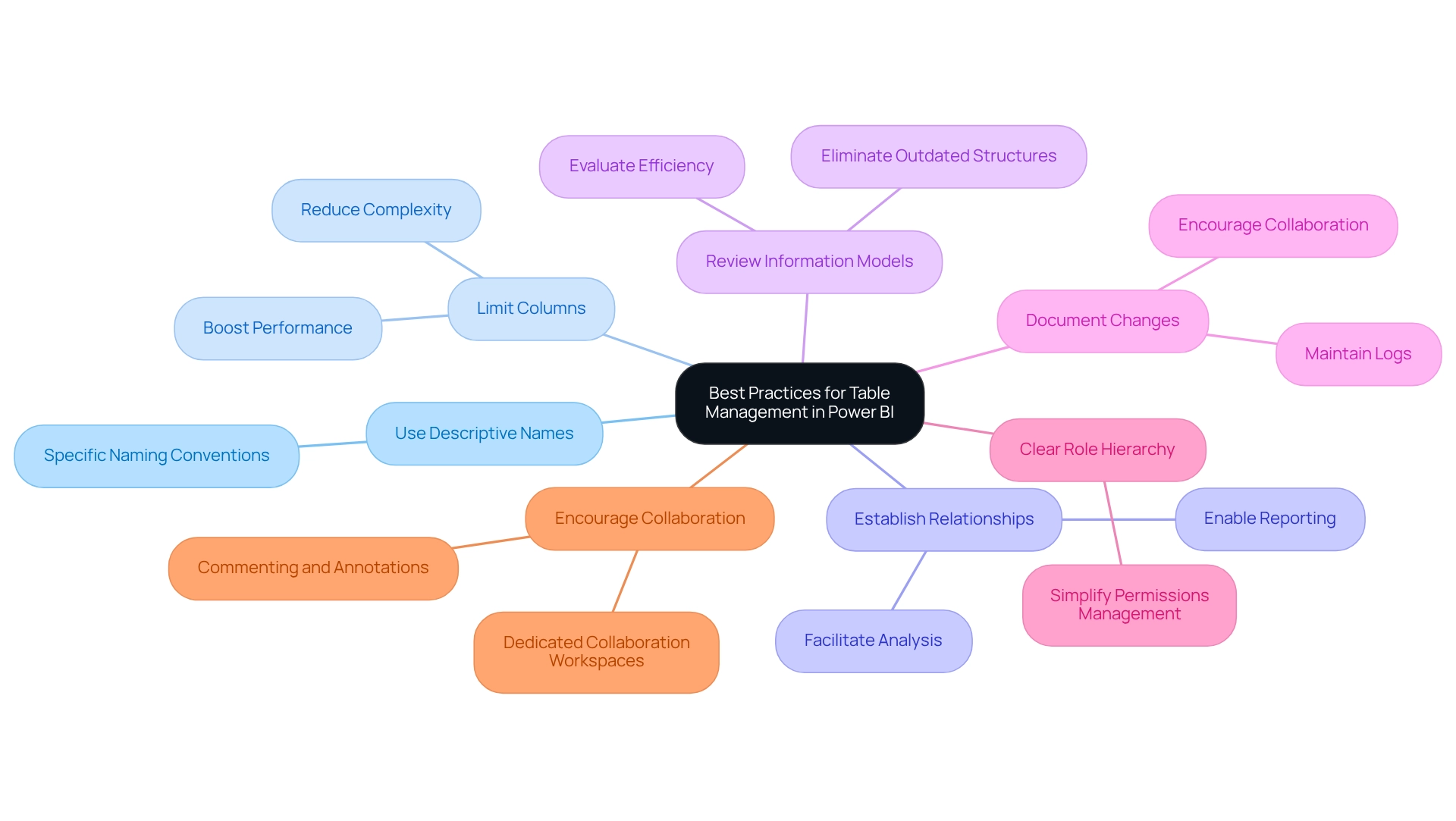

By establishing robust assessment frameworks, companies can enhance data quality and optimize operations, ultimately fostering growth and innovation. As Randy emphasizes, the role of Chief Data and Analytics Officer (CDAO) should be a commercial position reporting to organizational leadership, reflecting the growing importance of data management in achieving strategic objectives. As the landscape continues to evolve, the role of data analysis in operational efficiency will remain vital, empowering organizations to make informed decisions that align with their strategic goals.

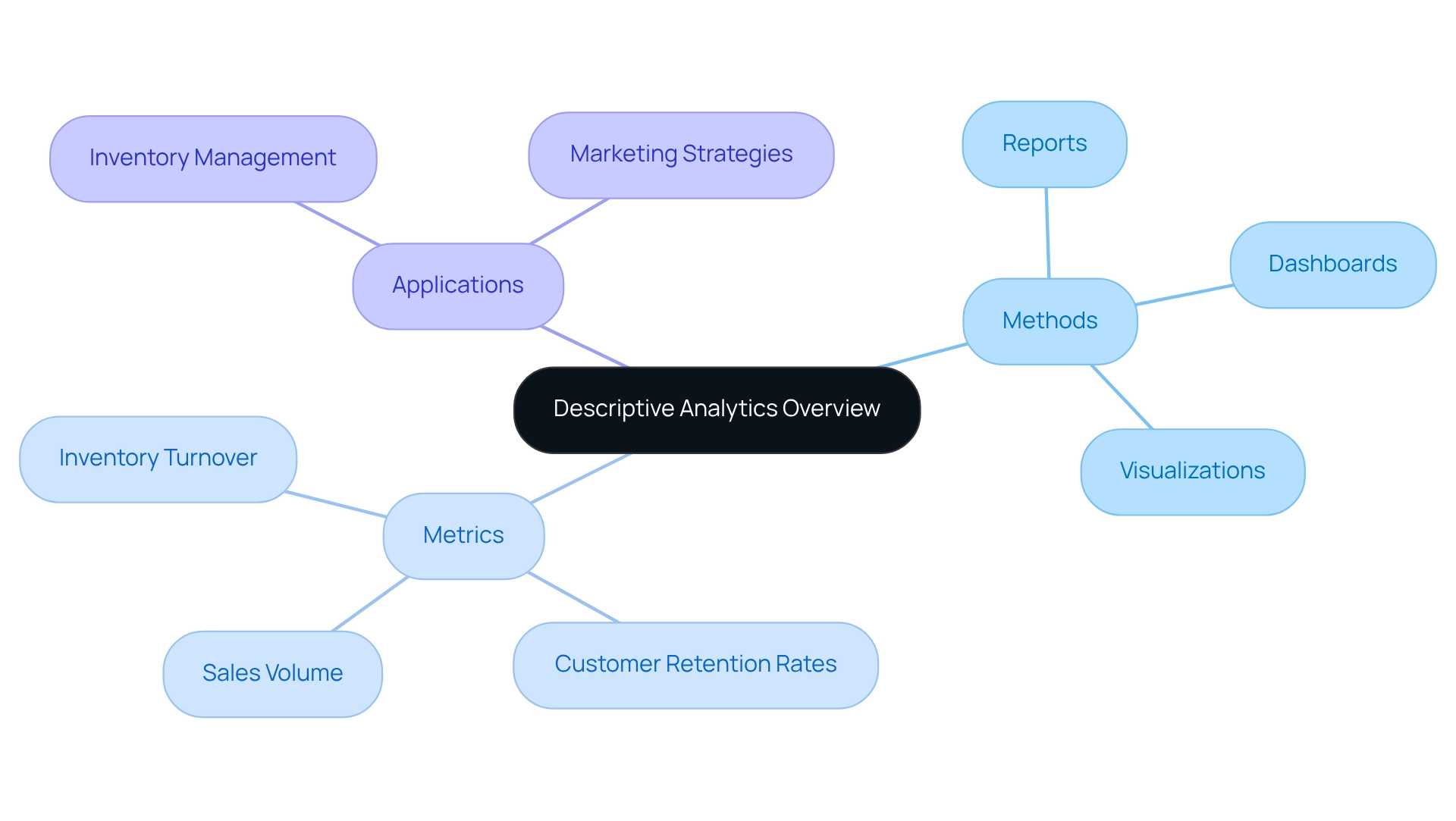

Descriptive Analytics: Summarizing Historical Data

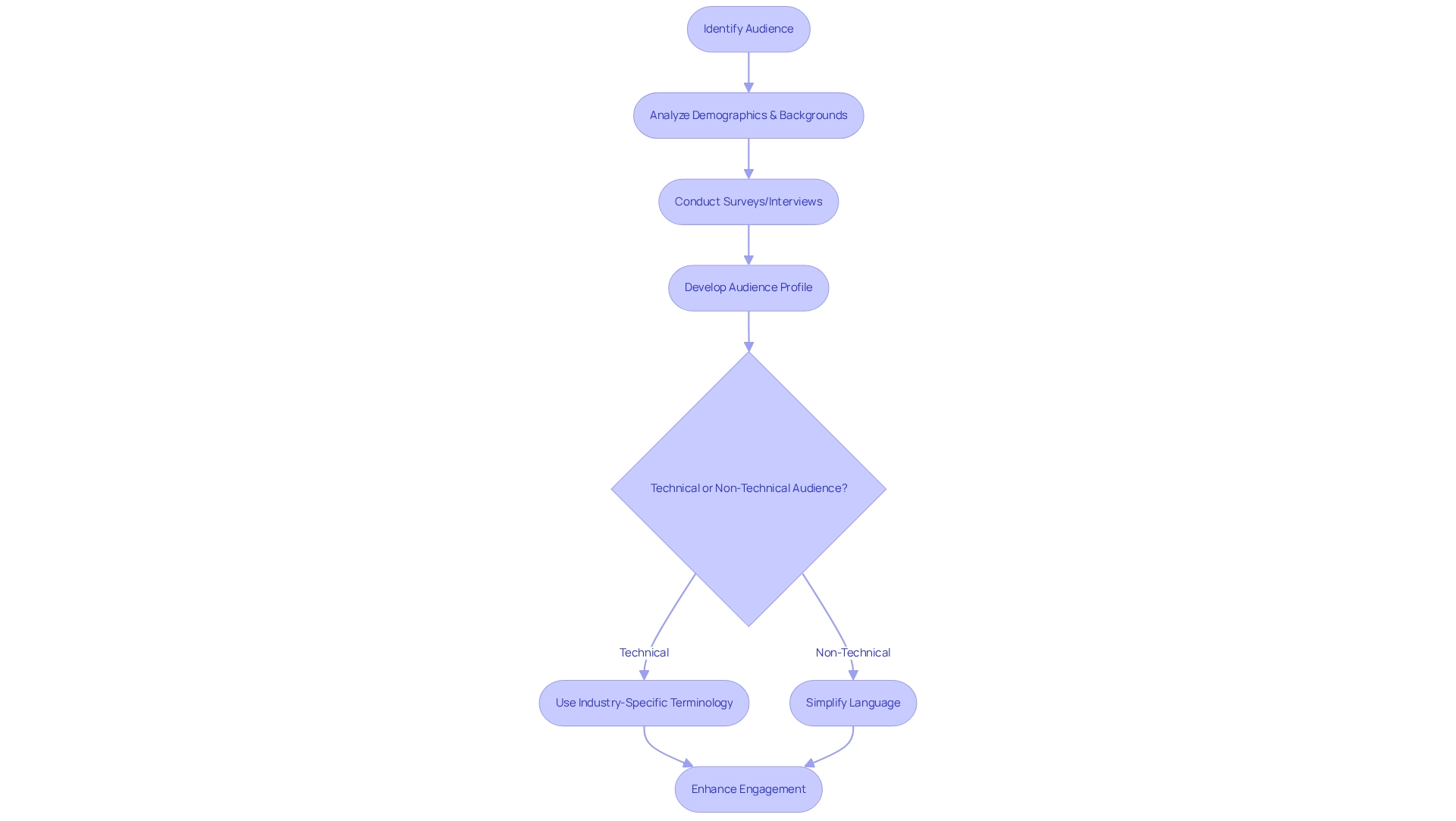

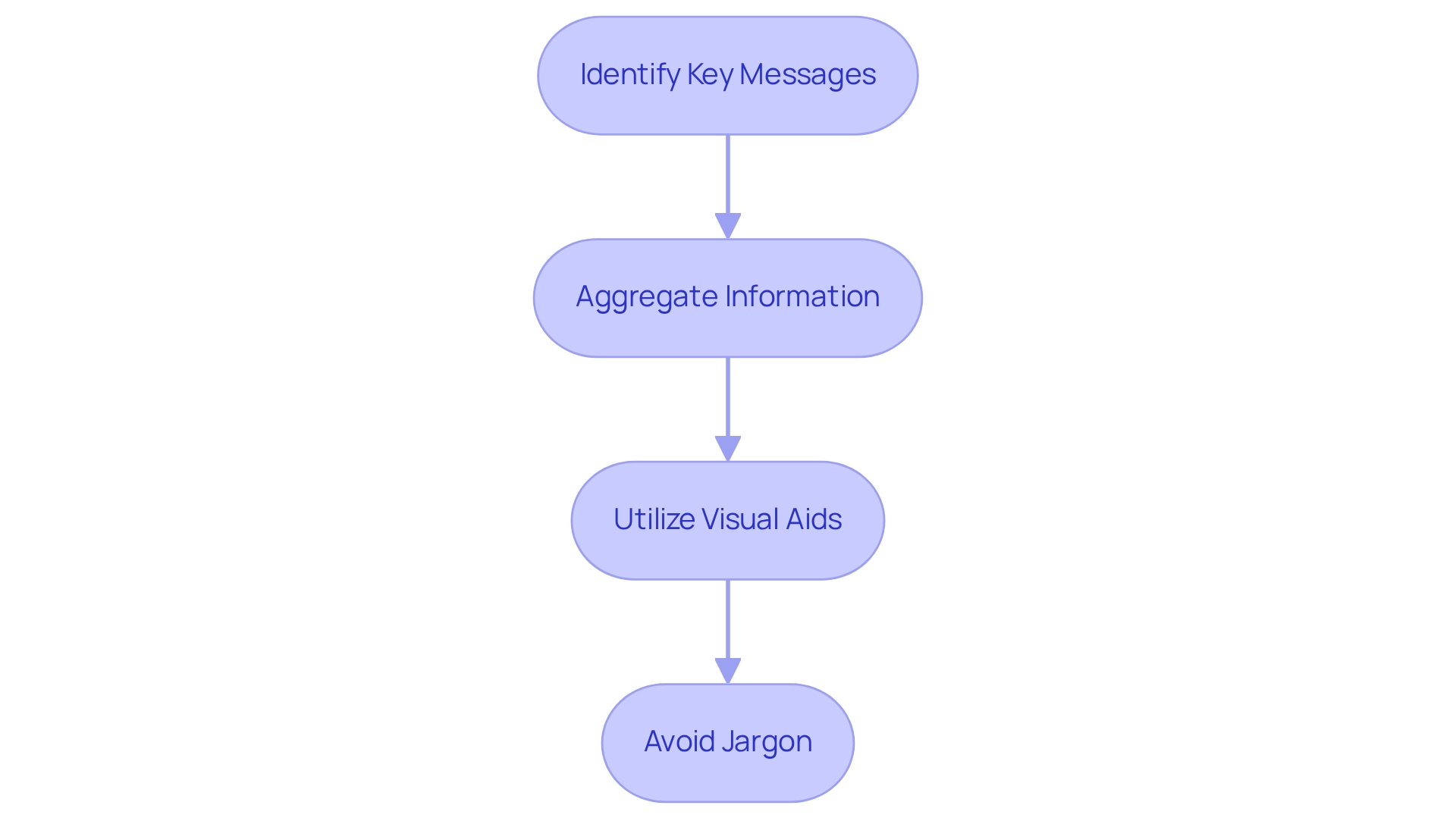

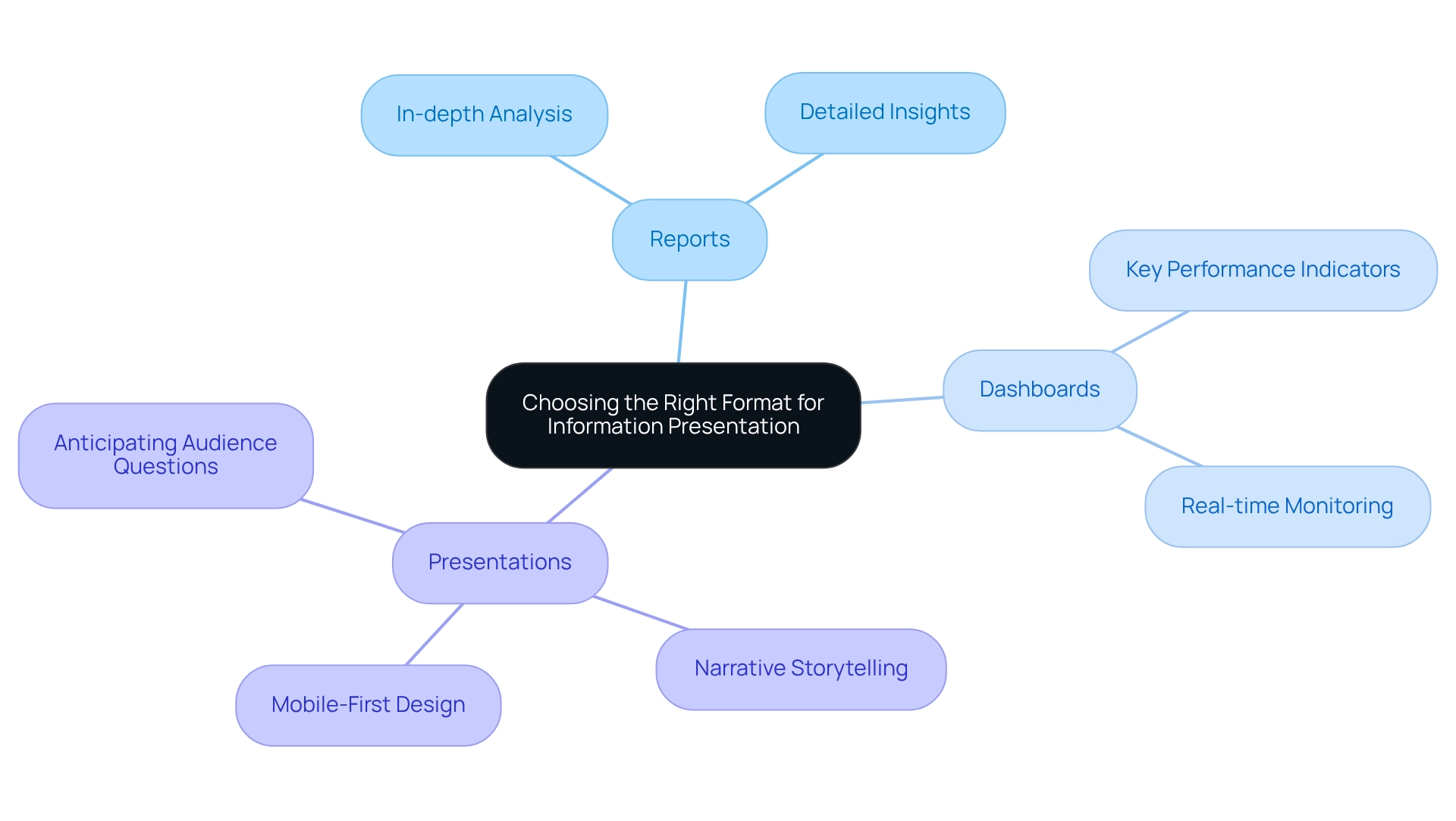

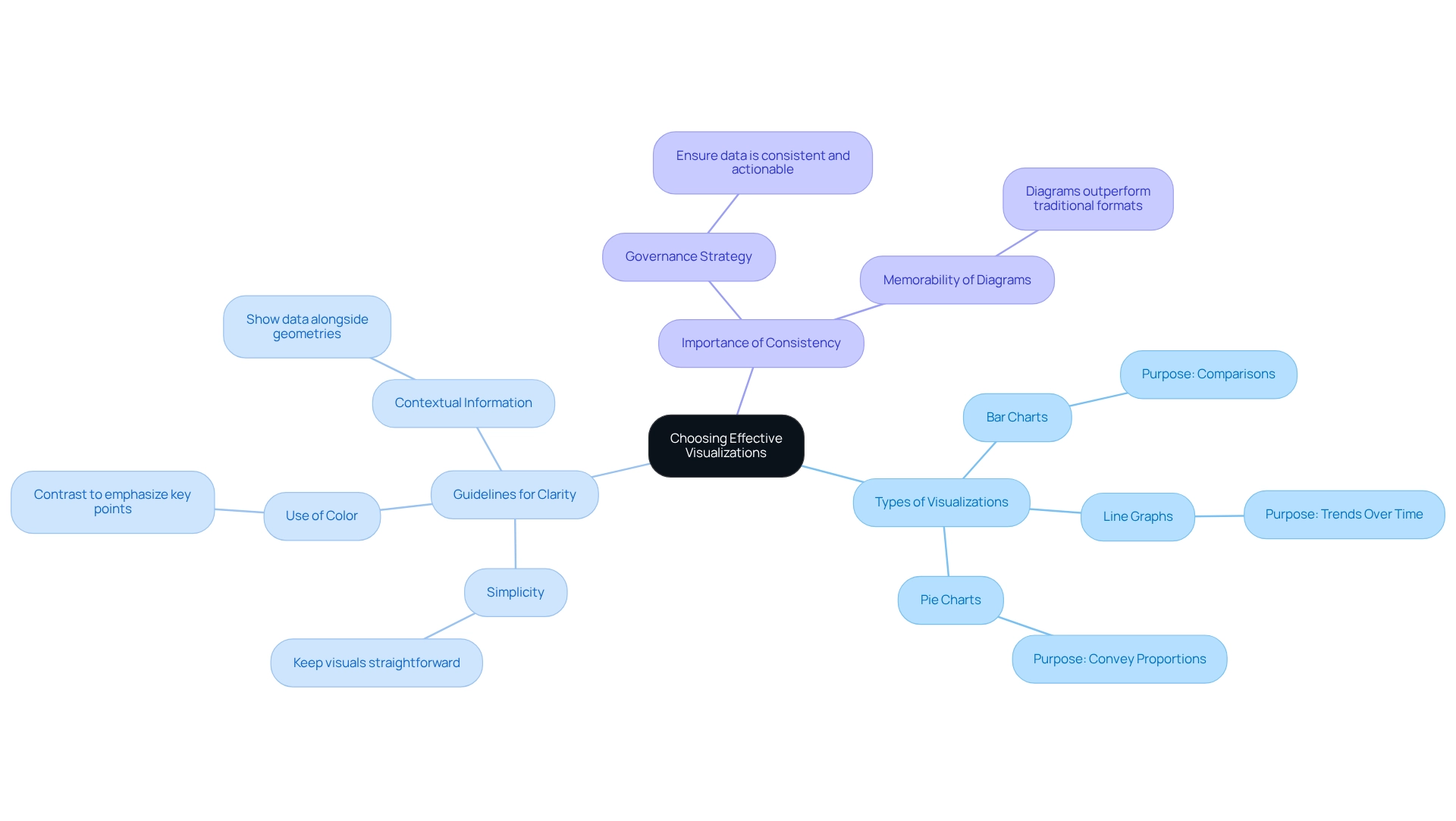

Descriptive analysis plays a vital role in interpreting historical information, enabling companies to summarize past occurrences and obtain actionable insights. This analytical method employs various metrics and visual representations, including reports, dashboards, and visualizations, to present information in an accessible manner. For instance, a retail firm may utilize descriptive analysis to examine sales data from prior years, uncovering seasonal patterns and consumer buying behaviors.

Such insights empower organizations to make informed decisions regarding inventory management and marketing strategies.

In 2025, the average metrics used in descriptive analysis will include key performance indicators (KPIs) such as sales volume, customer retention rates, and inventory turnover. The precision of market trend assessments has significantly enhanced by almost 20% through the use of descriptive analysis, enabling companies to modify their strategies and capitalize on new opportunities.

Catherine Cote, Marketing Coordinator at Harvard Business School Online, states, “Data analysis is a valuable tool for businesses aiming to increase revenue, enhance products, and retain customers.” This underscores the significance of descriptive analysis in addressing operational efficiency challenges, as it provides the insights necessary for optimizing processes and improving decision-making.

A notable case study highlighting the effectiveness of descriptive analytics is the adoption of analytics dashboards, which have surged by over 45% among leading firms. These dashboards, particularly those created through Power BI services, offer a centralized platform for data visualization, streamlining decision-making and enhancing oversight of market dynamics. The 3-Day Power BI Sprint enables organizations to swiftly create professionally designed reports that facilitate this process.

Additionally, the General Management App enhances comprehensive management and smart reviews, further supporting effective decision-making.

Companies employing descriptive data analysis for inventory management have reported substantial benefits. For example, retailers can analyze previous sales information to optimize stock levels, ensuring that popular items are readily available while minimizing excess inventory. This strategic approach not only enhances operational efficiency but also contributes to improved customer satisfaction.

As the Internet of Things (IoT) continues to expand, with projections indicating 20.3 billion connected devices generating 79.4 zettabytes of information by 2025, the significance of descriptive analytics will only increase. Businesses that effectively harness these insights, alongside leveraging Robotic Process Automation (RPA) to automate manual workflows, will be better positioned to navigate the complexities of the market and drive growth, particularly in managing the vast amounts of data generated by IoT devices. To explore how our actions portfolio can assist your organization, we invite you to book a free consultation.

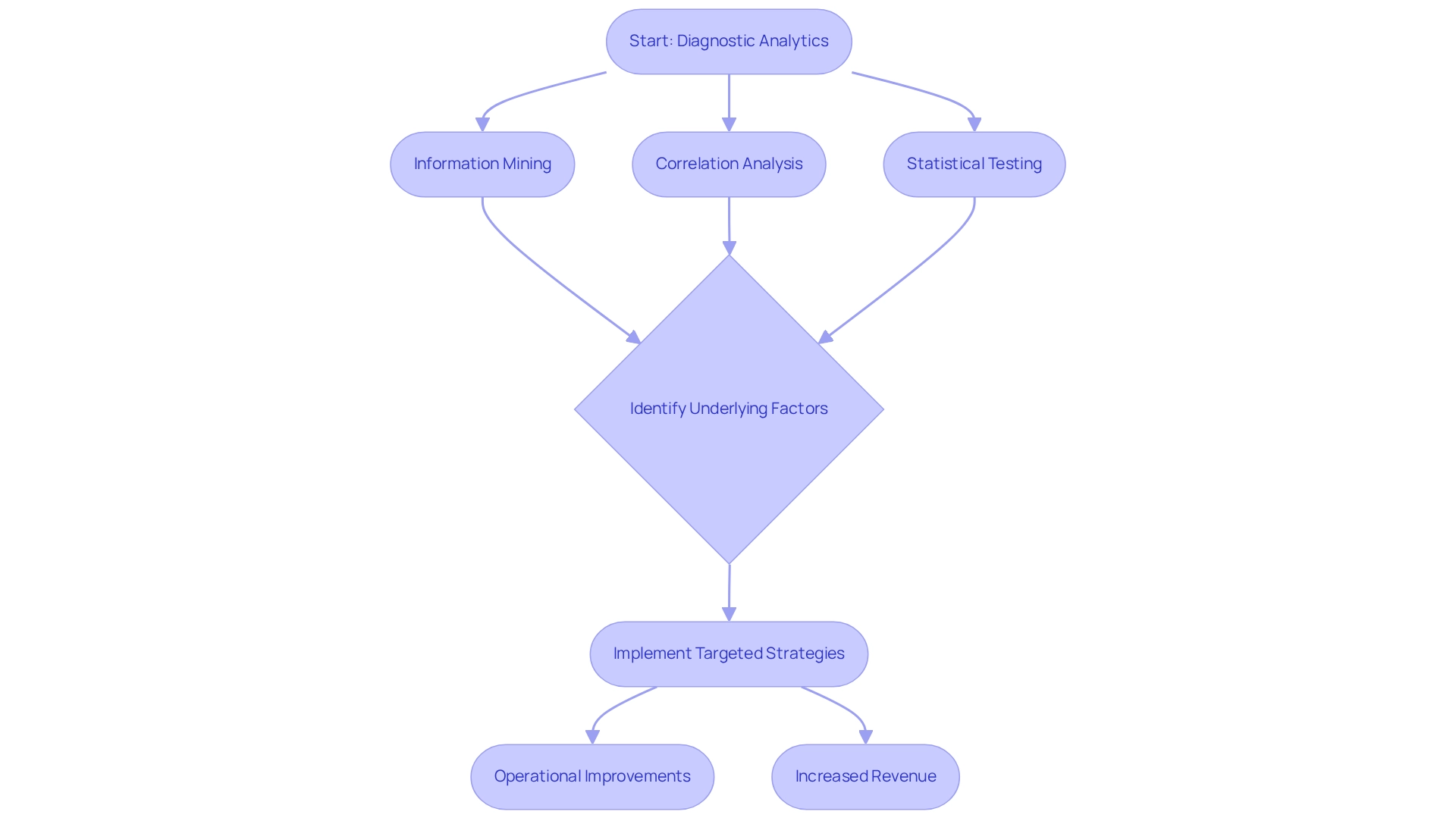

Diagnostic Analytics: Uncovering the ‘Why’ Behind Data

Diagnostic analysis transcends descriptive analysis by delving into the reasons behind previous results, effectively addressing critical inquiries such as ‘Why did this occur?’ and ‘What factors contributed to this result?’ This analytical approach employs a variety of techniques, including information mining, correlation analysis, and statistical testing, to unveil actionable insights.

For example, when a company experiences a sudden decline in sales, diagnostic evaluations can pinpoint whether the downturn stems from shifts in consumer behavior, heightened competition, or operational inefficiencies.

The significance of diagnostic evaluations in 2025 is paramount. It plays a vital role in enhancing operational efficiency, with studies indicating potential revenue boosts of up to 20% in marketing and sales processes. Organizations leveraging diagnostic analytics can identify common factors that lead to performance issues, such as customer dissatisfaction or supply chain disruptions, allowing them to implement targeted strategies to address these challenges.

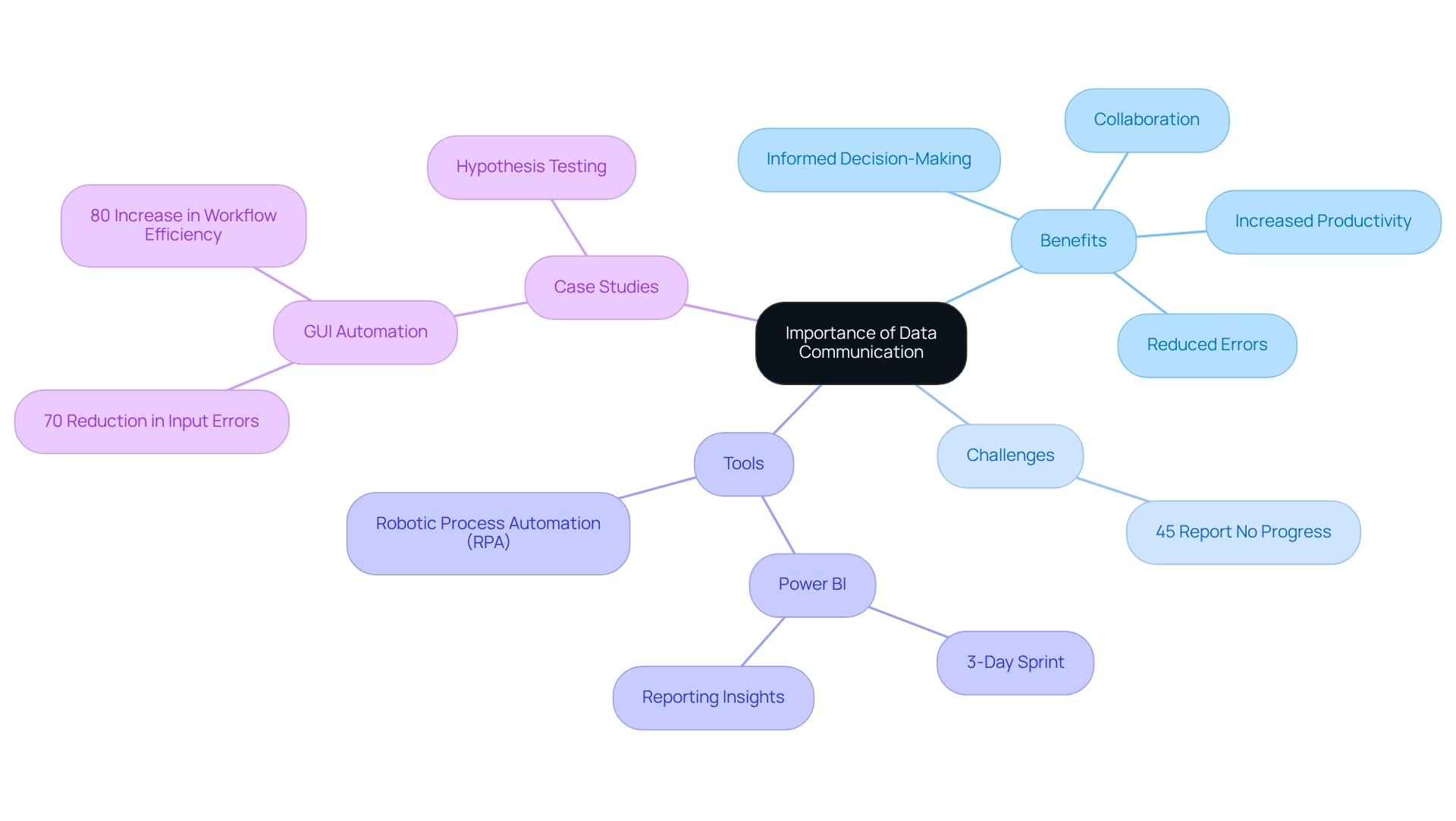

A compelling case study illustrates this point: a mid-sized firm faced challenges like manual entry errors, sluggish software testing, and difficulties in integrating legacy systems without APIs. By adopting GUI automation, the company significantly improved efficiency by automating data entry, software testing, and legacy system integration. This initiative resulted in a 70% reduction in data entry errors, a 50% acceleration in testing processes, and an 80% enhancement in workflow efficiency, achieving ROI within six months.

Such examples underscore the capacity of diagnostic analysis to deliver substantial business outcomes.

Expert opinions further affirm the importance of this analytical method. Industry experts assert that diagnostic analysis is essential for uncovering insights that facilitate informed decision-making and operational improvements. Techniques like root cause analysis are crucial for understanding the underlying issues affecting performance, empowering organizations to devise effective solutions.

In an environment abundant with data, the ability to extract meaningful insights through diagnostic analysis is critical for enterprises aiming to thrive in a competitive landscape.

Additionally, the Analytics & Insights team at Seer employs both diagnostic and predictive analysis to enhance marketing strategies for clients, showcasing how diagnostic analysis fits within a broader analytical framework. Furthermore, AI platforms in healthcare enhance physicians’ access to the latest research and treatment methods, demonstrating the extensive impact of data analysis across various sectors. In 2025, the common elements identified through diagnostic analysis will continue to play a vital role in addressing operational inefficiencies, ensuring companies maintain their competitive edge.

Moreover, the implementation of Robotic Process Automation (RPA) can further streamline these processes, enabling organizations to automate repetitive tasks and focus on strategic initiatives.

Predictive Analytics: Forecasting Future Trends

Predictive analysis harnesses historical information, statistical algorithms, and machine learning methods to forecast future results, playing a pivotal role in shaping business strategies. By meticulously examining patterns and trends from past data, organizations can make informed predictions about upcoming events. For example, financial institutions increasingly rely on predictive modeling to evaluate the likelihood of loan defaults, leveraging historical borrower behavior to refine decision-making processes.

By 2025, the adoption of predictive data analysis across various sectors has surged, with an impressive 76% of organizations integrating these technologies into their operations. This growth is primarily fueled by the escalating reliance on big data technologies and the demand for tailored solutions that tackle specific business challenges. The accuracy rates of predictive modeling systems have also improved significantly, with many organizations reporting precision levels exceeding 85% in their forecasts.

Expert insights underscore the importance of predictive analysis in risk management, where organizations can proactively identify potential risks and implement mitigation strategies. For instance, companies in the finance sector are utilizing predictive analysis to enhance their risk assessment frameworks, enabling them to navigate uncertainties more effectively.

Current trends indicate that predictive analysis is not only revolutionizing financial institutions but also extending its impact into supply chain management, empowering manufacturers to anticipate demand and optimize inventory levels. Organizations like Planet Fitness have recently partnered with Coherent Solutions to leverage advanced data analysis for enhancing customer insights, showcasing the flexibility of predictive modeling across diverse industries.

Common statistical algorithms employed in predictive modeling include:

- Regression analysis

- Decision trees

- Neural networks

Each contributing to the robustness of predictive models. As companies continue to embrace these technologies, the potential for predictive analysis to forecast future trends and shape strategic decision-making will only expand. As Coherent Solutions states, “Coherent Solutions is your reliable tech partner providing sophisticated information analysis services to reveal historical insights, patterns, and relationships that guide strategic decision-making.”

Moreover, the predictive insights market is expected to grow substantially, driven by increasing adoption of large-scale information technologies and customization needs, highlighting the dynamic nature of this sector. By integrating predictive insights with Robotic Process Automation (RPA) and customized AI solutions, enterprises can enhance their operational efficiency and make data-driven decisions that propel business growth.

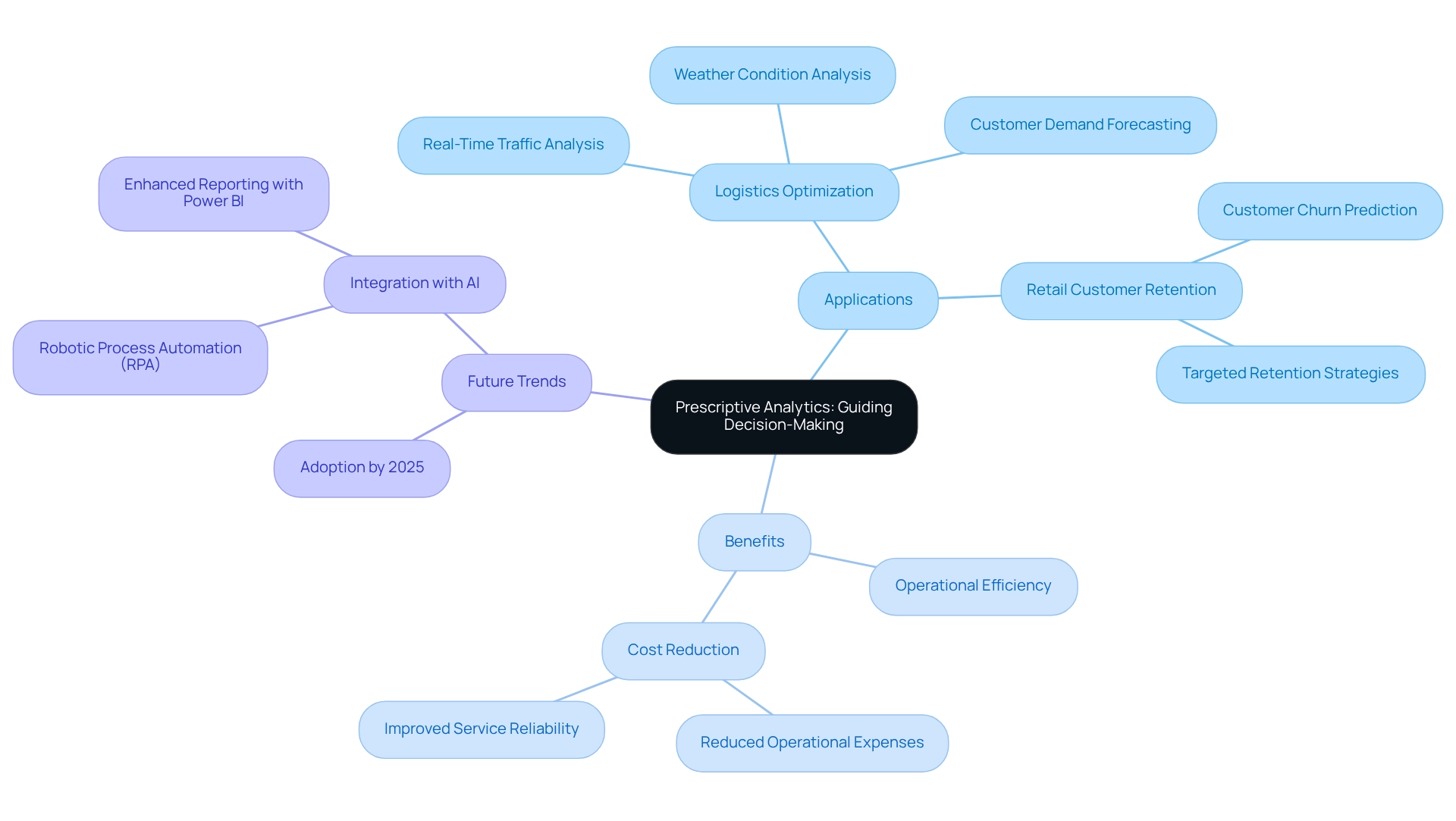

Prescriptive Analytics: Guiding Decision-Making

Prescriptive analysis represents the pinnacle of analytical capabilities, delivering actionable recommendations derived from comprehensive data examination. By leveraging insights from the three primary areas of analytics—descriptive, diagnostic, and predictive—organizations can identify the most effective courses of action. For example, in the logistics sector, prescriptive methods can optimize delivery routes by analyzing real-time traffic patterns, weather conditions, and customer demand.

This sophisticated analytical approach not only streamlines operations but also significantly enhances decision-making processes.

Looking ahead to 2025, the adoption of prescriptive data analysis in logistics is anticipated to increase. Studies indicate that firms utilizing these insights can reduce operational expenses by up to 15% while improving service reliability. This is particularly vital as businesses strive to maintain competitiveness in a data-driven environment. Additionally, organizations can track supplier performance and adjust procurement strategies through data analysis, further decreasing costs and enhancing service reliability.

Moreover, prescriptive data analysis empowers organizations to make informed decisions by providing tailored recommendations. For instance, it can propose inventory adjustments based on predictive models of customer behavior, ensuring that supply aligns with demand efficiently. A notable case study involves a retail company that successfully implemented prescriptive analytics to anticipate customer churn.

By identifying at-risk clients and recommending customized retention strategies, the firm significantly reduced churn rates, illustrating the tangible benefits of this analytical approach.

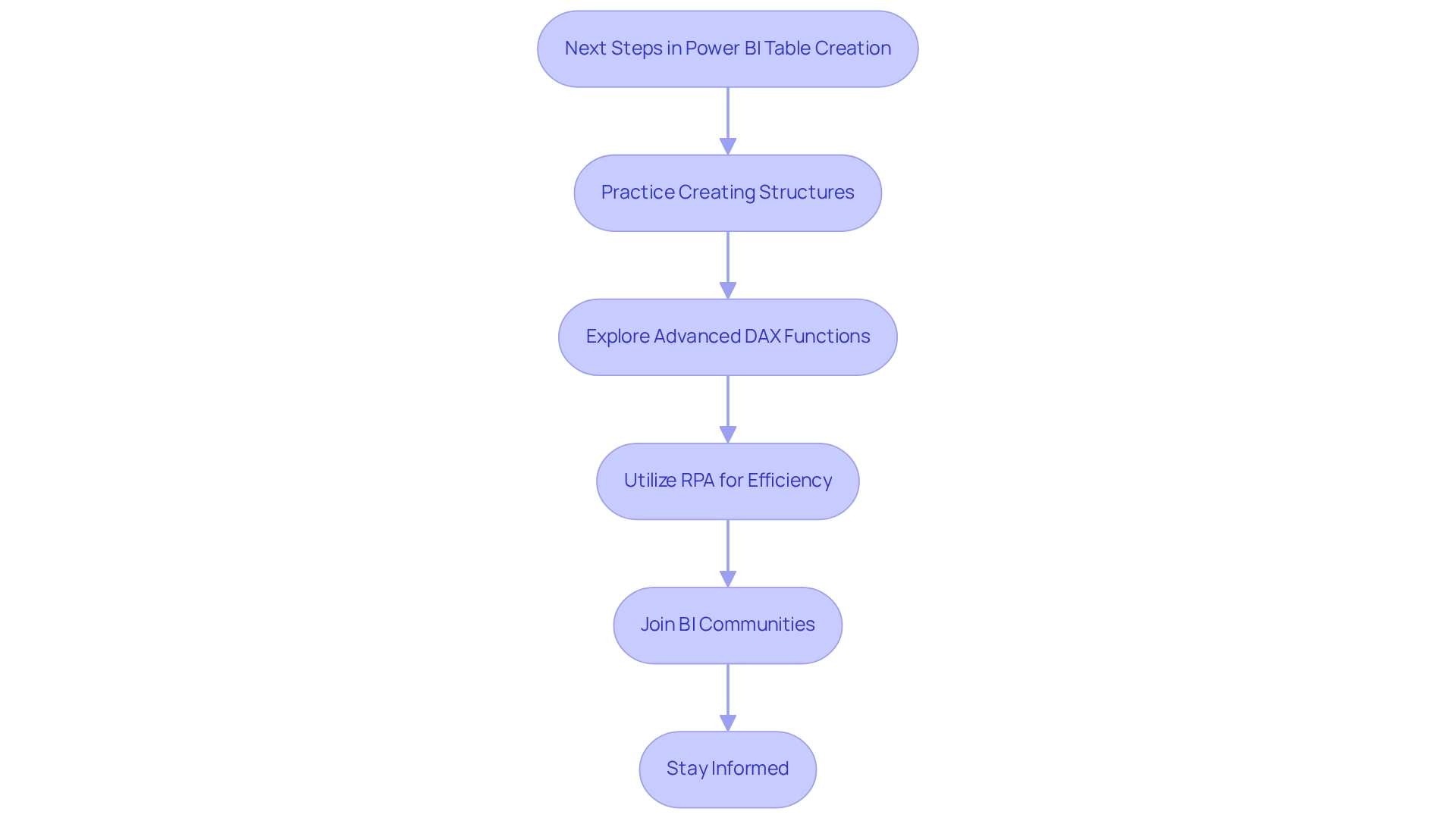

To enhance reporting and derive actionable insights, integrating Power BI services can be crucial. The 3-Day Power BI Sprint enables organizations to swiftly generate professionally designed reports, while the General Management App facilitates comprehensive management and intelligent reviews. Additionally, employing AI through Small Language Models and GenAI Workshops can improve information quality and provide practical training, ensuring that teams are equipped to effectively utilize these advanced insights.

Expert opinions underscore the necessity of integrating information science, domain expertise, and business acumen for the successful implementation of prescriptive insights. Davenport emphasizes that the effective execution and utilization of prescriptive analysis require this unique skill set. Continuous improvement of prescriptive models is essential, as maintaining information quality enhances their accuracy and relevance, ultimately driving operational efficiency and strategic decision-making in 2025 and beyond.

Furthermore, adopting Robotic Process Automation (RPA) can automate manual workflows, further boosting operational efficiency in an ever-evolving AI landscape, allowing teams to concentrate on more strategic, value-adding tasks.

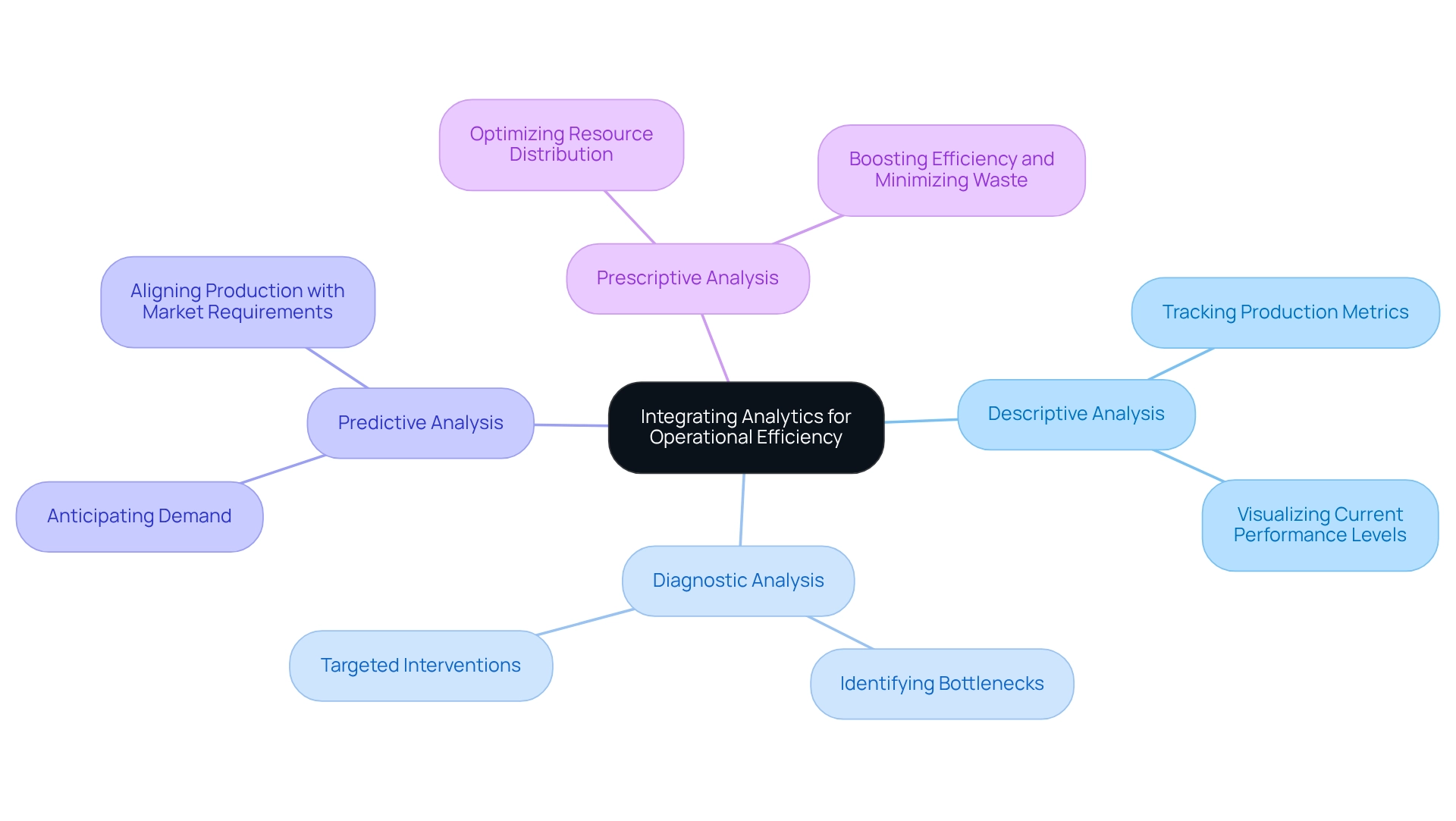

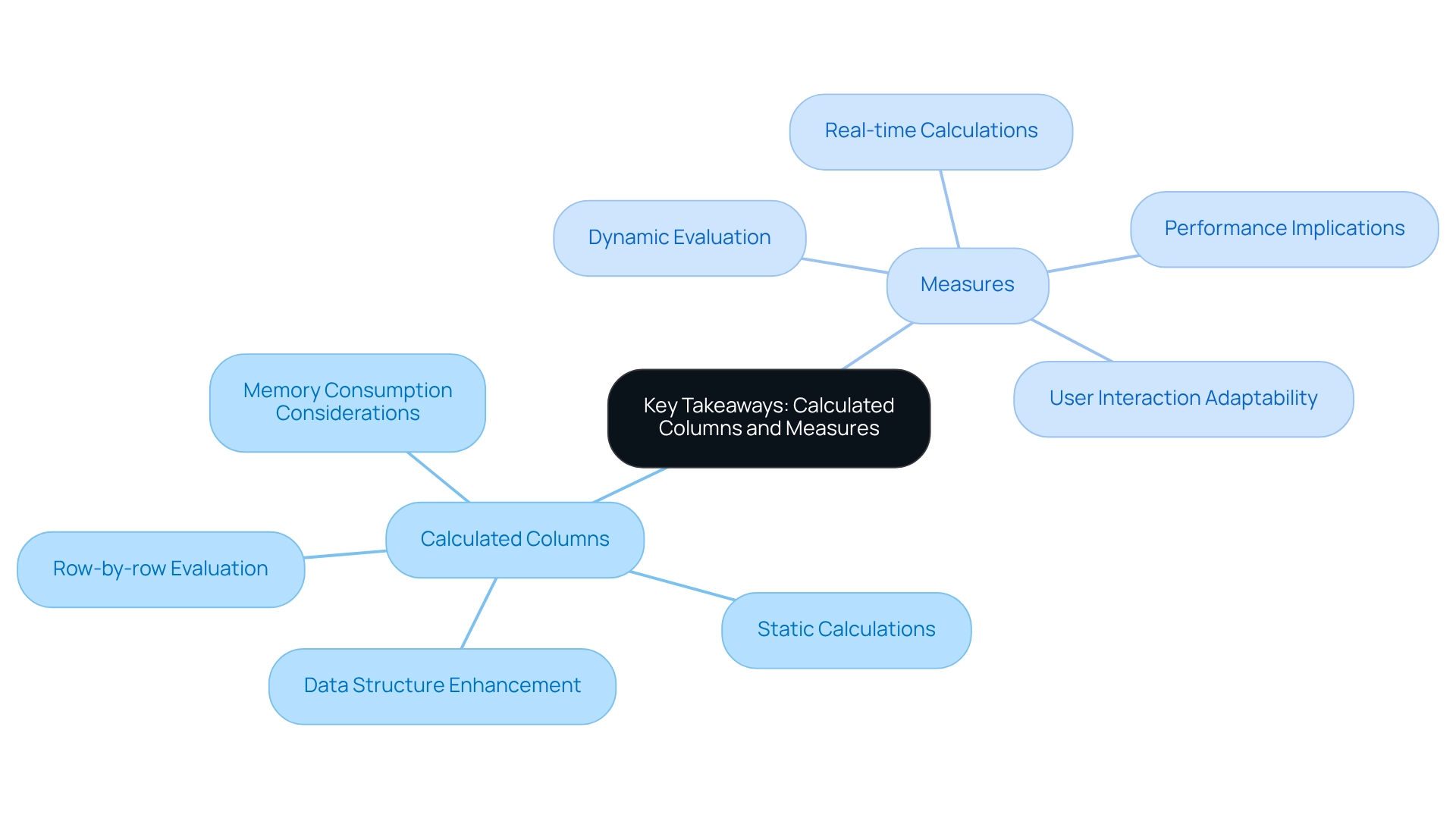

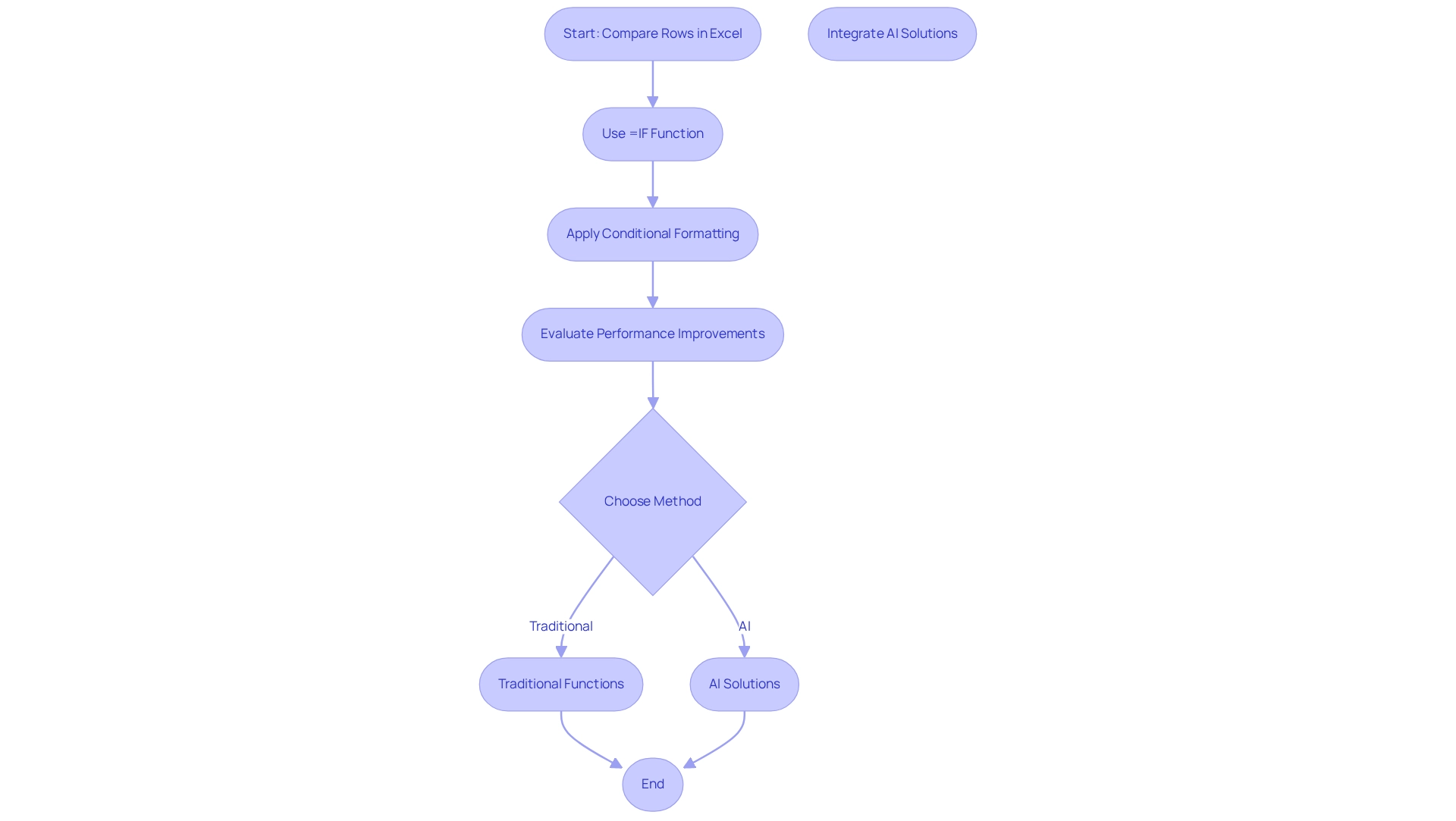

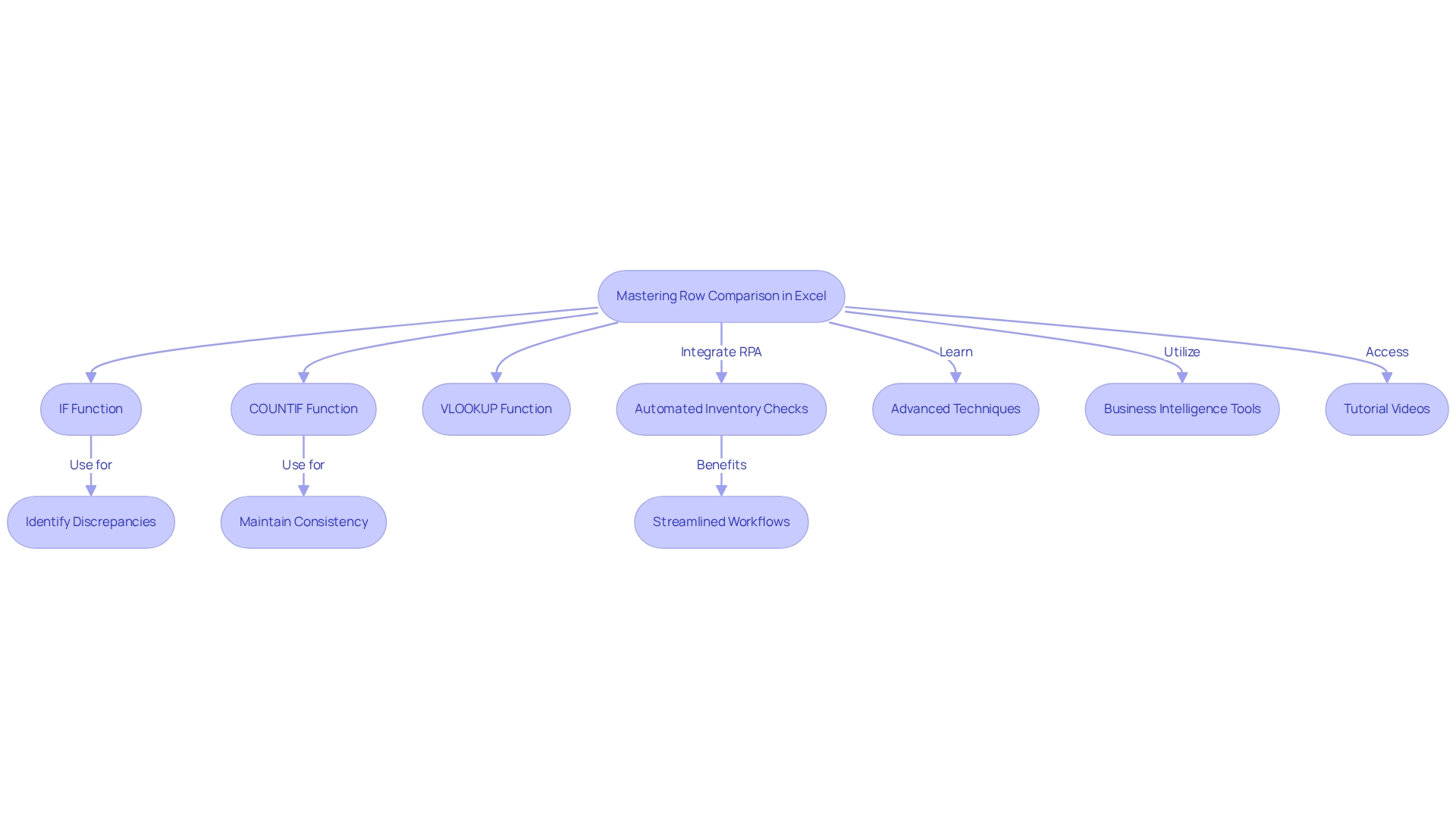

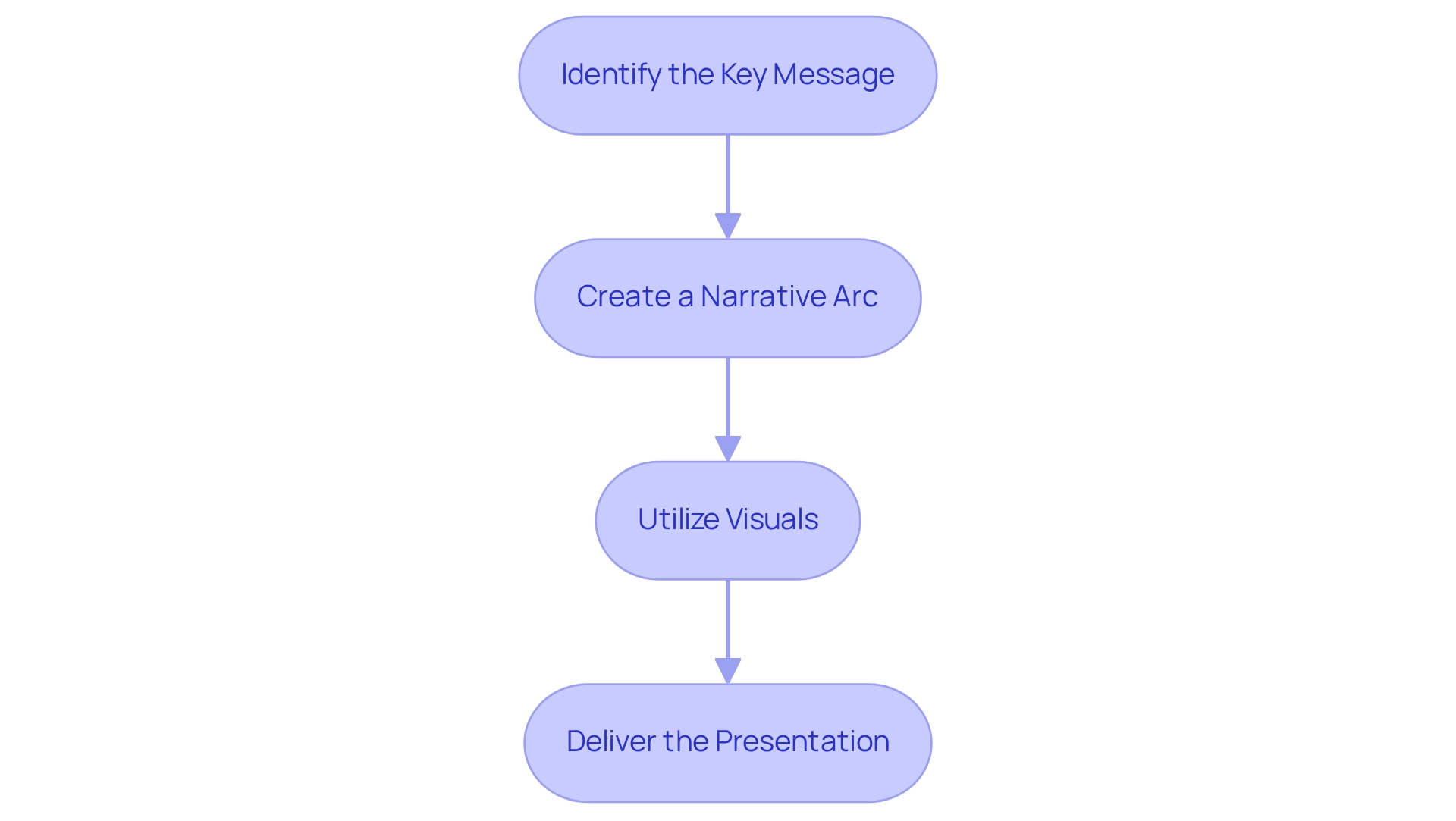

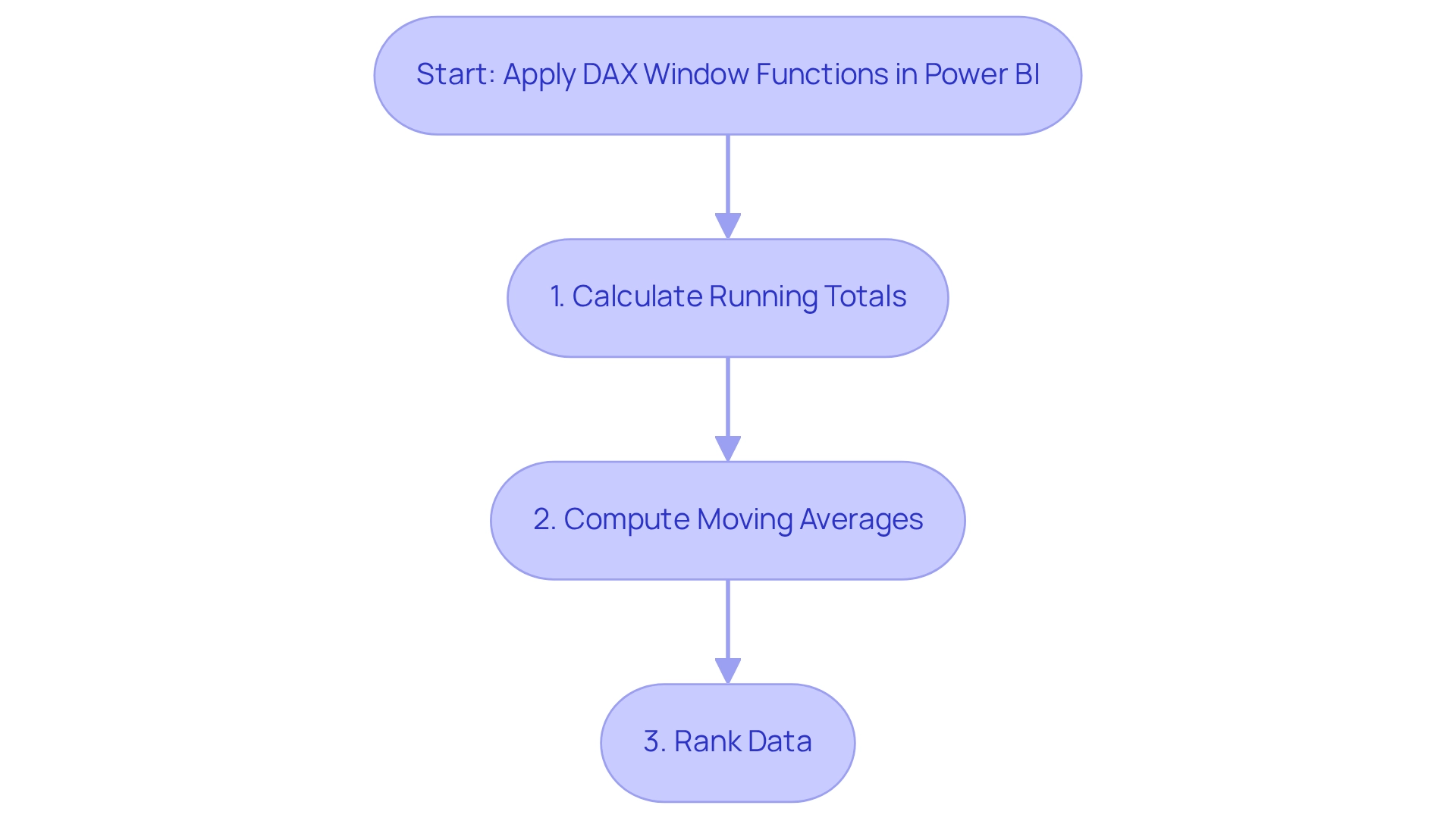

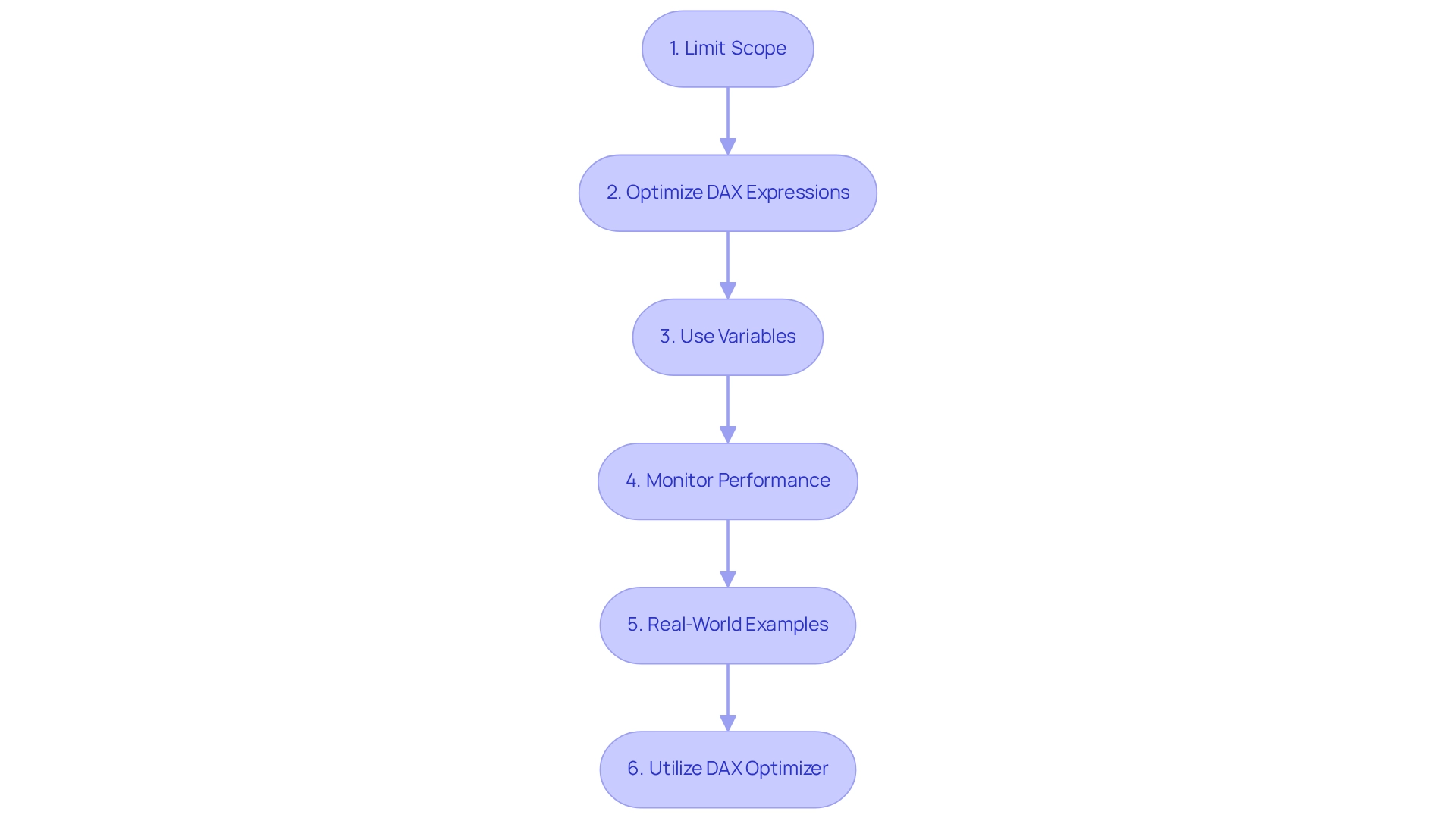

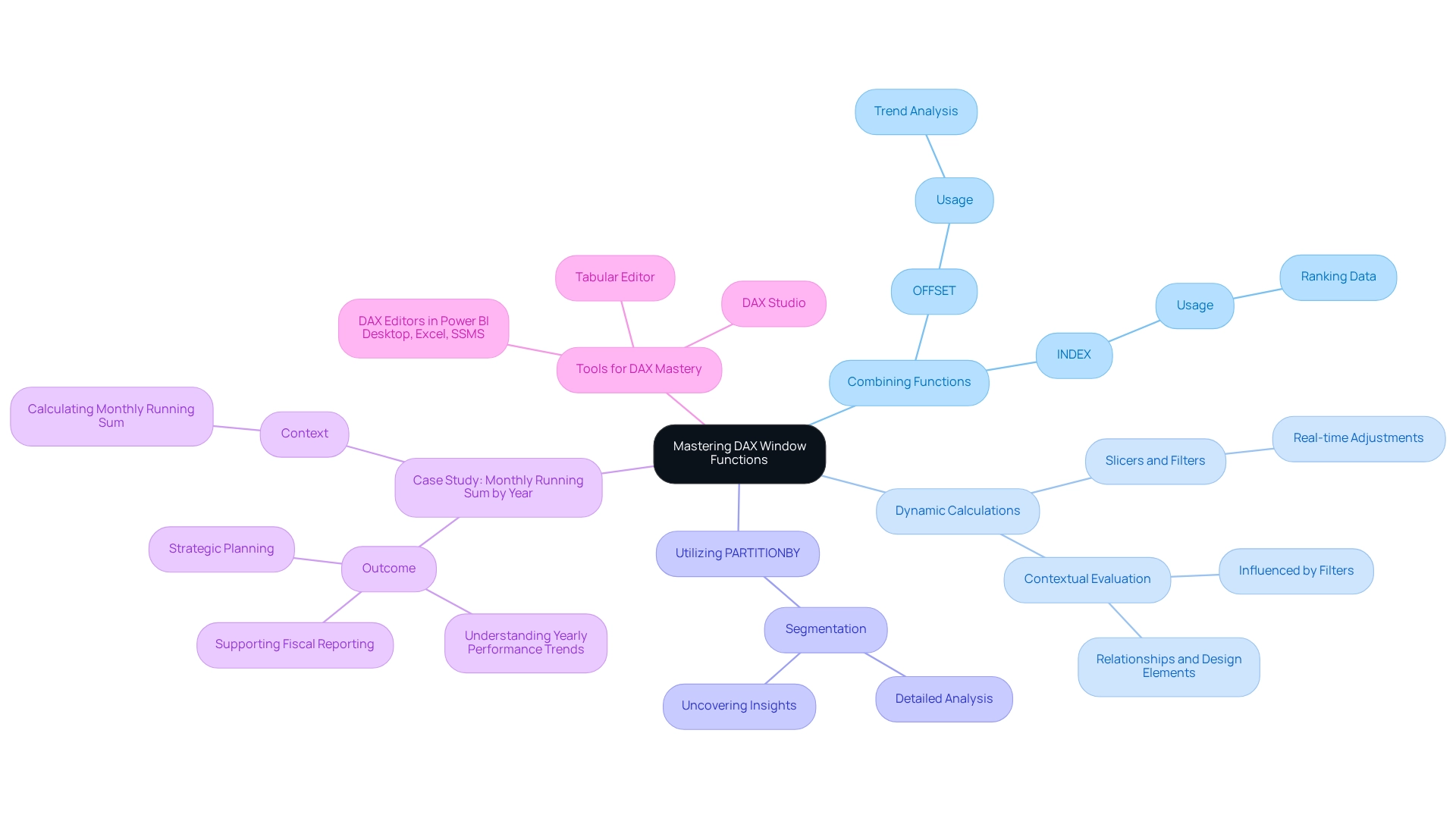

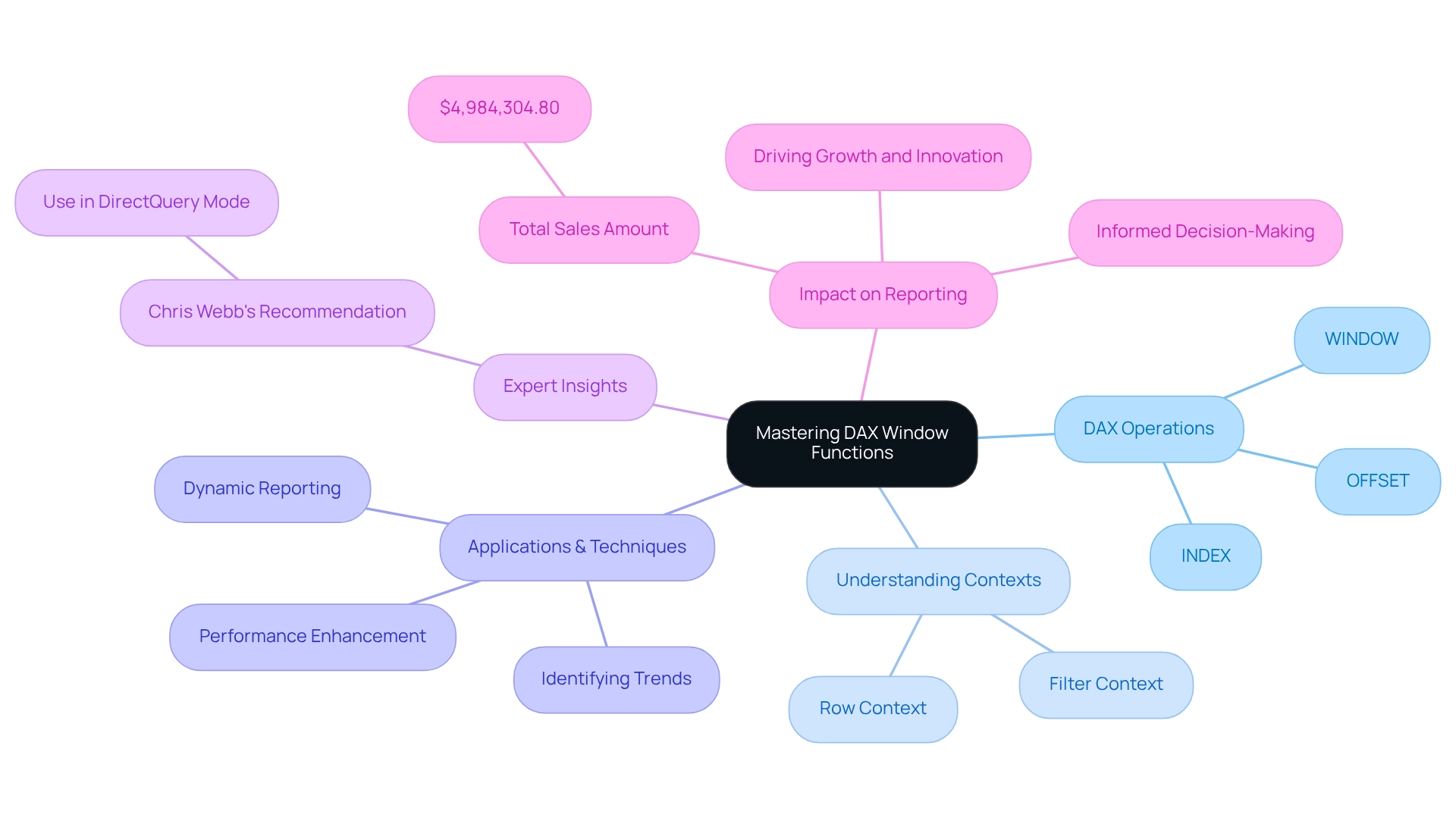

Integrating Analytics for Operational Efficiency

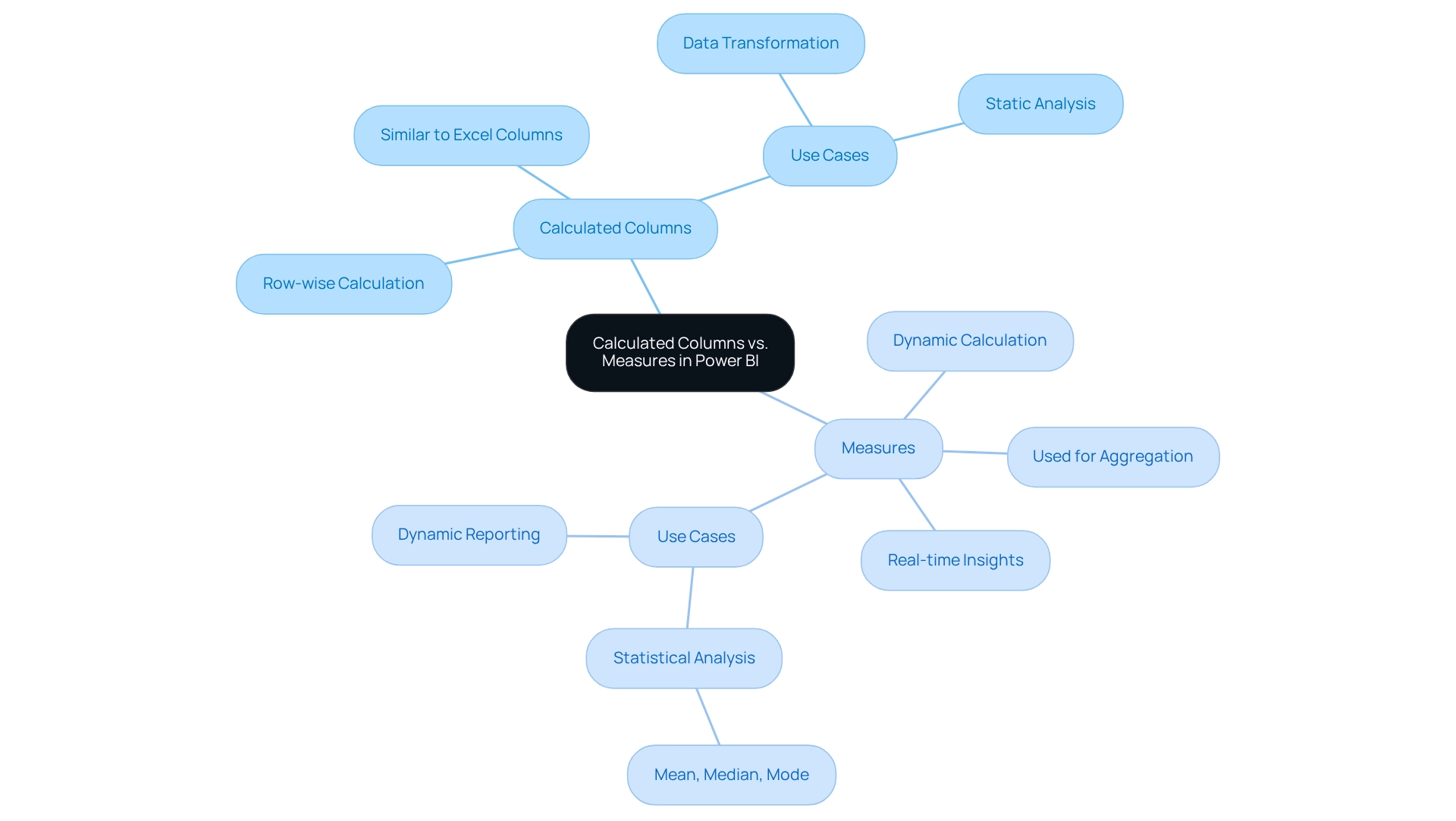

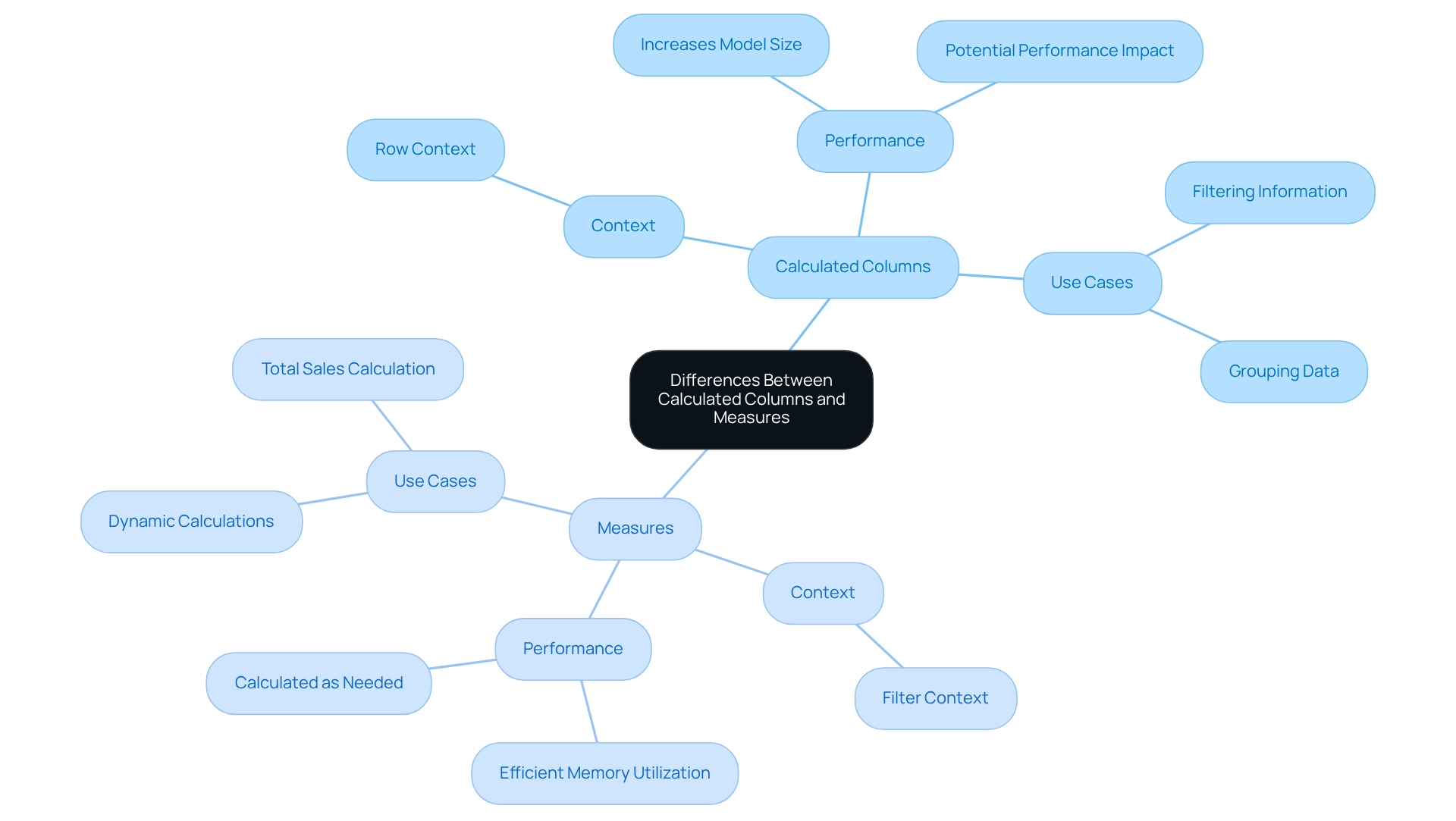

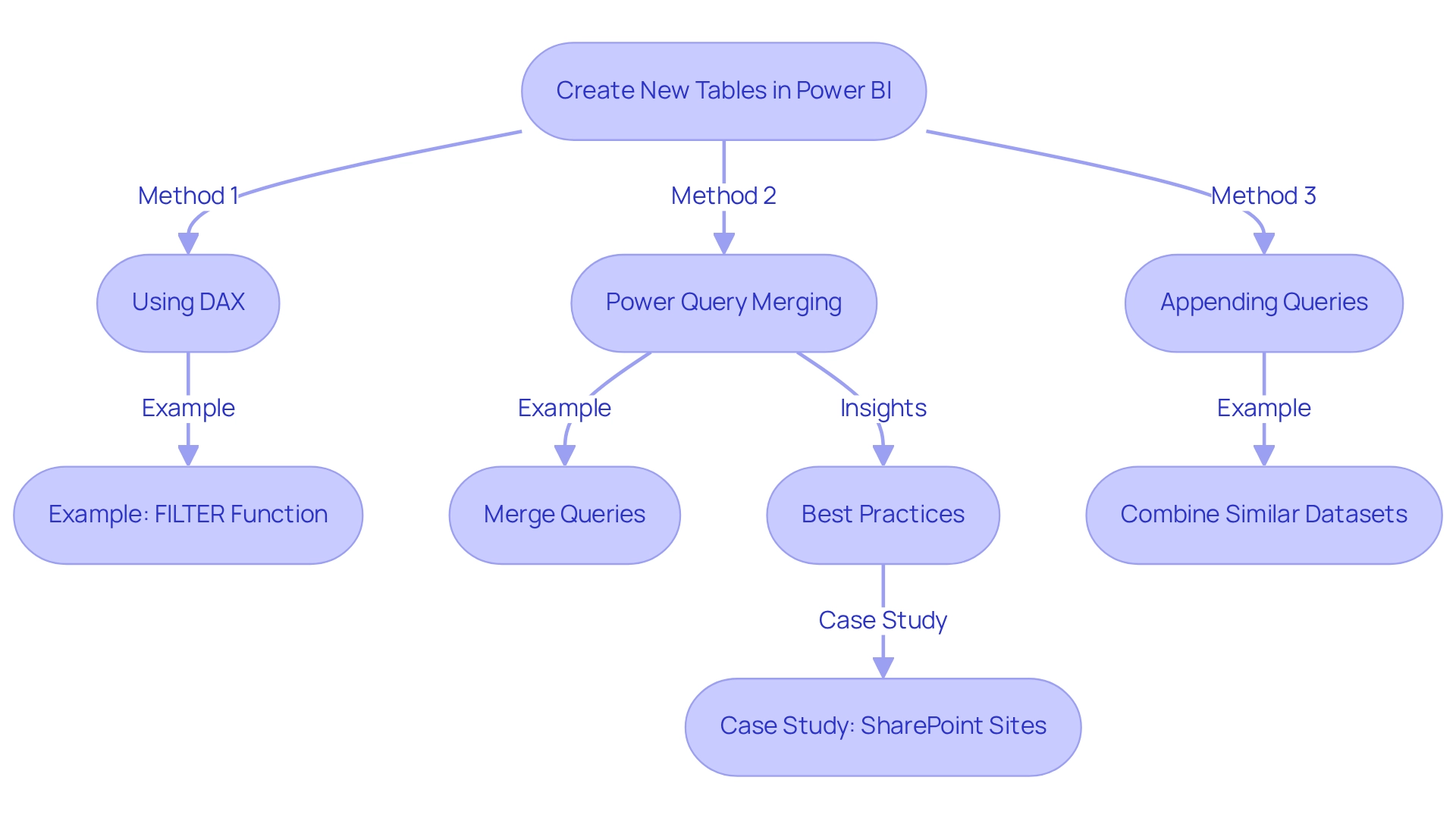

Integrating different types of analytics—descriptive, diagnostic, predictive, and prescriptive—forms the backbone of a comprehensive analytics framework. This prompts an essential question: what are the three primary areas of analytics that empower organizations to unlock deeper insights into their operations? This method not only aids in recognizing inefficiencies but also promotes the execution of evidence-based strategies that can significantly enhance operational effectiveness.

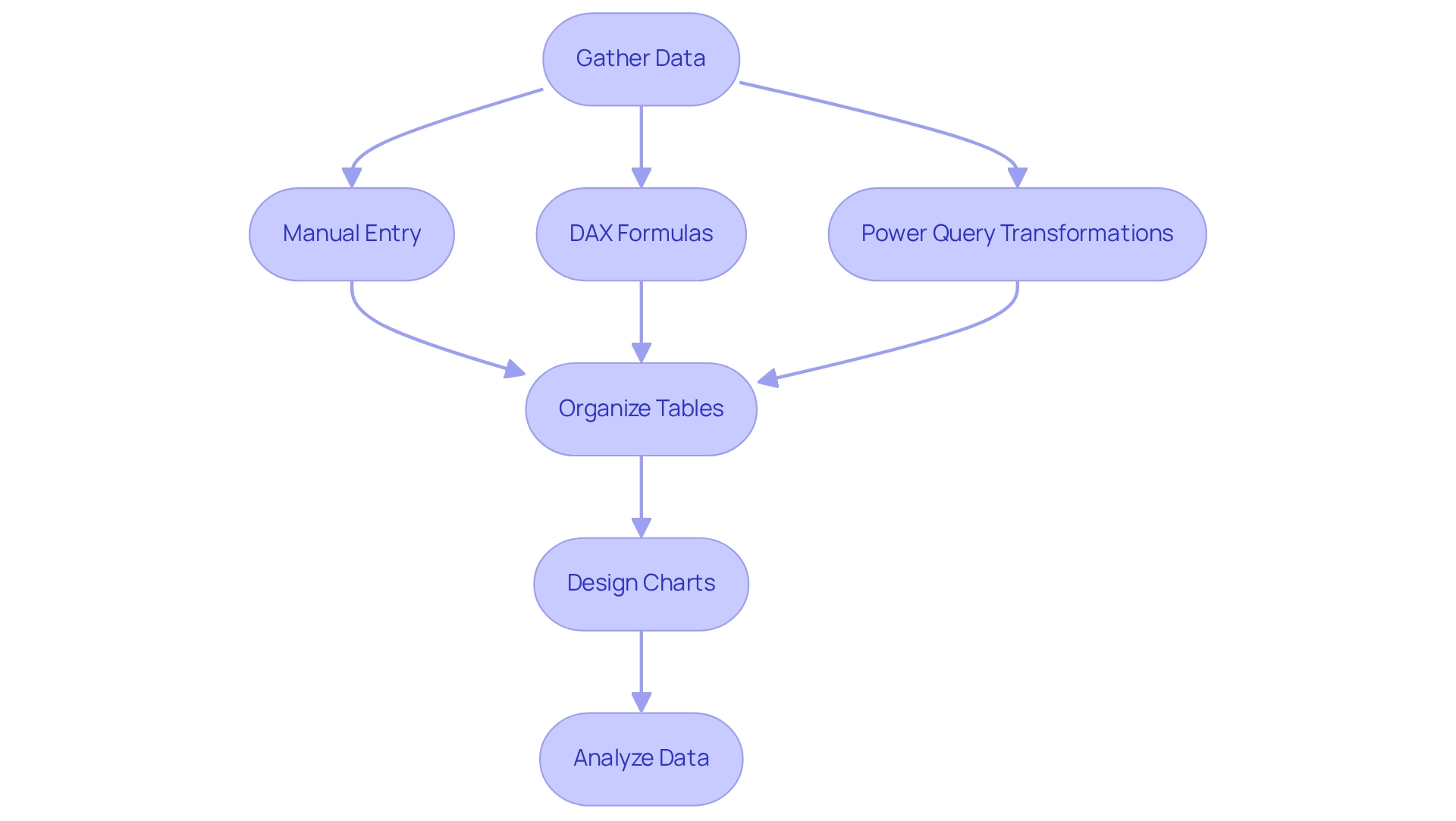

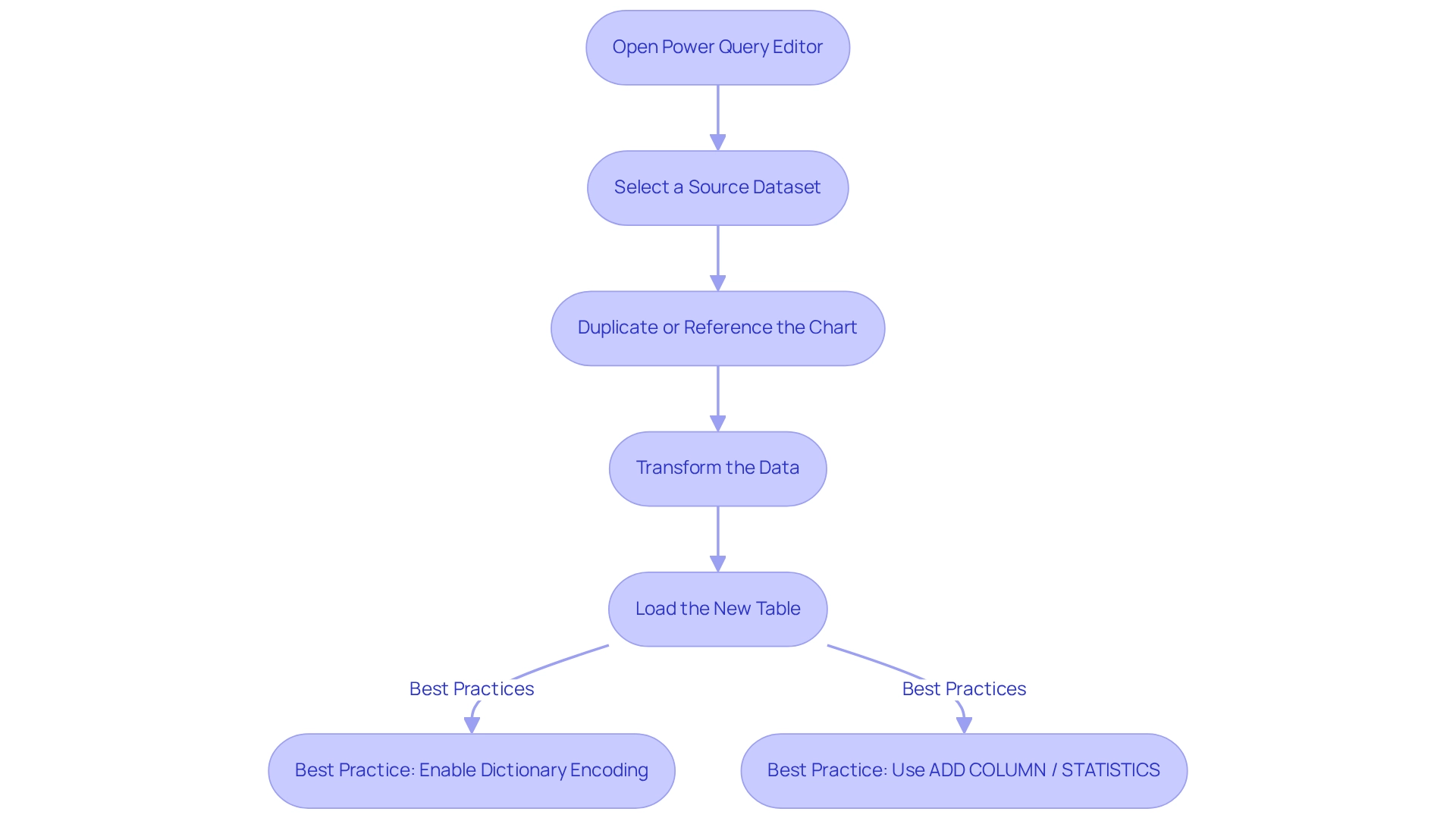

Consider a mid-sized company grappling with challenges such as manual information entry mistakes, slow software testing, and difficulties integrating outdated systems. By automating data entry, software testing, and legacy system integration through GUI automation, the company enhanced its operational efficiency. Utilizing descriptive analysis, it tracked production metrics, enabling visualization of current performance levels.

Employing diagnostic analysis allowed the identification of specific bottlenecks, leading to targeted interventions. Predictive analysis enabled the company to anticipate demand accurately, ensuring production aligned with market requirements. Ultimately, prescriptive analytics provided practical recommendations for optimizing resource distribution, effectively boosting efficiency and minimizing waste.

The combination of these analytics forms is increasingly crucial, especially as the number of Internet of Things (IoT) devices is projected to rise from 16 billion in 2023 to 20.3 billion by 2025, generating an impressive 79.4 zettabytes of data. This surge presents both opportunities and challenges for businesses, which must effectively manage and analyze the complex information generated to remain competitive. Organizations that adeptly navigate this information landscape will be better positioned to leverage insights for operational improvements.

However, a report indicates that 61% of employees perceive their organization’s information analysis approach as siloed, potentially leading to missed collaboration opportunities. Customized solutions, such as Robotic Process Automation (RPA), can help dismantle these barriers, ensuring information flows seamlessly between departments and enhancing overall efficiency.

Practical examples illustrate the success of a comprehensive analytical framework. Companies that have effectively integrated various forms of analysis report improved decision-making processes and enhanced operational efficiency. For instance, the aforementioned mid-sized firm reduced entry mistakes by 70%, accelerated testing procedures by 50%, and increased workflow productivity by 80% through automation.

Aligning data initiatives with broader objectives can mitigate the risk of failure—evident in studies showing that 70% of data projects do not achieve their intended outcomes due to misalignment. This alignment is vital for ensuring that analytical efforts contribute significant value to strategic goals.

In conclusion, incorporating diverse forms of analysis not only enhances operational efficiency but also fosters a culture of continuous improvement, enabling organizations to adapt swiftly to evolving market conditions and promote sustainable growth.

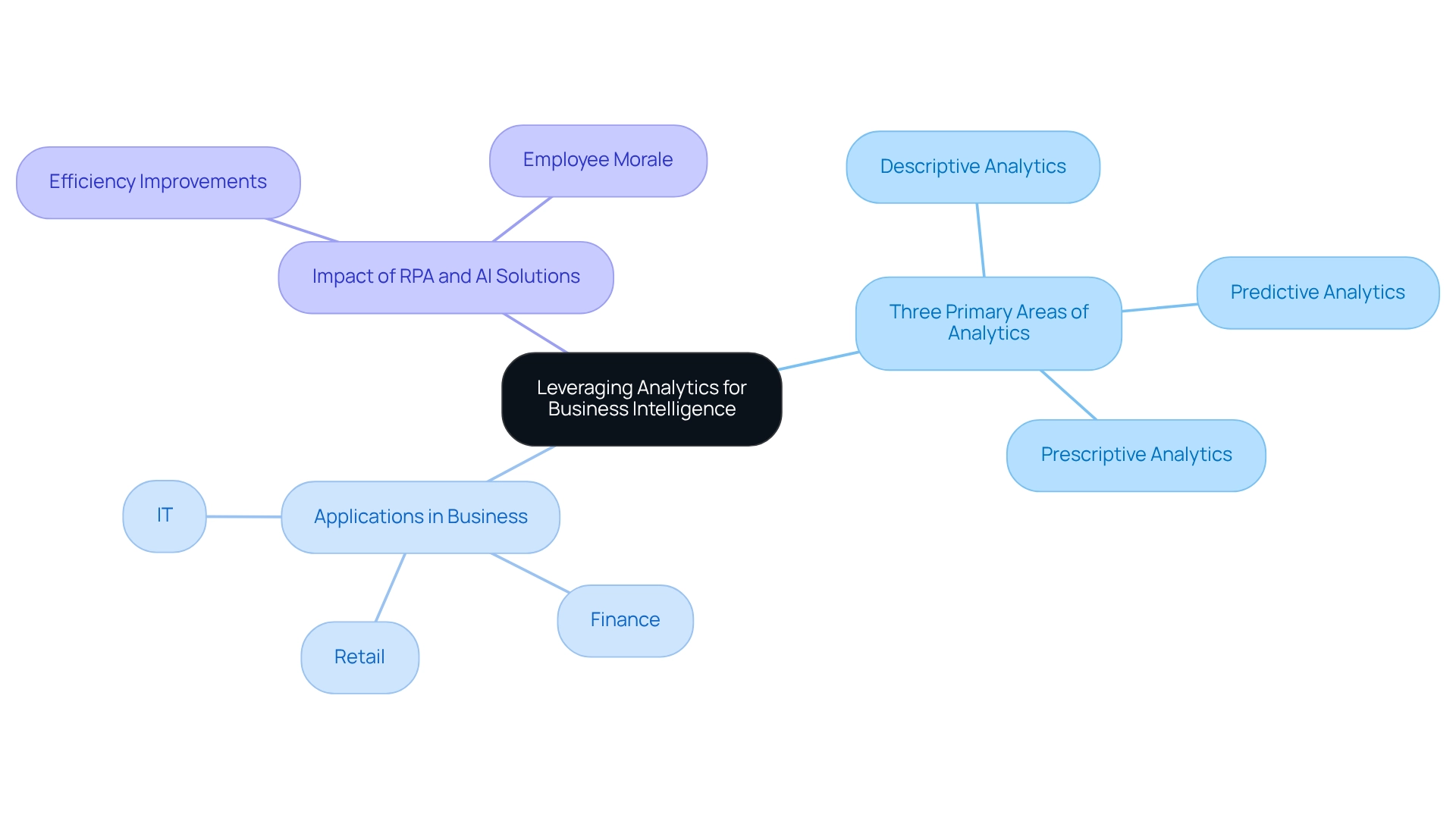

Leveraging Analytics for Business Intelligence

Analytics serves as a fundamental pillar of organizational intelligence (BI), offering essential insights that empower entities to understand the three primary areas of analytics and make informed choices. Data analysis enables businesses to reveal trends, evaluate performance, and direct strategic initiatives. For instance, retail chains utilize data analysis to scrutinize customer purchasing behaviors, directly influencing inventory management and marketing strategies.

This analytical approach not only enhances operational efficiency but also fosters a competitive advantage in the marketplace. It raises the question of what the three primary areas of analytics should be considered, especially with the integration of Robotic Process Automation (RPA) solutions like EMMA RPA and Microsoft Power Automate. These tools significantly enhance efficiency and employee morale by automating repetitive tasks. EMMA RPA specifically tackles task repetition fatigue, while Microsoft Power Automate alleviates staffing shortages, enabling organizations to concentrate on strategic initiatives.

Furthermore, edge computing plays a vital role in monitoring safety risks and enhancing project management, prompting further reflection on the three primary areas of analytics that can improve operational processes. As the demand for information engineers is expected to expand at an impressive rate of 50% each year, this highlights the significance of understanding the three primary areas of analytics in the sector. According to IBM, finance and insurance, professional services, and IT sectors represent 59% of job demand for science and analysis, emphasizing the importance of these analytics areas.

Organizations are increasingly embracing cloud-based BI solutions, such as the Power BI services provided by Creatum, which offer scalability and adaptability. A recent testimonial from Sascha Rudloff, Team Leader of IT and Process Management at PALFINGER Tail Lifts GMBH, emphasizes the transformative influence of Creatum’s Power BI Sprint. This solution not only provided a readily usable Power BI report but also accelerated their analysis strategy. This success narrative illustrates how sophisticated analysis and customized AI solutions, including Small Language Models and GenAI Workshops, can enhance information quality and address governance challenges in business reporting, ultimately improving decision-making.

As entities increasingly embrace sophisticated analysis, they become better equipped to react to market dynamics and promote sustainable growth. This aligns with the entity’s emphasis on implementing robotic process automation (RPA) and customized AI solutions. To explore how these solutions can benefit your company, book a free consultation today.

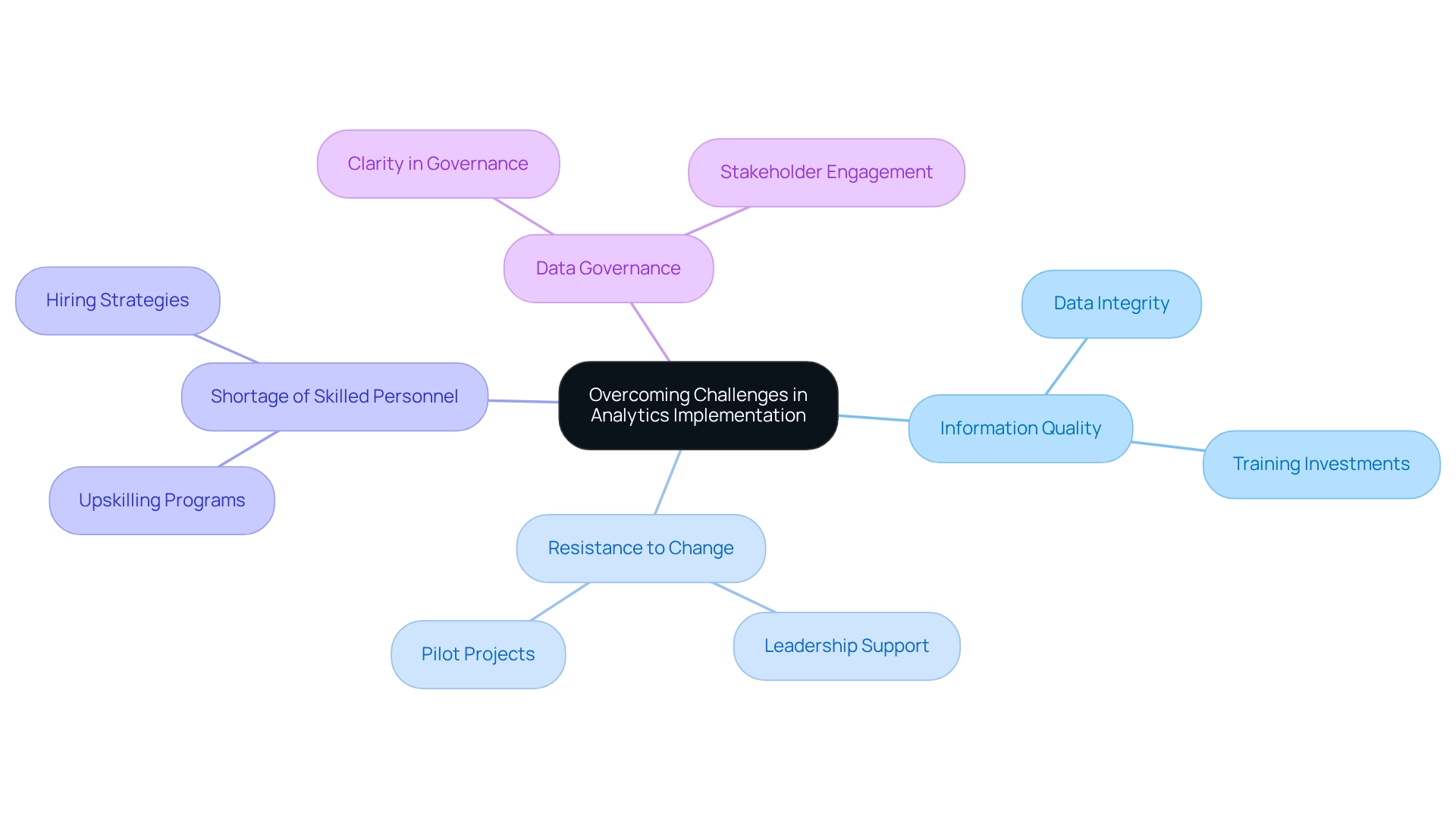

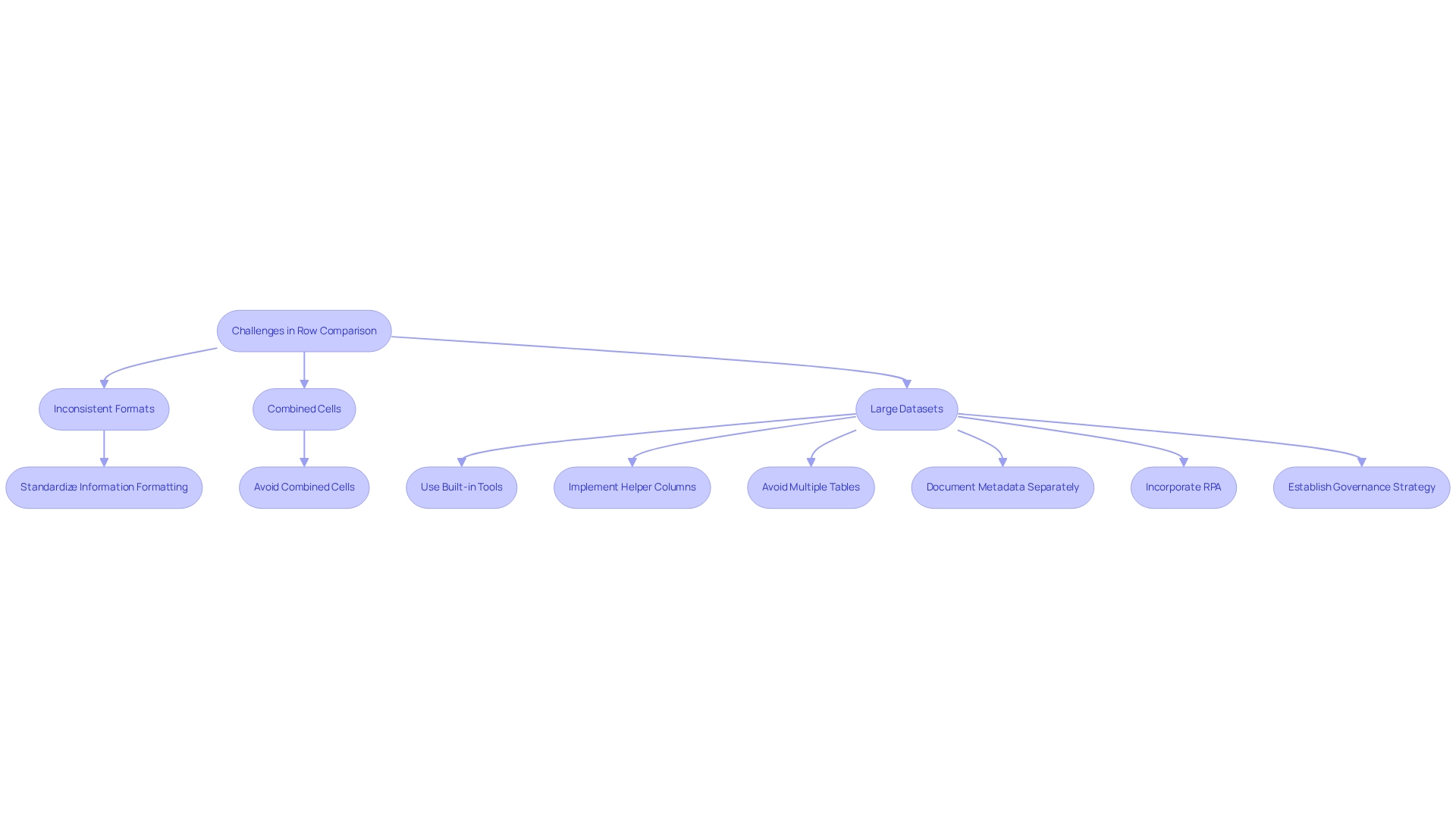

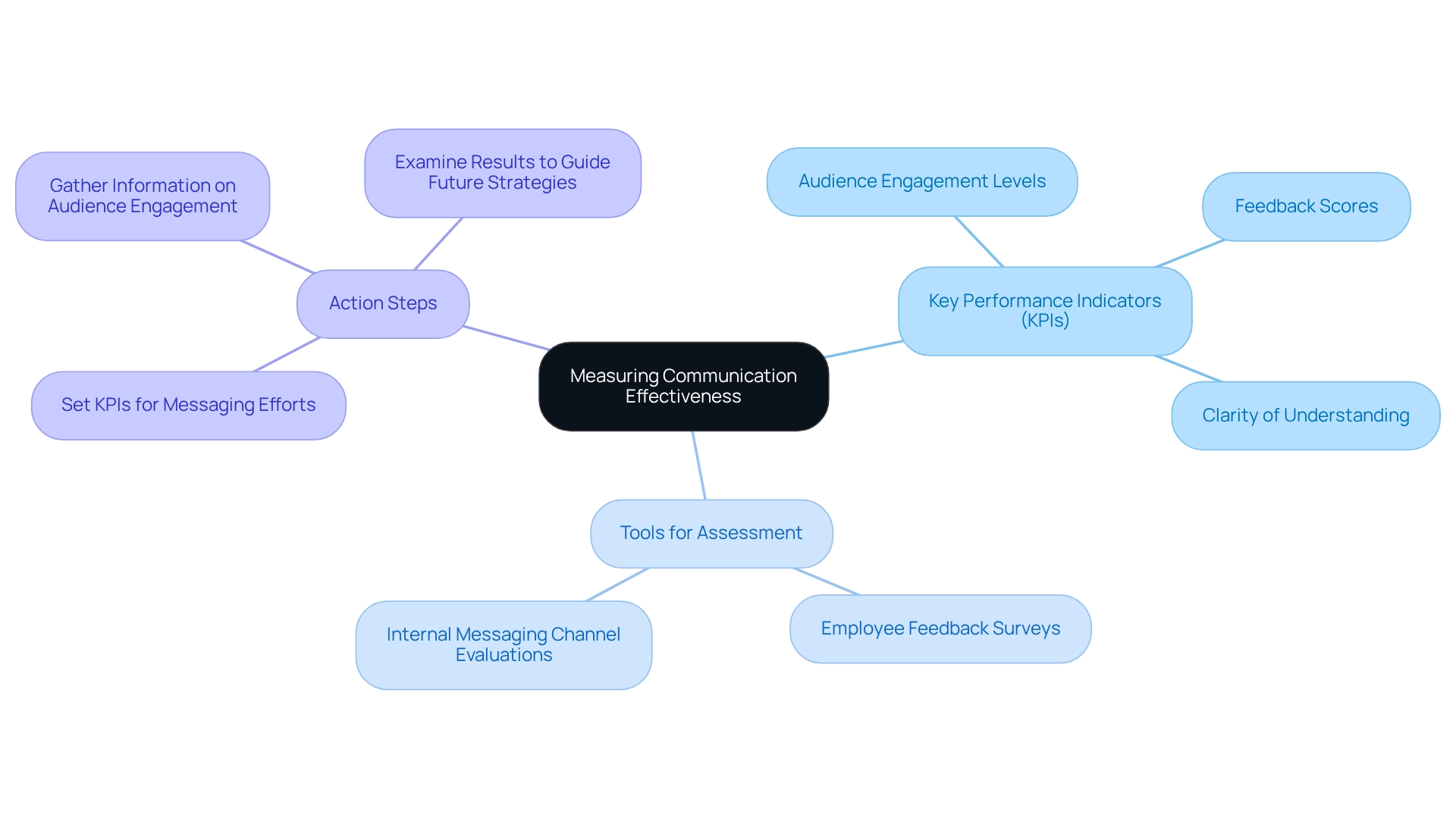

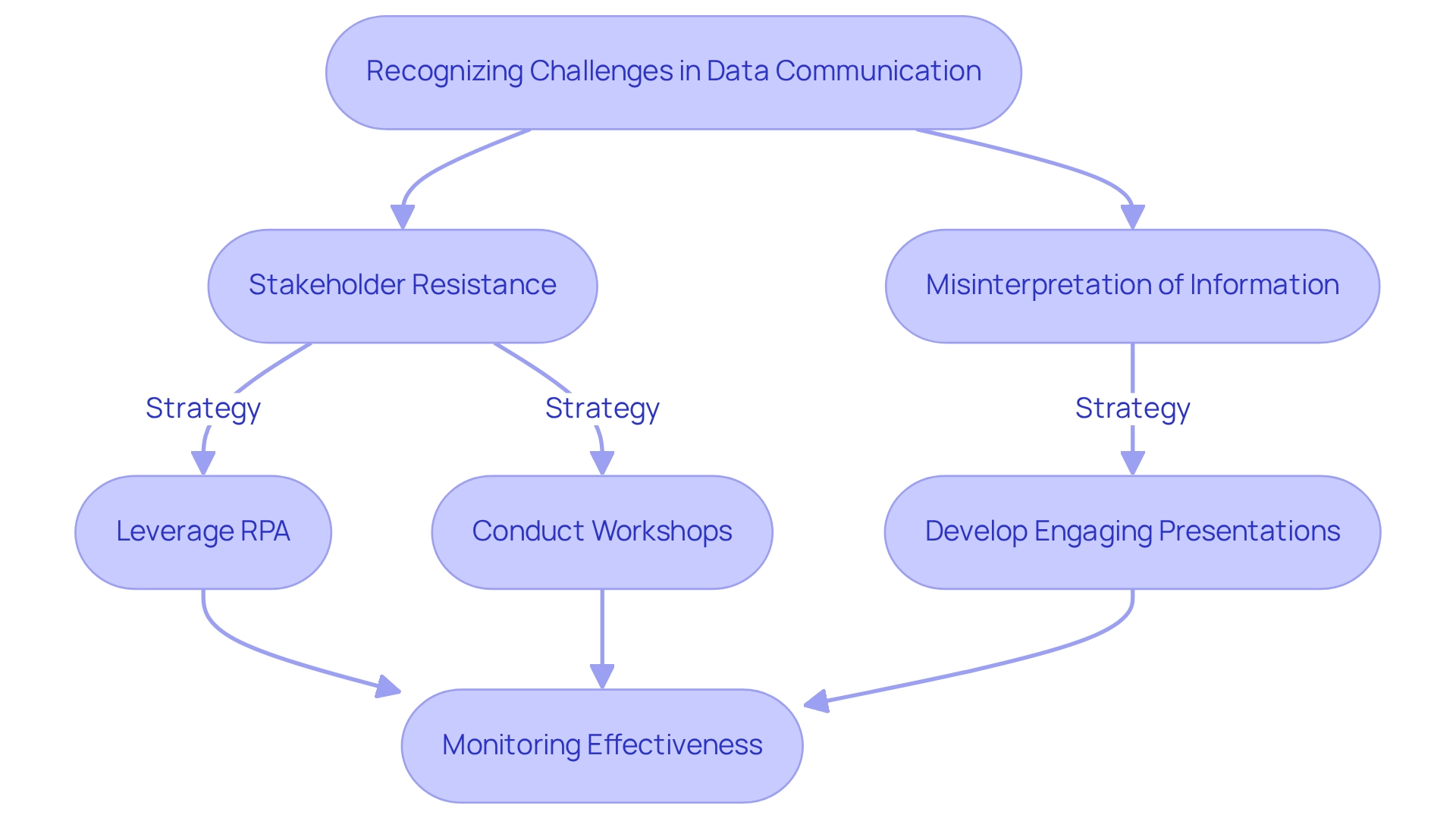

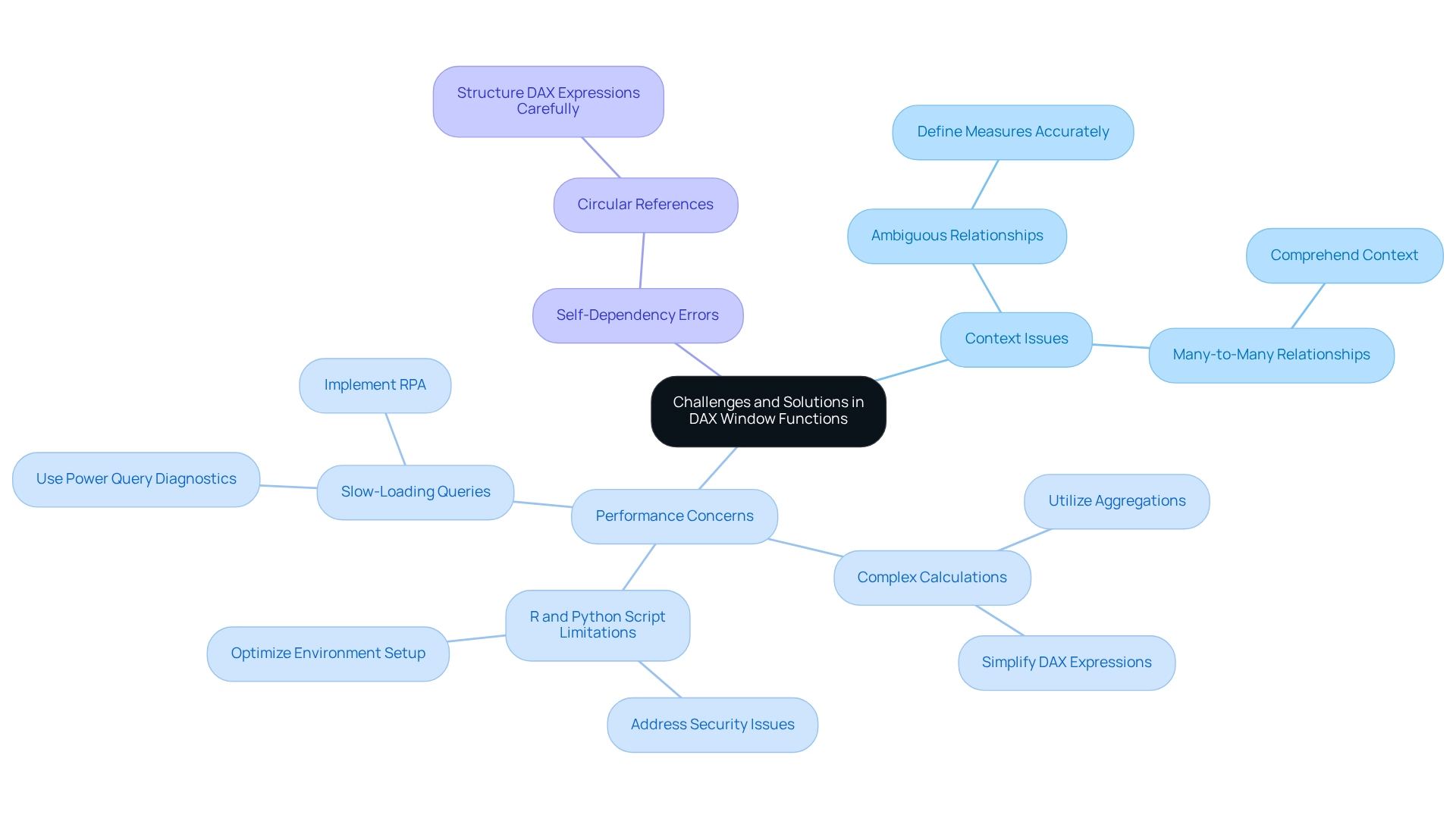

Overcoming Challenges in Analytics Implementation

Integrating analysis within a company often encounters significant challenges, particularly concerning information quality, resistance to change, and a shortage of skilled personnel. As we approach 2025, the impact of information quality on analytics success is increasingly critical; disorganized information can undermine even the most well-intentioned AI implementation strategies. To overcome these challenges, organizations must foster a strong culture of data-driven decision-making.

This necessitates not only investing in training to bolster skills but also ensuring data integrity at every level of the organization. Leadership is pivotal in this transformation. By championing analytics adoption through pilot projects and highlighting success stories, leaders can effectively illustrate the tangible benefits of data-driven strategies. For instance, organizations that have successfully cultivated a data-driven culture often report enhanced operational efficiency and improved decision-making capabilities.

The integration of generative AI technologies, such as multi-agent AI systems and graphical neural networks, has the potential to revolutionize operational processes, offering practical applications that strengthen core competencies. Statistics reveal that by 2026, over three-quarters of businesses’ standard information architectures will incorporate streaming information and event processing, underscoring the urgency for organizations to adapt. However, many initiatives falter due to an internal focus that fails to deliver substantial value, as highlighted in recent discussions about the challenges surrounding information products. This reassessment of effectiveness calls for a renewed commitment to aligning data initiatives with broader business objectives.

As Amani Undru, a BI & Data Strategist at ThoughtSpot, observed, “In contrast, just 11% of planners feel they are ahead of the competition, emphasizing the urgency for them to take action.”

Common obstacles in data implementation include a lack of clarity in data governance and insufficient stakeholder engagement. By addressing these issues head-on and leveraging expert insights, organizations can overcome barriers and unlock the full potential of their analytics initiatives. The adoption of Robotic Process Automation (RPA) can streamline workflows, enhancing efficiency and minimizing errors, while customized AI solutions can assist organizations in identifying the right technologies that align with their specific business goals.

Ultimately, fostering a culture that prioritizes data-driven decision-making is essential for driving growth and innovation in today’s data-rich landscape. Tailored solutions that enhance information quality and facilitate AI implementation are crucial in this endeavor.

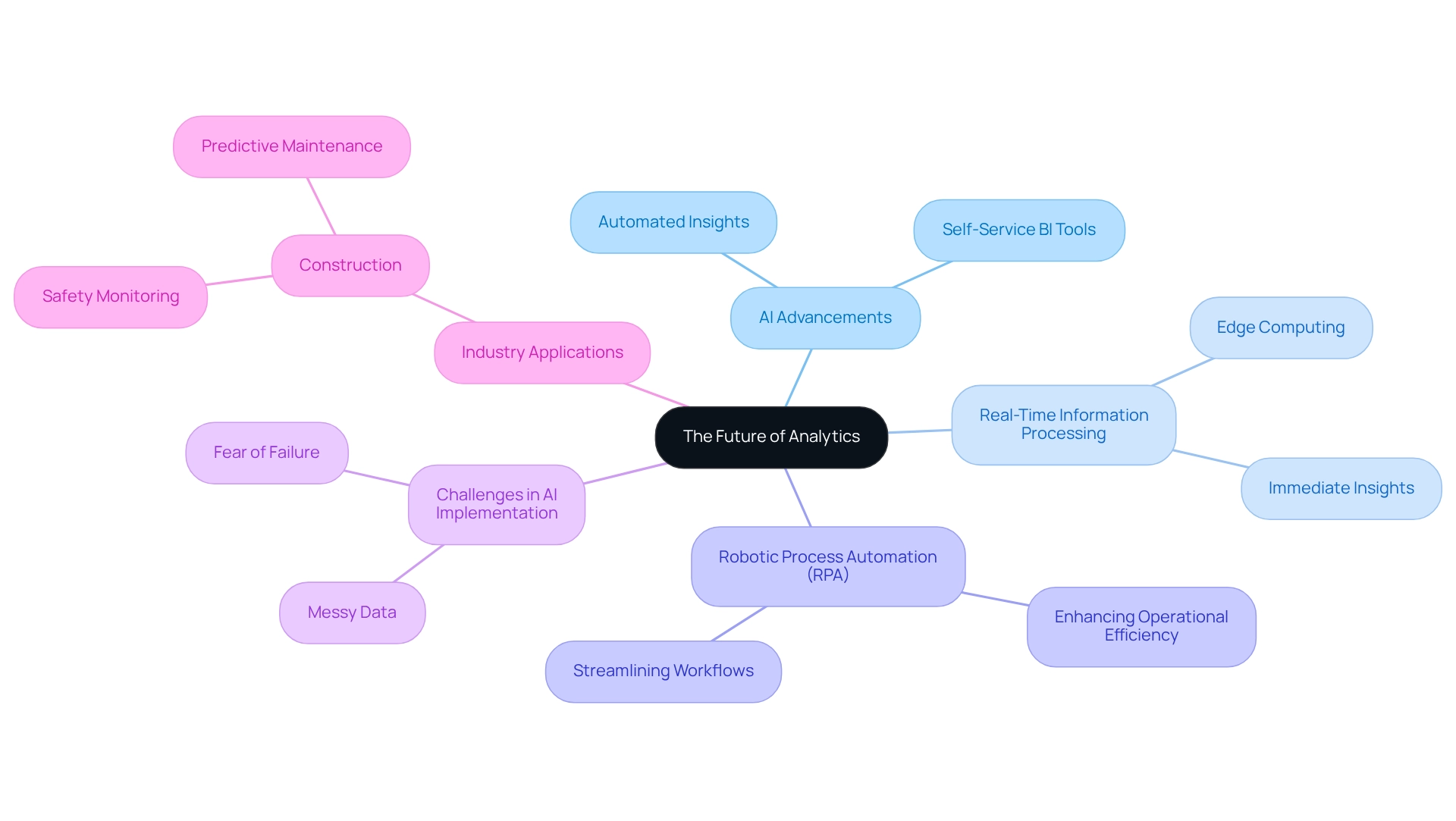

The Future of Analytics: Trends and Innovations

The terrain of analysis is on the verge of a significant transformation, primarily driven by advancements in artificial intelligence, machine learning, and large-scale information technologies. Key trends such as enhanced analysis and real-time information processing are emerging as crucial influences that will reshape how companies employ information. However, obstacles such as messy data and fear of failure can derail AI implementation plans, making it crucial for companies to tackle these challenges.

For instance, Robotic Process Automation (RPA) from Creatum GmbH can significantly streamline manual workflows, enhancing operational efficiency by reducing errors and freeing up teams for more strategic tasks. The incorporation of AI into data analysis tools is allowing companies to automate insights, greatly improving their decision-making abilities. As companies embrace these innovations, they can anticipate enhanced operational efficiency and a stronger competitive stance in their sectors.

In 2025, the influence of AI on data analysis will be significant, with a remarkable transition towards self-service business intelligence (BI) tools that streamline performance monitoring. This evolution is underscored by the fact that 48% of early adopters in the U.S. and Canada cite increased data-analyst efficiency as their primary motivation for embracing generative AI. Additionally, contemporary self-service BI tools enhance performance monitoring by elucidating the distinctions between KPIs and metrics, enabling entities to assess success more efficiently.

Real-time information processing will also play a vital role in forming the future of analysis, especially in industries that require immediate insights, such as construction. For example, edge computing enables the processing of user information close to its origin, facilitating quicker decision-making and improving project management through better safety oversight and predictive maintenance.

As organizations keep utilizing augmented analytics for innovation, they will not only improve their information quality but also ease the complexities related to AI implementation. By embracing these emerging trends, including tailored AI solutions and RPA from Creatum GmbH, businesses can transform raw data into actionable insights, driving growth and fostering a culture of informed decision-making. To see how these technologies can be applied in real-world scenarios, explore our case studies that highlight successful implementations.

Conclusion

Integrating various forms of analytics—descriptive, diagnostic, predictive, and prescriptive—profoundly enhances operational efficiency and fosters a culture of continuous improvement within organizations. Leveraging these diverse analytical approaches enables businesses to identify inefficiencies and implement data-driven strategies that propel growth and innovation. As organizations increasingly adopt self-service BI tools and embrace AI technologies, the transformation of raw data into actionable insights becomes more accessible, allowing teams to make informed decisions swiftly.

Moreover, the importance of cultivating a data-driven culture cannot be overstated. Leadership plays a critical role in championing analytics adoption, ensuring that data integrity and quality are prioritized across all levels. By addressing common challenges—such as data governance and stakeholder engagement—organizations can unlock the full potential of their analytics initiatives. This enables them to navigate the complexities of a rapidly evolving data landscape and remain competitive.

Looking ahead, the future of analytics is poised for significant advancements driven by innovations in AI and machine learning. The emergence of augmented analytics and real-time data processing will redefine how organizations utilize data, enhancing decision-making capabilities and operational efficiency. By embracing these trends and leveraging tailored solutions, businesses can improve their analytics frameworks and drive sustainable growth in an increasingly data-centric world. In this evolving landscape, the ability to harness analytics effectively will be a key differentiator for organizations aiming to thrive in the years to come.

Frequently Asked Questions

What is analytics and why is it important for organizations?

Analytics involves the systematic examination of information and statistics, helping organizations navigate complex data landscapes. It is crucial for informed decision-making, enhancing operational efficiency, driving innovation, and achieving strategic goals.

What are the current trends in data analysis for businesses?

There is a significant shift towards self-service business intelligence tools that simplify performance tracking. Additionally, the integration of Robotic Process Automation (RPA) helps automate manual workflows, allowing teams to focus on strategic tasks.

How does data analysis impact operational efficiency?

Data analysis improves operational efficiency by identifying patterns and trends, which helps organizations make informed decisions. Case studies show that companies using AI in data analytics gain a competitive edge, with potential productivity increases predicted by experts.

What role does descriptive analysis play in business decision-making?

Descriptive analysis interprets historical data to summarize past occurrences and provide actionable insights. It uses metrics and visual representations to help organizations understand trends, such as sales patterns, which can inform inventory management and marketing strategies.

What are the key metrics used in descriptive analysis?

Common metrics in descriptive analysis include key performance indicators (KPIs) such as sales volume, customer retention rates, and inventory turnover.

How has the adoption of analytics dashboards impacted firms?

The use of analytics dashboards has surged by over 45% among leading firms, providing a centralized platform for data visualization that streamlines decision-making and enhances oversight of market dynamics.

What is diagnostic analysis and how does it differ from descriptive analysis?

Diagnostic analysis goes beyond descriptive analysis by exploring the reasons behind past results. It employs techniques like information mining and correlation analysis to answer questions about performance issues, such as declines in sales.

What benefits can organizations gain from diagnostic analysis?

Organizations can potentially boost revenue by up to 20% in marketing and sales processes through diagnostic analysis. It helps identify common factors leading to performance issues, allowing targeted strategies to be implemented.

Can you provide an example of how diagnostic analysis has improved efficiency?

A mid-sized firm improved efficiency by adopting GUI automation to address manual entry errors and sluggish processes, resulting in a 70% reduction in data entry errors and a 50% acceleration in testing processes.

What is the future significance of data analysis in business?

As data continues to grow, the importance of data analysis will increase, enabling organizations to extract meaningful insights and maintain a competitive edge. The integration of RPA will further streamline processes, allowing a focus on strategic initiatives.

Overview

This article delves into best practices for effectively handling null values in DAX. Understanding and managing ‘BLANK’ entries is crucial for ensuring accurate data analysis. Essential DAX functions such as ISBLANK and COALESCE are detailed to provide clarity on their application. Additionally, strategic approaches like proactive checks and effective visualization methods are discussed, collectively enhancing data quality and informing decision-making processes.

Introduction

In the realm of data analysis, managing null values is a critical yet often overlooked aspect that can significantly influence the integrity and accuracy of insights derived from datasets. Represented as ‘BLANK’ in DAX, these null values demand careful handling to avoid pitfalls that distort calculations and mislead decision-makers. As organizations increasingly rely on data-driven strategies, understanding the nuances of null values becomes essential for data professionals aiming to enhance their analytical capabilities.

This article delves into the complexities surrounding null values in DAX, exploring best practices, key functions, and advanced techniques that empower analysts to effectively manage these challenges. By mastering these concepts, professionals can ensure their analyses remain robust and reliable. Ultimately, this drives informed decision-making and fosters growth in today’s competitive landscape.

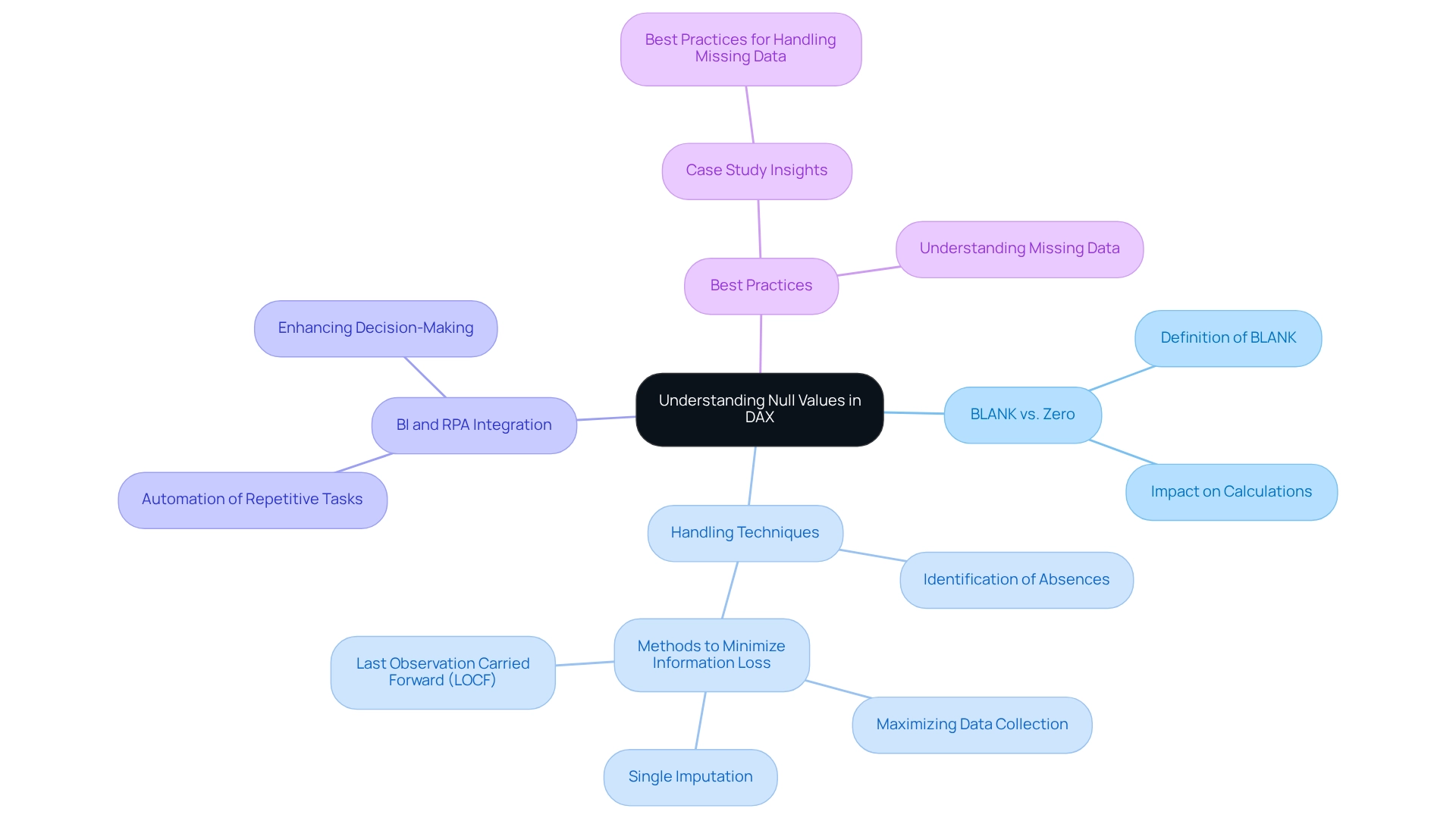

Understanding Null Values in DAX: A Primer

In DAX, null entries are represented as ‘BLANK,’ a crucial distinction that significantly influences analysis. DAX treats BLANK distinctly compared to other data types, making it imperative for data professionals to grasp how these elements are interpreted. Mismanagement of BLANK principles can lead to unexpected outcomes in calculations, underscoring the importance of appropriate handling techniques.

Familiarity with the concept of BLANK is essential to avoid common pitfalls in analyses. Moreover, understanding the difference between an empty entry and a zero is vital, as they convey different meanings within information contexts. A null signifies the absence of information, while zero represents a numeric value that can affect calculations and aggregations in unique ways. Recognizing these distinctions ensures that evaluations are accurate and reliable, ultimately enhancing the quality of insights derived from information.

In today’s information-rich environment, leveraging Business Intelligence tools can help organizations tackle challenges related to poor master information quality and barriers to AI adoption. By implementing RPA solutions, companies can automate repetitive tasks, thereby increasing efficiency and allowing professionals to focus on extracting actionable insights from their evaluations. This synergy between BI and RPA not only drives operational efficiency but also supports informed decision-making that promotes growth and innovation.

According to a case study titled ‘Best Practices for Handling Missing Information,’ it is crucial to identify the type of absence and select appropriate handling methods to minimize information loss. This understanding is vital, as mishandling absent information can significantly reduce the effectiveness of evaluations, particularly in trials. Recent discussions emphasize that maximizing information collection during study design is the best approach to address absent values, as single imputation and Last Observation Carried Forward (LOCF) methods may introduce bias.

As Nasima Tamboli, a Freelance Software Engineer and Data Science Enthusiast, notes, “Consider the potential biases and implications of the selected method on your evaluation.” This perspective highlights the importance of understanding principles related to null in DAX and the broader implications of absent information in data evaluation, especially when supported by robust BI and RPA frameworks.

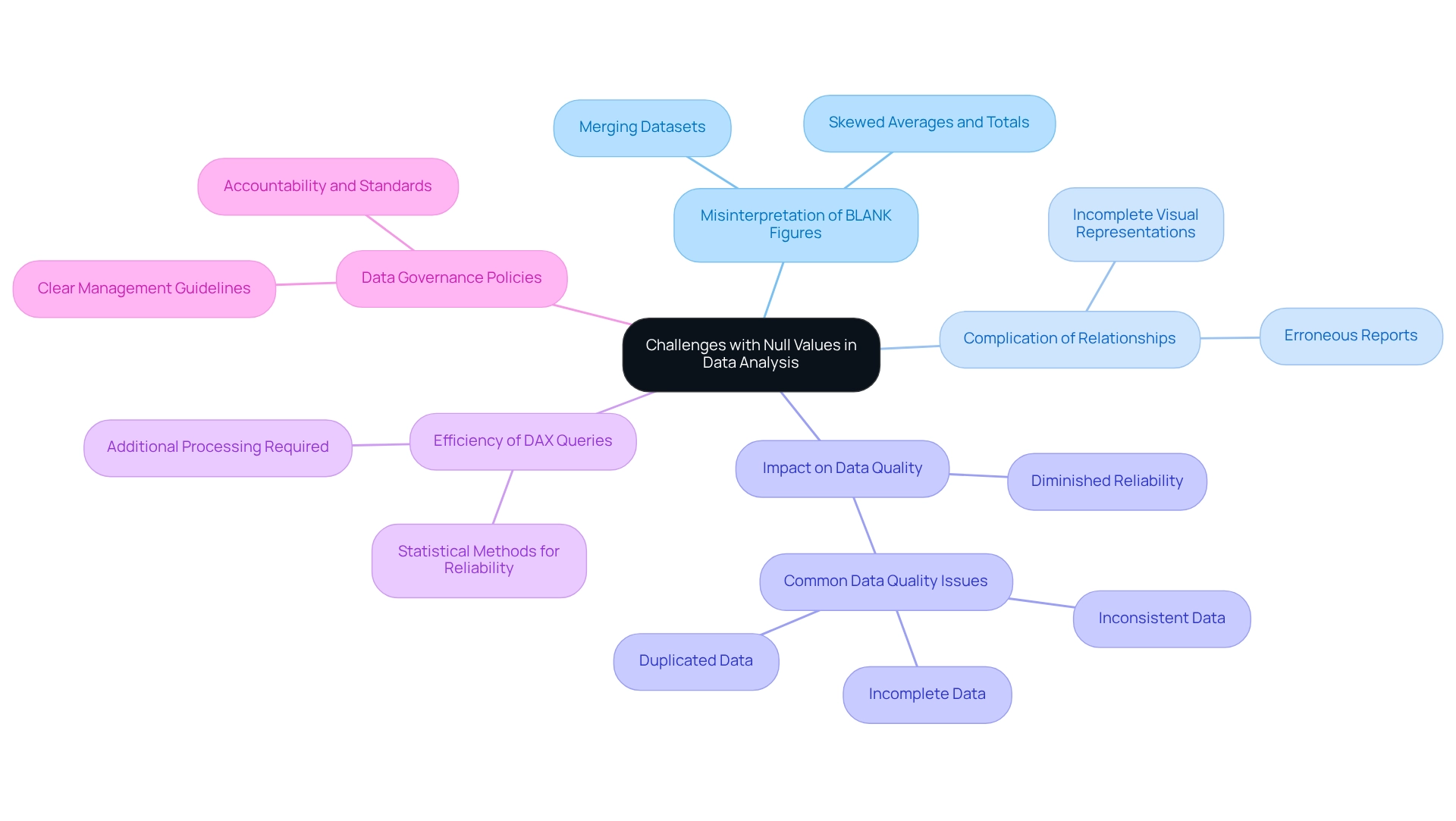

Common Challenges with Null Values in Data Analysis

Data experts frequently encounter significant challenges when managing null values in DAX entries during their analyses. A common issue is the misinterpretation of BLANK figures during calculations, which can lead to distorted results. For instance, merging datasets that contain BLANK entries can produce misleading averages or totals, ultimately skewing insights.

Moreover, absent entries complicate relationships among data, particularly in models where certain fields are expected to contain information. This complexity can result in incomplete visual representations and erroneous reports, undermining the reliability of the analysis.

The implications of missing entries extend beyond mere misinterpretation; they can also adversely affect the precision of data. Statistics indicate that a considerable percentage of information professionals report difficulties associated with null values in DAX, with many noting that these challenges diminish the quality and reliability of data. This concern is especially critical in an information-rich environment where organizations rely on Business Intelligence (BI) to transform raw data into actionable insights that drive growth and innovation.

Employing robust statistical methods can enhance the reliability of results, making it imperative for professionals to address these challenges effectively. Furthermore, the presence of missing entries can impede the efficiency of DAX queries, necessitating additional processing to manage these situations.

As Diego Vallarino, PhD, aptly notes, ‘Comprehending entropy is crucial for revealing the potential of information assessment,’ underscoring the importance of grasping the complexities introduced by absent elements. Organizations that have established clear information governance policies have successfully navigated these challenges. By defining how data should be managed and ensuring adherence to standards, they have improved their handling of null values in DAX, leading to more accurate and reliable analyses.

This enhancement is essential for leveraging RPA solutions, such as EMMA RPA and Power Automate, which aim to automate repetitive tasks and boost operational efficiency.

For example, implementing these guidelines has empowered organizations to directly confront prevalent quality issues, such as incomplete and inconsistent data, which obstruct successful AI adoption. Understanding these challenges is crucial for professionals striving to implement effective solutions and elevate the overall quality of their data-driven insights. Additionally, clarity in reporting how absent data was managed is vital for building trust in findings and comprehending the methodologies behind results.

Creatum GmbH recognizes that difficulties in extracting valuable insights can position companies at a competitive disadvantage, making it essential to address these quality challenges.

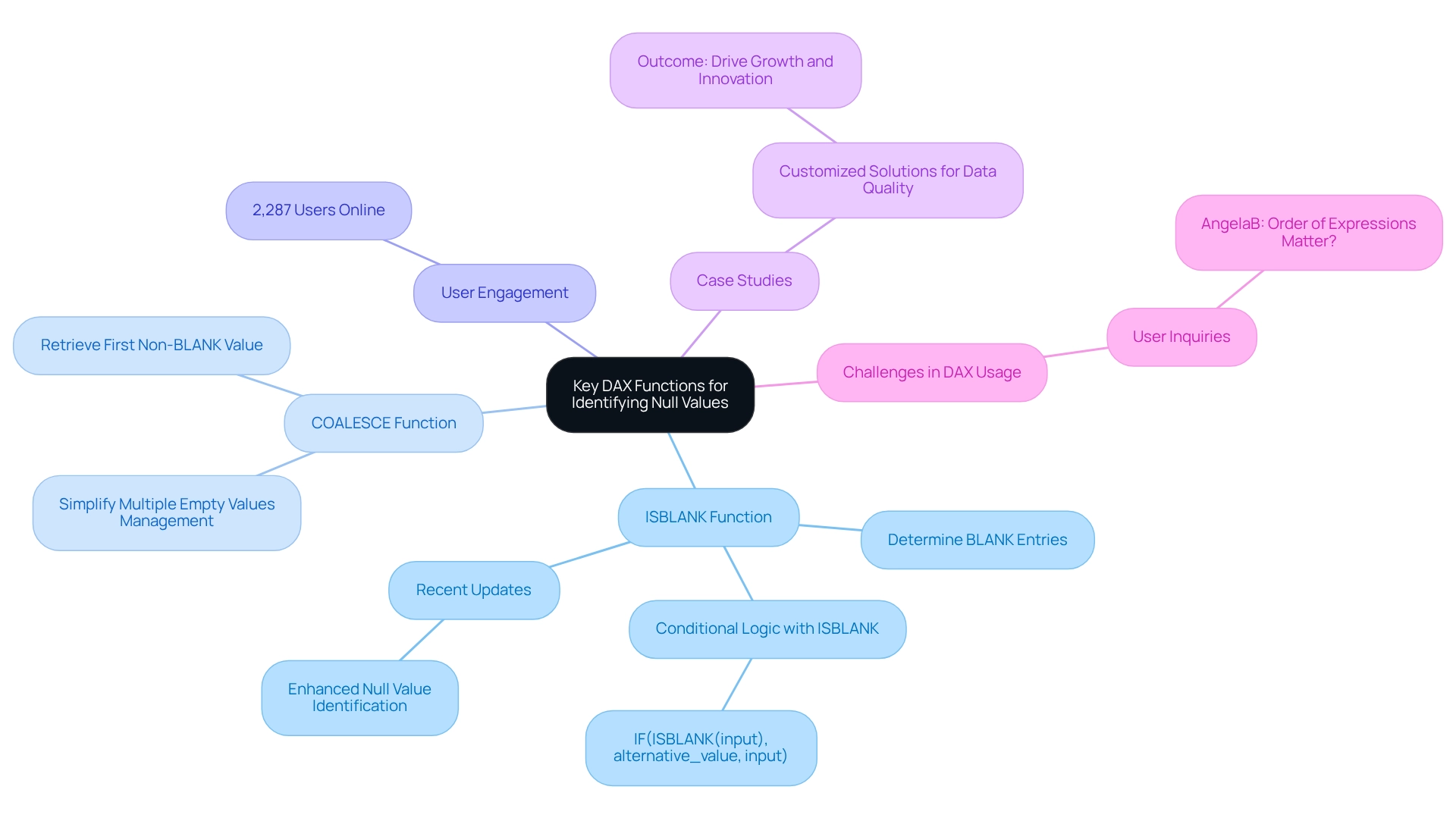

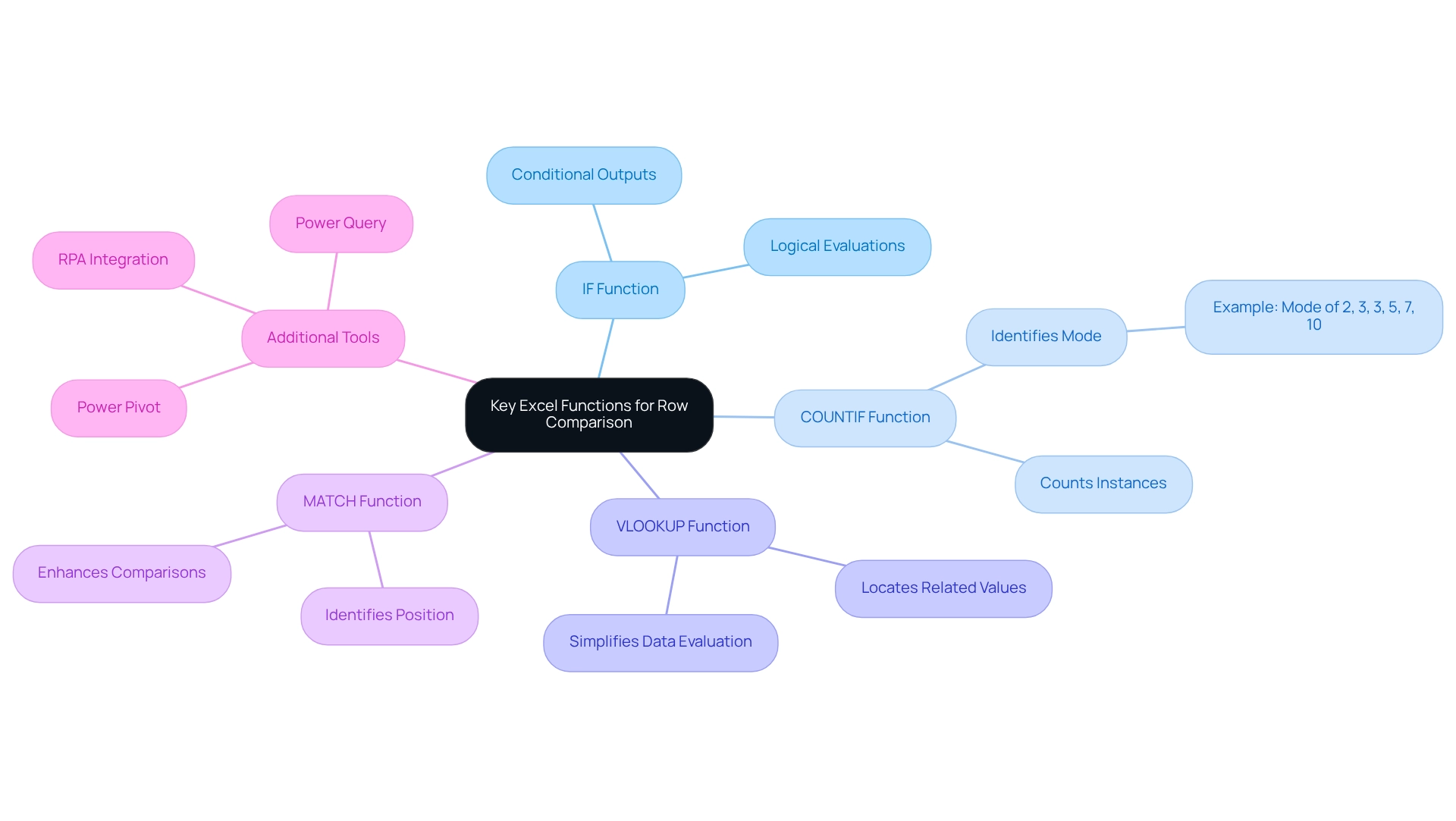

Key DAX Functions for Identifying Null Values

DAX functions play a crucial role in efficiently identifying and addressing missing entries, or null values, within datasets—an essential aspect of enhancing data quality, particularly in Business Intelligence and RPA implementation at Creatum GmbH. The ISBLANK function serves as a fundamental tool, enabling users to determine if an entry is BLANK, returning TRUE or FALSE as needed. This function proves especially useful in conditional statements, facilitating the removal or substitution of empty entries in calculations.

For example, a common application involves using IF in conjunction with ISBLANK to formulate more intricate logic for managing empty values. A typical structure is IF(ISBLANK(input), alternative_value, input), which provides a default option when an empty entry is encountered, ensuring that computations remain robust and meaningful.

In addition to ISBLANK, the COALESCE function is invaluable for retrieving the first non-BLANK value from a list of arguments. This function simplifies the management of multiple potential empty values in calculations, streamlining the process and enhancing information quality. The effectiveness of these functions is underscored by their extensive usage; statistics reveal that 2,287 users are currently online in the Fabric community, actively employing these DAX functions to improve their information handling practices.

Moreover, recent discussions within the community underscore the importance of grasping the nuances of these functions, particularly the recent updates to the ISBLANK function that augment its capability in identifying null values in DAX. As Angela, a frequent visitor, noted, “Thank you for continuing to help with this. I tried that edit but same result, it’s returning all as ‘null’. Does the order of expressions matter?” This inquiry reflects the real-world challenges users encounter when navigating DAX functions. Case studies illustrate how organizations, including Creatum GmbH, have successfully leveraged the ISBLANK function to enhance information quality, ultimately driving growth and innovation.

By mastering these DAX functions, analytics professionals can significantly elevate their analytical capabilities and ensure more precise decision-making, directly contributing to their organization’s success. Furthermore, addressing the challenges associated with poor master data quality is vital for unlocking data-driven insights that enhance operational efficiency.

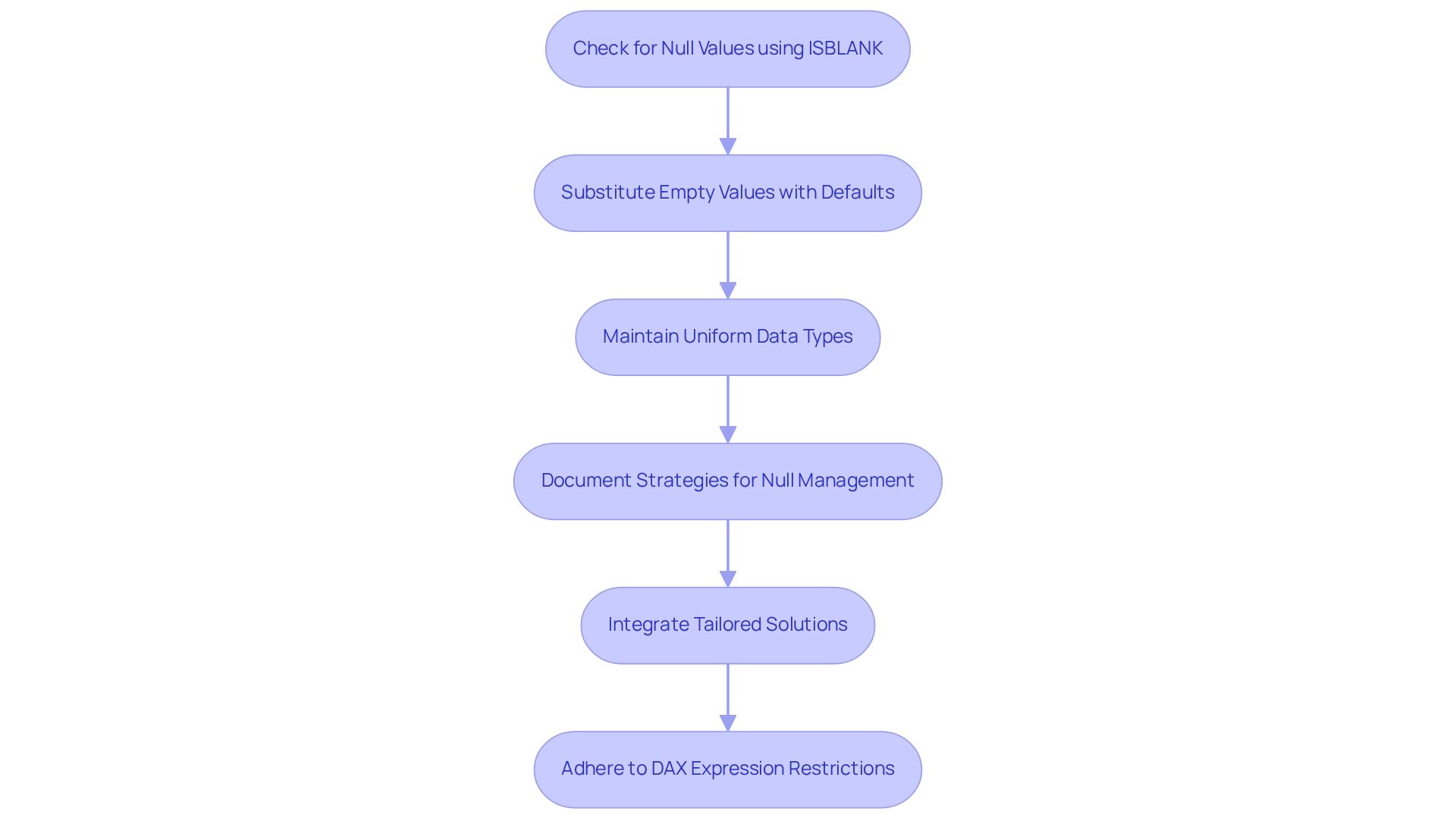

Best Practices for Managing Null Values in DAX

Effectively managing empty entries, or null values in DAX, requires a strategic approach grounded in best practices. Begin by leveraging the ISBLANK function to check for empty entries before executing calculations. This proactive measure not only prevents errors but also guarantees the accuracy of your analysis—an essential aspect in a data-rich environment where extracting meaningful insights can be particularly challenging.

Moreover, consider substituting empty values with meaningful defaults using the IF function or COALESCE. Such practices enhance the interpretability of your reports, rendering the information more accessible to stakeholders and promoting informed decision-making that drives growth and innovation.

Maintaining uniform data types across your model is crucial for minimizing empty values. For example, ensure that numeric fields do not contain text entries that could inadvertently result in BLANK outputs. Additionally, documenting your strategies for managing null values within your DAX code significantly enhances readability and maintainability.

This transparency facilitates comprehension of your logic by others, fostering collaboration and knowledge sharing—key elements for overcoming barriers to AI adoption in organizations.

Integrating these best practices not only streamlines your DAX procedures but also aligns with the broader goal of enhancing information quality and fostering innovation within your organization. Tailored solutions, such as EMMA RPA and Power Automate from Creatum GmbH, play a pivotal role in this context, as they enhance information quality and streamline AI implementation, ultimately nurturing growth and innovation.

As Yogana S. aptly states, “Viewing information validation as the sentinel of analytical integrity, it’s akin to fortifying the foundations of a building.” This perspective underscores the importance of efficiently managing empty entries. Furthermore, the case study titled ‘Enhancing Data Validation Abilities’ illustrates the significance of data validation methods for data analysts, demonstrating how addressing missing entries contributes to generating reliable analytical outcomes.

It is also important to note that restrictions exist on DAX expressions permitted in measures and calculated columns, making the handling of null values essential in DAX calculations. By adhering to these best practices, you can ensure the reliability of your analyses and cultivate a culture of continuous improvement, ultimately positioning your organization for success in a competitive landscape. To discover how Creatum GmbH can assist you in enhancing your Business Intelligence and RPA capabilities, schedule a free consultation today.

Visualizing Null Values: Techniques for Clear Reporting

Effectively representing absent data, such as null in DAX, is essential for clear and informative reporting, particularly when utilizing Business Intelligence to enhance decision-making. One effective method involves using conditional formatting in Power BI, which highlights cells containing empty entries, making them easily identifiable. As noted by Marlous Hall from the Leeds Institute of Cardiovascular & Metabolic Medicine, ‘Conditional formatting is vital for highlighting important metrics, such as missing entries, which can greatly influence decision-making.’

This aligns with our Power BI offerings at Creatum GmbH, ensuring effective reporting and information consistency. Our 3-Day Power BI Sprint facilitates swift report generation, while the General Management App provides thorough management solutions.

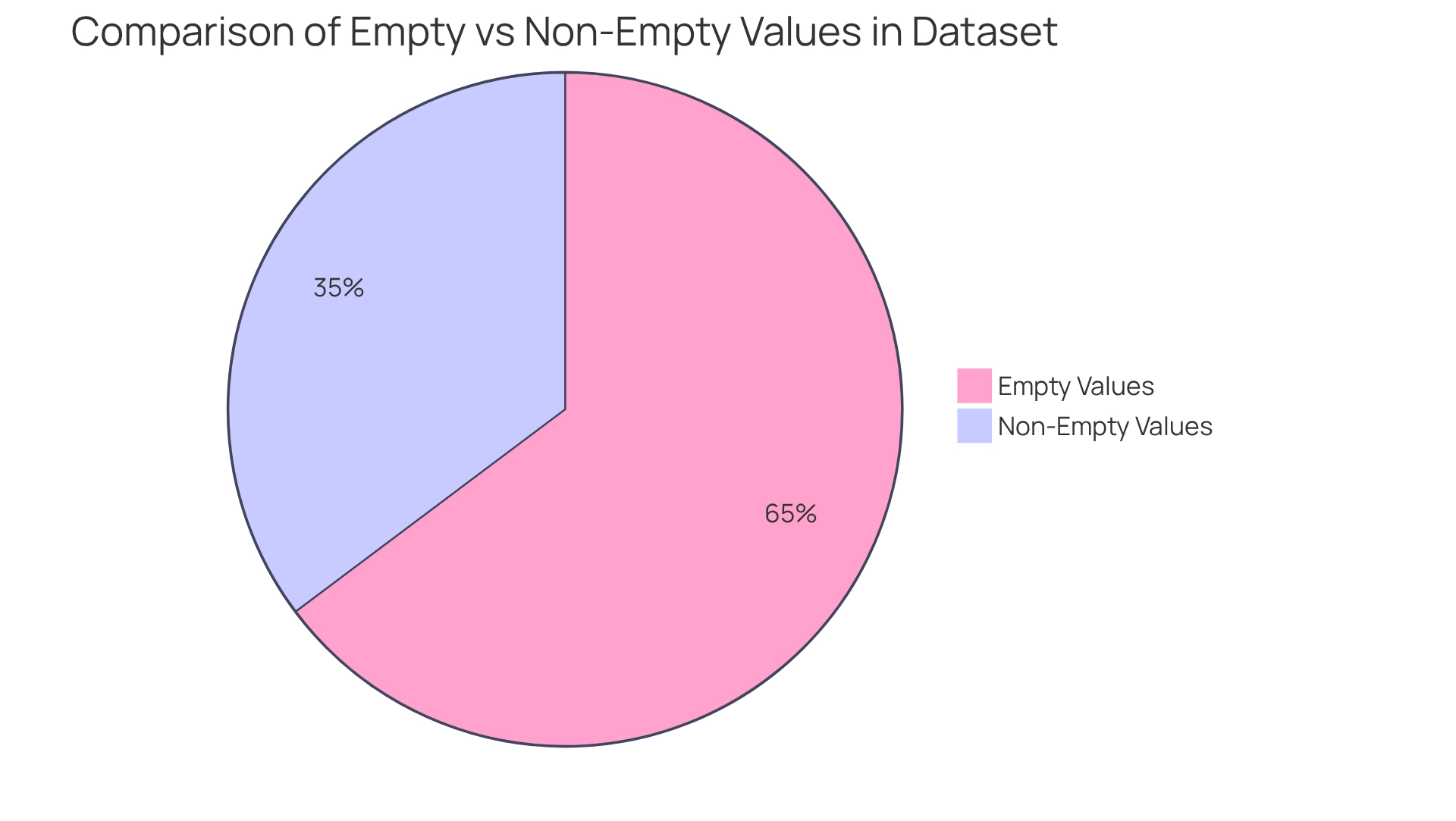

Moreover, adding labels or annotations in charts offers context regarding the presence of absent entries, assisting stakeholders in grasping their significance. Developing specialized visualizations that specifically address the issue of null in DAX values is another crucial method. For instance, bar charts that contrast the number of empty values against non-empty ones can vividly demonstrate the impact of missing information on overall trends.

This approach emphasizes the magnitude of the problem and enables a deeper examination of data reliability, particularly concerning null in DAX, which is vital for informed decision-making. In a dataset containing 86 fields, addressing missing entries becomes even more critical, as unforeseen gaps can lead to significant insights being overlooked. Recent discoveries revealed a total of 158 intersections indicating null in DAX codes in diagnostic fields, underscoring the importance of accurately reporting empty entries.

Furthermore, incorporating legends or descriptive notes in visualizations to explain how missing data is managed is essential. This level of transparency enhances the audience’s understanding of the information and fosters trust in the insights presented. By applying these methods, information specialists can significantly improve the transparency and impact of their reporting on missing entries, ultimately enabling companies to derive meaningful insights from their data and foster growth.

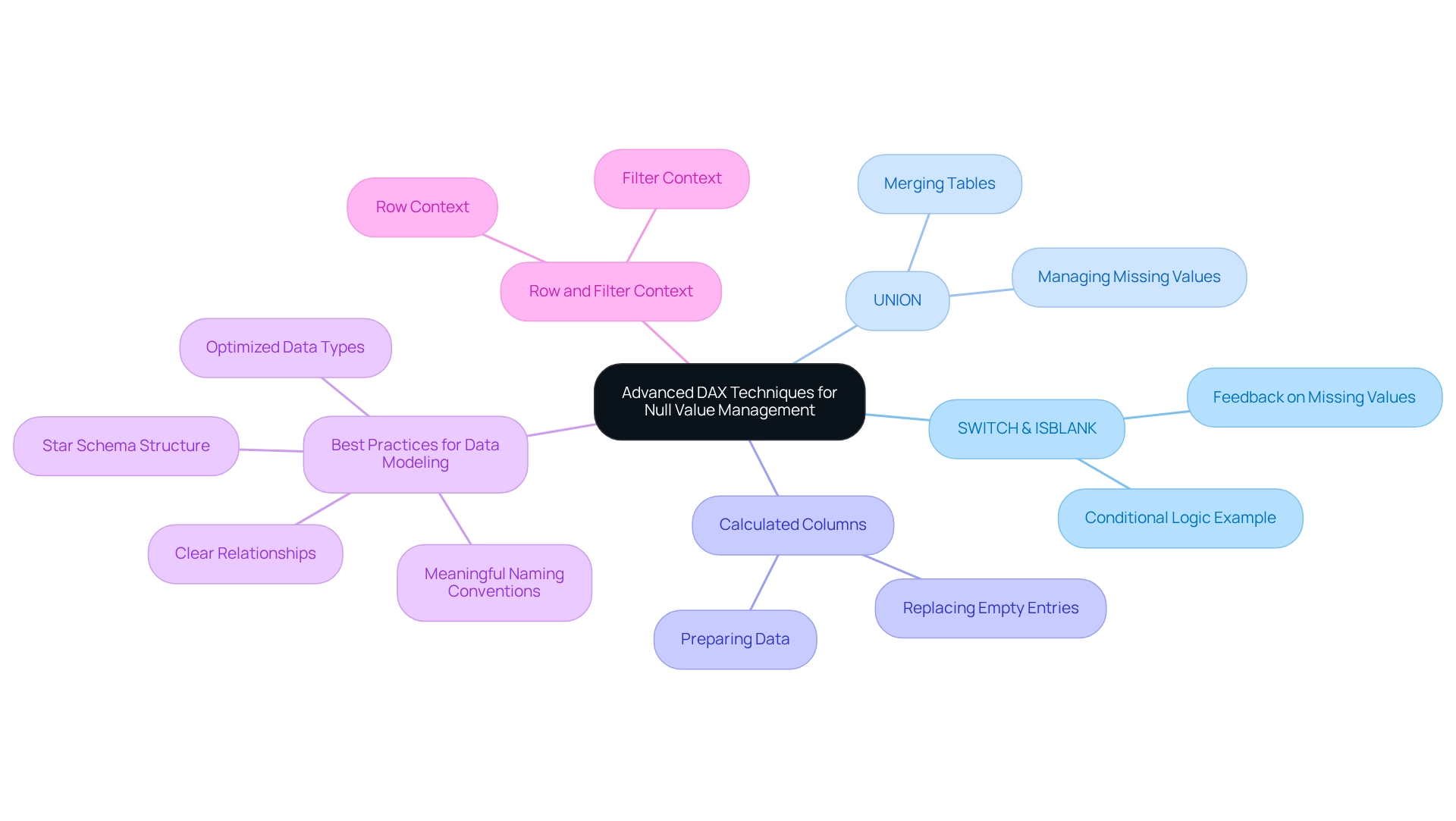

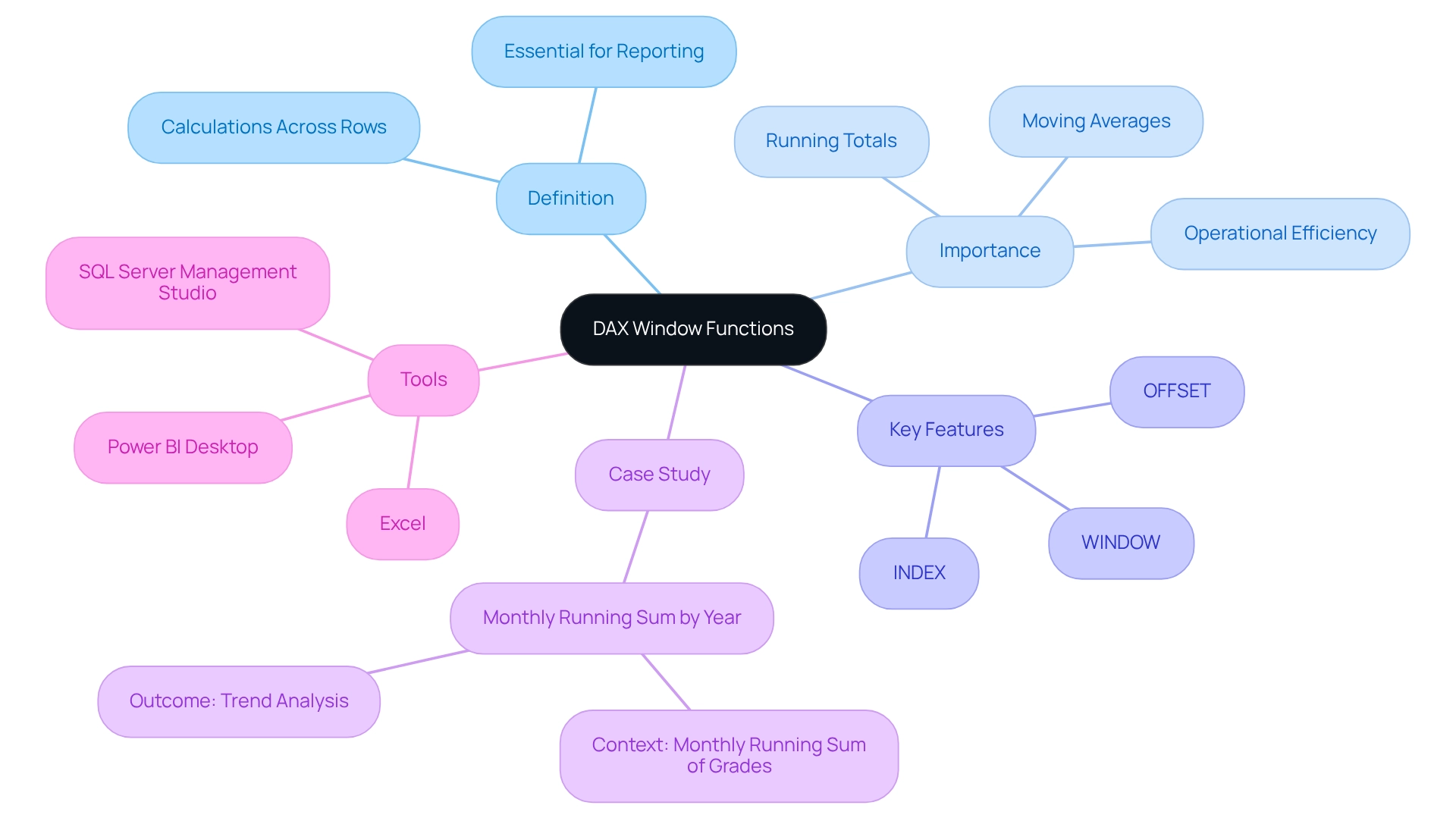

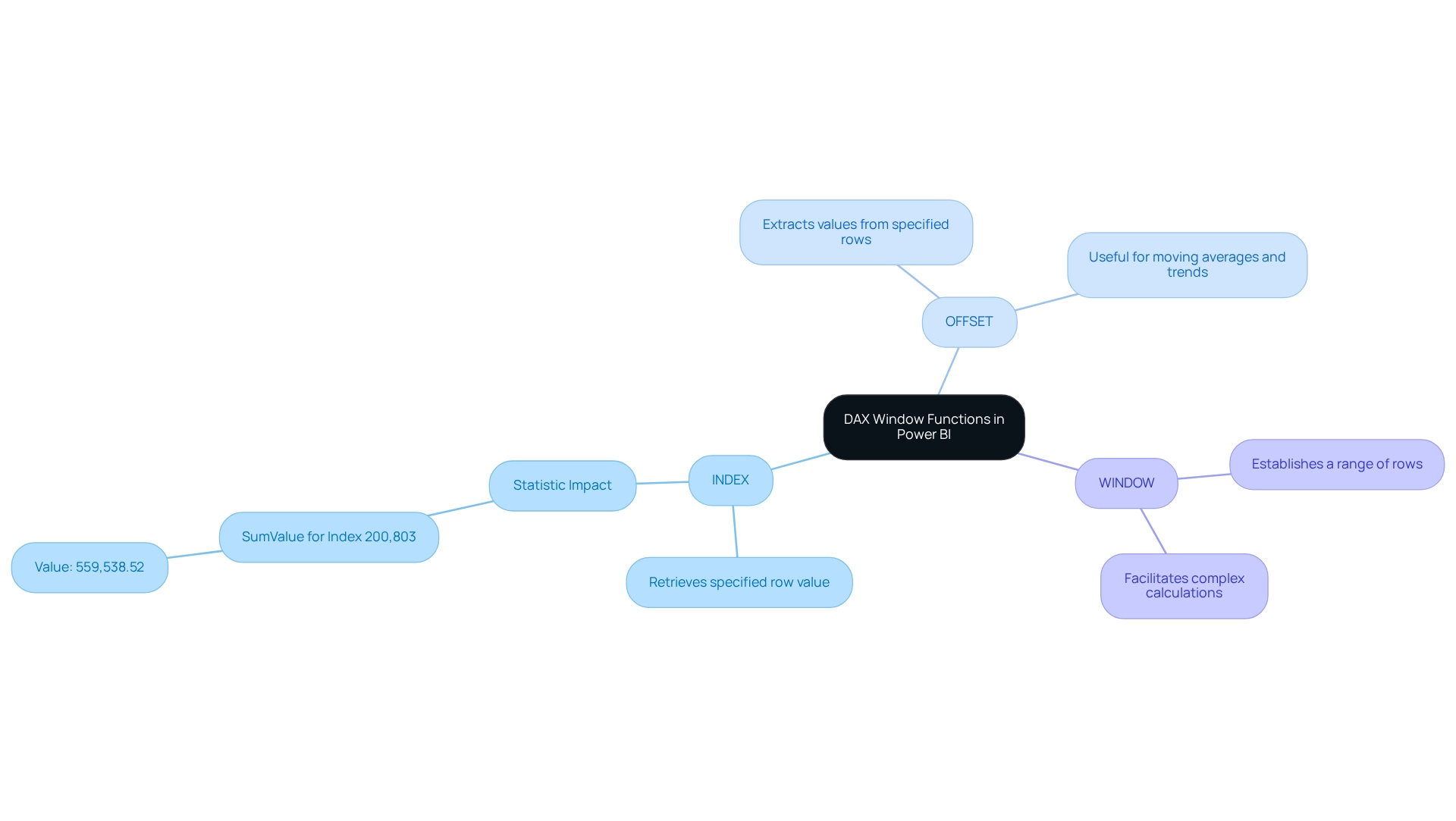

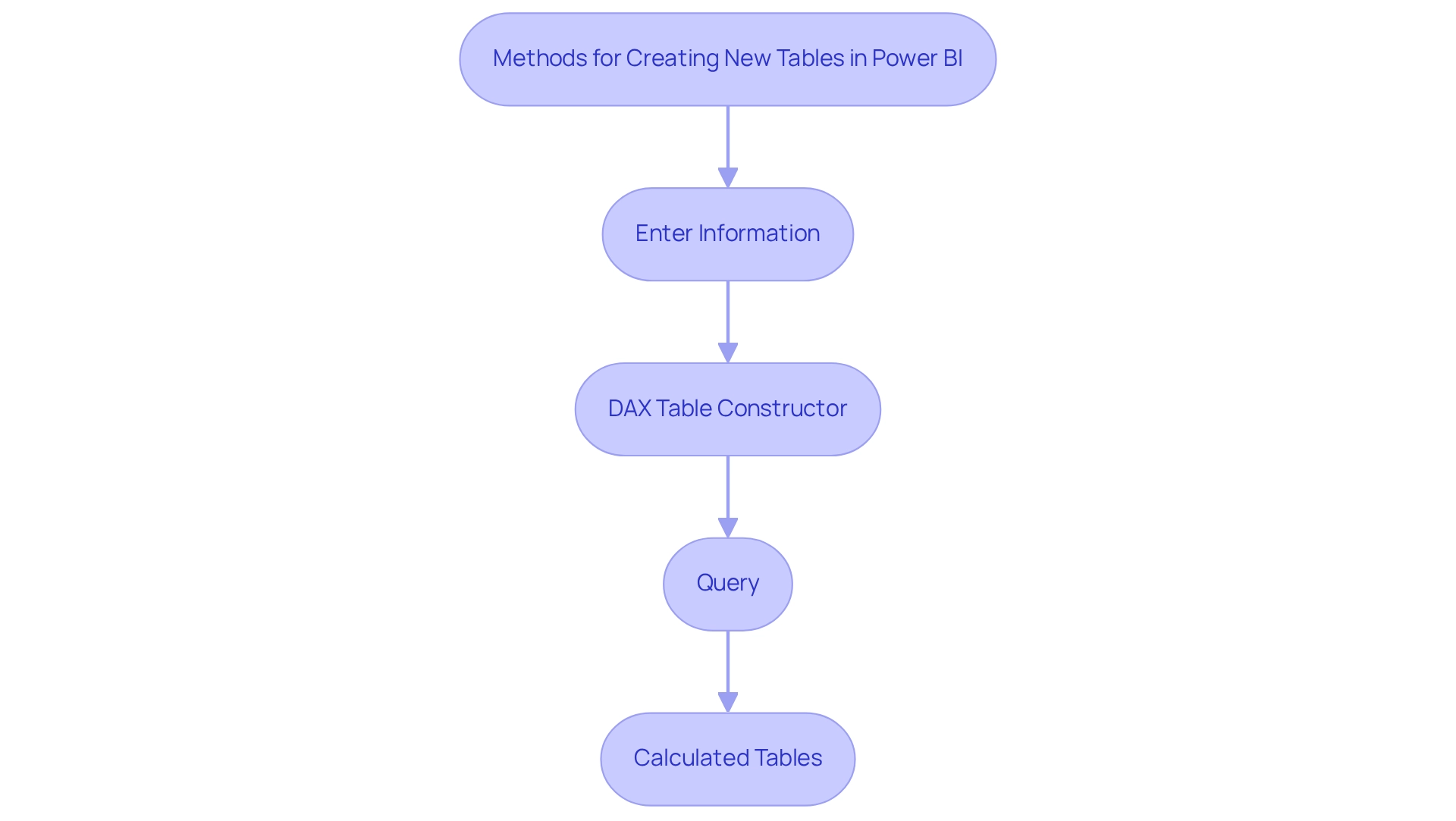

Advanced DAX Techniques for Transforming Null Values

For experienced users, a range of DAX methods can be employed to effectively modify empty entries, a critical aspect in leveraging Business Intelligence for operational efficiency. A powerful approach involves utilizing the SWITCH function alongside ISBLANK, which enables the creation of intricate conditional logic. For instance, the expression SWITCH(TRUE(), ISBLANK(value1), 'Value is missing', ISBLANK(value2), 'Another value is missing', 'All values present') offers comprehensive feedback on which specific values are absent, thereby enhancing the clarity of your analysis and addressing issues such as inconsistencies.

Moreover, the UNION function can be utilized to merge tables while adeptly managing missing values, allowing for more flexible information modeling. This is particularly advantageous in scenarios where information integrity is paramount, especially when aiming to extract significant insights from Power BI dashboards. Additionally, employing calculated columns to prepare data facilitates the replacement of empty entries with meaningful values before their integration into measures.

This practice ensures that analyses are grounded in reliable information, ultimately leading to more accurate insights that drive growth and innovation.

Integrating these advanced DAX techniques not only streamlines the management of absent entries but also aligns with best practices for data modeling. Case studies underscore the importance of meaningful naming conventions, clear relationships, and optimized types. The star schema, consisting of a central fact table linked to various dimension tables, provides a structured framework that enhances the management of empty values within data models. Understanding row context, which pertains to the current row in a calculated column, and filter context, which relates to filters in reports, is crucial for effectively addressing null values in DAX.

As Amitabh Saxena notes, “Power Query M is straightforward and efficient in addressing issues with the coalesce operator,” highlighting the value of employing simple yet powerful tools in data transformation. By adopting these strategies, professionals can significantly boost the performance and maintainability of their Power BI models, effectively overcoming the challenges of time-consuming report creation and ensuring actionable insights for informed decision-making.

To further enhance operational efficiency, consider exploring our RPA solutions, including EMMA RPA and Power Automate, which can automate repetitive tasks and improve overall productivity. Don’t let the competitive disadvantage of not utilizing Business Intelligence impede your business—schedule a free consultation today to discover how we can assist you in transforming your data into actionable insights.

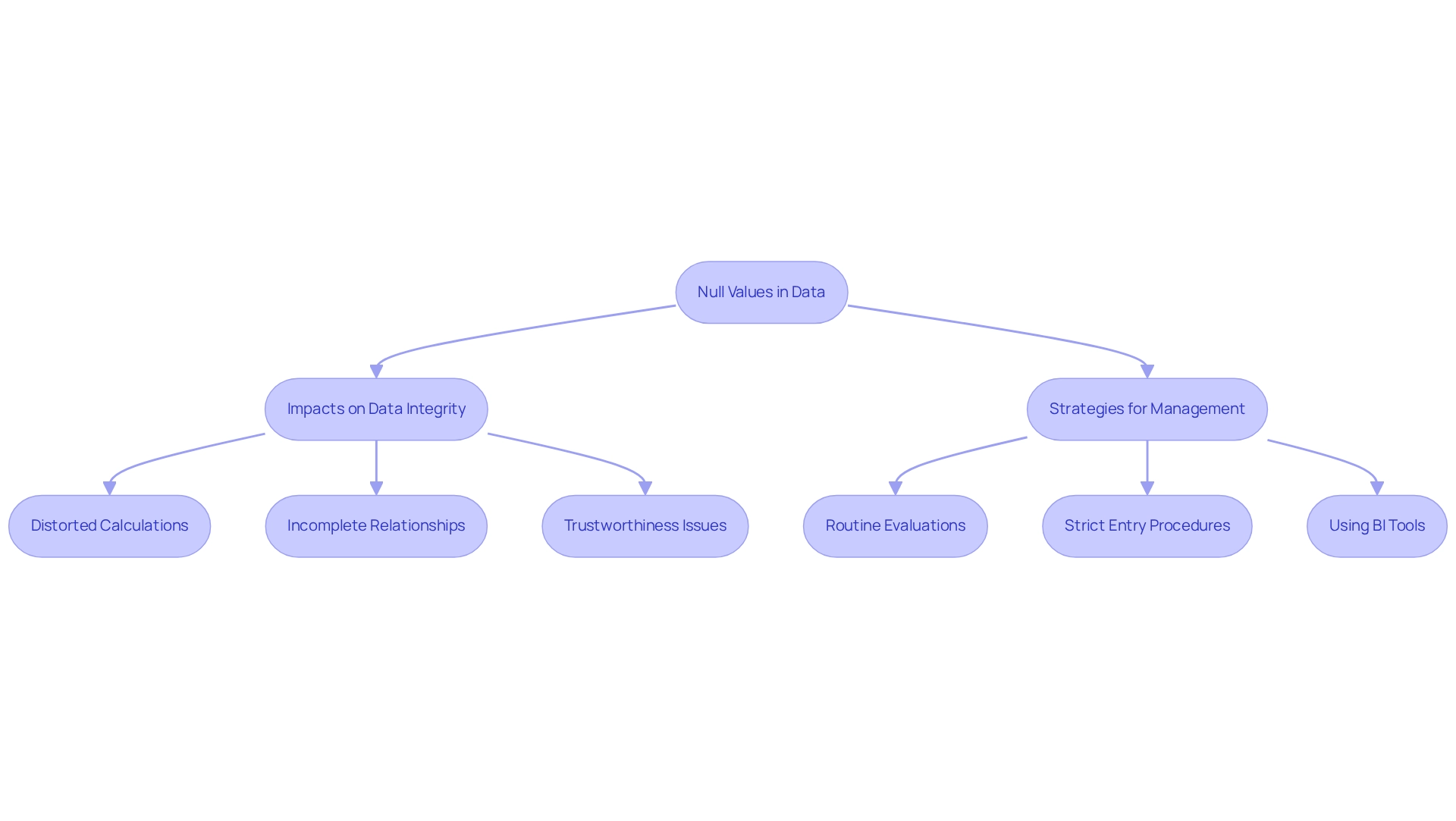

Impact of Null Values on Data Integrity and Analysis

Null entries, often referred to as null in DAX, pose a significant threat to data integrity and can severely distort analysis outcomes. If left unaddressed, these principles can lead to biased results, as calculations may overlook or misinterpret them. For instance, combining datasets that include gaps can distort averages and totals, leading to deceptive insights that mislead decision-making.

Moreover, empty entries complicate relationships among information, resulting in incomplete or flawed visual representations that fail to accurately depict the underlying data.

To maintain information integrity, it is essential to implement robust strategies for recognizing and handling missing entries throughout the information lifecycle. This involves conducting routine evaluations of information quality to proactively identify and manage omissions. Additionally, establishing strict information entry procedures can significantly decrease the occurrence of empty values, ensuring that the information remains trustworthy and usable.

Utilizing Business Intelligence tools enhances these processes by providing insights into information quality and facilitating better management practices.

Experts emphasize that mishandling absent values can undermine the trustworthiness of evaluations, underscoring the necessity for clarity in managing incomplete information. As Taran Kaur states, “Being open not only aids in establishing confidence in your results, but it also demonstrates that you’ve carefully reflected on how absent information might influence your evaluation.” By categorizing absent information into Missing Completely at Random (MCAR), Missing at Random (MAR), and Missing Not at Random (MNAR), specialists can gain clearer insights into the effects of null in DAX values and select appropriate techniques for examination and imputation.

This classification not only helps mitigate potential biases but also enhances the overall quality of insights derived from the data. Furthermore, conducting sensitivity evaluations to assess the robustness of results related to the MAR assumption is crucial, as suggested by the National Research Council. Care must also be taken with single imputation techniques such as EM, which can underestimate standard errors and overestimate precision, creating a misleading sense of power in evaluations. Recent studies focus on the handling of absent values in ordinal data, emphasizing ongoing research and its significance to current methods in information evaluation.

By incorporating these factors and employing RPA solutions like EMMA RPA and Power Automate from Creatum GmbH to streamline information management tasks, professionals can significantly enhance the integrity and reliability of their analyses, ultimately fostering business growth and innovation. Companies that fail to effectively extract valuable insights from their information risk falling behind their competitors in today’s data-driven environment.

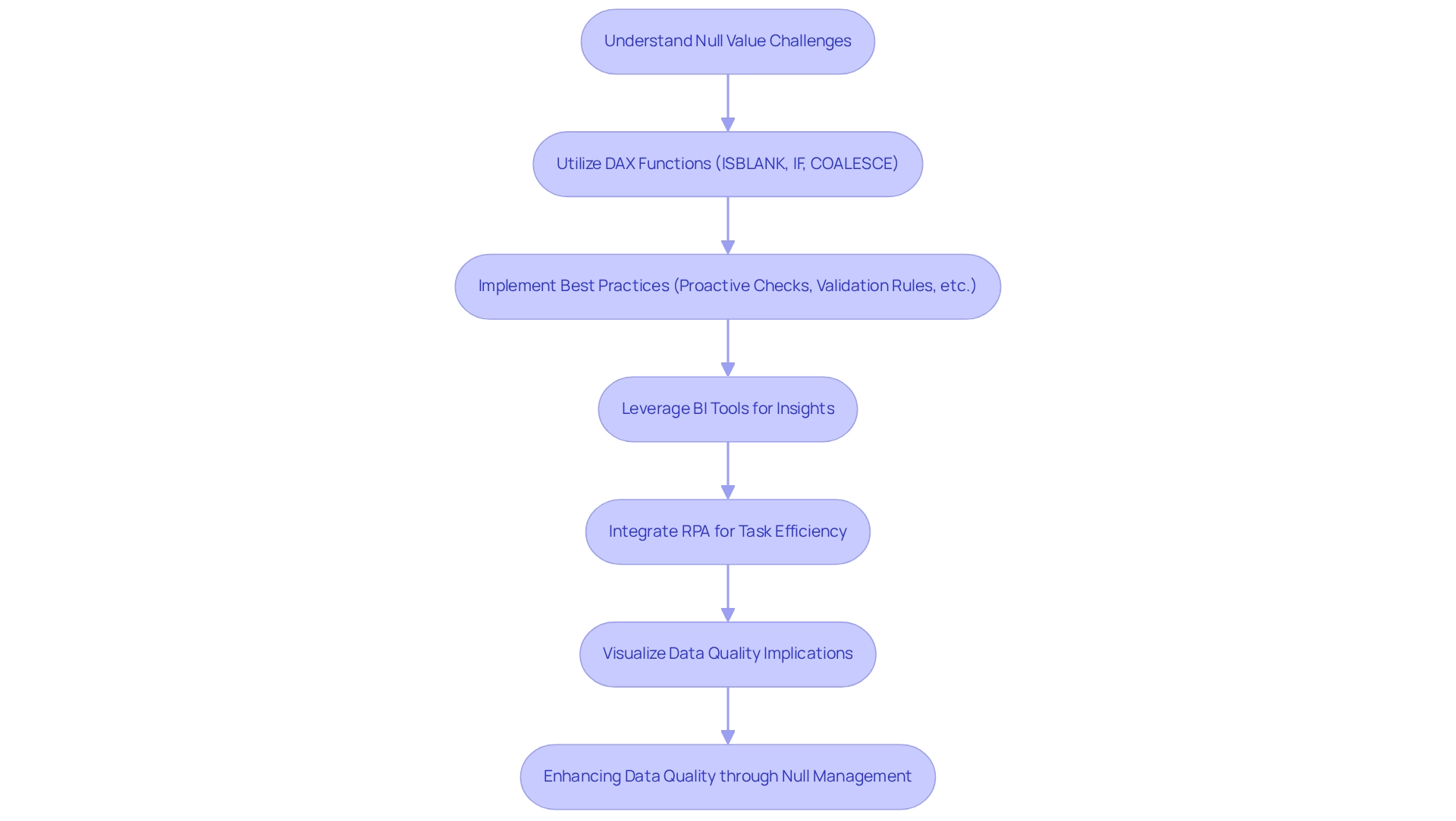

Key Takeaways: Enhancing Data Quality with Null Management

To enhance information quality through efficient absence management, information specialists must first grasp the complexities of handling null values in DAX and the challenges they present. Essential DAX functions like ISBLANK, IF, and COALESCE serve as vital tools for effectively identifying and managing nulls in DAX. By applying best practices—such as proactive checks, equality checks, validation rules, profiling, and completeness checks—organizations can not only improve the accuracy of their analyses but also mitigate the risks associated with poor information, which IBM estimated cost the U.S. economy $3.1 trillion in 2016.

Furthermore, the integration of Business Intelligence (BI) and Robotic Process Automation (RPA) is pivotal in fostering data-driven insights and enhancing operational efficiency. Leveraging BI tools enables organizations to convert raw data into actionable insights that inform decision-making and promote growth. RPA solutions, including EMMA RPA and Power Automate, streamline repetitive tasks, thereby improving information quality and freeing up resources for more strategic initiatives.

Effective visualization methods are equally crucial in communicating the implications of absent entries to stakeholders, ensuring that the significance of information quality is acknowledged across the organization. As Chien remarked, “It is not only important to have the board’s attention in DQ improvement, but also for it to be a sustainable practice.” A case study focusing on trend monitoring illustrates how organizations can utilize time series evaluation to detect anomalies and oversee information quality over time, specifically addressing issues related to null values in DAX while distinguishing between normal fluctuations and genuine problems.

By embracing these strategies, data professionals can substantially enhance the integrity and reliability of their analyses, ultimately driving improved decision-making and operational efficiency.

Conclusion

Managing null values in DAX is essential for ensuring data integrity and accuracy in analysis. This article highlights the critical distinctions between null values and other data types, emphasizing the unique handling required for ‘BLANK’ values to prevent misleading insights. By utilizing key DAX functions such as ISBLANK, IF, and COALESCE, data professionals can effectively identify and manage nulls, thereby enhancing the quality of their analyses.

Moreover, integrating best practices and advanced techniques, including conditional formatting in reporting and the SWITCH function for conditional logic, empowers analysts to visualize and address null values transparently. This proactive approach mitigates the risks associated with data quality issues and fosters trust in the insights derived from data.

Ultimately, effective null management is not merely a technical necessity; it is a strategic imperative that drives informed decision-making and operational efficiency. As organizations increasingly rely on data to guide their strategies, mastering the complexities of null values is crucial for data professionals aiming to deliver reliable and actionable insights. By prioritizing data quality and utilizing appropriate tools, businesses can position themselves for success in today’s competitive landscape, ensuring they harness the full potential of their data assets.

Frequently Asked Questions

What does ‘BLANK’ represent in DAX?

In DAX, ‘BLANK’ represents null entries, which are treated distinctly compared to other data types. Understanding how BLANK is interpreted is crucial for accurate data analysis.

Why is it important to understand the difference between an empty entry and zero in DAX?

An empty entry signifies the absence of information, while zero represents a numeric value. Misinterpreting these can lead to inaccuracies in calculations and aggregations.

How can poor handling of BLANK values affect data analysis?

Mismanagement of BLANK values can lead to unexpected outcomes in calculations, distorted results, misleading averages, and ultimately undermine the reliability of the analysis.

What are the challenges associated with managing null values in DAX?

Data experts often face difficulties with the misinterpretation of BLANK figures, complications in data relationships, and the overall precision of analyses, which can diminish data quality and reliability.

What role do Business Intelligence (BI) tools play in addressing information quality challenges?

BI tools help organizations tackle issues related to poor master information quality, enabling them to transform raw data into actionable insights that drive growth and innovation.

What methods can be employed to handle missing information effectively?

Identifying the type of absence and selecting appropriate handling methods, such as maximizing information collection during study design, can minimize information loss and bias.

Why is clarity in reporting how absent data was managed important?

Clarity in reporting is vital for building trust in findings and understanding the methodologies behind results, which enhances the credibility of the analysis.

How can organizations improve their handling of null values in DAX?

Establishing clear information governance policies and adhering to defined data management standards can improve the handling of null values, leading to more accurate and reliable analyses.

Overview

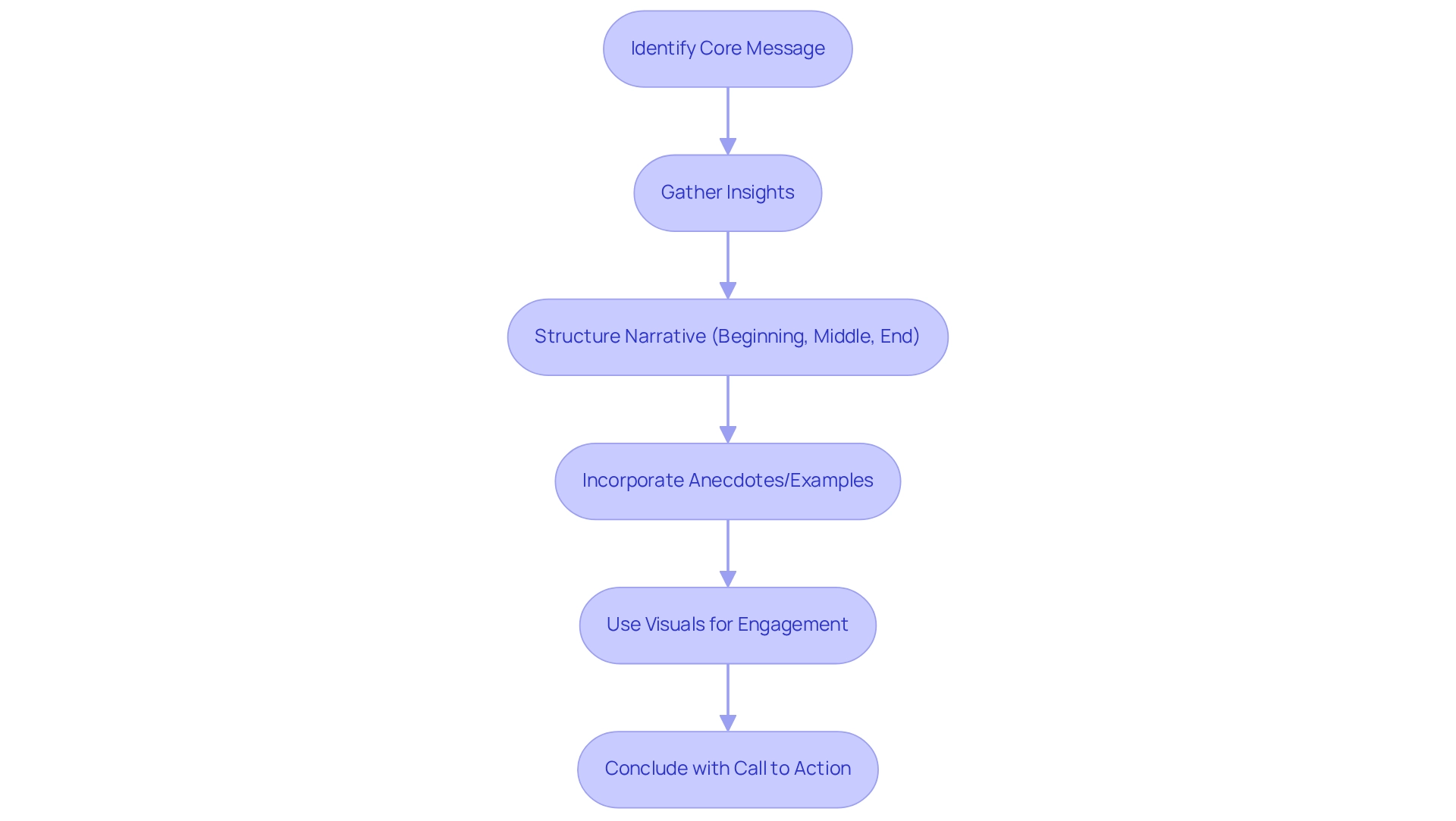

Diagnostic analytics addresses essential questions about the underlying causes of past events and trends. For instance, it seeks to understand why sales have declined or what factors have influenced customer churn. Analyzing historical data is crucial for uncovering these root causes, ultimately enhancing decision-making processes.

A compelling illustration of this can be seen in the successful case study of Canadian Tire, which effectively utilized AI-driven insights to boost sales. This example underscores the value of diagnostic analytics in driving informed business strategies.

Introduction

In the rapidly evolving landscape of data analytics, diagnostic analytics stands out as an essential tool for organizations aiming to grasp the complexities of their operations. By investigating the reasons behind historical events, this analytical approach not only addresses the crucial question of ‘why’ but also empowers businesses to enhance decision-making and operational efficiency. As industries contend with an ever-growing volume of data—projected to reach an astonishing 79.4 zettabytes by 2025—leveraging diagnostic analytics becomes vital for pinpointing root causes of issues and uncovering actionable insights.

The applications of diagnostic analytics are extensive and transformative, ranging from optimizing sales strategies to improving patient care in healthcare settings. This article delves into the core aspects of diagnostic analytics, exploring its methodologies, real-world applications, and the challenges organizations encounter during implementation. Ultimately, it underscores the pivotal role of diagnostic analytics in driving growth and innovation across various sectors.

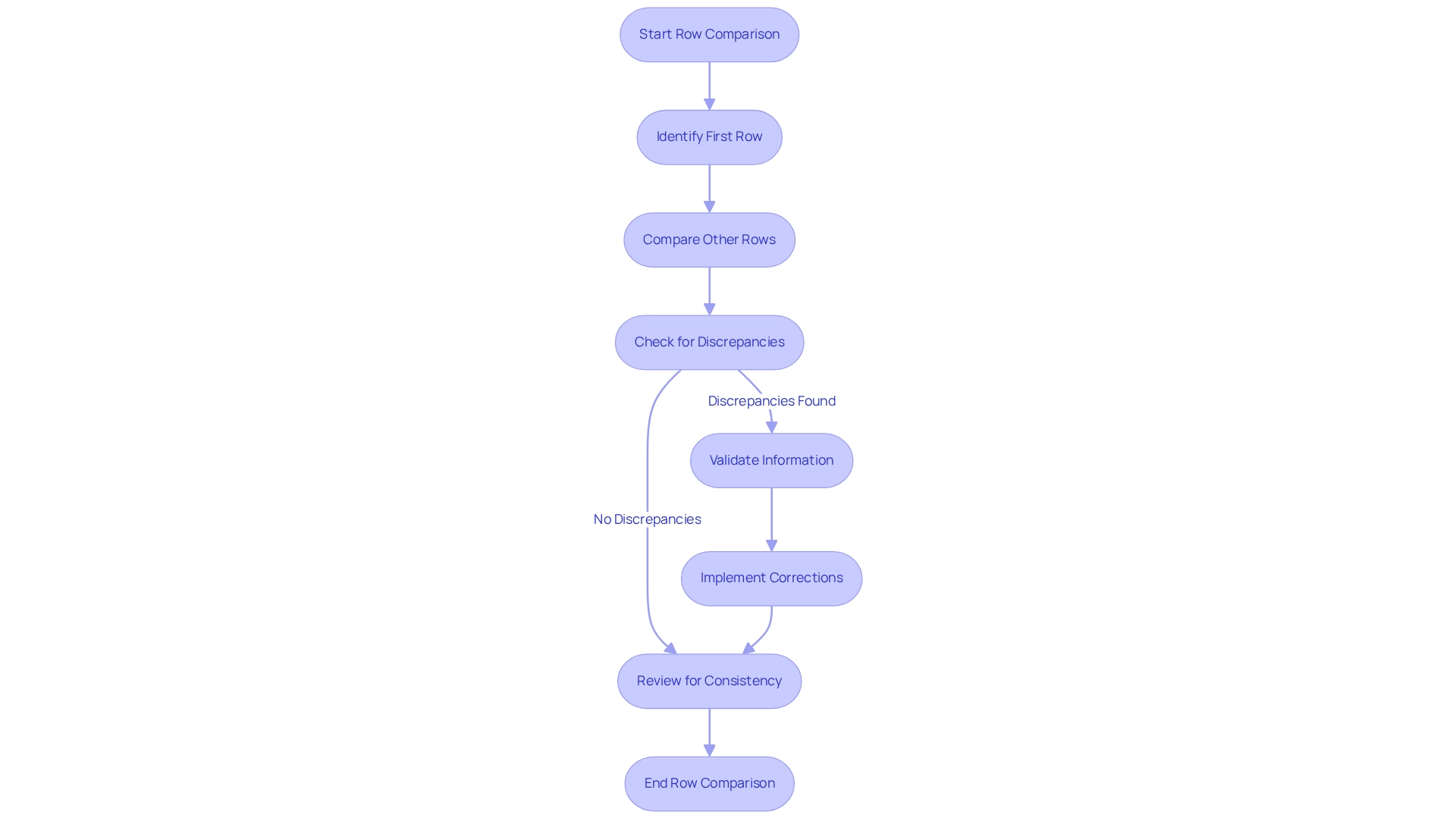

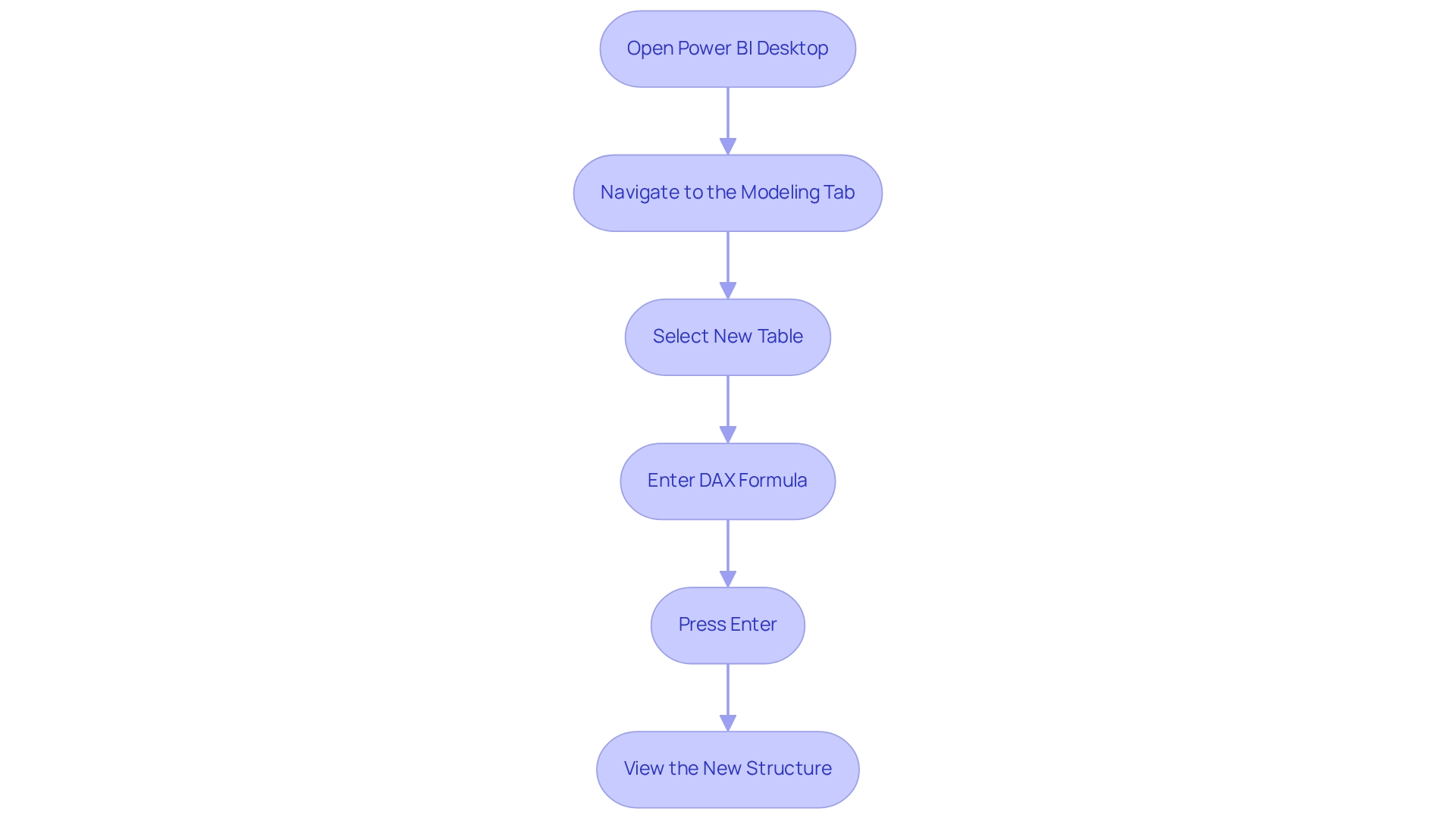

Understanding Diagnostic Analytics: Definition and Purpose

Diagnostic analytics represents a vital branch of analytical study, delving into the reasons behind past occurrences or trends. It specifically addresses the question: what does diagnostic analytics answer? By scrutinizing historical data, organizations can reveal the root causes of issues, a necessity for informed decision-making and improving future outcomes. This analytical approach gains particular significance in today’s data-rich landscape, where the number of connected devices is projected to surge from 16 billion in 2023 to 20.3 billion by 2025, generating an astonishing 79.4 zettabytes of data.

Such vast amounts of information necessitate robust analytical frameworks to extract practical insights, underscoring the critical need for effective evaluative analysis. The importance of evaluative analysis in enhancing operational efficiency cannot be overstated. It enables organizations to pinpoint inefficiencies and tackle challenges proactively. For instance, Canadian Tire effectively harnessed AI-driven insights to analyze the purchasing behaviors of new pet owners, achieving nearly a 20% increase in sales by identifying cross-selling opportunities.

This case illustrates how diagnostic analytics can convert raw data into strategic insights that propel revenue growth. Experts assert that leveraging Robotic Process Automation (RPA) solutions, such as EMMA RPA and Microsoft Power Automate from Creatum GmbH, can significantly boost efficiency and employee morale by automating manual workflows. Furthermore, understanding past events through diagnostic analytics addresses the fundamental question of what diagnostic analytics answers, essential for organizations striving to refine their operations. By integrating tailored AI solutions and Business Intelligence tools, organizations can enhance their decision-making processes, ensuring strategies are informed by historical performance and trends.

The use of Power BI services, including the 3-Day Sprint for rapid report generation, further enhances information reporting and actionable insights, addressing inconsistencies and governance challenges in organizational reporting. Expert opinions emphasize that comprehending the reasons behind past events is crucial in commercial analysis, as it helps answer what diagnostic analytics addresses, laying the groundwork for future achievements and innovation. In summary, analytical assessment serves as a cornerstone for organizations aiming to bolster operational efficiency and navigate the complexities of their data environments.

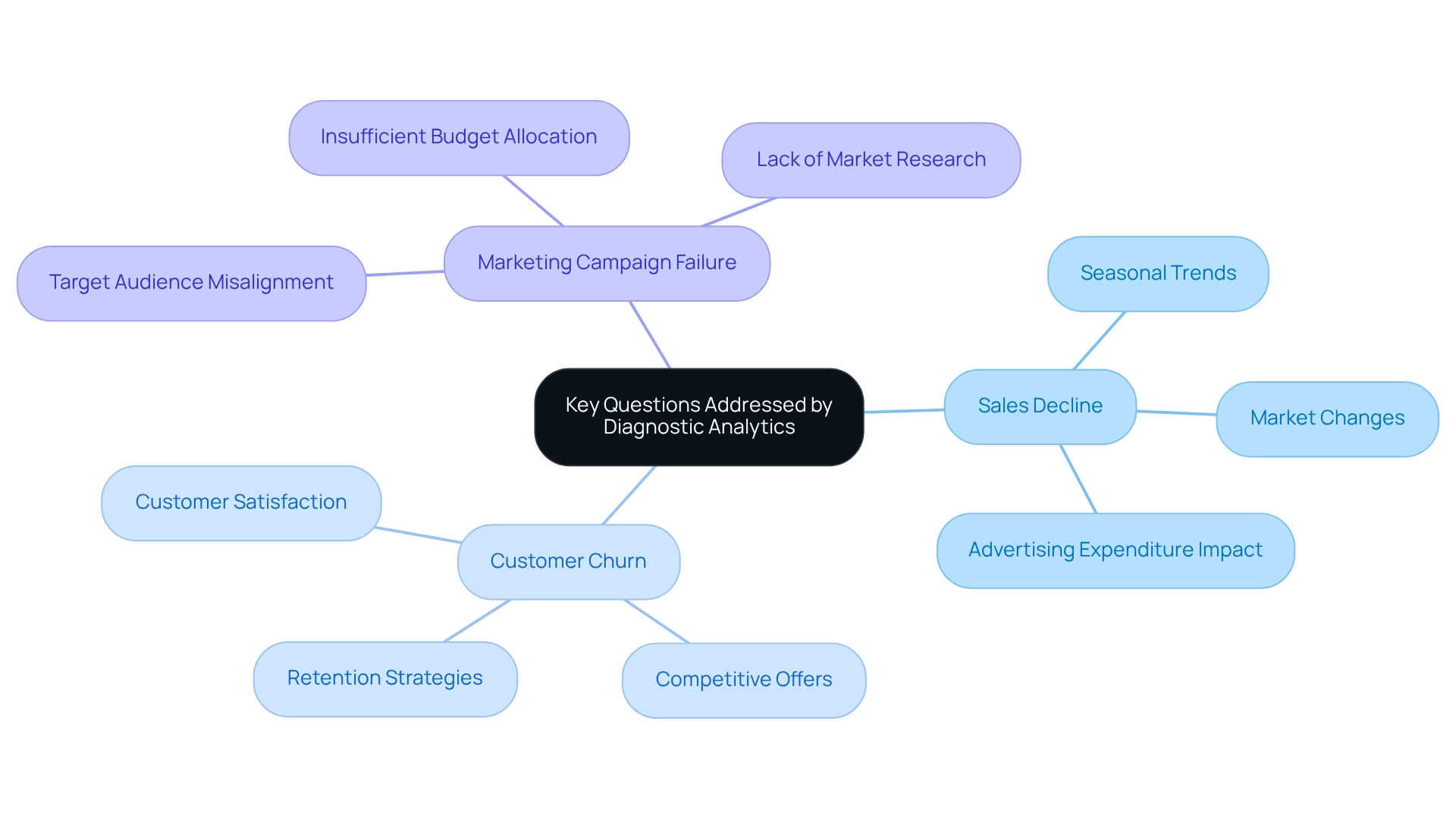

Key Questions Addressed by Diagnostic Analytics

Diagnostic analysis is pivotal in addressing the question of what diagnostic analytics reveals about organizational performance. Key inquiries include:

- ‘Why did sales decline during a specific period?’

- ‘What factors contributed to increased customer churn?’

- ‘Why did a marketing campaign fail to generate expected results?’

By systematically addressing these questions, organizations can uncover underlying issues and formulate targeted strategies to prevent recurrence.

For instance, companies often utilize regression analysis to pinpoint outliers that may skew the relationship between variables, such as the correlation between advertising expenditure and sales results. This analytical approach not only clarifies these relationships but also enhances decision-making processes.

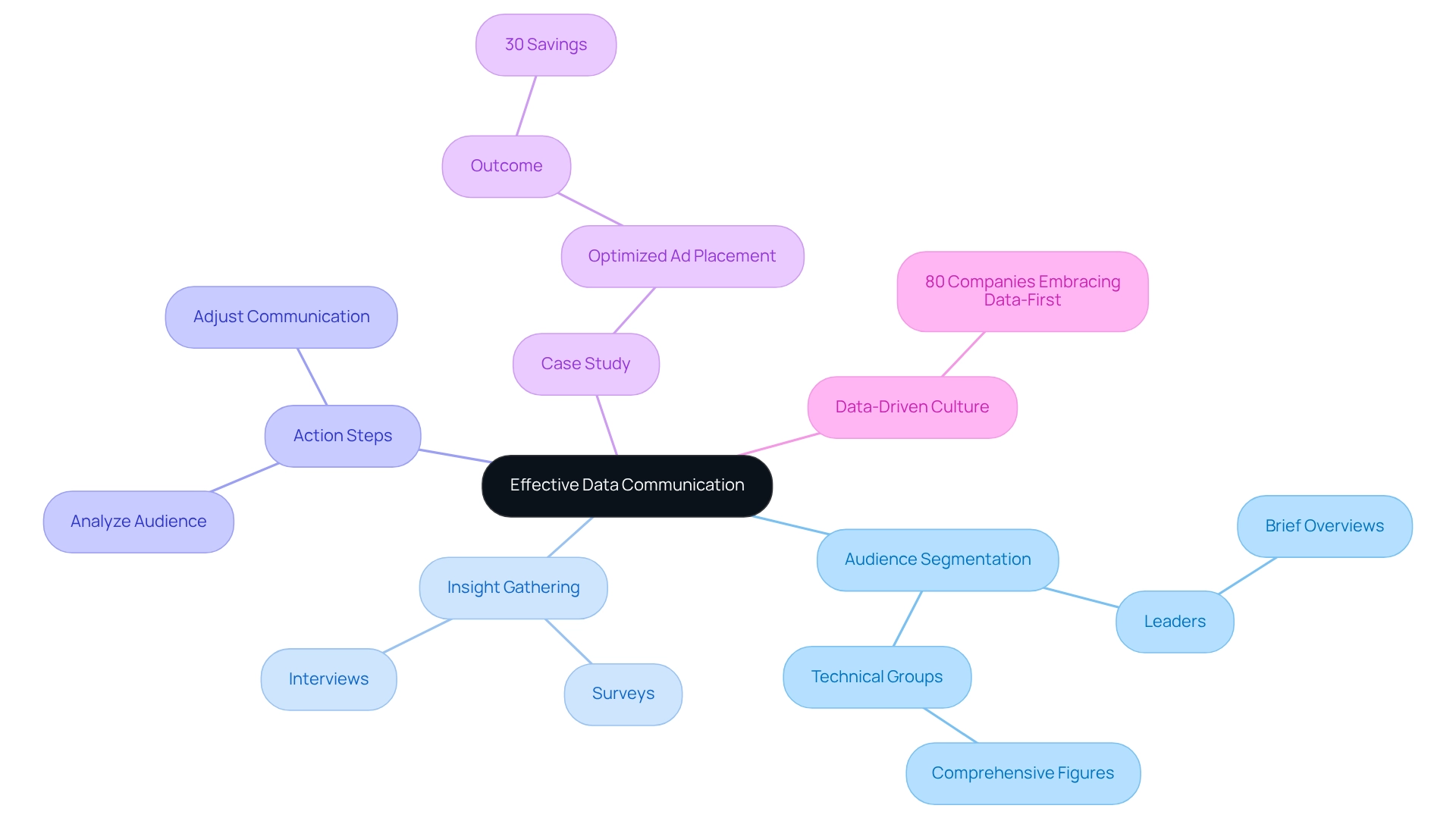

The impact of evaluative analysis on organizational performance is significant. Organizations that effectively harness these insights, particularly through the integration of Business Intelligence tools, can improve customer retention rates, as retaining existing customers is generally more cost-effective than acquiring new ones. This principle underscores the importance of understanding customer behavior through analytical evaluation, which can be further enhanced by Robotic Process Automation (RPA) to streamline information gathering and examination procedures.

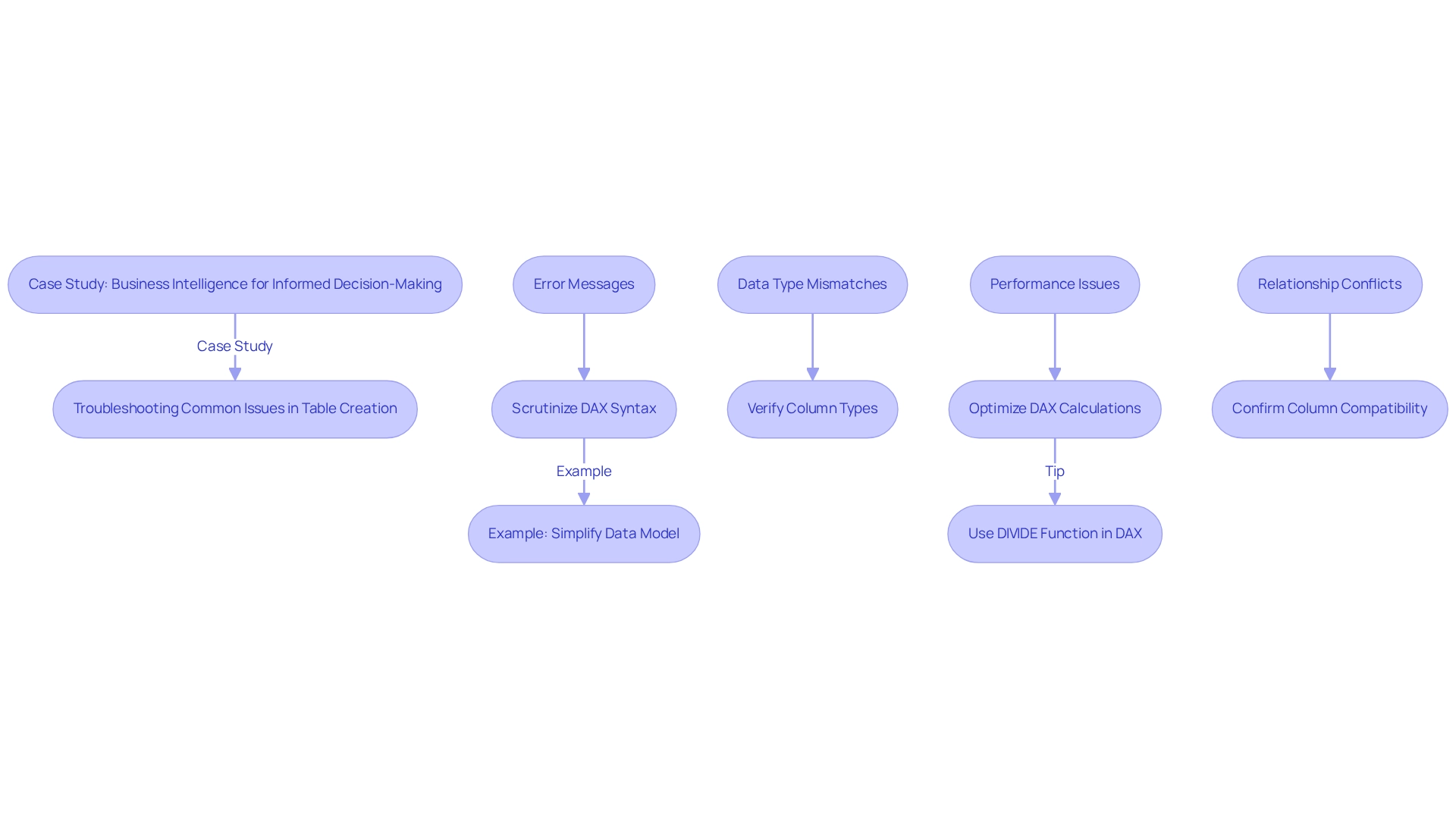

Moreover, case studies indicate that companies facing sales declines have successfully employed analytical assessments to identify root causes, yielding actionable insights that bolster performance. However, it is crucial to address challenges such as differentiating correlation from causation and ensuring data quality to derive accurate insights. The demand for advanced analytical skills further complicates the effective application of assessment techniques.

As Catherine Cote, Marketing Coordinator, states, “Do you want to become a data-driven professional? Explore our eight-week course and our three-course Credential of Readiness (Core) program to deepen your analytical skills and apply them to real-world business problems.” By overcoming these obstacles, organizations can unlock the full potential of diagnostic data analysis, ultimately fostering growth and innovation in their operations.

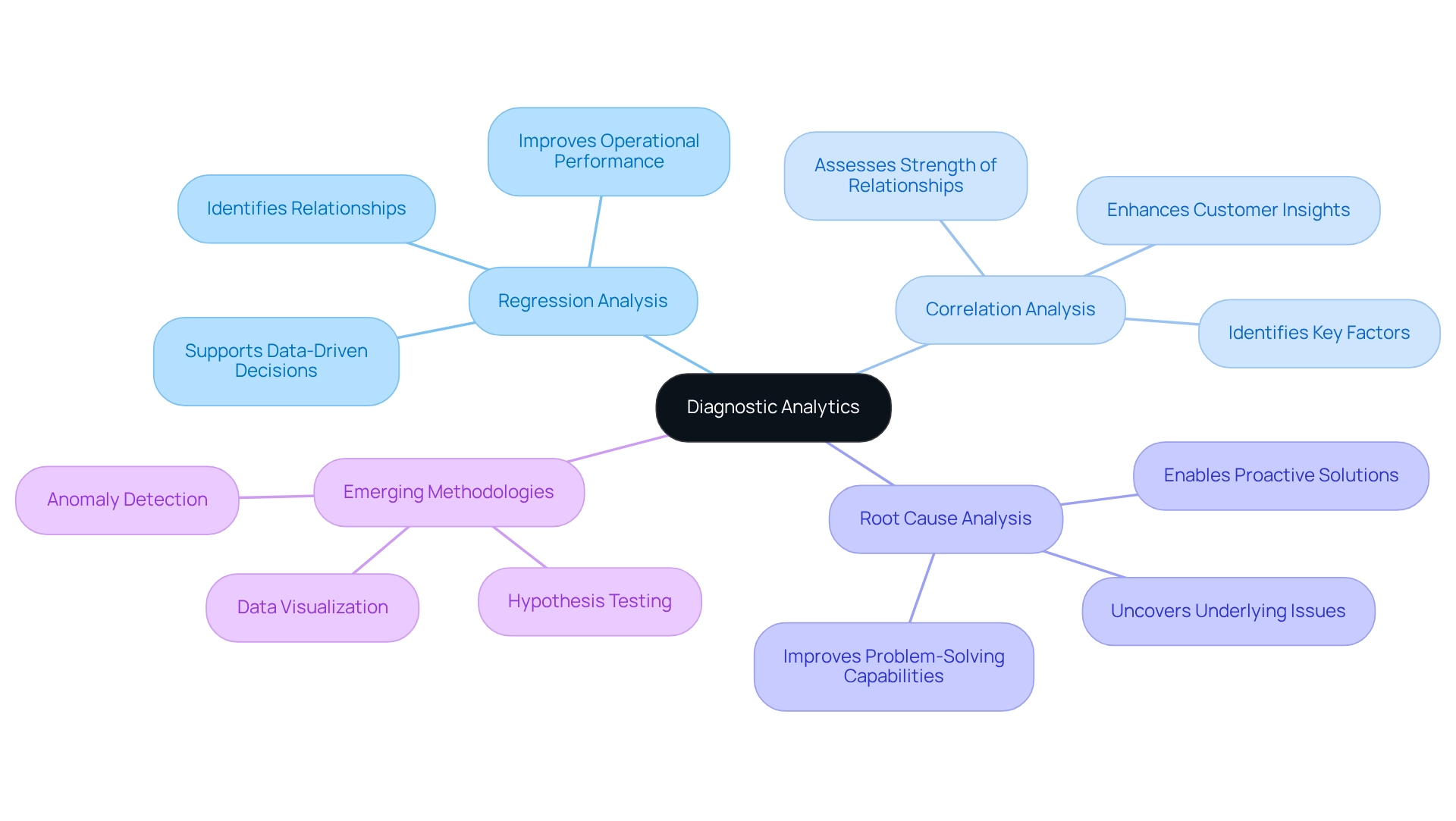

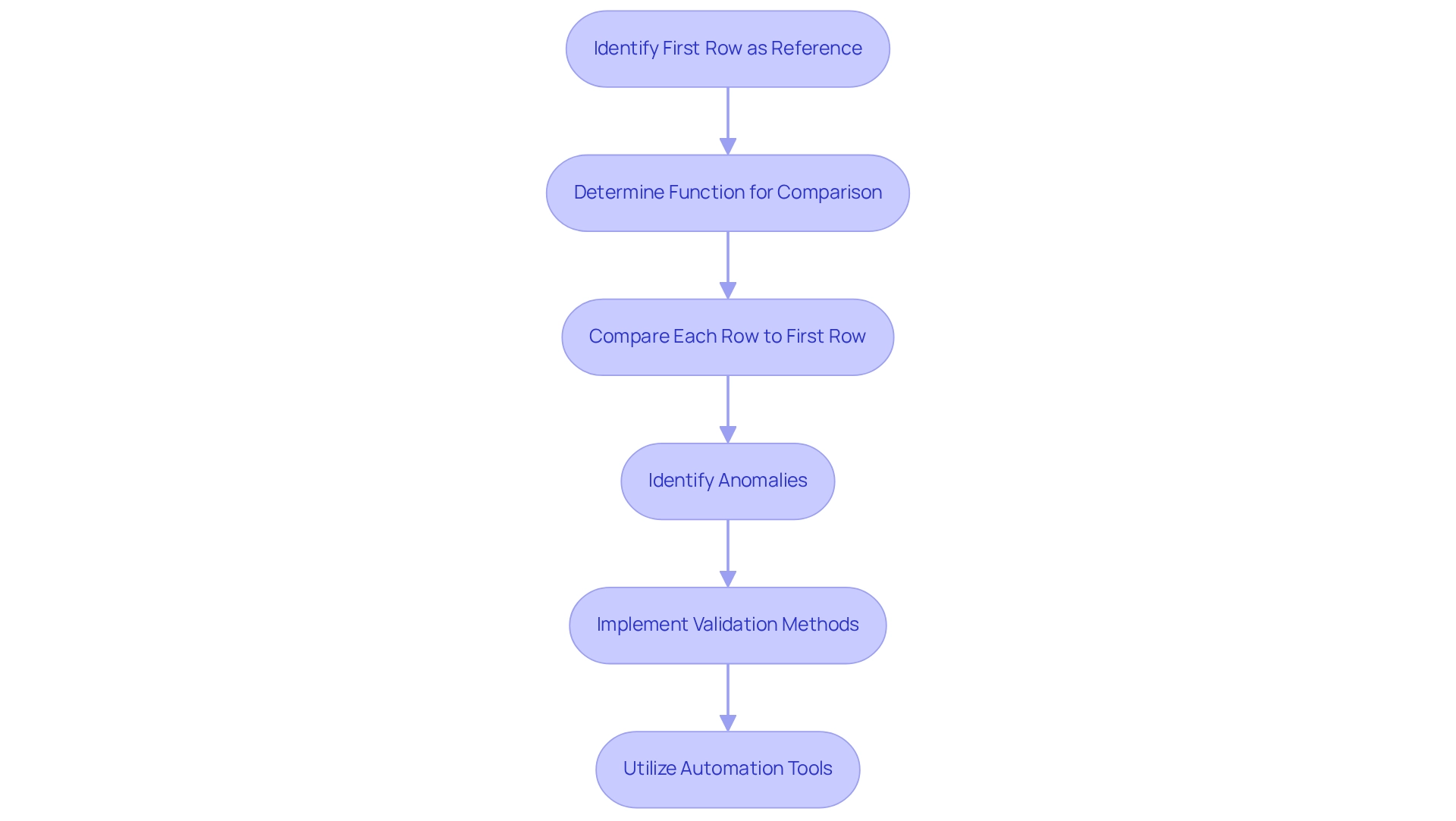

Techniques and Methodologies in Diagnostic Analytics

In today’s overwhelming AI landscape, one might wonder: what question does diagnostic analytics answer? This field employs a range of techniques crucial for revealing insights from information. Among the most prominent methodologies are:

- Regression analysis

- Correlation analysis

- Root cause analysis

These can be enhanced through tailored AI solutions from Creatum GmbH, aligning with specific business goals.

Regression analysis is a powerful tool that identifies relationships between variables, allowing organizations to understand how changes in one factor can influence another. This technique has gained traction in recent years, reflecting a growing reliance on data-driven decision-making. As Jan Hammond, a Harvard Business School Professor, states, “Regression enables us to obtain insights into the structure of that relationship and offers measures of how well the information aligns with that relationship.”

Organizations employing regression analysis can substitute intuition with empirical information, leading to more informed decisions and improved operational performance.

Correlation analysis complements regression by assessing the strength and direction of relationships between variables. This technique is particularly valuable for organizations seeking actionable insights, as it helps identify which factors are most closely linked to desired outcomes. Numerous enterprises have effectively utilized correlation analysis to enhance their strategies and deepen customer insight, ultimately fostering innovation.

Root cause analysis delves deeper into information, aiming to uncover the underlying reasons for specific outcomes. This technique is crucial for identifying business problems and implementing effective solutions. Current statistics indicate that organizations employing root cause analysis experience marked improvements in problem-solving capabilities, enabling them to address issues proactively rather than reactively.

As we approach 2025, the landscape of diagnostic analytics continues to evolve, with methodologies such as hypothesis testing, anomaly detection, and visualization becoming increasingly common. Data representation plays a crucial role in simplifying intricate relationships, making it easier for stakeholders to grasp insights swiftly. The past decade has seen companies becoming more reliant than ever on information, underscoring the significance of these developing methodologies in enhancing operational efficiency and organizational growth.

However, companies struggling to extract meaningful insights may find themselves at a competitive disadvantage.

Case studies illustrate the effectiveness of these techniques. For instance, the case study titled ‘Improving Business Decisions through Information Analysis’ emphasizes how information analysis enhances decision-making by offering insights that substitute intuition with factual evidence. Organizations that have leveraged regression and correlation analysis have reported significant improvements in their decision-making processes, leading to a competitive advantage in their respective markets.

By utilizing these diagnostic evaluation methods and the strength of Business Intelligence, companies can convert raw information into actionable insights, promoting growth and innovation.

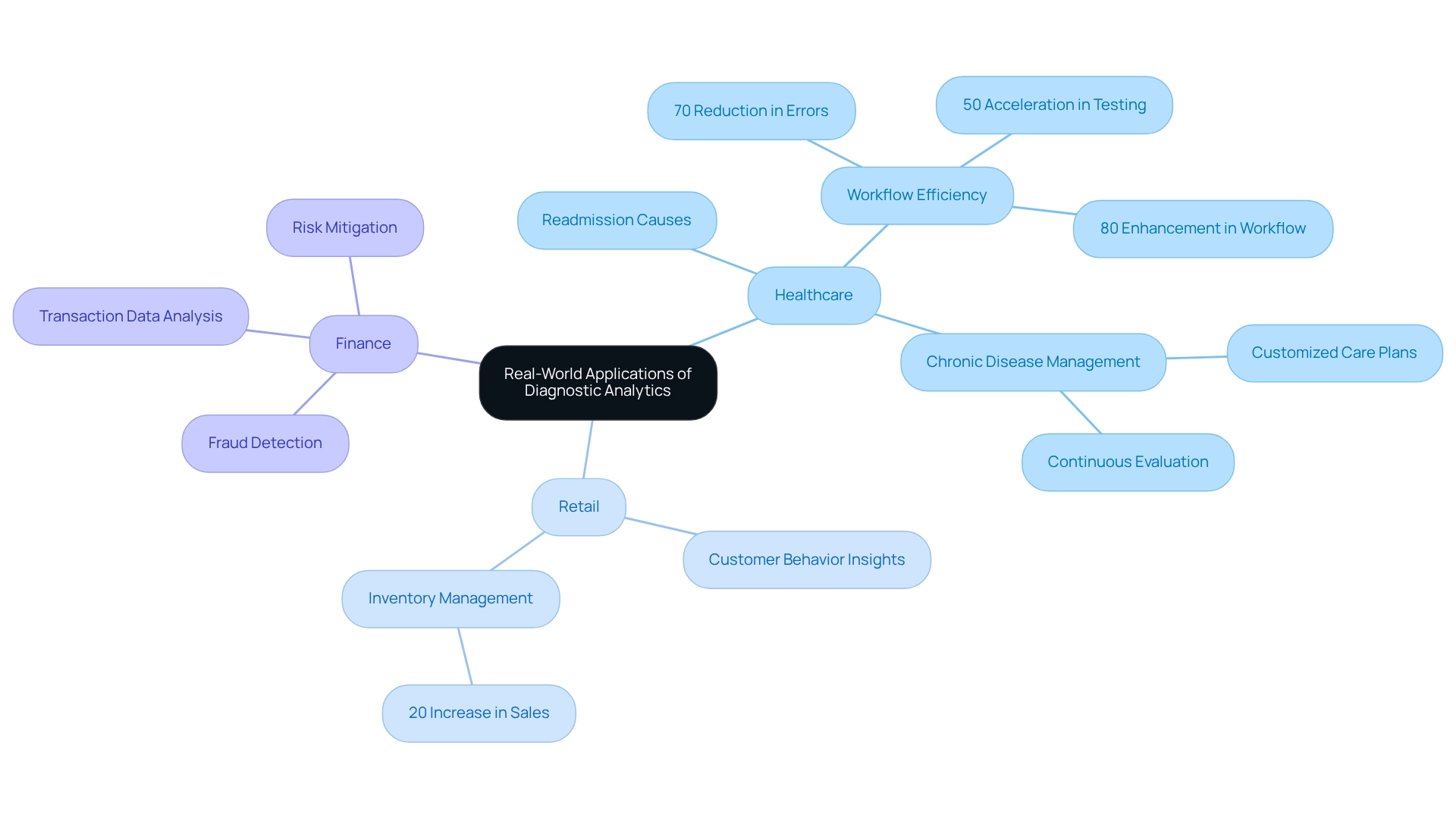

Real-World Applications of Diagnostic Analytics Across Industries

Understanding the role of diagnostic analytics across various industries reveals its capacity to answer critical questions that drive significant improvements in operational efficiency and decision-making. In healthcare, for example, diagnostic analytics is instrumental in identifying the underlying causes of patient readmissions. By thoroughly examining patient information, hospitals can uncover specific factors contributing to readmissions, enabling them to implement targeted interventions that enhance care quality and patient outcomes.

A noteworthy case study from Creatum GmbH illustrates how a mid-sized healthcare firm achieved remarkable efficiency by automating information entry and software testing through GUI automation. This implementation not only reduced information entry errors by 70% but also accelerated testing processes by 50%, culminating in an impressive 80% enhancement in workflow efficiency. Such measurable outcomes underscore the transformative impact of Creatum’s technology solutions on operational efficiency within healthcare services.

Moreover, personalized chronic disease management is significantly improved through the analysis of patient information, facilitating the development of customized care plans tailored to individual needs. Continuous evaluation, driven by patient feedback data, fosters a culture of excellence in healthcare delivery, ultimately elevating patient care standards.

In the retail sector, data analysis proves equally revolutionary. Businesses harness these techniques to gain insights into customer behavior, addressing pivotal questions that diagnostic analytics can answer, which informs inventory management strategies. For instance, Canadian Tire utilized AI-driven analysis to scrutinize purchasing behavior, leading to an impressive 20% increase in sales.

This demonstrates how understanding customer preferences through analytical assessments can effectively address what diagnostic analytics answers, ultimately optimizing stock levels and minimizing surplus inventory to enhance profitability.

Furthermore, diagnostic analysis plays a crucial role in finance, addressing key questions by enabling organizations to examine transaction data and reveal fraud patterns. By leveraging these analyses, financial institutions can identify anomalies and mitigate risks, thereby protecting their operations and maintaining customer confidence. As Trinity Cyrus, Marketing Coordinator, states, ‘Prescriptive analysis is used to recommend actions to optimize outcomes,’ illustrating the broader spectrum of data analysis that supports informed decision-making.

These examples highlight the versatility of analytical methods, showcasing their ability to drive enhancements and improve operational efficiency across diverse sectors, from healthcare to retail and finance. The distinctive value offering of organizations lies in delivering tailored solutions that enhance data quality and streamline AI implementation, ensuring businesses can effectively leverage these insights for growth and innovation.

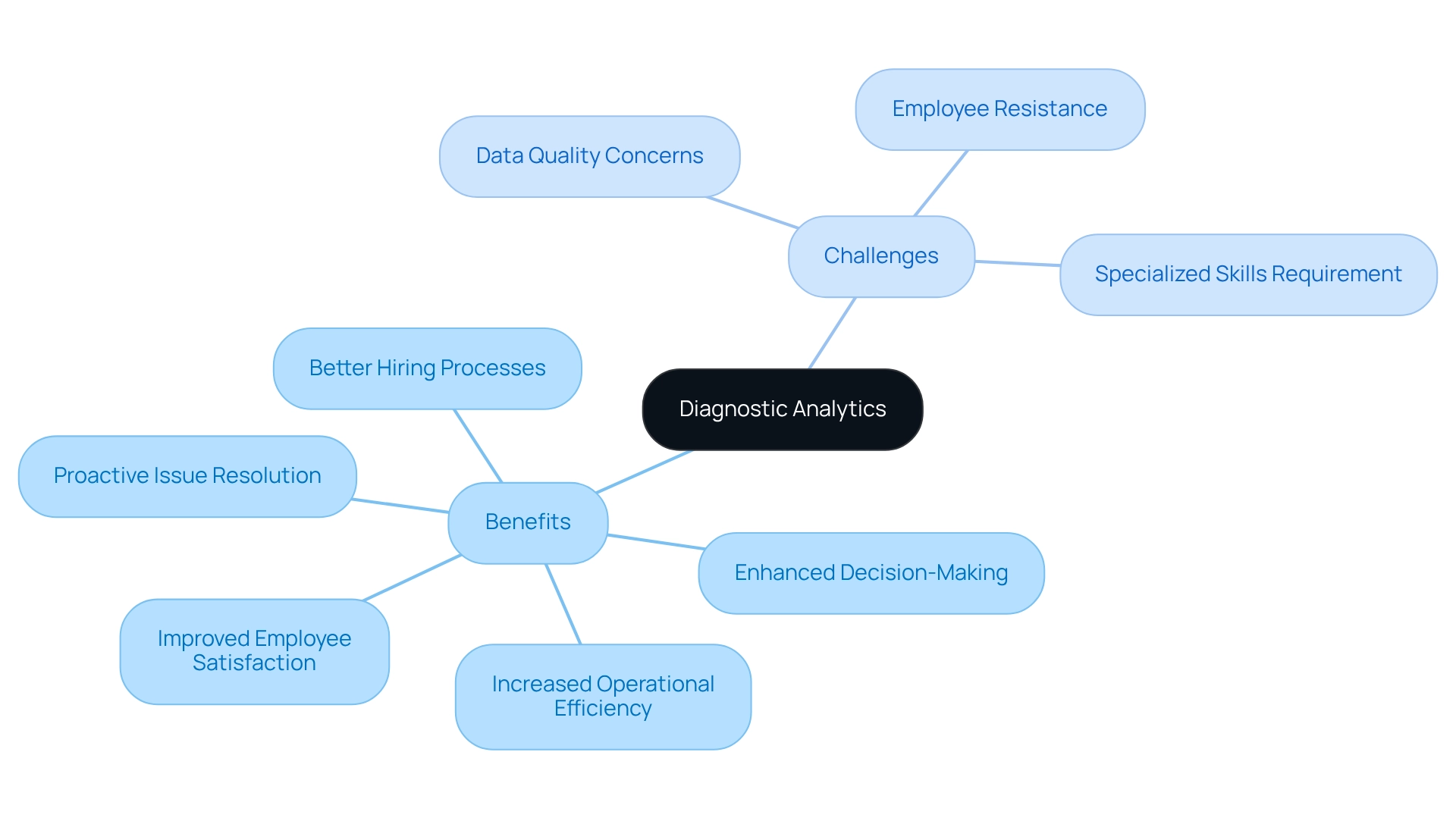

Benefits and Challenges of Implementing Diagnostic Analytics

Applying assessment analysis offers numerous advantages, including enhanced decision-making capabilities, increased operational efficiency, and the proactive identification and resolution of issues. Organizations that leverage data analysis gain a deeper understanding of their performance, empowering them to make informed, timely decisions that promote growth. As one expert noted, “Data analysis allows business leaders to make informed, timely decisions and gain greater insights into their operations and clients.”

A pivotal aspect of analytical diagnosis is its ability to uncover the root causes of performance issues by addressing the essential inquiries of diagnostic analytics. However, the path to effective implementation is fraught with challenges. Quality concerns often emerge as a significant barrier, as inaccurate or incomplete data can lead to misleading conclusions.

Moreover, employee resistance to change can hinder the adoption of new analytical processes, as staff may be reluctant to abandon familiar practices. The requirement for specialized skills to analyze data effectively further complicates the landscape, as organizations may struggle to find or develop talent proficient in analytical evaluation. To fully harness the benefits of diagnostic analytics, it is crucial to grasp the fundamental questions that diagnostic analytics seeks to answer.

To navigate these challenges, organizations can employ Robotic Process Automation (RPA) to streamline data collection and processing, thereby enhancing quality and minimizing the time spent on manual tasks. Additionally, customized AI solutions can assist businesses in navigating the rapidly evolving technological landscape, ensuring they adopt the appropriate tools aligned with their specific requirements. The integration of Business Intelligence can transform raw data into actionable insights, facilitating informed decision-making that drives growth and innovation.

For example, Canadian Tire’s experience exemplifies the transformative potential of these strategies. By utilizing ThoughtSpot AI-Powered Analytics to examine the purchasing behavior of new pet owners, the company identified cross-selling opportunities, resulting in an impressive 20% increase in sales within their pets department. This case underscores the importance of addressing data quality issues and fostering a culture open to change, as these factors are vital for realizing the full benefits of data analysis.

Furthermore, organizations that effectively implement analytical assessments can expect improvements in employee satisfaction and retention, as these evaluations enhance hiring processes by identifying the traits of successful candidates. As companies navigate the complexities of implementing diagnostic data analysis, focusing on these advantages while addressing the associated challenges will be essential to unlocking their full potential.

Diagnostic Analytics vs. Other Analytics Types: A Comparative Analysis

In the analytical landscape, diagnostic analysis is pivotal, as it addresses the question: what does diagnostic analytics answer? This form of analysis distinguishes itself from descriptive, predictive, and prescriptive analytics. While descriptive analysis answers ‘What happened?’, diagnostic analytics delves deeper, exploring ‘Why did it happen?’.

This deeper analysis is essential for organizations seeking to understand the underlying causes of trends and anomalies in their data. In today’s overwhelming AI landscape, identifying the right solutions can be daunting. The challenges of navigating this environment can hinder companies from making informed choices, highlighting the need for customized solutions from Creatum GmbH.

As Akash Jha observes, “By utilizing the correct kind of analysis at the suitable phase, companies can obtain valuable insights, make informed decisions, and ultimately propel success in an increasingly competitive and data-driven environment.” Predictive analysis, on the other hand, uses historical information to forecast future results, assisting businesses in anticipating potential challenges and opportunities. Meanwhile, prescriptive analysis advances this approach by recommending specific actions to achieve desired outcomes based on data examination and various scenarios.

Understanding these distinctions is crucial for organizations aiming to select the most appropriate analytical approach for their specific needs. For instance, a financial organization efficiently employs predictive analysis to assess credit risk by examining applicants’ credit histories, forecasting the probability of loan defaults. In contrast, an energy company utilized diagnostic analysis to investigate a sudden rise in energy usage.

By analyzing consumption data and customer behavior, they determined whether the increase stemmed from seasonal changes or inefficiencies in their distribution system.

Furthermore, statistics indicate that prescriptive analysis can provide actionable insights, such as recommending A/B tests for targeted advertising, significantly enhancing marketing effectiveness. This comparative study underscores how analytical evaluations not only improve predictive and prescriptive methods but also serve as a fundamental component in the decision-making process. By integrating tailored AI solutions from Creatum GmbH and leveraging the power of Business Intelligence, organizations can drive informed decisions and foster growth in an increasingly competitive landscape.

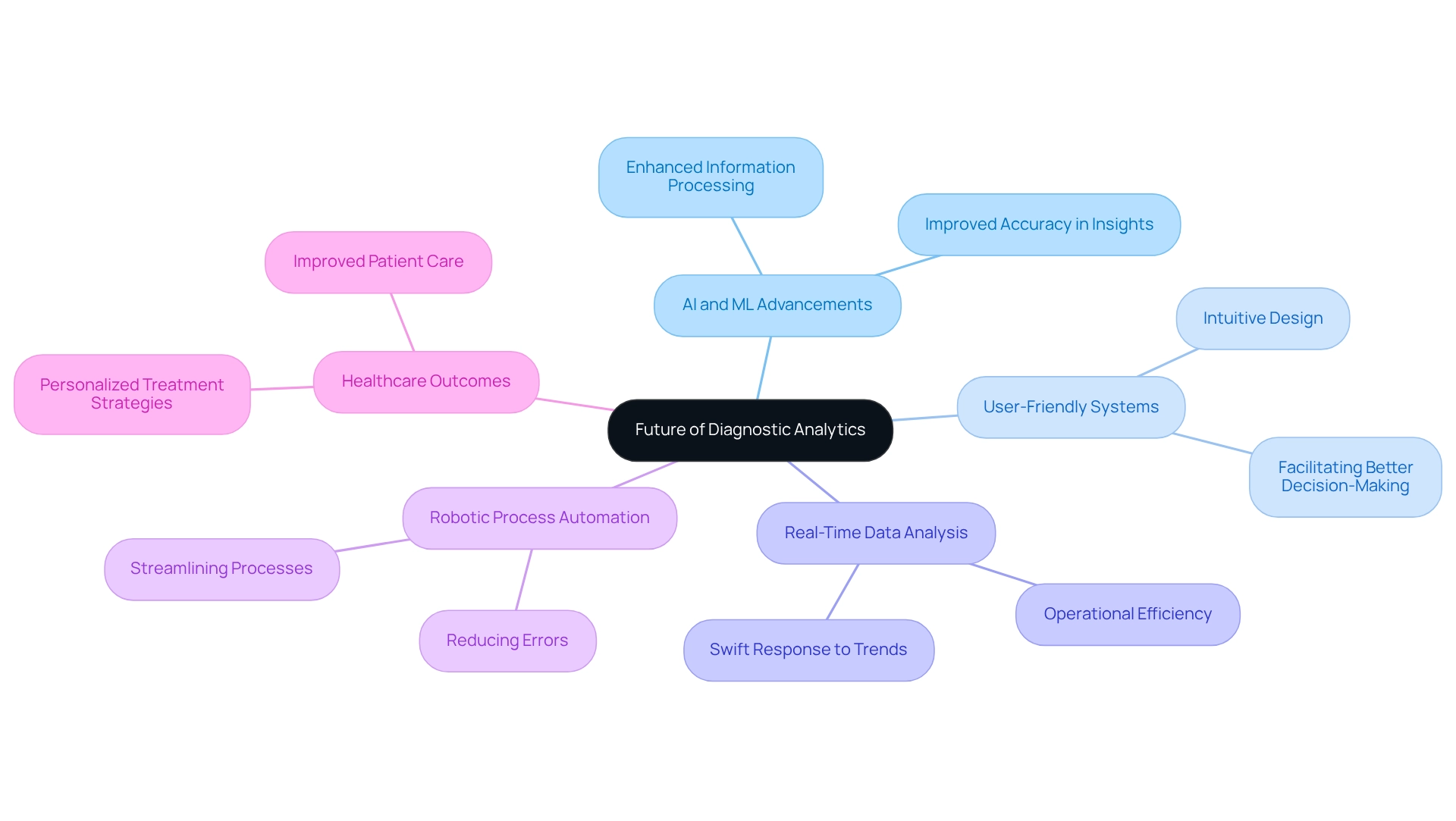

The Future of Diagnostic Analytics: Trends and Innovations

The future of diagnostic assessment is on the brink of significant transformation, driven by advancements in artificial intelligence (AI) and machine learning (ML). These technologies are set to enhance information processing capabilities, resulting in improved accuracy in insights and decision-making. For instance, organizations utilizing accessible large-scale information assessment tools have reported enhanced analysis and decision-making, ultimately improving patient outcomes in healthcare environments.

A study titled ‘Perceived Ease of Use in Big Data Analytics Adoption’ underscores the necessity for intuitive and user-friendly systems. Such tools facilitate superior information analysis and decision-making, thereby enhancing patient outcomes and healthcare delivery.