Overview

Calculating standard deviation in Power BI is a critical skill for data professionals. Users can efficiently achieve this by employing DAX functions such as STDEV.P and STDEV.S, tailored for population and sample data respectively. This article provides a clear, step-by-step guide that underscores the importance of understanding the data context, ensuring data cleanliness, and implementing effective visualization techniques. By following these guidelines, users can derive accurate insights that inform sound decision-making.

Introduction

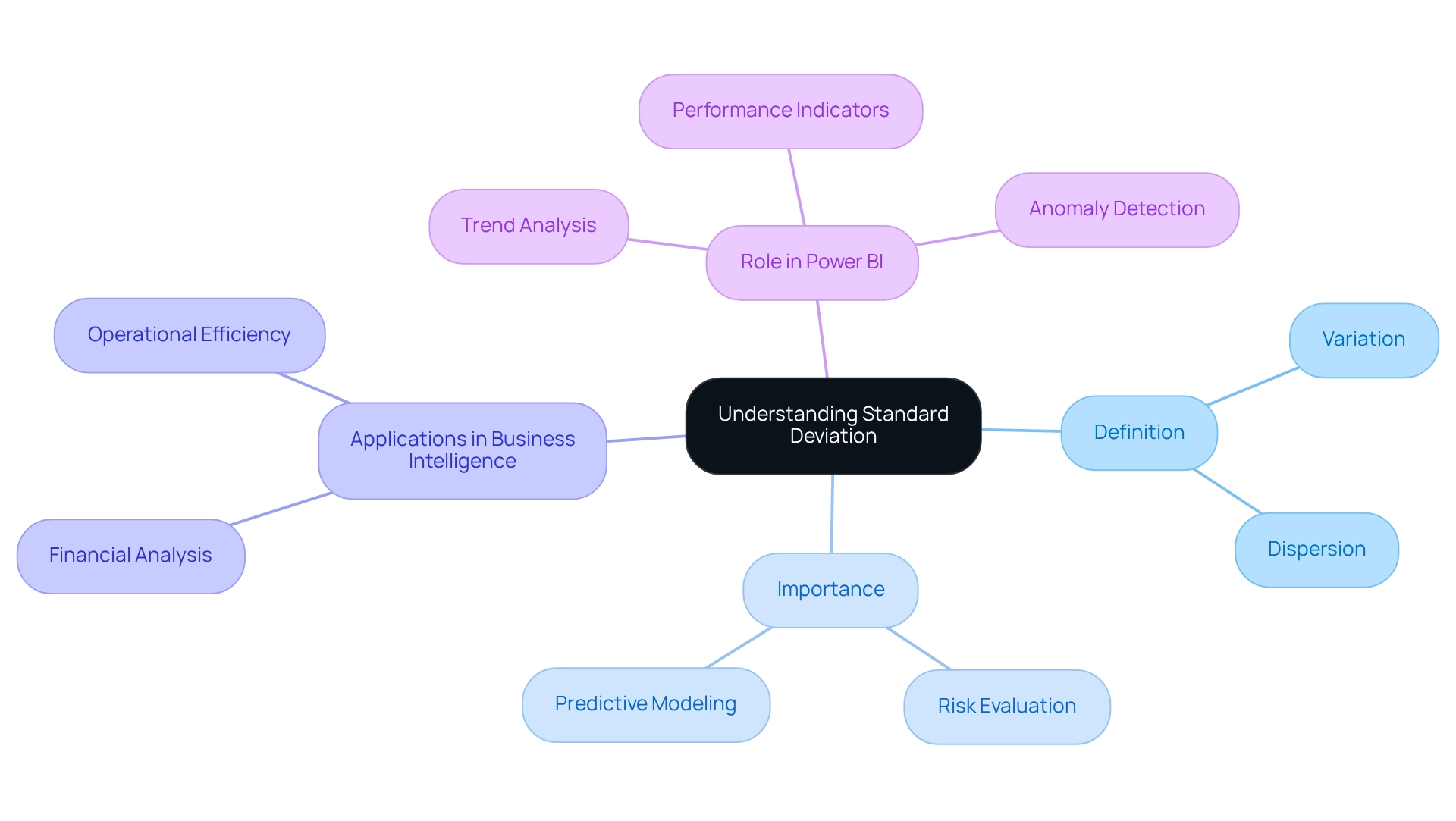

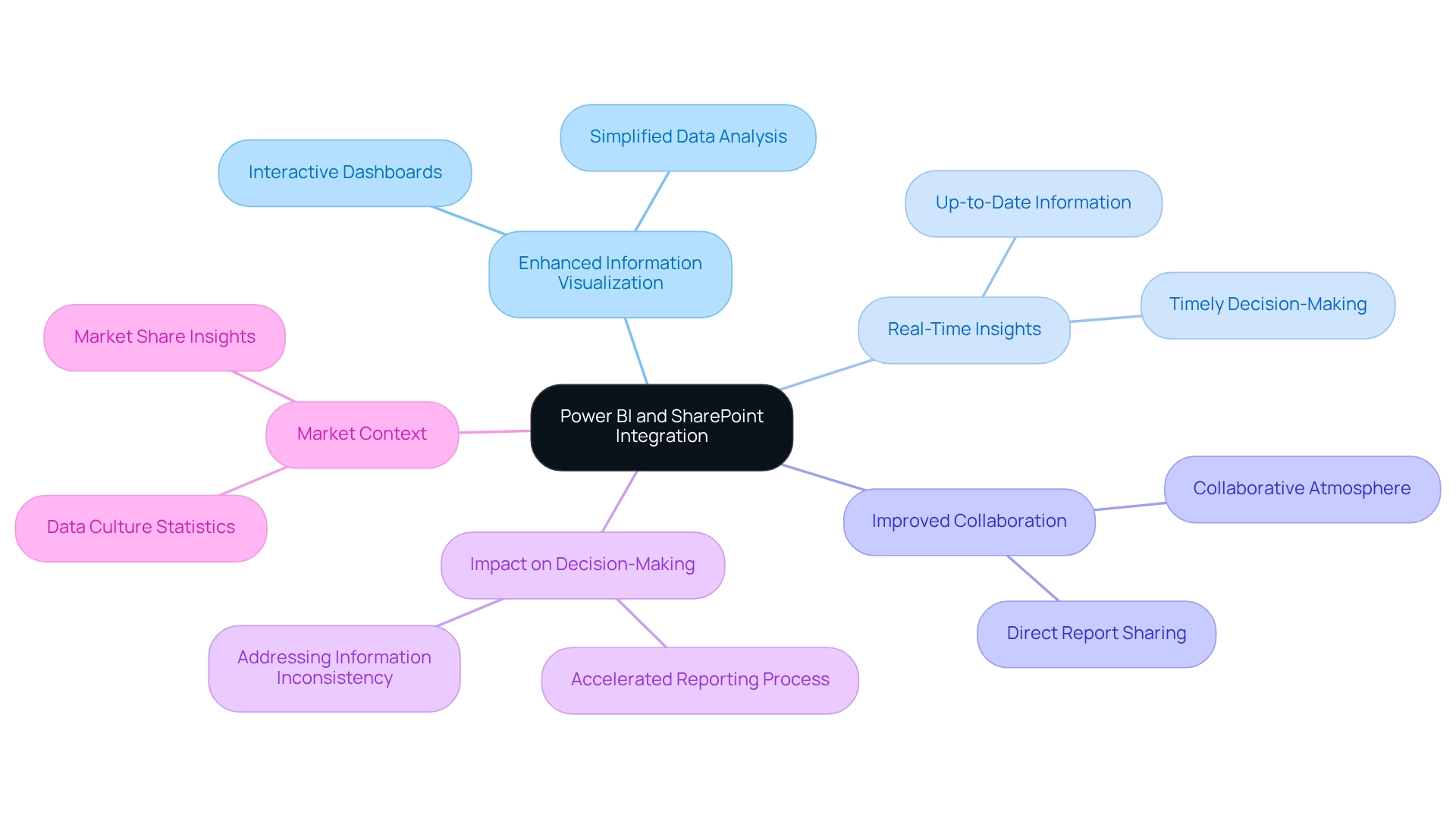

In the realm of data analysis, grasping the nuances of standard deviation is crucial for extracting valuable insights from complex datasets. This vital statistical measure not only underscores the variability within data but also functions as an essential tool in risk assessment and predictive modeling. As businesses increasingly depend on data-driven decisions, mastering standard deviation becomes imperative for evaluating performance metrics and identifying trends.

Within the context of Power BI, organizations can leverage the power of standard deviation to enhance reporting capabilities, streamline operations, and propel strategic initiatives. This article explores the significance of standard deviation, provides practical guidance on its calculation using DAX functions, and examines its real-world applications across various sectors. Ultimately, it empowers businesses to navigate the intricacies of data with confidence.

Understanding Standard Deviation: Definition and Importance

Standard variation is a crucial statistical measure that quantifies the variation or dispersion within a dataset. A small variation in values signifies that the points are grouped closely around the average, indicating consistency. In contrast, a large variation shows a broader range of values, suggesting greater variability. This measure is essential in analysis, as it aids in evaluating the reliability of information and plays a vital role in risk evaluation and predictive modeling based on historical trends.

Moreover, the interquartile range (IQR) signifies the central 50% of values, offering additional context for understanding variability alongside typical variation.

In the domain of Power BI, a strong understanding of standard deviation is crucial for precisely analyzing trends and making informed, analytical decisions. Companies can utilize variation to assess performance indicators, recognize anomalies, and improve forecasting precision. Recent statistics highlight the significance of standard deviation in Power BI analysis, especially in 2025, when organizations increasingly depend on accurate metrics to direct strategic initiatives.

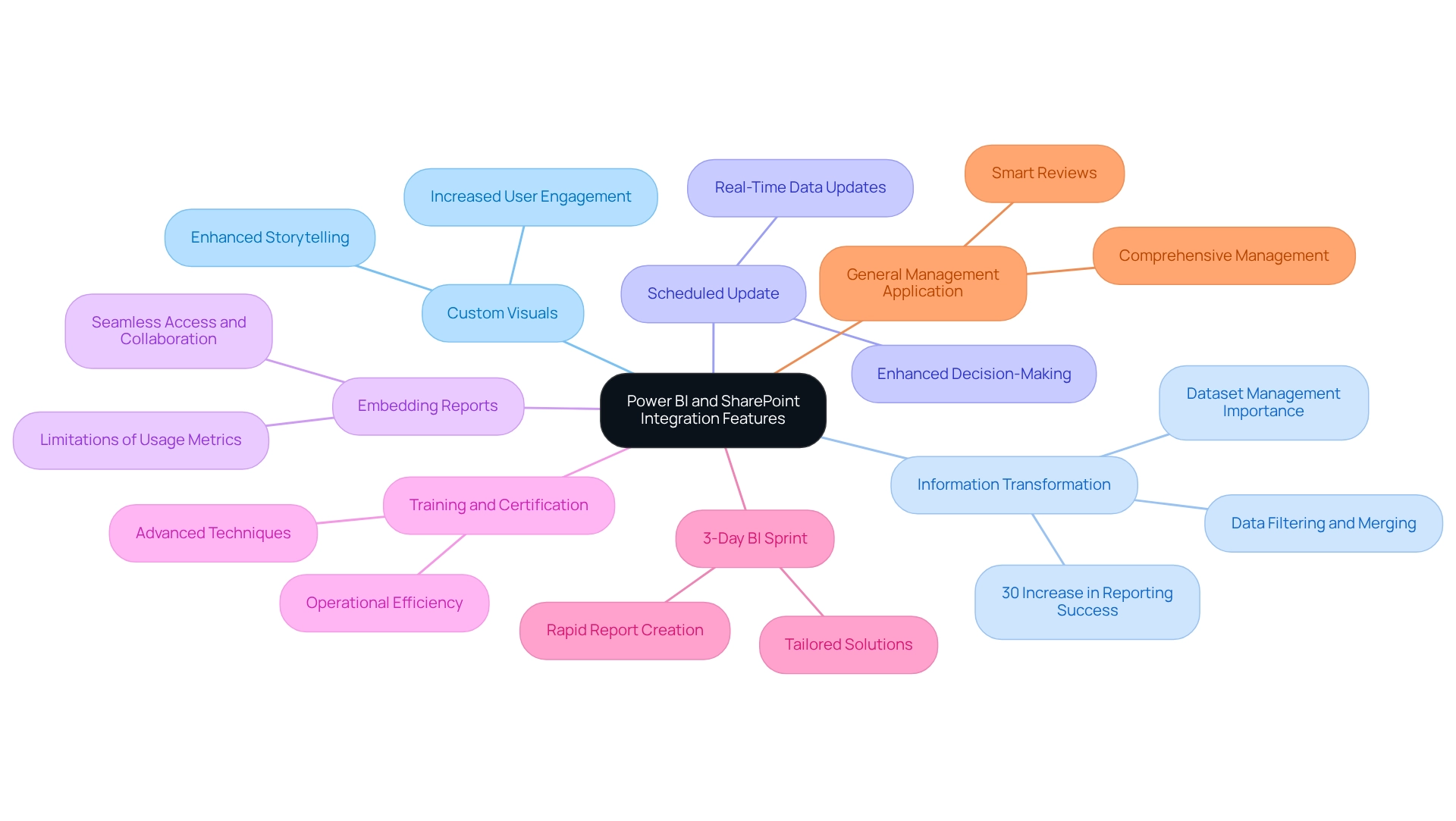

Creatum GmbH’s Power BI services, including the 3-Day Power BI Sprint and the General Management App, enable organizations to enhance their reporting capabilities and obtain actionable insights. This service facilitates the rapid creation of professionally designed reports while addressing data inconsistency and governance challenges, ultimately supporting enhanced decision-making processes.

Professional insights emphasize that grasping standard deviation in Power BI is essential for efficient decision-making in business intelligence. As Alex Dos Diaz points out, “Standard variation calculates all uncertainty as risk, even when it’s in the investor’s favor—such as above-average returns.” This perspective underscores the importance of variation in measuring uncertainty and facilitating better decisions, ultimately fostering growth and innovation.

Practical applications of statistical variation in business decision-making encompass financial analysis, assisting investors in evaluating risk and return profiles, and operational efficiency evaluations that help identify process variations.

Case studies demonstrate the revolutionary effect of variation on information insights. For instance, in the case analysis titled ‘Business Intelligence for Insights,’ companies that effectively employ typical variation in their analytical frameworks report better quality information and enhanced decision-making abilities. Furthermore, feedback from customers who have engaged with Creatum’s Power BI Sprint emphasizes significant improvements in their information evaluation capabilities. This demonstrates how incorporating standard deviation in Power BI not only elucidates fundamental patterns but also enables organizations to navigate the complexities of an information-rich environment with confidence.

To explore how Creatum GmbH can enhance your reporting and decision-making processes, schedule a complimentary consultation today.

Step-by-Step Guide to Calculating Standard Deviation in Power BI

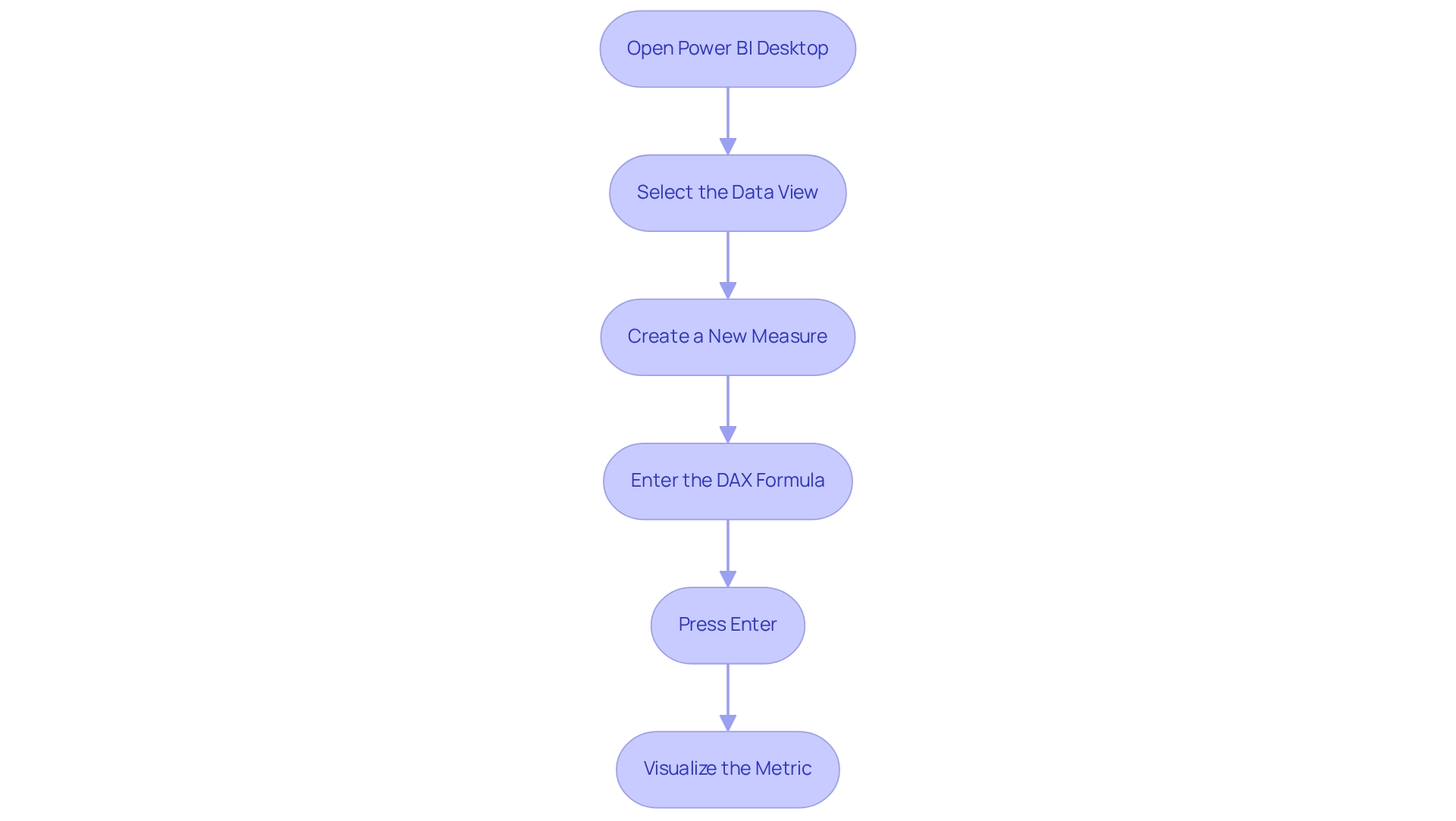

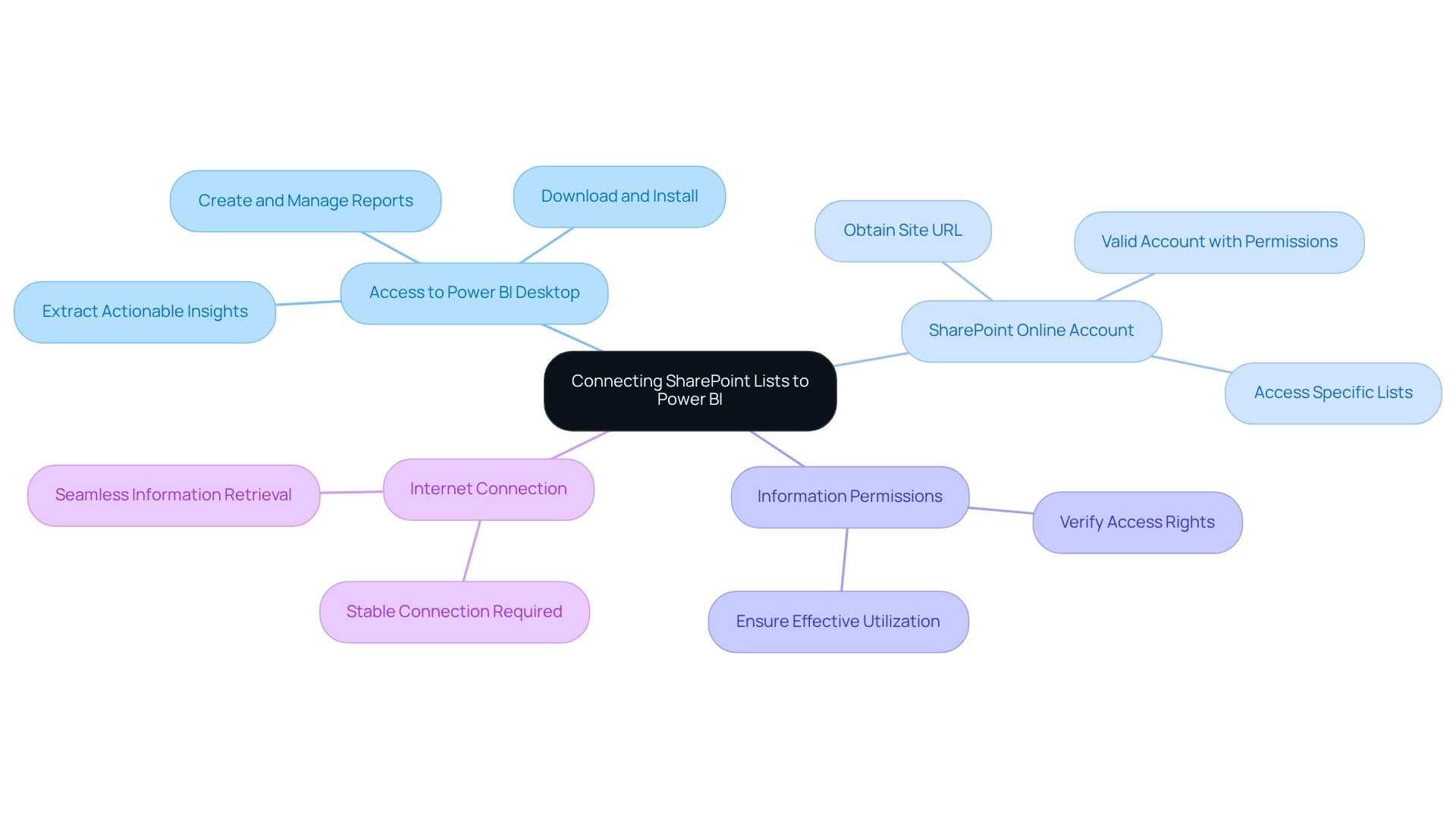

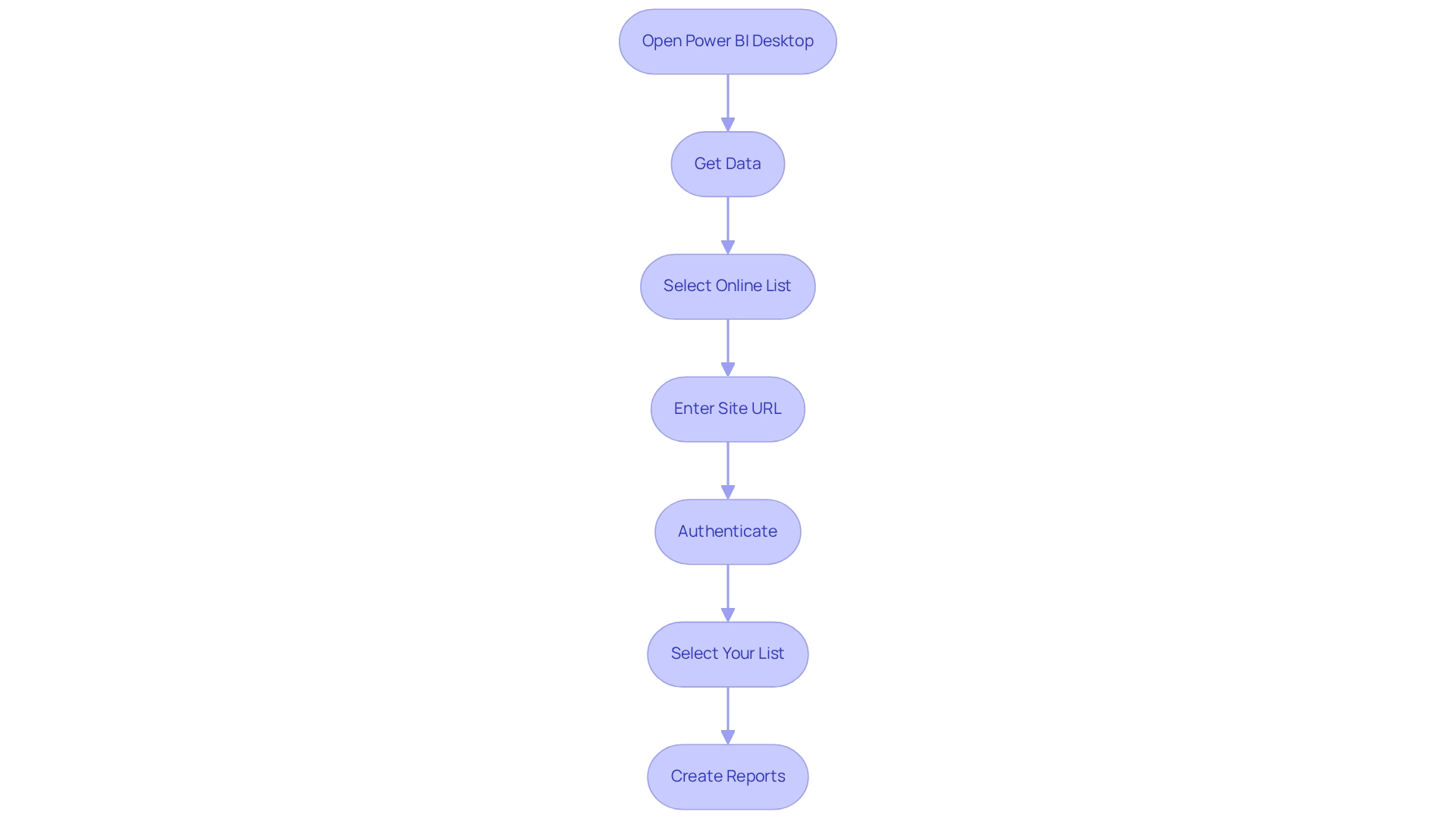

Calculating the standard deviation in Power BI is a straightforward process that can significantly enhance your data analysis skills, particularly in leveraging Business Intelligence for informed decision-making. Follow these steps to effectively calculate the typical variation using DAX functions:

- Open Power BI Desktop: Start by launching the application and loading your dataset.

- Select the Data View: Click on the table icon located in the left sidebar to navigate to the Data view.

- Create a New Measure: Go to the ‘Modeling’ tab and select ‘New Measure’ to initiate the creation of a new calculation.

- Enter the DAX Formula: In the formula bar, input the appropriate DAX formula for typical variation. For population standard deviation, use:

StdDev_Pop = STDEV.P('YourTable'[YourColumn])For sample standard deviation, the formula is:

StdDev_Sample = STDEV.S('YourTable'[YourColumn]) - Press Enter: After entering the formula, press Enter to finalize the creation of the measure.

- Visualize the Metric: Drag the newly created metric into a visual to examine the typical variation within your dataset.

Utilizing Power BI for information analysis is increasingly popular, with user satisfaction ratings reflecting its effectiveness—Power BI boasts a score of 4.7 out of 5, surpassing competitors like Tableau at 4.4 and Excel at 3.8. This highlights Power BI’s superiority in user satisfaction. As organizations continue to recognize the value of data-driven insights, the demand for skilled professionals in business intelligence is on the rise.

Indeed, the need for Business Intelligence experts, especially analytics specialists, is increasing swiftly. Analytics specialists rank as the #3 position on Glassdoor’s Top 50 Jobs in America list, providing a median salary of $102,000. By mastering DAX functions for calculating standard deviation in Power BI, you position yourself to extract deeper insights from your information, ultimately driving informed decision-making. Moreover, the incorporation of Robotic Process Automation (RPA) can improve operational efficiency by automating repetitive tasks, addressing issues such as time-consuming report generation and inconsistencies.

This ensures that your organization remains competitive and efficient while transforming raw information into actionable insights. The governance market was valued at $3.02 billion in 2022, underscoring the growing significance of governance in relation to analysis tools like Power BI.

Utilizing DAX Functions for Standard Deviation in Power BI

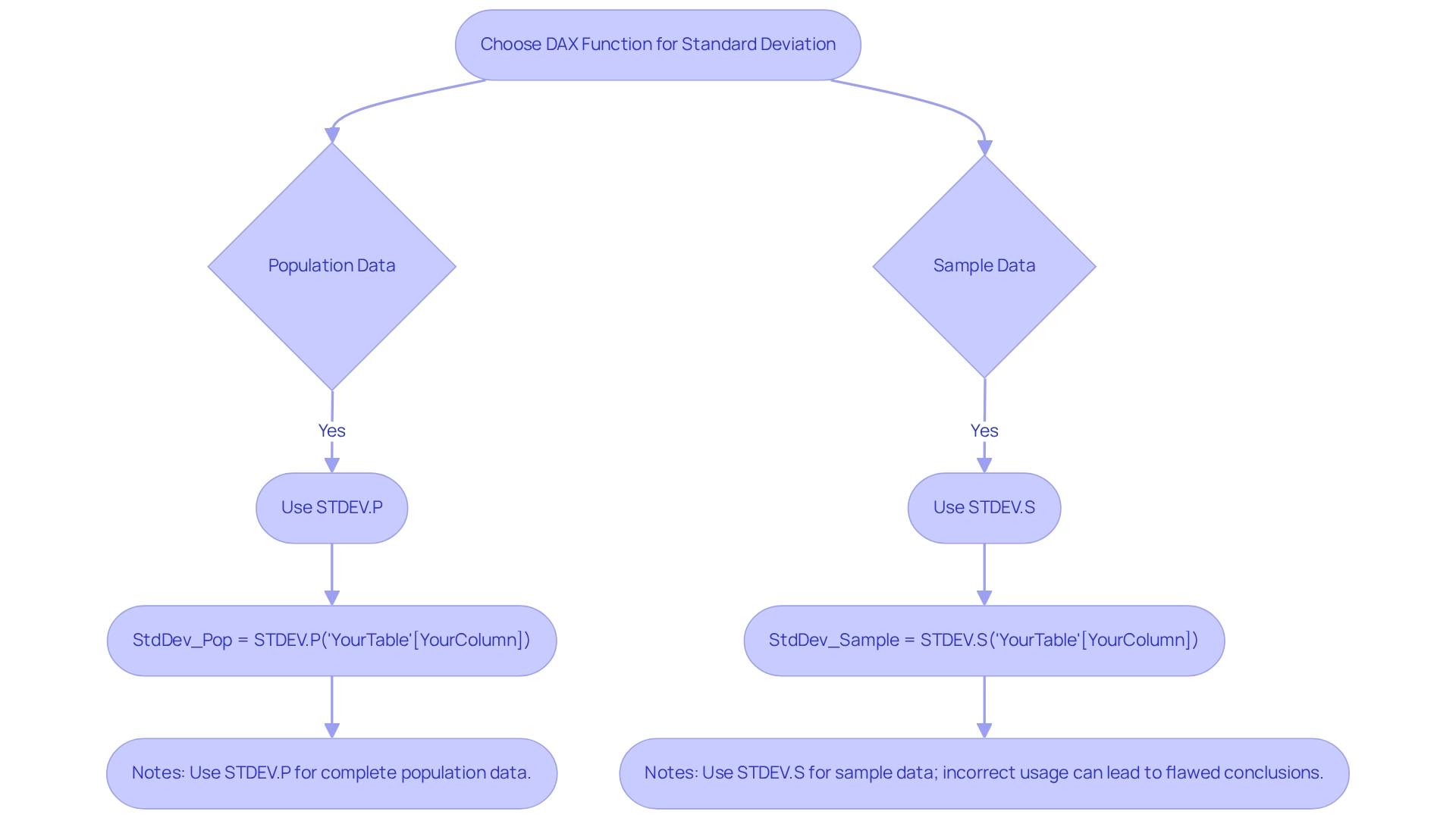

Power BI employs DAX (Data Analysis Expressions) to execute a variety of calculations, including the critical analysis of variance, which is essential for understanding data variability and fostering analytics-driven decision-making. The two primary functions for calculating standard deviation are:

-

STDEV.P: This function calculates the standard deviation based on the entire population. It is applicable when you possess data for the complete group under examination. The syntax for this function is:

StdDev_Pop = STDEV.P('YourTable'[YourColumn]) -

STDEV.S: Conversely, this function estimates the standard deviation from a sample of the population. It proves particularly useful when your dataset represents a subset of a larger group. The syntax for this function is:

StdDev_Sample = STDEV.S('YourTable'[YourColumn])

Accurate application of these functions is vital for obtaining precise calculations of variability in your data, which is crucial for effective analysis and operational efficiency. Recent statistics indicate that the population variability is approximately 8.896, underscoring the importance of precise calculations in data-informed decision-making. This statistic illustrates how understanding variability can significantly influence strategic decisions within organizations, especially in a data-rich environment where insights drive growth and innovation.

In practice, utilizing STDEV.P and STDEV.S can yield different insights based on the context of your data. For example, a case study on statistical guidelines in biomedical journals revealed that numerous articles misreported statistical measures, leading to flawed conclusions and impacting data-driven decisions in that field. This emphasizes the necessity for accurate standard deviation calculations to enhance the reliability of published research and improve operational outcomes.

Experts in the field advocate for the appropriate use of these functions, noting their significant role in reducing the time to insights. As Ram Ravichandran, Google Analytics Manager, remarked, “An invaluable tool for analysts, the platform’s self-driven analytics reduce time to insights significantly.” The choice between STDEV.P and STDEV.S can greatly affect data analysis, highlighting the necessity of understanding the underlying structure before selecting the appropriate function. By mastering these DAX functions, analysts can ensure their analyses are both robust and reliable, ultimately driving growth and innovation in their organizations while overcoming challenges such as time-consuming report creation and inconsistencies.

At Creatum GmbH, we underscore the importance of these analytical tools in our Business Intelligence and Robotic Process Automation solutions. To explore how our services can enhance your data-driven decision-making, we invite you to book a free consultation.

Troubleshooting Common Issues in Standard Deviation Calculations

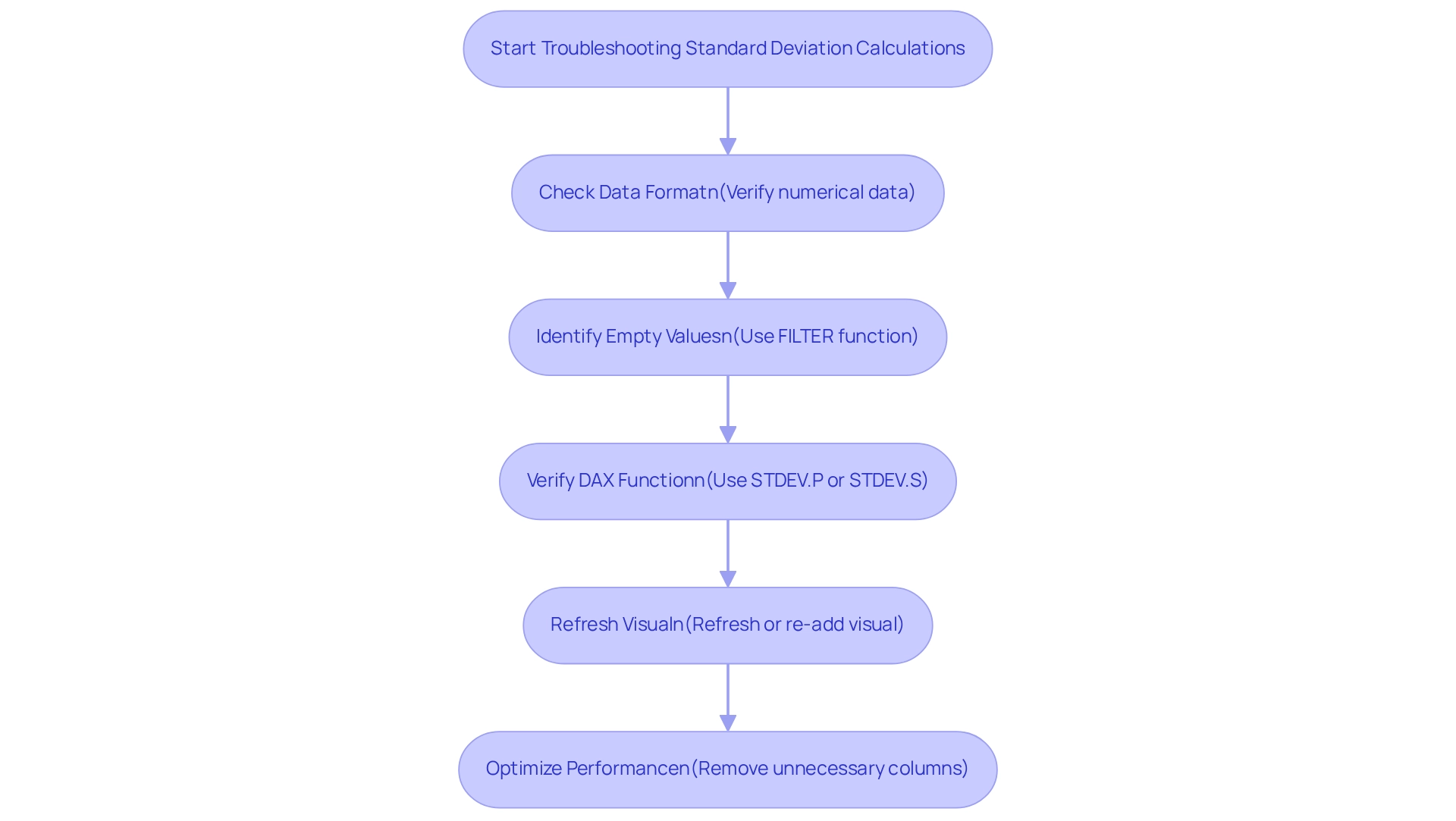

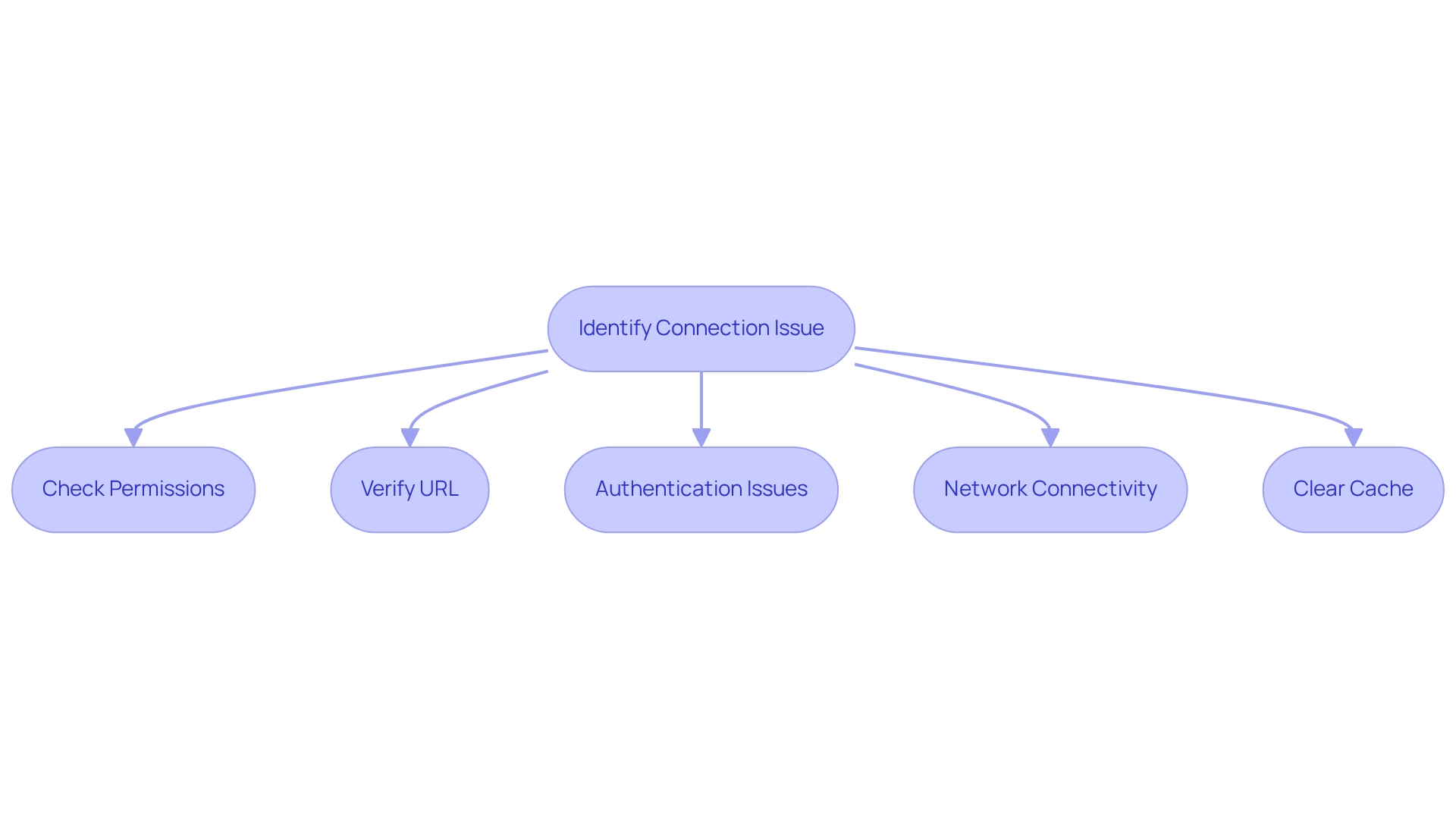

Calculating typical variation in Power BI presents several challenges that users must navigate to achieve precise results. This is particularly vital when aiming to enhance operational efficiency through data-driven insights with Creatum GmbH’s solutions.

Incorrect Formats: It is essential to confirm that the column under examination contains numerical data. If the information type is incorrectly set, Power BI will be unable to compute the standard deviation, resulting in misleading insights. Always verify the type settings in the model to ensure they align with your evaluation requirements.

Empty Values: The presence of empty or null values within your dataset can severely affect the accuracy of your calculations. To mitigate this, utilize the FILTER function to exclude these values from your evaluation. This step is crucial for maintaining the integrity of your statistical calculations, ensuring that your insights are actionable.

Using the Wrong Function: Selecting the correct DAX function is vital. Ensure you are using STDEV.P for population data and STDEV.S for sample data. Misapplication of these functions can lead to incorrect standard deviation results, skewing your analysis and potentially hindering informed decision-making.

Visual Not Refreshing: Should your visual fail to represent the newly calculated measure, refreshing the information or re-adding the visual to the report canvas may be necessary. This guarantees that all modifications are accurately depicted in your visualizations, enabling effective storytelling and operational insights.

Performance Issues: Working with large datasets can lead to performance bottlenecks, slowing down calculations. To enhance performance, consider optimizing your information model by removing unnecessary columns or employing aggregations. This approach not only accelerates computations but also improves the overall user experience in Power BI, aligning with RPA objectives to simplify workflows.

By addressing these common issues, users can refine their skills in calculating standard deviation in Power BI, ultimately leading to more reliable analysis and decision-making. Proactive management of Power BI challenges, including regular performance tuning and robust modeling, has been shown to bolster an organization’s capacity to extract meaningful insights from information. This is evidenced by forward-looking enterprises that prioritize these practices. Effectively utilizing Business Intelligence tools can transform raw information into actionable insights, fostering growth and innovation.

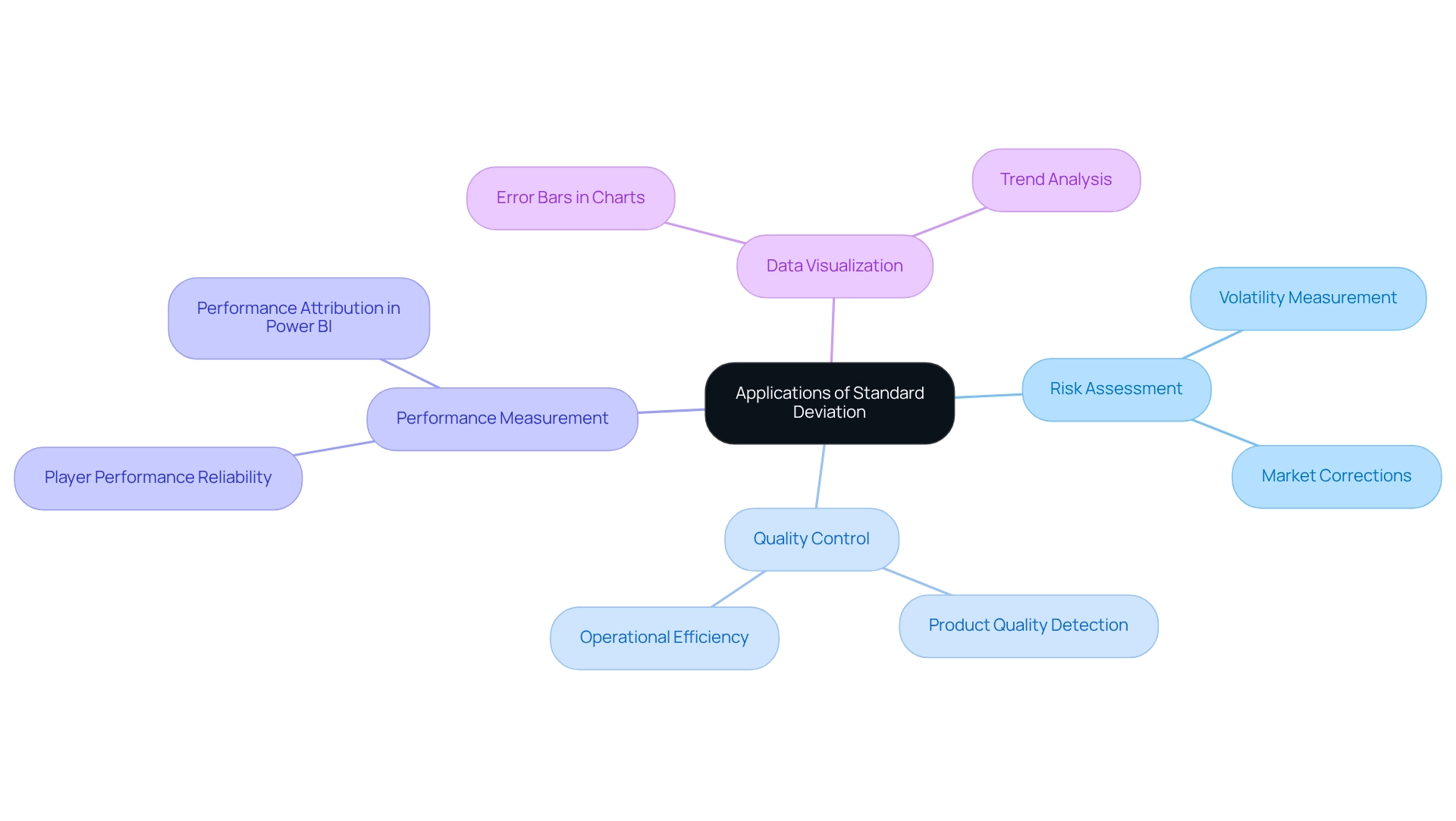

Applications of Standard Deviation in Data Analysis and Visualization

Standard deviation plays a crucial role in data analysis and visualization, with applications spanning multiple domains.

Risk Assessment: In the financial sector, standard deviation serves as a key metric for measuring the volatility of asset prices. Many advanced financial models and investment strategies depend on the accurate measurement of volatility to balance risk and reward effectively. An increased variation signifies greater risk, making it crucial for investors to evaluate potential fluctuations in their portfolios. Historical examination suggests that times of increased variability frequently precede market adjustments, emphasizing its significance in proactive investment approaches. A case study named ‘Historical Trends and Market Corrections’ illustrates that investors who tracked these statistical indicators were more equipped to modify their strategies, highlighting the essential function of variability in investment decision-making.

Quality Control: Within manufacturing, variability is essential in detecting differences in product quality. By establishing acceptable ranges, manufacturers can identify products that fall outside these parameters, signaling potential quality issues. This application not only enhances product reliability but also minimizes waste and rework, ultimately driving operational efficiency.

Performance Measurement: In the field of sports analytics, variability is employed to assess the reliability of player performance. A reduced variability suggests more reliable performance, which can be essential for coaches and managers when making strategic choices regarding player usage and growth. As mentioned by the AccountingInsights Team, in performance attribution, the standard deviation in Power BI helps pinpoint the sources of return variability, further emphasizing its importance in performance assessment.

Data Visualization: The standard deviation in Power BI can be effectively represented through error bars in charts, which convey the variability of information points. This visualization assists stakeholders in making educated choices based on observed trends, enabling a clearer comprehension of potential risks and opportunities.

Incorporating variability in data examination not only improves the precision of insights but also enables organizations to make data-informed choices that align with their strategic objectives, ultimately fostering growth and innovation.

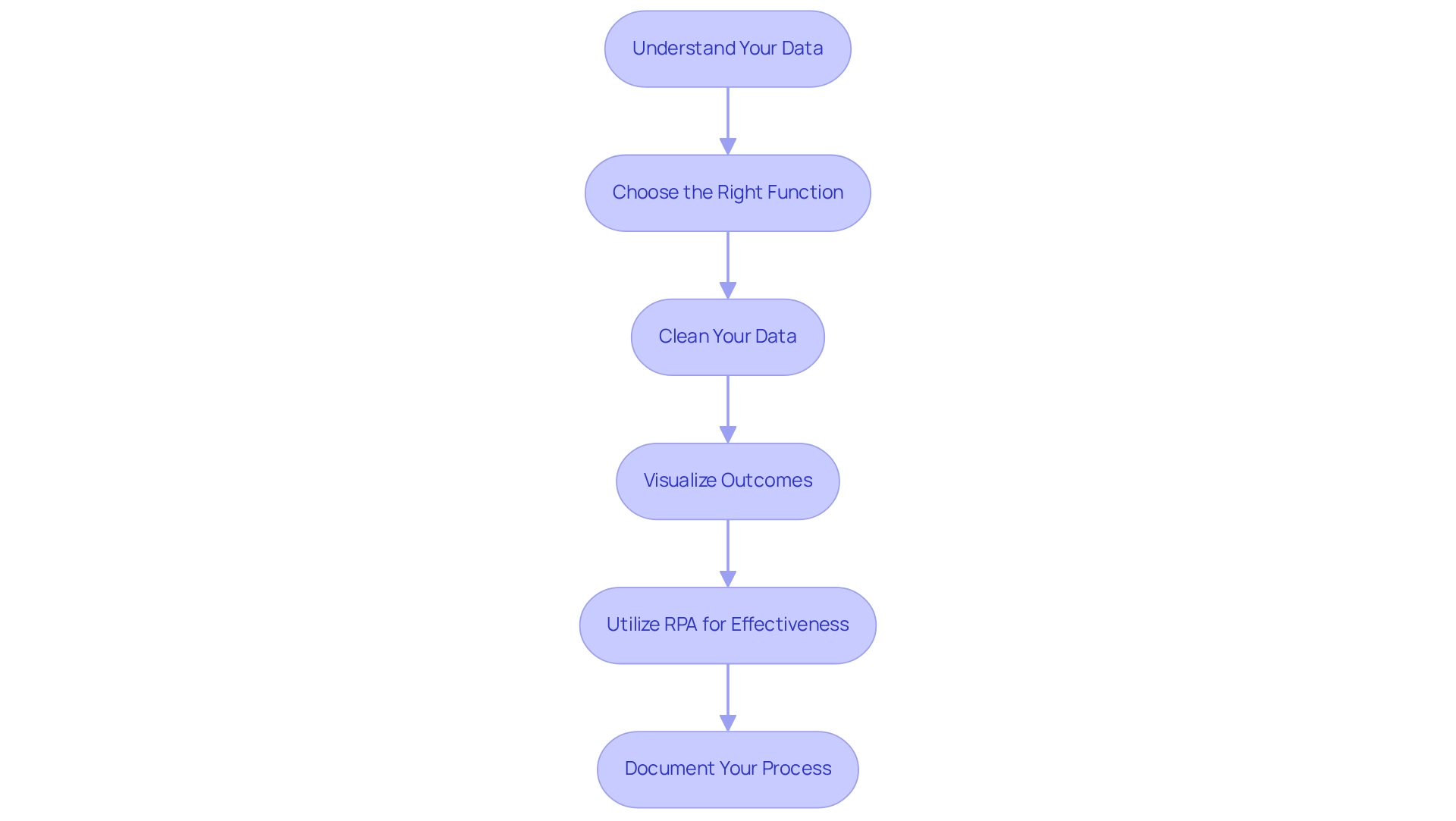

Best Practices for Implementing Standard Deviation in Power BI

To effectively implement standard deviation in Power BI, consider the following best practices:

-

Understand Your Data: A comprehensive understanding of your dataset and the context of your analysis is crucial. This foundational knowledge allows for more accurate interpretations and insights. In today’s information-rich environment, utilizing Business Intelligence (BI) is essential for converting raw information into actionable insights that foster growth and innovation.

-

Choose the Right Function: Selecting the appropriate function is essential for accurate calculations. Utilize

STDEV.Pfor population andSTDEV.Sfor sample to ensure your results reflect the correct statistical context, which is vital for informed decision-making. -

Clean Your Data: Data cleaning is vital for accurate standard deviation calculations. Eliminate outliers and incorrect points that could distort your results. Techniques such as filtering, normalization, and validation checks can significantly enhance information quality, leading to more reliable analyses. For instance, in a case study named ‘Baseball Team Age Analysis,’ a group of 25 players was examined, showing an average age of 30.68 years and a typical variation of 6.09 years, illustrating how effective information management can produce significant insights. This step is crucial in overcoming challenges such as inconsistencies that frequently occur in Power BI dashboards, particularly when analyzing standard deviation.

-

Visualize Outcomes: Efficient representation of variation is essential for conveying variability. Utilize visuals such as scatter plots or line charts with error bars to represent standard deviation clearly, making it easier for stakeholders to grasp the implications of the information. This aligns with the objective of BI to present information in a manner that is actionable and insightful.

-

Utilize RPA for Effectiveness: Incorporating Robotic Process Automation (RPA) can greatly improve your information evaluation process. By automating repetitive tasks associated with information collection and cleaning, tools like EMMA RPA and Power Automate can liberate valuable time for analysts, enabling them to concentrate on deriving insights instead of becoming overwhelmed by manual processes.

-

Document Your Process: Maintaining thorough documentation of your calculations and the rationale behind your choices is important. This practice not only promotes transparency but also serves as a useful reference for future evaluations and for colleagues assessing your work. As Jim aptly stated, “Congratulations! May you continue to increase your survival Z-score!” This underscores the importance of grasping standard deviation in the realm of information evaluation.

Integrating these best practices will enhance the precision and efficiency of your analysis in Power BI, particularly in calculating standard deviation, ultimately resulting in more informed decision-making. Furthermore, merging clinical expertise with statistical understanding, as emphasized in evidence-based medicine, is essential for accurate data interpretation. By leveraging BI and RPA, organizations can automate repetitive tasks and improve operational efficiency, thus driving business growth.

To learn more about how our solutions can help you achieve operational excellence, book a free consultation with Creatum GmbH today.

Conclusion

Understanding standard deviation is fundamental for any organization aiming to harness the power of data analysis in today’s competitive landscape. This article has explored the essential role standard deviation plays in quantifying data variability, assessing risk, and enhancing decision-making processes. From its calculation using DAX functions in Power BI to its applications in various sectors such as finance, manufacturing, and sports analytics, the significance of standard deviation cannot be overstated.

By mastering the techniques for calculating standard deviation and implementing best practices in Power BI, organizations can improve the accuracy and reliability of their data insights. This improvement supports informed decision-making and strategic planning, ultimately driving growth and innovation. The challenges associated with standard deviation calculations can be effectively navigated through proactive data management and the integration of automation tools, ensuring that businesses remain agile and data-driven.

In conclusion, embracing the principles of standard deviation equips organizations with the necessary tools to interpret complex datasets confidently. As businesses continue to rely on data-driven insights, understanding and applying standard deviation will be pivotal in unlocking the full potential of their analytics capabilities. This fosters a culture of informed decision-making that leads to sustainable success.

Frequently Asked Questions

What is standard deviation and why is it important?

Standard deviation is a statistical measure that quantifies the variation or dispersion within a dataset. It indicates how closely data points are grouped around the average. A small standard deviation signifies consistency, while a large standard deviation indicates greater variability. It is essential for evaluating the reliability of information, risk evaluation, and predictive modeling based on historical trends.

What does the interquartile range (IQR) represent?

The interquartile range (IQR) signifies the central 50% of values in a dataset, providing additional context for understanding variability alongside standard deviation.

How is standard deviation used in Power BI?

In Power BI, understanding standard deviation is crucial for analyzing trends and making informed decisions. It helps companies assess performance indicators, recognize anomalies, and improve forecasting precision.

What services does Creatum GmbH offer related to Power BI?

Creatum GmbH offers Power BI services, including the 3-Day Power BI Sprint and the General Management App, which help organizations enhance their reporting capabilities and obtain actionable insights while addressing data inconsistency and governance challenges.

How does standard deviation relate to business decision-making?

Standard deviation helps in financial analysis by assisting investors in evaluating risk and return profiles, as well as in operational efficiency evaluations to identify process variations. It plays a vital role in measuring uncertainty and facilitating better decision-making.

What are some practical applications of statistical variation in business?

Practical applications include financial analysis for evaluating risk and return profiles, operational efficiency evaluations to identify process variations, and enhancing decision-making abilities through better quality information.

How can standard deviation be calculated in Power BI?

To calculate standard deviation in Power BI, follow these steps: 1. Open Power BI Desktop and load your dataset. 2. Select the Data View. 3. Create a New Measure in the ‘Modeling’ tab. 4. Enter the appropriate DAX formula: For population standard deviation: StdDev_Pop = STDEV.P(‘YourTable'[YourColumn]) For sample standard deviation: StdDev_Sample = STDEV.S(‘YourTable'[YourColumn]) 5. Press Enter to finalize the measure. 6. Visualize the metric in your report.

What is the user satisfaction rating for Power BI compared to its competitors?

Power BI has a user satisfaction rating of 4.7 out of 5, which surpasses competitors like Tableau (4.4) and Excel (3.8).

What is the significance of governance in relation to analysis tools like Power BI?

The governance market was valued at $3.02 billion in 2022, highlighting the growing importance of governance in data analysis and decision-making processes using tools like Power BI.

Overview

Direct Query in Power BI represents a transformative connectivity mode, enabling users to access real-time data directly from the source without the need for importing. This capability significantly enhances operational efficiency and empowers timely decision-making.

Notably, this approach facilitates immediate insights and minimizes manual data handling, making it an attractive option for organizations. However, it is crucial to recognize that there are performance considerations and limitations that must be navigated to fully leverage its advantages.

Organizations that embrace Direct Query can unlock a new level of data-driven decision-making, but they must remain vigilant about the challenges it presents. By understanding these dynamics, businesses can optimize their data strategies and drive impactful results.

Introduction

In today’s landscape of data-driven decision-making, organizations are increasingly leveraging innovative tools to enhance their analytical capabilities. One such tool is Direct Query in Power BI, a feature that allows users to connect directly to their data sources. This ensures access to real-time insights without the cumbersome need for data imports. Such an approach streamlines reporting processes and significantly alleviates the operational burdens tied to data management.

Yet, despite these compelling benefits—ranging from improved decision-making speed to enhanced efficiency—organizations must also navigate certain challenges. Understanding the optimal timing and methods for implementing Direct Query can revolutionize data analysis, empowering businesses to respond swiftly to market changes and optimize operations in an ever-evolving landscape.

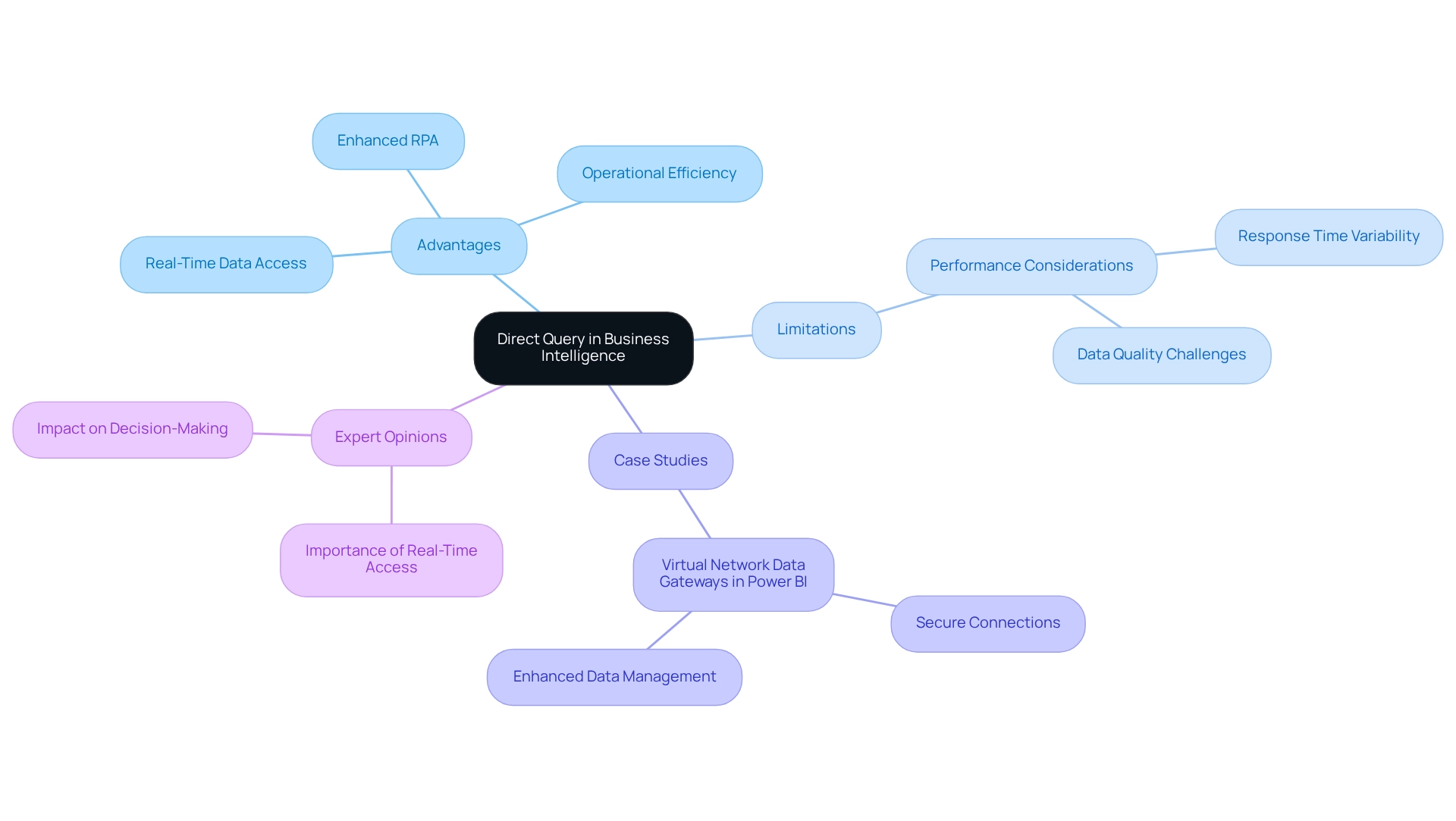

Understanding Direct Query: An Introduction

Direct Query stands out as a robust connectivity mode in Business Intelligence (BI), allowing users to connect directly to data sources without the need to import information into BI’s internal storage. This functionality empowers Power BI to send queries directly to the underlying data source each time a report is accessed or refreshed, ensuring that users consistently work with the most current information available. This capability is particularly advantageous for organizations requiring real-time information analysis and reporting, as it eliminates the need for periodic data refreshes, thereby enhancing operational efficiency and facilitating the automation of manual workflows through Robotic Process Automation (RPA).

RPA not only streamlines these workflows but also reduces errors, freeing your team to focus on more strategic, value-adding tasks.

However, while Direct Query offers substantial advantages, it also poses certain limitations and performance considerations. For instance, the response time for queries can vary based on the performance of the underlying data source and the complexity of the executed queries. Recent advancements in Power BI have focused on refining these interactions, with improvements aimed at enhancing the speed and reliability of direct connections.

It is important to note that it can take up to 10 minutes beyond the configured time for files to appear in the specified folder, a crucial factor for organizations reliant on prompt data access.

Statistics indicate that organizations leveraging real-time retrieval can achieve faster decision-making processes, as they gain immediate insights. In practice, companies utilizing Direct Query experience a significant improvement in their ability to respond swiftly to market fluctuations, with some studies revealing a reduction in reporting times by as much as 50% compared to traditional import methods. This capability is essential in a rapidly evolving AI landscape, where timely data access can greatly enhance operational efficiency and support strategic decision-making.

A notable case study involves the implementation of virtual network data gateways in Power BI, enabling secure connections to services within an Azure virtual network. This setup not only enhances data management capabilities but also ensures that organizations can maintain compliance and security while accessing real-time information, further supporting the integration of RPA solutions and customized AI technologies.

Expert opinions underscore the importance of real-time data access in business intelligence. Analysts assert that having immediate access to up-to-date information is critical for informed decision-making, particularly in fast-paced industries. As one analyst remarked, ‘Real-time data access transforms how companies operate, enabling them to pivot quickly and capitalize on new opportunities.’

In conclusion, Direct Query in the context of BI serves as an essential tool for organizations striving to leverage real-time information, driving efficiency and informed decision-making in an increasingly data-driven landscape. It also enhances the effectiveness of RPA initiatives while addressing challenges related to subpar master data quality.

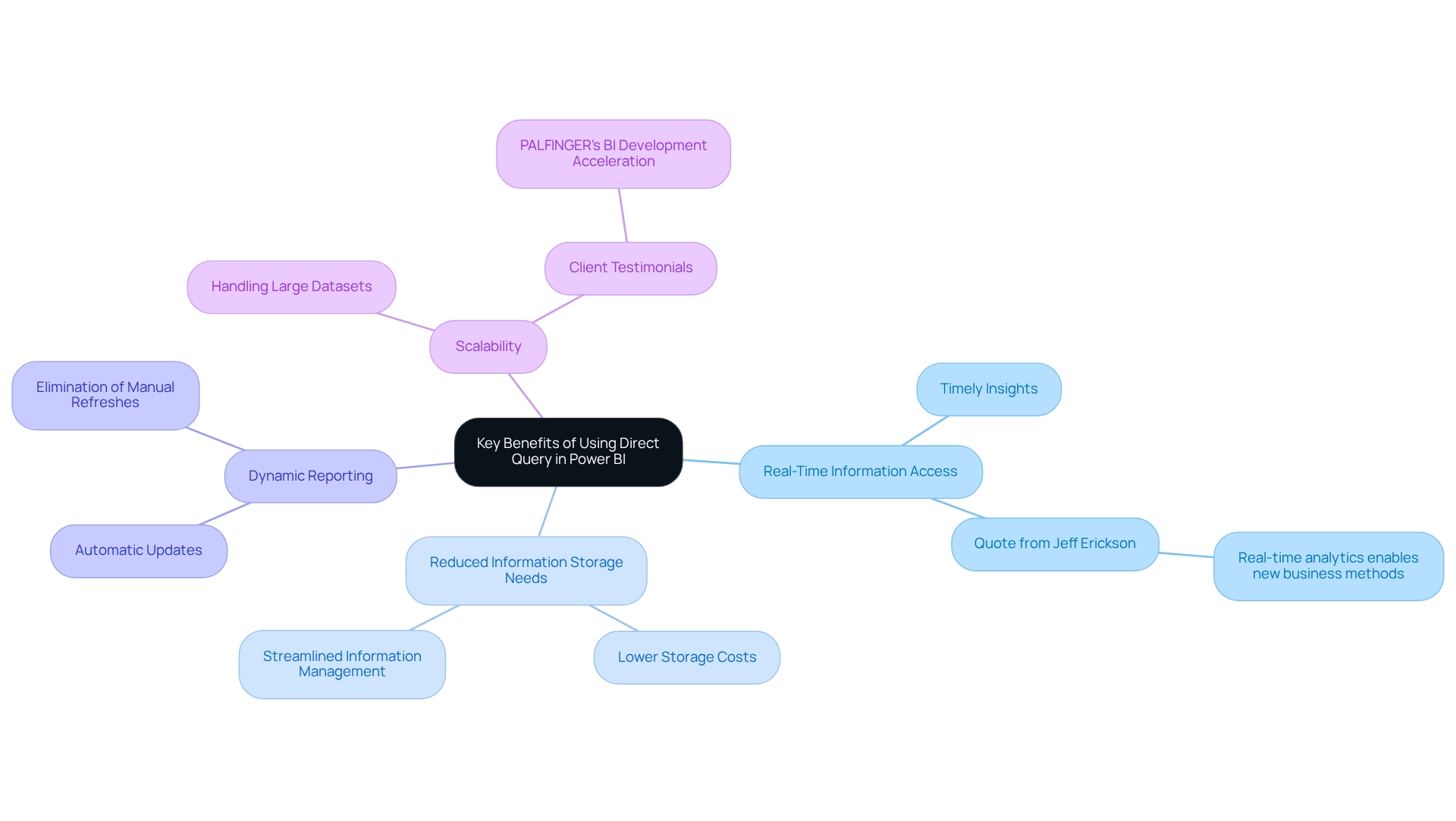

Key Benefits of Using Direct Query in Power BI

The primary benefits of utilizing Direct Query in Power BI are significant and multifaceted:

-

Real-Time Information Access: Direct Query allows users to access the most current information directly from the source, which is crucial for scenarios where timely insights are essential. This capability enables organizations to respond swiftly to changing conditions, enhancing operational agility. As Jeff Erickson, a Tech Content Strategist, observes, “Unlike conventional analytics, real-time analytics is about more than guiding future choices; it allows entirely new methods of conducting business by enabling teams to act instantly.” This is particularly relevant in the context of Business Intelligence, as the ability to derive actionable insights quickly can drive growth and innovation.

-

Reduced Information Storage Needs: By not storing information within Power BI, organizations can significantly lower their storage costs. This method streamlines information management and clarifies the concept of Direct Query by easing the difficulties linked with processing large collections, making it a cost-effective solution for many companies. Efficiency is further improved by RPA solutions that automate repetitive tasks, allowing teams to focus on strategic analysis rather than management.

-

Dynamic Reporting: With real-time access, users can create dynamic reports that automatically reflect alterations in the underlying information. This eliminates the need for manual dataset refreshes, ensuring that decision-makers always have access to the latest insights—vital for informed decision-making. The challenges of time-consuming report creation and inconsistencies are mitigated, allowing for a smoother analytical process.

-

Scalability: Direct Query is designed to handle extensive datasets that may exceed the memory thresholds of the BI tool. This scalability enables organizations to assess large volumes of information without encountering performance problems, making it an excellent option for information-heavy operations. The transformative impact of utilizing Direct Query in BI is emphasized by testimonials from clients like PALFINGER, who experienced significant acceleration in their BI development through Creatum’s BI Sprint service. This service not only provided them with a ready-to-use Power BI report but also enhanced their overall information analysis strategy.

The benefits of real-time information access are underscored by statistics showing that enhanced information accessibility can lead to a 20% improvement in overall research accuracy. Furthermore, organizations like Domino’s Pizza have demonstrated the power of real-time analytics; after implementing their ‘Domino’s Tracker’ system, they reported a 25% increase in customer satisfaction and sales. Such examples highlight Direct Query as a strategic resource that Immediate Inquiry signifies for companies seeking to optimize their data analysis capabilities and improve operational effectiveness.

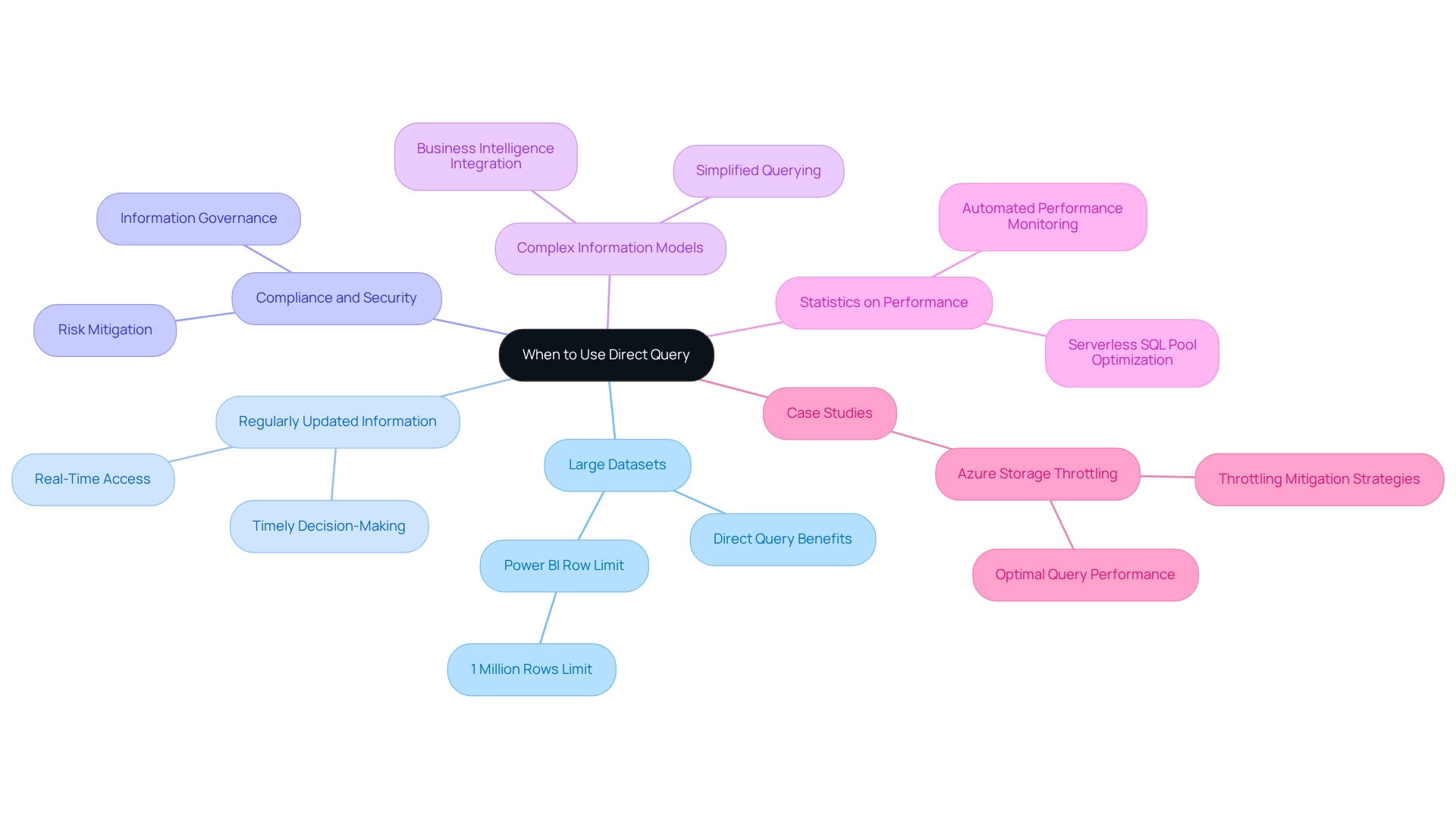

When to Use Direct Query: Ideal Scenarios

Immediate Inquiry proves to be especially beneficial in various critical situations:

-

For organizations grappling with large datasets that exceed Power BI’s import limits, understanding what direct query entails facilitates analysis without the constraints of memory limitations. Notably, a fixed limit of 1 million rows is placed on the number of rows that can be returned in any single query to the underlying source, as highlighted by v-jingzhang from Community Support. This capability is vital for sectors like healthcare and finance, where information volumes can be considerable. Utilizing Robotic Process Automation (RPA) can further enhance these processes, ensuring that information is efficiently managed and analyzed, thereby automating manual workflows that would otherwise consume valuable time.

-

Regularly Updated Information: Companies operating in dynamic environments, such as financial markets or inventory control, gain substantial advantages from real-time access to information. This feature allows for immediate insights, enabling timely decision-making that can significantly impact operational efficiency. By integrating RPA, organizations can automate the retrieval and processing of this information, enhancing responsiveness and reducing manual workload, which is essential for maintaining a competitive advantage.

-

Compliance and Security Mandates: Firms subject to strict information governance policies find Immediate Inquiry particularly advantageous. By retaining sensitive information in its original location, it mitigates the risk of breaches and ensures adherence to regulations, which is crucial for sectors like banking and healthcare. RPA can assist in monitoring compliance and automating reporting processes, further enhancing security measures and addressing technology implementation challenges.

-

Complex Information Models: In scenarios involving intricate information structures that require regular updates, Straightforward Access simplifies the querying process. Rather than relying on sporadic imports, it permits direct access to the information source, streamlining operations and improving accuracy. The integration of Business Intelligence tools can provide deeper insights into these complex models, driving better decision-making and overcoming the hurdles of technology implementation.

-

Statistics on Performance: Understanding what direct query is and how utilizing direct inquiries can significantly enhance query efficiency is essential, as serverless SQL pools optimize retrieval by bypassing unnecessary row groups based on specified conditions. This efficiency is vital for organizations that rely on large datasets for their analytics. RPA can help automate performance monitoring, ensuring that systems operate at peak efficiency and that manual oversight is minimized.

-

Case Studies: For instance, in addressing Azure Storage Throttling, organizations have implemented strategies to prevent overloading storage accounts during query execution. This method not only reduces throttling impacts but also sustains optimal request performance, demonstrating the practical advantages of immediate access in real-world applications. By merging RPA with immediate inquiries, companies can further boost their operational effectiveness and information management strategies, illustrating how automation can reduce manual effort and enhance overall performance.

By comprehending these situations, organizations can better utilize immediate inquiries in Business Intelligence to improve their analytics capabilities and foster informed decision-making. Additionally, the upcoming Microsoft Fabric Community Conference 2025, taking place from March 31 to April 2 in Las Vegas, Nevada, presents an opportunity for professionals to explore advancements in data analytics and network with industry leaders.

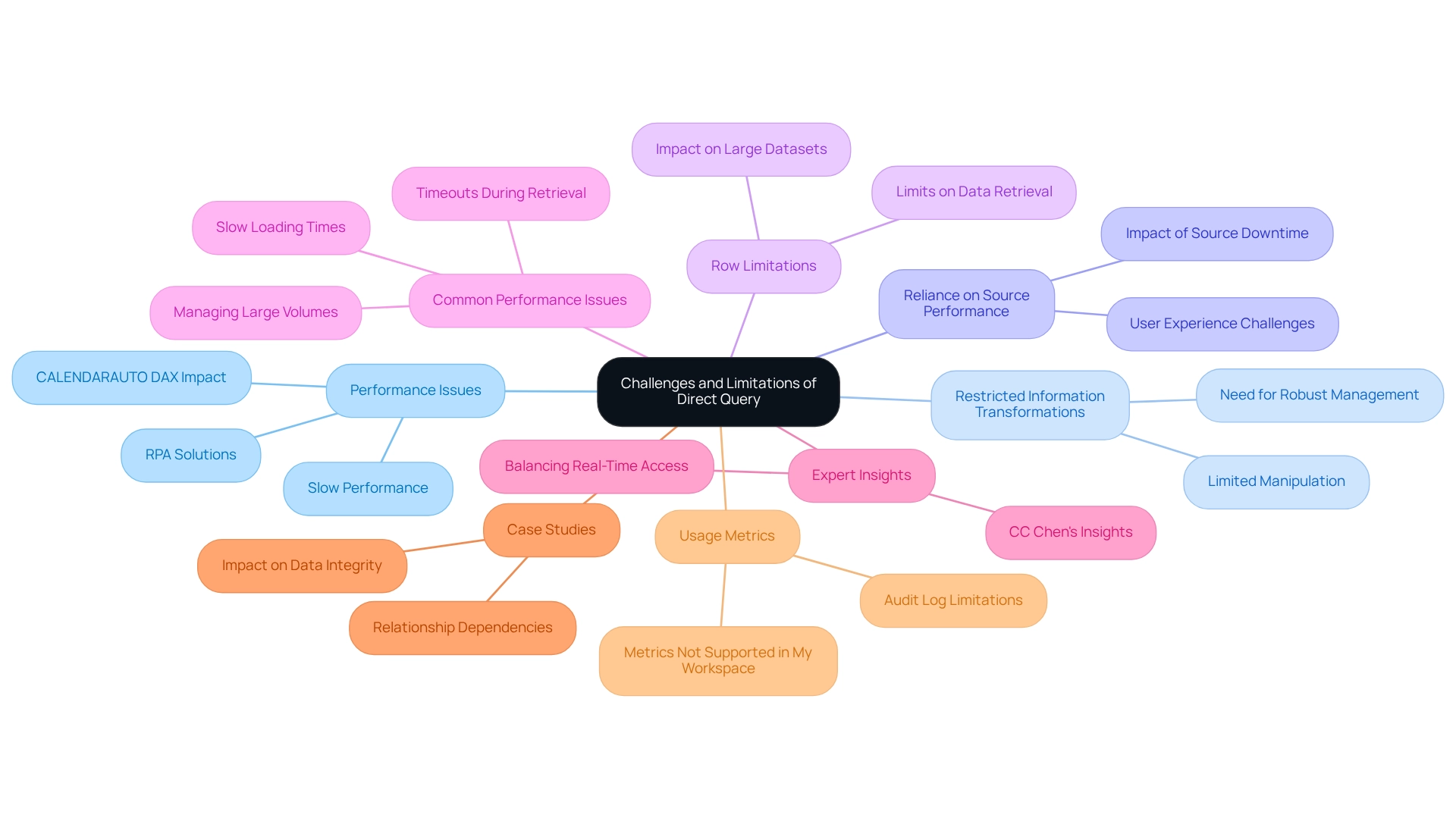

Challenges and Limitations of Direct Query

While Direct Query in Power BI offers notable advantages, it is crucial to acknowledge the accompanying challenges and limitations:

-

Performance Issues: A primary concern with Direct Query is its potential to slow down performance. This is particularly evident when the underlying information source is not optimized for efficient query execution. Each interaction with a report can initiate multiple inquiries to the source, leading to considerable delays in response times, especially during peak usage. The CALENDARAUTO DAX function, which scans all date and date/time columns in your model to determine the earliest and latest stored date values, can also impact performance if not managed properly. These performance challenges can hinder the effectiveness of business intelligence initiatives, making it essential for organizations to adopt solutions like RPA from Creatum GmbH to streamline workflows and enhance operational efficiency.

-

Restricted Information Transformations: When utilizing Immediate Inquiry, users face limitations in transformation capabilities within Power Transformation. Unlike imported information, which allows extensive manipulation, Immediate Access restricts the complexity of transformations that can be applied. This constraint can impede the ability to derive deeper insights from the information, underscoring the necessity for robust management practices to ensure high-quality master records.

-

Reliance on Source Performance: The effectiveness of immediate reports is closely tied to the performance of the underlying source. If the information source is slow or experiences downtime, it directly impacts the user experience in Power BI, potentially leading to frustration and decreased productivity. Organizations must address these challenges to leverage insights effectively and understand what Direct Query entails to make informed decisions.

-

Row Limitations: Direct Query imposes limits on the number of rows that can be retrieved in a single request. This limitation can significantly affect the analysis of large datasets, as users may find themselves unable to access all essential information for comprehensive reporting.

-

Common Performance Issues: Organizations frequently encounter performance challenges when using Direct Query. These include slow loading times, timeouts during information retrieval, and difficulties in managing large volumes of information. Such issues can undermine the effectiveness of business intelligence initiatives, particularly in understanding what Direct Query is. Optimization techniques such as removing unused columns, filtering information, and enabling incremental refresh can help alleviate these challenges, resulting in a more efficient and scalable Power BI model.

-

Expert Insights: Information management specialists emphasize that while Direct Query can facilitate real-time information access, it is vital to weigh these advantages against the potential performance limitations. CC Chen states, “In this article, I’ll be sharing my recent insights into analytical information processing — focusing on Business Intelligence dashboards and the…” This highlights the importance of balancing real-time access with performance factors, crucial for organizations striving to enhance their decision-making processes.

-

Case Studies: A significant case analysis on relationship dependencies illustrates how changes in one component can affect others, resulting in circular relationships that compromise information integrity. Understanding these dependencies is essential for managing the complexities associated with real-time data access, as they can greatly influence analysis and reporting.

-

Usage Metrics: It is important to note that usage metrics reports are not available in My Workspace, which may relate to the restrictions of immediate inquiry regarding accessibility and reporting.

In summary, while immediate access offers the promise of real-time data retrieval, organizations must navigate its limitations thoughtfully to ensure optimal performance and data integrity. By leveraging Business Intelligence and RPA solutions such as EMMA RPA and Automate from Creatum GmbH, businesses can address these challenges and foster growth through informed decision-making.

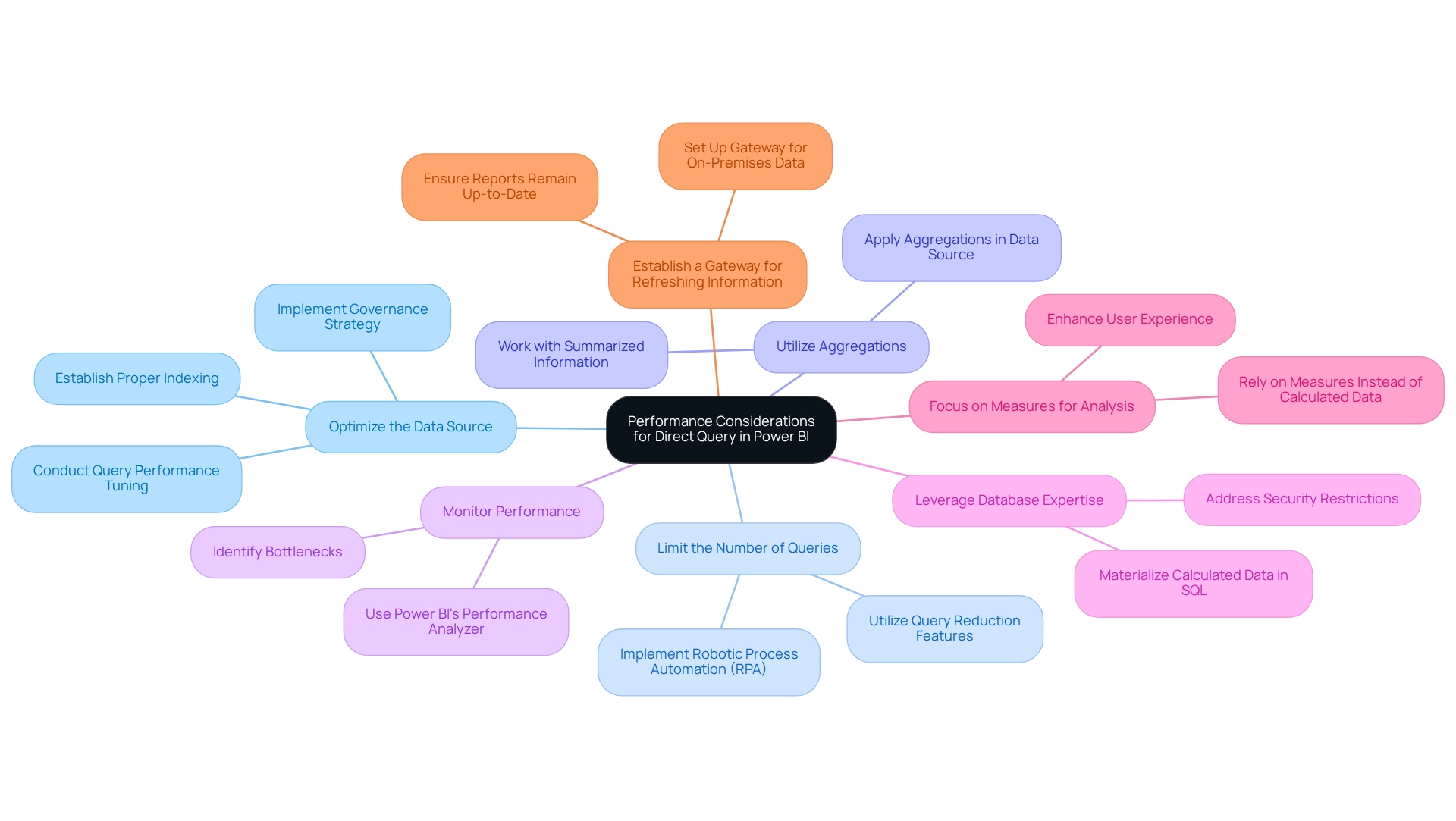

Performance Considerations for Direct Query

To enhance performance when utilizing Direct Query in Power BI, several key strategies should be implemented:

-

Optimize the Data Source: Ensure that the underlying database is meticulously optimized. This includes establishing proper indexing and conducting query performance tuning, which can significantly reduce response times and improve overall efficiency. This optimization is crucial to address the frequent issue of inconsistencies that can occur from poorly managed information sources. Furthermore, establishing a governance strategy can assist in preserving information integrity and consistency across reports.

-

Limit the Number of Queries: Utilize features like query reduction to decrease the frequency of requests sent to the source. This practice not only streamlines performance but also alleviates the load on the database, leading to faster report generation and allowing teams to focus on leveraging insights rather than spending excessive time on report creation. Robotic Process Automation (RPA) can also be utilized to automate repetitive tasks, further enhancing efficiency.

-

Utilize Aggregations: Applying aggregations within the information source can significantly decrease the amount of information being queried. This is especially advantageous for extensive datasets, as it improves performance by enabling the application to operate with summarized information instead of unprocessed material, thereby offering clearer, actionable insights.

-

Monitor Performance: Regularly evaluate the performance of real-time reports using tools like Power BI’s Performance Analyzer. This proactive monitoring aids in recognizing bottlenecks and areas for enhancement, ensuring that reports operate seamlessly and effectively, which is vital for preserving stakeholder confidence in the information presented.

-

Leverage Database Expertise: As highlighted in a case study explaining what is direct query implementation, having database expertise is crucial for optimizing performance. The case study outlines the challenges encountered when implementing DirectQuery, especially in environments with security restrictions that require on-premises information storage. It highlights the necessity for database proficiency to enhance performance and the challenges in attaining uniform information refresh across visuals. Achieving good performance with DirectQuery is complex and may require materializing calculated information in SQL, which could negatively impact user experience.

-

Focus on Measures for Analysis: Experts suggest depending on measures instead of implementing calculated information in SQL. As David Cenna, a certified expert in Data Import perspective, states, “Therefore, @Sam.McKay has always recommended to rely most of the times on measures in order to achieve diverse analysis.” This approach not only simplifies the analysis process but also enhances user experience by maintaining performance integrity.

-

Establish a Gateway for Refreshing Information: When publishing reports created with DirectQuery to the BI Service, it is essential to establish a Gateway for refreshing from on-premises information. This requirement is critical for ensuring that the reports remain up-to-date and perform optimally.

By implementing these approaches, organizations can greatly enhance the effectiveness of what is direct query in BI, resulting in more efficient information management and improved decision-making abilities. Moreover, incremental refresh verifies that only a few thousand new rows are retrieved daily, further highlighting the effectiveness of information management in immediate access.

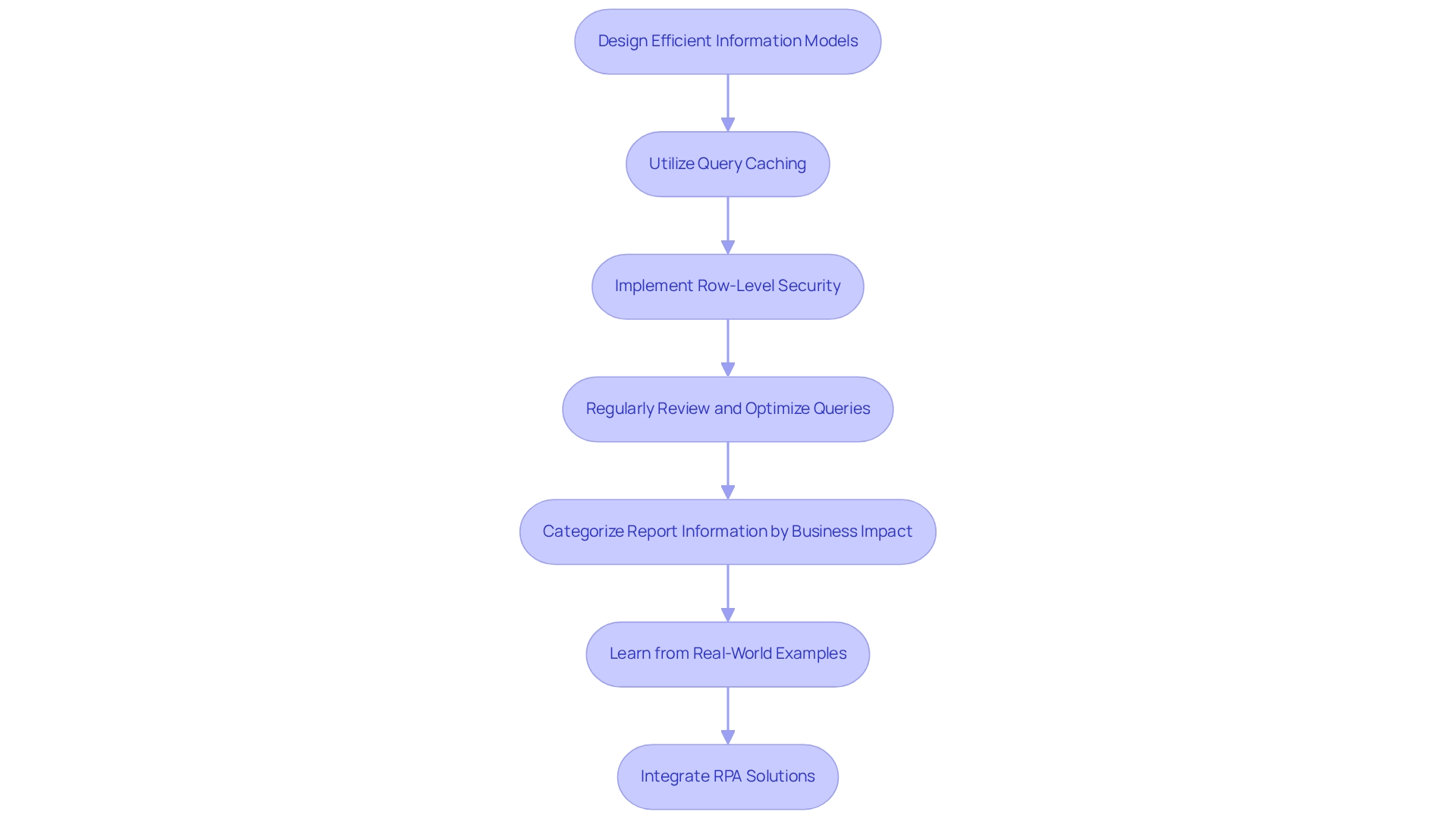

Best Practices for Optimizing Direct Query Usage

To maximize the effectiveness of Direct Query in Power BI, consider these best practices:

-

Design Efficient Information Models: Focus on organizing your models to reduce complexity. Ensure that only essential information is queried. This approach streamlines performance and enhances user experience, aligning with the demand for tailored solutions in today’s AI landscape.

-

Utilize Query Caching: Take advantage of Power BI’s query caching capabilities to significantly boost performance. By storing results, you reduce the number of queries sent to the information source, resulting in quicker report loading times and enhanced responsiveness. This efficiency is vital for extracting significant insights from your data.

-

Implement Row-Level Security: Establish row-level security to manage access to information at a granular level. This practice ensures users only view data relevant to their roles, enhancing security and optimizing performance by limiting the data processed during queries, thereby supporting operational efficiency.

-

Regularly Review and Optimize Queries: Make it a routine to analyze your direct queries. Identifying and addressing inefficiencies can lead to substantial performance improvements, ensuring that your reports run smoothly and effectively. This is crucial for leveraging insights from Power BI dashboards.

-

Categorize Report Information by Business Impact: Use sensitivity labels to categorize report content based on its business impact. This strategy not only raises user awareness about information security but also aids in prioritizing access and management strategies, reinforcing the significance of BI in driving insight-driven decisions.

-

Learn from Real-World Examples: Reflect on the case study regarding Azure Storage Throttling, which underscores the importance of implementing strategies to avoid overloading the storage account during query execution. By doing so, organizations can mitigate throttling effects and enhance overall query performance, showcasing the practical outcomes of effective BI practices.

-

Integrate RPA Solutions: Consider incorporating Robotic Process Automation (RPA) to automate repetitive tasks related to information management and reporting. This integration can significantly enhance operational efficiency, allowing teams to focus on strategic decision-making instead of mundane tasks.

As Tim Radney remarked, “Thanks for this post; it was a good read,” emphasizing the value of sharing best practices within the community. By adhering to these best practices, organizations can improve their use of Direct Query in Business Intelligence, resulting in enhanced operational efficiency and more insightful analysis. Staying informed about best practices, as discussed in the author’s recent presentation at the PASS Community Summit, is essential for continued success in effectively utilizing BI.

Creatum GmbH is dedicated to assisting companies in overcoming these challenges and harnessing the full capabilities of their information.

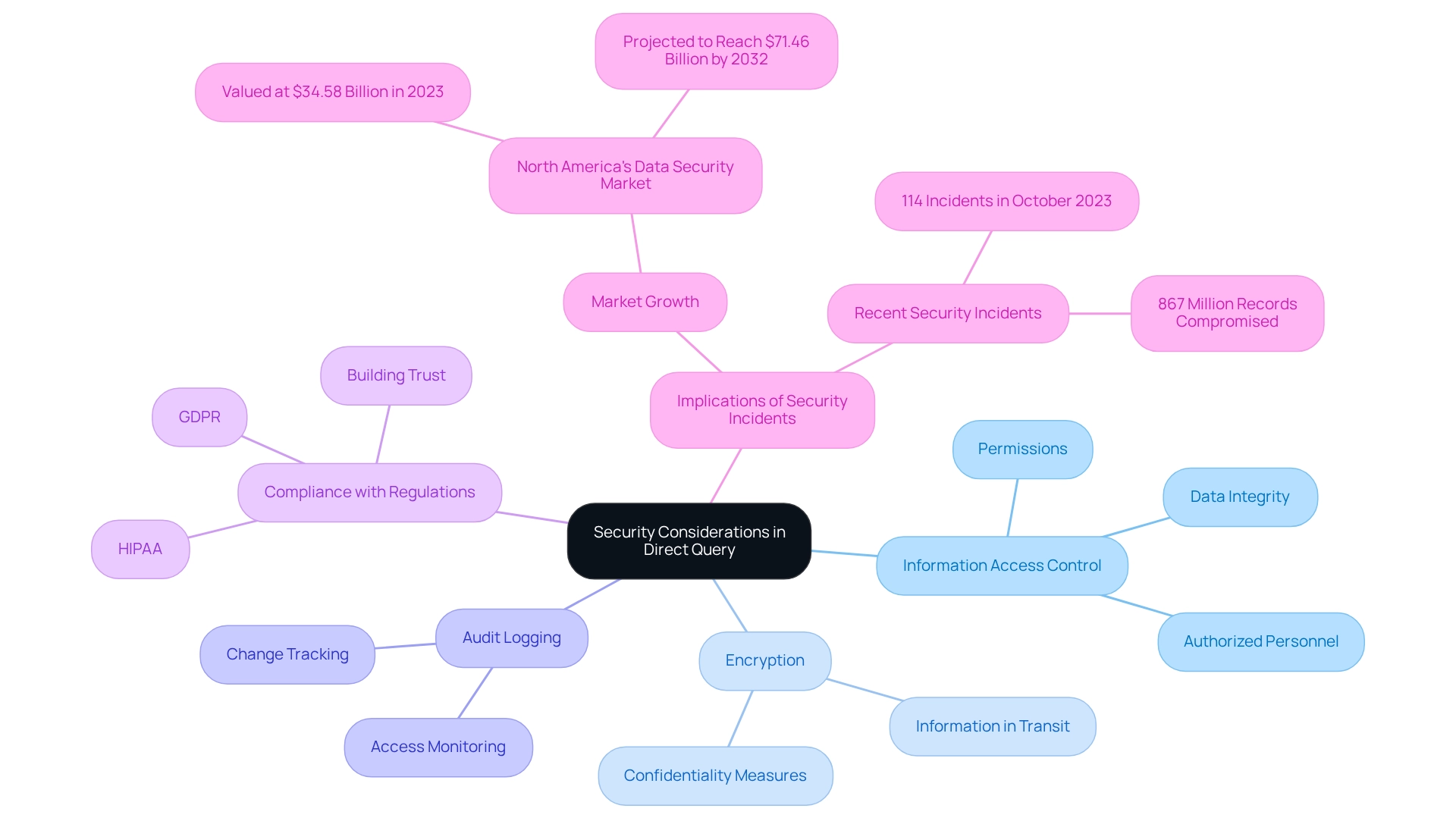

Security Considerations in Direct Query

When utilizing Direct Query in Power BI, organizations must prioritize several critical security aspects to safeguard their data while effectively leveraging insights:

-

Information Access Control: Implementing strict access controls to the underlying information sources is crucial. This involves setting appropriate permissions for users accessing reports in Power BI, ensuring that only authorized personnel can view or manipulate sensitive information. Such practices not only protect information but also preserve the integrity of the insights obtained.

-

Encryption: Implementing encryption for information in transit is vital. This measure safeguards sensitive information from unauthorized access during query execution, thereby preserving the confidentiality and integrity of the information being processed. In an information-rich environment, the emphasis should be on actionable insights rather than merely raw data.

-

Audit Logging: Strong audit logging systems are essential for monitoring access and changes to the source. This practice provides a thorough account of who accessed which information and when, promoting accountability and transparency in information management. Such transparency is critical for building trust in the insights generated from Power BI dashboards.

-

Compliance with Regulations: Organizations must ensure that their use of Direct Query aligns with relevant information protection regulations, such as GDPR and HIPAA. Masha Komnenic, an Information Security and Data Privacy Specialist, emphasizes the importance of compliance, stating, “Implementing effective security measures is not just about protecting information; it’s about building trust with stakeholders through transparency and accountability.” Adhering to these regulations not only reduces legal risks but also enhances stakeholder trust by demonstrating a commitment to information privacy and security.

Considering the rising number of security incidents—114 publicly disclosed cases in October 2023 alone, compromising over 867 million records valued at over $5 billion—organizations must grasp the significance of Direct Query to adopt proactive measures that strengthen their security strategies. The North American information security market, valued at $34.58 billion in 2023, is anticipated to expand considerably, indicating an increased need for effective risk management solutions. This growth underscores the necessity for organizations to understand Direct Query in Business Intelligence, as it requires investment in robust access control measures and encryption practices to ensure their information remains secure and compliant, facilitating informed decision-making that fosters growth and innovation.

Moreover, organizations must recognize that inefficient utilization of Business Intelligence can lead to a competitive disadvantage, as time-consuming report generation and information inconsistencies obstruct the capacity to obtain actionable insights from Power BI dashboards. Creatum GmbH is committed to assisting organizations in navigating these challenges and enhancing their operational efficiency.

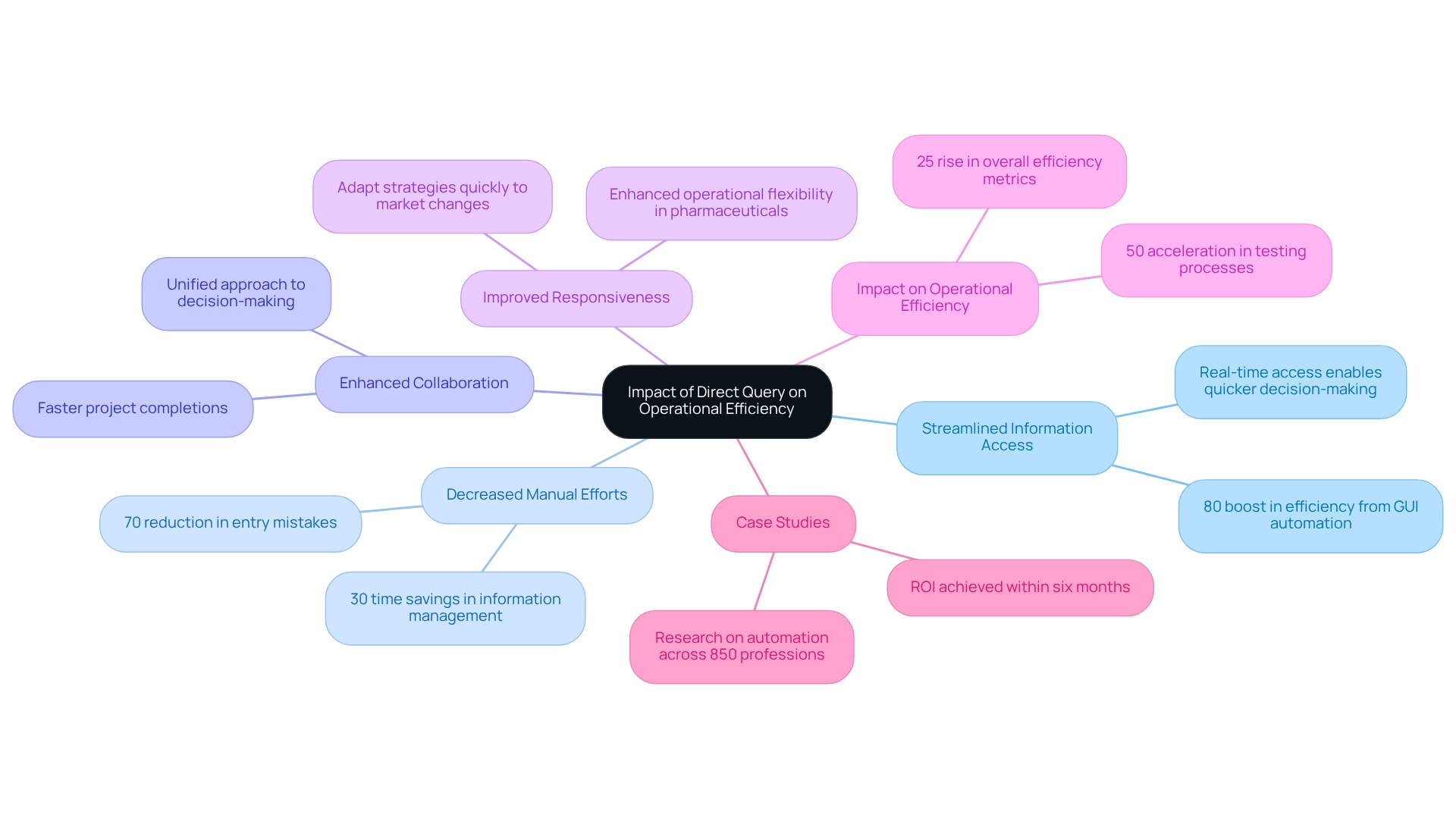

The Impact of Direct Query on Operational Efficiency

Direct Query can significantly enhance operational efficiency in various ways:

-

Streamlined Information Access: Direct query allows for real-time access to information, enabling quicker decision-making and significantly decreasing the time invested in information preparation. This immediacy empowers organizations to act swiftly on insights, which is crucial in today’s fast-paced business environment. By simplifying information access, organizations can propel growth and innovation, aligning with their mission to improve operational efficiency. A recent case study illustrated how a mid-sized healthcare firm faced obstacles like manual entry mistakes and sluggish software testing, but enhanced its operational workflows by adopting GUI automation, resulting in an 80% boost in efficiency.

-

Decreased Manual Efforts: By automating information retrieval, this method reduces the necessity for manual imports. This shift enables teams to focus on analysis instead of information management, resulting in significant time savings. Industry specialists have observed that organizations utilizing direct query can save up to 30% of the time they previously dedicated to information management. This efficiency is especially significant considering the environmental impact of information processing, as training one large language model can emit approximately 315 tons of carbon dioxide. In the case study, the healthcare company decreased entry mistakes by 70% through automation, demonstrating the effectiveness of such solutions.

-

Enhanced Collaboration: With current information readily available, teams can collaborate more effectively. This ensures that all stakeholders are working with the same information, fostering a unified approach to decision-making. Operations managers have indicated that streamlined data access, defined by direct query, has resulted in enhanced team alignment and quicker project completions. Testimonials from clients like NSB GROUP highlight how Creatum GmbH’s technology solutions have driven business growth through enhanced collaboration.

-

Improved Responsiveness: Organizations can respond more quickly to changing business conditions and market demands. By leveraging real-time insights, they can adapt strategies and operations promptly. For example, a recent case study emphasized how a pharmaceutical firm enhanced its operational flexibility by incorporating direct query, which enabled it to modify its supply chain strategies in reaction to market changes. This adaptability is essential, especially as pharmaceutical companies must implement quality checks and ensure explainability when integrating new technologies.

-

Impact on Operational Efficiency: The adoption of immediate data access has been demonstrated to improve operational efficiency considerably. Based on recent statistics, organizations using real-time data access have observed a 25% rise in overall efficiency metrics. This enhancement is credited to the decrease in latency and the capacity to make informed decisions quickly. As Michael Chui, a partner at McKinsey, stated, ‘The moment to take action is now,’ highlighting the urgency of embracing technologies such as direct query to enhance operational efficiency. The case study on GUI automation also reported a 50% acceleration in testing processes, further illustrating the benefits of automation.

-

Case Studies: An extensive examination of automation possibilities across 850 professions demonstrated that organizations utilizing immediate access not only improved their information retrieval but also optimized their overall operational processes. This research underscores the importance of adopting such technologies to stay competitive in the evolving landscape. The transformative effect of Creatum’s Power BI Sprint service has also been highlighted in client testimonials, demonstrating accelerated analytics and reporting success. Notably, the healthcare company achieved a return on investment (ROI) within six months of implementing these solutions.

In summary, understanding direct query not only streamlines data access and decision-making but also plays a pivotal role in driving operational efficiency, making it an essential tool for organizations aiming to thrive in 2025 and beyond.

Conclusion

The implementation of Direct Query in Power BI stands out as a transformative solution for organizations aiming to elevate their data analytics capabilities. By providing real-time access to data, Direct Query empowers businesses to swiftly respond to market changes and operational demands, ultimately driving enhanced decision-making and operational efficiency. The capability to connect directly to data sources eliminates cumbersome data imports, streamlining reporting processes while reducing manual errors and freeing up valuable time for strategic analysis.

However, while the benefits of Direct Query are compelling, it is crucial to recognize and address the associated challenges, including performance issues and limitations on data transformations. Organizations must adopt best practices, such as:

- Optimizing data sources

- Implementing robust security measures

to fully harness the potential of Direct Query. By doing so, they can mitigate risks and ensure data integrity is maintained, fostering a more informed decision-making environment.

Ultimately, embracing Direct Query in Power BI positions organizations to thrive in an increasingly data-driven landscape. As they leverage real-time insights and automated processes, businesses can enhance collaboration, improve responsiveness, and achieve significant gains in operational efficiency. The journey to effective data utilization begins with a thorough understanding and implementation of Direct Query, paving the way for sustained growth and innovation in the years to come.

Frequently Asked Questions

What is Direct Query in Business Intelligence (BI)?

Direct Query is a connectivity mode in Business Intelligence that allows users to connect directly to data sources without importing data into BI’s internal storage, enabling real-time access to the most current information.

What are the advantages of using Direct Query?

The advantages of Direct Query include real-time information access, reduced information storage needs, dynamic reporting, and scalability for handling large datasets.

How does Direct Query enhance operational efficiency?

Direct Query eliminates the need for periodic data refreshes, allowing organizations to analyze real-time information, automate workflows through Robotic Process Automation (RPA), and reduce errors, ultimately enabling teams to focus on strategic tasks.

What are the performance considerations when using Direct Query?

The response time for queries can vary depending on the performance of the underlying data source and the complexity of the executed queries, which may affect overall performance.

How does Direct Query impact decision-making processes in organizations?

Organizations leveraging real-time retrieval through Direct Query can achieve faster decision-making processes, with some studies indicating a reduction in reporting times by up to 50% compared to traditional import methods.

What is the significance of real-time data access in business intelligence?

Real-time data access is critical for informed decision-making, particularly in fast-paced industries, as it allows companies to pivot quickly and capitalize on new opportunities.

What role does RPA play in conjunction with Direct Query?

RPA streamlines workflows and reduces errors, enhancing the effectiveness of Direct Query by allowing teams to focus on more strategic, value-adding tasks.

Can Direct Query handle large datasets effectively?

Yes, Direct Query is designed to manage extensive datasets that may exceed the memory thresholds of BI tools, making it suitable for information-heavy operations.

What are some real-world examples of organizations benefiting from Direct Query?

Companies like Domino’s Pizza have reported significant improvements in customer satisfaction and sales after implementing real-time analytics systems, demonstrating the strategic value of Direct Query in optimizing data analysis capabilities.

How does Direct Query ensure compliance and security?

The implementation of virtual network data gateways in Power BI enables secure connections to services within an Azure virtual network, enhancing data management capabilities while maintaining compliance and security.

Overview

Diagnostic analytics can be broadly categorized into two distinct areas:

- Anomaly detection

- What-if analysis

Each category serves a unique purpose in the realm of understanding past events and informing future decisions. Anomaly detection is crucial as it identifies unusual patterns that demand immediate attention, ensuring that organizations can respond swiftly to potential issues. On the other hand, what-if analysis empowers organizations to simulate various scenarios, allowing them to predict outcomes effectively. This capability not only enhances operational efficiency but also supports strategic planning. By leveraging these analytical tools, organizations can make informed decisions that drive success.

Introduction

In the dynamic realm of data analytics, diagnostic analytics emerges as an essential tool for organizations seeking to comprehend the ‘why’ behind historical events and trends. This analytical approach transcends simple data summarization, offering profound insights that foster informed decision-making and enhance operational efficiency.

With businesses increasingly acknowledging its critical role—particularly in industries such as healthcare and finance—the need for integrating advanced technologies and methodologies becomes paramount.

As the global market for diagnostic analytics is poised for significant growth, grasping its applications, challenges, and implementation strategies is crucial for organizations aiming to effectively leverage data in a competitive landscape.

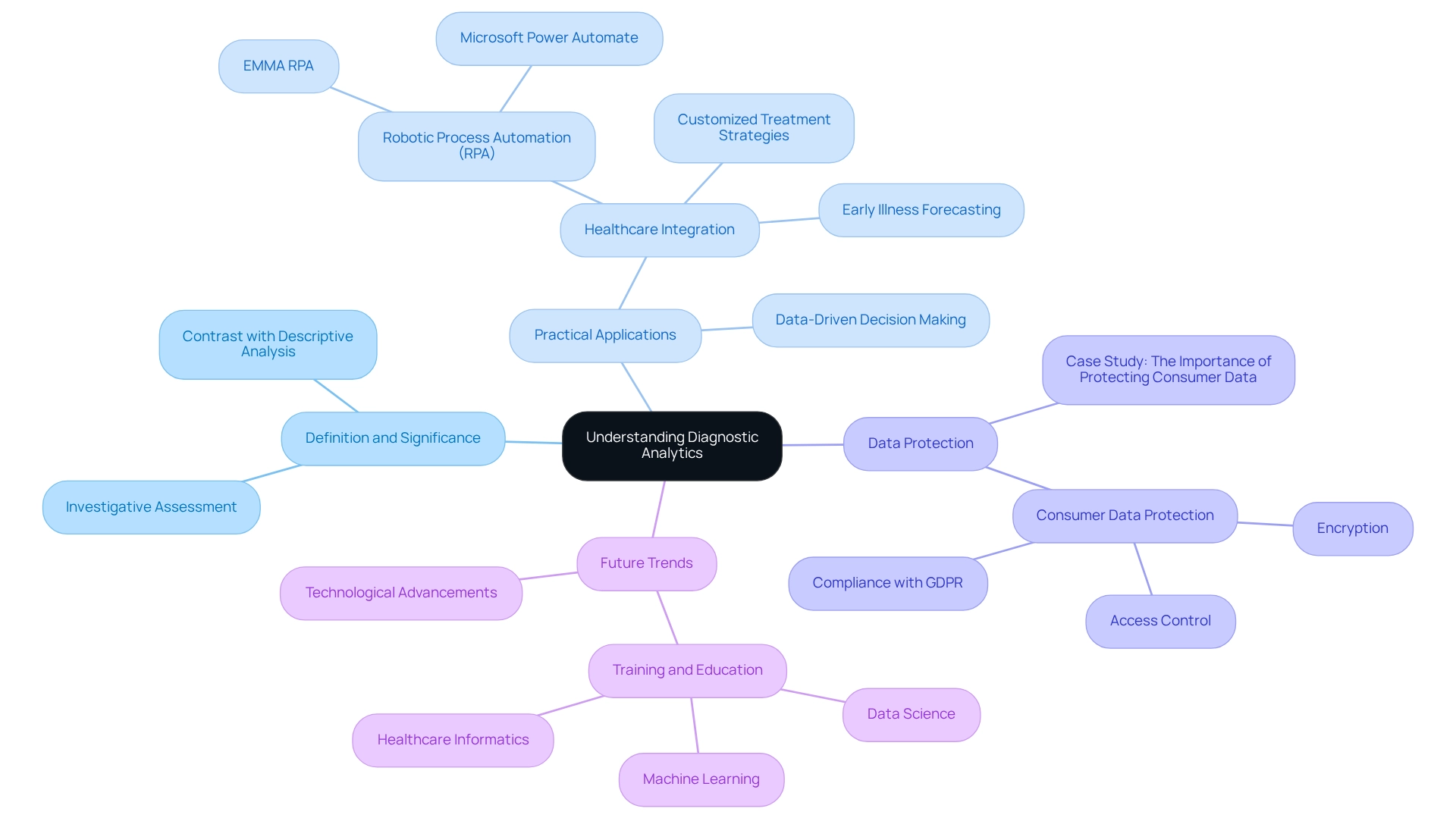

Understanding Diagnostic Analytics: An Overview

Diagnostic analysis is a pivotal field that delves into the underlying reasons for past occurrences and trends. Investigative assessment, one of the two broad categories of diagnostic analytics, provides deeper insights into why specific results occurred, in contrast to descriptive analysis, which merely summarizes historical data. This analytical approach is essential for organizations aiming to enhance operational efficiency and make informed, data-driven decisions.

As we look toward 2025, the landscape of assessment methods is evolving rapidly, with organizations increasingly recognizing its significance in optimizing operations. The global Internet of Medical Things market is projected to reach a staggering $286.77 billion, underscoring the growing importance of assessment metrics in healthcare and operational efficiency. By pinpointing the root causes of inefficiencies, businesses can effectively tackle issues and refine their strategic planning processes.

Healthcare organizations, for instance, are leveraging assessment insights in conjunction with Robotic Process Automation (RPA) tools like EMMA RPA and Microsoft Power Automate. These technologies enhance patient outcomes through early illness forecasting and tailored treatment strategies, showcasing their transformative potential.

Numerous practical examples exist, with firms employing analytical tools to inform decision-making. Organizations that have established robust information protection measures, such as encryption and access control, have experienced significant improvements in operational efficiency while ensuring compliance with regulations like GDPR. This highlights the dual benefit of analytical assessment: enhancing operational processes while safeguarding consumer data.

The case study titled “The Importance of Protecting Consumer Data” illustrates how these measures can mitigate risks and bolster operational effectiveness.

Industry leaders assert that diagnostic analysis is vital for informed decision-making. Dmytro Tymofiiev, Delivery Manager at SPD Technology, emphasizes, “Sometimes it makes sense to assist your healthcare professionals in acquiring further education in science related to information, machine learning, or healthcare informatics to gain a deeper comprehension of the complexities of analysis.” Investing in knowledge is crucial as organizations navigate the intricacies of data analysis, ultimately leading to improved outcomes.

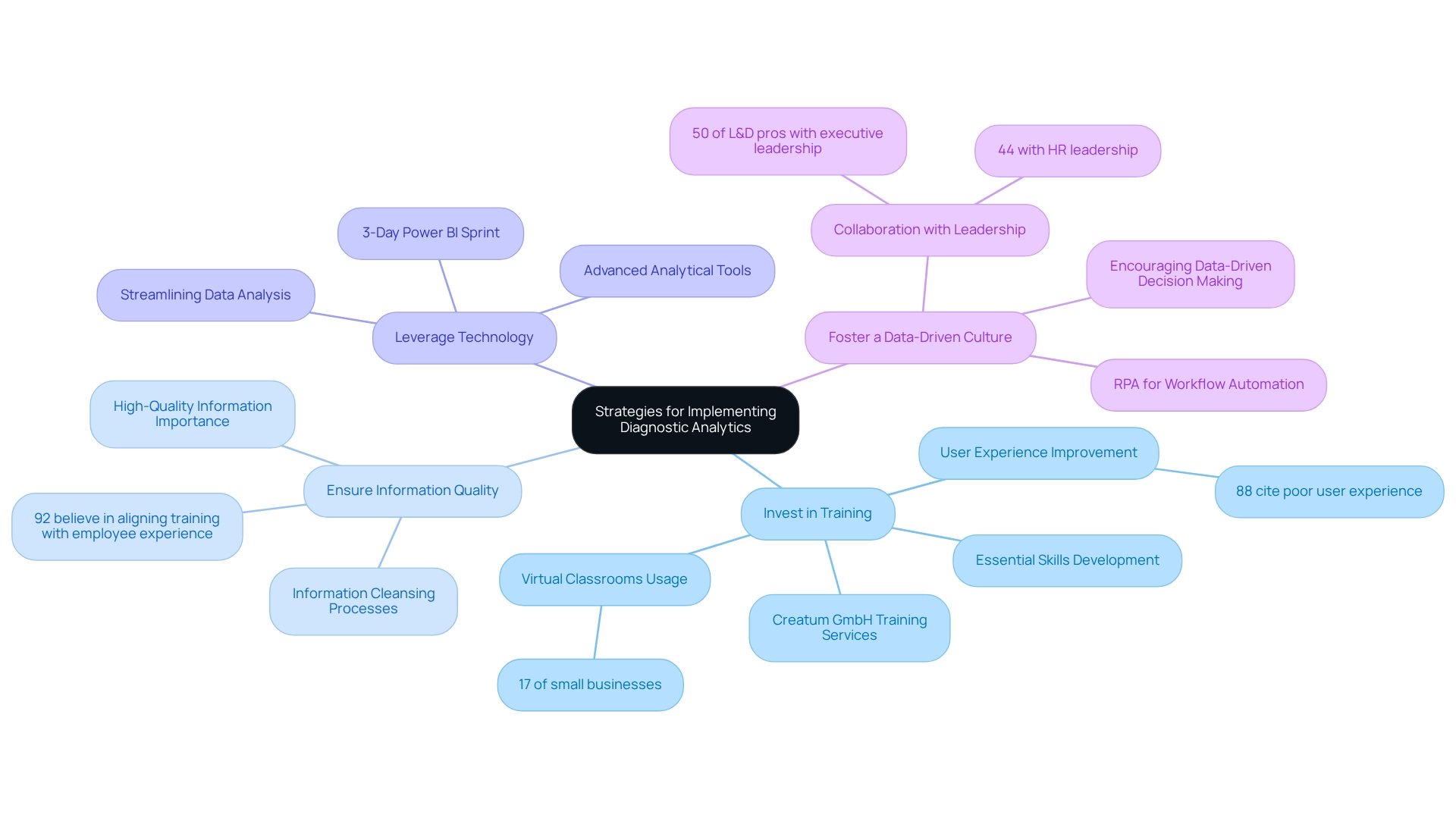

In conclusion, assessment analysis not only aids in understanding past performance but also plays a critical role in shaping future strategies. By distinguishing itself from descriptive data analysis, it empowers organizations to make informed choices that enhance operational efficiency and foster innovation. Current trends in diagnostic analysis for 2025 indicate a sustained focus on integrating advanced technologies, such as RPA, and training, further amplifying its impact on operational efficiency.

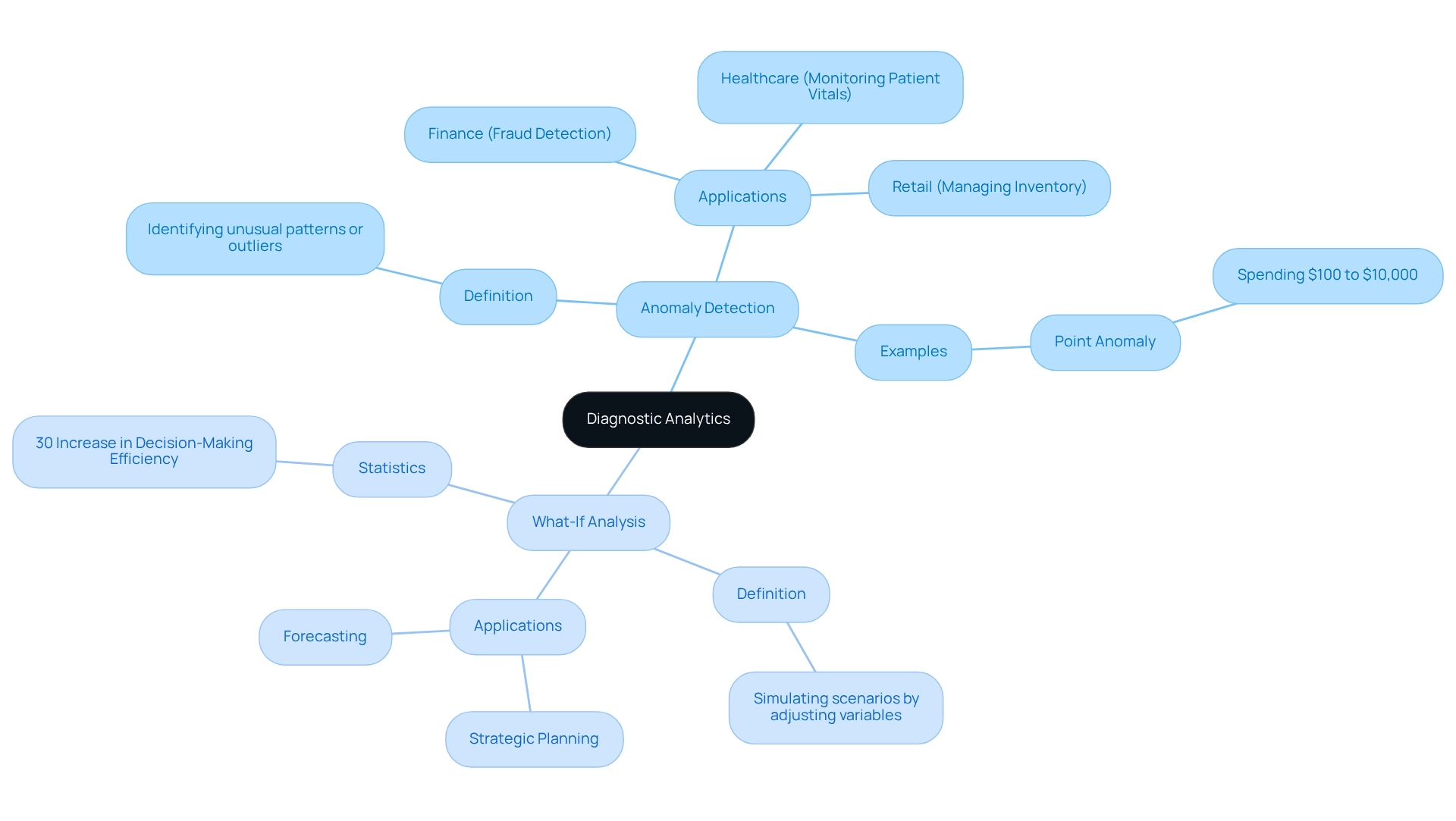

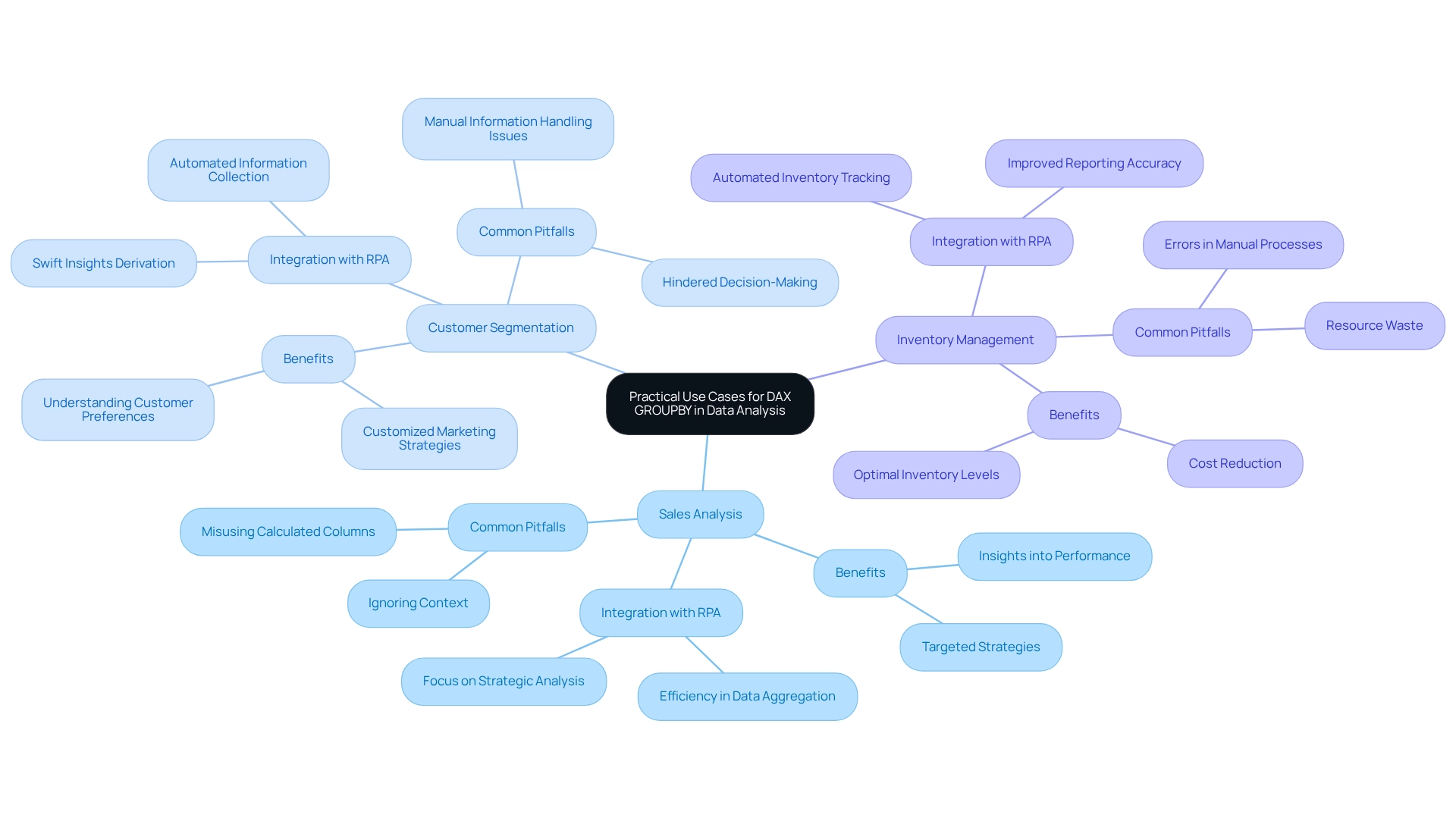

Exploring the Two Broad Categories of Diagnostic Analytics

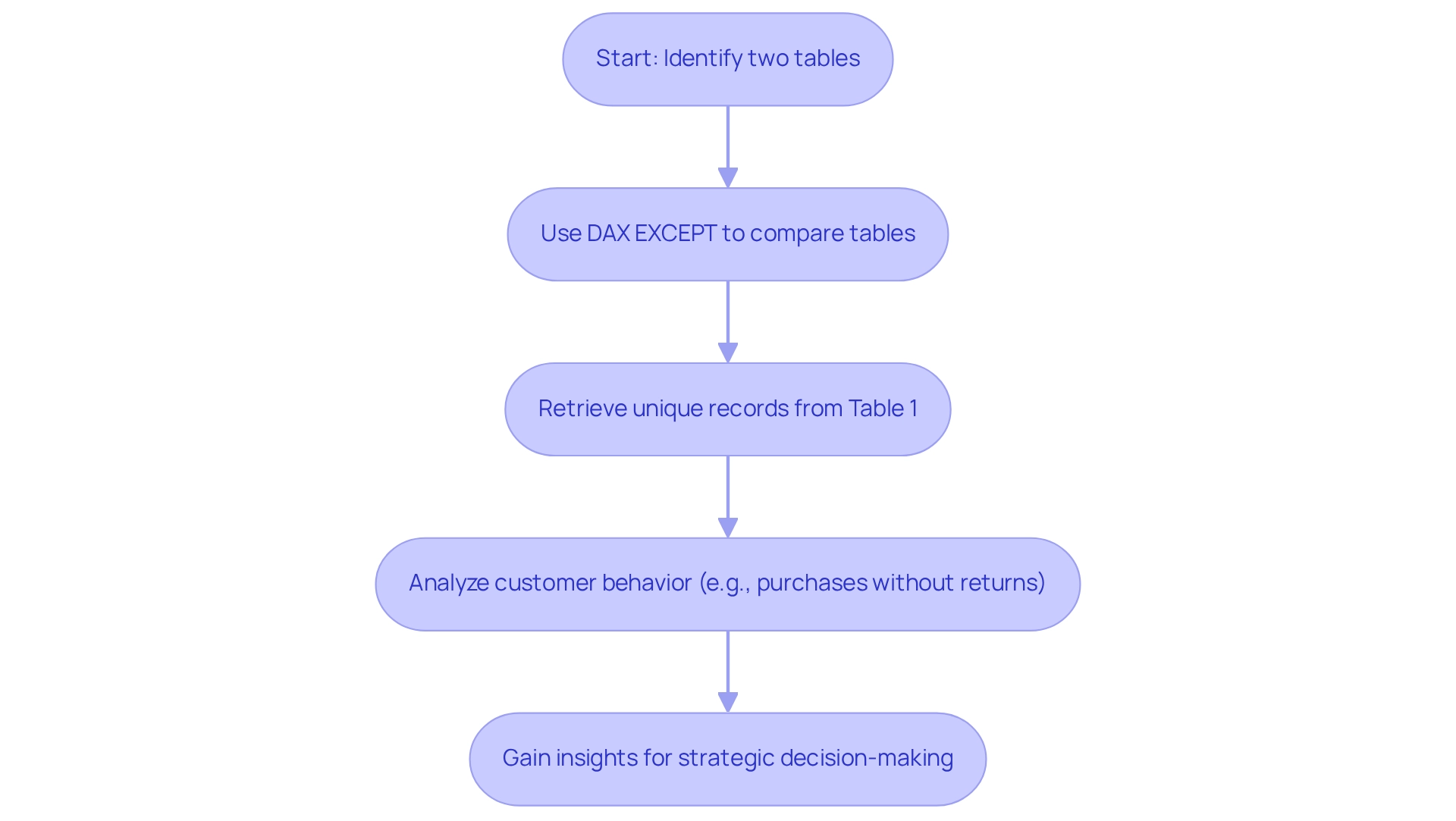

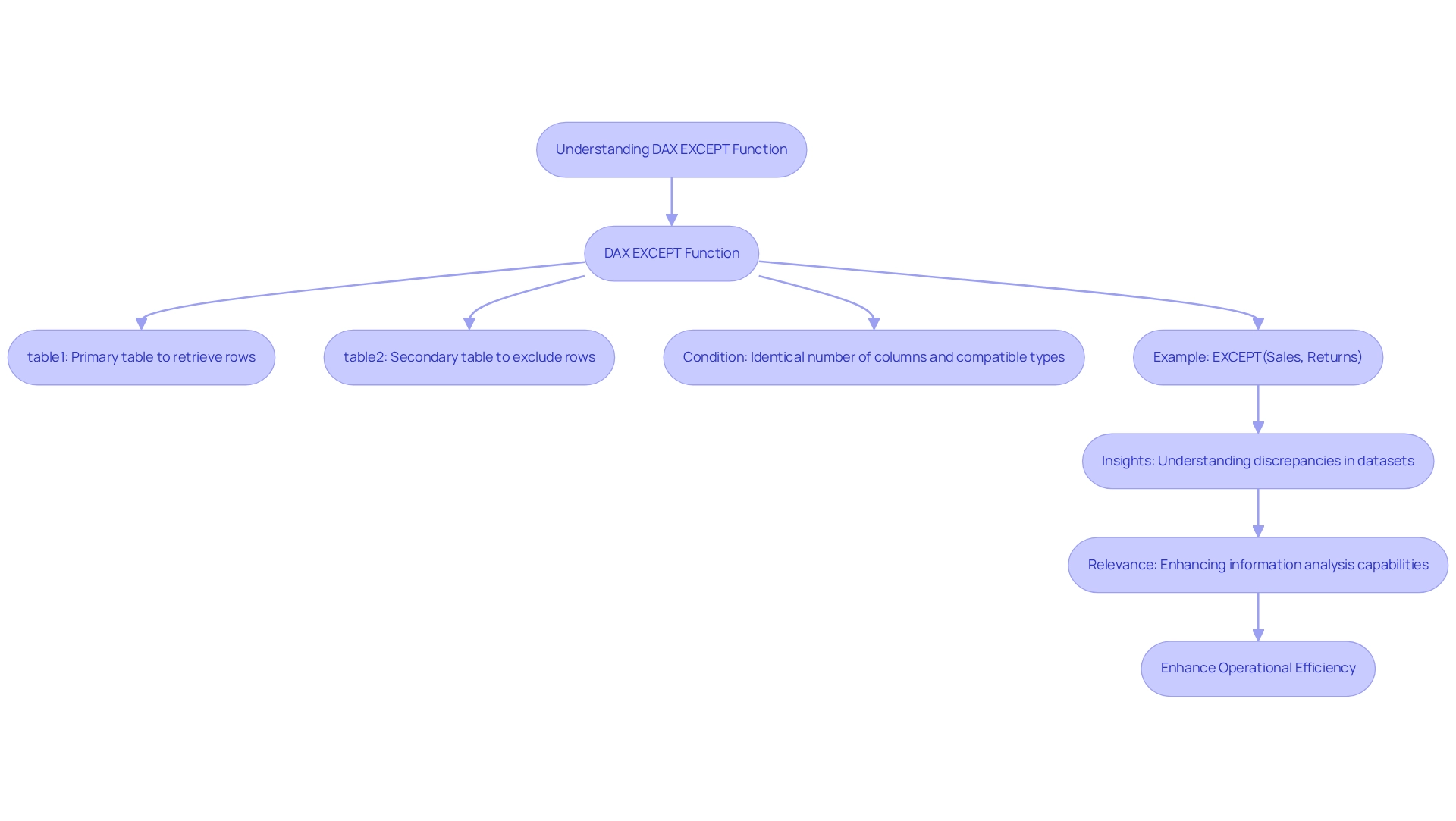

Diagnostic analytics can be divided into two primary types: anomaly detection and what-if analysis. Anomaly detection is essential for identifying unusual patterns or outliers in information that deviate from expected behavior. For instance, a consumer typically spending $100 on groceries suddenly spending $10,000 exemplifies a point anomaly that warrants immediate investigation.

This technique is crucial across various industries, including finance for fraud detection, healthcare for monitoring patient vitals, and retail for managing inventory. By utilizing real-time information ingestion—such as Tinybird’s method for sales transactions—organizations can identify anomalies rapidly and initiate alerts, thereby improving operational responsiveness.

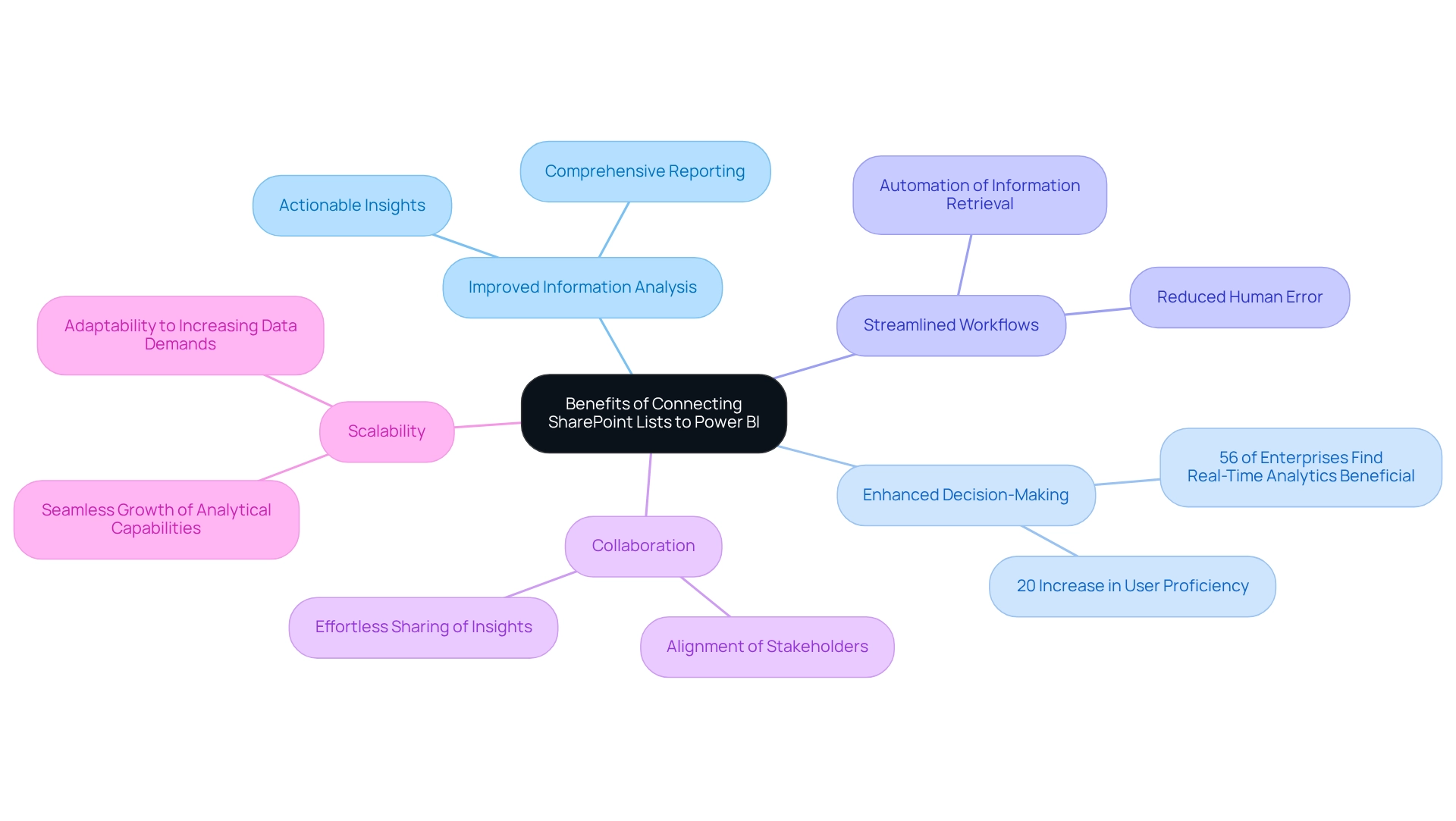

Moreover, the integration of Power BI services can significantly enhance reporting and provide actionable insights. With features like the 3-Day Power BI Sprint, businesses can swiftly create professionally designed reports that facilitate better decision-making. This becomes particularly important when addressing data inconsistency and governance challenges in business reporting, ultimately leading to improved operational efficiency.

Conversely, what-if analysis empowers businesses to simulate various scenarios by adjusting key variables to observe potential outcomes. This analytical method is invaluable for strategic planning and forecasting, enabling entities to evaluate the impact of various decisions prior to execution. Recent statistics indicate that businesses employing what-if analysis report a significant enhancement in decision-making efficiency, with studies demonstrating that entities incorporating this technique into their operational planning processes experience up to a 30% increase in efficiency.

The distinction between anomaly detection and what-if analysis is essential, as it highlights their differing objectives: anomaly detection aims to identify issues that require immediate attention, while what-if analysis supports proactive planning by exploring potential future scenarios. Expert insights underscore the significance of these techniques in diagnostic analytics, with content creator Bex Tuychiev noting, “I enjoy writing in-depth articles on AI and ML with a touch of sarcasm because you need to do something to make them a little less tedious.” This emphasizes the necessity for engaging and informative content in the field.

Furthermore, utilizing AI solutions, such as Small Language Models and GenAI Workshops, can further enhance companies’ capabilities in addressing quality challenges and training personnel effectively. Case studies, like the two-step outlier detection approach for weekly and monthly information using the Generalized Extreme Studentized Deviate (GESD) test combined with an adjusted box-plot method, illustrate the effective application of both techniques. These examples showcase how organizations can harness these tools to enhance their analytical capabilities and make informed decisions, ultimately driving operational efficiency and strategic growth.

Additionally, the adoption of Robotic Process Automation (RPA) can streamline manual workflows, allowing teams to focus on more strategic, value-adding work, thereby enhancing overall productivity in a rapidly evolving AI landscape.

Category One: Identifying Anomalies and Outliers

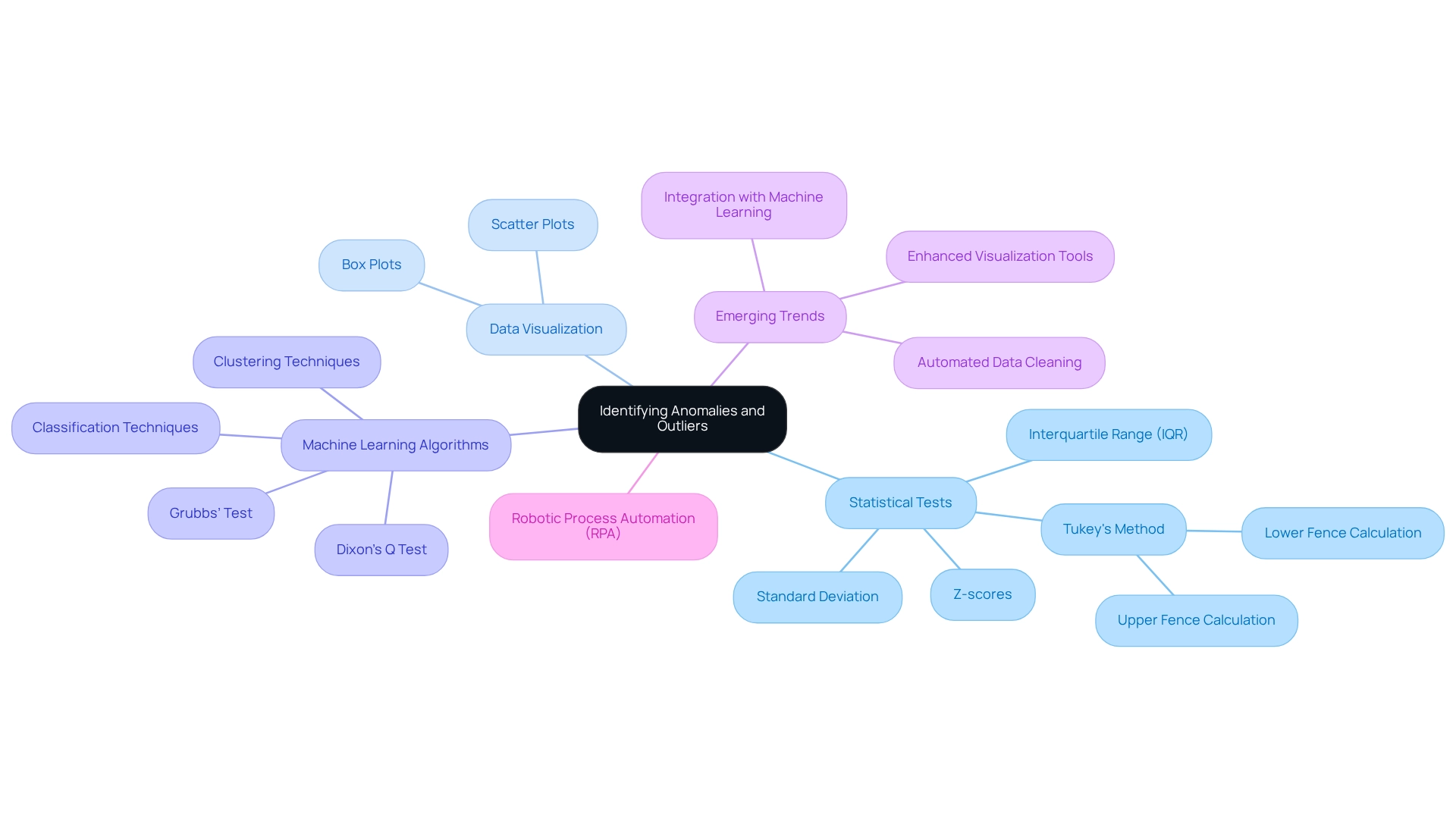

Recognizing anomalies and outliers is essential for preserving information integrity and operational efficiency. This process employs a combination of statistical methods and visualization techniques to pinpoint points that diverge significantly from expected patterns. Key techniques include:

-

Statistical Tests: Techniques such as z-scores and the interquartile range (IQR) form the foundation of outlier detection. Tukey’s method, for example, defines the lower and upper fences for outliers using the formula: lower fence = Q1 – k * IQR and upper fence = Q3 + k * IQR, where k is typically set to 1.5 for moderate outliers. Additionally, the function of standard deviation is crucial; values that fall beyond the range established by the empirical rule are flagged as potential outliers. These methods empower analysts to gauge how far an observation is from the mean, effectively identifying possible anomalies.

-

Data Visualization: Visualization tools like box plots and scatter plots are pivotal in anomaly detection. These graphical representations allow analysts to swiftly identify irregularities by providing clear visual context. For instance, box plots can illustrate the distribution of values and highlight outliers, while scatter plots can reveal clusters and gaps in information that may indicate anomalies.

-

Machine Learning Algorithms: The integration of machine learning in anomaly detection has transformed the field. Algorithms such as clustering and classification can automatically identify outliers in extensive datasets, significantly boosting efficiency. Techniques like Grubbs’ test and Dixon’s Q test are utilized in manufacturing quality control to detect defective measurements, thereby minimizing waste and enhancing quality assurance. As noted by Andrew Rukhin, “this really transforms to a t distribution,” underscoring the significance of statistical methods in this context.

Alongside these conventional techniques, leveraging AI through Small Language Models can enhance information analysis and improve quality, while GenAI Workshops can provide teams with the necessary training to implement these technologies successfully. Furthermore, the adoption of Robotic Process Automation (RPA) can streamline manual workflows, allowing organizations to focus on strategic initiatives rather than repetitive tasks. The effectiveness of these methods is reinforced by emerging trends in outlier detection, which include the integration of machine learning for automated information cleaning and advanced visualization tools.

As data scientists continue to explore these advancements, the consensus is clear: combining statistical methods, machine learning algorithms, and RPA not only enhances anomaly detection but also enables companies to tackle underlying issues, such as operational inefficiencies and data quality challenges.

Category Two: Performing What-If Analysis

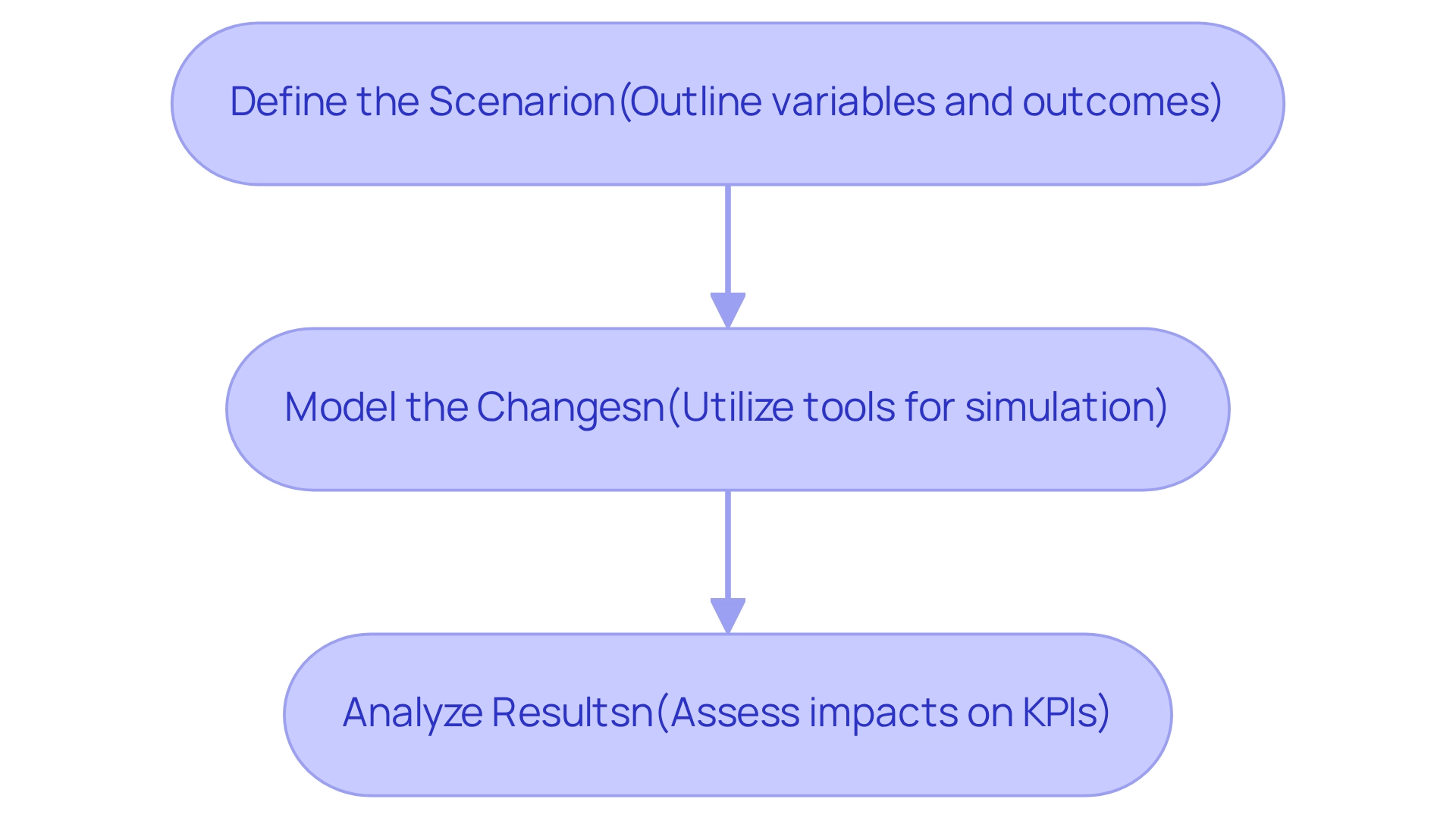

What-if analysis is a powerful technique that involves altering input variables to evaluate their impact on outcomes. This approach is widely employed in financial modeling, risk management, and operational planning, enabling entities to navigate uncertainties effectively. The key steps in performing what-if analysis include:

-

Define the Scenario: Begin by clearly outlining the variables that will be modified and the anticipated outcomes. This step is crucial for setting the parameters of the analysis.

-

Model the Changes: Utilize spreadsheet software or specialized analytics tools, potentially enhanced by Robotic Process Automation (RPA), to simulate various scenarios. This modeling allows for a structured approach to understanding potential outcomes based on different inputs, streamlining the workflow and reducing manual effort.

-

Analyze Results: After modeling, assess how the changes influence key performance indicators (KPIs). This examination is essential for making informed choices that align with institutional objectives, particularly in a swiftly changing AI environment where data-driven insights are crucial.

Utilizing what-if analysis enables entities to prepare for various future scenarios, refining strategies to bolster resilience against market variations. Recent statistics suggest that around 70% of entities utilize what-if analysis in their financial modeling processes, underscoring its importance in strategic planning. Additionally, the performance of predictive models can be quantified, with an R² score of 0.82 demonstrating their effectiveness in forecasting outcomes.

Expert insights highlight the importance of scenario modeling in operational planning, with financial analysts emphasizing that effective scenario modeling can lead to more robust decision-making frameworks. As noted by P8, scenario modeling serves as an adjunct to traditional analysis, enhancing the overall decision-making process. Moreover, as companies progressively embrace advanced analytics, the amalgamation of what-if analysis with AI and machine learning is anticipated to improve predictive capabilities, enabling real-time information processing and more tailored customer experiences.

Case studies demonstrate the practical use of what-if analysis in financial modeling. For example, companies that have implemented this technique have reported improved risk management strategies, enabling them to identify vulnerabilities and adapt to changing market conditions. The evolution of influence analysis, which is expected to integrate more deeply with AI and machine learning, will further enhance the capabilities of what-if analysis, fostering sustainable growth and corporate responsibility.

As we progress through 2025, the trend towards employing what-if analysis for business planning continues to expand, with companies increasingly acknowledging its importance in promoting sustainable growth and corporate responsibility. Additionally, WIA techniques facilitate collaborative analyses, allowing stakeholders to engage in decision reasoning collectively. Utilizing tools such as Power BI can further improve reporting and actionable insights, ensuring that organizations are prepared to make informed choices based on thorough analysis.

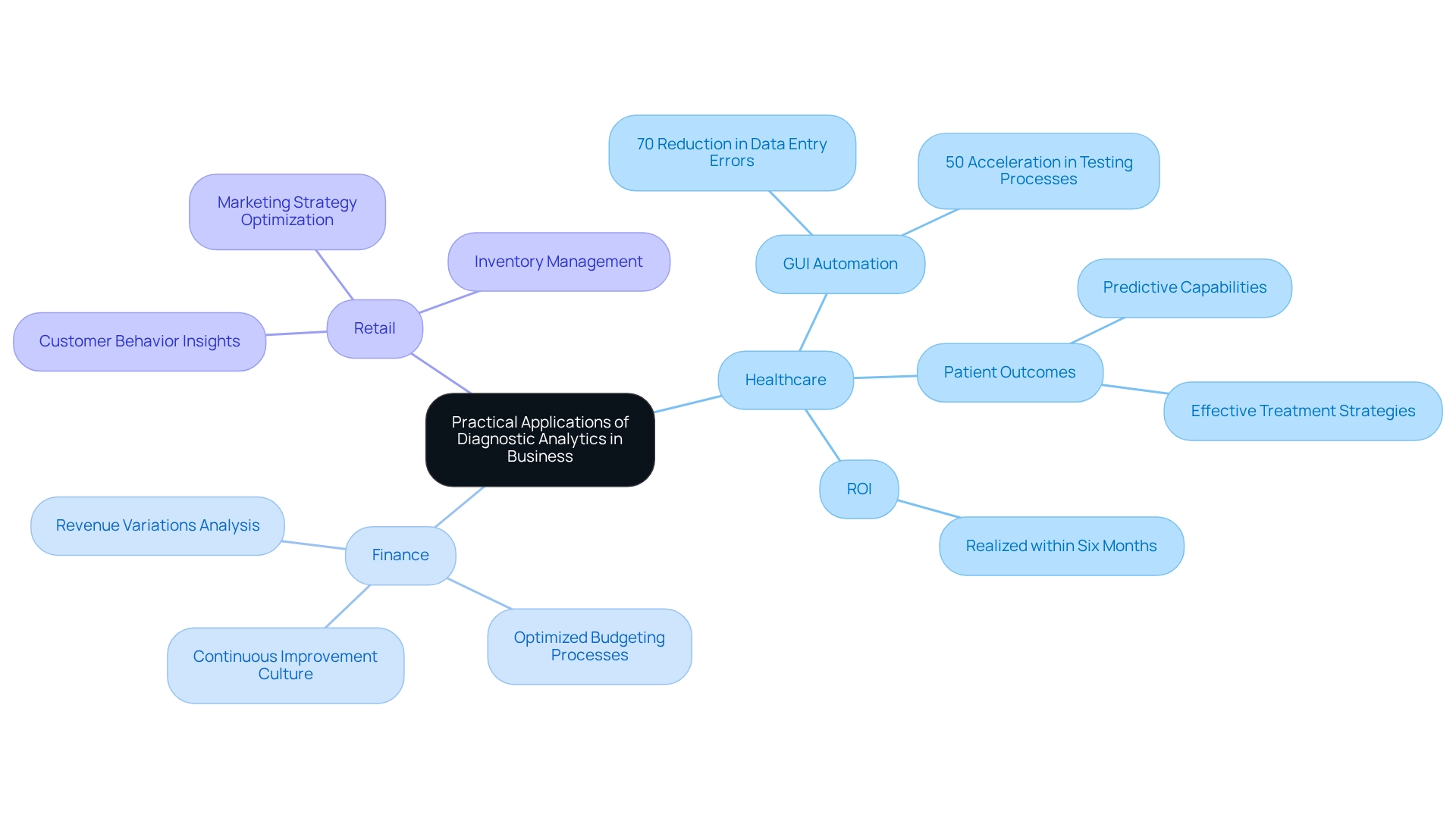

Practical Applications of Diagnostic Analytics in Business

In the realm of operational efficiency and strategic decision-making, understanding the pivotal role of diagnostic analytics across multiple sectors is essential. Its applications span various industries:

-

Healthcare: By analyzing patient data trends, healthcare providers can significantly enhance treatment outcomes and reduce readmission rates. For instance, a mid-sized healthcare company implemented GUI automation to streamline data entry and software testing, achieving a remarkable 70% reduction in data entry errors and a 50% acceleration in testing processes. This automation not only improved assessment precision but also empowered healthcare professionals to foresee illnesses and identify high-risk patients more effectively. A study involving 37 patients demonstrated the efficacy of these methods in deriving positive and negative likelihood ratios, leading to more precise recommendations for confirmatory tests and better management of patient outcomes. Furthermore, prescriptive analysis develops effective treatment strategies and suggests measures to enhance patient outcomes, ultimately improving operational efficiency in healthcare institutions. Notably, the ROI from these implementations was realized within six months, underscoring the effectiveness of GUI automation.

-

Finance: In the financial sector, analytical assessments are crucial for uncovering the root causes of revenue variations. By analyzing historical financial performance, organizations can optimize budgeting processes and make informed decisions that enhance profitability. The incorporation of evaluative insights fosters a culture of continuous improvement, enabling companies to adapt swiftly to evolving market conditions.

-

Retail: Understanding customer behavior is vital for crafting effective marketing strategies and optimizing inventory management. Retail experts emphasize the importance of utilizing analytical tools to gain insights into customer preferences and purchasing behaviors. This data-driven approach not only enhances customer satisfaction but also drives sales growth.

Through analytical assessments, organizations in these fields can make informed, data-driven choices that lead to enhanced efficiency and profitability. In today’s data-rich environment, the impact of these analytics transcends operational improvements, significantly enhancing financial performance. As Craig R. Denegar noted, the use of statistics is essential for establishing certainty in diagnosis, further highlighting the importance of robust analytical frameworks in achieving these results.

Moreover, case studies such as “Enhancing Diagnostics and Predictions through AI and Machine Learning” illustrate how AI and machine learning bolster diagnostic accuracy and predictive capabilities, resulting in more effective healthcare solutions. Client testimonials from entities like NSB GROUP and Hellmann Marine Solutions underscore the transformative impact of Creatum GmbH’s technology solutions in improving operational efficiency and fostering business growth. This is particularly evident through services like the 3-Day Power BI Sprint, which facilitates rapid report generation and actionable insights. The Power BI services offer features such as a comprehensive management application and a systematic approach to reporting, ensuring consistency and clarity in decision-making.

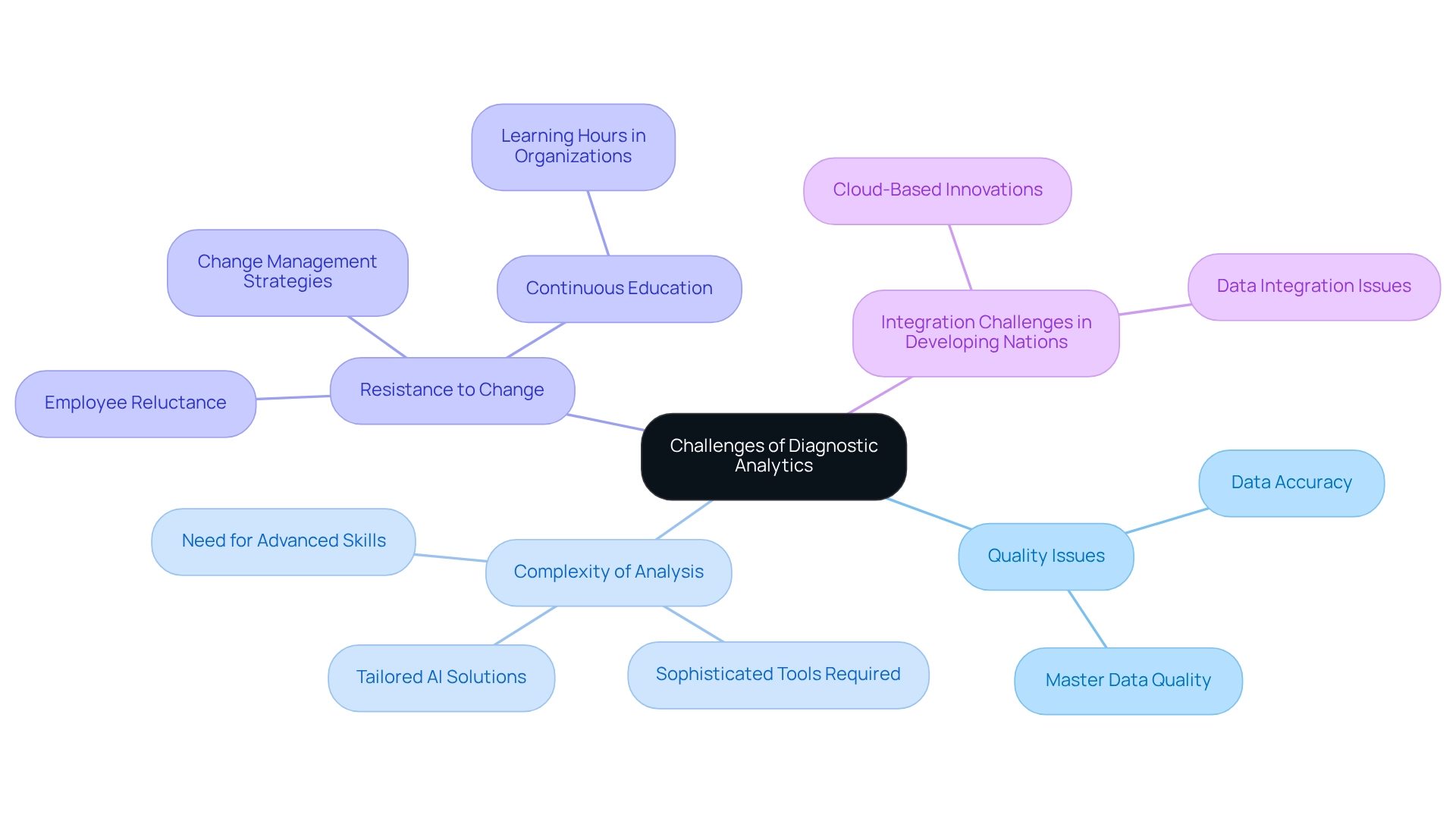

Challenges and Limitations of Diagnostic Analytics

While diagnostic analytics provides essential insights, companies frequently face significant challenges that can hinder its effective implementation.

-

Quality Issues: The accuracy and comprehensiveness of data are crucial. In 2025, organizations continue to grapple with data quality challenges, where incorrect or inadequate information can lead to misleading conclusions, ultimately affecting decision-making processes. Poor master data quality can severely impede the success of both RPA and Business Intelligence initiatives, making it vital to address these foundational issues.

-

Complexity of Analysis: The analytical landscape is becoming increasingly intricate, necessitating advanced statistical skills and sophisticated tools. This complexity can serve as a barrier for many organizations, limiting their ability to leverage analytical insights effectively. Tailored AI solutions can simplify this landscape, providing targeted technologies that align with specific business objectives and challenges, thereby enhancing analytical capabilities.

-

Resistance to Change: Employees may show reluctance to embrace new analytical methods, which can hinder progress. Implementing effective change management strategies is essential to facilitate this transition and foster a culture of data-driven decision-making. Organizations can overcome this resistance by promoting a culture of continuous education and skill enhancement in data science, similar to practices observed in leading firms like Amazon, which mandate ongoing ‘learning hours’ for analysts. Addressing these challenges is crucial for organizations aiming to maximize the benefits of evaluative analysis. For instance, a case study on transforming public health management illustrates how analytical techniques can analyze diverse data sources to identify disease outbreaks before they escalate. This capability enables healthcare organizations to manage public health emergencies more effectively, showcasing the potential to tackle data quality issues through the strategic application of RPA and Business Intelligence.

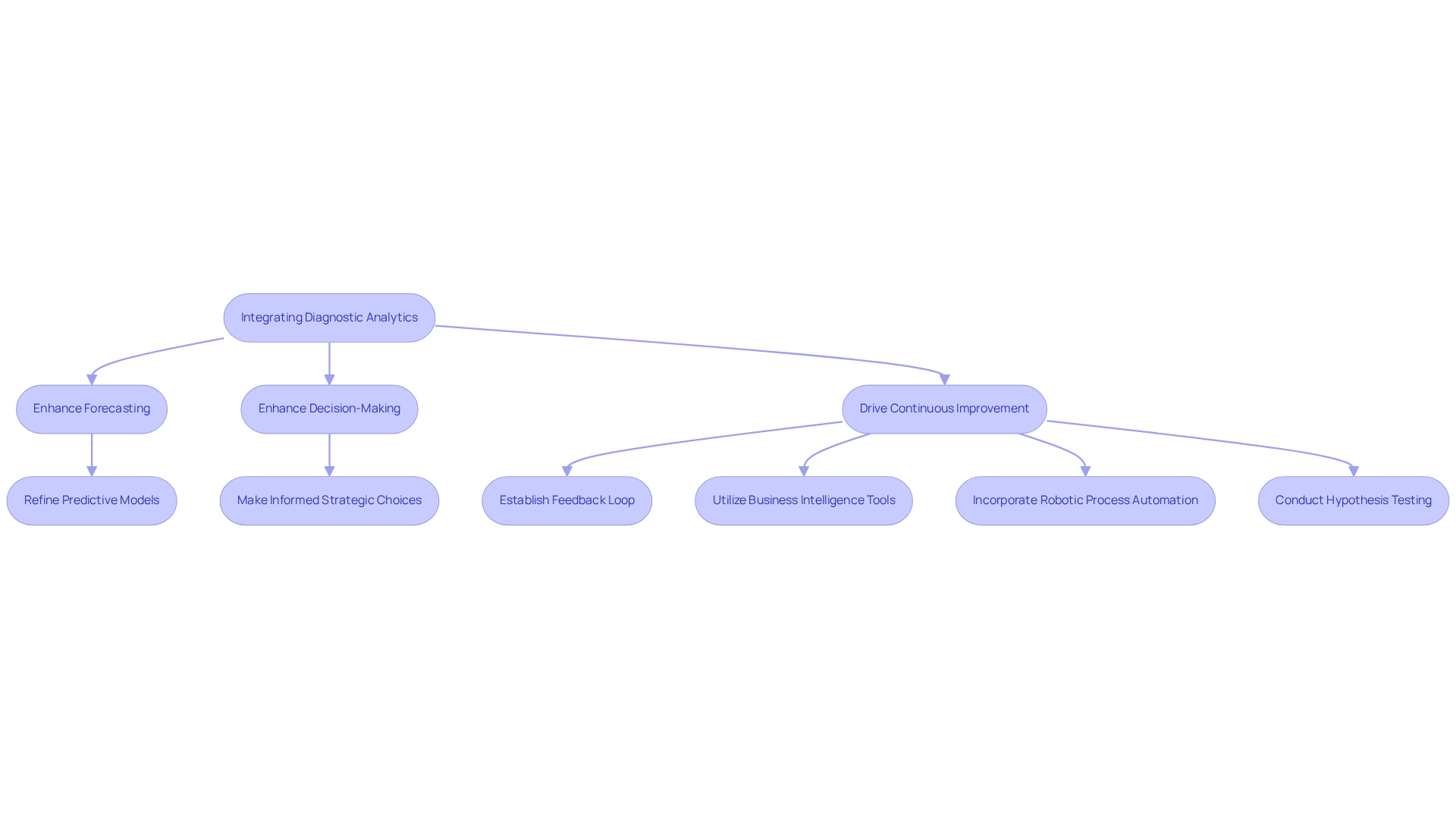

Moreover, retail leaders have successfully employed analytical assessments to understand customer purchasing patterns, refining their marketing strategies. Such examples underscore the importance of addressing complexity and data quality, two broad categories of diagnostic analytics, to enhance the efficiency of diagnostic analysis. As organizations navigate these challenges, expert insights suggest that fostering a culture of continuous learning and upskilling in analytics can significantly mitigate resistance and improve analytical capabilities.