Introduction

In the rapidly evolving world of artificial intelligence, the size of AI models has emerged as a critical factor influencing their performance and applications. Organizations are increasingly recognizing that the number of parameters within a model not only determines its ability to learn and adapt but also significantly impacts operational efficiency and cost-effectiveness.

As businesses strive to harness the power of AI, understanding the intricate balance between model size and architecture becomes essential. This article delves into the nuances of AI model sizes, exploring:

- Their historical evolution

- The trade-offs involved in selection

- The innovative trends shaping the future

By navigating these complexities, companies can unlock the full potential of AI, streamline their workflows, and drive meaningful growth in an ever-competitive landscape.

Defining AI Model Size: A Fundamental Overview

The AI model size is determined by the quantity of parameters or weights that a machine learning framework possesses, which play a crucial role in its capacity to learn from data and produce precise predictions. Generally, larger systems can identify more complex patterns within datasets, enhancing predictive capabilities. However, it’s essential to recognize that the AI model size is not solely about the number of parameters; the architecture of the model significantly influences how effectively these parameters can be utilized.

Recent advancements in AI architectures have shown that optimized structures can enhance performance metrics, making it essential for entities to consider AI model size and architecture when implementing AI strategies. By leveraging Small Language Models (SLMs), businesses can achieve efficient data analysis that is secure and cost-effective, addressing issues of poor master data quality while overcoming the challenges of AI adoption. Additionally, our GenAI Workshops provide hands-on training, empowering teams to create tailored AI solutions that fit their specific needs.

As Mark Webster notes, ‘52% of experts believe automation will displace jobs and create new ones,’ highlighting the implications of AI adoption on workforce dynamics. With 43% of businesses planning to reduce their workforce due to technological integration, it becomes imperative for organizations to adapt and harness AI effectively. The study titled ‘Consumer Perspectives on AI‘ reveals a mix of optimism and skepticism among consumers regarding AI technologies, underscoring the need for better communication and education around AI.

By concentrating on the appropriate framework dimensions, architecture, and optimizing the AI model size, companies can improve operational efficiency and manage the challenges of AI adoption in a swiftly changing technological environment. This journey reflects a dialogue between human curiosity and artificial intelligence, symbolized by the visual metaphor of a human figure contemplating a question mark alongside a robot holding a light bulb, illustrating the innovative potential of AI.

![]()

The Implications of Model Size on AI Performance and Applications

The ai model size of an AI system plays a pivotal role in determining its performance capabilities, especially when considering the integration of Robotic Process Automation (RPA) into workflows. Larger systems, like GPT-3, which boasts an impressive ai model size of 175 billion parameters, excel in complex tasks such as natural language processing and image recognition, often resulting in outstanding language generation. However, this enhanced capability incurs increased computational demands and operational costs—ChatGPT’s initial budget, for instance, was set at €100 million annually.

In contrast, smaller ai model sizes can provide efficiency and speed, making them ideal for real-time applications, although this may involve some compromises in accuracy. As Thomas Scialom, a research scientist at Meta, suggests, ‘We can put more compute in the smaller ai model sizes, and in the larger ai model sizes, we will have better designs with the same recipe in five years’ time.’ This viewpoint motivates organizations to reassess their strategy for selection, especially regarding RPA, which can optimize manual workflows, diminish mistakes, and allow teams to focus on more strategic, value-enhancing tasks.

Choosing the future we wish to simulate is both a functional and spiritual choice about our identity, highlighting the importance of aligning model capabilities with organizational values and goals. Furthermore, President Macron’s advocacy for open-source options in France highlights the increasing importance of accessible AI technologies. Insights from Jeff Wittich further suggest that optimizing LLM performance with CPUs may challenge the conventional reliance on high-end hardware for AI tasks, paving the way for more affordable computing options.

By thoughtfully evaluating their particular needs and operational objectives, businesses can select the suitable framework that balances performance with resource efficiency, ultimately resulting in more effective AI integration, improved business productivity, and an appropriate AI model size. Furthermore, smaller systems can be particularly cost-effective, allowing organizations to implement RPA solutions without incurring substantial operational costs, thus enhancing overall efficiency.

A Historical Perspective on AI Model Size Evolution

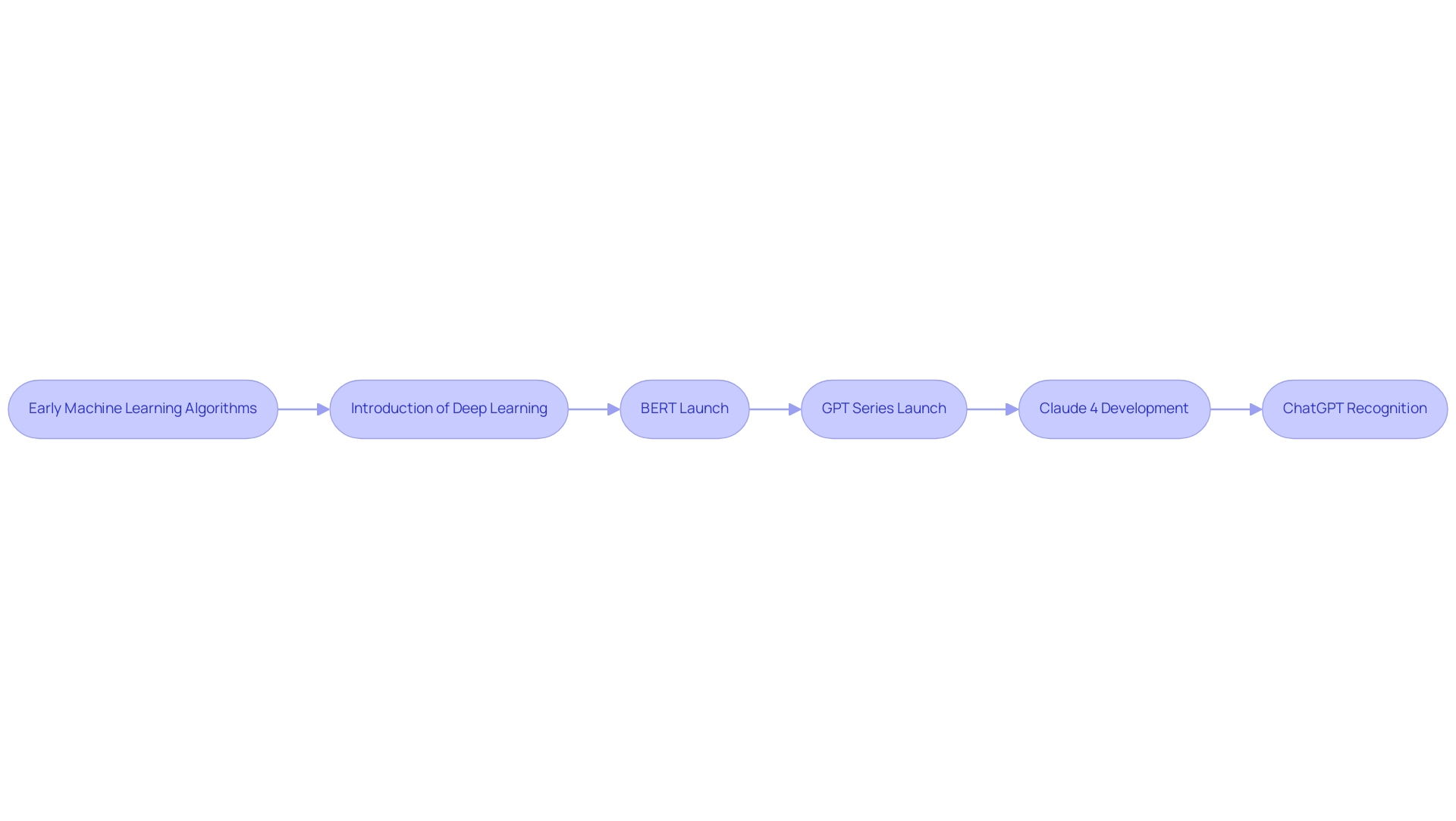

The evolution of AI model sizes has been a remarkable journey, beginning with early machine learning algorithms that were limited by a small number of parameters. As computational power increased and large datasets became accessible, AI systems expanded considerably. The introduction of deep learning marked a transformative moment, enabling the development of neural networks capable of handling millions of parameters.

This shift has been pivotal, allowing AI systems to tackle increasingly complex tasks. From 2010 to 2024, the quantity of parameters in prominent systems has skyrocketed, with trends indicating a clear move toward even larger frameworks. Notable examples include Google’s BERT and OpenAI’s GPT series, which have set new benchmarks in natural language processing.

Claude 4, developed by Anthropic, further illustrates the rapid growth trajectory of AI model sizes. This evolution reflects not just technological progress but also the growing expectations placed on AI systems, particularly concerning AI model size, to perform sophisticated functions, driving innovations that are shaping the future of artificial intelligence. In this context, leveraging Robotic Process Automation (RPA) can help streamline workflows by automating manual, repetitive tasks, thereby boosting efficiency, reducing errors, and freeing up your team for more strategic, value-adding work.

This enables entities to navigate the overwhelming options in the AI landscape effectively. As Steve Polyak poignantly remarked, ‘Before we work on artificial intelligence, why don’t we do something about natural stupidity?’ This quote serves as a reminder of the challenges faced in AI development.

Dr. Alan D. Thompson emphasizes the importance of aligning AI advancements with practical applications, including tailored AI solutions that can help organizations identify the right technologies to meet their specific business goals. For example, implementing AI-driven analytics tools can transform raw data into actionable insights, enabling informed decision-making. A tangible illustration of this evolution is OpenAI’s ChatGPT, which has attained extensive usage and acknowledgment for producing coherent and contextually appropriate responses, highlighting the practical effects of enhanced AI model size and system capabilities.

Navigating Trade-offs: Costs and Resources in Model Size Selection

Choosing the suitable AI model size is a nuanced decision that involves considering various trade-offs, particularly in the context of enhancing operational efficiency through RPA. Manual, repetitive tasks can significantly slow down operations, leading to wasted time and resources, which RPA aims to alleviate by automating these workflows. Larger systems, while capable of delivering enhanced performance, demand substantial computational resources that are influenced by the AI model size, leading to increased expenses related to hardware and energy consumption.

OpenAI’s recent pricing adjustment to one-fifth of one cent for approximately 750 words of output marks a significant reduction, reshaping the financial landscape for companies leveraging AI. This pricing shift has prompted organizations, including Latitude, to reassess their AI strategies. A Latitude spokesperson remarked, ‘I think it’s fair to say that it’s definitely a huge change we’re excited to see happen in the industry and we’re constantly evaluating how we can deliver the best experience to users.’

Such changes promote a reassessment of company strategies, especially as they aim to complement AI systems with RPA to automate manual workflows and enhance productivity. On the other hand, smaller AI model sizes typically provide a more budget-friendly and quicker training option, albeit often at the cost of reduced accuracy and capability. Organizations must carefully weigh their budget constraints, infrastructure capabilities, and specific application requirements to identify the optimal AI model size that aligns with their strategic objectives.

Customized AI approaches can specifically assist organizations in navigating the overwhelming AI landscape by providing targeted technologies that align with their business objectives. This consideration is particularly vital as they navigate the trade-offs between larger and smaller AI model sizes in 2024, while also exploring customized AI solutions and harnessing Business Intelligence to drive actionable insights and informed decision-making.

Future Trends: Innovations and Efficiency in AI Model Sizes

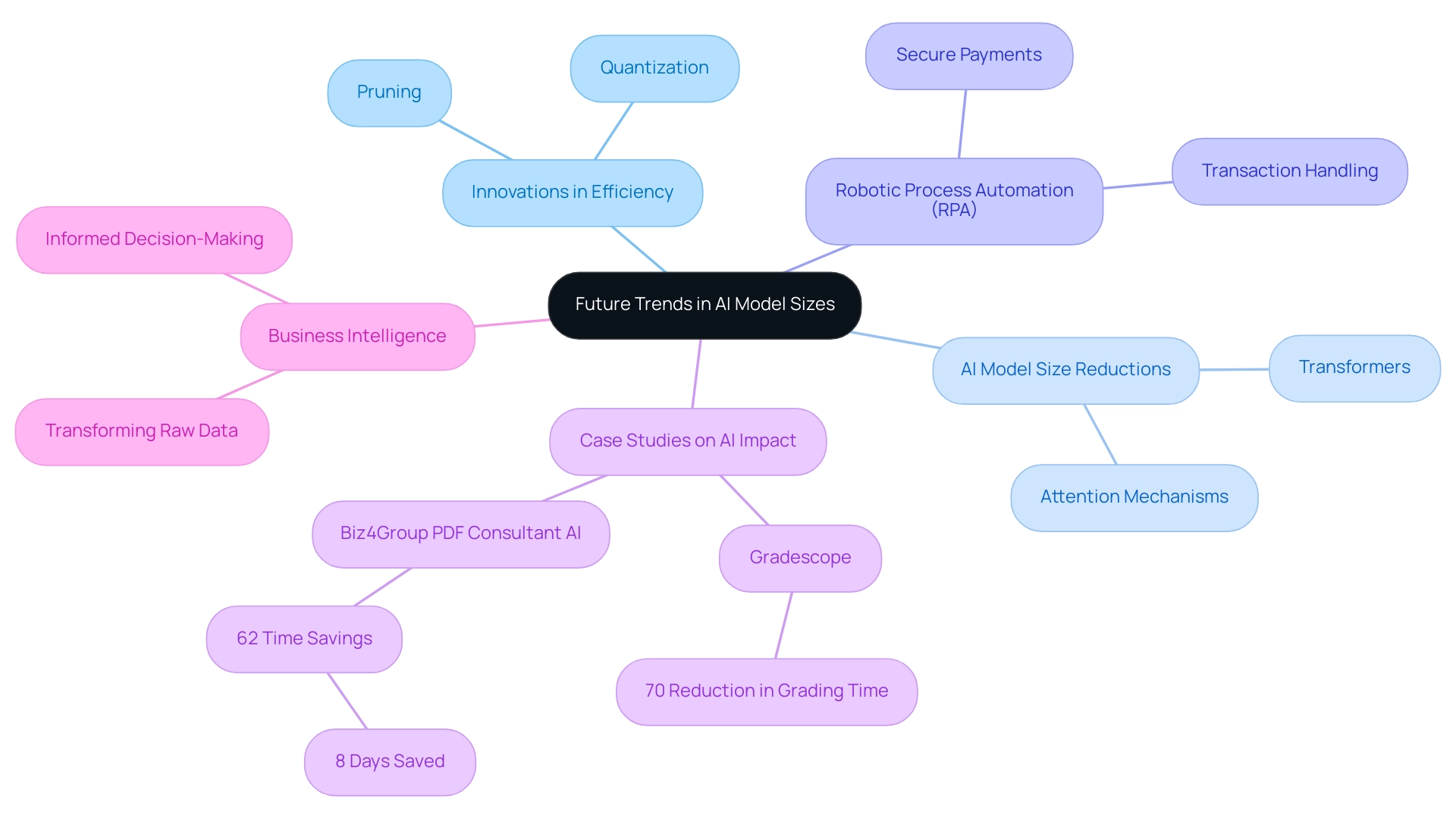

The landscape of AI frameworks is on the brink of transformative change, fueled by groundbreaking innovations in efficiency and advanced technology. Methods like pruning and quantization are becoming increasingly essential, allowing companies to reduce sizes without sacrificing performance. For instance, research indicates that educators utilizing AI-powered tools like Gradescope have achieved a remarkable 70% reduction in grading time, showcasing the potential of AI to streamline operations effectively.

In parallel, leveraging Robotic Process Automation (RPA) can further automate manual workflows, enhancing operational efficiency in an increasingly complex AI landscape. Sanjeev Verma, Founder & CEO, emphasizes that AI technologies handle transactions smoothly, ensuring secure payments and processing refunds without hassle, highlighting their operational efficiency. Furthermore, advancements in model architectures, particularly with transformers and attention mechanisms, are leading to the development of smaller models capable of executing complex tasks with fewer parameters.

The Biz4Group PDF Consultant AI case study demonstrates how AI innovations can save users 62% of their time on long-form content, translating to 8 days saved and increased productivity among working professionals. By referencing legal advisory platforms, we see that AI provides accessible and reliable counsel across various sectors, further broadening the discussion on its impact. As entities aim to enhance their AI systems, grasping these trends and their consequences will be crucial.

Additionally, the role of Business Intelligence in transforming raw data into actionable insights cannot be overstated, as it enables informed decision-making that drives growth and innovation. Businesses often face challenges in identifying the right AI solutions amidst the rapidly evolving landscape, making it crucial to embrace tailored AI solutions alongside RPA. By unlocking the full potential of RPA and AI technologies while managing costs and resources adeptly, organizations can set the stage for enhanced operational efficiency in the years to come.

Conclusion

AI model size is a pivotal aspect that significantly influences performance, operational efficiency, and cost-effectiveness in the realm of artificial intelligence. As explored throughout this article, understanding the relationship between model size and architecture is essential for organizations looking to leverage AI effectively. The historical evolution of AI models illustrates a remarkable journey towards increased complexity and capability, with larger models demonstrating enhanced performance in tasks such as natural language processing and image recognition. However, this comes at a cost, necessitating a careful evaluation of resources and operational needs.

The trade-offs between larger and smaller models are critical for organizations aiming to enhance their workflows through AI integration. While larger models may offer superior capabilities, they also demand substantial computational resources and higher operational costs. Conversely, smaller models can provide quicker and more budget-friendly solutions, albeit with some compromises in accuracy. Businesses must strategically assess their unique requirements to select the model size that aligns with their goals, ensuring that they strike the right balance between performance and resource efficiency.

Looking ahead, the future of AI model sizes is marked by innovation and a focus on efficiency. Techniques such as model pruning and quantization are emerging as effective methods to streamline models without sacrificing their capabilities. By harnessing the advancements in AI technology and integrating Robotic Process Automation, organizations can enhance productivity and operational efficiency. As the landscape continues to evolve, embracing tailored AI solutions will be essential for navigating the complexities of AI adoption and unlocking the full potential of these transformative technologies. Through informed decision-making and strategic implementation, organizations can position themselves to thrive in an increasingly competitive environment.

Discover how our tailored AI and RPA solutions can streamline your workflows—contact us today!