Introduction

In the rapidly evolving landscape of data management, organizations are increasingly turning to Power BI dataflows as a powerful solution to streamline their data integration and reporting processes. This innovative feature not only simplifies the connection and transformation of data from multiple sources but also enhances operational efficiency by minimizing redundancy and fostering collaboration among teams.

Through the strategic utilization of dataflows, businesses can overcome common challenges related to data handling, paving the way for insightful reporting and data-driven decision-making.

By exploring best practices, common pitfalls, and integration strategies, organizations can unlock the full potential of Power BI, ensuring they remain competitive in today’s data-driven environment.

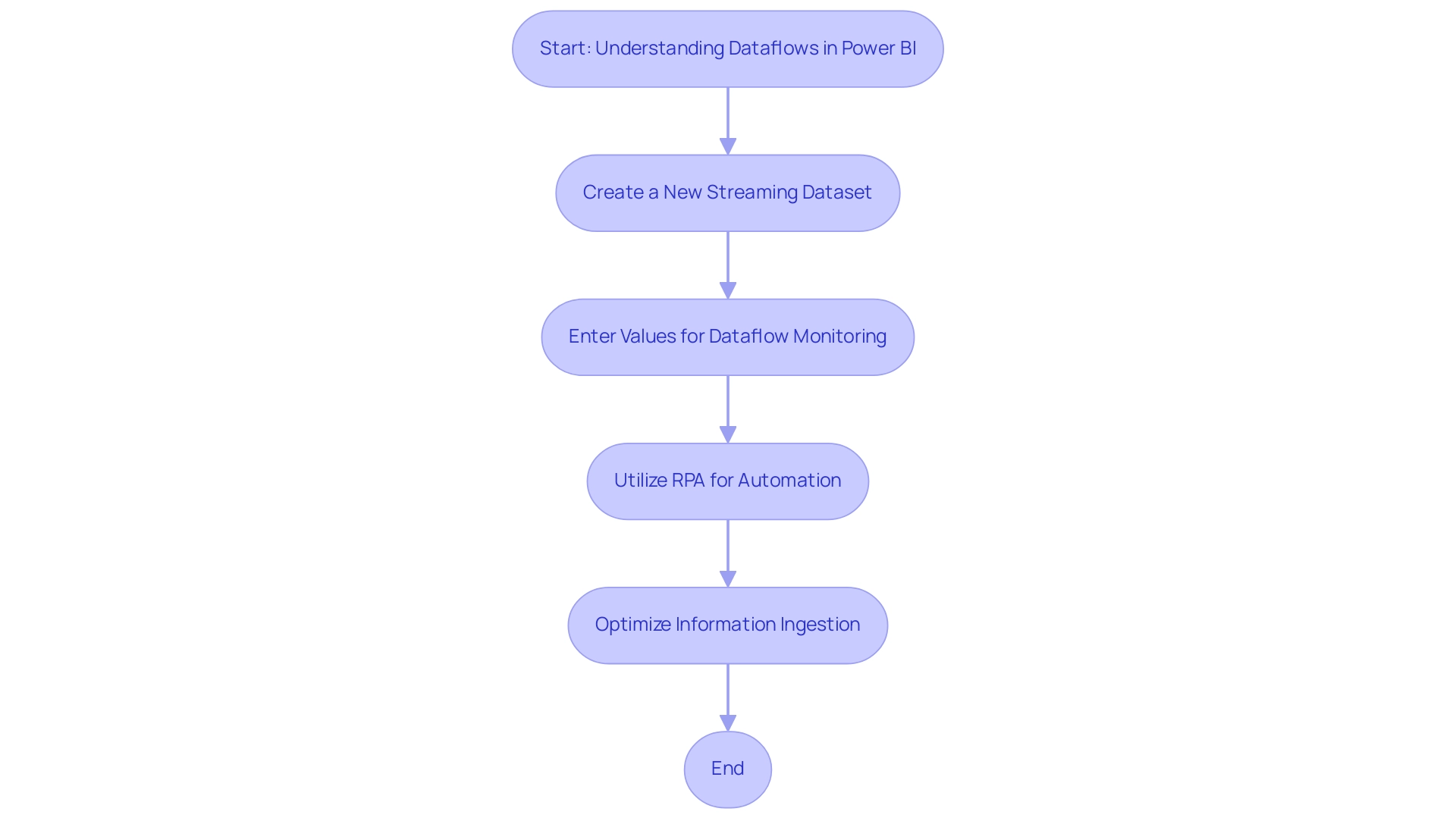

Understanding Dataflows in Power BI

Dataflows in BI represent a revolutionary aspect that allows users to effortlessly link, modify, and load information from various origins into a cohesive repository, efficiently addressing obstacles in technology implementation. This capability not only improves information management but also establishes the foundation for insightful reporting and analysis. Using Query, workflows outline crucial transformation steps that can be reused across various BI presentations, ensuring consistency and efficiency in information handling.

Wisdom Wu, a Community Support expert, emphasizes, ‘Based on my learning, you can use a Power BI semantic model to build a dataflow monitoring report. First, create a new streaming dataset. Then, enter the following values.’

This practical insight emphasizes the necessity of mastering dataflows for effective data management. Additionally, a recent case study titled ‘Detecting Orphaned Information Streams’ highlights user challenges in developing processes to identify orphaned information streams, revealing the critical need for timely implementation to avoid setbacks. The Fabric community’s report of 2,392 users online reflects a growing interest in utilizing these tools effectively.

Furthermore, the experience of a user who was sidetracked for 1 year, 9 months, and 7 days before building their solution illustrates the hurdles organizations may encounter in adopting dataflows. By mastering these capabilities, organizations can optimize their information ingestion processes, minimize redundancy, and foster greater collaboration among teams. This strategic approach not only streamlines information preparation but also positions businesses to make informed decisions based on dependable information, enhancing operational efficiency and driving innovation.

To further improve information reporting and actionable insights, our BI services include:

- A 3-Day BI Sprint for rapid creation of documents

- A General Management Application for thorough oversight and intelligent evaluations

Additionally, leveraging RPA can automate repetitive tasks within this workflow, significantly improving efficiency and reducing errors, allowing teams to focus on strategic initiatives.

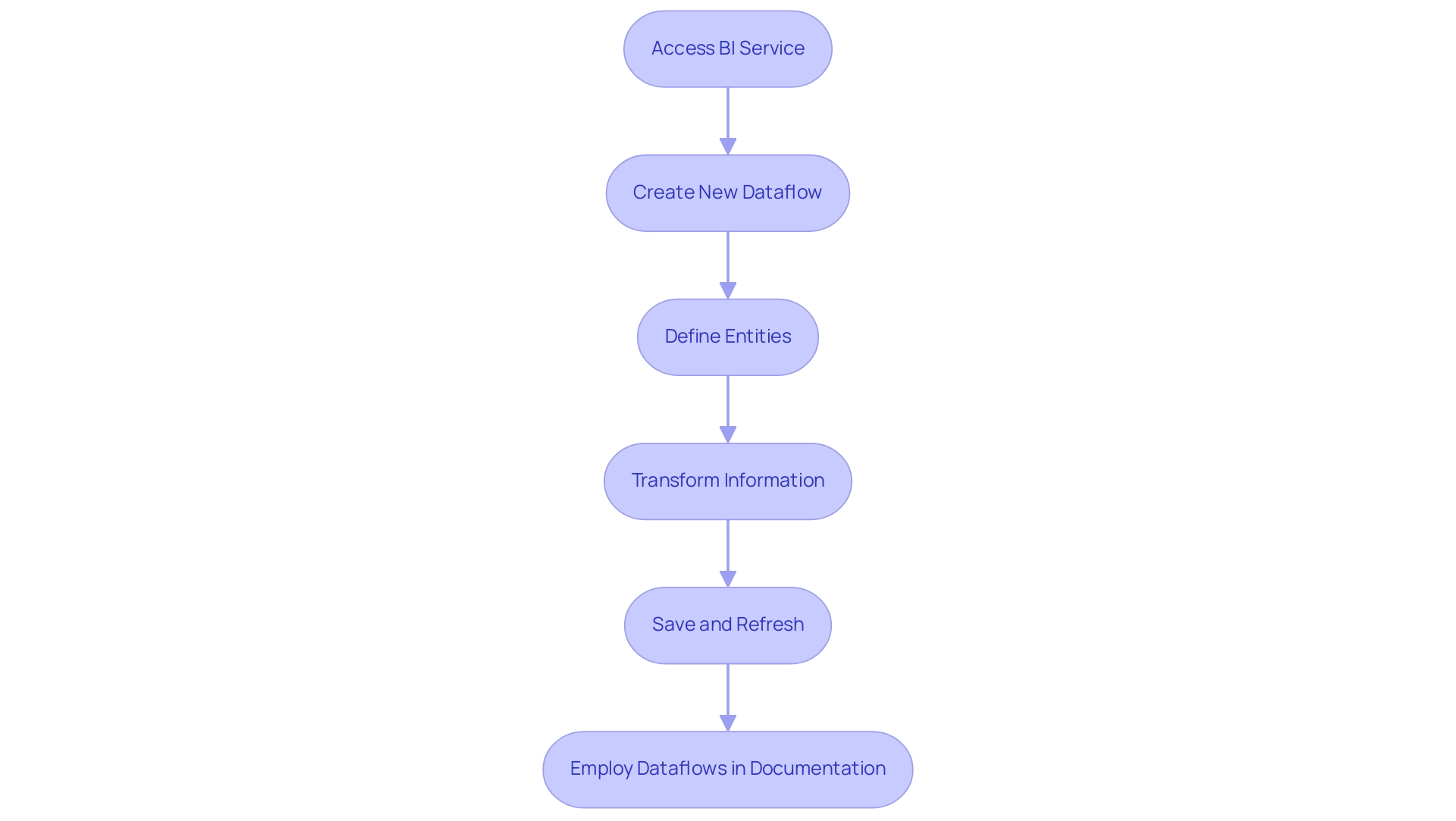

Step-by-Step Guide to Creating Dataflows

-

Access BI Service: Begin by logging into your BI account and navigating to the specific workspace where you wish to create your dataflow. Ensuring you have the right access is crucial, especially as certain features, such as computed tables, are only available to Premium subscribers.

-

To create a new dataflow, initiate the process by clicking on ‘Create’ and selecting ‘Dataflow’ from the dropdown menu. This simple step establishes the foundation for your information integration efforts, overcoming common challenges of time-consuming report creation.

-

Define Entities: In the Power Query editor, connect to your various information sources. You can enhance your connections by selecting ‘Add new entities,’ allowing you to incorporate multiple streams efficiently. Defining entities accurately is crucial to prevent inconsistencies and common user mistakes that can occur during the creation of dataflows.

-

Transform Information: Utilize Query to apply essential transformations to your information. This process might involve filtering out unnecessary information, merging datasets for comprehensive analysis, and organizing your information according to your reporting needs. Remember, the transformations you perform will execute on the information in Power BI dataflows storage, not on the external source itself, ensuring your insights are actionable.

-

Save and Refresh: Once your transformations are complete, save the dataflow. Establish a refresh schedule to ensure your information remains current and accurately reflects any changes in the underlying source. This is essential for preserving the accuracy of your documents and fostering ongoing enhancement in operational efficiency.

-

Employ Dataflows in Documentation: Once you have established your dataflows, they can be effortlessly incorporated as a source in your BI documentation. This integration ensures consistent information usage across various reports, enhancing the overall efficiency of your business intelligence efforts. Avishek Ghosh, an analytics expert, emphasizes that understanding these foundational aspects can greatly influence your analytical capabilities in BI. Furthermore, as emphasized in the case study on creating computed tables, once activated, any transformations on the computed table are performed on the information in BI dataflows storage, not on the external information source.

As Nikola perceptively pointed out, while it may appear intricate initially, each topic can be examined further in following articles, laying the groundwork for deeper comprehension and mastery of dataflows in BI. By following these steps, you will not only streamline your data integration processes but also position your organization to leverage real-time insights effectively, driving growth and innovation. Furthermore, integrating RPA solutions such as EMMA RPA and Automate can significantly enhance operational efficiency, allowing your team to focus on strategic initiatives rather than repetitive tasks.

Remember, failing to leverage Business Intelligence can leave your organization at a competitive disadvantage in today’s data-driven landscape.

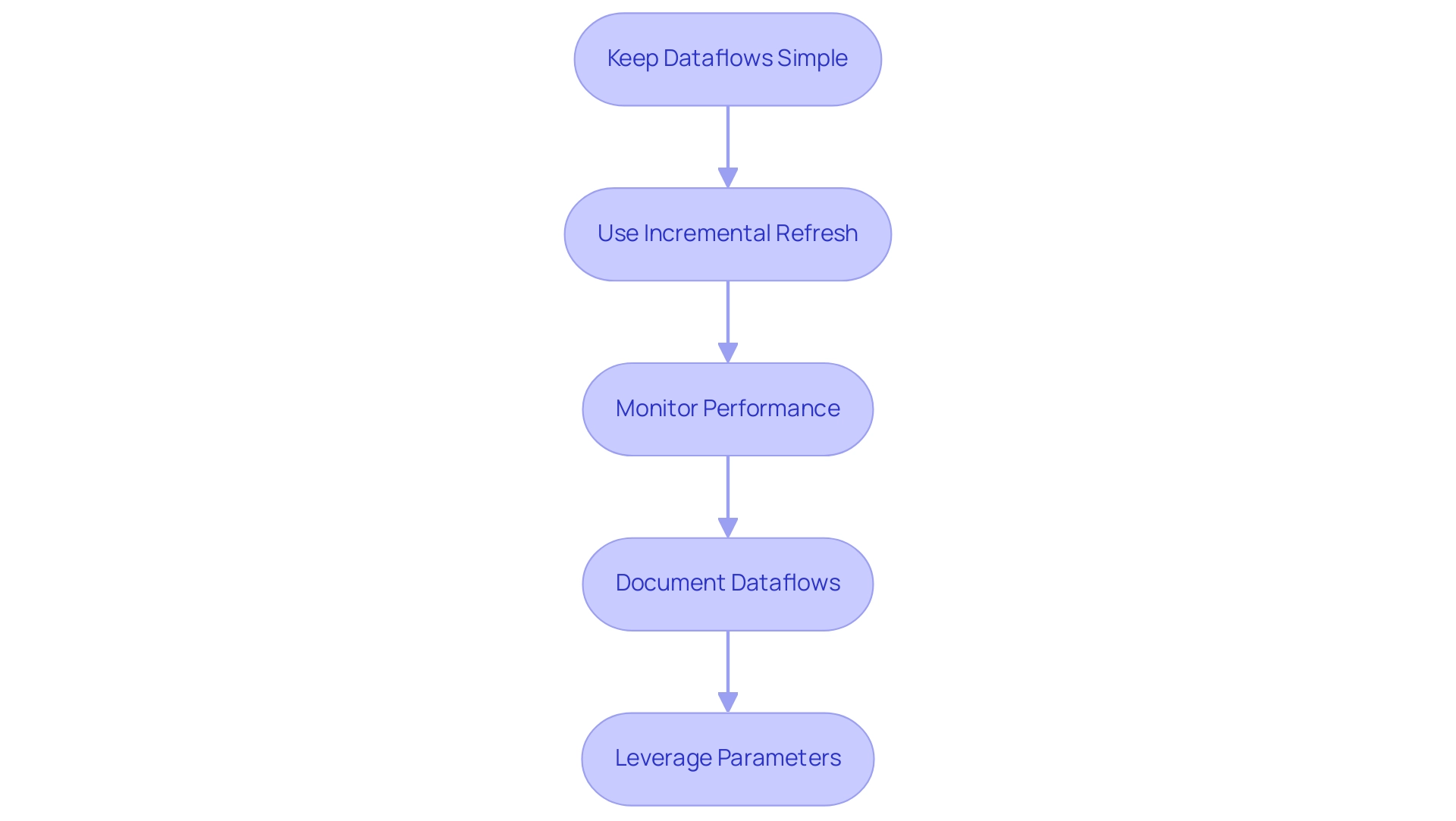

Best Practices for Optimizing Power BI Dataflows

-

Keep dataflows simple by streamlining your dataflows and avoiding overly complex transformations. Break down large processes into smaller, manageable components. This not only enhances performance but also simplifies troubleshooting and maintenance, ensuring a user-friendly approach akin to solutions like Power Automate and EMMA RPA, which are both AI-powered and designed for accessible automation.

-

Use Incremental Refresh: For organizations dealing with extensive datasets, implementing incremental refresh is crucial. This approach enables you to refresh only the new or modified information, minimizing load times significantly. According to a case study titled “Incremental Refresh,” this strategy optimizes data loading processes and reduces unnecessary data processing, ultimately enhancing operational efficiency and supporting risk-free ROI assessment.

-

Monitor Performance: Regularly evaluate the efficiency of your data streams. Tracking usage patterns and refresh times enables you to identify bottlenecks and optimize processes effectively. Ongoing performance monitoring guarantees that your information streams stay efficient and responsive to shifting demands, utilizing the power of automation for business intelligence.

-

Document Dataflows by maintaining comprehensive documentation of your dataflow processes. Clear documentation aids in troubleshooting and serves as a valuable resource for onboarding new team members, fostering a collaborative environment and enhancing productivity.

-

Leverage Parameters: Utilize parameters to create adaptable processes that can seamlessly transition between different environments or datasets. This flexibility reduces the need for extensive modifications, allowing for quicker adjustments to meet evolving business needs.

As m_alireza, a Solution Specialist, points out, the choice between a shared dataset and a data flow -> dataset model can impact efficiency. He notes, “Once you grow larger, our team personally found issues with the golden dataset approach as well. It soon became unmanageable as more tables were added.

Refresh times were becoming too slow.” This highlights the importance of considering the organization’s size and governance when designing effective dataflows. Additionally, the recommendations in this section are informed by the expertise of Aaron Stark, who holds 7 Microsoft Certifications, underscoring the credibility of these best practices and the significance of RPA and business intelligence in driving data-driven insights for operational growth.

For further insights and personalized guidance, we encourage you to Book a free consultation to explore how EMMA RPA and Power Automate can transform your business operations.

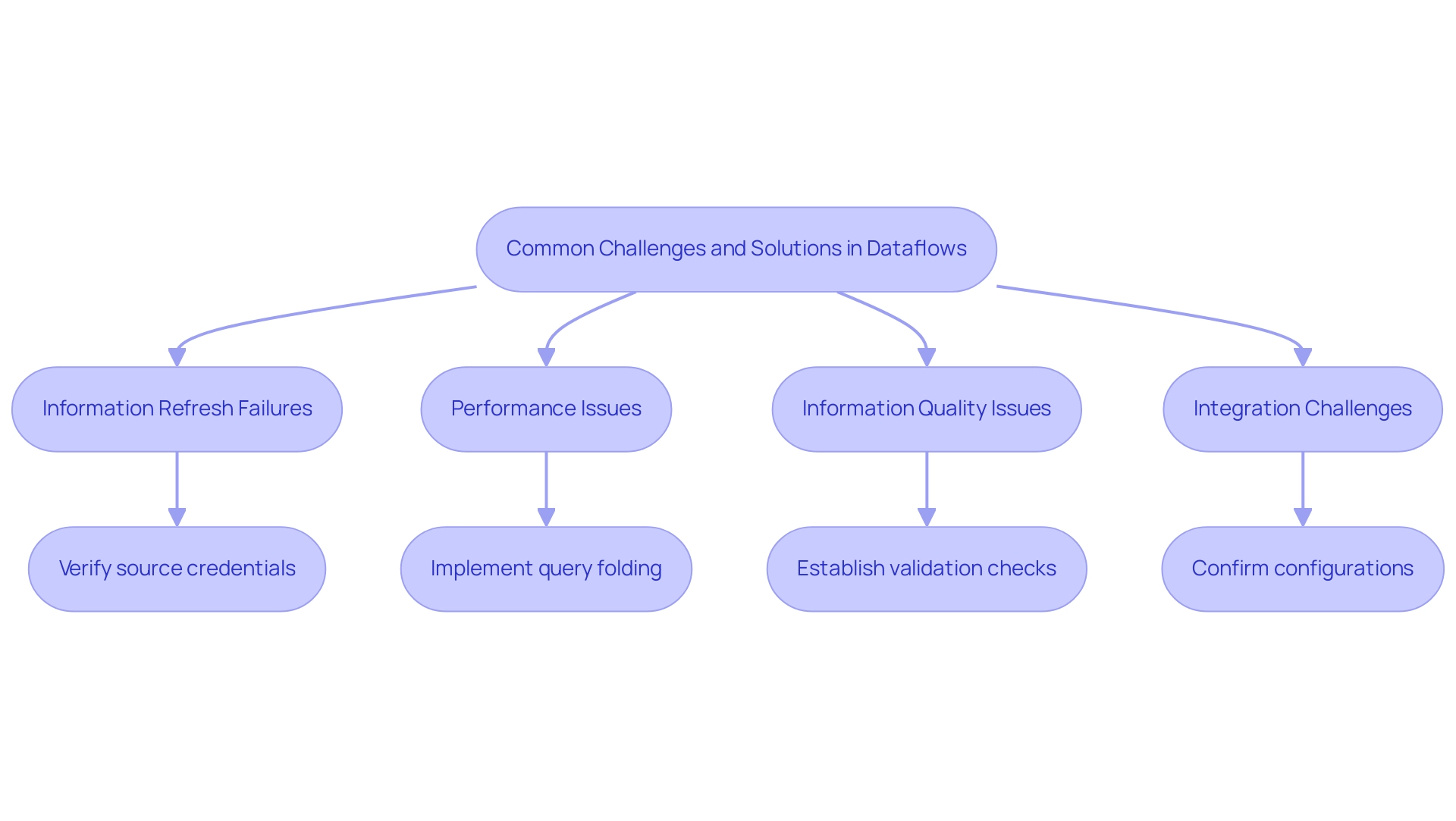

Common Challenges and Solutions in Dataflows

-

Information Refresh Failures: When facing dataflows refresh failures, it’s essential to first verify your source credentials. Ensure they are current and that the source information is both accessible and correctly formatted. Recent statistics indicate that many organizations struggle with refresh failures due to misconfigured dataflows, making this initial check crucial. Furthermore, using Usage Metrics can offer insights into how your dashboards and documents are being utilized across your organization. As a Microsoft employee notes, ‘If you have edit access to that dashboard or report, or have a Pro license, you can use Usage Metrics to discover how those dashboards and reports are being used, by whom, and for what purpose.’ Understanding this usage can help identify potential issues leading to refresh failures and enable your team to automate responses effectively.

-

Performance Issues: Slow dataflows can greatly impede operational efficiency. To tackle this, concentrate on optimizing transformations and minimizing the amount of information processed. Implementing query folding—where feasible—can greatly enhance performance. As Marcus Wegener, a Full Stack Power BI Engineer at BI or DIE, emphasizes, maximizing information utility often hinges on these performance optimizations. By integrating RPA into your workflow, you can automate routine performance checks and adjustments, streamlining operations further while reducing errors and allowing your team to concentrate on more strategic initiatives.

-

Information Quality Issues: Inconsistent information can lead to flawed reports and decision-making. To combat this, establish robust validation checks within your dataflows. These checks assist in identifying and correcting errors early in the processing pipeline, ensuring higher quality outputs. Leveraging RPA can automate these validation processes, improving data integrity and reliability.

-

Integration Challenges: Integrating various services with dataflows can present compatibility issues. To prevent disruptions, confirm that all configurations are correct and compatible with the services you’re integrating. Utilize Bi’s extensive documentation and community forums for tailored guidance and solutions. Additionally, adopting a customized AI solution can help navigate these integration challenges, aligning technologies with your specific business goals and challenges in the rapidly evolving AI landscape.

Furthermore, the Automatic Refresh Attempts Tracking feature in Business Intelligence provides valuable insights into refresh history, allowing users to visualize all refresh attempts and understand the reasons behind delays or failures. This functionality is crucial for improving the management of dataset refreshes and addressing common challenges effectively, ultimately enhancing your operational efficiency and enabling informed decision-making through actionable insights.

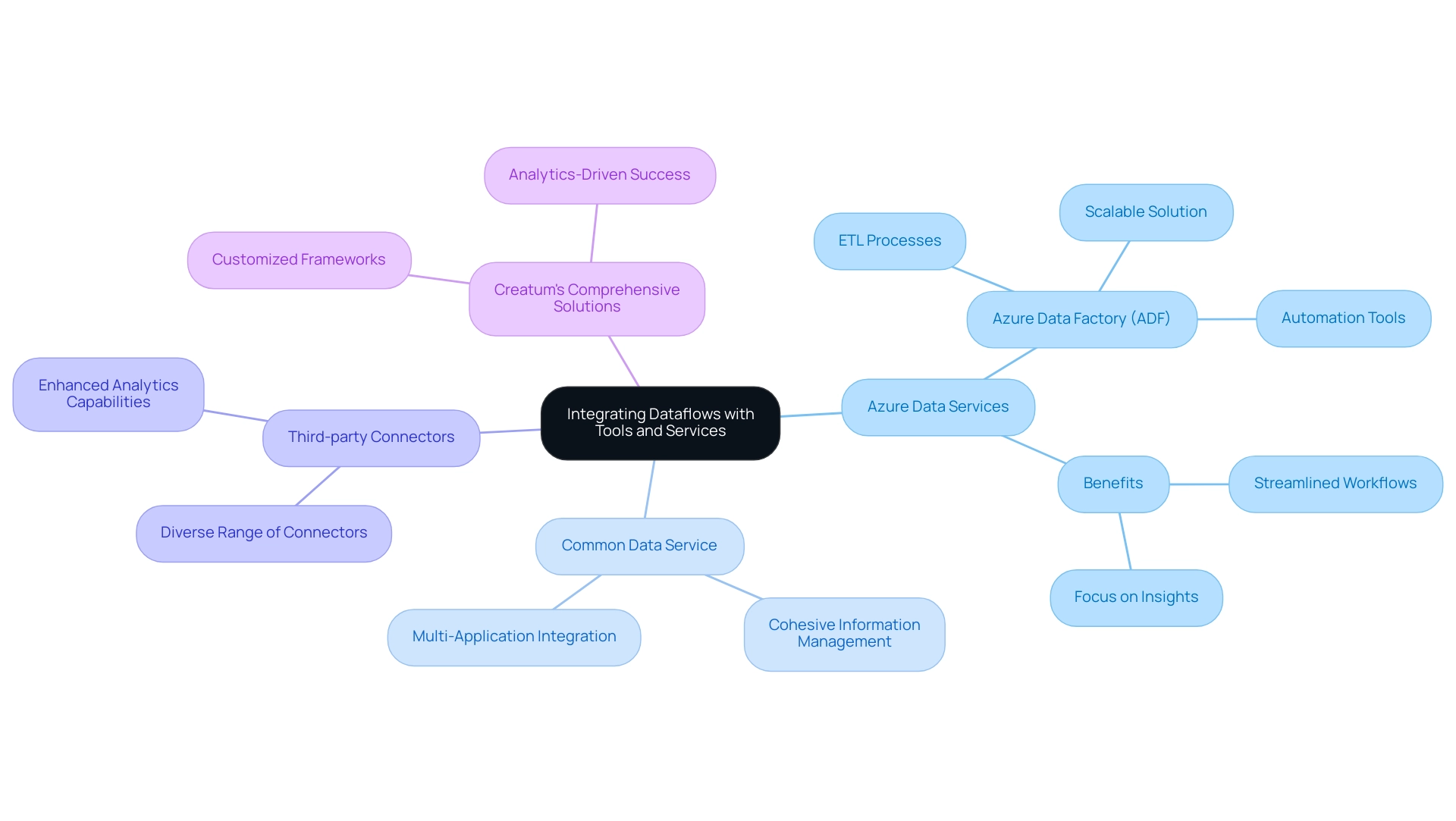

Integrating Dataflows with Other Tools and Services

Integrating various tools and services with dataflows is essential for maximizing your BI experience and driving data-driven decisions, especially during our 3-Day BI Sprint. Here are key integrations to consider:

- Azure Data Services: Utilize Azure Data Factory (ADF) to orchestrate information movement and transformations before loading into Power BI.

This cloud-based service streamlines the extraction, transformation, and loading (ETL) processes, accommodating complex information integration scenarios. By utilizing ADF, you acquire a scalable solution that facilitates seamless cloud migrations and improves your overall information architecture. Harness the automation tool to streamline workflows involving flows, such as triggering refreshes or sending notifications when updates occur.

As noted by Ben Sack, a Program Manager,

These new features free valuable time and resources previously spent extracting and unifying information from different sources, enabling your team to focus on turning information into actionable insights.

This automation enhances efficiency and enables your team to obtain valuable insights from the information, especially during the intensive 3-Day BI Sprint.

-

Common Data Service: Integrating with the Common Data Service enhances information management across multiple applications, ensuring a cohesive approach to handling your assets.

-

Third-party Connectors: Utilize the diverse range of connectors available in BI to import information from external sources, creating a comprehensive ecosystem that enhances your analytics capabilities.

-

Creatum’s Comprehensive Solutions: Keep in mind that Creatum provides customized frameworks and extensive solutions tailored to fulfill your distinct business needs for analytics-driven success, especially through our 3-Day BI Sprint.

As we approach 2024, while you strive to combine BI processes with Azure services, it’s essential to acknowledge that a minimum of 100 rows of historical information is necessary for effective regression model analysis, guaranteeing your predictive insights are strong and trustworthy. Additionally, the support for incremental refresh in Power BI datasets and dataflows further optimizes your data handling capabilities, which necessitates Power BI Premium and a datetime field in the result set.

Conclusion

Harnessing the full potential of Power BI dataflows can significantly transform an organization’s data management and reporting processes. By understanding and implementing the foundational aspects of dataflows, businesses can streamline their data integration efforts, reduce redundancy, and foster collaboration among teams. The step-by-step guide provided illustrates how to create effective dataflows, ensuring that users can navigate the complexities of data integration with confidence.

Moreover, adopting best practices such as:

- Keeping dataflows simple

- Utilizing incremental refresh

- Maintaining thorough documentation

can optimize performance and enhance operational efficiency. Addressing common challenges, including data refresh failures and quality issues, with proactive solutions ensures that organizations can maintain reliable data for informed decision-making.

Integrating dataflows with other tools and services, such as Azure Data Services and Power Automate, further amplifies the capabilities of Power BI, enabling teams to automate workflows and focus on deriving actionable insights. As organizations continue to embrace data-driven strategies, mastering Power BI dataflows will be a key differentiator in enhancing competitiveness and driving growth in a rapidly evolving landscape.