Introduction

In the rapidly evolving landscape of artificial intelligence, large language models (LLMs) are at the forefront of innovation, promising to reshape industries and enhance operational efficiency. These advanced systems, designed to understand and generate human-like text, hold immense potential for transforming processes, particularly in sectors like healthcare and customer service.

As organizations grapple with the complexities of integrating AI into their workflows, understanding the fundamentals of LLMs becomes paramount. This article delves into the intricacies of LLMs, offering practical insights on building, evaluating, and deploying these powerful tools effectively.

By harnessing the capabilities of LLMs, operational leaders can not only streamline their processes but also drive significant improvements in service quality and customer satisfaction.

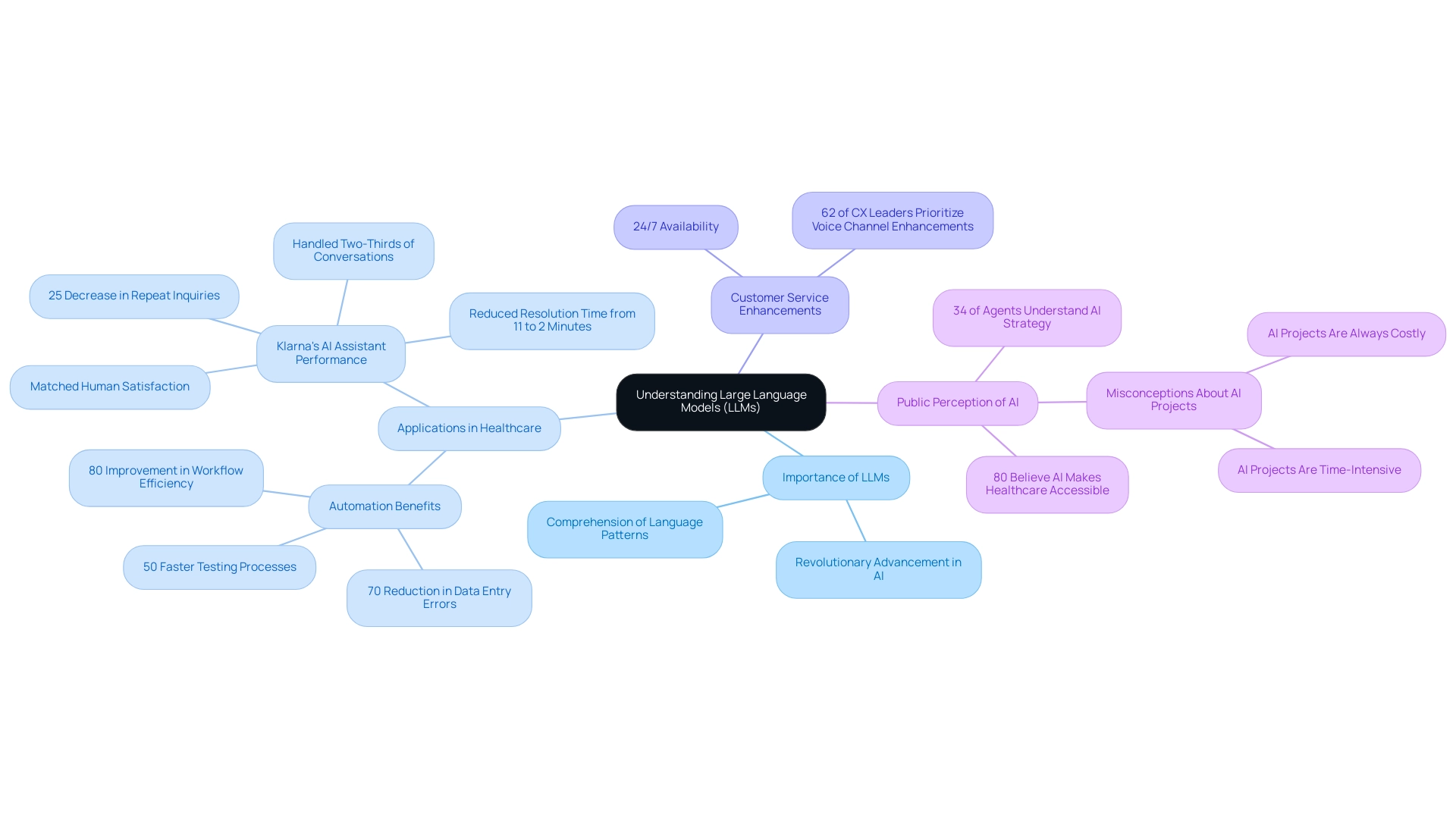

Understanding Large Language Models: Basics and Importance

Large models (LLMs) represent a revolutionary advancement in AI technology, engineered to comprehend and generate human-like text. These sophisticated systems are trained on extensive datasets, allowing them to grasp language patterns, context, and subtle nuances that influence communication. Comprehending LLMs is essential for their ability to revolutionize operations across different sectors, especially in improving customer service and optimizing analysis.

In healthcare, automation has proven to be a game-changer. For instance, a mid-sized firm encountered considerable obstacles, including frequent manual information entry mistakes, sluggish software testing methods, and challenges in integrating outdated systems without APIs. By implementing GUI automation to address these issues, they achieved remarkable results:

– Reducing data entry errors by 70%

– Accelerating testing processes by 50%

– Improving overall workflow efficiency by 80%

all while realizing a return on investment within just six months.

Such measurable outcomes highlight the operational efficiency that automation can bring to healthcare services. Recent trends indicate that as many as 80% of Americans believe AI can make healthcare more accessible and affordable, reflecting a broader acceptance of AI innovations. However, only 34% of customer service agents understand their department’s AI strategy, showcasing a crucial gap in knowledge that operational leaders must address. Furthermore, in 2024, half of support teams identified 24/7 availability as the primary advantage of integrating AI into customer service frameworks.

As Zendesk indicates, CX leaders who observe high ROI on their support tools are 62% more inclined to prioritize improving their voice channel with speech analytics, voice AI, and natural processing. For operational leaders, acknowledging the transformative power of developing large model (LLMs) and automation is the crucial first step toward utilizing their capabilities effectively. The success of initiatives like Klarna’s AI Assistant, which quickly handled two-thirds of customer service conversations while matching human agents in satisfaction, exemplifies how automating routine tasks not only boosts efficiency but also enhances service quality.

By overcoming perceptions of complexity and cost, organizations can leverage these technologies for strategic management and competitive advantage in the healthcare sector. Addressing common misconceptions about AI adoption, such as the belief that AI projects are always time-intensive and costly, is crucial for fostering a more informed and proactive approach to integrating AI solutions.

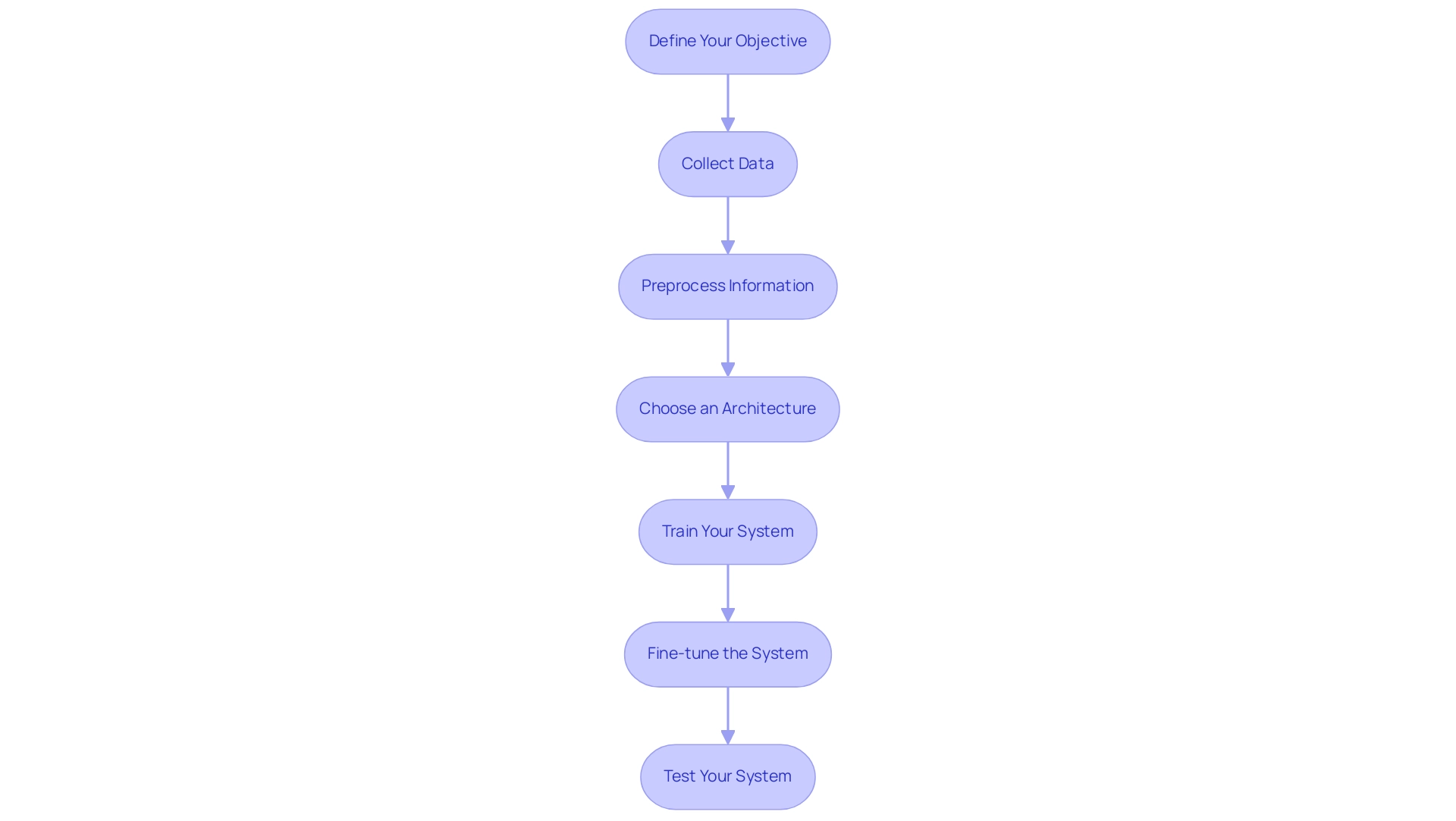

Step-by-Step Guide to Building Your Own Large Language Model

- Define Your Objective: Begin by clearly identifying the specific task your developing large model will address, such as text generation, sentiment analysis, or conversational AI. This foundational step ensures that your system aligns with the desired outcomes and can effectively integrate with RPA while developing large model strategies to enhance operational efficiency.

- Collect Data: Assemble a diverse dataset that is relevant to your defined objective. Data sources may include books, scholarly articles, web pages, and proprietary databases, providing a rich tapestry of information for your model. It’s important to note that pretraining typically has a significantly larger number of candidate points than instruction-tuning, emphasizing the importance of comprehensive collection.

- Preprocess Information: Clean and format the collected information to prepare it for training. This process involves removing irrelevant content, managing missing values, and tokenizing the text to enhance understanding. By leveraging RPA, you can automate this data preparation phase, significantly reducing manual effort and errors. RPA is particularly beneficial in minimizing the risk of human error during this critical step.

- Choose an Architecture: Select an appropriate architecture for your system, such as the popular Transformer framework, which has been widely adopted for its efficiency and effectiveness in handling language tasks. Investigating current frameworks, such as Glam introduced by Du et al. (2022), can guide your architectural decision.

- Train Your System: Utilize a robust machine learning framework, such as TensorFlow or PyTorch, to train your system while focusing on developing large model techniques on the preprocessed dataset. This phase is crucial as it lays the groundwork for your model’s ability to understand and generate language effectively. The integration of Business Intelligence tools can provide insights into the training system, ensuring alignment with your strategic goals. However, the rapidly evolving AI landscape can make it challenging to identify the right solutions, so remain adaptable throughout this journey.

- Fine-tune the System: After initial training, refine your system by adjusting hyperparameters and conducting additional training sessions. This iterative process is essential for enhancing performance and ensuring it meets its objectives.

- Test Your System: Finally, assess your system’s output against the defined objectives. Testing is vital to verify the model’s accuracy and reliability, establishing confidence in its real-world application. Additionally, consider the case study by Xia et al. (2024) on Gradient-Based Utility for Data Mixing, which utilized a gradient-based utility to assign values to individual auxiliary data points, improving the efficiency of utility assignment and facilitating better integration of auxiliary data into training. This case study highlights the importance of continuous improvement and adaptation in the AI development process.

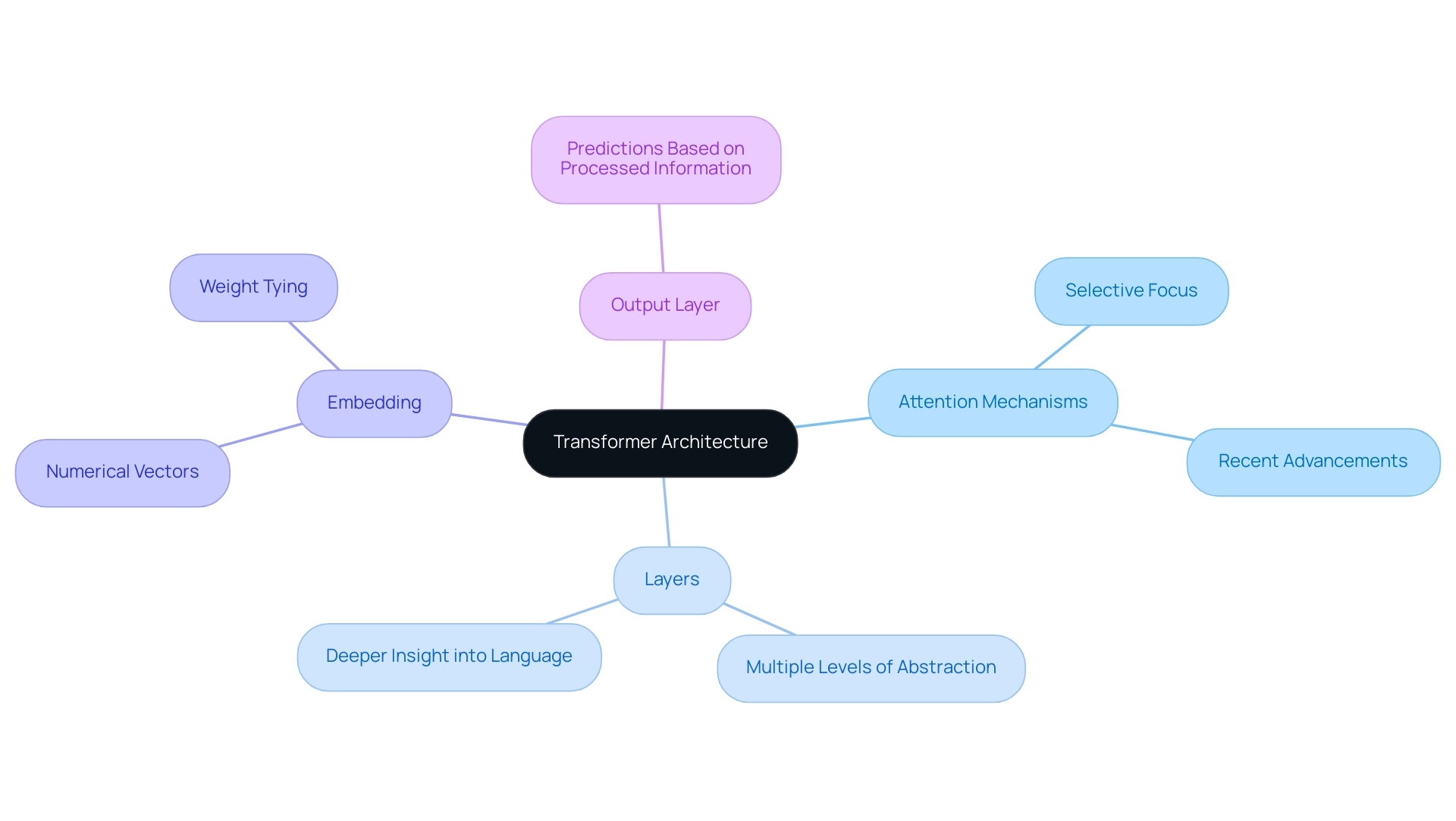

Key Components and Architecture of Large Language Models

Large language systems predominantly utilize Transformer architecture, a sophisticated framework composed of several critical components that enhance performance and understanding. Key elements include:

-

Attention Mechanisms: These mechanisms enable the model to selectively focus on relevant segments of the input text, significantly improving comprehension and contextual relevance. Recent advancements emphasize the significance of attention in processing information, with new research continually enhancing these capabilities.

-

Layers: The architecture is structured in multiple layers, allowing for data to be processed at various levels of abstraction. This stratification facilitates deeper insight into the complexities of language.

-

Embedding: Words are transformed into numerical vectors through embedding techniques, which capture their semantic meanings and relationships. This transformation is vital for the system’s ability to interpret context accurately. Notably, the embedding matrix M and the un-embedding matrix W are sometimes required to be transposes of each other, a practice known as weight tying.

-

Output Layer: Ultimately, the output layer produces predictions based on the processed information, fulfilling the system’s function.

Comprehending these components is essential for troubleshooting and enhancing performance. Alongside Robotic Process Automation (RPA), which streamlines manual tasks and enhances operational efficiency, these AI advancements can drive significant improvements in business operations. RPA not only reduces errors but also frees up teams to focus on strategic, value-adding tasks, addressing the critical need for efficiency in operations.

The case study titled ‘Summary of Transformations in Transformers‘ illustrates that input text undergoes a series of transformations—from words to tokens, then to word embeddings with positional encoding, followed by linear transformations, self-attention, and feed-forward networks. This comprehensive process allows systems to create compressed representations or predict subsequent words effectively, depending on whether they are in the Encoder or Decoder phase. As the field of AI evolves, grasping the significance of these components alongside leveraging RPA will empower directors to implement cutting-edge strategies in their operations.

Moreover, failing to leverage Business Intelligence can leave organizations at a competitive disadvantage, as it hinders informed decision-making. As Prashant Ram emphasizes, continuous learning is essential in this rapidly changing landscape, with DAASST achieving 100% accuracy in distinguishing between different contamination times serving as a testament to the potential of AI when effectively harnessed.

Evaluating the Performance of Your Large Language Model

Assessing the performance of your developing large model (LLM) is essential to guarantee it meets user expectations and achieves desired outcomes. Here are some effective methods to consider:

- Accuracy Metrics: Employ precision, recall, F1 score, and other critical metrics such as the confusion matrix, AUC-ROC curve, and cross-validation to gauge the predictive accuracy effectively. These metrics provide quantitative insights into how well your system performs, enabling informed adjustments.

- Human Evaluation: Engaging human reviewers is vital for assessing the quality of the generated text. This qualitative approach assists in recognizing nuances that automated metrics might miss, thereby improving the overall performance of the system.

- A/B Testing: Implement A/B testing to compare your system’s performance against a baseline. This method allows you to measure improvements and understand the impact of any changes made to your model.

- Feedback Loops: Establish continuous feedback loops by incorporating user feedback. This practice not only refines outputs but also ensures that the system evolves in line with user needs and expectations.

- Latency Metrics: Consider latency metrics to measure the time taken by your system to process input and generate output. Lower latency enhances user satisfaction and the ability to handle multiple concurrent requests efficiently, while developing large model evaluation methods ensures that your LLM remains effective and responsive, ultimately leading to improved user satisfaction. As highlighted by experts in the field,

A potential solution would be to fine-tune a custom LLM for evaluation or provide extremely clear rubrics for in-context learning, and for this reason, I believe bias is the hardest metric of all to implement.

This highlights the intricacy involved in assessing linguistic systems and the need for strong evaluation practices to attain optimal performance.

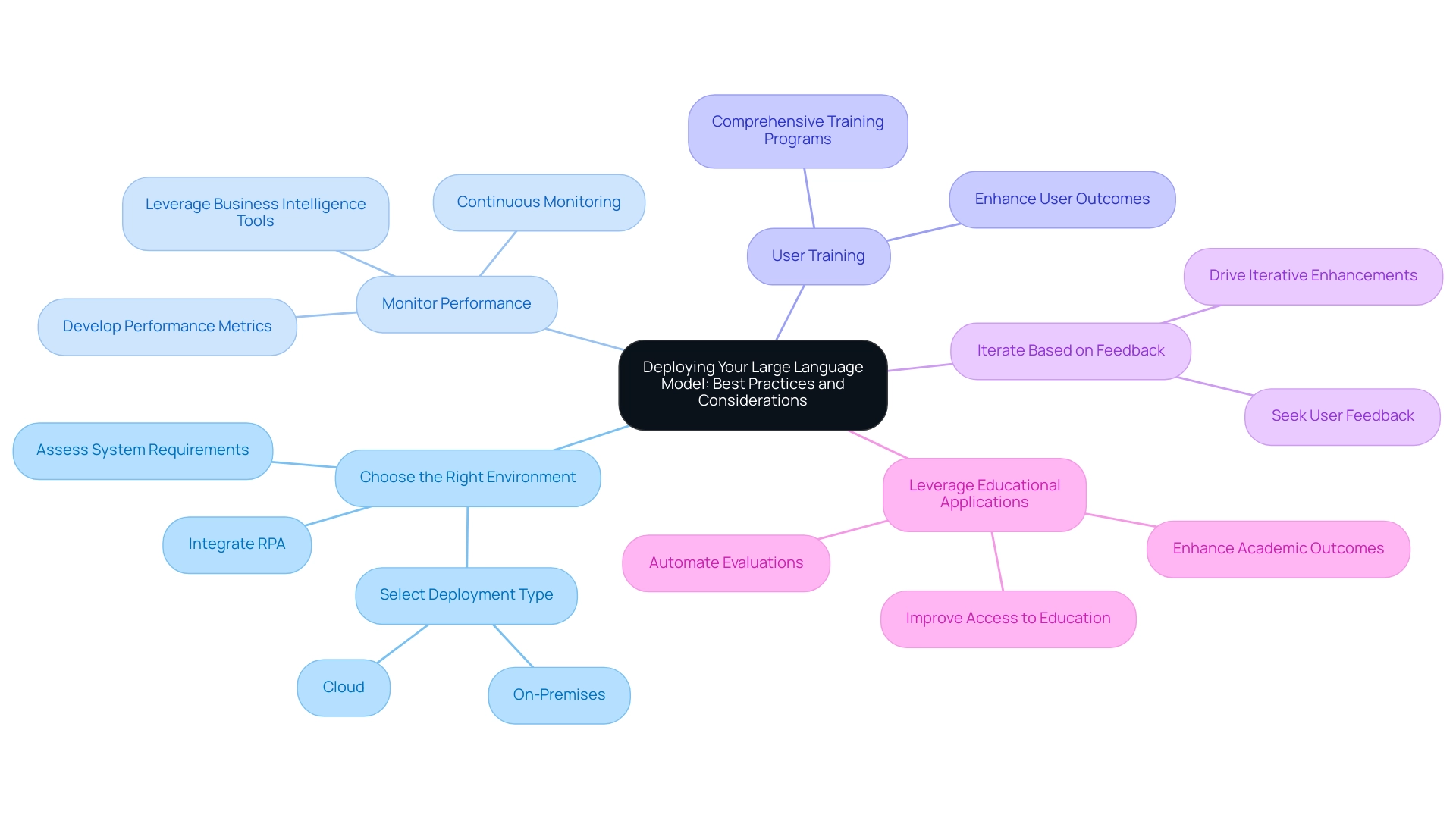

Deploying Your Large Language Model: Best Practices and Considerations

To successfully deploy your large language system (LLS) while enhancing operational efficiency, consider these best practices:

- Choose the Right Environment: Carefully assess your system’s requirements and select a deployment environment that aligns with those needs, whether cloud or on-premises. With recent statistics indicating that the cost and effort associated with fine-tuning LLMs have significantly decreased, developing large model deployment options are now more feasible than ever. Integrating Robotic Process Automation (RPA) can streamline this selection process, allowing for more efficient workflows and reducing the potential for errors.

- Monitor Performance: Continuously monitor the performance of your LLM post-deployment. This vigilance enables the early identification of any issues, ensuring optimal operation. Leveraging Business Intelligence tools can aid in developing large model insights into performance metrics, enhancing decision-making capabilities.

- User Training: Equip users with comprehensive training to ensure they can effectively utilize the system within their workflows. Well-informed users can significantly enhance the outcomes of AI integration, contributing to overall business productivity.

- Iterate Based on Feedback: Actively seek user feedback to drive iterative enhancements to your system. This user-centered approach not only enhances the utility of developing large models but also assists in overcoming technology implementation challenges.

- Leverage Educational Applications: Consider AI solutions’ capabilities to automate evaluations and improve access to education, especially when deploying LLMs in educational contexts. This can significantly enhance both efficiency and effectiveness in learning environments, demonstrating the versatility of tailored AI solutions.

By following these strategies, you position your LLM for effective integration into operational processes, maximizing potential benefits while minimizing risks. As noted by Anthropic, ‘While LLMs hold a lot of promise, they have significant inherent safety issues which need to be worked on.’ These best practices act as an essential measure in reducing the harms of these systems while maximizing their advantages. The support from organizations like Anthropic, Google, and Microsoft emphasizes the importance of collaboration and comprehensive strategies for responsible development and deployment, highlighting the need for ongoing dialogue to address risks associated with language models.

Conclusion

Harnessing the power of large language models (LLMs) presents a transformative opportunity for organizations aiming to enhance efficiency and service quality. By understanding the fundamentals of LLMs, from their architecture to the nuances of deployment, operational leaders can strategically integrate these advanced tools into their workflows. The insights shared highlight the critical steps involved in building, evaluating, and deploying LLMs, emphasizing the importance of clear objectives, comprehensive data collection, and ongoing performance monitoring.

The case studies and statistics presented illustrate the tangible benefits that LLMs can deliver across various sectors, particularly in healthcare and customer service. With the right approach, organizations can overcome common misconceptions about AI implementation, such as complexity and cost, and instead focus on the significant returns these technologies can offer. As the landscape of AI continues to evolve, staying informed and adaptable will be key to leveraging LLMs effectively.

Ultimately, the integration of LLMs, when executed with thoughtfulness and precision, has the potential to not only streamline operations but also elevate customer experiences. Embracing these innovative solutions can position organizations for sustainable growth and competitive advantage in an increasingly digital world. Now is the time to take decisive action and unlock the full potential of large language models in operational strategies.