Overview:

Power BI refresh limits are crucial for data analysts to understand, as they dictate how frequently datasets can be updated, with Pro users limited to eight updates daily and Premium users allowed up to 48 updates. The article supports this by detailing the types of updates available—full, incremental, and scheduled—and emphasizes the importance of strategic planning to optimize refresh operations while adhering to these constraints, ultimately enhancing the reliability and efficiency of business intelligence reporting.

Introduction

In the dynamic landscape of data management, ensuring that reports and dashboards in Power BI reflect the most current information is paramount. As organizations increasingly rely on data-driven insights to inform strategic decisions, understanding the intricacies of data refresh becomes essential. This article delves into the various methods of data refresh, including:

- Full Refresh

- Incremental Refresh

- Scheduled Refresh

While highlighting the implications of licensing limitations and the importance of optimizing refresh strategies. By exploring common challenges such as data refresh failures and best practices for troubleshooting, organizations can enhance their operational efficiency and unlock the full potential of their data. With practical solutions and expert insights, this guide aims to empower data analysts and decision-makers to navigate the complexities of Power BI, ensuring that actionable insights are always at their fingertips.

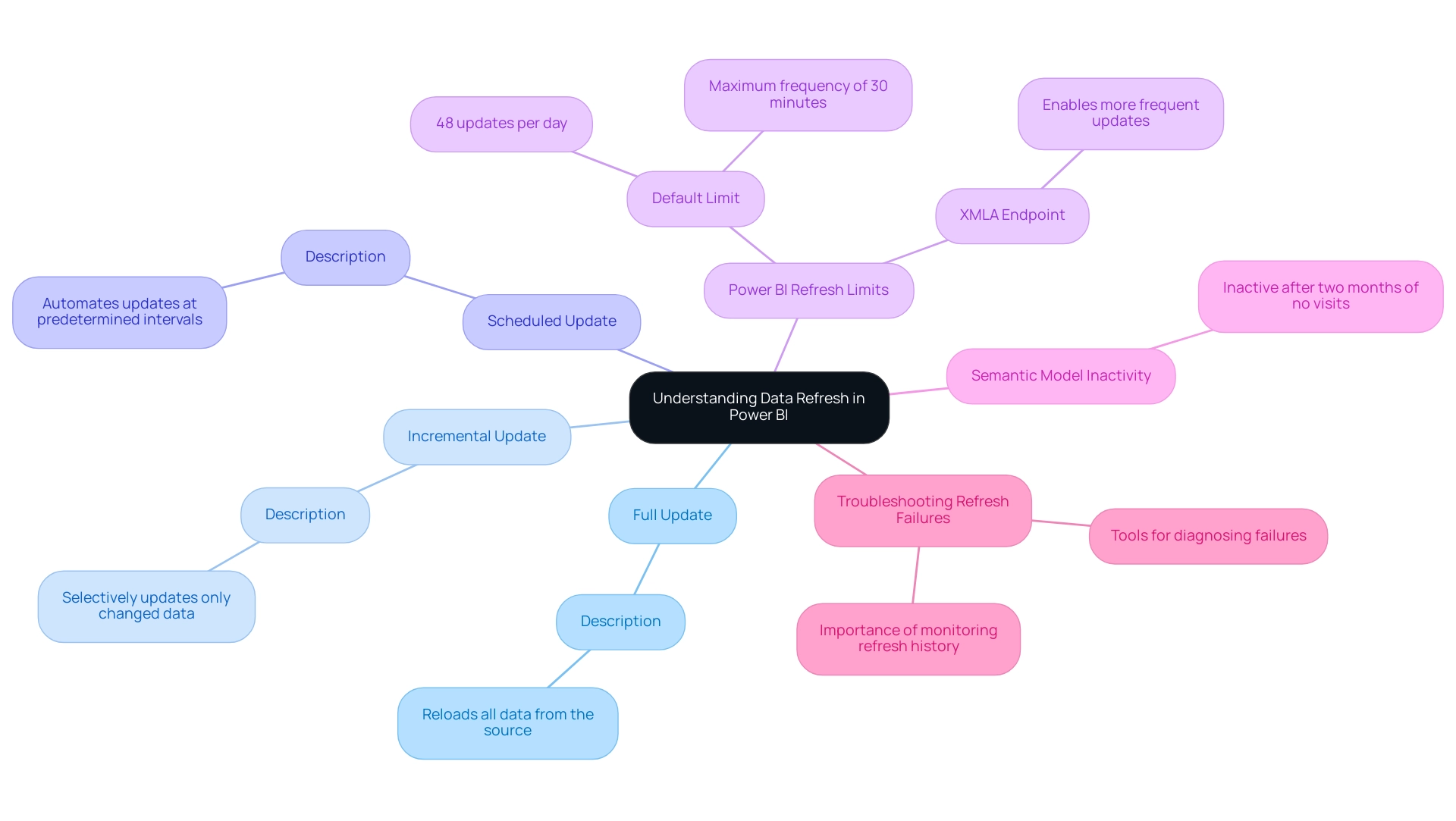

Understanding Data Refresh in Power BI

Data update in BI is essential for guaranteeing that reports and dashboards showcase the most current information available, a key component of effective Business Intelligence. There are three primary types of data updates:

- Full Update: which reloads all data from the source for a comprehensive update.

- Incremental Update: which selectively updates only the data that has changed since the last update, enhancing efficiency and performance.

- Scheduled Update: which automates this process at predetermined intervals to maintain consistency without manual intervention.

In 2024, comprehending these strategies is crucial, particularly as Power BI users must navigate the power bi refresh limits, facing a default restriction of 48 updates per day under a Premium license, which allows a maximum update frequency of 30 minutes.

If you want to go beyond that limit, you must enable an XMLA Endpoint. Additionally, a semantic model becomes inactive after two months of no user visits, underscoring the importance of regular refreshes. Organizations can greatly benefit from RPA tools that diagnose failure issues, as highlighted in the case study titled ‘Troubleshooting Refresh Failures.’

Consistent oversight of update history and quick response to failures can greatly enhance the dependability of information updating procedures. Ultimately, this understanding empowers analysts and Directors of Operations Efficiency to select the most appropriate update strategy customized to their dataset needs and performance factors, resulting in more dependable and efficient information management, fostering growth and innovation.

Exploring Refresh Limitations in Power BI

Power BI has established power bi refresh limits to maintain optimal performance levels, which can significantly impact your ability to leverage insights effectively. For Pro users, the maximum dataset size is capped at 1 GB, whereas Premium users can manage significantly larger datasets, with a maximum size of up to 400 GB. However, to utilize these large datasets effectively, they must be published to a workspace assigned to Premium capacity.

Furthermore, the update frequency differs significantly between the two licenses:

– Pro users can update their datasets up to eight times daily.

– Premium users experience a considerable enhancement, permitting updates up to 48 times per day.

These constraints emphasize the necessity for meticulous planning of models and refresh schedules while considering the power bi refresh limits to enhance operational efficiency and prevent potential performance bottlenecks. Furthermore, as Ice from Community Support notes, the maximum number of columns allowed in a dataset, across all tables in the dataset, is 16,000 columns.

This statistic exemplifies the importance of understanding dataset structure and limitations when optimizing your BI environment. Addressing these challenges is essential for providing clear, actionable insights to stakeholders; however, many reports often lack specific guidance on the next steps, which can lead to confusion. To combat this, implementing best practices for report design and ensuring that insights are clearly articulated can help stakeholders understand how to act on the information presented.

Furthermore, while this tool provides strong security features, restrictions concerning row-level security on specific information sources may raise concerns about information security and collaboration among team members. This emphasizes the necessity for a comprehensive governance strategy to manage inconsistencies and enhance trust in the reports, ensuring that stakeholders can depend on the insights generated.

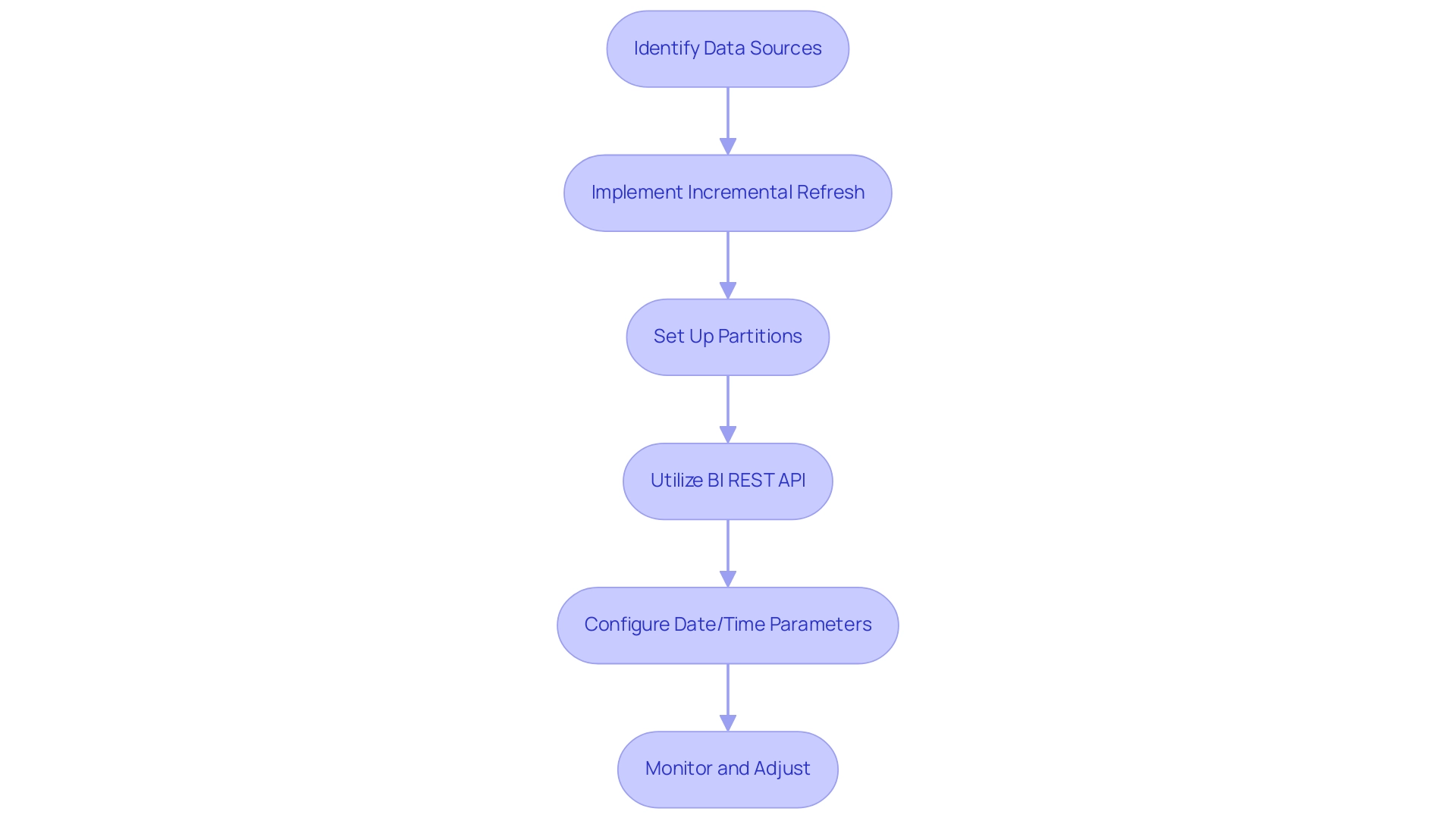

Optimizing Data Refresh Strategies

To effectively enhance content update in Power BI, it is essential to implement Incremental Refresh within the Power BI refresh limits. This method greatly reduces the amount of information handled during each update operation, which is particularly beneficial for extensive datasets, especially considering the Power BI refresh limits where only a portion of the information experiences changes frequently. Crucially, all partitions must request information from a single source when setting up incremental updates while considering Power BI refresh limits for real-time information.

By concentrating on the most pertinent information, organizations can improve efficiency and performance, tackling common challenges such as time-consuming report creation and inconsistencies. Marina Pigol, Content Manager at Alpha Serve, highlights this aspect:

Reduce Resource Usage: possessing less information to update decreases the total memory and other database resources utilized in BI to finalize the update.

The incremental update partition generally encompasses the previous 1 day, demonstrating the time frame for effective information management.

Additionally, utilizing the BI REST API provides programmatic control over the update process, allowing analysts to start update operations on-demand or configure them to automate based on specific triggers. For instance, the case study titled ‘Configuring Incremental Refresh in Power BI Desktop’ highlights how creating two date/time parameters, RangeStart and RangeEnd, can effectively filter information based on specified periods. This method guarantees that only pertinent information is handled during update activities, enhancing performance and enabling organizations to foster growth through more effective and actionable insights.

Applying these strategies not only leads to quicker update times but also enhances overall performance, making them crucial for any organization aiming to optimize their information management processes while adhering to Power BI refresh limits. Moreover, utilizing RPA tools such as EMMA RPA and Automate can further improve operational efficiency, enabling businesses to automate repetitive tasks and concentrate on obtaining actionable insights from their information. In today’s competitive landscape, the lack of data-driven insights can place organizations at a disadvantage, making it imperative to adopt these strategies.

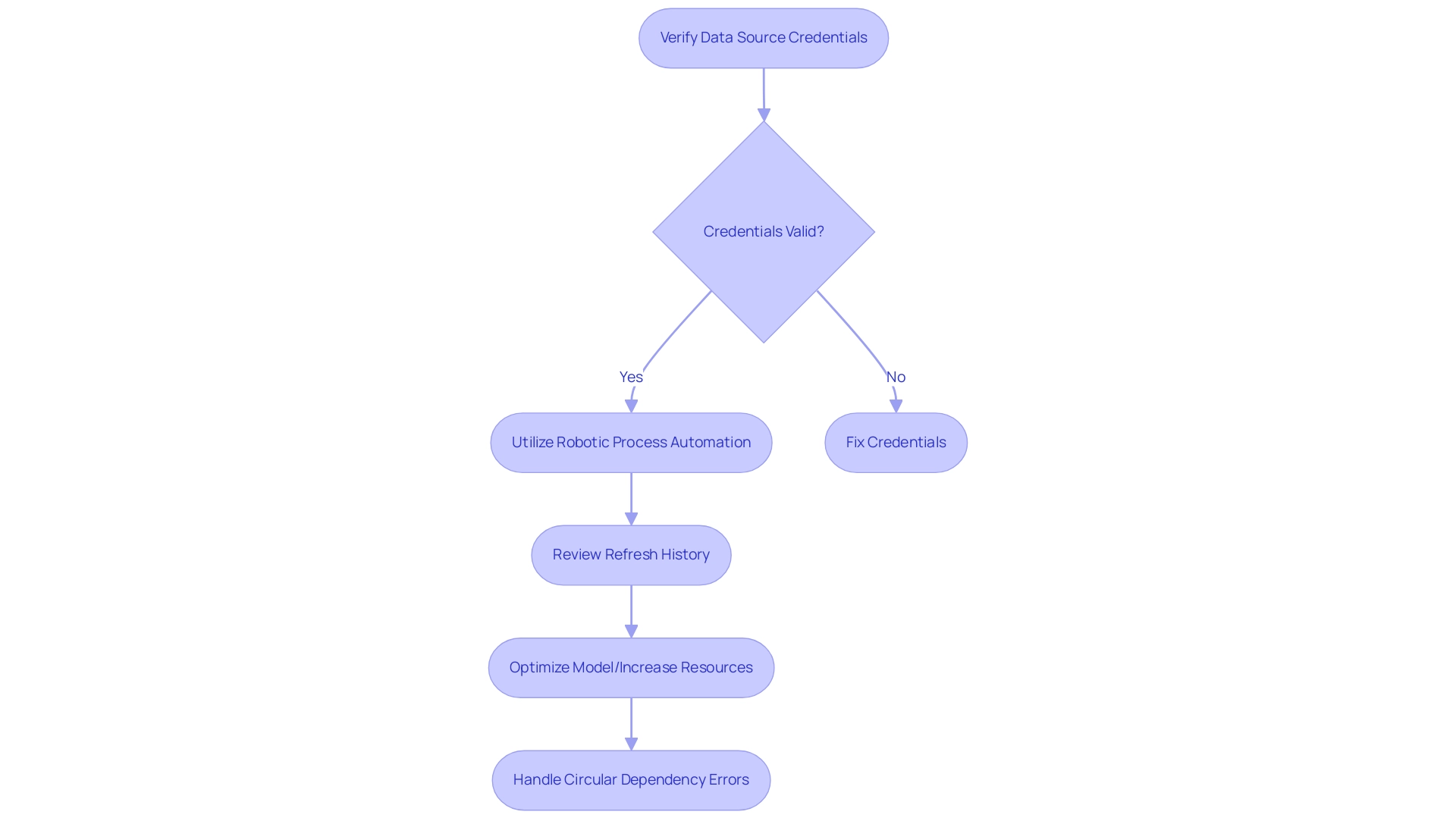

Troubleshooting Data Refresh Issues

Challenges in updating information within BI frequently appear as timeouts and failures caused by alterations in information sources, memory limitations, or service disruptions. Significantly, update operations can fail when the Power Embedded service is paused, requiring manual re-enabling every Monday. To effectively tackle these issues, it’s crucial to first verify the Data Source Credentials—ensuring they are properly set up can mitigate many common problems.

Utilizing Robotic Process Automation (RPA) can optimize your workflow, improving efficiency and minimizing mistakes in the information management task. Moreover, customized AI solutions can assist in enhancing information quality and automating the detection of problems in the update procedure. In situations where refreshes are timing out, optimizing the model or increasing the resources allocated for the refresh process can make a significant difference.

Regularly reviewing the Refresh History in BI Service is essential; this allows for identifying patterns and recurring issues, facilitating proactive measures to prevent disruptions in reporting. According to a recent survey, 73% of BI users report encountering connection issues, highlighting the significance of these troubleshooting steps in maintaining operational efficiency. Additionally, as Uzi2019, a BI Super User, notes,

If you make any changes in your BI desktop file (add a new chart, new column to your table, any DAX), you have to publish every time to BI service.

This highlights the necessity for careful management of your Power BI environment to ensure that you stay within the power bi refresh limits while maintaining seamless and dependable refreshes. A practical example of a common challenge is the Circular Dependency Error that can occur when using the SummarizeColumns function inside measures. This error may occur if not handled properly during model update.

Identifying and modifying CalculateTables that use SummarizeColumns can resolve this circular dependency error, often requiring the use of Tabular Editor for batch changes. By addressing these challenges and leveraging Business Intelligence, along with tailored AI solutions, organizations can transform their information into actionable insights, driving growth and innovation.

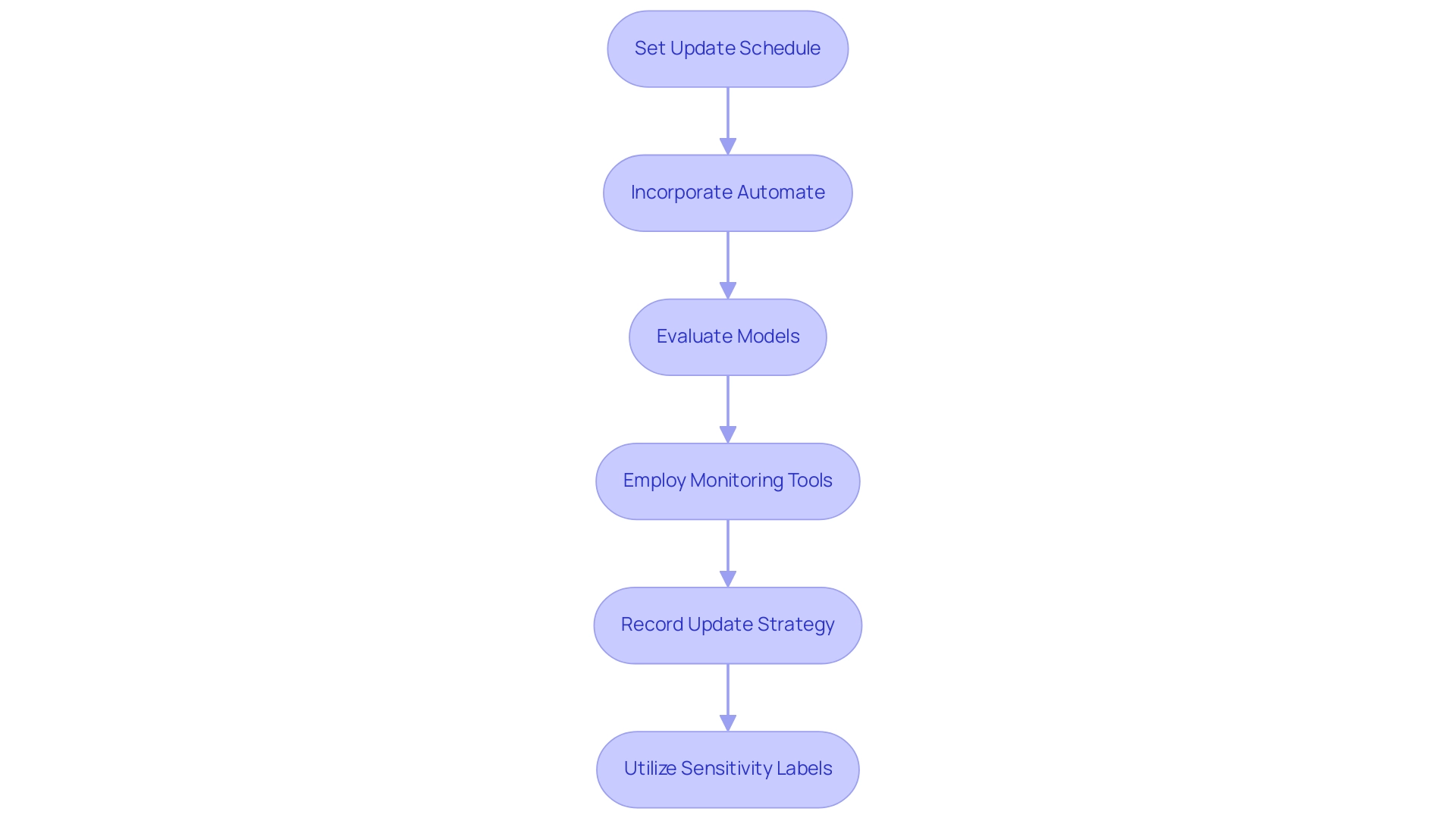

Best Practices for Managing Data Refresh

To efficiently handle information updates in Business Intelligence, it is crucial to set up a schedule for updates that aligns with your business requirements and adheres to the Power BI refresh limits. This strategic approach ensures that your reports remain current and relevant, enhancing reporting efficiency. By incorporating Automate into your workflow, you can streamline repetitive tasks related to refresh, greatly decreasing the time and effort needed for manual updates.

Power Automate not only simplifies these tasks but also enables a risk-free ROI evaluation, as you only pay if the task is automated as intended. Routine evaluations and enhancements of your models further contribute to a streamlined workflow, alleviating unnecessary load. Employing elements such as bookmarks, drillthrough pages, and tooltips can decrease the volume of information loaded on a page, enhancing update procedures.

Furthermore, utilizing monitoring tools offers real-time insights into update performance, allowing you to establish alerts for any failures or delays in the update process. As industry expert Jeff Shields notes, “I wonder if you could ZIP them and read them into a dataflow from a OneDrive/SharePoint folder.” This innovative approach, together with the features of Automate, can improve information management while preserving efficiency.

Recording your update strategy and any modifications promotes clarity in your information management practices. A case study on classifying report information with sensitivity labels demonstrates efficient information management in BI, ensuring suitable handling of sensitive material. By leveraging Power Automate’s features for data management, you can not only safeguard data integrity but also empower your organization to navigate the complexities associated with Power BI refresh limits effectively, driving growth and innovation.

Conclusion

Ensuring that Power BI reports and dashboards are consistently up-to-date is vital for effective data management and decision-making. The article outlines essential strategies for data refresh, including:

- Full Refresh

- Incremental Refresh

- Scheduled Refresh

Each catering to different organizational needs. Understanding the licensing limitations and optimizing refresh strategies can significantly enhance operational efficiency, enabling teams to leverage data insights more effectively.

Addressing common challenges such as data refresh failures is critical for maintaining the reliability of reporting processes. Implementing best practices for troubleshooting and utilizing tools like RPA can streamline workflows and reduce errors, ultimately leading to more actionable insights. Regular monitoring of refresh history and adapting data models ensures that organizations are well-equipped to manage their data refresh processes effectively.

By adopting these strategies and best practices, organizations can unlock the full potential of their data, driving growth and innovation. Empowering data analysts and decision-makers with the knowledge to navigate the complexities of Power BI not only enhances data accuracy but also fosters a culture of data-driven decision-making within the organization. Now is the time to prioritize effective data refresh strategies to stay ahead in today’s competitive landscape.