Overview

The article outlines the top 10 DAX best practices for efficient data analysis, emphasizing principles such as clarity, consistency, performance awareness, and effective use of variables. These best practices are supported by techniques that enhance DAX code performance, such as optimizing data models and avoiding common pitfalls, ultimately driving operational efficiency and improving analytical capabilities in platforms like Power BI.

Introduction

In the realm of data analysis, DAX (Data Analysis Expressions) stands as a cornerstone for professionals looking to elevate their operational efficiency and drive actionable insights. As organizations grapple with the complexities of data management, mastering the fundamental principles and best practices of DAX becomes not just beneficial but essential.

This article delves into a comprehensive guide that outlines key strategies for:

1. Optimizing DAX performance

2. Leveraging variables

3. Refining data models

By embracing these techniques, analysts can streamline their workflows, reduce errors, and enhance the clarity of their analyses, ultimately leading to smarter decision-making. Through practical examples and expert insights, the path to DAX mastery is illuminated, empowering teams to navigate the intricacies of data with confidence and precision.

Fundamental Principles of DAX Best Practices

DAX (Data Analysis Expressions) functions are an essential tool for modeling and analysis in platforms like Power BI and Excel. For any analyst aspiring to boost operational efficiency amidst challenges like time-consuming report creation, data inconsistencies, and a lack of actionable guidance, mastering DAX best practices is essential. Here are the key principles to guide your DAX journey:

-

Clarity and Simplicity: Strive for clear and concise formulas. Complex expressions can introduce errors and obscure understanding for others. Emphasizing simplicity not only aids comprehension but also enhances the effectiveness of your analyses.

-

Consistency: Maintain uniform naming conventions and formatting throughout your DAX code. This practice fosters readability and minimizes confusion, allowing team members to follow your logic with ease.

-

Documentation: Invest time in commenting your code. A well-documented process, particularly for intricate formulas, facilitates future revisions and supports collaboration by helping others interpret your work.

-

Performance Awareness: Prioritize performance when crafting DAX formulas. Efficient code accelerates calculations and boosts overall report responsiveness, making your analyses more impactful.

-

Testing and Validation: Regularly verify your DAX formulas to ensure they deliver expected outcomes. Consistent testing helps identify errors early, preserving the integrity of your analysis.

To illustrate these principles, consider the case study on regression analysis, where DAX functions like LINEST and FORECAST are utilized to model relationships between variables. This application not only assists in predictive modeling and trend analysis but also demonstrates the clarity and simplicity required in DAX for effective interpretation.

By embracing DAX best practices, you can enhance your analysis capabilities, drive insights based on information, and improve operational efficiency in your organization. Moreover, integrating Robotic Process Automation (RPA) can further streamline your workflows, allowing for automation of repetitive tasks and freeing up time for more strategic analysis. As Walter Shields aptly states in his work on launching a data analytics career, “For anyone looking to excel in data analysis, mastering DAX best practices is crucial.

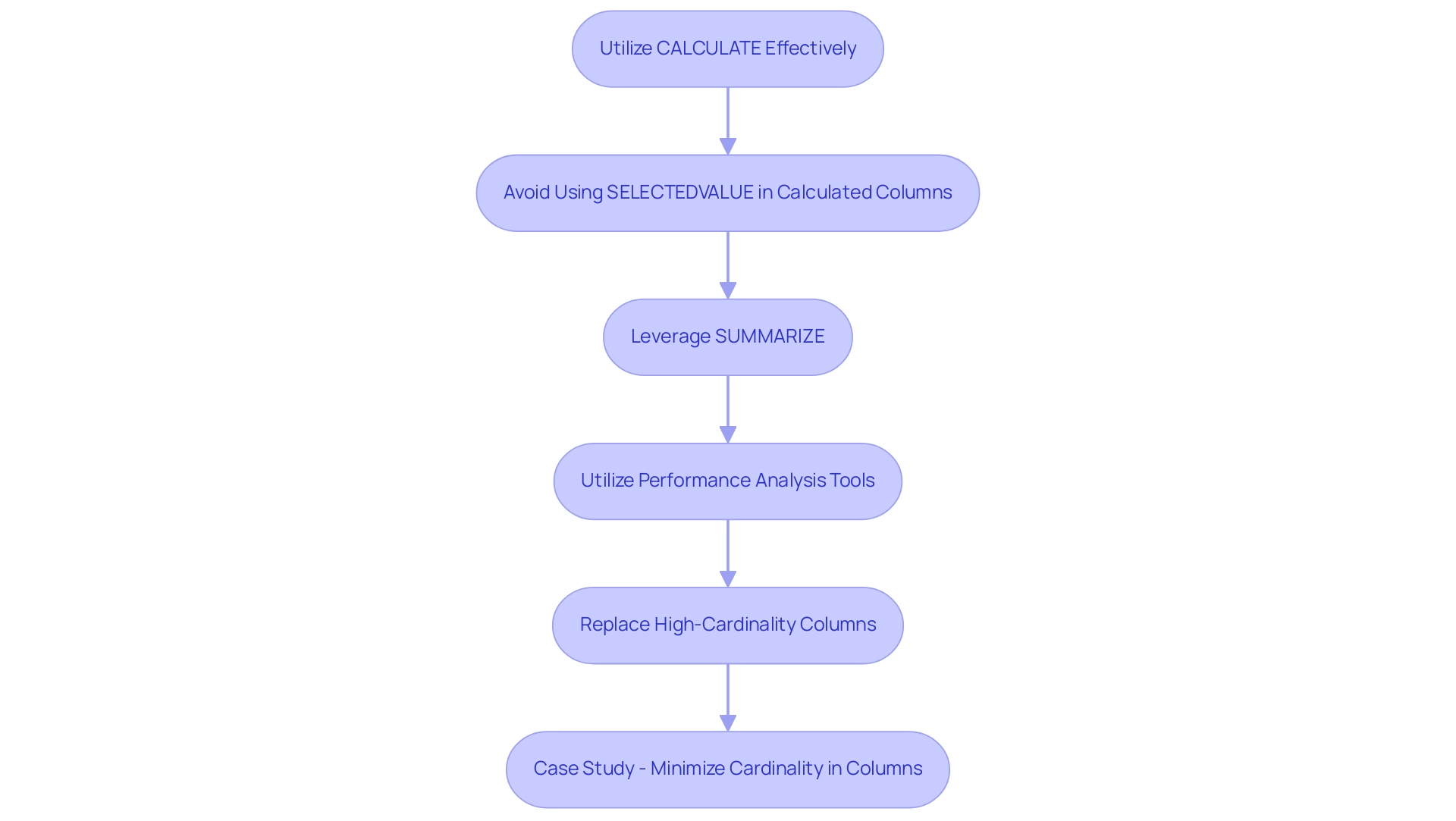

Techniques for Optimizing DAX Code for Performance

To improve the efficiency of your DAX code, consider implementing the following techniques:

-

Utilize CALCULATE Effectively: Although the CALCULATE function is a powerful tool for dynamic assessments, its overuse can impede efficiency. Limit its application to essential computations to ensure efficiency.

Reduce Row Context: Streamlining row context can significantly improve performance. It’s advisable to use iterator functions judiciously and to avoid unnecessary calculations on extensive datasets, which can lead to slower processing times. -

Avoid Using SELECTEDVALUE in Calculated Columns: Instead of applying SELECTEDVALUE in calculated columns, reserve it for measures, where it can yield a more substantial positive impact on performance.

-

Leverage SUMMARIZE: Utilizing the SUMMARIZE function allows the creation of summary tables, which helps to minimize the volume of information DAX must handle, thereby enhancing overall processing speed.

Limit the Use of DISTINCT: Although DISTINCT is helpful for eliminating duplicates, its frequent use can diminish efficiency. Employ it sparingly and only when absolutely necessary to maintain efficiency. -

Utilize Performance Analysis Tools: Performance analysis tools in Power BI Desktop are essential for tracking rendering times for visuals and DAX queries. Regularly monitoring these metrics can help identify bottlenecks and areas for improvement.

-

Replace High-Cardinality Columns: Substituting high-cardinality columns, such as Guide, with surrogate keys can significantly enhance your models. This change reduces complexity and improves processing speed.

-

Case Study – Minimize Cardinality in Columns: A practical example of optimization involves reducing the cardinality of columns. For instance, using the LEFT function to shorten product names can lower complexity and enhance processing speed.

These techniques are essential for optimizing DAX performance and adhering to DAX best practices, ensuring that your operations run smoothly and effectively. As noted by expert Priyanka P Amte, mastering DAX optimization can lead to faster queries in Power BI, allowing for more responsive and insightful data analysis.

Leveraging Variables to Enhance DAX Formulas

Harnessing variables in DAX best practices is a powerful strategy that can significantly boost the efficiency of your formulas, especially within the context of Business Intelligence and RPA. Here’s how to effectively leverage them:

-

Simplify Complex Processes: Variables enable you to break down intricate processes into smaller, more manageable segments.

This not only enhances clarity but also makes it easier for others to follow your logic, vital for teams striving for data-driven insights. -

Improve Performance: By storing intermediate results within variables, you minimize the frequency of repeated calculations.

This can result in substantial improvements, particularly in complex expressions.

A relevant case study shows that without DAX variables, the execution time for a Sales amount measure soared to 1198 ms, highlighting the performance degradation linked to repeated computations, which can hinder data consistency and actionable guidance. -

Enhance Readability: Appropriately named variables can transform your formulas into more understandable scripts, facilitating easier comprehension and collaboration, thus overcoming challenges in time-consuming report creation.

-

Reduce Repetition: When a computation appears multiple times within a formula, utilizing a variable to store that result prevents redundancy.

This not only streamlines your formulas but also enhances overall efficiency, addressing staffing shortages and outdated systems. -

Scope Control: Variables enable precise management of the scope of computations, ensuring they are used only where necessary.

This targeted approach helps in reducing potential errors in your measures, aligning with the goal of driving operational efficiency through automation, and it exemplifies DAX best practices.

Additionally, integrating RPA solutions such as EMMA RPA and Power Automate can further enhance the effectiveness of your DAX formulas.

By automating repetitive tasks, these tools can help mitigate staffing shortages and enhance consistency, allowing for more reliable insights.

As noted by Zhengdong Xu, Community Support,

Just as @Alex87 said, using variables is important. Variables can enhance performance by avoiding redundant calculations.

This underscores the crucial role that variables play in optimizing DAX formulas and improving overall operational efficiency while leveraging Business Intelligence for growth, in accordance with DAX best practices.

For those interested in ongoing learning opportunities, users can register to download relevant files and receive a bi-weekly newsletter about BI news.

Engaging with the Fabric community, which currently has 3,132 active users, can also provide valuable insights and discussions surrounding DAX variables and their applications.

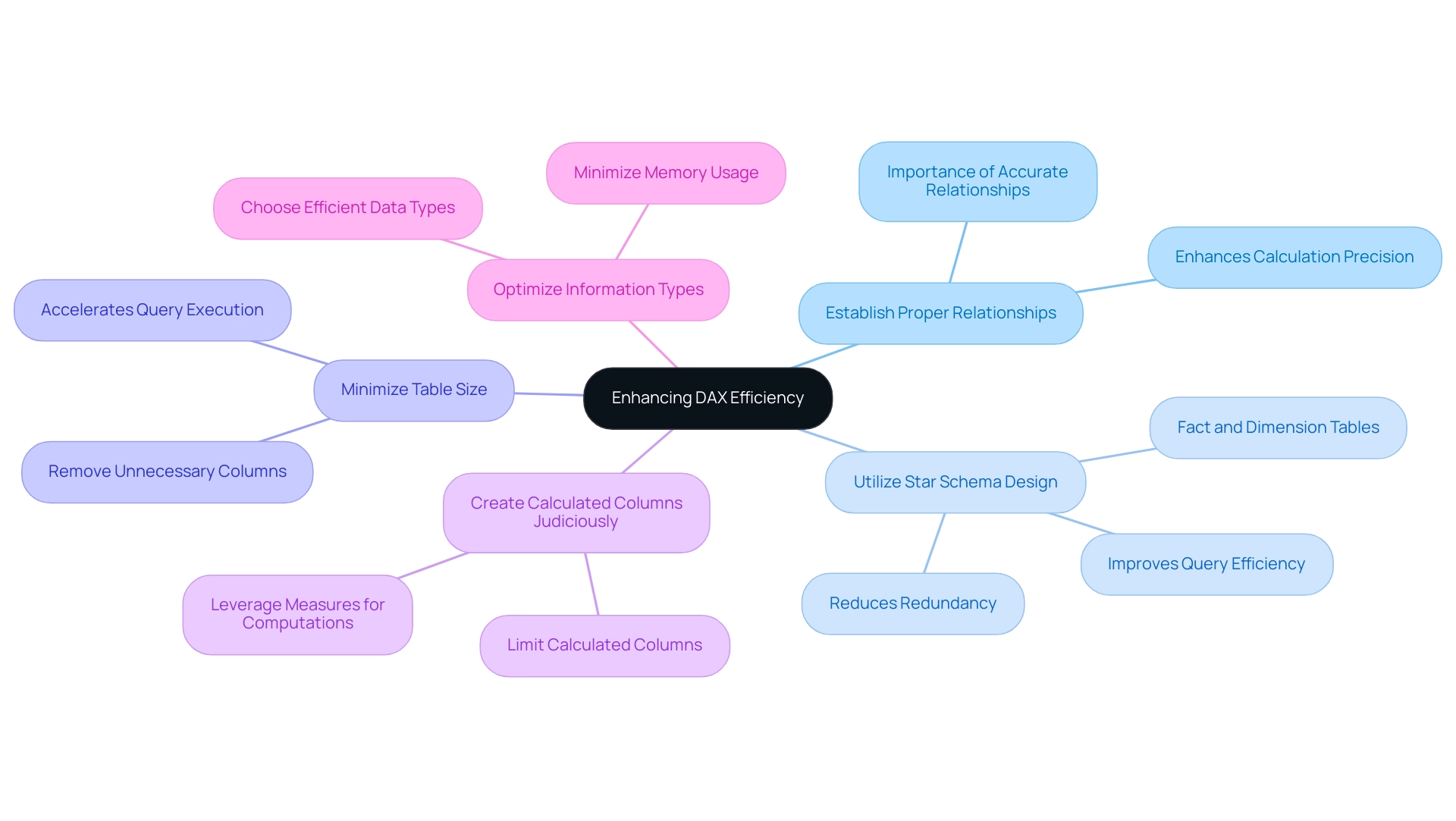

The Role of Data Modeling in DAX Efficiency

Effective information modeling is essential for maximizing DAX efficiency and leveraging insights for impactful business decisions. Here are key strategies to enhance your information models:

-

Establish Proper Relationships: Defining accurate relationships between tables is crucial for facilitating precise calculations. A well-structured relationship enhances the integrity of your analyses and supports the actionable outcomes of your reports.

-

Utilize Star Schema Design: Whenever possible, implement a star schema. This design arranges information into fact and dimension tables, significantly enhancing query efficiency by decreasing redundancy in organization. By adhering to this framework, you can improve overall effectiveness and simplify your information model, aligning with the objectives of the 3-Day Power BI Sprint.

-

Minimize Table Size: Streamline your tables by removing unnecessary columns and rows. This practice is essential as it not only accelerates query execution but also results in more efficient processing, ensuring that your model is both clear and effective, ultimately driving operational efficiency.

-

Create Calculated Columns Judiciously: Limit the use of calculated columns; instead, consider leveraging measures for computations. Measures typically offer improved results and contribute to clearer, more effective information models, boosting your capacity to obtain actionable insights.

-

Optimize Information Types: Choosing the most efficient types for your columns is essential. By optimizing data types, you minimize memory usage and enhance overall efficiency, making your reports more effective.

Incorporating these best practices not only improves the clarity and scalability of your Power BI data models but also leads to faster queries and more effective reporting. This ultimately supports better decision-making and drives growth, ensuring your organization can navigate challenges in leveraging insights from Power BI dashboards. Additionally, during our 3-Day Power BI Sprint, we will assist you in applying these strategies to create a fully functional, professionally designed report tailored to your needs.

You can also use this report as a template for future projects, ensuring a professional design from the start. Moreover, our training workshops will provide further insights into these practices, enhancing your team’s capability to utilize Power BI effectively.

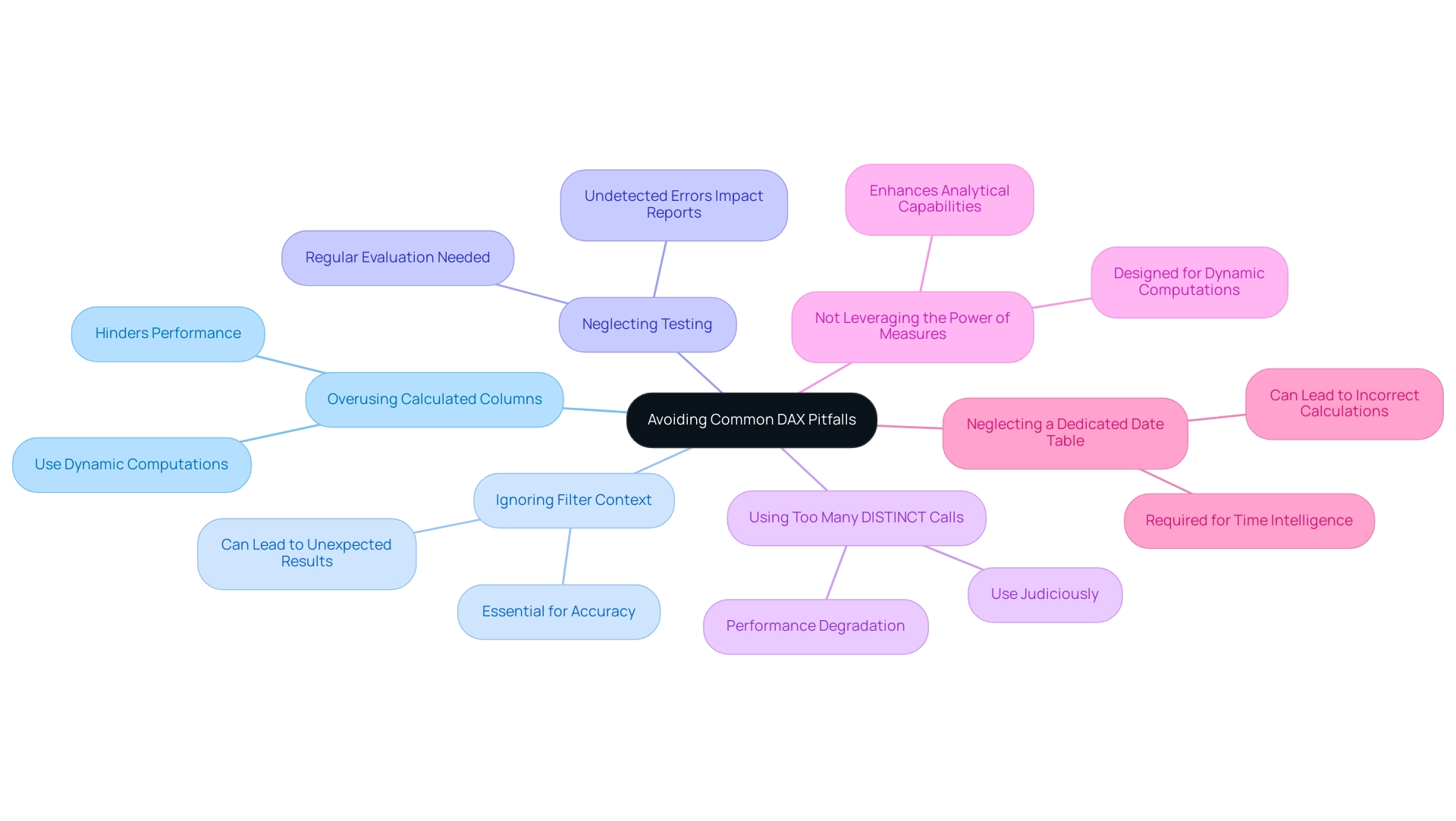

Avoiding Common DAX Pitfalls and Anti-Patterns

To maximize efficiency in DAX and leverage the benefits of Robotic Process Automation (RPA), it is crucial to be mindful of these common pitfalls:

-

Overusing Calculated Columns: Excessive reliance on calculated columns can significantly hinder performance. When feasible, choose methods that are enhanced for dynamic computations and can boost overall efficiency.

-

Ignoring Filter Context: A deep understanding of filter context is essential. Neglecting this aspect can lead to unexpected results in your calculations, ultimately affecting the accuracy of your reports.

-

Neglecting Testing: Regular evaluation of your DAX formulas is vital. Not testing DAX formulas before deployment can result in undetected errors, leading to sluggish reports that undermine the user experience and decision-making process.

-

Using Too Many DISTINCT Calls: The overuse of DISTINCT can lead to performance degradation. Employ this function judiciously to maintain optimal processing speeds.

-

Not Leveraging the Power of Measures: Measures are specifically designed for dynamic computations, and neglecting to utilize them can restrict your DAX capabilities. Embracing measures will enhance your analytical prowess and reporting accuracy.

-

Neglecting a Dedicated Date Table: Many DAX functions require a dedicated date table for accurate time intelligence computations. Failing to implement this can lead to incorrect calculations and insights.

Integrating RPA into your DAX workflows can help automate manual processes such as data entry, report generation, and data validation, eliminating repetitive tasks and reducing the potential for human error. This allows your team to focus on strategic analysis and decision-making. As Mazhar Shakeel aptly states,

Remember, Power BI is a tool that rewards careful planning and thoughtful design.

By adhering to DAX best practices and avoiding common pitfalls, such as those discussed in the case studies on advancing DAX skills, you can foster a more effective DAX environment, improve operational efficiency, and enhance the overall quality of your insights.

Conclusion

Mastering DAX is a transformative step for any organization looking to enhance its data analysis capabilities. By adhering to the fundamental principles of:

- Clarity

- Consistency

- Documentation

- Performance awareness

- Rigorous testing

analysts can craft DAX formulas that are not only effective but also easy to understand and maintain. These best practices lay the groundwork for more efficient workflows and help mitigate common data management challenges.

Optimizing DAX performance requires strategic techniques, such as:

- Judicious use of the CALCULATE function

- Reducing row context

- Leveraging summary tables

By implementing these strategies, analysts can significantly enhance the speed and responsiveness of their reports, allowing for quicker decision-making and more impactful insights. Additionally, incorporating variables into DAX formulas streamlines complex calculations, reduces redundancy, and improves readability, further elevating operational efficiency.

Effective data modeling is equally crucial in maximizing DAX performance. Establishing proper relationships, adopting a star schema design, and optimizing data types are essential steps that lead to clearer, faster, and more actionable reports. Recognizing and avoiding common pitfalls, such as overusing calculated columns and neglecting performance testing, empowers analysts to create robust and efficient DAX environments.

In conclusion, mastering DAX is not merely a technical skill; it is a strategic advantage that enables organizations to navigate the complexities of data analysis with confidence. By embracing these principles, techniques, and best practices, teams can unlock the full potential of their data, driving informed decisions and fostering a culture of data-driven excellence.