Overview

Integrating Delta Lake with Azure Synapse enhances data management by combining Delta Lake’s advanced capabilities with Azure Synapse’s analytics features, facilitating real-time insights and operational efficiency. The article outlines a step-by-step setup process and highlights best practices, such as effective partitioning and the use of Robotic Process Automation (RPA), to streamline workflows and improve data handling while addressing common integration challenges.

Introduction

In the rapidly evolving landscape of data management, organizations are increasingly turning to advanced technologies to optimize their operations and extract actionable insights. The integration of Delta Lake with Azure Synapse represents a powerful synergy that enhances the reliability and efficiency of data lakes. By leveraging features such as:

- ACID transactions

- schema enforcement

- time travel capabilities

businesses can manage extensive datasets with confidence. This article delves into the critical aspects of this integration, offering a comprehensive guide that not only covers setup and troubleshooting but also highlights best practices and advanced features. As companies strive to harness the full potential of their data, understanding the nuances of Delta Lake and Azure Synapse becomes essential for driving strategic decision-making and fostering innovation.

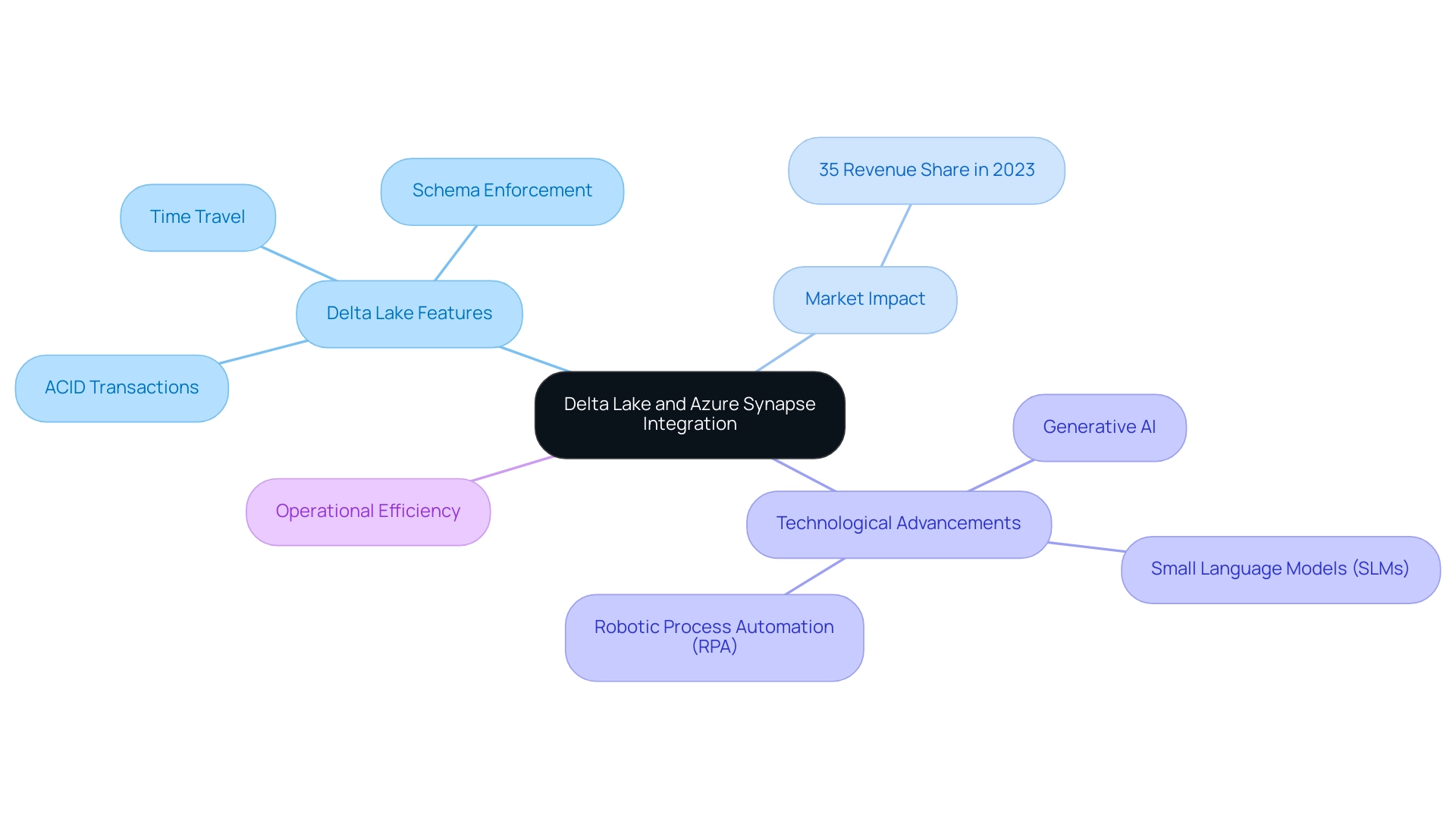

Understanding Delta Lake and Azure Synapse Integration

Delta Lake functions as a crucial open-source storage layer that improves the reliability of lakes by allowing users to manage extensive datasets with ACID transactions, schema enforcement, and time travel features. According to recent reports, the information lake market in the United States accounted for approximately 35% of revenue share in 2023, illustrating its significant growth and relevance in today’s analytics-driven landscape. The technological advancement found in North America, along with the existence of leading lake providers, further strengthens this market development.

Azure Synapse, which combines large-scale information and information warehousing into a cohesive analytics experience, becomes even more powerful when paired with a specific storage solution. The synergy between these two platforms facilitates real-time analytics, empowering organizations to extract actionable insights from their information efficiently. As firms increasingly adopt the principles of mesh—decentralizing ownership and fostering collaboration across teams—understanding the integration of Delta Lake Synapse is vital with Azure Synapse.

This combination not only streamlines workflows but also significantly enhances operational efficiency, positioning businesses to leverage their information strategically in a competitive market. Furthermore, the integration of Generative AI and Small Language Models (SLMs) in information engineering exemplifies how AI tools are transforming workflows in management, automating tasks, improving user interactions, and ensuring enhanced privacy and cost-effectiveness. Additionally, leveraging Robotic Process Automation (RPA) can further automate manual workflows, reducing errors and freeing teams for more strategic tasks.

These advancements tackle the challenges of inadequate master information quality, emphasizing the necessity for organizations to remain informed on recent progress in information management that facilitates the integration of delta lake synapse and Delta Storage.

Step-by-Step Setup of Delta Lake in Azure Synapse

-

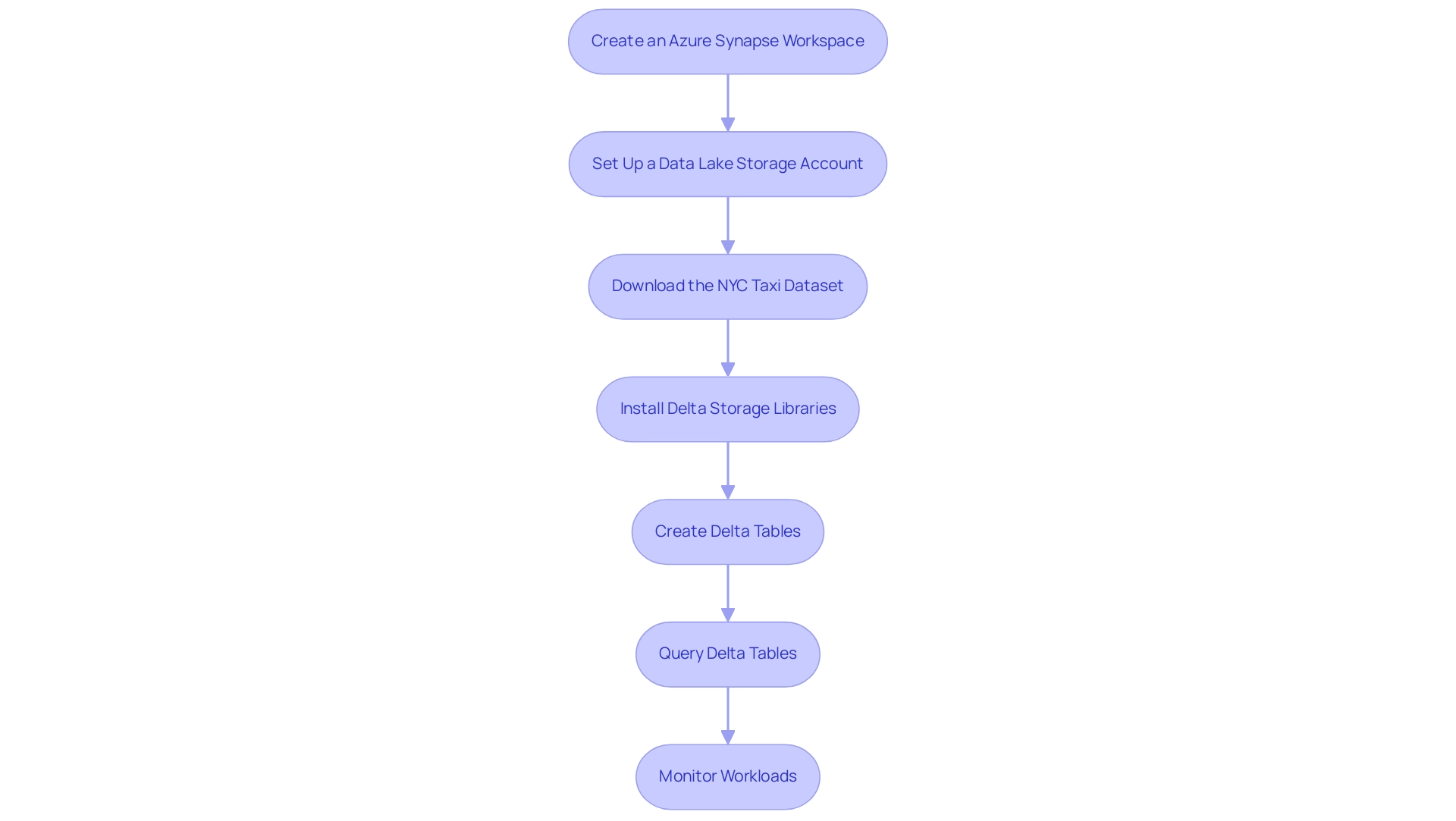

Create an Azure Synapse Workspace: Begin by logging into the Azure portal. Initiate a new Synapse workspace, selecting the optimal region and resource group that aligns with your operational needs. This foundational step ensures that you have a dedicated environment for your management tasks, crucial for leveraging Robotic Process Automation (RPA) to streamline operations by automating the setup of workflows.

-

Set Up a Data Lake Storage Account: Establish a new Azure Data Lake Storage Gen2 account. This account will be essential for storing your information efficiently. Ensure it is seamlessly linked to your Delta Lake Synapse workspace to facilitate smooth operations and retrieval, thereby enhancing workflow automation and operational efficiency. RPA can automate the information ingestion processes, reducing manual input and errors.

-

Download the NYC Taxi Dataset: Users should download the NYC Taxi – green trip dataset, rename it to

NYCTripSmall.parquet, and upload it to the primary storage account in Synapse Studio. This collection will serve as a practical example for your implementation, demonstrating how structured information can drive actionable insights. Automating this data upload process through RPA can save time and minimize human error. -

Install Delta Storage Libraries: Within your Synapse Studio, navigate to the Manage hub. Choose Apache Spark pools and continue to install the required storage libraries customized for your Spark environment. This installation is essential for enabling advanced information management capabilities in Azure Synapse, particularly through delta lake synapse, supporting RPA initiatives aimed at reducing manual tasks and enhancing accessibility. According to Azure specialists, implementing the latest delta lake synapse libraries ensures optimal performance and compatibility with your workflows, thereby enhancing RPA effectiveness.

-

Create Delta Tables: Utilize Spark SQL or DataFrame APIs to create Delta tables in your lake. Carefully define the schema and load your information into these tables. By structuring your information correctly, you lay the groundwork for efficient querying and manipulation, key for enhancing business intelligence. RPA can automate the schema definition and information loading processes, ensuring consistency and accuracy.

-

Query Delta Tables: Leverage Azure Synapse’s SQL capabilities to execute queries on your delta lake synapse. This integration allows you to harness the strengths of both platforms, optimizing performance and enhancing your analytical capabilities, thus aligning with your goal of data-driven decision-making. RPA can facilitate automated querying processes, delivering insights without manual intervention.

-

Monitor Workloads: Continuously monitor workloads within Azure Synapse to maintain optimal performance and troubleshoot any potential issues. Utilize the

STATS_DATE()function to monitor when statistics were last refreshed, ensuring your information remains pertinent and actionable. As pointed out by scientist Moez Ali, Azure Synapse supports hybrid cloud environments by enabling seamless integration between on-premises systems and the cloud, making it a robust choice for modern management. To maximize the benefits of Azure Synapse, users should follow best practices such as optimizing storage formats, managing compute resources, implementing security measures, and monitoring workloads. RPA can automate these monitoring tasks, allowing your team to focus on strategic initiatives.

Optimizing Performance: Best Practices for Delta Lake in Azure Synapse

-

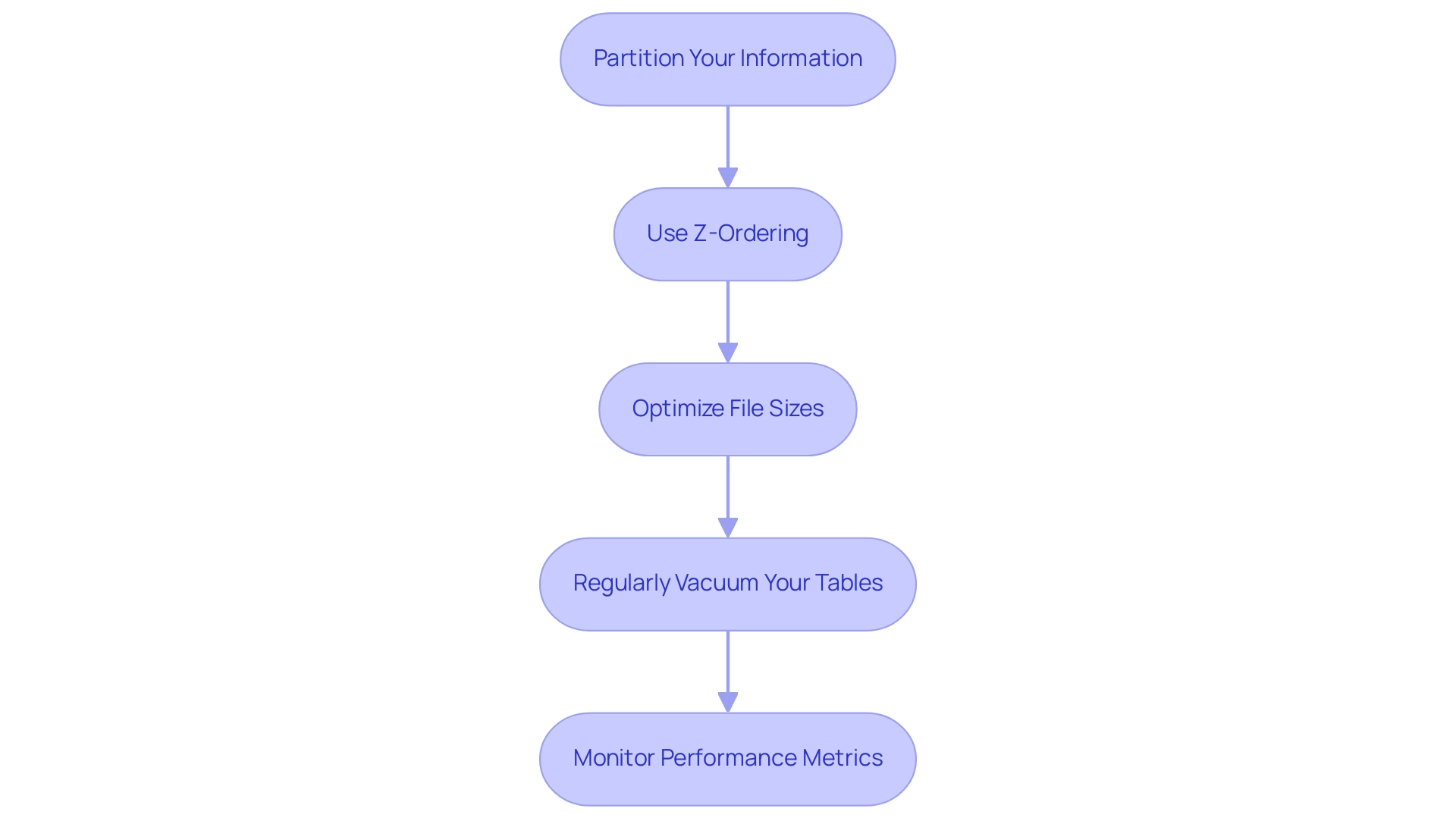

Partition Your Information: Strategically organizing your information into partitions based on access patterns is essential for enhancing query performance. By implementing targeted partitions, you can significantly reduce the volume scanned during queries, leading to faster results. Utilizing the OPTIMIZE command allows you to specify partitions directly with a WHERE clause, ensuring that the underlying information in the Delta table remains intact while still optimizing performance. This strategic approach reflects the efficiency improvements provided by Robotic Process Automation (RPA), which can automate manual information management tasks and free your team for higher-level analytical work, ultimately decreasing the likelihood of errors.

-

Use Z-Ordering: Implementing Z-ordering on columns that are frequently queried together can drastically enhance retrieval speeds. This technique reorganizes the information based on column values, allowing for more efficient access and minimizing the need for extensive scanning. As RPA tools can streamline information retrieval processes, combining Z-ordering with automation techniques can lead to even greater operational efficiency, allowing your team to focus on strategic initiatives.

-

Optimize File Sizes: Maintaining Delta file sizes between 128 MB and 1 GB strikes an optimal balance between read and write performance. Following this file size guideline assists in efficient information management and ensures better performance during operations. RPA can aid in keeping these file sizes by automating regular checks and adjustments, which helps prevent human error and enhances overall information integrity.

-

Regularly Vacuum Your Tables: Executing the VACUUM command is crucial for managing storage and maintaining performance. By removing old versions of data, you free up space, which enhances overall system performance and ensures that your data environment remains streamlined. Automating this process through RPA can save time and reduce the potential for human error, ensuring that your team can redirect their efforts toward more strategic tasks.

-

Monitor Performance Metrics: Leverage Azure Synapse’s monitoring tools to continuously track performance metrics. By consistently evaluating these metrics, you can pinpoint bottlenecks and areas for enhancement, enabling proactive modifications that improve the efficiency of your implementation. Recent updates, such as the new operation metrics for SQL UPDATE, DELETE, and MERGE commands in version 2.1.0, provide valuable dataframes with operation metadata, simplifying logging and tracking for developers and enhancing management capabilities. Additionally, integrating RPA to automate the monitoring and reporting process can lead to a more data-driven decision-making environment. Finally, remember that Delta Lake Synapse 2.0.0 requires a minimum Synapse Runtime of Apache Spark 3.3 to function effectively. In the context of the rapidly evolving AI landscape, leveraging tailored AI solutions alongside RPA can further enhance your information management strategies, ensuring they align with your specific business goals and challenges.

Troubleshooting Common Issues in Delta Lake and Azure Synapse Integration

-

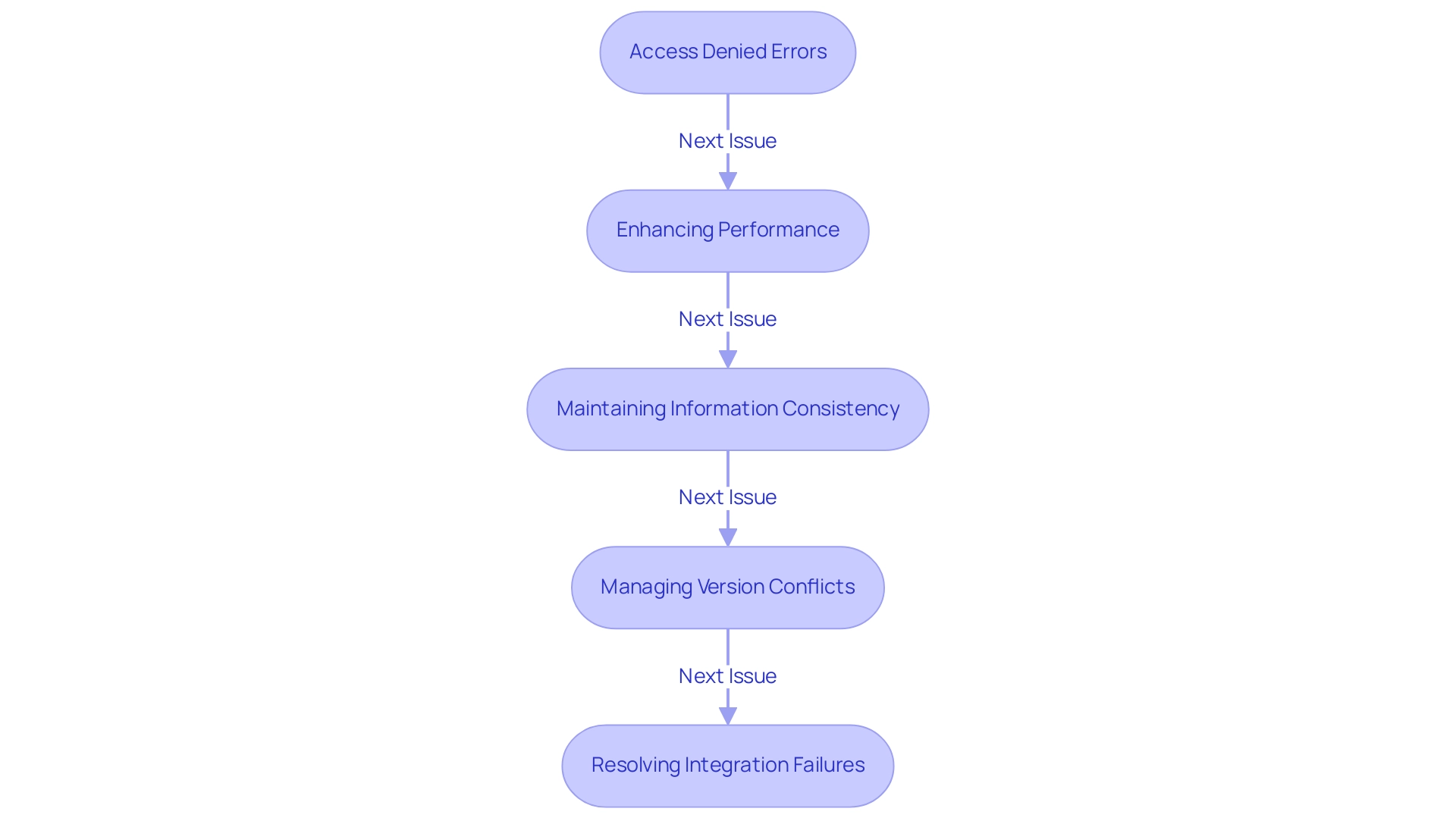

Addressing Access Denied Errors: To resolve access denied errors in Azure Synapse, it’s crucial to verify that your workspace has the appropriate permissions to interact with the delta lake synapse and the Data Lake Storage account. Begin by auditing the IAM roles and access policies associated with your Synapse workspace. Abdennacer Lachiheb emphasizes the importance of ensuring that all configurations are correct, stating,

Try to set this spark conf to false:

spark.databricks.delta.formatCheck.enabledfalse.

This adjustment can enhance access reliability. Additionally, with recent Microsoft Entra authentication changes causing queries to fail after one hour, consider implementing a solution that maintains active connections without rebuilding your setup from scratch, leveraging RPA to automate these monitoring processes. -

Enhancing Performance: If you notice that your queries are running slower than expected, take a close look at your partitioning strategy. Effective partitioning is essential for enhancing structured tables, and employing Business Intelligence tools can offer insights into usage patterns. Regularly reviewing and refining this aspect can significantly improve query performance, thus enhancing overall operational efficiency. Additionally, not utilizing these tools can leave your business at a competitive disadvantage due to missed insights. Moreover, be aware of the potential for buffer overflow attacks, as understanding this feasibility can help in securing your information management practices.

-

Maintaining Information Consistency: To combat consistency errors, leverage the

MERGEcommand in Delta Lake Synapse. This command allows for seamless updates to records, ensuring that your tables remain consistent and accurate. Consistency is essential, particularly in settings where information integrity is paramount, and integrating RPA can assist in automating these consistency checks. -

Managing Version Conflicts: If you face version conflicts within your datasets, implementing a robust version control strategy is essential. Regularly purging older versions of information can prevent conflicts and streamline management processes, thus enhancing the efficiency of your operations. Utilizing tailored AI solutions can assist in identifying and resolving potential conflicts before they escalate, ensuring that your information management practices align with your business goals.

-

Resolving Integration Failures: Lastly, should you encounter integration failures, it’s important to examine your network configurations and Spark environment settings. Misconfigurations can lead to integration issues that hinder the performance of your workflows. Ensuring that these settings align with best practices will mitigate potential failures and optimize your integrations. For instance, token expiration can lead to errors during query execution; switching to a service principal or managed identity for long-running queries is recommended to avoid such issues, which can also be automated through RPA solutions.

Exploring Advanced Features of Delta Lake in Azure Synapse

Time Travel: This storage solution provides a robust feature that allows you to query earlier versions of your information, facilitating rollback and auditing capabilities. By using the VERSION AS OF syntax in your SQL queries, you can effortlessly access historical records, which is invaluable for compliance and verification processes.

Versioning: Keeping a comprehensive history of changes in your tables is crucial for maintaining compliance and ensuring integrity. This versioning capability enables you to monitor changes efficiently, thereby improving accountability within your information management practices.

Schema Evolution: The support for schema evolution in this technology is revolutionary, allowing you to add new columns or alter types without any interruption. This flexibility not only streamlines the management process but also ensures that your structures can adapt to evolving business needs seamlessly.

Change Capture (CC): Implementing CC is essential for tracking changes in your information, allowing for efficient synchronization with other systems. This capability guarantees that your information stays up-to-date and precise across platforms, which is essential for operational efficiency.

Streaming Capabilities: Utilize Delta’s strong support for streaming information to create real-time analytics applications. This functionality empowers you to react instantly to incoming information, enhancing your decision-making processes. Dileep Raj Narayan Thumula highlights that comprehending distribution of information is essential for query enhancement, making these streaming functionalities even more significant in your operational strategies.

Access Control and Security: Delta Tables also facilitate effective access regulation and security management through Databricks’ built-in features, ensuring that your information remains safeguarded while permitting necessary access based on roles and responsibilities.

Vector Search Support: In Q3, Databricks introduced a gated Public Preview for Vector Search support in Databricks SQL, improving the capabilities of information querying and analysis, which can complement the features of these tables, particularly in complex information environments.

Case Study on Extended Statistics Querying: An example using a sales_data table illustrates how to compute statistics for specific columns, such as product_name and quantity_sold, and how to query extended statistics to verify results. This process aids in comprehending the distribution of information, which is vital for query optimization, with specific statistics like min, max, and distinct count being emphasized.

Integrating RPA for Enhanced Efficiency: By automating manual workflows with Robotic Process Automation (RPA), organizations can significantly reduce the time spent on repetitive tasks, freeing up resources for strategic information management. For example, a firm that adopted RPA to manage information entry tasks alongside a storage solution reported a 30% decrease in mistakes and a significant boost in team productivity. This synergy between the capabilities of Delta Lake and RPA within the delta lake synapse environment not only enhances operational efficiency but also drives actionable insights, allowing your team to focus on high-value projects amidst the rapidly evolving AI landscape.

Moreover, RPA addresses challenges within this landscape by ensuring that data processes are streamlined and less prone to human error.

Conclusion

The integration of Delta Lake with Azure Synapse offers organizations a transformative approach to data management, enabling them to harness the full potential of their data assets. By leveraging features such as ACID transactions, schema enforcement, and time travel capabilities, businesses can ensure data reliability and integrity, which are essential for informed decision-making.

The step-by-step setup guide provided emphasizes the importance of:

1. Creating a dedicated environment

2. Automating workflows through Robotic Process Automation (RPA)

3. Optimizing data performance

Implementing best practices like:

– Data partitioning

– Z-ordering

– Regular monitoring

not only enhances query performance but also streamlines operations, allowing teams to focus on strategic initiatives rather than repetitive tasks.

Addressing common challenges such as:

– Access errors

– Performance issues

– Data consistency

is crucial for maintaining an efficient data ecosystem. By utilizing advanced features like time travel, schema evolution, and change data capture, organizations can ensure compliance and adapt swiftly to evolving business needs. The integration of RPA further amplifies these benefits, driving operational efficiency and minimizing errors.

In conclusion, embracing the synergy between Delta Lake and Azure Synapse equips organizations with the tools necessary to thrive in a data-driven world. By prioritizing effective data management strategies and leveraging automation, businesses can not only enhance their operational efficiency but also foster innovation and strategic growth in an increasingly competitive landscape.