Overview

The article confronts the critical issue of duplicate values in datasets, which obstruct the establishment of relationships between tables. This challenge can result in significant operational inefficiencies and erroneous reporting. It underscores the necessity of implementing unique identifiers and conducting regular data maintenance.

Automation tools, such as Robotic Process Automation (RPA), play a pivotal role in managing duplicates effectively. By enhancing data integrity, organizations can facilitate improved decision-making processes, ultimately driving better outcomes.

Introduction

In the intricate realm of data management, duplicate values present a formidable challenge that can undermine the very foundation of effective data relationships. As organizations increasingly depend on data-driven insights to steer strategic decisions, the importance of maintaining data integrity cannot be overstated. Duplicate entries not only lead to operational inefficiencies but also threaten the accuracy of reporting, ultimately skewing outcomes and hindering growth.

This article explores the complexities of managing duplicate values, examining their causes, impacts, and effective strategies for identification and removal. By grasping these dynamics, organizations can implement best practices that enhance data quality, streamline operations, and foster informed decision-making in an ever-evolving digital landscape.

Understanding the Duplicate Values Dilemma in Data Relationships

Duplicate values in information sets pose significant challenges when establishing relationships between tables. For instance, when both a Sales Data Table and a Price Data Table contain non-unique product IDs, the system may generate an error indicating that the relationship cannot be created due to these duplicates. This issue is particularly common in various information handling systems, including Excel and Power BI, where unique identifiers are crucial for accurate linkage.

As Subha Airan-Javia, MD, emphasizes, effective information management is essential for operational efficiency, underscoring the need for organizations to proactively address these challenges.

In the pursuit of enhancing operational efficiency in healthcare through GUI automation, organizations can automate the identification of repeated values, significantly reducing manual efforts and errors. This automation is vital for organizations aiming for improved data integrity, allowing them to extract meaningful insights without the impediment of inconsistencies. For example, a medium-sized firm that implemented GUI automation achieved a remarkable 70% reduction in entry errors and accelerated testing processes by 50%.

Consider a scenario where repeated product IDs exist in both tables. Without unique identifiers, the system lacks the necessary information to accurately relate the two tables, resulting in an inability to create the relationship due to duplicate values. This inefficiency can severely impede decision-making processes. The overall record count for Saccharomyces cerevisiae stands at 68,236, highlighting the prevalence of repeated values in information handling systems and their potential impact on data integrity.

Moreover, a case study titled ‘Systemic Hazards of Information Overload in EMRs’ identifies information overload and dispersion as significant risks in electronic medical records (EMRs), obstructing clinicians’ ability to locate relevant information effectively. This study advocates for the reengineering of documentation systems to mitigate these hazards, suggesting collaborative documentation models and adaptable systems to enhance information management.

To tackle the issue of repeated values, it is imperative first to acknowledge their presence in your information sets. This foundational step lays the groundwork for implementing effective strategies to eliminate duplicates, ultimately facilitating the successful establishment of relationships and improving the overall quality of your management systems. Leveraging AI, such as Small Language Models designed for efficient information analysis and GenAI workshops for practical training, can further enhance quality and training, providing a comprehensive strategy for operational efficiency.

Causes of Duplicate Values in Data Sets

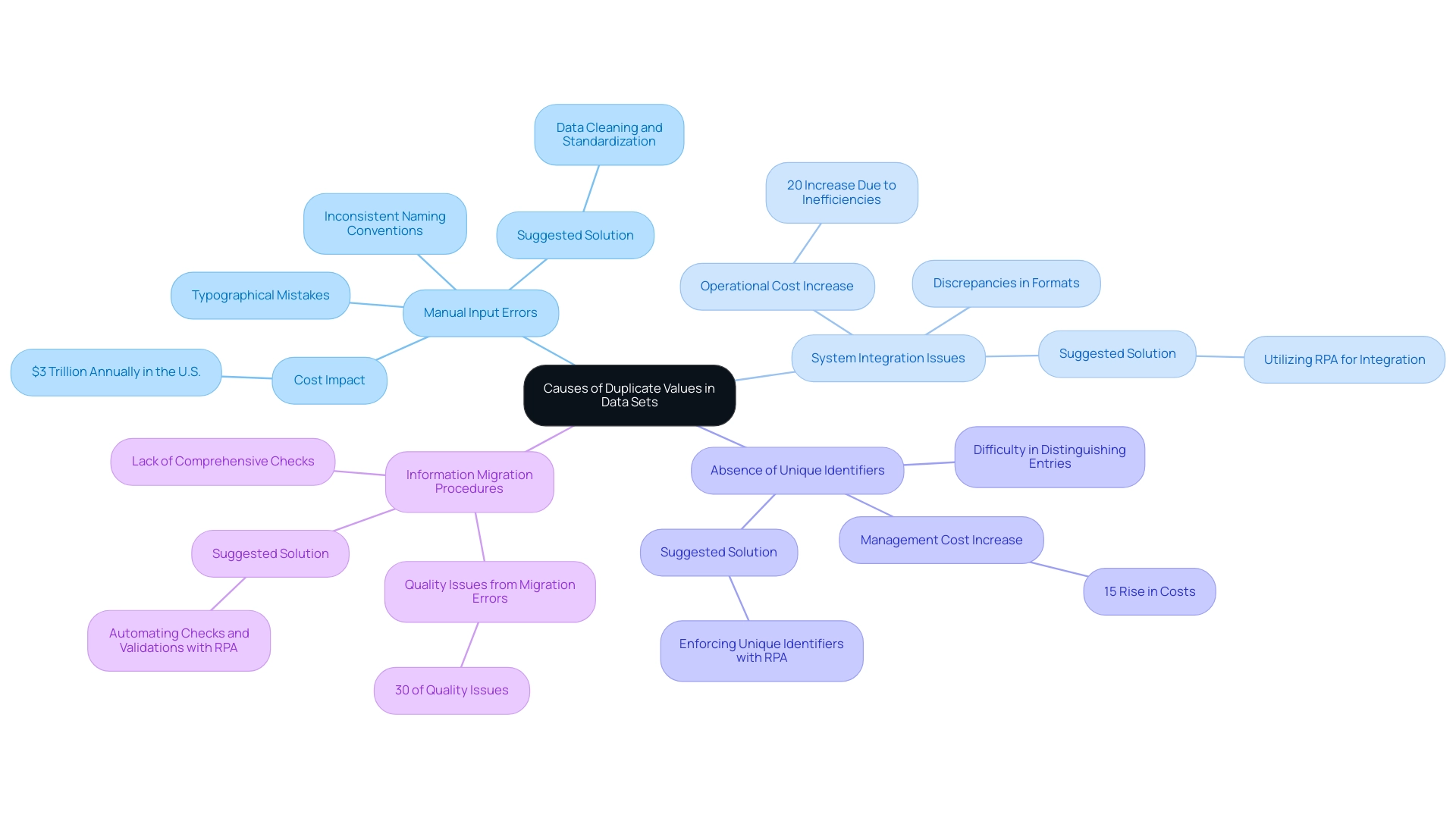

Duplicate values in databases can arise from numerous sources, each contributing to a decline in quality. Key causes include:

-

Manual Input Errors: Human mistakes during the entry process significantly contribute to duplicates. Common errors, such as typographical mistakes or inconsistent naming conventions, can inadvertently create multiple entries for the same entity. Recent studies indicate that manual information entry errors can cost organizations up to $3 trillion annually in the U.S. alone. As Kin-Sang Lam, a Managed Care Pharmacist, emphasizes, “In my experience, it is essential to clean and standardize information to ensure it is reliable for use.”

-

System Integration Issues: Integrating information from disparate systems often reveals discrepancies in formats or identifiers, leading to duplicated records. When systems do not align, the complexities of information integration can result in significant quality issues. A case study on industry employment trends highlights that improper integration can cause a 20% increase in operational costs due to inefficiencies stemming from duplicate records. Utilizing RPA can automate these integration processes, ensuring consistent formats and minimizing errors, thereby enhancing overall quality.

-

Absence of Unique Identifiers: Without designated unique identifiers, distinguishing between different entries becomes problematic. This lack of clarity often results in duplicate values, indicating that relationships cannot be established, thereby undermining the integrity of the information set. The absence of unique identifiers has been linked to a 15% rise in management costs, underscoring the necessity for clear governance. RPA can assist in identifying and enforcing unique identifiers across systems, ensuring that integrity is maintained.

-

Information Migration Procedures: During information transfer between systems, duplicates may unintentionally be created if comprehensive checks and validation measures are not established. A lack of oversight during these migrations can compromise quality, leading to an estimated 30% of quality issues stemming from migration errors. RPA tools can simplify these migration processes by automating checks and validations, thereby improving information integrity and minimizing the chances of duplicates.

Understanding these causes is essential for developing robust information handling strategies. By proactively addressing these challenges and ensuring executive focus on information quality initiatives, organizations can significantly minimize redundancies, thus enhancing the overall quality and usability of their information. Moreover, in a rapidly evolving AI environment, incorporating RPA as a strategic element in operations can assist in managing complexities, enhance efficiency, and support business objectives.

Effective Strategies for Identifying and Removing Duplicates

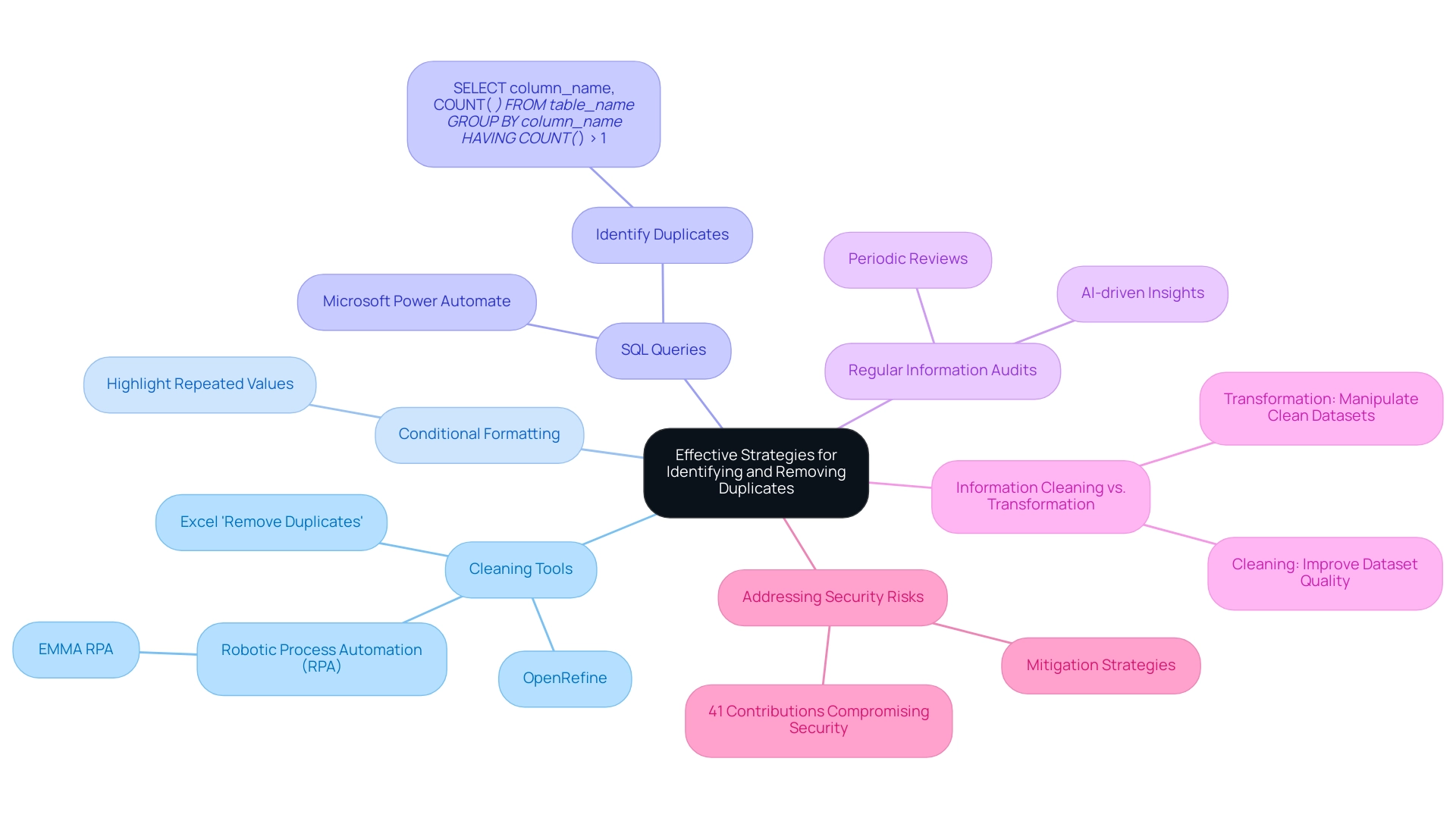

To effectively identify and remove repetitions from your datasets, consider implementing the following strategies:

-

Utilize Cleaning Tools: Employ specialized software for cleansing, such as OpenRefine or Excel’s built-in ‘Remove Duplicates’ feature. These tools automate the identification and elimination of repetitions, significantly enhancing operational efficiency. As Rui Gao, a data analyst, points out,

[Cleaning technology can enhance information quality](https://pmc.ncbi.nlm.nih.gov/articles/PMC10557005) and offers more precise and realistic target information than the original content.Furthermore, integrating Robotic Process Automation (RPA) solutions like EMMA RPA can streamline these processes by automating routine tasks. This allows teams to concentrate on more strategic initiatives, thereby improving employee morale by reducing repetitive workloads. -

Implement Conditional Formatting: In Excel, leverage conditional formatting to emphasize repeated values. This visual aid streamlines the identification and management of repetitions manually, ensuring that no unnecessary information goes unnoticed.

-

SQL Queries for Database Management: For those working within database environments, SQL queries serve as a powerful means to identify repetitions. For instance, the query

SELECT column_name, COUNT(*) FROM table_name GROUP BY column_name HAVING COUNT(*) > 1;effectively highlights any duplicate entries, enabling prompt action to clean your information. Moreover, utilizing Microsoft Power Automate can automate SQL queries, enhancing management efficiency and freeing up resources for higher-value tasks. -

Regular Information Audits: Conducting periodic reviews of your information is crucial. These audits assist in recognizing and correcting duplicates before they develop into major problems, ensuring ongoing integrity and usability. Utilizing AI-driven insights can further enhance the audit procedure and improve information quality.

-

Understand the Distinction Between Information Cleaning and Information Transformation: Recognizing that information cleaning focuses on improving dataset quality while information transformation manipulates clean datasets for analysis is essential in the overall management process. This understanding can lead to more effective strategies for maintaining information integrity.

-

Address Security Risks: It is important to note that 41 contributions from team members compromising security for speed can lead to significant risks. Effective cleaning strategies, potentially supported by RPA, can mitigate these risks by ensuring that only accurate and reliable information is utilized.

By adopting these strategies and leveraging RPA tools, organizations can maintain cleaner datasets, ensuring smoother relationships since the relationship cannot be created with duplicate values. This leads to improved overall governance. Furthermore, the case study titled ‘Recommendations for Effective Information Governance’ emphasizes the importance of establishing a unified governance platform, which can enhance the quality of real-world information and foster better collaboration among stakeholders.

Impact of Duplicate Values on Data Relationships and Operational Efficiency

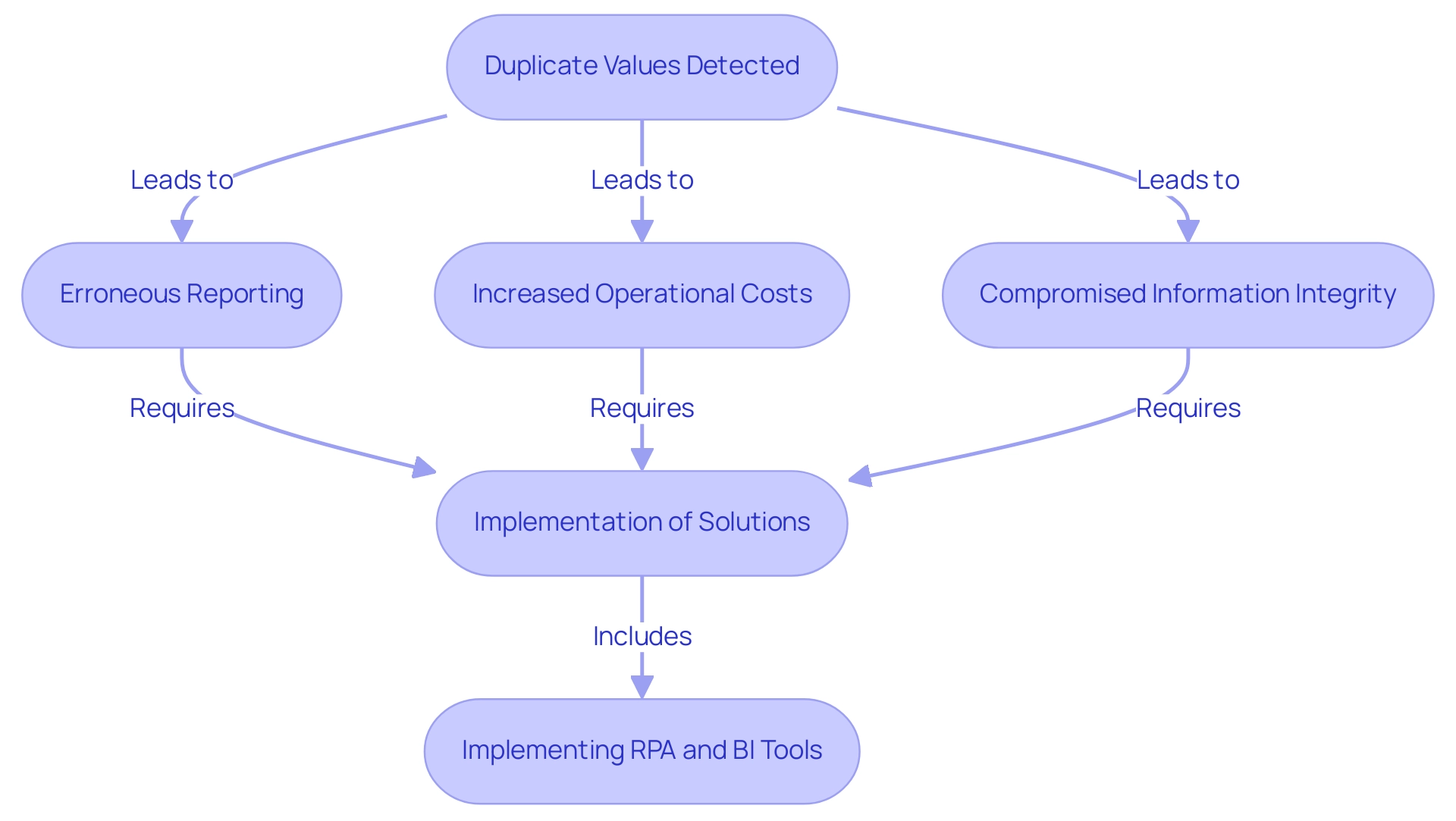

Unresolved repeated values signal that a relationship cannot be established due to duplicate entries, which can significantly undermine information relationships and overall operational effectiveness across organizations. A critical consequence is erroneous reporting, where repeated data inflates metrics and produces misleading reports, ultimately distorting decision-making processes. As industry experts emphasize, the ramifications of inaccurate reporting extend beyond misguided strategies to missed opportunities, adversely affecting the organization’s bottom line.

A striking example of the necessity for information integrity is the €1.2 billion fine levied against Meta Ireland for privacy violations, highlighting the severe repercussions of inadequate management. Additionally, the presence of duplicate records can lead to increased operational costs; many firms overlook these inefficiencies, often facing unnecessary record reconciliation efforts that waste time and resources. Implementing Robotic Process Automation (RPA) can streamline these tasks, enhancing efficiency, minimizing errors, and freeing up resources for more strategic initiatives.

Moreover, compromised information integrity emerges as a pressing concern; since relationships cannot be formed with duplicate values, trust in the data diminishes, leading to hesitance in utilizing insights derived from this information. As Kyra O’Reilly aptly states,

Duplicate invoices in some cases can be potentially fraudulent, so this contributes towards your duties to fraud prevention.

Recognizing the various sources of duplication, such as human error or automated processes, enables organizations to devise effective strategies to ensure that relationships cannot be formed with duplicate values, thereby managing their information more efficiently.

For instance, information duplication may occur from saving multiple versions of files or unforeseen incidents like power outages during backup operations. By leveraging Business Intelligence tools alongside RPA, organizations can prioritize data cleaning and oversight practices, ensuring the uniqueness of entries and ultimately safeguarding the integrity and reliability of operational insights. Furthermore, customized AI solutions can enhance these processes by providing targeted technologies that address specific business challenges, thereby improving overall operational efficiency.

Best Practices for Creating Relationships in Data Management

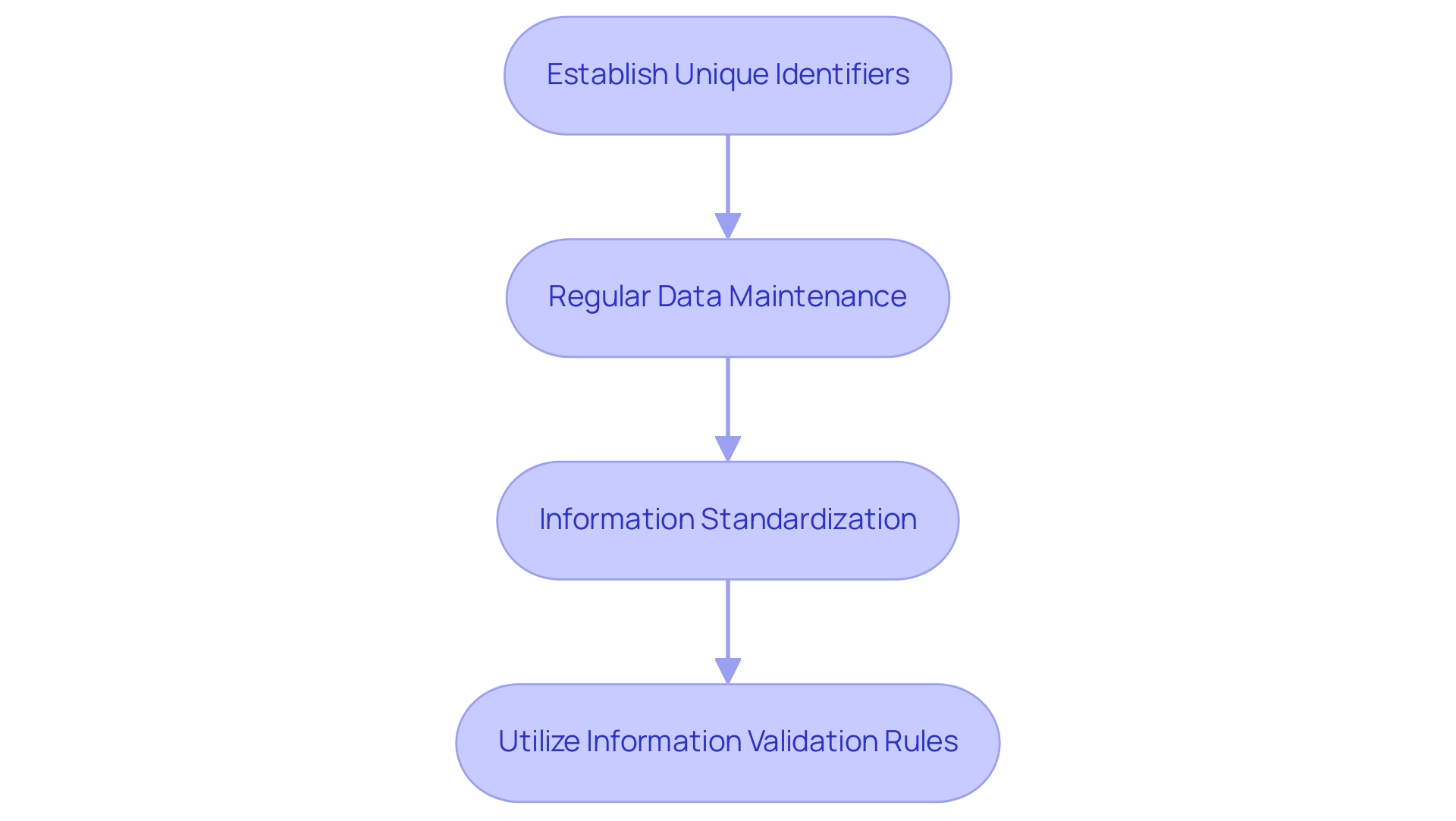

To establish robust relationships within information management systems, organizations should implement the following best practices:

-

Establish Unique Identifiers: Each table must have a unique identifier to prevent the creation of duplicate values. This is vital, as one URI should correspond to no more than one entity globally, facilitating seamless integration. Universally unique identifiers (UUIDs) are a common method for generating these unique dataset identifiers, although they can be cumbersome to use.

-

Regular Data Maintenance: Routine checks and maintenance are essential to prevent the creation of duplicate values before they disrupt relational integrity. Statistics indicate that organizations prioritizing regular information maintenance reduce issues related to information by up to 30%. By implementing a proactive information maintenance strategy, organizations can avoid costly disruptions and enhance operational efficiency, aided by Robotic Process Automation (RPA) to automate these routine tasks.

-

Information Standardization: Standardizing entry processes minimizes variations that can result in redundancies. Consistent naming conventions and formats across datasets are vital in maintaining quality. Leveraging Business Intelligence tools can aid in monitoring these standards and ensuring compliance across the board.

-

Utilize Information Validation Rules: Implementing validation rules in entry systems is an effective measure to ensure that the creation of duplicate values is avoided. As pointed out by Simson Garfinkel, > It’s easy to create a unique identifier, but it’s challenging to choose one that individuals appreciate using, emphasizing the significance of careful identifier selection.

Alongside these practices, organizations can benefit from customized AI solutions that examine patterns and automate intricate decision-making activities. These solutions can enhance information handling by offering insights that promote operational efficiency and informed decisions.

A notable example of effective information oversight practices can be observed in the case study of UXCam, which offers tools for session replay, heatmaps, and user journey analysis. By leveraging these insights through Business Intelligence, product teams can identify user frustrations and optimize their onboarding methods, ultimately enhancing user experience and improving feature adoption. Moreover, UXCam’s integration of RPA in their data management workflows demonstrates how automation can streamline processes, further mitigating technology implementation challenges.

By adhering to these best practices and incorporating tailored AI solutions, organizations can significantly enhance their data management capabilities and ensure the integrity of their data relationships, ultimately driving efficiency and informed decision-making in a rapidly evolving AI landscape.

Conclusion

Duplicate values in data management pose a significant challenge that organizations cannot afford to ignore. The complexities surrounding these duplicates—stemming from causes such as manual entry errors and system integration issues—underscore the necessity for proactive measures. Implementing effective strategies for identifying and removing duplicates enhances data integrity, streamlines operations, and prevents costly inaccuracies in reporting.

Establishing best practices like maintaining unique identifiers, conducting regular data audits, and leveraging data cleaning tools are essential steps toward achieving a reliable data management system. Furthermore, integrating Robotic Process Automation (RPA) and tailored AI solutions can bolster these efforts, automating routine tasks and improving the overall quality of data handling.

Ultimately, the commitment to addressing duplicate values safeguards the integrity of data relationships and empowers organizations to harness the full potential of their data-driven insights. By prioritizing data quality and adopting a structured approach to data management, organizations can effectively navigate the complexities of the digital landscape, paving the way for informed decision-making and sustainable growth.