Overview

Mastering data management in Power BI requires a thorough understanding of the platform’s inherent data limits, which, if exceeded, can lead to significant performance issues. Consider the implications: how might your current practices be impacting efficiency?

Effective strategies are essential to navigate these challenges:

- Utilizing DirectQuery for real-time data access is a powerful approach.

- Optimizing dataset size and implementing best practices—such as limiting dashboard visuals—are critical for maintaining efficiency.

By adopting these strategies, organizations can ensure they derive actionable insights from their data.

Introduction

In the rapidly evolving landscape of data analytics, Power BI emerges as a formidable tool for organizations eager to harness their data’s potential. However, understanding the complexities of data limits is crucial for maintaining optimal performance and efficiency. With strict dataset size restrictions and unique challenges, users must possess a comprehensive understanding of these parameters to avoid pitfalls that could impede their reporting and analysis efforts.

Consider the constraints of DirectQuery mode and the intricacies of data management best practices. Organizations must adopt strategic approaches to effectively leverage their data. As the demand for data-driven decision-making intensifies, recognizing and addressing these limitations becomes essential in transforming raw data into actionable insights that propel growth and operational excellence.

Understanding Data Limits in Power BI

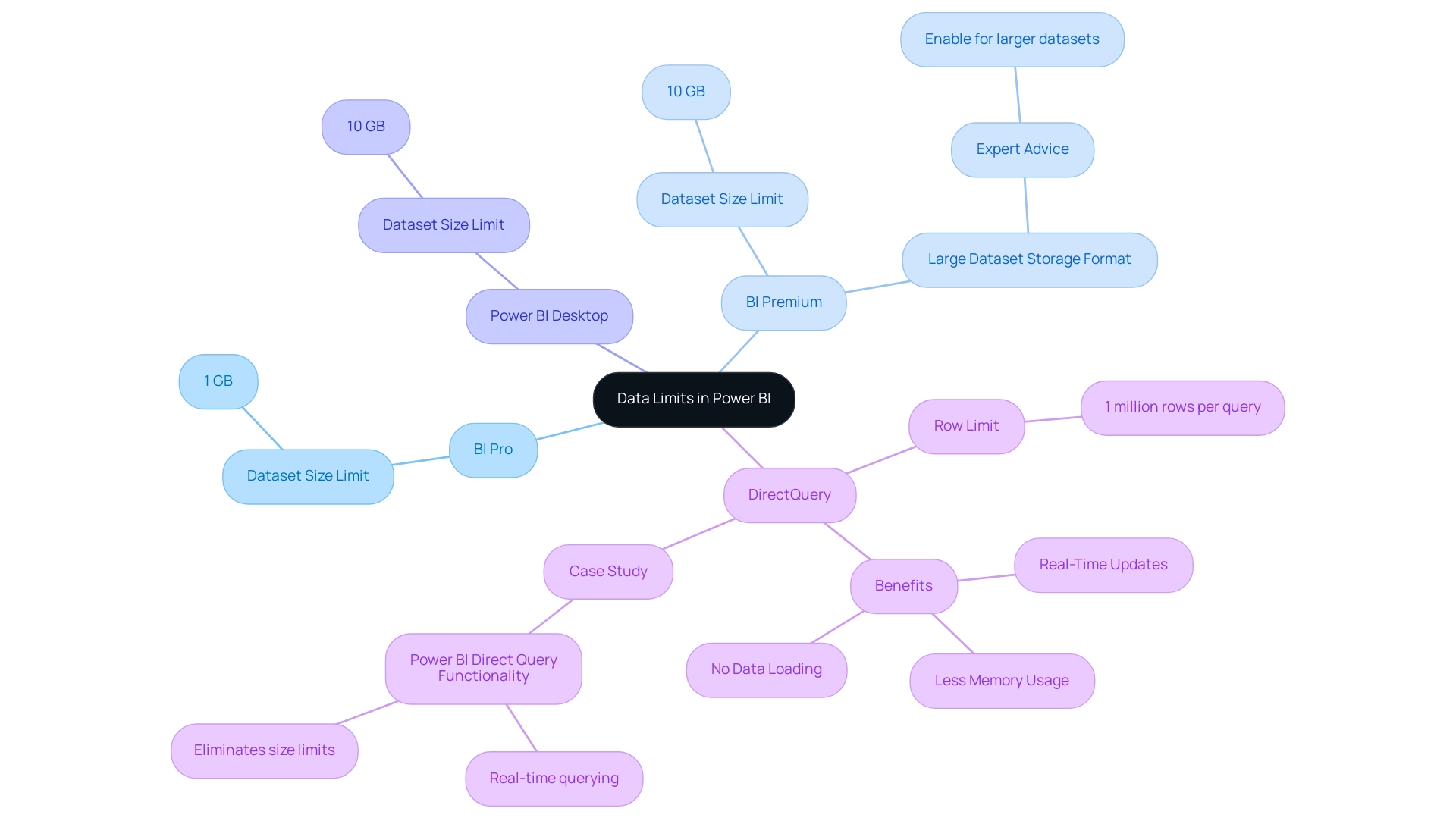

Business Intelligence (BI) establishes critical data limits that users must consider to maintain optimal performance and efficiency. In 2025, the BI Pro version enforces a dataset size limit of 1 GB, while the BI Premium version accommodates larger datasets, allowing uploads of up to 10 GB. Notably, the Power BI Desktop model upload size is also limited to 10 GB, providing a clearer comparison between the Pro and Premium versions.

When utilizing DirectQuery mode, users should note the row limit of 1 million rows per query. This mode is especially beneficial as it uses less memory compared to Import Mode, since it does not retain information in-memory. A notable case study titled “Power BI Direct Query Functionality” highlights how DirectQuery enables Power BI to query sources in real time without loading information into memory.

This approach not only eliminates size constraints but also ensures real-time updates and minimizes memory usage, making it ideal for managing large collections. However, many organizations struggle with leveraging insights effectively due to time-consuming report creation processes and inconsistencies in information across various reports. As Pratyasha Samal, a Memorable Member, advises, “Enable the Large dataset storage format option to use datasets in BI Premium that are larger than the default limit.”

Comprehending these limits is essential for efficient information management and reporting. When data exceeds the limit in Power BI, it can lead to errors and obstruct performance. By tackling these challenges using Business Intelligence and RPA solutions such as EMMA RPA and Automate, Creatum GmbH can convert raw information into actionable insights, driving growth and operational efficiency.

Common Challenges When Data Exceeds Limits

In 2025, users of this analytics tool encounter significant challenges when their data surpasses Power BI’s limits, particularly concerning inadequate master data quality. A critical issue is the inability to export large datasets, which can severely hinder reporting and analysis efforts. This limitation often forces users to seek alternative methods for sharing insights, such as generating reports in BI and exporting them as PDFs or PPTs for offline distribution, especially in scenarios where internet access is unreliable.

Another prevalent obstacle is the ‘Visual Has Exceeded the Available Resources’ error. This occurs when a visual attempts to process more information than Power BI can accommodate, leading to disruptions in workflows and considerable frustration among users. Such errors not only impede productivity but also require a deeper understanding of how to manage and optimize data within the platform.

Organizations struggling with inconsistent, incomplete, or inaccurate data face additional challenges in effectively adopting AI solutions, further complicating their data management strategies. The perception that AI projects are time-consuming, costly, and difficult to implement often discourages organizations from fully embracing these technologies.

As organizations increasingly depend on data-driven decision-making, it is vital for users to adeptly navigate these limitations. The maximum storage capacity for BI Premium users is 100TB, and in data-rich environments, it is possible for data to exceed Power BI’s limits, reaching this significant threshold swiftly. Therefore, understanding the complexities of data management and integration in BI is essential for optimizing operational efficiency and ensuring that teams can focus on strategic, value-enhancing activities.

Moreover, it is crucial to acknowledge that BI does not offer data cleansing solutions, operating under the assumption that the retrieved information is already of high quality. This presumption can lead to challenges in maintaining data integrity, particularly when handling large datasets. As Miguel Myers, Leader of the BI Core team, remarked, ‘This year, BI has introduced visuals like Date Picker by Powerviz, Advanced Table, and Beeswarm visuals that provide numerous advanced options and customizations.’

These advancements highlight the necessity for users to take proactive measures in managing their data quality to fully leverage BI’s features. By incorporating Robotic Process Automation (RPA) and tailored AI solutions, organizations can streamline their workflows, enhance data quality, and ultimately facilitate informed decision-making, positioning themselves at the forefront of technological opportunities.

Effective Workarounds for Data Limitations

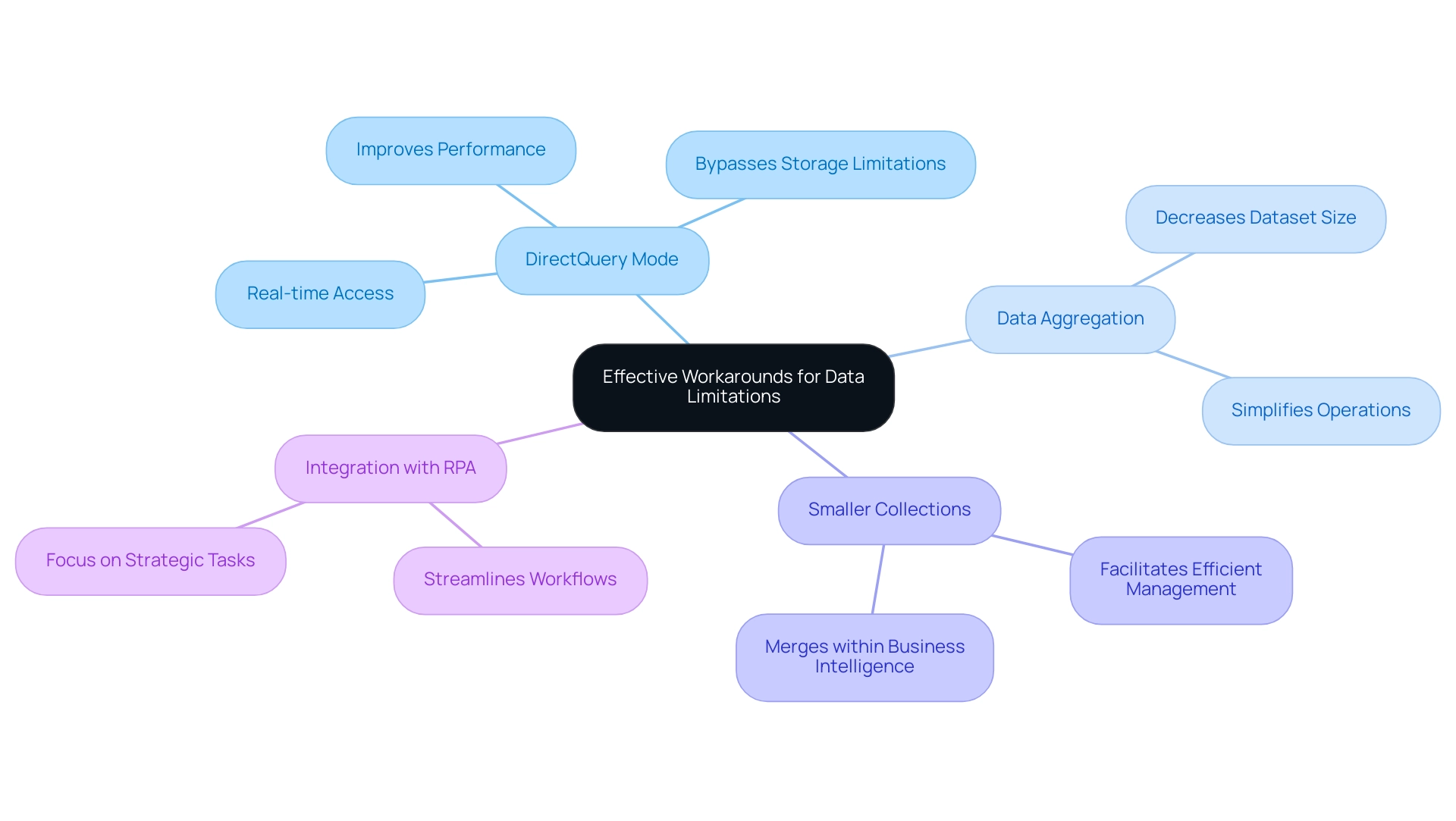

To navigate the information constraints inherent in this platform, individuals can adopt several effective workarounds that align with the principles of Business Intelligence and Robotic Process Automation (RPA). One of the most beneficial strategies is utilizing DirectQuery mode, which enables real-time access without the need to import extensive sets into Power BI. This method not only bypasses storage limitations—Power BI Premium subscribers have a maximum storage capacity of 100TB—but also improves performance by querying information directly from the source as required, thus enhancing operational efficiency.

In addition to DirectQuery, users can enhance their information management by aggregating data prior to import. This technique significantly decreases the overall dataset size, ensuring that the data does not exceed the limits imposed by Power BI, simplifying operations. Moreover, generating several smaller collections that can be merged within Business Intelligence facilitates more efficient management and analysis, tackling common issues such as time-consuming report generation and inconsistencies.

These strategies not only assist users in staying within Power BI’s limitations but also lead to enhanced performance. As Alberto Ferrari notes, “It’s packed with detailed explanations and practical examples that make complex concepts much easier to grasp.” Comprehending Power BI’s limitations is essential for organizations striving to effectively utilize large datasets, especially when their data exceeds the platform’s constraints.

The case study titled ‘Understanding Power BI’s Limitations’ illustrates the significance of acknowledging that when data exceeds the limits of Power BI, it can adversely affect performance as information scales up. By implementing these workarounds, businesses can ensure a seamless experience when accessing real-time information, ultimately driving better decision-making and operational efficiency while adhering to governance and compliance standards. Furthermore, integrating RPA solutions can further streamline workflows, allowing teams to focus on strategic tasks that add value to the organization.

Best Practices for Efficient Data Management in Power BI

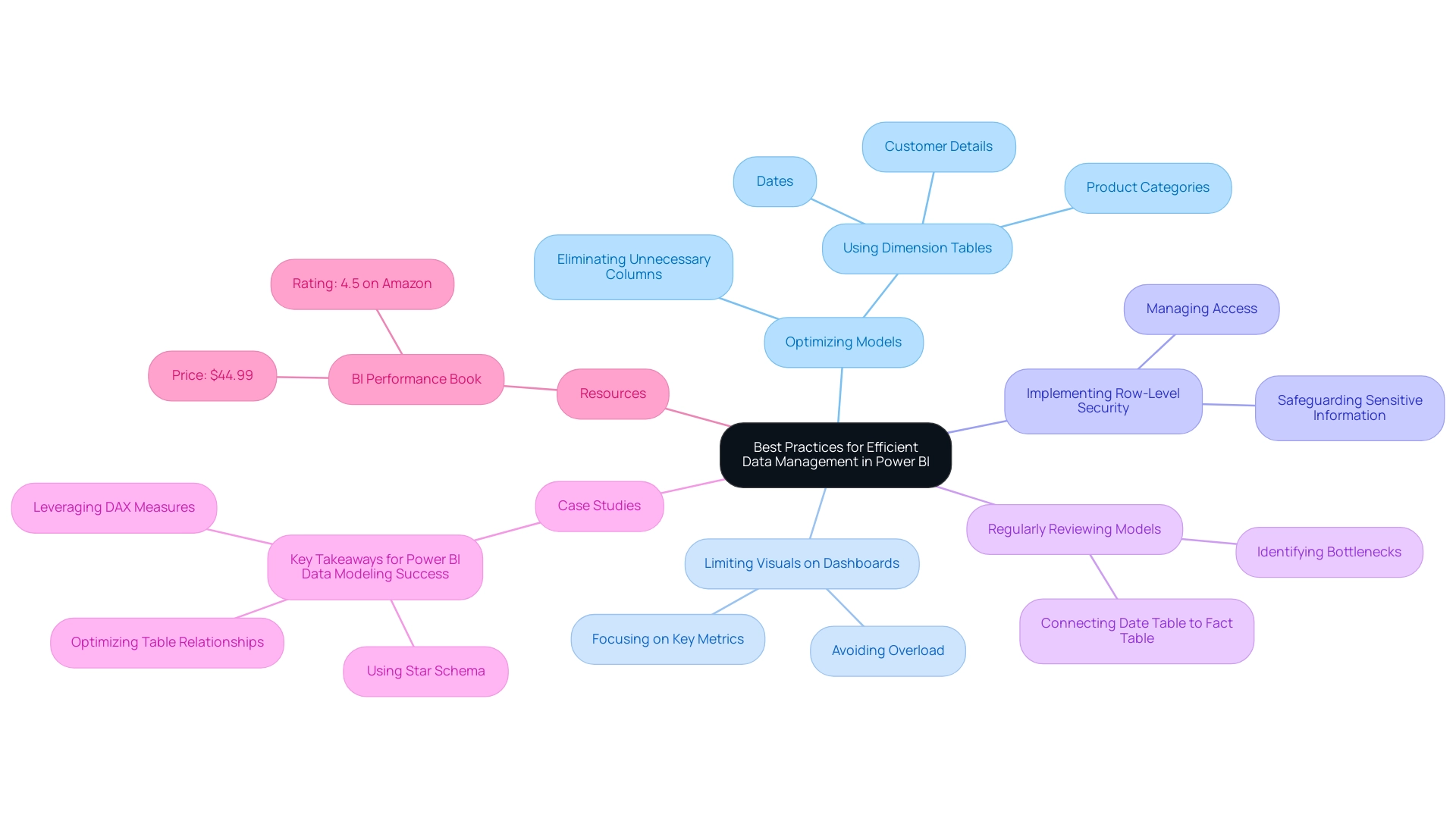

Efficient information management in Power BI hinges on the adoption of several best practices that can significantly enhance performance and usability, particularly in addressing common challenges like time-consuming report creation, inconsistencies, and the absence of actionable guidance. One of the foremost strategies involves optimizing models by eliminating unnecessary columns and tables, resulting in a substantial reduction in dataset size. Dimension tables, which encompass descriptive information such as customer details, product categories, and dates, are crucial in this optimization.

This practice not only streamlines information but also enhances processing speed, enabling quicker insights.

Another critical aspect is limiting the number of visuals on dashboards. Overloading dashboards with excessive visuals can overwhelm the system and degrade performance. Instead, focusing on key metrics and insights ensures that users can interpret information easily, free from unnecessary distractions.

As Szymon Dybczak, an esteemed contributor, noted, “It allowed me to pinpoint which visuals or queries were the bottlenecks,” underscoring the importance of clarity in dashboard design.

Implementing row-level security (RLS) is essential for managing access effectively while maintaining optimal performance. RLS allows organizations to control who can access specific information, ensuring that sensitive details are safeguarded without compromising the overall efficiency of the reporting system.

Regularly reviewing and refining models is vital for sustained management efficiency. This practice enables users to identify and address potential bottlenecks, ensuring that information remains relevant and actionable. For instance, connecting the Date Table to the Fact Table using the date field facilitates time-based analysis, enhancing the depth of insights derived from the information.

Real-world applications of these best practices have demonstrated significant performance improvements. A case study titled ‘Key Takeaways for BI Data Modeling Success’ illustrates how implementing a Star Schema, optimizing table relationships, and leveraging DAX Measures can lead to enhanced performance, easier reporting, and improved analysis capabilities. These strategies are not merely theoretical; they have been proven to yield tangible results across various organizational contexts.

As the landscape of information oversight continues to evolve, resources like the BI performance book provide crucial insights into performance enhancement and practical approaches for real-world applications. With a rating of 4.5 on Amazon, it serves as an essential guide for professionals seeking to enhance their Power BI experience. By adopting these best practices, organizations can transform their information handling processes, boosting efficiency and informed decision-making while also considering the key advantages of the General Management App, such as comprehensive oversight, Microsoft Copilot integration, custom dashboards, seamless information integration, predictive analytics, and expert training.

Evolving Strategies for Data Management Success

In an era where information challenges are increasingly complex, organizations must proactively refine their management strategies. Staying informed about updates and new features in Power BI is crucial for enhancing handling capabilities. For instance, the introduction of the Fabric Monitoring Hub, which now features a semantic model refresh detail page, empowers organizations to gain deeper insights into their refresh activities, thereby enhancing operational efficiency.

Moreover, with information centers consuming over 1% of worldwide electricity, effective handling becomes essential in lowering operational expenses and ecological impacts.

To further enhance operational efficiency, organizations can leverage Robotic Process Automation (RPA) to automate manual workflows, significantly reducing the time spent on repetitive tasks. This not only boosts efficiency but also minimizes errors, allowing teams to concentrate on strategic, value-adding work. Consistent training and development programs for team members are vital to instill best practices and familiarize them with emerging trends in information handling and RPA.

Studies suggest that organizations investing in training experience a notable rise in information handling efficiency, with statistics indicating that well-trained teams can enhance reporting precision by as much as 30%.

Employing advanced tools such as Inforiver Super Filter can further improve information handling strategies by offering sophisticated filtering options, including time-period selectors and various date filtering modes. By fostering a culture of ongoing enhancement and incorporating customized AI solutions from Creatum GmbH, organizations can better prepare themselves to tackle future information challenges and fully utilize the capabilities of Power BI. Real-world implementations, such as AccoMASTERDATA, illustrate how improved master information management can streamline processes and lead to smarter business decisions.

As Patrick LeBlanc, Principal Program Manager, emphasizes, “We highly value your feedback, so please share your thoughts using the feedback forum.” As we progress through 2025, it is imperative for organizations to adapt their strategies, ensuring they remain competitive and capable of extracting actionable insights from their data. For more information on how Creatum GmbH can assist you in overcoming technology implementation challenges, please visit our website.

Conclusion

Power BI’s effectiveness relies on a comprehensive understanding of its data limits, which are crucial for maintaining optimal performance and efficiency. Users face dataset size restrictions and challenges posed by modes like DirectQuery; navigating these complexities is essential to prevent errors that could disrupt reporting and analysis. Strategies such as:

- Leveraging DirectQuery for real-time data access

- Aggregating data before import

- Creating smaller datasets

are vital for optimizing performance within Power BI’s constraints.

Organizations must prioritize best practices for data management, including:

- Optimizing data models

- Limiting visuals on dashboards

- Implementing row-level security

These practices not only enhance usability but also promote a clearer understanding of data insights. As the landscape of data management evolves, staying informed about updates in Power BI and integrating Robotic Process Automation can significantly streamline workflows and minimize errors. This enables teams to focus on strategic initiatives.

As the demand for data-driven decision-making intensifies, organizations must proactively adapt their data management strategies. By addressing the limitations of Power BI and implementing effective workarounds and best practices, businesses can transform raw data into actionable insights, driving growth and operational excellence. Embracing these approaches ensures that organizations remain competitive and fully leverage the capabilities of their data analytics tools, ultimately paving the way for informed decision-making and sustained success.