Overview

This article delves into effective methods for locating data within specific rows of datasets, underscoring the critical role of structured information and efficient retrieval techniques. It asserts that tools such as SQL and Python, coupled with strategic indexing and row selection methods, markedly improve data access efficiency. Evidence of enhanced operational performance, alongside compelling case studies, illustrates the practical application of these techniques. Such insights are invaluable for professionals seeking to optimize their data management strategies.

Introduction

In a world increasingly driven by data, the ability to efficiently locate and retrieve information is paramount for organizations striving for operational excellence. The architecture of datasets, particularly the arrangement of data in rows and columns, serves as the foundation for effective data management and analysis. As businesses grapple with vast amounts of information, the significance of structured data becomes undeniable. Not only does it streamline retrieval processes, but it also enhances decision-making capabilities.

This article delves into the intricacies of data organization, the essential tools and languages for data retrieval, and advanced techniques that can transform raw data into actionable insights. By exploring these elements, organizations can unlock the full potential of their data, ensuring they remain competitive in an ever-evolving landscape.

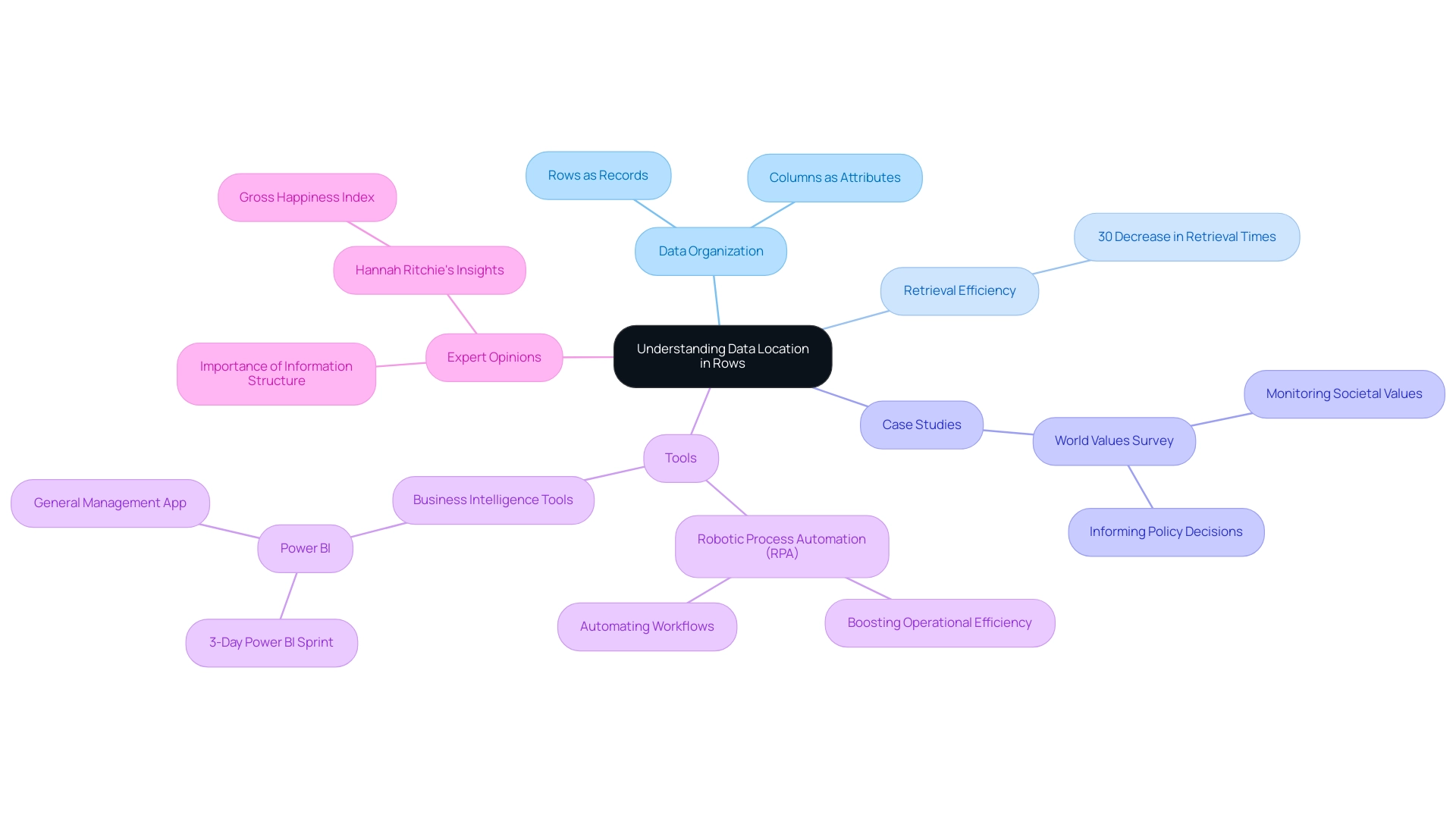

Understanding Data Location in Rows

Data location in rows is a fundamental aspect of how information is organized within datasets, typically presented in a tabular format. Each row signifies a distinct record, while the columns denote various attributes associated with that record. Understanding this framework is vital for efficient information access, as it addresses which of the following can be used to locate data in a particular row.

For instance, in a customer database, each row may encapsulate details about an individual, with columns specifying their name, address, and purchase history. This careful arrangement not only streamlines querying processes but also improves information manipulation capabilities, allowing users to extract relevant information swiftly and accurately.

Recent trends suggest that entities emphasizing information arrangement experience a significant enhancement in retrieval efficiency. Studies demonstrate that well-structured datasets can decrease retrieval times by as much as 30%. Furthermore, the Integrated Postsecondary Education Information System (IPEDS) gathers information from educational institutions, highlighting the importance of structured information in educational contexts, which enhances the credibility of the argument.

Moreover, case studies such as the World Values Survey illustrate the importance of systematic information organization. By conducting representative national surveys, they effectively monitor societal values and beliefs, demonstrating how organized information can inform social research and policy decisions. Their structured approach to information collection leads to more reliable insights, reinforcing the necessity of organization.

Incorporating Robotic Process Automation (RPA) from Creatum GmbH can further enhance these processes by automating manual workflows, thereby boosting operational efficiency and reducing errors. This allows teams to focus on more strategic, value-adding tasks. Furthermore, utilizing Business Intelligence tools such as Power BI from Creatum GmbH can revolutionize reporting, offering actionable insights that facilitate informed decision-making.

The 3-Day Power BI Sprint enables organizations to quickly create professionally designed reports, while the General Management App offers comprehensive management and smart reviews, addressing inconsistencies and governance challenges.

Expert opinions underscore the necessity of understanding information structure for optimal retrieval. As articulated by industry leaders, a well-defined information architecture not only facilitates efficient access but also enhances the overall quality of insights derived from the information. Moreover, as Hannah Ritchie observed, Bhutan’s ‘Gross Happiness Index’ exemplifies how organized information is essential for assessing societal well-being, further strengthening the case for the importance of comprehending information structure.

In summary, the significance of information arrangement in datasets cannot be overstated; it is a crucial element that directly impacts access effectiveness and the capability to extract actionable insights.

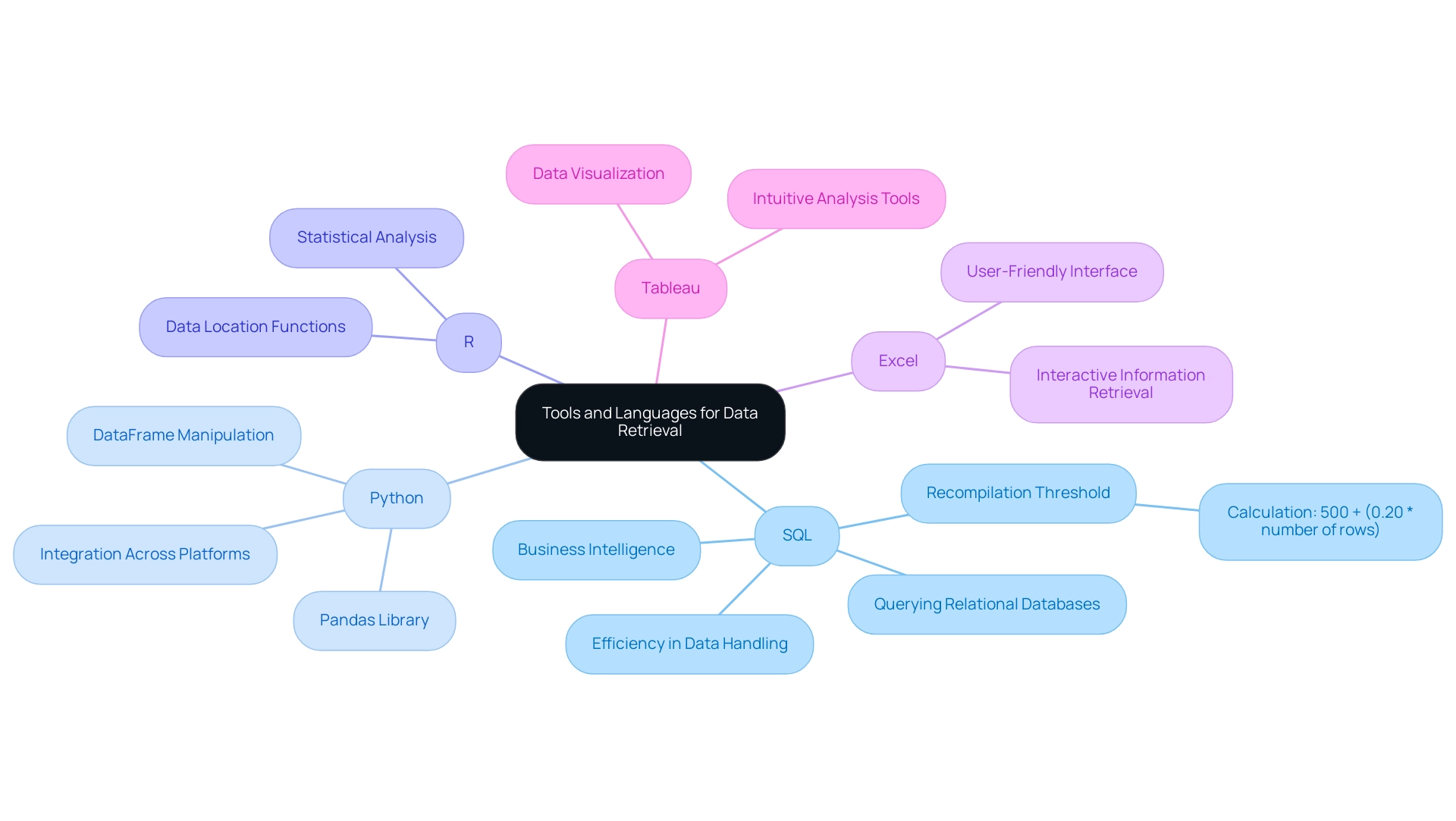

Tools and Languages for Data Retrieval

In the realm of information gathering, various programming languages and tools stand out, each offering distinct features and capabilities. SQL (Structured Query Language) is the cornerstone for querying relational databases, especially when considering which of the following can be used to locate data in a particular row. Renowned for its efficiency in selecting, inserting, updating, and removing information, SQL’s widespread adoption is underscored by the fact that, as of 2025, it continues to dominate the landscape of information retrieval, with a significant percentage of organizations relying on it for their database management needs.

In SQL Server 2014, the recompilation threshold for permanent tables is calculated as 500 + (0.20 * number of rows). This calculation highlights the importance of efficient information handling in the context of Business Intelligence (BI) initiatives.

Python has emerged as a formidable contender, particularly with the Pandas library, which offers robust manipulation capabilities. Users can effortlessly filter and retrieve information from DataFrames, raising the question of which of the following can be used to locate data in a particular row. This flexibility and power make Python an excellent choice for those requiring comprehensive analysis. Its versatility is further enhanced by compatibility with various information sources, allowing seamless integration and manipulation across platforms, crucial for organizations seeking actionable insights.

R, primarily recognized for its statistical analysis prowess, also provides effective functions that can locate data in a particular row based on defined conditions. This capability is particularly valuable in data-heavy environments where statistical insights are paramount, supporting the drive for data-driven decision-making.

Alongside these programming languages, tools like Microsoft Excel and visualization platforms such as Tableau enable interactive information retrieval and analysis. These tools cater to individuals without extensive programming expertise, allowing them to extract insights through intuitive interfaces, thus democratizing access to BI.

The choice among SQL, Python, and R often hinges on the specific needs of the organization. SQL excels in structured environments, while Python and R offer greater flexibility for complex manipulation tasks. For instance, creating a computed column for complex expressions in SQL can lead to better cardinality estimates, enhancing its capabilities in operational efficiency.

As businesses increasingly seek to harness the power of information, understanding the strengths and applications of these tools becomes essential for optimizing retrieval processes. However, navigating the overwhelming AI landscape presents challenges, such as the need for tailored solutions to effectively address specific business needs. A case study named ‘Business Intelligence Empowerment’ illustrates how the entity has assisted companies in converting unprocessed information into actionable insights, enabling informed decision-making and fostering growth.

Regularly monitoring and updating SQL Server statistics is crucial to ensure optimal performance and accuracy in database management. RPA solutions can further enhance operational productivity by automating repetitive tasks, allowing entities to focus on strategic initiatives. As Rajendra Gupta, a recognized Database Specialist and Architect, states, “Based on my contribution to the SQL Server community, I have been recognized as the prestigious Best Author of the Year continuously in 2019, 2020, and 2021 at SQLShack and the MSSQLTIPS champions award in 2020.”

This underscores the ongoing significance and necessity of SQL in the evolving environment of information acquisition, especially as organizations navigate the overwhelming AI landscape and strive for operational excellence.

Indexing Techniques for Efficient Data Access

Indexing serves as a pivotal method in database administration, significantly enhancing the speed and efficiency of information access operations. By establishing indexes on specific columns, databases can quickly pinpoint rows that meet query criteria, thereby eliminating the need to scan the entire dataset. Among the most widely used indexing techniques are B-tree indexing and hash indexing.

B-tree indexing organizes data in a balanced tree structure, facilitating efficient searches, while hash indexing employs a hash function to directly map keys to specific locations, optimizing access times.

The impact of indexing on information retrieval performance is substantial. Statistics indicate that after implementing indexing, CPU load on database servers can decrease from 50-60% to merely 10-20%, showcasing a remarkable improvement in performance. Moreover, insights from 280 engineers and managers reveal a significant shift-left approach aimed at reducing wasted engineering time, highlighting the critical importance of effective indexing strategies.

In terms of operational effectiveness, leveraging Robotic Process Automation (RPA) from Creatum GmbH can further enhance these indexing methods by automating repetitive tasks associated with information management. RPA streamlines workflows, minimizes errors, and liberates valuable resources, enabling teams to concentrate on strategic initiatives that foster business growth. This is especially vital in the fast-paced AI landscape, where organizations must adapt to emerging technologies and methodologies.

Current trends in indexing techniques underscore the necessity of adapting to evolving information landscapes. For instance, non-clustered indexes are particularly effective for optimizing frequently queried columns that do not form part of the clustered index. As Devesh Poojari notes, “Non-clustered indexes are ideal for optimizing frequently queried columns that are not part of the clustered index,” emphasizing their vital role in improving operational efficiency.

Additionally, Digma’s capabilities in identifying endpoints generating a high volume of database queries can offer organizations insights into potential inefficiencies within their access layers, further reinforcing the significance of effective indexing strategies.

Case studies, such as ‘Understanding Index Fragmentation,’ illustrate that indexes can become fragmented due to modifications, which may lead to slower query performance and increased disk I/O. It is advisable to rebuild indexes when fragmentation surpasses 30% to sustain optimal performance, although this threshold may vary based on the specific database system in use.

In practical terms, a well-indexed database can retrieve customer records based on their ID in milliseconds, demonstrating the efficiency of locating data in a particular row. In contrast, a non-indexed search could take considerably longer, underscoring the essential role of indexing in operational efficiency. As businesses navigate an information-rich environment, employing effective indexing techniques alongside RPA from Creatum GmbH will be crucial for transforming raw data into actionable insights.

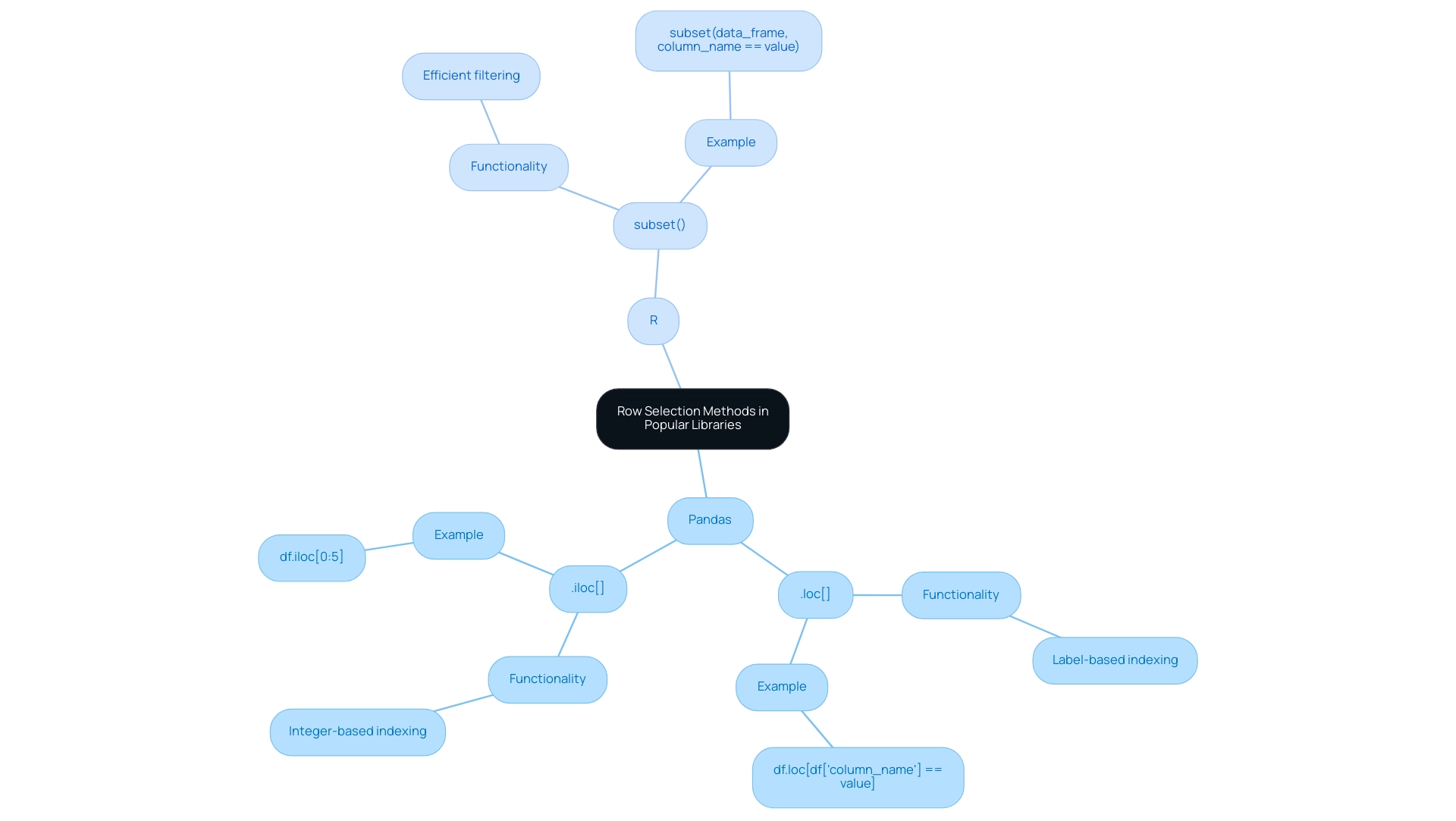

Row Selection Methods in Popular Libraries

Popular information manipulation libraries provide a range of methods for selecting rows based on specific criteria, which is crucial for effective analysis. In Python’s Pandas library, the .loc[] and .iloc[] methods serve as essential tools for label-based and integer-based indexing, respectively. For instance, using df.loc[df['column_name'] == value] enables users to retrieve all rows where the specified column matches a given value, streamlining the process of filtering datasets according to specific conditions.

Conversely, R offers the subset() function, allowing users to filter frames efficiently. An example of its application is subset(data_frame, column_name == value), which fulfills a similar role to the Pandas .loc[] method. These row selection techniques not only enhance user-friendliness but also improve the efficiency of data extraction from large datasets, thereby facilitating informed decision-making.

Recent updates in information manipulation libraries underscore the increasing significance of these methods. For example, the rising usage statistics of Pandas and R reflect their popularity among analytics professionals, with Pandas particularly favored for its intuitive syntax and powerful capabilities. Expert opinions indicate that mastering these row selection methods can significantly enhance productivity in analysis tasks, enabling teams to concentrate on deriving insights rather than becoming mired in retrieval processes.

As noted by DataQuest, “knowing both also makes you a more adaptable job candidate if you’re seeking a position in the science field.”

Case studies illustrate the practical application of these techniques. For example, participants in the ‘Python & Data 1’ tutorial gained hands-on experience with information manipulation, learning to generate descriptive statistics and visualize information using tools like Google Colab and Jupyter. This training emphasizes the importance of understanding row selection techniques in both Pandas and R, as these skills are essential for anyone aspiring to excel in analysis.

Notably, no prior knowledge of coding is required for the Stata tutorials, showcasing the accessibility of learning manipulation. Furthermore, the tutorial covers fundamental Python syntax and statistical modeling techniques, reinforcing the relevance of the discussed methods in a practical learning environment.

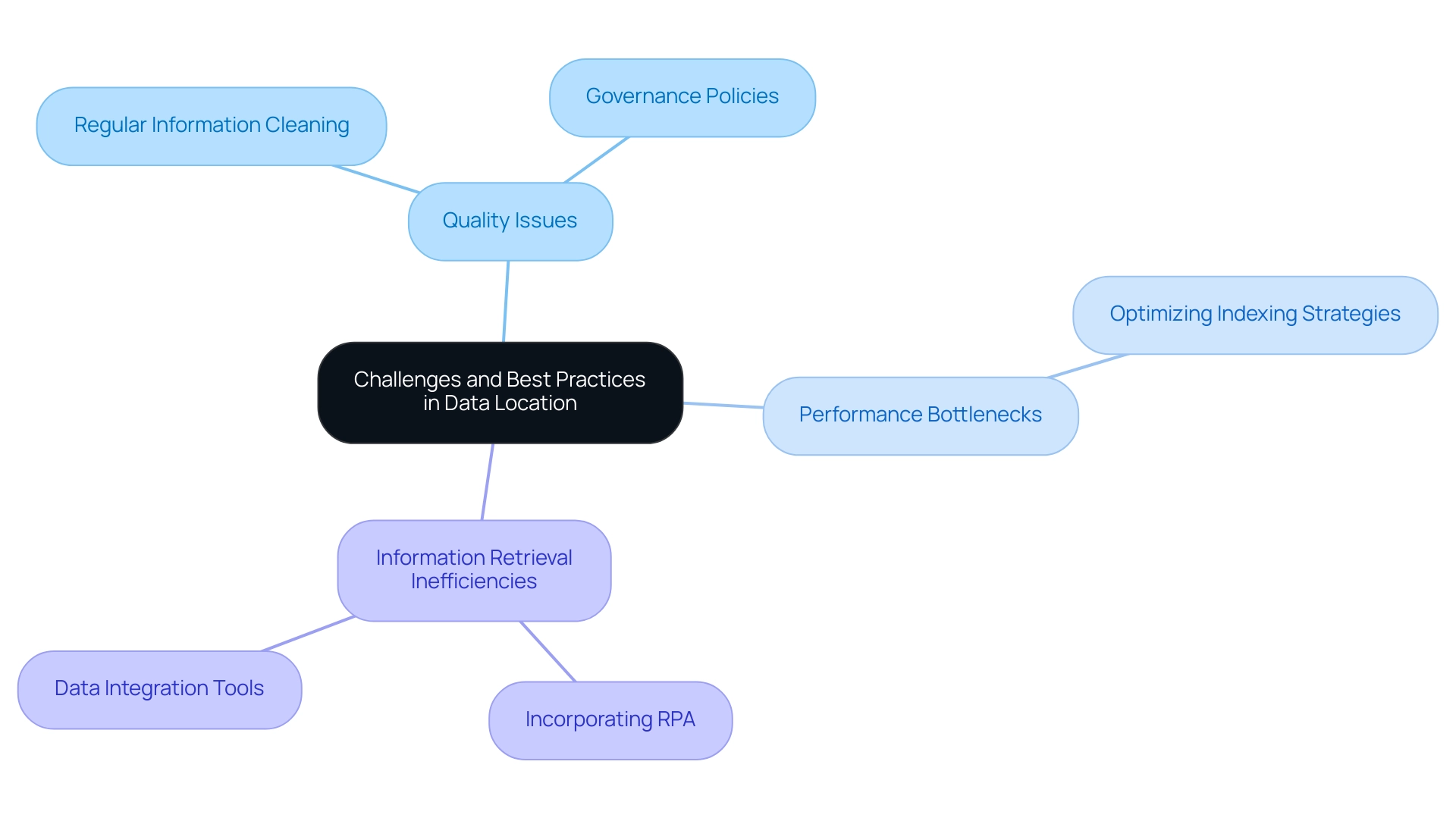

Challenges and Best Practices in Data Location

Effectively navigating large and complex datasets poses significant challenges, primarily stemming from quality issues such as missing or inconsistent values. These challenges can severely impede precise information retrieval, leading to inefficiencies and potential errors in decision-making. Additionally, performance bottlenecks frequently emerge when querying extensive tables that lack appropriate indexing, hindering access to essential information.

To mitigate these challenges, organizations must adopt best practices that prioritize quality and governance. Regular information cleaning is crucial for maintaining dataset integrity, while clear governance policies establish accountability and standards for management. Monitoring information is vital for sustaining quality, as it aids in the early detection of errors and inconsistencies, ensuring that data remains clean and usable for analysis and decision-making.

Optimizing indexing strategies is equally essential; for instance, routinely reviewing and updating indexes based on actual query patterns can substantially enhance retrieval performance. This proactive approach guarantees that information remains both accessible and reliable.

Incorporating Robotic Process Automation (RPA) into these practices can further bolster operational efficiency. By automating repetitive tasks associated with information management, RPA minimizes human error and accelerates processing, allowing teams to focus on strategic initiatives. A notable case study highlights the management of unstructured information, where organizations often encounter content that deviates from standardized formats.

By leveraging integration tools alongside RPA to transform unstructured information into structured records, organizations can uncover valuable insights that might otherwise remain hidden. This practice not only improves information quality but also streamlines the retrieval process, illustrating the significant impact of effective governance on operational efficiency.

Looking ahead to 2025, the challenges of information retrieval continue to evolve. Experts note that poor quality can lead to substantial financial repercussions, averaging around $15 million annually, as highlighted by author Idan Novogroder. Thus, implementing robust quality measures is not merely a best practice; it is a strategic necessity for organizations striving to thrive in an information-driven environment. Furthermore, automation through RPA can significantly mitigate quality issues by reducing human error, underscoring the imperative for organizations to harness technology in their management practices.

An exemplary case of effective information management is the UK Cystic Fibrosis Registry, which maintains records on nearly all individuals with Cystic Fibrosis in the UK, underscoring the importance of structured information practices.

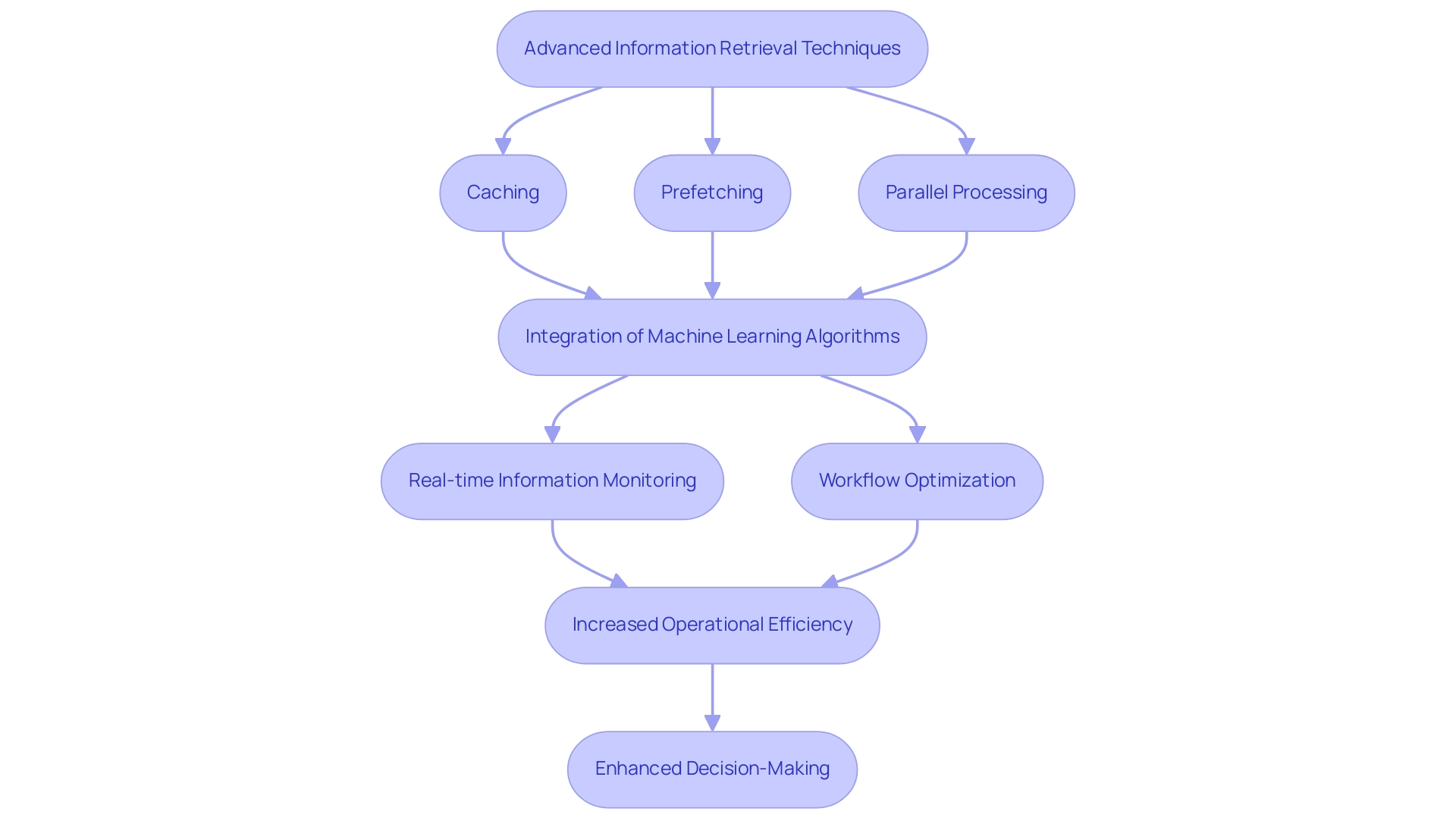

Advanced Techniques for Data Retrieval

Advanced information retrieval techniques are pivotal in enhancing access efficiency and effectiveness. Techniques such as caching, prefetching, and parallel processing enable systems to retrieve information swiftly while minimizing latency. For instance, caching frequently accessed information in memory significantly reduces the need for repeated database queries, resulting in faster response times.

Conversely, prefetching anticipates user information needs based on historical behavior, ensuring that relevant information is readily available when required.

The integration of machine learning algorithms further optimizes predictive information retrieval, allowing systems to adaptively refine access patterns based on usage trends. This not only improves overall system performance but also enhances decision-making capabilities by providing timely insights. Organizations that have adopted robust information quality procedures, including customized AI solutions from Creatum GmbH, such as Small Language Models and GenAI Workshops, have reported enhanced operational agility and decision-making effectiveness. This highlights the essential role of real-time information monitoring in today’s information-rich environment.

As Gorkem Sevinc emphasizes, “Robust information quality processes and strategies are crucial for overseeing the entire lifecycle management from collection to analysis, highlighting the importance of information literacy.”

A significant case study is DDMR’s quality enhancement initiatives, which focused on improving operational performance through advanced information retrieval techniques. DDMR implemented specific strategies such as real-time information monitoring and workflow optimization, alongside leveraging Robotic Process Automation (RPA) to automate manual tasks. Following these initiatives, DDMR experienced a notable increase in revenue and operational efficiency, demonstrating the tangible benefits of investing in advanced information management practices, supported by the solutions offered by Creatum GmbH.

As we progress deeper into 2025, trends in advanced information retrieval techniques continue to evolve, with an increasing emphasis on machine learning applications and the effectiveness of caching and prefetching strategies. These advancements streamline workflows and empower organizations to make informed decisions based on high-quality, readily accessible information. Furthermore, statistics indicate that dynamic pricing optimization adjusts prices in response to market movements, underscoring the broader implications of effective information access techniques.

Key Takeaways and Actionable Insights

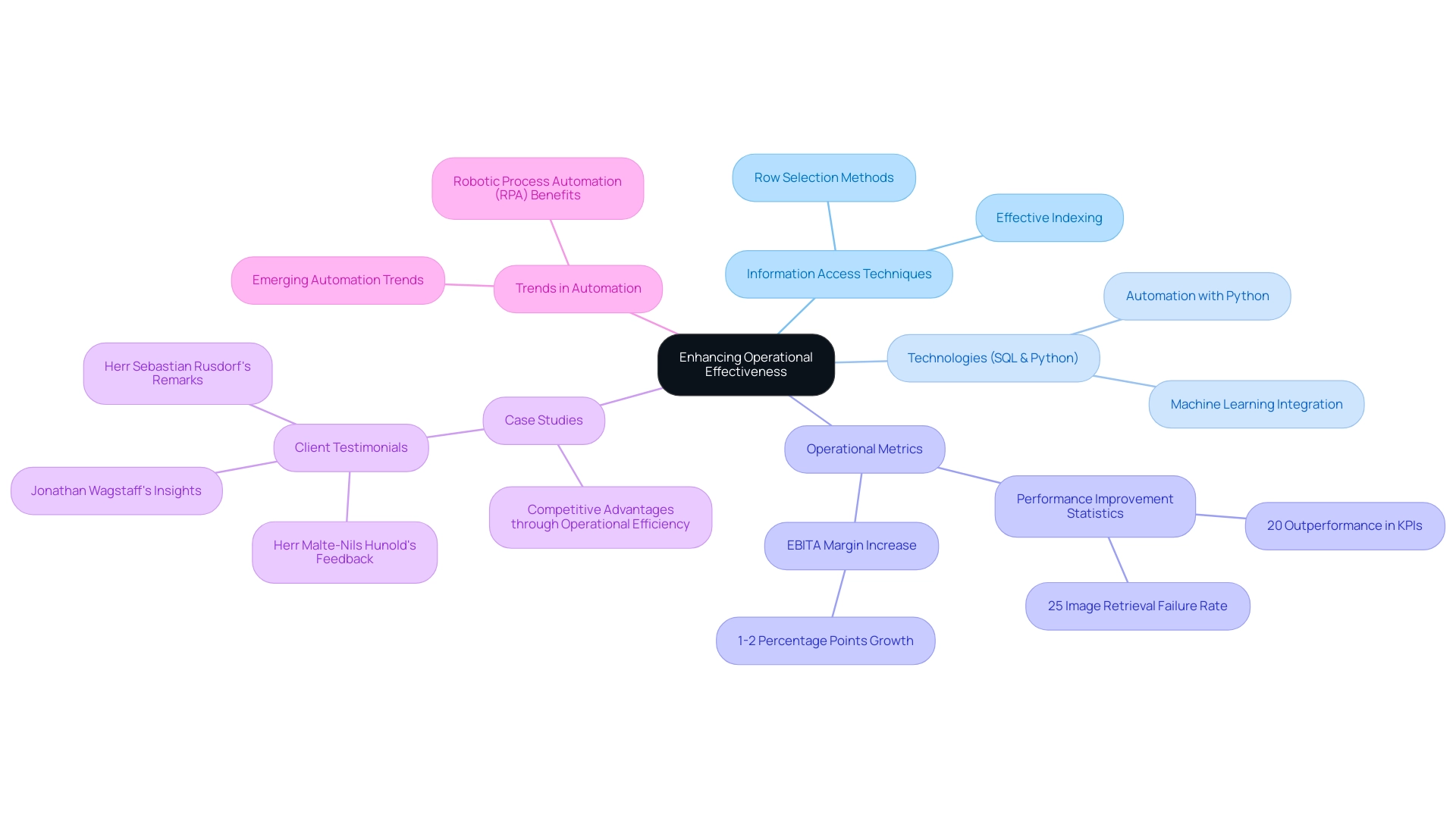

Enhancing operational effectiveness hinges on a comprehensive grasp of information access techniques, specifically addressing which methods can be employed to locate data in a particular row. When utilizing tools and languages such as SQL and Python, understanding the mechanisms for locating data is crucial, as these technologies offer robust capabilities for effective information manipulation that streamline processes. For instance, effective indexing techniques can drastically improve access speeds, and practical row selection methods in popular libraries can significantly enhance efficiency.

Organizations that prioritize information collection are likely to observe substantial improvements in their operational metrics. Research indicates that companies with mature information governance outperform their peers by 20% in key performance indicators, underscoring the essential role of effective management. Furthermore, a notable statistic reveals that researchers often struggle to locate desired images in nearly 25% of instances when searching for medical images, highlighting the necessity for efficient information acquisition techniques across various contexts.

As illustrated in a recent case study, distributors focusing on operational excellence can capture a larger market share, potentially increasing EBITA margins by one to two percentage points. This exemplifies the direct correlation between operational efficiency and financial performance.

The significance of information retrieval techniques lies in identifying methods that can be used to locate data in a particular row, as they are essential for informed decision-making and fostering innovation. For example, Jonathan Wagstaff, Director of Market Intelligence at DCC Technology, noted that automating intricate processing tasks through Python scripts has liberated substantial time for teams, resulting in swift improvements in ROI. This demonstrates how effective information manipulation not only enhances efficiency but also drives strategic initiatives.

Additionally, clients like Herr Malte-Nils Hunold from NSB GROUP stated, “Creatum GmbH’s solutions have transformed our operational workflows,” while Herr Sebastian Rusdorf from Hellmann Marine Solutions remarked, “The impact of Creatum GmbH’s technology on our business intelligence development has been significant,” showcasing the transformative impact of our technology on business intelligence development.

As we progress through 2025, current trends in information manipulation tools continue to evolve, with a growing emphasis on automation and machine learning capabilities. Organizations leveraging SQL and Python for data manipulation are better positioned to navigate management complexities, ultimately unlocking actionable insights that propel growth. By adopting best practices and advanced recovery techniques, businesses can fully harness their information’s potential, leading to enhanced operational effectiveness and competitive advantages.

Moreover, leveraging Robotic Process Automation (RPA) to automate manual workflows can significantly enhance operational efficiency in a rapidly evolving AI landscape, as highlighted by our clients’ experiences. RPA not only reduces errors but also frees up teams for more strategic, value-adding work. Addressing the lack of representation of diverse communities and practices in data management can further enhance understanding and effectiveness in data retrieval strategies.

Conclusion

The intricate landscape of data management underscores the critical importance of efficient data retrieval methods. Understanding the organization of data in rows and columns is foundational for optimizing how information is accessed and manipulated. By leveraging tools like SQL and Python, organizations can enhance their data retrieval processes, significantly improving operational efficiency and decision-making capabilities.

Effective indexing techniques and robust row selection methods are essential for minimizing retrieval times and maximizing data accessibility. The implementation of these practices not only streamlines workflows but also enhances the overall quality of insights derived from data. Organizations prioritizing data governance and quality management demonstrate superior performance metrics, showcasing the tangible benefits of investing in structured data practices.

Moreover, the integration of advanced techniques such as caching, prefetching, and machine learning further empowers businesses to adapt to evolving data landscapes. Embracing Robotic Process Automation (RPA) allows organizations to automate repetitive tasks, thereby reducing errors and freeing up resources for strategic initiatives.

As the data-driven environment continues to evolve, the ability to effectively manage and retrieve data will be paramount for organizations seeking to thrive. By adopting best practices and advanced methodologies in data retrieval, businesses can unlock the full potential of their data, driving innovation and maintaining a competitive edge in their respective markets.

Frequently Asked Questions

What is the significance of data location in rows within datasets?

Data location in rows is fundamental for organizing information in datasets, typically in a tabular format where each row represents a distinct record and columns denote various attributes. This structure enhances efficient access to information.

How does organized data impact information retrieval efficiency?

Well-structured datasets can significantly enhance retrieval efficiency, with studies indicating that it can decrease retrieval times by as much as 30%. This organization facilitates quicker querying and better information manipulation.

Can you provide an example of how structured information is used in practice?

The Integrated Postsecondary Education Information System (IPEDS) collects structured information from educational institutions, which enhances credibility and supports effective decision-making in educational contexts.

What role do case studies play in demonstrating the importance of information organization?

Case studies like the World Values Survey illustrate the impact of systematic information organization by monitoring societal values and beliefs, leading to more reliable insights that inform social research and policy decisions.

How can Robotic Process Automation (RPA) improve data management processes?

Incorporating RPA can automate manual workflows, boosting operational efficiency and reducing errors, allowing teams to focus on strategic tasks rather than repetitive ones.

What tools are recommended for enhancing reporting and decision-making?

Business Intelligence tools such as Power BI can revolutionize reporting by providing actionable insights. The 3-Day Power BI Sprint helps organizations quickly create professionally designed reports.

Why is understanding information structure important for optimal data retrieval?

A well-defined information architecture facilitates efficient access to data and enhances the quality of insights derived from it, as emphasized by industry experts.

What programming languages and tools are commonly used for information retrieval?

SQL is the cornerstone for querying relational databases, while Python (especially with the Pandas library) and R are popular for data manipulation and statistical analysis. Tools like Microsoft Excel and Tableau also enable interactive data retrieval without extensive programming knowledge.

How do organizations decide which programming language or tool to use for data retrieval?

The choice often depends on the specific needs of the organization; SQL is preferred for structured environments, while Python and R offer flexibility for complex tasks.

What are the challenges organizations face in navigating the AI landscape for information acquisition?

Organizations need tailored solutions to address specific business needs amidst the overwhelming AI landscape, highlighting the importance of understanding the strengths and applications of various tools.