Introduction

In a world where data is the lifeblood of modern organizations, navigating the complexities of data management has never been more crucial. Delta Lake emerges as a game-changing solution, offering a robust open-source storage layer that enhances the reliability and efficiency of data lakes. By facilitating ACID transactions and enabling seamless integration of streaming and batch data processing, Delta Lake empowers businesses to harness their data effectively.

Coupled with Robotic Process Automation (RPA), organizations can automate tedious workflows, minimize errors, and allocate resources to higher-value tasks. As the demand for real-time analytics grows, understanding the capabilities of Delta Lake becomes essential for those looking to gain a competitive edge in an increasingly data-driven landscape.

This article delves into the transformative power of Delta Lake, exploring its practical applications, key features, and how it can revolutionize data management strategies in conjunction with RPA.

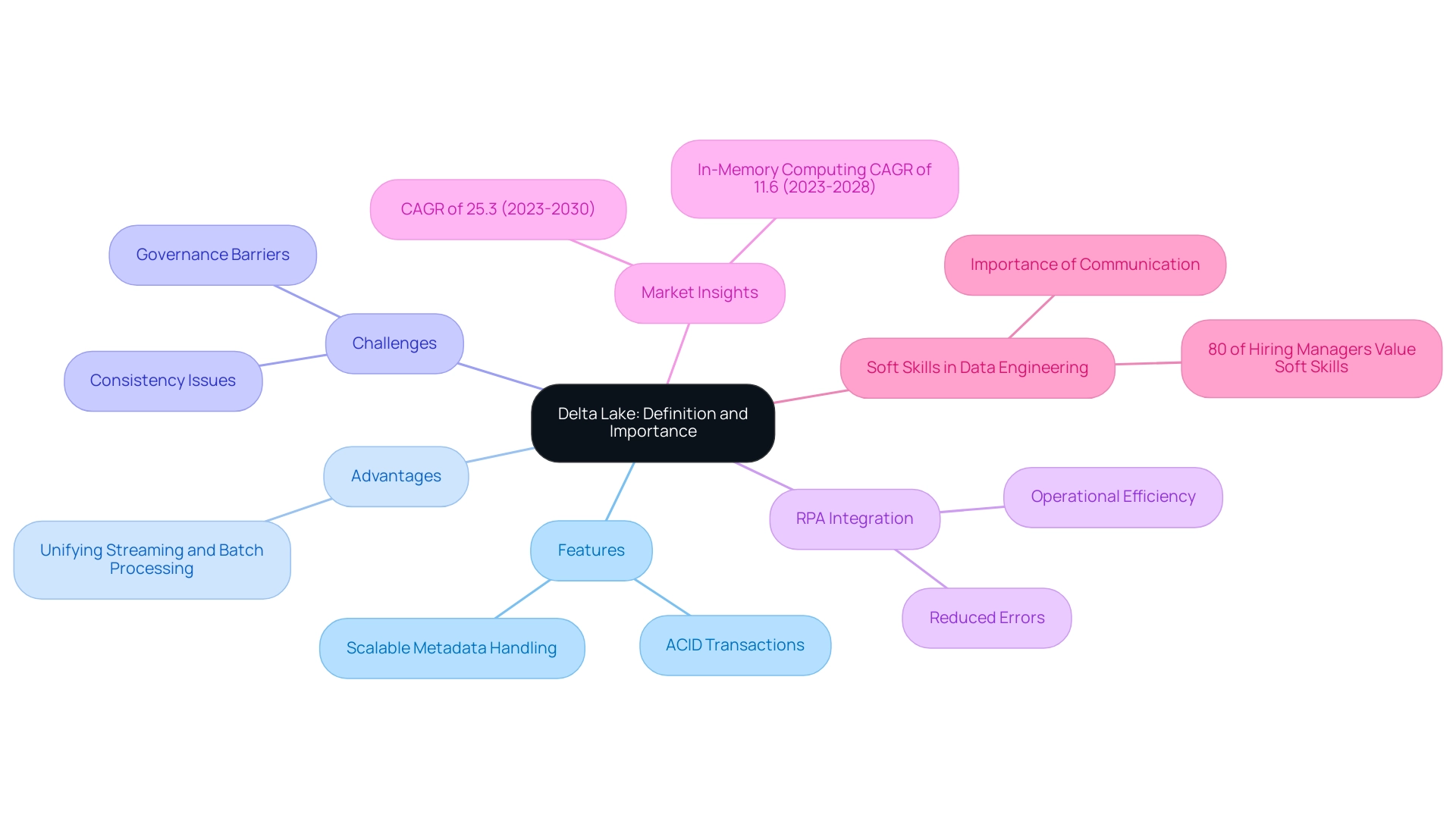

Understanding Delta Lake: Definition and Importance

This solution functions as a revolutionary open-source storage layer that improves the reliability and efficiency of information reservoirs, essential for entities seeking to optimize their management through advanced technologies. By enabling ACID transactions and scalable metadata handling, it ensures that information remains consistent and accessible. One of the primary advantages of Delta Lake is its ability to unify streaming and batch information processing, which is essential for organizations seeking to harness their resources effectively.

In conjunction with Robotic Process Automation (RPA), businesses can automate manual workflows, reducing errors and freeing up team resources, thereby enhancing operational efficiency in a rapidly evolving AI landscape. The challenges linked to traditional lakes—such as ensuring consistency and governance—are significant barriers to deriving actionable insights. As Paul Stein, Chief Scientific Officer at Rolls-Royce, notes,

- “We generate tens of terabytes of information on each simulation of one of our jet engines.”

- “We then have to use some pretty sophisticated computer techniques to look into that massive dataset and visualize whether that particular product we’ve designed is good or bad.”

This emphasizes the need for strong frameworks that not only support intricate information operations but also improve integrity and reliability. With the anticipated CAGR of 25.3% for storage technologies from 2023 to 2030, organizations that utilize this framework alongside RPA stand to gain a competitive advantage in information management, ultimately resulting in enhanced decision-making and organizational agility.

Additionally, as emphasized in a case analysis, soft skills like communication are becoming ever more essential for engineers, highlighting the necessity for experts who can efficiently articulate the advantages of RPA and enhance operational efficiency. To explore how RPA can transform your information management processes, consider implementing tailored RPA solutions that align with your business goals.

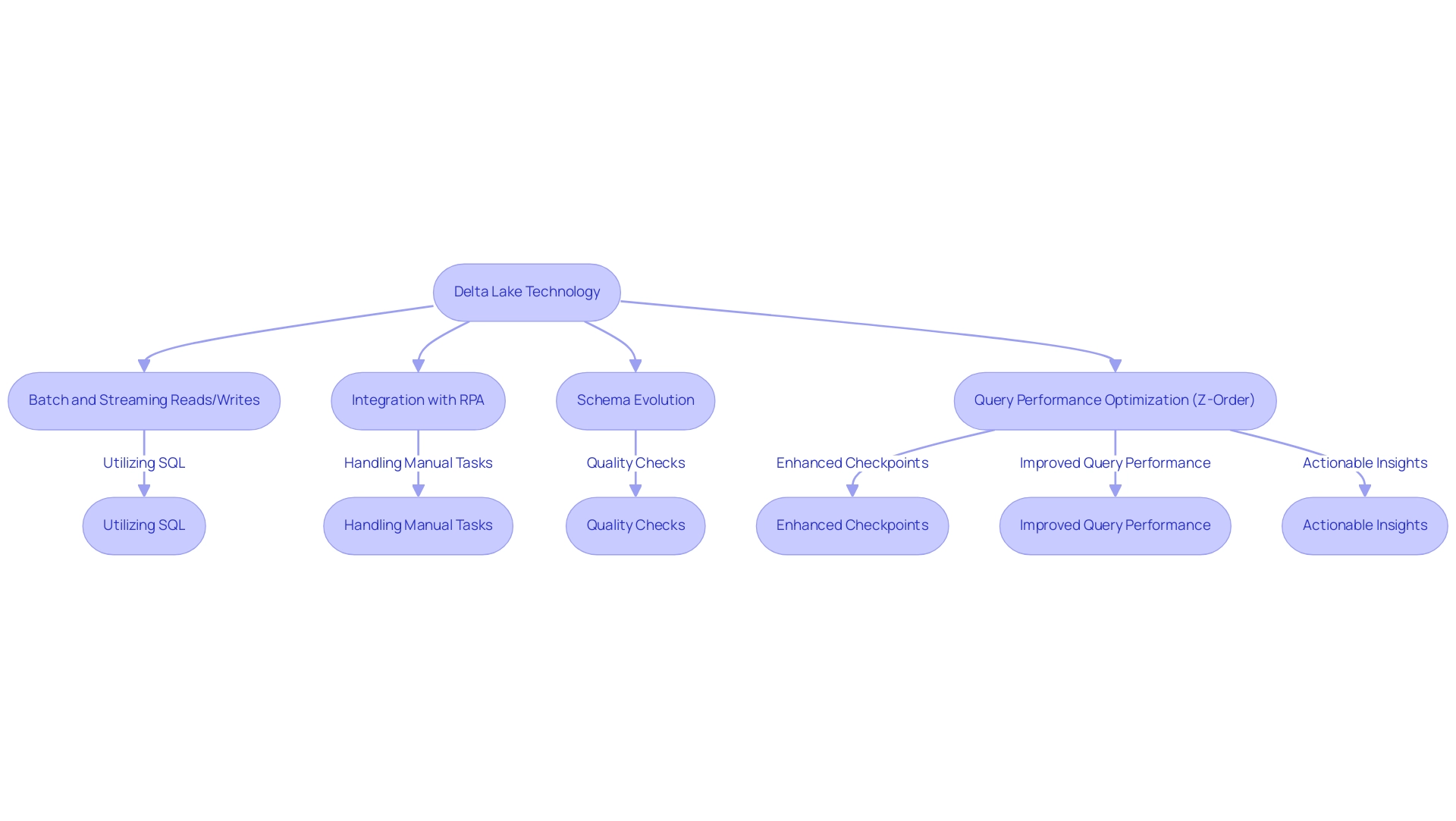

Practical Applications: Reading and Writing Data in Delta Lake

This technology empowers users to effortlessly carry out a range of operations, effectively handling both batch and streaming reads and writes. This flexibility allows users to write information in formats such as Parquet while querying it through SQL or utilizing processing frameworks like Apache Spark. As organizations encounter rising demands for real-time analytics and the challenging task of pinpointing the right AI solutions, integrating a data storage system with Robotic Process Automation (RPA) can streamline workflows, enhance efficiency, and lower operational expenses.

This integration addresses the challenges posed by manual, repetitive tasks, allowing teams to focus on strategic initiatives. As noted by expert Fernando De Nitto, ‘You should partition your information as much as you can since this operation is presented as a best practice in the official Delta Lake documentation.’ This emphasizes the significance of schema evolution and the enforcement of quality checks during operations, significantly enhancing the reliability of management.

Notably, Structured Streaming workloads can enable enhanced checkpoints if they don’t have low latency requirements, which is essential for organizations aiming for efficient data processing. Moreover, utilizing Business Intelligence with a data platform can unveil the potential of actionable insights, fostering informed decision-making that accelerates growth and innovation. For those curious about deeper insights, additional investigation of the transaction log of the data storage can be found in ‘Diving into Data Storage: Unpacking the Transaction Log v2’.

Furthermore, the Z-Order optimization method illustrates a practical use of the technology, enhancing query performance by physically organizing related information together according to specific column values, decreasing execution time from 4.51 seconds to 0.6 seconds. By leveraging Delta’s capabilities alongside RPA and Business Intelligence, organizations can effectively oversee information pipelines that require near real-time updates, ensuring that analytics are consistently reflective of the most current information available.

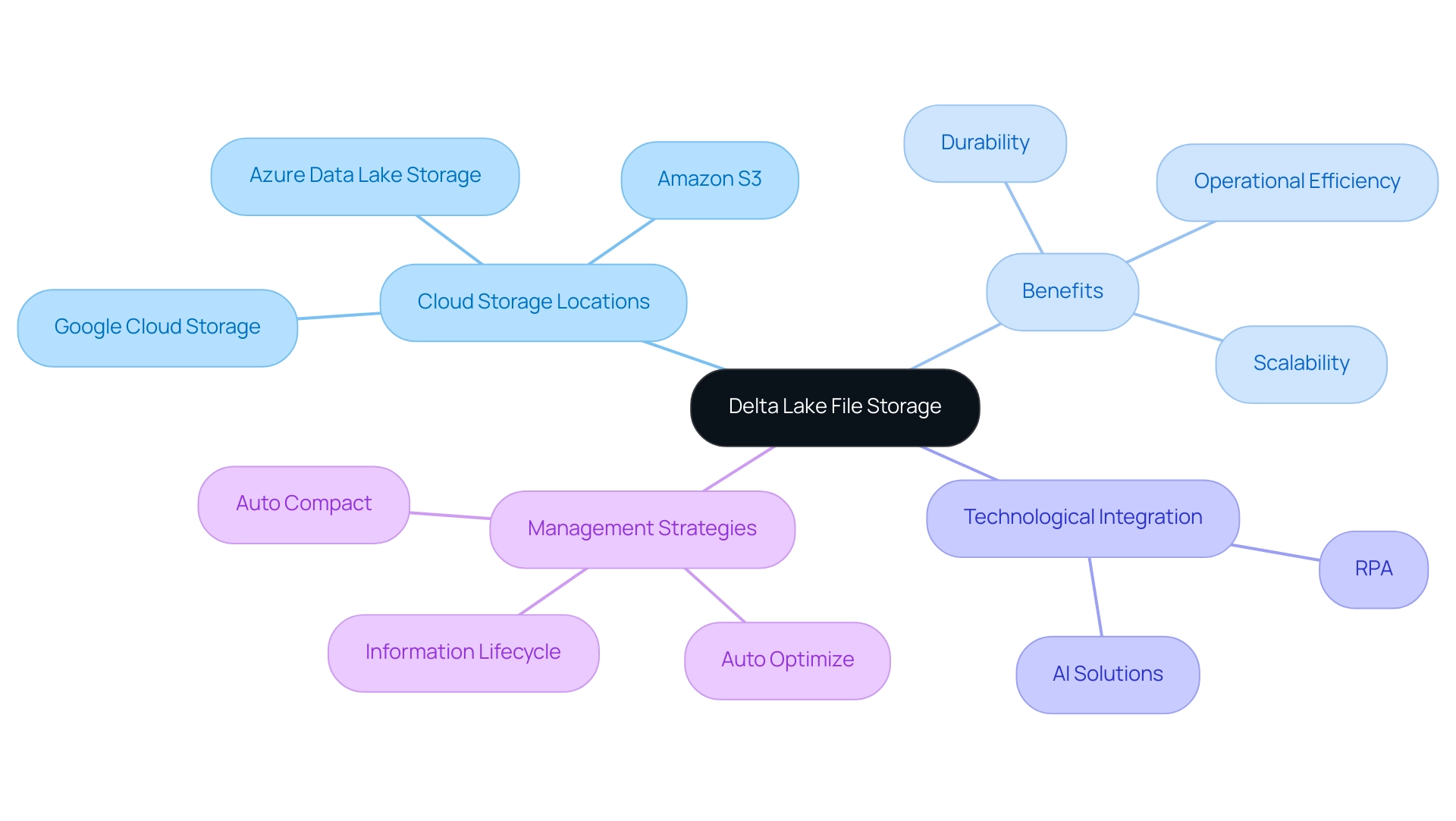

Where Are Delta Lake Files Stored? Understanding Storage Locations

The delta lake location files are predominantly housed within advanced cloud storage systems like Amazon S3, Azure Data Lake Storage, and Google Cloud Storage. Utilizing cloud infrastructure not only ensures scalability and durability of information but also optimizes the management of large datasets. For instance, queries that process significant amounts of information (100 GB+) benefit from acceleration, highlighting the efficiency gains achievable with cloud storage.

In situations where a single table can exceed 400PB, entities can effectively manage the intricacies of their operations. Moreover, by integrating Robotic Process Automation (RPA) into these workflows, businesses can automate manual processes, significantly reducing errors and freeing up team resources for more strategic, value-adding work, further enhancing operational efficiency in the rapidly evolving AI landscape. The directory structure of the system enables efficient management and querying of datasets, highlighting the significance of comprehending the delta lake location for these storage areas.

This knowledge is pivotal for organizations, as it significantly influences information accessibility, compliance, and backup strategies. Furthermore, Delta Lake’s architecture empowers users to effectively manage their information lifecycle, ensuring secure storage and timely retrieval as needed. Significantly, features such as Auto Optimize and Auto Compact dynamically modify partition sizes and look for chances to compact files, enhancing overall management efficiency.

As ByteDance/TikTok highlights, ‘In our scenario, the performance challenges are huge. The maximum volume of a single table reaches 400PB+, the daily volume increase is at the PB level, and the total volume reaches the EB level. This emphasizes the essential role of strong information management strategies and RPA in promoting insight-driven knowledge and operational efficiency for business growth. Additionally, tailored AI solutions can complement RPA by providing targeted technologies that address specific operational challenges, enhancing overall efficiency.

As cloud storage utilization for the platform is expected to increase in 2024, the benefits of embracing these technologies become more evident—improving operational efficiency while tackling modern information management challenges.

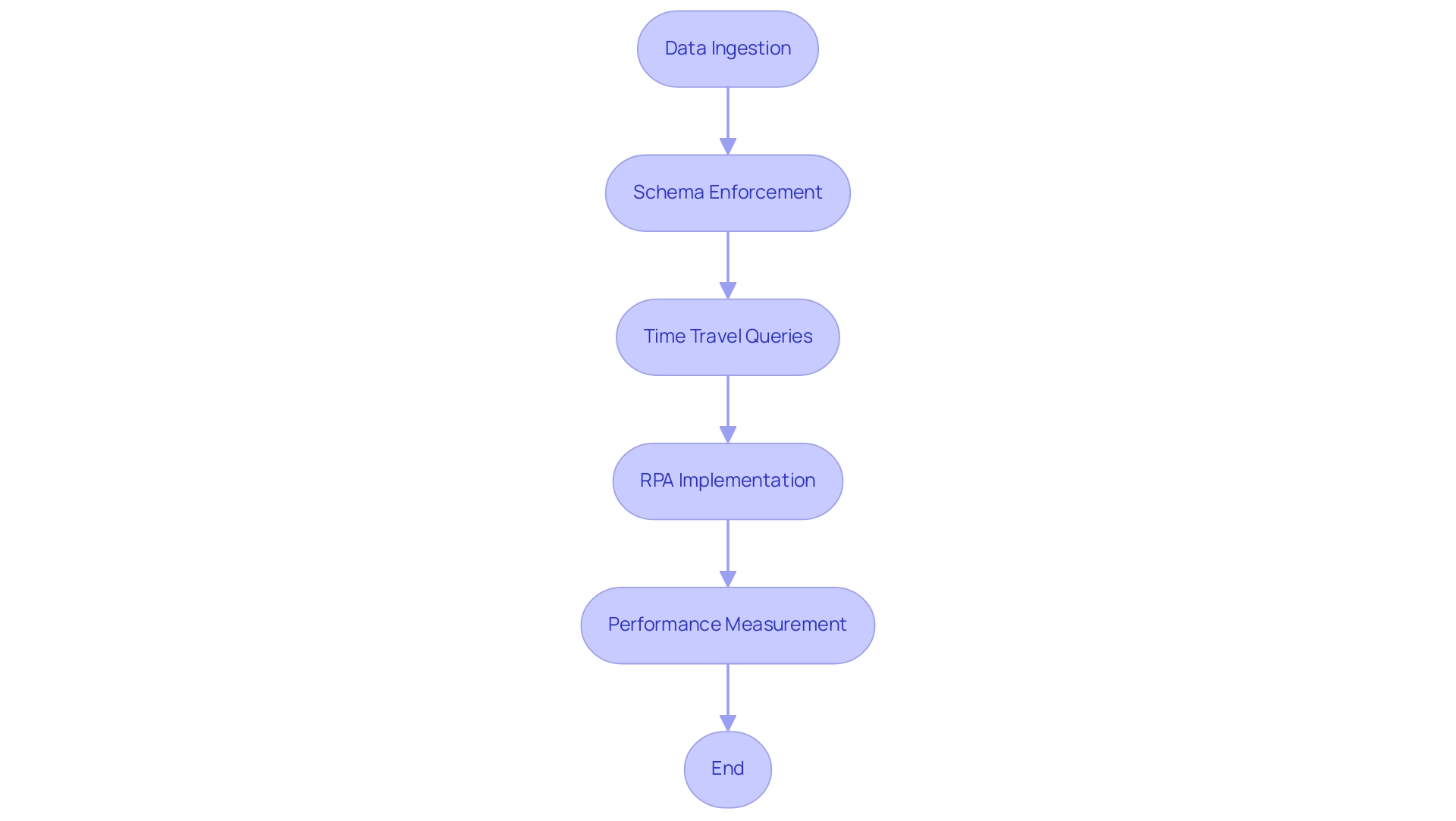

Delta Lake and Apache Spark: A Powerful Combination

The platform is designed for smooth integration with Apache Spark, enabling organizations to carry out intricate workflows effectively. By utilizing Spark’s robust distributed computing capabilities, users can effectively manage vast datasets at the delta lake location. This partnership is underscored by features such as schema enforcement and time travel, which significantly enhance analytical capabilities.

The ability to query historical information states allows for deeper insights while maintaining integrity during operations, addressing common challenges in leveraging insights from Power BI dashboards, such as time-consuming report creation and inconsistencies. By implementing Robotic Process Automation (RPA), companies can automate these manual tasks, reducing errors and freeing up teams to focus on strategic initiatives. Notably, the trigger execution duration has been measured at 1887 ms, showcasing the performance capabilities of this integration.

With recent advancements like Auto Optimize and Auto Compaction, which are now default in Databricks Runtime 9.1 LTS and above, organizations can unlock the potential for real-time analytics and transformation processes. This is essential in a rapidly evolving AI landscape, where tailored solutions can help navigate complexities. As Alex Ott pointed out, ‘The previous ones lack this functionality, it exists only in DB version,’ emphasizing the benefits of the data structure.

Furthermore, the case study entitled ‘Stack vs Queue in Organization’ demonstrates practical applications and clarifies the differences between stack and queue structures, assisting in choosing the suitable structure for specific use cases. These improvements generate actionable business insights, making the combination of Apache Spark and RPA a strategic asset for operational efficiency and growth.

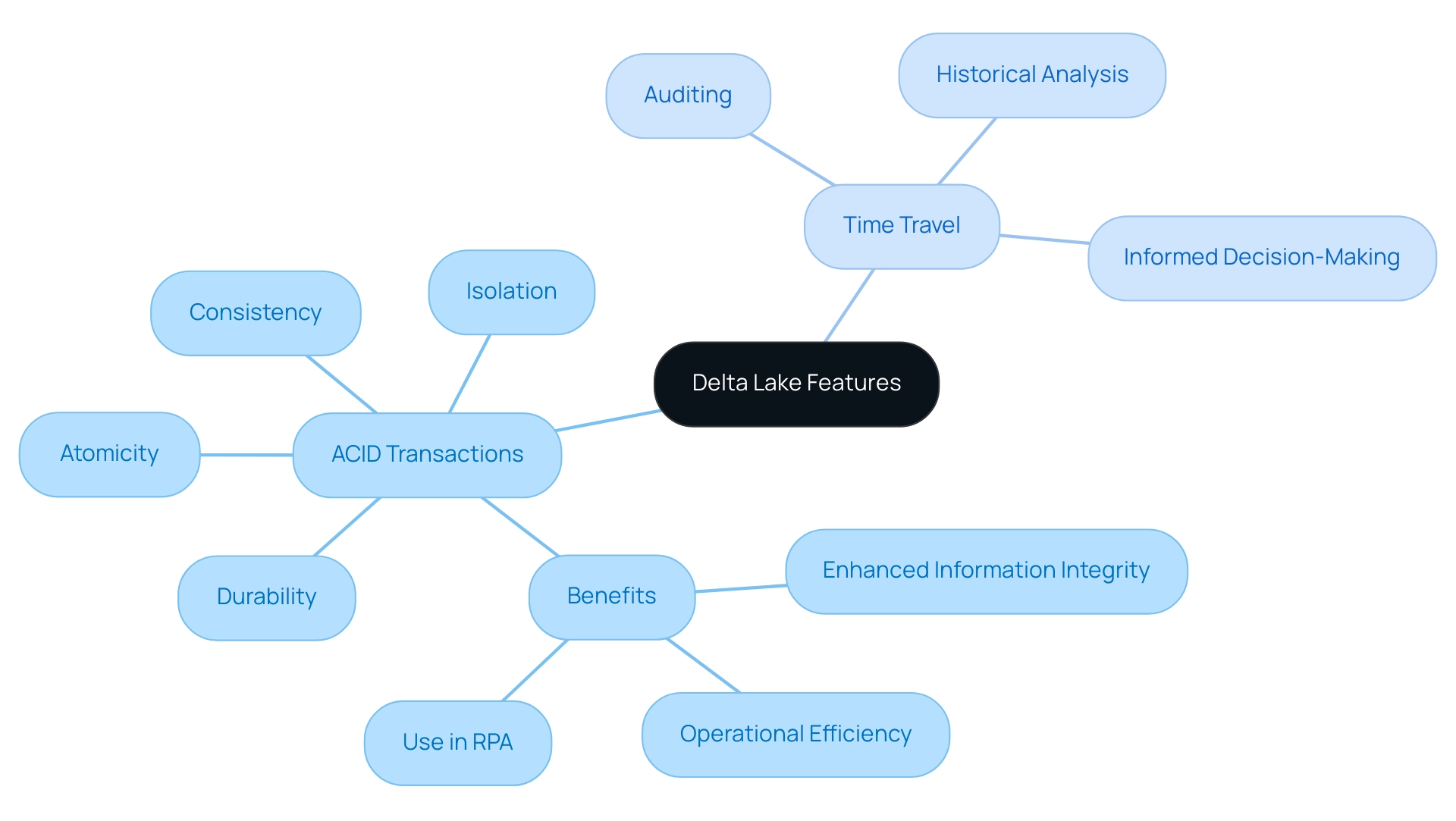

Key Features of Delta Lake: ACID Transactions and Time Travel

Delta Lake’s robust support for ACID transactions—Atomicity, Consistency, Isolation, and Durability—ensures that every operation is executed reliably, safeguarding integrity even amidst concurrent writes or system failures. This ability is essential for companies aiming for steadfast uniformity in their processes, especially in a time when utilizing Robotic Process Automation (RPA) can streamline manual workflows, minimize mistakes, and allow team members to focus on more strategic, value-enhancing tasks. Recent statistics indicate that 75% of organizations implementing ACID transactions have reported enhanced information integrity and operational efficiency in 2024.

According to industry expert Franco Patano,

ANALYZE is the best return on your compute investment, as it benefits all types of queries, without a lot of pre-planning on the DBA’s part.

Moreover, a case study on the Budibase platform demonstrates how Delta’s ACID transactions enabled smooth information operations, allowing teams to produce actionable insights effectively. Additionally, Delta Lake’s innovative time travel feature empowers users to access and query historical versions of their information, making it an invaluable tool for auditing and detailed historical analysis.

This functionality not only improves information quality but also enables informed decision-making based on precise, current details. By harnessing these advanced capabilities alongside tailored AI solutions, organizations can significantly enhance their governance practices and drive operational efficiencies, positioning themselves for sustained success in an increasingly information-driven landscape. The relevance of these features extends to emerging technologies like blockchain, emphasizing the critical importance of data integrity and management.

As businesses navigate the rapidly evolving AI landscape, tailored AI solutions can help identify the right technologies that align with specific business goals and challenges.

Conclusion

Delta Lake stands out as a pivotal solution for organizations striving to optimize their data management strategies. By enabling ACID transactions and facilitating the integration of streaming and batch data processing, it addresses the inherent challenges of traditional data lakes. The synergy between Delta Lake and Robotic Process Automation (RPA) not only automates repetitive tasks but also enhances operational efficiency, empowering teams to focus on strategic initiatives that drive growth.

The practical applications of Delta Lake, from seamless data reads and writes to its compatibility with cloud storage and Apache Spark, underscore its versatility in managing vast datasets. Features like schema enforcement and time travel further enhance data integrity and accessibility, ensuring organizations can derive actionable insights swiftly. As businesses increasingly demand real-time analytics, leveraging Delta Lake alongside RPA will be crucial for maintaining a competitive edge in a data-driven marketplace.

In conclusion, the transformative power of Delta Lake, coupled with RPA, positions organizations to navigate the complexities of modern data management effectively. By embracing these technologies, businesses can enhance their decision-making processes, improve data governance, and ultimately achieve greater operational efficiency. As the landscape continues to evolve, investing in robust data management frameworks will be key to unlocking future growth and innovation.