Overview

The best practices for using the Power BI Databricks connector focus on optimizing performance through effective integration and data management techniques. The article outlines essential strategies such as utilizing DirectQuery mode for real-time data access, transforming data within Power BI, and monitoring performance metrics, which collectively enhance efficiency and decision-making capabilities in organizations leveraging these tools.

Introduction

In the rapidly evolving landscape of data analytics, the integration of Power BI with Azure Databricks emerges as a game-changer for organizations striving to harness the full potential of their data. This powerful combination not only facilitates seamless analysis of vast datasets but also enhances the visualization capabilities that are essential for informed decision-making.

By connecting these two platforms, businesses can unlock actionable insights and streamline their analytics processes, ultimately driving better outcomes. As organizations navigate the complexities of this integration, understanding the essential requirements, overcoming common challenges, and applying best practices will be crucial for maximizing performance and operational efficiency.

This article delves into the intricacies of Power BI and Databricks integration, offering valuable insights and strategies to empower users in their data-driven journey.

Understanding the Power BI and Databricks Integration

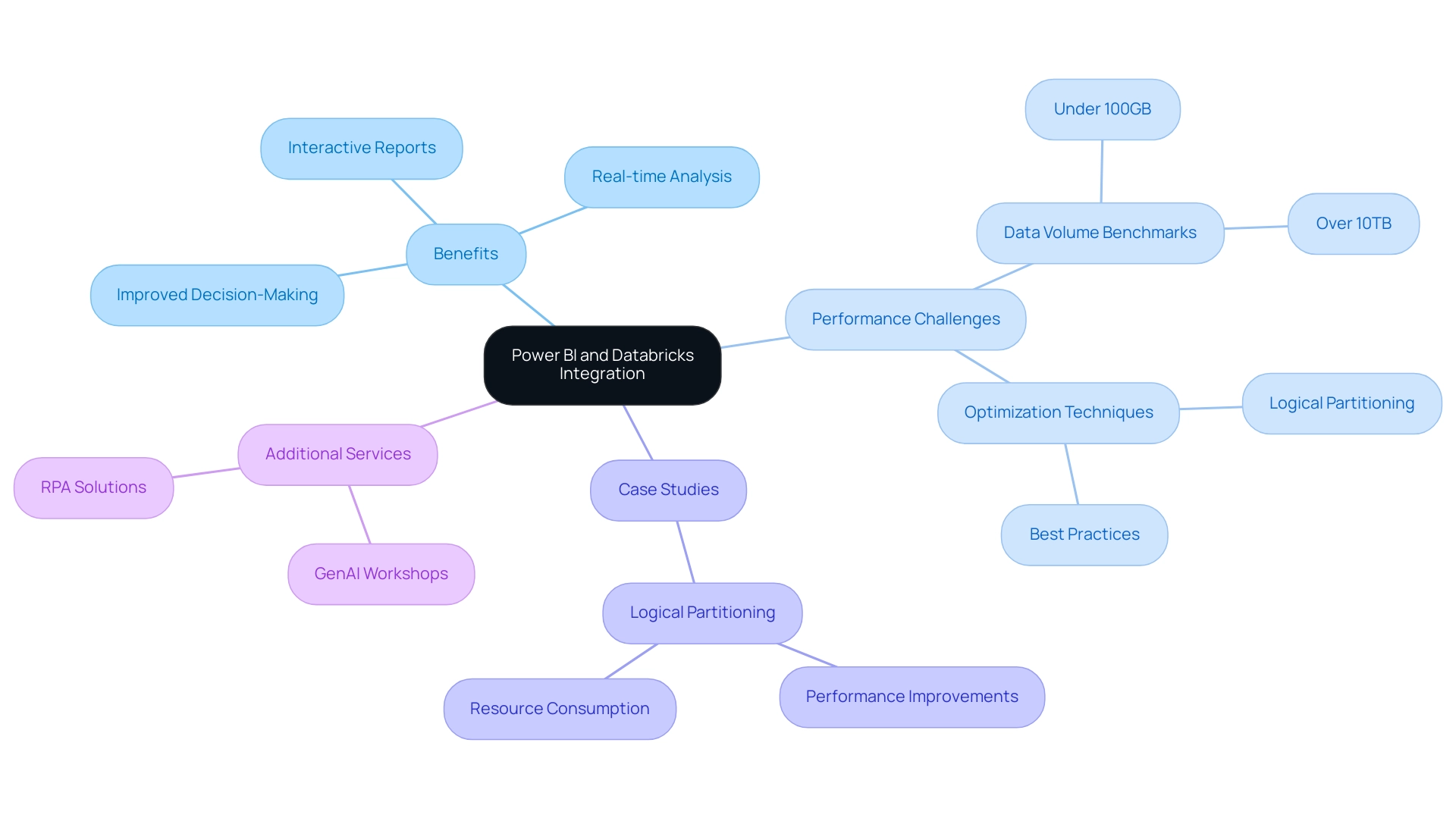

The combination of BI tools with Azure services unlocks significant potential for organizations seeking to utilize extensive analytics and visualization. The business intelligence tool, known for its strength, allows individuals to create interactive reports and dashboards, while the analytics platform functions as a complete solution that simplifies engineering and machine learning processes. The Power BI Databricks connector enables organizations to efficiently analyze large datasets housed in Databricks, facilitating the rapid generation of actionable insights by connecting these two platforms.

In fact, with our Power BI services, including a focused 3-Day Sprint for report creation, individuals can quickly create professionally designed reports that improve information accessibility and enable real-time analysis. This timely access empowers individuals to make informed decisions based on the latest information, ultimately driving better business outcomes. Recent benchmarks highlight that while Direct Lake outperforms SQL warehouse endpoints for data volumes under 100GB, challenges arise with larger datasets, particularly those exceeding 10TB.

Consequently, understanding these performance dynamics is crucial for optimizing the integration process. Moreover, the repository provides reusable samples and best practices for enhancing BI solutions on SQL, offering practical guidance for users. A notable case study on logical partitioning in BI illustrates how this technique enhances performance and manageability of large datasets, demonstrating significant improvements in refresh times and resource consumption when comparing partitioned and non-partitioned tables.

As David Kaminski notes, ‘Here’s a breakdown of the latest Microsoft Fabric updates and why they matter for your business,’ underscoring the relevance of staying informed about advancements in these technologies. Overall, the combination of BI and another analytics platform not only streamlines the analytics process but also significantly enhances the decision-making capabilities of organizations. Our GenAI Workshops and customized AI solutions, including Small Language Models, focus on enhancing quality and offering practical training to support ongoing improvement and operational efficiency.

Additionally, our RPA solutions address task repetition fatigue, further enhancing operational efficiency and employee morale.

Essential Requirements for Connecting Power BI to Databricks

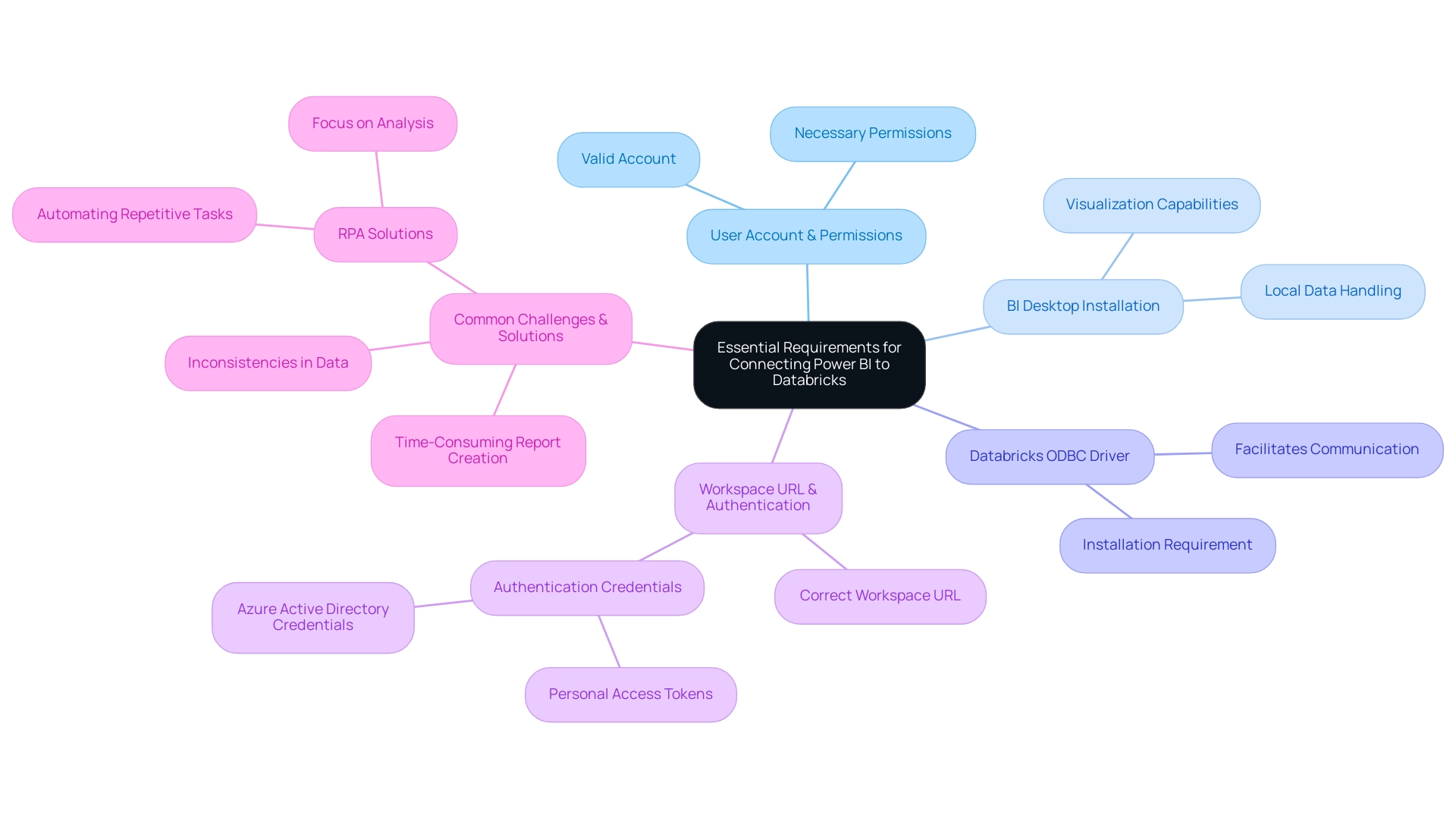

For a smooth link between BI and the data platform, it is essential to have several prerequisites established. First and foremost, users must have a valid account with the necessary permissions to access the required information. Additionally, having BI Desktop installed on your local machine is essential for local data handling and visualization.

An important component is the Databricks ODBC driver, which facilitates communication between the Power BI Databricks connector and Databricks; ensuring the installation of this driver is crucial for successful integration. Furthermore, individuals need to verify that they have the correct workspace URL and appropriate authentication credentials—either personal access tokens or Azure Active Directory credentials—to establish a secure connection. By confirming these foundational requirements, users can streamline the integration process and significantly reduce potential complications, enabling them to leverage the full capabilities of both platforms efficiently.

It’s critical to recognize that without addressing common challenges such as time-consuming report creation and inconsistencies, organizations may struggle to derive actionable insights from their information. Moreover, integrating RPA solutions can enhance operational efficiency by automating repetitive tasks, allowing teams to focus on analysis rather than report generation. As Gus Santaella, Sr. Director of Information, noted, ‘Sigma has become almost like a verb here,’ highlighting the importance of effective information integration practices.

Additionally, with over 540 hands-on courses available through DataCamp, professionals can enhance their skills in utilizing these tools effectively. Participating in gatherings such as the forthcoming PASS Community Summit also provides important perspectives on current trends and educational opportunities connected to BI integration. To advance, organizations should prioritize implementing RPA alongside these integrations to transform raw information into actionable insights that drive business growth.

Overcoming Common Challenges in Power BI-Databricks Connection

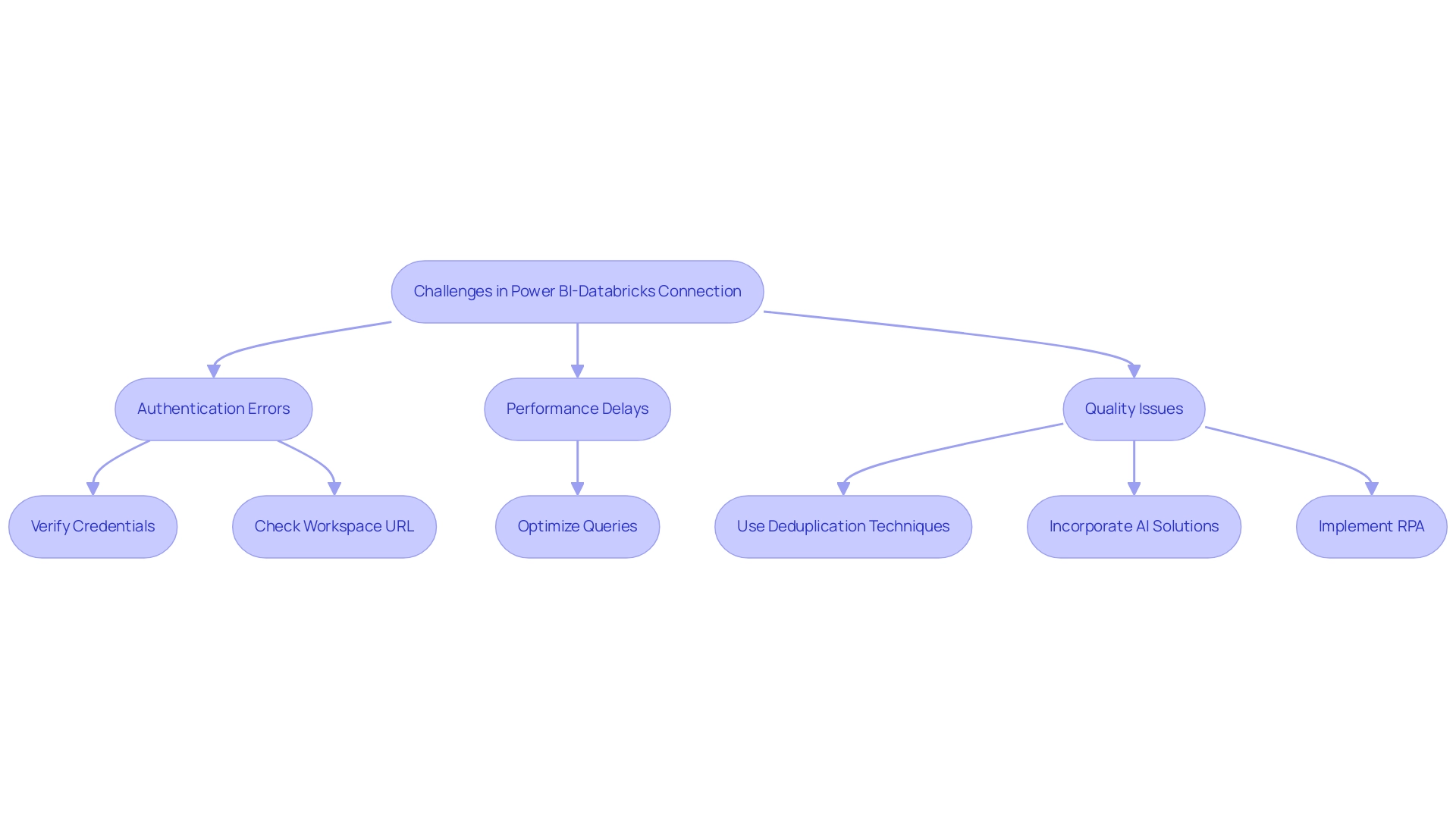

Integrating Power BI with the data platform can present several notable challenges, particularly around authentication errors and connectivity issues. A common statistic reveals that authentication error rates in analytics tools can significantly hinder user experience. Additionally, the cost of operating a cluster is just over $11/hour, which highlights the financial implications of performance issues.

To mitigate these challenges, it is essential to meticulously verify authentication credentials and confirm the correct workspace URL is utilized. Users frequently experience performance delays; in such situations, optimizing queries in the platform is essential for improving retrieval speeds. Furthermore, ensuring that the ODBC driver is current can effectively prevent compatibility issues that may arise.

For those struggling with refreshes, it’s beneficial to configure Power BI settings for scheduled updates, ensuring that reports remain current. Tackling quality challenges is essential since inadequate master information quality can result in ineffective operations; to this end, the platform provides various techniques for deduplication, including:

- MERGE

- distinct()

- drop Duplicates()

- ranking windows

These techniques can be crucial in preserving information integrity. By incorporating tailored AI solutions, organizations can better navigate these challenges, optimizing their integration processes and enhancing decision-making capabilities.

Additionally, incorporating Robotic Process Automation (RPA) allows organizations to automate repetitive tasks involved in information management, thereby enhancing efficiency and freeing up resources for more strategic initiatives. Proactively addressing these challenges allows users to significantly improve their integration experience with the Power BI Databricks connector, unlocking the full potential of Power BI and Databricks. As one expert noted, ‘Quarantining allows transformations to insert good information while storing bad information separately for later review,’ emphasizing the importance of managing quality throughout the integration process.

Furthermore, leveraging Business Intelligence can transform raw information into actionable insights, enabling informed decision-making that drives growth and innovation. The Time Travel functionality of Delta Lake enables individuals to manually revert to earlier versions of information, offering a practical solution for addressing inaccuracies during integration, thereby empowering informed decision-making.

Best Practices for Optimizing Power BI with Databricks

To fully harness the potential of the Power BI Databricks connector, implementing the following best practices is essential for optimizing performance.

-

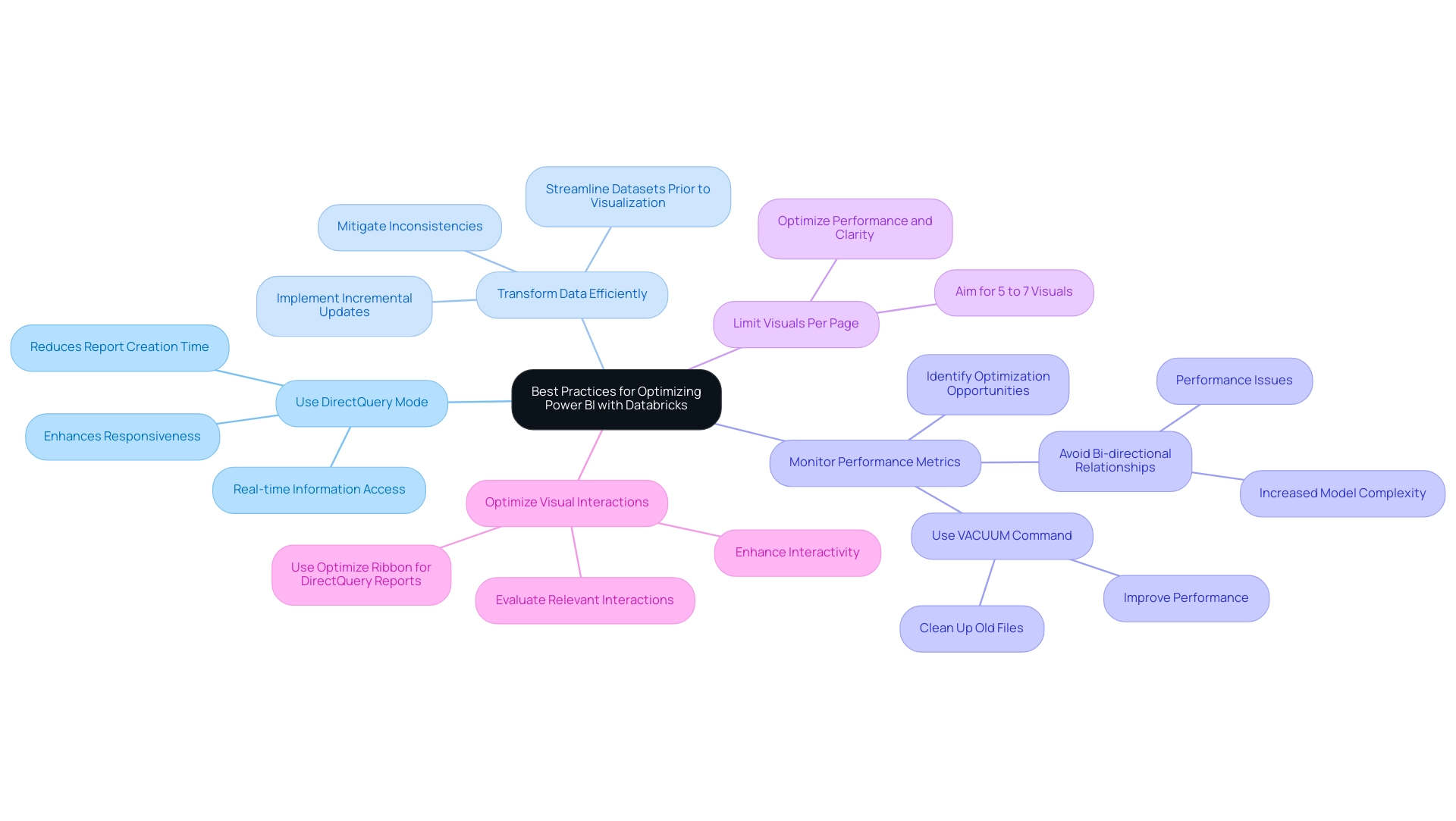

Utilize the Power BI Databricks connector in DirectQuery mode, which enables real-time information access, ensuring that users work with the latest insights without the need to import it into BI. This approach not only enhances responsiveness but also aligns with best practices for information analysis, addressing the common challenge of time-consuming report creation.

-

Focus on information transformation within Power BI by utilizing the Power BI Databricks connector to streamline datasets prior to visualization. This step reduces the workload on Databricks, enabling efficient information handling and mitigating inconsistencies. Additionally, implementing incremental updates can significantly enhance performance, especially when managing larger collections.

-

Regularly monitor and analyze performance metrics, which is vital for identifying further optimization opportunities. As emphasized by Jaylen from Community Support, it’s crucial to avoid bi-directional relationships unless absolutely necessary, as they can lead to performance degradation and increased model complexity. Furthermore, using the VACUUM command can help clean up old files and improve performance, which is a critical aspect of maintaining an efficient system.

-

Aim for a maximum of five to seven visuals per report page to optimize performance and ensure clarity, addressing the lack of actionable guidance often experienced.

-

Consider the case study on optimizing visual interactions; every visual interacts with others on the page through cross-filtering or cross-highlighting. Evaluating relevant interactions is crucial, and for DirectQuery reports, using the Optimize Ribbon can enhance interactivity.

By following these best practices, individuals can effectively improve their analytical capabilities, leading to more informed and strategic decision-making that drives growth and innovation. Moreover, integrating RPA tools such as EMMA RPA and Automate can further streamline processes, reduce task repetition, and mitigate the competitive disadvantages associated with lacking data-driven insights.

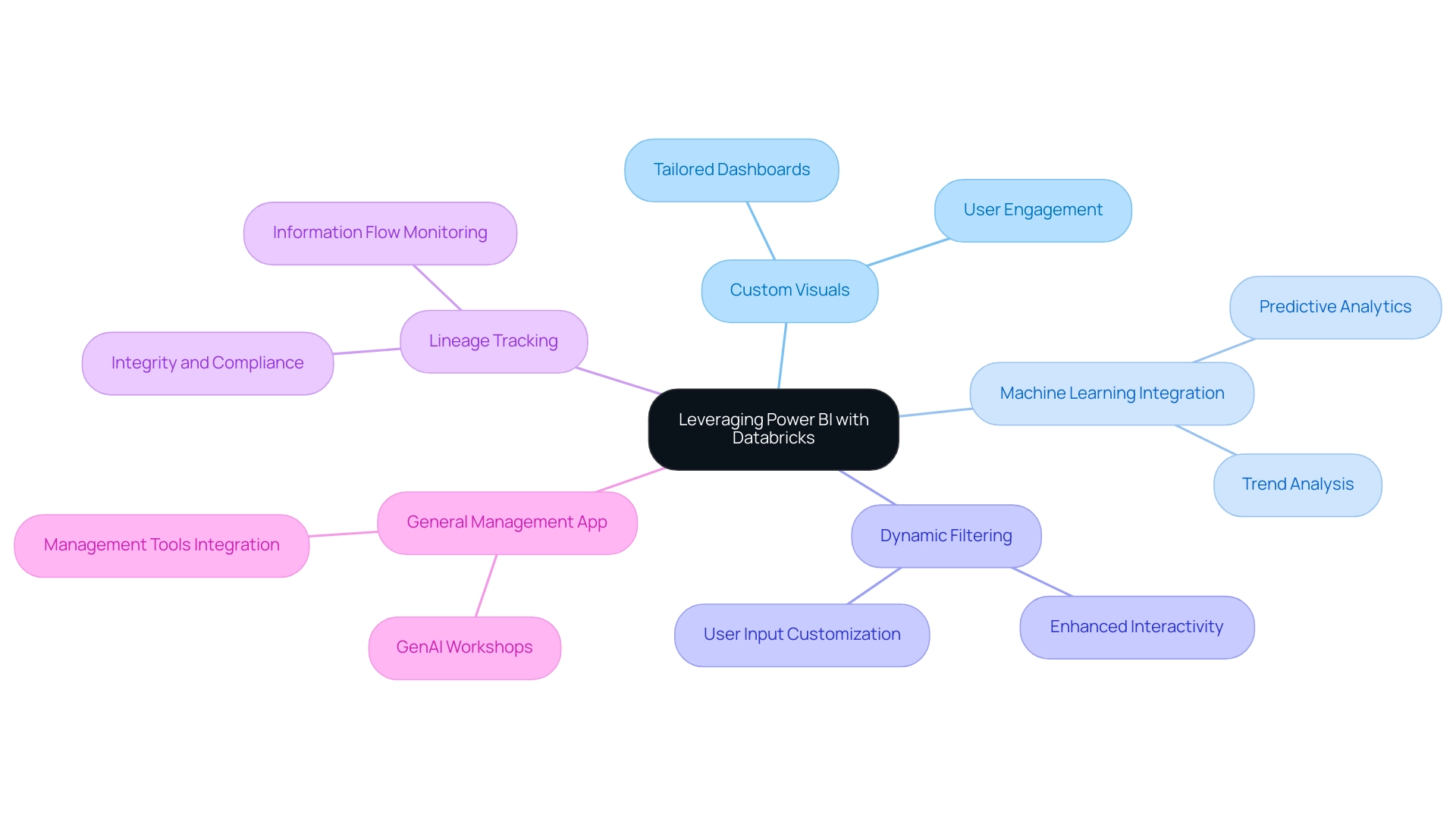

Advanced Techniques for Leveraging Power BI with Databricks

To utilize the advanced features of the analytics tool alongside Azure, individuals can apply a range of sophisticated techniques that tackle common issues such as time-consuming report generation, data inconsistencies, and the necessity for a strong governance strategy. One effective method is utilizing custom visuals within BI to craft dashboards tailored to specific business requirements, enhancing user engagement and providing clear, actionable guidance. Furthermore, the integration of machine learning models created in Databricks into BI reports using the power bi databricks connector enables organizations to leverage predictive analytics, facilitating deeper insights into trends and patterns crucial for operational efficiency.

Accurate forecasts rely on adequate historical information to identify these patterns, underscoring the need for organizations to gather and analyze relevant information over time. Another key technique involves utilizing parameters in Power BI, which enables individuals to dynamically filter information based on their input, significantly enhancing interactivity and experience. Moreover, implementing lineage tracking is essential for monitoring the flow of information throughout analytics processes, ensuring both integrity and compliance with regulatory standards.

The General Management App enhances these capabilities by integrating Microsoft Copilot and providing comprehensive management tools that specifically address the challenges of time-consuming report creation and the need for clear guidance. It provides functionalities such as Small Language Models for enhanced information quality and GenAI Workshops that enable individuals with the skills to optimize insights. A practical example of these techniques can be seen in the case study titled ‘Cost Management and Resource Monitoring,’ where Azure Monitor and Microsoft Cost Management were utilized to analyze resource telemetry and manage cloud spending, maximizing performance and reliability while controlling costs across the Azure environment.

By leveraging these advanced techniques and tools, organizations empower users to extract maximum value from their data, enhancing their overall analytical capabilities—a necessity in today’s data-driven landscape. As highlighted by industry expert Bharat Ruparel, ‘This is a really good article Kyle. Good to see you doing great things at Databricks; the integration of the power bi databricks connector represents a significant step forward in operational efficiency.

Conclusion

Integrating Power BI with Azure Databricks represents a transformative opportunity for organizations eager to enhance their data analytics and visualization capabilities. This article has explored the essential requirements for establishing a successful connection, including:

- The importance of having a valid Databricks account

- The necessary ODBC driver

- The correct authentication credentials

By ensuring these foundational elements are in place, organizations can streamline their integration processes and effectively leverage the strengths of both platforms.

The discussion has also addressed common challenges encountered during the integration, such as:

- Authentication errors

- Connectivity issues

Emphasizing the importance of meticulous verification and optimization of queries. Furthermore, implementing best practices, like utilizing DirectQuery mode and focusing on data transformation, can significantly enhance performance and user experience. By adopting these strategies, organizations can not only overcome obstacles but also unlock actionable insights that drive informed decision-making.

In conclusion, the synergy between Power BI and Databricks is a powerful catalyst for innovation and efficiency in data analytics. By embracing advanced techniques, such as machine learning integration and custom visuals, organizations can further enhance their analytical capabilities and maintain a competitive edge. As the landscape of data analytics continues to evolve, staying informed about technological advancements and best practices is crucial for maximizing the potential of this integration, ultimately leading to better business outcomes and strategic growth.