Overview

This article provides an authoritative overview of mastering the MERGE statement in Spark SQL, focusing on its syntax, best practices, and advanced techniques for effective data manipulation. By leveraging Delta Lake and Robotic Process Automation (RPA), operational efficiency and performance can be significantly enhanced. This is evidenced by the strategies outlined for optimizing merge operations and addressing common challenges. Professionals seeking to improve their data handling capabilities will find actionable insights that can transform their current practices.

Introduction

In the realm of data manipulation, the MERGE statement in Spark SQL emerges as a powerful and versatile tool. It enables organizations to perform conditional updates, inserts, and deletes seamlessly. As businesses increasingly rely on data-driven insights, integrating Robotic Process Automation (RPA) into these operations not only enhances efficiency but also minimizes human error. When paired with Delta Lake, the MERGE statement becomes even more formidable, optimizing the handling of massive datasets.

This article delves into the intricacies of the MERGE statement, exploring its syntax, best practices, and advanced techniques that can elevate data operations to new heights. By gaining a comprehensive understanding of join types, setup requirements, and troubleshooting strategies, organizations can harness the full potential of Spark SQL. This knowledge is crucial for navigating the complexities of today’s data landscape effectively.

Understanding the MERGE Statement in Spark SQL

The JOIN command in Spark SQL serves as a powerful tool for executing conditional updates, inserts, and deletions in a unified manner, greatly enhancing the efficiency of information manipulation. By incorporating Robotic Process Automation (RPA) into this process, organizations can automate these operations, thereby reducing manual intervention and minimizing errors. This effectiveness is especially evident when using Delta Lake, which optimizes the management of large datasets.

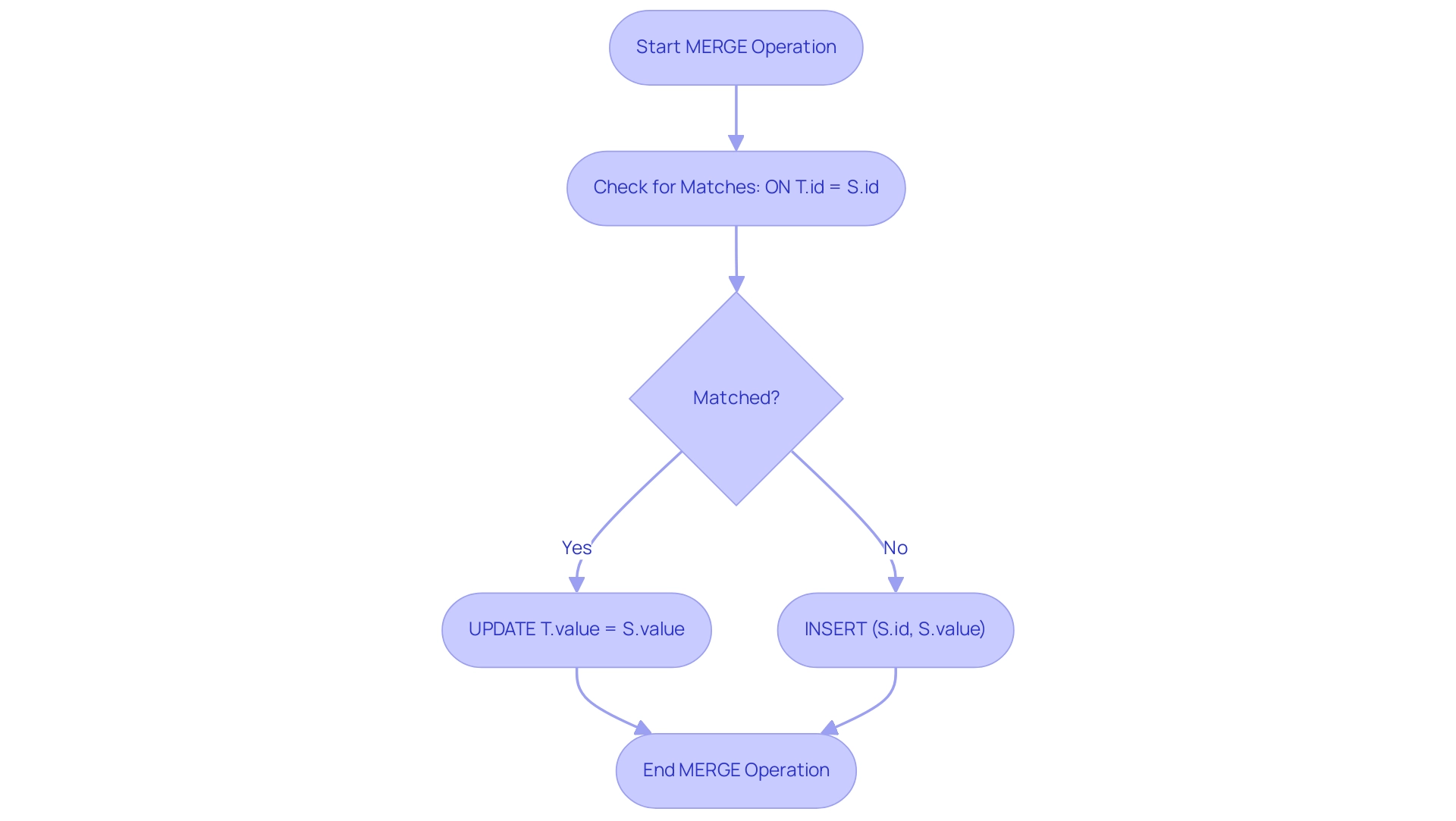

The syntax for a MERGE operation is as follows:

MERGE INTO target_table AS T

NATURAL JOIN source_table AS S

ON T.id = S.id

WHEN MATCHED THEN

UPDATE SET T.value = S.value

WHEN NOT MATCHED THEN

INSERT (id, value) VALUES (S.id, S.value);

This command assesses specified conditions to identify matches between the target and source tables, executing the necessary actions accordingly. In environments handling substantial volumes of data—such as those where combining operations can be sluggish with 30-50 million staging records versus billions of records—leveraging Delta Lake’s capabilities can result in significant performance improvements. Furthermore, cost-based optimization (CBO) is crucial in Spark SQL merge operations, enabling the use of statistical information to craft more efficient execution plans. As highlighted in case studies analyzing various recommendations for enhancing Spark SQL merge performance, many complex solutions often fall short, while simple, foundational practices are frequently overlooked.

Alex Ott, an experienced user, succinctly stated, > But you can pull necessary information using the History on the Delta table <. This functionality not only streamlines operations but also underscores the importance of foundational practices, steering clear of ineffective complex solutions.

By focusing on these essential strategies and integrating RPA to automate information manipulation processes, organizations can markedly enhance their operational efficiency.

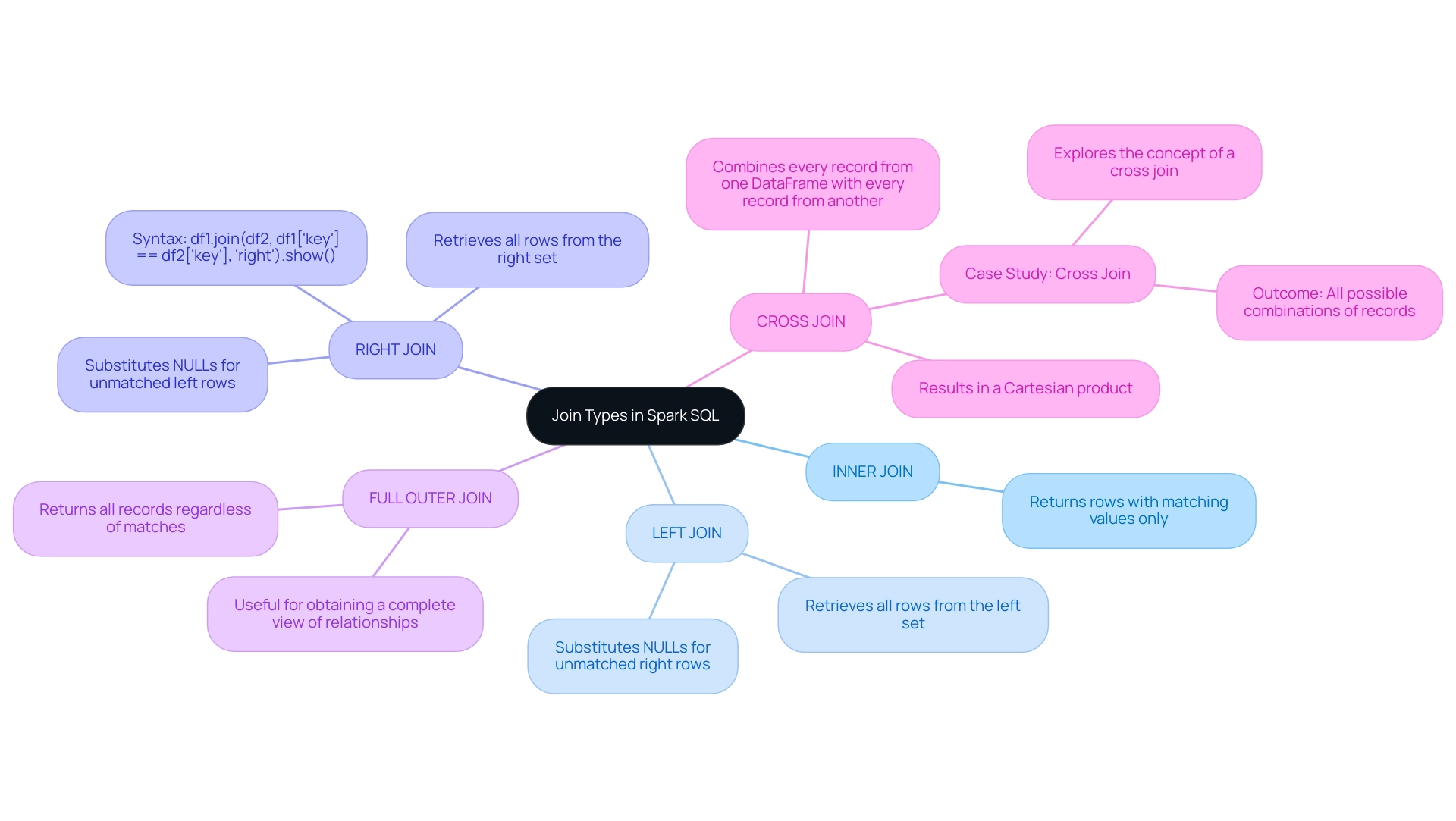

Exploring Join Types in Spark SQL

In Spark SQL, various join types are available, each tailored to fulfill specific purposes in data manipulation. A comprehensive understanding of these joins is essential for crafting effective statements that utilize Spark SQL’s merge functionality to accurately capture desired relationships. Alex Ott underscores the importance of selecting appropriate file formats, noting that features like Data Skipping and Bloom filters can significantly enhance join performance by reducing the number of files accessed during operations.

The key join types include:

- INNER JOIN: This join returns only those rows with matching values in both sets, ensuring that only relevant information is included in the result set.

- LEFT JOIN: This retrieves all rows from the left set alongside the matched rows from the right set, substituting NULLs for any unmatched rows from the right, thereby preserving all information from the left.

- RIGHT JOIN: Conversely, this join returns all rows from the right set along with the matched rows from the left set, filling in NULLs for any unmatched left-side rows. The syntax for a Right Outer Join is

df1.join(df2, df1['key'] == df2['key'], 'right').show(). - FULL OUTER JOIN: This comprehensive join type ensures that all records are returned, regardless of matches in either the left or right table. This is particularly beneficial for obtaining a complete view of relationships. Furthermore, the concept of a Cross Join, where every record from one DataFrame merges with every record from another, illustrates the practical application of join types. Understanding the distinctions among these join types not only aids in precise data retrieval but also enhances the performance and efficiency of data operations when utilizing Spark SQL’s merge functionality.

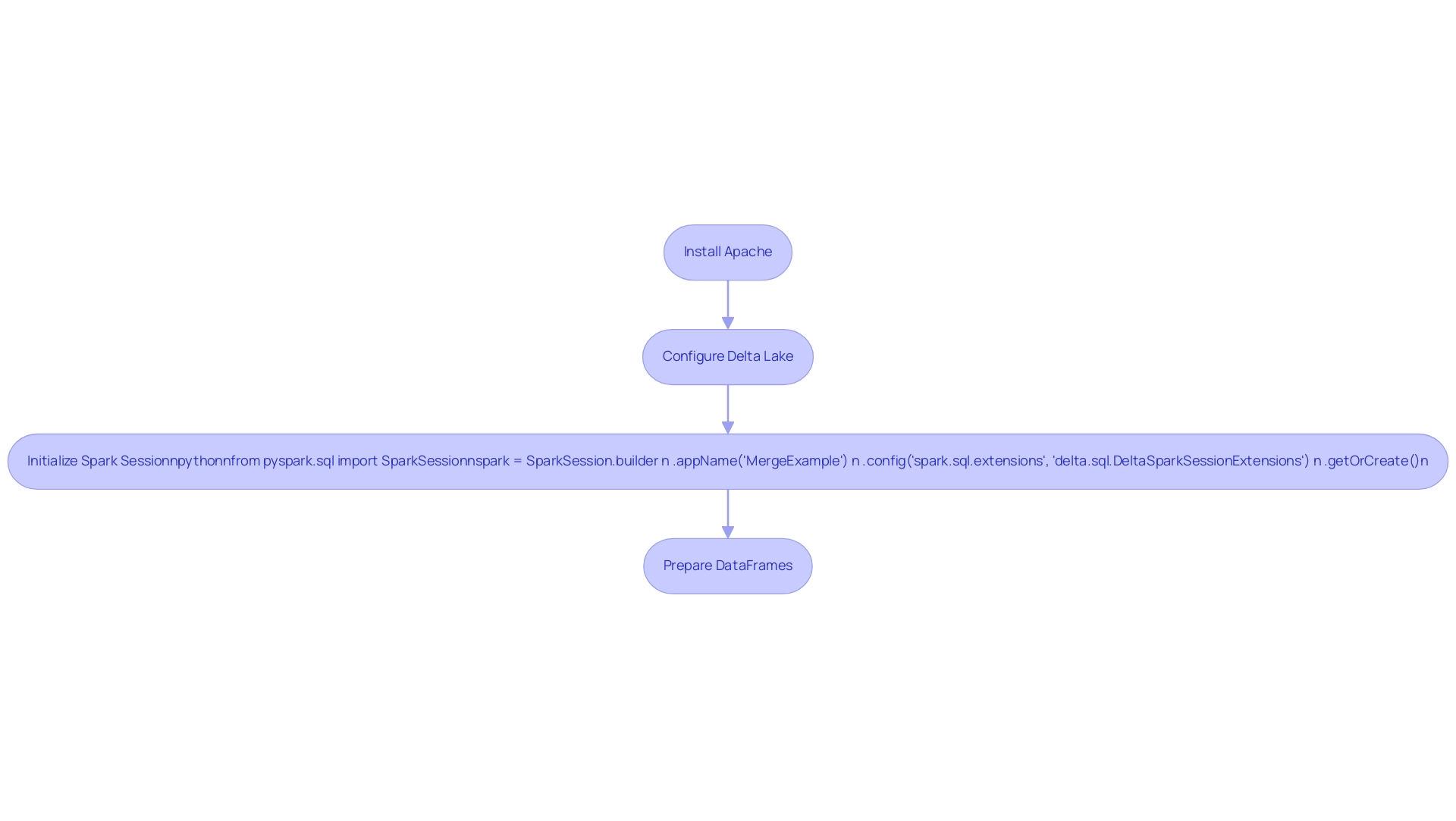

Setting Up Your Spark SQL Environment for MERGE Operations

To effectively execute MERGE operations in Spark SQL, establishing a robust setup is essential. This setup includes several key components:

-

Apache: Start by installing Apache and ensuring it is correctly configured in your development environment.

-

Delta Lake: Utilize Delta Lake as your storage format. Combining operations are exclusively compatible with Delta structures, enhancing performance and reliability through automation.

-

Spark Session: Initialize your Spark session to support Delta functionality with the following code:

from pyspark.sql import SparkSession spark = SparkSession.builder \ .appName('MergeExample') \ .config('spark.sql.extensions', 'delta.sql.DeltaSparkSessionExtensions') \ .getOrCreate() -

Data Preparation: Before executing the combination statement, prepare your source and target tables by loading them into DataFrames.

Incorporating Robotic Process Automation (RPA) into this setup can significantly streamline Spark SQL merge operations. For instance, RPA can automate preparation steps, ensuring that DataFrames are consistently loaded and ready for processing without manual intervention. This approach not only reduces the potential for human error but also accelerates workflow, allowing your team to focus on more strategic tasks.

This comprehensive setup enables you to harness the full potential of SQL for advanced manipulation while aligning with the increasing demand for operational efficiency in a rapidly evolving AI landscape. As organizations increasingly adopt tools like SQL and Delta Lake—driven by the projected $68.9 trillion growth of the Analytics as a Service (AaaS) market by 2028—leveraging RPA can enhance data-driven insights and operational efficiency for business growth. According to Deloitte, “MarkWide Research is a trusted partner that provides us with the market insights we need to make informed decisions.”

This underscores the critical role of utilizing reliable tools and insights in navigating the evolving data landscape. Furthermore, the supremacy of Google in web analytics, evidenced by its 30.35% market share, highlights the necessity for organizations to implement robust analytics solutions such as SQL and Delta Lake to remain competitive in this expanding market.

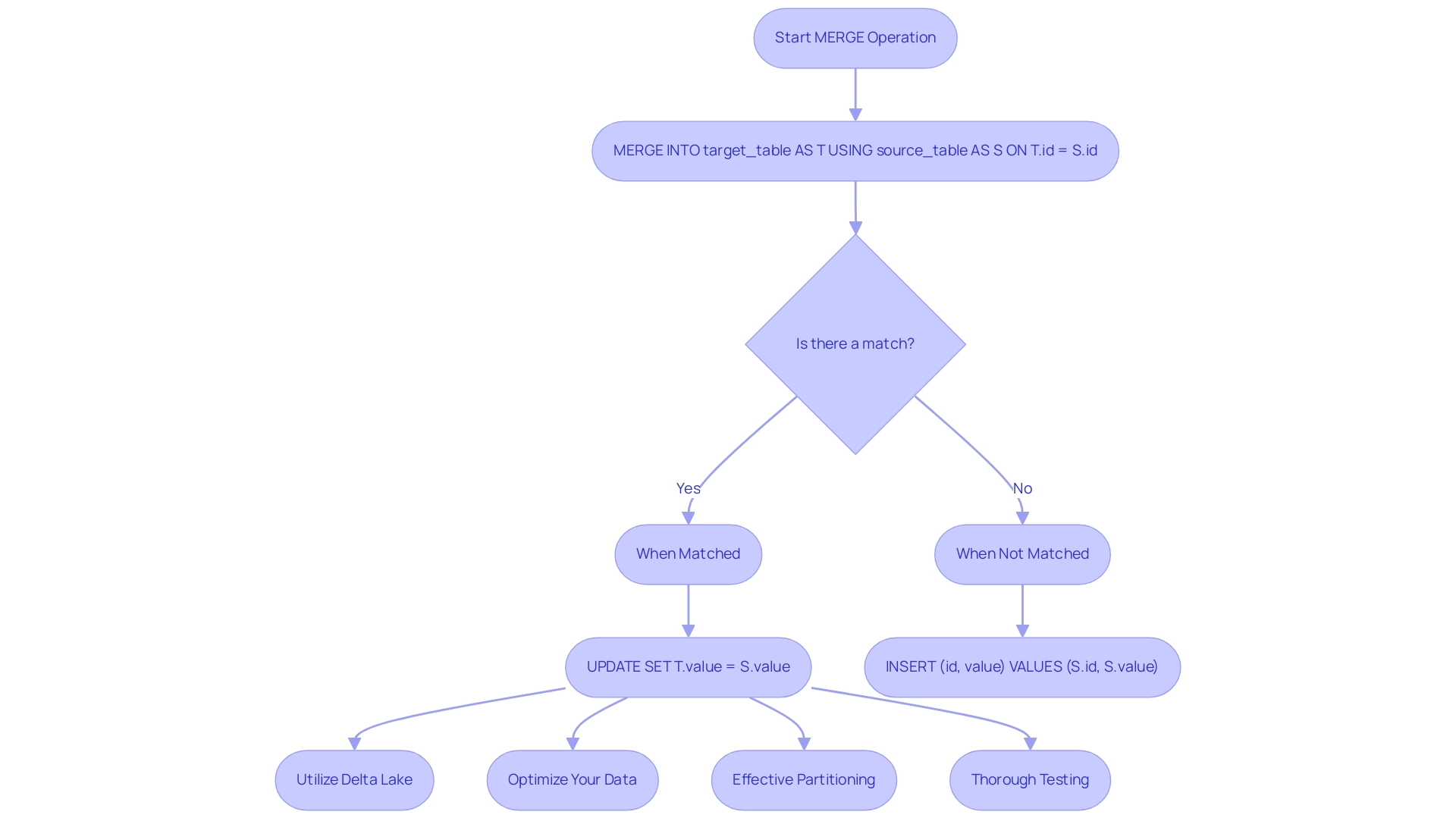

Executing MERGE Operations: Syntax and Best Practices

To perform a MERGE operation in Spark SQL, you can follow this syntax:

MERGE INTO target_table AS T

USING source_table AS S

ON T.id = S.id

WHEN MATCHED THEN

UPDATE SET T.value = S.value

WHEN NOT MATCHED THEN

INSERT (id, value) VALUES (S.id, S.value);

Best Practices:

- Utilize Delta Lake: For optimal performance, ensure your tables are in Delta format. Delta Lake supports ACID transactions and unifies batch and streaming paradigms, simplifying complex transactions involving incremental information. As highlighted by Prashanth Babu Velanati Venkata, Lead Product Specialist, “Delta Lake supports ACID transactions and unifies batch and streaming paradigms, simplifying delete/insert/update operations on incremental information.”

- Optimize Your Data: Regularly use OPTIMIZE commands on Delta structures to enhance read and write performance, especially for large datasets. This aligns with the principles of Business Intelligence, allowing for better data management and informed decision-making.

- Effective Partitioning: Implement partitioning based on frequently queried columns to significantly boost efficiency and reduce processing time. This strategy, in conjunction with RPA, can streamline workflows and reduce manual intervention, addressing the challenges posed by repetitive tasks.

- Thorough Testing: Before executing combining statements on larger datasets, conduct tests with smaller groups of data to verify accuracy and performance outcomes. This practice is crucial in a rapidly evolving AI landscape where precision is key.

Additionally, consider the benefits of pre-bucketing and pre-sorting tables in the framework, which allow for colocating joinable rows. This method minimizes shuffling during joins, significantly speeding up operations and enabling the handling of large joins on smaller clusters without running into out-of-memory issues. By utilizing RPA to automate these manual workflows, and recognizing that over 50% of Fortune 500 firms employ Databricks, you can perform combination operations in SQL more efficiently.

Tailored AI solutions can further enhance this process by providing specific technologies that align with your business goals, ensuring both performance and reliability while driving operational efficiency.

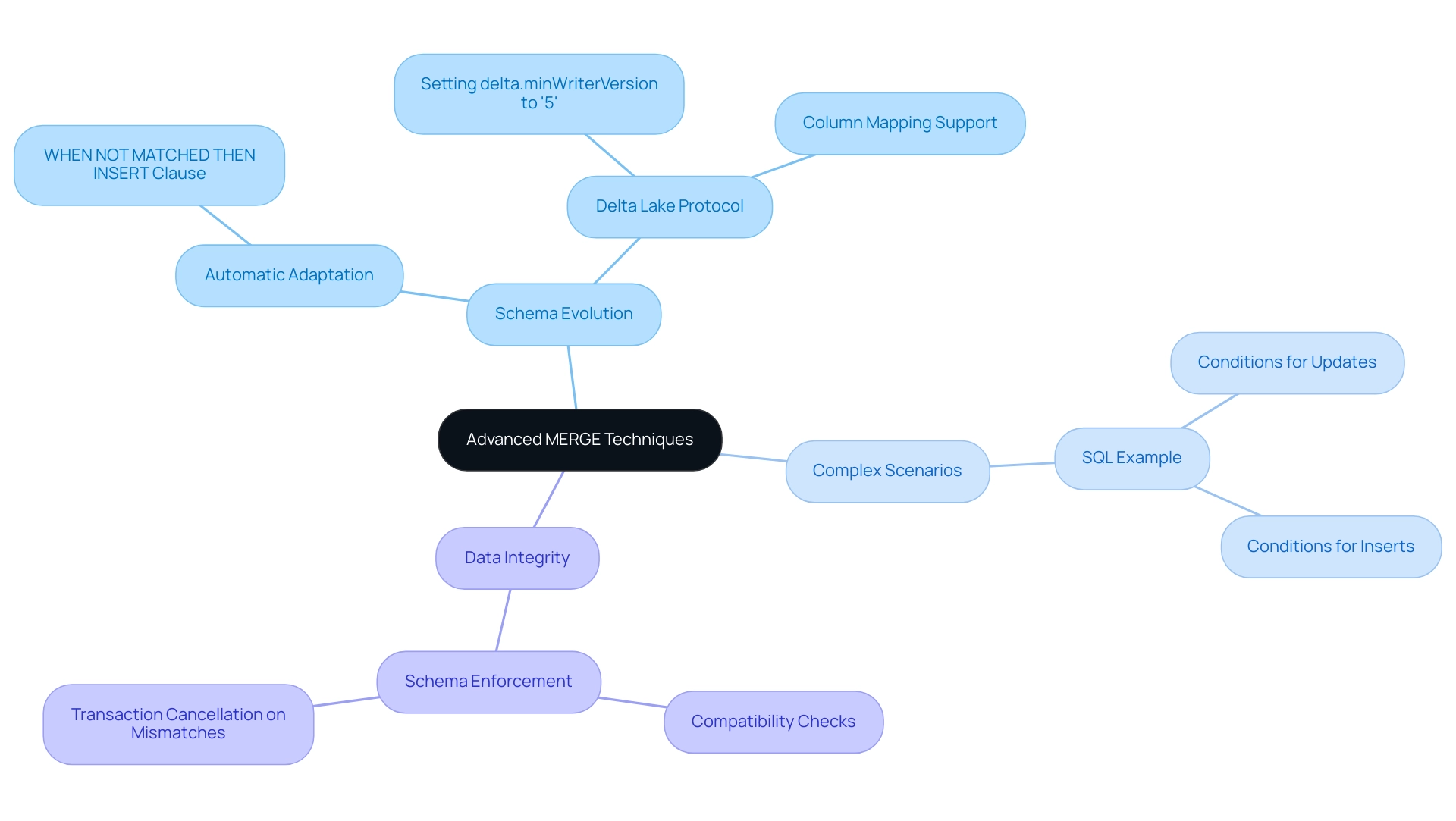

Advanced MERGE Techniques: Schema Evolution and Complex Scenarios

Advanced techniques in Spark SQL, such as MERGE INTO, offer powerful capabilities for data manipulation, particularly in managing schema evolution and navigating complex scenarios.

Schema Evolution: This feature enables the target table schema to automatically adapt to changes in the source table schema. To activate schema evolution, incorporate the WHEN NOT MATCHED THEN INSERT ... clause, along with setting the appropriate parameters like delta.minWriterVersion to ‘5’. Additionally, enhancing the Delta Lake protocol may be vital to support advanced functionalities such as column mapping, ensuring that your operations are both flexible and effective.

Complex Scenarios: In situations that require intricate logic for updates and inserts, the MERGE statement can be expanded to accommodate multiple conditions. Consider the following SQL example:

MERGE INTO target_table AS T

USING source_table AS S

ON T.id = S.id

WHEN MATCHED AND T.status = 'inactive' THEN

UPDATE SET T.value = S.value

WHEN NOT MATCHED AND S.new_column IS NOT NULL THEN

INSERT (id, value, new_column) VALUES (S.id, S.value, S.new_column);

This example illustrates how to effectively manage updates based on specific conditions, thereby enhancing the power of data manipulation within Spark SQL.

Real-world applications of these techniques can be observed in Delta Lake’s schema enforcement. A recent case study demonstrates that Delta Lake rigorously checks all new writes against the target table’s schema, canceling transactions when mismatches are detected. This process is crucial for maintaining data integrity, preventing incompatible information from being written, and avoiding potential corruption or loss. By integrating these advanced techniques, organizations can adeptly navigate complex information scenarios with enhanced precision and effectiveness.

As Pranav Anand noted, ‘We’d also like to thank Mukul Murthy and Pranav Anand for their contributions to this blog.’ This acknowledgment reflects the collaborative effort in deepening our understanding of these advanced techniques.

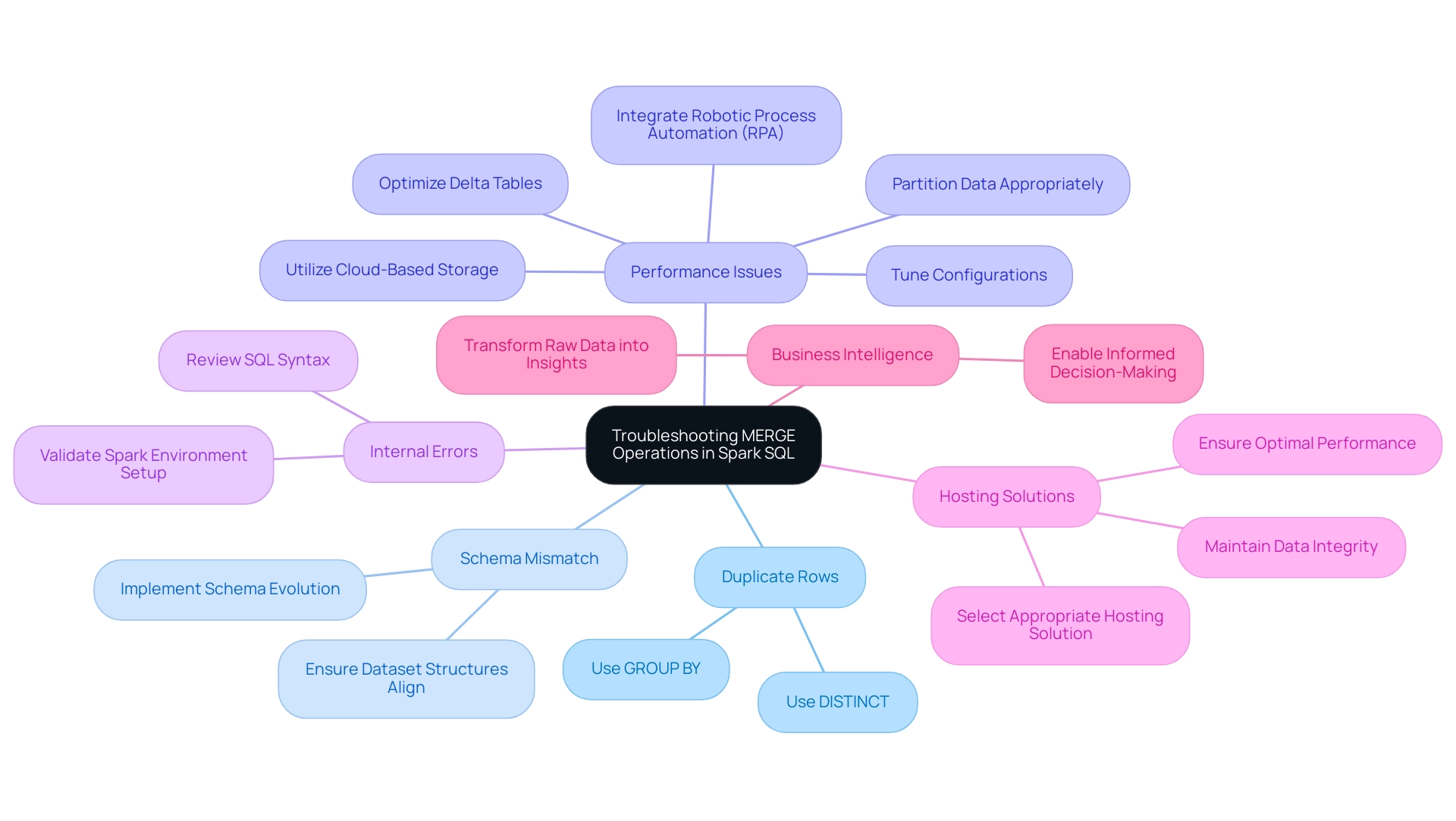

Troubleshooting MERGE Operations: Common Challenges and Solutions

Combine operations in Spark SQL can present several challenges that, if not addressed, may hinder data processing efficiency. Among the most common issues are:

-

Duplicate Rows: A primary obstacle is the presence of duplicate rows in the source dataset, leading to conflicts during the MERGE process. To mitigate this, utilizing commands like

DISTINCTorGROUP BYis advisable to eliminate duplicates before performing a Spark SQL merge. -

Schema Mismatch: Another frequent issue arises from schema incompatibility between the source and target tables. It is crucial to ensure that the dataset structures align. When necessary, schema evolution can accommodate changes in structure.

-

Performance Issues: Slow MERGE operations may indicate underlying inefficiencies. To enhance performance, consider optimizing your Delta tables, tuning configurations, or appropriately partitioning your information. These steps can significantly reduce execution time and improve operational efficiency. Additionally, integrating Robotic Process Automation (RPA) can automate manual tasks—often repetitive and time-consuming—further streamlining workflows in a rapidly evolving AI landscape. Utilizing cloud-based storage facilities can also provide scalability and cost-effectiveness, making them appealing choices for hosting merged collections.

-

Internal Errors: Should internal errors occur, meticulously reviewing your SQL syntax and validating your Spark environment setup is essential. Misconfigurations can lead to unexpected failures; thus, ensuring all parameters are correctly defined is vital.

Addressing these challenges proactively not only boosts the reliability of your operations using Spark SQL merge but also enhances overall processing efficiency. Utilizing Business Intelligence can convert raw information into actionable insights, enabling informed decision-making that drives growth and innovation. A case study titled “Hosting Merged Information” underscores the importance of selecting an appropriate hosting solution to maintain optimal performance, scalability, and integrity.

This case study illustrates how the right environment can mitigate the issues discussed above. Furthermore, the significance of tailored AI solutions in addressing these challenges cannot be overlooked, as they offer targeted technologies that align with specific business needs. As Syed Danish, a data science enthusiast, states, “I am a data science and machine learning enthusiast,” reflecting the growing importance of mastering these operations for effective data management.

Conclusion

The MERGE statement in Spark SQL emerges as an essential tool for organizations seeking to elevate their data manipulation capabilities. By facilitating conditional updates, inserts, and deletes, it streamlines operations and reduces human error, particularly when integrated with Robotic Process Automation (RPA) and Delta Lake. Mastering the syntax, best practices, and advanced techniques related to the MERGE operation is crucial for effectively navigating the intricacies of modern data management.

Successfully executing MERGE operations necessitates incorporating various join types and establishing a robust Spark SQL environment. By harnessing the strengths of Delta Lake, organizations can enhance performance and ensure data integrity. Moreover, identifying common challenges—such as duplicate rows and schema mismatches—offers valuable insights for troubleshooting and maintaining operational efficiency.

Ultimately, mastering the MERGE statement and its related practices empowers businesses with the necessary tools to leverage data-driven insights effectively. As the realm of data analytics evolves, organizations that prioritize these strategies will not only improve their operational efficiency but also maintain a competitive edge in an increasingly data-centric landscape. Embracing these methodologies transcends technical necessity; it represents a strategic imperative for fostering growth and innovation.