Overview

Maximizing operational efficiency in Azure Synapse Pipelines is crucial for organizations seeking to enhance their productivity. Implementing best practices such as:

- Automation through Robotic Process Automation (RPA)

- Optimizing resource management

- Leveraging advanced features for data integration

are essential strategies. These approaches not only lead to reduced errors but also significantly improve processing speeds and decision-making capabilities. Ultimately, these enhancements drive productivity and growth within organizations.

Consider how these strategies can transform your operations. By adopting automation and optimizing resource management, you can streamline processes and reduce the likelihood of errors. The advanced features of Azure Synapse Pipelines provide powerful tools for data integration, enabling smarter decision-making.

In conclusion, embracing these best practices is not just beneficial; it is imperative for organizations aiming to thrive in today’s competitive landscape. Take action now to leverage these strategies and propel your organization toward greater efficiency and success.

Introduction

In the quest for operational excellence, organizations are increasingly turning to Azure Synapse Pipelines—an advanced tool engineered to optimize data integration and analytics processes. By minimizing resource wastage and enhancing productivity through automation, businesses can streamline workflows and achieve quicker insights.

Furthermore, the integration of Robotic Process Automation (RPA) amplifies these benefits, enabling companies to automate repetitive tasks, reduce errors, and redirect human resources toward strategic initiatives.

This article delves into the intricacies of maximizing operational efficiency within Azure Synapse, exploring:

- Common challenges

- Best practices

- Real-world success stories that illustrate the transformative potential of this platform in a data-driven landscape.

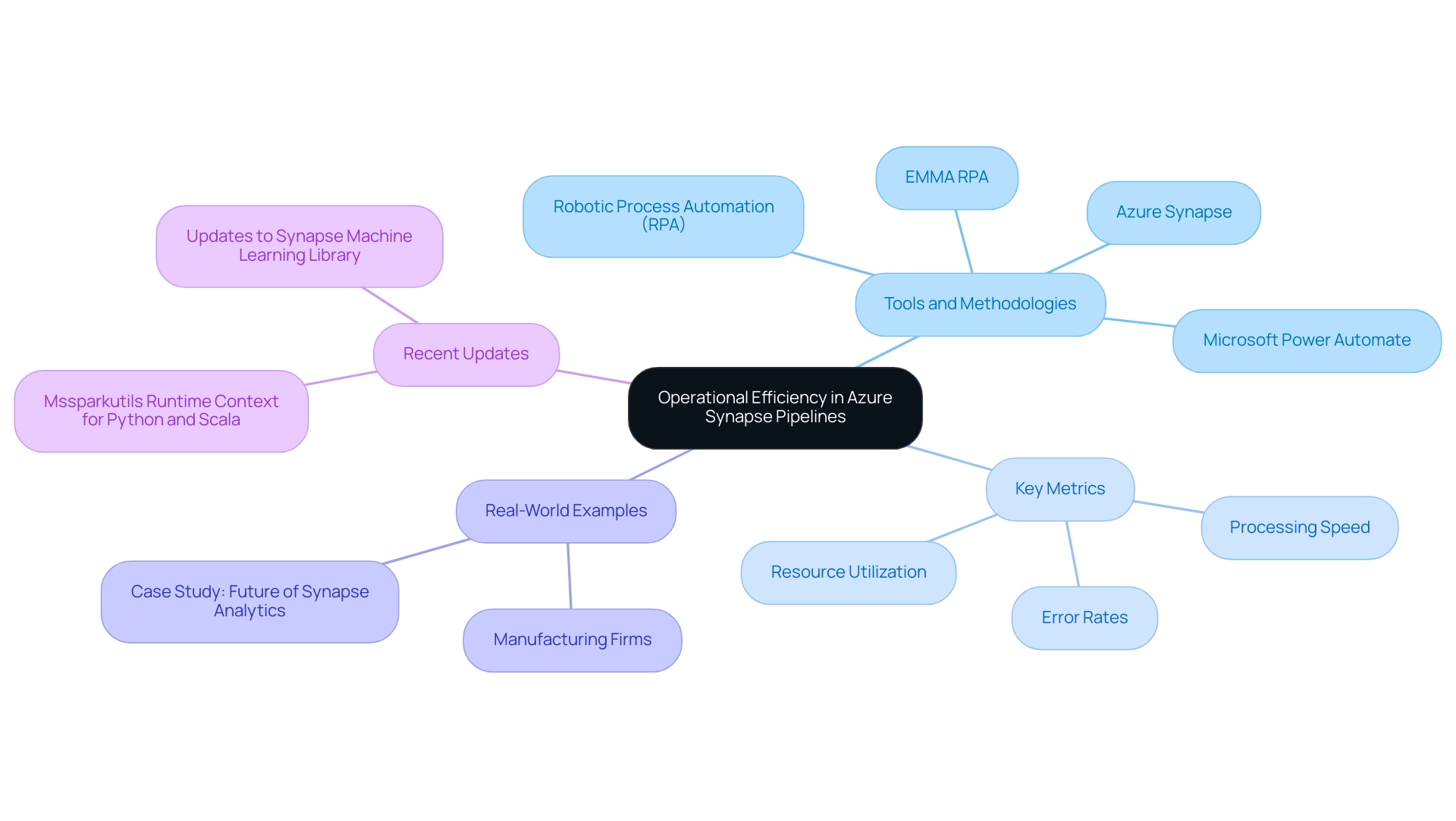

Understanding Operational Efficiency in Azure Synapse Pipelines

Operational effectiveness in synapse pipelines hinges on the ability to execute integration and analytics processes while minimizing resource and time waste. This effectiveness emerges from the strategic application of tools and methodologies, which are designed to streamline workflows, reduce manual interventions, and enhance overall productivity. By leveraging Azure Synapse alongside Robotic Process Automation (RPA) solutions like EMMA RPA and Microsoft Power Automate, organizations can automate critical tasks, including data ingestion, transformation, and analysis. The result? Quicker insights and more informed decision-making.

Key metrics for evaluating performance encompass processing speed, resource utilization, and error rates. These metrics are essential, as they collectively contribute to a robust functional framework. For instance, manufacturing firms have effectively utilized Synapse to improve supply chain visibility and streamline production processes, demonstrating the platform’s capacity to foster effectiveness.

Recent statistics reveal that these firms have experienced substantial enhancements in their work processes since implementing Synapse, particularly through the integration of RPA. This not only boosts productivity but also elevates employee morale by alleviating the burden of repetitive tasks.

Moreover, recent updates to the Synapse Machine Learning library simplify the creation of scalable machine learning pipelines, empowering organizations to maximize performance in data integration processes. The introduction of the Mssparkutils runtime context for Python and Scala enhances Synapse’s versatility, enabling teams to streamline workflows effectively.

As Devendra Goyal emphasizes, ‘Microsoft Fabric for Data Science: Advanced ML Model capabilities are vital for organizations aiming to improve their performance.’ This insight underscores the importance of leveraging advanced features within Synapse, alongside customized AI solutions, to achieve optimal results.

Real-world examples illustrate the transformative impact of Synapse on operational efficiency. Organizations adopting Synapse Analytics Services are not only staying ahead in data analytics but are also reaping the benefits of a unified platform that fosters growth and innovation. As highlighted in the case study titled ‘Future of Synapse Analytics Services,’ the outlook is promising, with continuous improvements expected as Microsoft invests in enhancing its capabilities.

In summary, boosting productivity in synapse pipelines is crucial for organizations striving to excel in a data-driven environment. By focusing on automation through RPA, efficient resource management, and the utilization of advanced features, businesses can achieve significant enhancements in their information integration and analytics processes.

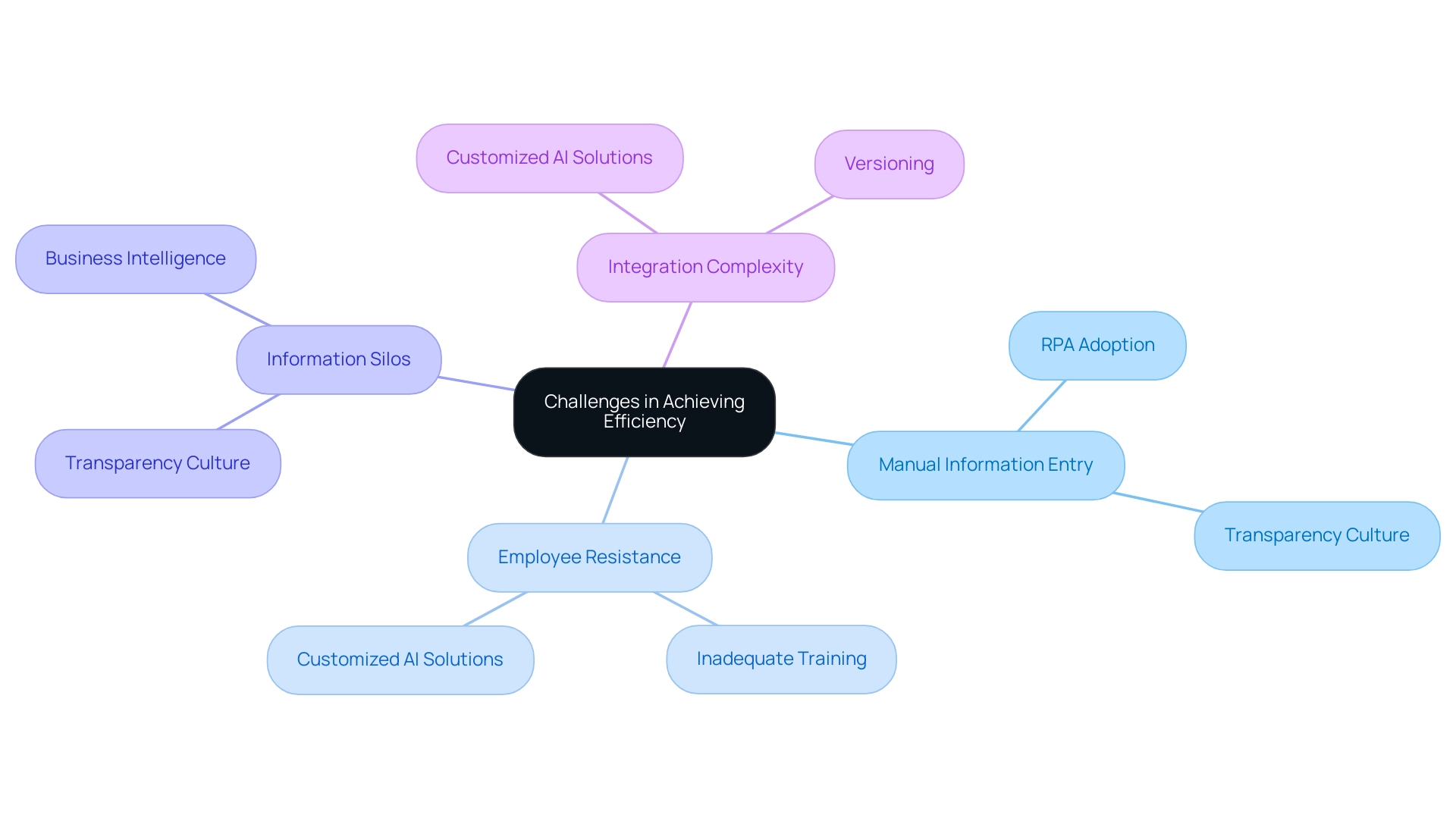

Identifying Common Challenges in Achieving Efficiency

Organizations frequently grapple with numerous challenges when striving for operational efficiency in synapse pipelines. A significant hurdle is manual information entry, which is not only time-consuming but also highly susceptible to errors. Studies indicate that mistakes in manual information entry can account for up to 30% of inaccuracies in organizations. This inefficiency is compounded by employee resistance to adopting new technologies, often stemming from a lack of familiarity and comfort with innovative tools.

Inadequate training further exacerbates these issues, as employees may struggle to leverage the full potential of Azure Synapse without proper guidance. Furthermore, information silos present a formidable barrier, preventing seamless sharing across departments and stifling collaboration. The complexity of integrating diverse information sources into a cohesive system adds another layer of difficulty, making it challenging for organizations to achieve a unified view of their information landscape.

Recognizing these challenges is crucial for developing effective strategies to overcome them. For instance, fostering a culture of transparency regarding information can significantly enhance collaboration and trust, enabling teams to access and utilize insights more effectively. This approach is essential for effective observability, as it fosters collaboration and trust through accessible insights.

Moreover, the adoption of Robotic Process Automation (RPA) can streamline manual workflows, significantly reducing errors and freeing up employees for more strategic tasks. By automating repetitive processes, organizations can enhance operational effectiveness and drive data-driven insights critical for business growth. A case study on a mid-sized company demonstrates this: by implementing GUI automation, they reduced entry errors by 70%, accelerated testing processes by 50%, and enhanced workflow productivity by 80%, achieving ROI within six months.

Furthermore, customized AI solutions can assist organizations in navigating the rapidly evolving AI landscape, ensuring they identify the appropriate technologies that align with their specific business requirements. The adoption of Business Intelligence is equally important, as it transforms raw information into actionable insights, enabling informed decision-making that drives growth and innovation.

Additionally, the adoption of versioning—a practice projected to be embraced by 60% of organizations by 2027—can facilitate better tracking of lineage and enable error rollbacks, thereby improving overall management practices. This emphasizes the significance of mastering tools and techniques for effective version control in data environments.

Fivetran’s Hybrid Deployment enables organizations to select whether their pipelines are processed within Fivetran’s secure network or their own environment, offering a practical example of how businesses can secure their data pipelines while enhancing productivity. By tackling these typical challenges, organizations can improve their productivity and fully utilize the features of synapse pipelines. As noted by the International Energy Agency, effective energy management is crucial, as natural gas provides dispatchable energy that can balance intermittent renewable sources like wind and solar.

Creatum GmbH is dedicated to assisting organizations in overcoming these challenges and attaining excellence in their operations.

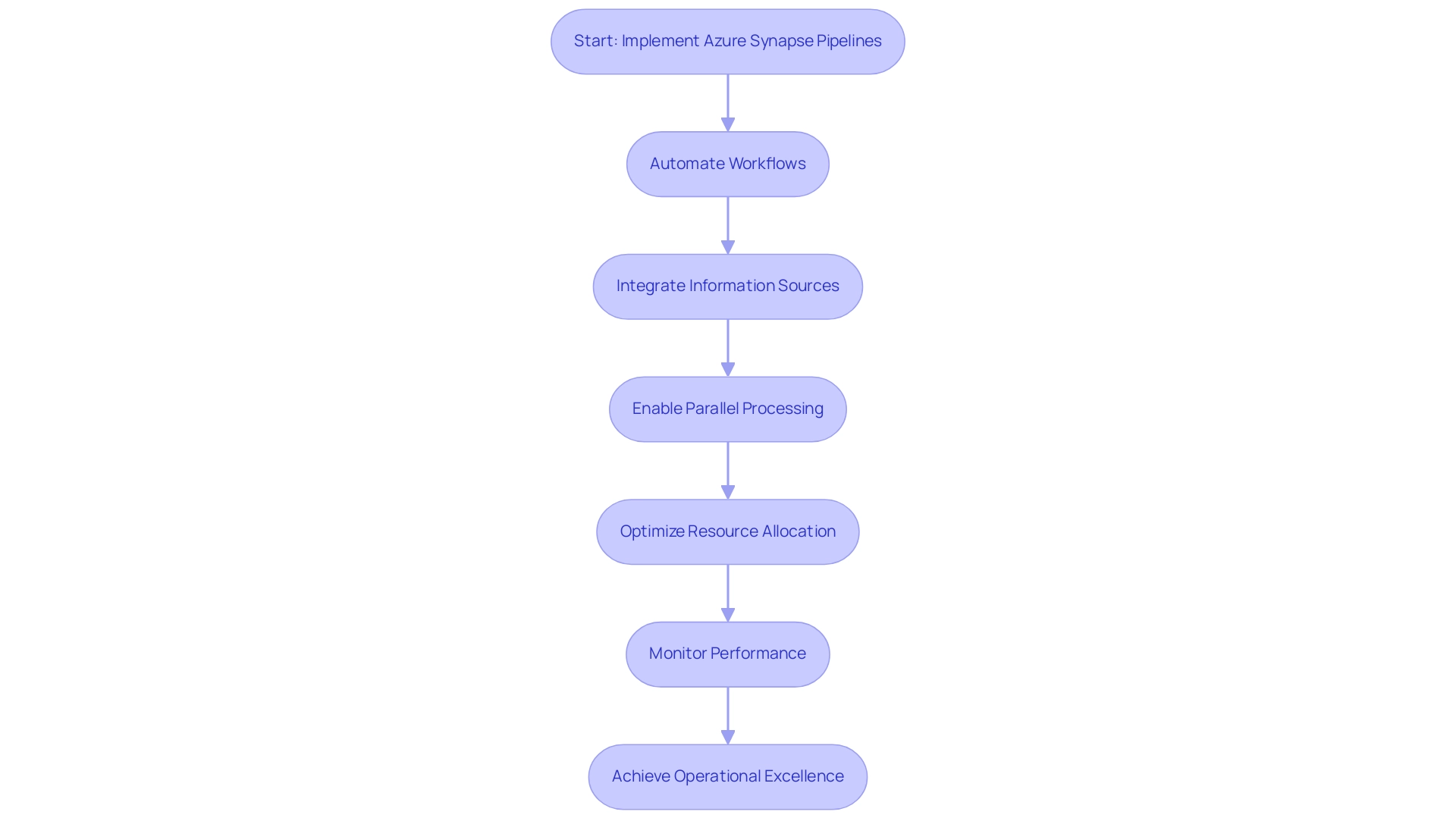

Leveraging Azure Synapse Pipelines for Enhanced Efficiency

Synapse pipelines in Azure present a robust framework for automating workflows, significantly enhancing operational efficiency. One of the standout features is the orchestration of complex information integration tasks, streamlining ETL processes and enabling real-time performance monitoring. By leveraging built-in connectors, organizations can effortlessly integrate diverse information sources, minimizing the time and effort required for manual handling.

The platform’s support for parallel processing is particularly noteworthy, allowing multiple tasks to execute simultaneously. This capability accelerates processing times and optimizes resource utilization, crucial for effective cost management. Organizations that have implemented these strategies report improved performance while maintaining budgetary control, showcasing the dual benefits of efficiency and cost management.

Furthermore, the automation of information workflows utilizing Synapse has been linked to considerable enhancements in operational excellence. As highlighted by industry experts, adopting automated workflows is essential for thriving in data-driven environments. A recent case study demonstrated how a company successfully scaled its operations and managed costs through dynamic resource scaling and effective use of cost management tools within Azure Synapse.

These strategies included optimizing resource allocation based on workload demands, allowing for better financial oversight. In 2025, statistics indicate that organizations utilizing automation in their workflows have experienced a marked increase in efficiency, with many reporting reduced error rates and improved accuracy. Notably, the SELECT statement will trigger automatic creation of statistics in serverless SQL pools, further enhancing management capabilities. The platform’s tools for information cleansing and standardization ensure that the content being processed is both accurate and consistent, supporting both ETL and ELT processing paradigms.

By implementing Synapse pipelines, businesses can create a more agile and responsive information environment, ultimately driving growth and innovation while allowing teams to focus on strategic, value-adding activities. Utilizing AI via Small Language Models and engaging in GenAI Workshops can further improve information quality and efficiency. As Sangeet Vashishtha aptly stated, “The findings suggest that adopting automated workflows is a vital step toward operational excellence in data-driven environments.”

Additionally, using DBCC SHOW_STATISTICS provides summary and detailed information about the distribution of values in a statistics object, offering further insights into information management within Synapse.

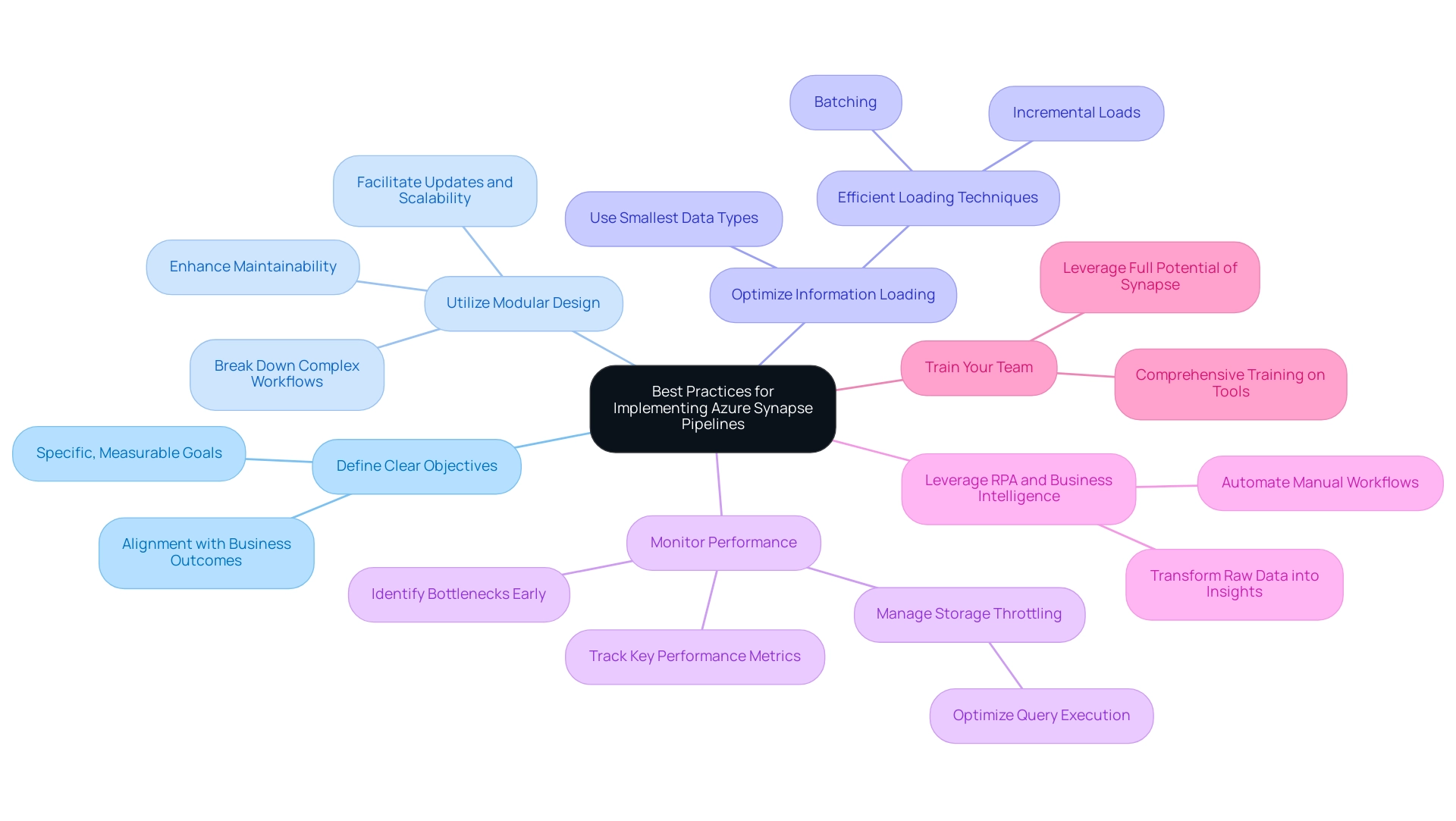

Best Practices for Implementing Azure Synapse Pipelines

To implement Azure Synapse Pipelines effectively, organizations must adhere to several best practices:

-

Define Clear Objectives: Establish specific, measurable goals for your information pipelines. This clarity will guide the design and execution of your workflows, ensuring alignment with broader business outcomes.

-

Utilize Modular Design: Embrace a modular approach by breaking down complex workflows into smaller, reusable components. This not only enhances maintainability but also facilitates easier updates and scalability, ultimately improving workflow efficiency in Synapse Pipelines.

-

Optimize Information Loading: Implement efficient information loading techniques, such as batching and incremental loads. These strategies significantly reduce processing time and resource consumption, leading to quicker information availability and enhanced performance. Additionally, utilizing the smallest types possible can further optimize loading processes by enhancing performance and concurrency in queries.

-

Monitor Performance: Regularly track key performance metrics of your Synapse Pipelines. Recognizing bottlenecks early allows for proactive enhancement of resource distribution, ensuring consistent performance and reliability in information access. Understanding and controlling storage throttling, as illustrated in the Azure Storage Throttling case study, can improve query execution and sustain performance when accessing information through serverless SQL pools.

-

Leverage RPA and Business Intelligence: Integrate Robotic Process Automation (RPA) to automate manual workflows, thereby enhancing operational efficiency and reducing errors. RPA can streamline repetitive tasks, freeing your team to focus on strategic initiatives that add value. Furthermore, harnessing Business Intelligence tools enables the transformation of raw data into actionable insights, driving informed decision-making that supports business growth.

-

Train Your Team: Invest in comprehensive training for your team members on Azure Synapse tools. A well-informed team can leverage the full potential of Synapse Pipelines to drive operational efficiency and innovation.

In 2025, it is anticipated that 80% of analytics governance efforts will concentrate on achieving concrete business outcomes, underscoring the significance of these best practices. As Punitkumar Harsur, a Data Engineer, observed, ‘Information warehouses are an optimal choice to store historical information in a more cost-effective manner.’ By implementing these approaches, organizations can enhance their information management capabilities and achieve significant advancements in productivity.

Integrating Robotic Process Automation with Synapse Pipelines

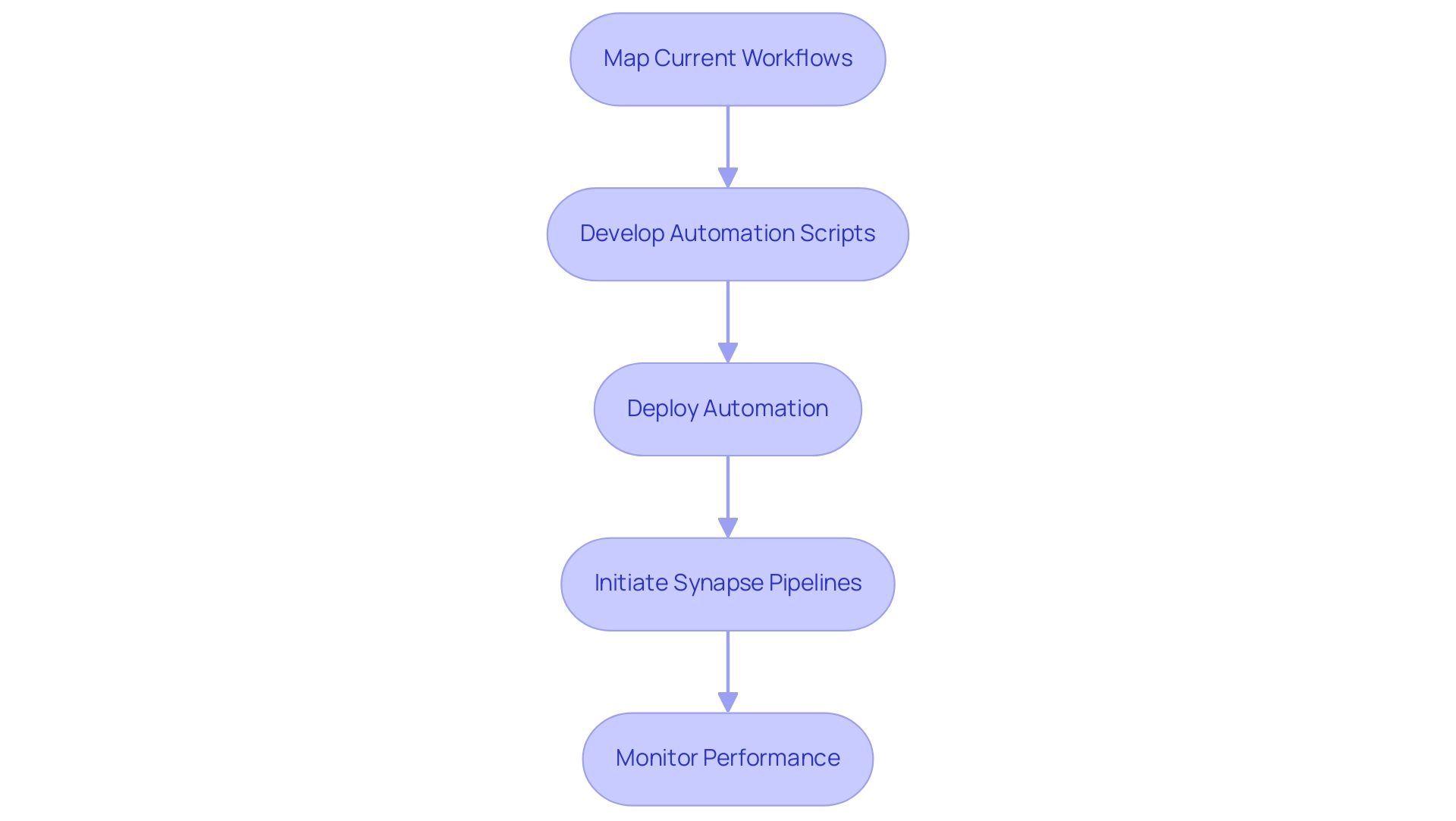

Incorporating Robotic Process Automation (RPA) with synapse pipelines significantly enhances operational productivity by automating repetitive tasks that typically consume valuable time and resources. A compelling case study from Creatum GmbH exemplifies this, showcasing how a mid-sized company improved efficiency by automating data entry, software testing, and legacy system integration through GUI automation. This strategy effectively addressed challenges like manual input errors and sluggish software testing, culminating in an impressive 70% reduction in entry mistakes and a 50% acceleration in testing processes.

RPA excels in streamlining input, validation, and reporting processes, empowering human resources to concentrate on strategic initiatives that propel business growth. By automating these workflows, organizations can achieve a significant reduction in errors, enhancing accuracy and reliability. The case study further highlighted that the execution of GUI automation involved meticulous mapping of current workflows, followed by the deployment of automation scripts, resulting in an 80% improvement in workflow performance, with a return on investment realized within just six months.

Moreover, RPA can initiate synapse pipelines in response to specific events, enabling a seamless transfer of information throughout the system without manual intervention. This functionality not only expedites processes but also bolsters overall productivity, allowing teams to swiftly adapt to changing business requirements.

As organizations increasingly recognize the benefits of RPA, expert insights suggest that the integration of RPA with synapse pipelines will be crucial for maximizing performance in 2025 and beyond. This synergy not only simplifies information management but also equips businesses to thrive in a rapidly evolving digital landscape. Notably, over 90% of C-level executives utilizing intelligent automation believe their organizations excel at managing change in response to shifting business trends, underscoring the strategic significance of RPA in today’s business environment.

Enhancing Data Quality and Insights for Operational Success

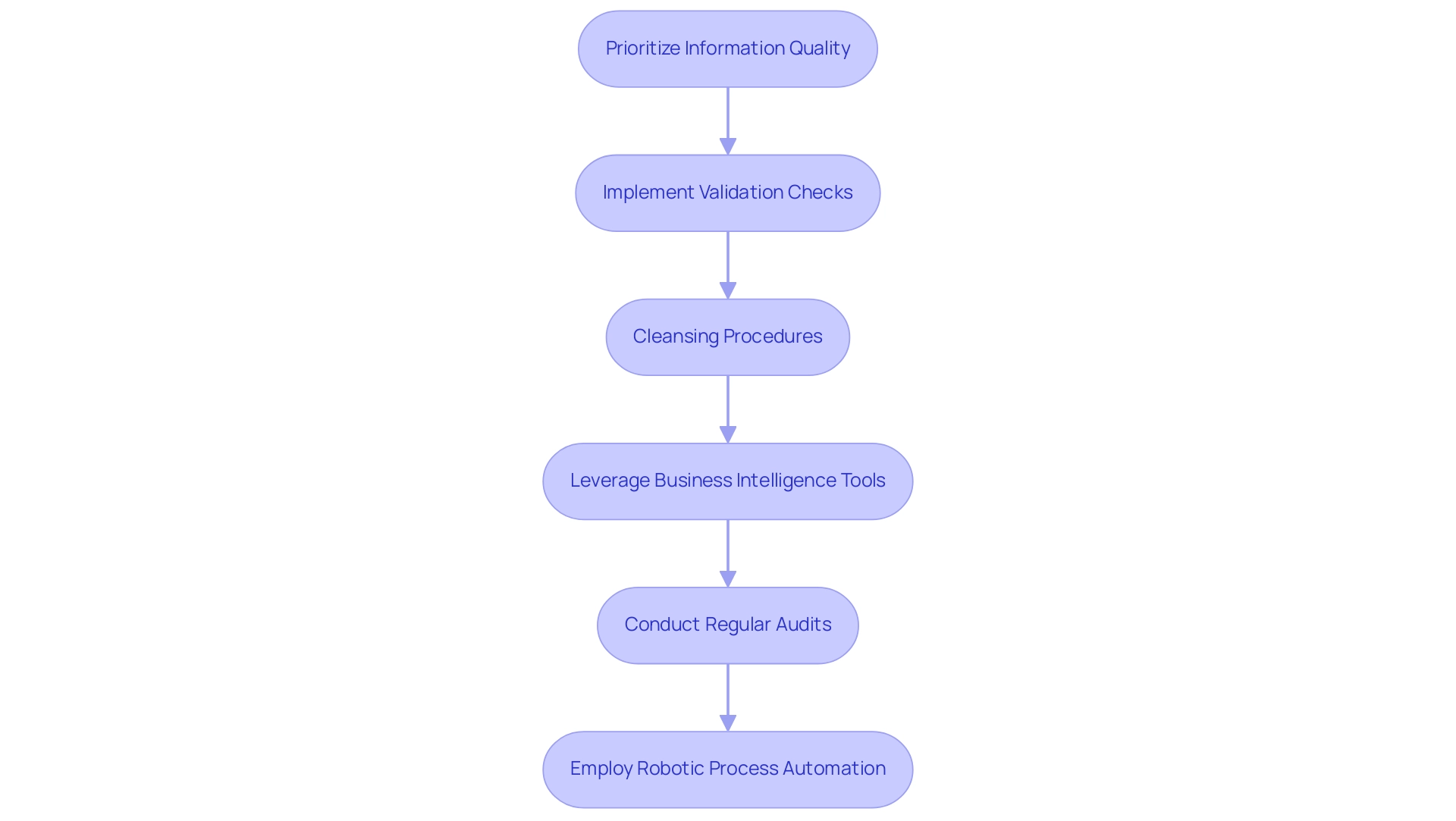

To achieve maximum operational efficiency, organizations must prioritize information quality. By 2025, the emphasis on this quality is anticipated to evolve towards creating resilient systems that guarantee consistent, trusted, and AI-ready information flows, thereby transforming insights into a competitive advantage. Inadequate information quality poses significant compliance risks, including hefty penalties and potential legal actions. Thus, it is essential to implement robust validation checks and cleansing procedures within synapse pipelines to ensure that only accurate and reliable information is processed.

This approach not only mitigates compliance risks but also bolsters the overall integrity of information-driven decision-making.

Leveraging advanced business intelligence tools within Azure Synapse can greatly enhance insights, empowering organizations to extract actionable intelligence that propels strategic initiatives. For example, conducting regular audits of information quality metrics can uncover critical areas for improvement, ensuring consistency and trustworthiness. A case study on information time-to-value reveals that a high volume of errors in transformations or excessive manual cleanup underscores the need for a solid quality framework.

Moreover, expert insights highlight the significance of validation and cleansing processes. Antonio Cotroneo from Data Integrity states, ‘New AI-powered innovations in the Precisely Data Integrity Suite help you enhance productivity, maximize the ROI of data investments, and make confident, data-driven decisions.’ By prioritizing these practices, organizations can refine their processes, leading to improved outcomes and increased productivity.

Additionally, employing Robotic Process Automation (RPA) can streamline manual workflows, further enhancing performance in a swiftly evolving AI landscape. As this landscape progresses, integrating AI-powered innovations from Creatum GmbH, such as Small Language Models and GenAI Workshops, will further elevate data quality, maximizing returns on data investments and empowering teams to make confident, data-driven decisions. Book a free consultation.

Continuous Improvement: Monitoring and Adapting for Long-Term Efficiency

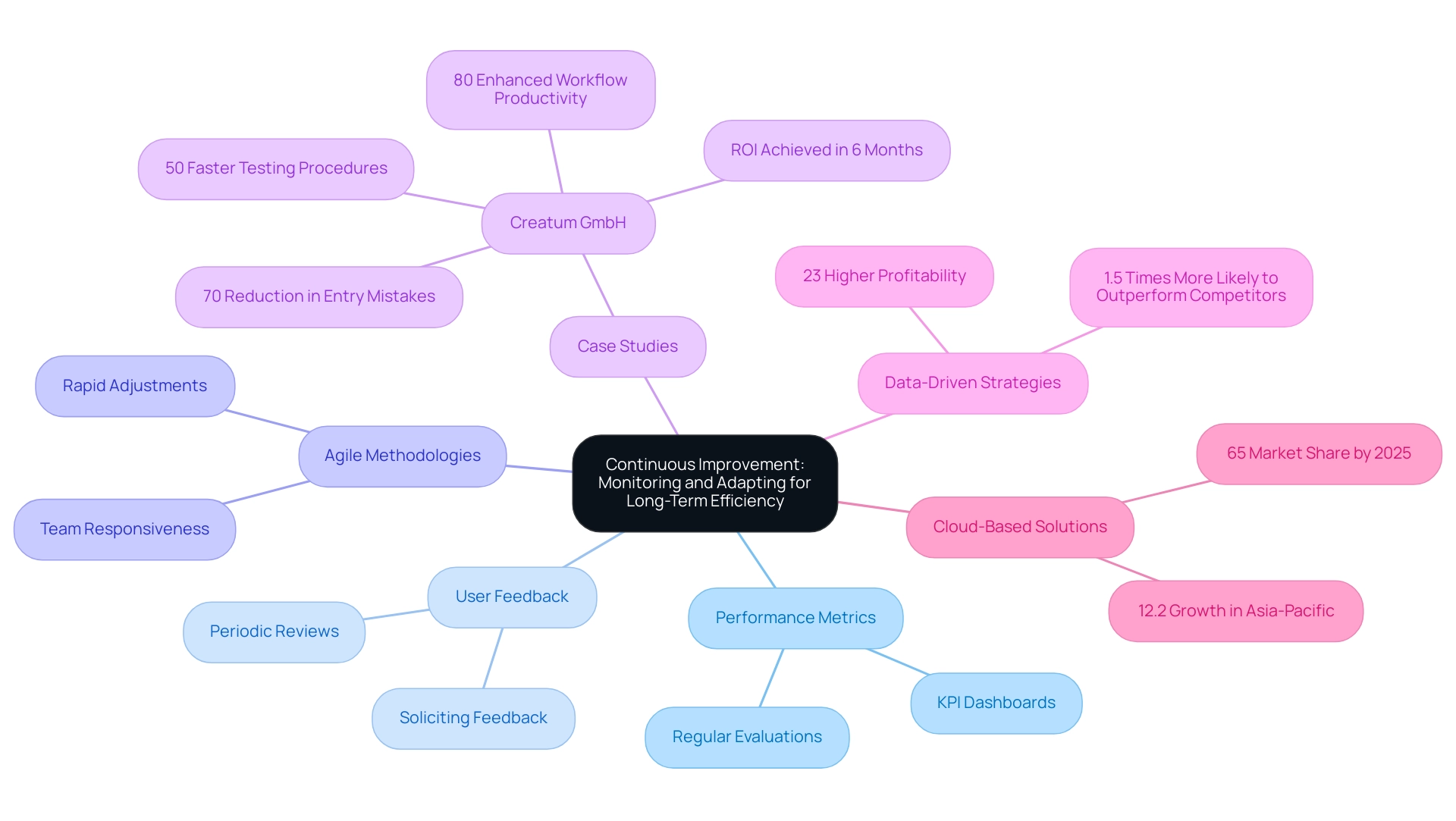

Continuous enhancement is crucial for maintaining and improving efficiency within synapse pipelines. Organizations must implement a structured framework that includes regular reviews of performance metrics and actively solicits user feedback. This can be achieved by establishing dashboards that visualize key performance indicators (KPIs) and conducting periodic evaluations of pipeline effectiveness.

By fostering a culture of continuous improvement, organizations can swiftly adapt to emerging challenges, optimize their processes, and secure long-term success.

Adopting agile methodologies further supports rapid adjustments to evolving business needs, ensuring teams can respond effectively to changes in the business landscape. Notably, companies utilizing analytics in their performance processes are 1.5 times more likely to surpass their competitors across key metrics, highlighting the essential role of information-driven strategies in achieving operational excellence. Moreover, organizations implementing data-driven strategies demonstrate 23% higher profitability, reinforcing the importance of these approaches.

To illustrate the impact of continuous improvement practices, consider the case of a mid-sized healthcare company, Creatum GmbH. This organization successfully integrated GUI automation to streamline operations. Before this implementation, the company faced challenges such as manual information entry errors, slow software testing, and difficulty integrating outdated systems without APIs. By automating information entry, software evaluation, and legacy system integration, the company reduced entry mistakes by 70%, accelerated testing procedures by 50%, and enhanced workflow productivity by 80%.

This case exemplifies how leveraging Robotic Process Automation (RPA) can enhance efficiency, particularly in a rapidly evolving AI landscape. The return on investment (ROI) was achieved within six months, demonstrating the effectiveness of the implemented solutions.

The digital transformation in performance management is accelerating, with cloud-based enterprise performance management (EPM) solutions projected to capture a 65% market share by 2025. This shift not only enhances data management capabilities but also empowers organizations to effectively monitor performance metrics within synapse pipelines. The relevance of this trend is further underscored by the case study titled “Projections for Performance Management Software Adoption,” which highlights the growing adoption rates of cloud-based solutions.

In 2025, businesses using AI for task management are expected to report a 40% reduction in administrative workload and a 20% improvement in employee satisfaction, as noted by writer Trudi Saul. This illustrates the tangible advantages of integrating advanced technologies into functional frameworks. By focusing on performance metrics and continuous improvement strategies, organizations can maximize their operational efficiency and drive sustainable growth.

Conclusion

Maximizing operational efficiency through Azure Synapse Pipelines represents a transformative approach for organizations aiming to thrive in a data-driven landscape. By understanding and addressing common challenges—such as manual data entry and data silos—businesses can implement strategies that enhance collaboration and streamline workflows. The integration of Robotic Process Automation (RPA) has proven particularly effective, enabling organizations to automate repetitive tasks, reduce errors, and reallocate human resources to more strategic initiatives.

Best practices for implementing Azure Synapse Pipelines further underscore the importance of defining clear objectives, utilizing modular designs, and optimizing data loading techniques. These strategies not only improve efficiency but also foster a culture of continuous improvement, ensuring that organizations can adapt to changing demands and maintain a competitive edge. By leveraging advanced features within Azure Synapse and integrating RPA, companies can enhance data quality and insights, driving informed decision-making that fuels growth and innovation.

As industries evolve, the focus on operational excellence will become increasingly critical. Organizations that embrace these methodologies and technologies will not only see immediate improvements in their data integration and analytics processes but will also position themselves for long-term success in an ever-changing digital landscape. The future of operational efficiency lies in the hands of those willing to invest in automation, continuous improvement, and data-driven strategies—ultimately transforming challenges into opportunities for growth.

Frequently Asked Questions

What is the main focus of operational effectiveness in synapse pipelines?

The main focus is on executing integration and analytics processes while minimizing resource and time waste through the strategic application of tools and methodologies.

How can organizations enhance operational effectiveness in synapse pipelines?

Organizations can enhance effectiveness by leveraging Azure Synapse alongside Robotic Process Automation (RPA) solutions, automating tasks such as data ingestion, transformation, and analysis.

What key metrics are used to evaluate performance in synapse pipelines?

Key metrics include processing speed, resource utilization, and error rates, which collectively contribute to a robust functional framework.

What benefits have manufacturing firms experienced by implementing Synapse?

Manufacturing firms have improved supply chain visibility, streamlined production processes, and experienced substantial enhancements in work processes, particularly through RPA integration.

What recent updates have been made to the Synapse Machine Learning library?

Recent updates simplify the creation of scalable machine learning pipelines and introduce the Mssparkutils runtime context for Python and Scala, enhancing Synapse’s versatility.

What challenges do organizations face in achieving operational efficiency in synapse pipelines?

Challenges include manual information entry, employee resistance to new technologies, inadequate training, information silos, and the complexity of integrating diverse information sources.

How can Robotic Process Automation (RPA) help organizations?

RPA can streamline manual workflows, reduce errors, and free up employees for more strategic tasks, enhancing operational effectiveness and driving data-driven insights.

What is the significance of adopting Business Intelligence in organizations?

Business Intelligence transforms raw information into actionable insights, enabling informed decision-making that drives growth and innovation.

What role does versioning play in managing data environments?

Versioning facilitates better tracking of lineage, enables error rollbacks, and improves overall management practices, with a projected adoption rate of 60% by 2027.

How does Fivetran’s Hybrid Deployment benefit organizations?

It allows organizations to choose whether their pipelines are processed within Fivetran’s secure network or their own environment, enhancing data security and productivity.