Overview

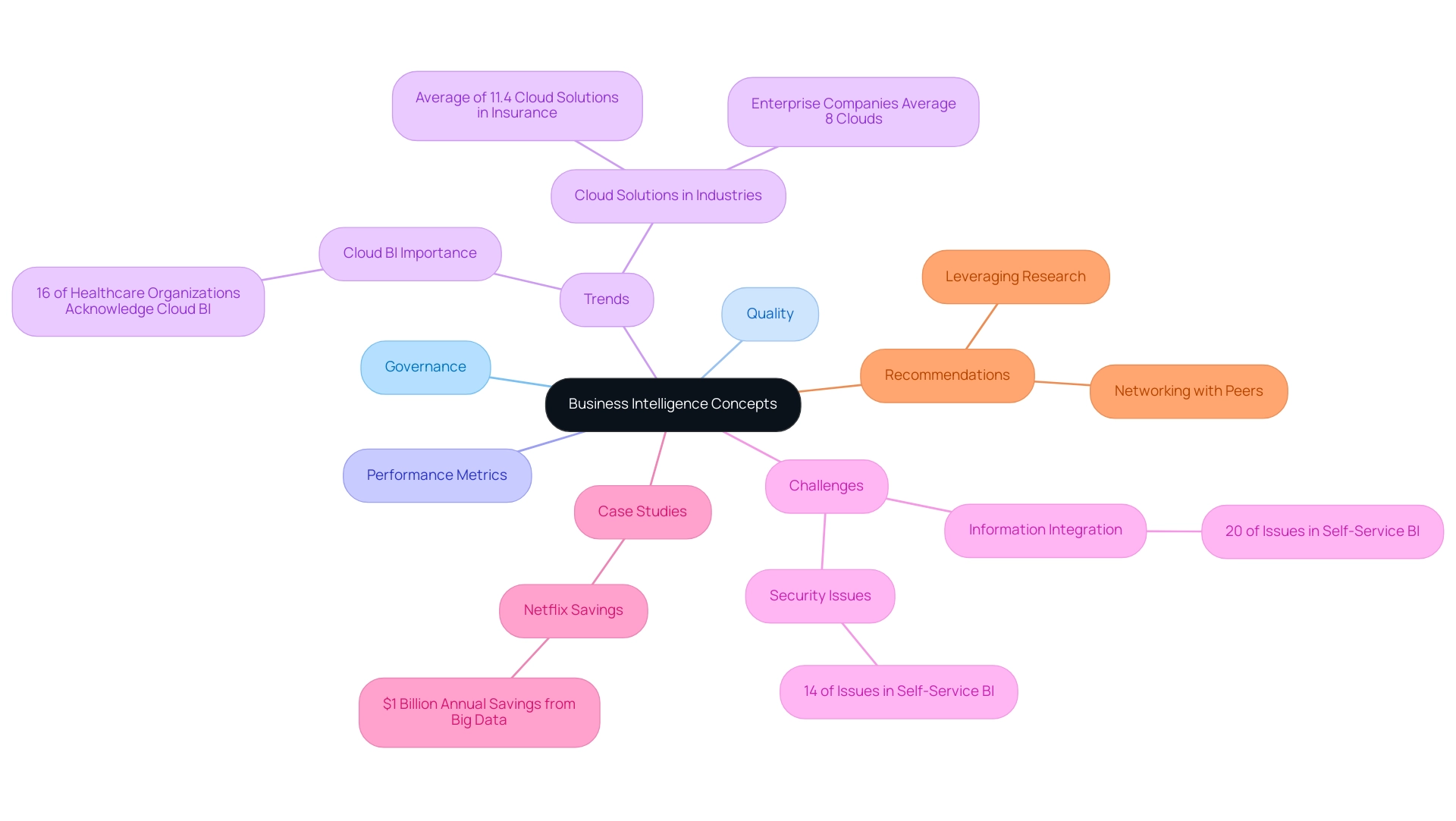

SSAS performance monitoring in Power BI is essential for enhancing operational efficiency and ensuring timely report generation. By tracking key metrics such as query execution times and report load durations, organizations can gain valuable insights. Effective monitoring not only identifies performance bottlenecks but also supports data-driven decision-making. This, in turn, drives business growth and improves user experience through real-time insights. \n\nConsider the impact of not monitoring these metrics. How can businesses make informed decisions without understanding their data performance? By prioritizing SSAS monitoring, companies can harness the power of their data, leading to improved operational outcomes and strategic advantages. \n\nIn conclusion, implementing robust SSAS performance monitoring is not merely an option; it is a necessity for organizations aiming to thrive in a data-driven landscape. Take action now to ensure your reporting processes are optimized and aligned with business objectives.

Introduction

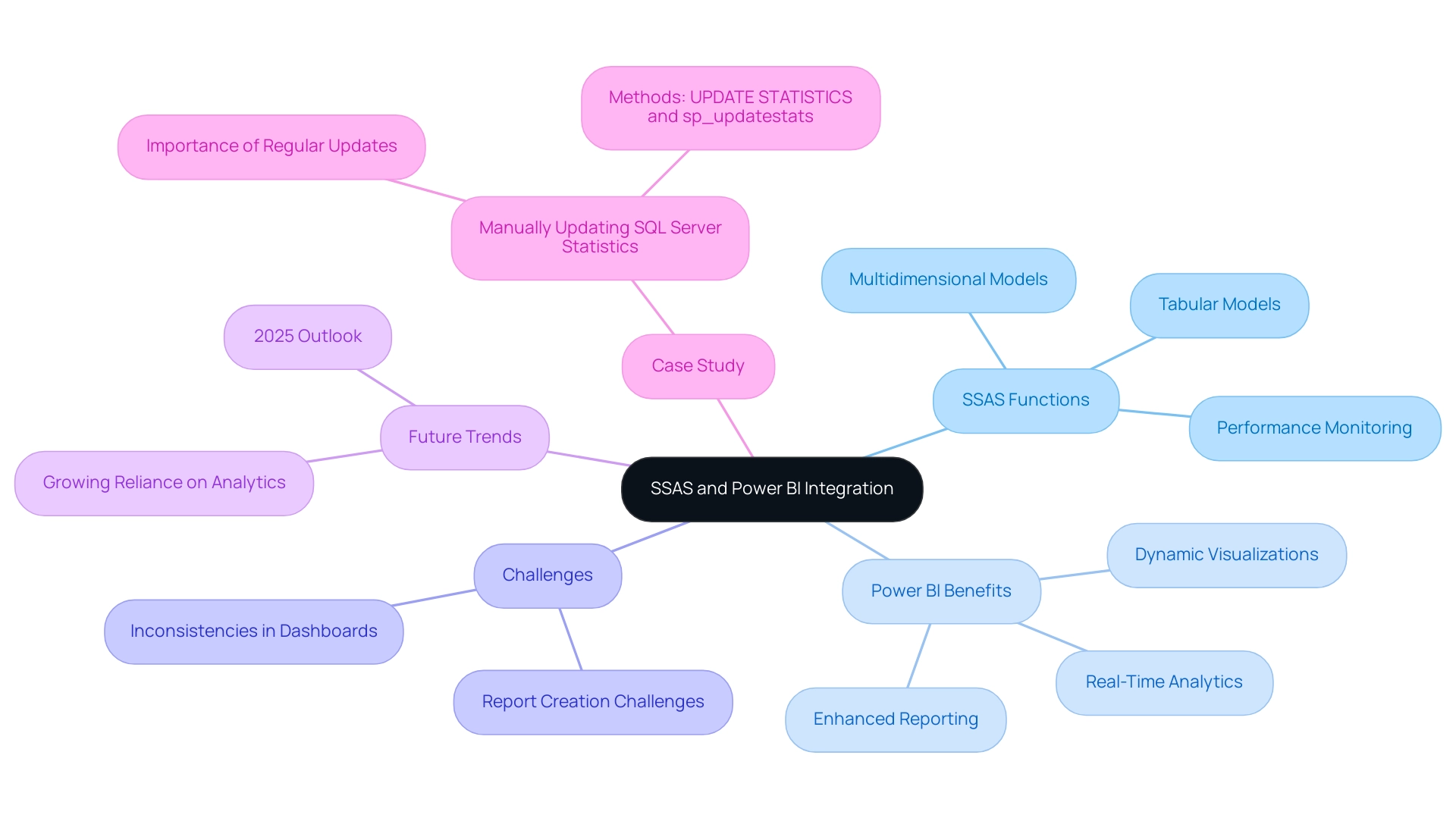

In the dynamic landscape of data analytics, the integration of SQL Server Analysis Services (SSAS) with Power BI stands as a pivotal advancement for organizations aiming to refine their decision-making processes. This robust combination not only facilitates the development of sophisticated data models but also empowers real-time analytics, enabling businesses to fully harness their data’s potential. As companies increasingly depend on data-driven insights to sustain a competitive edge, grasping the complexities of SSAS and Power BI becomes imperative.

From optimizing performance to resolving common integration challenges, organizations can markedly enhance their operational efficiency by embracing best practices and leveraging cutting-edge technologies. This article explores the essential elements of SSAS and Power BI integration, providing valuable insights and strategies for businesses striving to excel in an ever-evolving digital landscape.

Understanding SQL Server Analysis Services and Power BI Integration

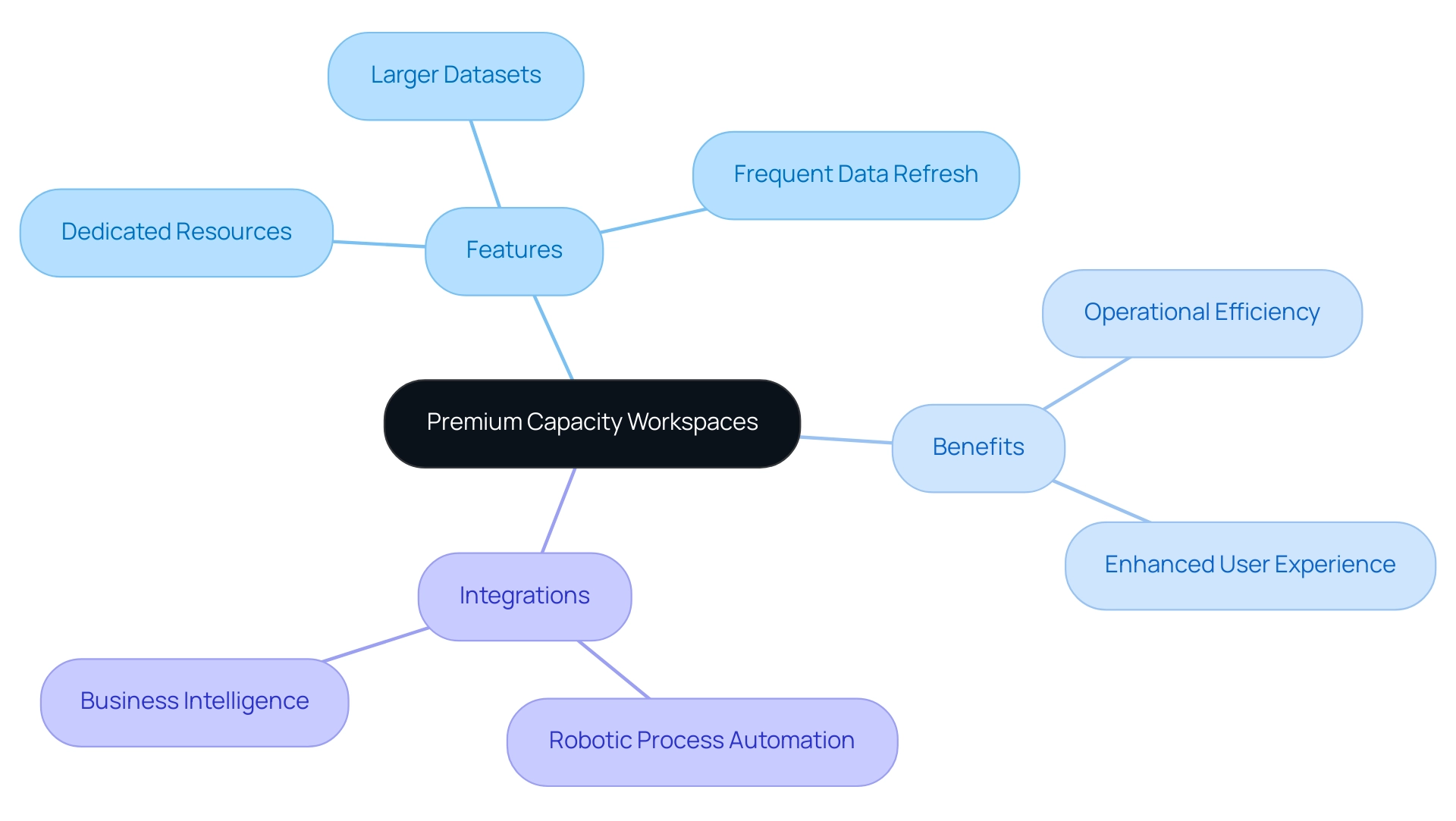

SQL Server Analysis Services (SSAS) serves as a powerful analytical engine, expertly designed to bolster decision-making and business analytics. It empowers users to construct both multidimensional and tabular information models, which can be seamlessly integrated with Business Intelligence (BI) to enhance reporting and analytics capabilities. This integration is crucial for efficient SSAS performance monitoring through Power BI, enabling organizations to fully leverage their information’s potential.

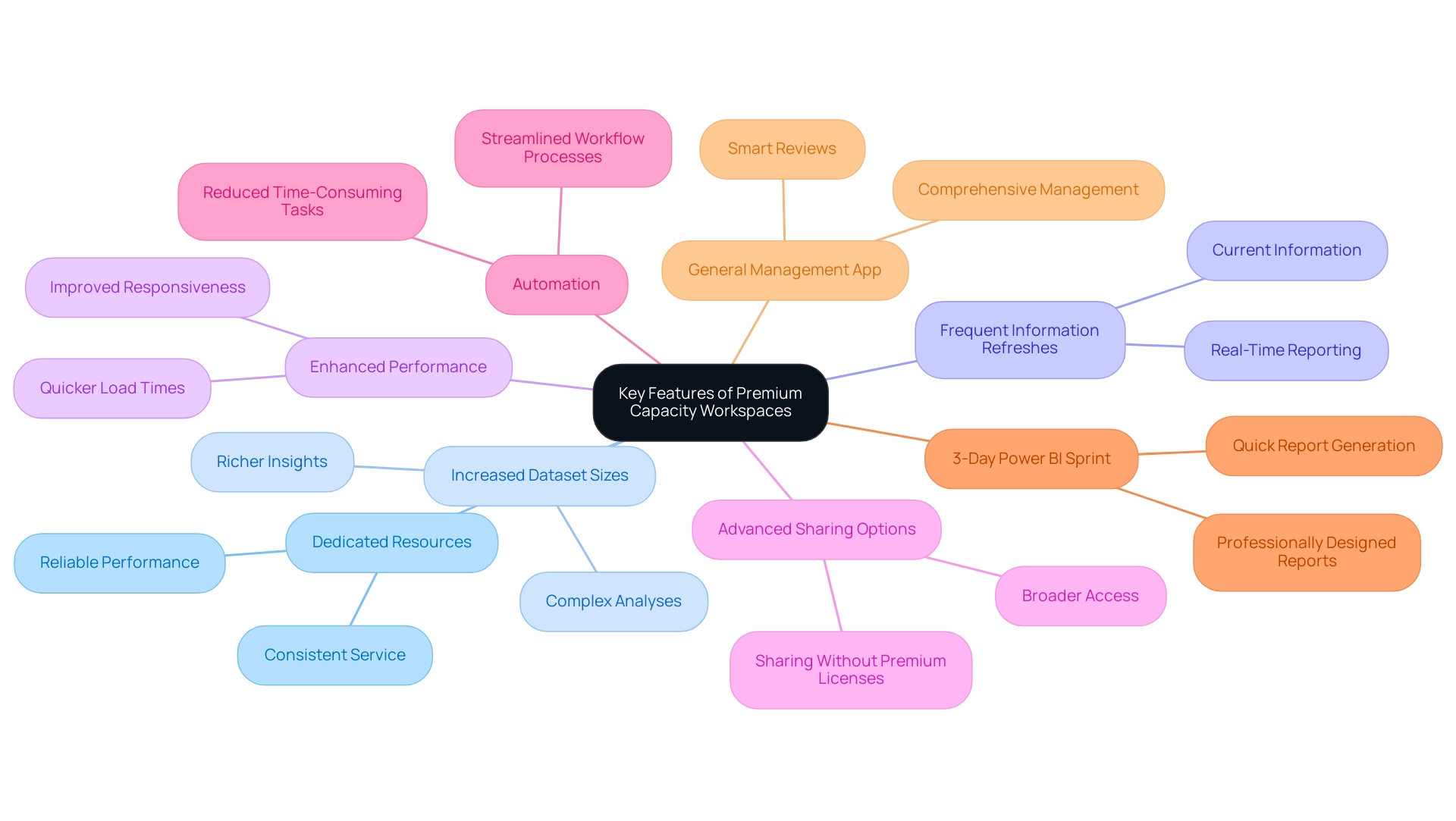

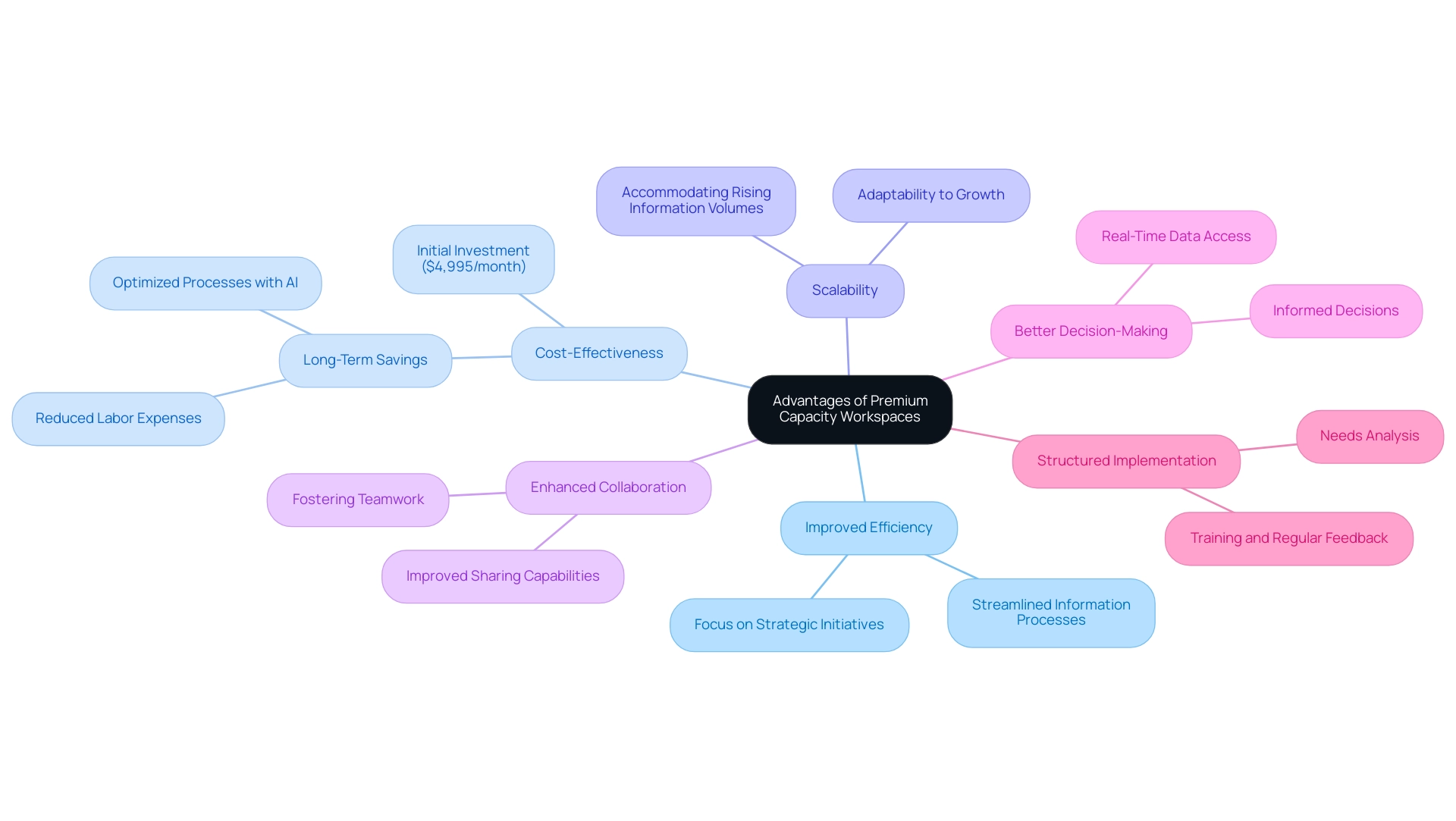

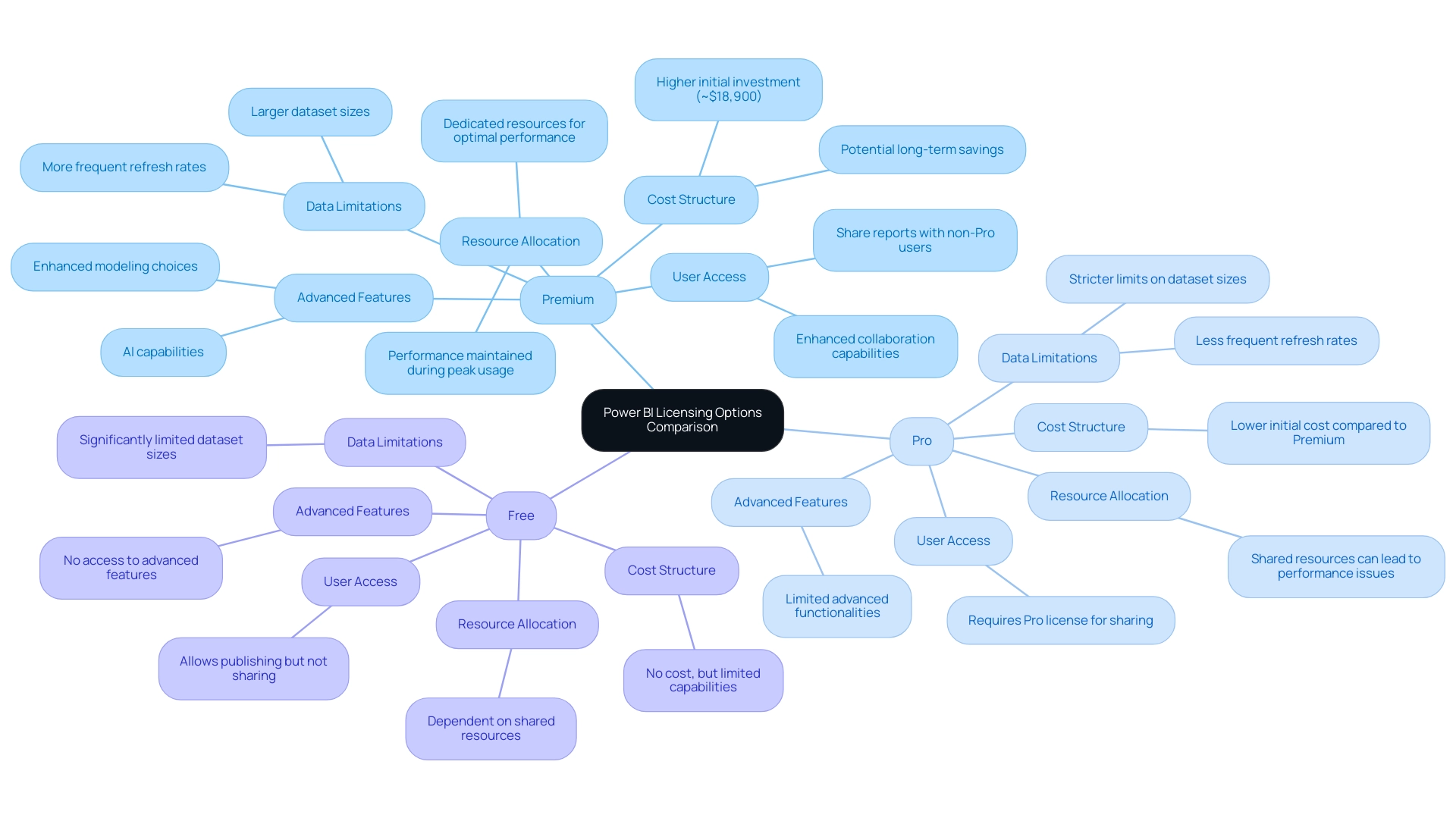

By linking BI to SSAS, users can adeptly query extensive datasets, facilitating intricate analyses and dynamic visualizations. Notably, the 3-Day Business Intelligence Sprint offers a rapid solution for generating professionally crafted reports, significantly improving the speed and effectiveness of reporting processes. Additionally, the General Management App provides comprehensive management and intelligent reviews, ensuring that actionable insights are readily available for informed decision-making.

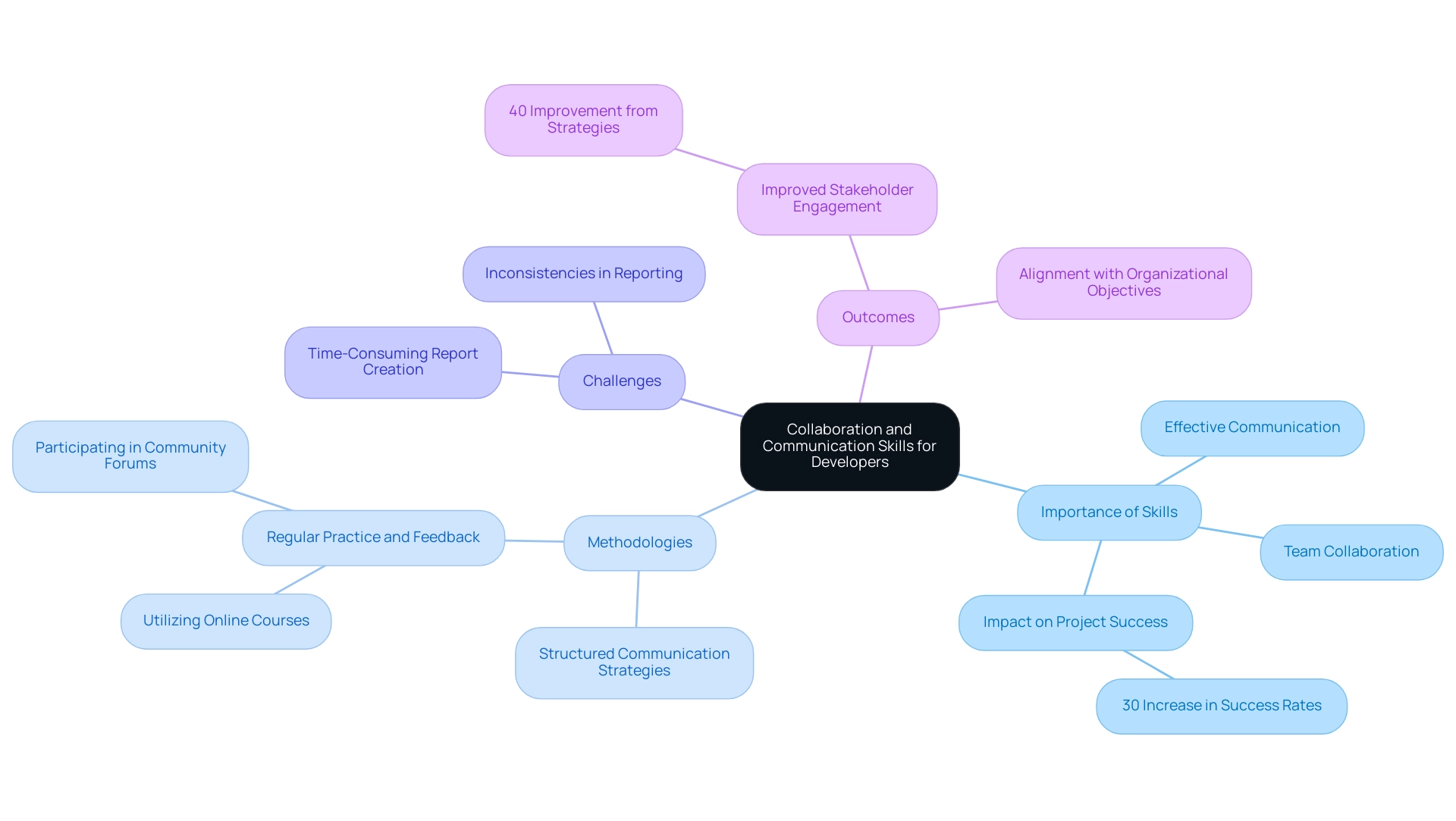

This integration supports real-time analytics, positioning it as an invaluable asset for organizations seeking to enhance their data-driven decision-making processes via SSAS performance monitoring in Power BI. Moreover, with Automate, businesses can streamline workflows, delivering risk-free ROI assessments and professional execution for operational efficiency. However, challenges remain in harnessing insights from BI dashboards, particularly the time-consuming nature of report creation and inconsistencies that can hinder effective decision-making.

As part of our offerings, we present an Actions portfolio and encourage you to schedule a free consultation to explore how we can assist you in overcoming these challenges. As Paul Turley, a Microsoft Data Platform MVP, aptly notes, ‘Same best practice guidelines… different scale, and different focus,’ emphasizing the adaptability of these practices across diverse organizational contexts. Looking ahead to 2025, the integration of SSAS with Business Intelligence is increasingly relevant, especially given that a significant portion of Microsoft SQL Server Analysis Services customers are medium to large enterprises, with 1,036 companies employing between 1,000 and 4,999 employees.

This trend underscores the growing reliance on robust analytics solutions to drive business success. Furthermore, a case study on manually refreshing SQL Server statistics illustrates the importance of routinely updating statistics to ensure that the query optimizer possesses the most current data distribution details, which is essential for maintaining optimal query efficiency.

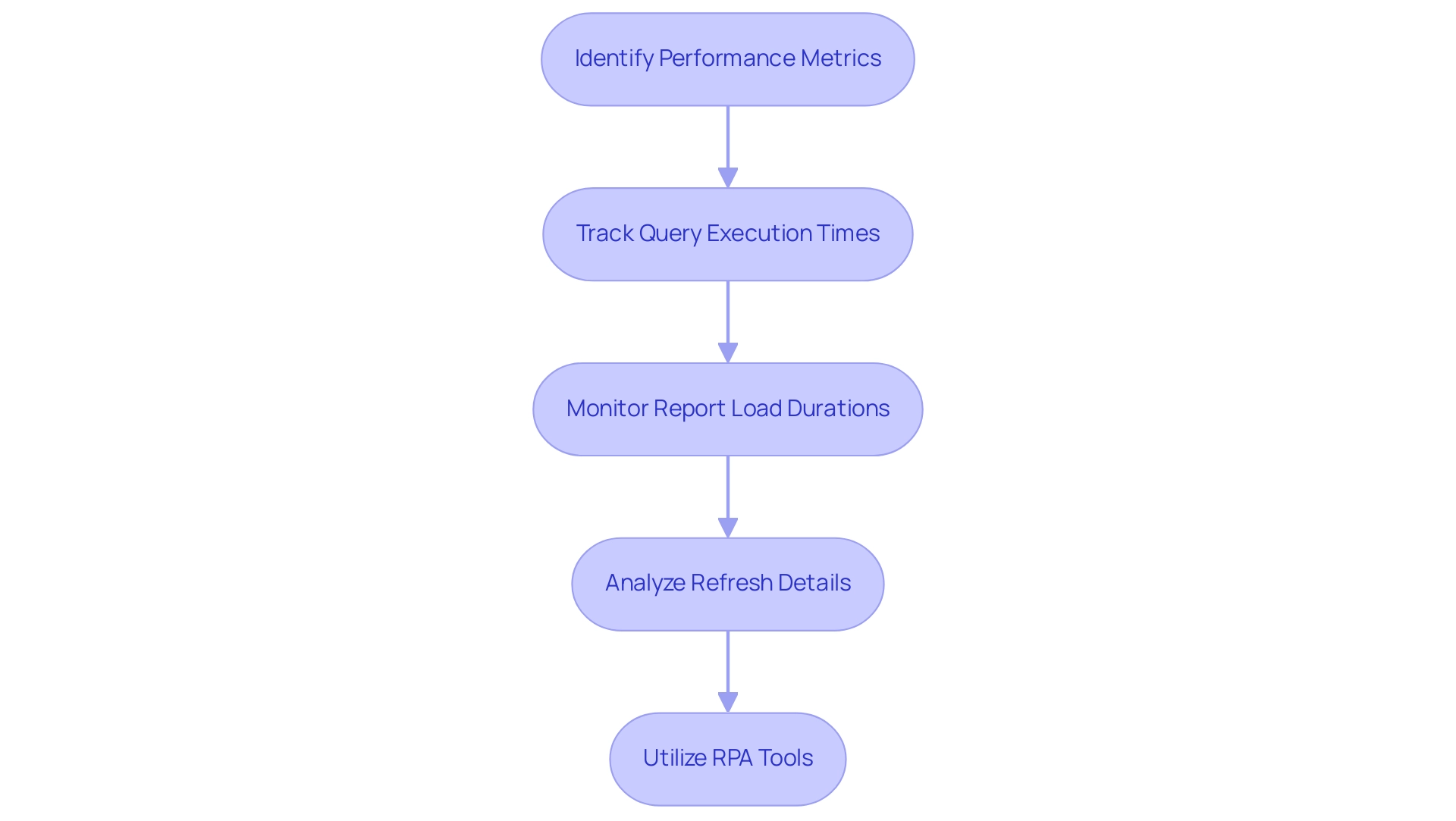

The Importance of Performance Monitoring in Power BI

Monitoring SSAS performance in Power BI is crucial for ensuring that reports are generated swiftly and efficiently, particularly in a landscape where data-driven insights are vital for maintaining a competitive edge. By meticulously tracking various effectiveness metrics—such as query execution times and report load durations—organizations can swiftly identify issues that may compromise user experience. This is especially pertinent when utilizing SQL Server Analysis Services (SSAS) as a data source, where SSAS performance monitoring in Power BI can help pinpoint efficiency bottlenecks that may arise.

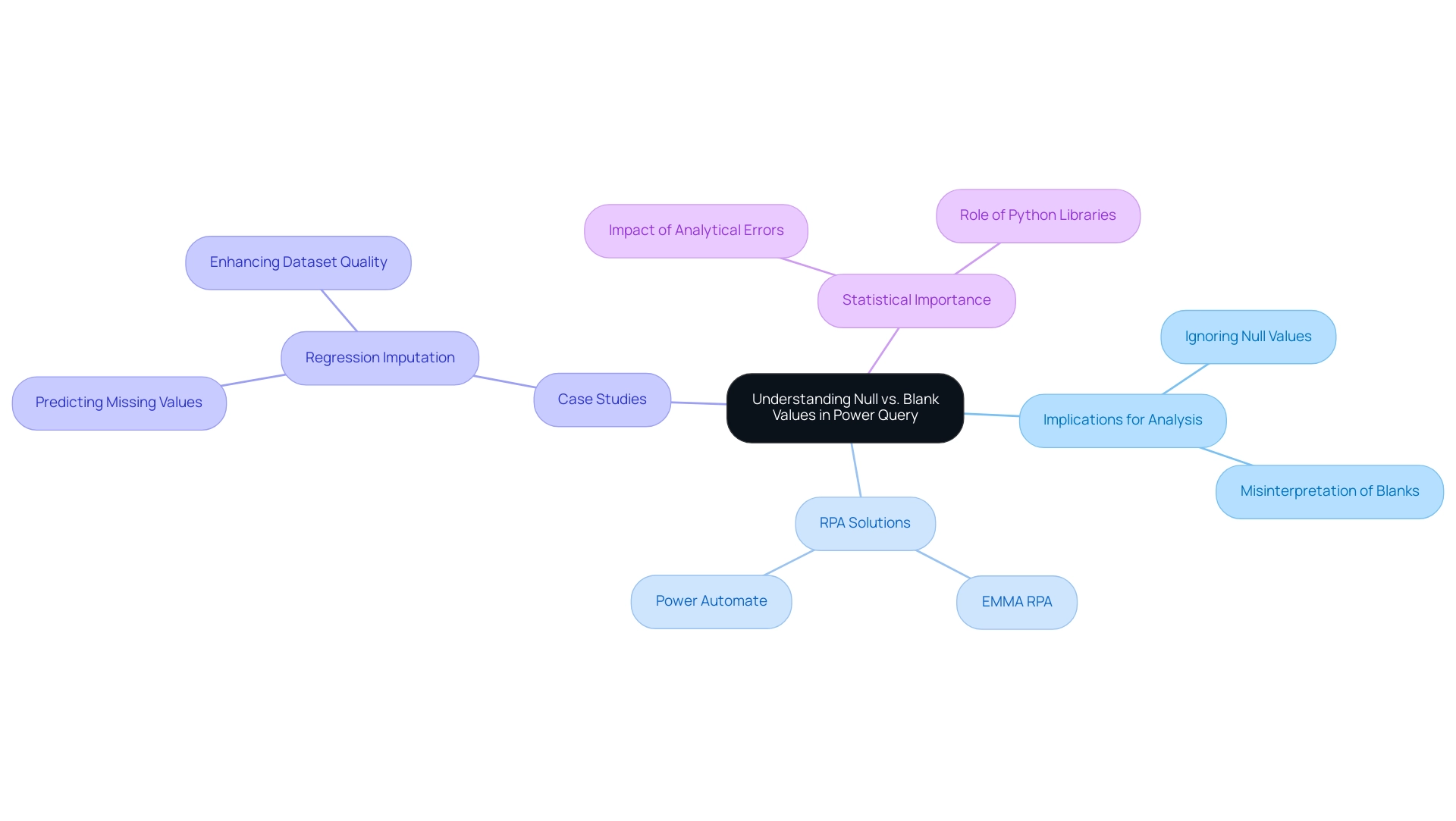

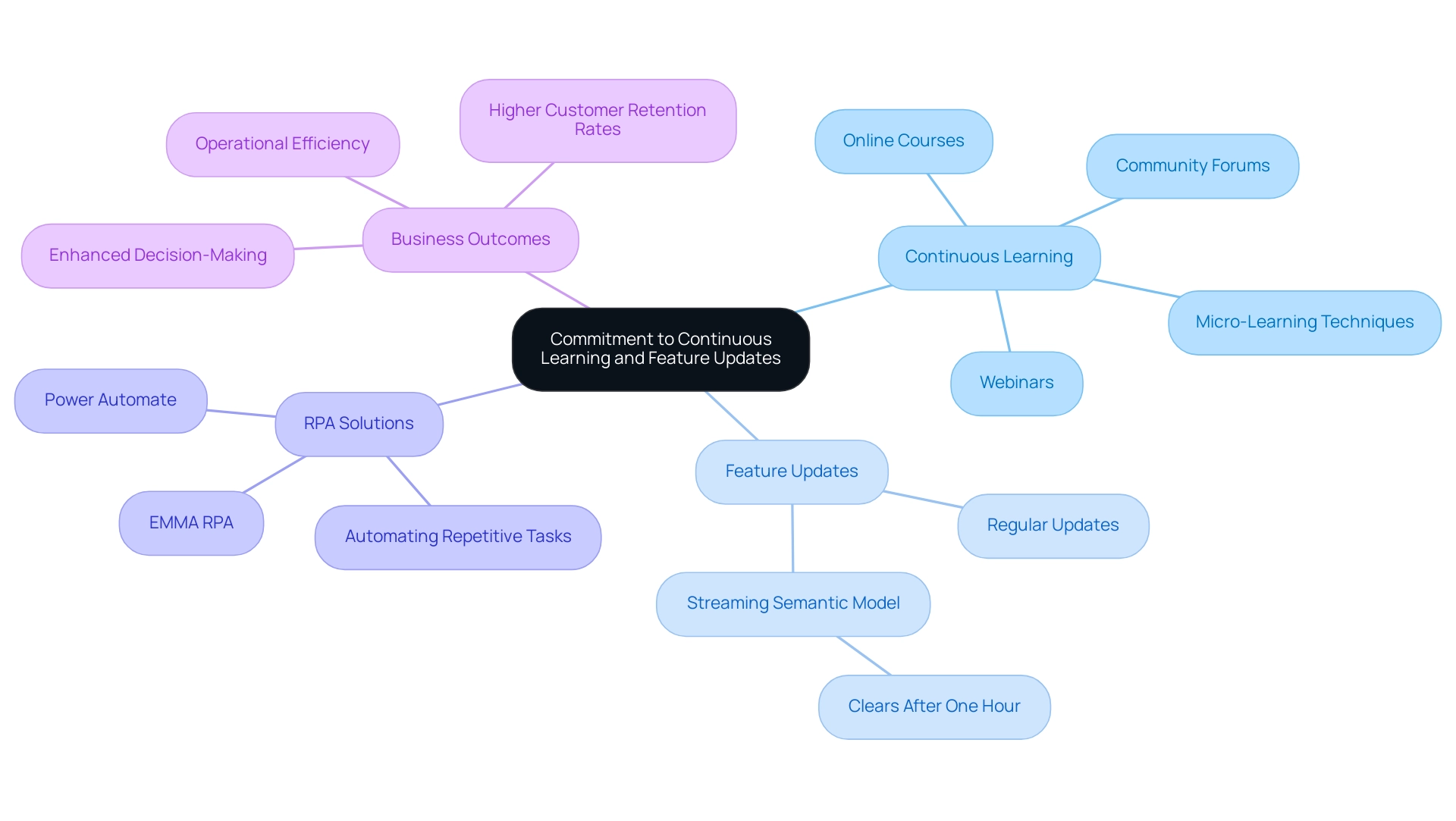

Effective SSAS performance monitoring in Power BI not only enhances report responsiveness but also empowers organizations to make informed decisions based on real-time data insights, thereby driving business growth. Furthermore, incorporating RPA solutions, such as EMMA RPA and Automate, can streamline processes, reduce task repetition, and enhance operational efficiency. As emphasized by the new Semantic model refresh detail page, comprehensive details of refresh activities—including capacity, gateway, start and end times, and error details—significantly assist in SSAS performance monitoring in Power BI.

As Hubert Ferenc, Practice Lead of the Platform at TTMS, highlights, ‘the appropriate tools can fulfill all operational requirements flawlessly,’ underscoring the essential nature of selecting efficient solutions for tracking outcomes. In 2025, as organizations strive to enhance their data-focused strategies, the importance of these metrics in BI cannot be overstated. A recent case study on SSAS performance monitoring in Power BI demonstrates how the Fabric Monitoring Hub’s detailed insights aid users in troubleshooting and optimizing their semantic model refresh processes, further emphasizing the critical nature of robust monitoring in achieving operational excellence.

Employing RPA tools alongside BI not only improves reporting efficiency but also provides a comprehensive framework for addressing the challenges of information management and operational workflows.

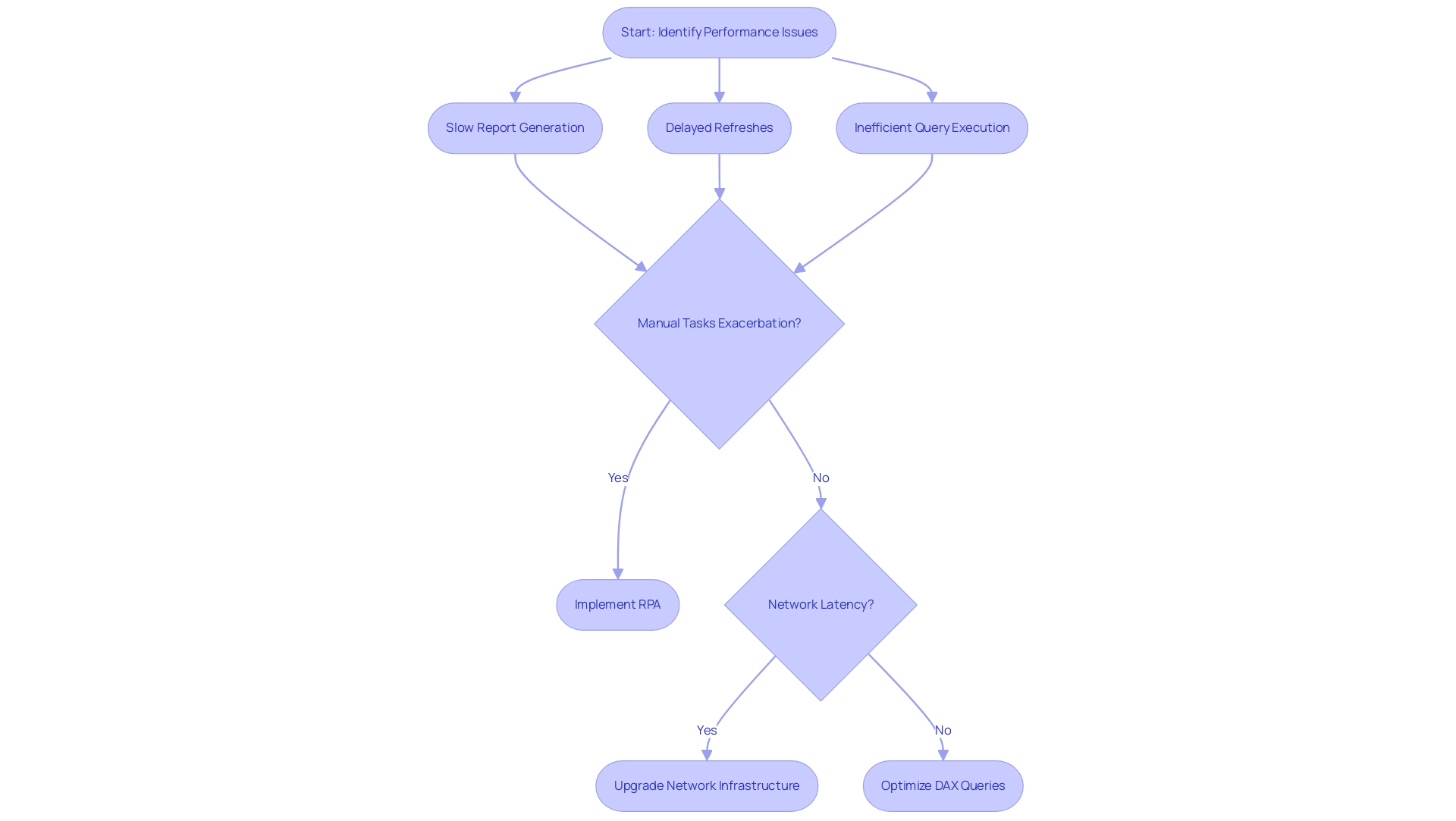

Identifying Common Performance Issues with SSAS in Power BI

Integrating SQL Server Analysis Services with Power BI involves performance monitoring, which presents various challenges organizations must navigate. Among the most prevalent issues are:

- Slow report generation

- Delayed refreshes

- Inefficient query execution

Manual, repetitive tasks significantly exacerbate these challenges, leading to wasted time and resources.

For instance, reports utilizing complex DAX queries often experience extended load times, particularly when the underlying model lacks optimization. A recent analysis revealed users reported hundreds of thousands of records in their final queries, underscoring the need for efficient information handling. The potential benefits of implementing Robotic Process Automation (RPA) to streamline these workflows are clear, as it reduces manual tasks and enhances operational efficiency.

Network latency is another crucial element negatively impacting efficiency, especially when retrieving SSAS information from a distance. This latency can lead to significant delays in data retrieval, complicating the reporting process. Identifying these efficiency bottlenecks early is essential for organizations aiming to enhance their BI solutions with performance monitoring.

By implementing optimizations such as simplifying DAX queries or upgrading network infrastructure, businesses can significantly enhance report efficiency. Moreover, customized AI solutions can improve analytics and Business Intelligence functionality, aligning with the organization’s aim of enhancing operational efficiency.

Expert opinions emphasize the significance of monitoring and adjusting the ‘Is Available in MDX’ property, as disabling it can affect the efficiency of operations like DISTINCT COUNT, MIN, and MAX on columns. As Anders noted in a recent discussion, ‘setting this to false on all columns would mean none of the columns would be available for the Excel users, right?’ This highlights the need for a balanced approach that considers both developer access and user experience, making it a critical consideration for operational efficiency.

Additionally, a subsequent evaluation revealed that after employing the analytical services, the effectiveness of the BI solution enhanced considerably, implying that the BI tool was utilized in an unintended manner. Case studies illustrate these challenges; one user evaluated the effectiveness of both SUM and SUMX functions in their measures, ultimately concluding that SUMX delivered superior results in their specific scenario despite initial concerns about its speed compared to SUM. Such insights are essential for organizations aiming to address challenges related to performance monitoring and enhance their BI reports effectively.

By leveraging RPA and BI, businesses can drive data-driven insights and operational efficiency, avoiding the competitive disadvantage that comes from struggling to extract meaningful insights from their data.

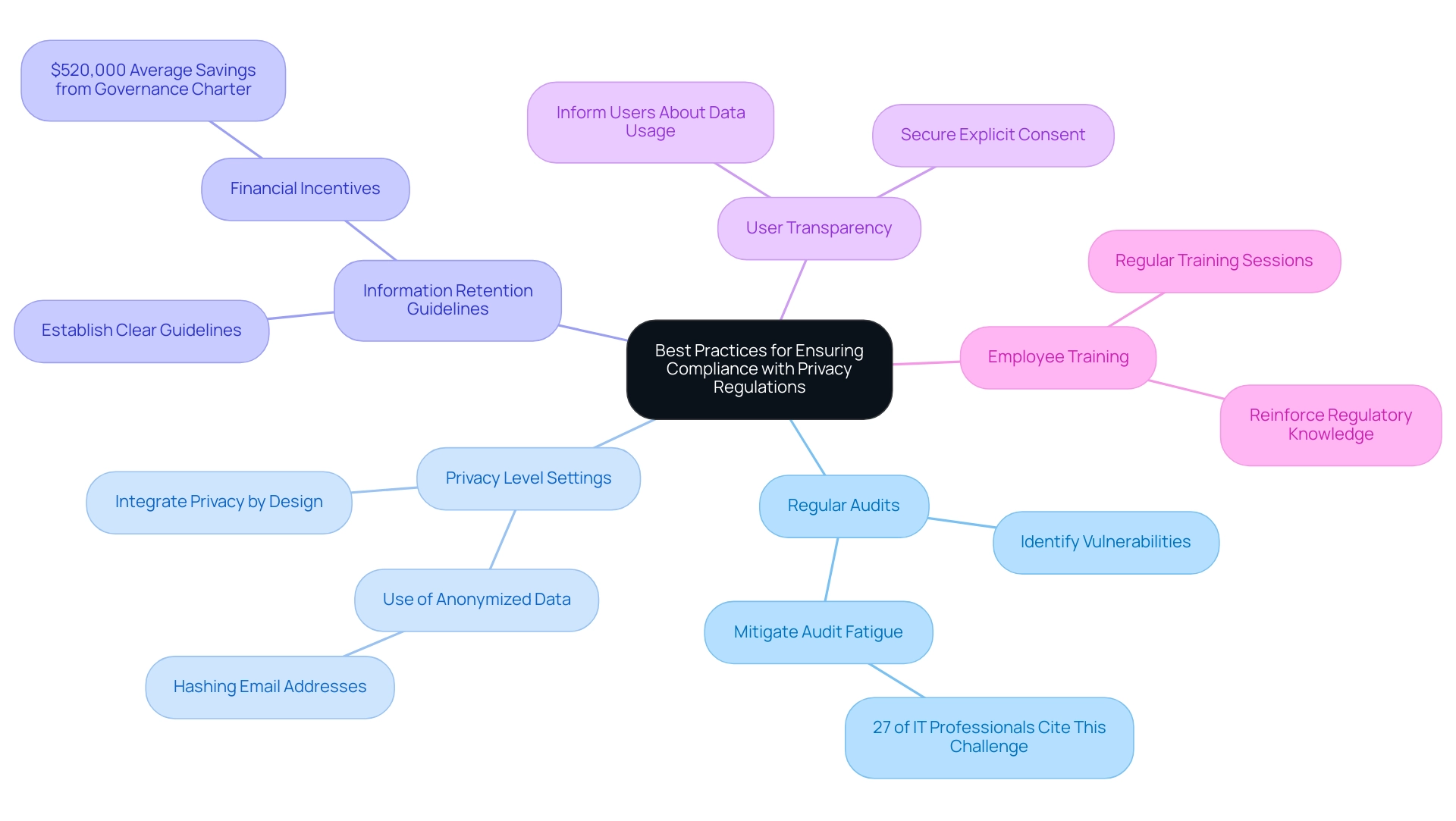

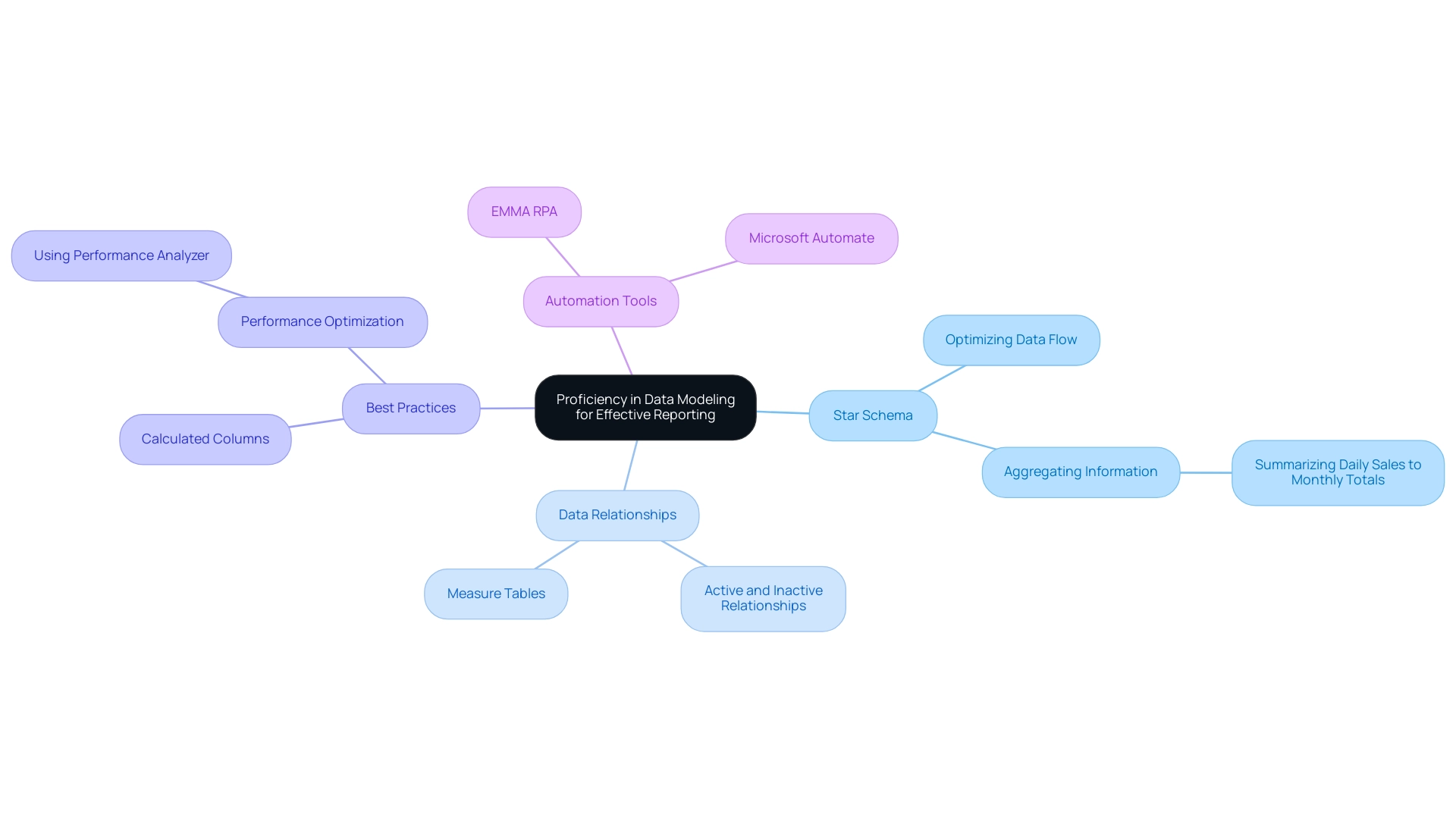

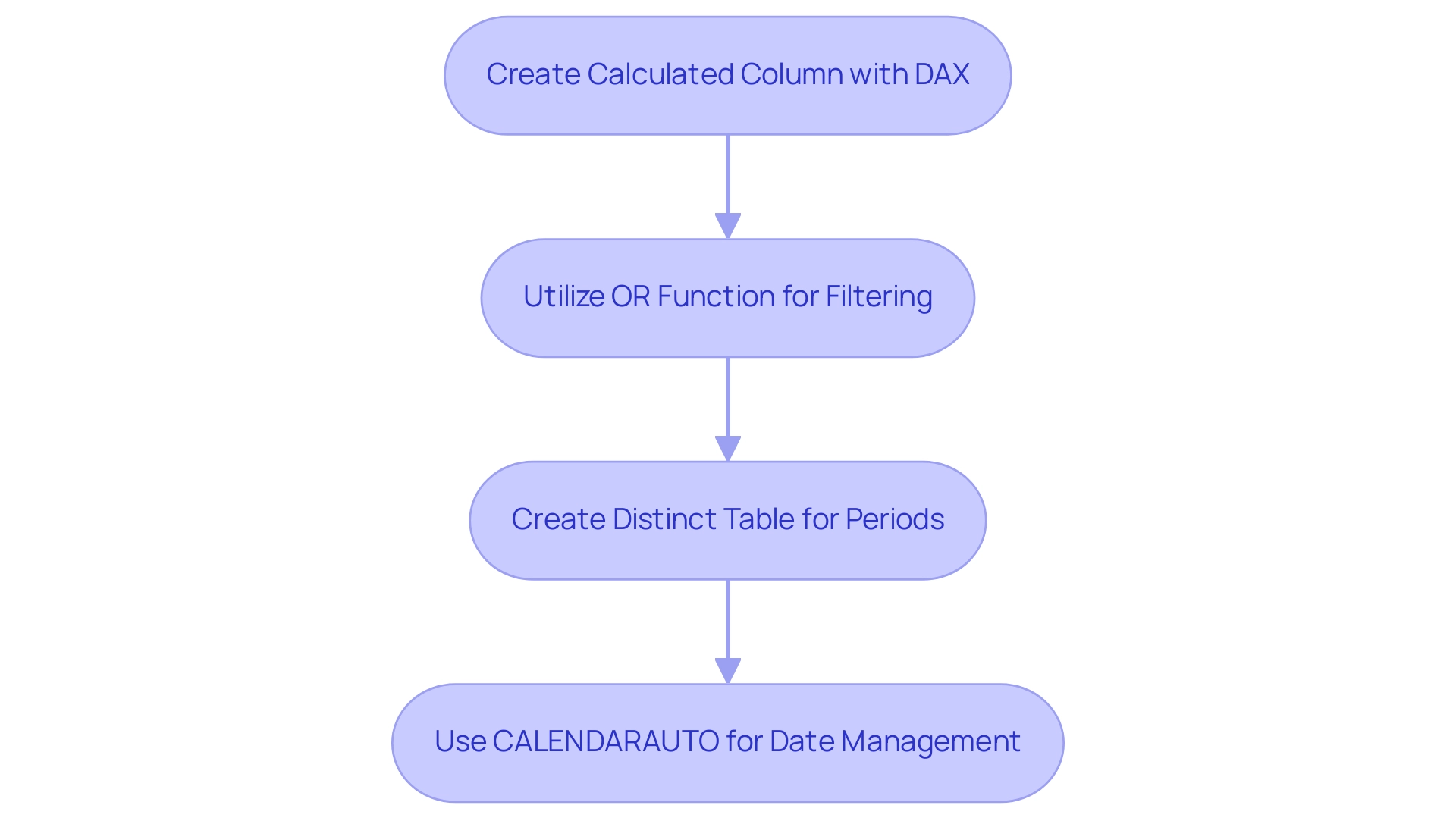

Best Practices for Optimizing SSAS Performance in Power BI

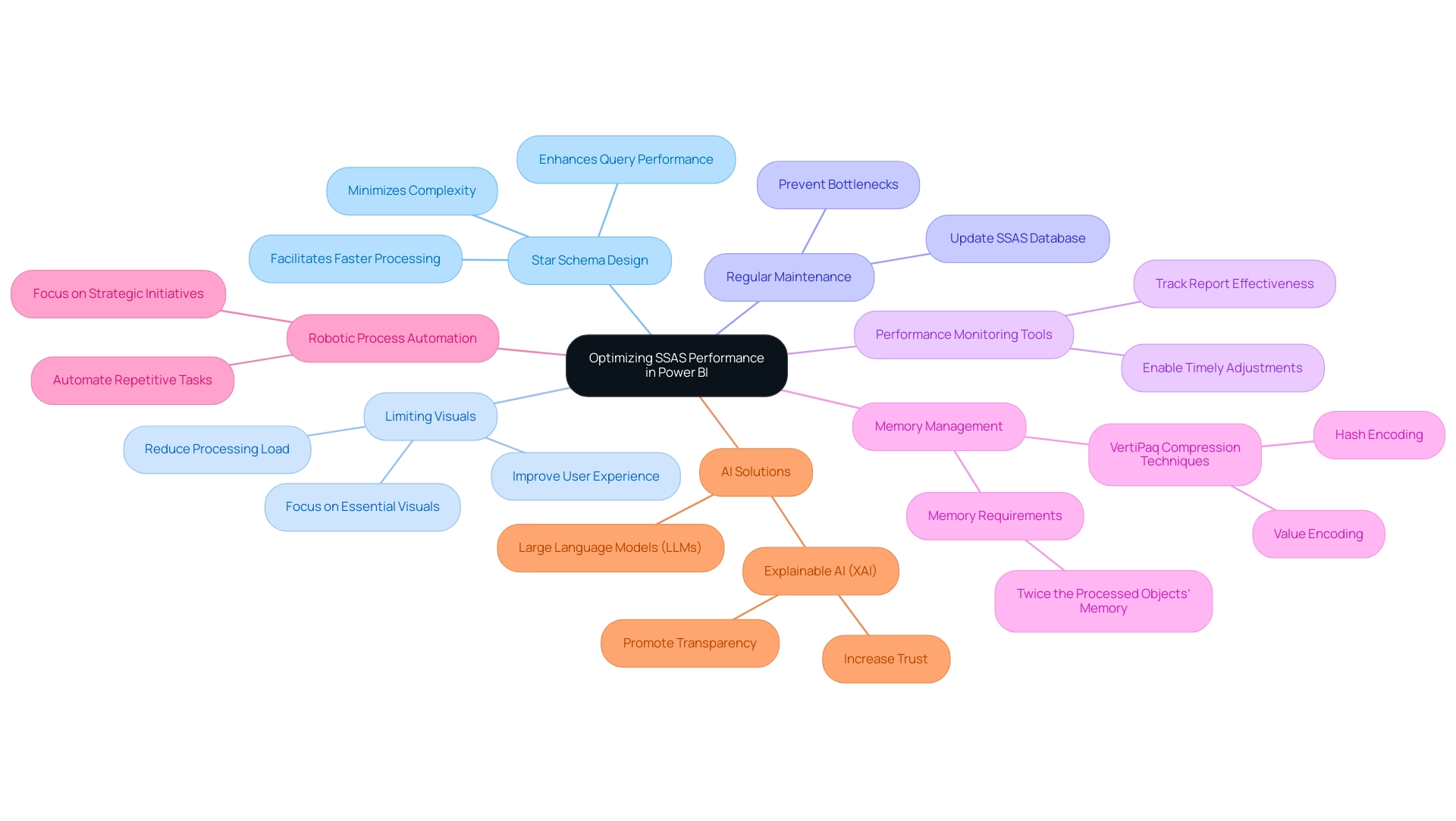

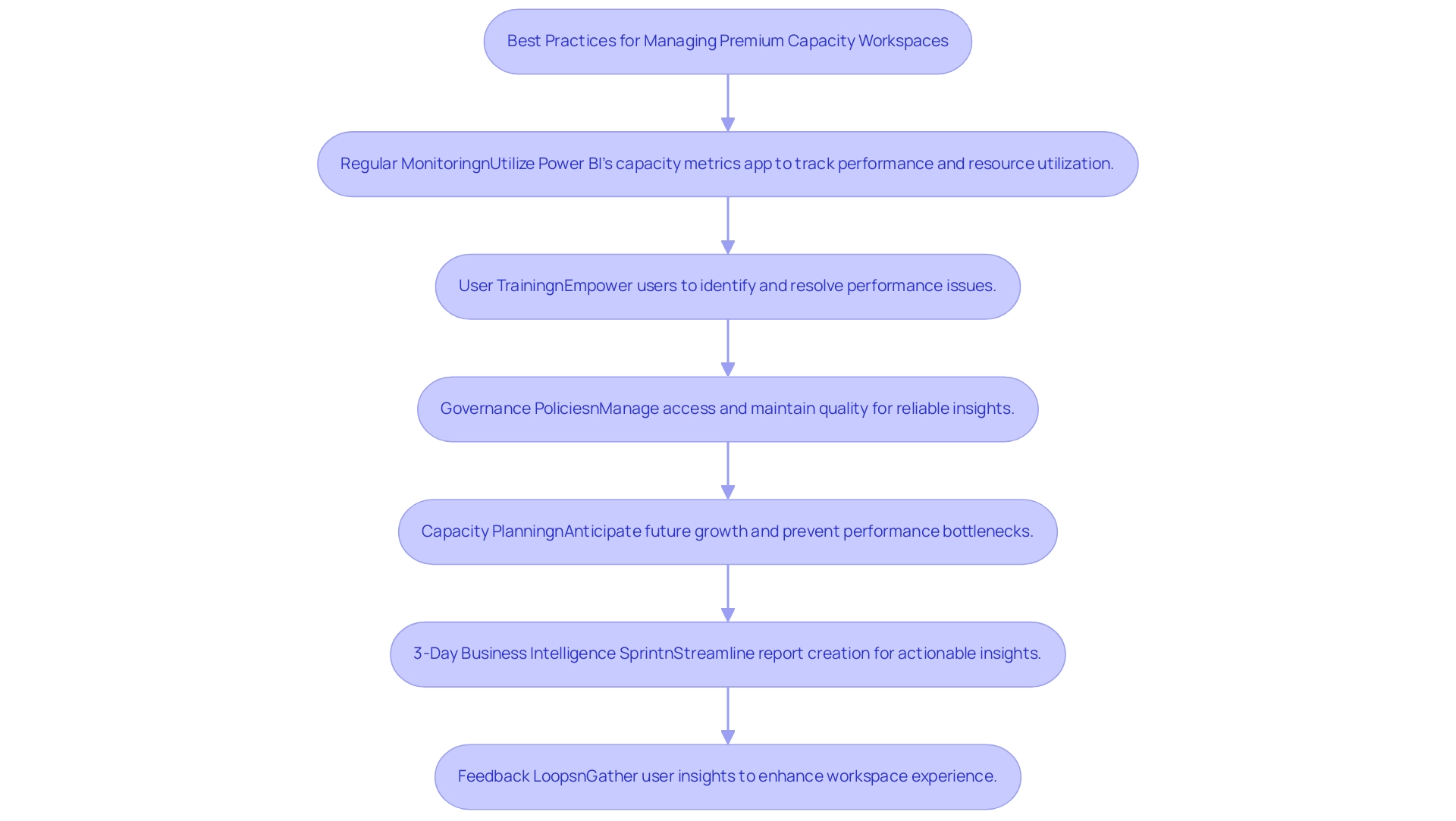

To enhance data analysis effectiveness in Power BI, organizations must adopt a set of best practices for SSAS performance monitoring that optimize operations and improve efficiency. A critical foundational step is the effective design of the SSAS model, with a preference for star schema designs whenever feasible. This approach minimizes complexity and enhances query performance, as star schemas have been proven to significantly improve retrieval times.

Utilizing a star schema can lead to a more efficient model, facilitating faster processing and better scalability.

Another vital strategy involves limiting the number of visuals per report. By focusing on essential visuals and leveraging compiled information, organizations can substantially reduce processing load, resulting in quicker report generation and an improved user experience. Regular updates and maintenance of the SSAS database are equally crucial for effective performance monitoring; outdated information can create bottlenecks and inefficiencies that hinder functionality.

Moreover, employing performance monitoring tools is essential for tracking and analyzing report effectiveness. These tools provide insights that enable timely adjustments to the model, ensuring it remains optimized for current needs. As industry experts note, “Every column included in an AS tabular model will be compressed and stored by the Vertipaq engine using either one of these techniques: value encoding or hash encoding.”

This compression technique is vital for enhancing performance and managing memory effectively. Notably, during processing, the VertiPaq memory required could be twice that of the processed objects’ memory, underscoring the importance of efficient memory management.

Incorporating Robotic Process Automation (RPA) can further boost operational efficiency by automating repetitive tasks, allowing teams to concentrate on more strategic initiatives. Recent advancements, such as the Power Fabric update, exemplify the benefits of integrating information processes within Power BI. This update has streamlined workflows, improved information accessibility, and enhanced visualization capabilities, ultimately supporting better decision-making for organizations.

Additionally, leveraging AI solutions through GenAI workshops can enhance data quality and improve training methods. Integrating Explainable AI (XAI) and Large Language Models (LLMs) can further transform operational efficiency by increasing trust and promoting transparency in data handling and analysis. By adopting these best practices, companies can ensure that their analytics efficiency in Business Intelligence is not only enhanced but also aligned with their strategic objectives for 2025.

Troubleshooting Common SSAS and Power BI Integration Issues

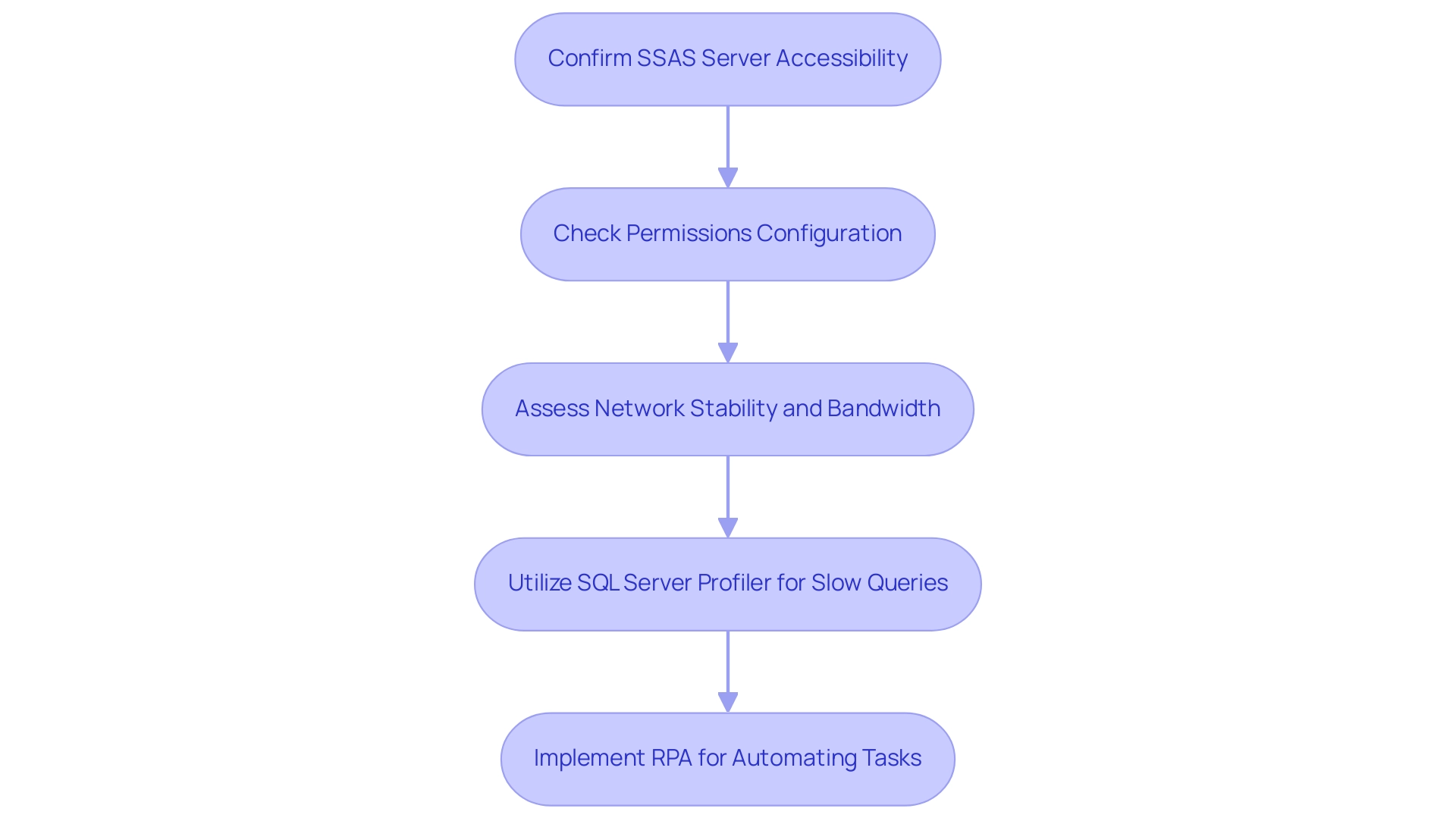

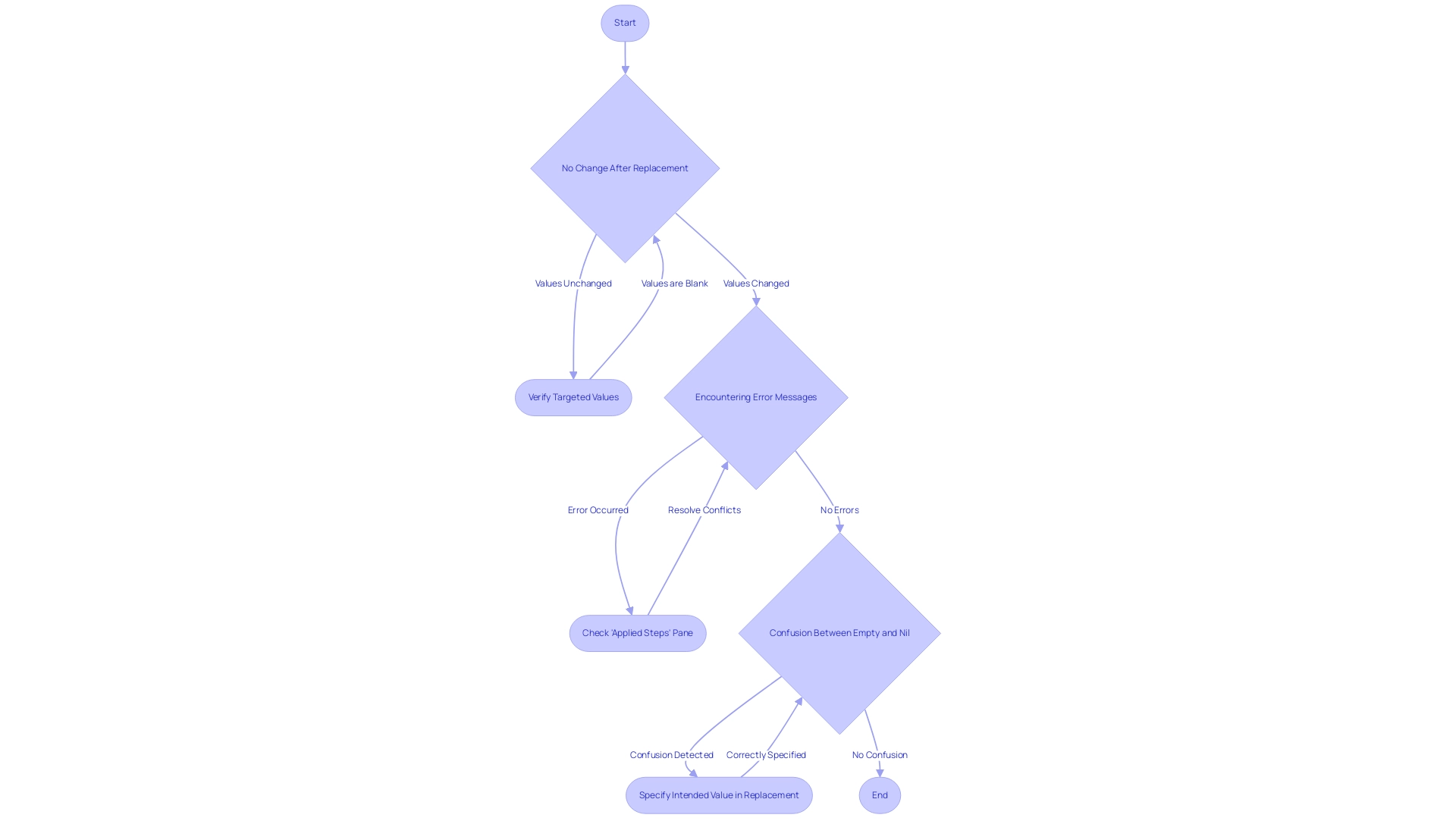

Troubleshooting integration issues between SQL Server Analysis Services and Power BI is crucial for SSAS performance monitoring to ensure operational efficiency. Frequent challenges such as connection timeouts, authentication failures, and slow query execution can significantly hinder productivity. Manual, repetitive tasks exacerbate these issues, causing delays and wasting valuable time and resources.

To effectively address these integration challenges, it is essential to confirm that the SSAS server is accessible and that all necessary permissions are configured correctly. Stability in network conditions and sufficient bandwidth are also critical; fluctuations can greatly affect functionality.

Utilizing tools like SQL Server Profiler can be instrumental in identifying slow queries and performance bottlenecks. This analysis guides informed adjustments to data models or Power BI reports, thereby enhancing overall efficiency in SSAS performance monitoring. Moreover, the integration of Robotic Process Automation (RPA) can streamline workflows by automating repetitive tasks, allowing your team to focus on strategic initiatives, ultimately driving growth.

For instance, RPA can automate the extraction and transformation processes, significantly reducing the time spent on manual handling.

Recent statistics indicate that a considerable percentage of organizations experience common SSAS connection issues, with authentication failures being a leading cause of integration problems. A case study titled “Information Transformation Performance Comparison” highlights the efficiency of various transformation methods, providing insights that can guide organizations in selecting the best approach for reporting and analysis needs. This case study also emphasizes the importance of understanding visibility limitations, as loading DB tables over Visual Studio in Excel shows measures, while loading via server connection only displays values until December 2024.

Furthermore, experiments comparing information load and transformations between CSV files and a Fabric lakehouse have yielded current insights into performance comparisons relevant to your interests. As Paul Turley, a Microsoft Data Platform MVP and Principal Consultant for 3Cloud Solutions, states, “All models and reports will use managed information and will be certified by the business.” This underscores the importance of upholding information integrity throughout the integration process, leading to more reliable insights and informed decision-making.

To further enhance your operations, our tailored AI solutions can identify specific integration challenges and provide targeted recommendations. For those looking to deepen their understanding, utilizing code MSCUST for a $150 discount on conference registration can offer valuable opportunities to learn more about these critical topics.

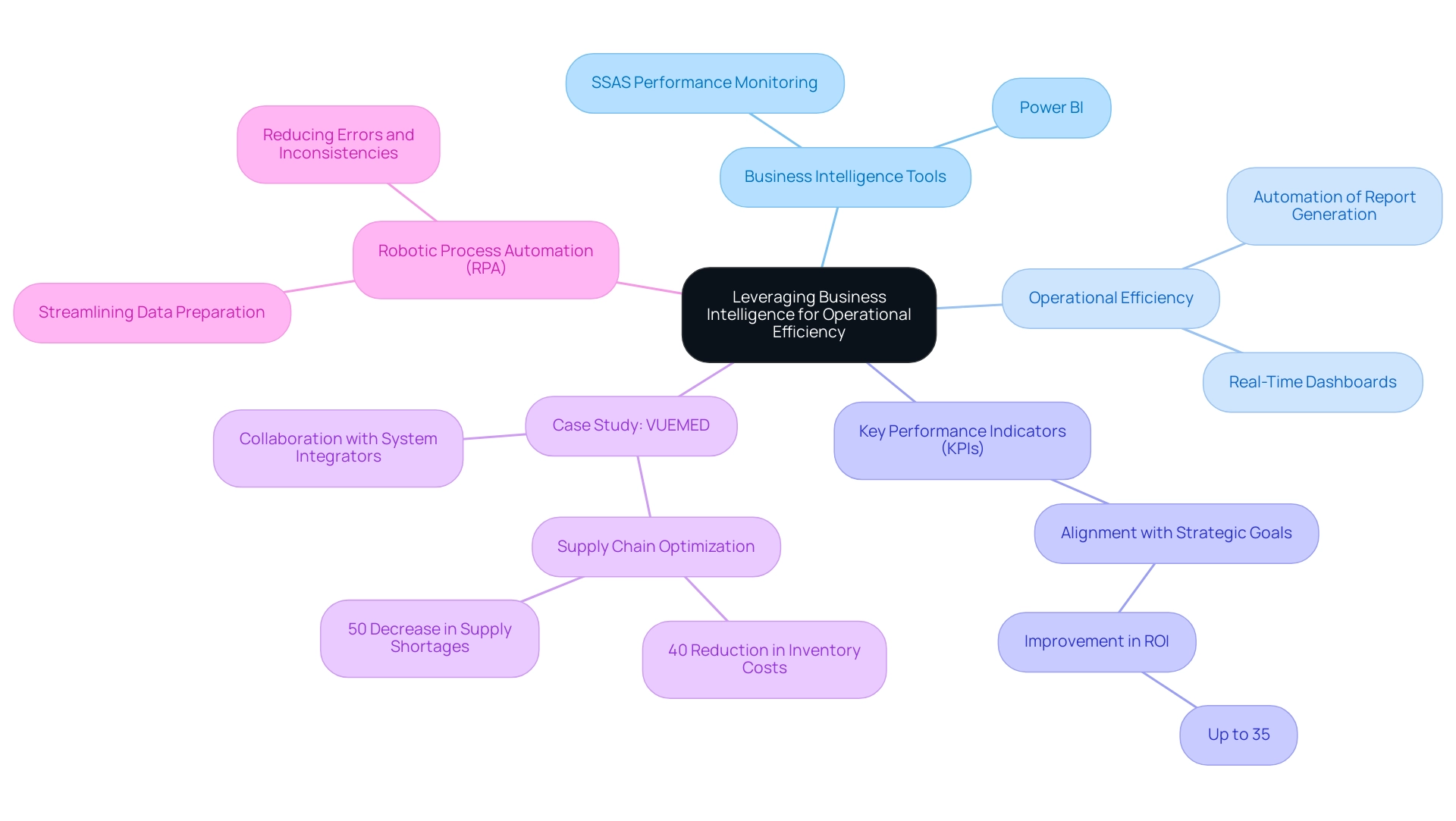

Leveraging Business Intelligence for Operational Efficiency

Utilizing business intelligence tools such as SSAS performance monitoring and Power BI can significantly enhance operational efficiency by converting raw data into actionable insights. This transformation empowers organizations to make informed decisions that drive growth and innovation. The effective application of BI tools, combined with Robotic Process Automation (RPA), facilitates the identification of inefficiencies within business processes, allowing for targeted improvements and the automation of labor-intensive report generation.

For instance, real-time dashboards deliver critical insights into key performance indicators (KPIs), enabling teams to swiftly adjust strategies and operations. Aligning KPIs with strategic business goals has been shown to improve ROI by as much as 35%. Moreover, RPA can streamline the preparation process, reducing errors and mitigating inconsistencies, thus freeing up valuable time for teams to focus on analysis rather than data entry.

As Mykhailo Kulyk notes, ‘When evaluating the best BI tools in 2025, many organizations lean towards solutions that offer flexibility, real-time analytics, and deep integration capabilities.’ This is crucial as organizations navigate the complexities of a rapidly evolving business landscape. A notable case study is VUEMED, which utilized BI to optimize their supply chain operations. By analyzing usage patterns and integrating real-time monitoring into their inventory management processes, they achieved a remarkable 40% reduction in inventory costs and a 50% decrease in supply shortages over nine months.

Their success was further bolstered by collaboration with system integrators and data analysts, demonstrating the effectiveness of combining BI with IoT and agile methodologies. This underscores the significant benefits of business intelligence and RPA for operational efficiency in 2025, particularly in creating actionable reports for SSAS performance monitoring and Power BI that are visually easy to interpret and regularly updated. Furthermore, as organizations face an overwhelming AI landscape, tailored AI solutions can assist in navigating these challenges, ensuring that businesses leverage the right technologies for actionable insights.

Conclusion

Integrating SQL Server Analysis Services (SSAS) with Power BI offers a transformative opportunity for organizations aiming to elevate their data analytics and decision-making capabilities. By harnessing SSAS, businesses can develop sophisticated data models that seamlessly connect with Power BI, facilitating efficient querying and dynamic reporting. This integration not only enables real-time analytics but also empowers organizations to monitor performance effectively, ensuring that insights are timely and actionable.

However, as highlighted throughout the article, organizations must confront common performance challenges linked to this integration. From slow report generation to inefficient query execution, recognizing and resolving these issues is essential for maximizing the benefits of SSAS and Power BI. Implementing best practices, such as:

- adopting star schema designs

- optimizing DAX queries

- utilizing performance monitoring tools

can significantly enhance operational efficiency and user experience.

Ultimately, the combination of SSAS and Power BI represents a pivotal strategy for organizations striving to remain competitive in a data-driven landscape. By embracing a proactive approach to performance monitoring and troubleshooting, businesses can unlock the full potential of their data, driving growth and innovation. As the digital landscape continues to evolve, investing in these advanced analytics solutions will be crucial for achieving long-term success and operational excellence.

Overview

This article underscores the critical importance of effectively managing the first row in data sources. This row serves as the title row, containing essential labels that define the data columns. Proper management of this row is paramount, as it enhances clarity and minimizes errors in data processing. Such diligence ultimately leads to improved decision-making and operational efficiency. Furthermore, the integration of automation tools, such as Robotic Process Automation (RPA), supports this endeavor, streamlining processes and driving better outcomes.

Introduction

In the realm of data management, the significance of the first row in any dataset is paramount. This crucial header row not only sets the stage for understanding the data it encapsulates but also guides users through the intricacies of columns filled with vital information. As organizations navigate the complexities of data analysis and reporting, the clarity provided by a well-structured header becomes essential.

In today’s fast-paced digital landscape, where Robotic Process Automation (RPA) is transforming operational workflows, effectively managing this foundational element can dramatically enhance data accuracy and decision-making. Consider the common pitfalls, such as header duplication, alongside innovative techniques for leveraging automation. The journey toward mastering data management begins with recognizing the power of that first row.

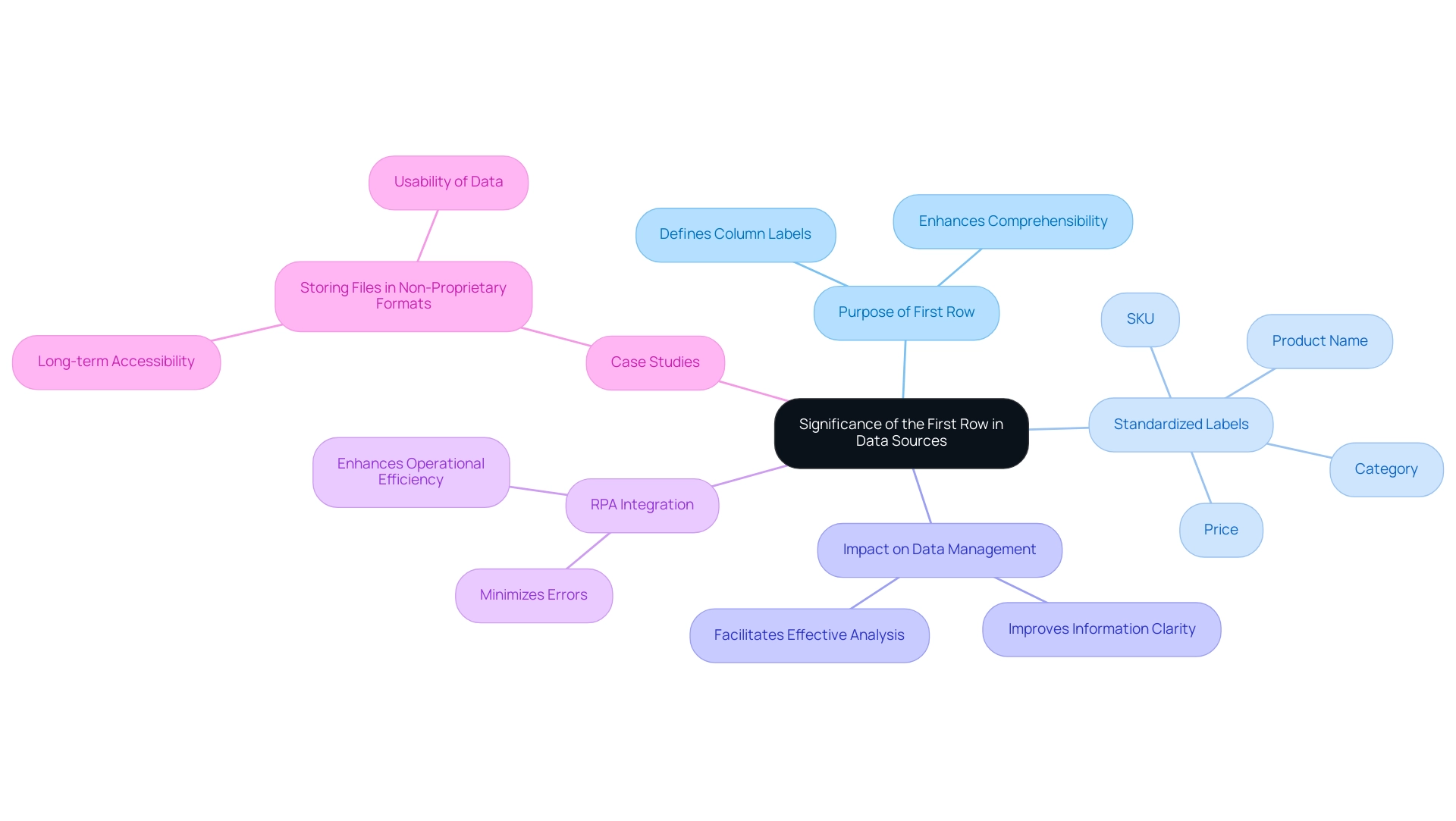

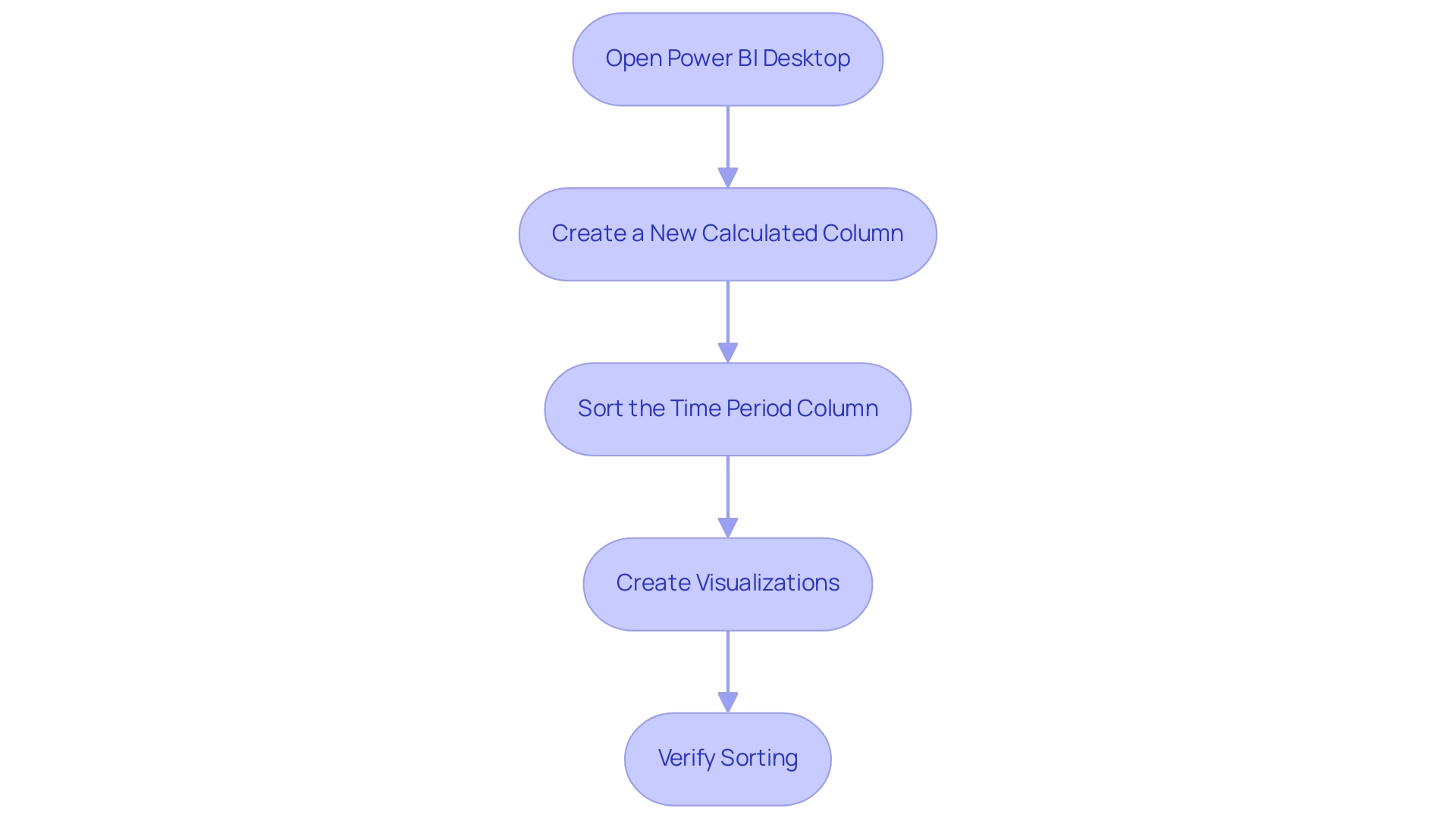

1. Name: Understanding the Significance of the First Row in Data Sources

The first row in a data source, whether it is a spreadsheet or a database, is essential as it typically serves as the title row. This first row contains the labels that define each column, enabling users to grasp the essence of the information presented. For instance, in a sales dataset, the first row might include crucial labels such as ‘Date’, ‘Product’, ‘Quantity’, and ‘Price’.

Proper management of the first row in a data source is vital for ensuring that information is easily comprehensible and modifiable, which in turn fosters more effective analysis and reporting.

In today’s rapidly evolving AI landscape, leveraging Robotic Process Automation (RPA) to automate manual workflows is crucial for enhancing operational efficiency. RPA can significantly minimize errors and liberate team resources for more strategic tasks, making it a formidable tool in management. The significance of a distinct title in the first row cannot be overstated; without it, information can become ambiguous, leading to processing errors that ultimately impact decision-making.

As noted by Kara H. Woo from the Information School at the University of Washington, “It is important to ensure that the process is as error-free and repetitive-stress-injury-free as possible.” This underscores the necessity of maintaining high-quality title rows, recognized as the first row in a data source, to reduce confusion and enhance operational efficiency through RPA.

Recent studies have shown that standardized row labels, such as ‘SKU’, ‘Product Name’, ‘Category’, and ‘Price’, serve as the first row in a data source, contributing to a clearer inventory database and improving overall information management practices. Moreover, implementing RPA alongside multi-column statistics can significantly boost performance when multiple columns are accessed together. This suggests that by structuring information with clear titles, users can leverage analytical tools more effectively, resulting in enhanced insights and decision-making.

In practical terms, the lack of clear headers can lead to interpretation challenges, as evidenced by case studies that highlight the importance of storing files in non-proprietary formats. These studies reveal that using standard file formats not only enhances accessibility but also ensures long-term usability of information. Furthermore, instead of emphasizing questionable data in spreadsheets, incorporating an indicator variable in a separate column can provide clarity and improve information handling practices.

Consequently, the quality of title rows, particularly the first row in a data source, directly influences the effectiveness of information analysis. It is essential for organizations to prioritize this aspect in their information management strategies while utilizing RPA to drive insight-driven decisions and enhance operational efficiency.

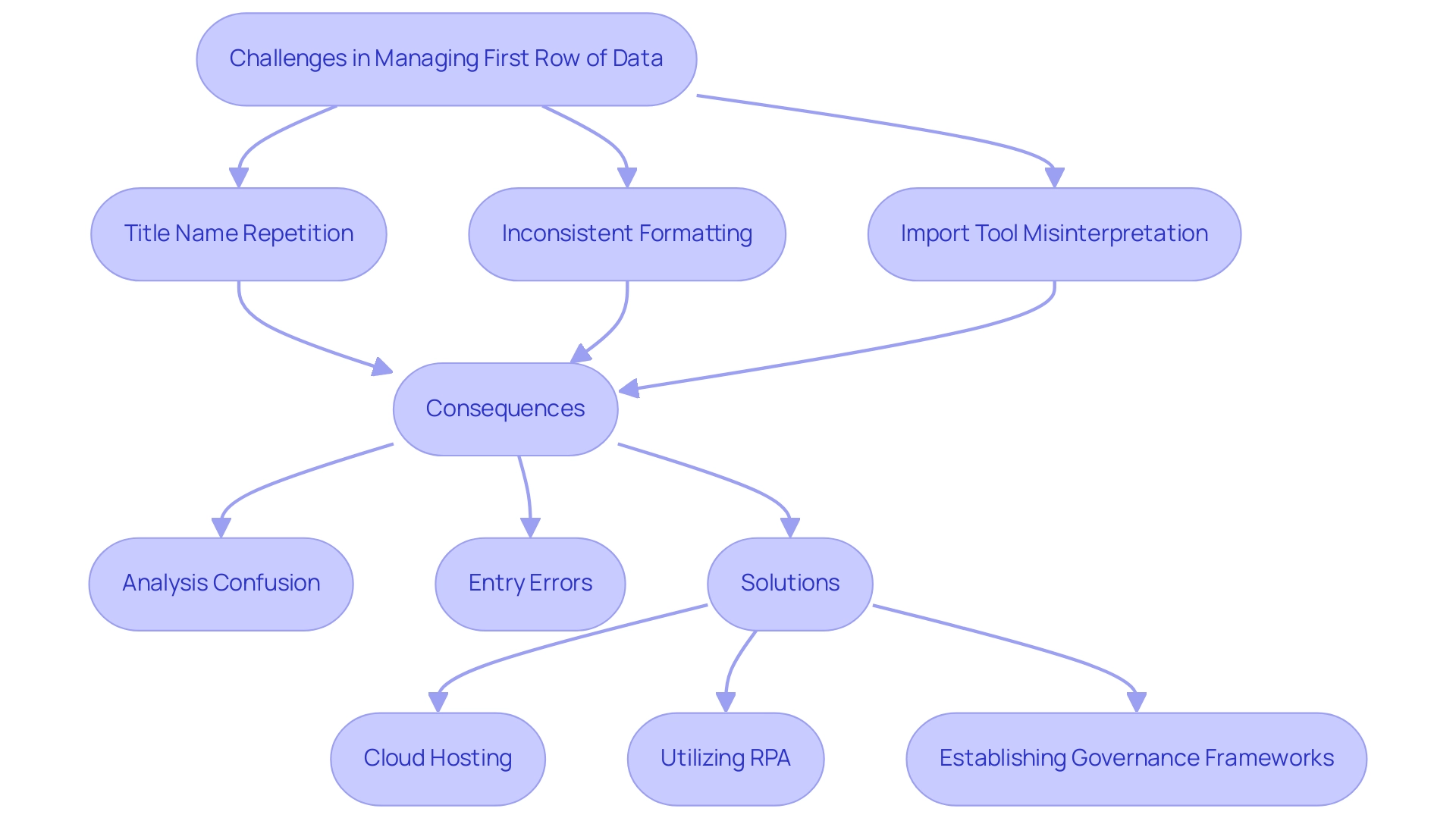

2. Name: Common Challenges in Managing the First Row of Data

Managing the first row in a data source presents significant challenges that impact overall integrity and analysis. A common issue arises from the unintentional repetition of title names, leading to confusion during analysis and potentially incorrect conclusions. For example, if a dataset contains multiple columns labeled ‘Date’ and ‘date’, processing tools may treat these as distinct entities, complicating queries and analyses.

This inconsistency can result in critical errors, as emphasized by industry experts who stress the importance of maintaining uniform formatting.

As organizations navigate 2025, they encounter additional hurdles, such as inconsistent formatting across datasets. Variations in date formats, capitalization, and even spacing can hinder effective processing. Moreover, import tools may misinterpret the first row in a data source, failing to recognize it as titles, which can lead to inaccurate parsing and subsequent analysis mistakes.

Recent statistics indicate that approximately 30% of entry errors are linked to header row issues, underscoring the need for robust management practices.

To illustrate these challenges, consider the case of a logistics provider grappling with information silos. The CIO, Daragh Mahon, emphasized the necessity of isolating the correct information to derive actionable insights, stating, “By hosting all relevant information in the cloud, companies can capture and store information and leverage AI and machine learning technology for quick analysis to inform decision-making.” By adopting a cloud-first strategy and utilizing Robotic Process Automation (RPA), including tools like Power Automate, the organization centralized its information storage, facilitating quicker analysis through AI and machine learning technologies.

This approach not only addressed problems associated with repetition but also enhanced overall information quality.

Understanding these challenges is essential for organizations striving to adopt best practices in information entry and oversight. By prioritizing clarity and consistency in header oversight and employing RPA tools to automate manual workflows, such as information entry and reporting processes, businesses can significantly reduce the risk of input errors and enhance the reliability of their analysis efforts. Furthermore, establishing governance frameworks and ensuring leadership buy-in, as proposed by Pat Romano, can further strengthen an organization’s information management strategy, driving insight-driven conclusions and supporting business growth.

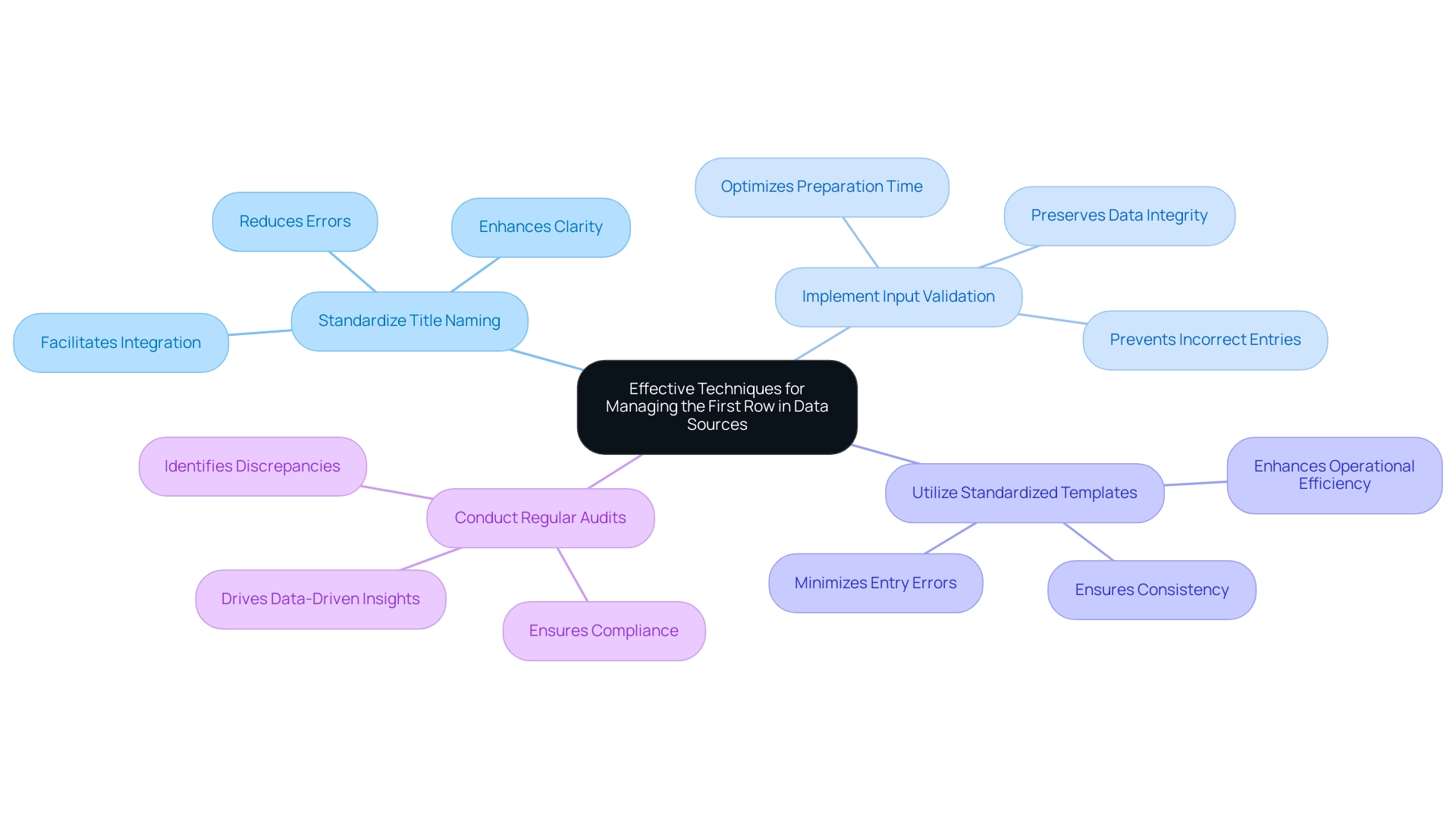

3. Name: Effective Techniques for Managing the First Row in Data Sources

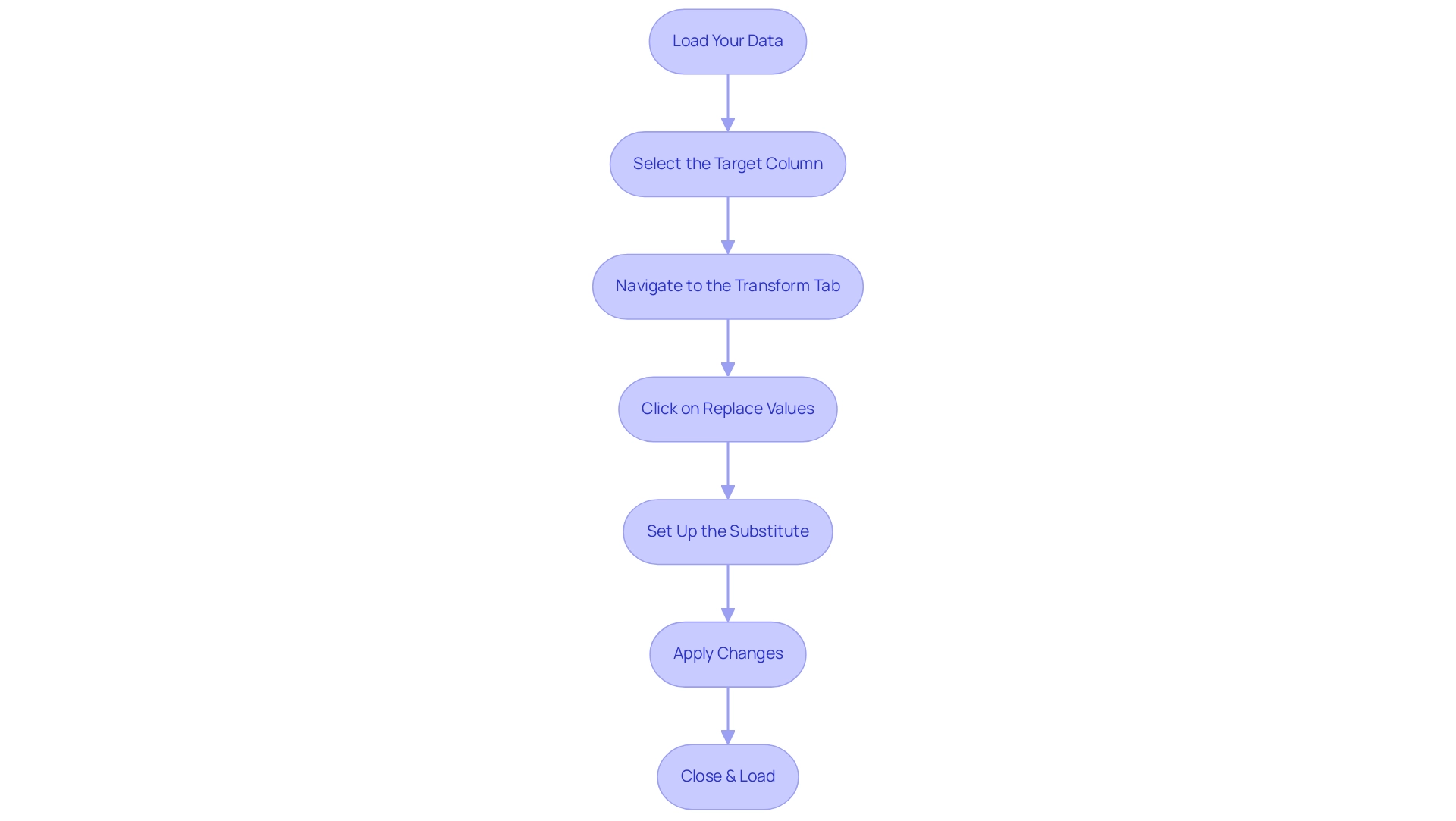

To effectively manage the first row in data sources and leverage technology for operational efficiency, organizations must adopt the following techniques:

-

Standardize Title Naming: Establish unique and consistently formatted title names across all datasets. Avoid spaces and special characters, as these can lead to confusion and mistakes in processing. Uniform title names not only enhance clarity but also facilitate simpler information integration and analysis, which is essential in a setting where RPA can automate tasks associated with information management.

-

Implement Input Validation: Utilize validation rules to ensure that only correct entries are permitted in the first row of a data source. This practice is vital for preserving the integrity of the information, as incorrect entries can jeopardize the entire collection. As noted by Cindy Turner, SAS Insights Editor, “Most of them spend 50 to 80 percent of their model development time on preparation alone,” underscoring the importance of accuracy from the outset, which can be optimized through RPA solutions like EMMA RPA and Power Automate.

-

Utilize Standardized Templates: Create and employ standardized templates for information entry that include predefined titles. This strategy minimizes the risk of errors and ensures consistency across various datasets, making it easier to manage and analyze information effectively, thereby enhancing operational efficiency in a rapidly evolving AI landscape.

-

Conduct Regular Audits: Implement a routine audit process to assess compliance with established header standards. Regular audits help identify and correct discrepancies swiftly, ensuring that the information remains trustworthy and usable for analysis. Coupled with Business Intelligence tools, these audits can drive data-driven insights that propel business growth. RPA tools can assist in automating parts of this auditing process, ensuring efficiency and accuracy.

By applying these techniques, organizations can significantly simplify their information handling processes. Effective header oversight not only enhances the reliability of analysis but also contributes to better decision-making and a higher return on investment in information initiatives. This structured approach safeguards integrity and confidentiality, ultimately driving growth and innovation.

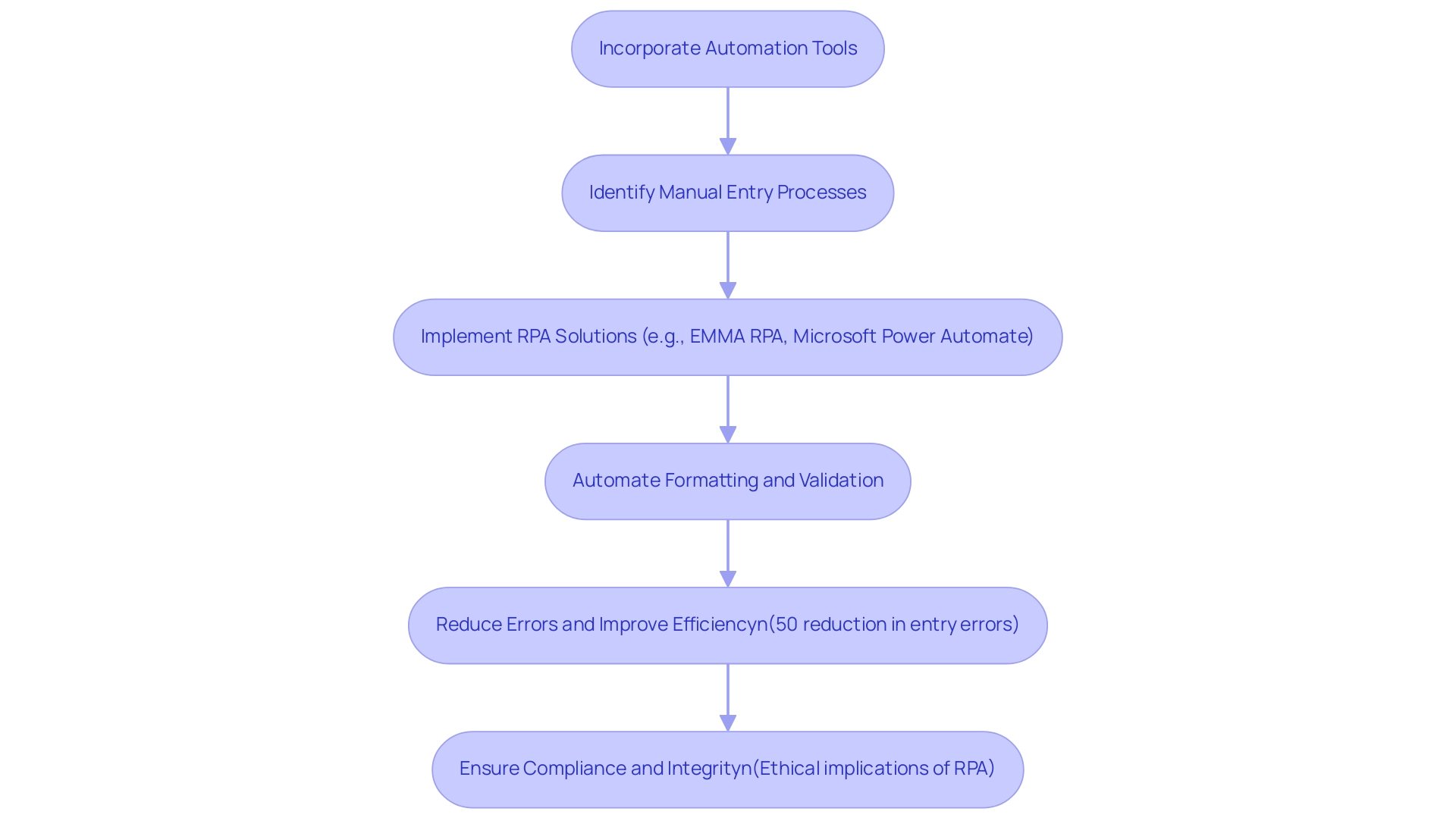

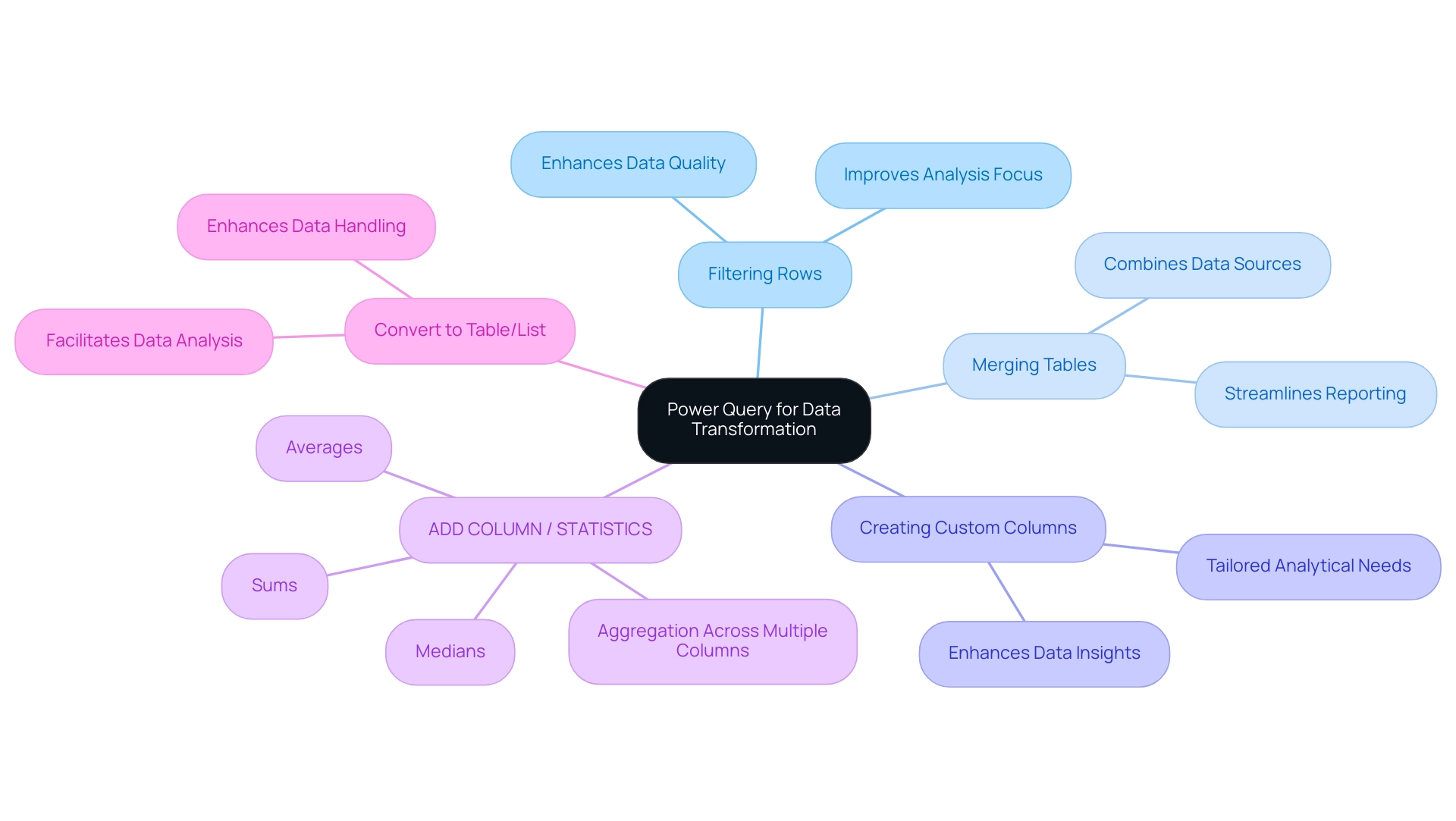

4. Name: Leveraging Automation for Efficient Data Row Management

Incorporating automation tools into information management processes significantly enhances efficiency and accuracy. Robotic Process Automation (RPA) solutions, such as EMMA RPA and Microsoft Power Automate, streamline the formatting and validation of information upon import. This effectively reduces the time spent on manual entry and minimizes the potential for errors. For instance, RPA tools can be programmed to identify and rectify duplicate headers or inconsistent formatting before finalizing the information.

This proactive approach ensures that information is consistently structured, which is crucial for effective analysis and reporting. The application of such automation not only enhances operational efficiency but also leads to substantial improvements in information accuracy. Statistics indicate that organizations leveraging RPA can reduce errors in entry by up to 50%, showcasing the technology’s potential to transform management practices.

Among RPA adopters, robots could contribute as much as 52% of work capacity, further emphasizing the significant impact of RPA on operational efficiency. As Dmitriy Malets observes, ‘RPA tools can be programmed to adhere to regulatory compliance standards, ensuring that all processes are compliant.’ This highlights the importance of automation in preserving information integrity. Furthermore, a case study on a mid-sized company demonstrates how automating repetitive tasks through GUI automation resulted in a 70% decrease in entry mistakes and an 80% enhancement in workflow efficiency, underscoring the quantifiable results of such implementations.

The company faced challenges like manual information entry errors and slow software testing, which were effectively addressed through automation. However, it is essential to consider the ethical implications of RPA, particularly regarding employment and information privacy, as these factors will increasingly shape the landscape of automation. With industry organizations striving to set standards for RPA implementations, the future of information handling is poised for a revolution propelled by automation, ensuring that best practices and security are prioritized.

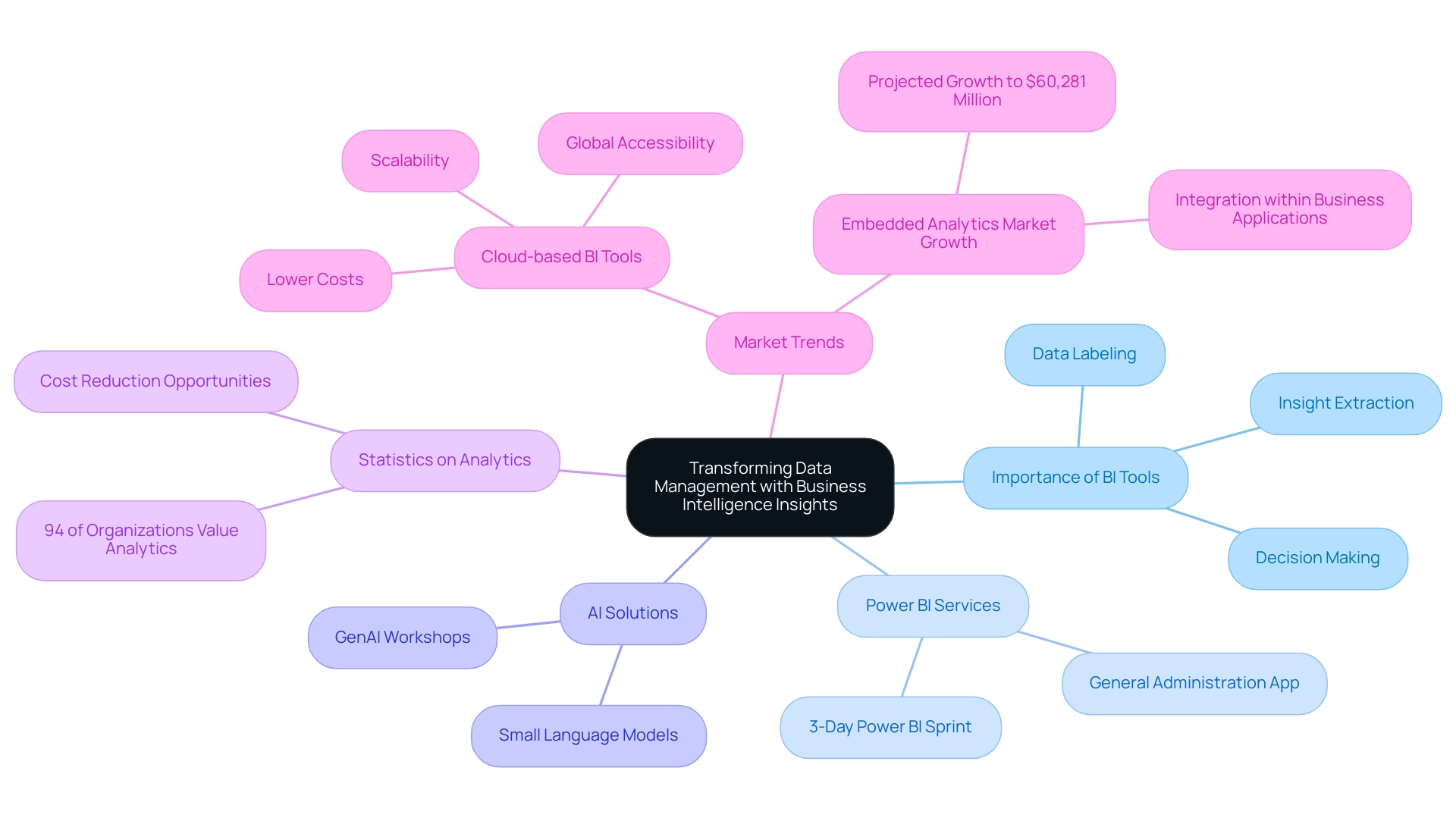

5. Name: Transforming Data Management with Business Intelligence Insights

Business Intelligence (BI) tools are pivotal in revolutionizing information management practices, particularly regarding the initial row of information. Properly labeling and formatting the first row in a data source allows organizations to harness the full potential of BI tools, enabling the extraction of valuable insights. For instance, BI dashboards can effectively visualize trends, relying on the accurate categorization provided by the header row, which empowers teams to make informed decisions.

Moreover, our Power BI services simplify the reporting process through offerings like the 3-Day Power BI Sprint, facilitating quick creation of professional reports that improve consistency and actionable guidance. Additionally, the General Administration App offers extensive oversight and intelligent evaluations, further supporting operational efficiency. Prioritizing the effective management of the first row in a data source not only strengthens an organization’s information strategy but also drives improved operational insights and strategic growth.

As Ivan Blagojevic points out, ‘94% of organizations regard analytics solutions as essential for their growth,’ highlighting the crucial role of well-organized information in attaining business goals. Furthermore, our customized AI solutions, including Small Language Models for efficient information analysis and GenAI Workshops for practical training, can navigate the overwhelming AI landscape, addressing challenges such as poor master information quality and perceived complexities. BI tools can assist organizations in identifying bottlenecks and revealing opportunities for cost reduction, underscoring the practical advantages of efficient information handling.

The growing popularity of cloud-based BI tools reinforces this trend, providing lower costs, scalability, and global information accessibility—essential for modern enterprises aiming to optimize their management practices. Additionally, the projected growth of the embedded analytics market, expected to reach $60,281 million by 2023, signifies a trend towards more integrated data solutions within enterprise applications, reinforcing the relevance of BI tools in today’s data-driven environment.

Conclusion

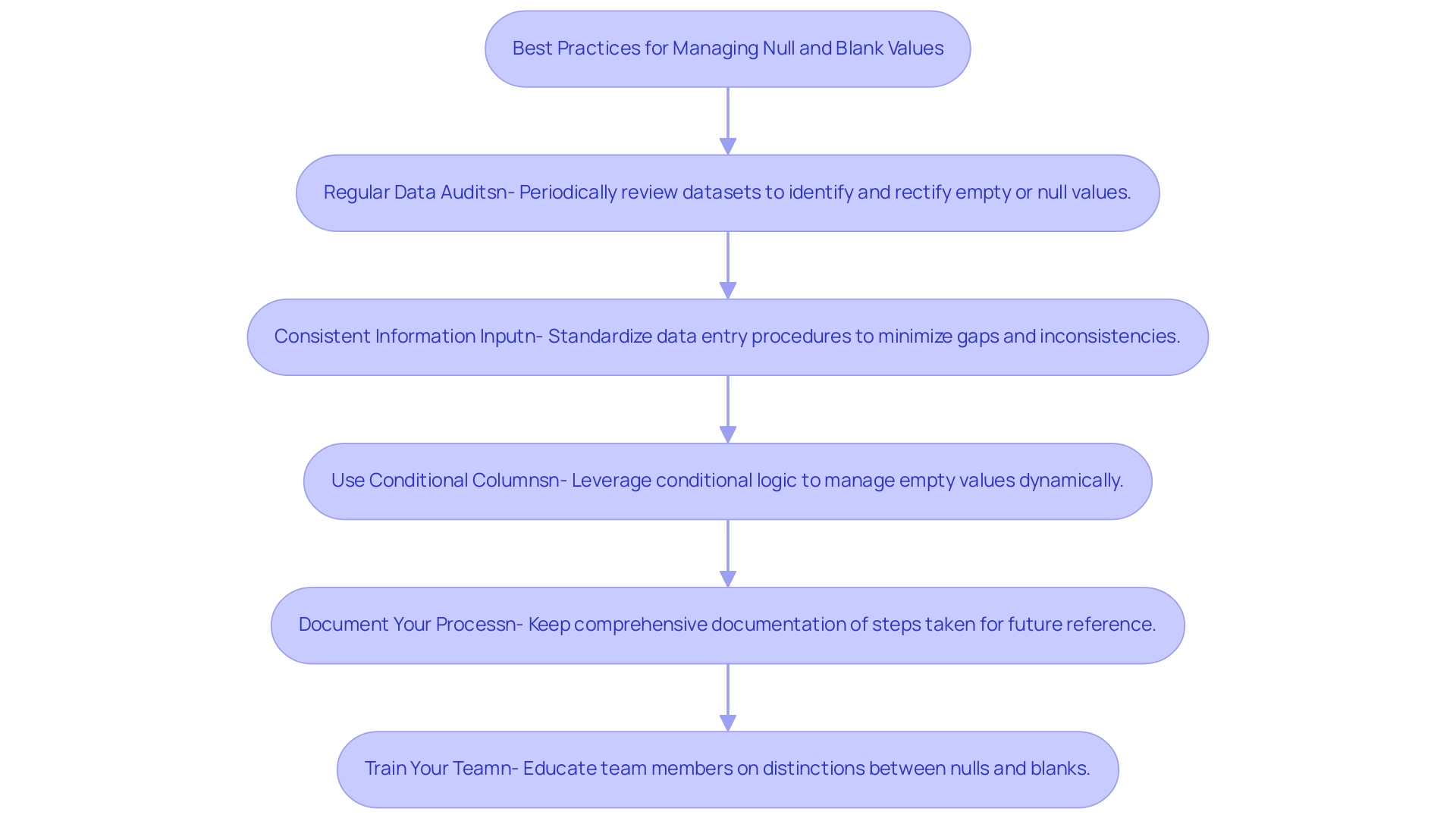

The management of the first row in data sources represents a foundational aspect of effective data management that cannot be overlooked. This header row serves as a critical guide for interpreting and analyzing data, with its clarity directly influencing data accuracy and decision-making processes. By standardizing header naming conventions, implementing data validation, and utilizing templates for consistency, organizations can mitigate common challenges such as header duplication and inconsistent formatting—issues that are known to result in significant data entry errors.

Moreover, the integration of Robotic Process Automation (RPA) into data management practices enhances efficiency by automating repetitive tasks, reducing manual entry errors, and ensuring compliance with regulatory standards. The transformative power of RPA, combined with Business Intelligence (BI) tools, allows organizations to unlock valuable insights from their data, driving informed decision-making and strategic growth.

In a rapidly evolving digital landscape, prioritizing the meticulous management of the first row not only safeguards data integrity but also positions organizations to harness the full potential of their data assets. As businesses continue to navigate the complexities of data analysis, recognizing and optimizing the role of the header row will be essential for achieving operational excellence and fostering innovation in data-driven environments.

Overview

This article delves into mastering the SUMMARIZE function in DAX, highlighting its essential role in crafting summary tables that streamline data analysis and reporting. It presents a detailed examination of the syntax, practical applications, and best practices for effectively employing SUMMARIZE. By illustrating its impact on operational efficiency and decision-making within business intelligence contexts, the article provides valuable insights for professionals seeking to enhance their analytical capabilities.

Introduction

In the realm of data analysis, distilling vast amounts of information into actionable insights is more crucial than ever. The SUMMARIZE function in DAX emerges as a powerful ally for analysts, enabling the creation of summary tables that streamline the aggregation process, akin to SQL’s GROUP BY clause. As organizations increasingly turn to Business Intelligence solutions to navigate the complexities of data, understanding how to leverage this function becomes essential. With its capacity to enhance reporting efficiency and clarity, SUMMARIZE not only addresses common challenges such as time-consuming report generation but also plays a pivotal role in driving strategic decision-making. As the landscape of data analytics continues to evolve, mastering DAX functions like SUMMARIZE will be key to unlocking the full potential of data-driven insights.

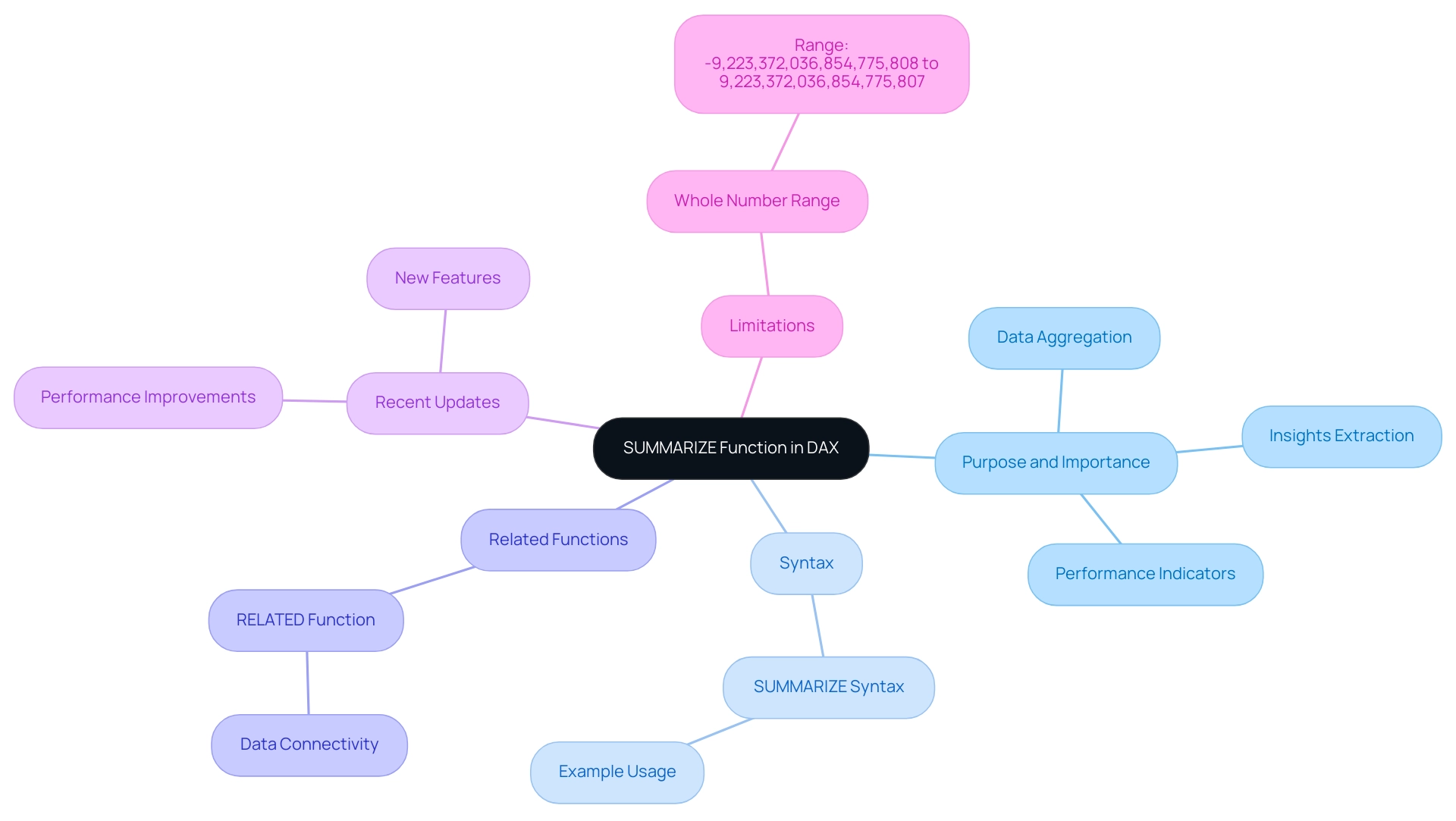

Understanding the SUMMARIZE Function in DAX

The capability to summarize in DAX serves as a crucial resource for generating summary tables by organizing information based on designated columns. Functioning similarly to a GROUP BY clause in SQL, it enables users to aggregate information efficiently. The syntax for the SUMMARIZE operation is as follows:

SUMMARIZE(<table>, <groupBy_columnName>[, <groupBy_columnName>]…)

In today’s data-driven environment, swiftly extracting meaningful insights is essential for maintaining a competitive edge. This operation proves vital in scenarios where summarizing information for reporting is necessary, allowing for rapid insights extraction from extensive collections. As we look ahead to 2025, organizations increasingly depend on Business Intelligence to drive growth and innovation, underscoring the expanding importance of DAX in analytics and its rising adoption across various industries.

Statistics indicate that DAX operations, including SUMMARIZE, are utilized in over 70% of analysis tasks, highlighting their essential role in effective management and operational efficiency.

It’s important to note that whole numbers in DAX must reside within the range of -9,223,372,036,854,775,808 to 9,223,372,036,854,775,807, a crucial limitation to consider when working with DAX operations.

Understanding the operation to summarize in DAX is vital for anyone aiming to harness its power for analysis, as it lays the groundwork for more intricate calculations and manipulations. For instance, this feature can be employed to create summary reports that highlight key performance indicators, facilitating informed decision-making. This aligns with the need for practical guidance in addressing issues related to time-consuming report creation and inconsistencies in information.

Moreover, the RELATED feature is essential for retrieving information from interconnected tables, enhancing connectivity in Power BI. This function complements the SUMMARIZE function by allowing users to pull in relevant information from other tables, thereby enriching the summary reports generated.

Recent updates in 2025 have further enhanced the capabilities of the SUMMARIZE function, making it even more robust for those looking to summarize in DAX. These updates include improved performance and additional features that streamline the summarization process. Real-world examples demonstrate its effectiveness in generating concise reports that drive strategic insights, solidifying its importance in analysis and the ongoing evolution of tailored AI solutions.

In addition, RPA solutions like EMMA RPA and Power Automate can significantly enhance operational efficiency by automating repetitive tasks involved in preparation and reporting. This not only decreases the time spent on these tasks but also minimizes errors, allowing organizations to concentrate on deriving actionable insights from their information. As businesses navigate the overwhelming AI landscape, tailored AI solutions can assist them in identifying the right technologies that align with their specific needs, ensuring they leverage Business Intelligence effectively for informed decision-making.

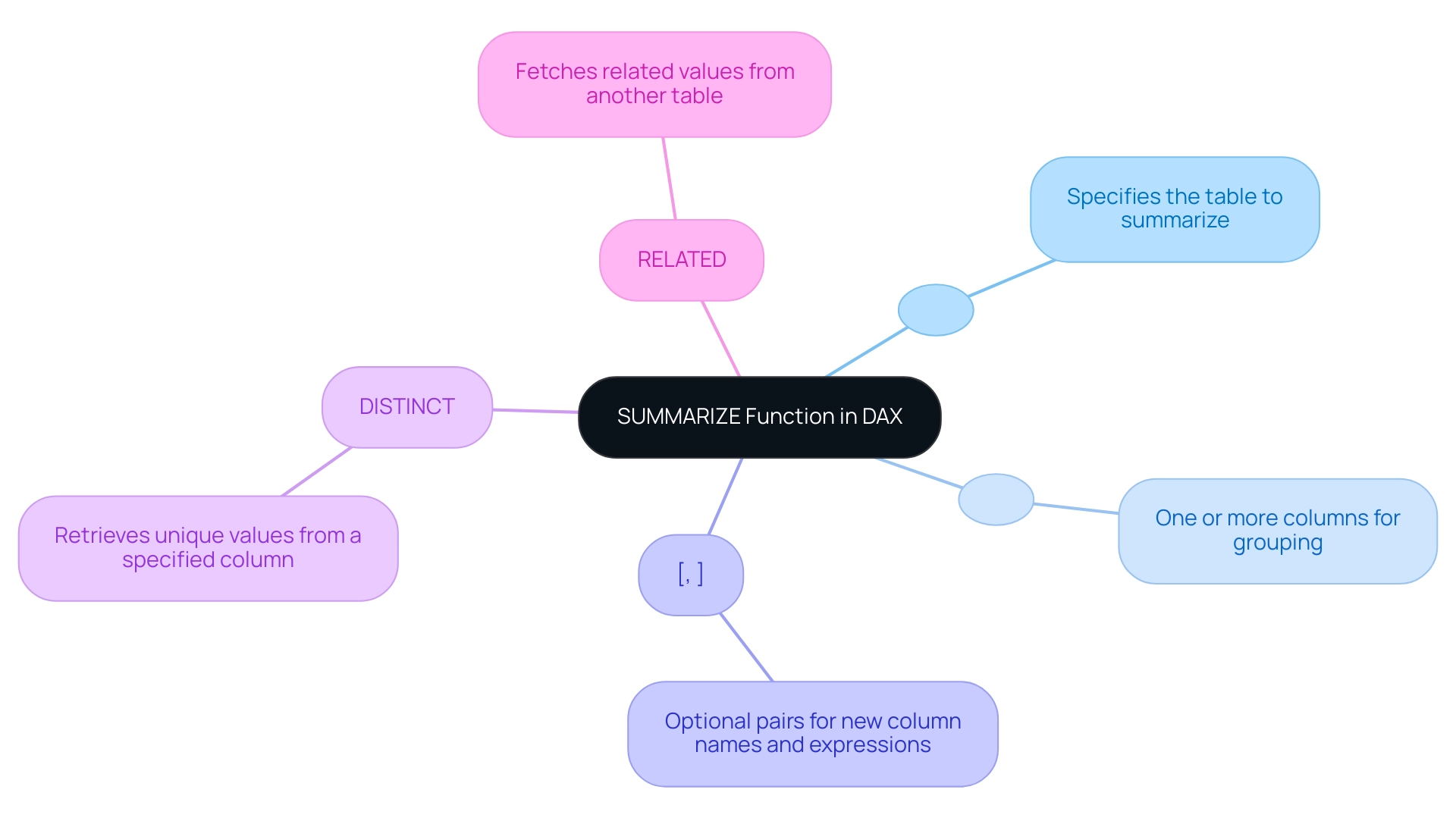

Syntax and Parameters of SUMMARIZE

To summarize in DAX, the SUMMARIZE function stands out as a powerful tool that necessitates specific parameters for effective operation within the broader context of DAX queries, which are designed to retrieve, filter, and analyze information in Power BI. This capability facilitates custom calculations and aggregations, making it indispensable for effective summarization and addressing challenges such as time-consuming report creation and inconsistencies. Below is a detailed breakdown of its syntax:

SUMMARIZE(<table>, <group by_column name>[, <group by_column name>]…, [<name>, <expression>]…)

: This parameter specifies the table you wish to summarize.

- <groupBy_columnName>: You can include one or more columns that will serve as the basis for grouping the information.

- [

, : These are optional pairs that allow you to define a new column name alongside the corresponding DAX expression to compute its value.] For instance, consider a sales table where you want to summarize sales information by product category. The DAX expression would look like this:

SUMMARIZE(Sales, Sales[Category], "Total Sales", SUM(Sales[Amount]))This command generates a new table that organizes sales by category while calculating the total sales for each category, thereby unlocking the power of Business Intelligence for informed decision-making. Mastering these parameters is essential to summarize in DAX effectively, enabling you to derive meaningful insights from your information and drive business growth and innovation.

Moreover, understanding the DISTINCT operation is crucial, as it retrieves unique values from a specified column, enhancing the summarization process. For instance, if you wish to summarize sales data while ensuring that each product category is included only once, you would utilize the DISTINCT feature along with a summarization method.

Additionally, consider how the RELATED feature can work alongside SUMMARIZE. This method retrieves related values from another table, enabling deeper analysis in reports. For example, if you summarize sales information by category and also want to include related product details, using RELATED can enhance your report’s depth.

RPA (Robotic Process Automation) can further enhance the efficiency of utilizing DAX capabilities by automating the report generation process. This approach not only reduces the time spent on manual tasks but also minimizes the risk of errors, effectively addressing staffing shortages and outdated systems. By integrating DAX with RPA, businesses can streamline their analysis workflows, ensuring that insights are generated quickly and accurately.

As Yana Khare, a student, aptly puts it, “Happiness can be found even in the darkest of times if one remembers to turn on the light.” This quote resonates with the journey of mastering DAX capabilities and RPA, reminding us that comprehending these tools can illuminate the path to insightful analysis.

Interpreting Return Values from SUMMARIZE

The capability to summarize in DAX serves as a powerful tool, creating a summary table based on the specified

groupBy_column Namearguments and any additional calculated columns defined in the expression pairs. The output is structured as a table, where each row represents a unique combination of the grouped columns, accompanied by aggregated values as specified.For example, consider the following DAX expression:

SUMMARIZE(Sales, Sales[Category], "Total Sales", SUM(Sales[Amount]))This command produces a table that lists each product category alongside its corresponding total sales amount. Grasping the output of the SUMMARIZE function is essential for effective visualization and interpretation in DAX, empowering users to derive actionable insights that facilitate informed decision-making.

To enhance your reporting capabilities, our Power BI services ensure efficient reporting and consistency. We offer a 3-Day Power BI Sprint that enables the quick creation of professionally designed reports, alongside a General Management App for comprehensive management and smart reviews. However, challenges such as time-consuming report creation and inconsistencies can hinder effective decision-making.

Regular updates to Power BI tools enhance user experience and system stability, making it essential for organizations to stay current with these improvements. Additionally, leveraging the Power Query Editor in Power BI allows for cleansing, shaping, and enrichment before loading, ensuring quality for analysis. This aligns with our focus on improving information quality through AI solutions, including Small Language Models and GenAI Workshops, which facilitate hands-on training and tailor-made AI tools for enhanced analysis.

A case study titled “Speeding Up DAX Query Execution” underscores the significance of optimizing DAX queries. By simplifying measurements and avoiding unnecessary aggregations, organizations can enhance query performance, leading to faster execution times and improved analysis efficiency in Power BI. As Utpal Kar aptly states, “Measurements should be simplified, surplus-to-requirements aggregations avoided, and relationships should be optimized.”

This method not only streamlines information processing but also enhances the overall quality of insights derived from the information. It reinforces the importance of Business Intelligence and RPA in driving insight-driven analysis and operational efficiency for business growth. Moreover, cooperation with stakeholders is essential for developing high-performing, scalable information solutions, ensuring that the implementation of tools is effective and aligned with organizational objectives.

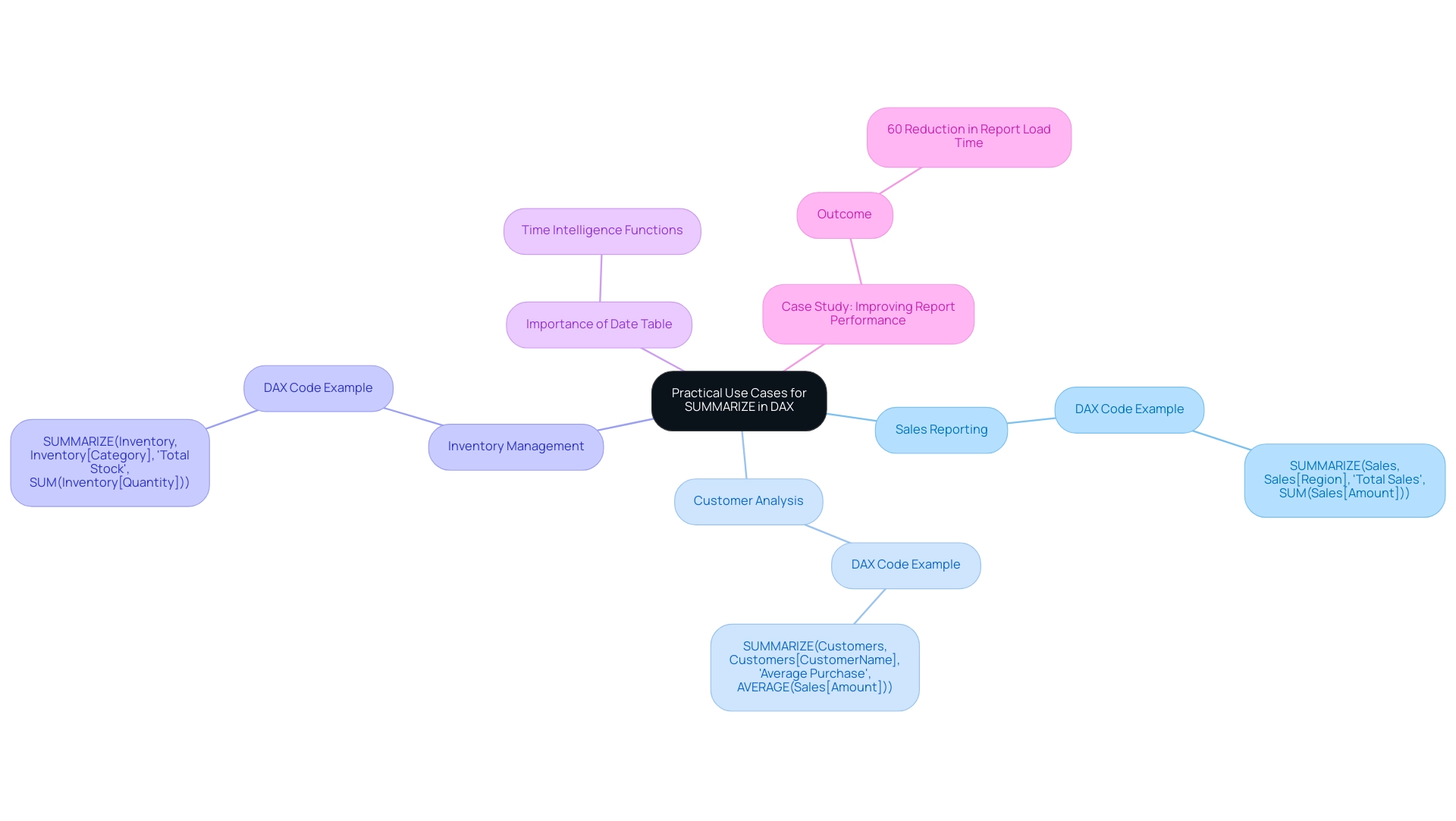

Practical Use Cases for SUMMARIZE in DAX

The SUMMARY function in DAX stands as a powerful tool, adept at summarizing across various practical scenarios, thereby significantly enhancing information analysis capabilities while addressing key challenges in data handling. Consider these pivotal applications:

-

Sales Reporting: By summarizing sales information by region or product line, organizations gain invaluable insights into performance metrics. For instance:

SUMMARIZE(Sales, Sales[Region], "Total Sales", SUM(Sales[Amount]))This method enables businesses to pinpoint high-performing areas, effectively tackling the common challenge of time-consuming report creation and allowing for strategic adjustments.

-

Customer Analysis: Understanding customer behavior is essential for driving sales. The SUMMARIZE function can group customer data to calculate average purchase amounts per customer, revealing insights into spending patterns:

SUMMARIZE(Customers, Customers[CustomerName], "Average Purchase", AVERAGE(Sales[Amount]))This analysis is instrumental in tailoring marketing efforts to boost customer engagement and overcome data inconsistencies.

-

Inventory Management: Effective inventory management is crucial for operational efficiency. By summarizing stock levels by product category, businesses can swiftly identify low stock items:

SUMMARIZE(Inventory, Inventory[Category], "Total Stock", SUM(Inventory[Quantity]))This functionality supports the maintenance of optimal stock levels and the prevention of stockouts, showcasing how RPA can streamline these processes.

These examples illustrate the adaptability of the capability to summarize in DAX, making it an essential resource for analysts utilizing Power BI or Excel. As organizations increasingly rely on data-driven decision-making in 2025, the ability to efficiently summarize in DAX and analyze data will be paramount. A well-organized Date table is crucial for the effective operation of time intelligence operations in DAX, ensuring precise calculations over time.

A recent case study highlighted how one organization enhanced report performance by 60% by replacing complex nested iterators with straightforward measures and aggregated tables, specifically employing the summarizing technique. This transition resulted in a more responsive dashboard, underscoring the significance of mastering the summarization process in DAX to improve operational efficiency and facilitate informed decision-making. Furthermore, the integration of RPA solutions, such as EMMA RPA and Power Automate, can automate the summarization process, minimizing manual effort and enhancing the effectiveness of these analyses.

By synergizing Business Intelligence strategies with RPA, businesses can unlock the full potential of their data, transforming insights into actionable results.

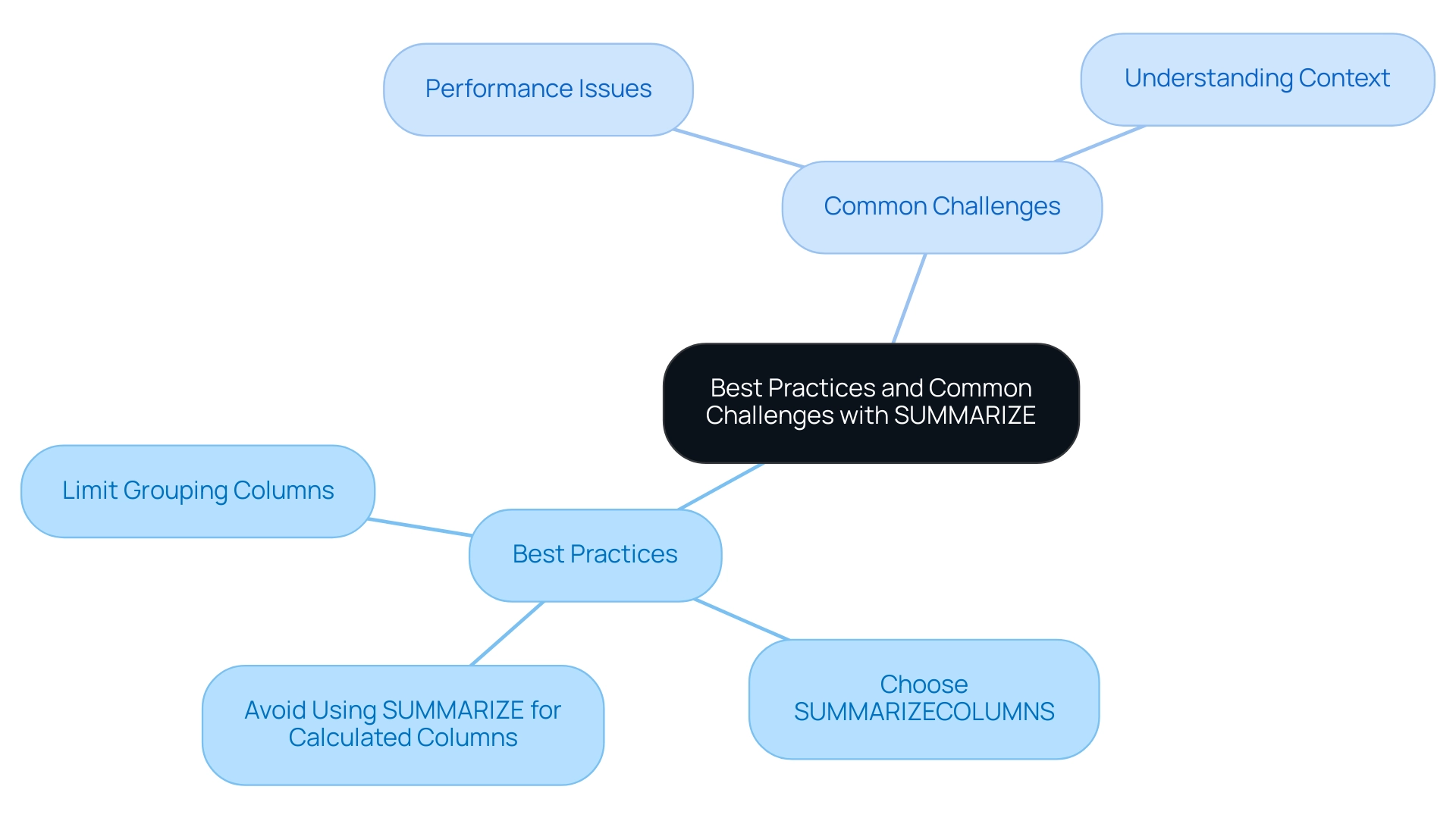

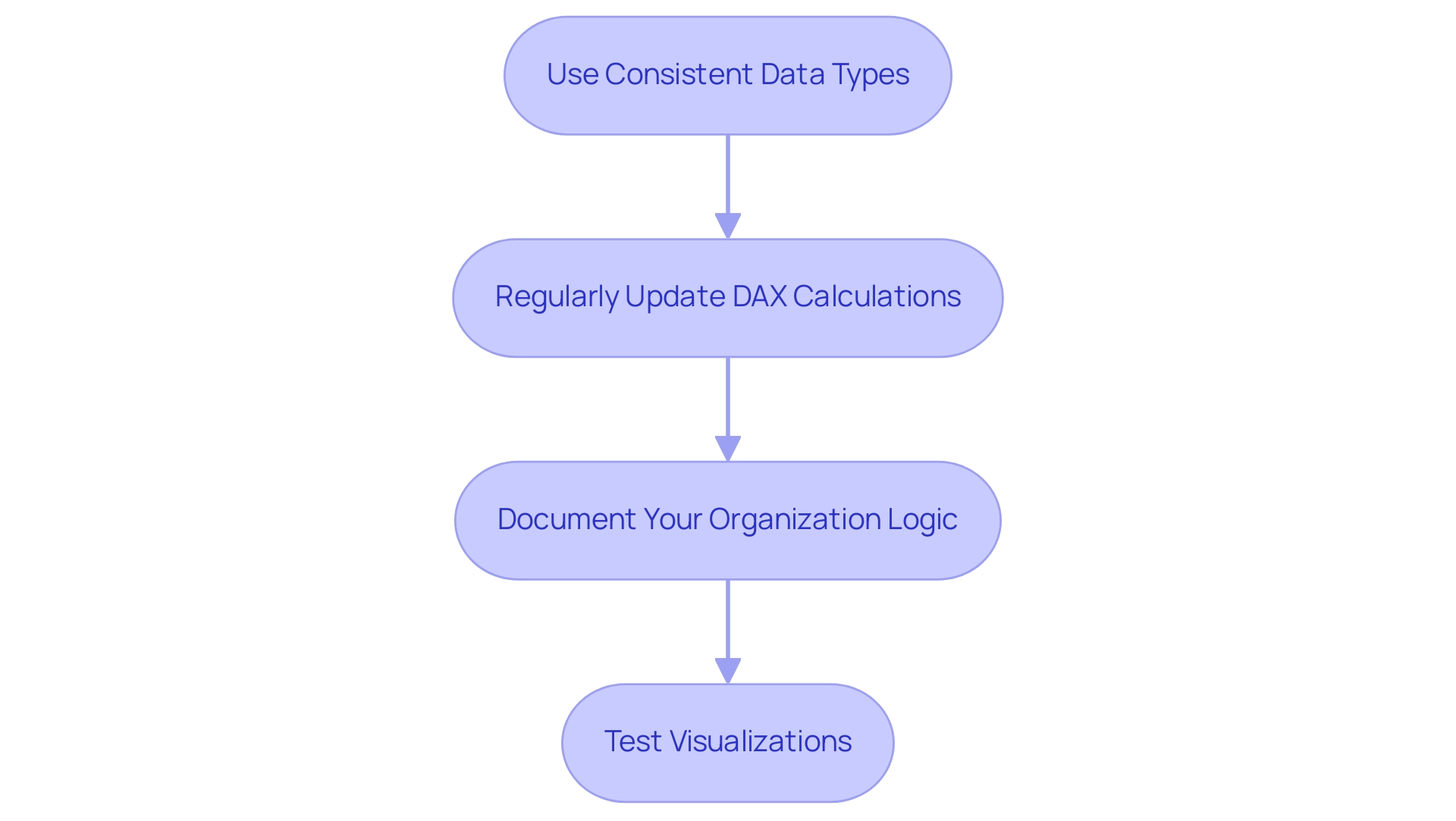

Best Practices and Common Challenges with SUMMARIZE

When utilizing the SUMMARIZE function in DAX, adhering to best practices can significantly enhance performance and clarity, particularly when leveraging Business Intelligence tools like Power BI for data-driven insights.

- Limit the Number of Grouping Columns: Excessive groupBy_columnName arguments can lead to performance degradation. Concentrate on the most pertinent columns to streamline calculations and facilitate quicker insights that drive business growth.

- Choose SUMMARIZECOLUMNS: For enhanced performance, SUMMARIZECOLUMNS is suggested instead of the alternative, as it is specifically optimized for querying, leading to quicker execution times. This alleviates some challenges of time-consuming report creation, enabling efficient analysis.

- Avoid Using SUMMARIZE for Calculated Columns: Instead, pair ADDCOLUMNS with GROUP BY to enhance both clarity and performance, ensuring your model remains efficient and actionable.

RPA solutions are crucial for automating repetitive information processes, greatly improving the effectiveness of DAX operations. By integrating RPA, businesses can streamline data handling, reduce manual errors, and free up valuable resources for more strategic tasks.

Common challenges encountered when using the SUMMARIZE function include:

- Performance Issues: Working with large datasets can hinder calculation speeds. Implementing filtering and aggregations judiciously is crucial to maintain efficiency, which is vital for operational effectiveness when summarizing in DAX.

- Understanding Context: It is essential to recognize that summarizing in DAX operates within a context-sensitive framework. A thorough comprehension of row and filter context is necessary to avoid unexpected results, especially when aiming for actionable insights from Power BI dashboards.

In the context of training and expertise, the ‘Improving Real World RAG Systems’ course has received a commendable rating of 4.7, showcasing the quality of training available for mastering DAX capabilities. As Eusebio Kickel aptly noted, “You have a way of making even the most challenging topics seem approachable and understandable,” which resonates with the common challenges faced by users in making data-driven decisions.

Furthermore, the case study on the ‘Power BI Training Partnership’ demonstrates how effective training can empower businesses to improve their analytical skills and make informed, insight-driven decisions through effective visualizations and dashboards. It emphasizes the practical use of the summarization tool in real-life situations, especially in addressing inconsistencies in information.

It’s also important to note that restrictions exist on DAX expressions permitted in measures and calculated columns, which can influence how users utilize the summarization capability. Recent advancements allow users to add new measures to the model directly from the DAX query view, enabling them to test changes without affecting the model until they choose to update it.

By implementing these best practices and remaining cognizant of potential challenges, users can effectively summarize in DAX, elevating their data analysis capabilities and ultimately leading to more informed decision-making and the transformation of raw data into actionable insights.

Conclusion

The SUMMARIZE function in DAX is a cornerstone for effective data analysis, enabling the creation of summary tables that distill vast datasets into manageable insights. By leveraging its capabilities, analysts can group and aggregate data efficiently, akin to SQL’s GROUP BY clause. This streamlines report generation and enhances operational efficiency. Notably, this function is essential for producing concise reports that highlight key performance indicators and plays a crucial role in driving informed decision-making within organizations.

Understanding the nuances of the SUMMARIZE function, including its syntax and practical applications, empowers users to tackle common challenges such as time-consuming report creation and data inconsistencies. Implementing best practices—like limiting the number of grouping columns and considering alternatives like SUMMARIZECOLUMNS—allows analysts to optimize performance and clarity in their data models.

Moreover, integrating RPA solutions further enhances the functionality of DAX, automating repetitive tasks and enabling analysts to focus on deriving actionable insights. As organizations navigate the complexities of data in an increasingly AI-driven landscape, mastering tools like the SUMMARIZE function is pivotal in unlocking the full potential of data-driven decision-making.

In conclusion, the SUMMARIZE function stands out as an indispensable tool in the realm of data analytics. Its ability to facilitate efficient summarization and analysis positions it at the forefront of Business Intelligence strategies. As data continues to grow in volume and complexity, harnessing the capabilities of DAX functions remains essential for organizations striving to maintain a competitive edge and drive strategic growth.

Overview

This article delves into the effective use of the PREVIOUSMONTH DAX function in Power BI, a crucial tool for time-based data analysis. It serves as a comprehensive guide, detailing the function’s syntax and its practical applications within business intelligence. Additionally, it addresses common challenges users may encounter and offers advanced techniques to enhance analytical capabilities. Mastering these tools is emphasized as a means to significantly improve reporting efficiency and decision-making processes.

Introduction

In the realm of data analysis, mastering the intricacies of Data Analysis Expressions (DAX) is pivotal for professionals seeking to unlock the full potential of their data. Among its many capabilities, time intelligence functions, such as PREVIOUSMONTH, stand out as essential tools for performing insightful temporal analyses. This article delves into the significance of DAX functions, particularly how the PREVIOUSMONTH function can transform reporting practices within business intelligence. By enabling comparisons across different time frames, analysts can effectively track trends, streamline reporting processes, and make informed decisions that drive organizational success.

Furthermore, with the integration of Robotic Process Automation (RPA), these functionalities become even more powerful. Automating repetitive tasks allows analysts to focus on strategic insights rather than manual data compilation. As organizations strive for greater efficiency and accuracy in their data analysis, understanding and applying these advanced techniques is crucial for staying ahead in a competitive landscape.

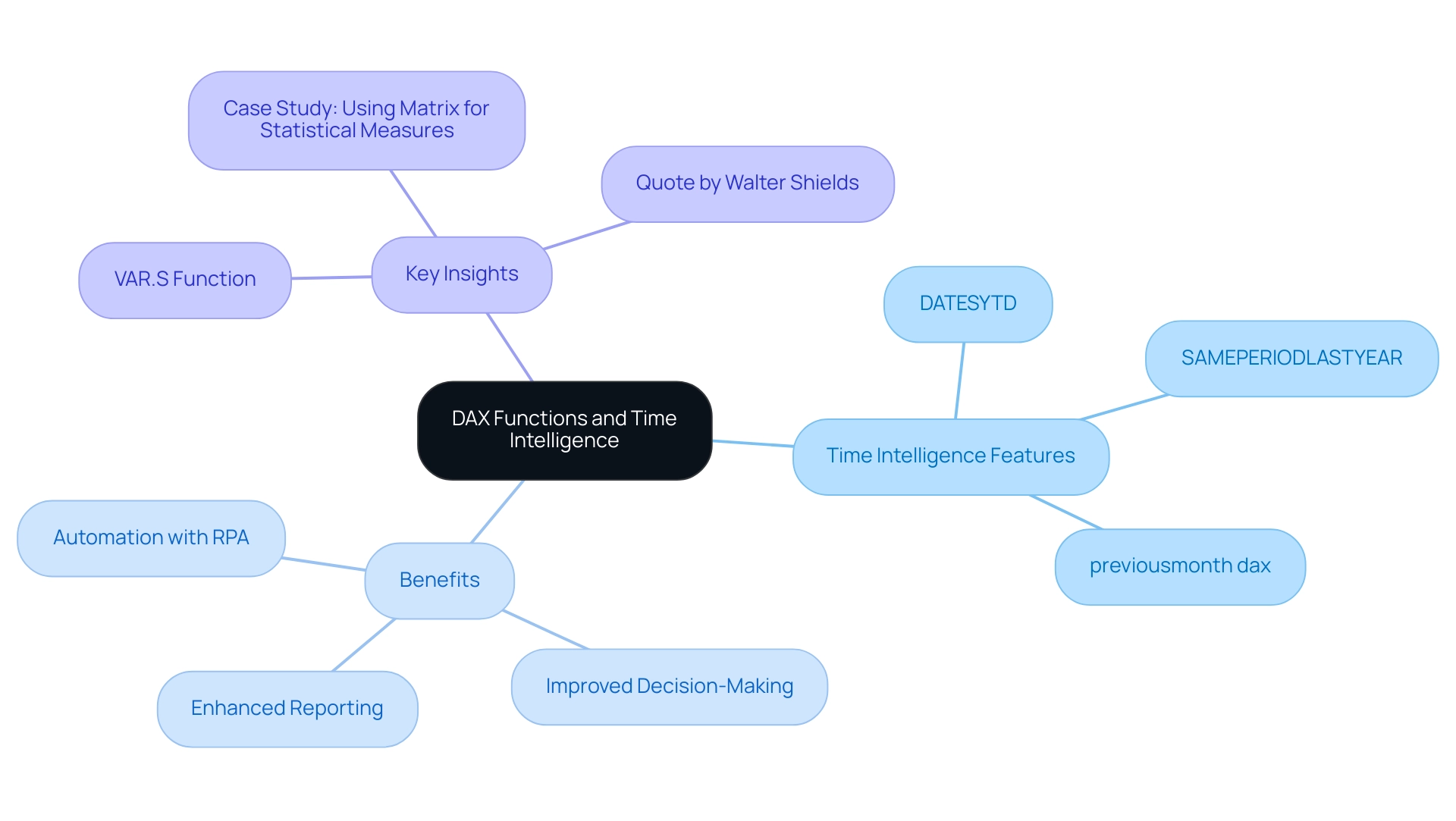

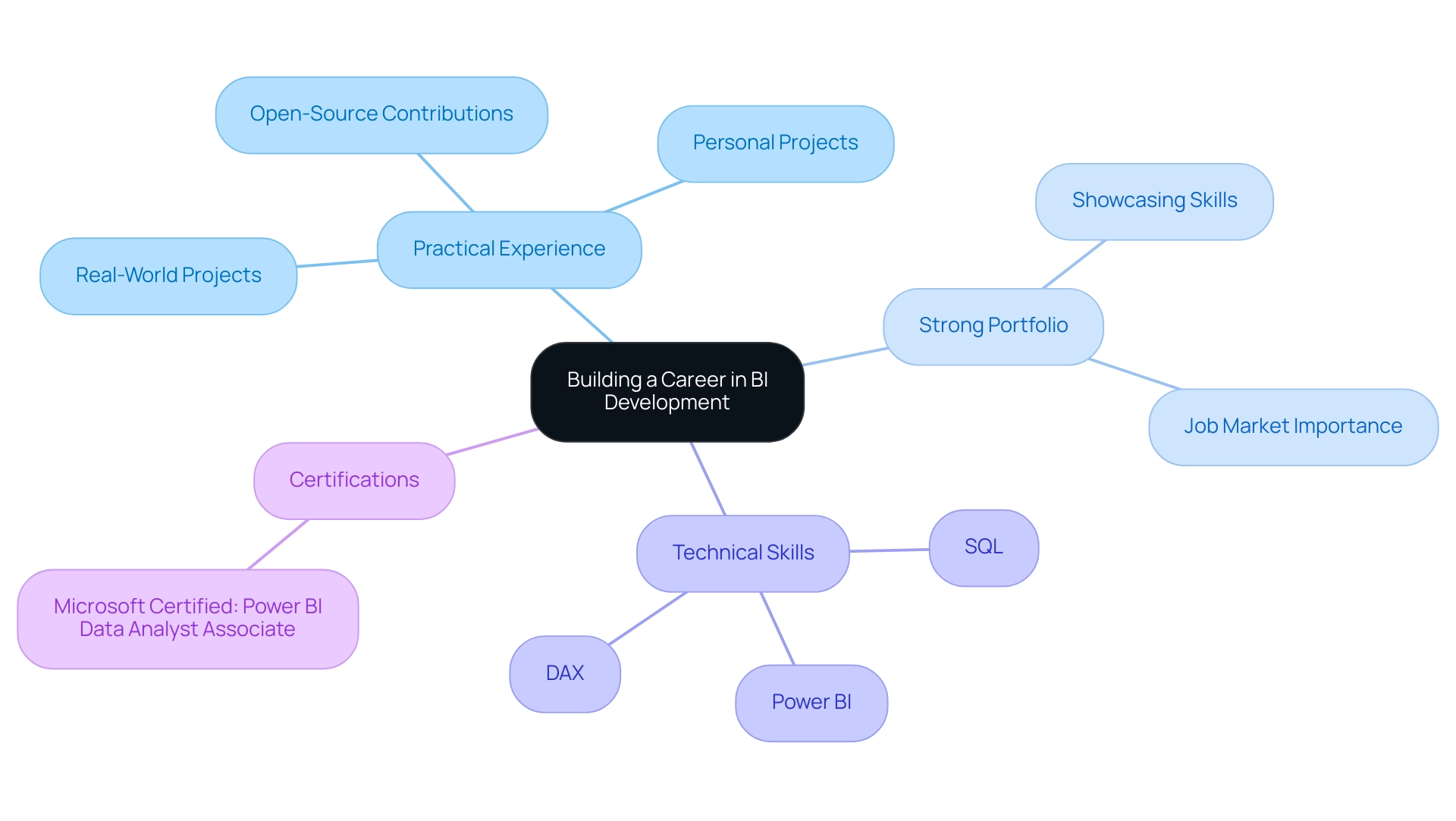

Understanding DAX Functions and Time Intelligence

Analysis Expressions (DAX) is an essential formula language utilized in Power BI and other Microsoft platforms, designed for modeling and analysis. A vital component of DAX is time intelligence, empowering users to execute calculations that involve dates, enabling comparisons across various time periods, such as months, quarters, and years. Proficiency in DAX and its time intelligence functionalities is crucial for deriving actionable insights from information and overcoming common challenges in reporting, such as time-consuming report creation and inconsistencies that lead to confusion and mistrust in the information presented.

Significant features within this subset include:

- DATESYTD

- SAMEPERIODLASTYEAR

- previousmonth dax

Each serving unique purposes that enable analysts to monitor and evaluate trends effectively. Additionally, the VAR.S formula returns the variance of a sample population, further enhancing the analytical capabilities of DAX.

Walter Shields, in his work on starting a career in analytics from the ground up, emphasizes that ‘mastering DAX operations is essential for anyone aiming to excel in analysis.’ By mastering these time management capabilities, analysts can significantly streamline their reporting processes, reduce the time spent on report creation, and improve consistency across reports. This leads to enhanced decision-making abilities and more impactful information presentations. Furthermore, integrating RPA solutions can automate repetitive tasks related to report generation, allowing analysts to concentrate on interpreting information rather than compiling it.

Consider the case study titled ‘Using Matrix for Statistical Measures.’ This illustrates how to utilize the Matrix visualization in Power BI to present various statistical metrics, demonstrating how analysts can leverage DAX techniques alongside RPA to enhance their presentation abilities while ensuring that stakeholders receive clear, actionable advice.

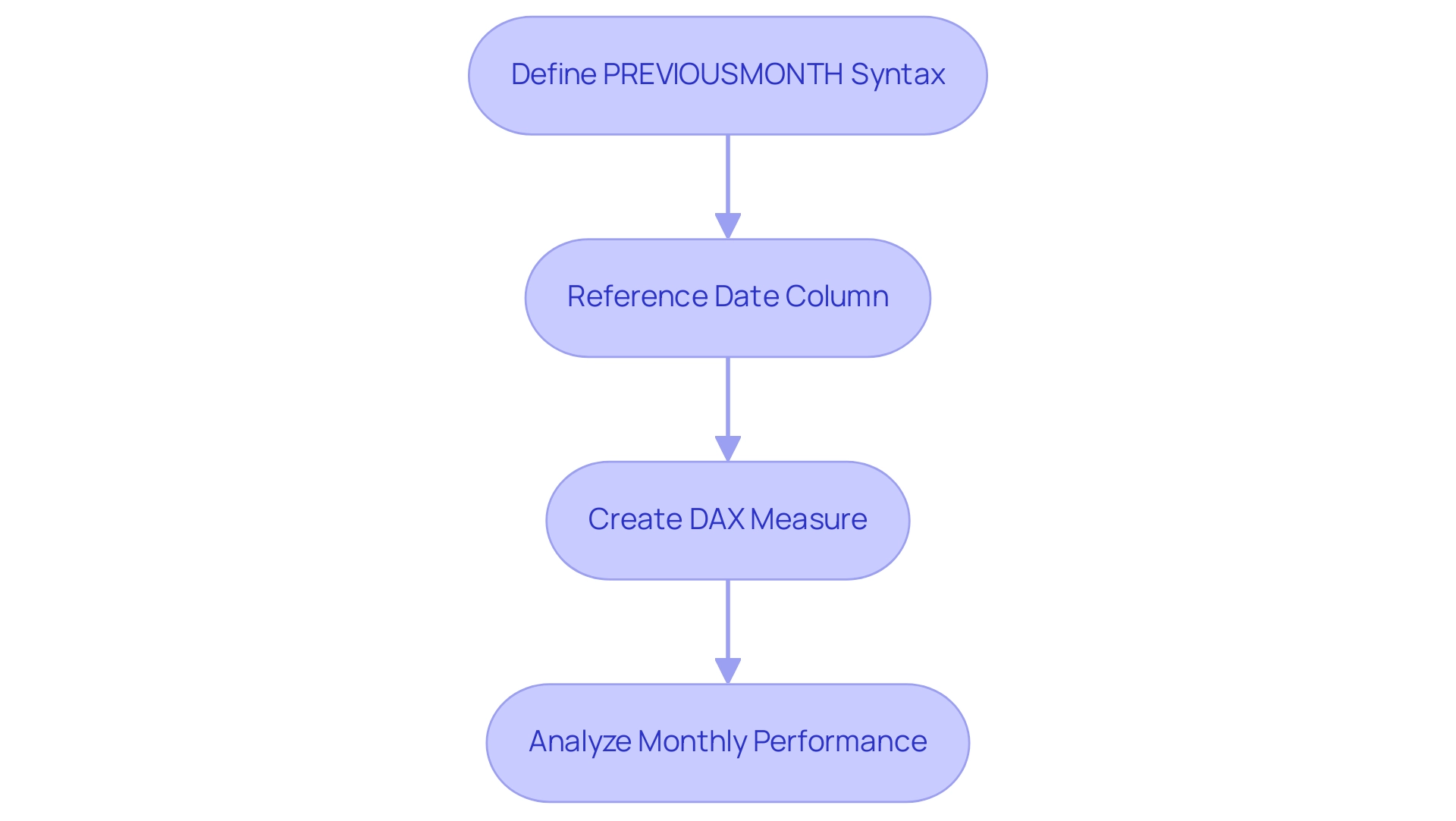

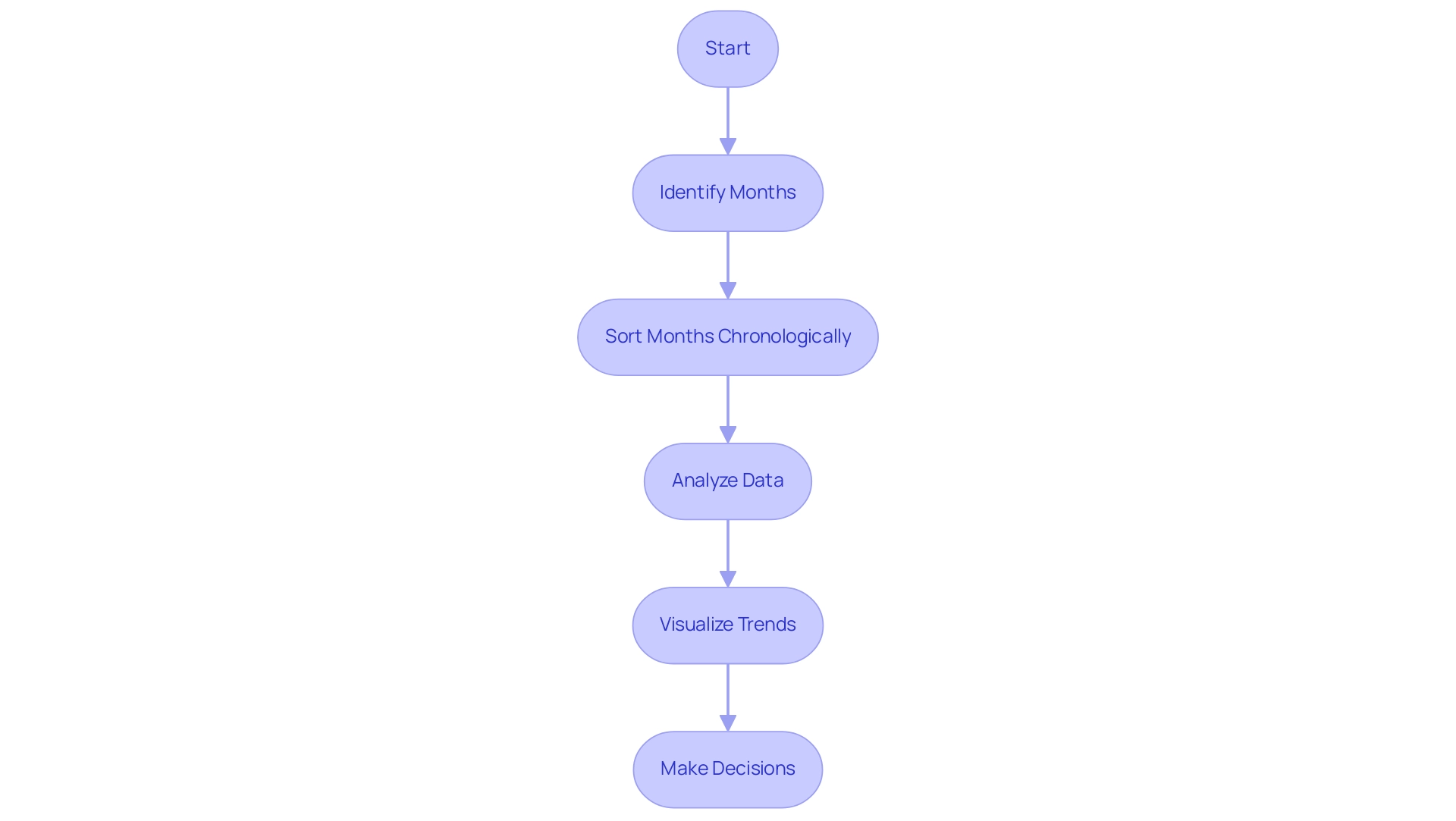

Exploring the PREVIOUSMONTH DAX Function: Syntax and Usage

The syntax for the PREVIOUSMONTH DAX formula is straightforward, enabling effective analysis of temporal data and facilitating essential data-driven insights for operational efficiency. The function is defined as follows:

PREVIOUSMONTH(<Dates>)

Here, <Dates> must reference a column that contains date values. This procedure yields a table with a single column of dates from the prior month, starting from the earliest date in the specified column. For instance, if you have a date column named ‘Sales[Date]’, you can create a DAX measure to ascertain total sales from the previous month with the following code:

Total Sales Previous Month = CALCULATE(SUM(Sales[Amount]), PREVIOUSMONTH(Sales[Date]))

This measure dynamically computes the total sales for the month preceding the current context defined by the date filter applied in your report. Utilizing the PREVIOUSMONTH DAX function empowers analysts to conduct insightful evaluations of monthly performance, fostering a nuanced understanding of sales trends. Recent discussions among information analysts emphasize the tool’s simplicity and effectiveness, as noted by Janet: “Ok, I figured it out. It’s actually pretty simple; you just explicitly tell PBI what to do.”

Moreover, this functionality addresses common challenges in leveraging insights from Power BI dashboards, such as time-consuming report creation and inconsistencies. By streamlining the reporting process, it ensures uniformity in analysis, ultimately contributing to improved operational efficiency.

A case study on calculating order counts for the current month and previous month DAX emphasizes the practical application of this tool, demonstrating its utility in examining sales information effectively. Furthermore, it’s crucial to acknowledge that some users have raised concerns about the operation’s effectiveness, highlighting the importance of maintaining proper relationships and column references in DAX formulas. In the broader context of Business Intelligence, applying the prior month method illustrates how analytical techniques can drive growth and innovation by transforming raw data into actionable insights.

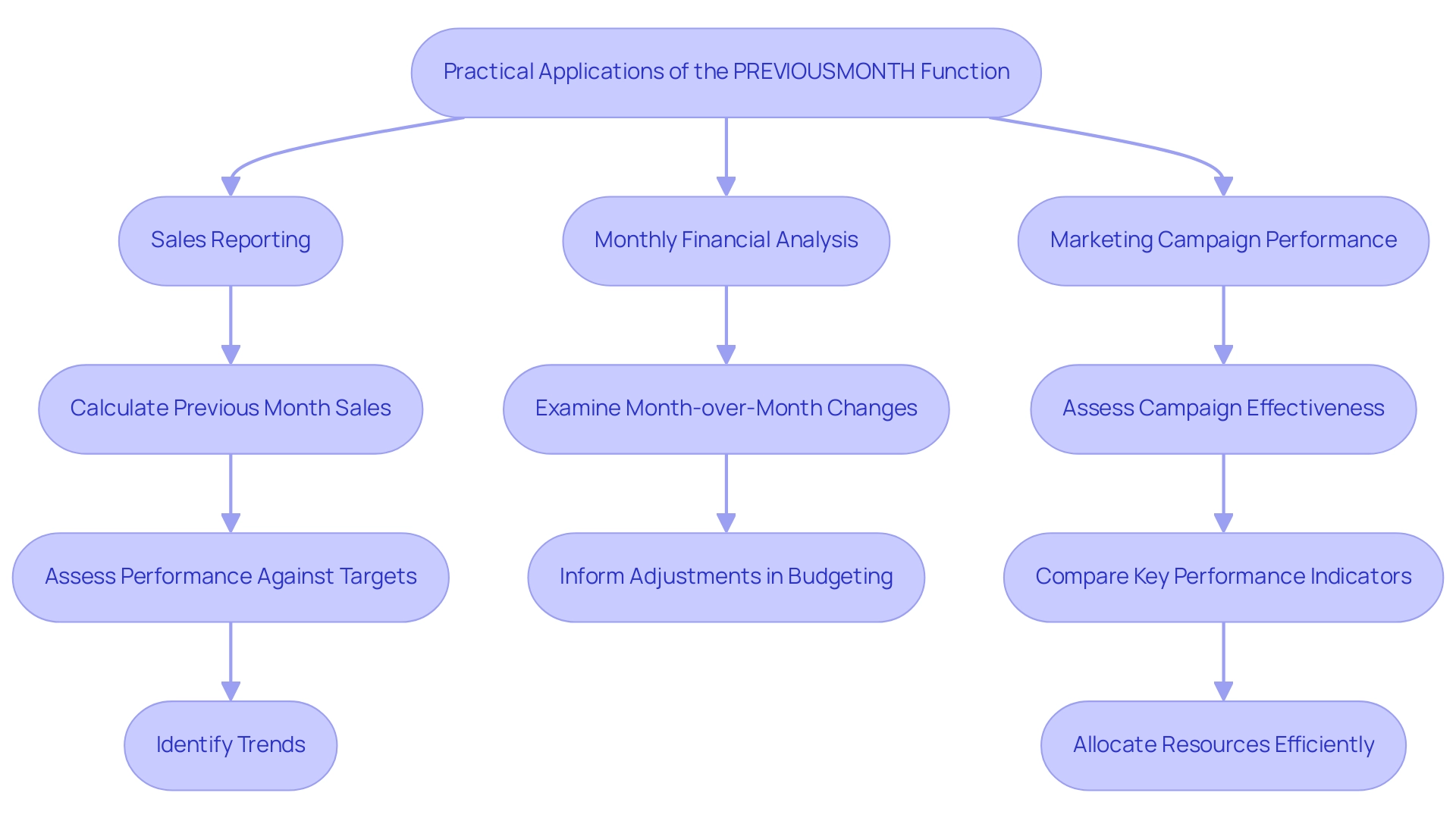

Practical Applications of the PREVIOUSMONTH Function in Business Intelligence

The PREVIOUSMONTH capability emerges as a crucial asset in diverse business intelligence scenarios, significantly enhancing analytical capabilities and decision-making processes, particularly when integrated with Robotic Process Automation (RPA). Notable applications include:

-

Sales Reporting: By calculating the previous month DAX sales figures, organizations can effectively assess performance against established targets. For instance, a retail business may leverage this capability in conjunction with RPA to improve information gathering and reporting, contrasting last month’s sales with current figures to identify emerging trends that could influence inventory and sales strategies. RPA not only automates these processes, minimizing the risk of human error but also liberates team members to focus on strategic initiatives rather than manual entry.

-

Monthly Financial Analysis: Financial analysts can utilize the previousmonth DAX function to examine month-over-month changes in critical metrics such as revenue, expenses, and profit margins. This analysis, enhanced by RPA for automating information aggregation, provides insights into the overall financial health of the organization, informing adjustments in budgeting and forecasting. Recent studies underscore the capacity of individual investors to time the market, highlighting the necessity of thorough information evaluation for informed decision-making. By reducing errors in information handling, RPA contributes to more accurate financial assessments.

-

Marketing Campaign Performance: Marketers can assess the effectiveness of their campaigns by comparing key performance indicators—such as leads generated or conversion rates—from the previous month DAX to current results. The integration of RPA here facilitates seamless information extraction from various platforms, ensuring that resources are allocated efficiently based on comparative analysis. This automation lessens the risk of oversight, empowering teams to direct their efforts more effectively.

Moreover, analyzing a sample of 3,036 investors reveals that understanding investor flows can enhance predictions of market trends. The case study titled “Bear Market Predictions Using Investor Flows” illustrates how a significant negative difference in flows between favorable and unfavorable periods nearly doubles the likelihood of negative market returns in the subsequent month. This insight can be further refined through RPA, which streamlines the information gathering process, simplifying the analysis of investor behavior and trends.

By integrating the previous month capability into their reports and employing RPA, organizations not only facilitate more informed decision-making but also bolster strategic planning efforts. In a rapidly evolving AI landscape, RPA stands as an essential tool for navigating complexities, minimizing errors, and ultimately driving operational efficiency.

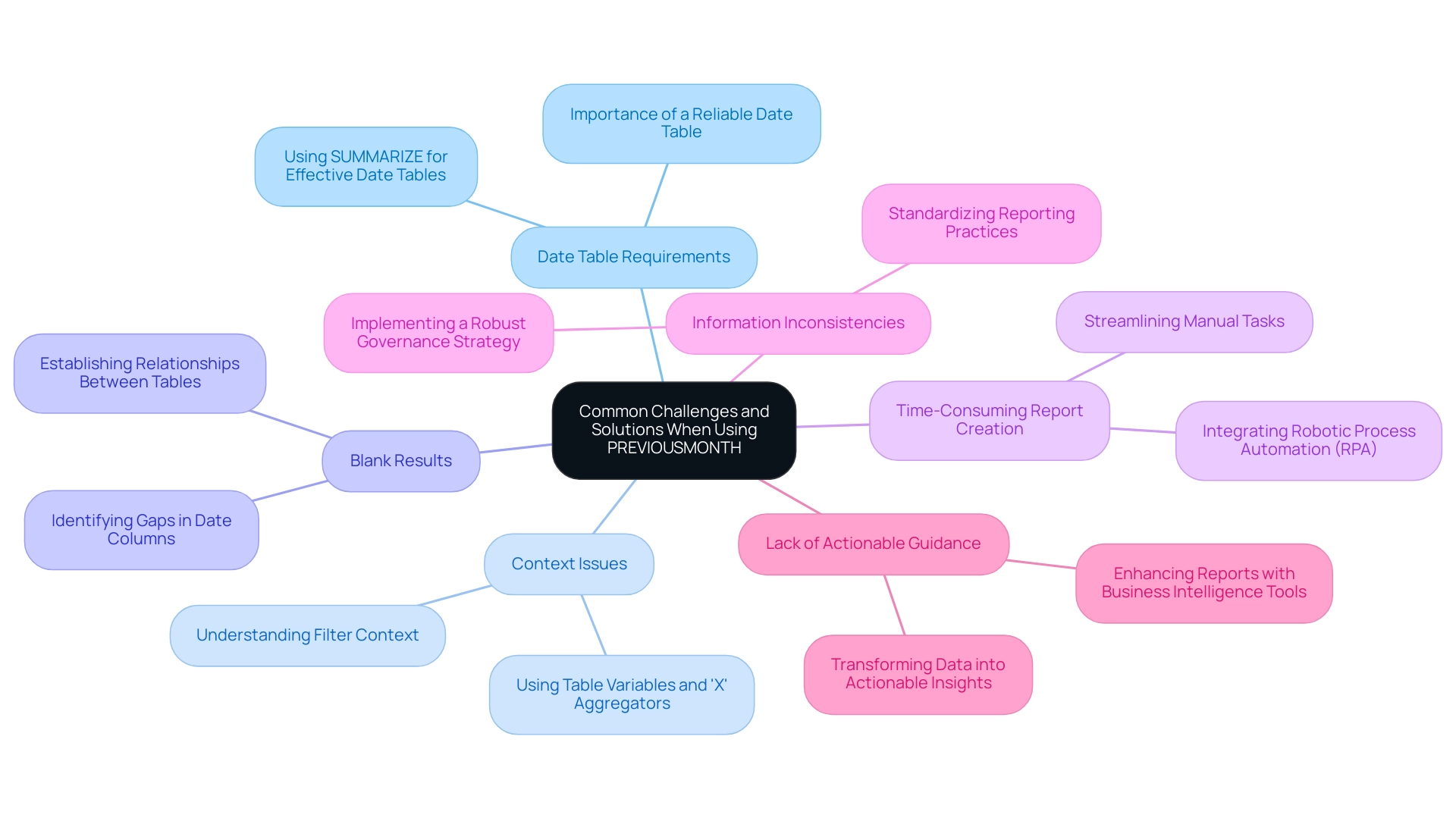

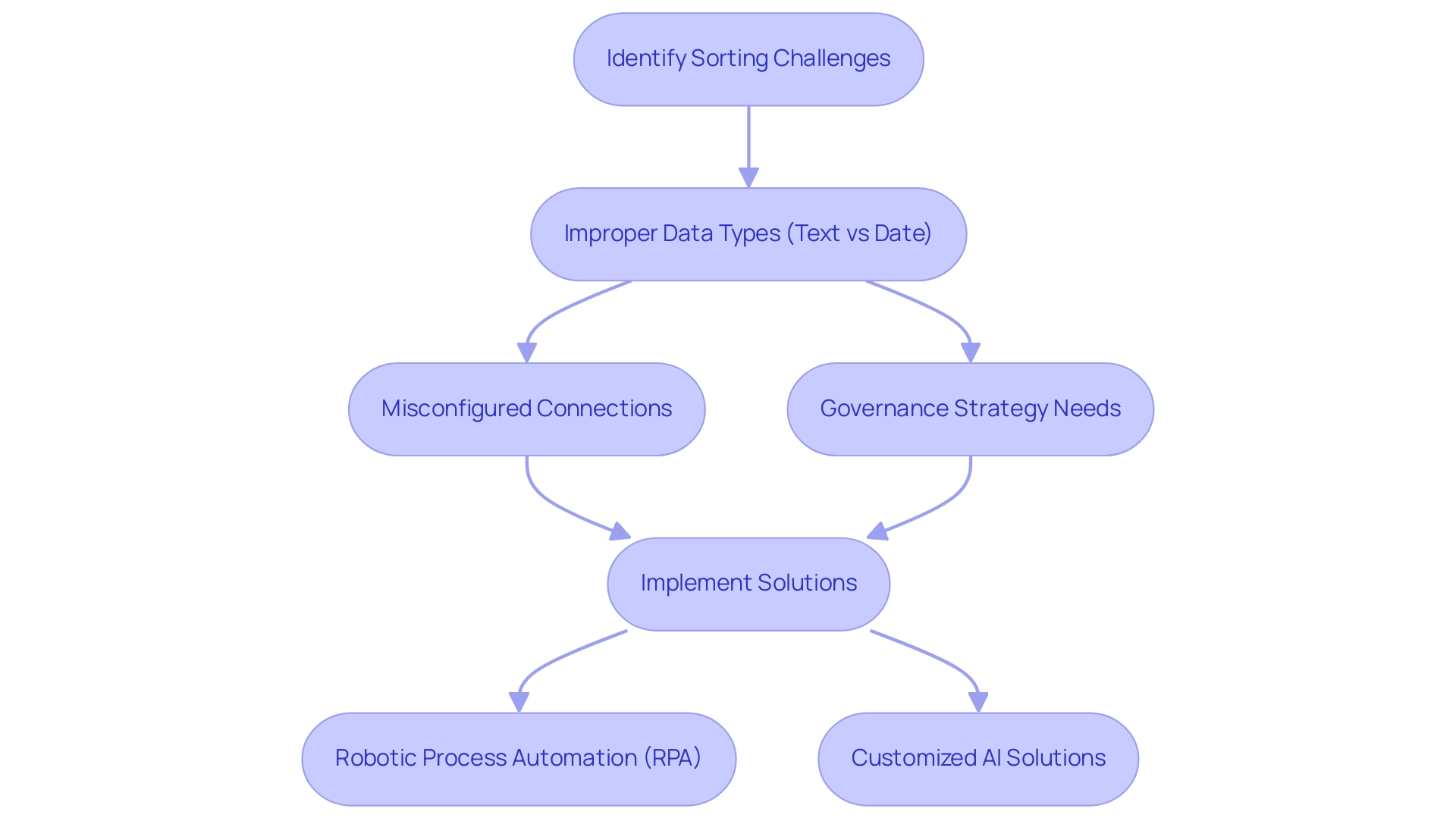

Common Challenges and Solutions When Using PREVIOUSMONTH

While the PREVIOUSMONTH function serves as a powerful tool for time-based data analysis, users must navigate certain challenges effectively:

-

Date Table Requirements: A well-organized date table is crucial for the accurate application of the PREVIOUSMONTH operation. This procedure depends on a continuous and complete date range; without it, users may encounter inaccurate results or blanks. Establishing a reliable date table significantly enhances the precision of your analysis. As highlighted in the case study ‘Key Concepts in DAX for Power BI’, understanding the granularity of fact tables and employing methods such as SUMMARIZE can facilitate the creation of effective date tables that support the prior month capability.

-

Context Issues: Grasping the filter context in which the PREVIOUSMONTH operation is utilized is essential. If your report includes slicers or other filters, the output may not meet expectations. To mitigate this, consider using table variables and ‘X’ aggregators, which often prove more effective than relying solely on CALCULATE for modifying the filter context. This strategy ensures that the intended data is accurately represented in the results.

-

Blank Results: Users may encounter scenarios where the previous month’s operation yields blank outcomes. This typically arises from gaps in the date column or an unsuitable context for previous month DAX calculations. Establishing a relationship between your date table and the fact table can resolve these issues, enabling accurate monthly comparisons. According to Mitchell Pearson, a Data Platform Consultant at Pragmatic Works, “A well-defined date table not only supports time-based functions like previousmonth DAX but also enhances overall reporting accuracy.”

-

Time-Consuming Report Creation: Many users find themselves dedicating excessive time to report construction rather than extracting insights from Power BI dashboards. This common challenge diverts focus from analysis to report creation, resulting in inefficiencies. Manual, repetitive tasks significantly contribute to this issue, wasting valuable resources. By integrating Robotic Process Automation (RPA) into your workflow, you can streamline these tasks, freeing up time for strategic analysis and decision-making.

-

Information Inconsistencies: Discrepancies across various reports due to a lack of governance can create confusion and erode trust in the information presented. Implementing a robust governance strategy is vital for ensuring information integrity and reliability, facilitating more confident insights. A well-defined governance framework can standardize reporting practices and mitigate discrepancies.

-

Lack of Actionable Guidance: Frequently, reports filled with numbers and graphs fail to deliver clear, actionable guidance, leaving stakeholders directionless regarding next steps. Enhancing your reports with targeted Business Intelligence tools can transform raw data into actionable insights, fostering growth and informed decision-making.

By proactively addressing these common obstacles, including the impact of manual processes and the necessity for governance strategies, users can fully leverage the capabilities of the prior month feature and enhance their overall information evaluation skills within Power BI.

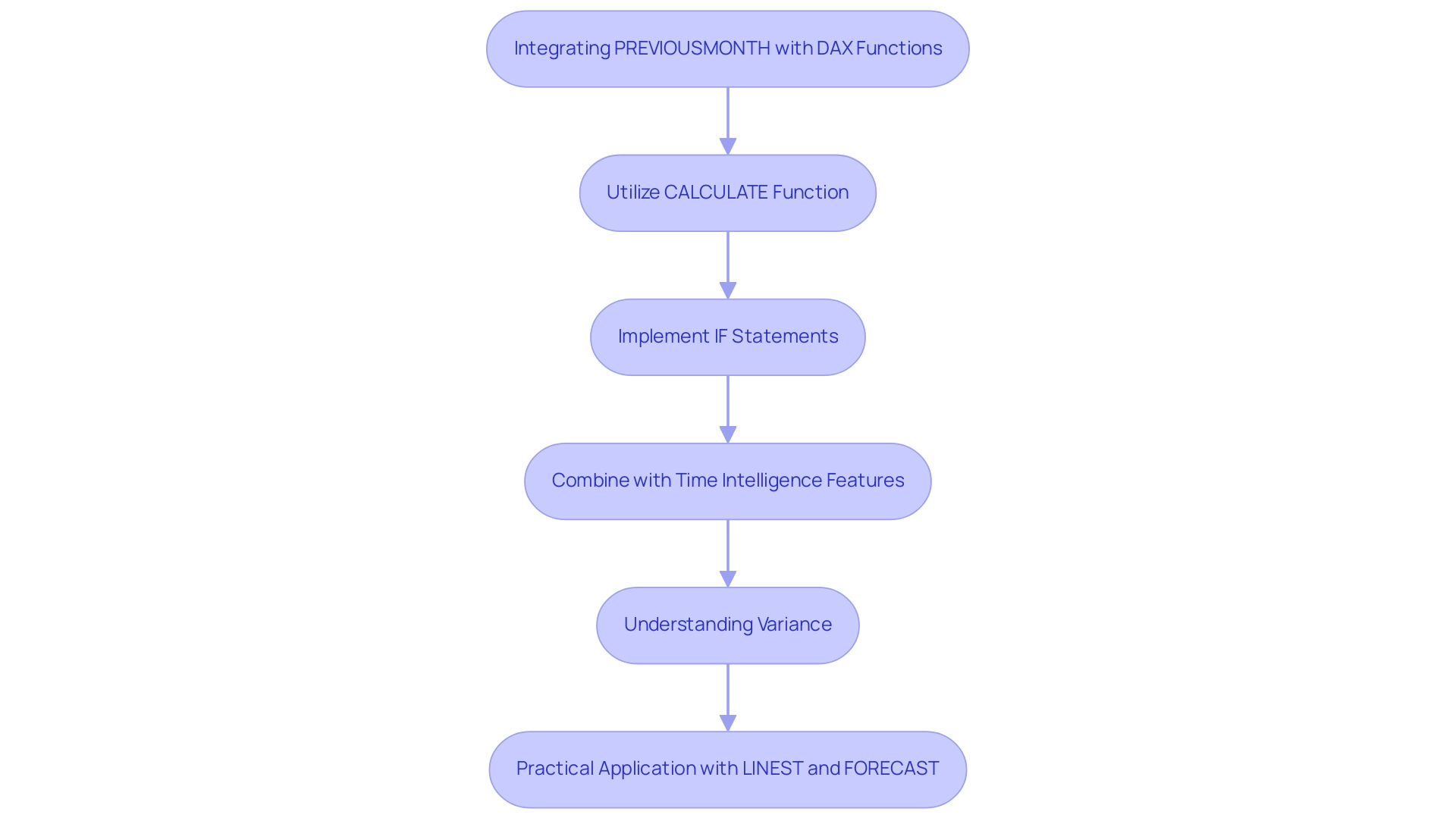

Advanced Techniques: Combining PREVIOUSMONTH with Other DAX Functions

To enhance your analytical capabilities in Power BI, integrating the prior month method with various DAX tools can yield valuable insights. This approach is especially effective when you streamline manual processes through Robotic Process Automation (RPA). By automating tasks such as report generation and data cleaning, analysts can concentrate on strategic decision-making. Consider these advanced techniques:

-

Utilize the CALCULATE function: Adjust the filter context while applying the previous month DAX. For example:

Sales Growth = CALCULATE(SUM(Sales[previous month]), PREVIOUSMONTH(Sales[Date])) - SUM(Sales[previous month])This formula effectively measures sales growth compared to the previous month, offering a clear perspective on performance trends and supporting data-driven decision-making.

-

IF Statements: Implement conditional logic using IF statements to handle scenarios where data may be missing. For instance:

Previous Month Sales = IF(ISBLANK(CALCULATE(SUM(Sales[previous month]), PREVIOUSMONTH(Sales[Date]))), 0, CALCULATE(SUM(Sales[previous month]), PREVIOUSMONTH(Sales[Date])))This ensures that if sales data from the previous month is absent, the formula returns zero instead of a blank, promoting clearer reporting and minimizing inconsistencies.

-

Time Intelligence Features: Enhance your analysis by combining PREVIOUSMONTH with other time intelligence features, such as DATESYTD or SAMEPERIODLASTYEAR. This strategy allows for comprehensive reporting that compares various time frames, providing richer context for decision-making, which is vital in a rapidly changing AI landscape.

-

Understanding Variance: It’s crucial to recognize that the VAR.S formula returns the variance of a sample population, essential for analyzing variability and making informed decisions.

-

Practical Application: As illustrated in a case study on regression, DAX offers functions like LINEST and FORECAST for conducting linear regression, modeling the relationship between a dependent variable and one or more independent variables. This capability facilitates predictive modeling, trend analysis, and forecasting based on historical data, further enhancing your analytical prowess and operational efficiency.

As Aaron, the Lead Power BI instructor at Maven Analytics, emphasizes, “Trust me; you won’t want to miss it!” By mastering these advanced DAX techniques in conjunction with RPA, analysts can uncover deeper insights and drive impactful business decisions, ultimately fortifying operational efficiency and strategic planning within your organization.

Conclusion

The exploration of Data Analysis Expressions (DAX) and its time intelligence functions, particularly the PREVIOUSMONTH function, underscores their vital role in enhancing data analysis and reporting practices. By facilitating comparisons across different time periods, this function empowers analysts to track performance trends, streamline reporting processes, and make informed decisions that drive organizational success. Moreover, the integration of Robotic Process Automation (RPA) significantly amplifies these capabilities, enabling analysts to concentrate on strategic insights rather than the manual compilation of data.

Practical applications of the PREVIOUSMONTH function span various domains, including:

- sales reporting

- financial analysis

- marketing performance evaluation

Harnessing this function allows organizations to gain valuable insights that inform strategy and operational efficiency. However, to fully leverage its potential, challenges such as the need for a well-structured date table, context issues, and potential data inconsistencies must be addressed.

Incorporating advanced techniques, such as combining the PREVIOUSMONTH function with other DAX functions, enhances analytical capabilities and supports robust decision-making. As the landscape of data analysis continues to evolve, mastering DAX functions and integrating RPA will be essential for professionals aiming to unlock the full potential of their data. Embracing these tools not only streamlines processes but also fosters a culture of data-driven decision-making, positioning organizations for sustained growth and innovation.

Overview

This article provides an authoritative overview of mastering the MERGE statement in Spark SQL, focusing on its syntax, best practices, and advanced techniques for effective data manipulation. By leveraging Delta Lake and Robotic Process Automation (RPA), operational efficiency and performance can be significantly enhanced. This is evidenced by the strategies outlined for optimizing merge operations and addressing common challenges. Professionals seeking to improve their data handling capabilities will find actionable insights that can transform their current practices.

Introduction

In the realm of data manipulation, the MERGE statement in Spark SQL emerges as a powerful and versatile tool. It enables organizations to perform conditional updates, inserts, and deletes seamlessly. As businesses increasingly rely on data-driven insights, integrating Robotic Process Automation (RPA) into these operations not only enhances efficiency but also minimizes human error. When paired with Delta Lake, the MERGE statement becomes even more formidable, optimizing the handling of massive datasets.

This article delves into the intricacies of the MERGE statement, exploring its syntax, best practices, and advanced techniques that can elevate data operations to new heights. By gaining a comprehensive understanding of join types, setup requirements, and troubleshooting strategies, organizations can harness the full potential of Spark SQL. This knowledge is crucial for navigating the complexities of today’s data landscape effectively.

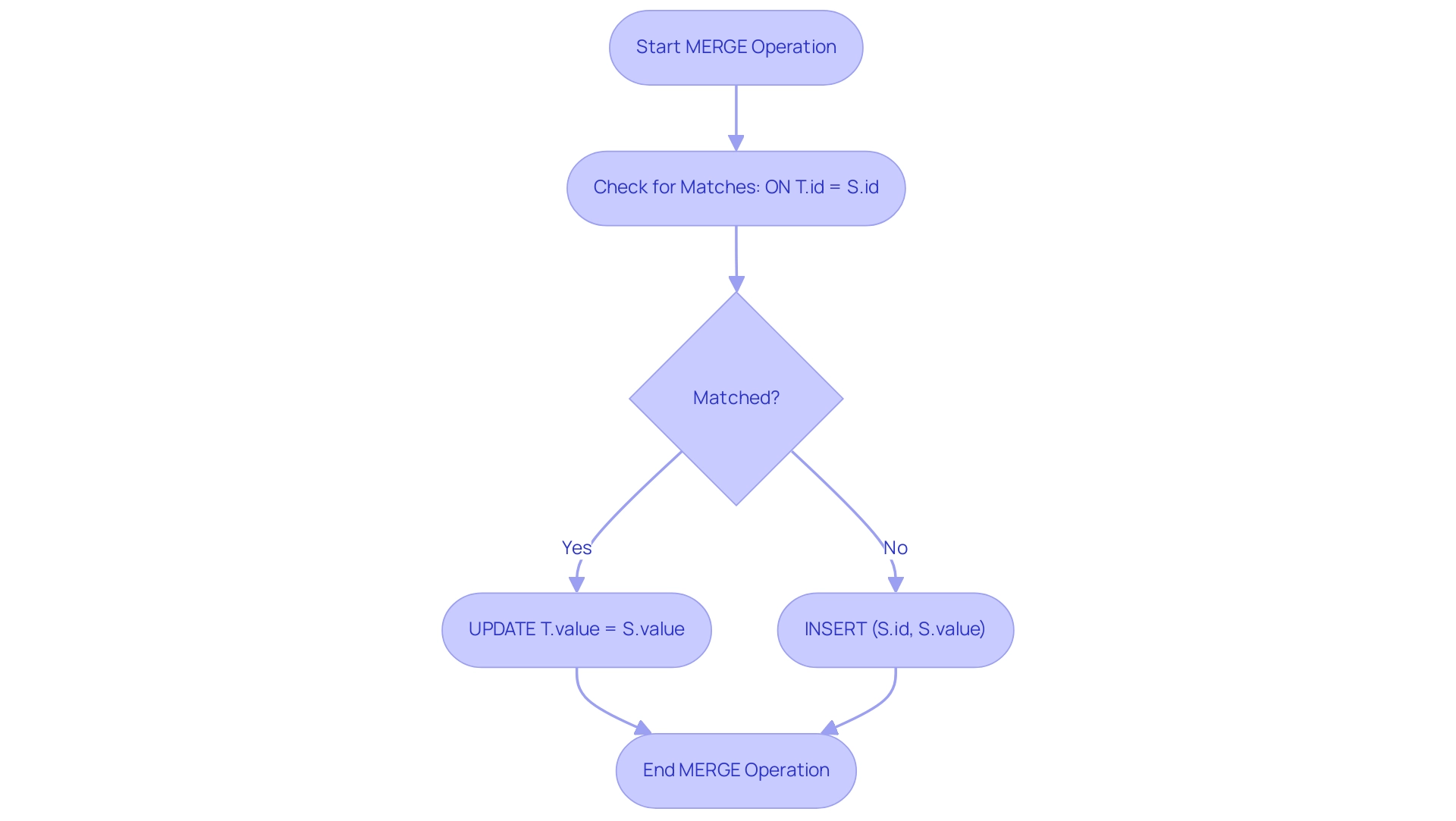

Understanding the MERGE Statement in Spark SQL

The JOIN command in Spark SQL serves as a powerful tool for executing conditional updates, inserts, and deletions in a unified manner, greatly enhancing the efficiency of information manipulation. By incorporating Robotic Process Automation (RPA) into this process, organizations can automate these operations, thereby reducing manual intervention and minimizing errors. This effectiveness is especially evident when using Delta Lake, which optimizes the management of large datasets.

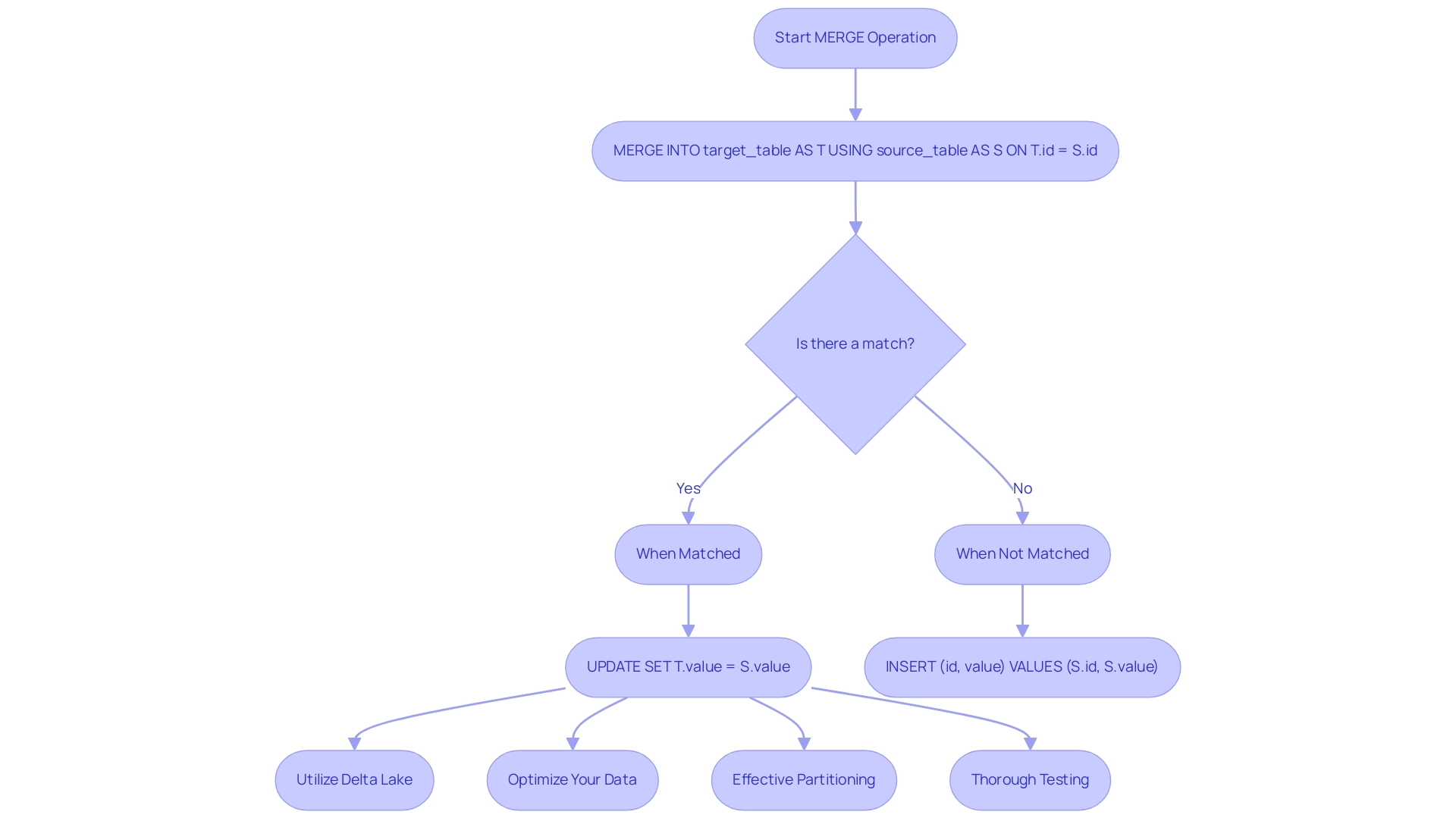

The syntax for a MERGE operation is as follows:

MERGE INTO target_table AS T

NATURAL JOIN source_table AS S

ON T.id = S.id

WHEN MATCHED THEN

UPDATE SET T.value = S.value

WHEN NOT MATCHED THEN

INSERT (id, value) VALUES (S.id, S.value);

This command assesses specified conditions to identify matches between the target and source tables, executing the necessary actions accordingly. In environments handling substantial volumes of data—such as those where combining operations can be sluggish with 30-50 million staging records versus billions of records—leveraging Delta Lake’s capabilities can result in significant performance improvements. Furthermore, cost-based optimization (CBO) is crucial in Spark SQL merge operations, enabling the use of statistical information to craft more efficient execution plans. As highlighted in case studies analyzing various recommendations for enhancing Spark SQL merge performance, many complex solutions often fall short, while simple, foundational practices are frequently overlooked.

Alex Ott, an experienced user, succinctly stated, > But you can pull necessary information using the History on the Delta table <. This functionality not only streamlines operations but also underscores the importance of foundational practices, steering clear of ineffective complex solutions.

By focusing on these essential strategies and integrating RPA to automate information manipulation processes, organizations can markedly enhance their operational efficiency.

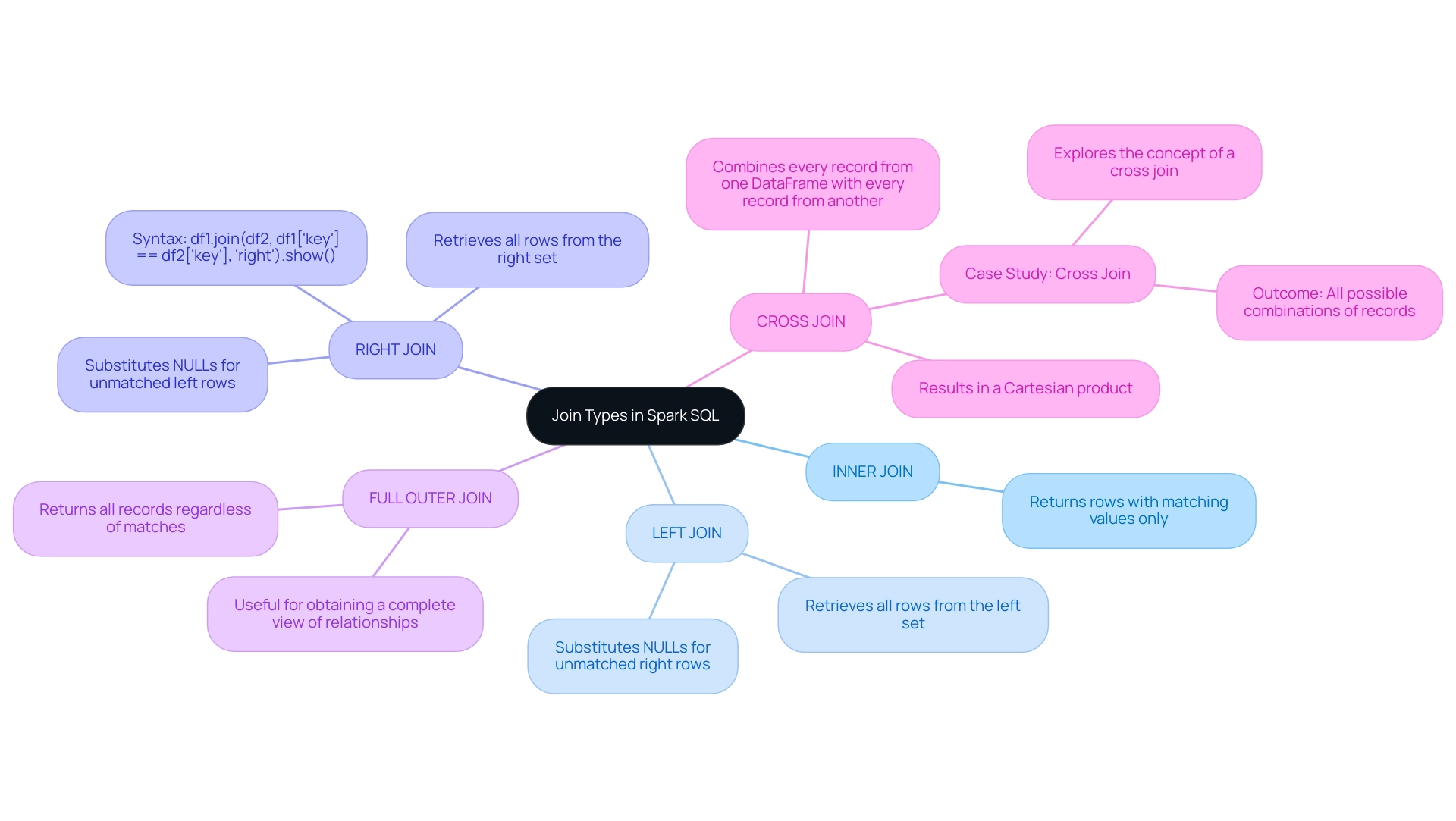

Exploring Join Types in Spark SQL

In Spark SQL, various join types are available, each tailored to fulfill specific purposes in data manipulation. A comprehensive understanding of these joins is essential for crafting effective statements that utilize Spark SQL’s merge functionality to accurately capture desired relationships. Alex Ott underscores the importance of selecting appropriate file formats, noting that features like Data Skipping and Bloom filters can significantly enhance join performance by reducing the number of files accessed during operations.

The key join types include:

- INNER JOIN: This join returns only those rows with matching values in both sets, ensuring that only relevant information is included in the result set.

- LEFT JOIN: This retrieves all rows from the left set alongside the matched rows from the right set, substituting NULLs for any unmatched rows from the right, thereby preserving all information from the left.

- RIGHT JOIN: Conversely, this join returns all rows from the right set along with the matched rows from the left set, filling in NULLs for any unmatched left-side rows. The syntax for a Right Outer Join is

df1.join(df2, df1['key'] == df2['key'], 'right').show(). - FULL OUTER JOIN: This comprehensive join type ensures that all records are returned, regardless of matches in either the left or right table. This is particularly beneficial for obtaining a complete view of relationships. Furthermore, the concept of a Cross Join, where every record from one DataFrame merges with every record from another, illustrates the practical application of join types. Understanding the distinctions among these join types not only aids in precise data retrieval but also enhances the performance and efficiency of data operations when utilizing Spark SQL’s merge functionality.

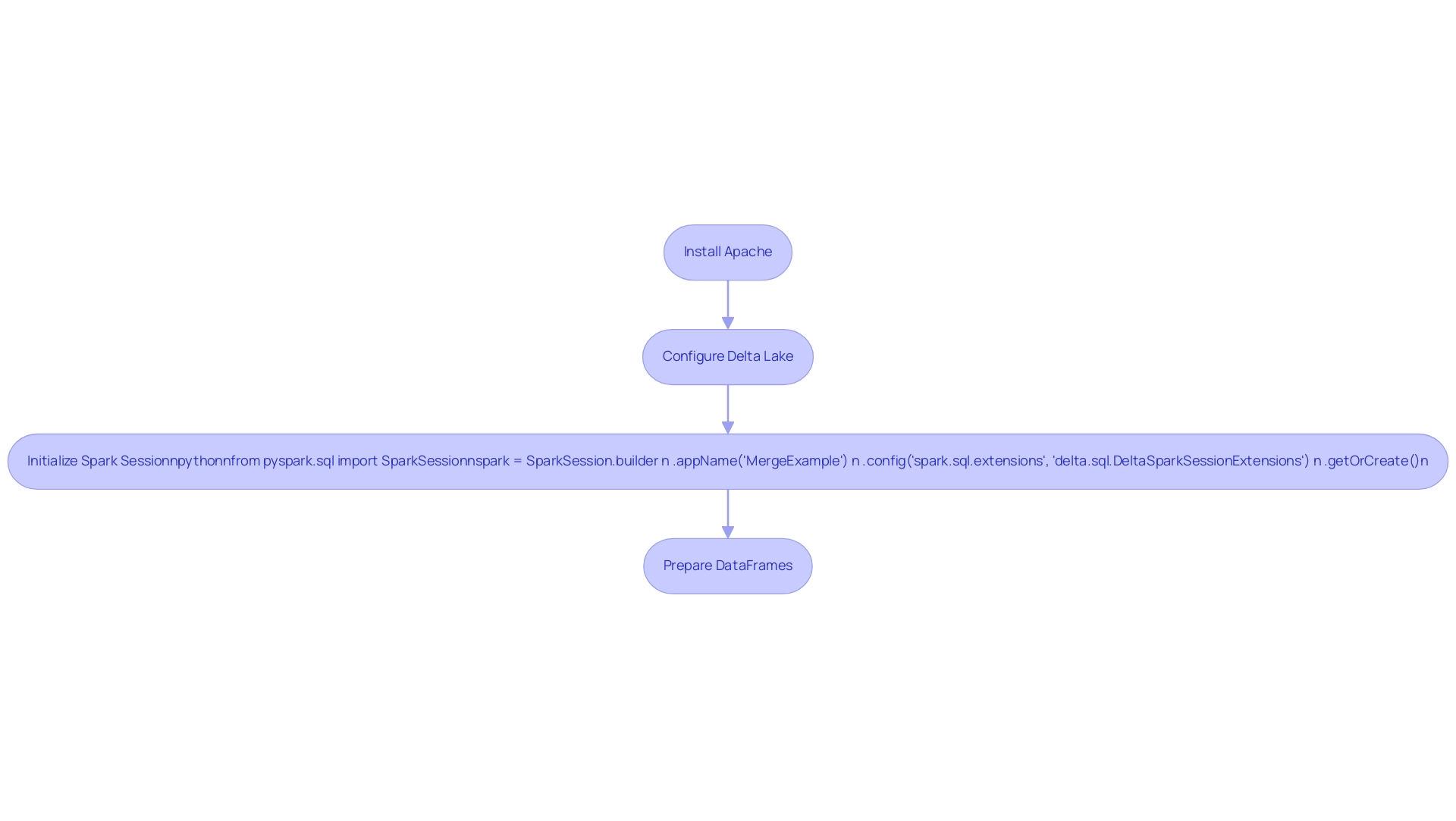

Setting Up Your Spark SQL Environment for MERGE Operations

To effectively execute MERGE operations in Spark SQL, establishing a robust setup is essential. This setup includes several key components:

-

Apache: Start by installing Apache and ensuring it is correctly configured in your development environment.

-

Delta Lake: Utilize Delta Lake as your storage format. Combining operations are exclusively compatible with Delta structures, enhancing performance and reliability through automation.

-

Spark Session: Initialize your Spark session to support Delta functionality with the following code:

from pyspark.sql import SparkSession spark = SparkSession.builder \ .appName('MergeExample') \ .config('spark.sql.extensions', 'delta.sql.DeltaSparkSessionExtensions') \ .getOrCreate() -

Data Preparation: Before executing the combination statement, prepare your source and target tables by loading them into DataFrames.

Incorporating Robotic Process Automation (RPA) into this setup can significantly streamline Spark SQL merge operations. For instance, RPA can automate preparation steps, ensuring that DataFrames are consistently loaded and ready for processing without manual intervention. This approach not only reduces the potential for human error but also accelerates workflow, allowing your team to focus on more strategic tasks.

This comprehensive setup enables you to harness the full potential of SQL for advanced manipulation while aligning with the increasing demand for operational efficiency in a rapidly evolving AI landscape. As organizations increasingly adopt tools like SQL and Delta Lake—driven by the projected $68.9 trillion growth of the Analytics as a Service (AaaS) market by 2028—leveraging RPA can enhance data-driven insights and operational efficiency for business growth. According to Deloitte, “MarkWide Research is a trusted partner that provides us with the market insights we need to make informed decisions.”

This underscores the critical role of utilizing reliable tools and insights in navigating the evolving data landscape. Furthermore, the supremacy of Google in web analytics, evidenced by its 30.35% market share, highlights the necessity for organizations to implement robust analytics solutions such as SQL and Delta Lake to remain competitive in this expanding market.

Executing MERGE Operations: Syntax and Best Practices

To perform a MERGE operation in Spark SQL, you can follow this syntax:

MERGE INTO target_table AS T

USING source_table AS S

ON T.id = S.id

WHEN MATCHED THEN

UPDATE SET T.value = S.value

WHEN NOT MATCHED THEN

INSERT (id, value) VALUES (S.id, S.value);

Best Practices:

- Utilize Delta Lake: For optimal performance, ensure your tables are in Delta format. Delta Lake supports ACID transactions and unifies batch and streaming paradigms, simplifying complex transactions involving incremental information. As highlighted by Prashanth Babu Velanati Venkata, Lead Product Specialist, “Delta Lake supports ACID transactions and unifies batch and streaming paradigms, simplifying delete/insert/update operations on incremental information.”