Overview

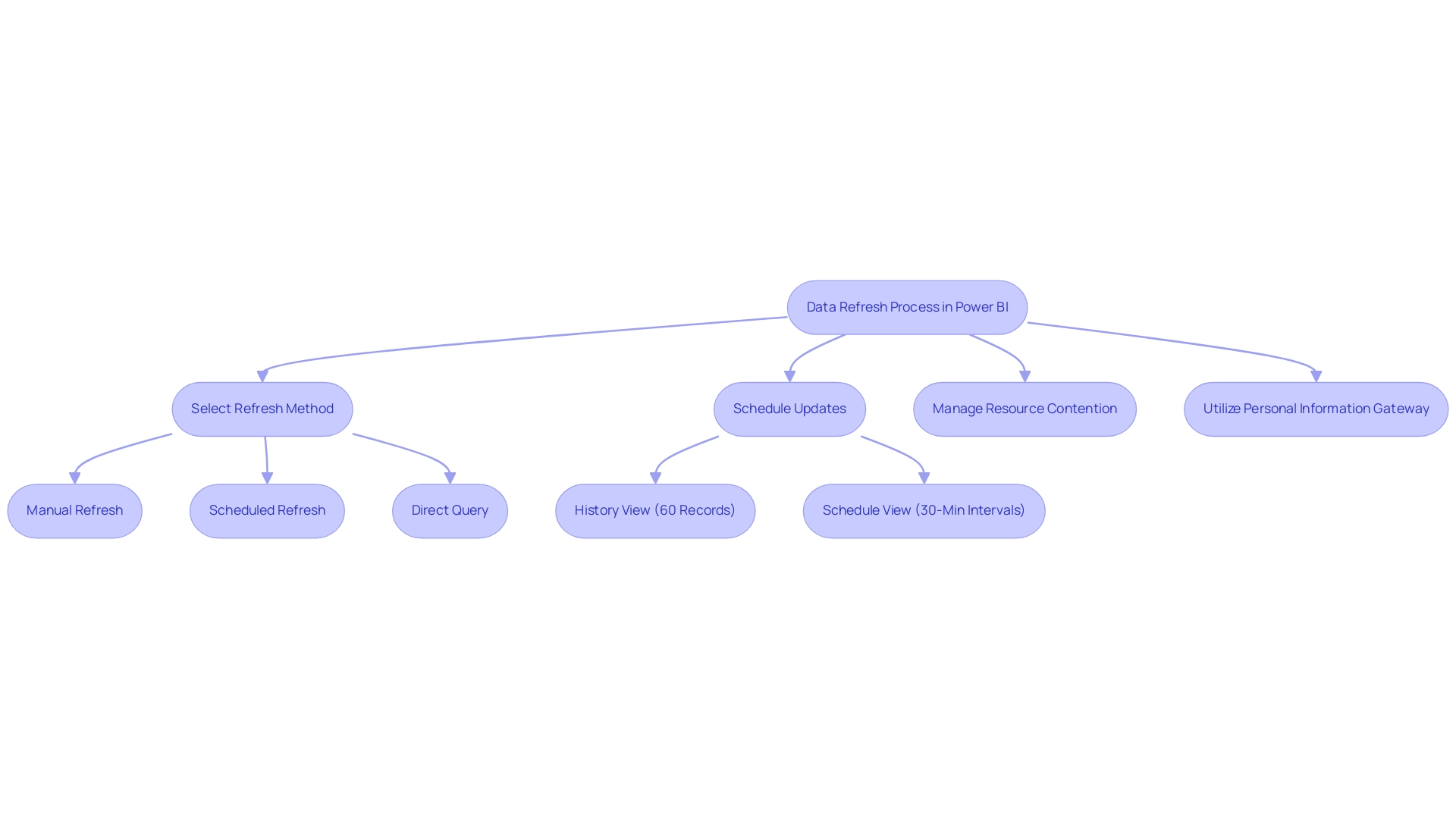

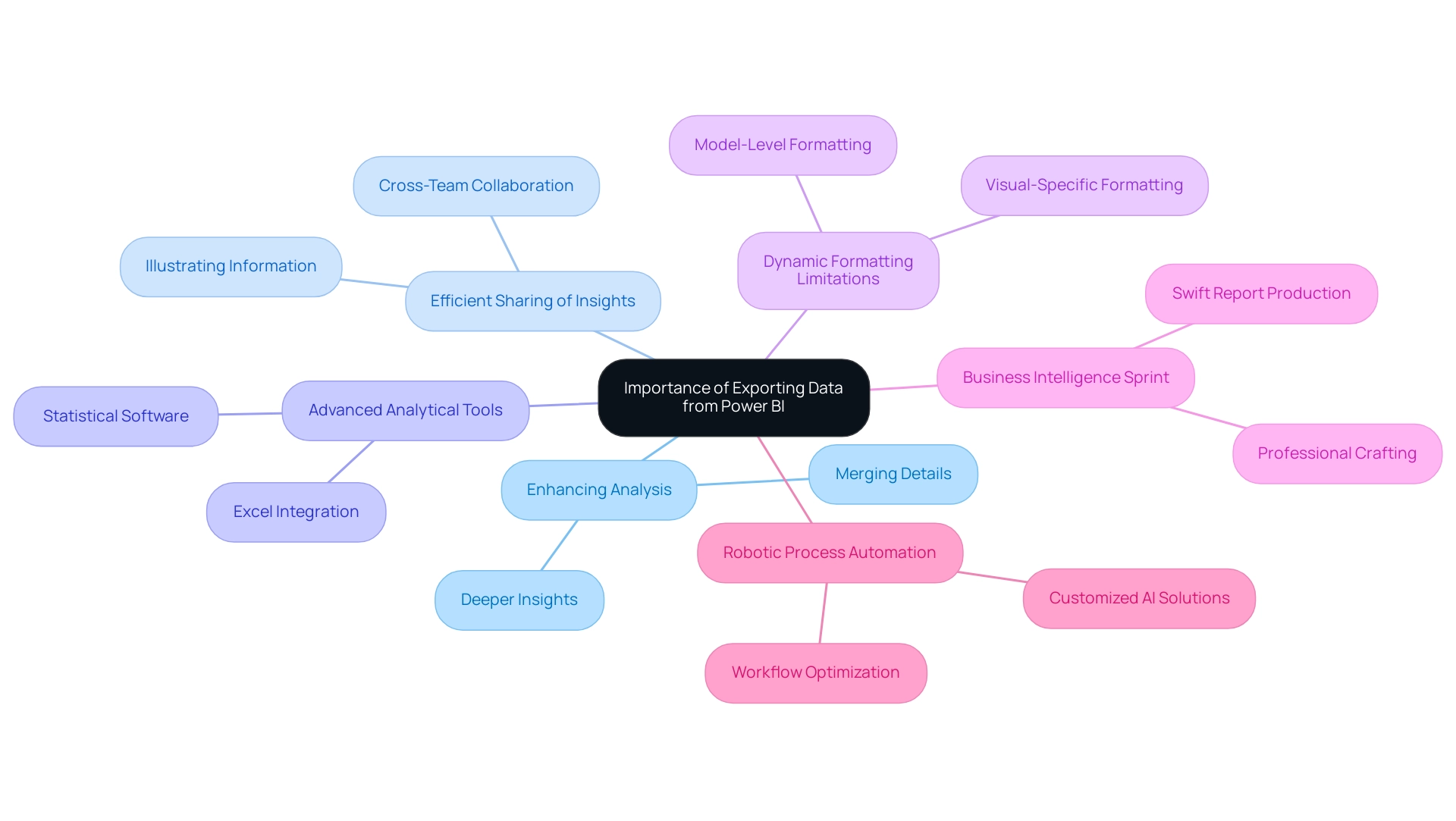

To update Power BI data effectively, users can choose between full updates, which replace all current information, and incremental updates, which modify only the changed content, thereby optimizing performance and reducing resource usage. The article emphasizes the importance of selecting the right update method based on operational needs and highlights best practices, such as automating processes with Robotic Process Automation (RPA) and monitoring refresh performance, to ensure reliable and timely access to accurate data.

Introduction

In the dynamic landscape of data-driven decision-making, ensuring that insights are timely and accurate is paramount for organizations striving to maintain a competitive edge. Power BI offers robust solutions for data refresh, enabling users to harness the latest information from their source systems effectively.

This article delves into the intricacies of data refresh in Power BI, exploring various methods such as:

- Manual refresh

- Scheduled refresh

- On-demand refreshes

While providing practical steps for setting up automatic refresh features. Additionally, it addresses common troubleshooting challenges and outlines best practices that can significantly enhance operational efficiency.

By understanding these concepts and implementing effective strategies, organizations can transform their data management processes, ultimately driving growth and innovation.

Understanding Data Refresh in Power BI

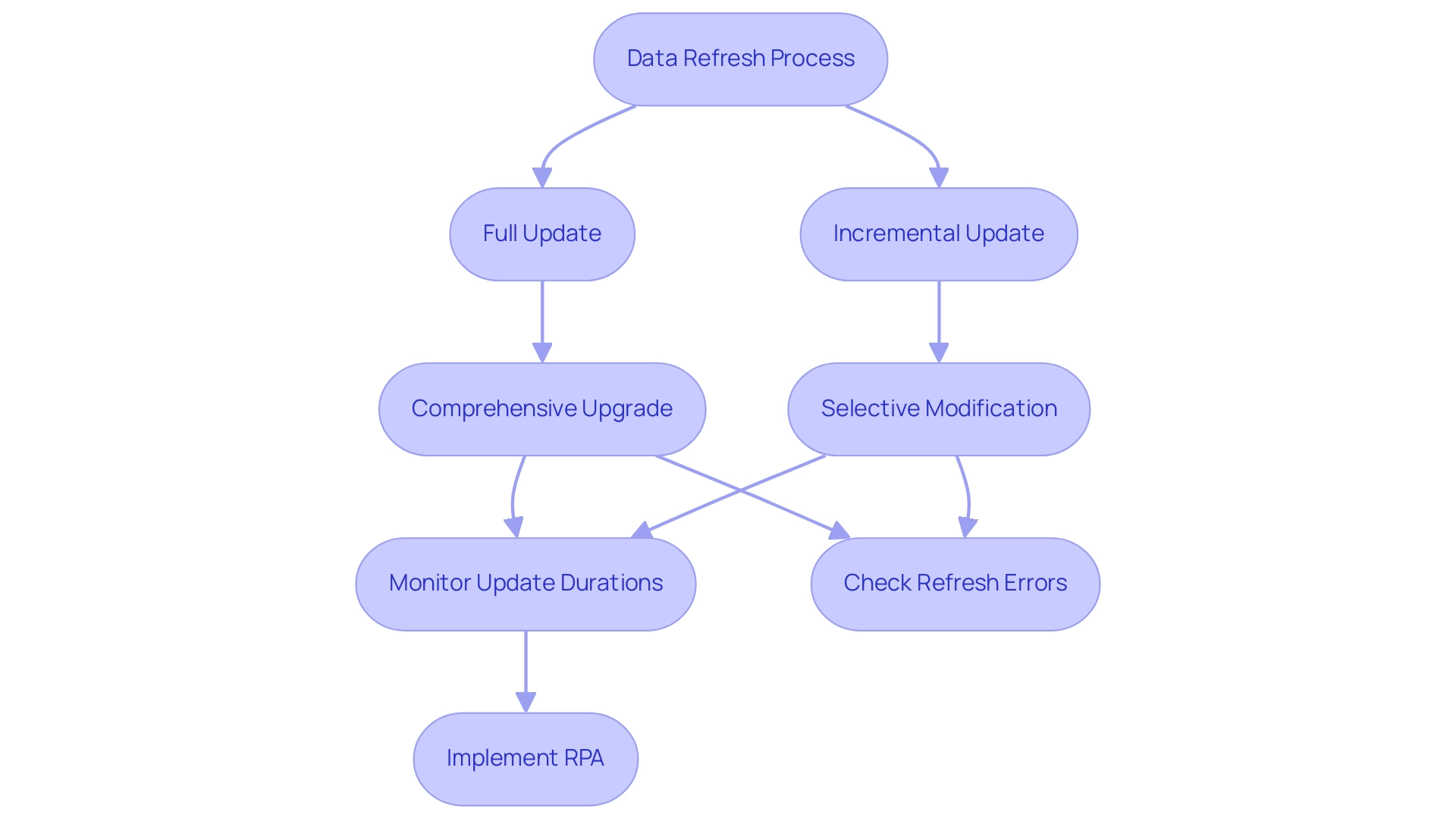

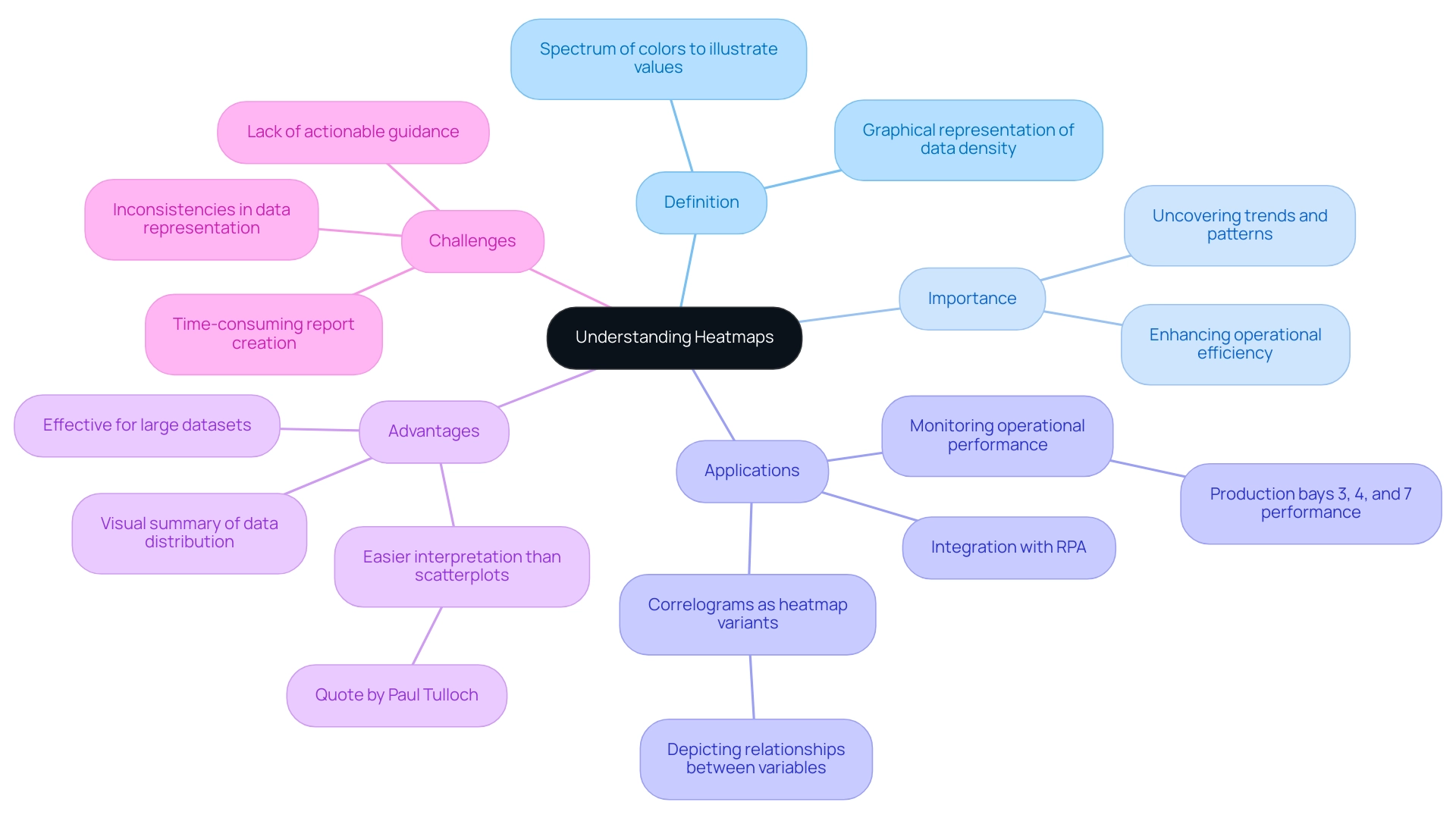

Understanding how to update Power BI data is an essential procedure that guarantees your reports and dashboards represent the most recent information from source systems, facilitating informed decision-making. This process primarily revolves around two types of updates:

- Full update

- Incremental update

A full update replaces all current information, offering a comprehensive upgrade, while an incremental update selectively modifies only the content that has altered since the previous update.

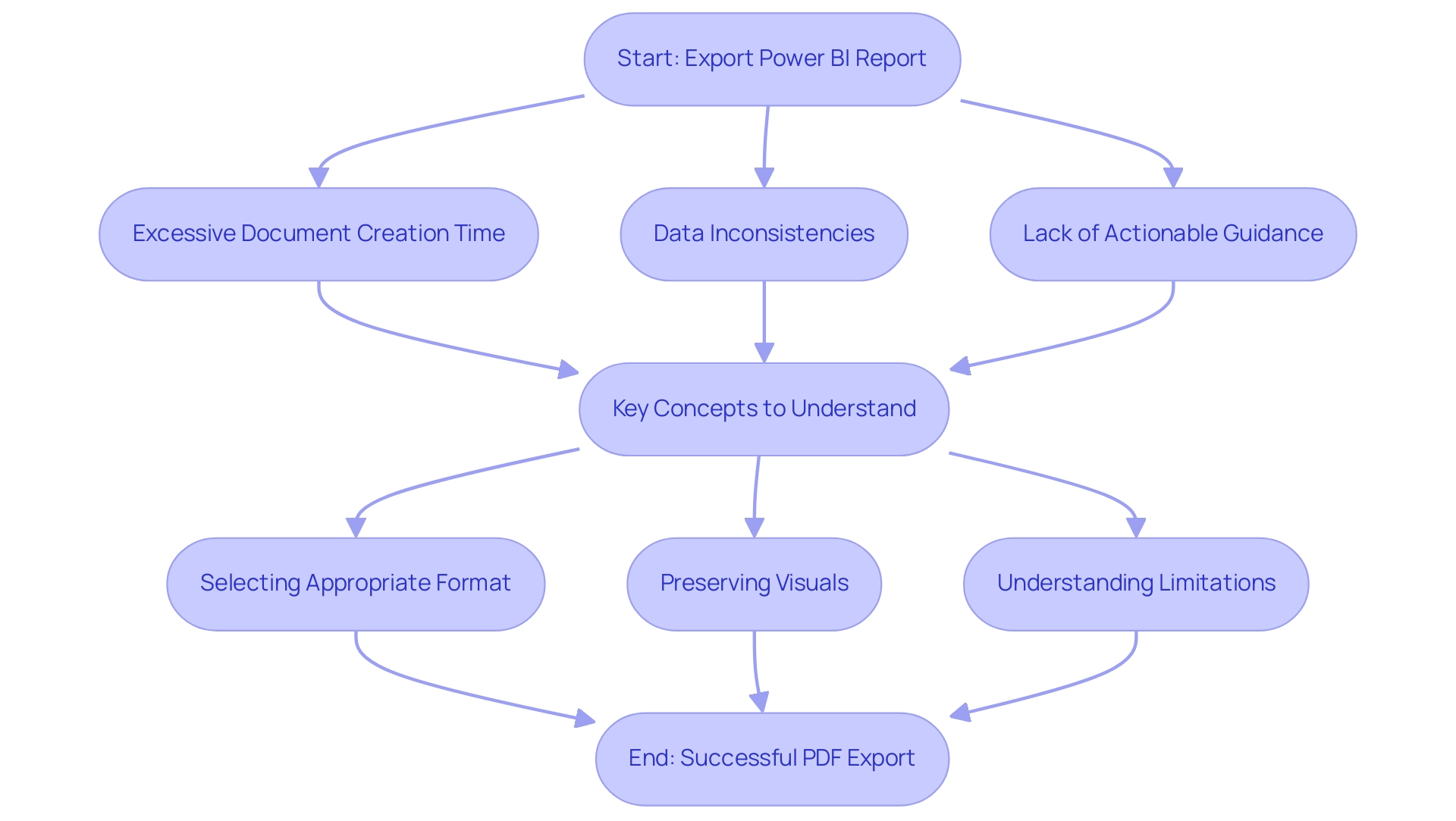

Understanding these distinctions is crucial for effective data management, particularly given the challenges organizations face, such as time-consuming report creation and data inconsistencies. As emphasized in recent discussions, it can take the business intelligence tool up to 60 minutes to update a semantic model when utilizing the ‘Refresh now’ option. This statistic highlights the significance of choosing the suitable update strategy to enhance performance.

As mjmowle noted, ‘We have BI Premium per-user licenses, and while we have found that up to 48 refreshes can be assigned in a 24-hour period, they still appear to be limited to 30-minute intervals.’ This insight offers a real-world viewpoint on the constraints and functionalities of update schedules in BI. Moreover, users are encouraged to monitor update durations closely; if update times exceed two hours, they may need to explore how to update Power BI data by transitioning models to Power BI Premium or implementing incremental updates for larger datasets.

Additionally, users can periodically check for refresh errors and review the refresh history of semantic models to monitor their status. By incorporating Robotic Process Automation (RPA) into your strategy, you can automate repetitive tasks related to information management, further enhancing operational efficiency and alleviating the competitive disadvantage of struggling to extract meaningful insights. Getting acquainted with these concepts will enable you to utilize BI more effectively, enhancing the accuracy of your insights and driving operational growth.

Exploring Refresh Methods: Manual, Scheduled, and On-Demand

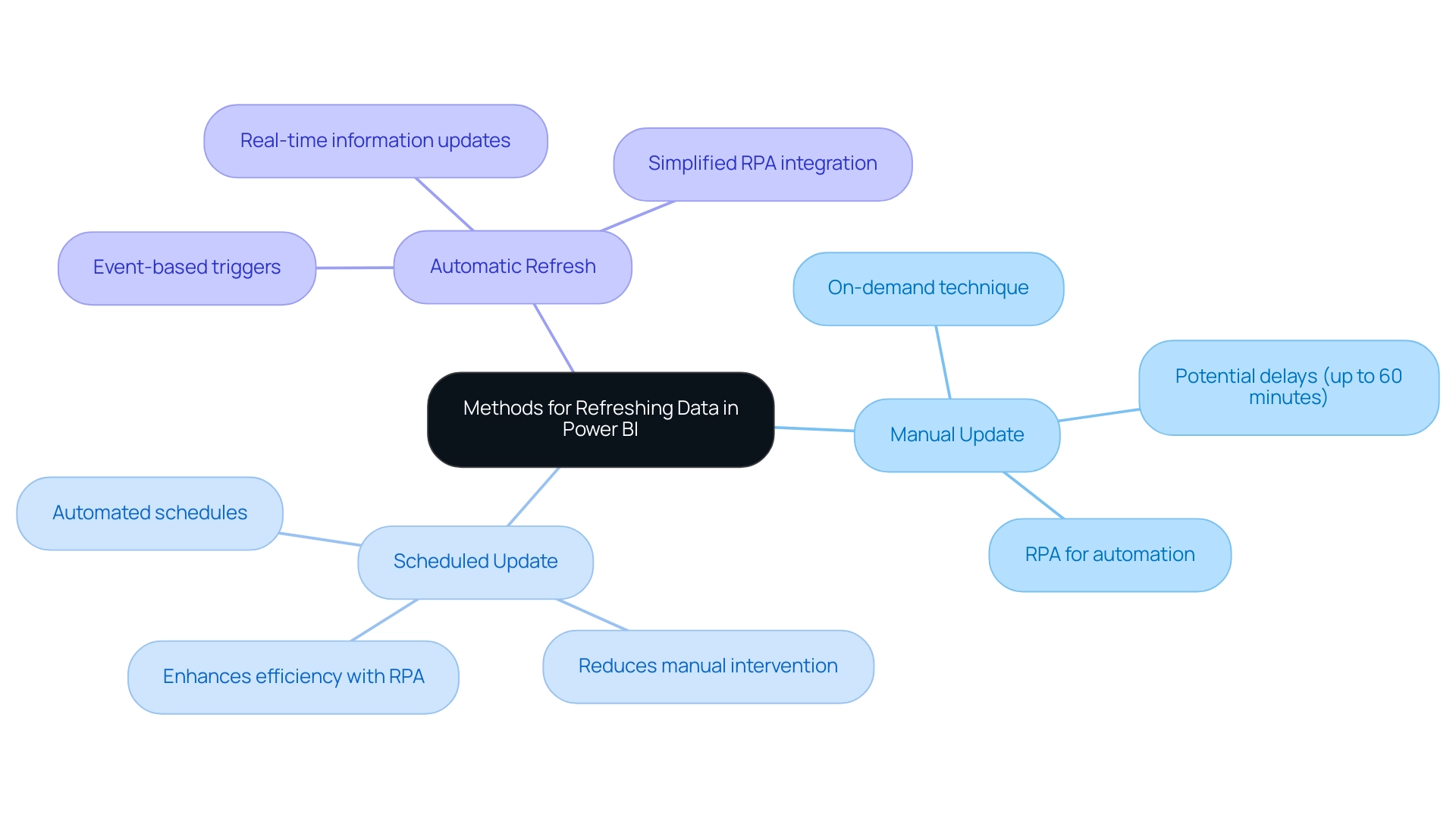

This tool provides three main approaches for how to update Power BI data: manual, scheduled, and on-demand, each customized for various operational requirements, ultimately fostering insights based on information and improving operational efficiency.

-

Manual Refresh: This approach allows users total control, enabling them to refresh their documents at their discretion. By simply clicking the ‘Refresh’ button in either Power BI Desktop or the Power BI Service, users can learn how to update Power BI data to instantly see the most recent information. As mentioned by Da Data Guy, comprehending this process is vital:

I concentrate on crafting high-quality articles that deconstruct each phase of the procedure so you can follow along and learn as well.

This enables teams to make informed choices based on the most up-to-date information. -

Scheduled Update: Perfect for guaranteeing that documents stay up-to-date without requiring user involvement, scheduled update allows individuals to establish specific times for automatic updates. This approach tackles typical obstacles like lengthy document generation and information discrepancies, enhancing update intervals by minimizing the volume of information handled. Incremental updates further improve this approach, reducing the information that requires updating, resulting in quicker update times. Recent statistics indicate that organizations typically schedule refreshes multiple times a day to maintain the accuracy of their reporting. Significantly, as companies prepare for future expansion, there is an imminent inquiry on how to update Power BI data automatically in a BI report linked to Fabric Lakehouse, set for July 2024, emphasizing the importance of scheduled update techniques in future strategies. Incorporating RPA tools such as EMMA RPA and Power Automate can further optimize these processes, improving efficiency and alleviating staffing shortages.

-

On-Demand Update: This approach is ideal for on-the-fly reporting needs, allowing users to update information in real-time based on urgent requirements. It provides flexibility to adapt to changing circumstances and is particularly useful in dynamic operational environments, ensuring that leaders can respond swiftly to new insights. By utilizing RPA, organizations can automate the update process, thus enhancing employee morale by decreasing manual workloads.

A practical example of update methods can be observed in the case study of deploying a personal information gateway. When an enterprise information gateway is unavailable, users can manage their own semantic models without sharing sources, although personal gateways have limitations. Selecting the appropriate update approach depends on your reporting requirements and understanding how to update Power BI data based on how often your information changes.

Optimal methods recommend evaluating your operational needs and adjusting the update strategy correspondingly to enhance efficiency and effectiveness, while also contemplating how RPA can simplify these procedures, converting raw information into actionable insights that foster growth and innovation.

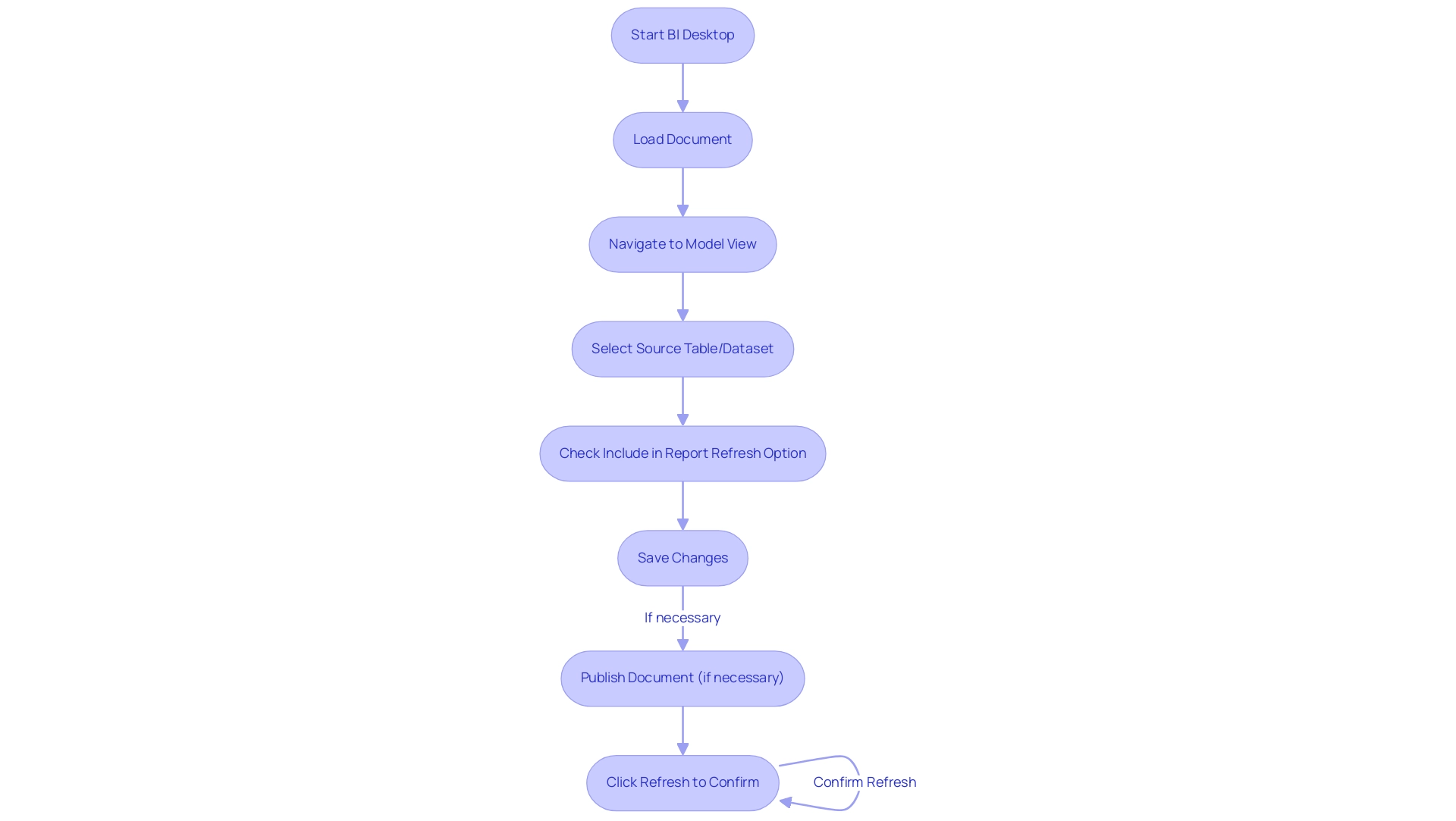

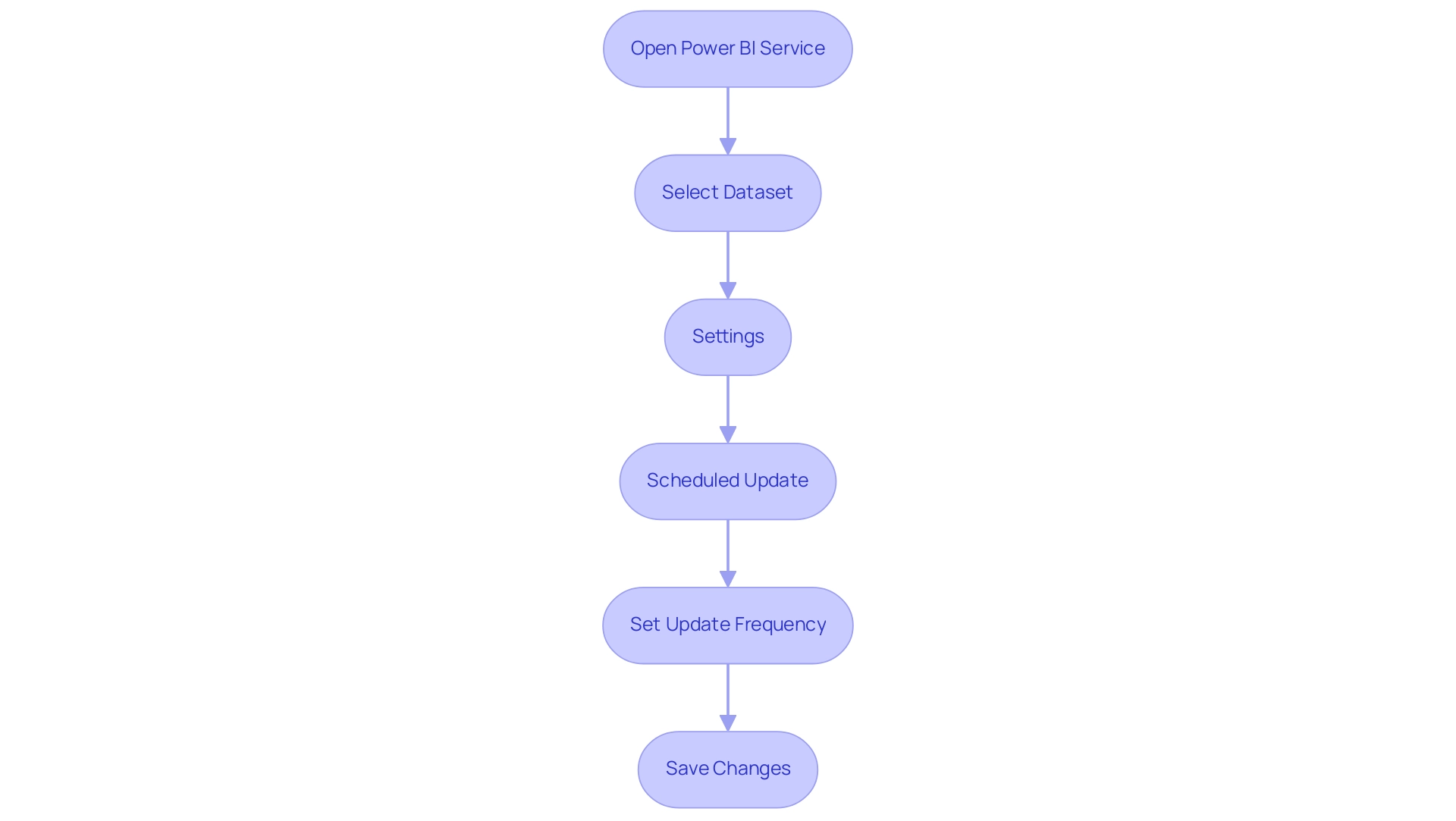

Setting Up Automatic Data Refresh in Power BI

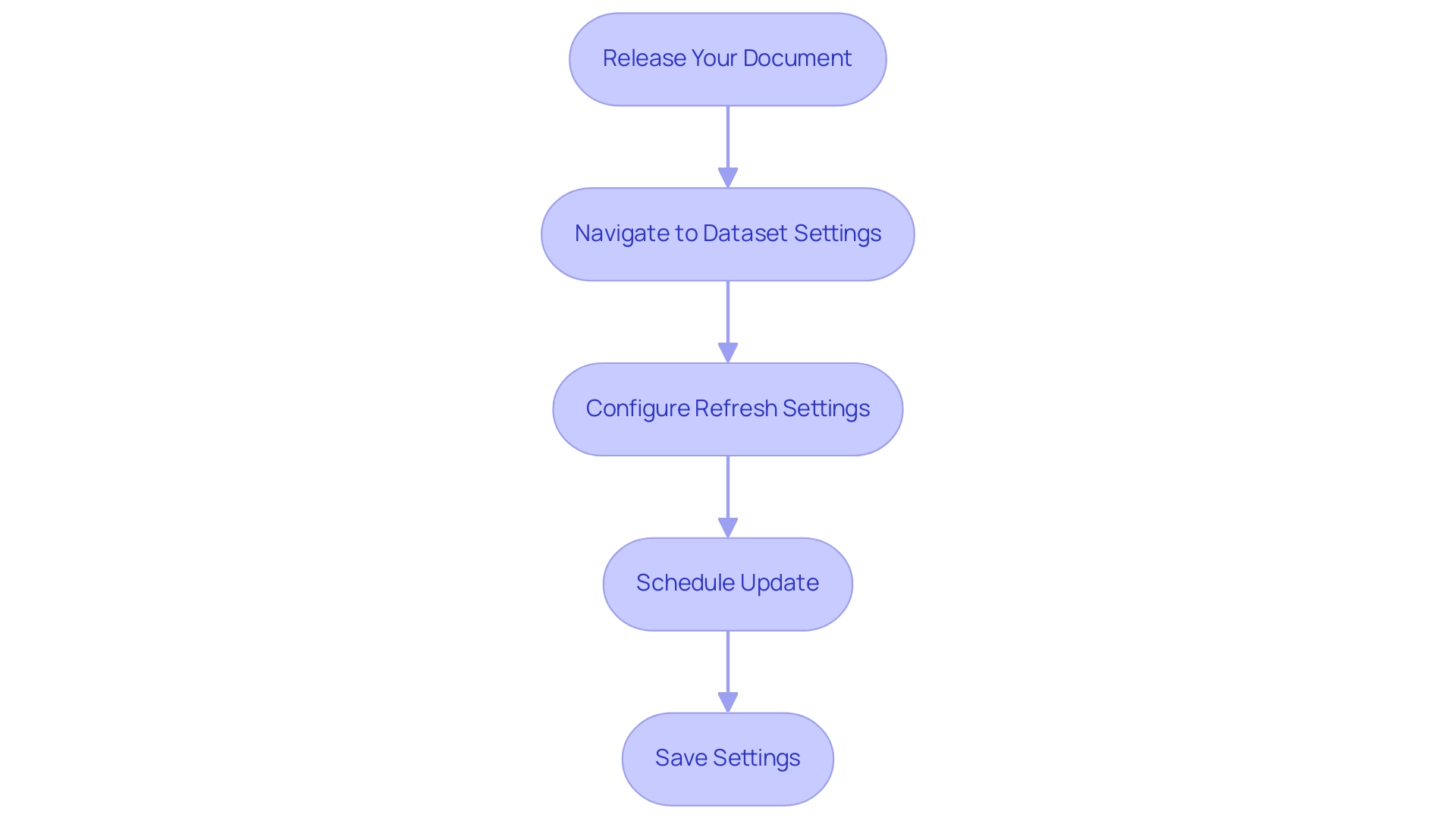

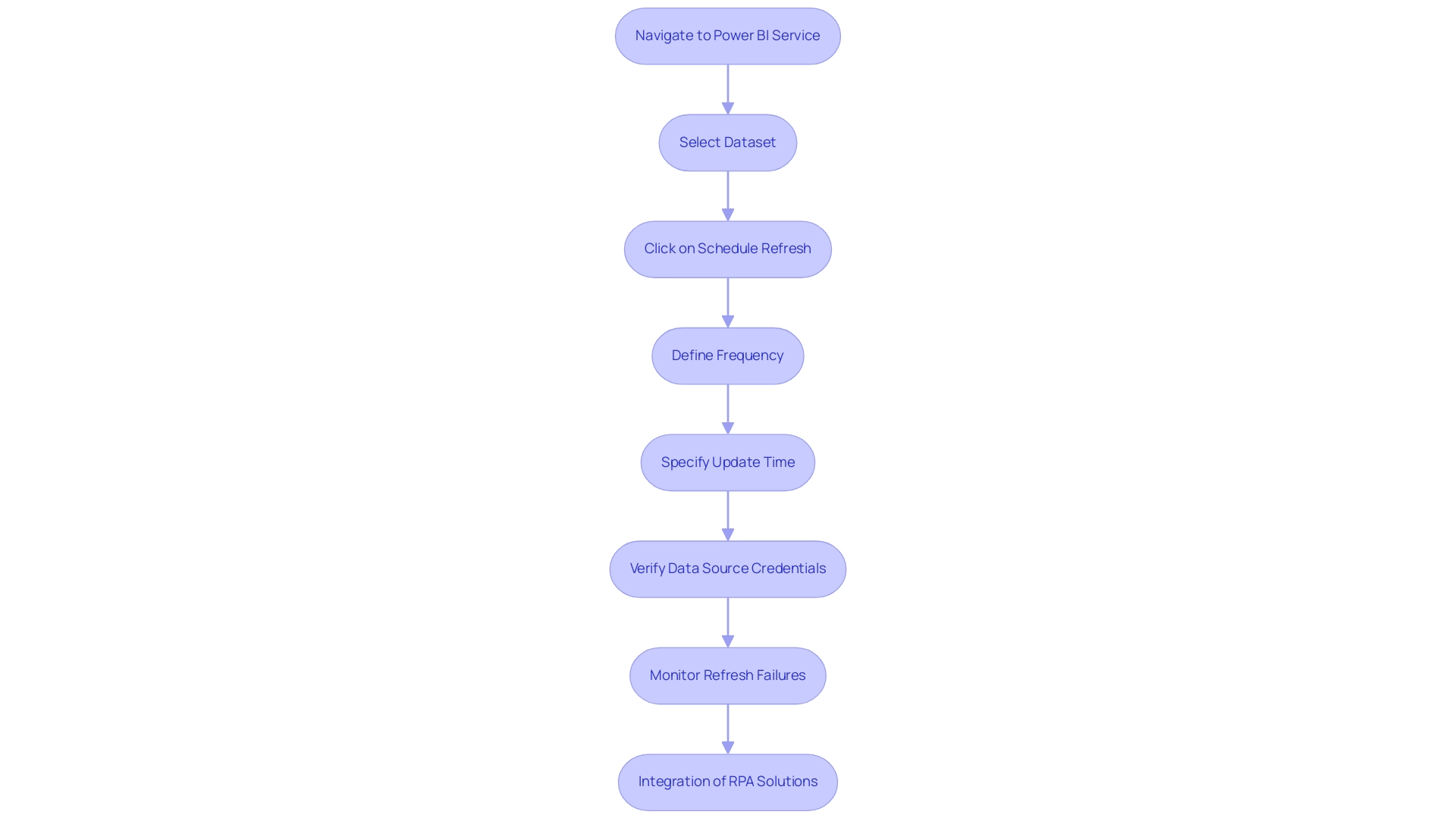

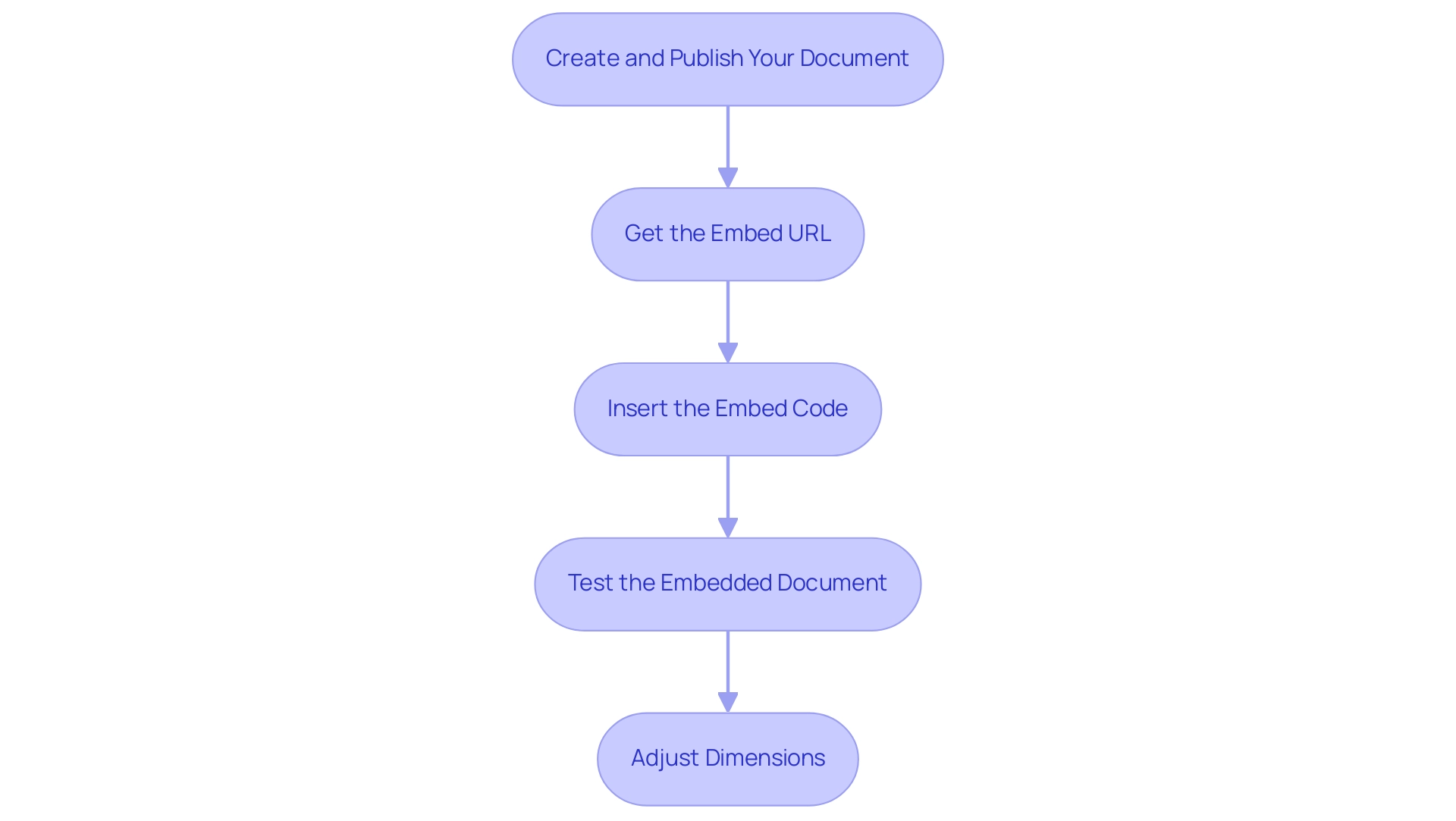

Establishing automated data updates in BI is crucial for sustaining current insights that can facilitate informed decision-making, especially when considering how to update power bi data to address issues such as time-intensive document creation and data discrepancies. Follow these steps to streamline the process:

-

Release Your Document: Start by confirming your BI analysis is shared with the BI Service.

This step is crucial as it enables the renewal functionality, allowing you to leverage the full potential of Business Intelligence. -

Navigate to Dataset Settings: In the Power BI Service, access ‘My Workspace’ or the relevant workspace housing your report. Click on ‘Datasets’ to locate the dataset you wish to refresh automatically.

This guarantees that your information remains consistent and reliable. -

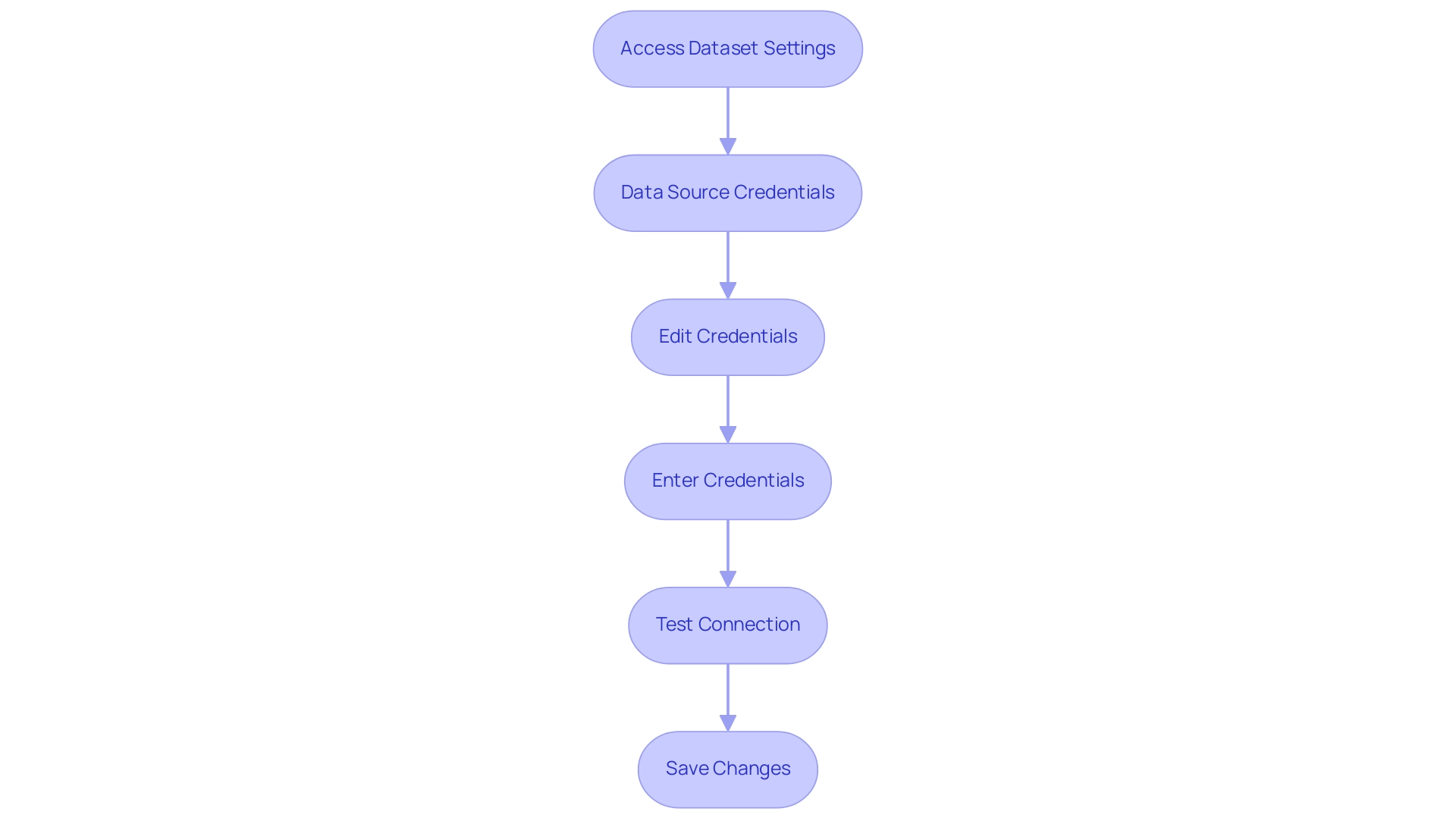

Configure Refresh Settings: Click the ellipsis (…) next to the dataset and select ‘Settings’. Within the ‘Data source credentials’ section, confirm that your credentials are correctly configured to avoid any disruptions.

This step is vital to prevent inconsistencies across reports, which can often lead to confusion and mistrust. -

Schedule Update: Scroll to the ‘Scheduled update’ section. Activate the ‘Keep information updated’ toggle and define your preferred refresh frequency and time zone.

It is important to note that to view report metrics for all dashboards or reports in a workspace, filters must be removed from the report. This customization ensures the data aligns with your operational needs and provides clear, actionable guidance. -

Save Settings: Conclude by clicking ‘Apply’ to save your settings.

Your dataset is now set up to update automatically, enabling you to focus on analysis rather than manual updates.

Recent user feedback indicates that many BI users find documentation complex and often tailored for software engineers rather than end users. This highlights the necessity for clear, practical instructions, as expressed by frequent visitor wolf, who lamented, > I got so lost today!

By following these straightforward steps on how to update power bi data, you can reduce common frustrations and fully utilize BI’s automatic updating features. Utilizing these features not only improves efficiency but is progressively becoming standard, with a significant percentage of BI users choosing automatic refresh to maintain their insights updated.

Furthermore, incorporating RPA solutions like EMMA RPA and Automate can further enhance your reporting processes, alleviating the burden of manual report creation and ensuring information consistency. A case study titled ‘Configuring Scheduled Refresh in BI’ emphasizes how to update power bi data by establishing connectivity between BI and information sources, which is crucial for configuring scheduled refreshes to ensure information remains current.

Troubleshooting Data Refresh Issues in Power BI

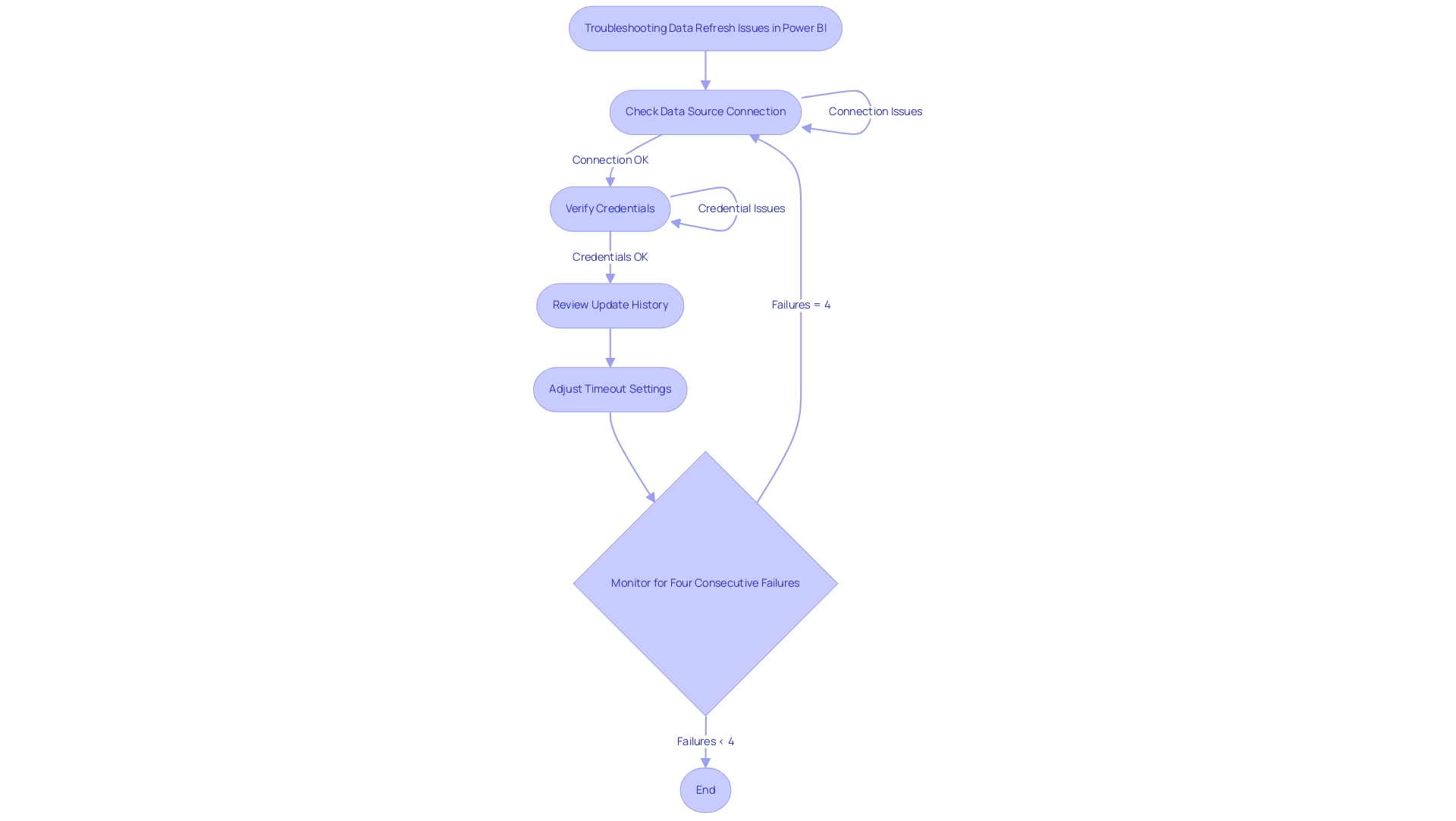

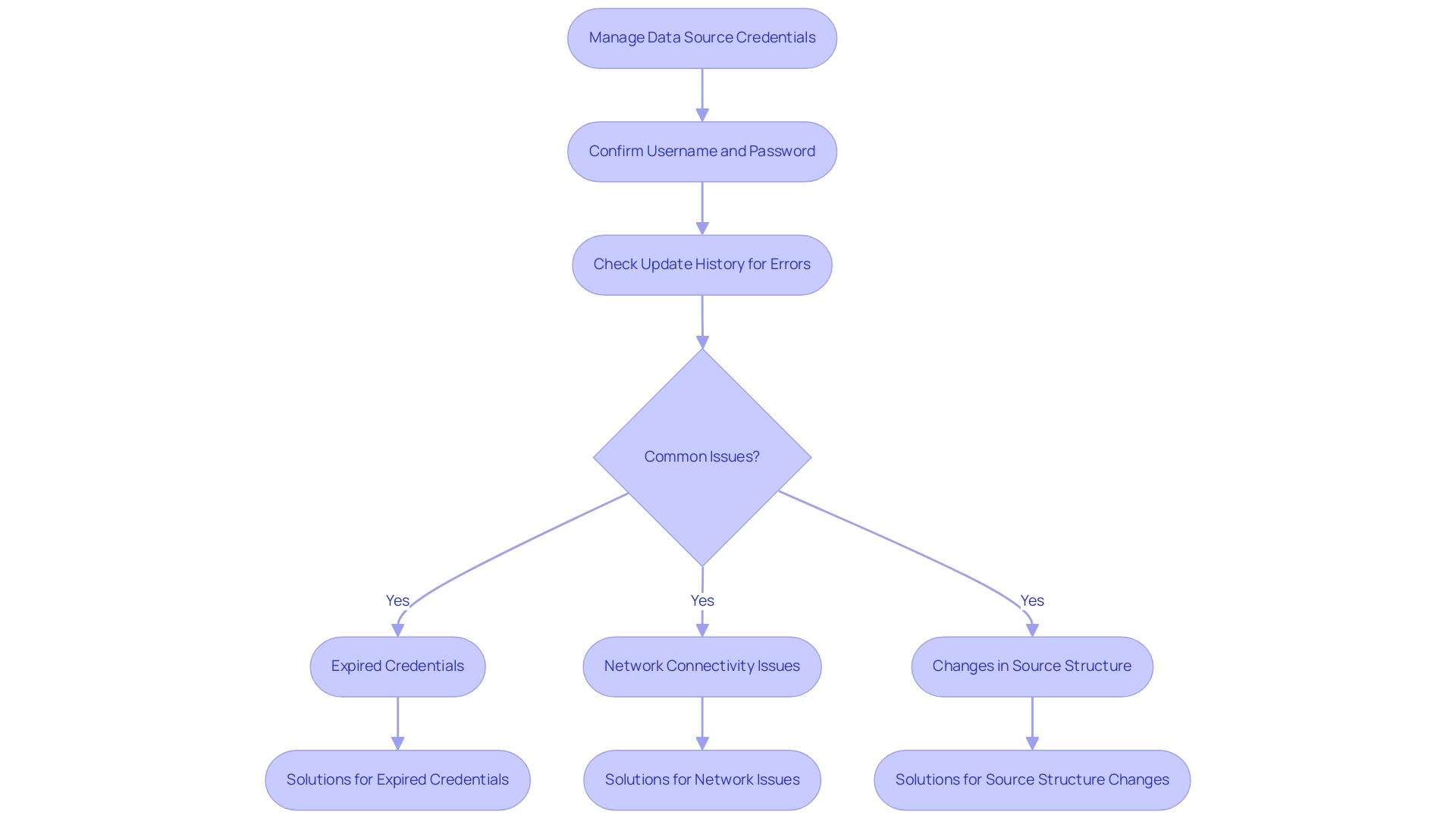

Data refresh in Power BI can often present challenges such as connection errors, credential issues, and timeout errors, which can hinder operational efficiency. Here are strategic steps to troubleshoot these common issues effectively while leveraging Business Intelligence and Robotic Process Automation (RPA) for informed decision-making:

- Check Data Source Connection: First and foremost, ensure that your data source is accessible. Network issues can frequently interrupt the connection, so verify that your information source is online and reachable. This foundational step is crucial for unlocking the full potential of your BI tools.

- Verify Credentials: Navigate to the dataset settings in Power BI Service to confirm that your data source credentials are accurate and have not expired. Keeping current credentials prevents failures and ensures a smooth workflow.

- Review Update History: Utilize the update history feature to monitor past synchronization cycles. This enables you to identify error messages that give vital information about what went wrong during the update. Understanding these errors is vital for improving operational efficiency and productivity.

- Adjust Timeout Settings: If you encounter timeout errors, consider increasing the timeout settings in your information source. Additionally, optimizing your queries can significantly reduce execution time, leading to smoother refresh operations. Efficient query management is a key component in maximizing the effectiveness of your information management strategy.

Moreover, it’s important to recognize that manual, repetitive tasks can significantly slow down your operations and lead to wasted time and resources. By integrating RPA into your data management processes, you can automate these repetitive tasks, enhancing overall efficiency and allowing your team to focus on more strategic initiatives.

It’s essential to note that the BI tool deactivates your update schedule after four consecutive failures, underscoring the importance of proactive monitoring. As BI Super User Uzi 2019 emphasizes, “If you make any changes in your BI desktop file (add a new chart, new column to your table, any DAX), you have to publish every time to BI service.” This reinforces the need for careful management of your Power BI environment.

Additionally, in cases where DirectQuery sources with enforced primary keys are used, adjusting the Assume Referential Integrity setting can optimize query performance. This adjustment can accelerate query execution by utilizing inner joins instead of slower outer joins, further enhancing the efficiency of your update operations.

By applying these troubleshooting measures and monitoring your update status closely, you can effectively address typical update problems and guarantee your operations function seamlessly, ultimately fostering growth and innovation by understanding how to update power bi data.

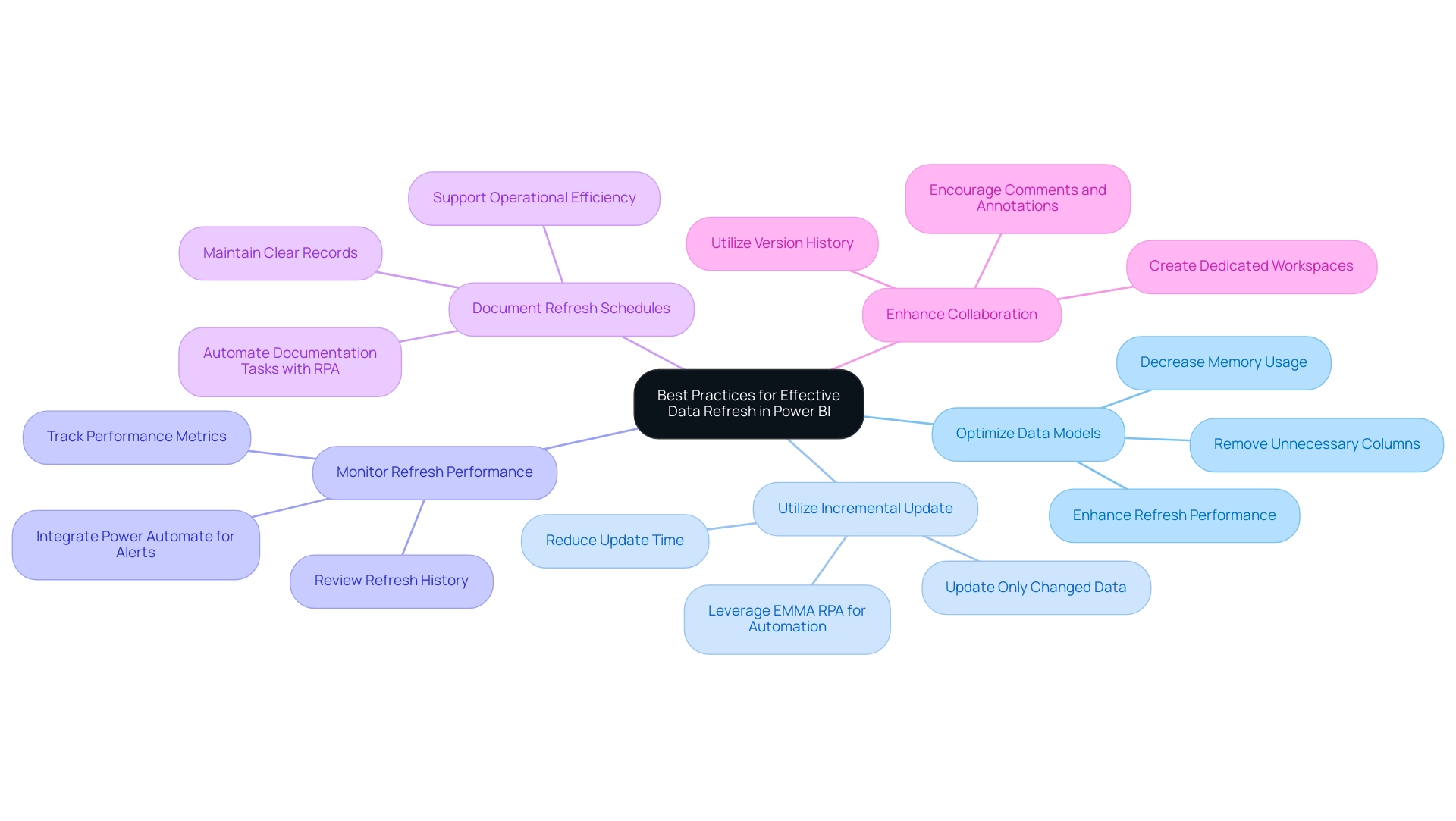

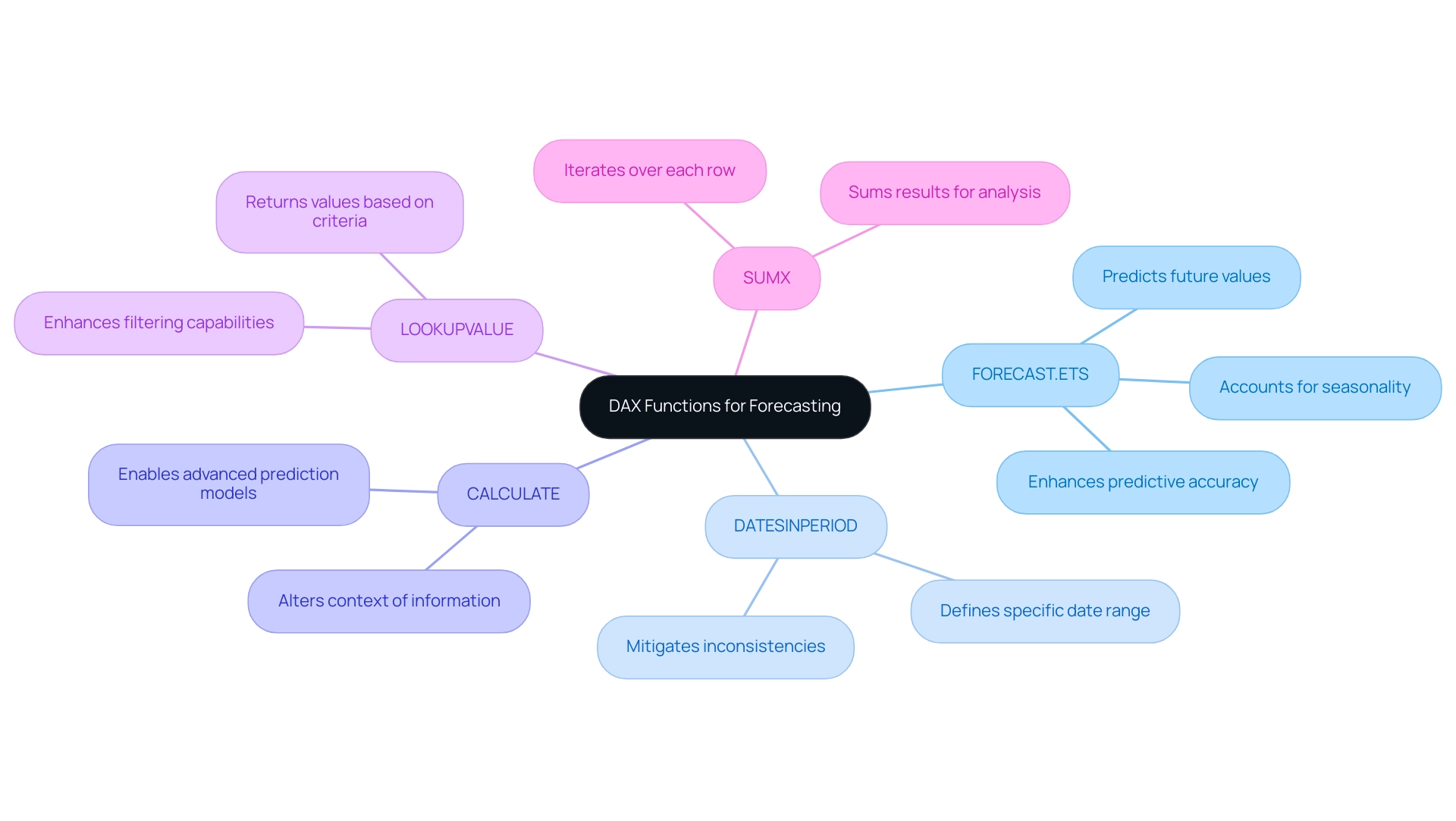

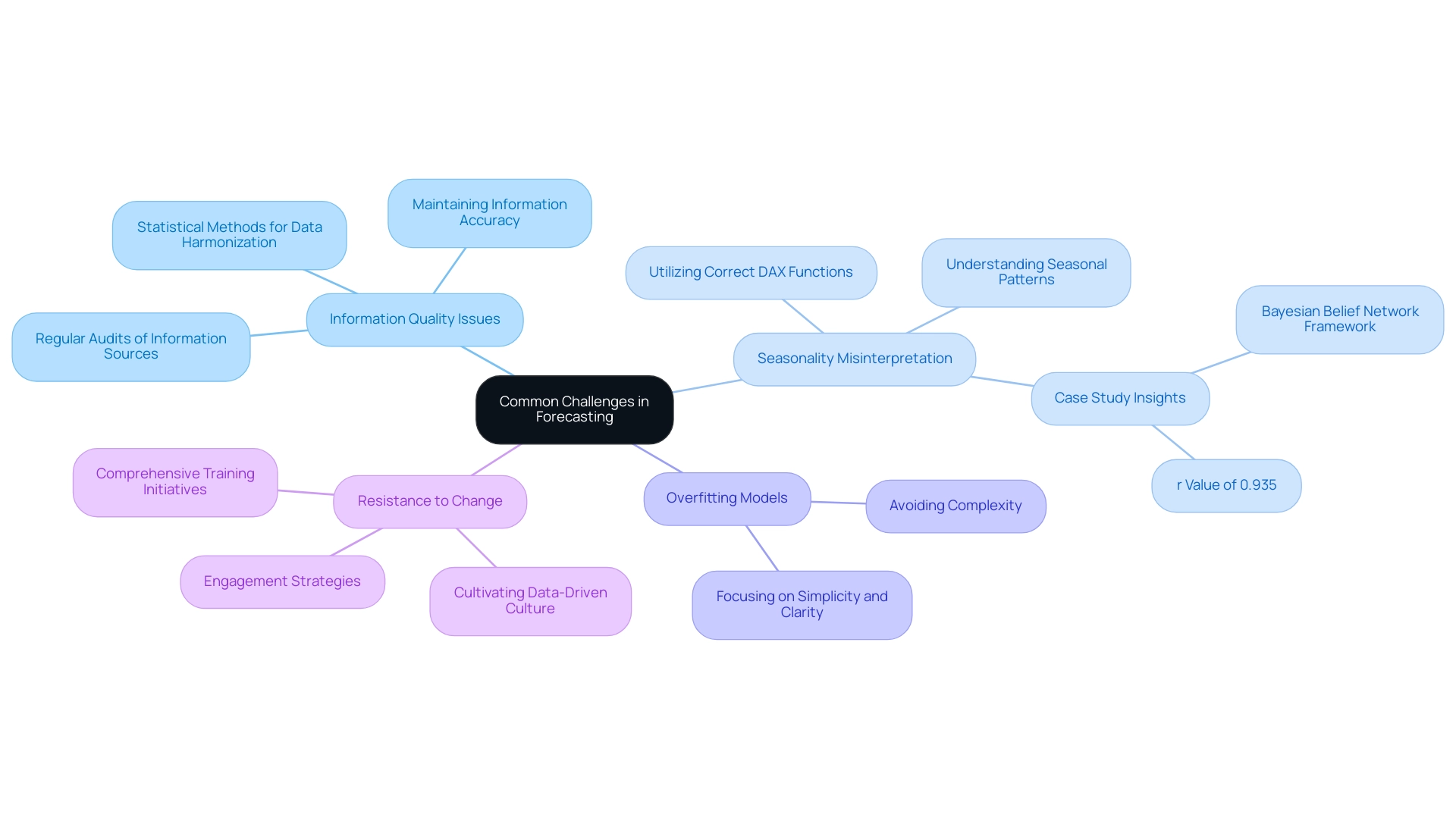

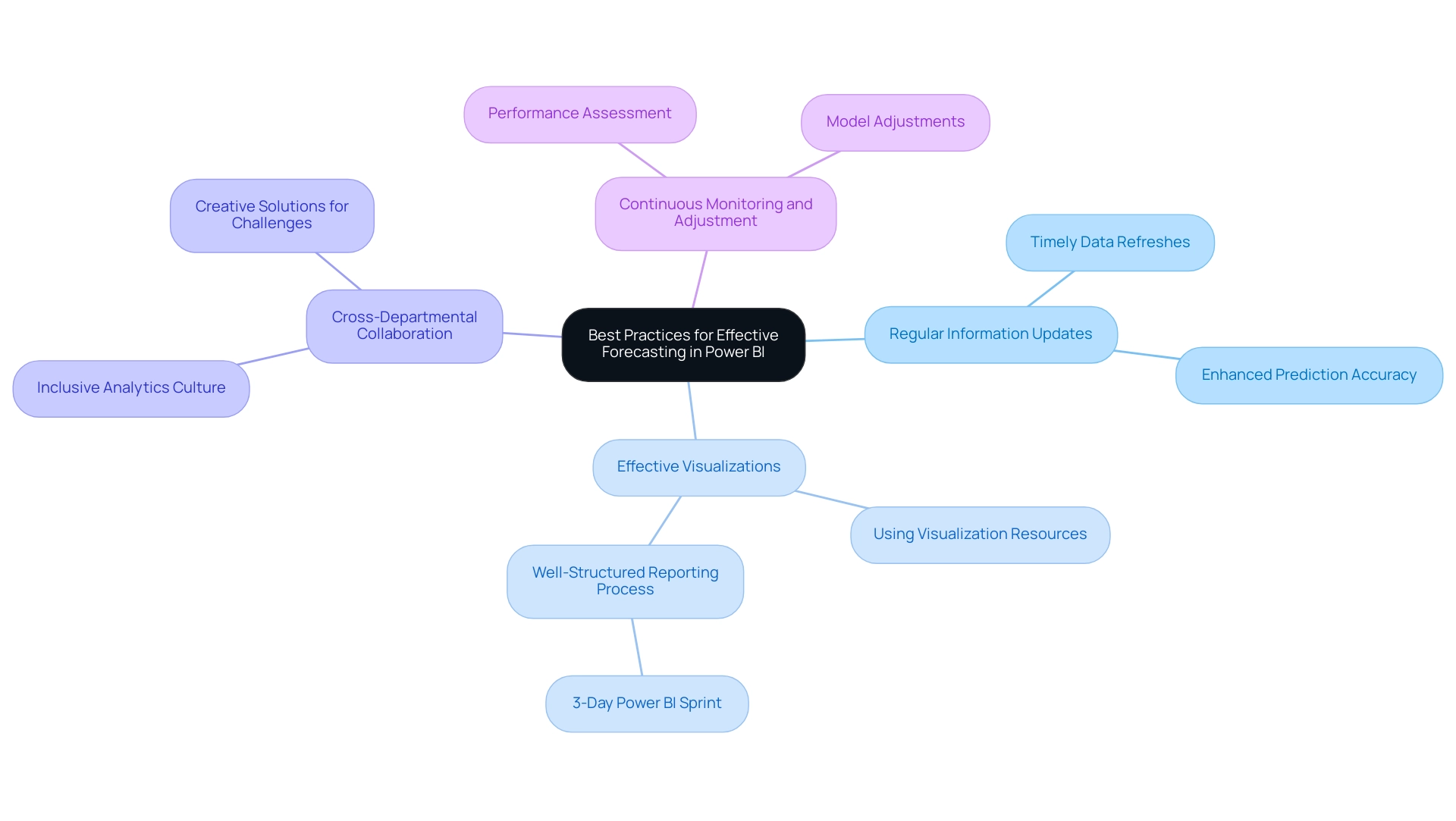

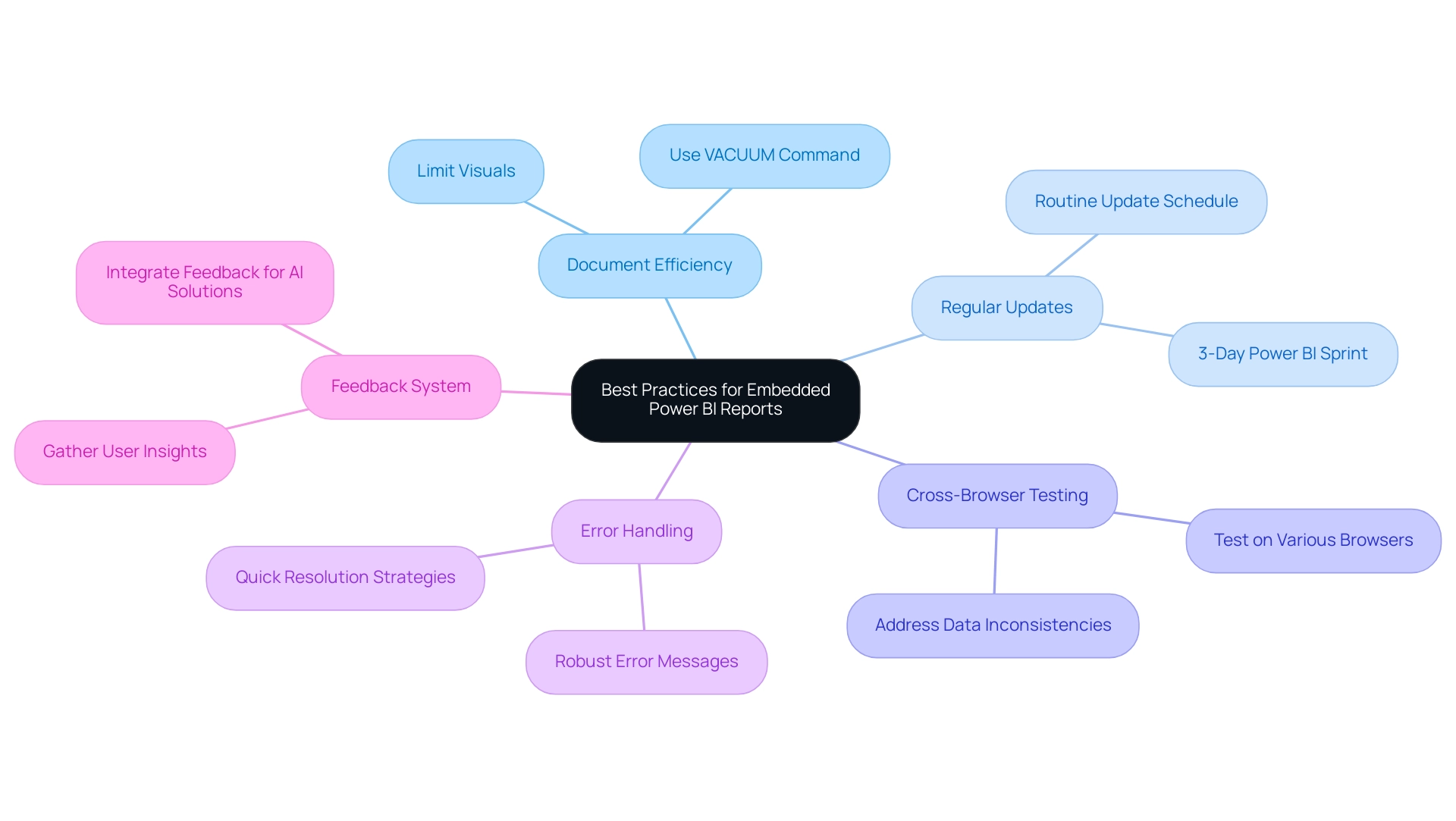

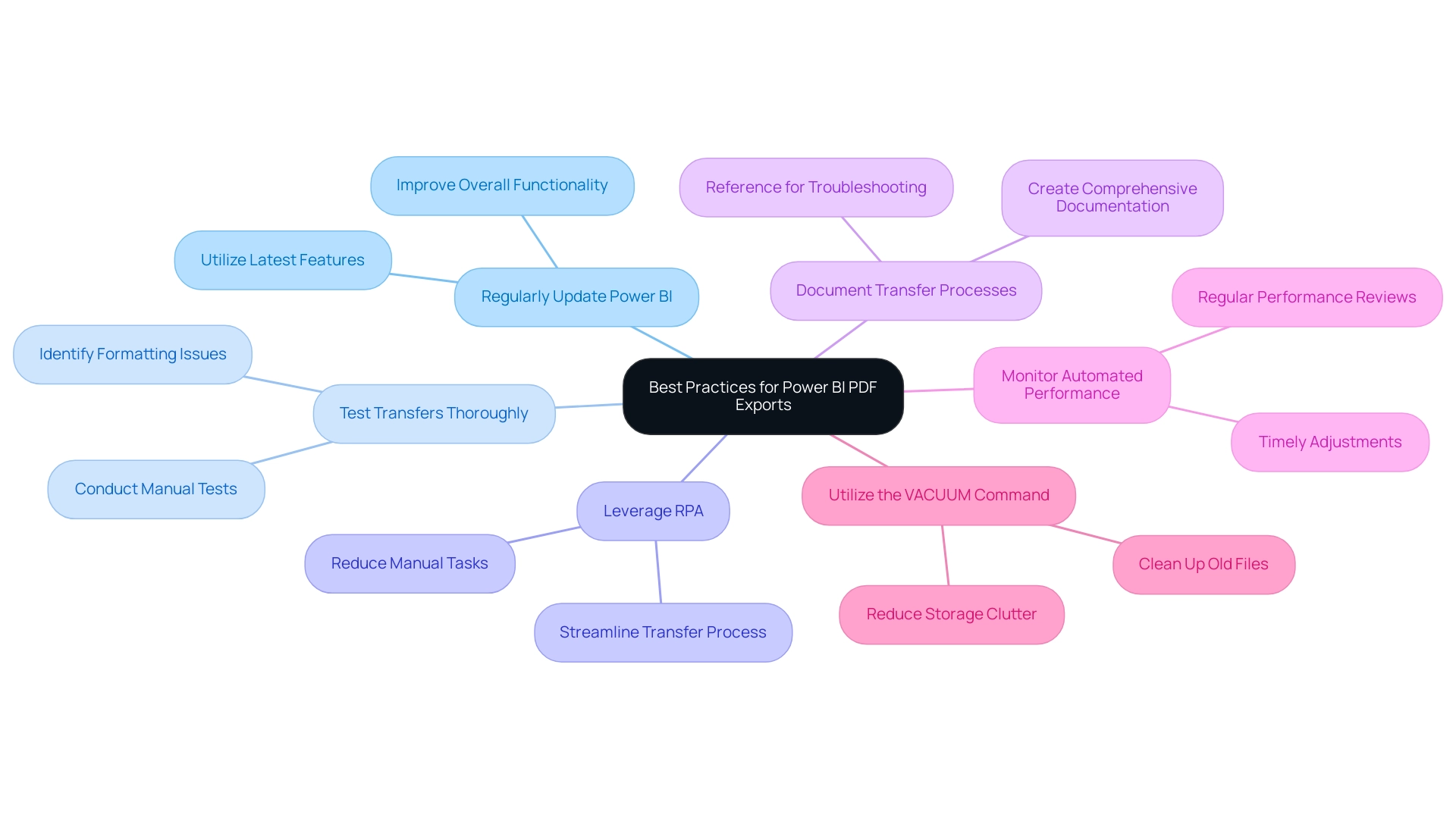

Best Practices for Effective Data Refresh in Power BI

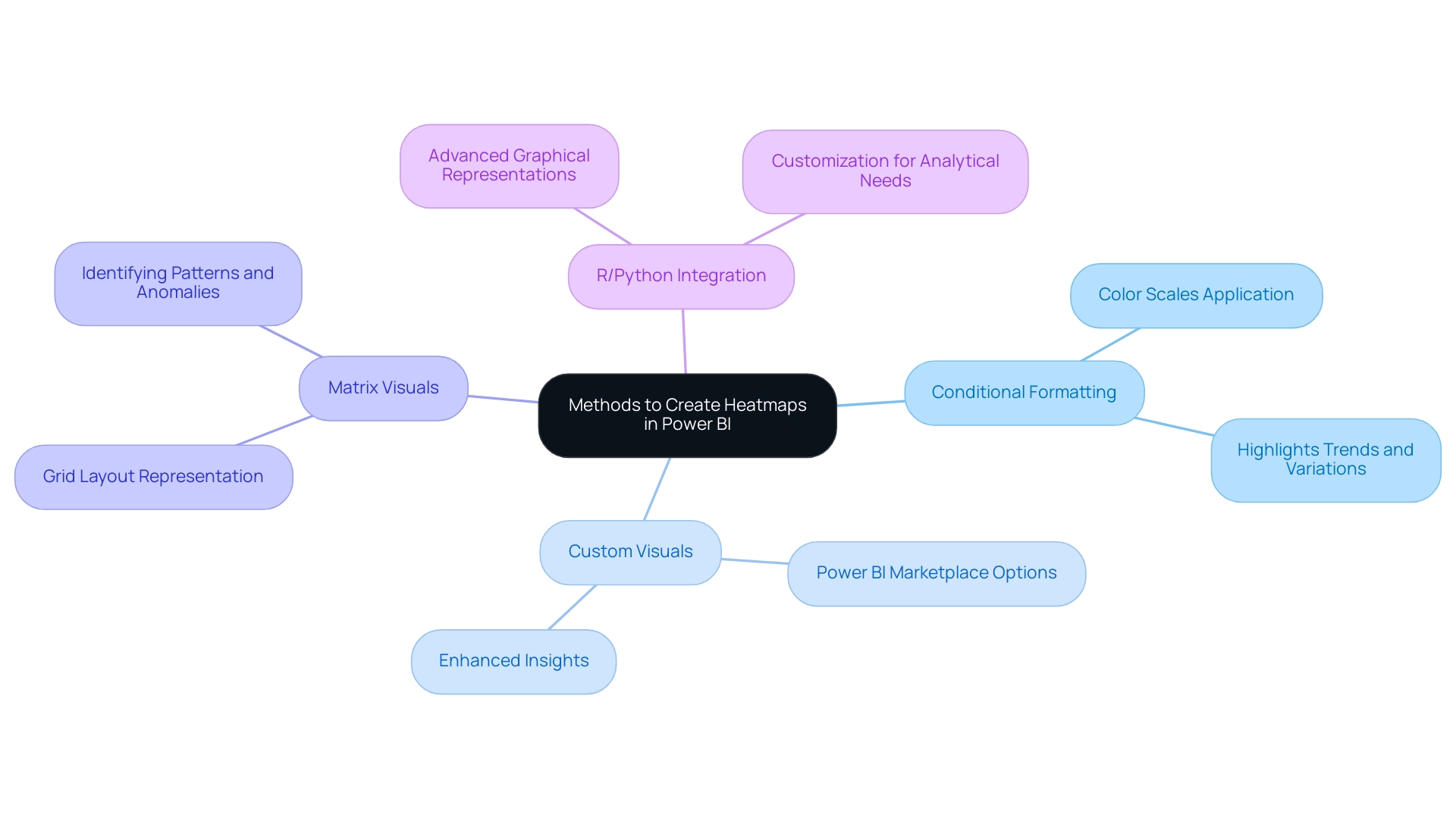

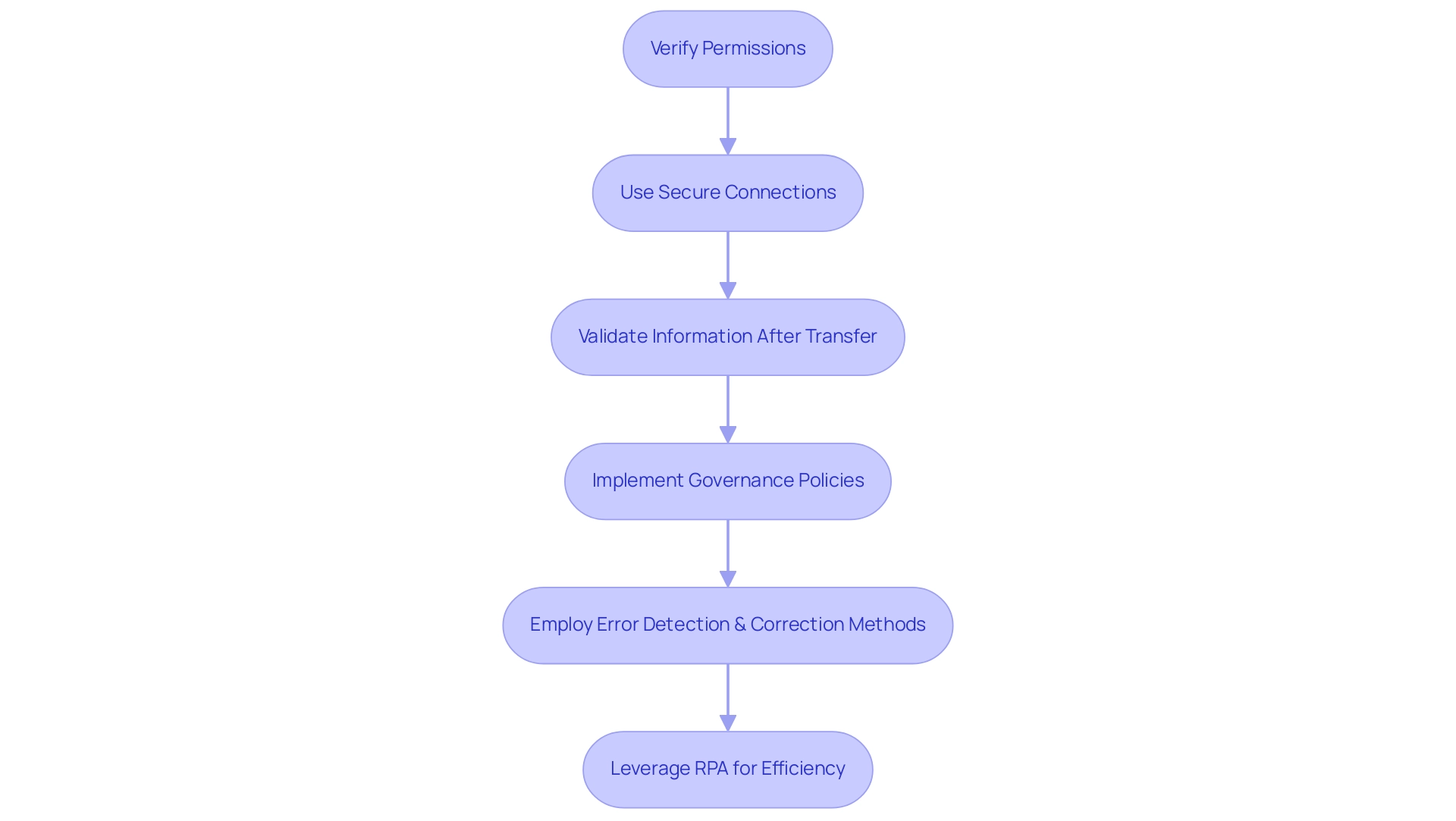

To ensure effective data refresh in Power BI, consider implementing the following best practices:

-

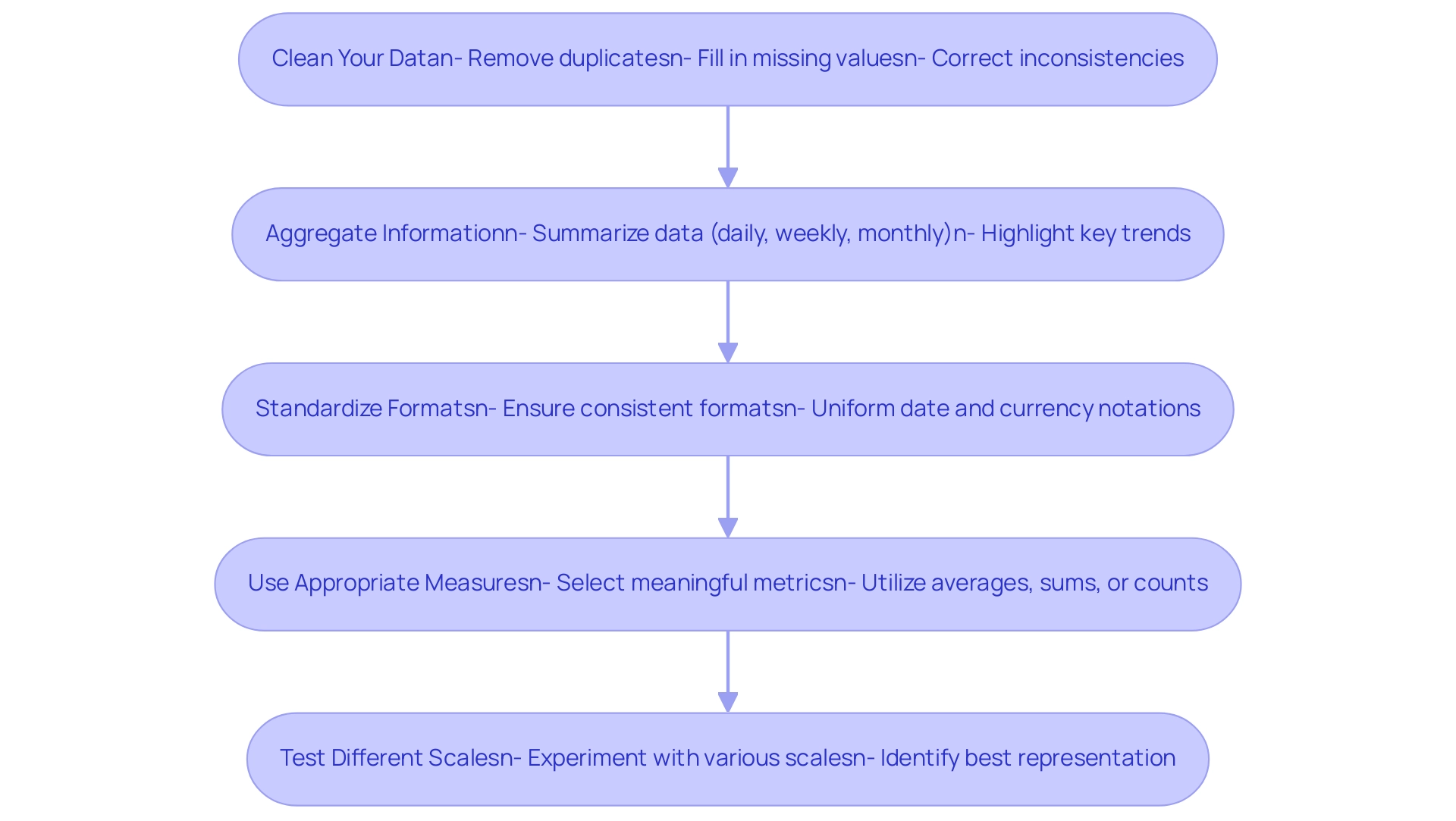

Optimize Data Models: Streamlining your data models is crucial for enhancing refresh performance. Begin by removing unnecessary columns and tables that do not add value to your reports. This not only decreases memory usage but also accelerates the update process. It is essential to remember that during a complete update, your system needs twice the amount of memory that the semantic model requires, making optimization critical for efficient information handling.

-

Utilize Incremental Update: For large datasets, adopting incremental update is a game-changer. This technique shows you how to update Power BI data by modifying only the information that has altered, significantly reducing update time and resource usage. Recent findings show that this approach can lead to substantial efficiency gains in information handling, which can be further enhanced by utilizing EMMA RPA to automate repetitive tasks, such as entry and report generation, ensuring that your team can focus on strategic analysis.

-

Monitor Refresh Performance: Regularly reviewing refresh history and performance metrics is vital. By keeping an eye on these metrics, you can proactively identify and address any issues that may arise, which is essential for understanding how to update Power BI data and ensuring consistent and reliable data availability. Integrating Power Automate can streamline this monitoring process, making it easier to track performance metrics automatically and alert your team to any anomalies in real time.

-

Document Refresh Schedules: Maintaining a well-documented record of your refresh schedules and settings is essential for consistency and easy reference. This practice supports operational efficiency and aids in maintaining a clear overview of your management processes, especially when combined with RPA solutions that automate documentation tasks, reducing the administrative burden on your team.

In the context of collaboration, encouraging comments and annotations within documents enhances teamwork. The case study focusing on collaborative practices illustrates that implementing dedicated workspaces and utilizing version history not only encourages meaningful discussions but also preserves a record of changes. By utilizing EMMA RPA and Automate, organizations can enhance these collaborative practices by automating notifications for updates and ensuring that all team members have access to the most recent information insights, ultimately improving the quality of shared insights.

Moreover, as PaulDBrown, a Community Champion, mentions, “If the Excel file is stored on OneDrive and you configure the source to link to the online file, the update will be automatic… so you can utilize the report online with current information, and thus don’t need to concern yourself with manually updating.” This practical advice emphasizes the significance of utilizing technology, such as EMMA RPA and Automate, to streamline processes related to how to update Power BI data and ensure that high-quality data is consistently available for informed decision-making. By adhering to these best practices, you can significantly enhance your Power BI experience and drive operational efficiency.

Conclusion

In the realm of data-driven decision-making, the significance of effective data refresh methods in Power BI cannot be overstated. This article has explored the key strategies for refreshing data, including:

- Manual refresh

- Scheduled refresh

- On-demand refresh

Each serving specific operational needs. Understanding the nuances of full and incremental refreshes is essential, as it enables organizations to optimize performance and maintain accurate reporting.

Setting up automatic data refresh is a crucial step in ensuring timely insights. By following straightforward steps for configuration and integrating Robotic Process Automation, organizations can streamline their reporting processes and alleviate the burdens of manual updates. Proactive troubleshooting of common data refresh issues ensures smooth operations, minimizing disruptions that can hinder efficiency.

Implementing best practices, such as:

- Optimizing data models

- Leveraging incremental refresh

can dramatically enhance the performance of Power BI reports. Regular monitoring of refresh performance and documenting schedules contribute to a sustainable data management strategy that supports operational efficiency.

Ultimately, by mastering these data refresh techniques and strategies, organizations are empowered to harness the full potential of their data, driving informed decision-making and fostering innovation. Embracing these practices not only enhances data accuracy but also positions organizations to thrive in an increasingly competitive landscape, transforming challenges into opportunities for growth.

Overview

The article provides a step-by-step guide on how to use the ‘Include in Report Refresh’ feature in Power BI, which allows users to specify which data sources are updated during a report refresh. This is crucial for maintaining data accuracy and operational efficiency, as the article emphasizes the importance of timely updates and offers best practices to optimize the refresh process while mitigating common issues.

Introduction

In the dynamic landscape of data analytics, the ability to maintain up-to-date and accurate reports is paramount for informed decision-making. Power BI offers a robust framework for report refresh, ensuring that stakeholders have access to the latest insights.

However, navigating the intricacies of data refresh processes can present challenges that, if left unaddressed, may hinder operational efficiency. This article delves into the essential components of report refresh in Power BI, from understanding the mechanics of scheduled updates to troubleshooting common issues.

By exploring best practices and practical solutions, organizations can enhance their reporting capabilities, streamline workflows, and ultimately drive strategic growth through data-driven insights.

Understanding Report Refresh in Power BI

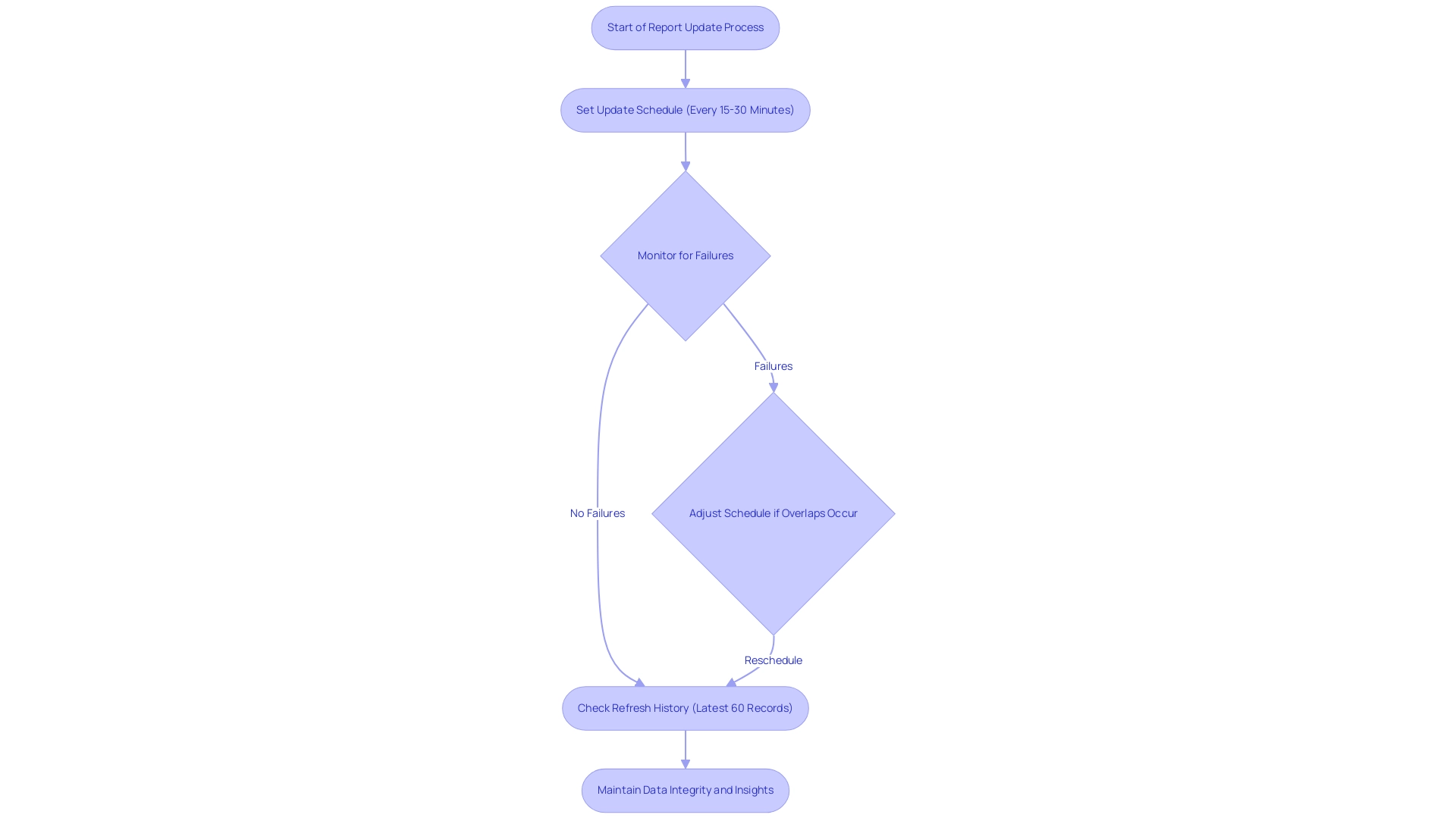

In BI, the report update procedure is vital for ensuring your reports display the most recent information. This step is fundamental to maintaining integrity, as it guarantees that changes in underlying sources are accurately represented in your visualizations. As mjmowle, an advocate for information precision, noted,

With BI Premium, you can establish update schedules as often as every 15 minutes…

emphasizing the flexibility available to users.

However, while up to 48 refreshes can be assigned within a 24-hour period, they remain confined to 30-minute intervals, highlighting the need for strategic scheduling to avoid time-consuming report creation and inconsistencies. Power BI also disables update schedules after four successive failures, highlighting the significance of overseeing update processes to preserve information integrity and offer practical insights. The lack of clear, actionable steps can leave stakeholders uncertain about the next actions, which reduces the significance of the information presented.

The effect of timely data updates on report accuracy cannot be overstated; it directly influences effective data analysis and informed decision-making. Recent updates to the BI tool’s update features, including a history view showing the latest 60 records of scheduled updates, enhance this process further. Moreover, case studies like ‘Example of Refresh Time Slot Analysis’ reveal how overlapping refresh events can occur when one refresh exceeds its designated time slot, prompting administrators to adjust schedules accordingly and prevent resource contention.

By prioritizing document updates and utilizing Automation software for streamlined workflow efficiency, organizations can ensure their analytics are strong, dependable, and indicative of the latest trends. Moreover, through our no-risk consultation process, we evaluate your automation requirements, calculate ROI, and implement the automation professionally, enabling you to concentrate on utilizing insights instead of dealing with document creation.

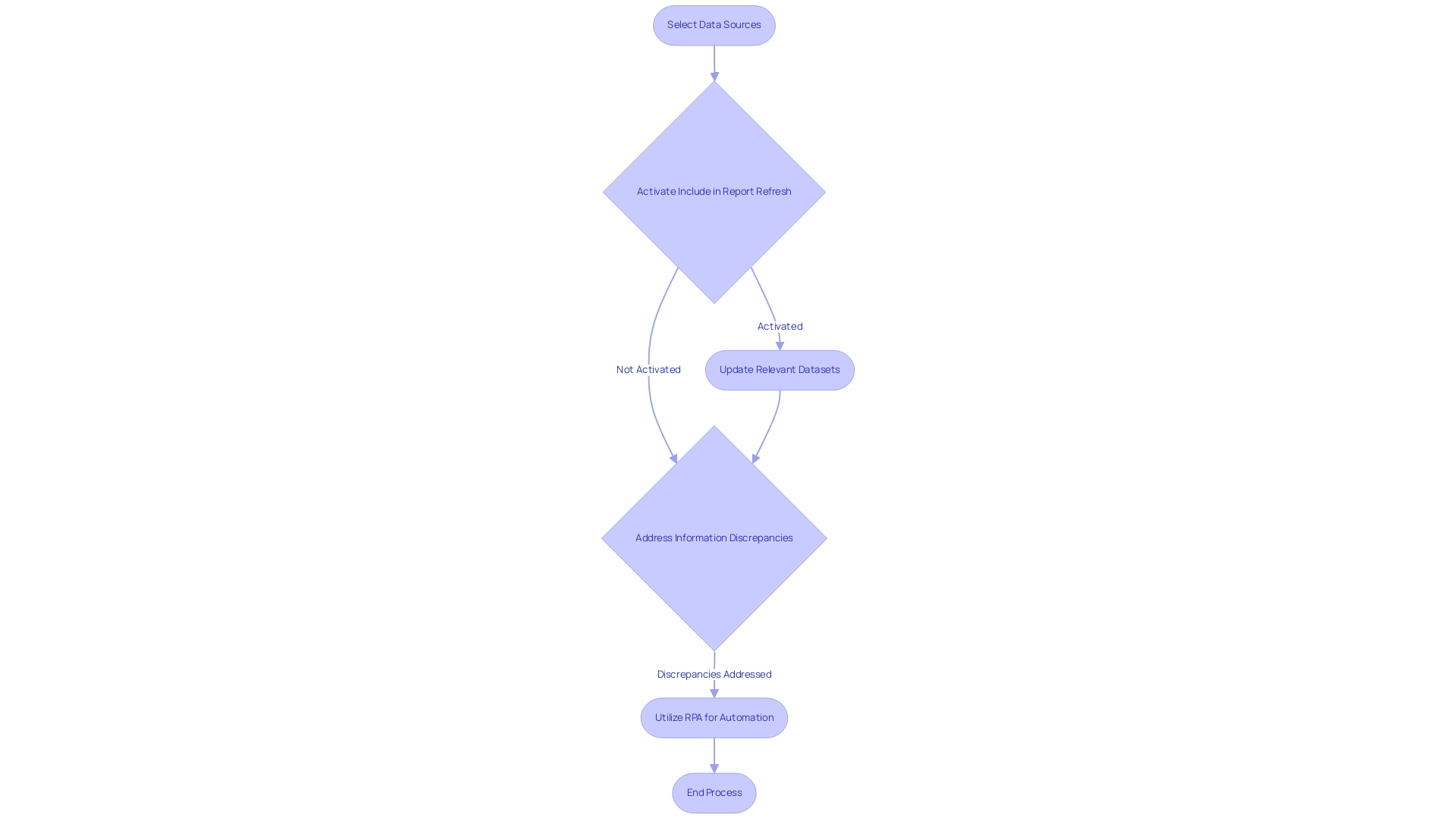

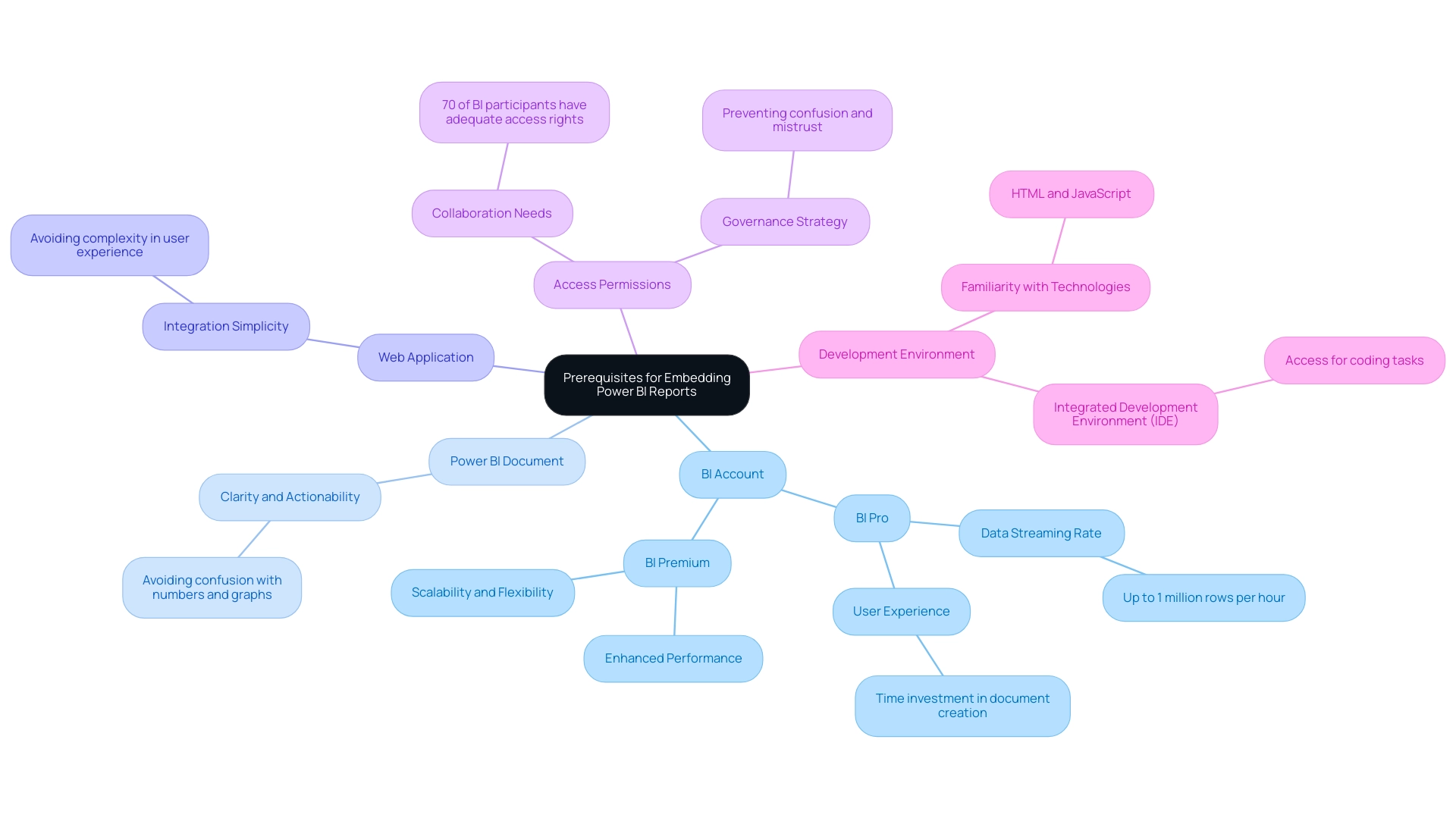

What Does ‘Include in Report Refresh’ Mean?

Include in Report Refresh Power BI’ is a pivotal feature that empowers users to dictate which sources are refreshed during a report update. By activating this option, users can ensure that all pertinent datasets receive updates together, facilitating a comprehensive view of the landscape. This becomes particularly advantageous for Directors of Operations Efficiency overseeing numerous information sources, as RPA can simplify this update process, improving operational effectiveness and enabling teams to concentrate on strategic initiatives.

Nonetheless, obstacles like information discrepancies and lengthy documentation processes can impede efficient decision-making. Usage metrics summaries generally update each day with new information, making it essential for operations leaders to remain in sync with the latest insights. If a document or dashboard is not shown, it likely hasn’t been utilized in over 90 days, highlighting the significance of keeping documents updated.

Denis Davydov notes that ‘to include in report refresh, it is used to maintain the old information; you will not extract the information from the source, and you can use it to join with another query if it is old information and you don’t keep fetching the information every time you perform a refresh.’ This capability not only enhances operational efficiency but also supports informed decision-making across the organization. Moreover, the integration of RPA solutions can help automate the report creation process, reducing errors and freeing up teams for more strategic work, ultimately driving business growth.

The case study on Admin Control Over Usage Metrics illustrates how BI administrators can enable or disable usage metrics, ensuring organizations manage data privacy and compliance while leveraging usage metrics for performance insights.

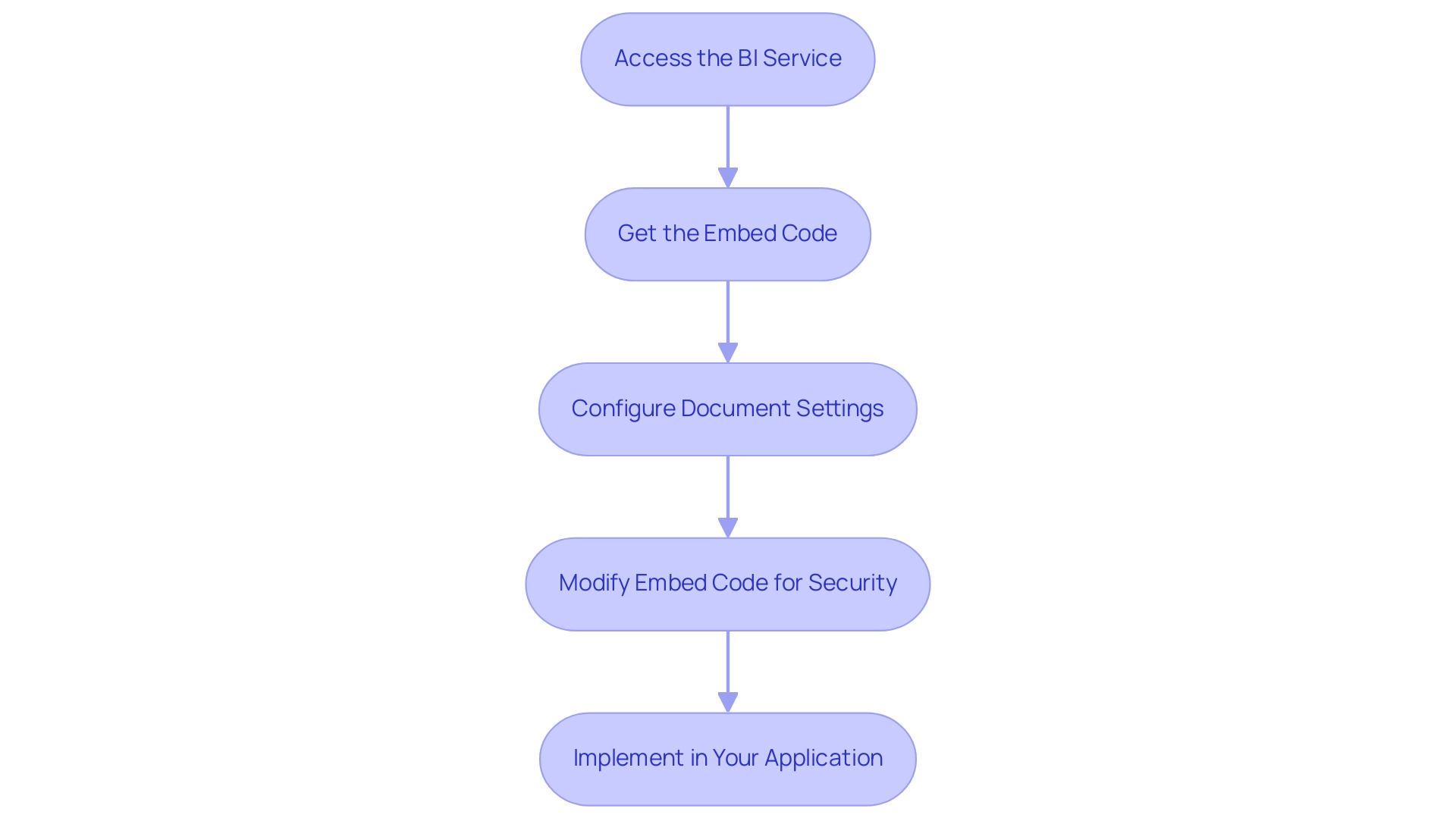

Step-by-Step Guide to Enable ‘Include in Report Refresh’

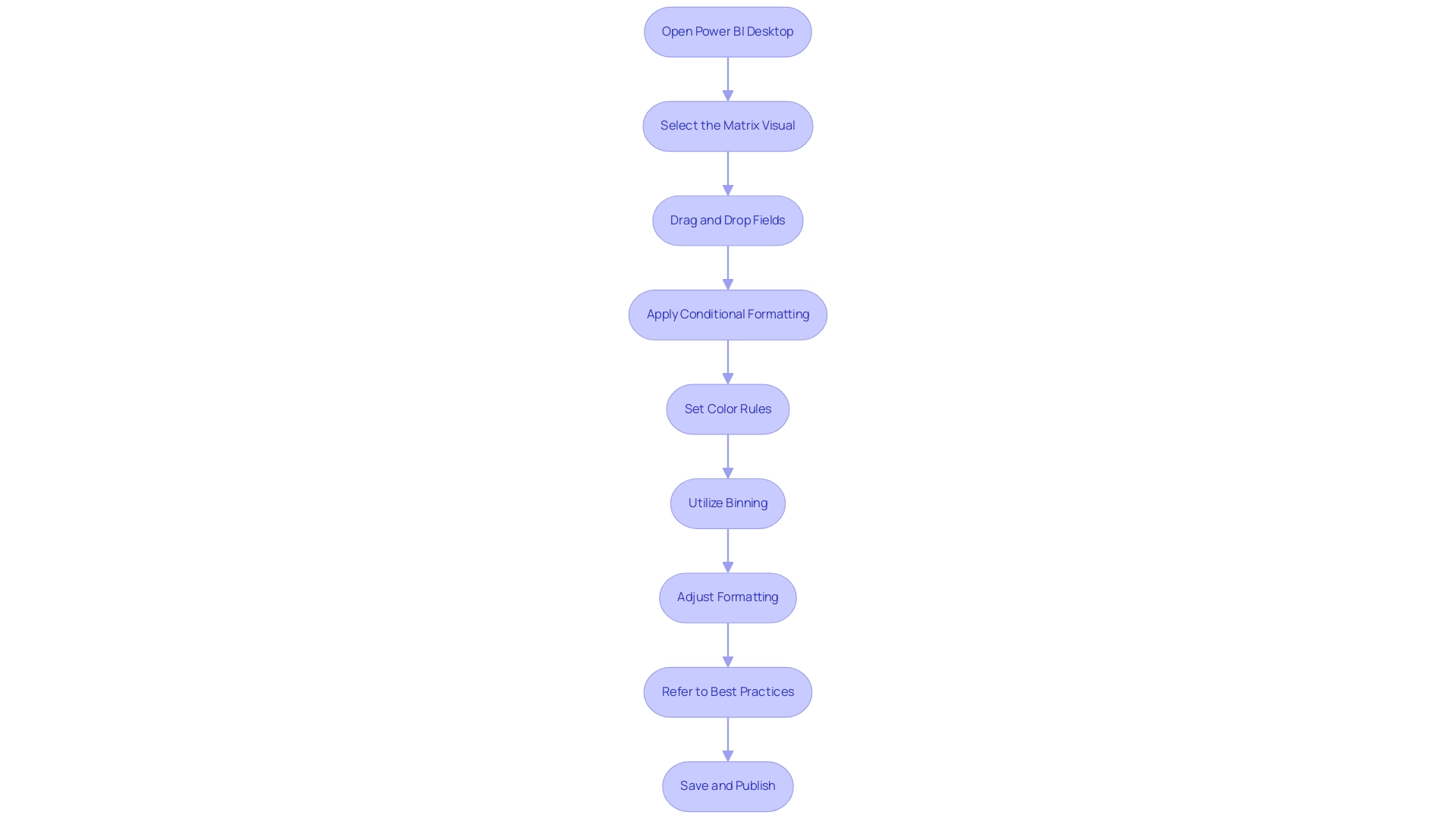

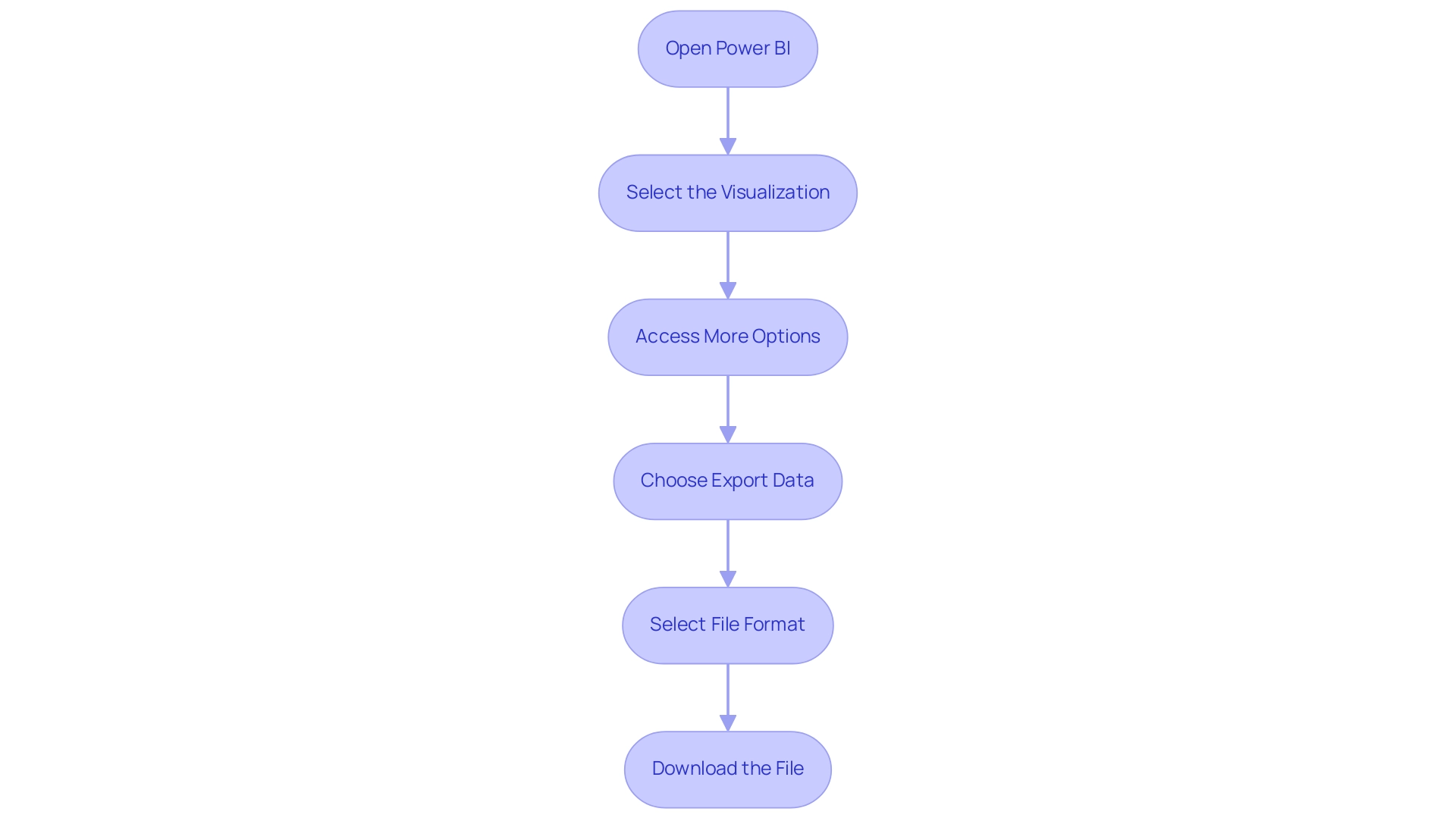

To effectively enable the ‘Include in Report Refresh’ feature in BI, follow these straightforward steps:

- Start your BI Desktop application and load the document you intend to modify. Click on the ‘Model’ icon located in the left sidebar to navigate to the ‘Model’ view.

- Choose the particular source you want to incorporate in the update by clicking on the relevant table or dataset. In the properties pane, locate the option to ‘include in report refresh power bi’ and check the box next to it to activate it. Save your changes and, if necessary, publish the document to Power BI Service to make it accessible. To confirm that the refresh operates as designed, go to the ‘Home’ tab and click on ‘Refresh’, making sure that the chosen information sources are updated appropriately.

By following these steps, you improve your reports’ precision and relevance, tackling common issues like inconsistencies and ensuring your insights are timely and actionable. This alignment with the latest information trends and insights supports informed decision-making that drives operational efficiency and business growth. Furthermore, think about checking the 12-minute tutorial on creating date tables in BI, which can further enhance your information management processes.

As Iason Prassides emphasizes, mastering features like DAX in Business Intelligence is crucial for customizing and optimizing key performance indicators (KPIs), especially when managing data refresh settings. Moreover, Douglas Rocha’s case study on utilizing statistical measures in Business Intelligence demonstrates how these concepts can be applied in real-world situations, emphasizing the significance of incorporating strong statistical analysis into your documents. To further enhance operational efficiency, consider leveraging RPA solutions like EMMA RPA and Automate, which can automate repetitive tasks and improve overall workflow, ensuring that your data-driven insights are not only accurate but also actionable.

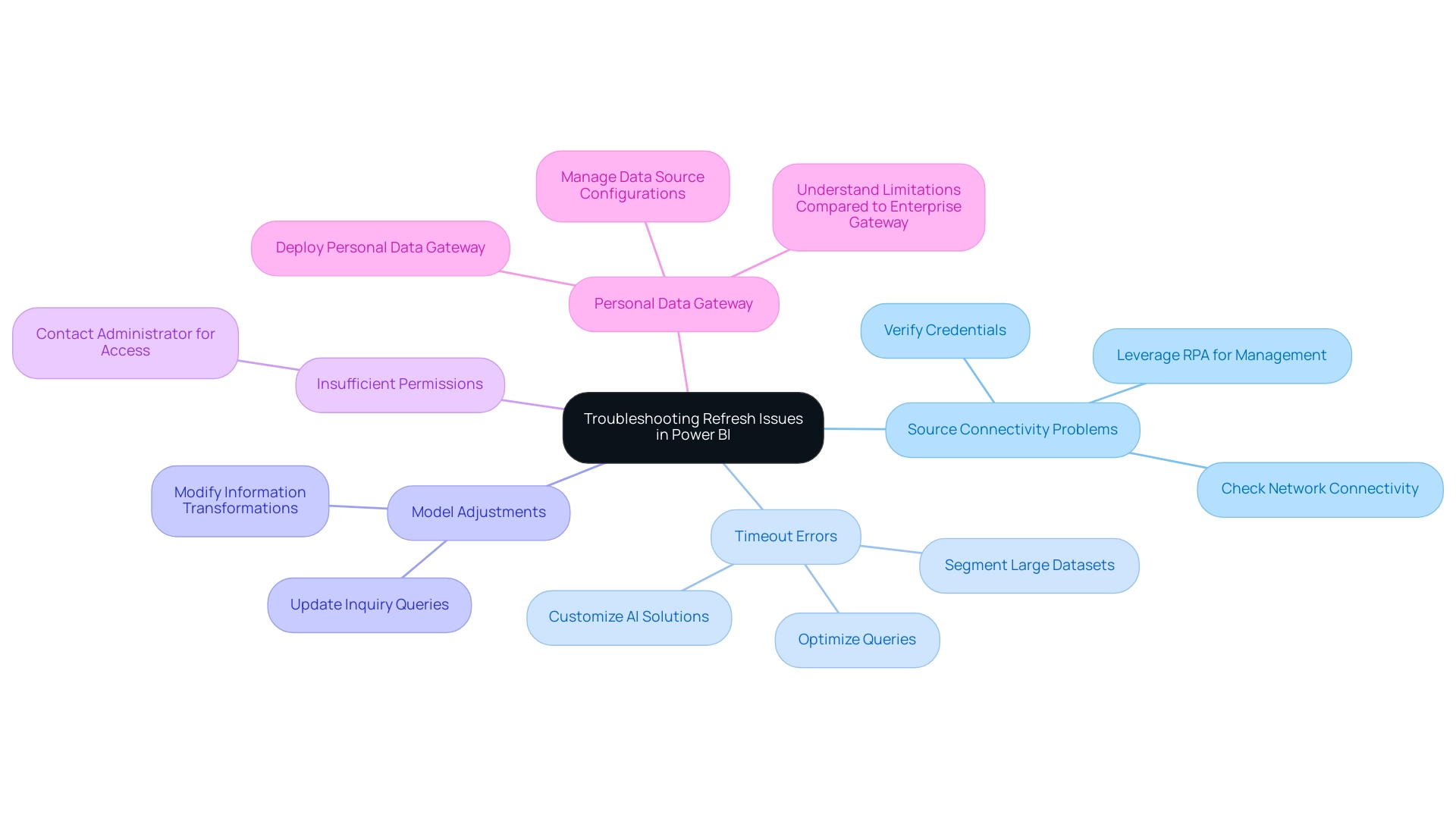

Troubleshooting Common Refresh Issues in Power BI

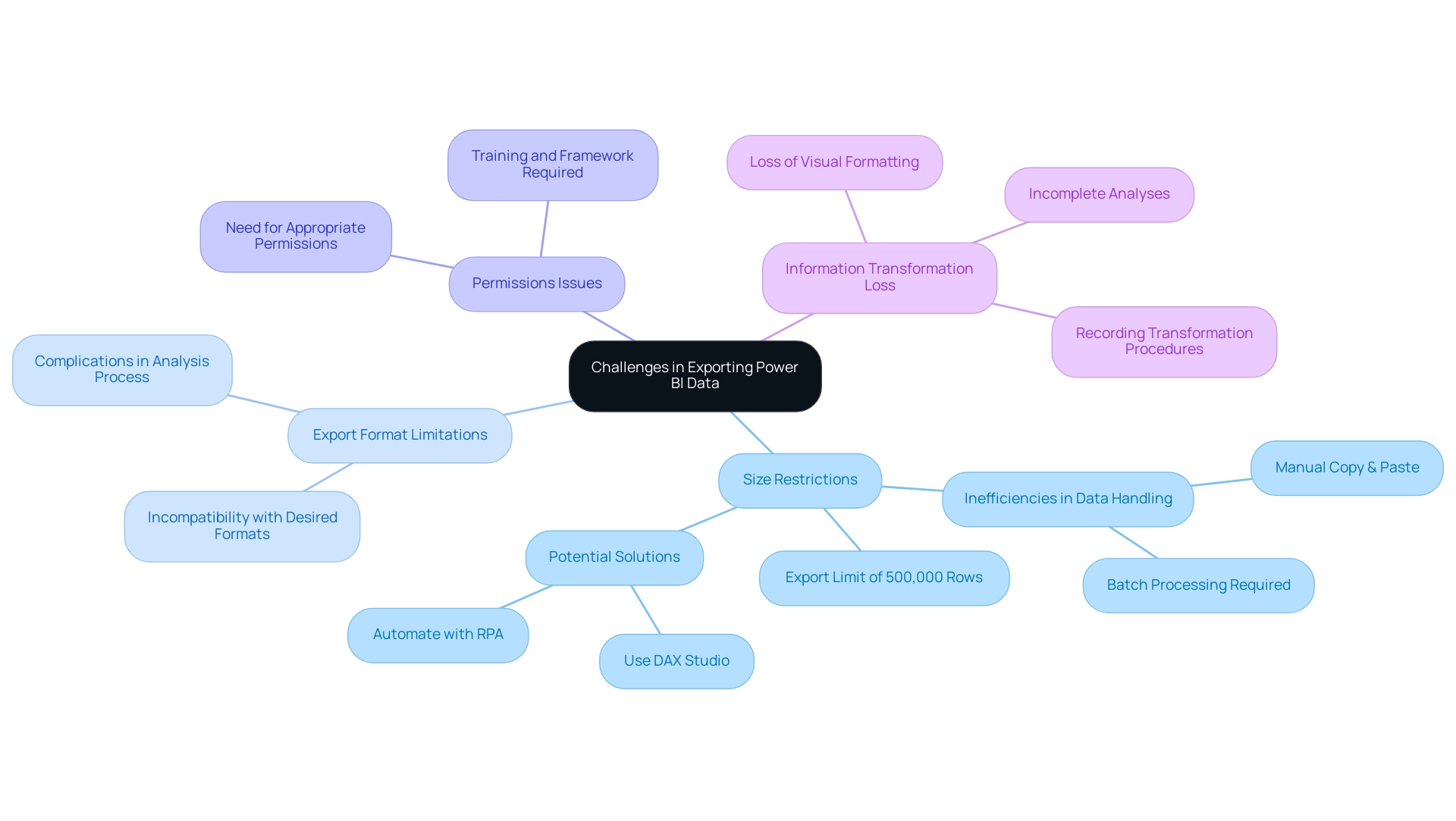

When working with BI, it is important to include in report refresh Power BI several common issues that users often encounter, as these can hinder efficiency and lead to wasted time and resources. Here are key challenges and effective solutions:

-

Source Connectivity Problems: Ensuring that all sources are accessible and credentials are correctly configured is crucial. If issues arise, check network connectivity and permissions, as Power BI may deem a semantic model inactive after just two months of inactivity. Maintaining regular user engagement with your dashboards is essential. By proactively managing access and connectivity, you can leverage Robotic Process Automation (RPA) to streamline these efforts and prevent interruptions in your information flow, thus mitigating the impact of manual, repetitive tasks.

-

Timeout Errors: Extended refresh times can lead to timeout errors. To address this, consider optimizing your queries or segmenting large datasets into smaller, more manageable chunks, enhancing performance and reliability. Customizing AI solutions can also help in improving these processes to better align with your business objectives.

-

Model Adjustments: Modifications to the underlying structure require corresponding updates to inquiry queries. It’s crucial to examine and modify information transformations to align with the updated model, ensuring consistency and precision in your documents, thus leveraging Business Intelligence for informed decision-making.

-

Insufficient Permissions: Lack of necessary permissions can obstruct access to information sources. If you encounter this issue, contact your administrator to secure the required access, enabling seamless data retrieval and allowing your team to focus on more strategic tasks.

-

Personal Data Gateway: For users without access to an enterprise gateway, deploying a personal data gateway can be a viable solution for managing data source configurations directly. While it has limitations compared to an enterprise gateway, it allows individual management of semantic models, helping to mitigate connectivity issues.

By identifying these common challenges and implementing the suggested troubleshooting strategies, you can effectively resolve refresh problems and include in report refresh Power BI to ensure that your reports remain accurate and up-to-date. Additionally, the difficulty in extracting meaningful insights from your BI dashboards can leave your business at a competitive disadvantage. Consider the insights of industry expert Jeff Shields, who emphasizes innovative approaches to data connectivity:

I wonder if you could ZIP them and read them into a dataflow from a OneDrive/SharePoint folder.

This emphasizes the potential for innovative solutions in optimizing your BI experience.

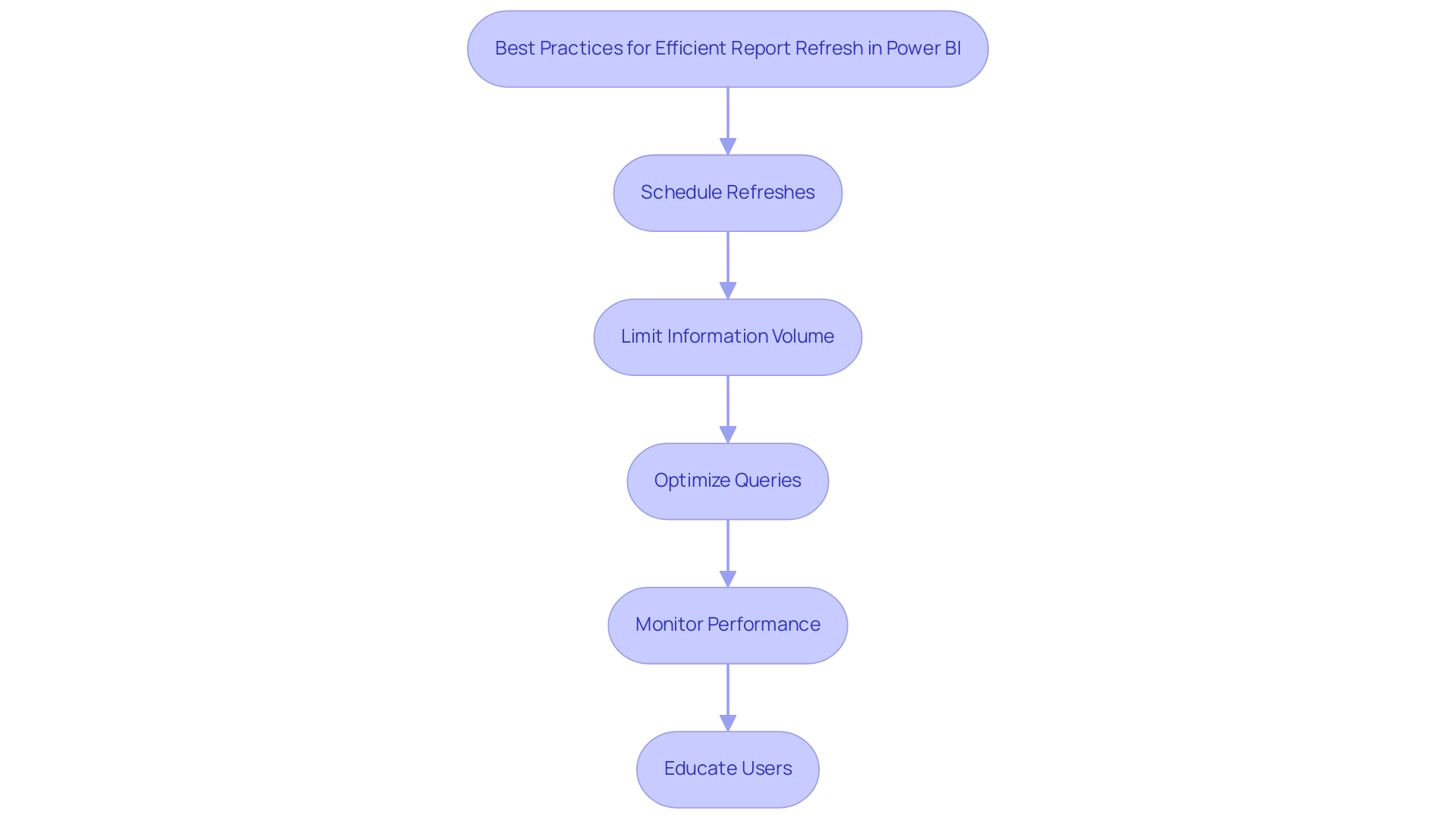

Best Practices for Efficient Report Refresh in Power BI

To optimize your refresh processes in Power BI, implementing the following best practices will significantly enhance efficiency while leveraging the power of Robotic Process Automation (RPA):

-

Schedule Refreshes: Conduct scheduled refreshes during off-peak hours to minimize performance impacts on users accessing documents. This approach not only improves performance but also enhances user experience, aligning with RPA’s goal of streamlining operations.

-

Limit Information Volume: Concentrate on including only the essential details in your reports. By utilizing filters to limit information where possible, you can dramatically decrease the quantity of content processed during refreshes, leading to quicker load times. For example, transforming the Matrix visual into a Table visual can decrease load time by around 15 seconds, showcasing the effect of visual optimization on update efficiency.

-

Optimize Queries: Carefully review your queries for efficiency. Leveraging query folding and avoiding complex transformations can prevent unnecessary delays in refresh times. As experts advise,

Before joining columns, always clean your information by trimming whitespace and ensuring the values are in a compatible format.

This practice alleviates issues that can arise during data processing and supports the data-driven insights necessary for business growth, while also minimizing errors that can stem from unoptimized queries. -

Monitor Performance: Regularly evaluate performance metrics in Power BI Service to identify any bottlenecks or areas that need enhancement. This proactive approach helps in maintaining optimal performance levels. For example, understanding row, query, and filter context in DAX can be challenging, but simplifying your explanations and using clear examples can demonstrate context behavior effectively, enhancing operational efficiency and reducing wasted resources.

-

Educate Users: Provide training to users on best practices for interacting with documents. This education aids in minimizing unnecessary update requests, ultimately sustaining overall system performance and ensuring that your organization can leverage insights effectively. By addressing common challenges associated with manual workflows, such as time-consuming report creation and data inconsistencies, you can further enhance the effectiveness of your reporting processes.

By adopting these strategies, you can significantly enhance the efficiency of your report refresh processes, ensuring that your Power BI reports remain timely and reliable while also integrating the principles of RPA and Business Intelligence, thus driving data-driven insights that contribute to your business growth and strategic objectives.

Conclusion

Maintaining up-to-date and accurate reports in Power BI is crucial for effective decision-making and operational efficiency. The article highlighted essential elements of the report refresh process, emphasizing the importance of scheduled updates and the utility of features like ‘Include in Report Refresh.’ By implementing a strategic approach to refresh scheduling, organizations can ensure that their reports reflect the latest data, thus enhancing the integrity of their insights.

Common challenges, such as data source connectivity issues and timeout errors, can disrupt the refresh process. However, the article provided practical solutions to troubleshoot these issues effectively. By prioritizing regular monitoring and optimizing data queries, teams can mitigate potential disruptions, ensuring that their data remains reliable and actionable.

Incorporating best practices, such as limiting data volume and educating users, can further streamline the report refresh process. These strategies not only improve performance but also empower teams to focus on strategic initiatives rather than administrative tasks. Ultimately, by leveraging the capabilities of Power BI and adopting a proactive approach to report refresh, organizations can drive growth through timely, data-driven insights that support informed decision-making and operational excellence.

Overview

To share Power BI reports outside your organization, several methods are available, including using the “Publish to Web” feature, exporting to PDF or PowerPoint, and sharing directly via the BI Service. Each method has unique considerations regarding security, interactivity, and audience needs, which are crucial for ensuring effective collaboration while protecting sensitive information.

Introduction

In the realm of data-driven decision-making, sharing insights from Power BI reports can be a game-changer for organizations seeking to enhance collaboration and transparency. With a myriad of methods available, from public links to secure email updates, each approach presents unique advantages and considerations that can significantly impact how data is utilized.

As businesses navigate the complexities of external sharing, understanding the nuances of permissions, roles, and best practices becomes essential. This article delves into effective strategies for sharing Power BI reports, empowering organizations to overcome common challenges while ensuring data security and fostering informed decision-making.

By exploring practical solutions and real-world examples, leaders can unlock the full potential of their data, driving growth and innovation in an increasingly competitive landscape.

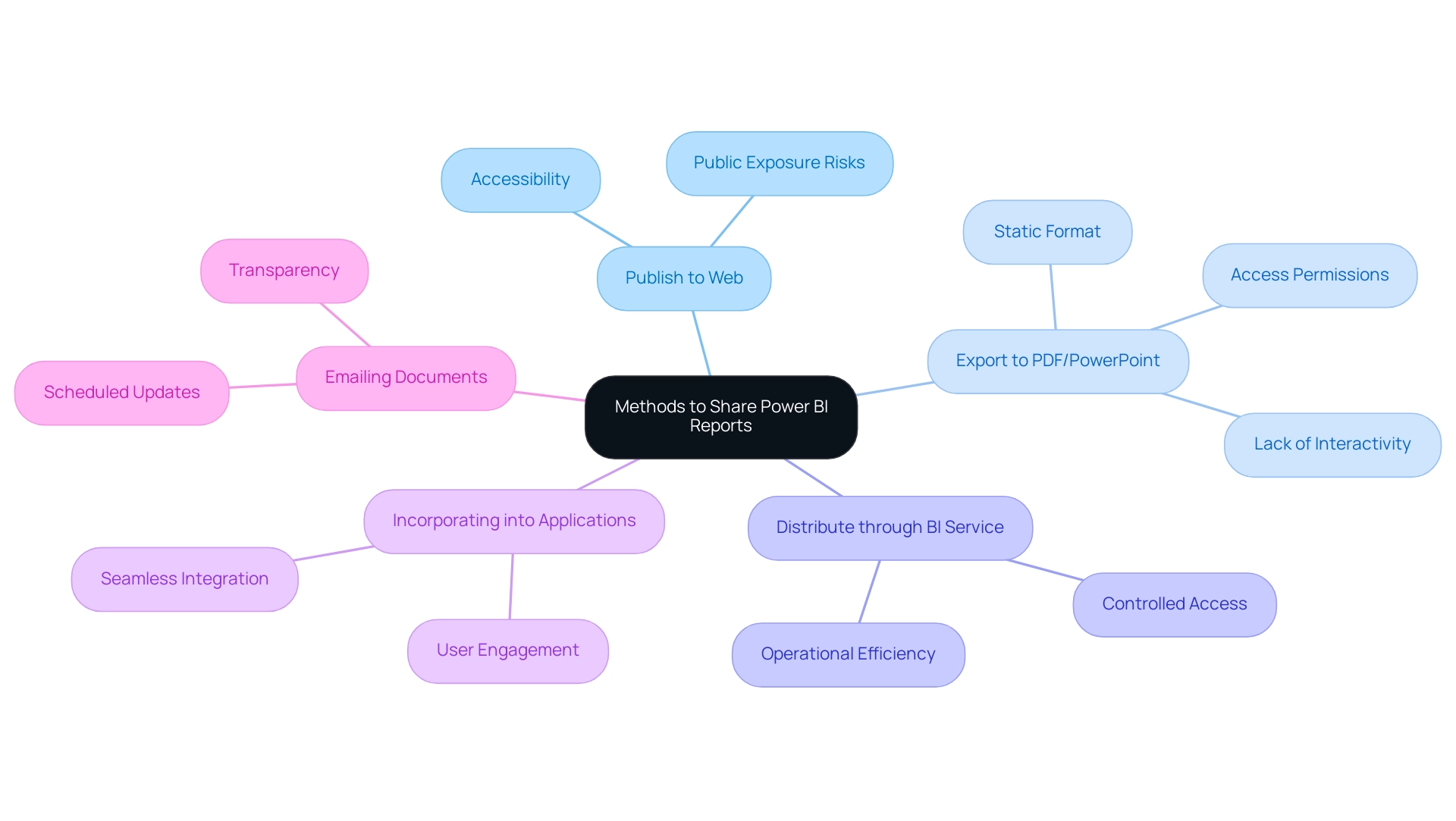

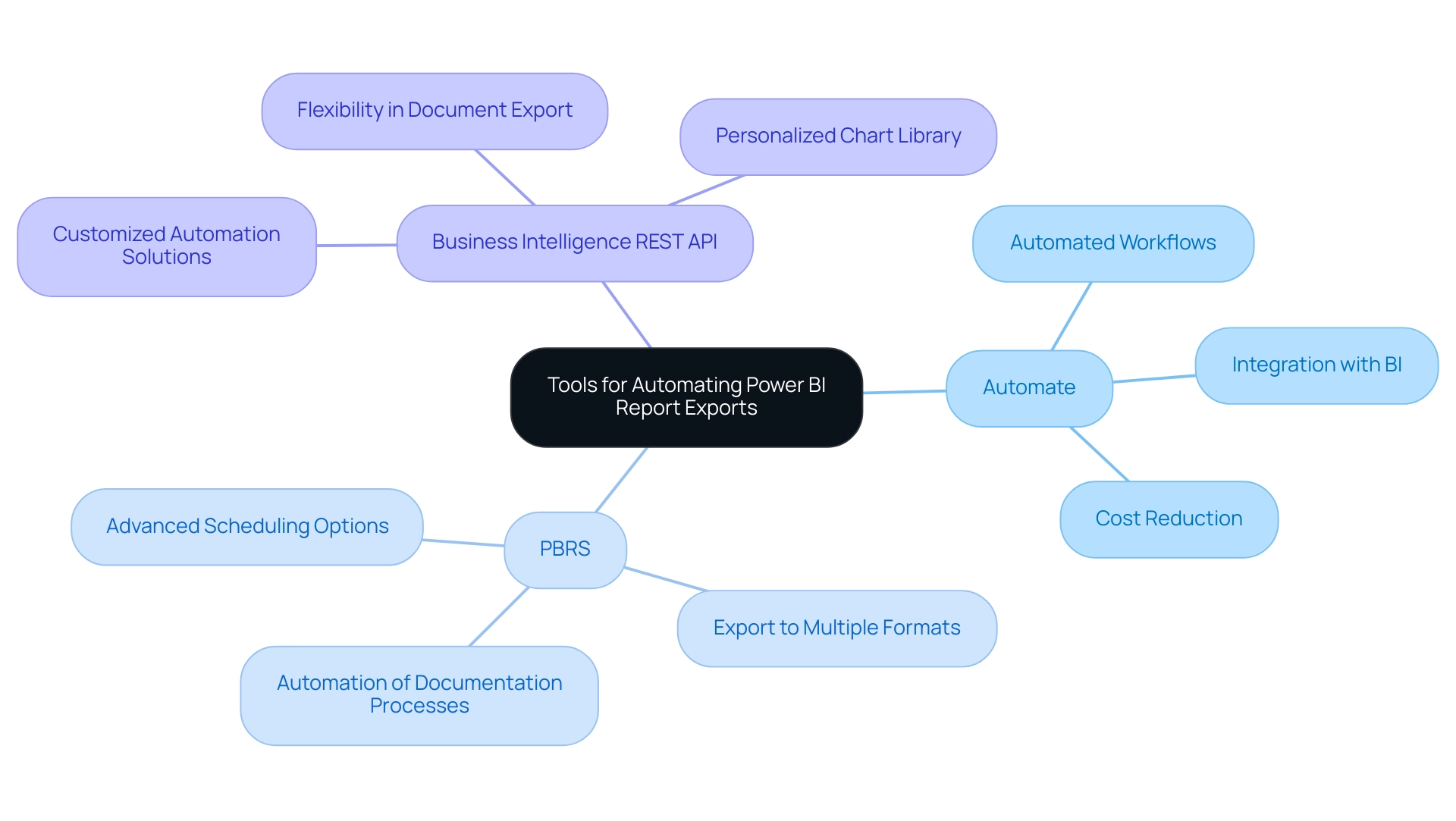

Exploring Different Methods to Share Power BI Reports

There are various methods on how to share Power BI report outside organization, each tailored to different needs and scenarios. Here’s a breakdown of the primary options available:

-

Publish to Web: This feature enables you to create a public link to your document, making it accessible to anyone.

While convenient, it’s crucial to be mindful that this method exposes your information to the public, which may not be suitable for sensitive content. -

Export to PDF or PowerPoint: Exporting your document to PDF or PowerPoint format is an effective way to share static formats.

However, this option lacks interactivity, limiting engagement with the data. It’s essential to recognize that individuals require access to the document to see it within the presentation software, and the same permissions apply as in Business Intelligence. -

Distribute through BI Service: For individuals who utilize BI, insights can be shared directly through the BI platform.

By including individuals or groups, you retain authority over who views the documents, ensuring that sensitive information stays secure. This method enhances operational efficiency by streamlining how data-driven decisions are made within your organization. -

Incorporating into Applications: Organizations can improve experience by integrating BI insights directly into custom applications utilizing the BI API.

This seamless integration enables individuals to engage with documents within familiar interfaces, fostering involvement and actionable insights without the necessity for additional navigation. -

Emailing Documents: The ‘Subscribe’ feature enables scheduled email updates of your documents to external users with Business Intelligence accounts.

This approach is beneficial for keeping stakeholders updated without needing them to access the BI service, thus fostering a culture of transparency and ongoing information sharing.

Each sharing method has unique use cases and considerations. For example, using BI shared datasets can centralize your data model for various documents, enhancing consistency and diminishing redundancy—crucial elements in addressing issues such as data inconsistencies and time-consuming document creation.

Moreover, by effectively addressing these challenges, organizations can leverage insights from BI dashboards to drive growth and innovation.

A pertinent case study is the creation and sharing of applications in BI, which enables report designers to share collections of reports and dashboards with specific user groups while maintaining control over the content.

As Grant Gamble, proprietor of G Com Solutions, highlights, “I operate a firm named G Com Solutions, focusing on BI training and consultancy, assisting businesses in mastering analytics.”

This mastery can significantly impact how effectively you share insights, leading to informed decision-making that drives growth and innovation.

Therefore, carefully evaluate your audience and information type when selecting the most appropriate sharing method.

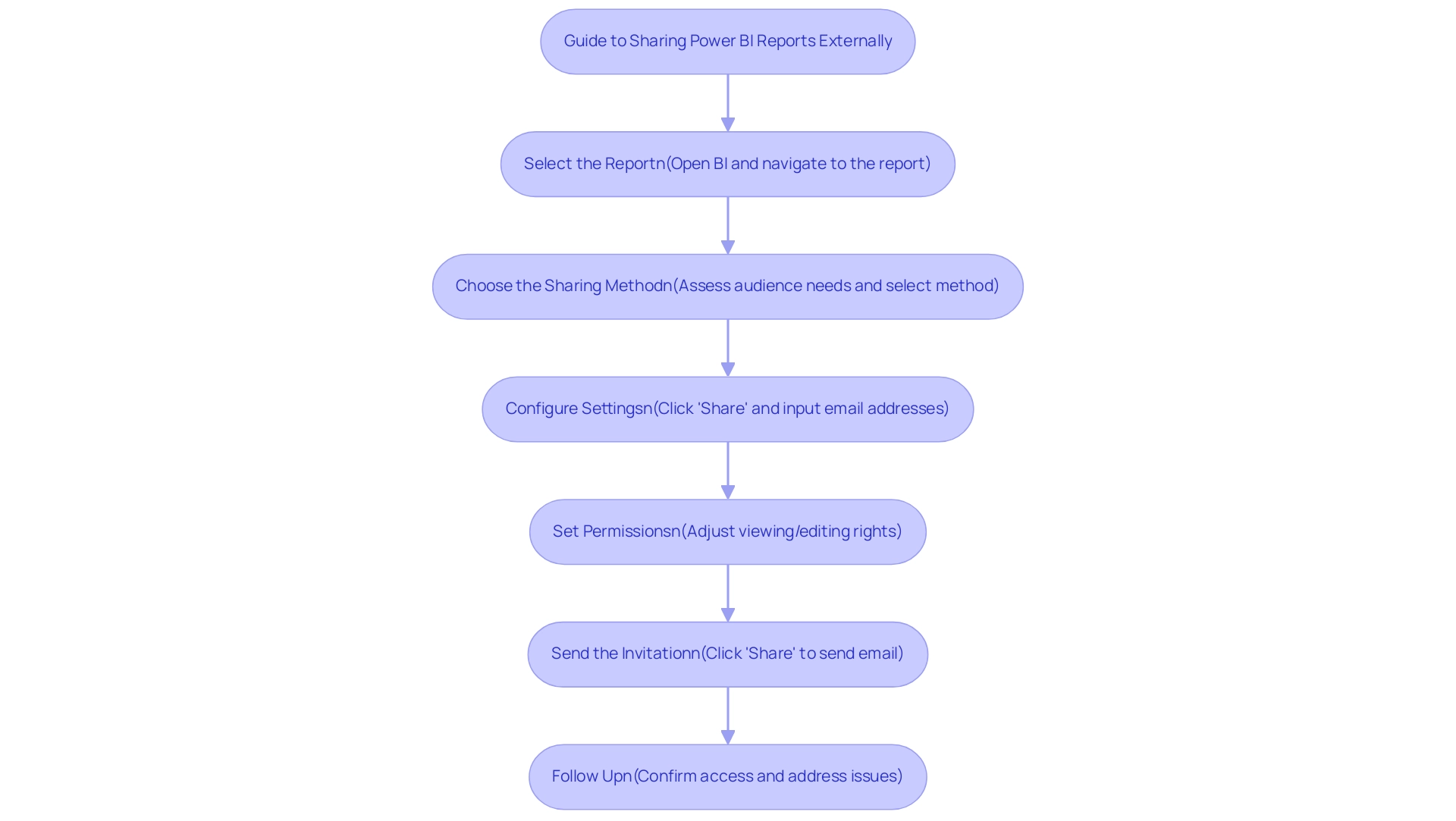

Step-by-Step Guide to Sharing Power BI Reports Externally

Distributing BI analyses externally requires understanding how to share Power BI report outside organization, which can greatly improve collaboration and data accessibility, yet it frequently presents challenges like time-consuming creation, data inconsistencies, and a lack of actionable guidance. To help you navigate this process effectively, here’s a straightforward guide:

-

Select the Report: Begin by opening BI and navigating to the specific report you wish to share, mindful of the time spent on report construction versus leveraging insights from dashboards.

-

Choose the Sharing Method: Assess your audience’s needs and decide on the most suitable sharing method—options include Publish to Web or sharing directly via BI Service. A thoughtful approach here can minimize inconsistencies, which can be addressed through a robust governance strategy that ensures accuracy and consistency across reports.

- Configure Settings: For those choosing to use BI Service, click the ‘Share’ button. Input the email addresses of the external recipients, ensuring they possess Power BI accounts for access, which helps reduce confusion around information access.

- Set Permissions: Carefully adjust permissions to define what recipients can do with the document, such as viewing or editing rights.

This practice ensures alignment with your project charter and helps meet key performance indicators effectively. As noted by industry professionals, this strategic alignment is crucial for achieving desired outcomes and providing clear, actionable guidance for stakeholders. Furthermore, make certain that documents contain clear subsequent actions, so stakeholders understand how to respond to the information provided.

-

Send the Invitation: Once everything is set, click ‘Share’ to dispatch an email invitation, granting access to the report.

-

Follow Up: It’s essential to confirm with your recipients that they received the invitation and can access the document as intended, addressing any potential issues proactively. Keep in mind that documents not accessed within the 90-day data retention period will not be visible, emphasizing the significance of prompt follow-up.

Integrating insights from case studies, such as the use of workspaces in Business Intelligence, demonstrates how collaborative areas improve sharing and permissions management. By implementing these steps, you can learn how to share Power BI report outside organization, facilitating collaborative efforts with external stakeholders while overcoming common challenges associated with outside sharing.

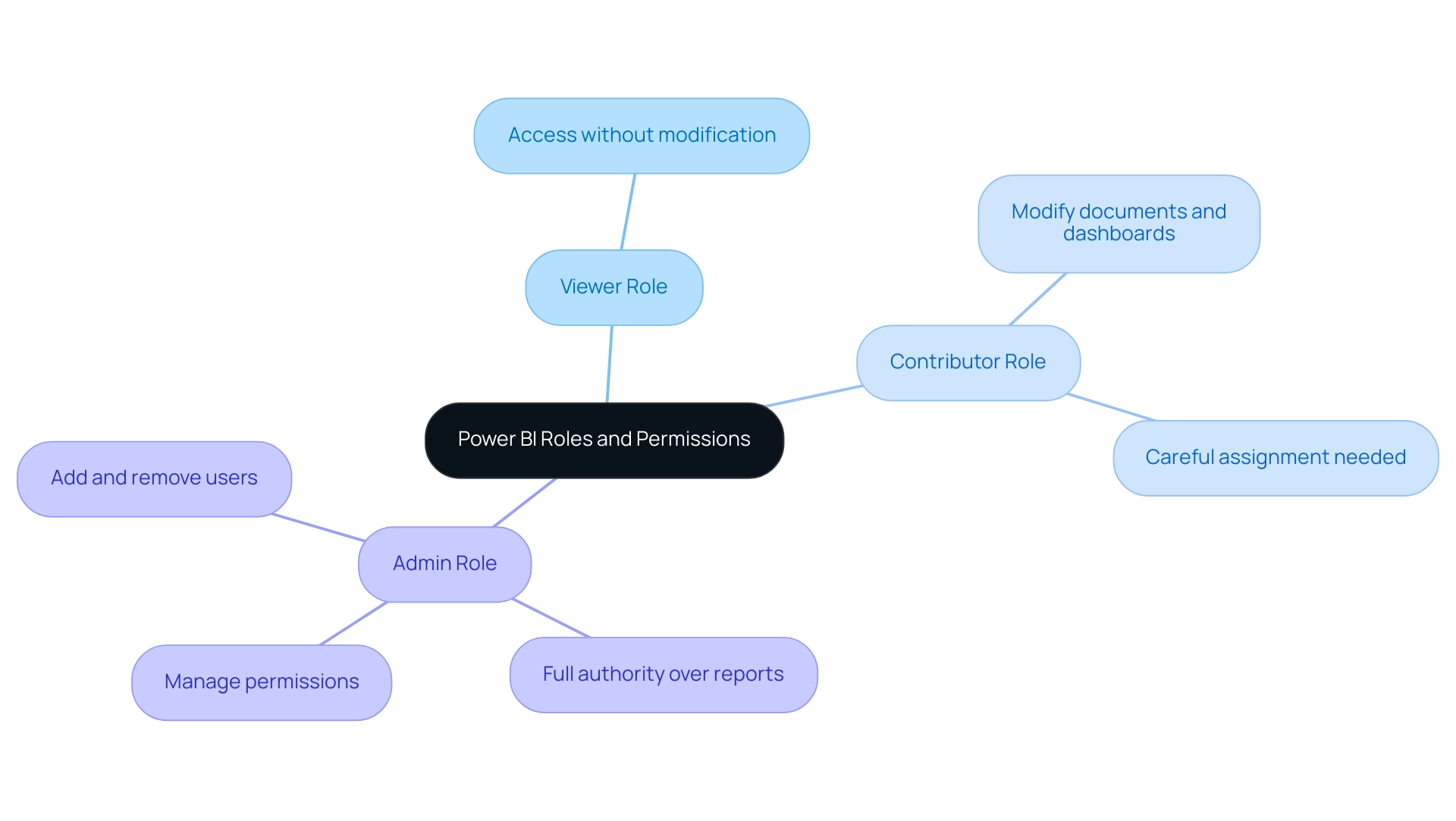

Understanding Permissions and Roles for Effective Sharing

Comprehending the different permissions and roles in Power BI is crucial for efficiently understanding how to share Power BI reports outside the organization and utilizing insights to improve business operations. Here’s a breakdown of the key roles:

-

Viewer Role: This position enables individuals to access documents without modifying them, rendering it appropriate for external stakeholders who need entry to information without the capacity to change it.

-

Contributor Role: Contributors can modify documents and dashboards, so it’s essential to assign this role carefully, especially to external participants. Limiting this access helps maintain control over the content while enabling collaboration.

-

Admin Role: Admins possess full authority over reports and can manage permissions comprehensively. As noted, “This category can carry out all functions of the previous types as well as add and remove individuals, including other admins.” This role should typically be reserved for internal personnel to safeguard sensitive information.

Furthermore, when administrators turn off usage metrics for their whole organization, they can opt to remove all existing analyses and dashboard tiles. This demonstrates the significance of managing roles and permissions effectively.

To set permissions, navigate to the report settings in Power BI and adjust user roles as needed. Regularly reviewing these permissions is crucial to ensure that only authorized individuals have access, particularly when learning how to share Power BI reports outside the organization with sensitive data.

A relevant case study emphasizes that IT leaders often encounter challenges with BI workspace sprawl and seek solutions to maintain security within their Microsoft 365 environment. By employing customized provisioning templates, organizations can effectively manage and secure their environments.

In conjunction with our 3-Day BI Sprint, we tackle these challenges directly by producing a fully functional, professionally designed document on a subject of your choice. This service not only allows you to focus on utilizing insights but also provides a template for future projects, ensuring a professional design from the start. By maintaining a structured approach to user roles and leveraging our expertise, organizations can optimize their BI sharing effectiveness while enhancing security and overcoming challenges in utilizing insights from dashboards.

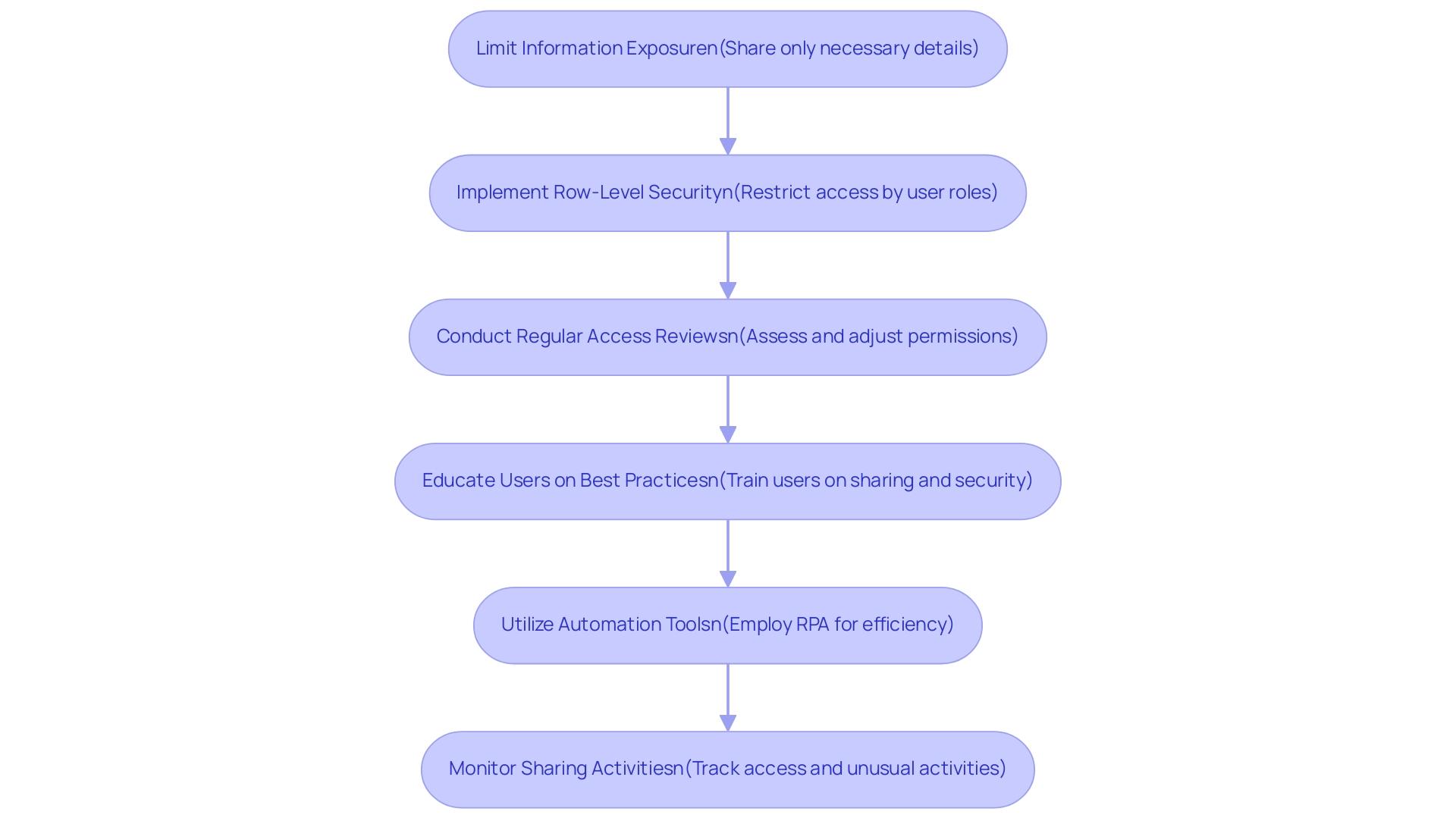

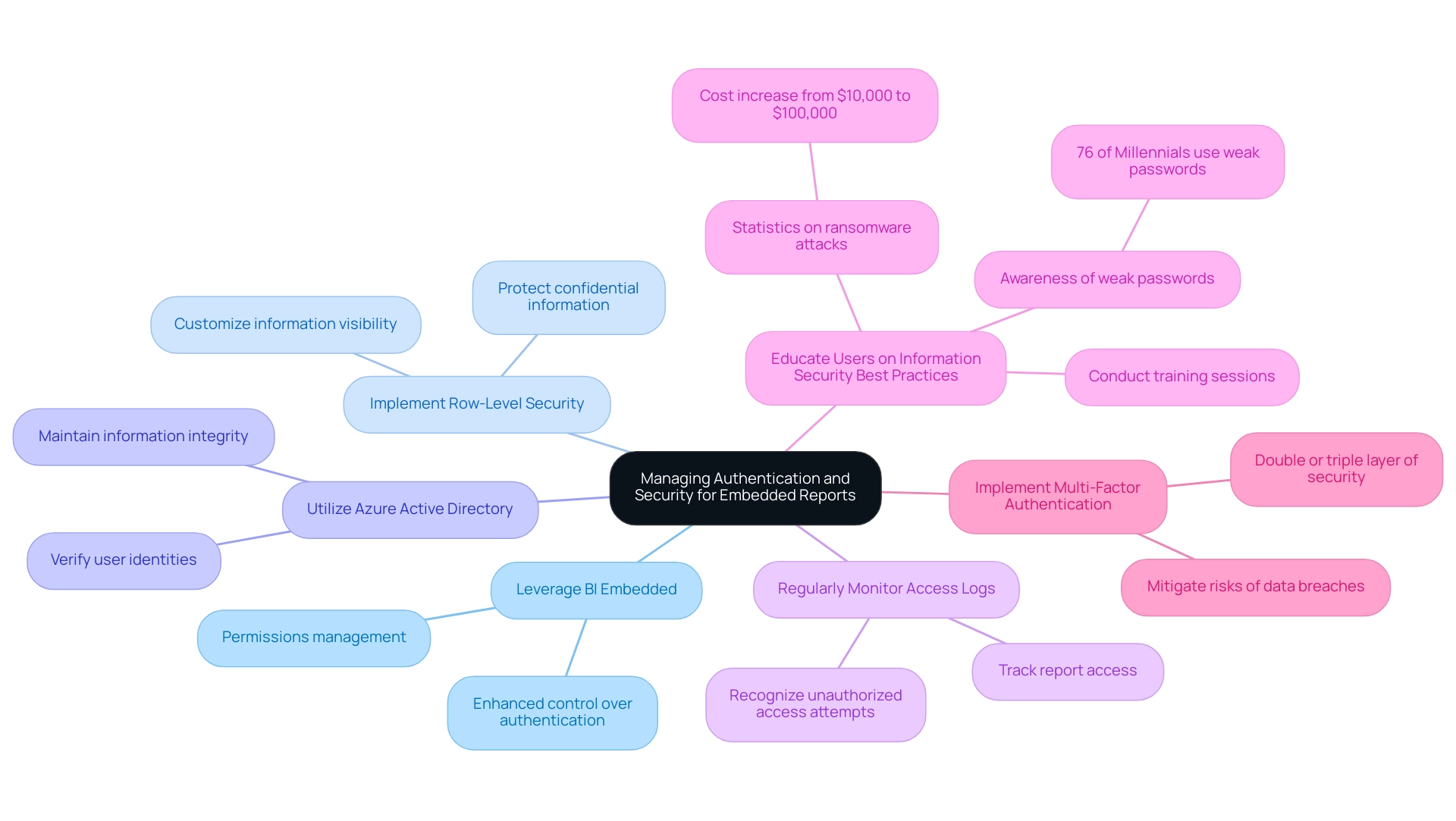

Best Practices for Sharing Power BI Reports Safely

To effectively share Power BI reports while ensuring security, consider these essential best practices:

- Limit Information Exposure: Share only the details required for the report’s specific purpose. Be cautious to exclude sensitive or confidential information that is not essential.

- Implement Row-Level Security: Utilize row-level security to restrict access according to user roles, ensuring users can only view information pertinent to them. This approach not only mitigates risks but also improves governance, addressing challenges often faced in leveraging insights from Power BI dashboards.

- Conduct Regular Access Reviews: Periodically assess who has access to your reports, adjusting permissions as necessary, particularly after shifts in team structure or project requirements. Such vigilance is crucial, especially in light of the statistic that indicates around 46% of U.S. employees share passwords for work accounts, which can lead to security vulnerabilities. Additionally, it is important to note that less than 5% of current corporate information sharing programs can identify and locate trusted sources, highlighting the challenges faced in effective sharing.

- Educate Users on Best Practices: Provide comprehensive training for users on information sharing and security best practices, cultivating a culture of awareness and accountability regarding handling. As noted by experts, information quality refers to “the overall utility of a dataset(s) as a function of its ability to be easily processed and analyzed for other uses.” This underscores the importance of ensuring the information is not only shared but also of high quality, which is essential for driving growth and innovation.

- Utilize Automation Tools: Think about employing Robotic Process Automation (RPA) applications such as EMMA RPA and Automate to enhance the document creation process. Automating repetitive tasks can significantly reduce the time and effort required, addressing challenges such as data inconsistencies and enhancing operational efficiency.

- Monitor Sharing Activities: Utilize BI’s integrated monitoring tools to track access and identify any unusual activities. This proactive approach can help you respond swiftly to potential security issues.

By adhering to these best practices and integrating automation solutions, you can significantly enhance the security of your Power BI reports while understanding how to share Power BI report outside organization with external stakeholders. Moreover, embracing capacity building and training initiatives, particularly in regions like the Global South, can help integrate robust information sharing practices into organizational culture, paving the way for sustainable information use. Moreover, comprehending the policy environment, as demonstrated in the case study “Navigating the Sharing Policy Landscape,” is essential for successful sharing initiatives, ensuring compliance and addressing risks while leveraging opportunities for collaboration.

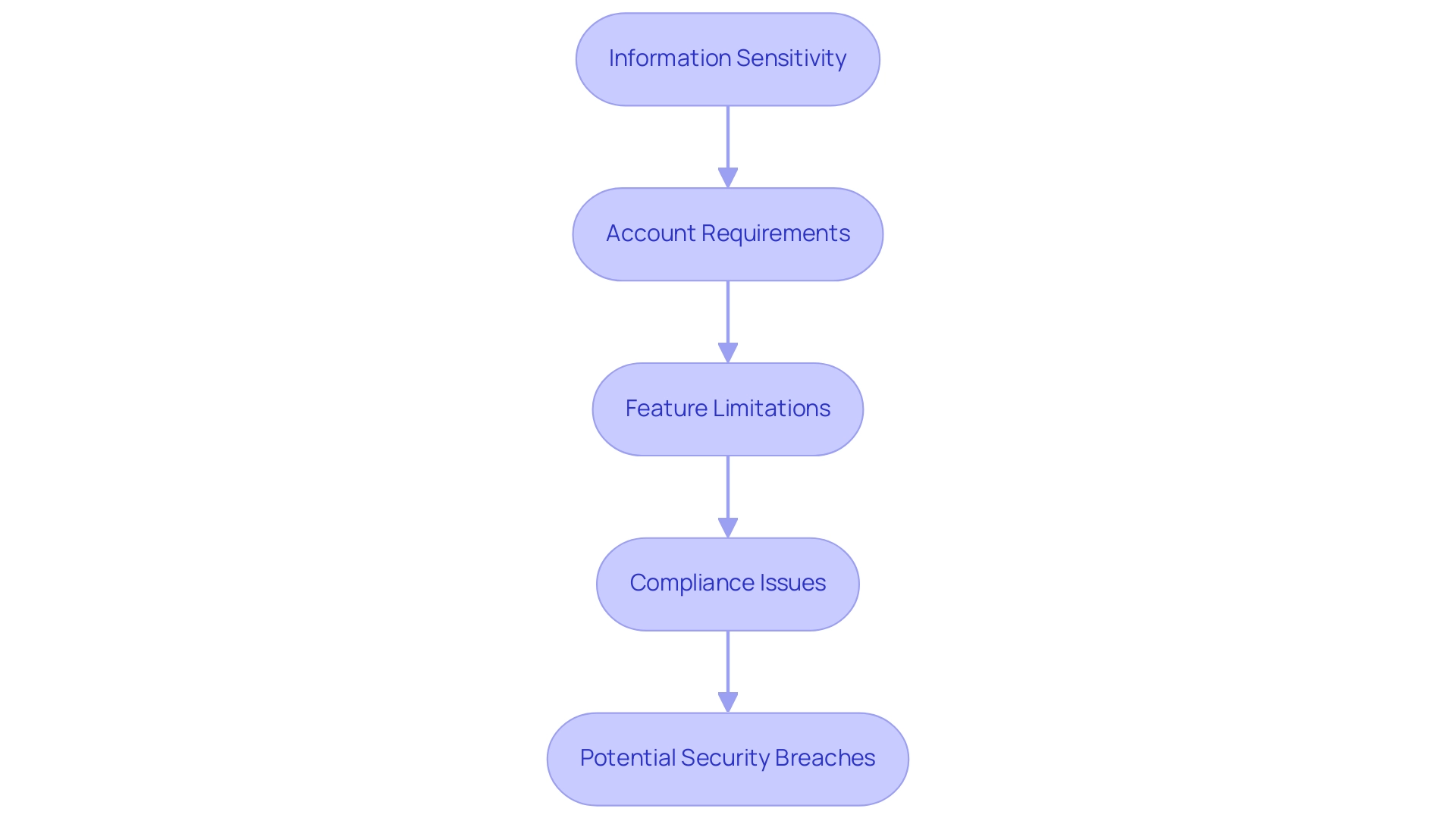

Navigating Limitations and Security Concerns in Report Sharing

When distributing Power BI documents, it’s essential to address the related limitations and security issues effectively, especially in the context of streamlining document creation and improving information governance. Here are several key considerations:

-

Information Sensitivity: Evaluate the sensitivity of the information in your documents prior to sharing.

Public sharing methods, such as ‘Publish to Web’, can expose confidential information to a broad audience, which is often unsuitable for sensitive data, contributing to data inconsistencies due to a lack of governance strategy. -

Account Requirements: Be aware that external individuals may need to create Power BI accounts to access shared documents.

This requirement can pose accessibility challenges and limit the reach of your insights, ultimately consuming time that could be spent on deriving actionable insights. -

Feature Limitations: Certain features may be restricted when sharing reports externally.

For instance, some interactive capabilities might not function as intended, impacting user experience and engagement, thereby hindering effective decision-making. -

Compliance Issues: Ensure that your sharing practices align with organizational policies and legal regulations related to information protection and privacy.

With 72% of businesses now utilizing compliance solutions for privacy, adhering to these guidelines is paramount. The challenges in privacy compliance are considerable; only 25% of companies surveyed can fulfill the obligation to notify authorities of a breach within 72 hours, emphasizing the necessity for strong frameworks. The average cost of a data breach is significant, averaging around $3.60 million, emphasizing the importance of compliance. -

Potential Security Breaches: Regularly monitor your shared documents for any unauthorized access.

If security breaches are detected, it’s critical to take immediate action to mitigate risks. Masha Komnenic, an Information Security and Data Privacy Specialist, emphasizes the importance of vigilance, noting that effective compliance frameworks are essential for safeguarding sensitive data.

By understanding these limitations and security concerns, you can enhance your approach to how to share Power BI reports outside the organization, ensuring both effectiveness and security in your operations while leveraging insights for informed decision-making and driving growth.

Conclusion

Sharing Power BI reports effectively can transform organizational collaboration and data-driven decision-making. By exploring various methods such as:

- Publishing to the web

- Exporting to PDF

- Utilizing the Power BI Service

- Embedding reports

- Leveraging email subscriptions

organizations can tailor their sharing strategies to meet specific needs while maintaining data security.

Understanding permissions and roles is equally crucial. Assigning the right access levels—whether as a viewer, contributor, or admin—ensures that sensitive information is safeguarded while allowing necessary collaboration. Regular reviews of these permissions help maintain a secure environment, especially as team dynamics evolve.

Implementing best practices enhances the safety and effectiveness of report sharing. Key steps include:

- Limiting data exposure

- Utilizing row-level security

- Educating users about data handling

Moreover, leveraging automation tools can streamline report creation, reduce inconsistencies, and enhance operational efficiency.

Navigating the limitations and security concerns surrounding report sharing is vital for successful data governance. By being mindful of data sensitivity, compliance issues, and user access requirements, organizations can mitigate risks and enhance their overall approach to data sharing.

In conclusion, by adopting these strategies and best practices, organizations can unlock the full potential of their Power BI reports. This proactive approach not only fosters informed decision-making but also drives growth and innovation, positioning businesses for success in a competitive landscape. Embrace these insights to ensure that data sharing becomes a powerful ally in achieving operational efficiency and strategic objectives.

Overview

To update a Power BI dashboard with new data, users can either manually refresh their data sources or set up scheduled refreshes to automate this process, ensuring reports reflect the latest information. The article emphasizes the importance of understanding different data source capabilities and configuring refresh settings correctly, as these practices enhance data reliability and streamline reporting efficiency.

Introduction

In the ever-evolving landscape of data management, Power BI stands out as a powerful ally for organizations striving to harness their data effectively. By understanding the intricacies of data sources, refresh capabilities, and automation techniques, businesses can transform their reporting processes and drive operational efficiency.

This article delves into essential strategies for optimizing Power BI dashboards, including:

- Setting up scheduled refreshes

- Troubleshooting data source credentials

With practical insights and best practices, readers will discover how to elevate their data reporting capabilities, ensuring that their dashboards not only reflect real-time information but also empower decision-making and foster innovation. Embracing these techniques will pave the way for organizations to navigate the complexities of data management with confidence and agility.

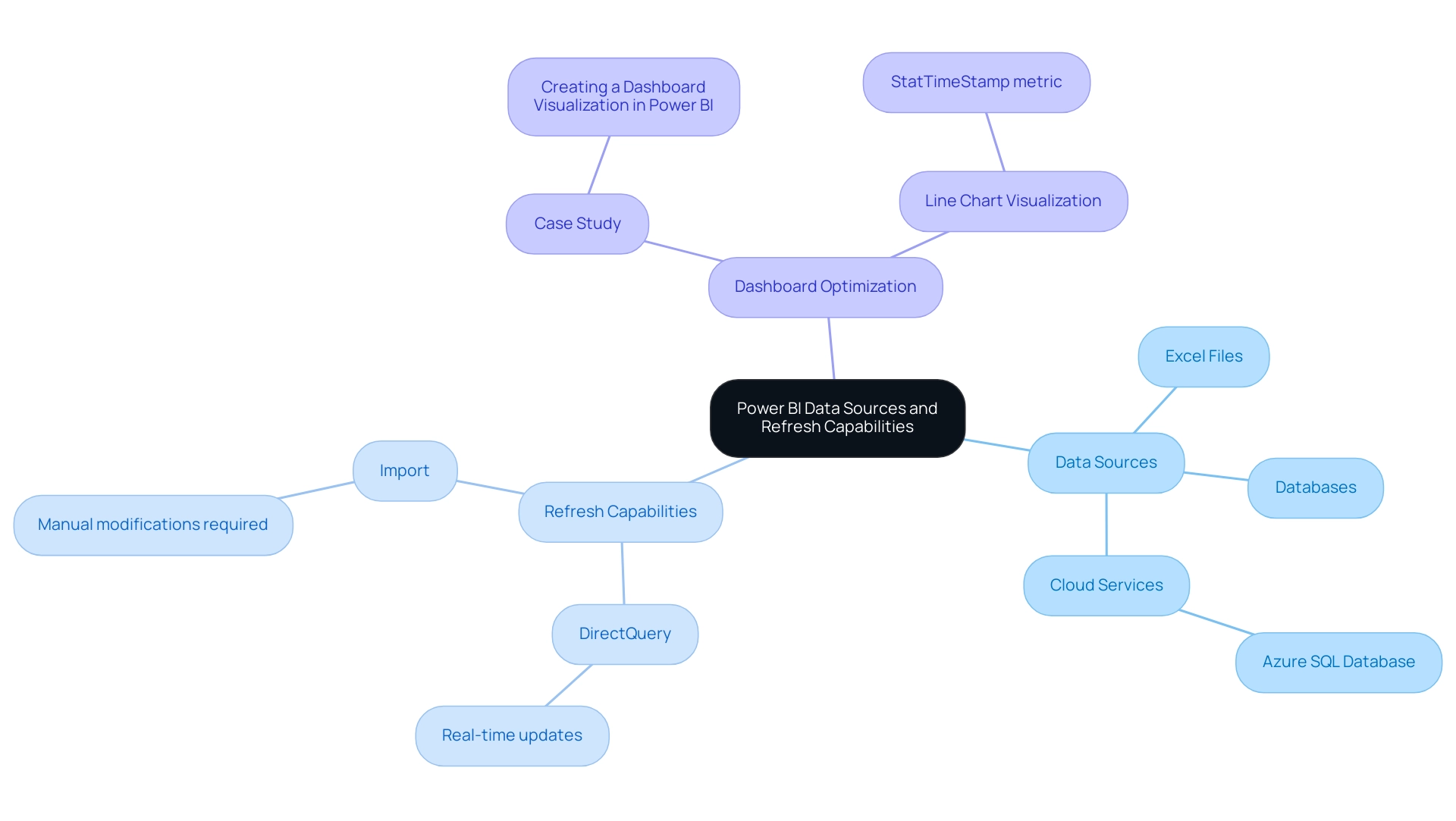

Understanding Data Sources and Refresh Capabilities in Power BI

This tool provides a strong ability to link with various information origins, including Excel files, databases, and cloud services. Grasping how to update Power BI dashboard with new data is essential for efficiently refreshing your dashboard, especially during our 3-Day Power BI Sprint, which enables the swift production of professionally crafted reports. Various information origins have unique refresh functionalities; for example, cloud platforms like Azure SQL Database enable real-time updates, while knowing how to update Power BI dashboard with new data from Excel files usually requires manual modifications.

As mentioned by Fitrianingrum Seto, a software engineer with over 15 years of experience, “Comprehending information sources is vital for maximizing the efficiency of your reporting tools.” To enhance your dashboard performance, it is crucial to understand the kinds of connections available in BI, including:

- DirectQuery

- Import

Moreover, leveraging our General Management App can provide comprehensive management capabilities and smart reviews, further enhancing your reporting process.

A practical example of dashboard optimization is demonstrated in the case study titled ‘Creating a Dashboard Visualization in Power BI,’ where a line chart visualization is created using the StatTimeStamp metric. This process highlights the significance of choosing suitable measurements and ensuring visibility, ultimately enabling effective analysis. By unlocking the power of Business Intelligence and incorporating RPA for streamlined workflows, you can overcome challenges such as time-consuming report creation, inconsistencies, and lack of actionable guidance, ensuring that your dashboards remain relevant, actionable, and aligned with your operational efficiency goals.

Additionally, explore our Actions portfolio and schedule a free consultation to discover how we can further assist you in enhancing your reporting capabilities.

Setting Up Scheduled Refreshes for Your Power BI Dashboard

Configuring scheduled refreshes in BI is a straightforward process that teaches you how to update Power BI dashboard with new data without ongoing manual intervention, which is crucial for leveraging Business Intelligence effectively. Begin by navigating to the Power BI Service and selecting your dataset. Within the settings menu, click on ‘Schedule Refresh.’

This is where you can define the frequency of your updates—options include daily, weekly, or customized intervals to suit your operational needs. Additionally, you can specify the exact time for the update to align with your workflow, ensuring that your reports are always based on the latest information. It’s essential to verify that your data source credentials are up to date; obsolete credentials can result in update failures, which can interrupt your data flow and cause difficulties in report generation and data inconsistencies.

In fact, refresh failures may occur due to gateway issues, so it’s advisable to monitor these aspects closely. Once you’ve configured your settings, BI will handle the rest, automatically refreshing your dashboard according to the schedule you’ve established, which shows you how to update Power BI dashboard with new data. This automation not only saves you time but also enhances the reliability of your data.

As highlighted by mjmowle, BI Premium users can assign up to 48 refreshes in a 24-hour period, but they are limited to 30-minute intervals. This distinction is crucial for understanding the capabilities and constraints of scheduled refreshes. Furthermore, a user with BI Premium per-user licenses noted similar limitations, prompting inquiries about whether the 15-minute refresh option was exclusive to Premium Capacity licenses.

To further enhance your reporting procedures and address the challenges of inconsistencies, consider integrating RPA solutions like EMMA RPA and Automate. These tools can automate repetitive tasks, ensuring that your insights are not only timely but also actionable, ultimately driving operational efficiency and helping you avoid the competitive disadvantage associated with a lack of data-driven insights.

Managing Data Source Credentials and Troubleshooting Refresh Issues

To effectively manage credential information in Power BI, navigate to the dataset settings within the Power BI Service. It’s essential to confirm you possess the correct username and password for each information repository. If you experience update issues, examine the update history for error messages that may offer insight into the problem.

Common pitfalls include:

- Expired credentials

- Network connectivity challenges

- Changes in the source structure

Moreover, as highlighted in our discussions on the importance of Business Intelligence, these issues can hinder your ability to leverage insights effectively. Keep in mind that it can take up to 24 hours for new usage information to be imported, which may also contribute to perceived refresh issues.

For instance, as PowerBINoob24 noted,

'Figured it out. There was an issue with the path to the files in BI Desktop. Once that was corrected and published, I had to delete the old files from the workspace because republishing did not overwrite the file path with the correct path.'

To further enhance your reporting and operational efficiency, consider utilizing RPA solutions like EMMA RPA and Power Automate.

These tools can automate repetitive tasks associated with management and reporting, significantly reducing the time spent on manual processes and minimizing errors. Regularly review and utilize version history to track changes made to reports and dashboards. Addressing these issues promptly is essential for learning how to update Power BI dashboard with new data.

By proactively managing your credentials and staying attuned to these common challenges, you can significantly minimize disruptions and ensure a seamless refresh process, which is essential for understanding how to update Power BI dashboard with new data to enhance the effectiveness of your reporting. Understanding compliance and security standards, as demonstrated in our case studies on usage metrics in national/regional clouds, can also inform your data management strategies, positioning your organization for growth and innovation.

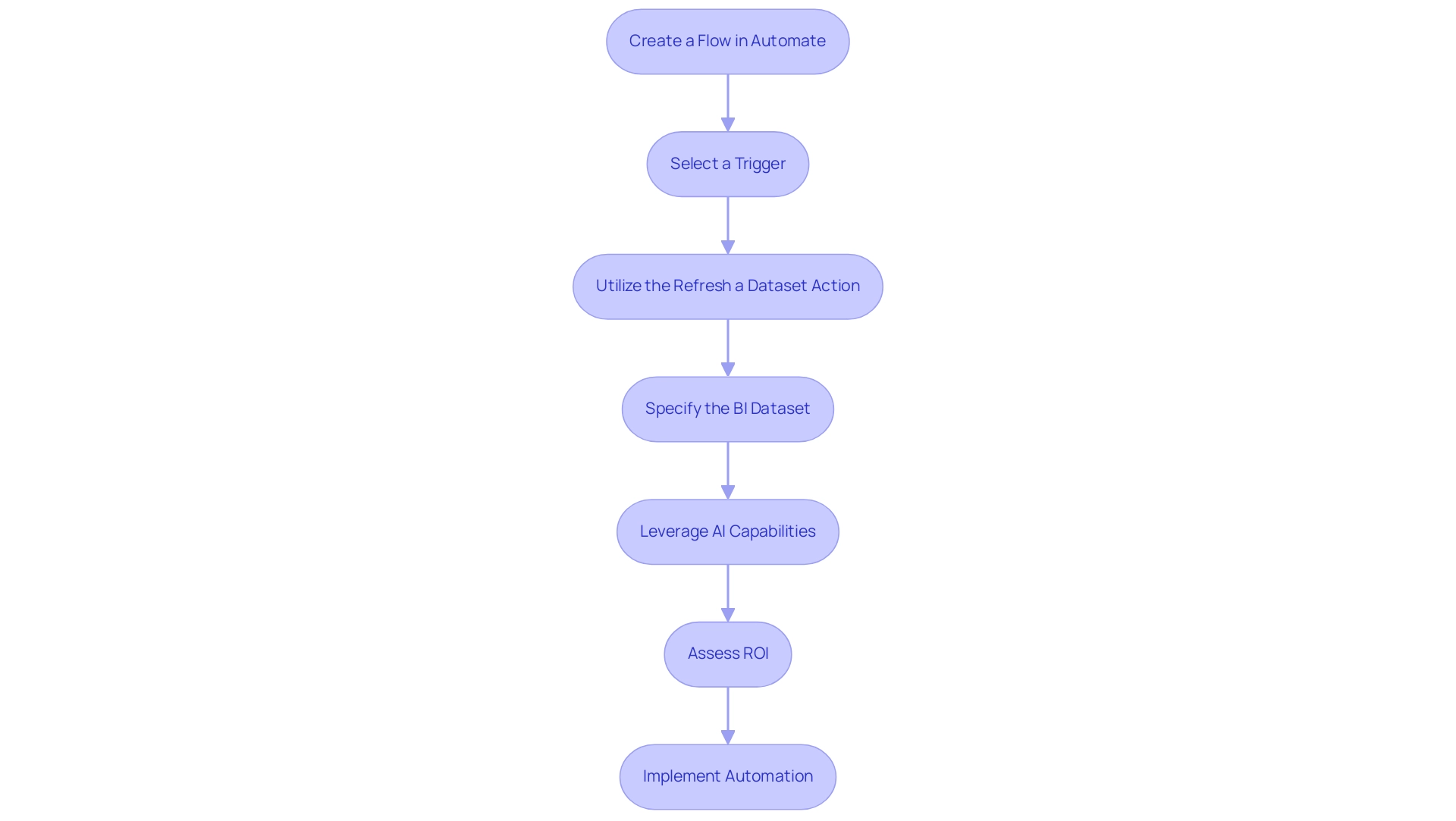

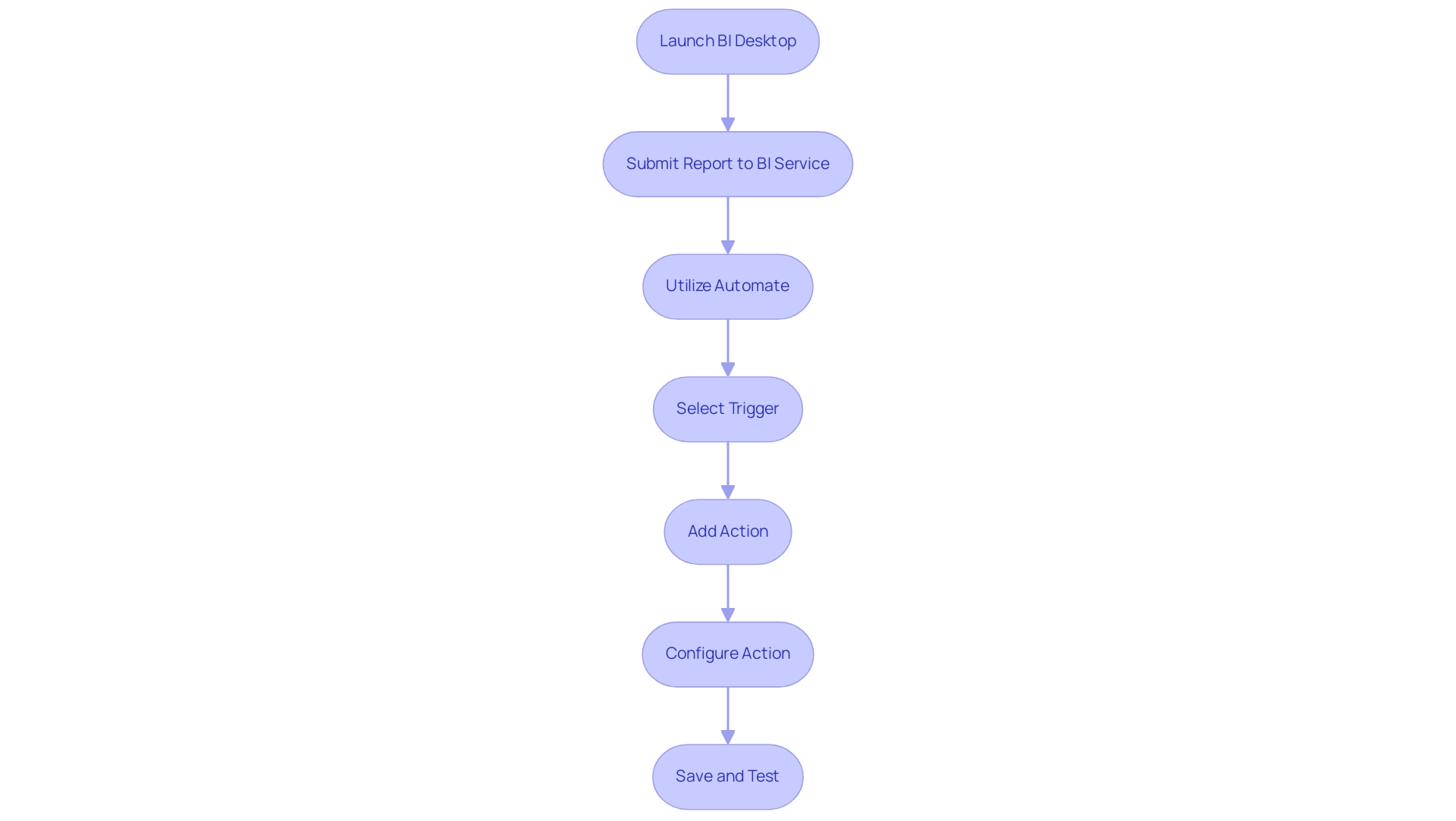

Automating Dashboard Updates with Power Automate

To effectively automate your BI dashboard updates, the first step is to create a flow in Automate. Begin by selecting a trigger that aligns with your operational needs, such as a scheduled time or an event from another application. Next, utilize the ‘Refresh a dataset’ action to specify the exact BI dataset you wish to refresh.

This automation not only saves valuable time but also illustrates how to update Power BI dashboard with new data whenever it becomes available, significantly enhancing operational efficiency. By leveraging Automate’s AI-driven capabilities, you can streamline workflows and execute automation risk-free, as our approach involves a thorough ROI assessment before implementation. Grasping Automate is essential for creating seamless workflows and maximizing the benefits of your BI tools.

For example, in a case study on Automate integration with BI, managers were able to receive customized reports by establishing flows that filter information based on their department, demonstrating the practical use of this automation. As Taymour Ahmed, a Business Intelligence Professional, highlights, ‘The capability to automate processes in BI is not merely a convenience; it’s a strategic edge that allows organizations to react promptly to evolving information requirements.’

Furthermore, comprehending the three types of flows in automation—Automated Flows, Instant Flows, and Scheduled Flows—can further optimize your approach to how to update Power BI dashboard with new data, making your reporting more responsive and tailored to your needs.

Additionally, we offer a free consultation to help you assess your automation needs and demonstrate how accessible automation can benefit your organization.

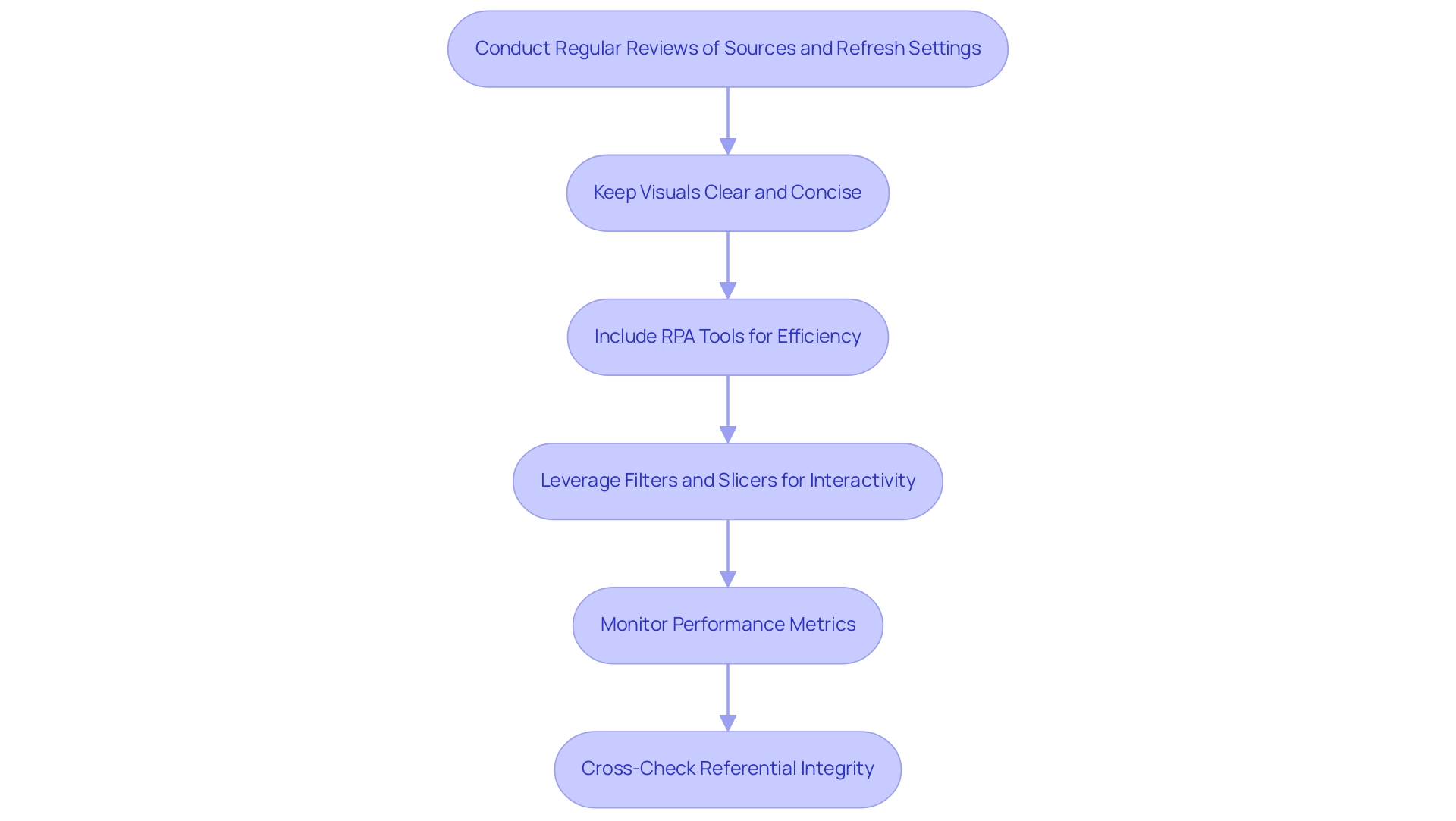

Best Practices for Maintaining and Optimizing Power BI Dashboards

To effectively maintain and optimize your BI dashboards, it is essential to understand how to update Power BI dashboard with new data by conducting regular reviews of your sources and refresh settings, ensuring they are aligned with your business objectives. In today’s dynamic environment, leveraging Business Intelligence is crucial for transforming raw information into actionable insights that drive growth and innovation. A key trend is to keep visuals clear and concise, as clutter can lead to user confusion and diminish engagement; reducing the number of visuals in BI reports can significantly decrease query load and enhance performance.

Furthermore, think about including Robotic Process Automation (RPA) tools such as EMMA RPA and Automate to simplify repetitive tasks associated with report creation, tackling issues of time consumption and information inconsistencies. For instance, EMMA RPA can automate the extraction and transformation of information, while Automate can facilitate seamless integration between various applications, enhancing efficiency. Leverage filters and slicers to boost interactivity, enhancing the overall user experience.

Monitoring performance metrics is essential; identify any slowdowns and modify your model accordingly to maintain responsiveness. Significantly, minimizing network latency can greatly affect performance; strategically placing your data repositories, gateways, and BI capacity in the same region can result in enhanced service responsiveness, as demonstrated by case studies that have effectively reduced latency problems through strategic location. For example, a recent case study demonstrated that a company reduced report generation time by 50% after implementing RPA solutions, showcasing the tangible benefits of these technologies.

Furthermore, it is important to cross-check referential integrity for relationships in DirectQuery sources to optimize query performance. As Idrissshatila wisely suggests,

Learn with me on YouTube @DAXJutsu or follow my page on Facebook @DAXJutsuPBI

— a reminder that continuous learning and adaptation are key to mastering how to update Power BI dashboard with new data effectively.

Conclusion

Optimizing Power BI dashboards is a strategic imperative for organizations aiming to leverage their data for operational excellence. By understanding data sources and refresh capabilities, businesses can ensure that their reports are both accurate and timely. Scheduled refreshes automate the data update process, reducing manual intervention and enhancing reliability, while effective management of data source credentials minimizes disruptions and maintains the integrity of reporting.

Incorporating tools like Power Automate and RPA solutions can significantly streamline workflows and automate repetitive tasks, providing a competitive advantage in today’s data-driven landscape. Best practices, such as:

- Regular reviews of data sources

- Maintaining clear visuals

- Monitoring performance metrics

are essential for ensuring dashboards remain relevant and user-friendly.

Ultimately, embracing these strategies not only empowers organizations to make informed decisions but also fosters a culture of innovation and agility. By navigating the complexities of data management with confidence, businesses can transform their reporting capabilities into a powerful tool for driving growth and operational efficiency. Now is the time to harness the full potential of Power BI and unlock actionable insights that propel your organization forward.

Overview

To refresh Power BI effectively, users can utilize three main methods: manual updates, scheduled updates, and automatic refreshes, each tailored to specific operational needs. The article illustrates that understanding these methods, along with best practices and the integration of Robotic Process Automation (RPA), is crucial for maintaining data accuracy and operational efficiency, thereby empowering users to make informed decisions based on timely insights.

Introduction

In the realm of data-driven decision-making, the ability to refresh and manage data effectively is paramount for any organization aiming to enhance operational efficiency. Power BI stands out as a powerful tool that not only simplifies the complexities of data reporting but also ensures that insights are timely and actionable.

As businesses increasingly rely on accurate data to inform their strategies, understanding the nuances of data refresh methods becomes essential. This article delves into the various techniques available in Power BI for refreshing data—manual, scheduled, and automatic—while highlighting best practices and troubleshooting tips that empower users to maintain seamless operations.

By mastering these processes, organizations can unlock the full potential of their data, driving growth and innovation in an ever-evolving landscape.

Understanding Data Refresh in Power BI

Data refresh in this tool is a crucial process that demonstrates how to refresh Power BI by updating your dashboards with the latest information from your sources, ensuring that the insights derived are both current and precise. In the context of enhancing operational efficiency, our BI services provide a streamlined approach to reporting, featuring a 3-Day BI Sprint designed to quickly create professionally crafted reports that address common challenges. Given that analyzing datasets on Microsoft Excel can become complex and slow with over a million rows, Power BI offers robust solutions to simplify this task.

The platform enables users to select from various methods for refreshing information, including learning how to refresh Power BI, empowering them to choose the approach that aligns best with their workflow and operational needs. Comprehending how information update processes operate is crucial for handling documents efficiently and making knowledgeable choices based on the most current available information. Furthermore, the regularity of information updates plays a vital role in preserving the precision of reports, as timely revisions are directly associated with the dependability of insights produced.

The history display in BI presents information for the latest 60 records for each scheduled update, while the schedule view divides scheduling details into 30-minute intervals, assisting in managing resource contention efficiently. As organizations increasingly adopt Power BI—evidenced by a staggering 97% of Fortune 500 companies utilizing it—understanding how to refresh Power BI techniques becomes crucial for sustaining competitive advantage. Furthermore, in scenarios where access to an enterprise information gateway is unavailable, users can deploy a personal information gateway for managing their semantic models.

Although personal gateways have limitations, they enable users to manage source configurations without needing to share with others. This emphasizes the significance of information quality and the utilization of tools such as Automate for efficient management, ultimately fostering insight-driven decision-making and operational effectiveness for business growth. Additionally, explore our Actions portfolio to discover tailored solutions that can further enhance your BI experience, and don’t hesitate to book a free consultation to discuss how we can support your business needs.

Methods for Refreshing Data in Power BI: Manual, Scheduled, and Automatic

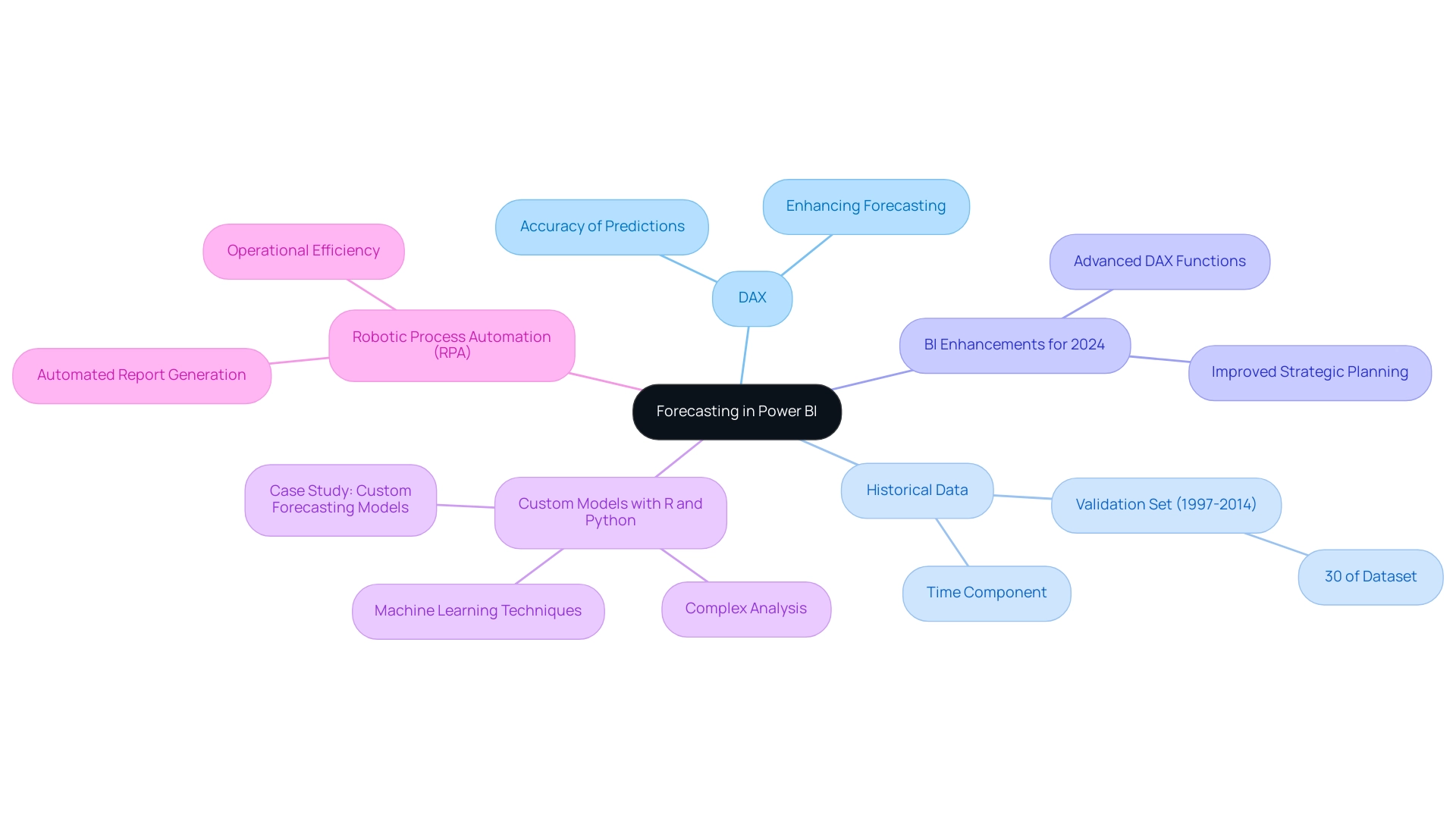

The BI tool offers three crucial approaches for updating information, each customized to particular operational requirements, and can be greatly improved through the incorporation of Robotic Process Automation (RPA):

-

Manual Update: This on-demand technique enables users to update information whenever required. By simply clicking the ‘Refresh’ button in Power BI Desktop, users can learn how to refresh Power BI to retrieve the latest information from their sources. While this method provides immediate updates, it can lead to delays; for instance, refreshing a semantic model can take up to 60 minutes using the ‘Refresh now’ option. Understanding this potential delay is crucial for operational planning, especially in an environment where RPA can automate repetitive tasks surrounding manual refreshes, which relates to how to refresh Power BI, thus saving time and reducing the likelihood of human error.

-

Scheduled Update: This feature enables users to set automated update schedules at designated times. Employing scheduled updates alongside RPA is an effective method to understand how to refresh Power BI, ensuring that reports are regularly updated and reducing the necessity for manual intervention while preserving data accuracy. Implementing such refresh schedules can significantly enhance efficiency, particularly when RPA is employed to manage tasks related to how to refresh Power BI seamlessly, allowing team members to focus on more strategic initiatives.

-

Automatic Refresh: For datasets housed in the Power BI service, it is possible to learn how to refresh Power BI automatically based on specific triggers or events. This method is particularly advantageous for real-time information scenarios, demonstrating how to refresh Power BI to allow for instantaneous updates that keep your reports relevant and actionable. Furthermore, utilizing RPA here can simplify the process of establishing these triggers, ensuring that your information management is optimized for efficiency without manual oversight.

To illustrate practical applications, consider the case study on deploying a personal information gateway. In situations where an enterprise information gateway is unavailable, users can deploy a personal information gateway for managing their semantic models without sharing sources. While personal gateways have limitations, they allow users to manage source configurations directly without needing to add definitions.

Incorporating RPA into this process could further simplify configurations, improve information management, and reduce mistakes.

By comprehending and effectively utilizing these updating techniques alongside RPA, you can uphold precise and prompt reports, thereby boosting operational efficiency. As Dhyanendra Singh Rathore aptly puts it, > If everything looks as expected, then we will update the production dataset. < Consequently, choosing the appropriate update strategy not only simplifies operations but also guarantees your information stays usable and dependable, fostering business expansion through informed decision-making and enabling teams to assign resources to more strategic tasks.

Step-by-Step Guide to Setting Up Scheduled Refresh in Power BI

Understanding how to refresh Power BI through a scheduled refresh is a simple yet essential procedure that greatly improves your information management efficiency. In today’s data-driven landscape, maintaining engagement with your documents is vital, particularly as Power BI deems a semantic model inactive after two months of inactivity. Frequent updates are crucial to address the typical difficulties of time-consuming documentation creation, information inconsistencies, and the absence of actionable insights from your dashboards.

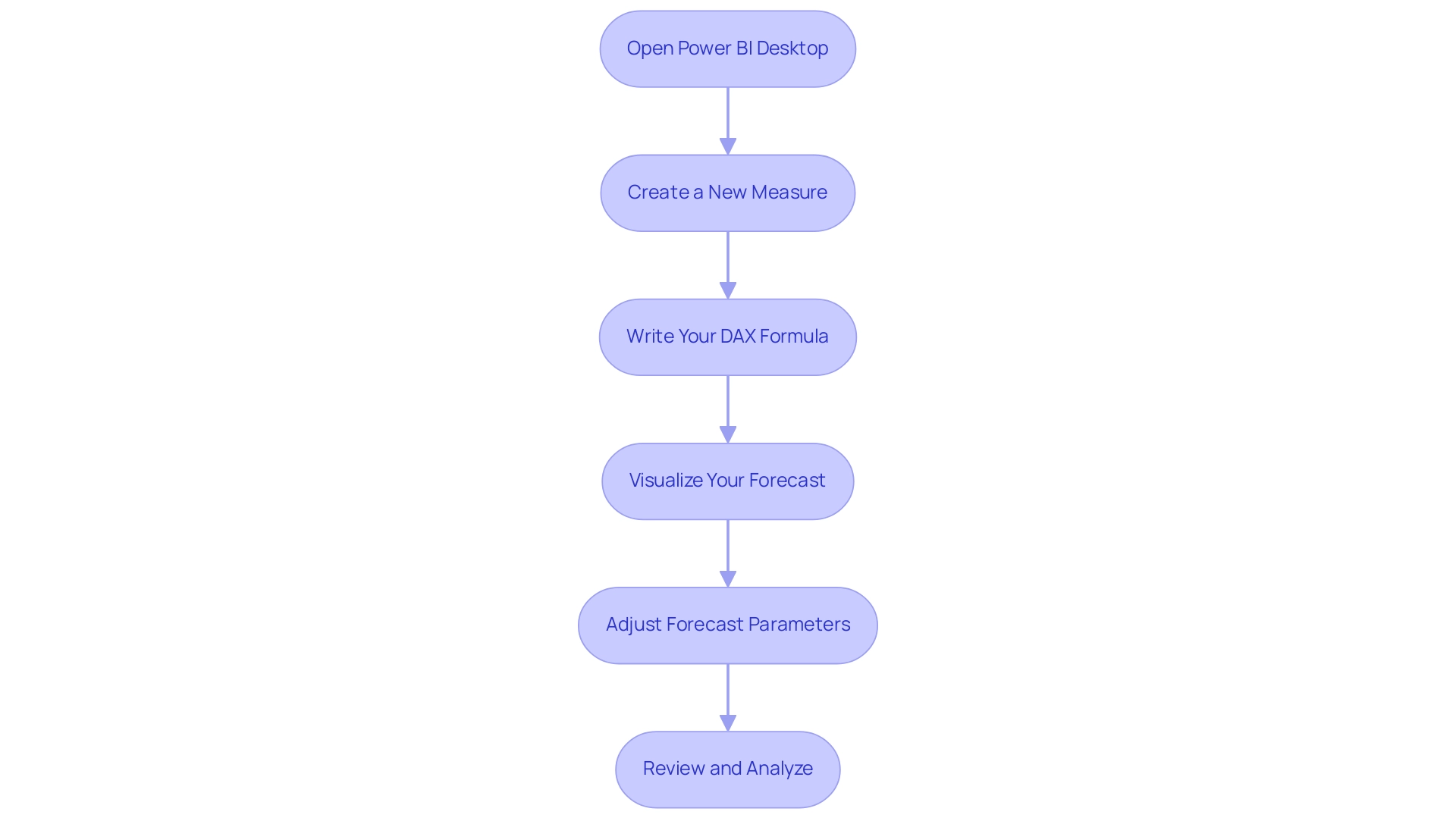

To automate your data update effectively and ensure your reports are always current, follow these steps:

- Open Power BI Service: Begin by logging into your Power BI account and navigating to the workspace containing your desired dataset.

- Select Dataset: Locate the dataset for which you want to arrange an update. Click on the ‘More options’ (represented by three dots) adjacent to it.

- Settings: Select ‘Settings’ to access the dataset settings page.

- Scheduled Update: In the ‘Scheduled update’ section, toggle the switch to ‘On’ to activate this feature.

- Set Update Frequency: Determine how often you wish the update to occur—options include daily or weekly—and specify the appropriate time zone.

- Save Changes: Finally, click ‘Apply’ to save your configurations.

By following these steps, you can effectively learn how to refresh Power BI to automate your information update processes, ensuring the relevance and precision of your reports. Mussarat Nosheen emphasizes the necessity of setting up a data gateway for on-premises data sources, stating, ‘For on-premises data sources, setting up a data gateway is crucial.’ This demonstrates the significance of sustaining a continuous connection during the update process.

Furthermore, following optimal procedures, as emphasized in the case study ‘Best Practices for Scheduled Update in BI,’ can result in quicker update times and enhanced performance. Simplifying information models and implementing incremental refresh are proactive strategies that not only mitigate common issues, such as source connection errors and refresh timeouts but also align with broader efforts to enhance operational efficiency through Business Intelligence and RPA.

Moreover, utilizing our ‘3-Day BI Sprint’ can accelerate the development of professionally crafted documents, while the ‘General Management App’ offers extensive oversight and intelligent evaluations, further improving your reporting abilities. By addressing these challenges, you can unlock the full potential of your information and drive informed decision-making.

Configuring Data Source Credentials for Successful Refresh

Setting up source credentials in Power BI is crucial for understanding how to refresh Power BI effectively and maintaining accuracy, which are vital for extracting actionable insights and driving business growth. In today’s information-rich environment, companies that struggle with time-consuming document creation and information inconsistencies face a competitive disadvantage. Precise reporting is essential, as demonstrated by the mean profit of 8,833 units and a median profit of 7,000 units, highlighting the necessity for trustworthy information management.

Moreover, integrating Robotic Process Automation (RPA) solutions can streamline the process of information handling, significantly reducing the time spent on report generation and minimizing inconsistencies. Follow these steps to establish a secure connection:

- Access Dataset Settings: In the BI Service, locate your dataset and select ‘Settings’.

- Data Source Credentials: Scroll to the ‘Data source credentials’ section to view your current settings.

- Edit Credentials: Click on ‘Edit credentials’ and select the appropriate authentication method, such as Basic or OAuth.

- Enter Credentials: Input your username and password, or any other required information, then click ‘Sign In’.

- Test Connection: After entering your credentials, select ‘Test connection’ to confirm that BI can successfully access your source.

- Save Changes: Once the connection is validated, make sure to save your changes.

Implementing Azure Active Directory (AAD) for authentication not only simplifies credential management but also enhances security through features like Single Sign-On and conditional access policies. For instance, the case study titled ‘Using Azure Active Directory (AAD) for Authentication’ illustrates how organizations leveraging AAD have benefited from improved security and streamlined credential processes.

As noted by Harris Amjad, > Descriptive statistics also help us understand the world around us; this is particularly relevant here as effective credential management directly contributes to operational efficiency and accurate reporting. Addressing these challenges and following these steps on how to refresh Power BI ensures your scheduled refreshes operate smoothly, enabling you to deliver accurate and timely reports that drive informed decision-making and innovation.

Best Practices and Troubleshooting for Power BI Data Refresh

To enhance the efficiency of your Power BI data refresh process and leverage the full power of Business Intelligence, implementing the following best practices is essential:

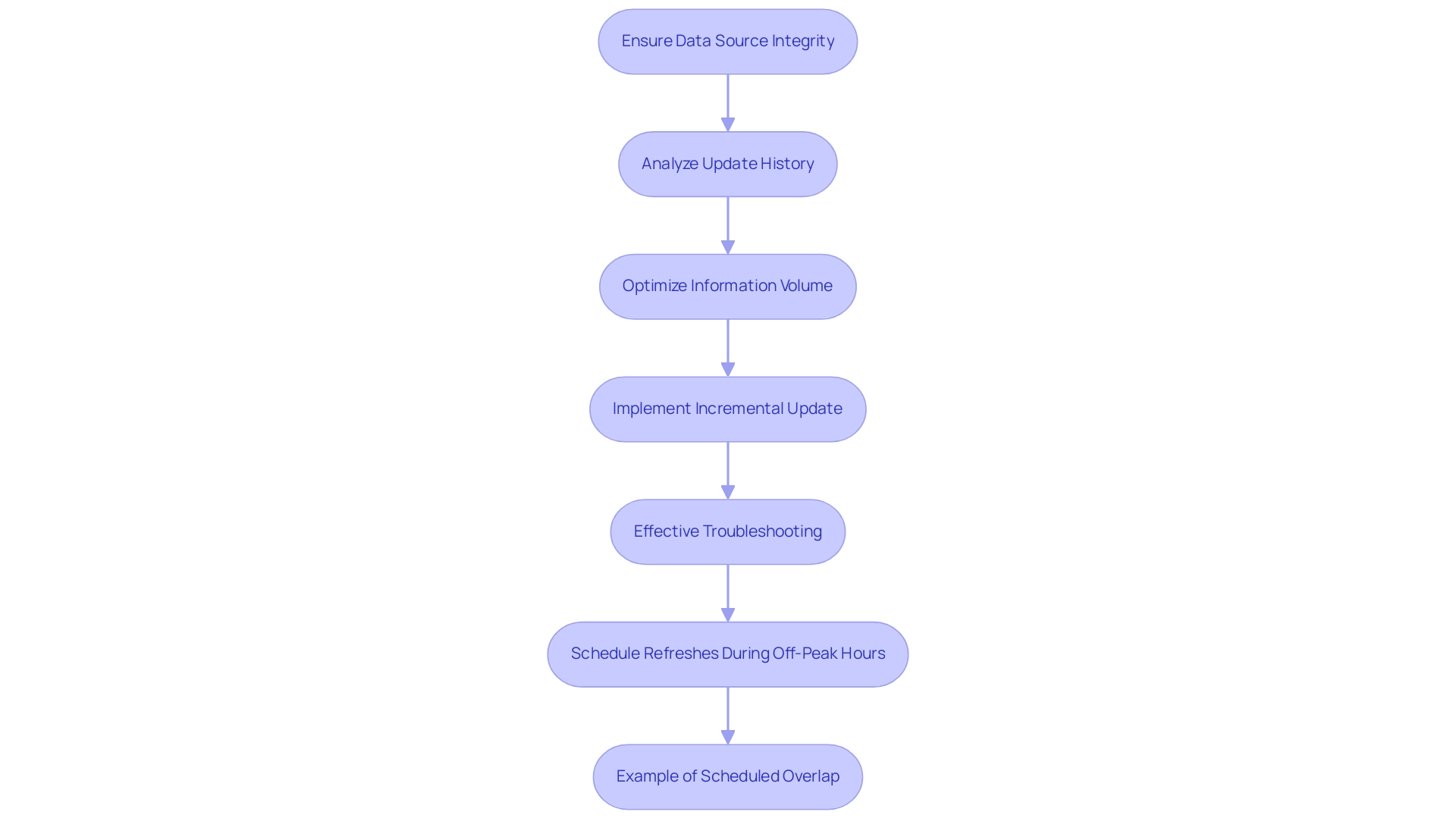

- Ensure Data Source Integrity: Regularly verify that your data sources are reliable and current. This reduces the risk of update errors and ensures a seamless upgrade, fostering data-driven decision-making that is crucial for maintaining a competitive edge in today’s market.

- Analyze Update History: Conduct a routine review of the update history to identify any failures or recurring issues that may hinder performance, ensuring that your team can tackle inefficiencies promptly.

- Optimize Information Volume: Limit the information volume being retrieved during update operations. This approach not only enhances performance but also decreases the chances of overlaps, which can result in inefficiencies in scheduled operations, a common challenge for many organizations.

- Implement Incremental Update: For extensive datasets, leveraging incremental update techniques allows you to modify only the information that has changed, significantly improving update times and reducing resource consumption—an essential strategy for operational efficiency.

- Effective Troubleshooting: In the event of update failures, verify data source credentials, ensure accessibility of data sources, and thoroughly review error messages from the update history for insight on how to resolve issues.

Additionally, it is crucial to schedule refreshes during off-peak hours to enhance performance. For instance, scheduled update #2 takes an average of over 48 minutes to complete, often causing it to overflow into the next time slot. isUzu 2019, a BI Super User, emphasizes, “If you make any changes in your BI desktop file (add a new chart, new column to your table, any DAX), you have to publish every time to BI service.”

This emphasizes the challenges encountered during the update process. Additionally, the case study titled “Example of Scheduled Overlap” illustrates the real-world implications of overlapping updates, where an administrator is prompted to contact the owners of the scheduled update to reschedule, thereby improving the efficiency of update operations. By adopting these best practices, including the integration of RPA tools such as EMMA RPA or Microsoft Power Automate, and remaining vigilant about common troubleshooting strategies, you can discover how to refresh Power BI more efficiently and effectively, ultimately driving growth and innovation in your organization.

Conclusion

Mastering data refresh techniques in Power BI is crucial for organizations striving to enhance operational efficiency and drive informed decision-making. The article has explored the importance of timely and accurate data refresh processes, highlighting three primary methods:

- Manual refresh

- Scheduled refresh

- Automatic refresh

Each method has its unique advantages, allowing users to select the most suitable approach based on their specific needs and workflow.

Setting up scheduled refreshes not only automates the update process but also ensures reports remain relevant and actionable. By following the outlined steps and best practices, such as configuring data source credentials and implementing incremental refresh, organizations can significantly improve their reporting capabilities. Additionally, integrating Robotic Process Automation can streamline these processes further, reducing the burden of manual tasks and enhancing data quality.

Ultimately, effective data management through Power BI empowers organizations to unlock the full potential of their data, leading to better insights, strategic growth, and a competitive edge in the market. Embracing these techniques and best practices will pave the way for a more efficient, data-driven future, where insights are readily available to support operational excellence and innovation.

Overview