Overview

The article provides a comprehensive guide on how to use the DISTINCTCOUNTNOBLANK function in Power BI, emphasizing its role in counting unique values while excluding blank entries for accurate data analysis. It outlines practical implementation steps, common troubleshooting issues, and performance optimization strategies, demonstrating how mastering this function enhances operational efficiency and data-driven decision-making in business intelligence contexts.

Introduction

In the realm of data analytics, the ability to extract meaningful insights from vast datasets is paramount, especially for organizations striving for operational excellence. The DISTINCTCOUNTNOBLANK function in Power BI emerges as a powerful tool, enabling users to accurately count unique values while filtering out blank entries that can skew results. This function is not just a technical detail; it represents a critical capability for ensuring data integrity and enhancing the quality of business intelligence.

As companies navigate the complexities of data analysis, mastering DISTINCTCOUNTNOBLANK becomes essential for unlocking the full potential of their reports. This article explores its:

– Definition

– Practical applications

– Troubleshooting tips

– Performance optimization strategies

Empowering professionals to leverage this function effectively and drive informed decision-making within their organizations.

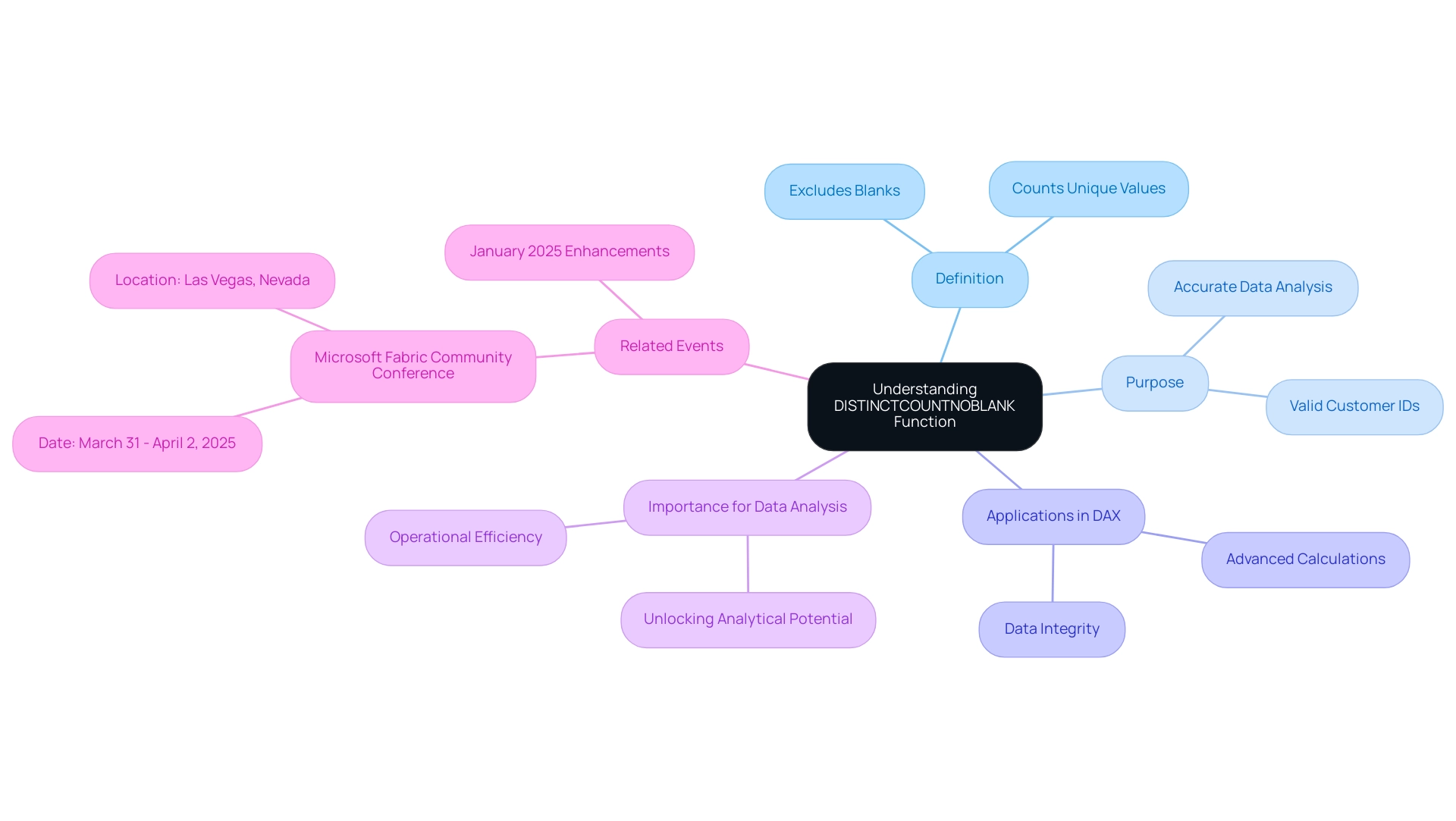

Understanding DISTINCTCOUNTNOBLANK: Definition and Purpose

The function in Power BI plays a pivotal role by using distinctcountnoblank to count unique values in a column while excluding any blank entries. This capability is essential for accurate data analysis, particularly when evaluating unique customer IDs in a sales report. By utilizing the distinctcountnoblank function, you ensure that only valid, non-empty IDs are considered, thus providing a true representation of your dataset’s integrity.

This function is integral to Data Analysis Expressions (DAX), the language that enables users to create advanced calculations within BI. Mastering its use is vital for unlocking the full analytical potential of your reports, especially as BI continues to evolve with updates like the January 2025 enhancements across Reporting, Modeling, and Data Connectivity. Moreover, our Power BI services, including the 3-Day Power BI Sprint, allow for rapid creation of professionally designed reports, while the General Management App supports comprehensive management and smart reviews to enhance operational efficiency.

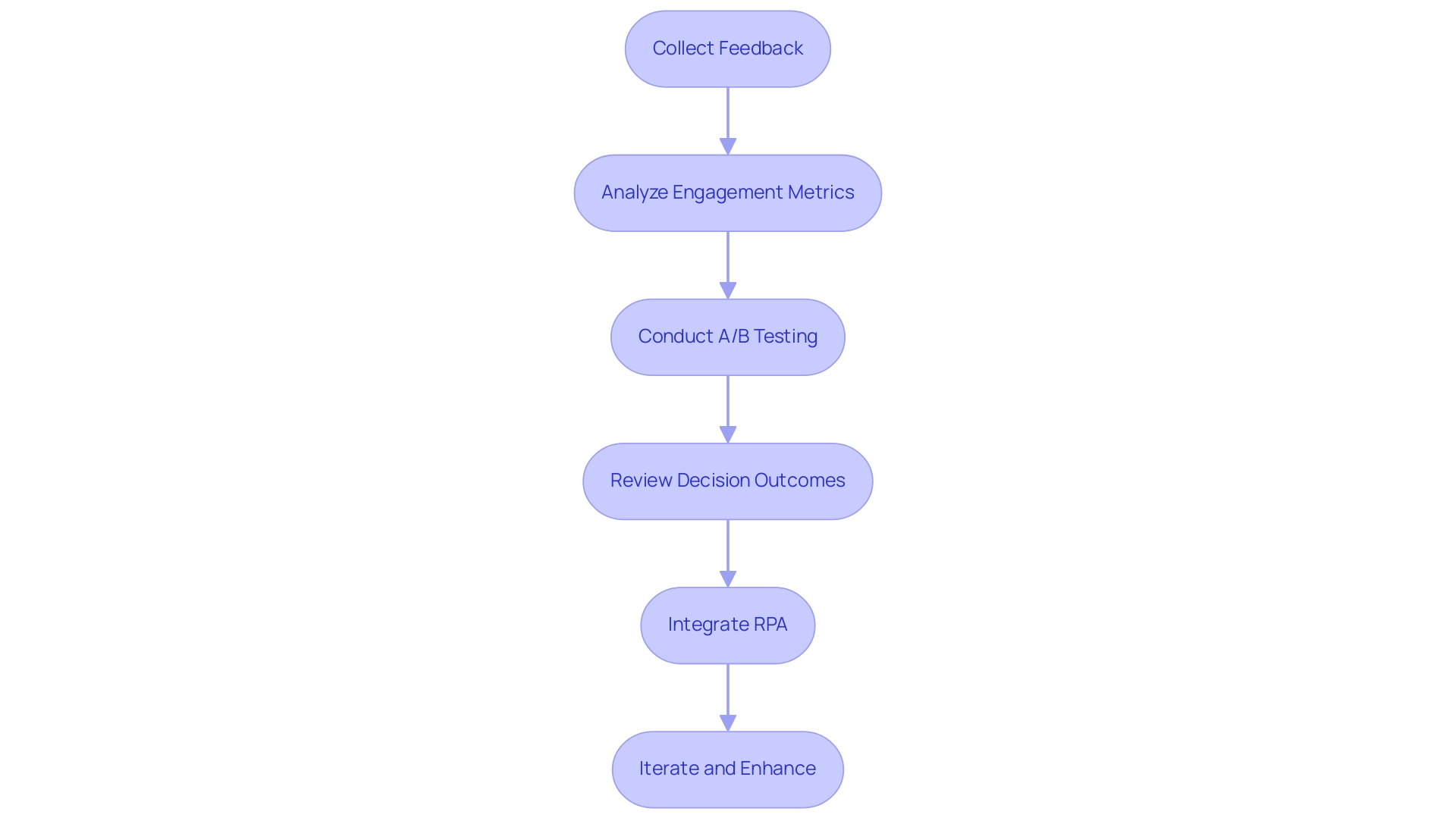

As we get ready for the Microsoft Fabric Community Conference from March 31 to April 2, 2025, in Las Vegas, Nevada, attendees can explore DAX capabilities and best practices more thoroughly. Additionally, feedback from professionals like Wisdom Wu emphasizes the importance of precise data analysis for effectively accessing usage metrics and highlights how integrating RPA solutions like EMMA can help overcome outdated systems, reinforcing the critical role of business intelligence in driving data-driven insights and operational efficiency for business growth.

Practical Applications: How to Implement DISTINCTCOUNTNOBLANK in Power BI

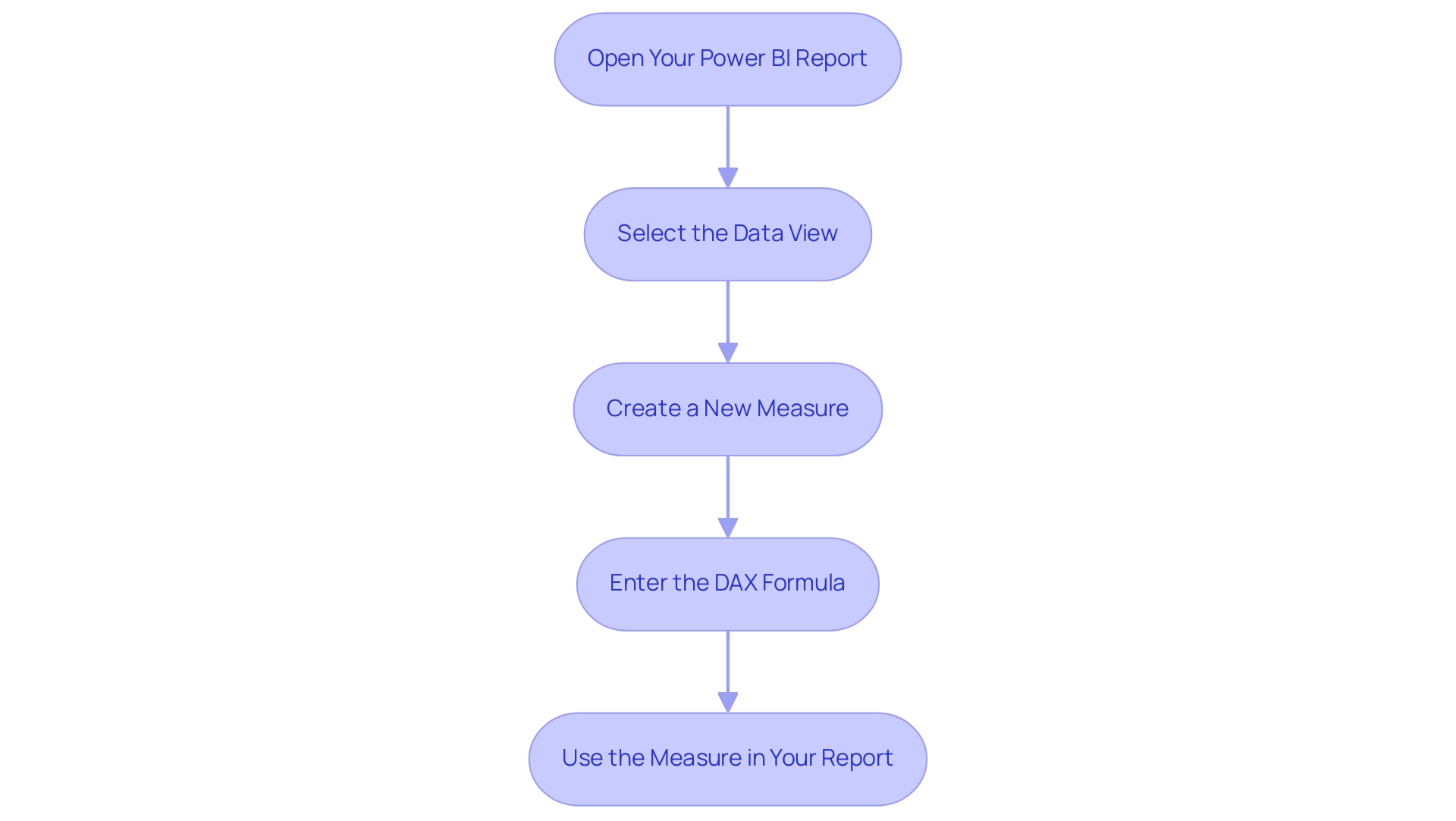

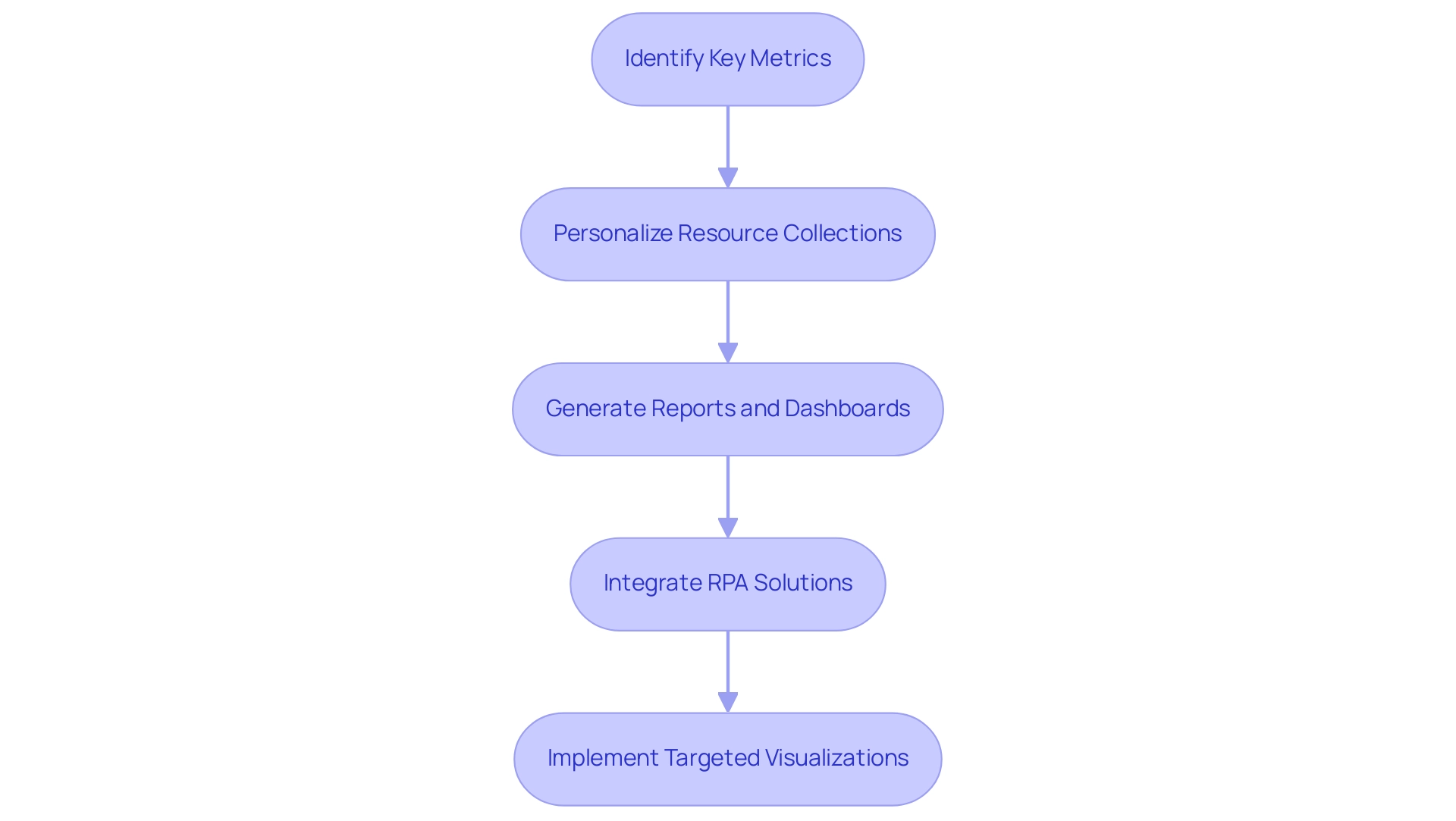

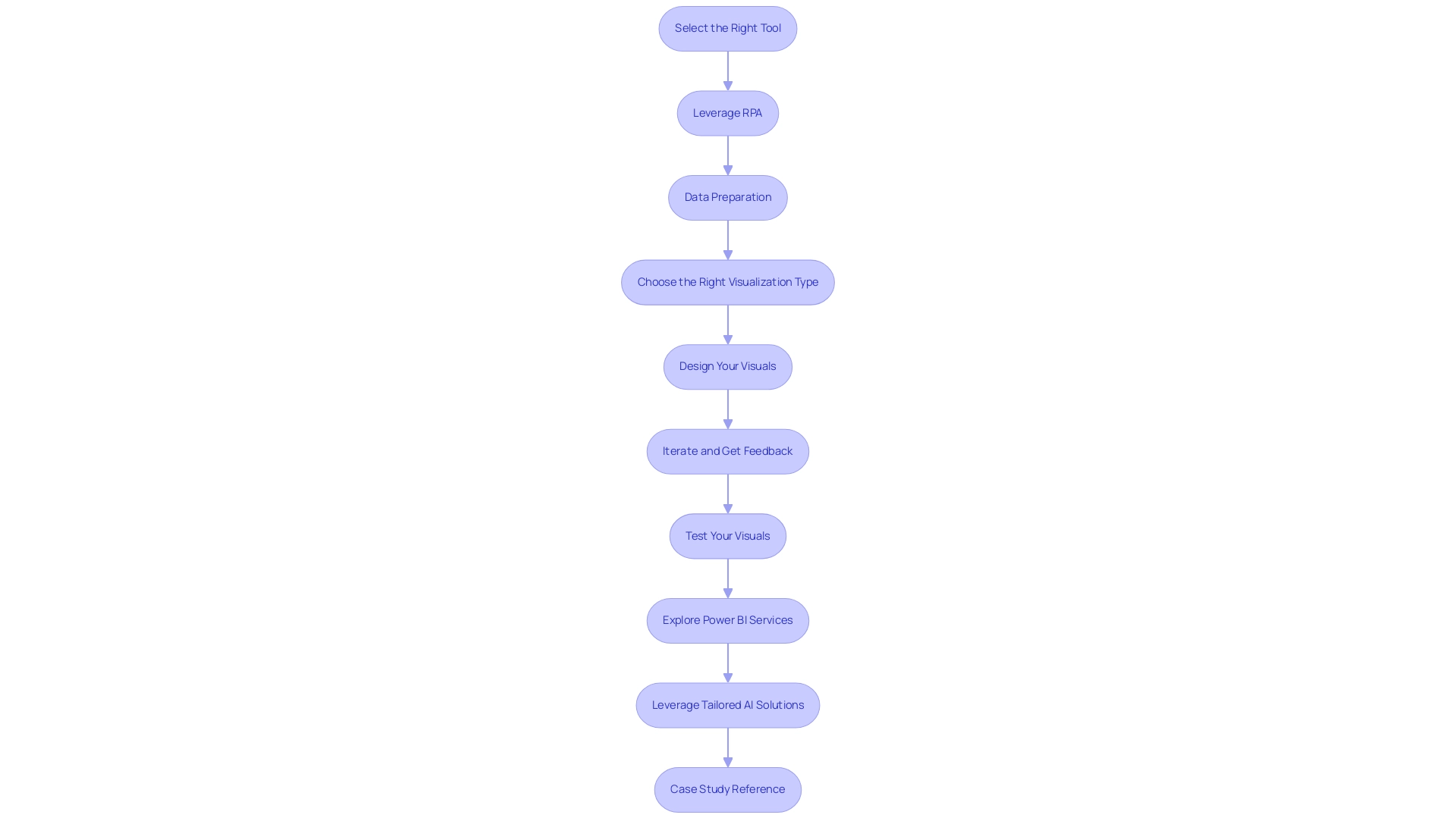

To effectively implement the DISTINCTCOUNTNOBLANK function in Power BI and harness the power of Business Intelligence for informed decision-making, follow these structured steps:

- Open Your Power BI Report: Launch Power BI Desktop and open the report you wish to modify.

- Select the Data View: Navigate to the Data view, where you can access your tables and columns.

- Create a New Measure: In the Fields pane, right-click on the table where you want the measure created and select ‘New measure’.

- Enter the DAX Formula: In the formula bar, input the DAX expression:

UniqueCount = COUNTAX(TableName[ColumnName]). Be sure to replaceTableNameandColumnNamewith the actual names relevant to your dataset. - Use the Measure in Your Report: Once your measure is created, you can incorporate it into your report visuals. For instance, if you’re analyzing sales information, this measure will clearly count the unique customers who made purchases, effectively ignoring any blank entries.

As Nirmal Pant states, “The distinctcountnoblank function is a powerful tool in Power BI for scenarios where you need to perform a distinct count while ignoring any blank values.” This highlights the significance of employing this feature for precise information analysis, particularly in an environment where obstacles like lengthy report creation and inconsistencies can hinder operational efficiency.

Incorporating RPA solutions can significantly enhance this process. For instance, automating the preparation steps can decrease the time allocated for report creation, enabling you to concentrate on analyzing the insights obtained from your information. RPA can also assist in maintaining data consistency, which is essential when using operations such as COUNTNOBLANK.

For a practical application, consider a dataset from the Global Super Store, where you may have customer orders with some missing product names. By using the formula Distinct Products Sold = DISTINCTCOUNTNOBLANK('Global Super Store'[Product Name]), you can effectively count the distinct products sold while ignoring any blanks. In this case, the operation counted four distinct products, showcasing its utility in real-world scenarios and highlighting how BI can drive growth and innovation by providing actionable insights.

Additionally, as of November 23, 2023, the order amount in Hyderabad was 110. Examining such information with the specified method can offer insights into customer purchasing behavior, assisting you in making informed operational choices in your position as a Director of Operations Efficiency.

By adhering to these steps and incorporating RPA into your workflow, you enable yourself to utilize a specific function, greatly improving your analysis capabilities in Power BI. This feature not only offers precision in your counts but also guarantees that your reports reflect an accurate depiction of your information, aligning with best practices in DAX usage and alleviating the common challenges encountered in BI reporting.

Troubleshooting DISTINCTCOUNTNOBLANK: Common Issues and Solutions

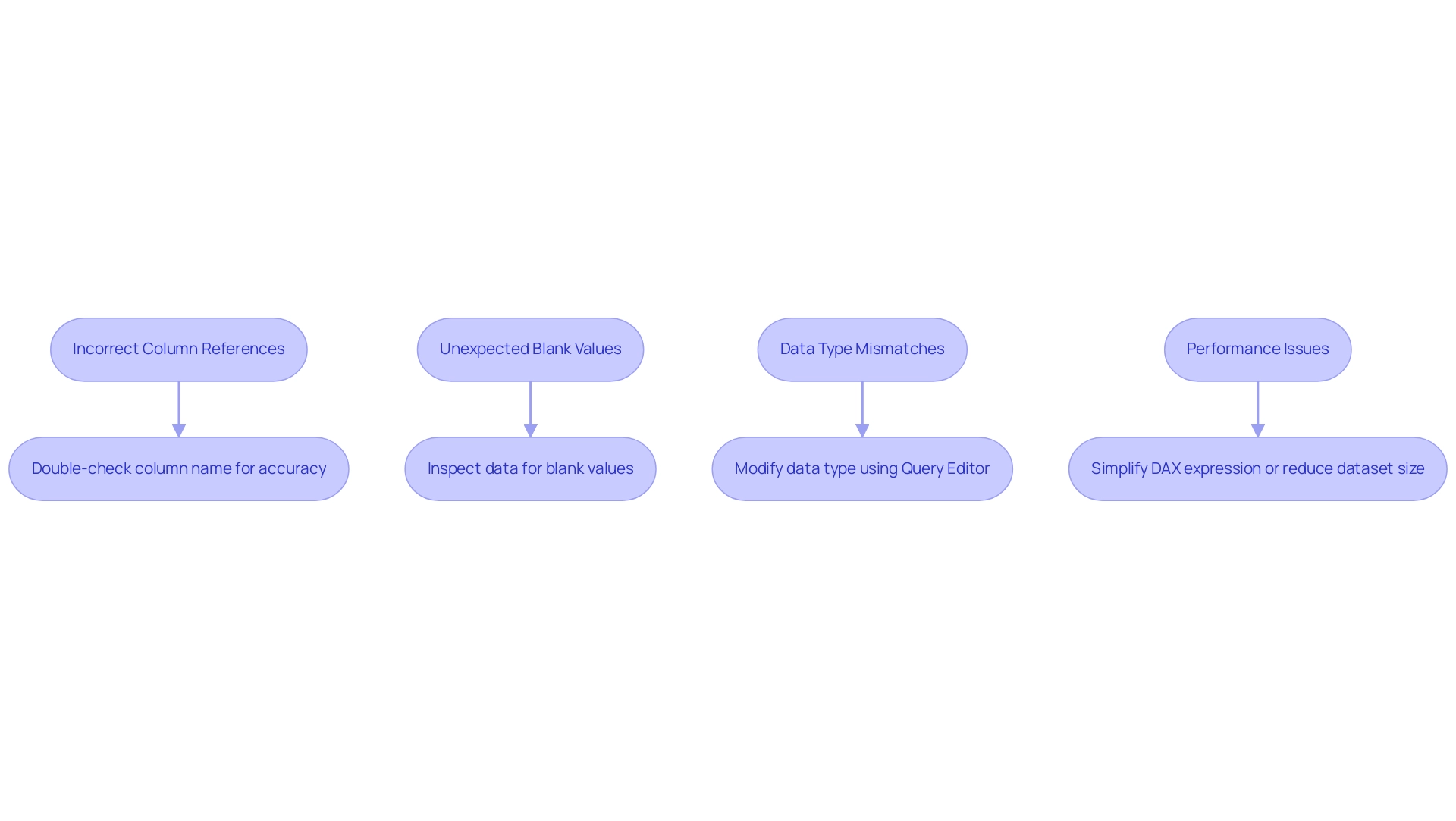

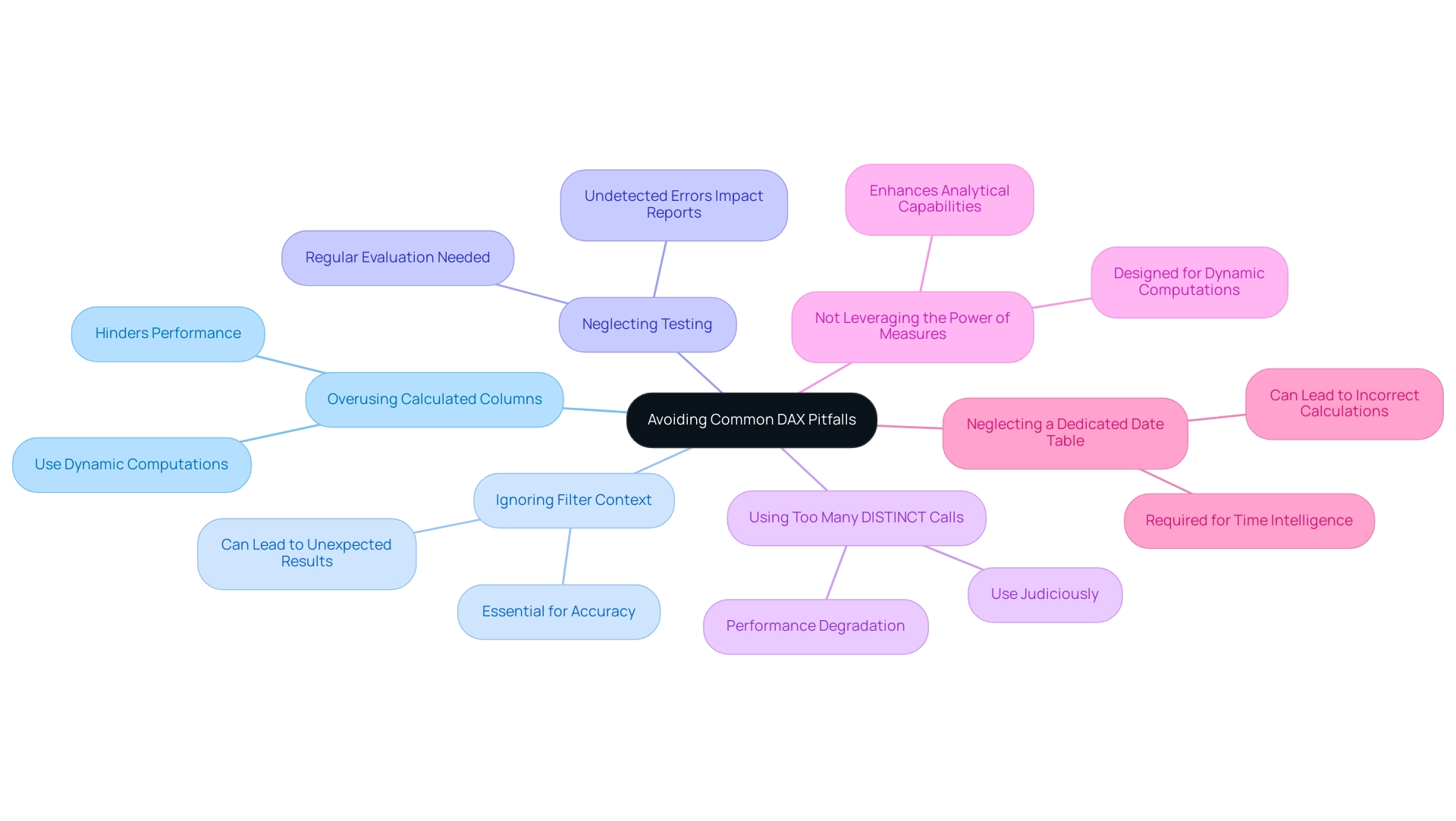

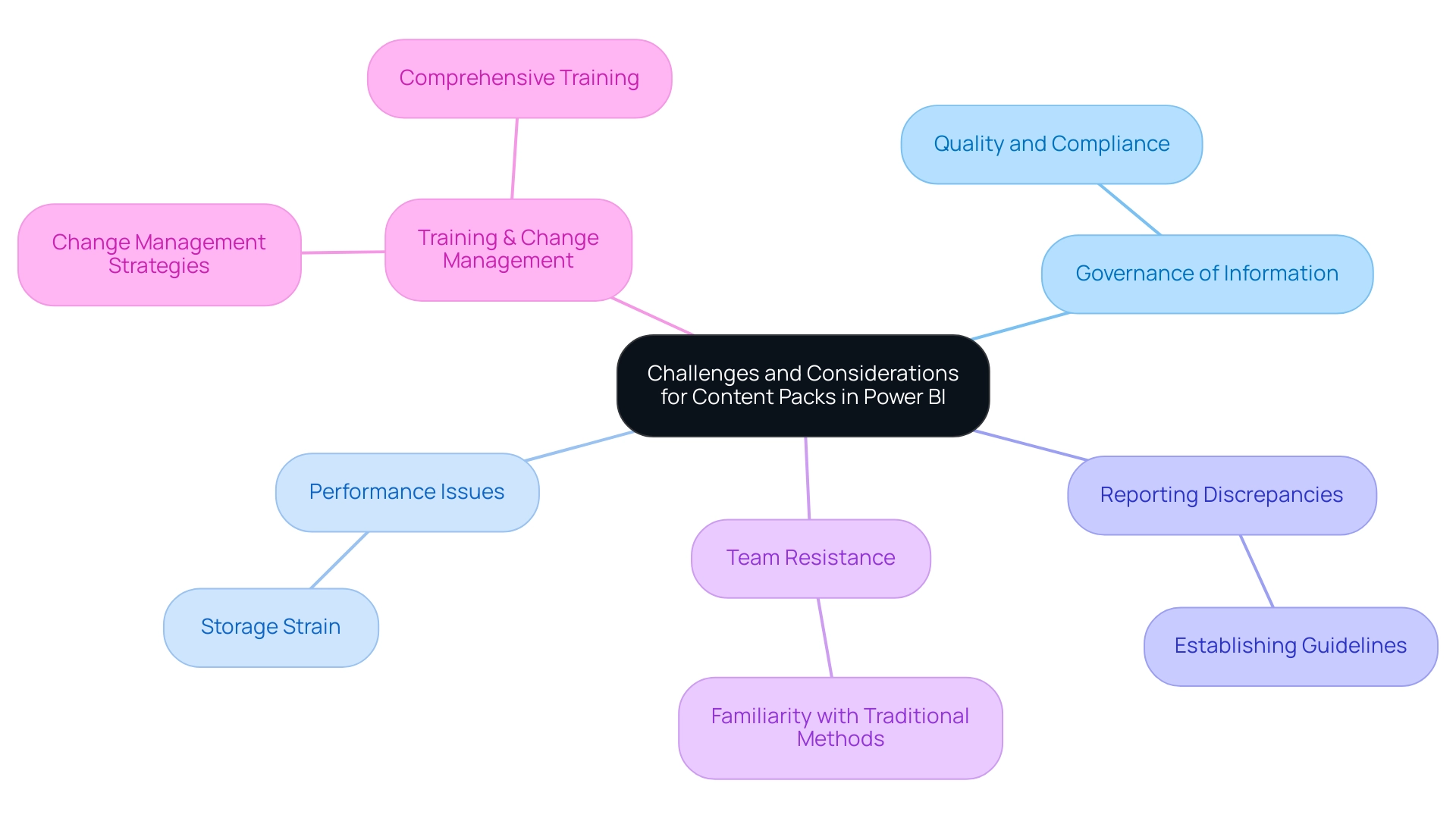

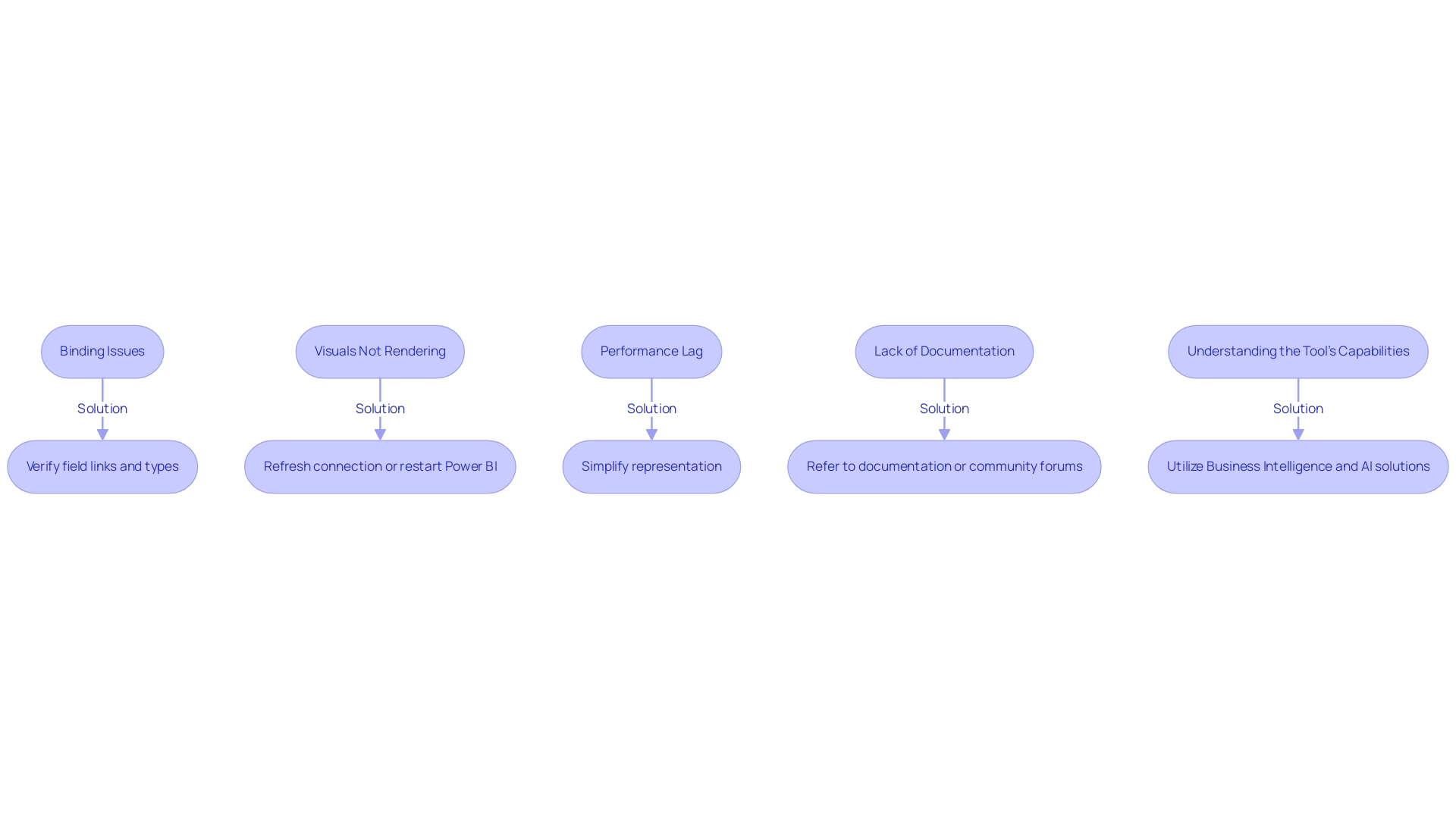

When utilizing the DISTINCTCOUNTNOBLANK function, users may face several typical challenges that can impede their data analysis efforts, particularly in the context of leveraging Business Intelligence effectively:

- Incorrect Column References: It’s crucial to ensure that the column referenced in the DISTINCTCOUNTNOBLANK function is accurately spelled and that it exists within the specified table. In the event of an error, double-check the column name for accuracy. This aligns with the need for accurate information management, a critical step in overcoming implementation challenges.

- Unexpected Blank Values: If the count yields unexpected results, verify the presence of blank values in your information. Utilize the ‘Data View’ feature to inspect and confirm the actual entries, ensuring that your analysis is based on clean information, which is essential for informed decision-making.

- Data Type Mismatches: The operation may not work as intended if the column’s type is incompatible. Ensure the column is formatted as either text or numeric. If needed, modify the data type using Query Editor before applying the operation. This step is vital for maintaining operational efficiency and maximizing the effectiveness of your BI tools.

- Performance Issues: Should you encounter slow report performance, consider whether a specific counting method is utilized within a complex calculation. Simplifying the DAX expression or reducing the dataset size can often enhance performance significantly, thus addressing potential bottlenecks in your reporting process.

As Nirmal Pant notes, “The distinctcountnoblank function is an essential tool in BI for scenarios that require a distinct count while ignoring blank values.” By recognizing these common issues and implementing the suggested solutions, users can navigate challenges with confidence, ultimately enhancing their experience and effectiveness in Power BI.

Furthermore, leveraging Robotic Process Automation (RPA) can help automate repetitive information tasks related to these challenges. For instance, RPA can be used to clean information by automatically identifying and removing blank entries, ensuring that the dataset is ready for analysis without manual intervention. This not only improves efficiency but also allows teams to focus on deriving strategic insights from the data.

A case study named “Utilizing a specific counting method in Business Intelligence” illustrates how implementing this approach on a dataset of customer orders using DISTINCTCOUNTNOBLANK precisely calculated the number of unique products sold, leading to a total of 4 unique products when blanks were omitted. The result of this analysis resulted in enhanced inventory management and sales strategies, demonstrating how effective application of DISTINCTCOUNTNOBLANK can enhance operational efficiency and utilize BI insights for business growth. Furthermore, instructions for visualizing the distinct count in BI can guide users in creating a new measure and adding it to their reports effectively, illustrating the strength of actionable insights in driving business growth.

Performance Considerations: Optimizing DISTINCTCOUNTNOBLANK Usage

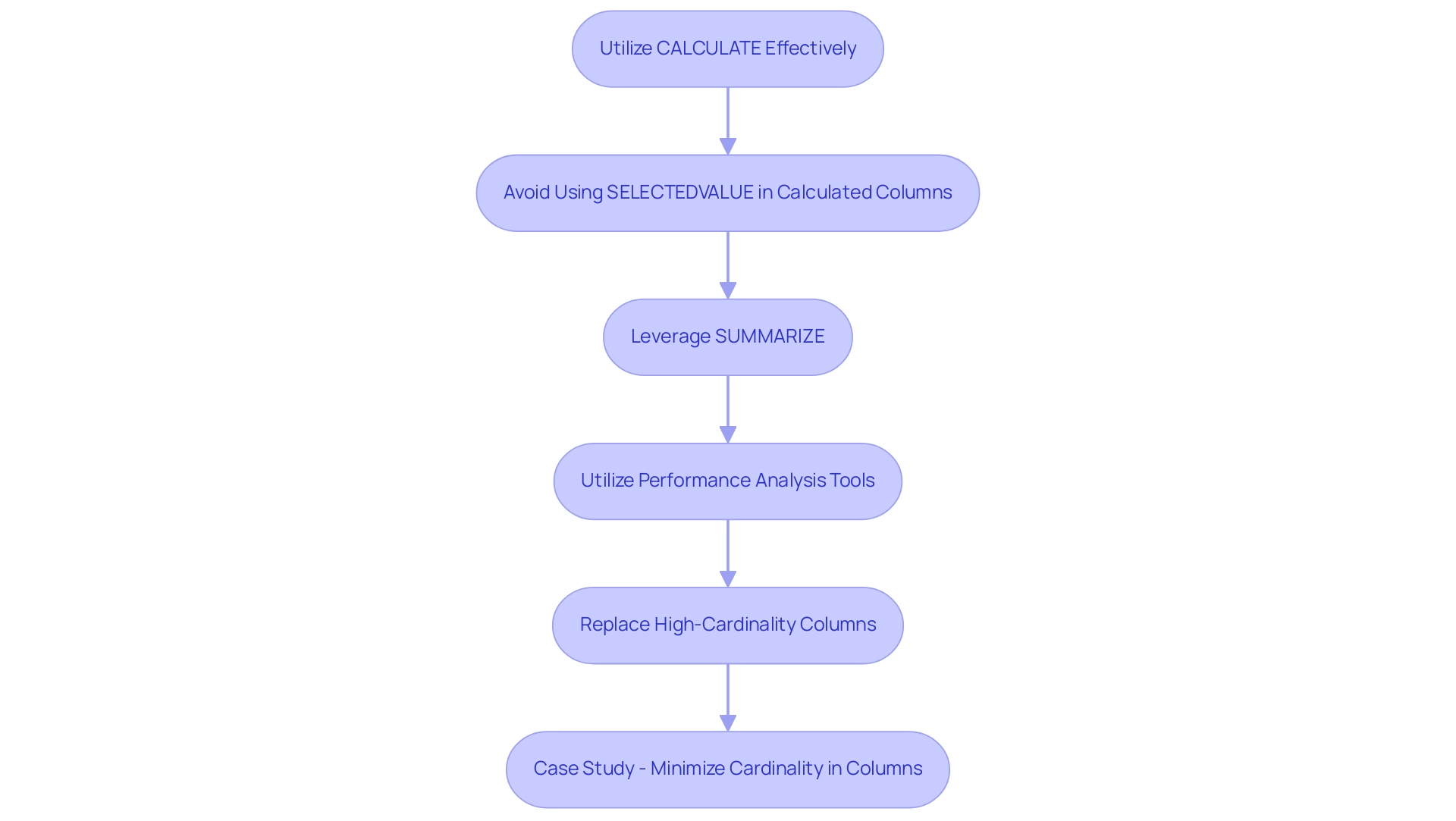

To optimize the DISTINCTCOUNTNOBLANK function in Power BI effectively, consider the following performance enhancement strategies that align with the goals of Business Intelligence and operational efficiency, while also addressing common challenges faced in leveraging insights from Power BI dashboards:

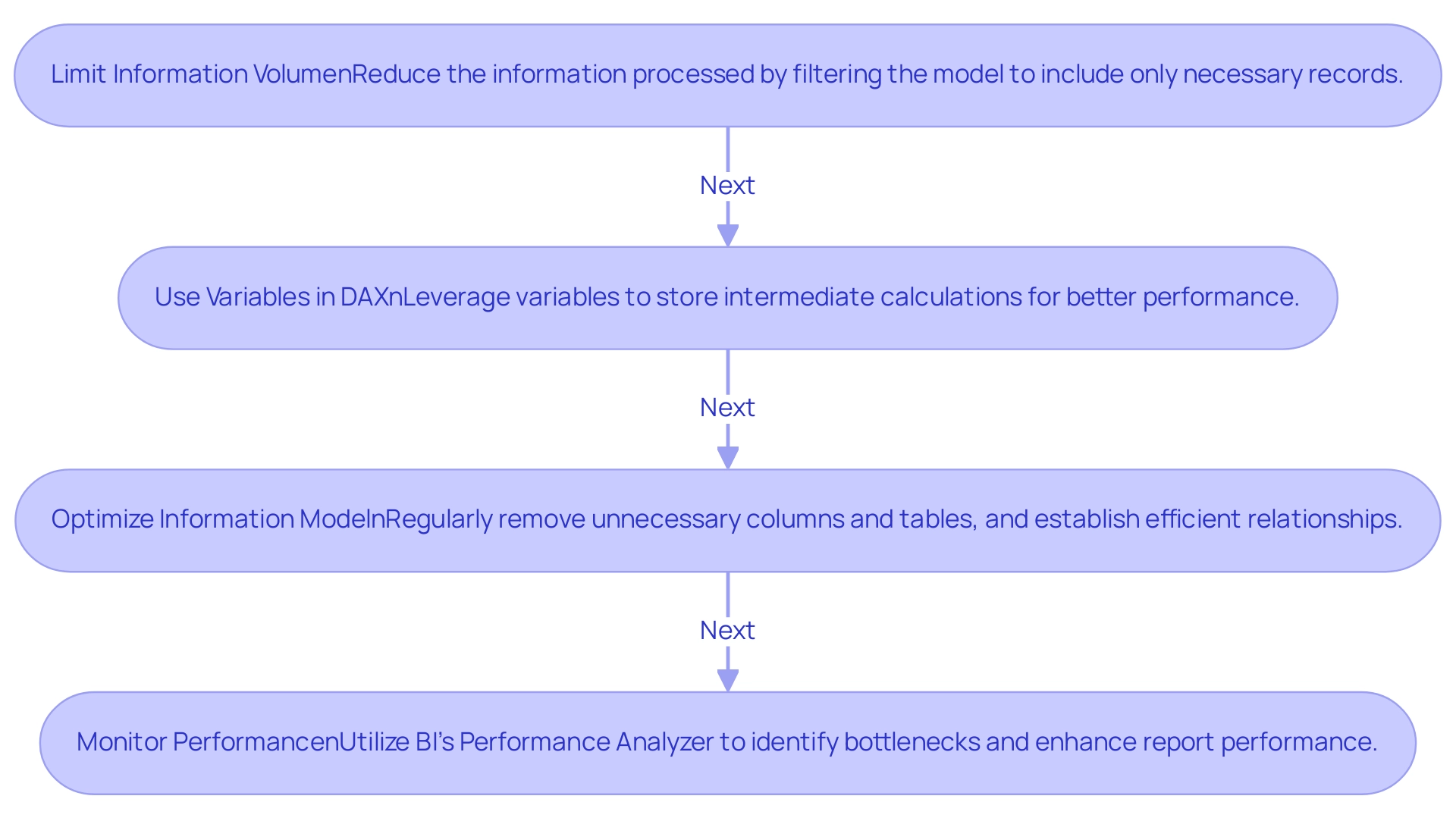

-

Limit Information Volume:

Reducing the information being processed is crucial for enhancing performance. Filter your model to include only the records that are necessary before applying the DISTINCTCOUNTNOBLANK function. This step not only speeds up calculations but also minimizes resource consumption. With 5,822 users currently online, the demand for efficient information handling is evident, reflecting the need for strong BI strategies to overcome the challenge of extracting meaningful insights. -

Use Variables in DAX:

When constructing complex DAX measures, leverage variables to store intermediate calculations. This practice simplifies your formulas and can significantly enhance performance by decreasing the frequency of processing, making your calculations more efficient. This method reflects the operational efficiencies pursued through RPA, tackling task repetition fatigue, especially in report generation and management. -

Optimize Information Model:

An optimally structured information model is essential. Regularly remove unnecessary columns and tables, and ensure that relationships are established efficiently. A streamlined information model minimizes calculation complexity and enhances performance across the board. As mentioned by lbendlin, ‘For import mode information sources, your only option is to optimize the DAX code.’ For Direct Query information sources, you can also examine the SQL code produced by the queries and apply optimizations at the source (indexes, statistics etc). This optimization supports the BI goal of transforming raw information into actionable insights, addressing the issues of inconsistencies. -

Monitor Performance:

Utilize BI’s Performance Analyzer to pinpoint bottlenecks in your reports. This powerful tool offers insights into loading times for each visual, empowering you to make informed adjustments and enhancements. Furthermore, consider the case study titled ‘Use Native Queries When Possible,’ which illustrates how leveraging the query engine of the data source directly can speed up query execution and utilize optimizations in the underlying database, thereby improving overall performance. This proactive monitoring aligns with the need to navigate the overwhelming AI landscape effectively, ensuring that your BI strategies remain relevant and impactful.

Implementing these strategies will not only improve the performance of your reports using DISTINCTCOUNTNOBLANK but also elevate the overall efficiency of your Power BI reports, enabling you to deliver insights more effectively and drive growth and innovation through informed decision-making.

Conclusion

Harnessing the power of the DISTINCTCOUNTNOBLANK function is essential for organizations aiming to enhance their data analysis capabilities. By accurately counting unique values while excluding blanks, this function ensures that reports reflect true data integrity. The practical applications outlined illustrate how to implement this function effectively, empowering professionals to derive actionable insights from their datasets.

As challenges arise in data analysis, recognizing common issues and applying troubleshooting strategies becomes crucial. Addressing:

- Incorrect column references

- Unexpected blank values

- Data type mismatches

will lead to more reliable results. Moreover, the integration of Robotic Process Automation can streamline these processes, allowing teams to focus on strategic insights rather than repetitive tasks.

Performance optimization strategies further enhance the efficacy of DISTINCTCOUNTNOBLANK. By:

- Limiting data volume

- Utilizing variables in DAX

- Regularly optimizing the data model

users can ensure efficient report generation and insightful analysis. Monitoring performance with tools like Power BI’s Performance Analyzer allows organizations to proactively identify and resolve bottlenecks.

Ultimately, mastering the DISTINCTCOUNTNOBLANK function not only elevates data accuracy but also drives operational efficiency. As organizations embrace these capabilities, they position themselves to make informed decisions that propel growth and innovation. Now is the time to leverage these insights and transform data challenges into opportunities for success.

Overview

Integrating Delta Lake with Azure Synapse enhances data management by combining Delta Lake’s advanced capabilities with Azure Synapse’s analytics features, facilitating real-time insights and operational efficiency. The article outlines a step-by-step setup process and highlights best practices, such as effective partitioning and the use of Robotic Process Automation (RPA), to streamline workflows and improve data handling while addressing common integration challenges.

Introduction

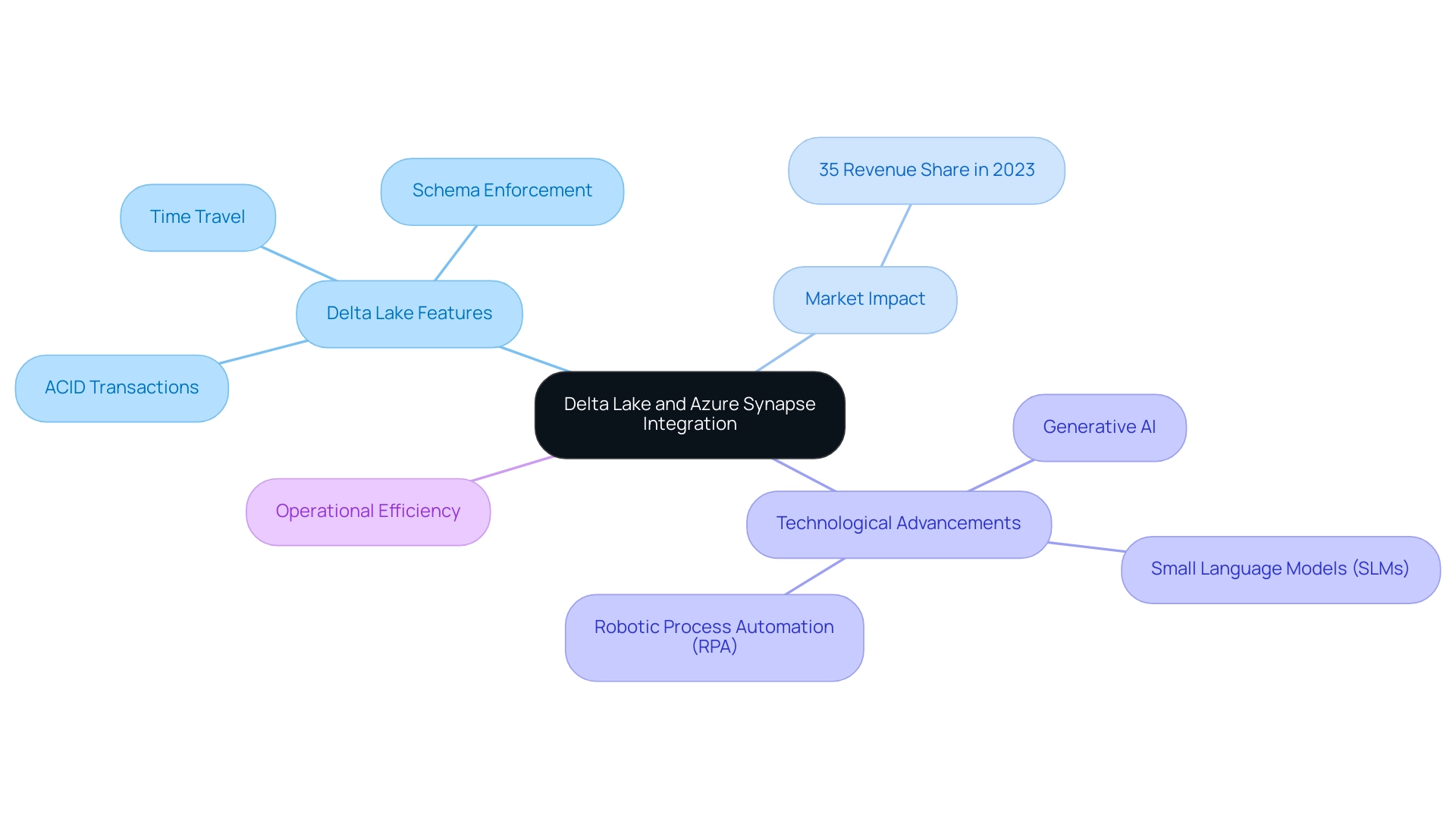

In the rapidly evolving landscape of data management, organizations are increasingly turning to advanced technologies to optimize their operations and extract actionable insights. The integration of Delta Lake with Azure Synapse represents a powerful synergy that enhances the reliability and efficiency of data lakes. By leveraging features such as:

- ACID transactions

- schema enforcement

- time travel capabilities

businesses can manage extensive datasets with confidence. This article delves into the critical aspects of this integration, offering a comprehensive guide that not only covers setup and troubleshooting but also highlights best practices and advanced features. As companies strive to harness the full potential of their data, understanding the nuances of Delta Lake and Azure Synapse becomes essential for driving strategic decision-making and fostering innovation.

Understanding Delta Lake and Azure Synapse Integration

Delta Lake functions as a crucial open-source storage layer that improves the reliability of lakes by allowing users to manage extensive datasets with ACID transactions, schema enforcement, and time travel features. According to recent reports, the information lake market in the United States accounted for approximately 35% of revenue share in 2023, illustrating its significant growth and relevance in today’s analytics-driven landscape. The technological advancement found in North America, along with the existence of leading lake providers, further strengthens this market development.

Azure Synapse, which combines large-scale information and information warehousing into a cohesive analytics experience, becomes even more powerful when paired with a specific storage solution. The synergy between these two platforms facilitates real-time analytics, empowering organizations to extract actionable insights from their information efficiently. As firms increasingly adopt the principles of mesh—decentralizing ownership and fostering collaboration across teams—understanding the integration of Delta Lake Synapse is vital with Azure Synapse.

This combination not only streamlines workflows but also significantly enhances operational efficiency, positioning businesses to leverage their information strategically in a competitive market. Furthermore, the integration of Generative AI and Small Language Models (SLMs) in information engineering exemplifies how AI tools are transforming workflows in management, automating tasks, improving user interactions, and ensuring enhanced privacy and cost-effectiveness. Additionally, leveraging Robotic Process Automation (RPA) can further automate manual workflows, reducing errors and freeing teams for more strategic tasks.

These advancements tackle the challenges of inadequate master information quality, emphasizing the necessity for organizations to remain informed on recent progress in information management that facilitates the integration of delta lake synapse and Delta Storage.

Step-by-Step Setup of Delta Lake in Azure Synapse

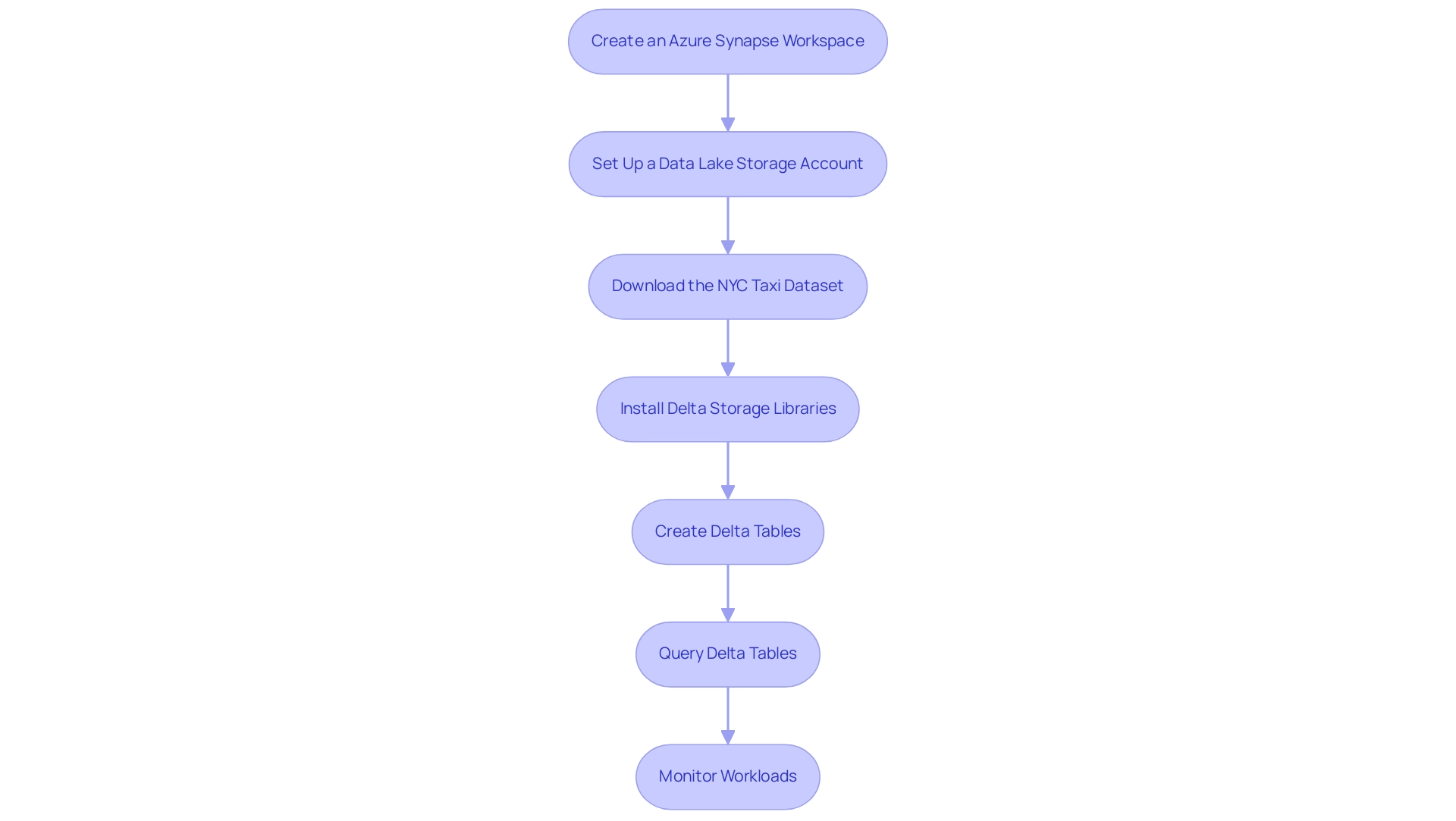

-

Create an Azure Synapse Workspace: Begin by logging into the Azure portal. Initiate a new Synapse workspace, selecting the optimal region and resource group that aligns with your operational needs. This foundational step ensures that you have a dedicated environment for your management tasks, crucial for leveraging Robotic Process Automation (RPA) to streamline operations by automating the setup of workflows.

-

Set Up a Data Lake Storage Account: Establish a new Azure Data Lake Storage Gen2 account. This account will be essential for storing your information efficiently. Ensure it is seamlessly linked to your Delta Lake Synapse workspace to facilitate smooth operations and retrieval, thereby enhancing workflow automation and operational efficiency. RPA can automate the information ingestion processes, reducing manual input and errors.

-

Download the NYC Taxi Dataset: Users should download the NYC Taxi – green trip dataset, rename it to

NYCTripSmall.parquet, and upload it to the primary storage account in Synapse Studio. This collection will serve as a practical example for your implementation, demonstrating how structured information can drive actionable insights. Automating this data upload process through RPA can save time and minimize human error. -

Install Delta Storage Libraries: Within your Synapse Studio, navigate to the Manage hub. Choose Apache Spark pools and continue to install the required storage libraries customized for your Spark environment. This installation is essential for enabling advanced information management capabilities in Azure Synapse, particularly through delta lake synapse, supporting RPA initiatives aimed at reducing manual tasks and enhancing accessibility. According to Azure specialists, implementing the latest delta lake synapse libraries ensures optimal performance and compatibility with your workflows, thereby enhancing RPA effectiveness.

-

Create Delta Tables: Utilize Spark SQL or DataFrame APIs to create Delta tables in your lake. Carefully define the schema and load your information into these tables. By structuring your information correctly, you lay the groundwork for efficient querying and manipulation, key for enhancing business intelligence. RPA can automate the schema definition and information loading processes, ensuring consistency and accuracy.

-

Query Delta Tables: Leverage Azure Synapse’s SQL capabilities to execute queries on your delta lake synapse. This integration allows you to harness the strengths of both platforms, optimizing performance and enhancing your analytical capabilities, thus aligning with your goal of data-driven decision-making. RPA can facilitate automated querying processes, delivering insights without manual intervention.

-

Monitor Workloads: Continuously monitor workloads within Azure Synapse to maintain optimal performance and troubleshoot any potential issues. Utilize the

STATS_DATE()function to monitor when statistics were last refreshed, ensuring your information remains pertinent and actionable. As pointed out by scientist Moez Ali, Azure Synapse supports hybrid cloud environments by enabling seamless integration between on-premises systems and the cloud, making it a robust choice for modern management. To maximize the benefits of Azure Synapse, users should follow best practices such as optimizing storage formats, managing compute resources, implementing security measures, and monitoring workloads. RPA can automate these monitoring tasks, allowing your team to focus on strategic initiatives.

Optimizing Performance: Best Practices for Delta Lake in Azure Synapse

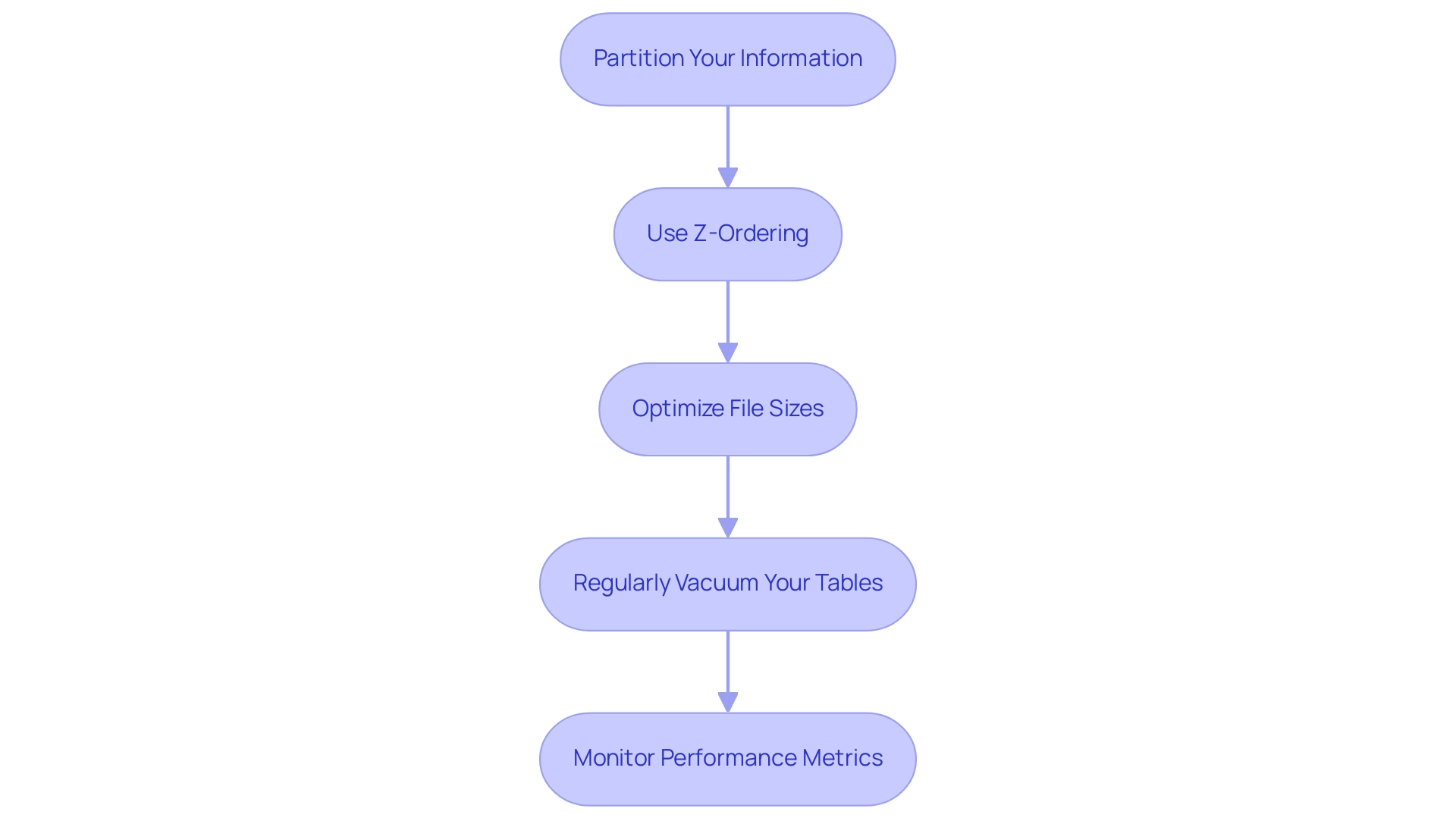

-

Partition Your Information: Strategically organizing your information into partitions based on access patterns is essential for enhancing query performance. By implementing targeted partitions, you can significantly reduce the volume scanned during queries, leading to faster results. Utilizing the OPTIMIZE command allows you to specify partitions directly with a WHERE clause, ensuring that the underlying information in the Delta table remains intact while still optimizing performance. This strategic approach reflects the efficiency improvements provided by Robotic Process Automation (RPA), which can automate manual information management tasks and free your team for higher-level analytical work, ultimately decreasing the likelihood of errors.

-

Use Z-Ordering: Implementing Z-ordering on columns that are frequently queried together can drastically enhance retrieval speeds. This technique reorganizes the information based on column values, allowing for more efficient access and minimizing the need for extensive scanning. As RPA tools can streamline information retrieval processes, combining Z-ordering with automation techniques can lead to even greater operational efficiency, allowing your team to focus on strategic initiatives.

-

Optimize File Sizes: Maintaining Delta file sizes between 128 MB and 1 GB strikes an optimal balance between read and write performance. Following this file size guideline assists in efficient information management and ensures better performance during operations. RPA can aid in keeping these file sizes by automating regular checks and adjustments, which helps prevent human error and enhances overall information integrity.

-

Regularly Vacuum Your Tables: Executing the VACUUM command is crucial for managing storage and maintaining performance. By removing old versions of data, you free up space, which enhances overall system performance and ensures that your data environment remains streamlined. Automating this process through RPA can save time and reduce the potential for human error, ensuring that your team can redirect their efforts toward more strategic tasks.

-

Monitor Performance Metrics: Leverage Azure Synapse’s monitoring tools to continuously track performance metrics. By consistently evaluating these metrics, you can pinpoint bottlenecks and areas for enhancement, enabling proactive modifications that improve the efficiency of your implementation. Recent updates, such as the new operation metrics for SQL UPDATE, DELETE, and MERGE commands in version 2.1.0, provide valuable dataframes with operation metadata, simplifying logging and tracking for developers and enhancing management capabilities. Additionally, integrating RPA to automate the monitoring and reporting process can lead to a more data-driven decision-making environment. Finally, remember that Delta Lake Synapse 2.0.0 requires a minimum Synapse Runtime of Apache Spark 3.3 to function effectively. In the context of the rapidly evolving AI landscape, leveraging tailored AI solutions alongside RPA can further enhance your information management strategies, ensuring they align with your specific business goals and challenges.

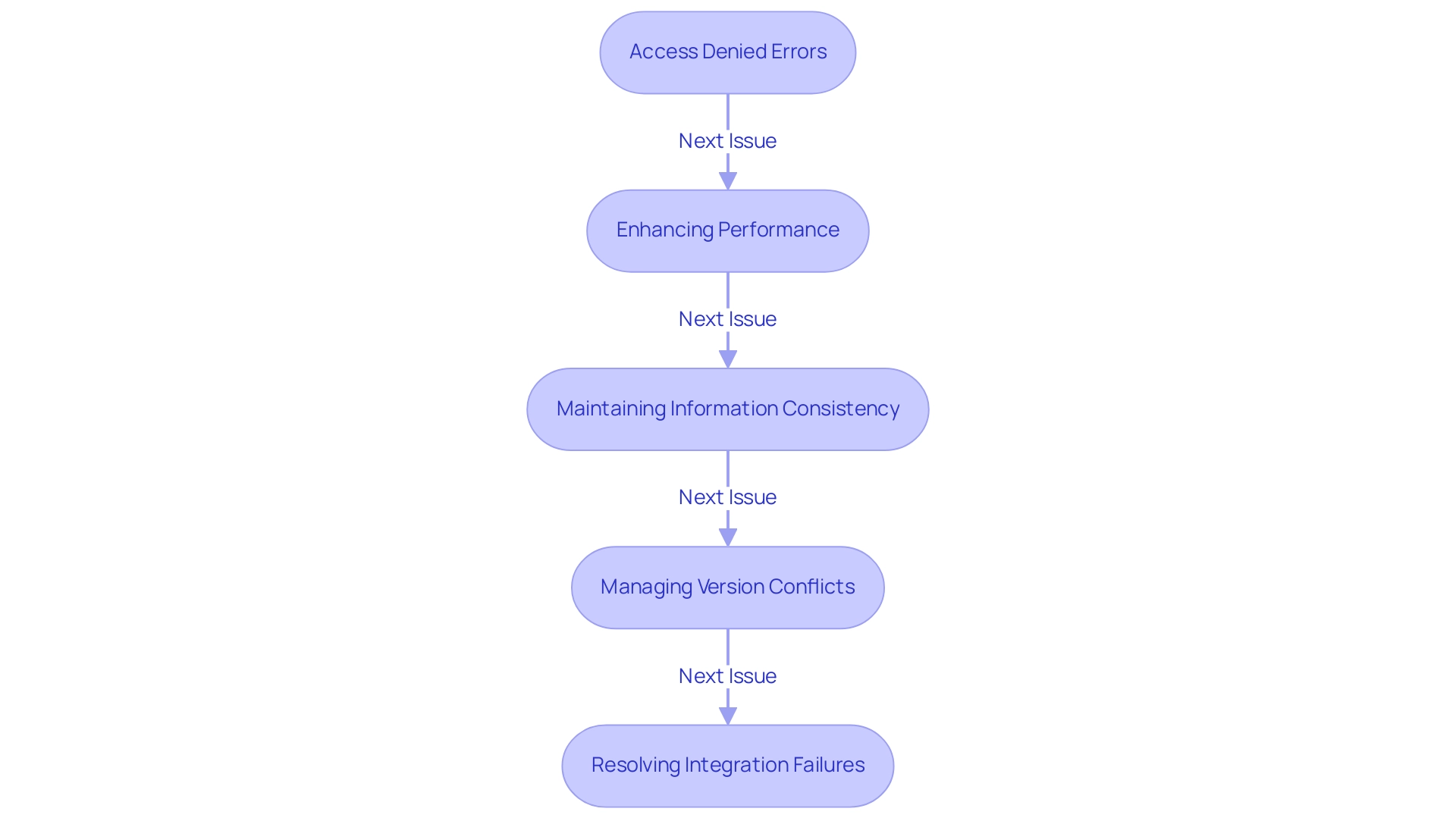

Troubleshooting Common Issues in Delta Lake and Azure Synapse Integration

-

Addressing Access Denied Errors: To resolve access denied errors in Azure Synapse, it’s crucial to verify that your workspace has the appropriate permissions to interact with the delta lake synapse and the Data Lake Storage account. Begin by auditing the IAM roles and access policies associated with your Synapse workspace. Abdennacer Lachiheb emphasizes the importance of ensuring that all configurations are correct, stating,

Try to set this spark conf to false:

spark.databricks.delta.formatCheck.enabledfalse.

This adjustment can enhance access reliability. Additionally, with recent Microsoft Entra authentication changes causing queries to fail after one hour, consider implementing a solution that maintains active connections without rebuilding your setup from scratch, leveraging RPA to automate these monitoring processes. -

Enhancing Performance: If you notice that your queries are running slower than expected, take a close look at your partitioning strategy. Effective partitioning is essential for enhancing structured tables, and employing Business Intelligence tools can offer insights into usage patterns. Regularly reviewing and refining this aspect can significantly improve query performance, thus enhancing overall operational efficiency. Additionally, not utilizing these tools can leave your business at a competitive disadvantage due to missed insights. Moreover, be aware of the potential for buffer overflow attacks, as understanding this feasibility can help in securing your information management practices.

-

Maintaining Information Consistency: To combat consistency errors, leverage the

MERGEcommand in Delta Lake Synapse. This command allows for seamless updates to records, ensuring that your tables remain consistent and accurate. Consistency is essential, particularly in settings where information integrity is paramount, and integrating RPA can assist in automating these consistency checks. -

Managing Version Conflicts: If you face version conflicts within your datasets, implementing a robust version control strategy is essential. Regularly purging older versions of information can prevent conflicts and streamline management processes, thus enhancing the efficiency of your operations. Utilizing tailored AI solutions can assist in identifying and resolving potential conflicts before they escalate, ensuring that your information management practices align with your business goals.

-

Resolving Integration Failures: Lastly, should you encounter integration failures, it’s important to examine your network configurations and Spark environment settings. Misconfigurations can lead to integration issues that hinder the performance of your workflows. Ensuring that these settings align with best practices will mitigate potential failures and optimize your integrations. For instance, token expiration can lead to errors during query execution; switching to a service principal or managed identity for long-running queries is recommended to avoid such issues, which can also be automated through RPA solutions.

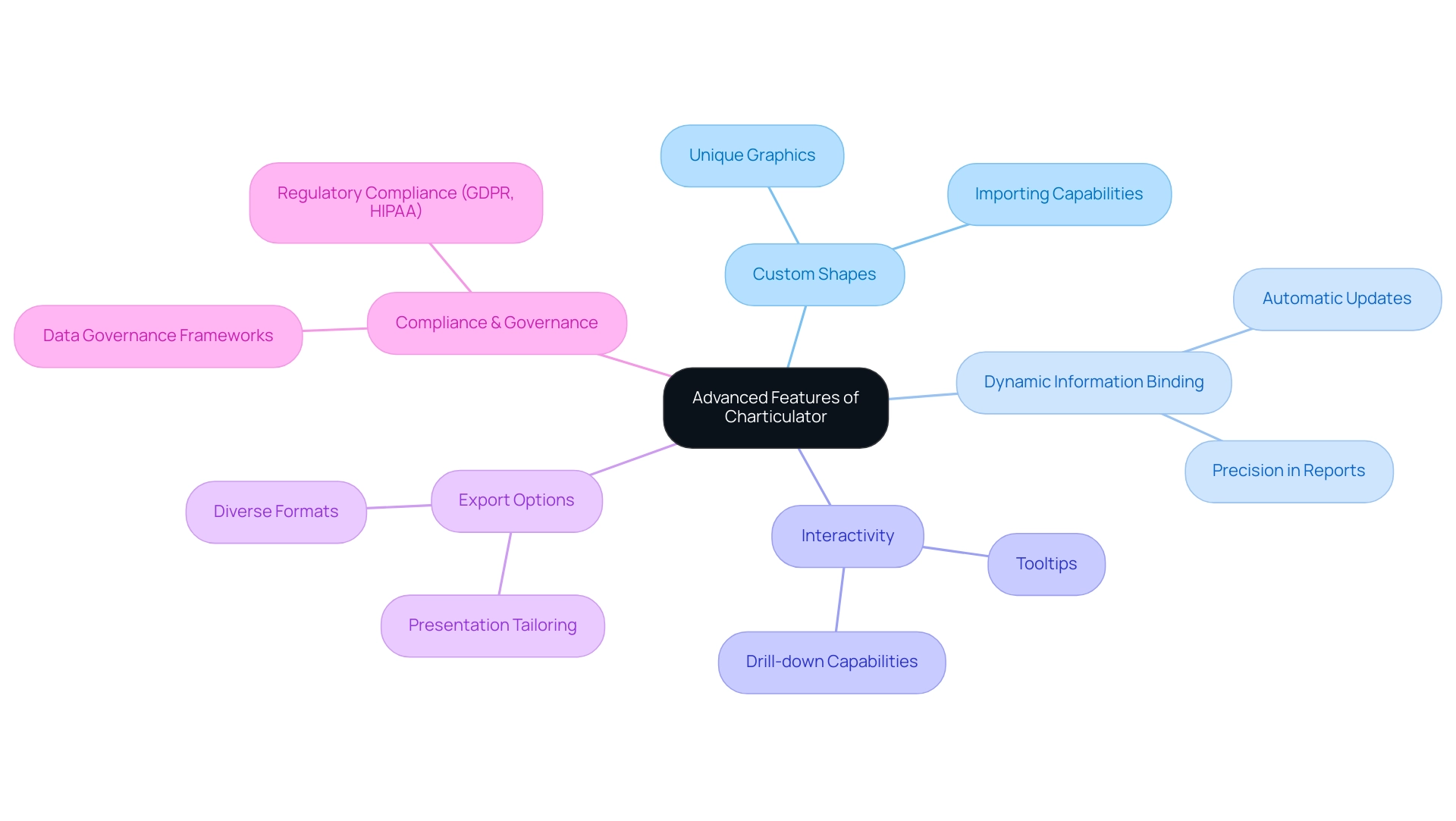

Exploring Advanced Features of Delta Lake in Azure Synapse

Time Travel: This storage solution provides a robust feature that allows you to query earlier versions of your information, facilitating rollback and auditing capabilities. By using the VERSION AS OF syntax in your SQL queries, you can effortlessly access historical records, which is invaluable for compliance and verification processes.

Versioning: Keeping a comprehensive history of changes in your tables is crucial for maintaining compliance and ensuring integrity. This versioning capability enables you to monitor changes efficiently, thereby improving accountability within your information management practices.

Schema Evolution: The support for schema evolution in this technology is revolutionary, allowing you to add new columns or alter types without any interruption. This flexibility not only streamlines the management process but also ensures that your structures can adapt to evolving business needs seamlessly.

Change Capture (CC): Implementing CC is essential for tracking changes in your information, allowing for efficient synchronization with other systems. This capability guarantees that your information stays up-to-date and precise across platforms, which is essential for operational efficiency.

Streaming Capabilities: Utilize Delta’s strong support for streaming information to create real-time analytics applications. This functionality empowers you to react instantly to incoming information, enhancing your decision-making processes. Dileep Raj Narayan Thumula highlights that comprehending distribution of information is essential for query enhancement, making these streaming functionalities even more significant in your operational strategies.

Access Control and Security: Delta Tables also facilitate effective access regulation and security management through Databricks’ built-in features, ensuring that your information remains safeguarded while permitting necessary access based on roles and responsibilities.

Vector Search Support: In Q3, Databricks introduced a gated Public Preview for Vector Search support in Databricks SQL, improving the capabilities of information querying and analysis, which can complement the features of these tables, particularly in complex information environments.

Case Study on Extended Statistics Querying: An example using a sales_data table illustrates how to compute statistics for specific columns, such as product_name and quantity_sold, and how to query extended statistics to verify results. This process aids in comprehending the distribution of information, which is vital for query optimization, with specific statistics like min, max, and distinct count being emphasized.

Integrating RPA for Enhanced Efficiency: By automating manual workflows with Robotic Process Automation (RPA), organizations can significantly reduce the time spent on repetitive tasks, freeing up resources for strategic information management. For example, a firm that adopted RPA to manage information entry tasks alongside a storage solution reported a 30% decrease in mistakes and a significant boost in team productivity. This synergy between the capabilities of Delta Lake and RPA within the delta lake synapse environment not only enhances operational efficiency but also drives actionable insights, allowing your team to focus on high-value projects amidst the rapidly evolving AI landscape.

Moreover, RPA addresses challenges within this landscape by ensuring that data processes are streamlined and less prone to human error.

Conclusion

The integration of Delta Lake with Azure Synapse offers organizations a transformative approach to data management, enabling them to harness the full potential of their data assets. By leveraging features such as ACID transactions, schema enforcement, and time travel capabilities, businesses can ensure data reliability and integrity, which are essential for informed decision-making.

The step-by-step setup guide provided emphasizes the importance of:

1. Creating a dedicated environment

2. Automating workflows through Robotic Process Automation (RPA)

3. Optimizing data performance

Implementing best practices like:

– Data partitioning

– Z-ordering

– Regular monitoring

not only enhances query performance but also streamlines operations, allowing teams to focus on strategic initiatives rather than repetitive tasks.

Addressing common challenges such as:

– Access errors

– Performance issues

– Data consistency

is crucial for maintaining an efficient data ecosystem. By utilizing advanced features like time travel, schema evolution, and change data capture, organizations can ensure compliance and adapt swiftly to evolving business needs. The integration of RPA further amplifies these benefits, driving operational efficiency and minimizing errors.

In conclusion, embracing the synergy between Delta Lake and Azure Synapse equips organizations with the tools necessary to thrive in a data-driven world. By prioritizing effective data management strategies and leveraging automation, businesses can not only enhance their operational efficiency but also foster innovation and strategic growth in an increasingly competitive landscape.

Overview

The article “Mastering DAX ISNULL Functions: An In-Depth Tutorial for Data Analysts” focuses on how the DAX ISNULL function can be effectively utilized to manage null values in data analysis, enhancing data integrity and reporting accuracy. It supports this by outlining practical applications, best practices, and troubleshooting tips for ISNULL, emphasizing that proper handling of null values is crucial for reliable analytics outcomes and informed decision-making in business intelligence contexts.

Introduction

In the world of data analysis, understanding how to effectively manage null values is paramount for ensuring accuracy and integrity in reporting. As organizations increasingly rely on powerful tools like DAX and Power BI, the challenge of addressing missing data can significantly impact decision-making processes.

By utilizing functions such as ISNULL, analysts can replace null entries with meaningful alternatives, enhancing the clarity of reports and fostering a data-driven culture. This article delves into the importance of recognizing and addressing null values, offering practical applications and best practices that empower data professionals to optimize their workflows and drive operational efficiency.

By mastering these strategies, organizations can transform raw data into actionable insights, paving the way for informed decisions and sustained growth.

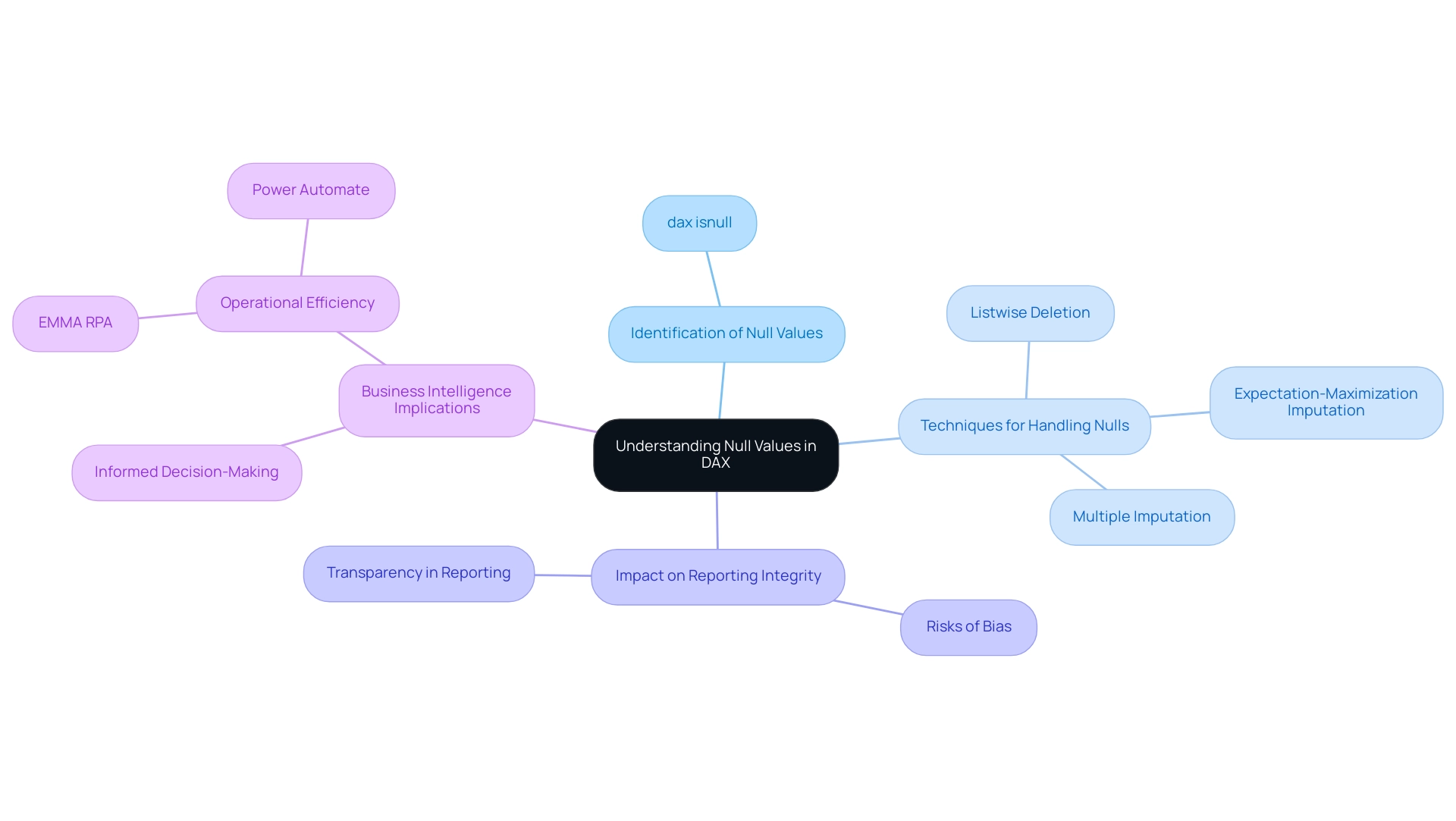

Understanding Null Values in DAX: A Foundation for Data Analysis

In the domain of DAX, the dax isnull function can help identify missing entries, indicating the lack of information within a specific field, which is essential to comprehend for achieving precise reporting. Struggling with these null values can be a significant challenge, especially when dax isnull is not utilized for leveraging insights from Power BI dashboards. Neglecting to address these gaps can lead to misleading conclusions, particularly during summarization, where calculations affected by absent entries may yield unexpected results.

The expectation-maximization imputation technique, for example, can be time-consuming, particularly with extensive collections containing a significant portion of missing information. Listwise deletion poses its own risks, potentially biasing estimates if information is not missing completely at random (MCAR). Recognizing and managing null values with dax isnull not only enhances the integrity of reports and dashboards but also fosters informed decision-making, a critical aspect of driving growth through Business Intelligence and RPA.

As mentioned by Hyun Kang from Chung-Ang University College of Medicine, ‘In general, multiple imputation is a good approach when analyzing datasets with absent information.’ Furthermore, best practices for reporting results emphasize the necessity of transparency in managing absent information, significantly enhancing the credibility of analyses. By adopting these practices, organizations can not only enhance their information management strategies but also build trust in their findings, thus empowering their operations and fostering a culture driven by insights.

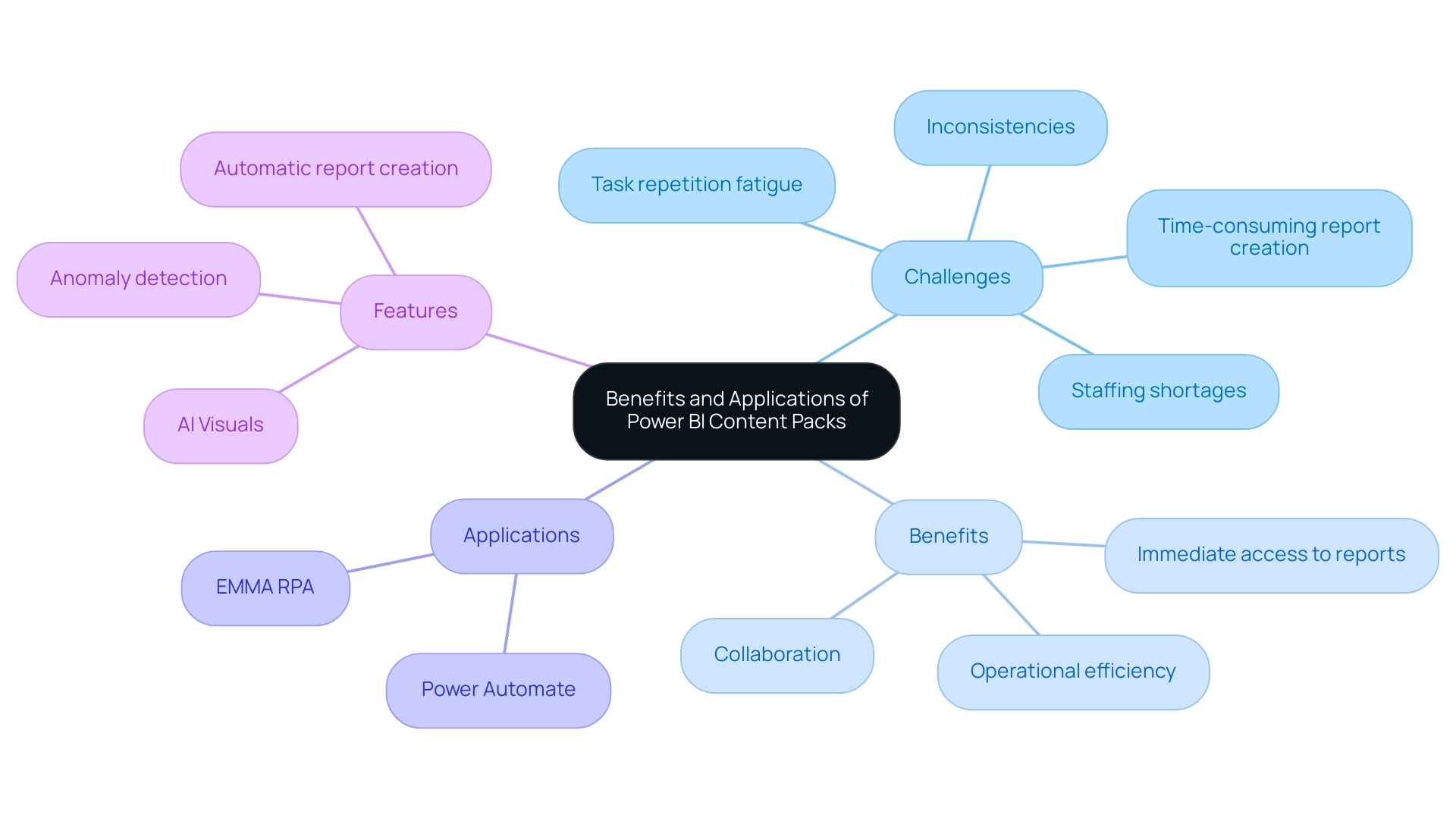

Failing to extract meaningful insights from information can leave businesses at a competitive disadvantage, underscoring the transformative power of BI in turning raw information into actionable insights. Integrating specific RPA solutions, such as EMMA RPA and Power Automate, can further streamline processes and enhance operational efficiency.

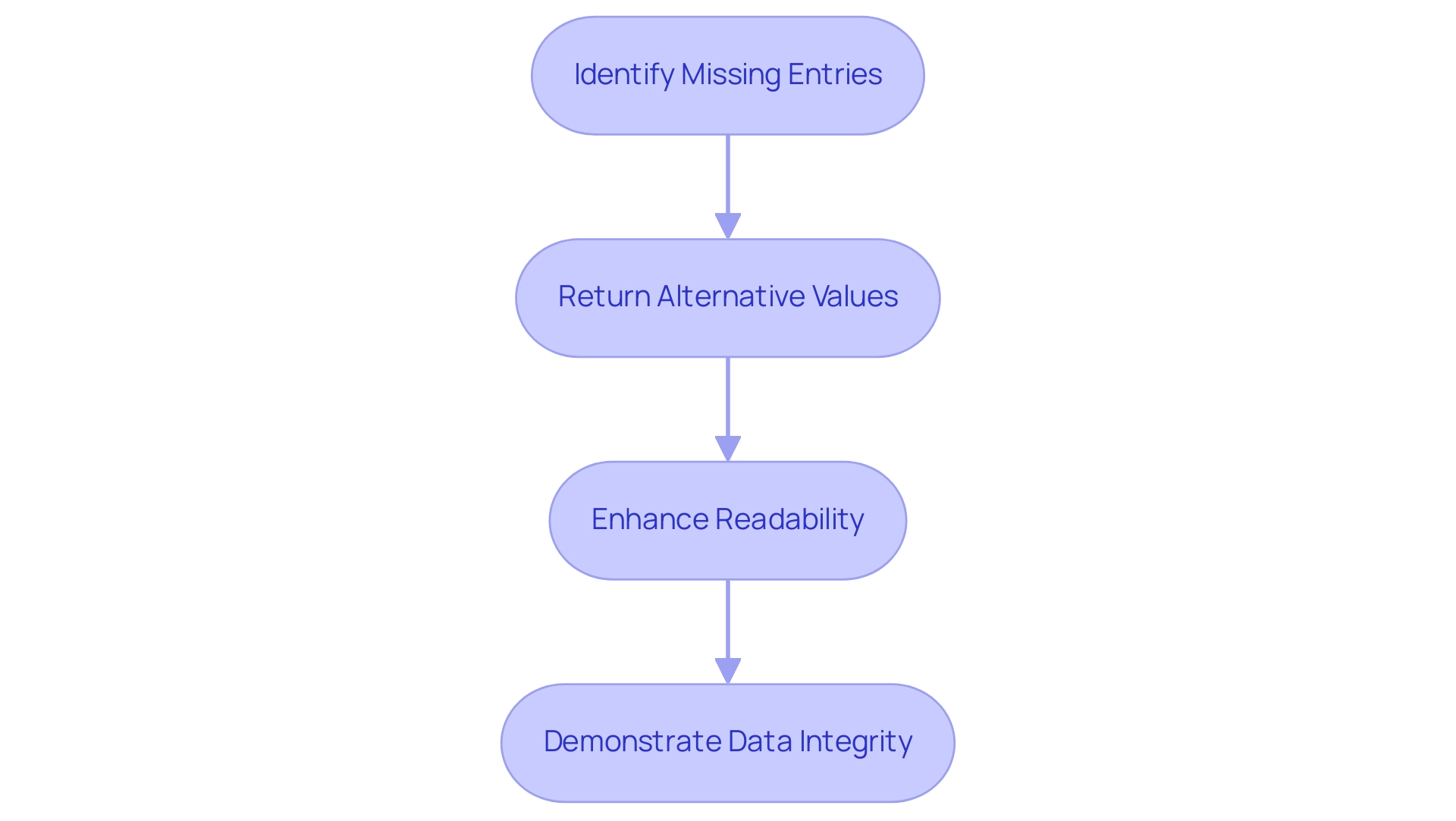

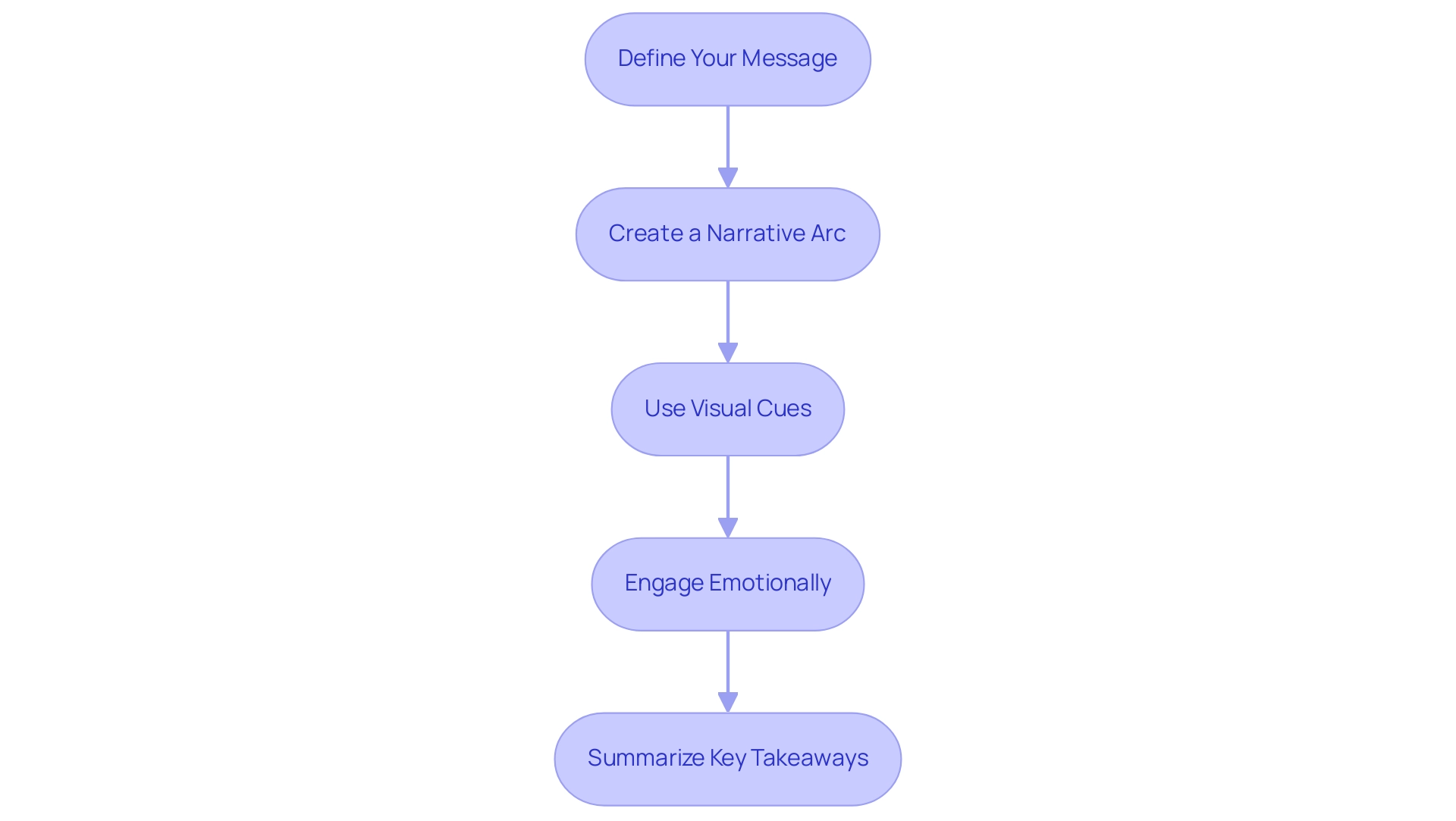

Practical Applications of ISNULL in DAX: Enhancing Data Integrity

The function dax isnull serves a critical purpose in DAX: it identifies null values and allows you to return an alternative in their place. This capability is essential for maintaining information integrity, particularly in reports where clear, trustworthy insights are paramount. For instance, in a sales report, if a customer ID is missing, displaying ‘Unknown Customer’ instead of leaving the field blank ensures a more comprehensive understanding of the data.

The syntax for the function is simple yet powerful: <expression>, <alternate_value>. Here’s a structured approach to implementing it effectively:

- Identify Missing Entries: Begin by using dax isnull to find any missing entries within your dataset. This step is crucial for ensuring your data is complete and reliable.

- Return Alternative Values: Determine what alternative value should be displayed when a non-existent value is encountered. An example implementation could be:

DAX

SalesPerson = IF(ISNULL(Sales[SalesPerson]), "Unknown", Sales[SalesPerson]) - Enhance Readability: By replacing nulls with descriptive text, you significantly improve the readability of your reports and dashboards, making them more user-friendly and actionable.

- Demonstrate Data Integrity: The application of ISNULL not only clarifies the information but also fosters trust among users, as they can comprehend the insights derived from your reports. This practice is essential in the ongoing monitoring and enhancement of ETL pipelines, ensuring integrity across analytics processes.

However, organizations often face challenges in leveraging insights from Power BI dashboards, such as time-consuming report creation and inconsistencies in information. Integrating Robotic Process Automation (RPA) can be a game-changer in this regard, automating repetitive tasks and streamlining the preparation process for DAX functions like ISNULL. This not only enhances operational efficiency but also allows analysts to focus on deriving actionable insights.

A recent study conducted by Precisely and Drexel University’s LeBow College of Business highlighted that organizations prioritizing information integrity saw improved efficiency and cost-cutting benefits. Specifically, 70% of respondents noted that maintaining information integrity led to more reliable analytics outcomes.

By efficiently utilizing the NULL function alongside RPA, analysts can enhance their workflows, presenting clearer and more precise datasets to stakeholders while reinforcing the integrity of their analytics. As one analyst mentioned, “Managing missing entries with precision is vital for precise reporting and decision-making,” highlighting the significance of effective practices in generating insights and enhancing operational efficiency.

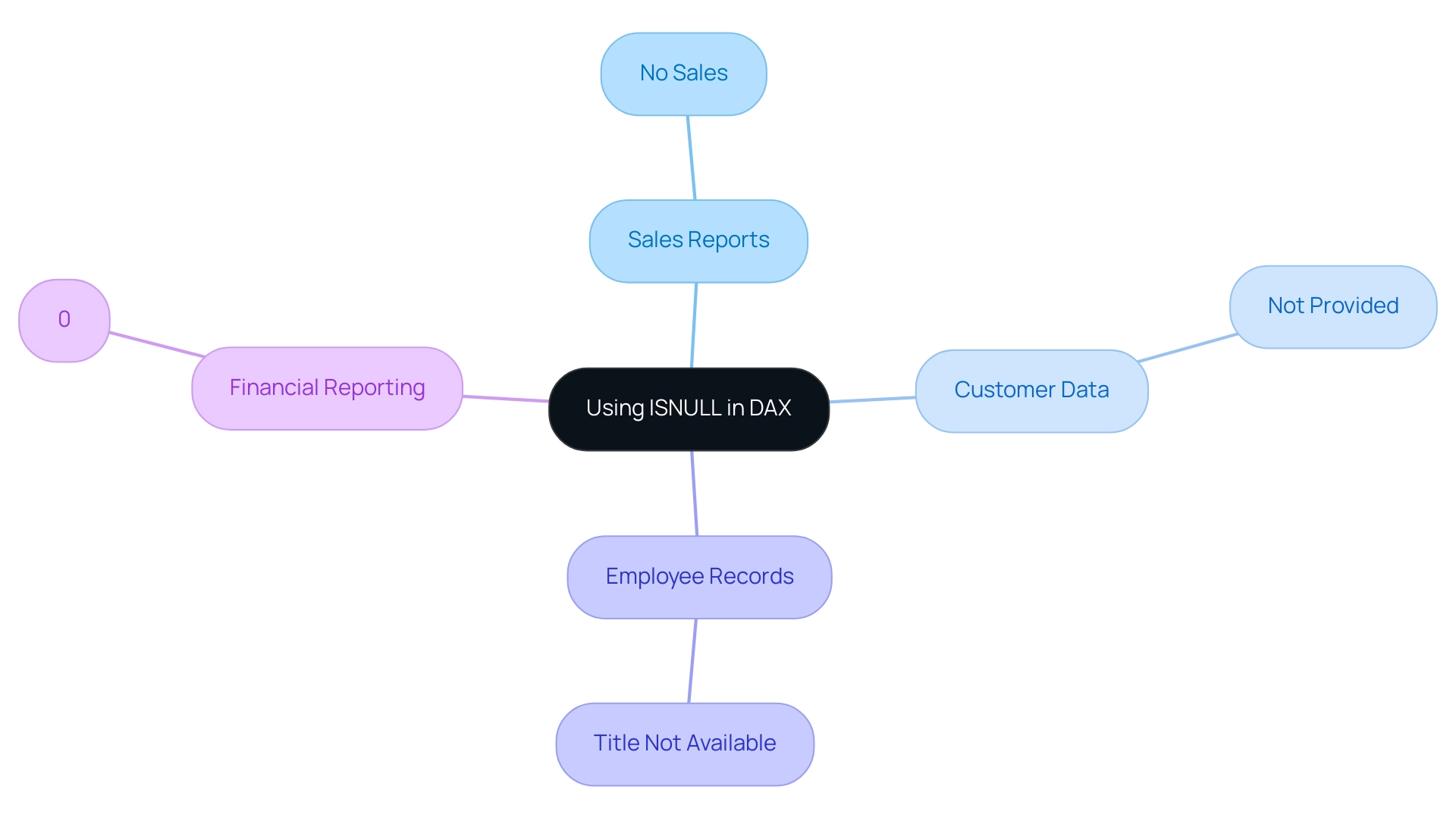

Common Scenarios for Using ISNULL in DAX

Analysts frequently encounter difficulties with null values in different contexts, which makes DAX ISNULL a crucial instrument for efficient information management. With more than 200 hours of learning and guidance from Generative AI specialists, comprehending functions like this one is vital for improving information integrity—particularly when tackling inadequate master information quality and the obstacles to AI implementation in organizations. Here are several scenarios where ISNULL can be particularly beneficial:

- Sales Reports: Null values in sales information may indicate a lack of transactions for specific products. By using ISNULL, you can present ‘No Sales’ instead of leaving this field blank, enhancing clarity for stakeholders:

DAX

SalesStatus = IF(ISNULL(Sales[SalesAmount]), "No Sales", Sales[SalesAmount])

- Customer Data: Missing contact information in customer databases can lead to incomplete records. Applying ISNULL allows you to replace these gaps with placeholders, ensuring that your data remains comprehensive:

DAX

ContactEmail = IF(ISNULL(Customers[Email]), "Not Provided", Customers[Email])

- Employee Records: In HR datasets, null entries for job titles can misrepresent employee roles. Using ISNULL can help fill in default values, maintaining accuracy in reporting:

DAX

JobTitle = IF(ISNULL(Employees[Title]), "Title Not Available", Employees[Title])

- Financial Reporting: Null entries for budget categories can lead to skewed analyses. Implementing ISNULL ensures that your financial reports are clear and informative:

DAX

Budget = IF(ISNULL(Finances[BudgetAmount]), 0, Finances[BudgetAmount])

These scenarios illustrate how the ISNULL function not only enhances data integrity but also ensures that your reports are both comprehensive and user-friendly. As mentioned by Katie Wampole, Research Data Curator, “Managing absent entries effectively is essential for preserving the quality of analysis.” Considering that a notable portion of sales reports might have null values, using COALESCE can result in more dependable analysis outcomes, consistent with best practices in information management.

Moreover, utilizing Small Language Models can deliver customized AI solutions that improve the use of DAX functions such as NULL, while GenAI Workshops provide practical training for teams to efficiently incorporate these methods into their management workflows. By tackling these challenges and utilizing AI tools, organizations can overcome barriers to AI adoption and enhance information quality. Comparable to the functionalities of the SimpleImputer, which can substitute missing entries with mean, mode, median, or a constant, the function offers an uncomplicated method for handling gaps in DAX.

By integrating these practices into your Power BI services, you can enhance reporting efficiency and gain actionable insights.

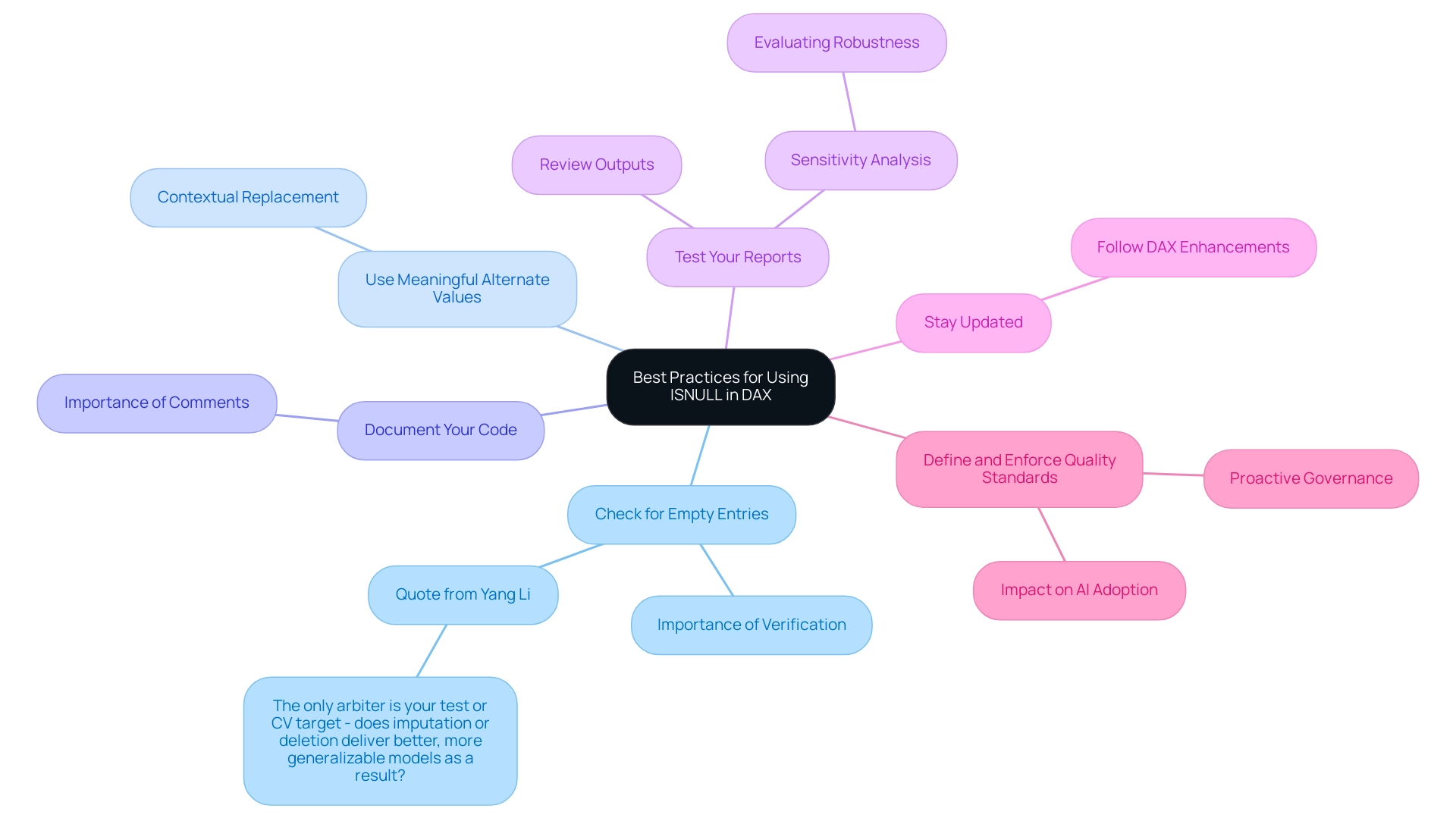

Best Practices for Using ISNULL in DAX

To maximize the effectiveness of the ISNULL function in DAX, adopting the following best practices is essential, particularly in light of the challenges posed by poor master data quality and the barriers to AI adoption in organizations:

-

Always Check for Empty Entries: Before conducting any calculations, use

dax isnullto verify the existence of empty entries. This step is critical to avoid skewed results that could mislead stakeholders. As Yang Li states, “The only arbiter is your test or CV target – does imputation or deletion deliver better, more generalizable models as a result?” This quote highlights the significance of rigorously testing various information handling methods to ensure reliable insights. -

Use Meaningful Alternate Values: When replacing nulls, select substitute values that offer context. This method significantly improves the clarity of reports, making information more interpretable and decision-making more effective.

-

Document Your Code: It’s vital to comment clearly on your use of the function within DAX expressions. This practice not only helps your future self in understanding the logic behind your code but also supports other team members who may work with your reports, fostering a collaborative environment focused on quality.

-

Test Your Reports: After applying

dax isnull, thoroughly review your reports to ensure that outputs align with expectations and that substitutions are relevant and accurate. Performing sensitivity analysis can assist in evaluating the robustness of your findings against assumptions made about absent information, ultimately leading to improved business intelligence. -

Stay Updated: Keep abreast of DAX updates and enhancements. As new functions emerge, they may present more efficient methods for managing null values, improving your analysis workflows and supporting your organization’s data-driven goals. This adaptability is crucial for overcoming the challenges of integrating AI into existing processes.

-

Define and Enforce Quality Standards: A proactive approach to governance includes defining and enforcing clear and consistent quality standards. This practice is crucial to guarantee that information handling techniques, including the application of null-checking, result in dependable and actionable insights that enhance operational efficiency. Enhanced information quality not only improves reporting but also establishes a strong basis for successful AI adoption.

By adhering to these best practices, analysts can ensure that their use of null checks not only meets industry standards but also enhances the overall quality and reliability of their analyses, ultimately empowering organizations to leverage insights for growth and innovation. Effective data handling is integral to unlocking the full potential of Business Intelligence, enabling informed decision-making that propels business success.

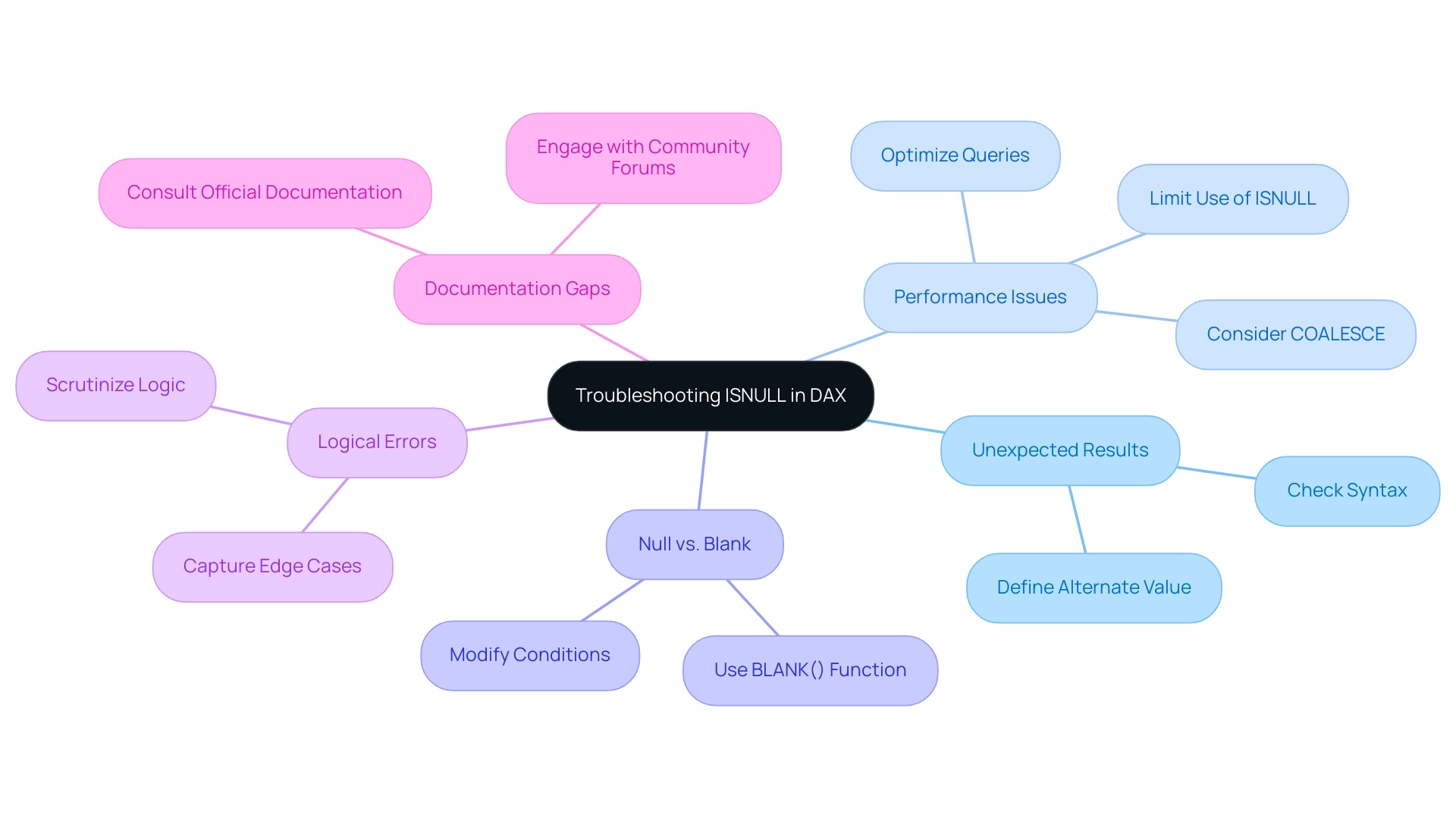

Troubleshooting Common Issues with ISNULL in DAX

When using the dax isnull function in DAX, analysts may encounter several common challenges that reflect broader issues in technology implementation. Here’s how to effectively troubleshoot them:

-

Unexpected Results: If the output seems incorrect, double-check the syntax of your null-check function. Ensure that both the expression and the alternate value are accurately defined to avoid misinterpretations. This is particularly vital in a landscape where tailored AI solutions can streamline the identification of such errors.

-

Performance Issues: In large datasets, dependence on null-check functions can significantly hinder performance. For instance, a user reported a drastic reduction in runtime from 25.2 hours to 102 seconds by optimizing a query that utilized dax isnull in conjunction with OVER. As Steve A. notes,

“Watch out if using a null-check function with a subquery as the replacement_value. Seems like it runs the subquery even if the check_expression value is not NULL which doesn’t help performance.”

Limit the use of dax isnull to essential cases and consider alternatives like COALESCE, which can enhance efficiency, particularly in data-rich environments. RPA can also be leveraged here to automate repetitive data checks, further improving performance. -

Null vs. Blank: Recognizing that null values, particularly when using dax isnull, and blank values are distinct is essential. For checks against blanks, employ the

BLANK()function or modify your conditions accordingly to ensure accurate results. This distinction can be crucial for leveraging Business Intelligence effectively to gain actionable insights. -

Logical Errors: Scrutinize the logic applied in your DAX expressions and consider using dax isnull to ensure comprehensive coverage of all scenarios. Additional conditions may be necessary to capture edge cases effectively, which is vital for overcoming common challenges in report creation and ensuring information consistency. RPA tools can assist in automating logic checks to enhance accuracy.

-

Documentation Gaps: If uncertainties arise regarding how this function integrates with other DAX functions, consulting official documentation or engaging with community forums can yield valuable insights and enhance your understanding. This proactive approach is essential in navigating the complexities of technology implementation.

Additionally, with the upcoming event on DAX handling of Boolean expressions scheduled for February 18-20, 2025, in Phoenix, analysts may find it advantageous to attend for further insights and networking opportunities. Such events can provide critical knowledge that aids in overcoming specific challenges related to technology implementation.

By proactively addressing these common issues, data analysts can significantly improve their proficiency with the ISNULL function, leading to more efficient data analysis and optimal performance. Furthermore, just as addressing a blockage in a ganged switch requires systematic troubleshooting, so too does mastering DAX functions demand a thorough approach to problem-solving.

Conclusion

Addressing null values in data analysis is not just a technical requirement; it is a fundamental necessity for ensuring the reliability and clarity of insights derived from data. Throughout this article, the critical role of the ISNULL function in DAX has been underscored, highlighting its ability to transform incomplete datasets into comprehensive reports. By replacing null entries with meaningful alternatives, organizations can significantly enhance the integrity of their analytics, thereby fostering informed decision-making.

The practical applications of ISNULL in various scenarios, from sales reports to financial analyses, illustrate its versatility in improving data quality. Best practices include:

- Checking for null values

- Using contextually relevant alternatives

- Documenting code

These practices are essential for maximizing the effectiveness of this function. Additionally, understanding and troubleshooting common issues with ISNULL ensures analysts can maintain optimal performance and accuracy in their reports.

Ultimately, embracing these strategies not only empowers data professionals to streamline their workflows but also contributes to a robust data-driven culture within organizations. By effectively managing null values, businesses can harness the full potential of their data, paving the way for informed strategies and sustained growth in an increasingly competitive landscape.

Overview

To delete a Power BI workspace, users must follow a straightforward step-by-step process that includes signing into their account, navigating to the workspace section, and confirming the deletion after backing up necessary data. The article emphasizes the importance of understanding the implications of deletion, such as potential data loss and access changes, which underscores the need for careful consideration and communication with team members before proceeding.

Introduction

In the dynamic landscape of data analytics, Power BI workspaces emerge as critical hubs for collaboration and insight generation. Understanding how to effectively navigate these environments can significantly enhance operational efficiency and empower teams to make informed decisions.

From the essential steps of deleting a workspace to the best practices for maintaining data integrity, this article delves into the intricacies of Power BI workspace management. It highlights the importance of strategic planning, robust data governance, and proactive communication, ensuring organizations can harness the full potential of their data while mitigating risks associated with workspace changes.

By exploring these key considerations, professionals can transform challenges into opportunities, paving the way for improved business intelligence and streamlined operations.

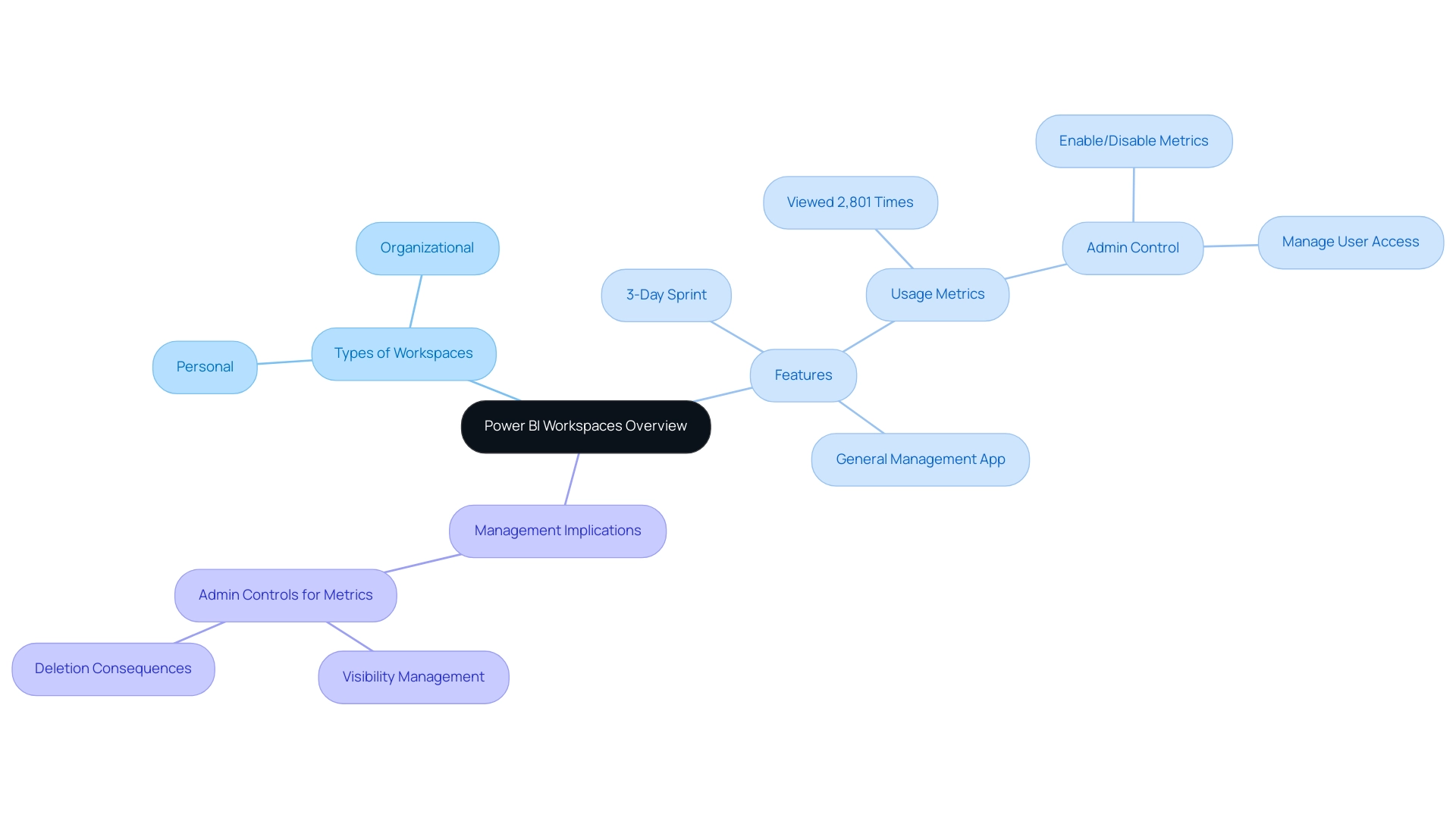

Understanding Power BI Workspaces: An Overview

Power BI areas act as crucial collaborative settings where users can develop, oversee, and distribute reports and dashboards efficiently, becoming vital instruments for improving reporting and obtaining actionable insights. Each environment serves as a dedicated container for related projects, facilitating seamless teamwork and enhancing project efficiency. A comprehensive understanding of the structure of the environment is crucial, as it directly impacts how data is organized, accessed, and shared among users.

Notably, the usage metrics feature has been viewed 2,801 times, underscoring its relevance in managing work environments. Workspaces are classified into two primary types:

- Personal

- Organizational

Personal areas remain private, accessible solely to their creators, while organizational settings encourage collaboration by being shared among team members.

Additionally, the 3-Day Power BI Sprint allows for the rapid creation of professionally designed reports, addressing the challenge of time-consuming report generation. The General Management App further enhances operational efficiency by providing comprehensive management tools and smart reviews, enabling teams to streamline their processes effectively. However, it is important to note that there are concerns regarding the accessibility of Usage Metrics Reports, as only an admin can now provide this information that was previously available to all users.

Getting to know the nuances of these environments not only helps in enhancing collaboration but also provides you with the understanding necessary to navigate the implications of workspace management, including the irreversible effects when you delete Power BI workspace and its contained information. According to the case study on Admin Controls for Usage Metrics, administrators can manage access to usage metrics, enabling or disabling metrics for specific users and managing data visibility. Recognizing these dynamics is essential for optimizing your use of BI and ensuring that collaborative efforts yield the desired insights.

To further explore how our solutions can address your unique challenges, consider booking a free consultation and discovering the potential of our actions portfolio tailored to enhance your business intelligence capabilities.

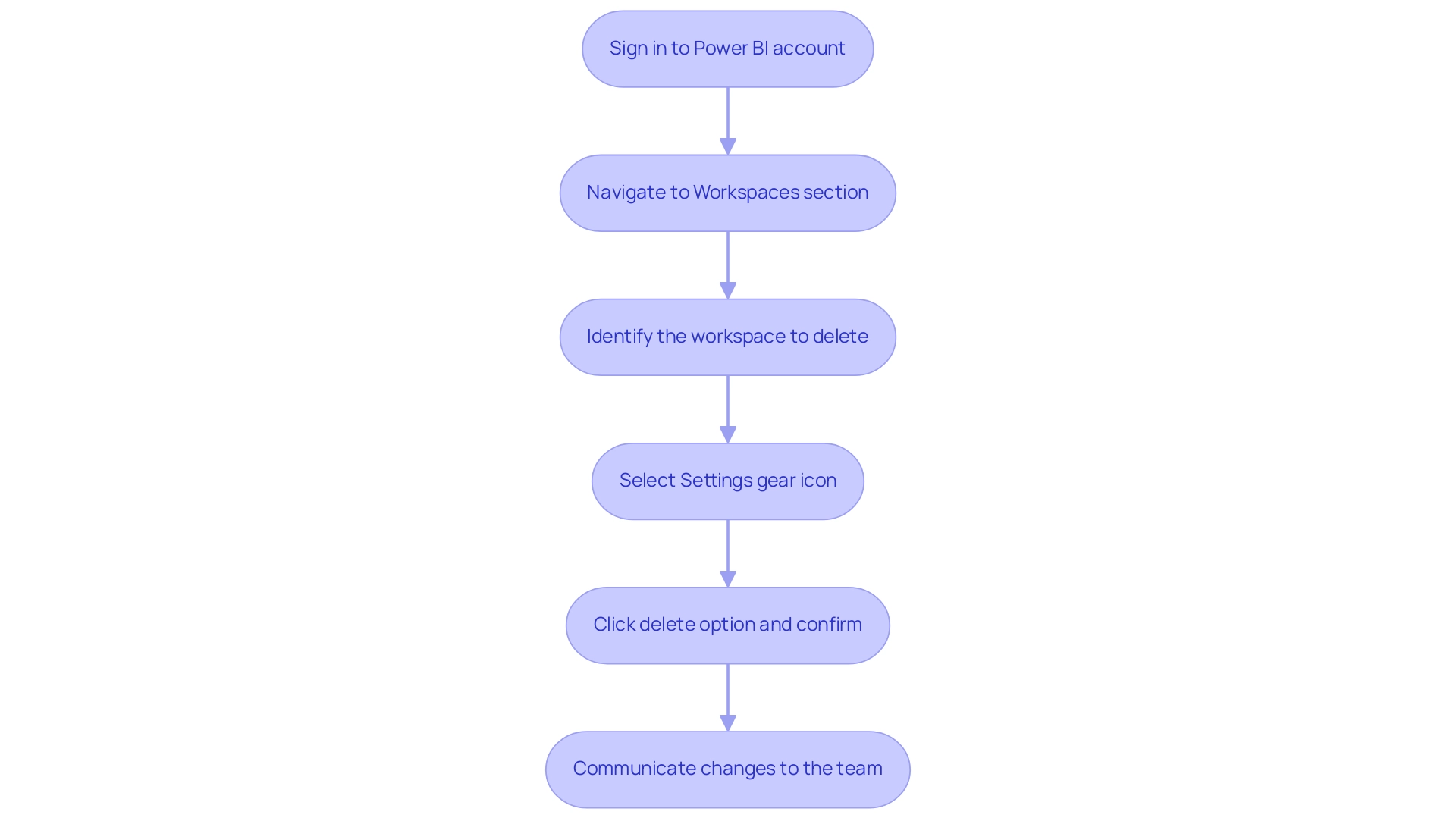

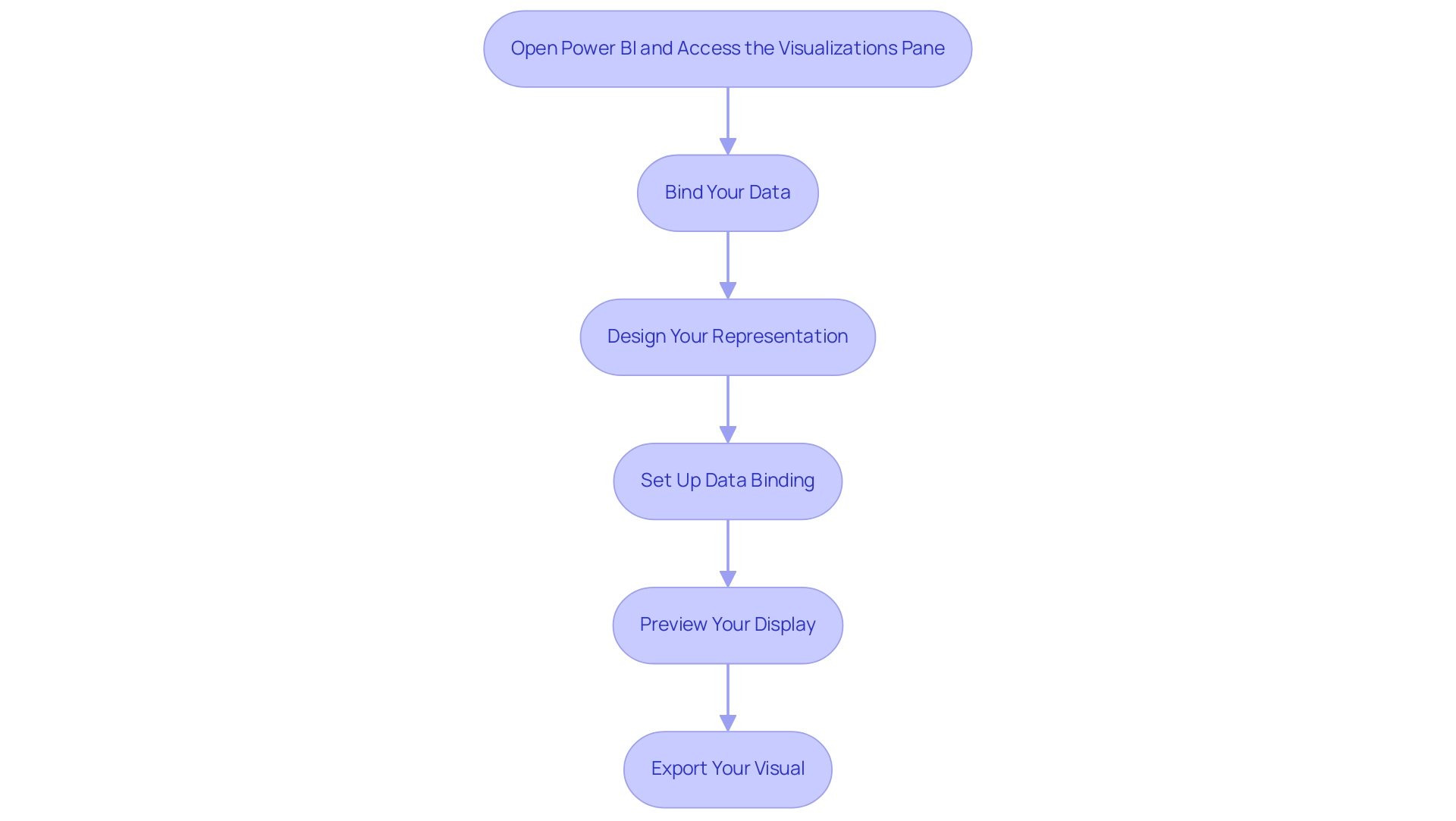

Step-by-Step Process to Delete a Power BI Workspace

To effectively remove a BI environment and maintain operational efficiency, adhere to the following steps:

- Sign in to your Power BI account.

- Navigate to the Workspaces section located in the left-hand menu.

- Identify the area you intend to delete in the Power BI workspace and click on it to delete the Power BI workspace.

- Select the Settings gear icon positioned in the top right corner of the workspace.

- Scroll down to the bottom of the settings page and click on the option to delete power bi workspace. Confirm the deletion by entering the project name as prompted, then click Delete to delete the power bi workspace.

- After the deletion, it is crucial to communicate the changes to your team to prevent any potential confusion.

While the removal process is straightforward, as noted by Power BI professional PVO3,

Unfortunately, I don’t think there will be an easy solution for this.

This highlights the significance of grasping the implications of such actions, particularly considering that an environment becomes inactive if no reports or dashboards have been accessed in the past 60 days. Therefore, monitoring activity in the work environment before deletion is essential.

Additionally, RPA solutions such as EMMA RPA and Automation can be utilized to automate repetitive tasks associated with workspace management, enhancing operational efficiency. After removing the semantic model, users should refresh their browser and generate a new usage metrics report in the BI service to ensure precise tracking. Furthermore, it’s vital to recognize that BI operates in separate national/regional clouds, which comply with local regulations while offering the same security and privacy as the global version.

This case study illustrates how usage metrics are available in specific national/regional clouds, ensuring compliance with local data residency and access regulations. Being proactive in informing your team about these changes can mitigate disruptions and enhance overall operational efficiency, reflecting the transformative power of Business Intelligence and RPA in driving data-driven decision-making.

Key Considerations Before Deleting a Workspace

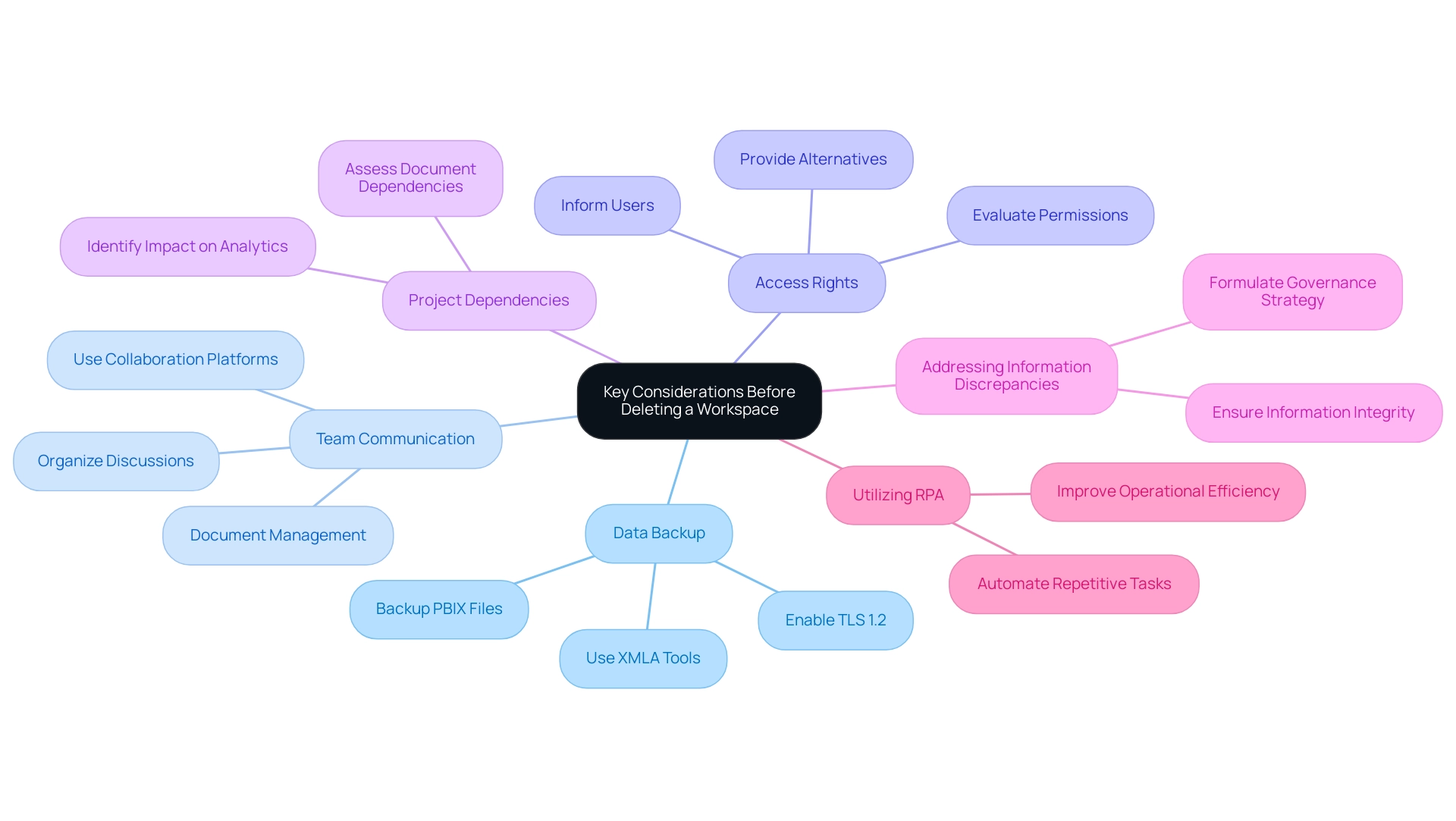

Before continuing with the removal of a BI environment, it is essential to consider several significant factors:

-

Data Backup: Prioritize backing up all reports and datasets within the environment. Utilize XMLA-based tools such as SQL Server Management Studio (SSMS) to create secure backups, as Power BI automatically generates backup folders when attached to a workspace. According to the case study on the Backup and Restore Process, these operations depend on the semantic models residing on Premium or PPU capacity, and backup files are stored in a designated folder. This step is vital to prevent information loss and ensure continuity in your reporting capabilities. In an era where extracting meaningful insights is critical, ensuring your data integrity is the first step towards leveraging Business Intelligence effectively. Additionally, enabling TLS 1.2 or higher for encryption settings during the backup process is essential for securing your data.

-

Team Communication: Clear communication among team members is essential before any area deletion. Informing the team helps mitigate confusion and avoids disruptions in ongoing projects. As emphasized by Johanna, a Continued Contributor, using a collaboration platform like Microsoft Teams can facilitate effective communication regarding such changes, allowing for organized discussions and documentation management. Liu Yang also emphasized that the ‘Power BI Service’ connector enables users to generate documents without the capability to remove the dataset, reinforcing the significance of maintaining backups in Desktop files. Regular involvement can assist in tackling the frequent issue of time-intensive document preparation by making certain that all are coordinated on data utilization.

-

Access Rights: Perform a comprehensive evaluation of the existing access permissions to the area. If you delete the Power BI workspace, it will revoke access for all users, potentially impacting their work. Ensuring that everyone is aware of this change and has alternative access options is critical to maintain operational efficiency.

-

Project Dependencies: Assess any dependencies that other documents or dashboards may have on the workspace. If you delete the Power BI workspace, it could adversely affect their functionality, leading to unexpected issues in your analytics and reporting processes. Lacking practical advice in documents can be a considerable obstacle, and comprehending these dependencies can aid in preserving clarity and direction.

-

Addressing Information Discrepancies: It is also essential to contemplate the possible confusion and mistrust in information due to discrepancies across documents. Formulating a governance strategy can assist in guaranteeing information integrity and trustworthiness, which is vital for efficient decision-making.

-

Utilizing RPA: Think about how Robotic Process Automation (RPA) can ease the difficulties encountered in the context of Business Intelligence. By automating repetitive tasks, RPA can simplify document creation and improve operational efficiency, enabling your team to concentrate on deriving actionable insights instead of being hindered by manual processes.

By addressing these factors, you not only protect your information but also enhance team effectiveness and uphold project integrity throughout the process. Remember, always create a backup of the PBIX file before making any modifications, and consider the ramifications of your actions on the broader BI environment. In a landscape where data-driven insights fuel growth, these steps are essential for empowering your organization and ensuring informed decision-making.

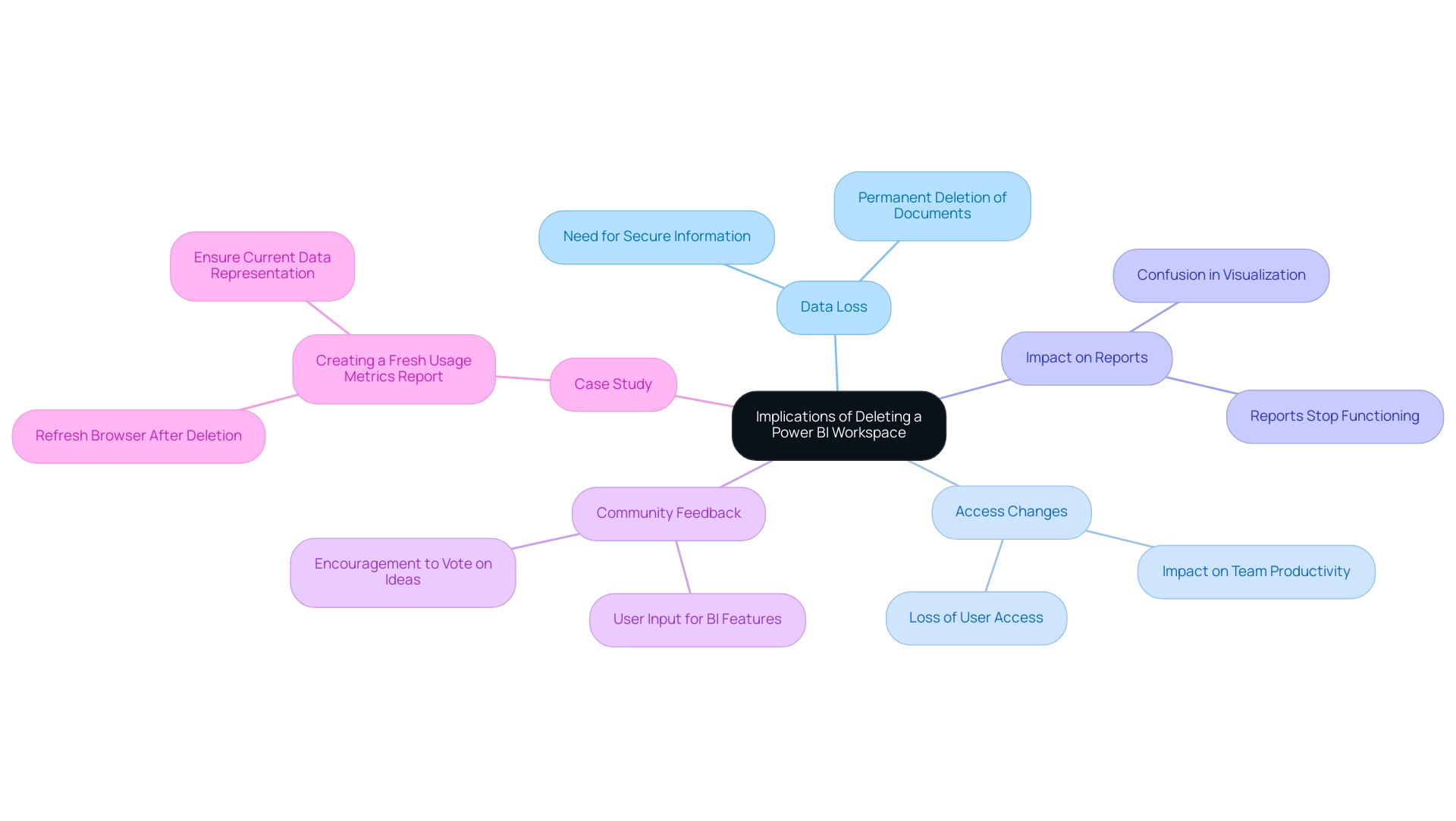

Implications of Deleting a Power BI Workspace

Removing a Power BI environment carries significant implications that every Director of Operations Efficiency should be aware of:

-

Data Loss: The elimination of an environment results in the permanent deletion of all associated documents, dashboards, and datasets. Considering the frequent issue of investing too much time in document preparation instead of utilizing insights, it is essential to secure any important information before moving forward with removal to reduce this risk. Moreover, having clear actionable guidance on what to do with the information prior to the delete power bi workspace can streamline the process and ensure that critical insights are not lost.

-

Access Changes: Users will lose access to the environment and any shared content, potentially leading to disruptions in ongoing projects and hindering collaborative efforts. This can significantly affect team productivity and overall information accessibility, compounding the challenges of maintaining consistent information across reports. A well-defined governance strategy can assist in managing user access and preventing such disruptions.

-

Impact on Reports: Reports and dashboards associated with the deleted project will stop functioning, which can create confusion or mistakes in visualization. As noted by Season from the Community Support Team, if you delete power bi workspace and the area has been deleted for 90 days, its status changes to ‘Removing,’ and the associated datasets will no longer appear in the ‘Refresh Summary’ of the Admin portal. This emphasizes the importance of having a governance framework to manage information dependencies efficiently.

-

Community Feedback: Community members are encouraged to vote on ideas for Microsoft to consider concerning BI features, underscoring the significance of user input in enhancing management practices and tackling common challenges.

-

Case Study: After the removal of a semantic model, users are advised to refresh their browsers and create a new usage metrics document in BI. This practice guarantees that the metrics report represents the most up-to-date data available, facilitating informed decision-making and precise reporting.

Being aware of these implications enables you to navigate the complexities of managing BI environments effectively, ensuring minimal disruption and maintaining operational efficiency while addressing the prevalent challenges in data governance and actionable insights.

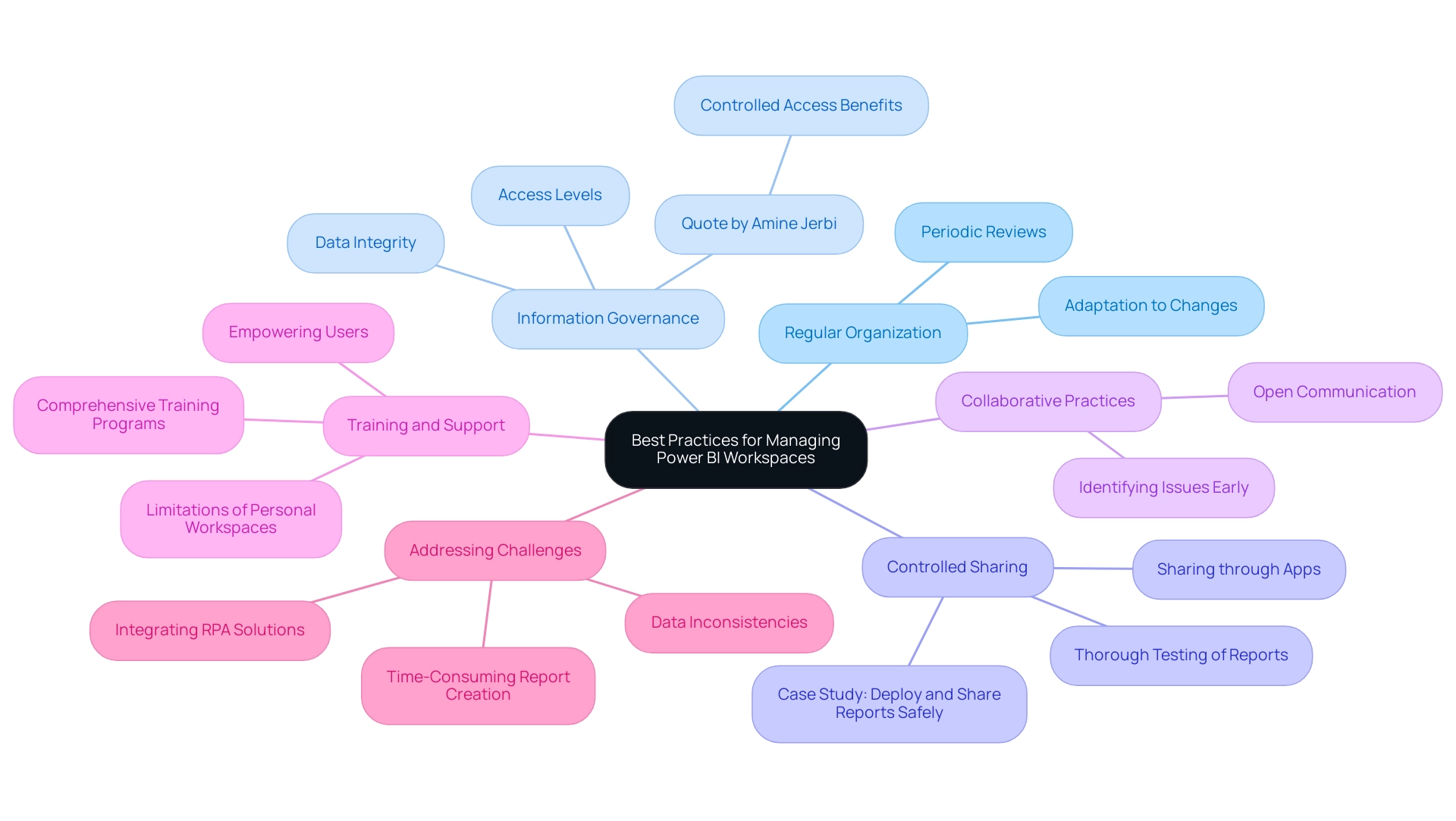

Best Practices for Managing Power BI Workspaces

To effectively manage Power BI workspaces, implementing the following best practices is essential:

-

Regular Organization: Schedule periodic reviews of your workspaces to keep them organized and efficient. This practice ensures that your workspaces remain relevant to your current projects and organizational goals, adapting to any changes in your operational landscape. Leveraging Business Intelligence tools effectively can help streamline this process and enhance your decision-making capabilities.

-

Information Governance: Establish robust information governance policies that dictate access levels and promote integrity. By clearly defining who can access what, you safeguard sensitive information and enhance overall information security. As Amine Jerbi aptly states, > Having the same item in two different environments with limited access helps recover it, emphasizing the value of controlled access in data management. With 3,202 users currently online, the scale of Power BI usage highlights the necessity for stringent governance measures to avoid inconsistencies that could hinder insights.

-

Controlled Sharing: The case study titled “Deploy and Share Reports Safely” illustrates the importance of thoroughly testing reports before deployment and sharing them through Apps or collaborative environments. This approach minimizes the risk of disseminating incorrect information and enhances data security, reinforcing the need for controlled sharing practices to ensure reliable insights that drive operational decisions.

-

Collaborative Practices: Encourage open communication among team members regarding area usage and updates. Regular collaboration helps identify potential issues early and promotes a shared understanding of functionalities, ultimately leading to more effective usage and fostering a culture of data-driven decision-making.

-

Training and Support: Invest in comprehensive training for team members on environment management and best practices. Well-informed users are better equipped to navigate the complexities of BI, enhancing overall efficiency and productivity. This emphasis on training not only empowers your team but also aligns with the recent trend of integrating best practices for managing BI environments into daily operations. Additionally, it is crucial to acknowledge that personal workspaces have limitations in sharing content and cannot publish BI apps, which are essential for content distribution within organizations.

-

Addressing Challenges: It is vital to recognize the challenges associated with leveraging insights from BI dashboards, such as time-consuming report creation, data inconsistencies, and the lack of actionable guidance. By implementing the best practices outlined above, organizations can mitigate these challenges, ensuring that their BI environments are well-managed, secure, and aligned with their operational objectives, which can help prevent the need to delete Power BI workspace. Moreover, integrating RPA solutions can automate repetitive tasks, further enhancing efficiency and effectiveness in workspace management.

By following these guidelines and integrating RPA solutions alongside BI practices, organizations can ensure their Power BI environments are well-managed, secure, and aligned with their operational objectives, ultimately driving growth and innovation.

Conclusion

Power BI workspaces are indispensable for fostering collaboration and driving insightful decision-making within organizations. Understanding the nuances of workspace management—ranging from the deletion process to the implications of such actions—enables teams to maintain data integrity and operational efficiency. By prioritizing data backup, ensuring clear communication, and conducting thorough assessments of access rights and project dependencies, organizations can safeguard their data while minimizing disruption.

Implementing best practices such as regular organization, robust data governance, and controlled sharing further enhances the effectiveness of Power BI workspaces. These strategies not only streamline processes but also empower teams to focus on deriving actionable insights rather than getting bogged down by administrative challenges. Additionally, leveraging Robotic Process Automation can significantly alleviate the burden of repetitive tasks, allowing teams to dedicate their efforts to strategic initiatives that drive growth.

In an era where data-driven decision-making is paramount, adopting a proactive approach to managing Power BI workspaces is essential. By embracing these practices, organizations can transform potential challenges into opportunities, ensuring that their Power BI environments contribute meaningfully to their business intelligence objectives. The path to operational excellence lies in the thoughtful management of data resources, paving the way for informed decisions that propel organizations forward.

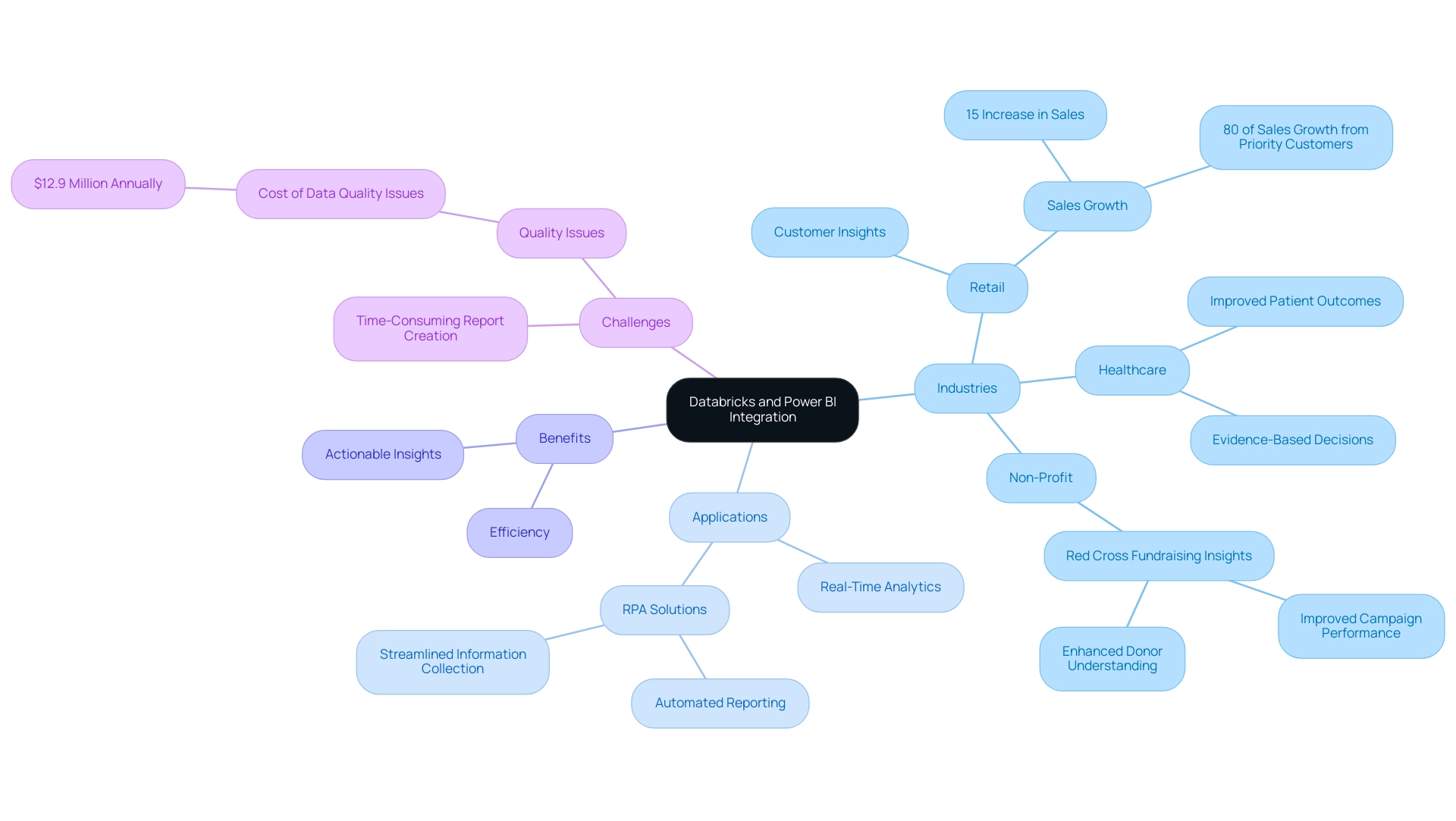

Overview

Databricks and Power BI integration enhances analytics capabilities by enabling organizations to visualize and analyze large datasets efficiently, ultimately improving decision-making and operational efficiency. The article discusses how this integration addresses challenges such as data latency and report creation time while showcasing real-world applications that demonstrate significant business benefits, such as increased sales and improved patient outcomes.

Introduction

In the rapidly evolving landscape of data analytics, the integration of Databricks and Power BI stands out as a game-changer for organizations seeking to harness the full potential of their data. By combining the robust capabilities of Databricks—known for its powerful big data processing and machine learning features—with the dynamic visualization tools of Power BI, businesses can unlock actionable insights that drive strategic decision-making.

However, this integration is not without its challenges; issues such as data latency, security concerns, and the complexities of report generation can impede progress. Yet, with the right strategies and tools, including Robotic Process Automation (RPA), organizations can streamline their workflows, enhance operational efficiency, and ultimately transform their data into a competitive advantage.

This article delves into best practices, real-world applications, and practical solutions to empower organizations in their journey towards data-driven excellence.

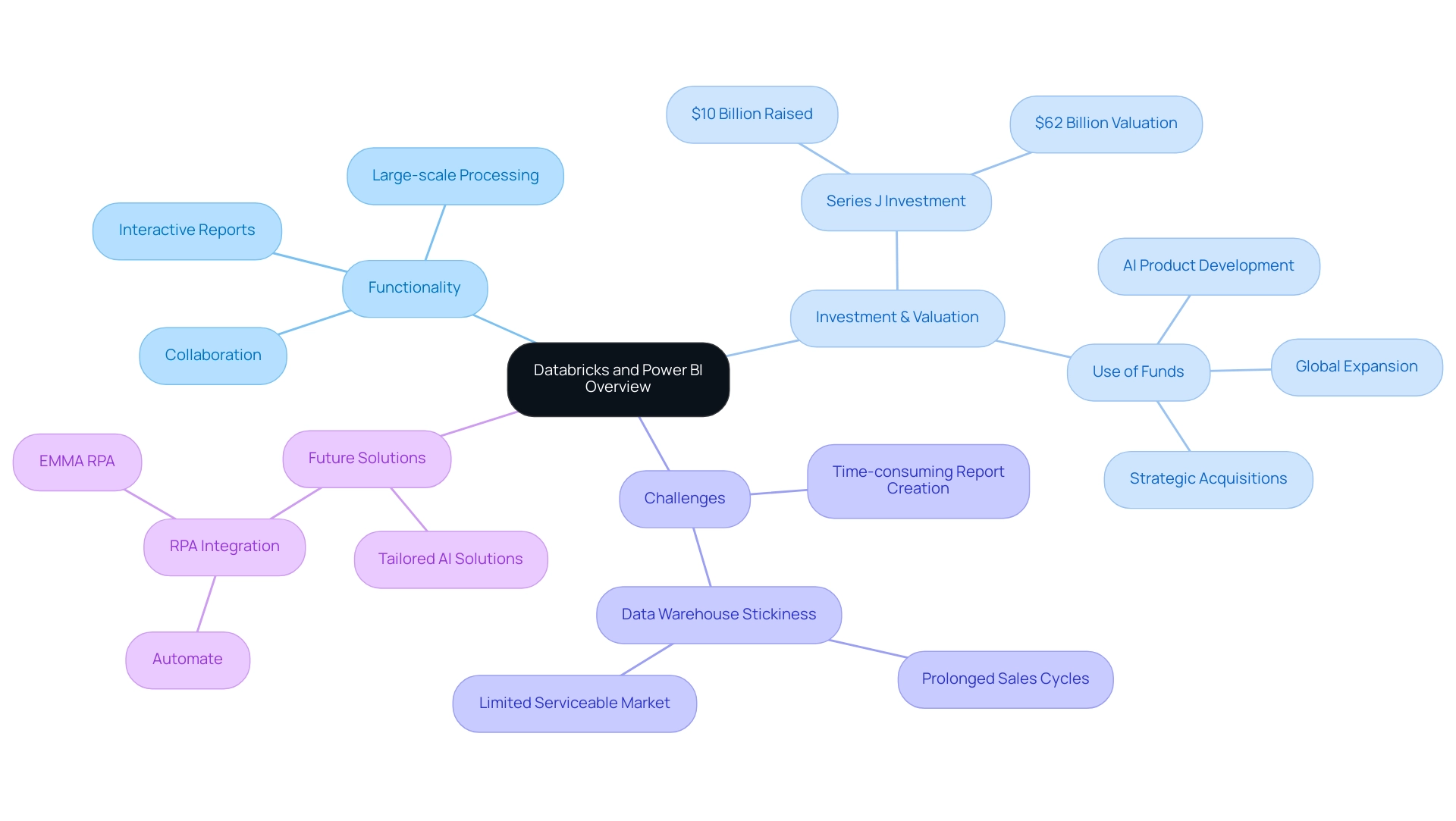

Understanding Databricks and Power BI: A Comprehensive Overview

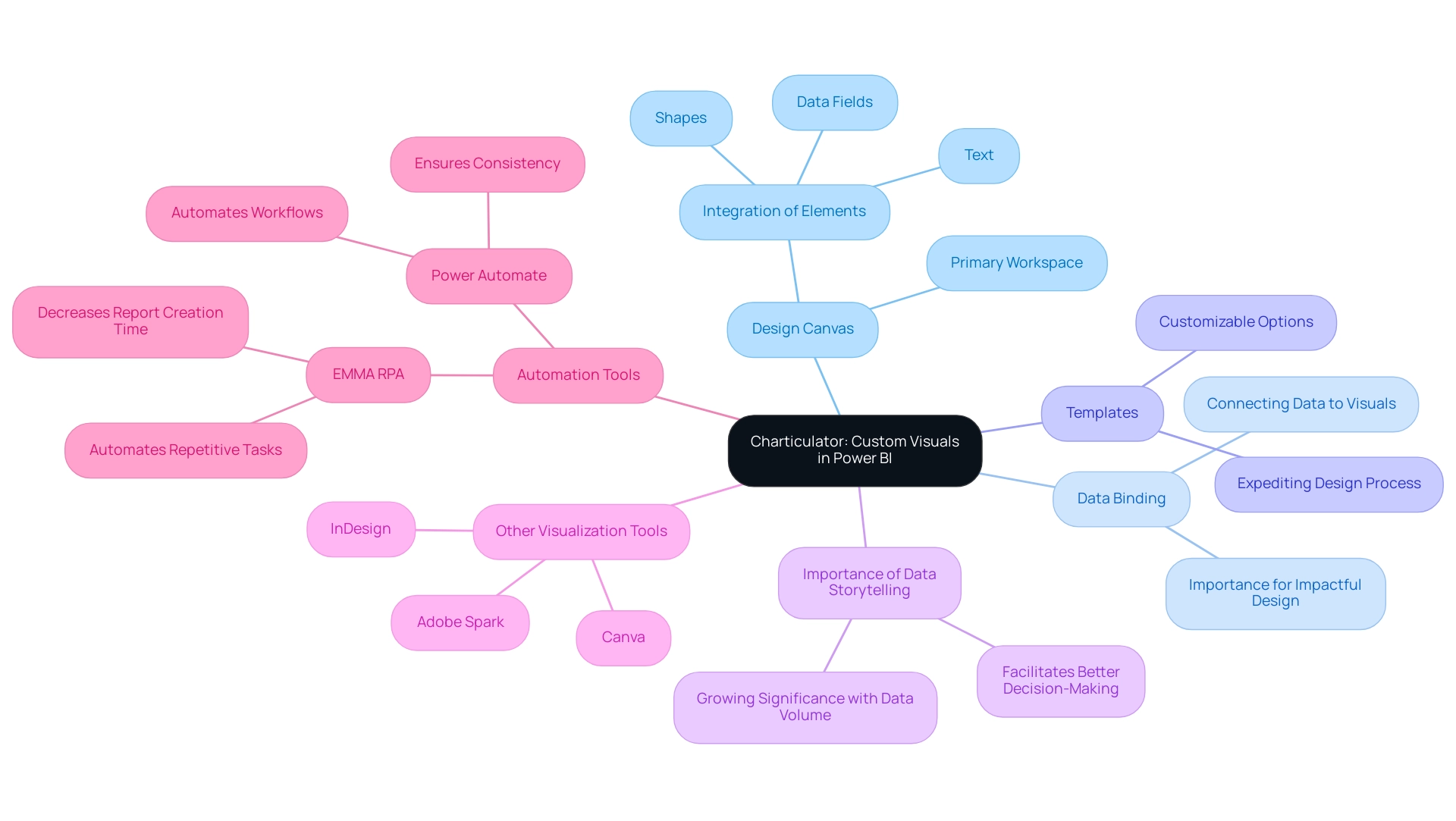

This platform functions as a powerful unified analytics solution intended to simplify large-scale processing and machine learning projects, offering a strong basis for leveraging Business Intelligence. By leveraging the capabilities of Apache Spark, it fosters collaboration between scientists and engineers within a cloud-based ecosystem, enabling them to perform large-scale analytics with unprecedented efficiency. Recently, the company raised a $10 billion Series J investment at a $62 billion valuation as of December 17, 2024, underscoring its financial strength and market position.

As noted by Sacra, ‘Databricks closed its Series J funding round in December 2024, raising $10 billion in non-dilutive financing, with $8.6 billion completed to date.’ In the meantime, this tool distinguishes itself as a top business analytics application that enables users to visualize information and share insights across the organization. By seamlessly linking to various information sources, including databricks and power bi, the platform facilitates the creation of interactive reports and dashboards that enhance insight-driven decision-making.

However, challenges persist in leveraging insights effectively, such as time-consuming report creation and inconsistencies. The integration of databricks and power bi not only enables companies to analyze extensive information sets but also transforms the way they visualize insights, ultimately enhancing business intelligence and operational efficiency. Additionally, the application of RPA solutions, such as EMMA RPA and Automate, can automate repetitive tasks, enhancing efficiency and employee morale.

As the AI landscape continues to evolve, tailored solutions that address these challenges can empower organizations to cut through the noise and fully leverage their data for growth.

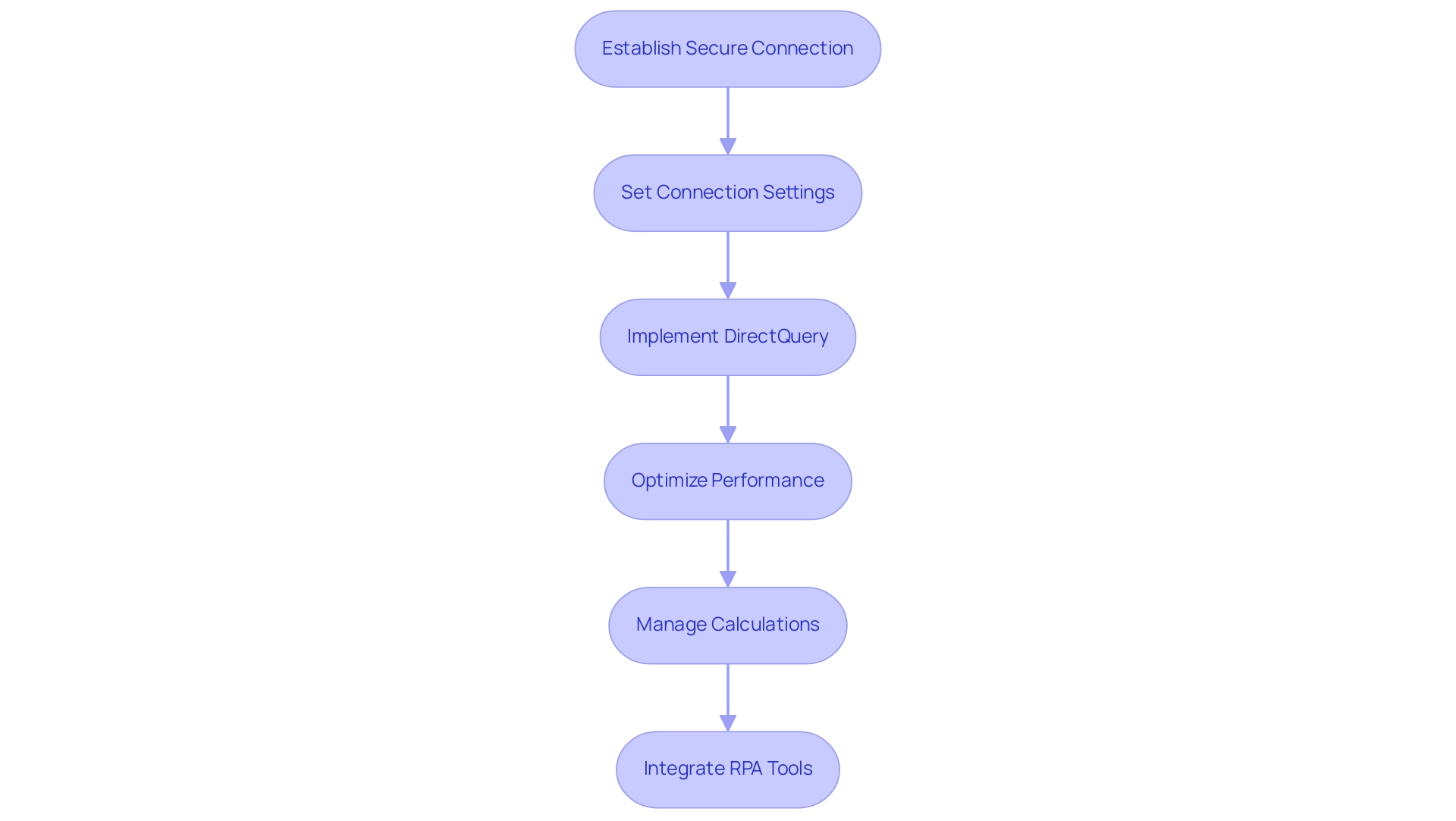

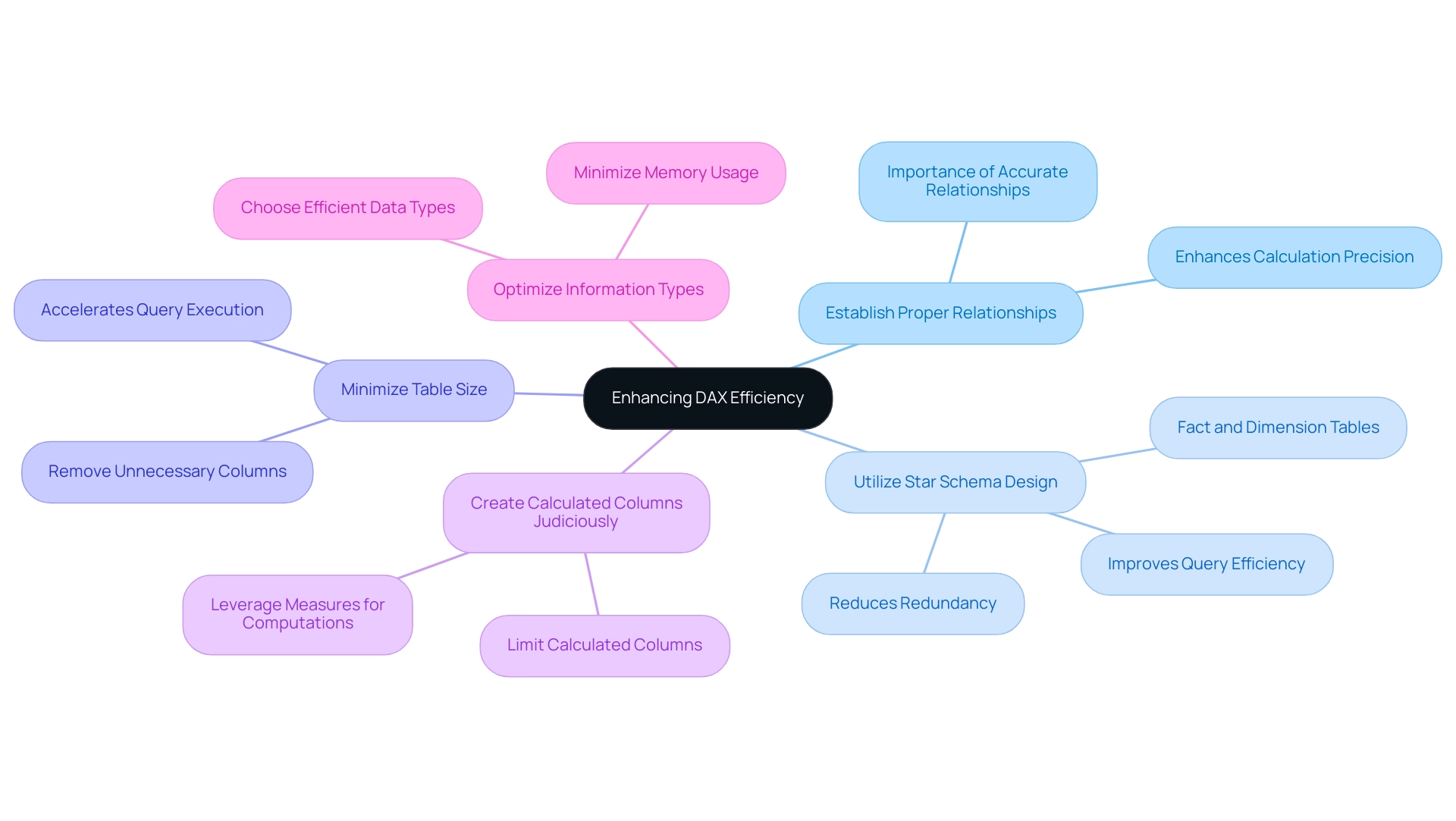

Integrating Databricks with Power BI: Best Practices and Techniques

To effectively integrate Azure with Business Intelligence tools and leverage the full potential of data analysis, the first step is to establish a secure connection through Azure. Utilizing the connector within Power BI allows users to seamlessly connect to their workspace, addressing the challenges of time-consuming report creation. It is vital to set up connection settings precisely, including the cluster configuration, to ensure optimal performance and information consistency.

Significantly, the Azure connector restricts the quantity of imported rows to the Row Limit established previously, emphasizing the necessity for meticulous information management. Implementing DirectQuery facilitates real-time data access, empowering teams to engage with the most current data without the manual burden of dataset refreshes. Moreover, employing performance optimization techniques, such as caching frequently used queries and leveraging materialized views in Databricks and Power BI, can significantly enhance operational efficiency.

As illustrated in the case study on ‘Understanding Lazy Evaluation,’ avoiding unnecessary recomputation by saving computed results for reuse can lead to optimized performance. Additionally, to manage calculations effectively in BI, it is advisable to prefer calculated columns over measures when necessary and to optimize DAX queries with simpler functions. Furthermore, integrating RPA tools like EMMA RPA and Automate can streamline these processes, reduce manual workloads, and ultimately improve employee morale by allowing staff to focus on more strategic tasks.

As noted by Jules Damji,

Often, small things make a huge difference, hence the adage that some of the best ideas are simple!

By adhering to these best practices and leveraging the right RPA solutions, organizations can achieve a seamless integration that not only enhances their analytical capabilities but also empowers informed decision-making, ultimately driving business growth and innovation.

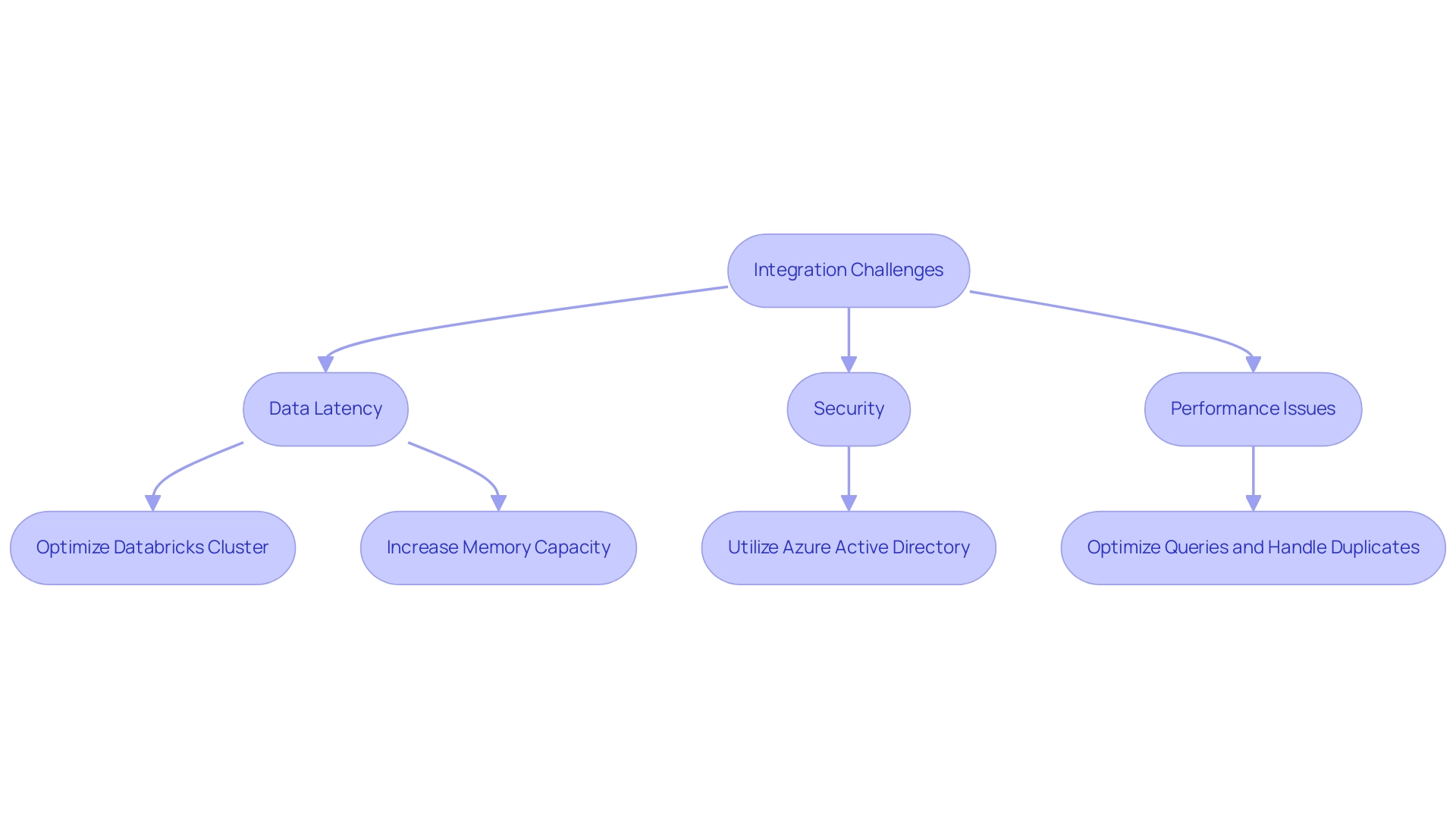

Challenges and Solutions in Databricks and Power BI Integration

Combining Databricks and Power BI with the analytics platform can present considerable difficulties, especially regarding data latency, security, and performance. Addressing these challenges is essential for enhancing operational efficiency. Data latency is a common hurdle, often stemming from suboptimal connections that delay report generation.

To combat this, ensure your Databricks cluster is adequately sized and configured to handle the expected workload effectively. Yalan Wu, a community support expert, emphasizes the importance of resource allocation, stating,

Power BI runs on memory. Thus, the larger the collection of information, the more memory it will need.

Make sure you have plenty of memory, and that you are on a 64-bit system. This insight is vital for optimizing performance in data-heavy environments. Moreover, leveraging Delta Lake’s ACID compliance at the table level ensures reliable information handling, which is crucial for maintaining integrity during integration processes.

Security remains a paramount concern; utilizing Azure Active Directory for authentication can significantly enhance access protection. Performance issues frequently arise from complex queries and duplicate records, which can be mitigated through the mechanisms of Databricks and Power BI for handling duplicates and effective query optimization techniques. The classic trade-off between throughput and latency further complicates performance, as highlighted in case studies where innovative designs and technologies like Photon and Delta Lake have been developed to improve both metrics.

For example, entities have effectively utilized RPA to automate repetitive information preparation tasks, greatly decreasing the time needed for report creation and reducing mistakes. By proactively tackling these challenges with RPA and Business Intelligence strategies, companies can significantly improve their integration experience, resulting in more efficient information management and informed decision-making.

Real-World Applications of Databricks and Power BI Integration

Organizations across diverse industries are leveraging the combined power of Databricks and Power BI to enhance their analytics capabilities. A notable example includes a retail company that effectively utilized this integration to analyze customer purchasing patterns, leading to a remarkable 15% increase in sales within just one quarter. This surge can be attributed to their customized marketing strategies, driven by real-time information insights.

In fact, PepsiCo’s priority customers accounted for 80% of the product’s sales growth in the first 12 weeks after launch, showcasing the significant impact of analytics in retail. However, challenges such as time-consuming report creation and inconsistencies often hinder effective insights from Power BI dashboards. As Lara Rachidi, a Solutions Architect, observes, ‘Quality problems in information cost the typical enterprise $12.9 million annually,’ highlighting the significance of integrity in these processes.

To alleviate these challenges, RPA solutions can automate repetitive tasks, streamlining information collection and reporting processes, thus enhancing overall efficiency. In the healthcare industry, another entity utilized BI dashboards that leverage Databricks and Power BI to analyze treatment information, enabling evidence-based decisions that greatly improved patient outcomes. Moreover, the Red Cross implemented Power BI for enhanced fundraising insights, improving campaign performance and donor understanding.

These real-world applications underscore how the synergy of these advanced tools, along with RPA solutions, not only fosters operational improvements but also provides a strategic edge in today’s competitive landscape, empowering organizations to transform raw data into actionable insights that drive growth and innovation.

Conclusion

Harnessing the integration of Databricks and Power BI can profoundly transform an organization’s approach to data analytics. By leveraging Databricks’ powerful big data processing alongside Power BI’s dynamic visualization capabilities, businesses can unlock actionable insights that drive strategic decision-making. However, challenges such as data latency, security, and report generation complexities must be navigated effectively to realize the full potential of this integration.

Implementing best practices—like establishing secure connections, optimizing performance, and employing RPA tools—can significantly enhance operational efficiency. Organizations that prioritize these strategies can streamline workflows, reduce manual workloads, and empower their teams to focus on strategic initiatives rather than repetitive tasks. Real-world applications demonstrate that when these platforms are effectively integrated, they can lead to substantial improvements in:

- Sales

- Patient outcomes

- Operational performance

In an era where data-driven decision-making is crucial for competitive advantage, embracing the integration of Databricks and Power BI is not just a technical upgrade; it is a strategic imperative. By proactively addressing the challenges and leveraging the capabilities of these powerful tools, organizations can transform their data into a formidable asset, ultimately fostering innovation and growth in a rapidly evolving business landscape.

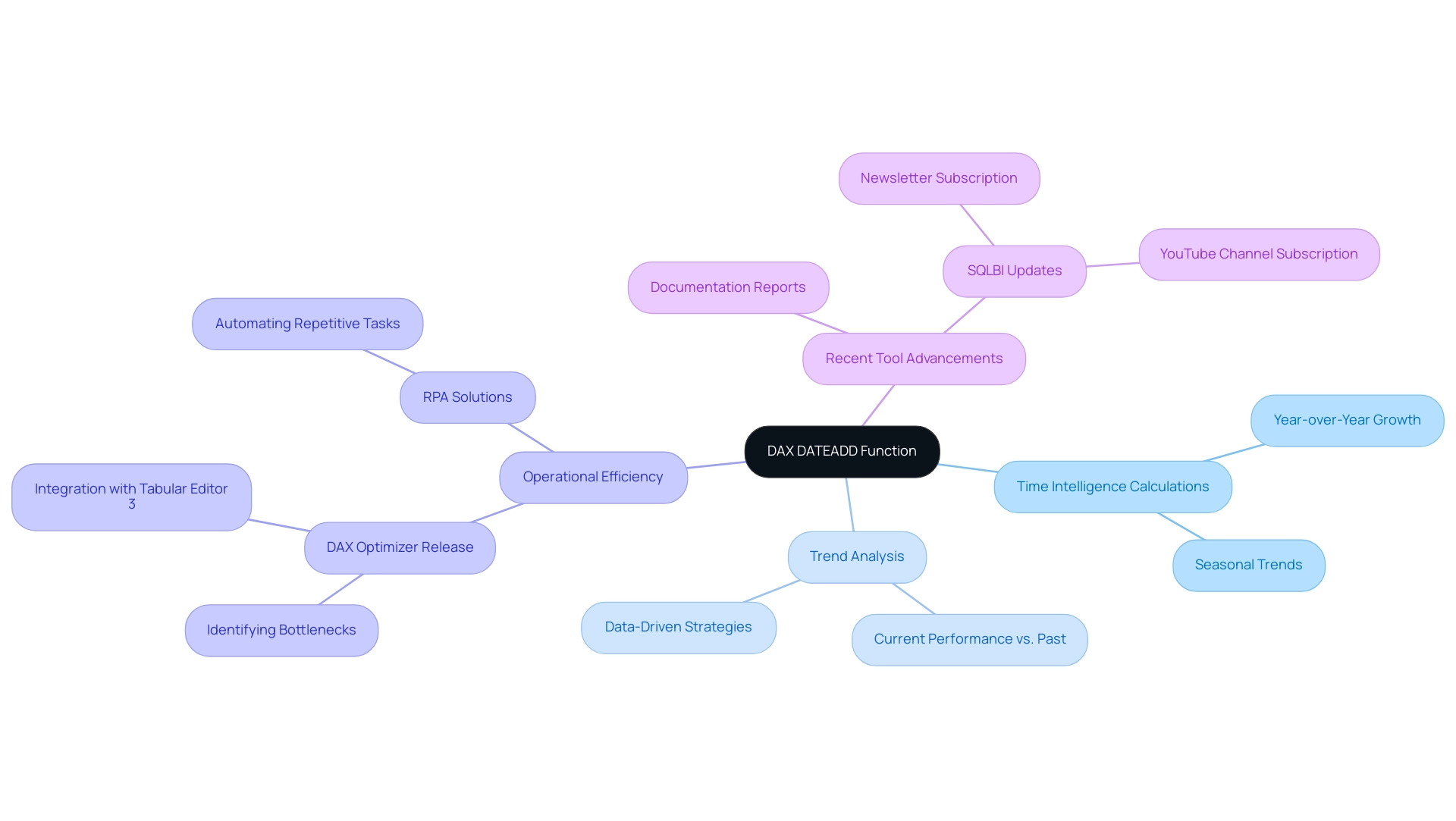

Overview

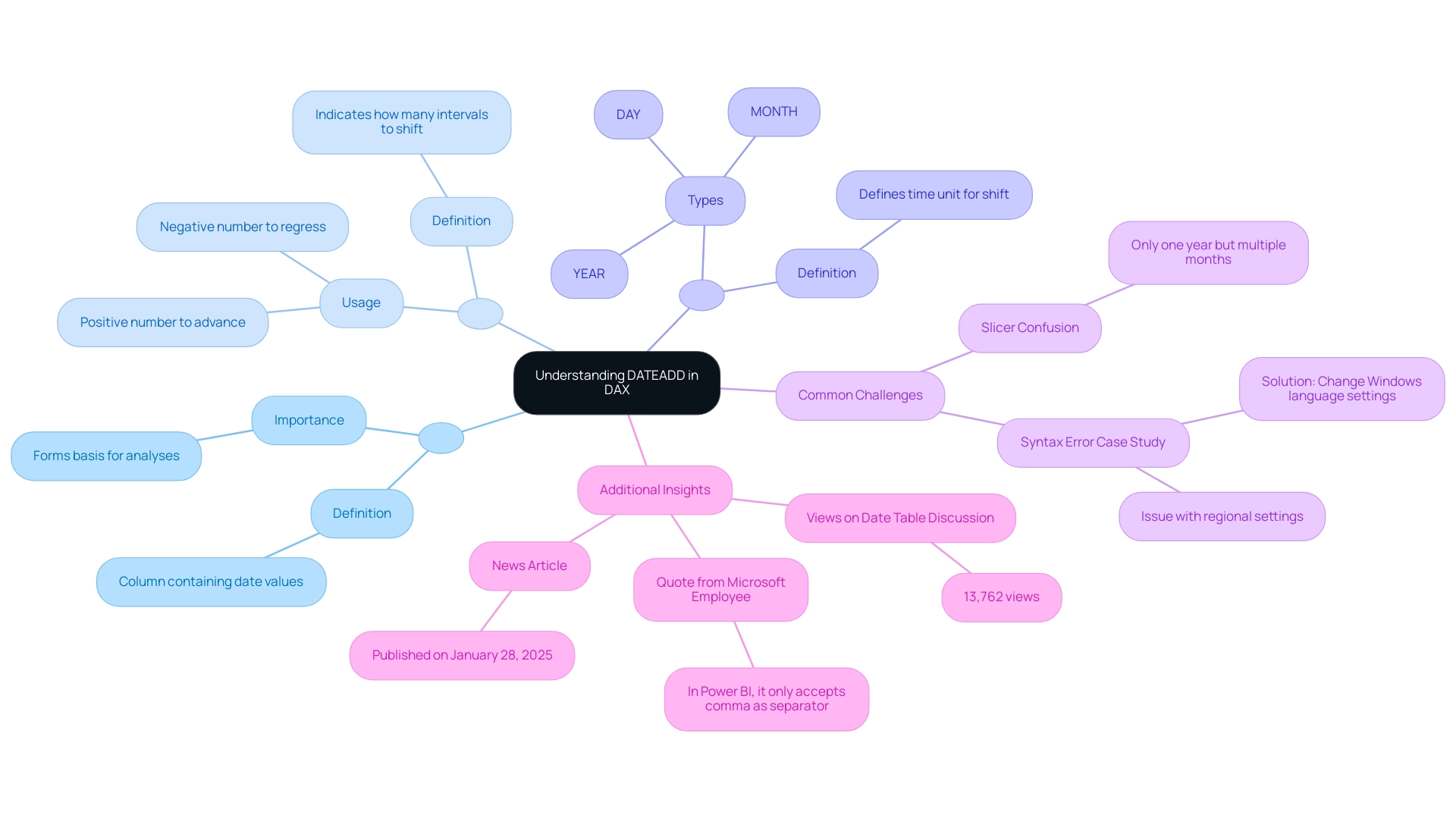

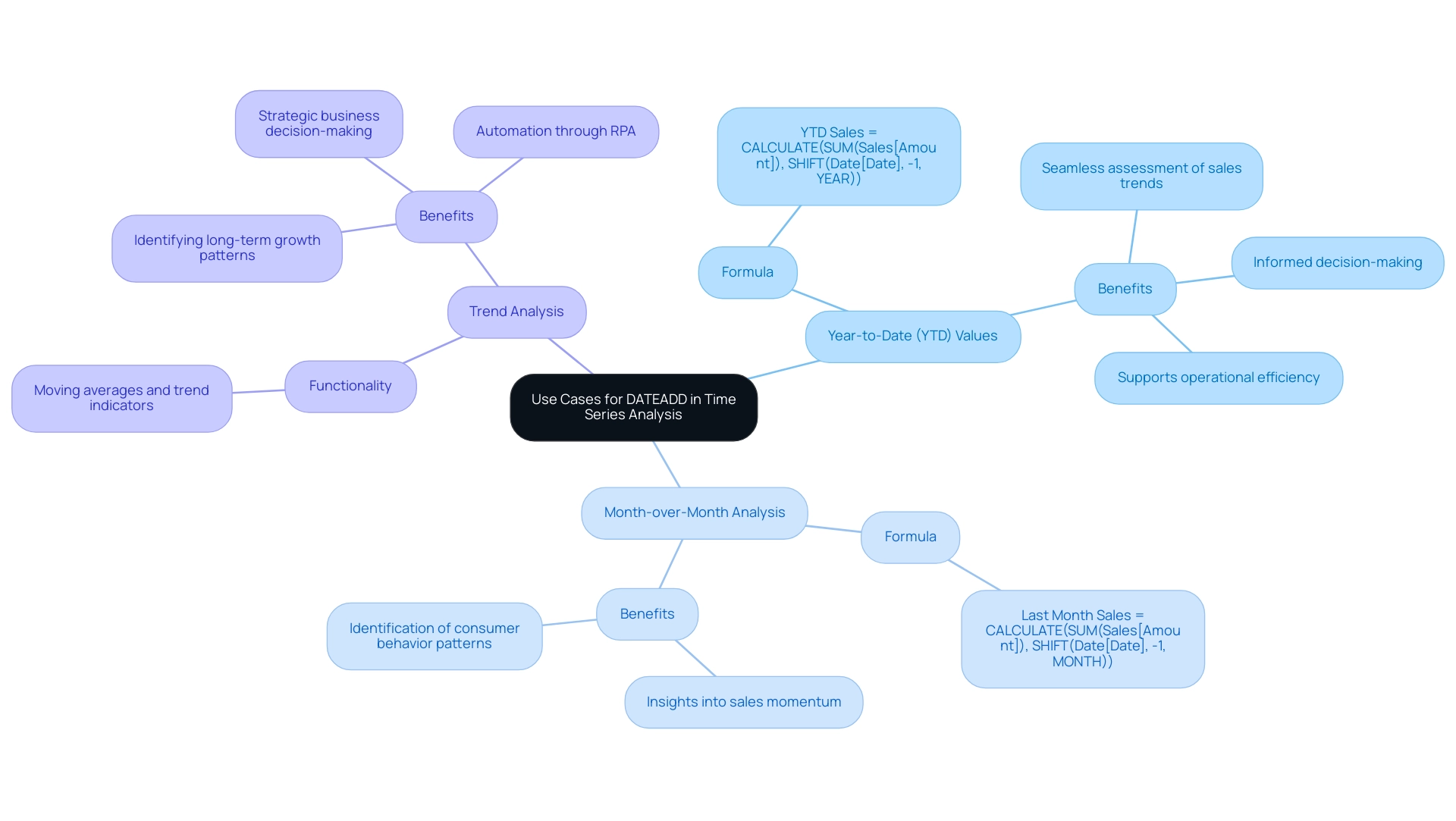

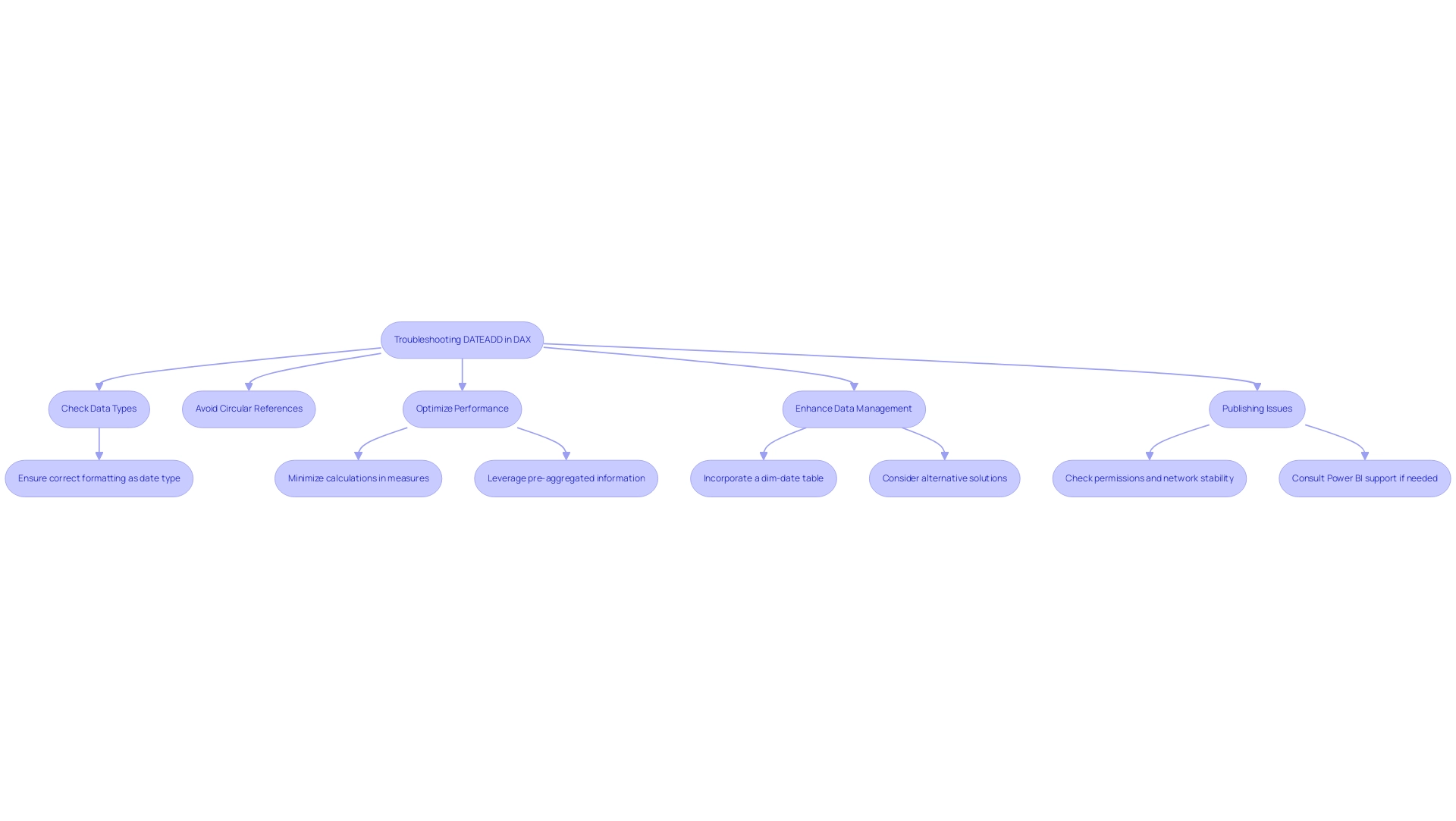

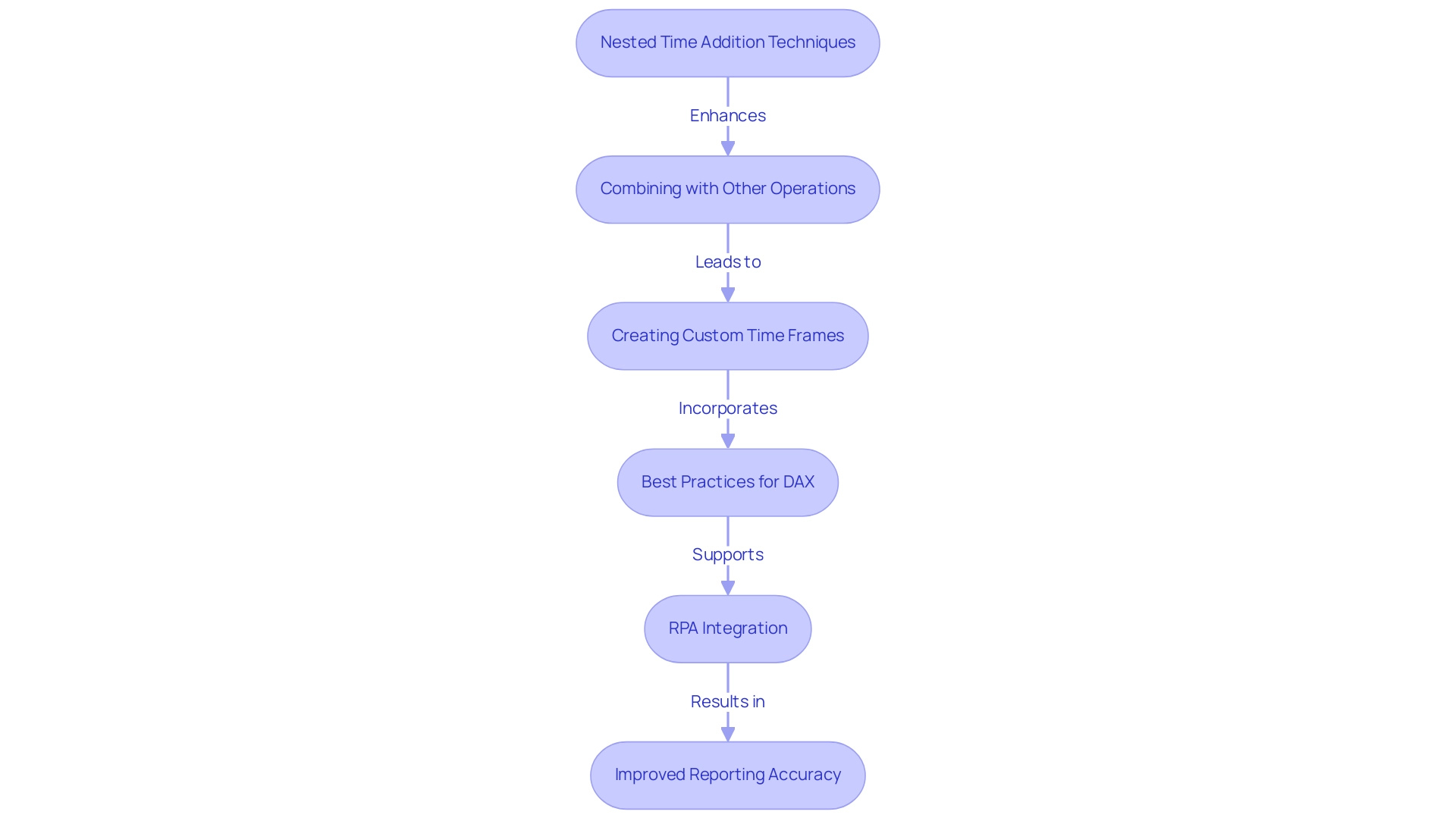

The article provides a comprehensive guide on how to use the DAX DATEADD function, particularly focusing on its application in time intelligence calculations to enhance data analysis and reporting. It explains the syntax, common use cases, troubleshooting strategies, and advanced techniques, illustrating how mastering DATEADD can significantly improve operational efficiency and facilitate insightful decision-making through effective date manipulation.

Introduction