Overview

Replacing null values with blank entries in Power Query is essential for improving data quality and ensuring accurate analysis and reporting. The article outlines a step-by-step guide for this process, emphasizing the importance of understanding the differences between null and blank values, and provides troubleshooting tips and best practices to maintain data integrity and operational efficiency.

Introduction

In the realm of data analysis, the distinction between null and blank values in Power Query can be the linchpin for achieving accurate and reliable results. While null values indicate a complete absence of data, blank values represent empty strings that can often be more manageable in reporting contexts.

However, the challenges posed by poor master data quality can complicate the handling of these values, leading to inconsistencies that undermine decision-making processes. Organizations that grasp these nuances are better equipped to enhance their data integrity and operational efficiency.

This article delves into practical strategies for addressing null and blank values, offering step-by-step guides and troubleshooting tips that empower users to optimize their data workflows. By embracing these insights, businesses can not only improve their data quality but also foster a culture of informed decision-making that drives growth in today’s competitive landscape.

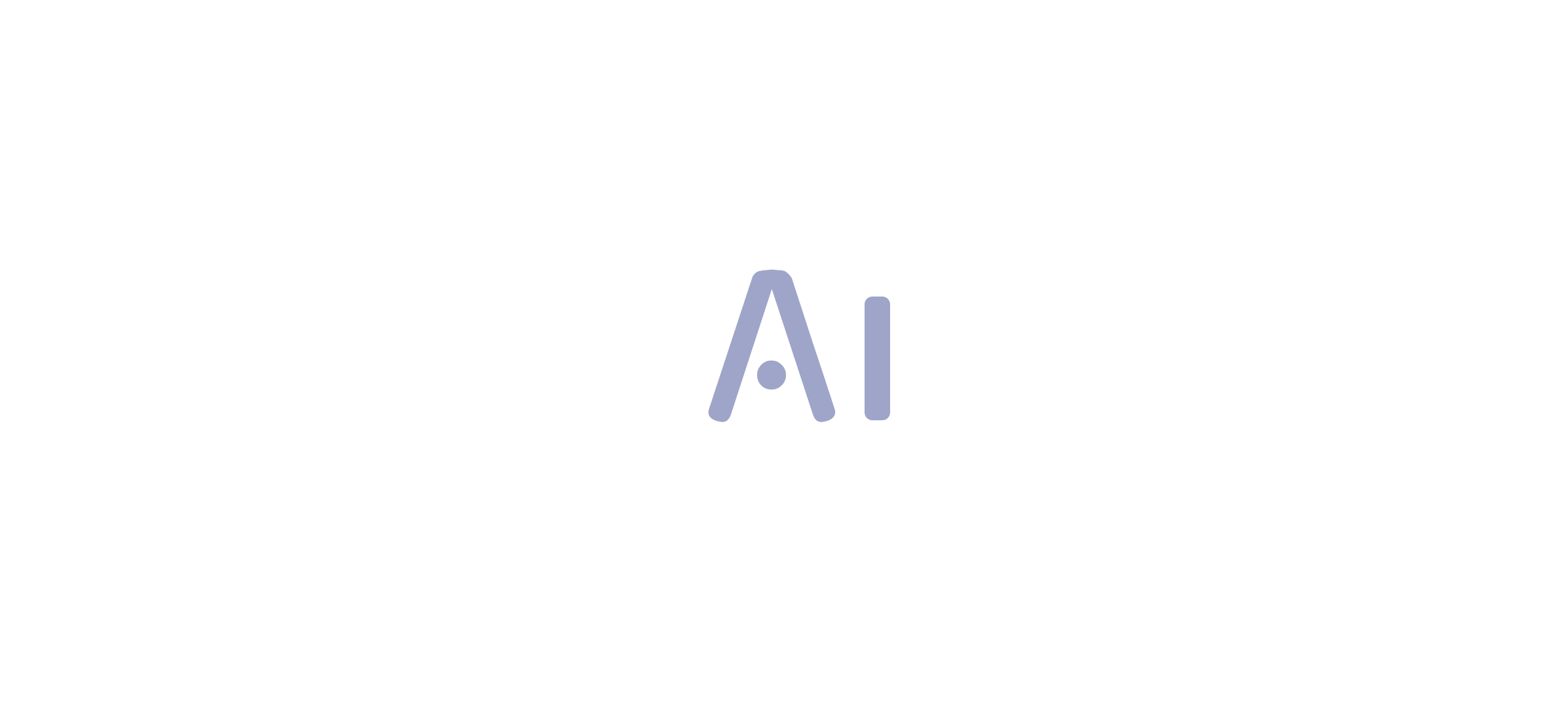

Understanding Null vs. Blank Values in Power Query

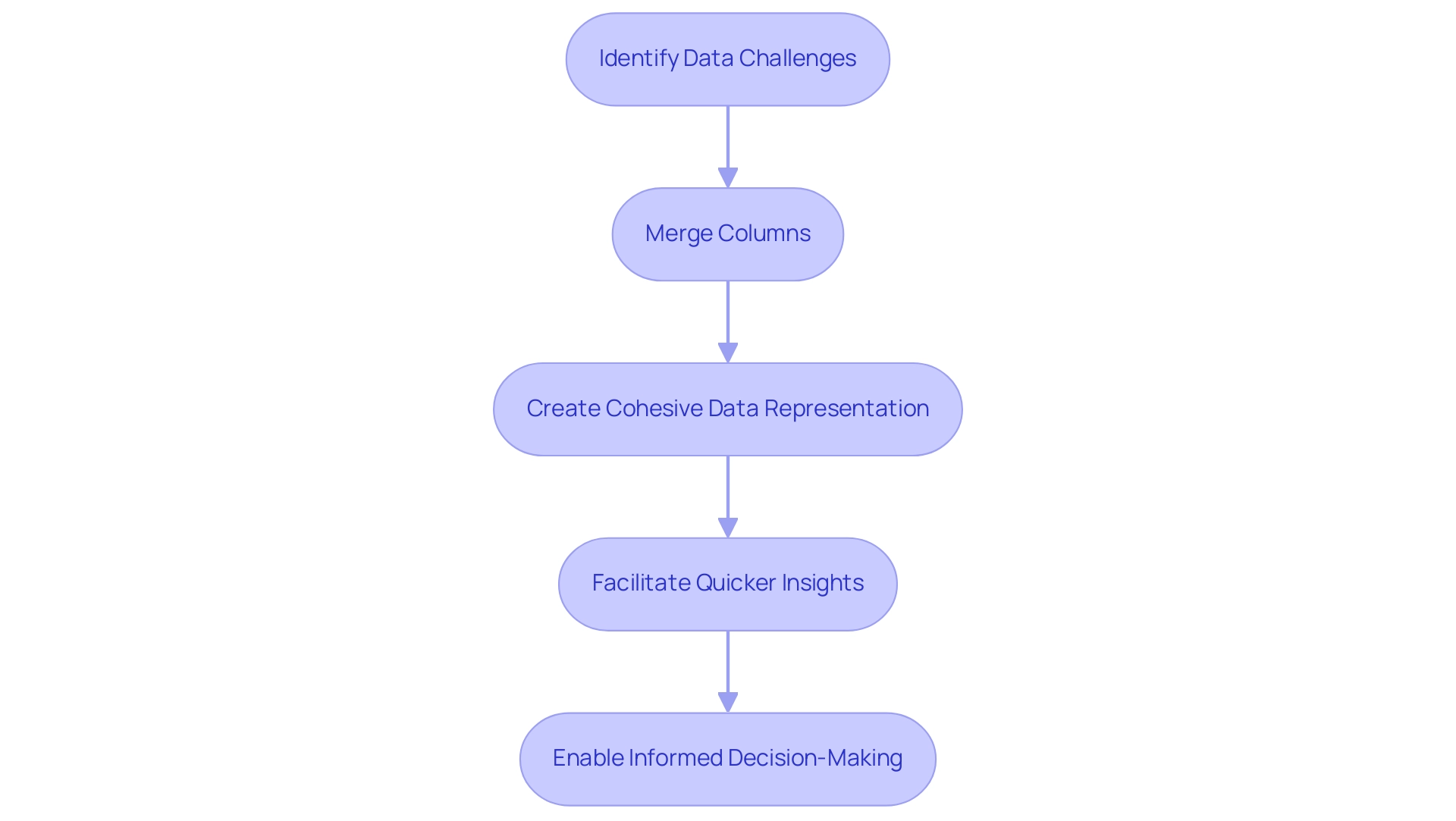

In Power Query, null entries signify the complete absence of information, while blank entries refer to strings that contain no characters. Grasping this difference is crucial, as null entries can result in unforeseen consequences in your analysis, possibly jeopardizing the dependability of your findings. This challenge is exacerbated by poor master information quality, which can hinder effective Business Intelligence and RPA adoption.

Poor master information quality can significantly complicate the handling of null and empty entries, as inconsistent entries may lead to increased null occurrences, further affecting analysis and decision-making processes. For instance, if winsorization is applied, the mean (SD) would be 3.2 (0.84), highlighting the quantitative impact of quality issues on statistical outcomes. Conversely, blank values are processed as empty strings, which can sometimes be more manageable in reporting contexts.

By replacing null with blank in Power Query for datasets where clarity is paramount, you can enhance consistency and ensure your reports accurately convey the absence of information. Continuous monitoring and predictive information quality are crucial for detecting and resolving these issues early, thereby maintaining the integrity of your analysis and fostering operational efficiency. Recognizing these differences equips you to navigate your information transformation processes effectively.

Jong Hae Kim aptly notes,

The presence of missing data reduces the information available to be analyzed, compromising the statistical power of the study, and eventually the reliability of its results.

This highlights the necessity of addressing null entries proactively to maintain the robustness of your findings and drive growth through informed decision-making. Furthermore, a case study on Visual Analogue Scale (VAS) Data demonstrates the real-world implications of managing missing entries and outliers.

In this study, proper handling of absent data prevented bias in statistical estimates, thus enhancing the reliability of the findings. This further promotes AI adoption in your organization, demonstrating that addressing data quality issues is not only essential for accurate analysis but also critical for maintaining a competitive edge in today’s data-driven environment.

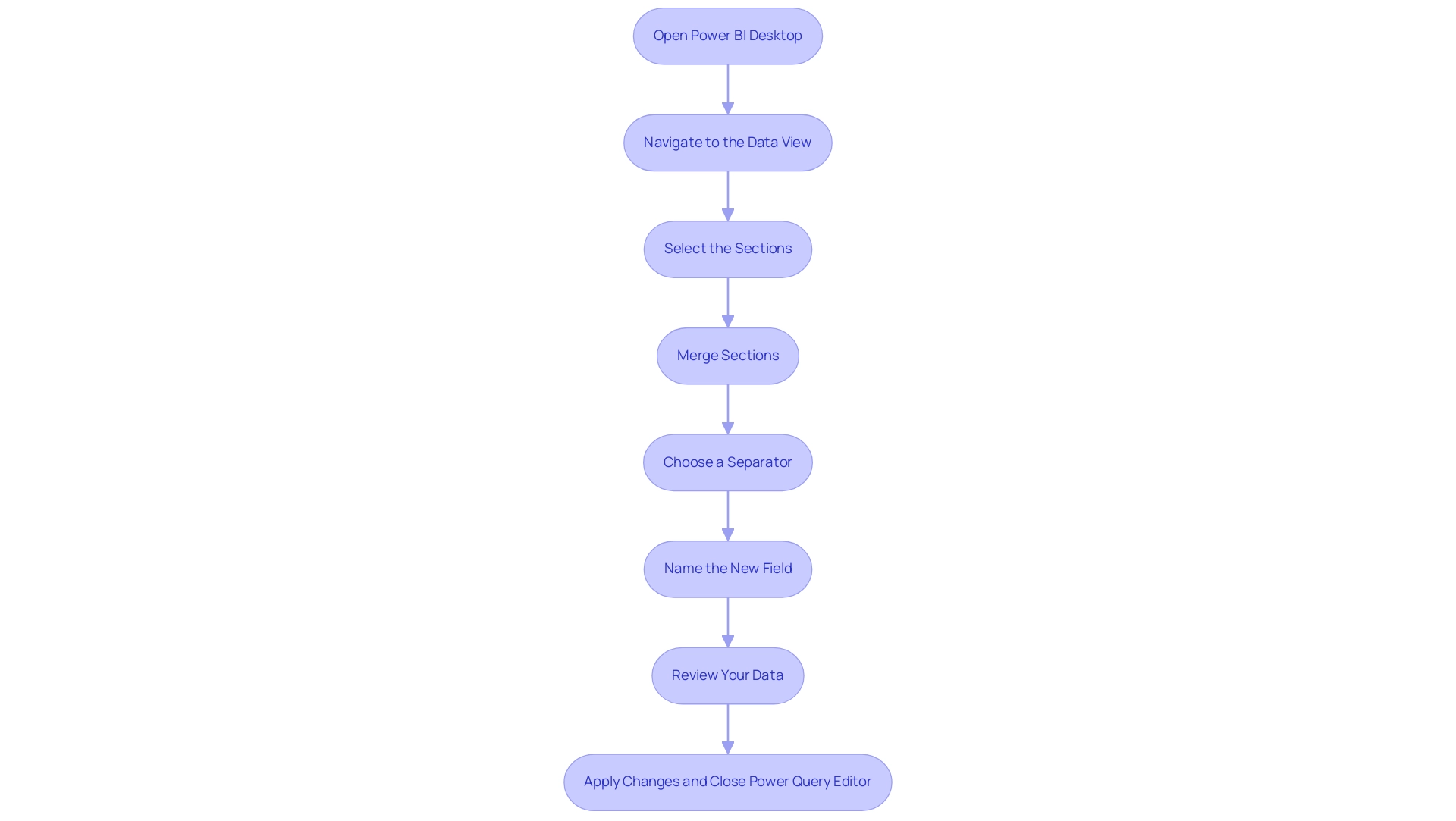

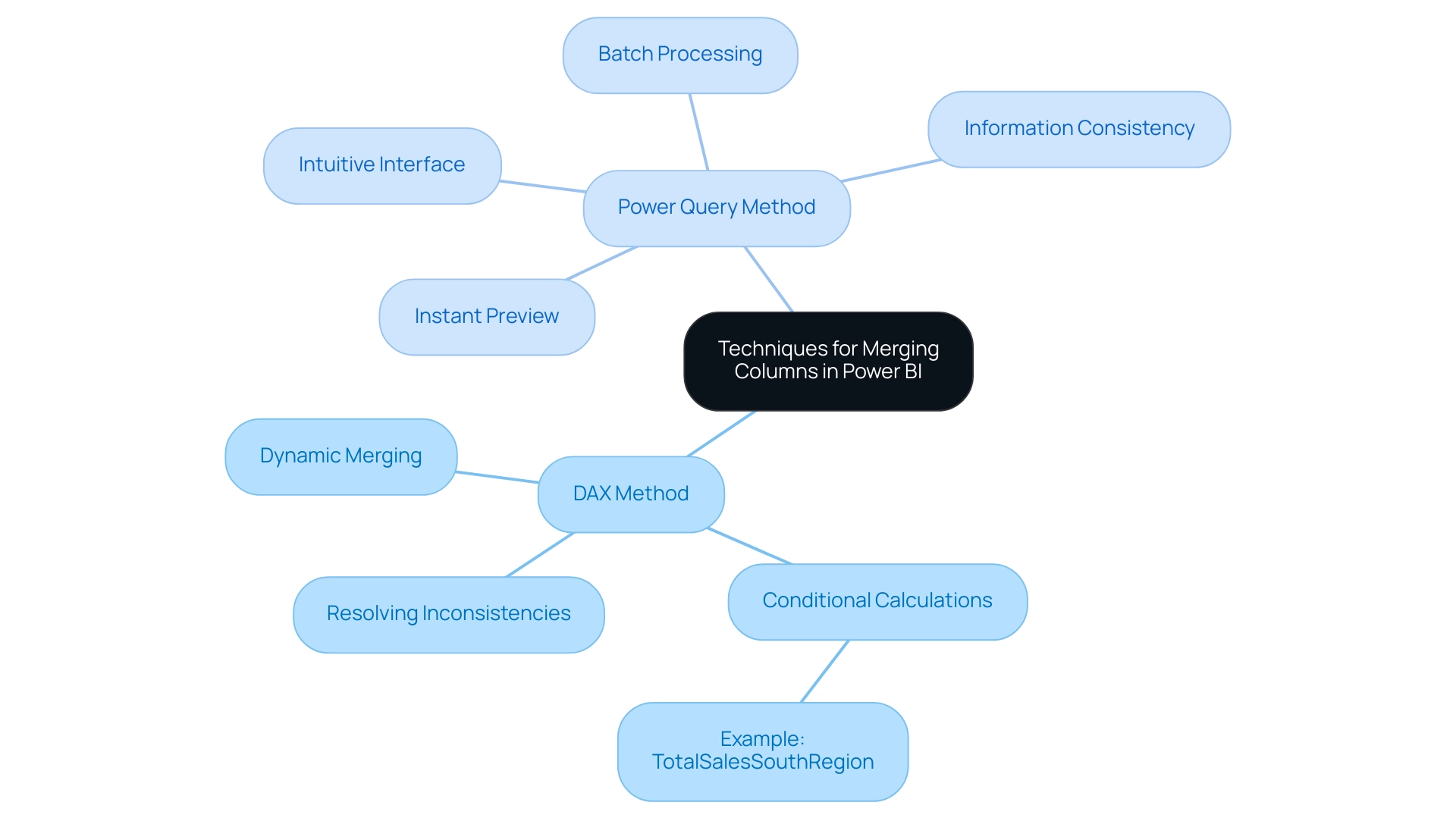

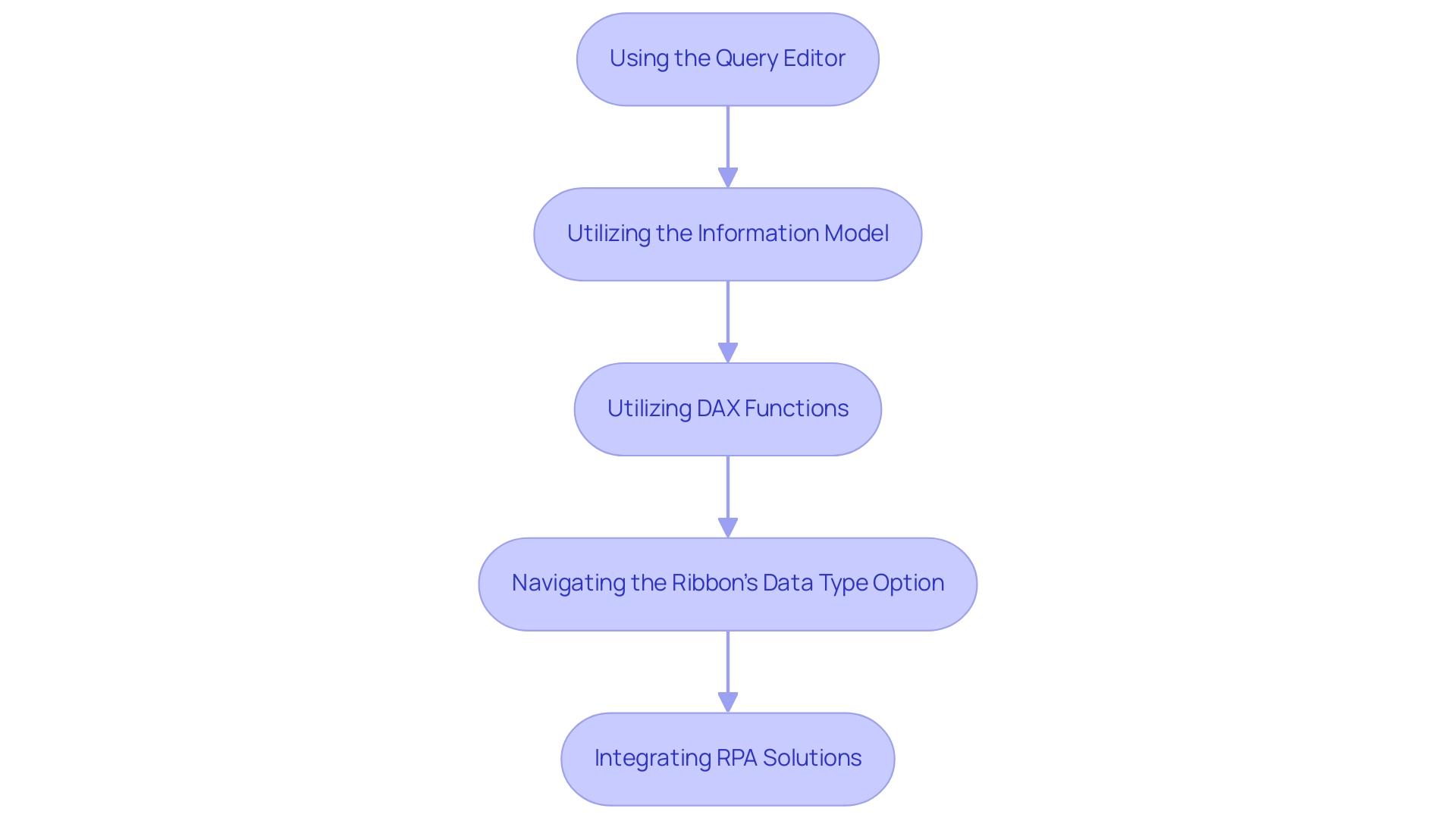

Step-by-Step Guide to Replacing Null with Blank in Power Query

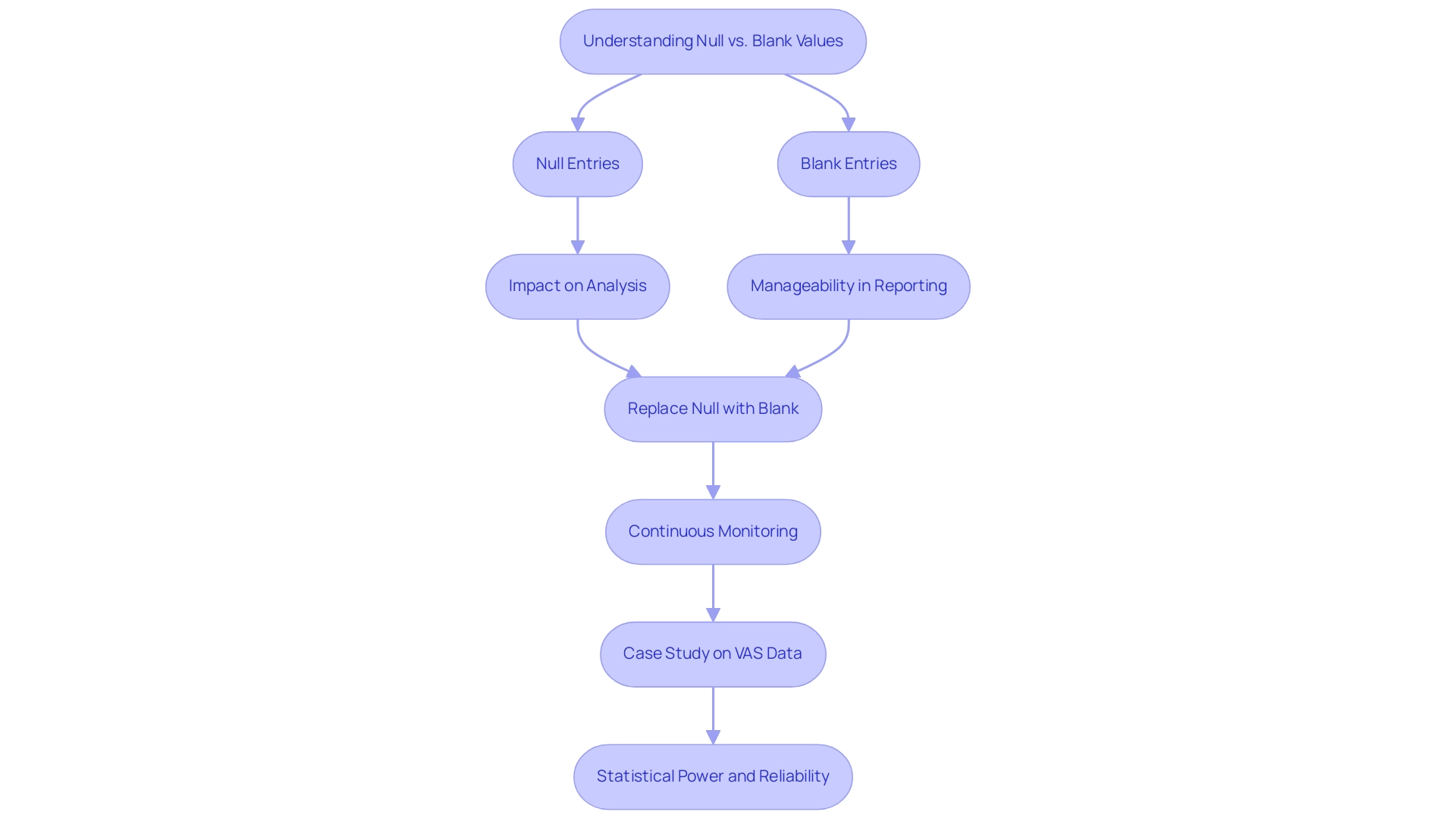

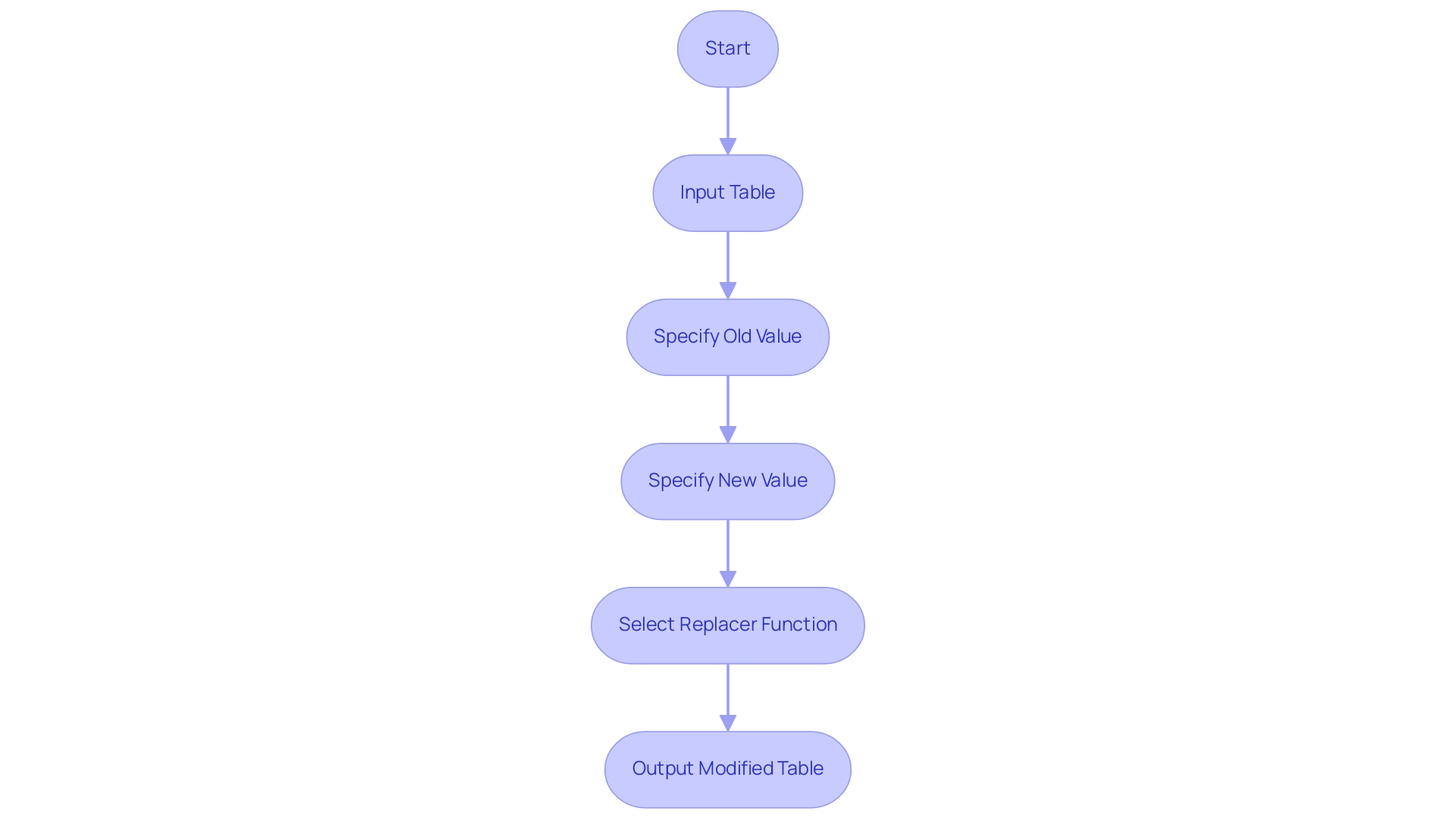

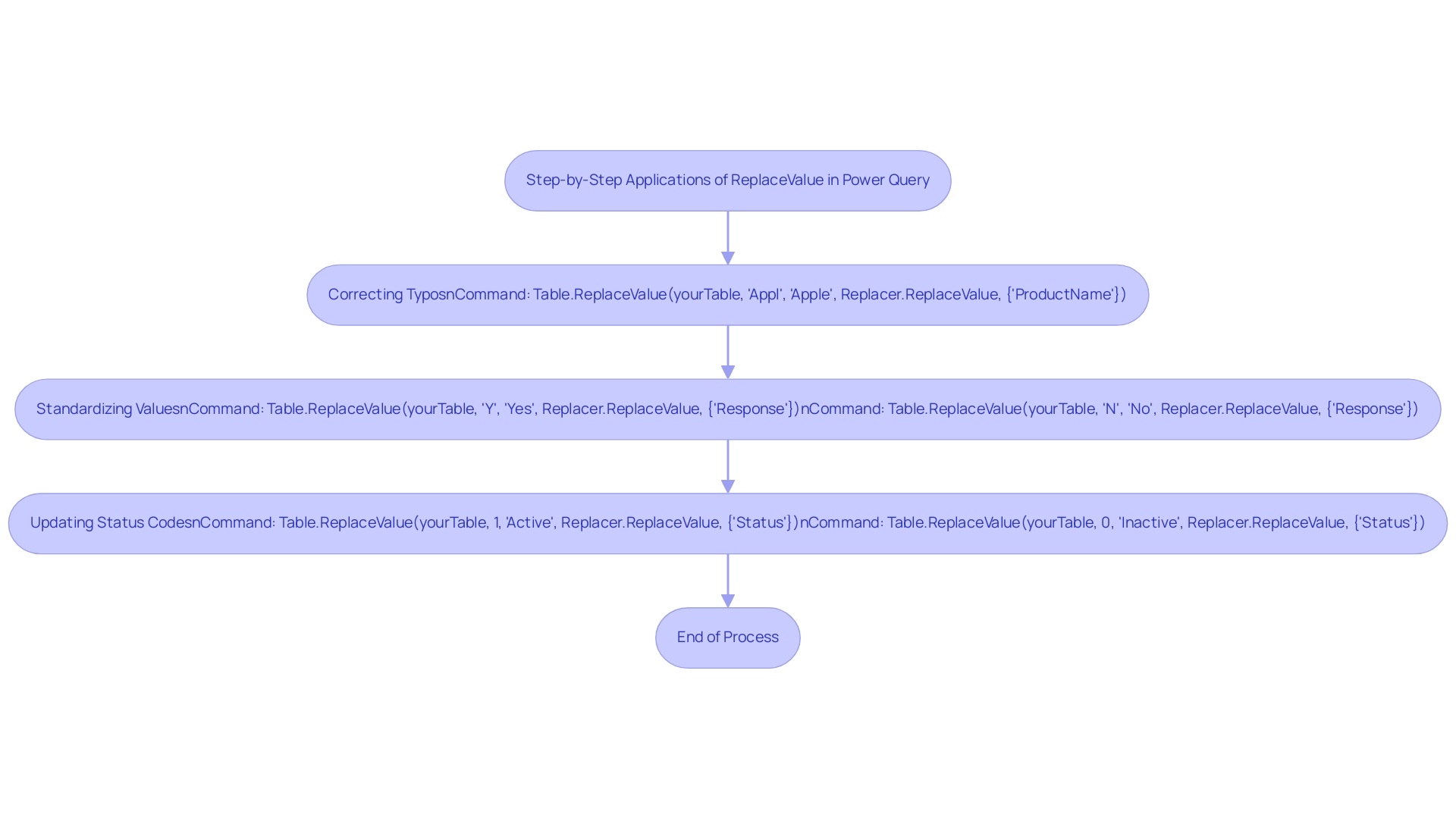

To effectively replace null values with blank values in Power Query, follow this straightforward guide:

- Load Your Data: Begin by launching Excel and navigating to the Data tab. Choose ‘Get Data’ to import your dataset into the data transformation tool.

- Identify the Column: Pinpoint the specific column that contains the null entries you wish to address by clicking on its header.

- Open the ‘Transform’ Tab: Within the data editing tool, locate and click on the ‘Transform’ tab to access various data manipulation options.

- Select ‘Replace Values’: Click on ‘Replace Values.’ A dialog box will appear where you can input ‘null’ (without quotes) in the ‘Field to Find’ section, allowing you to replace null with blank in power query by leaving the ‘Replace With’ section empty to denote a blank entry.

- Execute the Replacement: Confirm the action by clicking ‘OK.’ Power tools will automatically substitute all null entries in your chosen column with empty entries, enhancing the overall quality of your dataset.

- Close & Load Your Query: To finalize your modifications, return to Excel by clicking ‘Close & Load’ in the Home tab.

By adhering to these steps, you can effectively replace null with blank in power query, which will significantly improve your dataset’s usability for analysis and reporting. As noted by the professional gdecome, “I figured out my problem when trying to enter a function with more than one parameter… Make sure to structure your function calls correctly for optimal results.” This insight emphasizes a common challenge encountered during transformation that can influence the effectiveness of your cleaning process.

Furthermore, a case study on improving Table.Profile functionality demonstrates how a user overcame challenges in profiling, ultimately enhancing their analysis capabilities. This approach not only streamlines your information cleaning process but also enhances the reliability of your analysis results.

Considering that this subject has attracted 2,429 views, it highlights the significance and necessity of efficiently handling null entries in the tool for numerous users.

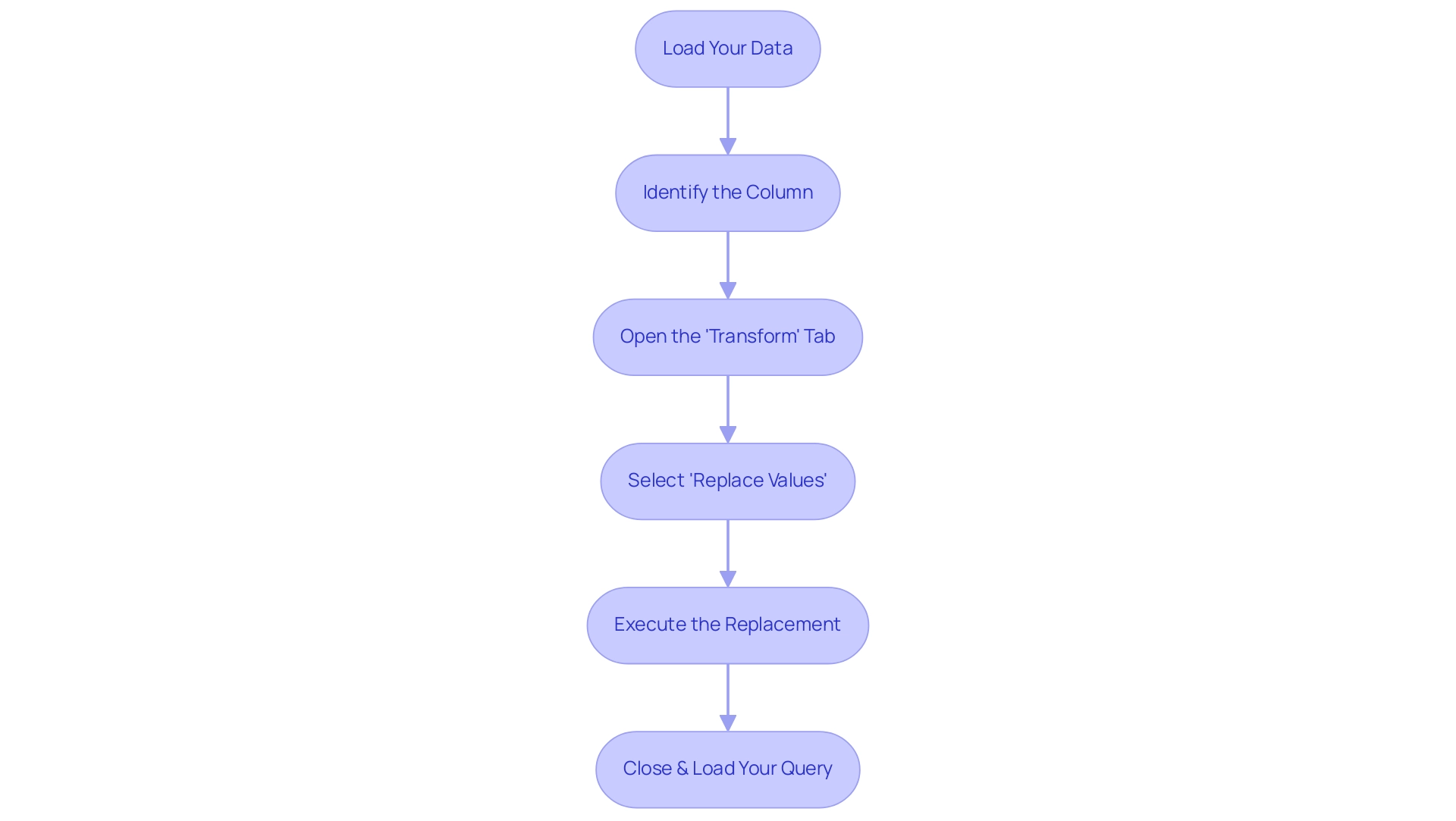

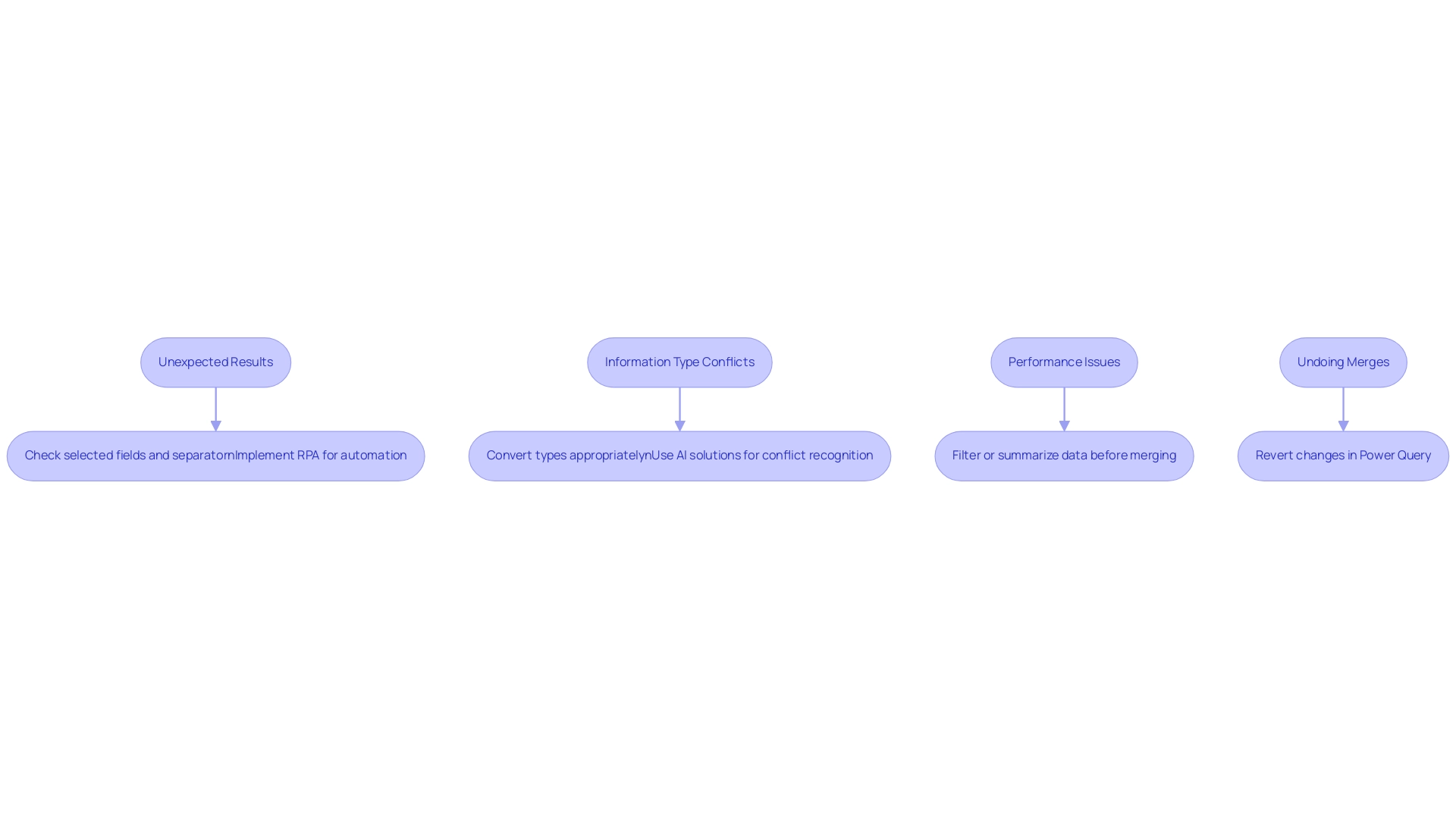

Troubleshooting Common Issues in Value Replacement

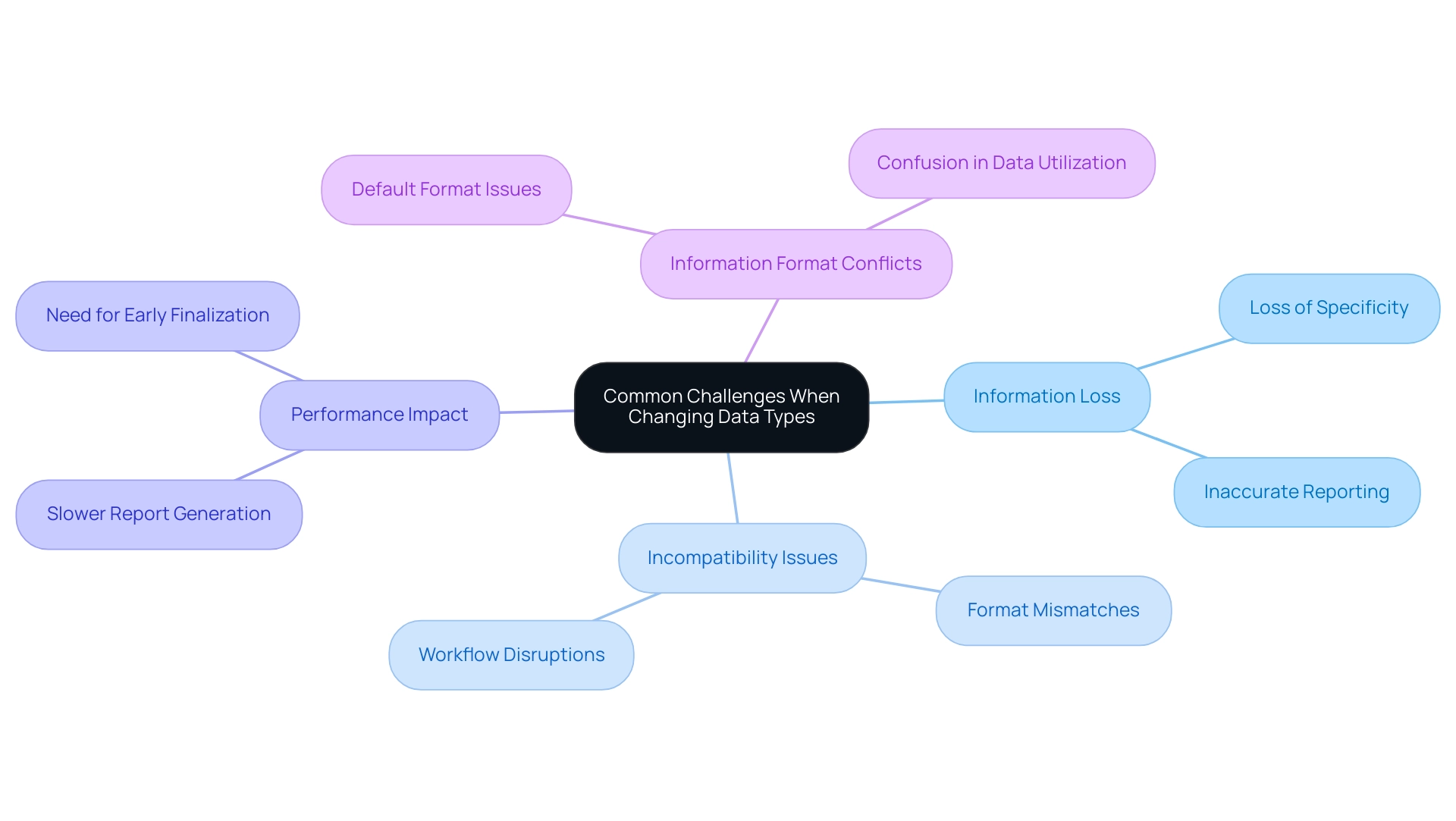

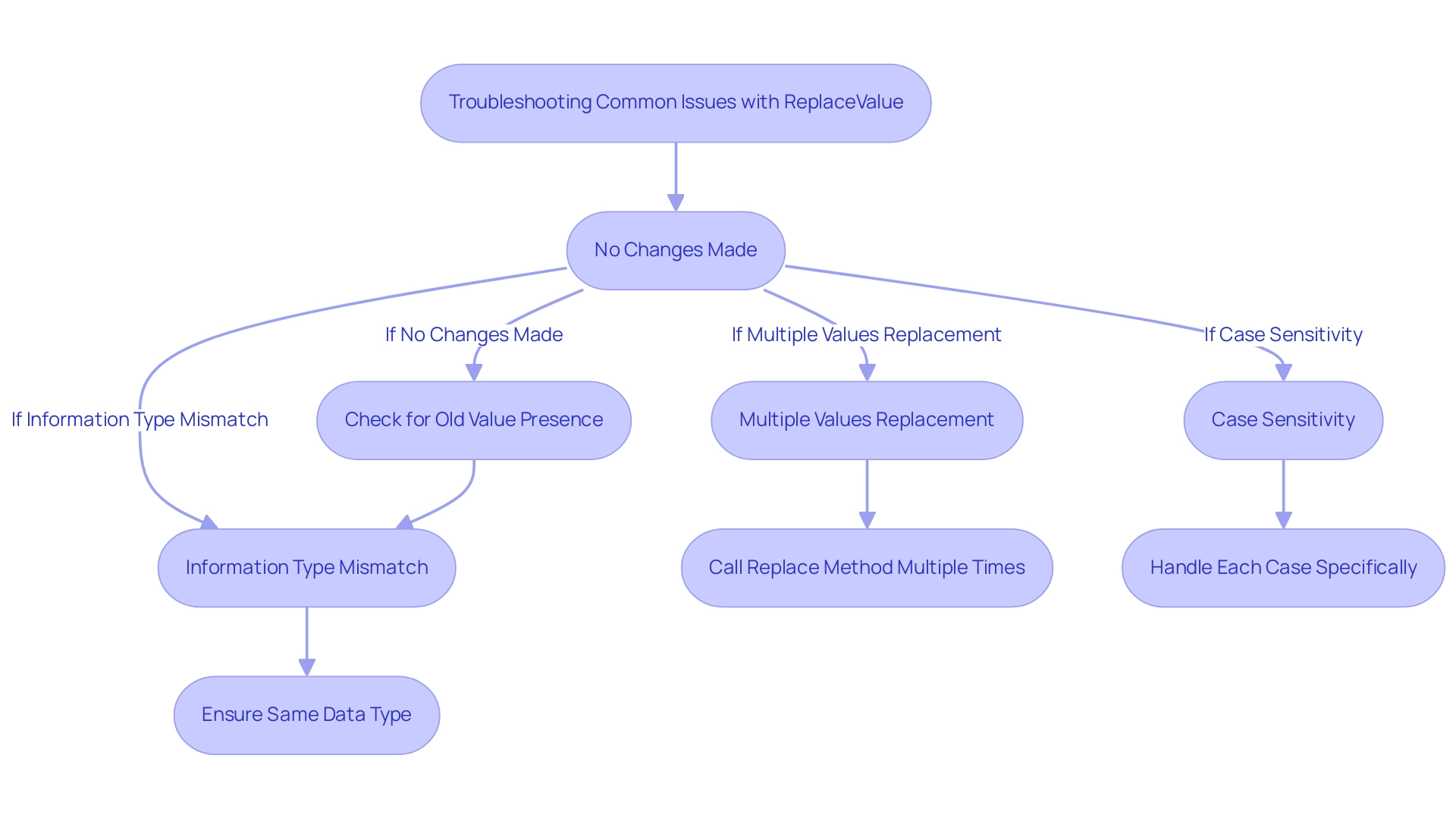

While it may seem straightforward to replace null with blank in Power Query during BI and data manipulation, users often encounter challenges that impede their data transformation processes. By leveraging Robotic Process Automation (RPA), organizations can automate these manual workflows, enhancing operational efficiency in the face of a rapidly evolving AI landscape. Here are common issues and solutions:

- Issue: No changes observed after replacement.

-

Solution: Check that ‘null’ is accurately entered in the ‘Value to Find’ field, as Power Query is case-sensitive. Any discrepancies in casing could prevent replacements from occurring.

-

Issue: Unexpected blank values in other rows.

-

Solution: Review your data to identify unintended blank entries. Filtering the column can assist you in visually examining the information more effectively.

-

Issue: Performance issues with large datasets.

- Solution: If sluggish performance arises, consider filtering your dataset before applying transformations. This approach can significantly reduce the volume of information Power Query processes at a time, thereby enhancing overall efficiency.

To further streamline these processes, consider utilizing RPA tools such as UiPath or Automation Anywhere, which can automate the repetitive tasks associated with information cleansing and transformation. By proactively addressing these potential issues and implementing the suggested solutions, you can troubleshoot effectively, ensuring a seamless workflow and maintaining high information quality. This is essential, particularly considering the challenges of inadequate master information emphasized in industry studies.

As Tracy Rock noted in 2022, 26% of small businesses that encountered cyberattacks lost between $250,000 and $500,000, emphasizing the financial consequences of inadequate information management. Furthermore, 20% of respondents either had not tested their disaster recovery plans or lacked them completely, highlighting the necessity of proactive information management strategies. Remember, overlooking issues in information processing can lead to significant setbacks, as evidenced by NASA’s loss of a $125 million Mars Climate Orbiter due to format inconsistencies.

By leveraging RPA and BI to drive data-driven insights, you can not only enhance operational efficiency but also reduce errors and free up your team for more strategic, value-adding work that fosters business growth.

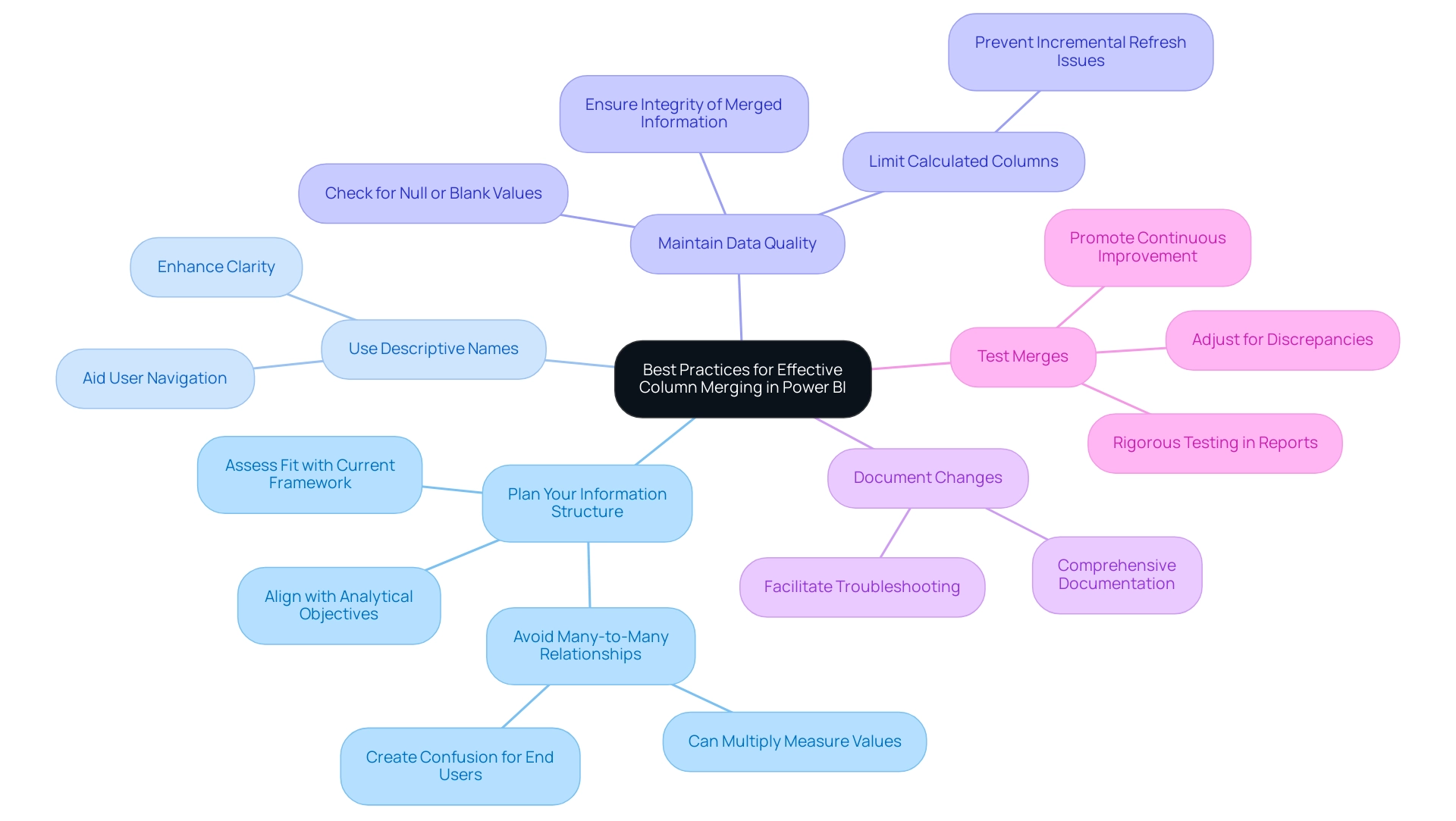

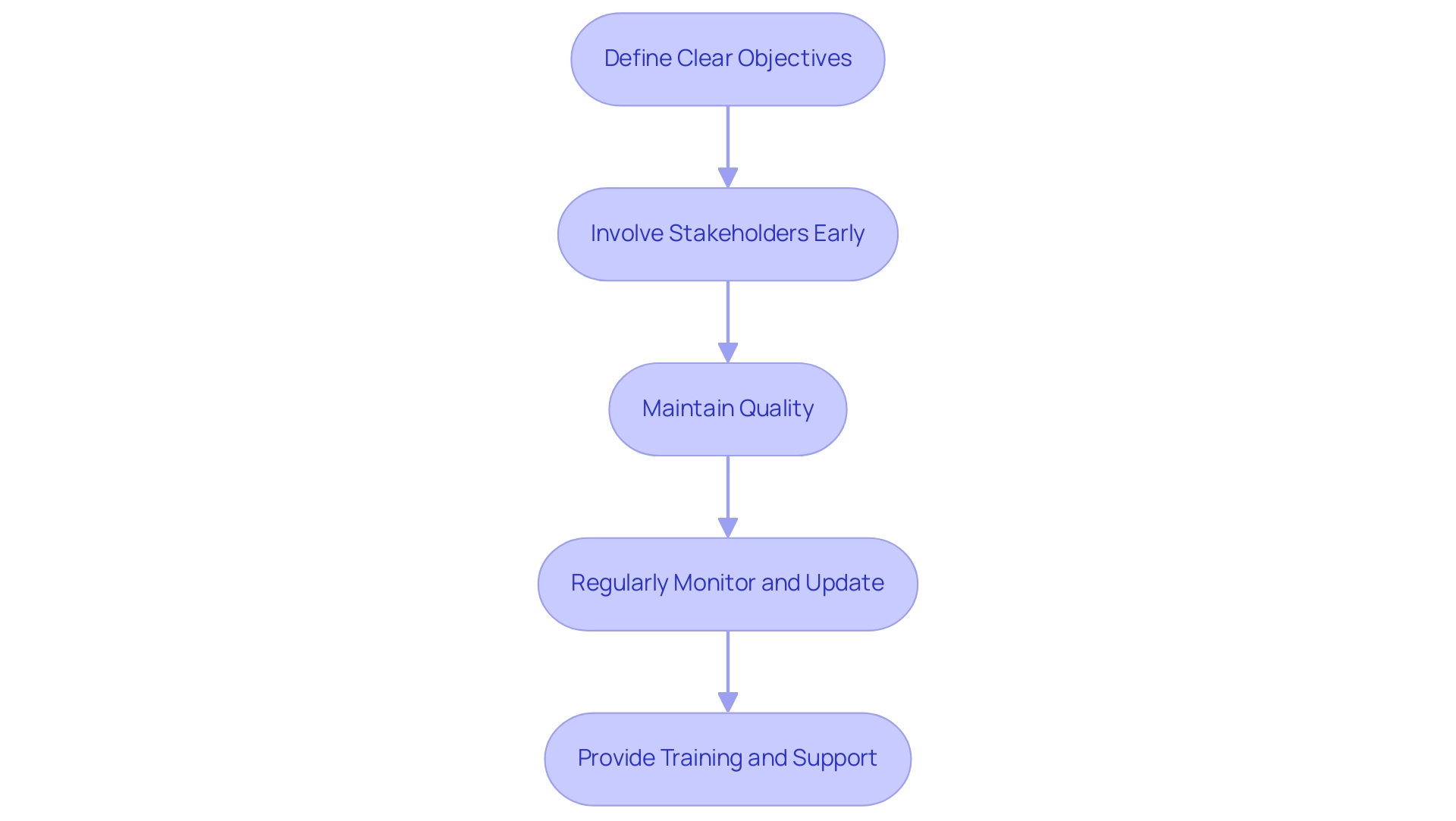

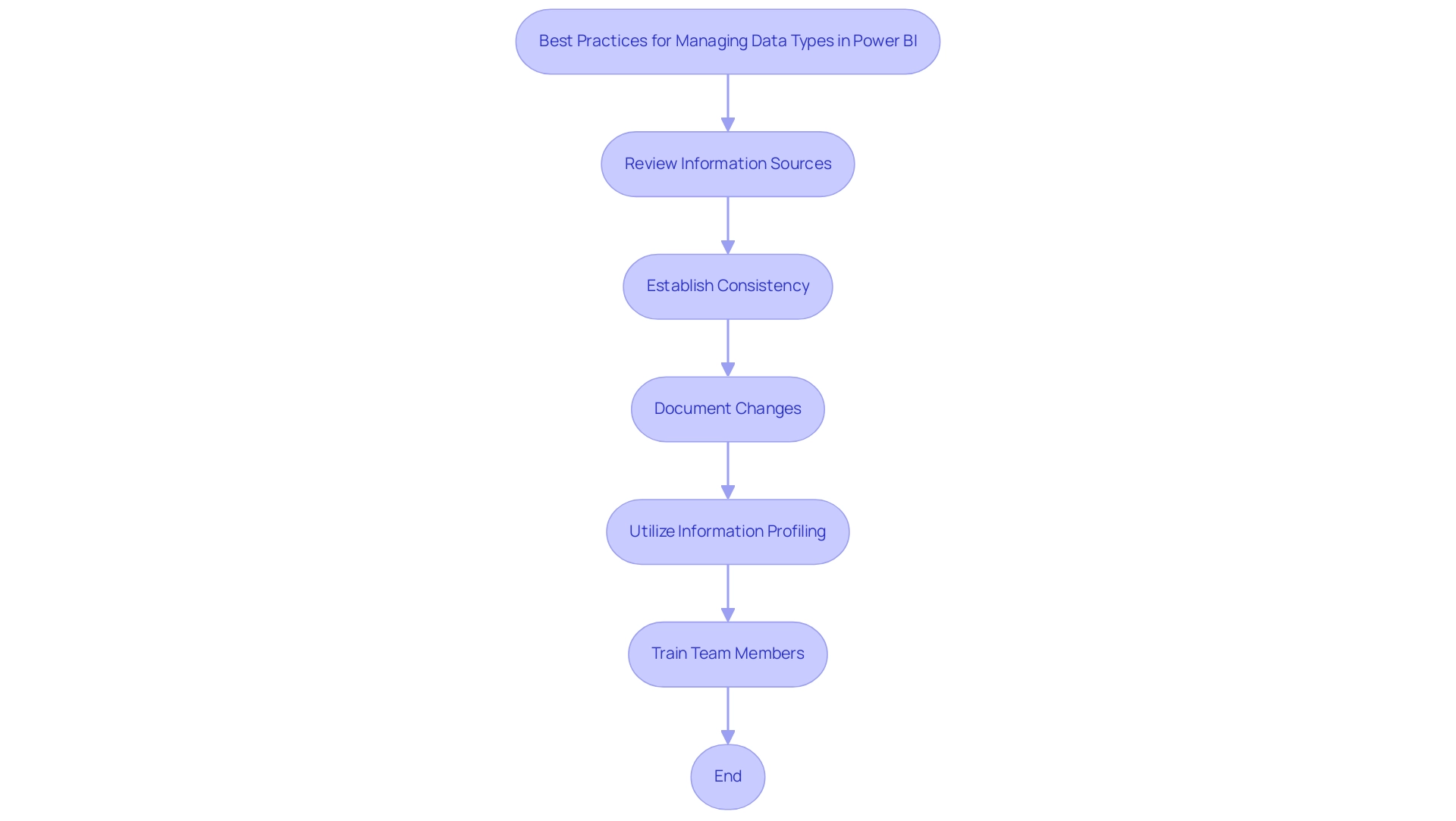

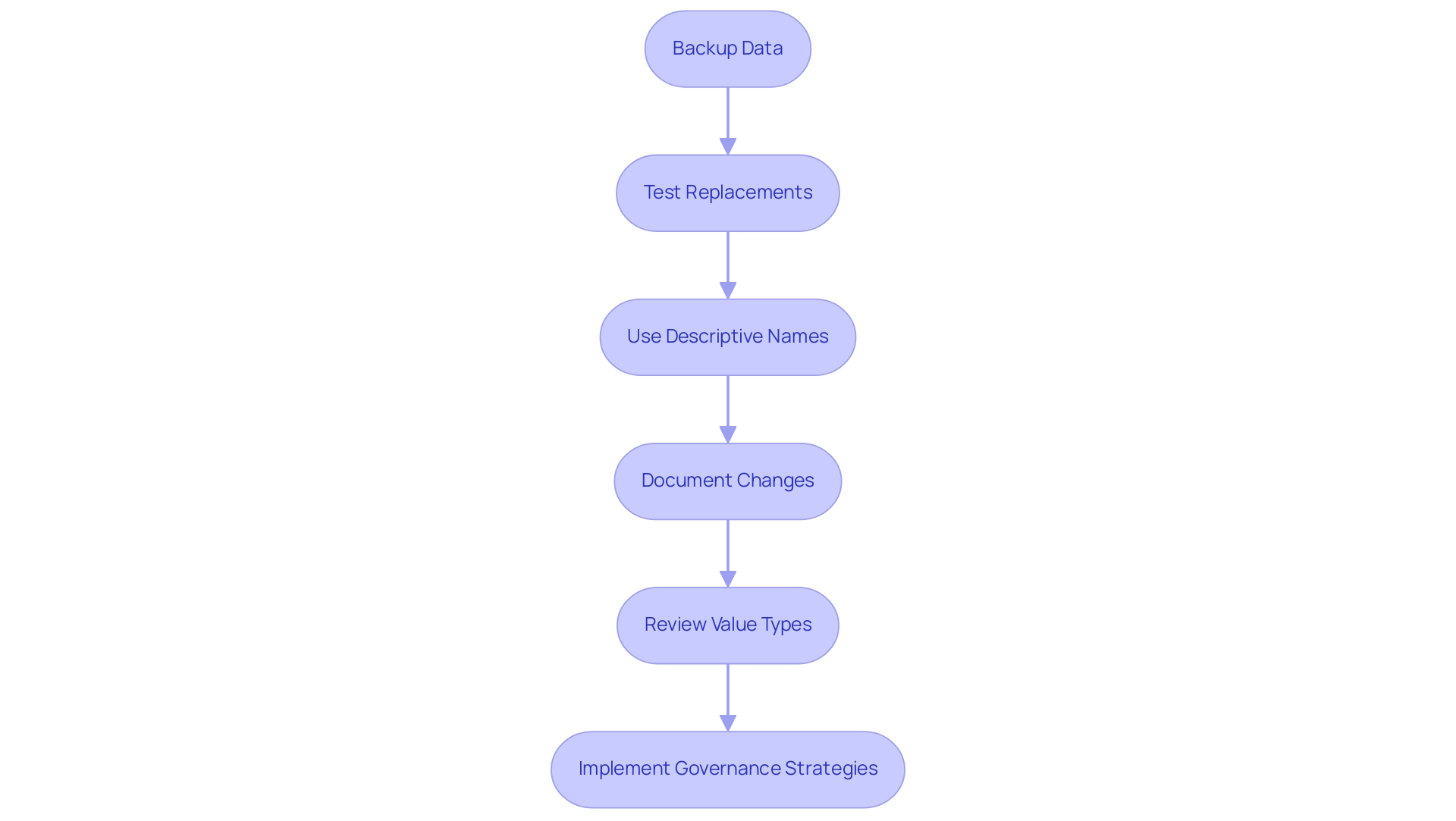

Best Practices for Handling Null and Blank Values in Power Query

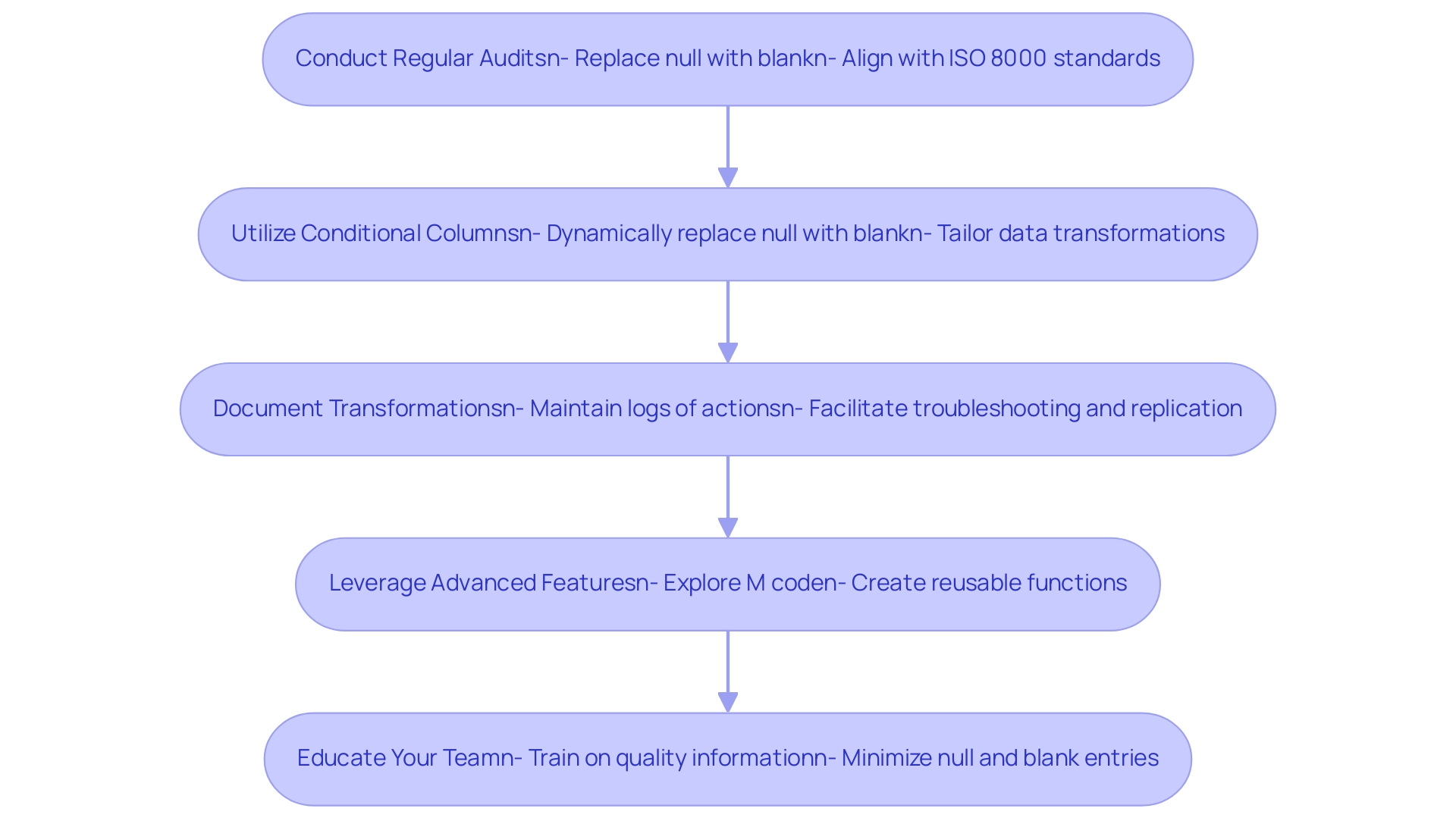

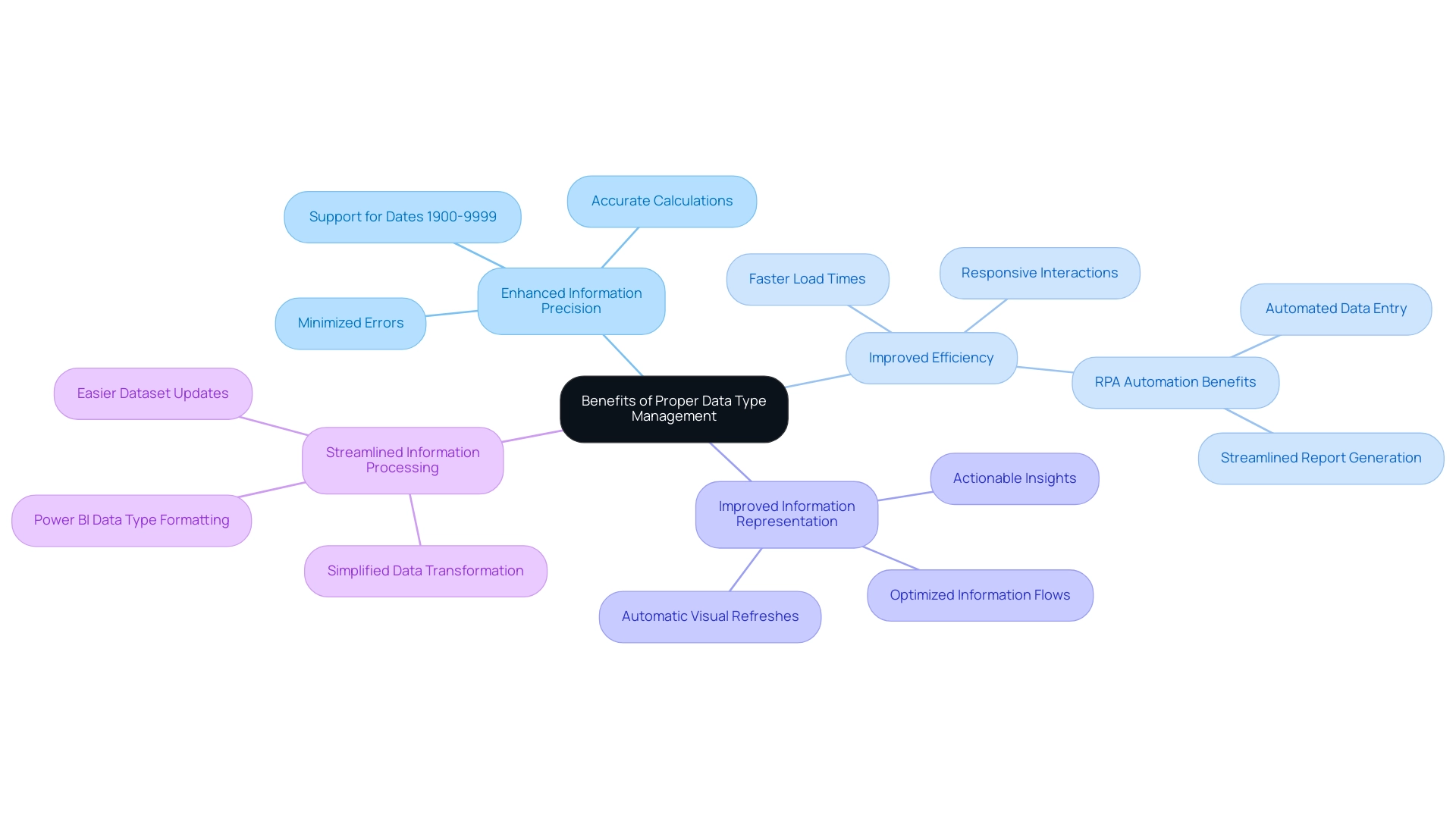

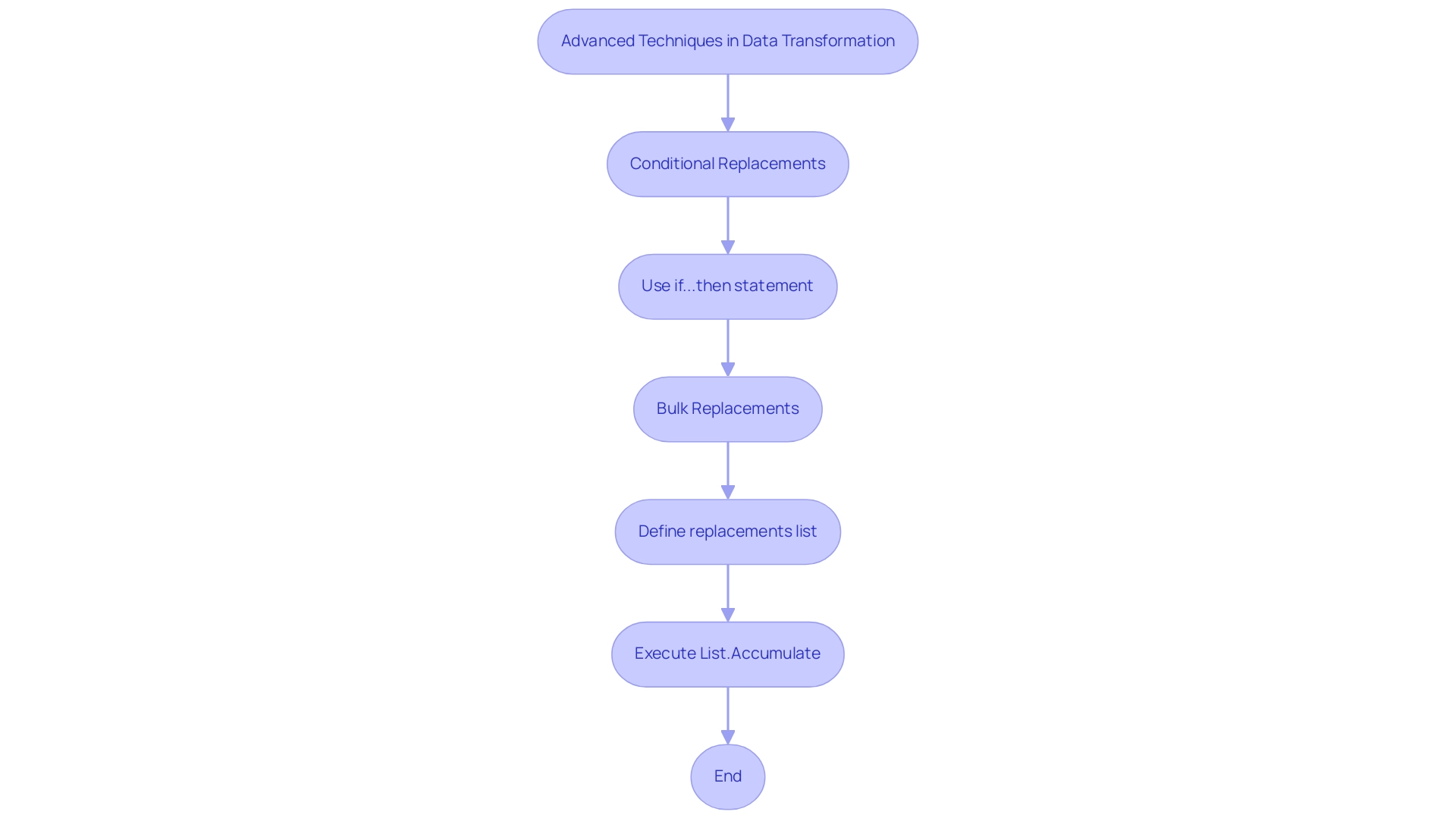

To ensure data integrity and streamline your workflows in Power Query, consider the following best practices, all of which can be further enhanced by leveraging our 3-Day Power BI Sprint service:

-

Conduct Regular Audits: Establish a routine to replace null with blank in Power Query while checking for null and blank values, aligning with standards such as ISO 8000 for quality management. This proactive approach will assist in preserving the overall quality of your information. As emphasized by Anja Whiteacre, Senior Consultant at RevGen, “If your organization is struggling to make the most of your BI tools, contact us to schedule a quick chat with one of our experts or visit our Technology Services site to learn more.”

-

Utilize Conditional Columns: Implement conditional columns to dynamically replace null with blank in Power Query, enabling more adaptive data transformations tailored to your needs. This method can be further refined during the 3-Day BI Sprint, ensuring a professional finish on your reports.

-

Document Transformations: Maintain thorough logs of the actions performed within Data Transformation. This documentation not only aids in troubleshooting but also facilitates the replication of successful processes in future projects, contributing to a streamlined workflow.

-

Leverage Advanced Features: Explore Power Query’s advanced functionalities, including M code, to tackle complex scenarios that necessitate the ability to replace null with blank in Power Query. By integrating these advanced features, you enhance your analytical capabilities, creating reusable functions that apply the same transformations across different queries, thereby saving time and centralizing transformation logic.

-

Educate Your Team: Foster a culture of integrity by training your team on the significance of quality information. By minimizing the occurrence of null and blank entries, you can improve your process to replace null with blank in Power Query, creating a more dependable information environment. This training can be part of our comprehensive BI services, which also include custom dashboards and advanced analytics, ensuring your team is well-equipped to uphold information integrity.

By implementing these best practices and utilizing our services, you will significantly enhance your data management strategies, drive operational efficiency through Robotic Process Automation, and achieve more dependable analyses, all while adhering to the guidelines set forth by ISO 8000. Our 3-Day BI Sprint not only promises a fully functional report tailored to your needs but also provides a template for future projects, enhancing your reporting capabilities.

Additional Resources for Power Query Users

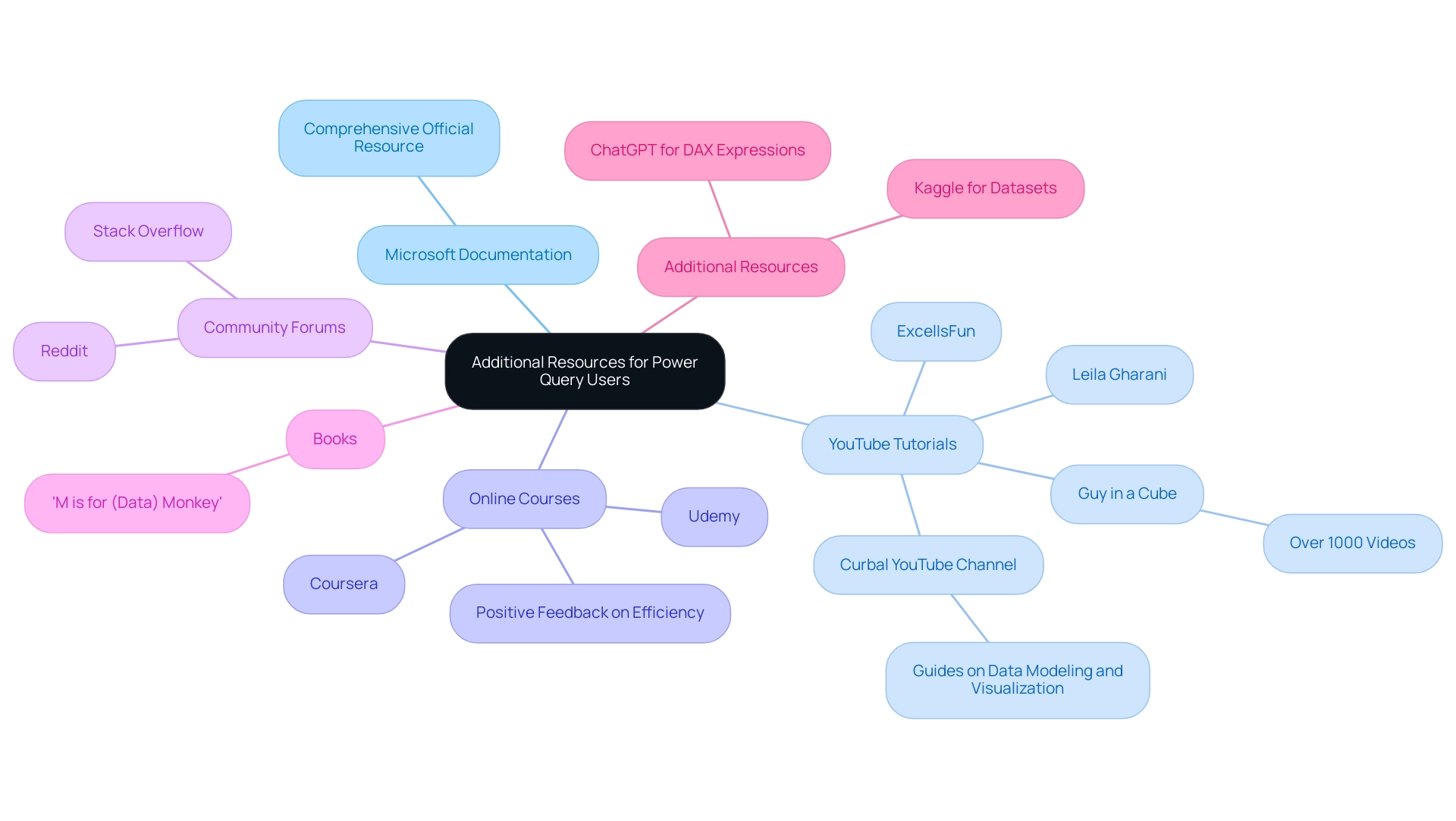

To enhance your expertise in Power Query, a variety of valuable resources are available that cater to different learning styles and preferences:

- Microsoft Power Query Documentation: This comprehensive official resource covers all features of Power Query, making it a foundational tool for both beginners and advanced users.

- YouTube Tutorials: Channels like the ‘Curbal YouTube Channel,’ owned by Ruth Pozuelo Martinez, offer valuable guides on modeling and visualization, assisting subscribers in staying updated on the newest BI features and improving their skills through brief, practical tutorials. Notably, ‘Guy in a Cube’ has uploaded more than a thousand videos, offering a vast array of content for learners. Furthermore, ‘ExcelIsFun’ and ‘Leila Gharani’ provide captivating video demonstrations that explain data transformation techniques.

- Online Courses: Platforms such as Coursera and Udemy offer organized courses that promote a deeper comprehension of data processing and manipulation, with numerous courses earning favorable feedback for their efficiency. Studies show that online courses significantly boost retention and comprehension in technical subjects.

- Community Forums: Engaging with the data transformation community on platforms like Stack Overflow and Reddit allows for interactive learning; you can ask questions, share experiences, and gain insights from fellow users who face similar challenges.

- Books: Titles such as ‘M is for (Data) Monkey’ offer comprehensive insights into data processing applications, making them an excellent complement to hands-on learning.

- Additional Resources: For further enhancement of your skills, consider utilizing ChatGPT for DAX expressions and Kaggle for datasets, which are valuable tools for practical application.

As Reid Havens, a Microsoft Data Platform MVP, highlights, ‘Investing in quality resources is vital for mastering information manipulation tools such as Data Transformation.’ By exploring these resources, you can empower yourself to deepen your understanding of Power Query and enhance your data manipulation skills effectively.

Conclusion

Understanding and effectively managing null and blank values in Power Query is crucial for ensuring data integrity and driving informed decision-making. The distinction between these two types of values can significantly impact data analysis outcomes, making it essential to address them proactively. By implementing practical strategies such as:

- Regular audits

- Conditional columns

- Detailed documentation of transformations

organizations can streamline their data workflows and enhance the reliability of their analyses.

Moreover, troubleshooting common issues and leveraging advanced features of Power Query can further bolster efforts to maintain high data quality. The integration of Robotic Process Automation (RPA) into these processes not only automates repetitive tasks but also frees up valuable resources for more strategic initiatives. This holistic approach to data management not only mitigates risks associated with poor data quality but also positions organizations to thrive in a competitive landscape.

Investing in continuous learning through various resources, including:

- Online courses

- Community forums

- Official documentation

empowers teams to stay ahead of the curve. By fostering a culture of data integrity and equipping staff with the necessary skills, organizations can ensure that they are not only maintaining data quality but also leveraging it to drive growth and operational efficiency. Ultimately, addressing null and blank values with diligence and strategy lays the groundwork for a data-driven future, enabling more effective and insightful business decisions.

Overview

Related tables in Power BI are essential for creating complex data models that facilitate insightful analysis and reporting by establishing connections between different datasets. The article emphasizes that these relationships enhance data integration and performance, enabling users to derive actionable insights and streamline report creation through functions like RELATED and LOOKUP, while also addressing operational challenges with automation tools like Robotic Process Automation (RPA).

Introduction

In the realm of data analytics, Power BI stands out as a powerful tool that transforms raw data into actionable insights. At the heart of this transformation lies the concept of related tables, which facilitate intricate data relationships and enable organizations to create comprehensive data models. As businesses grapple with the complexities of data integration and reporting, understanding how to effectively utilize these relationships becomes paramount.

By mastering key functions like LOOKUP and RELATED, and embracing best practices for data management, teams can streamline their reporting processes, enhance operational efficiency, and drive strategic decision-making.

This article delves into the essential aspects of related tables in Power BI, exploring their applications, the importance of establishing robust relationships, and the strategies that can optimize performance, ultimately empowering organizations to harness the full potential of their data.

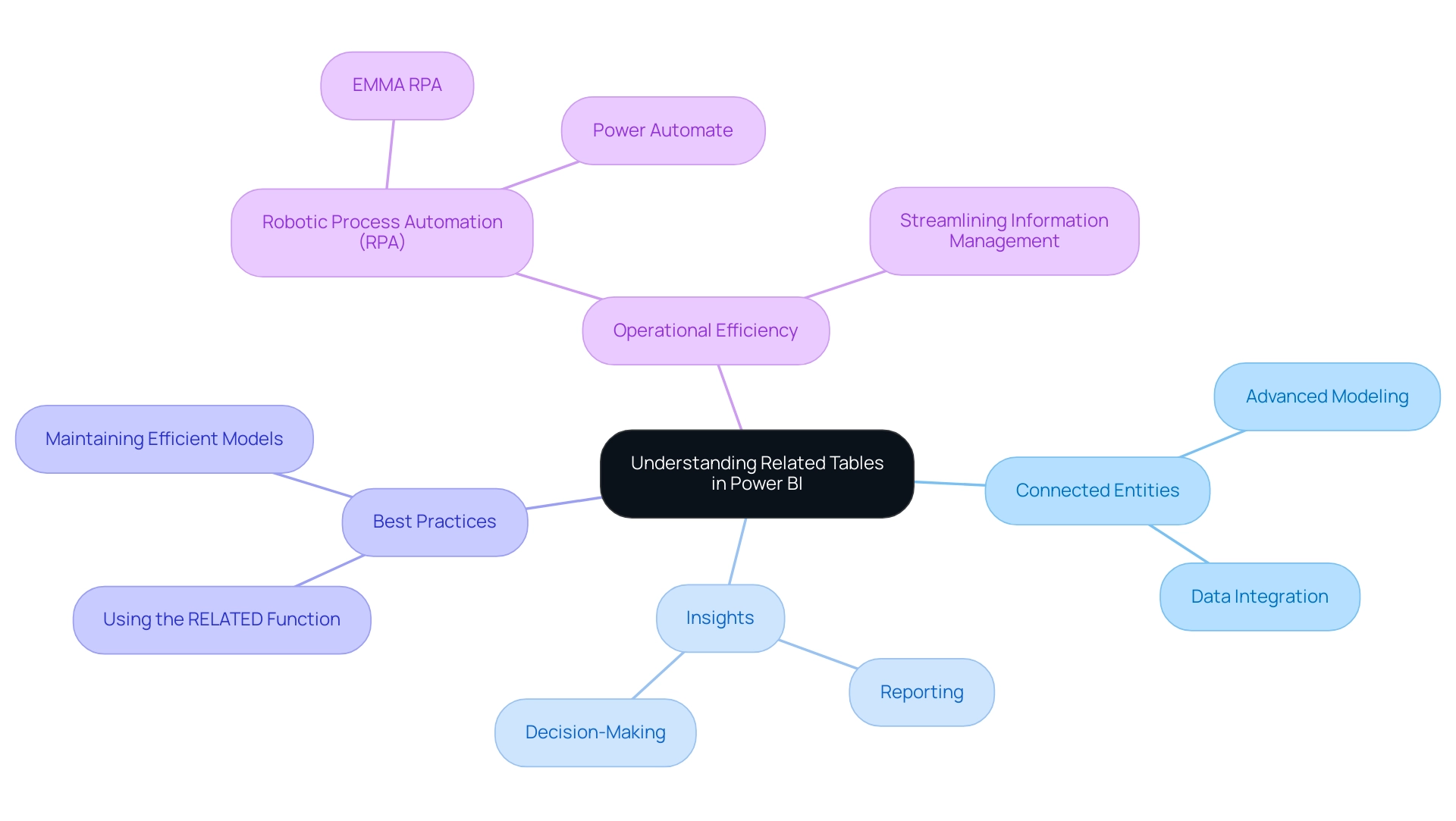

Understanding Related Tables in Power BI: An Overview

In this tool, connected entities act as the foundation of advanced modeling, establishing clear connections that enable users to create complex structures. With a course duration of just 3 hours and 63.7K views, Power BI is acknowledged for its powerful capabilities in visualization. These connections are essential for smooth information integration, allowing users to derive insights from various sources and form a comprehensive view of their datasets.

This unified approach not only enhances the depth of analysis but also facilitates more insightful reporting and strategic decision-making, addressing the challenges of time-consuming report creation and inconsistencies in information. The significance of associated datasets is further highlighted by the insights of Iason Prassides, who stresses,

Learn to use DAX in BI to customize and optimize your KPIs.

By strategically applying theRELATEDfunction, users can traverse complex relationships across multiple tables, ensuring that only the most pertinent information is incorporated, which ultimately improves report performance.

Adhering to best practices, as detailed in the case study titled ‘Best Practices for Using the RELATED Function’, assists in maintaining efficient models and improves report performance in BI. Additionally, integrating Robotic Process Automation (RPA) tools like EMMA RPA and Power Automate can significantly streamline information management processes. These tools automate repetitive tasks, reduce errors, and enhance operational efficiency, thereby allowing teams to concentrate on deriving actionable insights from the information.

This strategic application of related datasets, combined with RPA solutions, not only drives operational efficiency but also paves the way for actionable insights that propel business intelligence forward.

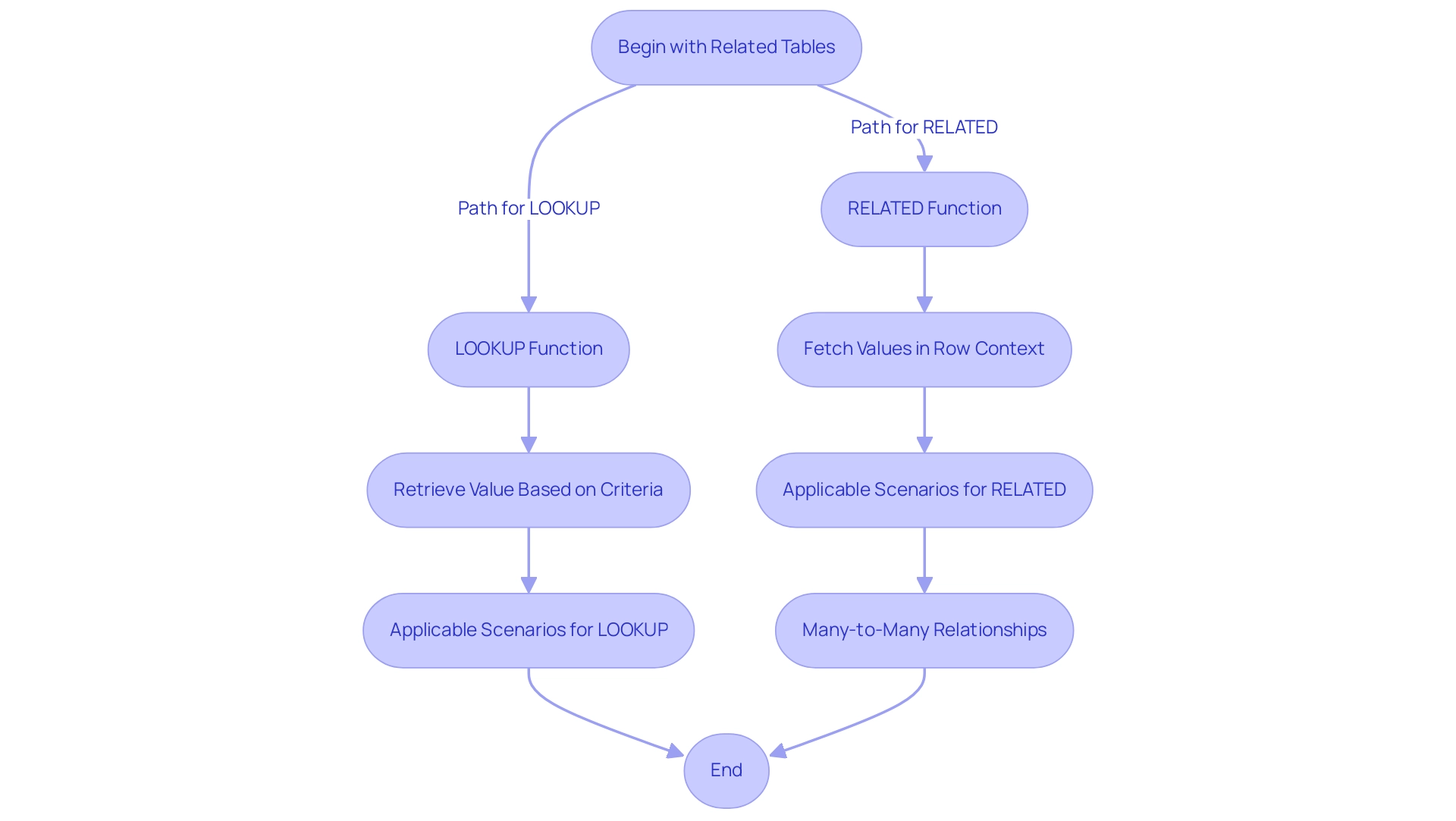

Key Functions for Managing Related Tables: LOOKUP and RELATED

The LOOKUP and RELATED functions are crucial in managing related table Power BI datasets, which enables users to unlock the full potential of their data relationships. The LOOKUP function retrieves a value from a related table in Power BI based on specified criteria, while the RELATED function fetches values from the related table in Power BI within a row context. For instance, consider a sales table linked to a products table; by leveraging related table Power BI functions, users can seamlessly integrate product details into their sales reports, enriching their analysis and driving informed decision-making.

Furthermore, the significance of Business Intelligence and RPA, including tools like EMMA RPA and Automate, cannot be exaggerated in this context, as they tackle challenges such as:

- Time-consuming report generation

- Information inconsistencies

- Task repetition fatigue

- Staffing shortages

Ultimately enhancing operational efficiency. Various techniques in BI can obtain outcomes akin to the VLOOKUP formula in Excel, depending on specific scenarios, which is crucial for users to understand. Mastering these functions not only improves the ability to create dynamic reports but also enables users to visualize intricate connections in their related table Power BI data.

A practical example is illustrated in the case study titled ‘Using DAX LOOKUPVALUE Function in Power BI,’ demonstrating how the DAX LOOKUPVALUE function can successfully perform lookups across datasets, especially in scenarios involving a related table Power BI where RELATED cannot be used, such as Many-to-Many relationships. This flexibility is crucial for effective information management. As Olga Cheban, a Technical Content Writer at Coupler.io, aptly states,

I’ve been writing about information, tech solutions, and business for years, and this passion never fades.

This sentiment emphasizes the significance of engaging thoroughly with BI’s functionalities and utilizing RPA solutions to cultivate a strong understanding of management. Readers can expect to spend approximately 17 minutes on this article, which provides a comprehensive exploration of these critical functions.

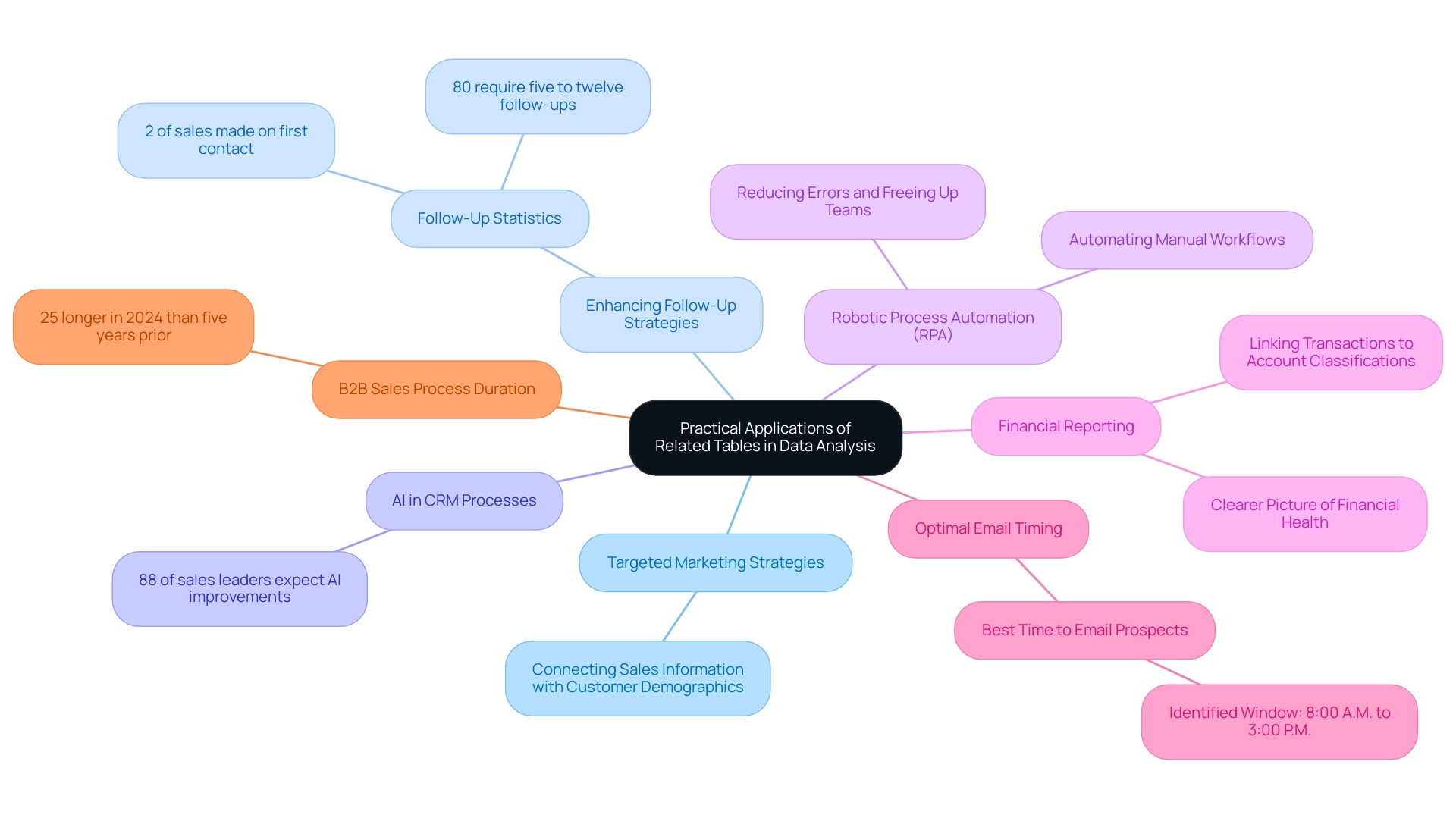

Practical Applications of Related Tables in Data Analysis

Related table power bi plays a crucial role in analysis within Power BI, offering a multitude of applications that can drive business success. By connecting sales information with customer demographics, businesses can develop targeted marketing strategies that significantly enhance engagement and conversion rates. As noted by sales executive Michael Brown, ‘Only 2% of sales are made on the first contact, while 80% require five to twelve follow-ups.’

This highlights the significance of employing information to enhance follow-up strategies effectively. Moreover, with 88% of sales leaders anticipating AI to improve their CRM processes in the coming two years, incorporating AI into information management practices becomes increasingly essential for operational efficiency. The challenges in leveraging insights from Power BI dashboards—such as time-consuming report creation and inconsistencies—can be addressed through Robotic Process Automation (RPA).

By automating manual workflows, RPA not only reduces errors but also frees up teams to focus on strategic, value-adding tasks rather than repetitive ones. In another application, a related table power bi can enhance financial reporting by linking transactions to specific account classifications. This integration provides organizations with a clearer picture of their financial health, allowing for more informed decision-making.

Additionally, understanding optimal email timing—identified as between 8:00 A.M. and 3:00 P.M.—can significantly enhance engagement rates with prospects, further illustrating the importance of timing in marketing strategies. Recent findings suggest that the typical B2B sales process required 25% more time in 2024 compared to five years prior, highlighting the necessity for effective information management in handling these complexities. By leveraging associated datasets effectively and incorporating RPA, organizations can significantly enhance their reporting capabilities, making data-driven decisions that align with their strategic goals and ultimately improve overall sales performance.

Moreover, tailored AI solutions can assist in navigating the overwhelming AI landscape, ensuring that businesses adopt the right technologies that align with their specific goals.

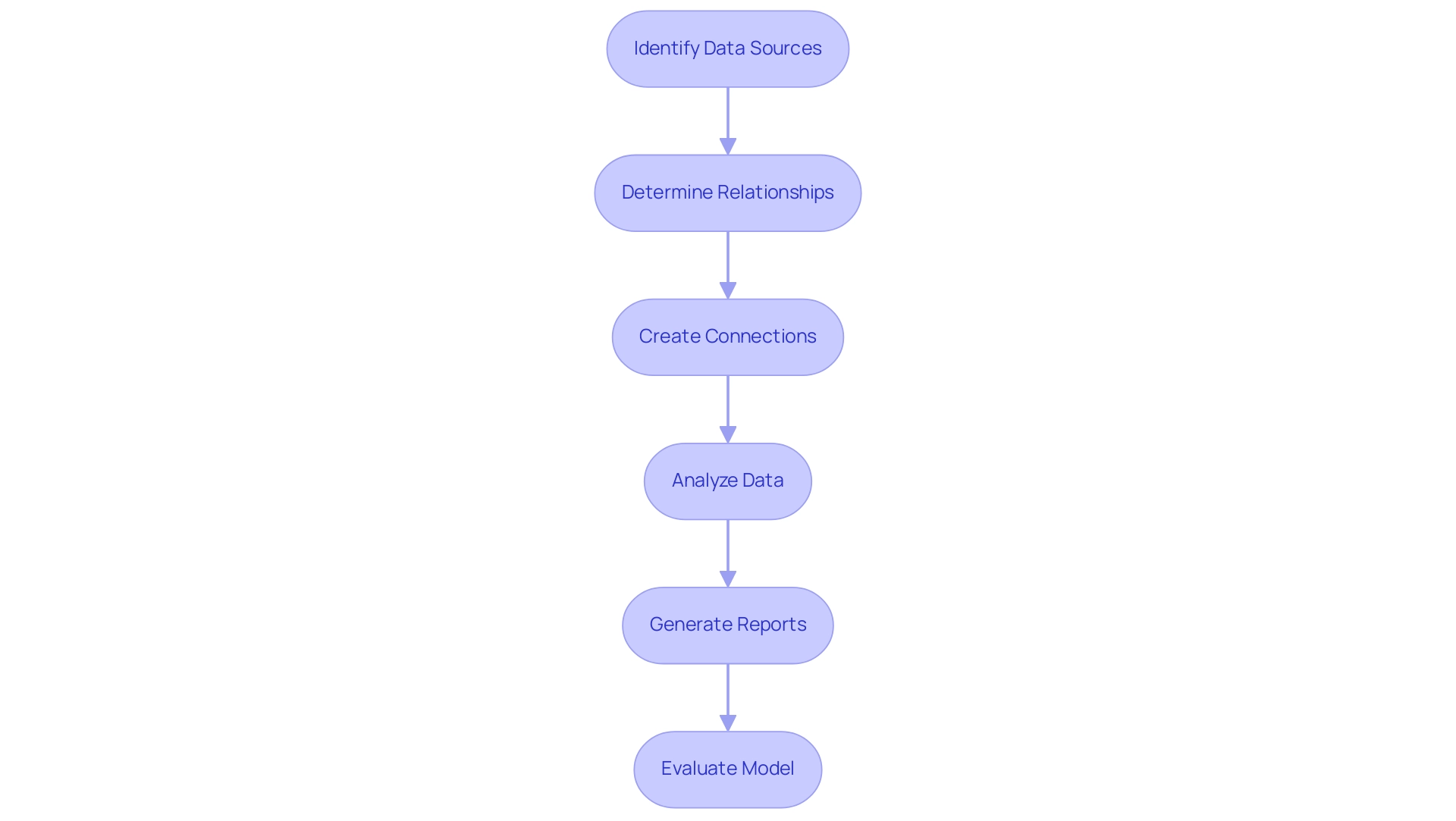

Establishing Relationships: The Backbone of Data Modeling

Creating connections among structures is a fundamental aspect of efficient information modeling in Power BI, particularly regarding the related table Power BI, which is emphasized in our 3-Day Power BI Sprint. These connections determine how information in one chart interacts with information in another, facilitating the creation of insightful and meaningful reports. For instance, implementing a one-to-many connection between a customer table and an orders table in Power BI enables comprehensive analysis of all orders linked to a specific customer using related table power bi.

This capability is essential for deriving actionable insights from interconnected datasets, ultimately streamlining report creation and utilization for impactful decision-making. In just three days, we promise to create a fully functional, professionally designed report on a topic of your choice, allowing you to focus on utilizing the insights. Furthermore, you can use this report as a template for future projects, ensuring a professional design from the start.

Frequent model evaluations are essential for guaranteeing that these connections stay refined to achieve user objectives. As noted by modeling experts, ‘In many cases, issues and errors are not discovered until the process is running,’ which underscores the importance of proactive management of relationships. By understanding how to establish and maintain these connections with the related table power bi, users can significantly enhance information integrity and reliability in their analyses, leading to better decision-making and strategic outcomes.

Additionally, with a strong foundation in statistics, professionals can expect to enhance their job prospects; those skilled in machine learning, for instance, can earn an average salary of close to $113,000 per year. This emphasizes the practical advantages of mastering modeling techniques, as shown in the case study on expanding job opportunities in science roles, where statistical analysis skills are increasingly sought after.

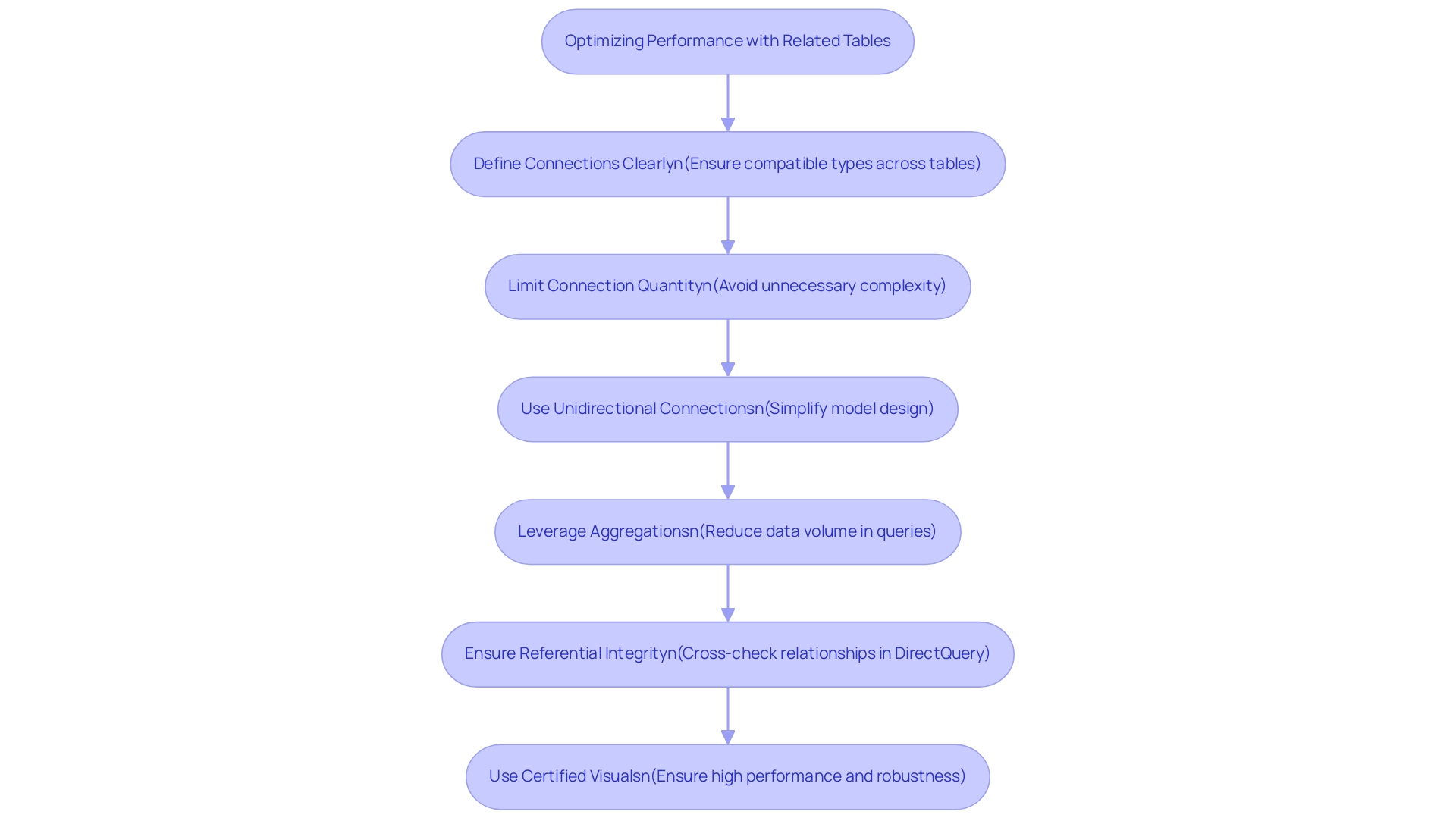

Optimizing Performance with Related Tables: Best Practices

To achieve optimal performance with related table Power BI datasets, it is essential for users to adopt several strategic best practices while being aware of common challenges. One significant issue is the time-consuming nature of report creation, which can detract from leveraging insights effectively. A lack of a governance strategy can exacerbate inconsistencies, leading to confusion and mistrust in the information presented.

Ensuring that connections are precisely defined, with compatible types across associated tables, helps to reduce mistakes and improve query performance. Moreover, it is recommended to restrict the quantity of connections to avoid unnecessary complexity in models, as excessively intricate designs can result in performance decline—a warning backed by understandings of the dangers of bi-directional connections. Instead, concentrating on unidirectional connections can simplify the model and enhance operational efficiency.

Additionally, leveraging aggregations can dramatically enhance performance by reducing the volume of data processed in queries. It is also crucial to cross-check referential integrity for relationships in DirectQuery sources to optimize query performance. The use of Power BI-certified visuals ensures high performance and robustness, further supporting the effectiveness of these practices.

A practical case study demonstrating the successful categorization of report information using sensitivity labels illustrates how effectively managing information not only aids in security but also optimizes performance. For instance, implementing a clear governance framework can guide users in establishing data accuracy and consistency across reports. As Markus, a frequent visitor, noted, ‘Thank you for all your suggestions everyone, I will look into it further, but this answer confirms my thoughts and gives me some new ideas.’

By implementing these best practices and establishing a governance strategy, users can overcome the challenges faced in report creation and significantly enhance the efficiency and robustness of their Power BI reports, particularly those involving related table Power BI, ultimately driving data-driven insights for better decision-making.

Conclusion

Harnessing the power of related tables in Power BI is essential for organizations aiming to transform their data into valuable insights. By establishing defined relationships, users can create sophisticated data models that enhance reporting accuracy and decision-making. Key functions like LOOKUP and RELATED play a crucial role in managing these relationships, allowing for seamless integration of data across multiple tables. This capability not only enriches data analysis but also streamlines the reporting process, making it easier for teams to derive actionable insights.

The practical applications of related tables extend beyond mere data management. By linking diverse datasets, businesses can refine their marketing strategies, improve customer engagement, and enhance financial reporting. Moreover, the integration of Robotic Process Automation (RPA) tools can automate repetitive tasks, reducing errors and freeing up valuable time for strategic initiatives. As organizations navigate the complexities of data integration, embracing these technologies and practices will be instrumental in achieving operational efficiency and driving business success.

Establishing and maintaining strong relationships within data models is foundational for effective analysis. Regular audits, adherence to best practices, and a focus on optimizing performance can significantly improve data integrity and reliability. As organizations continue to leverage the capabilities of Power BI and related tables, they position themselves to make informed, data-driven decisions that align with their strategic goals. Now is the time to embrace these practices and unlock the full potential of data analytics for enhanced operational excellence.

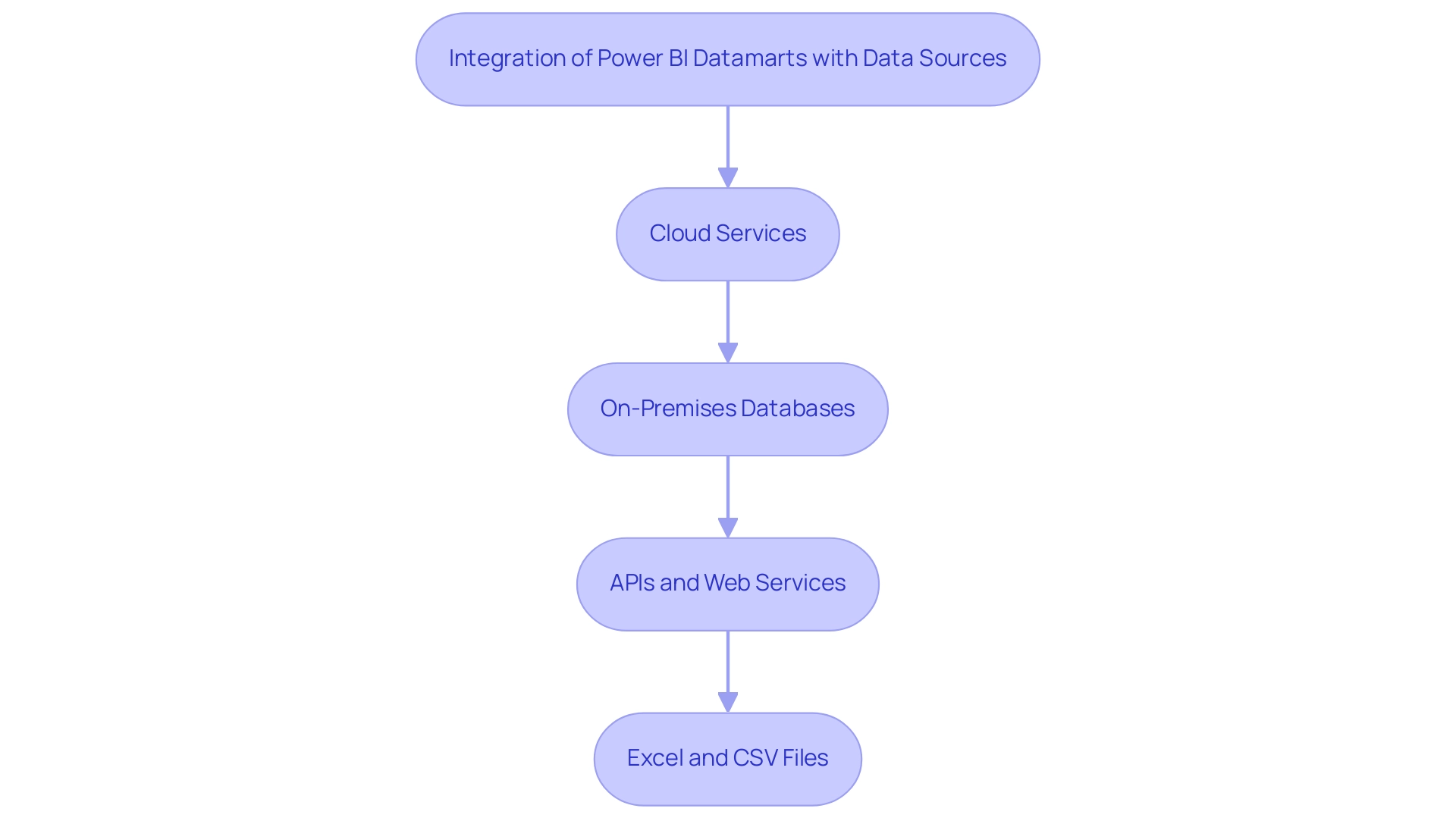

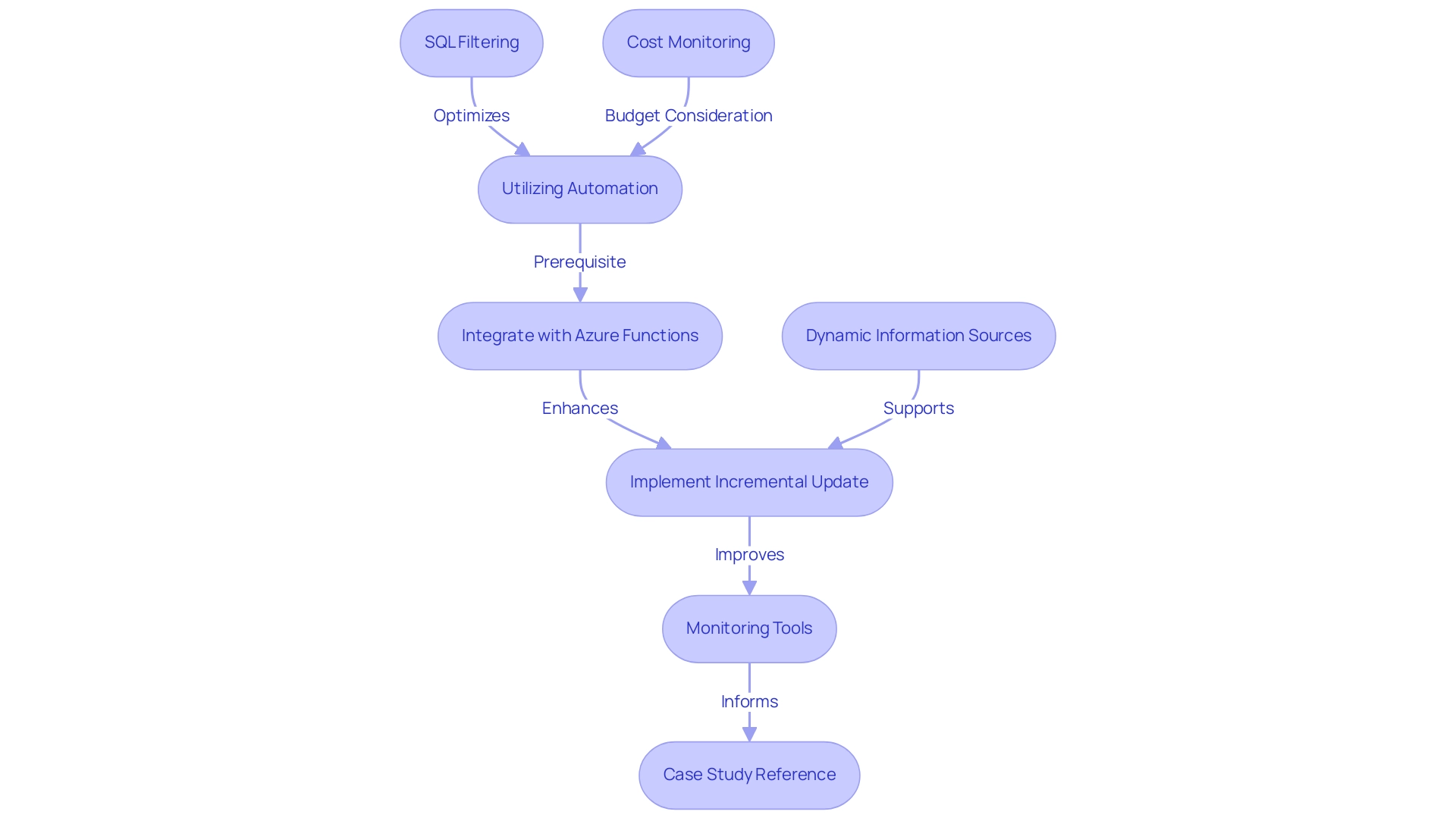

Overview

Setting up data refresh in Power BI involves a systematic approach that includes choosing the right refresh method, configuring settings, and monitoring updates to ensure accurate reporting. The article outlines a step-by-step guide detailing the process of initiating manual, scheduled, or automatic updates, emphasizing the importance of maintaining data integrity for informed decision-making and highlighting best practices to optimize refresh efficiency.

Introduction

In the dynamic landscape of data analytics, ensuring that reports and dashboards reflect the latest insights is essential for informed decision-making. Power BI stands out as a powerful tool in this realm, offering a variety of data refresh options designed to meet diverse organizational needs. From manual to automatic refreshes, each method plays a critical role in maintaining data integrity and operational efficiency.

However, many organizations struggle with effectively implementing these strategies, resulting in missed opportunities for actionable insights. By exploring best practices, troubleshooting techniques, and innovative automation solutions, organizations can enhance their data refresh processes, ensuring that they not only keep pace with the rapid flow of information but also leverage it to drive strategic growth.

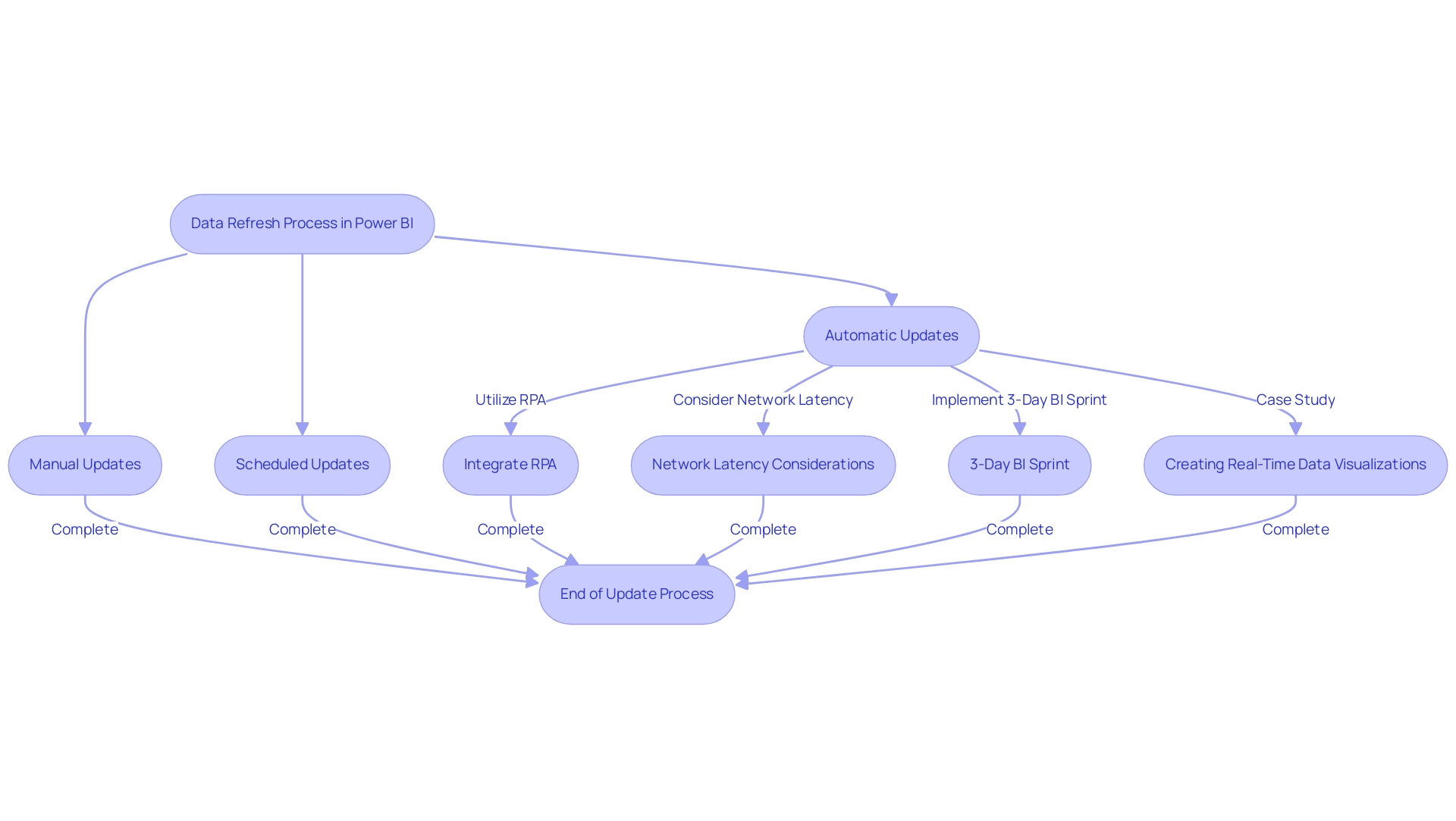

Understanding Data Refresh in Power BI

The update process in the BI tool is an essential activity to refresh data Power BI reports and dashboards with the most recent information from your sources, ensuring that your visualizations are aligned with current insights. This is not just a technical requirement; it’s essential for accurate reporting and strategic decision-making. By preserving the integrity of your insights, you enable your organization to make informed choices based on the most recent available information.

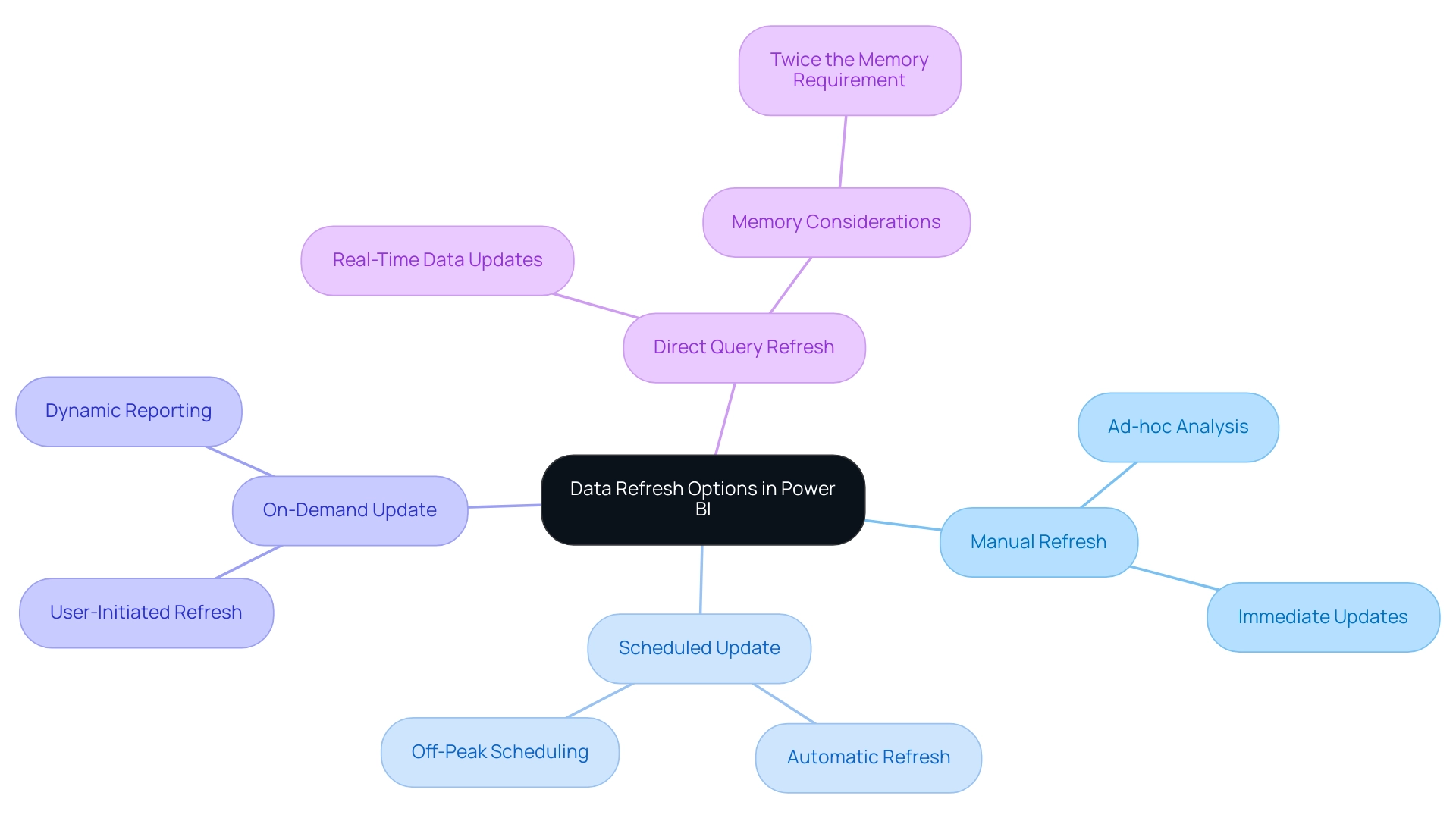

Power BI provides various update options designed for different situations, including:

- Manual updates

- Scheduled updates

- Ways to refresh data Power BI automatically

Each method addresses specific operational needs, allowing you to optimize your reporting practices effectively.

Moreover, the integration of Robotic Process Automation (RPA) can significantly enhance the efficiency of your reporting processes, reducing the time spent on manual tasks and minimizing errors. As you traverse the intricate environment of information management, utilizing customized AI solutions can enhance your operations, ensuring that the technologies you adopt correspond with your business objectives.

Information integrity is crucial; as shown by recent findings, 26% of organizations attain only a 50% update rate, while 58% fall below 25%. This highlights the need for a strong update strategy that not only improves the reliability of your insights but also allows you to refresh data Power BI, promoting a data-driven culture within your organization. Moreover, BI can send update failure alerts to your email, ensuring that you are quickly informed of any issues that may affect your information integrity.

Additionally, it is important to consider network latency, as it can impact update performance; therefore, it is advised to keep sources, gateways, and BI clusters in the same region to minimize delays. To demonstrate efficient information update methods, consider the 3-Day BI Sprint, which enables the swift production of expertly crafted reports, and the General Management App that offers extensive oversight and intelligent evaluations. A practical example of successful implementation can be seen in the case study titled ‘Creating Real-Time Visualizations,’ where organizations successfully utilized Power BI to refresh data and enhance agility and responsiveness in decision-making.

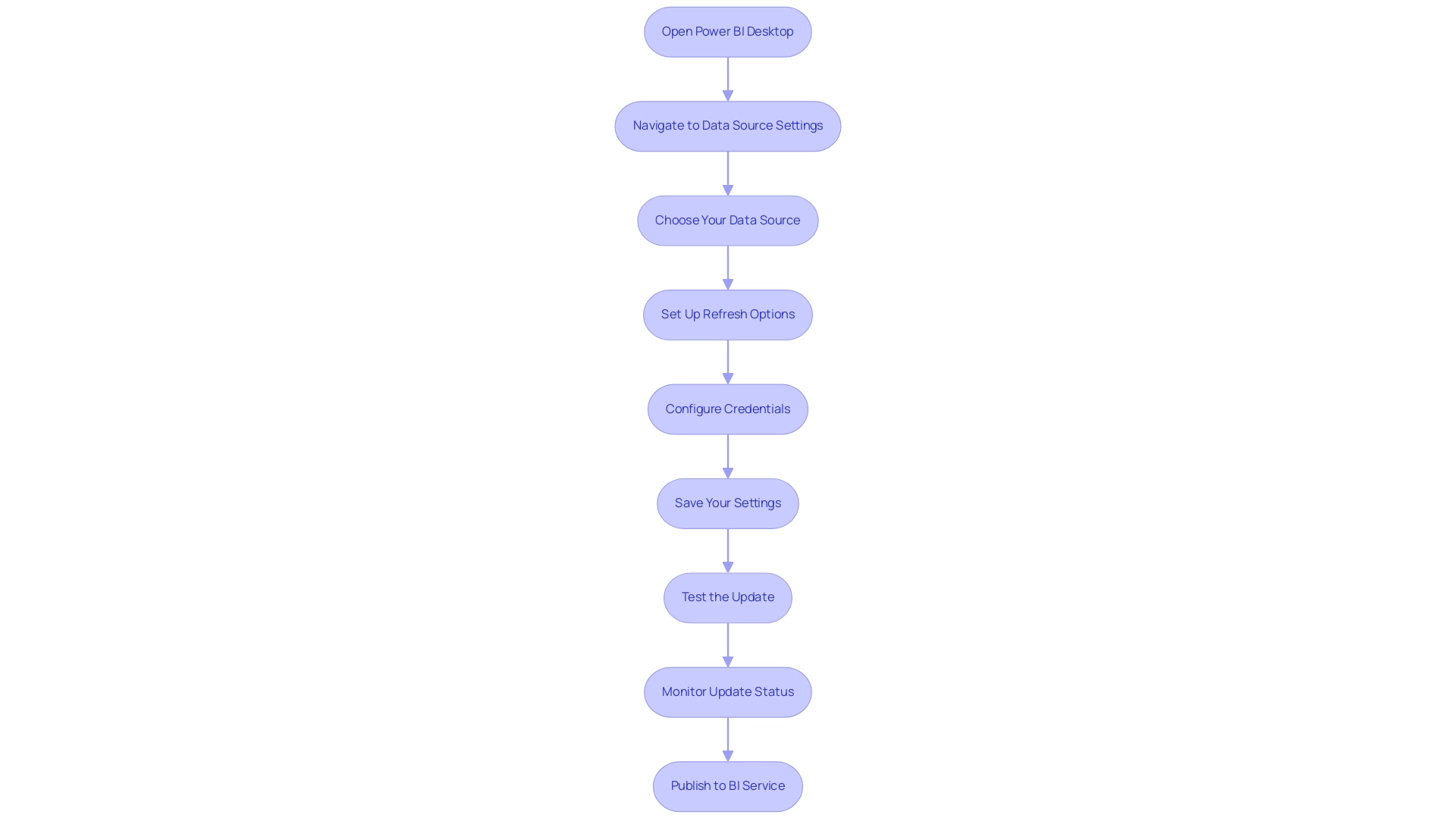

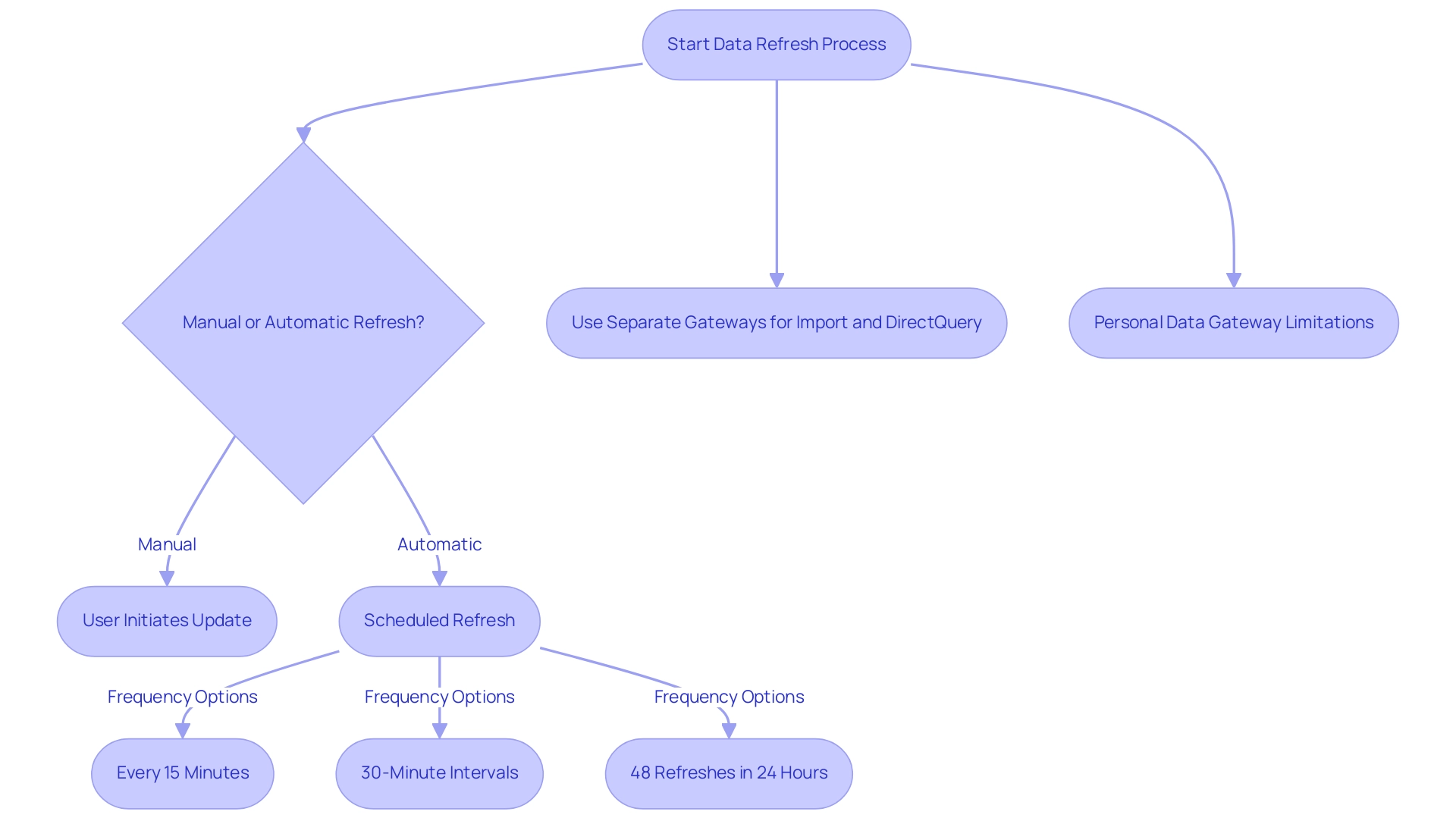

Step-by-Step Guide to Setting Up Data Refresh in Power BI

- Open Power BI Desktop: Launch the application and load the report you wish to work with.

- Navigate to Data Source Settings: Access the ‘File’ menu, then select ‘Options and settings,’ followed by ‘Data source settings.’

- Choose Your Data Source: Identify and select the specific data source that requires refreshing.

- Set Up Refresh Options: Click on ‘Schedule Refresh’ to define your preferred refresh frequency to refresh data Power BI. Power BI Premium allows you to refresh data Power BI as frequently as every 30 minutes, with a total of up to 48 refreshes available per day, enabling you to select daily, weekly, or custom intervals. However, as noted by mjmowle, while up to 48 times you can refresh data Power BI in a 24-hour period, they may be limited to 30-minute intervals depending on your license type.

- Configure Credentials: Make sure to enter the correct authentication details for your data source to avoid connectivity issues during refreshes.

- Save Your Settings: Click ‘Apply’ to ensure your configurations are saved.

- Test the Update: Manually initiate a test by clicking ‘Update’ to confirm that your settings are functioning correctly.

- Monitor Update Status: After configuring your update, regularly check the update status and history of your semantic models to refresh data Power BI, monitor for errors, and ensure successful synchronization cycles. This step is essential for overcoming challenges associated with time-consuming report creation and inconsistencies, as it ensures your information remains precise and current.

- Publish to BI Service: Once you’re assured in the update configuration, upload your report to the BI Service, allowing others to access the most recent information.

By following these steps, you can ensure that your BI reports are set up to refresh data Power BI consistently, providing accurate insights to support decision-making and drive business growth. Moreover, think about utilizing RPA solutions such as EMMA RPA or Automate to streamline the information update process, improving efficiency and minimizing manual involvement. Be aware of possible challenges with BI tools, such as a steep learning curve and integration issues; consider structured training and ongoing education programs designed for BI to enhance your proficiency with its update capabilities.

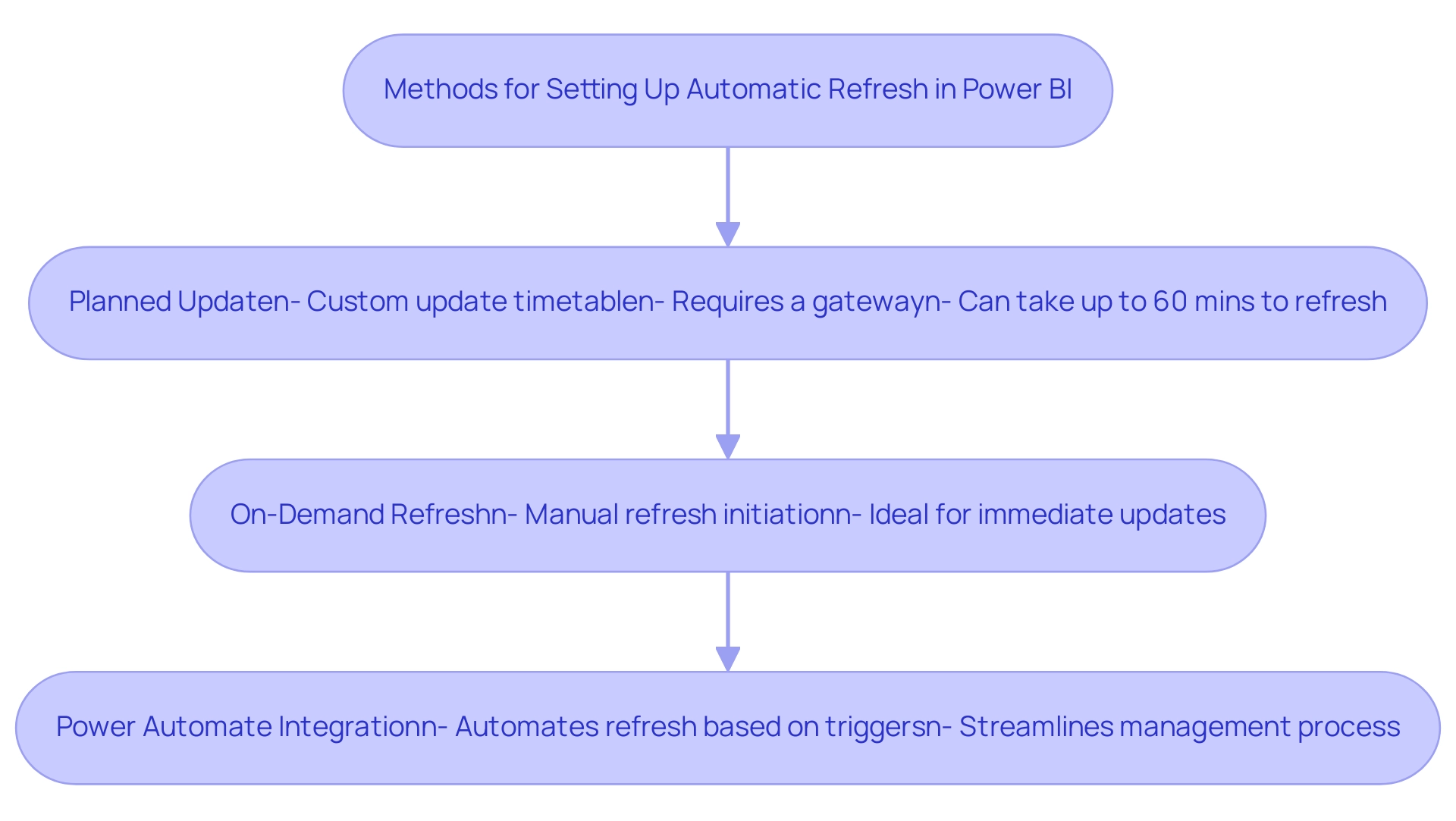

Exploring Different Types of Data Refresh Options

Power BI provides a range of data refresh options, each tailored to meet different reporting needs:

- Manual Refresh: This user-initiated option is ideal for ad-hoc analysis, allowing for immediate updates when specific insights are required.

- Scheduled Update: Automatically refreshing data Power BI at specified intervals, this approach is crucial for frequently updated reports, ensuring stakeholders have access to the most recent details without manual intervention. It is recommended to schedule these refreshes during off-peak times to optimize performance.

- On-Demand Update: This adaptable method allows users to refresh data Power BI at their discretion, making it especially beneficial for dynamic reporting situations where timing is crucial.

- Direct Query Refresh: This method allows users to refresh data Power BI for compatible data sources, providing real-time data updates and ensuring that they always interact with the most current information available. Significantly, during a complete update, you need twice the amount of memory the semantic model requires, which is a critical technical consideration.

Comprehending these varied options enables organizations to choose the most appropriate update method to refresh data in Power BI based on their reporting needs. However, many organizations struggle with a lack of data-driven insights, which can leave them at a competitive disadvantage. By applying these improvement strategies, organizations can enhance their reporting capabilities and drive informed decision-making.

Alongside these strategies, our 3-Day BI Sprint can expedite the report creation process, while the General Management App supports comprehensive management and smart reviews. As Sayantoni Das notes,

Query is a commonly utilized ETL (Extract, Transform, Load) tool, underscoring its essential role in the analytics landscape.

Additionally, adopting best practices for data transformation in BI, such as maintaining clear documentation, prioritizing information quality, and optimizing models, can further enhance these refresh strategies.

The case study titled “Best Practices for Transforming Data in Business Intelligence” illustrates that organizations can achieve improved business outcomes by adhering to these practices. By implementing these approaches, organizations can maximize the value of their advanced analytics investments and leverage Power Automate for streamlined workflow automation, ensuring a risk-free ROI assessment and professional execution.

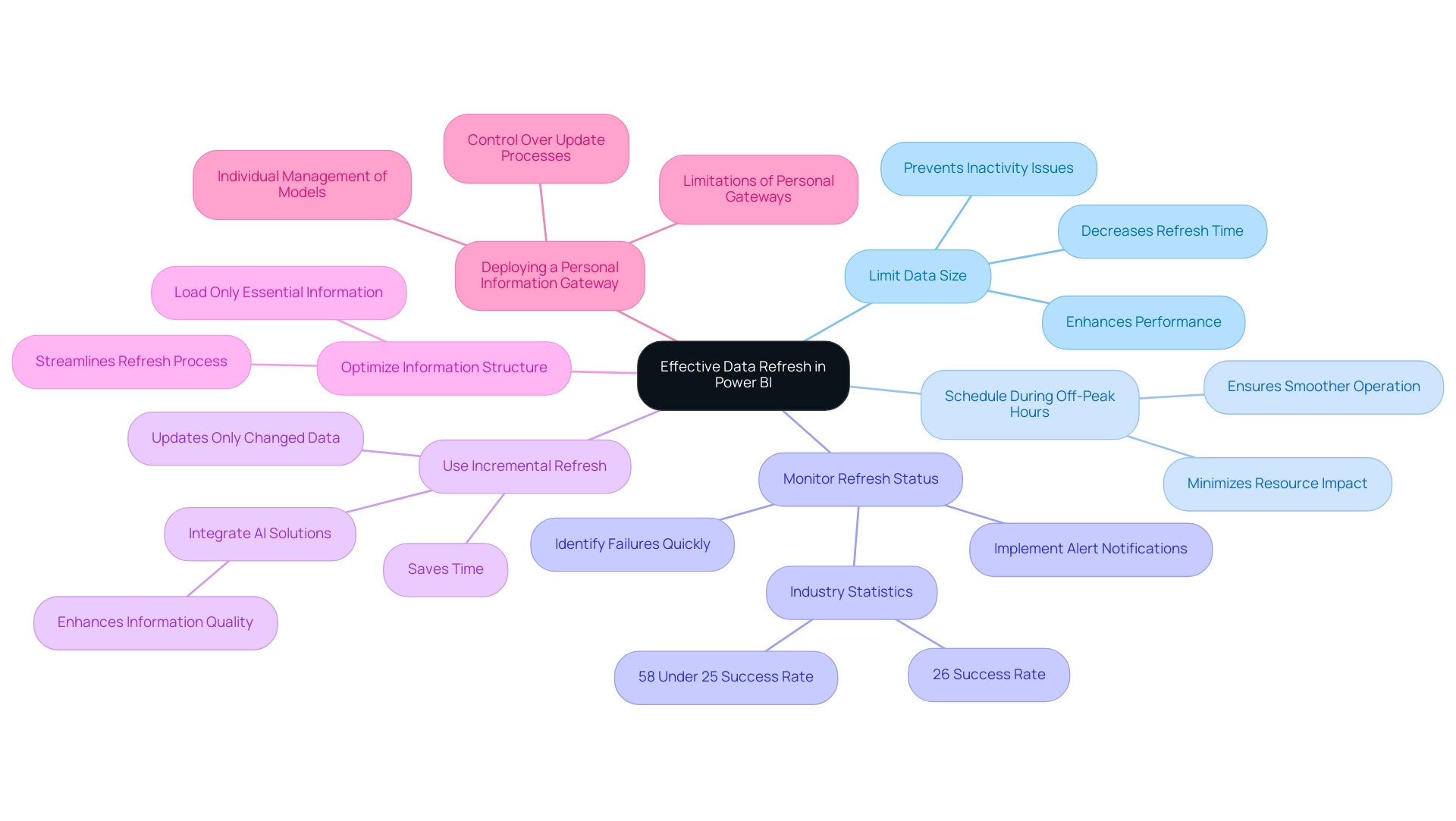

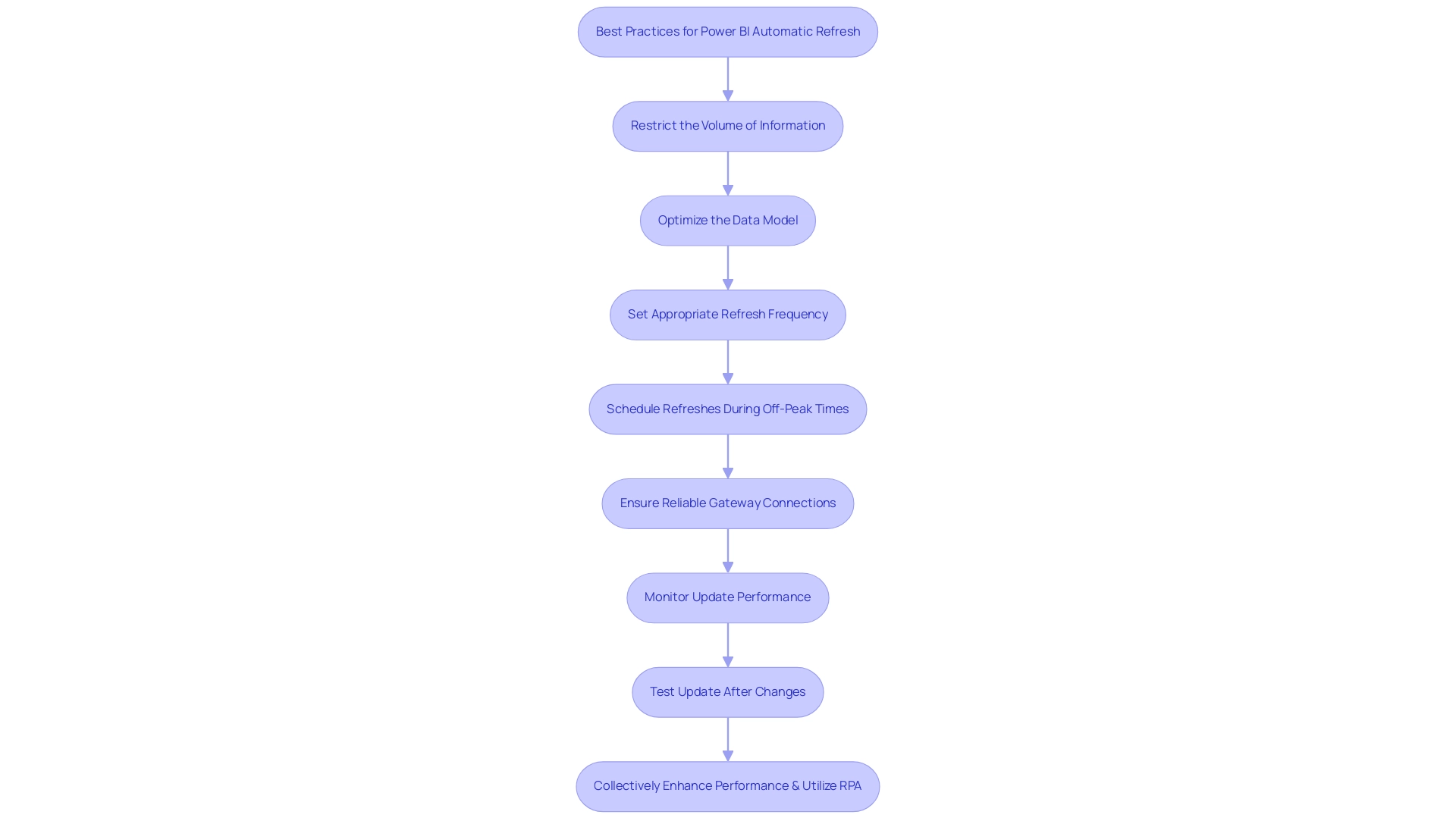

Best Practices for Effective Data Refresh in Power BI

-

Limit Data Size: Start by reducing the amount of data to refresh data Power BI effectively. A smaller dataset not only enhances performance but also decreases the time required for each refresh cycle. This is crucial, especially considering that it is important to refresh data Power BI deems a semantic model inactive after two months of inactivity, emphasizing the necessity of regular engagement.

-

Schedule During Off-Peak Hours: To further optimize performance, schedule data refreshes during off-peak hours. By doing so, you minimize the impact on system resources and ensure a smoother operation.

-

Monitor Refresh Status: Regular observation of update history is essential. This practice enables you to quickly identify any failures or delays, allowing for timely responses. According to industry statistics, ‘26% of organizations encounter update issues at a 50% success rate, while 58% report problems occurring under 25%.’ Implementing alert notifications for update failures can significantly enhance responsiveness to these challenges, especially when leveraging Robotic Process Automation (RPA) to automate manual workflows and improve operational efficiency.

-

Use Incremental Refresh: For those managing large datasets, incremental refresh is a game changer. This technique updates only the information that has changed, rather than refreshing the entire dataset, leading to substantial time savings and improved performance. Additionally, consider integrating AI solutions, such as Small Language Models, which can enhance information quality by providing tailored analyses that address inconsistencies in master information.

-

Optimize Information Structure: An effective information model is essential for improving update durations. Ensure that only essential information is loaded into your model. This not only streamlines the refresh process but also improves overall performance.

-

Case Study – Deploying a Personal Information Gateway: For users without access to an enterprise gateway, deploying a personal information gateway can be a practical solution. This permits individual management of semantic models, enabling users to handle source configurations independently. While personal gateways have limitations, they enable users to maintain control over their information update processes, aligning with the best practices discussed.

By adhering to these best practices and considering automation strategies, you can significantly enhance the reliability and efficiency of the refresh data Power BI processes, ultimately driving better decision-making and operational effectiveness. To discover more about how our AI solutions can specifically tackle your quality challenges, schedule a free consultation today.

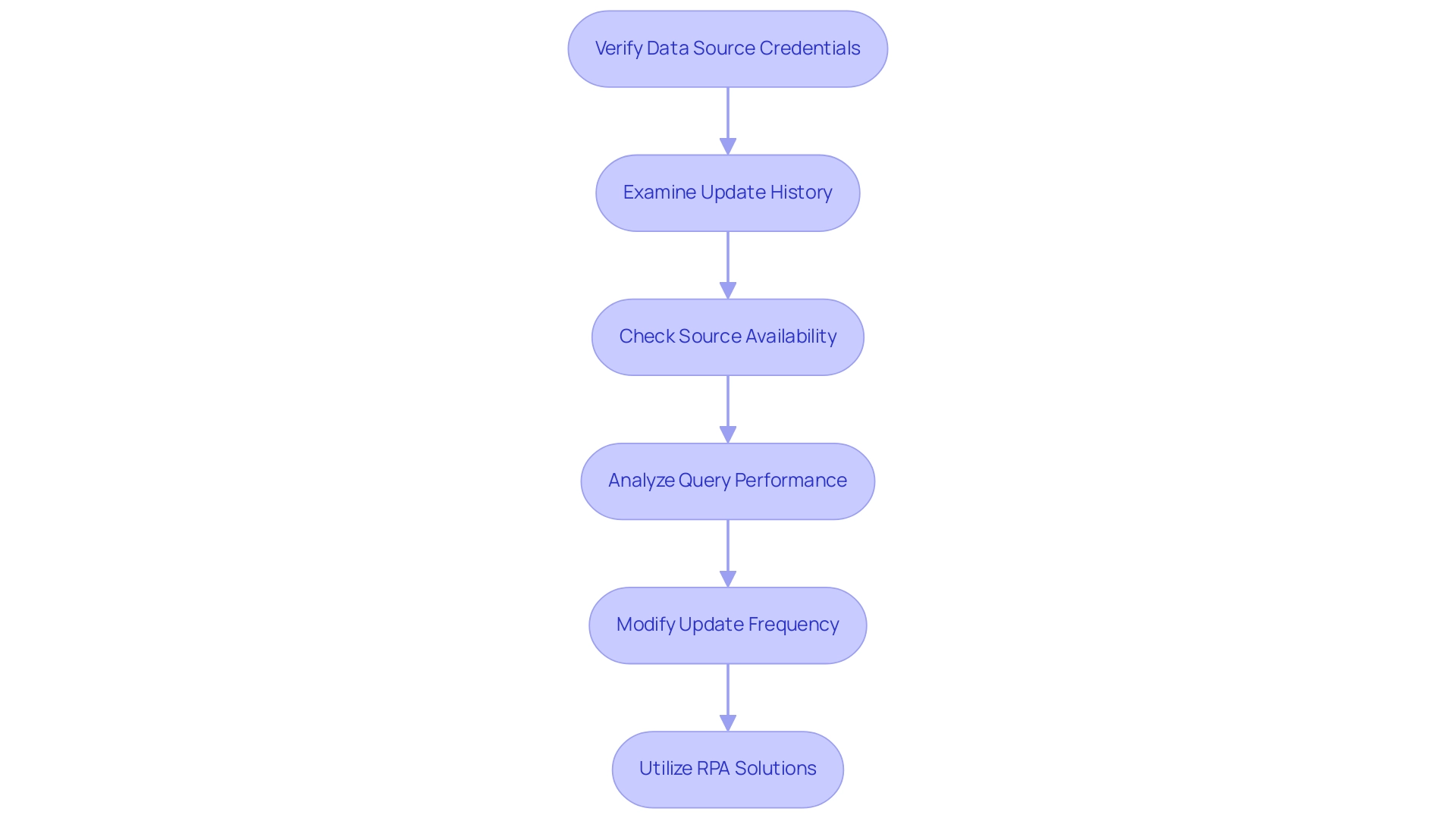

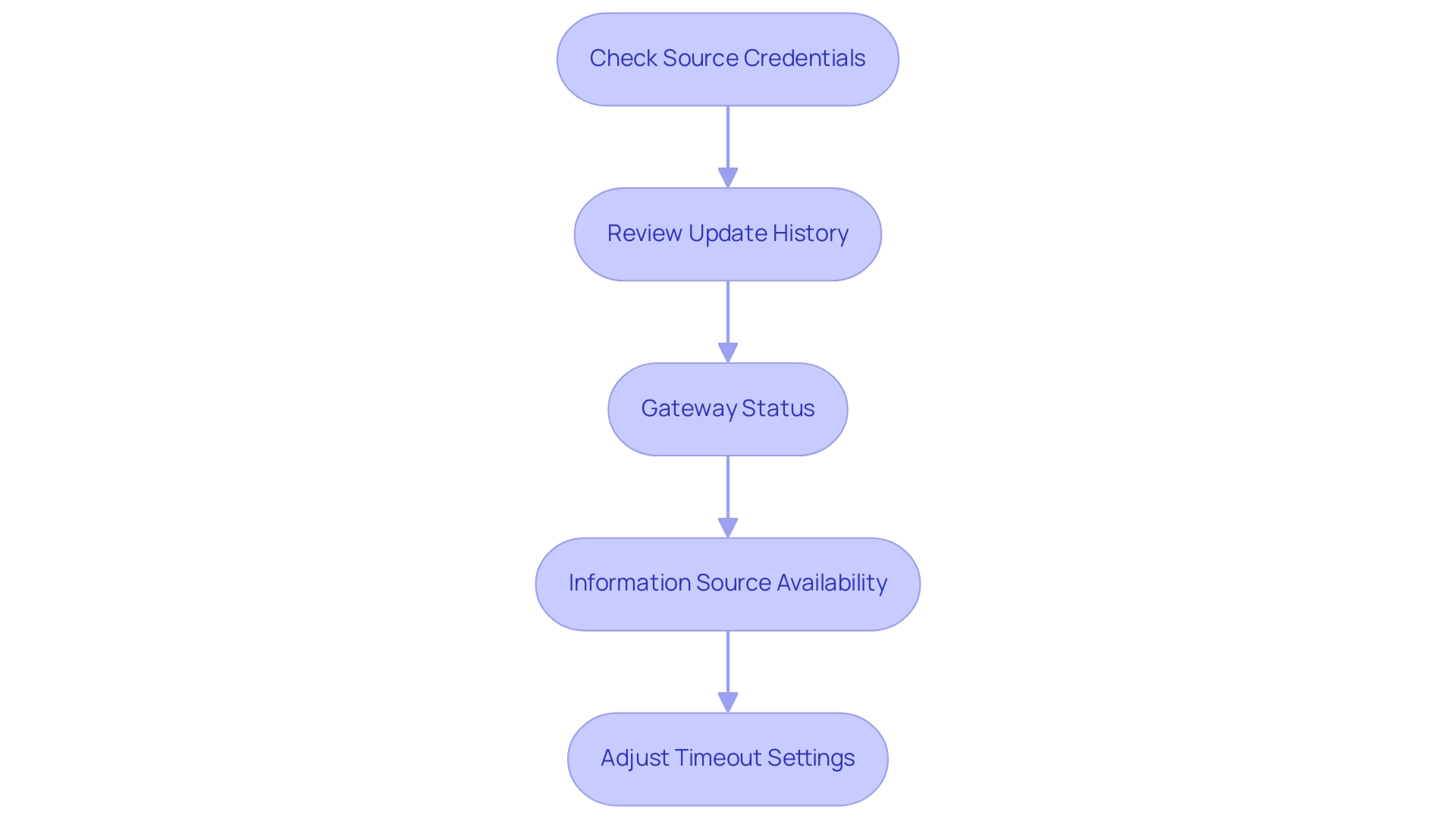

Troubleshooting Data Refresh Issues in Power BI

-

Verify Data Source Credentials: Start by confirming that the credentials for your data source are accurate and have not expired. This foundational step is vital, as incorrect credentials can lead to failed update attempts, ultimately affecting your ability to extract meaningful insights essential for informed decision-making.

-

Examine Update History: Utilize the update history feature in BI Service to pinpoint any error messages or recurring patterns. This insight can help identify specific issues that need addressing, allowing you to improve operational efficiency in report generation.

-

Check Source Availability: Ensure that your information source is online and reachable during scheduled update periods. Downtime or network issues can significantly impact update success rates, posing challenges in leveraging your Power BI dashboards effectively.

-

Analyze Query Performance: If you encounter sluggish update times, it’s essential to review the performance of the queries within your data model. Optimizing queries can lead to more efficient update operations, reducing timeouts and enhancing the overall user experience with your business intelligence tools.

-

Modify Update Frequency: For those experiencing frequent update failures, consider adjusting the update frequency. Allocating more time for processing can alleviate pressure on your models, particularly during high-demand periods. For instance, you might retain two years of information and configure the incremental update to modify only the last three months.

-

Utilize RPA Solutions: Think about incorporating RPA solutions such as EMMA RPA and Automate to streamline routine information management tasks. This can streamline the updating process, reduce human error, and enhance overall operational efficiency.

By implementing these key troubleshooting strategies, you can effectively manage and resolve common data updating issues in Power BI. As v-angzheng-MSFT notes, ‘The table will update if changes to the APPLIED STEPS pane are detected.’ There is no way to prevent an update from occurring if you modify a step. This emphasizes the significance of comprehending how changes affect update processes.

Additionally, consider the case study regarding scheduled refresh time-outs, which indicates that reducing the size or complexity of semantic models can help avoid time-outs. By integrating RPA solutions alongside these practices, the success rate of your troubleshooting efforts will improve, facilitating smoother operations and enhanced data insights, ultimately driving growth and innovation within your organization.

Conclusion

Ensuring that data refresh processes in Power BI are robust and efficient is critical for organizations striving to harness the full potential of their analytics. By understanding the various data refresh options—manual, scheduled, on-demand, and direct query—organizations can tailor their strategies to meet specific reporting needs. Each method serves a distinct purpose, facilitating timely access to the most current insights, which is essential for informed decision-making.

Implementing best practices, such as:

- Limiting data size

- Scheduling refreshes during off-peak hours

- Monitoring refresh statuses

can significantly enhance the reliability of data updates. Utilizing techniques like incremental refresh allows for more efficient data management, while integrating automation solutions such as Robotic Process Automation can streamline workflows and minimize human error.

Moreover, troubleshooting common refresh issues, from verifying data source credentials to optimizing query performance, empowers teams to maintain seamless operations. As organizations embrace these strategies, they position themselves to not only improve data integrity but also foster a culture of data-driven decision-making that drives strategic growth.

In today’s fast-paced business environment, the ability to leverage up-to-date data effectively can set organizations apart from the competition. By prioritizing these data refresh strategies, organizations can ensure they are equipped to respond swiftly to changing insights and market dynamics, ultimately paving the way for sustained success.

Overview

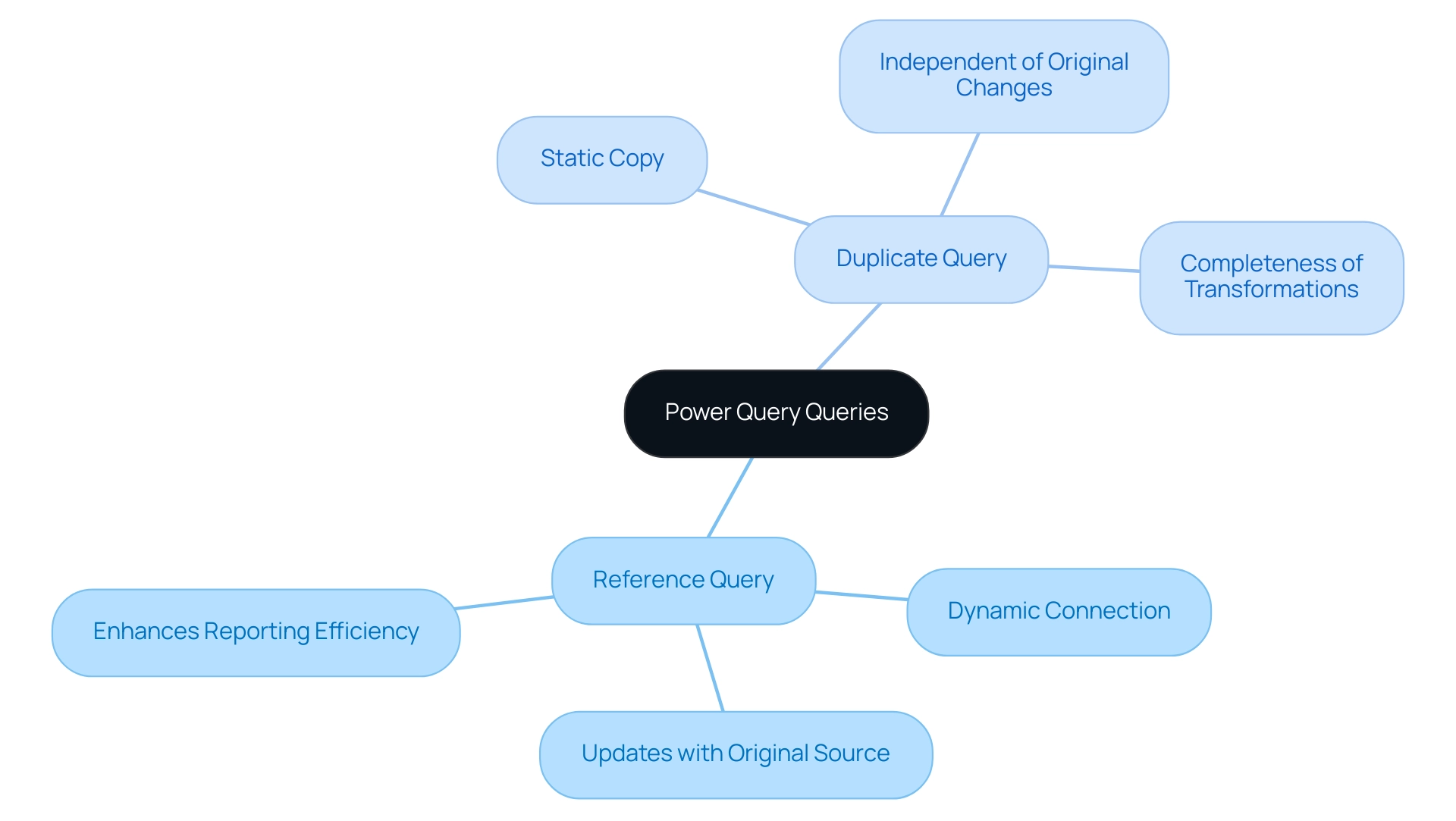

The article focuses on the differences between reference and duplicate queries in Power Query, highlighting their implications for data management and reporting efficiency. It explains that reference queries maintain a dynamic connection to the original data, allowing for real-time updates, while duplicate queries create static copies, which can hinder efficiency and increase resource consumption; thus, understanding these distinctions is crucial for optimizing workflows and ensuring accurate reporting in business intelligence contexts.

Introduction

In the evolving landscape of data management, understanding the nuances between reference and duplicate queries in Power Query is pivotal for optimizing operational efficiency. As organizations strive to harness the full potential of their data, these query types emerge as essential tools in the arsenal of Business Intelligence.

- Reference queries provide a dynamic connection to original datasets, ensuring that reports reflect the most current information.

- Duplicate queries allow for safe experimentation without altering the source data.

By mastering these concepts, organizations can streamline workflows, enhance reporting capabilities, and ultimately drive informed decision-making. This article delves into the practical applications, performance considerations, and best practices that empower users to leverage these queries effectively, transforming data challenges into opportunities for growth and innovation.

Understanding Reference and Duplicate Queries in Power Query

In Power Query, a linked component acts as a dynamic connection to the original information source, enabling users to generate a new element that mirrors any changes made to the source information. This indicates that as the original dataset evolves, the reference search seamlessly updates, enhancing efficiency and accuracy in the reporting process—key components in leveraging Business Intelligence for operational growth. Unlocking the power of Business Intelligence is essential for transforming raw information into actionable insights that drive informed decision-making.

Conversely, a duplicate query generates a complete copy of the original, encompassing all transformations applied. This leads to a static dataset that stays the same, regardless of updates made to the original information. Understanding the distinctions of reference vs duplicate power query is vital for optimizing workflows and ensuring information integrity, particularly considering the challenges many encounter, such as time-consuming report creation, inconsistencies, and lack of actionable guidance.

With 1,957 users online in the Fabric community, you are not alone in navigating these complexities. As Johnny Winter wisely noted,

It makes sense in this scenario to generate a request that brings in the complete table, and then compose a series of reference requests to transform the information into a better format for reporting.

For example, a junior analyst employed both reference vs duplicate power query searches to improve information management abilities, demonstrating the practical advantages of comprehending these search types.

Furthermore, understanding dependency can significantly enhance the management of multiple machine learning models in Power BI, streamlining data management and empowering users to leverage the full potential of Power Query. Additionally, our RPA solutions can further enhance operational efficiency by automating repetitive tasks, allowing you to focus on strategic decision-making. Book a free consultation to learn more about how RPA can transform your operations.

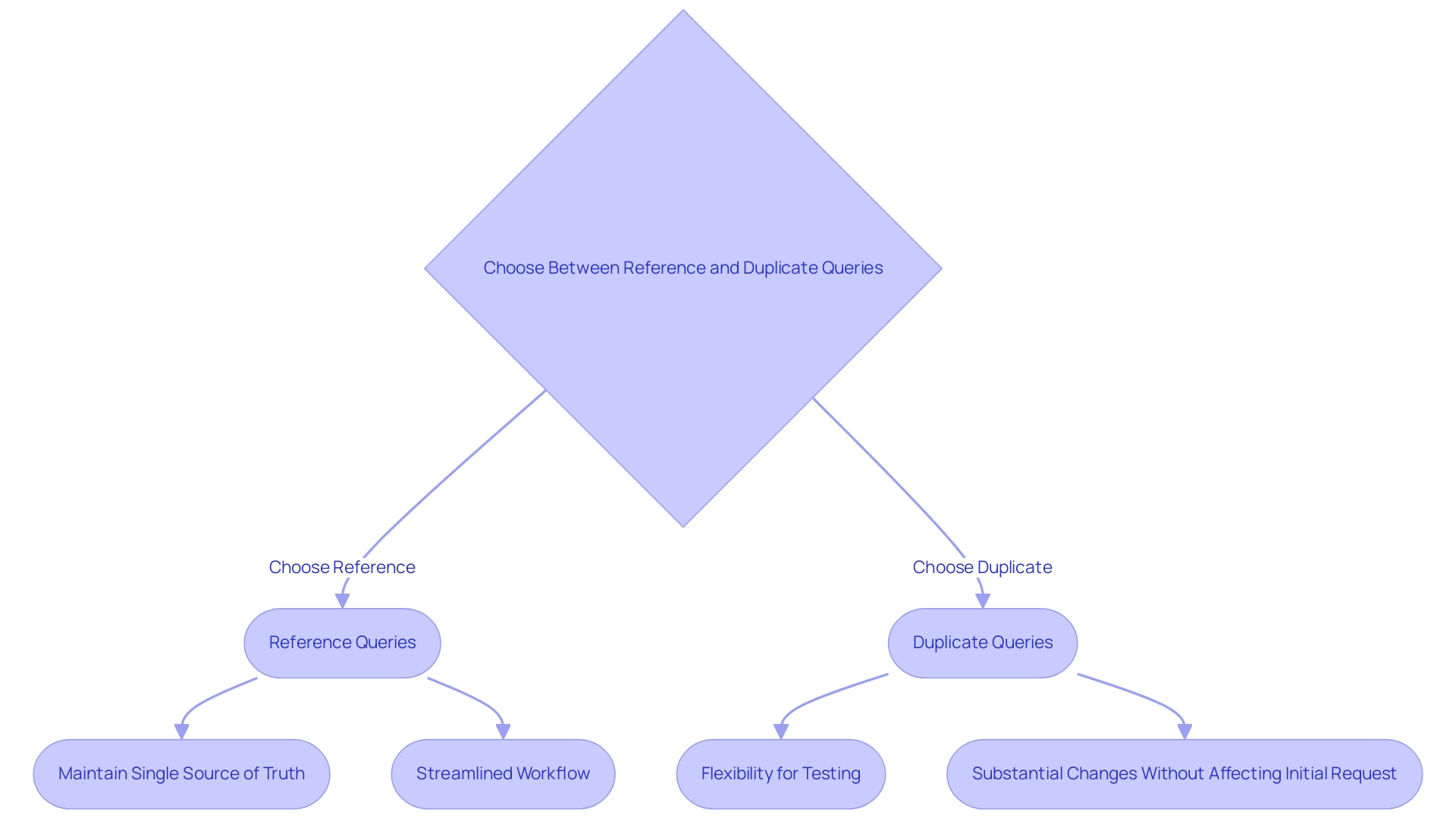

Practical Differences: When to Use Reference vs Duplicate Queries

When confronted with the choice between reference vs duplicate power query searches, it’s essential to carefully evaluate your objectives. When aiming to maintain a single source of truth, you should consider the difference between reference vs duplicate power query as your go-to option. This method ensures that any updates to the original information are automatically reflected in your reports, which is crucial for scenarios where freshness is paramount.

As emphasized by Pete, a Super User,

If you can develop a core request that includes all generic transformations, then use this for each subset of unique transformations, you will reduce the number of areas that require adjustments.

This approach not only streamlines your workflow but also enhances efficiency in ongoing reporting processes, crucially supporting your Business Intelligence efforts. Additionally, figures from Ovid and Covidence indicate a specificity of 100%, highlighting the significance of precise information management in your requests.

On the other hand, using reference vs duplicate power query is advantageous when substantial changes to the information structure or transformations are necessary without affecting the initial request. This flexibility is particularly valuable for testing new transformations or creating variations of a report based on the same dataset. The difference in the context of reference vs duplicate power query is how each technique employs processing power and memory; duplicating a request creates a separate object in memory, while pointing to a request functions like a pointer, thereby saving resources and avoiding circular references.

Furthermore, incorporating Robotic Process Automation (RPA) can notably improve the efficiency of these processes by automating repetitive tasks related to inquiries, enabling your team to concentrate on more strategic decision-making. It’s also important to acknowledge the limitations concerning the emphasis on sources from the Ovid platform and the incapacity to assess certain software due to file size constraints, which can create obstacles in analysis. Tackling these challenges, such as time-consuming report creation and inconsistencies, will enable you to make informed decisions that enhance your analysis strategies in Power Query and Power BI, ultimately driving growth and innovation in your operations.

Use Cases: Real-World Applications of Reference and Duplicate Queries

An information request acts as a strong instrument in business reporting, especially when handling sales reports that need real-time information from regularly updated sources, like a database monitoring customer orders. For instance, with SKF having 17,000 distributor locations worldwide, the ability to utilize the reference vs duplicate power query ensures that reports consistently reflect the most current sales figures across a vast network, empowering decision-makers with timely insights. As Andy Morris, Principal Product Marketing Specialist, notes, “The online experience should mirror a good trip experience, but the company had no visibility into the voice of the customer.”

This highlights the necessity for real-time information in sales reporting to enhance customer experience and drive operational efficiency. To support this, Robotic Process Automation (RPA) can be implemented to simplify information gathering and reporting processes, reducing errors and freeing up resources for more strategic tasks. On the other hand, when analyzing historical sales information, such as figures from the previous quarter, the distinction of reference vs duplicate power query provides a strategic benefit without altering the original dataset.

This method enables focused analysis while preserving the integrity of ongoing sales information. However, organizations frequently face challenges with inadequate master information quality, which can obstruct efficient utilization of both varieties of inquiries. The transition in large information utilization from 17% in 2015 to 53% in 2017, along with 97.2% of firms investing in big information and AI by 2018, indicates a significant movement towards advanced analytics.

By utilizing both citations and the reference vs duplicate power query in contemporary reporting methods, along with strong Business Intelligence solutions, organizations can enhance their reporting capabilities, tackle issues of inadequate master information quality, and ultimately achieve superior business results through informed decision-making.

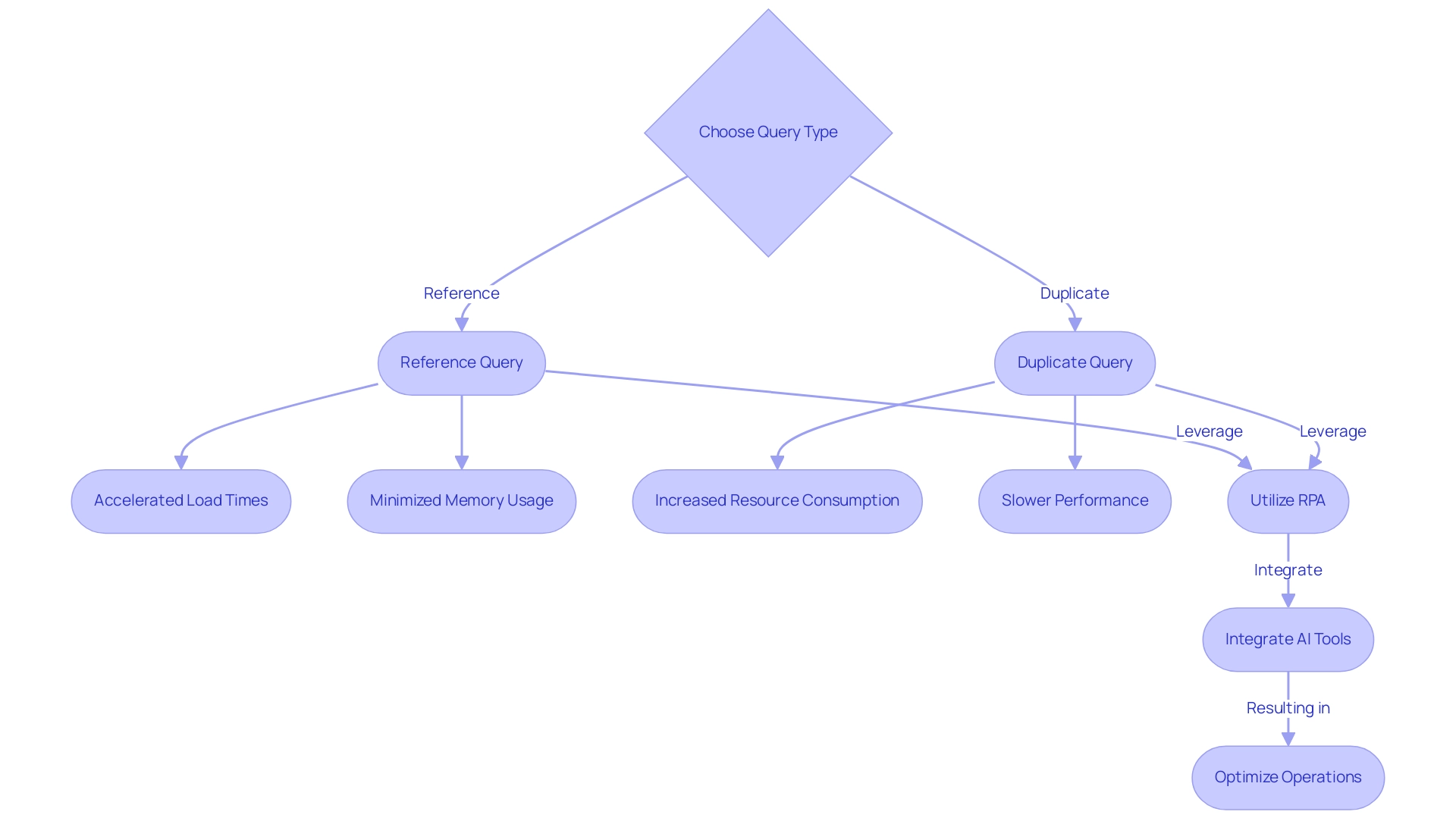

Performance Considerations: Efficiency of Reference vs Duplicate Queries

When assessing performance, the distinction between reference vs duplicate power query arises as the more efficient choice, mainly because reference queries do not replicate information; rather, they merely refer to the original source. This method can significantly accelerate load times and minimize memory usage, particularly when dealing with larger datasets. By utilizing Robotic Process Automation (RPA) alongside these requests, organizations can automate manual workflows, resulting in significant cost savings and enabling teams to concentrate on strategic tasks.

For example, automating information entry and report generation can decrease labor expenses and mistakes. Conversely, in the discussion of reference vs duplicate power query, duplicate queries tend to consume more resources since they generate a complete copy of the information, which can result in slower performance, especially if multiple duplicates are initiated. Recent findings suggest that organizations can enhance their information handling capabilities by integrating tailored AI solutions, which address challenges in a rapidly evolving technological landscape.

As emphasized in the case study ‘Common Challenges and Solutions When Working with Queries,’ implementing strategies like RPA can effectively address issues such as mismatches and performance bottlenecks. By prioritizing data requests for large datasets or intricate transformations, organizations can optimize operations, decrease resource usage, and ultimately improve overall efficiency. Furthermore, utilizing Business Intelligence tools in conjunction with RPA and AI can assist organizations in deriving actionable insights from their information, fostering informed decision-making.

As BI Analyst Pablo Genero notes,

While these small tips have little or no impact on performance, they can be beneficial in optimizing the workflow.

Organizations interested in furthering their understanding of these concepts can use code MSCUST for a $150 discount on registration for relevant workshops.

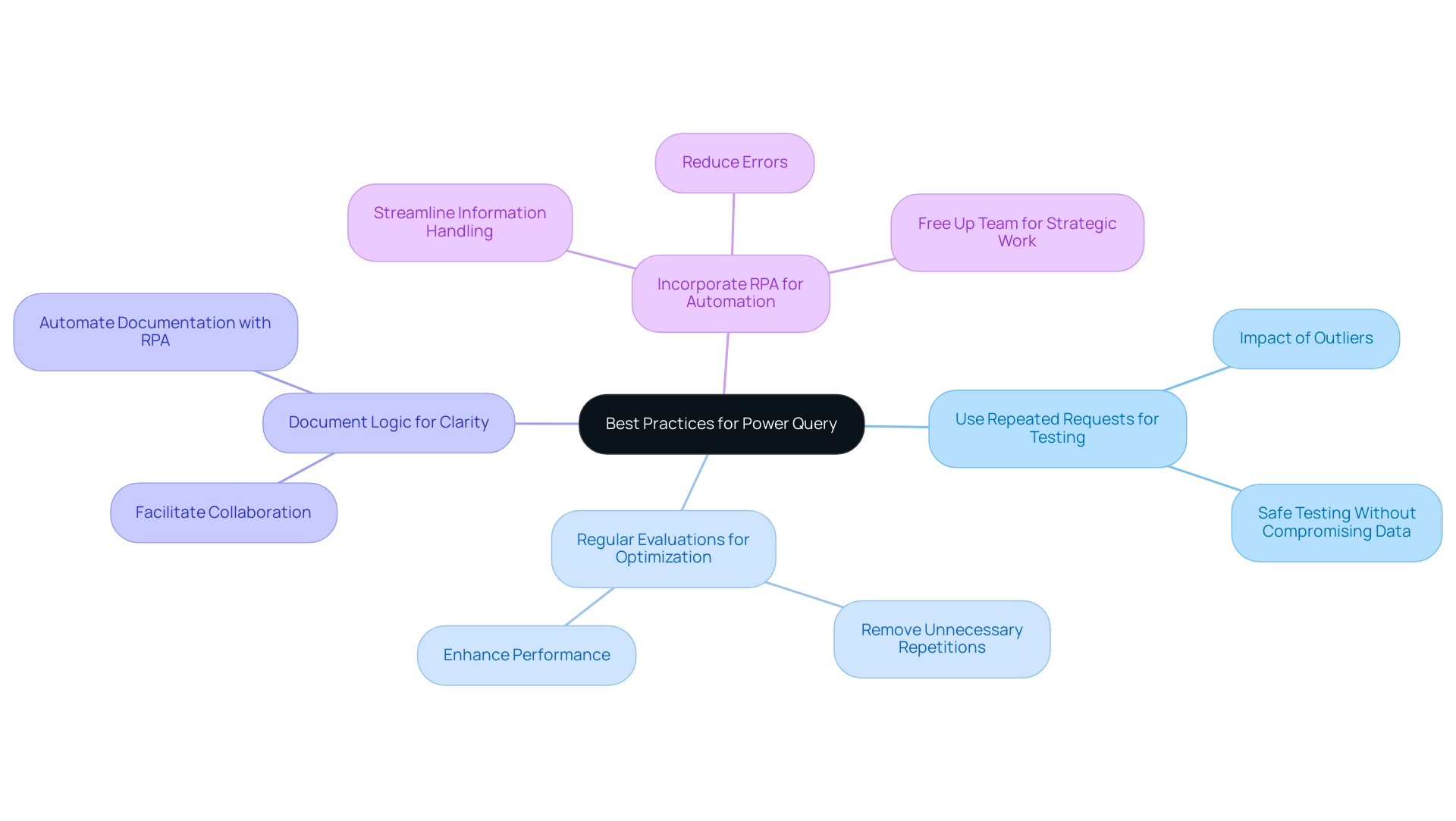

Best Practices for Using Reference and Duplicate Queries in Power Query

To enhance the efficiency of requests in Power Query, understanding the differences in reference vs duplicate power query is essential, particularly when utilizing reference requests for dynamic reports that necessitate real-time updates, ensuring your information remains current and pertinent. For instance, in analyzing vehicles sold for over 180 thousand Reais, identifying outliers can significantly influence decision-making and information transformation processes:

- Utilize repeated requests when experimenting with new transformations, allowing for safe testing without compromising the integrity of your original dataset.

- Conduct regular evaluations of your requests to optimize performance, actively removing unnecessary repetitions that may hinder efficiency.

By incorporating Robotic Process Automation (RPA) into this process, you can automate these reviews and streamline information handling, ultimately enhancing operational efficiency while reducing errors and freeing up your team for more strategic, value-adding work.

As Paul Turley, Microsoft Data Platform MVP, observes, “Comparing semantic model performance in Fabric and Power BI: Report & semantic model performance comparing the same information in Import mode, DirectQuery and Direct Lake” can provide insights into optimizing your processes:

- Thoroughly document your logic to enhance clarity and facilitate seamless collaboration with team members. RPA can also play a vital role in automating the documentation processes, ensuring that your team has easy access to updated query logic.

By adhering to these best practices, you can significantly enhance your data transformation processes, leading to improved operational efficiency and more informed decision-making.

Additionally, referencing the case study on Semantic Model Performance in Fabric and Power BI illustrates how performance variances can inform future BI architecture decisions and guide effective RPA implementation, especially when considering reference vs duplicate power query.

Conclusion

Understanding the distinctions between reference and duplicate queries in Power Query is essential for effective data management. Reference queries provide a dynamic link to original datasets, ensuring that reports reflect the most current information. This capability is vital for maintaining a single source of truth and facilitating informed decision-making. Conversely, duplicate queries allow for experimentation with data transformations without affecting the original dataset, making them ideal for safe testing and focused analysis.

The practical applications of these queries are significant:

- Reference queries empower organizations to generate real-time reports.

- Duplicate queries enable in-depth analysis of historical data.

By effectively leveraging both types, organizations can navigate data complexities and enhance their reporting capabilities.

Performance-wise, reference queries are generally more efficient, conserving resources and minimizing load times. Prioritizing these queries can streamline operations and boost productivity. Additionally, the integration of Robotic Process Automation (RPA) can further optimize data handling by automating routine tasks, allowing teams to focus on strategic initiatives.

In conclusion, mastering reference and duplicate queries is a strategic advantage that organizations should embrace. By utilizing the strengths of each query type and implementing best practices, organizations can improve data integrity, drive informed decision-making, and transform challenges into opportunities for growth and innovation.

Overview

Power BI Dataflows and Datasets serve distinct but complementary roles in business intelligence, with Dataflows focusing on data preparation and transformation, while Datasets emphasize data consumption and visualization. The article highlights that Dataflows enable centralized data management and reusability, making them ideal for complex data transformations and collaborative environments, whereas Datasets facilitate ease of use and rapid analysis, essential for effective reporting and decision-making.

Introduction

In the ever-evolving landscape of data management and analytics, organizations are increasingly turning to Power BI as a robust solution for harnessing the power of their data. At the heart of this platform lie Dataflows and Datasets, two essential components that facilitate seamless data preparation, transformation, and analysis. Understanding the distinct roles and benefits of these tools is crucial for any organization aiming to enhance operational efficiency and drive informed decision-making.

As the demand for data-driven insights continues to rise, particularly in a world where self-service analytics is becoming the norm, leveraging Power BI effectively can unlock significant competitive advantages. This article delves into the intricacies of Dataflows and Datasets, exploring their features, best practices, and the strategic scenarios in which they can be most beneficial, all while highlighting the importance of integrating innovative solutions like Robotic Process Automation (RPA) to streamline workflows and optimize performance.

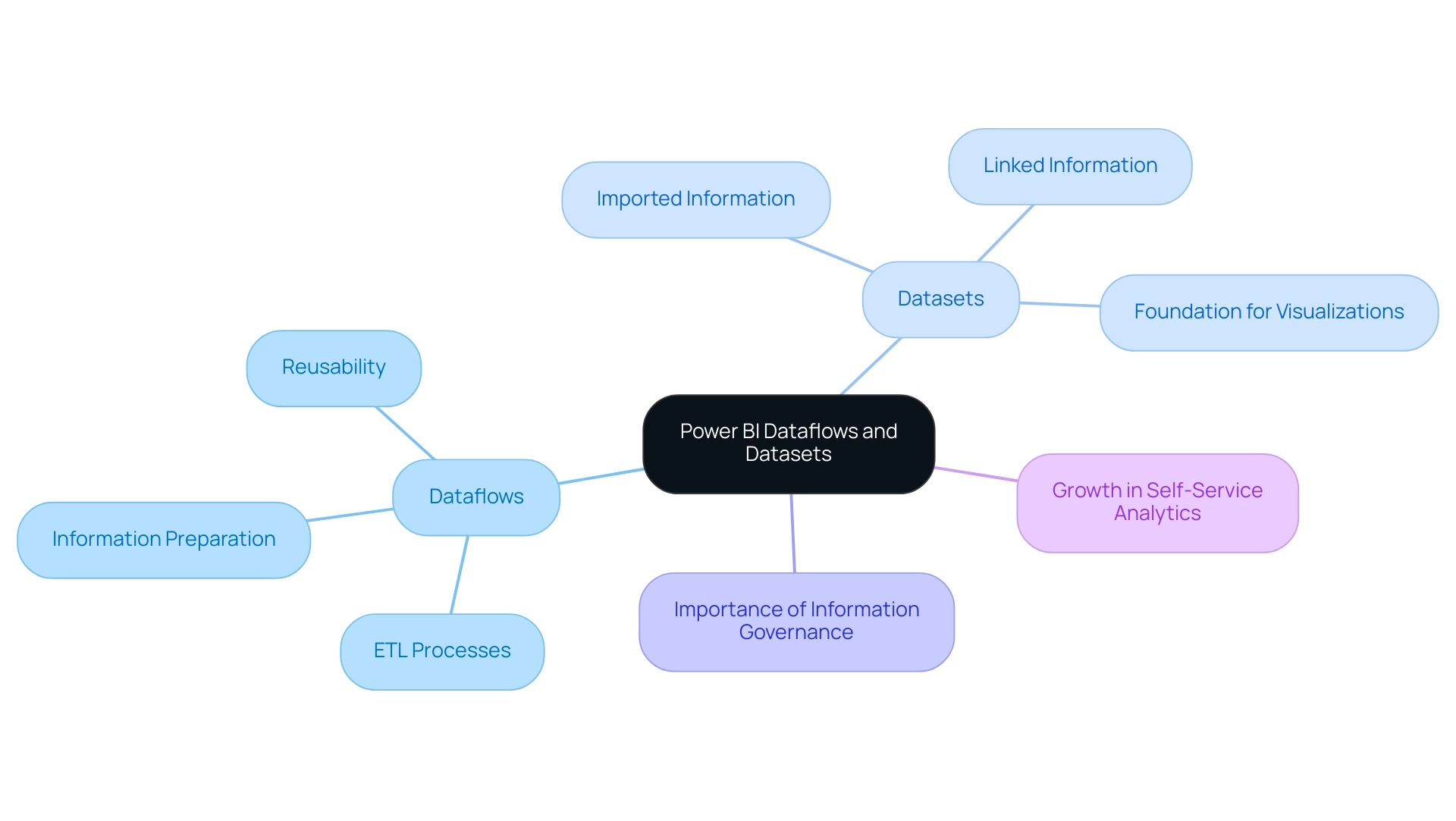

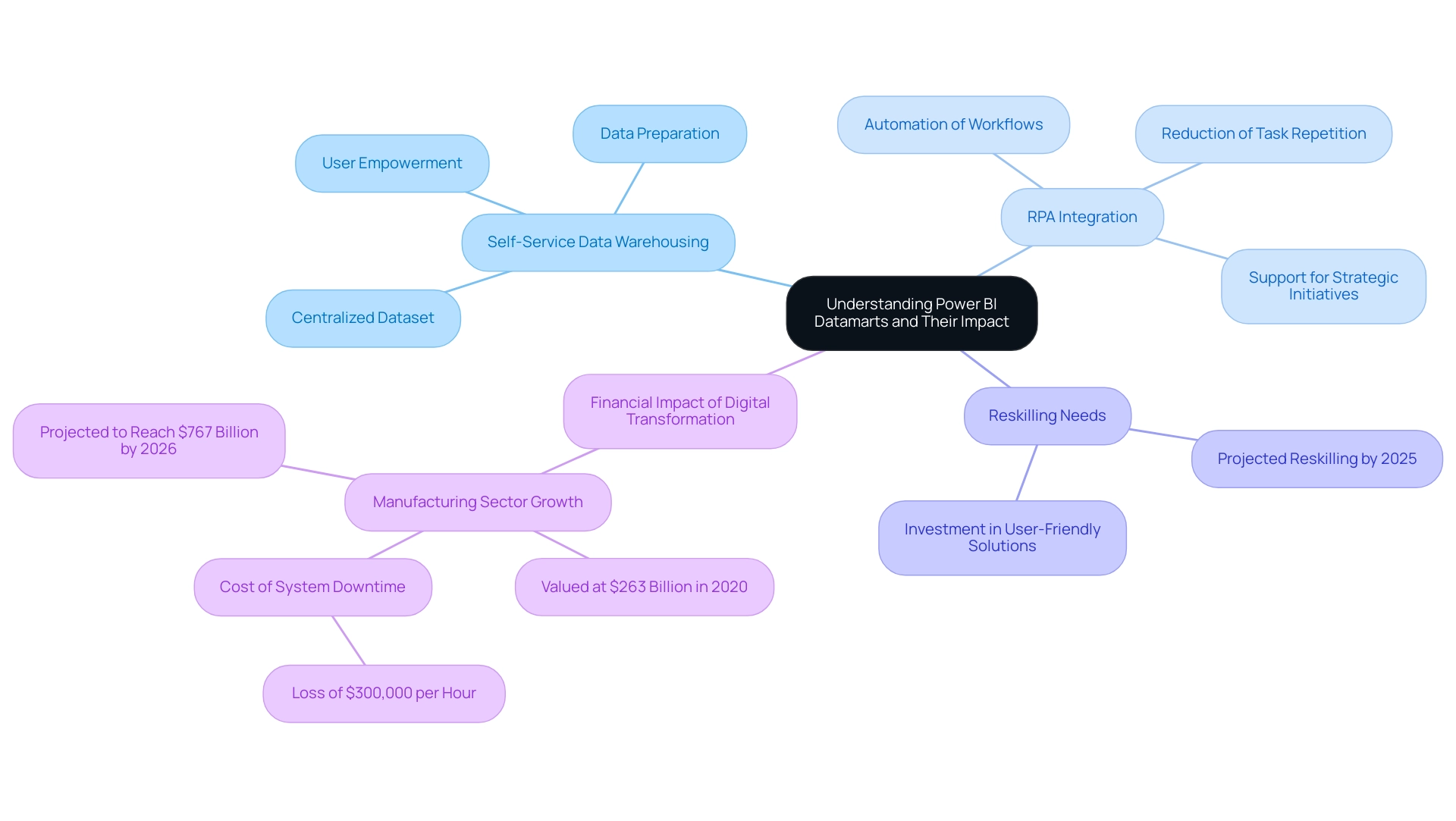

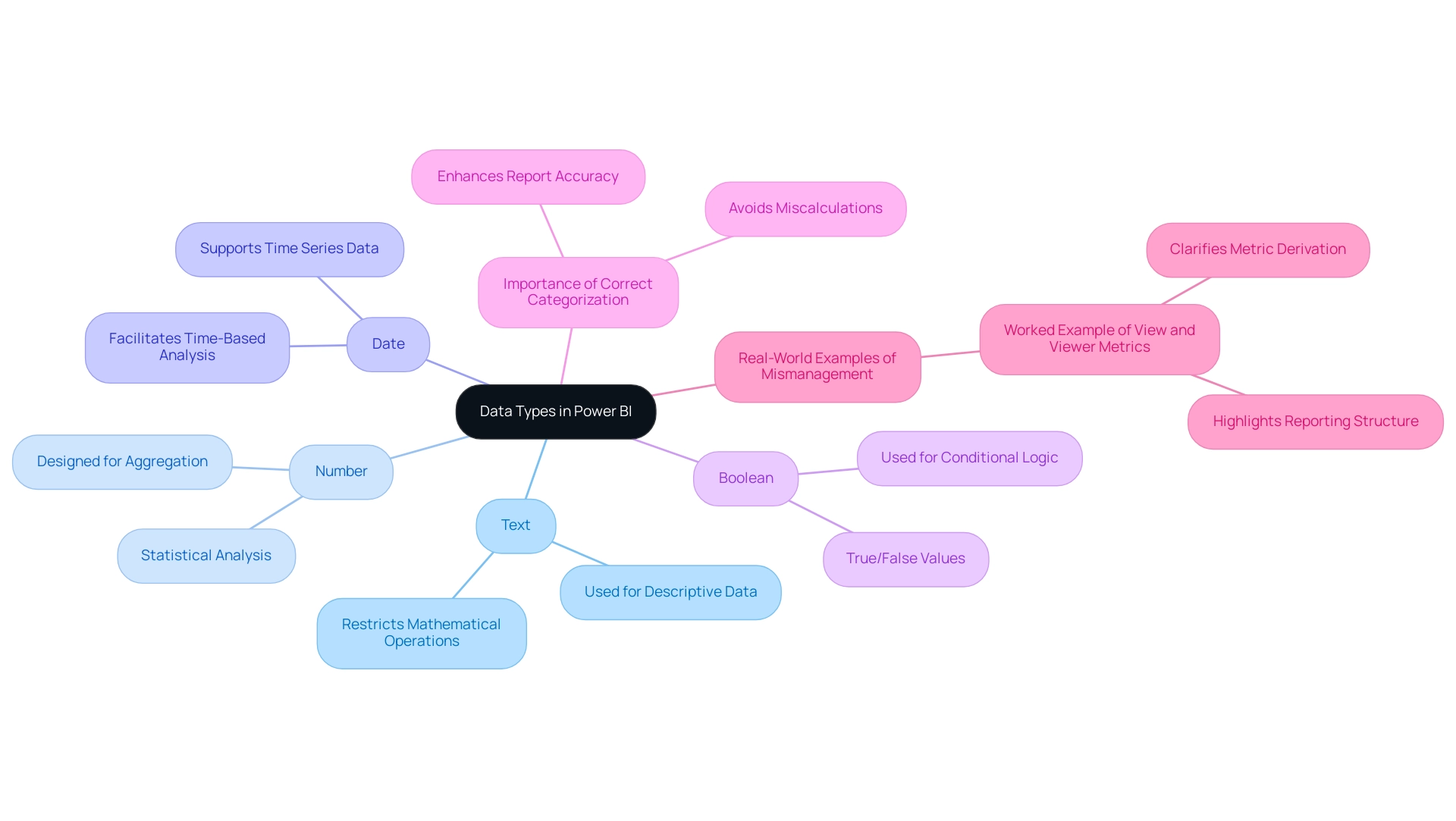

Understanding Power BI Dataflows and Datasets

These workflows and datasets are crucial components that improve information management and analytics within the BI environment. Data processes are specifically designed for information preparation and transformation, enabling users to connect to a variety of sources, cleanse, and shape the information prior to its entry into Power BI. This centralized method of managing ETL (Extract, Transform, Load) processes not only streamlines workflows but also encourages reusability across various reports and dashboards, ultimately enhancing efficiency in management.

As the need for information engineers is projected to grow at a rate of 50% annually, effective tools like Dataflows become increasingly crucial for organizations aiming to meet this requirement.

In contrast, collections consist of groups of information that have either been imported or linked to within Power BI. These datasets serve as the foundation for developing visualizations and reports, organizing the information in a way that is easily analyzable by users. While the discussion of Power BI dataflow vs dataset emphasizes the essential preparatory work needed for effective data management, Datasets focus on the consumption and analysis of that data.

Together, they create a powerful synergy within the BI framework, enhancing the overall effectiveness of data-driven decision-making. As Saumya Dubey noted, “Choosing between Power BI and Tableau depends on your organisation’s specific needs and budget,” highlighting the importance of understanding these tools within broader BI strategies.

Moreover, as we navigate the challenges of today’s information-rich environment, organizations can unlock the power of Business Intelligence to transform raw information into actionable insights, enabling informed decision-making that drives growth and innovation. Investing in solutions like RPA can further enhance operational efficiency by streamlining manual processes and freeing up teams for more strategic work. Additionally, with the anticipated growth in self-service analytics—whereby 50% of analytics queries will be generated using search or natural language processing by 2023—understanding the differences between Power BI Dataflow vs Dataset has never been more critical.

Organizations that prioritize information governance and real-time analytics are poised to outperform their peers financially, underscoring the relevance of these tools in modern BI practices. To back these initiatives, our services encompass the 3-Day BI Sprint for quick report generation and the General Management App for extensive management solutions, ensuring that businesses can effectively utilize the full capabilities of their information.

Key Features and Benefits of Power BI Dataflows vs. Datasets

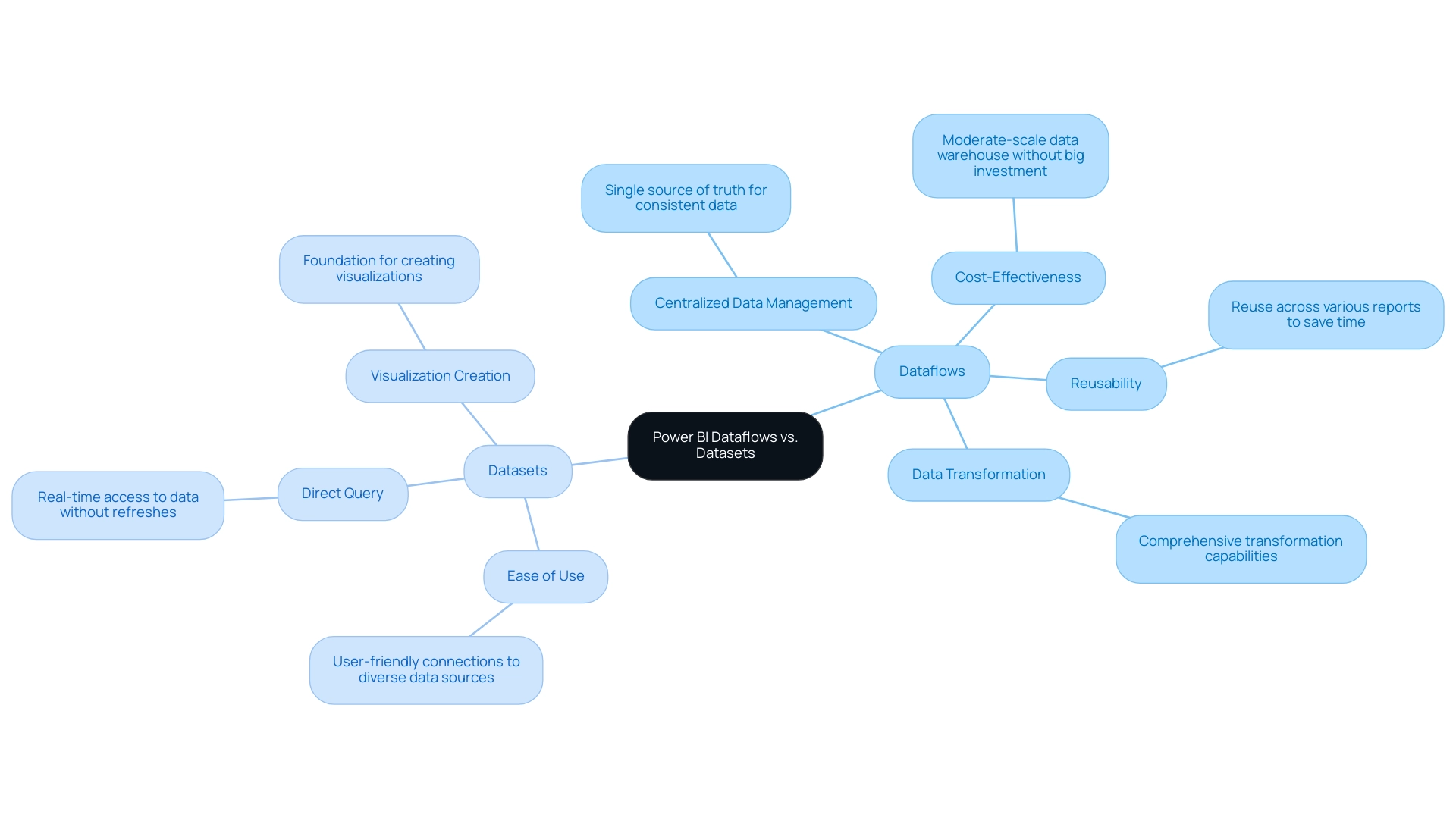

When evaluating Power BI Dataflows and Datasets, several crucial features and benefits stand out:

- Dataflows:

- Centralized Data Management: Dataflows establish a single source of truth, ensuring consistent data across multiple reports. This centralized approach enhances data governance and quality, vital for fostering a robust data culture, which is essential for overcoming challenges in leveraging insights from Power BI dashboards as highlighted in Matthew Roche’s blog series on building a data culture.

- Cost-Effectiveness: Dataflows can create a moderate-scale data warehouse without a substantial investment, making them a financially viable option for organizations looking to optimize their data management and improve operational efficiency through Business Intelligence.

- Reusability: Once a Dataflow is established, it can be reused across various reports, significantly reducing redundancy and saving valuable time for teams. This capability is particularly beneficial in multi-developer environments where collaboration is key, addressing the challenge of time-consuming report creation.

-

Data Transformation: Dataflows provide comprehensive transformation capabilities that efficiently handle complex data preparation tasks before integration with Power BI. This functionality is crucial for organizations aiming to streamline their data management processes without substantial investment, thus facilitating informed decision-making.

-

Datasets:

- Ease of Use: Datasets are designed for user-friendliness, allowing for straightforward connections to diverse data sources. This accessibility promotes quick analyses, making it easier for stakeholders to derive insights rapidly, addressing the lack of actionable guidance.

- Direct Query: Utilizing Direct Query mode, Datasets enable real-time access to data without necessitating data refreshes. This feature is crucial for organizations requiring up-to-the-minute information to inform their decisions, thereby enhancing operational efficiency.

- Visualization Creation: Serving as the foundation for creating visualizations, Datasets are essential for report building. They enable users to showcase information insights efficiently, converting unprocessed information into practical intelligence that fosters growth and innovation.

Integrating RPA solutions with BI tools and collections can further improve operational efficiency by automating information gathering and reporting procedures. This synergy not only addresses staffing shortages but also mitigates the impact of outdated systems, allowing organizations to focus on strategic initiatives. In essence, while data pipelines excel in data preparation and reusability, enabling organizations to manage their data landscape efficiently, the discussion of Power BI Dataflow vs Dataset emphasizes that Datasets focus on ease of use and visualization capabilities, making them indispensable for effective reporting and analytics.

As Paul Turley, a Microsoft Data Platform MVP, notes, comparing the performance of semantic models in both Fabric and Business Intelligence—specifically in Import mode, direct query, and Direct Lake—illustrates the nuanced advantages each approach brings to business intelligence.

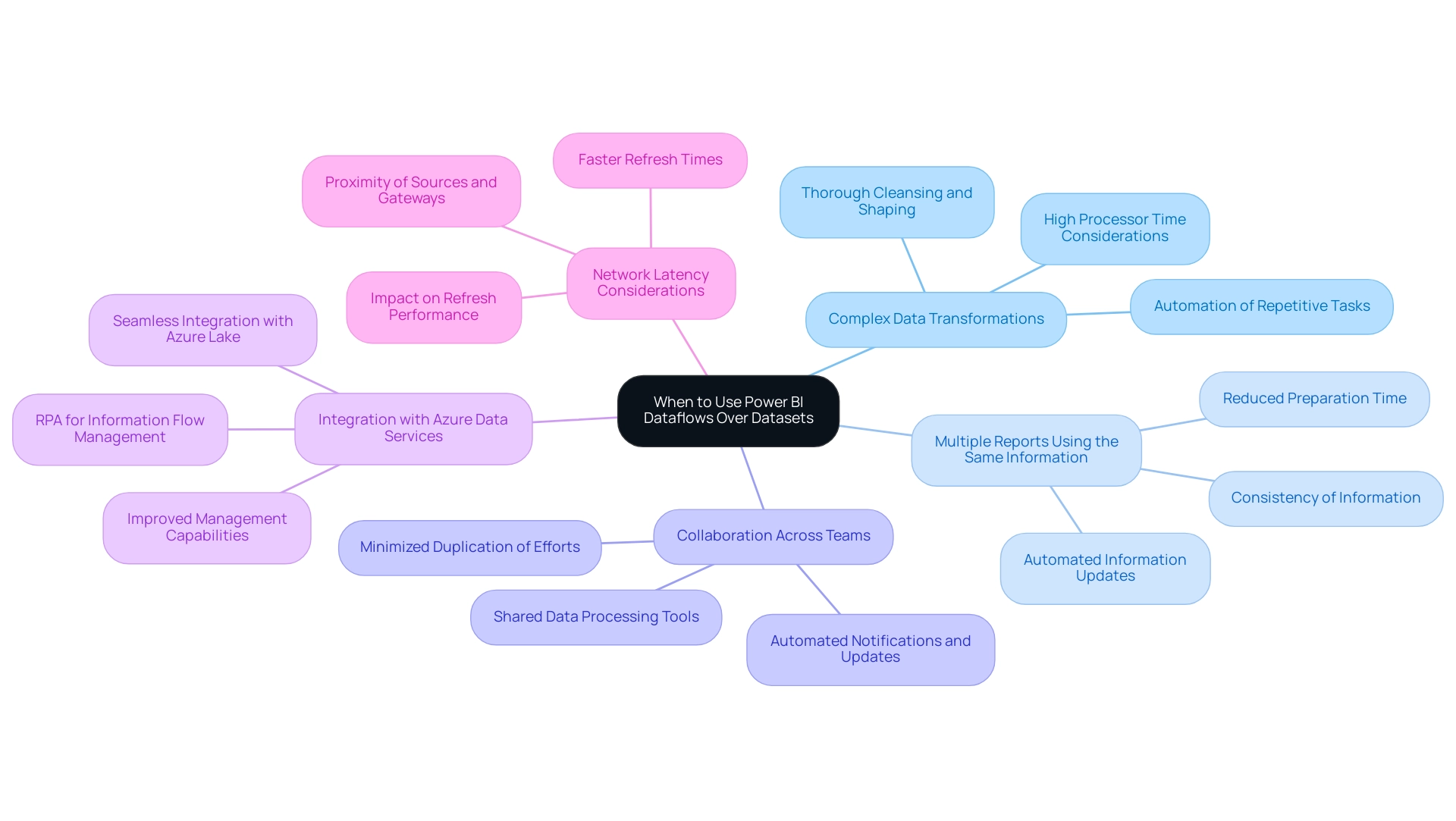

When to Use Power BI Dataflows Over Datasets

Power BI Dataflows present unique advantages in several critical scenarios that align with the pressing need for operational efficiency and actionable insights, particularly when integrated with Robotic Process Automation (RPA):

-

Complex Data Transformations: For organizations grappling with intricate data transformation requirements, such systems provide a powerful framework that allows for thorough cleansing and shaping of data prior to reporting. This capability is pivotal, especially when considering the powerbi dataflow vs dataset, as high processor time can result from the number of applied steps or the type of transformations being made. As Nikola aptly states, “You create a dataflow in the Power BI Service!” emphasizing the practicality and efficiency of utilizing data streams in overcoming challenges associated with time-consuming report creation. Furthermore, RPA can automate repetitive information preparation tasks, enhancing overall efficiency.

-

Multiple Reports Using the Same Information: When various reports rely on the same foundational information, understanding the powerbi dataflow vs dataset allows for the creation of a single entity that can be leveraged across multiple reports. This not only ensures consistency of information but also significantly reduces the time spent preparing information for reporting, addressing issues of inconsistencies that can hamper decision-making. The integration of RPA can further streamline this process by automating information updates and report generation, which can be compared to the concepts of Power BI dataflow vs dataset.

-

Collaboration Across Teams: In collaborative environments where different teams manage various reports, shared data processing tools enhance teamwork by providing access to a shared and prepped dataset, especially when considering powerbi dataflow vs dataset. This approach minimizes duplication of efforts and fosters synergy among departments, facilitating smoother operational processes. RPA can also support collaboration by automating notifications and updates across teams, which can be compared to the differences in powerbi dataflow vs dataset.

-

Integration with Azure Data Services: Organizations that utilize Azure services for storage can take full advantage of Dataflows, particularly when comparing powerbi dataflow vs dataset, as they seamlessly integrate with Azure Lake and other cloud platforms, thereby improving management capabilities and driving growth through better insights. RPA can assist in managing information flows between different services, ensuring seamless operations.

-

Network Latency Considerations: Additionally, it is important to recognize that network latency can impact refresh performance, as illustrated in the case study titled “Network Latency and Dataflow Performance.” Reducing latency by placing sources and gateways near the Power BI cluster can lead to quicker refresh times and an enhanced user experience, which is crucial for prompt decision-making in the context of powerbi dataflow vs dataset.

In contrast, when evaluating powerbi dataflow vs dataset, data collections are ideal for rapid analyses and straightforward reporting tasks, particularly when the information does not necessitate extensive preparation. This distinction is crucial for optimizing operational efficiency and ensuring that resources are allocated effectively in your reporting processes, ultimately empowering your organization to harness the full potential of Business Intelligence. Companies that find it difficult to derive valuable insights risk lagging behind their rivals, making the integration of RPA and data processes not only advantageous but crucial for ongoing growth and innovation.

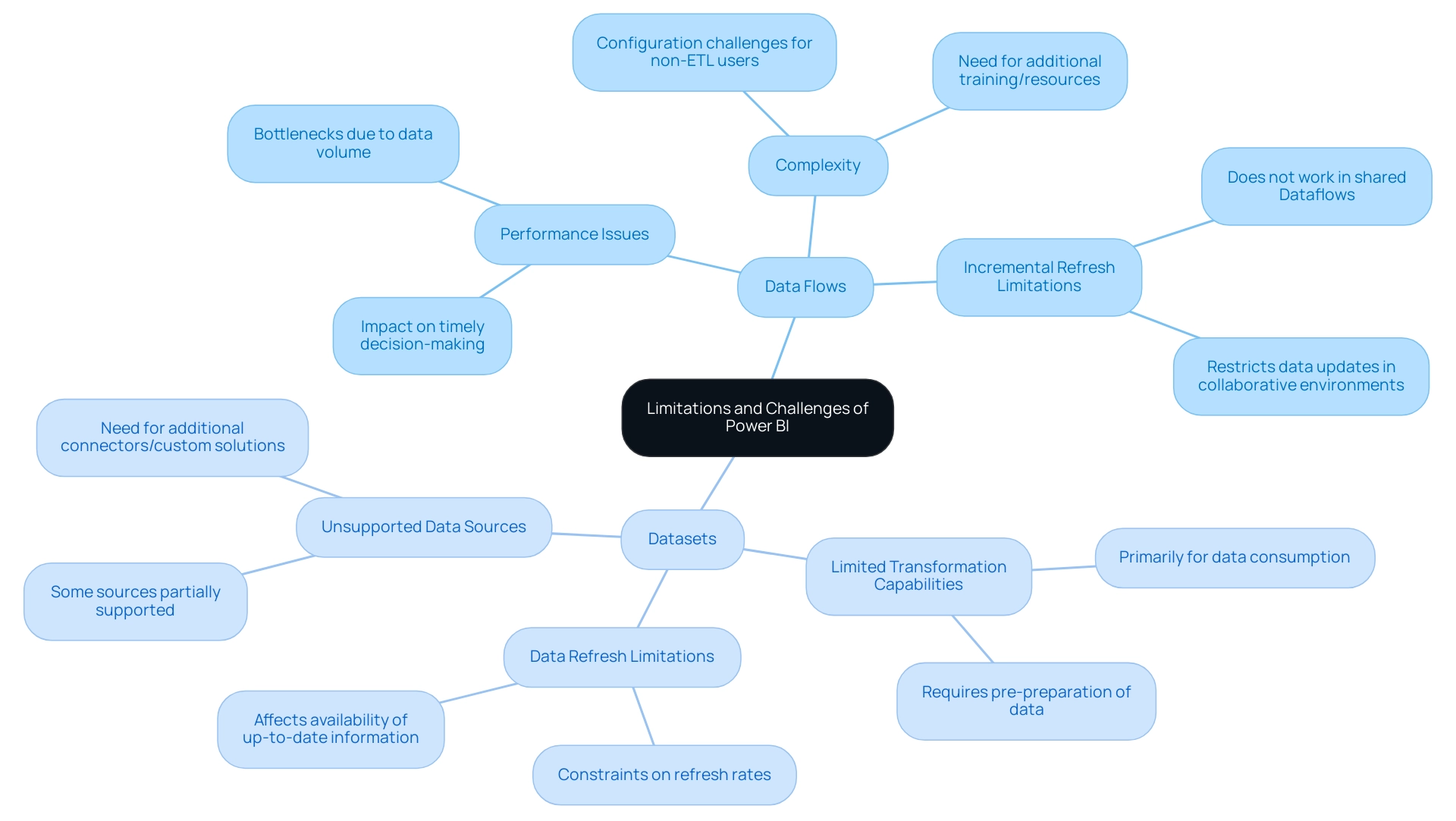

Limitations and Challenges of Power BI Dataflows and Datasets

While Power BI Dataflows and Datasets provide substantial advantages for business intelligence processes, they are not without their limitations that can impact operational efficiency:

- Data flows:

- Complexity: The configuration of Data flows can pose challenges, especially for users who are not well-versed in ETL (Extract, Transform, Load) processes. This complexity often necessitates additional training or resources, straining operational efficiency, especially in environments overwhelmed by AI options. Tailored AI solutions can simplify these processes, offering user-friendly interfaces and guided setups that reduce the learning curve.

- Performance Issues: Performance bottlenecks may arise due to data volume and transformation intricacies, hindering the speed of data processing and impacting timely decision-making.

-

Incremental Refresh Limitations: Incremental refresh does not work in shared Dataflows, significantly restricting data updates and management efficiency in collaborative environments.

-

Datasets:

- Limited Transformation Capabilities: Datasets are primarily for data consumption, lacking the robust transformation capabilities of Dataflows. This limitation may require organizations to prepare their information in advance, complicating workflows and making it harder to harness actionable insights. Tailored AI solutions can enhance transformation capabilities, allowing for more flexible data preparation.

- Data Refresh Limitations: Datasets often face constraints on refresh rates, particularly with large data volumes, affecting the availability of up-to-date information for analysis and decision-making. Custom solutions can help mitigate these limitations by optimizing refresh strategies.

- Unsupported Data Sources: Some data sources may be unsupported or only partially supported by Power Query, necessitating additional connectors or custom solutions, complicating the data integration process further.

Recognizing these limitations is vital for organizations aiming to effectively strategize their data management efforts and mitigate risks associated with these challenges. Mark Smallcombe’s insights emphasize the necessity of leveraging strengths while navigating the complexities inherent in BI:

In my extensive work with Query, I’ve encountered various limitations and challenges, but also numerous ways to leverage its strengths effectively.

Furthermore, comparing BI to Tableau reveals that while BI is generally more affordable and user-friendly for non-technical users, Tableau offers superior performance with large datasets and broader compatibility. Understanding these dynamics will empower organizations to navigate the overwhelming AI landscape and optimize their use of Business Intelligence tools for informed decision-making and operational efficiency. Additionally, integrating Robotic Process Automation (RPA) can further streamline workflows, reduce manual tasks, and enhance overall operational efficiency, allowing teams to focus on strategic initiatives.

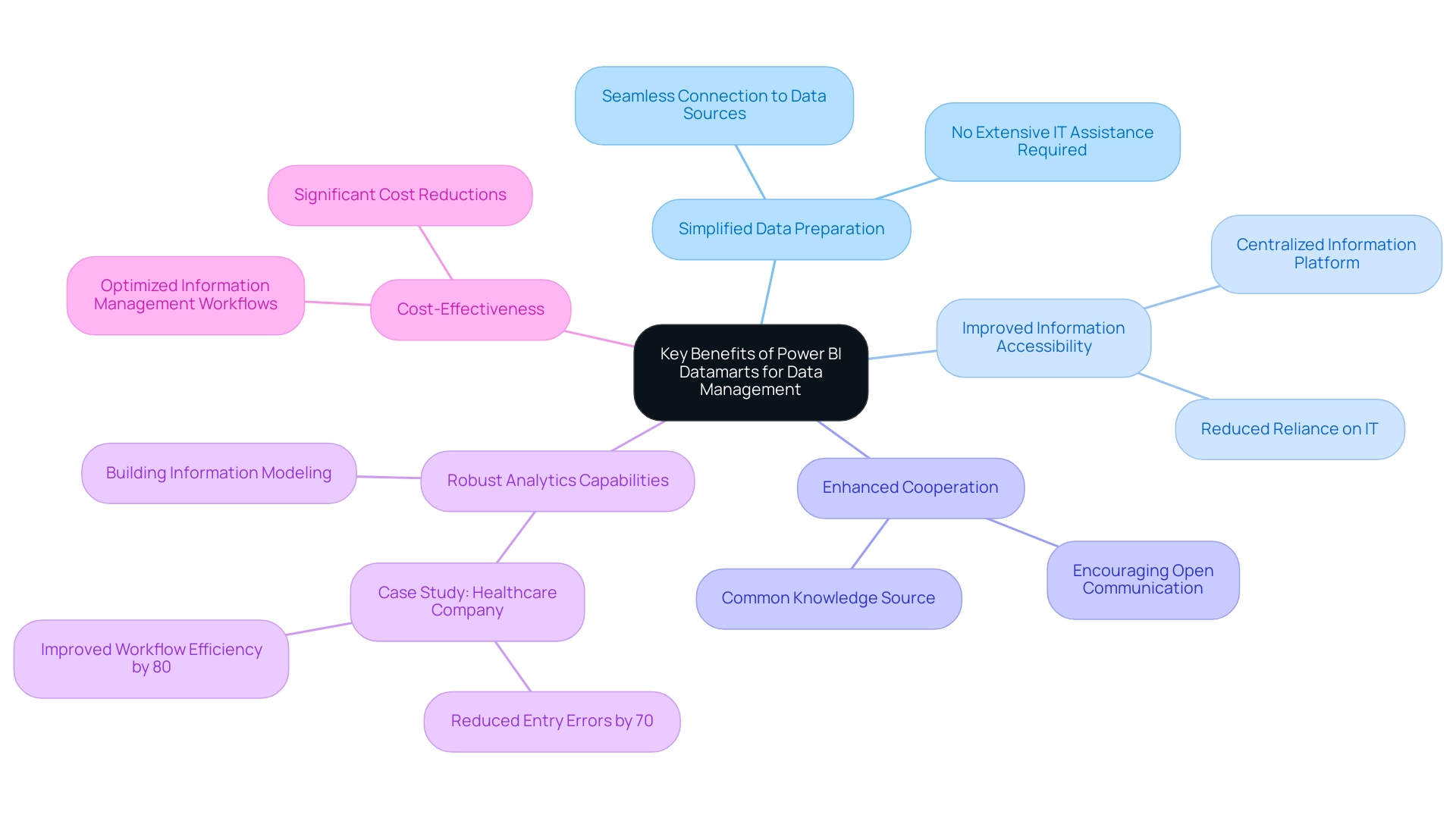

Best Practices for Utilizing Power BI Dataflows and Datasets

To fully harness the potential of Power BI data connections and Datasets, implementing the following best practices is essential:

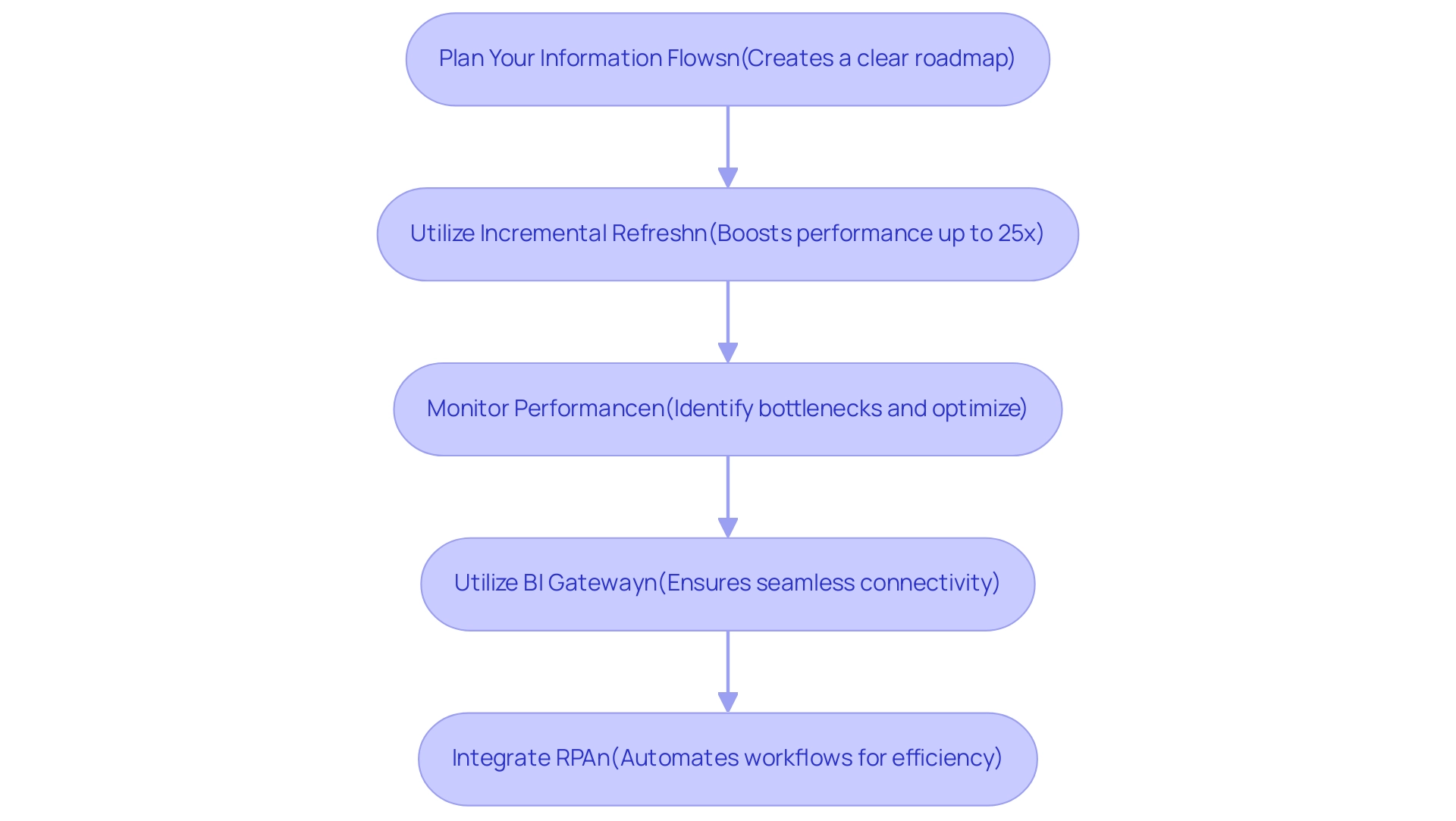

- Plan Your Information Flows: A strategic approach begins with outlining your sources and necessary transformations. This preparatory phase is crucial as it creates a clear roadmap that streamlines the creation process and enhances overall efficiency in governance.

- Utilize Incremental Refresh: For extensive datasets, the implementation of incremental refresh can significantly boost performance. This method reduces load times during information updates, allowing for smoother operations and quicker access to fresh insights. In fact, utilizing enhanced compute can potentially improve dataflow performance up to 25x, making this practice even more beneficial.

- Monitor Performance: Consistent performance tracking of both processes and collections is vital. By regularly evaluating these elements, organizations can swiftly identify bottlenecks and optimize configurations, ensuring that information management remains agile and effective. Maintaining comprehensive documentation of your Power BI dataflow vs dataset is critical. Clear annotations regarding their purposes and transformation processes facilitate collaboration among team members and streamline future updates, which is a key aspect of effective information governance when evaluating Power BI dataflow vs dataset.

- Utilize BI Gateway: To ensure uninterrupted refreshes and seamless connectivity between on-premises sources and BI services, utilizing the BI Gateway is highly recommended. This tool enhances the integration of diverse information streams, promoting a more cohesive environment.

By adhering to these best practices, organizations can significantly improve their management capabilities, resulting in enhanced reporting and more informed decision-making processes. Furthermore, the 3-Day Business Intelligence Sprint allows teams to create fully functional, professionally designed reports quickly, enabling a focus on actionable insights rather than the intricacies of report creation. For example, a production firm successfully optimized its procedures by centralizing its components list through Dataflows, decreasing information retrieval time by 30%—a testament to the transformative effect of effective planning and execution in BI environments. Additionally, by integrating RPA into these practices, organizations can automate manual workflows, further enhancing operational efficiency. RPA can specifically streamline data entry and reporting processes in Power BI, allowing teams to focus on strategic initiatives that drive business growth.

Conclusion

Power BI Dataflows and Datasets are pivotal in optimizing data management and analytics, enabling organizations to transform raw data into actionable insights. Dataflows serve as a powerful tool for data preparation and transformation, streamlining ETL processes and ensuring a centralized source of truth. This capability not only enhances data governance but also promotes reusability across various reports, significantly improving efficiency. On the other hand, Datasets facilitate easy access and analysis of data, serving as the foundation for insightful visualizations and reports. Together, these components create a synergistic effect that empowers organizations to make informed decisions and drive growth.

While both Dataflows and Datasets offer distinct advantages, their effective integration with Robotic Process Automation (RPA) can further enhance operational efficiency. By automating repetitive tasks and ensuring timely data updates, RPA complements the functionalities of Power BI, allowing teams to focus on strategic initiatives that foster innovation. Organizations that prioritize best practices—such as:

- Planning dataflows

- Utilizing incremental refresh

- Monitoring performance

can maximize the benefits of these tools, thereby positioning themselves for success in a data-driven landscape.

In conclusion, embracing Power BI Dataflows and Datasets, coupled with innovative solutions like RPA, equips organizations with the capabilities needed to thrive in an increasingly competitive environment. By harnessing the full potential of their data, businesses can not only enhance operational efficiency but also unlock significant competitive advantages that drive sustained growth and informed decision-making. As the demand for data-driven insights continues to rise, the strategic application of these tools is essential for organizations aspiring to lead in their respective industries.

Overview