Overview:

The article provides a step-by-step guide on how to copy conditional formatting in Power BI, emphasizing its importance for enhancing data visualization and communication of insights. It supports this by detailing the process involved, troubleshooting common issues, and sharing best practices that ensure effective and consistent application of conditional formatting across visuals, ultimately improving operational efficiency and user engagement.

Introduction

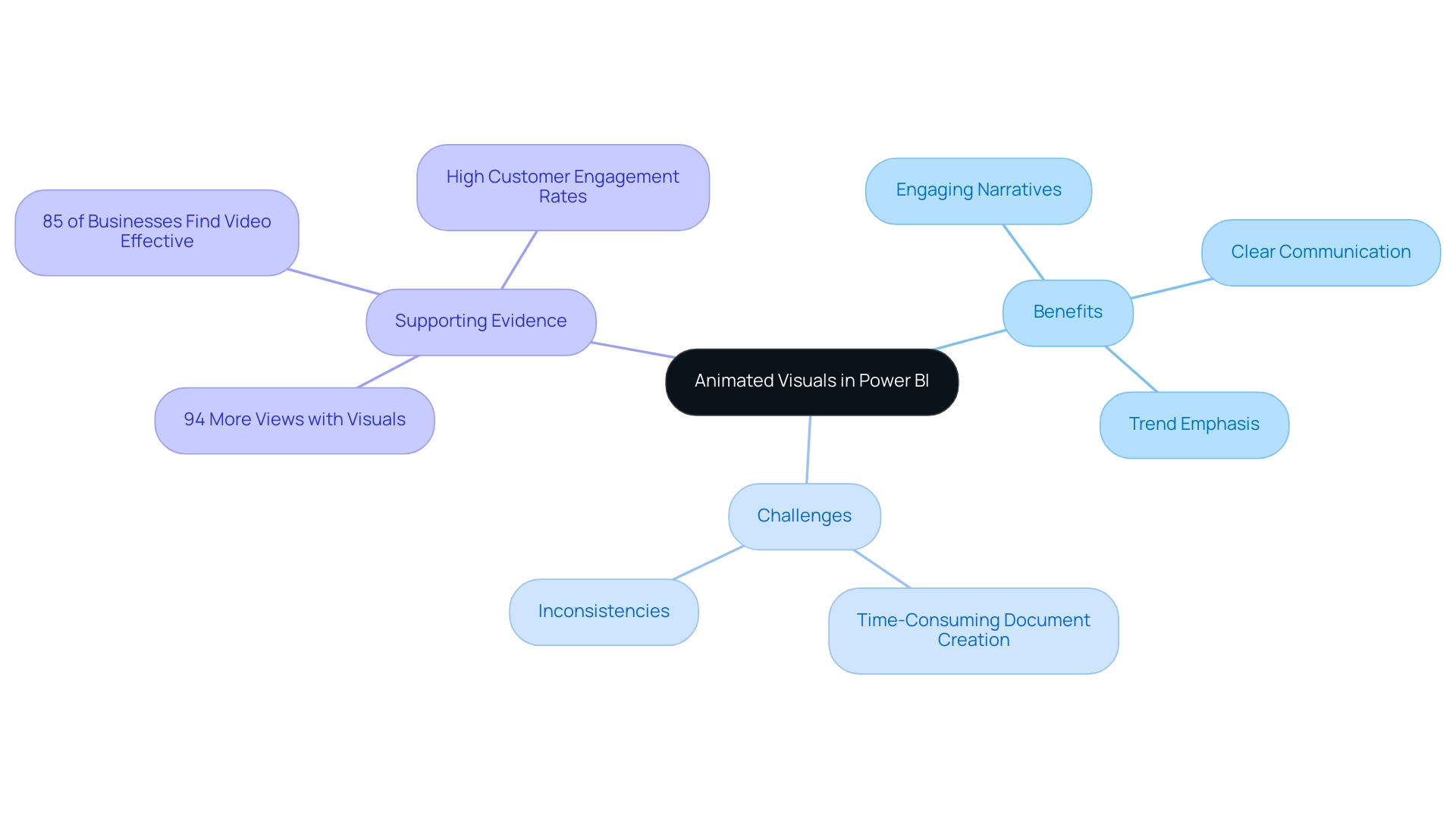

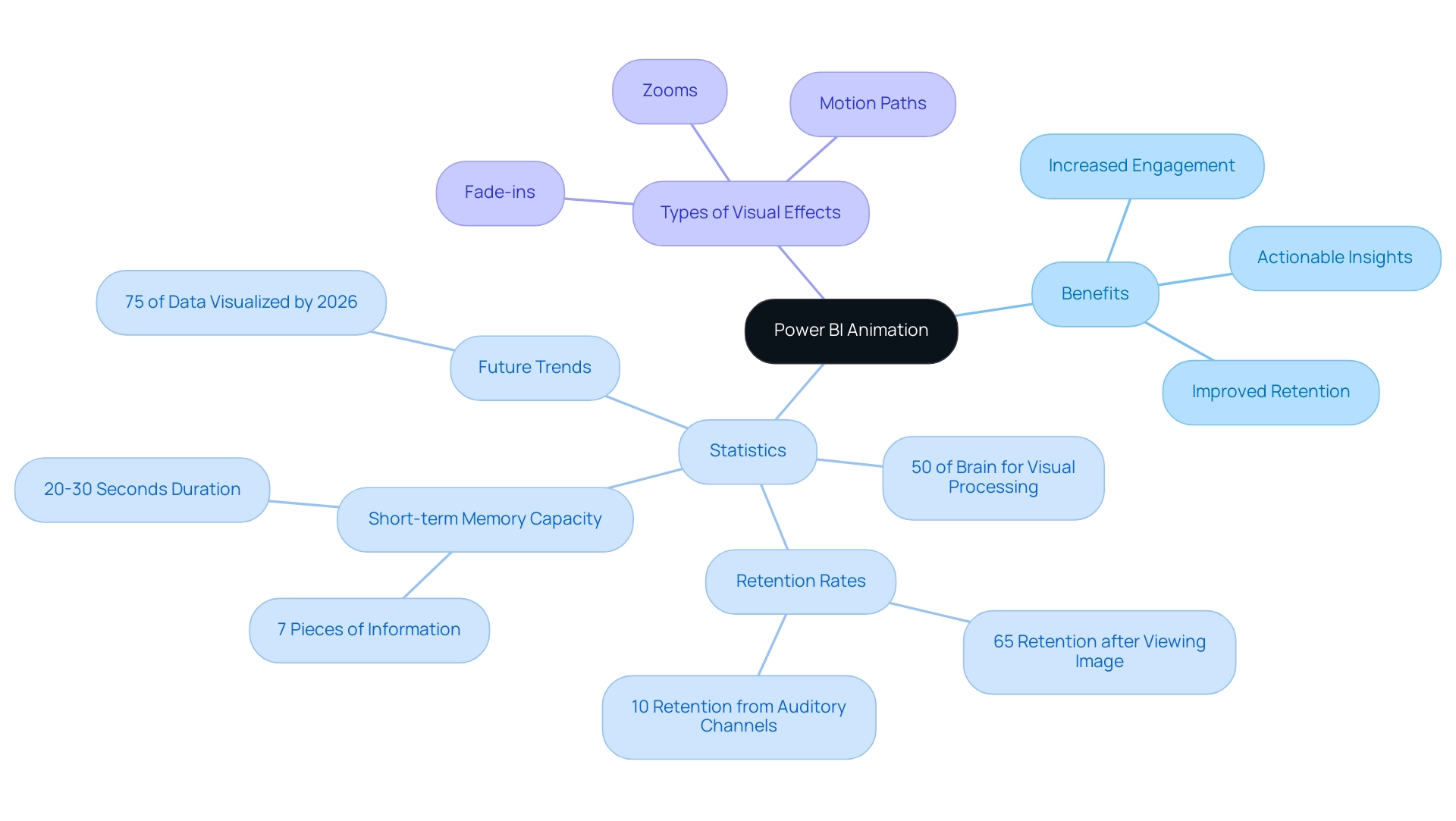

In the dynamic landscape of data visualization, conditional formatting in Power BI emerges as a game-changer, enabling organizations to transform raw data into compelling narratives. By applying visual cues that highlight trends and critical metrics, users can create reports that not only inform but also engage stakeholders effectively.

As businesses strive to enhance their operational efficiency and decision-making processes, understanding the nuances of conditional formatting becomes essential. This article delves into practical strategies for mastering this feature, offering:

- A step-by-step guide

- Troubleshooting tips

- Advanced techniques that ensure data integrity and accessibility

Whether navigating common challenges or exploring best practices, readers will discover actionable insights that elevate their data storytelling and drive impactful results in their organizations.

Understanding Conditional Formatting in Power BI

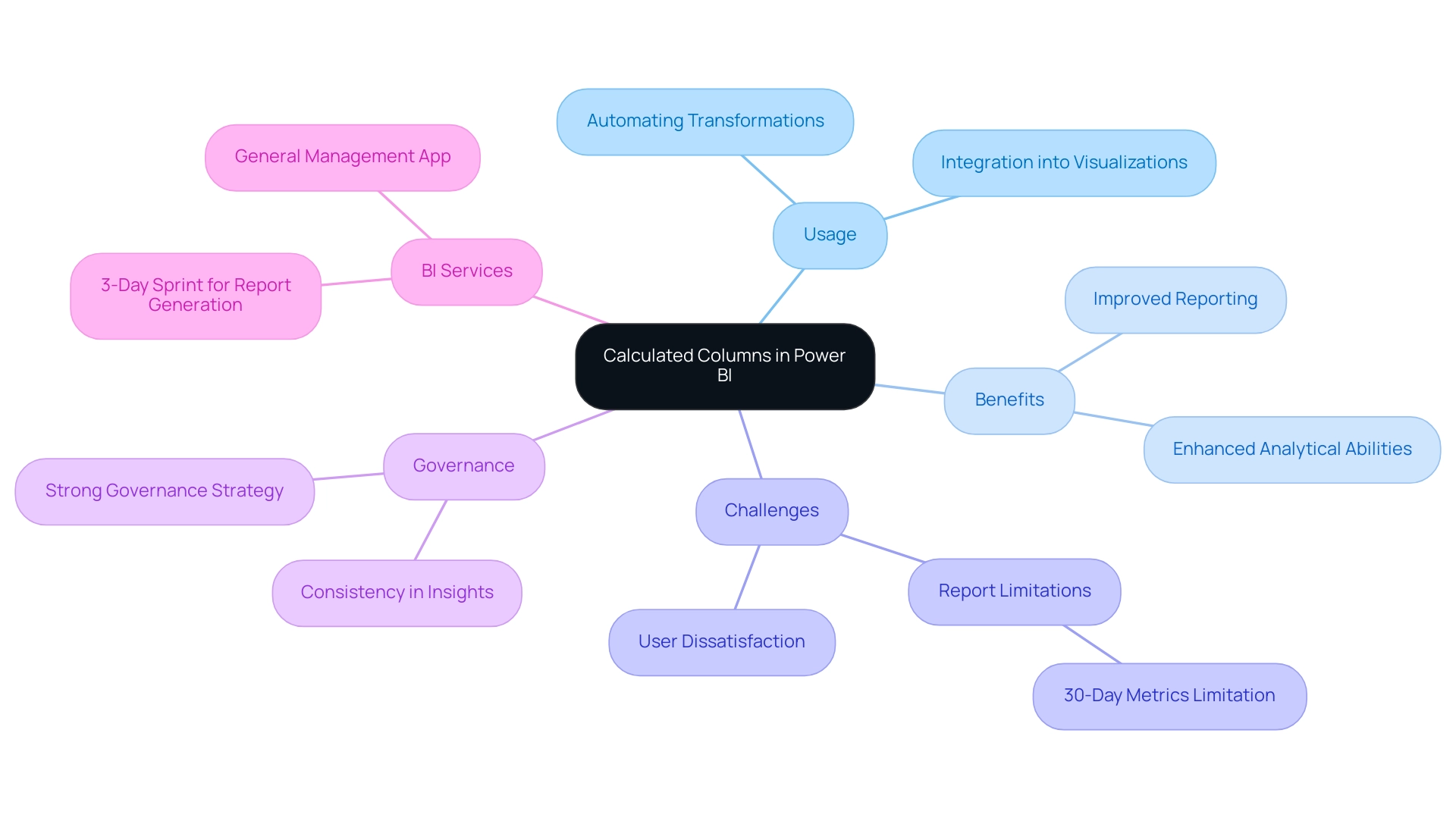

Power BI copy conditional formatting enables users to enhance their data visualization by applying visual cues that highlight trends, outliers, and critical metrics. This feature of Power BI copy conditional formatting alters the appearance of visuals according to established conditions—such as values exceeding a specific threshold—making outputs not only more informative but also intuitive. For instance, consider the Admin Monitoring Workspace, which utilizes power bi copy conditional formatting to provide organizations with a comprehensive view of their inventory and usage metrics.

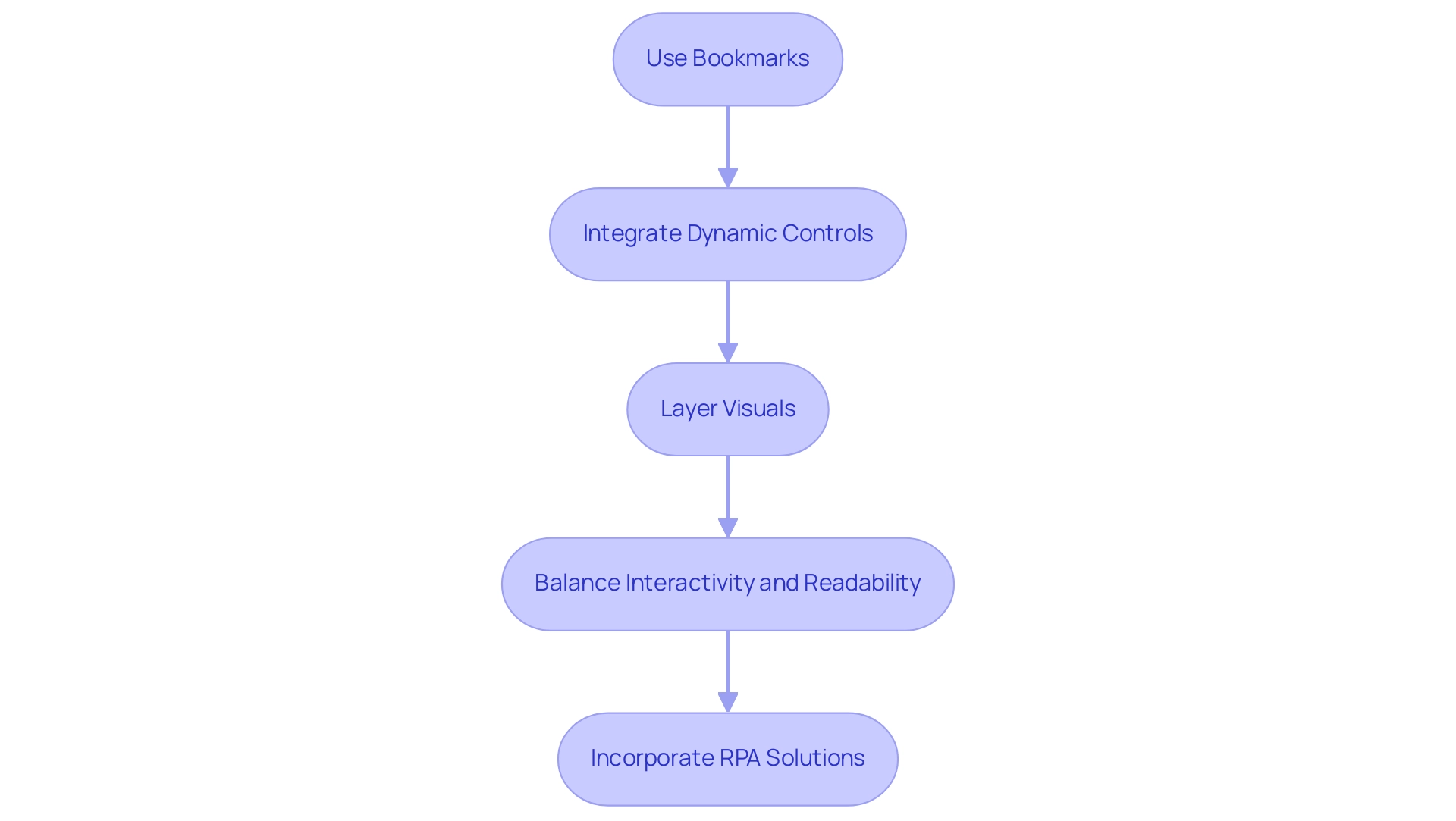

This capability directly tackles common challenges in utilizing insights from Power BI dashboards, such as time-consuming document creation and information inconsistencies. By integrating RPA solutions, organizations can automate repetitive tasks, further streamlining report generation and enhancing operational efficiency. The workspace updates automatically as new content is added, reflecting ongoing customer feedback and ensuring its relevance.

As a result, users can effectively communicate insights and engage stakeholders with clear, actionable information narratives. Notably, the blog post discussing these features garnered 124,433 views, indicating significant interest in the topic. As pawlowski6132 noted, ‘It’s not 90 days.

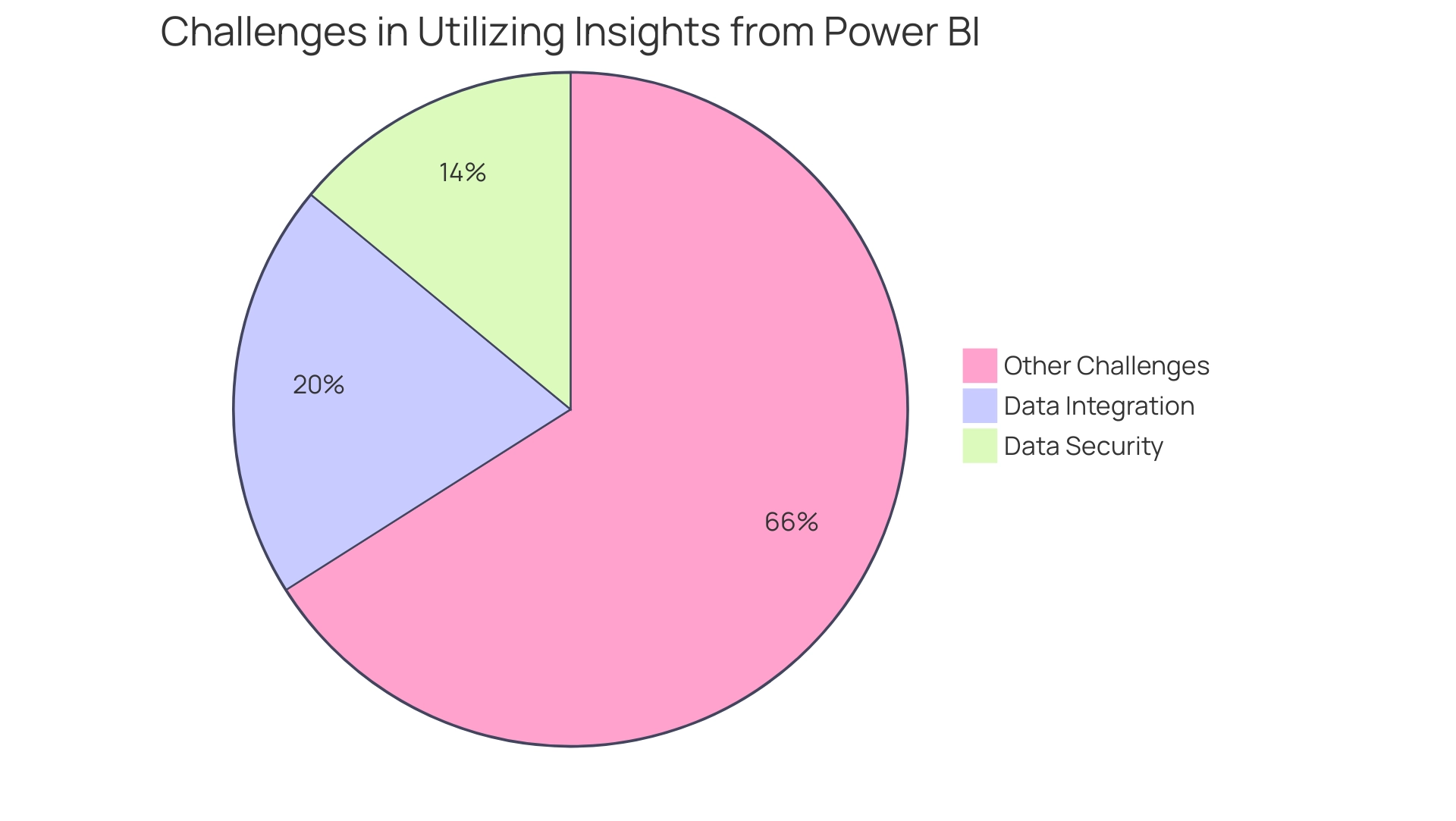

It’s 30 days. The question is, how to obtain as much as desired, highlighting the urgency of effective information management. Furthermore, with data integration and data security challenges representing 20% and 14% respectively, understanding and utilizing conditional styles, particularly power bi copy conditional formatting, is crucial for anyone aiming to elevate their data storytelling capabilities in 2024, especially as Power BI continues to evolve and incorporate user-driven enhancements.

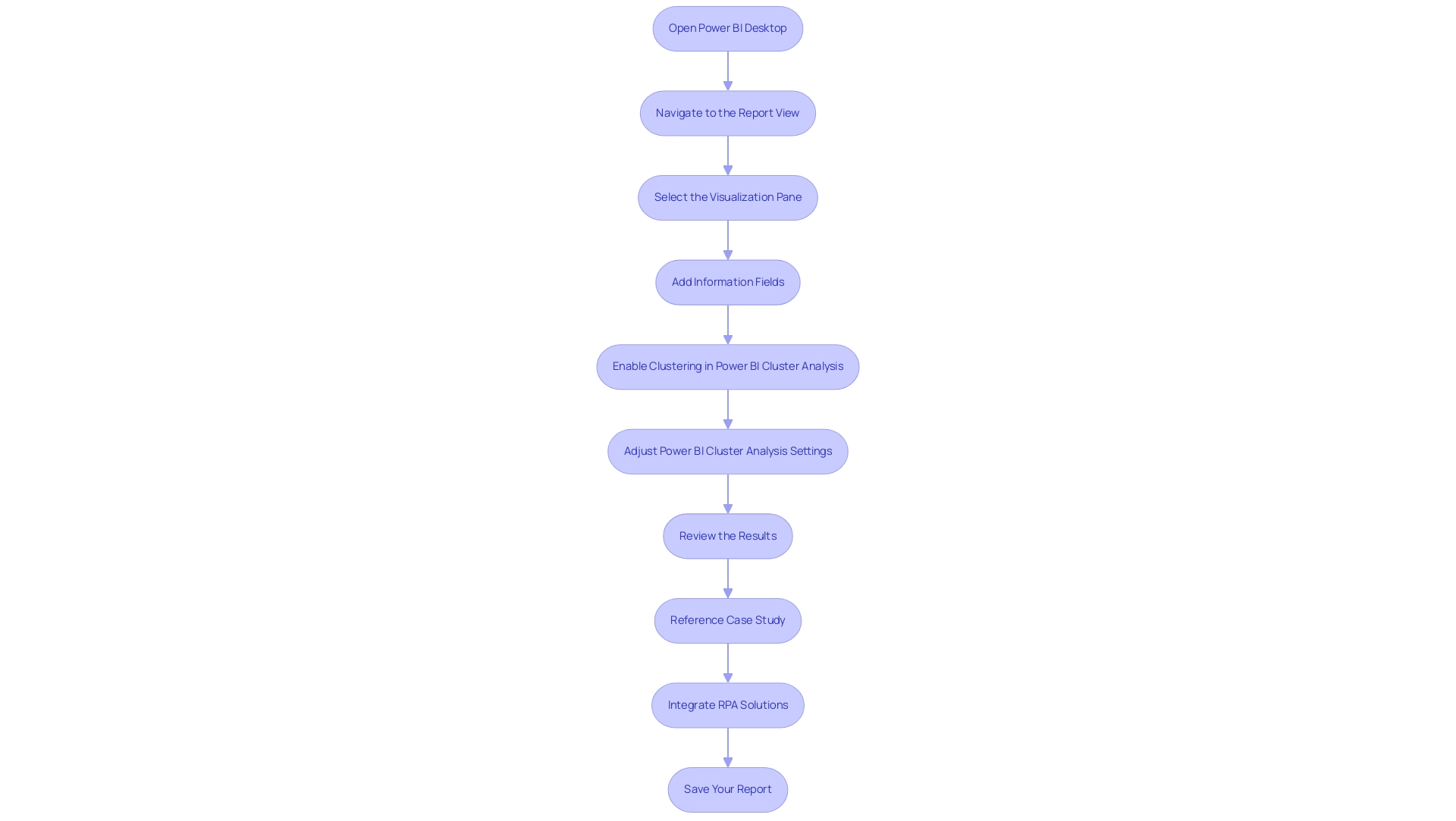

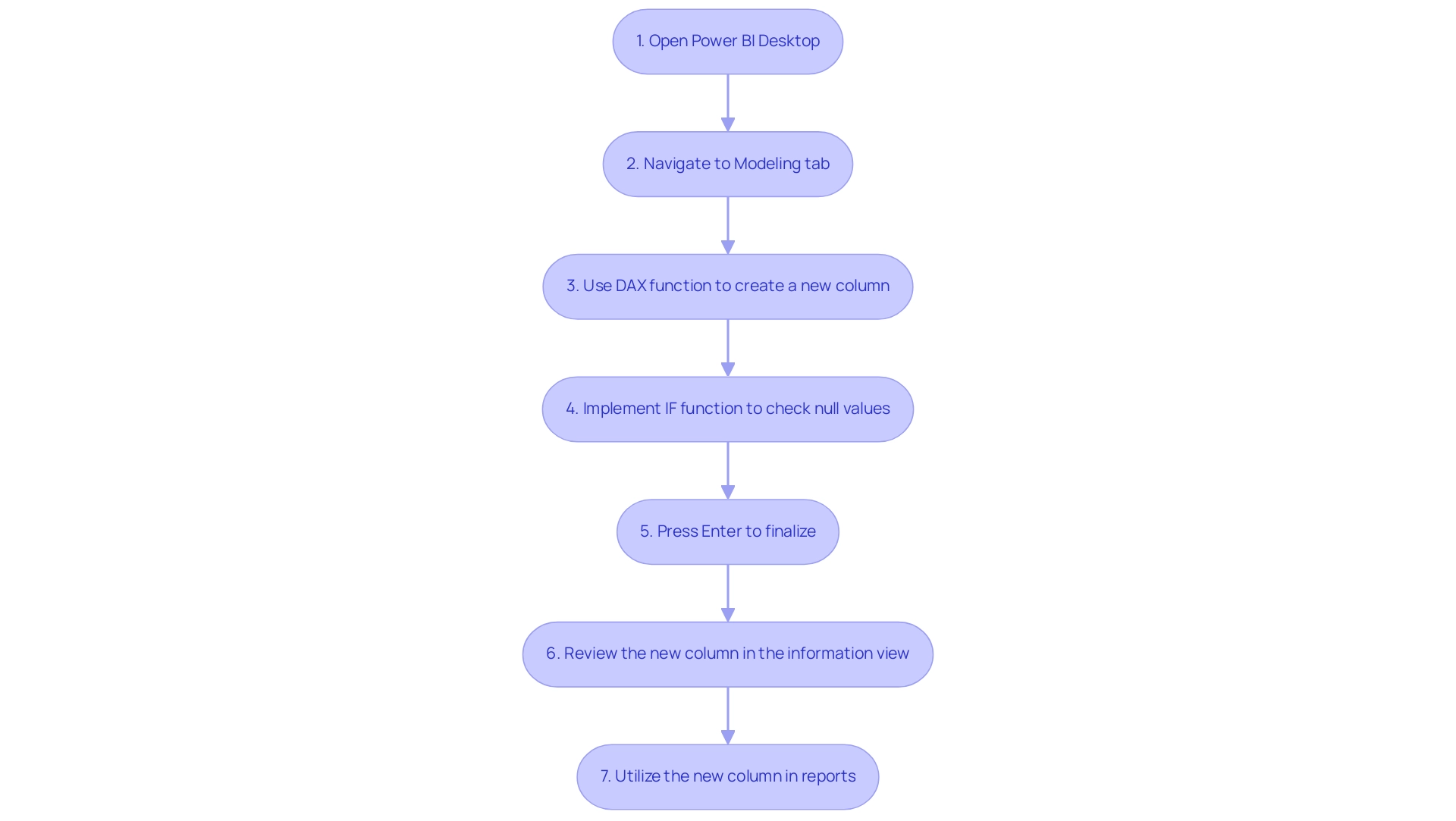

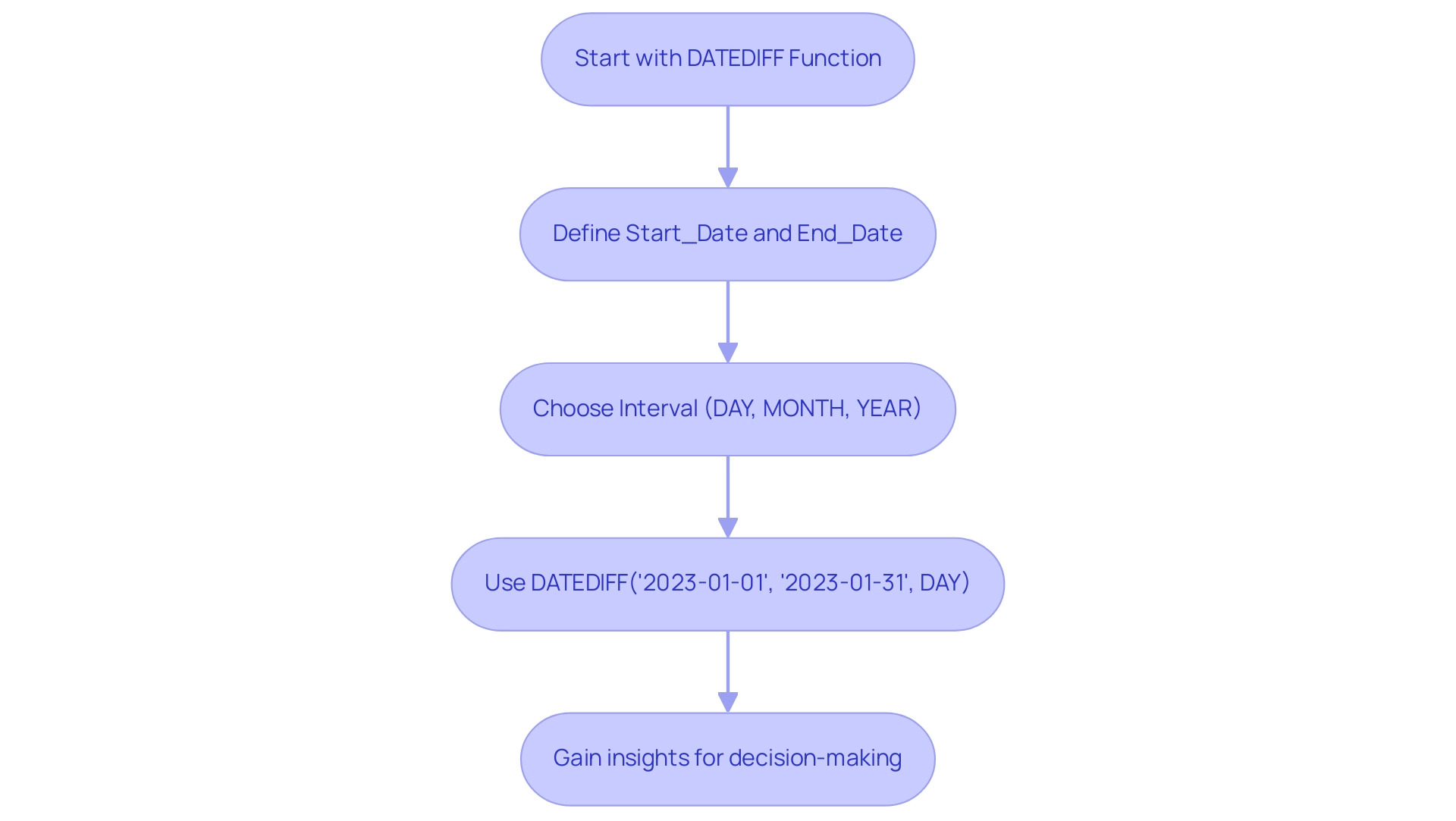

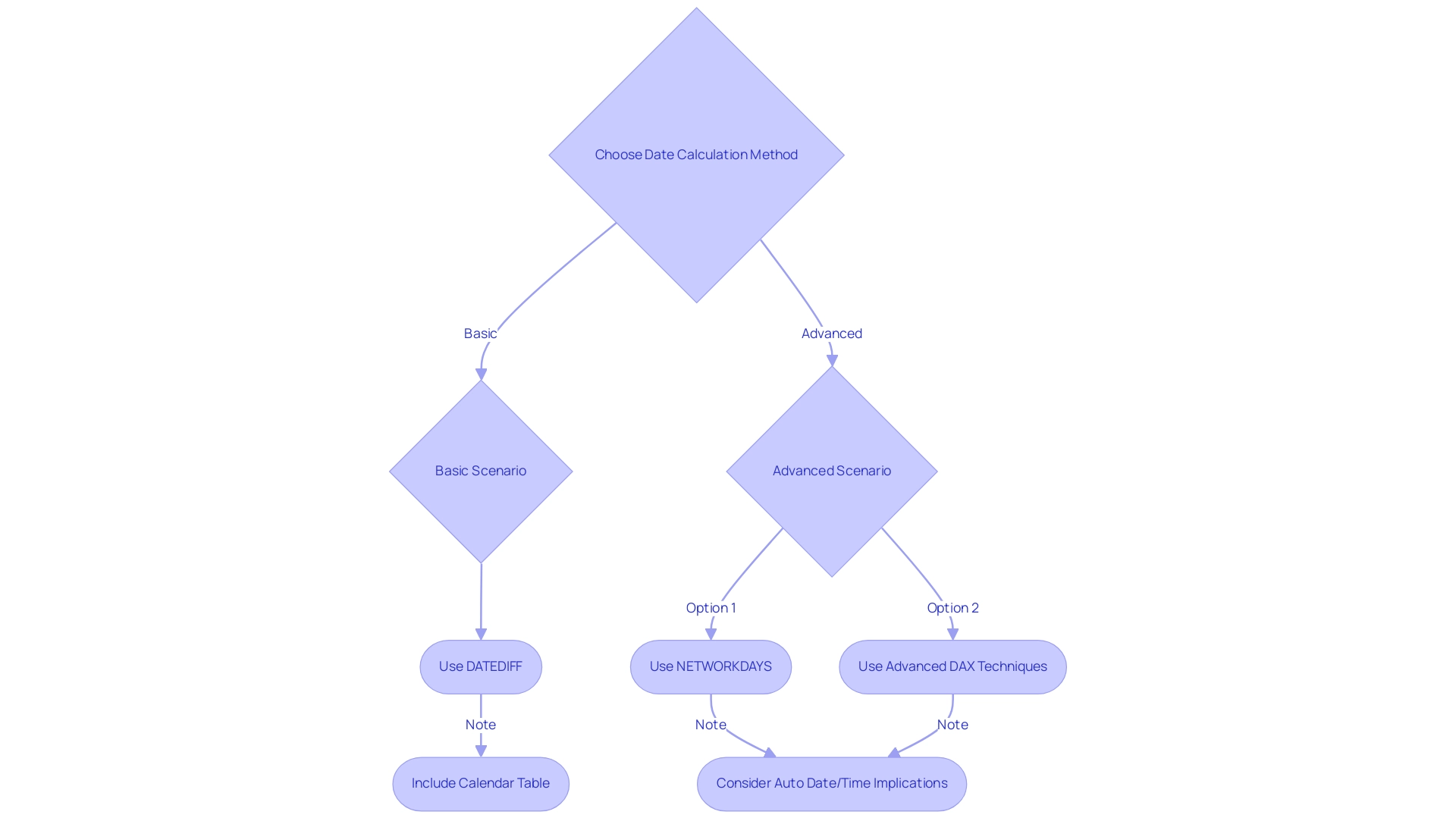

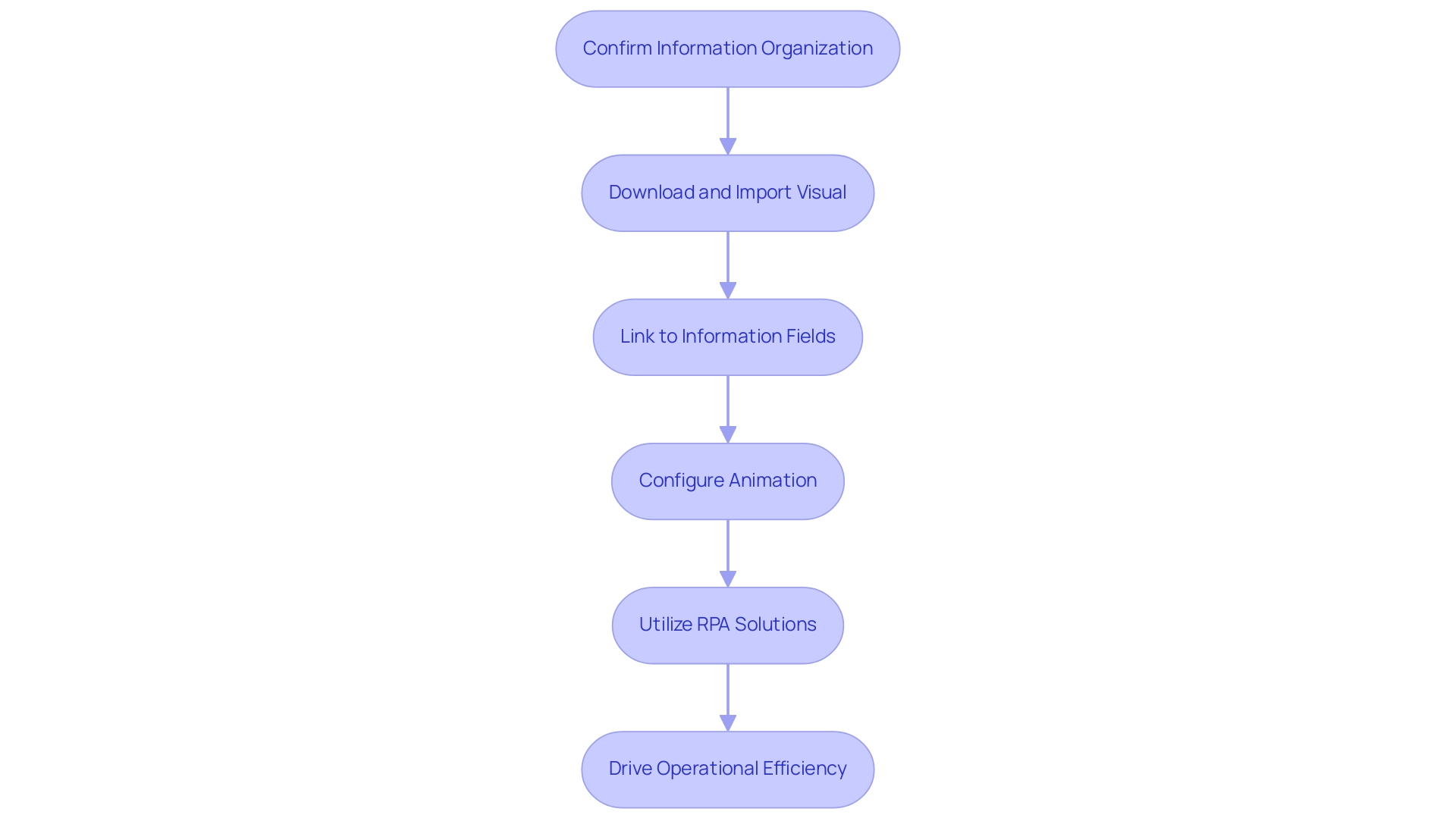

Step-by-Step Guide to Copying Conditional Formatting in Power BI

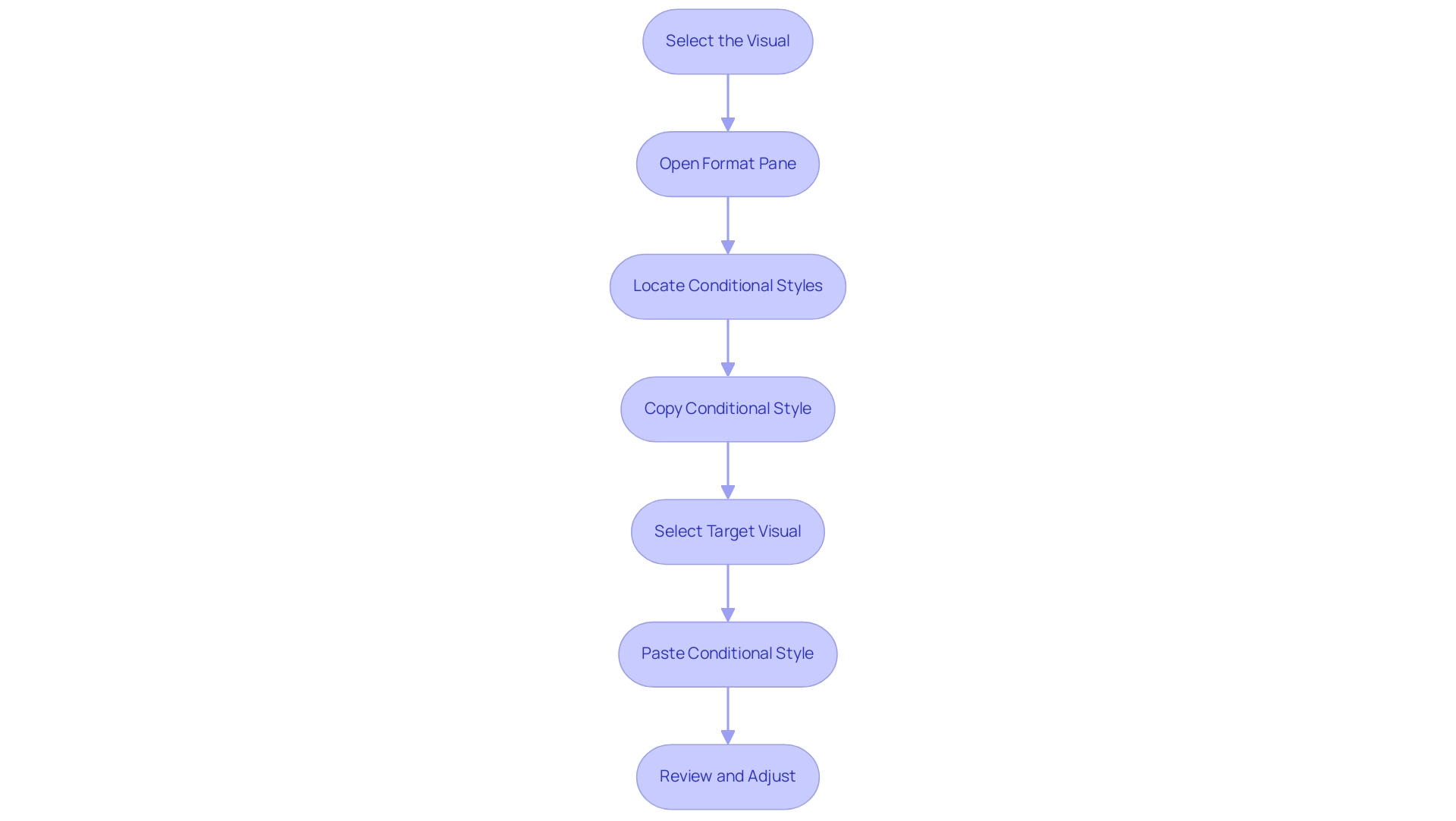

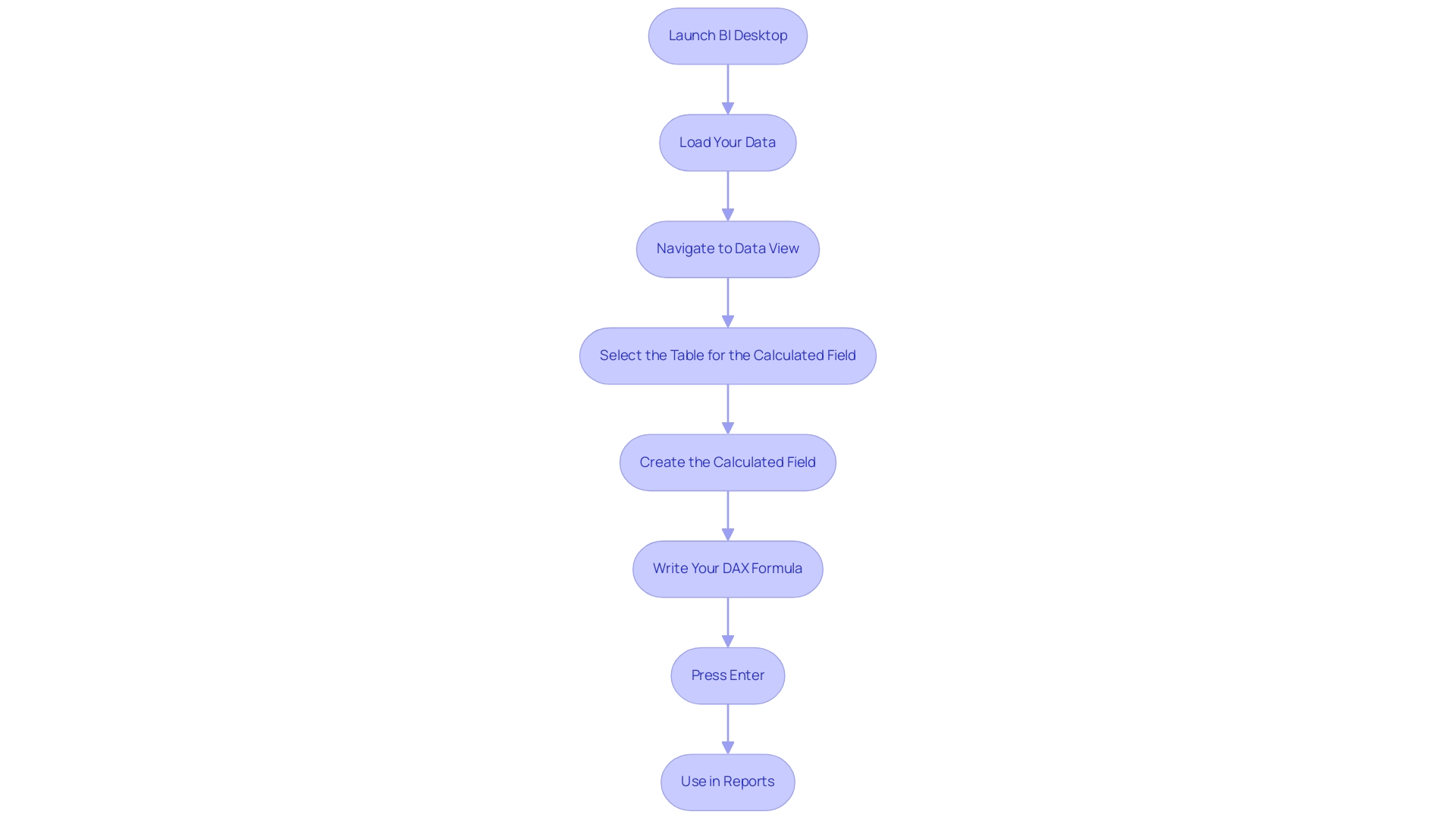

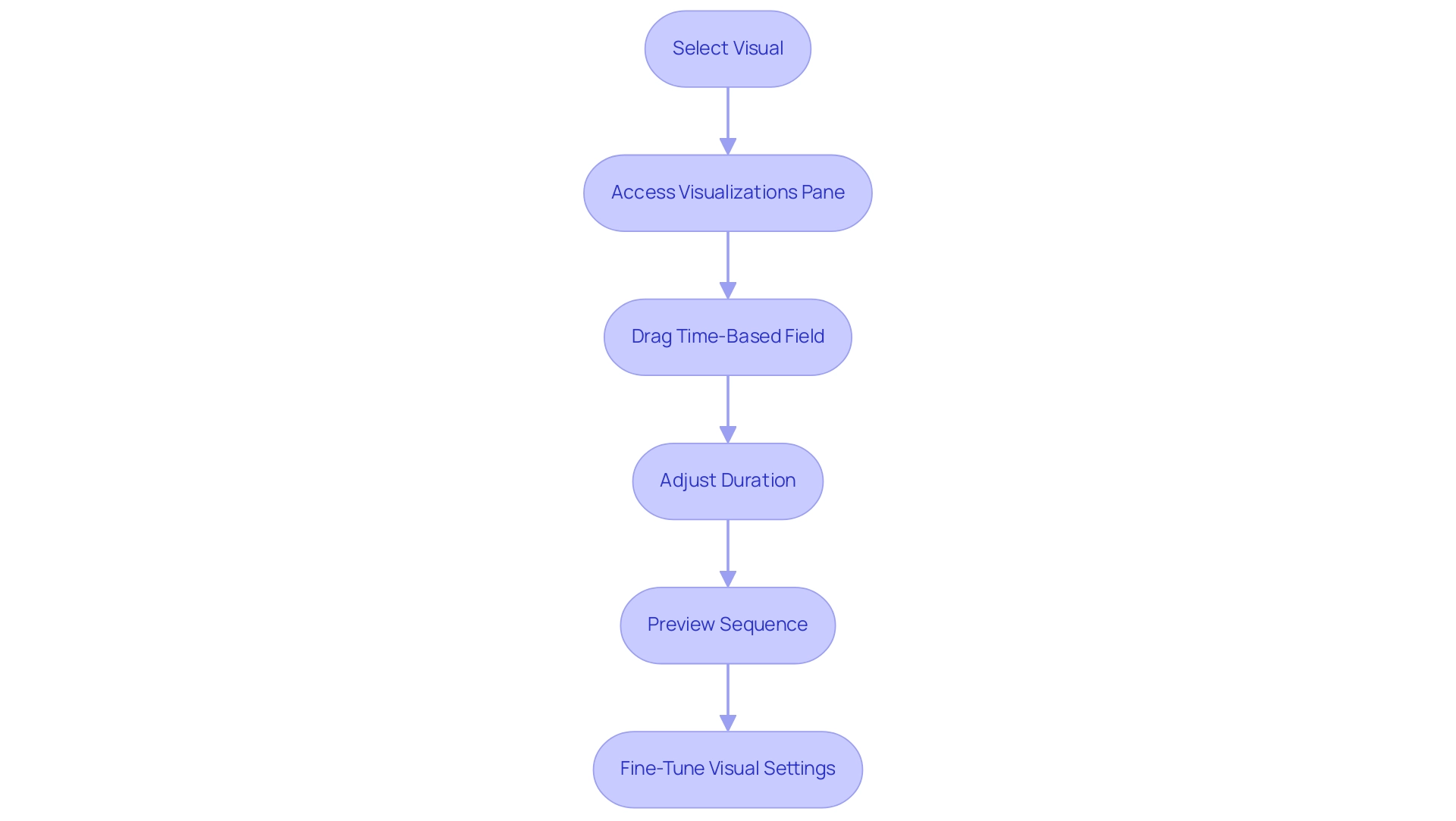

- Select the Visual: Begin by clicking on the visual from which you wish to perform power bi copy conditional formatting.

- Open Format Pane: Next, navigate to the Visualizations pane and select the paint roller icon to access the customization options.

- Locate Conditional Styles: Scroll down until you find the ‘Conditional styles’ section within the pane.

- Power BI copy conditional formatting: Use the ‘Copy’ option located next to the specific conditional style settings you wish to replicate.

- Select Target Visual: Click on the visual where you want to apply the copied style.

- Power BI copy conditional formatting: In the format pane of the target visual, select ‘Conditional style’ and then choose the ‘Paste’ option to use the power bi copy conditional formatting settings.

- Review and Adjust: Finally, examine the visual to confirm that the layout reflects your intentions. Make any necessary adjustments to achieve the desired appearance.

Implementing these steps not only streamlines your reporting process but also aligns with the principles of Robotic Process Automation (RPA) to enhance operational efficiency in a rapidly evolving AI landscape. By automating manual styling tasks, you reduce errors and free your team for more strategic activities, driving data-driven insights crucial for business growth. With over 4,538 views on this topic, it’s clear that effective structuring is essential for enhancing communication through visuals. As Scott Sugar aptly states, ‘The ability to communicate with people, regardless of distance or location, is one of the best things about tech.’ Furthermore, by recognizing unused reports through metrics, as emphasized in the ‘Unused Reports Count’ case study, RPA can assist in directing your efforts toward the most pertinent visuals, thereby improving decision-making and operational efficiency.

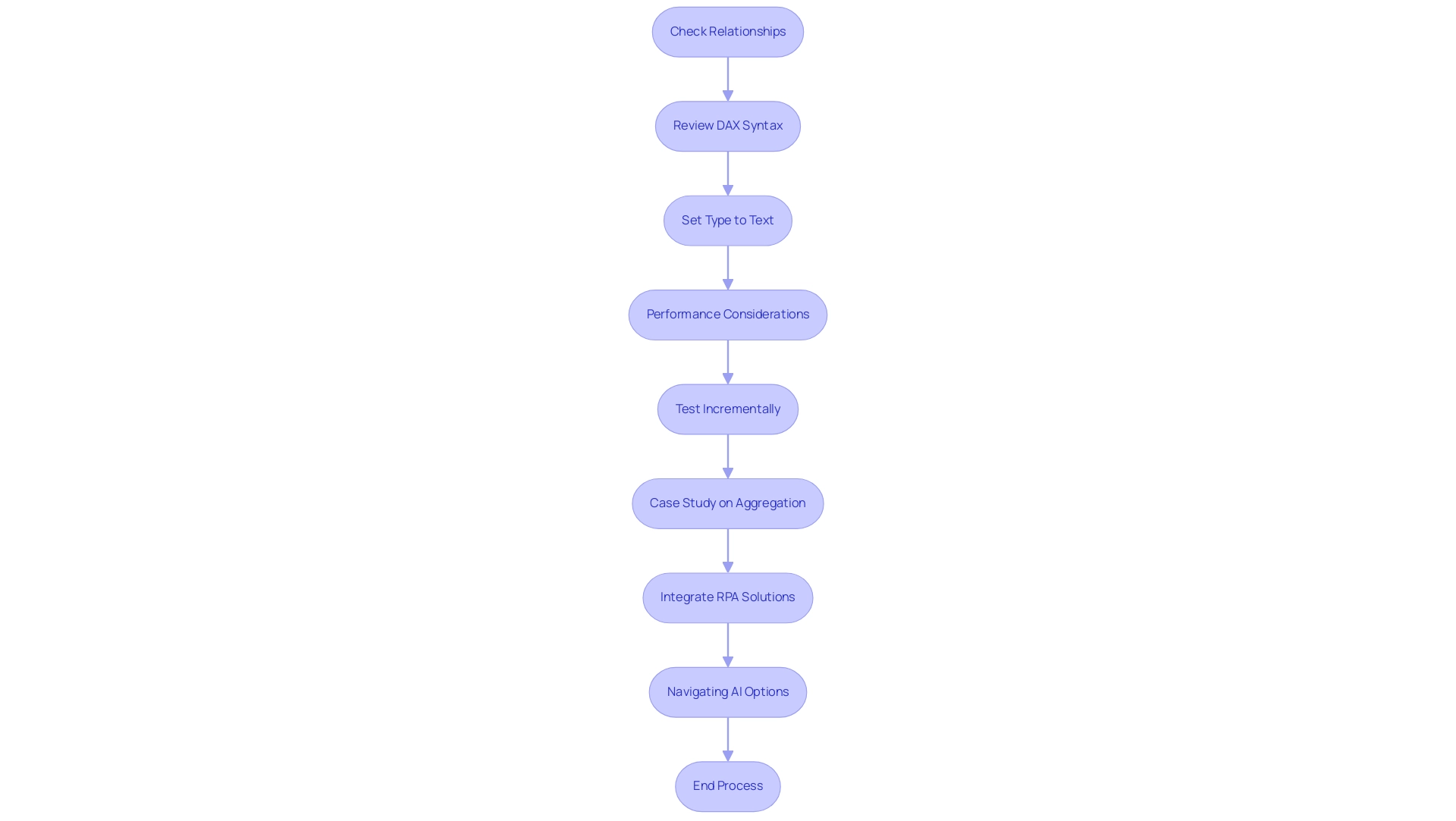

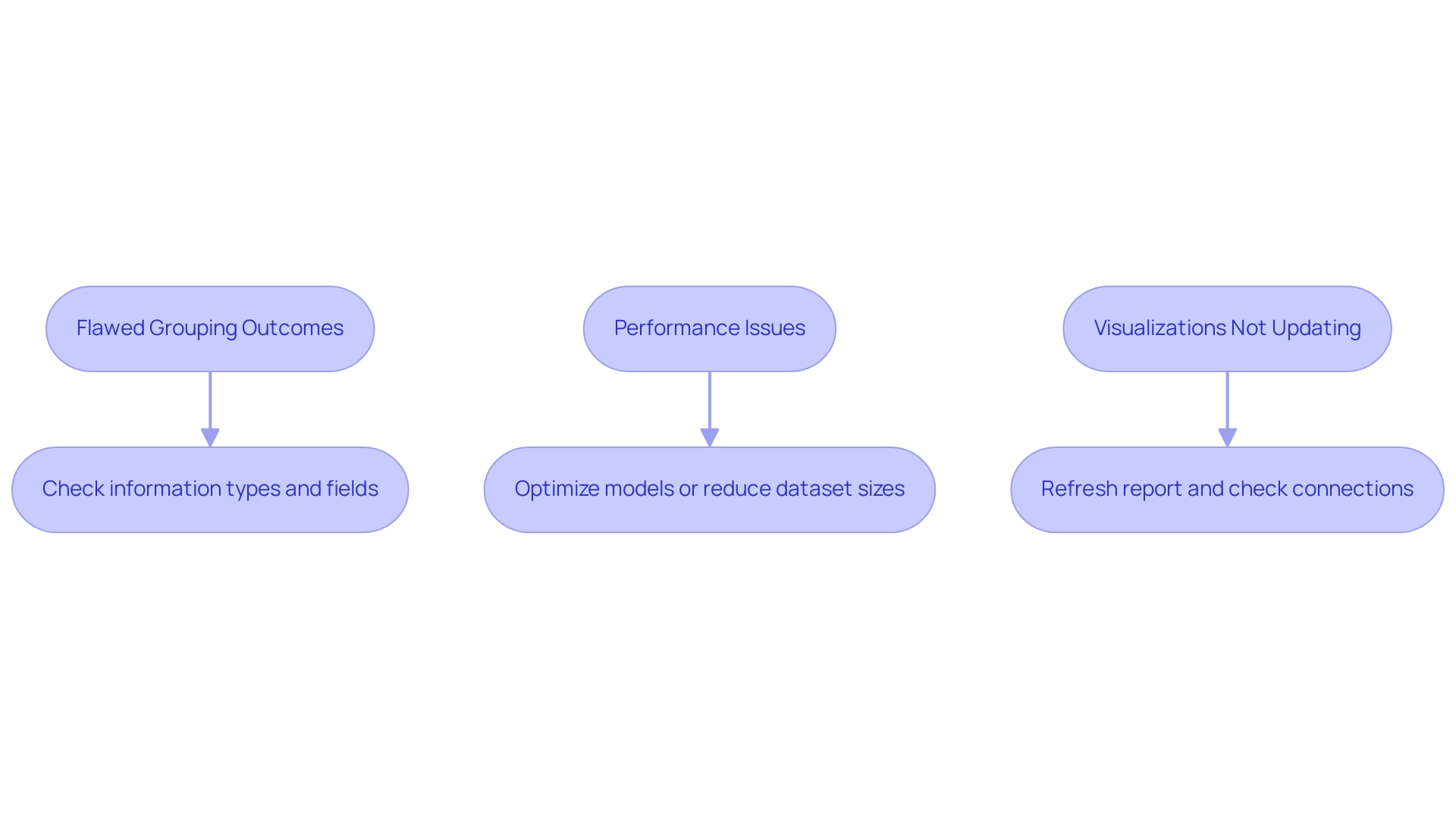

Troubleshooting Common Issues with Conditional Formatting Copying

-

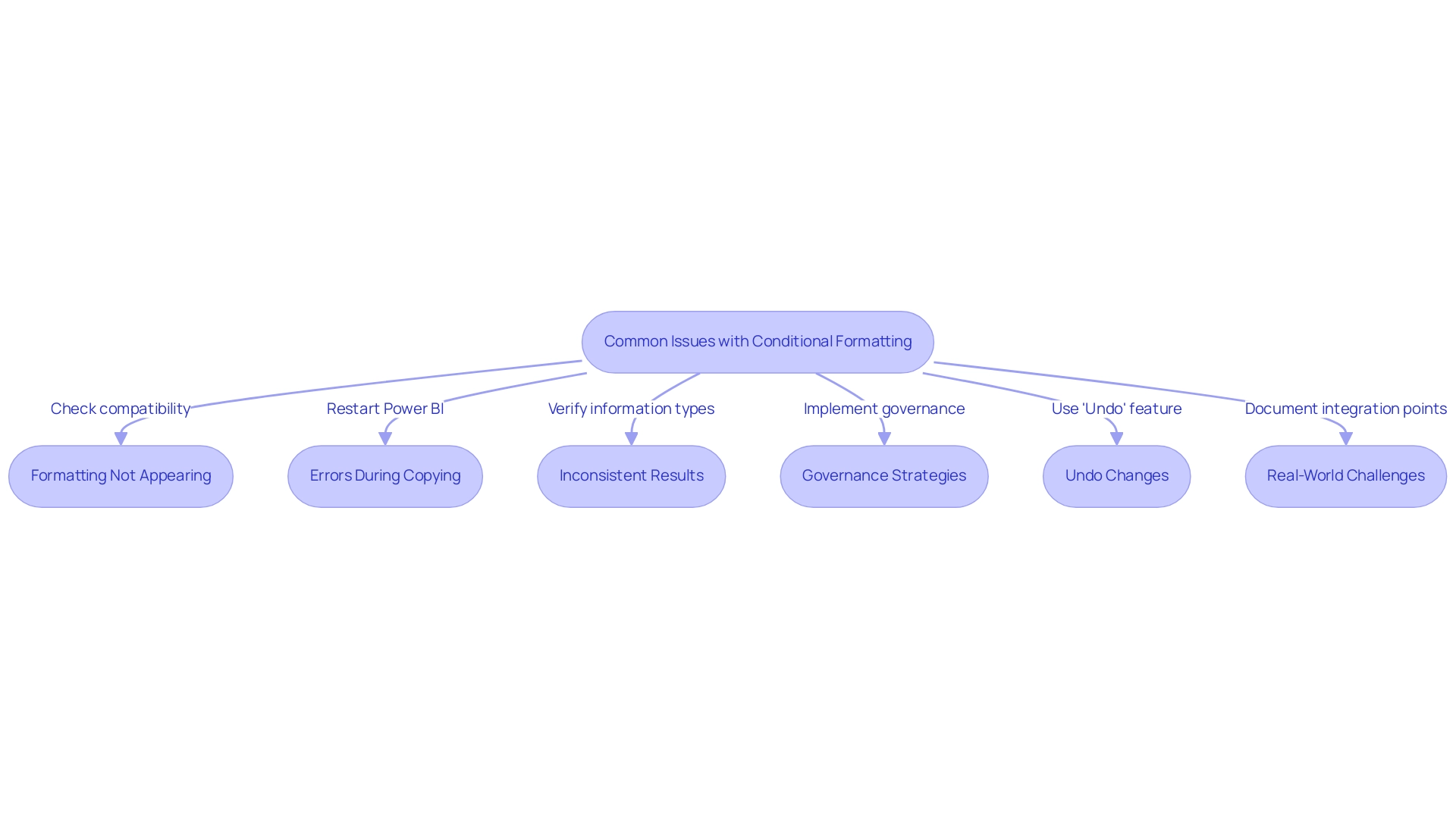

Formatting Not Appearing: When copied formatting does not appear, it’s crucial to check that both visuals are compatible and support the same type of formatting. This compatibility is essential to ensure that your desired design, such as power bi copy conditional formatting, is reflected accurately across different visuals. As Rajendra Ongole noted, ambiguous relationships can lead to incorrect results, making data verification even more critical in this context.

-

Errors During Copying: Should you encounter errors during the copying process, a simple yet effective first step is to restart Power BI or check for any available updates. Keeping your software up-to-date can often resolve unexpected issues, streamline your workflow, and help with the common challenge of time-consuming report creation, particularly when utilizing power bi copy conditional formatting.

Inconsistent Results: If you observe that the layout looks different across visuals, take the time to confirm that the information types and field settings align perfectly. Inconsistent information types can lead to unexpected results, making this verification a key step in maintaining visual integrity and ensuring accurate insights driven by information, particularly when applying power bi copy conditional formatting. Given that combining sales information monthly instead of daily can significantly affect reporting trends, using Power BI to copy conditional formatting is vital for ensuring accurate formatting.

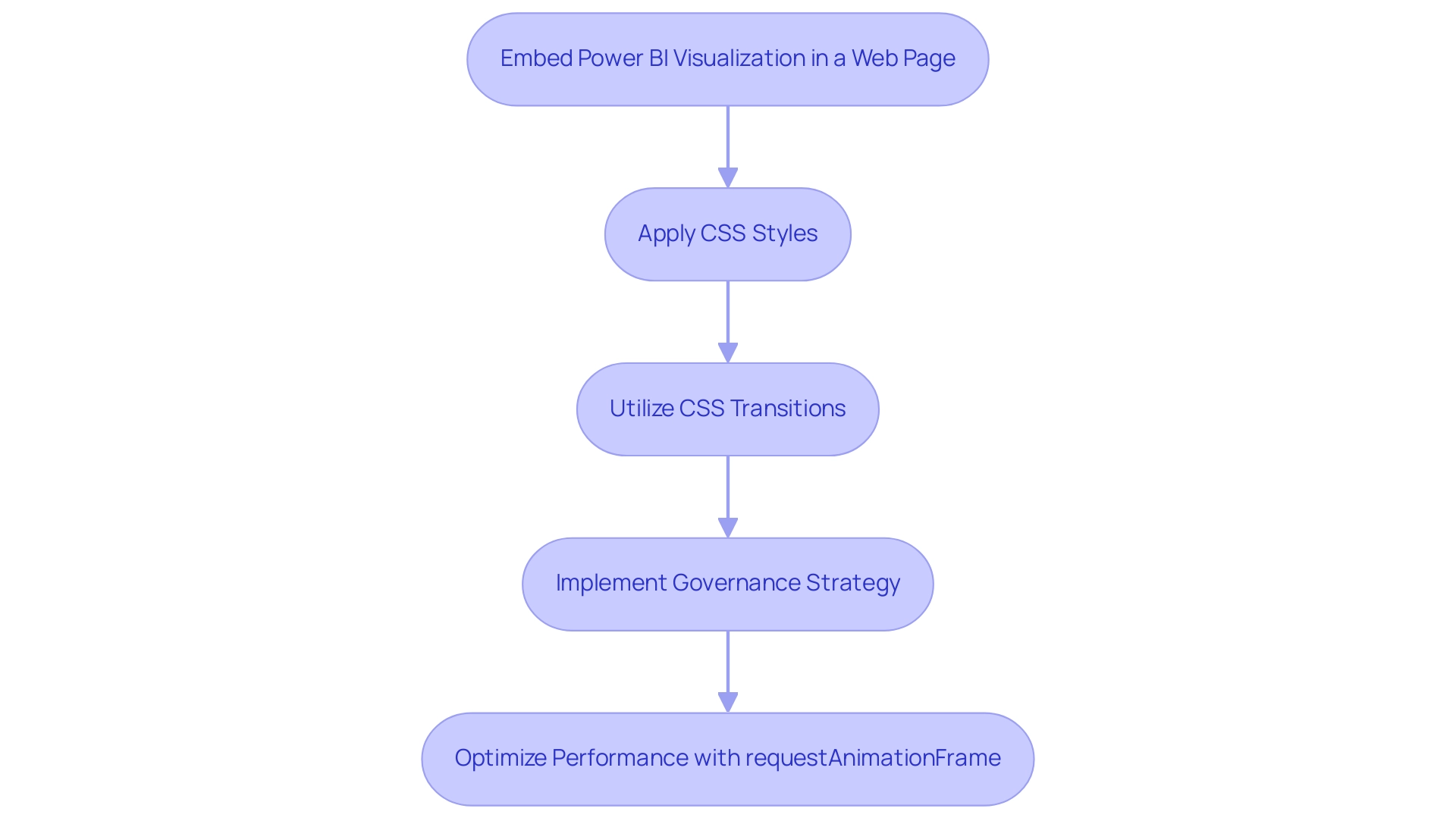

Governance Strategies: To reduce inconsistencies, it’s essential to implement a strong governance strategy. This method can assist in upholding information integrity across reports and encourage confidence in the insights obtained from BI dashboards. Without proper governance, organizations may face challenges in achieving reliable and actionable insights.

-

Undo Changes: In cases where you accidentally overwrite your formatting, don’t forget that the ‘Undo’ feature (Ctrl + Z) is your ally. This quick action can revert your visuals back to their previous state, saving you from potential frustrations and ensuring your work is preserved.

-

Real-World Challenges: Additionally, it’s important to consider the integration challenges highlighted in the case study titled ‘Integration and Connectivity Challenges.’ As organizations expand, combining BI with external systems can result in challenges such as API modifications and inconsistent formats. Documenting integration points and utilizing intermediate storage can enhance flexibility and scalability in Power BI environments, thereby addressing challenges in leveraging insights effectively. Moreover, stakeholders should ensure that documents not only present information but also provide clear, actionable guidance to facilitate informed decision-making.

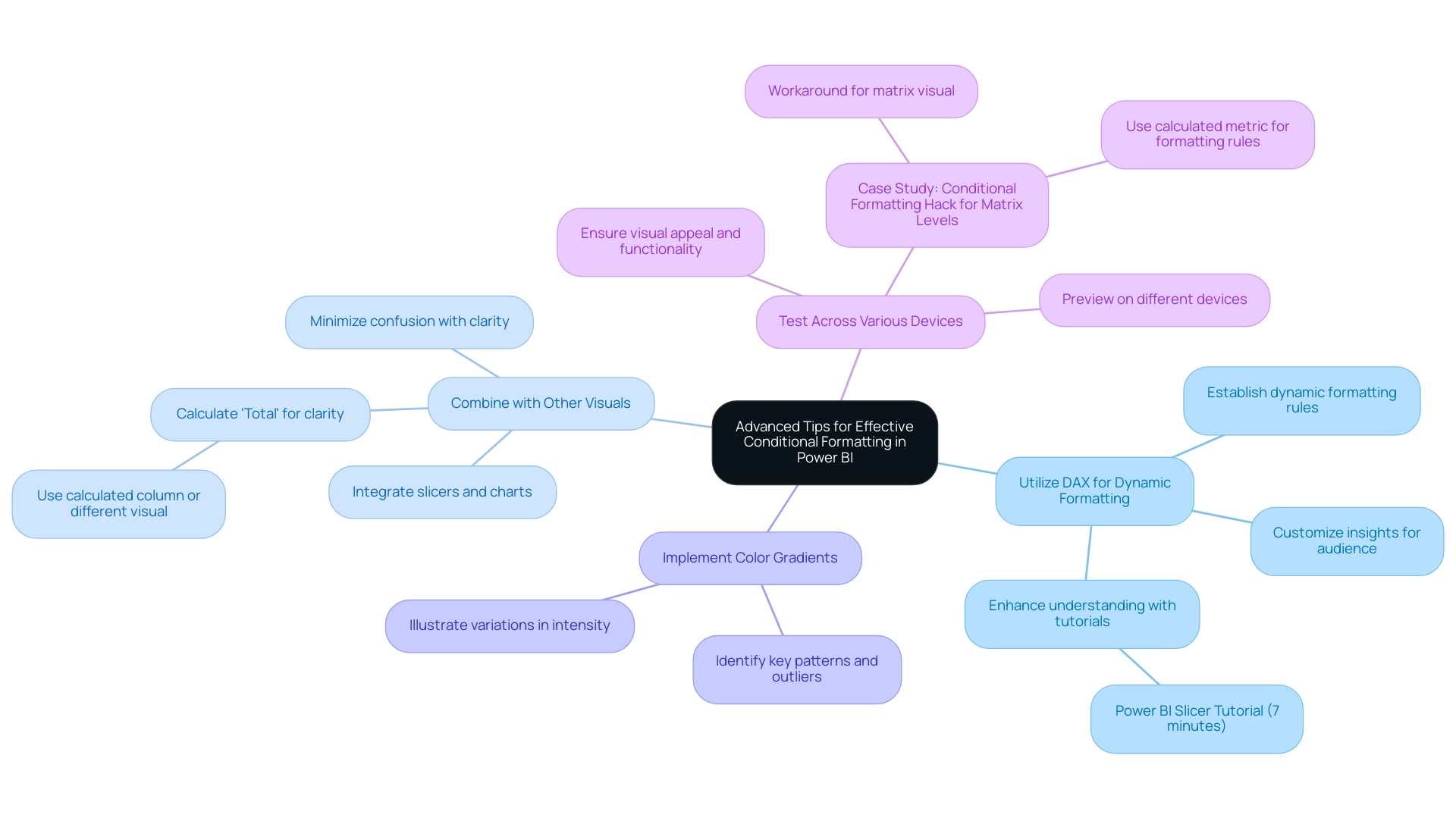

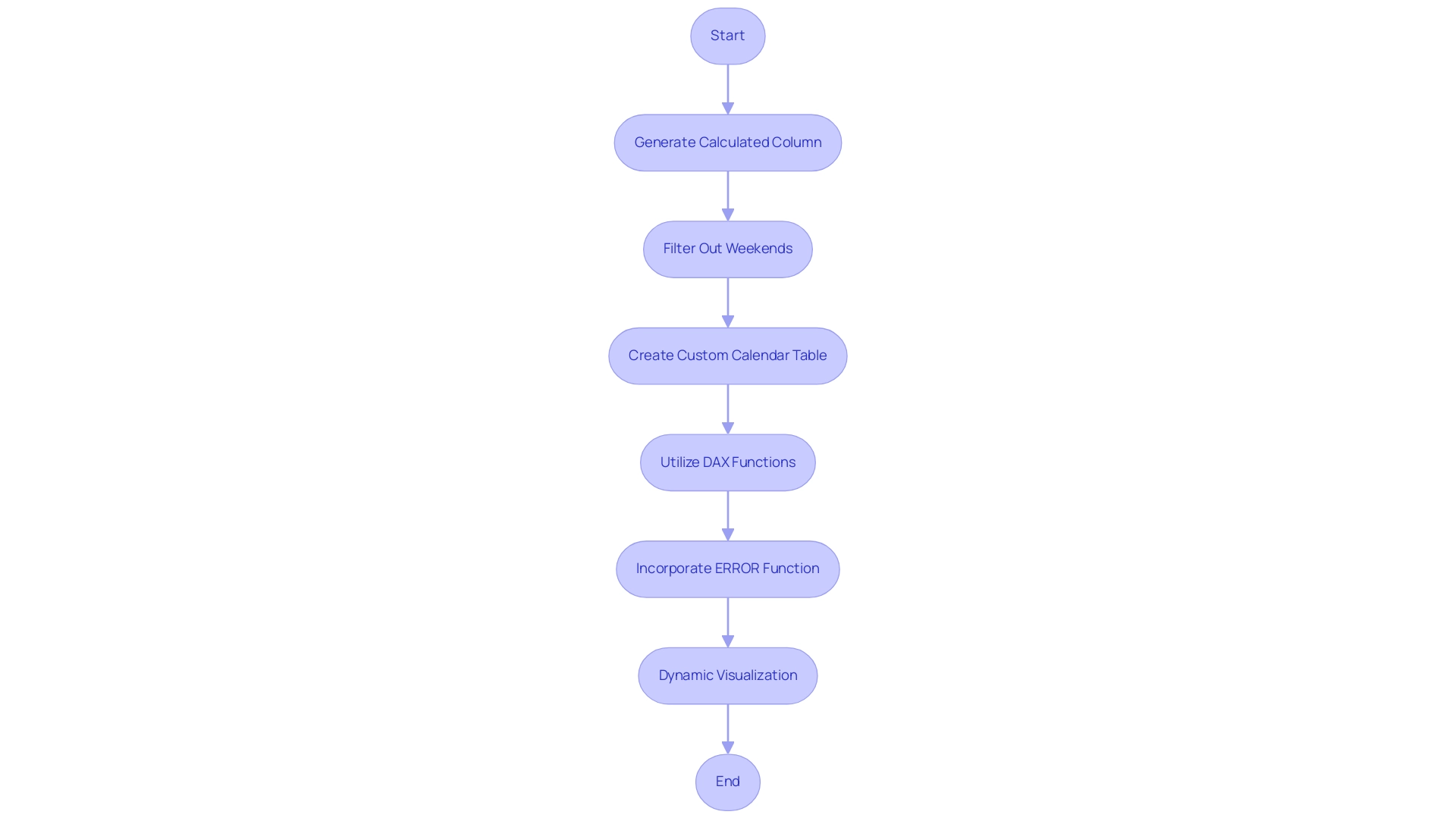

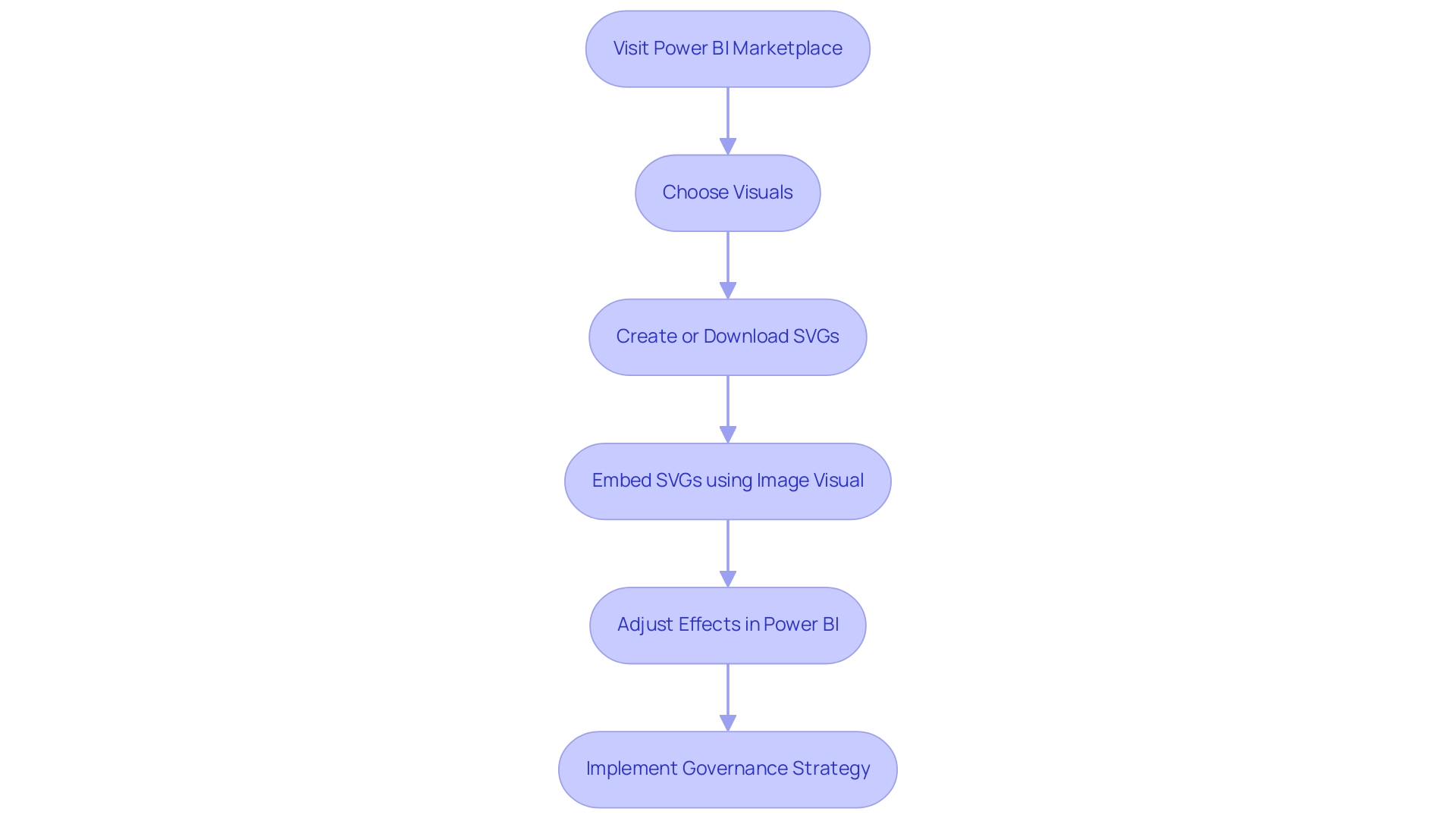

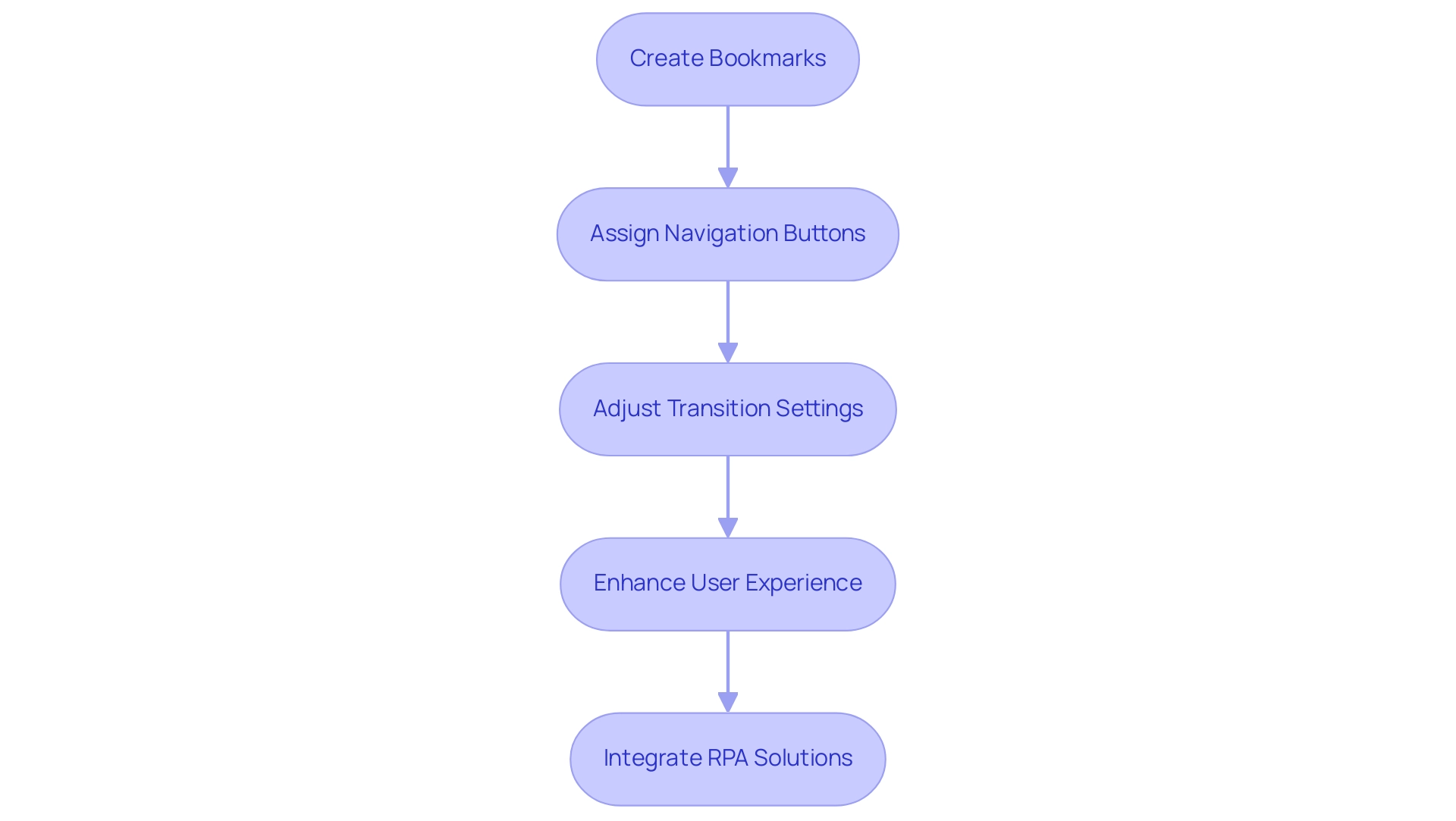

Advanced Tips for Effective Conditional Formatting in Power BI

-

Utilize DAX for Dynamic Formatting: Harness the power of DAX expressions to establish dynamic formatting rules that allow you to use power bi copy conditional formatting based on complex conditions. This method not only generates documents that communicate information effectively but also emphasizes key insights customized to your audience’s requirements. By dedicating just 7 minutes to the ‘Power BI Slicer Tutorial’, you can significantly enhance your understanding of these essential techniques, addressing the common challenge of time-consuming document creation.

-

Combine with Other Visuals: Pair Power BI copy conditional formatting with additional visuals, such as slicers and charts, to weave a cohesive and comprehensive narrative. This integration enhances the clarity of your summaries, allowing stakeholders to swiftly understand the narrative, thereby minimizing confusion arising from inconsistencies. When dealing with total hierarchies, remember to calculate ‘Total’ as a calculated column or utilize a different visual for clarity. Significantly, implementing a governance strategy can further mitigate inconsistencies by ensuring integrity across reports.

-

Implement Color Gradients: Employ color gradients to effectively illustrate variations in intensity. This technique simplifies the visualization of ranges and trends, making it easier for viewers to identify key patterns and outliers in your data, ultimately providing the actionable guidance that stakeholders often seek.

-

Test Across Various Devices: To ensure your documents maintain their effectiveness and appeal, always preview them on different devices. This step is crucial in confirming that your conditional design remains visually compelling and functional across all viewing platforms. As Fowmy aptly states,

Next time you aim to elevate the visual appeal of your BI visuals, keep this handy technique in mind to breathe new life into your visuals.

Furthermore, consider the case study titled ‘Power BI Copy Conditional Formatting for Matrix Levels‘, which showcases a practical workaround for using power bi copy conditional formatting techniques at different levels of a matrix visual. This case study illustrates how applying these techniques can greatly improve the efficiency and visual appeal of your BI analysis, while also emphasizing the significance of a governance strategy in ensuring consistency.

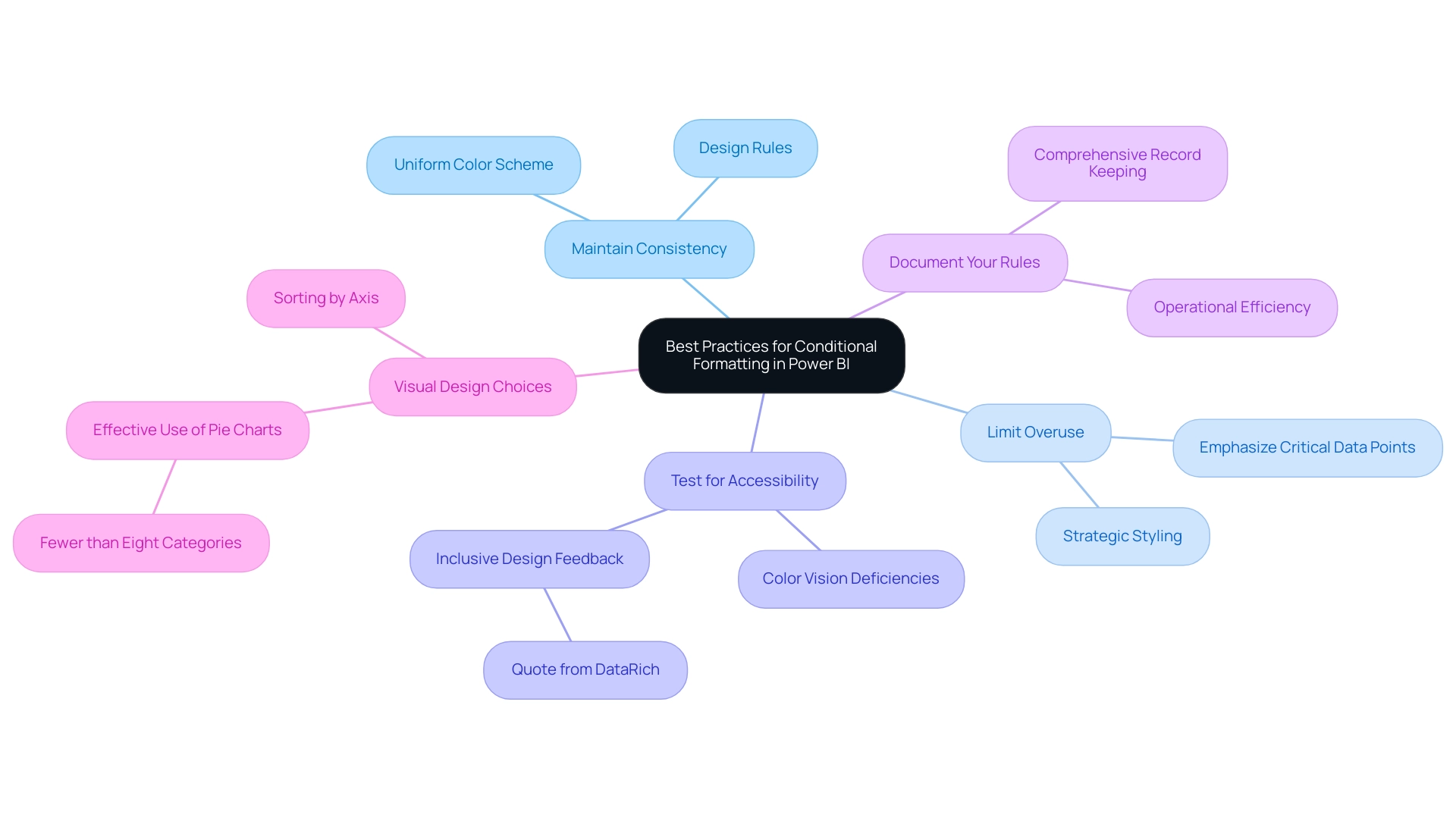

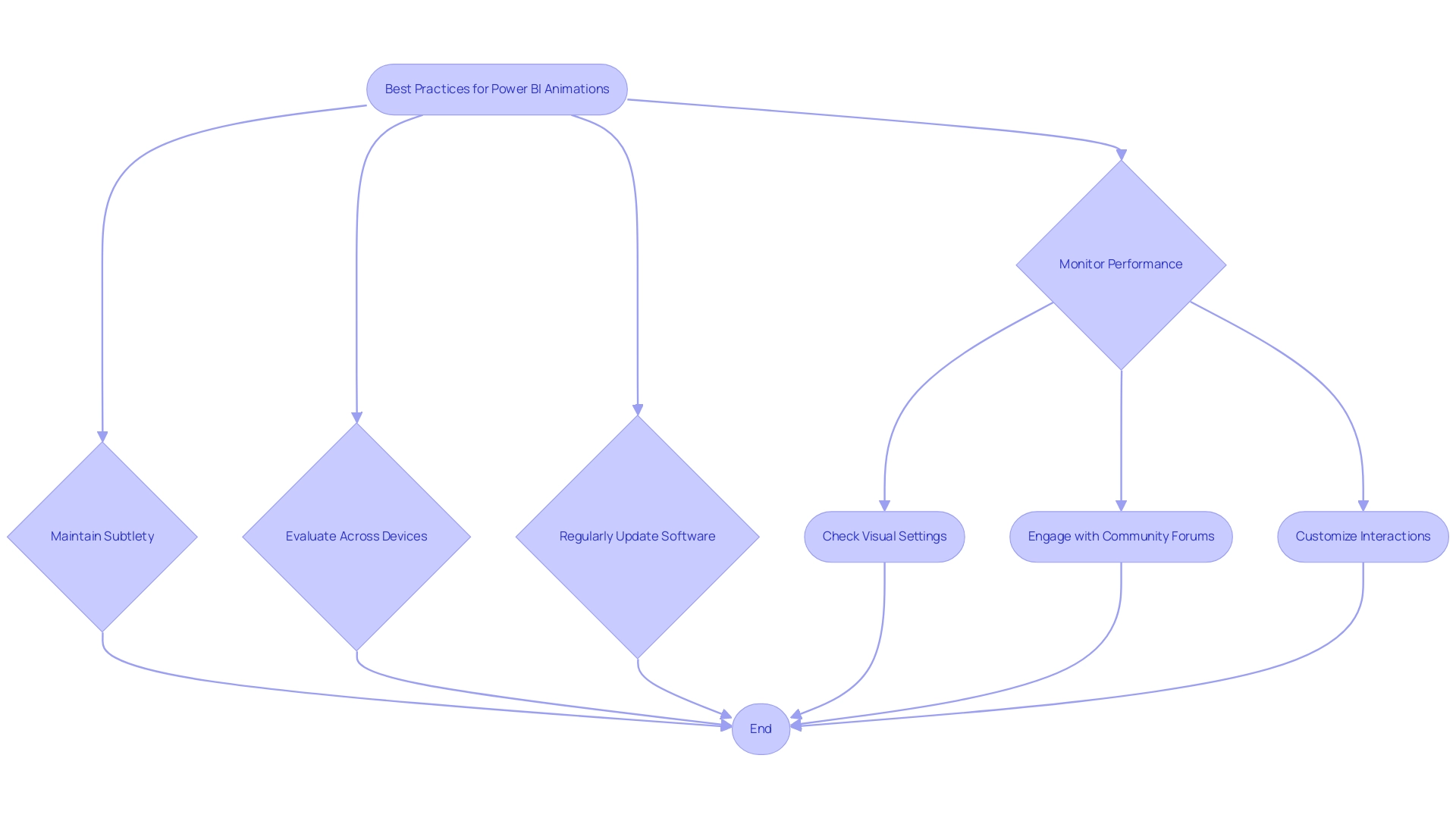

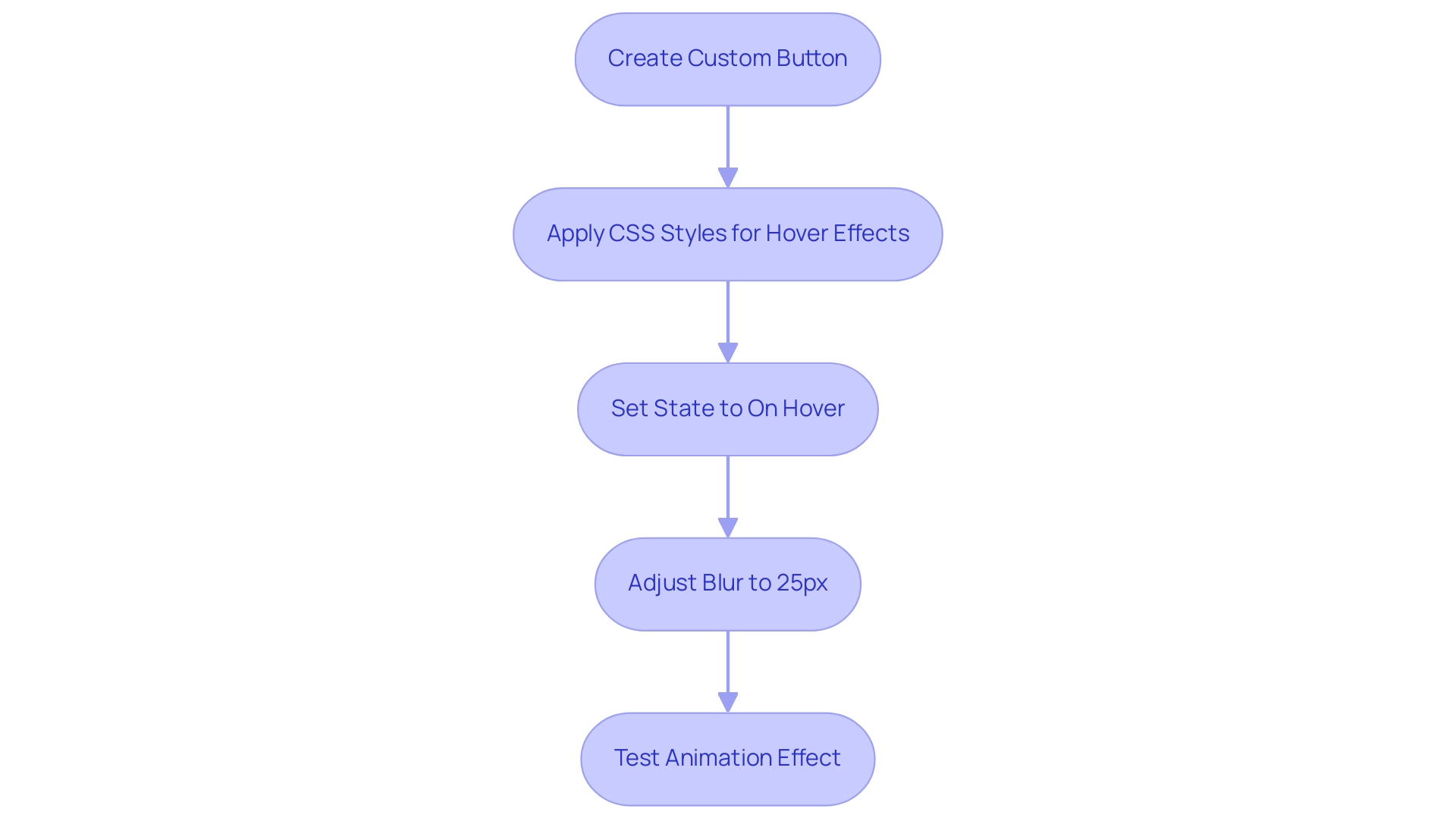

Best Practices for Using Conditional Formatting in Power BI

-

Maintain Consistency: Establishing a uniform color scheme and design rules across all visuals is vital for creating a cohesive report, especially when you’re working towards a polished final product in your 3-Day Power BI Sprint. Consistency helps in user understanding, enabling stakeholders to interpret information more effectively.

-

Limit Overuse: While conditional styling can be a powerful tool, excessive use can clutter your visuals and overwhelm users. Apply styling strategically to emphasize critical data points, ensuring that the most important information stands out without creating confusion. This approach enhances the actionable insights that Business Intelligence aims to deliver.

-

Test for Accessibility: Consider the accessibility of your color choices to accommodate all users, including those with color vision deficiencies. For instance, the feedback from DataRich, a new member, highlights the importance of inclusive design:

As someone who is partially color-blind, I appreciate the mention of that.

This insight emphasizes the necessity for careful color choice in your documents to maximize the effectiveness of your BI tools. -

Document Your Rules: Keeping a comprehensive record of the conditional formatting rules you apply is essential for maintaining consistency, especially when updating documents. This practice not only enhances operational efficiency but also supports users in navigating the dashboard with Power BI copy conditional formatting. For instance, sorting by axis can help users quickly find specific categories within many options. Furthermore, the case study titled ‘Help the User’ illustrated that supportive features such as pop-ups and documentation greatly enhance user confidence and effectiveness, emphasizing the significance of these practices in your work. Furthermore, remember that pie charts are most effective when there are fewer than eight categories, which can guide your visual design choices and ensure that your actionable insights are clearly communicated. By following these best practices, you can leverage the insights generated from the fully functional report created during your 3-Day Power BI Sprint, which can also serve as a template for future projects, ensuring a professional design from the start, particularly through the use of Power BI copy conditional formatting.

Conclusion

Harnessing the power of conditional formatting in Power BI is essential for transforming raw data into meaningful insights. By applying visual cues that highlight trends and critical metrics, organizations can create reports that not only engage stakeholders but also drive informed decision-making. The step-by-step guide provided offers a clear pathway to effectively copy and apply conditional formatting, while troubleshooting tips ensure that users can navigate common challenges with ease.

Advanced techniques, such as utilizing DAX for dynamic formatting and integrating color gradients, further enhance the storytelling capabilities of Power BI reports. Adhering to best practices—like maintaining consistency, limiting overuse, and testing for accessibility—ensures that the visuals remain clear and impactful. Documenting formatting rules also facilitates ongoing improvements and streamlines future reporting efforts.

As Power BI continues to evolve, mastering conditional formatting will empower organizations to elevate their data visualization efforts. By embracing these strategies, users can unlock the full potential of their data, ultimately fostering a culture of data-driven decision-making that can lead to substantial operational efficiencies and competitive advantages. Now is the time to implement these insights and transform how data narratives are communicated within organizations.

Overview:

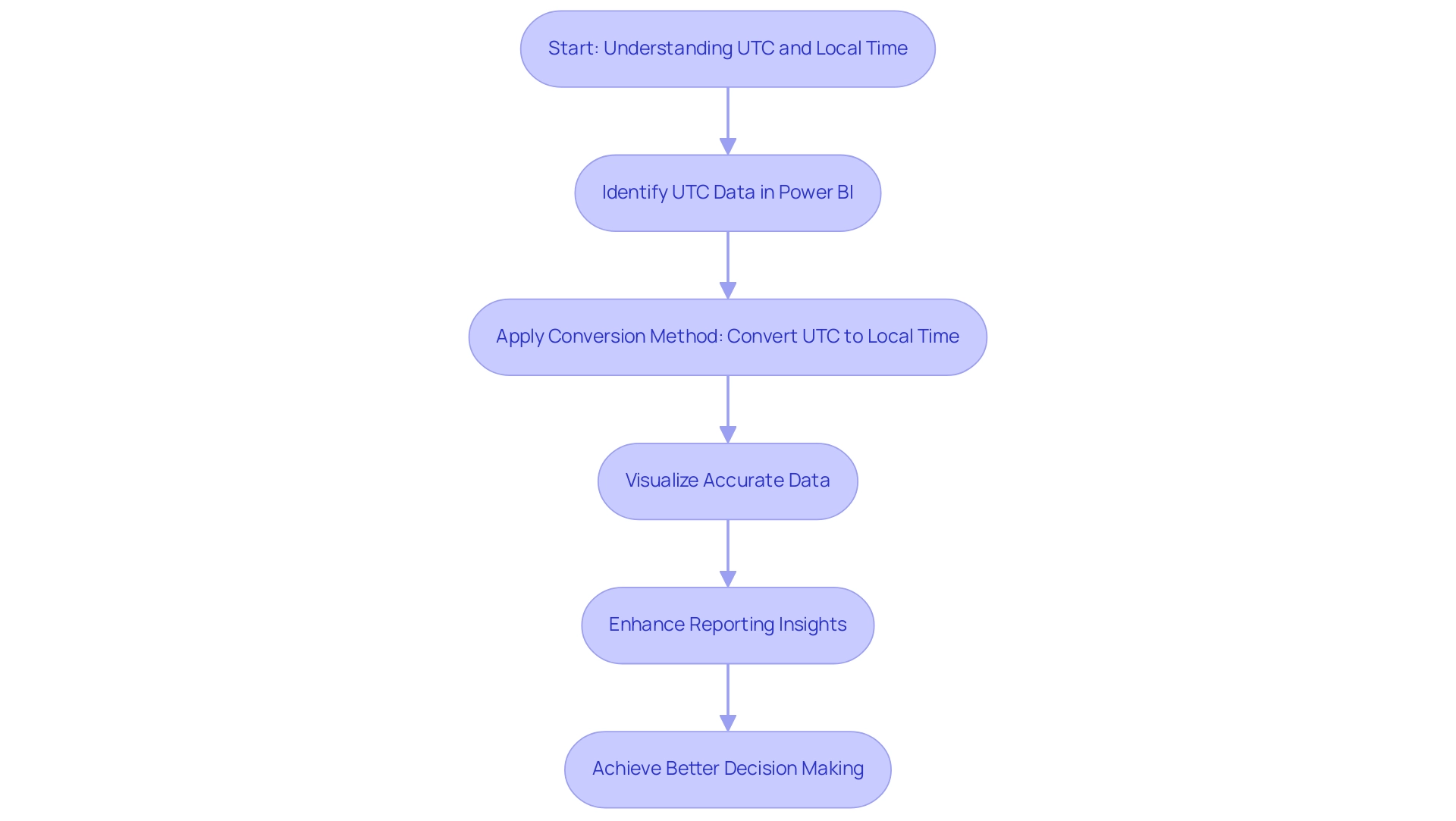

To convert UTC to local time in Power BI using DAX, users should follow a systematic step-by-step approach that includes creating a new column and applying the appropriate DAX formula to adjust for local time offsets. The article emphasizes that mastering this conversion process is essential for accurate data analysis and reporting, particularly in light of challenges such as Daylight Saving Time adjustments and the need for precise temporal information to enhance decision-making.

Introduction

In the realm of data analysis, the ability to accurately convert Coordinated Universal Time (UTC) to local time is not just a technical necessity; it’s a strategic advantage. As organizations increasingly rely on tools like Power BI for decision-making, understanding the nuances of time conversion becomes vital to ensure that insights drawn from data are precise and actionable.

The complexities introduced by local time variations and Daylight Saving Time (DST) can hinder effective reporting, leading to potential misinterpretations that impact business outcomes. This article delves into the essential methods and DAX functions required for seamless UTC to local time conversion, while also addressing common challenges faced by professionals in this space.

By mastering these techniques and leveraging automation solutions, organizations can enhance their operational efficiency and harness the full potential of their data analytics capabilities.

Understanding UTC and Local Time Conversion in Power BI

Coordinated Universal Time (UTC) serves as the global standard for regulating clocks and schedules, remaining consistent throughout the year without seasonal adjustments. This reliability makes UTC an invaluable reference for information analysis, as noted in the Wikipedia page about UTC±00:00. Nevertheless, local hours vary between areas and are influenced by changes like Daylight Saving Time (DST), complicating information interpretation and creating challenges such as lengthy report generation and inconsistencies in figures.

For professionals utilizing Power BI, it is crucial to use the method to power bi convert utc to local time dax to ensure that visualizations accurately reflect the intended moment for analysis and reporting. Rajani Vepa emphasizes that mastering this conversion is foundational for achieving precise insights in your reports and dashboards, ultimately enhancing decision-making processes. Moreover, grasping the historical background of temporal standards, such as the shift from Greenwich Mean Time (GMT) to UTC, demonstrates the development of clock management and its importance in information analysis.

By leveraging Business Intelligence and RPA solutions like EMMA RPA and Power Automate, organizations can automate repetitive tasks and address these challenges, improving operational efficiency and driving growth through actionable insights. Failing to extract meaningful insights can leave businesses at a competitive disadvantage, underscoring the urgency of utilizing these tools effectively.

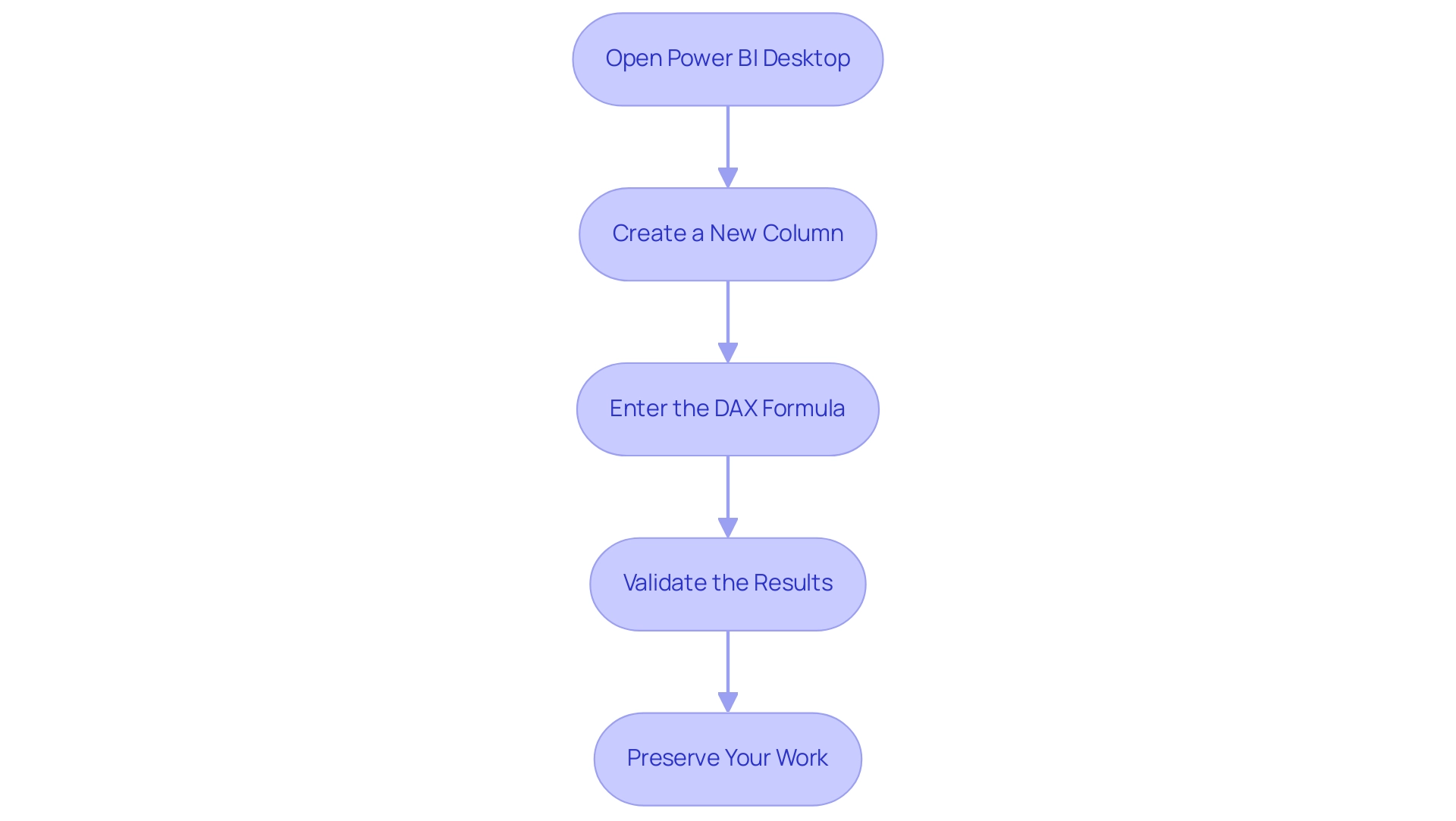

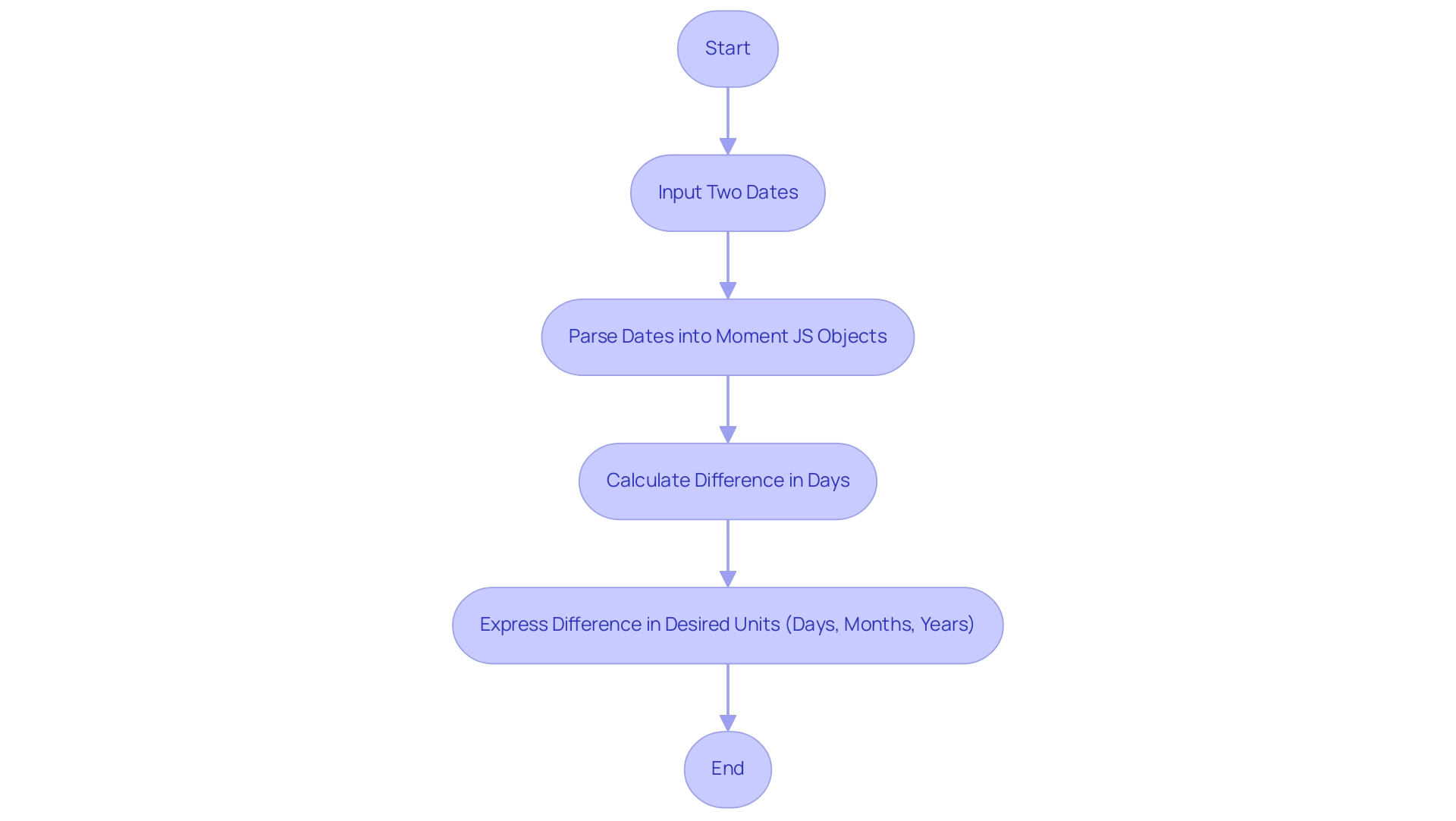

Step-by-Step Guide to Converting UTC to Local Time Using DAX

The procedure to power bi convert utc to local time dax is simple yet essential, as it transforms UTC to local hours, enhancing your analysis capabilities, particularly in light of possible delays in importing new usage information that can last up to 24 hours. Prompt data analysis is crucial for operational efficiency, so follow this step-by-step guide to ensure precision in your reporting:

- Open Power BI Desktop: Start your Power BI application and load the dataset that contains your UTC timestamps.

- Create a New Column: Navigate to the Data view, select your target table, and then click on ‘Modeling’ followed by ‘New Column’.

- Enter the DAX Formula: Input the following DAX formula to facilitate the conversion from UTC to local time:

DAX

LocalTime = UTCDateTime + TIME(Offset Hours, 0, 0)

Here, replaceOffset Hourswith the number of hours your local time zone differs from UTC. - Validate the Results: Once the new column is created, review it to confirm that the conversion has been executed correctly. Visualizing this information in your reports can help ensure its accuracy. Remember, to see all workspace usage metrics, you may need to remove the default filter applied to the report.

- Preserve Your Work: Ultimately, remember to store your Power BI file to protect the modifications you’ve made.

By mastering this process, you enhance your analytical toolkit, enabling more effective decision-making based on precise local temporal information. This mastery is crucial, particularly considering the typical challenges organizations encounter, such as spending more time on creating reports than utilizing insights from Power BI dashboards, resulting in confusion and mistrust in information. To enhance operational efficiency, consider incorporating RPA solutions to automate the report creation process, allowing your team to focus on analysis rather than information compilation.

Additionally, implementing governance strategies can help ensure consistency across reports, addressing one of the key challenges highlighted in the context. As illustrated by the case study on report usage metrics with Private Links, organizations face challenges in data reporting when metrics are not accurately captured. As Igor Plotnikov, a BI/Data Engineer, noted,

Hope I managed to expand your arsenal in tackling this challenge.

Embracing these techniques positions you for success in your operational efficiency goals.

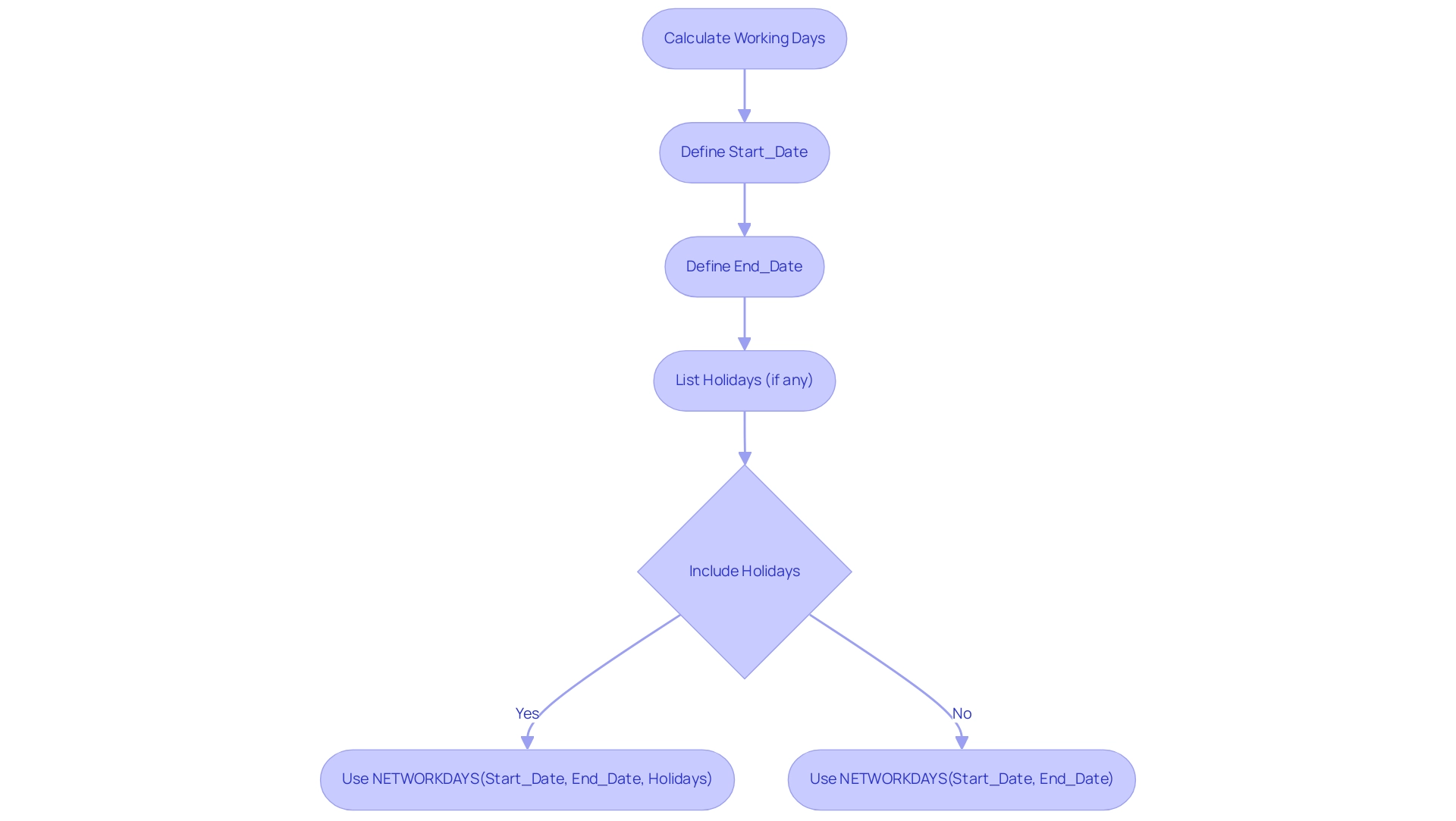

Navigating Daylight Saving Time in Time Conversion

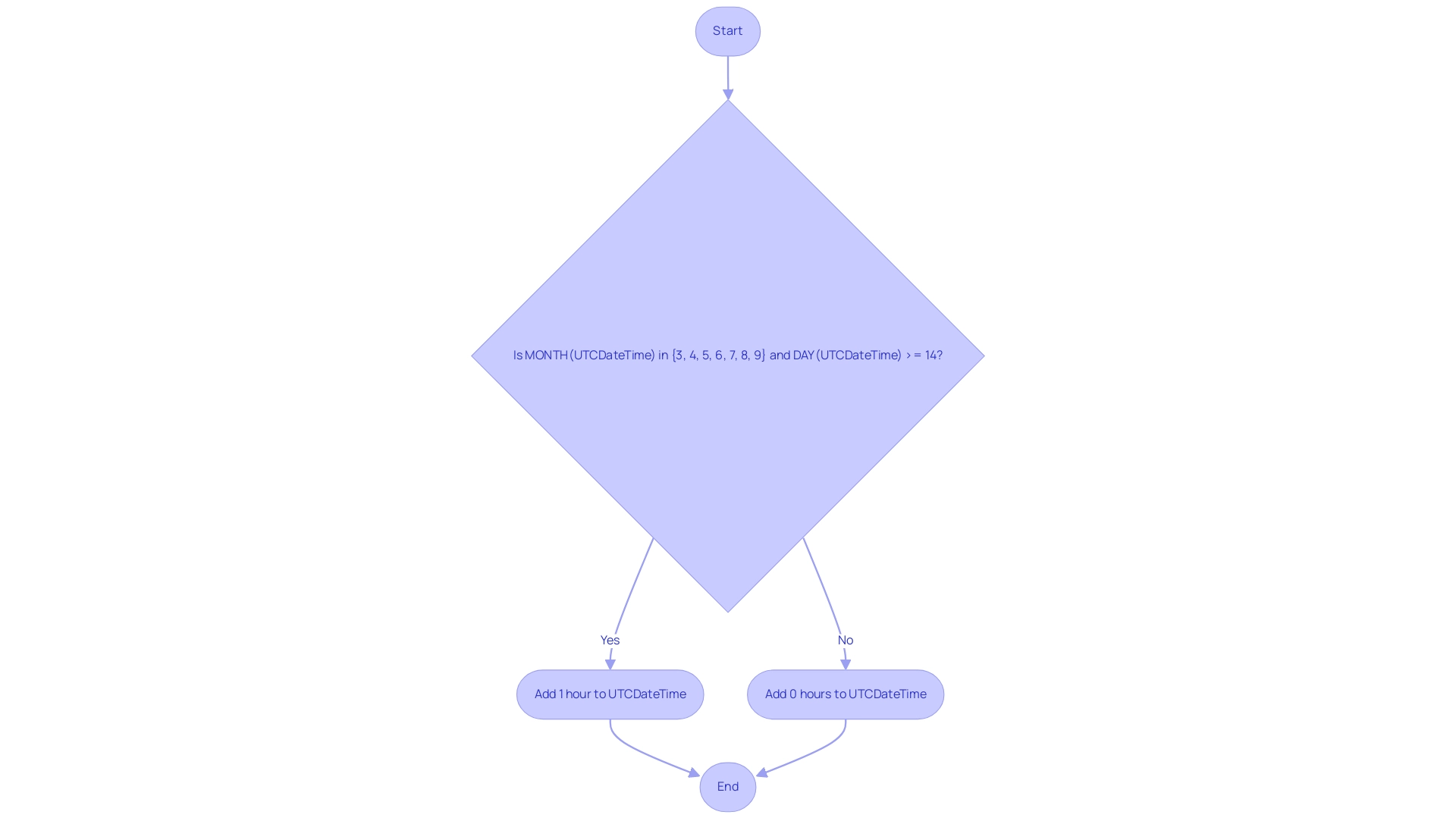

Daylight Saving Time (DST) introduces complexities that affect how Power BI converts UTC to local time DAX, as the necessary offset can shift throughout the year. To effectively manage power bi convert utc to local time dax in your DAX calculations, leveraging conditional logic is essential. The following DAX formula illustrates how to adjust for DST:

LocalTime =

IF(

MONTH(UTCDateTime) IN {3, 4, 5, 6, 7, 8, 9} && DAY(UTCDateTime) >= 14,

UTCDateTime + TIME(Offset Hours + 1, 0, 0),

UTCDateTime + TIME(Offset Hours, 0, 0)

)

This formula systematically checks whether the date falls within the DST period, adjusting the offset to reflect the correct local time throughout the year.

As noted by Dr. Adam Spira, “fortunately, most people can acclimate to the time change within a week or so,” indicating that while adjustments are necessary, they are manageable. Furthermore, comprehending the cost linked to the DST shift, as projected in the S30 Table, emphasizes the financial consequences of these modifications in reporting. The S30 Table contains a file size of 3.8KB, emphasizing the importance of managing information efficiently when dealing with DST.

A practical example can be seen in the case study of Hawaii, which does not observe DST; this study found no significant impacts on mortality rates, illustrating the complexities and effects of DST on analysis. This understanding is essential, particularly when considering the implications of DST on information accuracy and reporting efficiency. In a rapidly evolving AI landscape, embracing Robotic Process Automation (RPA) can streamline these manual data handling challenges, including automating the DST adjustment process and enhancing report creation efficiency, thereby allowing your team to focus on strategic, value-adding activities.

Furthermore, by automating these processes, organizations can mitigate the financial impacts associated with DST adjustments, ultimately driving better operational efficiency.

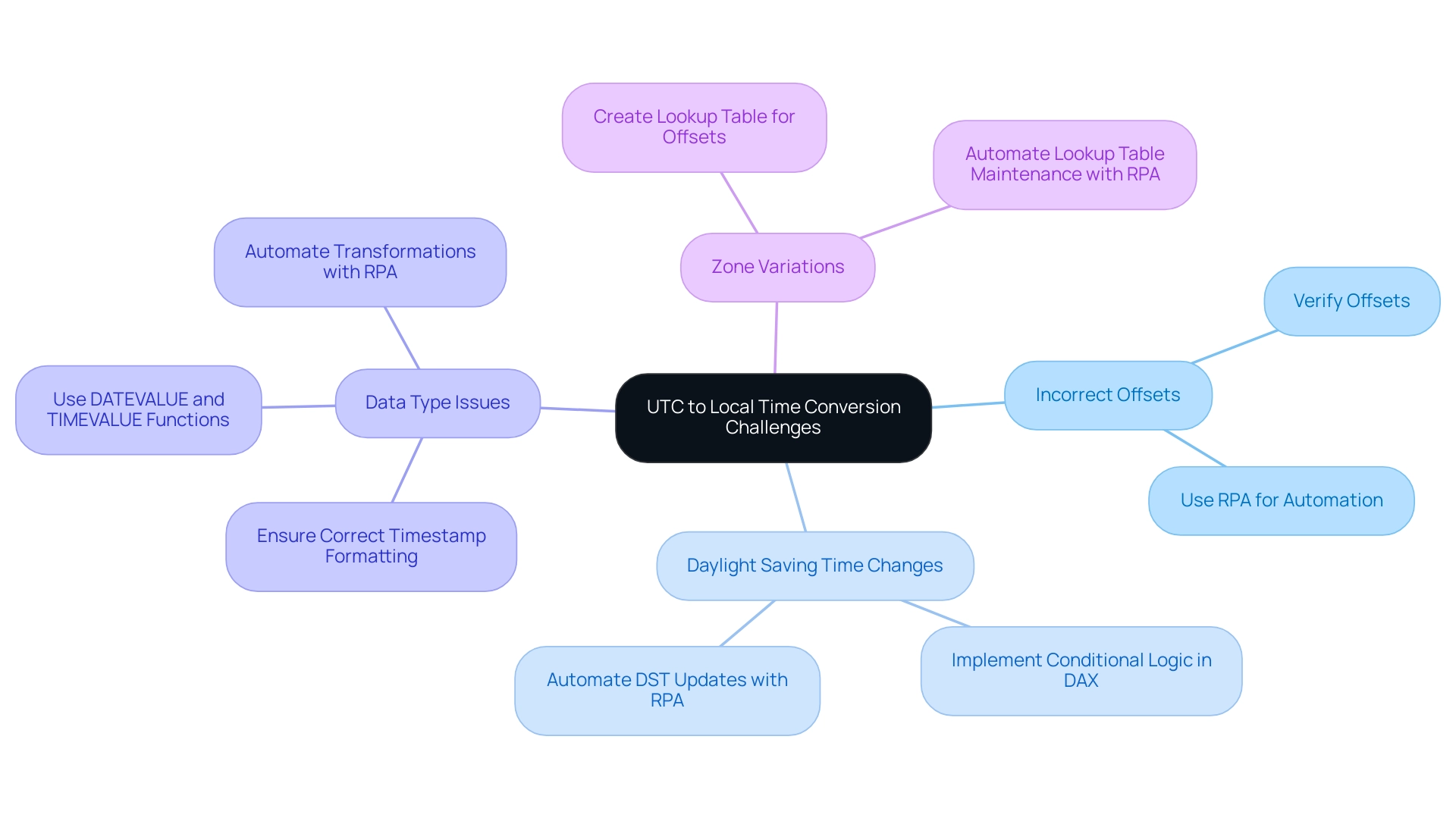

Common Challenges and Solutions in UTC to Local Time Conversion

Converting UTC to local hours might appear simple, but it often presents several challenges that require careful consideration:

- Incorrect Offsets: Always check the offset hours for accuracy. It is essential to use a reliable source to verify your local zone’s current offset from UTC, as this can fluctuate based on various factors. Flawed duration transformation can result in substantial problems; for example, with a bounce rate of 50.9%, ensuring accurate data analysis is essential to maintain user engagement. Utilizing Robotic Process Automation (RPA) can assist in automating these checks by integrating with dependable zone databases, greatly minimizing human error and enhancing operational efficiency.

Daylight Saving Time (DST) Changes: For regions that observe DST, your DAX formulas need to utilize the method to power bi convert utc to local time dax to manage these seasonal adjustments. Implement conditional logic within your calculations to automatically adjust offsets based on the time of year, ensuring accurate transformations for power bi convert utc to local time dax. The significance of accuracy in these calculations is emphasized by the fact that 48% of users abandon carts due to unexpected costs, highlighting the need for precise information management to avoid operational pitfalls. RPA can facilitate this process by automatically updating and applying DST rules across all relevant datasets, ensuring consistency and reliability.

- Data Type Issues: Confirm that your UTC timestamps are formatted correctly as date/time values. If timestamps are stored as text, utilize the

DATEVALUEorTIMEVALUEfunctions to transform them before applying the necessary adjustments, preventing potential errors in your analysis. Authenticity in information is as crucial as in reviews, where focusing on quality can yield better outcomes than simply accumulating a large volume of information. Automating these transformations through RPA not only improves information integrity but also optimizes your workflow, enabling your team to concentrate on more strategic tasks.

Zone Variations: When handling information across several regions, it can be advantageous to establish a lookup table that links each location with its corresponding UTC offset and DST regulations. This method streamlines the transformation process, making it more manageable to handle diverse datasets using Power BI convert UTC to local time DAX. As VWO is relied upon by numerous top brands, ensuring precise temporal transformation can improve the dependability of your analysis, directly affecting operational efficiency. By implementing RPA, you can automate the upkeep of this lookup table, ensuring it remains current without manual intervention, thus liberating your team to focus on more strategic initiatives.

As you address these challenges, keep in mind that accuracy and dependability are essential for effective conversion, directly influencing the quality of your information analysis. Embracing RPA in this context not only enhances efficiency but also empowers your team to focus on strategic initiatives that drive business growth.

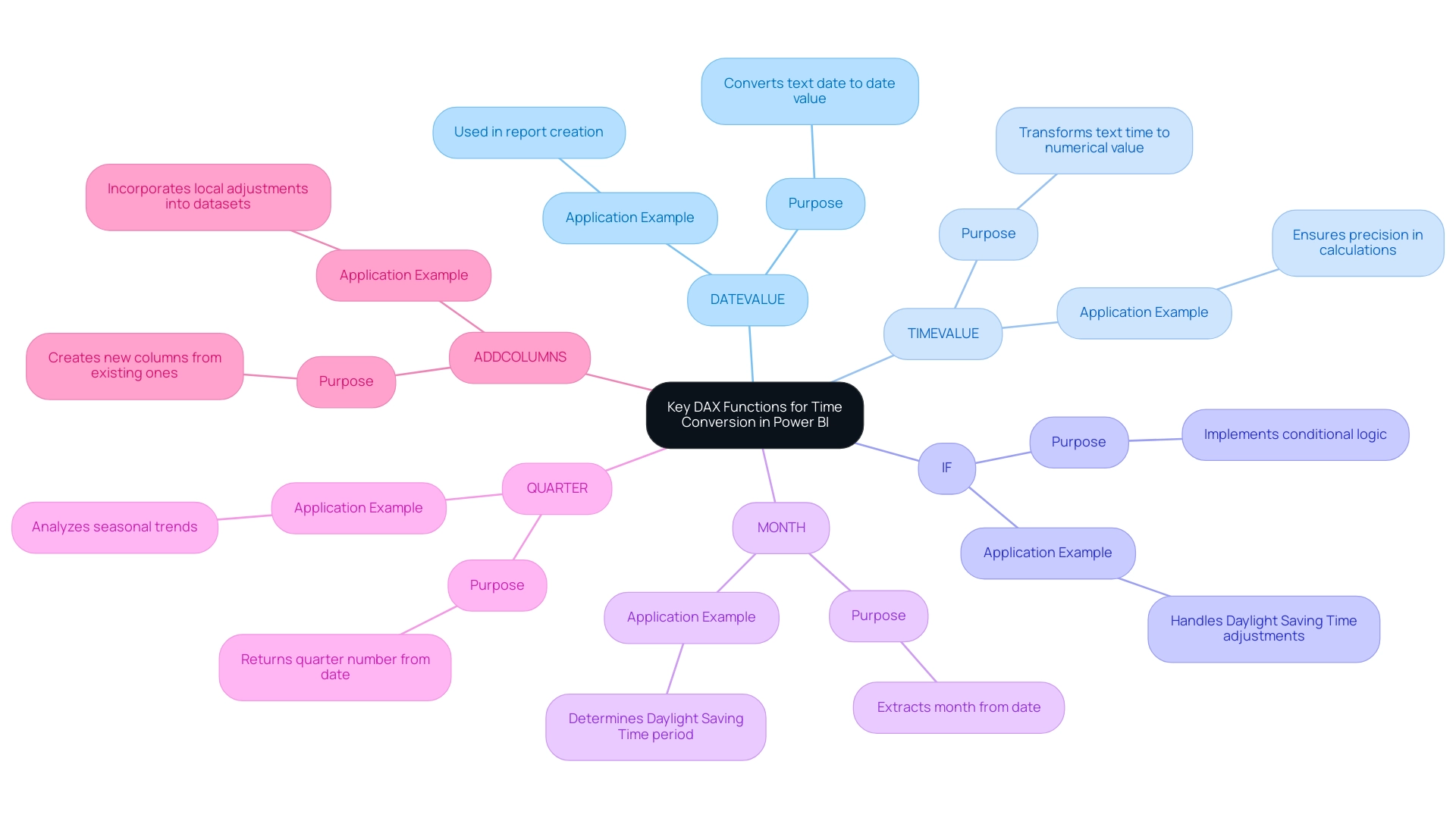

Key DAX Functions for Time Conversion in Power BI

To successfully convert UTC to local time in Power BI, it is essential to understand and utilize several DAX functions that facilitate accurate time calculations, ultimately enhancing your operational efficiency and data-driven insights:

- DATEVALUE: This function is invaluable as it converts a date presented in text format into a proper date value, ensuring that subsequent calculations are performed correctly and reducing time spent on report creation.

- TIMEVALUE: Likewise, TIMEVALUE transforms moments expressed as text into a numerical value, which is essential for ensuring precision in temporal calculations and resolving inconsistencies in information.

- IF: The IF function allows for the implementation of conditional logic, enabling you to accommodate variations such as Daylight Saving Time adjustments effectively, which can often lead to inconsistencies in reporting.

- MONTH: This function extracts the month from a date, helping determine whether a particular date falls within the Daylight Saving Time period, thus providing clarity and actionable guidance for stakeholders.

- QUARTER: The QUARTER(

) function can also be beneficial for comprehending seasonal trends in your information, which may influence temporal adjustments and overall analytical insights. - ADDCOLUMNS: By utilizing ADDCOLUMNS, you can create new columns derived from existing ones, making it easy to incorporate local adjustments directly into your dataset, streamlining your reporting process.

For example, consider a scenario where you calculate the total sales amount for the Western region in January 2022 using a DAX formula. This practical application showcases how these functions can be utilized effectively in real-world scenarios, enhancing your ability to leverage insights from Power BI dashboards.

Incorporating Robotic Process Automation (RPA) alongside these DAX functions can further enhance operational efficiency by automating repetitive reporting tasks, allowing your team to concentrate on analysis rather than input. Mastering these functions not only empowers you to handle time conversions with greater precision through power bi convert utc to local time dax but also enhances your overall analysis capabilities, addressing the common challenge of report creation.

Additionally, having a robust governance strategy in place is crucial to mitigate inconsistencies. This strategy ensures that data is accurate and reliable, providing stakeholders with clear, actionable guidance based on well-governed data.

As Sovan Pattnaik, a Technical Specialist in Data and Analytics, notes, “This is very helpful. Thank you so much!” This sentiment reflects the growing acknowledgment of the significance of these functions in enhancing user proficiency with DAX, particularly in the context of conversions.

With the recent updates in DAX functions for 2024, taking the time to familiarize yourself with these tools will significantly elevate your operational efficiency in Power BI.

Conclusion

Mastering the conversion of Coordinated Universal Time (UTC) to local time in Power BI is more than just a technical skill; it is a cornerstone for effective data analysis and decision-making. Understanding the nuances of UTC, local time variations, and Daylight Saving Time (DST) is essential for ensuring that reports are accurate and insights are actionable. By following the outlined step-by-step guide and utilizing key DAX functions, professionals can enhance their reporting capabilities and overcome common challenges associated with time conversion.

The integration of Robotic Process Automation (RPA) into these processes provides a significant advantage, enabling organizations to automate repetitive tasks, reduce human error, and maintain data integrity. This not only streamlines workflows but also allows teams to focus on strategic initiatives that drive business growth. The case studies and examples highlighted throughout the article underscore the importance of accuracy and reliability in time conversion, directly impacting the quality of data analysis and operational efficiency.

As organizations embrace these techniques and tools, they position themselves to leverage their data analytics capabilities fully. The combination of mastering DAX functions, implementing effective governance strategies, and automating processes through RPA creates a robust framework for successful time conversion. This is a crucial step toward making informed decisions that propel operational efficiency and enhance overall business performance. Embracing these practices ensures that organizations remain competitive in a data-driven landscape, turning challenges into opportunities for growth and efficiency.

Overview:

The article provides a comprehensive step-by-step guide on performing cluster analysis in Power BI, detailing the importance of clustering methods and their applications in extracting actionable insights from data. It emphasizes that effective clustering, supported by techniques such as K-Means and Hierarchical Clustering, enhances decision-making and operational efficiency, as evidenced by case studies demonstrating significant improvements in business performance.

Introduction

In the realm of data analysis, clustering emerges as a transformative technique that empowers organizations to uncover hidden patterns and relationships within their datasets. By grouping similar data points, companies can drive strategic decisions that enhance operational efficiency and foster growth.

With the increasing complexity of data landscapes, mastering clustering methods such as:

- K-Means

- Hierarchical Clustering

- DBSCAN

in Power BI is not just advantageous; it’s essential.

This article delves into the fundamentals of clustering, providing a comprehensive guide on executing cluster analysis in Power BI, exploring various methods, and outlining best practices for successful implementation. By equipping decision-makers with actionable insights and practical strategies, organizations can harness the full potential of their data, paving the way for informed decision-making and sustained business success.

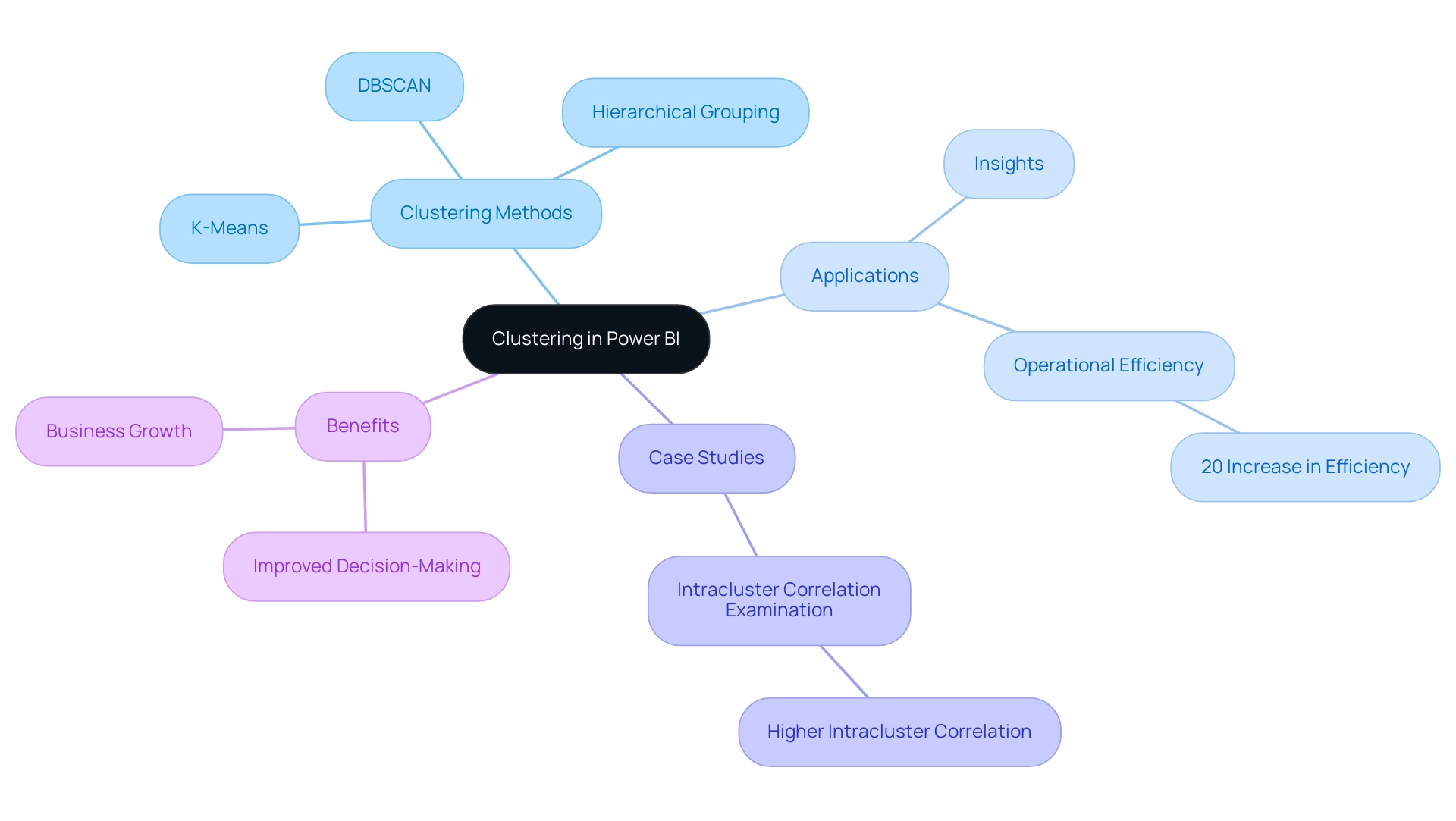

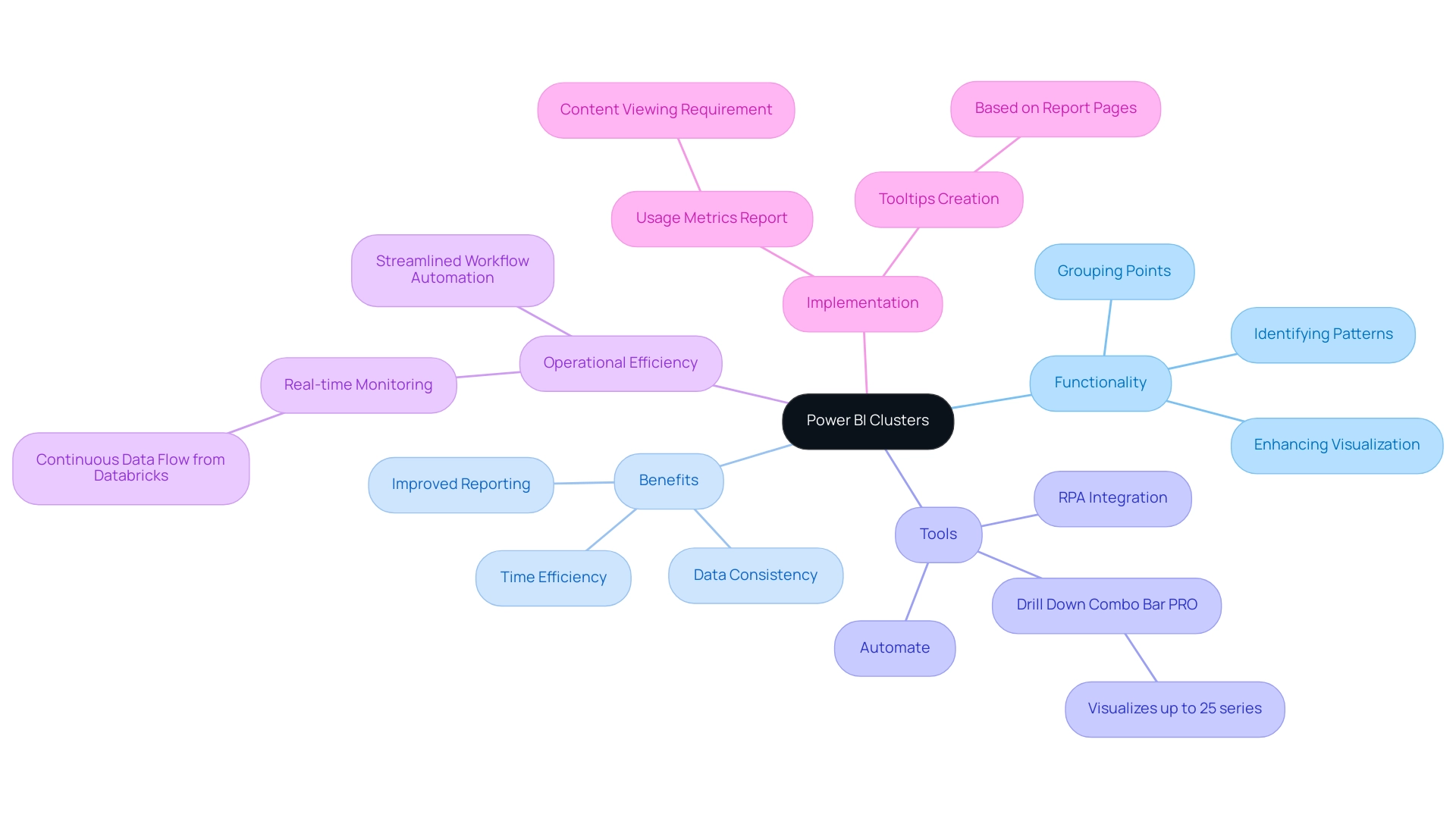

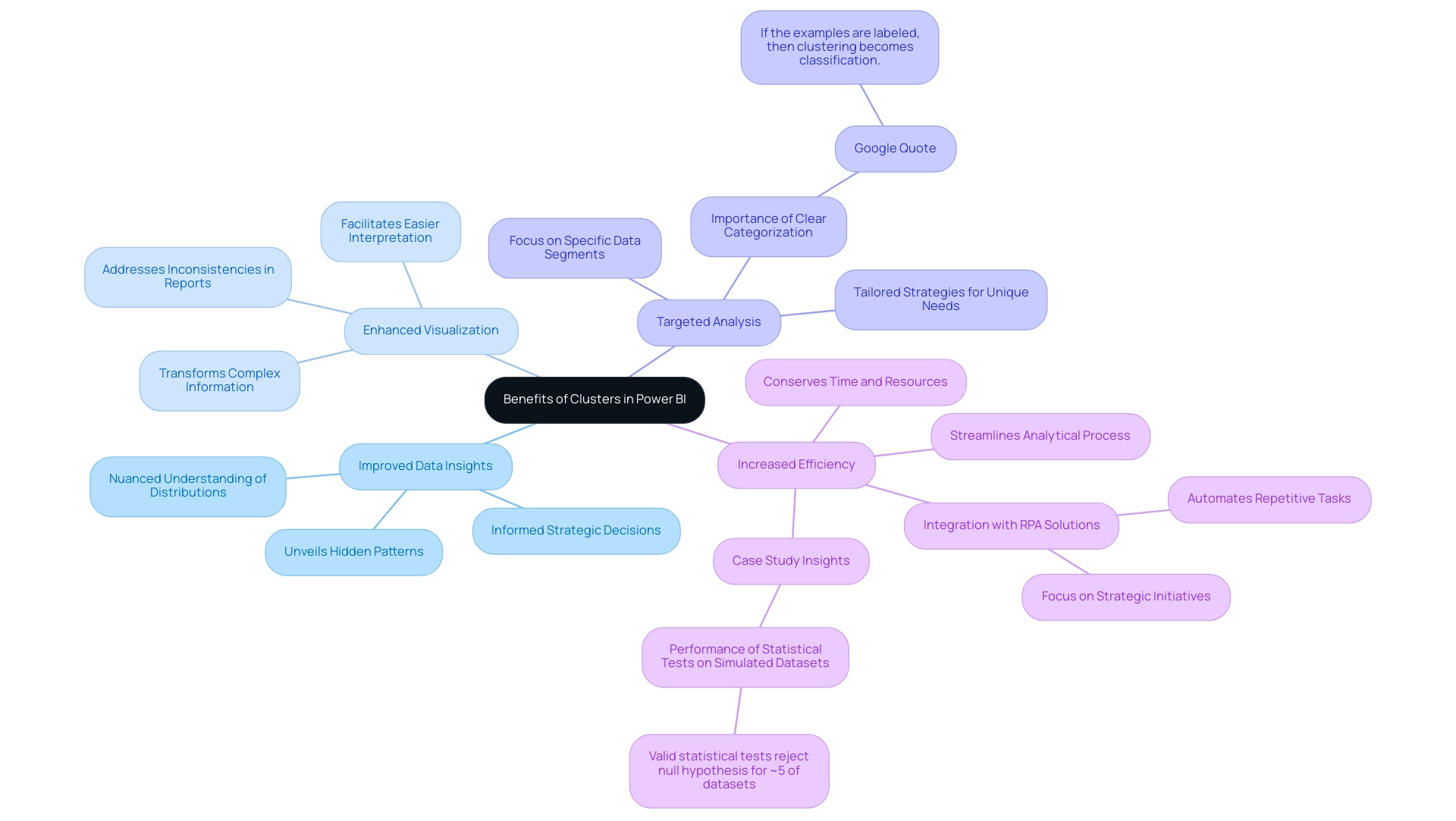

Understanding Clustering: The Basics

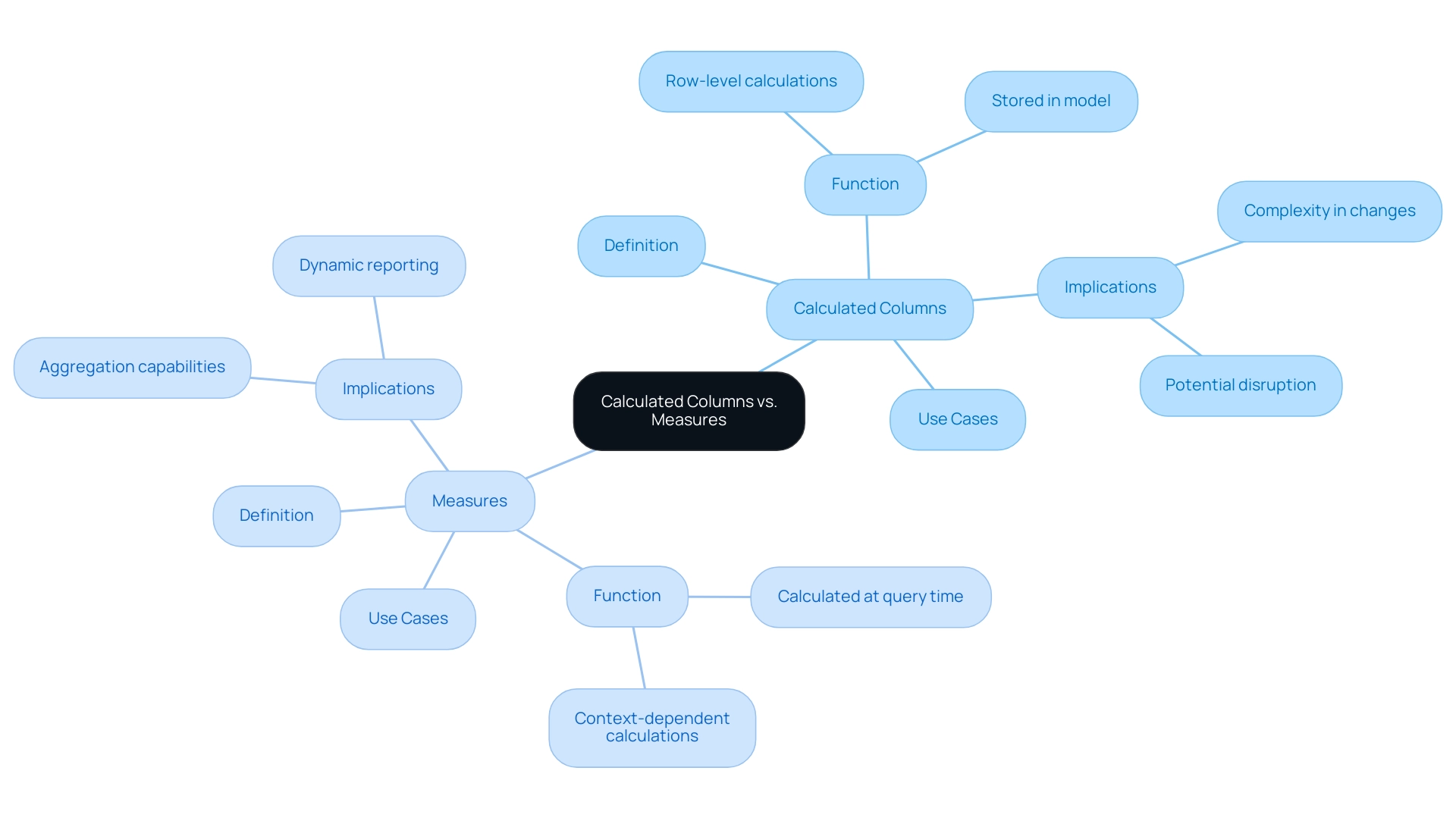

Clustering is a powerful method in information examination that supports Power BI cluster analysis by grouping similar points based on specific attributes, enabling organizations to unveil hidden patterns and structures within their collections. As Zyzanski et al. emphasize, the importance of comprehending the characteristics of grouped information is crucial for precise evaluation, clarifying how points correspond with each other within clusters.

By utilizing grouping methods like K-Means, Hierarchical Grouping, and DBSCAN in Power BI cluster analysis, decision-makers can improve their visualizations, making connections between elements clearer and more accessible—essential for fostering insights based on information and operational efficiency. Recent progress in grouping methods, especially in 2024, highlights its increasing importance in information evaluation. For example, the case study titled ‘Intracluster Correlation Examination‘ illustrated that a higher intracluster correlation signifies a more pronounced grouping effect, essential for comprehending the implications of grouping in statistical evaluation.

As Saurav Kaushik, a passionate data science enthusiast, notes,

Clustering techniques are vital for uncovering complex relationships and driving insightful choices.

Furthermore, a testimonial from a leading retail firm showcased how applying grouping analysis in their BI strategy led to a 20% increase in operational efficiency. This foundational technique not only aids in understanding information but also empowers organizations to harness Business Intelligence for actionable insights and make informed, insight-driven decisions effectively.

The advantages of Power BI cluster analysis in Power BI lead to enhanced analytical capabilities, allowing organizations to explore their information landscapes with confidence and precision, ultimately fostering business growth.

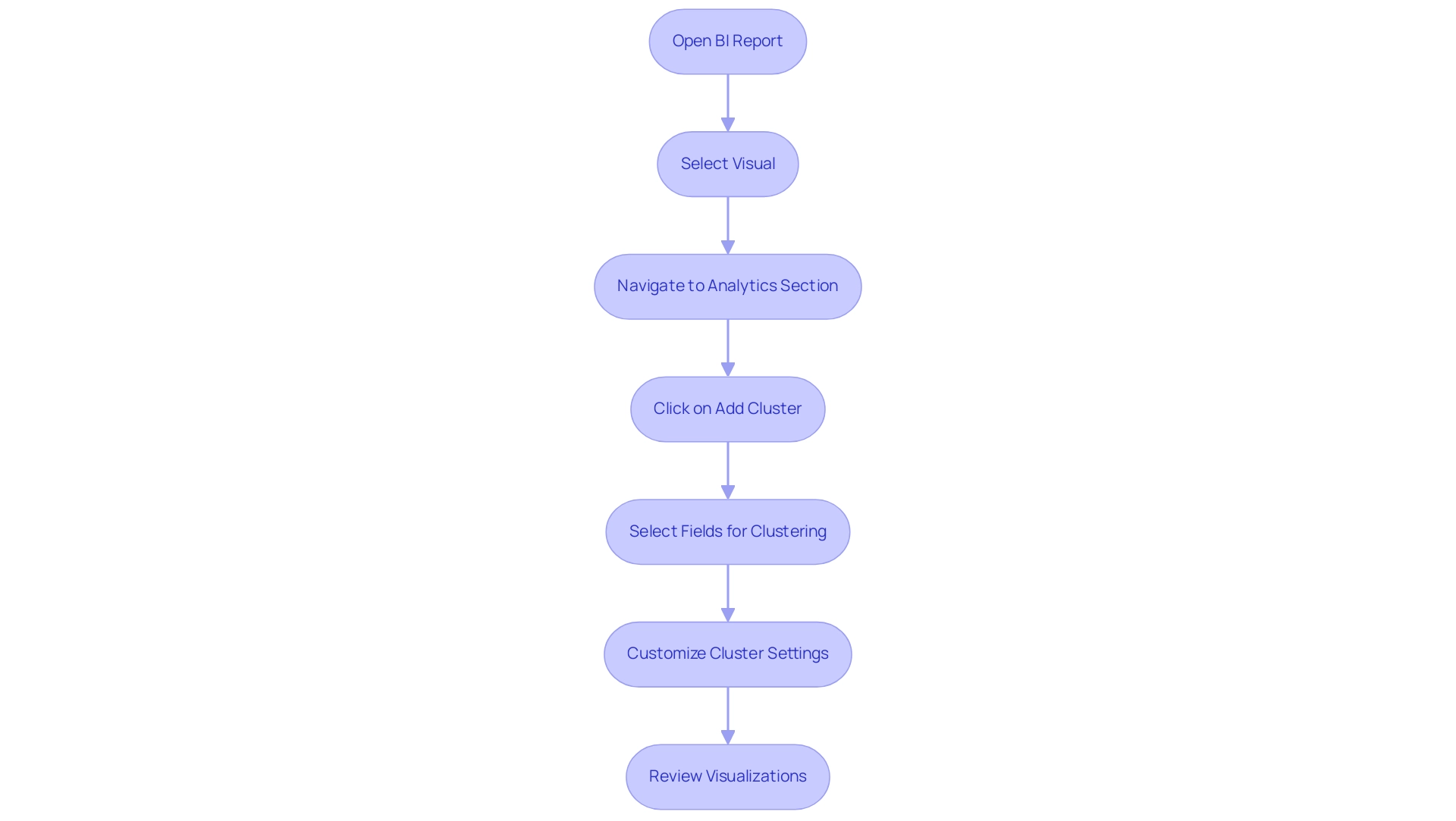

Step-by-Step Guide to Performing Cluster Analysis in Power BI

-

Open Power BI Desktop: Begin by launching the Power BI application and loading your dataset, ensuring that you have all necessary data at hand. This step is crucial in utilizing the strength of Business Intelligence to promote informed decision-making and reduce the competitive disadvantage of lacking actionable information.

-

Navigate to the Report View: Switch to the report view, which is essential for creating and manipulating visualizations effectively, helping to alleviate the challenges of time-consuming report creation.

The analysis of data can be greatly improved by utilizing power bi cluster analysis.

-

Select the Visualization Pane: Choose a scatter chart or another visualization that supports clustering, which is crucial for power bi cluster analysis and extracting actionable insights.

-

Add Information Fields: Drag and drop the relevant fields into the X and Y axes of your scatter chart, setting the stage for insightful representation that can highlight operational efficiencies.

-

Power BI cluster analysis can provide valuable insights.

Enable Clustering in Power BI Cluster Analysis: With the scatter chart selected, access the ‘Analytics’ pane and choose ‘Add Clusters’. This function will automatically group your data points based on their similarities, utilizing power bi cluster analysis to enhance data interpretability. Note that HDBSCAN can effectively identify groupings when all features show a within-feature difference corresponding to Cohen’s values of 0. The analysis of data can be greatly improved by utilizing power bi cluster analysis, which can be a valuable standard for your evaluation.

The analysis of data can be significantly enhanced through power bi cluster analysis.

-

Adjust Power BI Cluster Analysis Settings: Fine-tune the settings for power bi cluster analysis, including the number of clusters and the distance metrics. This customization aligns the analysis with your specific objectives in the power bi cluster analysis and improves the accuracy of your findings, ensuring that the conclusions are both relevant and actionable.

-

Review the Results: Analyze the clusters generated in your visualization, interpreting the insights they provide. As noted by Jason Himmelstein,

Selecting the correct data type is important as it influences how your visual calculations can be used in your charts and affects available formatting options for further customization.

This selection is crucial for effective grouping and visualization.

The data insights can be significantly improved through power bi cluster analysis.

-

Reference Case Study: Consider the findings from the case study titled “Statistical Power and Accuracy in Power BI Cluster Analysis,” which defined statistical power in the context of power bi cluster analysis and demonstrated the effectiveness of various methods in detecting true subgroups based on silhouette scores. This case study illustrates the significance of employing robust methods to obtain dependable knowledge, especially in improving operational efficiency through Business Intelligence.

-

Integrate RPA Solutions: To further enhance your operational efficiency, consider integrating RPA solutions such as EMMA RPA and Power Automate. These tools can automate repetitive tasks and streamline your data processes, enabling you to concentrate on evaluation and decision-making.

-

Save Your Report: When you’re satisfied with your analysis, save your Power BI report for future reference and sharing, ensuring your findings are preserved for stakeholders and can drive strategic decisions.

Exploring Clustering Methods in Power BI

Power BI presents a diverse array of clustering methods tailored to meet various analytical needs, playing a crucial role in enhancing Business Intelligence:

- K-Means Clustering: This widely-used method excels in partitioning larger datasets into K distinct clusters based on distance metrics. Its flexibility enables users to specify the number of clusters, making it especially effective for situations where rapid understanding is required. As companies aim to utilize insights based on information for expansion, recent trends indicate an increasing number of publications addressing K-Means grouping, highlighting its lasting significance in information analysis.

The effectiveness of K-Means can be validated using metrics such as the Adjusted Rand index and normalized mutual information, essential for external validation of grouping results.

- Hierarchical Clustering: This technique constructs a dendrogram or tree of clusters, offering a nuanced view of the relationships within the information. It is especially beneficial for smaller datasets where the complexity of relationships warrants a detailed examination.

Expert insights emphasize hierarchical grouping’s benefit in uncovering complex structures that may not be evident through alternative techniques. Tripti Jain, a Business Analyst at Paytm, observes that preparing data for grouping in the context of Power BI cluster analysis involves key steps such as using Power Query Editor to clean data, addressing missing values and inconsistencies, transforming categorical variables into numerical ones, and aggregating data for different analysis levels.

- DBSCAN (Density-Based Spatial Clustering of Applications with Noise): DBSCAN stands out for its ability to identify clusters of varying shapes and sizes, making it a robust choice when the dataset contains noise.

This method is particularly advantageous when traditional grouping techniques may falter, effectively distinguishing between dense regions and noise.

- Python Integration: For those seeking advanced analytical capabilities, Power BI’s integration with Python allows users to utilize additional grouping libraries, such as Scikit-learn. This feature allows the implementation of personalized grouping methods, serving users who need more tailored solutions for their analysis requirements.

Alongside these grouping techniques, the integration of RPA solutions such as EMMA RPA and Power Automate can significantly enhance operational efficiency. These tools automate repetitive tasks, addressing issues such as time-consuming report creation and inconsistencies, thereby allowing teams to concentrate on strategic decision-making. Understanding and utilizing these grouping methods alongside RPA empowers operations efficiency directors to harness the full potential of Power BI cluster analysis.

This, in turn, enables information-driven decision-making that addresses the challenges of time-consuming report creation and inconsistencies, ultimately fostering business growth and innovation.

Preparing Your Data for Effective Clustering

To organize your information for effective grouping and improve operational efficiency, follow these essential steps:

-

Data Cleaning: Start by removing duplicates, addressing missing values, and correcting inconsistencies. Clean information is essential, resulting in more precise grouping outcomes. Techniques such as fuzzy matching, provided by the fuzzywuzzy library, are useful for identifying and removing near-duplicates, further enhancing the quality of information. Recent studies emphasize that efficient information preparation can greatly enhance grouping precision, making it a crucial initial step in addressing technology implementation difficulties.

-

Feature Selection: Identify and select relevant features that contribute to meaningful grouping outcomes. Features that do not add value can obscure important patterns, ultimately leading to misleading results. As Lokesh B. emphasizes,

By scaling features to have similar scales (e.g., mean 0, standard deviation 1 for standardization), you prevent features with wider ranges from dominating distance calculations. This guarantees an equitable comparison of information and improves cluster formation, essential for fostering insights based on information.

-

Normalization: Scale your data to ensure that all features contribute equally to the grouping process. This is particularly vital for distance-based methods, such as K-Means, where the relative scale of features can dramatically influence results.

-

Exploratory Data Analysis (EDA): Conduct EDA to gain insights into your dataset’s distribution and relationships. Visualizations can reveal hidden patterns and guide your feature selection process, ensuring a more informed approach that aligns with your business goals.

-

Define Objectives: Clearly outline your clustering objectives. Comprehending what you intend to accomplish will guide your preparation and analysis, aligning your efforts with your overall business strategy. Implementing strategies that leverage RPA to automate manual workflows enhances efficiency and allows your team to focus on strategic initiatives. Additionally, addressing quality challenges through RPA can help mitigate barriers to AI adoption, ensuring that your information is not only clean but also actionable. The swift expansion of machine learning highlights the significance of power bi cluster analysis in enhancing business understanding and operational effectiveness. Furthermore, leveraging Business Intelligence tools like power bi cluster analysis can transform your cleaned information into actionable insights, enabling informed decision-making that drives growth and innovation.

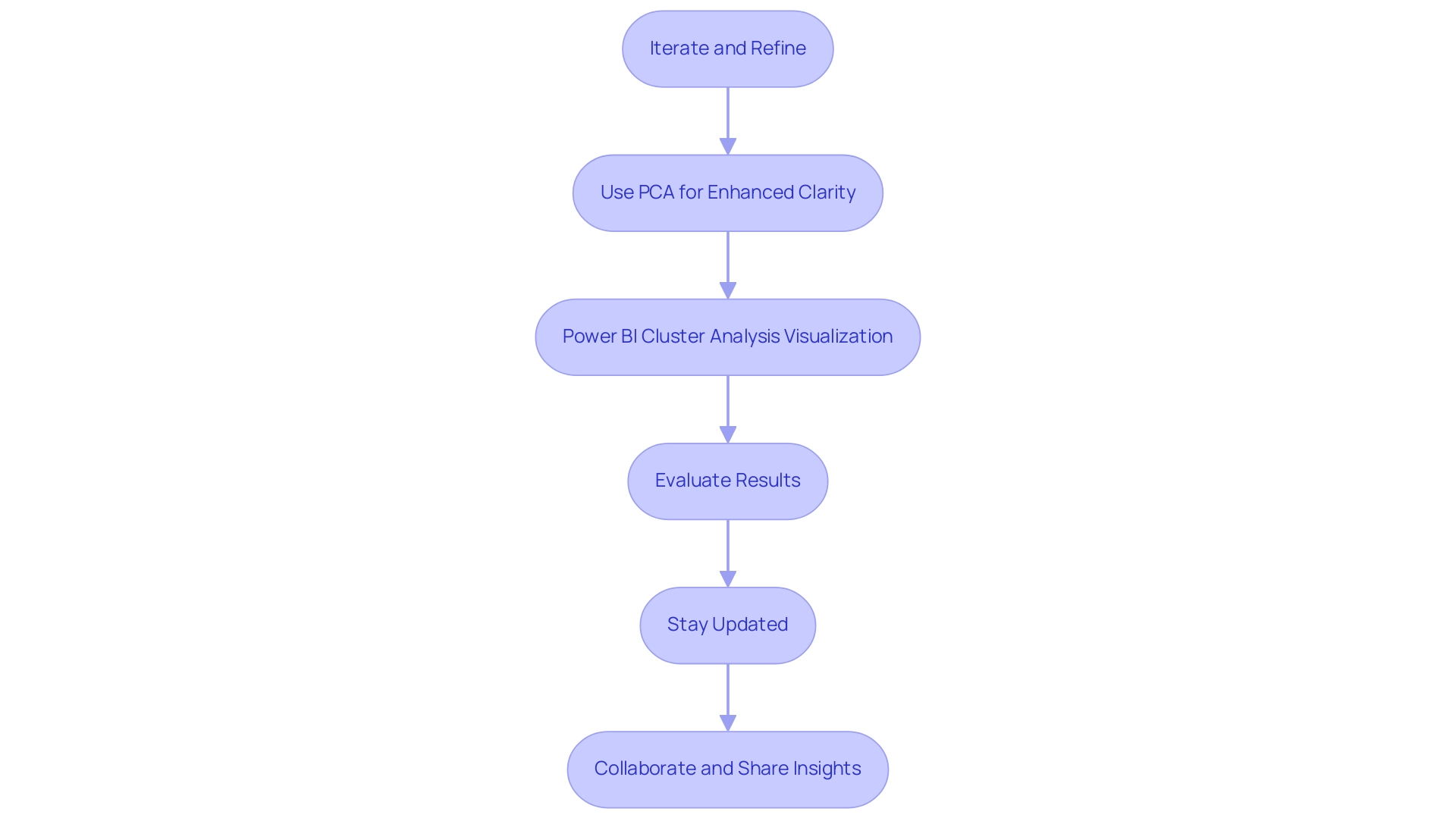

Best Practices for Successful Cluster Analysis

To achieve successful cluster analysis, consider implementing the following best practices to tackle challenges related to data quality and enhance your AI adoption journey:

-

Iterate and Refine: Embrace the iterative nature of clustering. Experiment with various methods and parameters to identify the optimal fit for your dataset, thereby enhancing the quality of your clusters and addressing inconsistencies that may arise from poor master information quality, such as incomplete or flawed entries.

-

Use PCA for Enhanced Clarity: Implement Principal Component Analysis (PCA) to improve cluster quality by ensuring that the variables are meaningful and less redundant. This approach leads to better-defined clusters, facilitating clearer insights from your data, which is crucial for effective decision-making.

-

Power BI Cluster Analysis Visualization: Visualization is essential for evaluating the clarity of your grouping results. Employ scatter plots and decomposition trees to observe the distinctiveness of your clusters, enabling you to make informed adjustments as needed and mitigate the challenges of time-consuming report creation in Power BI dashboards.

-

Evaluate Results: Implement robust metrics such as silhouette scores and the Davies-Bouldin index to assess performance of groupings. These metrics offer valuable insights into the appropriateness and effectiveness of your grouping solutions. For instance, an adjusted Rand index of 1 indicates a perfect match in grouping, while a value of 0 suggests chance performance. Moreover, the gap statistic serves as a powerful tool, comparing within-cluster dispersion to expected dispersion, guiding you toward the optimal number of clusters and aiding in avoiding both underfitting and overfitting.

-

Stay Updated: The landscape of data evaluation and AI is ever-evolving. Regularly update your knowledge on new grouping techniques and enhancements in power bi cluster analysis to expand your analytical capabilities and improve your grouping strategies. This ongoing learning is essential to address your uncertainty about integrating AI and to counter the common perception that AI projects are time-intensive and costly.

-

Collaborate and Share Insights: Foster a collaborative environment by working with your team to share findings and insights derived from your clustering evaluation. As highlighted by data expert Say, “You could run a few algorithms for determining the best number of clusters and see how your Gaussian model compares.” A consensus on a number of clusters is an indication of strong differentiation in the dataset. Such collaboration will not only enhance your understanding but also facilitate more effective decision-making based on your analysis, guiding you toward overcoming the perceived barriers to AI adoption.

Conclusion

Harnessing the power of clustering techniques in Power BI is essential for organizations looking to unlock the full potential of their data. By utilizing methods such as:

- K-Means

- Hierarchical Clustering

- DBSCAN

businesses can reveal hidden patterns and relationships that drive informed decision-making. The step-by-step guide provided illustrates how to effectively implement cluster analysis, enabling teams to visualize and interpret data with greater clarity.

Preparing data for clustering is equally critical, with best practices emphasizing the importance of:

- Data cleaning

- Feature selection

- Normalization

These foundational steps ensure that the insights derived from clustering are both accurate and actionable. Moreover, incorporating advanced techniques like Principal Component Analysis (PCA) and leveraging automation through RPA can significantly enhance operational efficiency and streamline processes.

Ultimately, the effective use of clustering in Power BI not only aids in data interpretation but also empowers organizations to make strategic decisions that foster growth and innovation. By embracing these practices and staying updated with evolving techniques, decision-makers can drive their organizations toward a future where data-driven insights are at the forefront of operational success.

Overview:

To connect Power BI to Databricks, users must ensure they have an active account, the latest version of Power BI Desktop, a configured cluster, appropriate access permissions, and the correct connection string. The article provides a detailed step-by-step guide that outlines the necessary prerequisites and procedures, emphasizing the importance of using Direct Query mode for real-time data access and recommending best practices for optimizing the integration process.

Introduction

In the dynamic landscape of data analytics, the ability to connect Power BI with Databricks is a game-changer for organizations seeking to enhance their operational efficiency and data-driven decision-making. This integration not only streamlines reporting processes but also empowers teams with real-time insights and robust data visualization capabilities.

As businesses navigate the complexities of data management, understanding the prerequisites, best practices, and troubleshooting techniques becomes essential. This article serves as a comprehensive guide, providing practical steps and strategies to ensure a seamless connection between Power BI and Databricks, ultimately driving innovation and growth in an increasingly competitive environment.

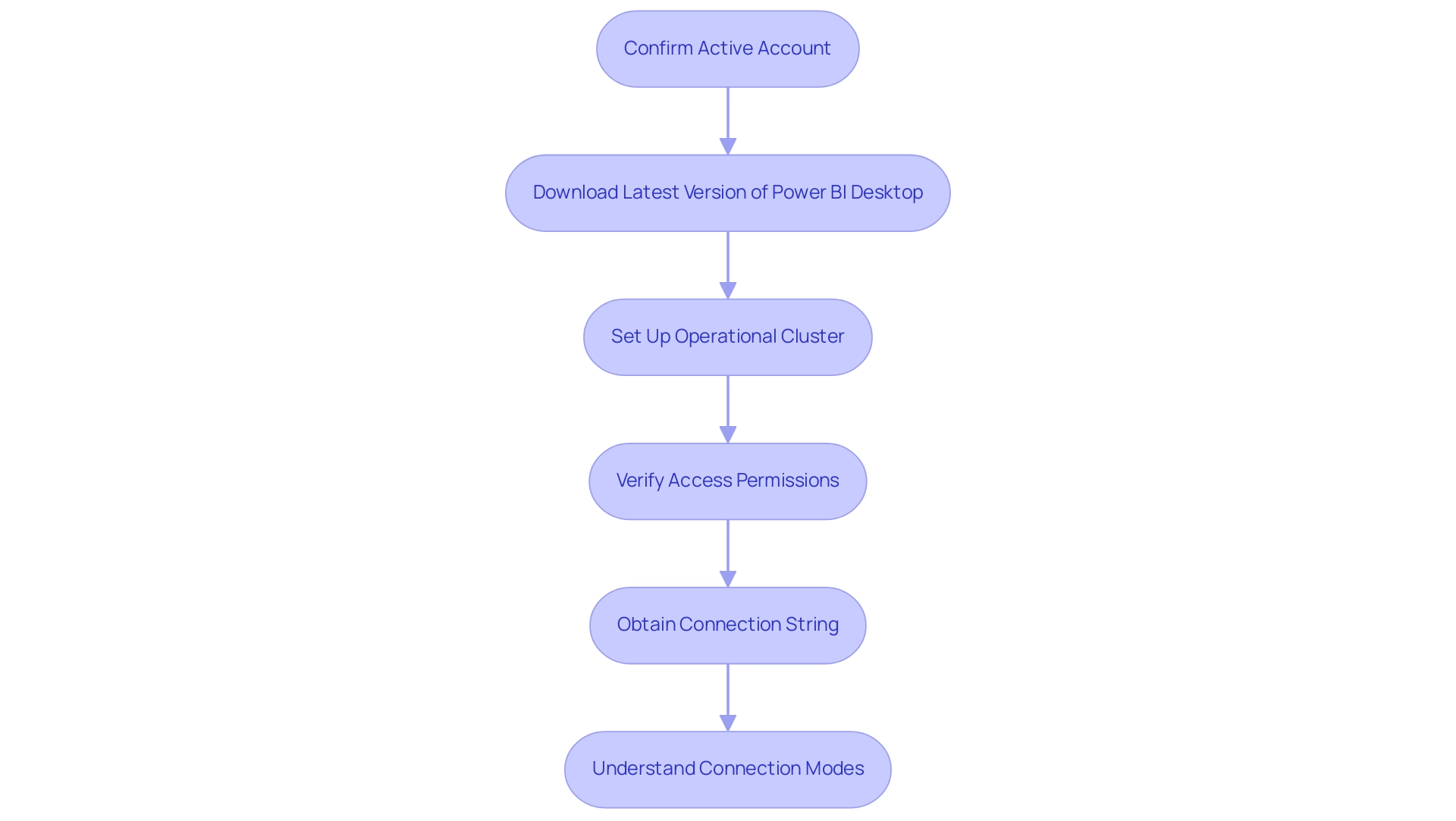

Getting Started: Prerequisites for Connecting Power BI to Databricks

To effectively establish a connection between Power BI and Databricks, and to fully leverage Business Intelligence and RPA for operational efficiency, it is essential to fulfill the following prerequisites:

- Active Account: Confirm that you have an active account. If you are new, consider signing up for a trial to explore the capabilities that can enhance your data-driven insights.

- Latest Version of Power BI Desktop: Download and install the latest version of Power BI Desktop from the Microsoft website to ensure compatibility and access to new features designed for efficiency.

- Configured Cluster: Set up and ensure that a cluster is operational. This cluster is critical for executing your queries efficiently, with the ability to add a compute cluster in as little as 5 minutes, thus improving your operational workflows.

- Appropriate Access Permissions: Verify that you possess the necessary permissions to access both the workspace and the specific data you plan to analyze, addressing any potential data inconsistencies.

- Connection String: Obtain the connection details for your instance, which is essential for establishing the link during configuration.

Understanding the connection modes is crucial as well. As Albert Wang noted, “Shared-no-isolation mode does not support unity catalog,” which is vital to consider when setting up your integration.

For a practical example, consider the case study titled “Power BI connect to Databricks,” which illustrates how users can connect Power BI to Azure Databricks clusters and SQL warehouses. This integration not only allows users to publish reports to the BI service but also enables single sign-on (SSO) using Microsoft Entra ID credentials, streamlining the reporting process and providing actionable guidance.

It’s important to recognize the challenges of data extraction and how RPA solutions can mitigate these issues, such as task repetition fatigue and outdated systems. Lastly, it’s advantageous to examine the comparison available for selecting between the Azure Connector and the Connector for Business Intelligence, as this can assist you in making informed choices about your integration strategy. By addressing these prerequisites, you can streamline the connection process and mitigate common issues, paving the way for a seamless integration experience that drives growth and innovation.

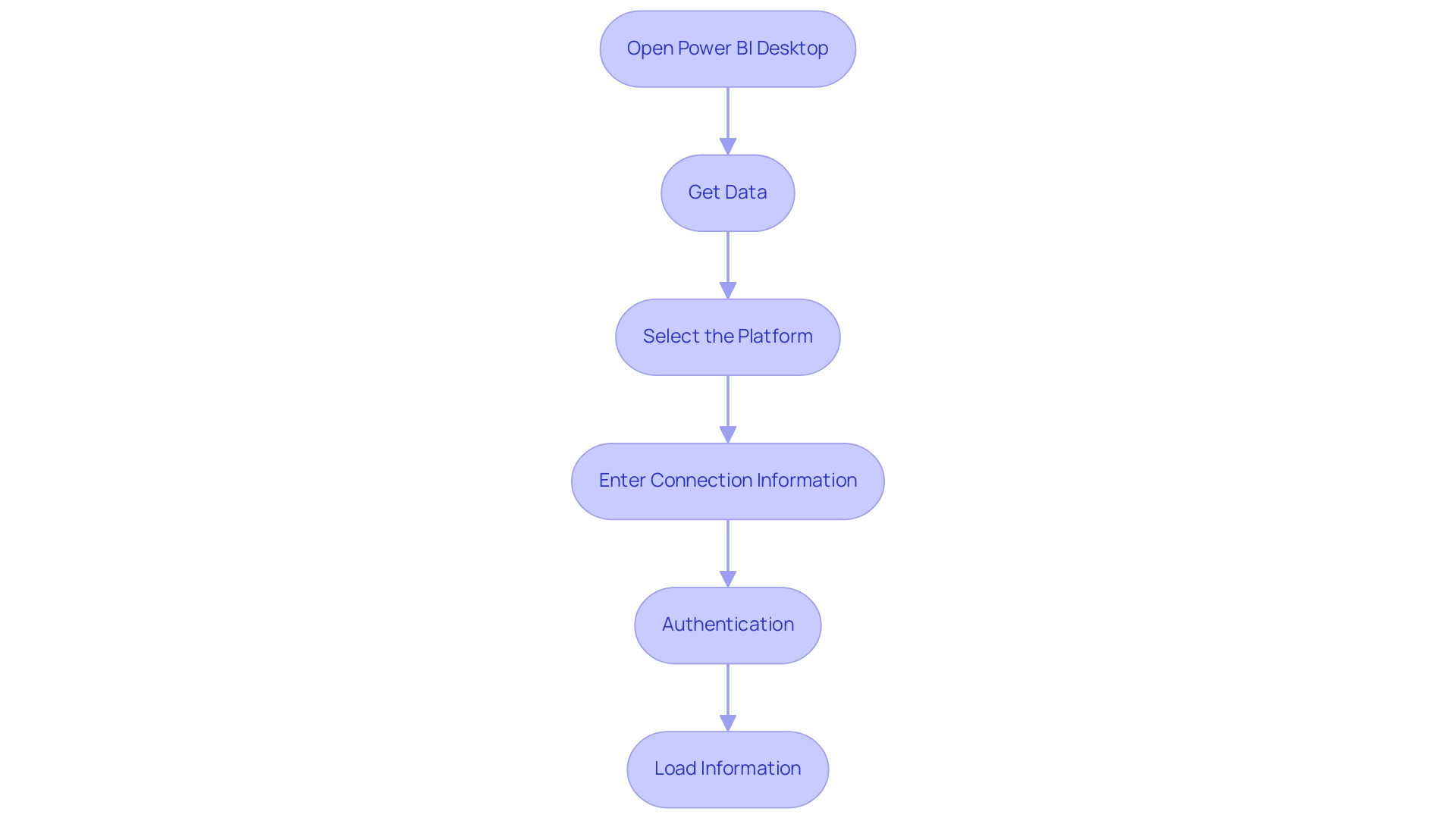

Step-by-Step Guide to Establishing the Connection

To seamlessly connect the business intelligence tool to the data platform and enhance your data analytics capabilities while leveraging Robotic Process Automation (RPA) for operational efficiency, follow these steps:

- Open Power BI Desktop: Start by launching the application on your device.

- Get Data: Navigate to the ‘Home’ tab and select ‘Get Data’. Click on ‘More…’ to access the expanded Get Data window.

- Select the platform: In the search bar of the Get Data window, type ‘the platform name’, choose it from the list, and click ‘Connect’.

- Enter Connection Information: You will be prompted to provide the necessary connection details:

- Server Hostname: Enter the server hostname associated with your Databricks account.

- HTTP Path: Input the HTTP path corresponding to your Databricks cluster.

- Authentication: Choose the authentication method that suits your setup (typically, the Token method is recommended) and input your access token for secure access.

- Load Information: Once connected, select the tables or datasets you wish to import into BI and click ‘Load’.

It’s important to note that the connector limits the number of imported rows to the Row Limit that was set earlier, which is a crucial operational constraint to consider during your information import process.

By integrating RPA into this workflow, you can automate repetitive information import tasks, significantly reducing the time spent on manual handling and minimizing the risk of errors. For example, RPA can be employed to arrange routine information imports or oversee information flows, guaranteeing that your analytics are consistently founded on the most up-to-date details.

By adhering to these steps, you will enable Power BI to connect to Databricks and create a strong link between BI and the database platform. This integration not only streamlines your analytics workflow but also improves security and access management, especially with the incorporation of a cloud platform on AWS with Business Intelligence through SSO using Microsoft Entra ID. This advancement allows your team to focus on deriving insights rather than managing governance, ultimately driving insights and operational efficiency through the power of automation.

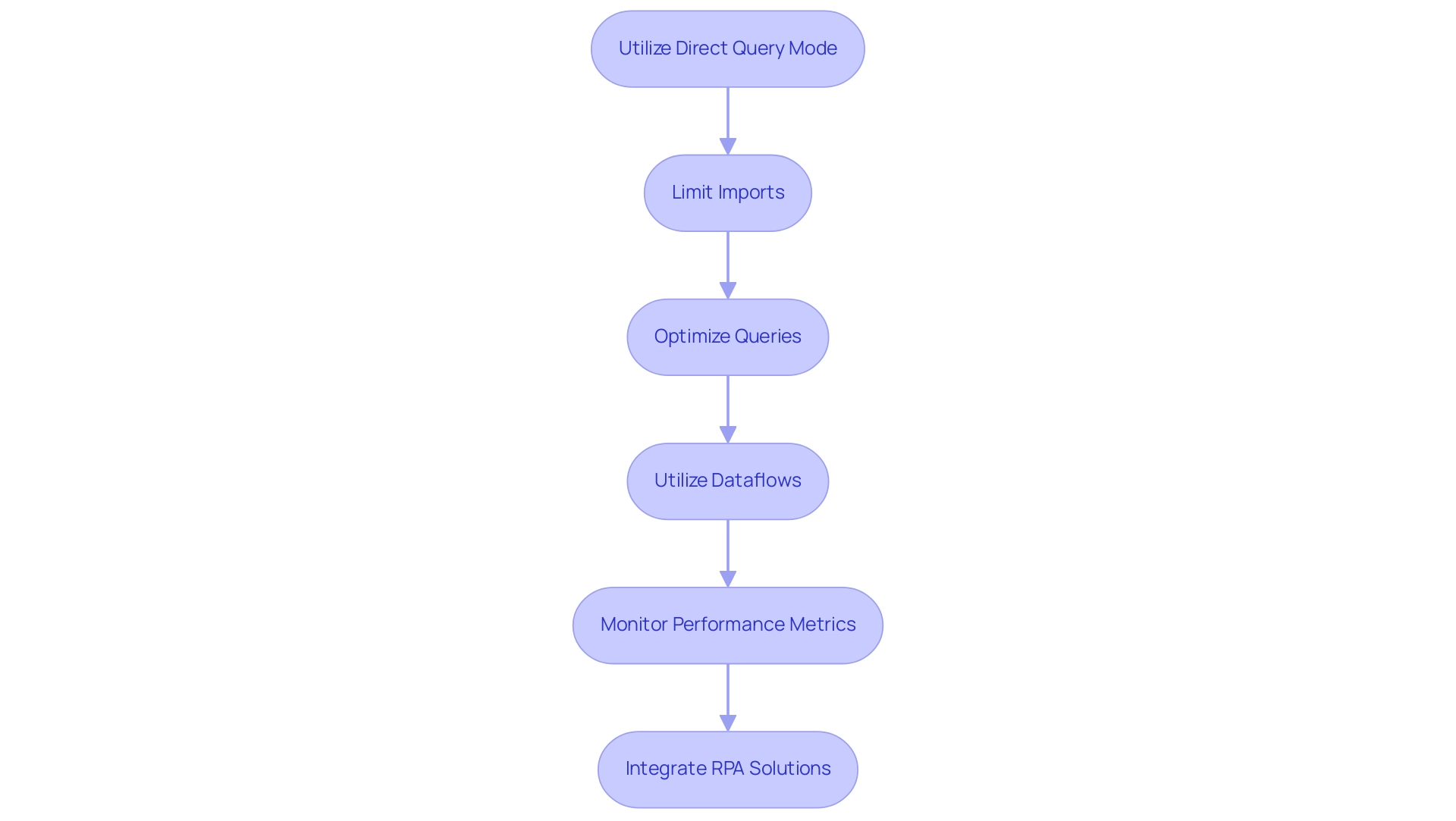

Best Practices for Optimizing Power BI and Databricks Integration

To achieve optimal integration of Power BI with Databricks, consider the following best practices that can significantly enhance your workflow and address common challenges:

-

Utilize Direct Query Mode: Whenever possible, use Direct Query to enable real-time access to information without the necessity of importing large datasets into BI. This approach not only streamlines operations but also ensures that your reports reflect the most current information. Notably, when using the integration of Power BI connect to Databricks, datasets can be published in as little as 10 to 20 seconds, showcasing the efficiency of this platform.

-

Limit Imports: Focus on importing only the essential information required for your analyses. This practice improves performance and minimizes load times, leading to a smoother user experience, thereby mitigating the time-consuming report creation challenges.

-

Optimize Queries: Craft efficient queries within the platform to reduce processing time and conserve resources. Well-structured queries can dramatically enhance the performance of your reports, addressing the issue of inconsistencies.

-

Utilize Dataflows: Harness the capabilities of BI dataflows to preprocess your information in the analytics platform before visualization. This step not only simplifies the reporting process but also enhances information management, facilitating more actionable guidance for decision-making.

-

Monitor Performance Metrics: Regularly evaluate the performance indicators in both BI tools to identify any potential bottlenecks. Continuous monitoring allows for timely interventions, ensuring optimal performance.

-

Integrate RPA Solutions: Consider leveraging Robotic Process Automation (RPA) to streamline tasks related to information management and reporting. RPA can automate repetitive processes, reducing manual errors and freeing up your team to focus on strategic analysis and decision-making.

Applying these best practices will not only improve the efficiency of your business intelligence and data integration but also enable Power BI to connect to Databricks, contributing to a more agile and responsive data environment. As noted by esteemed contributor Szymon Dybczak, “strategic approaches to query performance can yield significant improvements,” making it essential to adopt these methodologies in your everyday operations. Additionally, as organizations transition from traditional methods to digital solutions, the emphasis on strategic planning becomes crucial in maximizing the benefits of digital adoption.

This integration ultimately supports the drive for data-driven insights that are vital for informed decision-making.

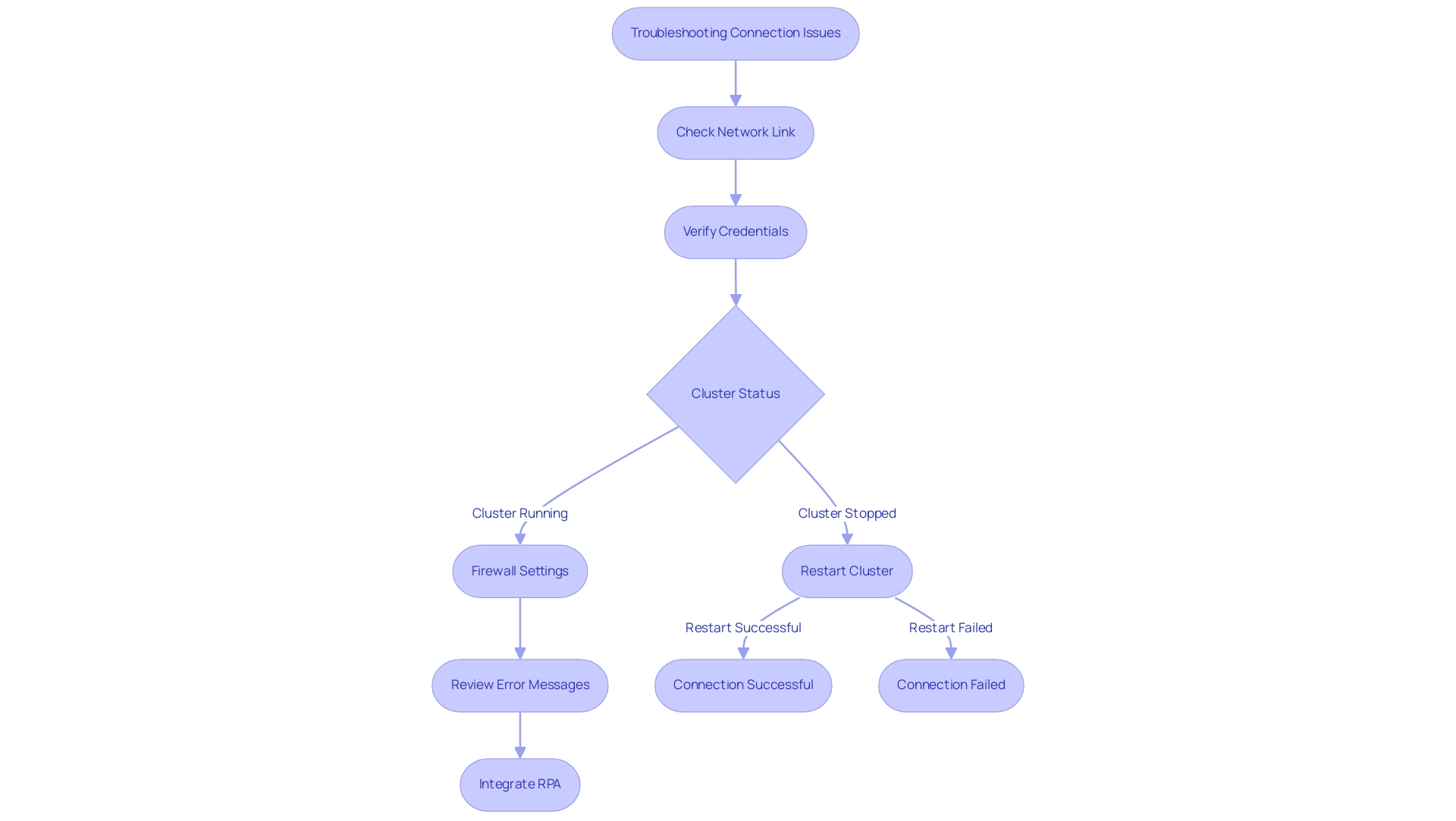

Troubleshooting Common Connection Issues

When linking the business intelligence tool to the data platform, experiencing problems can be irritating, particularly when your emphasis is on improving operational efficiency through informed decision-making. Applying these troubleshooting tips, together with Robotic Process Automation (RPA) and customized AI solutions, can assist you in resolving frequent issues while enhancing your reporting processes:

- Check Network Link: A stable internet link is essential for ensuring that Power BI can connect to Databricks seamlessly. Ensure your network is functioning optimally to avoid time-consuming disruptions.

- Verify Credentials: Double-check that you’re entering the correct server hostname, HTTP path, and authentication token. A small error can lead to connection failures. Note that a personal access token with a lifetime of 7 is required for BI authentication.

- Cluster Status: Confirm that your cluster is actively running; if it’s stopped or terminated, restart it to establish a fresh connection.

- Firewall Settings: Ensure your firewall configurations allow Power BI to connect to Databricks. Adjust your firewall configurations if necessary to enable this communication.

- Review Error Messages: Pay close attention to any error messages during your connection attempt, as they can provide valuable insights into the underlying issues.

Additionally, integrating RPA can automate the monitoring of these connections, allowing your team to focus on strategic tasks rather than troubleshooting. Recent guidance from community support, such as Liu Yang, emphasizes the importance of updating BI Desktop. Users experiencing frozen issues should consider uninstalling and reinstalling BI Desktop, especially since Microsoft’s engineers are currently addressing known issues with the application. You can download the latest version directly from the official Microsoft Download Center to ensure optimal performance. For those still facing challenges, a workaround involves navigating to File > Options and Settings > Options > Preview features to untick ‘Power BI Desktop infrastructure update.’ Alternatively, setting ‘Load Tables simultaneously’ and clearing the cache in BI Desktop can also enhance stability. A user successfully resolved their issues by uninstalling BI Desktop and installing a previous version, which serves as a potential solution for others experiencing similar problems with the latest updates. By adhering to these troubleshooting steps and considering RPA and AI solutions, you can effectively resolve common connection issues and understand how to power bi connect to databricks, successfully integrating your business intelligence tools with the data platform, ultimately enhancing your operational efficiency and driving better business outcomes.

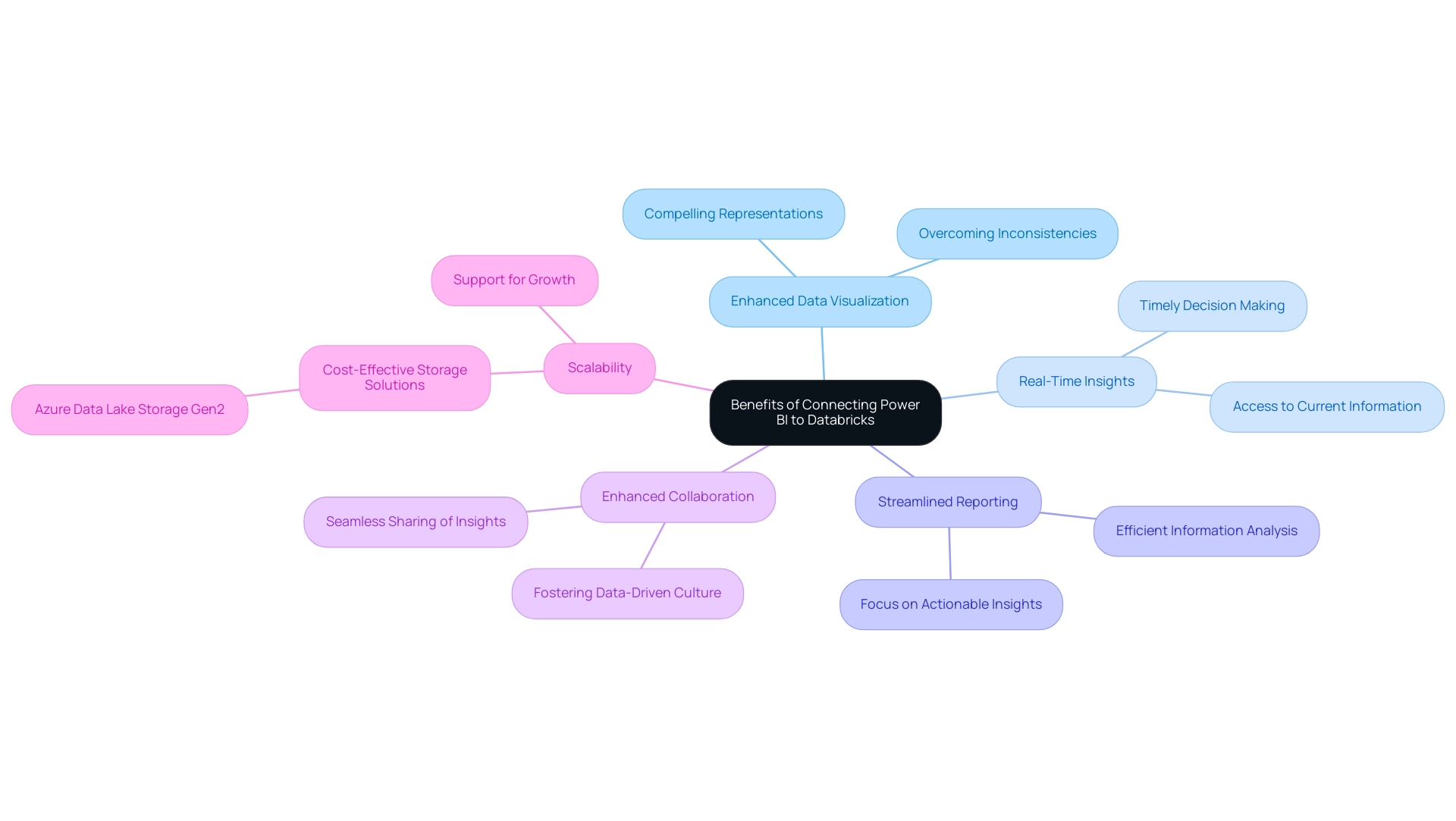

Benefits of Connecting Power BI to Databricks

Combining the BI tool with Azure unlocks a multitude of benefits that can significantly enhance your organization’s analytics capabilities, addressing the common challenges faced in leveraging insights from dashboards:

- Enhanced Data Visualization: Users can leverage Power BI connect to Databricks to excel in visualization capabilities, creating compelling representations of complex datasets. This visual clarity aids in interpreting information quickly and efficiently, overcoming issues related to inconsistencies.

- Real-Time Insights: The integration facilitates access to real-time information, empowering stakeholders to make timely decisions based on the most current details available. This immediacy is crucial in today’s fast-paced business environment, helping you navigate the challenges of time-consuming report creation.

- Streamlined Reporting: By combining the strengths of both tools, reporting processes become more straightforward. This synergy enables efficient information analysis, reducing the time spent on compiling reports and increasing focus on actionable insights.

- Enhanced Collaboration: With combined information sources, teams can work together more effectively, sharing insights and analyses seamlessly. This fosters a culture of data-driven decision-making throughout the organization, essential for driving growth and innovation.

- Scalability: The integration supports scalable information solutions, ensuring that as your organization grows, your analytics capabilities can expand without compromise. Azure Data Lake Storage Gen2 exemplifies this scalability, offering a cost-effective solution for storing unstructured and semi-structured information, thus facilitating self-service queries without predefined schemas. Incorporating RPA solutions alongside Power BI connect to Databricks can further enhance operational efficiency. RPA can automate repetitive tasks involved in information collection and reporting, allowing teams to concentrate on analysis and decision-making.

By harnessing these advantages, organizations can maximize their analytics efforts, ultimately driving improved business outcomes. Notably, starter warehouses are now serverless by default, further enhancing the efficiency of this integration and allowing for a more streamlined approach to data management. Additionally, the billing system tables will remain available at no additional cost across clouds, including one year of free retention, ensuring a cost-effective pathway to enhanced analytics.

As you prepare for the integration, consider reaching out to your Databricks account team for information on participating in private or gated public previews, which can provide early access to new features and functionalities.

Conclusion

Connecting Power BI with Databricks offers significant advantages that can transform an organization’s approach to data analytics. By ensuring that the prerequisites are met, such as having an active Databricks account and the latest version of Power BI, teams can establish a seamless connection that not only enhances data visualization but also supports real-time insights. This foundation allows organizations to navigate the complexities of data management with confidence.

Implementing best practices, like leveraging Direct Query mode and optimizing queries, further enhances the integration, enabling teams to work more efficiently and effectively. Regularly monitoring performance metrics and troubleshooting common issues ensures that the connection remains robust, allowing for uninterrupted access to critical data. The integration of Robotic Process Automation (RPA) also streamlines repetitive tasks, freeing up valuable time for strategic analysis.

Ultimately, the combination of Power BI and Databricks not only improves operational efficiency but also fosters a culture of collaboration and data-driven decision-making. By embracing this integration, organizations can unlock the full potential of their data, driving innovation and growth in an increasingly competitive landscape. The time to enhance data analytics capabilities is now, and the pathway is clear: connect, optimize, and thrive.

Overview:

To create Power BI conditional columns that effectively handle null values, users should follow a step-by-step process that includes defining conditions using DAX functions to ensure data integrity and improve analysis. The article supports this by detailing the importance of addressing null values, highlighting their potential to distort insights, and providing practical steps to create conditional columns that enhance operational efficiency and decision-making.

Introduction

In the dynamic landscape of data analysis, leveraging conditional columns in Power BI emerges as a game-changing strategy for organizations striving to enhance operational efficiency. These powerful tools allow users to transform raw data into actionable insights by categorizing and managing information effectively, particularly when dealing with the pervasive issue of null values.

As data integrity becomes increasingly vital in decision-making, understanding how to implement these conditional columns can significantly improve data workflows and analysis accuracy. With the right techniques, organizations can not only mitigate the challenges posed by incomplete data but also harness the full potential of their data assets, paving the way for informed strategies and sustained growth in a competitive environment.

This article delves into the practical applications of conditional columns, offering step-by-step guidance and best practices to empower teams in their data management journey.

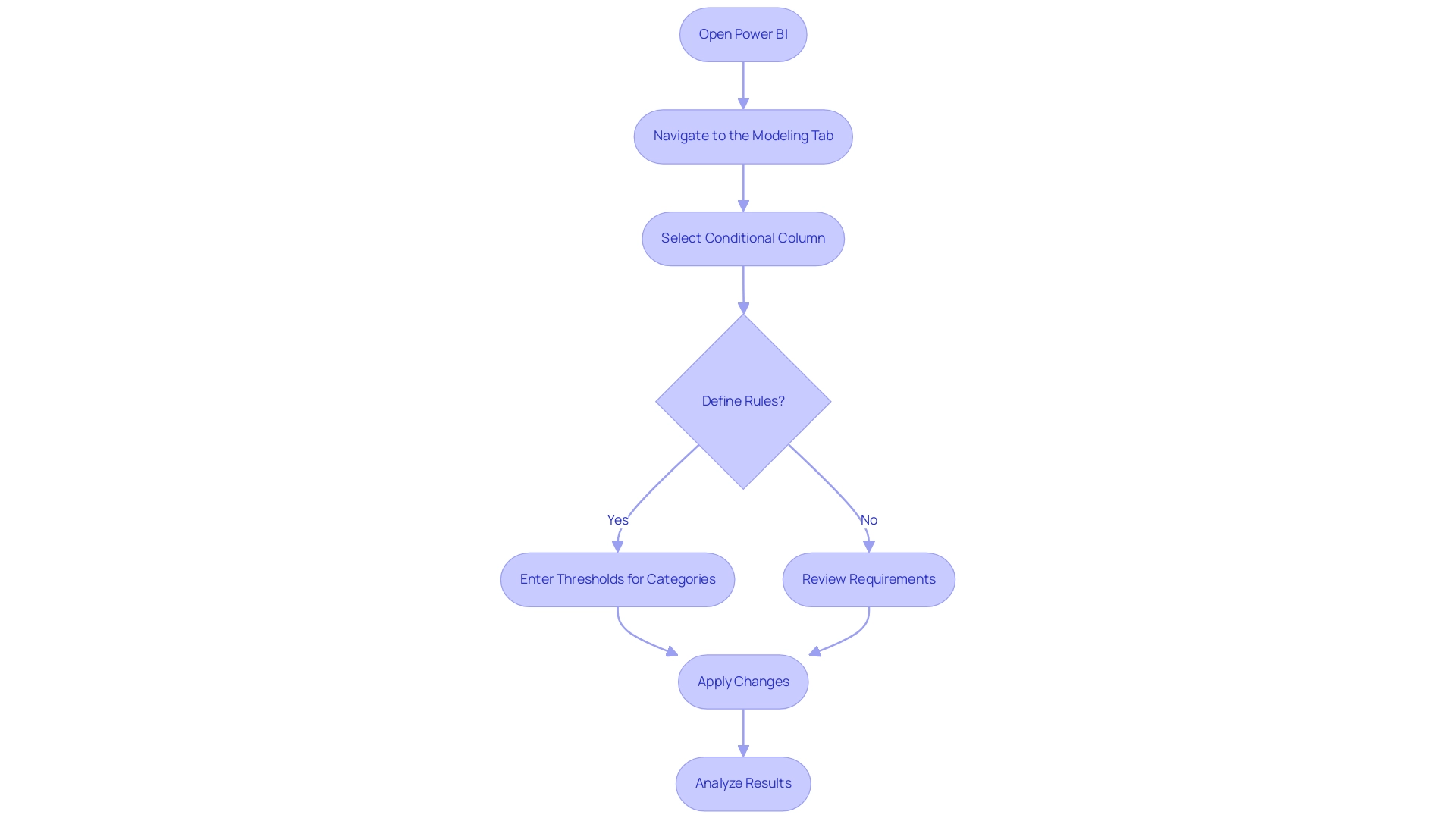

Understanding Conditional Columns in Power BI

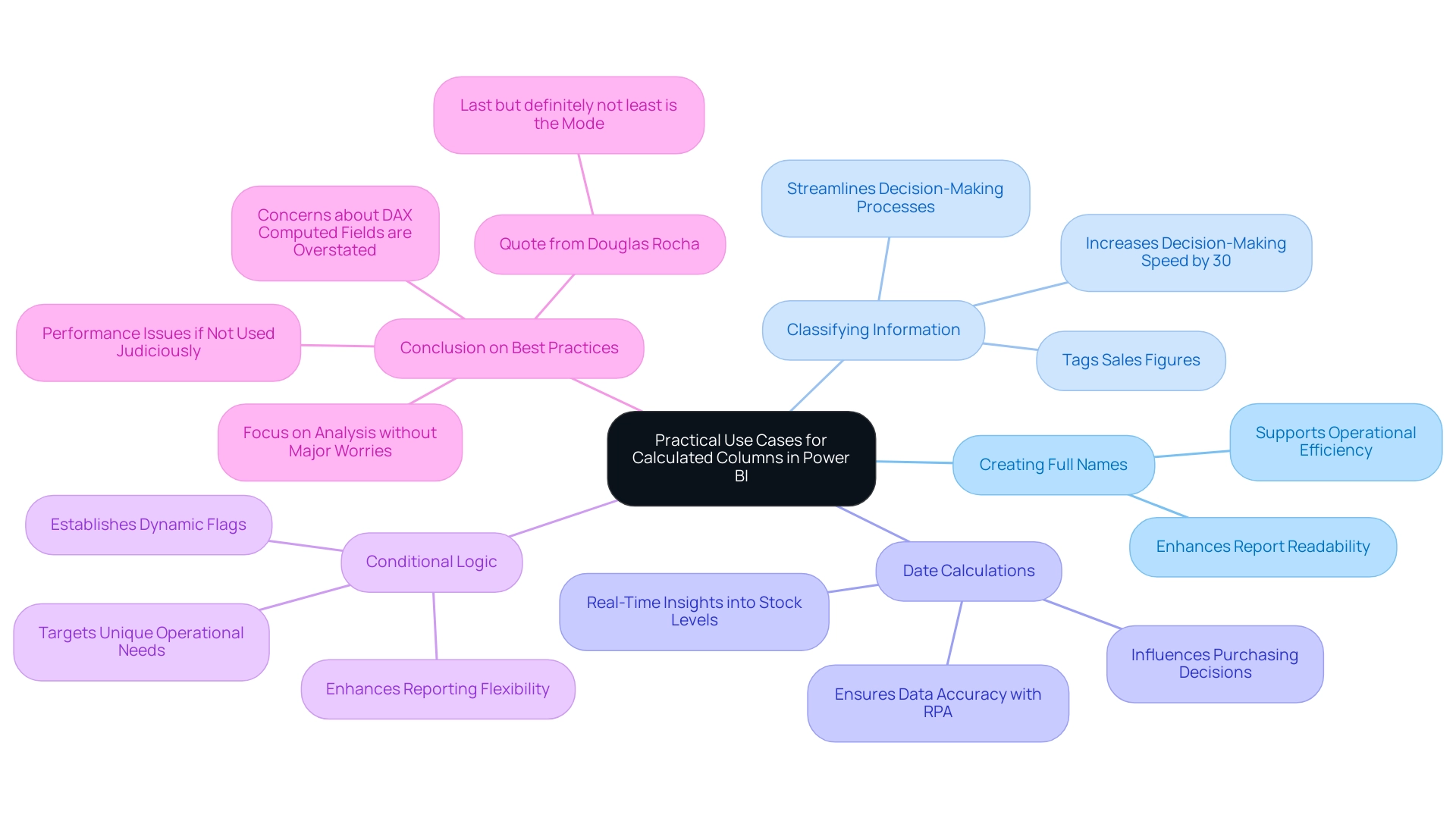

Conditional columns in Power BI are a crucial tool that enables users to extract new insights from existing information through specified rules. For instance, categorizing sales figures into ‘High’, ‘Medium’, or ‘Low’ based on defined thresholds enhances interpretability and directly addresses challenges like missing or null values, which is essential for ensuring the accuracy of a Power BI conditional column null. This functionality is crucial for Directors of Operations Efficiency seeking to enhance information accuracy and reliability.

To create these conditional fields, simply navigate to the ‘Modeling’ tab within the Power BI interface, where intuitive options await. Mastering this feature is not merely a technical skill—it represents a strategic advantage in your analytics toolkit. With 54% of companies prioritizing cloud computing and business intelligence to enhance their information strategies, the adept use of conditional fields can assist organizations in leveraging their resources more efficiently.

Furthermore, as the quantity of IoT devices is expected to rise to 20.3 billion by 2025, generating vast streams of information, robust management strategies, including conditional columns, become essential for extracting actionable insights. The worldwide big information analytics market, valued at $271.83 billion in 2022, highlights the increasing demand for skilled analysis. Our 3-Day BI Sprint can expedite your mastery of these essential features, while the General Management App offers comprehensive management tools to enhance operational efficiency.

As we investigate effective methods for handling null entries, understanding how to utilize power bi conditional column null will be crucial for optimizing workflows and improving operational efficiency, tackling the issues of inconsistencies and absence of actionable guidance.

Challenges of Handling Null Values in Power BI

Null entries in Power BI can significantly distort analysis, leading to misleading insights and incorrect conclusions. In today’s information-rich environment, where approximately 30% of datasets contain null values, understanding the implications of these gaps is crucial for effective management. These common challenges include:

- Difficulties in aggregation

- Inaccuracies in calculations

- Complications in visualization

For instance, a null sales figure can skew average calculations or result in incomplete reports, ultimately hindering decision-making. By unlocking the power of Business Intelligence, you can transform raw data into actionable insights that drive growth and innovation. Using Power Query, examine your dataset to find fields with null entries, enabling focused remediation.

Recognizing these challenges underscores the importance of creating a Power BI conditional column null that adeptly manages nulls. Furthermore, a novel method is to handle absent entries as a machine learning challenge, where a portion of the initial information is employed to forecast these omissions. This method not only tackles the challenge of managing null entries but also improves the dataset’s completeness, although it may diminish the overall effectiveness of your analysis, requiring a careful assessment of your results.

As the Statsig Team aptly states,

Interpreting results requires understanding what the numbers represent,

reinforcing the critical need for transparency about analysis limitations. Incorporating RPA solutions like EMMA RPA and Power Automate can streamline the process of managing null values, automating cleansing and enhancing operational efficiency. By recognizing and tackling these common issues, you enhance your analysis processes to produce more dependable and actionable insights, ultimately boosting operational efficiency and informed decision-making.

In a competitive landscape, failing to extract meaningful insights from your information can leave your business at a disadvantage, making it imperative to tackle these challenges head-on.

Step-by-Step Guide to Creating Conditional Columns for Null Values

To create conditional columns that effectively handle null values in Power BI and mitigate challenges like time-consuming report creation and data inconsistencies, follow this comprehensive step-by-step guide:

- Open Power BI Desktop and load your data model to begin the process.

- Navigate to the Modeling tab and select New Column to initiate the creation of a new column.

- In the formula bar, utilize the DAX function

IFto define your conditions. For instance:

DAX

new column = IF(ISBLANK([ExistingColumn]), "No Data", [ExistingColumn])

- This formula checks whether the content in

Existing Columnis blank (null). If it is, ‘No Information’ is assigned; otherwise, the original value is retained, ensuring that your information remains intact and actionable. - Press Enter to finalize the creation of the new section.

- Examine your newly created section in the information view to confirm that it functions as intended, ensuring precision in your analyses.

- Utilize this new column in your reports or for additional calculations as required to improve the quality of your insights.

By mastering these steps, you can effectively manage null values in a Power BI conditional column, which will lead to more accurate and meaningful analyses. This approach not only enhances your current analysis but also establishes a foundation for advanced strategies, including dynamic targets for KPIs in Power BI, customized through DAX formulas based on various conditions.

Moreover, a robust governance strategy is essential to prevent inconsistencies and ensure that your insights are reliable. Without such governance, businesses risk encountering a competitive disadvantage, as they may struggle to extract meaningful insights from their information, ultimately hindering informed decision-making. As emphasized in industry discussions, precise information handling is crucial for calculating profit margins, which are determined by dividing profit by total sales, underscoring the importance of maintaining integrity.

For further assistance, engage with the BI community for support, and consider exploring case studies like ‘How to Create a Dashboard on BI,’ which illustrates the practical application of these concepts by detailing the process of opening a report, editing it, pinning visualizations, and creating new dashboards tailored for sharing.

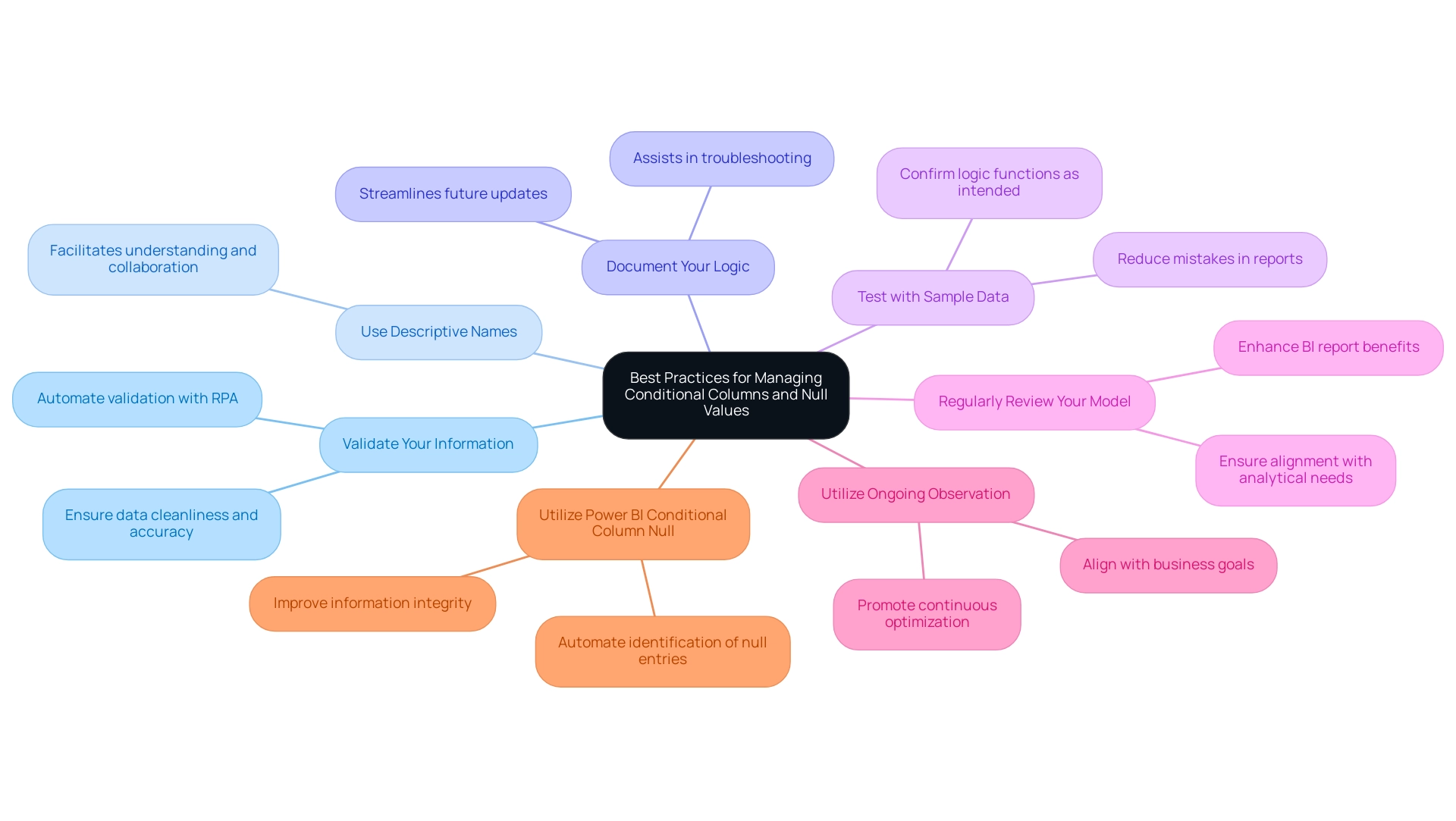

Best Practices for Managing Conditional Columns and Null Values

When handling Power BI conditional column null fields and addressing null values in Power BI, it’s essential to apply best practices that improve your information management capabilities while utilizing Robotic Process Automation (RPA) to optimize manual workflows. RPA can significantly reduce the time spent on repetitive tasks, allowing your team to focus on strategic initiatives. According to recent findings, switching to Delta Tables in Databricks has led to immediate improvements in query times, underscoring the impact of effective information management on performance.

Here are crucial strategies to consider:

- Always Validate Your Information: Before creating conditional sections, ensure that your information is clean and accurately reflects the insights you aim to derive. Precise information is the basis of efficient analysis, and RPA can assist in automating validation processes, tackling issues connected to inadequate master information.

- Use Descriptive Names: Assign descriptive titles to your new sections. This practice aids in quickly understanding their purpose, facilitating better collaboration and communication across your team.

- Document Your Logic: Maintain clear documentation on the logic applied in your conditional sections. This not only streamlines future updates but also assists in troubleshooting when issues arise, ensuring that your data-driven insights are actionable.

- Test with Sample Data: Before deploying your logic across larger datasets, test it with a small sample. This method enables you to confirm that your logic functions as anticipated, diminishing the likelihood of mistakes in your reports.

- Regularly Review Your Model: As your information evolves, it’s important to revisit your conditional columns to ensure they continue to meet your analytical needs. Ongoing observation and enhancement, potentially aided by RPA, are crucial for maximizing the benefit of your BI reports.

- Utilize Ongoing Observation: Establishing a system for ongoing observation, as emphasized in the case study on BI, guarantees alignment with business goals and promotes a culture of continual optimization. This is essential for conquering obstacles linked to inadequate master information quality and impediments to AI implementation.

- Utilize Power BI Conditional Column Null for Managing Null Entries: Power BI conditional column null can be employed to automate the identification and management of null entries in your datasets. For example, RPA tools can be set up to substitute empty entries with predefined defaults or to highlight records for examination, thereby improving information integrity and operational efficiency.

By following these practices and incorporating RPA where relevant, you can greatly enhance your information management skills, ensuring that your Power BI reports are not only precise but also insightful, thereby promoting informed decision-making within your organization.

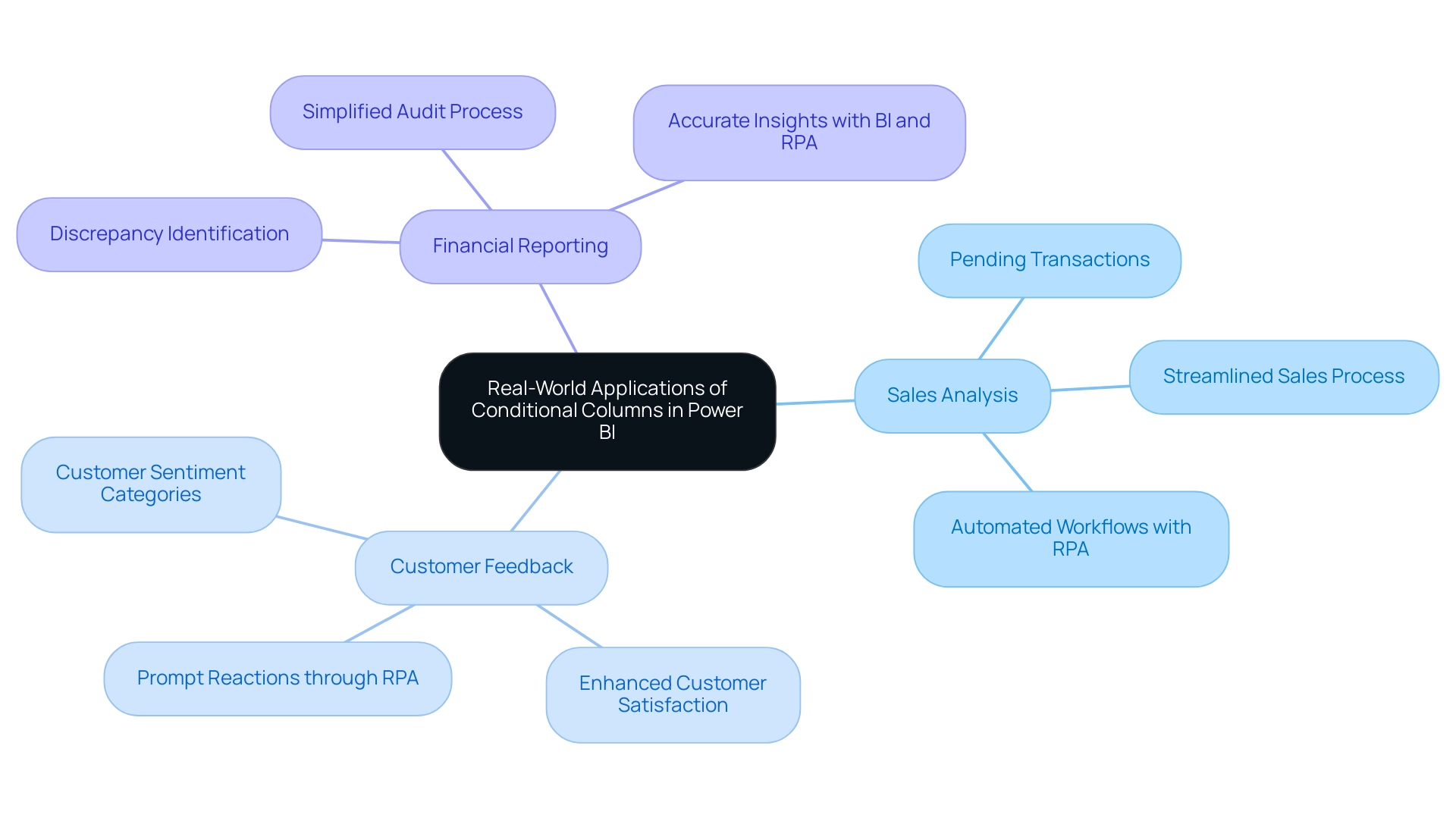

Real-World Applications of Conditional Columns in Power BI

Conditional fields are powerful tools that can significantly improve information operations across various sectors, especially when integrated with Robotic Process Automation (RPA) and Business Intelligence (BI) solutions. Here are several impactful applications:

-

Sales Analysis: A retail organization effectively utilizes conditional fields to categorize its sales information, designating entries with null values as ‘Pending’. This approach not only facilitates the tracking of incomplete transactions but also ensures that teams can swiftly address any issues, leading to a more streamlined sales process. By automating these workflows using RPA, the organization can further reduce manual oversight and enhance operational efficiency through the use of tools like the General Management App.

-

Customer Feedback: In the service industry, a company has harnessed the power of conditional data fields to analyze customer sentiment. By categorizing feedback into ‘Positive’, ‘Neutral’, or ‘Negative’, the organization gains valuable insights into customer perceptions and can respond appropriately to enhance satisfaction, even when some feedback entries are considered as power bi conditional column null. Incorporating RPA can streamline feedback classification, guaranteeing prompt reactions and enhanced customer interaction, in line with customized AI solutions that meet particular business requirements.

-

Financial Reporting: Financial institutions gain from Power BI conditional column null by marking absent information in expense reports. This proactive identification of discrepancies during audits simplifies the review process and enhances information integrity and compliance. Utilizing BI and RPA together can streamline reporting workflows, ensuring accurate insights with minimal manual intervention. The 3-Day BI Sprint can be especially impactful in swiftly generating these reports.

With more than 2000 satisfied clients, Hevo shows how useful conditional fields can be in optimizing operations. By implementing conditional columns effectively, organizations can transform their reporting capabilities. As mentioned by Joleen Bothma, a Data Science Consultant, the ability to customize visual elements in BI—such as using imported icons for conditional formatting—further enriches the data representation.

Notably, the case study titled ‘Changing Color Based on Value in Power BI‘ illustrates how users can click the function icon in the Data Colors section to set conditions and define color ranges based on field values. Ultimately, these strategies lead to improved decision-making and operational efficiency, positioning businesses for success in today’s rapidly evolving data-driven landscape.

Conclusion

Harnessing the power of conditional columns in Power BI is pivotal for organizations striving to maintain data integrity and derive actionable insights from their datasets. By effectively managing null values and establishing clear categorizations within data, businesses can enhance the accuracy of their analyses and streamline their decision-making processes. The step-by-step guidance provided illustrates how easy it is to implement these conditional columns, ensuring that data remains intact and actionable, even when faced with gaps.

Moreover, adopting best practices in data management—such as:

– Validating data

– Utilizing descriptive naming conventions

– Documenting logic

can significantly improve the reliability of insights. Integrating Robotic Process Automation (RPA) into these workflows not only mitigates the challenges posed by null values but also frees up valuable resources for strategic initiatives. The real-world applications discussed highlight how various sectors are successfully leveraging conditional columns for:

– Sales analysis

– Customer feedback

– Financial reporting

ultimately driving operational efficiency.