Introduction

In the realm of data analysis, Power BI stands out as a powerful tool that empowers organizations to transform raw data into actionable insights. At the heart of this transformation lies the effective use of sample data, which not only accelerates the learning curve for new users but also enhances the quality of reports and dashboards.

By leveraging pre-defined datasets, businesses can bypass the hurdles of data collection and inconsistencies, allowing them to focus on what truly matters—extracting meaningful trends and insights that drive strategic decisions.

As organizations increasingly recognize the value of data-driven approaches, understanding the best practices for utilizing sample data becomes essential. This article delves into the myriad benefits of sample data in Power BI, offering practical strategies to optimize its use and ultimately elevate decision-making processes across various sectors.

Understanding Sample Data in Power BI: A Key Component for Effective Analysis

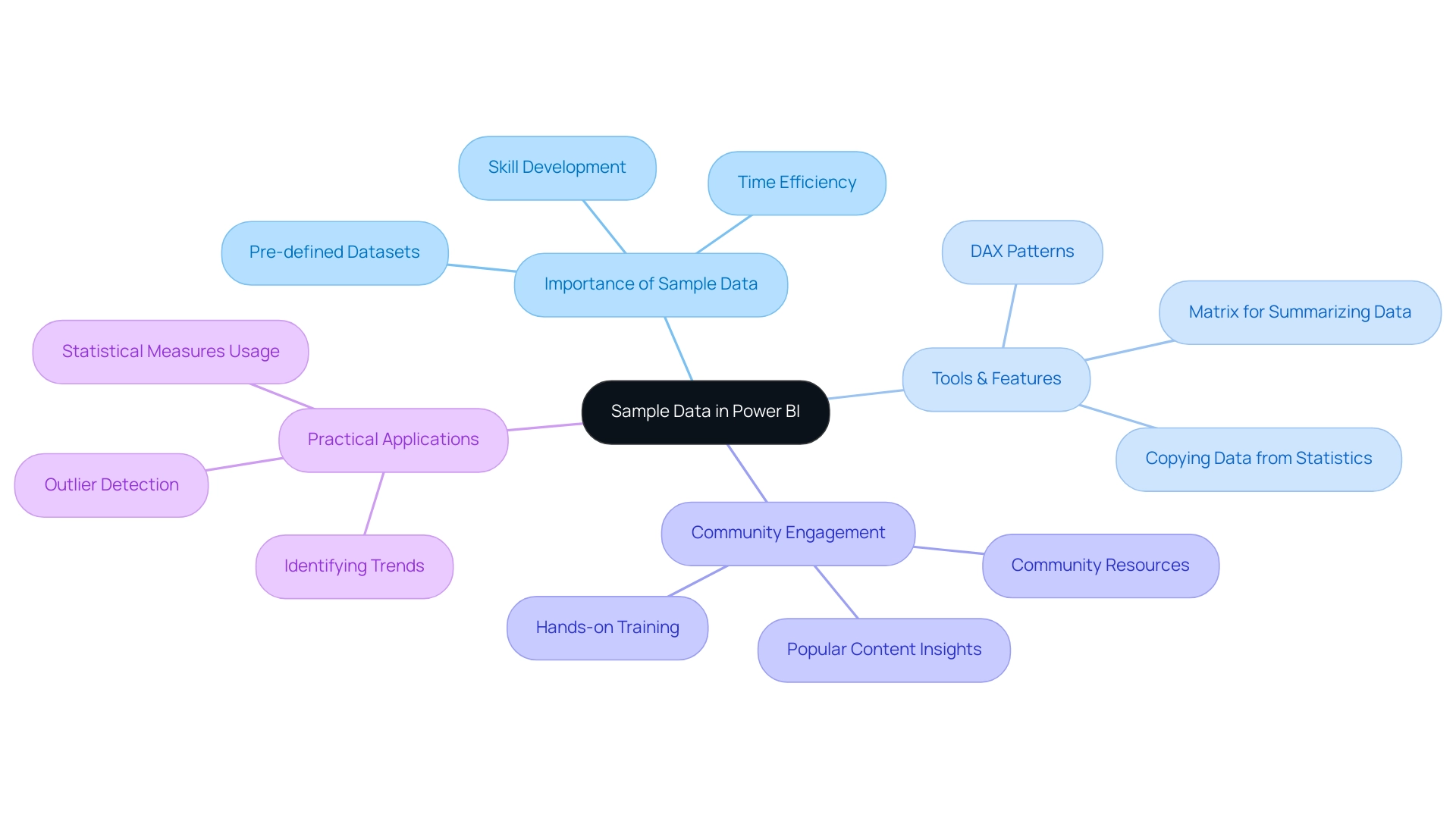

In Power BI, sample information serves as a vital resource for users, especially as they navigate the complexities of information management and insights generation. By utilizing pre-defined datasets, newcomers can engage with the software’s features without the delay of collecting their own information. This prompt access not only promotes skillfulness in information manipulation and visualization but also tackles the prevalent issues of time-consuming document creation and information inconsistencies, often worsened by an absence of governance strategy.

As individuals learn to create insightful reports using example information, they are better prepared to extract clear, actionable guidance from their analyses, addressing the common problem of reports filled with figures yet lacking clarity.

Recent improvements to BI tools, such as the capability to copy information from column statistics and the DAX Patterns repository for calculating statistical measures, further empower users. These tools streamline interactions with datasets and enhance analytical capabilities, crucial for directors focused on operational efficiency. As Greg Deckler emphasizes, community engagement is key to effective learning, and the Power BI community’s resources, including hands-on training sessions, exemplify this approach.

Notably, a case study demonstrating the use of basic statistical measures illustrates how users can summarize information without extensive technical knowledge, enabling them to identify trends, such as outliers among car sales over 180 thousand Reais. This practical usefulness of example information not only clarifies intricate ideas but also proves vital for making informed choices in real-world situations.

Use Cases of Sample Data: Enhancing Power BI Dashboards and Reports

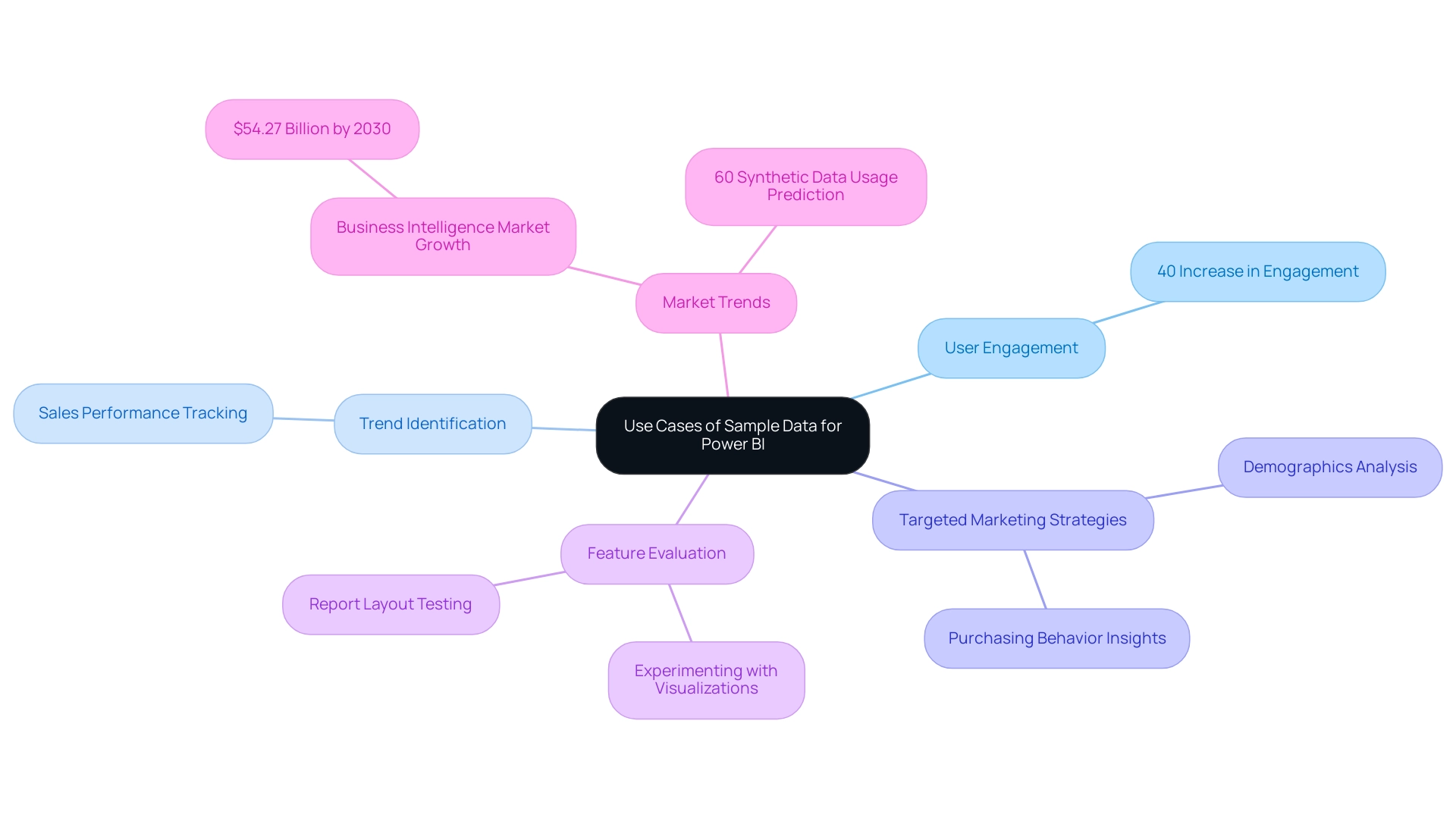

Utilizing sample data for Power BI provides numerous opportunities to enhance dashboards and reports, significantly boosting analytical capabilities. For example, statistics indicate that dashboards employing sample data for Power BI can enhance user engagement by as much as 40%, enabling organizations to generate dynamic visualizations that track sales performance over time using test sales figures. This approach enables stakeholders to identify trends and make informed, strategic decisions.

Furthermore, utilizing customer information enables companies to examine demographics and purchasing behaviors, aiding the creation of targeted marketing strategies. Another valuable use of sample data for Power BI is in evaluating new features, where users can experiment with various visualizations and report layouts without jeopardizing live information integrity. This capability not only fosters innovation but also accelerates the development of business intelligence solutions.

The recent case study of Databricks, which filed for its initial public offering (IPO), highlights the significance of innovative information management and analytics in a competitive landscape. With the business intelligence market expected to rise to $54.27 billion by 2030, utilizing sample data for Power BI becomes increasingly essential for organizations striving to stay competitive. As highlighted by industry experts, ‘The business intelligence market is evolving rapidly, and organizations must adapt to stay ahead.’

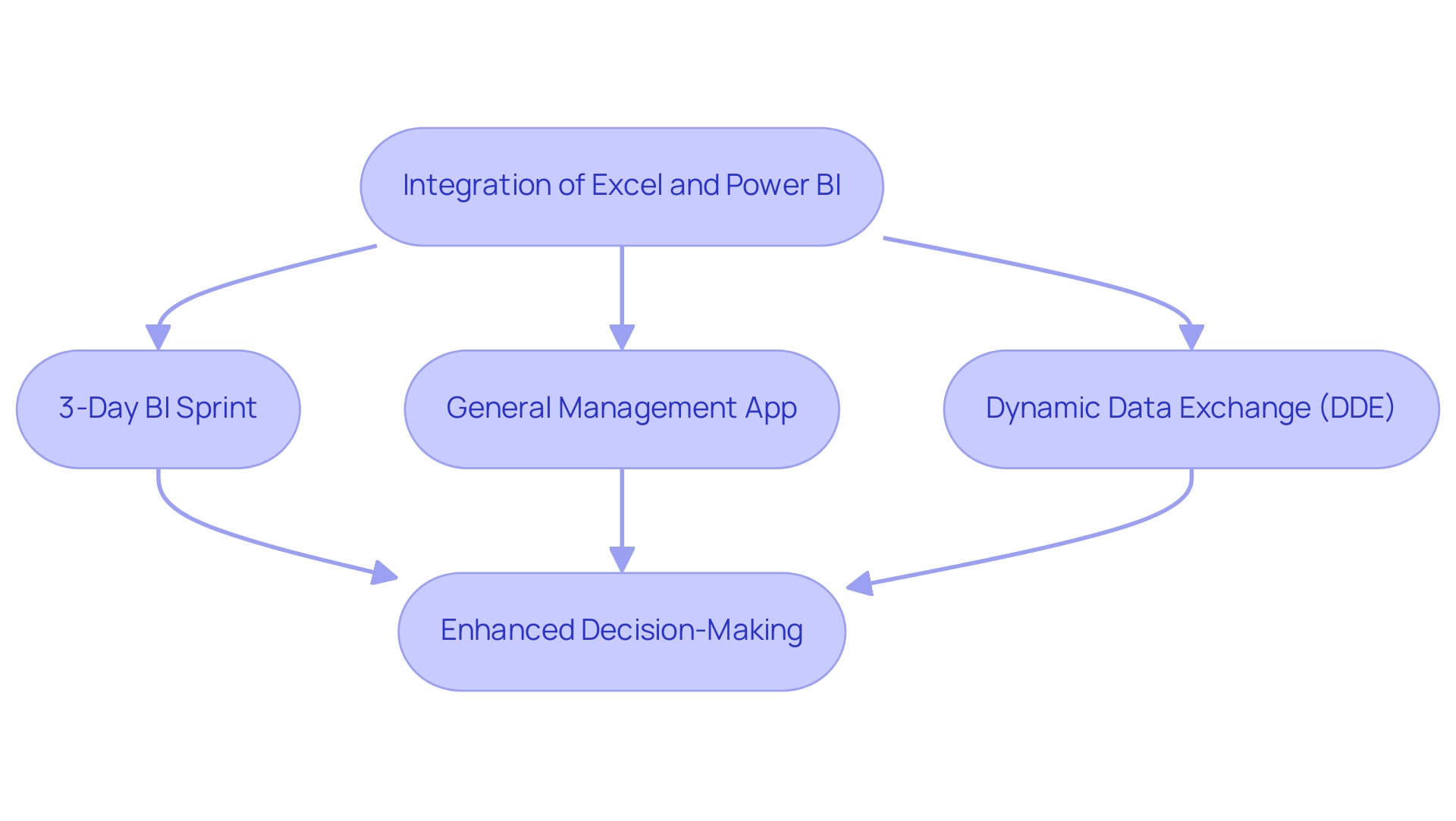

Furthermore, Gartner predicts that by the end of the year, 60% of the information utilized by AI and analytics solutions will be synthetic, making effective information management more critical than ever. By harnessing the strength of sample data for Power BI, organizations can effectively streamline their BI processes, ensuring they deliver insights that truly drive strategic initiatives and optimize performance. Additionally, our 3-Day BI Sprint can help you quickly create professionally designed reports, while the General Management App provides comprehensive management and smart reviews.

To explore how these features can benefit your organization, book a free consultation today.

Best Practices for Utilizing Sample Data in Power BI

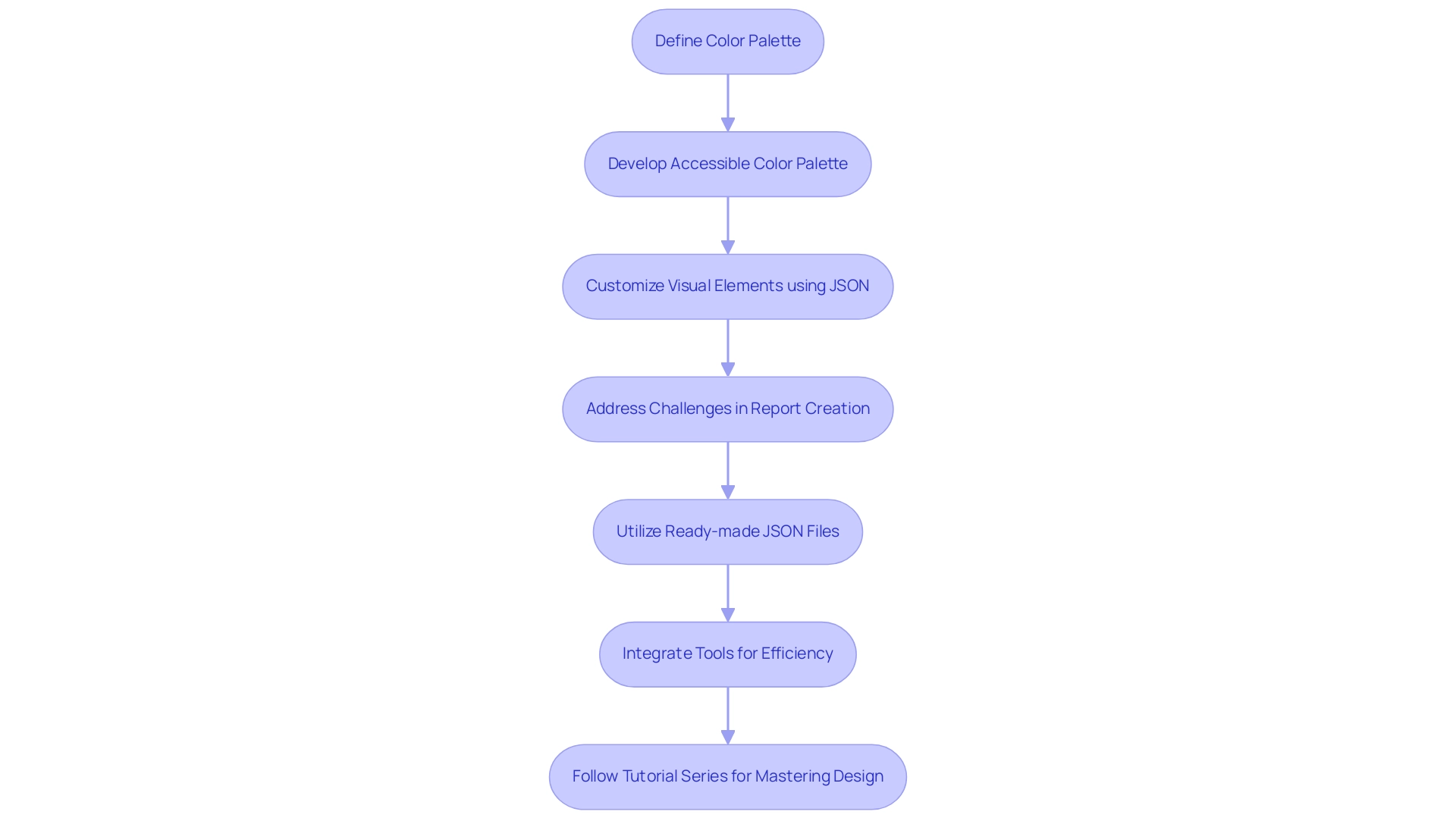

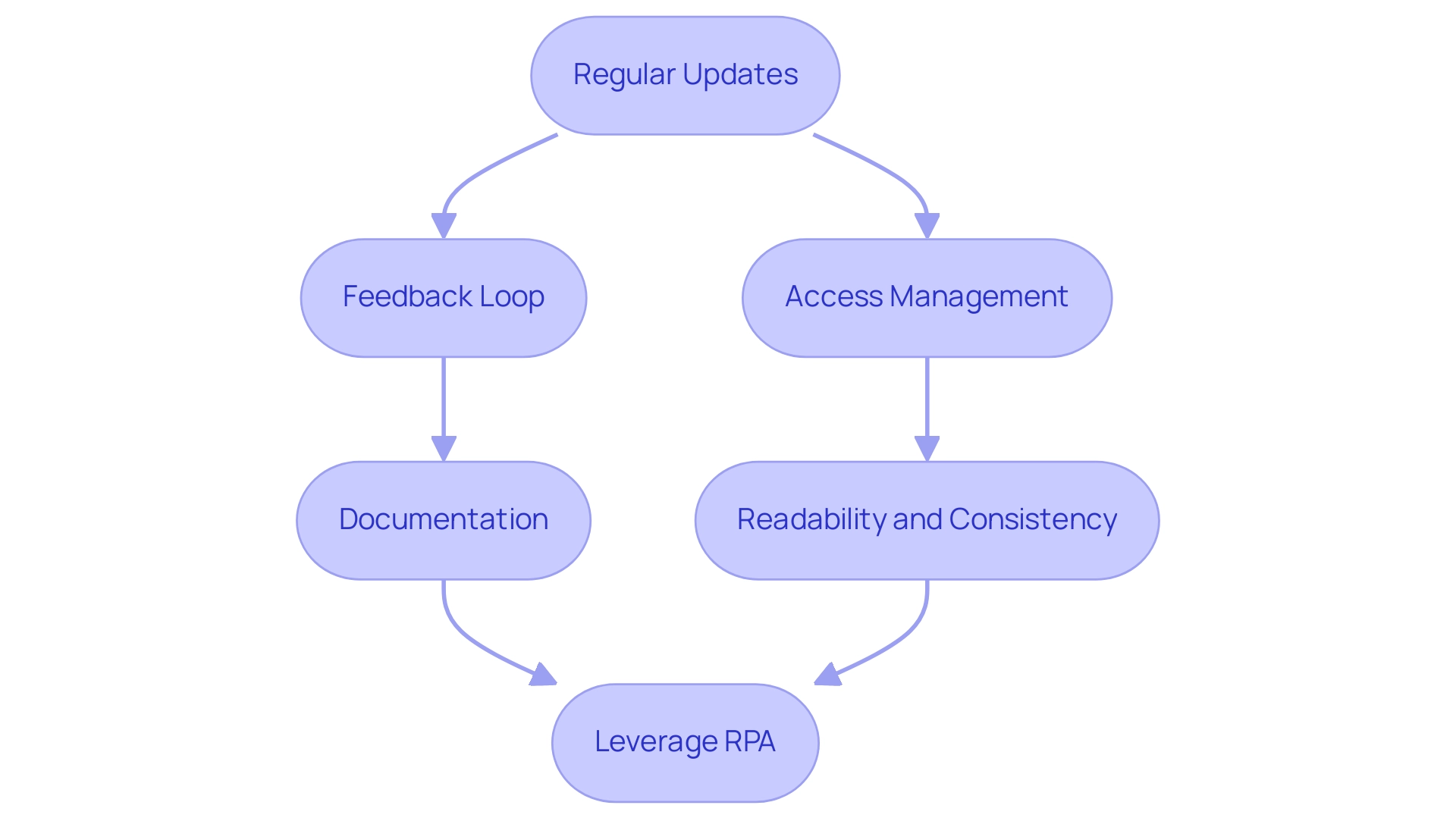

To maximize the utility of sample information in Power BI and leverage the power of Business Intelligence, the following best practices are essential:

-

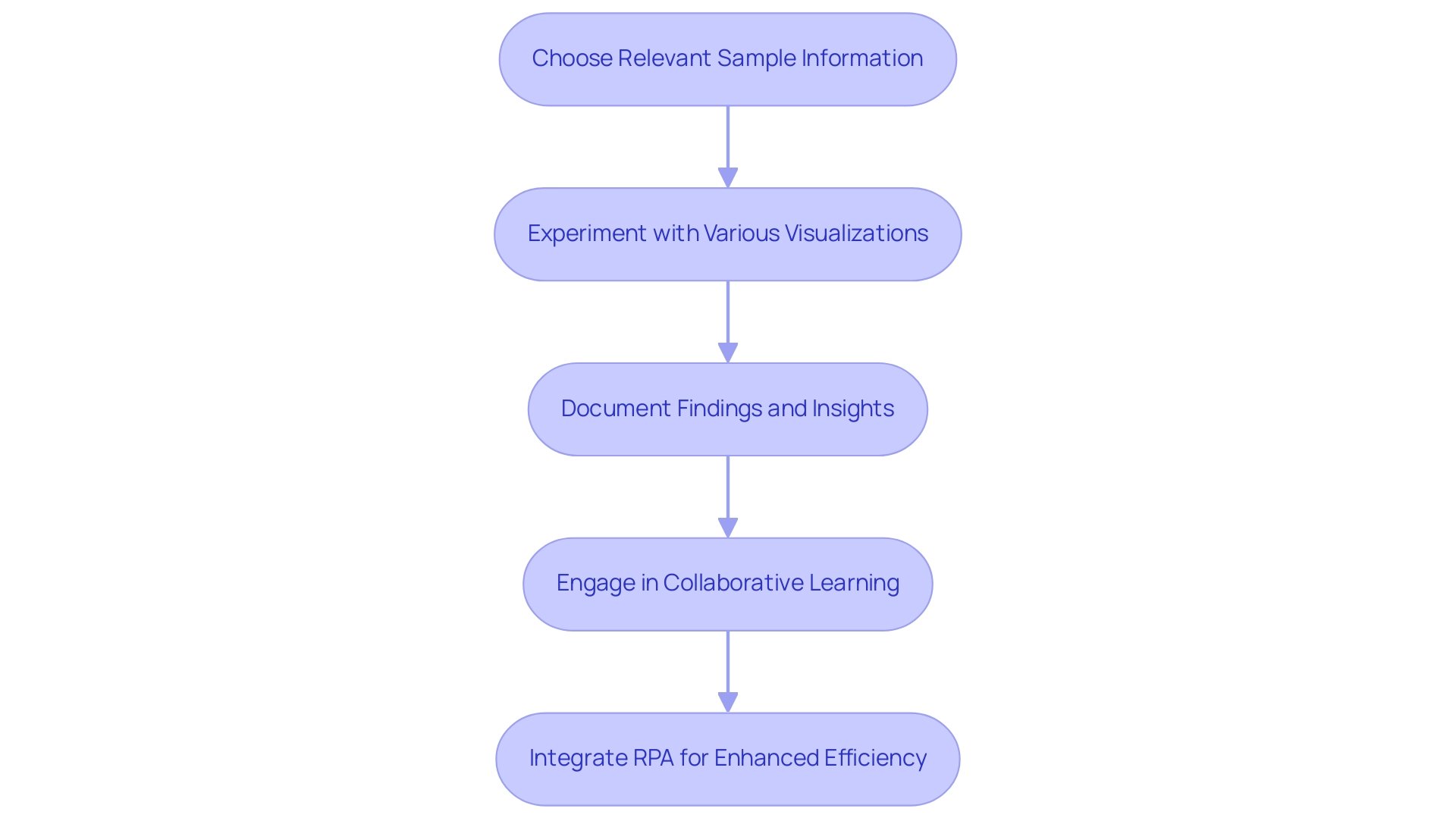

Choose Relevant Sample Information: Opt for datasets that closely mirror upcoming production information. This alignment facilitates a clearer understanding of final document functionality and aesthetics, paving the way for more effective operational insights.

-

Experiment with Various Visualizations: Utilize example information to test a range of visualization methods. This experimentation can unveil the most impactful methods for presenting insights effectively, addressing the common challenge of report creation that can be time-consuming.

-

Document Findings and Insights: As you explore representative information, meticulously record observations regarding successful strategies and pitfalls. Such records will serve as invaluable references during the analysis of actual information and can help mitigate inconsistencies.

-

Engage in Collaborative Learning: Foster a collaborative environment by sharing insights and findings with colleagues. This exchange fosters a culture of learning and ongoing enhancement in utilizing sample information and promotes growth through informed decision-making.

-

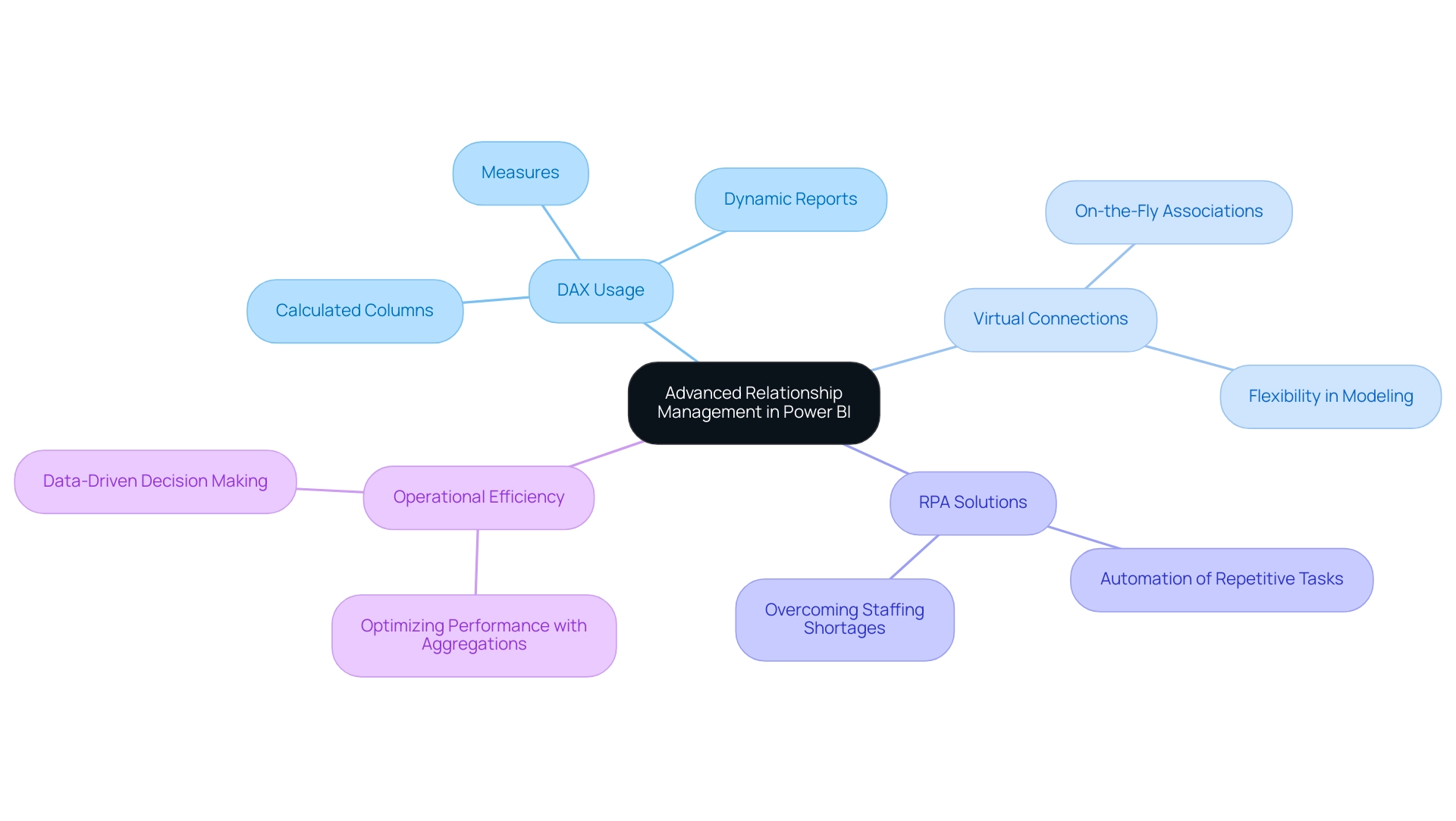

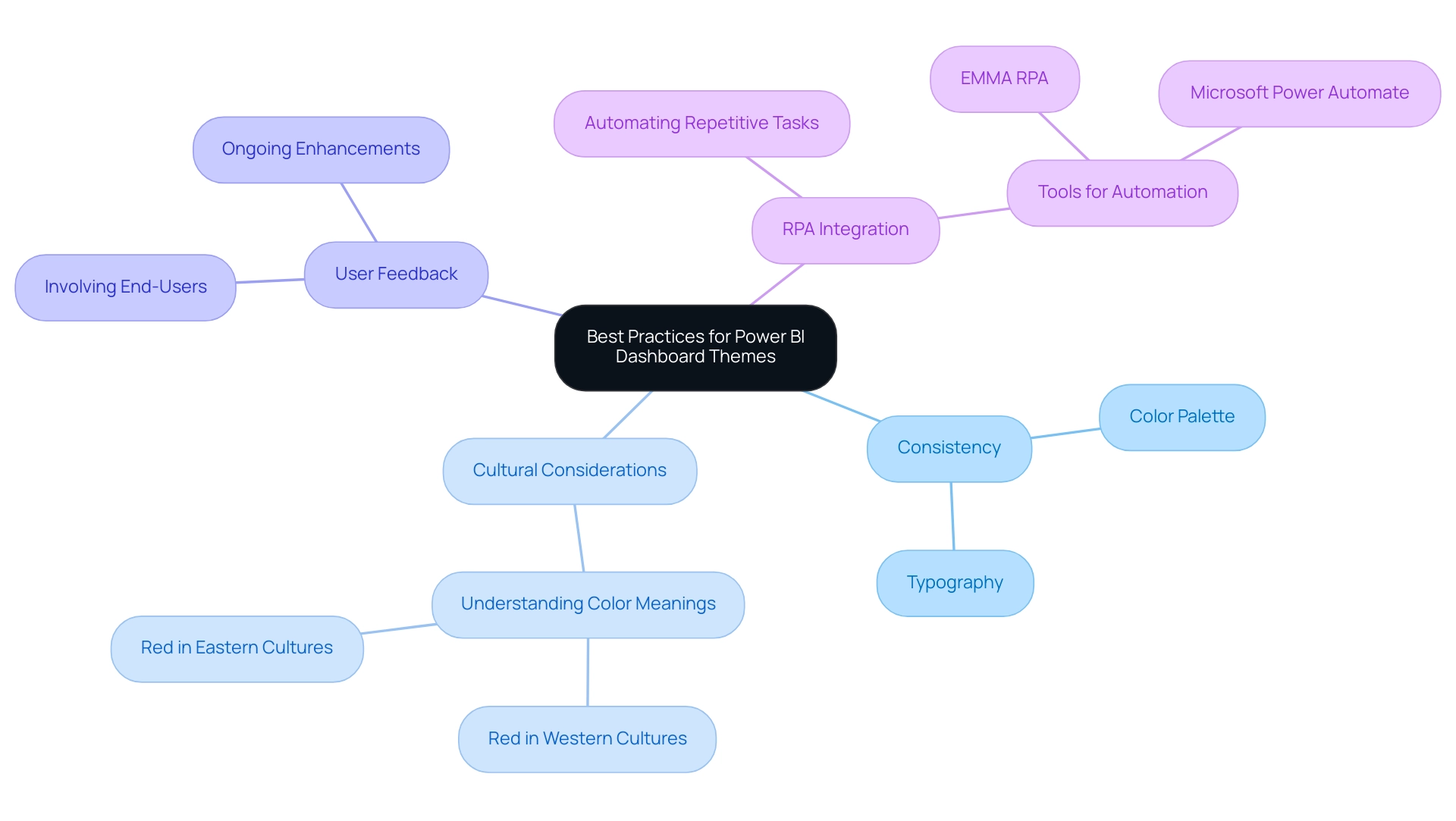

Integrate RPA for Enhanced Efficiency: Consider how Robotic Process Automation (RPA) can complement these best practices by automating repetitive tasks, thus improving overall efficiency in information management and report generation.

By adhering to these practices, users can significantly enhance their proficiency in Power BI, leading to the creation of dashboards that not only present insights effectively but also drive informed decision-making. A case study titled ‘Building the Dashboard’ underscores this approach, showing that visualizing calculated measures—such as mean, median, mode, and standard deviation—using a column chart can illuminate information distribution and outliers, thereby improving analytical clarity. For instance, cars sold for over 180 thousand Reais indicate the existence of outliers, showcasing the importance of identifying these values in analysis. As Brahmareddy aptly states,

This not only reduced the information size but also made the subsequent analysis much quicker.

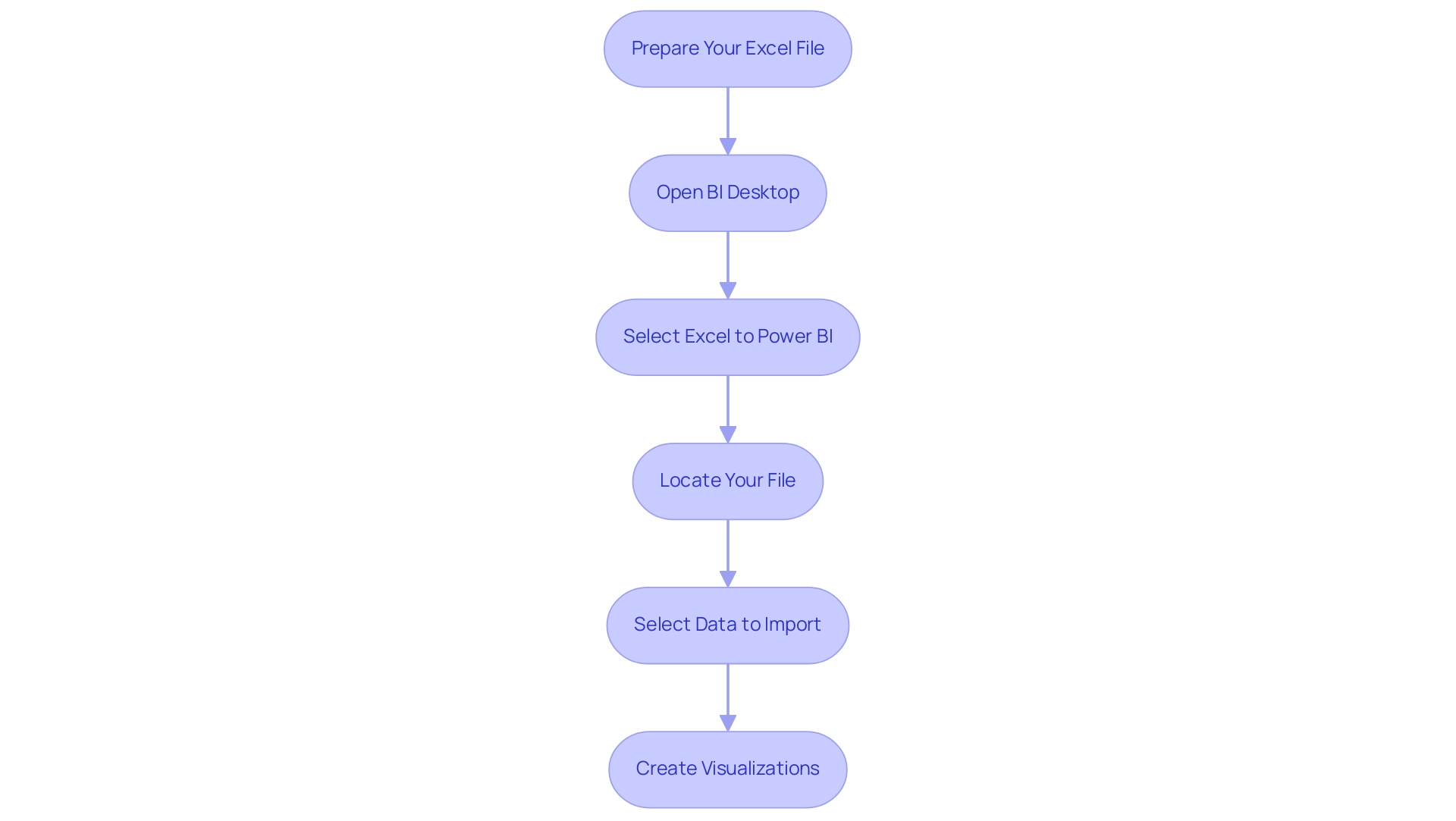

Additionally, to upload Excel files to the BI service, users must sign in, navigate to their workspace, and select the upload option. Applying these strategies will not only enhance the utilization of examples in BI tools but also boost user involvement and efficiency within BI projects. Remember, failing to leverage Business Intelligence effectively can leave your business at a competitive disadvantage, making these practices all the more crucial.

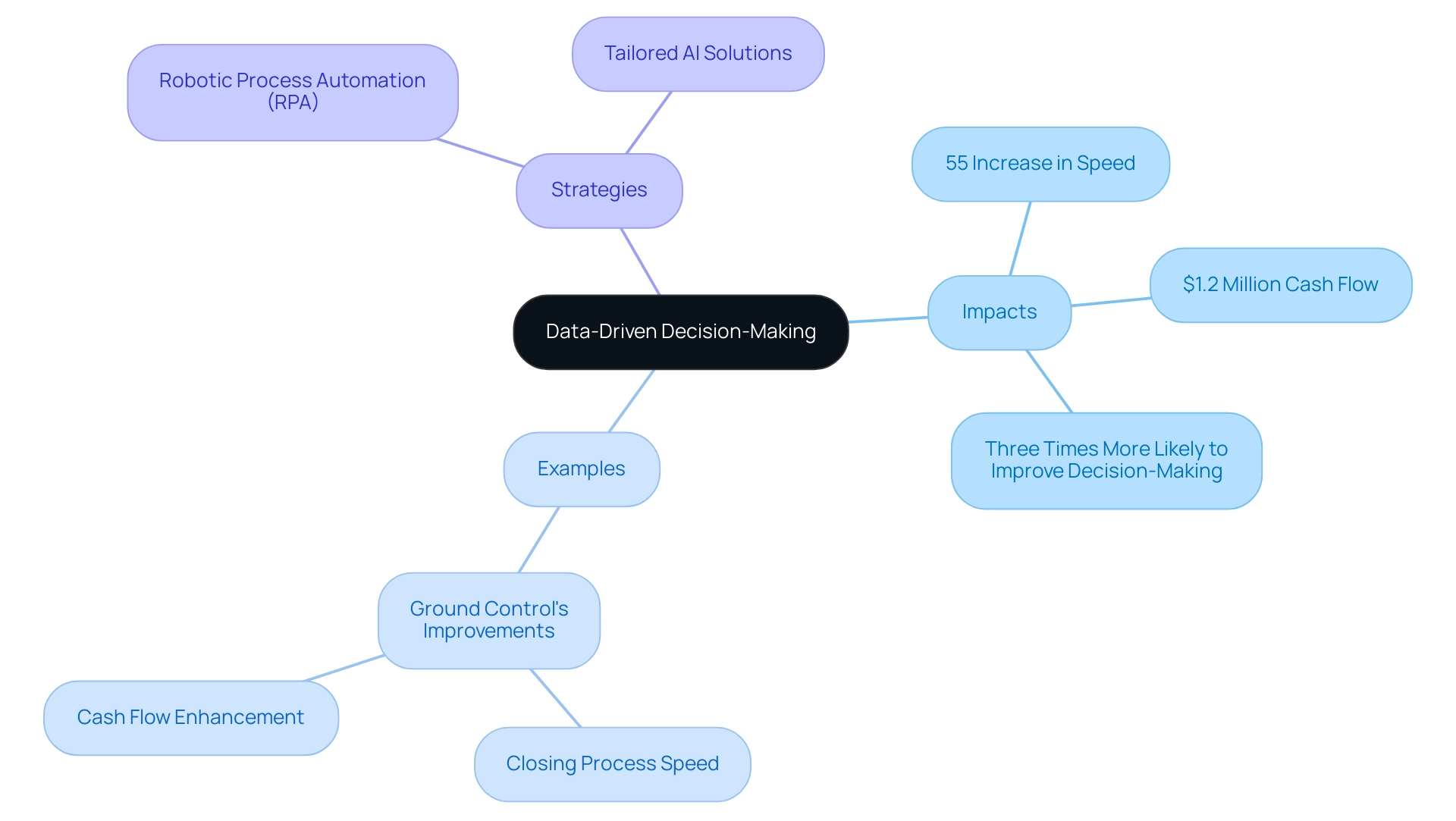

Measuring the Impact of Sample Data on Decision-Making

The impact of example information in Power BI on decision-making quality is significant and quantifiable. By utilizing representative information effectively, organizations can produce thorough reports that reveal essential insights into business performance, emerging trends, and strategic opportunities. This approach empowers decision-makers to formulate strategies grounded in strong analysis rather than relying on intuition alone.

For instance, Ground Control implemented strategies that significantly improved their closing process, achieving a 55% increase in speed and freeing up $1.2 million in cash flow, illustrating the tangible benefits of data-driven decision-making. Furthermore, organizations that diligently integrate sample data for Power BI into their BI processes cultivate a culture of informed decision-making, ultimately enhancing operational efficiency and overall effectiveness. As highlighted by marketing specialist Tim Stobierski, ‘Data-driven strategies not only streamline processes but also empower organizations to make informed decisions that drive success.’

Additionally, a recent survey by PwC shows that organizations prioritizing data-driven strategies are three times more likely to experience notable improvements in decision-making compared to their counterparts. This shift towards data-centric operations is further underscored by the timely release of the infographic titled ‘Data Mesh and Data Fabric – From Theory to Application,’ which illustrates modern approaches to data management and their implications for business success. To further enhance productivity and efficiency, integrating Robotic Process Automation (RPA) can streamline repetitive tasks, addressing the challenges of manual processes that often hinder operational flow.

Tailored AI solutions can also help identify the right technologies to address specific business challenges, ultimately driving operational excellence. We encourage you to explore how these solutions can transform your operations and lead to significant improvements in performance.

Conclusion

Harnessing the power of sample data in Power BI is a game-changer for organizations striving to enhance their data analysis capabilities. By utilizing pre-defined datasets, users can streamline their learning process, overcome common challenges, and create meaningful insights that drive strategic decisions. The practical applications of sample data—from improving user engagement in dashboards to facilitating innovative testing—underscore its value in a competitive business landscape.

Implementing best practices, such as selecting relevant datasets and engaging in collaborative learning, can significantly elevate the effectiveness of Power BI projects. By documenting findings and experimenting with different visualizations, organizations can create reports that not only reflect accurate data but also tell a compelling story. The impact of this approach is evident in the measurable improvements in decision-making quality, as evidenced by case studies highlighting substantial gains in operational efficiency and financial performance.

As the business intelligence market continues to evolve, the integration of sample data into analytical processes will be essential for organizations seeking to maintain a competitive edge. Embracing these strategies not only fosters a culture of data-driven decision-making but also empowers teams to navigate complexities with confidence. Prioritizing the use of sample data in Power BI is not just a technical enhancement; it is a strategic imperative that positions organizations for success in an increasingly data-centric world.

Introduction

In the realm of data analytics, understanding cardinality is not just a technical necessity; it is a cornerstone for achieving operational excellence in Power BI. As organizations grapple with the complexities of data relationships, mastering the nuances of cardinality becomes crucial for transforming raw data into actionable insights. With the potential for misconfigured relationships leading to inefficiencies and confusion, it is essential to navigate these challenges with a strategic approach.

By delving into the various types of cardinality—

1. one-to-one

2. one-to-many

3. many-to-many

users can enhance their data modeling capabilities, ensuring that their reports not only convey accurate information but also empower informed decision-making. As the landscape of data continues to evolve, implementing robust governance strategies and leveraging best practices will unlock the true potential of Power BI, enabling organizations to harness their data more effectively and drive meaningful results.

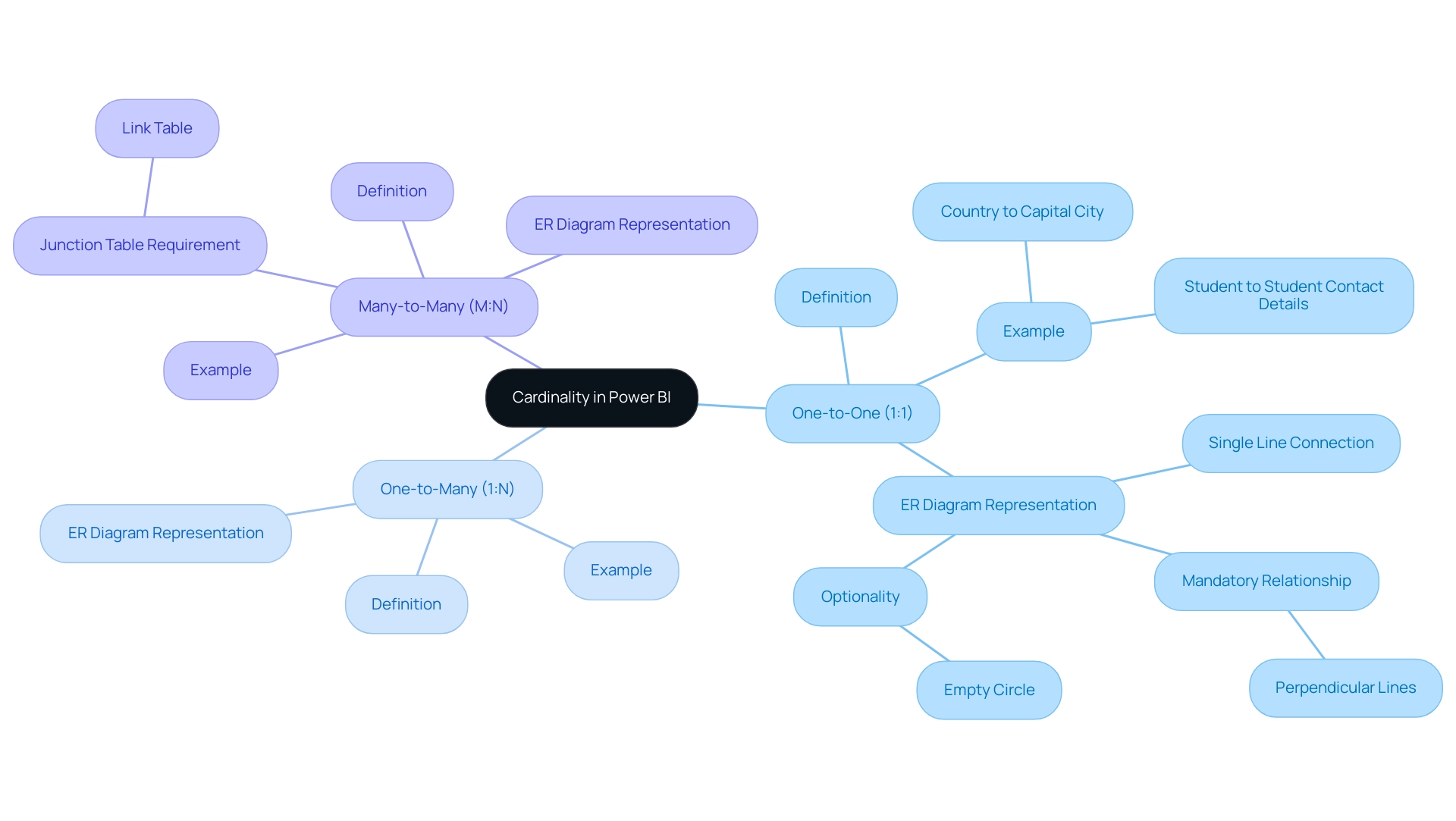

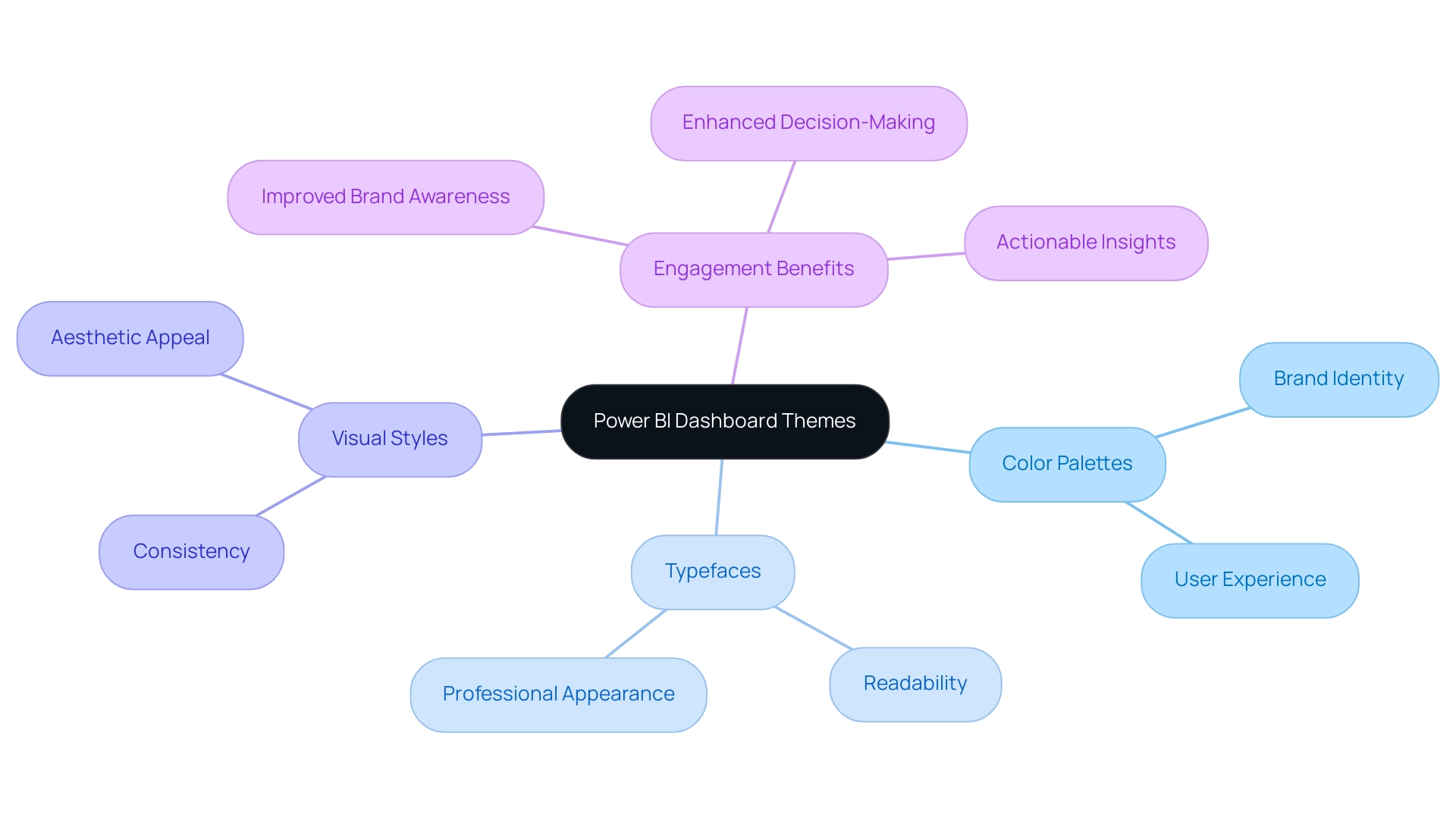

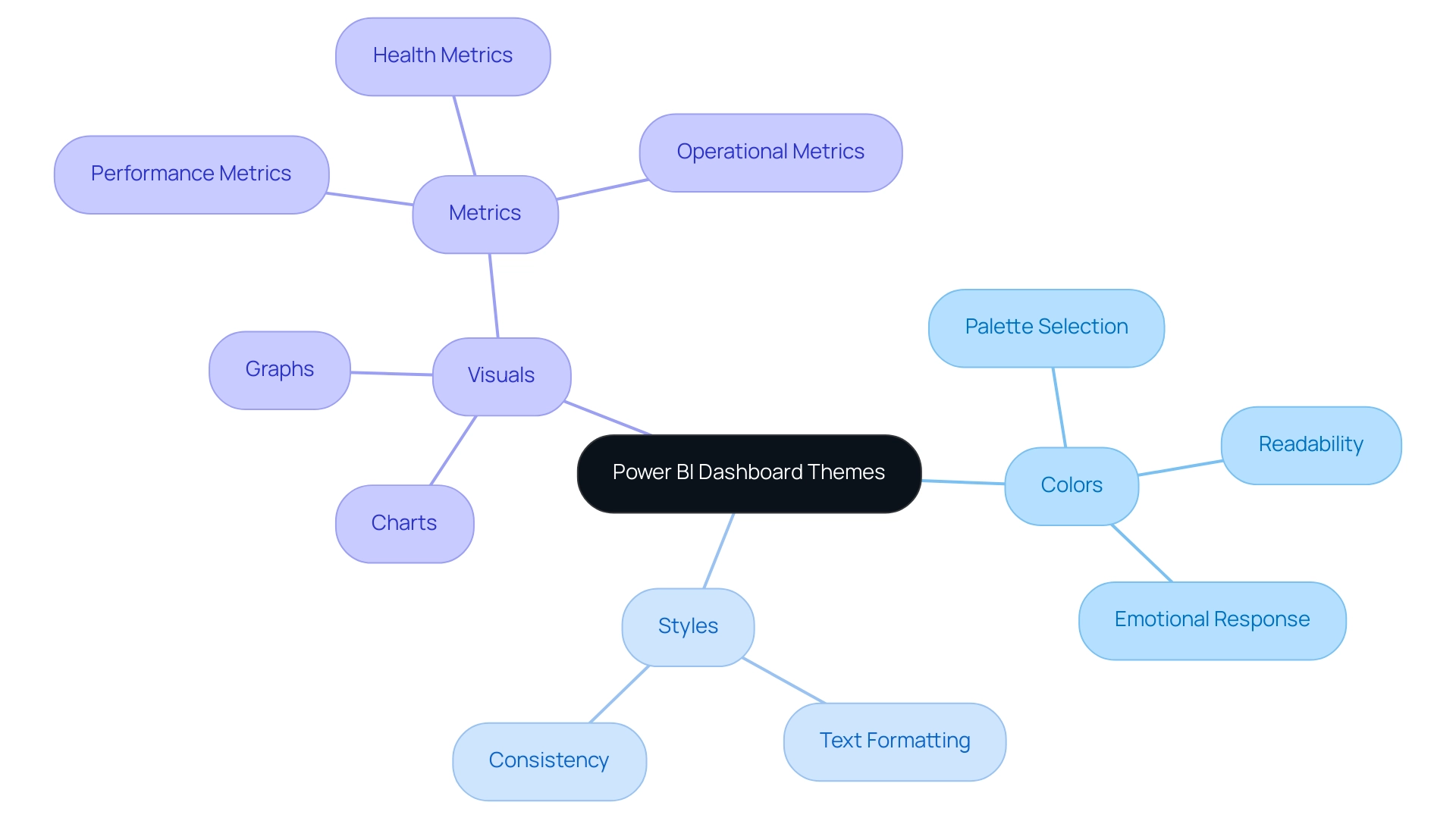

Understanding Cardinality in Power BI

In BI, understanding what is cardinality in Power BI is crucial for determining the uniqueness of values within a relationship, shaping how tables interact with one another. This understanding is essential for building effective models, as it directly impacts the precision of aggregation and the presentation of insights in reports. However, many users find themselves investing excessive time in report creation rather than leveraging insights from their Power BI dashboards.

This common challenge arises from issues such as inconsistencies across reports and a lack of actionable guidance, which can lead to confusion and impede decision-making. It is essential to implement a governance strategy to address these inconsistencies and ensure dependable information management. What is cardinality in Power BI? It is classified into three primary types:

- One-to-one

- One-to-many

- Many-to-many

Each type distinctly influences information behavior and outcomes. By mastering these fundamental concepts and highlighting actionable insights, users can significantly improve their modeling capabilities, resulting in more accurate and influential analysis. As noted by Joleen Bothma, a Data Science Consultant,

By following these guidelines, you can significantly improve the efficiency and scalability of your BI reports, making them more effective tools for business analysis.

This insight highlights the significance of grasping numerical relationships not only as a theoretical idea but as a practical basis for analytical success in BI. Furthermore, it is essential to mention that BI lacks a native Boxplot function, which restricts specific visualization capabilities associated with quantity. Following optimal methods in BI data structuring, grasping connections, data count, and directionality is essential for enhancing report efficiency and addressing the difficulties of report generation.

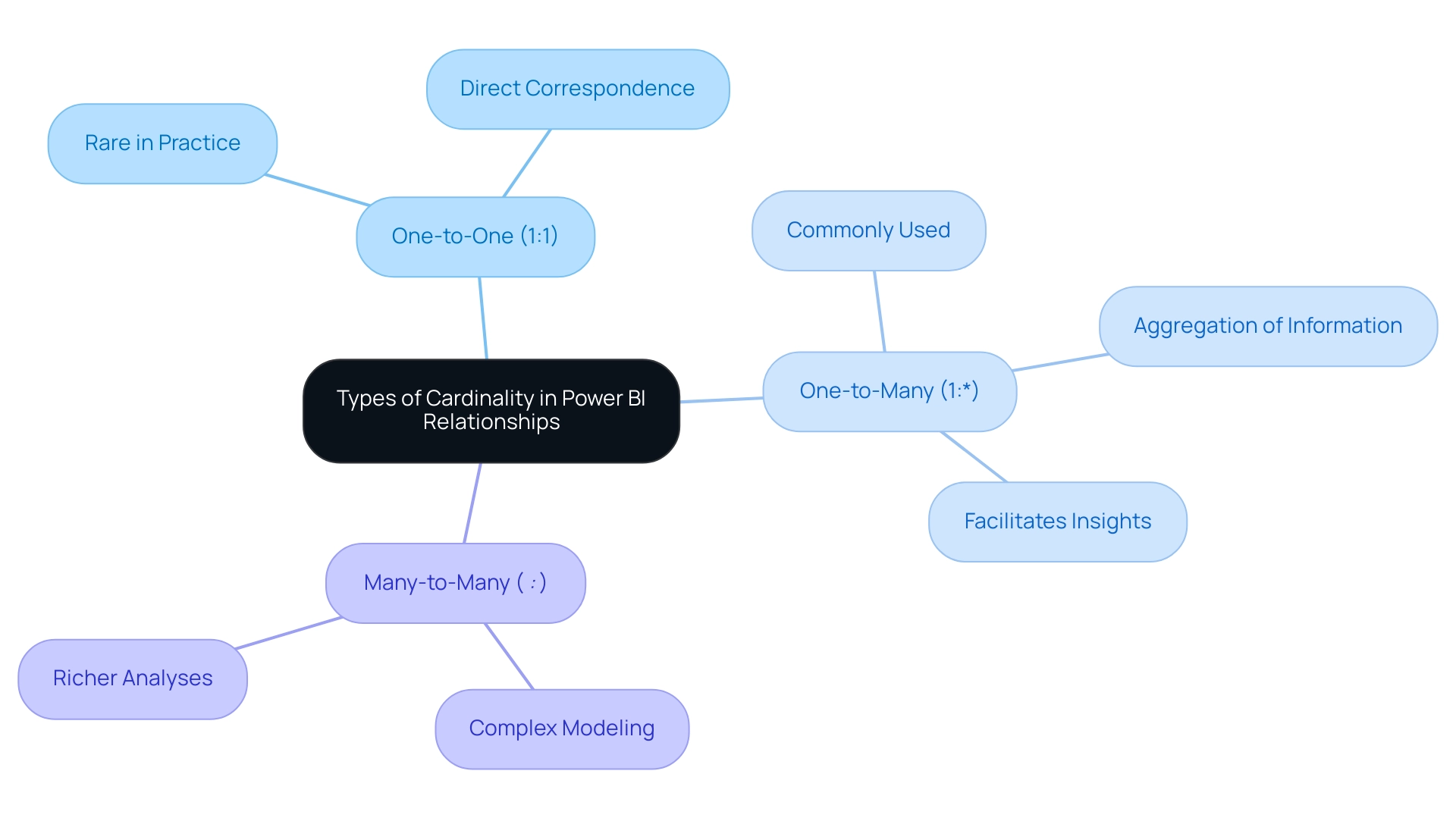

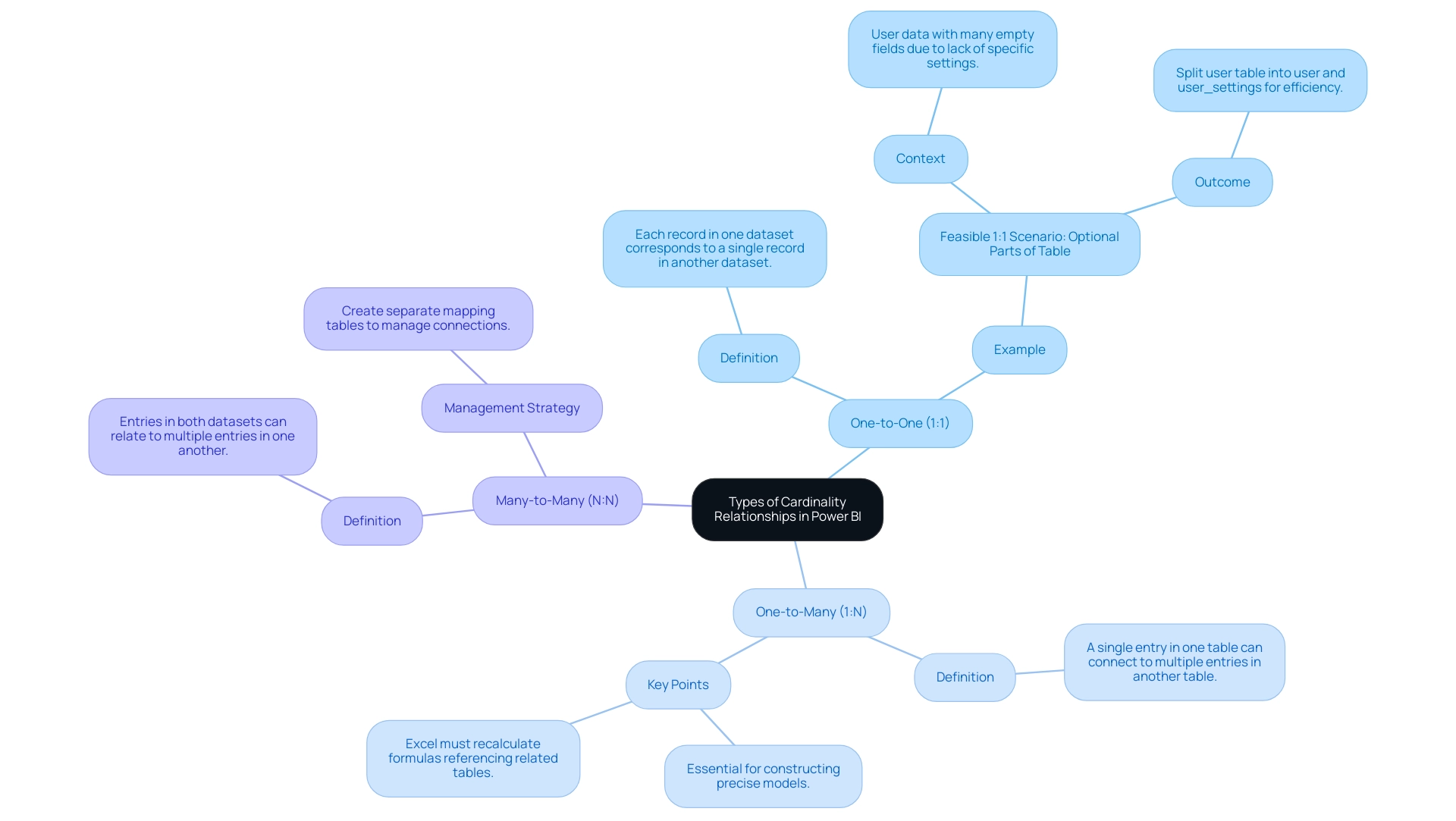

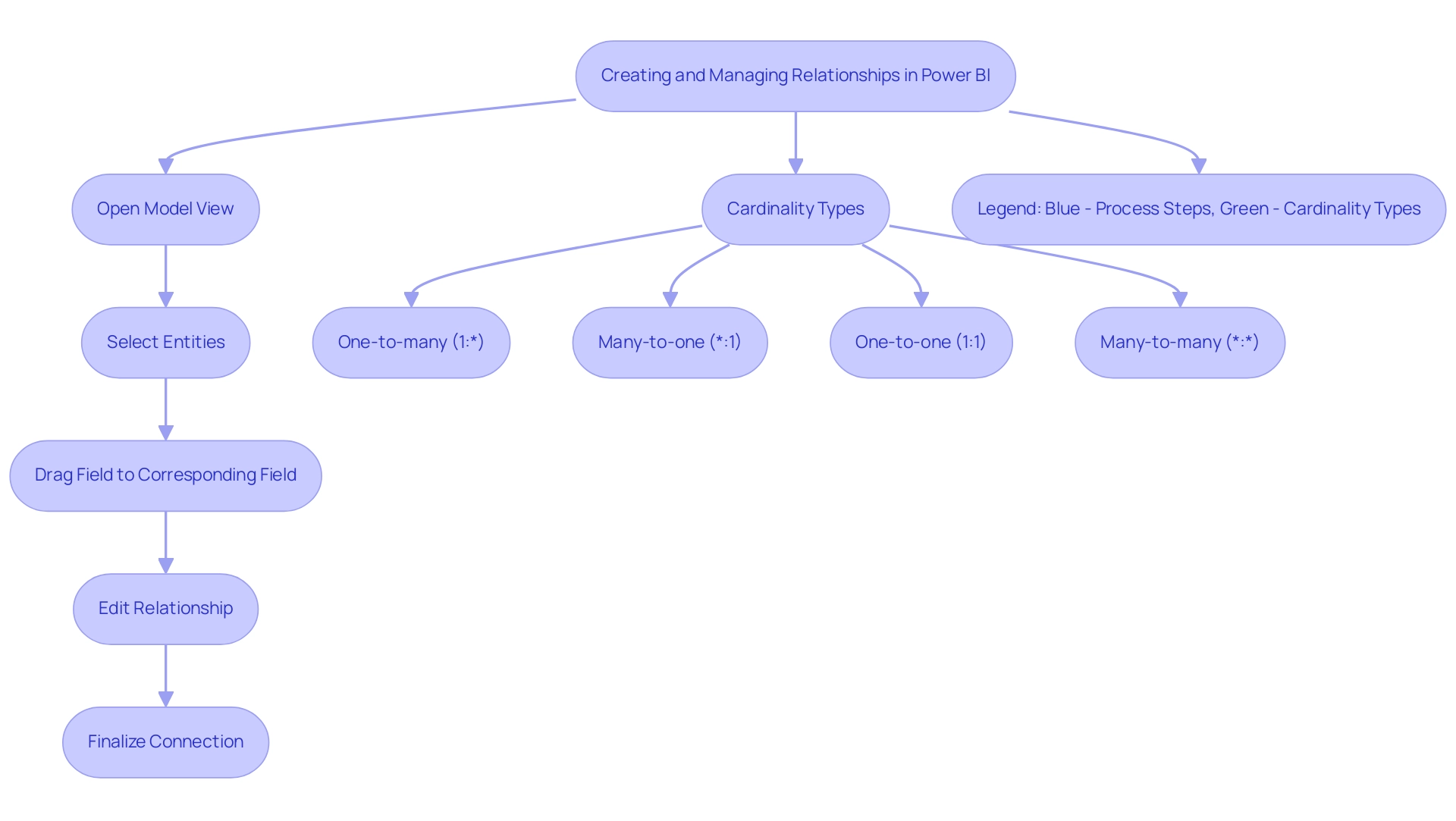

Types of Cardinality in Power BI Relationships

Power BI helps in understanding what is cardinality in Power BI by managing three primary types of connections: one-to-one (1:1), one-to-many (1:), and many-to-many (:*). In a one-to-one connection, each record in one table corresponds directly to a single record in another, which is relatively rare in practice. The one-to-many connection, however, is significantly more prevalent, allowing one record in a table to be associated with multiple records in another table.

This arrangement is particularly useful for aggregating information and drawing insights, addressing the common challenge of time-consuming report creation. Conversely, many-to-many relationships allow multiple records in one table to connect with multiple records in another, adding complexity to modeling but enabling richer analyses. Understanding what is cardinality in Power BI is essential for accurately building and examining models, particularly since organizations often encounter inconsistencies that can lead to confusion.

This confusion can be worsened by a lack of governance strategy, which is essential for maintaining integrity and trust. As Kta aptly points out,

In relational databases, we have relations among the tables. These relations can be one-to-one, one-to-many or many-to-many.

These relations illustrate what is cardinality in Power BI. A concrete example of distinctiveness in practice is the PERSON_ID column, which is likely to be a very high distinctiveness column where every row has a different value. Understanding what is cardinality in Power BI is crucial for accurately defining these relationships, which guarantees alignment with business requirements and facilitates the extraction of actionable insights, essential for effective analytics and operational efficiency.

Furthermore, as demonstrated in the case study titled ‘Defining Measurements Based on Business Rules,’ the concept is defined based on business rules and the nature of the objects involved, which plays a critical role in ensuring that the database structure aligns with business needs. In today’s information-abundant setting, failing to extract significant insights can put your business at a competitive disadvantage, highlighting the necessity of grasping the concept in optimizing information use.

The Impact of Cardinality on Data Modeling and Performance

Understanding what is cardinality in Power BI is crucial as it plays a pivotal role in shaping information modeling efficiency and overall performance, especially when organizations encounter challenges like poor master information quality and inefficient reporting processes. When settings related to what is cardinality in Power BI are improperly configured, they can lead to significant inefficiencies in retrieval, prolonging processing times and affecting report performance. This is particularly vital for directors concentrated on operational efficiency, as emphasized in the context of time-consuming report creation and inconsistencies that can arise from a lack of governance strategy.

Moreover, organizations often encounter barriers to AI adoption, such as uncertainty about how to integrate AI into existing processes and fears of high costs and complexity. These barriers can further exacerbate issues related to information quality and reporting efficiency. As indicated in industry insights, a model connection is restricted when there’s no assured ‘one’ side, often leading to many-to-many associations or cross-source group connections.

This limitation complicates information management and necessitates INNER JOIN semantics for table joins, which can restrict the use of certain DAX functions. Thus, defining clear quantities is crucial to enhance information connections, allowing quicker queries and more responsive dashboards. Furthermore, a thorough understanding of what is cardinality in Power BI is essential in preventing duplication and integrity issues that often stem from mismanaged relationships.

By addressing these challenges, including those related to AI integration, BI users can enhance their reporting capabilities. The recent inclusion of the ‘read/write XMLA endpoint’ to Business Intelligence signifies a notable milestone, bridging the gap between IT-managed workloads and self-service BI, further emphasizing the importance of effective modeling practices. By mastering the concept of quantity and overcoming AI adoption obstacles, BI users not only improve their operational efficiency but also enhance their decision-making abilities, thus leveraging insights more effectively.

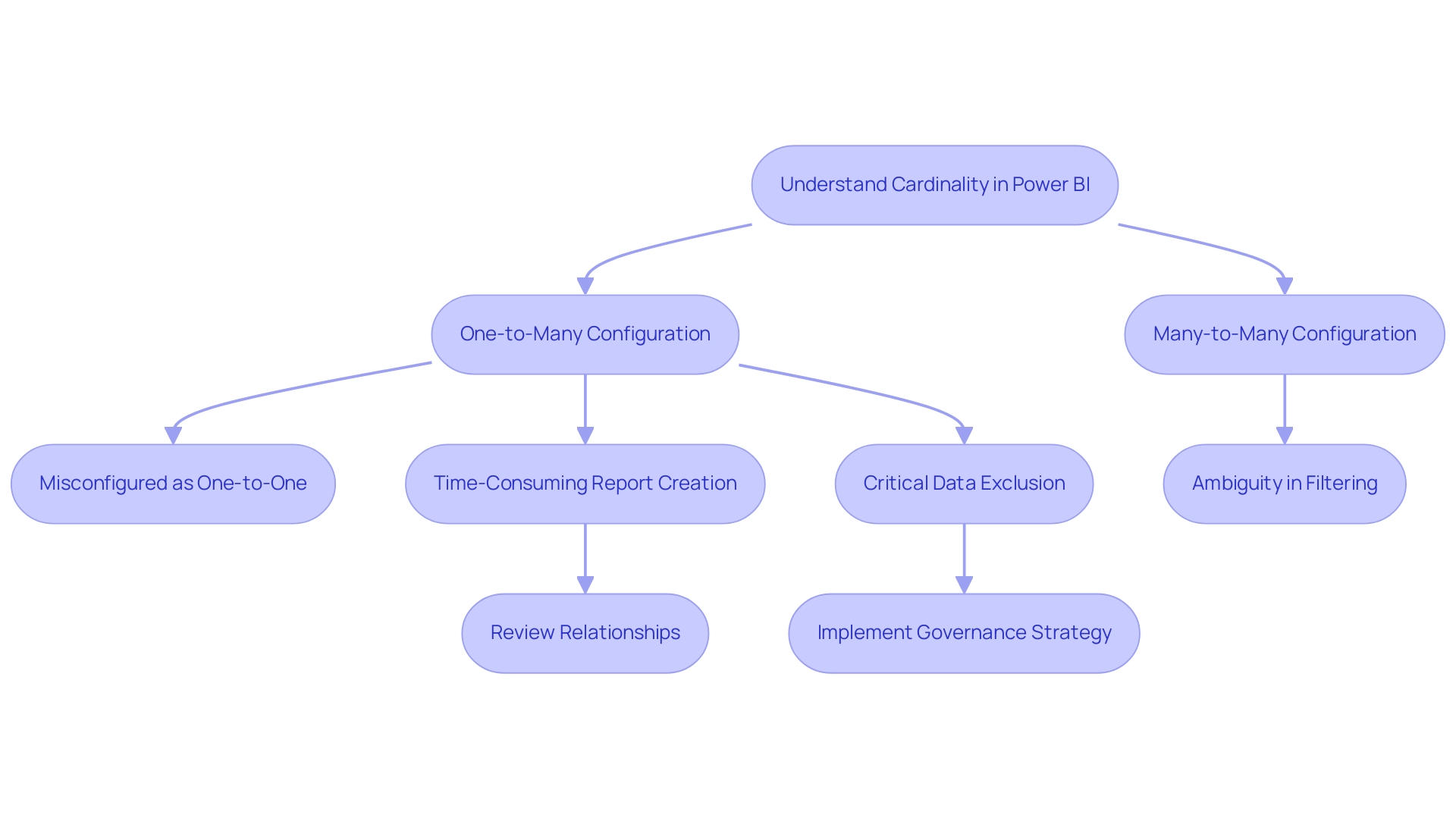

Common Cardinality Issues in Power BI

Understanding what is cardinality in Power BI is essential to address common problems, such as misconfigured one-to-many and many-to-many connections, which pose significant challenges for analysis. These misconfigurations can lead to time-consuming report creation, where users may spend more time building reports than extracting valuable insights. For instance, when a connection is mistakenly configured as one-to-one instead of one-to-many, critical data points may be excluded from reports, distorting the analysis.

Furthermore, many-to-many relationships introduce ambiguity and complicate filtering processes, hindering the ability to derive actionable insights. A recent user expressed concerns regarding Power BI automatically deciding on an n-to-n relationship, raising questions about the impact of empty cells in other rows on the analysis. This situation highlights the significance of understanding what is cardinality in Power BI configurations to prevent misinterpretations and inconsistencies in information.

It’s crucial for users to meticulously review their relationships to ensure precise definitions; failure to do so can severely compromise information integrity and impede effective decision-making. Additionally, the lack of a robust governance strategy can exacerbate these issues, leading to further inconsistencies across reports and diminishing trust in the data presented. As pointed out by Farhan Ahmed, a Community Champion, ‘Can’t filter the NULL value in Query Edit in Table 1?’

I don’t think this value will be used in your fact table. Did I answer your question? Mark my post as a solution!

Appreciate your Kudos!! Proud to be a Super User! This insight highlights the significance of proactively tackling common pitfalls in BI.

Moreover, reports that are filled with numbers and graphs yet lack clear, actionable guidance can leave stakeholders without a clear direction on the next steps. The case study titled ‘Creating Connections in BI’ illustrates that connections can be established automatically or manually, with autodetected connections being convenient but sometimes flawed. By maintaining robust information models and being aware of these issues, users can enhance their analytical capabilities and foster improved operational efficiency, ultimately shifting focus from report creation to leveraging insights.

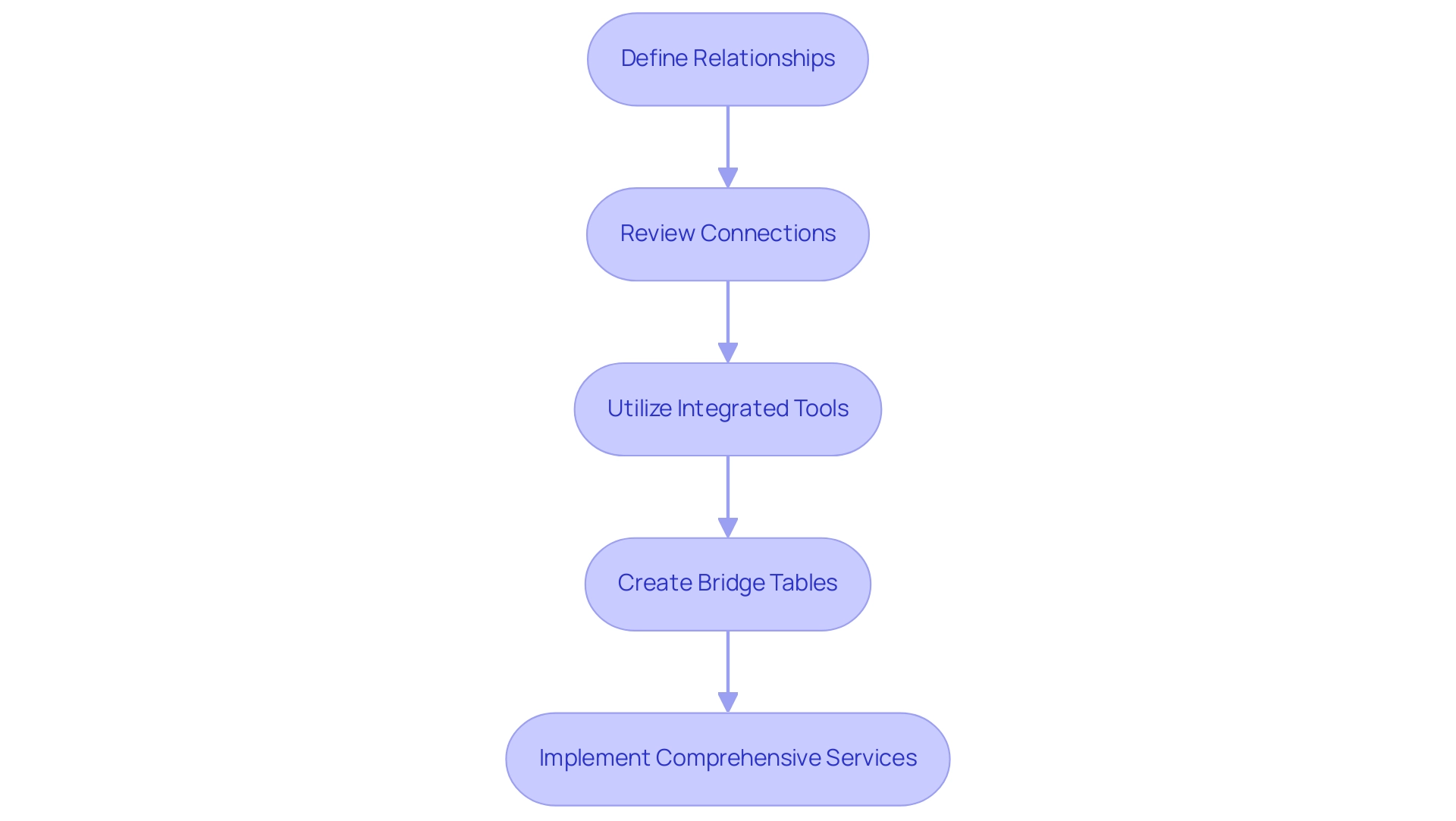

Strategies for Managing Cardinality in Power BI

Effectively managing the number of unique values in Power BI starts with a precise definition of relationships, ensuring they align seamlessly with the intended analytical outcomes. As Nikola Ilic aptly states, “The biggest opponent is referred to as the number of elements!” This emphasizes the difficulties encountered when handling quantity in information management.

It is essential to periodically review and update these connections, especially as data evolves, to maintain accuracy and relevance. With 5,917 views on discussions surrounding what is cardinality in Power BI, it is clear that managing this aspect is of significant interest to many. Utilizing Power BI’s integrated tools for visualizing and managing these connections can assist in recognizing potential issues before they can negatively impact performance.

One highly effective strategy involves creating bridge tables to address many-to-many relationships, which simplifies complex models and significantly enhances performance. Furthermore, as emphasized in our 3-Day BI Sprint, we enable you to tackle these challenges by producing professional reports that concentrate on actionable insights, ensuring your team can utilize information effectively. Furthermore, our Comprehensive Power BI Services include custom dashboards, integration, expert training, and the unique bonus of using your report as a template for future projects, which can significantly streamline your reporting processes.

By implementing these strategies and utilizing our services, users can foster efficient and effective data models that not only support their analytical goals but also drive insightful outcomes.

Conclusion

Understanding cardinality in Power BI is crucial for building effective data models and enhancing operational efficiency. By grasping the nuances of:

- one-to-one

- one-to-many

- many-to-many relationships

users can create more accurate and insightful reports. Misconfigured cardinality can lead to inefficiencies, confusion, and wasted time, highlighting the necessity of a robust governance strategy to maintain data integrity.

Addressing common cardinality issues is essential for preventing data misinterpretations and ensuring that reports deliver actionable insights. By meticulously reviewing relationships and leveraging Power BI’s tools, organizations can overcome these challenges and enhance their analytical capabilities. Implementing strategies such as utilizing bridge tables can simplify complex data models, leading to improved performance and faster decision-making.

Ultimately, mastering cardinality not only optimizes data relationships but also empowers organizations to leverage their data more effectively. This foundational knowledge equips users to transform raw data into meaningful insights, driving operational excellence and fostering informed decision-making in an increasingly data-driven landscape. Embracing these principles will enable organizations to unlock the full potential of Power BI and achieve significant business results.

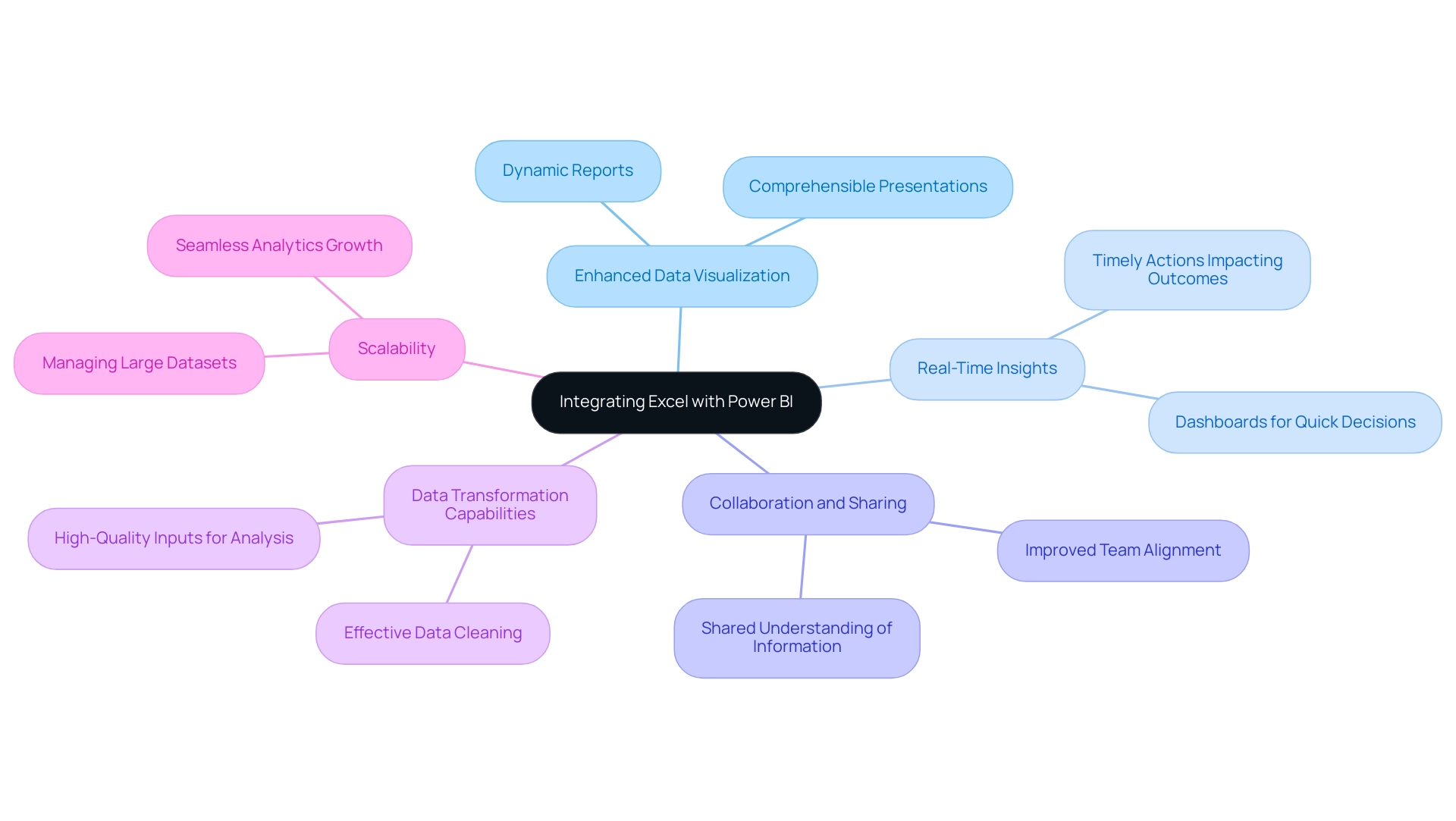

Introduction

In a world where data is the new currency, the ability to transform raw information into actionable insights is paramount for organizations striving for operational excellence. Semantic models in Power BI emerge as a transformative solution, bridging the gap between complex data structures and user-friendly analytics. By defining clear relationships among data entities, these models not only streamline reporting processes but also empower users to harness the full potential of their data.

As organizations grapple with challenges such as time-consuming report creation and data inconsistencies, understanding and implementing semantic models becomes essential for driving informed decision-making and enhancing efficiency. This article delves into the myriad benefits of semantic models, practical strategies for building and managing them, and best practices for integration, ultimately equipping organizations with the tools necessary to thrive in today’s data-driven landscape.

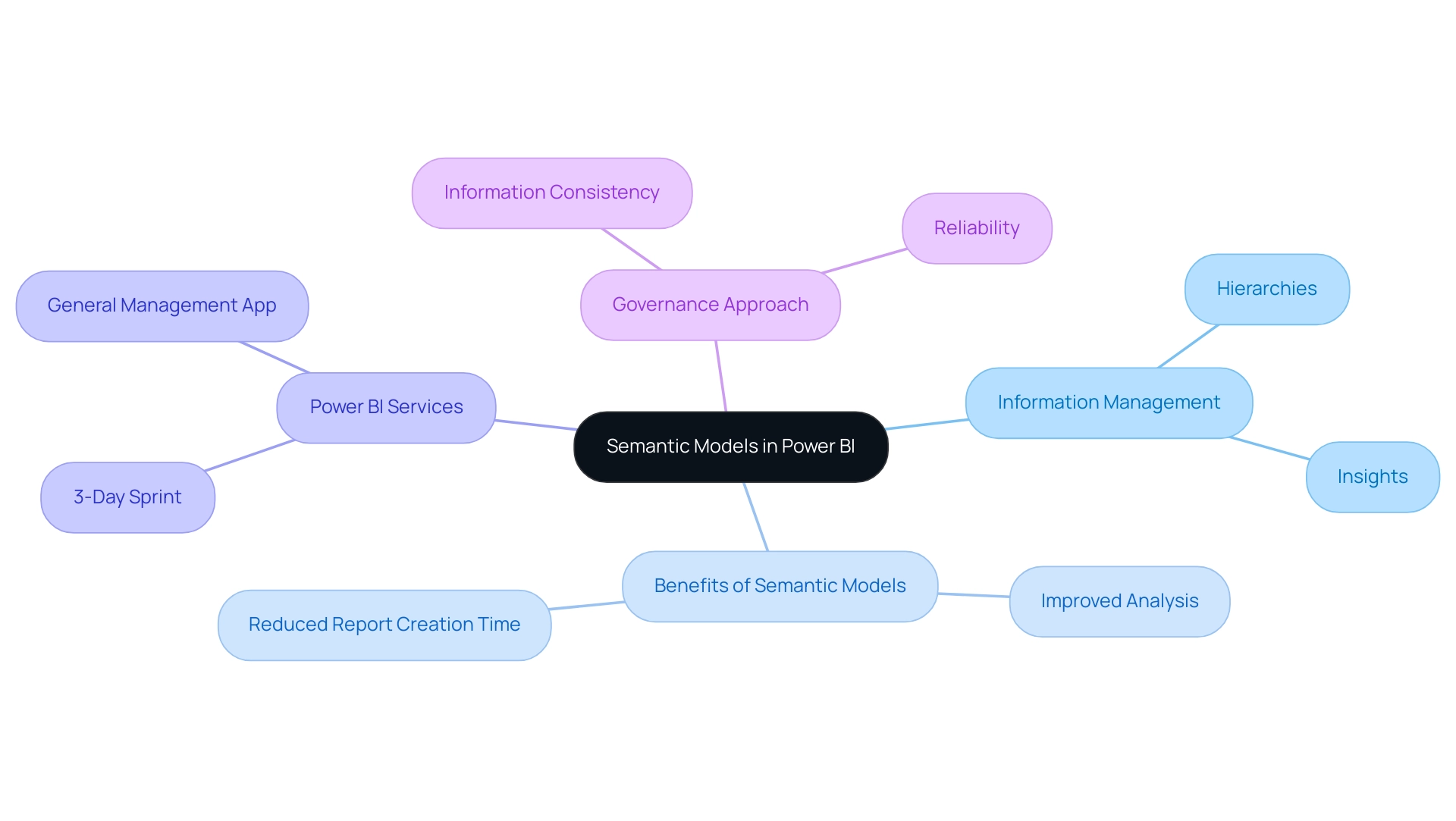

Understanding Semantic Models in Power BI

Semantic models in Power BI serve as a crucial element in information management, acting as organized structures that outline relationships between various information entities. They operate as a mediator layer, simplifying complex structures and enabling users to interact with their information more intuitively. By arranging information into clear hierarchies and significant connections, conceptual frameworks greatly improve the effectiveness of analysis and reporting processes, thus tackling prevalent issues encountered by operations directors, including the absence of actionable guidance.

This leads to extracting valuable insights with greater ease, ultimately reducing the time spent on report creation. As Ibarrau, an informed Super User, observes, ‘You might be able to obtain that information but with the BI Rest API only… in that manner, you could gain insights into the usage for creating new reports.’ This viewpoint emphasizes the essential significance of semantic models in Power BI for revealing the complete capabilities of BI, as they convert unprocessed data into practical insights that promote informed decision-making.

Furthermore, our Power BI services include a 3-Day Sprint designed to quickly create professionally designed reports, alongside the General Management App that facilitates comprehensive management and smart reviews. Furthermore, generating structured representations from Excel workbooks and CSV files automatically produces a framework with imported tables, simplifying the framework creation process. Case studies investigating usage metrics in national/regional clouds demonstrate how conceptual frameworks function within compliance structures while improving analytical capabilities.

Additionally, establishing a strong governance approach guarantees information consistency and reliability, further enhancing the efficiency of conceptual frameworks. Comprehending and applying semantic models in Power BI efficiently is crucial for any organization aiming to enhance its analytical capabilities and surpass obsolete systems through intelligent automation.

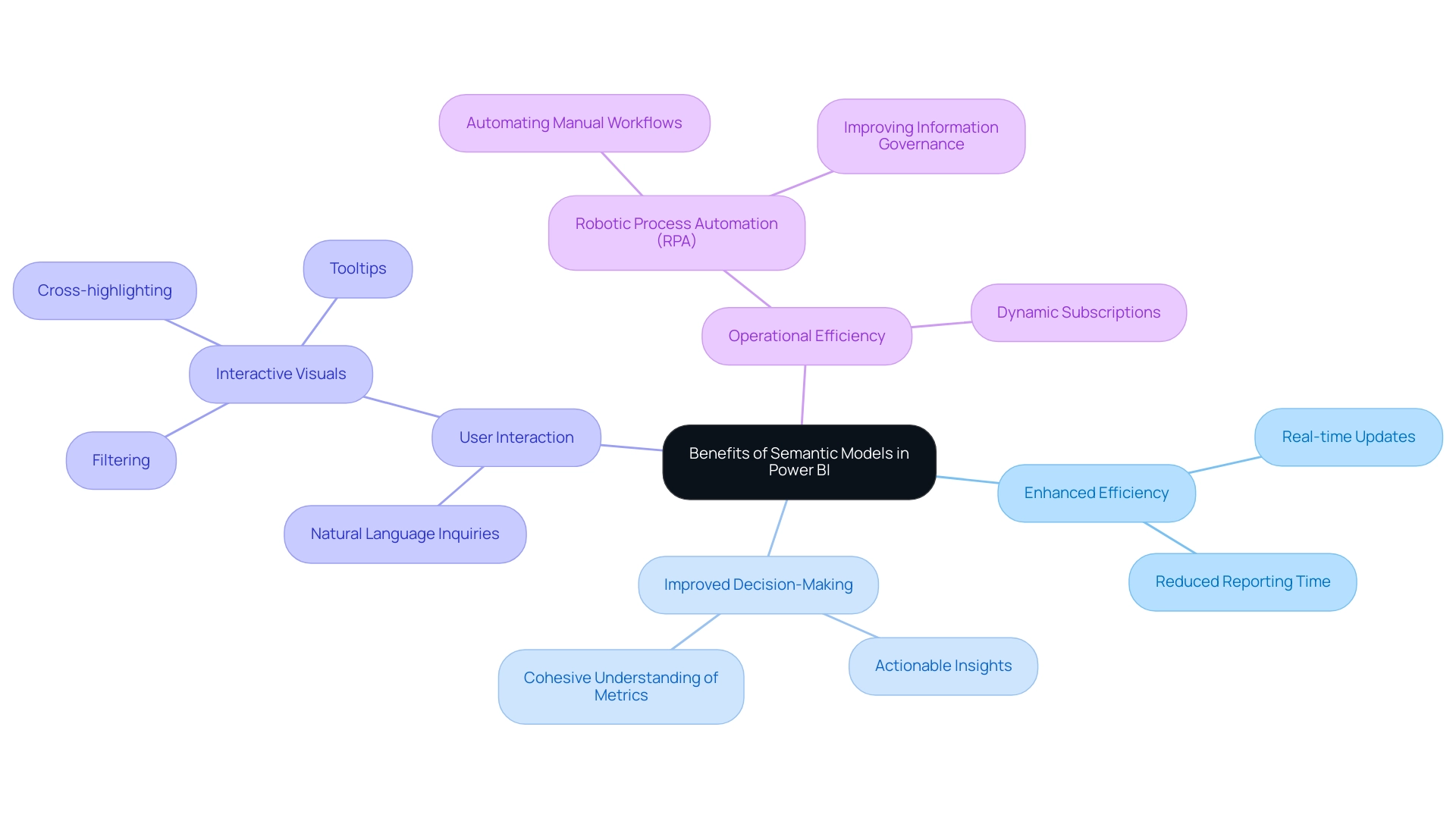

Benefits of Implementing Semantic Models in Power BI

Implementing semantic models in Power BI unlocks a plethora of advantages that significantly enhance organizational efficiency and decision-making processes. By tackling challenges such as time-consuming report creation, inconsistencies in information, and the absence of actionable guidance, these frameworks establish a clear structure and define relationships among information. This ensures that users across various departments have access to uniform information interpretations, minimizing discrepancies and fostering a cohesive understanding of business metrics.

Furthermore, conceptual frameworks enable users with sophisticated analytical functions, allowing features such as natural language inquiries. As Jason Himmelstein aptly notes,

Users can now update their visuals in real time, interact with their information through BI’s standard features such as filtering, cross-highlighting, and tooltips.

This interactivity not only streamlines the reporting process but also accelerates insight generation, allowing for more informed decision-making.

To effectively set up their environment for monitoring and enhancing the quality of semantic models in Power BI, organizations can follow the deployment steps outlined in the BPA case study, which includes:

- Creating a new Lakehouse

- Establishing a Data Engineering Environment

- Integrating the semantic-link-labs library

Moreover, incorporating Robotic Process Automation (RPA) can greatly improve operational efficiency by automating manual workflows, tackling the challenges of report creation and information governance, and enabling teams to concentrate on strategic, value-adding tasks. With over 200 hours of learning available, users can deepen their understanding of these frameworks and their implementation.

The inclusion of dynamic subscriptions in BI also improves the efficiency of these frameworks, enabling prompt updates and enhanced information management. As organizations adopt these frameworks alongside RPA, they position themselves to drive business success through enhanced accessibility and deeper analytical capabilities.

Building and Managing Power BI Semantic Models

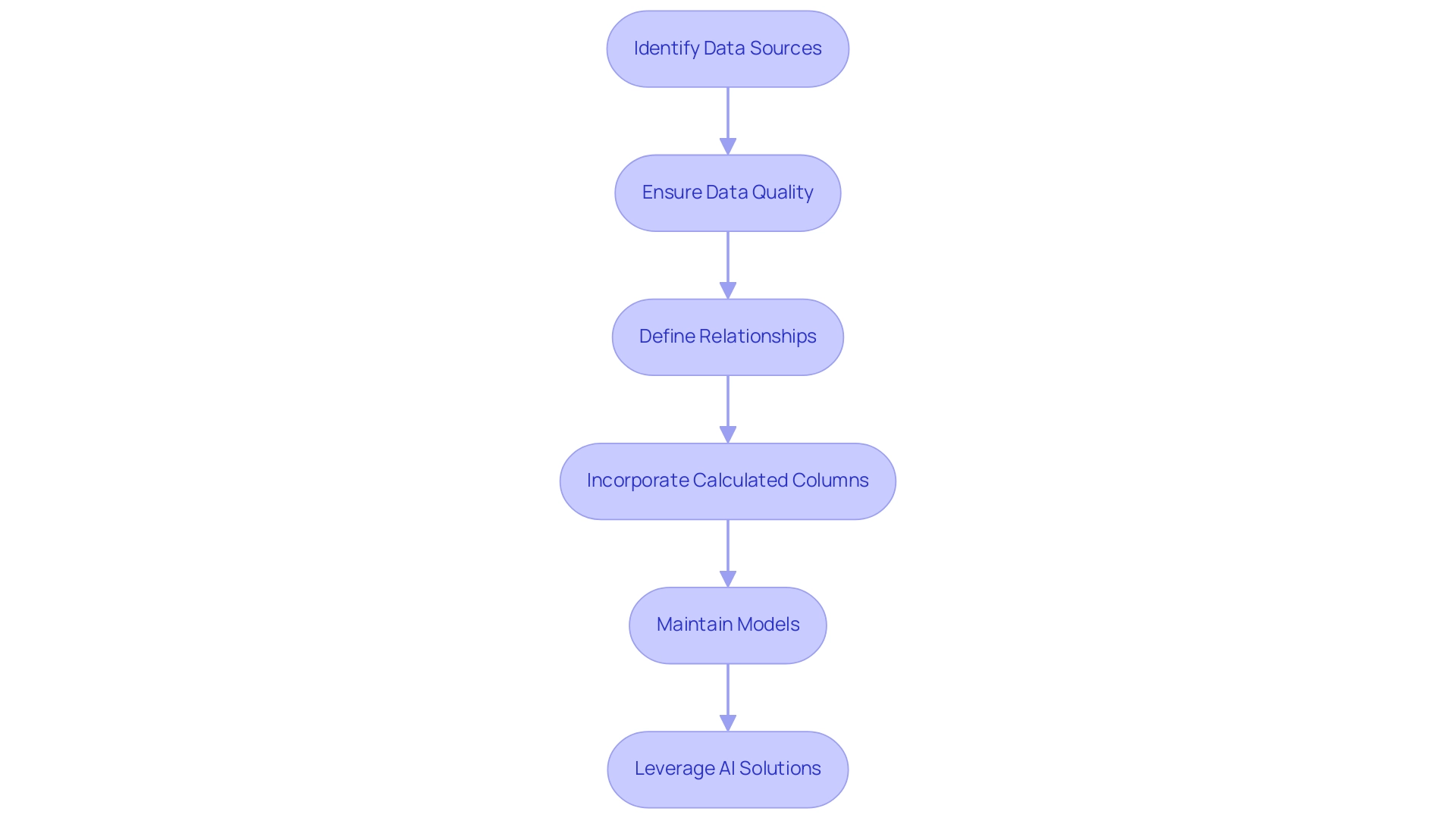

Constructing and overseeing semantic models in Power BI is a strategic process that enables organizations to utilize their information effectively. In today’s data-rich environment, the importance of Business Intelligence and RPA in driving data-driven insights cannot be overstated, particularly as many businesses struggle with a lack of actionable insights. A practical example of this can be seen in the tutorial on building a machine learning model in Power BI, where the first crucial step involves identifying and connecting to the suitable information sources.

This step is essential for ensuring that the information is both clean and well-structured, addressing challenges like inconsistencies that can arise in report creation. Following this, defining the relationships between various entities creates a logical framework that accurately reflects how the information interacts. In the case study titled ‘Tracking Training Status,’ the training procedure for the machine learning system involves monitoring the training status through the BI interface, indicating whether the system is queued, under training, or has been successfully trained.

Incorporating calculated columns and measures is vital, as these elements provide valuable insights that drive decision-making. Furthermore, consistent upkeep of the semantic models in Power BI must not be neglected; regularly examining and refreshing the models guarantees that they adjust to any alterations in information sources or changing business requirements. Our Power BI services, including the 3-Day Power BI Sprint, offer organizations rapid pathways to create professionally designed reports while the General Management App ensures comprehensive management and smart reviews.

By leveraging AI solutions like Small Language Models, which enhance information quality through efficient analysis, and GenAI Workshops that provide hands-on training, organizations can address the challenges of poor master information quality. As Ayushi Trivedi, Technical Content Editor at Analytics Vidhya, states, ‘I love building the bridge between the technology and the learner,’ emphasizing the importance of connecting technology with practical applications. By following these steps and utilizing these AI solutions, organizations can build strong conceptual frameworks that greatly improve their analytical capabilities, paving the way for informed strategic decisions.

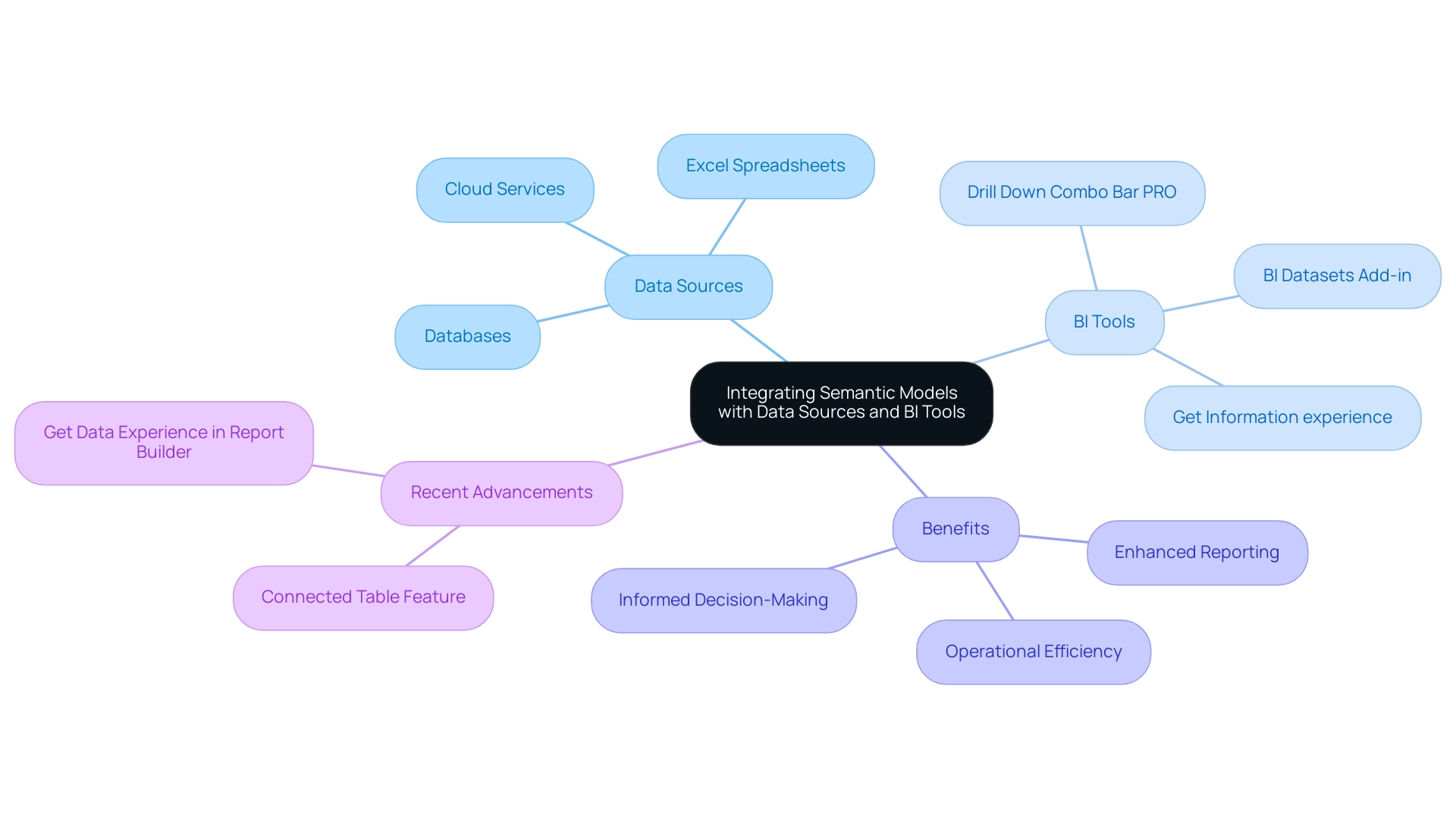

Integrating Semantic Models with Data Sources and BI Tools

Combining meaningful frameworks with a varied array of information sources and business intelligence tools is essential for enhancing the capabilities of BI. In today’s data-rich environment, the ability to unlock actionable insights through Business Intelligence is essential for maintaining a competitive edge. Users can connect the BI tool to numerous information sources, including databases, Excel spreadsheets, and various cloud services, ensuring that semantic models are consistently updated with the most current information available.

Notably, the Drill Down Combo Bar PRO can visualize up to 25 series, showcasing BI’s robust analytical capabilities. The combination of BI tools with other business intelligence solutions greatly improves reporting abilities and user experience, tackling issues like time-consuming report generation and inconsistencies in information. This seamless connection facilitates comprehensive analysis and visualization, empowering organizations to make informed decisions grounded in a holistic view of their information landscape.

Recent advancements, such as the connected table feature in the BI Datasets Add-in for Excel, streamline the incorporation of BI information into Excel workbooks, enhancing this integration journey. Moreover, the Get Information experience in Report Builder enables users to efficiently create reports from over 100 sources, enhancing performance and usability. As a result, users can monitor their business and receive quick answers through rich dashboards accessible on any device.

Furthermore, utilizing RPA solutions such as EMMA RPA and Automate can automate repetitive tasks, further enhancing operational efficiency and allowing teams to concentrate on strategic initiatives. As Arun Ulag noted concerning the upcoming Microsoft Fabric Conference 2025 in Las Vegas, the advancements in BI tool integration are poised to transform how businesses manage and analyze their information, further promoting growth and operational efficiency. To learn more about how these solutions can benefit your operations, book a free consultation today.

Challenges and Best Practices for Power BI Semantic Models

Navigating the complexities of semantic models in Power BI can present significant challenges, such as information quality issues, user resistance to change, and intricate model designs. To effectively tackle these hurdles, organizations must prioritize robust data governance, ensuring that all data remains accurate and current. Our 3-Day Power BI Sprint is designed to help you create professional reports quickly while addressing these challenges, allowing you to leverage insights effectively for impactful decisions.

A key feature of this service is the template bonus, providing you with a professionally designed report that can be reused for future projects. As Amine Jerbi aptly noted, as semantic frameworks grow in size after refreshes, if the capacity of the workspace is not increased, performance slowdowns can occur. In such cases, organizations can utilize incremental refresh as a viable solution.

Thus, it’s crucial to simplify models by removing unnecessary columns, tables, or complex calculations to enhance refresh times. The focus on cleaning datasets is further highlighted by the recent BI Dev Dash case study, where contestants demonstrated the impact of this practice. Furthermore, fostering a culture of teamwork and providing extensive training can greatly reduce resistance to new tools and processes, aligning with our commitment to expert education in our BI services.

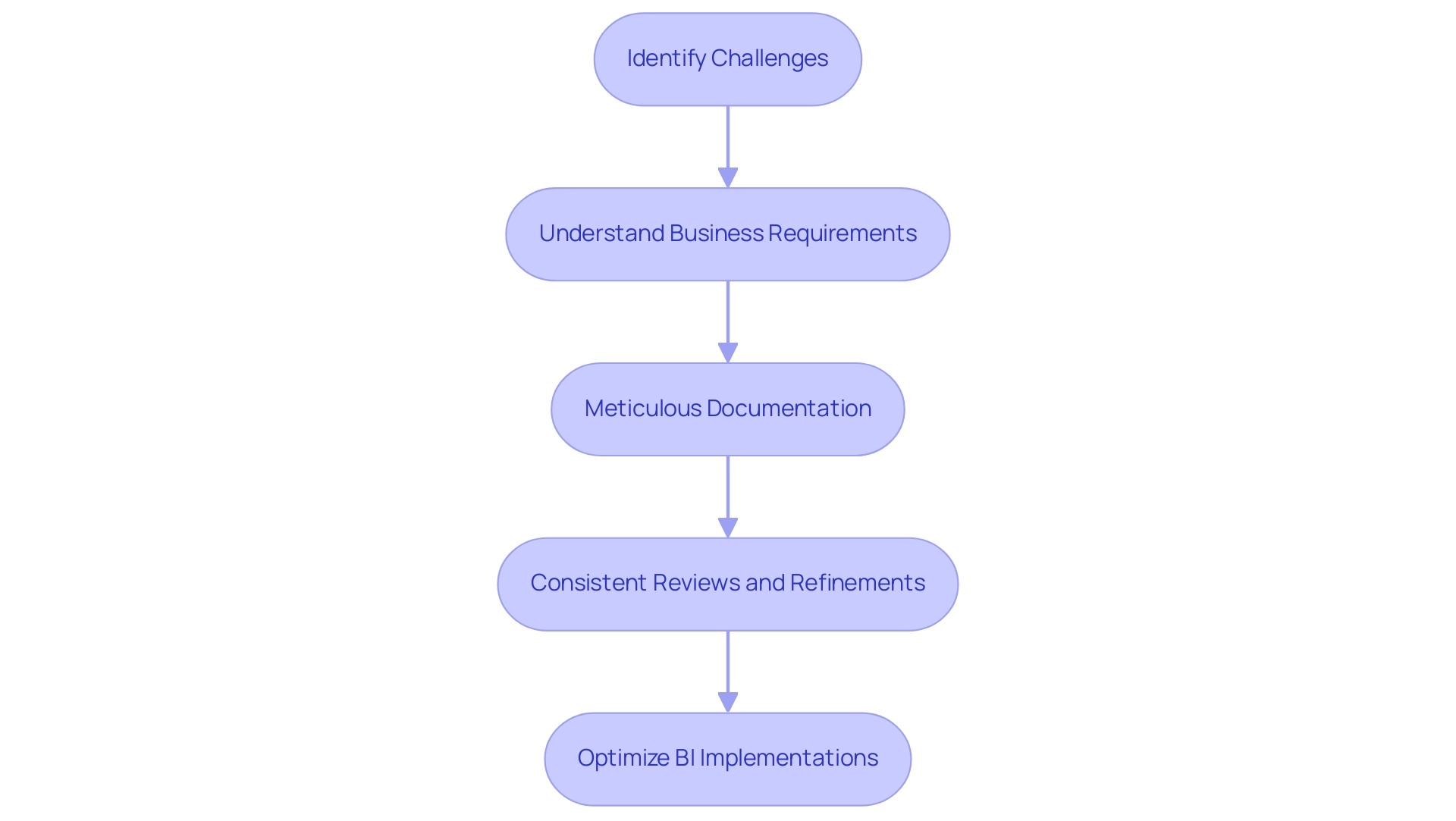

Best practices for developing effective semantic models in Power BI begin with:

- A thorough understanding of business requirements

- Meticulous documentation

- Consistent reviews and refinements of the model

These proactive measures not only address existing challenges but also empower organizations to optimize their BI implementations, ultimately driving greater efficiency and productivity. The Power BI Dev Dash case study exemplifies this approach, highlighting how Angelica’s strong technical skills contrasted with Allison’s focus on polished presentation, showcasing the importance of both technical proficiency and design in achieving success.

Conclusion

Implementing semantic models in Power BI is not just a strategic initiative; it is a crucial step toward operational excellence in a data-driven world. By establishing clear relationships among data entities, these models streamline the reporting process and minimize inconsistencies, enabling users across all departments to make informed decisions with confidence. The insights gleaned from semantic models empower organizations to enhance their analytics capabilities, ultimately transforming raw data into actionable information that drives business success.

Moreover, the integration of semantic models with various data sources and BI tools amplifies their effectiveness, allowing organizations to leverage real-time data and advanced analytics features. This seamless connectivity not only enhances the user experience but also fosters a culture of collaboration and innovation within the organization. By adopting best practices in building and managing these models, companies can navigate challenges such as data quality issues and user resistance, positioning themselves for sustained growth and competitive advantage.

In conclusion, embracing semantic models in Power BI is essential for organizations aiming to thrive in today’s complex data landscape. By investing in these structured frameworks and prioritizing data governance, organizations can unlock the full potential of their data, driving efficiency, enhancing decision-making, and ultimately achieving operational excellence. The journey toward effective data management starts now—equip your organization with the tools and strategies to harness the power of semantic models and pave the way for future success.

Introduction

In the dynamic landscape of data-driven decision-making, the installation and effective use of Power BI can significantly transform how organizations analyze and visualize their data. With the right prerequisites in place, users can unlock the full potential of this powerful tool, streamlining report creation and enhancing data consistency.

This article serves as a comprehensive guide, detailing everything from:

- The essential system requirements

- Installation steps

- Troubleshooting common issues

- Understanding the different versions of Power BI

By following these practical insights, organizations can not only overcome initial hurdles but also harness actionable insights that drive operational efficiency and informed decision-making. Whether for individual users or large enterprises, the journey to mastering Power BI begins with a solid foundation and a strategic approach to setup and configuration.

Essential Prerequisites for Installing Power BI

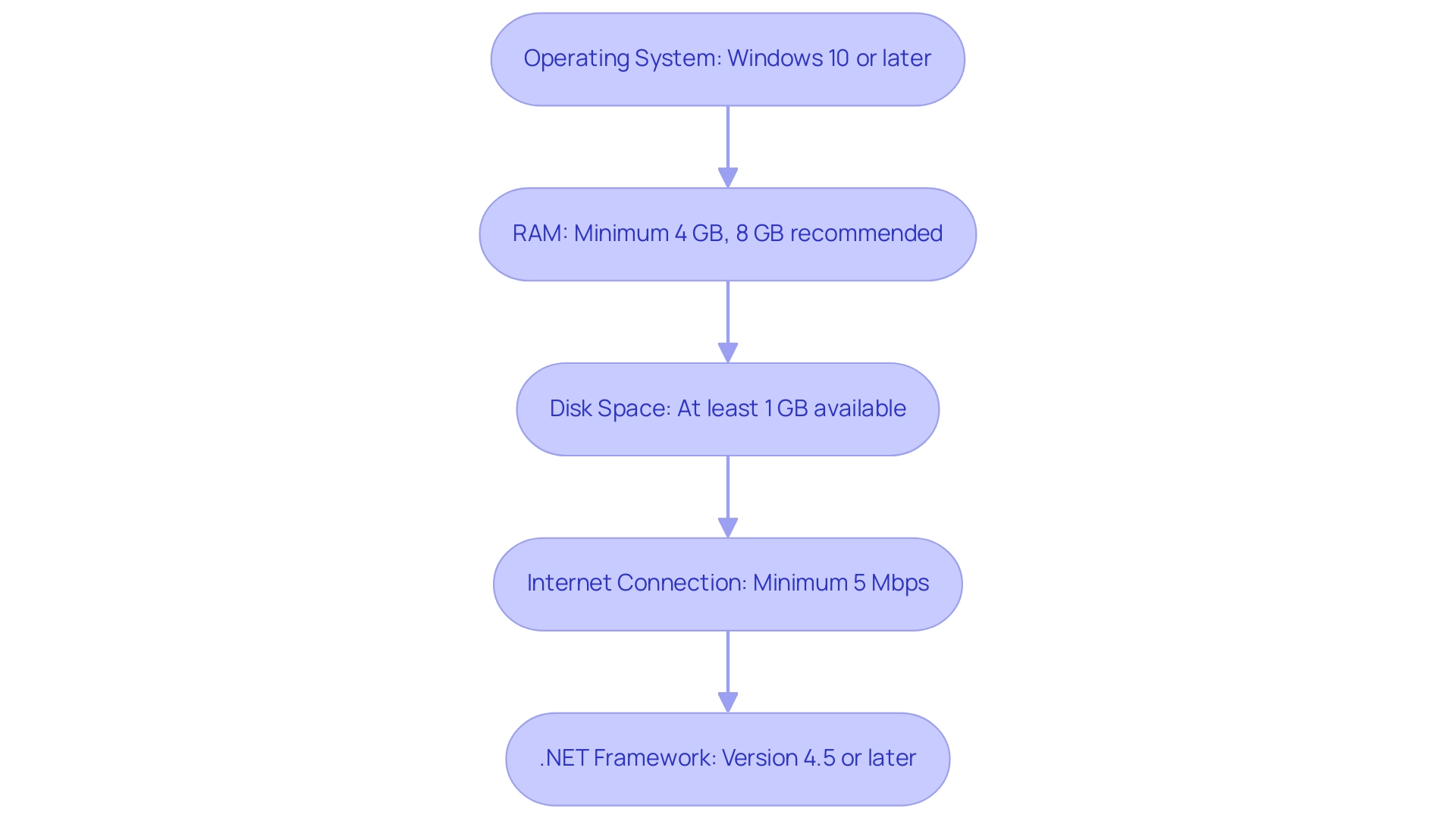

Before diving into the PowerBI installation, it’s essential to confirm that your system meets the following prerequisites for a seamless experience. Addressing common challenges such as time-consuming document creation, ensuring data consistency, and providing actionable insights begins with the right foundation:

- Operating System: Windows 10 or later, or Windows Server 2016 or later are necessary to run Power BI effectively.

- RAM: While a minimum of 4 GB of RAM will suffice, opting for 8 GB or more is advisable to significantly enhance performance, especially when working with larger datasets and avoiding delays in report generation.

- Disk Space: Ensure that you have at least 1 GB of available disk space for the PowerBI installation process. For optimal performance, a solid-state disk (SSD) is recommended, particularly for larger models, to facilitate swift data access and processing.

- Internet Connection: A reliable internet connection with a minimum speed of 5 Mbps is necessary for downloading BI and accessing its online features efficiently, which assists in reducing interruptions during document creation.

- .NET Framework: Confirm that the .NET Framework version 4.5 or later is installed, as this is crucial for BI’s functionality and to ensure that all features are available without inconsistencies.

By ensuring these prerequisites are met, you can avoid potential issues during the Power BI installation and enhance your ability to leverage BI’s insights. Meeting these requirements not only streamlines the PowerBI installation but also supports clearer, actionable guidance in your documents, leading to better decision-making. According to industry expert Andre, for content creators, opting for an i7 CPU, 16 GB of RAM, an SSD, and a 64-bit operating system will provide a notable boost in performance, especially when handling complex models with over 100 million rows.

For users considering compatibility, it is important to note that a MacBook can run BI, but only if Windows is installed on it. Additionally, while the Surface Pro 3 is suitable for smaller models, users should be aware that performance significantly improves with a desktop CPU when handling larger datasets. This preparation will set the stage for a successful experience with BI, reducing report creation time and improving the clarity of your insights, ultimately addressing the lack of actionable guidance in your reports.

Step-by-Step Guide to Installing Power BI

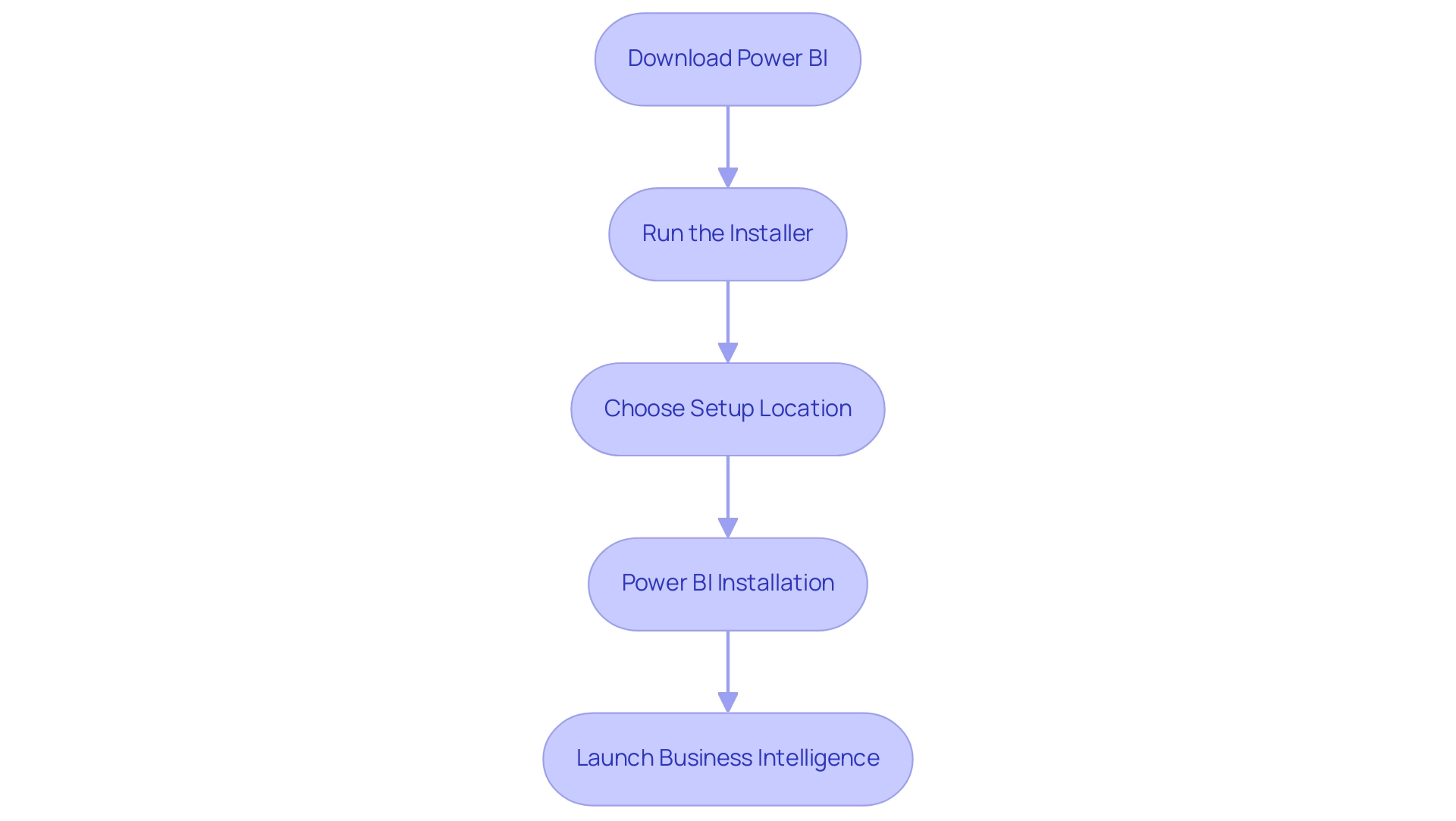

To successfully install BI and unlock its data visualization capabilities, follow these straightforward steps:

- Download Power BI: Navigate to the official Microsoft Power BI website and click on the ‘Download’ button for Power BI Desktop. This step is critical as it ensures you have the most recent version, which is vital for optimal performance.

- Run the Installer: Once the download completes, locate the installer file in your Downloads folder and double-click it to start the setup process.

- Accept the License Agreement: Carefully read through the Microsoft Software License Terms. Check the box to indicate your acceptance and click ‘Install’ to proceed.

- Choose Setup Location: If prompted, select your preferred setup location or leave it as the default setting, which is often the best choice for most users.

- PowerBI installation: Wait while the installation process completes. You will see a confirmation message indicating that BI has been successfully installed.

- Launch Business Intelligence: Click ‘Finish’ to exit the installer. Then, locate BI in your Start Menu and launch the application.

Congratulations on successfully completing the Power BI installation! With a growing demand for business intelligence solutions—evidenced by the Netherlands investing $1.2 billion in such technologies—effective use of BI can significantly enhance your operational efficiency. Moreover, as you leverage Robotic Process Automation (RPA) alongside Business Intelligence, you can accelerate the automation of manual workflows, thereby reducing errors and freeing up your team for more strategic tasks.

For example, automating information extraction processes with RPA can streamline the preparation phase, making it easier to visualize insights in Business Intelligence. The revised Snowflake connector, which enhances performance by decreasing unnecessary metadata queries, can further optimize your handling capabilities. Furthermore, the capability to link Dynamics 365 information to BI enables the integration of AI-driven analyses, demonstrating the tool’s adaptability in meeting diverse business requirements.

However, users may face challenges such as time-consuming report creation, data inconsistencies, and a lack of actionable guidance when leveraging insights from BI dashboards. As Jurriaan Amesz, Lead Product Owner at ABN AMRO Bank, states,

Business Intelligence and Azure … provided us with the performance for hundreds of concurrent users handling tens of billions of records.

This powerful tool is now at your fingertips!

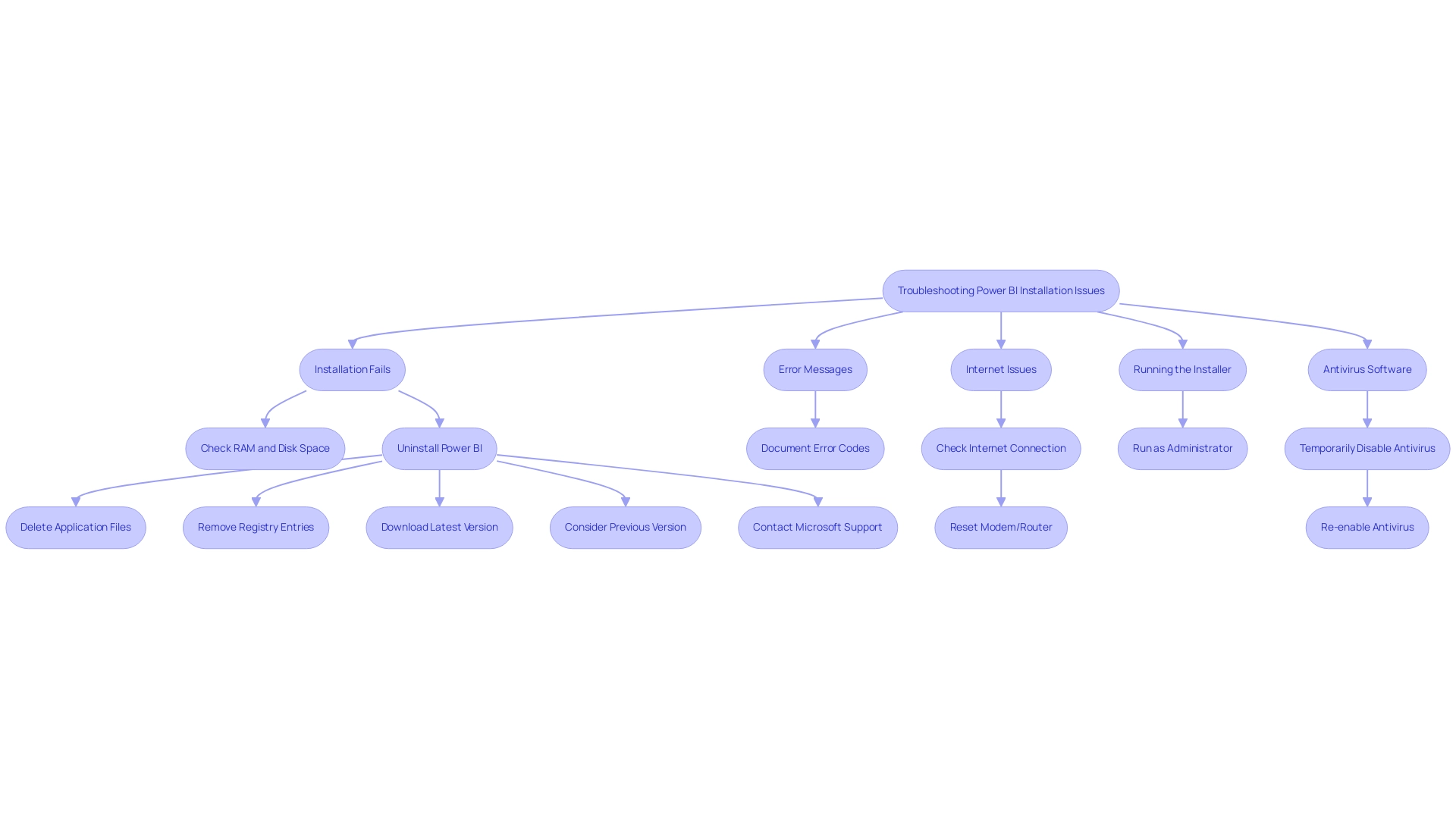

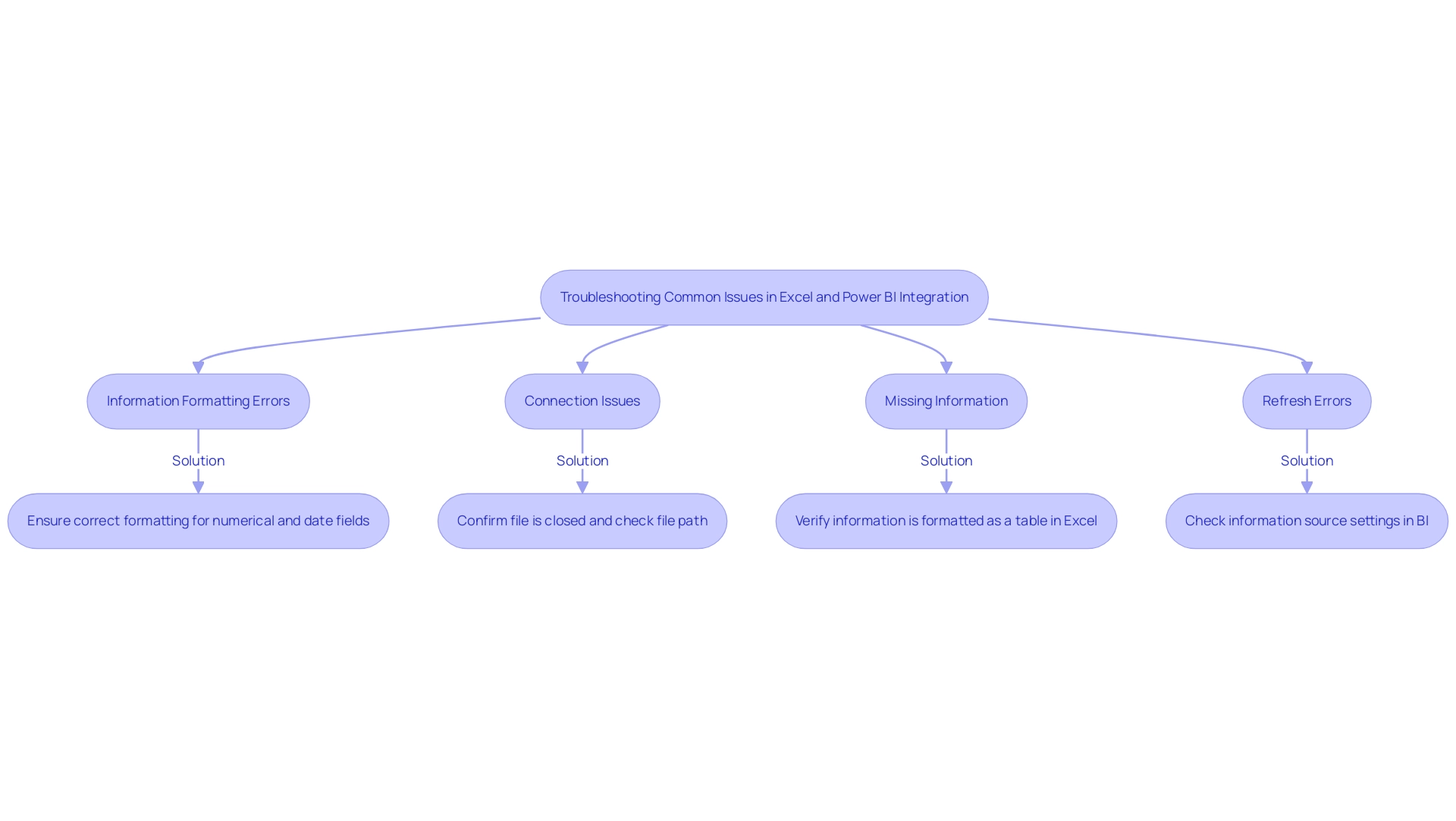

Troubleshooting Common Installation Issues

Facing problems during the PowerBI installation can be annoying, but with the correct troubleshooting measures, you can fix them effectively. Acknowledging that many users face similar challenges—with over 2,044 views on related discussions—here are some actionable solutions to ensure a smooth setup:

- Installation Fails: Verify that your system meets all prerequisites for Power BI.

-

Insufficient disk space or RAM can lead to setup failures. Ensure that you have at least 2 GB of RAM and sufficient disk space available for the PowerBI installation.

-

Error Messages: Pay close attention to any error messages that appear during the setup process.

-

Document these specific error codes, as they often lead to effective solutions tailored to your situation when searched online.

-

Internet Issues: If your download fails, check your internet connection.

- A stable connection is crucial for downloading large applications like Power BI.

-

If problems persist, consider resetting your modem or router.

-

Running the Installer: Running the installer with elevated privileges is essential for the PowerBI installation.

-

Right-click the installer and choose ‘Run as administrator’ to ensure the setup has the necessary permissions to modify system files.

-

Antivirus Software: Occasionally, antivirus programs can prevent setups.

- Temporarily disabling your antivirus software during the PowerBI installation process can assist, but remember to re-enable it afterward for your security.

In addition, organizations seeking to enhance operational efficiency should consider how deploying BI Pixie in Azure can improve information security and scalability. Furthermore, leveraging Robotic Process Automation (RPA) can streamline the report generation process, reducing the time spent on report creation and minimizing data inconsistencies. If these steps do not resolve your issues, consider reaching out to Microsoft support for further assistance with your Power BI installation.

As mentioned by Eason from the Community Support Team, it’s important to completely uninstall the PowerBI installation if you encounter persistent problems.

– You can locate the application directory in C:\Program Files\Microsoft BI Desktop\bin and delete all files within it.

– Additionally, navigate to the registry editor and remove any related folders under HKEY_LOCAL_MACHINE\SOFTWARE, HKEY_CURRENT_USER\SOFTWARE, and HKEY_USERS\.DEFAULT\Software.

After that, download the latest version of BI Desktop as part of the PowerBI installation from the Download Center or the Microsoft Store, especially if you’re using Windows 10. If your computer is not suitable for the latest version, consider reverting to an earlier version, such as the November 2021 update.

Referencing the case study on BI Admin Controls for Usage Metrics illustrates how administrators can effectively manage setup issues and user information, ensuring sensitive details are protected while enabling the analysis of usage metrics. These thorough troubleshooting suggestions not only enable you to handle typical installation problems but also guarantee a smoother setup experience with BI, while also tackling the challenges of creation and governance.

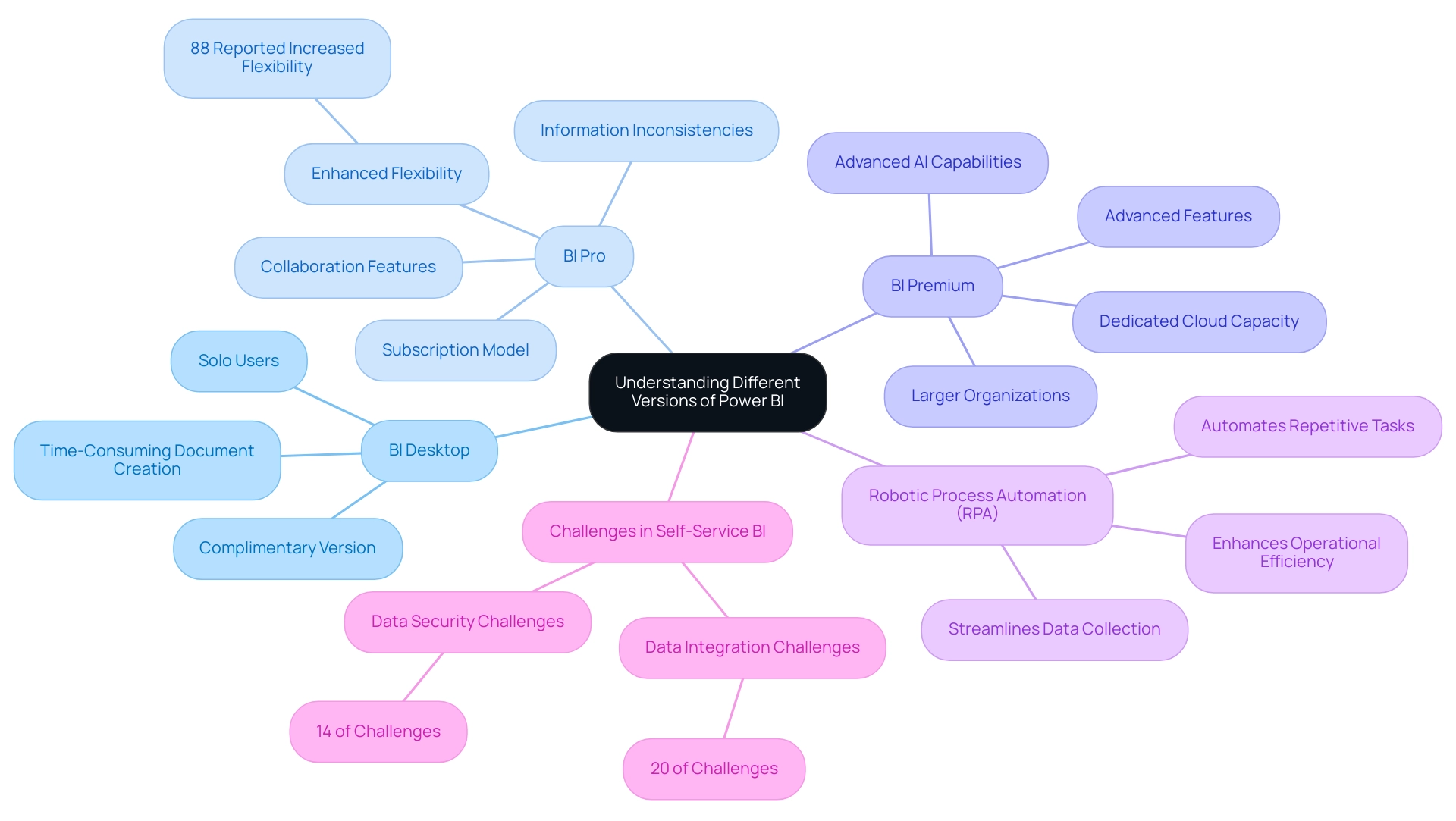

Understanding Different Versions of Power BI

BI provides a range of versions tailored to meet various user requirements, enabling organizations to harness data effectively and overcome challenges in deriving actionable insights.

-

BI Desktop: This complimentary version is perfect for solo users who want to generate visuals and dashboards on their own.

It serves as an excellent starting point for those new to business intelligence, though users may encounter time-consuming document creation processes that hinder efficiency. -

BI Pro: A subscription-based model that facilitates collaboration and sharing of reports across teams and organizations.

BI Pro boosts team efficiency by enabling refreshes and supporting larger collections, which is vital for dynamic business environments.

Based on a survey, 88% of organizations utilizing cloud-based BI reported enhanced flexibility in accessing and analyzing information from any location, at any time, emphasizing the benefits of using BI Pro.

However, issues like information inconsistencies may still arise without proper management strategies. -

BI Premium: Tailored for larger organizations, this version provides advanced features such as dedicated cloud capacity and superior performance for extensive datasets.

BI Premium also supports greater data storage and advanced AI capabilities, making it a robust choice for enterprises looking to derive deeper insights from their data.

Microsoft’s recent acquisition of C3.ai for $6.9 billion underscores the growing importance of advanced analytics and AI in the business intelligence landscape.

In conjunction with Business Intelligence, implementing Robotic Process Automation (RPA) can significantly enhance operational efficiency.

RPA tools, such as EMMA RPA and Microsoft Automation, can automate repetitive tasks, reducing task repetition fatigue and addressing staffing shortages.

By streamlining data collection and report generation processes, RPA allows teams to focus on analyzing insights rather than getting bogged down by manual tasks.

Choosing the suitable version of BI is essential to maximizing its potential.

By aligning the chosen version with organizational operational needs and budget constraints, users can fully utilize BI’s robust capabilities.

Addressing challenges in self-service business intelligence, such as data integration and security, is essential for successful adoption, especially in light of the BFSI sector’s projected growth in the business intelligence market from 2023 to 2032.

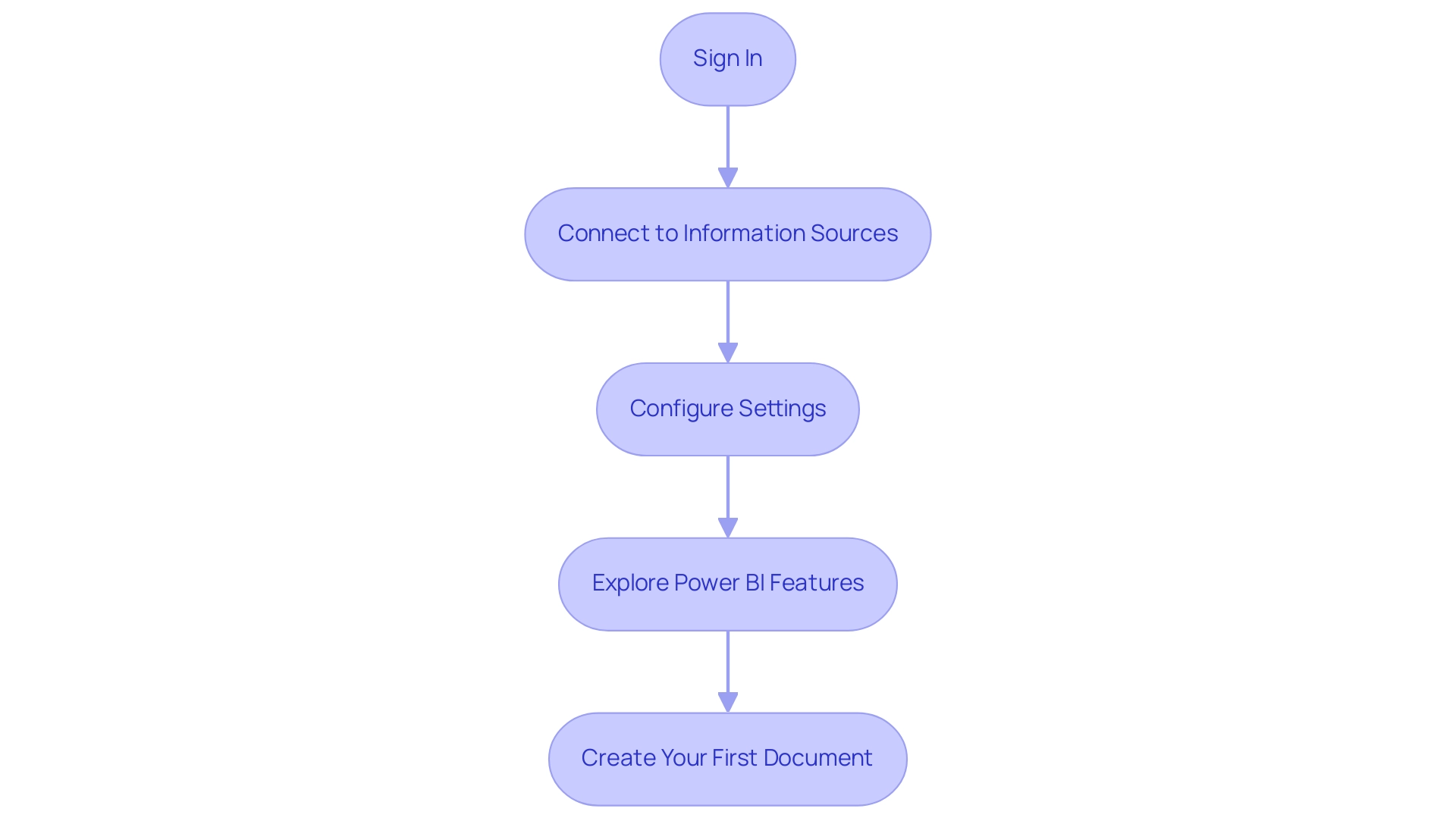

Post-Installation Setup and Configuration for Power BI

Once you have successfully installed BI, follow these strategic steps to set it up effectively:

- Sign In: After completing the Power BI installation, launch the application and sign in with your Microsoft account. If you don’t already have one, you can create an account during this process.

- Connect to Information Sources: Utilize the ‘Get Information’ feature to establish connections with various information sources, including Excel, SQL Server, and online services tailored to your needs.

- Configure Settings: Navigate to the ‘File’ menu and select ‘Options and settings’. Here, you can fine-tune preferences such as information load options and regional settings to optimize performance.

- Explore Power BI Features: Spend some time familiarizing yourself with the interface. Explore features such as dashboards, summaries, and datasets to understand how they can be utilized for your analysis.

- Create Your First Document: Begin by designing a simple document. This practical experience will enable you to understand the features of BI and the importance of Power BI installation for meeting your specific information requirements.

By completing the Power BI installation and finishing these steps, you will be well-equipped to leverage BI’s capabilities for comprehensive analysis and visualization. In an information-rich environment, the role of Business Intelligence in enhancing operational efficiency is crucial; organizations must overcome challenges like time-consuming report creation and inconsistencies to leverage insights effectively. As the market for social business intelligence is projected to reach $25,886.8 million by 2024, ensuring your team is adept at using tools like Power BI and RPA solutions such as EMMA RPA and Power Automate is crucial.

With the BFSI sector predicted to experience the fastest growth in business intelligence from 2023 to 2032, the relevance of effective tools cannot be overstated. Notably, user adoption rates can vary significantly; 26% of users achieve full utilization at 50% capacity, while 58% remain below 25%. This underscores the importance of a thorough setup process and adequate user training to ensure effective data-driven decision-making.

Additionally, a recent case study showcased how the narrative visual with Copilot can be embedded in applications, streamlining workflows by allowing organizations to refresh data with user-specific information, further enhancing operational efficiency and data usability.

Conclusion

Ensuring a successful installation and effective use of Power BI is pivotal for organizations aiming to harness the power of data-driven decision-making. By first confirming that system prerequisites are met, users can streamline the installation process and enhance overall performance. The step-by-step guide provided offers a clear pathway to successfully installing Power BI, empowering users to unlock its robust capabilities and drive operational efficiency.

Addressing common installation issues through proactive troubleshooting can prevent disruptions and facilitate a smoother setup experience. Understanding the distinctions between Power BI versions allows organizations to choose the right fit for their specific needs, ensuring they maximize the tool’s potential. Moreover, post-installation setup steps, such as connecting to data sources and configuring settings, are crucial for leveraging Power BI’s full range of features.

Ultimately, by following these guidelines, organizations can overcome initial hurdles and fully embrace the transformative capabilities of Power BI. This not only leads to improved report creation and data consistency but also fosters a culture of informed decision-making that is essential for thriving in today’s competitive landscape. Embracing Power BI as part of the organizational strategy will pave the way for deeper insights and enhanced operational efficiency, making it a valuable asset in the journey towards data excellence.

Introduction

In an age where data drives decision-making and operational efficiency, the ability to maintain up-to-date insights is crucial for organizations striving to stay competitive. Scheduled refresh in Power BI emerges as a powerful solution, allowing teams to automate data updates and ensure reports reflect the latest information. This functionality not only minimizes the risk of human error but also enhances consistency across reports—two significant challenges that often hinder effective data management.

By delving into the intricacies of scheduled refresh, from prerequisites to troubleshooting common issues, organizations can unlock the full potential of their data analytics efforts. Embracing best practices and leveraging innovative tools such as Robotic Process Automation (RPA) can further streamline these processes, empowering teams to focus on strategic initiatives rather than time-consuming manual tasks.

As businesses prepare for the evolving landscape of business intelligence, understanding and optimizing scheduled refresh capabilities in Power BI is essential for informed decision-making and sustained growth.

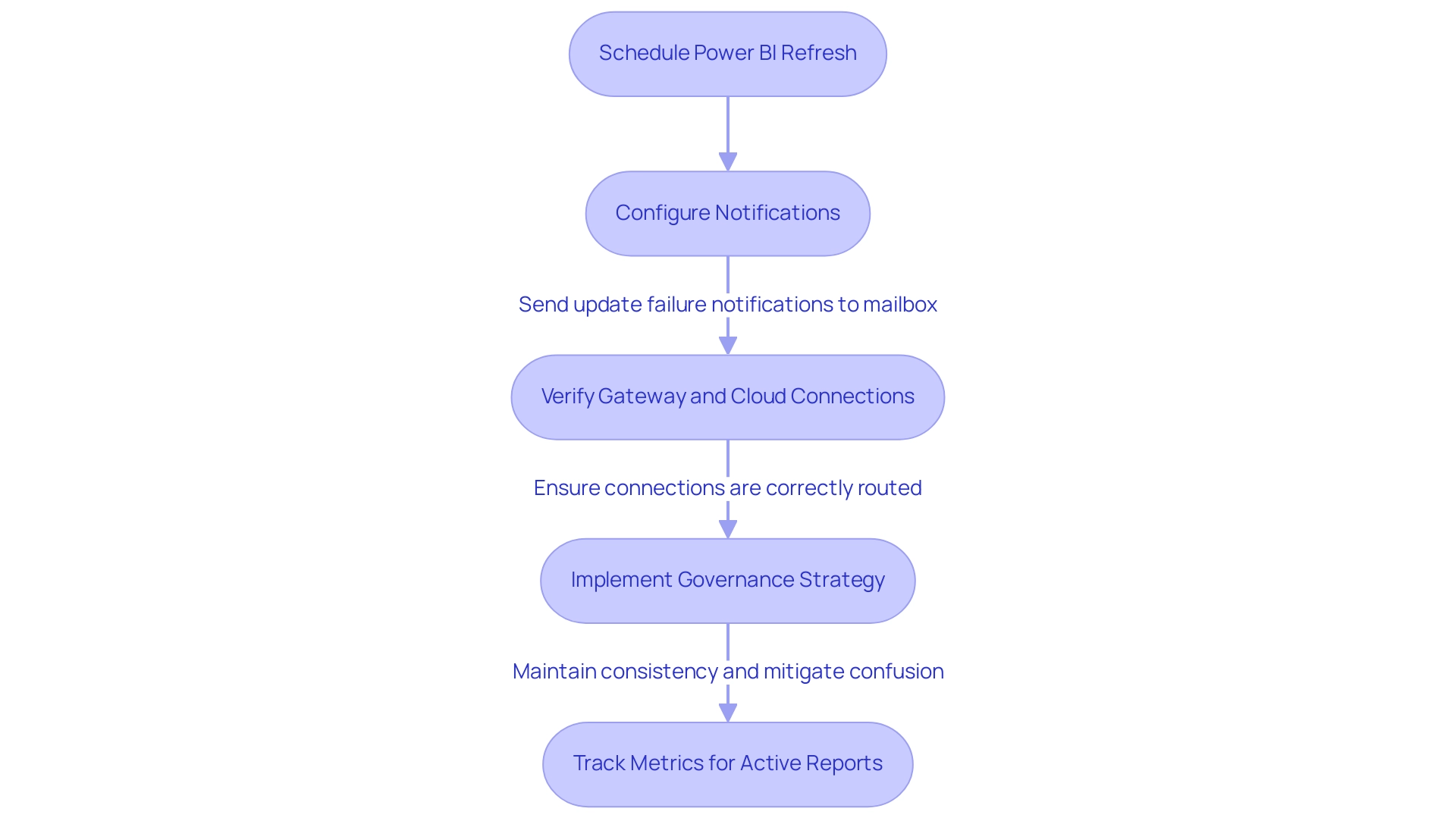

Understanding Scheduled Refresh in Power BI

The feature that allows organizations to schedule Power BI refresh is vital, as it enables automatic updates of datasets at defined intervals, ensuring that reports reflect the most current information. This capability is especially advantageous for businesses that rely on real-time information analysis for informed decision-making. By implementing a schedule power bi refresh, teams can eliminate the need for manual updates, significantly reducing the risk of errors and enhancing consistency across reports—key challenges often faced due to time-consuming report creation and data inconsistencies.

Significantly, it can take Power BI up to 60 minutes to update a semantic model when using the ‘Refresh now’ option, emphasizing the time efficiency gained through scheduling.

Furthermore, ensuring that Power BI is configured to send update failure notifications directly to your mailbox can help address any issues promptly. It’s also crucial to verify that ‘Gateway and cloud connections‘ are routed and applied correctly, as this can affect the overall effectiveness of the update strategy. A strong governance strategy is essential to maintain consistency and mitigate confusion, addressing prevalent issues in report accuracy.

As highlighted by industry expert Sal Alcaraz, organizations utilizing a Premium license can perform up to 48 refreshes per day, with the potential to increase this limit by enabling an XMLA Endpoint. Grasping the complexities of planned updates is essential for optimizing Power BI’s functionalities, particularly as companies aim to schedule Power BI refresh to utilize the newest enhancements and features in 2024. Furthermore, the General Management App improves your reporting strategy by offering comprehensive management, custom dashboards, and smooth information integration, tackling the frequent problem of reports lacking actionable guidance.

A case study titled ‘Active Reports and Viewer Counts’ demonstrates the effectiveness of regular updates, where metrics such as total views and total viewers were tracked, leading to improved report management and enhanced user engagement. This emphasizes the significant effect that a well-executed update strategy can have on data accuracy and operational efficiency within your organization, reinforcing the importance of real-time data analysis in business intelligence.

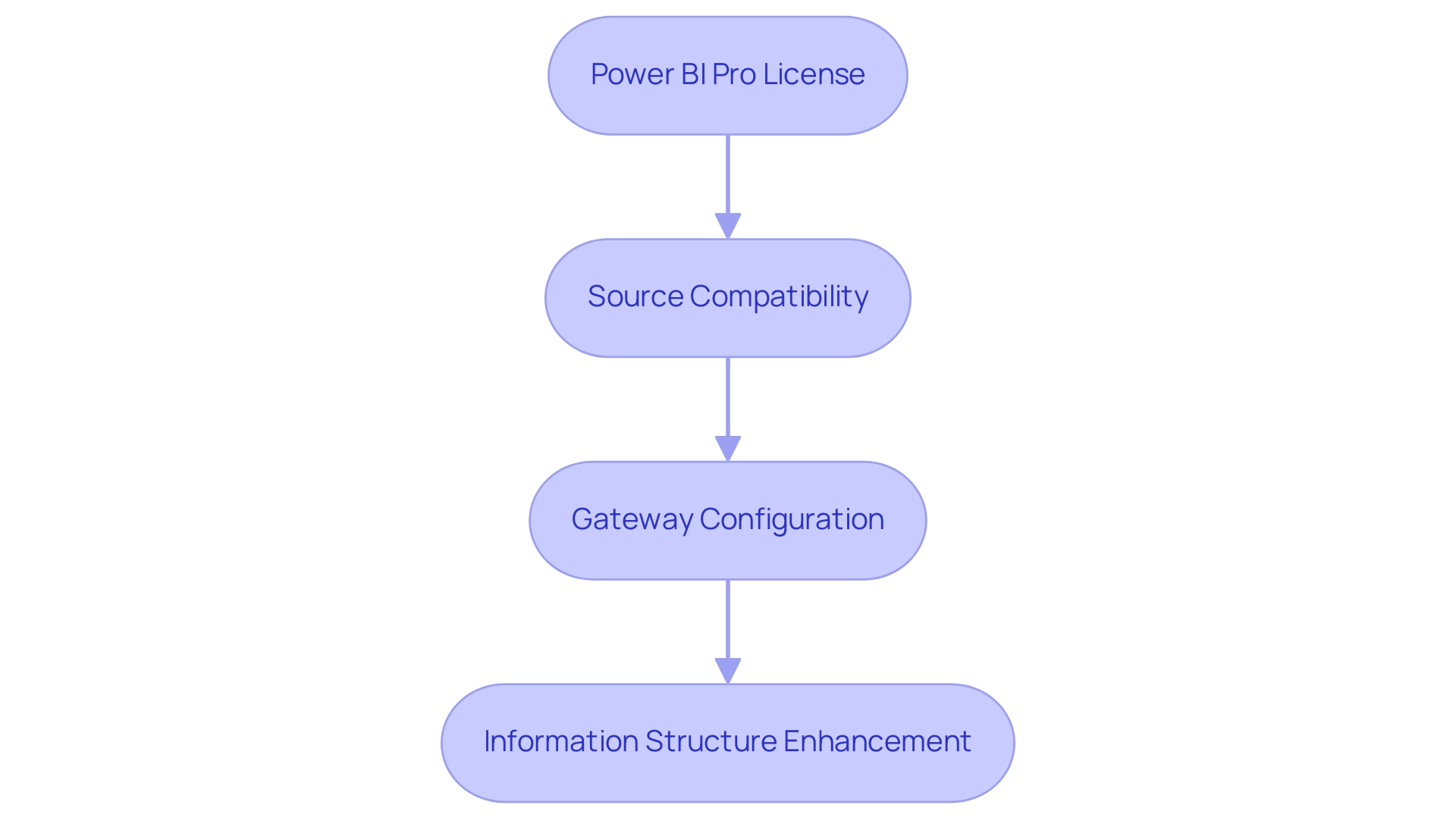

Prerequisites for Setting Up Scheduled Refresh

To successfully establish a timed refresh in BI, it’s crucial to meet the following prerequisites:

- A Power BI Pro license is essential to schedule Power BI refreshes in the Power BI Service. Be mindful that if the license remains inactive for an extended period, such as six months, it may not be utilized effectively.

- Source Compatibility: Verify that your sources are suitable for scheduled refreshes. Commonly supported sources include Azure SQL Database, SharePoint Online, and SQL Server.

- Gateway Configuration: For on-premises information sources, ensure that an On-premises Gateway is configured. This gateway is essential for facilitating seamless information transfer between your sources and Power BI.

- Information Structure Enhancement: Enhance your information structure for performance. Larger datasets can result in extended update times, so efficient modeling is key.

By meeting these prerequisites, you will be well-equipped to schedule Power BI refresh, which will enhance the efficiency of your operations and address the prevalent challenges of time-consuming report creation and inconsistencies that can undermine trust in your insights. To further enhance actionable guidance, consider utilizing Power BI features such as alerts and dashboards that provide real-time insights and direction. Additionally, integrating RPA tools can automate repetitive information handling tasks, streamlining your processes and allowing your team to focus on analysis rather than report creation. As noted by experts, many of the capabilities discussed here are not available to Fabric administrators, underscoring the need for the right licensing and setup. Organizations that have effectively met these requirements have reported significant improvements in their information management processes, facilitating information-driven decision-making that can drive growth and innovation.

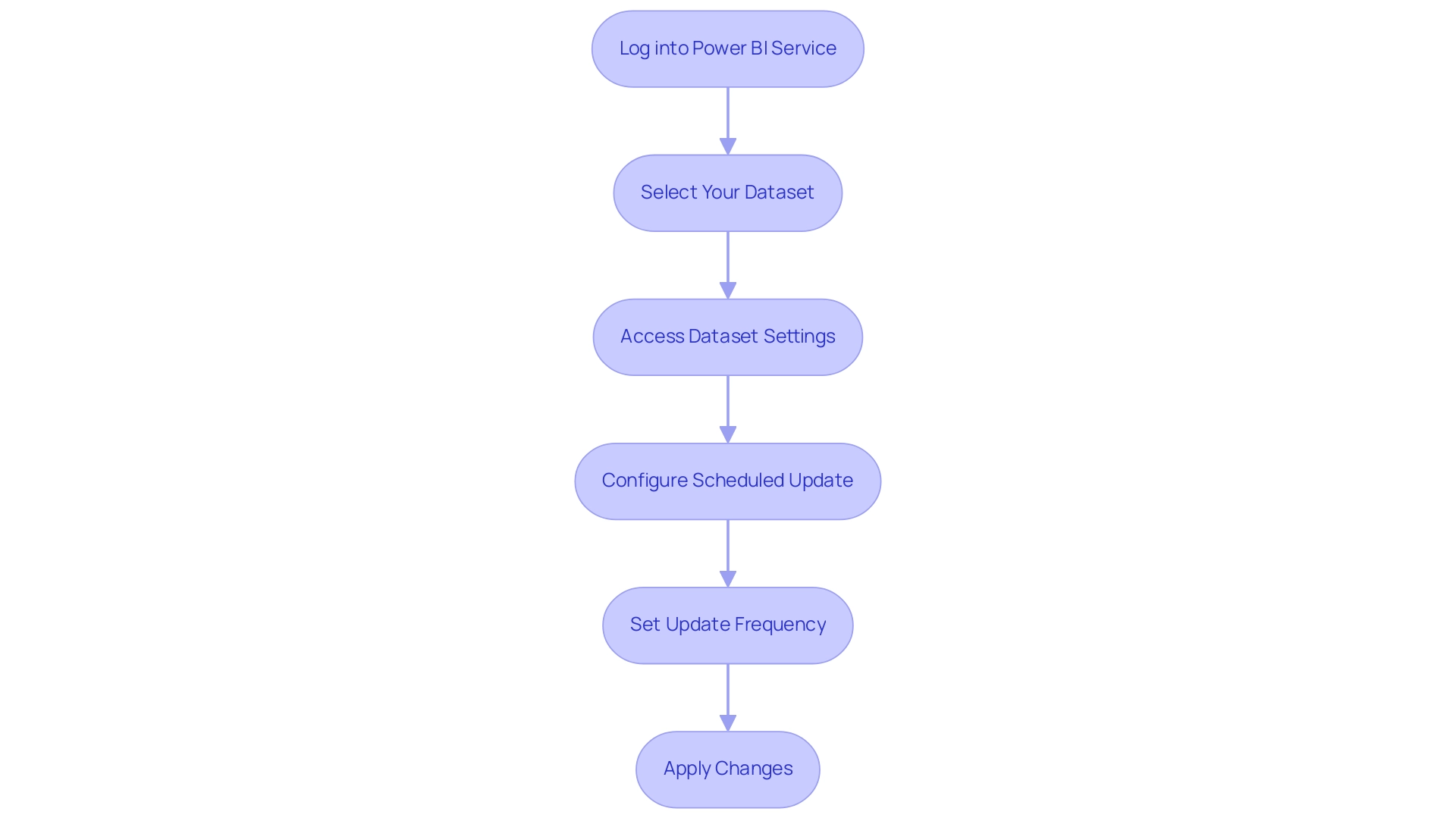

Step-by-Step Guide to Configuring Scheduled Refresh in Power BI Service

Configuring a schedule power bi refresh in the BI Service is essential for maintaining up-to-date reports, thereby enhancing your ability to leverage data-driven insights for operational efficiency. RPA solutions can play a pivotal role in automating the scheduling and management of these data refreshes, further streamlining the process. Follow these detailed steps to ensure a seamless setup:

- Log into Power BI Service: Begin by navigating to the Power BI Service at https://app.powerbi.com and logging in with your credentials.

- Select Your Dataset: In the left navigation pane, click on ‘Datasets’ to locate the specific dataset you plan to update.

- Access Dataset Settings: Click on the ellipsis (…) next to your selected dataset, then choose ‘Settings’ to access the configuration options.

- Configure Scheduled Update: Scroll down to the ‘Scheduled update’ section. Here, toggle the ‘Keep information updated’ switch to ‘On’ to enable automatic update.

- Set Update Frequency: Choose your desired update frequency, either daily or weekly, and select the appropriate time zone. Indicate the moments when you wish the update to take place for optimal information retrieval.

- Apply Changes: Click ‘Apply’ to save your settings. In this section, you can also choose to set up email notifications for any update failures, providing you with timely updates.

By following these steps, you will successfully schedule power bi refresh for your dataset, which will ensure that your reports reflect the most recent information available. This proactive approach not only streamlines report creation but also minimizes inconsistencies—common challenges faced by operations directors. Remember, a semantic model can utilize only a single gateway connection, so ensure that all necessary data source definitions are included in that same gateway.

For new updates, it is advisable to utilize the certified Supermetrics connector, as this helps streamline the update process. Keep in mind that the business intelligence tool will deactivate your update schedule after four consecutive failures, making it essential to monitor your update settings for consistent performance.

As Denys Arkharov, a BI Engineer at Coupler.io, states, “A notable achievement in my career was my involvement in creating Risk Indicators for Ukraine’s ProZorro platform, a project that led to my work being incorporated into the Ukrainian Law on Public Procurement.” This emphasizes the importance of efficient information management in BI. Moreover, the case study on utilizing BI Gateway for On-Premises Information Sources illustrates how mapping the gateway’s information source to the report’s semantic model facilitates the ability to schedule power bi refresh for reports using on-premises information sources.

Businesses that struggle to leverage these data-driven insights often find themselves at a competitive disadvantage, making the integration of RPA solutions crucial for optimizing data management and operational efficiency.

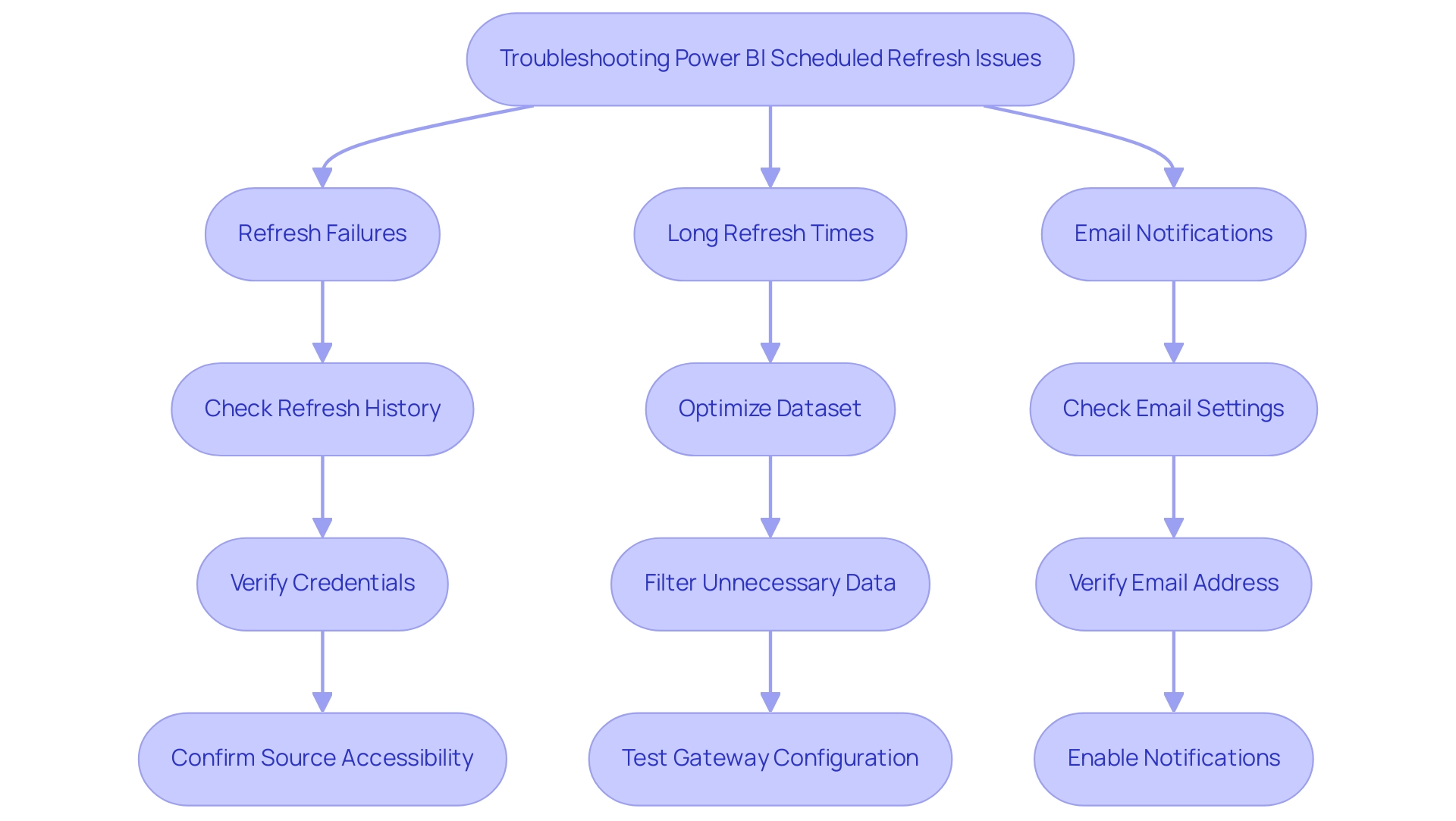

Troubleshooting Common Issues with Scheduled Refresh

When handling planned refreshes in Power BI, several common issues may arise, but with the right strategies, you can troubleshoot effectively:

-

Refresh Failures: If a scheduled refresh fails, the first step is to examine the error message in the refresh history. Often, such failures arise from invalid credentials or source connectivity issues. Confirm that your credentials are accurate and that the information source is accessible. Rena, from Community Support, emphasizes a crucial concern:

When did the last planned update and manual update occur independently? When SQL Server (the count: 4,232,405) is updated? Is it possible that the planned refresh operation has concluded, but the information in SQL Server has not been updated yet? Being aware of these nuances can help clarify the situation and emphasizes the importance of monitoring SQL Server updates in relation to the scheduled Power BI refresh, particularly in a landscape where a lack of governance strategy can lead to inconsistencies, confusion, and mistrust.

-

Long Refresh Times: If your refreshes are taking longer than anticipated, optimizing your model is essential. This can be achieved by reducing dataset size or filtering out unnecessary data. Additionally, ensure that your On-premises Data Gateway is properly configured and up to date. Regular testing of update scenarios is a best practice that helps prevent last-minute issues. The case study on Best Practices for Power BI Update Operations emphasizes that to schedule Power BI refresh, proactive management and regular testing can significantly enhance the reliability and efficiency of update processes. Remember, in today’s data-rich environment, efficiency in managing these processes is vital to avoid the pitfalls of time-consuming report creation and to leverage the full potential of Business Intelligence for informed decision-making.

-

Email Notifications: Not receiving notifications for update failures can hinder your ability to respond promptly. Verify that your email settings in the dataset configuration are accurate. Ensure that the email address is valid and that notifications are enabled. By taking these steps, you can maintain an agile decision-making process and ensure precise information reporting, transforming raw information into actionable insights that drive growth and innovation. The possible misunderstanding and distrust that can emerge from inconsistencies in information emphasize the importance of tackling these problems proactively.

Comprehending these frequent issues and their resolutions will enable you to efficiently schedule Power BI refresh for your planned updates, thus improving information accuracy and operational effectiveness.

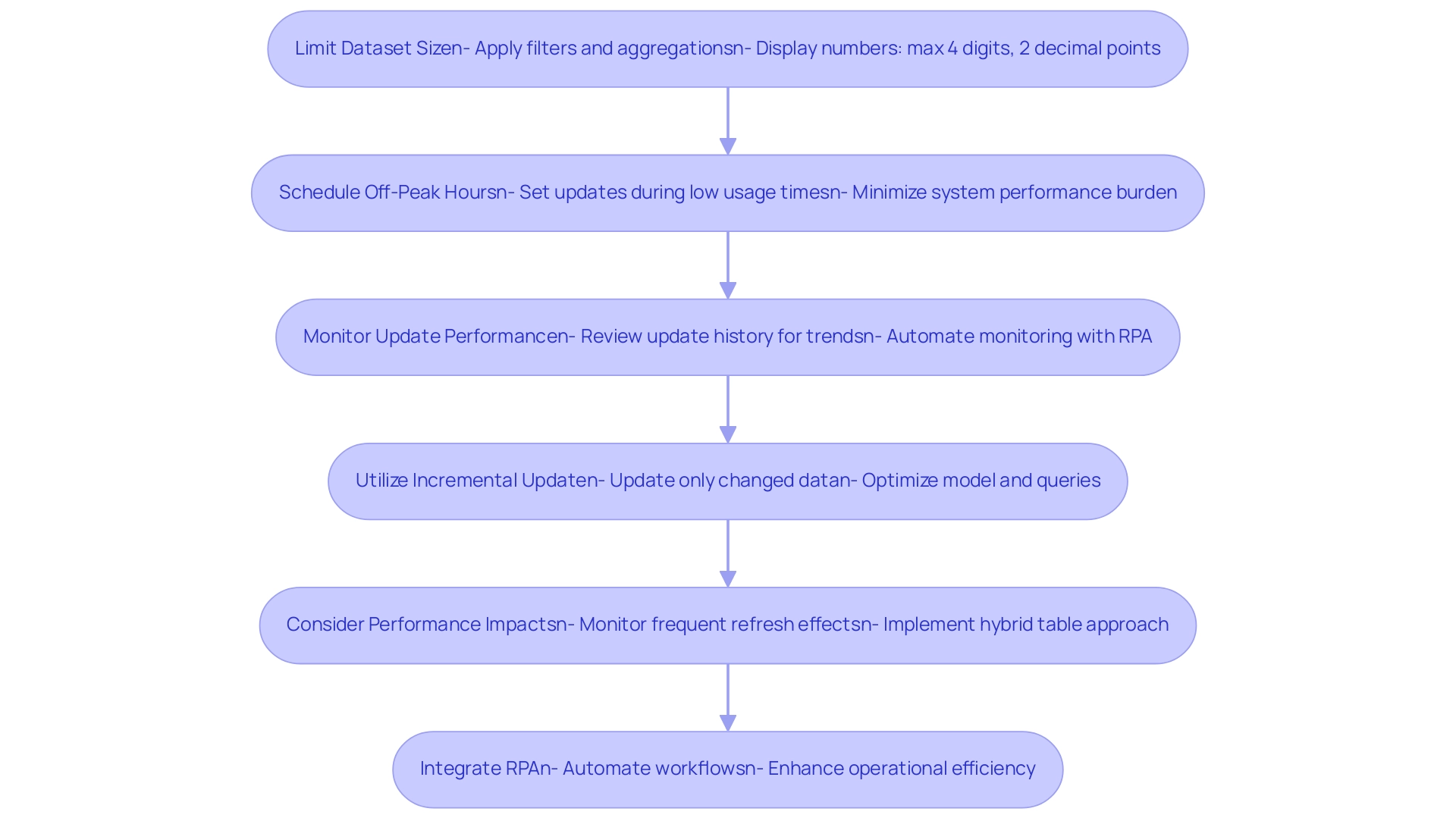

Best Practices for Optimizing Scheduled Refresh in Power BI

To enhance the efficiency of your scheduled refreshes in Power BI while addressing the challenges of manual, repetitive tasks, consider implementing the following best practices while leveraging Robotic Process Automation (RPA) to automate workflows:

-

Limit Dataset Size: Streamline your update processes by decreasing the amount of information updated at each interval. This can be achieved by applying filters or aggregating data whenever possible.

Smaller datasets not only update more rapidly but also enhance overall performance. Additionally, limiting displayed numbers to four numerals and decimal points to two decimal points improves readability and consistency across reports. -

Schedule Off-Peak Hours: Strategically set your update schedules for off-peak hours.

This timing minimizes the burden on system performance and ensures that sufficient resources are available for other critical tasks, leading to smoother operations. -

Monitor Update Performance: Consistently review the update history to identify trends in update durations and any failures.

Examining this information enables you to make informed choices regarding management and update strategies, ultimately enhancing performance.

By integrating RPA, this monitoring can be automated, freeing your team to focus on strategic initiatives and reducing the errors associated with manual oversight. -

Utilize Incremental Update: For larger datasets, explore the option of incremental update.

This technique allows you to update only the information that has changed since the last refresh, rather than reloading the entire dataset, thus improving efficiency.

As highlighted by community expert Dino Tao, it is essential to monitor and optimize your model and queries to mitigate potential performance issues that can arise from frequent intraday refreshes. -

Consider Performance Impacts: Be mindful of the performance ramifications of frequent intraday refreshes, especially with larger datasets.

Dino Tao emphasizes the importance of ensuring consistency between historical and real-time information segments, suggesting that implementing a hybrid table approach can help maintain this consistency without significant performance penalties.

Real-World Example: Consider the case study of using an Enterprise Data Gateway, which connects a semantic model to on-premises information sources.

Proper configuration and permissions allow gateway administrators to manage source definitions effectively, ensuring that the semantic model can access the necessary sources, thus optimizing refresh performance.

By adopting these best practices and leveraging RPA, you can significantly improve the performance and reliability of your scheduled Power BI refreshes.

This integration leads to more effective data management and informed decision-making, empowering your operations with timely and accurate insights.

Additionally, as the AI landscape evolves, RPA can adapt to these changes, further enhancing operational efficiency and reducing the burden of manual workflows.

Conclusion

Implementing scheduled refresh in Power BI is a vital step towards ensuring that organizations remain agile and data-driven in decision-making. By automating data updates, teams can eliminate the potential for human error and enhance the consistency of reports, addressing common challenges in data management. The prerequisites for setting up a scheduled refresh include:

- Having a Power BI Pro license

- Compatible data sources

These prerequisites provide a solid foundation for optimizing data operations.

Following a structured approach to configuring scheduled refresh not only streamlines the reporting process but also enables teams to focus on strategic initiatives rather than repetitive tasks. Troubleshooting common issues, such as:

- Refresh failures

- Long refresh times

is essential for maintaining a reliable data environment. By adopting best practices, including:

- Limiting dataset size

- Scheduling refreshes during off-peak hours

organizations can significantly enhance the efficiency of their data operations.

Incorporating innovative tools like Robotic Process Automation (RPA) further empowers teams to optimize their workflows, ensuring that data-driven insights are readily available for informed decision-making. Embracing these strategies will not only improve operational efficiency but also foster a culture of accurate data reporting, ultimately driving growth and innovation within the organization. As the landscape of business intelligence continues to evolve, mastering scheduled refresh capabilities in Power BI will be crucial for sustaining a competitive edge.

Introduction

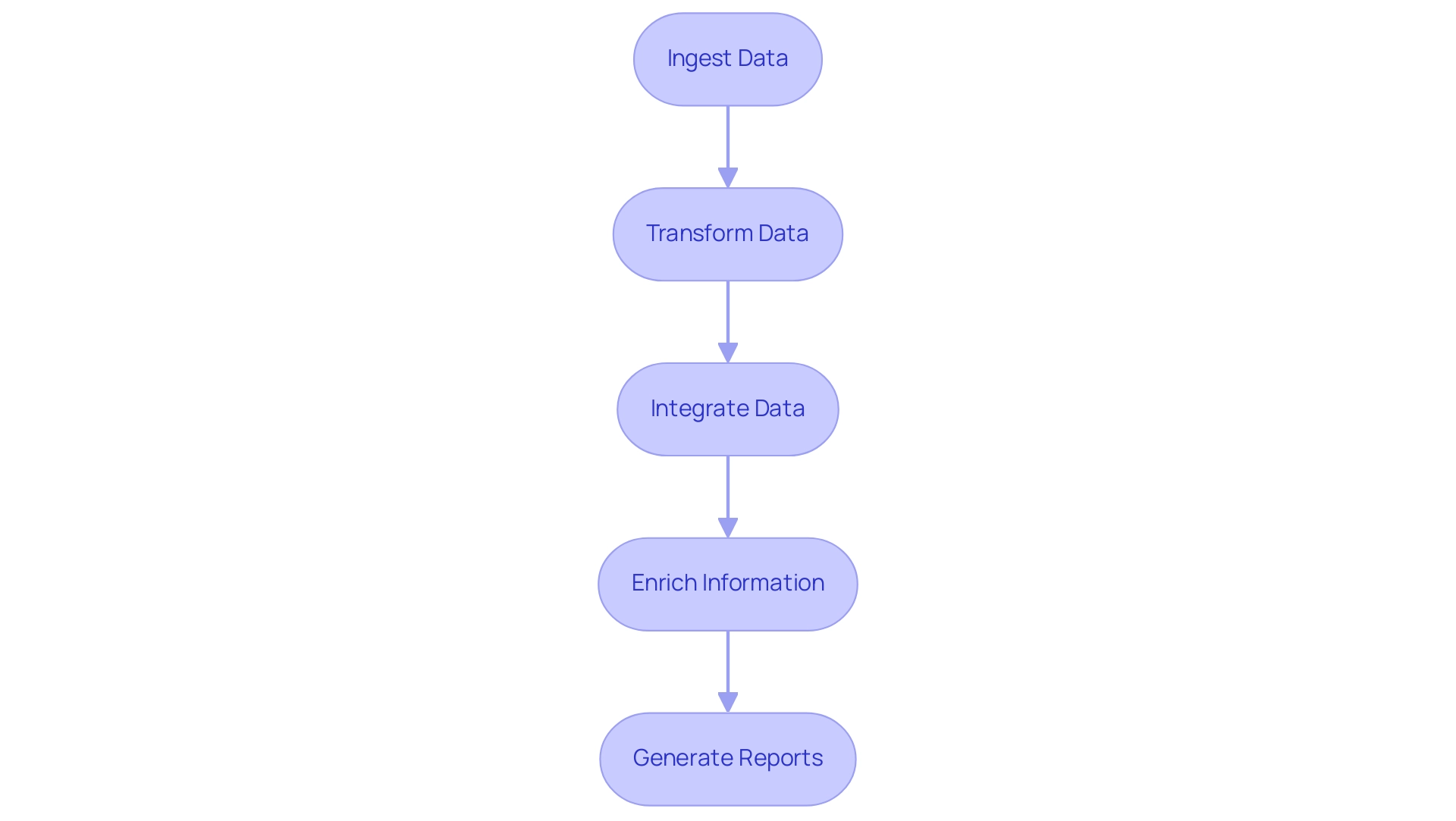

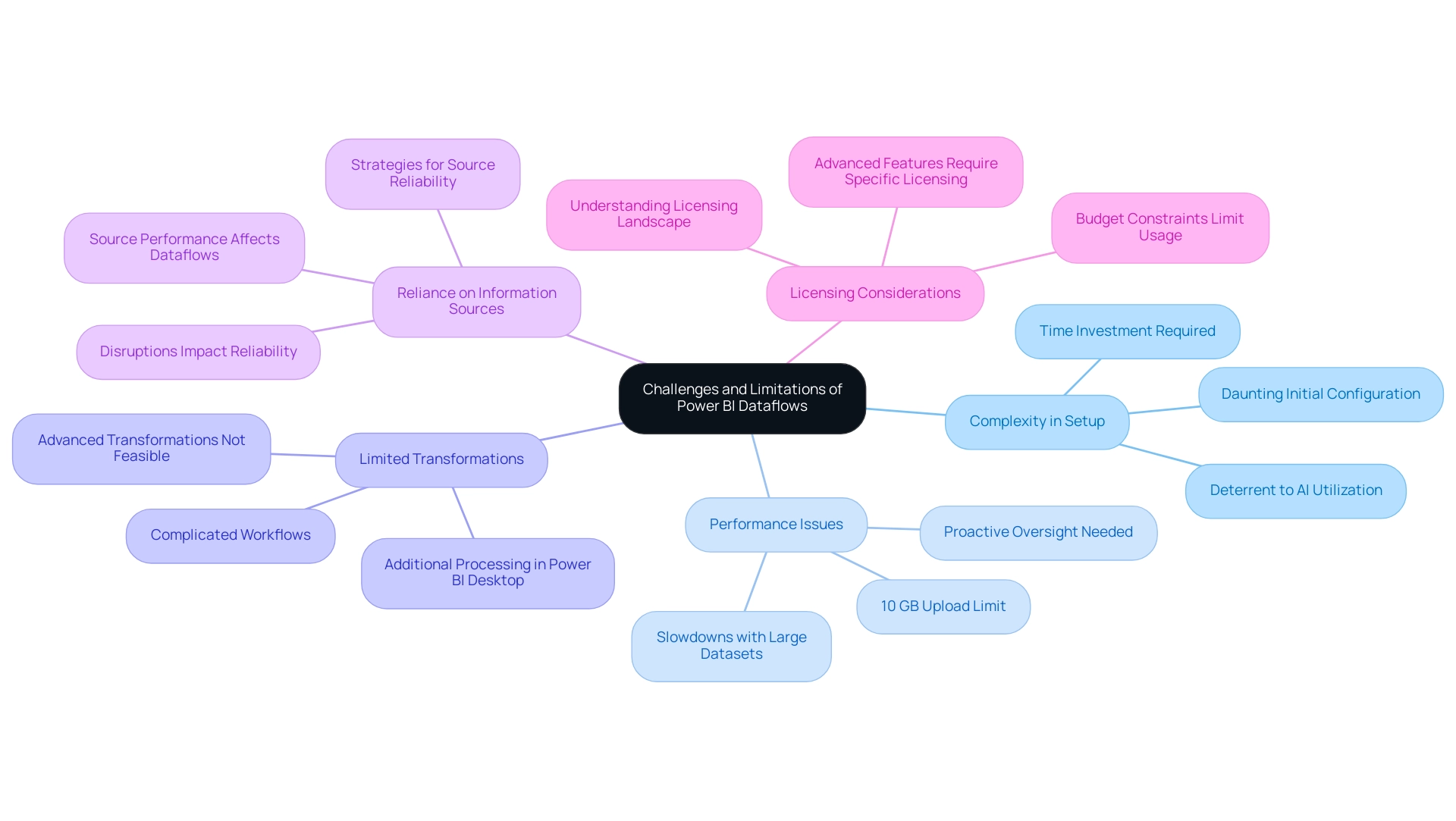

In the rapidly evolving landscape of data management, Power BI Dataflows emerge as a transformative solution for organizations seeking to harness their data effectively. As businesses grapple with the complexities of data preparation and analysis, these cloud-based tools offer a streamlined approach to ingesting, transforming, and integrating data, paving the way for insightful reporting.

However, the journey to optimizing Power BI Dataflows is not without its challenges. From establishing clear roles to implementing best practices, organizations must navigate a series of strategic steps to unlock the full potential of their data initiatives.

This article delves into the essentials of Power BI Dataflows, providing a comprehensive guide to creating, managing, and maximizing their benefits, ultimately empowering teams to make informed decisions and drive operational efficiency.

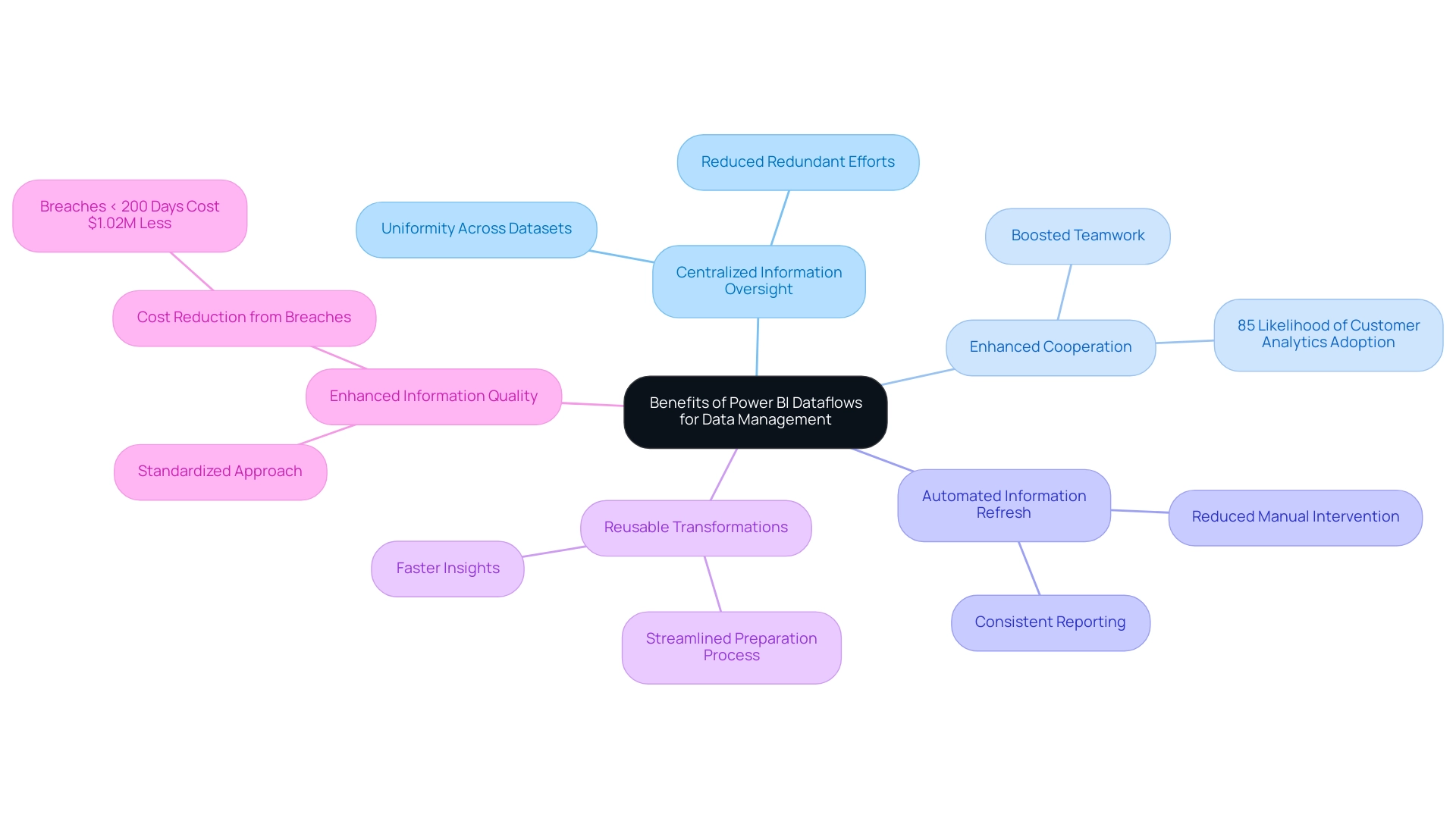

Introduction to Power BI Dataflows: What You Need to Know

Powerful BI workflows act as an effective cloud-based information preparation tool, allowing users to efficiently ingest, transform, integrate, and enrich information for insightful reporting and analysis. In today’s data-rich environment, failing to extract meaningful insights can leave your business at a competitive disadvantage. Establishing roles and responsibilities is essential for a successful BI initiative, as it guarantees stakeholder involvement and clarity throughout the information handling process.

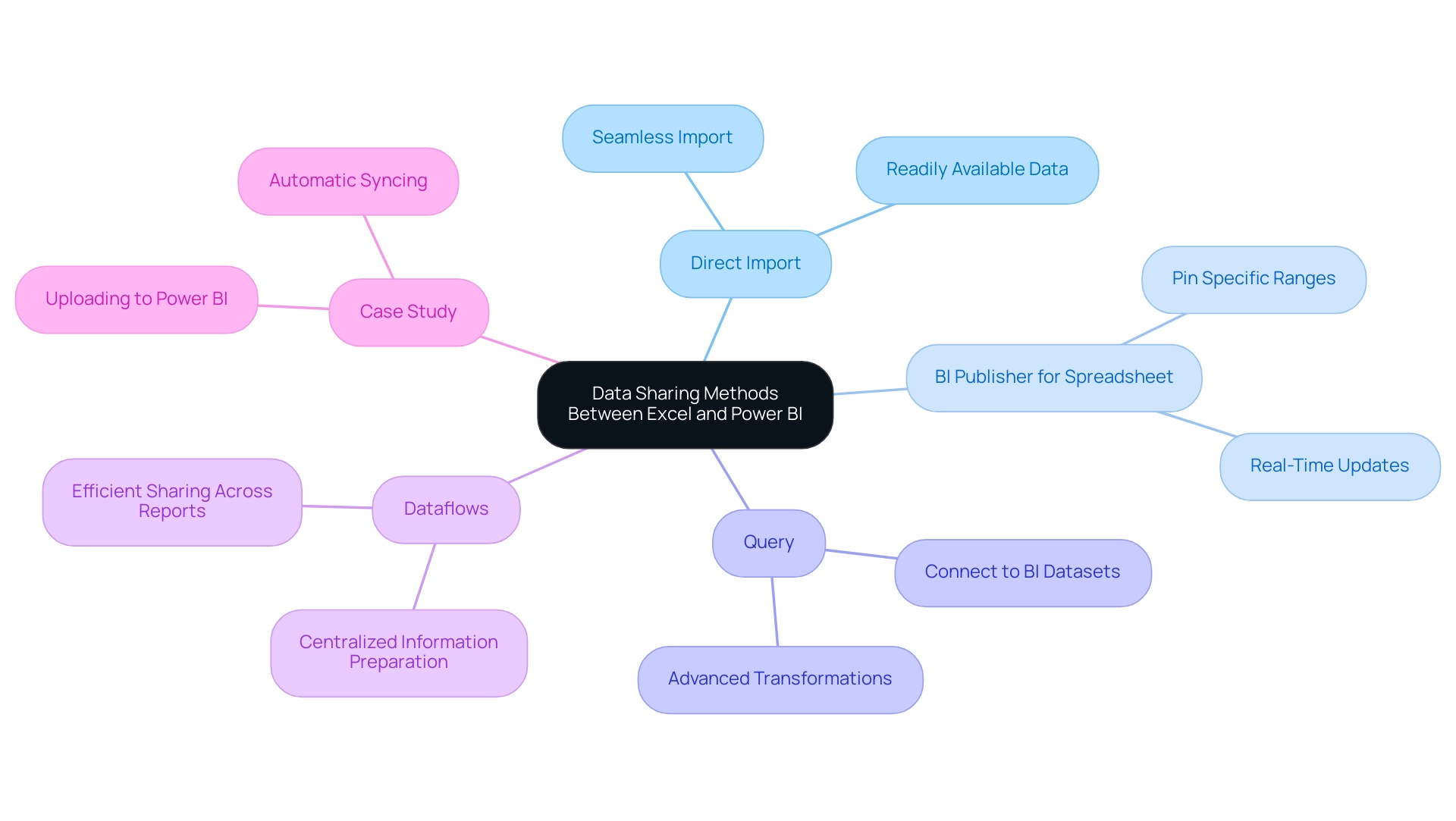

By focusing on information management tasks, these workflows enable the development of reusable transformations, ensuring consistency across various reports. The integration of dataflow Power BI within the BI ecosystem is essential; they automate preparation processes, significantly lowering manual efforts and improving collaboration among teams. Moreover, the challenges associated with leveraging insights from BI dashboards—such as time-consuming report creation and data inconsistencies—can be addressed through our tailored BI services.

Notably, our 3-Day Business Intelligence Sprint allows for rapid development of professionally designed reports, while the General Management App enhances overall management and review processes. As Tajammul Pangarkar, CMO at Prudour Pvt Ltd, aptly notes, ‘Understanding tech trends is essential for organizations to utilize tools like BI effectively.’ Utilizing Query, dataflow Power BI enables users to apply familiar transformation techniques without needing extensive technical knowledge, which empowers organizations to leverage their information more effectively.

This process of transforming raw data into actionable insights is central to driving informed decision-making. Furthermore, with Automate, businesses can streamline workflow automation, ensuring a risk-free ROI assessment and professional execution. Significantly, 80% of organizations utilizing cloud-based BI have witnessed enhanced scalability, highlighting the influence of dataflow Power BI on operational efficiency and informed decision-making.

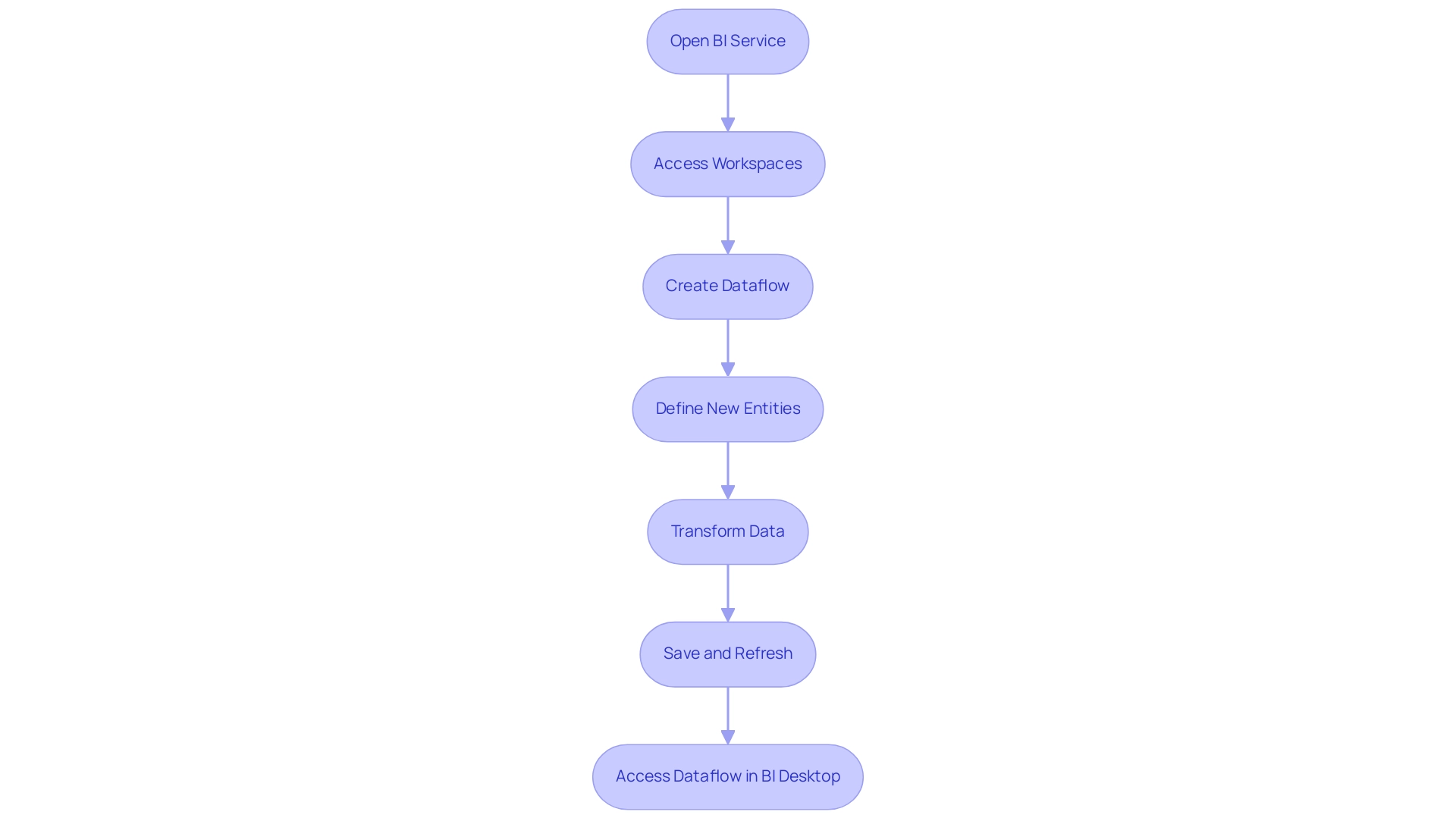

Step-by-Step Guide to Creating and Managing Power BI Dataflows

Establishing a dataflow Power BI in Business Intelligence is a simple procedure that enables organizations to efficiently handle their information while utilizing Robotic Process Automation (RPA) to streamline manual workflows. This integration not only boosts operational efficiency but also enhances data-driven insights crucial for business growth by reducing errors and freeing up team resources for more strategic tasks. Follow these steps to set up your Dataflow:

- Open BI Service: Begin by navigating to

https://app.bi.comand log in with your credentials. - Access Workspaces: On the left pane, click on ‘Workspaces’ and select the workspace you wish to use.

- Create Dataflow: Click the ‘Create’ button, then choose ‘Dataflow’ from the options.

- Define New Entities: Select ‘Add new entities’ and choose your information source, whether it be SQL Server, Excel, or another option.

- Transform Data: Utilize the Power Query Editor to apply transformations. This might involve filtering rows, renaming columns, or merging tables to customize the information to your needs.

- Save and Refresh: Save your Dataflow and establish a refresh schedule to keep your information current.

- Access Dataflow in BI Desktop: You can now reach this Dataflow in BI Desktop to create insightful reports.

By following these steps, users can create dataflow Power BI that not only improve information oversight but also align with RPA strategies for greater operational efficiency. The BFSI sector is expected to witness the quickest expansion in the business intelligence market from 2023 to 2032, according to Inkwood Research, making efficient information handling in tools such as BI crucial for operational success. With more than 10,000 firms utilizing BI within the 1,000 to 4,999 staff range, its scalability and adaptability are clear, offering a strong solution for mid-sized organizations seeking to enhance their processes.

Additionally, as organizations navigate the challenges posed by the rapidly evolving AI landscape, the significance of data management tools becomes increasingly apparent. Microsoft’s recent acquisition of C3.ai for $6.9 billion underscores the growing importance of utilizing BI for maximizing operational efficiency.

Best Practices for Optimizing Your Power BI Dataflows

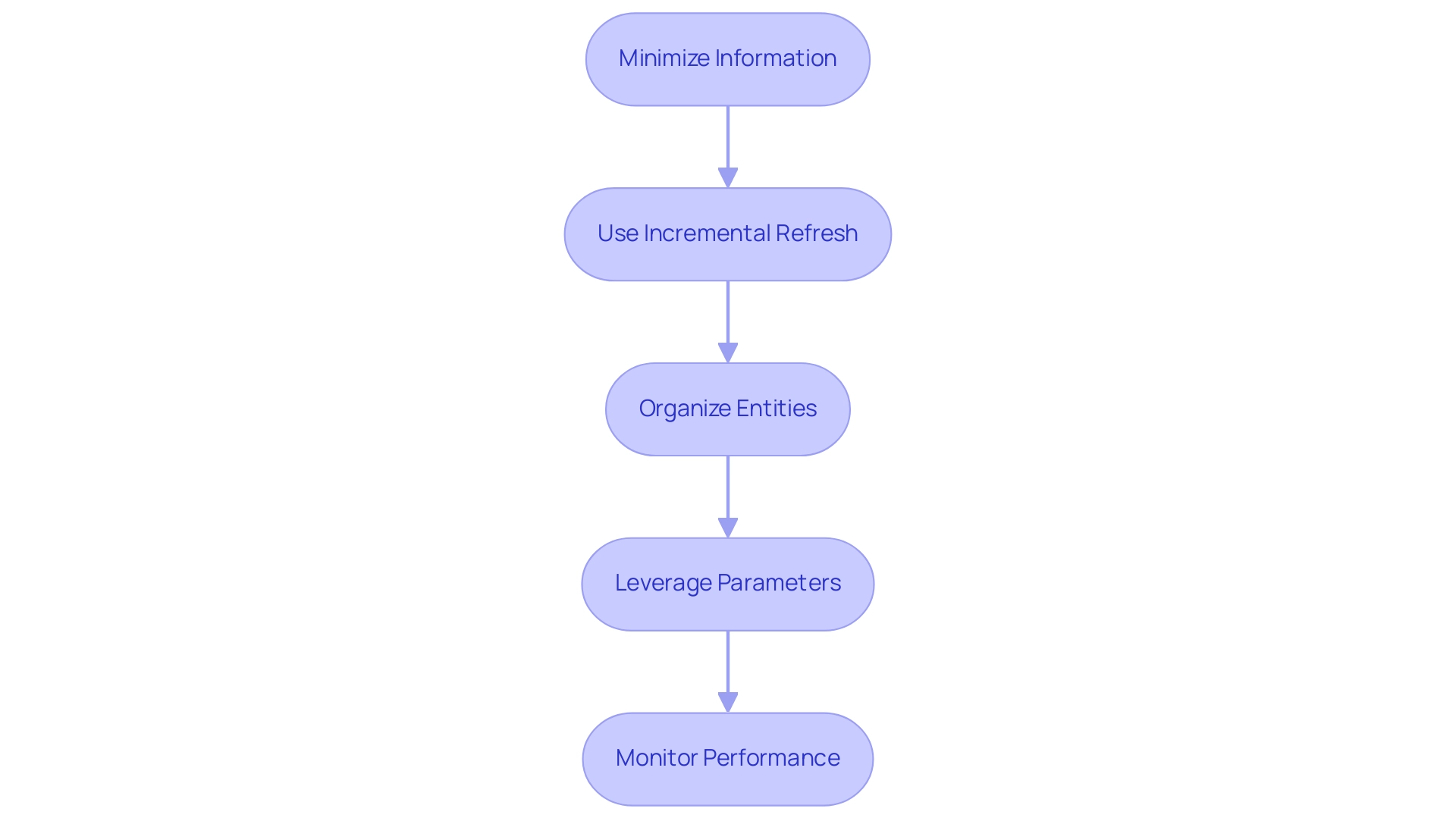

To enhance the performance of your dataflow Power BI and leverage the capabilities of Robotic Process Automation (RPA), implementing the following best practices is crucial:

-

Minimize Information:

Focus on extracting only the essential information required for your reports. This approach not only improves performance but also reduces load times, ultimately leading to more efficient operations.

RPA can automate the extraction process, ensuring that only the necessary information is pulled, which is especially important as spending on non-cloud infrastructure is projected to grow at a CAGR of 2.3% over the 2021-2026 period. -

Use dataflow Power BI with incremental refresh:

By employing dataflow Power BI’s incremental refresh, you can manage large datasets more effectively, ensuring that only the information that has changed is reloaded. RPA can automate the incremental refresh process, significantly cutting down on processing times and system resource usage, making it a vital strategy for organizations handling vast amounts of data. -

Organize Entities:

Structuring your dataflow Power BI with clear naming conventions and logical groupings can greatly facilitate easier management and collaboration among team members. RPA can assist in maintaining this organization by automating updates and adjustments, reducing the likelihood of errors. Leading marketers are 1.6 times more likely to believe that open access to information results in higher performance, highlighting the benefits of organized structures. -

Leverage Parameters:

Utilize parameters for dynamic filtering of information sources in dataflow Power BI. RPA can enhance this adaptability by automating parameter adjustments based on real-time information analysis, allowing for more tailored dataflow configurations that can adjust to varying project requirements without significant rework. -

Monitor Performance:

Regularly reviewing dataflow Power BI performance metrics is essential to identify and troubleshoot bottlenecks or inefficiencies. RPA can play a crucial role in continuous monitoring and alerting of performance issues, ensuring proactive oversight. As 85% of Singaporean business leaders recognize, effective information handling can significantly enhance decision-making and decrease risks linked to operational delays. Monitoring performance can help avoid the high costs associated with prolonged breaches of information, which average $1.02 million higher for incidents lasting beyond 200 days.