Introduction

Validating AI models is a complex and evolving process that requires rigorous strategies to ensure their reliability and ethical integrity. From data quality and overfitting to model interpretability and selecting the right validation techniques, there are numerous challenges to overcome. This article explores the key components of AI model validation, validation techniques used by experts, the impact of data quality on validation, the balance between overfitting and generalization, the importance of interpretability, the distinction between validation and testing, industry examples of AI validation, tools and resources for validation, and future directions and challenges in AI validation.

By understanding and addressing these complexities, businesses can develop trustworthy and dependable AI solutions that meet high standards of accuracy and effectiveness in a rapidly evolving digital landscape.

Challenges in Validating AI Models

Ensuring the reliability and ethical integrity of AI systems demands rigorous validation strategies, given their intricate and evolving nature. Here are some complexities faced in validating AI systems:

-

High-quality information and integrity are the foundation of effective AI models. As Felix Naumann’s research highlights, establishing a comprehensive quality framework is crucial. This framework should encompass various facets influencing the quality of information and the dimensions of its own quality. Five key facets have been identified that are essential for evaluating information quality and establishing a quality profile.

-

Overfitting vs. Generalization: Achieving the proper equilibrium between fitting the training information and generalizing to new, unseen examples is a delicate skill. Overfitting is a common pitfall where algorithms perform well on training data but fail to predict accurately on new data. Utilizing suitable verification techniques, like cross-validation, is vital to address this challenge.

-

Interpretability is a challenge due to the opaque nature of several AI models, which hinders accountability. Understanding and explaining the decision-making process is essential, especially when considering the ethical implications of AI.

-

Choosing appropriate methods for ensuring accuracy: With a wide variety of AI applications, choosing the right techniques is not a one-size-fits-all approach. Techniques like cross-validation or holdout validation must be tailored to suit the specific requirements of each AI model.

The concerns surrounding AI are not just theoretical; recent collaborations between EPFL’s LIONS and UPenn researchers have highlighted the susceptibility of AI technology to subtle adversarial attacks. This underscores the importance of enhancing AI robustness to ensure secure and reliable systems.

Moreover, as the data-driven science boom continues, the quest for accuracy, validity, and reproducibility in AI becomes more pressing. We have seen improvements in machine-learning methods over time, but without rigorous validation, the reliability of these advancements cannot be taken for granted.

As the field evolves, the parallels drawn from case studies, like the ones examining California’s wildfire risks and Ai’s origins, remind us of the importance of vigilance in maintaining standards for AI safety and efficacy. In essence, validating AI algorithms is an ongoing endeavor that requires a multifaceted approach to address its inherent complexities.

Key Components of AI Model Validation

Verifying AI frameworks is a complex procedure that demands careful scrutiny to guarantee their dependability and efficiency in real-world scenarios. It starts with Data Preparation, a crucial step involving the preprocessing and cleaning of data to eliminate noise and handle missing values, laying the foundation for high-quality data that is necessary for accurate training.

Next, defining Model Performance Metrics is crucial. It’s not just about accuracy; precision, recall, and other relevant metrics must be carefully selected to evaluate the performance of the system thoroughly. These metrics act as benchmarks, akin to ‘exam questions’ for the AI system, testing it across various competencies like language and context understanding.

Testing and Evaluation is where the rubber meets the road. Thorough testing using different validation techniques ensures that the structure performs consistently across different scenarios, reflecting the unpredictable nature of real-world applications. This is where we move beyond standardized benchmarks to a more nuanced and tailored evaluation, such as the customizable suite offered by LightEval.

Lastly, Model Interpretability cannot be overlooked. It is crucial to apply techniques that improve the explainability of the system, enabling stakeholders to trust and comprehend the decision-making process behind the Ai’s conclusions. As expressed by professionals in the domain, evaluations of structures are a developing discipline, vital for comprehending the capabilities and inclinations of artificial intelligence mechanisms. These evaluations go beyond safety, providing a comprehensive overview of AI system properties.

Including these elements in the validation procedure is not a single occurrence but a continuous dedication to preserving the integrity and reliability of AI systems. As evident in the thorough investigation and interviews carried out in the domain of wildfire risk management, comprehending and reducing disastrous risks is an unpredictable and intricate task, similar to guaranteeing the security and efficiency of AI systems in a rapidly changing digital environment.

Validation Techniques for AI Models

Assessing AI systems for performance and reliability is crucial in guaranteeing their effectiveness. Various techniques are used by experts for this purpose:

-

Cross-Validation: This technique involves partitioning the data into several subsets. The AI algorithm is trained and tested on these subsets in multiple rounds, enabling a comprehensive assessment of its reliability across various combinations of information.

-

Holdout Validation: In this approach, data is divided into two separate sets: one for training the AI system and another for assessment. The performance of the system is then evaluated based on how well it performs on the validation set, which it has not seen during training.

-

Bootstrapping: This involves generating multiple samples from the original dataset and training the system on each. The outcomes are then merged to offer a strong approximation of the performance of the system, taking into consideration variability within the data.

A/B Testing: A critical method for comparing different versions or variations of the same approach to identify the most effective strategy. It involves subjecting each prototype to the same situations and evaluating their performance against predetermined criteria.

The use of these techniques in real-world applications can have profound impacts. For instance, D-ID’s collaboration with Shiran Mlamdovsky Somech to raise awareness about domestic violence in Israel leveraged AI to animate photos of victims, bringing a powerful and emotive element to the campaign. Meanwhile, advancements such as Webtap.ai demonstrate the practical applications of AI in automating web extraction, showcasing the importance of validation in developing tools that can be trusted to perform as expected in various industries.

Data Quality and Its Impact on AI Validation

Ensuring the quality of the information used in training and testing AI models is crucial for the overall effectiveness of these systems. The sensitivity of algorithms to the nuances of information accuracy cannot be overstated, as even minor errors can skew results and lead to incorrect conclusions. Thorough information management practices must be integrated, which involves careful information preprocessing, cleaning, and validation efforts. Furthermore, the choice of the suitable algorithms for analysis is a crucial stage that requires thoughtful deliberation. As we navigate through a information-driven science boom, the sheer volume and complexity of datasets available underscore the need for vigilance against the risks of information quality issues. Recent court decisions reaffirm the unrestricted access to public information, emphasizing the legal support for organizations to gather and employ details for research and strategic business decisions. It is also crucial to maintain a cycle of ongoing quality monitoring and maintenance to adapt to evolving landscapes and preserve the integrity of AI models through time.

Overfitting and Generalization in AI Models

Finding a middle ground between overfitting and generalization is crucial in the validation of AI models, as it determines their dependability and efficiency in real-world applications. Overfitting is like memorizing an answer without understanding the questionâit happens when a structure is excessively complex and fits the training data too closely, which ironically undermines its ability to perform well on new, unseen data. Generalization, on the other hand, is the capacity of the system to apply acquired knowledge to new scenarios, demonstrating resilience beyond the initial dataset.

To fight overfitting and improve generalization, techniques such as regularization, early stopping, and controlling complexity are used. Regularization techniques, for instance, introduce a penalty for complexity, discouraging the system from learning to replicate the training data too closely. Early stopping ends training before the system starts to memorize instead of generalize, and managing system complexity involves selecting the appropriate structure to avoid overfitting from the beginning.

These efforts reflect the broader challenge in AI: ensuring safety and reliability across diverse scenarios and populations. For instance, facial recognition technology and medical imaging algorithms have demonstrated biases, performing inadequately for specific subgroups, resulting in significant real-world consequences. As we strive to build AI structures that are genuinely secure and reliable, it is vital to design them with an awareness of the various environments they will come across, such as those depicted in datasets like ImageNet and COCO.

A comprehensive approach to AI safety involves a trio of components: a world model, a safety specification, and a verifier. This framework aims to provide AI with high-assurance safety guarantees, ensuring it operates within acceptable bounds and does not lead to catastrophic outcomes. It is an ongoing endeavor in the AI community to improve these approaches and establish AI solutions that are not only robust but also in line with societal values and safety standards.

Interpretability and Explainability in AI Validation

Understanding and being able to articulate the rationale behind AI-driven decisions is paramount, particularly in areas where these decisions have significant consequences, such as in healthcare and financial sectors. Techniques such as feature importance analysis reveal which elements of the information have the greatest impact on the output of the system, providing stakeholders with a clear understanding of the decision-making process of the system. In addition, visualizing systems can offer an intuitive understanding of complicated algorithms, while rule extraction converts the Ai’s processing into rules that are understandable to humans, promoting confidence in these systems.

For instance, consider the application of AI in agricultural settings to identify crop diseases, which directly affects food safety and pricing. Here, the task is not just to create precise representations but also to demonstrate their decisions in a manner that is understandable, even when dealing with complex data patterns that differ significantly from those in typical datasets. The difficulty is compounded by varying hyperparameters that can sway the interpretation of results, highlighting the necessity for robust evaluation methods for these explanations.

Recent advancements highlight the importance and influence of forecasting in AI, where the capacity to anticipate future events has been improved by combining insights from various forecasting approaches and ‘superforecasters.’ This collective intelligence has been instrumental in guiding more informed decision-making processes in complex environments.

To provide a specific instance, a logistic regression approach predicting customer purchase behavior based on age and income can be made transparent through visualization of its decision boundary and by quantifying the impact of each feature. This not only assists in confirming the accuracy but also guarantees adherence to regulatory standards and ethical norms. Understanding the influence of individual features, like how a customer’s age might weigh more heavily on the probability of a purchase than their account size, is crucial for stakeholders to trust and effectively utilize AI solutions.

Validation vs Testing: Distinct Roles in AI Development

In the domain of AI system development, attaining a harmonious equilibrium between testing and checking is crucial. Validation is a meticulous process where the AI system’s performance, accuracy, and reliability are scrutinized to ensure they align with the desired benchmarks. This involves implementing various validation techniques and interpreting the outcomes to confirm the model’s efficacy.

On the other hand, testing focuses on identifying and fixing any glitches within the AI technology. This covers a range of testsâunit tests to evaluate individual components, integration tests to ensure seamless interaction between different parts, and tests to validate the overall functionality. These tests are not a one-off event but an ongoing endeavor at each stage of the project life cycle, ensuring the AI operates flawlessly and as expected.

The significance of both validation and testing cannot be overstated. They instill confidence in the AI system’s performance and dependability. For instance, Kolena’s new framework for model quality demonstrates the importance of continuous testing, either scenario-level or unit tests, to measure the AI model’s performance and identify any potential underperformance causes.

Likewise, the AI Index Report underlines the mission of offering rigorously vetted data to comprehend Ai’s multifaceted nature. It serves as a reminder that robust validation and testing practices are indispensable for the transparency and reliability of AI systems. As AI technologies become increasingly integrated into products, as observed by companies worldwide, it is crucial that the marketed benefits—such as cost and time savings—are backed by scientifically accurate claims, ensuring that marketing promises align with actual performance.

Case Study: Industry Examples of AI Validation

Within the healthcare industry, AI systems are crucial for improving diagnostic accuracy and forecasting patient outcomes. To exemplify this, consider the Ai’s role in refining clinical trial eligibility criteria. Ensuring the criteria are neither too narrow nor too broad is crucial to enroll an optimal number of participants, maintain manageable costs, and reduce variability. AI aids this process by estimating patient counts based on specific criteria, enhancing efficiency and precision.

In the financial sector, AI solutions have become essential tools for financial forecasting, fraud detection, and investment advice. The accuracy and adherence to regulatory standards of these designs are not only advantageous but essential for real-world application.

Similarly, in manufacturing, the use of AI for quality control, predictive maintenance, or process optimization cannot be overstated. Accurate prediction of faults and anomalies by AI systems is essential for avoiding costly downtime and enhancing operational efficiency.

The implementation of AI medical devices, like the Vectra 3D imaging solution, has transformed patient care by rapidly detecting signs of skin disease using a comprehensive database and advanced algorithms. Such technologies demonstrate the potential of AI to learn and execute tasks that traditionally required human expertise.

Furthermore, the importance of dataset diversity in AI development is paramount. The presentation of diverse populations in health datasets ensures that AI systems are unbiased and equitable, resulting in more accurate performance across various patient groups. This is crucial for the safety and reliability of AI applications in all sectors, particularly healthcare.

The commitment to advancing AI in a manner that is transparent, safe, and efficient is shared by multidisciplinary teams of healthcare professionals. They ensure that AI applications meet high standards of accuracy and stability before being integrated into daily operations, as highlighted by Kleine and Larsen’s multidisciplinary task force approach.

By thoroughly verifying AI systems, we can fully utilize their potential to tackle the issues faced by an aging population and overburdened healthcare systems, as outlined in recent reports and studies by the World Health Organization. Ai’s capacity to act as an additional set of ‘eyes’ in medical screenings is just one example of how technology can enhance care quality while potentially reducing costs.

Tools and Resources for AI Model Validation

In the field of AI consulting, guaranteeing the authenticity and dependability of AI systems is essential. A suite of sophisticated tools and frameworks is at the disposal of experts to facilitate this process. Frameworks like TensorFlow, PyTorch, and scikit-learn provide strong capabilities for verifying the accuracy of models. These include a variety of performance metrics, the capability for cross-validation, and provisions for hyperparameter tuning, which are all critical for fine-tuning AI models to achieve optimal performance.

Data validation is another crucial step in the AI lifecycle. Libraries like Great Expectations and pandas-profiling provide comprehensive tools that aid in the thorough examination of data quality. They are crucial in identifying missing values, outliers, or inconsistencies that could potentially distort the predictions of the system.

The interpretability of AI models is a topic of growing importance as businesses strive to understand the rationale behind predictions. Explainability tools like SHAP, Lime, and Captum provide techniques that illuminate the decision-making process of AI models, thereby fostering increased trust and transparency.

Having access to specialized datasets for verification also plays a vital role in assessing the performance of AI. Public datasets, like MNIST for image classification tasks or the UCI Machine Learning Repository for a wide array of domains, provide a benchmark for assessing the robustness of AI algorithms.

Adhering to best practices in AI and ML is critical for maintaining trust in these technologies. Openness in documenting and reporting all aspects of AI modelsâincluding data sets, AI systems, biases, and uncertaintiesâis crucial. This level of clarity is not just beneficial; it is a responsibility to ensure that AI applications are reliable and free from errors that could lead to incorrect conclusions or harmful outcomes.

It is important to remember that the adoption of ML methods comes with the responsibility of ensuring their validity, reproducibility, and generalizability. With the consensus of a diverse group of 19 researchers across various sciences, a set of guidelines known as REFORMS has been developed to aid in this process. It provides a structured approach for researchers, referees, and journals to uphold standards for transparency and reproducibility in scientific research involving ML.

To sum up, the verification of AI systems is a complex effort that necessitates the use of sophisticated tools, rigorous approaches, and a dedication to optimal methods. By leveraging these resources effectively, businesses can ensure that their AI solutions are not only powerful but also trustworthy and dependable.

Future Directions and Challenges in AI Validation

The path of AI authentication is guided by emerging complexities and requires a multifaceted approach. Ethical considerations become the main focus, as the scrutiny of fairness and absence of bias in the evaluation of safety standards in industries like energy, where subjectivity across varied metrics is common, is likened to an intricate task. This evaluation, akin to the meticulous case studies of California’s wildfire risks, underscores the dynamic nature of Ai’s impact on society.

Regulatory compliance, too, is of paramount importance. In highly regulated sectors such as healthcare and finance, where standards are stringent, AI must comply with existing frameworks. This echoes the AI-specific recommendations for ethical requirements and principles outlined for trustworthy AI, suggesting a blueprint for adherence that stakeholders may employ.

Moreover, interdisciplinary collaboration has never been more crucial. As AI models change and adjust, experts from various fields, including ethicists and regulatory authorities, must come together to navigate the complex maze of AI verification challenges. This cooperative spirit is reflected in the collaborative efforts of the AI2050 Initiative, which seeks to tackle hard problems through a multidisciplinary lens.

Ongoing verification is also crucial, as AI solutions are not fixed; their effectiveness and significance can be as dynamic as the data they process. This ongoing diligence is reminiscent of Duolingoâs âBirdbrainâ AI mechanism, which uses machine learning combined with educational psychology to customize learning experiences. Such an approach to AI validation ensures that models remain robust and reliable over time.

In light of these directions and challenges, the path forward is one of relentless research, collaboration, and innovation. It is a journey marked by the recognition of Ai’s potential and the prudent management of its risks, as highlighted by the detailed examination and reflection on AI systems by researchers using age-old mathematical techniques like Fourier analysis to decode the mysteries of neural networks.

Conclusion

In conclusion, validating AI models is a complex and evolving process that requires meticulous attention to detail and a multifaceted approach. The key components of AI model validation include data quality and integrity, striking the right balance between overfitting and generalization, model interpretability, and selecting the appropriate validation techniques. Data quality plays a crucial role in ensuring the reliability and effectiveness of AI models, and it requires comprehensive data management practices.

Overfitting and generalization must be carefully addressed to enhance the model’s reliability and robustness. Model interpretability is essential for understanding and explaining the decision-making process of AI models.

Validation techniques such as cross-validation, holdout validation, bootstrapping, and A/B testing are used to evaluate AI models’ performance and reliability. These techniques are tailored to suit the specific requirements of each AI model. Industry examples demonstrate the wide range of applications where AI validation is crucial, such as healthcare, finance, and manufacturing.

Various tools and resources, including frameworks, libraries, and specialized validation datasets, are available to facilitate the validation process.

The future of AI validation involves addressing emerging complexities, considering ethical considerations and regulatory compliance, fostering interdisciplinary collaboration, and continuously validating AI systems. It is a journey of relentless research, collaboration, and innovation to harness the full potential of AI while managing its risks. By embracing these challenges and implementing rigorous validation strategies, businesses can develop trustworthy and dependable AI solutions that meet high standards of accuracy and effectiveness in a rapidly evolving digital landscape.

Introduction

Blue Prism RPA is a powerful technology that is transforming business operations across industries. With its suite of capabilities, Blue Prism automates complex tasks, integrates artificial intelligence and machine learning, and provides data-driven insights. This article explores the core capabilities of Blue Prism RPA, its real-world applications, and the benefits of implementing this technology.

From healthcare to finance, retail to supply chain, organizations are leveraging Blue Prism RPA to enhance efficiency, improve security, and drive growth. Join us as we delve into the world of Blue Prism RPA and discover how it is revolutionizing industries and propelling organizations towards digital transformation.

Core Capabilities of Blue Prism RPA

The RPA technology revolutionizes business operations by providing a range of functionalities aimed at automating intricate, repetitive tasks and improving strategic business processes. At its core, the integration of artificial intelligence and machine learning enables organizations to automate not only routine tasks but also make informed decisions based on data analytics. This advanced innovation is scalable to fit businesses of all sizes and flexible enough to evolve with changing demands, ensuring seamless integration with existing systems. Safety is of utmost importance; the company provides strong encryption, stringent access controls, and comprehensive audit trails to maintain data integrity and compliance. With its sophisticated analytics and reporting tools, businesses gain critical insights into their RPA initiatives, driving continuous improvement and operational excellence.

In practice, the application of RPA tools like Blue Prism can be observed in healthcare, where the digital assurance process is crucial. For example, within the NHS, the implementation of new digital advancements undergoes a thorough initial evaluation led by the Digital Service Team to ensure any novel innovation is secure, suitable, and compliant. This diligence is a testament to the necessity of robust RPA solutions in managing sensitive information and streamlining healthcare operations. In alignment with Industry 4.0, the integration of RPA into the operational fabric of organizations has shown significant enhancements in productivity, with existing applications demonstrating double-digit percentage improvements.

Moreover, embracing RPA is part of a larger trend where organizations join forces in Centers of Excellence to share best practices and successes with AI and machine learning implementations. Such collaborations exemplify how RPA, when combined with collective industry knowledge, propels enterprises towards digital transformation, offering them a competitive edge in their respective fields. As adoption accelerates, organizations like IBM emphasize the importance of leveraging hybrid cloud platforms and AI to revolutionize industries securely and efficiently, as evidenced by their widespread use in sectors ranging from financial services to healthcare.

Use Cases for Blue Prism RPA

Robotic Process Automation (RPA) technologies like Blue Prism are revolutionizing the way industries operate by automating a broad spectrum of tasks, leading to significant efficiency gains. In the financial services sector, RPA is transforming essential operations by handling financial transactions, streamlining payment processing, and enhancing fraud detection mechanisms. These applications not only expedite service delivery but also bolster security and compliance—a critical consideration for institutions like M&T Bank that are embracing digital transformation to maintain their competitive edge while safeguarding sensitive data.

The retail landscape is also witnessing a metamorphosis with RPA’s ability to refine inventory control, accelerate order processing, and elevate customer service standards. The automation capabilities of the mentioned software are crucial for retailers, as evidenced by companies like Blue Yonder, which is acquiring technology to tackle complex challenges in omnichannel returns and inventory management.

In the realm of finance and accounting, RPA is a game-changer, automating tasks such as invoice processing and financial report generation. This automation drives down maintenance time and costs while ensuring software remains efficient and secure, as per the insights from M&T Bank’s initiative to uphold Clean Code standards.

Customer Relationship Management (CRM) is another area where RPA stands out. By automating lead generation and customer onboarding, RPA enhances the CRM process, allowing for a level of personalization and responsiveness that aligns with Hiscox’s strategy to improve customer satisfaction.

Lastly, RPA is optimizing supply chain and logistics by managing orders, tracking inventory, and scheduling shipments, showcasing its versatility and impact across various operational facets. The integration of RPA into these sectors demonstrates its vast potential to streamline processes, reduce response times, and contribute to overall growth, as highlighted by the significant revenue growth and productivity improvements reported in recent studies.

Benefits of Implementing Blue Prism RPA

By leveraging the technology of Robotic Process Automation (RPA), an organization can propel its operational capabilities to new heights of efficiency and effectiveness. By automating a wide range of tasks, from simple to complex, RPA accelerates workflows, delivering rapid outcomes with a degree of precision that manual processes cannot match. This translates into substantial cost savings as it minimizes the need for human intervention, allowing employees to focus on more strategic initiatives that add value to the business.

Furthermore, the precision and uniformity of RPA’s task execution greatly diminish the possibility of human mistake, improving overall performance. This is particularly crucial in sectors such as healthcare, where accuracy is paramount. For instance, in the implementation of new digital innovations within the NHS, a thorough digital-assurance process was established to guarantee security, suitability, and compliance. This procedure requires initial evaluations and comprehensive surveys to determine if the technology is compatible with current systems and meets rigorous criteria, a concept that mirrors the dependability of RPA in various applications.

From a security perspective, the RPA’s strong framework safeguards sensitive data, guaranteeing compliance with industry regulations and standards. This aspect of RPA is pivotal, as seen in the case of Yonder’s acquisition of Doddle, where end-to-end solutions for omnichannel returns were sought to address complex supply chain challenges.

Furthermore, the scalability that RPA provides organizations cannot be overstated. It streamlines business processes, allowing companies to adjust and expand their operations to accommodate growing demands without compromising quality or performance. Industry 4.0 has shown that the integration of AI, connectivity, and digital twins can result in significant productivity improvements, and RPA is an essential part of this technological advancement.

Lastly, the financial impact of implementing intelligent automation is evidenced by a Forrester Research study, which found a revenue growth of 73% of the overall Net Present Value (NPV) benefit and a 5.4% compound annual growth rate (CAGR) over three years for a composite customer. This underscores the transformative potential of RPA in driving business growth and long-term success.

Case Studies: Real-World Applications of Blue Prism RPA

Exploring the transformative power of RPA in banking and finance, we can observe significant advancements through various implementations. At the forefront, M&T Bank, with its deep-rooted heritage, faced the digital transformation tide head-on. Recognizing the need for impeccable software quality and compliance, the bank set a benchmark with Clean Code standards, ensuring their systems’ robustness in performance and security.

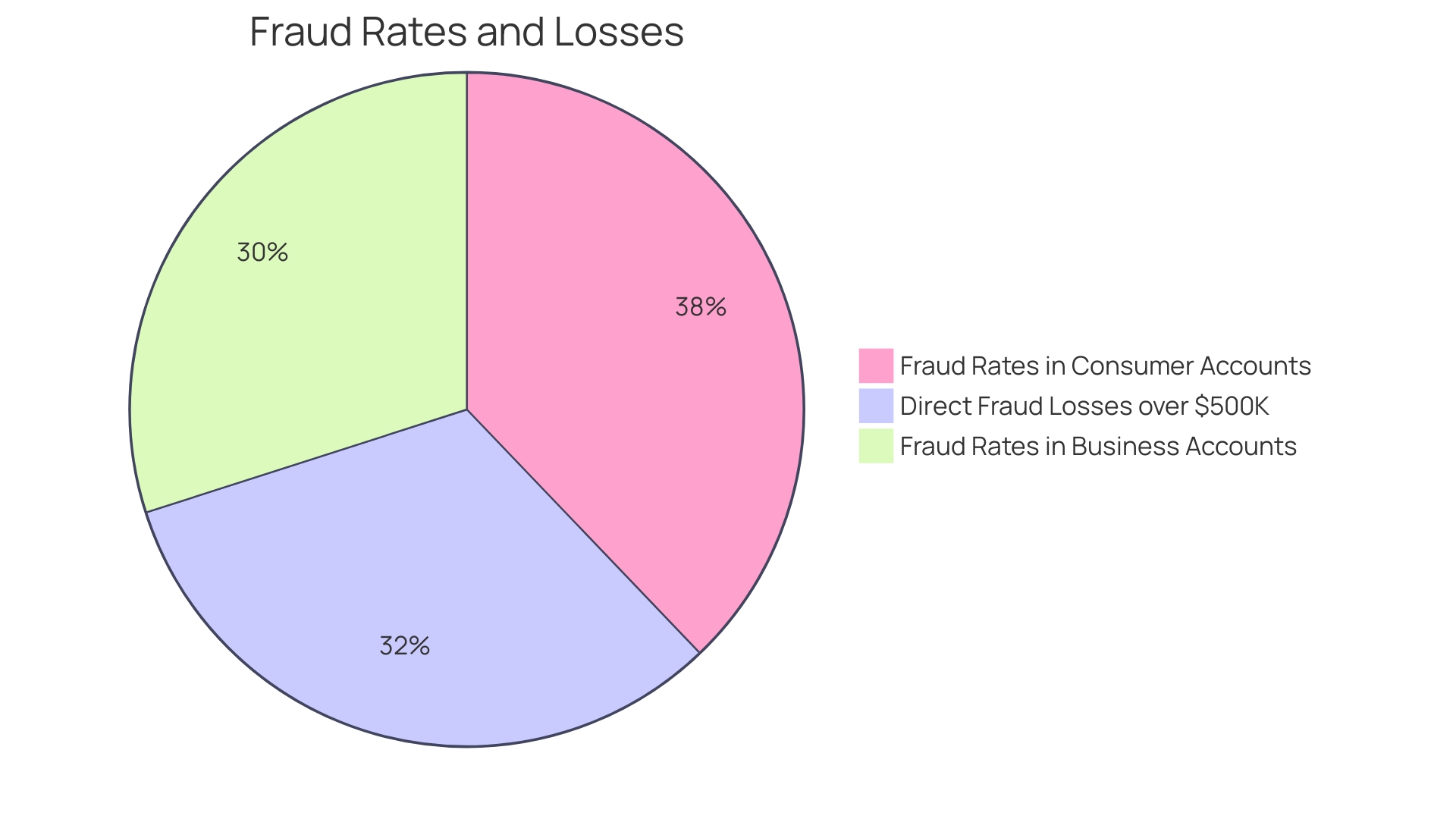

The financial sector’s digital shift also brings to light the stark reality of credit card fraud, which has alarmingly risen to an estimated 150 million incidents in 2023, equating to 5 fraud cases every second. To counteract this, institutions have leveraged technological innovations, adopting real-time transaction pre-authorization processes that enable instant fraud detection and prevention.

In this scenario, RPA stands out as a critical ally. For instance, Discover Financial Services harnessed Blue Prism RPA to refine their credit card application and fraud detection systems. The result was a leap in processing speed and precision, fostering a surge in employee productivity and customer contentment.

Similarly, asset management giant Schroders employed RPA to automate tasks such as data handling and compliance verification. This strategic move liberated hours of manual labor, enhancing data accuracy and freeing up the workforce to engage in more strategic pursuits.

These case studies exemplify the compelling impact of RPA in streamlining operations, bolstering security, and delivering customer excellence in the financial services industry. As adoption continues to accelerate, these technologies are set to redefine the landscape of digital banking and finance.

Conclusion

In conclusion, Blue Prism RPA is a powerful technology that transforms business operations. It automates tasks, integrates AI and machine learning, and provides data-driven insights. With real-world applications in healthcare, finance, retail, and supply chain, Blue Prism RPA streamlines processes, enhances fraud detection, improves customer service, automates finance and accounting tasks, and optimizes supply chain and logistics.

Implementing Blue Prism RPA brings numerous benefits, including accelerated workflows, reduced human error, data protection, compliance, scalability, and cost savings. Real-world case studies in banking and finance demonstrate the transformative power of RPA in enhancing software quality, fraud detection, and data handling, leading to improved performance and customer satisfaction.

In summary, Blue Prism RPA revolutionizes industries, propels organizations towards digital transformation, and offers a competitive edge. Its core capabilities, real-world applications, and benefits make it a powerful technology for enhancing efficiency, improving security, and driving growth. By embracing Blue Prism RPA, organizations can achieve new heights of operational capabilities and success.

Experience the power of Blue Prism RPA today and streamline your business operations.

Introduction

CRM automation is transforming the world of customer relationship management by leveraging advanced technology to automate essential yet repetitive tasks. This streamlined approach to sales and marketing improves operational efficiency and enhances productivity. Companies like Delivery Hero and St. James Winery have successfully implemented CRM automation, reducing employee downtime and maintaining high-quality production.

The integration of CRM systems consolidates scattered customer data into a single platform, leading to better data management and enhanced security. Sales automation specifically caters to manufacturing enterprises with complex product portfolios, saving valuable time and allowing companies to focus on sealing deals and fostering customer relationships. With the integration of generative AI into sales strategies, businesses can expect even stronger outcomes.

The significance of CRM and sales automation is further underscored by the recognition that human relationships and value-based selling remain integral to the industry’s success. While AI innovations enhance business growth, the core competencies of sales professionals continue to be highly valued in today’s dynamic marketplace.

What is CRM Automation?

CRM streamlines the landscape of customer relationship management by leveraging advanced technology to automate tasks that are essential yet repetitive, such as data entry, lead nurturing, and client communication. This process facilitates a more streamlined approach to sales and marketing efforts. For instance, Delivery Hero, a global delivery platform, managed to address the challenge of frequent employee lockouts by automating the identity verification and access restoration process. As a result, they significantly reduced the time employees were unable to work, enhancing overall operational efficiency. In the same way, St. James Winery utilized technology to uphold its standing as a top winery, emphasizing the importance of technological progress in maintaining excellent production and business expansion.

Moreover, the integration of CRM systems plays a pivotal role in consolidating client data, previously scattered across various digital repositories, into a single, unified platform. This centralization is not just conducive to better data management—it is also a crucial step for enhancing data security. Sales streamlining particularly addresses the requirements of manufacturing companies with intricate product portfolios by reducing manual quoting mistakes and optimizing client involvement. By automating outbound sales processes, companies can save valuable time and direct their focus towards closing deals and fostering customer relationships.

In the context of modern business practices, automation tools for selling are indispensable for companies aiming to sell smarter and more effectively. These tools aid in identifying potential customers, handling contacts, and evaluating sales information, thus optimizing the sales procedure. As pointed out by industry experts, the incorporation of generative AI into business strategies has already begun to produce strong outcomes, with a widespread adoption on the horizon.

The importance of CRM and sales technology is further emphasized by insights from Pipedrive CEO Dominic Allon, who highlights that despite the technological progress, the core of sales – human connections and value-driven selling – remains crucial to the industry’s achievements. The most recent trends in marketing and promoting, as shown in industry reports, indicate that while AI advancements improve business growth, the fundamental skills of sales professionals remain highly esteemed in the ever-changing marketplace.

Benefits of CRM Automation

CRM streamlines the process, empowering marketing teams with tools designed to enhance productivity and drive business growth. For instance, by leveraging CRM platforms, companies can closely monitor their team’s activities, including calls, emails, and texts, and compare them to established benchmarks. These insights can be leveraged to offer real-time suggestions and post-interaction reports with actionable recommendations to improve performance.

Rochester Electronics, a top supplier of semiconductors, utilized the power of CRM to integrate their tech stack, facilitating seamless collaboration across its global teams. Similarly, Delivery Hero effectively addressed the issue of account lockouts by automating the recovery process, which significantly reduced downtime and saved 200 hours per month.

With sales software, businesses can automate repetitive tasks, manage contacts, and streamline workflows, leading to more effective strategies and better customer relationships. This approach not only saves time but also allows sales teams to focus on more high-value interactions.

Moreover, statistics show a convincing trend towards business implementation of workflow mechanization, with 94% of corporate executives favoring a unified system for app integration and process mechanization. This change is apparent as more than a third of businesses have mechanized five or more divisions, showing the widespread adoption of mechanization to stay competitive in the marketplace.

By establishing a well-defined sales procedure and monitoring approach, CRM mechanization empowers companies to handle potential clients on a large scale and streamline their efforts in sales. This is crucial for manufacturing enterprises with complex product portfolios, where representatives face challenges in managing client interactions and manual tasks simultaneously.

In summary, CRM streamlining offers a strategic advantage, empowering sales and marketing teams to work smarter, not harder, by automating essential elements of their operations and providing valuable data-driven insights to refine their processes and enhance customer engagement.

Saves Time and Increases Productivity

CRM technology is revolutionizing the sales and marketing landscape by streamlining tasks and enhancing productivity. Take Delivery Hero, a global leader in local delivery, grappling with the inefficiency of employees being locked out of accounts. They faced approximately 800 requests monthly, taking 35 minutes each to resolve, amounting to a considerable loss of productive time. By eliminating IT as a bottleneck and automating the recovery process, they significantly reduced the downtime of employees and improved operational efficiency.

In the healthcare industry, where financial pressures and staffing challenges are widespread, the adoption of technology is being embraced to alleviate burdens on both clinical and administrative staff. A case in point is a large physician organization bogged down by manual reporting, requiring three business analysts full-time to manage. By automating this process, they were able to redirect their human resources to more strategic tasks.

In the same way, St. James Winery, the biggest and most honored winery in Missouri, has acknowledged the advantages of using technology to maintain their competitive advantage. By automating marketing and administrative functions, they have ensured that their quality remains consistent across competitions.

Recent advancements in AI technology have further heightened the impact of CRM automation on business growth, as noted by Pipedrive CEO Dominic Allon. In spite of the increasing prevalence of AI, he stresses the ongoing significance of human connections and value-based marketing in sales. Moreover, successful CRM implementation involves consolidating scattered customer data into one platform, thereby ensuring better data security and accessibility.

Sales Automation is particularly vital for manufacturing enterprises with complex product portfolios. It reduces the risk of mistakes like delays and chargebacks, which arise from manual sales processes. Establishing a well-defined sales process and bolstering it with software can streamline operations, aiding businesses in selling more effectively and efficiently.

The statistics are clear: the business world is quickly embracing workflow optimization. With 94% of corporate executives preferring unified platforms for app integration and process streamlining, and over a third of businesses having five or more automated divisions, the trend towards mechanization is undeniable. Those who hesitate to adopt these advancements risk losing market share, underscoring the critical nature of CRM in staying competitive.

Reduces the Chance of Human Error

CRM streamlining goes beyond simple data entry; it’s a strategic resource for boosting sales possibilities and enhancing customer service. By automating these processes, CRM systems significantly reduce the risk of human error, thus preserving data integrity. For instance, Delivery Hero faced a recurring operational bottleneck with 800 monthly support requests that took an average of 35 minutes each to resolve. By automating their account recovery process, they eradicated the time-consuming manual verifications, streamlining operations, and mitigating downtime. Similarly, St. James Winery, renowned for their award-winning wines, utilizes technology to uphold their industry-leading position. As CRM systems evolve, they’re equipped to monitor sales and marketing activities, offering real-time guidance and post-interaction analytics. This degree of mechanization not just enhances efficiency but also guarantees that every interaction with clients is informed and up to standard.

Improves Customer Satisfaction and Engagement

CRM mechanization is becoming an essential tool for delivering excellent client service and cultivating long-lasting relationships. The integration of automated communication capabilities is revolutionizing how businesses, like Kabannas hotels, interact with their clientele. By leveraging conversational AI across multiple platforms such as webchat, WhatsApp, and email, businesses can now provide round-the-clock personalized service, ensuring that every guest interaction is timely, relevant, and efficient.

With CRM mechanization, organizations are not simply meeting but outperforming client desires in the new computerized period. The Engagement of the New Era report highlights a crucial shift in engagement, emphasizing the necessity for a seamless and tailored experience across various touchpoints. By employing automation tools, businesses are reshaping client outreach and communication, resulting in improved performance and satisfaction.

Backing this transformation, statistics show the influential effect of AI on service. More than 63% of businesses in the retail industry currently utilize AI to improve interactions with consumers, with a significant number automating up to 70% of requests from clients. This increase in AI implementation is motivated by the acknowledgment that favorable user experiences are crucial, as demonstrated by the 78% of shoppers who have terminated transactions due to inadequate service. Moreover, 64% of business owners are confident that AI will only enhance relationships with clients, with an overwhelming 90% of businesses investing in AI technologies.

The success stories of brands featured in the report’s hall of fame highlight the importance of combining technology with a human touch to create exceptional experiences. As client expectations continue to evolve alongside technology, businesses are tasked with staying ahead of the curve to maintain client loyalty and satisfaction. CRM streamlining, therefore, becomes more than a mere toolâit’s a strategic asset that empowers businesses to create a service experience that is both memorable and rewarding.

Key Functions to Automate in CRM

Organizations seeking to harness the full potential of CRM should prioritize automating key functions within their CRM systems. By focusing on tasks such as data collection, process standardization, and communication management, companies can significantly enhance their operational workflow and decision-making efficiency.

For instance, automating data collection and information flow, as illustrated by a department within the Faculty of Engineering and Design, allows managers to access and utilize data more rapidly, leading to more informed decision-making. Similarly, Delivery Hero’s approach to streamline account recovery processes for their extensive workforce exemplifies how automating routine IT tasks can reduce downtime and improve overall productivity.

Furthermore, as observed in Hiscox’s service teams, the use of technology can significantly reduce repetitive tasks, such as handling client emails, by a significant percentage, thereby enhancing both customer and employee satisfaction levels. This is critical for industries where a personalized touch is essential, demonstrating that mechanization does not compromise service quality but rather enhances it.

Recognizing the significance of establishing a distinct sales process, CRM can assist in outlining each stage from lead generation to deal closure. This structured approach ensures that all potential customer interactions are efficiently managed and nothing falls through the cracks, ultimately driving successful conversions.

Workflow digitalization statistics project a significant adoption rate among businesses by 2024, emphasizing the urgency for companies to embrace digitization or risk losing market share. With a staggering 94% of corporate executives preferring a unified platform for app integration and process streamlining, the trend towards streamlined business operations is undeniable.

To sum up, through the automation of crucial CRM functionsâinformation gathering, sales process definition, and communication managementâorganizations can attain measurable enhancements in their business procedures, establishing the foundation for a more competitive and efficient operational model.

Contact Management

Utilizing the potential of CRM automation revolutionizes the domain of marketing and efficiency. By consolidating interactions and data from clients in one platform, businesses can gather and update client information, handle communications, and keep an updated record of client engagements. This not only streamlines the workflow but also ensures that customer data is precise and readily available, enabling sales and marketing teams to respond promptly and effectively.

For example, Kabannas, a group of UK hotels, utilized technology to improve their guest experience by enabling travelers to communicate digitally at their convenience, far beyond the usual business hours. Likewise, Delivery Hero used technology to tackle the common problem of employees being unable to access their accounts. By implementing automated recovery procedures, they greatly reduced downtime, highlighting the effectiveness of automated systems in streamlining operational processes.

Moreover, St. James Winery’s achievement in the competitive wine sector is partially attributed to their embrace of mechanization, which has optimized their marketing process and added to their status as a prominent winery. This method of mechanization is echoed by recent trends, where 88% of users interacted with chatbots in 2022, showcasing the growing reliance on automated systems for customer engagement.

Automating outbound sales processes is particularly beneficial as it saves significant time for sales teams, allowing them to concentrate on establishing relationships and finalizing sales. With 94% of executives favoring a unified platform for app integration and process streamlining, it’s clear that the adoption of such systems is becoming a business imperative.

Salesforce’s AI agents, such as Agentforce, highlight the movement towards mechanization in managing relationships with clients. Salesforce’s Atlas, a reasoning engine, is designed to emulate human thinking and planning, offering businesses a sophisticated tool for managing client interactions.

By embracing CRM technology, companies can not only improve their operational efficiency but also gain valuable insights into customer behavior and market trends, thereby informing strategic decisions and fostering business growth.

Lead Assignment and Management

Customer relationship management streamlines the sales environment, especially for manufacturing companies dealing with intricate product portfolios and challenging sales responsibilities. By automating lead assignment and management, CRM systems ensure that leads are efficiently distributed among representatives based on tailored criteria, facilitating prompt and effective follow-ups. This not only enhances the capability to track and score leads but also empowers teams to concentrate on the most promising prospects. Adopting CRM technology implies minimizing the risk of human mistakesâdelays, chargebacks, returns, and ultimately, dissatisfied customers become less of a concern, enabling sales representatives to concentrate on customer engagement and finalizing transactions. The integration of advanced AI models like Salesforce’s Xgen-Sales into CRM platforms further streamlines sales processes, enabling precision and speed in complex tasks. As the industry moves towards a more automated future, with 94% of corporate executives preferring a unified platform for app integration and process streamlining, the message is clear: adopting CRM streamlining is no longer a choice but a necessity to stay competitive and retain market share.

Task Management

CRM streamlines workflow processes by simplifying task management, thereby enhancing team collaboration. By automating routine tasks such as follow-ups, scheduling appointments, and managing deadlines, CRM ensures a high level of organization and prevents important tasks from being overlooked. Moreover, the introduction of automated tracking, reminders, and alerts enhances accountability and helps everyone adhere to their schedules. This shift toward mechanization is supported by a Zapier report which reveals that nearly all workers in small businesses encounter repetitive tasks that can be mechanized, leading to improved job satisfaction. Embracing workflow tools not only reduces manual errors but also fosters better communication and efficient work management across the organization.

Document Management

Embracing CRM integration revolutionizes the conventional approach to document management by seamlessly incorporating it within the CRM system. This transition provides a centralized hub where contracts, proposals, presentations, and other vital documents are not only stored but are also organized for efficient retrieval. The power of CRM shines in its facilitation of effortless collaboration between team members, ensuring that important documents are easily accessible when needed.

From the early days of basic word processors to the modern era of sophisticated CRM platforms, document digitization has evolved significantly. It began as a simple digitization of document creation and has now become a crucial tool for enhancing business operations. By leveraging templates and macros, repetitive tasks are automated, freeing up valuable time and reducing the potential for errors.

In practice, companies such as Centroplan have reaped the benefits of automating their document management within Salesforce, streamlining their sales cycle and allowing their teams to focus on more productive activities. Similarly, academic departments, like the Department of Electronic & Electrical Engineering, have employed technology to track student engagement more effectively. On a bigger scope, organizations like Delivery Hero have utilized technology to overcome operational challenges, greatly reducing the downtime experienced by employees due to account lockouts.

Today, document mechanization stands as a game changer, particularly for SMBs and professional services, by offering the means to compete with larger counterparts. It’s not just about storing documents; it’s about elevating efficiency and enhancing accuracy, which are essential in today’s fast-paced digital world.

Reporting and Analytics

CRM has transformed the manner in which organizations manage their marketing and advertising endeavors. With its advanced reporting and analytics capabilities, it’s now possible to monitor key performance indicators (KPIs) seamlessly, gauging the impact of different campaigns and pinpointing opportunities for enhancement. For instance, healthcare systems, facing unprecedented financial pressures and staffing challenges, have turned to CRM automation to alleviate the burden of manual reporting. Previously, labor-intensive tasks that monopolized the time of business analysts can now be streamlined, freeing up resources and expediting decision-making processes.

Real-time analytics have become a game-changer, offering an eagle-eye view of sales funnels, engagement, and evolving market dynamics. This shift towards data empowerment is exemplified by the healthcare industry’s move to automate data sharing and comply with new federal rules on information blocking, reflecting the need for immediate access to digital health records.

Moreover, the integration of CRM platforms has solved the puzzle of scattered data. By consolidating information of clients previously housed in various digital repositories, businesses are ensuring data security and integrity, critical in today’s digital landscape where data breaches are a constant threat. This consolidation not only improves data security, with encryption ensuring data remains unreadable to unauthorized parties but also provides a cohesive, comprehensive view of interactions.

According to the Sales and Marketing Report 2023/2024, the rise in AI-driven innovation in CRM has highlighted the equilibrium between technological efficiency and the indispensable worth of human relationships in sales. With these tools, businesses of all sizes, from startups to large enterprises, are equipped to navigate the complexities of today’s economic landscape, driving growth with precision and efficiency.

Integration with Other Applications

CRM enhancement greatly expands its functionalities when connected to other systems, altering the way businesses engage with their clients. Through the integration of CRM with tools such as marketing platforms, email systems, support systems, and various business applications, it establishes an interconnected ecosystem. This synergy facilitates a smoother data transfer, abolishing tedious manual entry and reconciliation processes. Most importantly, it offers a thorough view of client engagements across various touchpoints, empowering organizations to provide a consistent and customized client journey.

A testament to the power of such integrations is the success story of Virgin Money’s M-Track solution, which amalgamated data across diverse systems, including HR and social media, to furnish businesses with a holistic view of their operations. Similarly, APIs have emerged as linchpins in modern software development, allowing disparate programs to communicate effectively, which Google hails as the ‘crown jewel of software development.’ They are pivotal in cultivating an environment ripe for innovation, as they facilitate collaboration and enhance system capabilities.

API integration is not just a technical enhancement; it’s a strategic enabler. It removes data silos and coalesces functionality, which is crucial in a digital-first business landscape. Organizations leveraging API integration, like those consulting with firms renowned for their expertise in Salesforce, find themselves at the forefront, experiencing tangible growth and transformation. Essentially, when CRM is synchronized with other applications through API integration, it not only simplifies operations, but also fundamentally transforms the nature of customer relationship management.

Enhancing Lead Qualification and Sales Cycles

Utilizing CRM automation has changed the scenery of sales by allowing for a more effective lead assessment and speeding up the sales process. Automated tools, such as lead scoring systems and nurturing strategies, empower organizations to swiftly identify leads with the highest conversion potential. This prioritization is crucial as it directs marketing efforts towards the most promising prospects, optimizing the use of valuable time and resources. For example, by utilizing CRM data, sales teams can leverage prompts and business rules to identify the prime opportunities at any given moment. This strategic focus on qualified leads is crucial in reducing the time spent on prospects less likely to yield results, thus streamlining the overall sales journey.

Statistics from industry leaders illustrate the significance of rapid response and personalized communication in maintaining competitive service standards. For example, a specialist insurance company, Hiscox, faced challenges managing a growing influx of client emails. By implementing an automated system, they were able to decrease their repetitive workload by 28%, while also improving response timesâenhancing both client and employee satisfaction.

Furthermore, incorporating CRM with sales workflows is not only about time-saving; it’s about empowering sales teams to succeed. Sales tools offer valuable insights, effectively handle leads, and nurture client relationships. The goal is to assist sales teams in focusing on what really counts – establishing connections with clients and finalizing transactions. As the business world evolves and competition intensifies, the utilization of sales tools becomes indispensable in driving sales excellence and achieving business success.

Personalized Customer Communication

CRM revolution is transforming the manner in which businesses engage with their clients by providing individualized communication on a large magnitude. For instance, Capital One has leveraged CRM technology through Slack to enhance user experiences, while Benefit Cosmetics has employed WhatsApp to simplify their appointment scheduling procedure. This strategic use of technology enables the delivery of automated email campaigns, personalized recommendations, and content that is tailored to individual preferences and behaviors.

The incorporation of CRM mechanization enables organizations to access comprehensive client data, dividing it to customize messages that connect with various groups. As seen with Benefit Cosmetics, this can lead to enhanced engagement and the ability to maintain personal connections, even in fast-paced digital environments. Such tools empower businesses to meet the expectations of digital-savvy individuals for fast, accessible services, while still offering the personal touch that nurtures loyalty and strengthens relationships.

Embracing CRM automation is also about selecting the appropriate system with the essential features to fulfill business objectives, such as contact management and support for a range of service channels. As reported, more than 63% of retail companies now use AI to enhance their service to clients. This adoption is motivated by the acknowledgment that a negative service experience can lead to abandoned transactions, highlighting the significance of responsive and effective communication channels facilitated by CRM solutions.

Furthermore, data analytics plays a vital role in CRM, providing the basis for focused marketing efforts. However, with the increasing volume of data known as ‘infobesity’, it’s crucial to focus on actionable data for personalization. This approach has been validated by the success of companies that have developed a common data model to underpin their CRM strategies, leading to quicker deployment of new use cases and the ability to proactively address service issues.

Essentially, CRM is not just about utilizing technology; it involves establishing a more personalized and responsive customer service experience that aligns with the overall business objectives and enhances the customer journey at every touchpoint.

Improved Cooperation Between Marketing and Sales

CRM streamlining is not only concerning technology; it’s about uniting marketing and sales teams to collaborate seamlessly. With the integration of marketing systems and CRM systems, companies like Kolleno are streamlining their multi-channel campaigns, ensuring a cohesive workflow despite the complexity of coordinating across various platforms. In the same way, Centroplan’s implementation of document mechanization in their Salesforce ecosystem is reducing the manual burden for their sales team, enabling them to concentrate on more tactical assignments and expedite their sales process.

‘Parkhotel Adler’s move to modernize their marketing strategies is a prime example of how CRM can address the challenges of segmentation and personalization in a customer database. This shift is not just a step toward efficiency but is also about adapting to the contemporary buying landscape, where decisions are made by committees rather than individuals. By using CRM systems effectively, businesses can engage multiple stakeholders, resulting in more comprehensive and successful sales strategies.

The implementation of CRM is strengthened by the trend towards AI, which is seen simultaneously as a priority and a challenge by marketers. AI’s role in managing and analyzing client data is pivotal, providing marketers with the tools to build better relationships and personalize client experiences. With trustworthy data, companies can ensure that their decisions are based on accurate information, leading to more effective marketing campaigns and improved lead conversion rates.

By implementing CRM technology, companies are not just synchronizing their internal departments but also preparing for enhanced income by promoting stronger client connections and optimizing processes. The collaboration between marketing and sales, enhanced by CRM technology, is a game-changer that is revolutionizing the manner in which businesses engage with their clientele and attain expansion.

Two-Way Flow of Customer Data

CRM automation goes beyond the simple exchange of data across departments, transforming into a strategic synergy that enhances both marketing and promotion efforts. By leveraging the potential of integrated systems, sales teams provide valuable client observations directly to the marketing database, sparking customized campaigns that connect with specific audiences. Conversely, the wealth of data from marketing initiatives feeds back into sales strategies, empowering representatives with actionable intelligence for their next engagement.

Currys, a retail giant, experienced the transformative power of CRM firsthand. Affected by an obsolete marketing system burdened with manual procedures, they welcomed mechanization to rejuvenate their client communication. The result? A more interactive, attentive connection with clients, delivered with accuracy and relevance.

In another showcase of CRM’s adaptability, a leading beauty brand leveraged ScheduleJS with Selligent CRM to revolutionize their scheduling system. This integration delivered an intuitive, efficient platform for managing beauty consultants’ appointments, demonstrating the capability of CRM to tailor solutions to specific business needs.

The CRM landscape is pulsating with innovation, as platforms evolve to offer real-time guidance and performance analysis. These systems are not only monitoring the traditional metrics but are also equipped to propose enhancements during interactions, creating a feedback loop of ongoing improvement.

This convergence of sales and marketing data into a unified CRM strategy is the bedrock for lifecycle automation, a concept that extends beyond mere task automation. It weaves a tapestry of engagement throughout every stage of their journey, nurturing a richer, more personalized experience. As a result, businesses are leveraging this rich source to achieve a balance between attracting and retaining clients, with current clients often being the most profitable, contributing an astonishing 65% to a company’s revenue.

In essence, the integration of CRM automation tools like Zeotap CDP, which offers a flexible data ingestion model, is crucial for a comprehensive view. This aids in crafting marketing strategies that are not only effective but also deeply connected to the client’s evolving narrative. By breaking down data silos and leveraging a unified data strategy, businesses can anticipate and cater to client needs with unprecedented agility and personalization.

Using AI in CRM Automation

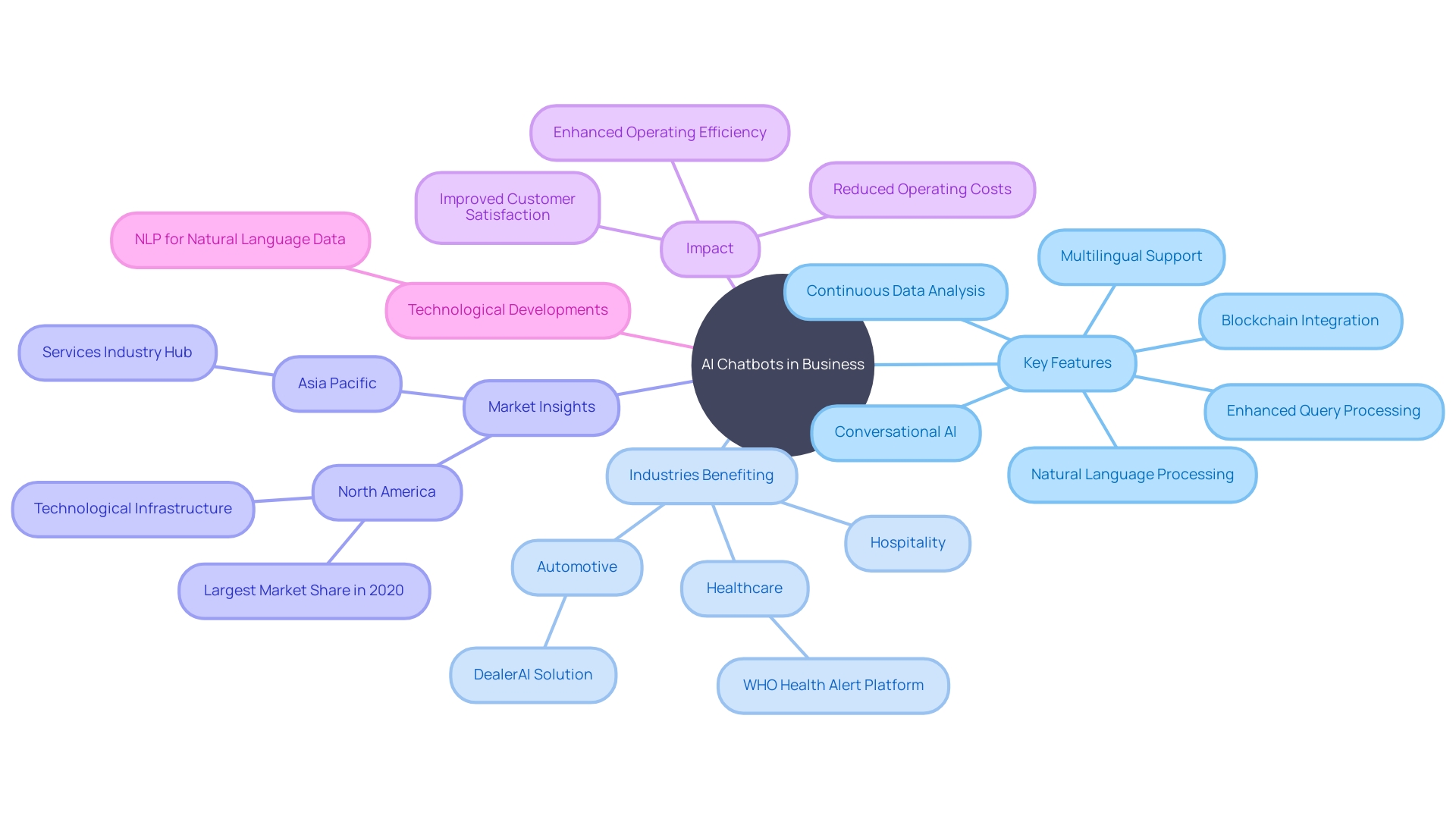

In the realm of CRM automation, the integration of Artificial Intelligence (AI) is revolutionizing how we interact with client data. Ai’s capability to analyze vast datasets, recognize patterns, and project trends is invaluable for marketing and advertising professionals aiming to comprehend their target market better. By utilizing AI, tasks like lead scoring, segmentation of clients, and creating personalized suggestions become streamlined, greatly improving the effectiveness of marketing and business initiatives.

Traffic Builders’ experience, a component of Unbound Group, underscores the power of data-driven strategies in the competitive B2C and B2B markets. Their award-winning approach to digital marketing and analytics, recognized consistently by Emerce 100, demonstrates the tangible benefits of harnessing data for operational success. Such expertise is now being translated into the world of CRM through AI, where predictive analytics can inform which leads are most likely to convert, what products they might buy, and the optimal times for engagement.

AI is not just an efficiency tool—it’s a partner in innovation. With 34% of marketing and sales departments already adopting generative AI, the technology is fostering new levels of client interaction that were previously unattainable. As the retail industry reports, over 63% are using AI to enhance client support, and with good reason. Given that a significant 78% of consumers have terminated a transaction due to inadequate service, the increase in AI application is a strategic response to a clear demand.

Experts advocate for AI as a co-pilot, enhancing human capabilities rather than replacing them. It’s a tool that can sift through email responses to categorize interest levels, summarize interactions with leads or clients, and even uncover new leads that fit the ideal client profile. This co-pilot approach ensures that while some jobs may evolve or be created, the fear of a ‘jobless future’ is largely unwarranted.

In essence, AI in CRM is not just about automating processes; it’s about elevating the customer experience, driving innovation, and ensuring competitive edge in a rapidly advancing digital marketplace.

Real-World Examples of CRM Automation

CRM streamlining is transforming the way businesses operate, providing tangible advantages to companies of various sizes and industries. For instance, Delivery Hero, a prominent local delivery platform with operations in over 70 countries, encountered significant challenges with employees frequently locked out of their accounts. This issue resulted in around 800 monthly requests to IT, taking an average of 35 minutes to resolve each case. By automating the recovery process, the IT service delivery team led by Dennis Zahrt, including members Slimani Ghaith and Dorina Ababii, successfully removed IT as a bottleneck, enhancing overall productivity.

Likewise, St. James Winery, the biggest and most acclaimed winery in Missouri, has acknowledged the importance of using technology to uphold its leading status in the fiercely competitive wine sector. With over 50 years of history, the winery has consistently ranked among the top five gold medal-winning wineries in the country, a testament to their commitment to innovation and quality.

These case studies highlight the wider industry trend towards CRM streamlining. Recent statistics highlight a surge in the adoption of automated processes, with 94% of corporate executives preferring a unified platform for app integration and process streamlining. Moreover, more than 33% of businesses have mechanized five or more divisions, 45% of corporate teams are actively involved in mechanization, and at least one function is mechanized in 31% of organizations. This growing dependence on mechanization demonstrates its vital part in upholding competitive edge and market share in the current fast-paced business setting.

Best Practices for Implementing CRM Automation

Implementing CRM automation is akin to setting up a powerful engine in the heart of your sales and marketing operations. To ensure this engine runs smoothly and effectively, observing best practices is key. A shining example comes from Delivery Hero, which managed to streamline its account recovery process, reducing the time employees were locked out and unable to work. By automating the verification and access restoration process, they achieved significant time savings for their IT department.

Similarly, Hiscox, a specialist insurance company, found that their service teams were overwhelmed by a growing influx of emails from clients, leading to delayed responses. By automating their email handling, they not only improved response times by 28% but also enhanced both customer and employee satisfaction. This demonstrates that targeted CRM automation can alleviate pressure on service teams and deliver a more competitive and swift communication experience.

To achieve similar results, businesses should contemplate automating their outbound marketing process. This not only saves time by eliminating repetitive tasks but also allows teams to focus on what they do bestâclosing deals. Furthermore, establishing a distinct process for selling, starting from acquiring potential customers to finalizing the agreement, can streamline the incorporation of technological tools.

When it pertains to email marketing, reconsidering the use of automated processes in light of advancements in data and technology is necessary. As today’s CRM and marketing platforms glean insights from professionals’ activities, they can provide real-time suggestions and post-interaction recommendations, all aimed at enhancing sales and marketing efforts.

Given the undeniable proof, it’s not surprising that workflow streamlining has experienced a surge in recent years. With 94% of executives preferring a unified platform for app integration and process streamlining, the message is clear: those who do not embrace streamlined processes risk losing ground to competitors.

In sum, CRM automation best practices revolve around understanding your processes, identifying repetitive tasks, and deploying automation strategically to free up valuable resources, ultimately driving efficiency and growth.

Conclusion

In conclusion, CRM automation revolutionizes customer relationship management by streamlining sales and marketing efforts. It improves efficiency, productivity, and data management. Sales automation caters to manufacturing enterprises, saving time and focusing on customer relationships.

The integration of AI strengthens business outcomes while valuing human relationships.

CRM automation saves time, reduces errors, and enhances customer satisfaction. Workflow automation is rapidly adopted, improving cooperation between marketing and sales teams. AI in CRM enables personalized communication and data-driven strategies.

Real-world examples show the tangible benefits of CRM automation, such as reduced downtime and increased productivity. Best practices include automating tasks, defining clear sales processes, and leveraging data and technology.

CRM automation offers a strategic advantage, empowering businesses to work smarter, achieve growth, and succeed in a competitive marketplace.

Introduction

Efficiency and effectiveness are essential for any organization’s success. In the realm of business process management, optimizing workflows is crucial to achieving operational excellence. This article explores the key attributes of an ideal business process, the benefits of effective business process management, and the tools and techniques that can drive process optimization.

From clearly defined goals and standardization to integration and automation, each aspect plays a vital role in enhancing productivity and delivering a superior customer experience. By streamlining operations, organizations can achieve cost savings, improved quality, and consistency, and ultimately position themselves for growth and success. With the right approach and tools, businesses can embark on a journey of continuous improvement, ensuring they stay agile and competitive in today’s dynamic business landscape.

What is a Business Process?

The core of a business lies in its carefully organized activities, which are tightly sequenced to achieve a specific organizational objective. This structured sequence facilitates the seamless transit of information, resources, and materials through various organizational pillars, encompassing production, customer service, financial management, and human resources. A crucial element of this is the flowchart, a visual tool that outlines the step-by-step progression of these tasks, using symbols like arrows and diamonds to represent flow and decision points, respectively.

Within the domain of streamlining operations, mathematical models play a vital role in improving resource allocation and scheduling, as demonstrated by studies highlighting a preference for these matters in previous research. Nevertheless, there is a recognized deficiency in managing the random components intrinsic to numerous commercial operations and a remarkable underutilization of data obtained from models or logs of events. This emphasizes the requirement for a comprehensive approach that acknowledges the diverse nature of organizational procedures, similar to the intricate relationships within an ecosystem.

Innovations in software for business workflow improvement reflect this feeling, providing instruments to examine business workflows for possible improvements. These solutions, such as Jira Work Management, underscore the importance of continual adaptation and improvement, even when the current processes suffice. They are instrumental in preventing operational mishaps, like overlooked tasks, by providing systematic task management and process-tracking features. Ultimately, incorporating these tools and strategies into operations can safeguard against inefficiencies and propel organizations towards their strategic goals.

Key Attributes of an Ideal Business Process

A strong operational procedure must demonstrate multiple essential qualities to attain maximum effectiveness and efficiency. These include a consistent, enjoyable, and effective user experience, as illustrated by a company that chose a technical stack—Django, Tailwind, HTMX—best suited to their specific needs. By analyzing competitor traffic and revamping existing content, they crafted a strategic roadmap for new content to address targeted search intentions, refining articles as they gained traction to optimize conversions.

In a case study involving John Dee, a multi-staged upgrade journey was pivotal. Beginning with an initial analysis of current and future needs, a distinctive design level was established, followed by the exploration of various design alternatives. This meticulous approach was essential for tailoring the solution to the company’s specific challenges and growth trajectory.