Overview

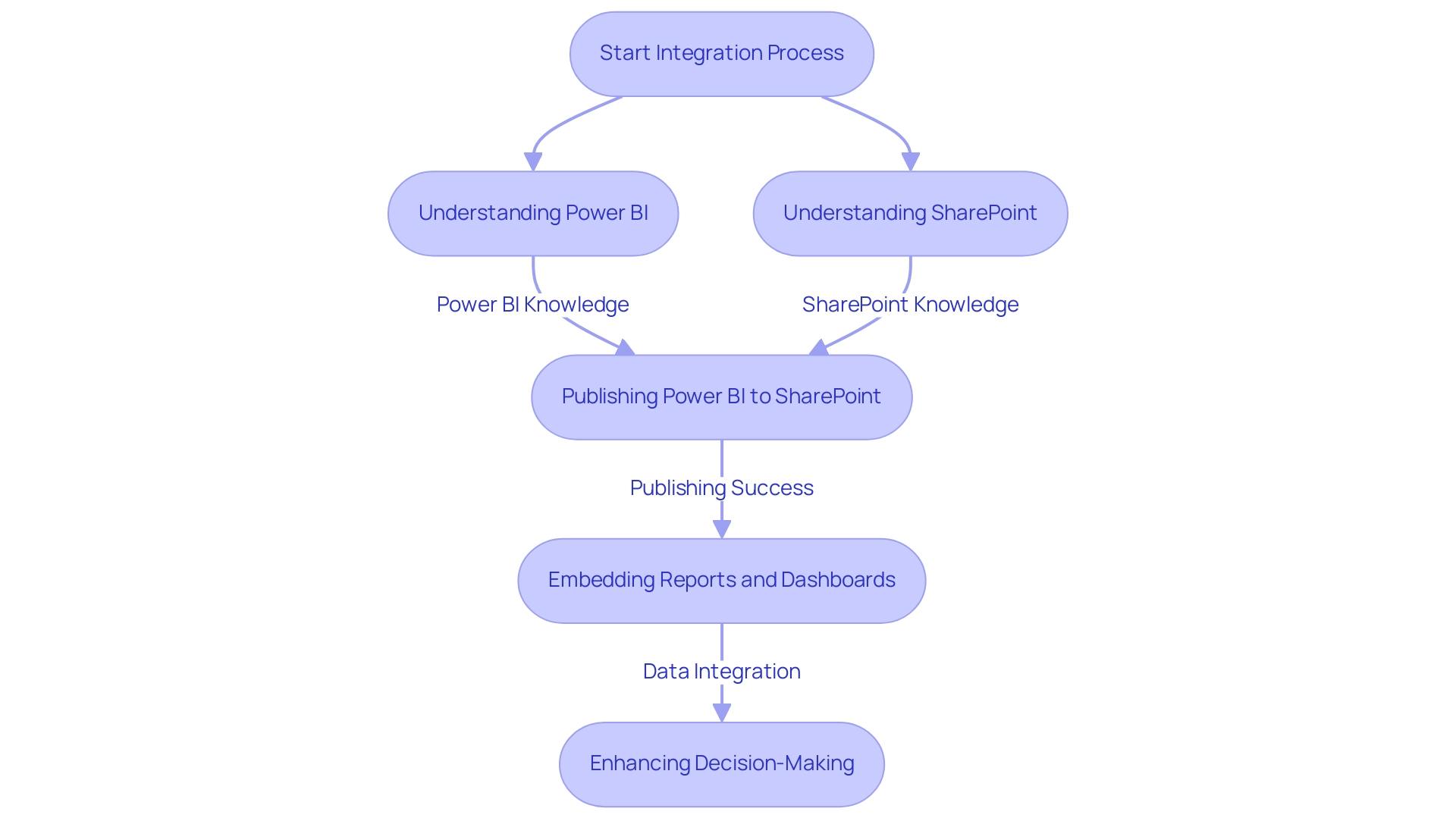

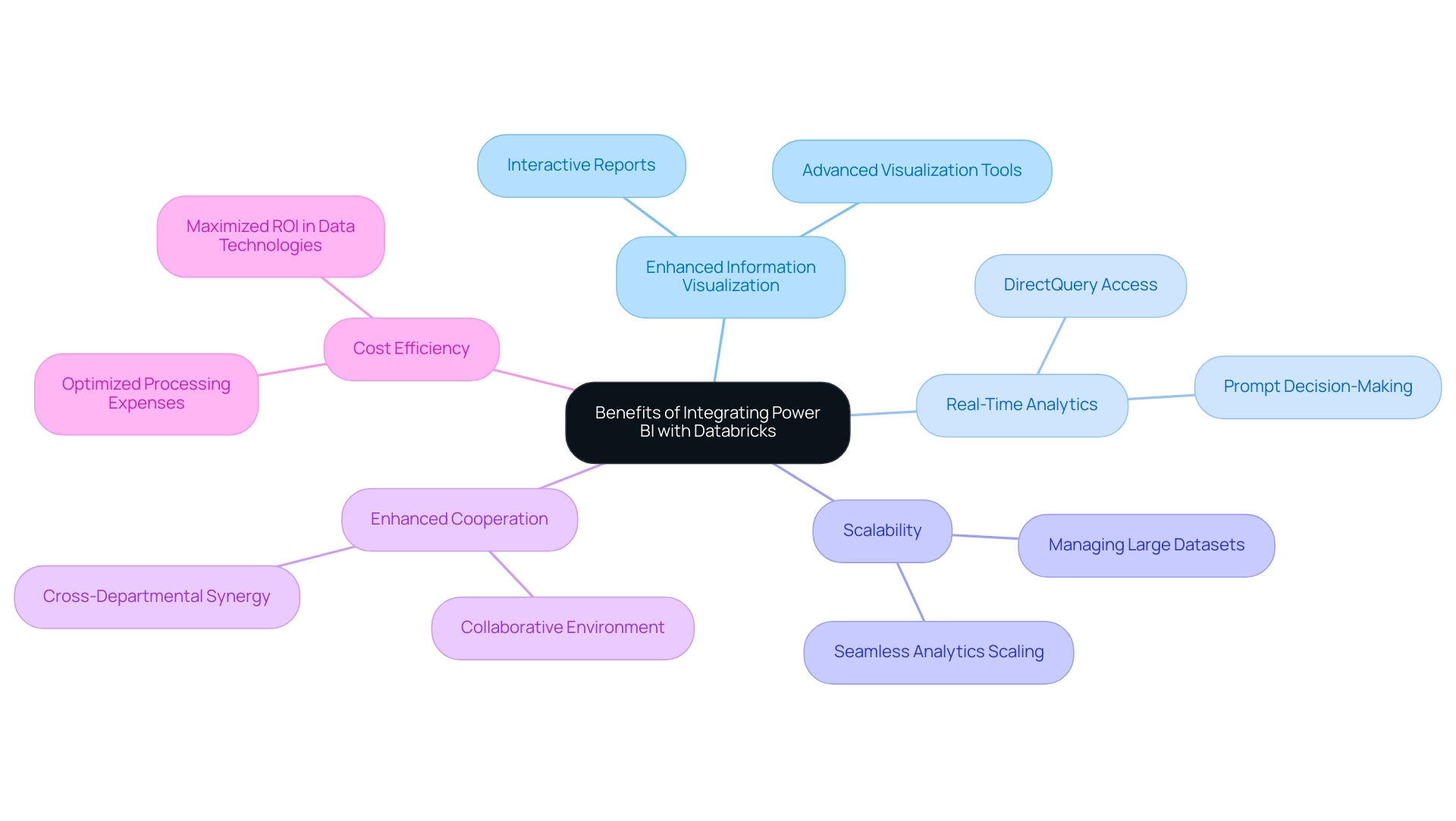

Publishing Power BI to SharePoint significantly enhances organizational collaboration and data accessibility, driving operational efficiency. This article outlines various methods for this integration, including:

- Embedding reports

- Managing permissions

It emphasizes the importance of user training and feedback to optimize the experience, ensuring secure access to insights. By adopting these strategies, organizations can unlock the full potential of their data, fostering a culture of informed decision-making.

Introduction

In the dynamic realm of business analytics, the integration of Power BI and SharePoint is transforming how organizations visualize and share data. As companies increasingly seek to make informed decisions in real-time, this potent combination not only enhances data accessibility but also cultivates collaboration among teams.

With projections suggesting that 70% of organizations will depend on real-time analytics by 2025, grasping the best practices for effectively embedding Power BI reports into SharePoint becomes essential.

This article explores the diverse strategies businesses can employ to optimize this integration, addressing:

- Technical considerations

- Permission management

- User experience enhancements

The goal is to convert raw data into actionable insights that drive growth and innovation.

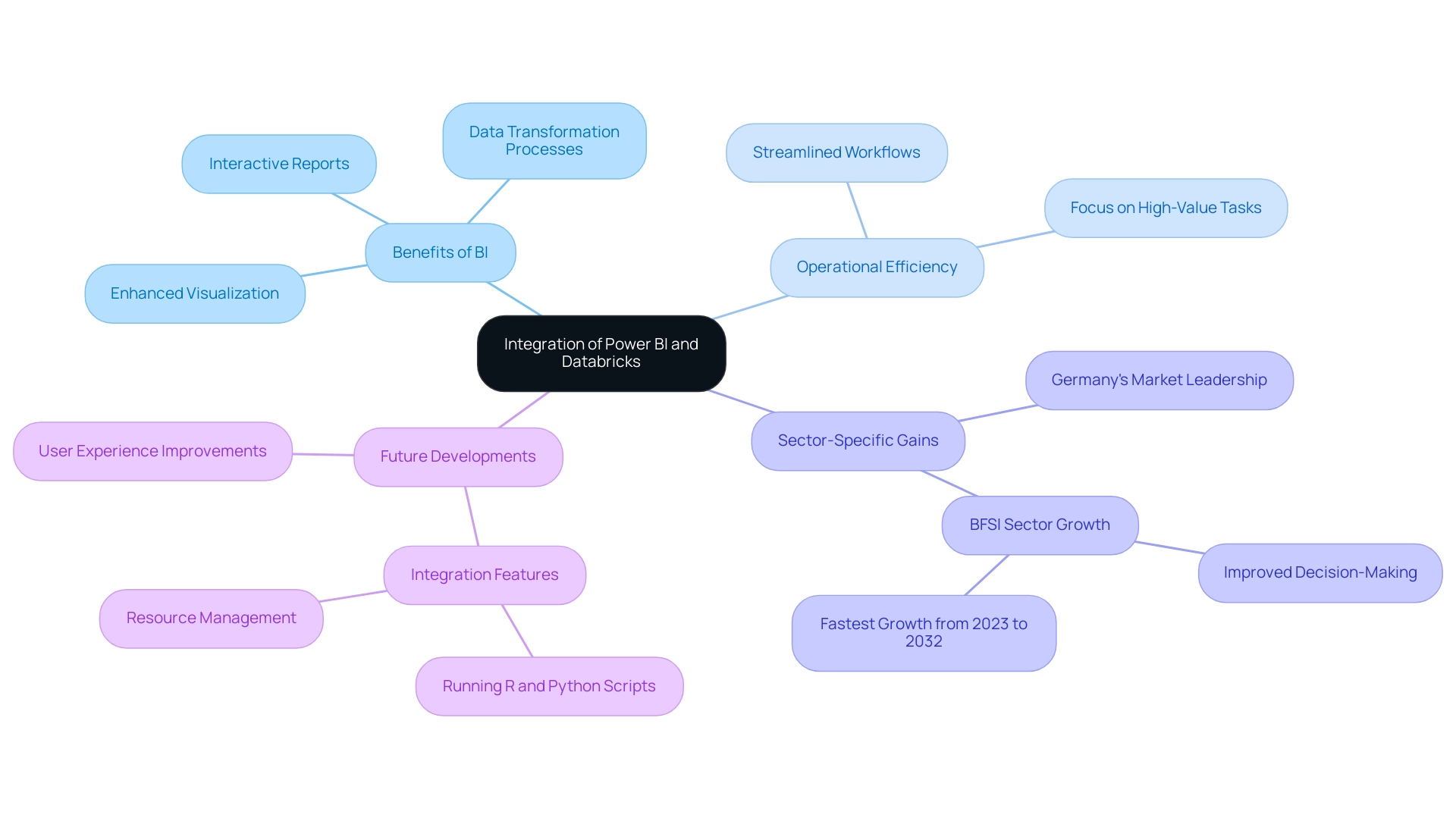

Understanding Power BI and SharePoint Integration

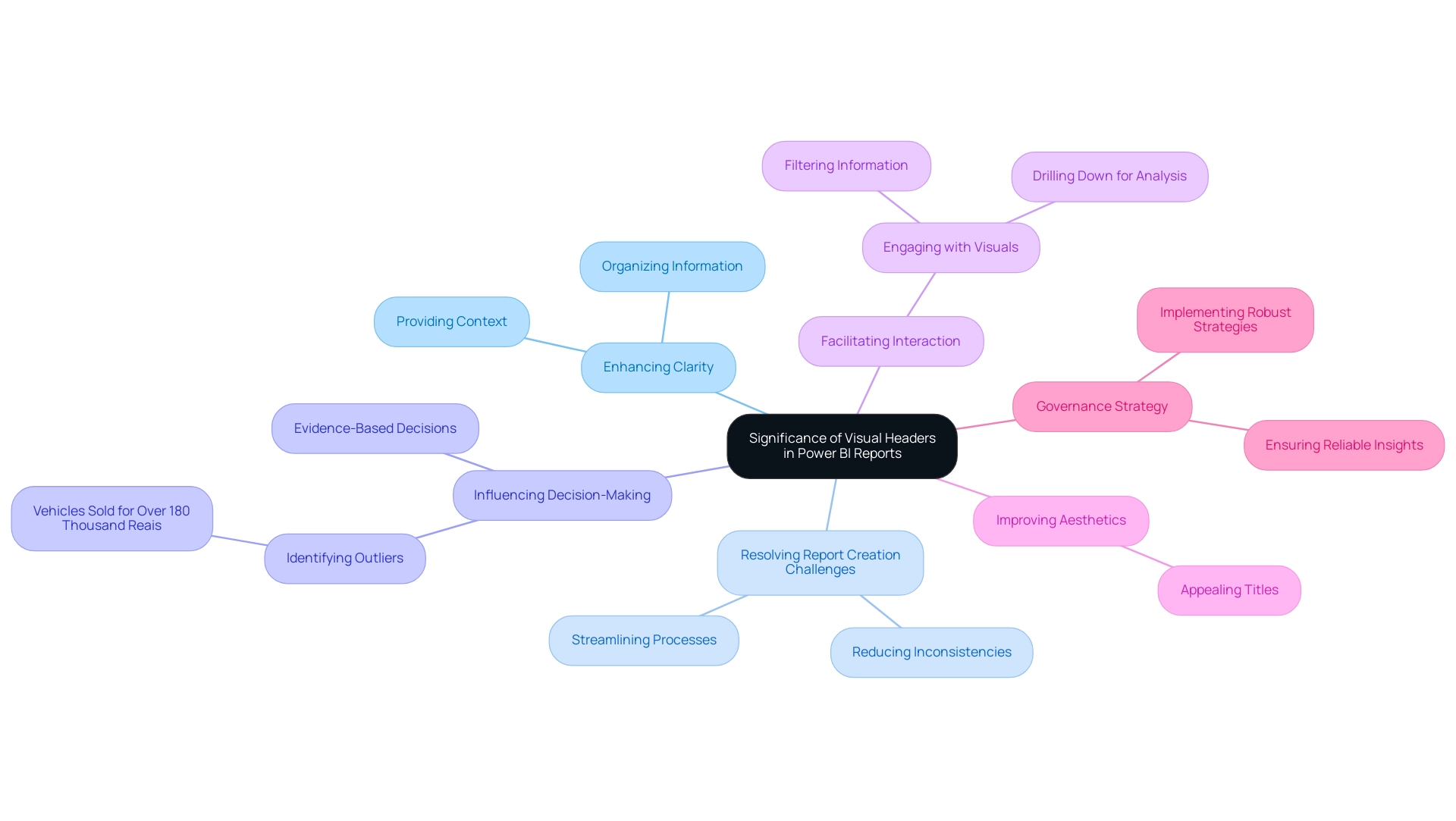

This tool stands out as a powerful business analytics instrument, enabling users to visualize information and share insights across their organizations. In contrast, SharePoint operates as a collaborative platform seamlessly integrated with Microsoft Office. Organizations are increasingly learning how to publish Power BI to SharePoint, allowing for the integration of interactive reports and dashboards directly into SharePoint pages, significantly enhancing data-driven decision-making processes.

This integration streamlines access to critical insights and fosters collaboration by embedding data within existing workflows, making it easier for teams to leverage information effectively.

As we approach 2025, the landscape of business analytics is evolving rapidly. Statistics indicate that by this year, 70% of organizations will utilize real-time analytics for informed decision-making, a substantial increase from 40% in 2020. This trend underscores the growing reliance on tools like BI, which facilitate prompt access to actionable insights.

Furthermore, organizations are increasingly recognizing how to publish Power BI to SharePoint as a valuable integration that enhances their analytical capabilities.

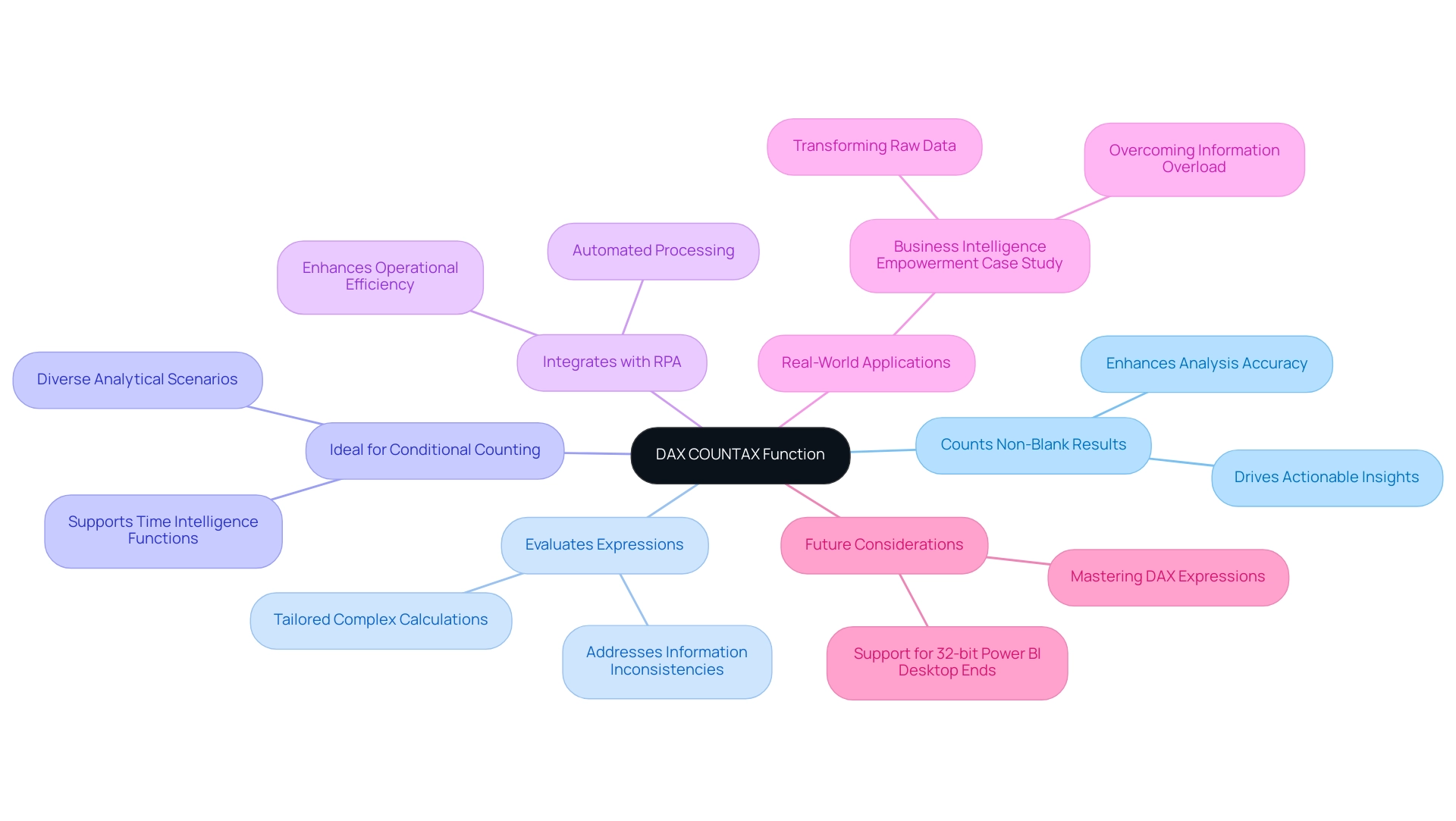

Successful case studies illustrate the transformative impact of this integration. For instance, organizations that have learned how to publish Power BI to SharePoint report better information quality and improved decision-making processes, ultimately fostering growth and innovation. The case study titled ‘Business Intelligence Empowerment‘ exemplifies this, showcasing how organizations have successfully transformed raw information into actionable insights through this integration.

The ability to visualize data in a collaborative environment not only simplifies analysis but also encourages a culture of data-driven decision-making across teams.

In 2025, the collaboration between BI and SharePoint is expected to yield significant benefits, including improved accessibility to insights, streamlined workflows, and enhanced team collaboration. As businesses continue to navigate a data-rich environment, leveraging these tools will be crucial for extracting meaningful insights and making informed decisions. The latest trends indicate that organizations are increasingly prioritizing this integration, recognizing its potential to empower teams and drive strategic initiatives.

Moreover, with the introduction of Automate and the EMMA RPA tool, organizations can streamline workflow automation with risk-free ROI assessments and professional execution, further enhancing operational efficiency. Automating repetitive tasks alleviates staff fatigue and boosts employee morale, making it a key consideration for operational strategies. The evolving landscape of business analytics highlights the increasing importance of tools like BI and SharePoint in this context.

As noted by an Analyst, ‘Erste Schritte sind einfach, ein sauberes Modell allerdings herausfordernd,’ emphasizing the complexities of creating clean models in the integration process. To explore how these tools can benefit your organization, book a free consultation with us today.

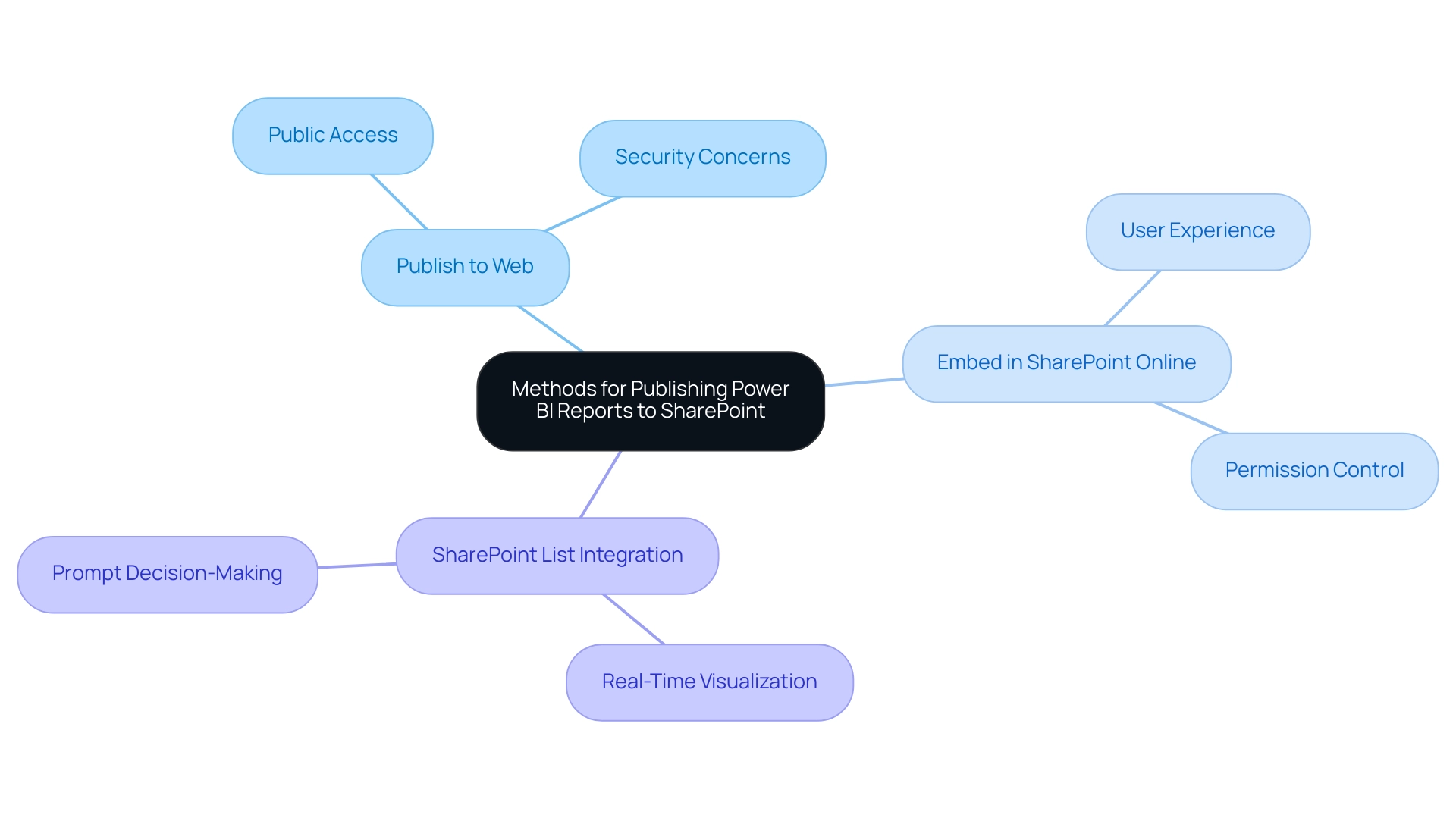

Methods for Publishing Power BI Reports to SharePoint

Understanding how to publish Power BI to SharePoint significantly enhances collaboration and data accessibility within organizations, driving operational efficiency through data-driven insights. Here are several effective methods to achieve this:

-

Publish to Web: This method allows users to generate a public link for their document, which can be easily embedded in SharePoint. While this approach facilitates broad access, caution is essential; anyone with the link can view the document, potentially exposing sensitive information.

-

Embed in SharePoint Online: Using the BI web part is an efficient way to incorporate analytics directly into SharePoint pages. This method not only improves user experience but also ensures that only individuals with the appropriate permissions can access the report, preserving security and integrity.

-

SharePoint List Integration: Linking BI directly to SharePoint lists allows for real-time information visualization, making it an ideal solution for organizations relying on SharePoint for information management. This integration enables teams to visualize and examine information as it evolves, promoting prompt decision-making.

In 2025, usage statistics for the BI web part in SharePoint indicate a growing trend, with organizations increasingly utilizing this feature to enhance their reporting capabilities. Optimal methods for sharing BI visuals on SharePoint involve confirming that all individuals possess the required permissions, frequently refreshing visuals to showcase the most current information, and employing the semantic model version history function to restore from any errors during the editing phase. This feature enables individuals to recover earlier iterations of their semantic models, boosting trust in self-service information management.

As Patrick LeBlanc, Principal Program Manager, emphasizes, ‘We highly value your feedback, so please share your thoughts using the feedback forum,’ highlighting the importance of user input in optimizing these tools.

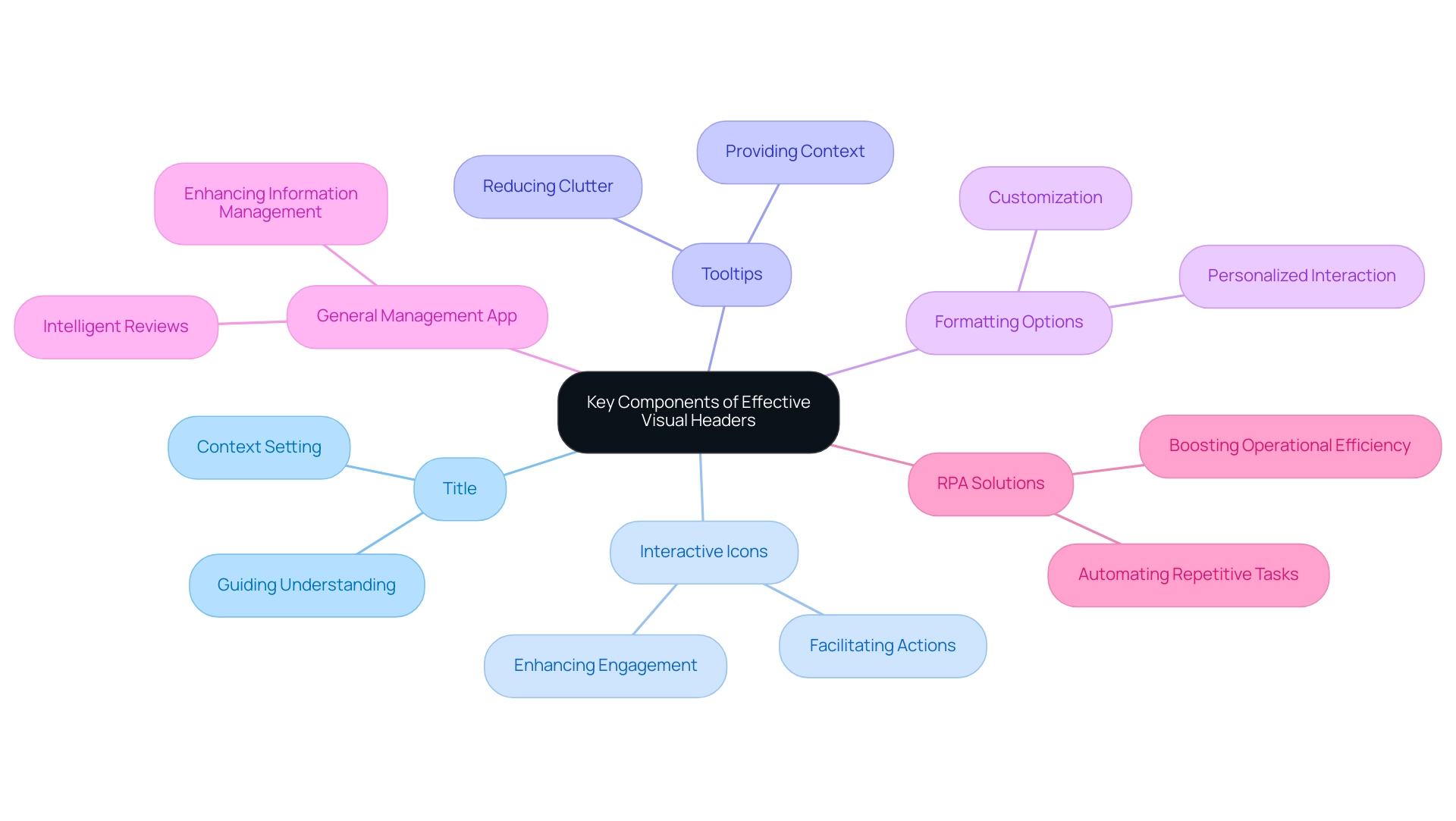

Additionally, leveraging the ‘3-Day Enhanced BI Sprint’ can expedite the report creation process, ensuring that teams can quickly adapt to evolving data needs. The ‘General Management App’ provides comprehensive management tools that facilitate smart reviews and actionable insights. By adopting these methods and best practices, organizations can effectively learn how to publish Power BI to SharePoint, integrating BI with SharePoint to drive operational efficiency and informed decision-making.

The incorporation of automation solutions further streamlines workflow automation, ensuring a risk-free ROI assessment and professional execution. With BI enabling efficient document distribution and collaboration through various sharing methods and workspace features, teams can work together seamlessly, exemplifying the practical application of these technologies.

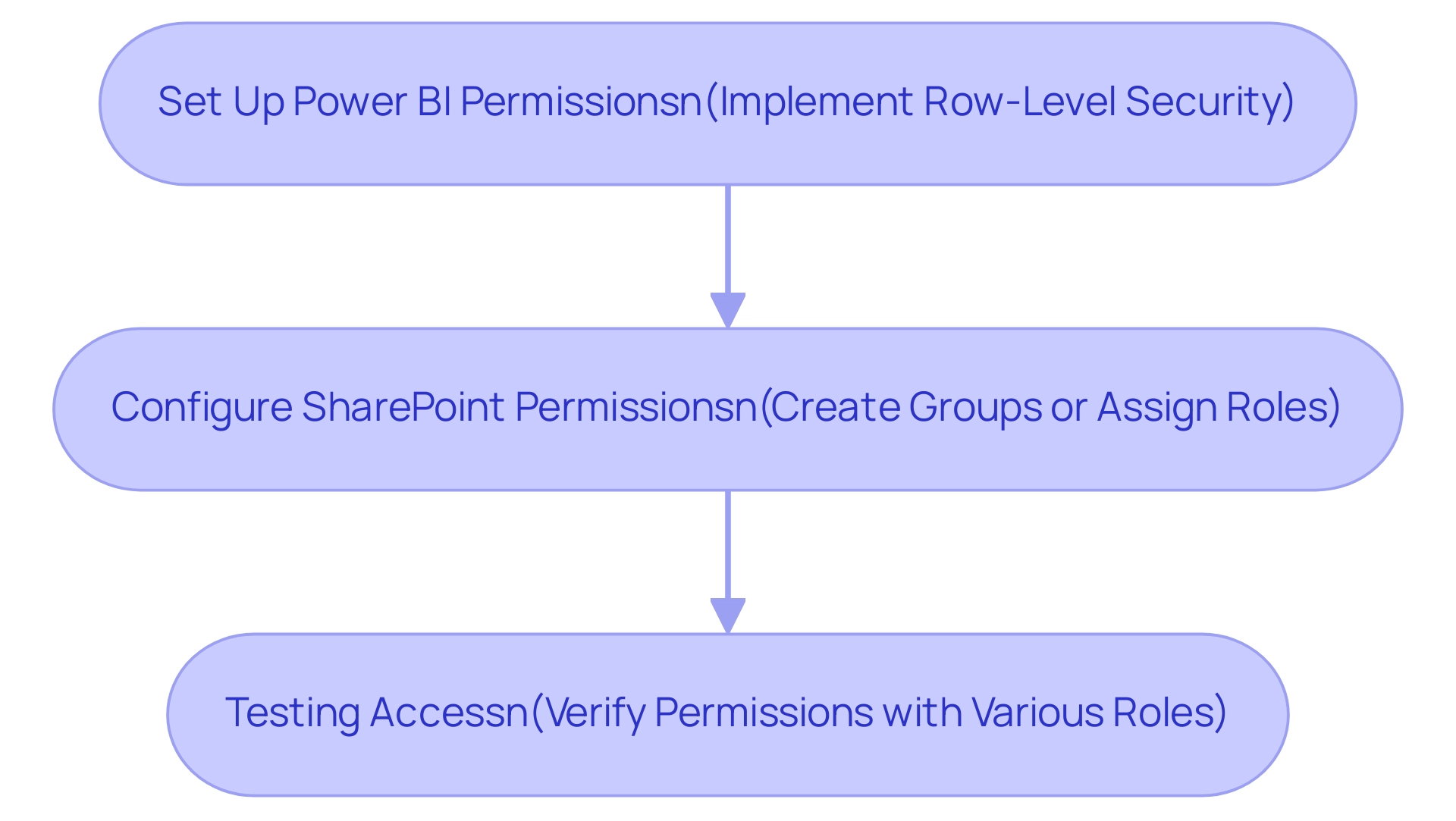

Managing Permissions and Access for Power BI Reports

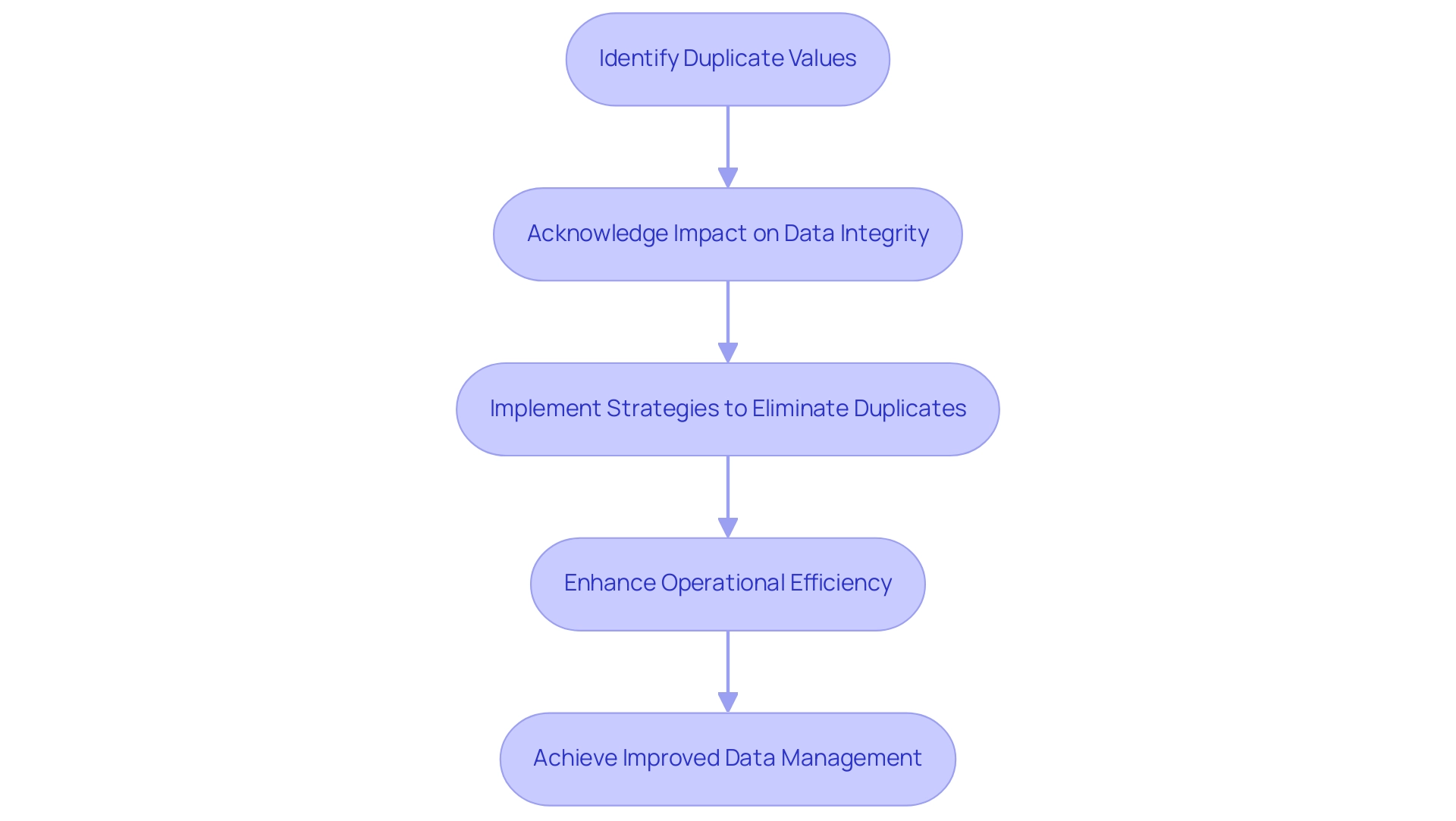

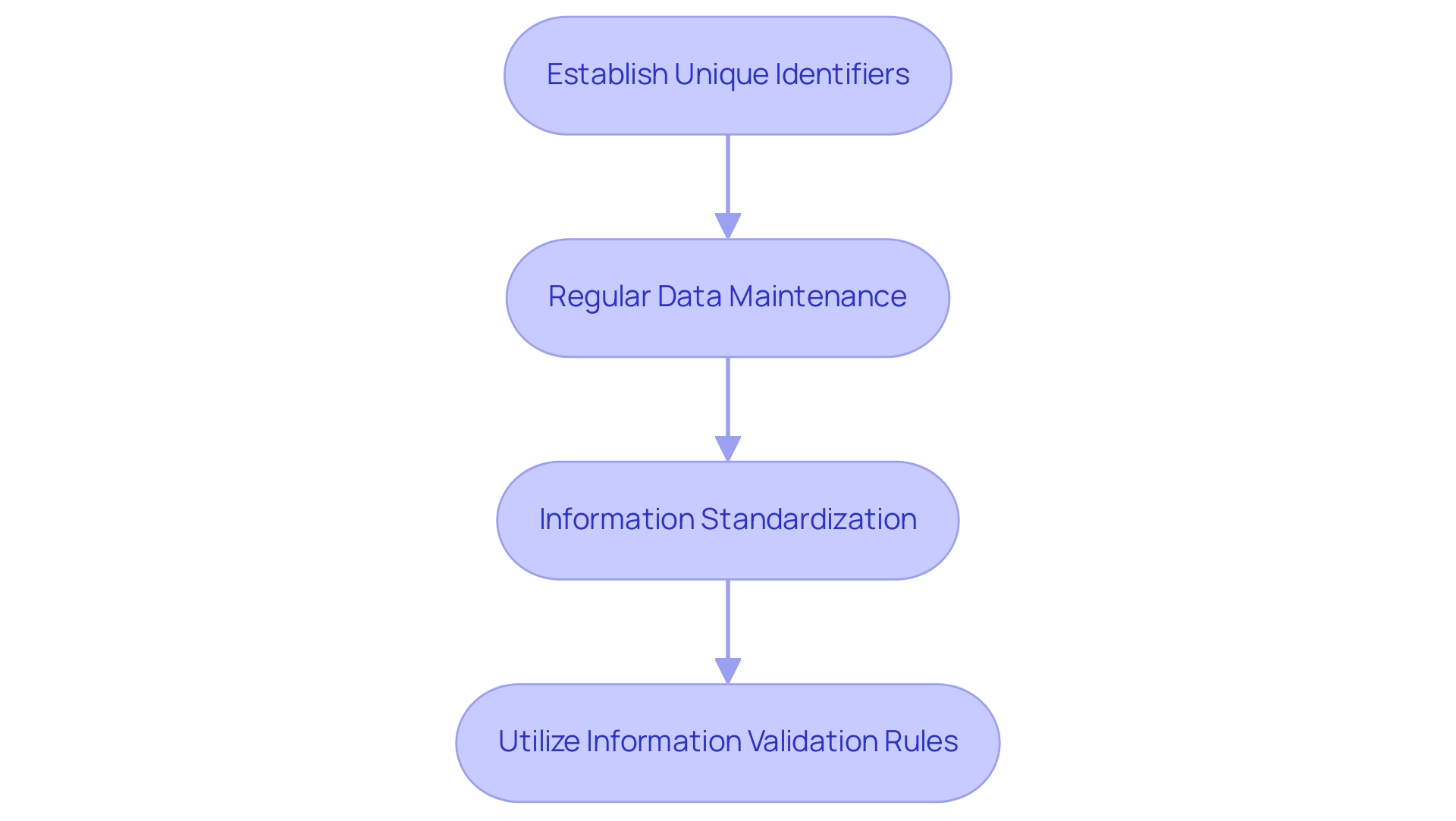

To effectively manage permissions for Power BI reports in SharePoint, follow this structured approach:

-

Set Up Power BI Permissions: Start by accessing the Power BI service and navigating to the document settings. Here, you can specify who has the ability to view or edit the report. Implementing Row-Level Security (RLS) is crucial, as it restricts access at the row level for different users, ensuring that sensitive information is available only to authorized personnel. This capability is essential for maintaining information integrity and security, particularly in an information-driven environment where insights dictate operational strategies. However, challenges such as time-consuming document creation and data inconsistencies can hinder effective decision-making, making proper permission management even more critical.

-

Configure SharePoint Permissions: Next, ensure that individuals possess the necessary permissions within SharePoint to access the page where the report is embedded. This may involve creating specific groups or assigning roles that align with your organizational structure. Current trends indicate that effective permission management in SharePoint is vital for maintaining data integrity and security in 2025, as organizations increasingly rely on data-driven decision-making. Moreover, integrating RPA solutions can streamline these processes, reducing the burden on employees and enhancing operational efficiency.

-

Testing Access: After establishing permissions, conduct thorough testing by logging in as individuals with various roles. This step is essential to confirm that permissions are applied correctly and that users can access the reports as intended. A case study on managing permissions for Business Intelligence in SharePoint highlighted the importance of isolating gateways for different query types, which can enhance performance and prevent overload issues, particularly for startups requiring low latency. This practice not only improves efficiency but also ensures that permission management is streamlined and effective.

As lbendlin aptly stated, “Pivot your thought process from BI structure to business needs.” By following these steps on how to publish Power BI to SharePoint, organizations can ensure that their BI analyses are securely managed within SharePoint, facilitating efficient data access while safeguarding sensitive information. Furthermore, utilizing BI insights through our 3-Day BI Sprint will empower your team to generate professional documents that not only inform choices but also foster growth and innovation.

This sprint is designed to help you overcome the challenges of document creation and ensure that your insights are actionable and impactful.

Technical Considerations and Limitations of Embedding Power BI

When embedding Power BI reports in SharePoint, several critical technical aspects must be considered to ensure a seamless integration. This is particularly important for enhancing operational efficiency and leveraging business intelligence for data-driven insights:

-

Licensing Requirements: To view embedded reports, users must possess either a BI Pro or Premium license. This requirement is essential for accessing the full capabilities of Power BI within SharePoint, especially as Microsoft continues to enhance its analytics offerings. As of April 1, 2025, these licensing adjustments reflect Microsoft’s commitment to innovation in the analytics space. As Charlie Phipps-Bennett states, ‘Microsoft is betting big on the future of analytics,’ underscoring the importance of remaining aware of these changes.

-

Information Refresh Limitations: One significant challenge is the potential for reports not to refresh in real-time, which can vary based on the source and configuration settings. It is crucial to optimize your data refresh settings to ensure that individuals are viewing the most current information. In 2025, understanding these limitations is vital, as they can significantly impact decision-making processes and hinder the extraction of actionable insights. With approximately 18,918 users actively engaging in community forums, there is a robust interest in overcoming these technical challenges and optimizing the use of the platform.

-

Time-Consuming Document Creation: The process of generating documents can be labor-intensive, often requiring significant manual effort. This can lead to delays in accessing critical insights, making it essential to streamline documentation generation through automation tools like RPA, which can help reduce the time spent on repetitive tasks.

-

Accuracy and Consistency: Ensuring accuracy and consistency across documents is a common challenge. Organizations must implement robust data governance practices to mitigate discrepancies, which can undermine the reliability of insights derived from Power BI dashboards.

-

Browser Compatibility: Users must access SharePoint through compatible browsers to avoid rendering issues with embedded documents. Ensuring that all team members are using supported browsers can mitigate technical difficulties and enhance the user experience, leading to improved operational efficiency.

-

Technical Limitations: Embedding BI in SharePoint may present certain technical limitations, such as restrictions on the types of visuals that can be displayed or the interactivity of reports. These limitations can influence how to publish Power BI to SharePoint, requiring careful planning during the integration process to maximize the effectiveness of business intelligence tools.

-

Expert Opinions: Industry experts emphasize the importance of understanding these technical limitations to maximize the effectiveness of BI within SharePoint. As Charlie Phipps-Bennett noted, “Microsoft is betting big on the future of data analytics,” highlighting the need for organizations to stay informed about evolving capabilities and requirements to drive growth through informed decision-making.

-

Consultation Services: Organizations looking to optimize their BI usage can benefit from consultations offered by Synapse, which help assess current usage and explore cost-saving alternatives regarding licensing. This tailored approach can address specific business challenges while enhancing efficiency through RPA solutions.

-

Case Studies on Enhancements: Over the past nine years, Microsoft has implemented over 1,500 updates to BI, focusing on enhancing experience and functionality. These improvements, featuring AI-driven information modeling and automated insights, seek to offer individuals smarter analytics tools and a more cohesive experience through deeper integration with Microsoft Fabric, positioning BI as a leading solution in analytics.

By addressing these aspects, including the challenges of time-consuming documentation creation and data inconsistencies, organizations can better navigate the complexities of embedding BI in SharePoint. This approach enables them to leverage the full potential of their data analytics capabilities, ultimately driving business growth and operational efficiency.

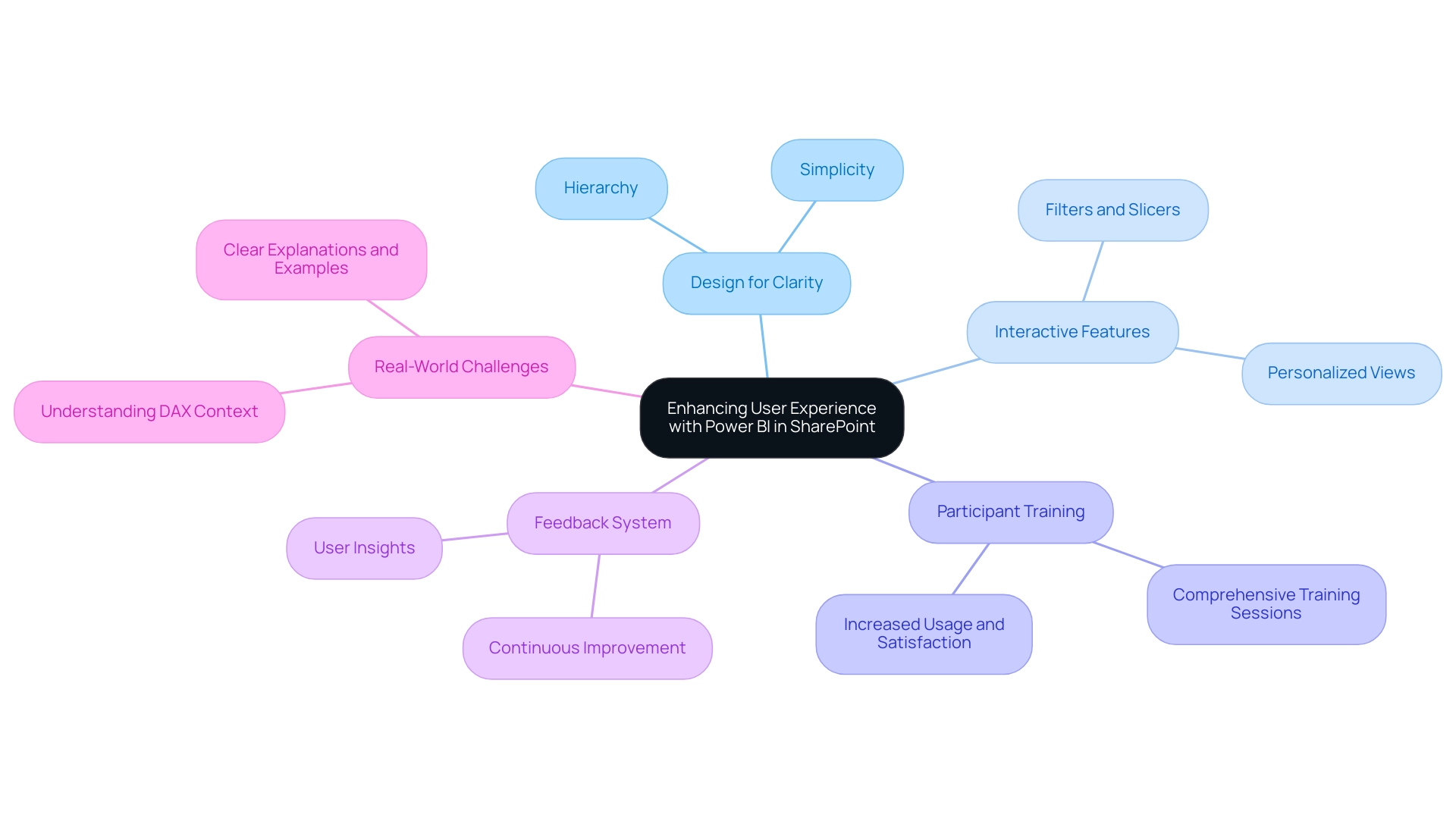

Best Practices for Enhancing User Experience with Power BI in SharePoint

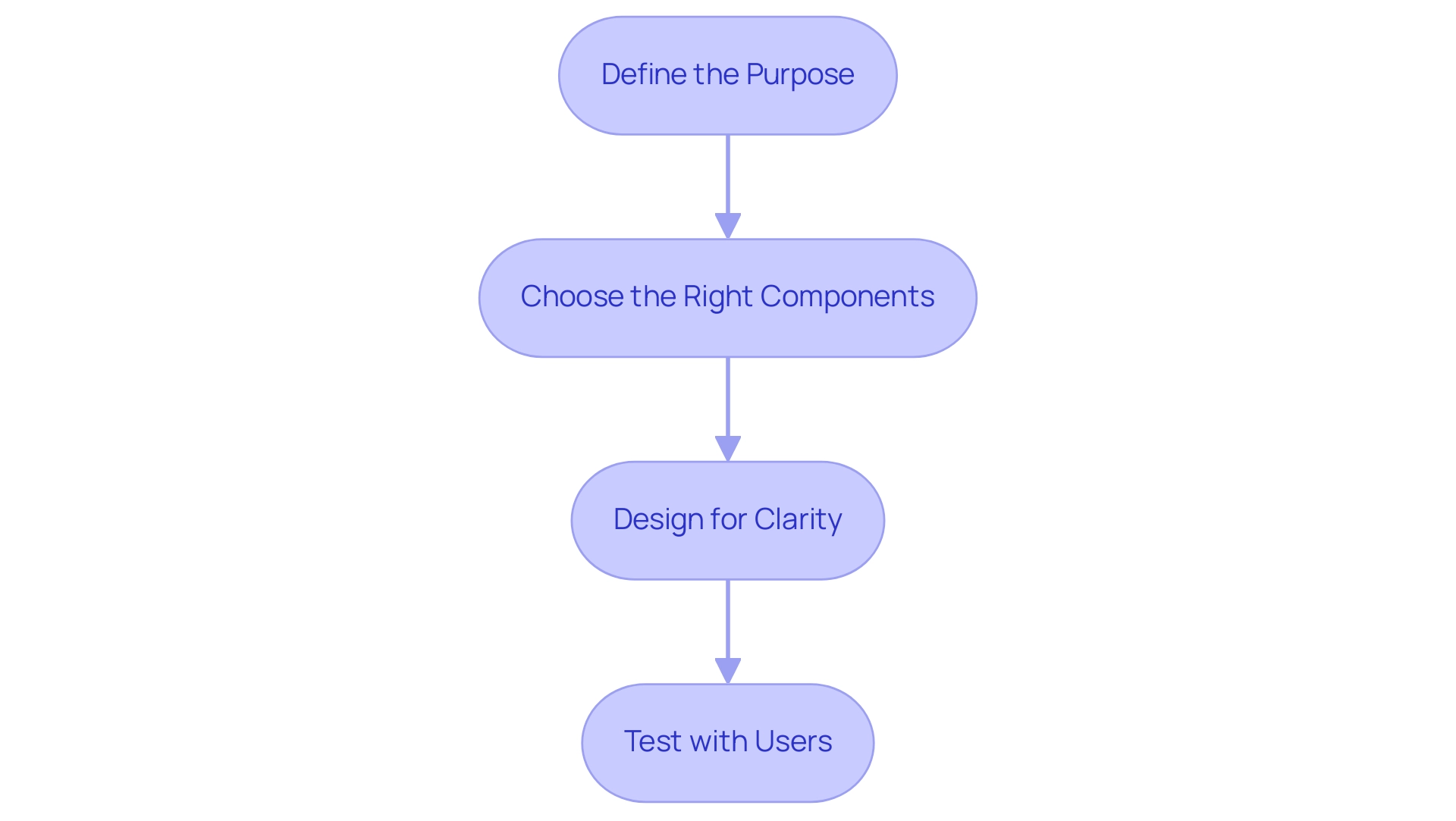

To optimize user experience with Power BI reports in SharePoint, adopting best practices is essential.

-

Design for Clarity: Prioritize clear and concise visuals that facilitate easy interpretation. Overwhelming individuals with excessive information can hinder comprehension; thus, simplicity is key to enhancing engagement. Establishing hierarchies further streamlines exploration and analysis within Power BI, allowing for effective navigation through intricate datasets while avoiding common pitfalls like inconsistencies. Clear visuals not only aid in understanding but also provide actionable guidance, empowering stakeholders to make informed decisions based on the information presented.

-

Interactive Features: Utilize filters and slicers to enable individuals to personalize their views. This interactivity enhances user experience and promotes deeper exploration of the information, addressing the lack of actionable advice often found in documents. By allowing individuals to engage with the data, they can derive insights tailored to their specific needs.

-

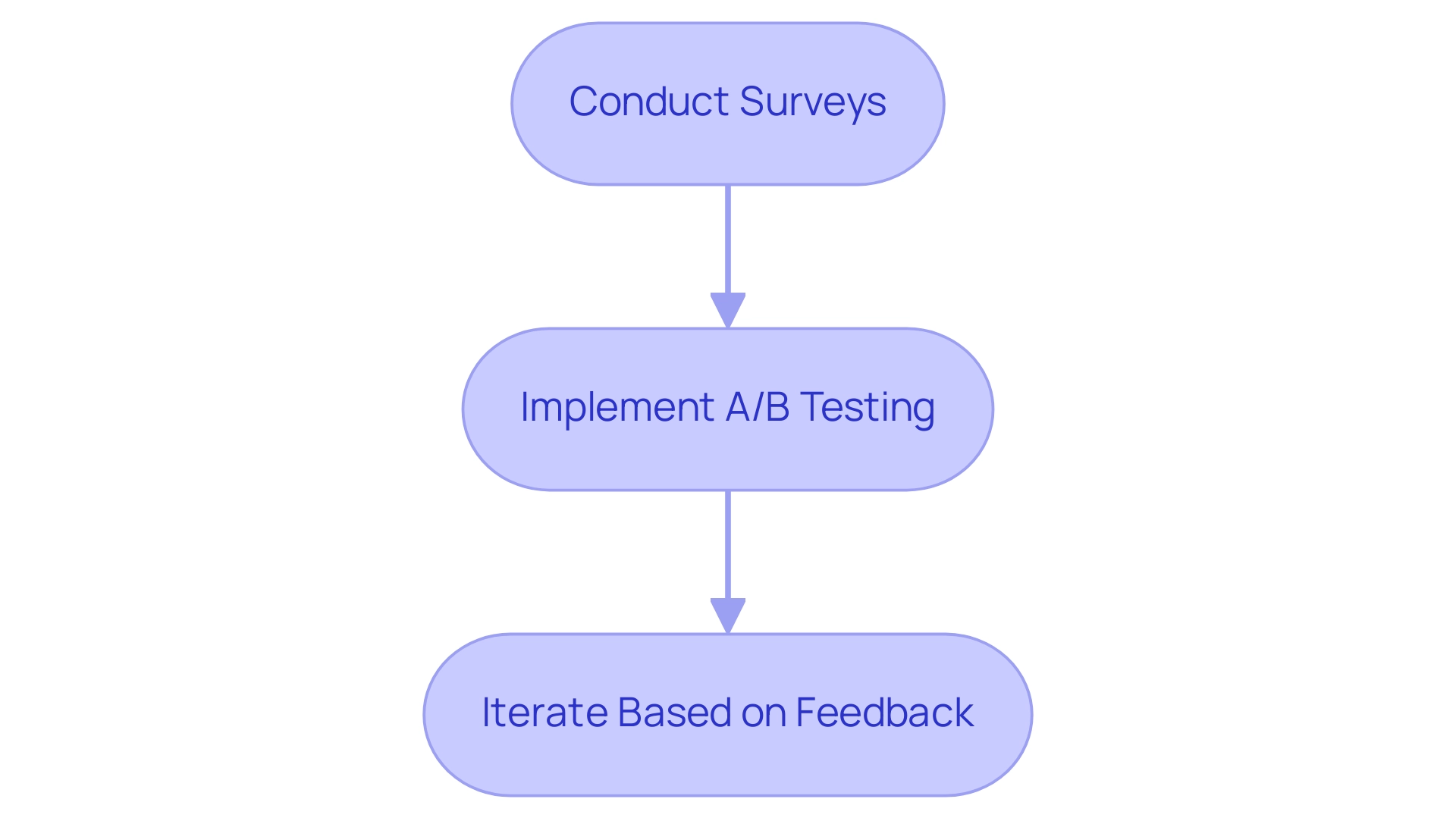

Participant Training: Conduct comprehensive training sessions to equip individuals with the necessary skills to navigate and utilize BI analysis effectively. Research shows that organizations investing in user training experience significant increases in usage and overall satisfaction. The interest in best practices for Power BI is evident; a recent LinkedIn post on the topic garnered over 110,000 impressions. Such training alleviates the common issue of excessive time spent on document creation instead of leveraging insights, ultimately leading to more actionable outcomes.

-

Feedback System: Establish a robust feedback system to gather insights from users regarding document usability. As Patrick LeBlanc, Principal Program Manager, stated, “We highly value your feedback, so please share your thoughts using the feedback forum.” This continuous feedback loop allows for timely adjustments and enhancements, ensuring that documents remain relevant and user-friendly, thereby enhancing operational efficiency. Integrating user feedback can result in clearer actionable guidance in future documents.

-

Real-World Challenges: Address common difficulties users face, such as understanding row, query, and filter context in DAX. Clear explanations and straightforward examples can assist users in grasping how context influences their information, ultimately improving their experience with BI presentations and fostering organizational growth. By clarifying these concepts, users can better understand how to derive actionable insights from their data.

By implementing these strategies, organizations can significantly enhance their understanding of how to publish Power BI to SharePoint, leading to improved decision-making and operational efficiency. Furthermore, these practices align with RPA solutions by streamlining processes and ensuring that data-driven insights are readily accessible.

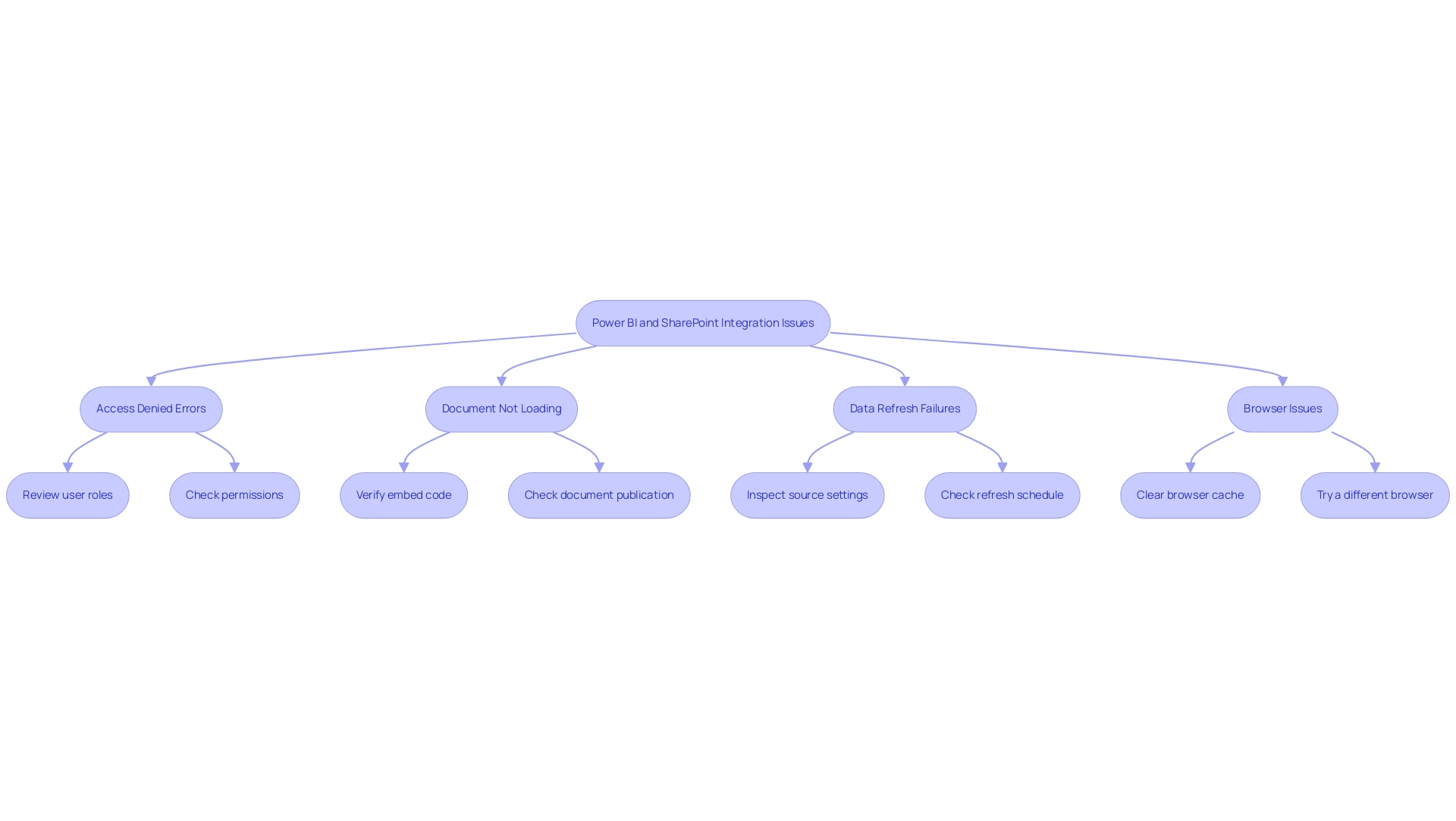

Troubleshooting Common Issues in Power BI and SharePoint Integration

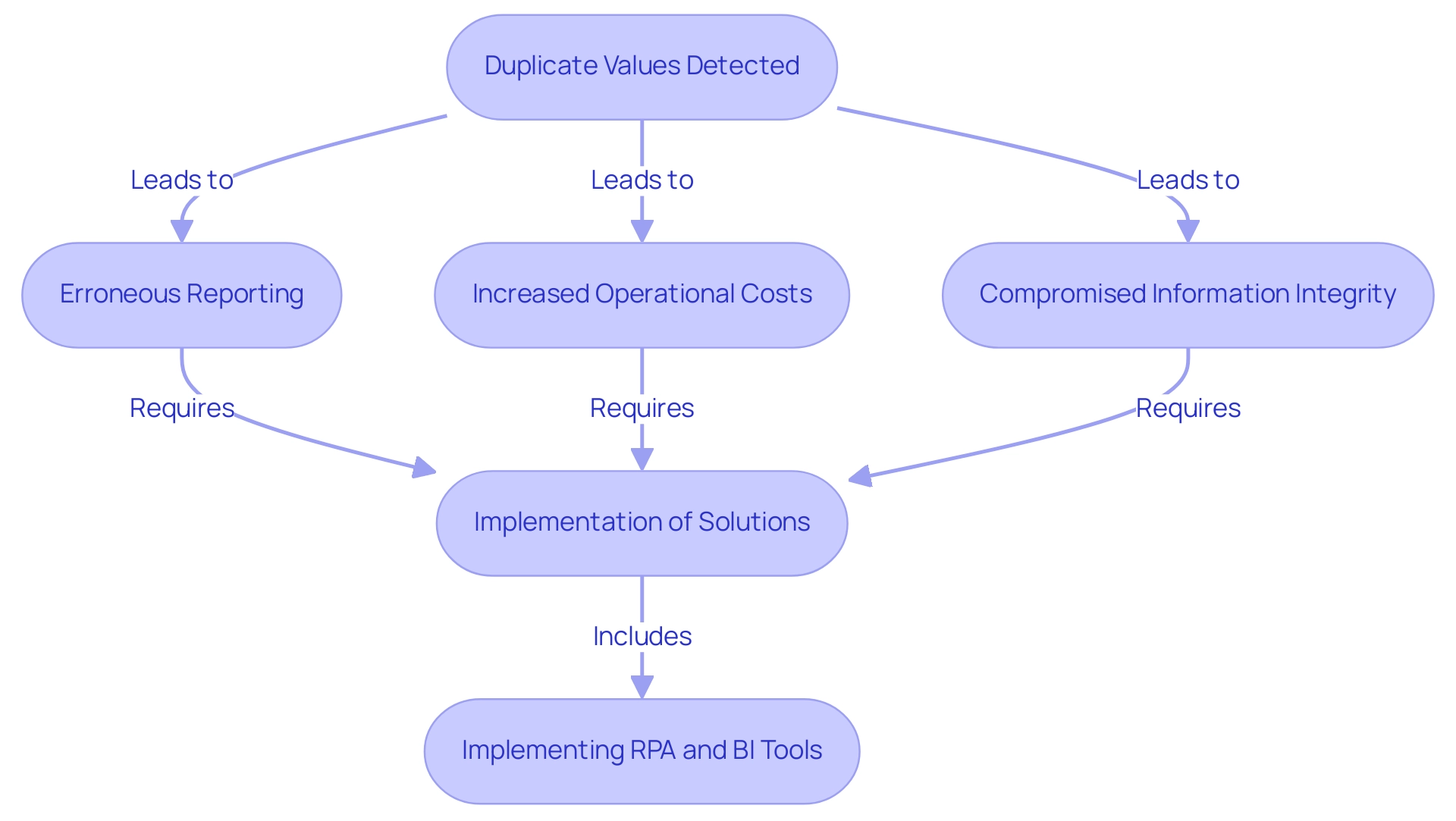

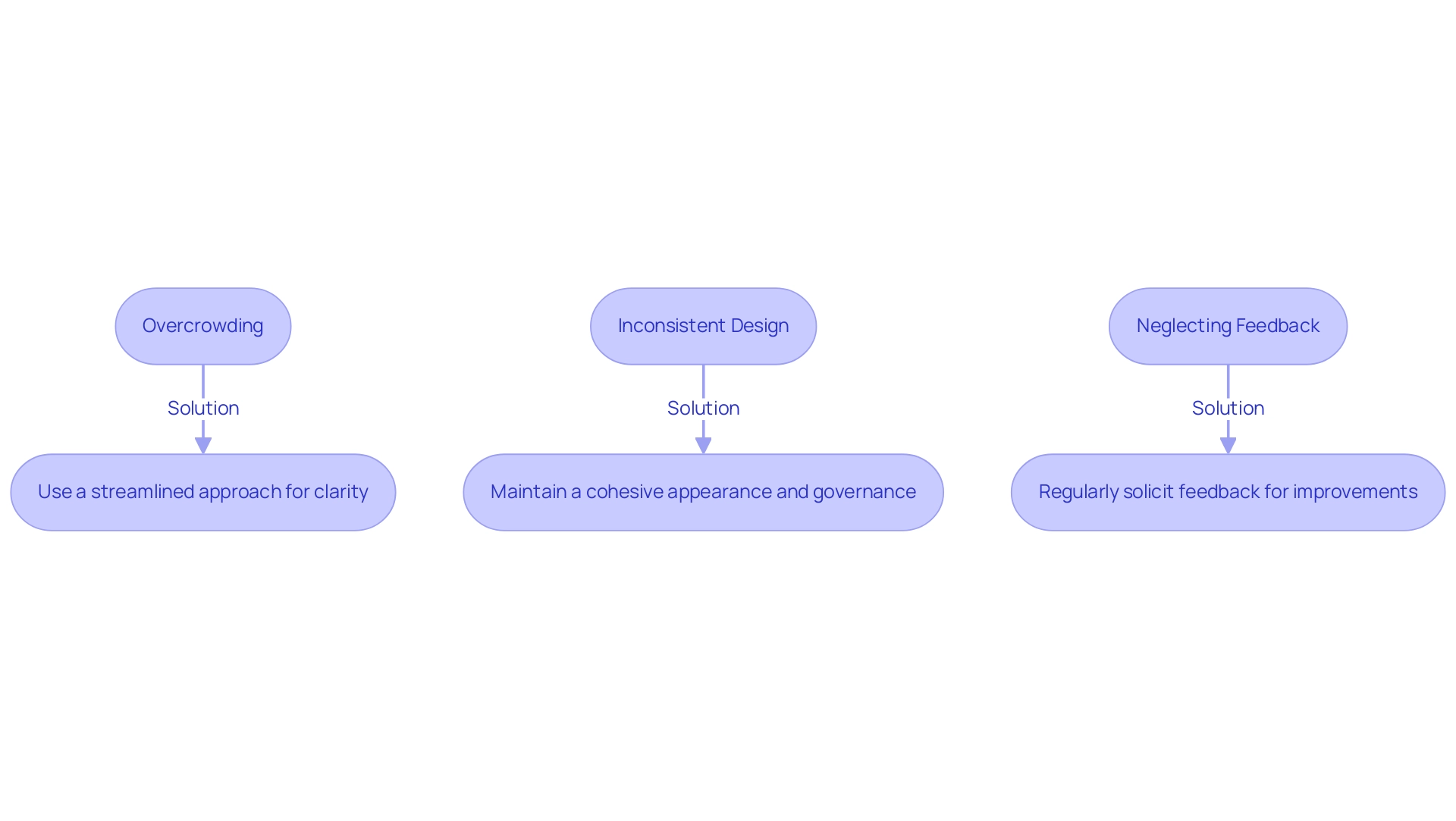

Integrating BI with SharePoint presents several challenges that can hinder effective data visualization and reporting, particularly when it comes to publishing Power BI to SharePoint. Understanding these challenges is crucial for overcoming them.

-

Access Denied Errors: Approximately 25% of users encounter access denied errors when attempting to view reports. To resolve this, ensure that users have the appropriate permissions in both BI and SharePoint. It is essential to meticulously review user roles and access settings to prevent these errors from occurring.

-

Document Not Loading: If documents fail to load, verify that the embed code is accurate and that the document has been successfully published in the BI service. Double-checking these elements can often resolve loading issues.

-

Data Refresh Failures: Data refresh failures can disrupt the flow of information. If updates are not occurring as expected, inspect the source settings and refresh schedule within Power BI. Ensuring that connections are properly set up is vital for maintaining current summaries.

-

Browser Issues: Users may experience loading problems due to browser-related issues. Clearing the browser cache or trying a different browser can often alleviate these problems.

A recent case study highlighted how businesses in information-rich environments struggled to extract meaningful insights from their raw data. They faced time-consuming report creation and inconsistencies, which often led to a lack of actionable guidance. By implementing effective troubleshooting strategies, they transformed their information into actionable insights, facilitating informed decision-making and driving operational efficiency.

This underscores the importance of addressing integration issues promptly to leverage the full potential of BI and SharePoint.

Moreover, integrating Robotic Process Automation (RPA) solutions can enhance operational efficiency by automating repetitive tasks related to information management. Tools like EMMA RPA and Automation streamline processes, reduce task repetition fatigue, and mitigate staffing shortages. As of 2025, BI is noted to efficiently process up to 30,000 rows of data without performance issues, establishing it as a robust tool for data analysis.

However, executing R and Python scripts in BI is limited to users with personal gateways, which can affect overall performance. Understanding these limitations and common problems is essential for optimizing how to publish Power BI to SharePoint. As Mr. Pullen, a Microsoft Employee, stated, “The data you want is available from Graph API – here https://graph.microsoft.com/v1.0/sites/{site-id}/lists/{list-id}/items/{item-id}/getActivitiesByInte...” This resource can be invaluable for troubleshooting data access issues.

Conclusion

The integration of Power BI and SharePoint is transforming how organizations approach data visualization and collaboration. By embedding interactive Power BI reports directly into SharePoint, businesses enhance accessibility to insights and cultivate a culture of data-driven decision-making. Exploring effective publishing methods, such as utilizing the Power BI web part and integrating SharePoint lists, underscores the importance of enabling real-time data visualization for timely decision-making.

Managing permissions and ensuring secure access to reports is crucial for maintaining data integrity. Implementing structured permission management practices, alongside robust testing protocols, guarantees that sensitive information is accessible only to authorized personnel. As organizations increasingly rely on real-time analytics, addressing technical considerations—such as licensing requirements, data refresh limitations, and browser compatibility—becomes essential for optimizing the user experience.

Best practices for enhancing user experience—including clear design, interactive features, and user training—play a vital role in maximizing the effectiveness of Power BI reports within SharePoint. Establishing a feedback mechanism allows organizations to continuously refine their reporting tools, ensuring relevance and usability.

As businesses prepare for the future, the integration of Power BI and SharePoint stands as a beacon for enhancing operational efficiency and driving growth. By embracing these tools and following best practices, organizations can transform raw data into actionable insights, paving the way for informed decision-making and innovation in an increasingly data-rich landscape. The time to harness the power of this integration is now, as it holds the potential to revolutionize how teams collaborate and derive insights from their data.

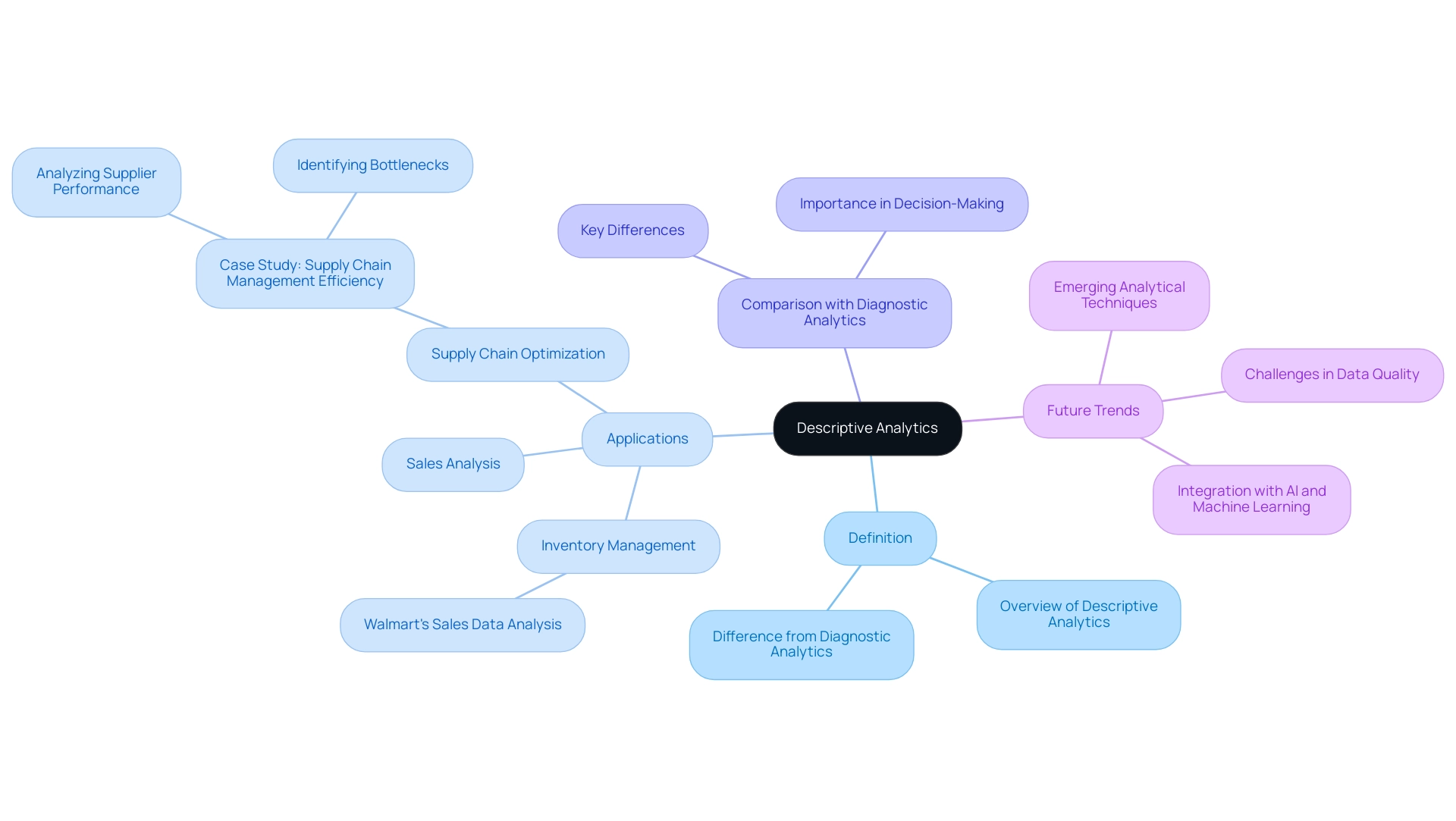

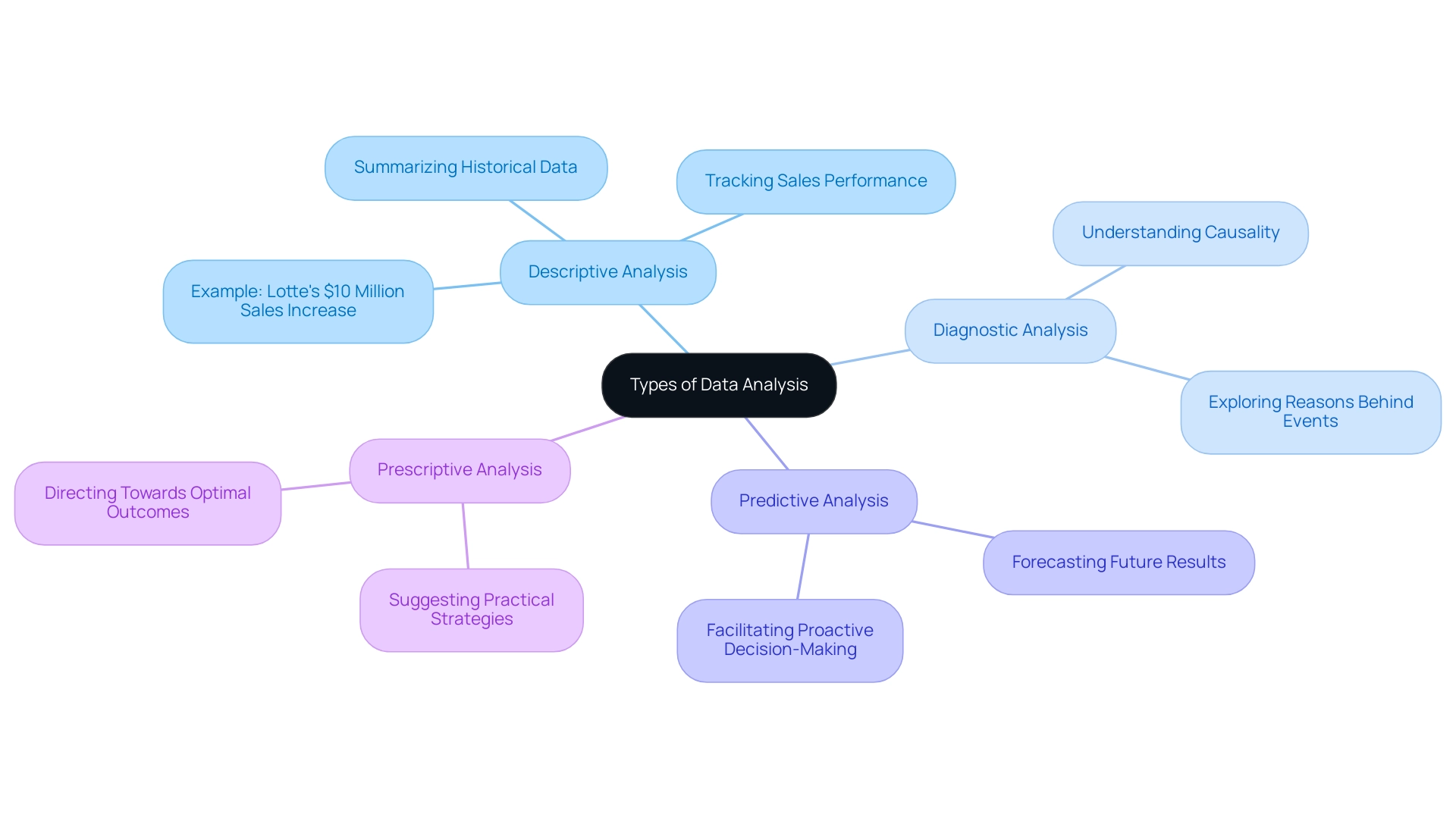

Overview

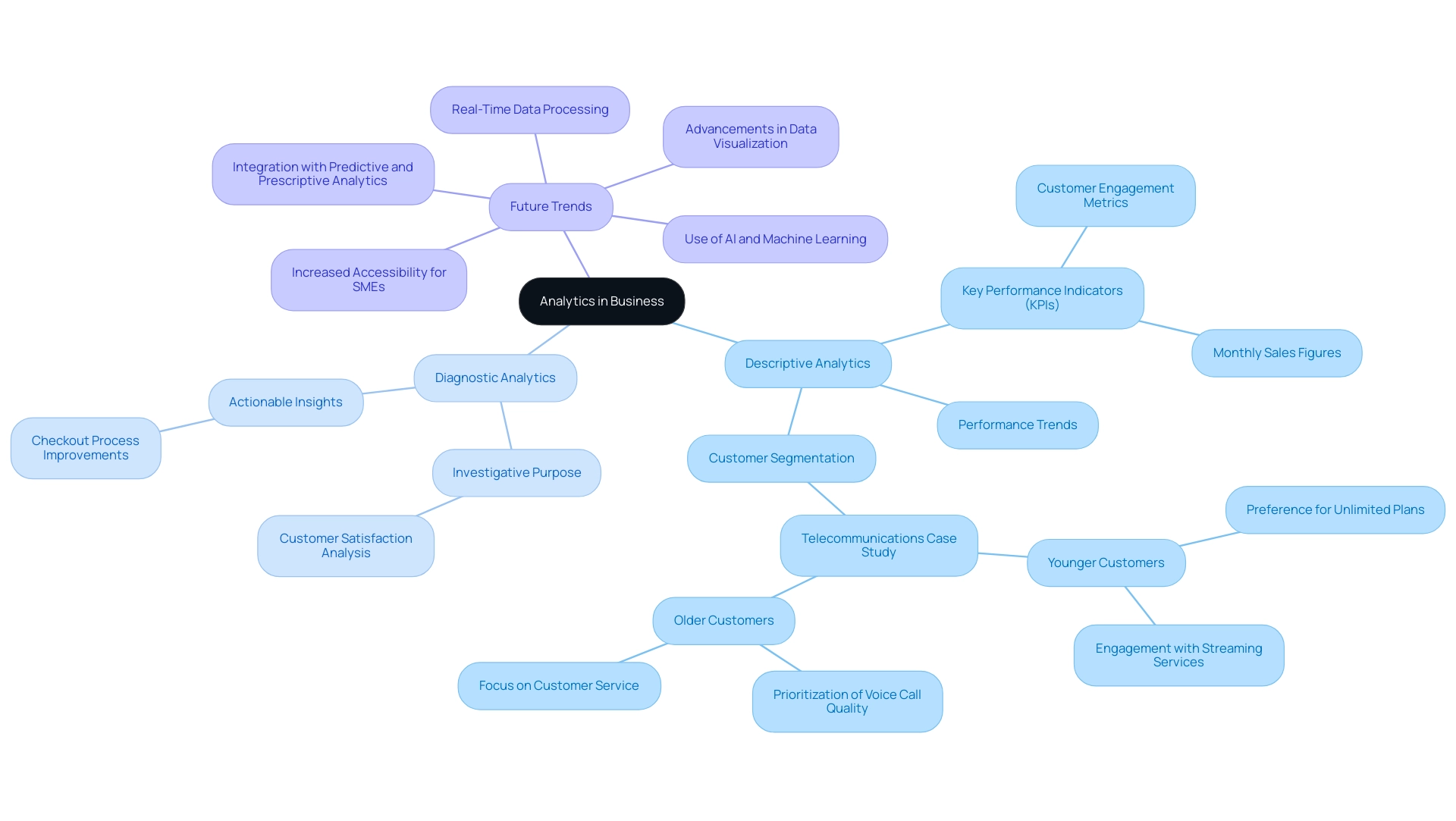

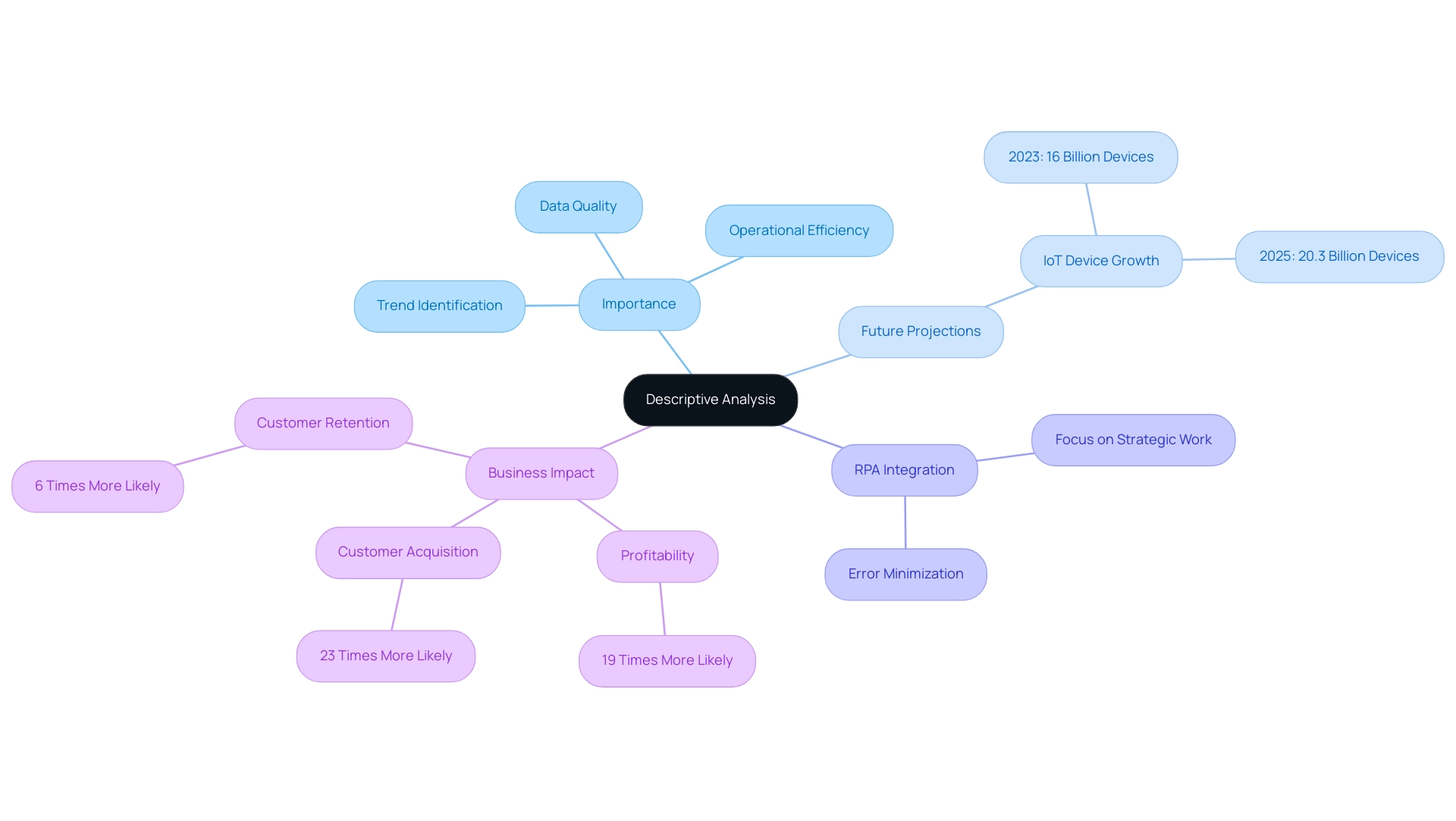

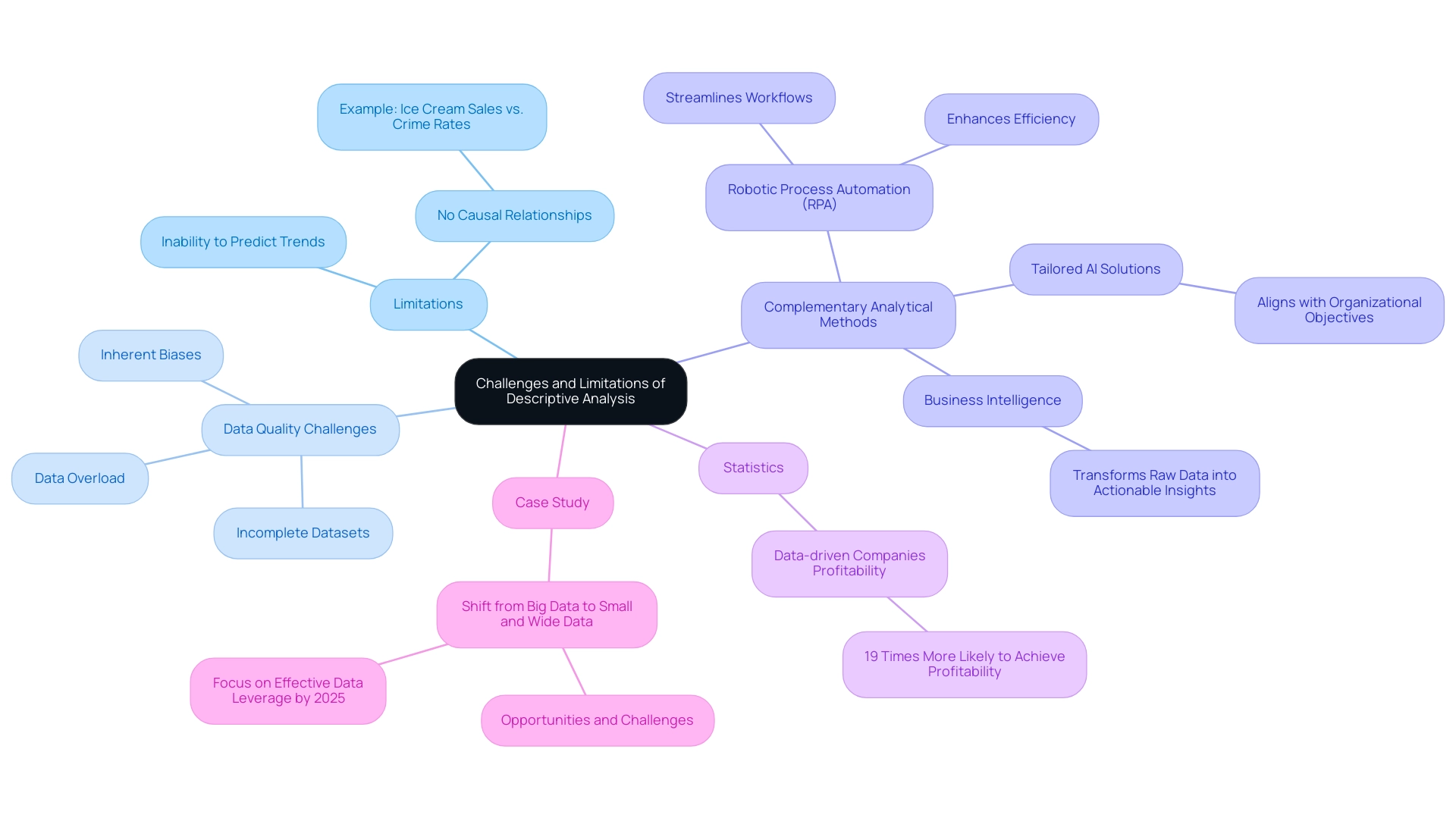

The key differences between diagnostic and descriptive analytics are rooted in their focus and methodologies. Descriptive analytics summarizes past data to answer the question, “What occurred?” In contrast, diagnostic analytics delves into the underlying reasons behind trends, addressing the inquiry, “Why did it happen?” This distinction is crucial for organizations seeking to leverage data effectively.

Descriptive analytics identifies patterns in historical data, such as sales trends, providing a clear snapshot of past performance. On the other hand, diagnostic analytics employs advanced techniques like regression analysis to uncover the root causes of performance changes. This deeper investigation enables organizations to make informed decisions and strategic adjustments, ultimately enhancing their operational effectiveness.

By understanding these differences, professionals can better utilize analytics to drive business success. Are you leveraging the right analytical approach to uncover insights and inform your strategies?

Introduction

In a world where data drives decision-making, understanding the nuances between diagnostic and descriptive analytics is essential for organizations aiming to enhance operational efficiency. Descriptive analytics provides a retrospective view, summarizing historical data to reveal trends and performance metrics. In contrast, diagnostic analytics delves deeper, uncovering the reasons behind those trends.

As automation technologies like Robotic Process Automation (RPA) become increasingly integrated into business processes, leveraging both analytics types empowers organizations to transform raw data into actionable insights.

This article explores the distinct roles of these analytics, their methodologies, and real-world applications, illustrating how businesses can harness their power to foster innovation and drive growth in an ever-evolving landscape.

Understanding the Basics of Diagnostic and Descriptive Analytics

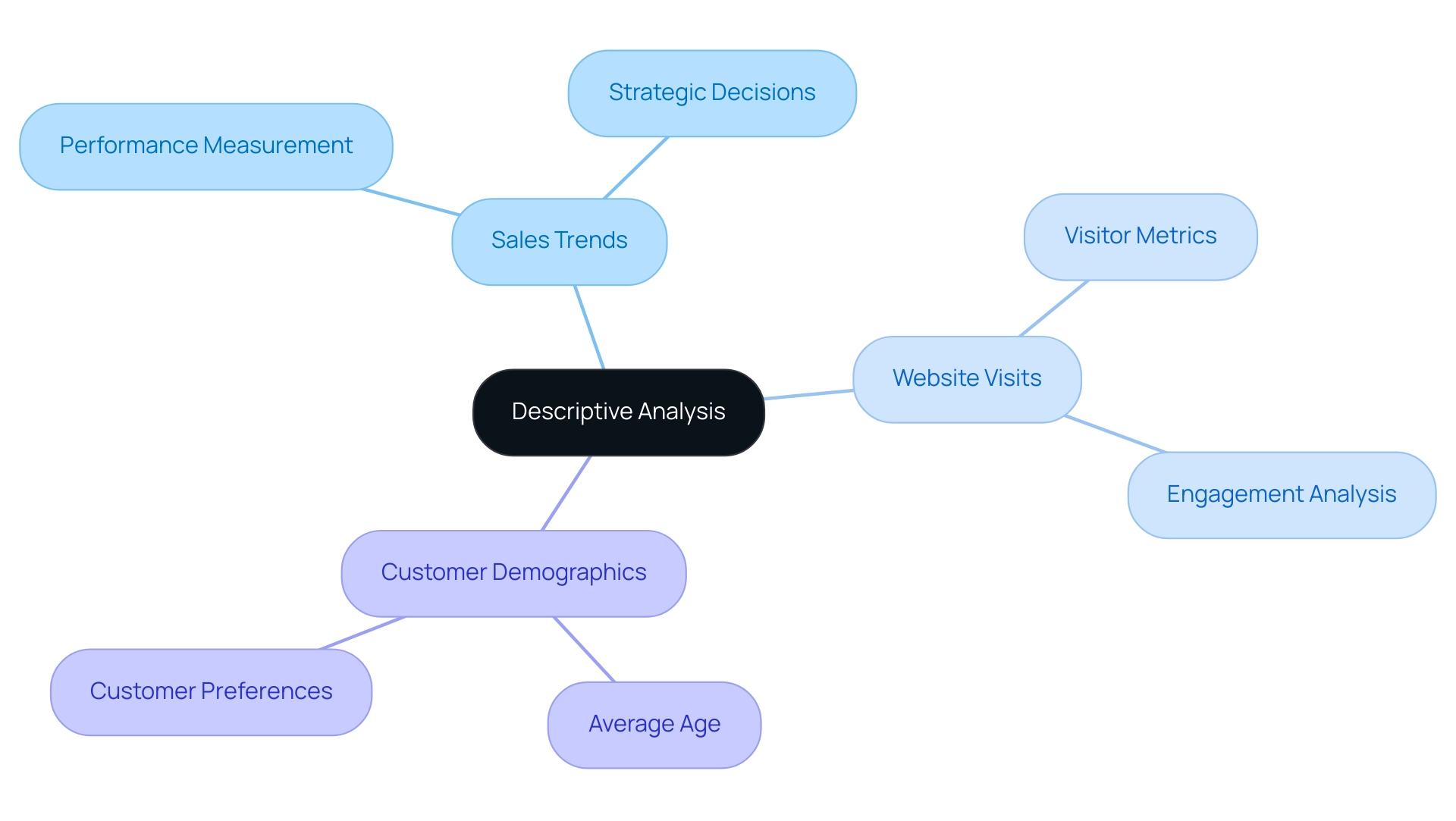

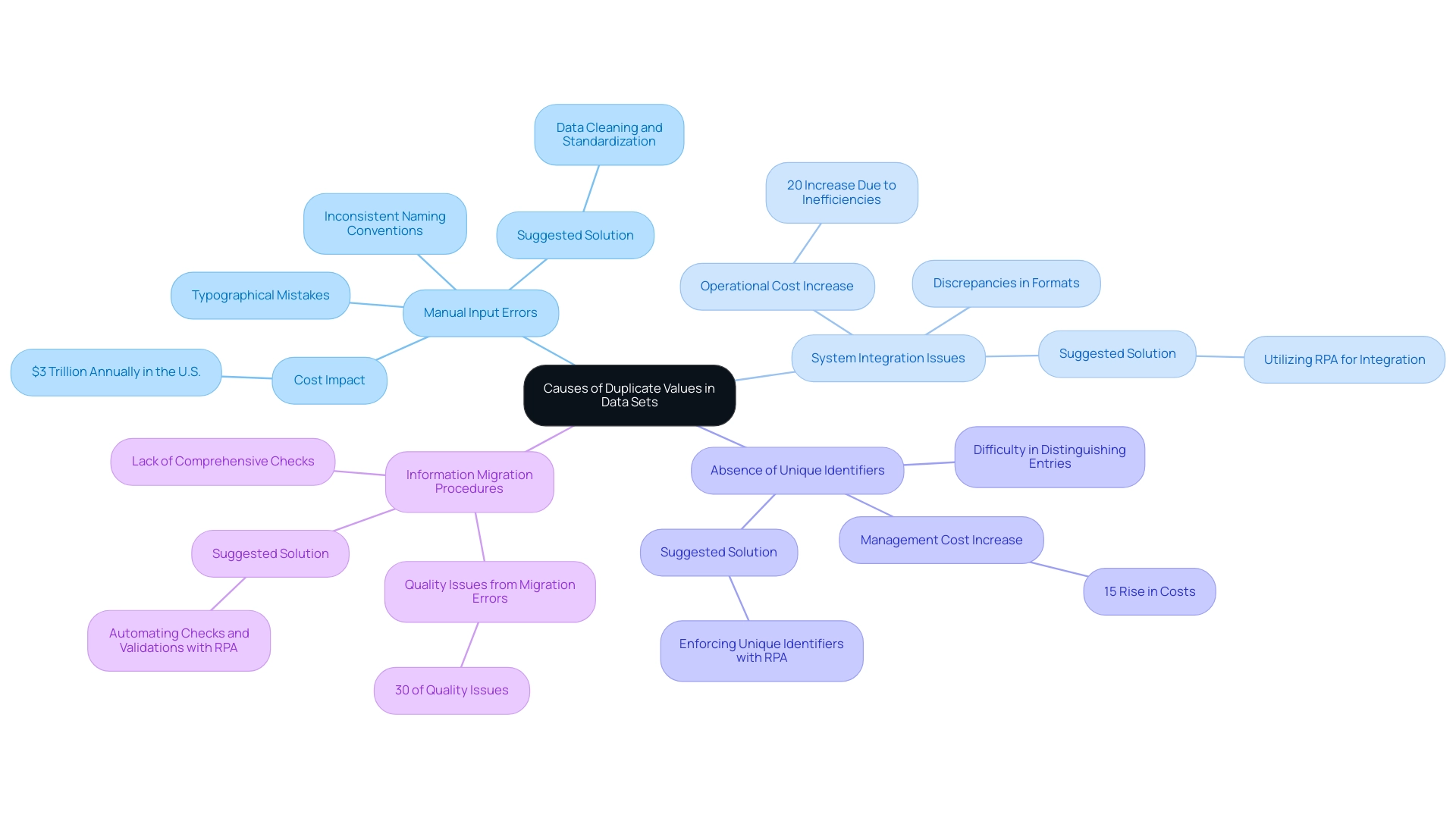

In the realm of automation technologies such as Robotic Process Automation (RPA), diagnostic and descriptive analytics fulfill distinct yet complementary roles in analysis. Descriptive analysis provides an overview of past information, answering the essential question: ‘What occurred?’ It focuses on identifying trends and patterns from historical data, enabling organizations to understand their performance over time.

For instance, a retail firm may employ descriptive analysis to scrutinize sales data from the previous quarter, revealing peak sales periods and customer preferences.

Conversely, diagnostic analysis delves deeper to uncover the reasons behind these trends, addressing the question: ‘Why did it happen?’ This analysis identifies causal relationships and sequences within the data, which is vital for comprehending trends. For example, if sales drop in a specific month, diagnostic analysis can reveal underlying issues such as supply chain disruptions or shifts in consumer behavior.

As Eric Wilson, Director of Thought Leadership at The Institute of Business Forecasting (IBF), emphasizes, “Companies that utilize experienced demand planners opt for diagnostic analysis as it provides comprehensive insights into an issue and additional information to aid business decisions.”

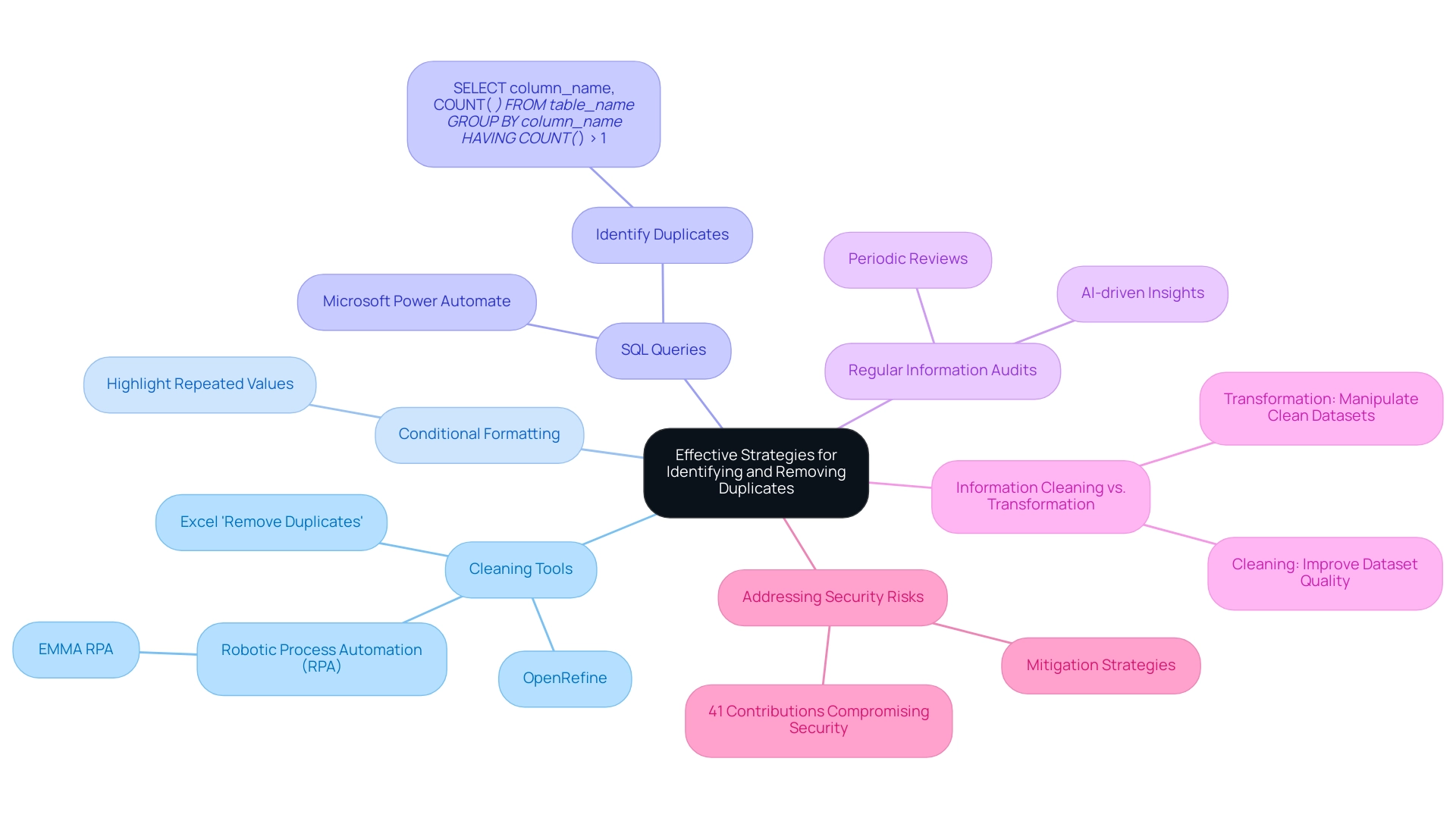

Understanding the distinctions between diagnostic and descriptive analytics is essential for effective decision-making. By 2025, organizations employing both types of data analysis, supported by innovative tools like EMMA RPA and Microsoft Power Automate, can significantly enhance operational efficiency and strategic planning. These RPA solutions not only minimize errors but also liberate teams to concentrate on more strategic, value-adding tasks, addressing the challenges posed by manual, repetitive workflows.

Recent findings indicate that companies utilizing diagnostic analysis gain a detailed understanding of issues, offering more thorough information to support organizational decisions. This is especially critical in a data-rich environment where informed decision-making is paramount.

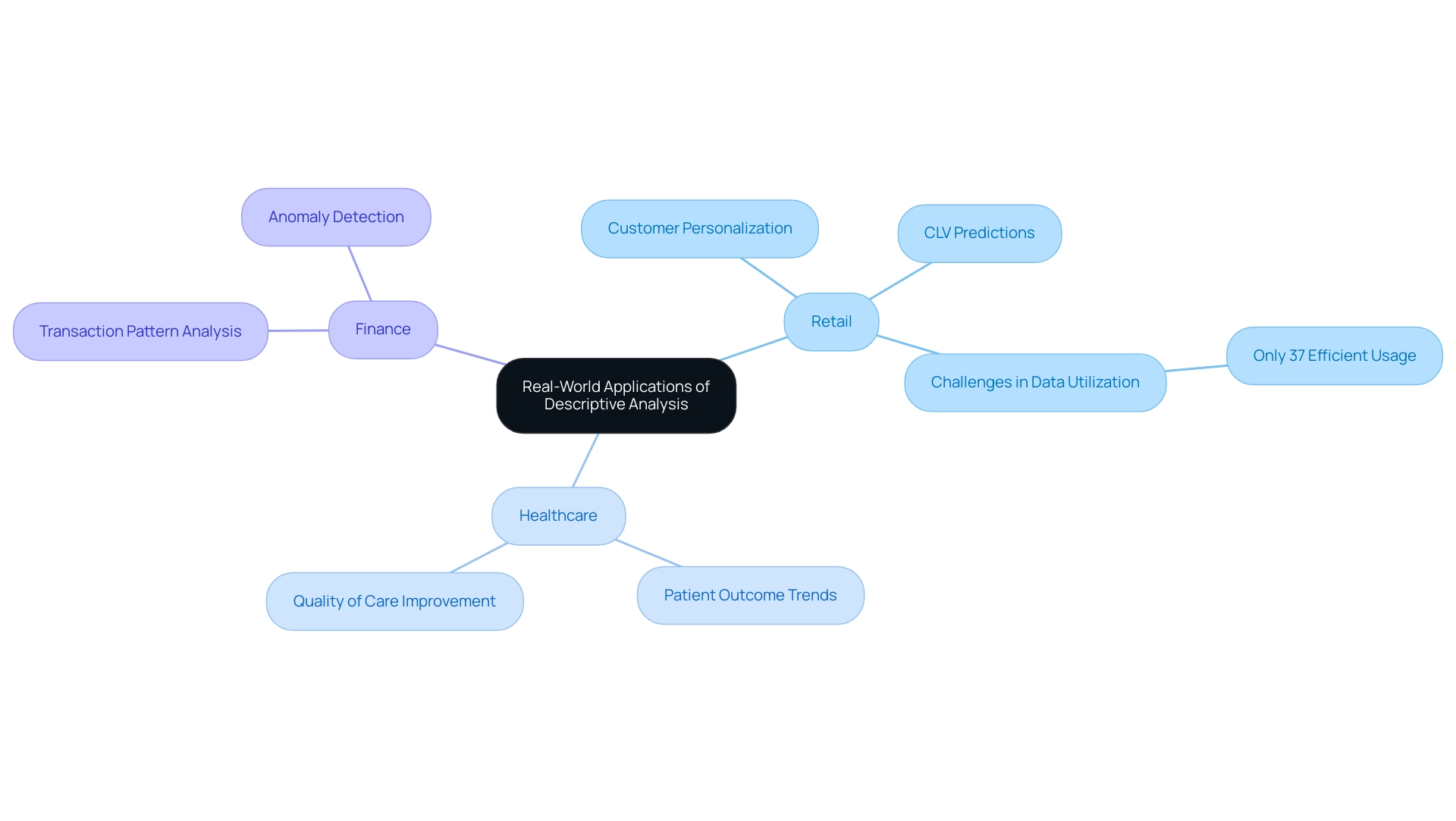

Real-world applications vividly illustrate these distinctions. For example, a manufacturing firm might use descriptive analytics to monitor production output while employing diagnostic analytics to investigate the causes of production delays. By integrating both approaches and leveraging RPA solutions, businesses can summarize their historical performance and derive actionable insights that drive future improvements.

Tools like Adobe Analytics facilitate the transformation of data into actionable insights, further enhancing analytical capabilities.

In summary, while diagnostic and descriptive analytics provide a snapshot of past events, diagnostic evaluation offers the context necessary to understand why those events occurred. This dual approach, empowered by RPA and AI-driven enhancements, enables organizations to make data-driven decisions that enhance operational efficiency and capitalize on market opportunities. By addressing task repetition fatigue and improving employee morale, RPA tools play a crucial role in supporting organizations on their journey toward operational excellence.

The Role of Descriptive Analytics: What Happened?

Descriptive analysis is essential in examining information, particularly when distinguishing between diagnostic and descriptive analytics. It serves as the foundational step that provides a comprehensive overview of historical performance. By employing statistical techniques, this analysis summarizes information through calculations of averages, totals, and trends over time, emphasizing the differences between diagnostic and descriptive analytics. For instance, Walmart efficiently utilizes detailed analysis to evaluate past sales data, enabling improvements in inventory requirements and enhancing store operations.

This method not only identifies peak sales periods but also reveals overall growth trends, which are crucial for businesses aiming to understand their current market position through diagnostic and descriptive analytics while strategizing for the future.

As we approach 2025, the significance of detailed analysis continues to grow, particularly as companies increasingly integrate artificial intelligence and machine learning into their evaluation processes. This integration fosters more sophisticated predictive capabilities, enhancing decision-making and operational efficiency. As industry expert Andy Morris asserts, “Diagnostic vs descriptive analytics provides vital information about a company’s performance,” facilitating effective communication of performance metrics both internally and externally, which is essential for attracting potential investors and lenders.

Present optimal methods in explanatory analysis underscore the importance of prompt information gathering and examination. Companies are encouraged to streamline their processes, as research indicates that organizations spend an average of 30% of their analytics time on tasks related to diagnostic and descriptive analytics. By optimizing these processes, organizations can focus more on strategic initiatives that drive growth, such as utilizing Power BI services for improved information reporting and actionable insights, or deploying Robotic Process Automation (RPA) to automate manual workflows and enhance operational efficiency, thereby underscoring the importance of understanding diagnostic and descriptive analytics.

RPA can significantly streamline workflows by automating repetitive tasks, reducing errors, and allowing team members to concentrate on more strategic work. Furthermore, leveraging Small Language Models and GenAI Workshops can enhance analytical capabilities by providing tailored AI solutions that improve quality and training processes.

Case studies illustrate the practical applications of diagnostic and descriptive analytics in enhancing organizational performance. For example, in supply chain management, businesses analyze information related to supplier performance, inventory levels, and transportation to identify bottlenecks and inefficiencies. This proactive approach enables timely improvements, ultimately leading to enhanced overall supply chain performance through the utilization of diagnostic and descriptive analytics.

By employing detailed analysis alongside AI-powered solutions, organizations can grasp the distinctions between diagnostic and descriptive analytics to pinpoint specific areas for improvement, ensuring efficient resource allocation.

As organizations navigate the complexities of a knowledge-rich environment, the capacity to summarize past information effectively through diagnostic and descriptive analytics becomes increasingly vital. By leveraging these insights, organizations can transform raw data into actionable intelligence, fostering informed decision-making and promoting innovation. Moreover, the evolving trends in data analysis, particularly the integration with AI and machine learning, alongside customized solutions that leverage Business Intelligence, are set to revolutionize how companies utilize diagnostic and descriptive analytics, making it an even more effective tool for operational efficiency.

Additionally, addressing the challenges of inadequate master data quality is crucial to ensuring that descriptive analysis yields accurate and reliable insights.

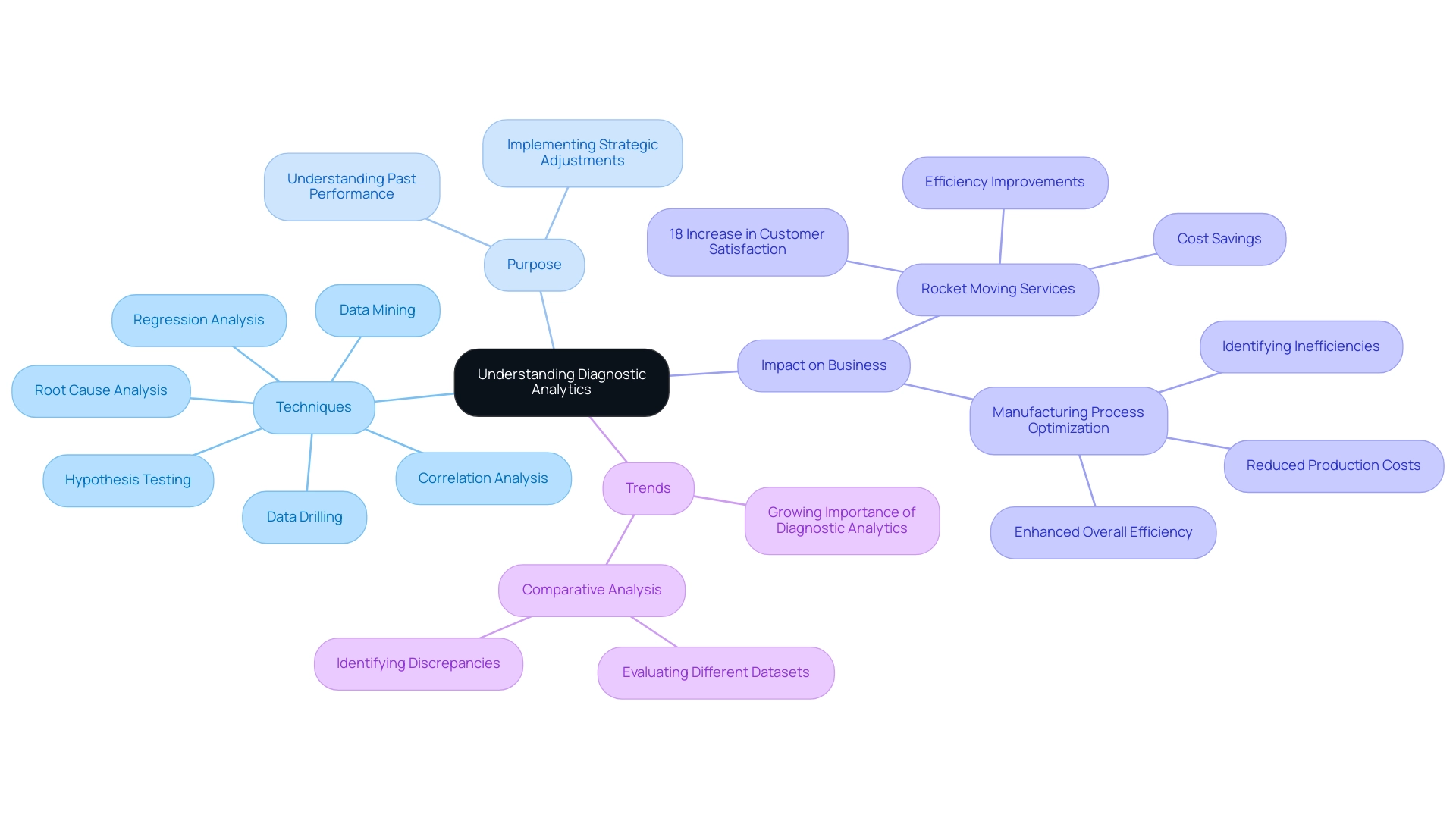

The Purpose of Diagnostic Analytics: Why Did It Happen?

The concept of diagnostic versus descriptive analytics underscores the distinction between merely reporting past information and delving deeper to uncover the root causes of performance variations. By leveraging advanced techniques such as:

- data mining

- correlation analysis

- regression analysis

- data drilling

- root cause analysis

organizations can reveal patterns and anomalies that clarify past performance. For instance, when a company faces a sudden decline in sales, diagnostic analysis can identify critical factors, such as shifts in customer preferences or adverse market conditions, that may have contributed to this downturn.

This analytical approach not only aids in pinpointing root causes but also empowers organizations to implement strategic adjustments effectively.

In the realm of operational efficiency, adopting technologies like Robotic Process Automation (RPA) can significantly enhance processes. RPA solutions, such as EMMA RPA, which offers intelligent automation capabilities, and Microsoft Power Automate, designed for seamless workflow automation, are tailored to automate manual workflows. This automation liberates teams to focus on more strategic, value-adding work. Such integration not only boosts employee morale by alleviating repetitive task fatigue but also fosters a streamlined workflow, enabling organizations to swiftly adapt to market changes.

The purpose of diagnostic analysis in business is multifaceted. It serves as a crucial tool for comprehending past events, allowing organizations to learn from experiences and refine operational strategies. A notable example is Rocket Moving Services, where implementing diagnostic analysis resulted in an 18% increase in customer satisfaction.

As Christopher Vardanyan, co-founder of Rocket Moving Services, stated, “diagnostic analysis has played a key role in improving our local moving services.” By examining customer feedback and operational data, we identified that utilizing larger trucks allowed us to accommodate more items in one trip, thus reducing the need for multiple trips. This adjustment led to shorter overall moving times and lower fuel and labor costs for both our company and our clients.

This optimization not only enhanced efficiency but also yielded substantial cost savings for both the company and its clients.

Current trends in information extraction and correlation analysis highlight the growing importance of diagnostic versus descriptive analytics in identifying performance issues. Techniques such as hypothesis testing are increasingly employed to uncover the reasons behind observed trends. For example, manufacturing firms utilize diagnostic analysis to identify inefficiencies in their production processes by analyzing data to uncover root causes of delays.

This leads to optimized processes, reduced production costs, and improved overall efficiency.

In 2025, experts emphasize the significance of diagnostic versus descriptive analytics in understanding business performance. By employing comparative analysis, organizations can evaluate different datasets or periods to identify discrepancies and potential causes of performance changes. Furthermore, by addressing the challenges of technology implementation, organizations can leverage RPA to streamline operations and overcome barriers to efficiency.

This comprehensive approach not only enhances operational efficiency but also cultivates a culture of continuous improvement, ultimately driving growth and innovation.

Methodologies: How Descriptive and Diagnostic Analytics Differ

Descriptive analysis primarily relies on fundamental statistical techniques such as mean, median, and mode to provide a clear summary of information. These techniques are essential for presenting a snapshot of performance metrics, enabling organizations to swiftly recognize trends and patterns. For instance, a company might observe that sales figures peaked in a particular quarter.

However, the true value of analytics becomes apparent when we transition to diagnostic analytics, which employs more sophisticated methodologies like regression analysis, clustering, and time-series analysis. These techniques delve deeper into the data, uncovering the underlying reasons for observed trends and answering critical questions such as ‘why did it happen?’ In today’s overwhelming AI landscape, businesses often struggle to pinpoint suitable analytical tools tailored to their unique needs, making it crucial to have solutions that cut through the noise.

A practical illustration of this distinction can be seen in the case of Canadian Tire, which achieved nearly a 20% increase in sales by leveraging AI-powered insights to examine purchasing behavior. This diagnostic approach enabled them to identify key factors driving customer purchases, such as effective marketing strategies and seasonal demand shifts. As we approach 2025, the methodologies employed in both descriptive and diagnostic analytics continue to evolve, with organizations increasingly adopting advanced statistical techniques to enhance their analytical capabilities.

For instance, regression analysis not only identifies relationships between variables but also aids in predicting future outcomes based on historical information. As Anik Sengupta, a Staff Engineer, notes, ‘Regression analysis identifies the relationship between a dependent variable and one or more independent variables.’ This predictive power is invaluable for organizations aiming to make informed decisions in a rapidly changing environment.

Moreover, the current landscape underscores the importance of understanding these methodologies. Experts in the field emphasize that a robust analytical framework is crucial for organizations seeking to optimize their operations and drive growth. By distinguishing between diagnostic and descriptive analytics, organizations can better utilize their information, transforming raw details into actionable insights that guide strategic initiatives.

Furthermore, advancements in technology are facilitating real-time prescriptive analysis, further enhancing organizations’ abilities to examine and react to trends. Harnessing Business Intelligence alongside RPA solutions stands at the forefront of this evolution, as RPA can automate data collection and processing, driving data-driven insights and operational efficiency for sustained business growth.

Real-World Applications: Leveraging Descriptive and Diagnostic Analytics

Businesses effectively utilize descriptive data analysis to create comprehensive reports encapsulating key performance indicators (KPIs), such as monthly sales figures and customer engagement metrics. This approach enables organizations to monitor performance trends over time, providing a clear picture of operational health. In contrast, the distinction between diagnostic and descriptive analytics reveals that diagnostic analysis serves a more investigative purpose, allowing companies to delve into specific challenges, such as pinpointing the causes behind a dip in customer satisfaction scores.

By meticulously analyzing feedback information and correlating it with operational changes, businesses can identify actionable insights for targeted improvements.

In today’s overwhelming AI landscape, businesses often struggle to identify the right solutions that meet their specific needs. Tailored solutions can help cut through the noise to harness the power of Business Intelligence. For example, a telecommunications firm utilized descriptive analysis to segment its customer base, revealing distinct usage patterns and preferences across various demographic groups.

This analysis indicated that younger customers favored unlimited plans and heavily engaged with streaming services, while older customers prioritized voice call quality and customer service. Such insights can guide focused marketing approaches and service improvements, ultimately boosting operational efficiency and company growth. Moreover, the distinction between diagnostic and descriptive analytics can lead to substantial enhancements in customer satisfaction.

A retail company, for example, might uncover that extended checkout times correlate with decreased customer satisfaction. By streamlining their checkout processes based on these findings, they can enhance the overall customer experience. This proactive approach not only addresses urgent issues but also nurtures long-term loyalty and satisfaction among clients.

As companies continue to adopt these analytical methods, the integration of real-time data processing and improvements in data visualization will further enable informed decision-making. Future trends in descriptive analysis include integration with predictive and prescriptive approaches, which will enhance the depth of insights available. Significantly, Narellan Pools experienced a sales rise while utilizing just 70% of its media budget, demonstrating the impact of data analysis in enhancing sales performance.

Andy Morris, a Principal Product Marketing Specialist, emphasizes that ‘descriptive analysis provides vital information about a company’s performance.’ The future of descriptive analytics is poised to include greater accessibility for small and medium-sized enterprises, enabling them to leverage data-driven insights to enhance operational efficiency and customer satisfaction. To learn more about how tailored AI solutions can align with your business goals and challenges, reach out to us today, reinforcing the significance of BI and RPA in today’s data-rich environment.

Conclusion

Understanding the distinct yet complementary roles of descriptive and diagnostic analytics is crucial for organizations aiming to enhance operational efficiency and drive growth. Descriptive analytics offers a foundational overview of historical performance, enabling businesses to identify trends and metrics that inform current strategies. By employing techniques that summarize past data, organizations can gain a clearer understanding of their market position and operational health.

In contrast, diagnostic analytics delves deeper to uncover the reasons behind observed trends. This analytical approach allows businesses to identify root causes of performance fluctuations, facilitating informed decision-making and strategic adjustments. The integration of automation technologies, such as Robotic Process Automation (RPA), further enhances these analytical capabilities by streamlining workflows and reducing manual errors. This empowers teams to concentrate on more strategic initiatives.

Real-world applications of both analytics types underscore their significance in driving operational improvements and customer satisfaction. By leveraging data-driven insights, companies can not only address immediate challenges but also cultivate a culture of continuous improvement and innovation. As the analytics landscape evolves, organizations that effectively harness both descriptive and diagnostic analytics will be better equipped to navigate the complexities of a data-rich environment. This ultimately leads to sustained business growth and enhanced competitive advantage.

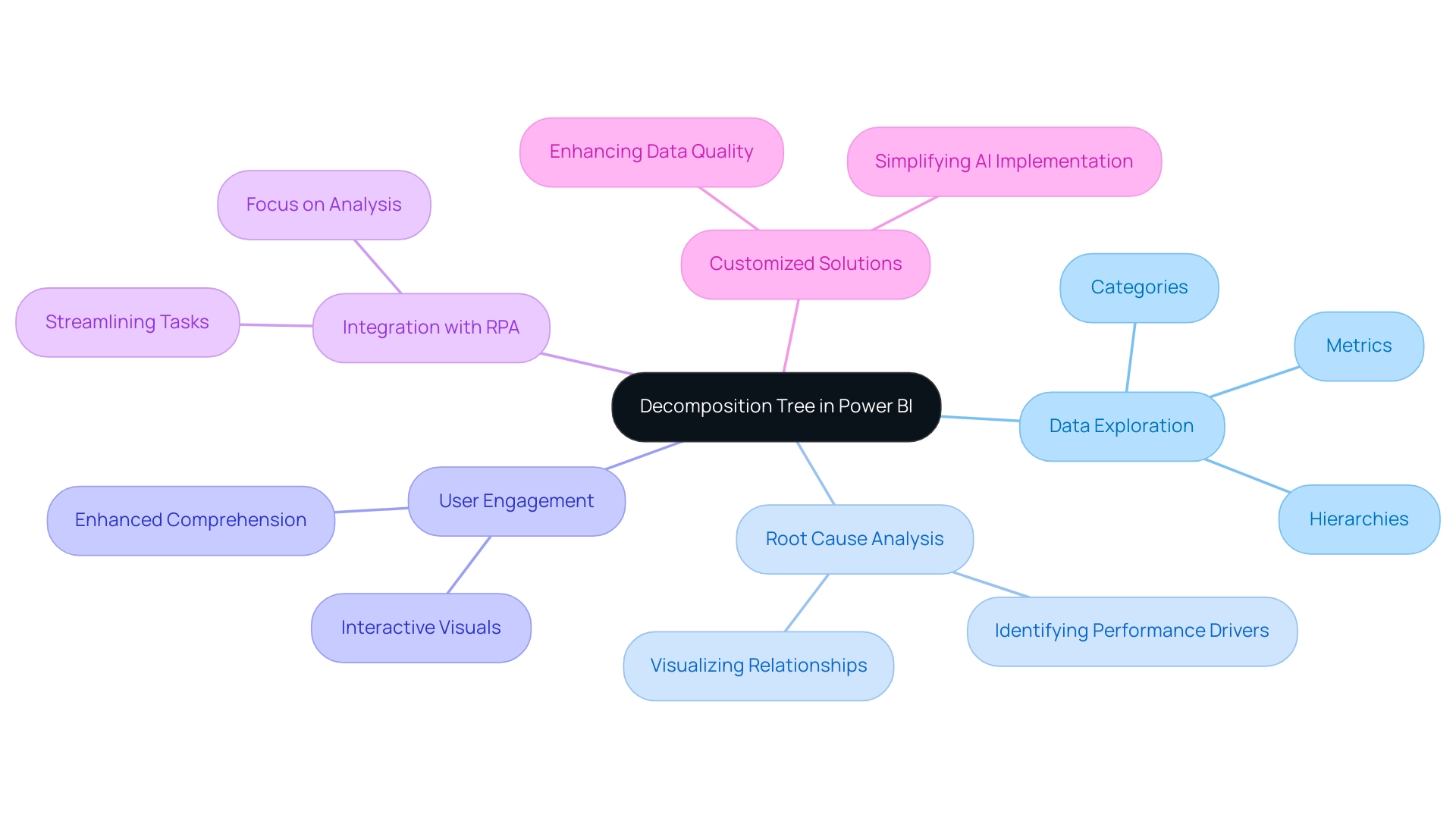

Overview

This article delves into mastering the decomposition tree in Power BI, highlighting its pivotal role as a powerful tool for interactive data analysis and root cause exploration. It illustrates how the decomposition tree elevates the understanding of complex datasets through structured visualizations and effective data segmentation. Ultimately, this capability aids organizations in making informed decisions and enhancing operational efficiency.

Introduction

In the realm of data analytics, the Decomposition Tree in Power BI emerges as a revolutionary tool that enables users to dissect complex datasets with unprecedented ease. This interactive visual not only simplifies the exploration of data hierarchies but also enhances the understanding of intricate relationships and trends that often elude traditional visualization methods. As organizations increasingly pivot towards data-driven decision-making, the Decomposition Tree stands out by facilitating root cause analysis and illuminating the performance drivers that shape business outcomes.

With its growing significance in the landscape of data visualization, this article delves into the myriad ways the Decomposition Tree can transform raw data into actionable insights. By driving operational efficiency and fostering innovation, it becomes essential for any organization aiming to thrive in an increasingly data-centric world. Whether through effective setup, strategic use of AI, or best practices for reporting, unlocking the full potential of this powerful tool is crucial. How is your organization leveraging data visualization to enhance decision-making?

Understanding the Decomposition Tree: An Overview

The analysis structure in Power BI stands out as a revolutionary visual instrument that empowers users to examine information across multiple dimensions. This interactive feature facilitates an in-depth exploration of information hierarchies, enabling users to drill down into specific categories and uncover the underlying factors influencing their metrics. Particularly beneficial for root cause analysis, the breakdown structure allows for the visualization of intricate datasets, revealing relationships and trends often obscured in conventional charts or tables.

In 2025, the latest trends in visualization tools underscore the increasing importance of interactive visuals such as the Breakdown Chart. These tools not only enhance user involvement but also improve information comprehension. The ability to segment data into manageable parts fosters a deeper understanding of performance drivers, ultimately leading to more informed decision-making.

Consider the case of Samoukale Enterprises Limited, which utilizes the analysis framework to scrutinize user profiles and IP addresses while maintaining a cookie duration of 3000 days. This strategy has markedly improved their advertising effectiveness, allowing them to respect user privacy while still gathering valuable insights.

The benefits of employing the decomposition tree in Power BI extend beyond mere visualization; they encompass enhanced information quality and streamlined AI implementation—both critical for fostering growth and innovation. By addressing the challenges of extracting meaningful insights from data, the decomposition tree in Power BI assists organizations in making informed decisions that bolster operational efficiency.

Moreover, integrating RPA solutions alongside Power BI can streamline repetitive tasks, enabling teams to focus on analysis rather than preparation. Tailored solutions, including Small Language Models and GenAI Workshops, further enhance information quality and simplify AI implementation, aligning with the organization’s mission to empower businesses. Expert opinions underscore its relevance in contemporary analysis, with many professionals, including Jaap, endorsing its application in uncovering root causes of performance issues: ‘I’ll still accept your solution, because it does work for the example I provided you.’

As organizations increasingly rely on data-driven strategies, the analytical framework emerges as an essential tool in their analytics arsenal, facilitating navigation through the complexities of their datasets with clarity and precision. However, it is important to acknowledge that support within Q&A is also limited, which may impact user experience. With the right application, it can transform raw data into actionable insights, paving the way for strategic advancements in operational efficiency while leveraging the power of Business Intelligence and RPA.

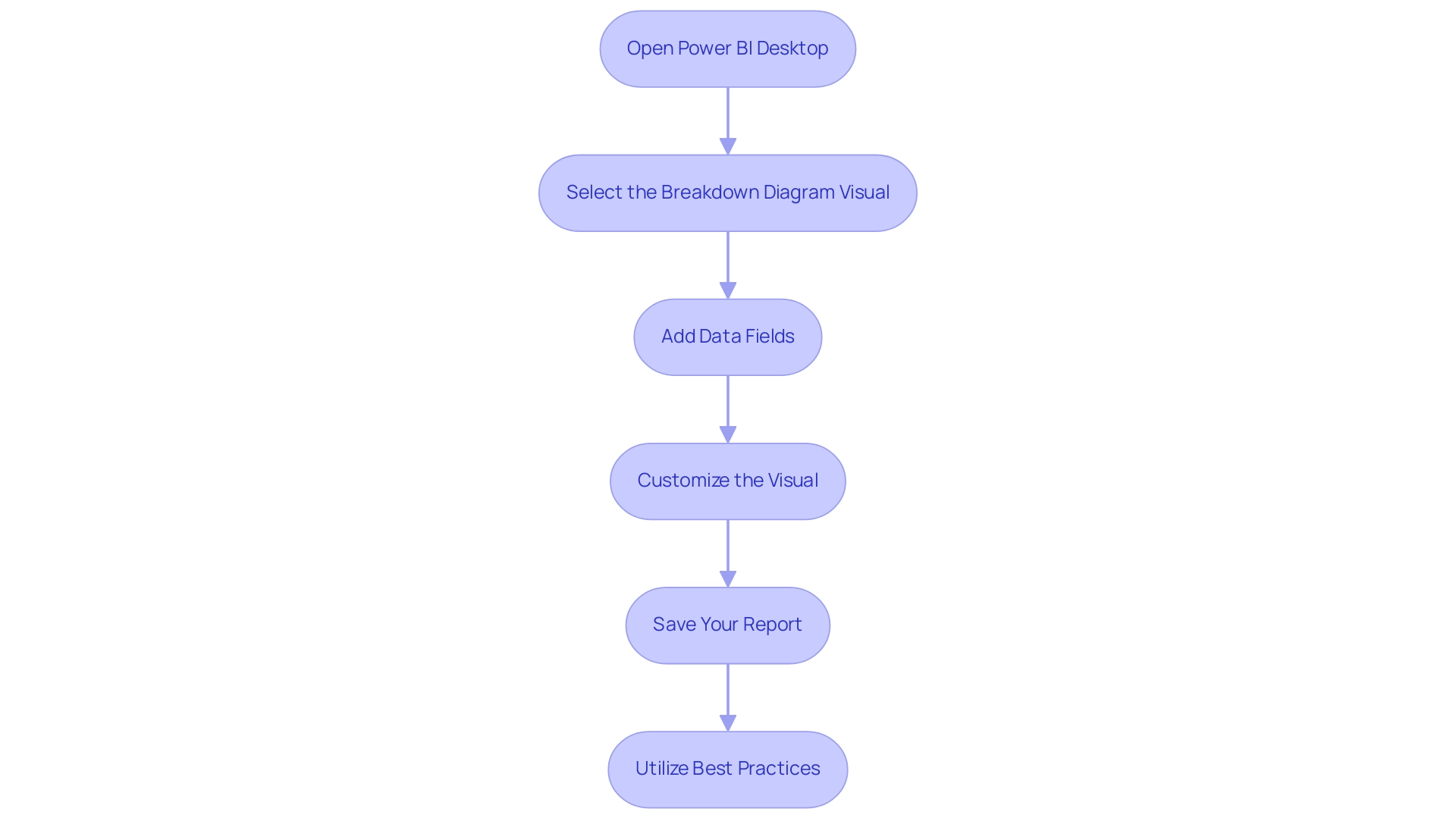

Setting Up the Decomposition Tree Visual in Power BI

Establishing the decomposition tree in Power BI for data breakdown is a straightforward task that can significantly enhance your data analysis capabilities. In an environment where leveraging Business Intelligence is crucial for operational effectiveness, this tool becomes essential. Follow these steps to implement it effectively:

- Open Power BI Desktop: Launch Power BI Desktop and access the report where you want to add the breakdown chart.

- Select the Breakdown Diagram Visual: In the Visualizations pane, locate and click on the Breakdown Diagram icon to add it to your report canvas.

- Add Data Fields: Drag the measure you wish to analyze into the ‘Analyze’ field well. Incorporate dimensions into the ‘Explain by’ field to specify how you want to dissect the information.

- Customize the Visual: Tailor the visual settings for clarity and presentation. Adjust colors, labels, and other formatting options to ensure the visual is both informative and visually appealing.

- Save Your Report: After finalizing your setup, save your report to secure your modifications.

By adhering to these steps, you can utilize the analytical structure to gain a deeper understanding of your relationships, ultimately promoting informed decision-making and encouraging innovation within your organization.

In 2025, user adoption rates for Power BI visuals have shown a significant increase, reflecting the growing recognition of the platform’s capabilities. Successful applications of the decomposition tree in Power BI have demonstrated its efficiency in providing detailed ad hoc analysis, enabling users to investigate information across various categories. A case study titled “Using Analysis Trees in Power BI” showcased how users could examine a single value through multiple dimensions, resulting in improved comprehension and practical insights.

This method has been measured, with an anticipated value calculation of 4.25x, demonstrating the efficiency of the decomposition tree in Power BI in enhancing analysis capabilities.

To maximize the benefits of the decomposition tree in Power BI and overcome challenges such as time-consuming report creation and inconsistencies, consider these best practices:

- Ensure your information is well-structured.

- Utilize clear labeling for ease of interpretation.

- Regularly update your visuals to reflect current trends.

Additionally, integrating RPA solutions like EMMA RPA and Power Automate can further streamline your processes, enhancing operational efficiency. Professional guidance underscores the significance of personalization in visual arrangements, as customized visuals can greatly enhance user involvement and understanding.

As noted by Super User amitchandak, “I doubt, Just check arrow keys can help,” highlighting the importance of utilizing all features available in Power BI for comprehensive analysis.

To learn more about how RPA can enhance your analytics efforts, consider scheduling a free consultation.

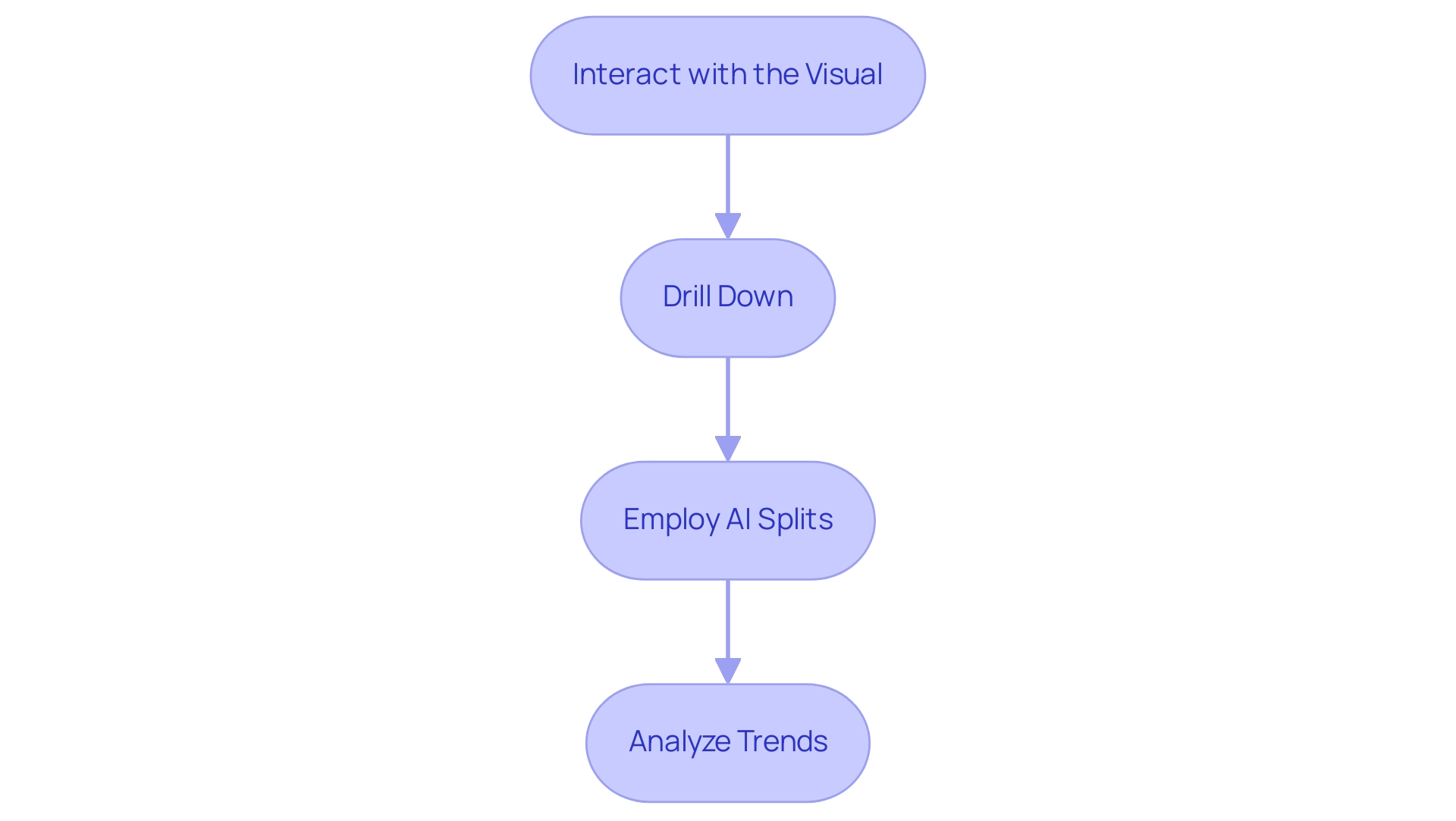

Exploring and Analyzing Data with the Decomposition Tree

Once the Decomposition Tree is established, you can effectively explore and analyze your data through the following steps:

- Interact with the Visual: Engage with the decomposition tree in Power BI by clicking on its nodes to expand or collapse them. This interaction uncovers deeper layers of information, allowing for a more detailed analysis and clarity in visual representation. This is essential for overcoming challenges related to poor master information quality.

- Drill Down: Utilize the drill-down feature to delve into specific dimensions. This capability enables you to understand how various factors influence your overall metrics, providing insights into the underlying drivers of performance. This supports data-driven decision-making, a critical aspect of leveraging insights from Power BI dashboards.

- Employ AI Splits: Take advantage of AI splits to automatically identify significant contributors to your information. These intelligent splits, part of our Small Language Models and GenAI workshops, streamline your analysis and highlight critical areas that merit further exploration. This enhances your decision-making process and addresses barriers to effective AI adoption.

- Analyze Trends: As you navigate through the information, actively look for patterns and trends. The visual depiction of the breakdown structure aids in detecting anomalies or unforeseen outcomes, necessitating prompt attention. This trend analysis is crucial for making informed, data-driven decisions in a rapidly evolving business landscape, further supported by our Business Intelligence solutions.

Furthermore, the analysis structure can examine sales from various product lines, regions, or customer segments, offering a thorough perspective of your data landscape. By mastering these techniques, you can unlock the complete potential of the analytical structure in Power BI. This transforms intricate datasets into practical understanding that enhances operational efficiency. Additionally, incorporating Power Automate can streamline your workflows, ensuring that information derived from the decomposition tree in Power BI is actionable without delay.

As Hans Levenbach aptly stated, “How Bad Data Will Beat a Good Forecast Every Time!!” This underscores the importance of ensuring data quality in your analyses. Moreover, utilizing dynamic formatting in your DAX measures can improve the clarity and usability of your visualizations. This ensures that users can easily interpret complex datasets and maximize their understanding.

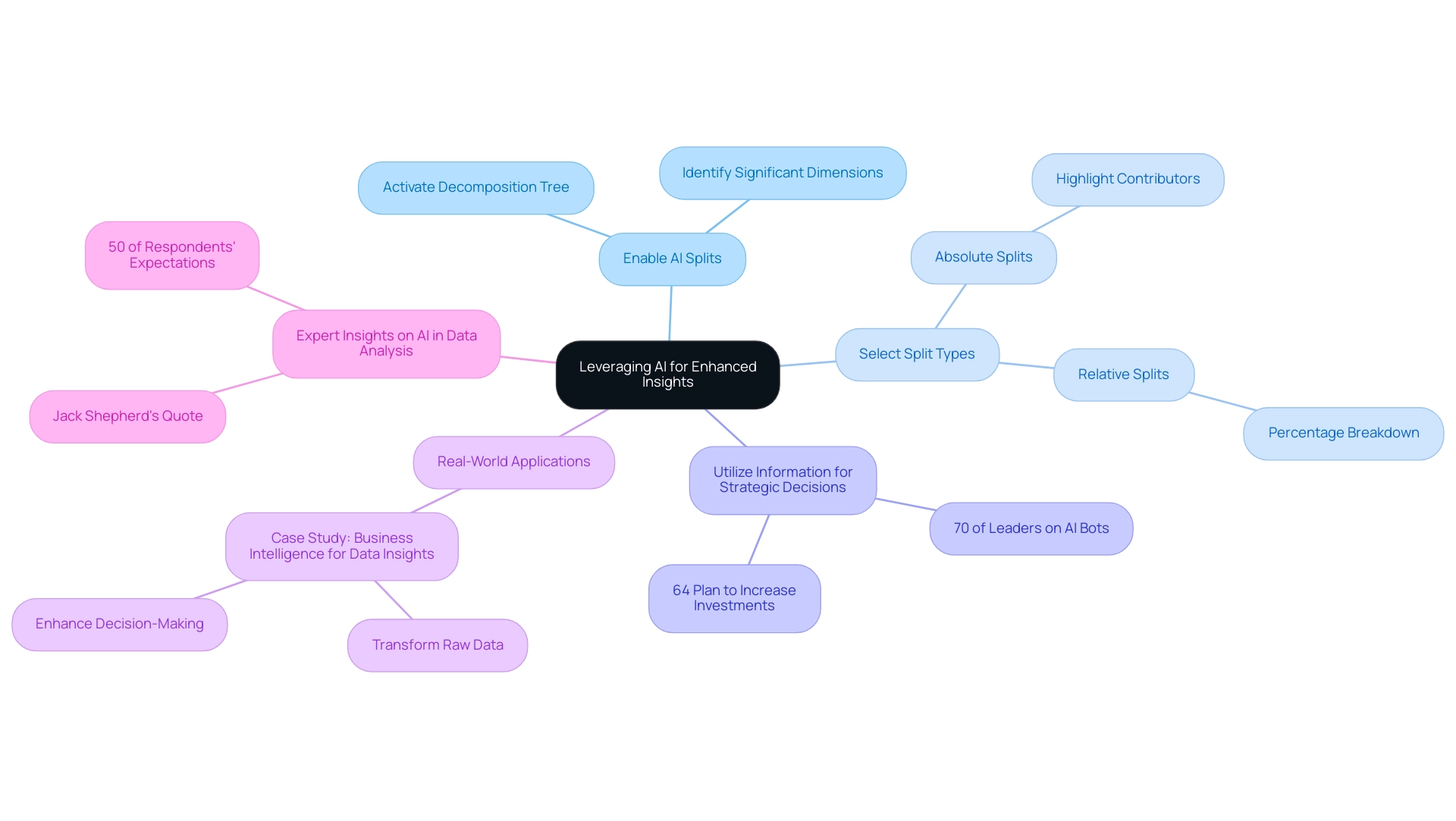

Leveraging AI for Enhanced Insights in the Decomposition Tree

To efficiently utilize AI for improved understanding within the analysis framework in Power BI, consider these key strategies:

-

Enable AI Splits: Ensure that AI splits are activated when configuring the decomposition tree in Power BI. This powerful feature automatically identifies and suggests the most significant dimensions for analysis based on your dataset, streamlining the decision-making process.

-

Select Split Types: Tailor your analysis by choosing between absolute and relative splits. Absolute splits emphasize the highest or lowest contributors, offering a clear understanding of performance extremes. In contrast, relative splits provide a percentage breakdown, allowing for a nuanced understanding of how different factors compare against one another.

-

Utilize Information for Strategic Decisions: The insights obtained from AI splits can significantly inform your business strategies. By identifying areas for improvement or uncovering new growth opportunities, organizations can make data-driven decisions that enhance operational efficiency. Notably, 70% of customer experience leaders recognize that AI bots are becoming adept at crafting highly personalized customer journeys, underscoring the importance of integrating AI into analytics. Furthermore, 64% of customer experience leaders plan to increase investments in evolving AI chatbots within the next year, highlighting the growing trend of investment in AI technologies.

-

Real-World Applications: Consider the case study of a business that utilized AI-driven insights to transform raw information into actionable intelligence. This approach not only enhanced information quality but also enabled the organization to make informed decisions that drove growth and innovation. Leveraging AI can lead to significant enhancements in analytical capabilities, as evidenced by the increasing adoption of tailored AI solutions in the industry. Utilize Power BI Services: Take advantage of our 3-Day Power BI Sprint to quickly create professionally designed reports that improve your reporting and gain actionable insights. The General Management App offers comprehensive management and smart reviews, further supporting your operational efficiency.

-

Expert Insights on AI in Data Analysis: As we move through 2025, understanding the role of AI splits in Power BI is crucial. Experts note that while UK AI adoption is gradually increasing, with Jack Shepherd, Co-Founder of Social Shepherd, stating that ‘UK AI adoption is not as high as in other regions, but the country is seeing a steady increase in adoption,’ the insights derived from the decomposition tree in Power BI reveal that 50% of respondents feel that AI meets their expectations. This indicates a growing confidence in these technologies. By harnessing AI splits effectively alongside Business Intelligence tools and Robotic Process Automation (RPA), businesses can enhance their analysis capabilities and achieve a competitive edge in their respective markets. Additionally, consider participating in our GenAI Workshops to gain hands-on training and explore Small Language Models for efficient data analysis tailored to your specific needs.

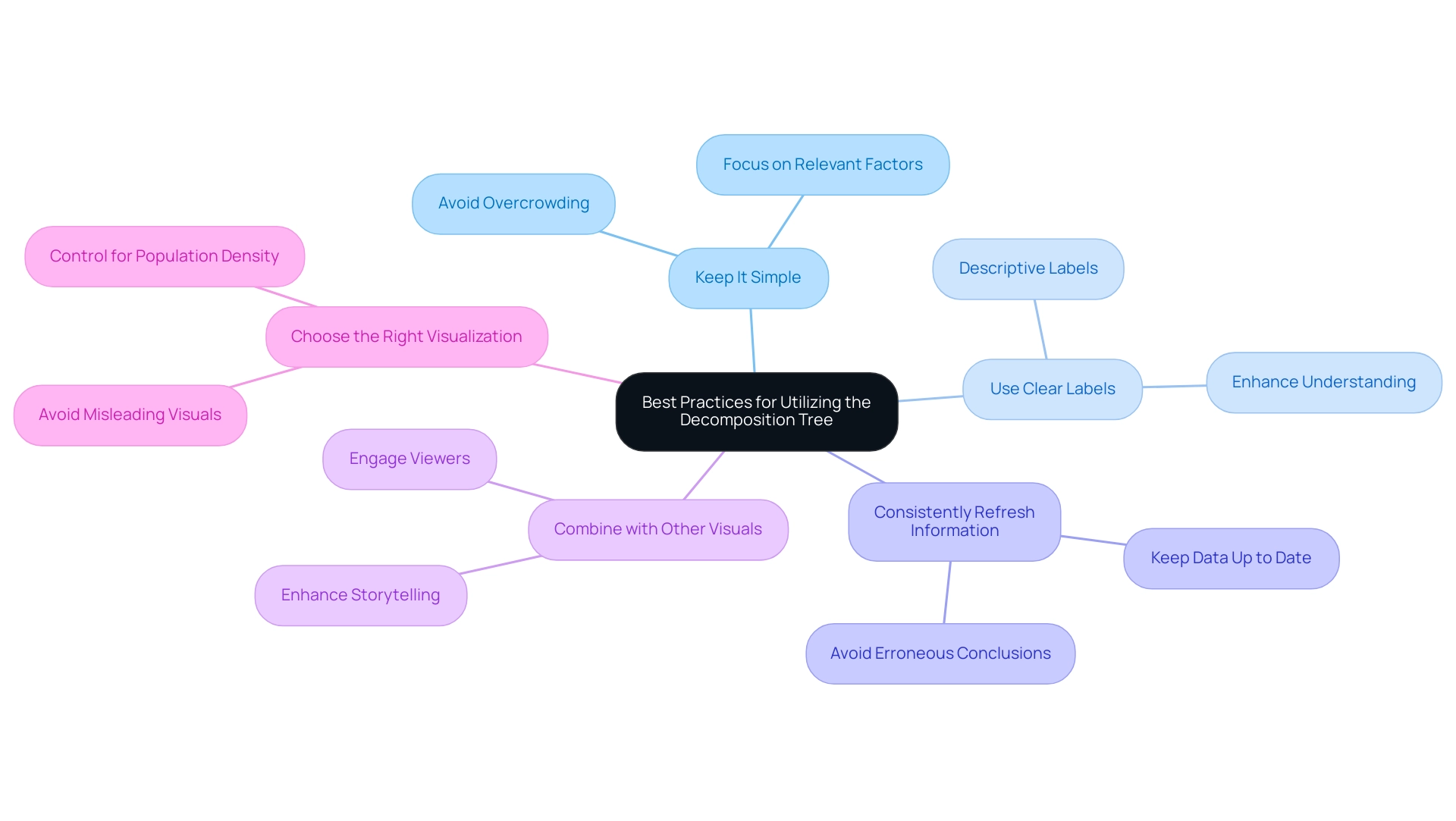

Best Practices for Utilizing the Decomposition Tree in Reports

To maximize the effectiveness of the Decomposition Tree in your reports, adhere to the following best practices:

-

Keep It Simple: Avoid overcrowding the visual with excessive dimensions. Focus on the most relevant factors to sustain clarity and promote understanding—vital in a data-rich setting where valuable knowledge is essential for competitive advantage.

-

Use Clear Labels: Descriptive and straightforward labels are crucial. As Edward R. Tufte stated, “the essential test of design is how well it assists the understanding of the content, not how stylish it is.” Clear labeling allows viewers to swiftly grasp the information being presented, greatly improving understanding and ensuring actionable direction from your Power BI dashboards.

-

Consistently Refresh Information: Ensure your information is up to date to uphold the significance and usability of the conclusions drawn from the structure. Obsolete information can lead to erroneous conclusions, complicating the retrieval of significant insights that promote growth and innovation.

-

The decomposition tree in Power BI is a valuable tool for data analysis. Combine the decomposition tree with other visuals to provide a comprehensive view of your information. This approach not only enhances storytelling but also makes your reports more engaging and informative, aligning with the goal of improving operational efficiency through data-driven insights.

-

Choose the Right Visualization: Be mindful of the visualization methods you select. For instance, while Choropleth maps can be useful, they may mislead if not controlled for population density or other factors. Choosing suitable visuals is crucial for precise information representation, further highlighting the significance of utilizing BI tools effectively.

The decomposition tree in Power BI is a useful tool for visualizing data. Consider integrating RPA solutions to automate the preparation and report generation processes, which can be enhanced by utilizing the decomposition tree. This can significantly reduce the time spent on manual tasks, address inconsistencies, and enhance the overall efficiency of utilizing the decomposition tree in Power BI and other BI tools.

By applying these strategies, you can significantly enhance the clarity and impact of your visualizations, ultimately driving better decision-making and operational efficiency. Additionally, consider that 157 partners can utilize these practices to deliver and present advertising and content effectively, showcasing the collaborative potential of data visualization tools. The case study titled “Business Intelligence Empowerment” illustrates how organizations can extract meaningful insights through business intelligence, supporting informed decision-making and driving growth and innovation.

Conclusion

The Decomposition Tree in Power BI signifies a transformative leap in data visualization, enabling organizations to unravel complex datasets with unparalleled clarity and precision. Its interactive features facilitate root cause analysis, allowing users to identify the underlying factors influencing performance metrics. This tool not only enriches the exploration of data hierarchies but also fosters a deeper comprehension of trends and relationships often missed by traditional methods.

Setting up the Decomposition Tree is a seamless process, empowering users to tailor their data analysis effectively. By integrating AI splits and adhering to best practices—such as employing clear labels and maintaining simplicity in visuals—organizations can unlock the maximum insights from their data. The strategic deployment of this tool, in conjunction with complementary solutions like Robotic Process Automation, streamlines workflows and enhances operational efficiency.

Ultimately, the Decomposition Tree transcends the role of a mere visual tool; it stands as a crucial asset within an organization’s analytics arsenal. As the importance of data-driven decision-making escalates, harnessing the full potential of the Decomposition Tree can convert raw data into actionable insights, propelling innovation and strategic progress. Embracing this powerful visualization technique positions organizations for success in the dynamic landscape of data analytics, ensuring they remain competitive and well-informed.

Overview

This article delves into the effective summation of multiple columns in Power BI using DAX, spotlighting the critical roles of both the SUM and SUMX functions in achieving precise data analysis. By presenting clear examples and relevant use cases, it illustrates how these functions can significantly boost operational efficiency and enhance reporting accuracy. Ultimately, this leads to more informed decision-making, empowering professionals to leverage data effectively.

Introduction

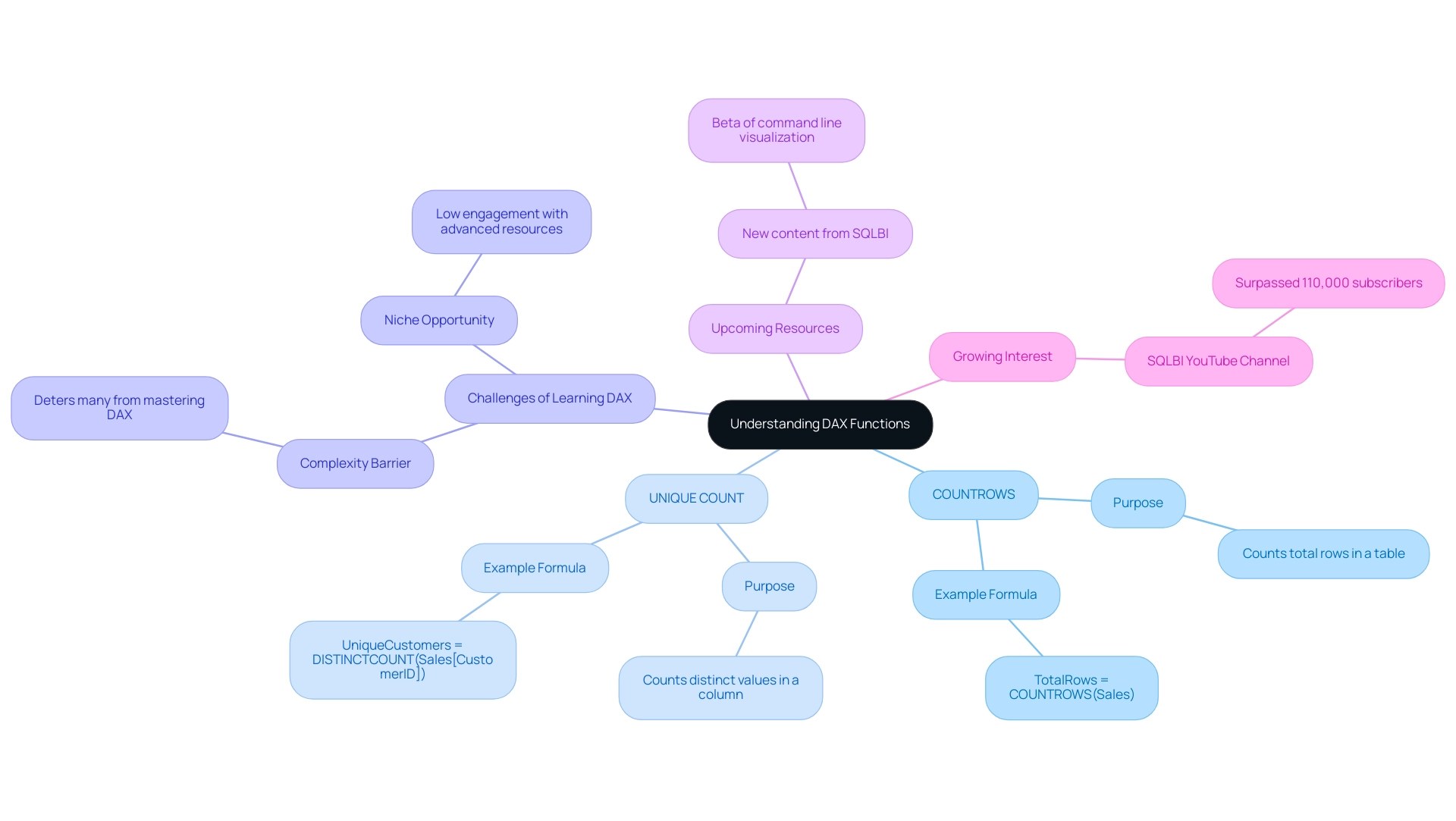

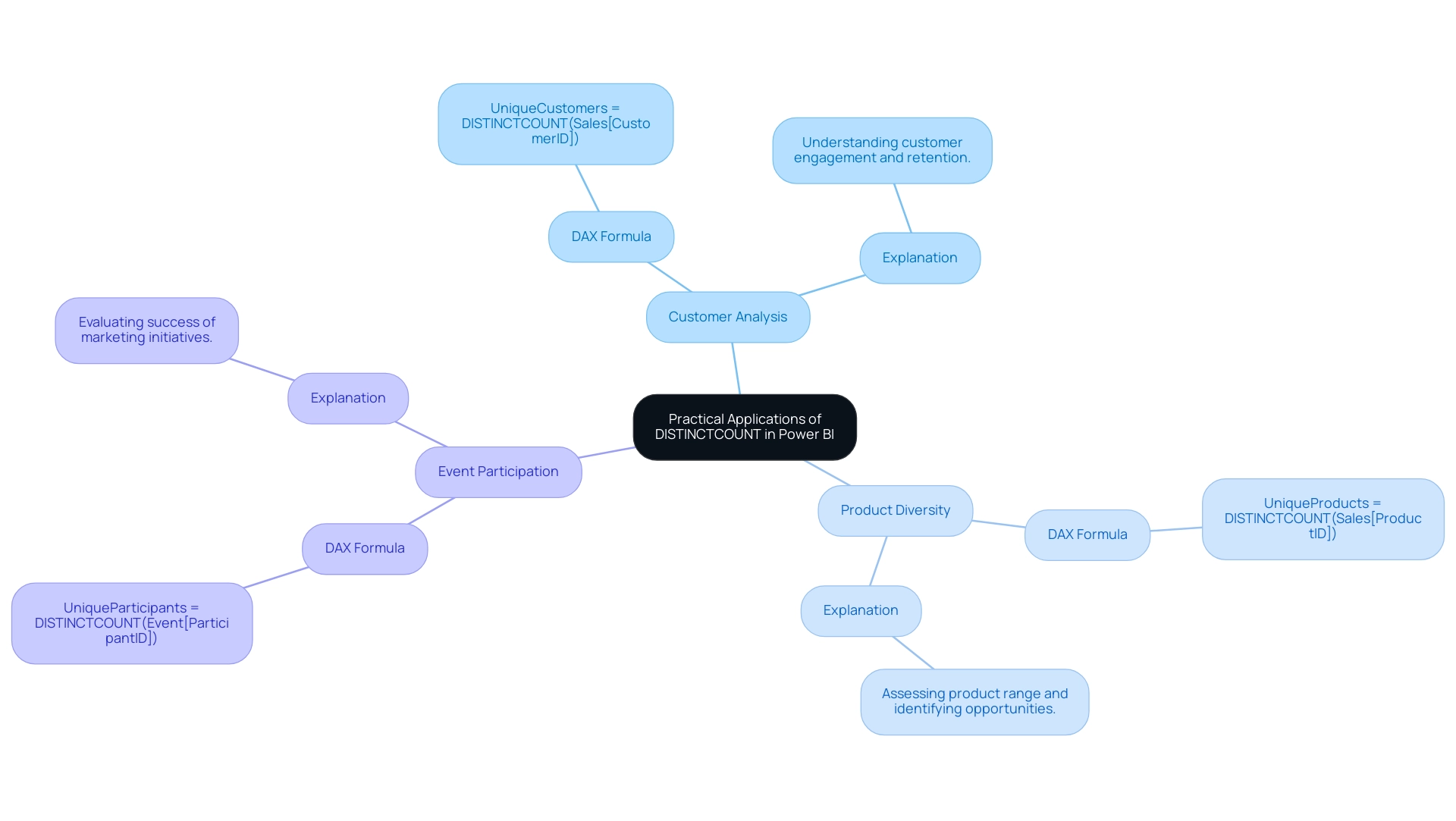

In the realm of data analysis, DAX (Data Analysis Expressions) stands as a cornerstone for maximizing the potential of Power BI. This powerful formula language empowers users to perform intricate calculations and derive actionable insights from their data. As organizations increasingly embrace data-driven decision-making, understanding DAX becomes essential—not merely for mastering its syntax, but for applying it effectively in real-world business contexts.

Consider the capabilities of DAX:

- Creating calculated columns

- Dynamic measures

- Summing multiple columns with precision

Mastery of DAX not only enhances reporting capabilities but also streamlines operational efficiency. As the landscape of business intelligence evolves, the necessity for professionals to harness the full power of DAX becomes clear. It is crucial for ensuring competitiveness in an increasingly data-centric world. Are you ready to elevate your data analysis skills and drive impactful decisions?

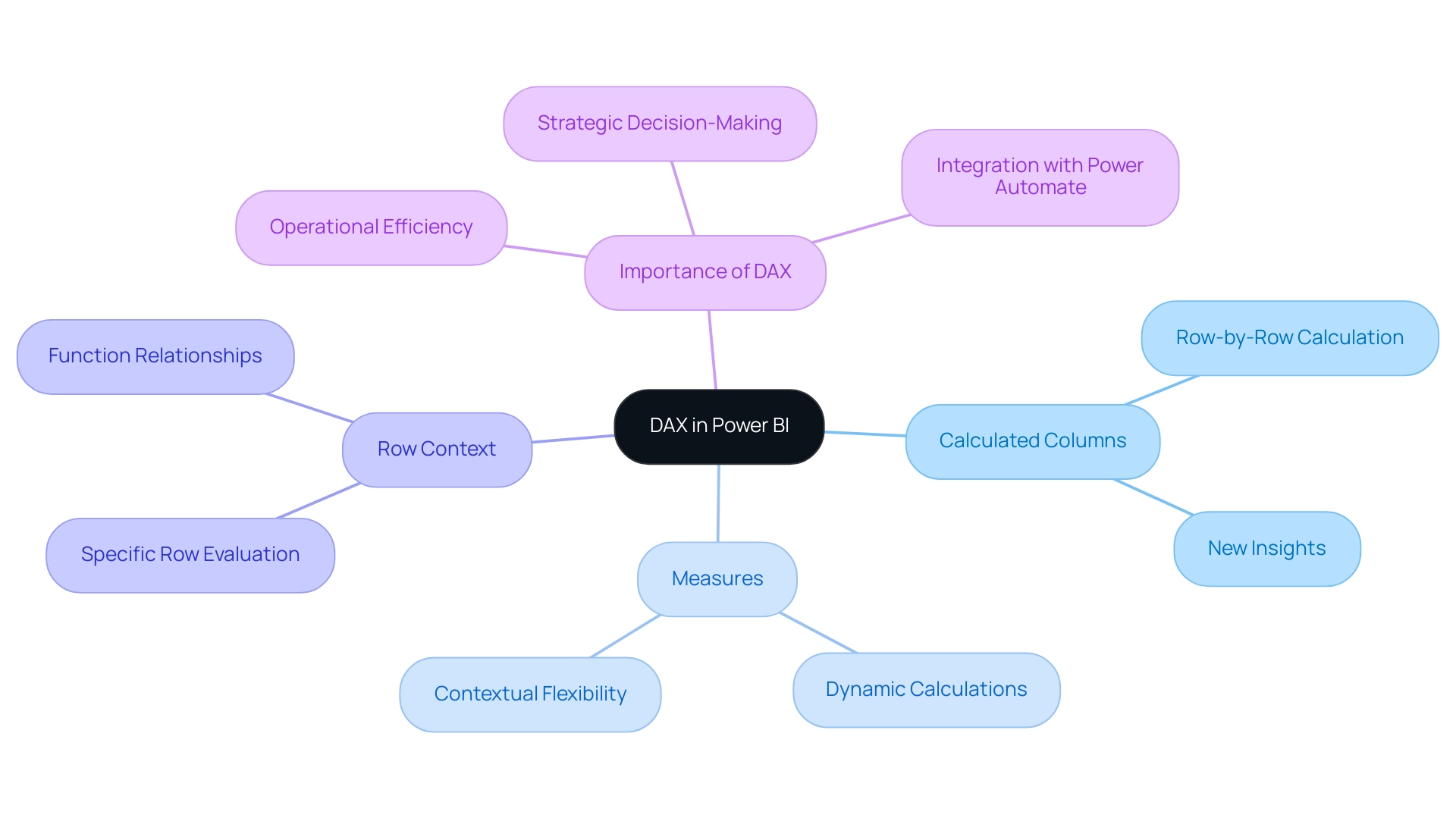

Understanding DAX: The Foundation for Power BI Calculations

DAX, or Data Analysis Expressions, stands as a powerful formula language integral to Power BI, enabling users to execute sophisticated calculations and analyses. Comprising a variety of functions, operators, and constants, DAX facilitates the creation of complex formulas tailored to specific information scenarios. Mastery of DAX is essential for maximizing the capabilities of Power BI, particularly when effectively utilizing DAX to sum multiple columns. Understanding DAX transcends mere syntax; it encompasses grasping its application in real-world business intelligence contexts.

Organizations leveraging DAX have reported significant improvements in operational efficiency, especially when paired with our Power BI services. For instance, the 3-Day Power BI Sprint empowers teams to quickly create professionally designed reports, enhancing reporting and providing actionable insights. Furthermore, our General Management App supports comprehensive management and smart reviews, further augmenting DAX’s capabilities in delivering clear, actionable guidance.

A recent case study highlighted the launch of a Semantic Model Refresh Detail Page, which provided extensive insights into refresh metrics, assisting in troubleshooting and enhancing updates. This illustrates how DAX can bolster the reliability of analysis processes, particularly when integrated with tools like Power Automate to streamline workflows and assess ROI risk-free.

The significance of DAX in analysis cannot be overstated. As of 2025, statistics indicate that a substantial percentage of Power BI users depend on DAX for their calculations, underscoring its critical role in effective management. Industry leaders assert that a solid understanding of DAX not only enhances reporting capabilities but also empowers teams to focus on strategic decision-making rather than manual information manipulation.

Moreover, leveraging Robotic Process Automation (RPA) alongside DAX can further enhance operational efficiency in a rapidly evolving AI landscape. By mastering these DAX concepts, you will establish a strong foundation for effectively using DAX to sum multiple columns in Power BI, ultimately driving better insights and operational efficiency. Furthermore, comprehending the distinctions between usage metrics and audit logs can offer greater insights into how DAX operates within the broader context of analysis and reporting.

Key Concepts of DAX:

- Calculated Columns: These are additional columns created in your model using DAX formulas, calculated on a row-by-row basis. They permit the incorporation of new insights directly into your model.

- Measures: In contrast to calculated columns, measures are dynamic calculations performed on the fly, depending on the context of the report. This flexibility is crucial for real-time information analysis.

- Row Context: Understanding row context is vital, as it refers to the specific row being evaluated in a table. This concept is essential for understanding how DAX functions work and relate to your information.

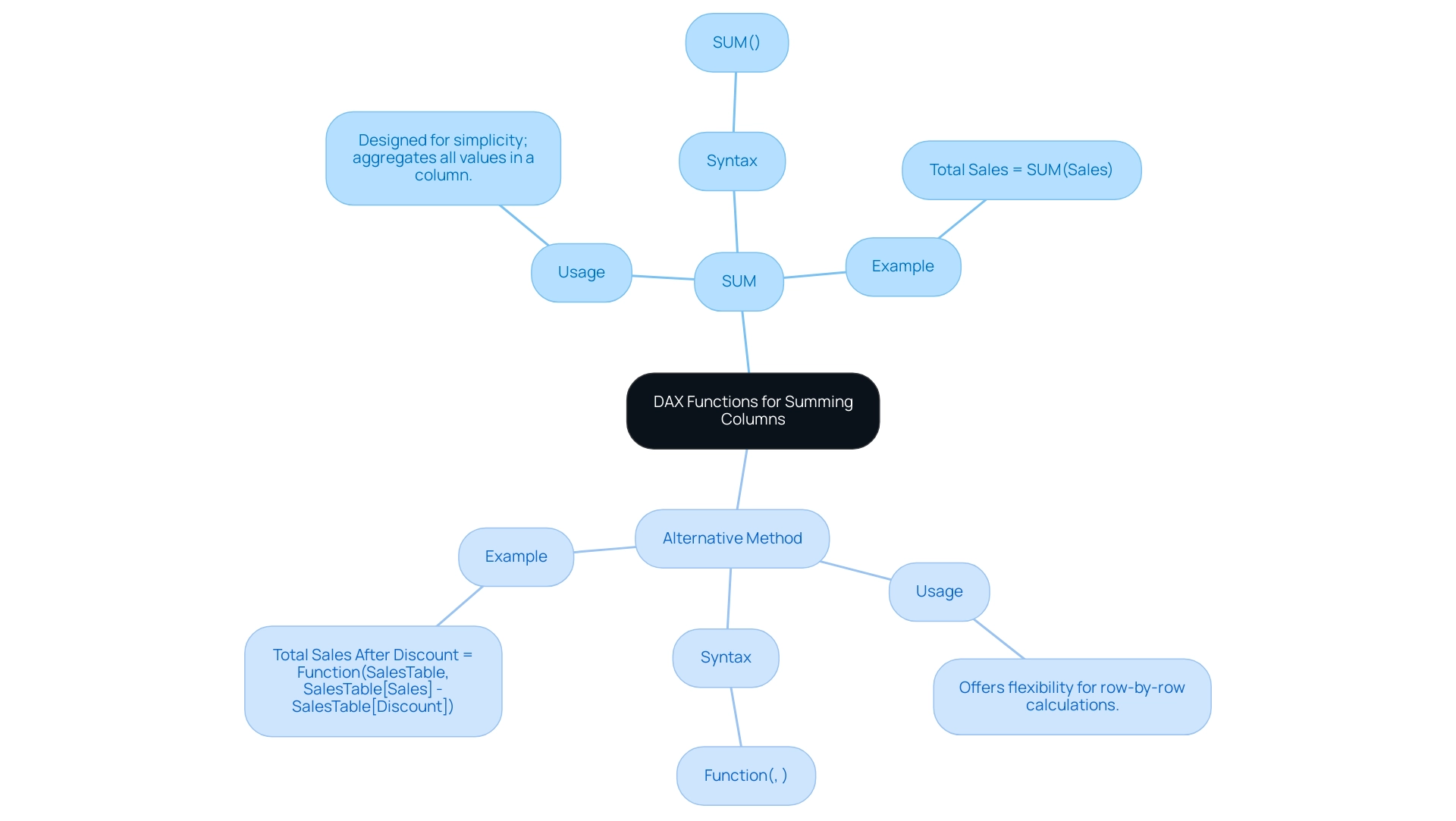

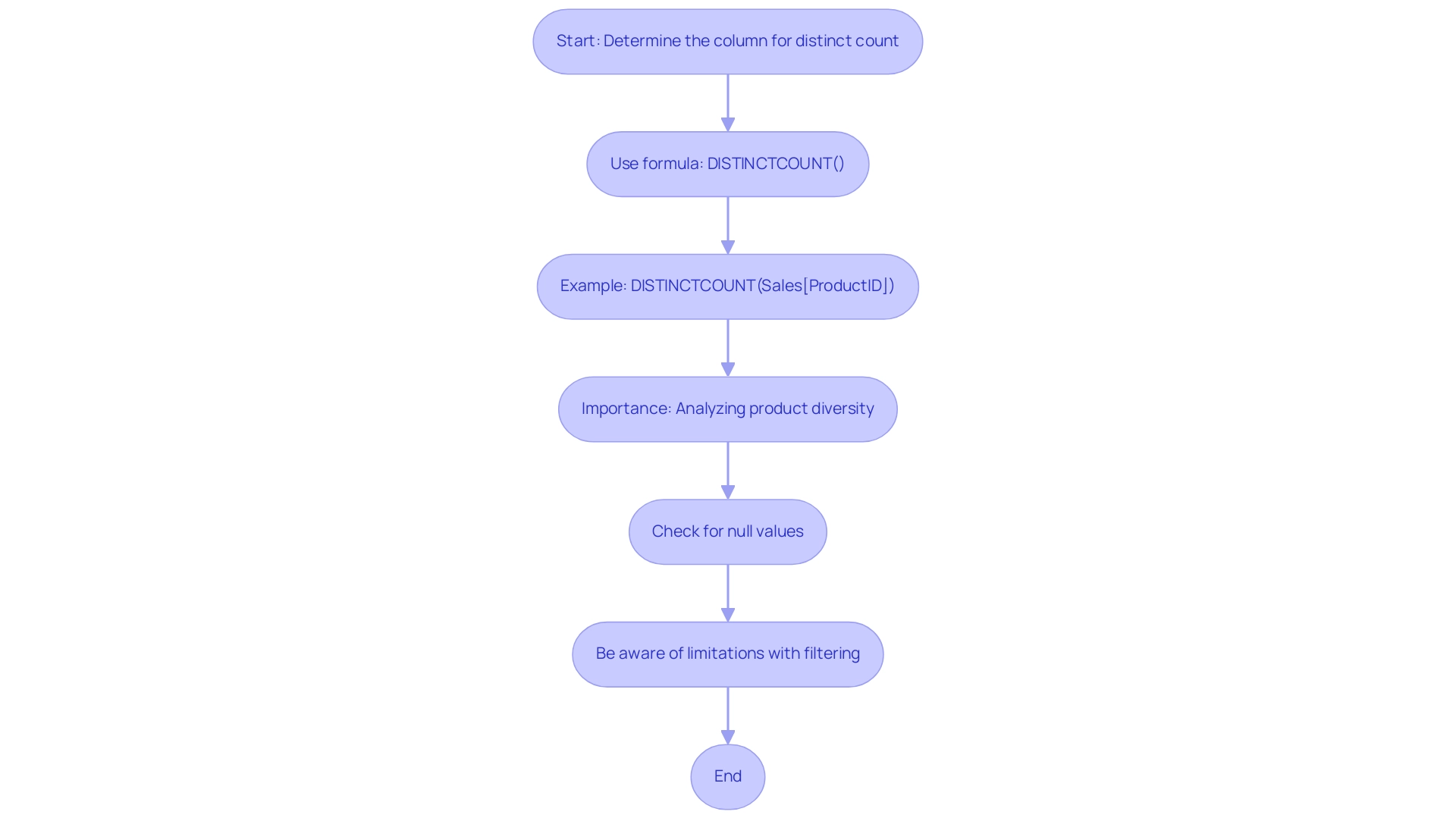

Key DAX Functions for Summing Columns: SUM vs. SUMX

In DAX, two essential operations for adding values are SUM and an alternative method. Understanding the distinctions between these operations is crucial for effective data analysis in Power BI, particularly as organizations increasingly depend on data-driven insights to enhance operational efficiency and tackle challenges such as time-consuming report generation and data inconsistencies.

SUM Operation:

- Usage: The SUM operation is designed for simplicity; it aggregates all values within a single column.

- Syntax:

SUM(<ColumnName>) - Example: For instance, to calculate total sales from a column named ‘Sales’, you would use

Total Sales = SUM(Sales). This operation is particularly effective for straightforward summation tasks, making it a preferred choice for fundamental analytical requirements.

This Calculation Method:

- Usage: In contrast, this calculation method offers enhanced flexibility by allowing row-by-row calculations. It evaluates an expression for each row in a designated table and then sums the resulting values.

- Syntax:

Function(<Table>, <Expression>) - Example: To calculate total sales after applying a discount, you might write

Total Sales After Discount = Function(SalesTable, SalesTable[Sales] - SalesTable[Discount]). This method is ideal for more complex calculations that involve a DAX sum across multiple columns or require a detailed row context.

The choice between using DAX sum across multiple columns and the traditional SUM hinges on the complexity of the calculation at hand. For simple aggregations, SUM suffices, while the alternative is more suitable for complex assessments that demand a thorough examination of the data.

Understanding the differences between these operations not only simplifies analysis but also enhances dashboard efficiency in Power BI. As organizations strive to transform raw data into actionable insights, mastering these functions becomes imperative. The efficient application of SUM and its alternatives can significantly mitigate issues such as data inconsistencies and prolonged report generation times, facilitating quicker and more informed decision-making.

As evidenced by the experiences of over 200 clients, individuals who adeptly differentiate between SUM and its alternatives are better equipped to select the appropriate option for their analytical needs, leading to more accurate and insightful outcomes. Case studies, such as the ‘Comparison of SUM and SUM in Power BI,’ illustrate that users who excel at these operations can navigate the complexities of data analysis more effectively. As Zach Bobbitt, creator of Statology, emphasizes, ‘My goal with this site is to help you learn statistics through using simple terms, plenty of real-world examples, and helpful illustrations,’ underscoring the importance of mastering these concepts in practical applications within the realms of Business Intelligence and Robotic Process Automation.

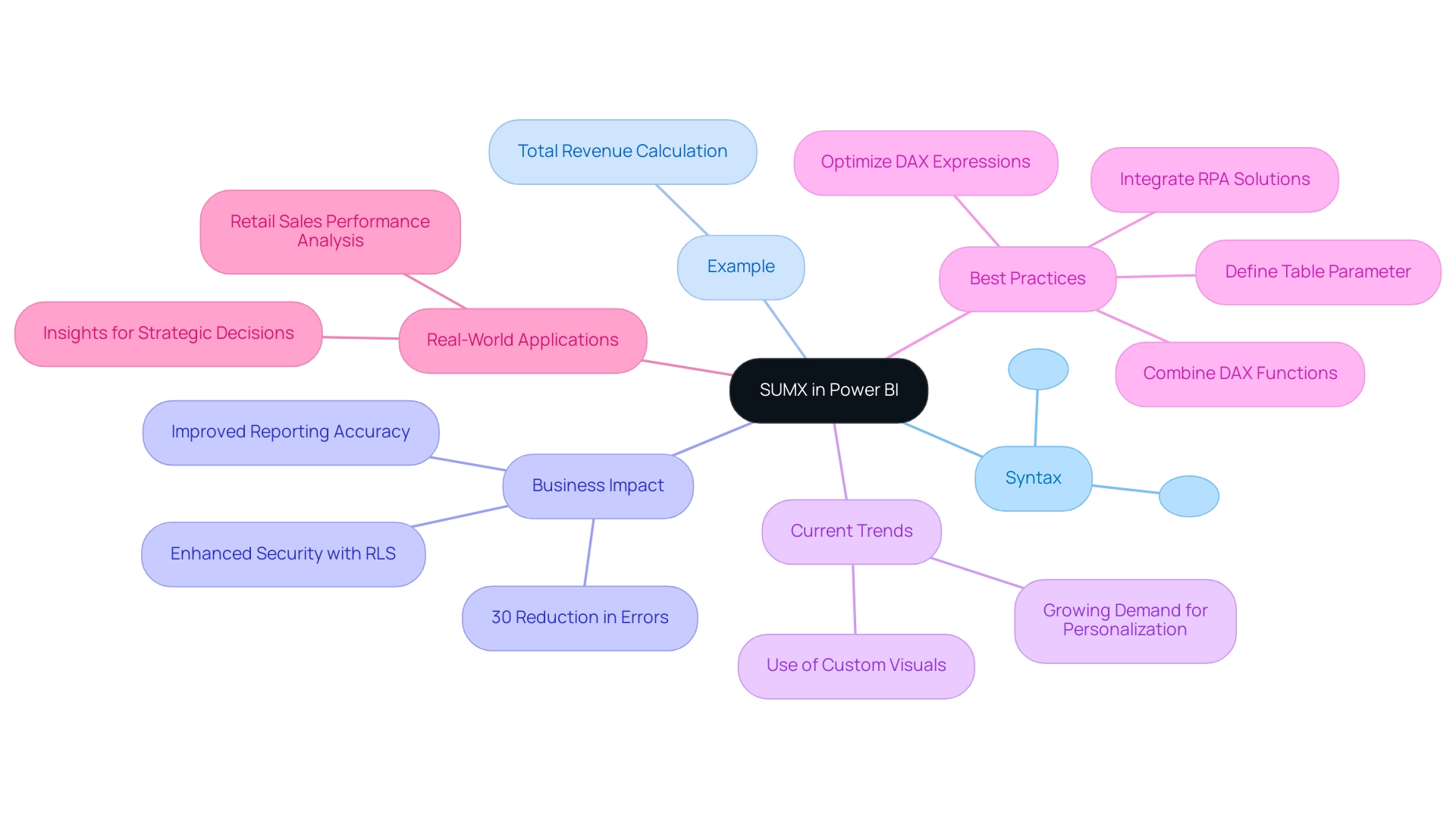

Exploring the Syntax and Parameters of SUMX in Power BI

The SUMX formula is a pivotal component in DAX, particularly for executing complex calculations within Power BI that drive business intelligence initiatives. Mastery of its syntax and parameters is essential for harnessing its full potential in data analysis and reporting. This is especially true in addressing challenges such as time-consuming report creation and data inconsistencies that many organizations encounter.

Syntax of SUMX:

SUMX(<Table>, <Expression>)

: This parameter signifies the table or expression that yields a table, which the function will iterate over.

: This expression is evaluated for each row in the specified table, facilitating dynamic calculations based on row context. Example of SUMX:

Consider a scenario where you have a table named ‘SalesData’ containing ‘Quantity’ and ‘Price’ columns. To compute the total revenue, the formula would be:

Total Revenue = SUMX(SalesData, SalesData[Quantity] * SalesData[Price])This calculation multiplies the quantity by the price for each row, subsequently summing the results to yield a comprehensive total revenue figure.

Business Impact of SUMX in Financial Reporting:

Utilizing the SUMX function can significantly enhance financial reporting accuracy and operational efficiency. Recent statistics reveal that organizations employing SUMX for complex calculations have experienced a notable improvement in information integrity and reporting efficiency, with a reported 30% reduction in errors compared to traditional methods. Furthermore, the implementation of Row-Level Security (RLS) restricts access for specific users, ensuring that only authorized personnel can view sensitive financial information, thereby bolstering the overall security and integrity of reports. This is particularly advantageous in addressing inconsistencies that can arise in reporting.

Current Trends in SUMX Usage:

In 2025, the trend towards utilizing SUMX for complex calculations in Power BI continues to grow, driven by the increasing demand for personalized data insights. Analysts are increasingly leveraging custom visuals in Power BI, allowing for tailored representations of data that enhance the interpretability of complex calculations. A case study on personalization and customization illustrates how custom visuals can significantly enhance the use of specific functions, enabling analysts to create bespoke visual representations that cater to specific business needs.

Best Practices for Using SUMX:

Industry experts recommend several best practices when employing SUMX:

- Ensure that the table parameter is well-defined to avoid performance issues.

- Utilize a DAX function in conjunction with other DAX functions to develop more comprehensive calculations.

- Regularly review and optimize your DAX expressions to maintain efficiency.

- Consider integrating RPA solutions to automate repetitive data processing tasks, further enhancing the efficiency of your data analysis and reporting processes.

Real-World Applications of SUMX:

Case studies demonstrate the effectiveness of SUMX in various business contexts. For instance, a retail company utilized a specific analytical tool to analyze sales performance across different regions, leading to actionable insights that informed strategic decisions and improved overall profitability. As Praveen, a Digital Marketing Specialist, observes, ‘Mastering DAX expressions is crucial for enhancing operational efficiency and attaining significant outcomes in analysis.’

By comprehending the syntax and practical applications of the SUMX capability, users can unlock advanced analytical skills in Power BI, transforming raw information into valuable insights that drive business growth. For those seeking to advance their skills further, the Power BI Certification Training by PwC Academy is highly recommended.

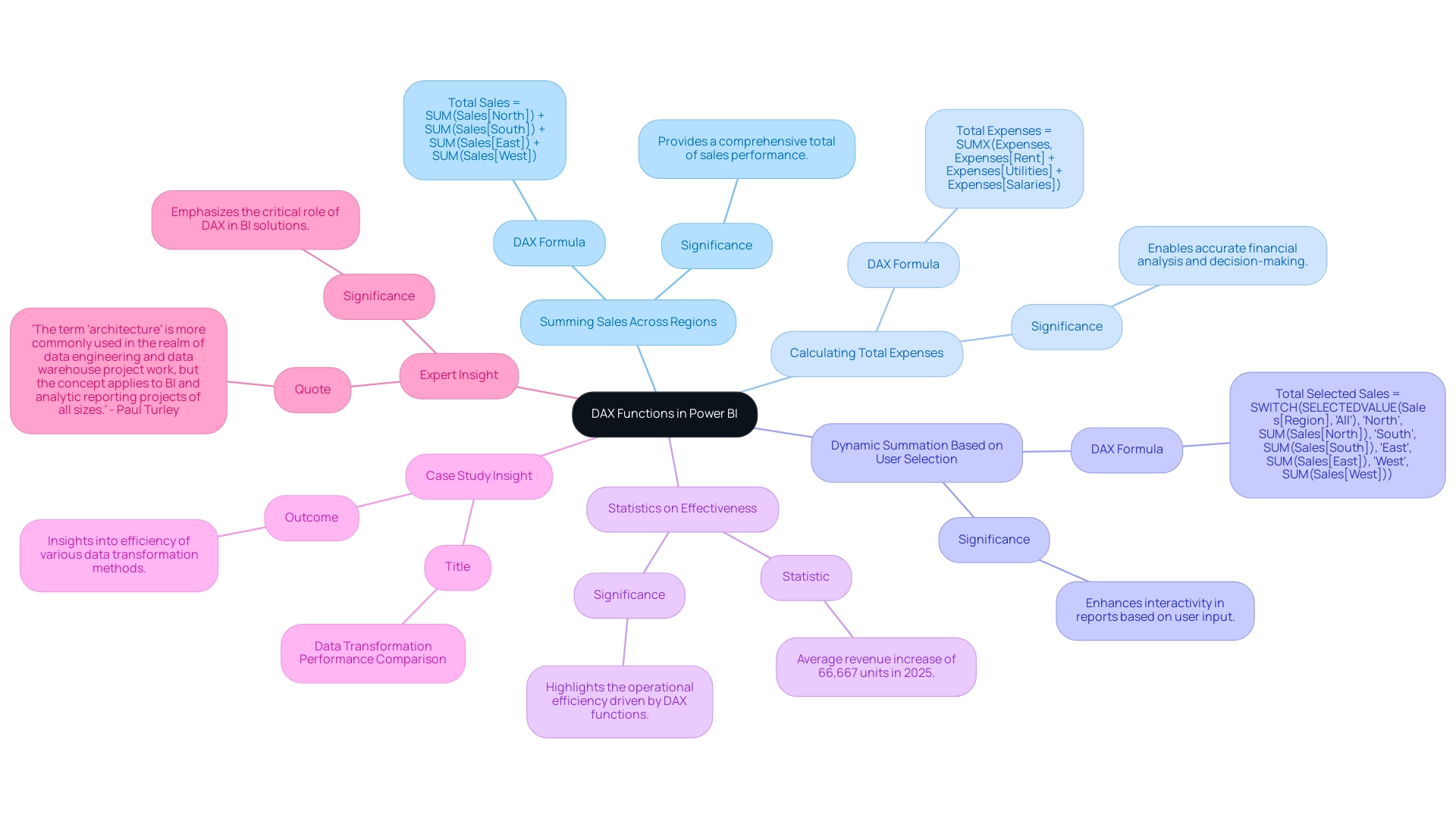

Practical Use Cases: Summing Multiple Columns in Power BI

Utilizing DAX functions to sum multiple columns can significantly elevate your analytical capabilities in Power BI, particularly in harnessing Business Intelligence for actionable insights. Here are several practical use cases that illustrate this functionality:

-

Use Case 1: Summing Sales Across Multiple Regions

When dealing with sales data distributed across various regions, you can efficiently aggregate these figures using the following DAX formula:Total Sales = SUM(Sales[North]) + SUM(Sales[South]) + SUM(Sales[East]) + SUM(Sales[West])This formula consolidates sales from all regions into a comprehensive total, providing a clear overview of overall performance. This clarity is essential for navigating the overwhelming AI landscape to identify effective solutions.

-

Use Case 2: Calculating Total Expenses

In financial reporting, having a clear picture of total expenses is crucial. You can achieve this by summing multiple expense categories with:Total Expenses = SUMX(Expenses, Expenses[Rent] + Expenses[Utilities] + Expenses[Salaries])Here, SUMX iterates through each row of the Expenses table, allowing for a detailed summation of specified columns. This capability is vital for accurate financial analysis and decision-making that drives growth.

-

Use Case 3: Dynamic Summation Based on User Selection

DAX also allows for dynamic calculations based on user input, enhancing interactivity in reports. For example:Total Selected Sales = SWITCH(SELECTEDVALUE(Sales[Region], "All"), "North", SUM(Sales[North]), "South", SUM(Sales[South]), "East", SUM(Sales[East]), "West", SUM(Sales[West]))This measure adjusts the total sales calculation according to the region selected by the user, showcasing the flexibility and power of DAX in tailoring data insights to specific needs while overcoming common challenges in leveraging insights from Power BI dashboards.

-

Statistics on Effectiveness

In 2025, organizations leveraging DAX to sum multiple columns reported an average revenue increase of 66,667 units. This statistic underscores the effectiveness of these functions in driving operational efficiency and informed decision-making. It emphasizes the significance of utilizing DAX to improve analysis and navigate the complexities of the AI landscape effectively. -

Case Study Insight

A recent case study on data transformation performance compared various methods, including the DAX sum multiple columns technique. While the primary focus was on transformation, it provided insights into how organizations employing DAX functions experienced improved reporting efficiency. This guidance is crucial for selecting optimal approaches for analysis needs, highlighting the importance of mastering DAX in the evolving landscape of business intelligence, especially in overcoming extraction and analysis challenges. -

Expert Insight

As Paul Turley, Microsoft Data Platform MVP, states, “The term ‘architecture’ is more commonly used in the realm of data engineering and data warehouse project work, but the concept applies to BI and analytic reporting projects of all sizes.” This highlights the critical role that DAX functions play in the architecture of effective BI solutions, particularly as businesses strive to harness data-driven insights for growth amidst the overwhelming options in the AI landscape.

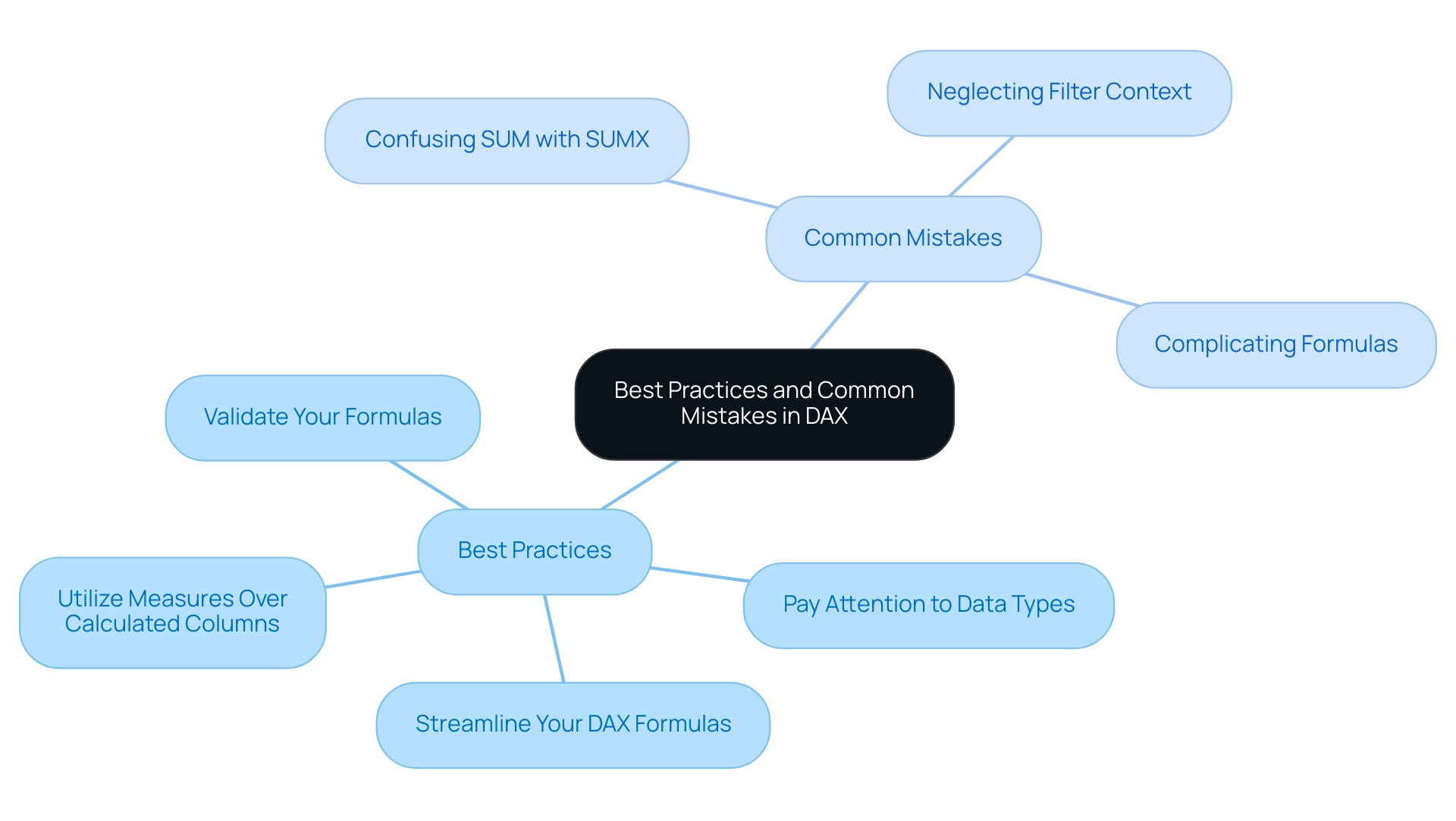

Best Practices and Common Mistakes in Summing Columns with DAX

When utilizing DAX for summing columns in Power BI, adhering to established best practices can significantly enhance your outcomes. This is particularly vital for driving data-driven insights and operational efficiency, both crucial for business growth. Here are essential recommendations:

Best Practices:

- Utilize Measures Over Calculated Columns: Measures are computed dynamically, enhancing efficiency and preventing unnecessary increases in your model’s size. The DAX query view can create a block to define all measures in a model, streamlining this process and improving information handling.

- Pay Attention to Data Types: Ensure that the columns being summed are numeric. This precaution helps avoid errors arising from incompatible types, especially when integrating with tools like EMMA RPA, which assist in automating repetitive tasks.

- Streamline Your DAX Formulas: Simplifying your formulas can lead to better performance. Avoid convoluted calculations within your expressions to maintain clarity and efficiency, especially given the time-consuming nature of report creation that many face.

- Validate Your Formulas: Always test your DAX formulas with sample values to confirm they yield the expected results, ensuring accuracy in your calculations. Users can add new measures to the model directly from the DAX query view and test changes to existing measures without affecting the model until confirmed.

Common Mistakes:

- Confusing SUM with SUMX: A frequent error is using SUM when row-by-row calculations are required. This oversight can lead to inaccurate results, as SUM does not account for the context of each row.

- Neglecting Filter Context: Ignoring how filter context impacts your calculations can result in unexpected totals. Always consider the influence of filters on your DAX expressions, which is vital for accurate reporting and data analysis.

- Complicating Formulas: Strive to keep your DAX expressions straightforward. Overly complex formulas not only increase the likelihood of errors but also complicate maintenance and understanding.