Overview

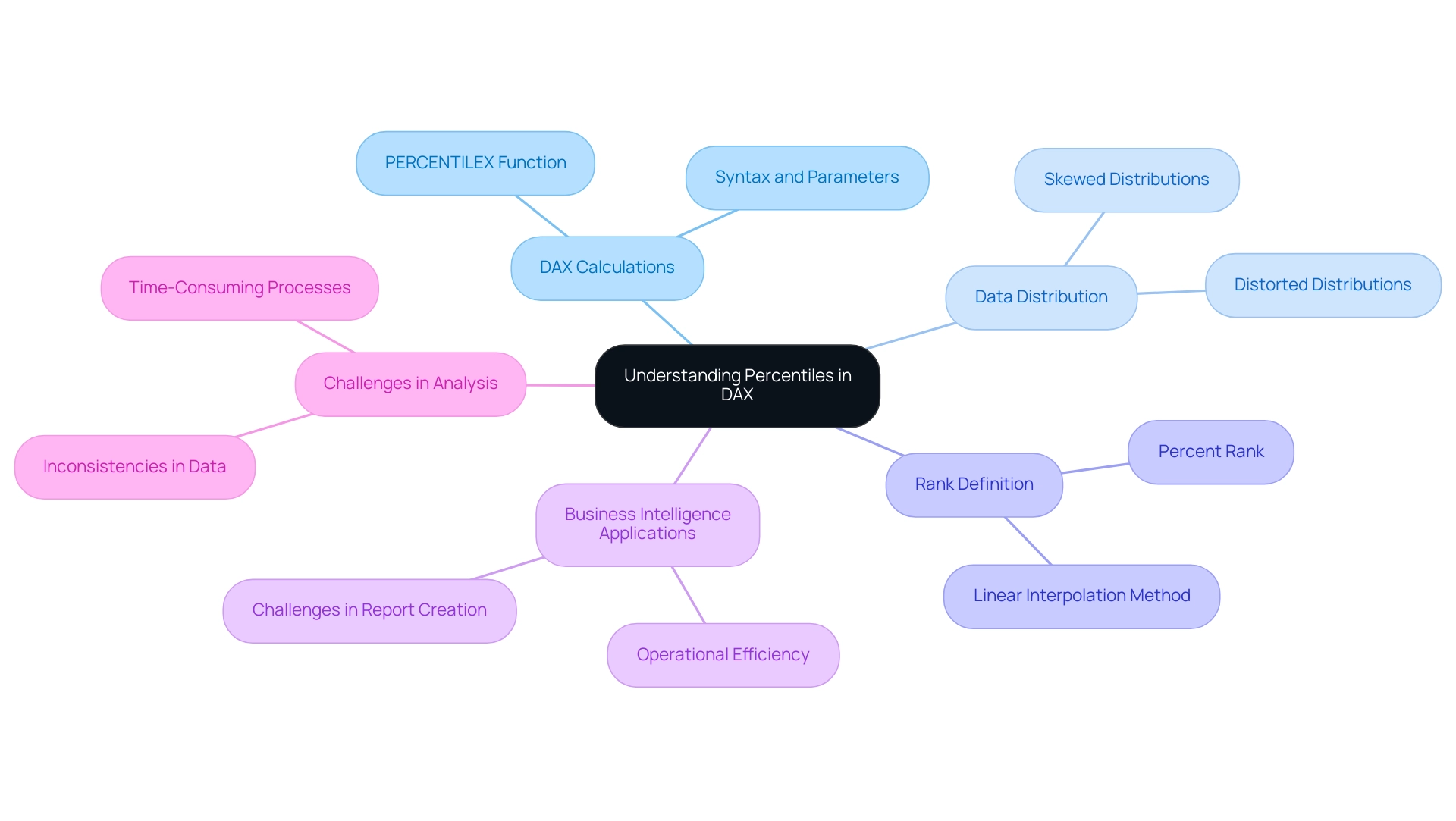

Power BI allows users to effectively compare dates through various techniques, including the use of built-in and custom calendar tables, DAX functions, and visualizations, which enhance data analysis and reporting efficiency. The article emphasizes that mastering these methods is crucial for addressing common challenges such as data inconsistencies and time-consuming report creation, ultimately enabling organizations to derive actionable insights for informed decision-making.

Introduction

In the world of data analysis, the ability to compare dates effectively in Power BI is not just a technical skill; it is a vital component that can transform raw data into strategic insights. As organizations strive to harness the power of their data, understanding the nuances of date comparison becomes essential for identifying trends, forecasting outcomes, and making informed decisions.

With challenges such as time-consuming report generation and data inconsistencies, mastering date functions can empower users to navigate these complexities. This article delves into the critical aspects of date comparison in Power BI, exploring practical techniques, best practices, and the role of DAX functions in enhancing data analysis capabilities.

By unlocking these tools, decision-makers can drive operational efficiency and derive actionable insights that propel their organizations forward.

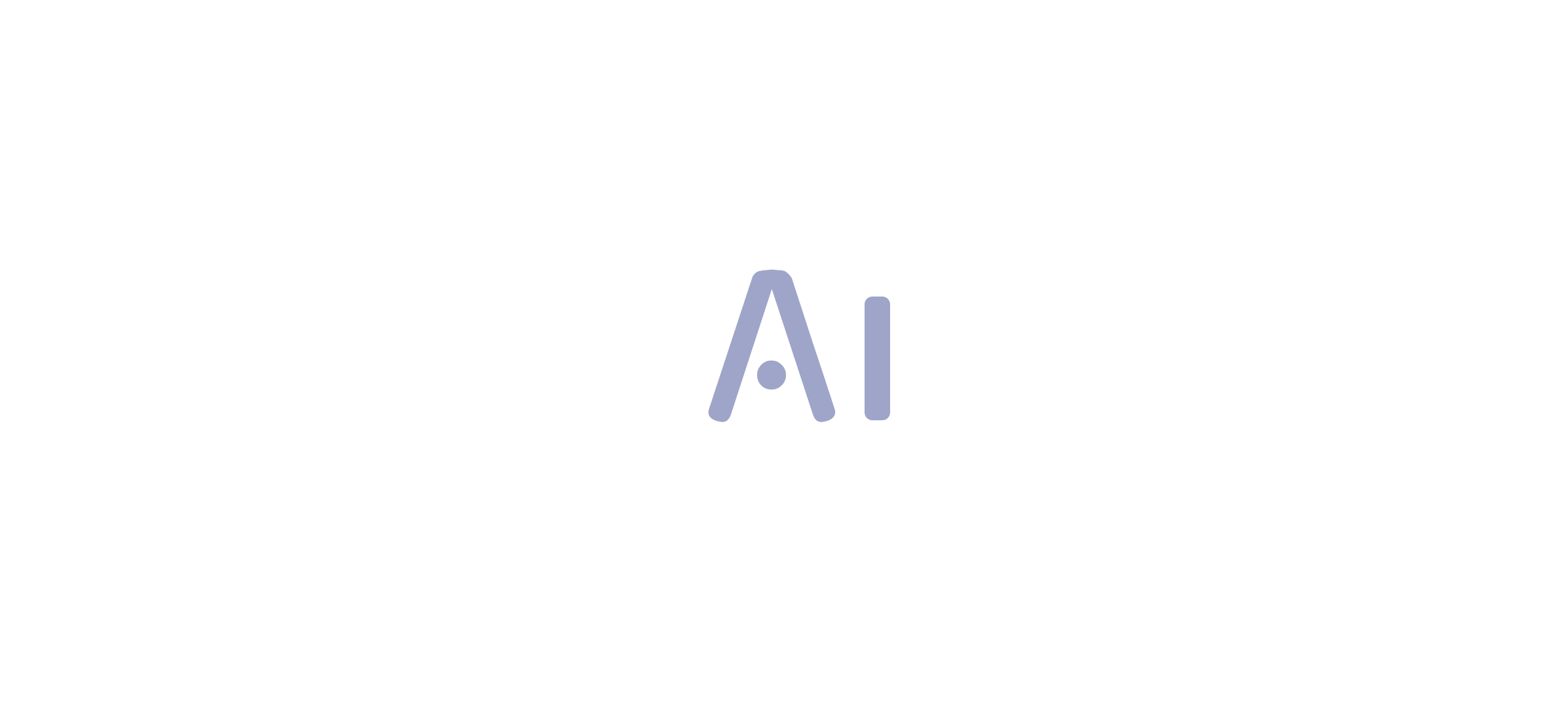

Understanding Date Comparison in Power BI

In Power BI, the feature to compare dates is a fundamental capability that empowers users to analyze and contrast information across various time periods. Mastery of time functions is essential, as it facilitates the identification of trends, enables accurate forecasting, and provides critical insights into performance over time. This is especially crucial considering the typical obstacles encountered by organizations, such as the time-intensive process of creating documents, inconsistencies in information that can result in mistrust, and the absence of practical advice in findings.

Key concepts in Power BI compare dates include:

- Relative dates: which enable comparisons based on current time frames.

- Absolute dates: which indicate fixed points in time.

Grasping these differences is essential for analyzing time-related information efficiently and tackling the absence of practical advice frequently seen in documents. Recent trends underscore the growing importance of time intelligence in data analysis, with organizations increasingly recognizing its value for informed decision-making.

For instance, the ‘total count’ in usage metrics represents unique entries viewed over a span of 90 days, illustrating user engagement. As emphasized in a case study named ‘Worked Example of View and Viewer Metrics,’ various users engage with numerous reports, illustrating how usage metrics are determined and the knowledge they offer regarding user behavior. Furthermore, the platform tracks the technology used by viewers to access reports—whether via PowerBI.com, mobile, or embedded solutions—further illuminating user engagement patterns over time.

By utilizing Power BI to compare dates, decision-makers can obtain practical knowledge that enhances operational efficiency. The General Management App specifically tackles these challenges by providing comprehensive management features, enhanced by Microsoft Copilot integration and predictive analytics, ensuring users can navigate and utilize information effectively for better outcomes.

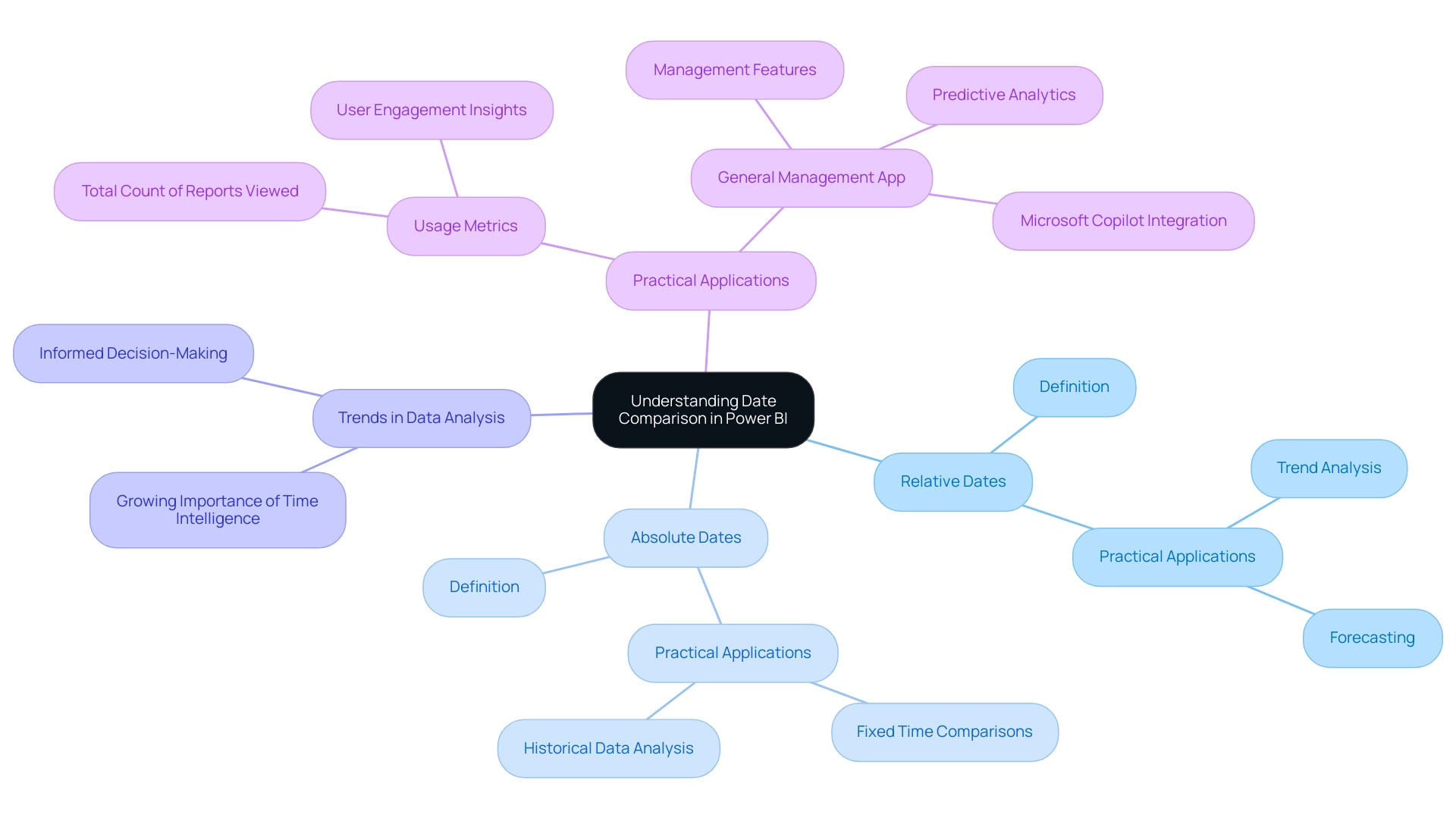

Creating Effective Date Tables for Comparison

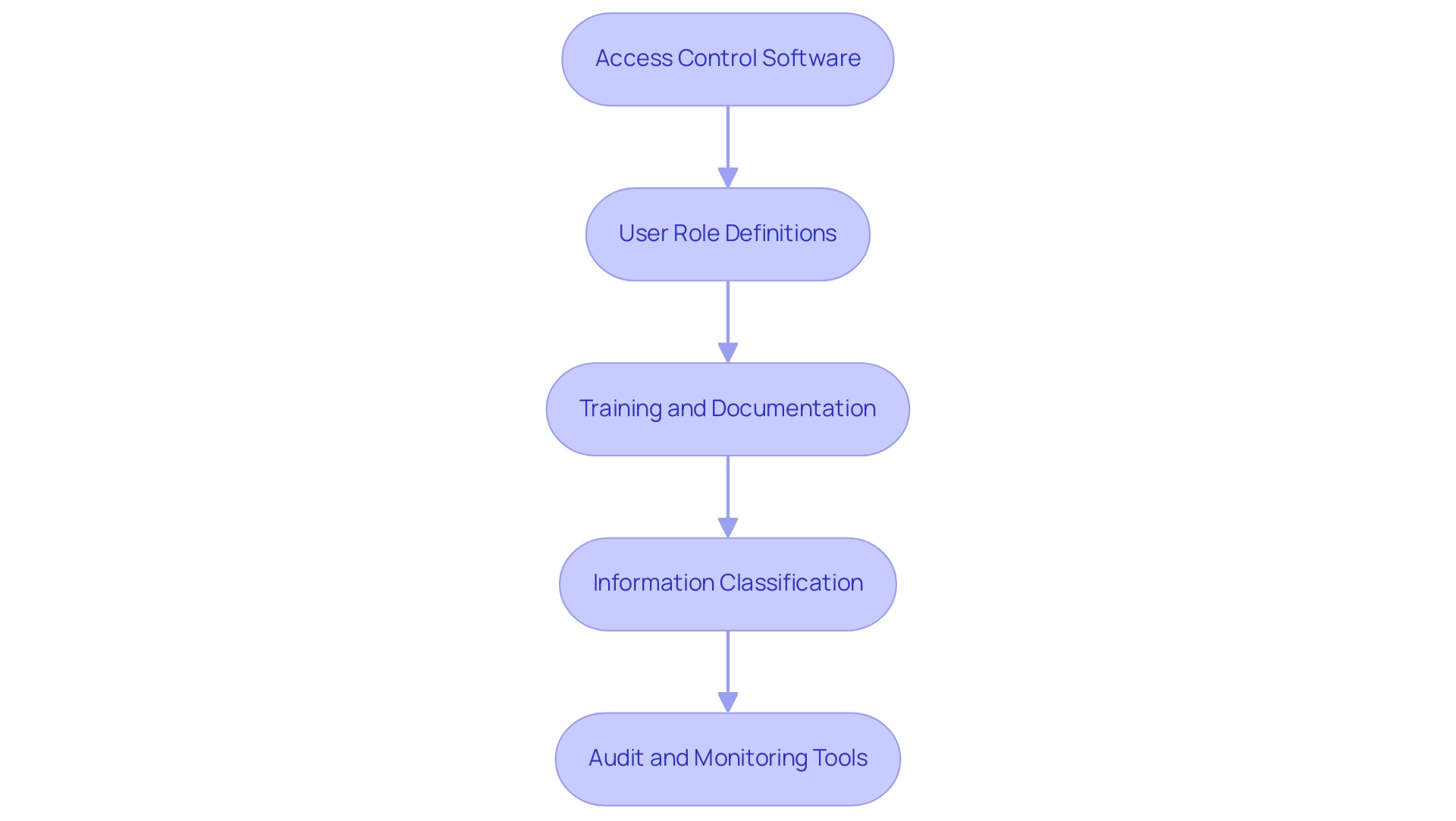

Creating an effective time table in Power BI is essential for accurately comparing dates in data analysis and reporting, especially when categorizing report data by business impact using sensitivity labels for data security. In today’s data-rich environment, the ability to extract meaningful insights from Power BI dashboards can significantly drive business growth and operational efficiency. There are two primary methods to achieve this: utilizing the built-in calendar function or constructing a custom time table.

Here’s a detailed guide to both approaches:

-

Using the Built-in Calendar Function:

Navigate to the ‘Modeling’ tab and select ‘New Table’. Enter the following formula:

DateTable = CALENDAR(MIN(YourData[Date]), MAX(YourData[Date])).This command generates a thorough time range that corresponds to the information present in your dataset, which is essential when you want to power bi compare dates, helping mitigate challenges like data inconsistencies and time-consuming report creation.

-

Creating a Custom Calendar Table:

Alternatively, you can create a calendar table manually in Excel, which allows for greater customization. When designing your table, ensure it includes critical columns such as Year, Month, Quarter, and Weekday.This approach facilitates more granular analysis tailored to specific reporting needs, ultimately enhancing operational efficiency through better data-driven insights.

-

Marking as a Time Table:

Once your time table is prepared, it is crucial to mark it as a time table in Power BI. Do this by selecting ‘Mark as Date Table’ and choosing the suitable column for dates.This step is crucial, as it enables Power BI to efficiently employ time intelligence functions, which enhances its ability to compare dates and improve overall data analysis capabilities.

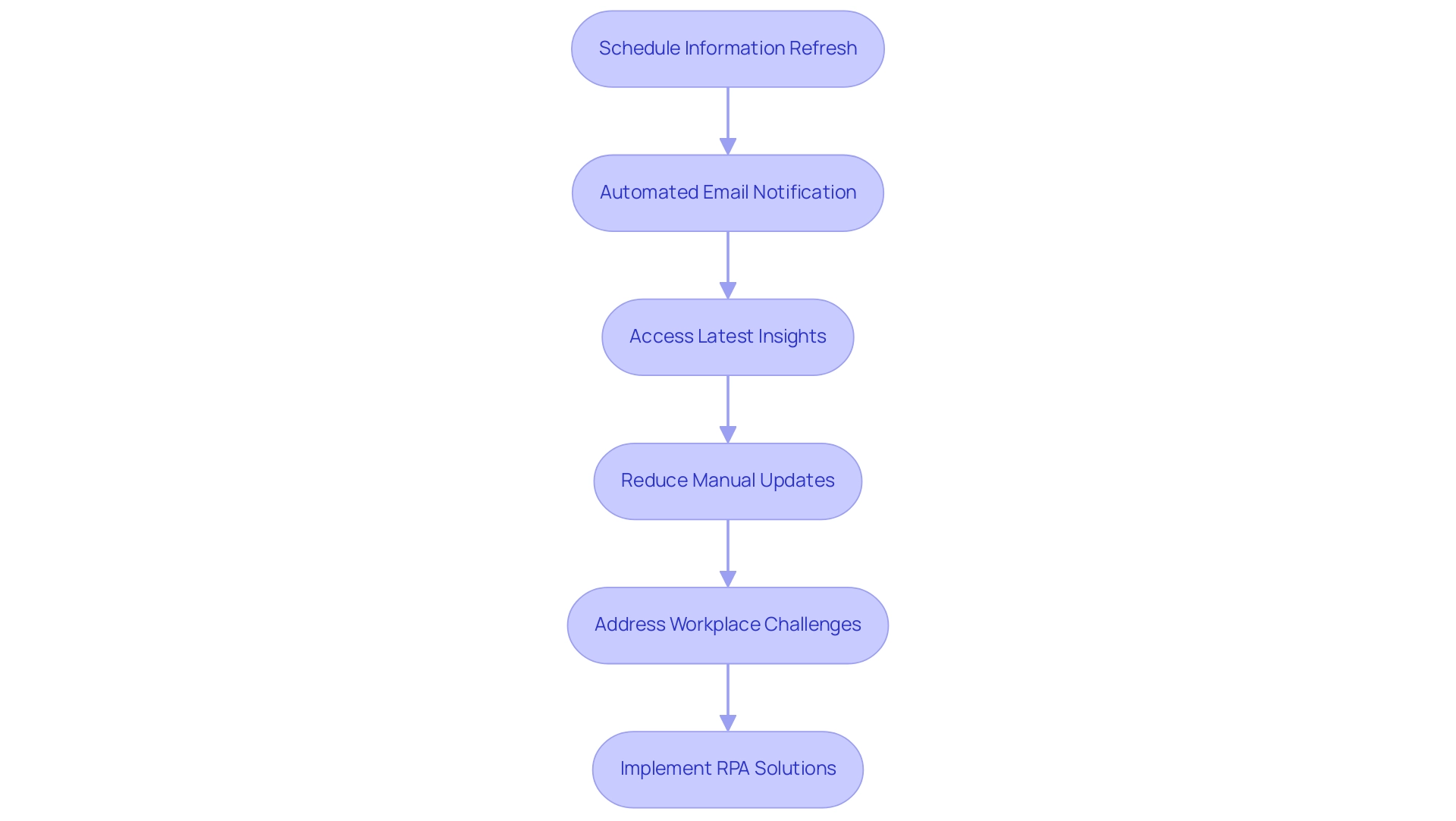

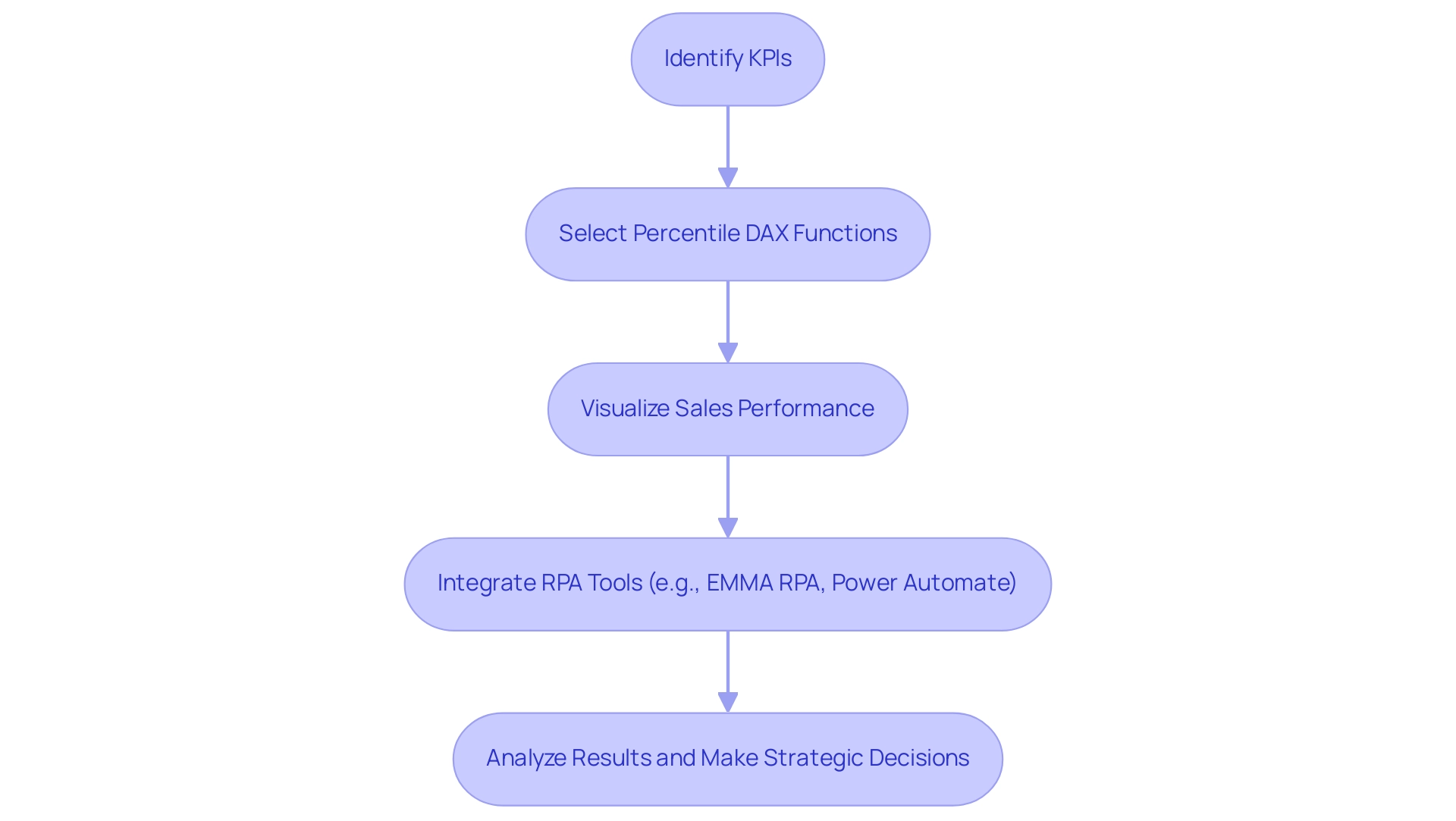

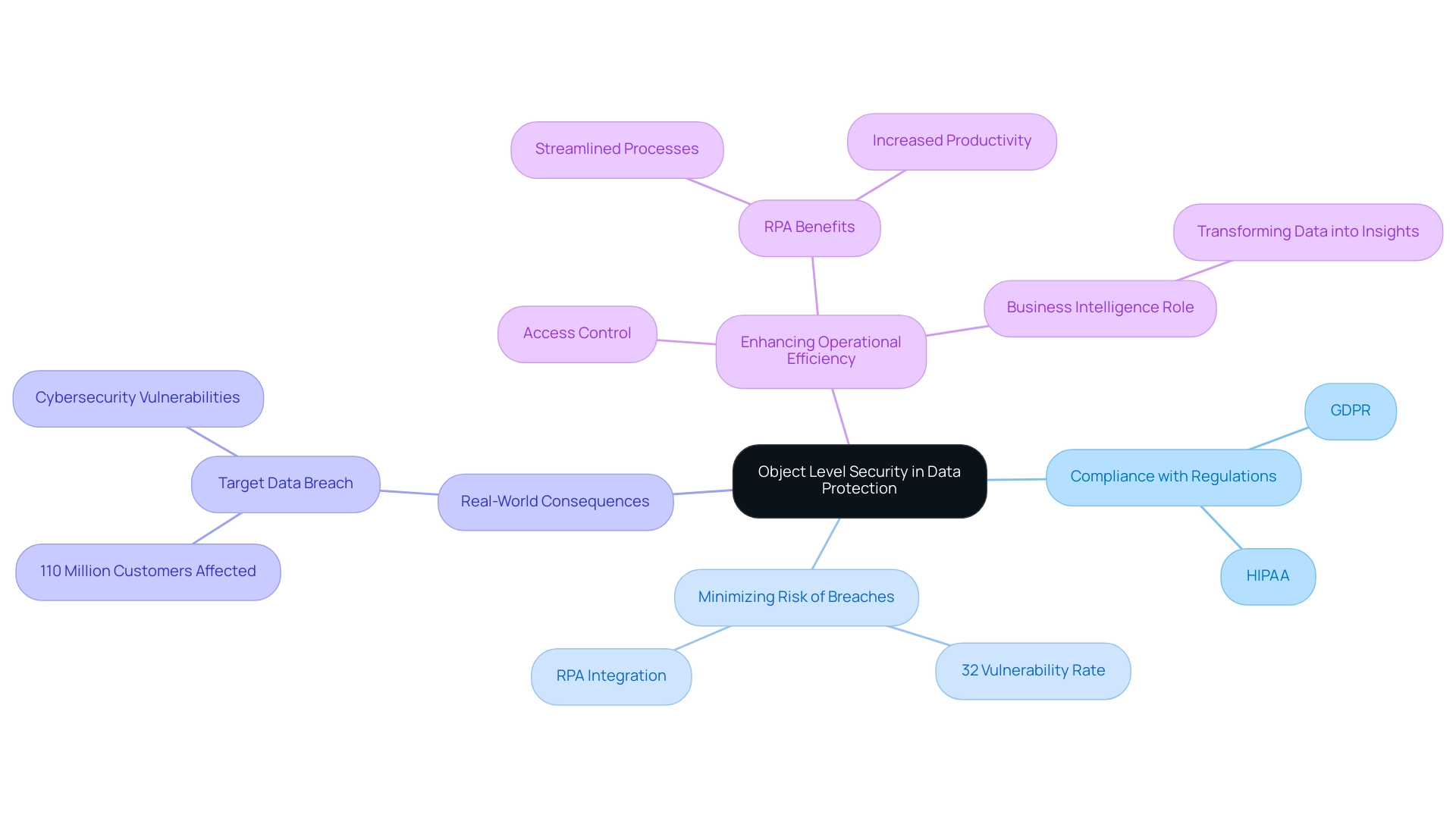

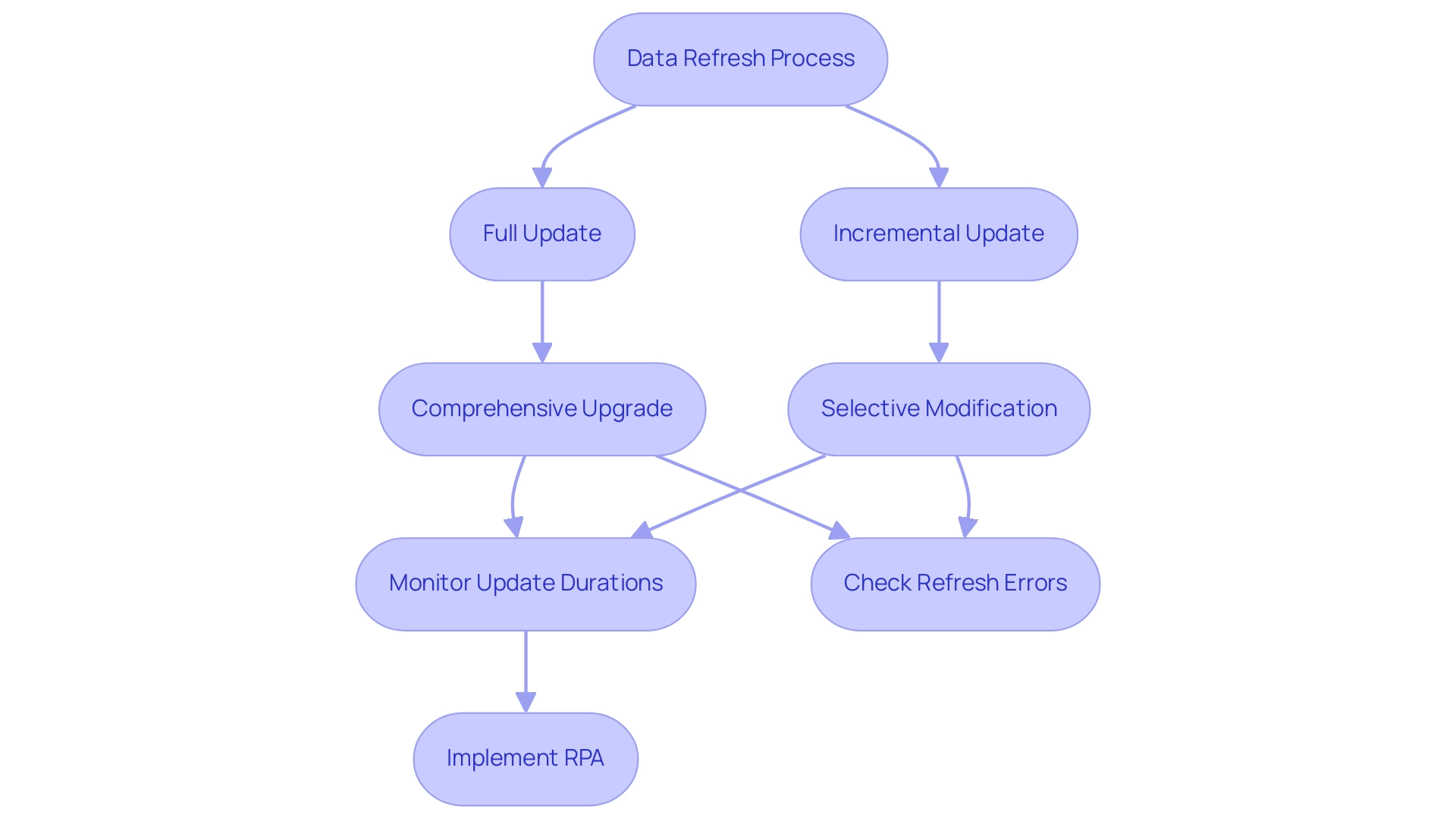

Integrating effective temporal tables can greatly enhance interactivity and user experience in documents, as Power BI can compare dates to tackle the challenges faced by many organizations in utilizing insights. Additionally, integrating Robotic Process Automation (RPA) solutions can streamline the generation process, reducing the burden of staffing shortages and outdated systems. As evidenced by case studies demonstrating the optimization of visual interactions, effective schedules enhance report relevance to the audience.

A Principal Consultant for 3Cloud Solutions, who focuses on Business Intelligence and Power BI, emphasizes that ‘the proper arrangement of time tables is foundational to unlocking the full potential of your insights.’ This content has resonated with many, receiving 2,394 views, underscoring the importance of mastering temporal tables in Power BI.

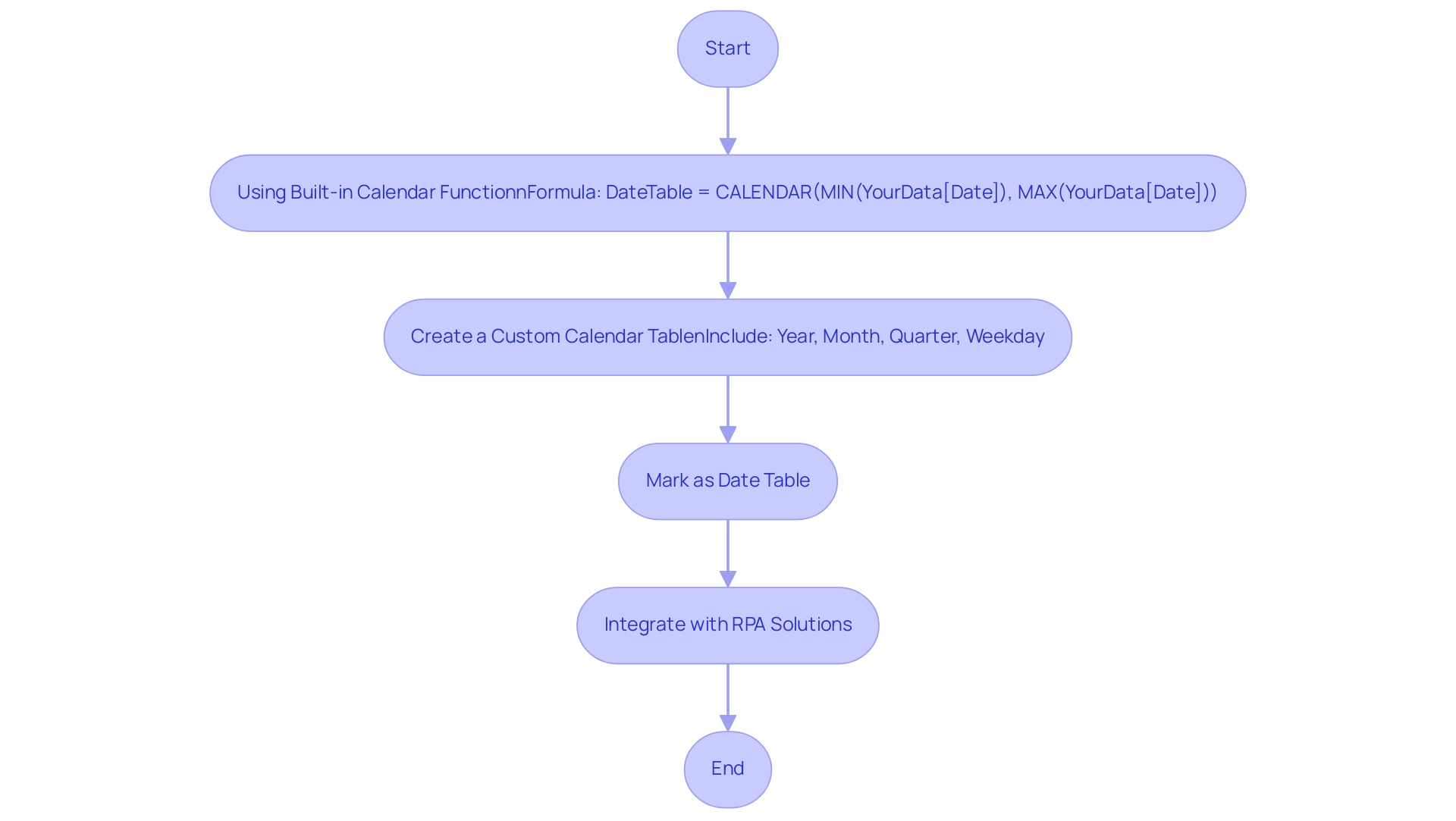

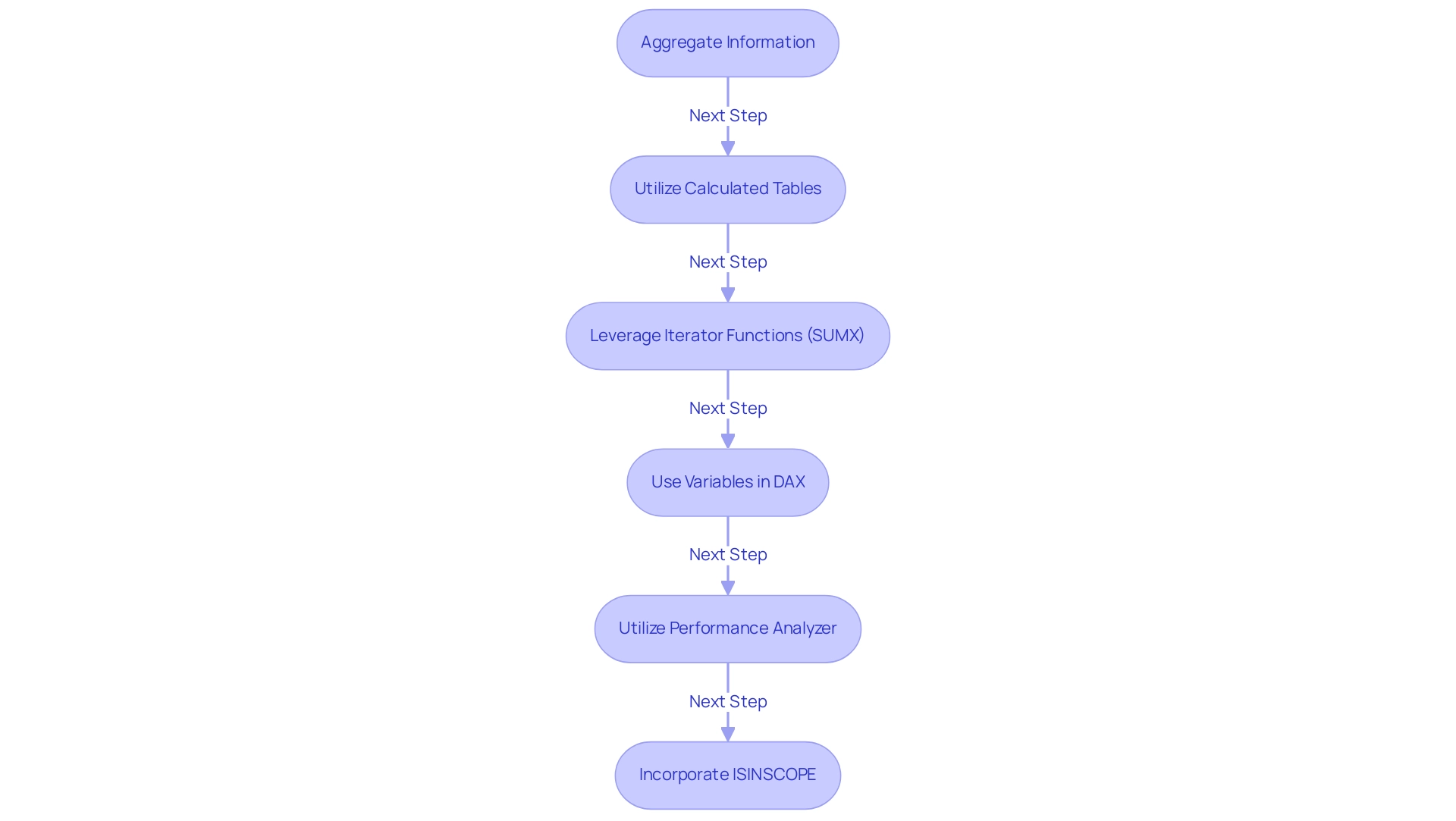

Leveraging DAX Functions for Date Comparison

DAX (Data Analysis Expressions) serves as a powerful formula language in Power BI, enabling users to perform advanced calculations, particularly when they need to power bi compare dates. However, it is important to note that not all DAX functions are supported or included in earlier versions of Power BI Desktop, Analysis Services, and Power Pivot in Excel, which can affect their usability in various scenarios. These limitations can exacerbate common challenges such as time-consuming report creation and data inconsistencies, ultimately hindering the generation of actionable insights.

Several DAX functions are essential for power bi compare dates.

-

DATEDIFF: This function calculates the difference between two points in time. For instance,

DATEDIFF(StartDate, EndDate, DAY)will return the total number of days separating the two specified points in time, allowing analysts to assess time intervals effectively.This can provide actionable insights by utilizing Power BI to compare dates and highlight trends over specific periods.

-

DATEADD: This function allows users to modify timestamps by a specified interval. A case in point would be

DATEADD(Date Table[Date], -1, MONTH), which shifts the entries back by one month, facilitating the ability to power bi compare dates across different time periods and aiding in providing clearer guidance on performance changes. -

YEAR, MONTH, DAY: These functions extract specific elements from a timestamp, serving as critical components for creating calculated columns that enable more nuanced comparisons.

Mastery of these functions not only enhances analytical capabilities but also addresses the challenges of report creation and clarity, particularly when using Power BI to compare dates, fostering the development of dynamic reports that reflect evolving trends. As Joleen Bothma notes, ‘Learn what DAX is and discover the fundamental DAX syntax and functions you’ll need to take your Power BI skills to the next level,’ emphasizing the importance of understanding these tools.

Furthermore, recent case studies demonstrate that the RELATED function in DAX effectively retrieves associated values from other tables, which can be invaluable when making comparisons across different datasets.

By utilizing the RELATED function, analysts can improve their capacity to extract understandings from interconnected information, directly tackling problems of inconsistencies and delivering actionable findings that enhance analysis, drive operational efficiency, and provide clearer guidance for stakeholders.

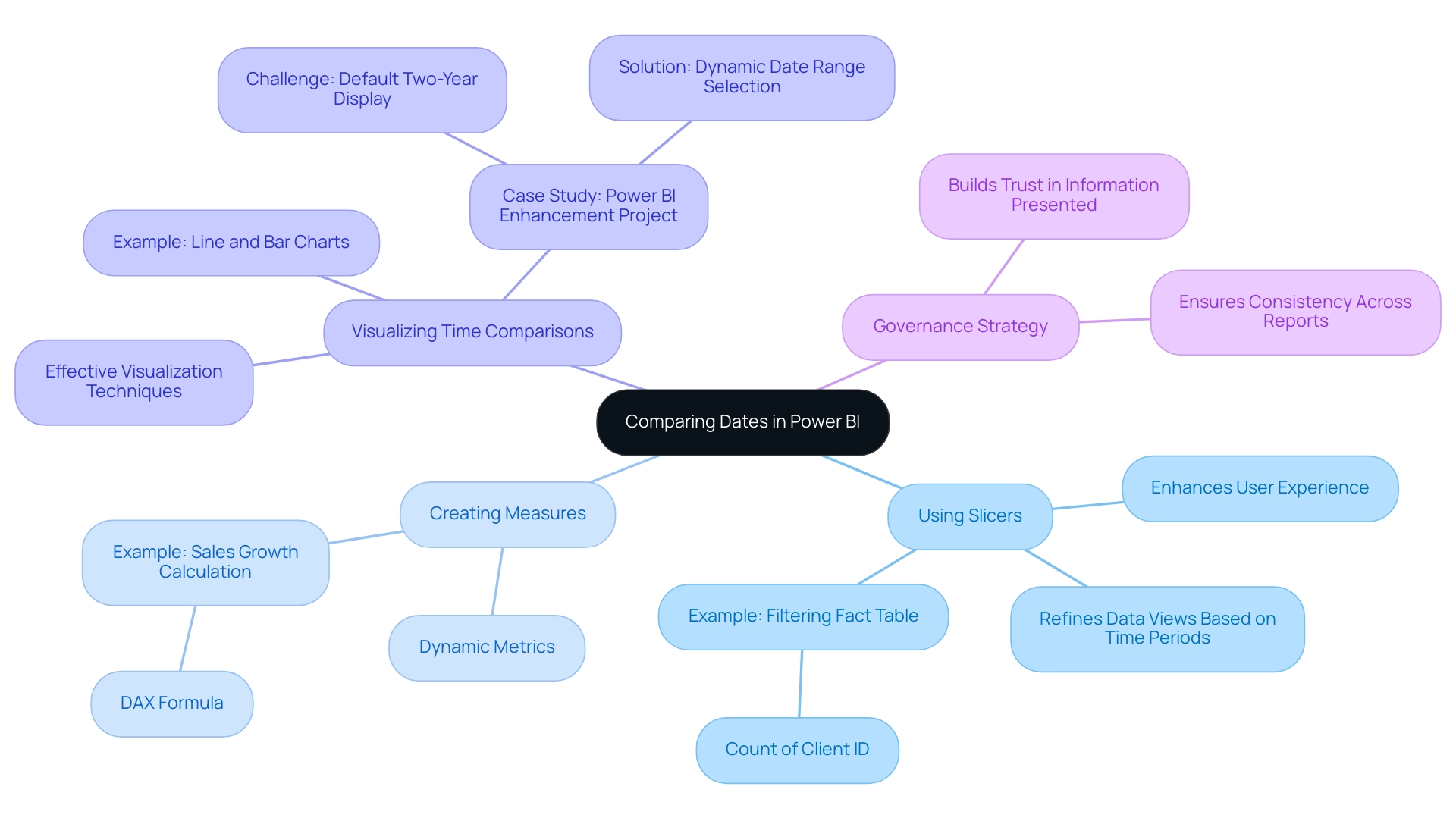

Practical Techniques for Comparing Dates in Power BI

To effectively compare dates in Power BI and enhance operational efficiency through actionable insights, several techniques stand out for improving user engagement and data analysis:

-

Using Slicers: Implementing time slicers allows users to filter data by specific time ranges seamlessly. This interactivity not only enhances user experience but also significantly boosts report engagement. As mentioned by a Data Insights Super User,

The concept is to filter Fact Table for the rows that correspond to the time slicer and then calculate the count of Client ID in the context of the filtered Fact Table.

This emphasizes the usefulness of slicers in refining data views based on user-chosen time periods. Importantly, the overall tally in usage metrics signifies distinct documents viewed over the past 90 days, highlighting the significance of efficient time filtering. -

Creating Measures: Develop measures using Data Analysis Expressions (DAX) to calculate performance metrics across different time periods. For example, calculating sales growth over the previous year can be achieved with the following DAX measure:

sales growth = (SUM(Sales[Current Year]) - SUM(Sales[Previous Year])) / SUM(Sales[Previous Year]).

This approach enables dynamic metrics that adjust to the chosen time periods, allowing for the use of Power BI to compare dates, which offers valuable insights into performance trends and tackles the challenge of time-consuming report creation. -

Visualizing Time Comparisons: Utilize line charts or bar charts for effective visualization of time comparisons. By configuring the x-axis to the time field, trends over time can be clearly illustrated. This visual depiction not only assists in the rapid understanding of information patterns but also improves the overall efficiency of comparisons in reports, particularly when using Power BI to compare dates. A pertinent case study, the ‘Power BI Enhancement Project,’ demonstrates how the Apps team encountered challenges in providing a default display of the last two years’ information while allowing users to select alternative time ranges. The solution required utilizing DAX and a calculated column to allow dynamic range selection while preserving the default view. Additionally, the recent integration of two Power BI slicers allows users to switch between default and custom date ranges seamlessly.

Furthermore, it is crucial to tackle the issue of dedicating additional time to creating documents instead of utilizing information from Power BI dashboards. This shift can lead to inefficiencies and hinder decision-making. Implementing a governance strategy is crucial to ensure consistency across reports, thereby building trust in the information presented.

The combination of these techniques not only simplifies data analysis but also corresponds with recent advancements in Power BI visualization methods, ensuring that users can extract valuable information effectively and tackle the prevalent problems of data inconsistencies and absence of actionable guidance.

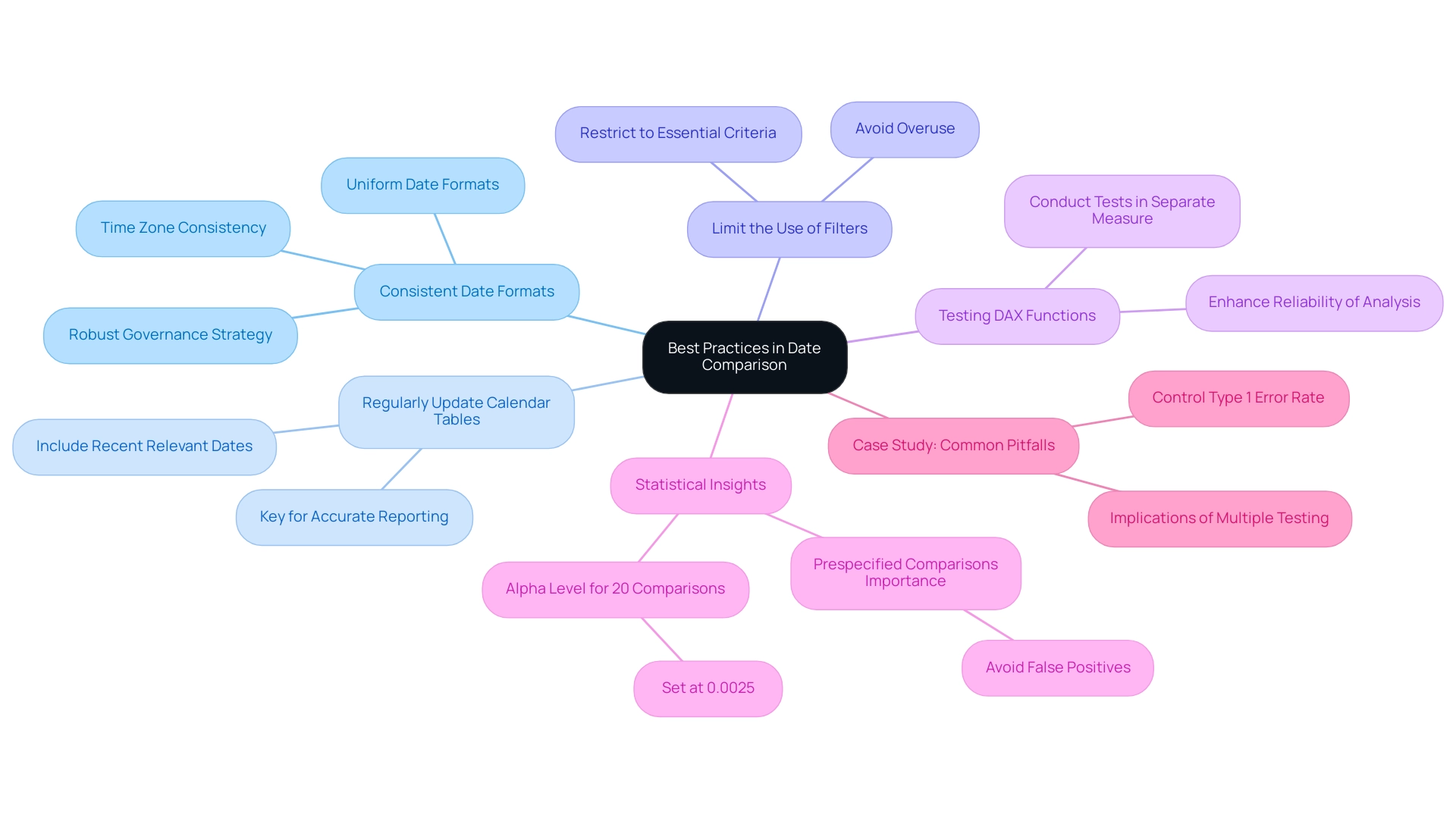

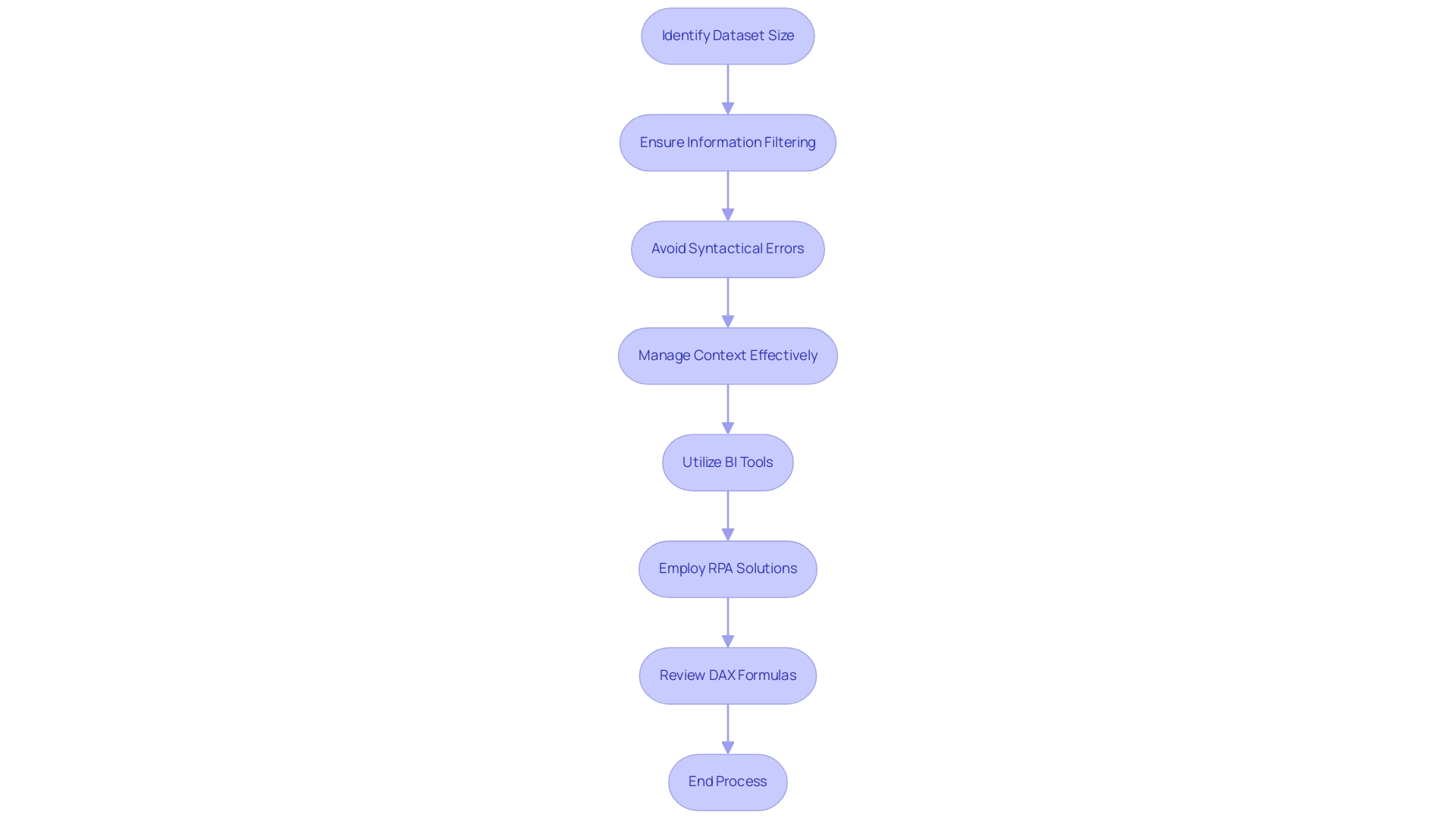

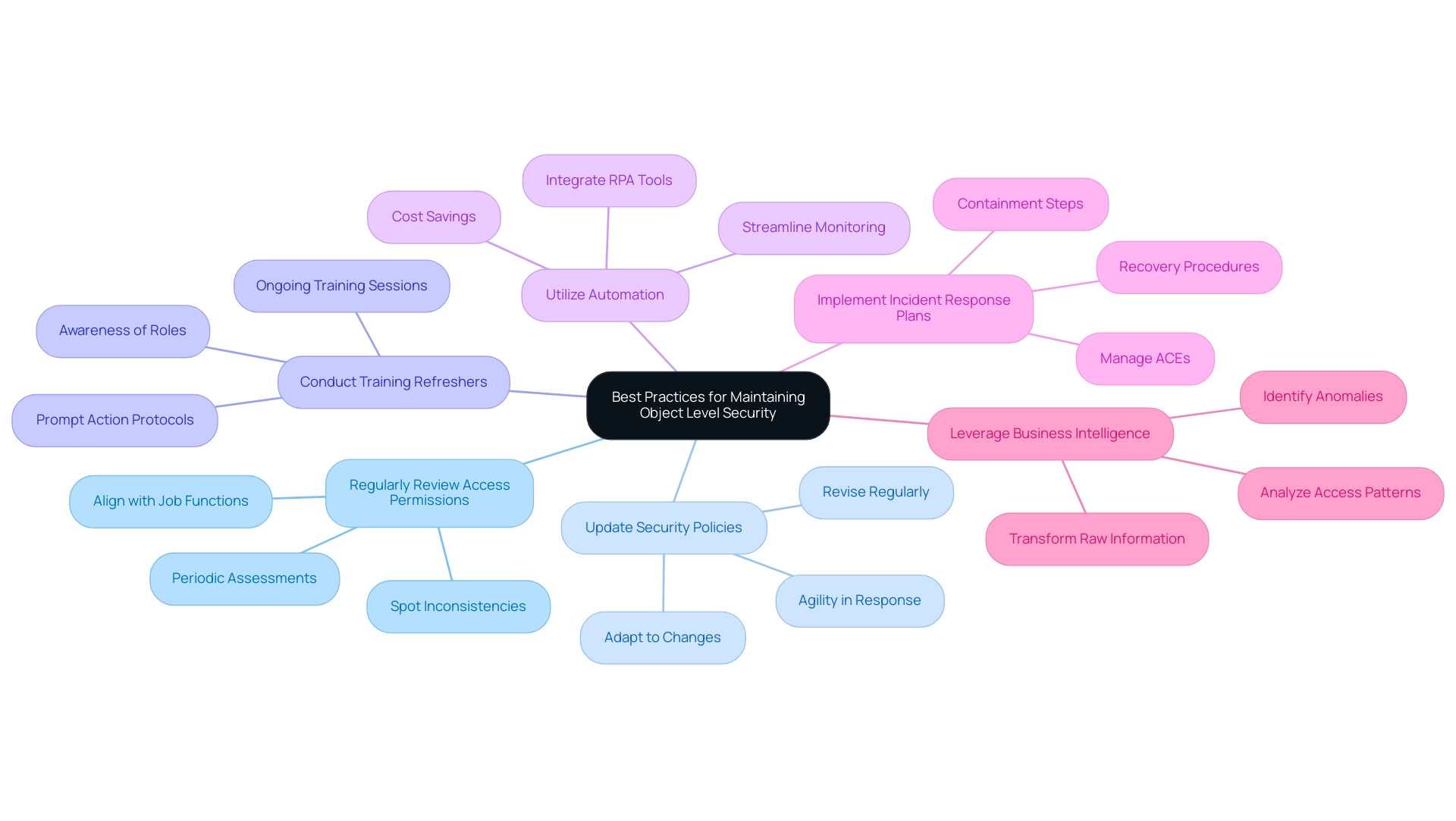

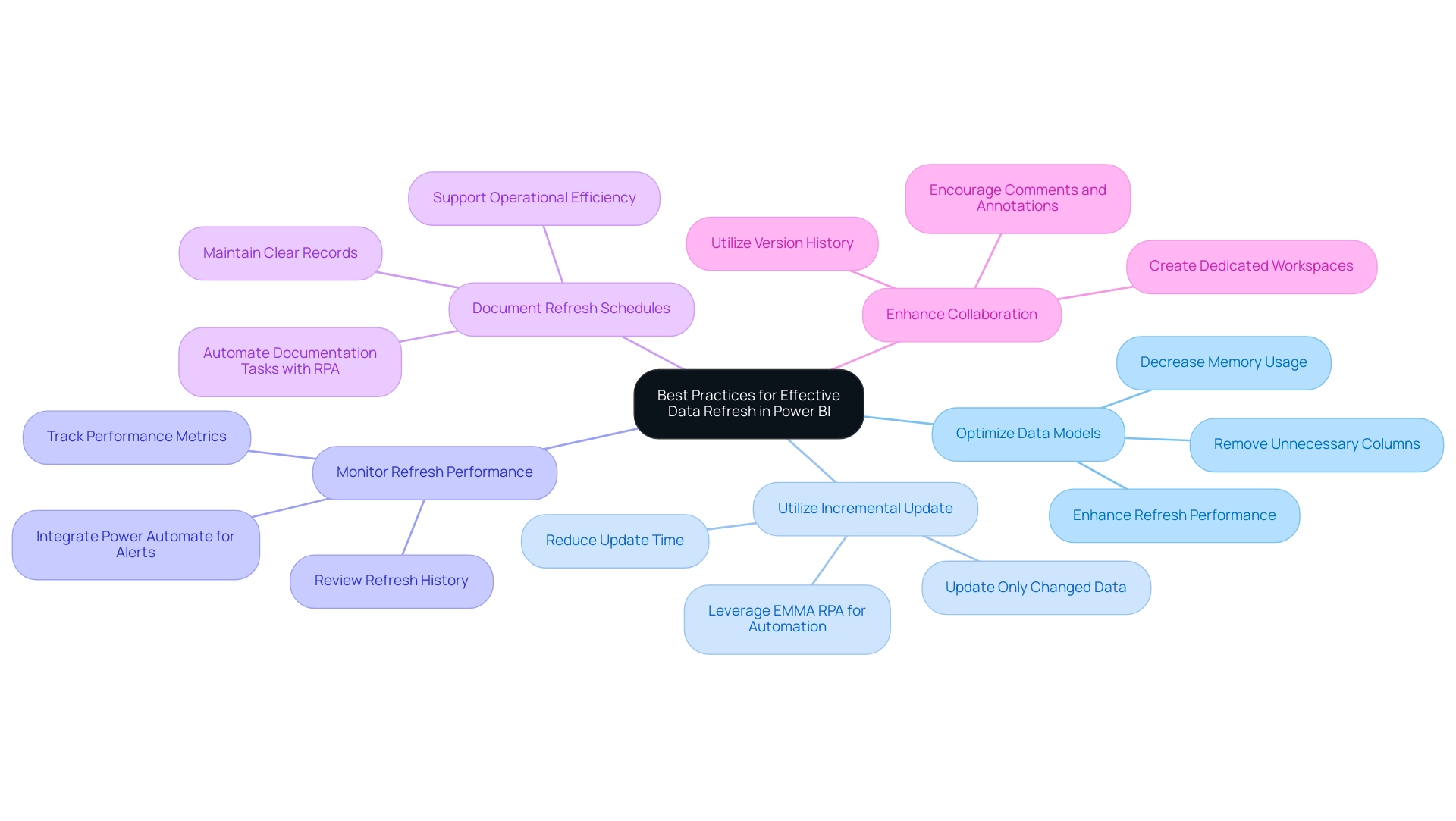

Best Practices and Common Pitfalls in Date Comparison

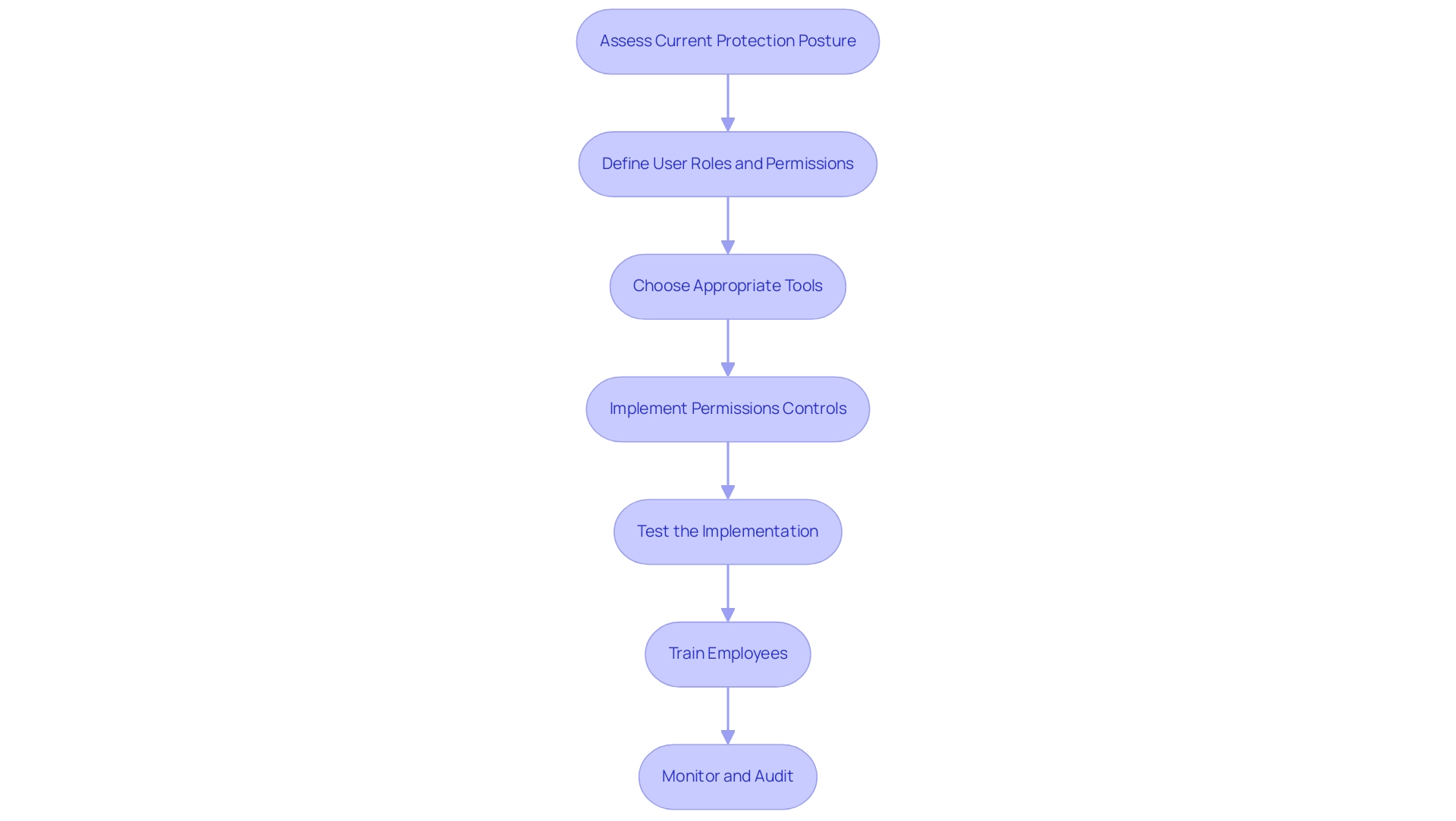

To optimize date comparisons in Power BI and effectively leverage insights from your dashboards, it is essential to follow these best practices:

-

Consistent Date Formats: Maintaining uniform date formats across all fields is critical to prevent errors during comparisons. Equally important is using the same time zone to ensure accuracy in your data analysis, addressing common inconsistencies that can lead to confusion. Implementing a robust governance strategy can help maintain these standards across reports.

-

Regularly Update Calendar Tables: For those utilizing custom calendar tables, it is vital to update these tables consistently. This ensures that your analyses include the most recent relevant dates, which is key for accurate reporting and decision-making.

-

Limit the Use of Filters: While filters are useful for refining datasets, overusing them can lead to confusion and misinterpretation of results. It is advisable to restrict filters to essential criteria to maintain clarity, thus providing actionable guidance for stakeholders.

-

Testing DAX Functions: Before applying any DAX functions in your documents, conduct tests in a separate measure. This practice ensures that the functions yield the expected results, enhancing the reliability of your analysis and reducing the time spent on report creation.

In the context of statistical analysis, Ranganathan P. emphasizes the importance of prespecifying comparisons to avoid serious problems—”It is to avoid these serious problems that all intended comparisons should be fully prespecified in the research protocol, with appropriate adjustments for multiple testing.” This principle is particularly relevant as, with 20 comparisons, the alpha level for significance should be set at 0.0025 to control for false positives effectively.

The case study titled “Common pitfalls in statistical analysis: The perils of multiple testing” highlights the implications of multiple testing in data analysis. It underscores the necessity of prespecified comparisons to mitigate the risk of false-positive findings, reinforcing the importance of these best practices.

By unlocking the power of Business Intelligence, you can transform raw data into practical knowledge, enabling informed decision-making that drives growth and innovation. Furthermore, integrating RPA solutions can improve operational efficiency by automating repetitive tasks, enabling your team to concentrate on utilizing information instead of report generation. By adhering to these guidelines, you can significantly improve the reliability and effectiveness of how you Power BI compare dates, while also addressing the common challenges faced in leveraging insights effectively.

Conclusion

Mastering date comparison in Power BI is an indispensable skill that can significantly enhance data analysis and decision-making capabilities. By understanding the fundamental concepts of date comparison, such as relative and absolute dates, users can identify trends and generate insights that drive operational efficiency. The article highlighted practical techniques, including:

- The creation of effective date tables

- The utilization of DAX functions

These techniques empower users to perform advanced calculations and comparisons seamlessly.

Incorporating best practices, such as:

- Maintaining consistent date formats

- Regularly updating date tables

ensures that analyses remain accurate and relevant. It is crucial to limit the use of filters to avoid confusion and to rigorously test DAX functions to enhance the reliability of the insights derived. By addressing common pitfalls and following these guidelines, organizations can transform raw data into strategic insights that facilitate informed decision-making.

Ultimately, leveraging the full potential of date comparison in Power BI not only helps in overcoming challenges like data inconsistencies and time-consuming report generation but also paves the way for actionable insights that propel organizations forward. Embracing these techniques and best practices will lead to improved data analysis, fostering a culture of data-driven decision-making that is essential in today’s fast-paced business environment.

Overview

Power BI comparison visuals are essential for effective data analysis, enabling organizations to accurately examine and contrast various data points, thereby enhancing operational efficiency and informed decision-making. The article emphasizes best practices such as simplicity in design, understanding the audience, and ensuring data quality, which collectively support the effective use of these visuals in driving actionable insights and addressing common pitfalls in data representation.

Introduction

In the dynamic landscape of data analytics, Power BI comparison visuals have emerged as essential tools for organizations seeking to harness the power of data-driven insights. These visuals, ranging from bar charts to scatter plots, not only simplify the process of analyzing complex datasets but also enhance decision-making capabilities by providing clear representations of trends and relationships.

As businesses increasingly turn to Business Intelligence solutions—projected to reach over half a million deployments in 2024—the importance of mastering these comparison visuals cannot be overstated. This article delves into the various types of comparison visuals available in Power BI, best practices for their design, and the critical role of data quality and user feedback in ensuring effective data communication.

By understanding these elements, organizations can unlock the full potential of Power BI, driving operational efficiency and strategic growth in an ever-evolving market.

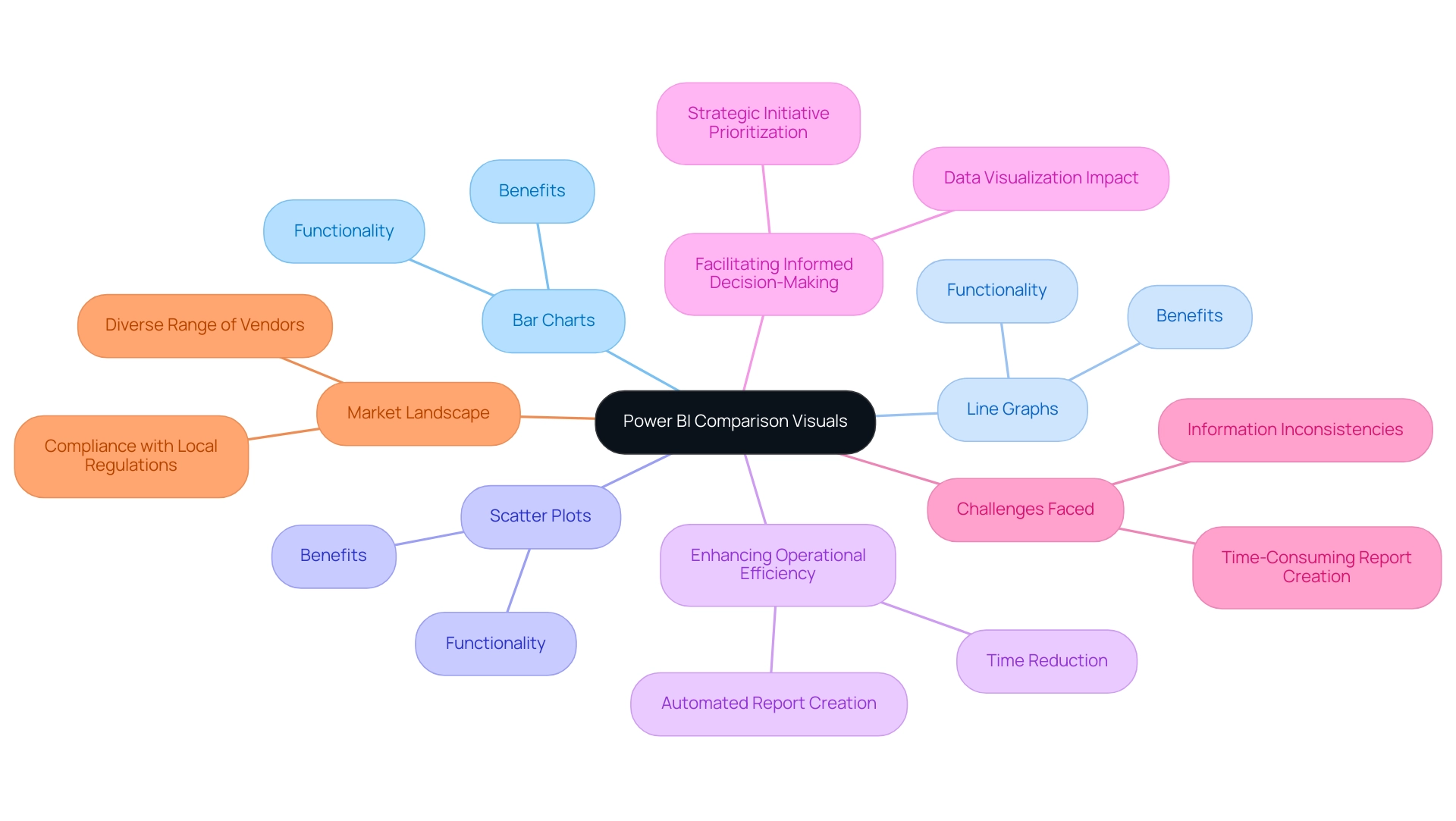

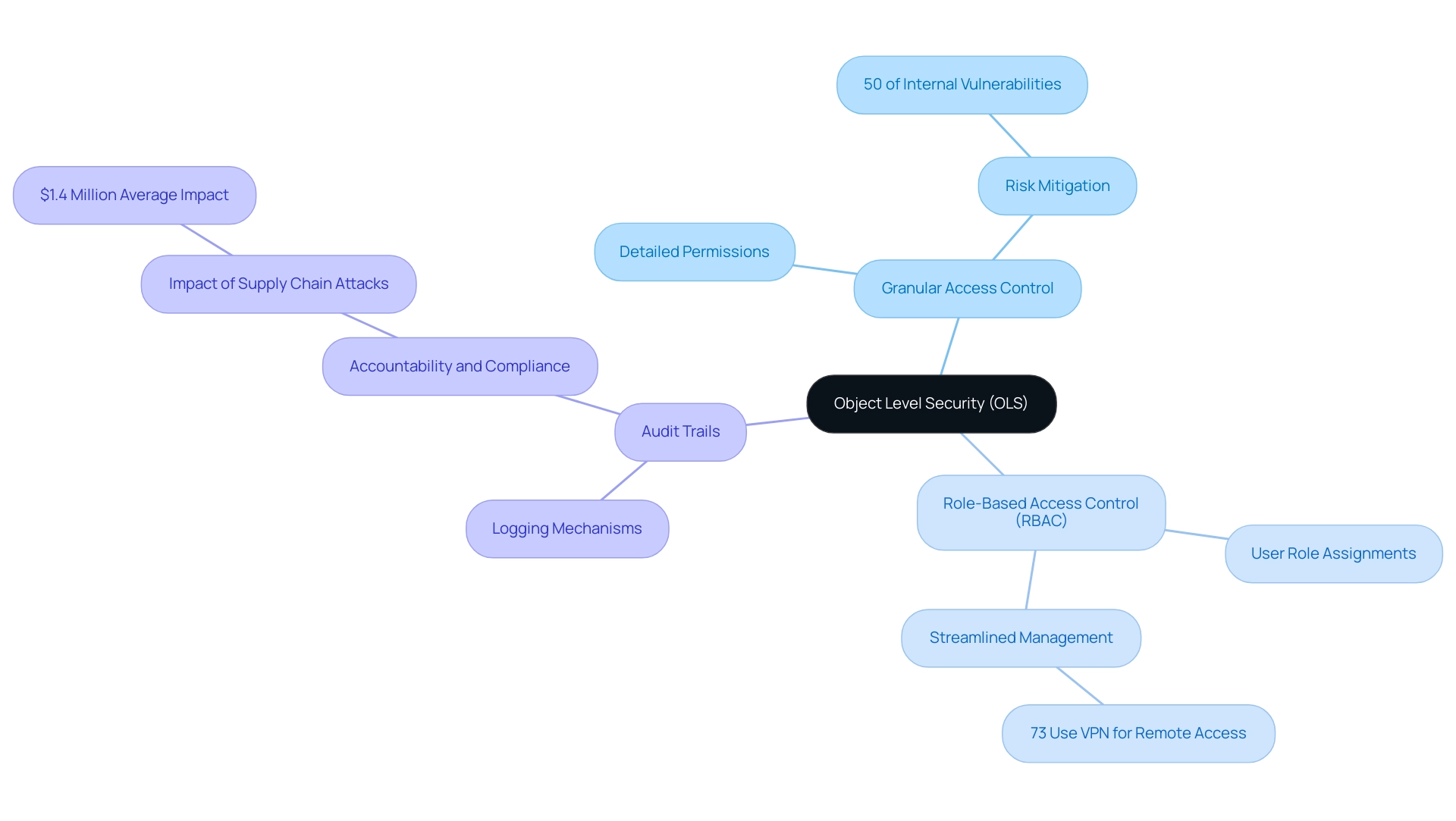

Understanding Power BI Comparison Visuals

BI comparison representations function as effective instruments for examining and contrasting various information points with accuracy. Utilizing formats such as bar charts, line graphs, and scatter plots, these representations offer a transparent depiction of data relationships, trends, and performance metrics, making them indispensable for organizations aiming to enhance their operational efficiency. In 2024, approximately 587,000 companies are deploying Business Intelligence solutions, reflecting a growing reliance on data-driven strategies.

This statistic highlights the importance of power BI comparison visuals in improving operational efficiency and facilitating informed decision-making. By effectively utilizing these images, organizations can swiftly pinpoint discrepancies, monitor progress against targets, and facilitate informed decision-making. For instance, in the case study titled ‘Viewing Content for Usage Metrics Correlation,’ users must engage with content in their workspace to enable the processing of information for the Usage Metrics Report, illustrating the practical application of these visuals.

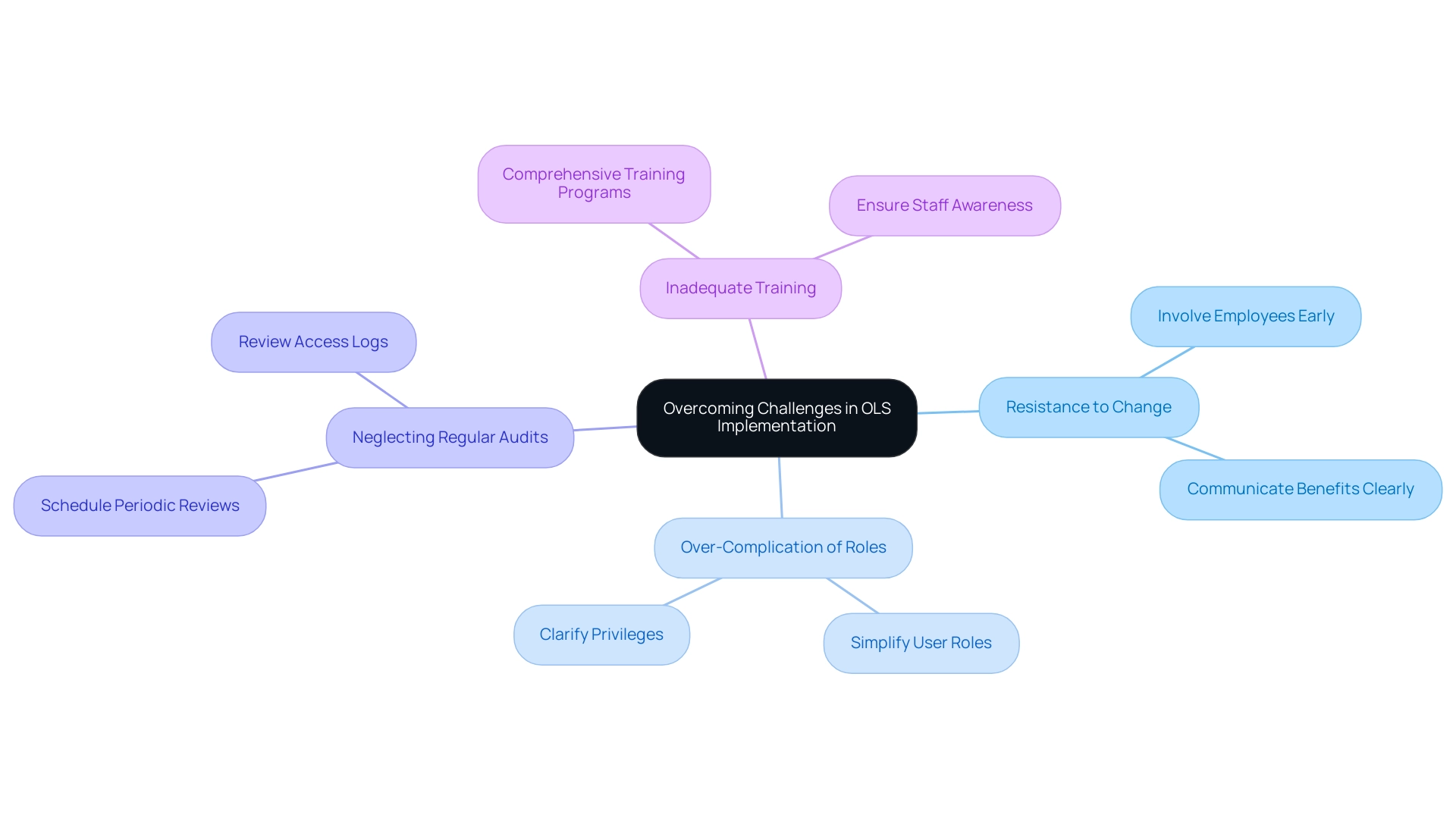

Additionally, integrating Robotic Process Automation (RPA) can further enhance these processes, allowing for automated report creation and reducing the time spent on manual tasks. However, challenges such as time-consuming report creation and information inconsistencies can hinder the effective use of BI insights. As emphasized by industry specialists, the capability to visualize information clearly influences decision-making effectiveness, allowing teams to prioritize strategic initiatives instead of maneuvering through complexity.

Furthermore, the evolving landscape of the Business Intelligence market, characterized by a multitude of vendors, reinforces the need for organizations to adopt advanced data visualization tools such as BI to stay competitive and compliant with local regulations. Key features of BI services, including the ‘3-Day BI Sprint’ for rapid report creation and the ‘General Management App’ for comprehensive oversight, are essential in addressing these challenges. As the Platform indicates, the technology a viewer used to open a report—whether via BI.com, Mobile, or Embedded—further emphasizes the versatility of BI in meeting diverse organizational needs.

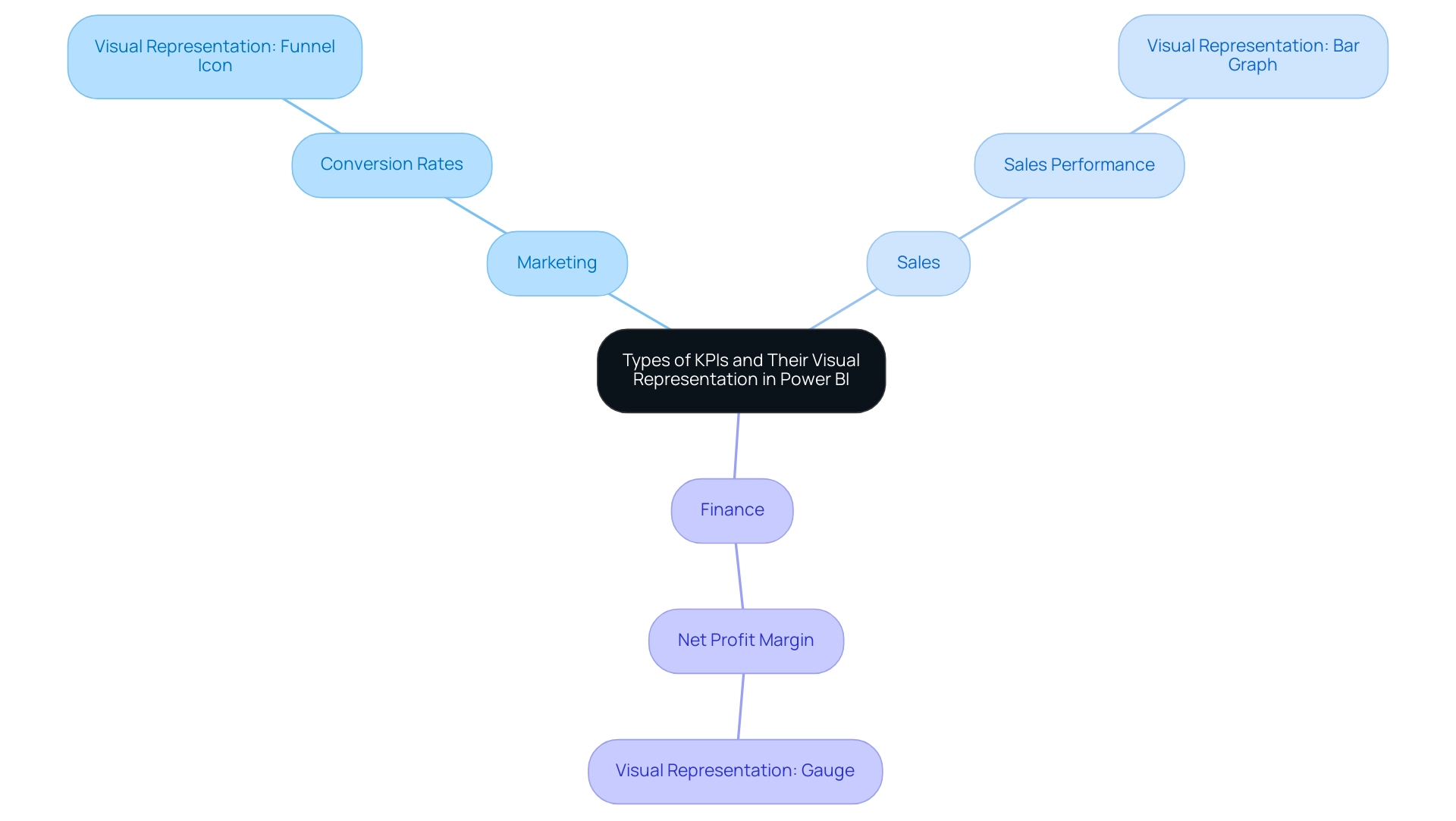

Types of Comparison Visuals in Power BI

Power BI comparison visuals offer a diverse array of tools, each designed for distinct analytical tasks, which is crucial for driving data-driven insights in an increasingly competitive landscape. The primary types include:

-

Bar and Column Charts: These charts excel in comparing values across different categories, making them vital for Power BI comparison visuals, as they effectively illustrate disparities in size and support quick decision-making. They assist in optimizing report generation by enabling individuals to swiftly visualize key metrics without extensive manual information handling.

-

Line Charts: Ideal for tracking trends over time, line charts serve as effective Power BI comparison visuals for comparing points across various intervals. This functionality addresses the challenge of time-consuming report creation by enabling users to visualize historical data trends at a glance, thus improving decision-making efficiency.

-

Scatter Plots: These representations are instrumental in uncovering correlations between two variables, offering insights into relationships that may otherwise remain hidden, particularly when analyzed through Power BI comparison visuals. By highlighting these correlations, scatter plots enhance the actionable guidance derived from BI tools, allowing for more informed strategic decisions.

-

Matrix Visuals: Providing a grid-like representation, matrix visuals enable multi-dimensional comparisons, which are essential for operational efficiency when utilizing Power BI comparison visuals in complex analytical tasks. They can help reduce inconsistencies by presenting information in a structured format that is easier to interpret.

In a recent project, our finalized leads totaled 12, highlighting the significance of effective information visualization in decision-making and how it can address issues like inconsistencies. As Satyam Chaturvedi, Digital Marketing Manager at Arka Softwares, states, “I love to spend my time studying the latest market insights,” emphasizing the importance of comprehending information in today’s market. Additionally, network charts, which visualize relationships between information points, are particularly useful for hierarchical structures but can become overwhelming if not designed with clarity in mind.

Comprehending these choices enables users to customize their visualizations effectively, ensuring alignment with the specific insights they seek to extract from their information. Furthermore, integrating RPA solutions can streamline the visualization process by automating repetitive tasks, thereby maximizing the potential of Business Intelligence and RPA in fostering business growth.

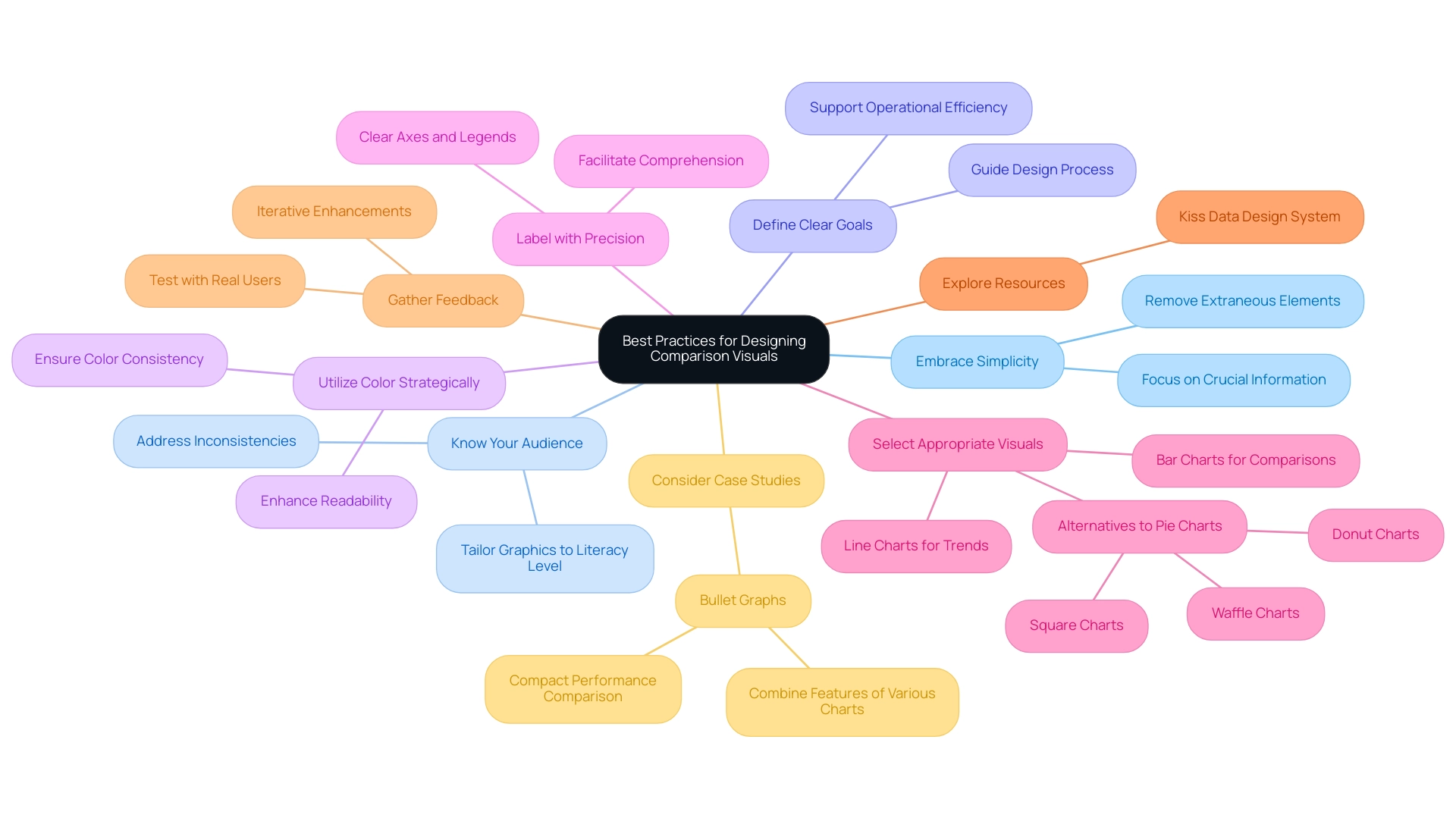

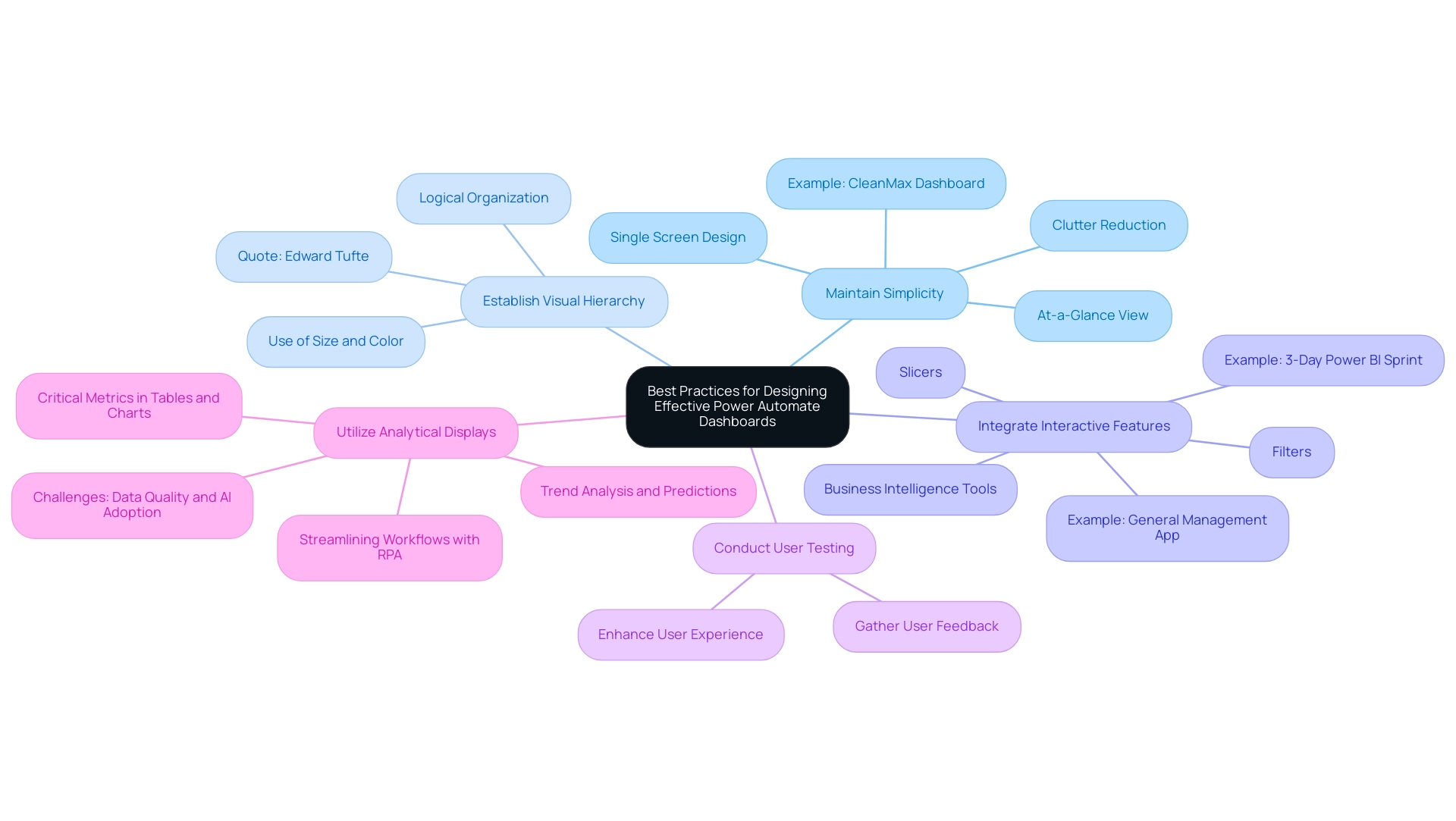

Best Practices for Designing Comparison Visuals

To create impactful Power BI comparison visuals, several best practices should be adopted:

-

Embrace Simplicity: A minimalist approach is crucial. Concentrate on crucial information while removing extraneous elements that may distract or confuse the viewer. This aligns with recent studies emphasizing the importance of simplicity in visualization design principles, particularly in the context of Business Intelligence, where clarity can drive better decision-making.

-

Know Your Audience: Understanding your audience’s literacy is vital. Tailor your graphics to their level of understanding, ensuring that the information presented is accessible and actionable. This consideration is especially important in overcoming challenges like inconsistencies that can arise in Power BI dashboards.

-

Define Clear Goals: Establishing clear objectives for your visualizations helps guide the design process and ensures that the visuals effectively communicate the intended message. Clear objectives support the overall mission of leveraging BI for operational efficiency and growth.

-

Utilize Color Strategically: A thoughtful color scheme can significantly enhance readability and differentiate between information series. Consistency is key; avoid overwhelming the audience by ensuring that colors serve a clear purpose. The influence of color schemes on readability cannot be overstated, as well-chosen colors can guide the viewer’s focus effectively.

-

Label with Precision: Clear labeling of all axes, legends, and points is essential to facilitate comprehension. This practice ensures that viewers can easily interpret the information presented, reinforcing the effectiveness of your visualizations and addressing potential confusion that may arise from lack of actionable guidance.

-

Select Appropriate Visuals: Choosing the right type of visual is paramount for accurately conveying your information. Line charts work well for illustrating trends over time, while bar charts are ideal for categorical comparisons. As pie charts are frequently criticized for their inefficiency—highlighting Edward Tufte’s claim that they can obstruct precise comparisons due to angle discrepancies—consider alternatives like donut, square, or waffle charts for clearer representation.

-

Explore Resources: The Kiss Data Design System is a valuable free information visualization kit that can assist in creating effective representations, contributing to the operational efficiency of your BI efforts.

-

Gather Feedback: Testing your representations with actual individuals is vital. Gathering feedback aids in confirming that your graphics convey the intended message effectively, allowing for iterative enhancements based on user experience. This practice is a cornerstone of creating effective BI graphics in 2024, ensuring they meet the needs of diverse audiences while adhering to contemporary information visualization design standards.

-

Consider Case Studies: For instance, bullet graphs combine features of thermometer charts, progress bars, and target indicators to display performance against benchmarks, providing a compact format for comparing actual performance to expectations.

Additionally, integrating Robotic Process Automation (RPA) can significantly enhance the efficiency of your reporting processes. RPA can automate repetitive tasks such as report generation, thereby alleviating time-consuming challenges and staffing shortages. By leveraging RPA alongside these best practices, you can streamline operations and improve the overall effectiveness of your BI representations.

By integrating these best practices and considering the role of RPA, you can enhance the clarity and effectiveness of your Power BI comparison visuals, leading to better storytelling, informed decision-making, and ultimately driving growth through robust Business Intelligence strategies.

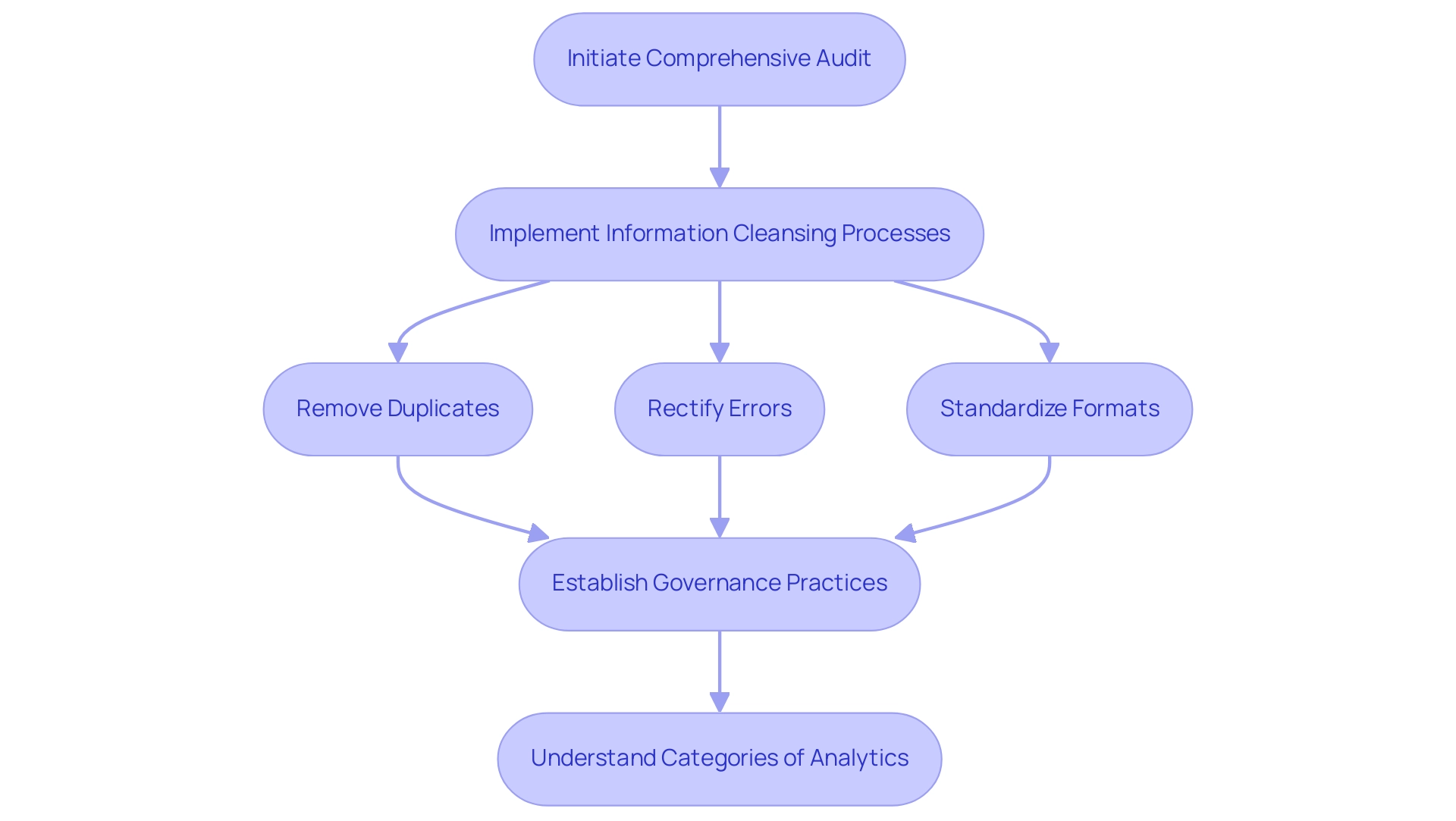

Ensuring Data Quality for Effective Comparisons

For organizations to leverage Power BI comparison visuals effectively, it is paramount to prioritize information quality. Initiating a comprehensive audit of information serves as the first step, allowing teams to pinpoint inaccuracies and inconsistencies within their datasets. Following this, implementing information cleansing processes is essential.

This involves:

- Removing duplicates

- Rectifying errors

- Standardizing formats to enhance overall reliability

Moreover, establishing robust governance practices is crucial; these frameworks should clearly define accountability for information quality and outline maintenance protocols. As Lior Solomon, VP of Data at Data, emphasizes, ‘secure, compliant, and reliable information is critical for the success of AI initiatives’.

He further notes that information quality must be prioritized throughout the model development life cycle. Furthermore, comprehending the four categories of analytics—descriptive, diagnostic, predictive, and prescriptive—can offer a wider view on how quality influences various analytical methods. Notably, a recent survey revealed that over 450 analytics professionals identified significant quality issues within organizations, underscoring the necessity of effective governance.

To combat the lack of data-driven insights that can leave organizations at a competitive disadvantage, our 3-Day Power BI Sprint offers a quick start to creating professional reports that leverage insights for actionable business outcomes. This sprint not only assists in enhancing quality but also tackles obstacles to AI adoption by ensuring that the information used in Power BI comparison visuals is both dependable and actionable. Additionally, incorporating RPA solutions can simplify information management processes, enhancing operational efficiency and allowing organizations to confidently depend on the insights gained from their comparison representations, ultimately facilitating more informed and strategic decision-making.

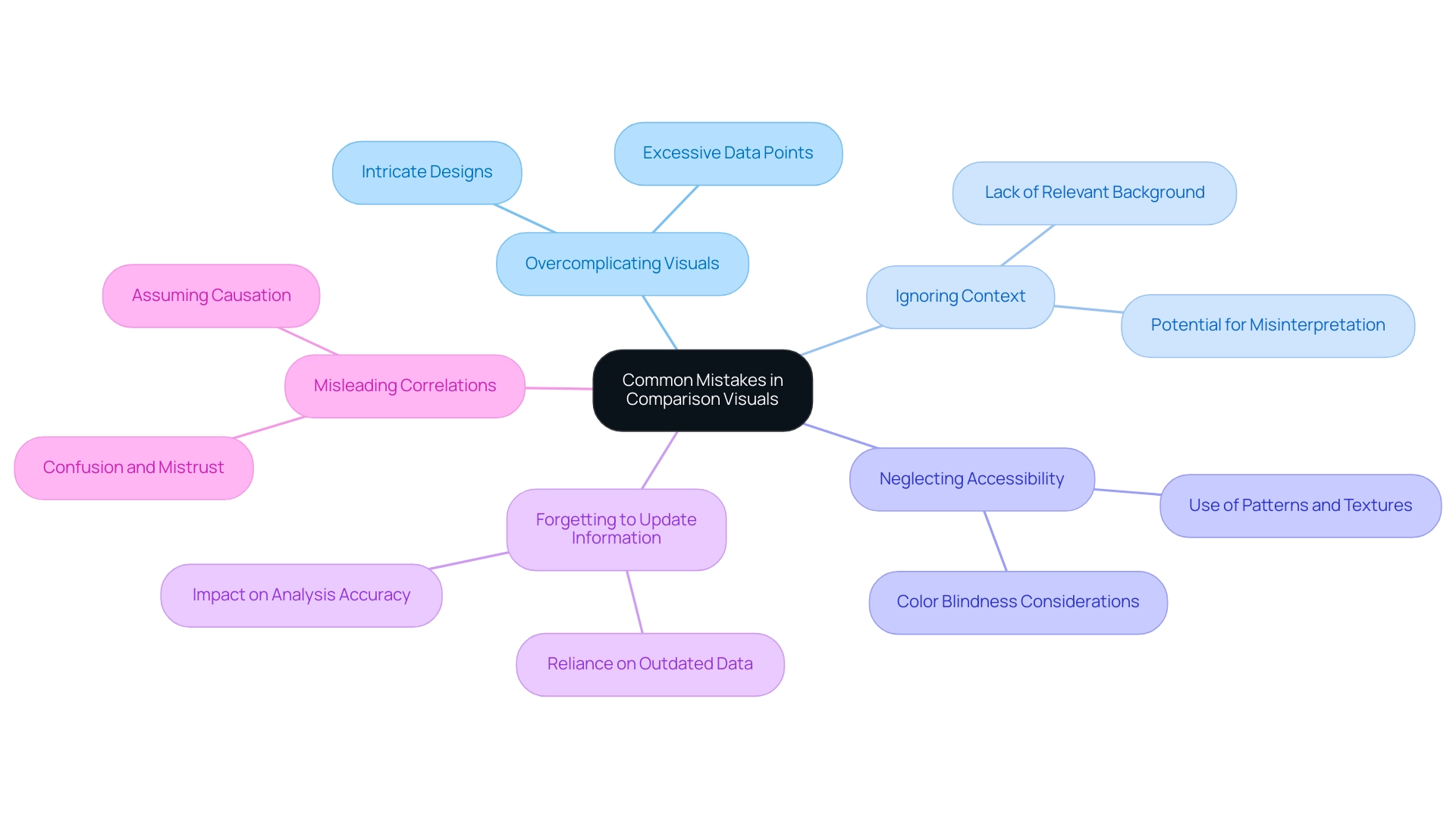

Avoiding Common Mistakes in Comparison Visuals

When utilizing comparison visuals in Power BI, it’s crucial to steer clear of several common mistakes that can hinder effective data communication, especially in an environment where leveraging insights is paramount for operational efficiency:

-

Overcomplicating Visuals: Incorporating excessive data points or overly intricate designs can overwhelm viewers and obscure the intended message. The principle of simplicity suggests that visuals should convey essential information clearly and efficiently, particularly when time-consuming report creation detracts from actionable insights.

-

Ignoring Context: Providing context is vital for accurate interpretation. Without relevant background information, users may misinterpret information, leading to flawed conclusions. As Edward Tufte aptly states,

Graphical excellence is that which gives to the viewer the greatest number of ideas in the shortest time with the least ink in the smallest space.

This highlights the necessity for clarity and context in visual storytelling, particularly considering frequent inconsistencies that can occur due to an absence of governance strategy. -

Neglecting Accessibility: Accessibility should be a primary consideration in data visualization. It’s essential to make visuals comprehensible to everyone, including those with color blindness. Incorporating patterns or textures alongside colors can significantly enhance accessibility and user experience.

-

Forgetting to Update Information: Relying on outdated information can distort analysis and lead to incorrect conclusions. Consistently updating information sources is essential to guarantee that insights obtained from representations are precise and pertinent.

-

Misleading Correlations: One of the critical pitfalls in visual representation is the potential for correlations in visuals to mislead users into assuming causation between unrelated factors. This can significantly distort the viewer’s understanding and interpretation of the information, particularly when reports are filled with numbers yet lack clear, actionable guidance. Failing to provide actionable guidance can lead stakeholders to confusion and mistrust, undermining the utility of the insights presented.

A relevant case study titled “Omitting Baseline and Truncating Scale” highlights how this issue is prevalent in political and sports visualizations, where such omissions can create misleading patterns or trends. Awareness of this problem is crucial to avoid misinterpretation of information, ensuring that visualizations accurately represent the details.

Coupler.io is utilized by over 24,000 companies worldwide, underscoring the importance of effective data visualization practices in enhancing decision-making across various industries. A well-designed dashboard prioritizes important variables and employs various visualization techniques to improve readability, ultimately enhancing user comprehension and engagement. Furthermore, failing to leverage insights from BI dashboards can place your business at a competitive disadvantage, emphasizing the urgency of addressing these challenges.

By steering clear of these frequent traps, you can create more effective and impactful BI representations, such as Power BI comparison visuals, that drive data-driven insights and operational efficiency.

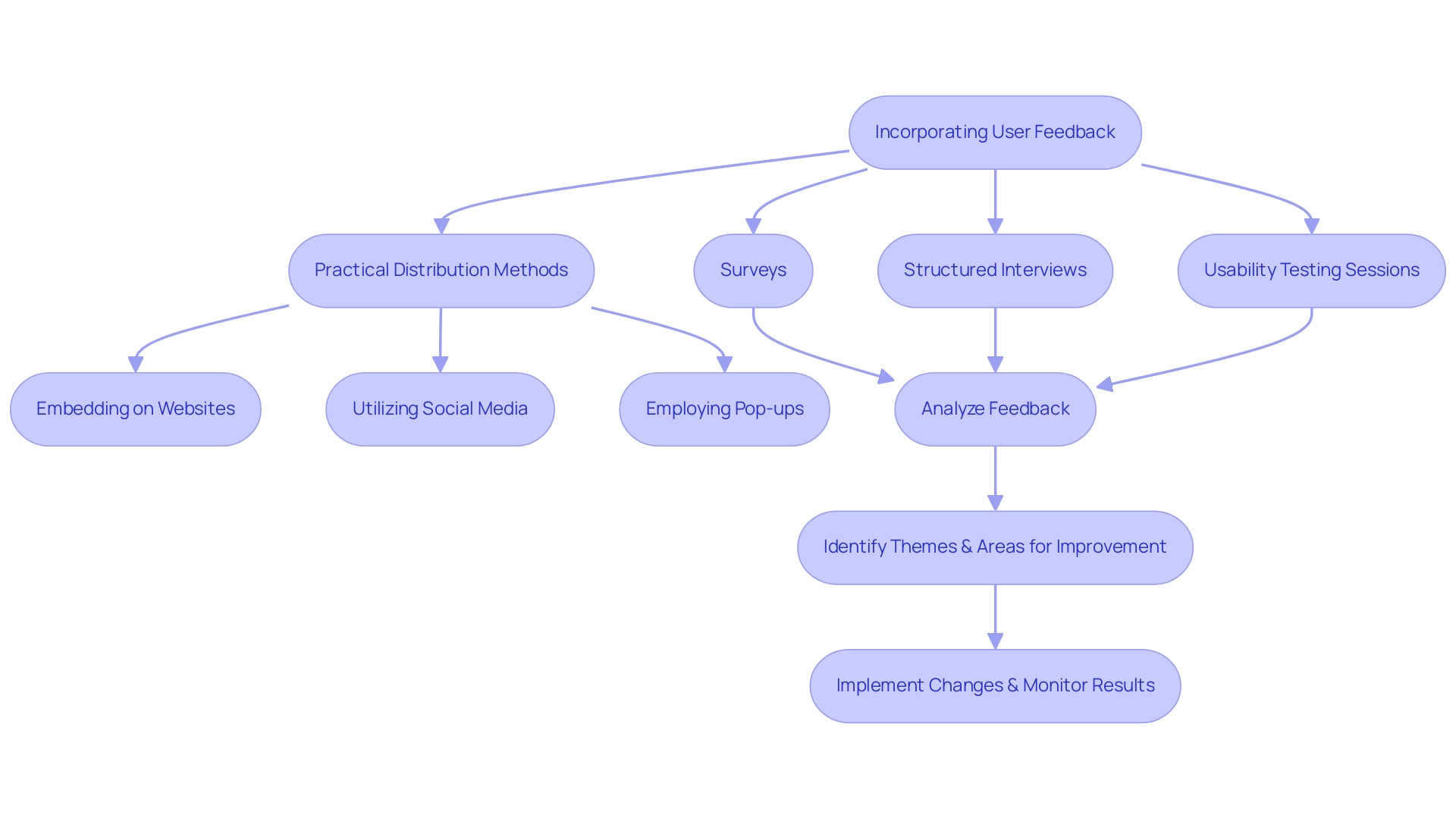

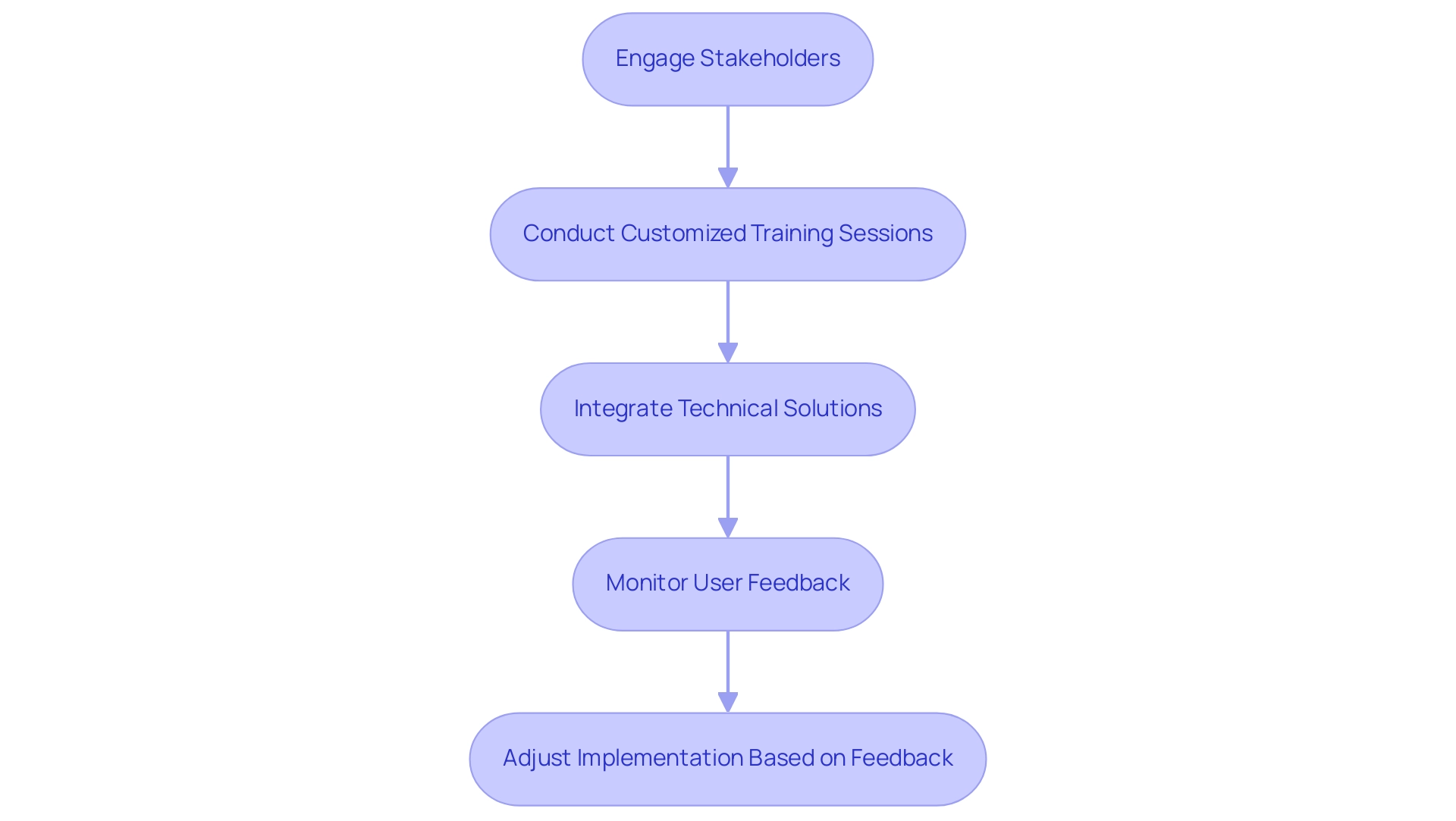

Incorporating User Feedback for Continuous Improvement

Incorporating feedback from participants is essential for the continuous improvement of Power BI comparison visuals. Creating regular feedback loops promotes a deeper understanding of experiences and challenges related to these visuals, particularly in addressing the common pitfalls of time-consuming report creation and data inconsistencies. To effectively gather insights, employ a variety of methods such as:

- Surveys

- Structured interviews

- Usability testing sessions

Notably, a case study by Hotjar illustrated that addressing feedback can lead to a remarkable 40% increase in conversion rates, highlighting the potential impact of insights on operational efficiency. Analyzing the resultant feedback can illuminate common themes and pinpoint areas ripe for improvement, thereby directly addressing the challenges in leveraging insights from Power BI dashboards. In a case study titled ‘Challenges in Gathering and Interpreting Feedback,’ it was revealed that despite inherent difficulties—such as individuals’ varying willingness to provide insights and the qualitative nature of the data—effective feedback mechanisms are essential for Product Managers.

By actively involving participants throughout the design process and implementing a governance strategy to ensure consistency of information, organizations can guarantee that their Power BI comparison visuals not only adapt to evolving needs but also significantly enhance information-driven decision-making and operational efficiency. Customized AI solutions can also play an essential role in this process, assisting in optimizing analysis and delivering actionable insights. Practical methods for distributing surveys and questionnaires include:

- Embedding them on websites

- Utilizing social media channels

- Employing pop-ups

All while keeping them concise and relevant to research goals.

As Syed Balkhi aptly notes,

You can collect feedback and other relevant data at any point in the UX design process.

This continuous improvement practice is crucial in creating visuals that resonate with users, drive satisfaction, and ultimately leverage the power of Business Intelligence and RPA for informed decision-making and enhanced productivity.

Conclusion

Power BI comparison visuals are indispensable tools that empower organizations to navigate the complexities of data analysis with clarity and precision. By utilizing various types of visuals, such as bar charts, line graphs, and scatter plots, businesses can uncover insights, monitor performance, and make informed decisions that drive operational efficiency. The article emphasizes the importance of mastering these tools as the demand for Business Intelligence solutions continues to grow, with projections indicating over half a million deployments by 2024.

Effective design practices play a critical role in maximizing the impact of comparison visuals. Embracing simplicity, understanding the audience, and ensuring data quality are fundamental to creating visuals that facilitate actionable insights. Additionally, gathering user feedback is essential for continuous improvement, allowing organizations to adapt their visualizations to meet evolving needs and enhance data-driven decision-making.

Ultimately, the successful integration of Power BI comparison visuals can transform how organizations leverage data, leading to strategic growth and competitive advantage. By prioritizing best practices in design and data quality, businesses can harness the full potential of their data, ensuring that insights are not only accessible but also actionable. As the landscape of data analytics continues to evolve, the ability to effectively communicate insights through powerful visuals will remain a key driver of success in the modern business environment.

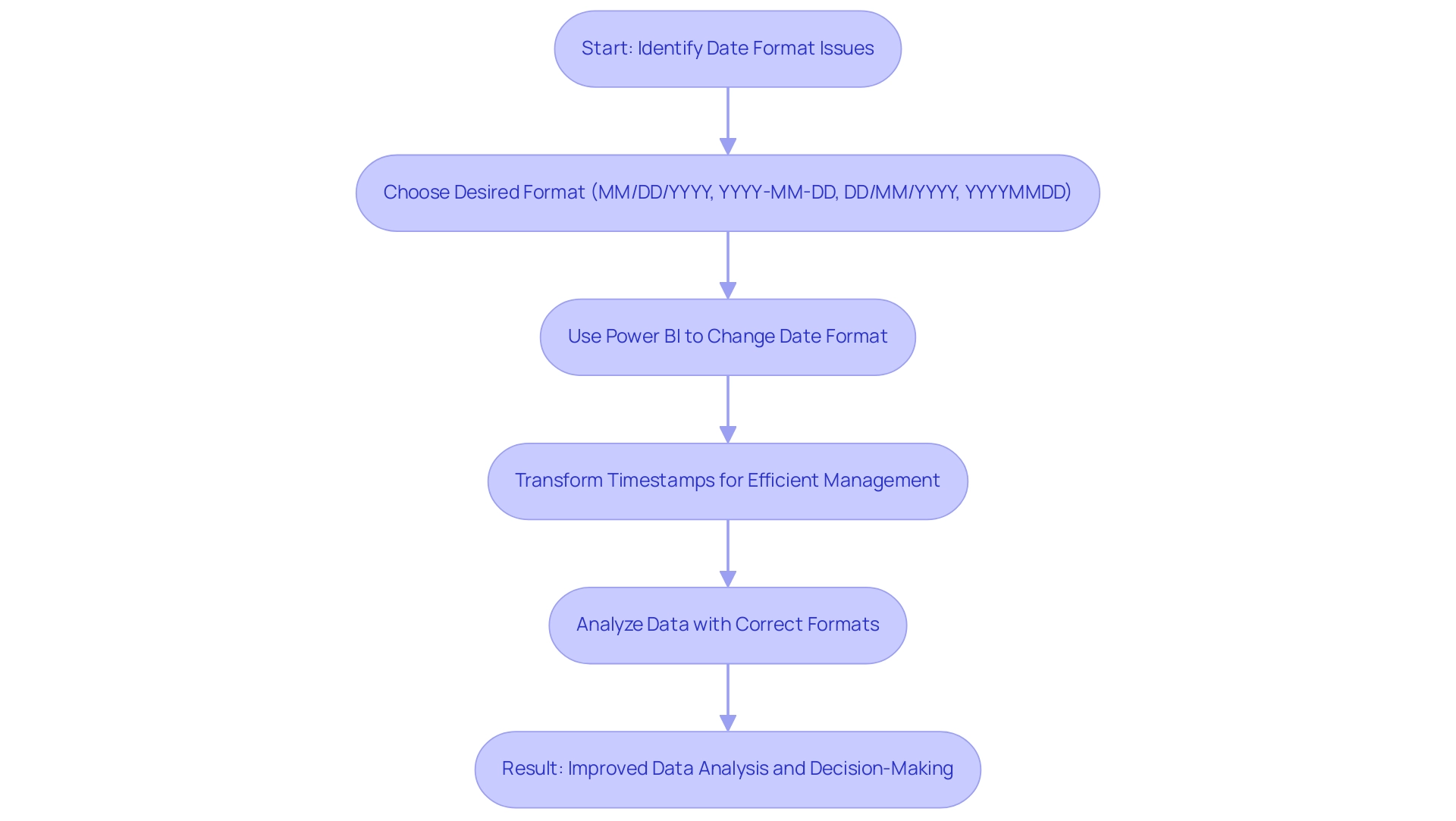

Overview

To change the date format in Power BI to DD/MM/YYYY, users should follow a step-by-step process that includes selecting the date column, ensuring its data type is set to ‘Date’, and applying the custom format. The article emphasizes the importance of proper date formatting for accurate data analysis and decision-making, highlighting that incorrect formats can lead to misinterpretations and inefficiencies in reporting.

Introduction

Navigating the complexities of date formatting in Power BI is essential for organizations seeking to enhance their data analysis capabilities. With various formats like:

- MM/DD/YYYY

- DD/MM/YYYY

- YYYY-MM-DD

the choice of date format can significantly influence how data is interpreted, sorted, and filtered. Missteps in date formatting can lead to confusion and erroneous insights, which can ultimately derail informed decision-making.

This article delves into the intricacies of date formats in Power BI, providing a comprehensive guide on best practices, common challenges, and advanced techniques to ensure accurate and efficient data management. By understanding and implementing these strategies, organizations can leverage the full potential of their data, driving growth and innovation in an increasingly data-driven world.

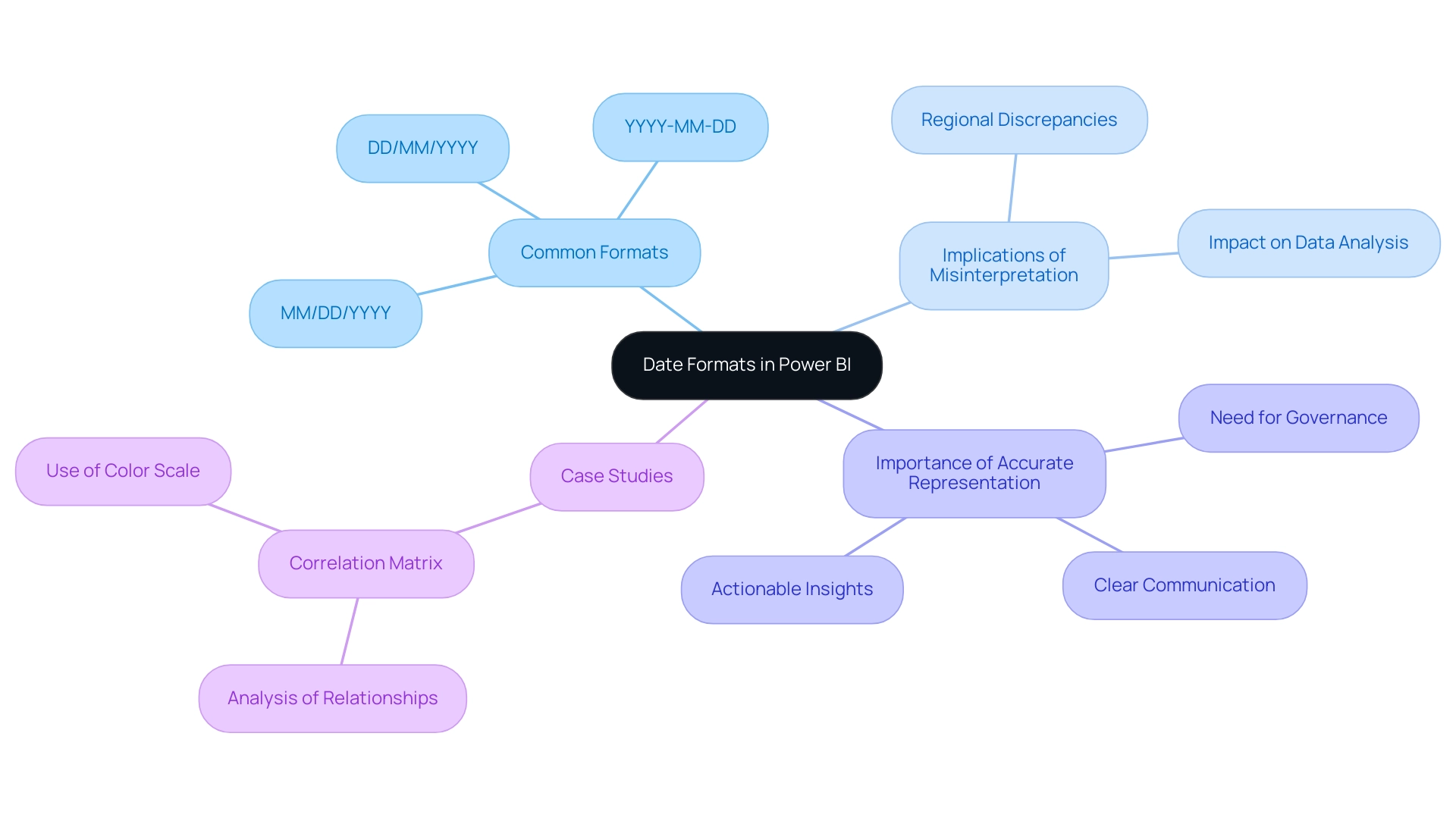

Understanding Date Formats in Power BI

In Power BI, the choice of date styles plays a crucial role in how information is interpreted and displayed, significantly affecting operational efficiency. Common layouts like MM/DD/YYYY and YYYY-MM-DD have unique implications for calculations, sorting, and filtering of date-related information, especially when using the power bi change date format to dd/mm/yyyy. For organizations functioning in areas where DD/MM/YYYY is the standard, it is crucial to use Power BI to change date format to dd/mm/yyyy to avoid confusion and reduce mistakes during analysis.

Improper formatting of dates can result in misinterpretations that distort insights and influence decision-making processes, especially in a data-rich environment where extracting actionable insights is crucial for business growth. As mentioned, ‘Handling timestamps as several elements instead of a single one facilitates their management and guarantees that reports are both precise and user-friendly.’ Moreover, transforming timestamps into a unified string style such as YYYYMMDD can enhance sorting and processing.

For example, the case study titled ‘Converting Dates to YYYYMMDD Format’ emphasizes how this method streamlines the management of temporal information, ensuring correct chronological order and enabling simpler information administration. Entities that embraced the YYYYMMDD structure reported enhanced efficiency in information analysis and minimized mistakes in temporal interpretation, tackling the usual issues of time-consuming report generation and information inconsistencies. Moreover, utilizing RPA solutions like EMMA RPA and Automate can streamline the formatting of timestamps, further improving efficiency and precision in information management.

Comprehending and accurately applying these structures, along with using RPA tools, is a fundamental step toward effective data analysis in BI, enabling operations directors to leverage insights that foster growth and innovation.

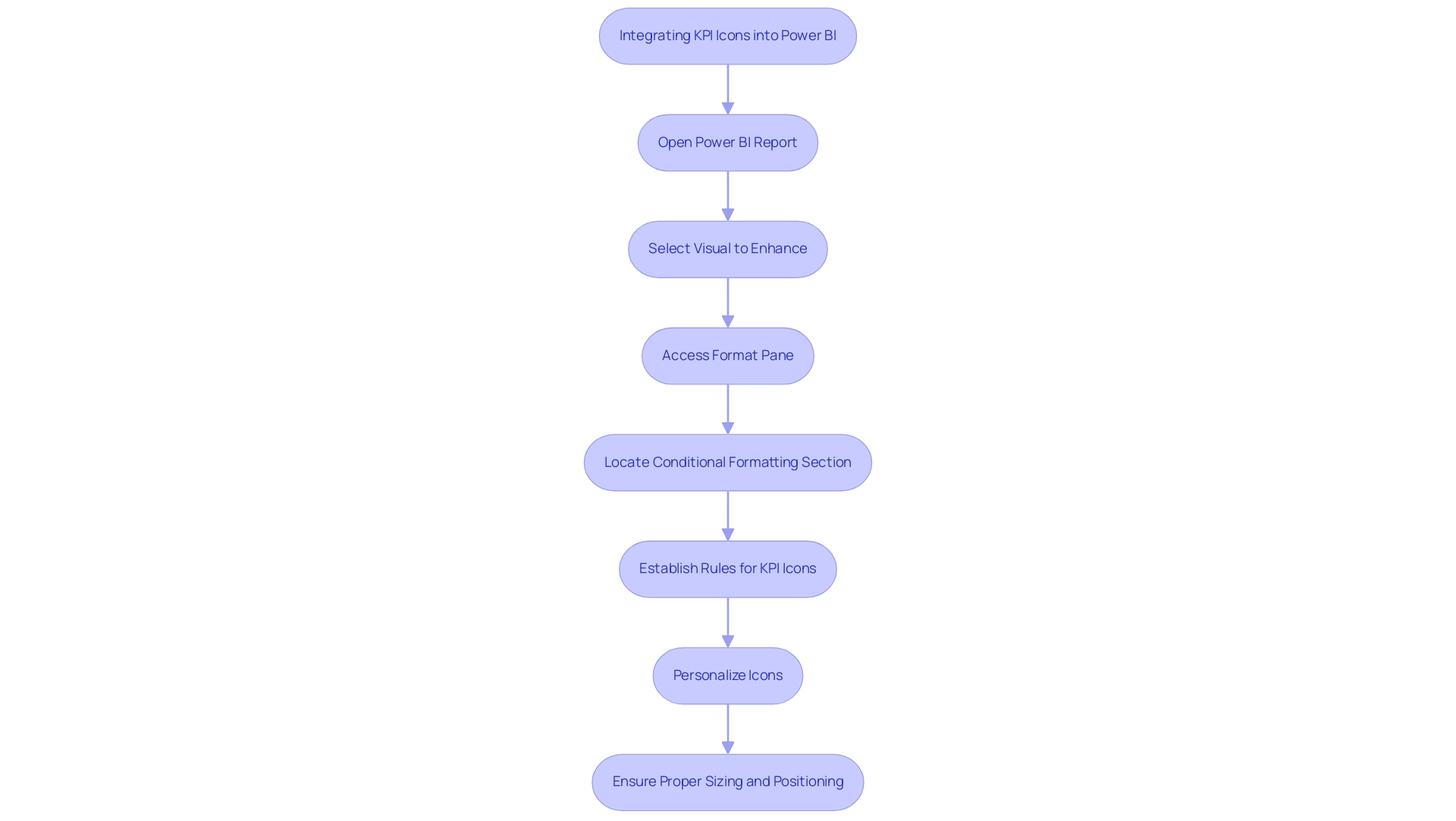

Step-by-Step Guide to Changing Date Format to DD/MM/YYYY

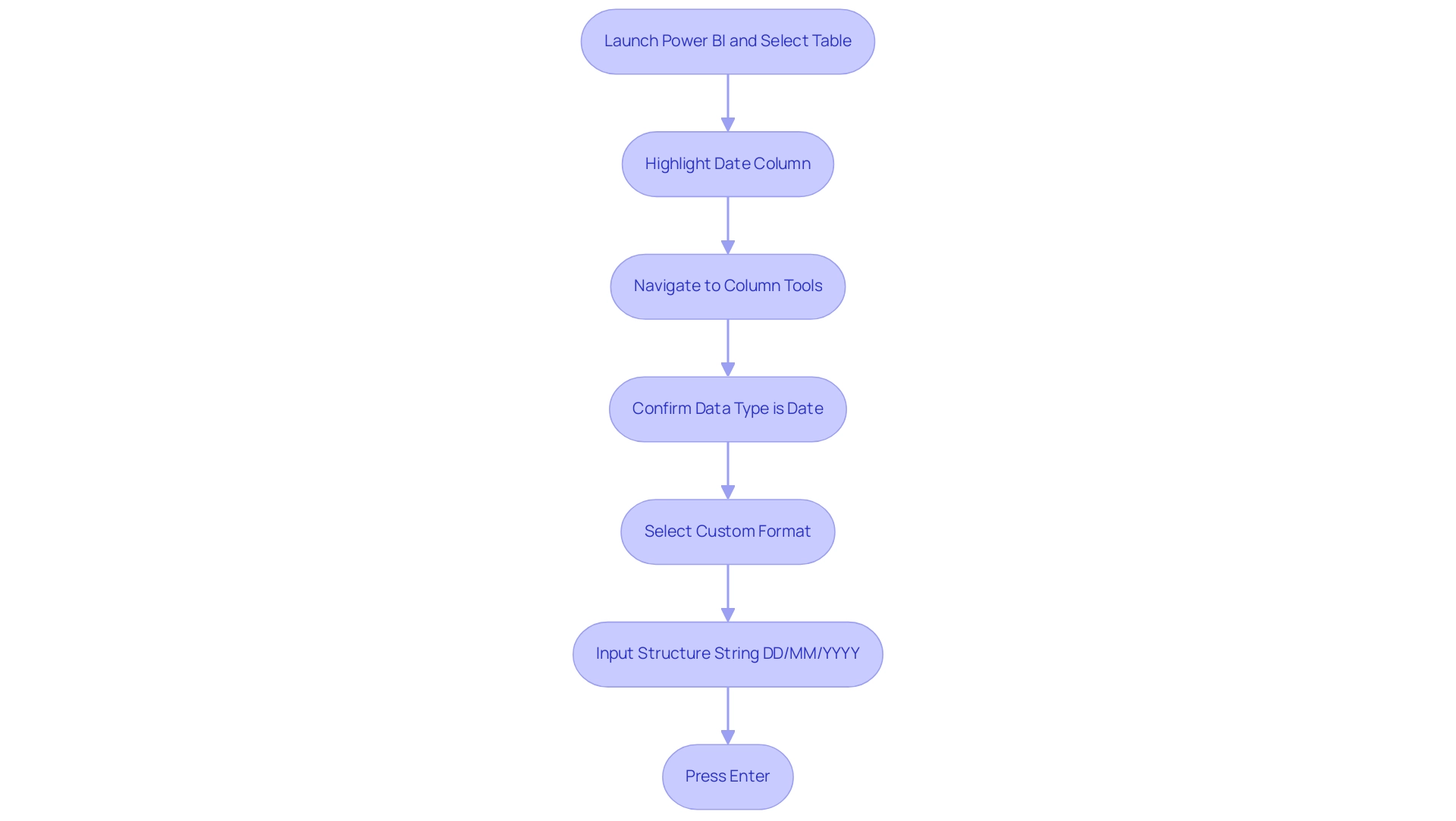

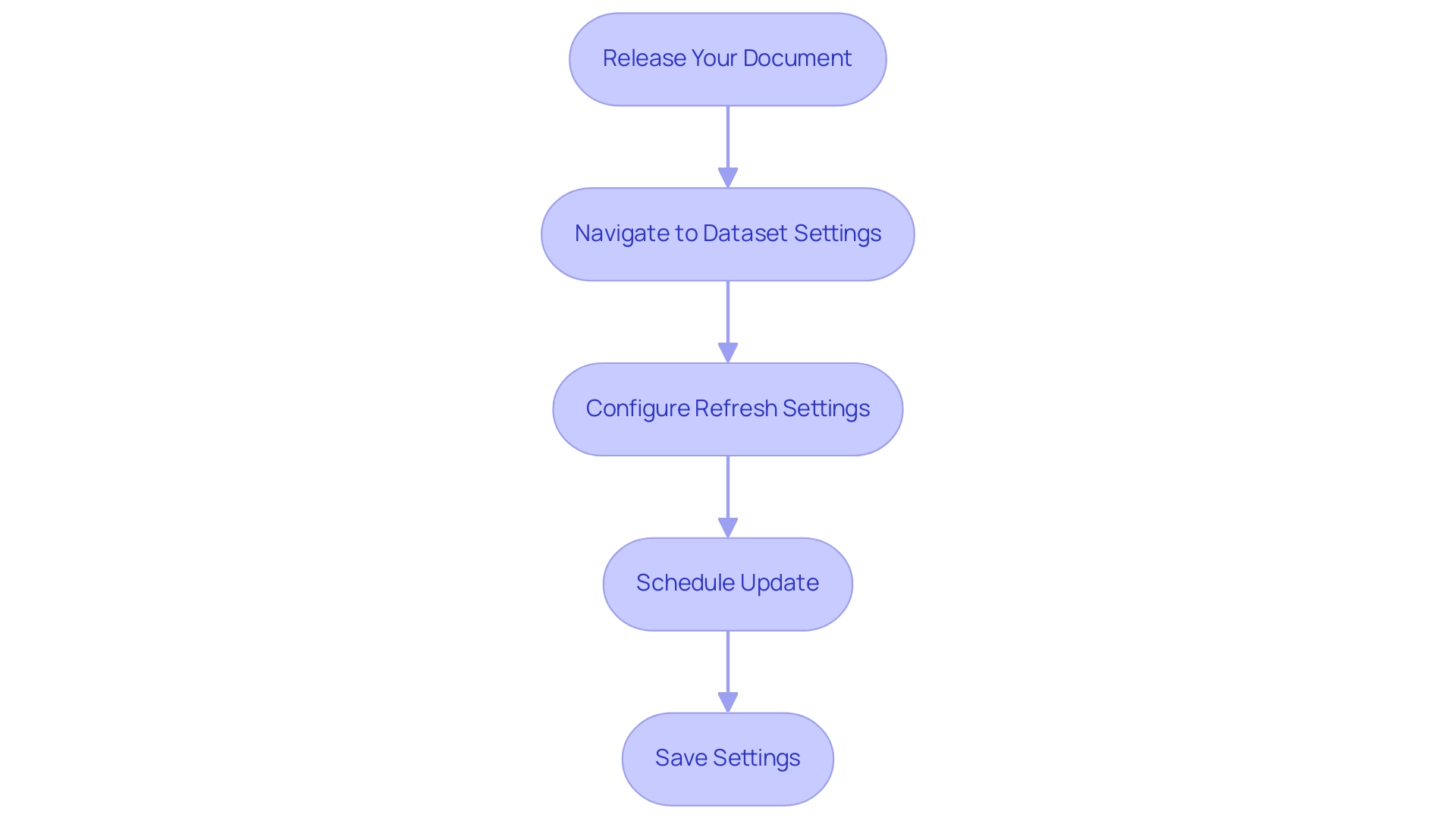

To change the date format to DD/MM/YYYY in Power BI, adhere to these steps for a seamless adjustment:

- Launch your Power BI report and select the table containing the column you intend to format.

- In the ‘Fields’ pane, click on the column that displays the date to highlight it.

- Navigate to the ‘Column tools’ tab located in the ribbon at the top of the window.

- In the ‘Data type’ dropdown, confirm that the data category is set to ‘Date’; it is crucial to ensure that a valid column includes year, month, and day, as any deviation will result in it being treated as text.

- Next, locate the ‘Format’ dropdown menu and opt for ‘Custom’.

- Input the structure string ‘DD/MM/YYYY’.

- Press ‘Enter’ to apply your changes.

After finishing these steps, you will understand how to power bi change date format to dd/mm/yyyy, ensuring your entries consistently show in the preferred DD/MM/YYYY arrangement throughout your report. This approach reflects best practices emphasized in community discussions, highlighting the importance of converting any textual representations of times into recognizable formats for optimal functionality in BI.

For example, in a case study regarding the creation of a Calendar Table in Power BI, it was emphasized that possessing a recognizable column for timestamps is crucial for forming connections with sales information, especially when dealing with a ‘Period’ column formatted as whole numbers. As community expert Jimmy801 advises, “Hello @DanielPasalic as a first step, transform your Period into a genuine moment.” Add a custom column called ‘Date’ and insert this formula:

Date From Text Period '01' 'de-DE'

This creates a Date-Column with the first day of your month. When you have this new column in your information model, you can apply every YEAR, MONTH function in DAX. Utilizing a distinct format for timestamps not only improves your information analysis abilities but also guarantees that your reports are precise and dependable.

Common Challenges and Solutions in Date Formatting

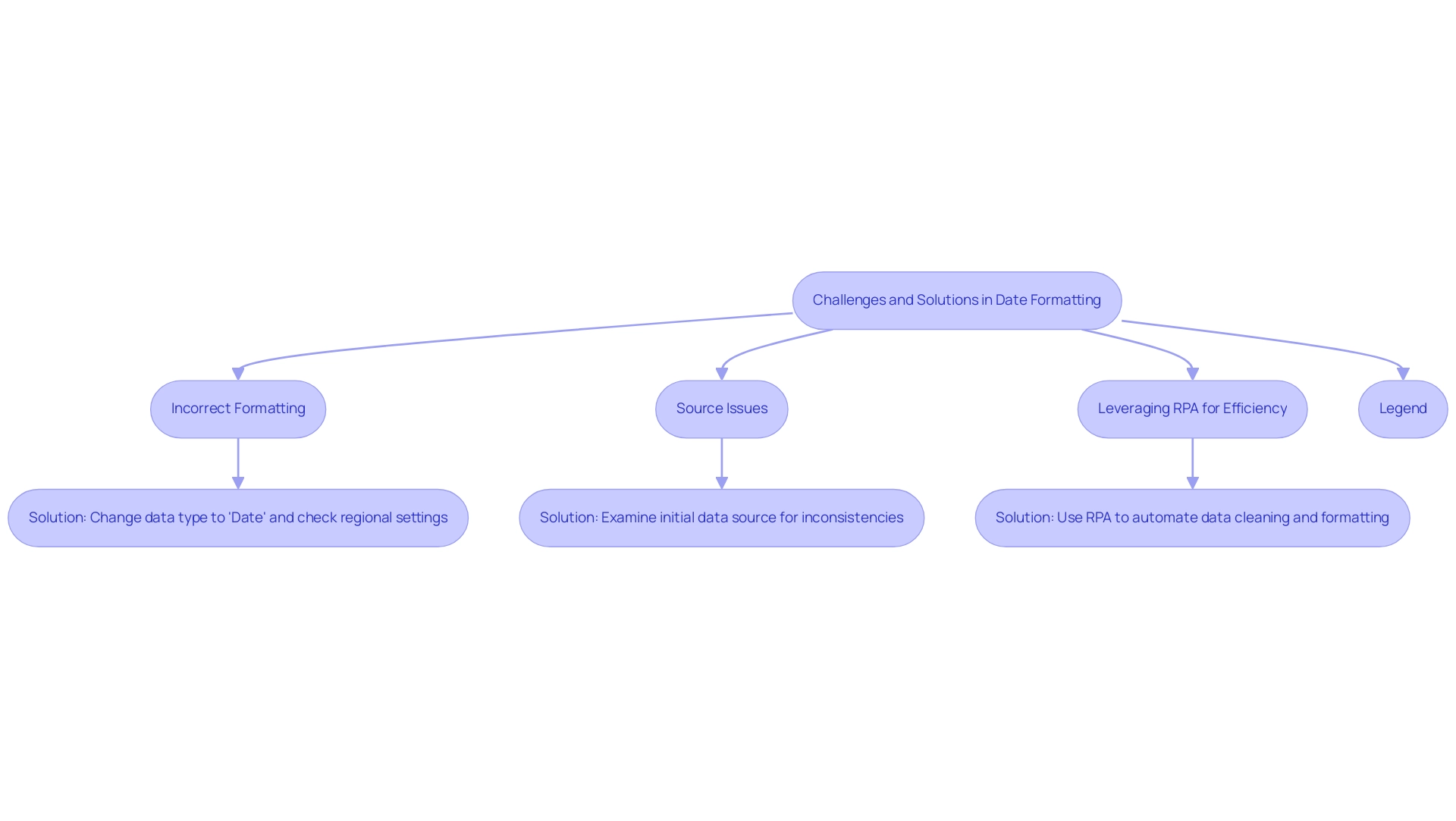

When navigating the intricacies of date formatting in BI tools, users often face several challenges that can hinder their workflow:

-

Incorrect Formatting: One of the most common issues is when dates do not display as expected. To resolve this, ensure that the data type is designated as ‘Date’ rather than ‘Text’ so that you can use Power BI to change date format to dd/mm/yyyy. This simple adjustment can often rectify formatting dilemmas and enhance efficiency by reducing errors, particularly when you need to power bi change date format to dd/mm/yyyy due to conflicts arising from regional settings. To ensure the power bi change date format to dd/mm/yyyy, it is essential to verify these settings by navigating to ‘File’ > ‘Options and settings’ > ‘Options’ > ‘Regional Settings’. As mentioned by community champion az38, users should be aware of both Global and Current File local settings to modify styles effectively. They shared insights on the importance of these settings:

@Mengerdahl where exactly did you try to change local? There are 2 places – Global and Current File local settings. With current file local settings English (United States) 04/01/10 00:00:00 was recognized as 2010 year for me.

This emphasizes the importance of comprehending how these configurations influence styling and the difficulties in attaining uniformity and precision, especially when trying to execute a power bi change date format to dd/mm/yyyy. -

Source Issues: If the preferred time structure remains difficult to find, it is wise to examine the initial source for legitimate time values. Data imported from Excel or CSV files may contain inconsistencies that require cleaning, particularly when you need to use Power BI to change date format to dd/mm/yyyy for accurate formatting. This relates to the broader challenge of poor master data quality, which can complicate the adoption of AI solutions in organizations seeking reliable insights.

-

Leveraging RPA for Efficiency: To streamline the data formatting process, organizations can utilize Robotic Process Automation (RPA) to automate data cleaning and formatting tasks. This not only improves efficiency but also minimizes the chances of human mistakes, enabling teams to concentrate on more strategic initiatives. Alongside these challenges, there is an increasing demand for Microsoft to improve time handling in BI visuals, as users desire greater flexibility and choices in styling. A relevant case study titled ‘Custom Format Request’ illustrates this frustration, where a user expressed the desire for a custom format like ‘Mon, Oct 14,’18’. This underscores the need for improved solutions in this area, particularly as businesses strive to leverage Business Intelligence for informed decision-making. Furthermore, with the upcoming February 2025 BI update promising new features, users may find relief from some of these formatting challenges in the near future. By proactively addressing these challenges and staying informed about updates, users can facilitate a more seamless experience when working with time formatting in Business Intelligence.

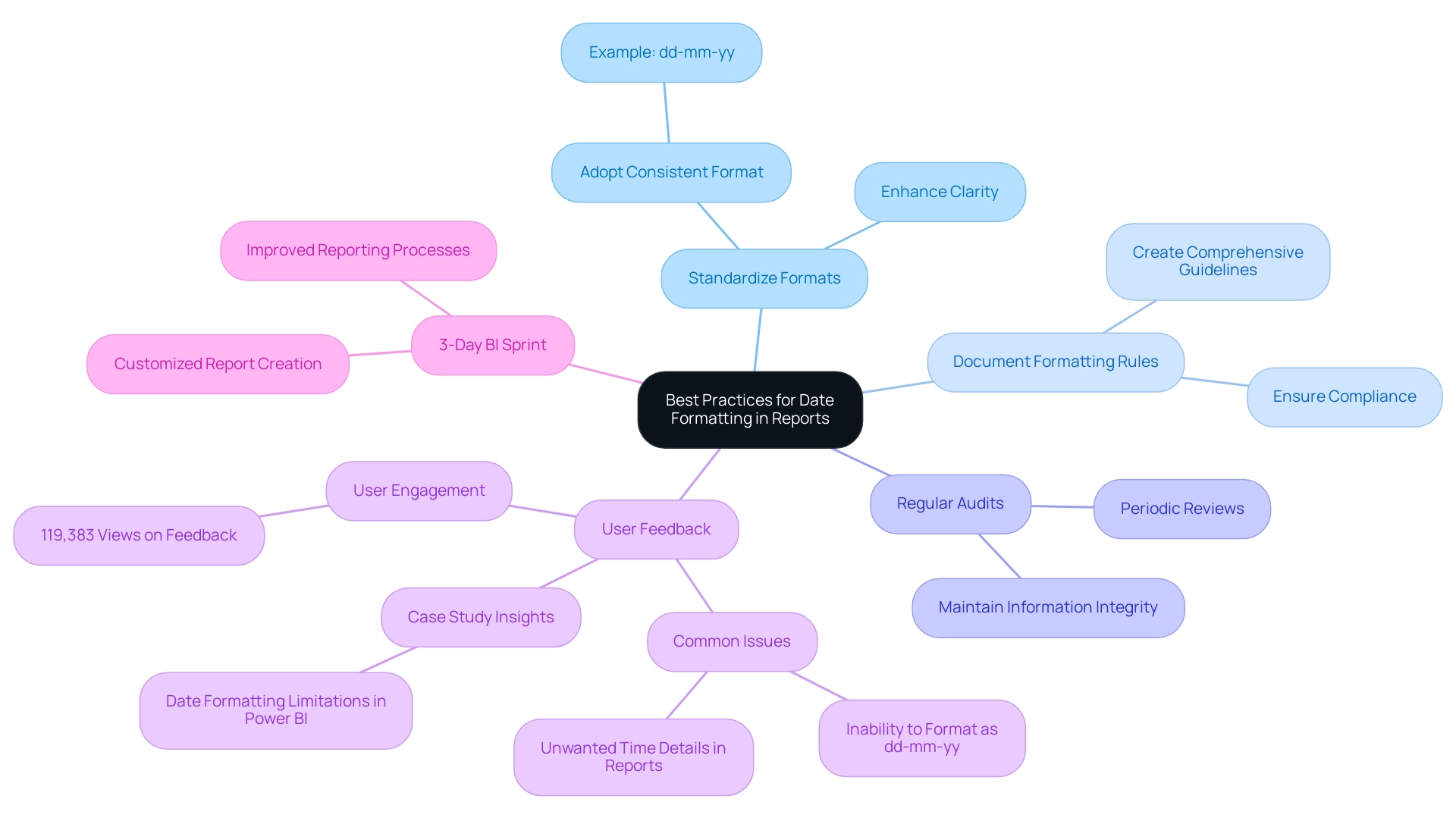

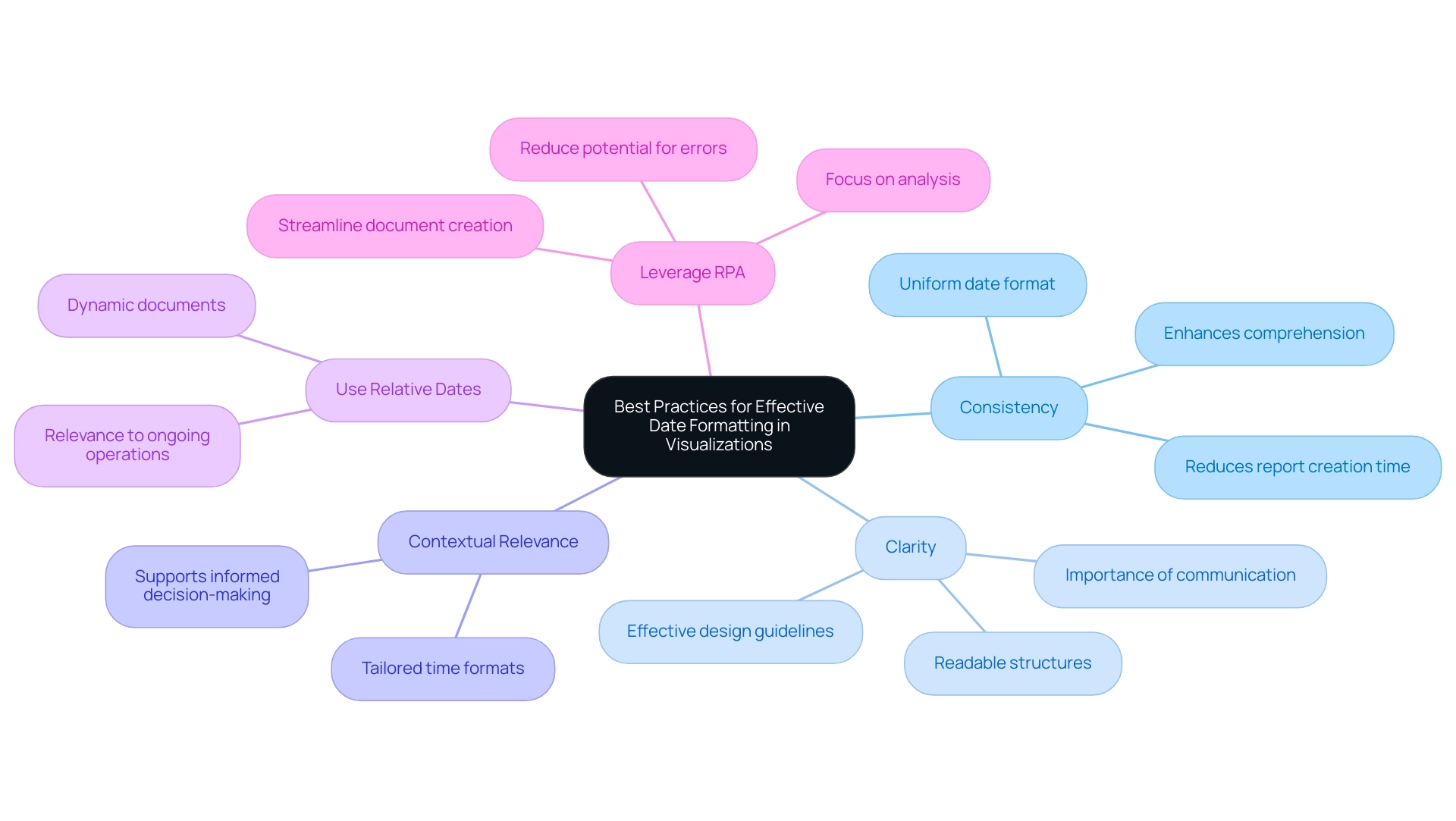

Best Practices for Consistent Date Formatting in Reports

To ensure consistent date formatting across your Power BI reports and maximize the effectiveness of your insights, implement the following best practices:

- Standardize Formats: Adopt a date format that aligns with your organization’s standards, such as dd-mm-yy, and apply it uniformly across all reports. This approach not only fosters familiarity but also reduces confusion among users, ultimately enhancing the clarity of your reports.

- Document Formatting Rules: Create an extensive document detailing the formatting guidelines for time entries. Ensuring that all report creators are aware of and adhere to these guidelines is crucial for maintaining consistency and compliance across your organization’s reporting efforts.

- Regular Audits: Conduct periodic reviews of reports to verify adherence to established time formatting standards. This practice not only helps maintain information integrity but also enhances report clarity for stakeholders, making it easier to leverage the insights generated by your reports.

A common issue highlighted by users pertains to the constraints in Power BI’s formatting options, particularly when they want to power bi change date format to dd/mm/yyyy. For instance, one user expressed frustration over the inability to power bi change date format to dd/mm/yyyy, noting that while the data view displayed the information correctly, the report view included unwanted time details. This feedback, which received significant attention with over 119,383 views, reflects a widespread concern among users regarding formatting inconsistencies related to time.

To tackle these challenges, our 3-Day BI Sprint service provides a customized approach to report creation, ensuring that time formatting and other essential elements are expertly managed. Clients have reported significant improvements in their reporting processes after utilizing our service, demonstrating its effectiveness in resolving similar issues.

The case study titled “Date Formatting Limitations in BI” further illustrates this issue, as it discusses how users have echoed similar frustrations and the discussions it prompted about potential workarounds. These insights emphasize the essential need for organizations to establish and enforce consistent time formats in their reporting processes. By following these best practices and considering our 3-Day BI Sprint, organizations can enhance the quality and clarity of their business intelligence reports, ultimately leading to more impactful decision-making.

Advanced Techniques for Date Formatting in Power BI

To harness advanced date formatting techniques in Power BI effectively, consider implementing the following strategies:

-

Employing DAX for Custom Formats: Dynamic date formatting can be achieved through calculated columns using DAX. For example, use the DAX expression:

FormattedDate = FORMAT(Your Date Column, 'DD/MM/YYYY')to apply the power bi change date format to dd/mm/yyyy, ensuring that your entries conform to the desired presentation style. This approach not only enhances readability but also facilitates deeper insights during analysis, a crucial factor in leveraging Business Intelligence for operational efficiency. -

Transformations in Power Query: Throughout the information loading phase, Power Query provides strong tools for altering time representations. Navigate to the ‘Transform’ tab to change the time arrangements before they are incorporated into your report. This step is essential as it can significantly influence information interpretation and ensure consistency across large collections. A pertinent case study named “Changing Column Structure for Dates in BI” illustrates how altering the structure of a column for dates can influence interpretations and calculations, especially when demonstrating how to power bi change date format to dd/mm/yyyy from ‘MM/DD/YYYY’. Using Power Query to change formats for multiple columns simultaneously can save time and ensure consistency across large datasets, addressing common challenges in report creation.

-

Managing Time Zones: In situations where your data includes multiple time zones, it’s essential to modify time values accordingly. Incorporating a computed column that considers the user’s location enables precise representations of time. This practice not only fosters relevance in your reports but also enhances user experience by providing contextually appropriate information, a key aspect in driving data-driven insights.

Moreover, incorporating RPA solutions can simplify the process of formatting and report creation in BI, alleviating the strain of manual modifications and reducing mistakes. By automating repetitive tasks, RPA enhances operational efficiency and allows teams to focus on more strategic initiatives.

To monitor report performance effectively, consider setting the max item for a ‘Top N’ filter to larger than what users would need, for example, 10,000. This can help identify and address bottlenecks in your reports.

Vikas Srivastava succinctly advises, > Efficiently manipulate timestamps in BI with these practical tips: Ensure proper formats, create dedicated temporal tables, leverage DAX functions, customize calculations, adapt to fiscal calendars, utilize relative filters, visualize trends, and optimize performance for enhanced analytics. By following these guidelines, you can navigate the complexities of date formatting in Power BI and learn how to power bi change date format to dd/mm/yyyy, ensuring both accuracy and clarity in your data presentations while unlocking the full potential of Business Intelligence and RPA in your operations.

Conclusion

Understanding the complexities of date formatting in Power BI is essential for organizations aiming to optimize their data analysis processes. By adopting the correct date formats, such as:

- MM/DD/YYYY

- DD/MM/YYYY

- YYYY-MM-DD

businesses can significantly enhance operational efficiency and reduce the risk of misinterpretation that can lead to erroneous insights. Implementing best practices, such as standardizing formats and documenting rules, ensures consistency across reports, facilitating clearer communication of insights to stakeholders.

Organizations face common challenges in date formatting, including:

- Incorrect settings

- Data source inconsistencies

Addressing these issues through regular audits and leveraging tools like Robotic Process Automation (RPA) can streamline processes and mitigate human error. Advanced techniques, including the use of DAX for custom formats and transformations in Power Query, provide additional layers of control and accuracy, allowing for tailored date presentations that meet specific analytical needs.

Ultimately, mastering date formatting in Power BI is not just about aesthetics; it is a foundational step in driving informed decision-making and fostering growth. By understanding the nuances of date handling and implementing effective strategies, organizations can unlock the full potential of their data, leading to improved insights, enhanced reporting capabilities, and a competitive edge in an increasingly data-driven landscape.

Overview

Power Automate enables users to efficiently export data to Excel by automating workflows and minimizing manual errors through a structured step-by-step process. The article supports this by outlining specific actions, such as creating flows and mapping information, while emphasizing the benefits of reduced mistakes and increased productivity, demonstrated through real-world case studies and best practices.

Introduction

In the ever-evolving landscape of business technology, automation has emerged as a critical driver of efficiency and productivity. Power Automate stands at the forefront of this revolution, offering organizations a robust platform to streamline workflows and minimize manual tasks without requiring extensive coding knowledge.

With its ability to integrate seamlessly with various applications and services, Power Automate not only enhances operational efficiency but also empowers users to leverage data analytics for informed decision-making.

As businesses increasingly turn to automation to navigate the complexities of data management and operational processes, understanding the capabilities of Power Automate becomes essential.

This article delves into the intricacies of Power Automate, providing a comprehensive guide on its functionalities, best practices, and the transformative impact it can have on modern organizations.

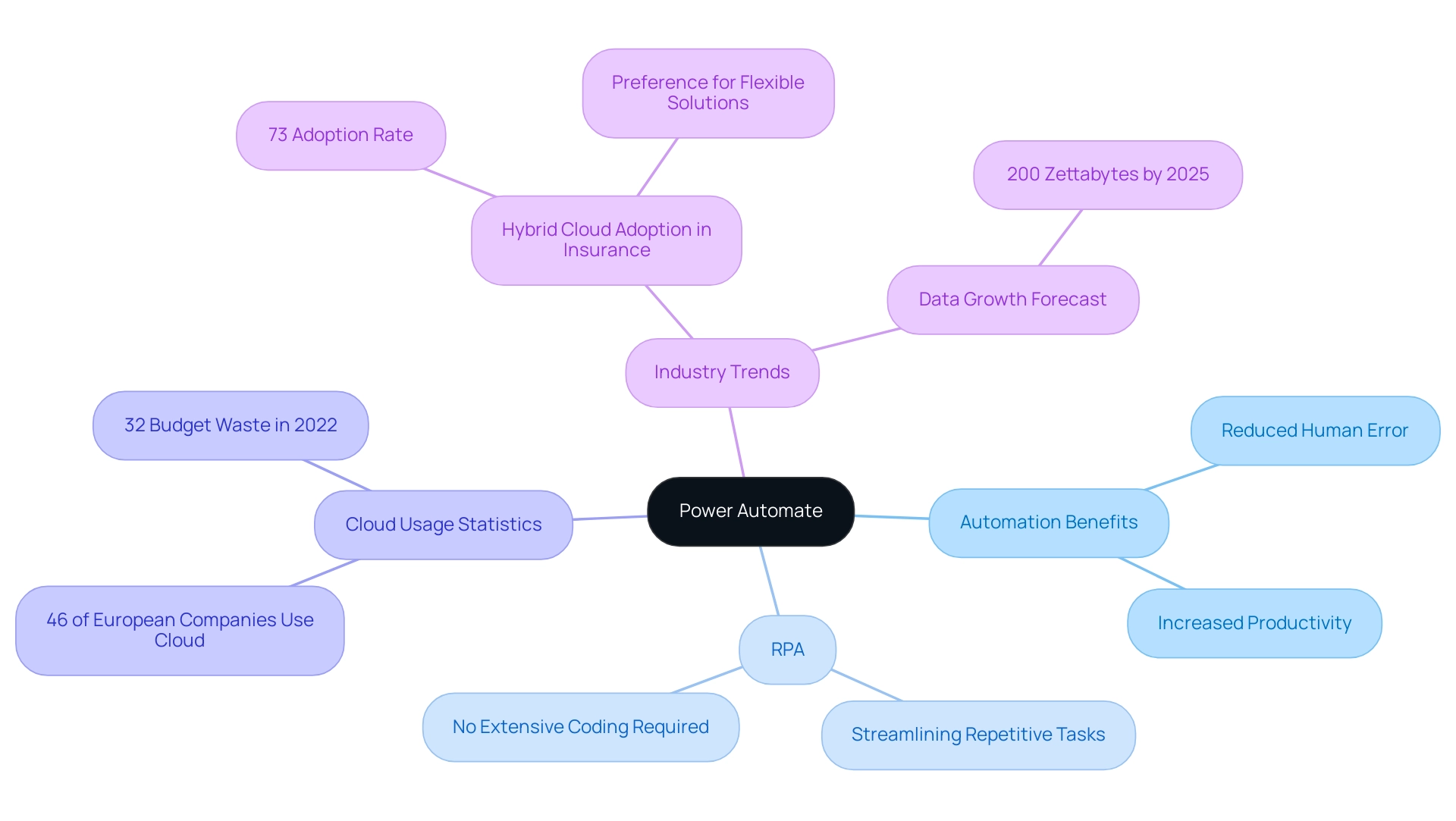

Understanding Power Automate: The Key to Automation

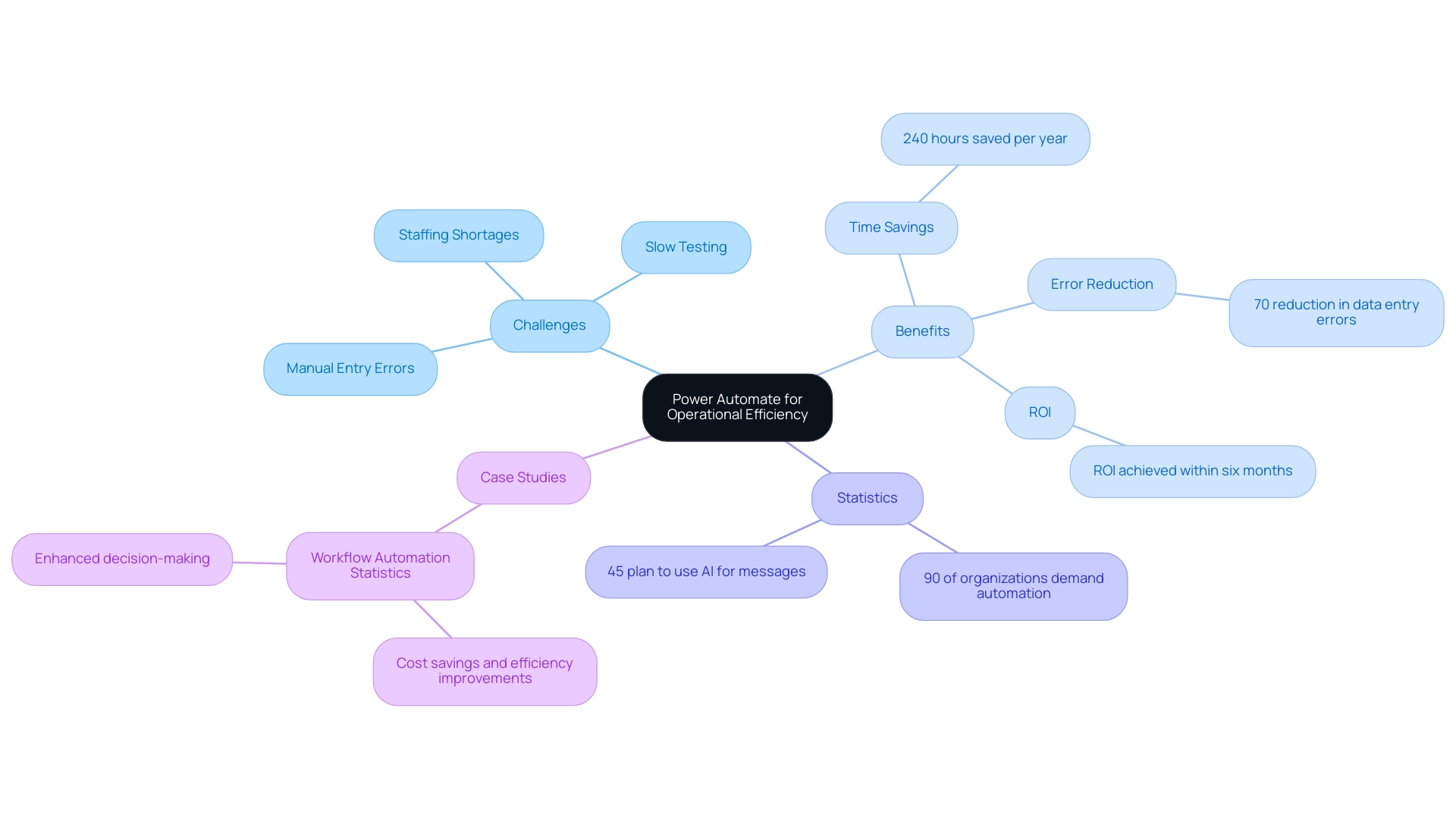

Power Automate export to Excel serves as a powerful cloud-based platform designed to facilitate the automation of workflows between diverse applications and services. This tool empowers users to streamline repetitive tasks without requiring extensive coding expertise, thereby enhancing business productivity through Robotic Process Automation (RPA). By utilizing pre-built templates and a wide range of connectors, organizations can effortlessly design automated workflows that facilitate power automate export to Excel, significantly saving time and minimizing the potential for human error.

As emphasized in a recent case study, a mid-sized company enhanced productivity by automating information entry and legacy system integration, decreasing entry mistakes by 70% and boosting workflow productivity by 80%. This underscores the practical benefits of RPA in real-world applications. Additionally, tailored AI solutions can further enhance these processes by identifying the right technologies that align with specific business needs, thereby complementing the capabilities of RPA.

Moreover, as Thales observes,

-

46% of European companies keep all their data in the cloud,

highlighting the increasing dependence on cloud solutions for operational effectiveness. However, with 32% of cloud budgets squandered in 2022, there is a growing demand for efficient automation tools like Power Automate, especially for tasks such as export to Excel. The adoption of hybrid cloud infrastructures, particularly in the insurance industry, where 73% of organizations prefer this model, illustrates a shift towards flexible cloud solutions that can enhance operational efficiency.

With forecasts suggesting that the globe will retain 200 zettabytes of information by 2025, grasping the functionalities of automation tools becomes progressively essential. By leveraging this tool and integrating Business Intelligence, organizations can transform raw information into actionable insights, enhancing productivity, reducing operational costs, and ultimately driving better outcomes across their processes, making informed decisions that foster growth and innovation.

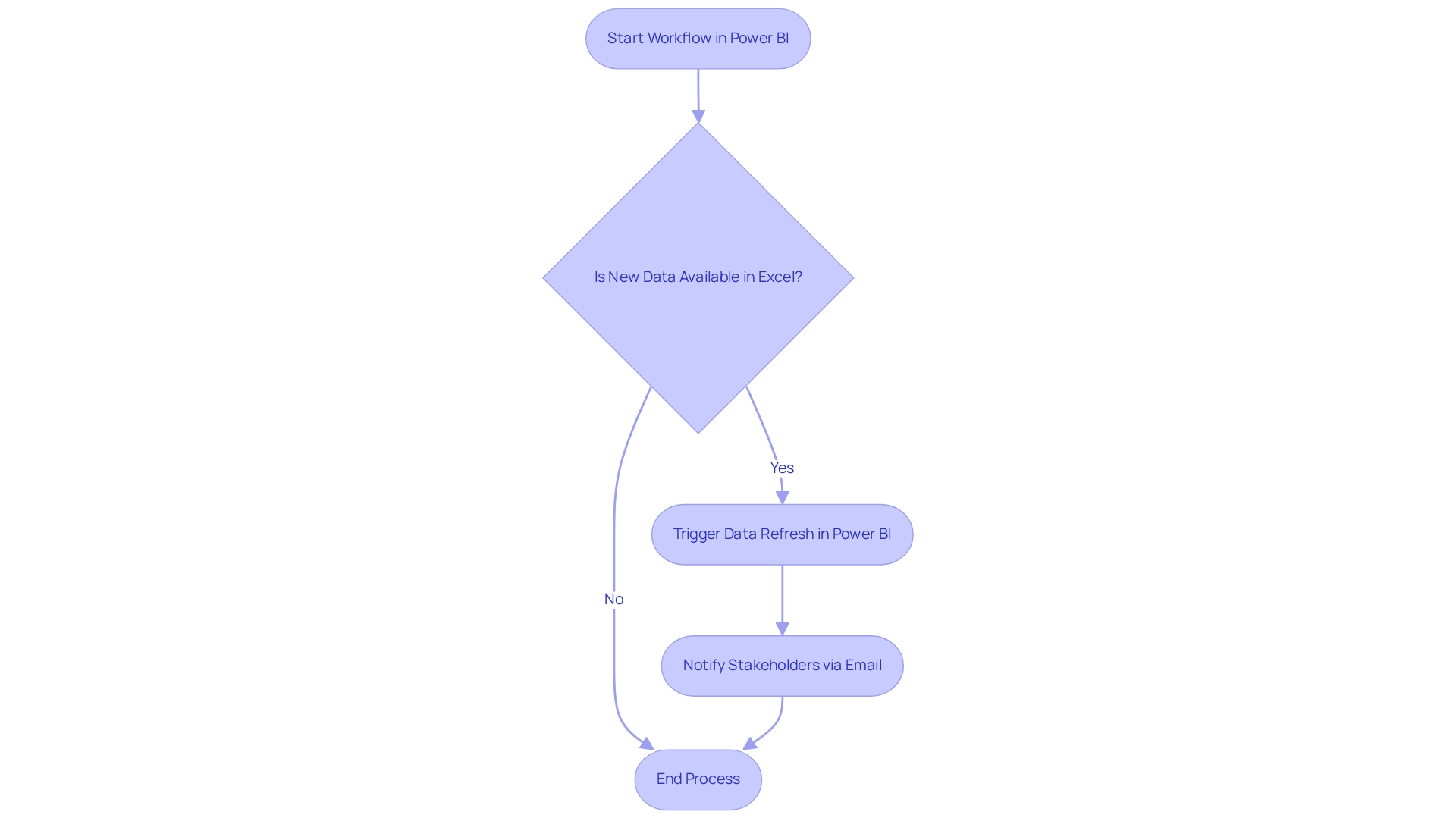

Step-by-Step Process for Exporting Data to Excel

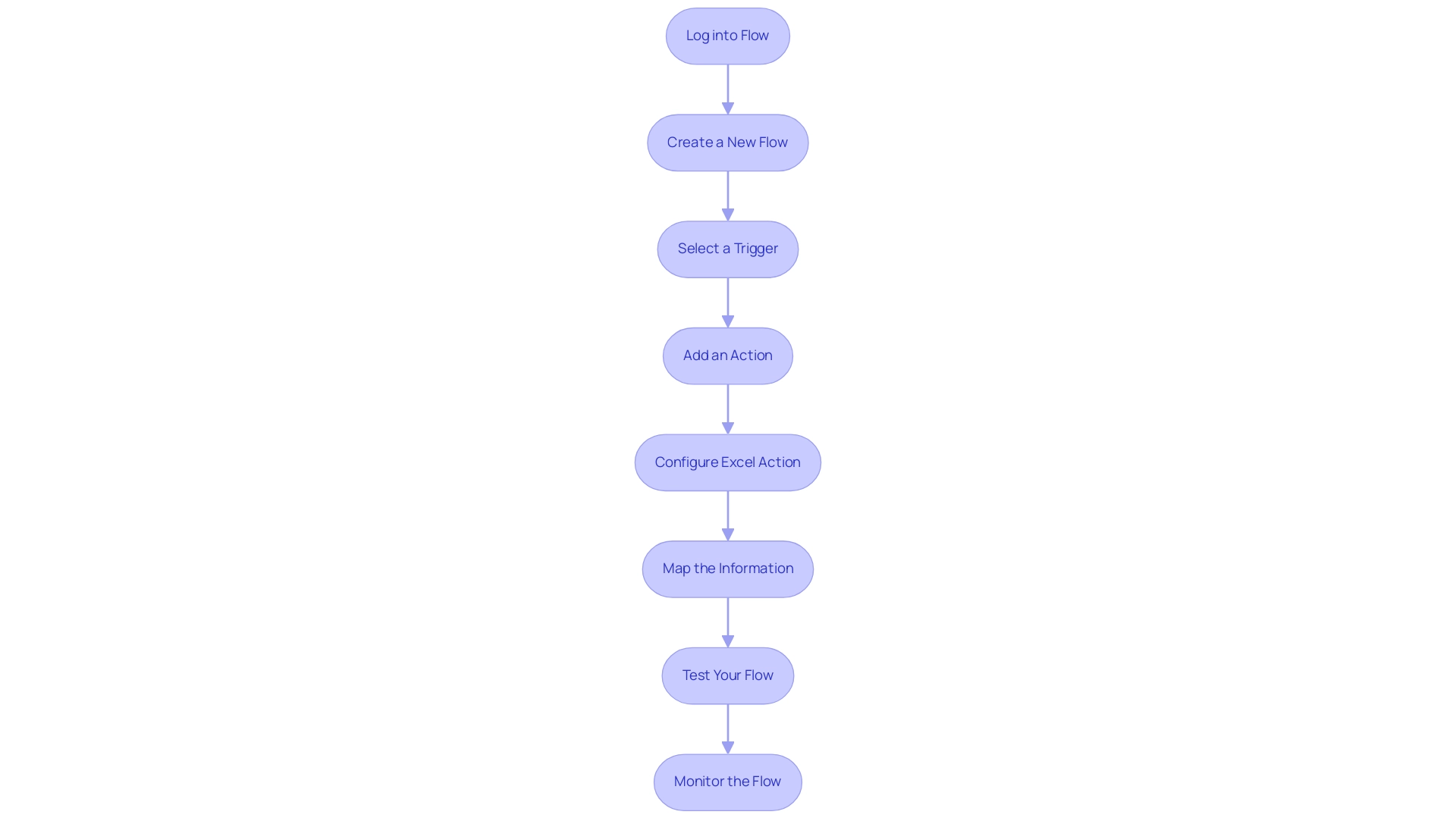

Using power automate export to excel, exporting information to Excel is a straightforward process that not only improves operational efficiency but also harnesses the capabilities of Robotic Process Automation (RPA) to simplify manual workflows, minimize errors, and allow your team to focus on more strategic tasks. It’s important to note that Excel has a limitation of 1,048,576 rows per worksheet, which can influence your management strategy. Follow these steps to successfully carry out this task:

- Log into Flow: Begin by accessing your account at flow.microsoft.com.

- Create a New Flow: Click on ‘Create’ and select either ‘Instant flow’ or ‘Scheduled flow’ according to your specific requirements.

- Select a Trigger: Choose an appropriate trigger that will set off the flow, such as ‘When an item is created’ in SharePoint, ensuring that the process responds to real-time events.

- Add an Action: Click on ‘New step’, search for ‘Excel’, and pick ‘Add a row into a table’. This action is pivotal for the information export process, demonstrating how power automate export to excel enhances productivity by automating repetitive tasks and minimizing the potential for human error.

- Configure Excel Action: Specify the location of your Excel file and select the table where the information will be integrated, benefiting from the enhanced integration capabilities introduced in the latest Power Automate updates.

- Map the Information: Align the information fields from your trigger to the corresponding columns in your Excel table, ensuring accurate representation.

- Test Your Flow: Save your flow and execute a test to confirm that the information exports correctly and meets your operational needs.

- Monitor the Flow: Finally, review the run history to ensure successful execution, and troubleshoot any issues that may arise, as common errors can impede the efficiency of information export workflows.

Utilizing tools like Flow allows for seamless integration of information, which is crucial in today’s information-rich environment. As Uber stated, ‘We knew we needed an accurate and reliable source of real-time flight data… we’re confident that OAG is the right partner.’ This emphasizes the significance of having reliable information sources in your operations.

Furthermore, in a case study centered on collecting case overview statistics in Process Mining, various metrics such as new events, active cases, case duration, and utilization were highlighted, showcasing the practical benefits and outcomes of utilizing this tool for information management. By adhering to this guide, you can effectively utilize the features of the automation tool and RPA, including Power Automate export to Excel, to simplify your export procedures, boost business efficiency, and obtain a competitive edge through insights based on information.

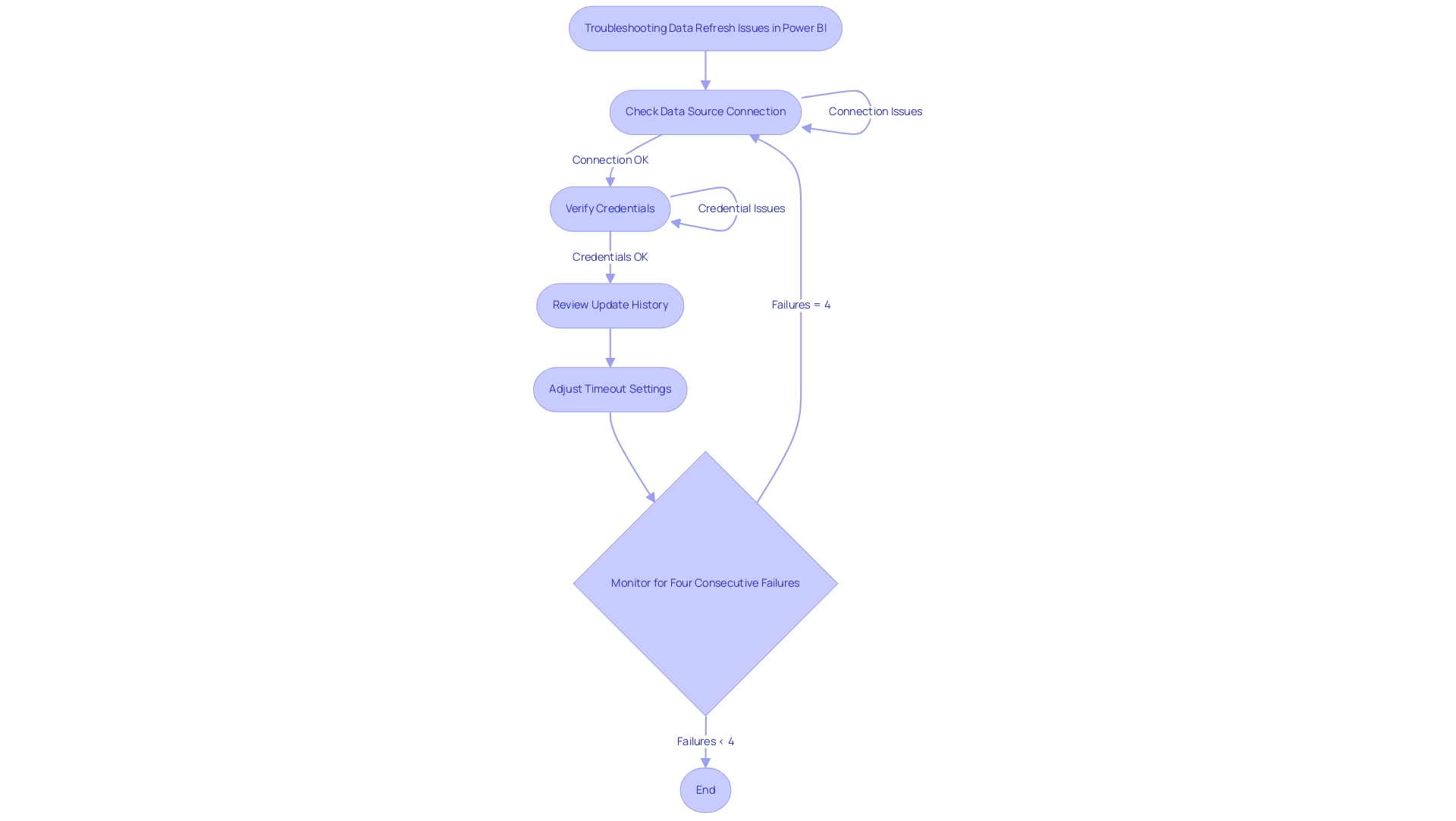

Troubleshooting Common Export Issues

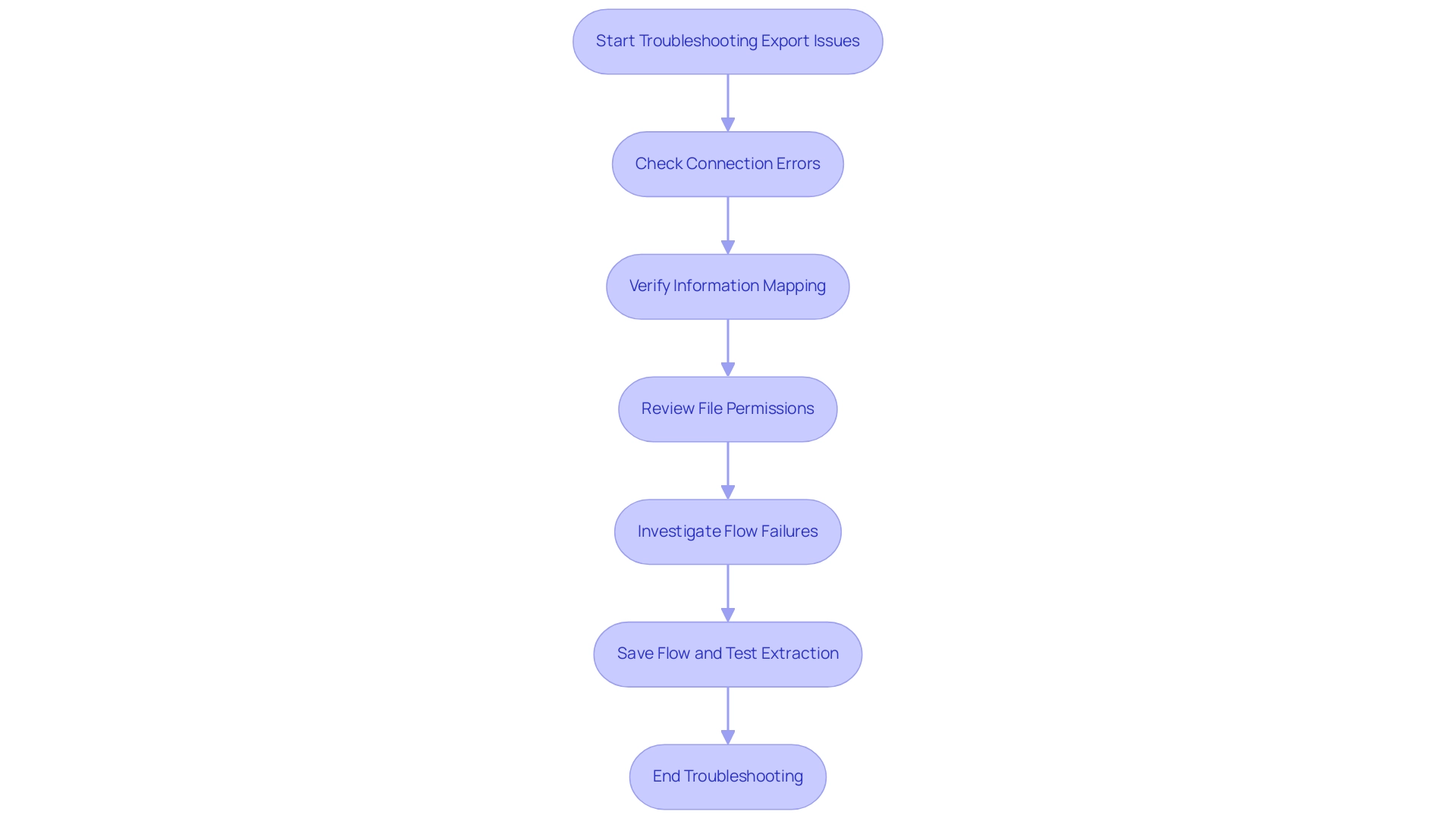

Transferring information via automation can be effective, yet individuals frequently encounter various obstacles that impede the procedure. Understanding these common issues is crucial for successful automation:

-

Connection Errors: A frequent hurdle is ensuring that the connection to Excel is correctly configured. Verify authentication settings and permissions to avoid disruption.

Statistics show that Power Automate interprets 500 errors thrown by connectors as a BadGateway error, complicating troubleshooting efforts. Overcoming these connectivity challenges is vital for maintaining operational efficiency, especially in a landscape where workflows are streamlined through power automate export to excel.

-

Information Mapping Issues: When information fails to appear in Excel, it’s essential to check that fields are appropriately mapped. Confirm that the column names in Excel match the information being sent to prevent miscommunication. Effective information mapping is a critical component of leveraging Business Intelligence for informed decision-making.

-

File Permissions: Permissions play a vital role in the export process. Ensure you have the necessary rights to write to the Excel file. If the file is stored in OneDrive or SharePoint, reviewing sharing settings is crucial to maintain access. Addressing file permission issues can significantly enhance the efficiency of your automated workflows, particularly when you utilize power automate export to excel.

-

Flow Failures: In the event of a flow failure, consult the run history for specific error messages. This feedback can guide adjustments to your flow, ensuring it aligns with the intended output. Utilizing RPA effectively involves continuous monitoring and refinement of these flows, with the final steps including saving the flow and testing the extraction process to ensure it works correctly for the power automate export to excel. This step is essential to confirm that all configurations are properly set and the information exports as intended. Tim Weinzapfel, an expert in Automation and Analytics, highlights the importance of addressing these issues, stating, > A big thanks to Colin Tomb for inquiring on this and I’m hoping this is useful. Such insights are invaluable as organizations navigate the complexities of power automate export to excel. Additionally, when a CSV file is created in SharePoint during this process, it includes a filename with the current date in ‘MM-dd-yyyy’ format, which helps in organizing exported data effectively. To illustrate the potential impact of resolving these challenges, consider the case of Stratos Wealth Partners, which implemented Encodian to streamline client agreement management. Their transformation resulted in improved productivity and operational advancements, highlighting the advantages of effectively tackling export challenges and utilizing RPA and Business Intelligence for business growth. Furthermore, tailored AI solutions can provide additional support in automating these processes, ensuring smoother operations and reducing the likelihood of errors, ultimately freeing up teams for more strategic work.

Best Practices for Efficient Use of Power Automate

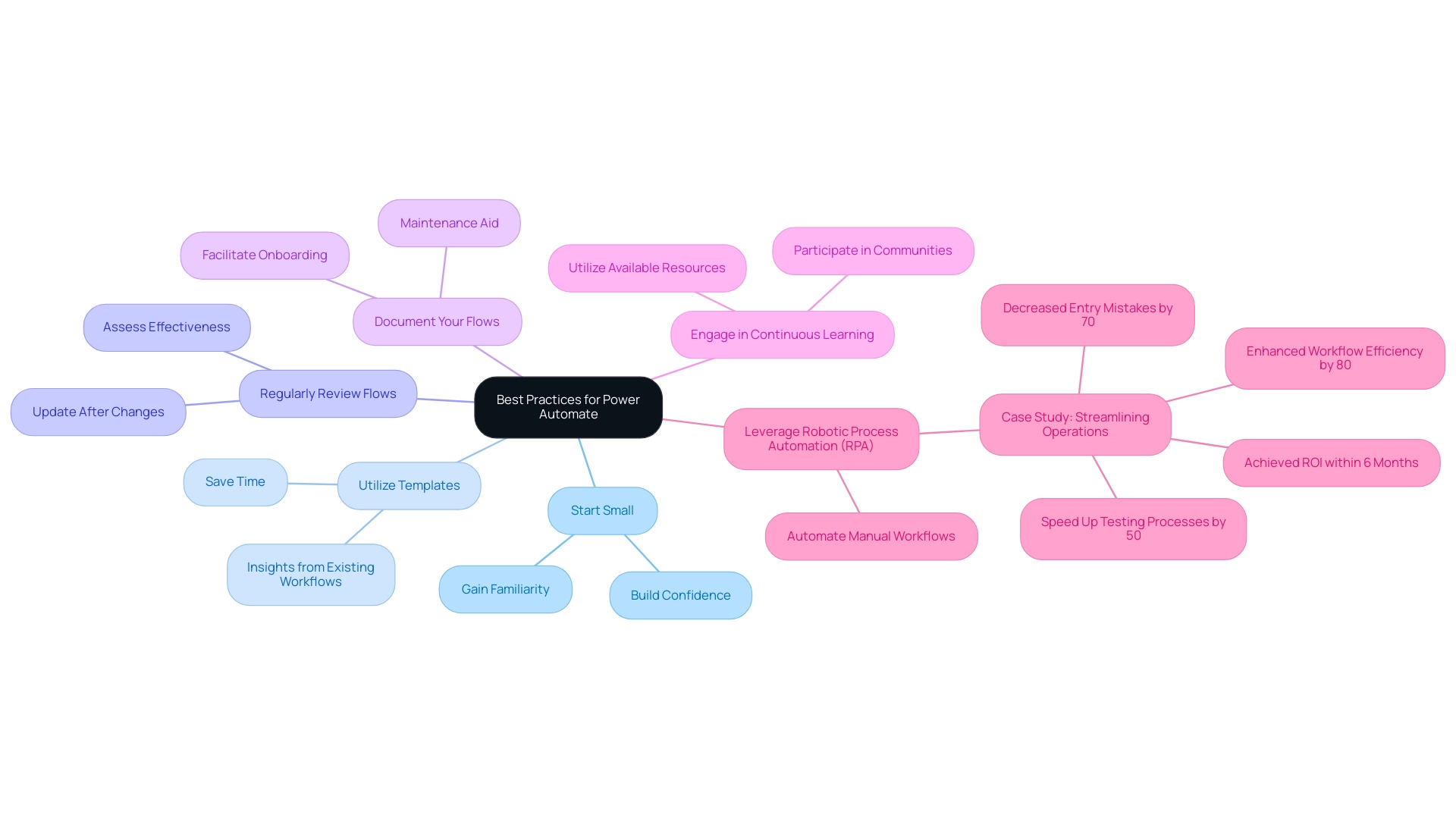

To maximize efficiency with Power Automate, implementing the following best practices can significantly enhance your workflows:

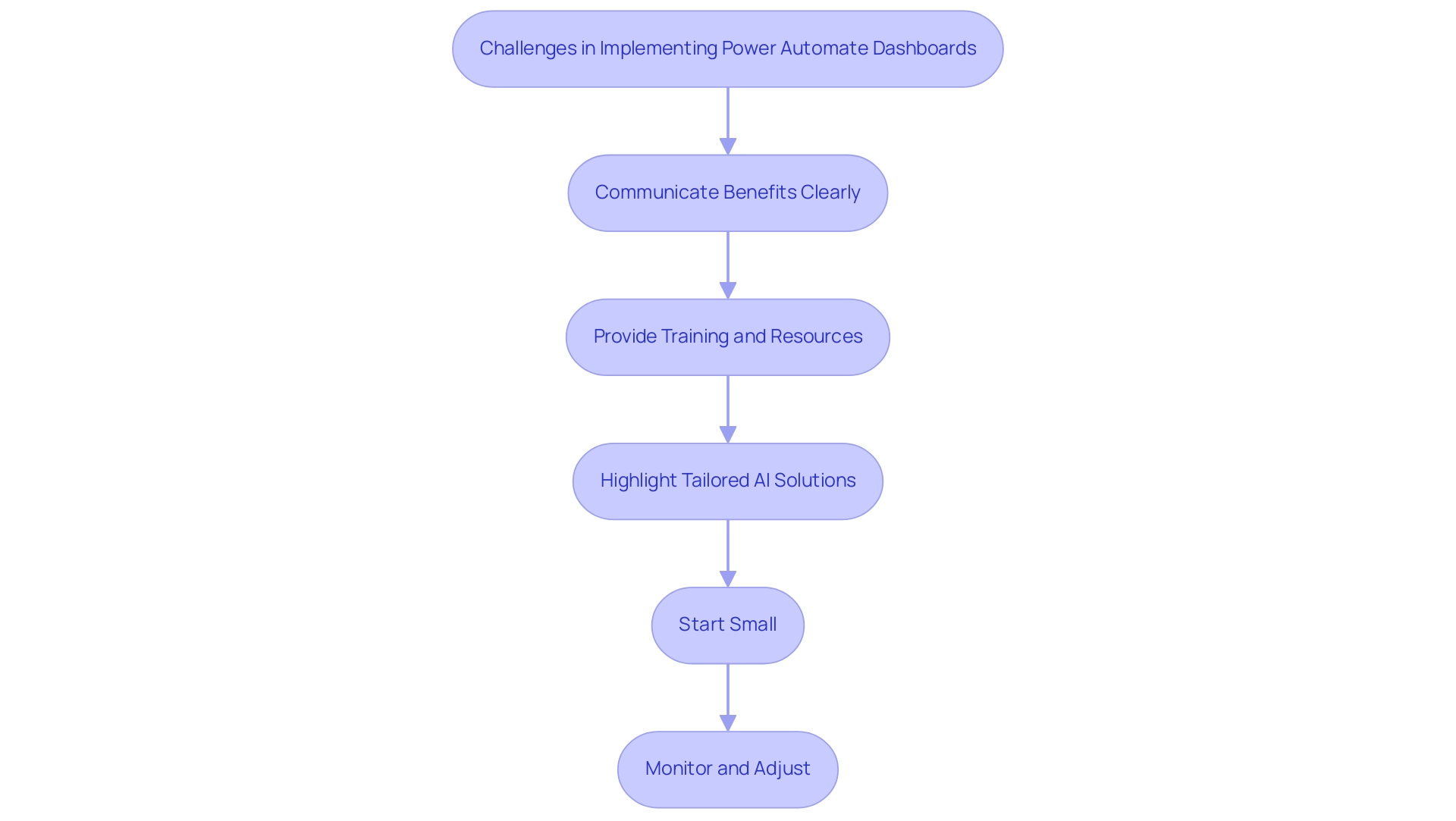

- Start Small: Initiate your journey with simple automations to gain familiarity with the tool. This approach allows you to build confidence before advancing to more complex workflows.

- Utilize Templates: Take advantage of the myriad pre-built templates in Power Automate, especially those designed for power automate export to excel. These templates not only save time but also provide insights into best practices derived from existing workflows, making them an invaluable resource for efficiency.

- Regularly Review Flows: Consistently assess and update your flows to ensure they remain effective and relevant, particularly after changes in source information or connected applications. This proactive approach can prevent potential bottlenecks and inefficiencies.

- Document Your Flows: Thorough documentation of your flows, including their purposes and operational details, is crucial. This practice aids in maintenance and facilitates onboarding for new team members, ensuring continuity and clarity in operations.

- Engage in Continuous Learning: Keep abreast of the latest features and best practices by actively participating in Power Automate communities and utilizing available resources. As Cornellius Yudha Wijaya, a data science assistant manager, aptly states,

AI has become a tool that certainly helps our data analysis process.

This sentiment highlights the transformative potential of automation tools in optimizing workflows and improving operational effectiveness.

Moreover, leveraging Robotic Process Automation (RPA) to automate manual workflows can further improve operational performance in today’s rapidly evolving AI landscape. A pertinent case study named ‘Streamlining Operations with GUI Automation’ demonstrates how a mid-sized company enhanced productivity by automating information entry, software testing, and legacy system integration. They decreased entry mistakes by 70%, sped up testing processes by 50%, and enhanced workflow efficiency by 80%, achieving ROI within 6 months.

This example exemplifies how tailored AI solutions and Business Intelligence can drive informed decision-making and enhance productivity.

Additionally, it is noteworthy that for a table containing 2 million rows, statistics are updated every 44,721 modifications, emphasizing the importance of maintaining accurate information for effective automation. Business Intelligence plays a crucial role in transforming this raw data into actionable insights, enabling organizations to make informed decisions that drive growth. By following these best practices, organizations can significantly enhance their automation initiatives, ensuring that the power automate export to Excel is utilized to its fullest potential while adapting to evolving operational requirements.

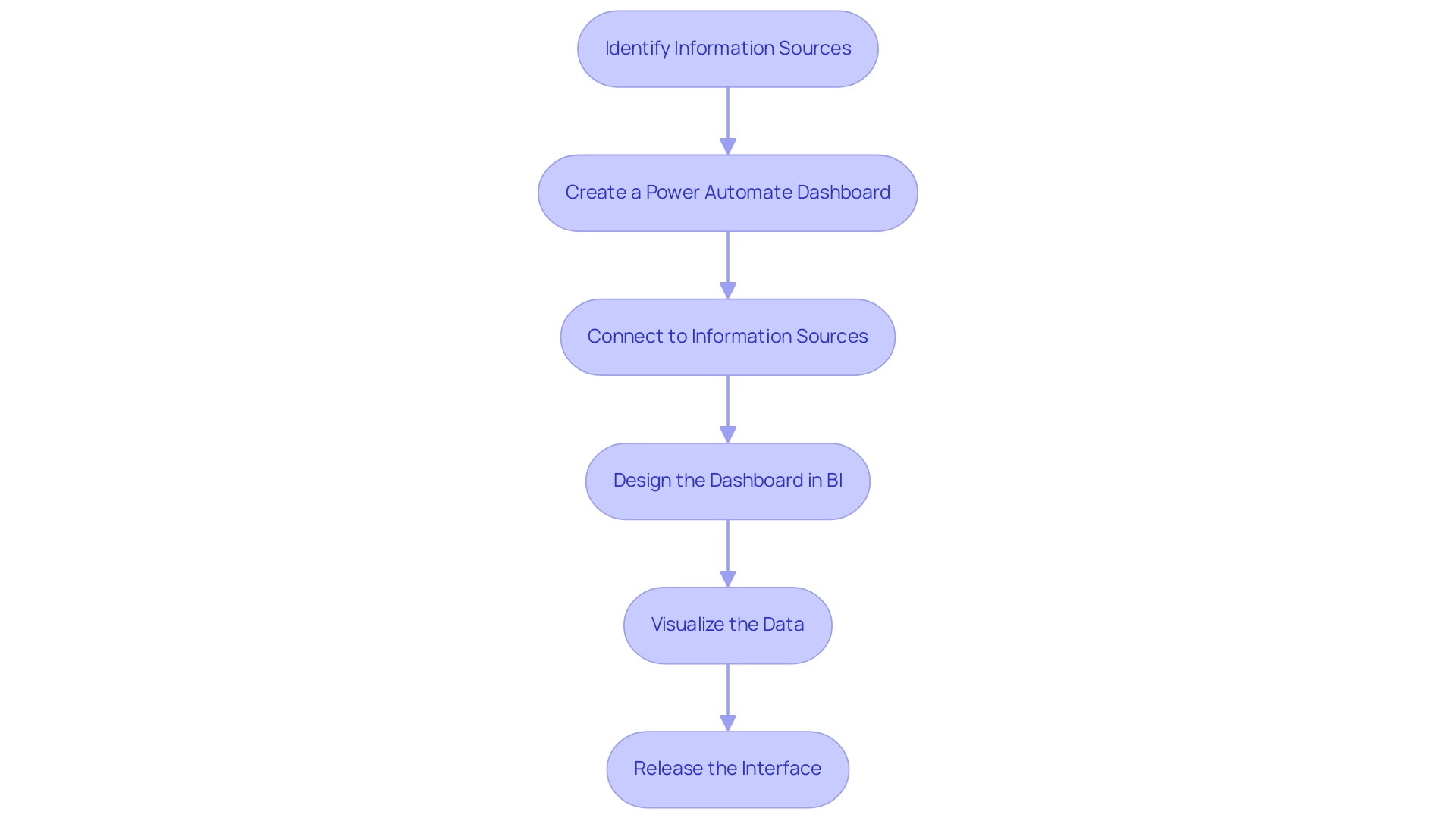

Integrating Power Automate with Other Tools for Enhanced Functionality

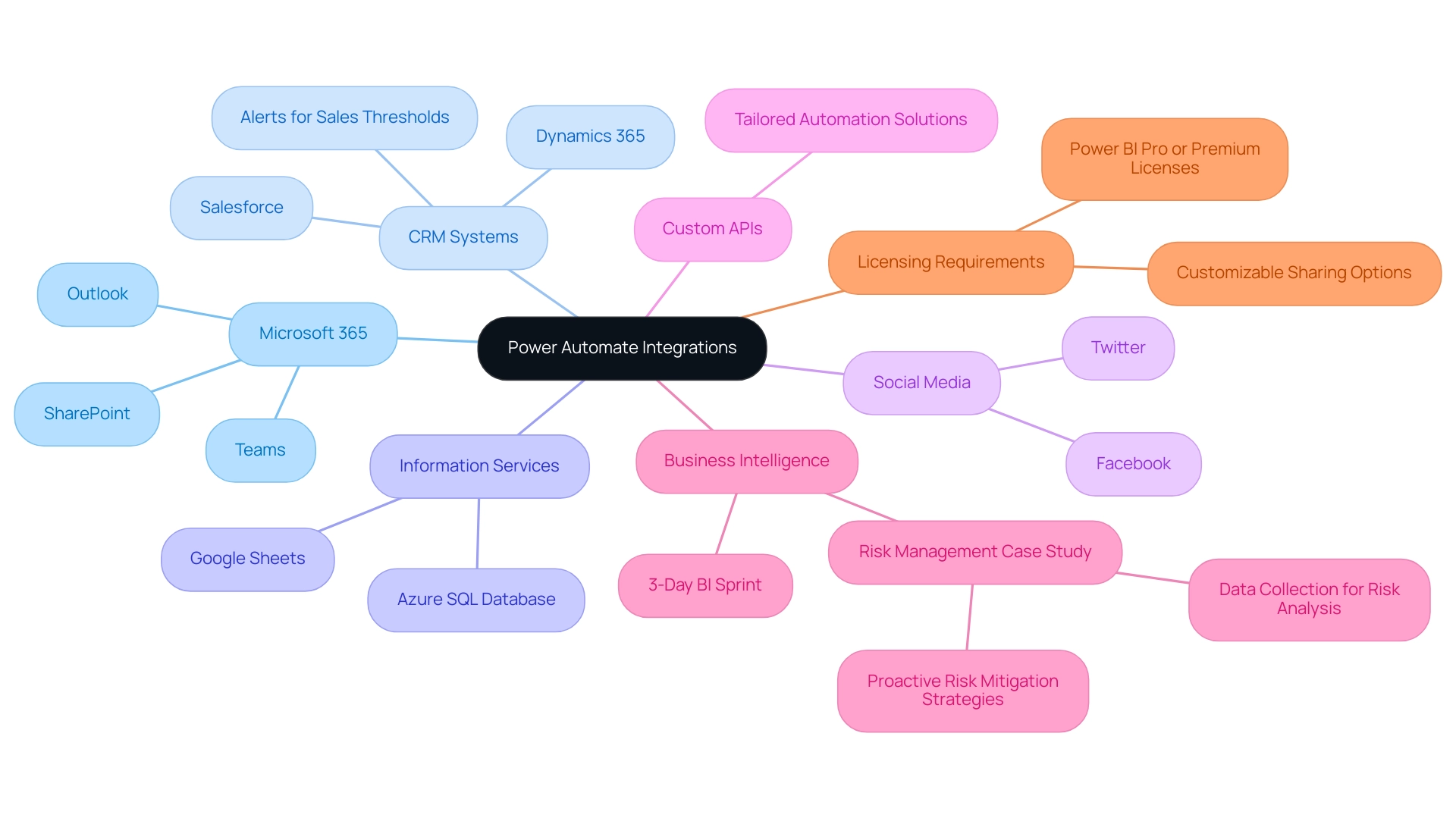

This automation solution stands out for its capacity to seamlessly connect with a diverse range of tools and platforms, significantly enhancing operational functionality and efficiency. Key integrations include:

- Microsoft 365: Connect with essential applications such as Outlook, SharePoint, and Teams to streamline communication and automate collaboration tasks. This integration is particularly beneficial, as it allows for real-time updates and enhanced teamwork, leading to more informed decision-making.

- CRM Systems: Integrate with leading platforms like Salesforce and Dynamics 365 to automate customer relationship management processes.

With a significant rise in usage rates anticipated for 2024, utilizing the automation solution for CRM tasks can enhance customer interactions and improve information accuracy, while also sending alerts if sales fall below a specified threshold to boost operational efficiency.

- Information Services: Employ connectors for services like Azure SQL Database or Google Sheets to automate information retrieval and processing, which can lead to more effective information management and reporting, essential for utilizing Business Intelligence.

- Social Media: Automate postings and interactions on social media platforms such as Twitter and Facebook, improving digital engagement without manual effort, thus freeing up resources for more strategic activities.

- Custom APIs: For advanced users, the automation solution provides the flexibility to create custom connectors, allowing for integration with proprietary systems. This capability fosters tailored automation solutions that directly meet unique business needs.

Additionally, the 3-Day BI Sprint enables teams to quickly create professionally designed reports, while the General Management App provides comprehensive management and smart reviews, enhancing the overall data reporting experience.

A relevant case study on Risk Management illustrates how organizations can use Automate to collect data on potential risks and feed it into BI for comprehensive analysis, enabling proactive risk mitigation strategies through detailed risk analysis reports.

As Caroline Mayou aptly states,

Their primary role is to bridge the gap between the technical potential of Automate and the practical needs of your business, ensuring that your investment in automation yields measurable outcomes.

This highlights the importance of aligning technical integrations with operational goals to achieve significant efficiency gains. Furthermore, it’s important to note that users must have Power BI Pro or Premium licenses to share dashboards and reports, which is crucial for maximizing the benefits of these integrations.

By focusing on Business Intelligence and Robotic Process Automation, organizations can enhance productivity and drive data-driven insights that foster growth and innovation.

Conclusion

Power Automate offers organizations a powerful solution to streamline workflows and enhance productivity through automation. By simplifying the process of integrating diverse applications and services, it enables users to tackle repetitive tasks efficiently without the need for extensive coding skills. As discussed, the platform’s capabilities extend beyond basic automation, incorporating Robotic Process Automation (RPA) and Business Intelligence to drive informed decision-making and operational efficiency.

The step-by-step approach to exporting data to Excel illustrates how easy it is to harness the full potential of Power Automate to improve data management and reporting. Addressing common export issues and adhering to best practices further ensures that organizations can maximize their investment in this technology. By engaging in continuous learning and utilizing pre-built templates, users can build confidence and enhance their automation strategies over time.

Ultimately, the seamless integration of Power Automate with various tools not only enhances operational functionality but also fosters collaboration and data-driven insights. As organizations navigate the complexities of today’s data-rich environment, leveraging the capabilities of Power Automate becomes essential for driving growth and innovation. By embracing automation, businesses position themselves to thrive in an increasingly competitive landscape, transforming their operations and achieving significant efficiency gains.

Overview

Changing the date format in Power BI visualizations is crucial for accurate data representation and can be achieved through methods such as using the Modeling Tab, Power Query, DAX expressions, and the DATE() function. The article emphasizes that these techniques not only enhance clarity and consistency in reporting but also mitigate common errors stemming from regional settings and incorrect interpretations, thus improving the overall operational efficiency of data analysis.

Introduction

In the realm of data analysis, the significance of date formatting in Power BI cannot be overstated. As organizations strive to make sense of vast amounts of information, the way dates are presented can greatly influence the clarity and accuracy of insights derived from data. With various formats like ‘MM/DD/YYYY’, ‘DD/MM/YYYY’, and ‘YYYY-MM-DD’ in play, understanding these nuances becomes essential to avoid costly misinterpretations that can lead to operational inefficiencies.

This article delves into the intricacies of date formats, explores effective methods for changing them, and highlights best practices that ensure data integrity and enhance decision-making capabilities. By mastering date formatting in Power BI, professionals can transform their reports into powerful tools that drive actionable insights and foster trust in the data presented.

Understanding Date Formats in Power BI

In Power BI, the option to change date format in visualization is essential as it influences how dates are displayed in dashboards, directly affecting operational efficiency. Among the most common styles are ‘MM/DD/YYYY’, ‘DD/MM/YYYY’, and ‘YYYY-MM-DD’. A comprehensive understanding of these formats is crucial; misinterpretations can result in significant errors in data analysis, adding to the difficulties of time-consuming documentation creation and data inconsistencies that many organizations encounter.

This issue is often exacerbated by a lack of governance strategy, which can result in discrepancies across reports. For instance, a format like ’01/02/2023′ could be interpreted as either January 2nd or February 1st, depending on the viewer’s regional settings. This ambiguity highlights the significance of clear communication in information visualization and actionable insights.

A recent study indicates that the error rate for the suggested approach to managing temporal representations was considerably lower than baseline methods, emphasizing the essential nature of precise temporal depiction. Furthermore, the correlation matrix case study demonstrates how format issues can impact the analysis of relationships between various information points in business contexts, ultimately resulting in confusion and mistrust in the information presented.

As Zixuan Liang from the Department of Computer and Information Sciences at Harrisburg University of Science & Technology states,

We present a second Normal Language Handling (NLP) strategy for creating a similar temporal design space language with comparable exactness, stretching out the calculation to deal with syntax variations and imperatives extraordinary to temporal structures.

This emphasizes the need for actionable insights derived from precise information representation. By identifying various temporal representations and their implications, you can ensure that your visualizations in Power BI change date format in visualization to convey precise information and offer clear, actionable guidance. Comprehending these subtleties is crucial for improving the reliability of your analysis and ensuring that documents, while filled with information, also provide clear guidance on the next steps.

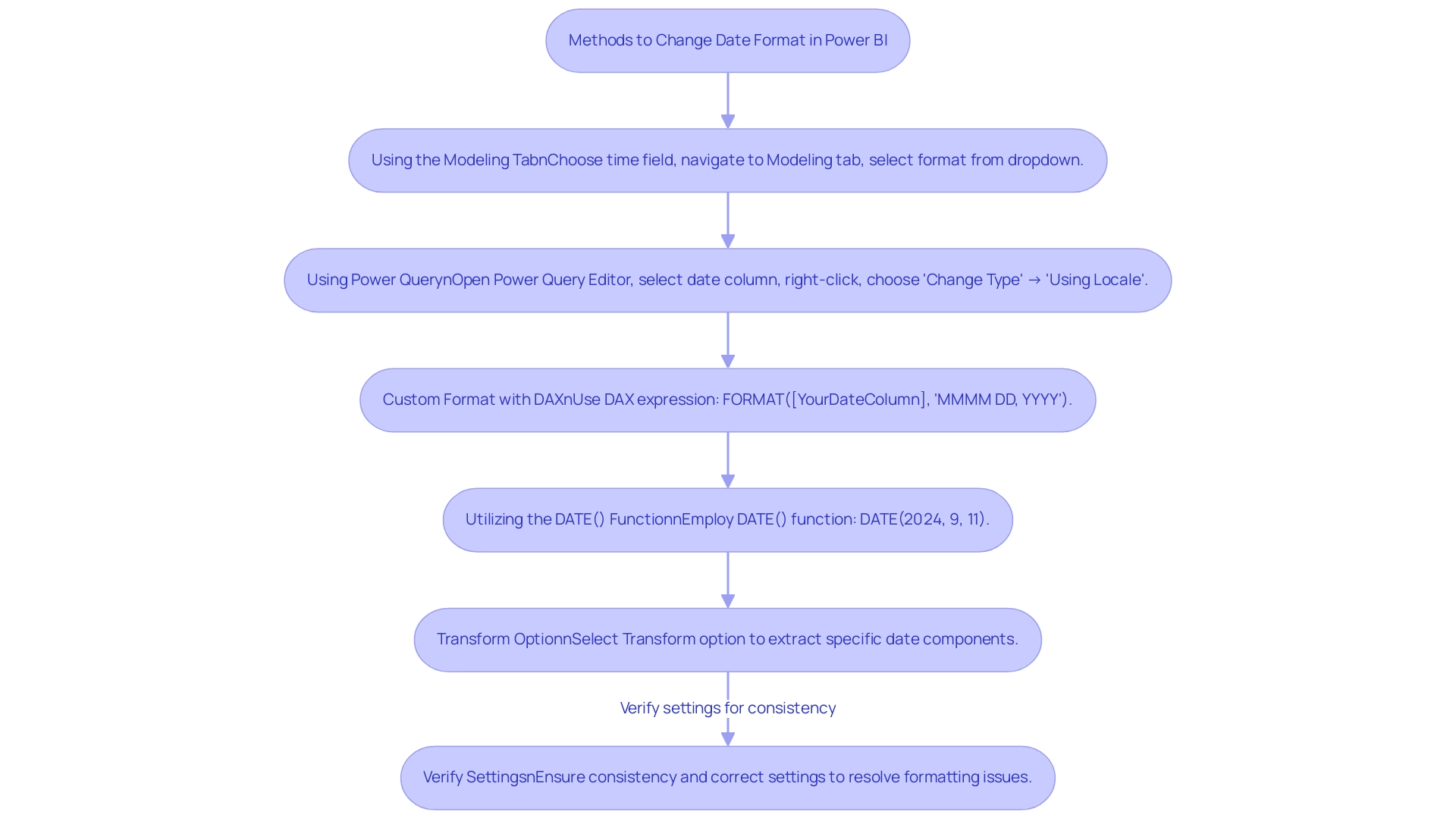

Methods to Change Date Format in Power BI

Changing date formats in Power BI can be accomplished through several effective methods, which not only enhance data presentation but also address common challenges faced by operations directors regarding report efficiency and clarity:

-

Using the Modeling Tab: Begin by choosing your time field in the Fields pane. Navigate to the Modeling tab, where you can choose your desired format from the ‘Format’ dropdown menu, ensuring that your data aligns with reporting standards and minimizes inconsistencies.

This method also offers clear guidance on how to present time effectively. -

Using Power Query: Open the Power Query Editor, select the date column, right-click, and choose ‘Change Type’ followed by ‘Using Locale.’

This permits you to define the format based on your regional or organizational requirements, improving consistency and trust in your documents, which directly tackles the confusion that may result from inconsistent information presentation. -

Custom Format with DAX: For unique formatting requirements, DAX expressions provide a powerful solution.

For instance, utilizingFORMAT([YourDateColumn], 'MMMM DD, YYYY')will transform the display to ‘January 01, 2023.’ This flexibility guarantees your documents are actionable and pertinent, offering stakeholders with clear next steps based on the information presented. -

Utilizing the DATE() Function: To generate specific timestamps, you can employ the DATE() function, such as

DATE(2024, 9, 11), which guarantees that the timestamp is correctly depicted in your documents, minimizing the time allocated for adjustments and reinforcing the dependability of your information. -

Transform Option: By selecting the Transform option, users can extract specific time components, such as the year, which can be particularly useful for analysis and reporting purposes.

These methods not only provide flexibility in how timestamps are presented in your reports but also ensure clarity and relevance in your visual representations.

Moreover, frequent problems with time formatting in Power BI, such as incorrect settings or invalid formats, can be resolved by verifying settings and ensuring consistency when attempting to change date format in visualization. This is emphasized in the case study ‘Troubleshooting Date Formatting Issues,’ which demonstrates how proper formatting of time can reduce confusion and mistrust in information.

By effectively employing these techniques, you can enhance your reporting capabilities in Power BI, transforming the way you leverage insights and make decisions.

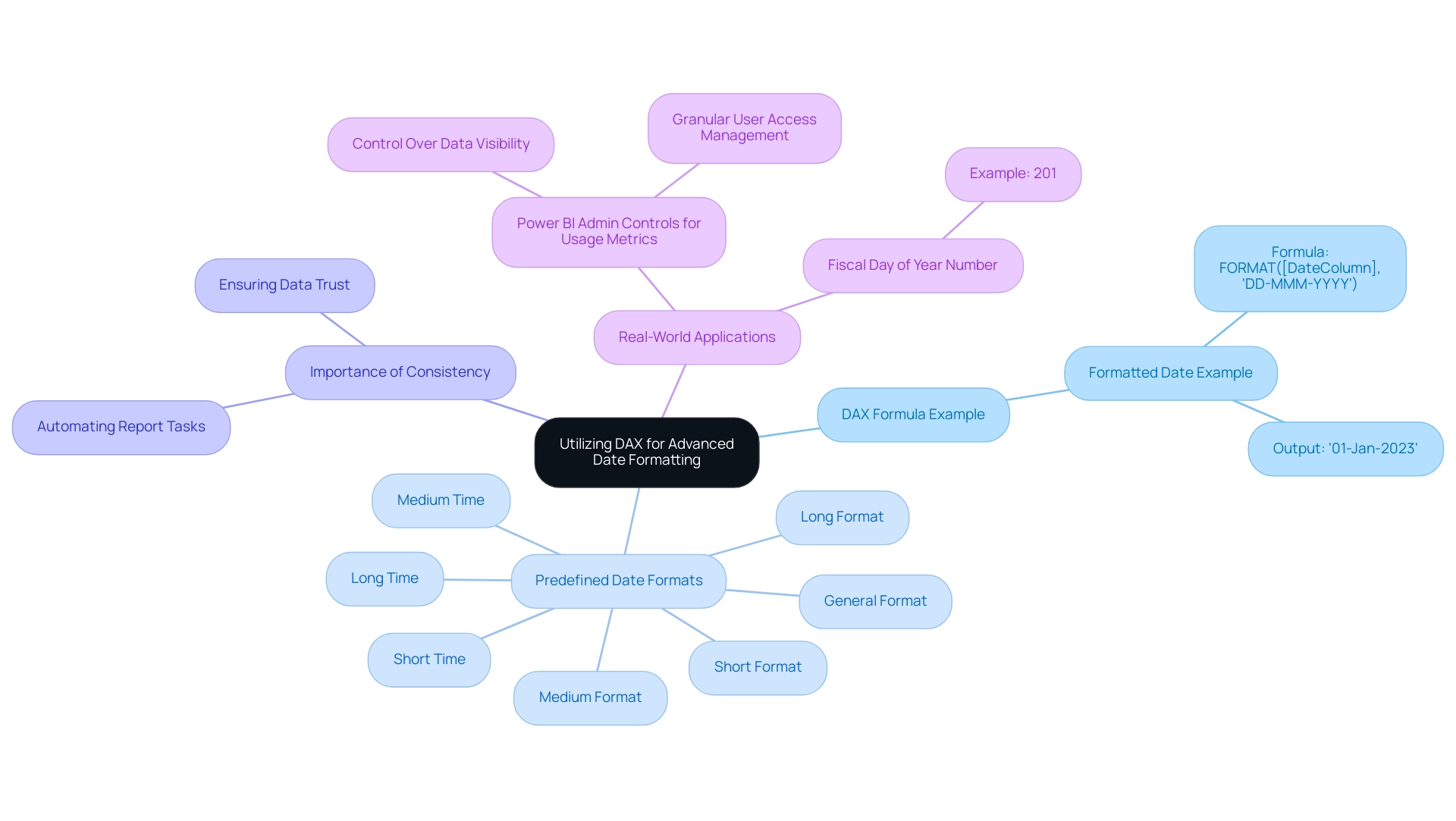

Utilizing DAX for Advanced Date Formatting

DAX (Data Analysis Expressions) functions as a powerful formula language within Power BI, allowing users to conduct complex calculations and custom formatting that can mitigate typical obstacles such as time-consuming documentation creation and information inconsistencies. For example, the following DAX formula exemplifies how to create a formatted date string:

FormattedDate = FORMAT([DateColumn], 'DD-MMM-YYYY')

This formula converts dates into a standardized format, resulting in outputs like ’01-Jan-2023′. By utilizing DAX, users can not only automate tedious report tasks but also ensure consistency across various reports, which is crucial for maintaining trust in the information presented.

Additionally, establishing a governance strategy is essential to prevent inconsistencies and confusion in reporting. DAX’s versatility encompasses its use in calculated columns and measures, enabling dynamic formatting that reacts to user interactions or filters, thus offering actionable insights instead of merely raw data. For instance, using DAX to create measures that summarize key performance indicators can guide stakeholders in decision-making processes.

Predefined date/time formats available in DAX include:

- ‘General Format’

- ‘Long Format’

- ‘Medium Format’

- ‘Short Format’

- ‘Long Time’

- ‘Medium Time’

- ‘Short Time’

This variety offers users options for presenting information clearly. Furthermore, the Fiscal Day of Year Number statistic, such as 201, can be utilized in DAX calculations for fiscal reporting, illustrating the practical application of temporal functions in a business context. As mentioned in professional circles,

You should utilize the ‘Date/Time’ information type for information that requires both temporal and chronological details,

highlighting the importance of appropriate information types for effective temporal manipulation.

Moreover, the case study on Power BI Admin Controls for Usage Metrics demonstrates how DAX can be employed to manage visibility and reporting, showcasing its real-world applications. Mastering DAX not only enhances your ability to adapt time formats for various reporting contexts but also empowers you to power bi change date format in visualization, allowing you to leverage advanced temporal formatting techniques that can significantly improve the clarity and efficacy of your information presentation.

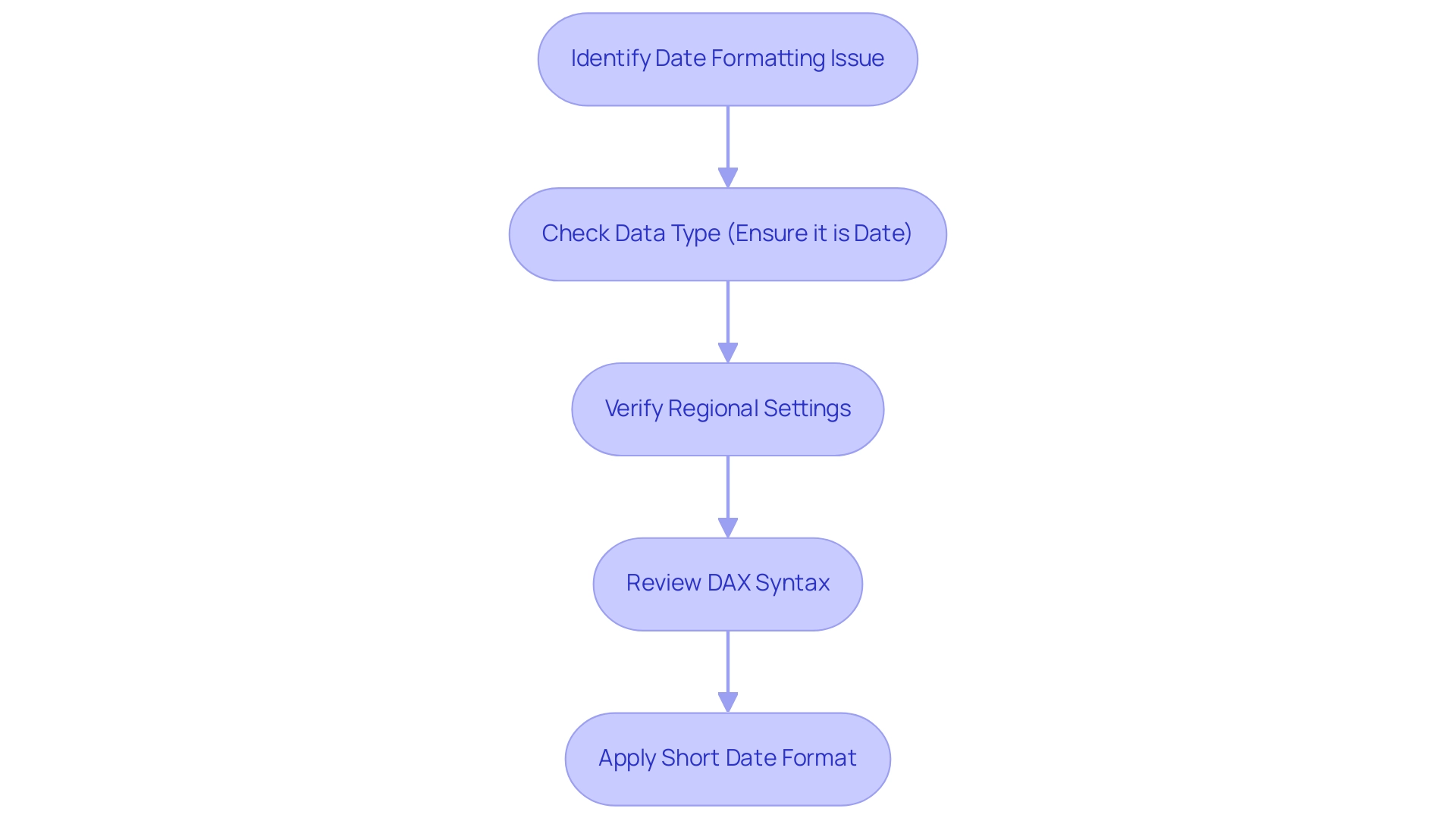

Troubleshooting Common Date Formatting Issues

Users must navigate a variety of challenges when they need to power bi change date format in visualization, which impacts operational efficiency and the quality of data-driven insights. Understanding these common issues and their solutions can significantly enhance the accuracy and presentation of your visualizations, particularly when you need to power bi change date format in visualization. Here are some key problems and recommended fixes:

-