Overview:

The article addresses the common causes of blank visuals in Power BI, identifying issues such as data connectivity problems, incorrect filters, and information refresh problems as key contributors. It supports these points through statistical data and practical solutions, emphasizing the importance of proper data management and the use of automation tools to enhance report integrity and operational efficiency.

Introduction

In the dynamic landscape of data visualization, blank visuals in Power BI pose significant challenges that can hinder effective decision-making and operational efficiency. Understanding the root causes behind these frustrating occurrences is crucial for any organization striving to harness the full potential of its data. From connectivity issues and incorrect filters to data refresh problems and type mismatches, the reasons for blank visuals are varied and often interlinked. By identifying these pitfalls and implementing proactive solutions, organizations can transform their reporting processes, ensuring that stakeholders receive accurate and actionable insights.

This article delves into the common scenarios leading to blank visuals, explores effective troubleshooting strategies, and offers best practices to maintain visual integrity, empowering teams to navigate the complexities of Power BI with confidence and clarity.

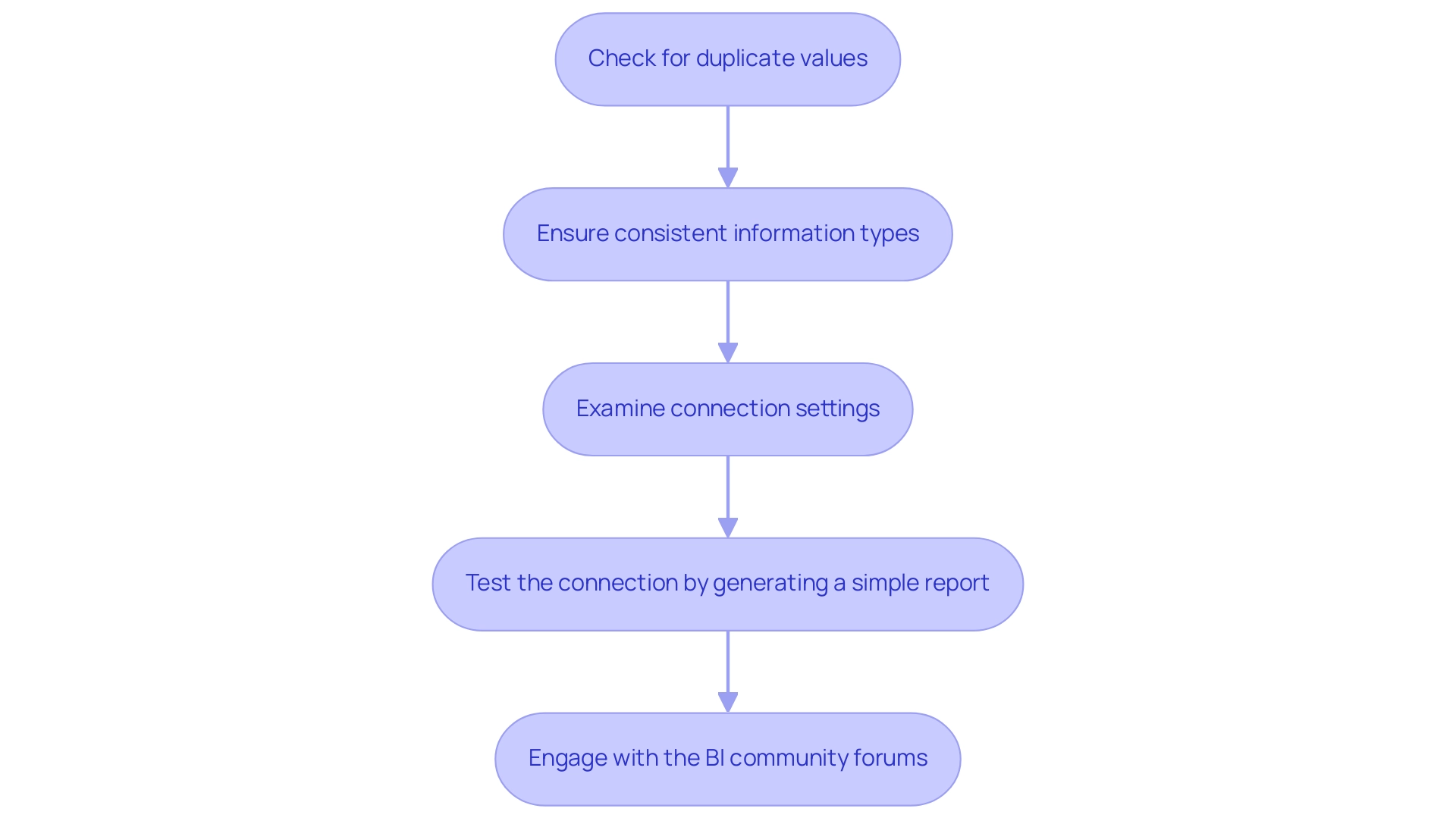

Understanding the Causes of Blank Visuals in Power BI

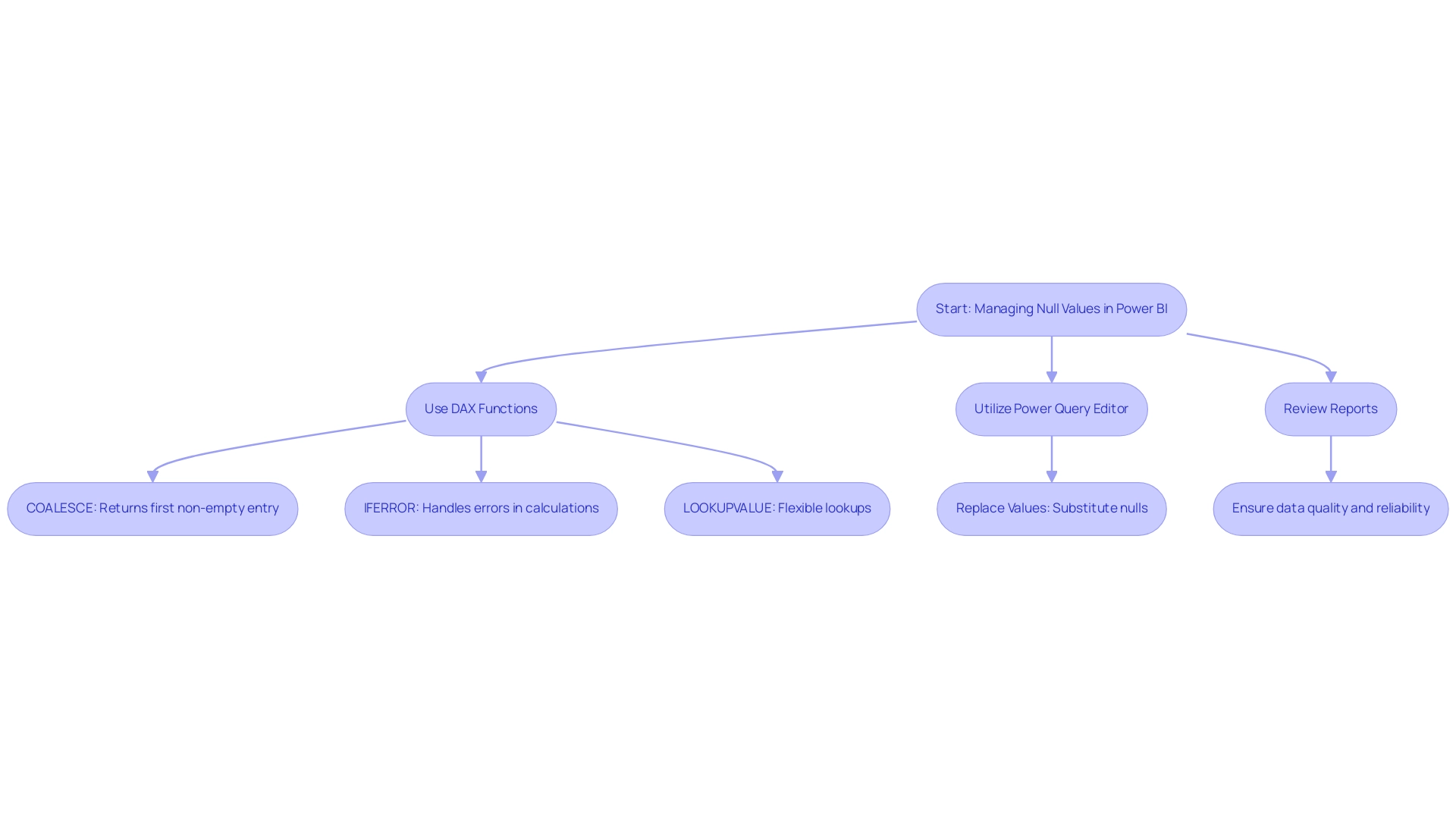

Empty displays in Power BI blank can result from various factors, making it crucial to determine the root causes for effective troubleshooting. Here are some common reasons that lead to blank images:

-

Data Connectivity Problems: When Power BI encounters a power bi blank because it fails to establish a connection with the information source, visuals may not render, leading to frustration and potential misinformation. Recent statistics indicate that approximately 30% of users experience connectivity issues, highlighting the prevalence of this challenge. RPA solutions can automate the monitoring of connections, ensuring seamless integration and reducing downtime.

-

Incorrect Filters: Filters applied to visuals or the document can inadvertently limit information visibility, resulting in empty outputs. It’s essential to ensure that filters align with the information being analyzed, as incorrect filtering has been a common source of frustration among users. RPA can help automate the validation of filter settings, ensuring they are set correctly before report generation.

-

Information Refresh Problems: If content is not refreshed post-updates, visuals may result in a Power BI blank, displaying outdated or no information at all, which hinders decision-making processes. This corresponds with the case study titled ‘Other Considerations for Usage Metrics,’ which highlights that users must interact with the content in their workspace to enable the processing of usage metrics information. Utilizing RPA tools can automate refresh schedules, ensuring that reports reflect the most current information.

-

Information Type Mismatches: Employing incompatible types within representations can also lead to power bi blank outputs, as Power BI necessitates consistency to interpret values correctly. RPA can assist in standardizing information types across datasets, minimizing errors and enhancing visual accuracy.

-

Power BI blank: When the underlying information is missing expected values, it results in a Power BI blank, preventing visuals from rendering as intended and highlighting the need for thorough validation prior to visualization. RPA solutions can automate validation processes, ensuring that all necessary information is present and correct before visualization.

As guillermosantos, a frequent visitor, noted, ‘I’ve been tracking the problem, and it looks like someone found a temporary solution.’ This emphasizes the persistent difficulties users encounter with Power BI blank visuals.

Tackling these problems proactively can greatly enhance the precision and dependability of your documents, ensuring that stakeholders are provided with the insights they require. By utilizing Business Intelligence tools and RPA solutions such as EMMA RPA and Automate, you can enhance report creation, remove inconsistencies, and ultimately promote operational efficiency for business growth.

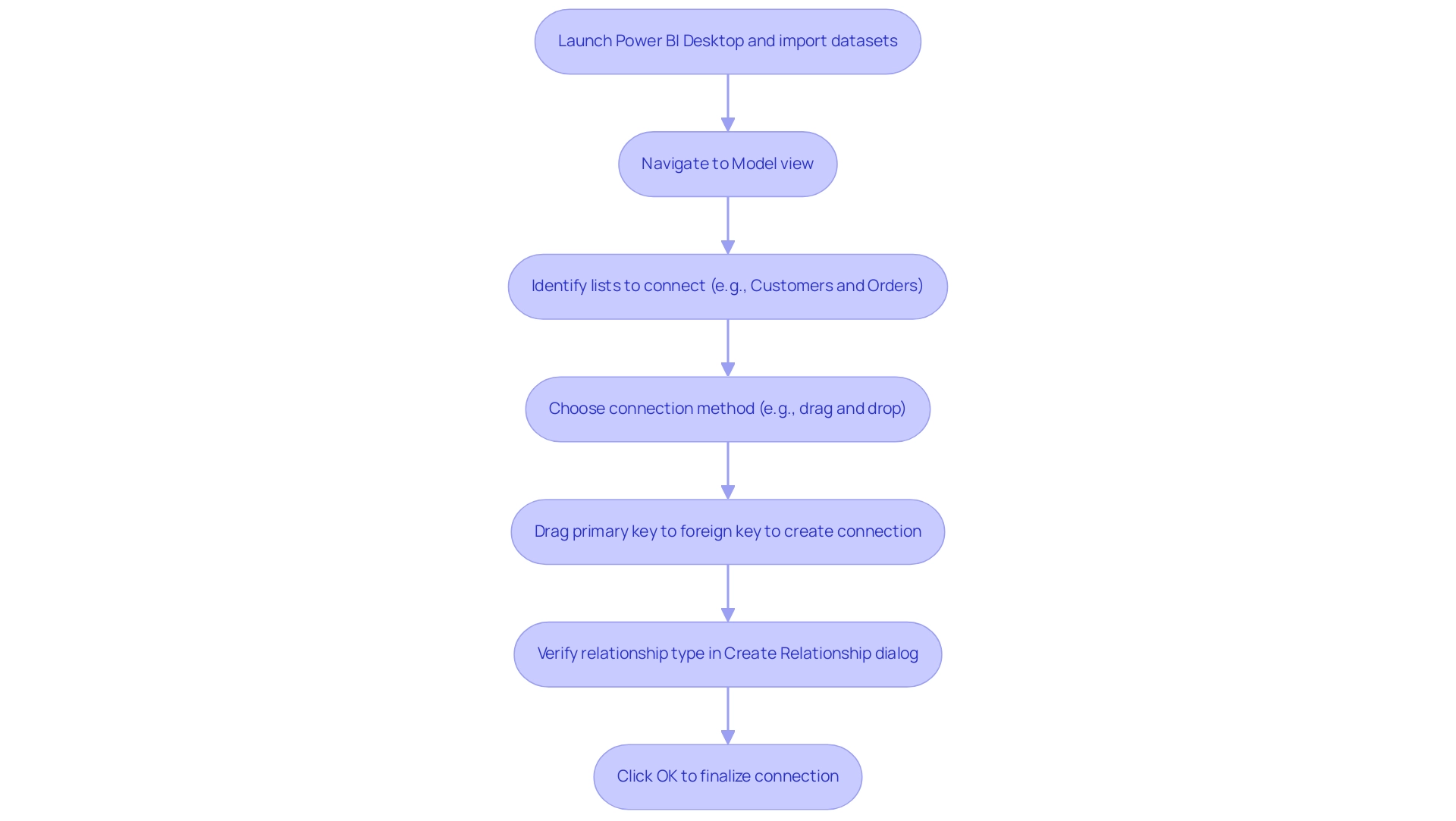

Effective Solutions to Resolve Blank Visuals in Power BI

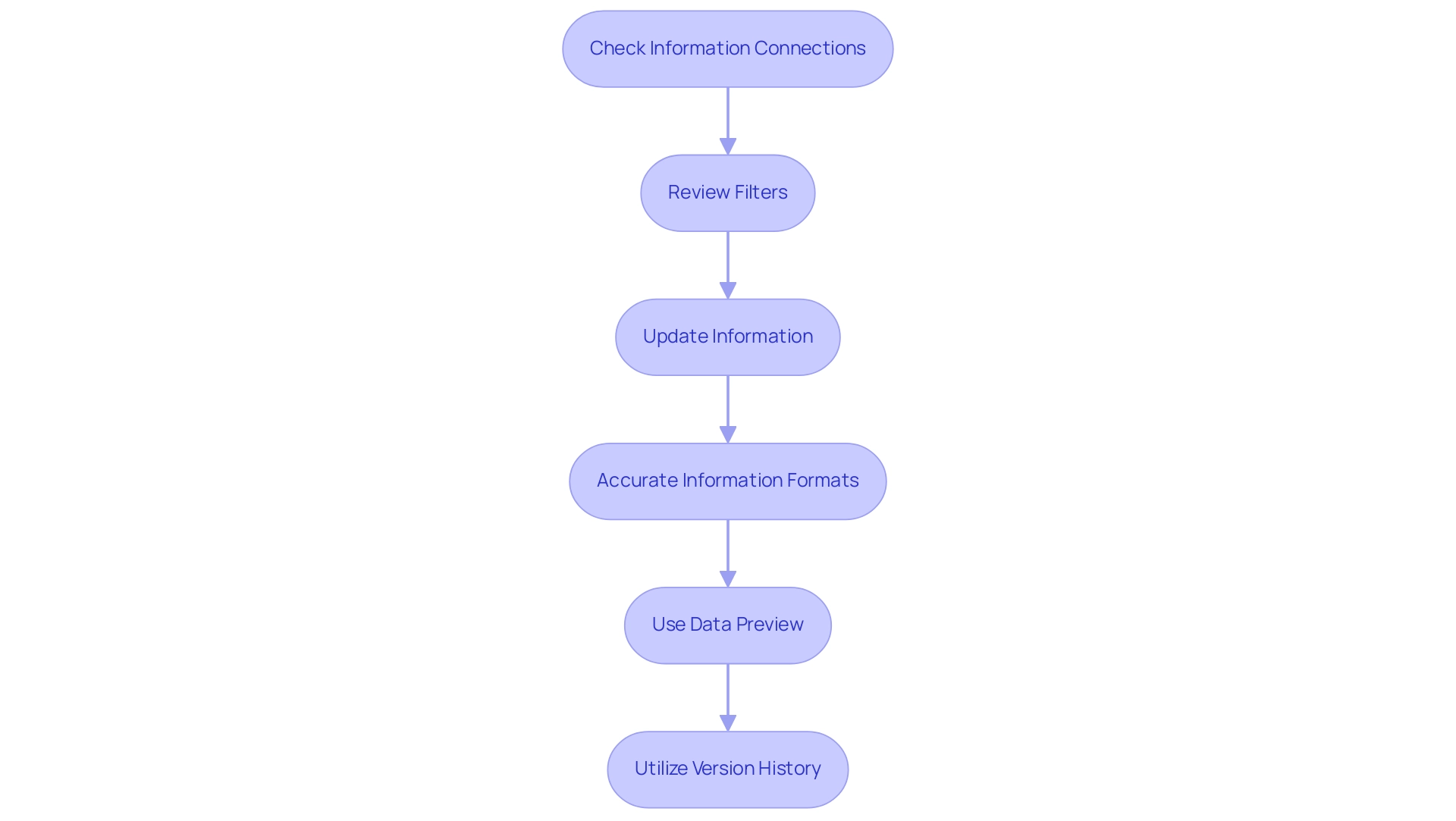

To effectively tackle empty graphics in BI and improve your operational efficiency, implement the following best practices:

- Check Information Connections: Confirm that your sources are correctly connected and accessible. Utilize Power BI’s integrated testing functions to confirm connections, ensuring that your Power BI blank graphics have the required information to populate effectively.

- Review Filters: Analyze any filters applied to your images. Adjust or remove filters as necessary to ensure they are not limiting the information you wish to display, thereby enhancing the actionable insights derived from your reports.

- Update Information: Regularly update your information to maintain displays current. Consider scheduling automatic refreshes to streamline this process and ensure real-time accuracy, which is vital for informed decision-making.

- Accurate Information Formats: Ensure that the types in your representations align with the expected formats. This may require adjustments to your information model to maintain compatibility, reducing the risk of inconsistencies.

- Use Data Preview: Leverage Power BI’s data preview feature to inspect for any missing or unexpected data before creating visuals. This proactive step can save time and enhance the reliability of your documents in Power BI blank, addressing the challenges of time-consuming document creation.

- Utilize Version History: Regularly review and utilize version history to track changes made to reports and dashboards. This practice is crucial for maintaining effective BI assets and ensuring that you can revert to previous versions if necessary.

Incorporating these strategies will help you maintain a reliable and efficient BI environment, ultimately empowering you to make data-driven decisions with confidence. Additionally, consider attending the upcoming Microsoft Fabric Community Conference from March 31 to April 2 in Las Vegas, Nevada, to further enhance your knowledge and connect with other BI professionals. It is important to recognize that failing to extract meaningful insights can place your business at a competitive disadvantage, making these practices essential.

Lastly, utilizing RPA alongside BI can streamline repetitive tasks, further enhancing your operational efficiency. The case study on backup and recovery strategies underscores the significance of resilience and continuity in managing your BI assets, which aligns with your operational efficiency goals and the transformative potential of RPA and BI in driving growth.

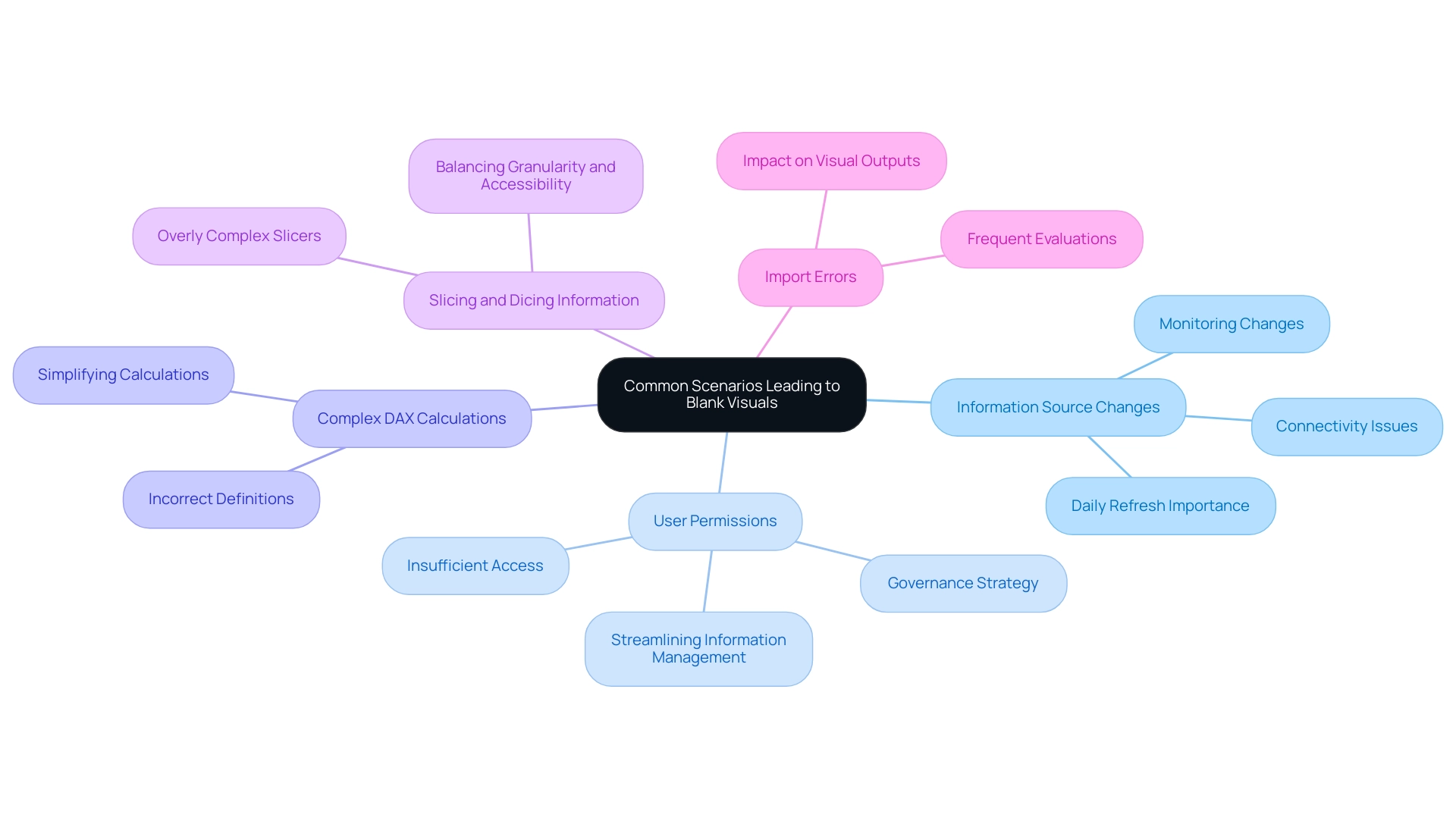

Common Scenarios Leading to Blank Visuals

Several scenarios can lead to blank visuals in BI, and understanding these can empower Directors of Operations Efficiency to troubleshoot effectively and streamline their reporting processes:

-

Information Source Changes:

Alterations to the structure or location of the information source can create connectivity issues. Monitoring these changes is crucial for maintaining visual integrity. Note that Power BI refreshes the usage metrics semantic model daily, underscoring the importance of accuracy in your reports. -

User Permissions:

Insufficient permissions can hinder access to specific information, resulting in Power BI blank visuals for users affected by these limitations. As mentioned by a Super User at Data Insights, ‘This approach also allows for the exclusion of unnecessary columns and rows, streamlining information management.’ Properly managing user permissions guarantees that all stakeholders have the necessary access to pertinent information, which is vital in establishing a reliable governance strategy. -

Complex DAX Calculations:

Incorrectly defined or overly complex DAX measures may yield results that are Power BI blank instead of the expected outcomes. Simplifying these calculations can enhance visual output and provide clearer insights for decision-making. -

Slicing and Dicing Information:

Utilizing overly complex slicers can inadvertently filter out all available content in Power BI, leading to Power BI blank visuals. It’s essential to strike a balance between information granularity and accessibility, ensuring that reports not only present information but also offer actionable insights. -

Import Errors:

Errors during import can lead to Power BI blank datasets, which directly impacts visual outputs. Frequent evaluations of imports can assist in reducing these risks and uphold the integrity of your reporting.

Furthermore, the case study on usage metrics in national/regional clouds illustrates how organizations can uphold compliance with local regulations while ensuring effective management practices. These insights underline the importance of proper user permissions and a robust governance strategy in ensuring effective visualization in BI, ultimately transforming your reporting process from time-consuming tasks into valuable insights.

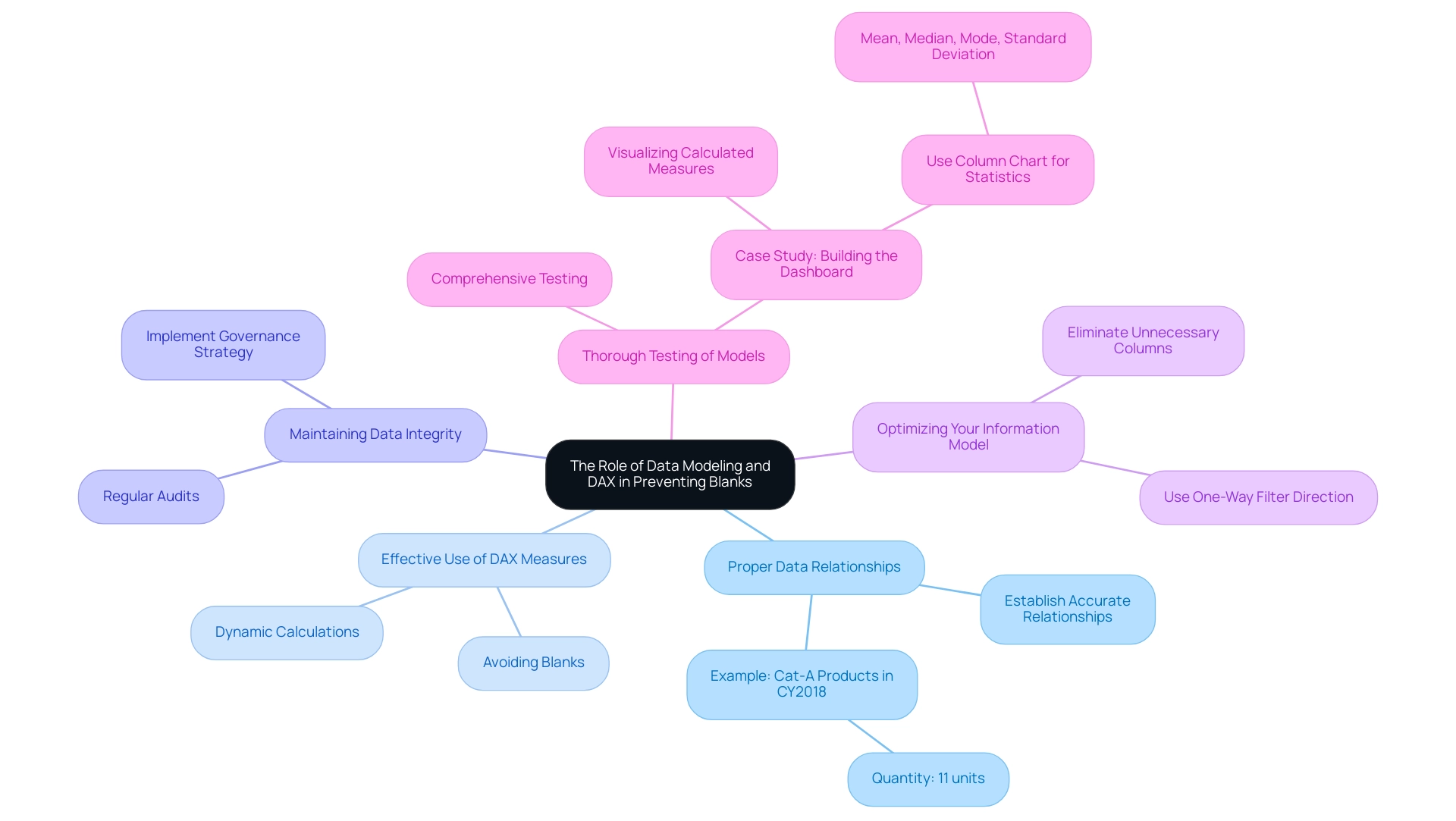

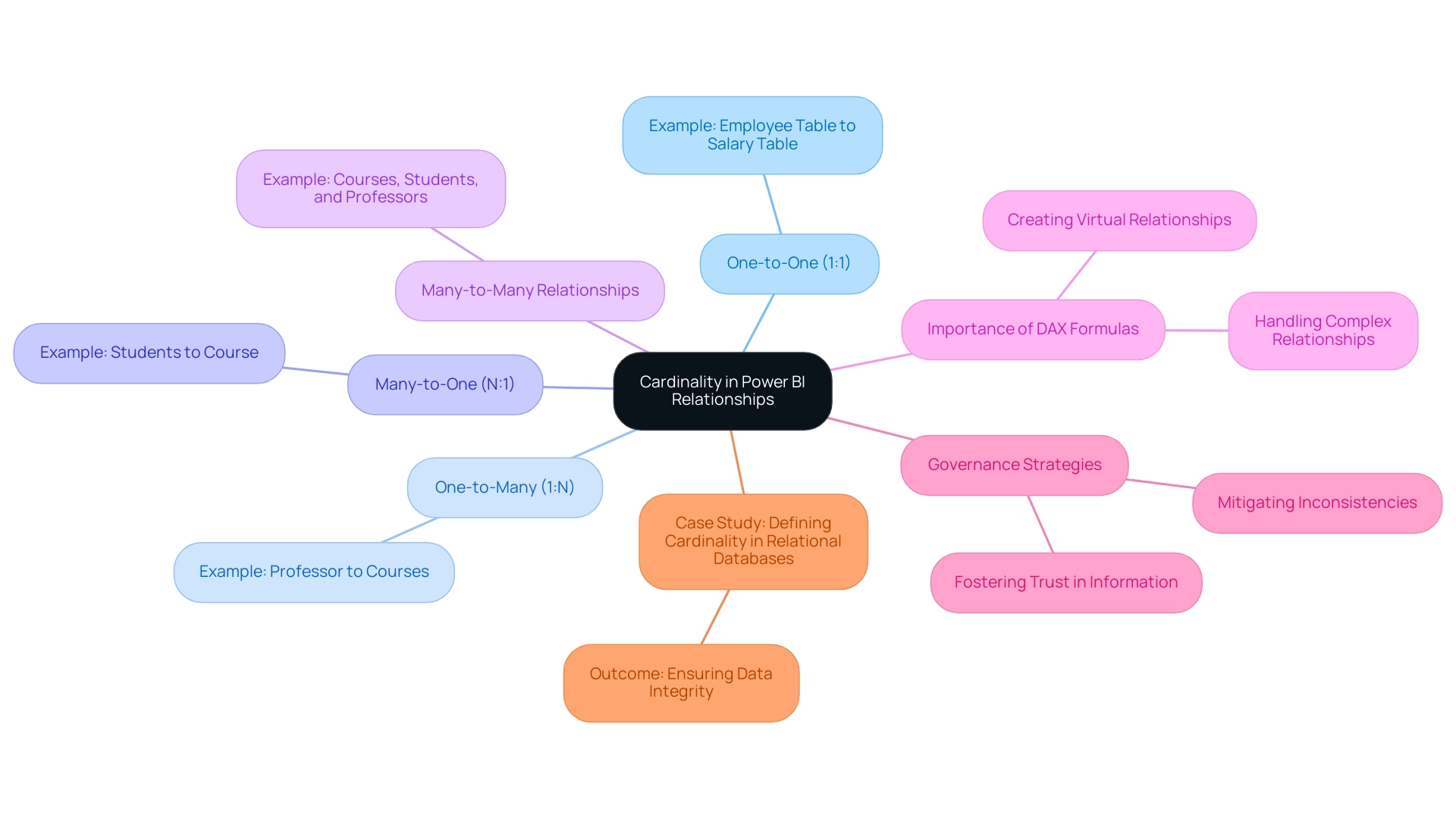

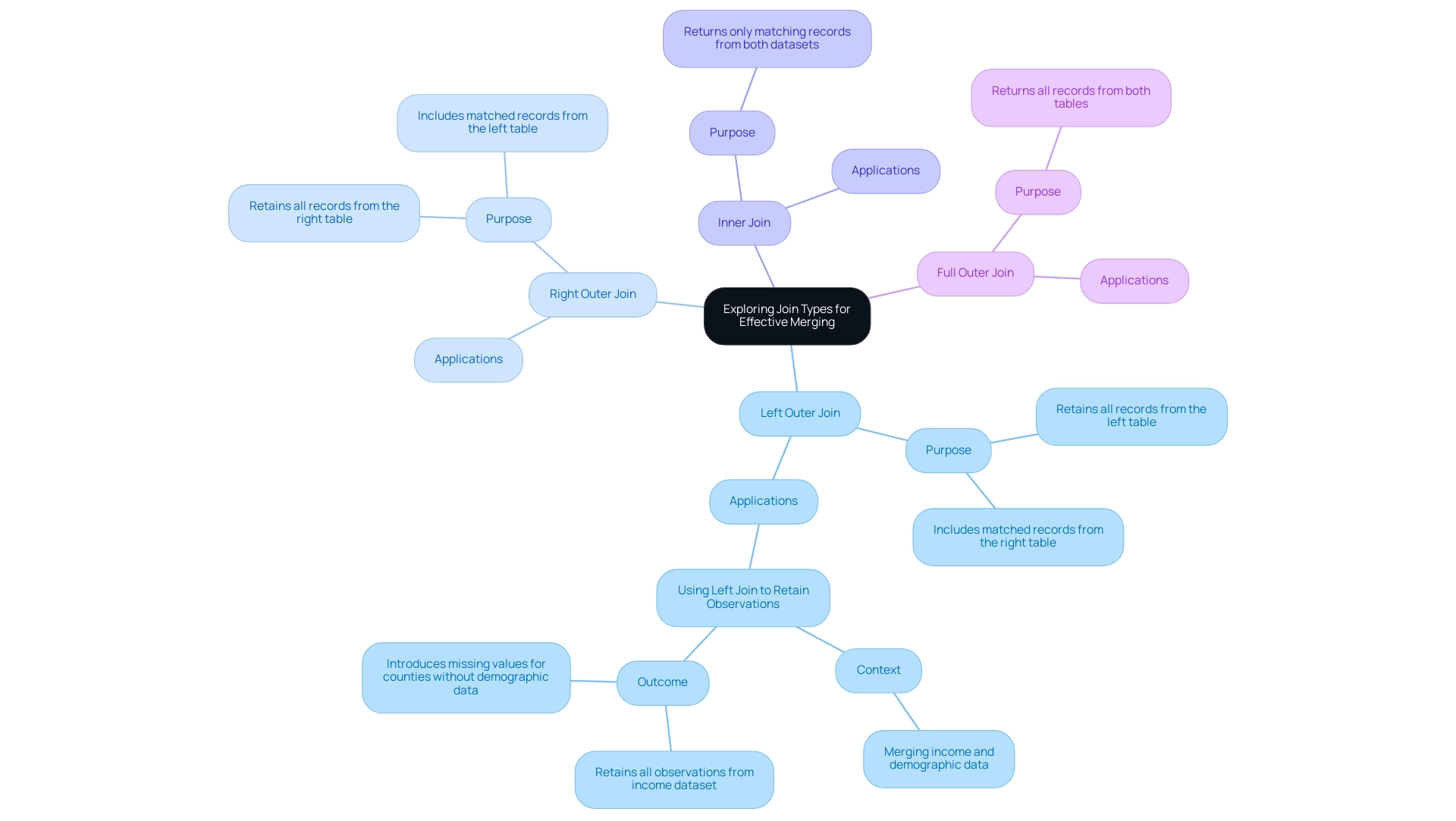

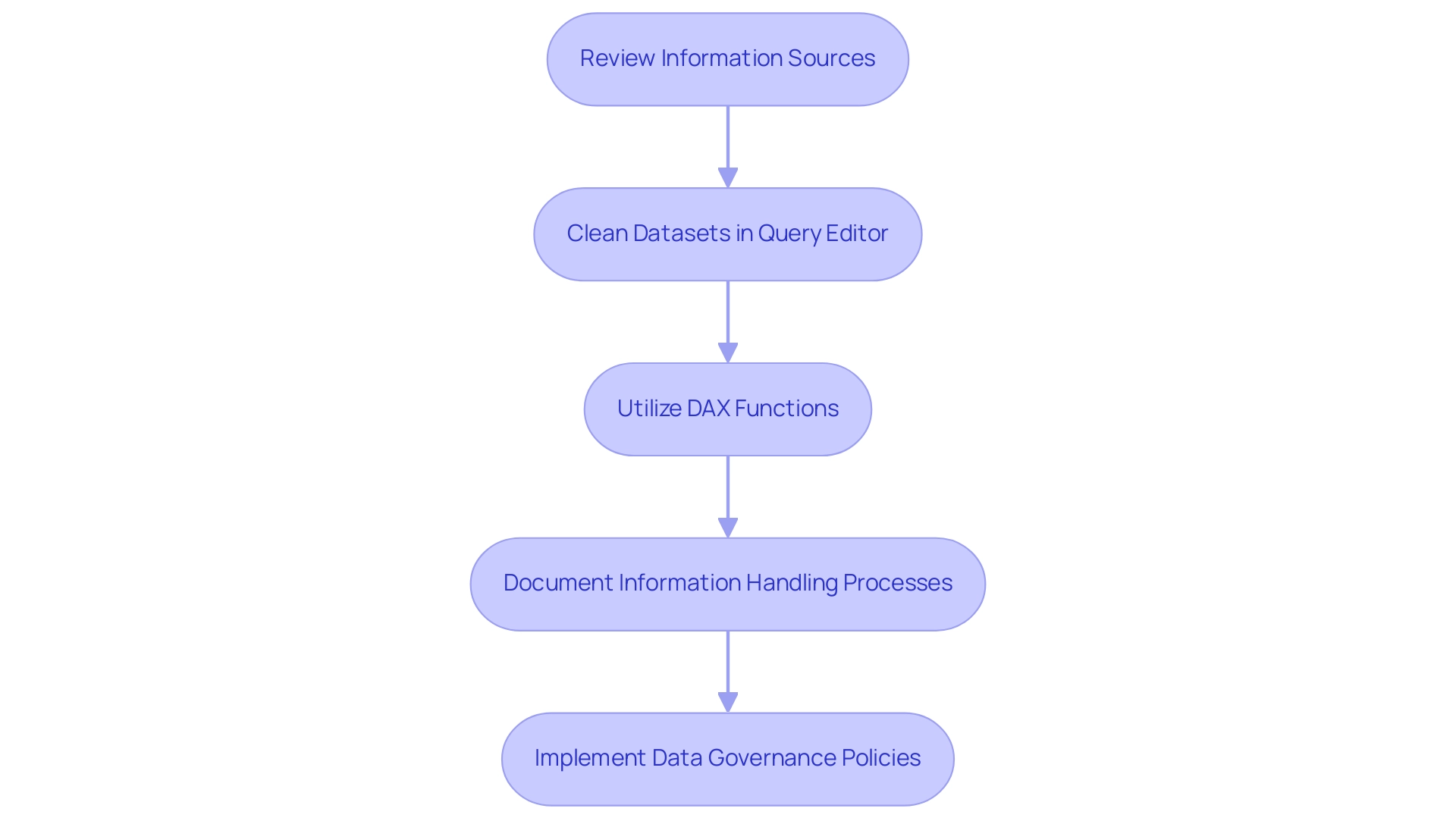

The Role of Data Modeling and DAX in Preventing Blanks

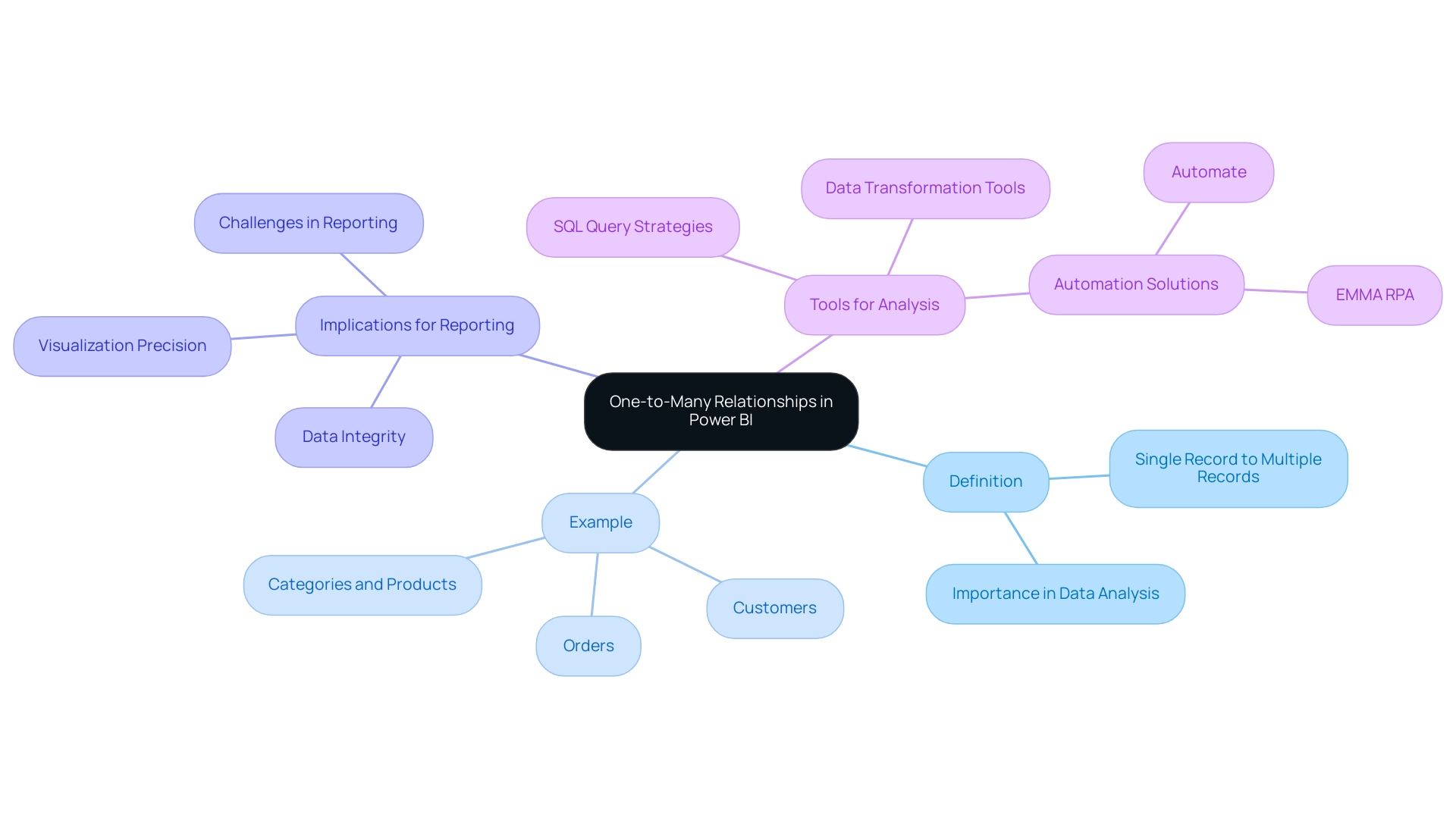

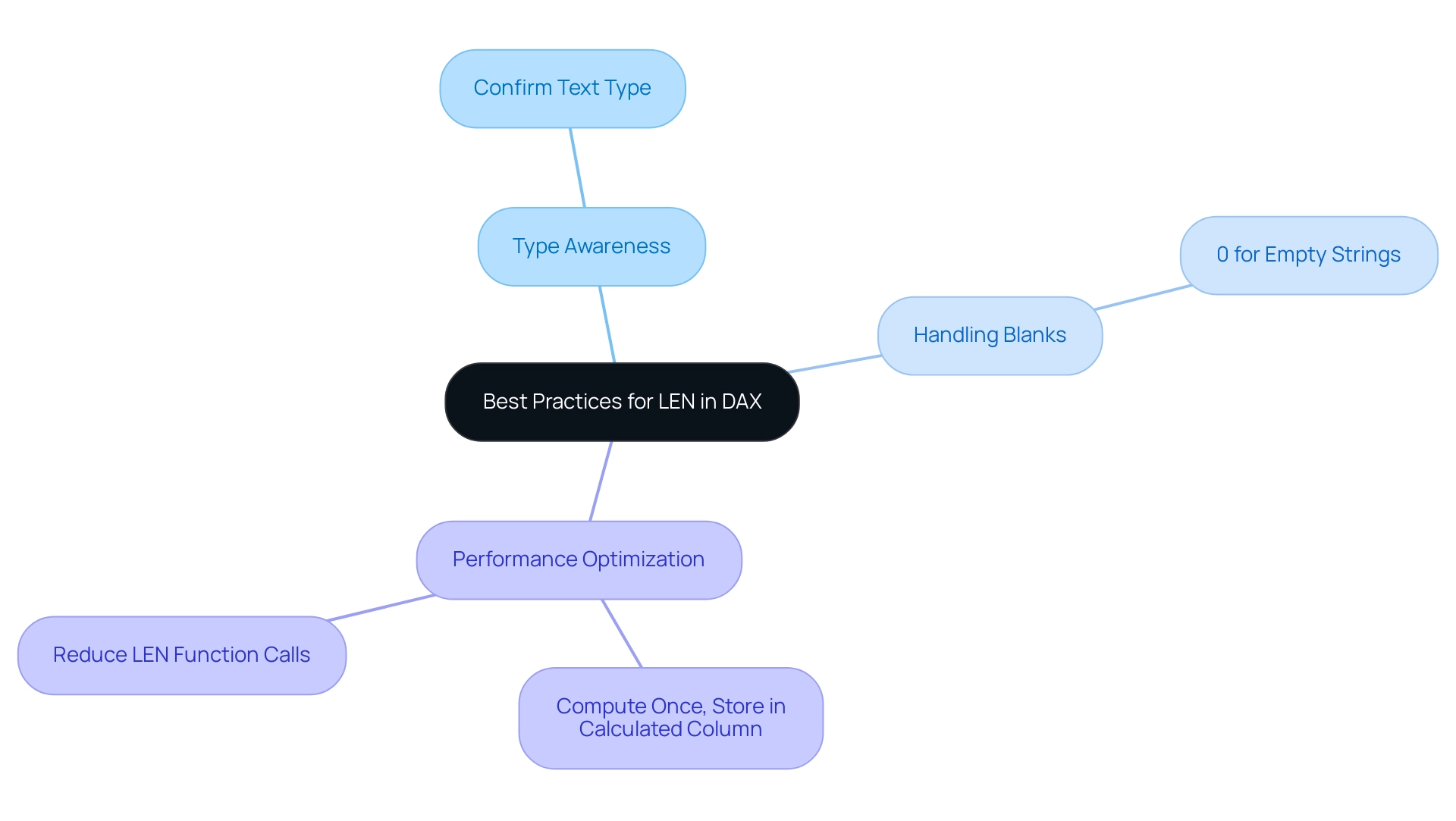

Information modeling and Data Analysis Expressions (DAX) are instrumental in preventing blank visuals in Power BI, especially when grappling with the challenges of time-consuming report creation and inconsistencies. Here are essential considerations to enhance your reporting experience:

-

Proper Data Relationships: Establishing accurate relationships between tables is crucial.

This foundational step ensures your information can be retrieved correctly, which is vital for meaningful analysis. For instance, the quantity value returned for products assigned to category Cat-A and ordered in year CY2018 is 11 units, highlighting the significance of accurate relationships in analyzing specific product performance. -

Effective Use of DAX Measures: Utilize DAX measures to dynamically calculate values.

Well-constructed DAX formulas are key to avoiding blanks, as they ensure calculations yield valid results even in the presence of null values, directly addressing the issue of missing actionable guidance in your reports. -

Maintaining Data Integrity: Regular audits of your dataset for integrity issues, such as duplicates or null entries, are essential.

These discrepancies can disrupt visual outputs, leading to confusion and mistrust in the information presented. Implementing a governance strategy can help maintain information quality and consistency across reports. -

Optimizing Your Information Model: Streamline your information model by eliminating unnecessary columns and tables to simplify calculations and enhance performance.

A well-optimized model improves efficiency and makes the analytical process more intuitive. Utilizing a one-way filter direction whenever possible helps avoid discrepancies and confusion. -

Thorough Testing of Models: Conduct comprehensive testing of your models before creating representations.

Recognizing potential problems early can conserve time and resources, ensuring your final visuals accurately depict the foundational information.

The case study titled ‘Building the Dashboard’ highlights the significance of visualizing calculated measures in a dashboard instead of merely in a view, recommending the use of a column chart to present mean, median, mode, and standard deviation.

As Software Engineer Douglas Rocha states,

My goal is fulfilled if you now know how to use BI’s assistance to find some statistics of your dataset.

By applying these strategies and offering clear next steps based on the insights obtained from your reports, you can greatly enhance the quality and dependability of your BI reports, effectively addressing the challenges of report creation and information management.

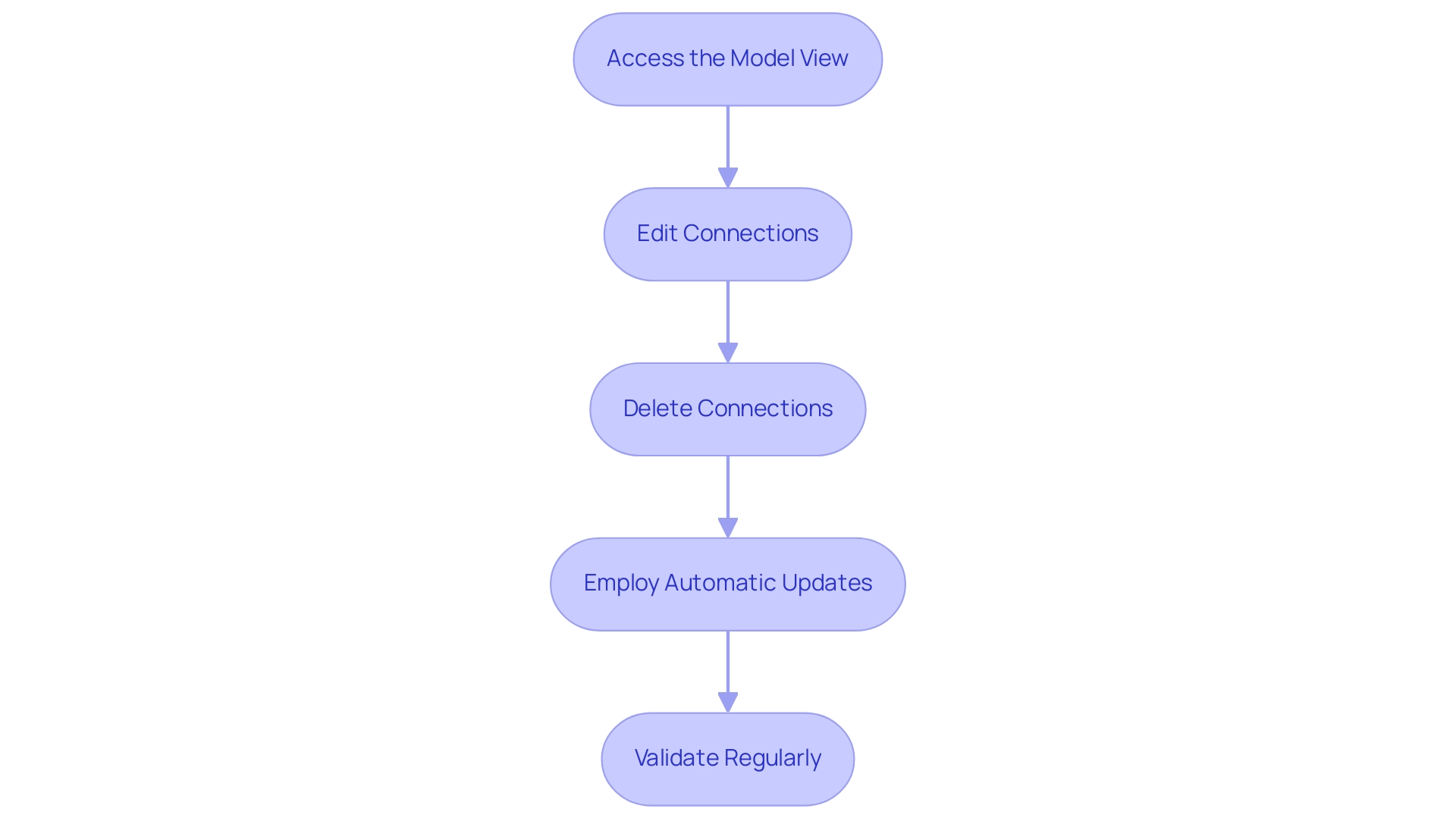

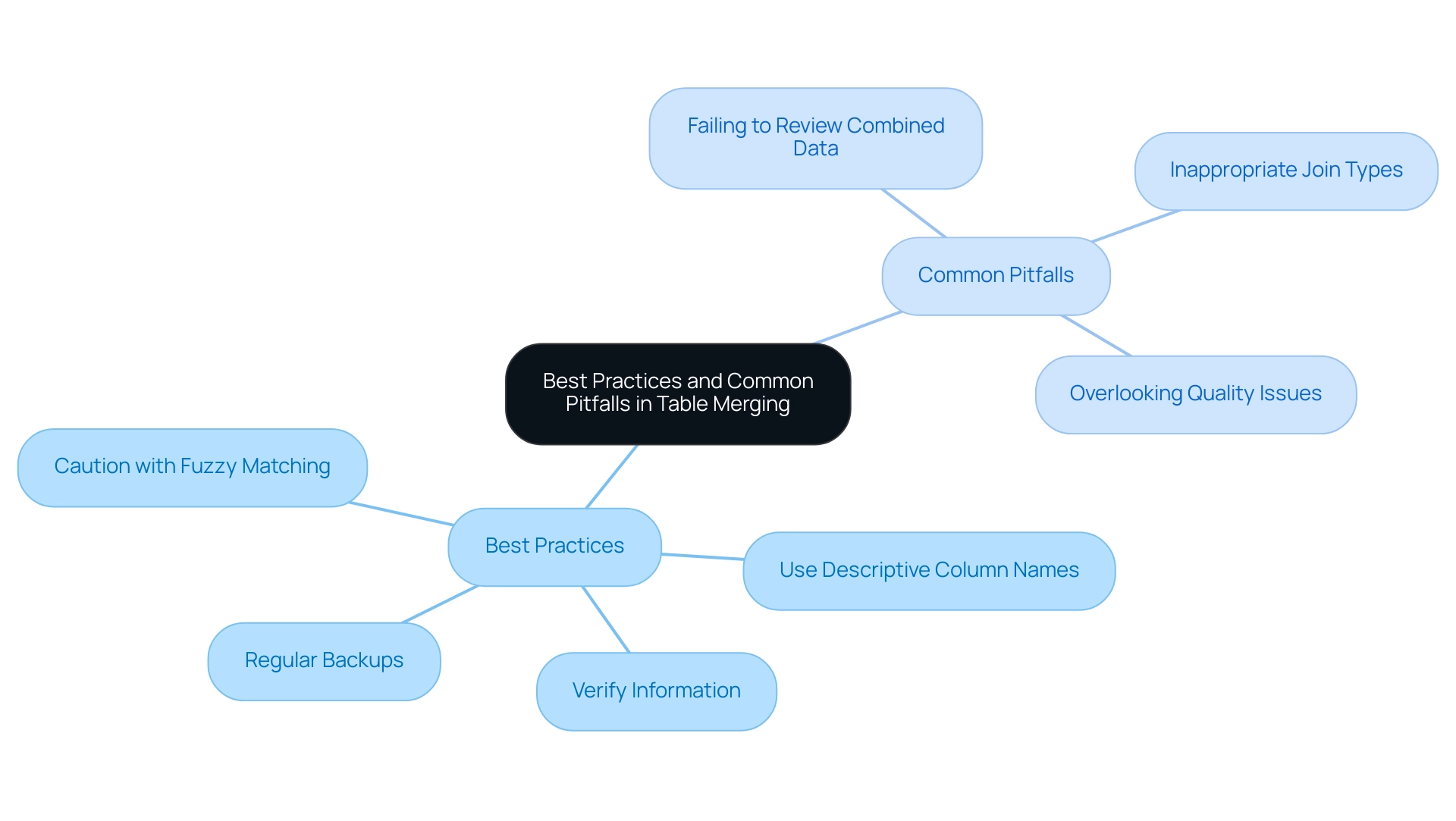

Best Practices for Maintaining Visual Integrity in Power BI

To uphold visual integrity in Power BI and eliminate blank visuals, implementing the following best practices is essential:

-

Regular Information Audits: Conduct regular audits of your information to ensure accuracy and completeness. This proactive method detects inconsistencies early and highlights the importance of keeping refresh operations within a 15-minute interval before 5 AM, ensuring that your documents are based on the most up-to-date information.

This is crucial for overcoming challenges related to data inconsistencies and highlights the importance of a governance strategy in maintaining data integrity. -

Consistent Naming Conventions: Adopting consistent naming conventions for tables and fields minimizes confusion and reduces errors, ultimately enhancing clarity. A well-structured naming system significantly improves the usability of Power BI reports, providing clear guidance for stakeholders.

-

Documentation: Thoroughly documenting your information sources, relationships, and measures fosters transparency and collaboration. This clarity ensures that all stakeholders have access to essential information, addressing the common challenge of navigating complex data landscapes.

-

User Training: Offering training sessions on best practices in Power BI is imperative.

Well-informed users are less likely to make errors, enhancing overall document quality and efficiency. Empowering users with knowledge directly contributes to smoother operations and better utilization of insights, enabling the transformation of raw data into actionable insights. -

Feedback Mechanisms: Establishing robust feedback mechanisms enables users to communicate issues with visuals promptly.

This continuous feedback loop facilitates quick resolution of issues and encourages ongoing enhancement of documents. As Reza aptly states, there are now easier methods to fetch audit logs using PowerShell scripts, which eliminate the 5000 rows limitation, streamlining the auditing process. -

Collaboration with Power BI Premium Capacity Administrators: Engaging with Power BI Premium capacity administrators is crucial for troubleshooting semantic model issues.

Their access to utilization and metrics applications offers valuable insights into memory usage and CPU performance, assisting in the resolution of model-related issues and improving quality. -

Utilization of Third-Party Tools: Incorporating third-party tools like DAX Studio simplifies access to DMVs and enhances your ability to analyze model structures.

These tools can maintain visual integrity and ensure that your documents are built on a solid foundation.

By embracing these best practices, you can significantly enhance the integrity and reliability of your Power BI visuals, ultimately driving better decision-making and operational efficiency. This approach not only addresses the challenges of report creation and data governance but also leverages Business Intelligence effectively for growth and innovation.

Conclusion

Identifying and addressing the root causes of blank visuals in Power BI is essential for any organization looking to optimize its reporting capabilities. By understanding factors such as:

- Data connectivity issues

- Incorrect filters

- Data refresh problems

- Type mismatches

teams can proactively troubleshoot and resolve these challenges. Implementing best practices, such as:

- Regular data audits

- Maintaining consistent naming conventions

- Providing user training

can significantly enhance the integrity of visuals and ensure stakeholders receive accurate insights.

The integration of automation tools like RPA can further streamline these processes, making it easier to maintain data quality and refresh schedules. By simplifying complex data models and leveraging effective DAX measures, organizations can minimize the risk of encountering blank visuals and maintain a reliable reporting environment.

Ultimately, these strategies not only improve operational efficiency but also empower teams to make informed, data-driven decisions. Embracing these practices will transform the way organizations utilize Power BI, enabling them to extract valuable insights and foster a culture of continuous improvement. Now is the time to take decisive action, ensuring that reporting processes are robust, reliable, and aligned with the organization’s goals for growth and success.

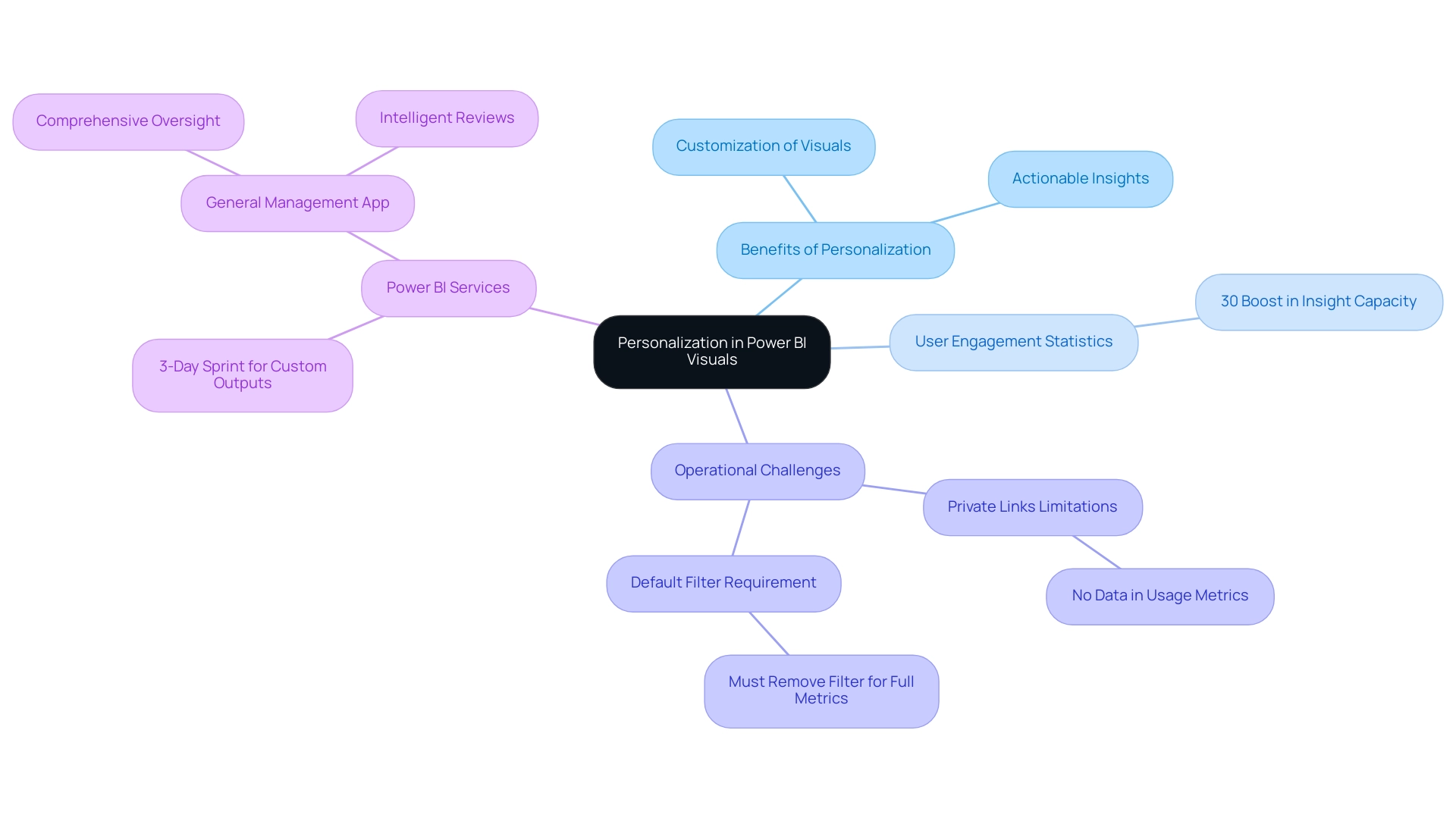

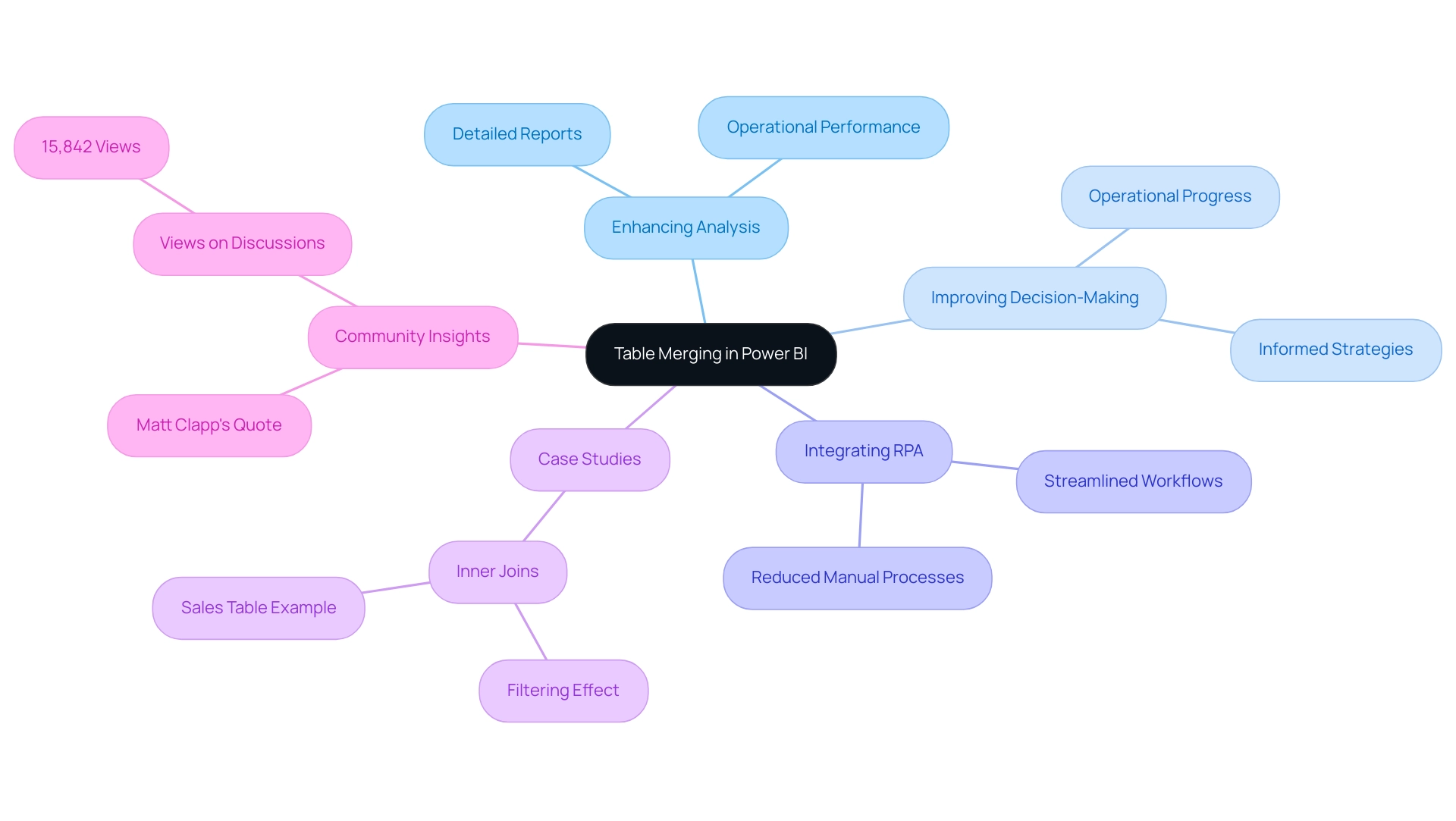

Overview:

Personalizing visuals in Power BI involves customizing report elements like colors, layouts, and information presentation to enhance user engagement and improve data insights. The article provides a detailed step-by-step guide that outlines the process for enabling personalization features, highlighting the benefits of tailored visuals while also addressing potential challenges such as repetitive document creation and collaboration issues.

Introduction

In the realm of data visualization, Power BI stands out as a transformative tool that empowers organizations to tailor their reporting experiences to meet specific needs. Personalization in Power BI visuals not only enhances user engagement but also turns complex data into actionable insights, driving informed decision-making.

As organizations grapple with the challenges of report creation and data inconsistencies, understanding how to effectively customize visuals becomes essential. This article delves into the intricacies of personalizing Power BI reports, offering a step-by-step guide to unlock features that enhance clarity and relevance.

It also addresses common pitfalls and limitations, ensuring users can navigate their data landscape with confidence and precision. From adjusting colors and layouts to managing personalized visuals effectively, the insights presented here will equip users to transform their reporting processes and maximize operational efficiency.

Understanding Personalization in Power BI Visuals

The ability to personalize visuals in Power BI enables individuals to customize their reports to align with their unique needs and preferences, significantly enhancing the experience. By using Power BI to personalize visuals according to specific roles, users can convert information into actionable insights. They can alter visual elements—including colors, layouts, and the information presented—to create an intuitive and efficient reporting environment.

Such customization to personalize visuals in Power BI not only enhances the relevance of information but also fosters deeper engagement with the data. Statistics indicate that users who interact with customized analyses in BI experience a 30% boost in their capacity to obtain meaningful insights, directly influencing decision-making processes. Furthermore, our Power BI services, including the 3-Day Sprint for quick, professionally crafted documents, enable organizations to swiftly develop customized outputs that meet specific business needs, while the General Management App provides comprehensive oversight and intelligent reviews to enhance operational efficiency.

However, organizations using Private Links encounter restrictions in transferring client information, which can lead to usage metrics lacking any information, as indicated in the case study ‘Usage Metrics Not Supported with Private Links.’ Additionally, to see metrics for all dashboards or documents in a workspace, individuals must remove the default filter applied to the document, highlighting the necessity for awareness of these operational nuances. By leveraging Business Intelligence and RPA, individuals can maximize the effectiveness of their information presentations, ultimately leading to improved operational efficiency and informed strategic decisions.

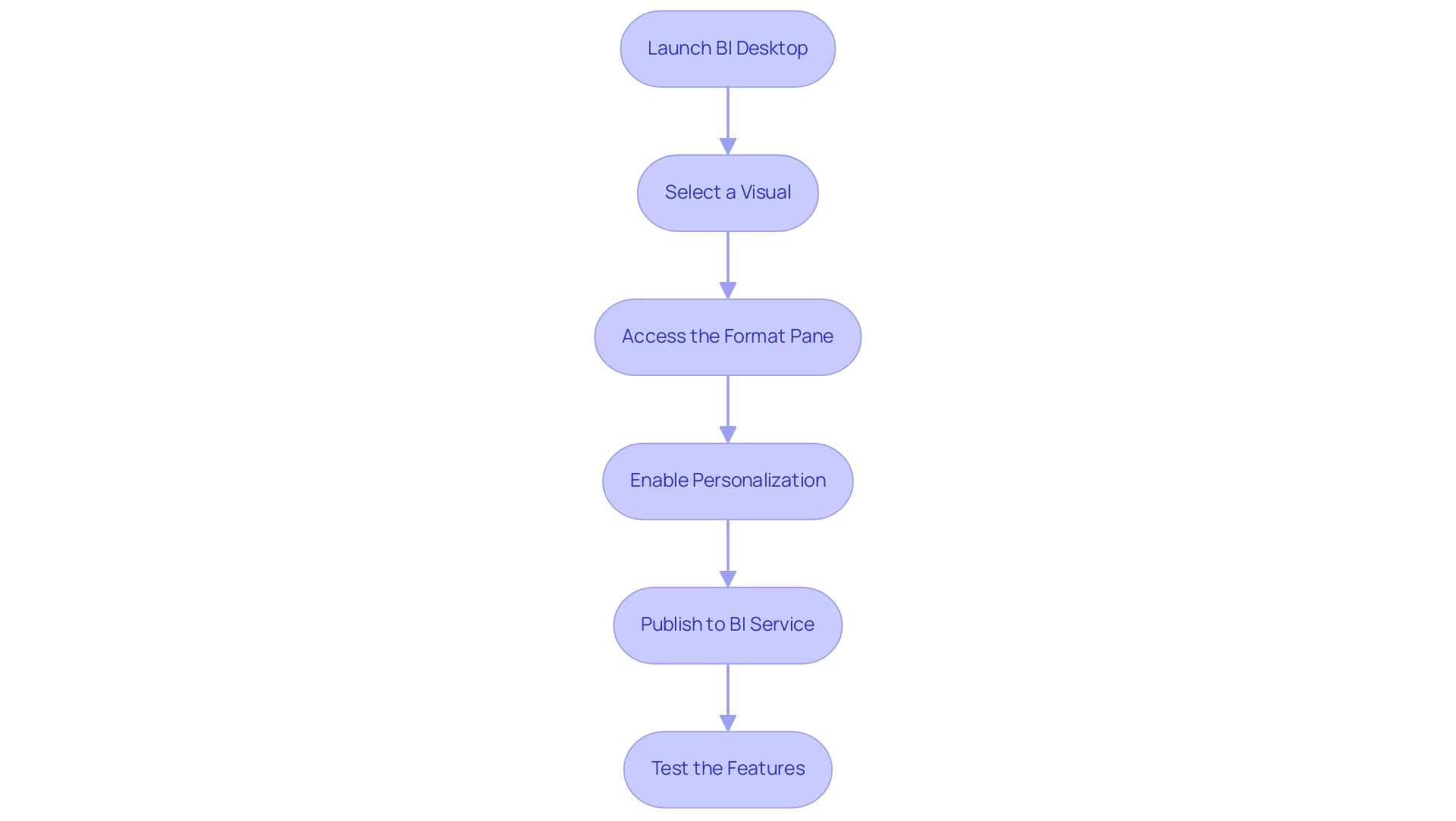

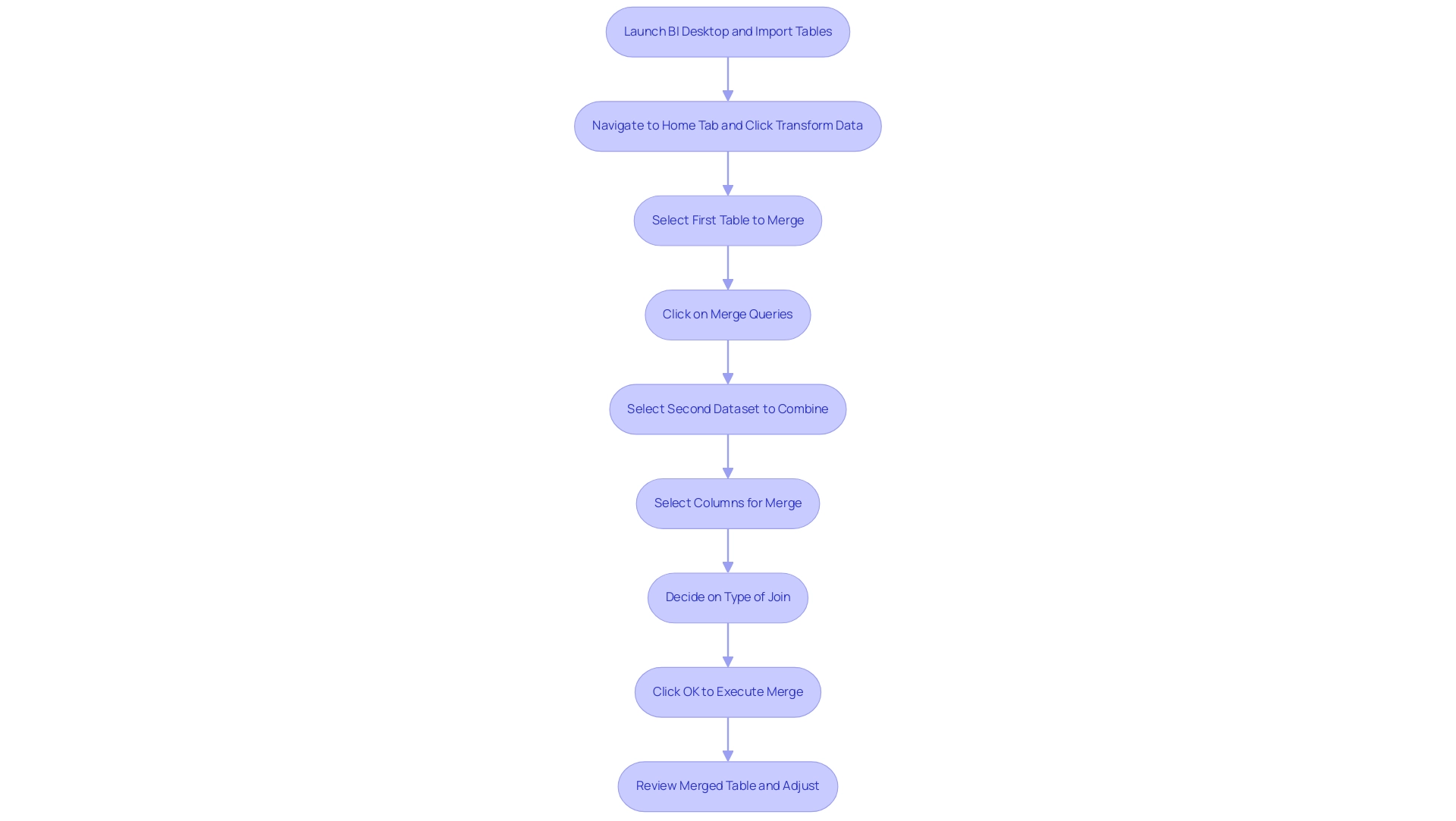

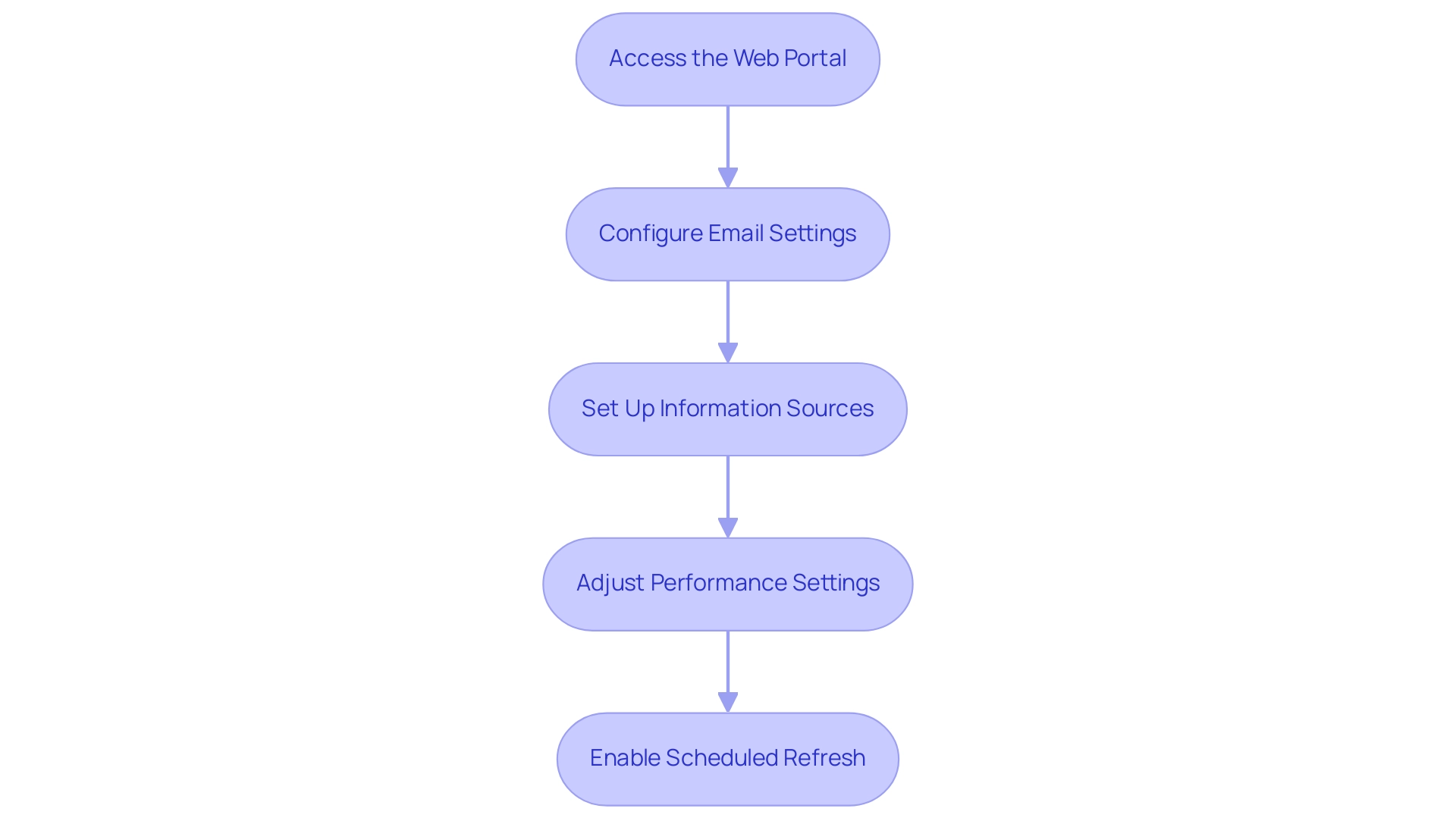

Step-by-Step Guide to Enabling Personalization Features

Activating features that allow you to personalize visuals Power BI is a robust method to improve your data visualization experience, particularly considering issues such as time-consuming document creation and data inconsistencies arising from an absence of governance strategy. By following this straightforward guide, you can empower users to customize their documents effectively, transforming these challenges into opportunities for improvement:

- Launch BI Desktop: Begin by starting the application and loading the document you wish to customize, an essential first step in addressing ineffective document creation.

- Select a Visual: Click on the specific visual element you want to personalize to focus your customization, which helps mitigate the confusion caused by inconsistent data presentations.

- Access the Format Pane: Locate the Format pane on the right side of your screen, represented by a paint roller icon.

- Enable Personalization: Scroll down in the Format pane until you find the ‘Personalize this visual’ option, which allows you to personalize visuals Power BI, then toggle it to ‘On’. This step activates the capability to personalize visuals Power BI for that visual, addressing the need for clearer, actionable guidance in your reports.

- Publish to BI Service: Ensure your document is published to the BI Service, which is essential for utilizing personalization features online.

- Test the Features: Open your document within the Power BI Service environment and check that the personalization options are now accessible for interaction.

By implementing these steps, individuals gain the ability to personalize visuals Power BI for their visual presentations, better meeting their needs and fostering an environment of self-service business intelligence. This adaptability not only enhances user engagement but also increases the overall effectiveness of your analysis efforts. Significantly, usage metrics reports refresh daily with new information, highlighting the significance of personalization features in maintaining content up-to-date and pertinent.

As Andy states, ‘Interacting with each visual to edit as needed’ is essential for maximizing the value of BI. Furthermore, the case study titled ‘Conclusions on BI’s Personalize Visuals Power BI Feature’ illustrates that enabling the personalize visuals Power BI features assists organizations in embracing Self-Service BI, granting users enhanced control over their information presentation and ensuring that insights are effectively utilized. Clear, actionable guidance in documents is essential to assist stakeholders navigate the information effectively, especially in the context of governance challenges.

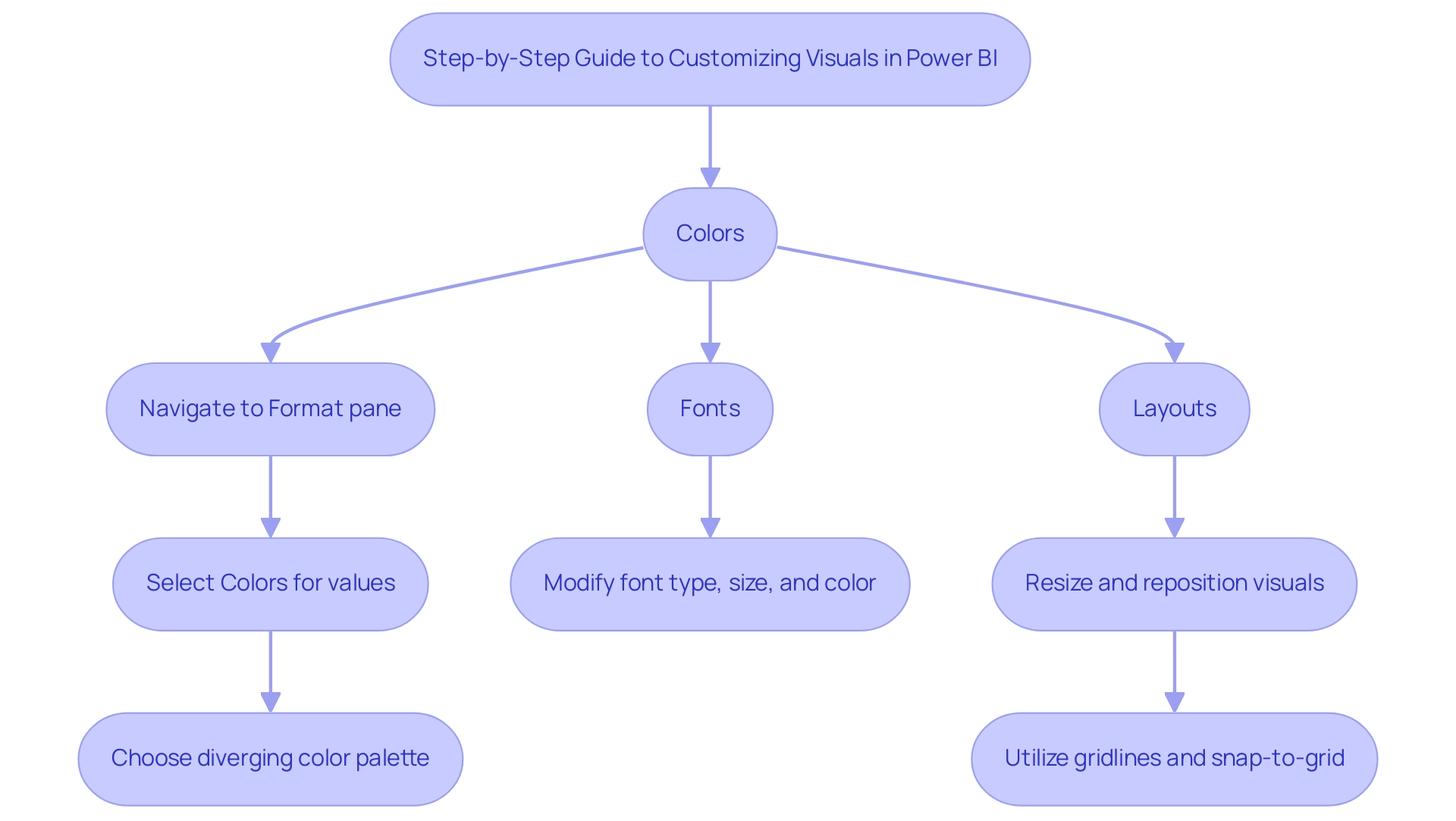

Customizing Visuals: Colors, Fonts, and Layouts

To improve report engagement and clarity while addressing common challenges like time-consuming report creation, inconsistencies, and the absence of a governance strategy, it is effective to personalize visuals in Power BI. Here’s a step-by-step guide to effectively adjust colors, fonts, and layouts:

-

Colors: Navigate to the Format pane and select ‘Colors for the values’ to specify colors for your points. Utilizing the color picker allows you to align with your organization’s branding or enhance visual distinctiveness. Current trends emphasize the use of diverging color palettes, which effectively display differences from a central point, such as a mean or median. A well-designed diverging palette, as detailed in the case study on diverging color palette design, enhances the viewer’s ability to interpret information by clearly indicating positive and negative values, thus improving the overall effectiveness of the visualization and assisting stakeholders in deriving actionable insights from complex datasets.

-

Fonts: In the ‘Title’ and ‘Labels’ sections of the Format pane, you can modify the font type, size, and color. This customization is crucial for improving readability and making your visuals more visually appealing. According to Jonathan Schwabish from the Urban Institute, “considerate font selections can enhance audience engagement and satisfaction in information presentations,” ensuring that documents are not just filled with figures but also offer clear direction for subsequent actions, thereby addressing the need for practical insights in governance.

-

Layouts: The ‘General’ section within the Format pane allows for resizing and repositioning visuals on the canvas. Utilizing gridlines and the snap-to-grid feature ensures precise alignment, contributing to a polished and professional layout. Practical uses, like a hospital’s implementation of a style guide to illustrate patient satisfaction scores, show how customized layouts can connect with particular audience needs and reduce confusion created by inconsistent information presentation.

By integrating these customization techniques, users can personalize visuals in Power BI to effectively communicate information and address the challenges of report creation along with the need for a strong governance strategy. This article reflects the latest trends as of February 2024, ensuring that the guidance provided remains relevant to current practices in data visualization.

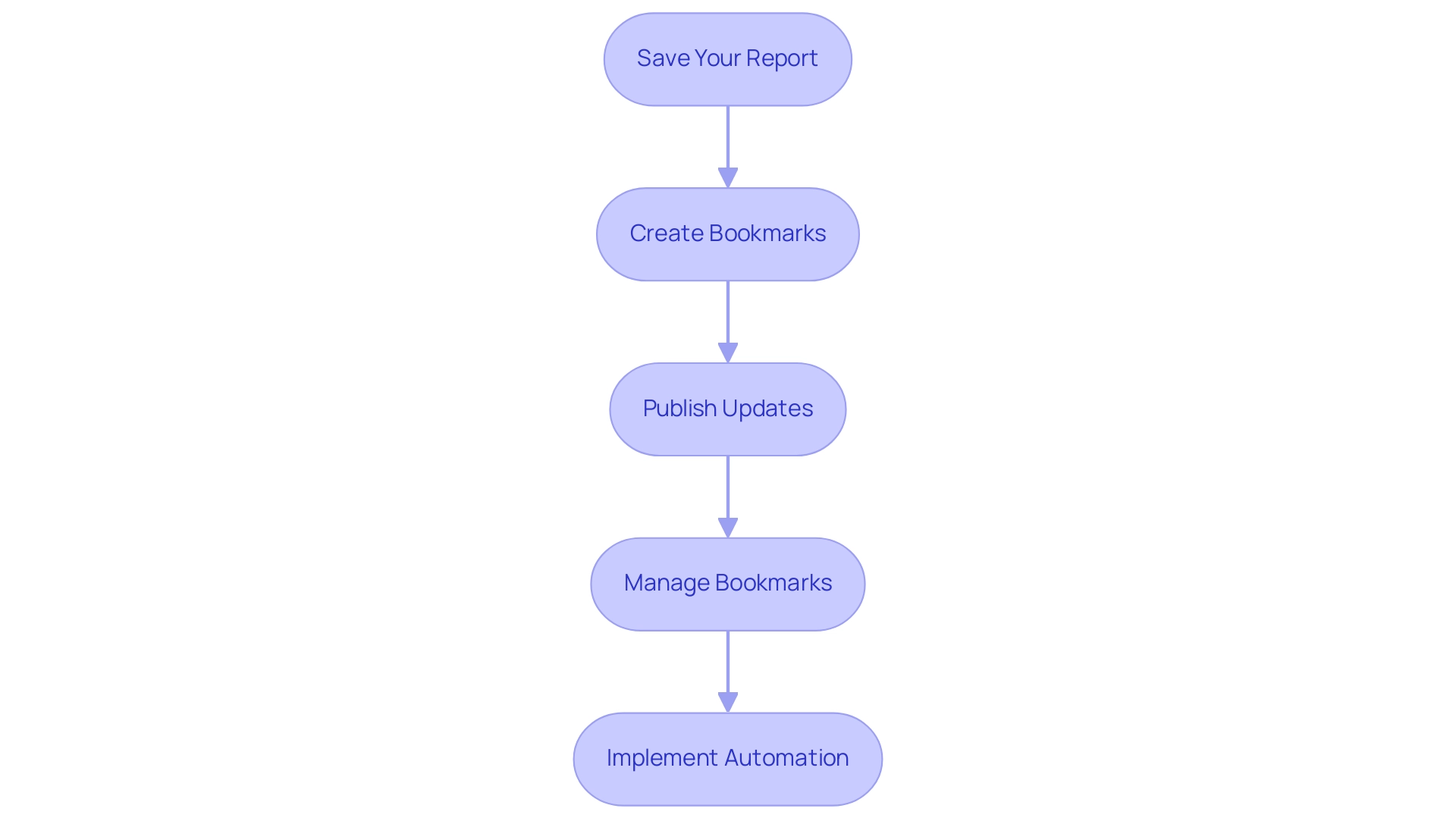

Saving and Managing Personalized Visuals

To effectively save and manage your personalized visuals in Business Intelligence, adhere to the following streamlined steps:

-

Save Your Report: After customizing your visuals, click on ‘File’ and select ‘Save’ to ensure that all your changes are securely stored. Keeping your reports up-to-date is crucial, especially considering that many reports go unused over time, highlighting the importance of regular updates to avoid the accumulation of unused reports. This practice can alleviate some of the repetitive tasks that may burden your team, contributing to a more efficient workflow and helping to retain talent by reducing frustration with outdated processes.

-

Create Bookmarks: Navigate to the ‘View’ tab to establish bookmarks that help you personalize visuals in Power BI for your customized views. This feature allows you to swiftly return to specific settings or layouts, greatly enhancing your workflow. Additionally, consider pinning key metrics to a dashboard to make them readily accessible to interested parties, facilitating better decision-making. Automating these processes with Robotic Process Automation (RPA) can further streamline your operations, freeing your team to focus on strategic initiatives rather than repetitive tasks.

-

Publish Updates: If you are using Power BI Service, don’t forget to publish your updates. This action makes the latest changes accessible to your team, fostering collaboration and ensuring everyone is on the same page. In a rapidly evolving AI landscape, maintaining up-to-date visualizations is essential for driving insight and operational efficiency for business growth, particularly in overcoming challenges posed by outdated systems.

-

Manage Bookmarks: Access the ‘Bookmarks’ pane to manage your bookmarks effectively. Here, you can rename, reorder, or delete bookmarks as needed, streamlining your navigation process. Such organization is essential in tackling challenges associated with time-consuming report creation and data inconsistencies, which can impede talent retention by generating frustration among staff.

Utilizing these techniques not only improves your reporting experience but also aligns with best practices in behavior analysis. For instance, in a case study on ‘Custom Usage Report Creation’, users faced challenges with new usage metrics due to admin access restrictions. This illustrates the practical challenges that can arise in managing how to personalize visuals in Power BI.

As mentioned by a Microsoft staff member, > For your daily tasks moving files around, I’m guessing Automate could assist you there, but I don’t know the details of what you are doing. Implementing Automation alongside these bookmarking strategies can further optimize your operations. By saving your preferences and leveraging bookmarks, you ensure a consistent and tailored reporting experience, empowering you to focus on the insights that matter most.

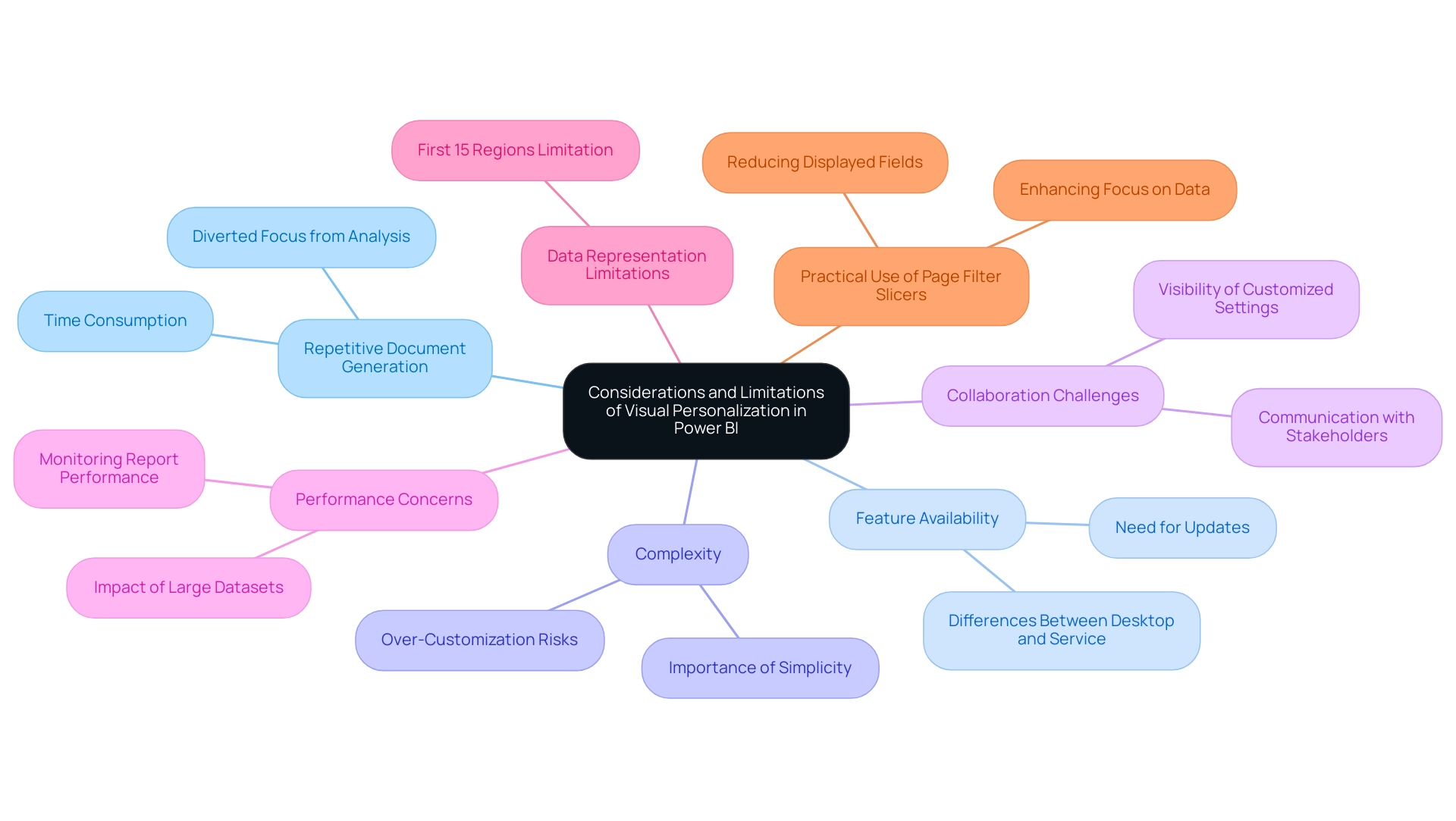

Considerations and Limitations of Visual Personalization

While personalizing visuals in Power BI can significantly enhance data presentation, it’s crucial to navigate several key considerations and limitations that often challenge operations directors:

-

Repetitive Document Generation: Numerous operations directors discover they spend too much time creating documents instead of utilizing insights from BI dashboards. This repetitive nature of document creation diverts focus from meaningful analysis, which can lead to inefficiencies.

-

Feature Availability: Users should be aware that not all personalization features may be accessible in both Power BI Desktop and Power BI Service simultaneously. Staying informed about Microsoft’s latest releases is essential to utilize the most current functionalities and avoid time-consuming creation processes.

-

Complexity: Striking a balance is essential; over-customization can lead to confusion for viewers. Opt for simplicity and clarity in visuals to maintain focus and avoid overwhelming your audience with excessive detail, which is vital in ensuring actionable guidance is clear.

-

Collaboration Challenges: Customized settings may not be consistently visible to all individuals, depending on how they access the document. It’s vital to communicate with key stakeholders about specific viewing options and how they can replicate or adapt personalized settings to ensure consistent reporting and mitigate data inconsistencies.

-

Performance Concerns: Extensive personalization can degrade performance, particularly with large datasets. Consistently observe how your reports react to alterations to sustain effectiveness and experience, thus improving operational efficiency.

-

Data Representation Limitations: It’s also important to note that the visualization will only show the bubbles for the first 15 regions, which may restrict insights when individuals analyze larger datasets.

-

Practical Use of Page Filter Slicers: Utilizing page filter Slicers can help reduce the value of fields displayed, allowing for a more focused and manageable dataset in your visuals.

Considering these factors, comprehending the limitations of BI visual customization will enable individuals to personalize visuals in Power BI effectively, making informed choices and enhancing their data visualization efforts while being aware of possible constraints. Furthermore, as emphasized in the case analysis named ‘Online-Only Access,’ this service necessitates internet connection, which can create difficulties for individuals requiring offline access or those with unreliable connectivity. Users often resort to generating reports and downloading them in PDF or PPT formats to share information, emphasizing the importance of a robust governance strategy.

This sentiment is echoed by users transitioning from platforms like Tableau to Power BI, highlighting the need for clarity and strategy when adapting to Power BI’s unique environment. Furthermore, the struggle to extract meaningful insights can place businesses at a competitive disadvantage, underscoring the importance of Business Intelligence in driving data-driven growth.

Conclusion

Personalizing Power BI visuals is a game-changer for enhancing reporting experiences and driving operational efficiency. By enabling users to tailor reports to their specific needs—whether through adjusting colors, fonts, or layouts—organizations can transform complex datasets into clear, actionable insights. This customization fosters deeper engagement and significantly increases the ability to derive meaningful conclusions, ultimately empowering decision-makers to act with confidence.

However, it is essential to approach these personalization features with awareness of the potential limitations and challenges. The repetitive nature of report creation, variability in feature availability, and the risk of overwhelming viewers with overly complex visuals are all factors that must be navigated carefully. By following the outlined steps for enabling personalization and understanding the considerations involved, users can maximize the benefits while minimizing pitfalls.

In summary, leveraging the full potential of Power BI through personalized visuals not only enhances the clarity and relevance of reports but also positions organizations to thrive in a competitive landscape. Embracing these practices will not only streamline reporting processes but also ensure that insights are effectively utilized, leading to improved operational outcomes and informed strategic decisions. The time to embrace personalization in Power BI is now, as it paves the way for a more efficient, data-driven future.

Overview:

The article focuses on the step-by-step process and significance of adding titles to visuals in Power BI, emphasizing how effective titles enhance data interpretation and audience engagement. It provides a detailed guide on the procedure, highlights best practices for creating impactful titles, and discusses the importance of clear labeling in driving informed decision-making and operational efficiency.

Introduction

In the realm of data visualization, the significance of titles in Power BI cannot be overstated. They serve as the critical first impression, guiding viewers through complex datasets and ensuring clarity in communication. A well-crafted title not only captures attention but also sets the context, transforming mundane reports into engaging narratives.

As organizations grapple with the challenges of report creation and data inconsistencies, understanding the art of titling becomes paramount. By embracing best practices and leveraging innovative tools, professionals can enhance user engagement, streamline reporting processes, and ultimately drive informed decision-making.

This article delves into the strategies for creating impactful titles, troubleshooting common issues, and implementing dynamic features that elevate Power BI visuals from simple displays to powerful storytelling devices.

Understanding the Importance of Titles in Power BI Visuals

In Power BI, you can add a title to visual headings, which serve as the initial touchpoint between your audience and the presented data, setting the stage for effective interpretation. A thoughtfully crafted heading is essential when you power BI add title to visual, as it directs viewers’ focus and provides crucial context that enhances understanding. For instance, rather than defaulting to a vague label like ‘Sales Data,’ opt for a more descriptive alternative such as ‘Monthly Sales Performance by Region.’

This approach clarifies the content and captivates the audience by showing how to power bi add title to visual specific insights at hand. Given the common challenges of time-consuming report creation and data inconsistencies, research has shown that the effect of headings extends beyond mere labeling; they play a significant role in audience engagement and influence data interpretation. Enrico Bertini’s findings reveal that ‘to examine the impact of headings, they exposed the participants to different headings, one supporting and one not supporting a given policy.’

This demonstrates how various labels can influence audience perceptions and reactions. Furthermore, as emphasized in a 1914 book on charts, the choice of headings can introduce biases that influence how data is perceived. By recognizing and applying these principles, professionals, particularly those in Decision Intelligence positions, can effectively utilize designations to convey their diverse capabilities and enhance their reporting abilities.

Along with improving designations, integrating RPA solutions can streamline the reporting process, reduce time spent on manual tasks, and ultimately provide clearer, actionable guidance for stakeholders. This ensures that their BI visuals not only inform but also inspire action, driving operational efficiency and informed decision-making.

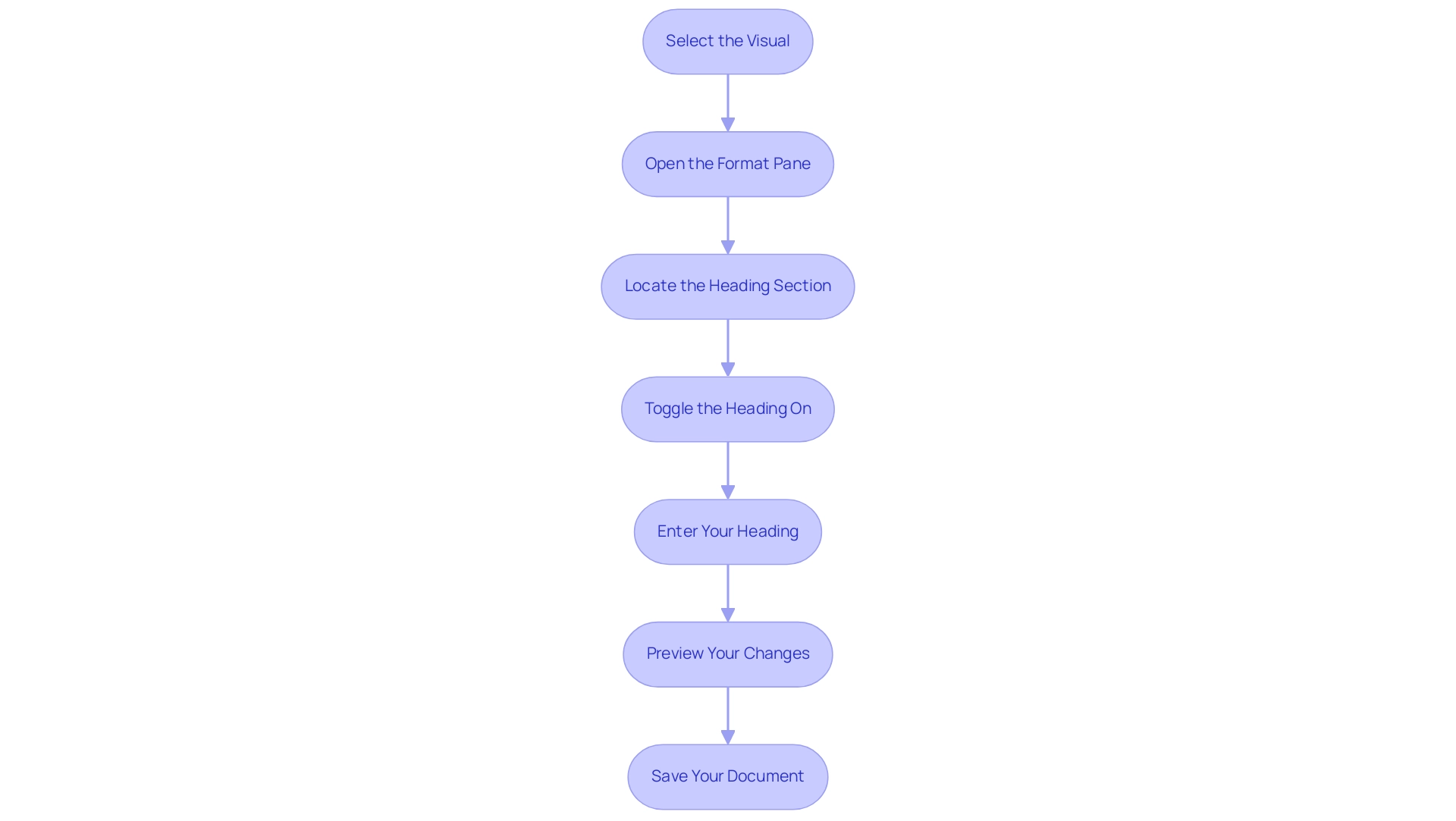

Step-by-Step Guide to Adding Titles to Your Power BI Visuals

To improve the clarity of your reports, particularly when facing common issues such as time-consuming report creation and information inconsistencies, you can use the procedure to power bi add title to visual. This practice not only aids in clear communication but also plays a crucial role in deriving actionable insights from your data. Follow these empowering steps to ensure your visuals effectively communicate their purpose:

- Select the Visual: Begin by clicking on the visual that you wish to title.

- Open the Format Pane: In the Visualizations pane, locate and click on the paint roller icon to access the Format options, a key area for customization.

- Locate the Heading Section: Scroll down to the ‘Heading’ section within the Format pane, where you can make your heading adjustments.

- Toggle the Heading On: Switch the ‘Heading’ toggle to ‘On’ to activate the heading field, ensuring your visual has a designated area for the heading.

- Enter Your Heading: Type your desired heading in the provided text box. Here, you can also modify the font size, color, and alignment to align with your design vision.

- Preview Your Changes: After entering the heading, click outside the text box to preview how it appears on your visual. Make any necessary adjustments to enhance its visibility and appeal.

- Save Your Document: Once you’re satisfied with how it looks, be sure to save your Power BI document to preserve your changes.

By following these steps, you can power bi add title to visual, which not only makes your visuals informative but also contributes to a more structured and engaging experience. This practice is essential, especially as organizations endeavor to tackle the difficulties of document creation and improve data integrity. For instance, Avison Young’s client experienced a notable increase in document adoption and usage after transitioning from legacy tools, highlighting the benefits of clarity in analytics.

Additionally, developing data-oriented leaders and establishing an Analytics Council can propel organizational transformation, highlighting the significance of distinct roles in boosting user involvement and facilitating analytics efforts. Integrating RPA tools can further streamline the reporting process, ensuring that governance strategies are in place to minimize inconsistencies and enhance the actionable guidance provided by your reports. The ‘Checklist for Adoption Tracking‘ case study offers a structured method for ensuring accountability and progress monitoring, further demonstrating how clear labels contribute to effective reporting and user engagement.

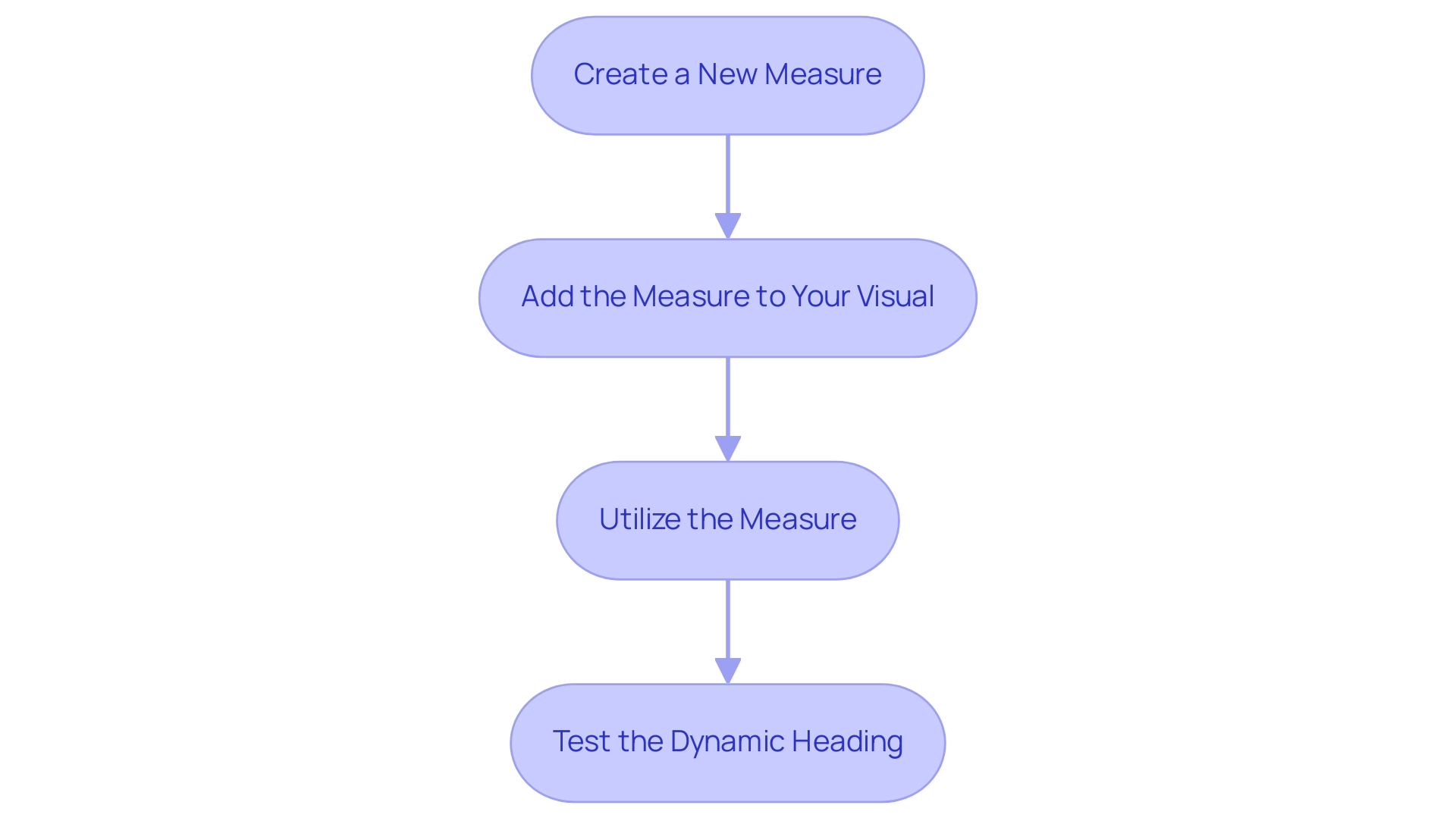

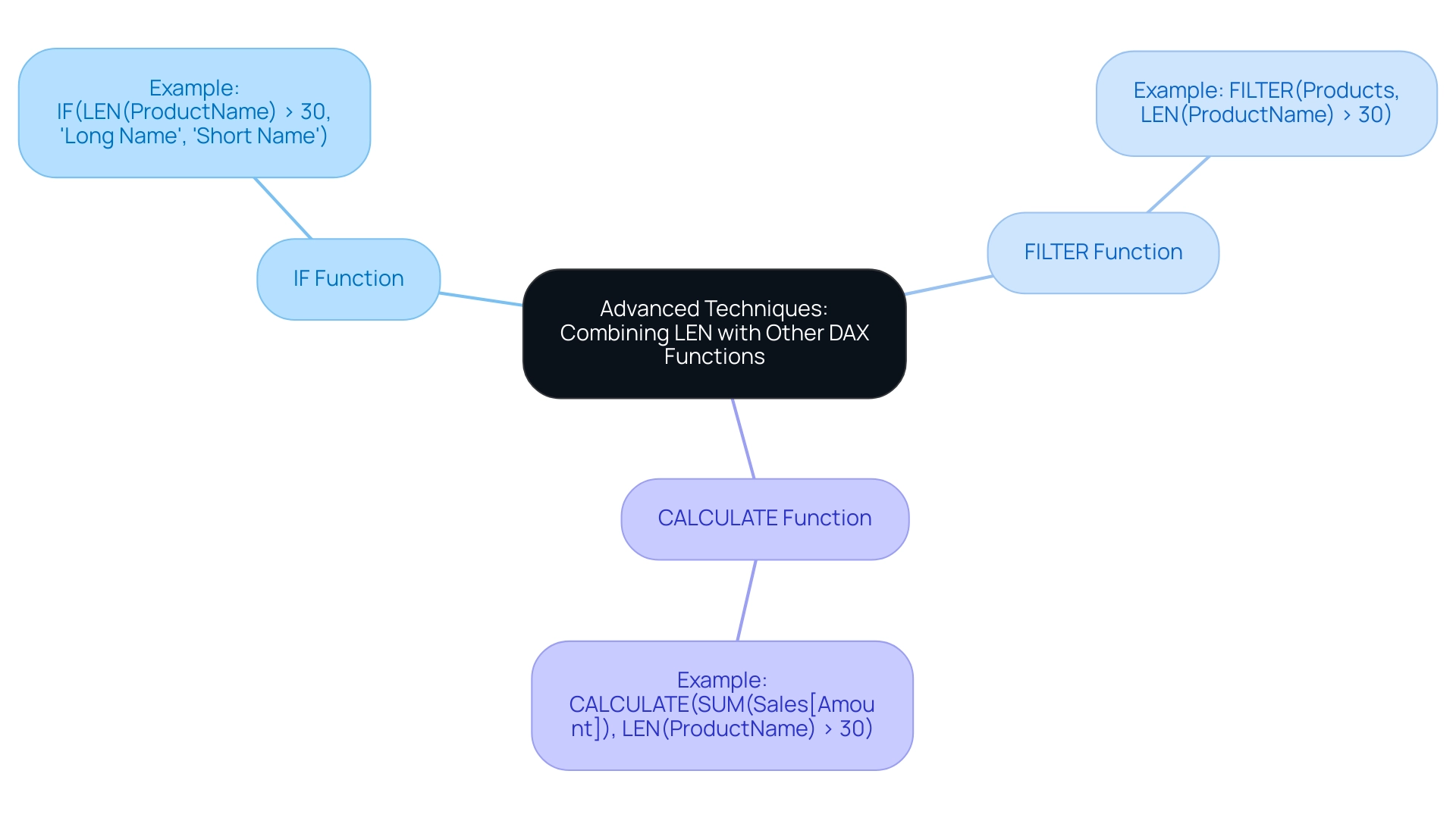

Creating Dynamic Titles for Enhanced Data Context

Dynamic labels in Power BI serve as a powerful mechanism to adapt visualizations based on user interactions, enriching the storytelling aspect of data presentation. With a count of all dashboards or reports in the organization that had at least one view in the past 90 days being maintained, implementing dynamic headings can significantly enhance user engagement and report visibility. To create a dynamic title using DAX (Data Analysis Expressions), follow these straightforward steps:

- Create a New Measure: Navigate to the Data view and select ‘New Measure’. Enter a DAX formula to define your title, such as:

Dynamic Title = "Sales Data for " & SELECTEDVALUE(Region[RegionName])

This formula enables the title to dynamically reflect the selected region, providing immediate context for viewers.

-

Add the Measure to Your Visual: Return to the visual where you wish to implement the dynamic label. In the Format pane, locate the Title section and select the ‘Title text’ option.

-

Utilize the Measure: Rather than inputting a fixed label, insert the measure you just created. This ensures that the heading updates automatically in response to user selections, which is important for how to power bi add title to visual, enhancing user engagement.

-

Test the Dynamic Heading: Preview your visual and experiment with different selections to observe how the heading adjusts in real time.

The application of dynamic headings greatly enhances the interactivity of your documents, which is essential for operational efficiency as emphasized in our 3-Day BI Sprint service. This quick turnaround not only streamlines report creation but also helps in addressing challenges like inconsistencies and lack of actionable guidance. Furthermore, the ability to power bi add title to visual elements facilitates clearer communication of data insights, aligning with the latest advancements in DAX for Business Intelligence.

Alongside BI, our General Management App and Automate play vital roles in enhancing operational efficiency by providing comprehensive management solutions and streamlined workflow automation. These tools work together to ensure that your organization can effectively leverage data-driven insights for informed decision-making. As noted by software engineer Douglas Rocha,

Hope I’ve helped you in some way and see you next time!

This sentiment reflects the collaborative spirit of leveraging tools like BI, alongside our General Management Application and Automate, to enhance operational efficiency and drive informed decision-making.

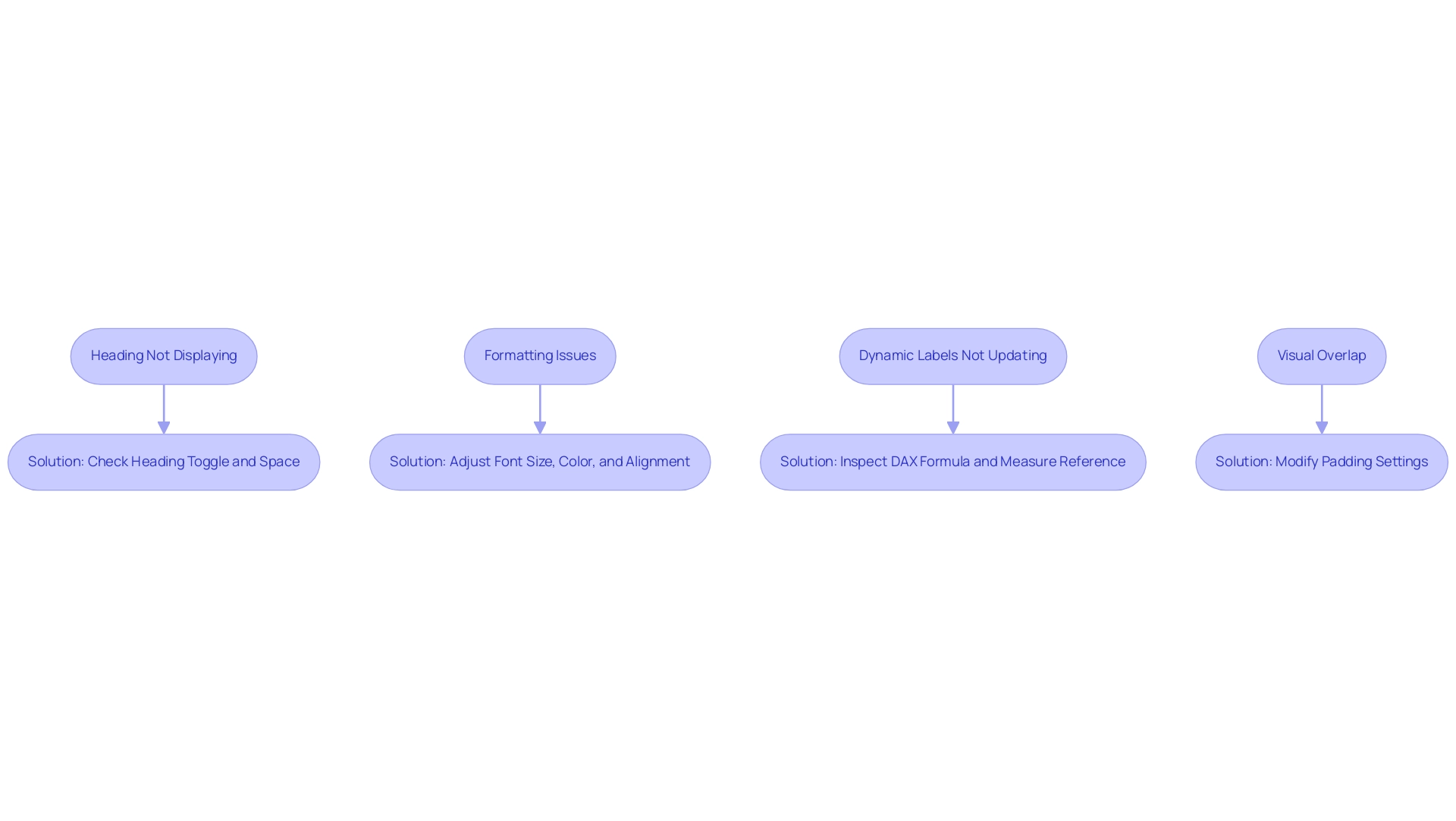

Troubleshooting Common Issues with Visual Titles in Power BI

When attempting to power bi add title to visual, users frequently face several challenges that can hinder their effectiveness. Here’s how to tackle these issues:

- Heading Not Displaying: If your heading fails to appear, first verify that the Heading toggle in the Format pane is set to ‘On.’ Additionally, ensure there is sufficient space within the visual to accommodate the heading.

- Formatting Issues: Should the heading appear misaligned or inconsistent, revisit the Format pane. Adjust the font size, color, or alignment settings to ensure they harmonize with the overall design of your report. Consistency in formatting enhances readability and professionalism.

- Dynamic Labels Not Updating: If a dynamic label doesn’t reflect the expected changes, inspect your DAX formula for potential errors. Confirm that the measure is properly referenced in the Title text option, as accurate formulas are key to dynamic functionality.

- Visual Overlap: In situations where the heading overlaps with other elements, consider modifying the padding settings in the Format pane. Creating additional space around the title can significantly improve the visual layout and clarity.

By tackling these typical title-related issues, you can enhance both the functionality and visual appeal of your BI visuals, which is essential when you consider how to power bi add title to visual to effectively convey your narrative. This is especially crucial in a data-rich environment where extracting meaningful insights can provide a competitive advantage. Utilizing tools such as Robotic Process Automation (RPA) can help in automating report generation and cleaning processes, enabling your team to concentrate on analysis and strategy.

Additionally, tailored AI solutions can enhance the functionality of BI visuals by providing advanced analytics capabilities, further addressing the challenges of extracting actionable insights. As srinudatanalyst aptly puts it, “Hope this helps. Did I answer your question?

Mark my post as a solution! Proud to be a Super User!” This community support can be invaluable when troubleshooting.

Remember, maintaining proper configuration is crucial for information integrity, especially when dealing with large datasets that may impact your visuals. Stay equipped with the latest strategies to tackle BI challenges.

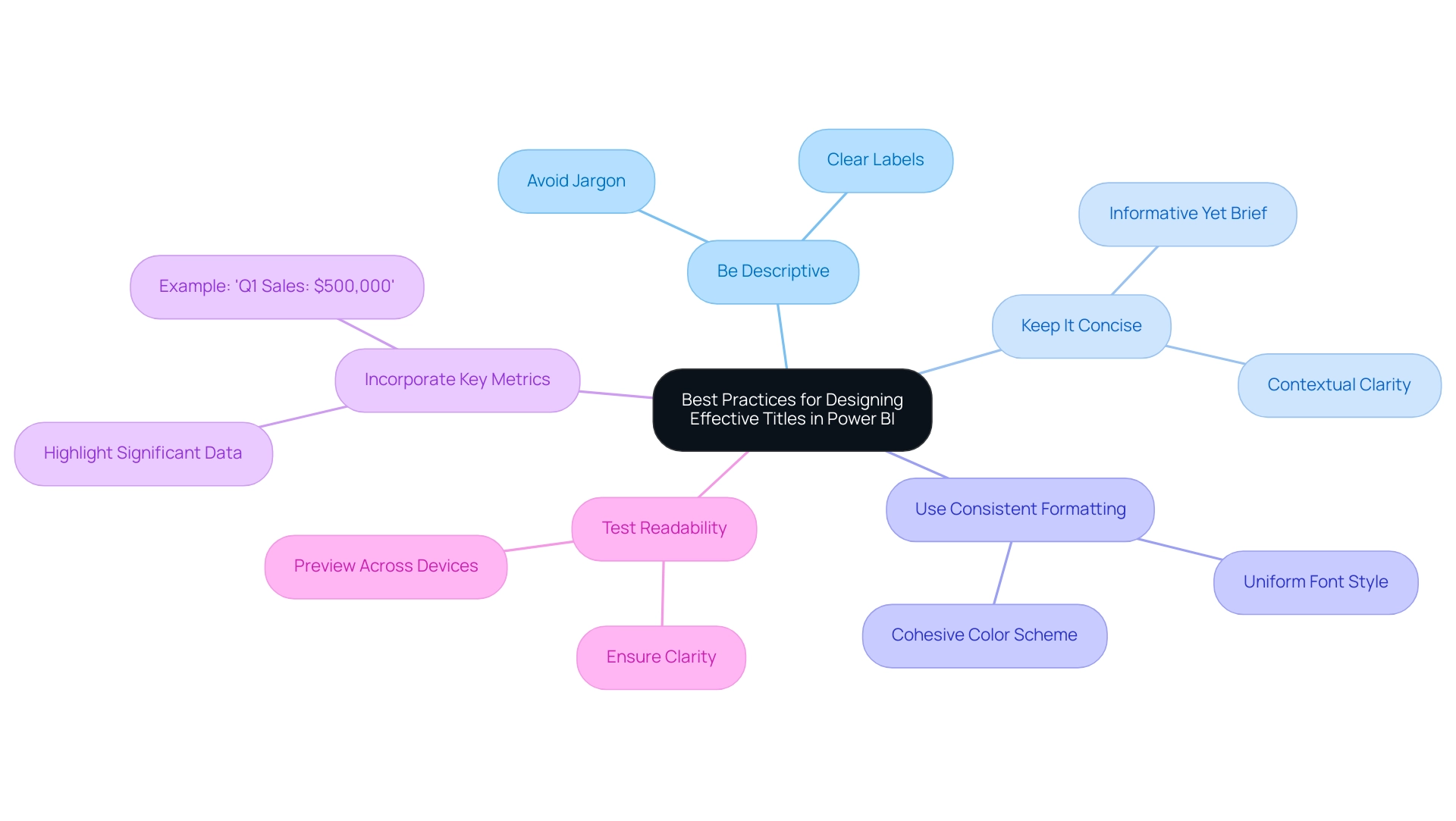

Best Practices for Designing Effective Titles in Power BI

Generating influential headings in Power BI is essential for efficient information presentation and storytelling, especially in addressing typical obstacles such as time-consuming report development and information inconsistencies. As Dorothy Walter, a statistics student, remarked, ‘It was really helpful for my research,’ emphasizing the importance of clear headings in understanding data insights. To achieve impactful headings and address these challenges, consider the following best practices:

- Be Descriptive: Choose clear and descriptive labels that communicate the central insight or message of the visual. Avoid jargon or overly technical language that could confuse viewers.

- Keep It Concise: Strive for brevity while providing sufficient context. A well-crafted heading should be informative without overwhelming the audience.

- Use Consistent Formatting: Ensure a uniform font style, size, and color scheme across all headings in your report. This consistency fosters a cohesive look and enhances readability.

- Incorporate Key Metrics: When applicable, include key metrics or figures in the heading to spotlight significant data points. For instance, a label like ‘Q1 Sales: $500,000’ immediately draws attention to the key figure, enhancing the visual’s impact.

- Test Readability: Preview your report in various formats (desktop, mobile) to confirm that headings remain clear and readable across devices.

Effective headings not only improve the clarity of your visuals but also facilitate communication with stakeholders regarding model maintenance and modifications. In a data-rich environment, where deriving meaningful insights is crucial for competitive advantage, clear labels in BI visuals play a significant role in fostering informed decision-making and operational efficiency. By adhering to these best practices, you can significantly enhance the effectiveness of your labels, as well as learn how to power bi add title to visual, transforming your Power BI visuals into not just informative tools but also visually compelling narratives.

Additionally, by implementing a governance strategy, you can ensure data consistency across reports, further enhancing the clarity and actionable guidance provided by your titles.

Conclusion

Crafting effective titles in Power BI is not merely a matter of aesthetics; it is an essential strategy for enhancing data communication and user engagement. The article has explored the pivotal role titles play in guiding viewers, providing context, and transforming raw data into compelling narratives. By adopting best practices such as being descriptive, concise, and consistent in formatting, professionals can significantly improve the clarity of their reports, ultimately enabling better decision-making.

Moreover, the implementation of dynamic titles and troubleshooting common issues can further enhance the functionality of Power BI visuals, ensuring they resonate with the audience and adapt to their interactions. As organizations face challenges like time-consuming report creation and data inconsistencies, leveraging innovative tools and strategies becomes increasingly crucial.

In summary, the thoughtful application of titling techniques in Power BI not only elevates the quality of reports but also fosters a culture of data-driven decision-making. By prioritizing impactful titles, organizations can transform their data storytelling, making insights more accessible and actionable for all stakeholders involved. Embracing these strategies paves the way for operational efficiency and a deeper understanding of data narratives, ultimately driving success in today’s data-centric landscape.

Overview:

To effectively add images in Power BI, users can utilize various methods such as importing from local files, web visuals, or picture hosting services, each with its own advantages and privacy considerations. The article provides a comprehensive guide on these methods, detailing step-by-step processes and best practices to enhance visual appeal and audience engagement while addressing common challenges like image quality and alignment.

Introduction

In the realm of data visualization, the integration of images within Power BI reports stands as a transformative approach to enhancing both clarity and engagement. As organizations strive to convey complex data narratives, visuals emerge as powerful tools that can reinforce branding, illustrate critical insights, and provide essential context. With studies revealing that visuals can significantly reduce errors and boost user engagement, the importance of effectively incorporating images cannot be overstated.

However, many face hurdles such as:

- Time-consuming report creation

- Inconsistencies in data presentation

This article delves into practical methods for:

- Importing images

- Formatting them for optimal display

- Overcoming common challenges

All while empowering users to elevate their data storytelling capabilities. By embracing these strategies, organizations can not only enhance the aesthetic appeal of their reports but also foster deeper connections with their audience, ultimately driving informed decision-making and operational efficiency.

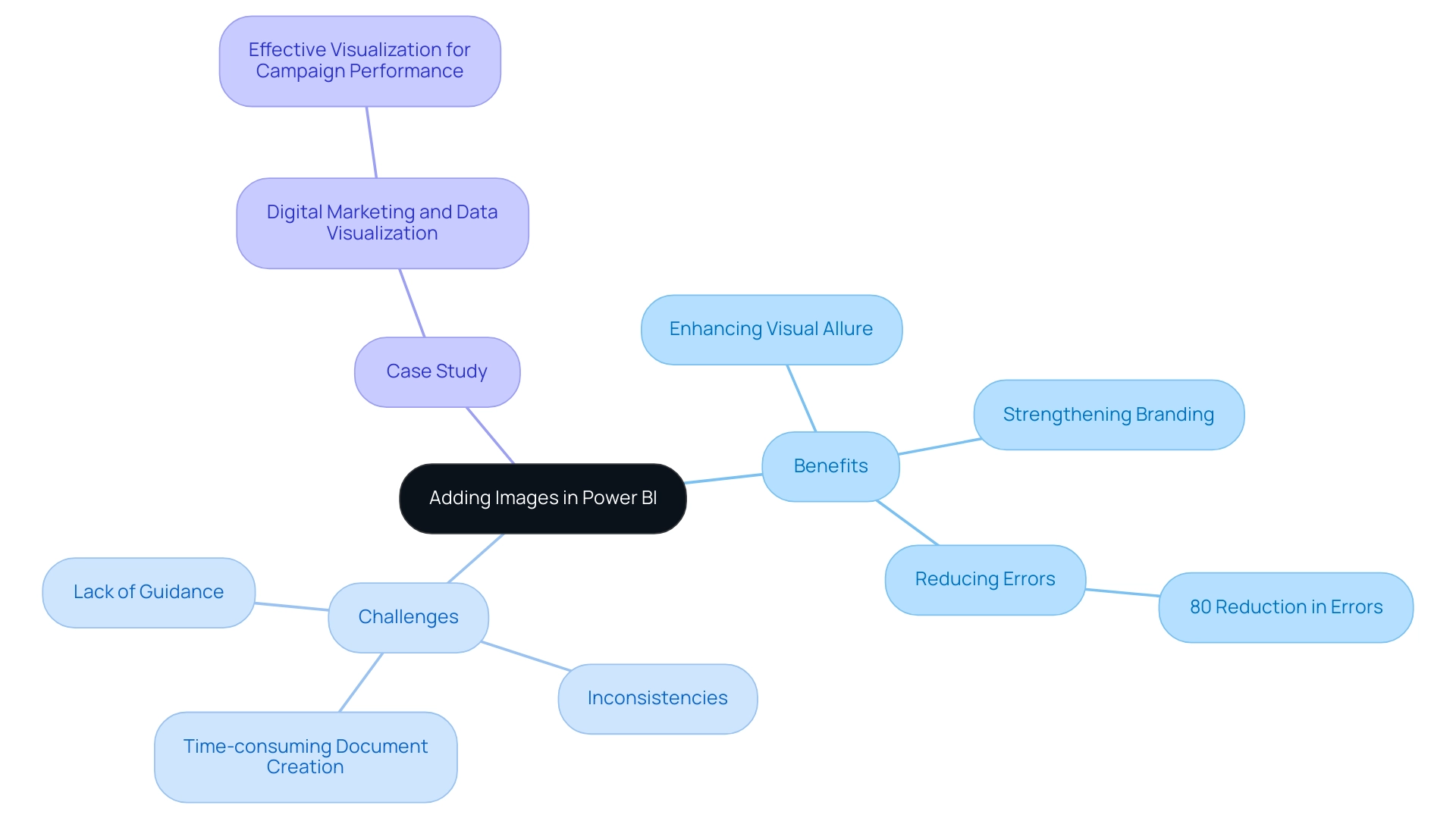

Introduction to Adding Images in Power BI

In BI, you can use the power bi add image feature to dramatically enhance both the visual allure and the overall efficiency of your presentations. Images serve a multifaceted purpose; they can strengthen branding, illustrate key information, or provide essential context to your analysis. Notably, studies indicate that some researchers experienced an impressive 80% reduction in errors when using visuals to clarify tasks, highlighting the power of visuals in enhancing comprehension.

Moreover, with 29% of businesses monitoring behavior through session replays, user engagement and tracking capabilities in your Power BI visuals can be significantly enhanced when you power bi add image. However, many organizations encounter difficulties such as time-consuming document creation, inconsistencies in information, and a lack of clear, actionable guidance. These challenges can hinder the effective use of Business Intelligence (BI) tools, which are designed to transform raw information into actionable insights.

A case study titled ‘Digital Marketing and Data Visualization’ illustrates how effective visualization aids marketers in quickly understanding campaign performance and optimizing strategies. Additionally, leveraging Robotic Process Automation (RPA) solutions can streamline the reporting process, reduce errors, and enhance operational efficiency. This guide will equip you with crucial steps to efficiently bring in visuals and style them, including how to use power bi add image, ensuring that your reports not only showcase information but also captivate your audience on a deeper level.

By addressing these challenges and utilizing the right visuals, your data storytelling can resonate more profoundly, fostering better decision-making and insights that drive operational efficiency and business growth.

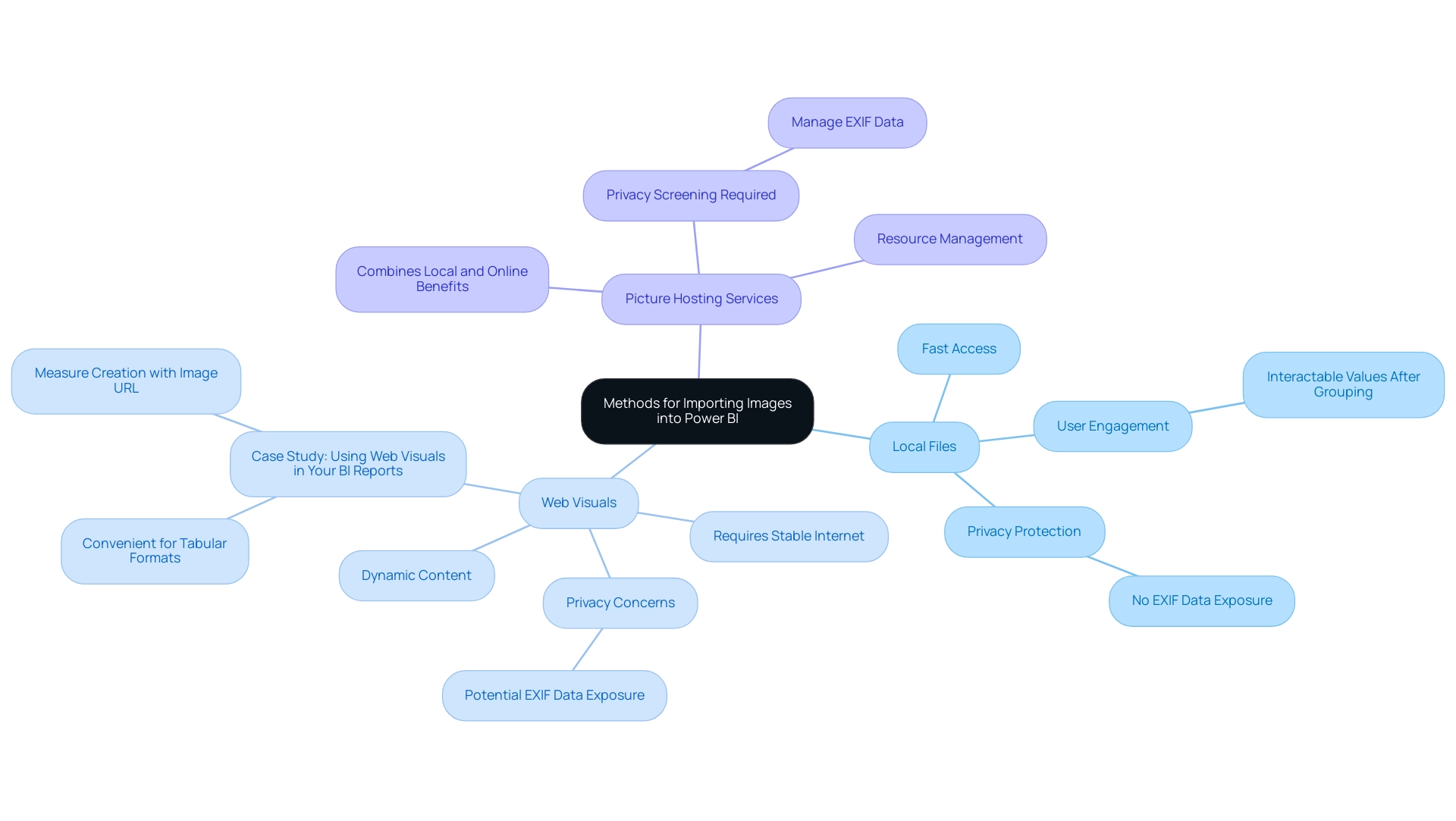

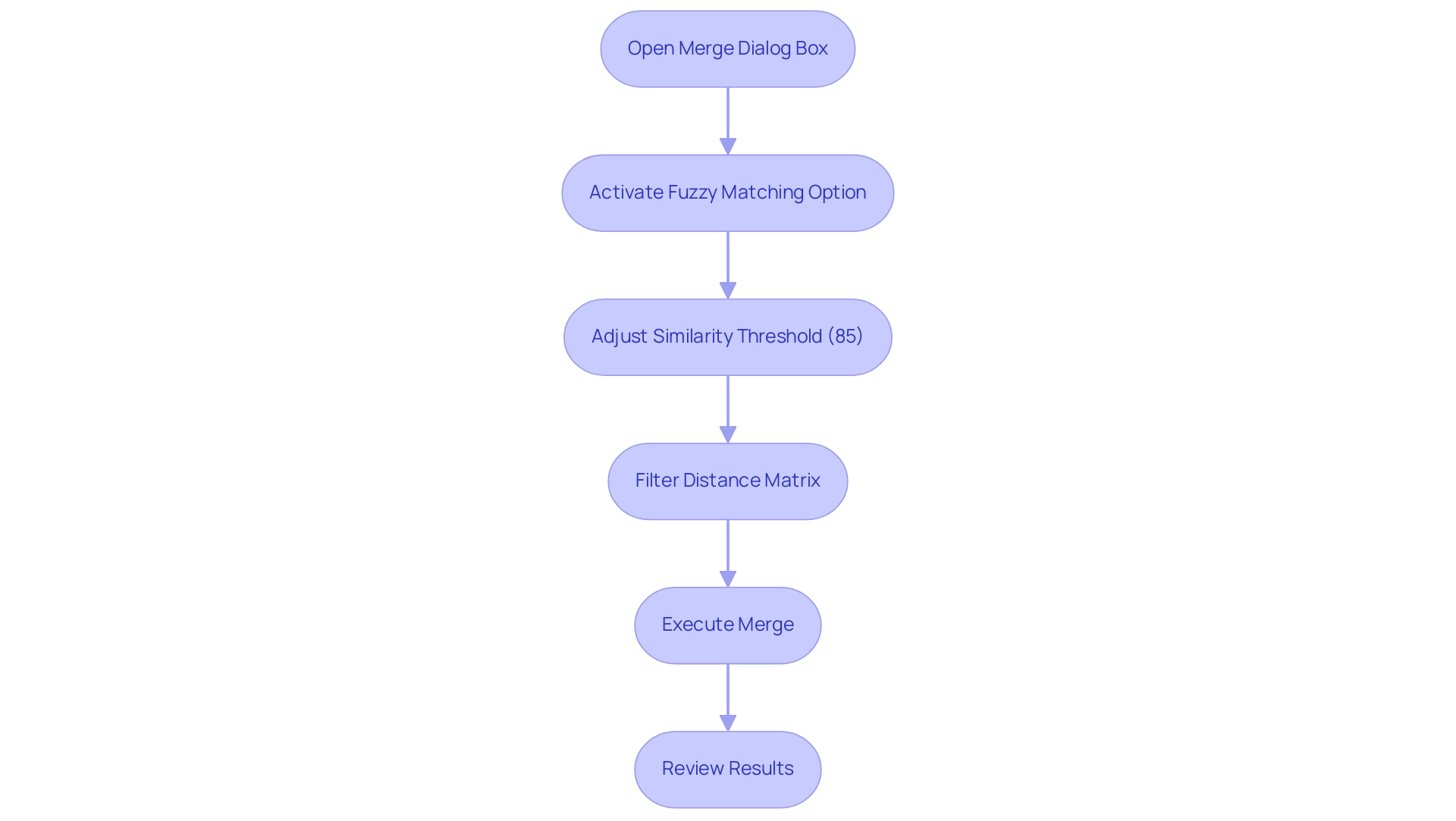

Methods for Importing Images into Power BI

When it comes to importing visuals into Power BI, there are several effective methods to consider, each suited for different needs while also keeping privacy concerns in mind:

-

Local Files: A straightforward method to power BI add image is by uploading pictures directly from your computer. This method guarantees fast access to visuals without needing an internet connection, making it especially beneficial for static documents. Furthermore, individual values in the chart remain interactable even after grouping, enhancing user engagement. Importantly, using local files eliminates the risk of exposing embedded location data (EXIF GPS), safeguarding privacy.

-

Web Visuals: By connecting to visuals stored online via a URL, you can effortlessly incorporate dynamic content into your documents. This method enables a smooth updating procedure, as modifications made to the original visual online are shown in your Power BI dashboard. However, it does require a stable internet connection to load visuals each time the report is accessed. It’s essential to verify that these visuals do not hold sensitive EXIF details, as visitors to your website can download and retrieve such information. The dangers linked to web visuals involve the possible revelation of location information, which can have serious privacy consequences. A case study titled ‘Using Web Visuals in Your BI Reports’ highlights how this approach facilitates the incorporation of online visuals, emphasizing its convenience in managing visuals, especially in tabular formats.

-

Picture Hosting Services: Platforms such as Imgur and Dropbox can be used to host pictures, supplying URLs for BI integration. This approach merges the advantages of both local and online visuals, enabling effective handling of asset resources while reducing privacy concerns if the visuals are adequately screened for embedded information. However, similar to web visuals, there is a risk of exposing EXIF data if not carefully managed.

According to recent insights, visuals can be up to 250kb after conversion for use in Power BI, ensuring that displays remain crisp and clear without compromising performance. Monika Kastner, a BI Developer in London, emphasizes the importance of selecting the appropriate import method, stating, > Hope it helped! <.

Furthermore, utilizing the knowledge of Cloud can improve your Azure experience and implement cloud solutions efficiently, similar to how a power bi add image can enhance visual data representation.

Each method has its distinct benefits: local files offer reliability and privacy, web visuals provide flexibility but necessitate caution, and hosting services enhance accessibility. By understanding the utilization rates of local versus online visuals, you can power bi add image in your Power BI presentations to make informed decisions that cater to your specific use case while addressing privacy concerns. The right approach will ultimately enhance your data storytelling and presentation, ensuring your reports are both visually appealing and functional.

Furthermore, aligning your import strategy with operational efficiency can lead to better resource management and streamlined reporting processes.

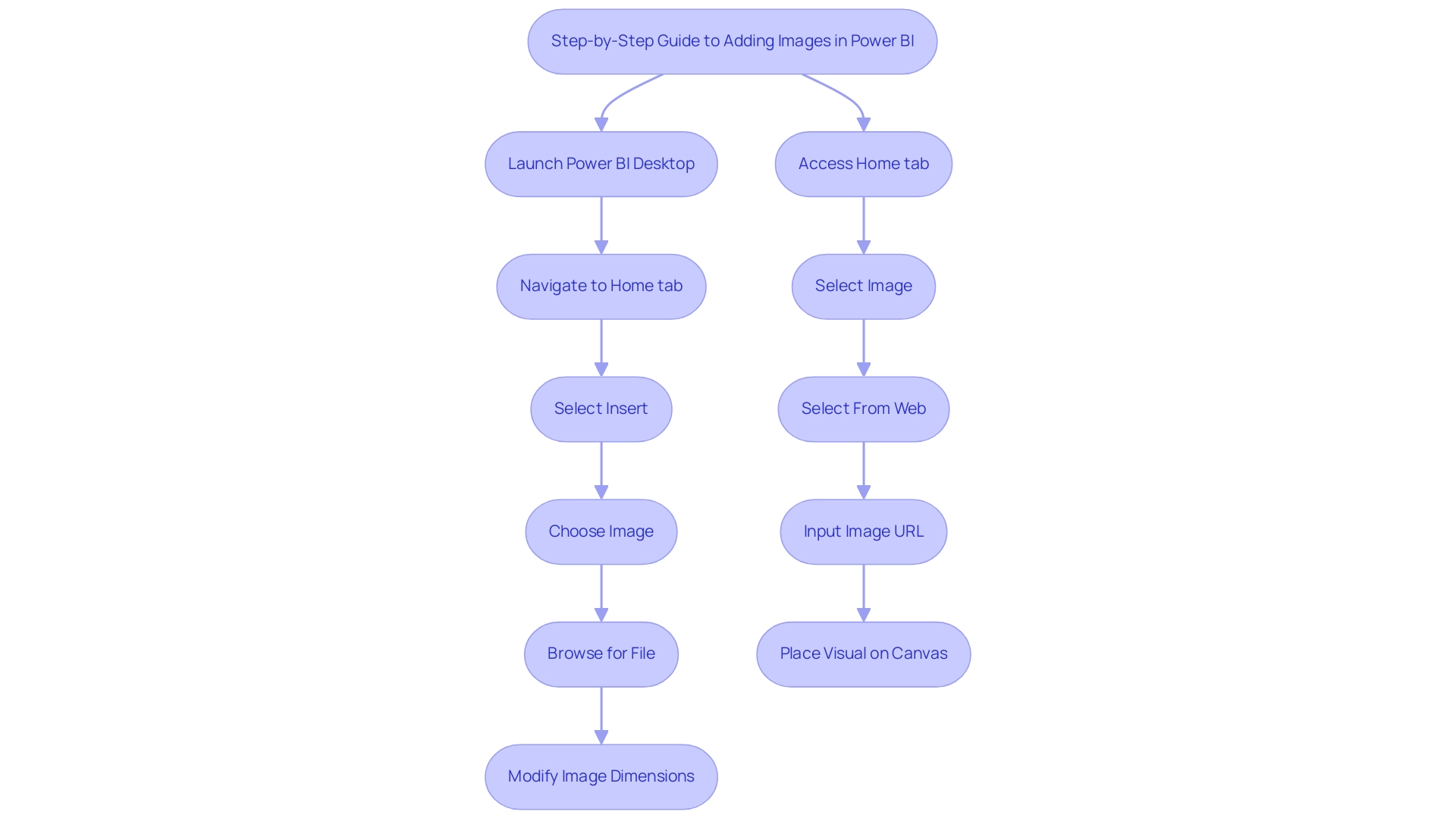

Step-by-Step Guide to Adding Images from Local and Web Sources

Adding Images from Local Files

- Begin by launching your Power BI Desktop application to learn how to power bi add image.

- To power bi add image, navigate to the Home tab and click on Insert to power bi add image.

- From the dropdown menu, select power bi add image to insert an image.

- In the file dialog that appears, browse to the location of your desired picture for the power bi add image feature, select it, and click Open.

- Modify the dimensions and placement of the visual on your layout to meet your design specifications.

Adding Images from the Web

- To use the power bi add image feature, access the Home tab and click on Insert.

- Choose Image and then select From Web to utilize the power bi add image option.

- Input the URL of the graphic you wish to power bi add image.

- Click OK and place the visual on your report canvas as desired.

When uploading photos, be aware of privacy issues, especially steering clear of those with embedded location information (EXIF GPS). Visitors can download and extract any location information, which emphasizes the need for careful selection of images. Visual content is crucial in enhancing user engagement; statistics show that tweets with visual elements receive 150% more retweets than their text-only counterparts.

Moreover, an enhancement in UX design that boosts customer retention by merely 5% can result in a 25% increase in profit, highlighting the importance of visual components in documents. However, creating these visuals can be time-consuming and may lead to inconsistencies in presentation if not managed properly. By effectively integrating images, you not only enhance the aesthetic appeal of your documents but also significantly boost audience interaction.

For instance, polls are effective for gathering audience opinions and preferences, as demonstrated by the NBA’s Twitter poll that garnered over 70,000 votes. As Nikola Gemes, a content marketer at Whatagraph, emphasizes,

It’s highly customizable so you can adapt the template to the individual needs of your firm or your clients.

This flexibility enables you to customize your presentations to connect with your audience, enhancing the storytelling experience.

Furthermore, the success of video content on platforms like Facebook and YouTube, where videos generate eight billion daily views, showcases the potential of visual content in reaching a wide audience. By tackling these challenges in document creation and ensuring data consistency, you can generate more actionable insights through your BI dashboards.

Formatting Images for Optimal Display in Power BI

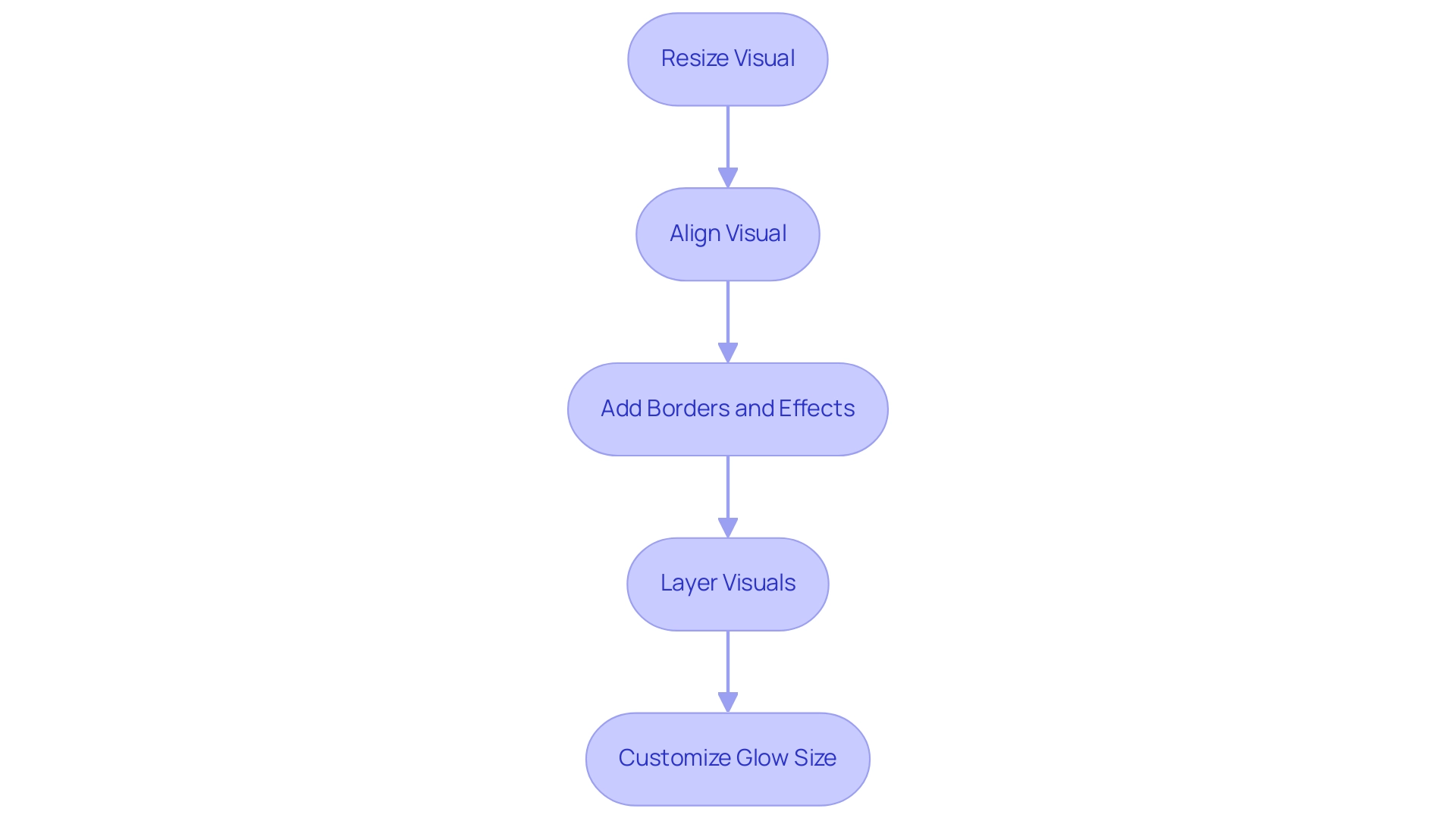

To format visuals effectively in Power BI, follow these essential steps:

- Resize: To maintain the aspect ratio while resizing, simply click and drag the corners of the visual. This method ensures that your visuals do not appear distorted, enhancing their professional look.

- Align: Utilize the alignment tools in the Format pane to position your visual accurately within your report layout. Proper alignment is essential for achieving a refined and orderly look.

- Add Borders and Effects: Enhance your visuals by incorporating borders, shadows, and other effects available in the Format pane. These features can enhance the visual allure of your visuals, making them stand out in your reports.

- Layering: When using multiple visuals, ensure they are layered correctly for optimal clarity and visual impact. This avoids clutter and confusion, enabling your audience to concentrate on the essential components of your report.

For instance, a user faced challenges while trying to create a traffic light indicator with a PNG file that had a transparent center. The problem arose from BI applying padding around the graphic, which created an undesirable ‘bleed’ effect. Jing Zhang, a community support expert, proposed a practical workaround:

Try inserting a blank button and using the logo graphic to fill the button.

Adjust the button’s height and width to leave no space around the logo. This approach not only resolved the ‘bleed’ issue but also showcased how careful formatting can significantly improve the presentation quality of visuals.

Additionally, it’s important to note that the glow size of visuals can be customized from 0 to 10 pixels, allowing for further enhancement of your visuals.

By adopting these best practices and leveraging the latest features for visual formatting in Power BI, such as the option to power bi add image, you can enhance the effectiveness of your reports, ensuring they communicate your data stories with clarity and professionalism. Furthermore, incorporating user feedback options can help enhance the tools available for visual formatting, making it essential to engage with your audience for continuous improvement.

Common Challenges and Best Practices for Adding Images in Power BI

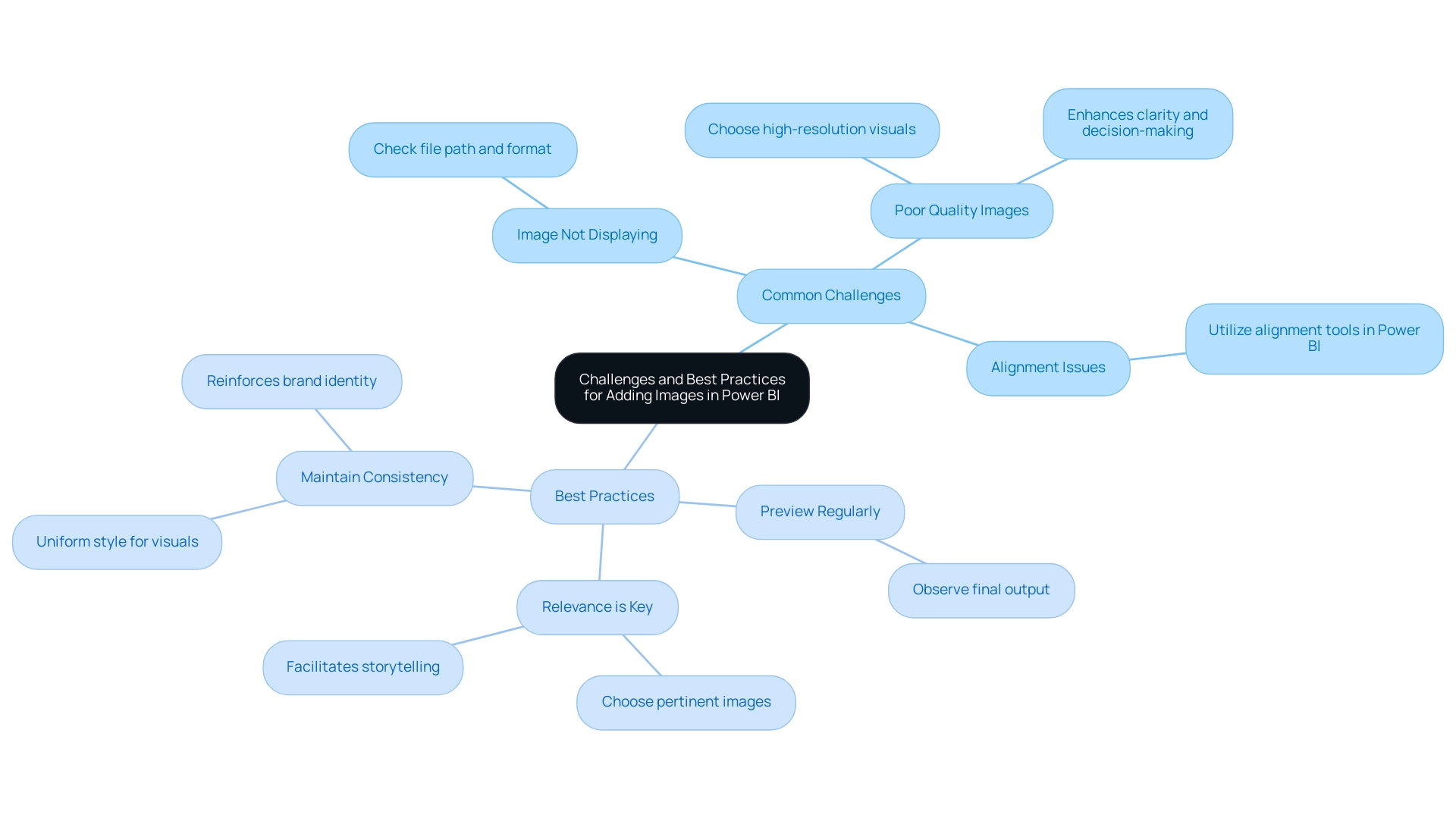

Common Challenges

- Image Not Displaying: One of the most frequent issues encountered is when images fail to display correctly. To resolve this, double-check the file path and ensure the file format is supported, such as JPEG or PNG.

- Poor Quality Images: The clarity of your documents can significantly impact data interpretation. Always choose high-resolution visuals to maintain professional quality and ensure that details are not lost. Research suggests that superior visual quality can enhance overall clarity of documentation, resulting in better decision-making. In fact, effective business data visualization can increase your ROI from business intelligence tools by producing faster and better insights.

- Alignment Issues: Correct positioning of visuals within your document layout can be tricky. Utilize the alignment tools available in Power BI to position visuals accurately, ensuring they complement the surrounding content without disrupting the flow.

Best Practices

- Preview Regularly: To get an accurate representation of your final output, always preview your report. This step enables you to observe how visuals combine with your representations and make necessary adjustments.

- Relevance is Key: Choose images that are not only pertinent but also improve the comprehension of the information being presented. Effective visuals can drive home key messages and facilitate storytelling within your documents. Justyna, a PMO Manager, emphasizes, “Let me be your single point of contact and guide you through the cooperation process,” highlighting the significance of clear communication and impactful visuals in presenting information.

- Maintain Consistency: Adopting a uniform style for visuals throughout your document fosters a professional appearance and reinforces brand identity. This consistency aids your audience in concentrating on the information without distractions.

By following these best practices, you can ensure that the power bi add image functionality enhances your visuals, making them more interactive and engaging for stakeholders. Additionally, consider the challenges highlighted in today’s data-rich environment, such as time-consuming document creation and data inconsistencies, which can be effectively addressed with RPA solutions. These solutions not only streamline report generation but also mitigate staffing shortages, ensuring that your organization remains competitive by leveraging data-driven insights for operational efficiency and business growth.

Conclusion

Integrating images into Power BI reports is a game-changer for enhancing visual appeal and engagement. By leveraging various methods such as:

- Importing local files

- Utilizing web images

- Employing image hosting services

Organizations can effectively address privacy concerns while making their reports visually compelling. Each approach offers unique benefits, allowing users to choose the best fit for their specific needs and ensuring that data storytelling resonates with the audience.

Effective formatting techniques are essential for optimizing image display, including:

- Resizing

- Aligning

- Layering visuals

Implementing best practices can significantly enhance the clarity and professionalism of reports, ultimately leading to improved decision-making. By regularly previewing reports and maintaining consistency in style, businesses can create a cohesive narrative that highlights key insights and reinforces brand identity.

Recognizing and overcoming common challenges such as image display issues and quality concerns is crucial for maximizing the impact of visuals. By adopting a strategic approach to image integration and presentation, organizations can transform their data reporting processes, foster deeper connections with stakeholders, and drive operational efficiency. Embracing these strategies not only elevates the aesthetic quality of reports but also empowers users to make informed decisions based on clear and engaging visual narratives.

Overview:

Object Level Security (OLS) in Power BI is crucial for safeguarding sensitive data by allowing organizations to control access at the level of tables, columns, and measures. The article emphasizes that OLS not only helps in complying with privacy regulations but also enhances operational efficiency by enabling tailored access for users, thereby fostering trust and reducing the risk of unauthorized information exposure.

Introduction

In an age where data is both a valuable asset and a potential liability, Object Level Security (OLS) in Power BI emerges as a critical solution for organizations striving to protect sensitive information.

As compliance demands escalate and privacy regulations tighten, the implementation of OLS allows businesses to define precise access controls at the object level, ensuring that only authorized personnel can view sensitive data.

This strategic approach not only mitigates risks associated with unauthorized access but also fosters transparency, aligning with the growing public expectation for responsible data governance.

By integrating OLS with cutting-edge technologies like Robotic Process Automation (RPA), organizations can streamline their reporting processes while enhancing operational efficiency.

As the landscape of data security continues to evolve, understanding and applying OLS will empower organizations to navigate compliance challenges and bolster their data protection strategies effectively.

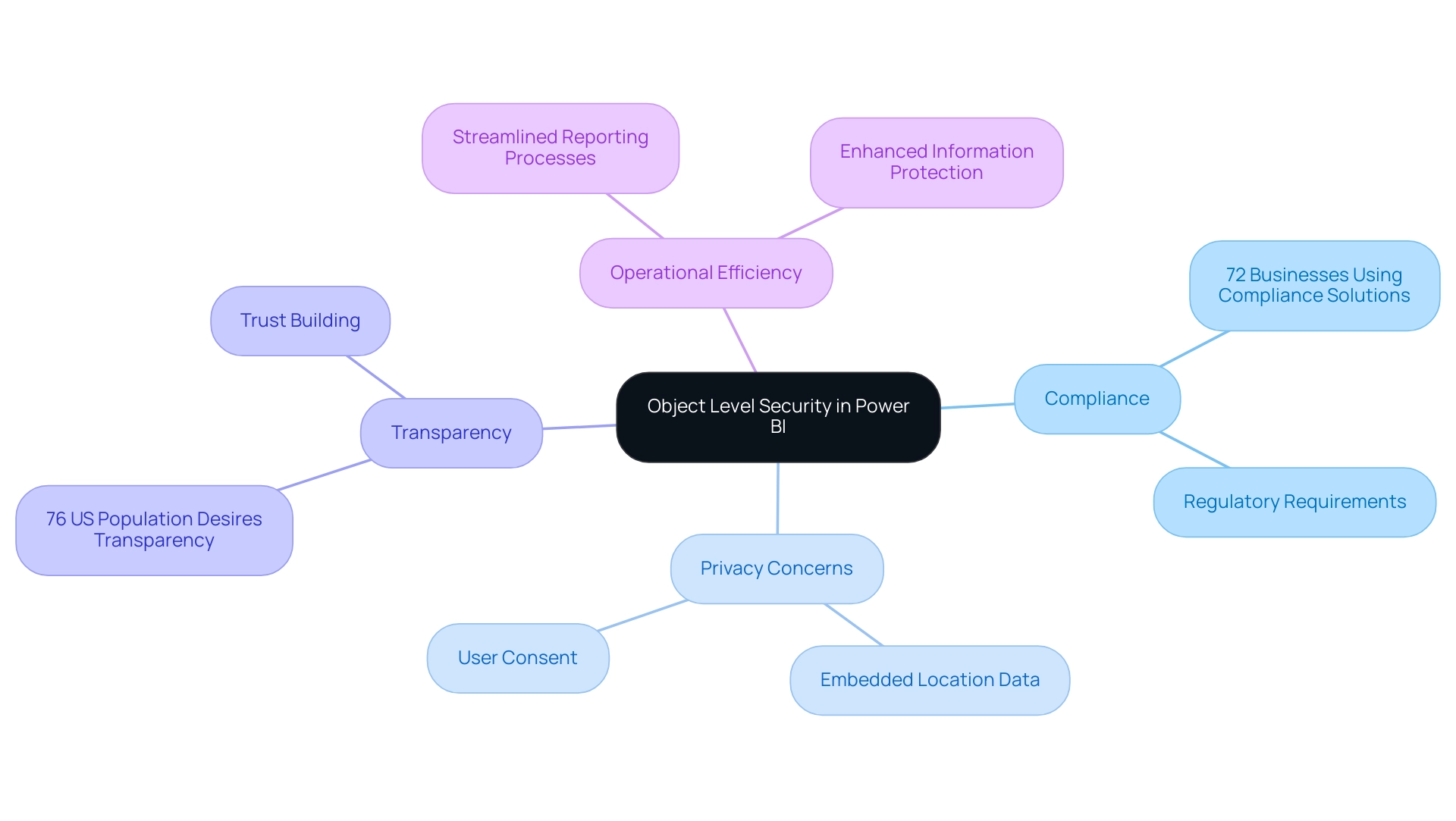

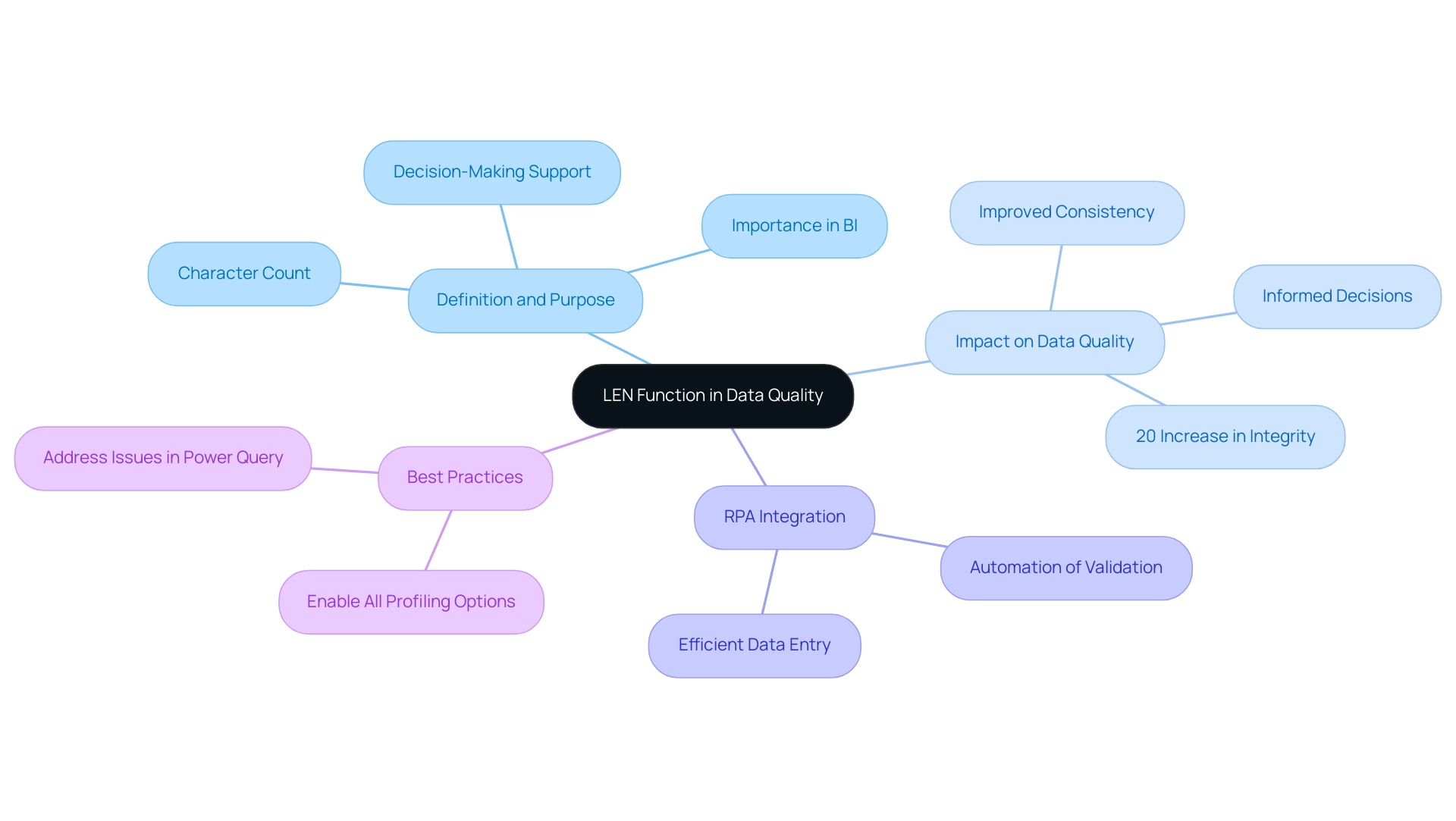

Understanding Object Level Security in Power BI

Object level security in Power BI is essential for protecting sensitive information as it allows entities to implement access controls at the object level, which includes tables, columns, and measures. This functionality is not just a technical enhancement; it is essential for ensuring compliance with increasingly stringent privacy regulations. In today’s information-rich environment, organizations must also be aware of privacy concerns associated with uploading images containing embedded location details (EXIF GPS), as this information can be extracted by visitors.

With 72% of businesses currently employing compliance solutions to navigate privacy law requirements, the implementation of object level security in Power BI becomes a strategic necessity. For example, a financial services company might limit access to customer financial information using object level security in Power BI, ensuring that only authorized personnel can view this sensitive content, while others can access only non-sensitive details. This practice not only reduces the risk of unauthorized information exposure but also aligns with the desires of 76% of the US population for greater transparency in information usage, highlighting the critical need for entities to communicate clearly about access.

As Osman Husain, a content lead at Enzuzo, aptly notes, ‘This indicates a failing on behalf of the industry as informed consent is a cornerstone of effective compliance.’ Furthermore, with 43% of websites employing tactics to nudge users towards accepting all cookies, entities must prioritize transparency to foster trust. Along with OLS, entities encounter difficulties in deriving insights from Power BI dashboards, including lengthy report generation and inconsistencies in information.

By integrating Business Intelligence and RPA solutions, companies can streamline their reporting processes and enhance operational efficiency. By adopting object level security in Power BI alongside robust Business Intelligence strategies, entities not only enhance their information protection measures but also build trust with stakeholders, reinforcing their commitment to responsible information governance and operational efficiency.

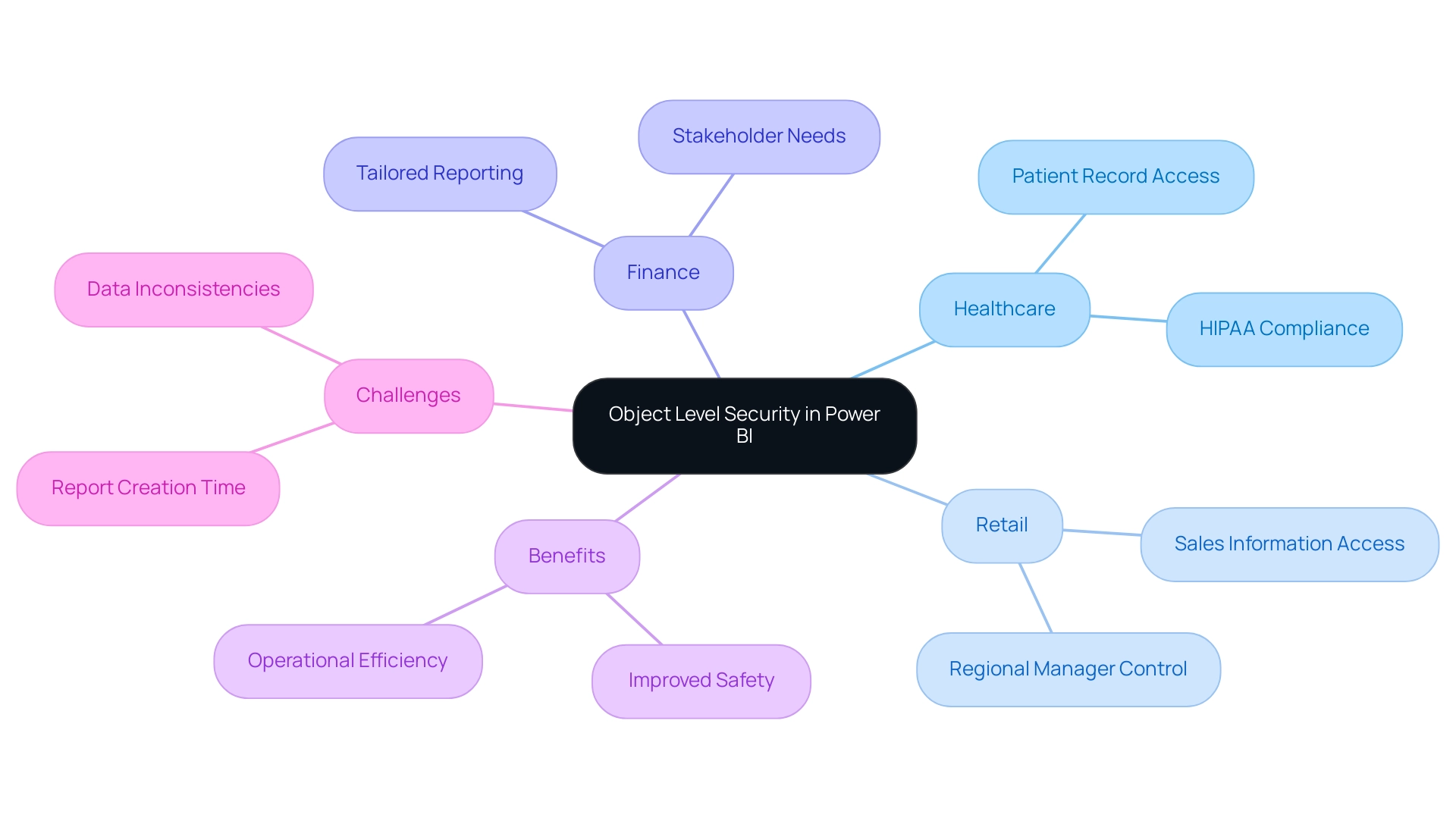

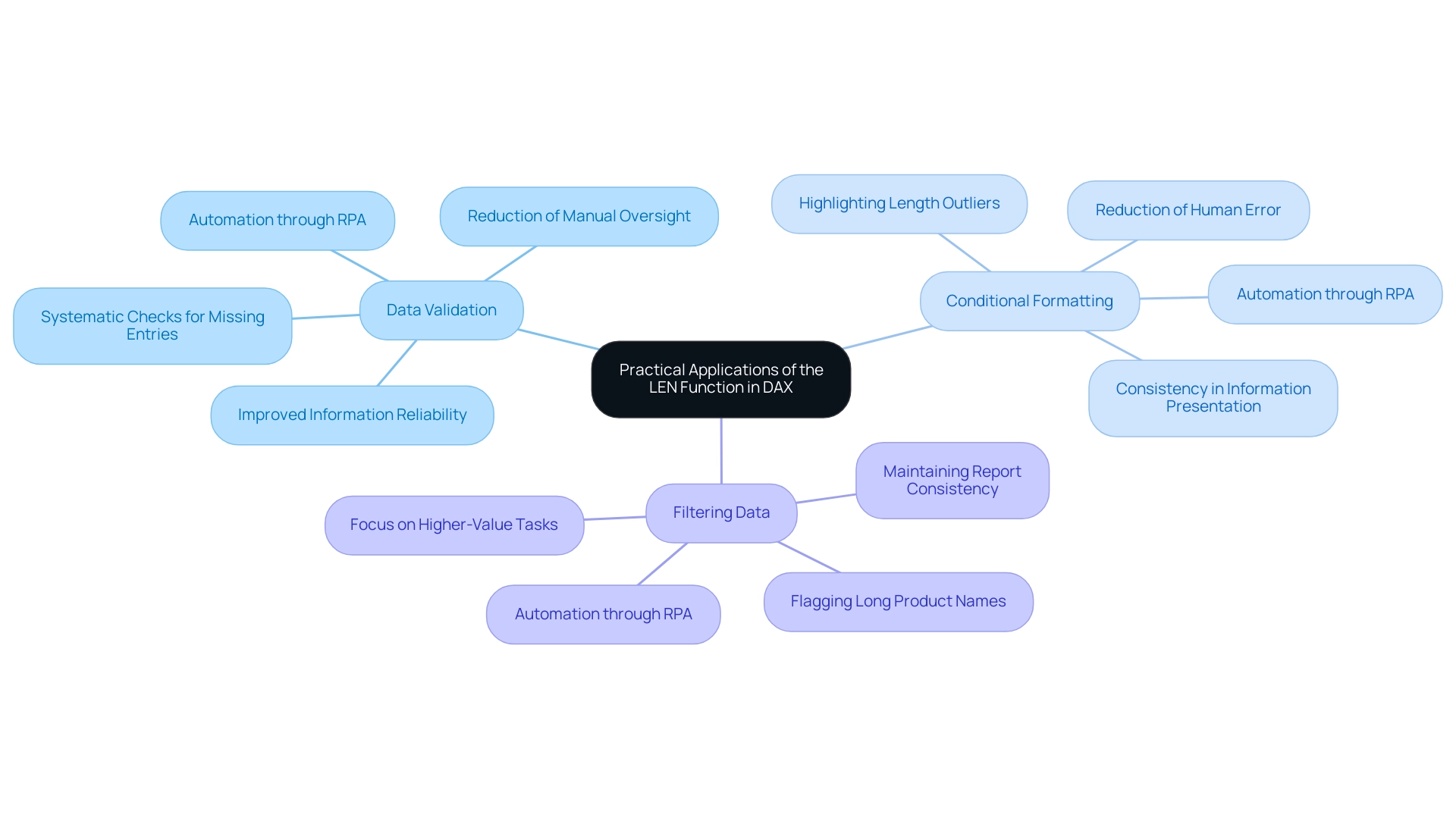

Practical Applications and Use Cases of Object Level Security

The practical applications of object level security in Power BI are extensive and impactful. In healthcare, for example, OLS can be employed to ensure that patient records are accessible only to authorized personnel, maintaining compliance with HIPAA regulations. This not only safeguards sensitive patient information but also reduces the risk of expensive breaches, which average nearly $11 million in the healthcare sector.

A recent case study titled ‘How to Prepare Your Entity to Avoid Vulnerabilities in 2024’ emphasizes that remediating cybersecurity vulnerabilities is an urgent priority for entities. In the retail sector, OLS can strategically restrict access to sales information by geographic area, allowing regional managers to view only the details relevant to their operations while protecting sensitive details from competitors. Additionally, in financial reporting, various stakeholders frequently need different levels of detail; OLS supports the development of tailored reports that satisfy these unique requirements.

By utilizing OLS alongside Robotic Process Automation (RPA), entities can improve safety and usability, cultivating an atmosphere where enhanced decision-making and operational efficiency flourish. RPA not only streamlines repetitive tasks, reducing errors, but also frees up team resources for more strategic initiatives, allowing them to focus on high-value work. OLS ensures that sensitive information is protected while enabling this efficiency.

The capability to implement detailed access controls through OLS is not merely a protective measure; it’s a strategic asset entities must utilize to remain competitive in an ever more interconnected and susceptible environment. However, organizations often face challenges when leveraging insights from Power BI dashboards, such as time-consuming report creation and data inconsistencies. Addressing these challenges is crucial for maximizing the value of BI solutions.

With the growing number of known spear-phishing groups and the alarming statistics around supply chain attacks, the urgency to prioritize protective measures like object level security in Power BI becomes even more evident. Moreover, as emphasized in a study of 24 incidents, over 50% of supply chain attacks were linked to prominent cyber crime groups, highlighting the necessity for strong protective measures, including object level security in Power BI. It is also crucial to note that penetration testing is essential for understanding vulnerabilities in interconnected computer systems, further reinforcing the importance of object level security in Power BI as an integral part of a broader protection strategy.

Implementing Object Level Security in Power BI

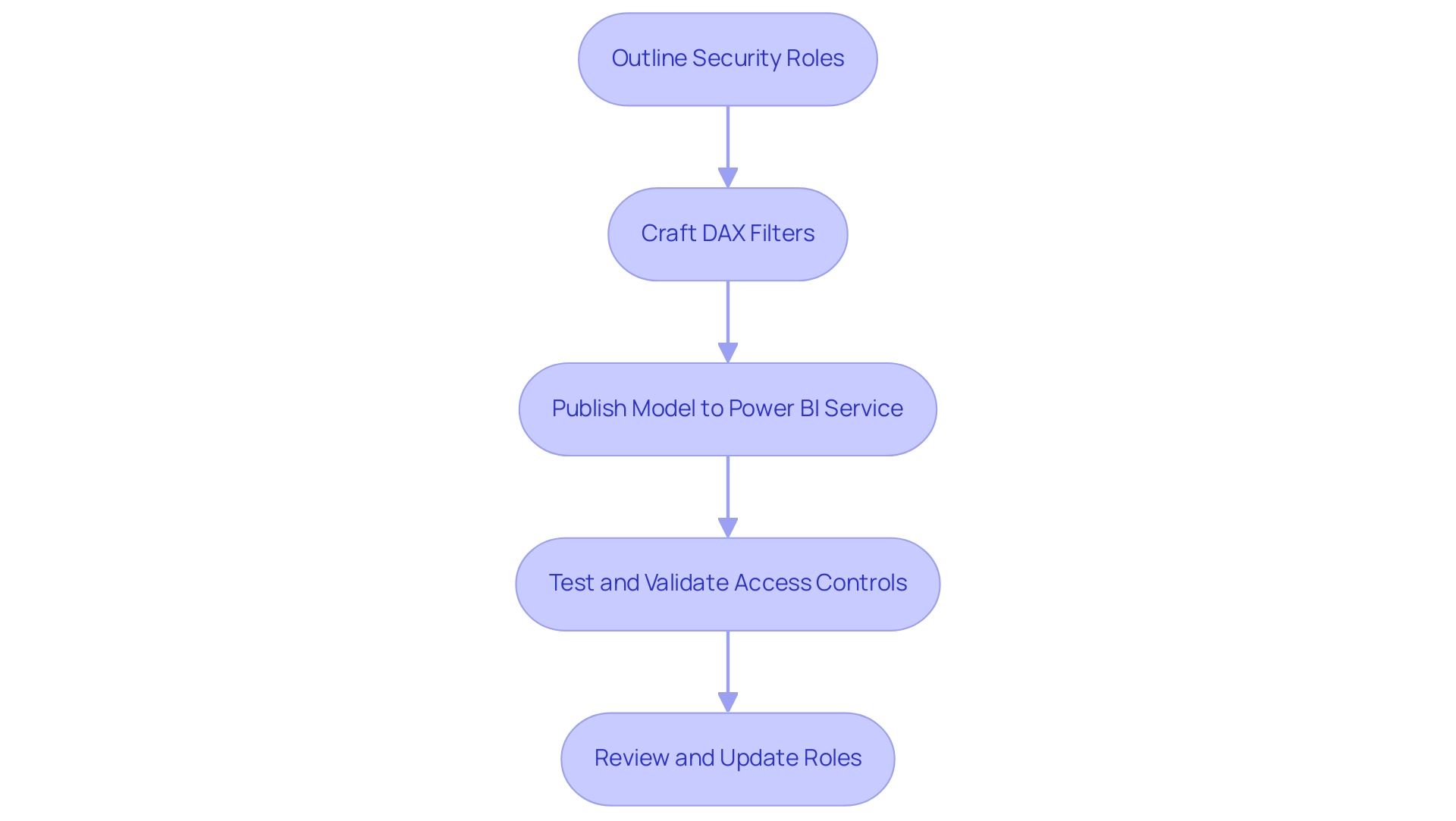

Implementing object level security in Power BI entails several crucial steps that empower organizations to manage access effectively while addressing common challenges such as time-consuming report creation, inconsistencies, and the lack of actionable guidance in reports. The process starts with outlining the specific security roles that will determine access to designated objects within the model. This can be accomplished in Power BI Desktop by selecting the ‘Modeling’ tab and clicking on ‘Manage Roles.’

Once roles are established, the next step involves crafting DAX (Data Analysis Expressions) filters to delineate the information each role can access. For instance, a DAX filter might be employed to restrict a sales manager’s visibility to sales information pertinent solely to their designated region. After establishing roles and filters, it is crucial to publish the model to the Power BI service, where the applied access controls can be thoroughly tested and validated.

Consistently reviewing and updating these roles is essential to adjust to changes within the organizational structure or shifts in information sensitivity. By following these guidelines, organizations can effectively leverage object level security in Power BI, thereby enhancing their security posture and ensuring compliance with privacy standards. Furthermore, OLS not only assists in concealing sensitive information but also enables the creation of actionable insights by ensuring that users have access to the most pertinent details for their roles.

As mentioned in recent discussions, the introduction of object level security in Power BI has been a game-changer since its release in February 2021, allowing users to hide not only columns and tables but also derived calculations like measures and calculated columns, thus providing an additional layer of protection. This capability is essential for driving data-driven insights that lead to informed decision-making, ultimately driving growth and operational efficiency. As Sapana Taneja remarked, ‘I hope you found this article helpful!’

This sentiment reflects the importance of sharing knowledge on such pivotal topics. Furthermore, during an Unplugged session lasting 45:22, experts discussed the practical implications of object level security in Power BI, emphasizing its role in improving data protection and addressing the challenges associated with data governance. A case study on object level security in Power BI highlights how users can set security roles within Power BI Desktop and test the user experience, showcasing the effectiveness of object level security in Power BI in real-world scenarios.

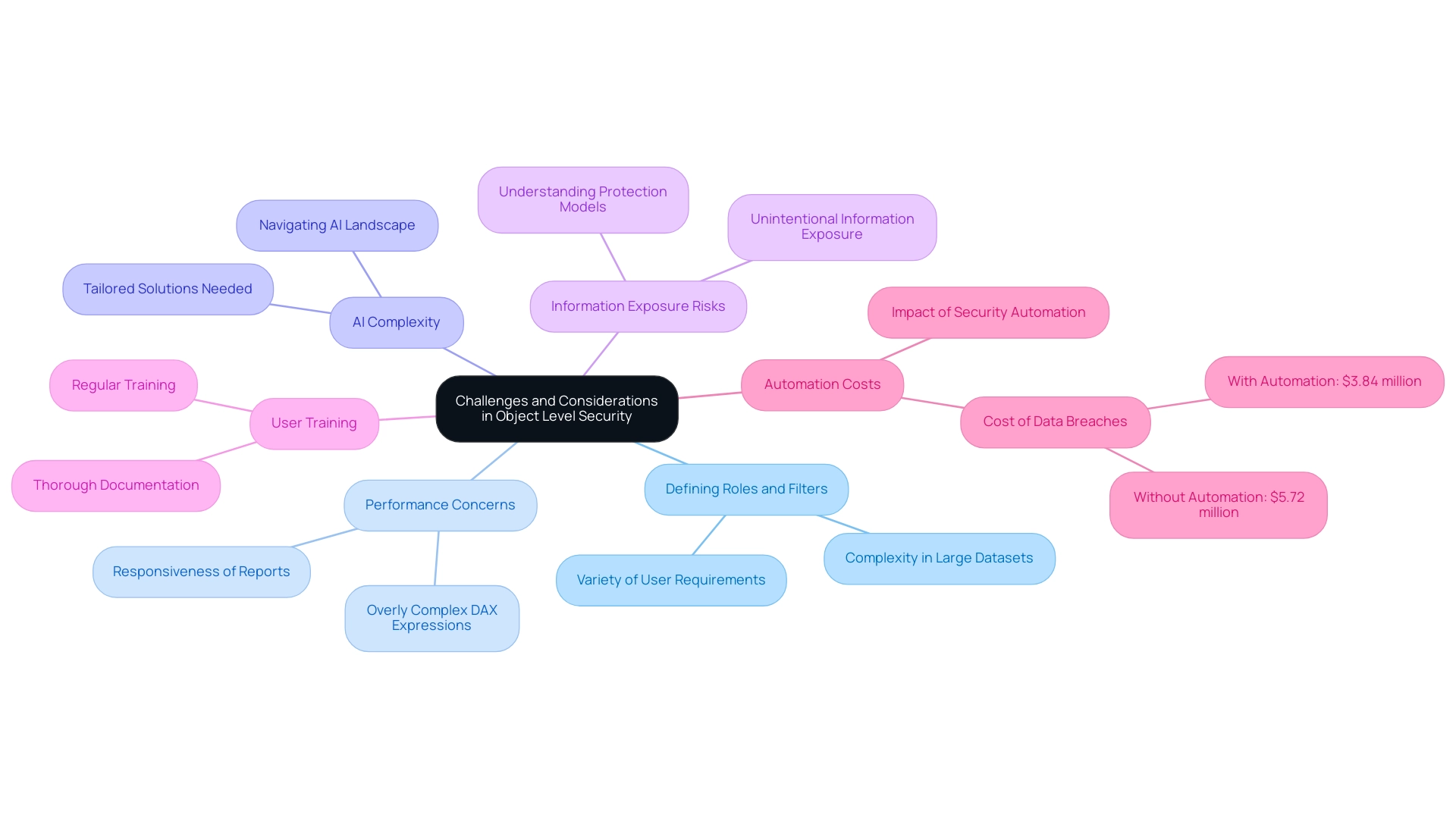

Challenges and Considerations in Object Level Security

Implementing object level security in Power BI within enterprises can yield significant advantages, yet several challenges often arise during its deployment. One prevalent issue is the intricacy involved in defining roles and filters, particularly in expansive datasets that cater to a variety of user requirements. Performance concerns may also emerge, especially if organizations rely on overly complex DAX expressions, which can hinder the responsiveness of Power BI reports.

Furthermore, navigating the overwhelming AI landscape necessitates tailored solutions that simplify these complexities, enhancing efficiency through tools like Robotic Process Automation (RPA). The risk of unintentional information exposure emphasizes the necessity of ensuring that all users understand the protection model effectively. With the average company sharing confidential information with 583 third-party vendors, the urgency of implementing robust protective measures cannot be overstated.

Regular training and thorough documentation play a crucial role in alleviating these obstacles; studies indicate that effective user training significantly enhances security awareness. Organizations should also prioritize the periodic review of their object level security in Power BI configurations to maintain their relevance and efficiency as business needs evolve. The challenges in leveraging insights from Power BI dashboards, such as time-consuming report creation and inconsistencies, can be effectively addressed through our 3-Day Power BI Sprint.

This service is designed to create professional reports quickly, focusing on actionable outcomes and ensuring that teams can leverage insights without the burden of extensive report-building processes. By providing a structured method for report creation, the 3-Day Power BI Sprint enables companies to streamline their reporting efforts, ultimately enhancing decision-making capabilities. Based on a case study examining the average expense of breaches related to automation levels, entities with significant use of automation encountered an average cost of $3.84 million in 2024, in contrast to $5.72 million for those without automation.

As Nick Rago, Field CTO at Salt Security, notes, ‘In many instances, the barrier to breach was pretty low, and the attacker did not need any herculean effort to take advantage of a misconfigured API.’ By proactively tackling these challenges and leveraging tailored AI solutions, organizations can fully utilize the benefits of object level security in Power BI while effectively minimizing associated risks.

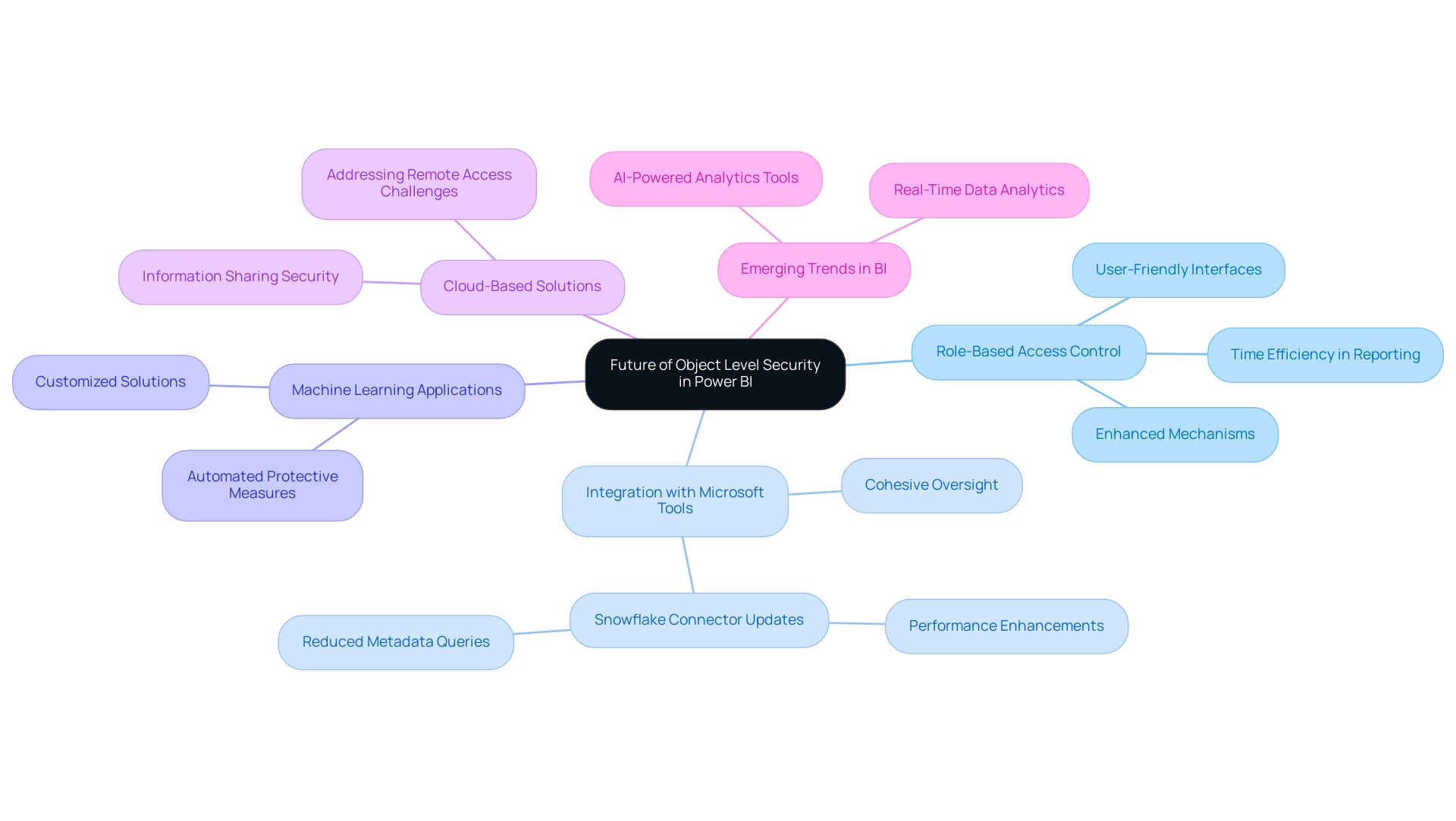

The Future of Object Level Security in Power BI

As the environment of information protection changes, notable progress is expected in object level security in Power BI, which will directly address typical challenges faced by Directors of Operations Efficiency. The enhanced role-based access control (RBAC) mechanisms guarantee that sensitive information is available solely to permitted individuals, highlighting the significance of protection in information access while decreasing the time invested in creating reports. Future advancements are anticipated to showcase more user-friendly interfaces for defining roles and filters, optimizing the management process and assisting in reducing inconsistencies.

Improved integration with other Microsoft protection tools is also on the horizon, offering a cohesive approach to oversight across platforms. Significantly, the incorporation of machine learning algorithms could transform how sensitive information is recognized, automating the suggestion of suitable protective measures customized to particular organizational requirements. With the growing adoption of cloud-based solutions, OLS will likely adjust to address new security challenges arising from remote access and information sharing, particularly through the use of object level security in Power BI.

For instance, recent updates to the Snowflake connector have enhanced efficiency in information handling and connectivity, showcasing practical advancements relevant to object level security in Power BI. Furthermore, as entities navigate the overwhelming options in the AI landscape, tailored AI solutions can help cut through the noise, aligning technologies with specific business goals and challenges. Insights from the community highlight emerging trends in Business Intelligence (BI), including the future of real-time information analytics and the necessity of AI-powered analytics tools.

By actively remaining aware of these trends, companies can enhance their information protection approaches, guaranteeing adherence and strong defense in a constantly changing digital environment. Jason Himmelstein stresses the urgency of these advancements, stating, ‘Users can now update their visuals in real time, interact with their information through Power BI’s standard features such as filtering, cross-highlighting, and tooltips.’ This adaptability is crucial as organizations prepare for the future of data security in 2024 and beyond.

Conclusion

Implementing Object Level Security (OLS) in Power BI is not just a technical necessity; it’s a strategic imperative for organizations aiming to safeguard sensitive data while fostering a culture of transparency and trust. By defining precise access controls at the object level, businesses can ensure that only authorized personnel have access to critical information. This capability is particularly vital in today’s regulatory environment, where compliance with data privacy laws is paramount.

The practical applications of OLS across various industries, from healthcare to finance, illustrate its versatility in enhancing data protection. By tailoring access to sensitive information, organizations can mitigate risks associated with unauthorized data exposure, ultimately protecting their assets and reputation. Moreover, integrating OLS with Robotic Process Automation (RPA) streamlines reporting processes, allowing teams to focus on high-value tasks and improving operational efficiency.