Introduction

In the realm of data analysis, the ability to generate dynamic sequences of numbers can be a game-changer for organizations striving for operational excellence. The GENERATESERIES function in DAX emerges as a powerful ally, enabling users to create single-column tables filled with sequential numeric values, tailored to their specific needs. This function not only simplifies complex calculations but also enhances data modeling within Power BI, setting the stage for insightful analytics that drive strategic decisions.

As organizations navigate the intricacies of data management, mastering GENERATESERIES can unlock new levels of efficiency, empowering teams to transform raw data into actionable insights and fostering a culture of informed decision-making that propels business growth.

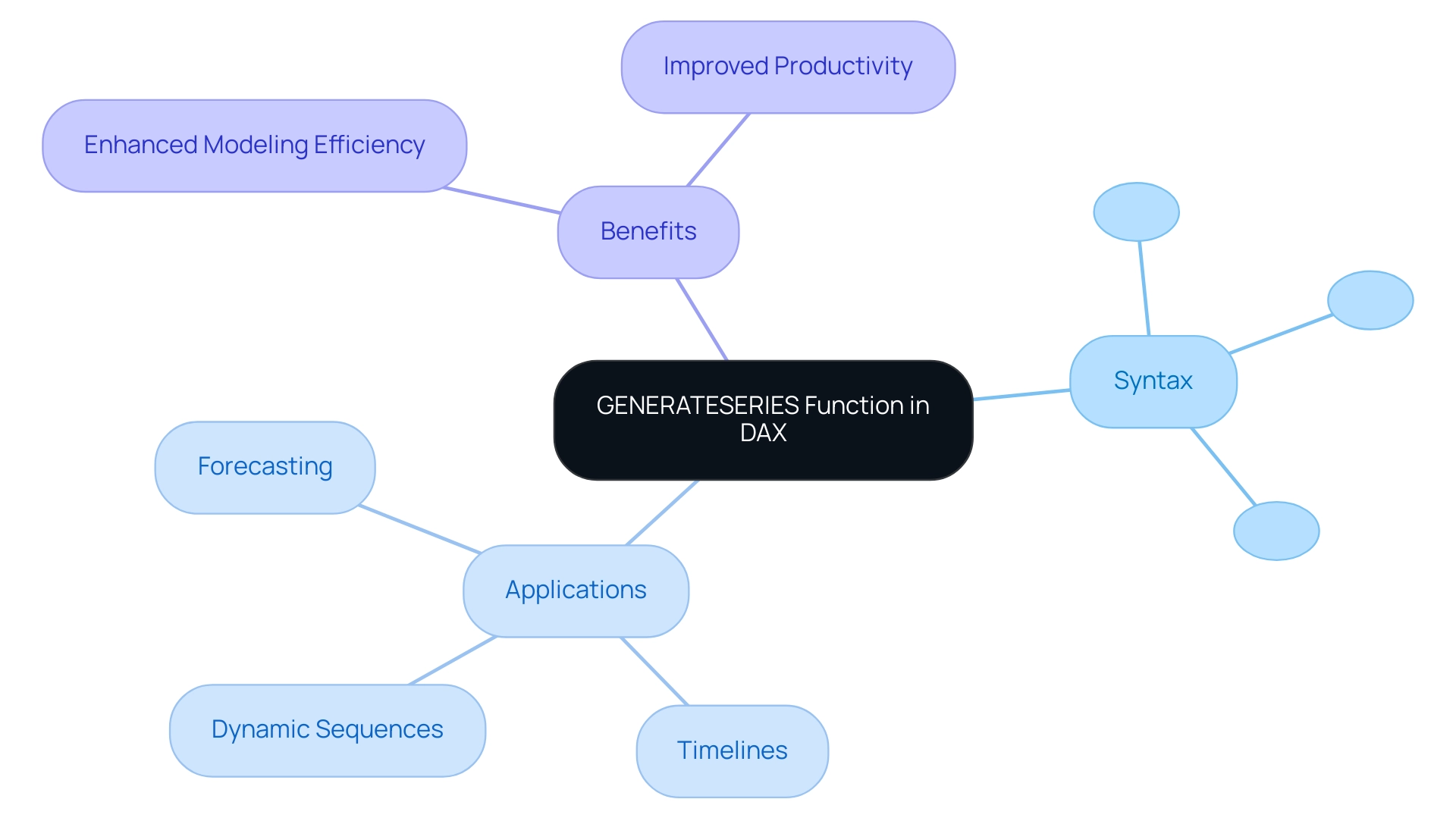

Understanding the GENERATESERIES Function in DAX

The dax generateseries feature in DAX is a powerful tool designed to create a single-column table filled with sequential numeric values. Its syntax is straightforward: <Start>, <And>, <Increment>. Here, <Start> indicates the initial value, <And> represents the final value, and <Increment> specifies the step size between values.

This procedure plays a vital role in producing dynamic sequences of numbers, which are necessary for various computations, visualizations, and modeling tasks within Power BI. Significantly, users should recognize that a maximum of 99,999 rows can be returned per query, highlighting the necessity for effective information modeling practices that improve operational efficiency.

By mastering dax generateseries, users can unlock the full potential of DAX, leading to the creation of robust models that enable insightful analytics. For example, in practical applications, it enables users to create timelines, dax generateseries for forecasting, or establish ranges for visualizations—key elements in driving data-driven insights that propel business growth. The capability to handle these calculations effectively is additionally illustrated by recent improvements in DAX operations, which boost overall performance and enable users to address issues related to time-consuming report creation and information inconsistencies.

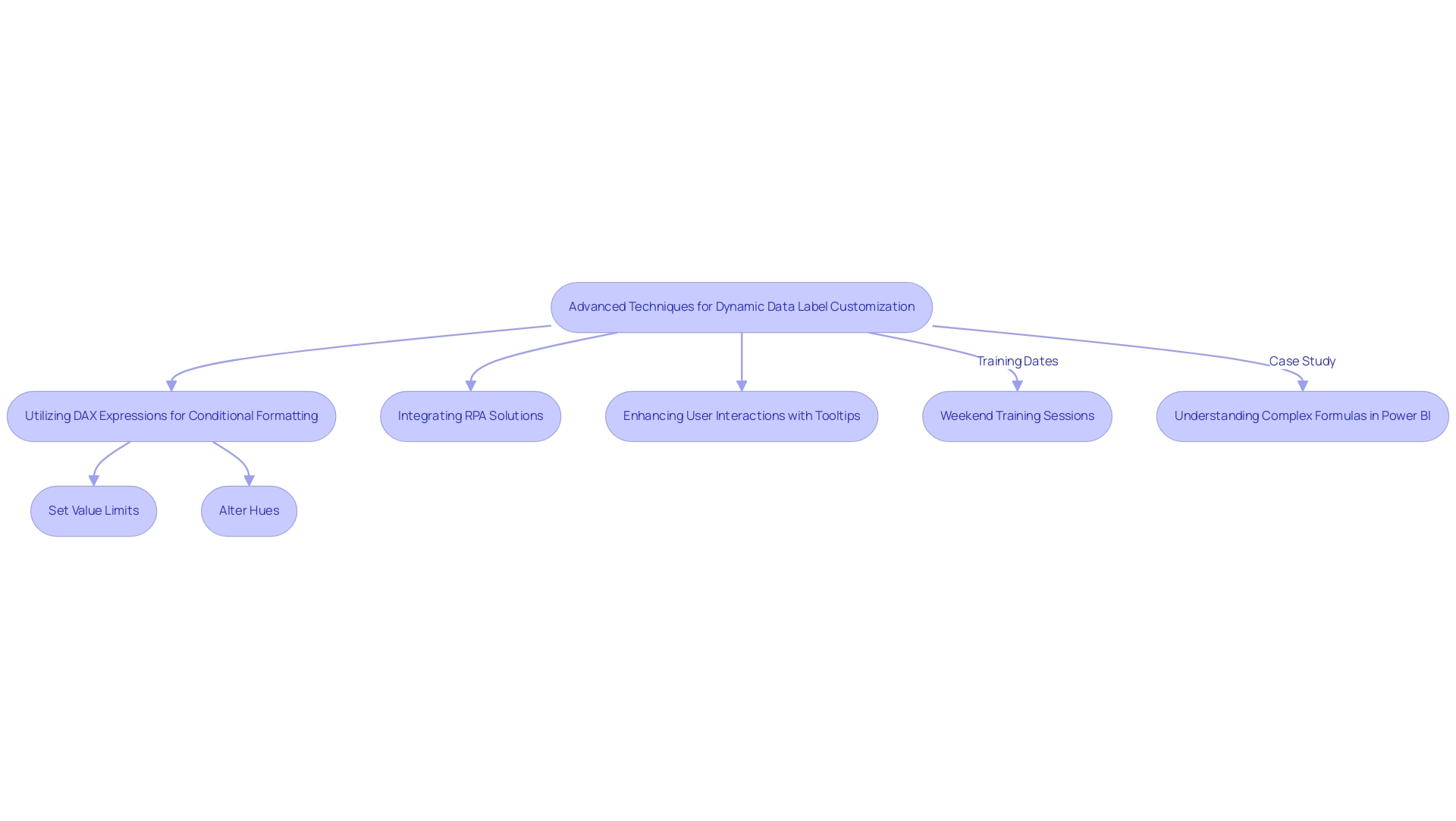

This is especially pertinent in the context of the ‘Query Tabs’ feature, which supports multiple queries, enabling users to manage and utilize the series generation tool more effectively, thereby enhancing productivity and operational insight. Furthermore, integrating RPA solutions like EMMA RPA and Power Automate can automate repetitive tasks related to manipulation, thereby improving efficiency. As mentioned by Data Science enthusiast Douglas Rocha, ‘Last but certainly not least is the Mode,’ which highlights the significance of choosing the appropriate operations for effective utilization of information, especially when dax generateseries is utilized.

Ultimately, comprehending and utilizing dax generateseries capability can greatly enhance modeling efficiency, allowing Directors of Operations Efficiency to leverage actionable insights for informed decision-making and innovation.

Practical Applications and Examples of GENERATESERIES in Power BI

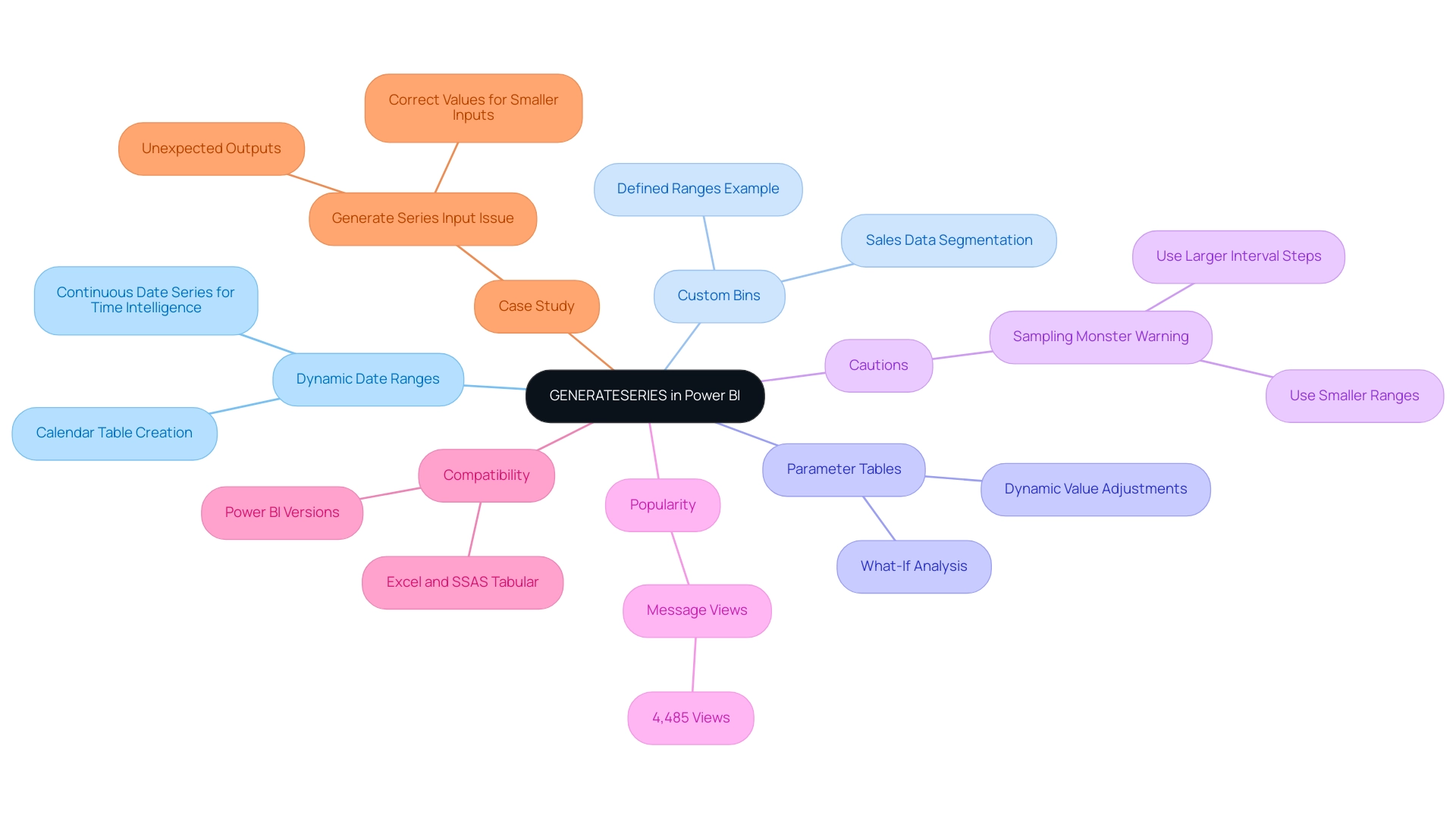

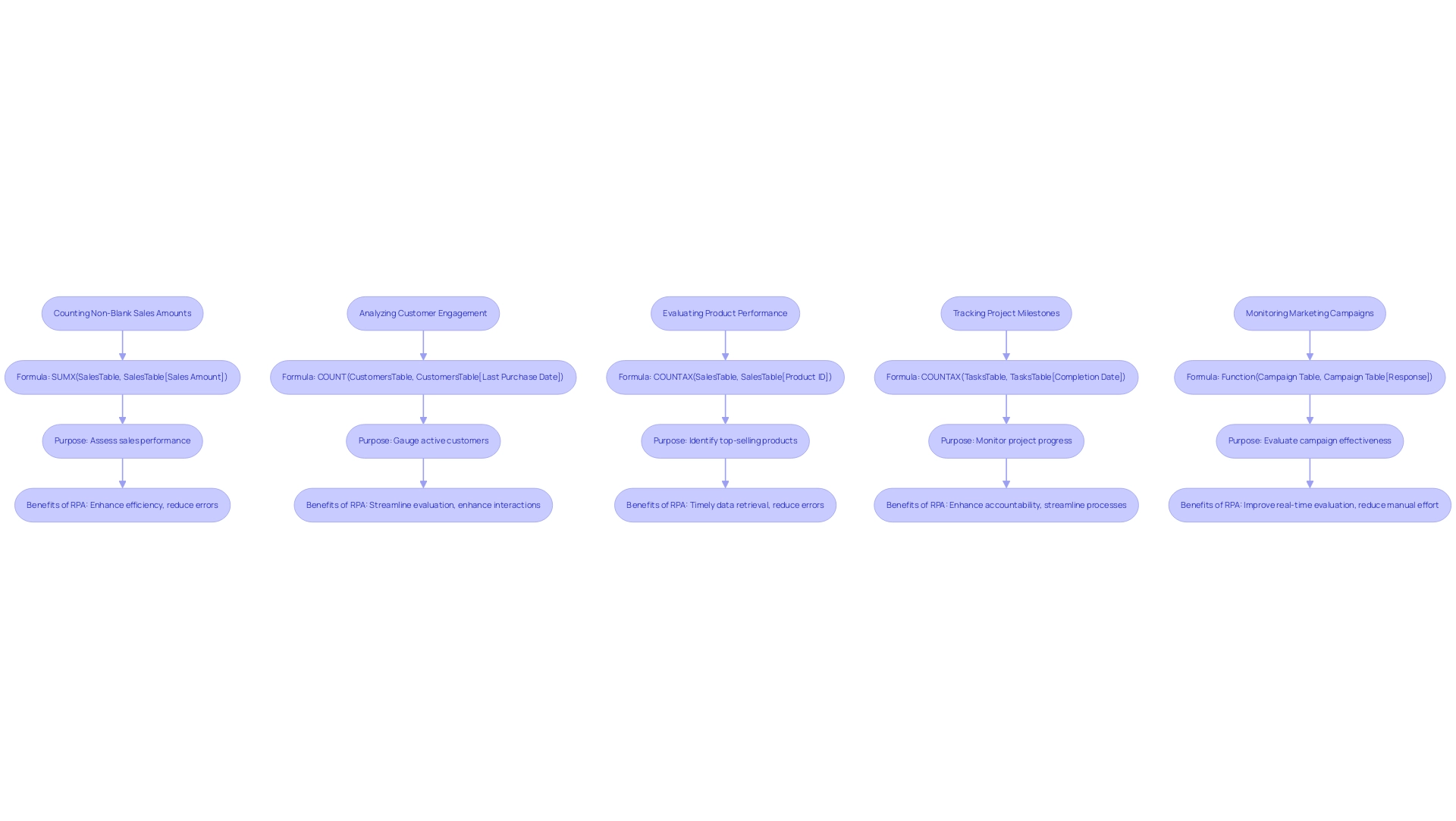

The GENERATESERIES function in Power BI offers versatile applications that can significantly enhance your data analysis capabilities, especially in the context of driving operational efficiency and extracting actionable insights:

-

Dynamic Date Ranges: The dax generateseries function is essential for facilitating the generation of a continuous series of dates, which is crucial for time intelligence calculations. For instance, using

DATE(1, 1, 1)toDATE(1, 1, 1)with an interval of 1 enables the creation of a comprehensive calendar table for the year, providing a robust framework for time-based analysis that can inform strategic decisions. -

Custom Bins: Another powerful application of the dax generateseries function is in the creation of custom bins for numerical datasets. For example, to classify sales data into defined ranges, the method can be utilized as follows:

0, 1000, 100. This effectively segments sales into bins such as 0-100, 101-200, and so on, allowing for clearer insights into sales performance and trends, thus addressing common challenges in reporting. -

Parameter Tables: Additionally, you can utilize the dax generateseries function for crafting parameter tables that support what-if analysis. By dynamically adjusting values such as discount percentages or interest rates, you enhance the interactivity and responsiveness of your reports, enabling more informed decision-making. This approach not only enriches your analytics but also empowers your team to explore various scenarios effectively, contributing to a culture of data-driven insights.

Furthermore, the popularity of this feature is underscored by the fact that the related message received 4,485 views, indicating significant interest in its applications. However, users should be cautious of the ‘sampling monster,’ as noted by Power BI expert lbendlin: “You have fallen victim to the sampling monster. Nothing you can do about it – except for using (much) smaller ranges or larger interval steps.”

This caution is especially pertinent considering a case study where a user faced a problem with the function, leading to unexpected outputs when larger values were inputted. The problem was attributed to the ‘sampling monster,’ highlighting the importance of using smaller ranges or larger interval steps to achieve accurate results.

Advanced Techniques: Leveraging GENERATESERIES for Complex Data Models

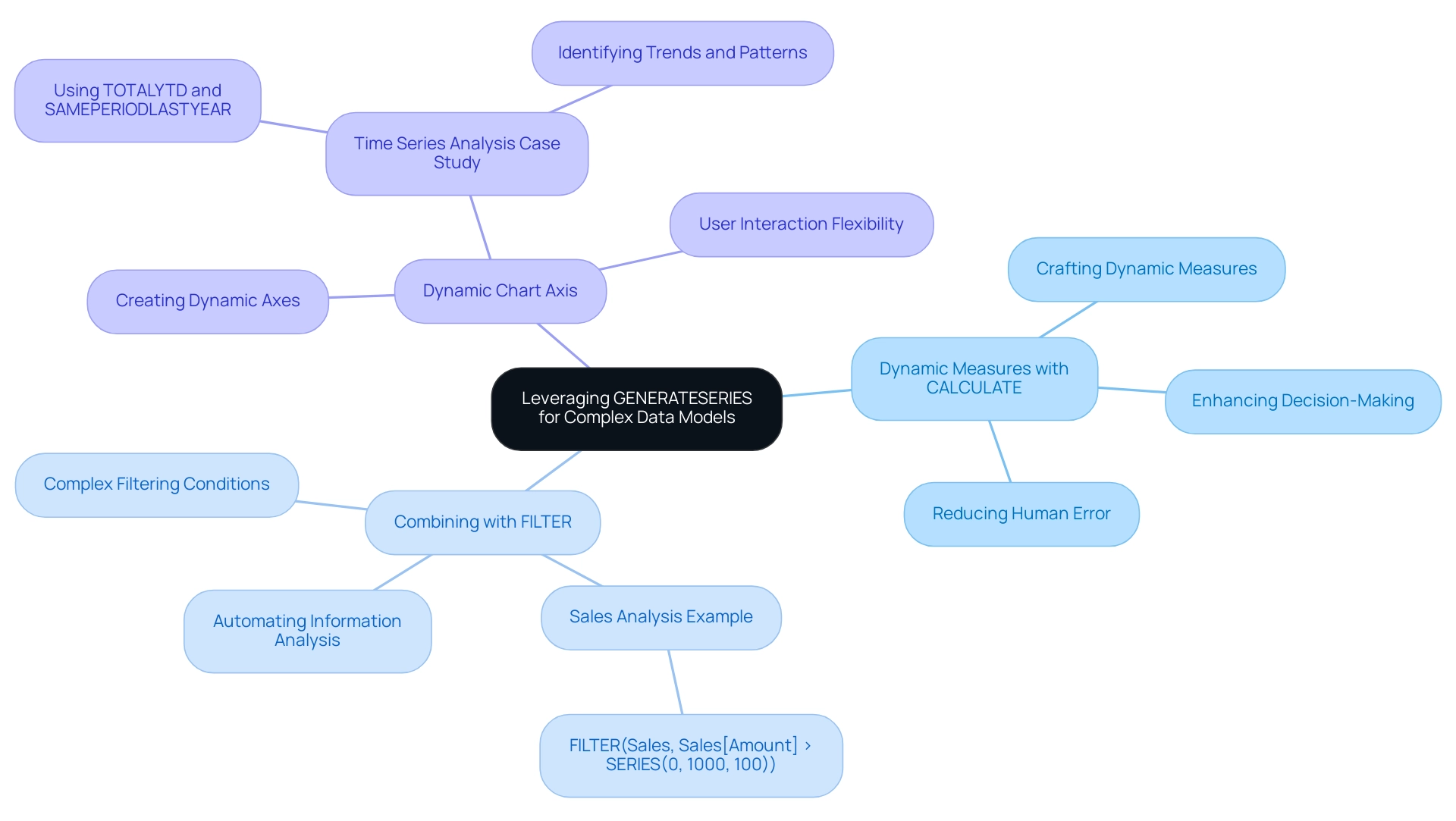

To effectively utilize the potential of the series generator in advanced information scenarios while leveraging Robotic Process Automation (RPA) for improved operational efficiency, consider the following strategies:

-

By utilizing

dax generateseriesin conjunction withCALCULATE, you can craft dynamic measures tailored to user-selected parameters. This combination allows for more nuanced insights, adapting to the variables and filters applied by users, ultimately enhancing the decision-making process. RPA can automate information collection and processing, ensuring that the details feeding into these measures are timely and accurate. As experts suggest, it is vital to test extensively to validate formulas in different contexts for accuracy, ensuring that your insights are reliable. Moreover, automating these processes usingdax generateseriesreduces the likelihood of human error, freeing up your team to focus on more strategic tasks. -

Combining with FILTER: Utilize

dax generateseriesto establish complex filtering conditions based on external criteria. For example, the expressionFILTER(Sales, Sales[Amount] > SERIES(0, 1000, 100))offers a robust method to extract insights from sales information that surpass specific thresholds, allowing focused analysis of performance metrics. By automating this process through RPA, you can ensure consistent information analysis without manual intervention, which drives operational efficiency and mitigates the risks associated with repetitive manual tasks, especially whendax generateseriesis utilized for data management. -

Dynamic Chart Axis: Utilizing a

dax generateseriesfunction allows for the creation of dynamic axes within visual representations. This functionality not only improves presentation but also provides users the flexibility to interactively adjust ranges, resulting in a more engaging user experience. A pertinent case study is the Time Series Analysis, where DAX functions likeTOTALYTDandSAMEPERIODLASTYEARcan be utilized alongsidedax generateseriesto analyze trends over time, identifying patterns and seasonality within sequential information points.

As Yash Sinha emphasizes,

Master analytics and tech with top-notch resources in Power BI, SQL, Machine Learning, and AI. Enhance your abilities and remain ahead in the information game!

These techniques exemplify how advanced DAX capabilities, combined with RPA, can transform your insights, enabling more informed decision-making and driving business growth while addressing the challenges posed by manual workflows.

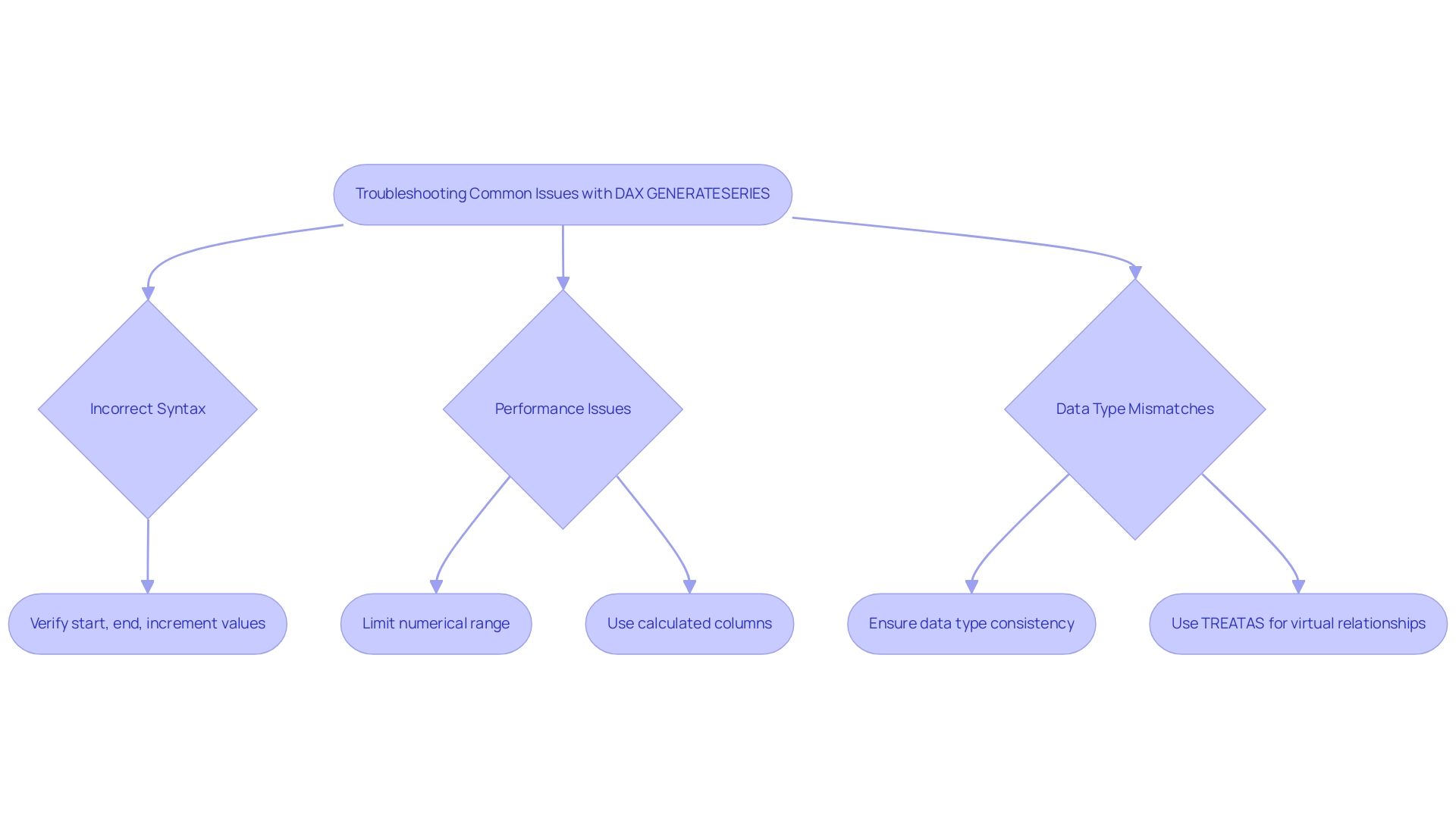

Troubleshooting Common Issues with GENERATESERIES

When employing the dax generateseries feature in DAX, several common issues may arise that can hinder performance and accuracy. Here are the key challenges and their solutions:

-

Incorrect Syntax: It’s crucial to verify that the start, end, and increment values are accurately defined and compatible in terms of type. Misconfigurations in syntax can lead to errors or yield unexpected outcomes. Ensuring precision in these definitions is essential for generating reliable results.

-

Performance Issues: Generating large series can adversely impact performance. To enhance efficiency and overcome technology implementation challenges, it is advisable to limit the numerical range being generated or to utilize calculated columns as an alternative approach. This strategy not only optimizes performance but also simplifies the overall calculation process within Power BI. In fact, creating calculated columns or flags in the backend can significantly improve performance, as demonstrated in the case study titled “Work Upstream for Complex Calculations.” Moreover, Robotic Process Automation (RPA) can be employed to automate repetitive tasks associated with DAX implementations, reducing the likelihood of manual errors and further streamlining the process.

-

Data Type Mismatches: Alignment of types across DAX operations is essential. For instance, using an integer in place of a decimal can result in calculation errors. Ensuring that data types are consistent is a fundamental practice that can prevent complications during analysis.

Additionally, for virtual relationships, it is suggested to use TREATAS instead of INTERSECT or FILTER to maintain filter context effectively, which is essential for optimizing DAX operations.

Mazhar Shakeel aptly notes, > Remember, Power BI is a tool that rewards careful planning and thoughtful design. < By addressing these common issues with foresight and strategic adjustments, you can significantly enhance the effectiveness of your DAX implementations and use dax generateseries to optimize performance in your reports. Embracing tailored AI solutions and leveraging Business Intelligence tools can further empower informed decision-making, ensuring your operations are both efficient and competitive.

Business Intelligence can convert insights obtained from DAX calculations into actionable strategies that promote growth. It is also important to note that the LINEST and LINESTX calculations require at least the Jan/Feb 2023 version of Power BI Desktop, which underscores the importance of using the latest tools for optimal performance.

Future Trends: The Evolution of DAX and GENERATESERIES

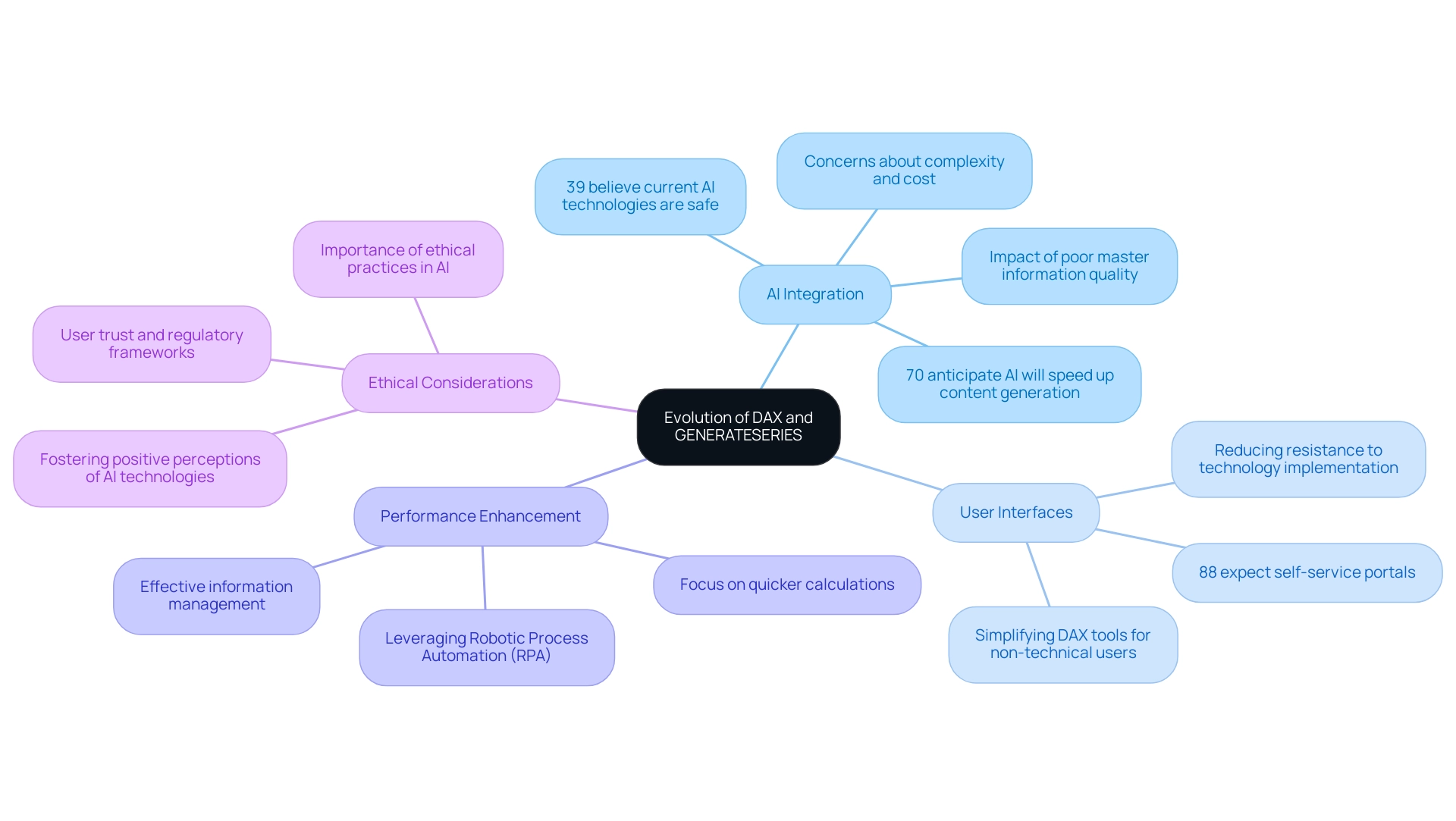

The evolution of DAX, particularly the GENERATESERIES feature, is poised to be influenced by several significant trends:

-

Increased Integration with AI: As artificial intelligence continues to advance, we can anticipate a deeper integration within DAX functions. This evolution will facilitate smarter information manipulation and enhance predictive analytics capabilities, aligning with the expectations of 70% of individuals who foresee AI accelerating content generation processes. However, it’s crucial to acknowledge that only 39% of U.S. adults believe that current AI technologies are safe and secure, highlighting a common reluctance rooted in perceptions of complexity and cost. Addressing these concerns, including the impact of poor master information quality on AI effectiveness, will be essential for successful AI adoption.

-

Enhanced User Interfaces: The future of DAX also points toward improved user interfaces, making DAX formulas more accessible for non-technical users. This development is crucial, especially as 88% of consumers now expect brands to provide self-service portals for quicker solutions. Simplifying DAX tools, such as how dax generateseries, will empower more users to harness their capabilities effectively, reducing the resistance linked to technology implementation.

-

Greater Performance Enhancement: With the ever-increasing volume of information, future updates to DAX will likely focus on performance optimization. Improvements focused on providing quicker calculations and more effective information management will be crucial for sustaining operational efficiency and addressing the increasing demands of users. Leveraging innovative tools, such as Robotic Process Automation (RPA), can significantly enhance workflow efficiency, freeing up resources for more strategic initiatives and addressing issues related to data quality that can hinder AI implementation.

-

Ethical Considerations in AI Integration: As DAX evolves with AI, it is imperative to consider ethical practices, user trust, and regulatory frameworks in AI development. Addressing these challenges will be essential for fostering a positive perception of AI technologies and ensuring that they are implemented responsibly, thus overcoming the barriers to their acceptance.

By staying ahead of these trends, operations leaders can not only improve their analytics capabilities but also build a data-driven culture that leverages Business Intelligence for informed decision-making. This approach will help mitigate the common perceptions that AI projects are time-intensive and costly, positioning their organizations to thrive in an increasingly competitive landscape.

Conclusion

The effective use of the GENERATESERIES function in DAX is essential for organizations aiming to enhance their data analysis capabilities. By mastering this function, teams can create dynamic series of numbers that facilitate a range of applications, from generating custom bins for data segmentation to developing comprehensive date ranges for time-based analysis. Such versatility not only streamlines complex calculations but also supports the creation of robust data models in Power BI, ultimately driving actionable insights that support strategic decisions.

Moreover, integrating GENERATESERIES with advanced techniques like CALCULATE and FILTER allows for more nuanced data analysis, enabling teams to extract meaningful insights tailored to specific criteria. As organizations increasingly adopt robotic process automation (RPA) solutions, they can automate repetitive tasks linked to data manipulation, further enhancing operational efficiency and accuracy. Addressing common challenges such as incorrect syntax and performance issues through careful planning and strategic adjustments ensures that the potential of DAX is fully realized.

Looking ahead, the evolution of DAX, particularly with trends like AI integration and user-friendly interfaces, presents exciting opportunities for operational leaders. By embracing these advancements, organizations can foster a culture of data-driven decision-making, empowering teams to leverage insights for innovation and growth. As the landscape of data analysis continues to evolve, staying informed and adaptable will be key to maintaining a competitive edge.

Introduction

In the world of data analysis, the ability to harness time intelligence functions like DATESMTD in DAX can transform how organizations approach financial metrics and operational efficiency. This powerful function not only facilitates the aggregation of month-to-date data but also empowers Directors of Operations Efficiency to make informed decisions based on real-time insights.

As businesses navigate the complexities of data, understanding how to effectively leverage DAX functions becomes essential for driving actionable insights and enhancing performance tracking. By exploring practical applications, comparing DATESMTD with other time intelligence functions, and addressing common challenges, this article aims to equip professionals with the knowledge needed to optimize their analytical capabilities and streamline operations in an increasingly data-driven landscape.

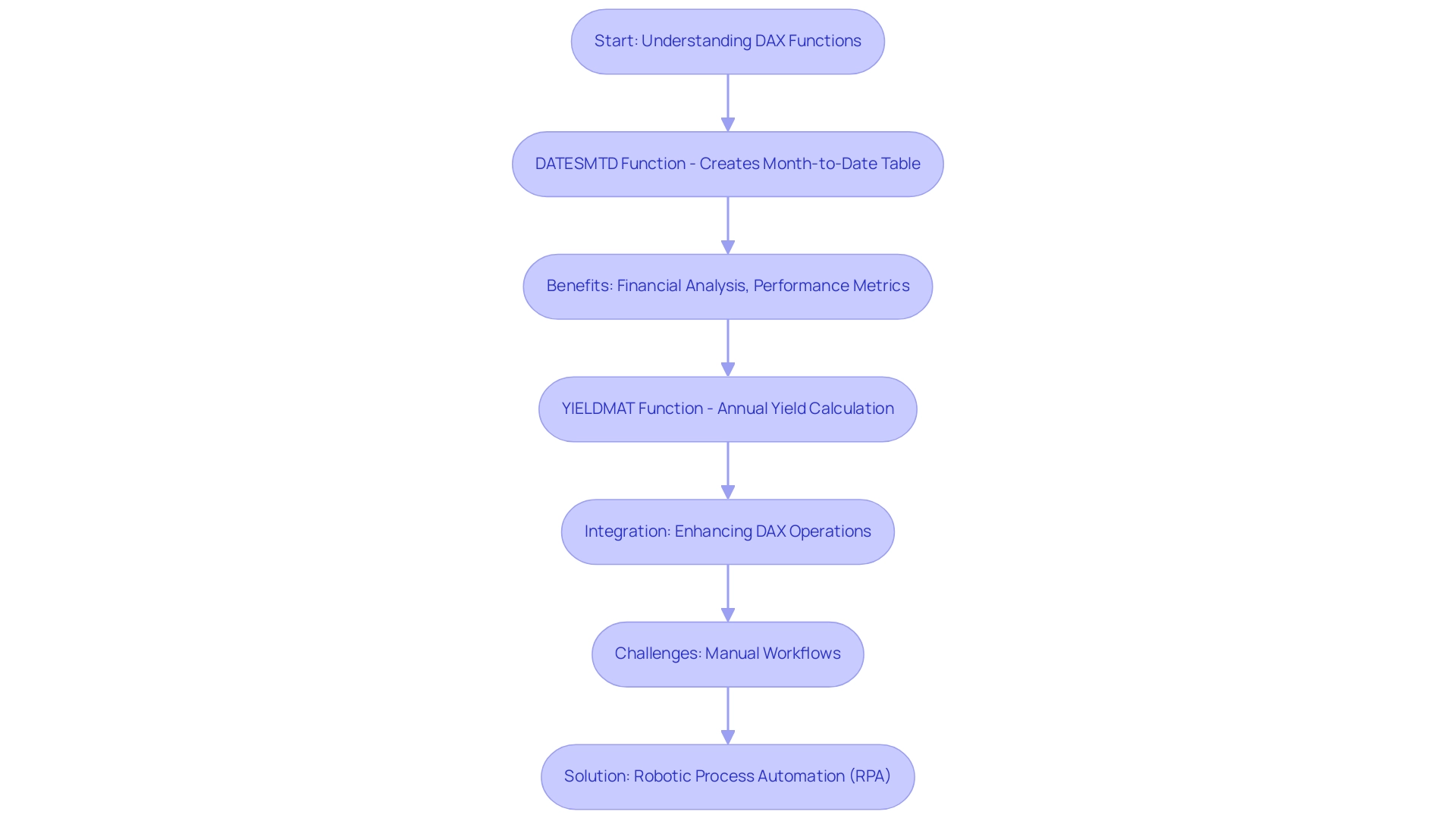

Understanding the DATESMTD Function in DAX

The DAX (Data Analysis Expressions) includes the datesmtd DAX, which serves as an essential time intelligence tool, creating a table that details dates from the beginning of the month to the present date based on a designated date column. This functionality is particularly advantageous for financial analysis, enabling the calculation of essential metrics like revenue and expenses up to the present day. In a rapidly evolving AI landscape, where identifying the right solutions can be overwhelming, mastering such tools is vital for Directors of Operations Efficiency seeking to harness Business Intelligence to drive actionable insights and streamline operations.

Additionally, understanding the YIELDMAT formula, which returns the annual yield of a security that pays interest at maturity, provides further context for effectively leveraging DAX operations in financial scenarios. By simplifying the aggregation of month-to-date data, the datesmtd DAX enhances the efficiency of performance metrics tracking, allowing organizations to respond promptly to financial trends and make informed decisions. Consider its ability to nest up to 64 layers of operations in calculated columns, significantly expanding your analytical capabilities.

Moreover, the disadvantages of manual workflows, such as inefficiency and susceptibility to errors, can be effectively tackled through Robotic Process Automation (RPA), which enhances the application of DAX in optimizing financial analysis. Staying informed about recent updates and best practices in DAX capabilities further empowers you in optimizing financial analysis, while also aligning with tailored AI solutions that can help navigate the complexities of the AI landscape.

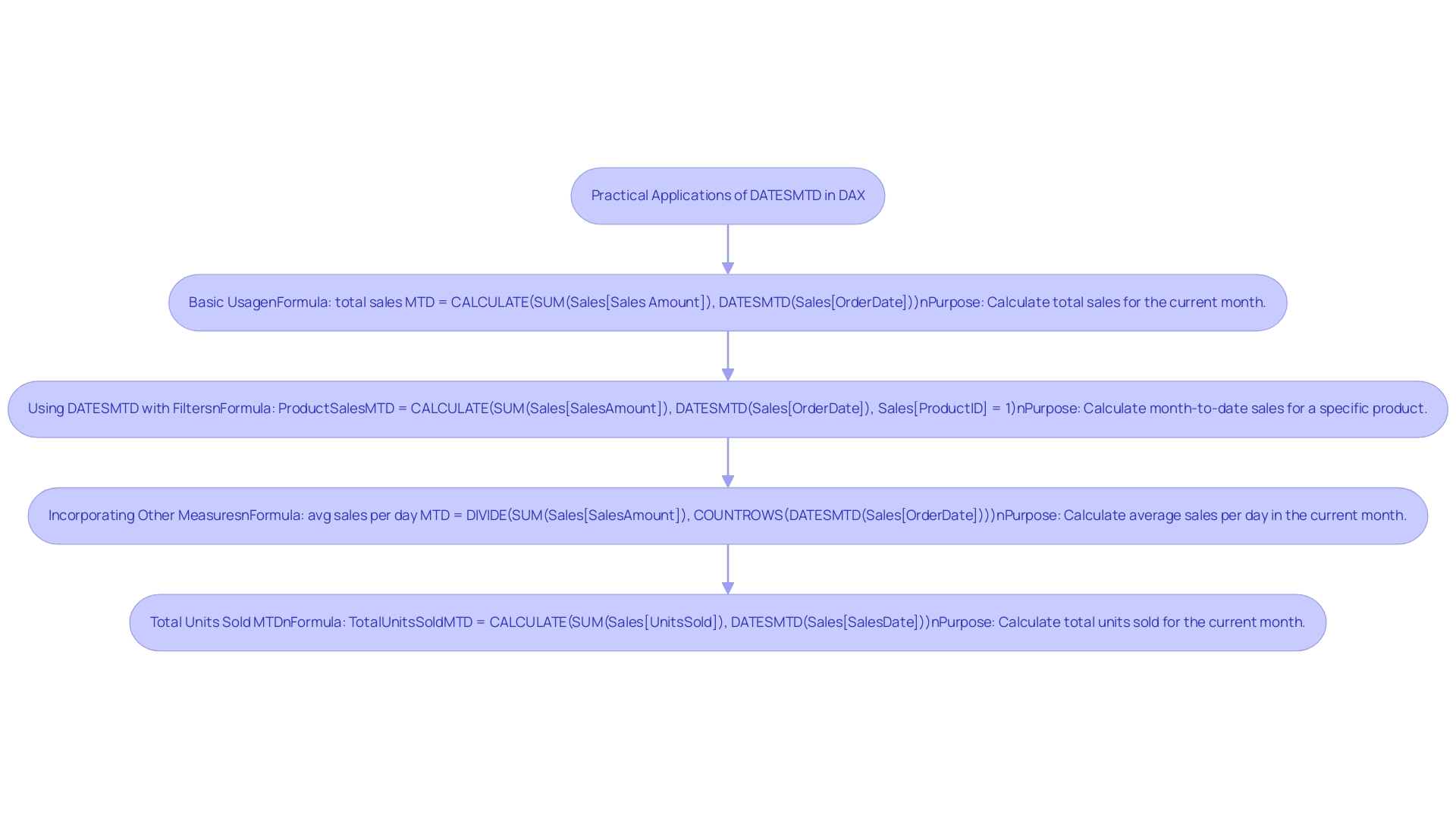

Practical Examples of Using DATESMTD in DAX

Explore these practical applications of the function in DAX to enhance your sales analysis capabilities:

-

Basic Usage: To calculate total sales for the current month, the following formula can be employed:

DAX

total sales MTD = CALCULATE(SUM(Sales[Sales Amount]), DATESMTD(Sales[OrderDate]))

This straightforward formula aggregates the sales amount for all orders placed from the start of the month up to the current date, providing a clear snapshot of monthly performance. For instance, total sales on 2023-06-25 amounted to $1,800, illustrating the effectiveness of this calculation. -

Using DATESMTD with Filters: By combining DATESMTD with additional filters, you can calculate month-to-date sales for a specific product:

DAX

ProductSalesMTD = CALCULATE(SUM(Sales[SalesAmount]), DATESMTD(Sales[OrderDate]), Sales[ProductID] = 1)

In this case, the formula computes the sales for Product ID 1 during the current month, allowing for targeted insights into product performance. -

Incorporating Other Measures: DATESMTD can also be utilized alongside other measures, such as when calculating the average sales per day in the current month:

DAX

avg sales per day MTD = DIVIDE(SUM(Sales[SalesAmount]), COUNTROWS(DATESMTD(Sales[OrderDate])))

This formula provides a more nuanced view of performance trends throughout the month, equipping you with the data needed to make informed operational decisions. Furthermore, the calculation of Total Units Sold MTD can be expressed as:

DAX

TotalUnitsSoldMTD = CALCULATE(SUM(Sales[UnitsSold]), DATESMTD(Sales[SalesDate]))

This reinforces the practical application of DATESMTD in analyzing sales performance.

Additionally, consider the broader context of time intelligence in DAX, as illustrated by the case study on Quarter-to-Date Sales Calculation using the DATESQTD() function. This illustrates the adaptability of DAX expressions in examining sales insights across various timeframes.

As Andrew Hubbard, a data analyst using Microsoft Power BI, wisely observes, “Enhance your analytical skills by diving into the realm of time intelligence through DAX.” Embracing these techniques not only enhances your analytical capabilities but also empowers your strategic decision-making.

Comparing DATESMTD with TOTALMTD and Other Time Intelligence Functions

Grasping the subtleties between DAX time intelligence tools and others is essential for effective data analysis over time. In a landscape where the significance of Business Intelligence (BI) and Robotic Process Automation (RPA) cannot be overstated, utilizing these DAX capabilities can significantly drive data-driven insights and enhance operational efficiency for business growth. Here’s a closer look at these features:

-

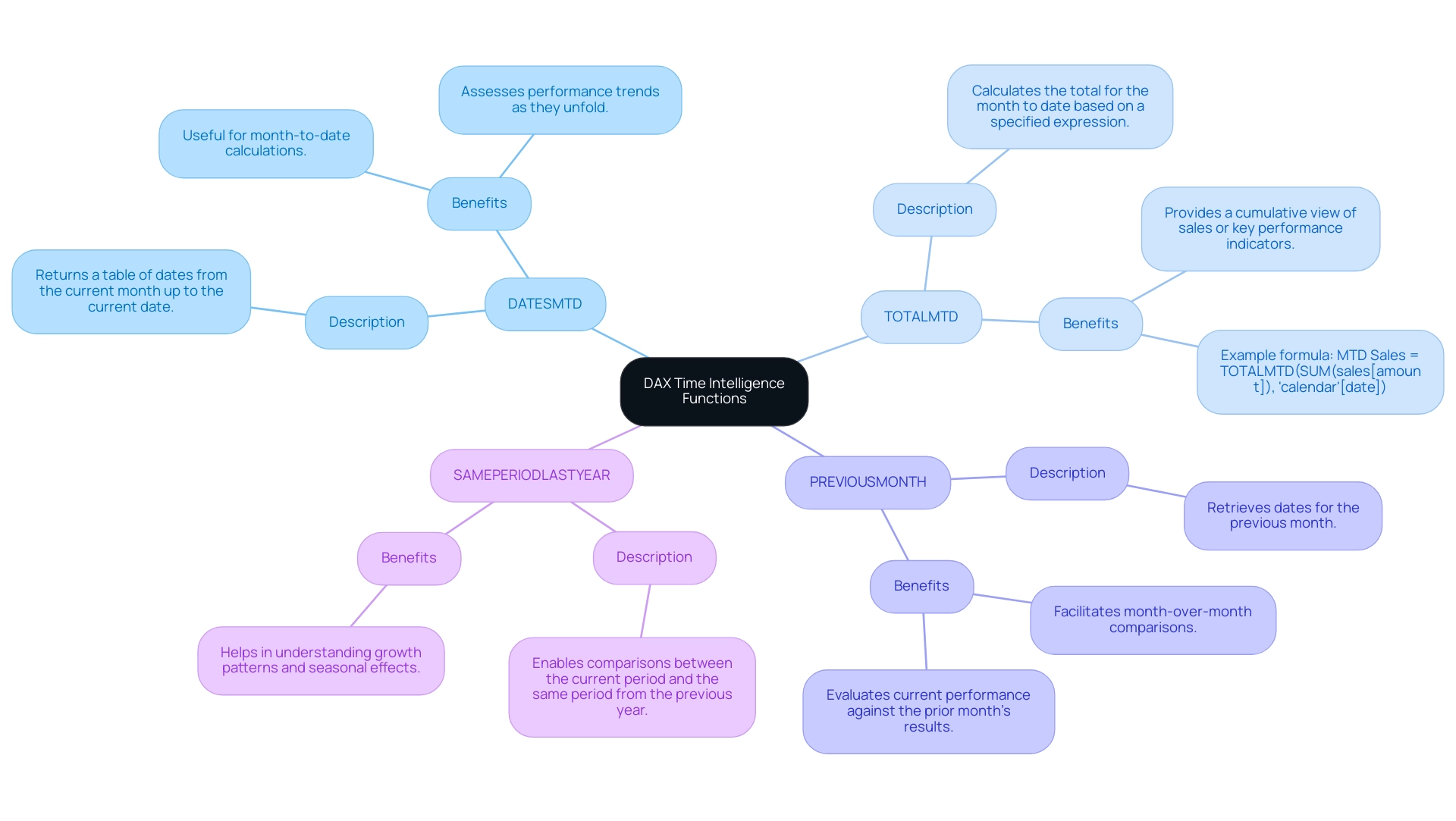

DATESMTD: This utility returns a table of dates from the current month up to the current date. It is particularly useful for month-to-date calculations, allowing analysts to assess performance trends as they unfold, which is critical in today’s data-rich environment where extracting meaningful insights can be challenging.

-

TOTALMTD: In contrast, TOTALMTD calculates the total for the month to date based on a specified expression. This feature is invaluable for aggregating metrics over the month, providing a cumulative view of sales or other key performance indicators. For example, to calculate the month running total for Internet sales, you can use the following formula:

MTD Sales = TOTALMTD(SUM(sales[amount]), 'calendar'[date])

-

PREVIOUSMONTH: This function retrieves dates for the previous month, facilitating month-over-month comparisons. It allows operational leaders to evaluate current performance against the prior month’s results, offering insights into trends and areas for improvement.

-

SAMEPERIODLASTYEAR: Essential for year-over-year analysis, this feature enables comparisons between the current period and the same period from the previous year. It helps in understanding growth patterns and seasonal effects, which are vital for informed decision-making.

Choosing the appropriate function hinges on the specific analysis objectives. For instance, if your goal is to monitor performance trends within the current month, DATESMTD proves to be the ideal choice. On the other hand, for a cumulative total that reflects overall performance, TOTALMTD is the more suitable option. As Dale from the Community Support Team aptly suggested, utilizing both TOTALMTD and SAMEPERIODLASTYEAR can significantly enhance your calculations:

MTD Sales = TOTALMTD(SUM(sales[amount]), 'calendar'[date])

SalesMTDLastYear = CALCULATE([MTD Sales], SAMEPERIODLASTYEAR('calendar'[date]))

This approach not only streamlines your analysis but also enriches your insights, ensuring that you’re well-equipped to drive operational efficiency. Significantly, the interest in this subject is highlighted by a user request for foundational information that garnered 21,085 views, demonstrating the significance and necessity of comprehending these DAX procedures in addressing the difficulties of report generation and information inconsistencies. Furthermore, integrating RPA solutions can automate repetitive tasks, alleviating issues like task repetition fatigue and staffing shortages, ultimately enhancing the effectiveness of your BI efforts and ensuring that raw data is transformed into actionable insights for better decision-making.

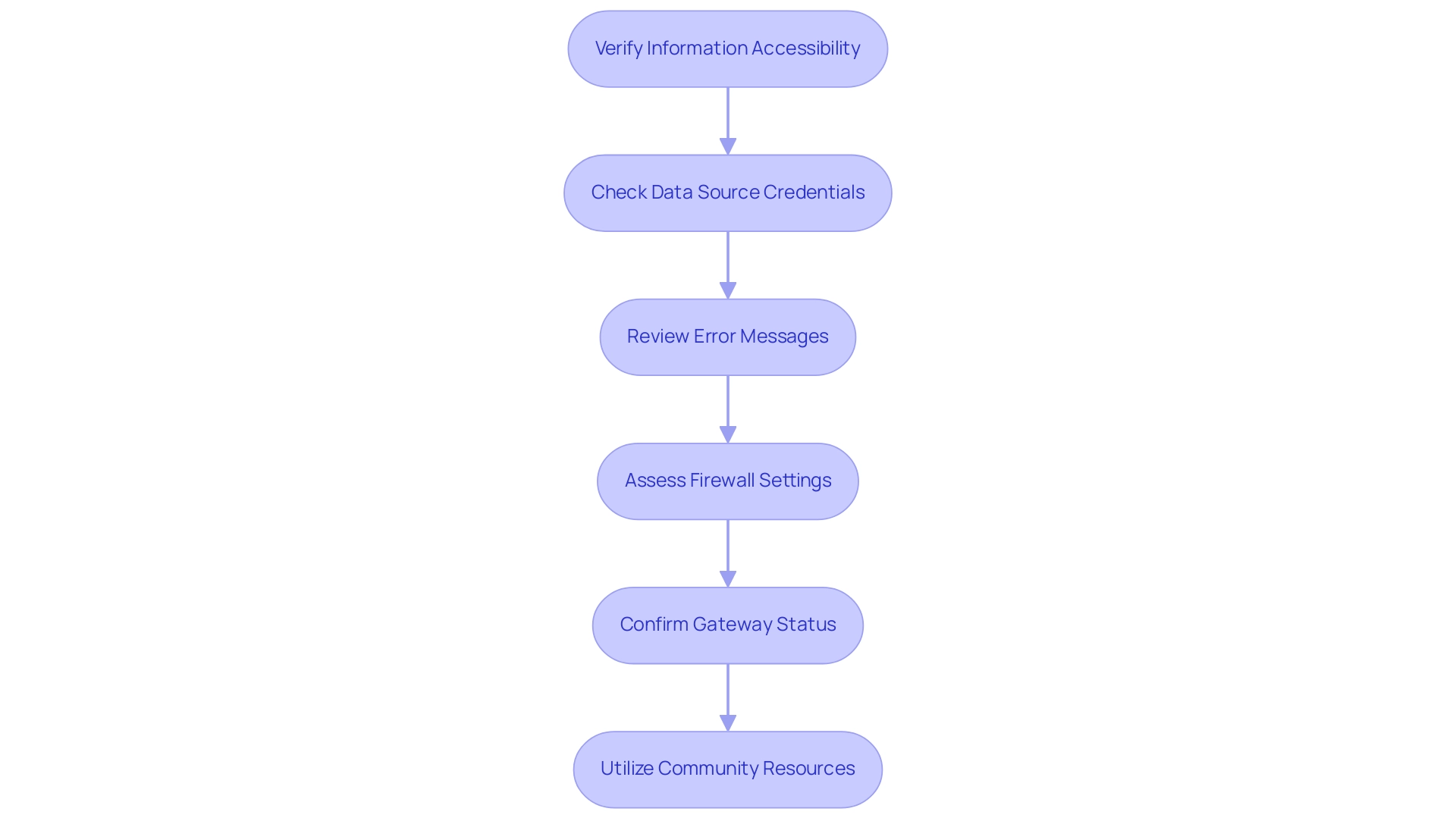

Challenges and Considerations When Using DATESMTD

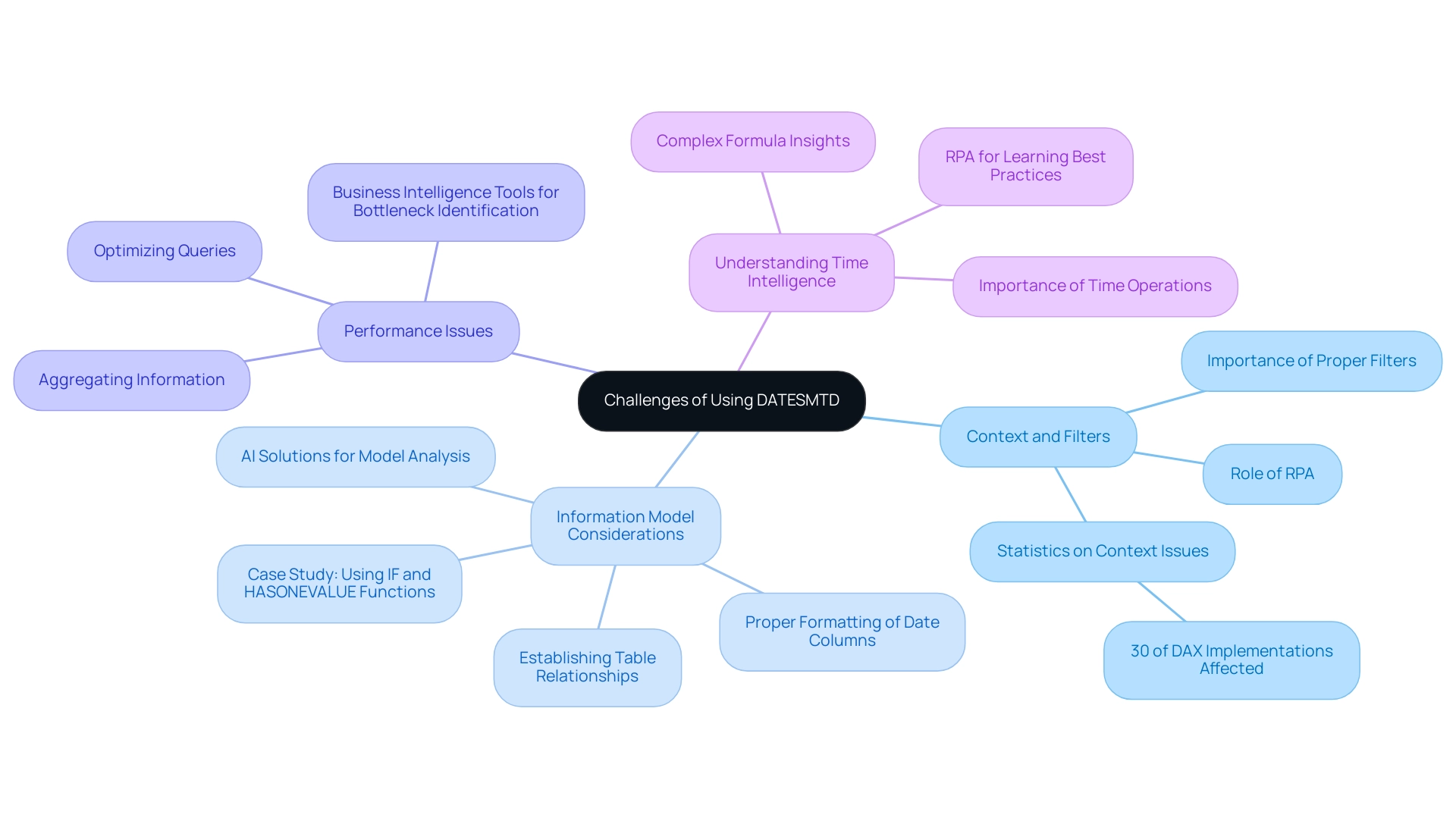

While the DATESMTD function is undoubtedly a powerful tool, users frequently encounter several challenges that can hinder its effectiveness:

-

Context and Filters: One of the primary hurdles is the function’s dependence on the context in which it is applied. It is vital to ensure that filters are accurately set to avoid unexpected results, as incorrect contexts can lead to significant discrepancies in your analysis. According to recent statistics, approximately 30% of DAX implementations face issues related to context and filter settings, highlighting the importance of datesmtd dax in this consideration. Leveraging Robotic Process Automation (RPA) can help streamline the setup of these filters by automating repetitive tasks, thereby enhancing operational efficiency and reducing the risk of human error.

-

Information Model Considerations: Problems often arise when the underlying information model is inadequately structured. For optimal performance, ensure that date columns are properly formatted and that relationships between tables are correctly established, which is foundational for accurate calculations in datesmtd dax. A case study titled “Using IF and HASONEVALUE Functions” illustrates how structuring models can help users effectively calculate cumulative sales based on selected months, demonstrating the importance of a well-organized model. Tailored AI solutions can assist in analyzing your model’s effectiveness, providing insights on how to optimize it further for DAX calculations.

-

Performance Issues: In scenarios involving large datasets, the DATESMTD DAX function can lead to performance lags. To counter this, it’s advisable to optimize your queries related to datesmtd dax and consider aggregating information when feasible to enhance processing speed. Performance optimization is crucial, especially in enterprises where data volume can significantly impact analysis time. Utilizing Business Intelligence tools can aid in quickly identifying bottlenecks in performance, allowing for timely adjustments that enhance analysis efficiency.

-

Understanding Time Intelligence: A robust understanding of time intelligence concepts is essential. For those new to DAX, investing time to understand how time operations interact will pay off, especially when tackling more complex formulas. As Pieter aptly noted, “Understanding the nuances of DAX expressions is key to unlocking their full potential.” By embracing these insights and overcoming implementation challenges, users can maximize the impact of the tool in their analyses. Additionally, automating the learning process through RPA can help teams stay updated on best practices, further enhancing their analytical capabilities.

The Role of Context and Filters in DATESMTD Calculations

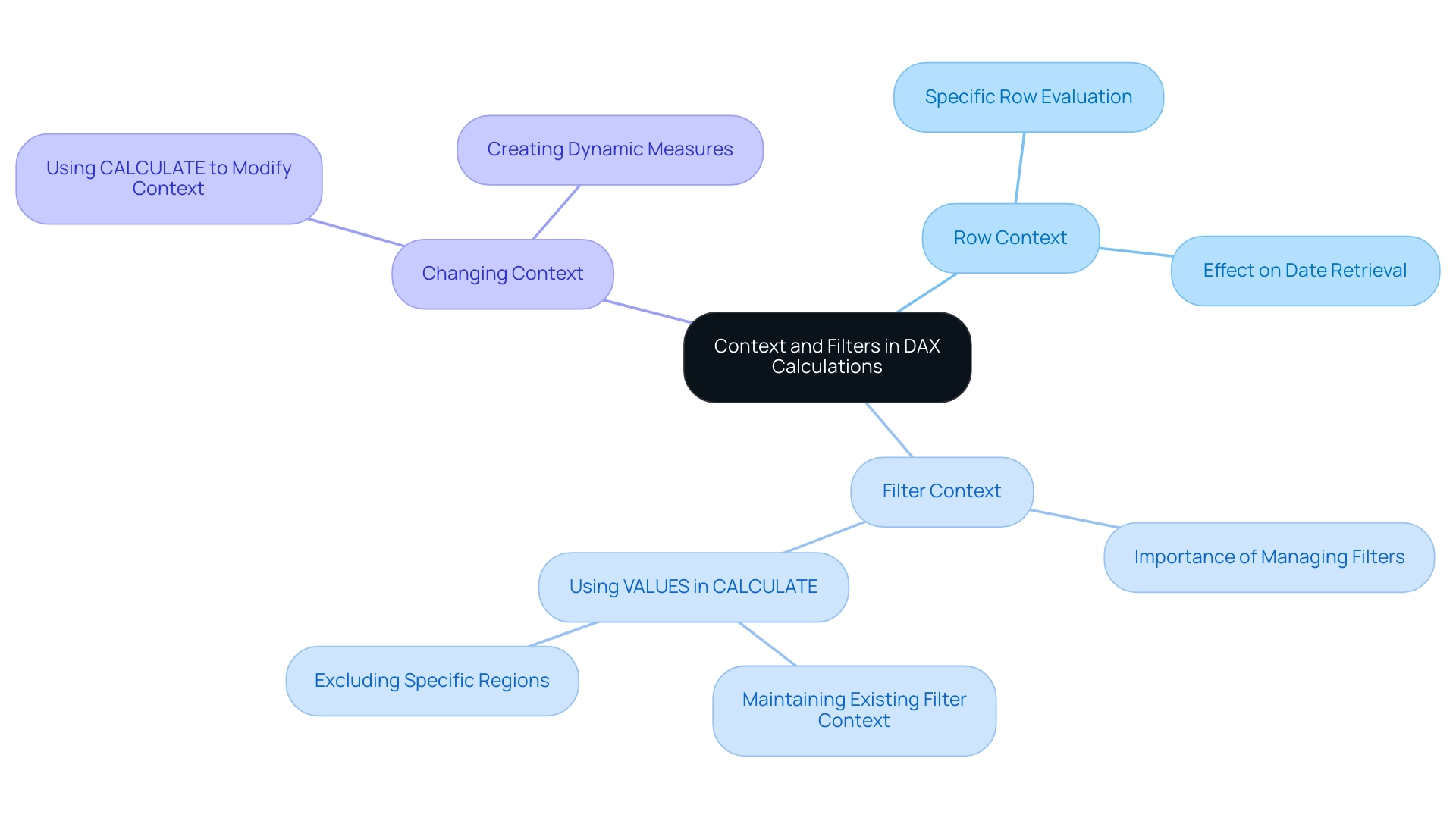

In DAX, the role of context is crucial for the precision and efficiency of calculations, particularly when employing a specific time-related function. Understanding the following components is essential:

- Row Context: This refers to the specific row being evaluated in your data model. It affects how the date retrieval mechanism acquires its date table, making it essential to understand how row context interacts with your datasets.

- Filter Context: The filters applied to your data model significantly affect the results generated by the date month-to-date function. Effectively managing these filters is vital for ensuring precise outcomes. For instance, when utilizing the VALUES feature within CALCULATE, you can preserve the current filter context while incorporating additional conditions, such as excluding specific regions from total sales calculations.

- Changing Context: Functions like CALCULATE empower users to modify the filter context, facilitating more intricate calculations. By using CALCULATE in conjunction with month-to-date functions, you can create dynamic measures that adjust according to user selections within reports.

It is important to note that valid dates in DAX, specifically for functions like datesmtd dax, are all dates after March 1, 1900, which is a crucial detail for understanding date-related functions. Mastering the nuances of row and filter context allows users to construct robust DAX formulas that enhance analysis capabilities. For instance, the case study titled “Designing Efficient Formulas with Proper Context Understanding” demonstrates how mastering these contexts can lead to enhanced analysis and reporting outcomes.

As Andy Brown from Wise Owl Training emphasizes, this understanding is foundational for creating efficient DAX formulas that can lead to improved reporting outcomes. By leveraging context and filters, you can unlock richer insights from your data.

Conclusion

Harnessing the power of the DATESMTD function in DAX is a game-changer for organizations seeking to enhance their financial analysis and operational efficiency. This function not only simplifies month-to-date calculations but also enables Directors of Operations Efficiency to derive actionable insights that drive informed decision-making. By understanding how to effectively apply DATESMTD alongside other time intelligence functions, professionals can refine their analytical capabilities and better respond to evolving business needs.

Practical applications of DATESMTD, such as calculating total sales or product-specific performance, demonstrate its versatility and significance in real-time analysis. Coupled with an awareness of context and filters, users can navigate common challenges, ensuring accurate and efficient calculations. The comparison with other DAX functions like TOTALMTD further emphasizes the necessity of selecting the right tools for specific analytical objectives, thereby optimizing performance tracking.

In a data-driven world where timely insights are paramount, mastering DAX functions like DATESMTD not only enhances analytical prowess but also positions organizations to remain agile and competitive. As businesses continue to leverage these powerful tools, the potential for unlocking deeper insights and driving operational success expands significantly. Embracing these strategies is essential for any organization committed to achieving excellence in data analysis and operational efficiency.

Introduction

In the realm of data analysis, harnessing the full power of DAX (Data Analysis Expressions) can transform how organizations interpret and utilize their data. Among the myriad of functions available, COUNTROWS and DISTINCTCOUNT stand out as essential tools for any data analyst aiming to derive meaningful insights from complex datasets. Understanding these functions is not just about counting; it’s about unlocking the potential for strategic decision-making and operational efficiency. As businesses increasingly integrate DAX with Robotic Process Automation (RPA), the ability to automate data tasks and streamline workflows becomes crucial. This article delves into the intricacies of COUNTROWS and DISTINCTCOUNT, offering practical examples and strategies that empower analysts to enhance their data capabilities and drive growth in their organizations.

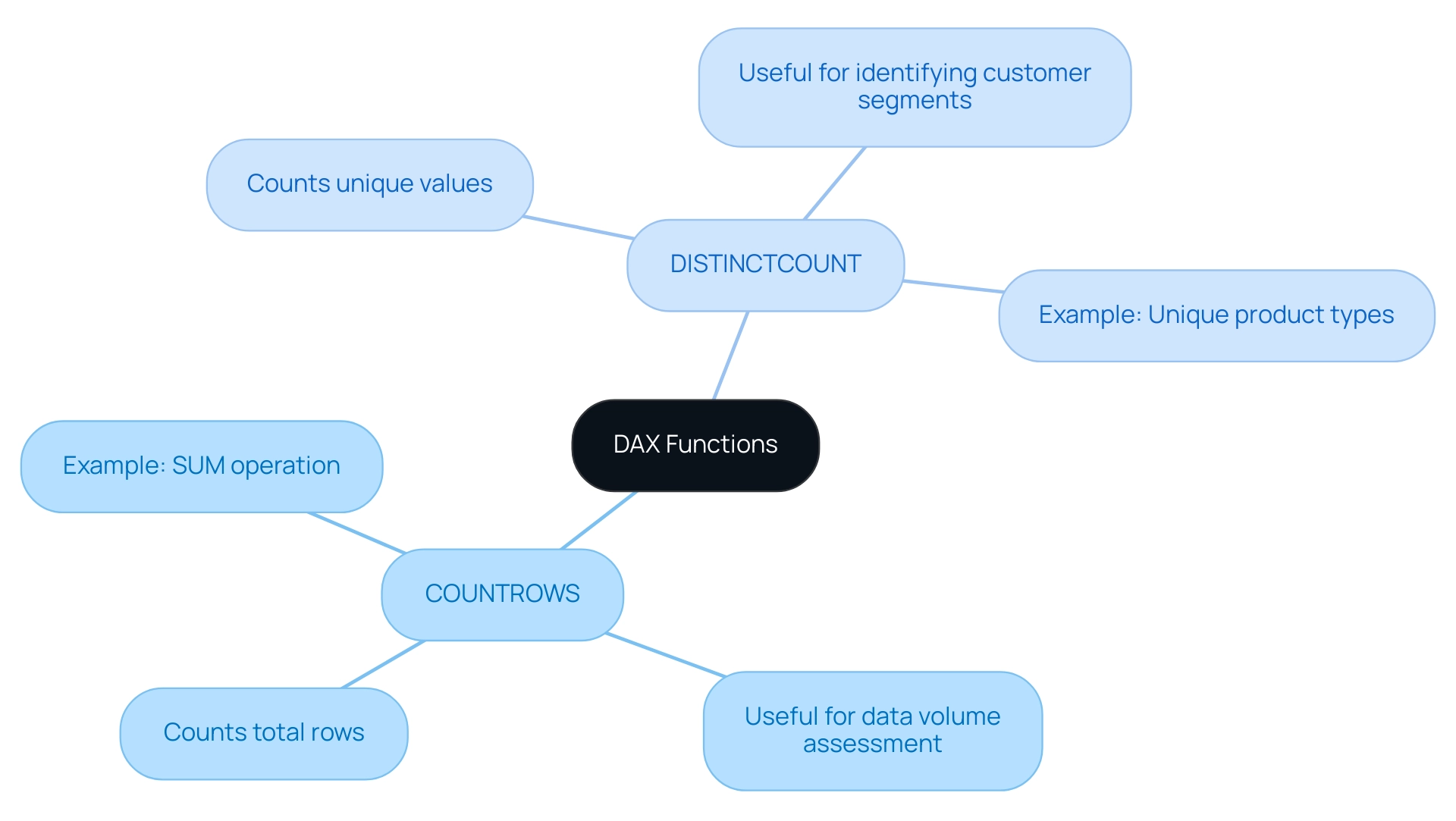

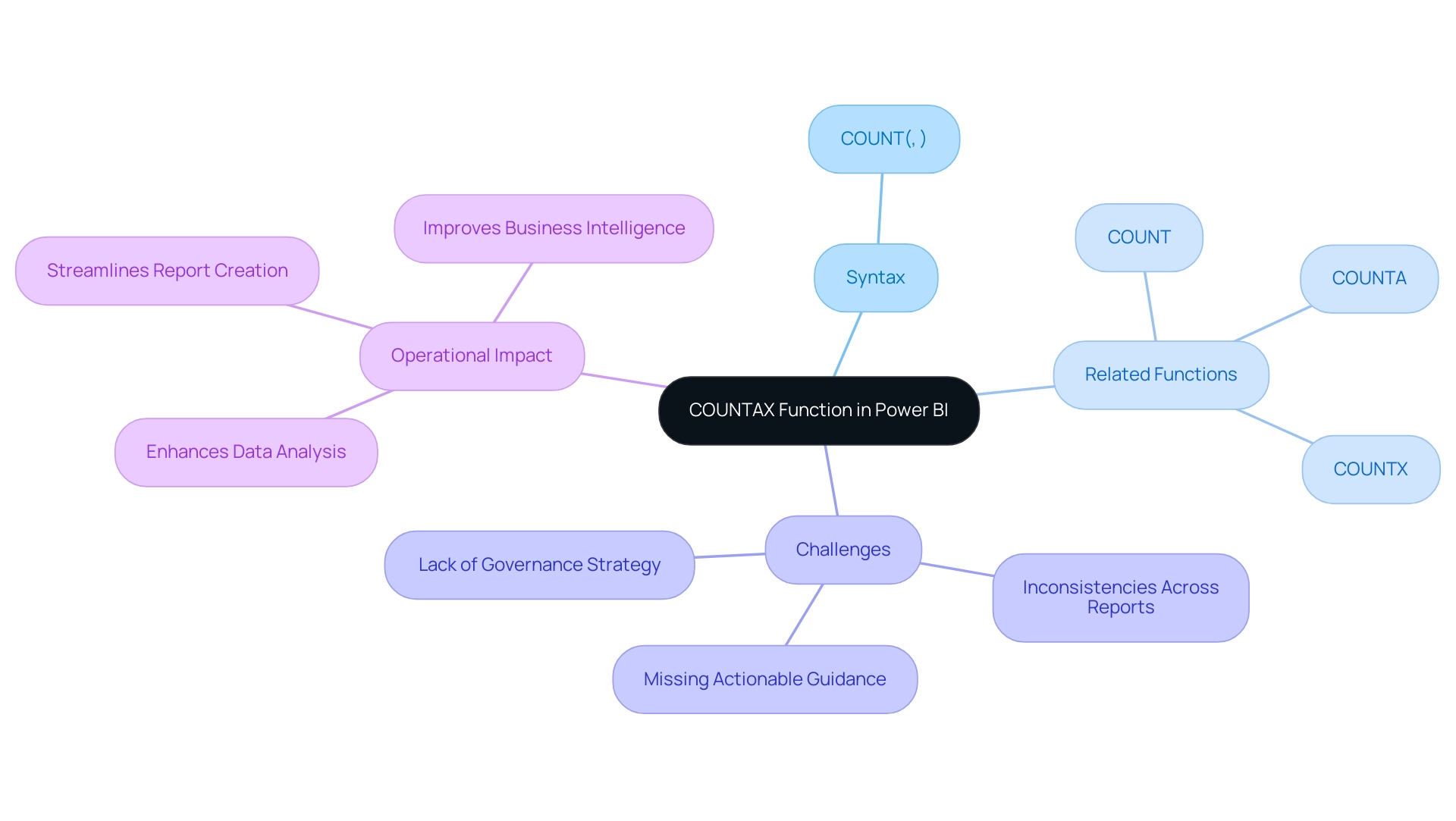

Understanding DAX Functions: COUNTROWS and DISTINCTCOUNT

DAX (Data Analysis Expressions) serves as a powerful formula language integral to Power BI, Excel, and other Microsoft applications, playing a crucial role in Business Intelligence and driving operational efficiency. Among its primary roles, COUNTROWS and the dax distinctcount with filter function are vital instruments for any analyst aiming to unlock the potential of their datasets.

- COUNTROWS counts the total number of rows within a table or table expression, making it invaluable for assessing the volume of entries present in a dataset.

This method is especially beneficial for rapidly assessing the volume of your information pool, directing later analysis and facilitating more efficient reporting. For example, in a recent case study, the SUM operation aggregates values in a column while SUMX assesses an expression for each row before summing results, illustrating how COUNTROWS complements these calculations by providing a clear count of total entries.

- Conversely, the function known as dax distinctcount with filter emphasizes counting unique values within a specified column, which is essential for comprehending variety, such as recognizing unique customer segments or product types. As emphasized by recent statistics, the utilization of DAX capabilities has surged in 2024, reflecting their increasing significance in successful information analysis practices.

Understanding these capabilities is essential for utilizing DAX effectively, especially when applying dax distinctcount with filter to extract specific insights. By integrating DAX with RPA solutions, organizations can automate repetitive data tasks, thus reducing the time-consuming nature of report creation and minimizing data inconsistencies. Mastering tools such as COUNTROWS and unique counts will empower you to make informed decisions, enhance your analytical capabilities, and ultimately drive growth and innovation in your organization.

Syntax Breakdown of DISTINCTCOUNT in DAX

The DAX DISTINCTCOUNT with filter formula is a powerful tool for counting unique values within a specified column, which is essential for enhancing Business Intelligence capabilities and driving operational efficiency through RPA. The syntax for this function is straightforward:

DISTINCTCOUNT(columnName)

- columnName: This refers to the specific column from which distinct values will be counted. It is essential that this column is incorporated into a well-organized information model, ensuring precise calculations.

For instance, consider a table labeled ‘Sales’ where you aim to determine the number of distinct customers. Your DAX formula would be:

DISTINCTCOUNT(Sales[CustomerID])

Understanding this syntax is crucial as it lays the groundwork for implementing filters and developing more complex DAX expressions. Mastery of COUNT UNIQUE not only enhances your analytical capabilities but also streamlines information processes, reducing repetitive tasks and addressing outdated systems, positioning you as a key player in your organization, refining strategies based on diverse consumer interactions.

As pointed out by expert Glenn Seibert, with 209 shops in North America, the capability to precisely tally unique values can greatly affect business choices across different locations. Furthermore, utilizing dax distinctcount with filter assists in overcoming typical obstacles encountered when deriving insights from Power BI dashboards, such as inconsistencies in information and the lengthy process of report generation. Engaging in courses such as the ‘Power BI Advanced Topics Course’ can provide professionals with the skills needed to use techniques effectively, resulting in valuable outcomes and enhanced operational efficiency.

It is also crucial to be aware that some DAX operations can be volatile, returning different results with the same arguments, highlighting the importance of understanding DAX nuances in practical applications.

Practical Examples of DISTINCTCOUNT Usage

Applying the DAX distinctcount with filter feature can greatly enhance your analysis capabilities, especially as the market for information quality tools is expanding at 16% each year. This process is an essential component in overcoming technology implementation challenges, particularly in enhancing operational efficiency through accurate data management. Furthermore, when combined with Robotic Process Automation (RPA), the use of DAX functions can streamline workflows, reduce manual tasks, and free up your team for more strategic, value-adding work.

Here are several practical examples to illustrate its effectiveness:

-

Counting Unique Products Sold: To determine the number of unique products sold during a specific period, utilize the following DAX formula:

DAX

DISTINCTCOUNT(Sales[ProductID])

This calculation is crucial as the retail market for unique product sales is projected to grow significantly, emphasizing the importance of accurate inventory analysis in 2024. Furthermore, utilizing unique count helps avoid duplicate entries in your summary index, ensuring the integrity of your data and freeing up resources for more strategic efforts. -

Finding Unique Customers: To assess how many distinct customers made purchases, the following formula is effective:

DAX

DISTINCTCOUNT(Sales[CustomerID])

Understanding your customer base is vital for retention strategies, as highlighted by Databel’s upcoming analysis of customer usage patterns and competitor comparisons. This method also aids in preventing duplicate entries, which can skew your understanding of customer dynamics, thus enhancing your overall operational efficiency. Integrating tailored AI solutions can further refine this analysis, providing deeper insights into customer behaviors. -

Evaluating Unique Transactions: To count the number of distinct transactions, you can apply:

DAX

DISTINCTCOUNT(Sales[TransactionID])

This metric is essential for evaluating sales performance and identifying trends over time. As noted by industry expert Anna Dunay, effective information management strategies, including the reuse of components and avoiding duplicates, can lead to improved product quality without compromising overall outcomes. By utilizing RPA together with DAX functions, companies can streamline the information gathering process, ensuring that these metrics remain consistently current and precise.

These illustrations clearly showcase the usefulness of COUNT across different contexts, providing you with practical tools to enhance the effectiveness and precision of your datasets. Leveraging such Business Intelligence tools, along with RPA and AI solutions, can transform raw data into actionable insights, enabling informed decision-making that drives growth and innovation.

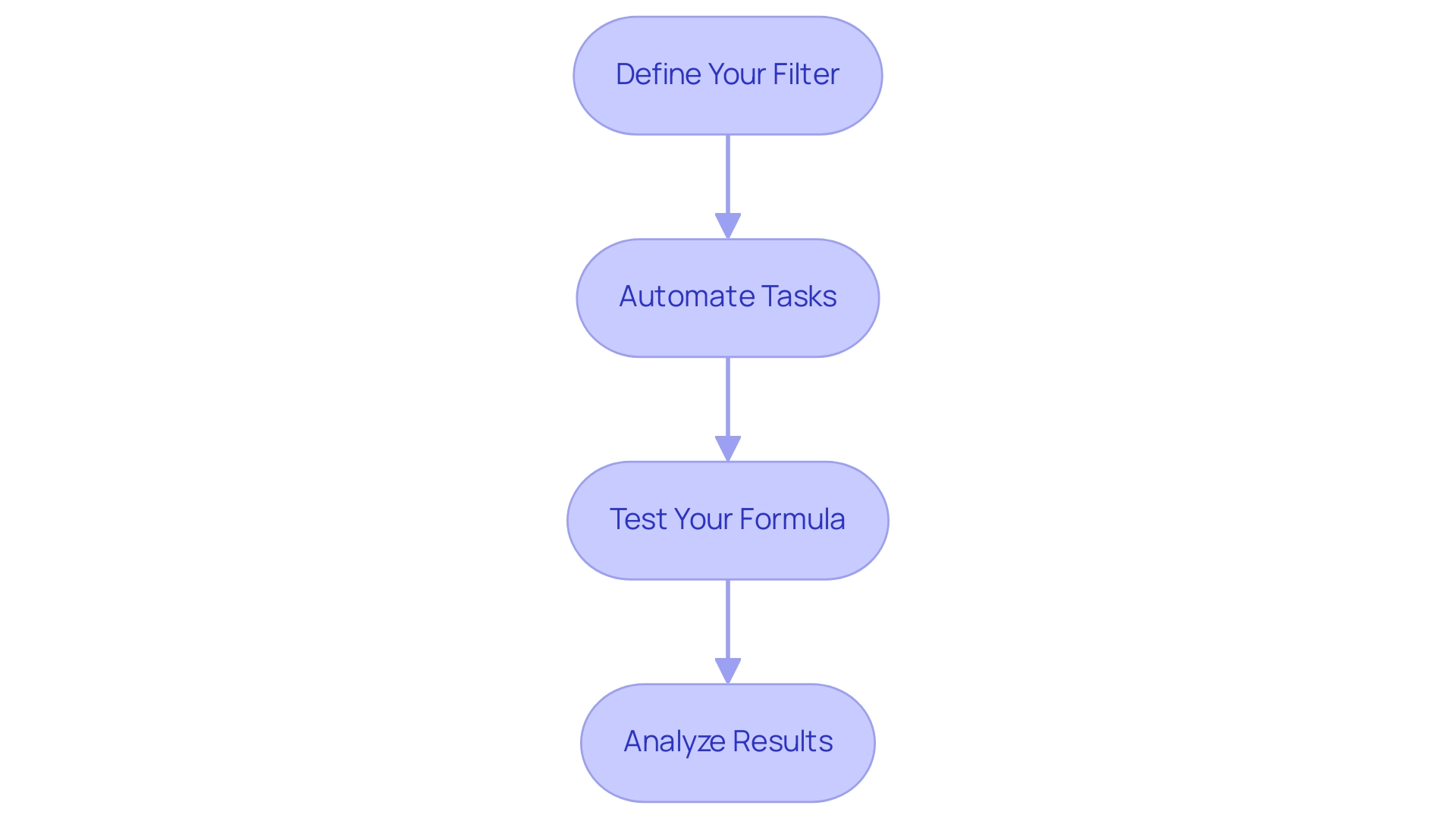

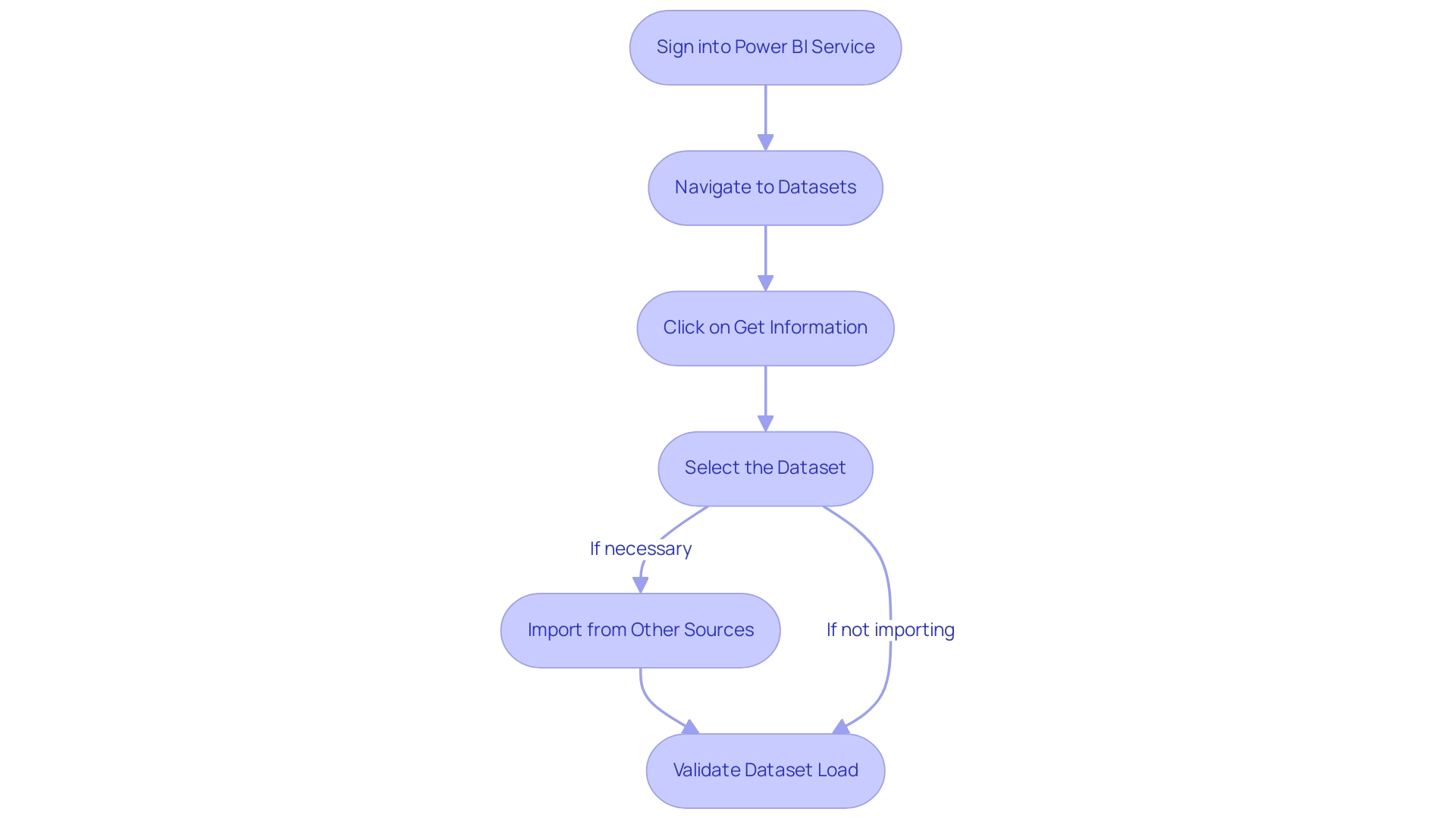

Applying Filters with DISTINCTCOUNT: A Step-by-Step Approach

To effectively apply filters with DISTINCTCOUNT in DAX while leveraging Robotic Process Automation (RPA) to enhance operational efficiency, follow these actionable steps:

- Define Your Filter: Begin by establishing the criteria for your filter. For instance, if your goal is to count unique customers who made purchases in a specific year, your filter criteria will focus on that specific year. You can utilize the

ENDOFYEAR(<dates>)method to dynamically reference the end of the year, ensuring your analysis remains relevant.

To analyze the data effectively, we can use DAX DISTINCTCOUNT with FILTER. Write the DAX expression that utilizes DAX DISTINCTCOUNT with FILTER to combine the unique count function with the FILTER function. Here’s a practical example:

DISTINCTCOUNT(FILTER(Sales, Sales[Year] = <specific_year>), Sales[CustomerID])

-

Automate Tasks: Consider automating manual activities related to your DAX processes, such as entry, report generation, or cleaning, using RPA. This can significantly reduce errors and save time, allowing your team to focus on strategic insights.

-

Test Your Formula: Once your DAX expression is written, test it within your model to verify that it yields the expected results. Make adjustments to your filter criteria as needed to refine your analysis. By automating this process with RPA, you can further enhance accuracy and efficiency.

-

Analyze Results: Leverage the output to extract meaningful insights from your information. For example, understanding customer behavior in a specific year can significantly enhance your marketing strategies. RPA can assist by automating the collection and reporting processes, facilitating quicker access to actionable insights.

This systematic method not only enables you to incorporate filters with UNIQUECOUNT effectively but also improves your overall analysis capabilities. As Joleen Bothma states, “Discover how to make your Power BI reports more insightful, informative, and interactive with this advanced analytical features tutorial.” For further insights, consider exploring her advanced tutorial on analytical features in Power BI, which can elevate your reporting to new levels of interactivity and insight.

Additionally, the case study titled ‘Advanced Analytical Features in Power BI Tutorial’ illustrates how users have successfully enhanced their reports with advanced features, increasing interactivity and insight.

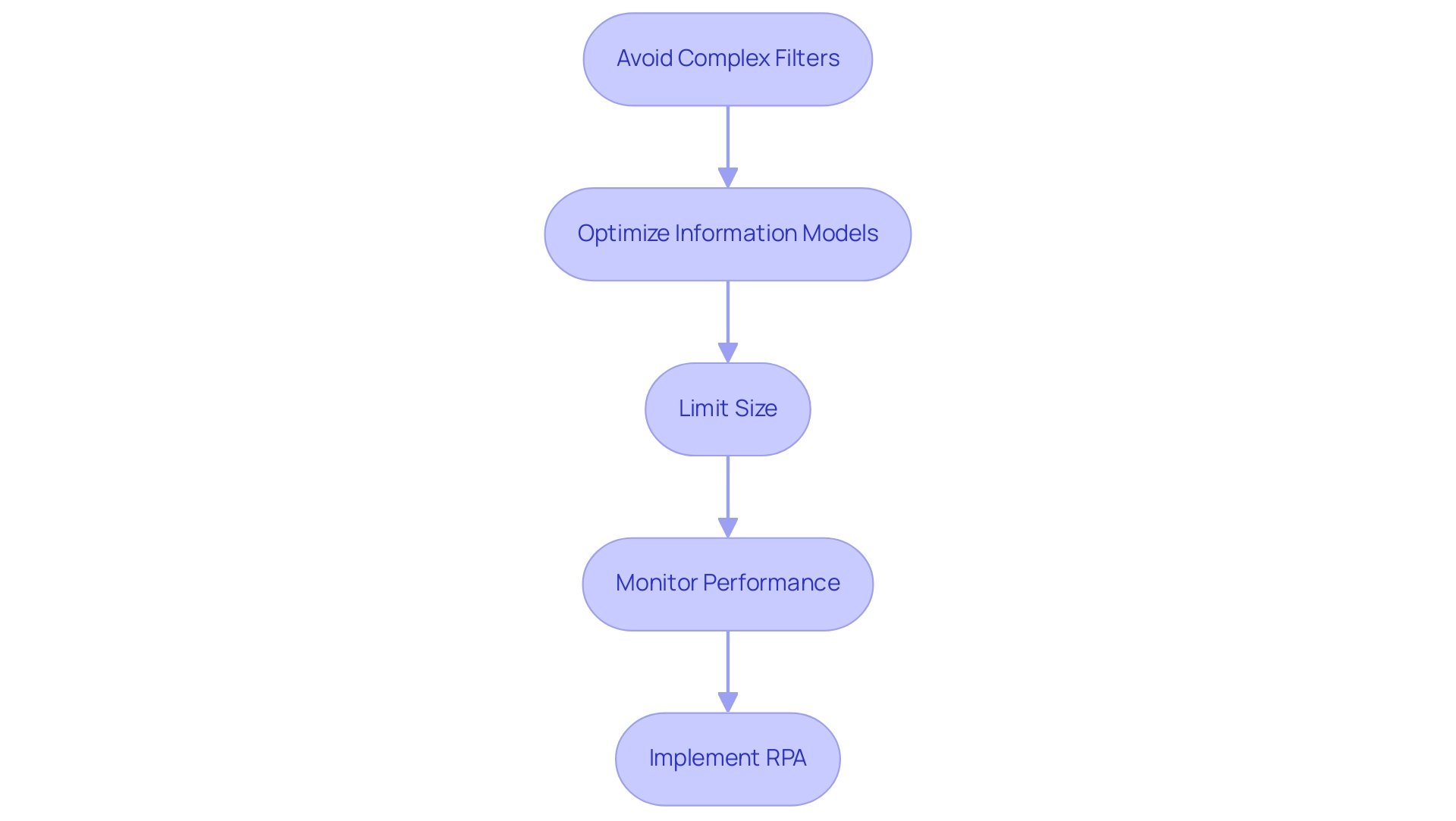

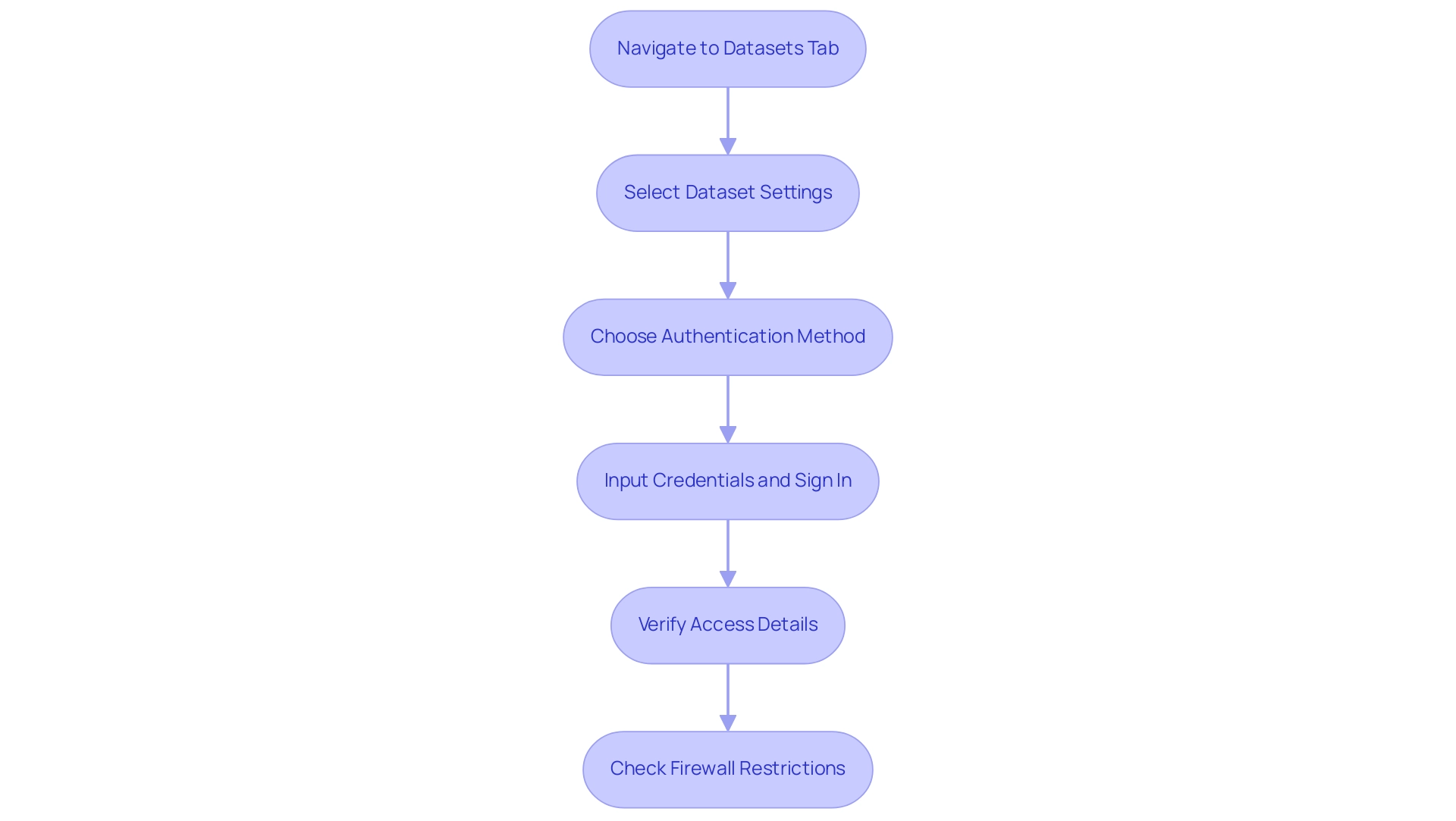

Performance Considerations for DISTINCTCOUNT in DAX

To enhance the performance of your DAX calculations when utilizing DISTINCTCOUNT, consider the following key strategies that align with Robotic Process Automation (RPA) principles, which can automate and streamline these processes:

-

Avoid Complex Filters: Implementing intricate filtering can significantly impede performance. Strive to simplify your filters wherever feasible to boost speed, mirroring the efficiency RPA brings to workflow automation. By automating the filtering process, RPA can ensure that only essential information is processed, enhancing overall performance.

-

Optimize Information Models: A well-structured information model is crucial. Proper optimization can lead to substantial improvements in DAX calculation performance, facilitating smoother operations. For instance, DAX Studio offers functionality to export and import VertiPaq model metadata using .vpax files, aiding in model optimization discussions and collaborations. RPA can automate the modeling process, ensuring consistent updates and optimizations without manual intervention.

-

Limit Size: When handling extensive datasets, employing dax distinctcount with filter to aggregate information can help minimize the number of rows processed, thereby enhancing efficiency—an approach that complements RPA’s capability to streamline workflows. RPA can automate the aggregation process, ensuring that only relevant data is analyzed, thus improving calculation speed.

-

Monitor Performance: Leverage tools like DAX Studio, which captures and formats Analysis Services traces, providing a user-friendly alternative to SQL Server Profiler. This tool enables you to analyze the performance of your DAX queries effectively and identify any bottlenecks. As Miguel Felix, a Super User, noted, “Can you share a sample file? I made a test file and it worked properly.” This highlights the importance of practical testing in optimizing performance, akin to testing RPA implementations for maximum effectiveness.

By implementing these performance optimization strategies in conjunction with RPA, users can not only unlock the full potential of their DAX calculations but also free up valuable team resources for more strategic, value-adding work. This dual approach leads to faster insights and more informed operational decision-making, helping navigate the complexities of the evolving AI landscape.

Conclusion

Harnessing the power of DAX functions like COUNTROWS and DISTINCTCOUNT is essential for any data analyst seeking to enhance operational efficiency and drive informed decision-making within their organization. These functions serve as critical tools in understanding dataset composition, whether through counting total entries or identifying unique values. By mastering these techniques, analysts can streamline their reporting processes and ensure data integrity, ultimately leading to more strategic insights.

The practical applications of DISTINCTCOUNT in various scenarios, such as assessing unique products sold or distinct customer interactions, illustrate its importance in contemporary data analysis. Coupled with Robotic Process Automation (RPA), these DAX functions can significantly reduce manual workloads and enhance data accuracy, allowing teams to focus on higher-value tasks. The integration of automation not only expedites data tasks but also ensures that insights remain timely and relevant.

As organizations continue to embrace data-driven strategies, the ability to effectively utilize DAX functions will be paramount. By implementing the discussed strategies and performance considerations, analysts can optimize their workflows and leverage insights that drive growth and innovation. The journey toward operational excellence begins with a solid understanding of these powerful tools, paving the way for data analysts to become key contributors to their organizations’ success.

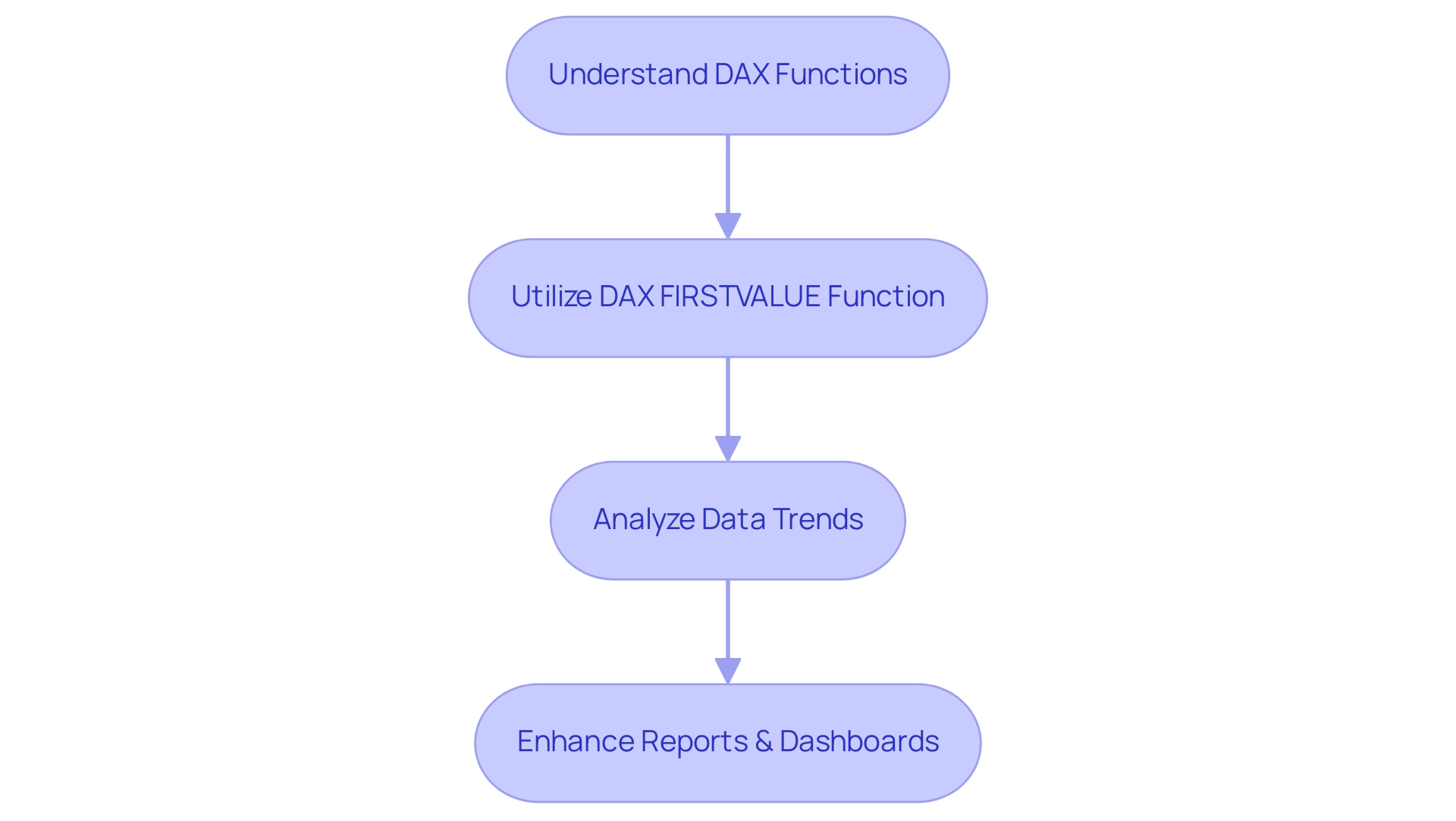

Introduction

In the dynamic world of data analysis, the DAX FIRSTVALUE function emerges as a pivotal tool for professionals seeking to extract meaningful insights from complex datasets. By enabling users to pinpoint the first occurrence of a value within a specified context, FIRSTVALUE empowers analysts to uncover trends and make informed decisions that drive operational efficiency.

As organizations navigate the challenges of data management, mastering this function becomes essential for enhancing reporting capabilities and fostering a culture of data-driven decision-making. With the integration of advanced technologies like Robotic Process Automation (RPA), the potential for streamlining workflows and improving accuracy is greater than ever.

This article delves into the intricacies of the FIRSTVALUE function, exploring its syntax, practical applications, and best practices to ensure users can harness its full potential in their analytical endeavors.

Introduction to the DAX FIRSTVALUE Function

The DAX initial value capability stands out as a crucial tool in analysis, enabling users to obtain the first value in a column based on a specified filter context. This capability is invaluable for analysts aiming to track trends over time or extract initial values from complex datasets, ultimately driving data-driven insights that enhance operational efficiency. By effectively utilizing this tool, analysts can enhance their reports and dashboards, thus fostering a foundation for more informed decision-making processes.

As Suhaib Arshad, a Sales Executive turned Data Scientist, emphasizes,

With over 3 years of experience addressing challenges in Ecommerce, Finance, and Recruitment Domains, the capacity to utilize such DAX capabilities can greatly enhance one’s modeling skills in Power BI or Excel. Moreover, the incorporation of Analysis Services improves Power BI’s capabilities, offering advanced modeling features, compression, and query optimization, which additionally supports the effective utilization of DAX tools. Grasping and employing the initial value not only improves analytical skills but also provides users with crucial tools required to maneuver through the swiftly changing environment of analysis.

Significantly, valid dates in DAX are all dates following March 1, 1900, highlighting the necessity of utilizing precise date operations in information models. As we transition into 2024, mastering DAX expressions such as dax first value is essential, as they signify the foundation of efficient modeling and insightful analysis. Furthermore, utilizing Robotic Process Automation (RPA), like EMMA RPA and Power Automate, can enhance manual workflows and address issues such as time-consuming report generation and inconsistencies, ultimately promoting operational efficiency and enabling teams to concentrate on strategic initiatives.

The case study on comprehending information models and Analysis Services demonstrates how these connections facilitate cross-table analysis and deeper insights, emphasizing the practical applications and results of using DAX operations in real-world situations. Furthermore, tackling the difficulty of deriving valuable insights from data is essential, and mastering DAX capabilities is a crucial step in overcoming this challenge.

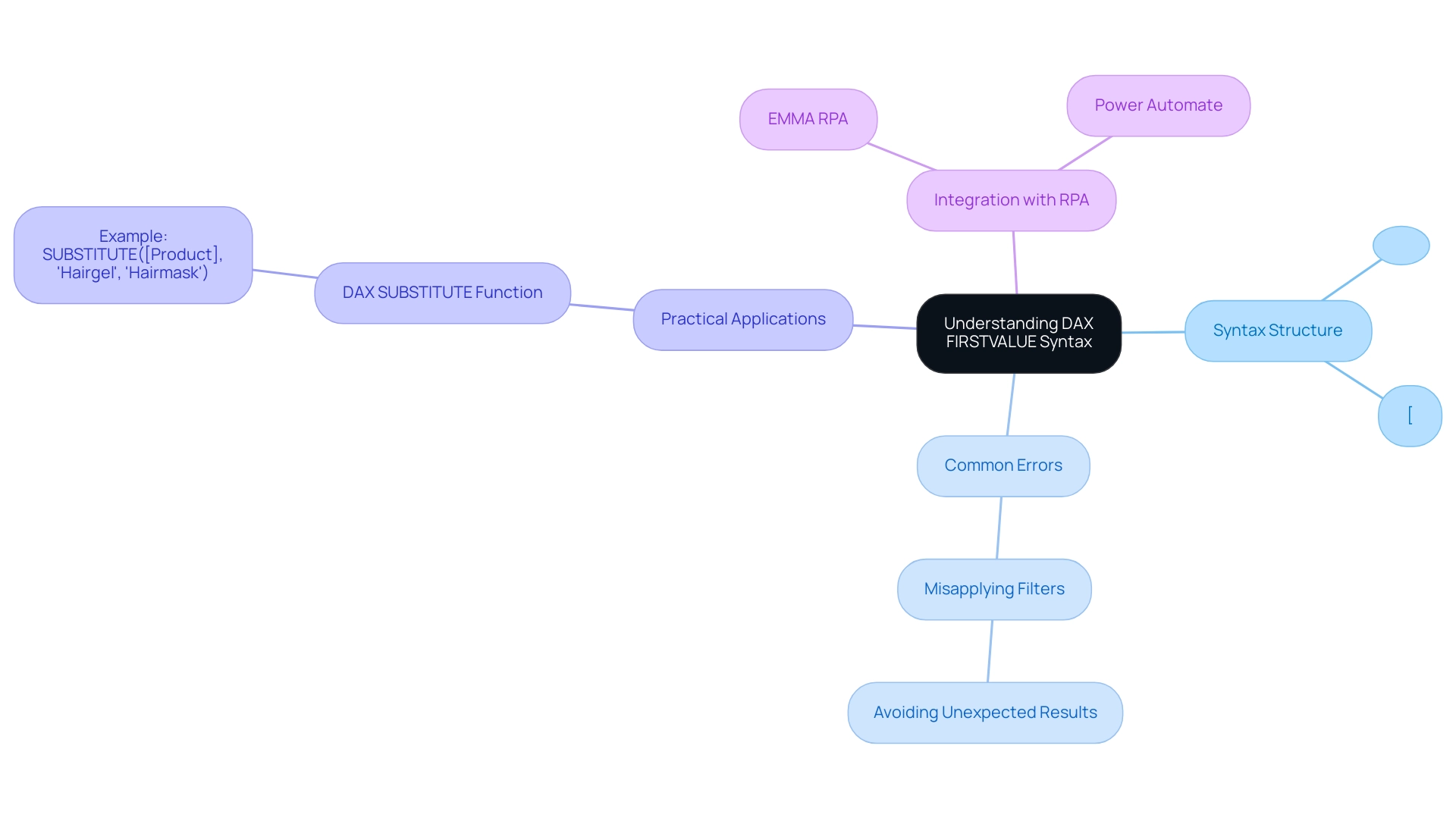

Understanding the Syntax of FIRSTVALUE

The syntax for retrieving the DAX first value of an item is organized as follows: FIRSTVALUE(<column>, [<filter>]). In this context, <column> signifies the particular column from which the first value is to be retrieved, while the optional [<filter>] enables you to specify any necessary conditions that must be met for the operation to return a result. For instance, to obtain the earliest order date from a sales table, you can use the formula: MIN(Sales[OrderDate]).

Mastering this syntax is essential for effectively utilizing the DAX first value method in your analysis, particularly in a data-rich environment where actionable insights drive growth and innovation.

Common errors often arise from misapplying the filter, leading to unexpected results. By understanding these nuances, you can avoid pitfalls and enhance your data modeling skills, ultimately improving operational efficiency through effective use of BI tools.

Additionally, it’s crucial to note that the START AT syntax follows the ORDER BY clause in the EVALUATE statement, which plays a vital role in defining the order of query results. For users working with DAX formulas, being aware of SSDT compatibility, which ranges from v14.0.1.432 to v16.0.70.21, adds credibility to your implementations.

To illustrate practical applications, consider the SUBSTITUTE tool, which replaces specified text in a string with new text. For instance, SUBSTITUTE([Product], 'Hairgel', 'Hairmask') substitutes ‘Hairgel’ with ‘Hairmask’ in the Product string, illustrating how DAX operations can be utilized effectively in real-world scenarios. As highlighted by expert Choy Chan Mun, ‘Excited to enhance your Power BI skills with this comprehensive guide!

‘💡’ This feature serves as a powerful tool in your DAX arsenal, enabling clearer insights and more precise interpretations while addressing the challenges of report creation and inconsistencies.

Moreover, to enhance operational efficiency, consider integrating RPA solutions like EMMA RPA and Power Automate to automate repetitive tasks, thereby freeing up resources for more strategic initiatives. Explore how these tools can transform your processes and drive growth in your organization. Book a free consultation today!

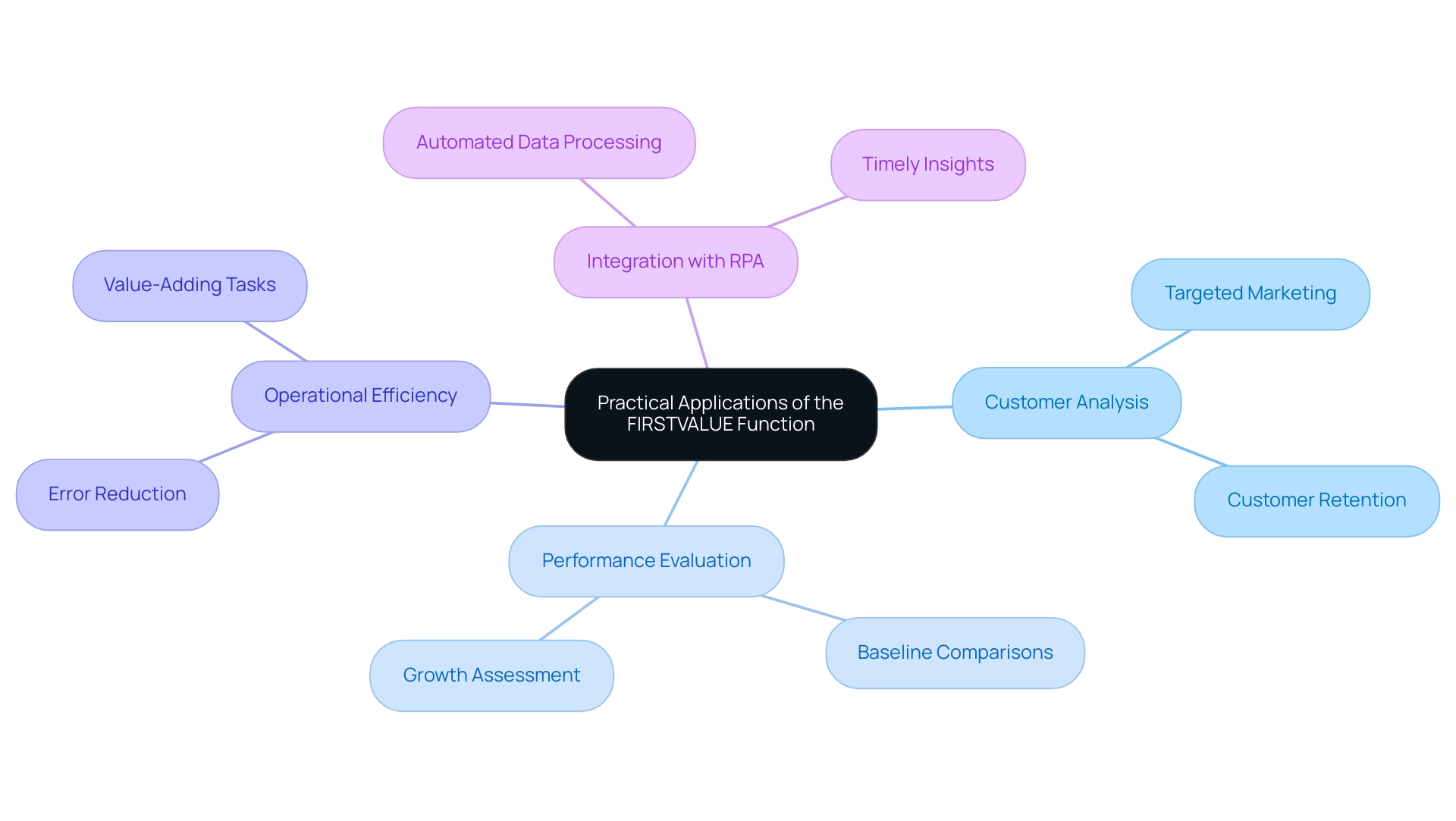

Practical Applications of the FIRSTVALUE Function

The DAX function serves as a powerful tool in various analytical contexts, enabling users to extract critical insights from their data. For instance, in customer analysis reports, the system can identify the dax first value of each customer’s purchase date, which is instrumental in refining targeted marketing strategies. By understanding the dax first value of when a customer first engaged with the brand, organizations can tailor their outreach efforts to enhance customer retention and loyalty.

Furthermore, this method can be applied to evaluate performance metrics over time, establishing a baseline for future comparisons and enabling clear assessments of growth or decline. This capability is further enhanced when integrated with Robotic Process Automation (RPA), which can automate the collection and processing of information, ensuring that the insights derived are timely and relevant. RPA not only reduces errors but also frees up team members to focus on more strategic, value-adding tasks, thereby enhancing overall operational efficiency.

Alongside the dax first value, the CONCATENATEX method can be employed to merge values from different columns or tables, producing detailed reports that incorporate textual insights with numerical information. This blend of capabilities simplifies information analysis and enables decision-makers to utilize past insights for strategic planning. By automating the workflow with RPA, Directors of Operations Efficiency can significantly reduce the time spent on repetitive processing tasks, allowing them to focus on interpreting insights that drive business growth.

As emphasized by Prasanth Pandey, “In Power BI, implicit measures are automatically created for basic aggregations when fields are included in visuals,” signifying the significance of effectively utilizing DAX expressions to improve analytical capabilities. Furthermore, for extensive datasets, the refined median metric can execute in under half a second, demonstrating the effectiveness of DAX operations in managing significant information. This efficiency is crucial for Directors of Operations Efficiency who rely on timely insights for informed decision-making, further bolstered by the integration of RPA into their analytical processes.

The rapidly evolving AI landscape necessitates the adoption of technologies like RPA to overcome the challenges posed by manual, repetitive tasks, ensuring organizations remain competitive and agile.

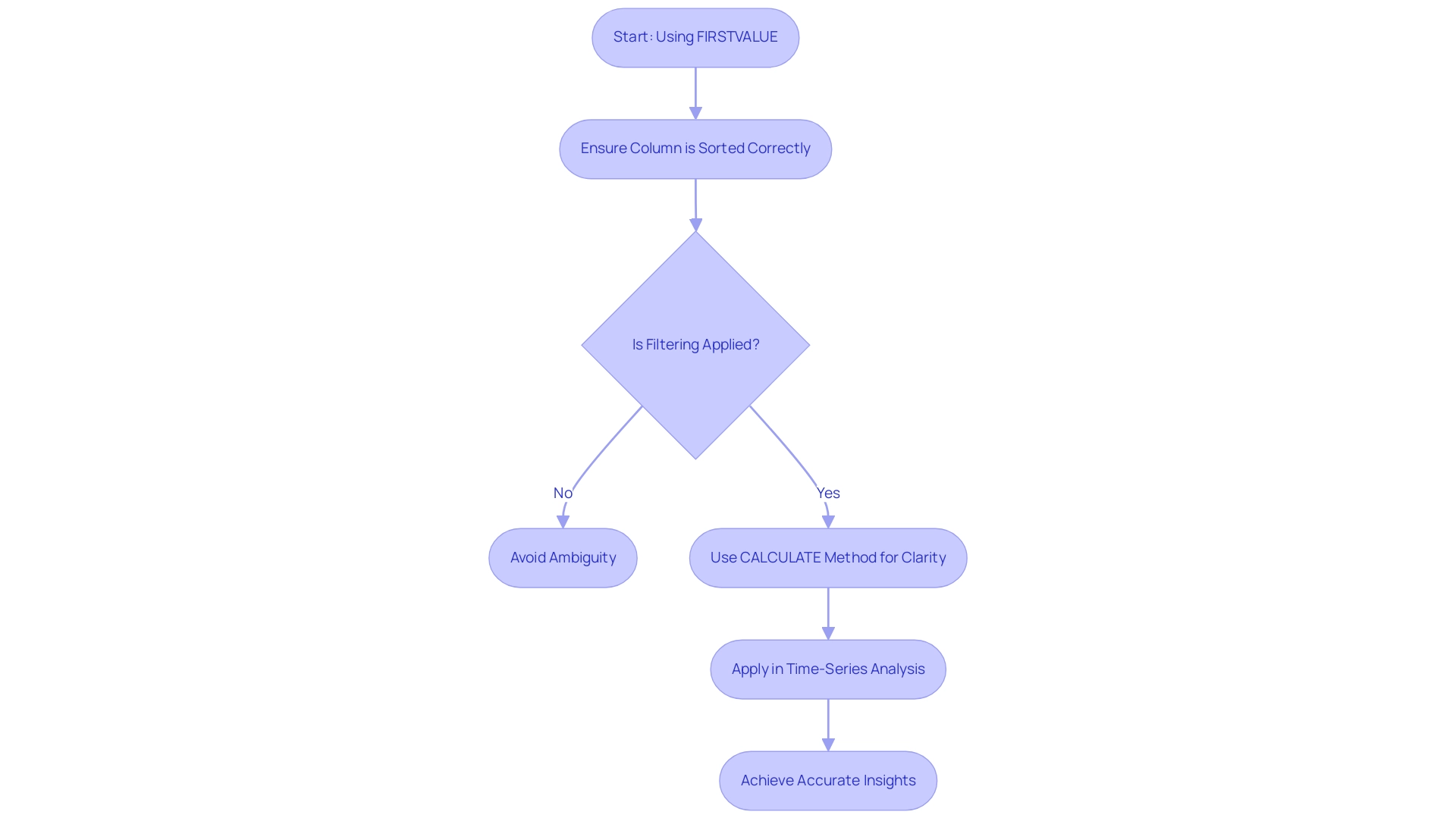

Best Practices and Common Pitfalls in Using FIRSTVALUE

Utilizing the initial value function effectively requires careful consideration of the column’s order, as ensuring the specified column is sorted correctly is crucial to obtaining reliable results. Failing to do so can lead to insights that diverge from expectations, a common challenge in leveraging Power BI dashboards due to time-consuming report creation and data inconsistencies. Moreover, it’s essential to avoid using this function in scenarios where multiple values may be returned without an explicit filter, which can introduce ambiguity into your analysis.

A best practice is to consistently pair the appropriate filtering with the DAX first value to enhance accuracy in your results. For instance, using it together with the CALCULATE method provides a clearer context: CALCULATE(InitialValue(Sales[OrderDate]), Sales[Region] = 'North'). This formula guarantees that you extract the first order date specifically for the North region, thus providing clarity and precision in your data analysis.

In practical scenarios, such as in Time-Series Analysis, the DAX first value capability can be crucial in recognizing trends and patterns over time. As Henry Chukwunwike Morgan-Dibie states, ‘Simplifying complex topics to empower understanding and decision-making,’ this principle applies to the use of DAX operations. By integrating Business Intelligence best practices and automating manual workflows with RPA solutions like EMMA RPA and Power Automate, organizations can streamline operations and make informed decisions that drive growth and innovation.

Moreover, calculated columns in Power BI enable users to generate new information through user-defined expressions, further demonstrating how this concept integrates within the wider context of DAX capabilities and their uses. The visual representation of automation, depicted in the stylized illustration of a human figure with robotic counterparts, underscores the transformative role of technology in enhancing operational efficiency.

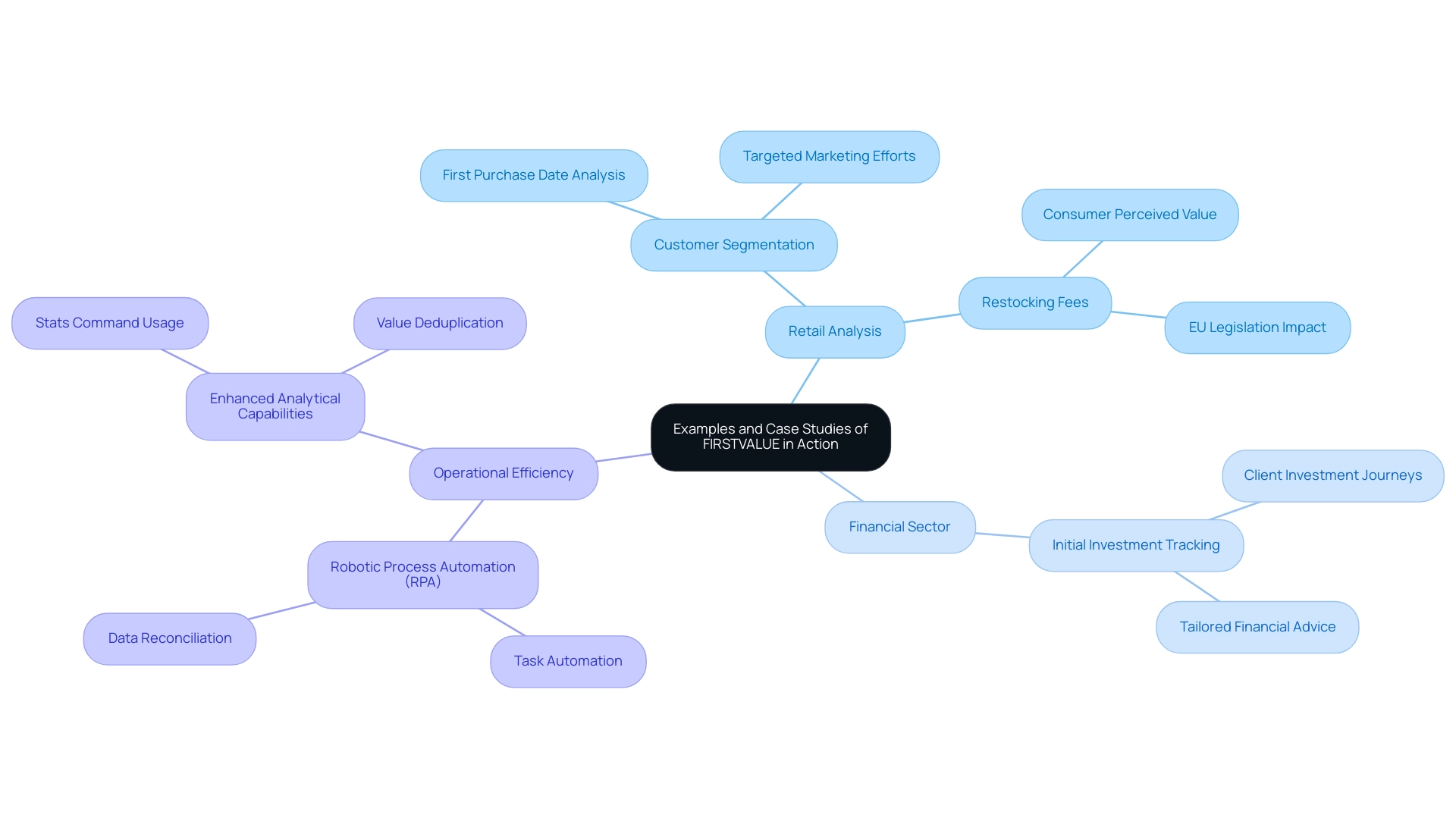

Examples and Case Studies of FIRSTVALUE in Action

In the realm of retail analysis, the dax first value tool can profoundly influence the understanding of customer purchasing behavior. For example, examine a case study named ‘Impact of Restocking Fees and EU Legislation,’ which explores how a leading retail firm employed the function to assess their sales information. By pinpointing the first purchase date for each customer, the retailer was able to effectively segment their clientele based on distinct buying patterns.

This segmentation not only improved targeted marketing efforts but also optimized inventory management strategies by aligning stock levels with customer preferences.

Moreover, the integration of Robotic Process Automation (RPA) within this analytical framework can further streamline operations by automating tasks such as entry, report generation, and collection processes. This automation frees up valuable resources for more strategic initiatives, enhancing operational efficiency in a rapidly evolving AI landscape. RPA solutions are designed to adapt to new technologies, allowing businesses to remain agile and responsive to changes in the market.

In addition, this case study highlights how customer perceptions play a critical role in implementing restocking fees, especially in light of new EU legislation regarding free returns.

As Rita Maria DiFrancesco from Politecnico di Milano states, ‘Retailers charging a restocking fee should put more effort into increasing consumer perceived value, as customers will accept a restocking fee if they view the online purchase as having higher quality than a brick-and-mortar purchase.’ This insight highlights the necessity for retailers to enhance perceived value to successfully implement such fees, aligning with the findings from the case study.

Similarly, in the financial sector, analysts can utilize the power of dax first value to track the initial investment dates of their clients. This capability enables financial advisors to offer tailored advice that reflects each client’s unique investment journey, ultimately fostering stronger relationships and driving client satisfaction. Furthermore, utilizing RPA to automate regular analytical tasks, such as transaction processing and data reconciliation, can improve the precision and quickness of insights obtained from such data.

Practical applications of this concept illustrate how data can be converted into actionable strategies, leading to enhanced operational efficiency and profitability. Furthermore, using the stats command in a multivalue BY field can deduplicate values and return the average of another field, further enhancing the analytical capabilities when working with the dax first value function.

Conclusion

The DAX FIRSTVALUE function is a transformative tool that allows analysts to extract crucial insights from complex datasets, making it indispensable for any data-driven organization. By mastering the syntax and practical applications of FIRSTVALUE, users can not only track initial values but also uncover trends that inform strategic decision-making. This capability is further enhanced by the integration of Robotic Process Automation (RPA), which streamlines workflows and promotes operational efficiency.

Best practices for using FIRSTVALUE emphasize the importance of accurate filtering and sorting, ensuring that the insights derived are both reliable and actionable. By combining FIRSTVALUE with other DAX functions, such as CALCULATE, analysts can refine their results and gain deeper insights into their data. Real-world case studies illustrate the function’s effectiveness in diverse sectors, from retail to finance, demonstrating how it can drive targeted marketing and optimize resource management.

As organizations continue to navigate the complexities of data analysis, the ability to leverage tools like FIRSTVALUE will be central to achieving operational excellence. Embracing these advanced analytical techniques, alongside automation solutions, empowers teams to focus on strategic initiatives that foster growth and innovation. Mastering DAX functions is not just a technical skill but a vital step towards cultivating a culture of informed decision-making in an increasingly competitive landscape.

Introduction

In the age of information, the ability to transform raw data into actionable insights is paramount for organizations striving to thrive in a competitive landscape. Data transformation in Power BI emerges as a pivotal process, enabling businesses to clean, shape, and integrate diverse data sources with precision.

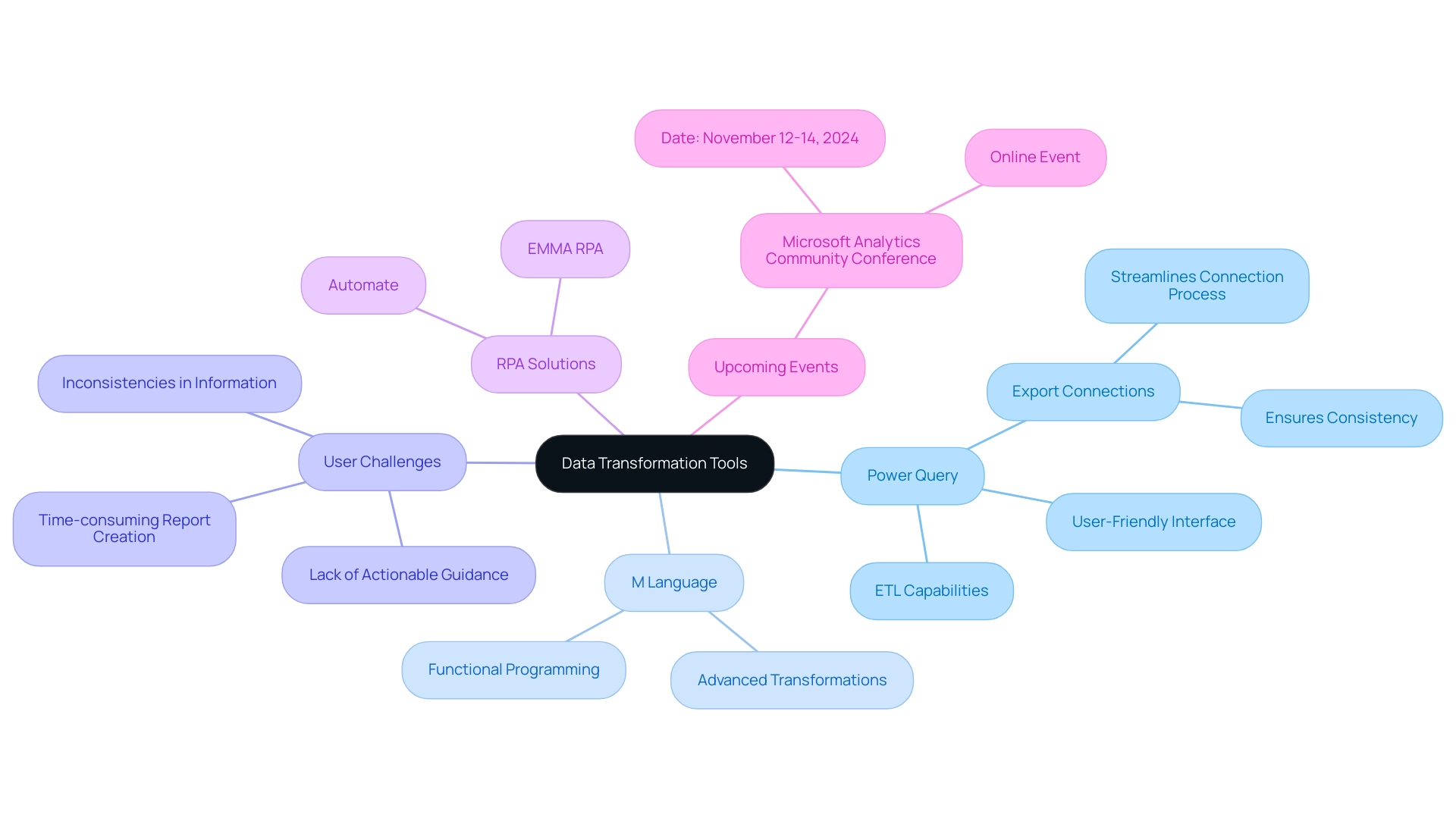

As companies grapple with challenges such as economic uncertainty and the complexities of digital transformation, understanding the nuances of data management becomes essential for operational success. By leveraging advanced tools like Power Query and M Language, along with innovative strategies such as Robotic Process Automation (RPA), organizations can streamline workflows, enhance data quality, and empower teams to focus on strategic initiatives.

This article delves into the critical techniques and best practices for effective data transformation, illuminating the path toward maximizing analytics capabilities and driving informed decision-making in an ever-evolving digital landscape.

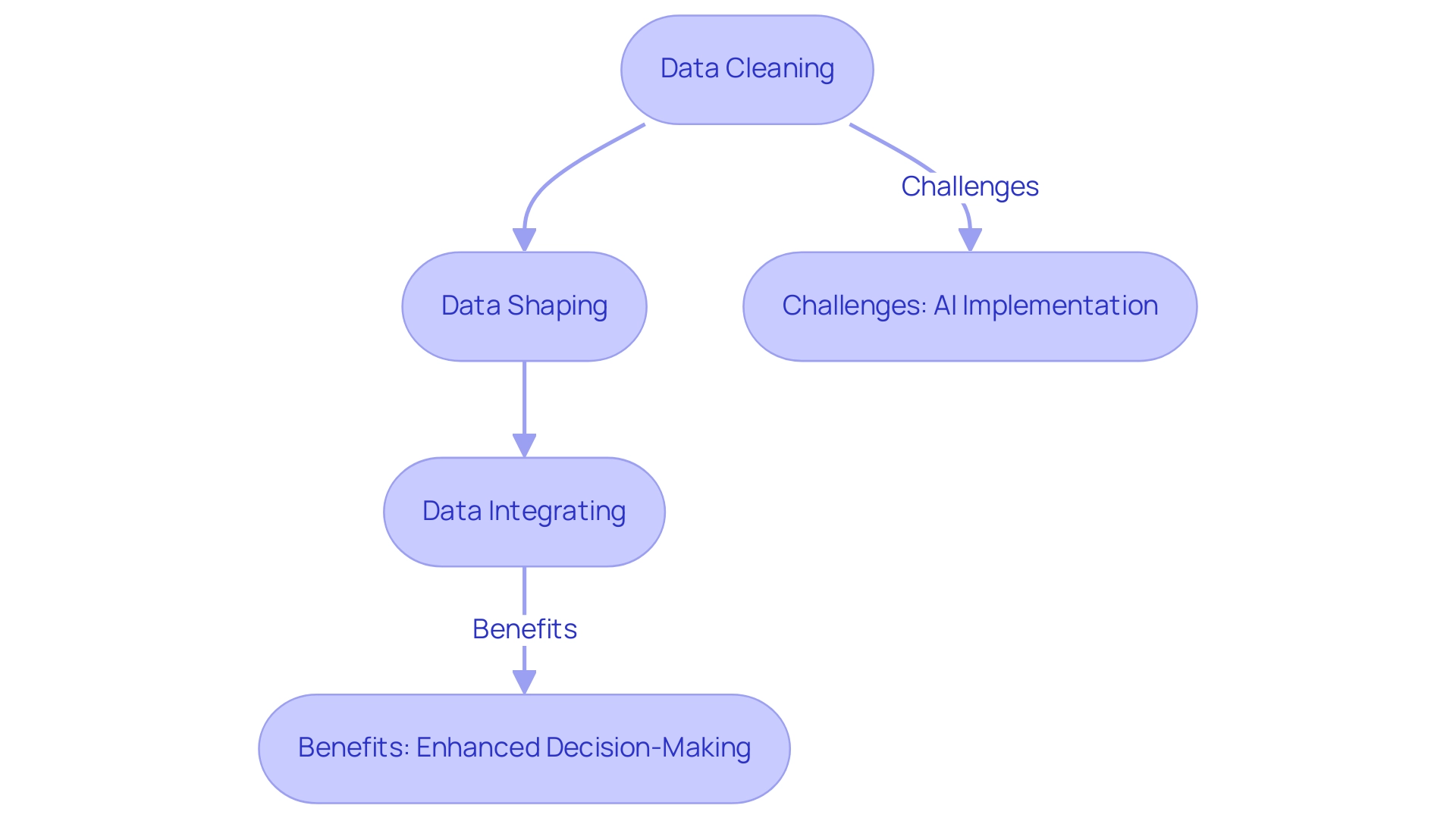

Understanding Data Transformation in Power BI

The process of data transformation in Power BI is essential, involving the conversion of raw information into a structured format suitable for analysis and reporting. This intricate process includes:

- Cleaning

- Shaping

- Integrating information from diverse sources

Ensuring both accuracy and consistency. As organizations traverse a progressively intricate digital environment, understanding data transformation in Power BI is essential for enhancing analytics abilities.

Moreover, addressing prevalent views regarding AI, especially the difficulties of implementation, such as inadequate master information quality and organizational reluctance to adopt AI, is crucial for operational success. A recent report indicates that 22% of IT leaders view economic uncertainty as a significant challenge to implementing digital changes, underscoring the need for reliable data practices amidst fluctuating market conditions.

Effective data transformation in Power BI not only enhances data quality but also empowers businesses to make informed decisions swiftly, improving their ability to adapt to market dynamics. Incorporating Robotic Process Automation (RPA) can further streamline workflows, reduce manual errors, and free up teams for more strategic tasks, ultimately driving data-driven insights and operational efficiency. While some may view AI projects as time-consuming and expensive, companies can alleviate these concerns by adopting structured approaches and utilizing tools that simplify integration.

Companies employing advanced technologies, like those provided by WalkMe’s Digital Adoption Platform, significantly enhance the chances of successful changes. Evidence suggests that organizations adapting digital tools to meet employee needs more than double the chances of achieving desired outcomes, leading to higher employee satisfaction and profitability. Furthermore, since digital changes can generate and remove jobs, it requires the advancement of new digital abilities, demonstrating the wider effects of data transformation in Power BI on today’s evolving workforce.

Exploring Tools for Data Transformation: Power Query and M Language

Power Query stands out as a formidable tool within BI, empowering users to seamlessly connect, combine, and refine content from diverse sources. Its user-friendly interface simplifies information transformation tasks, allowing users to execute operations such as filtering, sorting, and merging datasets effortlessly—no extensive coding knowledge required. As emphasized by Sayantoni Das,

Query is a commonly utilized ETL (Extract, Transform, Load) tool,

underscoring its essential role in the analytics landscape.

However, many users still encounter challenges with BI dashboards, such as:

- Time-consuming report creation

- Inconsistencies in information

- A lack of actionable guidance

In this context, incorporating RPA solutions such as EMMA RPA and Automate can significantly improve operational efficiency by automating repetitive tasks and lessening the load on staff, thus enabling users to concentrate on extracting actionable insights from their information. In contrast, M Language acts as a functional programming language that specifies the complexities of information alteration processes within Query.

It caters to advanced users by offering enhanced flexibility and control, enabling them to implement complex transformations that exceed the capabilities of the graphical interface. Significantly, choosing three columns can yield median or sum statistics, illustrating Query’s practical uses in analysis. Moreover, the upcoming Microsoft Analytics Community Conference, scheduled for November 12-14, 2024, presents an excellent opportunity for users to engage with the community and learn more about these tools.

Furthermore, the case study titled ‘Export Connections’ demonstrates how Query allows users to export connection details, streamlining the process of establishing connections and ensuring consistency in analysis projects. Together, Power Query and M Language establish a strong framework for effective data transformation in Power BI, equipping users with the essential tools to enhance their analysis projects while overcoming the typical challenges encountered in maximizing BI capabilities.

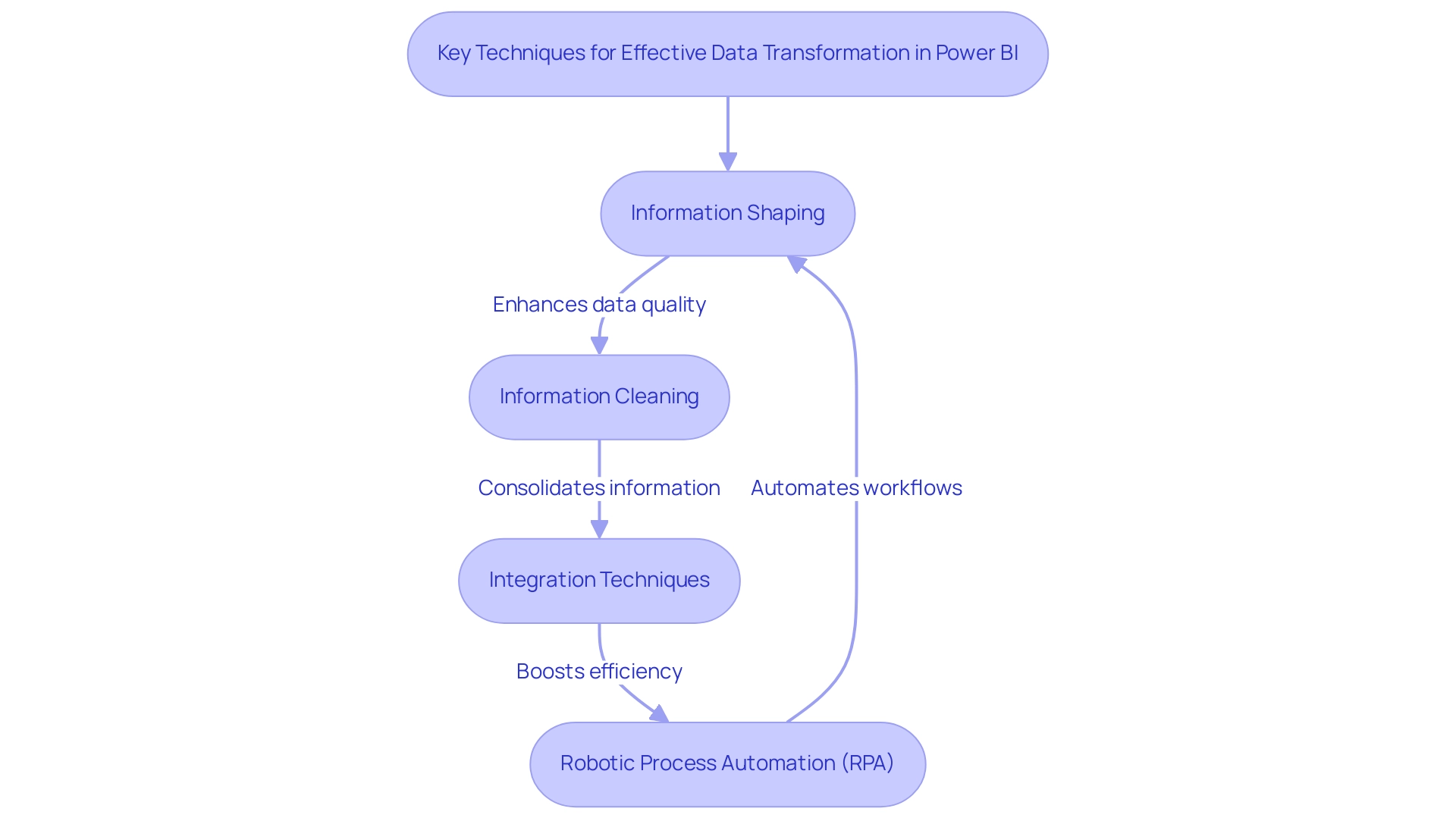

Key Techniques for Effective Data Transformation

Effective data transformation in Power BI is crucial for organizations aiming to leverage analytics for informed decision-making, especially in a rapidly evolving AI landscape. This process of data transformation in Power BI includes several key techniques, such as shaping, cleaning, and integration, which are significantly enhanced through Robotic Process Automation (RPA). Information shaping organizes and structures information to meet specific analytical requirements, ensuring that it is presented in a manner conducive to insight generation.

RPA can automate these manual workflows, boosting efficiency and reducing errors, thereby freeing your team for more strategic, value-adding work. Meanwhile, information cleaning focuses on identifying and rectifying errors or inconsistencies within the dataset, which is essential for maintaining integrity. However, many entities face significant challenges in implementing RPA and BI, including resistance to change and the complexity of integrating new technologies with existing systems.

According to recent studies, companies that adjust their technologies to meet employee needs can achieve up to 23% higher employee satisfaction and 22% greater profitability, emphasizing the significance of precise information in fostering a positive work environment. Yet, it’s important to note that in 2021, only 35% of companies worldwide achieved their digital objectives, illustrating the significant challenges organizations face. Furthermore, resistant company culture is noted as a challenge by 14% of respondents, suggesting that overcoming internal obstacles is crucial for successful information change.

Integration techniques further enhance the data transformation in Power BI by allowing users to consolidate information from various sources, creating a comprehensive view that is invaluable for analysis. For instance, the BFSI segment leads the digital transformation market with the largest revenue share, driven by enhanced consumer experiences and advanced technology adoption, which serves as a successful example of how effective information management can drive growth. With 17% of IT projects failing to a degree that threatens company survival, as noted in a study by the University of Oxford and McKinsey, the implications of effective information management cannot be overstated.

By utilizing these transformative techniques alongside RPA, entities not only ensure that their information is accurate and pertinent but also establish the foundation for obtaining meaningful insights through data transformation in Power BI, which can drive strategic decision-making and enhance operational efficiency. Furthermore, tailoring AI solutions to meet specific business needs is essential, as it allows organizations to address unique challenges and leverage technology effectively.

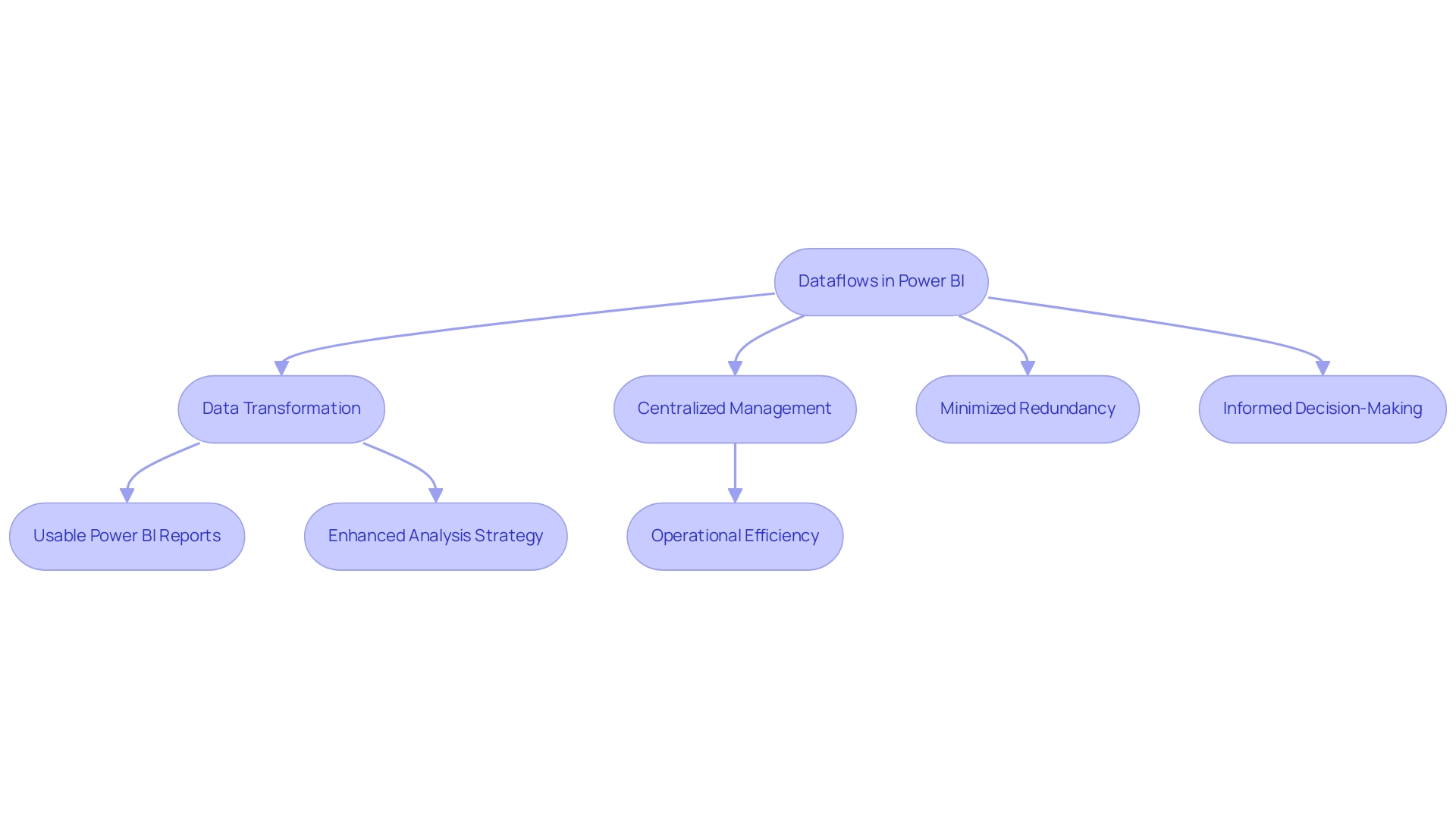

Leveraging Dataflows for Enhanced Data Management

Dataflows in Power BI are crucial for data transformation in Power BI, optimizing information conversion processes at scale and significantly enhancing efficiency across various reports and dashboards. By allowing users to define, manage, and centralize transformation tasks, dataflows minimize redundancy and ensure that all stakeholders access the same up-to-date information—vital for informed decision-making regarding data transformation in Power BI. As Sascha Rudloff, Team leader of IT and Process Management at PALFINGER, observed, ‘The outcomes of the sprint surpassed our expectations and were a significant enhancement for our analysis strategy.’

The recent success of CREATUM’s Power BI Sprint with PALFINGER demonstrates the impact of data transformation in Power BI: it not only led to an immediately usable Power BI report and gateway setup but also expedited PALFINGER’s overall Power BI development, enhancing their analysis strategy. Furthermore, information flows can connect to a variety of sources, establishing a consistent pipeline that enhances quality and accessibility across departments through data transformation in Power BI. As of 2024, the usage of information flows has surged, underscoring their growing importance in the effective management of data transformation in Power BI.

Recent advancements, such as saving information flows as drafts, support data transformation in Power BI, providing entities with enhanced flexibility in managing their strategies and driving operational efficiency. Additionally, leveraging refresh statistics optimizes performance, while case studies such as ‘Mitigating Long Refresh Durations’ provide practical solutions for addressing challenges associated with data transformation in Power BI and complex dataflows. The incorporation of Robotic Process Automation (RPA) further improves this process by automating repetitive tasks, which not only alleviates the burden of time-consuming report creation but also addresses inconsistencies, empowering operations leaders to harness insights that drive business growth and efficiency.

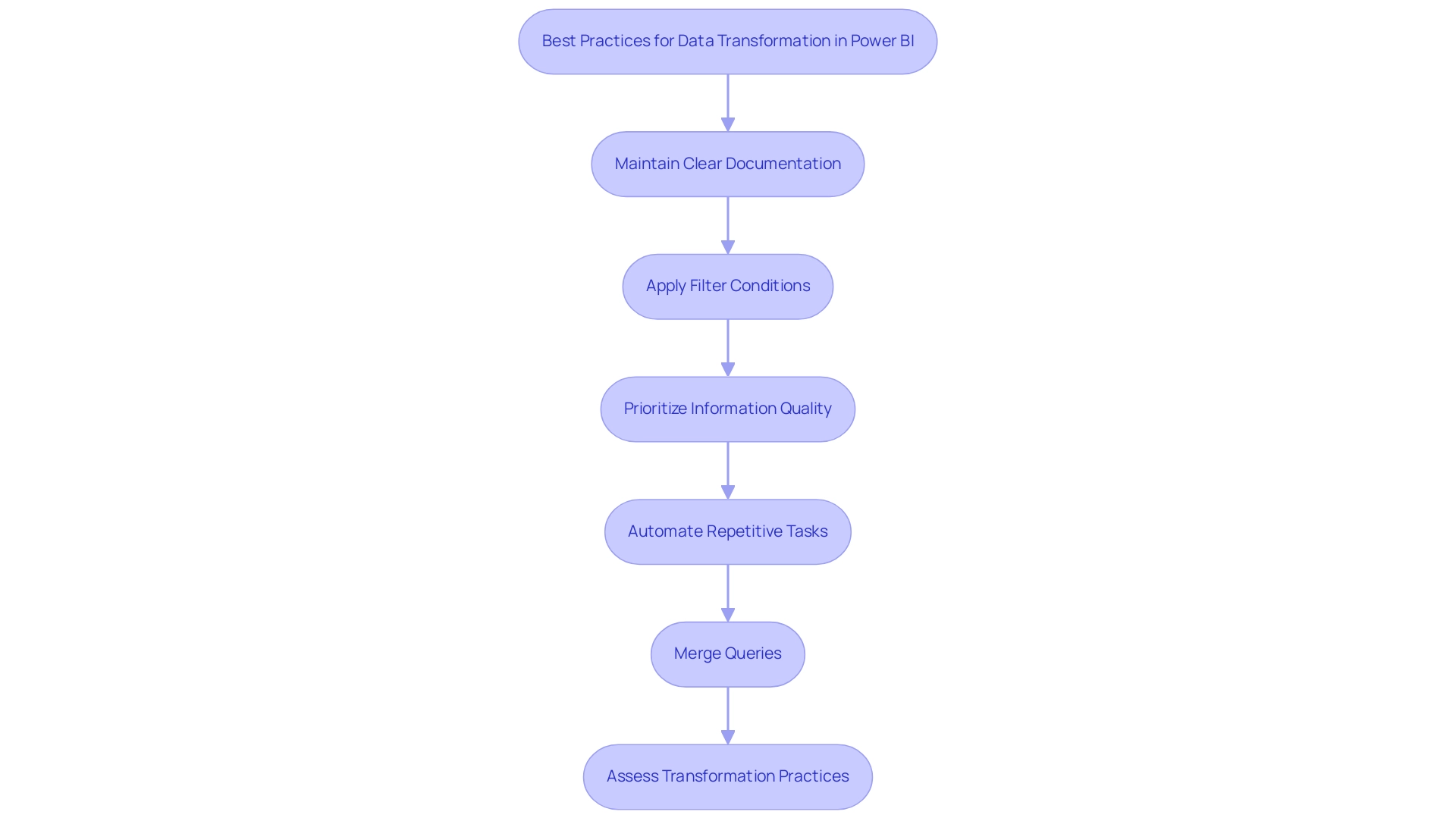

Best Practices for Transforming Data in Power BI

To enhance data transformation in Power BI, organizations must adopt a set of best practices that streamline processes and improve collaboration while leveraging Robotic Process Automation (RPA) to automate manual workflows.

Firstly, maintaining clear documentation of all change steps is crucial; it fosters knowledge sharing and ensures that team members can easily understand and replicate processes. Moreover, applying a filter condition to showcase rows where Total Amount is greater than or equal to $100 acts as a practical illustration of practices that prioritize information quality.

Secondly, prioritizing information quality through systematic validation checks and thorough cleaning routines is essential within the data transformation in Power BI workflow, as it directly impacts the reliability of analytics outcomes. Moreover, harnessing the power of Power Query and M Language allows teams to automate repetitive tasks, minimizing human error and enabling analysts to concentrate on strategic initiatives, thus enhancing operational efficiency and freeing up resources for more value-adding work. Merging queries to combine information based on a common column is another key practice that enhances integration and analysis.

As entities navigate the rapidly evolving AI landscape, routinely assessing and refreshing transformation practices to address shifting business needs and information sources is essential. This landscape presents challenges that tailored AI solutions can help address, ensuring entities can cut through the noise and implement effective strategies. As emphasized by the quote from Power BI, ‘Learn about the Power BI security best practices to protect your valuable information,’ implementing these strategies ensures high-quality information for reporting and maximizes the value of investments in advanced analytics.

By following these best practices and integrating RPA, organizations can significantly enhance their data transformation in Power BI capabilities, ultimately leading to improved business outcomes.

Conclusion

The journey of effective data transformation in Power BI is pivotal for organizations aiming to thrive in a competitive landscape. Through the processes of cleaning, shaping, and integrating diverse data sources, businesses can enhance data quality and make informed decisions swiftly. The integration of advanced tools like Power Query and M Language, alongside Robotic Process Automation (RPA), empowers teams to streamline workflows, reduce manual errors, and focus on strategic initiatives that drive growth.

Implementing key techniques such as:

- Data shaping

- Cleaning

- Integration

not only enhances operational efficiency but also lays the groundwork for deriving meaningful insights. As organizations embrace dataflows, they can centralize and manage transformation tasks, ensuring that all stakeholders have access to the most accurate and up-to-date information. This capability is essential for informed decision-making in today’s rapidly evolving digital landscape.

By adopting best practices that prioritize documentation, data quality, and systematic validation, organizations can significantly improve their data transformation processes. This enables them to navigate the challenges posed by economic uncertainty and digital transformation with confidence. Ultimately, effective data management and transformation strategies are not just about technology; they are about empowering teams to harness the full potential of their data, driving operational efficiency and fostering a culture of data-driven decision-making. Embracing these practices today will pave the way for a more resilient and successful future.

Introduction

In the realm of data visualization, Power BI stands out as a powerful tool that transforms raw data into actionable insights. As organizations strive to enhance their reporting capabilities, understanding the nuances of data labels becomes paramount. These labels not only convey essential metrics but also facilitate a clearer interpretation of trends and patterns, making them indispensable for effective decision-making.

By mastering the art of customizing data labels, professionals can significantly elevate the clarity and impact of their visualizations. This article delves into practical strategies for optimizing data labels, from establishing a robust data model to employing advanced techniques that enhance user engagement.

As businesses navigate the complexities of data management, these insights will empower them to harness the full potential of Power BI, driving efficiency and fostering informed choices.

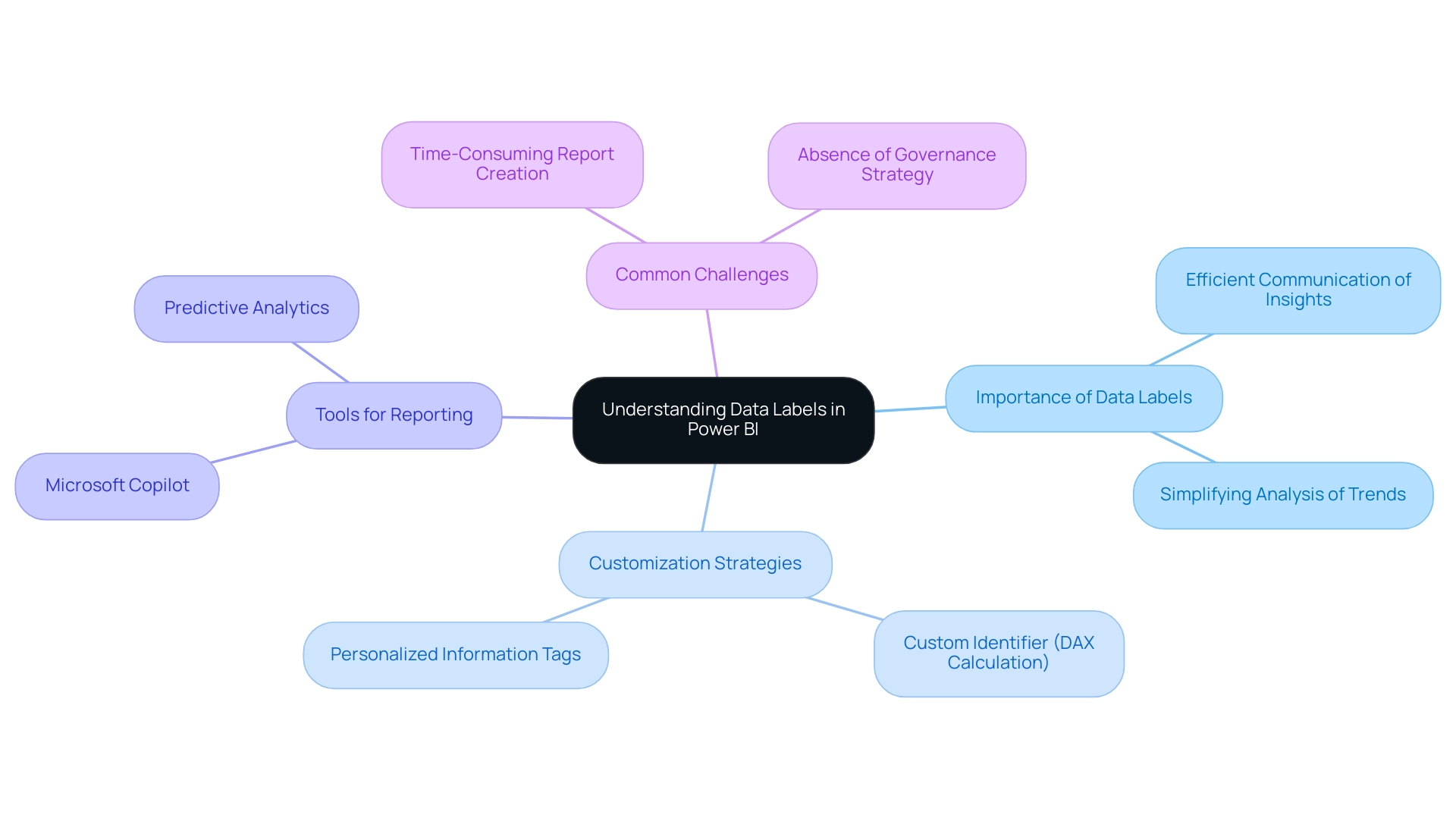

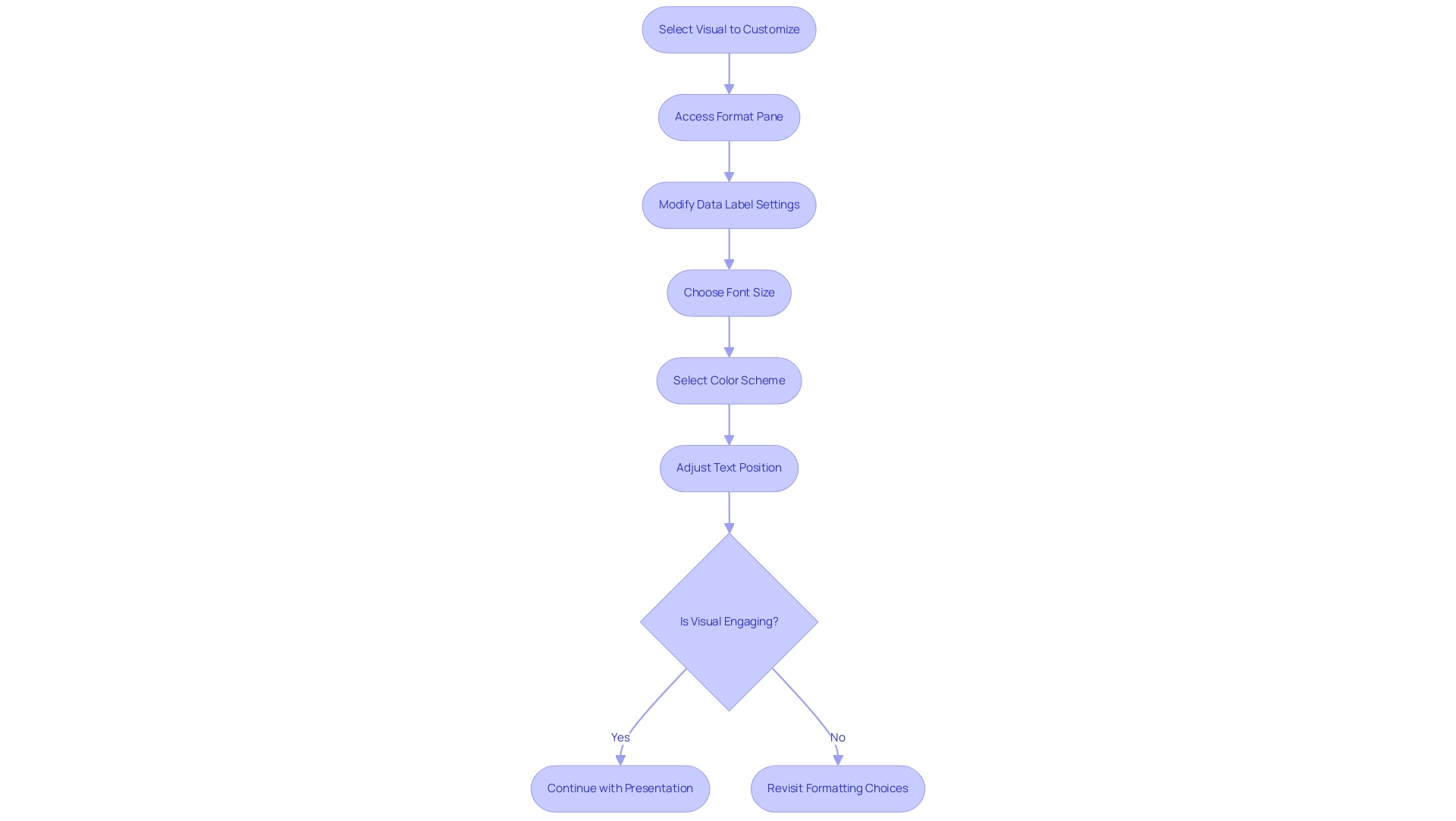

Understanding Data Labels in Power BI: An Overview

In Power BI, the use of data label power bi serves as essential instruments for efficiently communicating insights within visual representations, allowing viewers to quickly grasp important metrics. These tags show values associated with particular data points, simplifying the analysis of trends and patterns. As Mitchell Pearson, an experienced Data Platform Consultant and Training Manager at Pragmatic Works, points out, ‘A key aspect is creating a DAX calculation named ‘custom identifier’, which allows for the display of desired information.’

Customizing data labels in Power BI can substantially enhance the clarity and effect of your documents, ensuring that your audience receives critical insights at a glance. In addressing common challenges such as time-consuming report creation and inconsistencies, effective data label power bi strategies become essential. The absence of a governance strategy can result in confusion and mistrust in the information presented, making the implementation of clear data label power bi practices even more important.

To create impactful dashboards, consider:

- Showing all important information on a single screen for quick access to essential details.

- Utilizing tools like Microsoft Copilot and predictive analytics to enhance your reporting capabilities.

The column statistics chart shown below the preview section can further enhance understanding.

By concentrating on personalized information tags, as emphasized in numerous case studies, you can greatly enhance the clarity of your visual representations, aiding improved decision-making processes and ultimately fostering growth through insight-driven analysis.

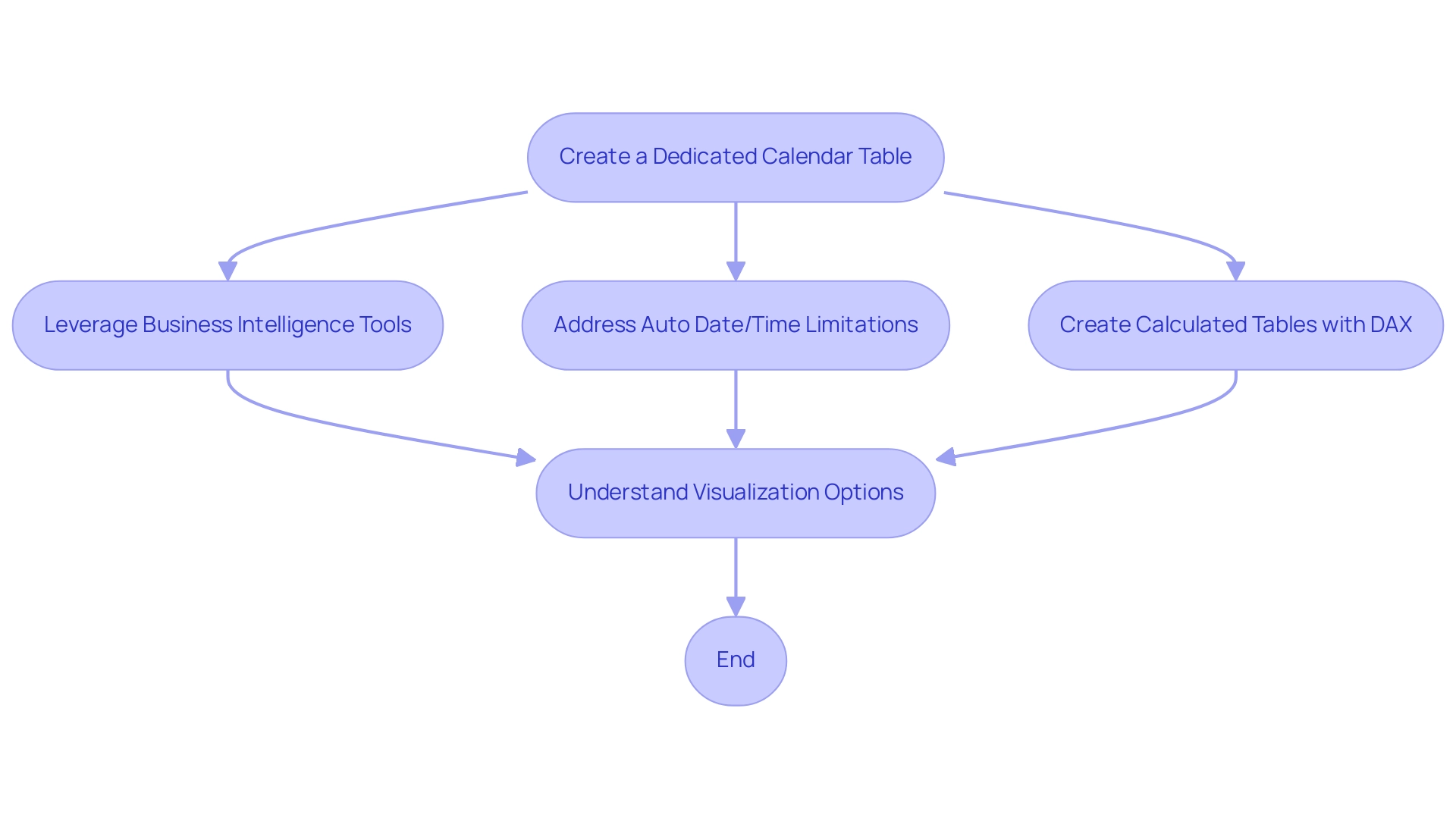

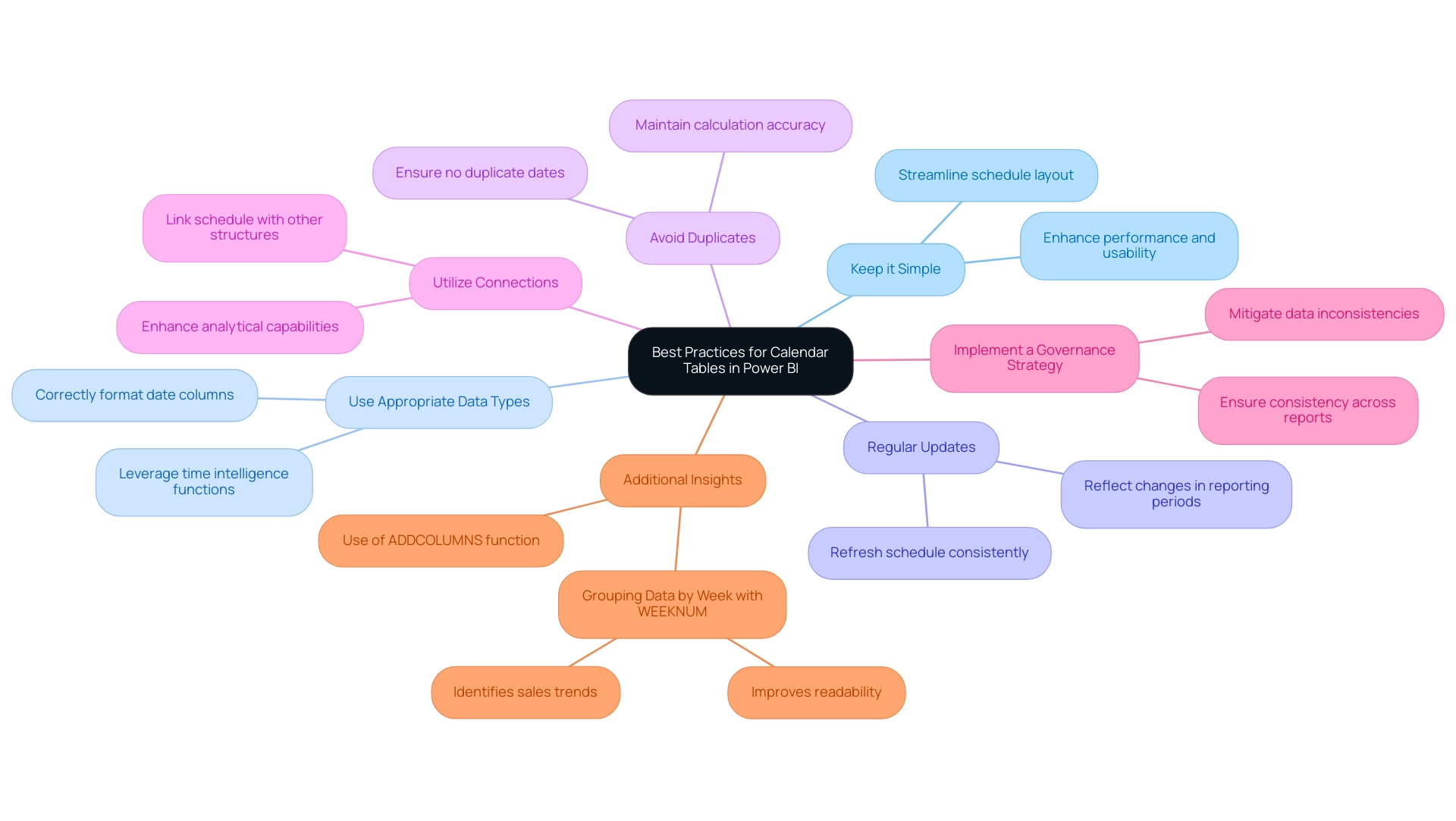

Essential Preparations for Customizing Data Labels

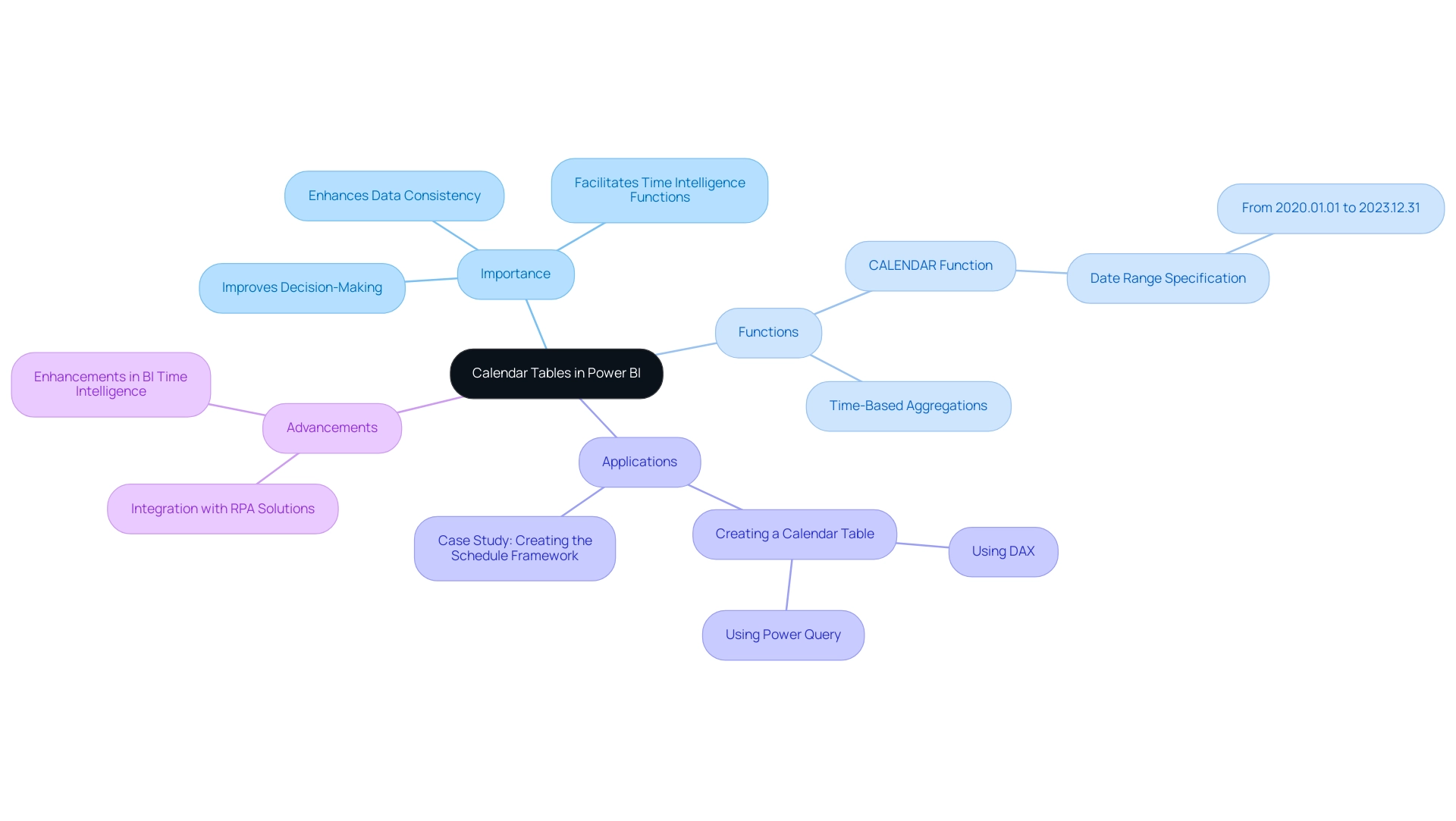

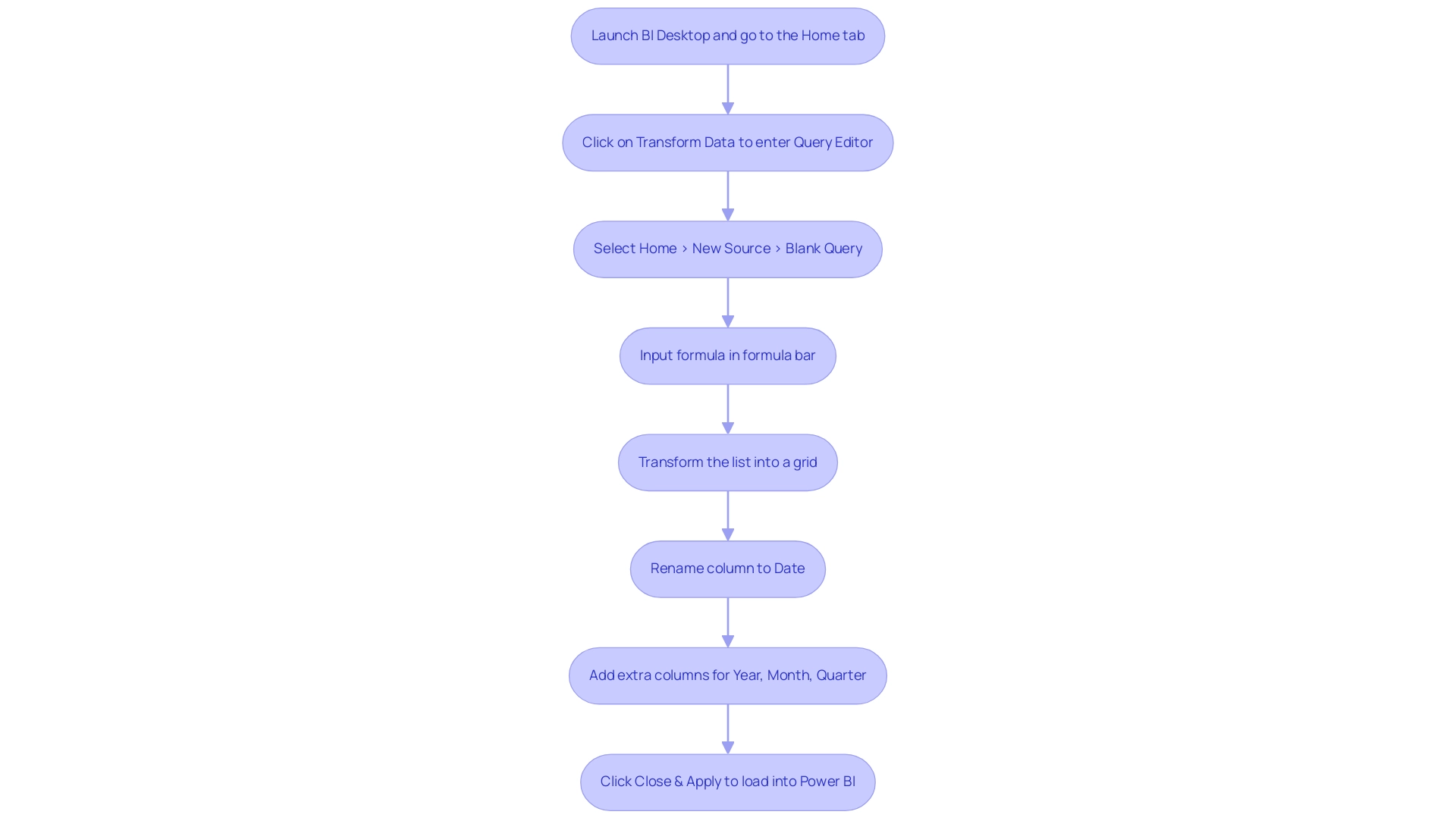

To effectively personalize data label Power BI, it is essential to create a dedicated calendar table within your information model. This essential component serves as the backbone for time-based analysis, facilitating the creation of measures that significantly improve your data label Power BI. Denys Velykozhon highlights the significance of such structures in his discussions on reporting, stressing that a solid foundation is key to effective information interpretation.

Furthermore, leveraging Business Intelligence tools like EMMA RPA and Power Automate can streamline this process, transforming raw information into actionable insights that drive efficiency and growth. Additionally, while Power BI offers the Auto date/time option for simple model requirements and ad hoc information exploration, it does not support a single date table design for multiple tables, making the use of a dedicated calendar table with data label Power BI a better choice for complex models. This choice can alleviate common challenges such as inconsistencies and time-consuming report creation, enabling a more efficient workflow.

Moreover, when a model already has a date table and another is needed for a role-playing dimension, a calculated table can be created to clone the existing date table using DAX. However, it’s essential to note that the cloned table only creates columns without applying model properties like formats or descriptions, and hierarchies are not cloned. Presenting the earliest date in the model as Min Date and the latest date as Max Date further emphasizes the significance of implementing a calendar table for effective information modeling, especially in relation to the data label Power BI.

Getting accustomed to the visualizations you plan to utilize is also essential, as each may provide unique options for showcasing labels. Comprehending the interplay between your information structure and visualization types will empower you to establish an effective groundwork for your customization efforts, ultimately driving insightful analysis and informed decision-making. By utilizing RPA tools, organizations can automate repetitive reporting tasks, enabling teams to concentrate on deriving insights instead of getting bogged down in preparation.

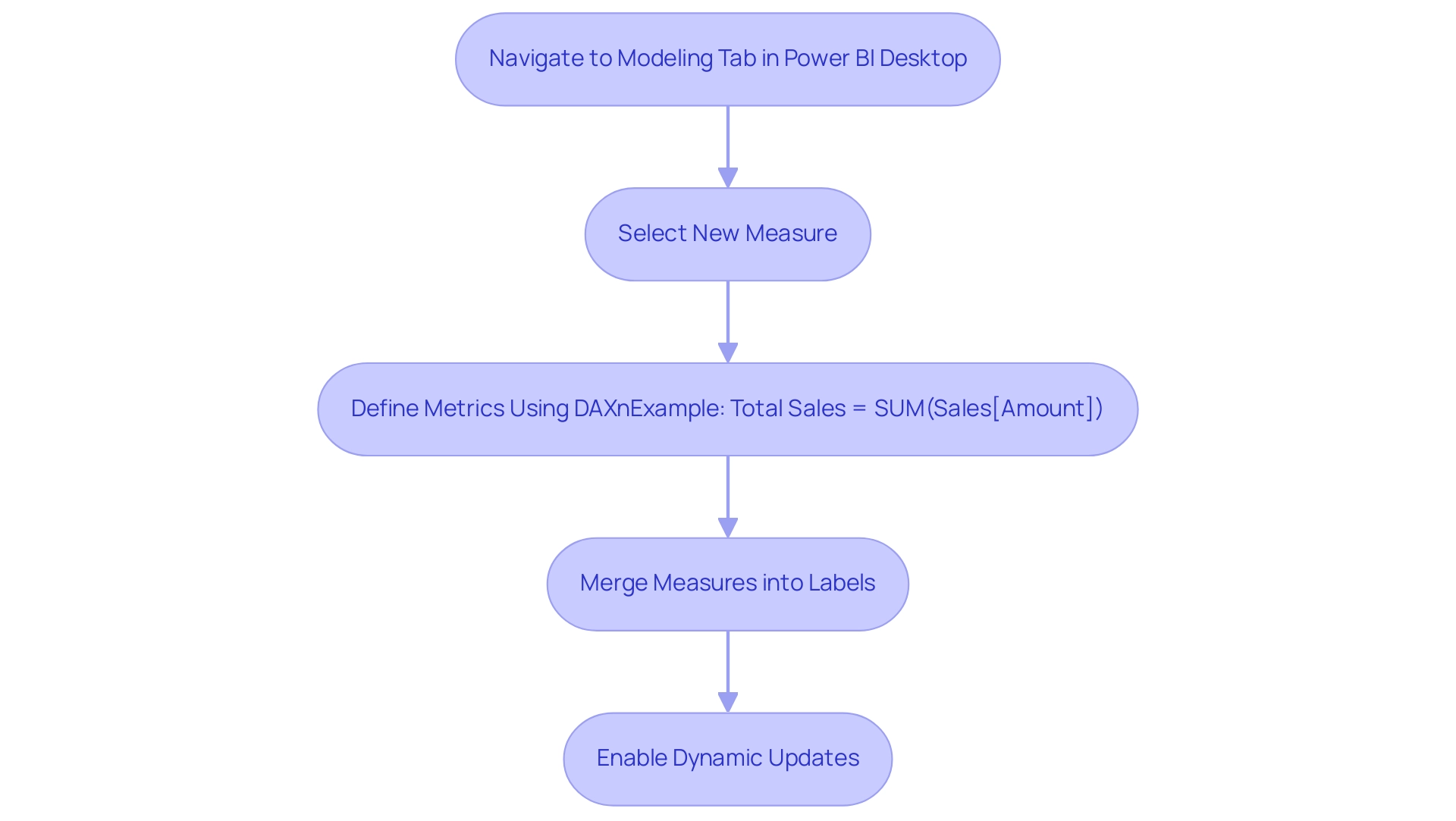

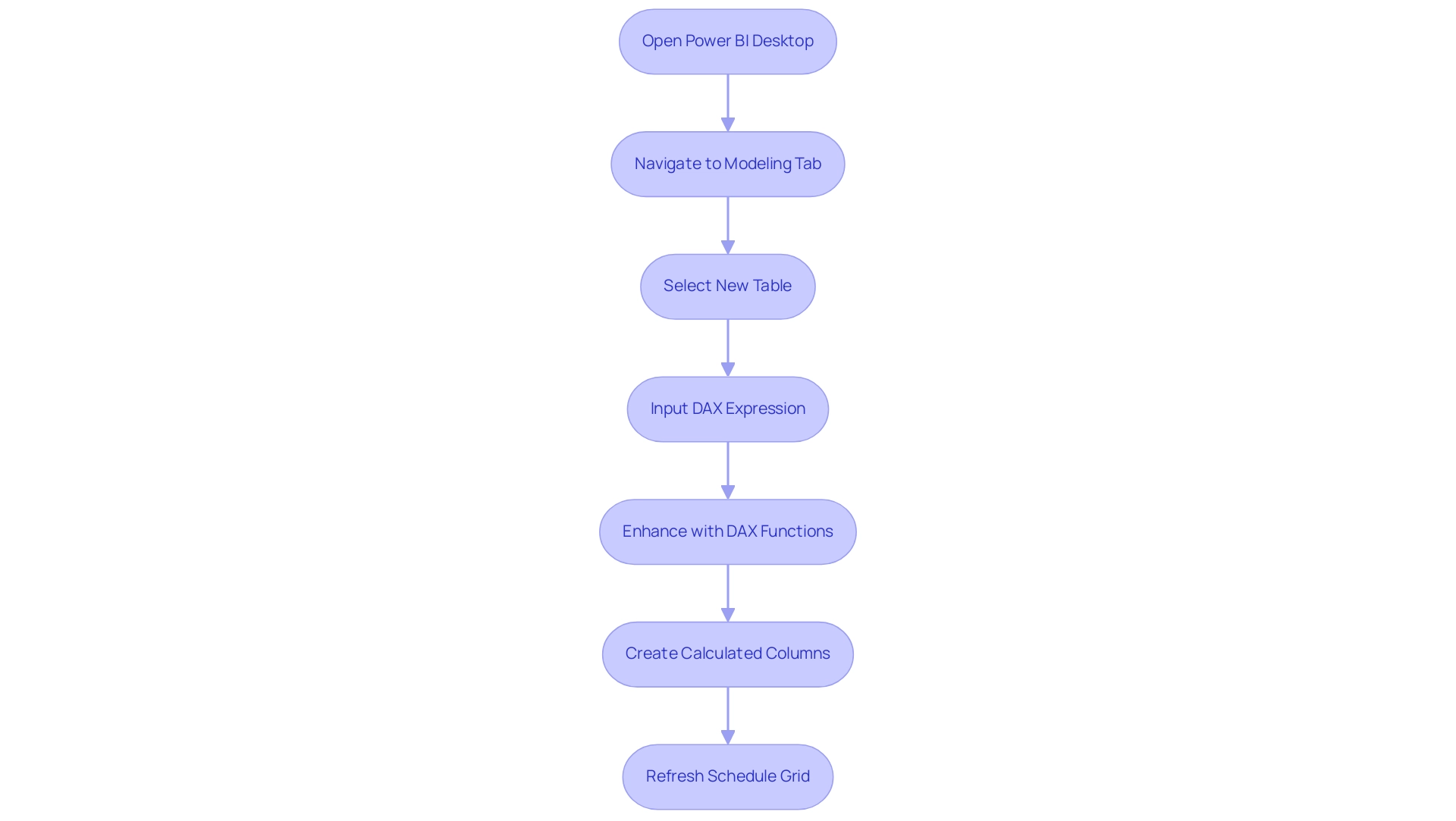

Creating Measures for Enhanced Data Labeling

Developing metrics for improved data label power bi is a simple yet impactful procedure that can greatly enhance your reporting abilities and boost operational efficiency. Begin by navigating to the ‘Modeling’ tab in Power BI Desktop and selecting ‘New Measure.’ Here, you’ll harness the power of DAX (Data Analysis Expressions) to define the metrics you wish to display.

For example, to illustrate total sales for a specific category, your DAX formula would be structured as follows: Total Sales = SUM(Sales[Amount]). Upon creation, these measures effortlessly merge into your labels, enabling dynamic updates that react to user interactions with the document. This method not only improves the clarity of your visuals but also enables stakeholders to extract insights swiftly and efficiently, tackling common issues such as time-consuming document creation and inconsistencies in information.

Furthermore, it is essential to provide actionable guidance within these reports to ensure stakeholders understand the next steps they should take based on the information presented. As mentioned by Super User Amit Chandak at the Microsoft Analytics Community Conference: