Introduction

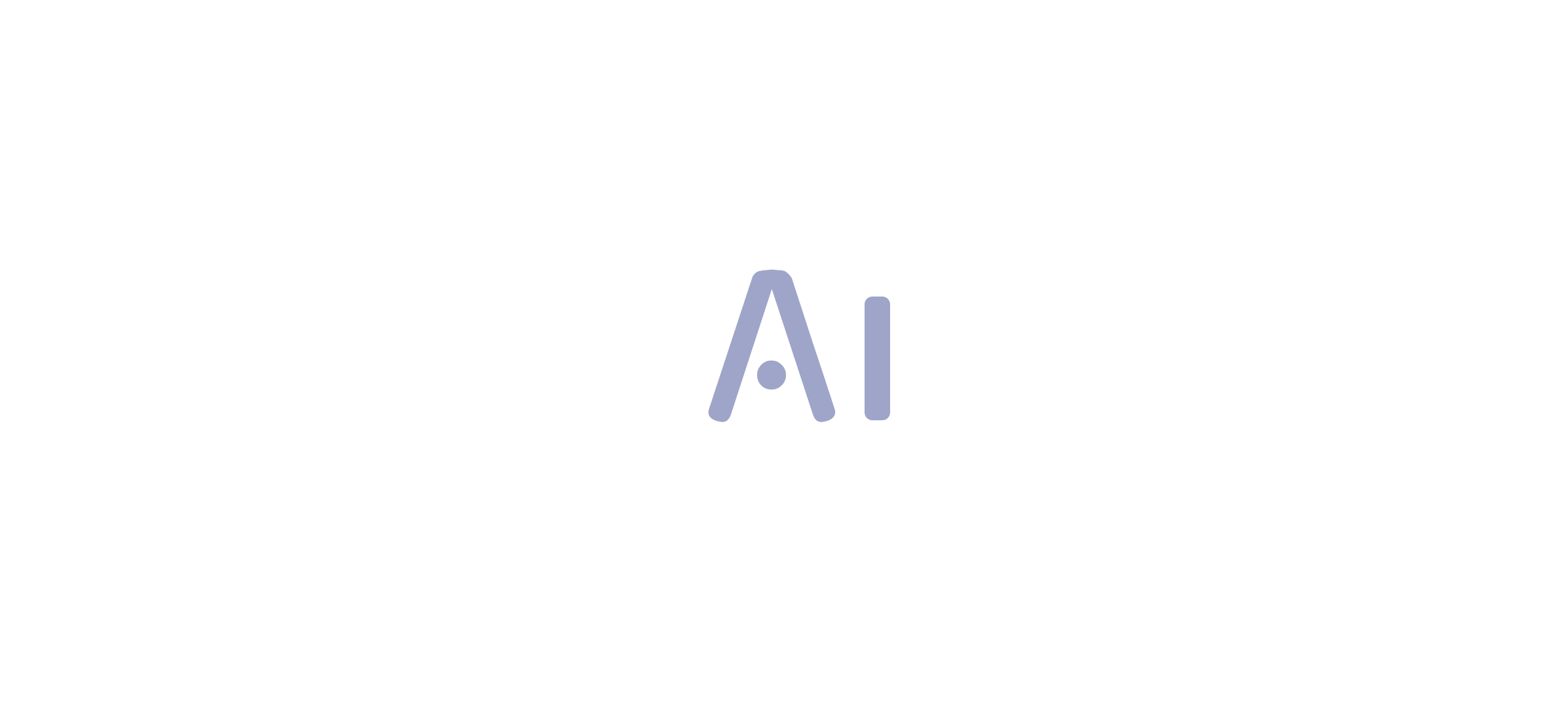

Navigating the complexities of data management in Power BI can be both a challenge and an opportunity for organizations aiming to enhance their operational efficiency. Understanding the various data types—text, numbers, dates, and boolean values—is crucial, as these dictate how data is processed and displayed, ultimately influencing the accuracy of insights derived from analysis.

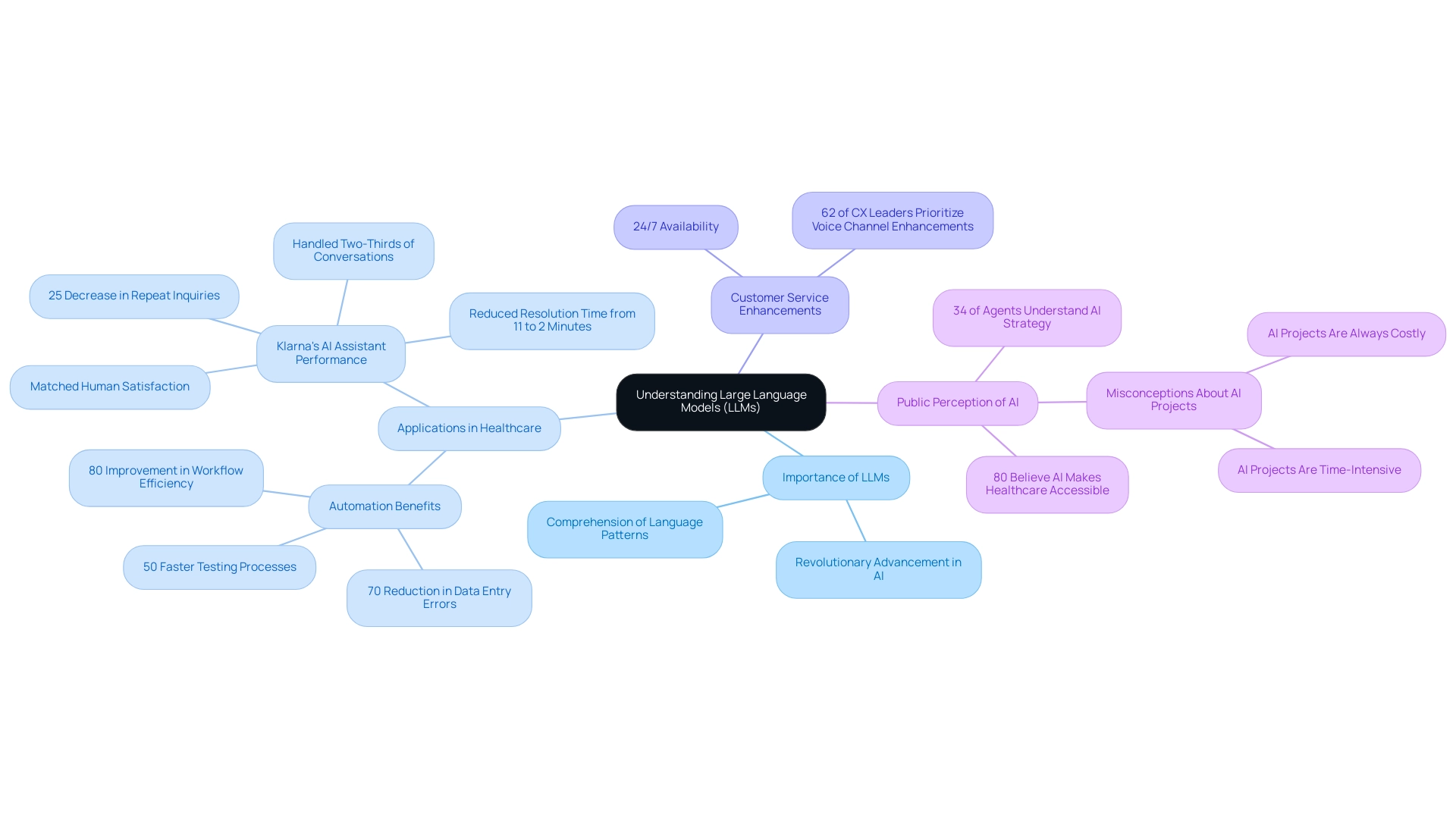

As organizations strive for data integrity, implementing best practices for managing these types becomes essential. From recognizing common pitfalls in data conversion to leveraging Robotic Process Automation (RPA) for streamlined processes, this article will explore practical strategies that empower professionals to transform their data handling practices.

By embracing these insights, organizations can unlock the full potential of their data, driving informed decision-making and fostering innovation in their operations.

Understanding Data Types in Power BI

In Power BI, the information classification allocated to each section is essential, as it determines how the information is handled, examined, and presented. Typical information categories consist of text, numbers, dates, and boolean values, each serving a unique function in information manipulation. For instance, a column labeled as text cannot be utilized in mathematical calculations, highlighting the necessity of understanding these categories.

Understanding them is essential for guaranteeing precise information representation and attaining the intended results in your calculations.

To prevent possible mistakes in your analysis, always confirm the information category before carrying out any change data type power bi operations. This diligence not only enhances information integrity but also helps to change data type power bi, ultimately leading to more reliable insights. By leveraging the power of Business Intelligence and RPA, organizations can transform intricate information into actionable insights, driving growth and innovation while addressing common challenges such as time-consuming report creation and inconsistencies.

The case study on disabling automatic information classification illustrates the practical implications of managing classifications effectively. By disabling this feature, users can maintain control over information categories, thereby preventing errors associated with frequent column changes that disrupt information consistency and the need to change data type power bi. This proactive strategy aids in reducing challenges encountered in BI dashboards, resulting in enhanced operational efficiency and reliability in analysis processes.

Douglas Rocha, a Software Engineer, underscores the significance of this practice, stating, ‘Hope I’ve assisted you in some way and see you next time!’ This personal viewpoint emphasizes the practical importance of comprehending and handling information categories. Moreover, figures from 2024 suggest that organizations emphasizing information management alongside strong BI strategies observe significant enhancements in processing precision, underscoring the essential nature of this element in Business Intelligence.

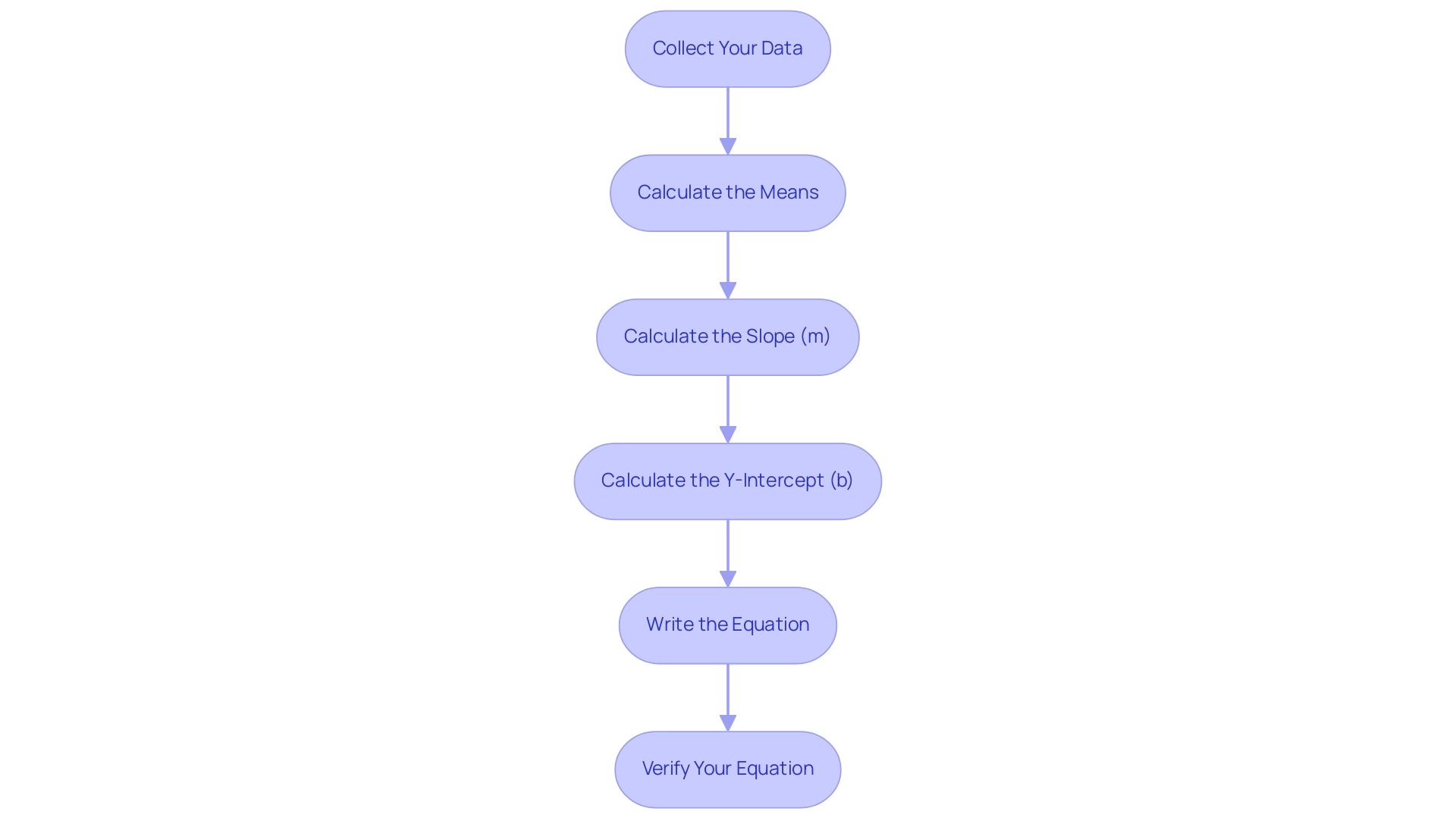

Step-by-Step Process to Change Data Types in Power BI

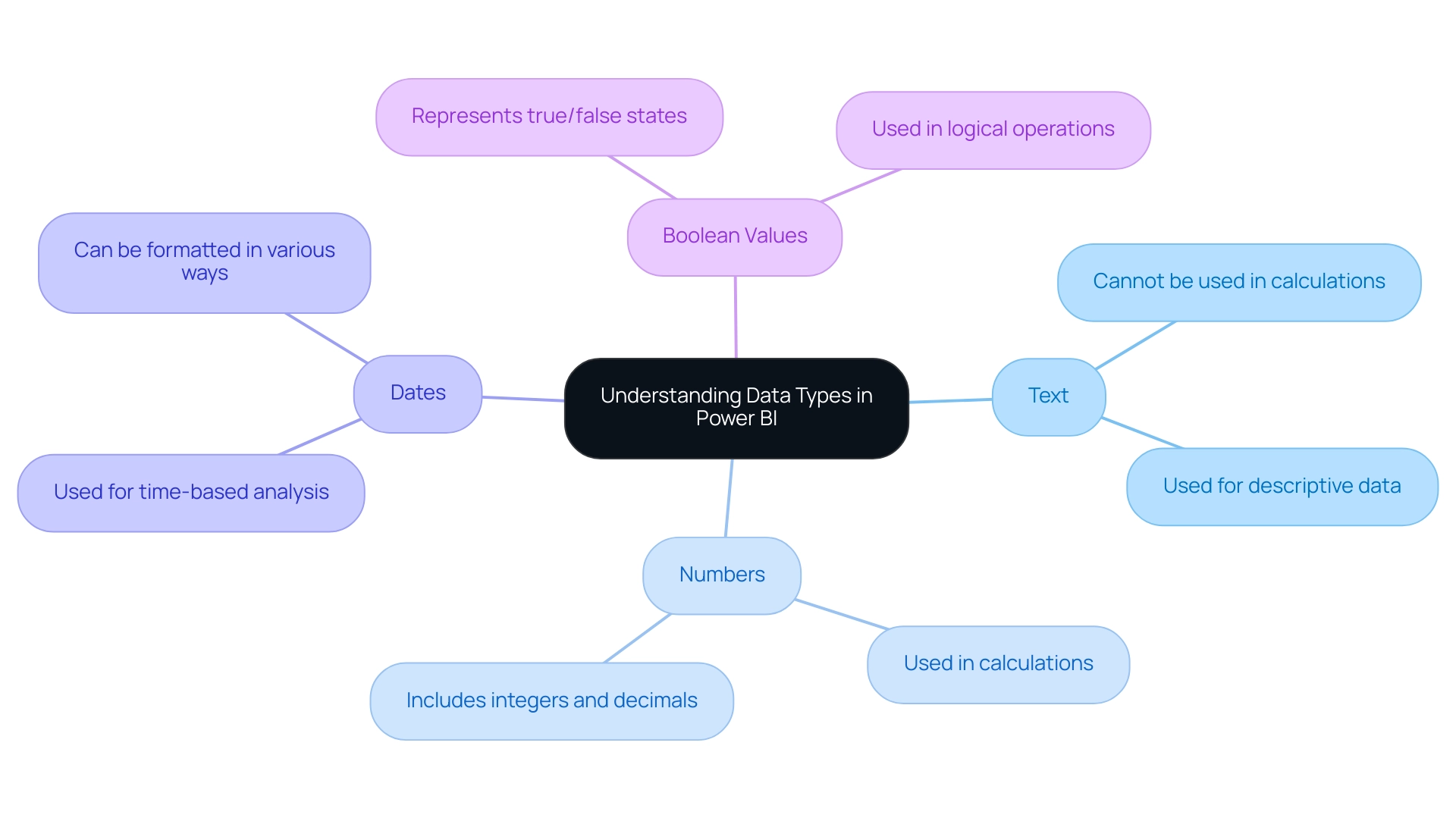

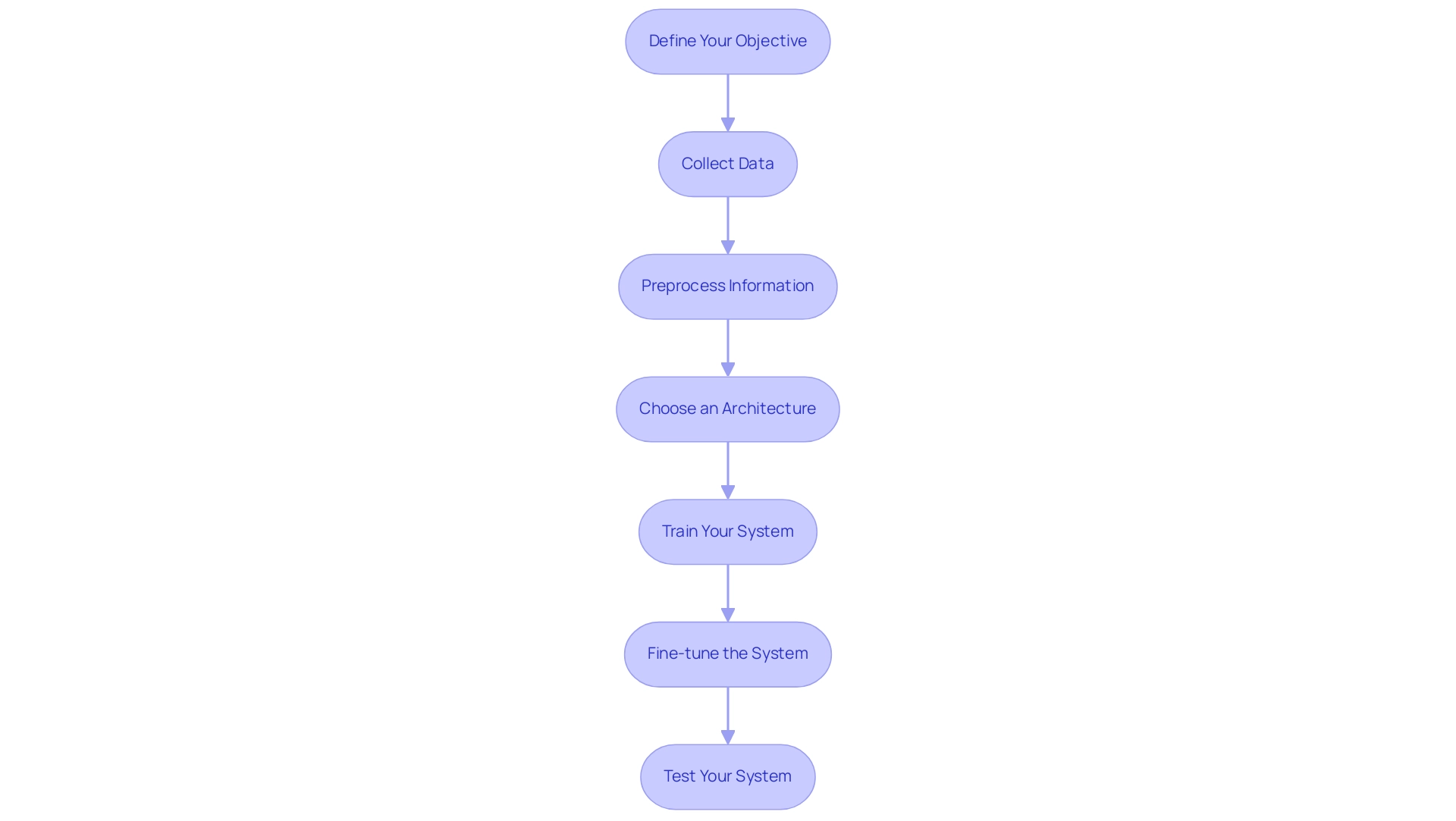

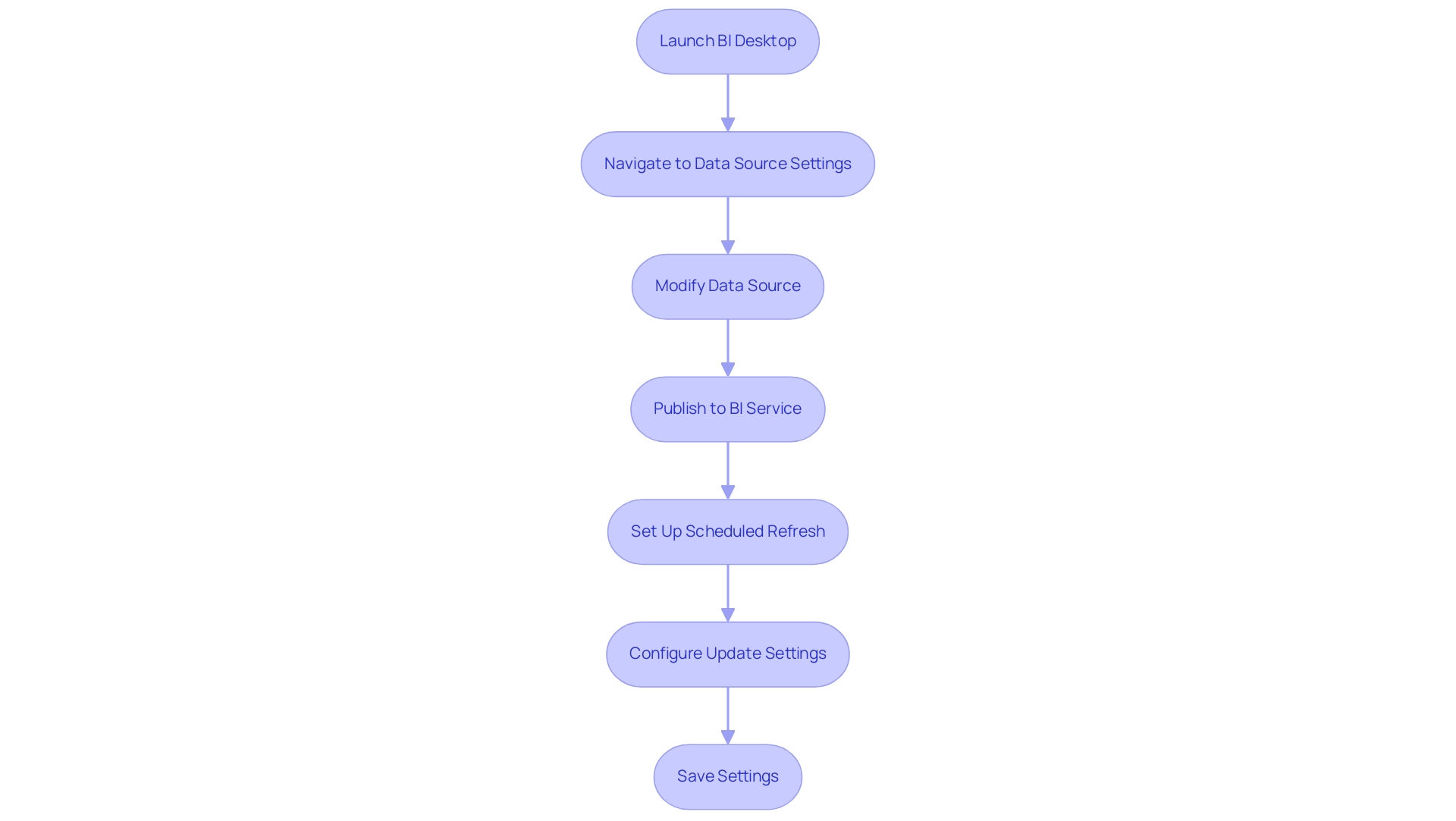

To ensure precise analysis and reporting, it is vital to change data type in Power BI, which serves a crucial function in utilizing Business Intelligence for operational effectiveness. Furthermore, incorporating Robotic Process Automation (RPA) can greatly improve this process by reducing task repetition fatigue and optimizing information management. Follow these empowering steps to seamlessly adjust your data types in Power BI Desktop:

- Open Power BI Desktop and load your dataset.

- Navigate to the Data view by selecting the table icon on the left sidebar.

- Identify and choose the column for which you wish to modify the format.

- In the Column tools tab at the top of the interface, locate the Data category dropdown menu to change data type in Power BI.

- Click the dropdown and choose the suitable information category from the options available (e.g., text, whole number, decimal number, date/time).

- Confirm your selection when prompted. Power BI will automatically attempt to change data type for the existing information to the new type. Be aware that if there are any conversion issues, a warning will be displayed.

- Take a moment to review the information to verify that the conversion was successful. Make any necessary adjustments to ensure accuracy.

For instance, in Scenario 8: Adjusting Sales Figures, an analyst was tasked with cleaning the sales information by addressing negative values and rounding figures to comply with corporate policy. The outcome was significant: negative values were converted to absolute values, and sales information was rounded to one decimal place, ensuring compliance and enhancing the accuracy of reporting. This case illustrates the importance of precise type management, particularly the need to change data type in Power BI, in achieving reliable results and overcoming challenges such as time-consuming report creation and inconsistencies.

Furthermore, particular challenges such as staffing shortages can impede effective information management. By employing RPA solutions, businesses can automate repetitive tasks, allowing staff to focus on more strategic initiatives. Moreover, a statement from industry experts highlights that “orders were most likely to ship out on Tuesday,” underscoring the practical implications of precise information management in driving business growth.

By diligently adhering to these steps and considering the integration of RPA, you can change data type in Power BI in your reports, which will align them with your analytical goals and enhance the overall integrity of your information while driving actionable insights for informed decision-making.

Common Challenges and Mistakes in Changing Data Types

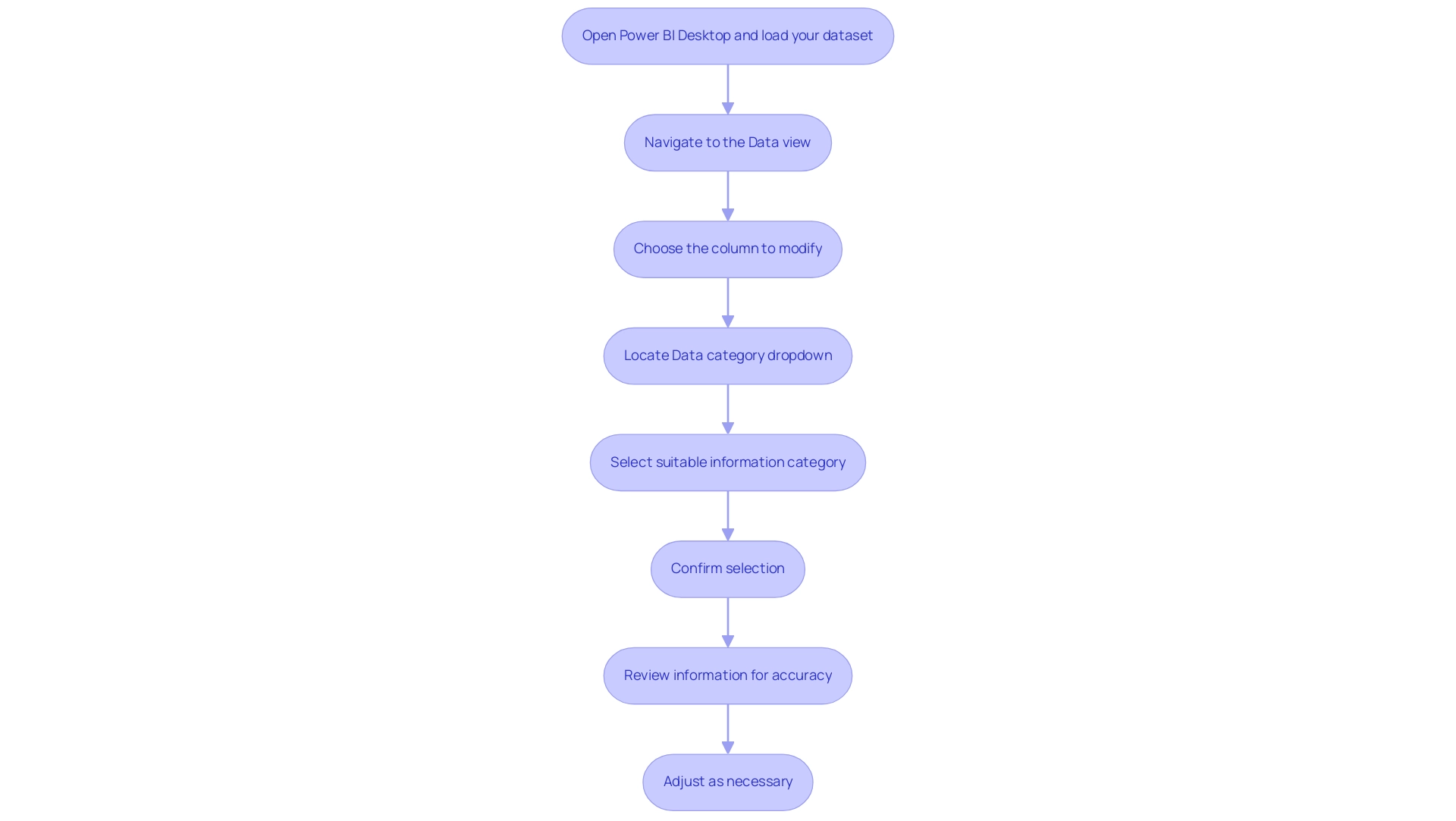

Altering information categories in Power BI can pose various difficulties, especially when you need to change data type Power BI, which may impact your information management efforts. Awareness of these challenges is essential for maintaining data integrity and ensuring smooth operations:

-

Data Conversion Errors: One common issue arises when existing data cannot be seamlessly converted to the new type. For instance, attempting to convert a text string containing letters into a numeric format will trigger an error. A Super User remarked, “Hi @Anonymous I have a date/time value in one of my fields while I am attempting to convert this field into date/time format in PBI it gives me the following error.” We cannot automatically convert the column to Date/Time format. To evade such traps, always confirm that the information aligns with the intended category before implementing any modifications.

-

Loss of Information: Another considerable risk is the potential loss of details when altering information categories, especially when streamlining information structures. For example, converting from a decimal format to a whole number can inadvertently eliminate critical decimal values. To protect your information, it is essential to back it up prior to making any modifications.

-

Inconsistent Information Representation: Inconsistent types across various sections can lead to confusion during analysis. For instance, if a date field includes text entries, it can skew results and create misunderstandings. Ensuring consistency across related information columns is crucial for accurate reporting and analysis.

In the rapidly evolving landscape of information management, organizations often face challenges with poor master information quality that can hinder decision-making. Leveraging Robotic Process Automation (RPA) can simplify information handling processes, while tailored AI solutions can enhance accuracy and streamline operations. Moreover, utilizing business intelligence tools can transform raw information into actionable insights, allowing for informed decisions that drive growth.

According to recent metrics, the topic of information conversion errors has garnered significant interest, with a total of 1,819 views on related discussions, highlighting the relevance of this issue in management.

By proactively addressing these challenges, you can mitigate risks associated with change data type Power BI and enhance the overall integrity of your management strategy. Additionally, utilize the column quality feature, which categorizes values into five distinct categories—Valid (green), Error (red), Empty (dark grey), Unknown (dashed green), and Unexpected error (dashed red)—to gain insights into the quality of your information before making adjustments. This feature is essential for identifying information issues early on.

Additionally, examine the case study titled “Function to Fetch Error Count,” which explores the implementation of a custom function in Query to count errors in a table. This practical solution can help you manage errors effectively and enhance your profiling reports, illustrating a hands-on approach to error management.

Impact of Data Type Changes on Data Modeling

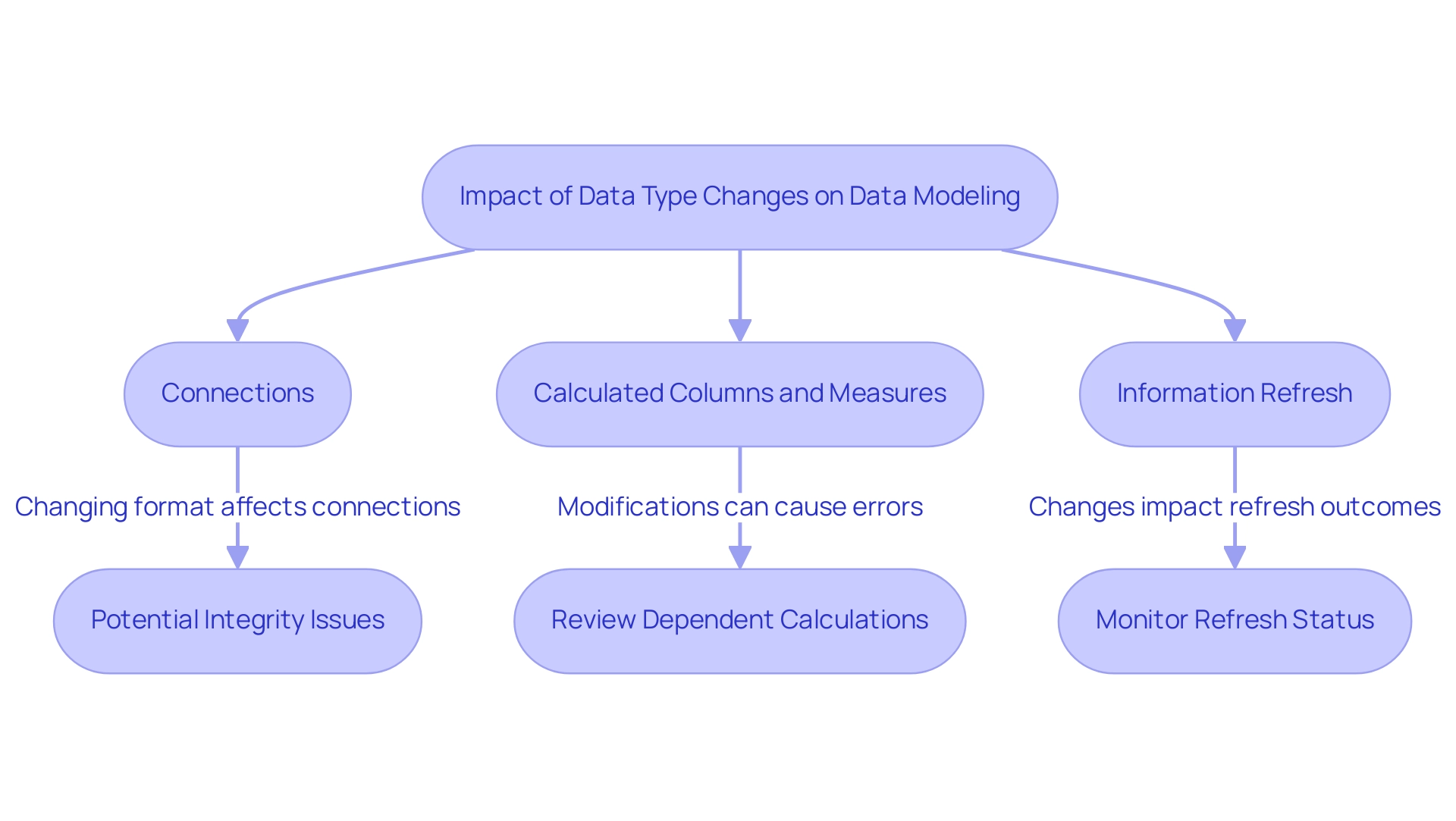

The process to change data type in Power BI can have significant effects on your modeling efforts. Here are essential factors to remember, particularly regarding typical obstacles encountered in utilizing insights effectively:

-

Connections: Changing an information format in a section that is part of a connection with another table can disturb that connection and lead to information integrity concerns. This is especially crucial as inconsistencies in information across various reports, often worsened by a lack of governance strategy, can lead to confusion and mistrust. It’s essential to confirm all relationships after implementing any modifications to ensure continuity and precision in your information.

-

Calculated Columns and Measures: Modifying the format of a column utilized in calculations can result in mistakes or yield unforeseen outcomes in your reports. Given that reports often contain numbers and graphs yet lack actionable guidance, a thorough review of all dependent calculations is essential. This enables you to make essential modifications and uphold the integrity of your analytics, ultimately offering clearer guidance for stakeholders.

-

Information Refresh: Altering information categories can also influence following information refreshes, potentially causing errors if the foundational information does not align with the new category. It’s important to closely monitor your refresh status and address any issues promptly to avoid time-consuming report creation processes that detract from analysis.

These considerations underscore the critical importance of effectively managing the change data type in Power BI within your models. Data modeling is an iterative process that requires continuous review and updates as organizational needs evolve. By adopting a proactive approach, you can reduce risks and improve the overall quality of your information, ultimately leading to better insights and decision-making within your organization.

For instance, the case study titled ‘Early Detection of Issues & Errors‘ illustrates how problems often go unnoticed until they affect users in production. Implementing a robust modeling approach helps identify these problems early, allowing for timely corrections and minimizing negative impacts on users. As one expert aptly stated,

Data models have such a profound influence not only on how the software is written, but also on how we think about the problem at hand.

Best Practices for Managing Data Types in Power BI

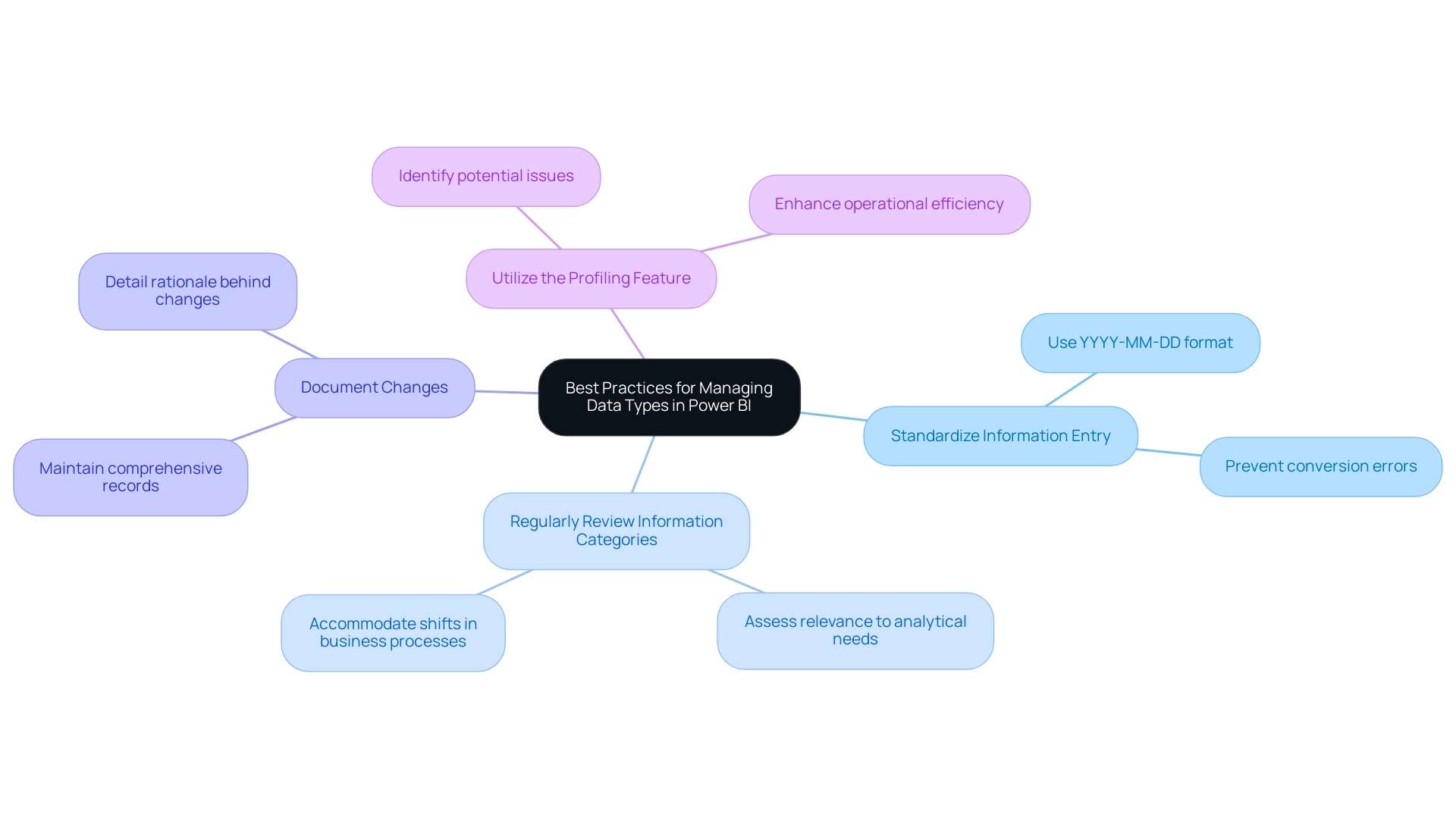

To effectively handle information categories within BI and unlock actionable insights, adopting a series of best practices is essential:

- Standardize Information Entry: Consistency is key in information management. Implement standardized formats for entries, such as using YYYY-MM-DD for dates, to prevent conversion errors when you need to change data type power bi in later analysis. This foundational step enhances the dependability of reporting that can be accomplished through our 3-Day BI Sprint service, especially when you need to change data type power bi.

- Regularly Review Information Categories: Periodically assessing the information categories used in your reports is essential. This ensures they remain relevant to your analytical needs and can accommodate any shifts in business processes or information sources, ultimately enhancing the effectiveness of your BI tools, especially when you need to change data type power bi.

- Document Changes: Maintain a comprehensive record of modifications made to information categories, detailing the rationale behind each change. This documentation serves as a crucial resource for troubleshooting and maintaining information integrity over time, especially when users need to change data type in Power BI, consistent with our dedication to delivering expert training and support.

- Utilize the Profiling Feature: BI’s profiling tools provide insights into the distribution and quality of your information. Utilize these tools to identify potential issues with information types before implementing changes, emphasizing the importance of insights in operational efficiency and how to change data type power bi effectively.

Organizations that have switched to Delta Tables in Databricks have reported immediate improvements in query times, underscoring the significance of effective information management practices. By following these best practices, you not only improve your transformation processes but also learn how to change data type power bi, greatly enhancing the overall quality and reliability of your BI reports. This enhances your decision-making and propels growth, as highlighted by our extensive BI services, including custom dashboards and advanced analytics.

As highlighted in a case study on dedicated project management, where a dedicated project manager ensured regular updates and incorporated client feedback, effective oversight is crucial for managing data types, especially when you need to change data type power bi to meet operational standards. Additionally, as noted by esteemed contributor Szymon Dybczak, such practices are invaluable for streamlining day-to-day operations within Business Intelligence.

To experience the benefits of these best practices firsthand, consider booking our 3-Day Power BI Sprint service, where we promise to create a fully functional, professionally designed report on a topic of your choice.

This report can serve as a valuable template for your future projects, ensuring a professional design from the start.

Conclusion

Understanding and managing data types in Power BI is not just a technical necessity; it is a strategic advantage for organizations aiming to enhance their operational efficiency. By familiarizing oneself with the various data types—text, numbers, dates, and boolean values—professionals can ensure accurate data representation, thereby improving the reliability of insights derived from analysis. The article emphasizes the importance of verifying data types before transformations, avoiding common pitfalls like data conversion errors and loss of information, which can significantly undermine the analytical process.

Moreover, integrating Robotic Process Automation (RPA) into data management practices can alleviate repetitive tasks, enabling teams to focus on strategic initiatives. The step-by-step guide provided for changing data types highlights practical solutions that can streamline workflows and enhance data integrity. By implementing best practices such as:

- Standardizing data entry

- Utilizing data profiling features

organizations can create a robust framework for data management that drives informed decision-making.

Ultimately, the proactive management of data types is essential for fostering a culture of data integrity and operational excellence. As organizations navigate the complexities of data in Power BI, the insights and strategies discussed can empower them to transform challenges into opportunities, unlocking the full potential of their data assets. Embracing these practices not only enhances the quality of reporting but also positions organizations for sustained growth and innovation in an increasingly data-driven landscape.

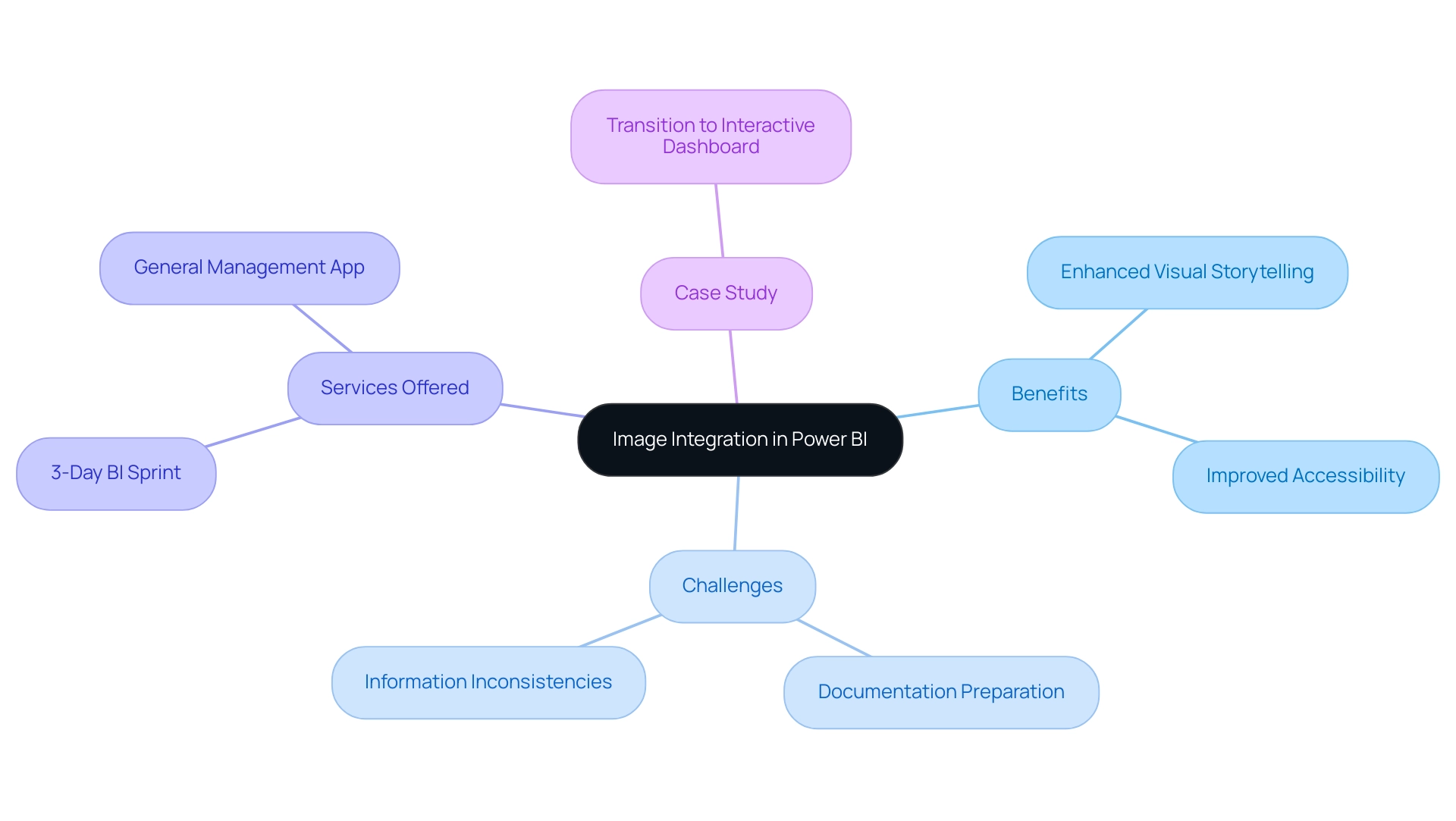

Introduction

In the realm of data analytics, the ability to transform raw information into actionable insights is paramount for organizations striving to maintain a competitive edge. Power BI emerges as a powerful ally, offering dynamic reporting capabilities that not only streamline the visualization process but also enhance decision-making across various sectors.

However, the journey doesn’t end with interactive dashboards; the need for meticulously formatted paginated reports is equally crucial, particularly in industries where compliance and detailed documentation are non-negotiable.

By navigating the nuances of both Power BI and paginated reports, organizations can harness the full potential of their data, ensuring clarity and precision in their reporting efforts.

This article delves into the strategic steps necessary for effectively converting Power BI reports into paginated formats, highlighting the integration of Robotic Process Automation (RPA) to further optimize operational efficiency and drive innovation.

Embracing these technologies not only mitigates the challenges of report creation but also positions businesses to thrive in an increasingly data-driven landscape.

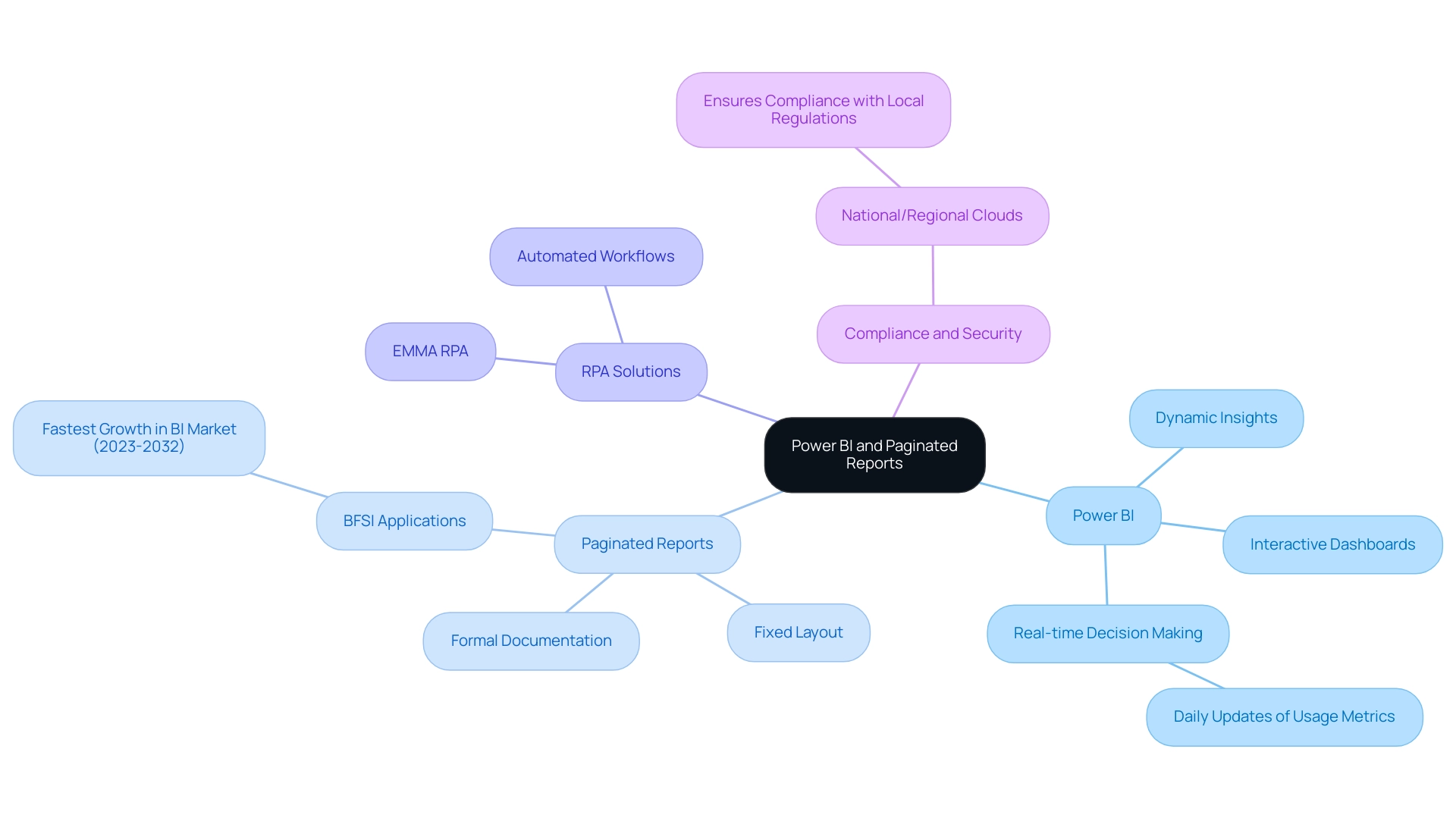

Understanding Power BI and Paginated Reports

Power BI stands out as a robust analytics tool, empowering organizations to visualize and share valuable insights obtained from their information. It addresses common challenges in leveraging insights from dashboards, such as time-consuming document creation and data inconsistencies, by offering dynamic interactive documents and dashboards that facilitate informed decision-making across various sectors. In contrast, paginated documents cater to a different need; they are meticulously designed for printing or sharing in a fixed layout format, making them exceptionally suited for generating detailed materials that require precise formatting, particularly when you want to convert Power BI report to paginated report.

This distinction is critical, as paginated documents excel at managing large datasets and offering a structured presentation, which is essential when you convert Power BI report to paginated report. Such features are especially beneficial for formal documentation and compliance purposes, a key consideration for industries like banking, financial services, and insurance (BFSI), which, according to Inkwood Research, will experience the fastest growth in the business intelligence market from 2023 to 2032. The integration of RPA solutions, such as EMMA RPA and Automated Workflows, can further enhance operational efficiency by automating repetitive tasks related to document generation, thus relieving staff from task repetition fatigue.

Furthermore, the daily updates of usage metrics reports underscore the dynamic nature of BI, ensuring that organizations can make real-time decisions based on the most current data. Additionally, BI’s availability in national and regional clouds demonstrates its commitment to compliance with local regulations, as highlighted in case studies on usage metrics. By understanding these differences and leveraging both BI and RPA, organizations can better meet operational and compliance standards, driving growth and innovation.

Struggling to extract meaningful insights from information can leave businesses at a competitive disadvantage, underscoring the urgency of adopting these technologies.

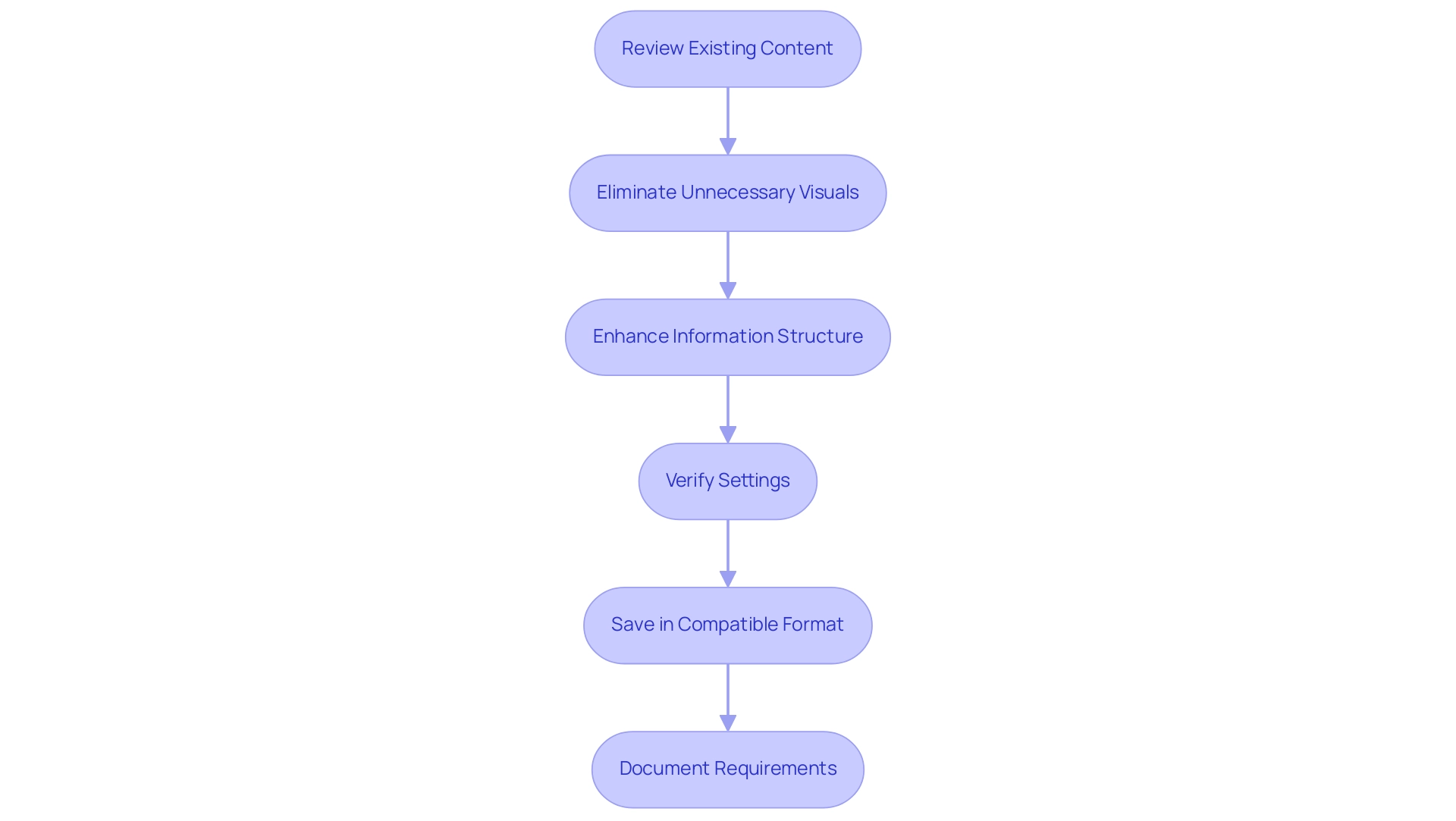

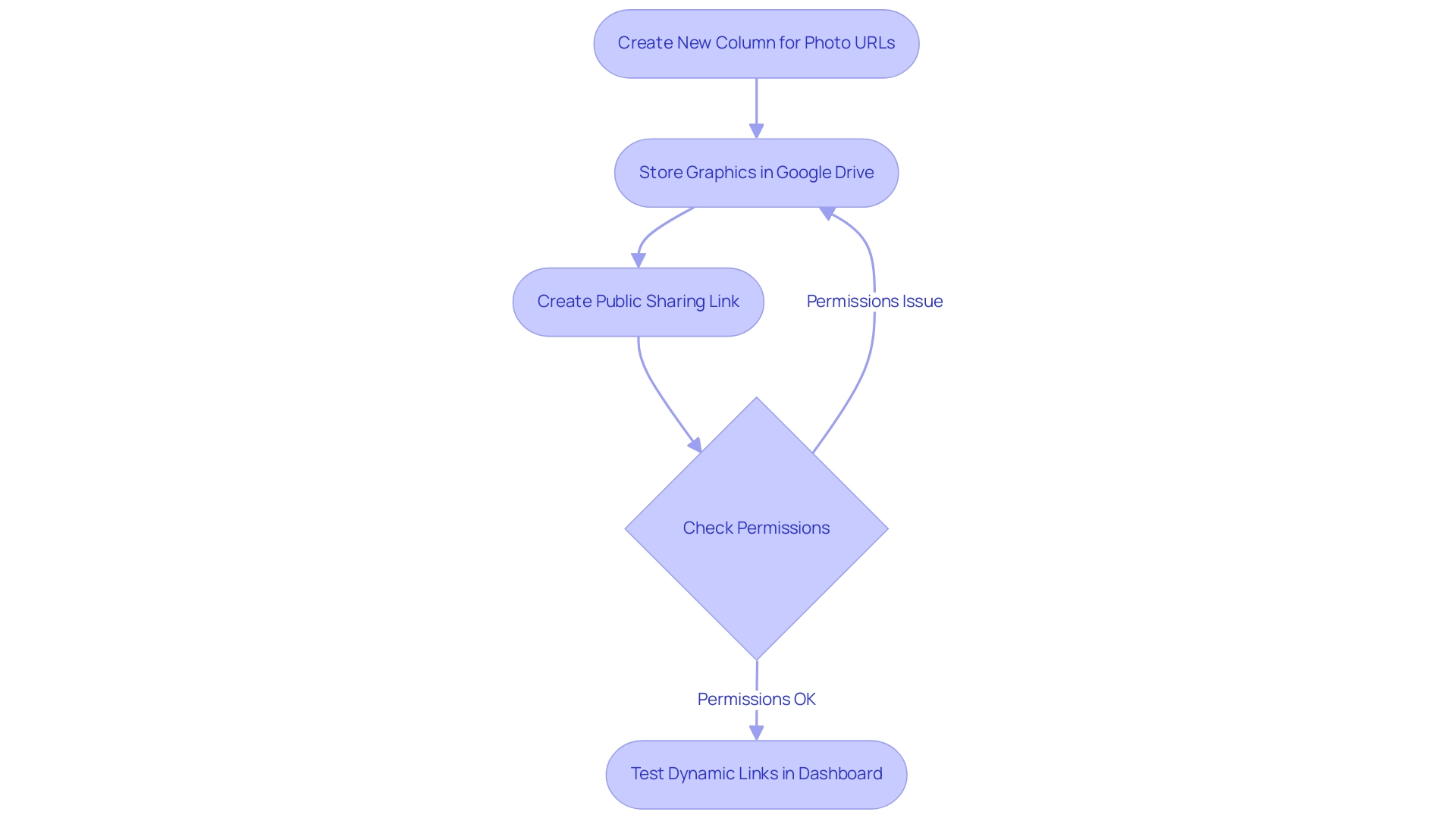

Step 1: Preparing Your Power BI Report for Conversion

To effectively prepare your Power BI document to convert Power BI report to paginated report format, begin with a thorough review of the existing content. Ensure that all visuals and information elements are correctly formatted and functional, as this foundational step greatly influences the outcome. Eliminating unnecessary visuals that may not translate well into a paginated format is crucial, streamlining the design for clarity and effectiveness.

Furthermore, enhancing your information structure is crucial; paginated summaries can manage larger datasets effectively, greatly boosting query performance. For instance, organizations that switched to Delta Tables in Databricks reported immediate improvements in query times, underscoring the importance of optimizing models. Notably, recent practices have demonstrated that verifying settings like Assume Referential Integrity in relationships can enhance performance in DirectQuery sources.

When you are ready, save your Power BI report in a compatible format.

Document any specific requirements or modifications needed for the conversion process to ensure a smooth transition. To illustrate the importance of a well-structured dataset, consider the case study on managing duplicate values in relationships: by creating a dimension table with unique values, organizations established effective connections across multiple information sources. As Szymon Dybczak, an esteemed contributor, noted, ‘Here’s a good article that answers your question.

I think the author did a pretty good job – many of his advice I apply in my everyday job.’ This strategic approach not only resolved potential issues but also optimized the overall data model, showcasing how BI can drive data-driven insights for business growth. By utilizing RPA tools such as EMMA RPA and Automation, companies can automate repetitive tasks associated with document creation, significantly improving operational efficiency and decreasing the time spent on manual processes.

By following these steps, you can significantly enhance the performance and usability of your BI documents in paginated formats, ultimately allowing you to convert Power BI report to paginated report and leverage the capabilities of BI and RPA to transform your operational efficiency and avoid the competitive disadvantage of struggling to extract meaningful insights.

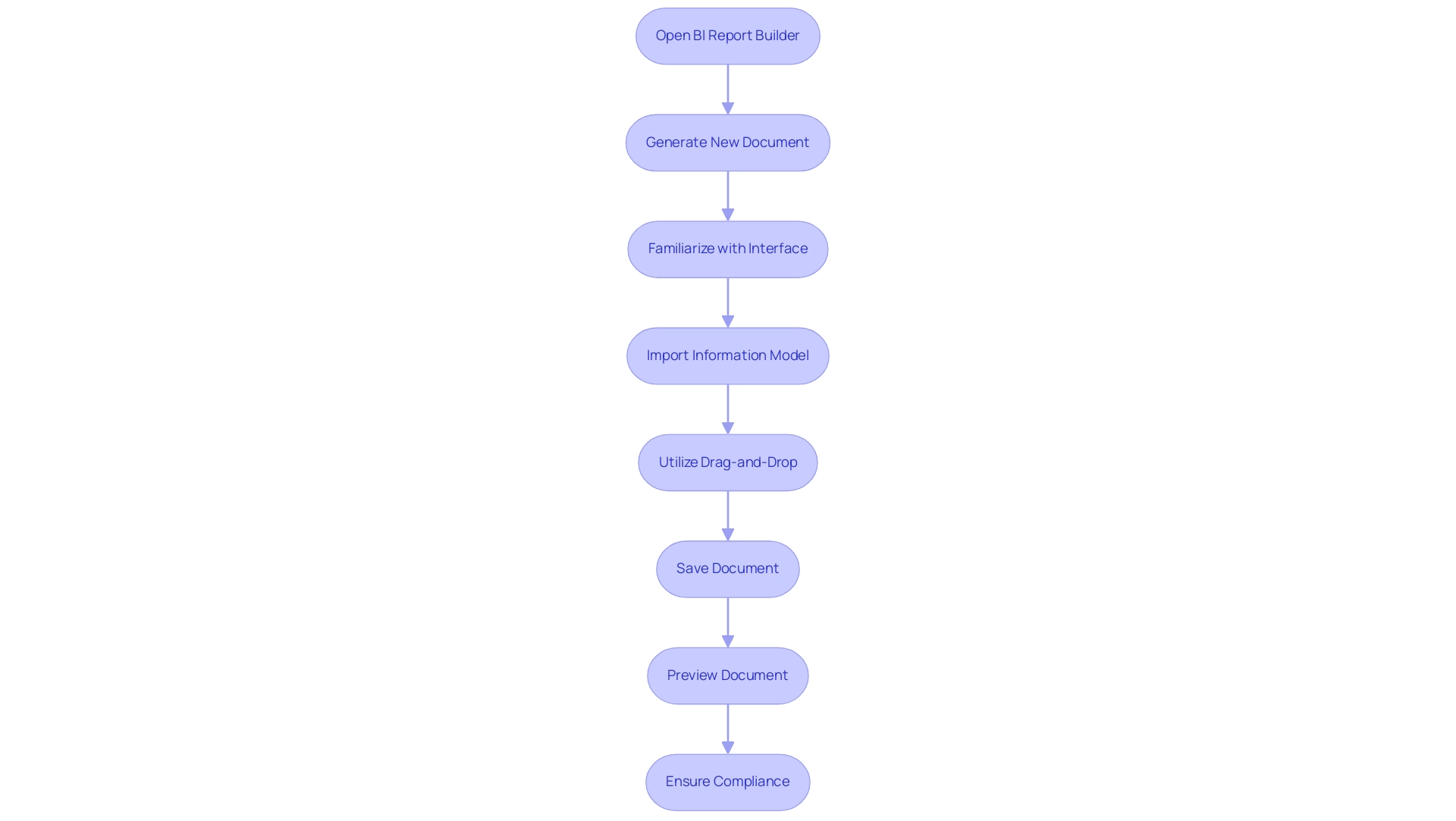

Using Power BI Report Builder for Paginated Reports

Start by opening BI Report Builder and generating a new document. Get accustomed to the user-friendly interface, including the analysis panel, design area, and properties window, which streamlines the documentation process. With nearly 10,000 users online, BI Report Builder is a widely utilized tool, underscoring its relevance in the field of business intelligence.

To improve your documentation abilities, import your current information model from a Power BI file by connecting to the same source, enabling seamless integration of information. Utilize the drag-and-drop functionality to effortlessly add tables, charts, and images, ensuring your document is both visually appealing and informative. Consistently save your work to avoid data loss, and utilize the preview feature to evaluate how your document will look once published, ensuring optimal presentation and clarity.

As emphasized by Ronnie from the Community Support Team, ‘You can only run usage metrics analysis in the Power BI service.’ However, if you save a usage metrics document or pin it to a dashboard, you can open and interact with that document on mobile devices. This capability is essential for accessibility across platforms, reinforcing the actionable insights your documents provide. Additionally, consider the case study on usage metrics in national/regional clouds, emphasizing how BI maintains compliance with local regulations while ensuring security and privacy standards.

By adhering to these steps, including the 3-Day BI Sprint for swift report generation and utilizing the General Management App for thorough oversight, you can conquer the obstacles of time-consuming report creation and inconsistencies, thereby enabling you to convert Power BI report to paginated report that fulfills your operational needs.

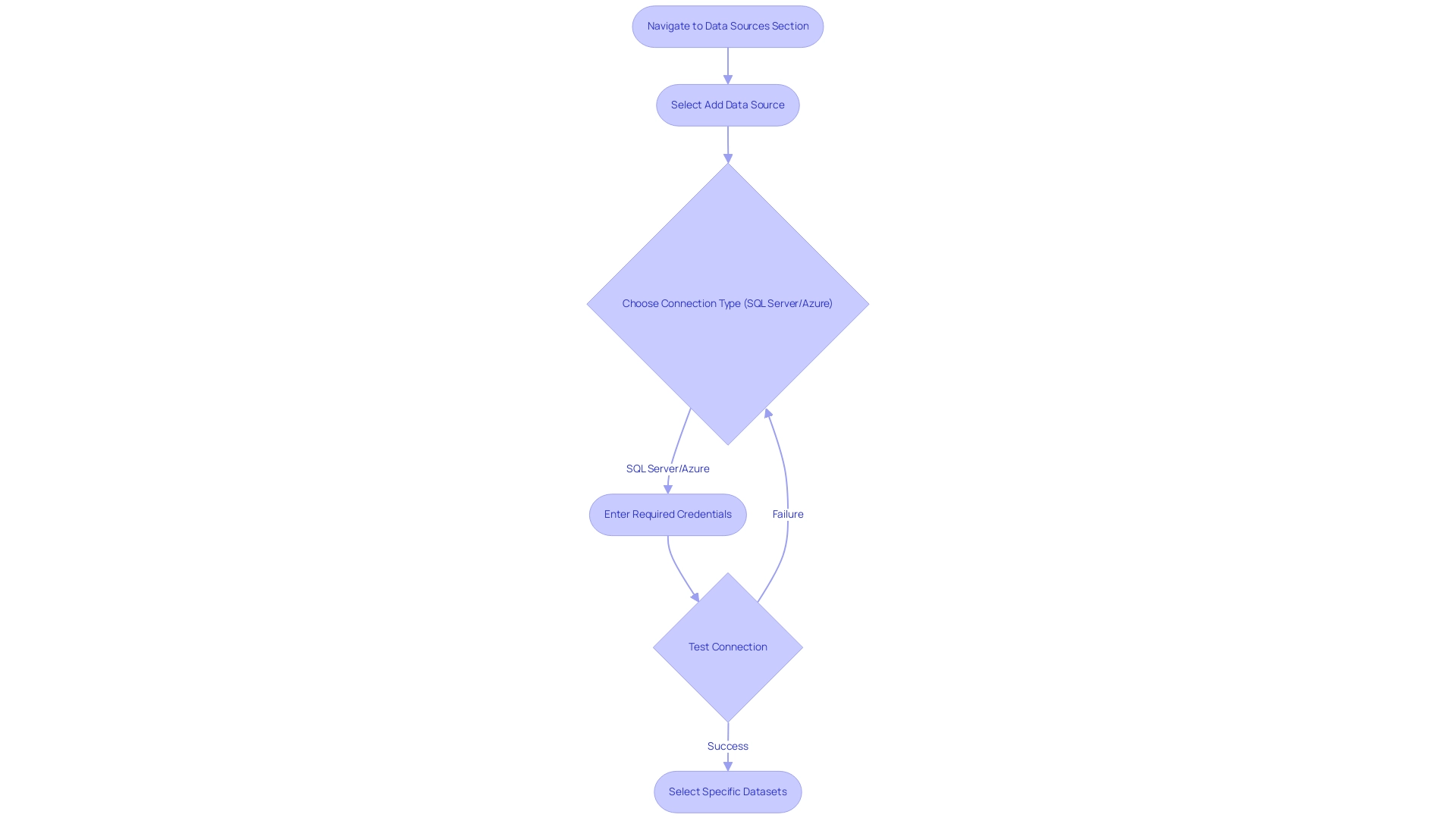

Step 2: Connecting to Data Sources for Your Paginated Report

Connecting to your information sources in Power BI Report Builder is a straightforward process that lays the foundation for effective reporting. Begin by navigating to the ‘Data Sources’ section and selecting ‘Add Data Source.’ Here, you will select the suitable connection type for your source, such as SQL Server or Azure.

Make sure to enter the required credentials and test the connection to confirm its success. Once established, you can select the specific datasets that will allow you to convert Power BI report to paginated report. This initial step is vital; the integrity and quality of your reporting depend on the precision of the information you connect.

As HadilBENAmor emphasizes,

BI, developed by Microsoft, is a robust tool that streamlines information integration and improves reporting capabilities.

To enhance your models in BI, consider using star schema design and minimizing unnecessary relationships, as this approach significantly impacts integrity and reporting accuracy. Moreover, applying Robotic Process Automation (RPA) can simplify repetitive documentation tasks, decreasing time-consuming creation processes, minimizing errors, and resolving information inconsistencies.

RPA can free up your team’s resources, allowing them to focus on more strategic initiatives. The case study titled ‘AI and Power BI’ illustrates how specialized AI visuals enhance interactivity for report consumers, making information exploration more engaging. These AI visuals significantly enhance the user experience by providing interactive elements that facilitate deeper insights into the information.

Additionally, with the average salary for a Data Analyst in India being approximately ₹6,24,796 per year, the significance of effective information integration and reporting cannot be overstated. Ensuring strong connections not only enhances the reliability of your findings but also enables you to provide valuable and actionable information to stakeholders, driving business growth through informed decision-making.

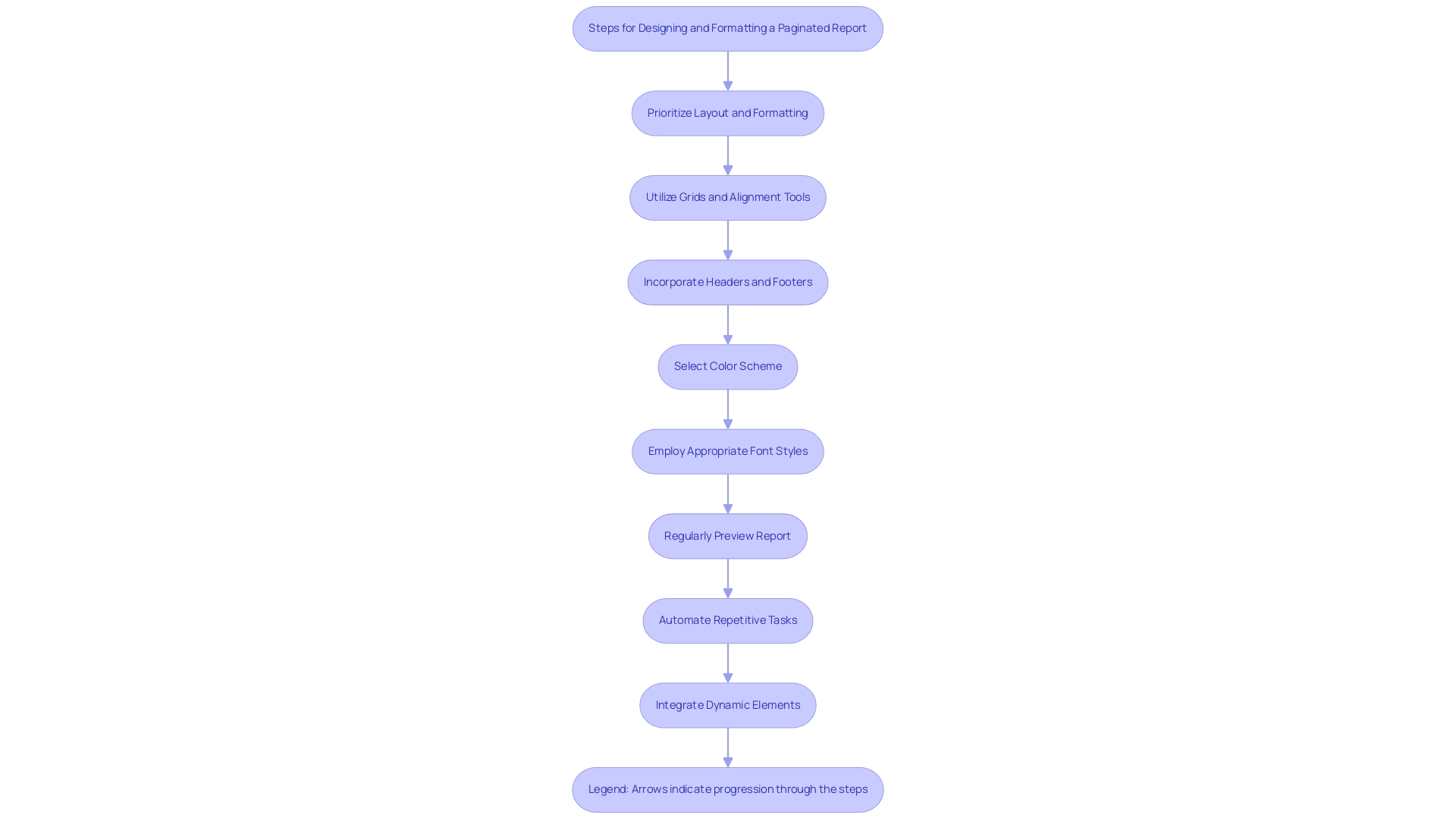

Step 3: Designing and Formatting Your Paginated Report

During the design phase of your paginated document, prioritizing layout and formatting is essential to elevate readability and user engagement. In today’s information-rich environment, leveraging Business Intelligence and RPA, such as EMMA RPA and Power Automate, can empower organizations and drive meaningful action, helping to overcome challenges such as time-consuming document creation and inconsistencies. As noted in industry insights, ‘When used to empower organizations and drive meaningful action, data visualization will continue to lead in business intelligence and analytics by adopting cutting-edge technologies.’

Utilizing grids and alignment tools will help you organize tables and charts, creating a visually appealing structure that enhances operational efficiency. Incorporating headers and footers not only provides context but also serves as navigation aids for users, facilitating easier access to insights. Select a color scheme that aligns with your organization’s branding while ensuring text remains legible against the background.

Furthermore, employing appropriate font styles and sizes for various sections establishes a clear hierarchy of information, making it easier for viewers to digest content. Regularly previewing your report is crucial; this iterative approach allows you to make necessary adjustments, ensuring the final product meets both aesthetic and functional expectations. Additionally, the introduction of visual level format strings, which requires enabling visual calculations in BI, is a key consideration for effective formatting.

Automating repetitive tasks with tools like EMMA RPA can significantly enhance efficiency and employee morale, freeing up valuable time for more strategic activities. As demonstrated in the case study on diversity and inclusion at the workplace, a well-designed Power BI dashboard can effectively illustrate important organizational trends, showcasing male and female employee distribution across various dimensions. As per the latest design trends for 2024, integrating dynamic elements can also enhance user interaction, fostering a more engaging experience while unlocking actionable insights that drive business growth.

Conclusion

Transforming Power BI reports into paginated formats is a multifaceted process that can significantly enhance operational efficiency and decision-making capabilities within organizations. By understanding the distinct features of Power BI and paginated reports, companies can tailor their reporting strategies to meet both compliance and documentation needs effectively. The integration of Robotic Process Automation (RPA) further streamlines this transformation, reducing the burden of repetitive tasks and allowing teams to focus on strategic initiatives.

The steps outlined in this article highlight the importance of:

– Preparation

– Connection to data sources

– Thoughtful design

in creating impactful paginated reports. From optimizing data models to ensuring seamless integration with Power BI Report Builder, each stage plays a critical role in enhancing the clarity and precision of reports. Regularly previewing and refining the report design not only improves readability but also ensures that the final product meets the expectations of stakeholders.

Ultimately, embracing these technologies and methodologies empowers organizations to unlock the full potential of their data. By leveraging Power BI and RPA effectively, businesses can navigate the complexities of report generation, foster innovation, and maintain a competitive edge in an increasingly data-driven landscape. The time to act is now; harnessing these tools will pave the way for improved insights and informed decision-making, driving sustainable growth and success.

Introduction

In the realm of data visualization, Power BI stands out as a powerful tool that transforms raw data into actionable insights. One of its most impactful features is conditional formatting, which allows users to enhance their reports by dynamically altering visual presentations based on data attributes. This capability not only highlights critical metrics but also fosters informed decision-making, enabling organizations to respond swiftly to changing business landscapes.

As the demand for real-time intelligence grows, understanding how to effectively implement conditional formatting becomes essential for maximizing operational efficiency and driving business growth. This article delves into the intricacies of conditional formatting in Power BI, offering practical guidance and best practices to empower users in their reporting endeavors. From troubleshooting common issues to exploring diverse formatting options, readers will discover how to leverage this feature to elevate their data presentation and ensure clarity in communication.

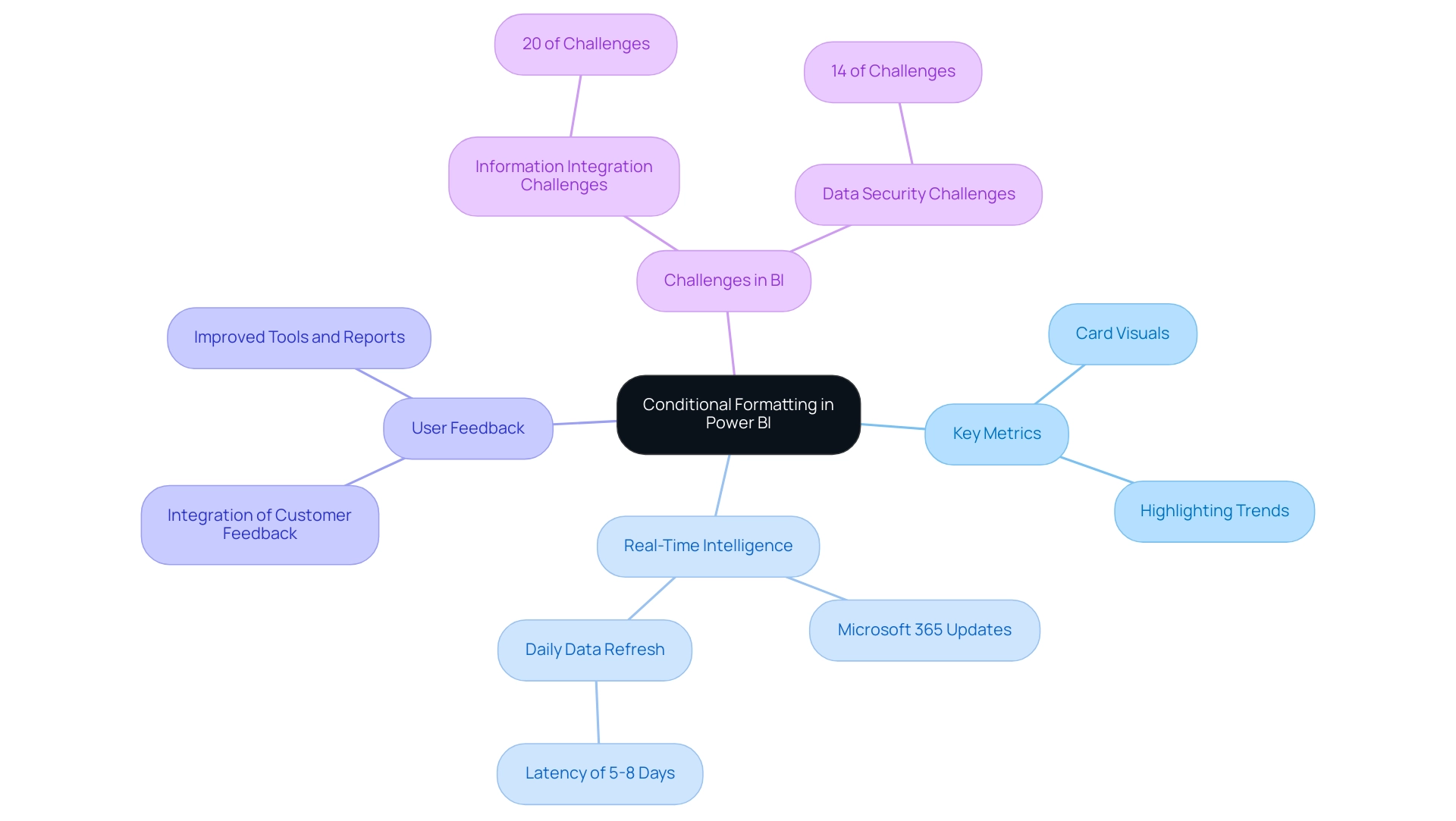

Understanding Conditional Formatting in Power BI

Conditional formatting in Power BI alters how users engage with information by enabling them to adjust the visual presentation based on specific attributes. This capability is particularly valuable in card visuals when using conditional formatting card power bi, as it effectively highlights key metrics and trends that drive decision-making. With our extensive Power BI services, including the 3-Day Power BI Sprint, users can swiftly generate professionally crafted reports that highlight key insights and improve reporting.

Additionally, the General Management App provides a robust platform for comprehensive management and smart reviews, further supporting actionable insights. By implementing rules that dictate visual changes—such as altering colors in response to information values—users can foster informed choices. The recent advancements in Power BI have further enhanced these features, with the back-end Microsoft 365 service now refreshing information daily, albeit with a latency of 5-8 days.

This timely update is crucial as it allows users to leverage the conditional formatting card power bi to make decisions based on the most current information available. As expert Surya Teja Josyula notes, ‘Real-Time Intelligence is generally available now!’ This opens new avenues for utilizing Application Lifecycle Management (ALM) and REST APIs to enhance design, making real-time information visualization more effective.

Furthermore, integrating customer feedback during the preview period enriches the user experience, ensuring that essential insights are readily accessible with improved tools and reports. It’s also important to acknowledge that information integration and security challenges in self-service business intelligence—accounting for 20% and 14% respectively—highlight the necessity for effective visualization techniques, such as the conditional formatting card power bi. The Old Usage Metrics Dashboard serves as a prime example of how foundational tools can provide baseline inventory and usage statistics for Power BI items, laying the groundwork for significant analysis and visualization.

Embracing the conditional formatting card power bi not only enhances the effectiveness of data visualization but also empowers users to make data-driven decisions with confidence, ultimately supporting operational efficiency and driving business growth.

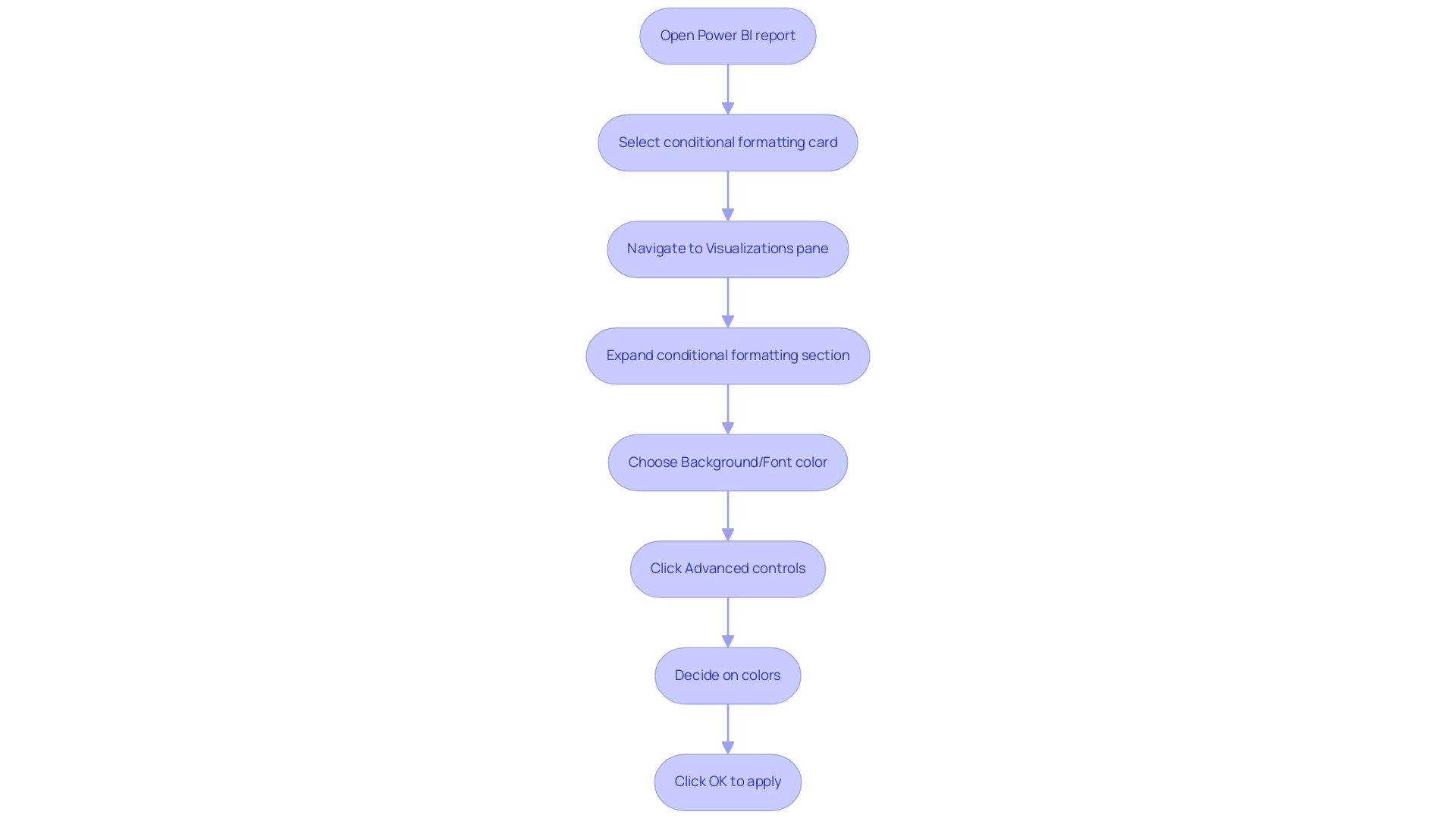

Step-by-Step Process to Apply Conditional Formatting to Cards

- Begin by opening your Power BI report and selecting the conditional formatting card power bi that you intend to format.

- Navigate to the Visualizations pane and click on the ‘Format’ icon, resembling a paint roller.

- Scroll down until you locate the ‘conditional formatting card power bi’ section and expand this option to reveal more settings.

- Choose either ‘Background color’ or ‘Font color’ depending on your preference for visual emphasis.

- Click on ‘Advanced controls’ to establish the specific styling rules for the conditional formatting card power bi that will govern your visual.

- Choose the field that will determine your style options and set your guidelines, such as using conditional formatting card power bi to style the card if the value surpasses a defined threshold. For instance, if you are using star ratings, remember that the number of ranges for these ratings is typically set to 5, which can guide your formatting decisions.

- Decide on the colors that will represent different conditions in the conditional formatting card power bi, ensuring a simple and consistent color palette to maintain clarity for your audience.

As Joleen Bothma aptly states, “Unlock the power of Power BI slicers with our step-by-step tutorial. Learn to create, customize, and optimize slicers for improved documents.” This principle applies equally to structuring, where clarity is essential to overcoming challenges in report creation and information inconsistencies, which can obstruct effective decision-making.

8. Finally, click ‘OK’ to apply your changes. Your card will now actively showcase the designated style based on the foundational information, utilizing conditional formatting card power bi to enhance both its attractiveness and efficiency.

Additionally, consider the practical application of the conditional formatting card power bi as illustrated in the case study titled ‘Format with Formula/Calculation.’ In this case study, sales figures are emphasized based on circumstances such as surpassing the previous year’s sales by 190%, demonstrating how effective structuring can tackle challenges in information presentation and enhance operational efficiency. This example highlights the potential effect of effective structuring on sales performance and overall business growth, making it a powerful tool in your BI arsenal.

Exploring Different Types of Conditional Formatting Options

Power BI offers a variety of conditional formatting card power bi options that can enhance visualization into a more insightful experience, particularly tackling the challenges of time-consuming report creation, inconsistencies, and the need for a robust governance strategy. Here’s a closer look at the key features:

-

Hue Scales: This feature enables users to apply a gradient of shades based on the values of a selected field. It visually conveys the intensity of information, allowing for quick evaluations of performance across various metrics. In a range of percent values from 21.73% to 44.36%, 50% of that range is 33%, illustrating how hues can effectively highlight performance variations. The recent improvements in 2024 have rendered these scales more user-friendly, facilitating quick interpretation of the information and decreasing the time required for document preparation. By utilizing a conditional formatting card power bi with color scales, organizations can mitigate inconsistencies in data representation and improve overall governance in reporting.

-

Data Bars: Adding horizontal bars within cards offers a clear visual representation of the relative size of values. This tool serves as an immediate visual cue, making it simple to compare performance metrics side by side without delving into complex details, thereby enhancing the clarity and effectiveness of reports. Data bars can assist in ensuring that stakeholders are aligned on performance indicators, addressing the lack of actionable insights.

-

Icons: Incorporating icons into conditional representations allows for the depiction of different states, such as up or down arrows for performance metrics. As pointed out by Super User amitchandak, utilizing unichar, icon measures, and hue measures can significantly enhance the clarity of your visuals (refer to https://exceleratorbi.com.au/conditional-formatting-using-icons-in-power-bi/). This type of formatting can quickly signal trends or changes in your data, enhancing decision-making processes and providing actionable guidance for stakeholders. By utilizing icons within a conditional formatting card power bi, organizations can reinforce governance by making performance indicators instantly recognizable.

-

Rules-based Formatting: This option allows users to establish specific rules that modify hues or styles based on predetermined thresholds. For instance, values exceeding a target can be highlighted in green, while those falling short can be displayed in red. This immediate visual feedback significantly enhances clarity in documents. A user shared a workaround involving the creation of a calculated column using DAX to compare values and set categories to Image URLs, successfully adding a new column to show the difference and suggested using color styles based on the difference values, with green for positive and red for negative. By implementing a conditional formatting card power bi with rules-based styling, organizations can enforce governance strategies that ensure information integrity and clarity.

Incorporating these styling techniques not only enhances the aesthetic appeal of your reports but also improves their functional value, ensuring your team can easily interpret the critical metrics that drive operational efficiency and address the challenges of report creation and information governance.

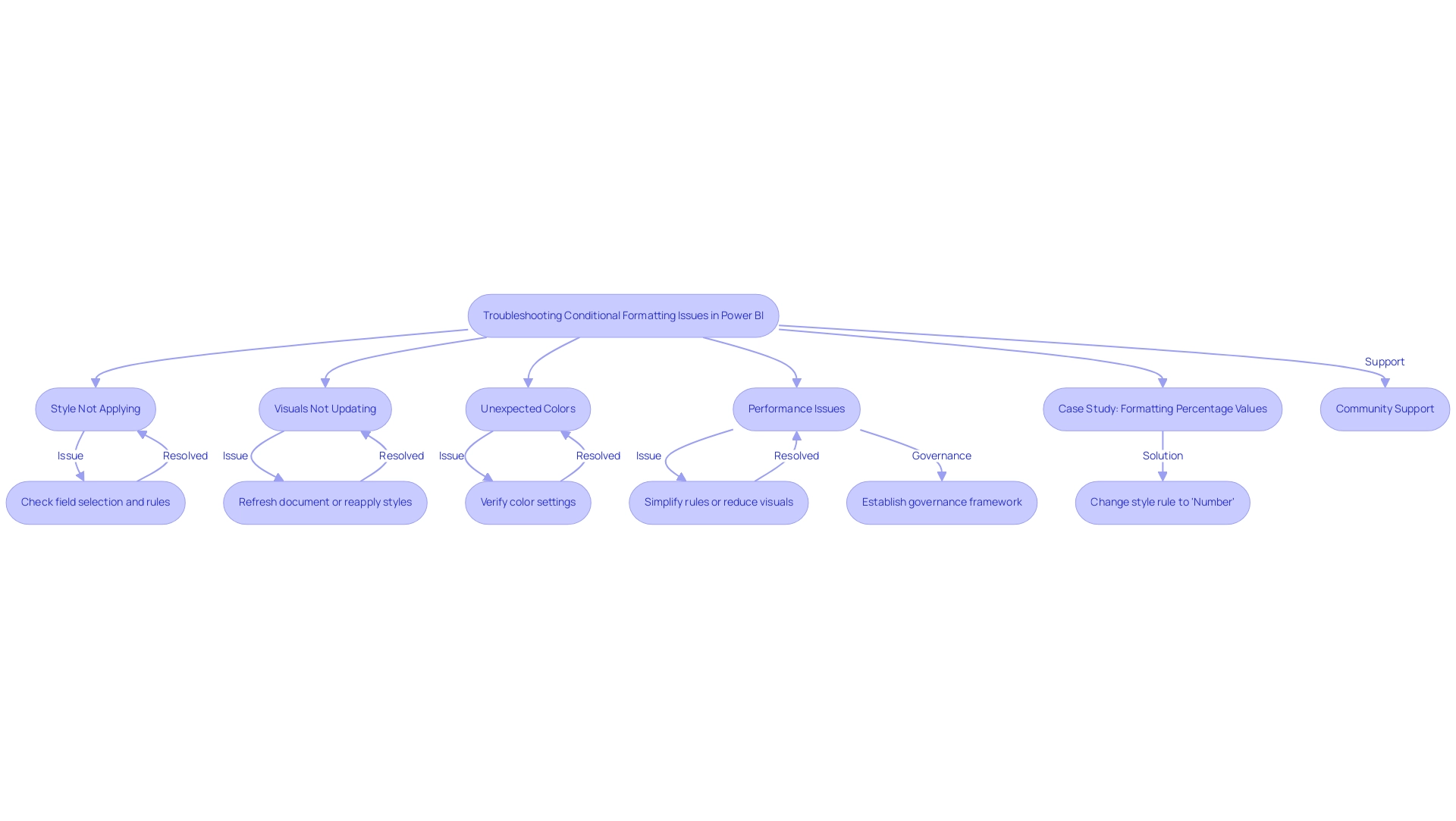

Troubleshooting Common Issues with Conditional Formatting in Cards

When using the conditional formatting card Power BI, users often encounter several common challenges that can hinder their reporting efficiency and impact decision-making. Addressing these issues proactively can streamline your workflow and enhance the visual impact of your data presentations:

-

Style Not Applying: A frequent hurdle is ensuring that the correct field is selected for your conditional style rules. It’s essential to double-check that the rules are established accurately, as even minor misconfigurations can lead to unexpected results, diverting your focus from meaningful insights to frustrating document creation.

-

Visuals Not Updating: Occasionally, visuals may fail to refresh automatically after changes are made. If you observe that your layout changes are not shown, try refreshing the document or reapplying the conditional formatting card power bi styles. This simple step can often resolve the issue and allow you to focus on deriving insights instead of troubleshooting.

-

Unexpected Colors: If the colors displayed do not match your expectations, it’s crucial to verify the color settings within your conditional formatting card power bi. Making sure that these configurations match your desired results can avoid misunderstanding and skepticism in your information, which is essential for efficient communication with stakeholders.

-

Performance Issues: Using intricate conditional styles can occasionally hinder document performance. If you encounter lag, think about simplifying your rules or reducing the number of visuals that use complex design. Streamlining these elements can significantly enhance your report’s responsiveness, thereby helping you spend less time on report creation and more on actionable analysis. Moreover, issues such as inconsistencies across reports due to a lack of governance strategy can exacerbate these challenges, leading to confusion and mistrust in the information presented. Establishing a strong governance framework can reduce these risks, enabling more dependable data insights.

A pertinent case study demonstrates this: a user faced issues with the conditional formatting card power bi while trying to show percentage values in a table. They aimed to format values equal to or higher than 95% in green and those lower than 95% in red but faced difficulties due to empty values in the percentage columns. The issue was resolved by changing the style rule to use ‘Number’ instead of ‘Percent’ for the conditional formatting card power bi settings, showcasing how understanding the rules can lead to effective solutions.

As one user expressed,

It works! I don’t know why I didn’t think this way. I created my new column in Query Editor; I think it should be the same as your way, but it didn’t.

Thank you very much!

< This sentiment captures the relief and satisfaction that comes with overcoming layout challenges, highlighting the importance of community support and shared knowledge in troubleshooting. By understanding these common pitfalls and implementing effective strategies, you can ensure that your Power BI reports incorporate conditional formatting card power bi to be not only visually appealing but also functional and efficient, ultimately empowering you to leverage insights more effectively.

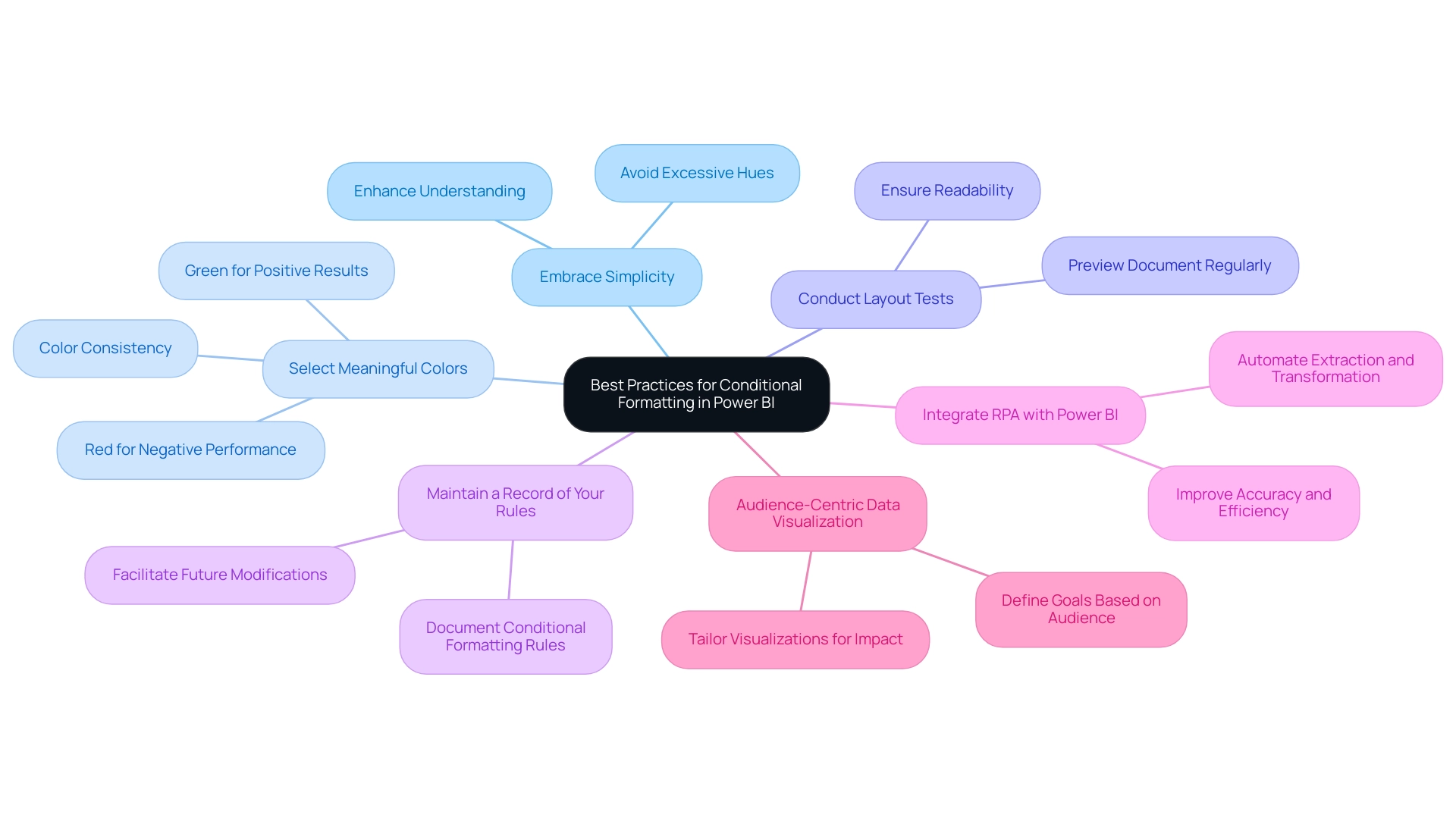

Best Practices for Effective Conditional Formatting in Power BI

To enhance the efficiency of conditional styling in Power BI, follow these best practices:

- Embrace Simplicity: Aim for clarity by avoiding excessive hues or complicated styling rules. The goal is to present information in a straightforward manner that enhances understanding, which is critical in today’s data-rich environment.

- Select Meaningful Colors: Choose colors that convey specific meanings—such as red indicating negative performance and green signaling positive results. This method enables users to swiftly understand the consequences of the information presented and assists in resolving information inconsistencies.

- Conduct Layout Tests: Regularly preview your document to assess how the layout appears in its actual context. This step is essential for ensuring that your visuals uphold readability and effectively convey the intended message, addressing challenges in time-intensive document creation.

- Maintain a Record of Your Rules: Document the conditional formatting card Power BI rules you implement. This practice not only supports consistency but also enables easy revisiting and modifications in the future, facilitating ongoing enhancements aligned with your operational efficiencies.

Additionally, integrating Robotic Process Automation (RPA) with Power BI can streamline the report creation process, reducing the time spent on repetitive tasks and improving accuracy. For instance, RPA can automate extraction and transformation, ensuring that your Power BI dashboards reflect real-time insights without manual intervention. These best practices also highlight the increasing significance of visualization in Environmental, Social, and Governance (ESG) reporting, a priority for businesses worldwide.

As Microsoft states, ‘Environmental, Social, and Governance (ESG) reporting is becoming a priority for businesses globally.’ Effective information visualization is crucial in communicating ESG metrics clearly. Additionally, the case study on audience-centric data visualization underscores that starting with the audience and defining the goal is essential for impactful insights.

Tailoring visualizations to meet the specific needs of your audience enhances understanding and decision-making, ensuring your insights are both actionable and accessible as Power BI continues to evolve.

Conclusion

Embracing conditional formatting in Power BI is a transformative step towards enhancing data visualization and operational efficiency. This powerful feature allows users to tailor their reports dynamically, highlighting critical metrics and trends that drive informed decision-making. By applying various formatting options—such as color scales, data bars, and iconography—organizations can create visually compelling reports that communicate essential insights clearly and effectively.

Implementing best practices, such as maintaining simplicity and selecting meaningful colors, ensures that reports are not only aesthetically pleasing but also functional and easy to interpret. Regularly testing formatting and documenting the rules applied fosters consistency and allows for ongoing improvements, reinforcing the value of data-driven insights in strategic operations.

Moreover, addressing common challenges in conditional formatting, such as ensuring proper field selection and managing visual updates, empowers users to overcome hurdles and maximize the potential of their reporting tools. As Power BI continues to evolve, leveraging its conditional formatting capabilities will enable organizations to respond adeptly to changing business landscapes, ultimately driving growth and enhancing decision-making processes. The time to harness the full power of Power BI is now—empower your team to turn data into actionable insights and elevate your reporting standards.

Introduction

In the realm of data analysis, the ability to merge multiple tables into a single dataset is not just a convenience—it’s a transformative strategy that can elevate operational efficiency and enhance decision-making. Table appending in Power BI stands at the forefront of this evolution, addressing common challenges such as time-consuming report creation and data inconsistencies. As organizations increasingly rely on Business Intelligence to drive growth, mastering the art of table appending becomes essential.

This guide delves into the practical steps of executing this process, highlighting how to:

- Import tables

- Append queries

- Refine your datasets to unlock deeper insights

By embracing these techniques and leveraging tools like Robotic Process Automation, businesses can streamline their workflows and position themselves for success in a data-driven landscape.

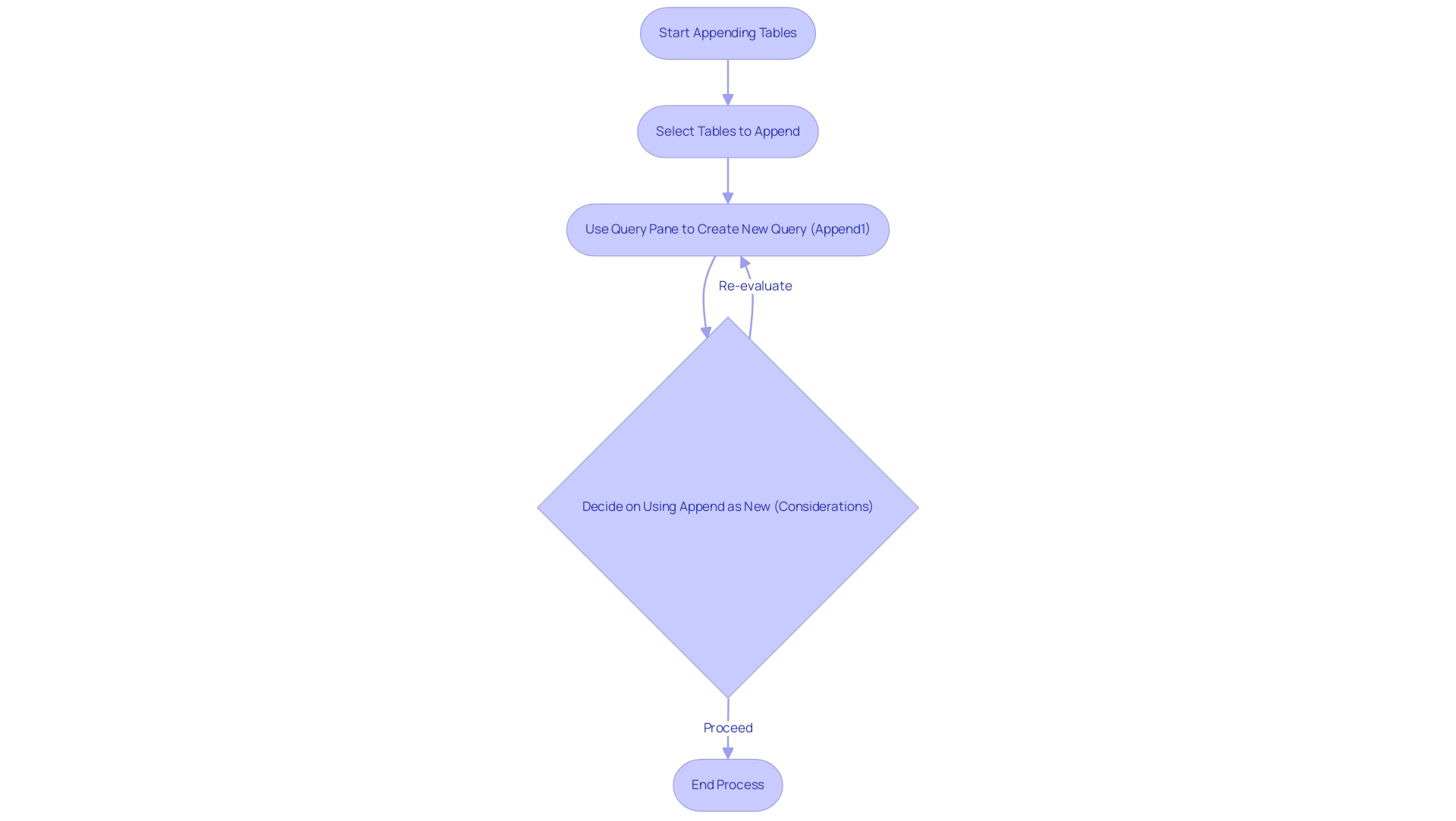

Understanding Table Appending in Power BI

The ability to append tables in Power BI is a robust feature that allows users to combine multiple sets into a single cohesive dataset, significantly improving the efficiency of analysis and reporting. In an era where Business Intelligence is crucial for driving growth, this process addresses common challenges such as time-consuming report creation and inconsistencies. By adding data sets, you form a cohesive collection that not only enhances your workflow but also encourages greater understanding and informed choices.

Furthermore, our General Management App complements this process by providing comprehensive management tools and intelligent reviews, further enhancing reporting and actionable insights. Carrying out the appending operation introduces a new query called ‘Append1’ in the query pane, streamlining the management process. As edhans, a Super User, aptly puts it, ‘DAX is for Analysis.

Query is for Data Modeling; this viewpoint emphasizes the significance of mastering data modeling techniques, including how to append tables in Power BI. It is essential to note that ‘Append as New‘ should only be used if there is a reason to re-use the query in another query, as it complicates the Query Dependency View. Mastering this concept is crucial, as it lays the groundwork for the practical steps outlined in this guide, enabling you to harness the full potential of BI and RPA in your operations, ultimately driving data-driven insights and operational efficiency.

Moreover, utilizing Automate can further streamline your workflows, ensuring a risk-free ROI assessment and professional execution.

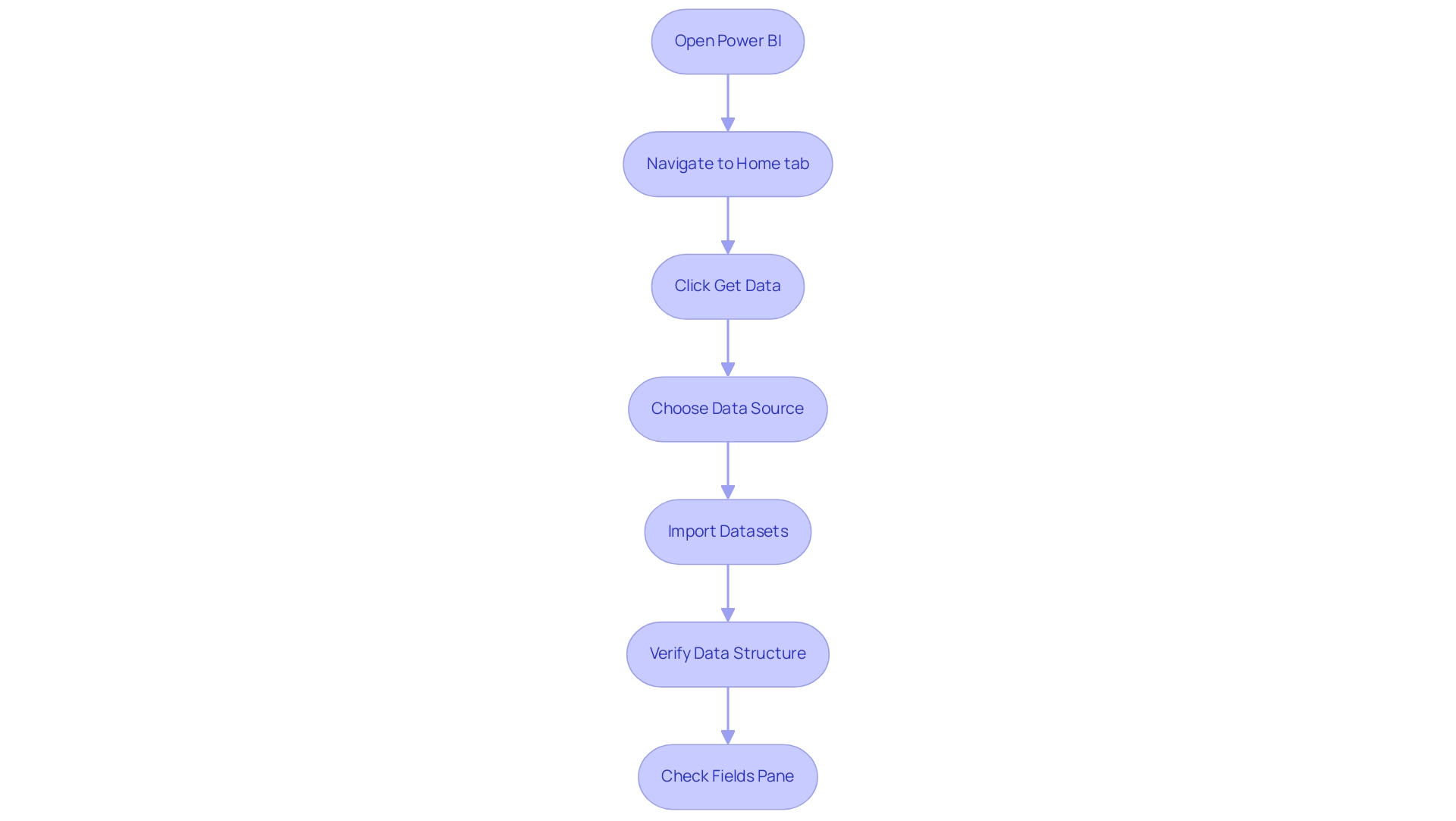

Step 1: Importing Multiple Tables into Power BI

To begin adding data sets in Power BI, open the application and navigate to the ‘Home’ tab. Click on ‘Get Data’ to choose the source of your datasets, such as Excel or SQL Server. Import each dataset by following the prompts carefully.

It’s essential to verify that the structures you import possess similar columns and compatible formats; this uniformity promotes a smoother process to append table in Power BI and reduces possible errors. After importing, you’ll find the tables listed in the ‘Fields’ pane on the right side of the BI interface. This approach not only simplifies your workflow but also conforms to best practices seen in the current trends of information sources used in BI 2024.

As Praveen, a Digital Marketing Specialist with over three years of experience, advises, concentrating on consistency can significantly enhance your reporting efficiency. Indeed, balancing user inquiries with query scale-out is crucial for enhancing performance in BI, especially as new sources of information arise. Furthermore, incorporating Robotic Process Automation (RPA) can automate repetitive tasks related to preparation, such as cleaning and transformation, which decreases errors and allows your team to concentrate on more strategic initiatives.

Leveraging the latest features in Power BI, including new connectors like the OneStream Power BI Connector and the Zendesk Data Connector, can further enhance your ability to append table in Power BI and improve your integration process. Moreover, the case study on ‘Automated Information Discovery’ illustrates how leveraging AI for automatic pattern discovery can lead to deeper insights with minimal effort, reinforcing the significance of effective management practices in driving insight-driven results and operational efficiency for business growth. Addressing challenges like data inconsistencies and time-consuming report creation through RPA can significantly enhance your team’s productivity and decision-making capabilities.

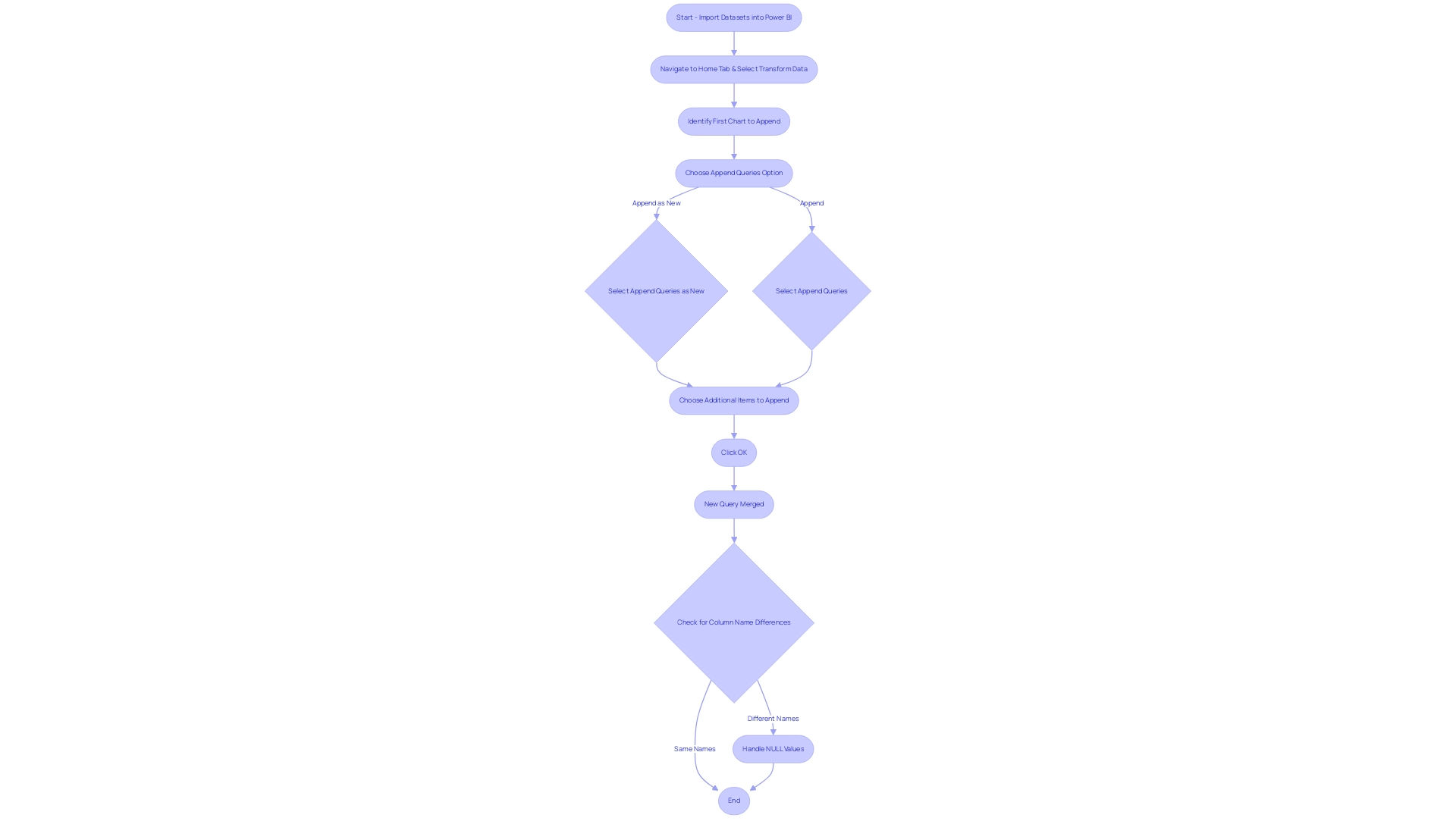

Step 2: Appending Queries in Power BI

Upon importing your datasets into Power BI, navigate to the ‘Home’ tab and select ‘Transform Data’ to access the Power Query Editor. Here, identify the first chart you wish to append. To append a table in Power BI, click on the ‘Append Queries’ option found in the ‘Home’ tab.

You’ll have two options:

1. Select ‘Append Queries as New’ to maintain the original records

2. Choose ‘Append Queries’ to merge them directly.

Carefully choose the additional items you wish to append and click ‘OK’. This process produces a new query that seamlessly merges the data from the selected sources.

Be aware that if the lists have differing column names, adding a custom column such as ‘Company’ to the Sales list may result in NULL values for entries from Sales, as the software recognizes these as separate columns. Participating in the BI community is priceless, with 3,372 users currently online, showcasing a lively exchange of ideas. As a BI Analyst for Ontiyj Retail Store stated, ‘Thank you for your time!

Hope you find this insightful,’ underscoring the real-world utility of these processes. Moreover, a case study named ‘Appending Information in Query’ outlines the essential steps to append a table in Power BI from various tables, reinforcing the significance of utilizing the Query editor for efficient management. Tackling issues like lengthy report generation and inconsistencies in information is essential for utilizing insights from BI dashboards.

Following these steps will not only enhance your data management capabilities but also empower you to tackle these challenges, ultimately driving growth and operational efficiency. Furthermore, integrating tools like EMMA RPA and Automation can streamline these processes, ensuring your organization remains competitive in a data-driven landscape.

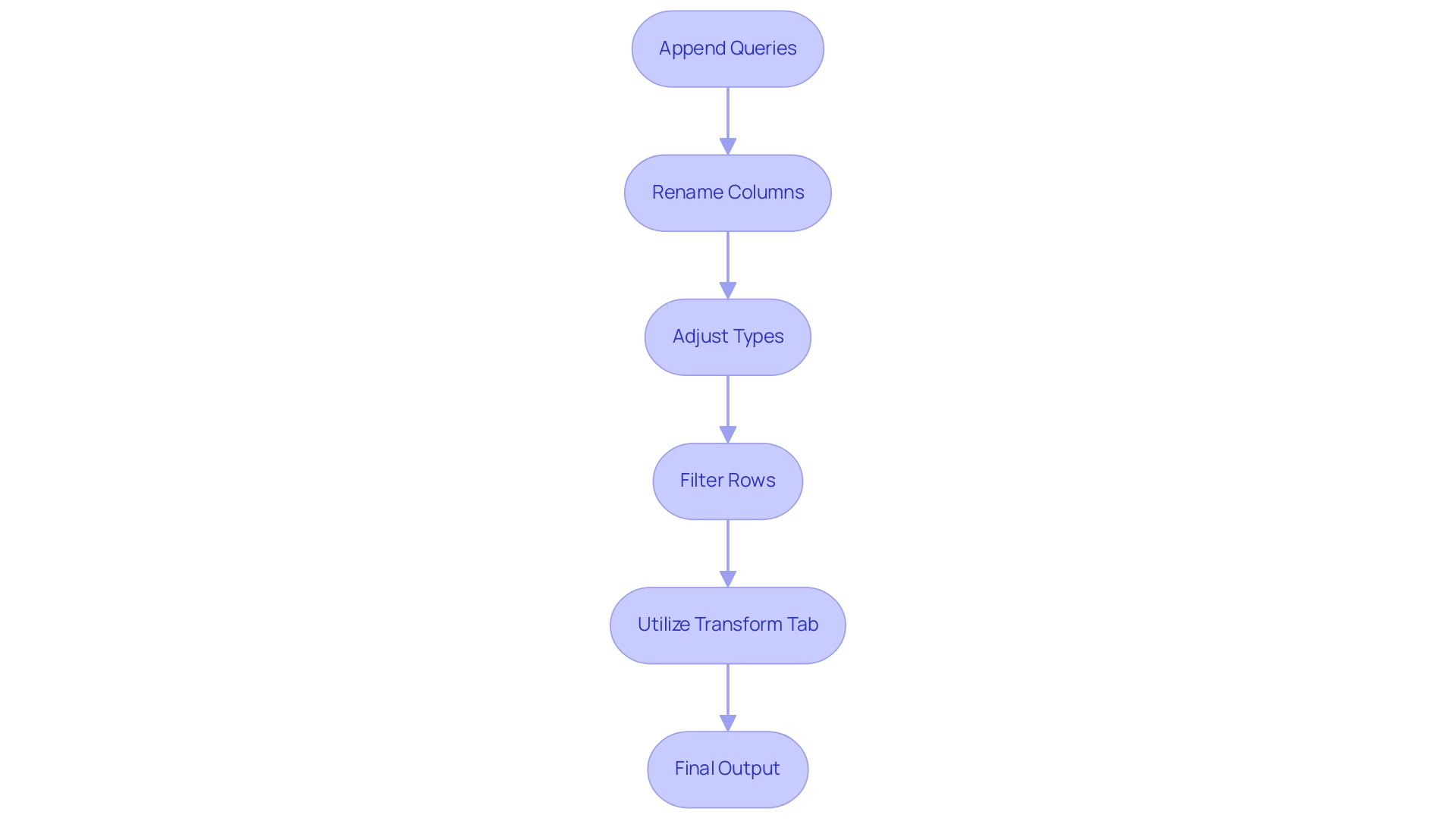

Step 3: Configuring Append Options for Tables

After appending your queries, it’s essential to refine the appended table within the Power Query Editor. Here, you can easily rename columns, adjust types, and filter rows according to your analytical requirements. The ‘Transform’ tab presents a variety of transformation options, providing the flexibility needed to customize your dataset effectively, enhancing the relevance of your analysis and ensuring that the final output is actionable.

As Denys Arkharov, a BI Engineer at Coupler.io, notes,

A notable achievement in my career was my involvement in creating Risk Indicators for Ukraine’s ProZorro platform, a project that led to my work being incorporated into the Ukrainian Law on Public Procurement.

This highlights the potential impact of well-transformed information in driving significant outcomes. In the context of operational efficiency, leveraging RPA can further streamline these processes, reducing manual effort and enhancing productivity, especially in repetitive tasks that slow down operations.

Furthermore, it’s essential to remember that BI refreshes the semantic model once daily, highlighting the significance of timely updates for precise analysis. By carefully arranging your added tables, as shown in the case study on ‘Usage Metrics in National/Regional Clouds,’ you prepare for deeper understanding and more informed decision-making while ensuring compliance with local regulations. Moreover, although this software does not inherently support Boxplots for visualizing percentiles and quartiles, alternative methods like plotting quartiles in a Column chart can offer valuable perspectives on your data distribution.

Embracing these tools and methodologies ultimately supports your goal of driving data-driven insights for business growth. Additionally, utilizing the General Management App can facilitate comprehensive management and smart reviews, further enhancing operational efficiency. This holistic approach ensures that your team can focus on strategic, value-adding work, maximizing the benefits of both BI and RPA.

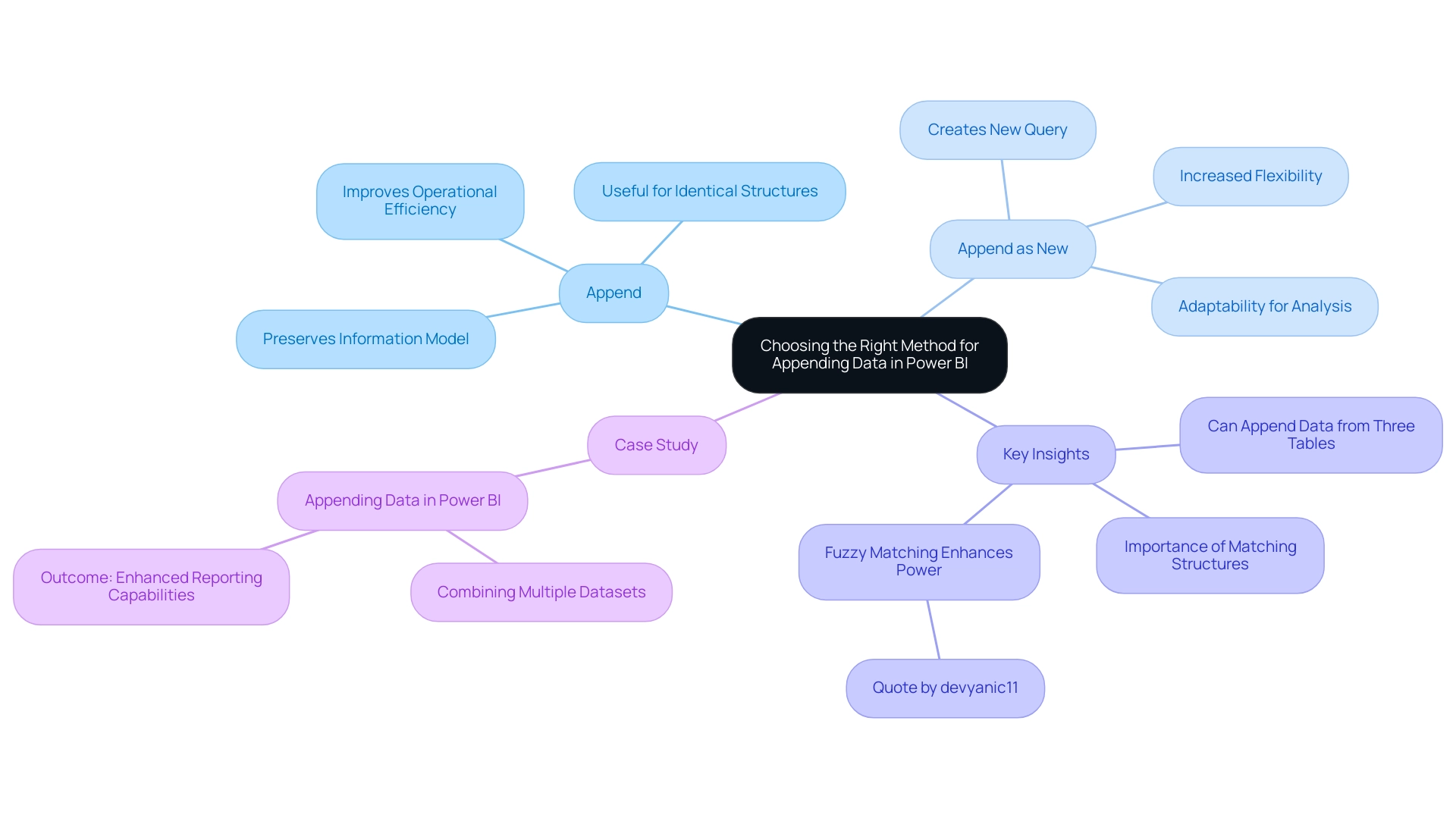

Append vs. Append as New: Choosing the Right Method

When using BI, you have two main choices for adding datasets to append table in Power BI: ‘Append’ and ‘Append as New’. Choosing ‘Append’ combines the selected sets into the existing query, which is beneficial for preserving a streamlined information model that improves operational efficiency. On the other hand, ‘Append as New’ creates a new query, maintaining the original structures and providing increased flexibility for analysis—essential in addressing the difficulties of lengthy report generation and inconsistencies frequently faced in BI dashboards.

This choice is especially significant as it aligns with effective information management strategies essential for leveraging insights for growth. Remarkably, you can add information from three sources in Power BI, greatly improving your capacity to handle larger datasets effectively. Adding information is particularly beneficial when the structures are the same, with matching column names and types, ensuring smooth integration.

According to recent insights, many users favor the ‘Append as New’ approach for its adaptability in complex analytical environments. A case study on how to append table in Power BI demonstrated that combining multiple datasets into a single unified entity greatly improved management and reporting capabilities, illustrating that using ‘Append’ allowed for a more efficient analysis, while ‘Append as New’ provided the flexibility needed for varied analytical scenarios. Furthermore, as noted by devyanic11,

When merge queries contain the fuzzy matching feature, which joins two tables based on partial matches, they become far more powerful.

Ultimately, consider your information management strategy carefully and select the method that best suits your analytical workflow, as this decision plays a vital role in unlocking the full potential of Business Intelligence and driving operational success. Remember, failing to extract meaningful insights can leave your business at a competitive disadvantage. Explore how RPA solutions can further enhance your data management capabilities and drive efficiency in your operations.

Conclusion

Mastering the process of table appending in Power BI is a fundamental step toward achieving operational efficiency and enhancing data-driven decision-making. By importing multiple tables, appending queries, and refining datasets, organizations can transform their data management practices and unlock deeper insights. Each step in this process not only simplifies workflows but also addresses common challenges such as data inconsistencies and time-consuming report creation.

The choice between ‘Append’ and ‘Append as New’ is critical, impacting how datasets are managed and analyzed. Selecting the right method allows for streamlined data models or flexible analysis, catering to the unique needs of each analytical scenario. Embracing these techniques, along with integrating tools like Robotic Process Automation, can significantly enhance productivity and empower teams to focus on strategic initiatives.

In a world increasingly driven by data, the ability to leverage Power BI effectively is essential for staying competitive. By adopting these best practices, organizations can position themselves for growth, ensuring that their data not only informs but also drives strategic decisions. The journey toward operational excellence begins with mastering the art of data integration, setting the stage for a future of informed decision-making and sustainable success.

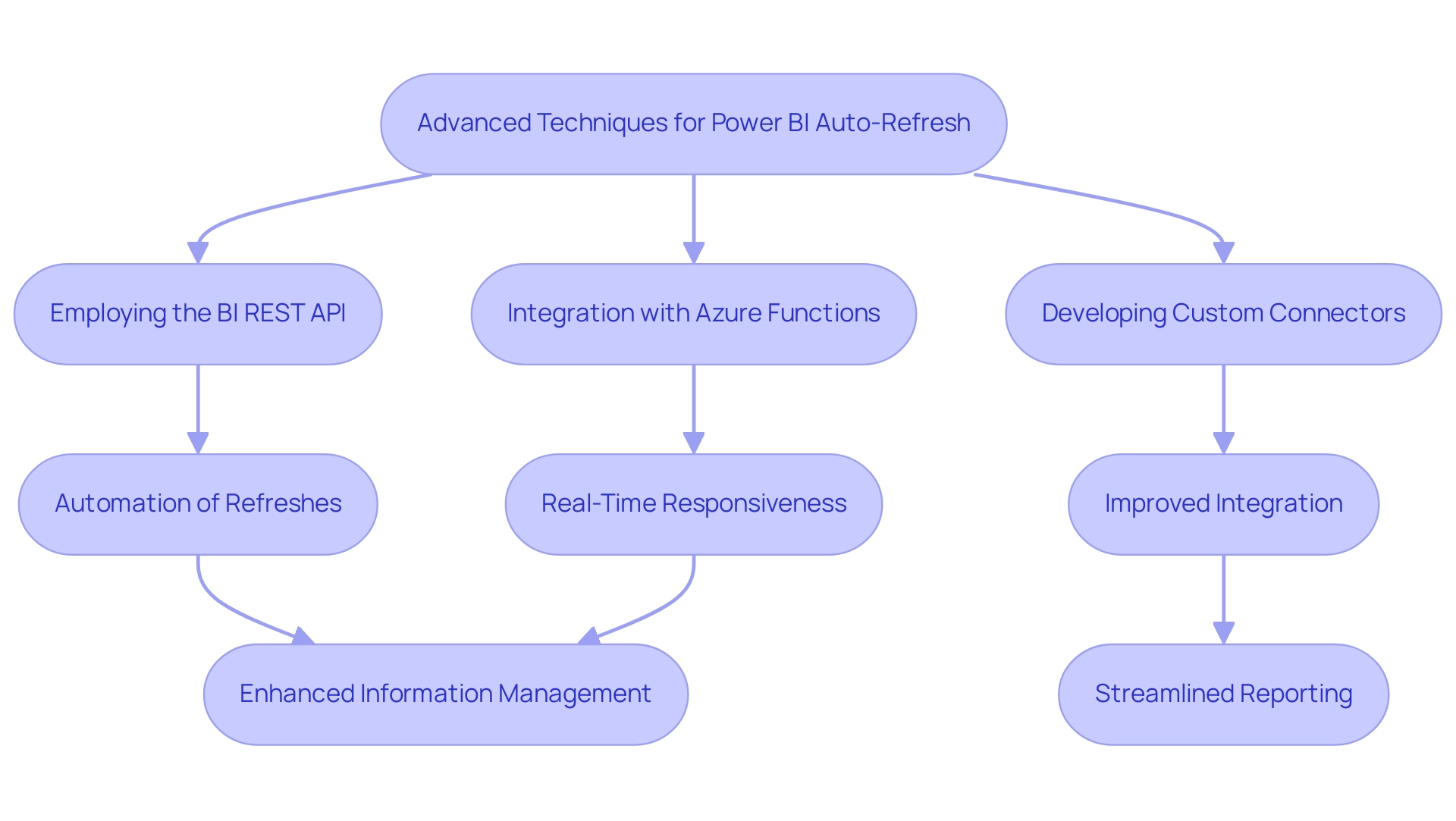

Introduction

In the dynamic landscape of data analytics, the significance of the last refresh date in Power BI cannot be overstated. This vital timestamp not only indicates when data was last updated but also serves as a cornerstone for maintaining trust and integrity in business intelligence efforts. As organizations increasingly rely on real-time insights to drive decision-making, understanding how to effectively manage and display this information becomes essential.

From implementing best practices to troubleshooting common issues, this article offers a comprehensive guide that empowers professionals to enhance operational efficiency and ensure their data remains relevant and actionable. By leveraging the right tools and strategies, businesses can transform raw data into valuable insights, paving the way for informed decisions that fuel growth and innovation.

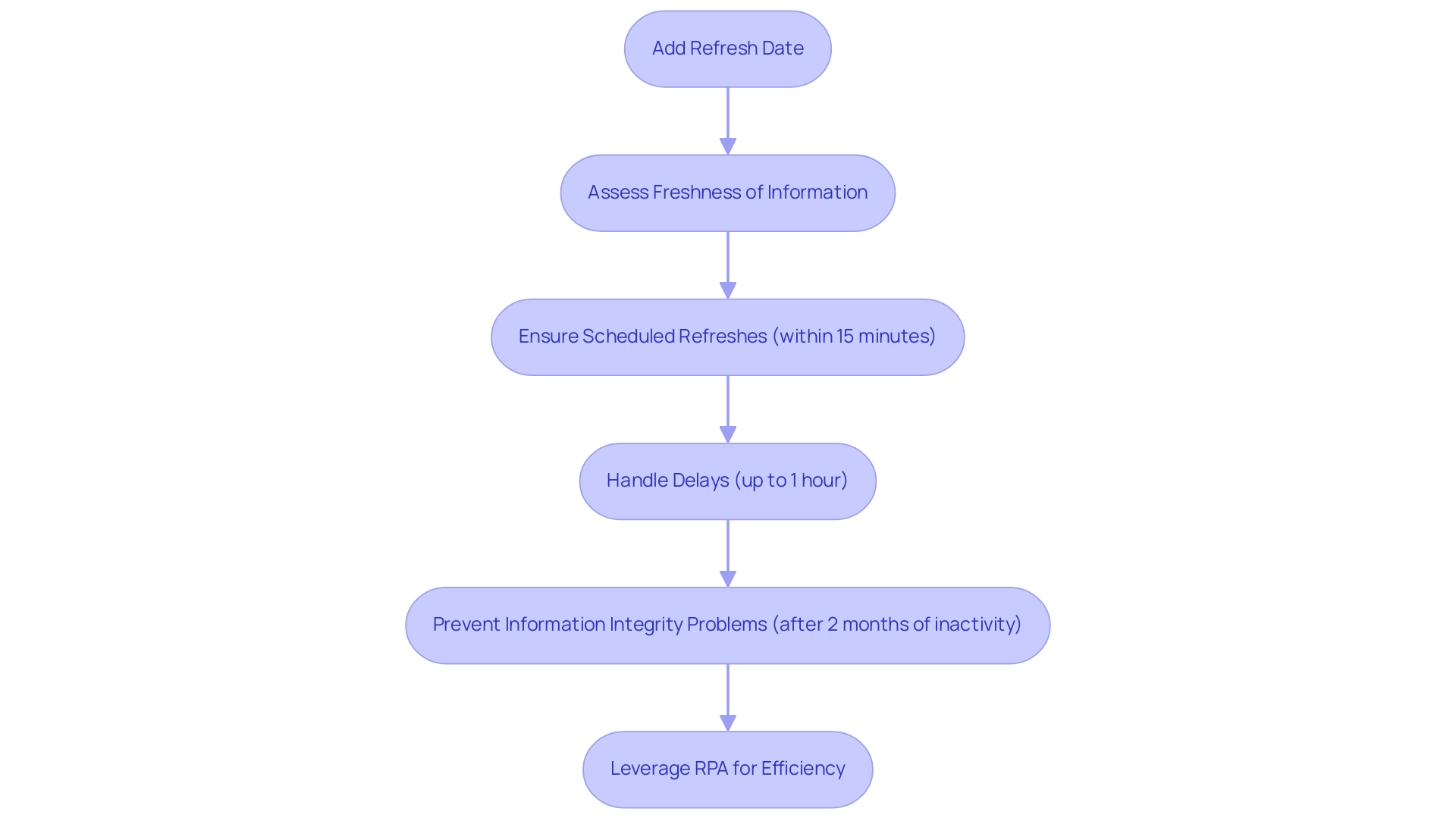

Understanding the Importance of Last Refresh Date in Power BI

In this tool, to add refresh date in Power BI, the most recent refresh timestamp acts as a vital sign of when the information was last updated, playing an essential role in maintaining the integrity of the content displayed. BI aims to initiate scheduled refreshes within 15 minutes of the scheduled time slot, though delays of up to one hour can occur. By prominently showcasing this timestamp, organizations enable users to assess the freshness of the information they are examining, fostering trust and aiding informed decision-making.

This transparency is particularly vital in fast-paced environments, where data-driven decisions can significantly impact outcomes. Additionally, to prevent possible information integrity problems, it is crucial to add refresh date in Power BI, especially since it halts planned updates after two months of inactivity. Ensuring that stakeholders are aware of the information’s timeliness not only enhances confidence in the analytics but also reinforces the organization’s commitment to operational excellence.

As Paul Turley, a Microsoft Data Platform MVP, aptly states, ‘All projects of any scale and size should adhere to the same general set of guidelines,’ emphasizing the importance of standard practices such as how to add refresh date in Power BI. This practice aids in decision-making and aligns with the broader goals of efficiency and reliability within operations. Additionally, the challenges of time-consuming report creation and inconsistencies can be effectively mitigated through robust RPA solutions, which streamline processes and enhance operational efficiency.

By leveraging RPA, organizations can transform raw data into actionable insights, addressing the critical competitive disadvantage faced by businesses that struggle to extract meaningful insights from their data.

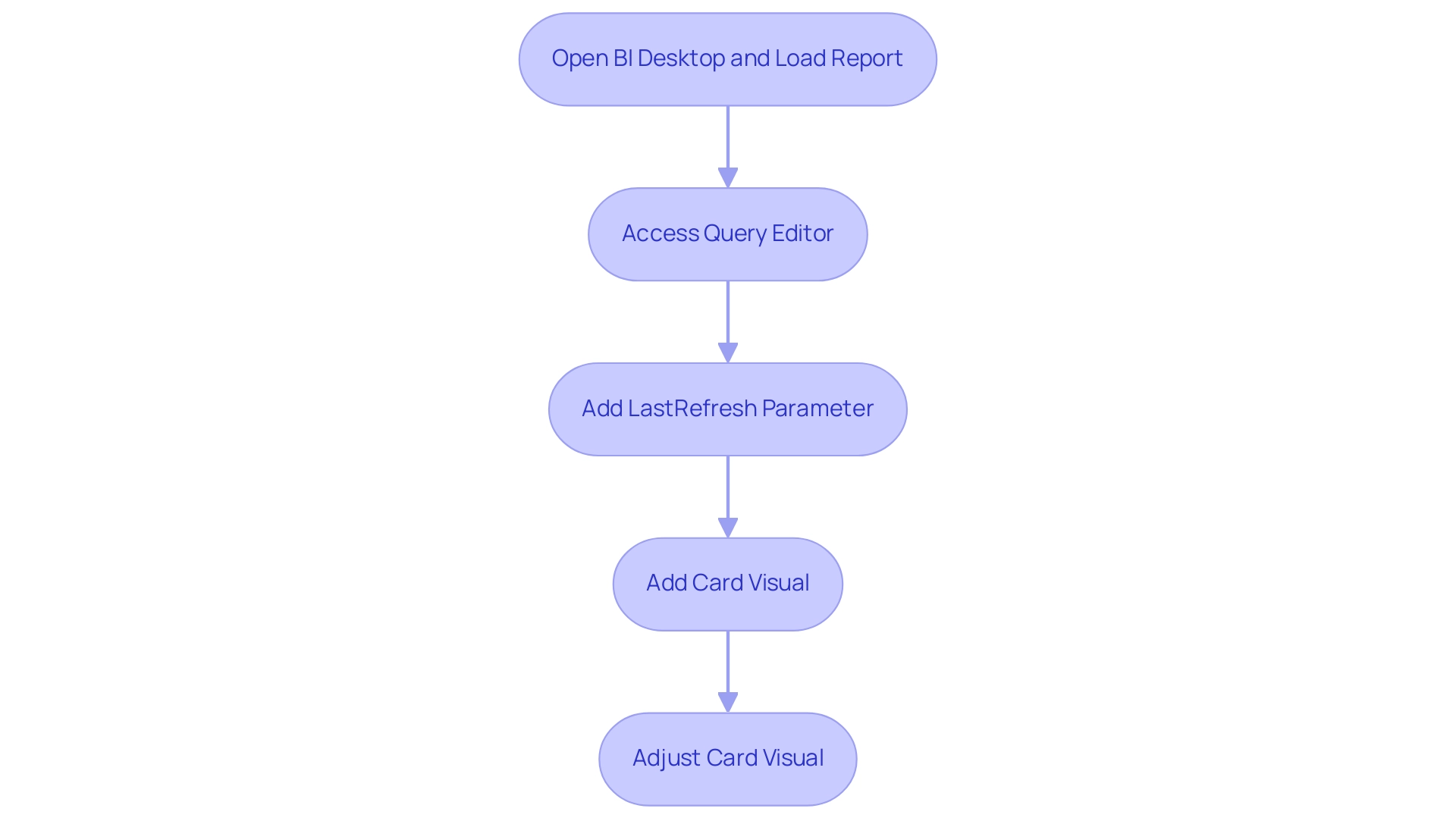

Step-by-Step Guide to Adding Last Refresh Date in Power BI Reports

To effectively display the last refresh date in your BI report and enhance operational efficiency, follow this detailed guide:

- Open your BI Desktop and load the report you wish to modify. Navigate to the ‘Home’ tab and click on ‘Transform Data’ to access the Query. In the Query Editor, select ‘Home’ and then ‘Advanced Editor’. Insert the following line of code, which creates a new parameter to capture the last refresh date:

M

LastRefresh = DateTime.LocalNow() - Click ‘Done’ to save your modifications, and then close the Power Query Editor.

- In the report view, add a Card visual to your report canvas.

- Drag the ‘LastRefresh’ parameter into the Card visual to display the date and time of the last refresh.

- Adjust the Card visual to improve its visibility, making sure it stands out within your report.

By using this approach, you can add refresh date in Power BI, which keeps your audience informed about when the information was last updated, thereby boosting transparency and trust in your reports. Utilizing features like this is vital for tackling challenges such as time-consuming report creation and inconsistencies. Additionally, integrating RPA solutions can further streamline the process by automating repetitive tasks, thereby enhancing operational efficiency.

Insights from BI experts suggest that incorporating clear, actionable features can significantly enhance user adoption rates, as users appreciate clarity and precision in presentations. Furthermore, as you manage historical information, consider utilizing RDBMS systems for better efficiency. A case study on usage metrics in national/regional clouds illustrates how BI not only maintains compliance with local regulations but also provides critical insights that address operational efficiency concerns, helping businesses avoid the competitive disadvantage of not extracting meaningful insights from their data.

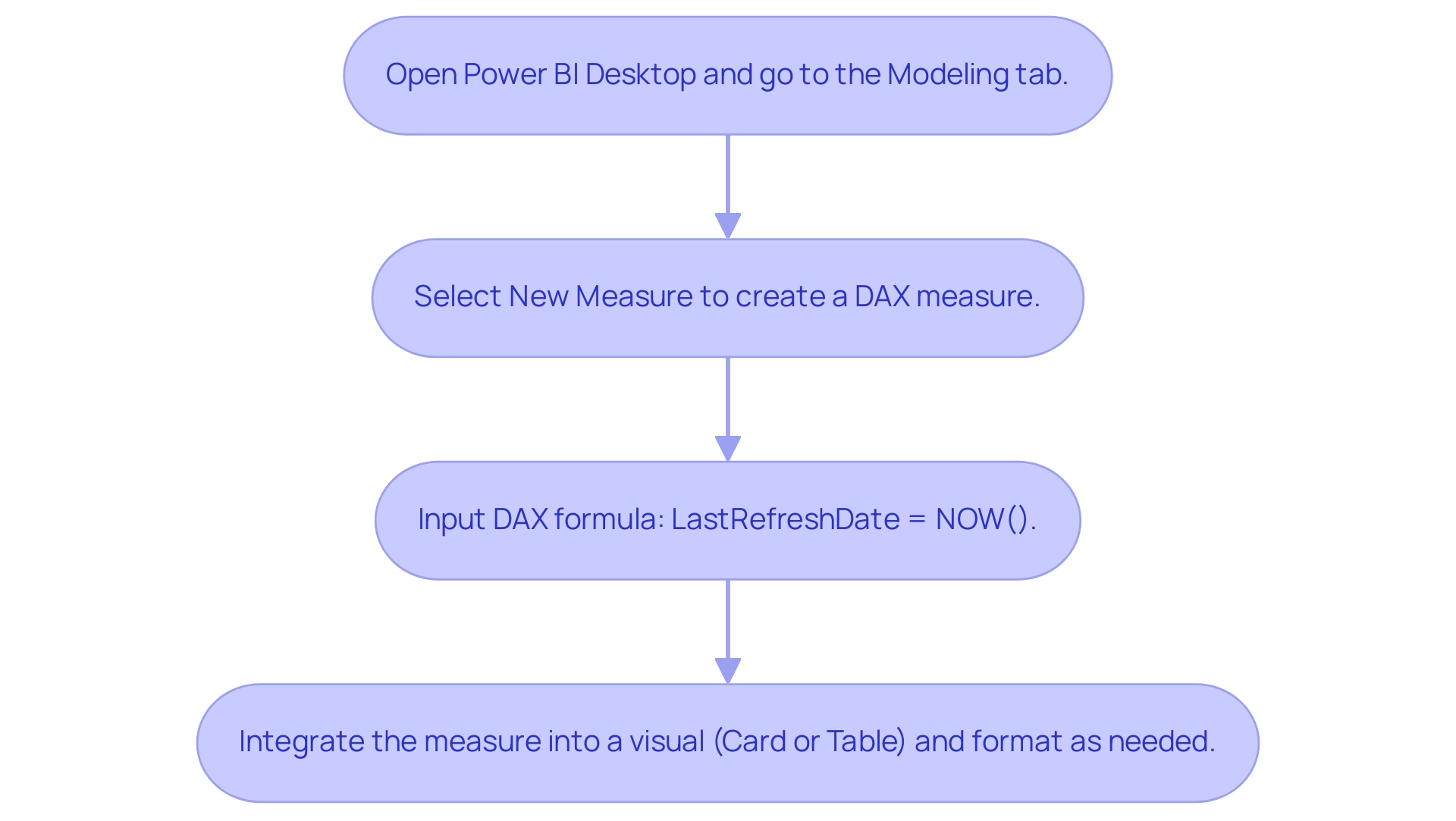

Using DAX Functions to Display Last Refresh Date

To effectively show the last refresh timestamp in BI using DAX functions, follow these straightforward steps:

- Open Power BI Desktop and navigate to the ‘Modeling’ tab. Here, select ‘New Measure’ to create a new DAX measure.

- Input the following DAX formula to capture the current date and time whenever the data refreshes:

DAX

LastRefreshDate = NOW() - Upon creating this measure, you can seamlessly integrate it into any visual within your report, such as a Card or Table, enhancing your dashboard’s informational value and ensuring your information remains actionable. For a tailored presentation, utilize the ‘Format’ option within the visualizations pane to adjust the display to show only the date or time, according to your specific requirements.

Mastering the use of DAX functions like this is vital in today’s analytics-driven landscape, where the ability to extract valuable insights can make the difference in operational efficiency and business growth. The challenges of time-consuming report creation and inconsistencies can be addressed through the effective application of DAX functions. For instance, utilizing functions like TOTALYTD and SAMEPERIODLASTYEAR can simplify report generation and improve consistency over time. Moreover, integrating RPA solutions can further automate repetitive tasks related to report preparation, allowing analysts to concentrate on deriving insights rather than on manual information handling. The growing community around DAX functions in Power BI, with over 2,033 followers, highlights the importance of this skill. Recent discussions emphasize that mastering statistical functions in DAX can unlock the full potential of insights for business analysts and data professionals. As Douglas Rocha, a data science enthusiast, aptly puts it,

Last but definitely not least is the Mode,

emphasizing the importance of understanding statistical functions in DAX for maximizing the effectiveness of your reports.

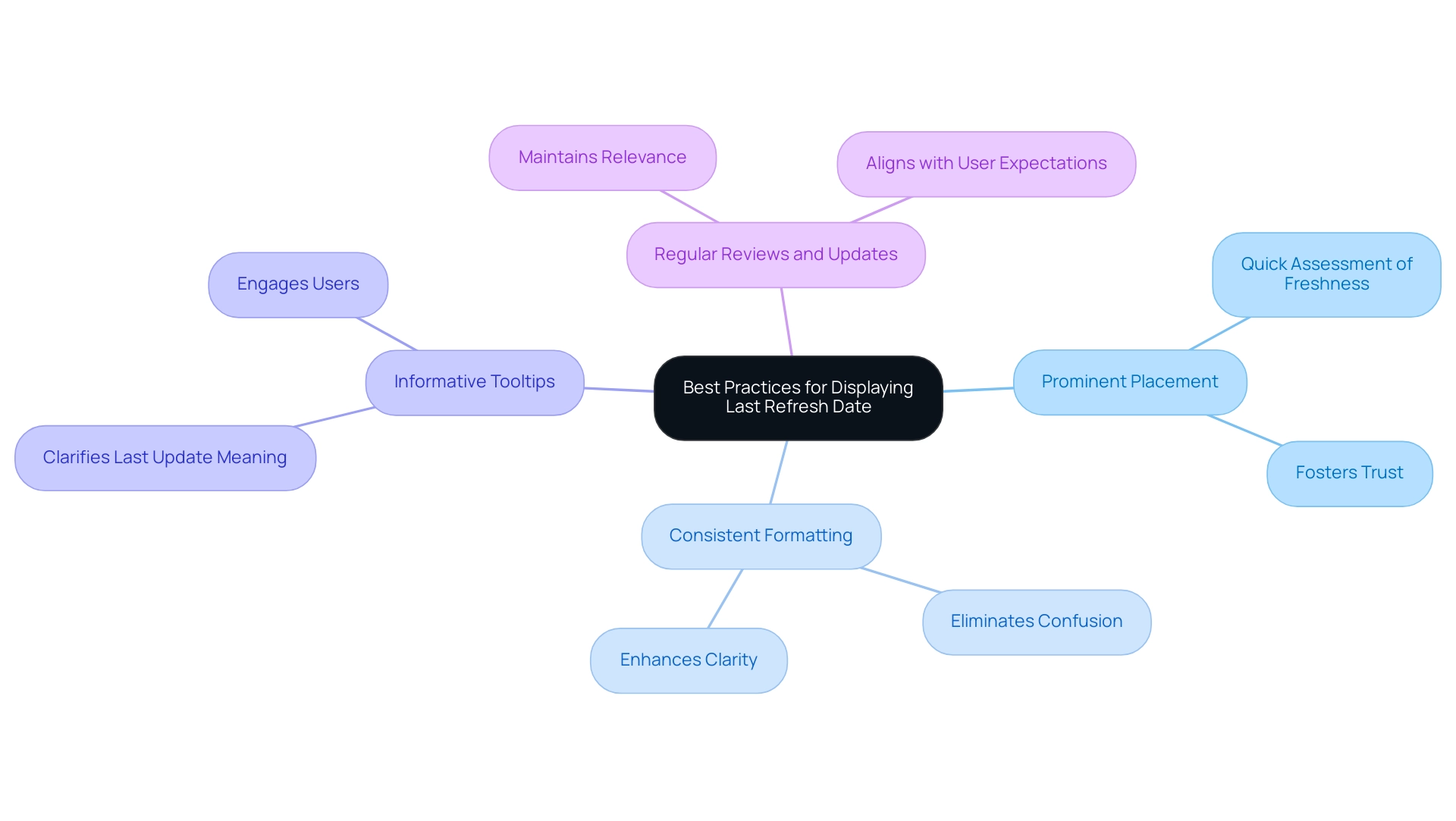

Best Practices for Displaying Last Refresh Date in Dashboards

To effectively show the most recent update timestamp in your Power BI dashboards and improve data-driven insights, it is vital to adhere to the best practices:

-

Prominent Placement: Ensure the last refresh timestamp is positioned prominently on the dashboard, ideally adjacent to key metrics. This allows users to quickly assess the freshness of the data, fostering trust and informed decision-making.

-

Consistent Formatting: Use a uniform format for the time and calendar to eliminate any potential confusion. Consistency aids in clarity, allowing users to easily interpret the information presented.

-

Informative Tooltips: Consider adding a tooltip or a brief note that clarifies what the last update time indicates. This is particularly beneficial for users who may be less familiar with the feature, enhancing their understanding and engagement with the dashboard.

-

Regular Reviews and Updates: Continuously assess and update your dashboard design to ensure that the last update timestamp remains visible and pertinent as your reporting needs change. Frequent updates not only preserve the accuracy of the information shown but also ensure the dashboard remains in line with user expectations.

Applying these methods will not only improve the visibility of the last update time in your BI dashboards but also help you to add refresh date in Power BI, creating a more efficient and user-friendly information visualization experience. As noted by Noah Iliinsky and Julie Steele, “The designer’s purpose in creating a visualization is to produce a deliverable that will be well received and easily understood by the reader.” Keeping user needs at the forefront of your dashboard design will enhance overall engagement and satisfaction.

Moreover, employing the S.M.A.R.T framework to set specific, measurable, actionable, realistic, and time-based objectives can guide the implementation of these best practices effectively.

This approach ensures that your dashboard design is not only visually appealing but also functional and aligned with user needs. By utilizing RPA solutions such as EMMA RPA, which automates repetitive tasks and minimizes information inconsistencies, along with Automate, which simplifies report generation, you can further improve operational efficiency and tackle the issues of lengthy report creation.

Moreover, referring to Nathan Yau’s guidelines on effective chart design emphasizes the significance of accurate information representation, which is vital for showing the last update time effectively. Following best practices in chart design can prevent misleading representations of information, ultimately leading to improved decision-making and fostering business growth.

Troubleshooting Common Issues with Last Refresh Date in Power BI

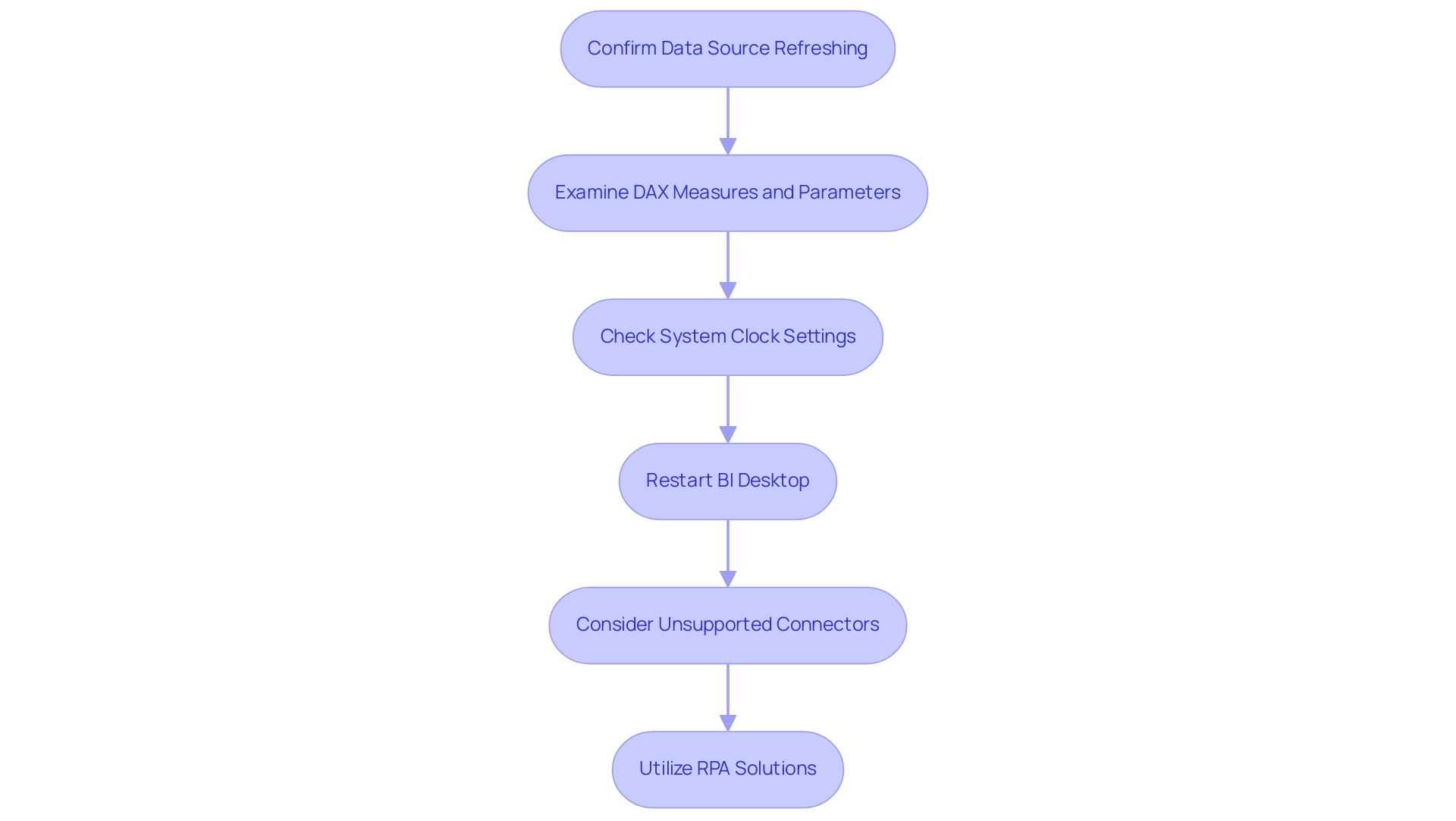

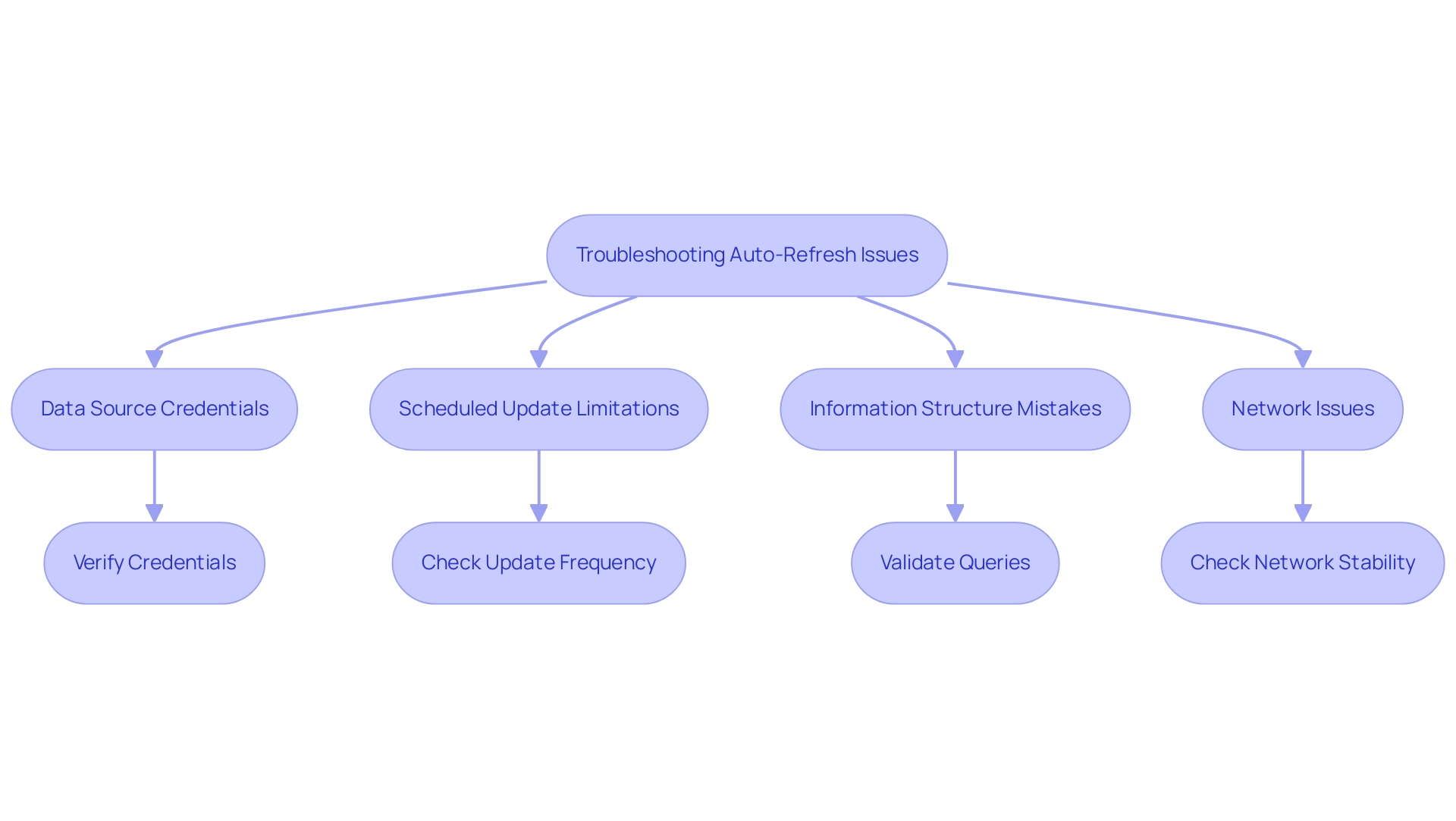

When encountering difficulties with showing the most recent update time in BI, it’s crucial to tackle the problem systematically, as maintaining information integrity is important for facilitating informed decision-making and addressing the absence of insight-driven knowledge. Here are some practical troubleshooting tips:

-

Confirm Data Source Refreshing: Start by ensuring that your data source is refreshing properly. Access the update settings in the Power BI service to verify that scheduled updates are activated. This is essential, as a failure in scheduled updates can lead to outdated information being displayed, which undermines the effectiveness of your Business Intelligence efforts. Keep in mind that in DirectQuery/LiveConnect mode, there is a one million-row limit for returning information, which may affect your update capabilities.

-

Examine DAX Measures and Parameters: If the final update timestamp does not change, it’s essential to verify the setup of your DAX measure or Query parameter. Make sure that there are no mistakes in your formulas, as these can hinder the last update timestamp from showing the current condition of your information. Poor master data quality can hinder your ability to leverage BI insights effectively.

-

Check System Clock Settings: An inaccurate system clock can lead to discrepancies in the displayed last refresh date. Verify that your system clock is set correctly to avoid any confusion, ensuring your operations run smoothly.

-

Restart BI Desktop: If you encounter any display glitches, consider restarting BI Desktop. This simple step can often resolve temporary issues and restore normal functionality, enhancing operational efficiency.

-

Consider Unsupported Connectors: Be aware that certain connectors are unsupported for dataflows and datamarts in Premium workspaces, leading to errors when used. Addressing these configuration issues is vital for ensuring seamless information refreshes.

-

Utilize RPA Solutions: Think about using RPA tools such as EMMA RPA and Automate to streamline the update process in BI. These solutions can help streamline operations, reduce manual errors, and ensure that your information is always current, thereby enhancing your ability to derive actionable insights from your information.

By systematically addressing these aspects, you can effectively troubleshoot common problems associated with how to add refresh date in Power BI, ensuring that the information you present is both accurate and timely. As Rita Fainshtein, a Microsoft MVP, emphasizes, “If this post helps, then please consider Accepting it as the solution to help the other members find it more quickly.” This reinforces the idea that collaborative knowledge-sharing enhances our collective efficiency and supports the overarching goal of leveraging data for growth and innovation.

Conclusion

The importance of the last refresh date in Power BI cannot be overstated, as it serves as a critical indicator of data integrity and timeliness. By following best practices for displaying this information, organizations can foster trust and empower users to make informed decisions based on current data. Implementing straightforward methods—such as using DAX functions and properly placing the last refresh date on dashboards—enhances transparency and operational efficiency.

Furthermore, addressing common challenges associated with the last refresh date ensures that data remains actionable and relevant. By leveraging tools like RPA solutions, businesses can streamline processes, reduce manual errors, and maintain the accuracy of their reports. This proactive approach not only mitigates potential issues but also positions organizations to capitalize on data insights effectively.

In a world where timely decision-making is paramount, ensuring the accuracy of the last refresh date is vital for any data-driven organization. Embracing these strategies will lead to enhanced operational excellence and a greater competitive edge, ultimately driving growth and innovation in an increasingly analytics-driven landscape.

Introduction

In the dynamic landscape of business intelligence, the choice between Power BI and SQL Server Reporting Services (SSRS) can significantly impact an organization’s reporting capabilities and overall efficiency. As companies strive to harness data for strategic advantage, understanding the unique strengths of each tool is crucial.

- Power BI, with its cloud-based flexibility and user-friendly interface, empowers teams to create interactive reports and dashboards that foster a culture of data-driven decision-making.

- On the other hand, SSRS offers a robust solution for traditional reporting needs, emphasizing precision and detailed operational insights.

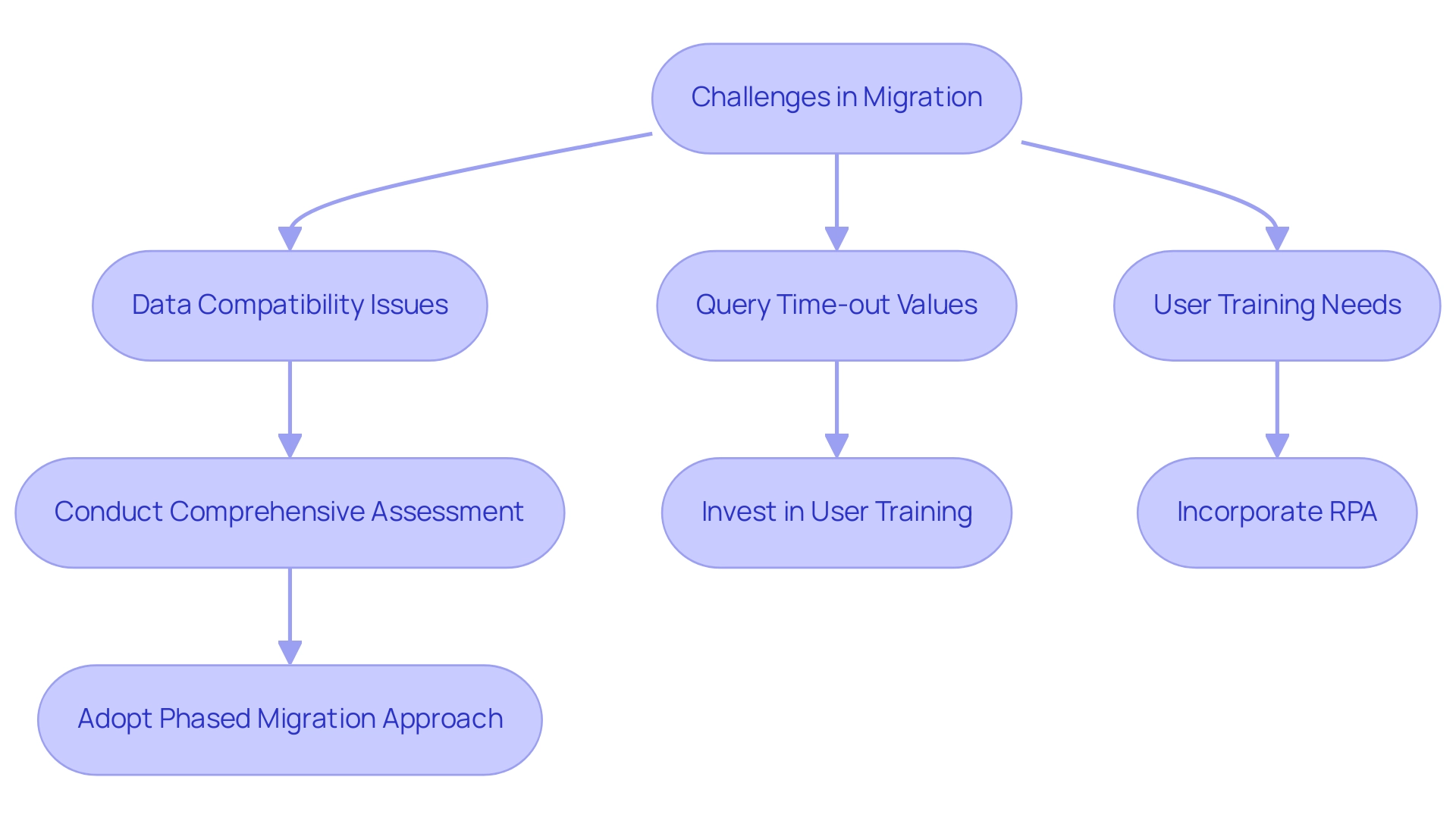

However, the transition from SSRS to Power BI is not without its challenges, including data compatibility and user training. By exploring deployment options, integration capabilities, and the potential of combining these tools with Robotic Process Automation (RPA), organizations can navigate their reporting journeys more effectively.

This article delves into the nuances of Power BI and SSRS, providing practical insights to help organizations make informed decisions that align with their specific reporting goals.

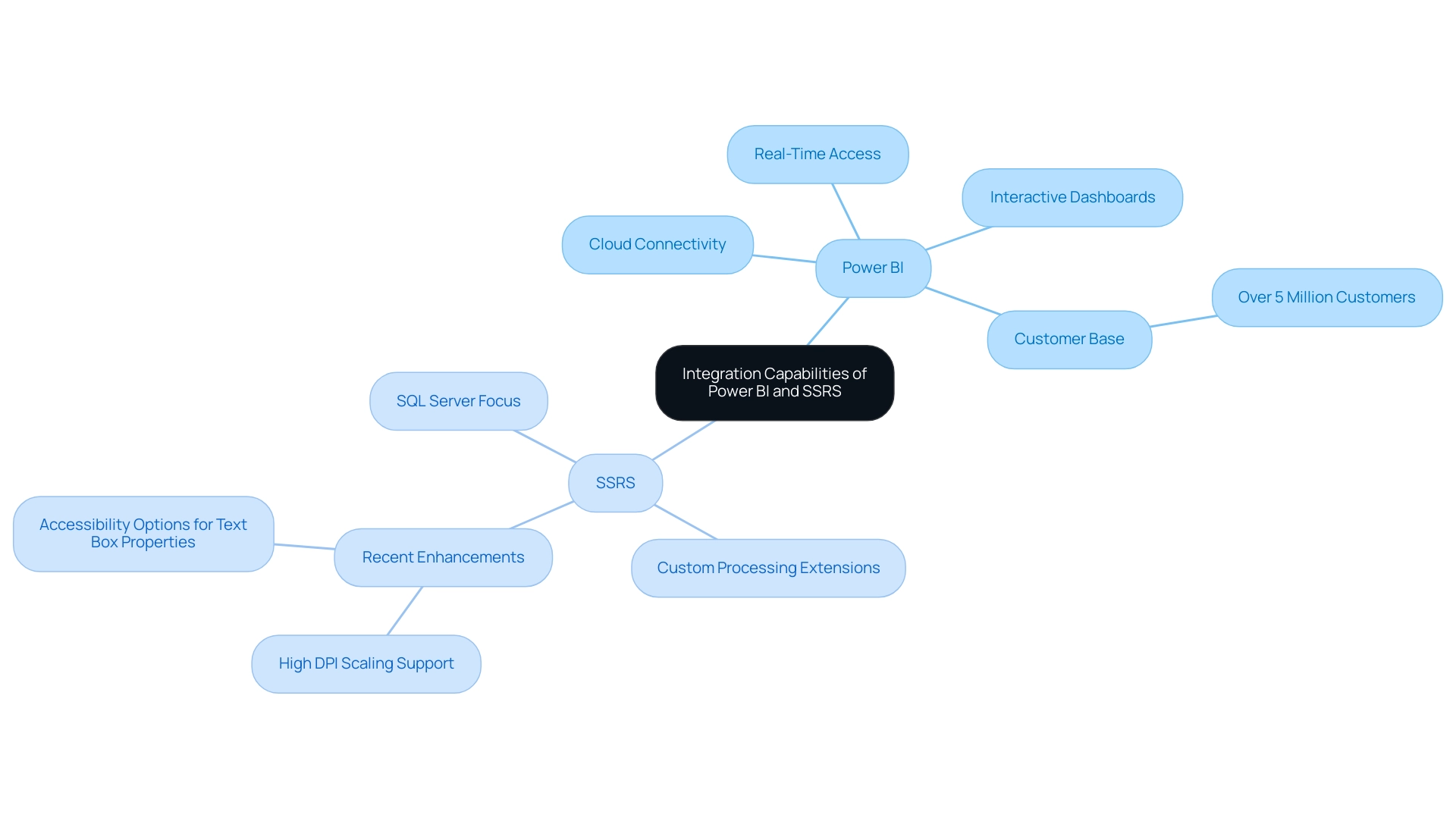

Introduction to Power BI and SQL Server Reporting Services (SSRS)

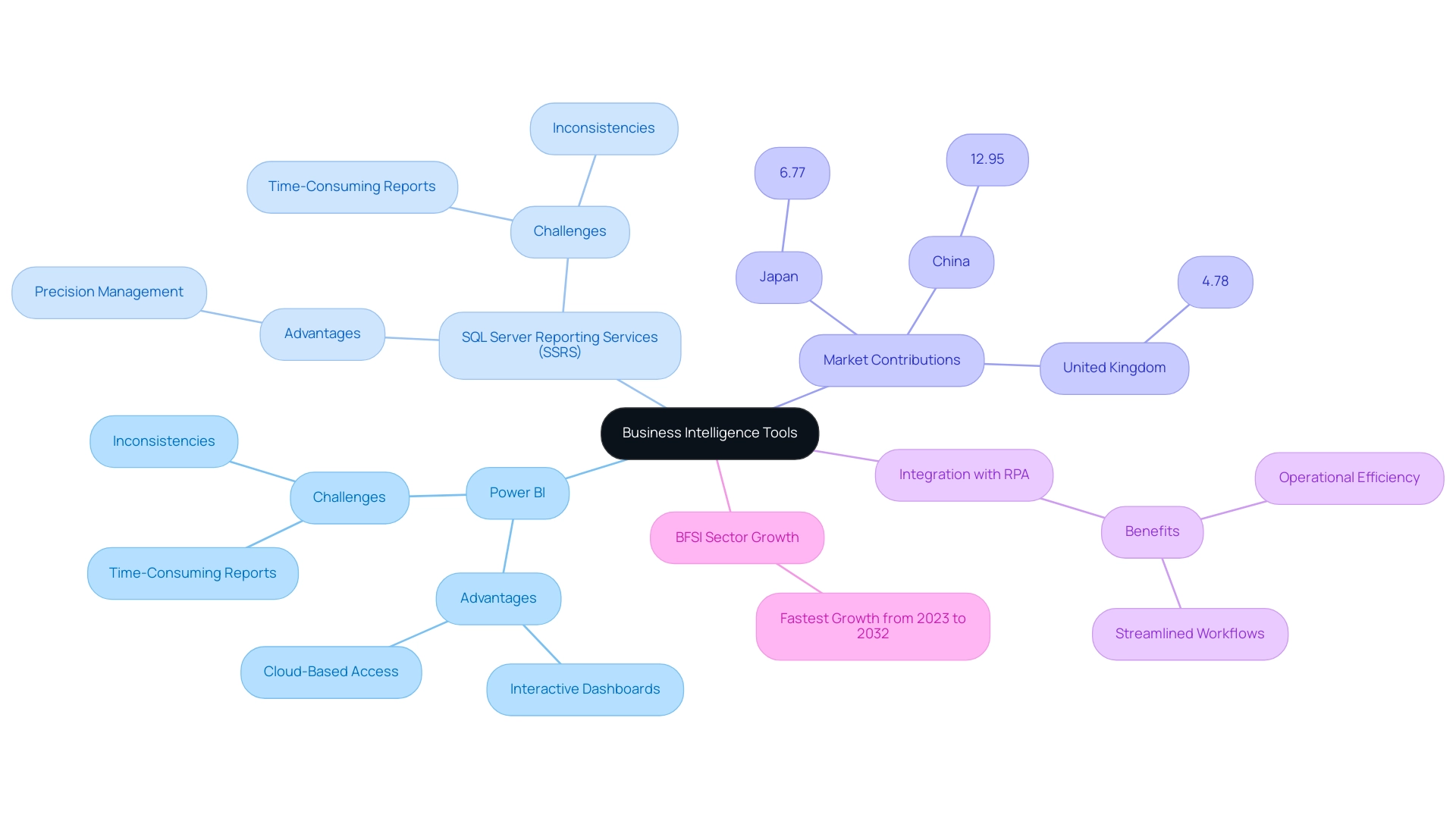

BI reporting services and SQL Server Reporting Services (SSRS) are integral tools in the realm of business intelligence reporting, each offering unique advantages tailored to specific organizational needs. BI, a cloud-based service from Microsoft, enables users to visualize information and share insights effortlessly across different tiers of an organization. Its interactive dashboards and customizable reports facilitate a dynamic approach to analytics, fostering a culture of evidence-based decision-making.

However, utilizing insights from Business Intelligence can pose challenges, such as time-consuming report creation, inconsistencies, and a lack of actionable guidance. According to a survey by MicroStrategy, 88% of organizations utilizing cloud-based BI indicated enhanced flexibility in accessing and analyzing data from any location, at any time, highlighting Power BI’s benefits in contemporary analysis environments. In contrast, the server-based solution stands as a robust tool that enables the creation, deployment, and management of documents with precision.

Both tools fulfill similar objectives but are designed to accommodate different documentation structures and organizational frameworks. As organizations aim to improve their documentation capabilities, comprehending the functionalities and applications of BI reporting services and SSRS becomes essential. Significantly, the BFSI sector is set for swift expansion in business intelligence tools from 2023 to 2032, indicating a heightened demand for efficient analysis solutions.

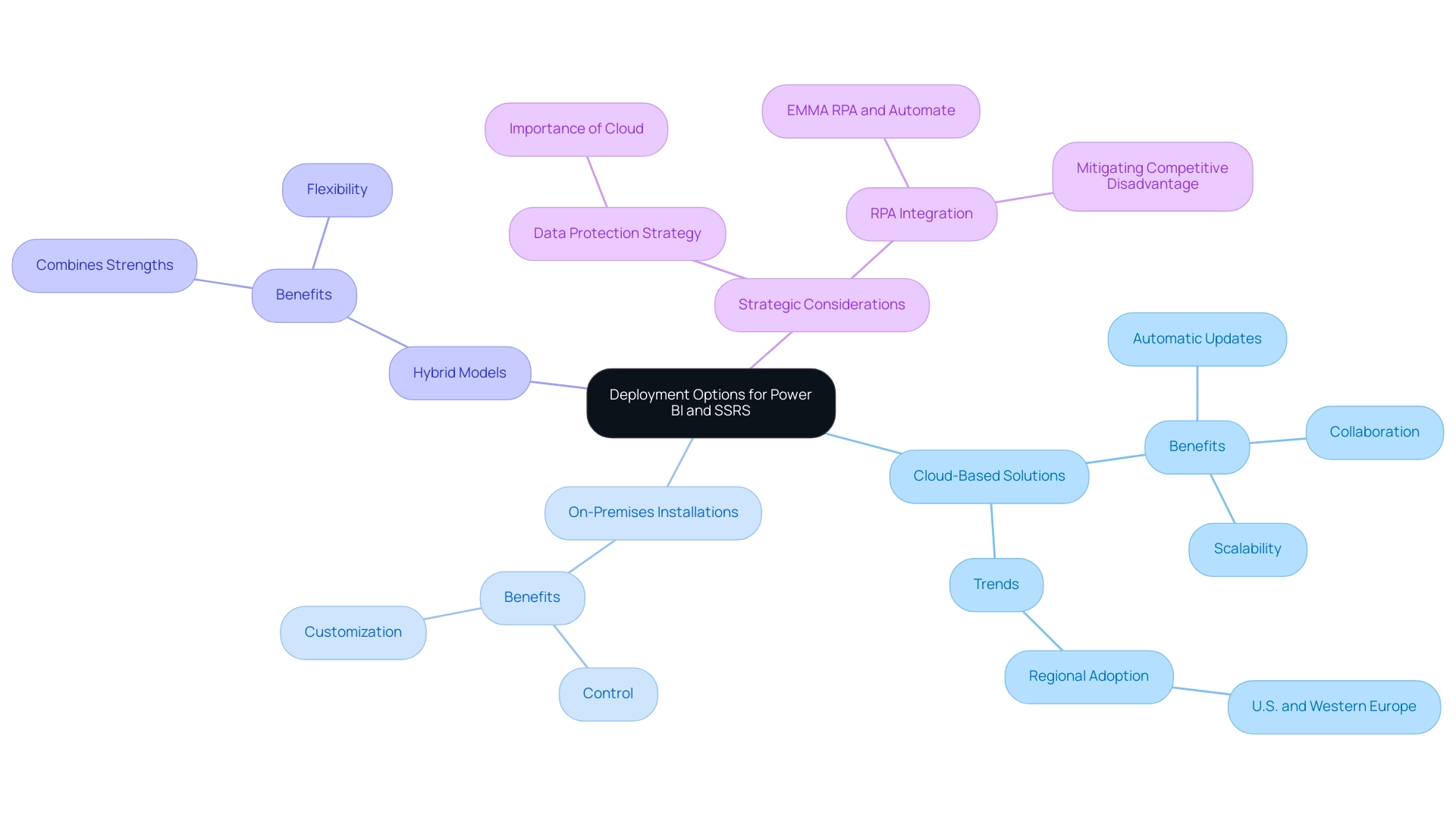

Additionally, significant markets such as China, Japan, and the United Kingdom contribute 12.95%, 6.77%, and 4.78% respectively to the BI landscape, further emphasizing the global relevance of these tools. With BI’s growing use—apparent from recent statistics indicating its dominance in the market—the decision between these tools ultimately depends on the specific analysis needs and objectives of the organization. Moreover, grasping the kinds of changes organizations need to handle during the execution of information and BI solutions, as emphasized in the case study on ‘Types of Change to Manage in Fabric Adoption,’ is crucial for effective integration and enhancing the advantages of these analytical solutions.

Embracing RPA alongside BI can significantly enhance operational efficiency, ultimately driving business growth. EMMA RPA provides advanced automation features that streamline repetitive tasks, while Automate simplifies workflows, ensuring that organizations can efficiently manage their data processes. Failing to adopt these BI tools can leave organizations at a competitive disadvantage, underscoring the urgency for Directors of Operations Efficiency to prioritize these technologies.

A proactive strategy for integrating BI and RPA can not only reduce challenges in documentation but also encourage a culture of continuous improvement and innovation.

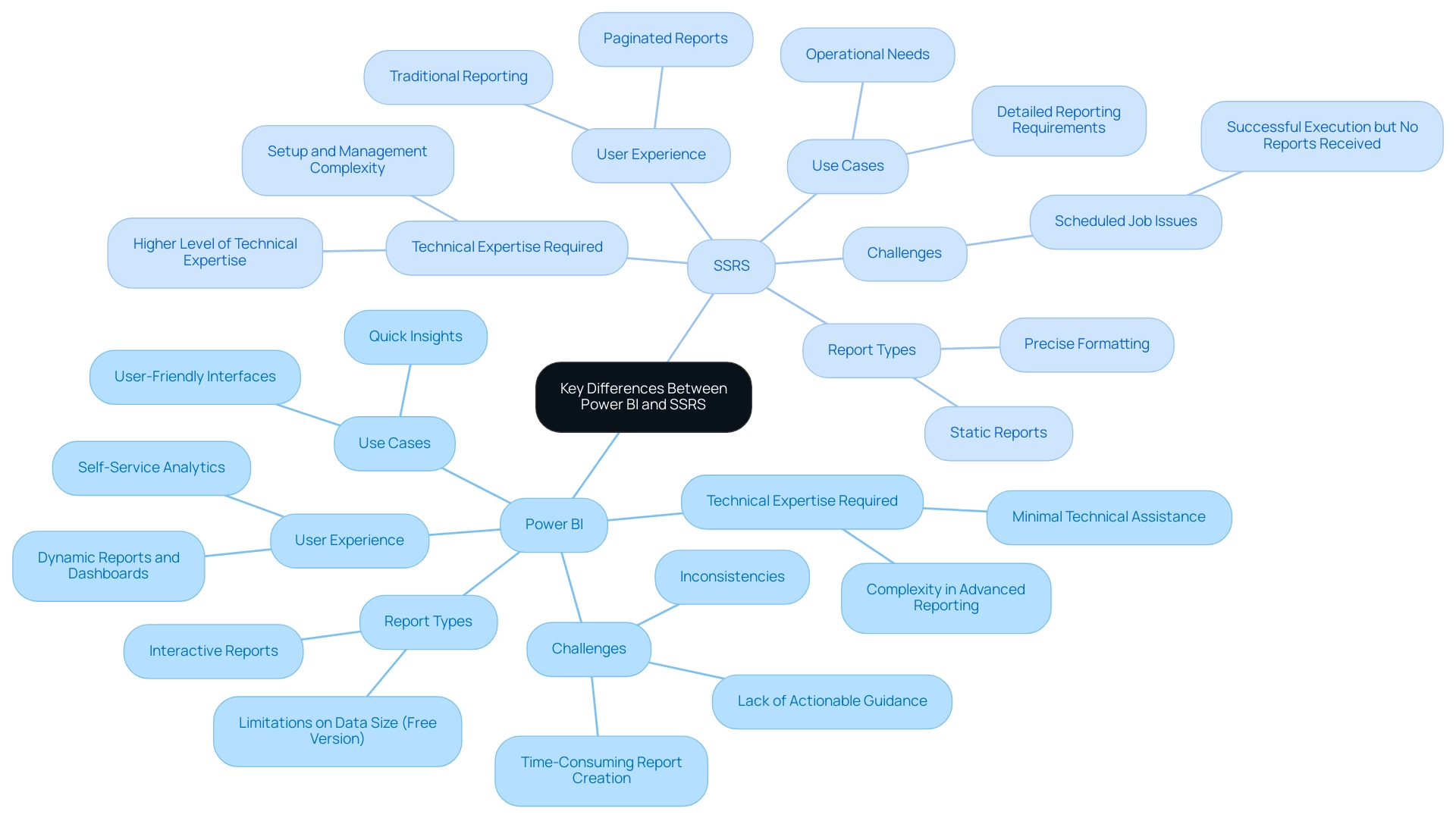

Key Differences Between Power BI and SSRS

Comprehending the main differences between BI reporting services and SSRS is crucial for enhancing your reporting strategy. Power BI stands out as a solution for self-service analytics, enabling users to independently create dynamic reports and dashboards with minimal technical assistance. Its emphasis on visualization and interactivity enhances the user experience, making insights more accessible.

However, challenges such as time-consuming report creation, inconsistencies, and a lack of actionable guidance can impede its effectiveness. As emphasized in our BI reporting services, we offer a 3-Day BI Sprint to swiftly develop professionally crafted documents, along with a General Management App for thorough management and insightful evaluations, ensuring effective documentation and data consistency. Additionally, our actions portfolio provides a structured approach to implementing these solutions effectively.

In contrast, the system is tailored for traditional reporting, emphasizing paginated reports that are crucial for operational needs. This tool demands a higher level of technical expertise for setup and management, which can be a barrier for some users. As noted by an anonymous user,

When we run scheduled jobs to email reports, jobs are executing successfully, but we don’t receive any reports.

This emphasizes the significance of tackling these challenges in utilizing insights from BI dashboards. Furthermore, PBIRS, which merges BI report server and another reporting server, requires a SQL Server Volume license or separate acquisition, adding complexity to the decision-making process for organizations. For those aiming for quick insights and user-friendly interfaces, BI, particularly with our tailored solutions, is often the preferred choice.