Introduction

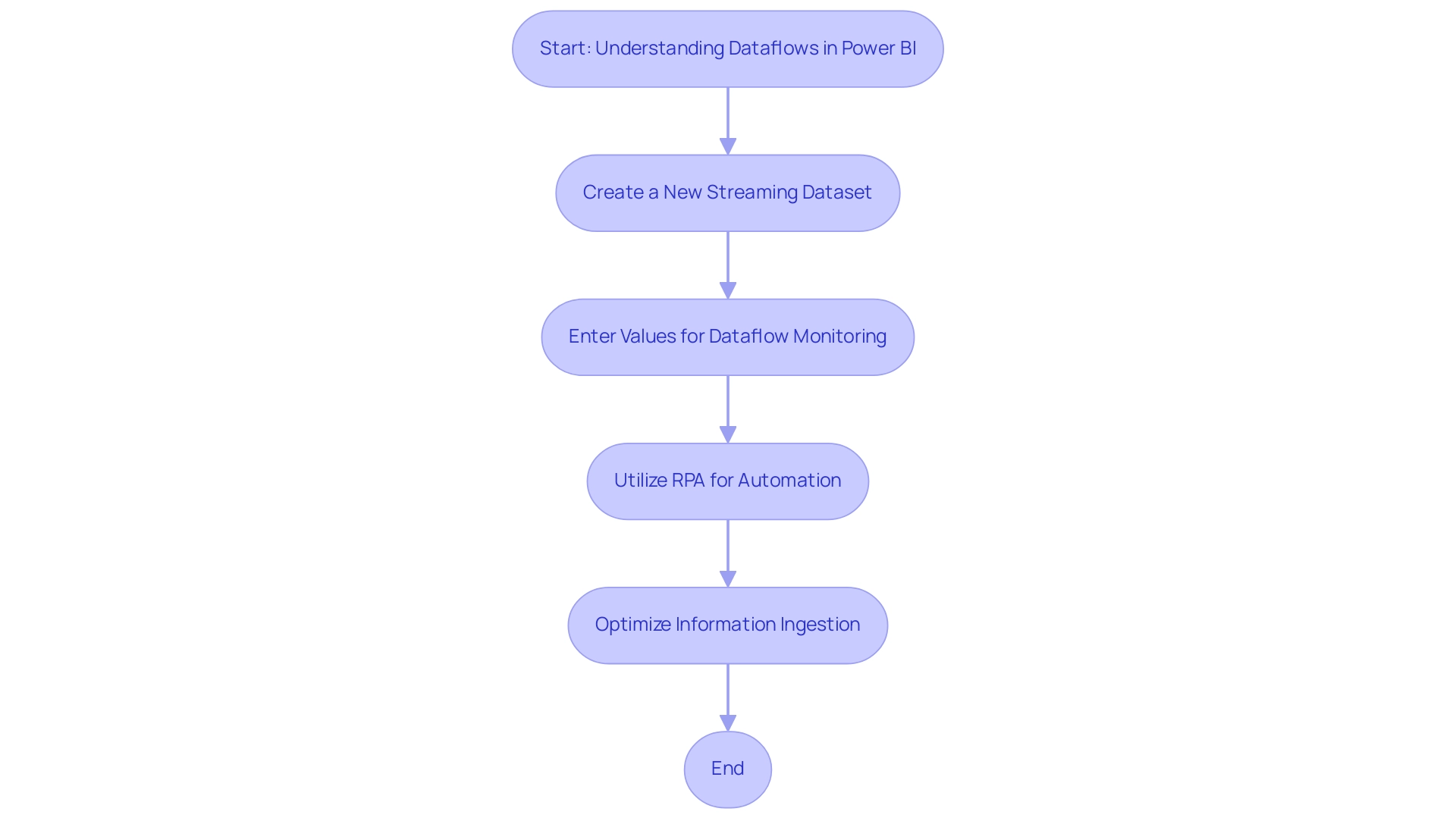

In an age where efficiency is paramount, mastering the task capture process has emerged as a crucial step for organizations striving to embrace automation successfully. By meticulously documenting and analyzing business processes, companies can uncover inefficiencies that hinder productivity and identify opportunities for innovative solutions like Robotic Process Automation (RPA).

With a growing number of businesses recognizing the transformative potential of automation—evidenced by significant reductions in errors and accelerated workflows—it’s clear that the journey toward operational excellence begins with a comprehensive understanding of task capture.

This article delves into effective strategies and best practices that empower organizations to optimize their automation efforts, ensuring they not only enhance efficiency but also position themselves for future growth in an increasingly competitive landscape.

Understanding the Task Capture Process for Effective Automation

The process of task capture download is an essential element in the journey toward effective mechanization, encompassing the documentation and analysis of each step in a business process. This meticulous approach not only allows organizations to pinpoint bottlenecks and repetitive activities but also recognizes inefficiencies that can be effectively tackled through innovative solutions like graphical user interface enhancements. A recent case study involving a mid-sized healthcare company illustrates this; they faced significant challenges such as manual data entry errors and slow software testing, but experienced a remarkable 70% reduction in data entry errors and a 50% acceleration in software testing by adopting graphical user interface techniques.

Research indicates that employees in the IT industry can save between 10% to 50% of their time by automating these repetitive activities, underscoring the potential for significant productivity gains. For example, companies may discover that their invoice handling requires many manual steps, which could be improved using robotic techniques like EMMA RPA and Microsoft Power Automate. By carefully outlining these activities, organizations can ensure their strategies are not only focused but also effective, ultimately leading to increased operational efficiency.

Moreover, the implementation of mechanization is gaining traction; currently, nearly 37% of businesses report lacking the technology necessary to streamline and organize their onboarding processes. This gap offers a notable chance for leaders to improve their operations through efficient task capture download and mechanization. As emphasized by Tidio, almost 70% of recruiters acknowledge AI as an essential resource for removing unconscious bias in hiring, illustrating the wider consequences of adopting technology across different functions.

Notably, 25% of companies have already embraced HR technology for essential tasks like screening resumes and scheduling interviews, allowing HR professionals to redirect their focus towards employee development. Moreover, staying updated on the latest trends in robotic task management is essential, as these advancements persist in evolving and influencing how companies function. This trend demonstrates the transformative influence of task capture download by recording business processes prior to starting initiatives, paving the way for organizations to enhance efficiency and achieve success.

Importantly, the ROI from the GUI automation implementation was achieved within six months, highlighting the effectiveness of this strategy.

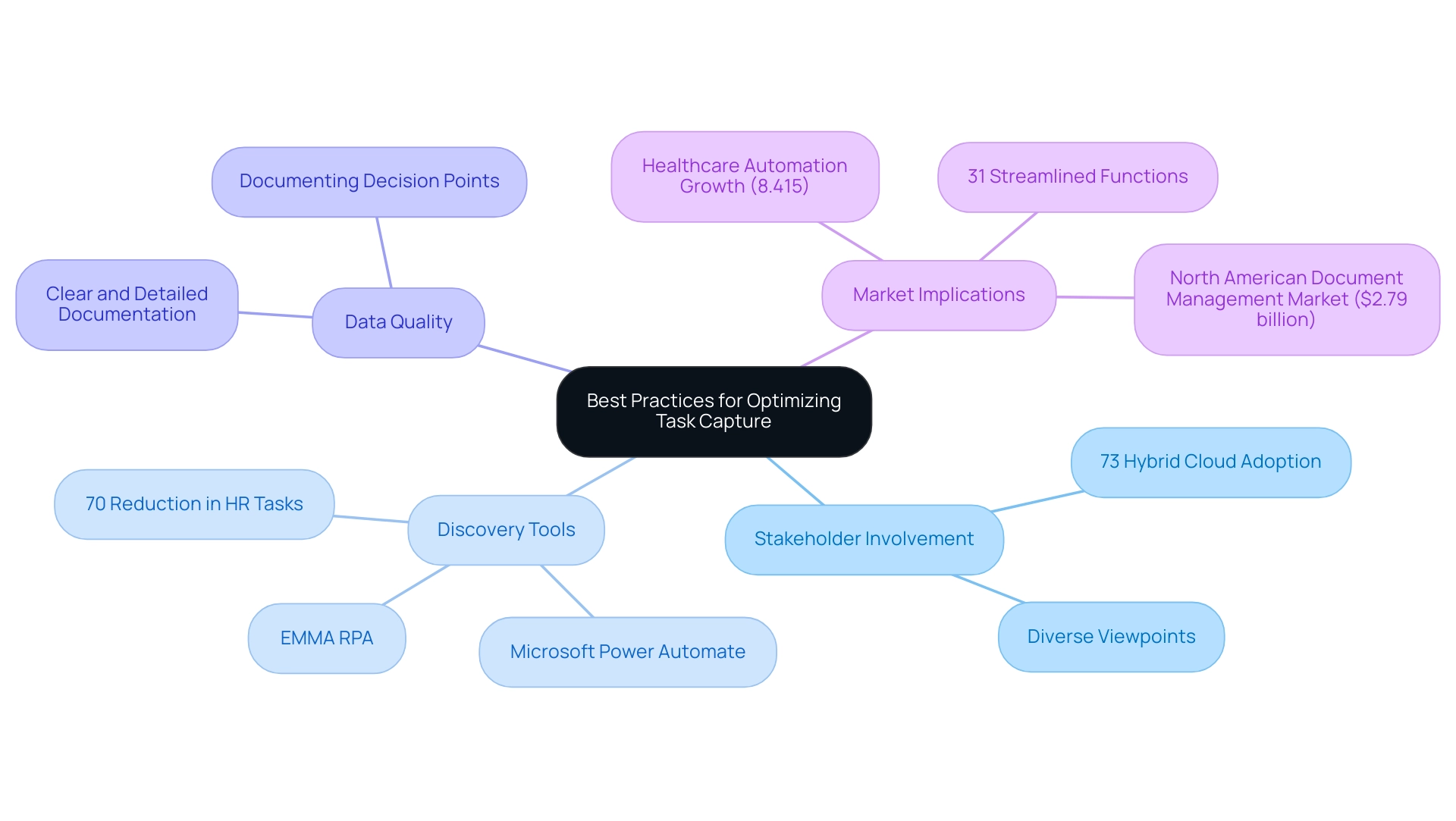

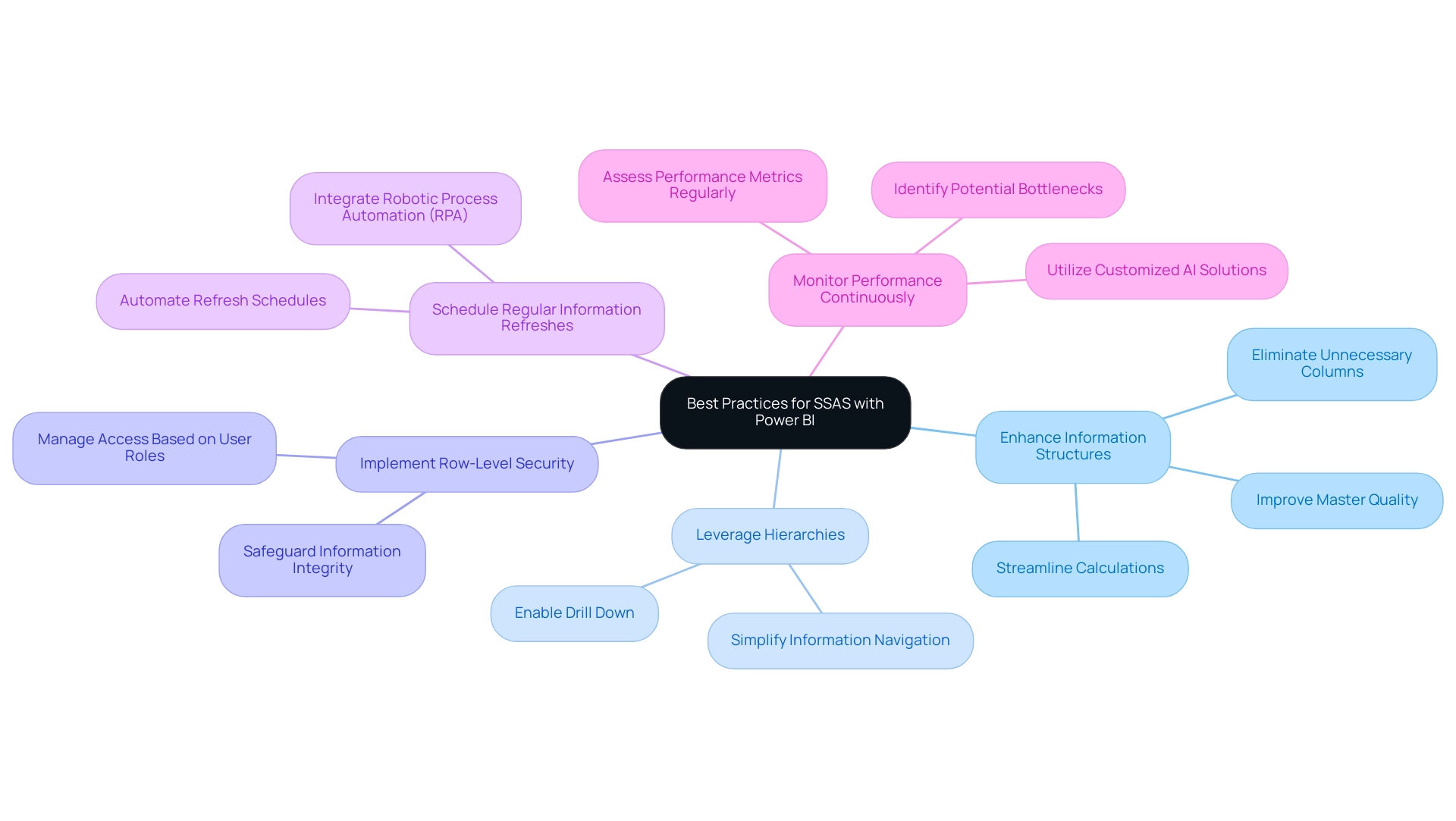

Best Practices for Optimizing Task Capture

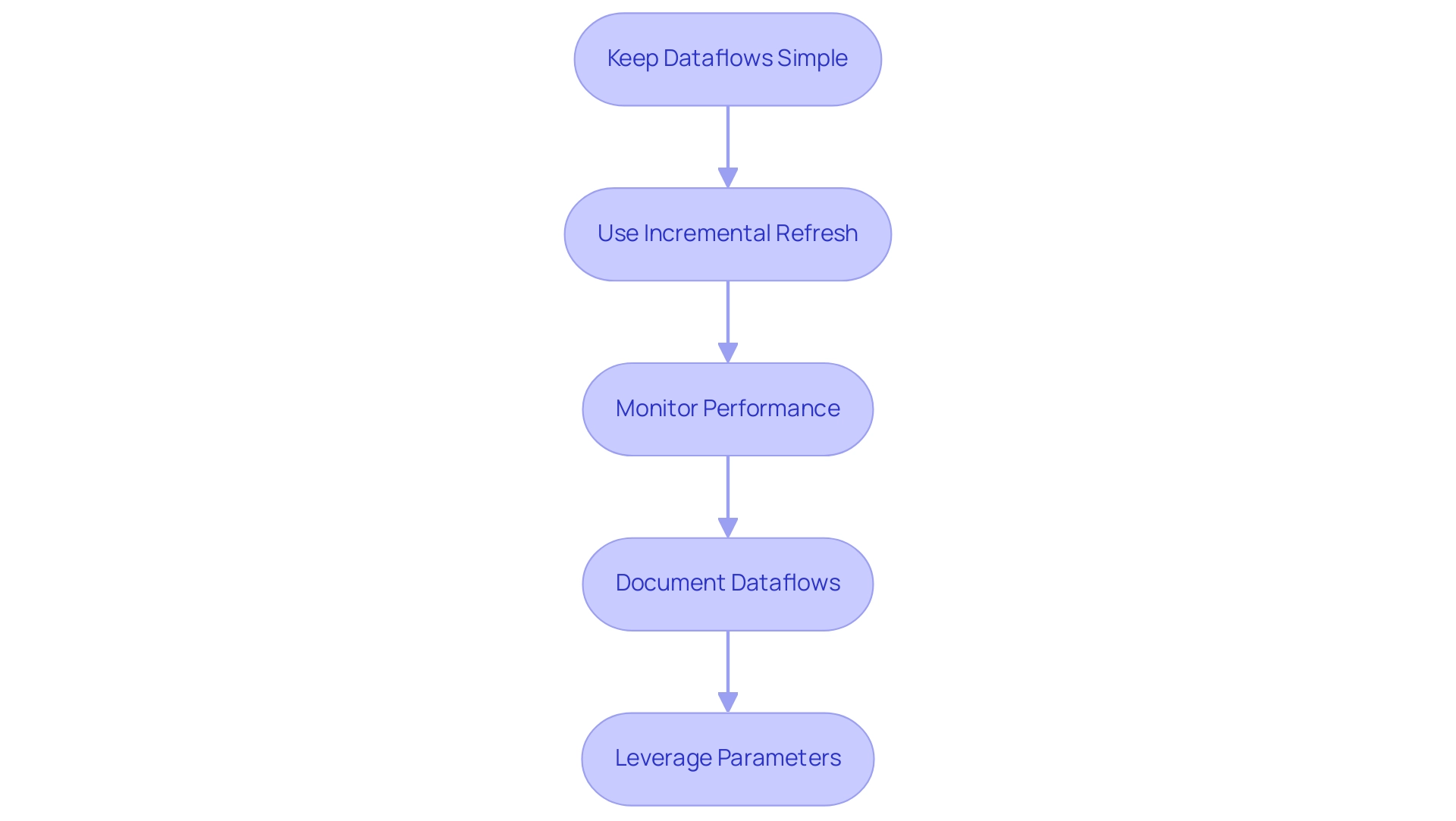

To improve the efficiency of activity recording for task capture download, organizations should embrace several essential best practices, particularly in the context of addressing repetitive activities and obsolete systems through innovative RPA solutions. Involving stakeholders from different departments is essential, as it guarantees a diverse array of viewpoints that enhance the comprehension of activities being recorded. Recent developments reveal that 73% of enterprises have embraced a hybrid cloud approach, suggesting a transition towards collaborative settings that can greatly enhance initiative capture endeavors.

Secondly, utilizing sophisticated discovery tools, such as EMMA RPA and Microsoft Power Automate, is crucial. These tools can automatically record user interactions across applications, offering a precise representation of workflows. For instance, Ula’s implementation of such tools resulted in a remarkable 70% reduction in mundane HR activities and a decrease in Slack queries from 100% to just 1.25% between review cycles, demonstrating the power of automated insights in enhancing efficiency and employee morale.

Moreover, it is vital that the data captured is both clear and detailed. This includes documenting decision points and any potential exceptions that may arise throughout the process. As the worldwide healthcare mechanization sector is expected to increase at an impressive rate of 8.415% over the next seven years, organizations that prioritize high-quality activity recording can position themselves for success in this expanding market.

Moreover, considering that 31% of enterprises have completely streamlined at least one essential function, the task capture download becomes progressively vital in propelling efficiency initiatives. Addressing staffing shortages, RPA solutions can help streamline operations, making it easier to attract and retain talent by reducing the burden of repetitive activities. Furthermore, the emotional impact of these responsibilities on employee morale can be reduced through mechanization, enabling staff to concentrate on more engaging work.

With the North American document management software market valued at $2.79 billion, improving workflow collection methods can significantly enhance document management strategies within organizations. By adhering to these optimal methods, organizations can improve their work documentation systems, thus boosting overall operational effectiveness and facilitating future advancements in mechanization.

The Importance of Document Download Tracking in Task Capture

Document download tracking plays a crucial role in organizations aiming to enhance their task capture download processes, particularly in the context of improving operational efficiency in healthcare. By meticulously monitoring which documents are downloaded and by whom, companies can glean valuable insights into user behavior and identify frequently accessed resources. For example, if a particular document is frequently downloaded before performing the work, it may indicate a shared dependency that requires mechanization or simplification.

This understanding is particularly timely, as:

- 27% of businesses cite spiraling costs as a significant barrier to implementing paperless office tools

- 41% of small businesses face skills shortages that complicate the adoption of effective document tracking systems

The ongoing evolution of these systems presents both opportunities and challenges, particularly concerning data privacy and cybersecurity risks. As document tracking becomes more interconnected and reliant on cloud infrastructure, organizations must address these challenges to ensure secure operations.

Leveraging RPA solutions like EMMA RPA and Microsoft Power Automate can transform business operations by automating repetitive tasks, ultimately enhancing efficiency and employee morale. Flexera’s discoveries show that:

- 73% of companies adopted a hybrid cloud strategy in 2023, highlighting the need for strong monitoring systems that improve visibility into operations and guide strategic choices related to efficiency opportunities

In the case study of a mid-sized company, implementing GUI mechanization led to:

- A 70% reduction in data entry mistakes

- A 50% speedup in testing activities

- An 80% enhancement in workflow efficiency, with ROI realized within six months

Initially, the company faced significant challenges, including manual data entry errors and slow software testing, which highlighted the critical need for automation. As organizations navigate these complexities, effective task capture download tracking becomes indispensable for optimizing business processes and enhancing operational efficiency.

![]()

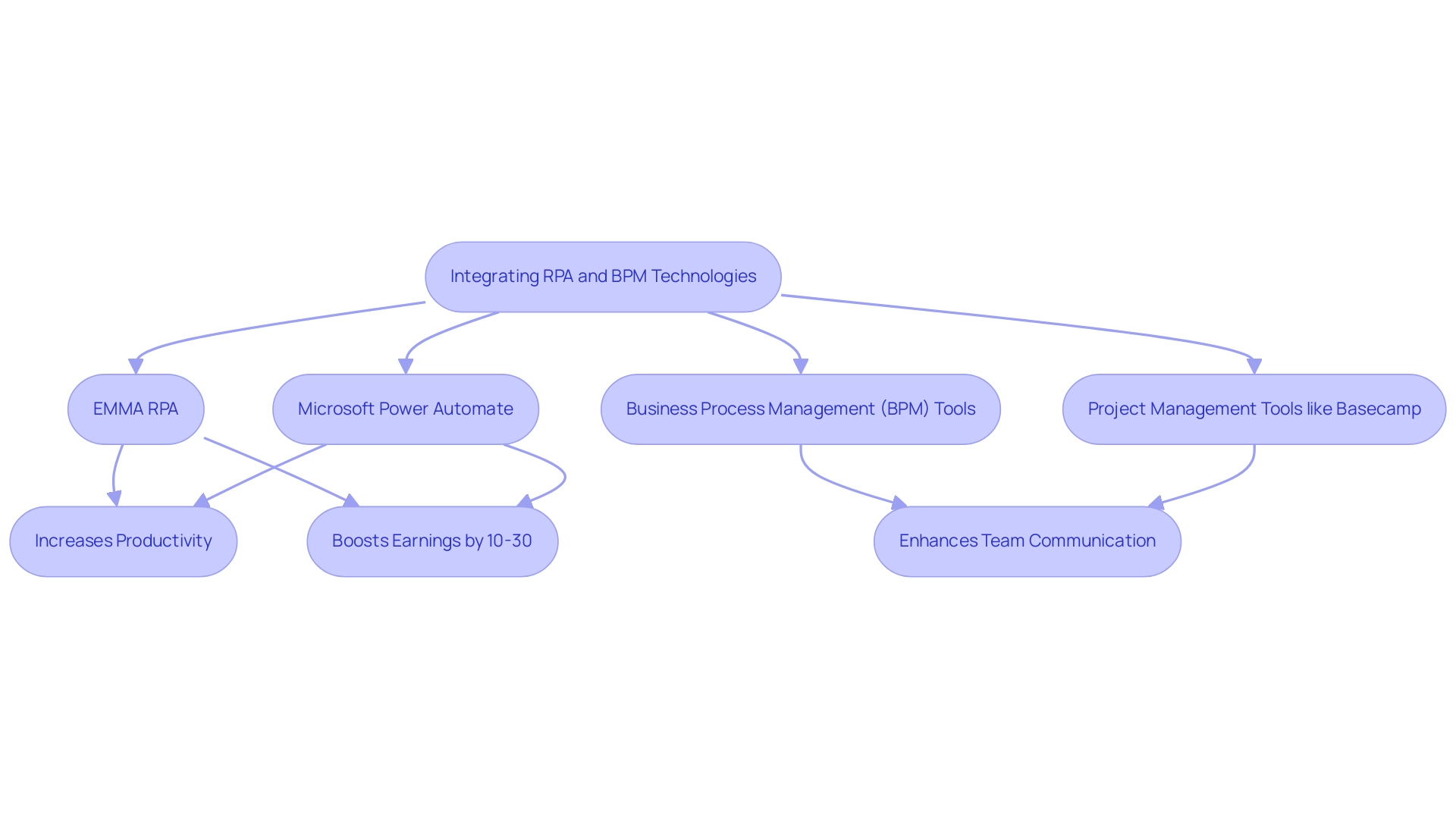

Leveraging Tools and Technologies for Enhanced Task Capture

Organizations today can significantly enhance workflow capture and overall efficiency by leveraging innovative Robotic Process Automation (RPA) tools like EMMA RPA and Microsoft Power Automate. EMMA RPA is designed for operational efficiency, enabling seamless automatic activity recording and addressing common challenges such as repetition fatigue and outdated systems. Microsoft Power Automate transforms operations by streamlining workflows, allowing employees to focus on higher-value activities, ultimately boosting morale and productivity.

Statistics indicate that mechanization can help companies achieve a remarkable 10-30% increase in earnings, illustrating the financial advantages of adopting these technologies. Additionally, Business Process Management (BPM) tools complement RPA by mapping workflows and identifying areas for improvement. For instance, Basecamp serves as an effective project management tool that enhances team communication and integrates with various applications to optimize productivity.

By integrating RPA and BPM technologies, organizations can enhance their methods for task capture download and pave the way for comprehensive automation. As Samuli Sipilä, an RPA Business Analyst at Elisa, remarks, ‘The task capture download feature has provided us with a unified way of easily creating flowcharts to represent the high-level process flow. As a business analyst, I receive ready-to-go instructions which I then incorporate into the PDD.’

This methodology not only enhances efficiency but also empowers teams to dedicate their efforts to strategic initiatives, fostering a culture of innovation and growth. Notable ETL tools such as Airbyte and Talend further illustrate the practical uses of mechanization in activity collection, offering real-world perspectives on operational enhancement. Book a free consultation to explore how our RPA solutions can benefit your organization.

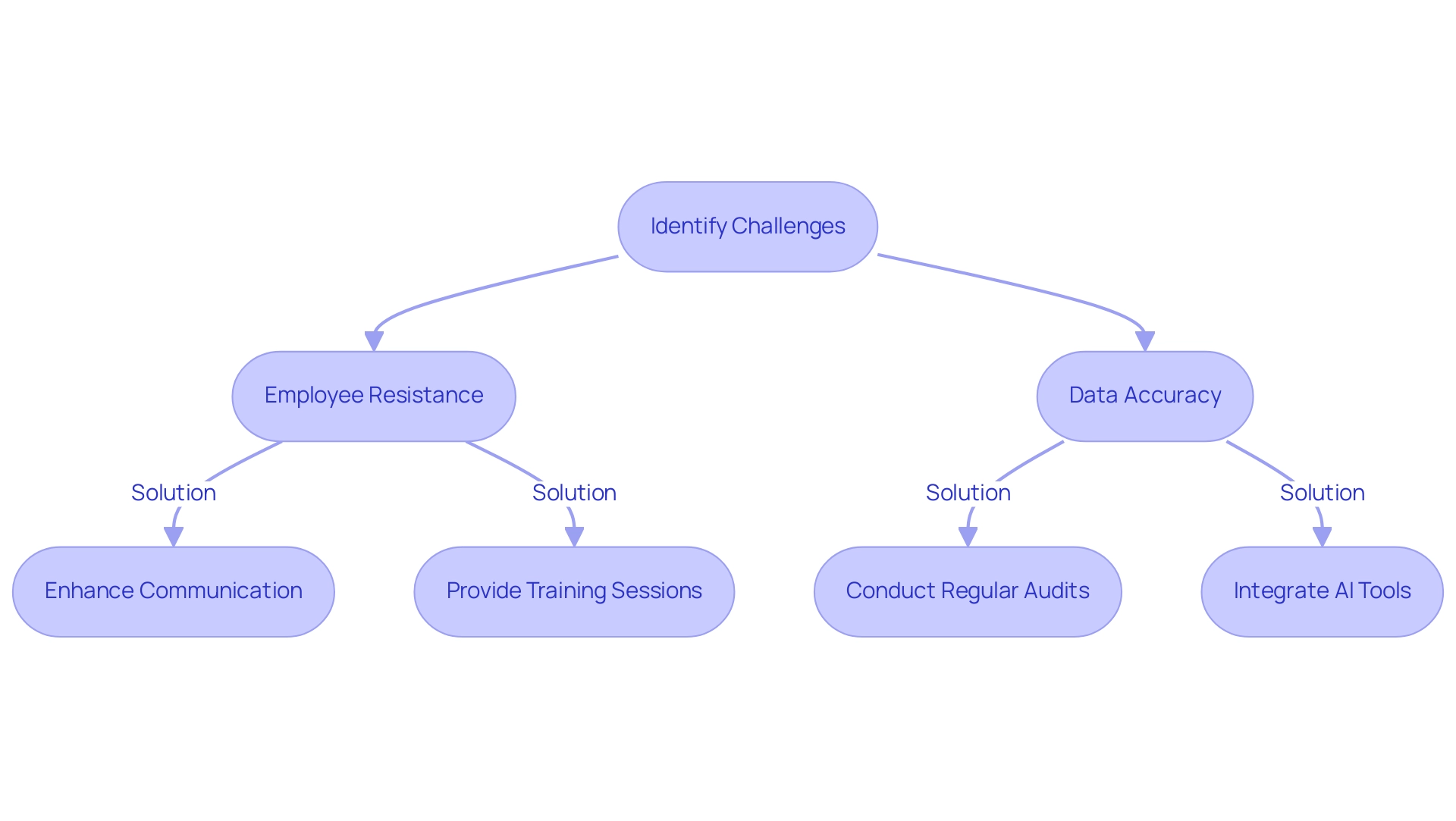

Overcoming Challenges in Task Capture Implementation

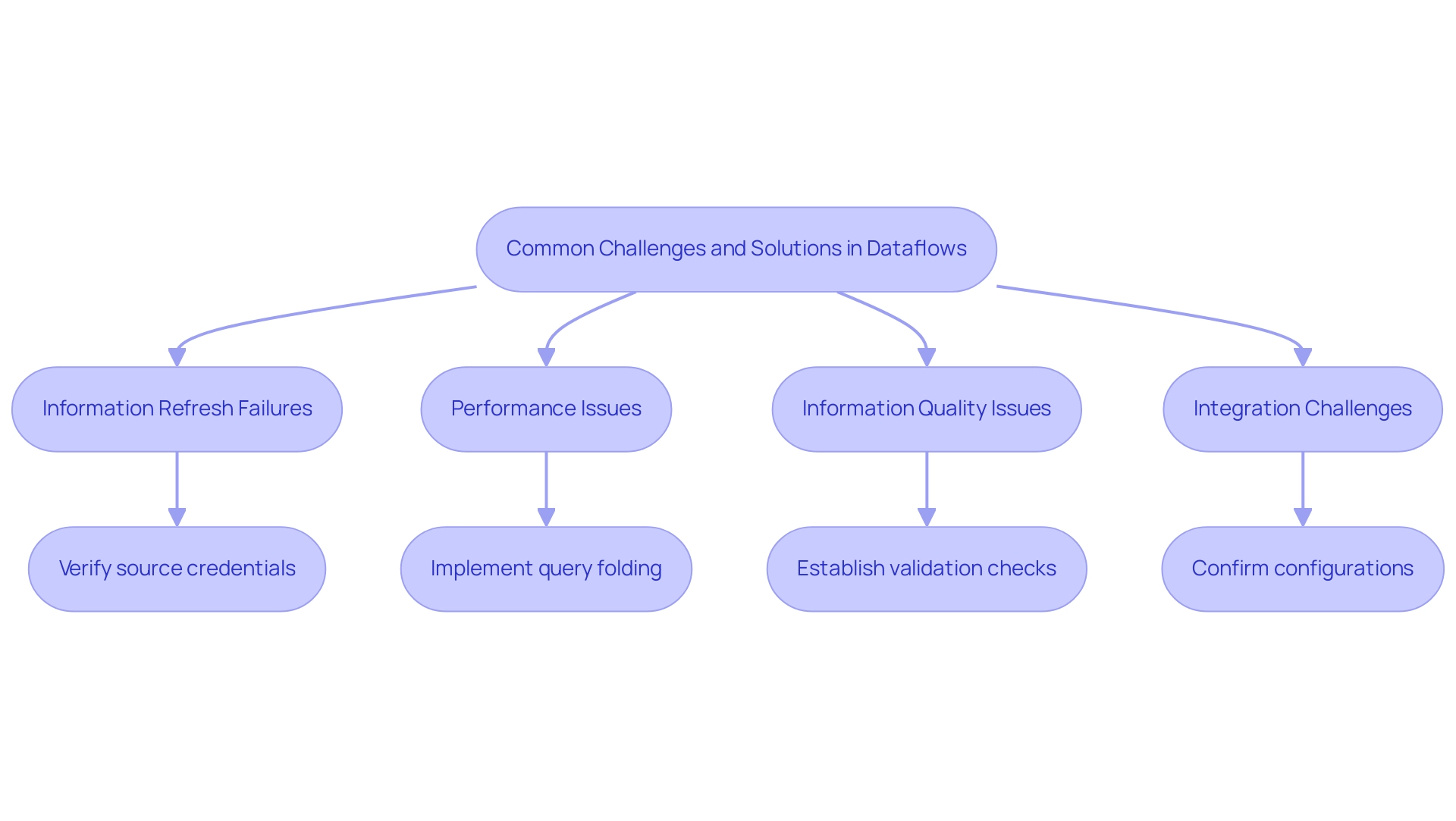

Executing project documentation poses multiple difficulties, particularly worker opposition to embracing new procedures. To tackle this, organizations must emphasize efficient communication and education regarding the advantages of project recording and mechanization. As emphasized by a recent report, 83% of employees using AI-driven tools believe it significantly reduces burnout and enhances job satisfaction, showcasing a clear advantage to embracing these technologies.

Arranging extensive training sessions, like those provided in our GenAI workshops, can further facilitate employees’ transition to new systems, enabling them to utilize activity recording effectively while ensuring ethical AI practices are followed throughout the process.

Another common challenge is maintaining data accuracy during the recording process. Organizations can mitigate this risk by conducting regular audits and actively involving team members in the verification of captured data. This collaborative approach not only boosts accuracy but also fosters a sense of ownership among employees, enhancing their engagement in the process of mechanization.

In the context of the growing trend towards mechanization, with 31% of businesses already fully implementing key functions, the market for mechanization is expanding at a remarkable rate of 20% per year. This highlights the urgency for organizations to adopt effective strategies for implementation of duties. Our case study on enhancing operational efficiency through GUI streamlining in a mid-sized company exemplifies this trend, demonstrating measurable outcomes such as a 70% reduction in data entry errors and an 80% improvement in workflow efficiency.

By proactively tackling these challenges and embracing tools like Grafana and Prometheus for observability, organizations can cultivate a culture of innovation and efficiency, positioning themselves for successful task capture download and a risk-free, ROI-driven journey. Furthermore, the integration of personalized customer experiences through our GenAI services ensures that automation not only enhances operational efficiency but also enriches customer interactions.

Conclusion

Mastering the task capture process is essential for organizations aiming to harness the full potential of automation. By meticulously documenting and analyzing business processes, companies can identify inefficiencies and repetitive tasks that hinder productivity. The case studies and statistics highlighted in this article demonstrate that adopting innovative solutions such as Robotic Process Automation (RPA) can lead to significant improvements, including reduced errors and accelerated workflows.

Best practices in task capture, such as engaging diverse stakeholders and utilizing advanced process discovery tools, not only enhance the accuracy of data capture but also foster a culture of collaboration and innovation. As organizations increasingly adopt automation, understanding the intricacies of their processes becomes vital for optimizing operational efficiency and positioning themselves for future growth.

Moreover, the importance of effective document tracking and leveraging the right tools cannot be overstated. These strategies not only streamline operations but also provide invaluable insights that drive informed decision-making. As the automation landscape continues to evolve, embracing these practices will empower organizations to overcome challenges and thrive in a competitive environment. In this journey toward operational excellence, organizations that prioritize task capture will find themselves well-equipped to navigate the complexities of automation, ultimately achieving a remarkable return on investment and enhanced employee satisfaction.

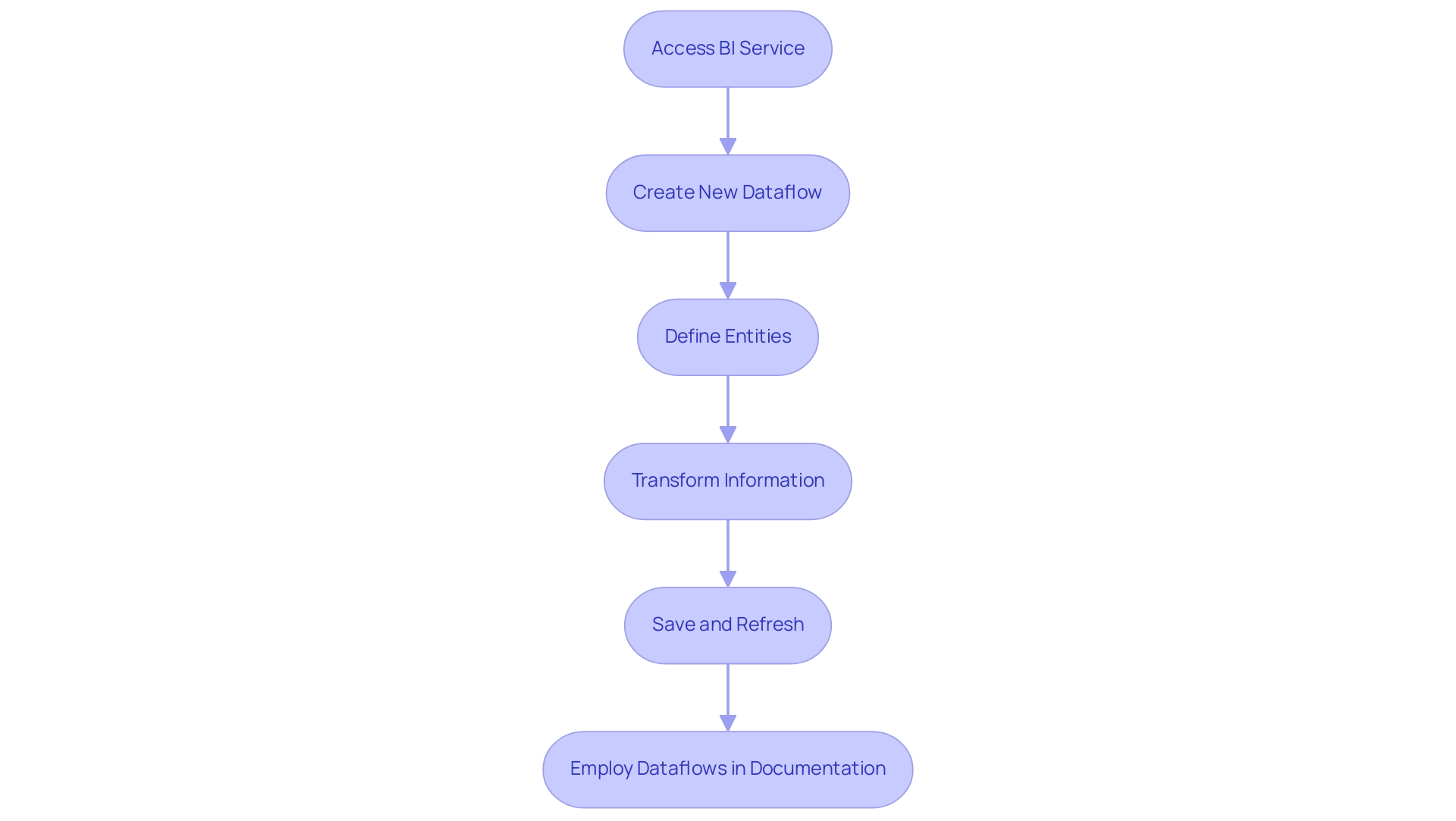

Introduction

In the realm of business analytics, Power BI stands out as a transformative tool that empowers organizations to visualize and share data insights like never before. As the demand for effective data management grows, so does the need for solutions that can seamlessly integrate various data sources and enhance decision-making processes.

However, many organizations encounter significant hurdles, such as:

- Data integration issues

- Security concerns

These challenges can impede their analytics efforts. This article delves into the core functionalities of Power BI, from its robust visualization tools to the intricacies of data preparation and the integration of advanced analytics through R and Python. By exploring these features, organizations can unlock the full potential of their data, turning challenges into opportunities for growth and efficiency.

Whether it’s creating compelling reports or enhancing operational workflows, mastering Power BI is essential for any organization looking to thrive in today’s data-driven landscape.

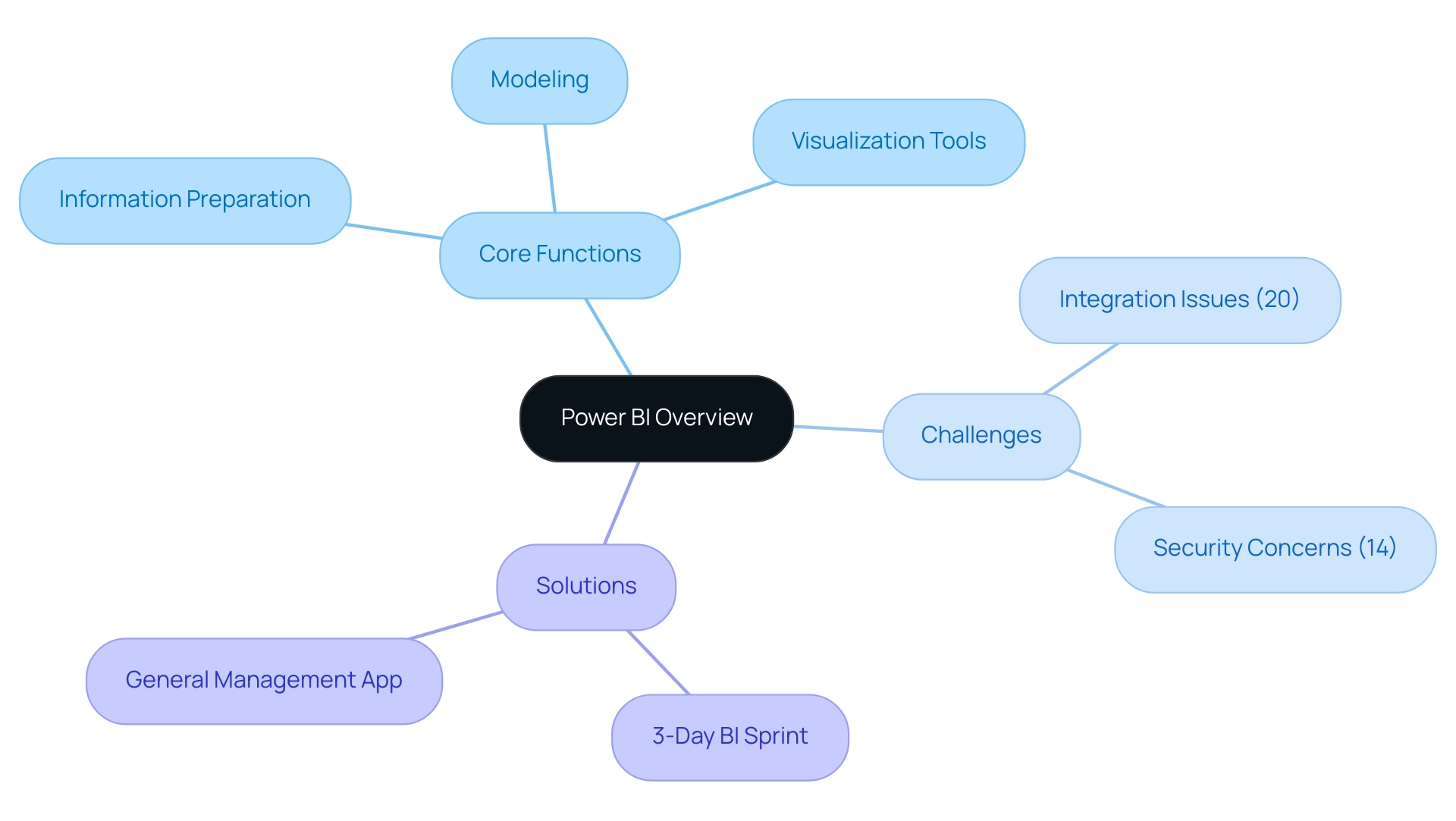

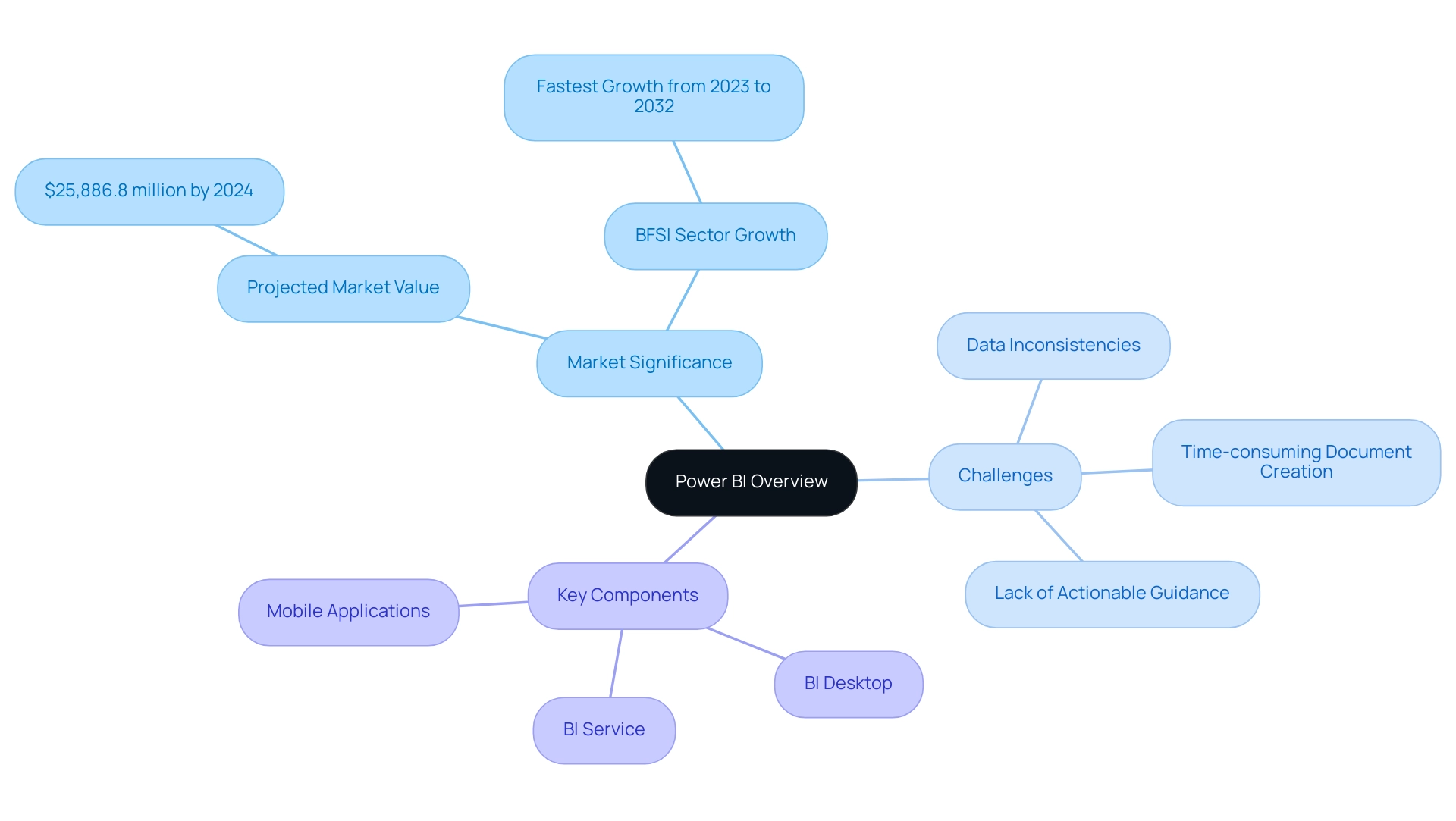

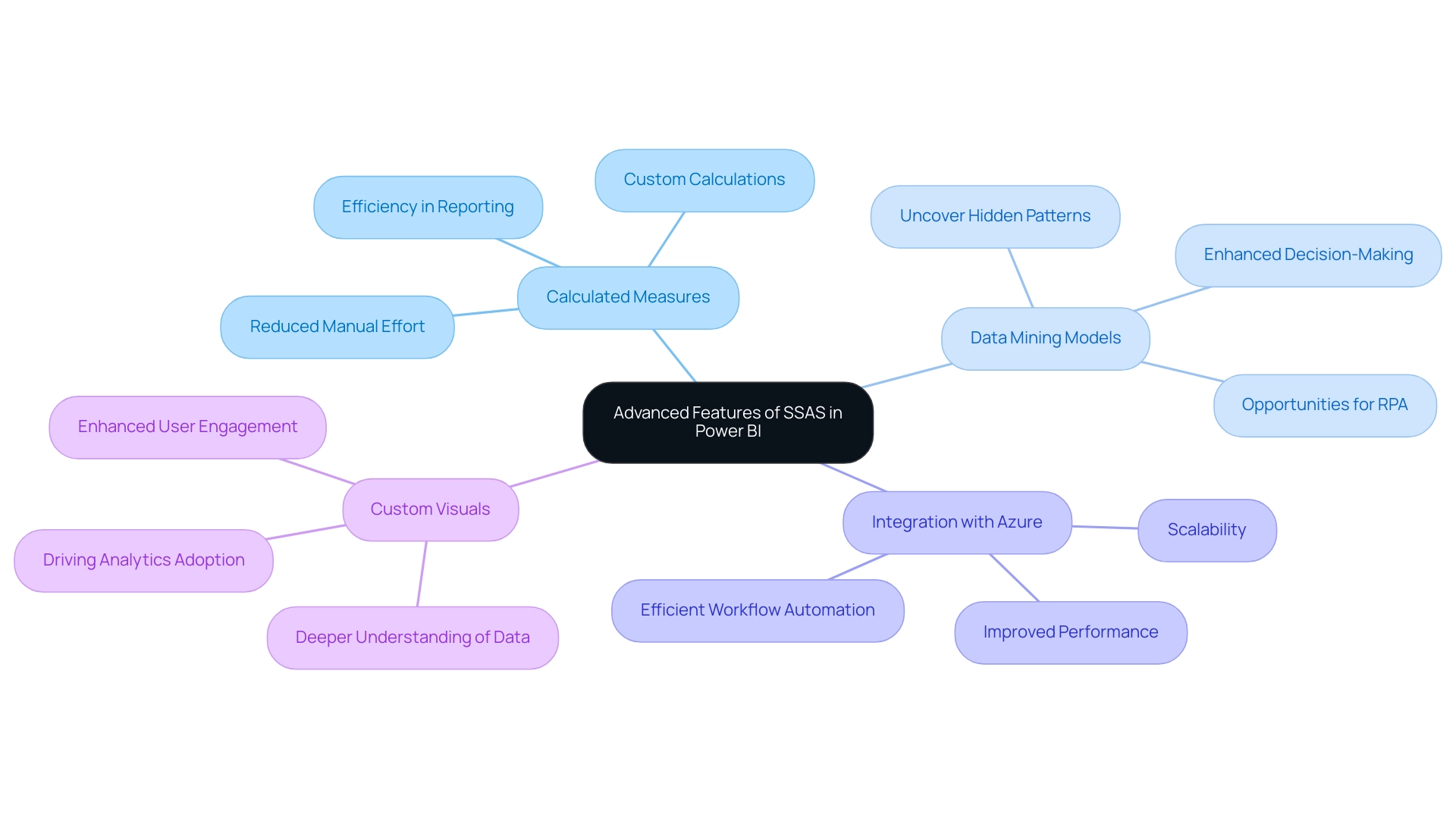

Introduction to Power BI: Understanding Its Core Functions

Power BI visualization tools serve as a leading business analytics solution designed to enable organizations to effectively visualize information and share insights across all levels. With core functionalities that encompass information preparation, modeling, and Power BI visualization tools, users can seamlessly connect to a multitude of data sources. This integration is crucial, especially as the social business intelligence market is expected to reach a remarkable $25,886.8 million by 2024.

However, organizations frequently encounter challenges in self-service business intelligence, particularly in integration and security, which represent 20% and 14% of the obstacles, respectively. To navigate these issues, our BI services offer improved reporting capabilities and actionable knowledge through features like:

- The 3-Day BI Sprint, which enables rapid creation of professionally designed reports.

- The General Management App, which provides comprehensive management tools and intelligent reviews to streamline decision-making processes.

Users can create interactive reports and dashboards with Power BI visualization tools that not only showcase information but also tell a compelling story, bolstered by built-in AI capabilities that provide deeper insights into trends and patterns.

For instance, the connected tables feature in the Power BI Datasets Add-in for Excel simplifies the process of integrating Power BI information into Excel workbooks, enhancing user-friendliness. As Kira Belova aptly states,

Embrace the power of BI with PixelPlex to transform your information into a strategic asset.

By leveraging these capabilities, organizations can effectively overcome the challenges of information integration and security, turning raw information into actionable intelligence that drives performance and enhances decision-making across the board.

Additionally, explore our Actions portfolio and book a free consultation to discover how we can tailor our solutions to meet your specific business needs.

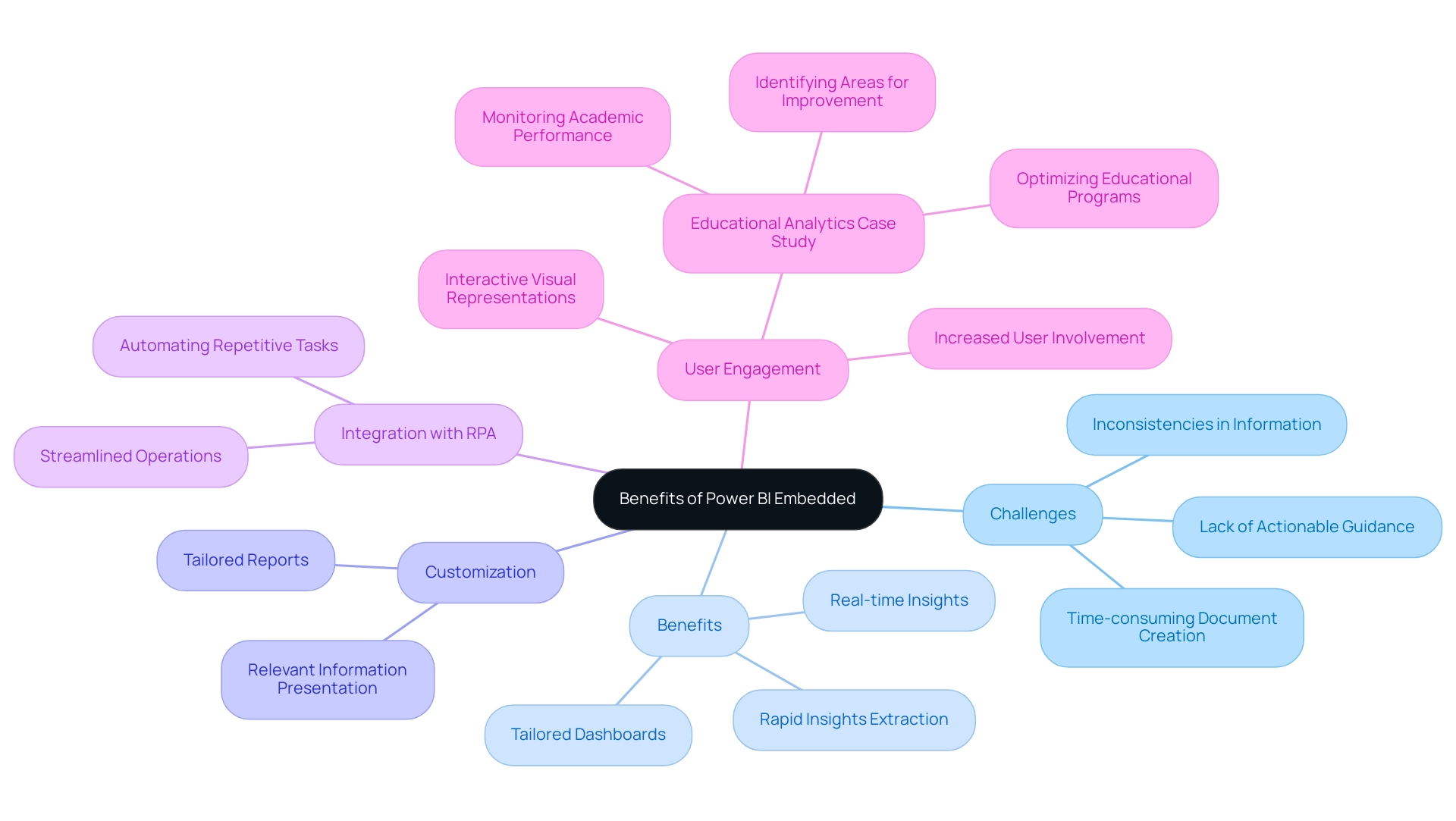

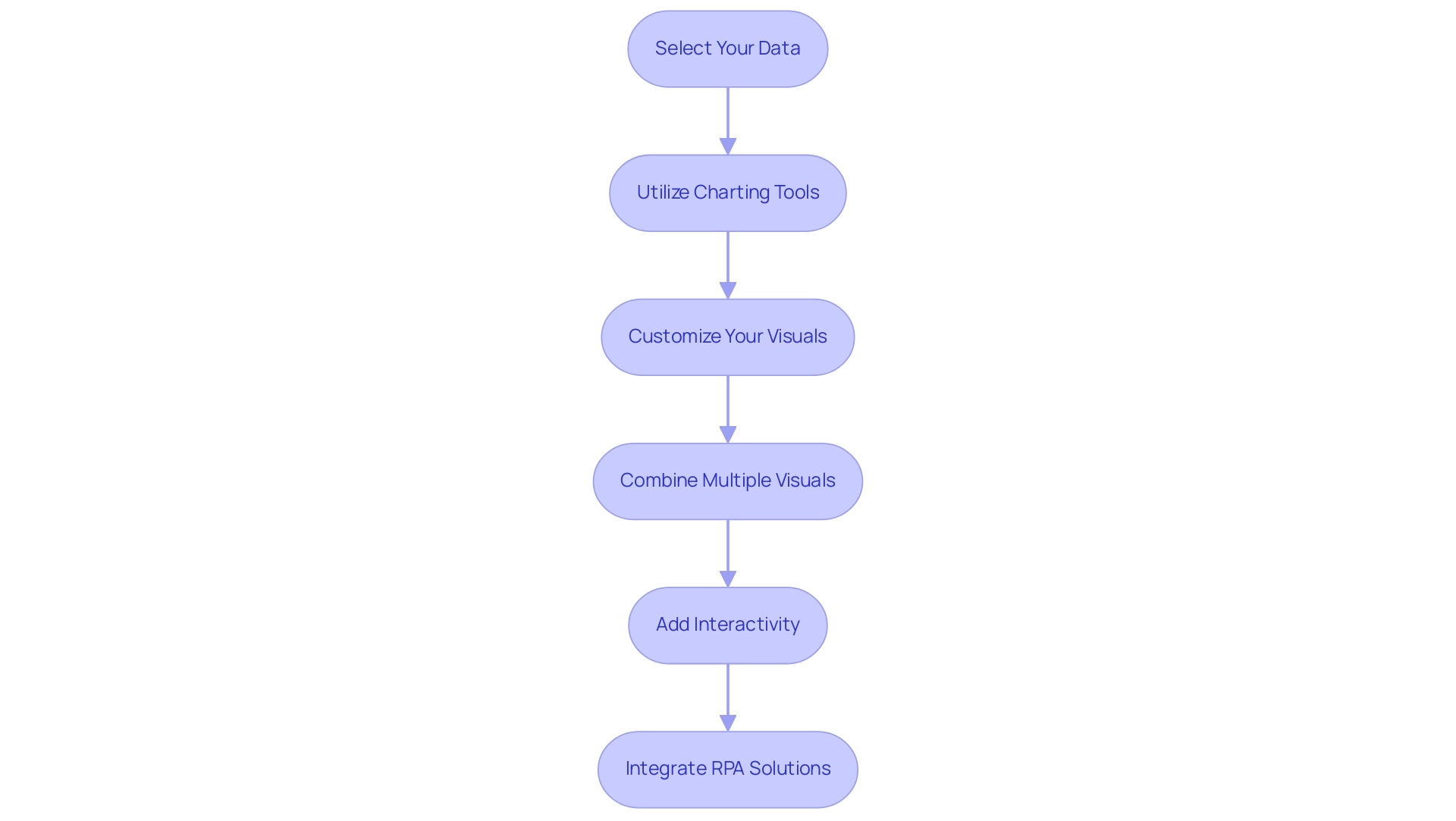

Exploring Power BI Visualization Tools: Types and Features

This software features a variety of visualization tools that are crucial for crafting interactive and insightful information presentations. Among the most popular options are:

- Bar charts

- Line graphs

- Pie charts

- Scatter plots

- Maps

Each serving distinct analytical purposes. For instance, bar charts excel at comparing quantities across categories, while line graphs effectively illustrate trends over time.

Furthermore, tables in Business Intelligence serve an essential function in presenting associated information in rows and columns, enabling quantitative comparisons and thorough analysis. However, numerous organizations encounter difficulties in utilizing information from BI dashboards, including:

- Time-consuming report generation

- Inconsistencies, which can obstruct effective decision-making

The capability to tailor visual representations with filters, slicers, and drill-through features enables users to investigate their information dynamically, revealing findings that might otherwise stay concealed.

Recent advancements in information visualization methods and the introduction of new tools in BI 2024 further enhance these capabilities. Notably, AI-driven insights are integrated, allowing for more intuitive information interpretation and helping to address issues of poor master information quality. As Ben Schneiderman aptly stated, ‘Visualization gives you answers to questions you didn’t know you had.’

Furthermore, with our 3-Day BI Sprint, organizations can quickly create professionally designed reports, while the General Management App ensures comprehensive management and smart reviews. Statistics indicate that organizations employing BI visualizations see a 30% rise in user engagement, emphasizing the effectiveness of these tools in narrative presentation. Mastering these resources, along with our GenAI Workshops and tailored Small Language Models, is crucial for anyone looking to elevate their data storytelling and engage their audience effectively.

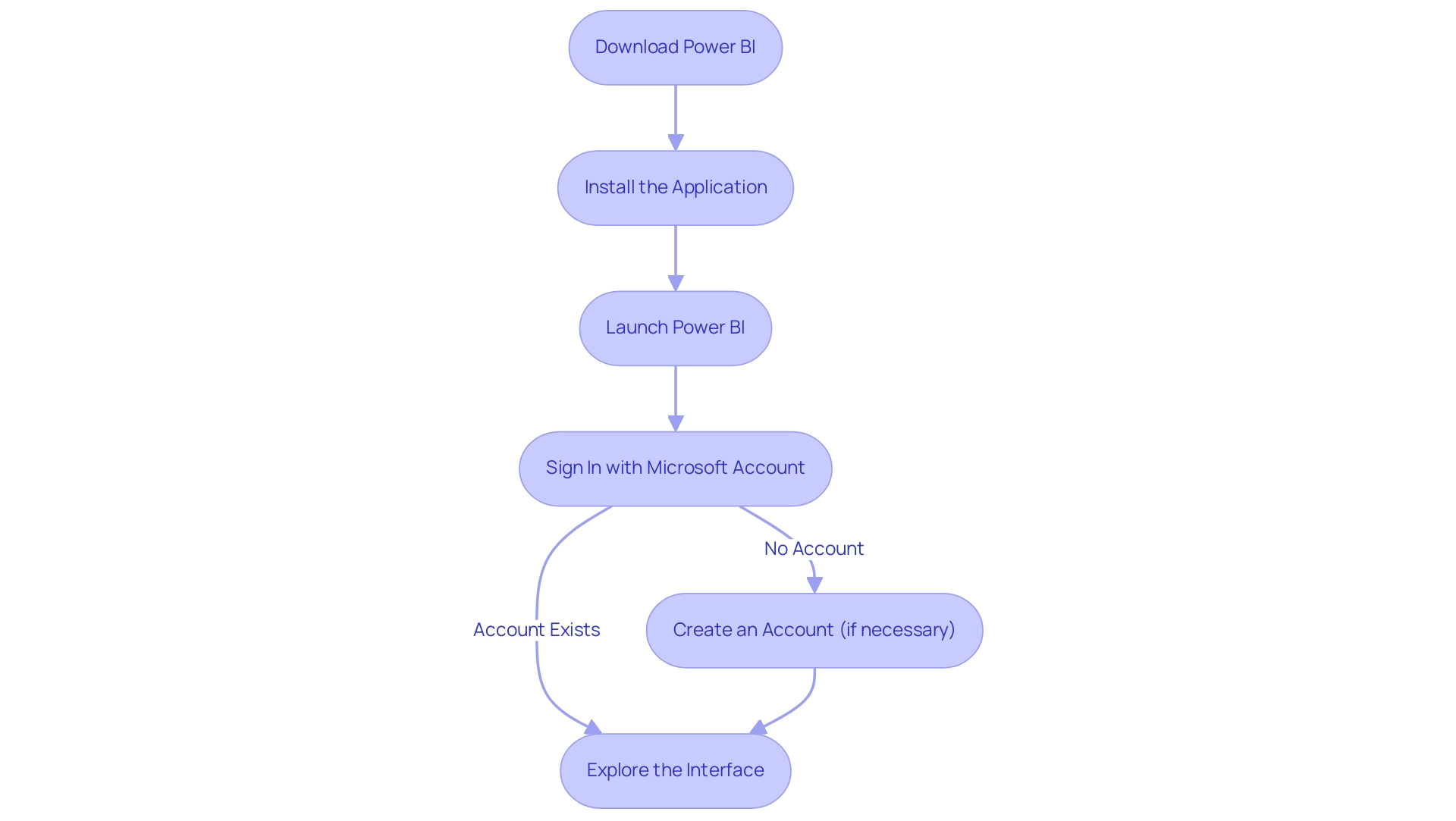

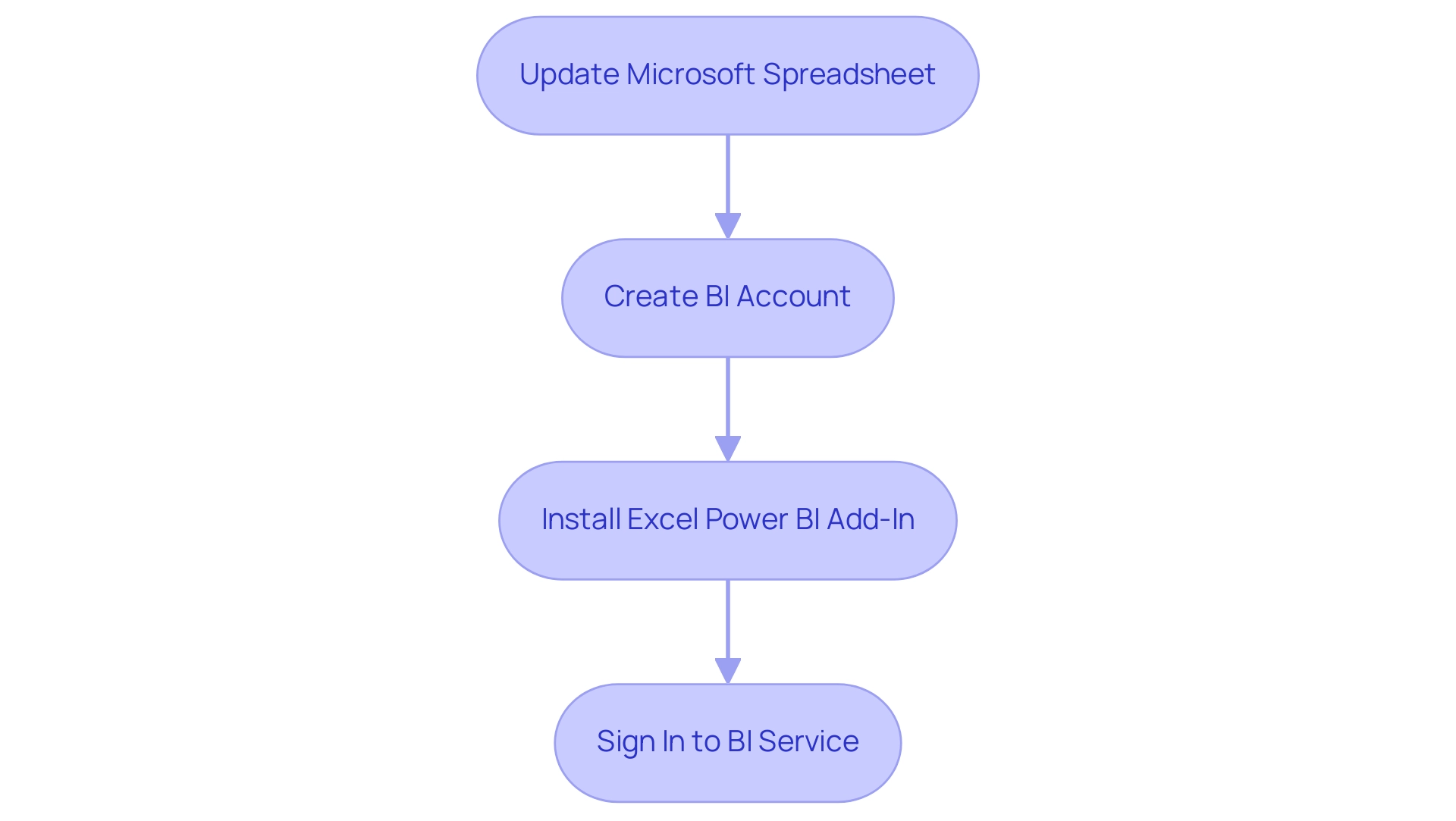

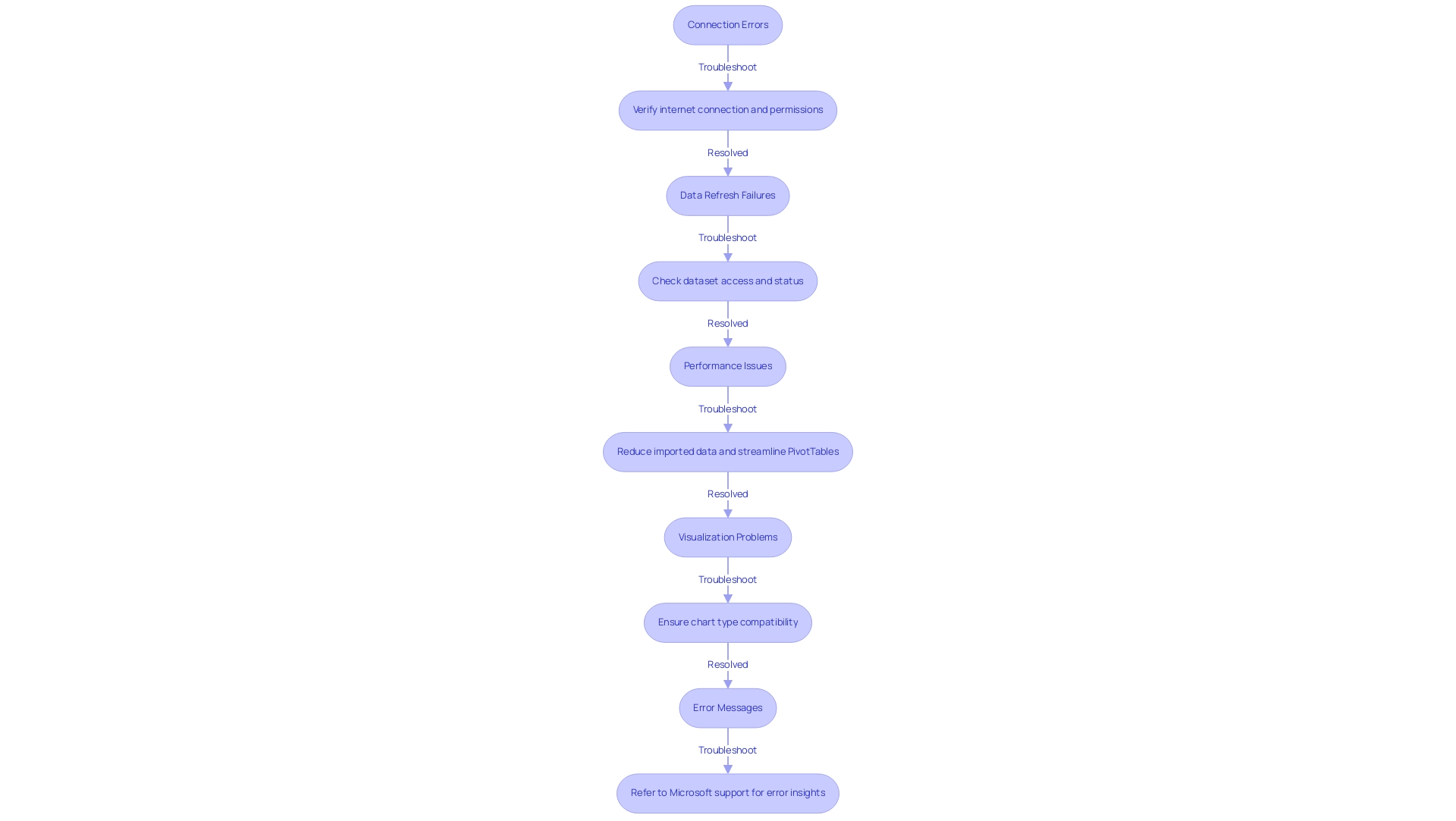

Getting Started with Power BI: Installation and Setup

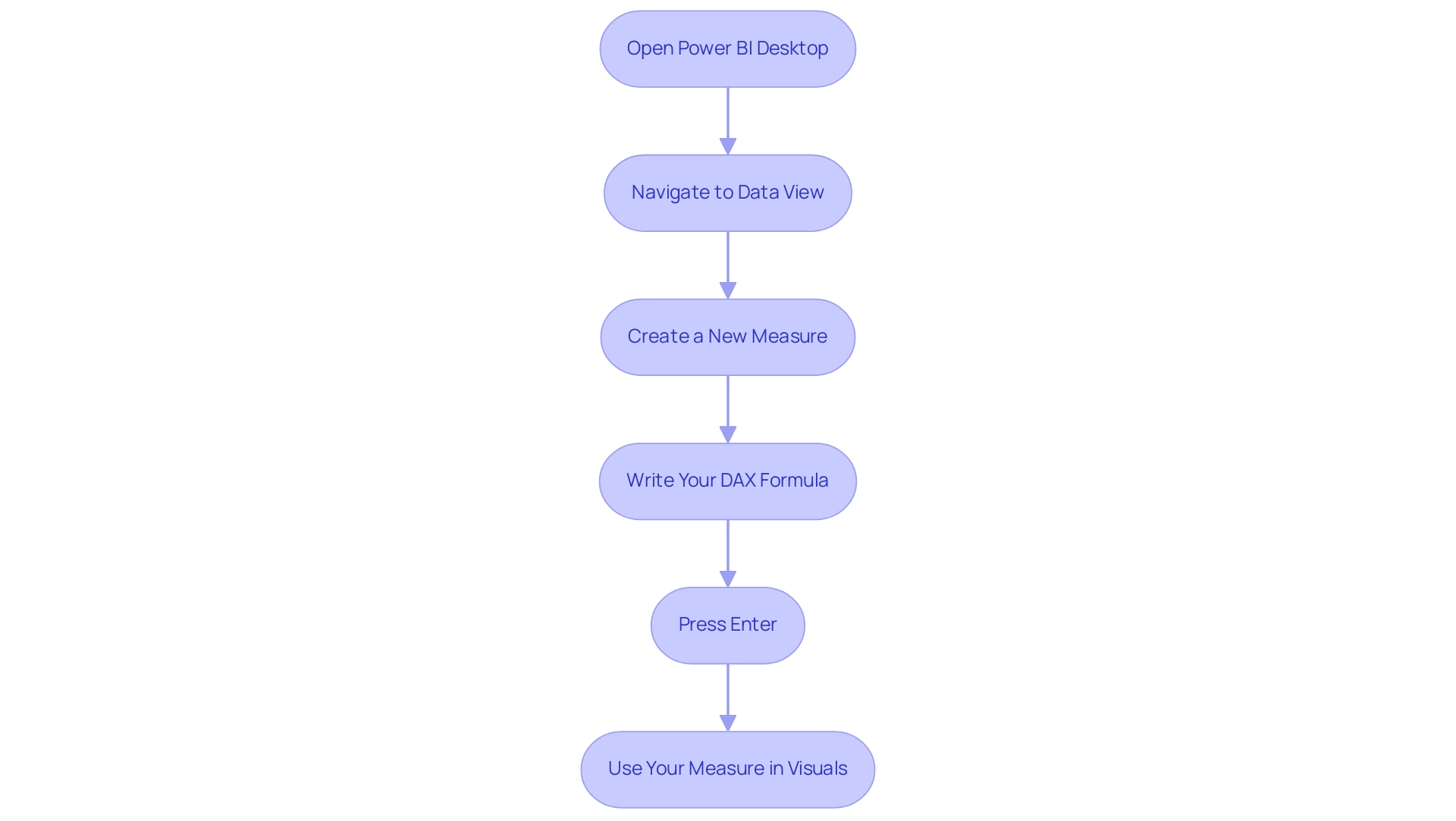

To begin your BI journey, the first step is to download the latest version from the Microsoft website. The installation process is straightforward: simply follow the prompts to install the application on your device. After installation, launch BI and sign in using your Microsoft account.

If you don’t have an account yet, you will have the option to create one during the sign-in process. Once you’re logged in, take a moment to explore the interface. Familiarize yourself with key components such as the ribbon, navigation pane, and report canvas.

This foundational step will empower you to utilize the power BI visualization tools effectively and maximize their features as you start to analyze and visualize your information. In today’s competitive environment, it is essential to utilize the capabilities of Business Intelligence and RPA solutions to enhance operational efficiency and derive valuable information. Notably, as the global cloud-based BI market is projected to reach $15.2 billion by 2026, with 80% of organizations reporting enhanced scalability and flexibility in information access, and 88% of organizations using cloud-based BI reporting increased flexibility in accessing and analyzing information, there’s never been a better time to invest in mastering this powerful tool.

However, companies frequently encounter obstacles such as time-consuming report creation, data inconsistencies, and a lack of actionable guidance when utilizing insights from power bi visualization tools. By understanding and navigating these challenges, you can make the most of Bi’s capabilities. As Tajammul Pangarkar, CMO at Prudour Pvt Ltd, aptly states, ‘When he’s not ruminating about various happenings in the tech world, he can usually be found indulging in his next favorite interest – table tennis.’

This emphasizes the significance of remaining informed about tools such as BI in a constantly changing tech environment, ensuring your business can succeed amidst challenges.

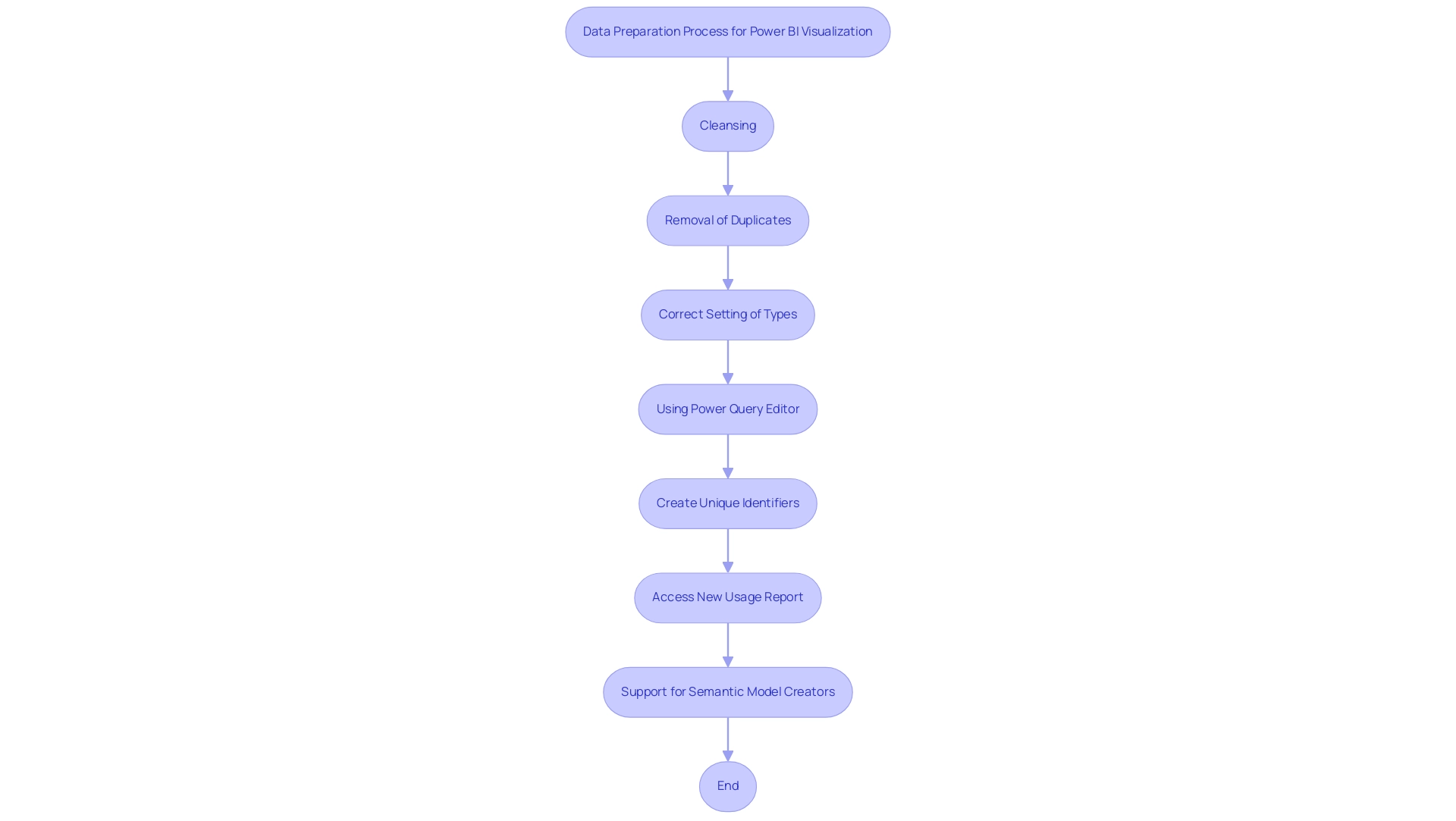

Data Preparation in Power BI: Transforming Your Data for Visualization

Effective visualization with Power BI visualization tools begins with meticulous preparation and transformation of the information at hand, a critical phase that addresses common challenges such as poor master quality and inconsistencies. This phase encompasses a variety of tasks, including:

- Cleansing

- Removal of duplicates

- Correct setting of types—ensuring dates, numbers, and other formats are accurately defined.

Notably, email or phone number fields must adhere to character limits between 3 to 128 characters, which is essential for validation.

Using the Power Query Editor is essential for executing these processes, as it enables the merging of diverse sources and the application of various transformations such as filtering and grouping. Additionally, unique identifiers can be created by adding prefixes to existing keys in the dataset, thus enhancing information management and tracking. As industry specialists highlight, the precision of visual interpretations greatly depends on the quality of the foundational information.

This highlights the significance of thorough information preparation: it not only improves the clarity and effectiveness of Power BI visualization tools, but it also ensures that the findings produced by these tools are both precise and practical. In light of the common apprehensions surrounding AI adoption, recent advancements in Business Intelligence, including the ‘New usage report on’ option, provide a fresh perspective on metrics, allowing users to access detailed insights over the past 30 days—an invaluable resource for refining strategies. Moreover, utilizing RPA tools such as EMMA RPA and Microsoft’s Automate can streamline these processes, addressing task repetition fatigue and enhancing operational efficiency.

A compelling case study involving the support for semantic model creators further illustrates the benefits of robust information preparation; it demonstrates how Power BI visualization tools streamline provisioning, reduce the workload for model creators, and maintain consistency across various models. By concentrating on these best practices for information preparation, especially in 2024, operations efficiency directors can significantly enhance their storytelling capabilities and overcome barriers to effective AI integration. As your supervisor once noted,

Orders were most likely to ship out on Tuesday,

illustrating how precise information preparation directly influences operational efficiency.

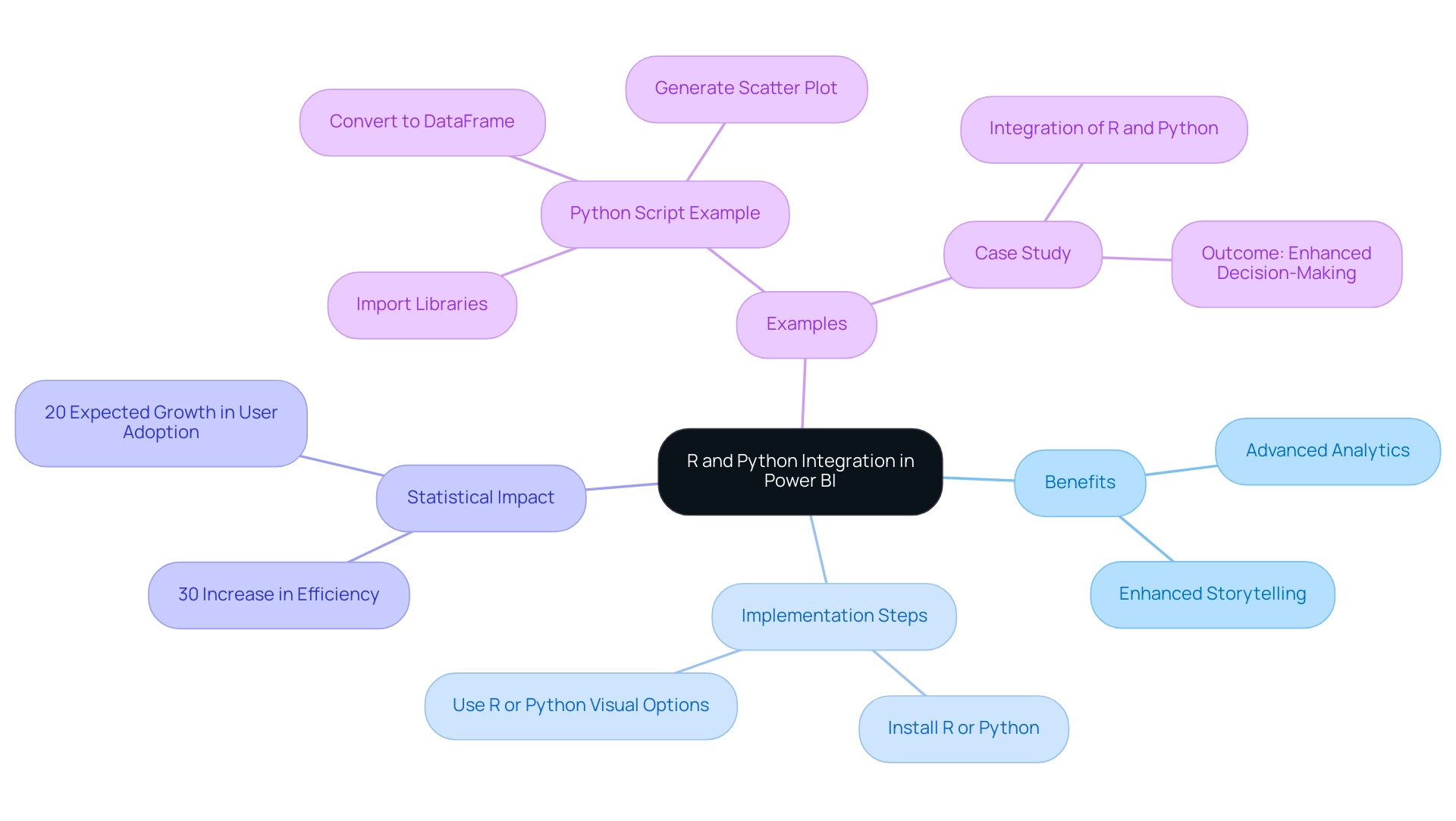

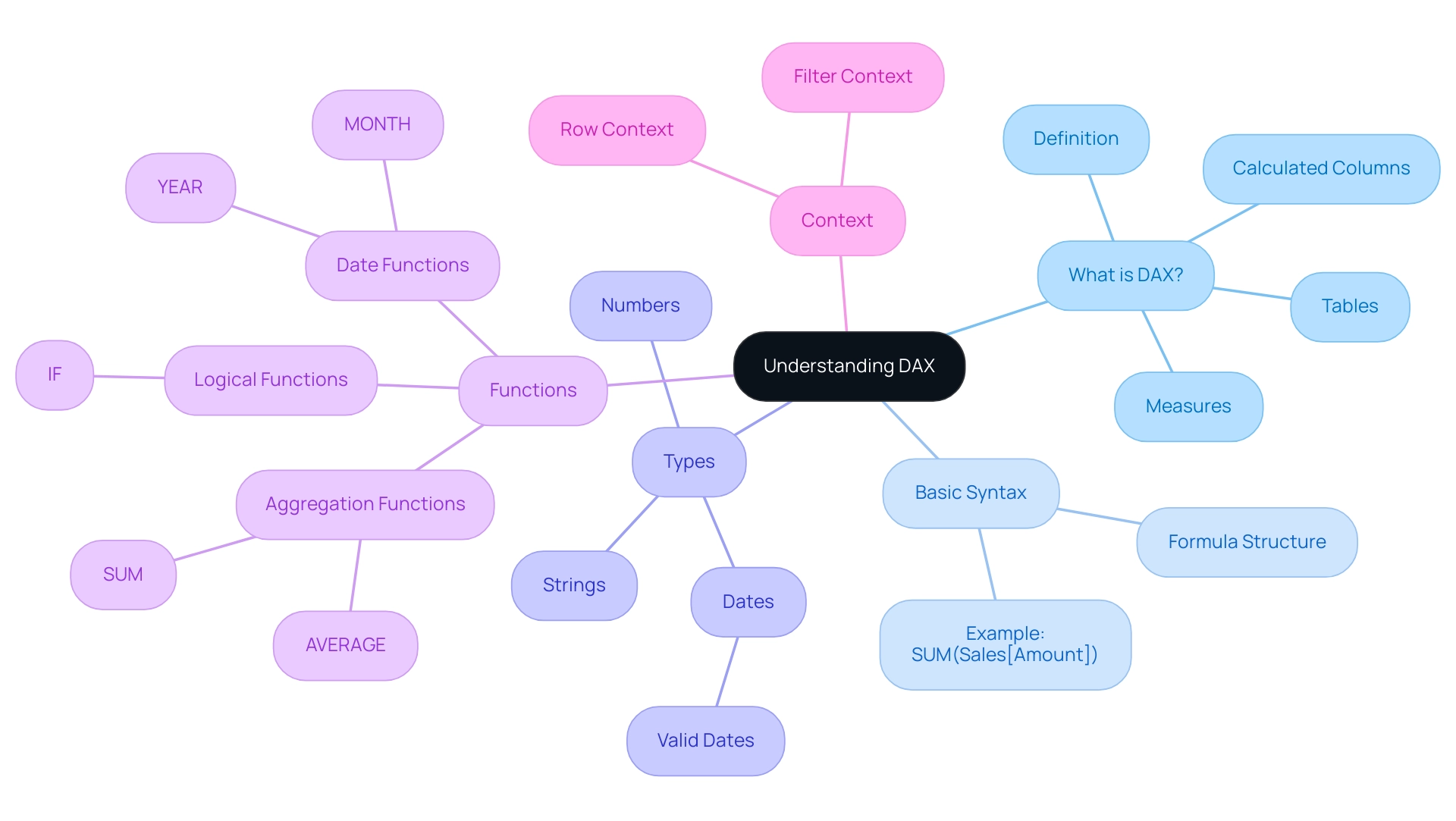

Enhancing Visualizations with R and Python Integration in Power BI

The integration of Bi’s R and Python provides users with vital Power BI visualization tools to unlock advanced analytics and create custom visualizations that enhance storytelling, ultimately leading to a deeper understanding and informed decision-making. In an era where extracting actionable insights is crucial for maintaining a competitive advantage, leveraging these languages can significantly enhance your analytical capabilities. To get started, ensure that either R or Python is installed on your machine.

Within Power BI, the R or Python visual options enable you to execute scripts directly in your reports, seamlessly merging coding capabilities with your analysis. This integration not only supports sophisticated statistical analysis and machine learning models but also enhances visualizations with Power BI visualization tools, tackling common challenges such as time-consuming report creation and inconsistencies. Additionally, our RPA solutions can complement these resources by automating repetitive tasks, further enhancing operational efficiency.

As Nirupama Srinivasan points out, R and Python visual usage will not contribute towards your Microsoft Fabric capacity usage for the first month, making it an ideal time to explore these options. For example, a fundamental Python script can include importing libraries such as matplotlib and pandas, converting your information into a DataFrame, and generating a scatter plot to illustrate relationships within your information. A case study on the integration of R and Python in Business Intelligence shows that organizations employing these resources reported a 30% increase in analytical efficiency and a significant enhancement in decision-making speed.

By utilizing these potent resources alongside RPA solutions, users can significantly improve their analysis and presentation abilities through Power BI visualization tools, tackling the challenges of today’s information-rich environment and advancing their Power BI expertise to new levels. Furthermore, recent statistics suggest that user adoption rates of R and Python in Power BI visualization tools are expected to rise by 20% in 2024, underscoring the growing importance of these languages in the analytics landscape. To learn more about how our solutions can benefit your organization, book a free consultation today.

Conclusion

Power BI represents a pivotal advancement in business analytics, enabling organizations to transform raw data into actionable insights through robust visualization and data integration capabilities. As explored in this article, the platform’s core functionalities—from data preparation and modeling to advanced analytics with R and Python—equip users to navigate common challenges such as data integration issues and security concerns. By leveraging these features, businesses can enhance their decision-making processes and foster a culture of data-driven insights.

The diverse visualization tools available in Power BI, including bar charts, line graphs, and AI-driven insights, empower users to create compelling narratives around their data. This capability not only improves user engagement but also facilitates a deeper understanding of trends and patterns. Moreover, the integration of R and Python further elevates analytical capabilities, allowing for sophisticated statistical modeling and custom visualizations that can lead to improved operational efficiency.

In conclusion, mastering Power BI is essential for organizations striving to thrive in today’s competitive landscape. By investing time in understanding its functionalities and overcoming initial hurdles, businesses can turn their data into a strategic asset that drives performance and innovation. Embracing the full potential of Power BI not only addresses current challenges but also positions organizations for future growth and success in an increasingly data-centric world.

Introduction

In a world where data is the new currency, advanced analytics is emerging as a game-changer for organizations seeking to unlock their full potential. By employing sophisticated techniques such as predictive modeling and machine learning, businesses can transform raw data into strategic insights that inform decision-making and drive operational efficiency. As 2024 approaches, the urgency to harness these capabilities has never been greater.

With the advent of technologies like Small Language Models and Robotic Process Automation, companies are finding innovative ways to streamline processes, reduce costs, and enhance customer engagement. This article delves into the multifaceted landscape of advanced analytics, exploring its various types, real-world applications, and the benefits it offers, while also addressing the challenges organizations face in its implementation.

Embracing these advancements isn’t just beneficial; it’s essential for staying competitive in an increasingly data-driven marketplace.

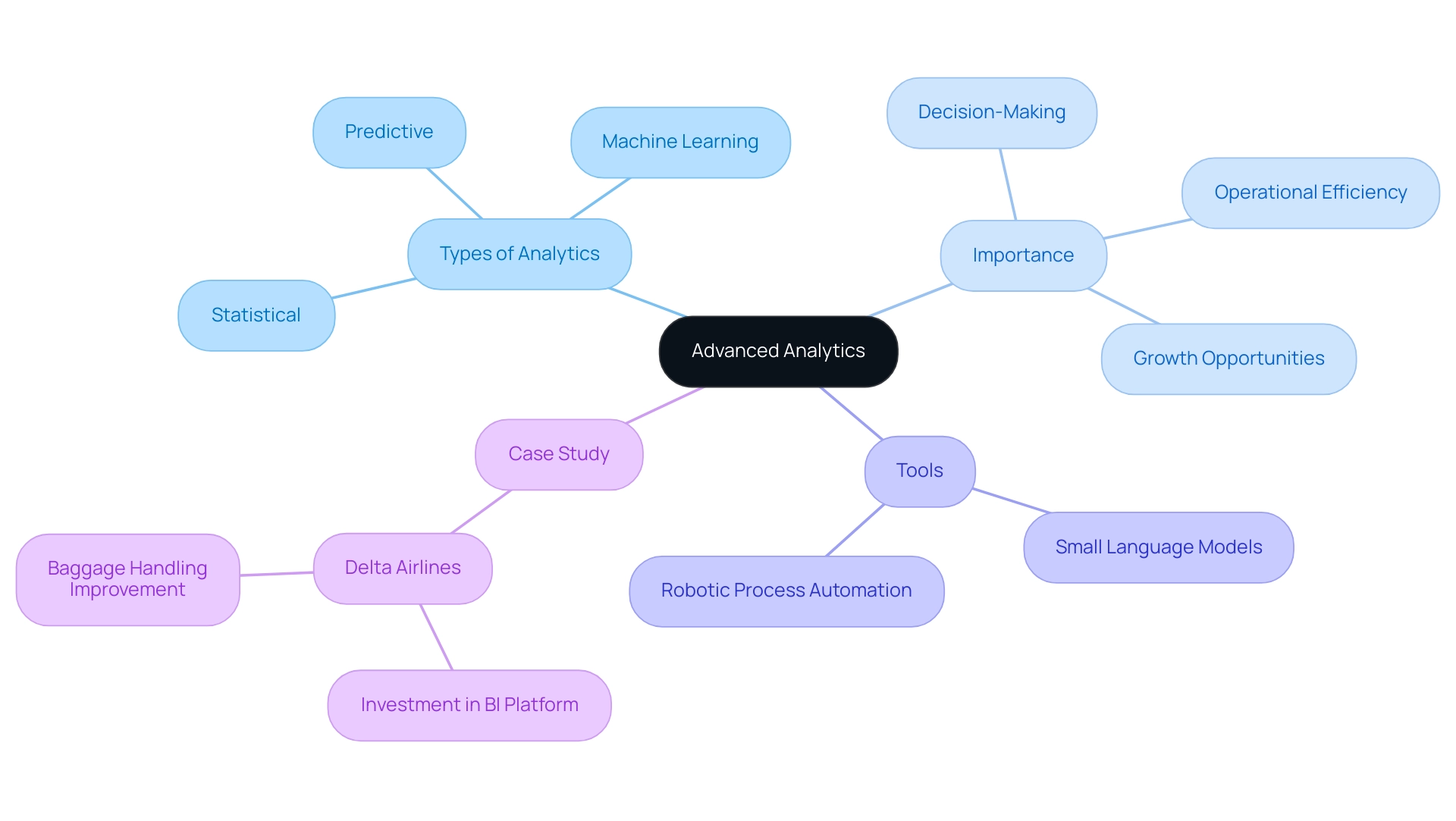

Understanding Advanced Analytics: Definition and Importance

Advanced data analysis represents a transformative approach that utilizes various types of advanced analytics and tools to extract insights from data beyond traditional methods. This encompasses types of advanced analytics, including a variety of statistical, predictive, and machine learning techniques, enabling entities to uncover deeper insights and make more informed decisions. The importance of types of advanced analytics is underscored by their capacity to enhance decision-making processes, streamline operations, and reveal new growth opportunities.

As we near 2024, the significance of types of advanced analytics in commerce cannot be exaggerated; they allow organizations to utilize their information effectively, transforming raw details into actionable insights that enhance operational efficiency and competitive edge. Significantly, Gartner predicts that by the end of this year, 60% of the information used by AI and analytical solutions will comprise synthetic content, emphasizing a paradigm shift in information management. The introduction of Small Language Models (SLMs) is a perfect example of how organizations can achieve efficient, secure, and cost-effective data analysis, tailored to specific industry needs.

SLMs can specifically address the common perception of AI projects being costly and time-intensive by requiring less computational power and enabling quicker implementation compared to larger models. Furthermore, Alteryx’s integration of generative AI features, including ‘Magic Documents’ for summarizing insights and an OpenAI connector for generating reports, illustrates the ongoing evolution of data analysis capabilities. As Josh Howarth points out, the business intelligence sector, which includes various types of advanced analytics, is expected to hit $54.27 billion by 2030, indicating the increasing acknowledgment of advanced data analysis as an essential element in business strategy.

Moreover, utilizing Robotic Process Automation (RPA) enables entities to automate manual workflows, significantly enhancing operational efficiency in a rapidly evolving AI landscape. RPA can help alleviate the burden of repetitive tasks, further countering the perception that AI is too complex or resource-heavy to implement. Real-time predictive insights further enhance agility and customer involvement, allowing entities to make prompt data-driven choices.

For instance, Delta Airlines invested over $100 million in a business intelligence platform to enhance baggage handling processes, effectively reducing customer stress associated with delays. This case illustrates how various types of advanced analytics, combined with SLMs and RPA, can significantly enhance operational results and marketing approaches, making it essential for enterprises aiming to succeed in today’s data-driven environment. Book a complimentary consultation to explore how our customized AI solutions can assist your entity in navigating these challenges.

Exploring the Key Types of Advanced Analytics

The terrain of advanced analytics is varied, with several key types that organizations can utilize to gain a competitive advantage, particularly in the manufacturing sector, where the market for extensive analytics is projected to reach $4,617.78 million by 2030:

- Descriptive Analytics: This foundational type employs historical information to clarify past events. By employing aggregation and mining techniques, it provides insights that assist businesses in understanding trends and patterns over time. RPA can improve descriptive analysis by automating information collection processes, minimizing errors and freeing up resources for more in-depth examination.

- Diagnostic Analytics: Building on descriptive analytics, this type delves deeper to uncover the reasons behind specific outcomes. Through information correlation and statistical analysis, it reveals relationships between variables, enabling organizations to identify root causes of issues. RPA can streamline this process by automating the extraction and correlation of pertinent information, making it easier to identify underlying issues.

- Predictive Analysis: Employing statistical models and machine learning, predictive analysis anticipates future occurrences based on past information. This type is particularly beneficial for risk management and resource allocation, allowing companies to anticipate challenges and make informed decisions. RPA supports predictive analysis by automating data preparation, ensuring that the models are fed with accurate and timely information.

- Prescriptive Analysis: Taking a proactive approach, prescriptive analysis recommends actions to achieve desired outcomes. By combining predictive analysis with optimization techniques, it provides customized recommendations that enable organizations to make data-driven decisions effectively. RPA can facilitate prescriptive analysis by automating the implementation of recommended actions, thus enhancing operational responsiveness.

- Cognitive Analysis: An emerging frontier, cognitive analysis harnesses AI and machine learning to replicate human thought processes in examining complex datasets. This innovative method enables deeper insights and more nuanced decision-making, ensuring organizations remain agile in a rapidly evolving market. RPA can improve cognitive insights by automating routine tasks, enabling human analysts to concentrate on more intricate analytical processes.

In addition to these types of advanced analytics, Robotic Process Automation (RPA) solutions play a vital role in boosting operational efficiency. By automating manual workflows, RPA reduces errors and addresses task repetition fatigue, freeing up team members for more strategic tasks. Instruments such as EMMA RPA and Microsoft Power Automate illustrate how companies can enhance operations while elevating employee morale and tackling staffing shortages.

Significantly, 54% of firms are contemplating the adoption of cloud and business intelligence (BI) in their information analysis efforts, as emphasized by industry leaders like IBM and Microsoft. Moreover, a case study from the insurance and telecommunications sectors demonstrates that organizations are increasingly utilizing an average of 11.4 cloud services, showcasing a growing reliance on advanced data analysis and RPA to enhance operational efficiency.

Each of these types of data analysis, coupled with the strategic implementation of RPA, is crucial for organizations aiming to harness information effectively, driving efficiency and innovation in their operations.

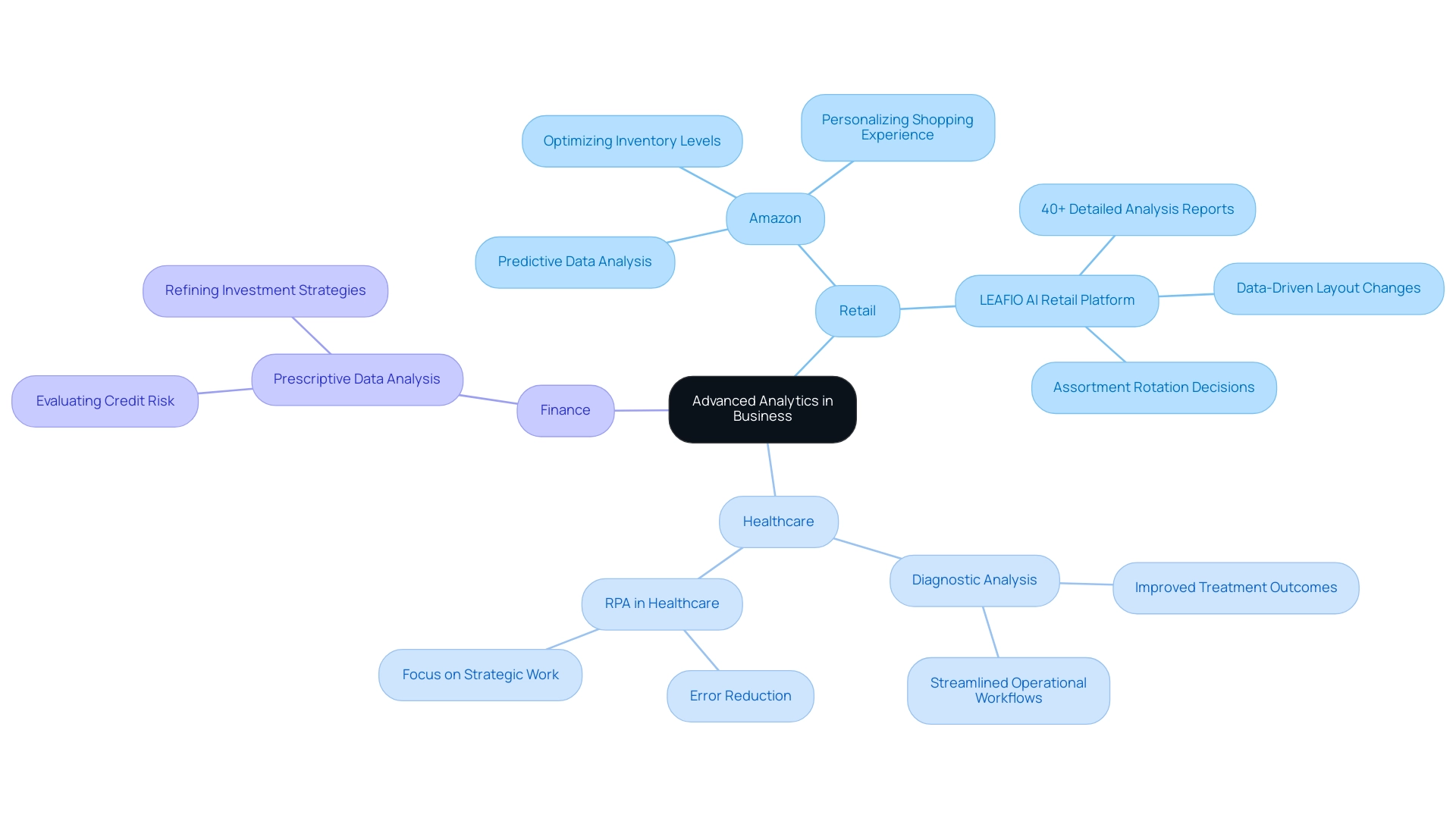

Real-World Applications of Advanced Analytics in Business

The types of advanced analytics, combined with Robotic Process Automation (RPA) and tailored AI solutions, are revolutionizing decision-making processes and operational efficiency across various sectors. In retail, for example, companies like Amazon illustrate the strength of predictive data analysis by accurately forecasting demand, optimizing inventory levels, and personalizing the shopping experience for customers. The LEAFIO AI Retail Platform further illustrates this trend, enabling retailers to produce over 40 detailed analysis reports.

These insights empower businesses to make informed layout changes and assortment rotation decisions, ultimately driving sales growth and improving customer retention. Retailers are encouraged to schedule consultations to investigate how information analysis can enhance their operations. In the healthcare sector, organizations utilize diagnostic analysis alongside RPA to uncover patterns in patient data, resulting in improved treatment outcomes and streamlined operational workflows.

Experts in healthcare emphasize that every interaction and transaction is an opportunity to glean insights, as stated by Muhammad Ghulam Jillani, Senior Data Scientist and Machine Learning Engineer. RPA not only reduces errors but also frees up teams to focus on more strategic, value-adding work. Additionally, financial institutions utilize prescriptive data analysis to evaluate credit risk and refine investment strategies, enhanced by the efficiency of RPA.

By monitoring key performance indicators such as:

- Sales growth

- Customer retention

- Inventory turnover

- Cost savings

companies can assess the effect of their data analysis and automation efforts on profitability. Customized AI solutions play a crucial role in assisting organizations navigate the rapidly evolving AI landscape, ensuring that technologies align with specific objectives. These practical applications of types of advanced analytics, RPA, and Business Intelligence highlight their potential to provide substantial performance enhancements and establish a competitive advantage in various commercial settings.

To learn more about how these technologies can transform your operations, we invite you to book a consultation.

Benefits and Challenges of Implementing Advanced Analytics

The execution of types of advanced analytics, such as those provided in Creatum’s 3-Day Power BI Sprint, offers numerous benefits, including:

- Enhanced decision-making abilities

- Increased operational efficiency

- Identification of new opportunities

In just three days, we promise to create a fully functional, professionally designed report on a topic of your choice, allowing you to focus on utilizing the insights. According to a study by IBM, Microsoft, and Humans of Data, 54% of firms are contemplating the integration of cloud computing and business intelligence into their information analysis strategies, indicating a notable transition towards types of advanced analytics.

Organizations that embrace these types of advanced analytics can anticipate:

- Enhanced customer insights

- Streamlined processes

- Increased profitability

Furthermore, interactive visualization simplifies complex information into graphical representations, enabling quicker decision-making. A significant case study is SAP’s incorporation of types of advanced analytics, including predictive data analysis, into its Cloud Analytics platform, which has enabled enterprises of all sizes to leverage enhanced data interpretation and machine learning, promoting automated workflows and the extraction of valuable insights from extensive datasets.

However, entities must navigate several challenges to successfully implement these strategies. Particular challenges encountered in adopting types of advanced analytics include:

- Quality issues

- Employee resistance to change

- Need for skilled personnel

To counter these obstacles, it is crucial for organizations to cultivate a data-driven culture, invest in comprehensive employee training—including expert training from Creatum—and enforce robust data governance practices.

Additionally, our customized AI solutions can assist organizations in identifying the right technologies to align with their specific goals. By proactively tackling these challenges and utilizing RPA to automate manual workflows, businesses can unlock the full potential of types of advanced analytics, positioning themselves for greater success in a data-centric landscape.

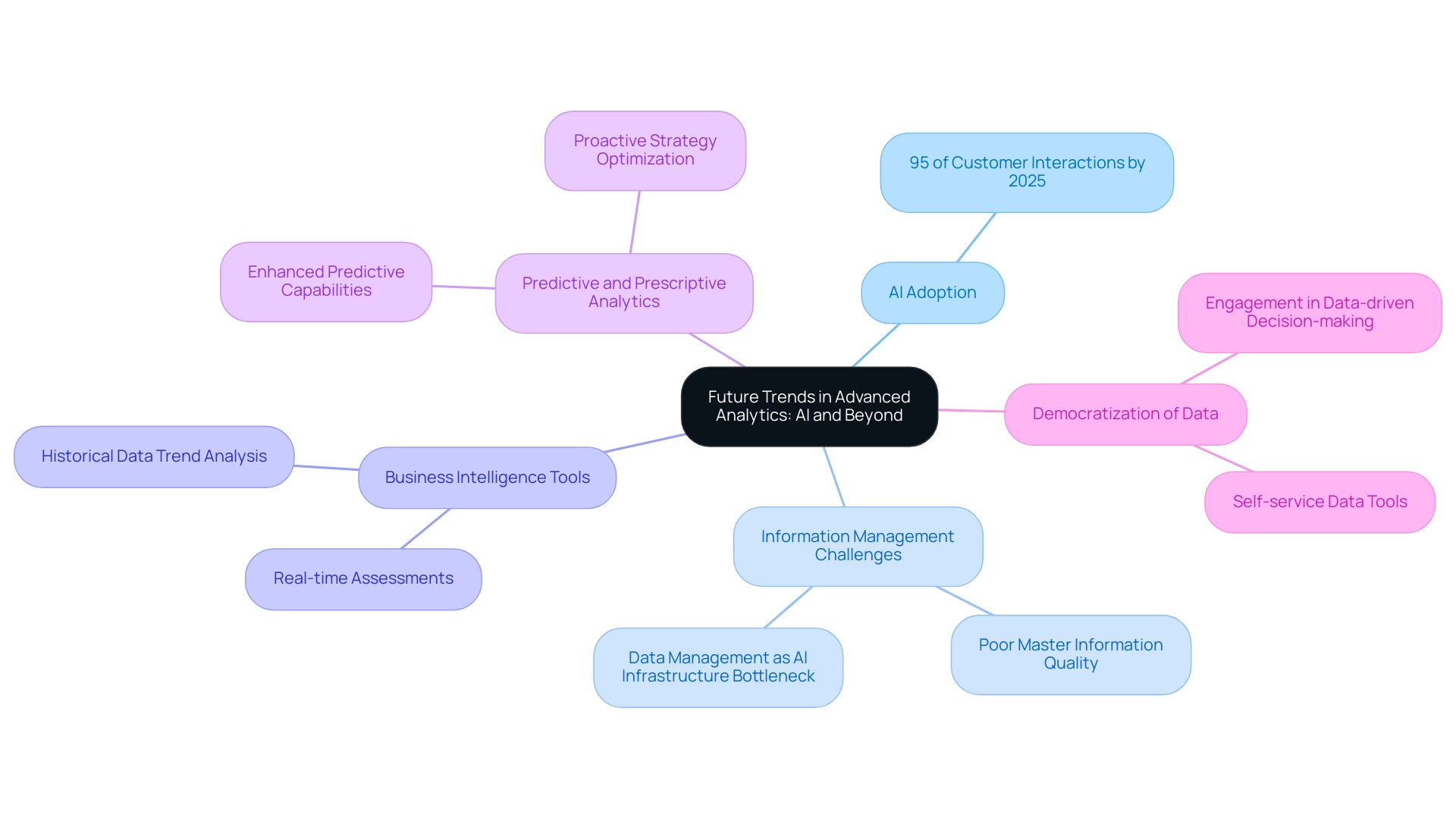

Future Trends in Advanced Analytics: AI and Beyond

The landscape of types of advanced analytics is undergoing a profound transformation, driven by the rapid adoption of AI and machine learning technologies. With projections indicating that by 2025, AI will facilitate 95% of customer interactions, organizations must urgently enhance their information management frameworks to overcome common challenges such as poor master information quality. Inconsistent, incomplete, or inaccurate information can lead to flawed decision-making and operational inefficiencies, making it crucial for companies to address these issues proactively.

As emphasized in the conversation on information management as an AI infrastructure bottleneck, tackling these issues is essential for effective AI implementation. Companies that adopt these technologies can anticipate substantial progress in automation, facilitating real-time assessments and accelerated decision-making processes. For instance, organizations can utilize Business Intelligence tools to analyze historical data trends, helping them make informed predictions about future market conditions.

Furthermore, integrating AI with types of advanced analytics will enhance predictive and prescriptive capabilities, empowering businesses to anticipate market shifts and optimize strategies proactively. The rise of self-service data tools democratizes access to insights, enabling non-technical users to engage in data-driven decision-making across all organizational levels. This democratization is essential, particularly as organizations integrate data leadership roles into broader technology functions, fostering a culture of analytics and AI.

Embracing these future trends is not just advantageous but crucial for businesses aiming to sustain a competitive edge in today’s data-centric environment.

Conclusion

Advanced analytics is no longer just an option; it has become a necessity for organizations striving to thrive in a data-driven world. By leveraging advanced techniques such as predictive modeling, machine learning, and robotic process automation, businesses can unlock valuable insights that enhance decision-making and operational efficiency. The various types of advanced analytics—from descriptive to cognitive—provide a comprehensive toolkit for organizations to understand their data, identify opportunities, and drive innovation.

Real-world applications across sectors showcase the transformative potential of these analytics. In retail and healthcare, for example, companies are harnessing advanced analytics to optimize operations, improve customer experiences, and enhance treatment outcomes. The integration of AI and RPA not only streamlines processes but also empowers teams to focus on strategic initiatives, significantly enhancing productivity.

However, the journey toward implementing advanced analytics is not without challenges. Organizations must address data quality issues, foster a culture of data-driven decision-making, and invest in employee training to fully realize the benefits. By proactively tackling these obstacles and leveraging tailored AI solutions, businesses can position themselves for success.

As we move forward, embracing the future trends in advanced analytics will be essential. With the increasing reliance on AI and self-service analytics tools, organizations must adapt their data management strategies to ensure they remain competitive. The time to act is now; harnessing the power of advanced analytics is crucial for unlocking growth, enhancing operational efficiency, and staying ahead in an ever-evolving marketplace.

Introduction

In a world where data is the new currency, mastering Power BI can be the key to unlocking unprecedented insights and driving operational success. As organizations strive to harness the power of business intelligence, the ability to visualize and share data effectively becomes paramount.

With the social business intelligence market projected to soar, understanding the intricacies of Power BI—from its essential components to advanced data modeling techniques—can empower teams to make informed decisions that propel growth.

However, many face hurdles such as:

- Time-consuming report generation

- Data inconsistencies that can stifle innovation

By embracing RPA solutions and leveraging the full capabilities of Power BI, organizations can transform their data landscape, streamline processes, and foster a culture of collaboration that ultimately leads to enhanced efficiency and success.

This article delves into the essential skills and strategies needed to navigate Power BI, ensuring that users are equipped to turn challenges into opportunities for impactful data storytelling.

Getting Started with Power BI: An Overview

This software, created by Microsoft, stands out as a strong business analytics tool that enables organizations to visualize their information and share insights throughout their teams. In a time when the social business intelligence market is anticipated to attain an impressive $25,886.8 million by 2024, the significance of effective information utilization cannot be overstated. As highlighted by Inkwood Research, the BFSI (Banking, Financial Services, and Insurance) sector is expected to experience rapid growth, driven by the consistent implementation of BI technologies that enhance operational efficiency.

However, many organizations encounter difficulties in utilizing insights from BI dashboards, including:

- Time-consuming document creation

- Data inconsistencies

- A lack of actionable guidance

For those new to BI, participating in a power bi introduction training to familiarize yourself with its interface is crucial. The platform comprises several key components:

- BI Desktop for report creation

- The BI Service for sharing and collaboration

- Mobile applications that enable users to access reports on-the-go

This foundational understanding of BI’s components will enable you to navigate the platform with confidence and overcome the hurdles of outdated tools and fear of new technology adoption. By mastering BI and integrating RPA solutions like EMMA RPA, which automates repetitive tasks, and Automate, which streamlines operations, you position yourself at the forefront of this evolving market. These tools not only enhance operational efficiency but also address task repetition fatigue and staffing shortages, driving data-driven insights that fuel business growth and innovation.

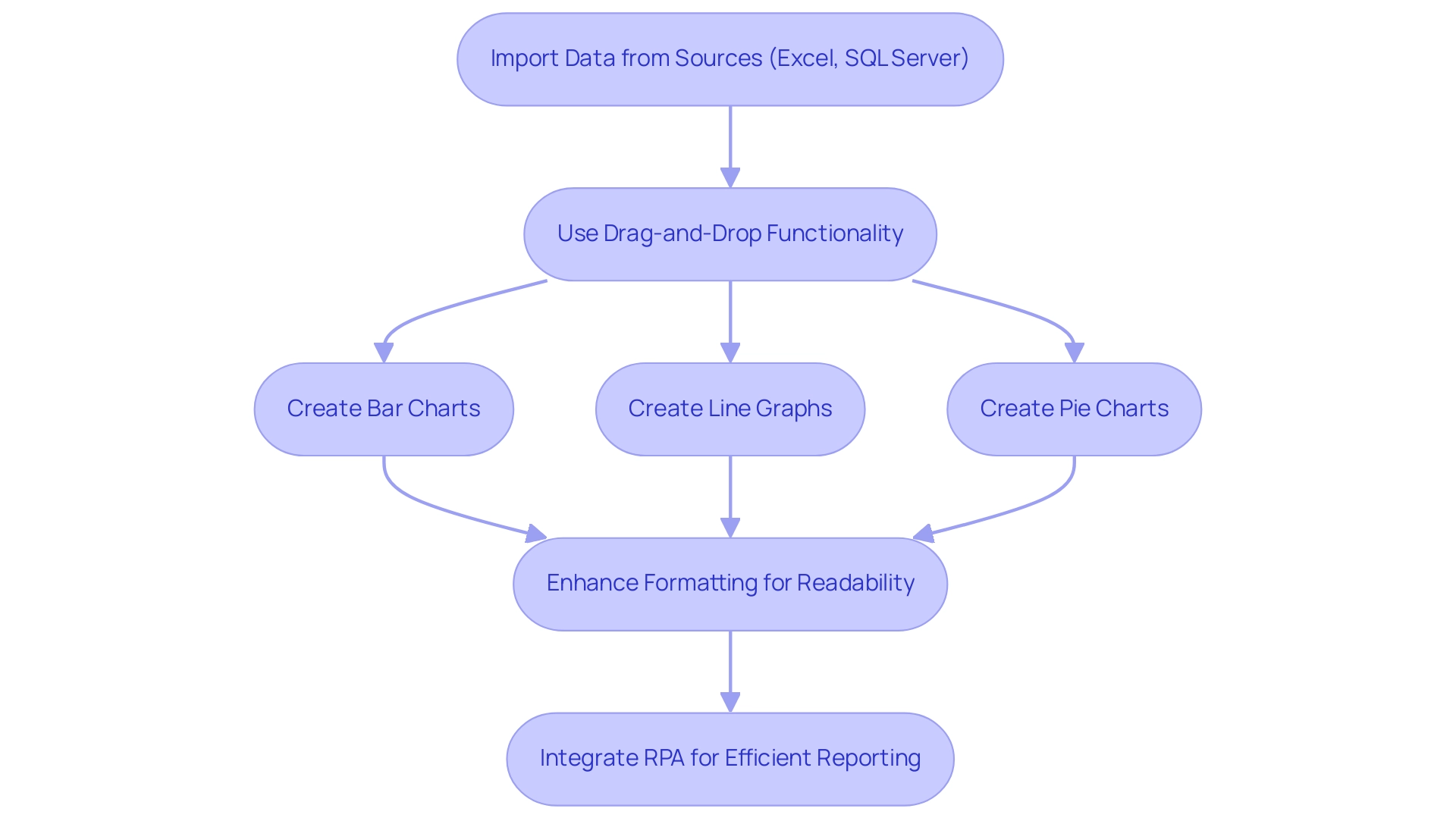

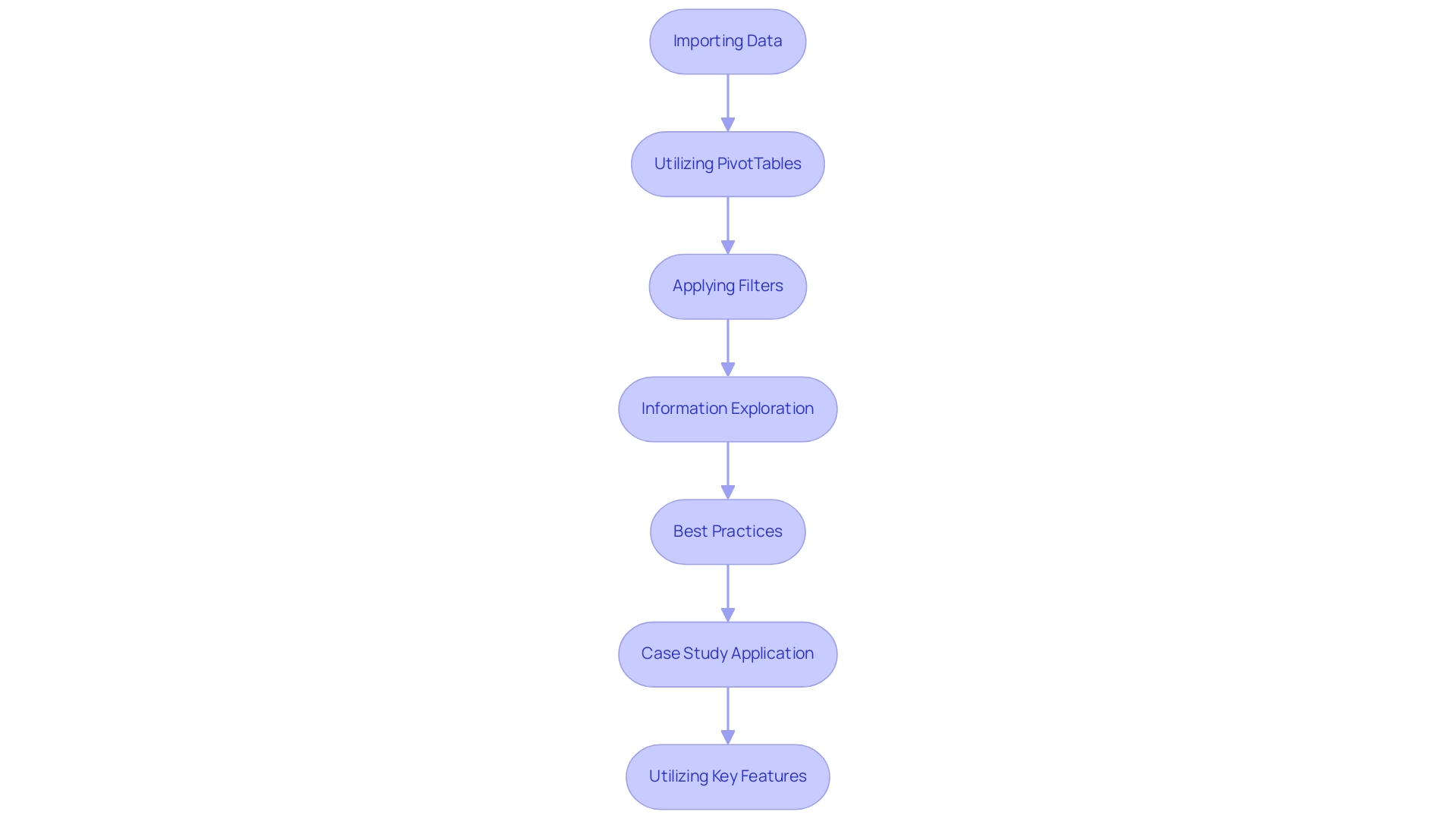

Essential Skills for Beginners: Data Visualization and Reporting in Power BI

For newcomers aiming to excel in visualization using BI tools, a Power BI introduction training is essential to cultivate a range of fundamental skills that will allow you to produce engaging charts, graphs, and dashboards. Start by importing information from diverse sources such as Excel and SQL Server into Power BI Desktop. Once your information is successfully loaded, leverage the intuitive drag-and-drop functionality to craft visual representations of it.

Get acquainted with different visual types:

– Employ bar charts for straightforward comparisons

– Use line graphs to demonstrate trends over time

– Utilize pie charts to represent proportional information effectively

Pay careful attention to the formatting options available; this will significantly enhance the readability and impact of your visualizations. The increasing enthusiasm for visualization tools is clear, as the search volume for ‘Tableau Public’ has risen by over 80% in the last five years, highlighting the significance of mastering platforms such as BI.

Key sectors such as finance, healthcare, retail, manufacturing, and transportation are increasingly utilizing large-scale information. However, numerous organizations encounter difficulties in utilizing insights from BI dashboards, including time-consuming creation of documents and inconsistencies in information. Integrating RPA solutions like EMMA RPA and Automate can streamline these processes, allowing for more efficient reporting and enhanced operational efficiency.

Thus, comprehending how to efficiently present and visualize information through Power BI introduction training can enable you to create meaningful documents that not only communicate your information narrative but also foster informed decision-making within your organization, ultimately improving operational efficiency through effective BI and RPA strategies.

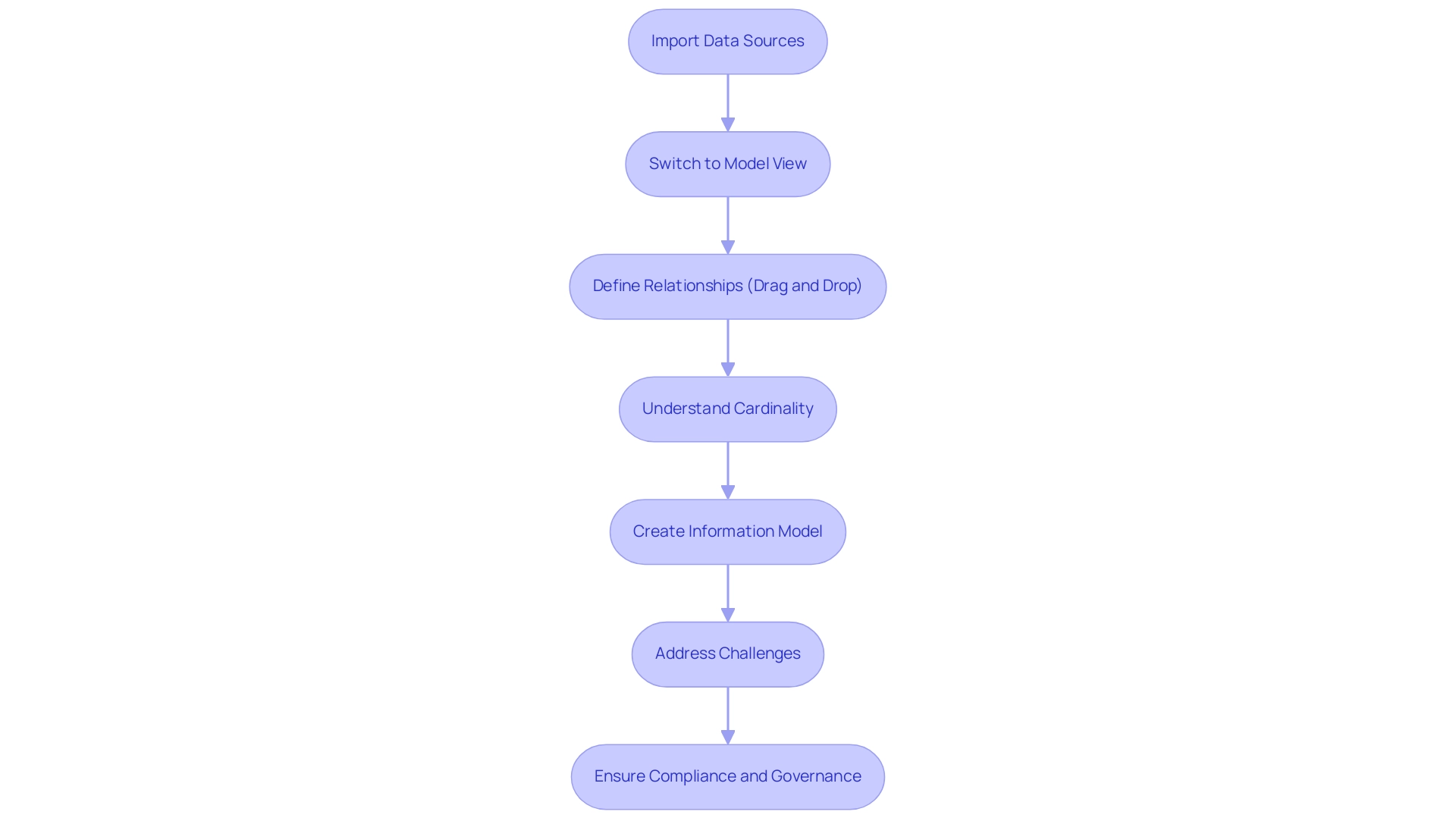

Exploring Power BI’s Data Modeling Capabilities

Efficient information modeling in BI is a crucial element for creating insightful reports, as it entails establishing connections among various tables. To start, import all pertinent information sources into BI and switch to the ‘Model’ view in BI Desktop. From there, you can easily define relationships by dragging and dropping fields between tables.

A thorough understanding of cardinality—whether one-to-one or one-to-many—is essential for accurately setting up these relationships. As Juan Sequeda, a principal scientist and head of the AI Lab at data.world, notes,

Knowledge graphs simplify complex concepts at one glance by giving rich, meaningful context and connections between datasets.

This emphasizes the significance of a well-organized information model; without it, organizations risk creating systems that fail to meet customer needs.

In today’s data-rich environment, struggling to extract meaningful insights can leave your business at a competitive disadvantage. Utilizing the capabilities of Business Intelligence and RPA solutions, like EMMA RPA and Power Automate, can convert raw information into actionable insights, facilitating informed decision-making that promotes growth and innovation. For instance, the network information model represents information in a complex network-like structure, allowing multiple parent-child relationships, often used for modeling intricate information relationships.

By investing time in creating a solid information model, you can ensure that your visualizations yield precise and meaningful insights, ultimately facilitating more informed decision-making. Recent statistics indicate that 55% of data engineers face challenges when incorporating various formats into cohesive models, underscoring the necessity of robust connections in BI. Additionally, organizations face challenges like task repetition fatigue and staffing shortages, which RPA solutions can effectively address by automating repetitive tasks and streamlining operations.

Furthermore, as we approach 2024, the importance of information governance and ethical information handling practices becomes increasingly vital, shaping the landscape of modeling in BI and ensuring systems are constructed with compliance and responsibility in mind.

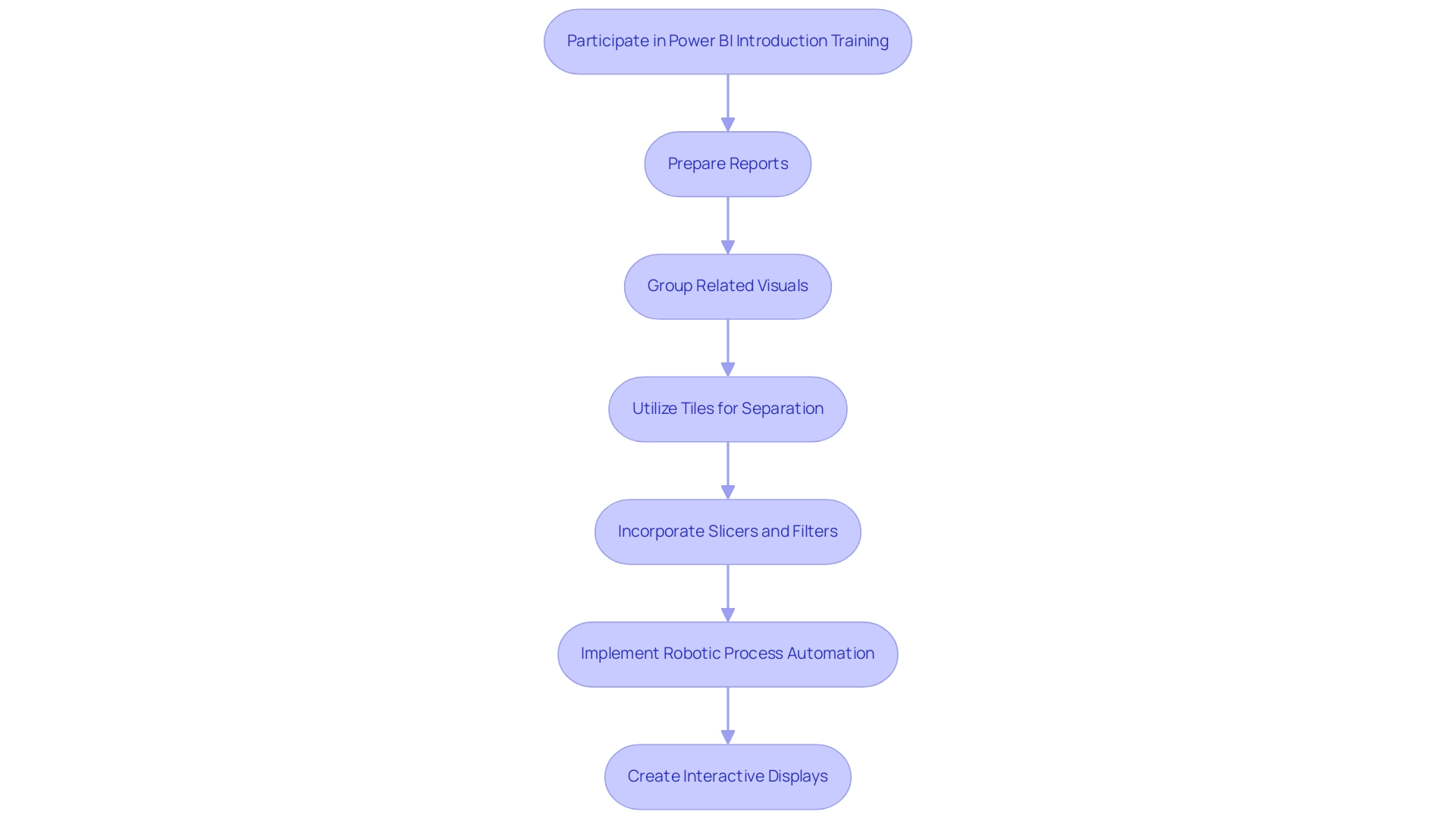

Creating Interactive Dashboards

Participating in power bi introduction training enables you to create interactive displays in Power BI, providing a powerful way to convey your insights effectively. However, challenges such as time-consuming document creation and information inconsistencies can hinder the process of extracting actionable insights. To overcome these hurdles, once your reports are prepared, you can easily attach visualizations to a panel by selecting the pin icon on the desired visuals and choosing the appropriate panel.

As you create your display, prioritize a thoughtful layout by:

- Grouping related visuals

- Utilizing tiles to distinctly separate various information categories

This approach not only enhances clarity but also ensures that the dashboard remains relevant to different user roles, spotlighting the metrics that matter most. To encourage interactivity, utilize features such as slicers and filters, allowing users to examine the information in greater depth.

Furthermore, incorporating Robotic Process Automation (RPA) can simplify repetitive tasks linked to preparation, significantly decreasing the time required for report generation and minimizing inconsistencies. As highlighted by ThoughtSpot, ‘AI-Powered Analytics allows everyone to create personalized insights to drive decisions and take action.’ A well-structured interface, combined with RPA, can convert intricate information into easily understandable insights, aiding informed decision-making for stakeholders at a glance.

In 2024, the emphasis on interactive displays will be crucial as organizations transition from data secrecy to a culture of openness, enhancing collaboration across all business functions. Moreover, companies implementing the AI TRiSM framework are anticipated to be 50% more successful in adoption, business objectives, and user acceptance by 2026, highlighting the significance of incorporating interactive displays and RPA into business intelligence strategies. By adopting these best practices, you can ensure that your displays, especially during the power bi introduction training, not only convey insights but also drive meaningful actions within your organization.

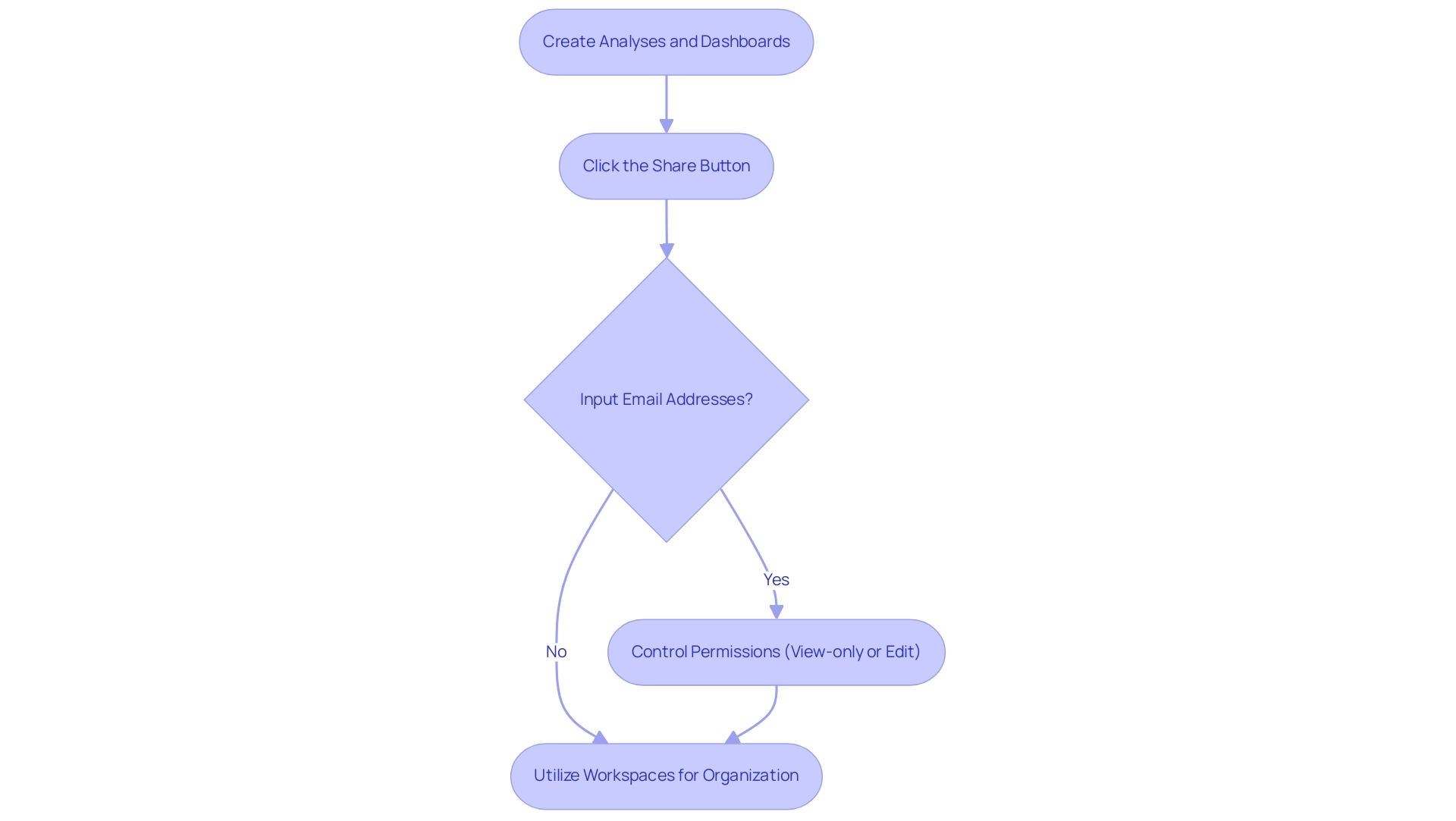

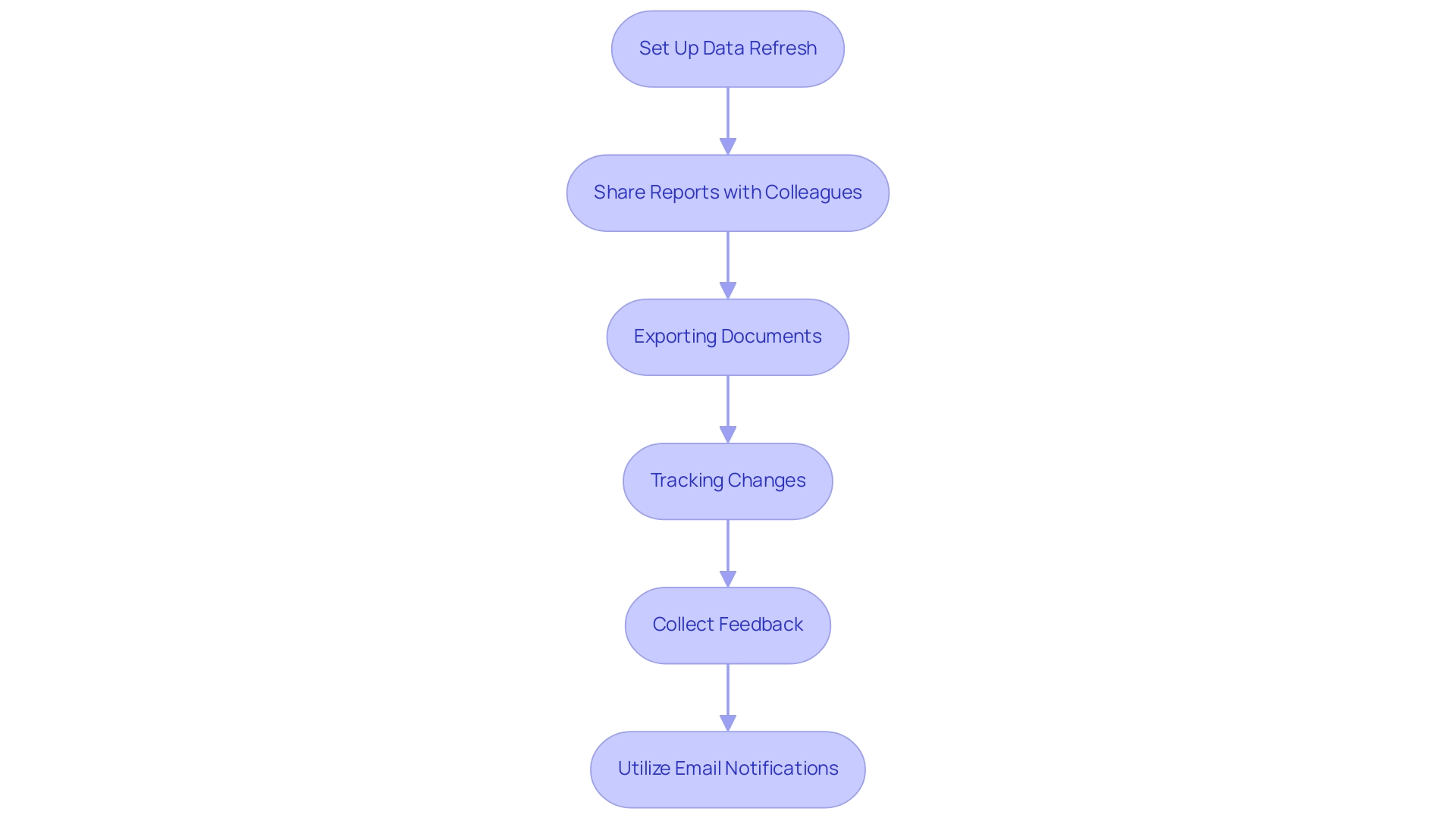

Sharing and Collaborating in Power BI

After creating insightful analyses and dashboards in BI, Power BI introduction training is crucial for effective sharing with your team and collaborative decision-making. The process is facilitated seamlessly through the Power BI introduction training. To share a document, simply click the ‘Share’ button located in the view, and input the email addresses of your colleagues.

You are also empowered to control permissions, granting either view-only access or editing rights tailored to your requirements. Utilizing workspaces during your Power BI introduction training can further enhance your workflow by organizing related reports and dashboards, thereby ensuring streamlined collaboration. As Kim Manis, Vice President of Product Management, aptly stated,

Fabric is a complete analytics platform that reshapes how your teams work with information by bringing everyone together with tools for every analytics professional.

Considering that 98% of employees voice dissatisfaction with video meetings held from home, incorporating Power BI introduction training to utilize sharing functionalities becomes even more essential. By fostering a data-driven culture within your organization through Power BI introduction training, you can significantly improve user satisfaction in remote environments, making effective collaboration tools essential. Moreover, for businesses struggling with time-consuming report creation and data inconsistencies, our 3-Day BI Sprint is designed to transform your approach, providing you with a fully functional, professionally designed report on a topic of your choice.

This not only enables actionable insights but also serves as a template for future projects, ensuring a professional design from the start. Microsoft’s recognition as a Leader in the 2024 Gartner Magic Quadrant for Analytics and Business Intelligence Platforms underscores the reliability of BI as a leading solution in this field. For assistance with Power BI, please contact +1 281 899 0865 or email arish.siddiqui@dynamicssquare.com.

Conclusion

Harnessing the full potential of Power BI is essential for organizations seeking to thrive in today’s data-driven landscape. By mastering its key components—from data visualization and reporting to effective data modeling and interactive dashboards—teams can transform raw data into actionable insights that drive informed decision-making. The integration of Robotic Process Automation (RPA) further enhances these capabilities, streamlining processes and alleviating common pain points such as time-consuming report generation and data inconsistencies.

As businesses navigate the complexities of data management, fostering a collaborative culture through effective sharing and communication becomes paramount. Power BI’s capabilities in facilitating teamwork and collaboration empower organizations to break down silos, ensuring that insights are readily accessible and actionable across all levels.

In conclusion, the journey to becoming proficient in Power BI is not just about acquiring technical skills; it’s about embracing a transformative mindset that prioritizes data-driven decision-making. By equipping teams with the right tools and strategies, organizations can turn challenges into opportunities, paving the way for sustained growth and operational excellence. Now is the time to invest in Power BI and RPA solutions to unlock the insights that will drive innovation and success in the future.

Introduction

In a rapidly evolving business landscape, organizations are increasingly turning to Centers of Excellence (CoEs) as a strategic response to enhance operational efficiency and drive innovation. CoEs serve as specialized hubs that foster expertise, streamline processes, and promote best practices across various domains, from technology to human resources. By focusing on specific challenges such as manual workflows and staffing shortages, these centers not only optimize performance but also empower teams to leverage advanced solutions like Robotic Process Automation (RPA).

As companies navigate the complexities of modern operations, understanding the purpose and implementation strategies of CoEs becomes essential for achieving measurable improvements and sustained competitive advantages.

This article delves into the significance of CoEs, exploring their diverse types, value propositions, and the strategies necessary for successful implementation and evolution.

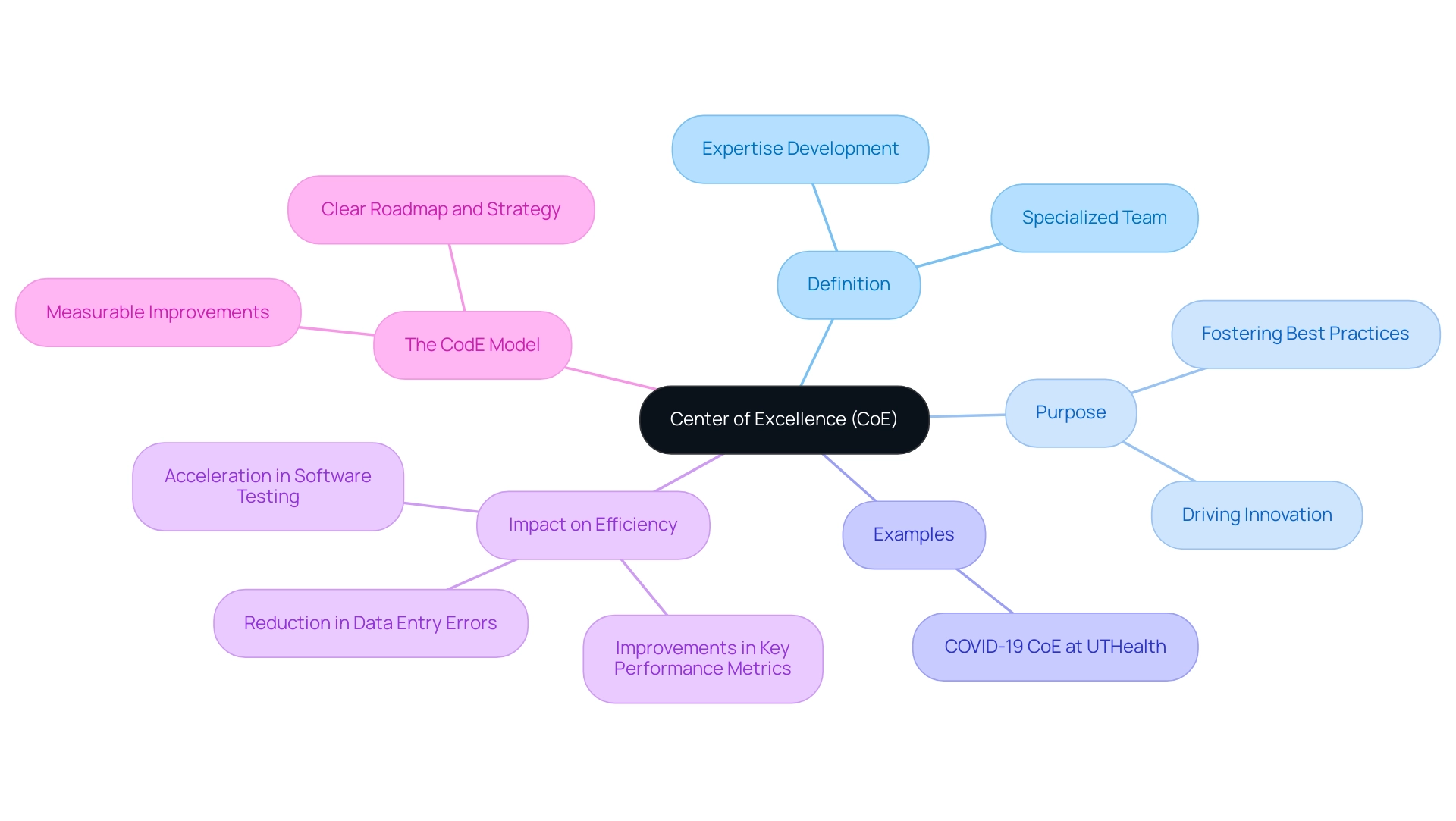

Defining the Center of Excellence: Purpose and Importance

The coe team meaning refers to a Center of Excellence (CoE) that represents a specialized team or entity within a business, dedicated to fostering expertise, best practices, and innovation in a particular domain. The essence of a CoE team meaning lies in its ability to provide strategic leadership and facilitate knowledge sharing, ensuring that best practices are not merely theoretical but actively implemented across the organization. As Margaret Wilhelm, Vice President of Customer Insights at Fandango, aptly noted,

We’re so much closer to the customer now.

And that’s made our whole company better.

This sentiment reflects the transformative potential of coe team meaning, especially in enhancing operational efficiency and driving innovation. A notable example is the COVID-19 Center of Excellence at UTHealth, which was established to focus on research and response to the pandemic, illustrating how such centers can effectively manage crises and improve organizational responses.

By creating a clear roadmap and strategy, organizations can leverage the capabilities of the coe team meaning to address specific challenges—whether in robotic process automation (RPA) or analytics. Recent developments highlight that the coe team meaning is increasingly crucial in optimizing operations and elevating organizational performance. For instance, a mid-sized company faced significant challenges with manual information entry errors and slow software testing processes, which hindered their operational efficiency.

By implementing GUI automation, they achieved a 70% reduction in data entry errors and a 50% acceleration in software testing processes—key metrics that demonstrate the effectiveness of centers of excellence in driving operational efficiency. Furthermore, RPA solutions like EMMA RPA, which automates repetitive tasks and enhances workflow efficiency, and Microsoft Power Automate, which streamlines processes and provides risk-free ROI assessments, have been instrumental in improving employee morale and productivity. In 2024, studies suggest that the coe team meaning is reflected in the enhancements experienced by entities with Centers of Excellence, achieving up to 30% improvements in key performance metrics.

For leaders aiming to enhance their operations, understanding and implementing the CodE model is not just beneficial; it is essential for achieving measurable improvements in efficiency and effectiveness.

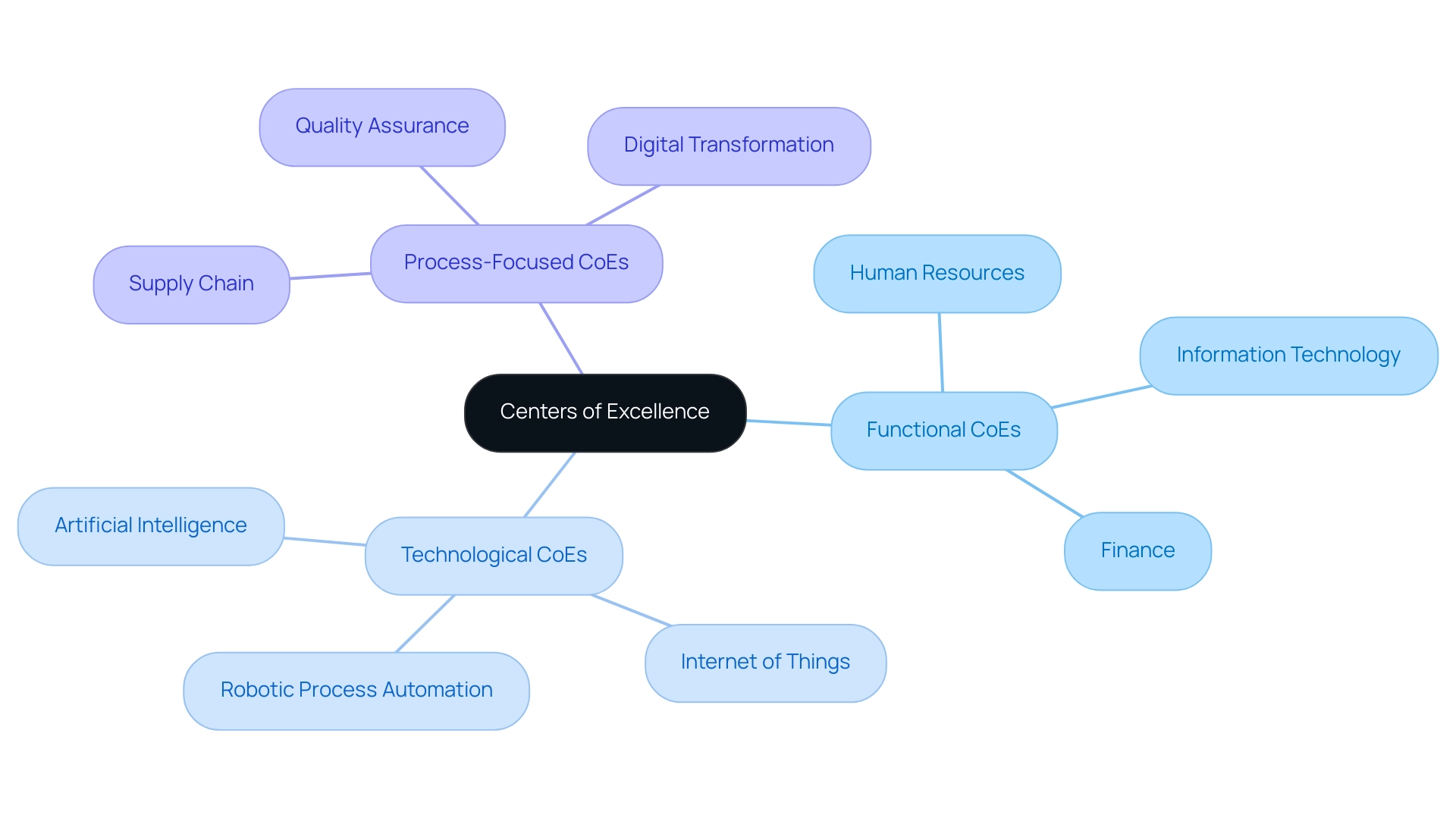

Exploring Different Types of Centers of Excellence

Organizations can leverage various types of Centers of Excellence to enhance their operational effectiveness amid challenges like repetitive tasks, staffing shortages, and outdated systems. Functional centers of excellence concentrate on specific business functions such as:

- Human Resources

- Information Technology

- Finance

This allows organizations to hone in on specialized expertise. Technological centers of excellence focus on harnessing innovations like:

- Artificial Intelligence

- Internet of Things

- Robotic Process Automation (RPA)

These innovations drive efficiency and combat the burden of manual workflows.

For instance, UserTesting’s enhancement of customers’ search experience has led to higher conversions and improved bottom-line revenue, illustrating the tangible advantages of implementing centers of excellence effectively. Moreover, RPA solutions play a vital role in modernizing operations by automating repetitive tasks, thereby enhancing productivity and reducing errors. Process-focused centers of excellence aim to refine workflows, which is crucial for addressing specific challenges within an entity’s processes.

Each type of CoE reflects the coe team meaning, serving unique purposes tailored to meet the distinct needs and challenges faced by an organization, thereby enhancing productivity. Additional types of centers of excellence, such as those focused on:

- Supply chain

- Quality assurance

- Digital transformation

These further illustrate the diverse applications of these entities across various sectors. Insights from case studies, such as the strategies detailed in ‘Three Ways to Make Your Information Do More,’ highlight the significance of implementing AI and minimizing complexity to enhance business outcomes.

By understanding these options, leaders can implement centers of excellence that not only align with their strategic objectives but also significantly enhance overall performance. Furthermore, the integration of Business Intelligence (BI) is crucial, as it transforms raw data into actionable insights, enabling informed decision-making that drives growth and innovation. As Margaret Wilhelm, Vice President of Customer Insights at Fandango, aptly states,

We’re so much closer to the customer now.

And that’s made our whole company better.

This sentiment highlights the transformative potential of well-structured centers of excellence in fostering collaboration, innovation, and ultimately, improved business outcomes.

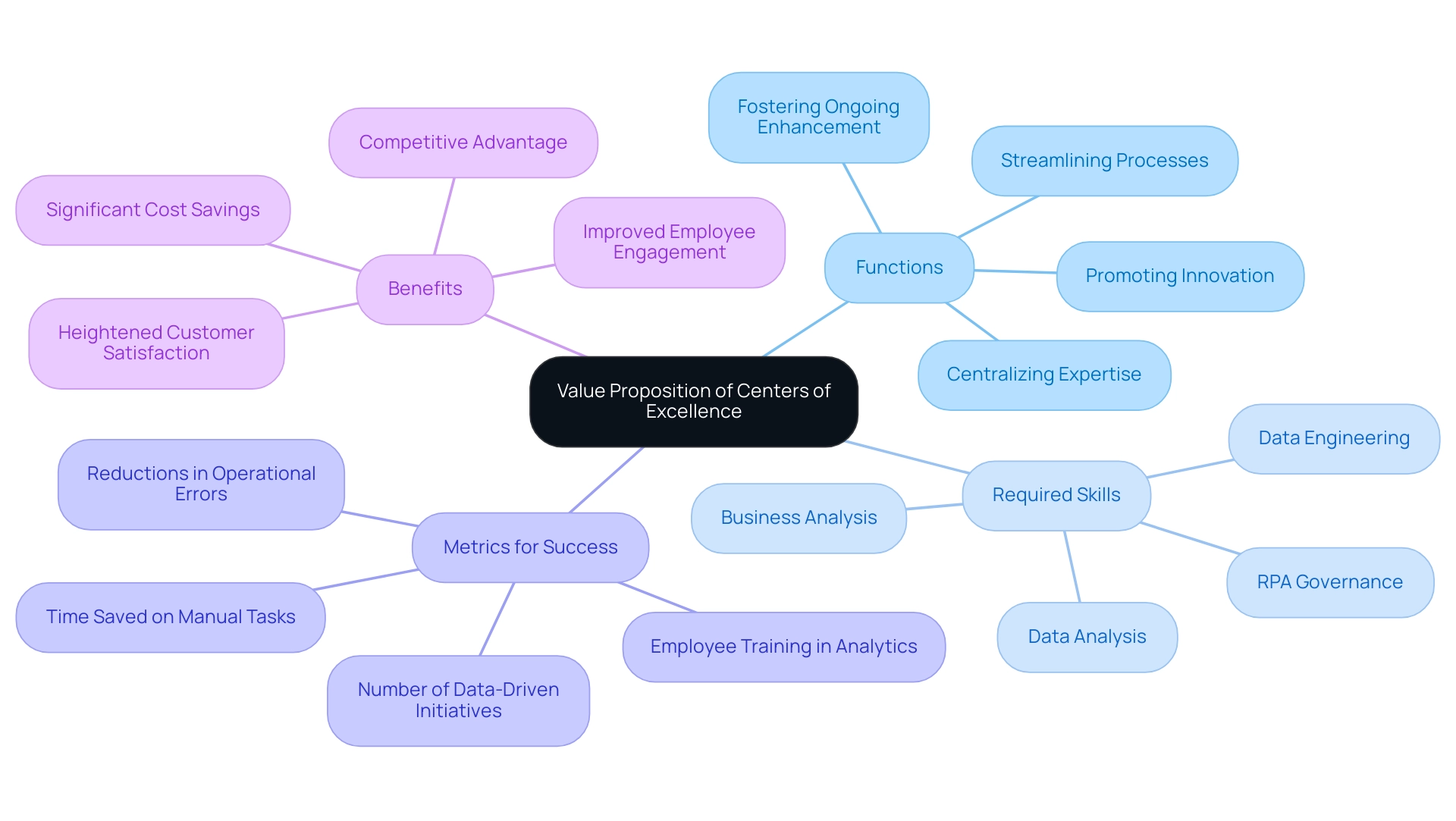

The Value Proposition of Centers of Excellence

The coe team meaning serves a crucial function in entities by fostering a culture of ongoing enhancement and promoting innovation. They serve as hubs for specialized knowledge, streamlining processes and enhancing decision-making capabilities, particularly in leveraging Robotic Process Automation (RPA) to automate manual workflows. By centralizing expertise in information management and analytics, Codes empower organizations to keep pace with the rapidly evolving AI landscape, ultimately fostering a competitive advantage.

Investing in a Code is not merely an operational decision; it is a strategic move that can lead to substantial long-term benefits. An effective CoE requires a skilled team of information professionals and RPA specialists, which highlights the coe team meaning, necessitating an investment in talent and training. This diverse ACE team should include individuals proficient in:

– Data analysis

– Data engineering

– Business analysis

– RPA governance

As noted by the Chief Data Officer,

Ultimately, the CDO’s leadership and strategic positioning are critical to harnessing the full potential of the CoE, driving innovation, and achieving sustainable competitive advantages.

Furthermore, entities must establish clear metrics to measure the impact of their CoE initiatives. For instance, defining metrics enables entities to evaluate the value and effectiveness of the ACE over time, such as tracking the number of data-driven initiatives, employee training in analytics, and the implementation of RPA solutions.

These metrics can include:

– Reductions in operational errors

– Time saved on manual tasks

– Improvements in team resource allocation towards strategic projects

These benefits include significant cost savings, improved employee engagement, and heightened customer satisfaction, thus reinforcing the value proposition of centers of excellence in driving organizational performance well into 2024 and beyond. Additionally, addressing the challenges posed by manual, repetitive tasks is crucial, as these can hinder efficiency and lead to wasted resources, making the case for RPA even stronger.

Implementing a Center of Excellence: Strategies for Success

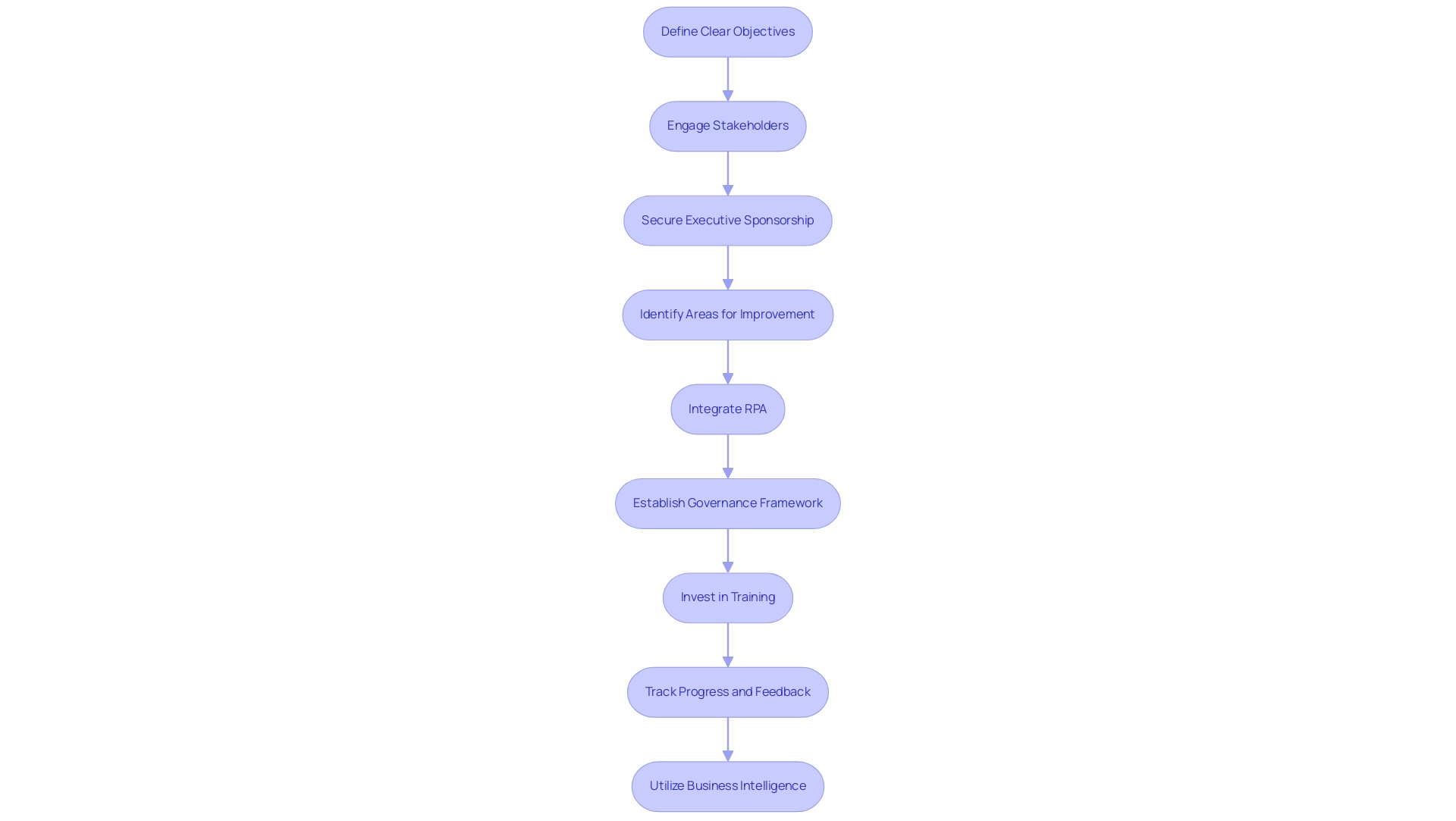

Successfully implementing a Center of Excellence (CoE) requires understanding the coe team meaning, which begins with defining clear objectives that resonate with organizational goals. It’s crucial to identify and engage the right stakeholders early in the process, securing executive sponsorship to ensure top management support. A recent observation from corporate experts highlighted a list of 100 areas needing improvement after site visits, underscoring the necessity of a structured approach to address specific challenges in Code implementation.

Manual, repetitive tasks can significantly slow down your operations, leading to wasted time and resources; integrating Robotic Process Automation (RPA) can significantly streamline workflows, enhancing operational efficiency and allowing your team to focus on more strategic initiatives. Establishing a robust governance framework is essential; it should clearly define roles, responsibilities, and decision-making processes to foster accountability and transparency, especially in light of new SEC ESG regulations that may influence compliance requirements. The rapidly evolving AI landscape can be daunting, making it essential to tailor AI solutions to your unique business context, ensuring that you adopt the right tools that align with your goals.

Investing in training and resources is equally important, as understanding the CoE team meaning equips members with the necessary skills to drive initiatives effectively. Regularly tracking progress and soliciting feedback not only facilitates continuous improvement but also enhances stakeholder engagement—a critical component for success. According to a report by the Data Governance Institute, entities with a coe team meaning enjoy improved information quality and compliance, with 80% of them having strong governance frameworks compared to just 45% lacking.

The College of New Jersey’s Center for Excellence in Teaching and Learning (CETL) exemplifies this structured approach, as it plans to expand its online resources and strengthen connections with schools over the next two years by focusing on tailored solutions for faculty development. By adopting these strategies and utilizing Business Intelligence to convert information into actionable insights, entities can ensure their codes provide significant value.

Sustaining and Evolving Your Center of Excellence

To effectively sustain and evolve a Center of Excellence (CoE), leaders must proactively assess the coe team meaning and its relevance amidst the ever-changing organizational landscape and market dynamics. By leveraging Robotic Process Automation (RPA), companies can automate repetitive manual workflows, significantly enhancing operational efficiency, reducing errors, and freeing up team capacity for more strategic, value-adding work. Establishing clear metrics is essential for evaluating the coe team meaning, which enables the collection of valuable insights from both internal and external stakeholders.

For instance, metrics such as the following can provide concrete measures of the CoE’s effectiveness:

- The number of evidence-based decisions made

- The level of information literacy among employees

- The speed of information processing

Fostering a culture of innovation within the CoE is crucial; understanding the coe team meaning can be achieved by promoting experimentation and collaboration among team members. Additionally, navigating the rapidly evolving AI landscape is vital—tailored AI solutions can help identify the right technologies that align with specific business goals.

Staying informed about the latest industry trends and emerging technologies, such as artificial intelligence and machine learning, will ensure that the Code remains a leader in best practices. Statistics indicate that 80% of entities with a Code have strong governance frameworks in place, a stark contrast to the 45% of those lacking one. This highlights the meaning of the coe team in enhancing efficiency and driving strategic decision-making.

The primary purpose of a Data and Analytics CoE is to centralize expertise and resources in data management and analytics, which reflects the coe team meaning by fostering a data-driven culture that supports strategic decision-making throughout the entity. Ultimately, as the Chief Data Officer aptly notes,

Ultimately, the CDO’s leadership and strategic positioning are critical to harnessing the full potential of the CoE, driving innovation, and achieving sustainable competitive advantages.

By fostering an adaptive Code that incorporates RPA and Business Intelligence, entities can continue to leverage its expertise and insights, leading to sustained improvements in operational efficiency and innovation.

We encourage you to explore RPA solutions to unlock these benefits and drive your organization’s success.

Conclusion

In the current business environment, the establishment of Centers of Excellence (CoEs) has emerged as a vital strategy for organizations seeking to enhance operational efficiency and foster innovation. By concentrating expertise in specific domains, CoEs not only facilitate knowledge sharing but also drive the implementation of best practices that lead to measurable improvements in performance. The diverse types of CoEs—from functional and technological to process-focused—provide tailored solutions to unique organizational challenges, enabling companies to optimize workflows and leverage advanced technologies such as Robotic Process Automation (RPA).

The value proposition of CoEs is clear: they cultivate a culture of continuous improvement and empower organizations to navigate the complexities of modern operations. By investing in skilled teams and establishing clear metrics, organizations can track the impact of their CoE initiatives, ensuring alignment with strategic objectives. The integration of RPA and Business Intelligence further enhances decision-making capabilities, transforming raw data into actionable insights that drive growth.

To sustain and evolve a CoE, it is crucial for leaders to regularly assess its relevance and effectiveness in light of shifting market dynamics. By fostering a culture of innovation and embracing tailored AI solutions, organizations can ensure their CoEs remain at the forefront of industry best practices. The leadership and strategic positioning of the CoE are pivotal in harnessing its full potential, ultimately leading to sustained competitive advantages and improved operational outcomes. By embracing these strategies, organizations can unlock the transformative benefits of CoEs and position themselves for long-term success in an ever-evolving landscape.

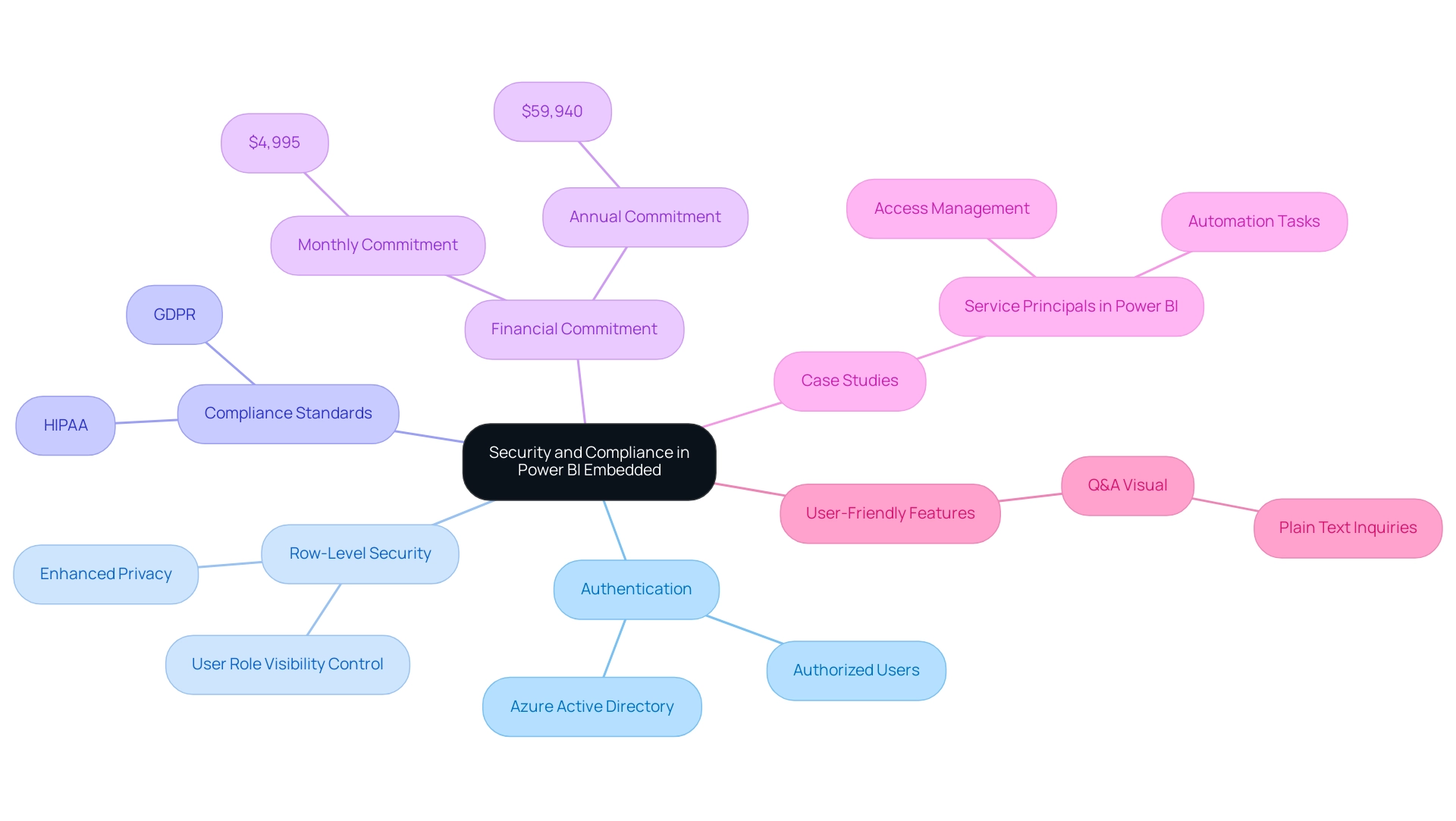

Introduction

In the rapidly evolving landscape of data analytics, organizations are increasingly turning to Power BI Embedded as a game-changing solution. This powerful Microsoft Azure service not only enhances the user experience by embedding interactive reports and dashboards directly into applications but also empowers businesses to make data-driven decisions with confidence.

As industries like banking and financial services anticipate significant growth, the ability to leverage real-time insights becomes paramount. By addressing common challenges such as:

- Time-consuming report creation

- Data inconsistencies

Power BI Embedded streamlines workflows and boosts productivity, allowing directors to focus on operational efficiency.

With a suite of best practices for deployment and robust security measures in place, organizations can unlock the full potential of their data, driving innovation and success in their operations.

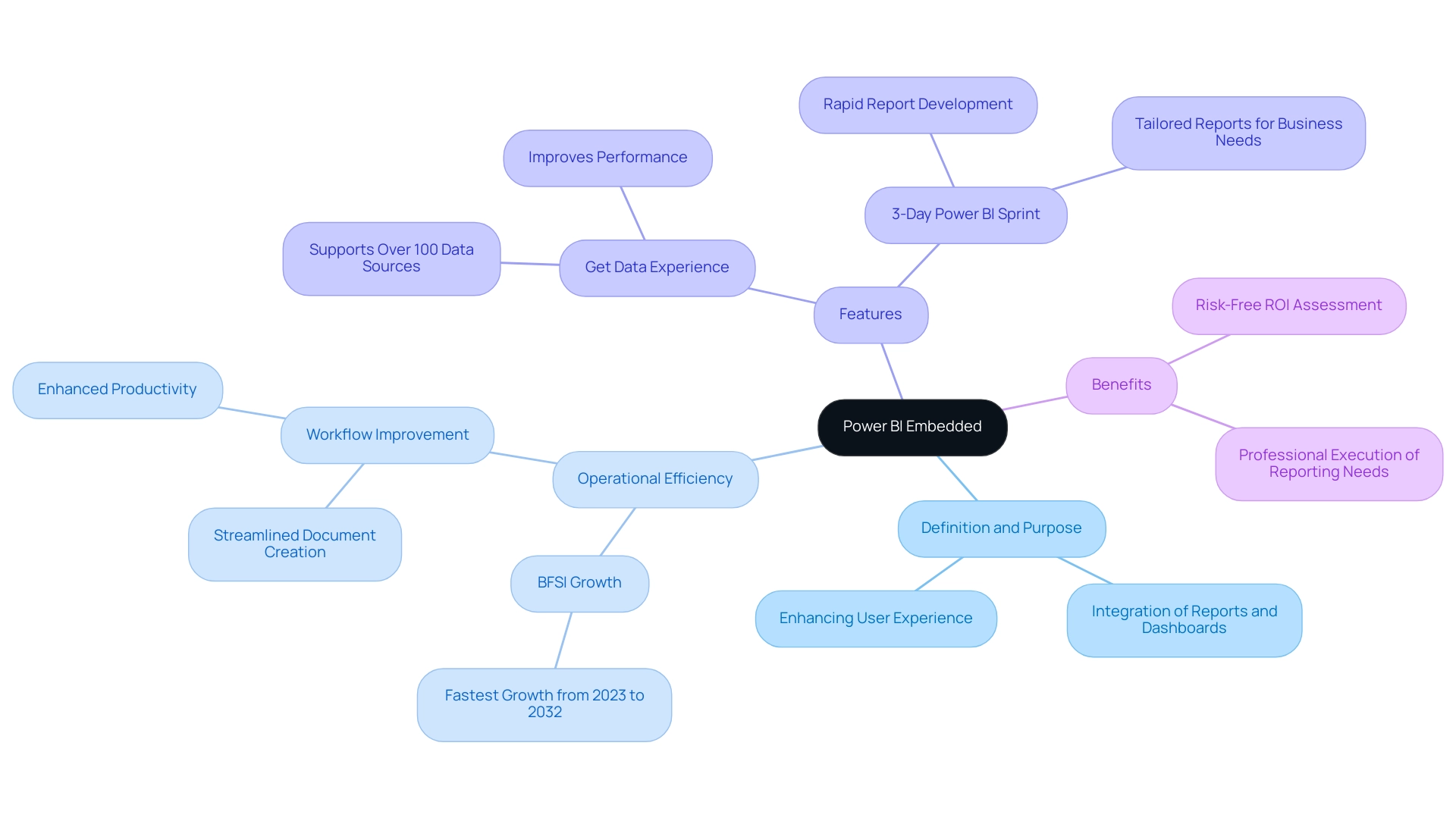

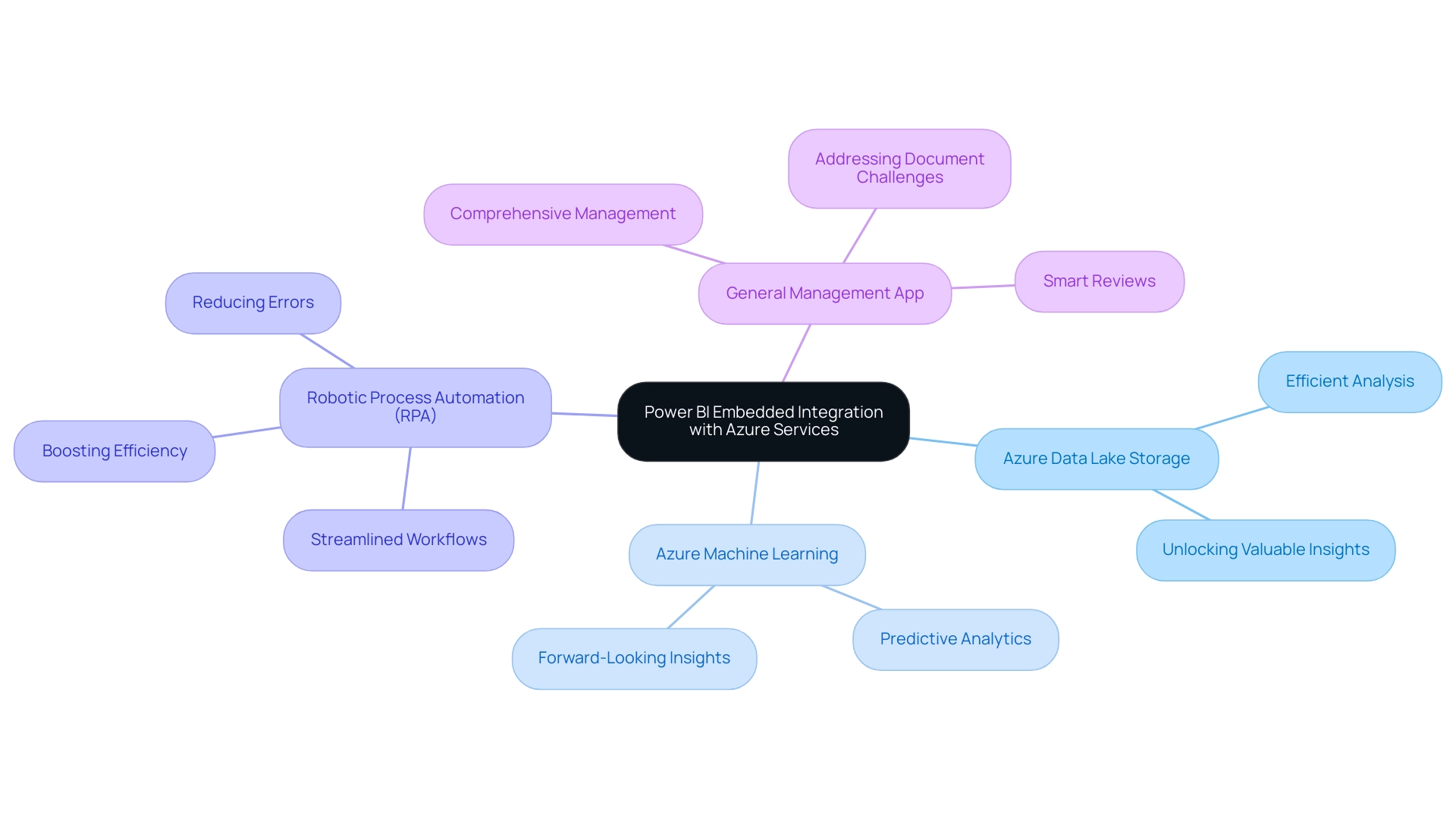

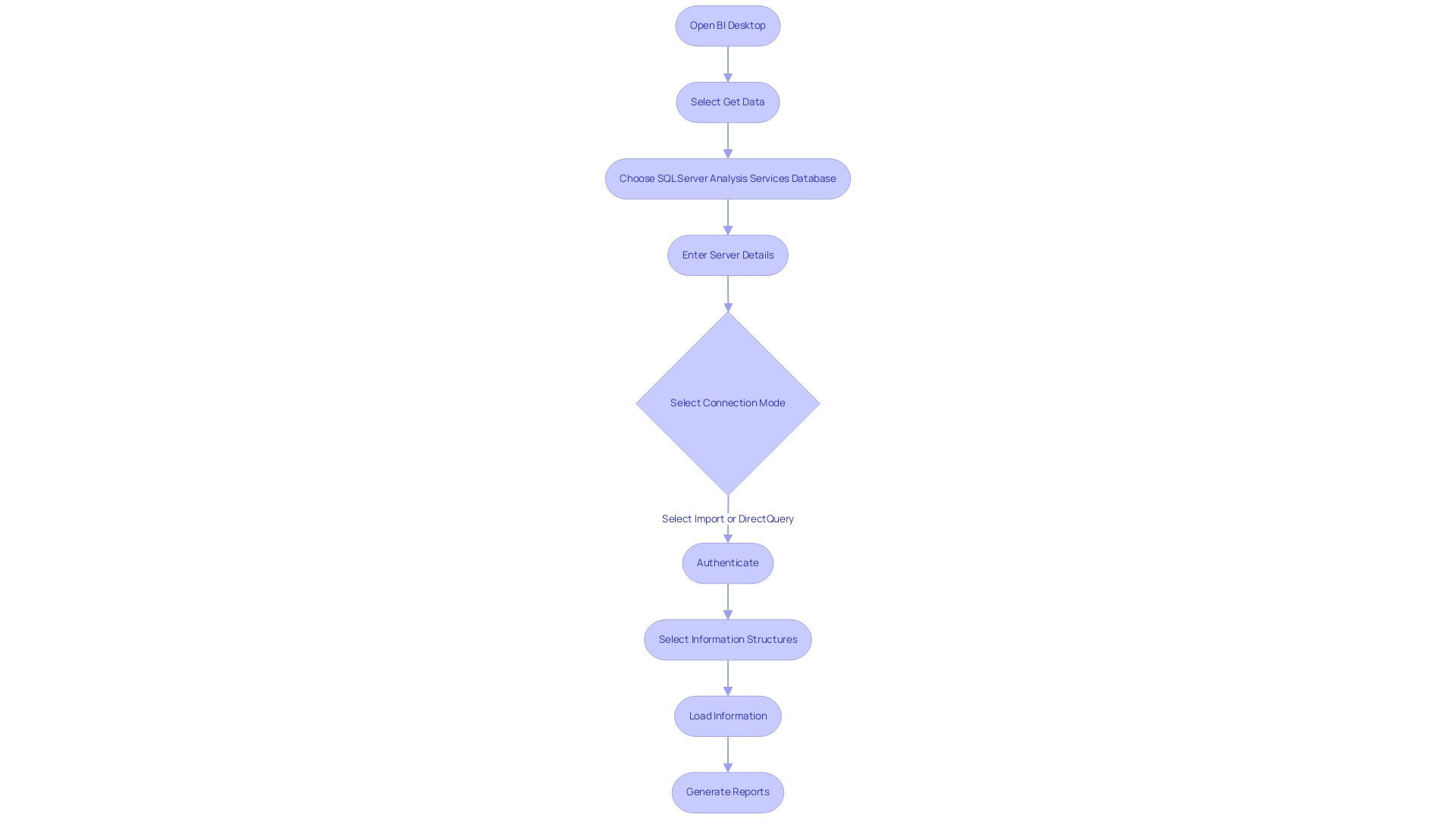

Understanding Power BI Embedded: Definition and Purpose

The Power BI Embedded Azure service signifies a crucial offering from Microsoft Azure that enables developers to effortlessly incorporate interactive reports and dashboards into their applications. This integration allows organizations to provide comprehensive visualizations and strong analytics capabilities to users, all without requiring a BI account for access. The main objective of Power BI Embedded Azure is to enhance user experience by providing direct access to insights within the application, thereby enabling informed decision-making through real-time analysis.

In terms of operational efficiency, especially in fields such as BFSI (Banking, Financial Services, and Insurance), which is expected to see substantial growth from 2023 to 2032 according to Inkwood Research, utilizing Power BI Embedded Azure can be revolutionary.

- The introduction of the ‘Get Data Experience in Power BI Report Builder’ allows creators to build documents on over 100 data sources, thus improving performance and enhancing the document creation process for large datasets. This not only streamlines workflows but also enhances productivity, which is essential for directors concentrated on operational efficiency.

Additionally, the 3-Day Power BI Sprint offers a fast-track solution for organizations seeking to create professionally designed documents swiftly. This sprint is designed to rapidly develop tailored reports that meet specific business needs, significantly reducing the time from concept to deployment. Coupled with tools like the General Management App, which offers comprehensive management features such as smart reviews and performance tracking, businesses can achieve a risk-free ROI assessment and professional execution of their reporting needs.

These solutions, alongside the insights gained from the case study on enhancing operational efficiency through GUI automation, showcase how companies can overcome challenges in data entry, software testing, and legacy system integration while realizing measurable outcomes. By embedding Power BI Embedded Azure, organizations can not only utilize its robust analytical features but also improve overall user experience and operational productivity.

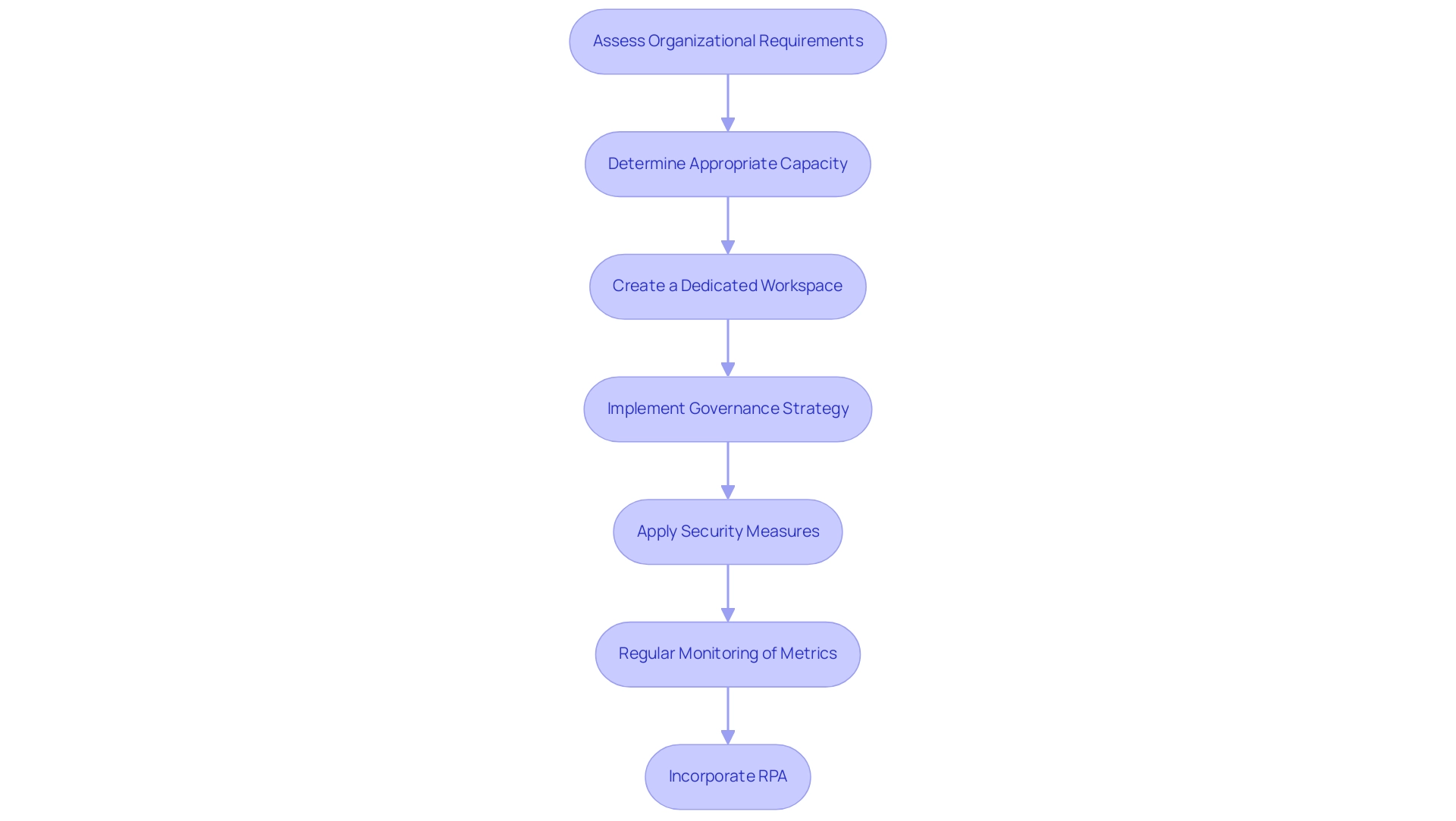

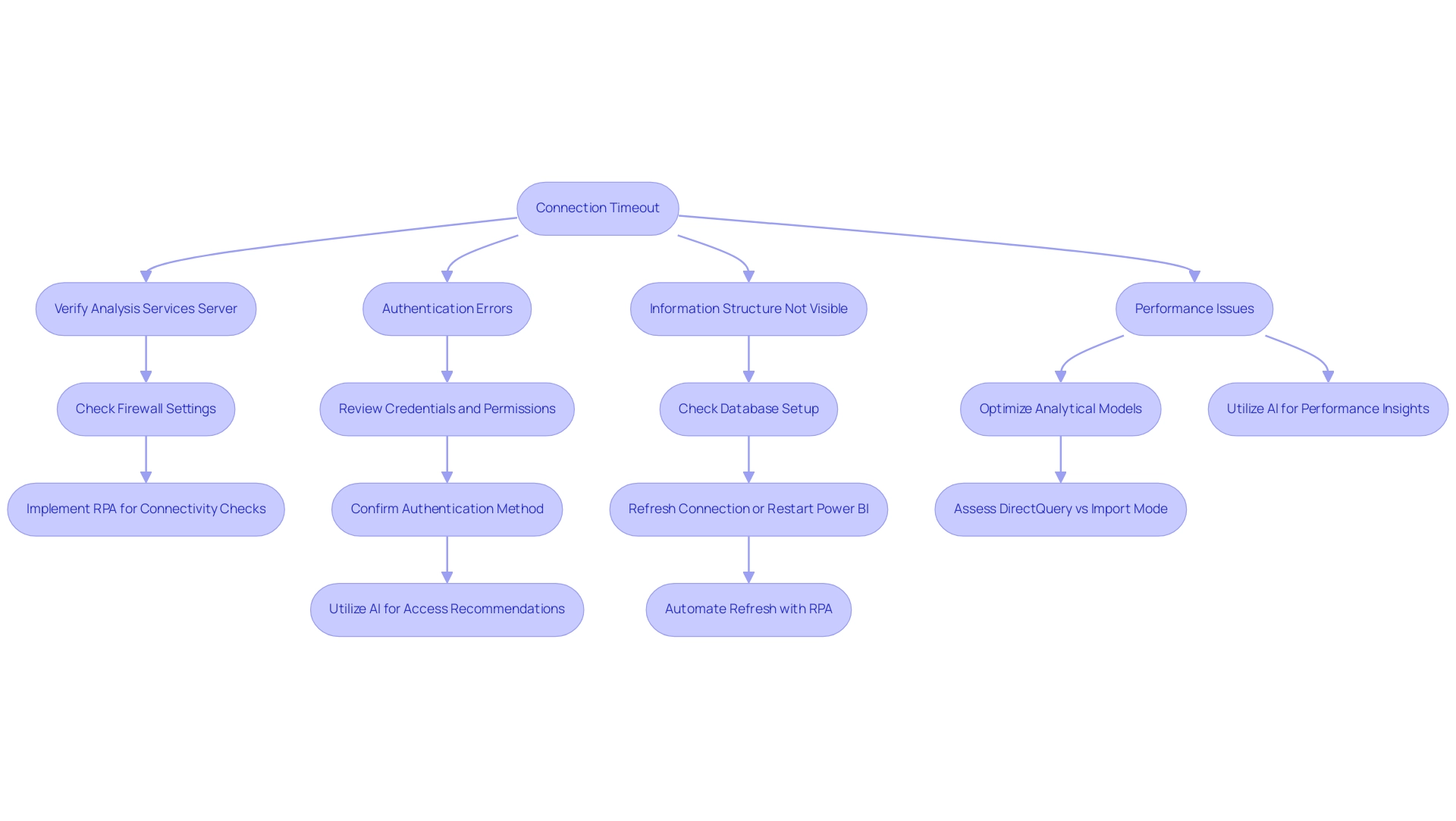

Deploying Power BI Embedded in Azure: Best Practices and Strategies

To successfully implement Power BI Embedded Azure integration, organizations must adhere to a set of best practices that ensure smooth integration and optimal performance. Firstly, a thorough assessment of the organization’s specific requirements is critical for determining the appropriate capacity for Power BI Embedded Azure, as this choice directly influences both performance and cost. According to recent studies, optimizing information loading can enhance document speed by up to 40%, while efficient storage alternatives can significantly decrease latency.

Using the Azure portal to create a dedicated workspace for Power BI Embedded Azure is the next logical step, allowing developers to effectively manage documents and information sources. However, many organizations find themselves investing more time in creating documents than leveraging insights from Power BI dashboards. This common challenge can lead to confusion and mistrust in the information presented, particularly when inconsistencies arise due to a lack of governance strategy.

Implementing a robust governance strategy is essential to ensure information integrity and consistency across reports. Security measures, such as implementing row-level security and robust authentication protocols, are essential to safeguard sensitive information from unauthorized access. A relevant case study titled ‘Avoiding Floating Point Data Types’ highlights how employing whole numbers or decimals minimizes precision issues, leading to accurate analytical outputs.

Additionally, regular monitoring of usage and performance metrics allows organizations to fine-tune their analytics solutions, including Power BI Embedded Azure, facilitating ongoing optimization. As Ted Pattison noted, ‘Regular updates and assessments are key to leveraging the full potential of Power BI.’ By incorporating Robotic Process Automation (RPA) to streamline repetitive tasks and focusing on actionable insights rather than just information presentation, businesses can enhance their analytics-driven decision-making capabilities.

Additionally, the General Management App, featuring its Microsoft Copilot Integration, provides extensive management tools, personalized dashboards, and predictive analytics, simplifying the process for organizations to obtain actionable insights from their information. This guarantees that their BI Integrated deployment is both efficient and secure.

Benefits of Power BI Embedded for Businesses and Developers

Power BI Embedded Azure offers a revolutionary chance for enterprises and developers, greatly improving accessibility and visualization of information. In today’s information-rich environment, where obtaining valuable insights is essential, organizations can utilize BI tools to address challenges such as:

- Time-consuming document creation

- Inconsistencies in information

- A lack of actionable guidance

By facilitating rapid insights extraction, organizations can make informed, data-driven decisions with confidence.