Introduction

In the realm of modern business operations, the quest for efficiency is paramount. As organizations navigate the complexities of manual workflows, AWS Batch emerges as a transformative solution, streamlining batch computing workloads in the cloud. This fully managed service not only optimizes resource allocation but also integrates seamlessly with APIs to automate processes, ultimately empowering teams to focus on strategic initiatives rather than repetitive tasks.

Coupled with the insights derived from Business Intelligence, AWS Batch presents a robust framework for driving operational excellence. This article delves into the intricacies of AWS Batch, offering a comprehensive guide on:

- Job submission via API Gateway

- IAM configuration

- Monitoring strategies

- Best practices to harness its full potential

By understanding and implementing these strategies, businesses can enhance productivity and achieve a competitive edge in an increasingly data-driven landscape.

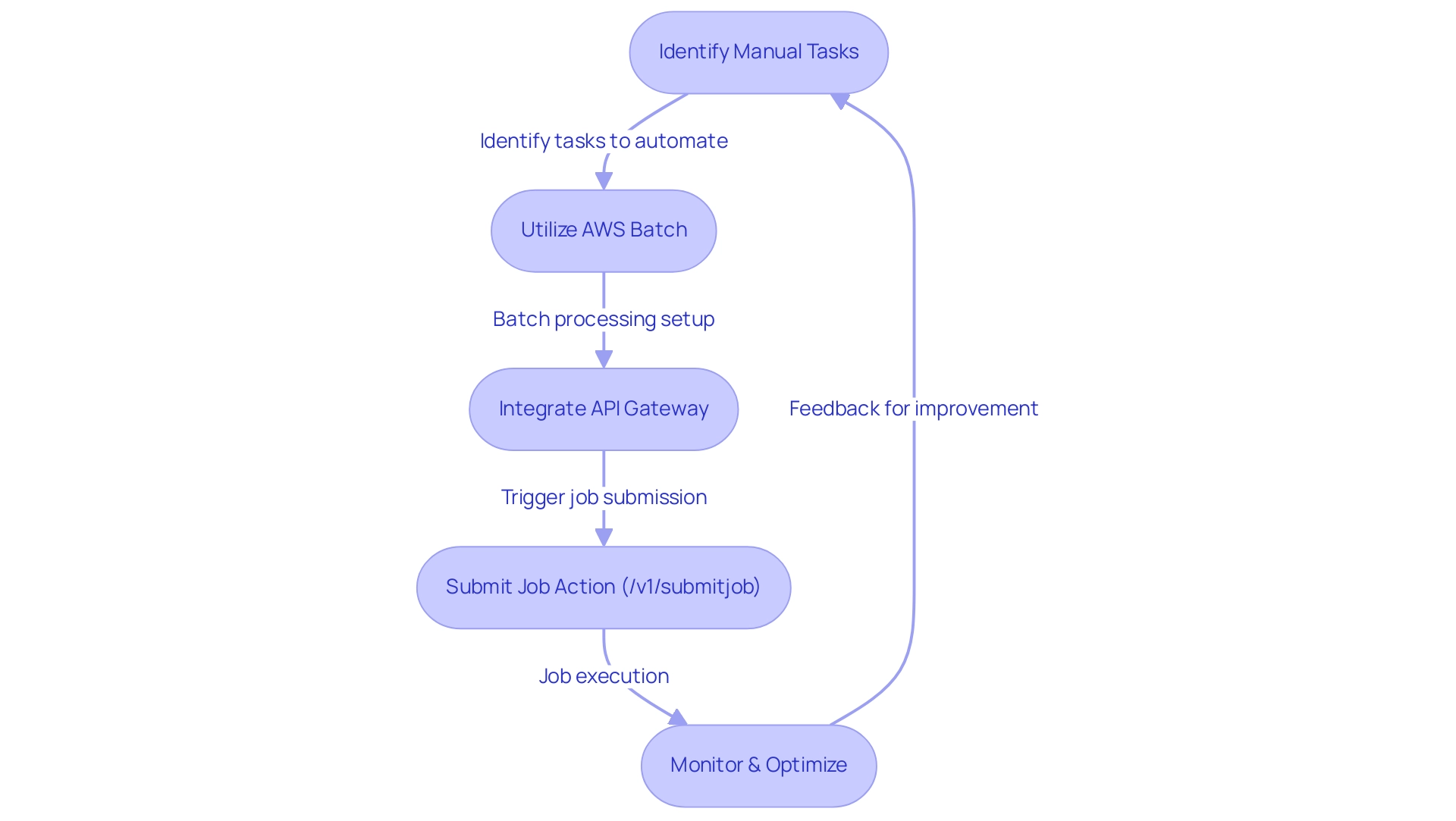

Understanding AWS Batch and API Integration

Manual, repetitive tasks can significantly hinder operational efficiency, leading to wasted time and resources. AWS is a robust, fully managed service that allows organizations to efficiently execute batch computing tasks in the AWS Cloud. By intelligently allocating the optimal quantity and type of compute resources—whether CPU or memory—AWS handles the specific needs of the submitted tasks, thereby alleviating the challenges associated with manual workflows.

This capability significantly enhances operational efficiency and resource utilization, making it an excellent complement to Robotic Process Automation (RPA) strategies aimed at automating these manual tasks.

The integration of APIs further enhances the usability of AWS services. Developers can programmatically submit tasks to trigger API actions, automating workflows and triggering processes based on a variety of events. Comprehending how AWS processes interact with APIs is essential for maximizing this potential and improving business productivity through customized AI solutions.

Additionally, the role of Business Intelligence is vital in transforming data into actionable insights, enabling informed decision-making that drives growth. The AWS API Gateway serves as a pivotal bridge, allowing you to create, publish, maintain, monitor, and secure APIs at scale. By utilizing API Gateway, you can effortlessly trigger API for AWS processing tasks from your applications or other AWS services, streamlining operations and enhancing productivity.

Notably, the path override for the AWS Job submitJob action is /v1/submitjob, and while the API Gateway resource method can be either GET or POST, it is essential that the integration request uses POST for the AWS Job submitJob call.

A pertinent case study titled ‘Submitting an AWS Task from AWS API Gateway‘ examines how to initiate AWS tasks using AWS API Gateway, emphasizing the difficulties encountered in comprehending the integration process and the particular settings needed. The author successfully documented the necessary configurations, including the integration type, HTTP method, and mapping templates, to streamline the process for others. This integration not only simplifies job submission but also enables more responsive and automated responses to business needs, empowering your team to focus on strategic, value-adding work.

Furthermore, in a rapidly evolving AI landscape, understanding the right solutions for your business needs is crucial, and AWS services, combined with RPA and Business Intelligence, offers a robust framework for achieving operational excellence.

Step-by-Step Process for Submitting AWS Batch Jobs via API Gateway

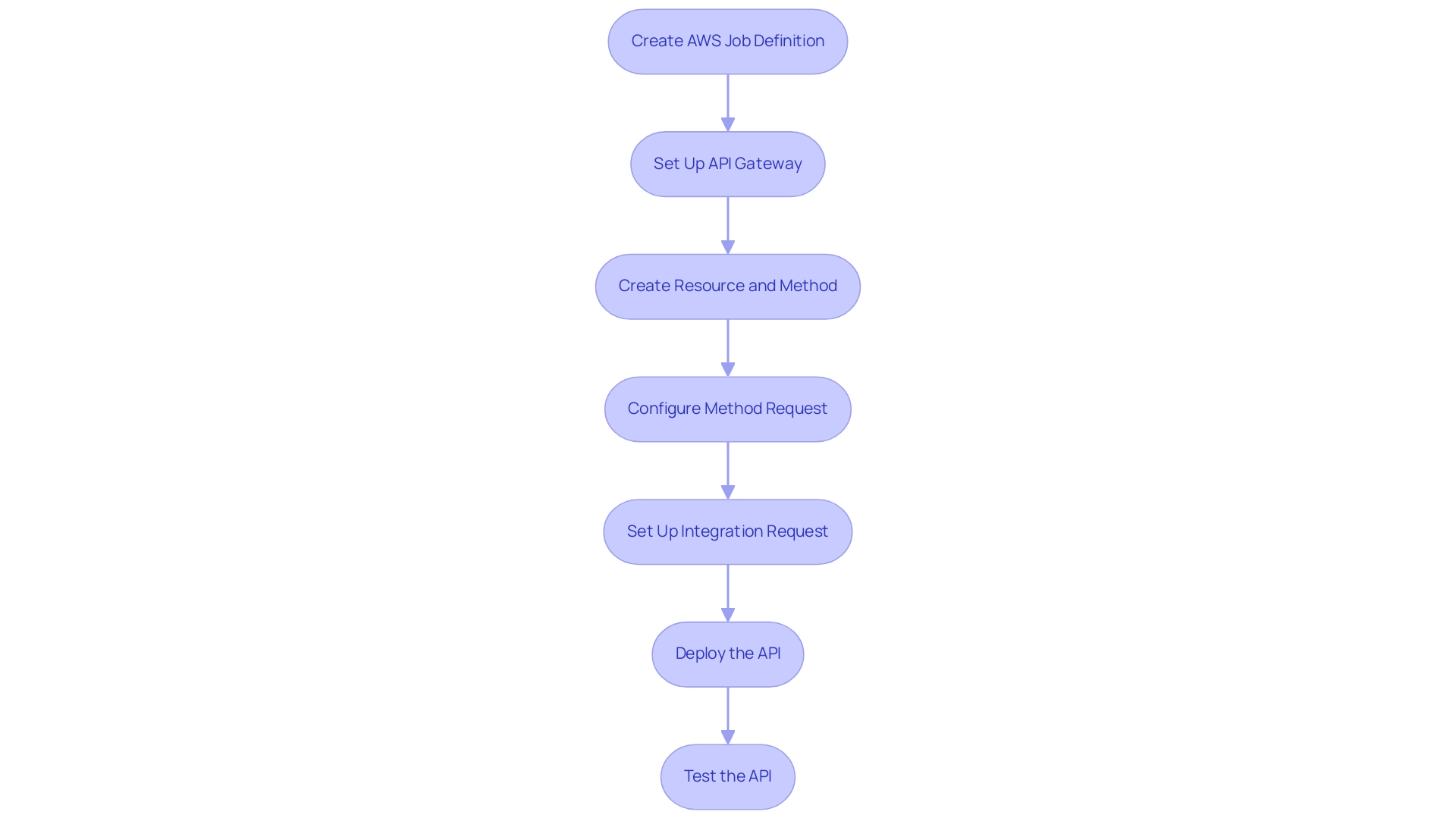

To automate the submission of AWS Batch jobs via the API Gateway, follow these structured steps:

-

Create an AWS Job Definition: Start by developing a job definition that outlines the execution parameters for your job. Access the AWS console, create a new job definition, and configure key attributes such as job name, container image, and resource allocation.

-

Set Up API Gateway: Access the AWS Management Console and navigate to the API Gateway service. Initiate the creation of a new API, selecting the REST API option, and tailor the configuration to meet your operational needs. Depending on your use case, choose between regional or edge-optimized APIs.

-

Create a Resource and Method: Within your API, establish a new resource, such as

/submit-job. Subsequently, generate a method (e.g., POST) for this resource. For the method settings, select AWS Service as the integration type and configure it to interface with AWS Processing. -

Configure Method Request: Define the request parameters and body that your API will accept. Ensure these include essential job parameters like job name, job queue, and job definition to facilitate seamless processing.

-

Set Up Integration Request: Map the incoming requests from the API Gateway to the AWS Processing API. It’s crucial to ensure that the request body adheres to the format expected by the AWS

SubmitJobAPI call, enabling efficient job submission. -

Deploy the API: After finalizing your API configuration, deploy it to a designated stage (e.g., production). This deployment will create an endpoint that you can utilize to initiate AWS tasks efficiently.

-

Test the API: Utilize tools such as Postman or curl to validate your API endpoint. Execute a POST request with the requisite parameters to confirm that your AWS jobs are triggered as intended.

Important Considerations: It’s essential to note that AWS does not provide managed-node orchestration for CoreDNS or other deployment pods, which may affect your job submission strategy.

As AWS OFFICIAL states, “How do I run an AWS job using Java?” – this emphasizes the versatility in job execution methods.

Furthermore, citing the case study named “Triggering AWS Mainframe Modernization Jobs” demonstrates how specialists have effectively automated job scheduling and enhanced workflow management in AWS, highlighting the practical implications of this automation process.

By following these steps, you can simplify the process of submitting AWS jobs through the trigger API, thereby improving your operational efficiency and automation capabilities.

Configuring IAM Roles and Permissions

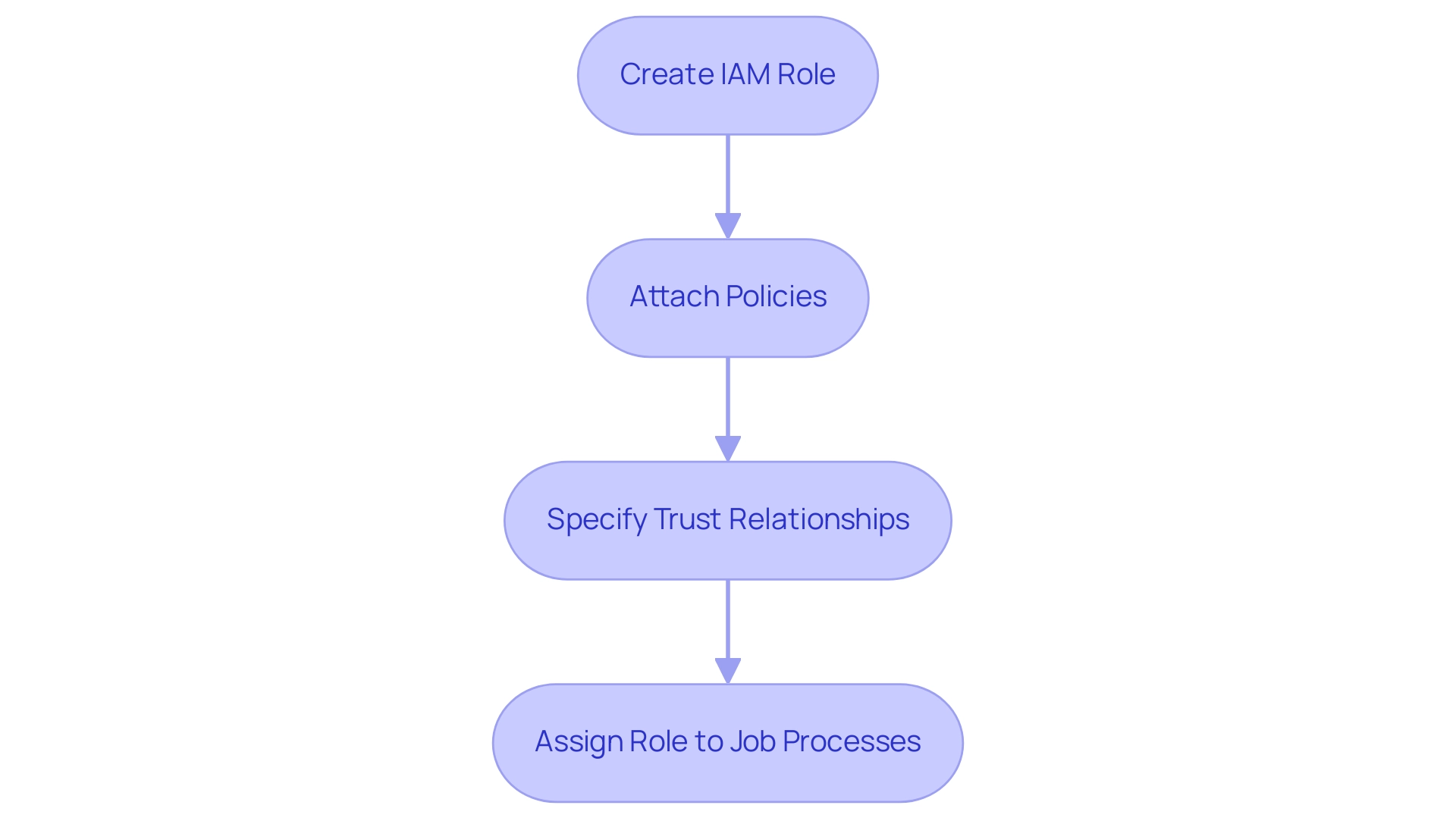

Setting up IAM roles and permissions for AWS jobs and API Gateway is vital for guaranteeing secure and efficient operation. Here’s a step-by-step guide to setting this up effectively:

-

Create an IAM Role: Start by navigating to the IAM console in AWS.

Create a new role, selecting AWS service as the trusted entity. Choose AWS as the service that will utilize this role for batch processing. Note that the service might return information about five IAM roles, which can help you understand the available options. -

Attach Policies: Next, attach the necessary policies that grant permissions for AWS processing operations, such as

AWSBatchFullAccess.

Additionally, if you wish to monitor your jobs, consider attaching logging policies likeCloudWatchLogsFullAccessto enable comprehensive tracking of your job activities. -

Specify Trust Relationships: It’s crucial to modify the trust relationships of the IAM role to permit the API Gateway to assume the trigger API role.

To do this, add a trust policy that specifies API Gateway as a trusted entity to trigger API, ensuring seamless integration between the services. AWS services now support using service-linked roles in all regions, making it essential to leverage this capability for enhanced functionality. -

Assign the Role to Your Job Processes: When creating a job definition in AWS, ensure you specify the IAM role you just created.

This assignment allows your roles to access other AWS resources securely, adhering to the principle of least privilege. If you face challenges during a deletion task, refer to the AWS services documentation to understand how to clean up resources effectively.

By carefully configuring IAM roles and permissions, you enable your AWS processing tasks to operate securely and efficiently, accessing only the resources they need. This strategic approach not only enhances operational efficiency but also mitigates potential security risks associated with over-permissioning.

Monitoring and Troubleshooting AWS Batch Jobs

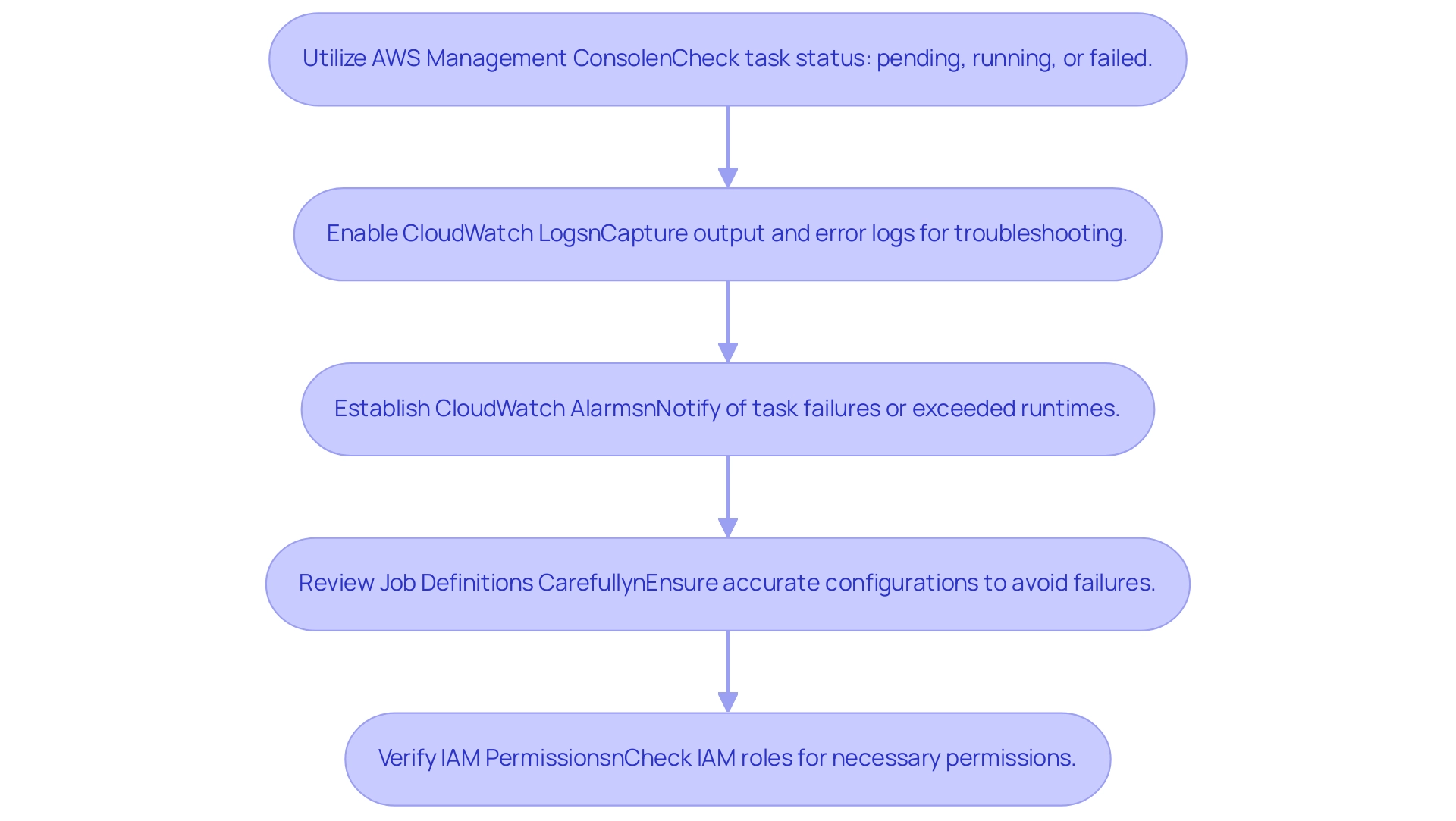

To effectively monitor and troubleshoot AWS Batch jobs, consider implementing the following strategies:

-

Utilize the AWS Management Console: Access the AWS Batch console to check the status of your tasks at a glance. You can easily recognize whether tasks are pending, actively running, or have failed, enabling quick decision-making. Remember that the job queue has a priority of 1, which is crucial for managing job execution effectively.

-

Enable CloudWatch Logs: Ensure that logging is activated for your tasks. This crucial step allows you to capture both output and error logs, providing a detailed view that is essential for troubleshooting. In fact, AWS Batch job monitoring statistics for 2024 indicate that organizations leveraging CloudWatch Logs report a significant reduction in troubleshooting time.

-

Establish CloudWatch Alarms: Set up CloudWatch alarms to notify you of task failures or when tasks exceed their expected runtime. This proactive method helps you address potential issues before they escalate into critical failures, maintaining operational efficiency.

-

Review Job Definitions Carefully: In the event of job failures, thoroughly review the job definitions to ensure all parameters are accurately configured. Common pitfalls include incorrect resource allocations or misconfigured environment variables. A case study on container image pull failures highlights the importance of precise configurations; despite a retry strategy allowing for ten attempts, a failure due to a ‘CannotPullContainerError’ resulted in immediate job termination due to misconfiguration.

-

Verify IAM Permissions: Check that the IAM roles linked to your tasks possess the necessary permissions. Inadequate permissions can lead to job failures and hinder the overall efficiency of your operations. By creating an instance role and attaching it to an instance profile, you can ensure proper interaction with Amazon ECS. Expert insights indicate that by defining retry strategies, you can effectively respond to various infrastructure events or application signals, enhancing reliability. “By defining retry strategies you can react to an infrastructure event (like an EC2 Spot interruption), an application signal (via the exit code), or an event within the middleware (like a container image not being available).”

By adopting these monitoring and troubleshooting strategies, you can significantly enhance the reliability of your AWS jobs, ensuring they operate smoothly and efficiently.

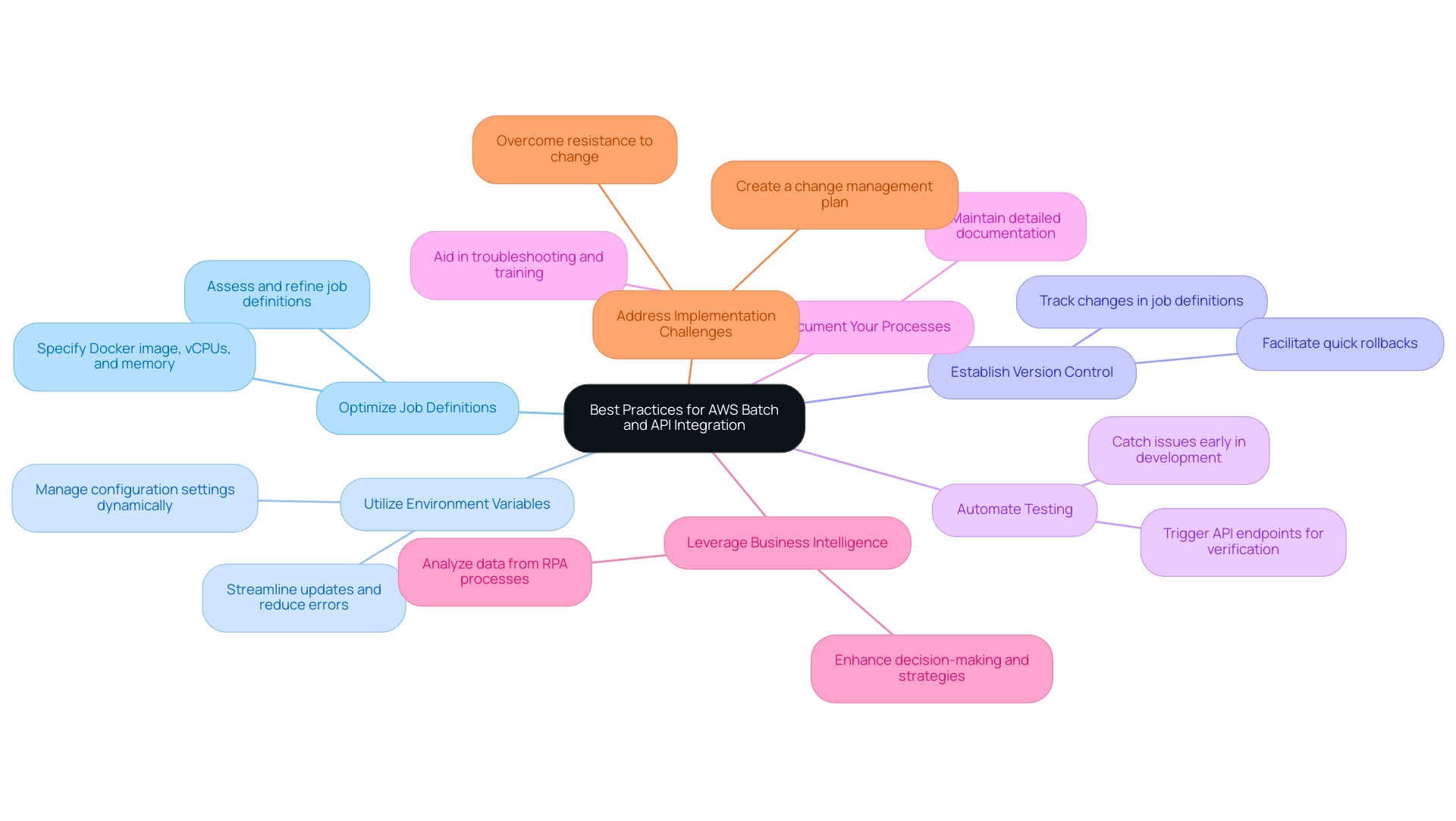

Best Practices for Using AWS Batch and API Integration

To harness the full potential of AWS job processing and API integration while leveraging Robotic Process Automation (RPA) for enhanced operational efficiency, consider implementing the following best practices:

-

Optimize Job Definitions: Regularly assess and refine your job definitions to align with evolving operational needs. This includes specifying crucial elements such as the Docker image, vCPUs, and memory requirements based on performance metrics, which can lead to improved efficiency and reduced costs, particularly when integrated with RPA to automate repetitive tasks.

-

Utilize Environment Variables: Implement environment variables within your job definitions to dynamically manage configuration settings. This approach not only streamlines updates but also mitigates the risk of errors when deploying modifications, enhancing overall reliability, and supporting the agile integration of tailored AI solutions.

-

Establish Version Control: Adopt version control for your job definitions to trigger API configurations. This strategy allows for effective tracking of changes and facilitates quick rollbacks in case of unexpected issues, thereby maintaining stability in your operations while reinforcing the efficiency of RPA processes.

-

Automate Testing: Introduce automated testing procedures that trigger API endpoints to verify their functionality consistently. By catching potential issues early in the development cycle, you can ensure smoother deployments and greater confidence in your systems, which is critical in a rapidly evolving AI landscape.

-

Document Your Processes: Create and maintain detailed documentation of your AWS Job Scheduling and configurations for the trigger API Gateway. This invaluable resource aids in troubleshooting and serves as a training tool for onboarding new team members, ensuring continuity and knowledge transfer, which is crucial for overcoming technology implementation challenges.

-

Leverage Business Intelligence: Integrate Business Intelligence tools to analyze data generated from your RPA processes. This can provide actionable insights that enhance decision-making and operational strategies, ensuring your organization remains competitive in a data-rich environment.

-

Address Implementation Challenges: Be aware of common pitfalls in RPA deployment, such as inadequate training or resistance to change among staff. Creating a change management plan can assist in alleviating these difficulties, promoting a more seamless shift to automated workflows.

By following these best practices, you can enhance your AWS processing usage, making sure it stays efficient, secure, and scalable while effectively managing expenses related to processing jobs. For instance, AWS service allows users to track and manage costs through cost allocation tags and budget alerts, providing a real-world example of how these strategies can lead to better financial oversight and control. The flexibility of AWS Batch also plays a crucial role in efficient management and scaling, further enhancing operational efficiency through the integration of RPA and Business Intelligence.

Conclusion

In summary, AWS Batch stands out as an essential tool for organizations aiming to enhance operational efficiency and streamline batch computing workloads in the cloud. By automating job submissions via the API Gateway, configuring IAM roles, and implementing effective monitoring strategies, businesses can alleviate the burdens of manual workflows and focus on strategic initiatives that drive growth.

The integration of AWS Batch with Business Intelligence and Robotic Process Automation creates a robust framework that not only empowers teams but also facilitates informed decision-making through actionable insights. By following best practices such as:

– optimizing job definitions

– utilizing environment variables

– maintaining thorough documentation

organizations can harness the full potential of AWS Batch while ensuring security and scalability.

Ultimately, embracing AWS Batch is not just about adopting a new technology; it’s about transforming operations to achieve a competitive edge in a data-driven landscape. By prioritizing efficiency and automation, businesses can position themselves for success in an ever-evolving marketplace, paving the way for innovation and growth.