Overview:

The article provides a comprehensive step-by-step guide on how to remove duplicates in Power BI, emphasizing the significance of maintaining data integrity for accurate analysis and decision-making. It outlines practical methods like using DAX and Power Query, along with best practices and troubleshooting tips, to ensure effective data cleaning and enhance operational efficiency.

Introduction

In the realm of data analysis, the integrity of information is paramount, and duplicates can pose significant challenges. Within Power BI, repeated entries not only skew analysis but also mislead decision-makers, potentially resulting in inflated metrics and misguided strategies. As organizations strive for accuracy and efficiency, understanding the implications of duplicate data becomes crucial.

This article delves into the importance of identifying and removing duplicates, offering practical methods and best practices to enhance data quality. From leveraging Power Query and DAX to implementing automated solutions like Robotic Process Automation, readers will discover actionable strategies that empower teams to maintain clean datasets and drive informed decision-making.

Embracing these insights can transform data management processes, ensuring that businesses are equipped to harness the full potential of their analytics.

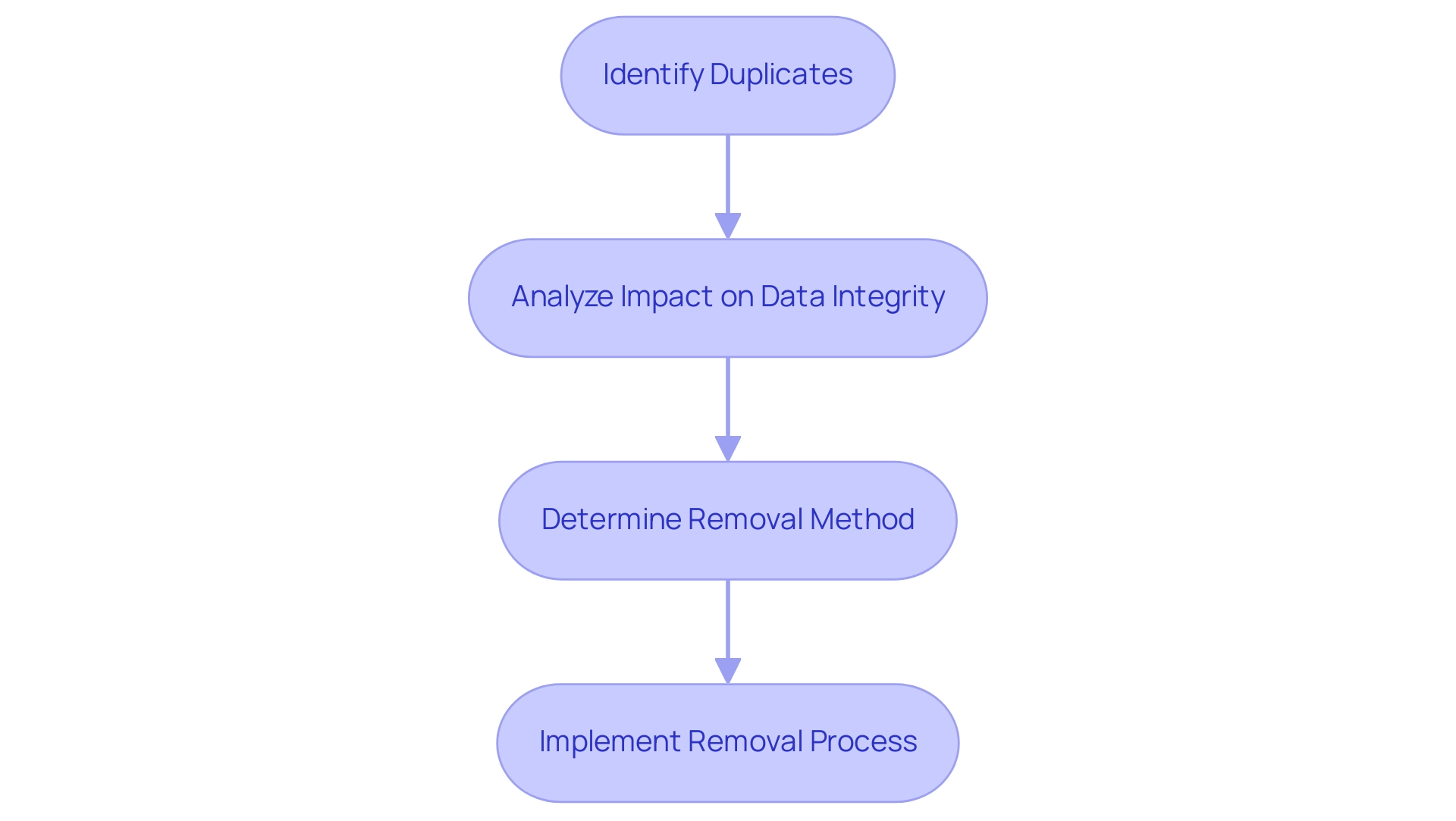

Understanding Duplicates in Power BI: Importance and Implications

In Power BI, understanding how to remove duplicate in power bi is essential, as repeated entries signify occurrences within your dataset, which can significantly distort analysis and lead to misleading insights. The necessity of understanding how to remove duplicate in power bi cannot be overstated; they distort key performance indicators and misrepresent critical trends. For example, think of a situation where customer transaction information is affected by repeated entries.

Such redundancy can result in inflated sales figures, ultimately distorting the financial outlook of the business. Notably, the training dataset in this context has over 78% redundancy, with 1,074,992 records over-represented in a total of 4,898,431 records. As emphasized by Aditya Sasmal, a Microsoft-certified Business Analyst, ‘Duplicate information consists of repeated details within a dataset, often resulting from entry errors, multiple sources integration, or inadequate management.’

Consequently, prioritizing information integrity by understanding how to remove duplicate in power bi is crucial for precise reporting and informed decision-making. This becomes even more crucial in light of recent discussions on the importance of establishing a knowledge management process to ensure consistency when teams work with the same dataset. By promoting an atmosphere of openness concerning information gathering and challenges, teams can reduce the risks linked to redundancies and maintain the integrity of their analyses.

Furthermore, the challenge of time-consuming report creation often diverts attention from leveraging insights effectively. As illustrated in the case study titled ‘Critical Thinking in Data Analysis,’ while software can identify repeated data, the role of analysts in applying critical thinking to understand how to remove duplicate in power bi is vital for deciding the best approach to handle repetitions. By integrating robust Business Intelligence practices and RPA solutions such as EMMA RPA, which streamlines repetitive tasks, and Automate, which enhances operational workflows, organizations can transform their analytical processes.

This guarantees not only the elimination of repeated items but also teaches how to remove duplicate in power bi, leading to actionable insights that drive business growth and operational efficiency. To explore how these solutions can benefit your organization, book a free consultation today.

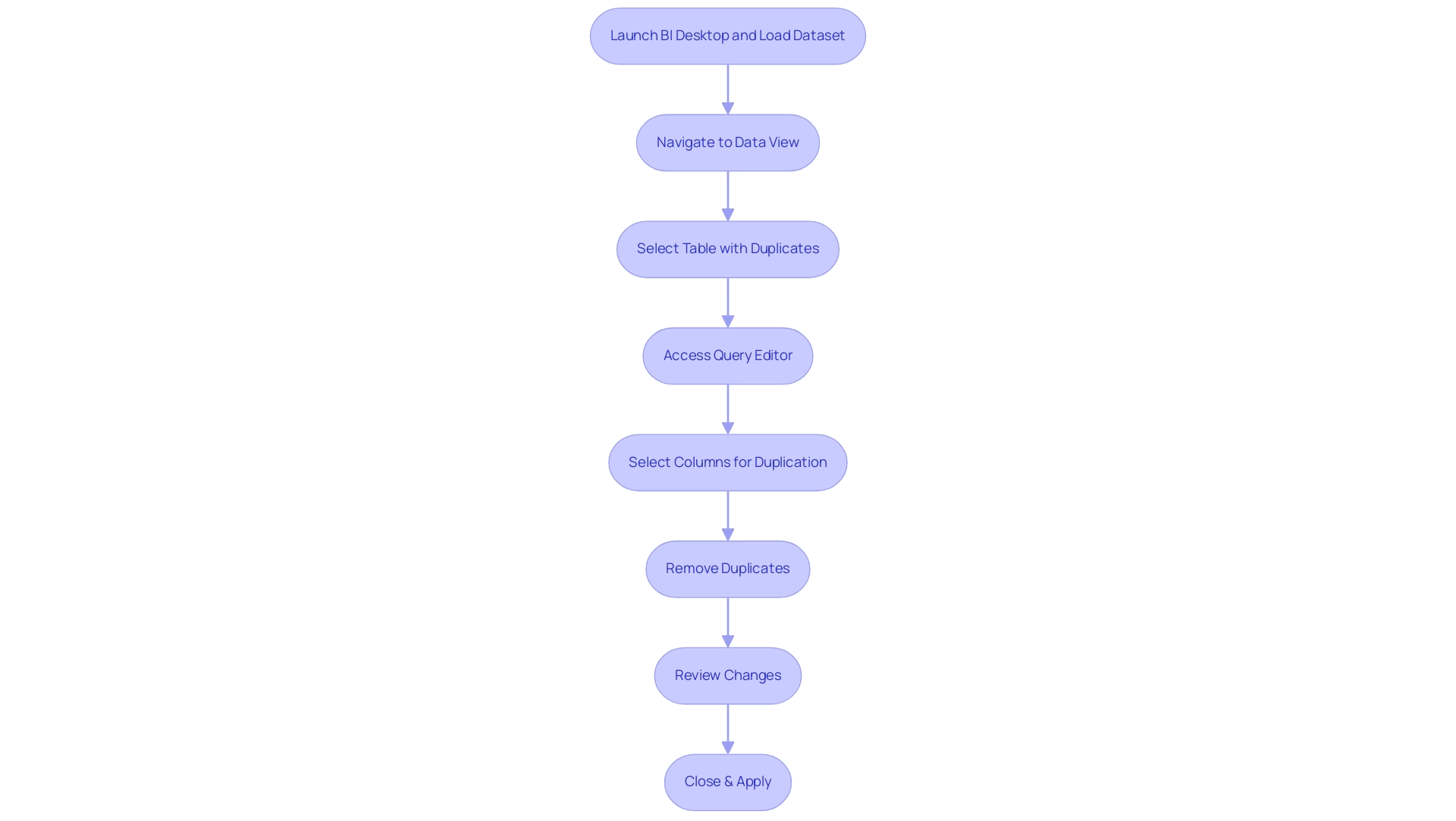

Step-by-Step Guide to Removing Duplicates in Power BI

- Start by launching BI Desktop and loading the dataset you wish to clean.

- Navigate to the ‘Data’ view by clicking on the table icon located on the left sidebar.

- Identify and select the table that contains the repeated entries.

- Click on the ‘Transform Data’ button to access the Query Editor.

- Within the query tool, select the specific columns where repetitions may occur.

- In the ‘Home’ tab, find the ‘Remove Rows’ option and select ‘Remove Duplicates’.

- Carefully review the changes displayed in the preview pane to confirm that all duplicates have been effectively removed.

- Finally, click ‘Close & Apply’ to save your changes and return to the main Power BI interface.

Consistently engaging in information cleaning practices is essential for maintaining accuracy and integrity. These practices not only assist in how to remove duplicates in Power BI but also help tackle inconsistencies and reduce the time-consuming nature of report creation that can obstruct effective decision-making. As Neeraj Sharma aptly puts it,

Remember, clean information is the foundation of accurate and reliable insights.

By utilizing Business Intelligence tools like BI alongside RPA solutions such as EMMA RPA and Automate, you can automate these data cleaning tasks, significantly enhancing efficiency and ensuring actionable insights for your business growth. For further insights, consider the case study titled “Best Practices for Data Cleaning in Business Intelligence,” which outlines effective techniques that can be adopted.

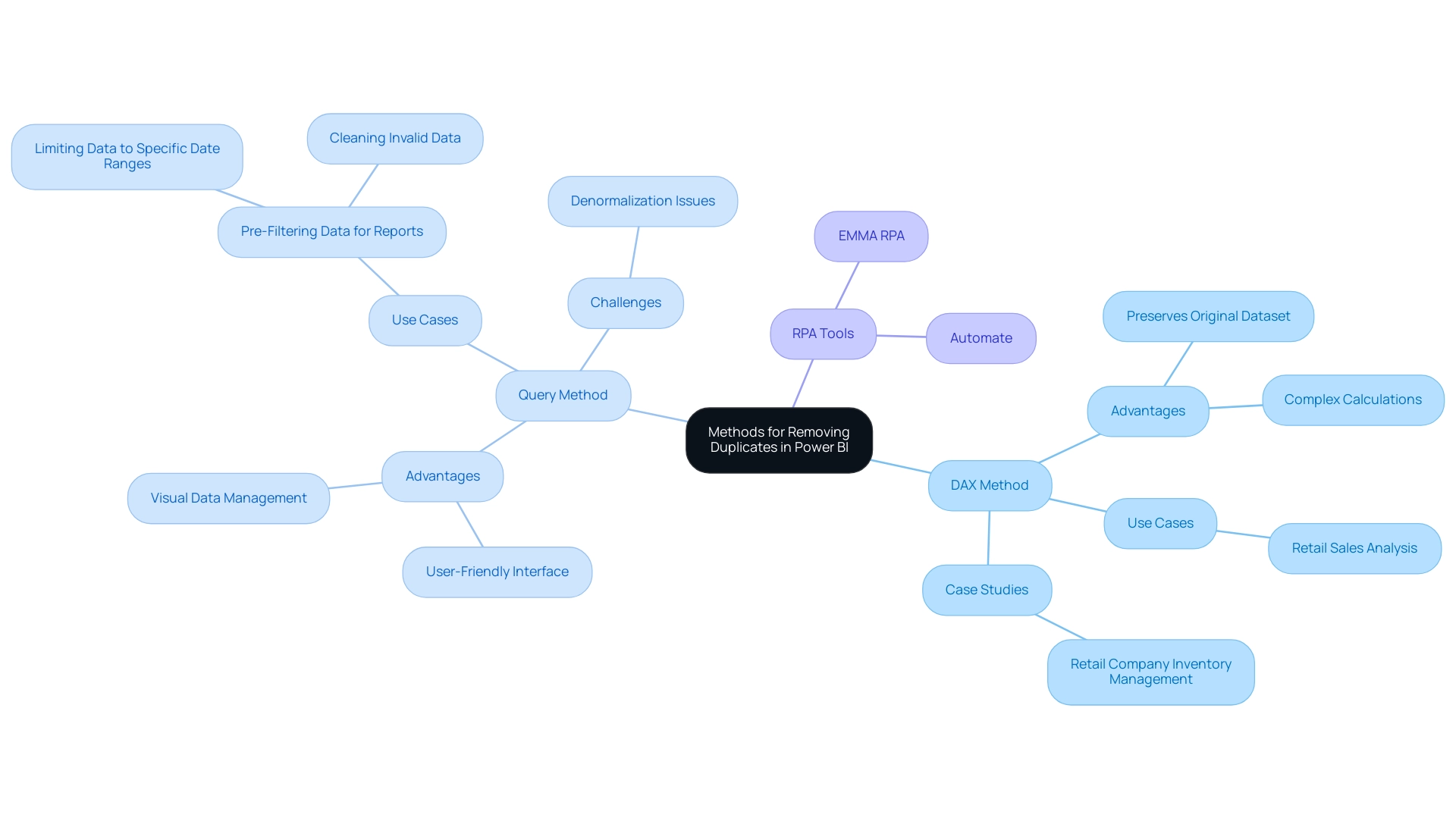

Methods for Removing Duplicates: DAX vs. Power Query

When tackling the challenge of how to remove duplicate in Power BI, two main methods stand out: DAX and Query, both crucial in leveraging Business Intelligence for operational efficiency and actionable insights.

-

DAX Method: Utilizing the DAX formula

DISTINCT, you can learn how to remove duplicate in Power BI by creating a new table that retains only unique values. This approach is particularly advantageous for those who wish to preserve their original dataset while gaining insights from unique entries. Analysts often favor this method for its flexibility and precision, allowing for complex calculations without altering the original structure. For instance, a case study may highlight how a retail company utilized DAX to analyze sales information, effectively identifying unique transactions for improved inventory management. -

Query Method: Query provides a user-friendly interface that simplifies how to remove duplicate in Power BI directly from the dataset. This method is ideal for those who prefer a visual approach to information management, showing users how to remove duplicate in Power BI and efficiently clean their sets before analysis. However, as Eason from Community Support notes, another case would be the denormalization of tables originating from various information sources, because this would not be optimized using the query folding technique in Query. This highlights the difficulties in preserving information integrity during the cleaning procedure, especially when addressing discrepancies among diverse information sources.

Alongside these techniques, incorporating RPA tools such as EMMA RPA and Automate can greatly improve operational efficiency. These tools can automate repetitive tasks involved in information cleaning, reducing the time spent on manual processes and minimizing errors. For instance, EMMA RPA can be employed to automate the extraction and transformation of information, ensuring that only pertinent and unique entries are processed in Business Intelligence.

Both approaches have unique benefits and are essential in fostering insights based on information for business expansion, particularly in understanding how to remove duplicate in Power BI. The decision between them ultimately depends on your operational needs and familiarity with BI functionalities. Recent conversations among analysts indicate a trend toward utilizing DAX for unique value analysis, while Query continues to be a preferred choice for pre-filtering and cleaning datasets, particularly in improving report quality.

Common tasks executed with Query, such as restricting information to specific date ranges or sanitizing invalid content, further demonstrate its practical applications. Consider your team’s preferences and the specific nature of your data when deciding which method to implement, and don’t overlook the potential benefits of incorporating RPA to streamline these processes.

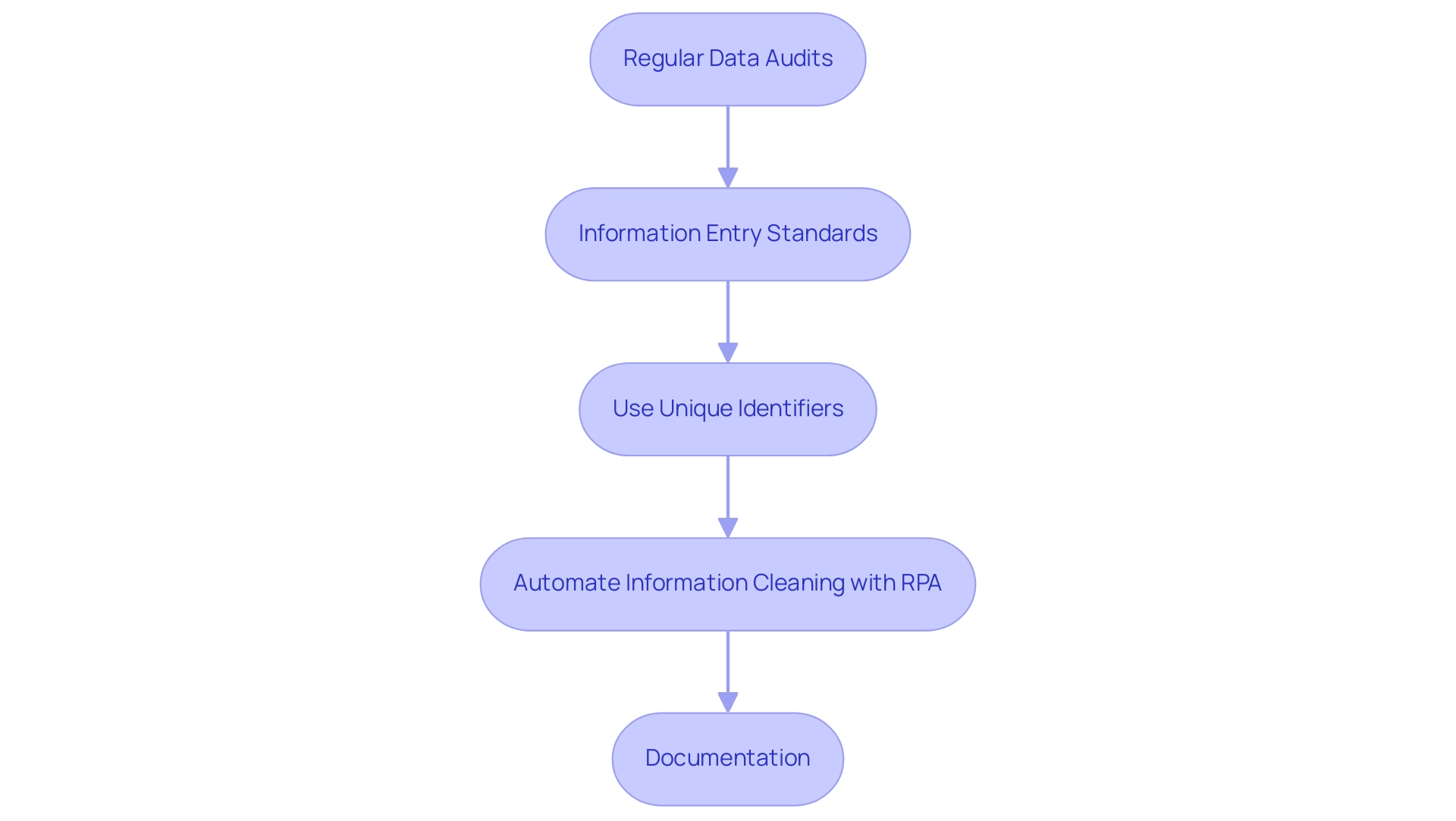

Best Practices for Managing Duplicates in Power BI

To effectively handle repetitions in Power BI and enhance operational efficiency, consider implementing the following best practices:

-

Regular Data Audits:

Conduct periodic reviews of your datasets to pinpoint and rectify duplicates. This proactive approach ensures information integrity and reliability, allowing for informed decision-making. As highlighted in the article ‘Good enough practices in scientific computing,’ effective information management can significantly reduce errors, with studies indicating that poor quality can cost organizations up to 25% of their revenue. -

Information Entry Standards:

Establish comprehensive guidelines for information entry. By standardizing formats for names, addresses, and other critical fields, organizations can significantly reduce the likelihood of duplicates arising. Cindy Turner, SAS Insights Editor, emphasizes that,Most of them spend 50 to 80 percent of their model development time on information preparation alone

— underscoring the importance of these standards. -

Use Unique Identifiers:

Implement unique identifiers, such as customer IDs or transaction numbers, to distinctly differentiate records. This practice not only aids in information accuracy but also simplifies tracking and retrieval processes. The case analysis on information modeling demonstrates how comprehending the relationships and flow of knowledge can improve the management of redundancies, resulting in enhanced operational efficiency. -

Automate Information Cleaning with RPA:

Utilize tools such as Query to automate the process of how to remove duplicates in Power BI during your information preparation phase. Incorporating Robotic Process Automation (RPA) not only streamlines operations but also minimizes manual errors, enhancing overall efficiency. By automating these manual workflows, your team can focus on more strategic, value-adding tasks. Our 3-Day BI Sprint can assist you in establishing these methods, guaranteeing a fully operational, professionally crafted report on a subject of your choice within just three days, which can act as a template for upcoming projects. -

Documentation:

Keep comprehensive records of your information sources and the cleaning methods utilized. This ensures transparency and reproducibility, which are essential for compliance and effective auditing. Moreover, following the ‘Ethical Guidelines for Statistical Practice’ emphasizes the significance of ethical considerations in information management, which is vital for preserving integrity.

By embracing these optimal practices, including utilizing our 3-Day BI Sprint for generating professional reports, organizations can significantly enhance their information management procedures in BI, thereby promoting improved decision-making and operational efficiency.

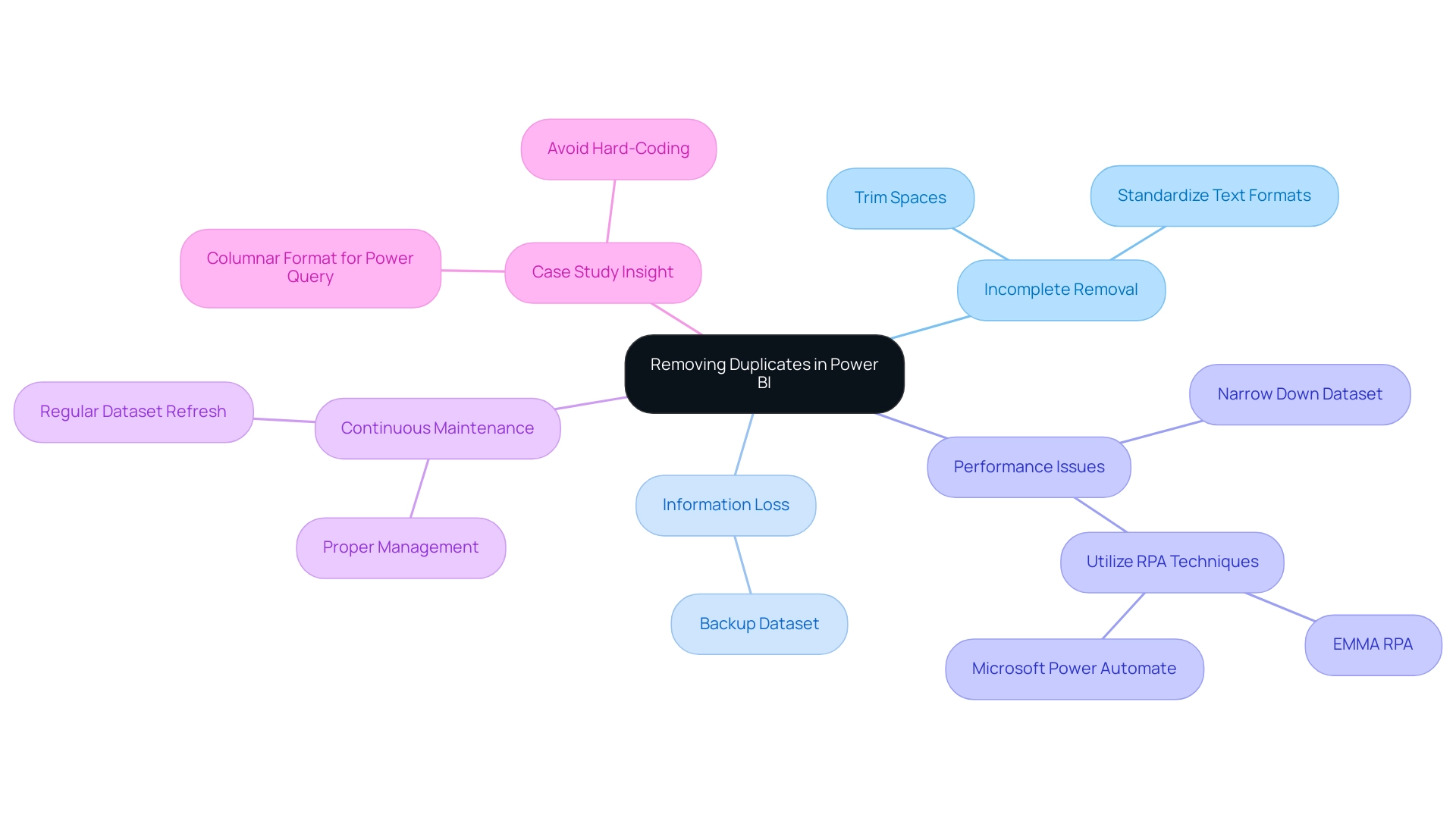

Troubleshooting Common Issues When Removing Duplicates

When addressing the challenge of eliminating repetitions in Power BI, users frequently encounter several typical problems that can obstruct their efforts:

-

Incomplete Removal: Variations in information entry, such as extra spaces or inconsistent casing, can result in repetitions remaining in the dataset. To effectively resolve this, it’s crucial to clean your information beforehand by trimming spaces and standardizing text formats. This step greatly improves the precision of the replication removal method and relates to how to remove duplicate in Power BI, ensuring that your information is trustworthy and actionable.

-

Information Loss: A common mistake is the unintentional removal of essential information while trying to eliminate replicas. To safeguard against this, always back up your dataset prior to making any modifications. This precaution guarantees that you can retrieve your initial information if necessary, preserving information integrity critical for informed decision-making.

-

Performance Issues: Handling large datasets can create performance challenges during the duplicate elimination procedure, potentially hindering operations. To alleviate this, think about narrowing your information to a more manageable size before you learn how to remove duplicate in Power BI. This approach not only accelerates performance but also improves overall efficiency, directly benefiting from Robotic Process Automation (RPA) techniques such as EMMA RPA or Microsoft’s Power Automate that automate and expedite information handling tasks.

-

Continuous Maintenance: Regular upkeep of the Power BI environment is essential for optimal performance and reliability. By making certain that your datasets are regularly refreshed and properly managed, you can lower the chances of facing problems when figuring out how to remove duplicate in Power BI. Highlighting the importance of RPA in automating these maintenance tasks can greatly enhance operational efficiency.

-

Case Study Insight: As noted in the case study concerning Excel’s matrix information models, improper formatting can complicate the removal of repetitions. Feeding data into Power Query in a columnar format minimizes transformation needs and reduces the risk of errors, thereby facilitating a smoother process on how to remove duplicate in Power BI. Additionally, as Les Isaac advises, content creators should avoid any kind of hard-coding in Power Query, which can lead to complications in data management and increase the risk of errors during cleaning processes. By being aware of these challenges and implementing these strategies, including the use of tailored AI solutions for enhanced data cleaning, users can confidently learn how to remove duplicate in Power BI, ultimately preserving data integrity while leveraging Business Intelligence and RPA to enhance their analysis capabilities.

Conclusion

The significance of addressing duplicate data in Power BI cannot be overstated. As outlined in this article, duplicates distort analysis and mislead decision-makers, leading to inflated metrics and misguided strategies. By employing methods such as Power Query and DAX, organizations can effectively identify and remove duplicates, ensuring that their datasets reflect true insights. Regular audits, standardized data entry practices, and the use of unique identifiers are essential best practices that further enhance data integrity and operational efficiency.

Moreover, the integration of Robotic Process Automation (RPA) tools like EMMA RPA and Power Automate streamlines the data cleaning process, allowing teams to focus on strategic analysis rather than tedious data management tasks. By automating repetitive processes, organizations not only improve efficiency but also maintain high-quality data that drives informed decision-making.

In conclusion, embracing the strategies discussed in this article empowers organizations to transform their data management practices. By prioritizing the removal of duplicates and leveraging advanced tools and methodologies, businesses can unlock the full potential of their analytics, fostering a culture of accuracy that leads to better outcomes and sustainable growth. Now is the time to take decisive action to enhance data quality and ensure that every analysis leads to actionable insights.