Overview

Power BI stores data using various methods, including Import Mode, Direct Query Mode, and Composite Model, each designed to meet different analytical needs and performance requirements. The article emphasizes that understanding these storage options is crucial for optimizing reporting and insights, as each method impacts data refresh rates, query performance, and overall user experience in business intelligence applications.

Introduction

In the realm of data analytics, Power BI stands out as a powerful tool that offers a myriad of storage options designed to cater to the unique needs of organizations. With the right understanding of these alternatives, businesses can unlock the full potential of their data, transforming complex information into actionable insights. From the rapid performance of Import Mode to the real-time capabilities of Direct Query, each method presents distinct advantages and considerations.

As organizations navigate the intricacies of data management, embracing best practices and innovative solutions becomes essential for optimizing performance and enhancing decision-making. This article delves into the various data storage options available in Power BI, their impact on operational efficiency, and the critical role of data governance in achieving reliable and effective analytics.

Understanding Power BI Data Storage Options

Understanding where does Power BI store data is essential, as BI offers a range of storage alternatives, each designed to address various business needs and situations, making it crucial for organizations seeking to enhance their analytics capabilities. Comprehending these options can greatly improve reporting and actionable insights, particularly when utilizing our BI services, which feature a 3-Day Sprint for swift report creation and a General Management App to ensure consistency and clarity.

Import Mode: This approach brings information into BI’s in-memory storage, which raises the question of where does Power BI store data, enabling rapid query performance due to the highly optimized format of stored content.

However, it necessitates periodic refreshes to maintain current information accuracy, which can be a consideration for dynamic business environments.

Direct Query Mode: In this mode, Power BI retrieves the information directly from its source, leading to the question of where does Power BI store data. This method is especially advantageous for real-time information retrieval, but it might result in reduced efficiency as it relies on the responsiveness of the underlying information source.

Composite Model: This innovative model merges both Import and Direct Query methods, allowing users to import specific datasets while querying others. This flexibility optimizes performance based on particular analytical needs, making it a versatile choice for varying workloads, especially in contexts like where does Power BI store data. Power BI Dataflows enable the creation of reusable information transformation processes within the cloud, raising the question of where does Power BI store data.

This feature streamlines information preparation and storage, enhancing efficiency in managing pipelines, particularly in understanding where does Power BI store data.

In addition to these storage methods, the integration of AI features such as Small Language Models can improve analysis by providing tailored insights and enhancing quality. Our GenAI Workshops further empower teams to create custom GPT solutions that address specific reporting challenges, making information management more efficient.

Moreover, the Control Chart XmR allows users to download calculated values such as LCL, CL, UCL, sigmas, and signals, which can significantly enhance information management and analytics capabilities. This tool, when combined with AI-driven insights, supports organizations in making informed decisions and unlocking the true potential of their data.

By addressing common challenges in leveraging insights from BI dashboards, such as the time-consuming nature of report creation and ensuring data consistency, organizations can better harness their data for strategic decision-making. Recent developments suggest that these storage options will keep evolving, especially with the expected advancements in 2024, which aim to enhance functionality and user experience. Organizations must weigh the benefits and limitations of each method, as the choice of storage solution can profoundly influence performance metrics, refresh intervals, and overall user satisfaction in Business Intelligence.

As Stacey Barr once remarked,

Years ago, Stacey Barr introduced us to the magic of Control Charts, highlighting the significance of making informed choices in information management to unlock the true potential of analytics.

![]()

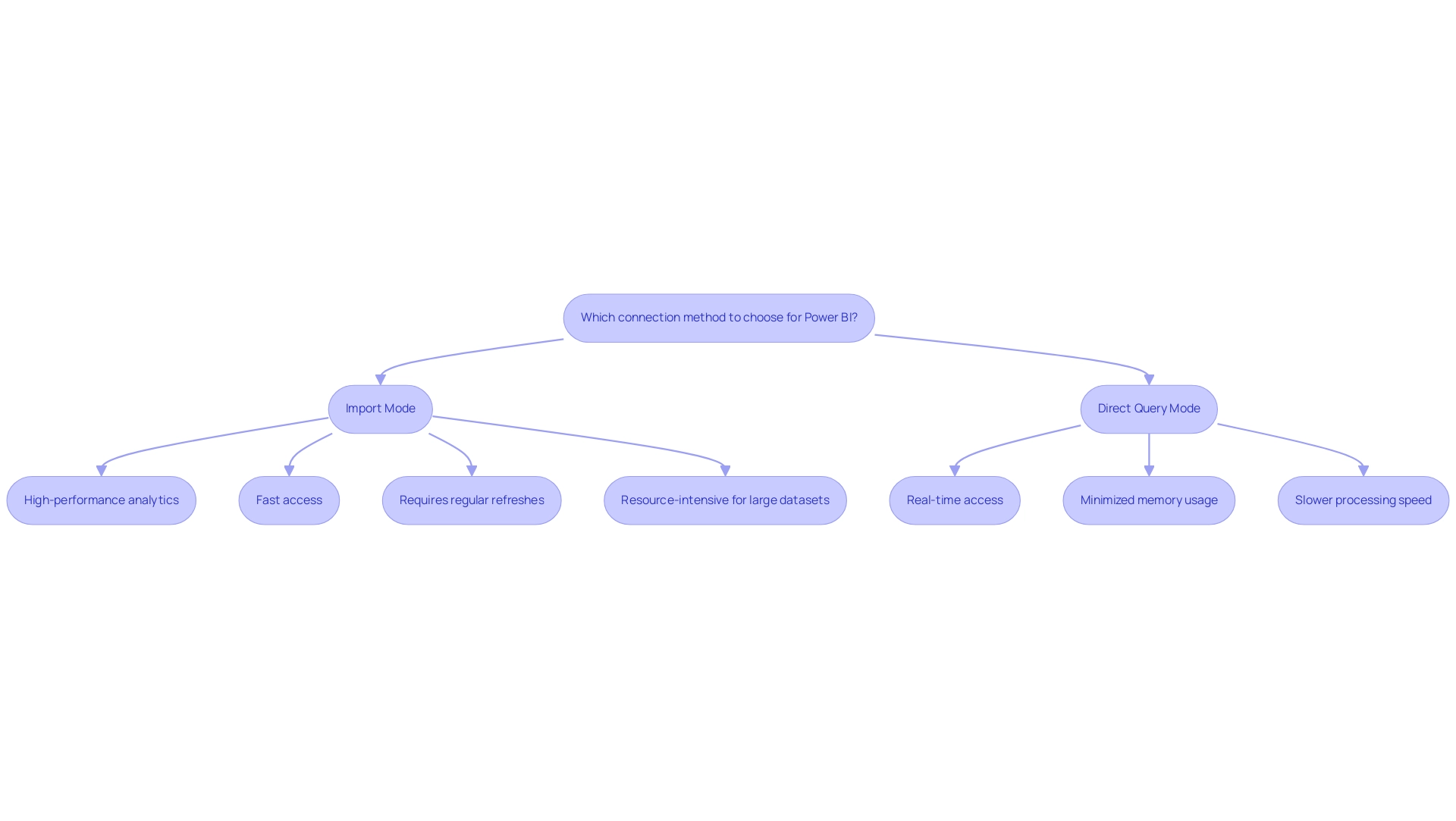

The Impact of Data Connection Methods on Storage

The choice of a connection method in BI profoundly influences where Power BI stores data and how information is accessed, directly affecting operational efficiency. The two primary connection methods are:

- Import Mode: This method loads information into Power BI’s in-memory storage, facilitating rapid access and analysis.

While Import Mode provides high-performance analytics, it requires regular refreshes that can be resource-intensive, especially with larger datasets. Integrating Robotic Process Automation (RPA) can further streamline this process by automating the refresh operations, reducing manual effort and minimizing errors. This is especially advantageous in tackling the challenge of time-consuming report creation, enabling teams to concentrate on analysis instead of preparation.

- Direct Query Mode: This alternative allows Power BI to obtain information in real-time from the source, ensuring users access the most current details. This method minimizes memory usage by querying information directly from the source, which is advantageous for managing large datasets, particularly in understanding where Power BI stores data. However, this immediacy can come at the cost of processing speed, particularly if the underlying source is not optimized for efficient querying.

To enhance this mode, organizations can utilize RPA to automate validation and cleansing processes, ensuring that the information queried is accurate and reliable, thus mitigating issues of inconsistencies.

Choosing between these methods should align with organizational objectives and specific information requirements. For instance, businesses that prioritize real-time insights may gravitate towards Direct Query, whereas those focused on high-performance analytics often prefer Import Mode. Significantly, usage metrics reports refresh each day, highlighting the importance of real-time information access for operational efficiency.

Moreover, organizations using BI Premium can utilize improved performance features for large datasets. This option offers dedicated cloud resources, larger dataset sizes, and more frequent refreshes, making it suitable for intensive big-data analytics. As highlighted by Dr. Andrew Renda, Associate Vice President of Population Health Strategy at Humana, the ability to quickly build dashboards that visualize information and update in real-time is critical for effective strategy formation and execution.

Moreover, in the context of the swiftly changing AI environment, comprehending these dynamics and integrating RPA can assist organizations in optimizing their BI implementation, boosting both efficiency and effectiveness in analysis.

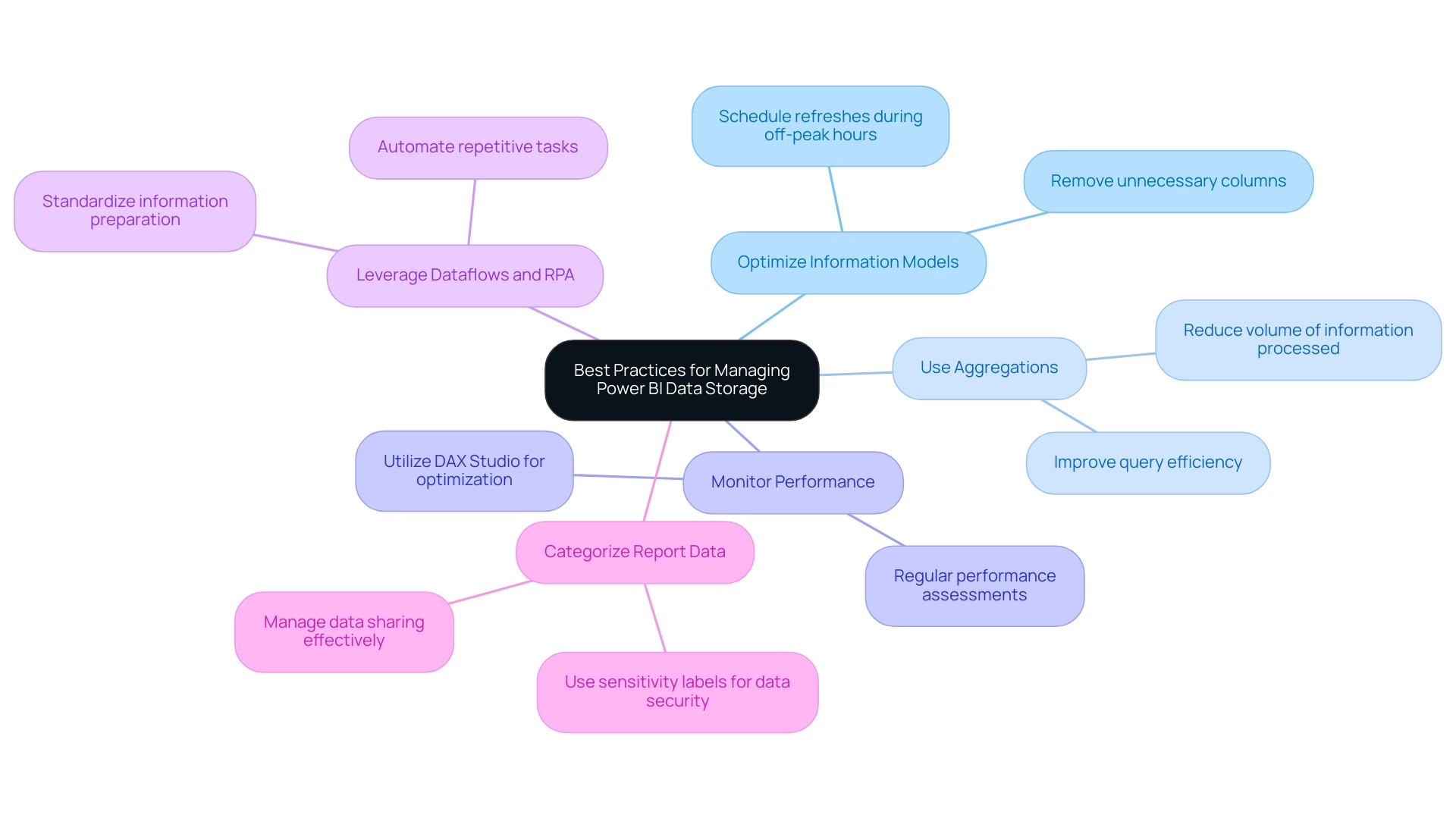

Best Practices for Managing Power BI Data Storage

To effectively manage Power BI information storage and harness the full potential of Business Intelligence, organizations should consider implementing the following best practices:

-

Optimize Information Models: Streamlining information models is crucial; removing unnecessary columns and tables not only improves efficiency but also decreases storage expenses. This practice ensures that the information model is efficient and easy to navigate, leading to quicker insights and enhanced operational efficiency, crucial in today’s information-rich environment.

Plan for Refreshes: Scheduling refreshes during off-peak hours is crucial for minimizing performance impact. Power BI’s capability for parallel loading allows multiple tables or partitions to load concurrently, reducing overall refresh times. This strategy guarantees that users have access to the most current information while maintaining operational efficiency. As lbendlin emphasizes,Anything above 5 seconds leads to bad user experience.

Thus, timing your refreshes wisely can significantly contribute to a smoother user experience and help overcome challenges in document creation. -

Use Aggregations: Implementing aggregations can drastically reduce the volume of information that Power BI needs to process, which raises the question of where does Power BI store data. This not only improves query efficiency but also results in quicker document creation, enhancing overall user satisfaction and tackling information inconsistency issues.

Monitor Performance: Regular performance assessments of reports and dashboards are vital. Recognizing bottlenecks or inefficiencies in information retrieval and storage, including where does Power BI store data, can help preempt issues before they impact users. Utilizing tools like DAX Studio can further optimize measures and queries, as noted in SQLBI.com’s extensive training resources, enhancing the actionable guidance from your BI dashboards.

Leverage Dataflows and RPA: Utilizing Power BI Dataflows for information preparation and transformation tasks allows for standardization across reports, which enhances quality and consistency. Combining this with RPA can automate repetitive information tasks, further enhancing operational efficiency. This practice is especially effective in ensuring that information, including where does Power BI store data, is reliable and readily available for analysis, thereby empowering informed decision-making that drives growth and innovation.

Categorize Report Data: Categorizing report data by business impact using sensitivity labels raises awareness about data security and manages data sharing effectively. This timely consideration is essential for maintaining compliance and protecting sensitive information.

By adhering to these best practices, organizations can maximize the efficiency of their Power BI implementations, leading to enhanced analytics and more informed decision-making capabilities. In situations where DirectQuery sources enforce primary keys, verifying the Assume Referential Integrity setting can significantly enhance query efficiency, as demonstrated in recent case studies. This adjustment not only speeds up query execution but also ensures efficient joins, further enhancing overall performance. Furthermore, applying these strategies can assist in tackling the challenges of time-consuming report creation and information inconsistencies, ultimately resulting in more actionable insights from business intelligence dashboards.

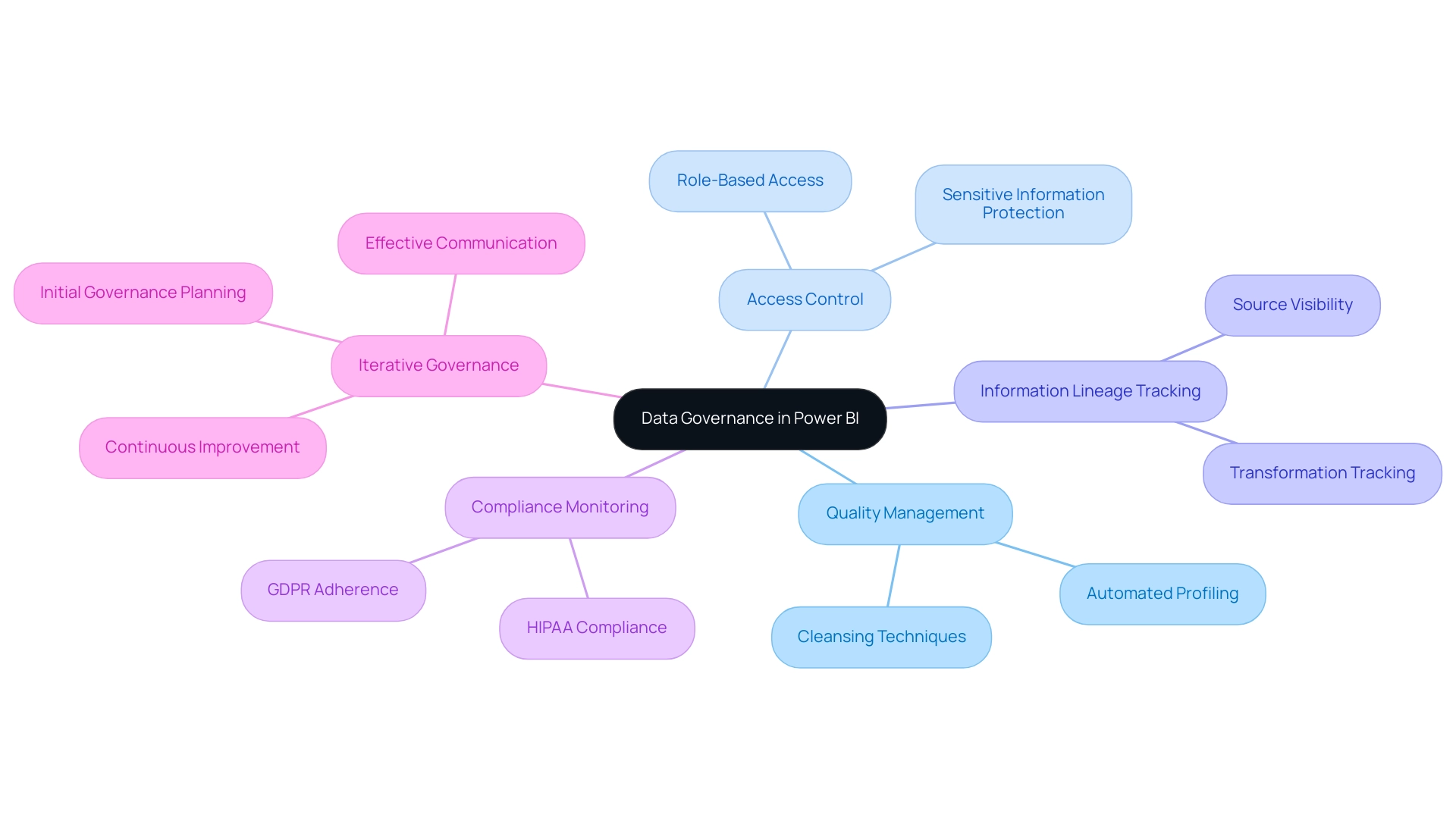

Understanding Data Governance in Power BI

Information governance stands as a cornerstone in the effective management of information within Power BI, particularly in relation to where does Power BI store data, ensuring that details remain accurate, accessible, and secure. Recent statistics reveal that approximately 47% of new information collected by companies contains one or more critical errors, underscoring the urgent need for robust governance practices. These errors can lead to misguided decisions and inefficiencies, highlighting the importance of effective information governance strategies.

Key elements of effective information governance include:

- Quality Management: Establishing systematic processes to routinely assess and enhance the quality of information utilized in Power BI involves understanding where does Power BI store data, which is essential. With recent advancements in information quality management, organizations can harness tools and methodologies, such as automated profiling and cleansing techniques, that significantly boost accuracy and reliability, enabling teams to focus on insights rather than the tedious task of report creation.

- Access Control: Implementing role-based access controls ensures that sensitive information is only accessible to authorized personnel, mitigating risks related to breaches and safeguarding confidentiality.

- Information Lineage Tracking: Maintaining comprehensive visibility into sources and transformations is vital. This enables organizations to comprehend how information flows through the system, enhancing trust and clarity in decision-making based on insights.

- Compliance Monitoring: Adhering to relevant regulations and standards, such as GDPR or HIPAA, is crucial for safeguarding information integrity and promoting accountability.

- Iterative Governance with Rollouts: This approach balances agility with governance, allowing for initial governance planning while iteratively improving governance alongside Fabric development. It enhances user productivity and governance efficiency, but necessitates effective communication and discipline to keep documentation and training current.

By prioritizing these governance strategies, organizations can greatly improve their management practices, leading to more trustworthy and actionable insights from their BI analyses, including understanding where does Power BI store data. As emphasized in our 3-Day BI Sprint, effective information governance not only simplifies creation but also enables teams to utilize insights for significant decision-making. Our 3-Day Power BI Sprint specifically addresses the challenges of poor master information quality by implementing best practices in governance, while also clarifying where does Power BI store data and providing tailored methodologies for AI integration.

Additionally, the documents generated during the sprint can be utilized as templates for future projects, ensuring a professional design from the start. As Grant Gamble of G Com Solutions emphasizes,

Mastering data analytics is fundamental for businesses, and effective data governance paves the way for that mastery.

The recent enhancements in Power BI, including the support for publishing and downloading enhanced report formats (PBIR), further facilitate collaboration and source control, driving better governance and user engagement.

Conclusion

Understanding the diverse data storage options available in Power BI is crucial for organizations striving to optimize their data analytics capabilities. Each method—Import Mode, Direct Query, Composite Model, and Dataflows—offers unique advantages tailored to various business scenarios, enabling rapid performance, real-time access, and efficient data management. By leveraging these options effectively, organizations can enhance reporting accuracy and generate actionable insights that drive strategic decision-making.

Implementing best practices for data storage management, such as:

- Optimizing data models

- Planning refresh schedules

- Utilizing aggregations

further elevates the efficiency of Power BI. Additionally, integrating data governance strategies ensures that the data remains accurate, secure, and compliant, ultimately leading to more reliable analytics. The combination of these approaches empowers organizations to harness the full potential of their data, addressing common challenges such as report creation delays and data inconsistencies.

As the landscape of data analytics continues to evolve, staying informed about the latest advancements and adopting innovative solutions remains essential. By embracing the right data storage and governance practices, organizations can transform their data into a powerful asset, enabling informed decision-making and fostering operational excellence. The journey to mastering data analytics begins with a commitment to understanding and optimizing the tools at one’s disposal, paving the way for sustainable growth and success.