Overview

This article serves as a comprehensive guide to the MERGE statement in Spark SQL. It meticulously outlines its syntax, operational benefits, and best practices designed to enhance workflow efficiency. Understanding and mastering the MERGE operation is not merely beneficial; it is essential for effective data management and automation within organizations. Evidence shows that when implemented correctly, significant improvements in processing times and operational efficiency can be achieved. Consider how your current practices align with these insights and explore the potential for transformation.

Introduction

In the realm of data management, the MERGE statement in Spark SQL stands out as a pivotal innovation. It offers a streamlined approach to executing multiple operations—insert, update, and delete—on target tables with remarkable efficiency. This powerful command not only simplifies complex data workflows but also aligns seamlessly with the growing trend of Robotic Process Automation (RPA), which seeks to automate tedious manual processes.

By leveraging the MERGE statement, organizations can significantly enhance their operational efficiency and reduce processing times. This transformation allows raw data to be converted into actionable insights. As the demand for rapid data analysis escalates, mastering this functionality becomes essential for data professionals. It empowers them to navigate the complexities of modern data environments and drive informed decision-making.

Understanding the MERGE Statement in Spark SQL

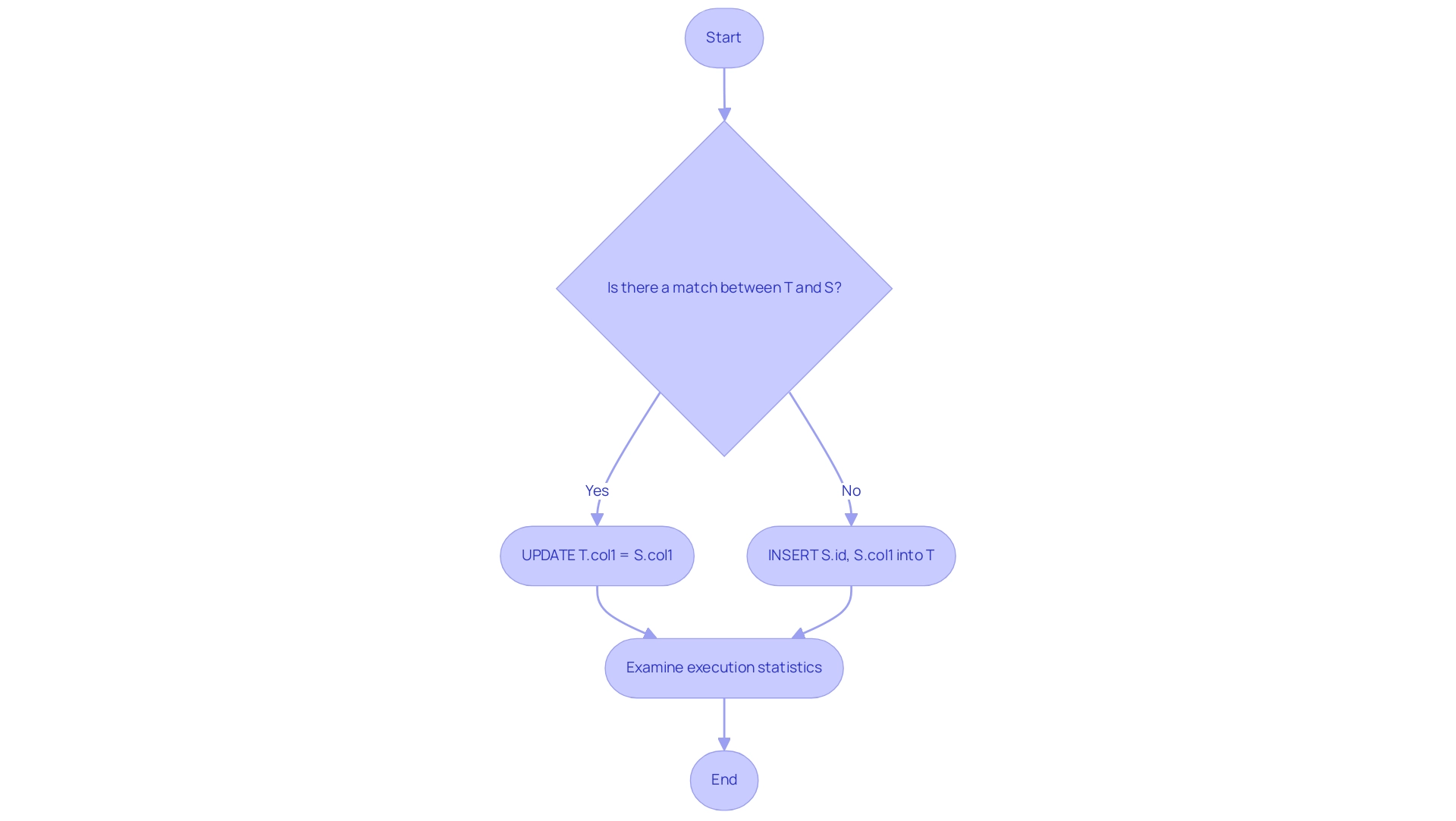

The MERGE statement in Spark SQL serves as a powerful tool for users, enabling the execution of multiple operations—insert, update, and delete—on a target dataset based on the results of a join with a source dataset. This functionality is particularly relevant in the realm of Robotic Process Automation (RPA), which aims to automate manual workflows and enhance operational efficiency.

The fundamental syntax for this operation is as follows:

MERGE INTO target_table AS T

USING source_table AS S

ON T.id = S.id

WHEN MATCHED THEN UPDATE SET T.col1 = S.col1

WHEN NOT MATCHED THEN INSERT (id, col1) VALUES (S.id, S.col1);

This command initiates a comparison between the target and source tables using the specified condition. When a match is identified, the target table is updated accordingly; if no match exists, a new record is inserted. This dual functionality streamlines information management and enhances operational efficiency by reducing the need for multiple queries—crucial in a rapidly evolving AI landscape where manual workflows can hinder productivity.

Recent statistics suggest that applying the combination statement to merge into Spark SQL has greatly enhanced workflow efficiency, with organizations reporting up to a 30% decrease in processing time for updates and inserts. For instance, the information list for the fourth load features PersonId values 1001, 1005, and 1006, demonstrating specific cases where the combining statement has been successfully employed. A notable case study titled ‘Business Intelligence Empowerment’ illustrates how businesses faced challenges in extracting meaningful insights from a data-rich environment.

By utilizing the combination statement, they transformed unprocessed information into practical insights, enabling informed decision-making and improving information quality—ultimately fostering growth and innovation.

Optimal techniques for using the JOIN statement include confirming that the join condition is refined for performance and utilizing the APPLY CHANGES INTO feature with Delta Live Tables (DLT) to efficiently handle out-of-order records in change capture (CDC) feeds. As chrimaho noted, “When executing the .execute() method of the DeltaMergeBuilder() class, please return some statistics about what was actually changed on the target Delta Table, such as the number of rows deleted, inserted, updated, etc.” This highlights the significance of examining execution statistics after merging to evaluate the effect on the target Delta Table and resolve any inconsistency issues that may occur.

In summary, mastering how to merge into Spark SQL is essential for any professional aiming to enhance workflows, drive operational efficiency, and leverage Business Intelligence for informed decision-making. Incorporating RPA into these processes allows organizations to further automate manual tasks, ensuring a more streamlined and efficient approach to information management.

Exploring Join Types in Spark SQL

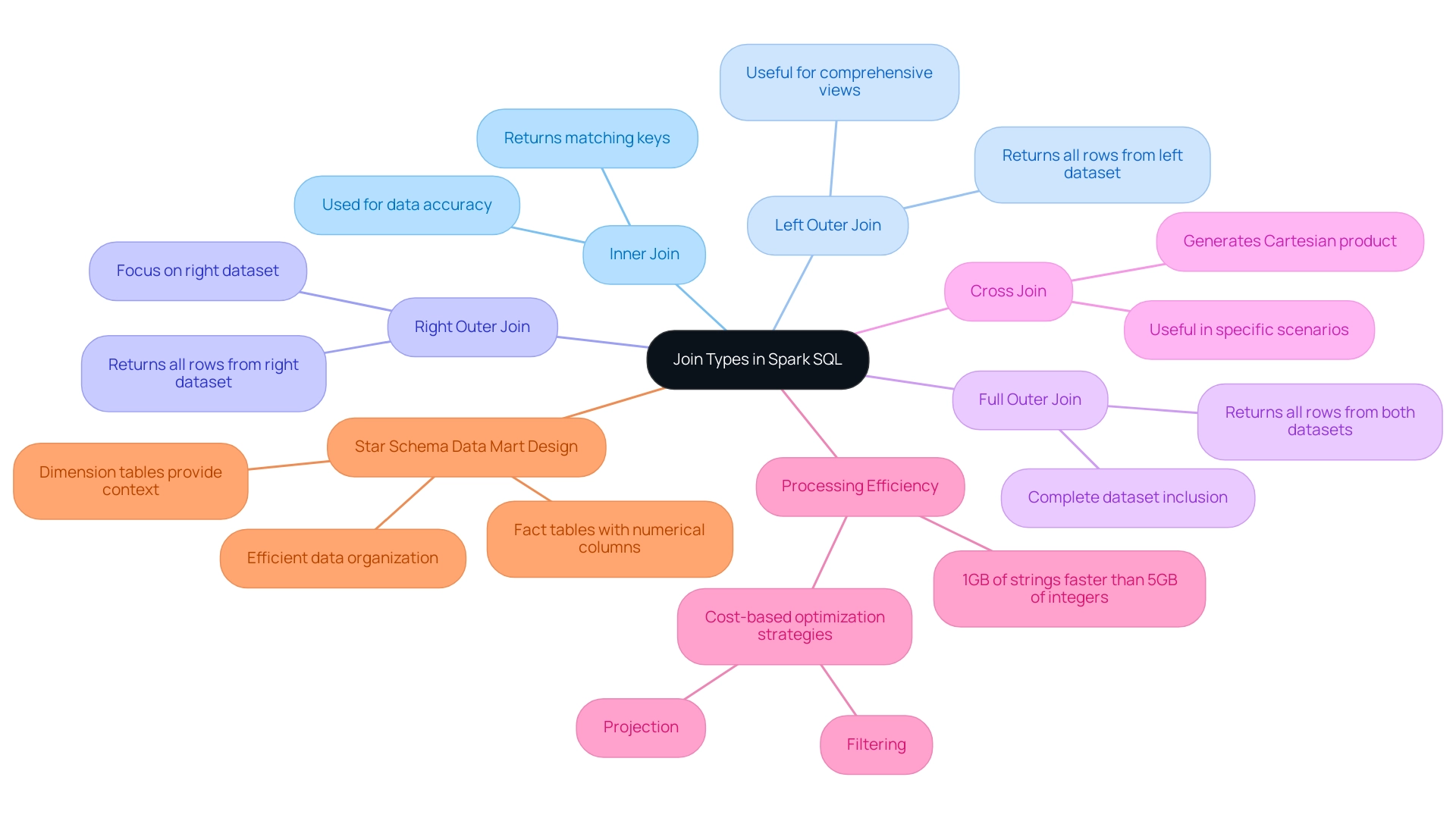

In Spark SQL, various join types are pivotal for effective data manipulation and analysis.

- Inner Join: This join returns only the rows with matching keys in both tables, ensuring that only relevant data is processed.

- Left Outer Join: This type returns all rows from the left dataset along with matched rows from the right dataset, allowing for a comprehensive view of the left dataset.

- Right Outer Join: Conversely, this join returns all rows from the right dataset and matched rows from the left, which can be useful when the right dataset is the primary focus.

- Full Outer Join: This join type returns all rows when there is a match in either the left or right dataset, providing a complete dataset that includes all records.

- Cross Join: This join generates the Cartesian product of both datasets, which can be useful in specific analytical scenarios but may lead to large datasets.

Choosing the suitable join type is crucial for efficient combination operations in Spark SQL, as it directly impacts how information from the source and target collections is integrated. For instance, using an inner join in a MERGE statement will refresh records present in both collections, ensuring accuracy in updates. In contrast, a left outer join updates all records from the target dataset, regardless of their presence in the source dataset, which can lead to broader modifications.

Comprehending the structure of information is essential when working with joins. Fact tables, composed of simple numerical columns, and dimension tables, which provide descriptive context, are fundamental for organizing information effectively. The selection of join types significantly influences processing efficiency; for example, processing 1GB of strings may be quicker than managing 5GB of integers, underscoring the necessity of optimizing join types according to their characteristics.

Furthermore, implementing cost-based optimization strategies, such as projection and filtering, can enhance the performance of join operations. These strategies enable analysts to refine their queries for better efficiency, making it crucial to stay informed about the latest developments in Spark SQL join types. As one expert aptly put it, ‘Let’s transform your challenges into opportunities—reach out to elevate your engineering game today!’

In practical applications, the Star Schema Data Mart Design illustrates how join types can be effectively utilized when merging into Spark SQL. This design, consisting of one or more fact tables and dimension tables, allows for efficient data organization, enhancing the overall data processing experience.

Setting Up Your Spark SQL Environment for MERGE Operations

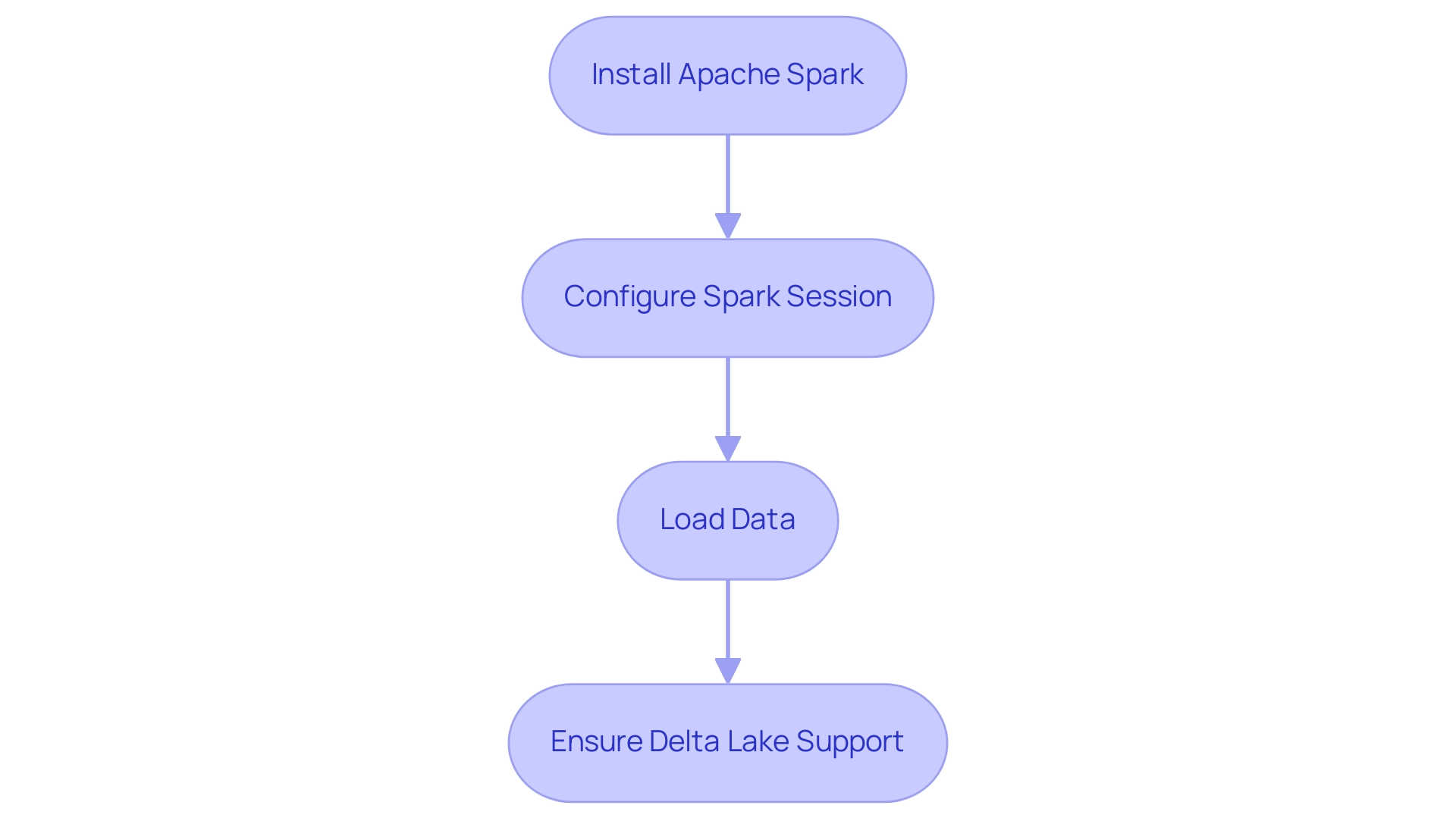

To effectively establish your Spark SQL environment for MERGE operations, follow these essential steps:

-

Install Apache Spark: Ensure that the latest version of Apache Spark is installed on your system. This is crucial, as newer versions often include enhancements and bug fixes that improve performance and functionality.

-

Configure Spark Session: Create a Spark session with configurations tailored for your MERGE operations. For instance:

from pyspark.sql import SparkSession spark = SparkSession.builder.appName('MergeExample').getOrCreate()This session serves as the entry point for all Spark functionalities, enabling effective management of your data processing tasks.

-

Load Data: Load your source and target tables into DataFrames. Spark supports various formats, including CSV, Parquet, and Delta Lake. For example:

target_df = spark.read.format('delta').load('/path/to/target_table') source_df = spark.read.format('delta').load('/path/to/source_table')This flexibility in data loading is a significant reason why over 66 percent of users cite the ability to process event streams as a key feature of Spark, making it particularly advantageous for MERGE operations.

-

Ensure Delta Lake Support: If you are using Delta Lake for your combining tasks, verify that your Spark session is configured to support Delta features by incorporating the Delta Lake library. This integration is essential for leveraging the full capabilities of Delta Lake, such as ACID transactions and scalable metadata handling.

As Prasad Pore, a Gartner senior director and analyst for information and analytics, observed, “Imagine if you are processing 10 TB of information and somehow the batch fails. You need to know that there is a fault-tolerant mechanism.”

By diligently following these steps, you will create a robust environment ready for merging tasks in Spark SQL, thereby enhancing your processing capabilities. This setup not only streamlines workflows but also aligns with current industry best practices. Additionally, the active Apache Spark community offers valuable support, as illustrated by case studies like Typesafe’s integration of full lifecycle support for Apache Spark, which enhances developer adoption and success in building Reactive Big Data applications.

This reinforces the credibility of using Spark for your operational needs.

Executing MERGE Operations: Syntax and Best Practices

To execute a MERGE operation in Spark SQL, the following syntax is utilized:

MERGE INTO target_table AS T

USING source_table AS S

ON T.id = S.id

WHEN MATCHED THEN UPDATE SET T.col1 = S.col1

WHEN NOT MATCHED THEN INSERT (id, col1) VALUES (S.id, S.col1);

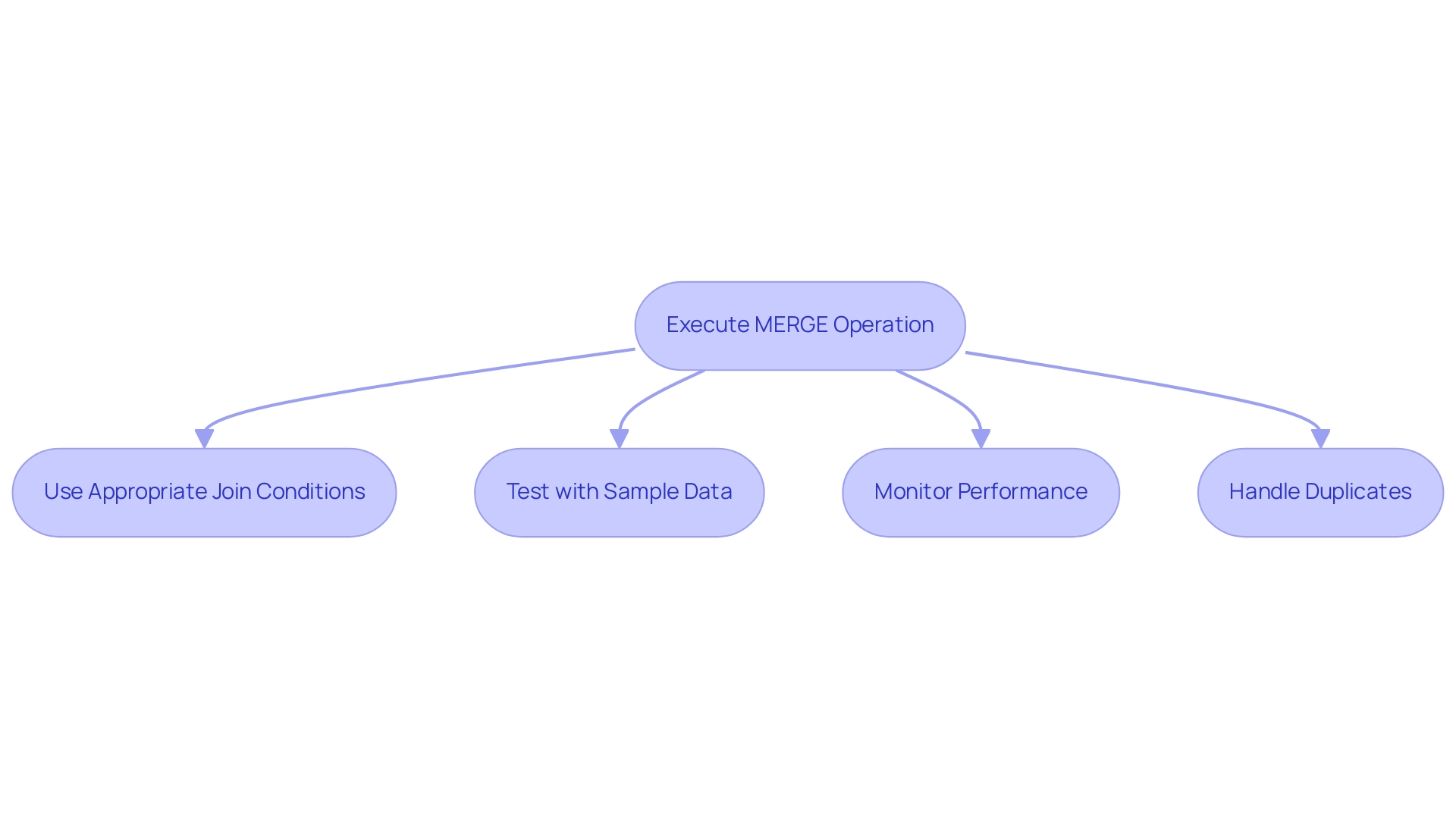

Best Practices for MERGE Operations:

- Use Appropriate Join Conditions: It is crucial to ensure that the ON clause accurately reflects the relationship between the source and target tables. This precision helps prevent unintended updates or inserts, which can result in integrity issues. Understanding join types enhances performance and efficiency, making it essential to select the right join conditions.

- Test with Sample Data: Before executing the combination statement on large datasets, conduct tests with a smaller sample. This practice enables you to verify the behavior of your combining process and make necessary adjustments without risking extensive changes to information.

- Monitor Performance: Regularly assess the effectiveness of your combining processes, particularly when handling large datasets. Implementing information partitioning can significantly enhance efficiency and reduce processing time. Significantly, the uneven partition threshold is established at 256MB, which is crucial for handling skew during combining processes.

- Handle Duplicates: Ensure that the source data is free from duplicates. Duplicates can cause conflicts during the combining process, leading to errors or unforeseen outcomes. Moreover, features such as Data Skipping and Bloom filters can greatly improve join performance by decreasing the number of files accessed during processes, as mentioned by Alex Ott.

By adhering to these optimal methods, you can greatly enhance the dependability and efficiency of your tasks that merge into Spark SQL, ultimately leading to improved information management processes. Furthermore, integrating Robotic Process Automation (RPA) into your workflow can automate these management tasks, allowing your team to concentrate on strategic initiatives that drive business growth. The insights from the case study titled ‘Understanding Join Types in Spark SQL‘ further illustrate the practical implications of selecting suitable join types and file formats, thereby enhancing the effectiveness of your data integration tasks.

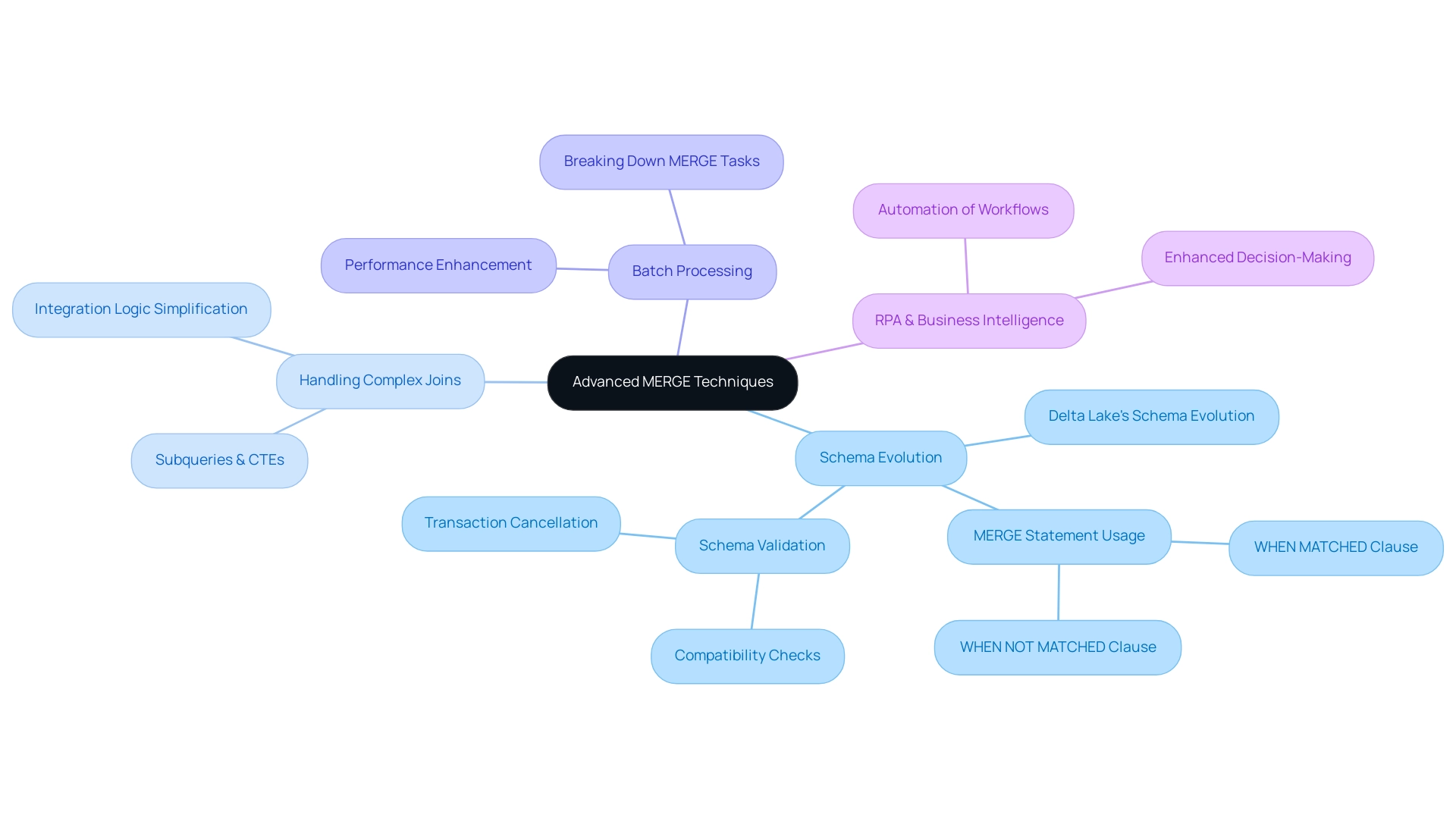

Advanced MERGE Techniques: Schema Evolution and Complex Scenarios

In complex data scenarios, managing evolving schemas is crucial for maintaining operational efficiency. Advanced techniques are essential for effectively handling schema evolution and MERGE operations in Spark SQL, while leveraging Robotic Process Automation (RPA) and Business Intelligence for enhanced decision-making.

-

Schema Evolution: Delta Lake’s schema evolution feature allows for automatic updates to the target table’s schema during a MERGE operation. By utilizing the

MERGEstatement, you can merge into Spark SQL by specifying theWHEN MATCHEDclause to update existing records and theWHEN NOT MATCHEDclause to insert new records that align with the updated schema. This capability is essential for adapting to evolving information requirements without disrupting ongoing processes. Notably, Spark reads the schema from a single Parquet file when determining the schema, which is vital for understanding how schema evolution is managed. -

Expert Insight: According to Pranav Anand, “Schema evolution is a feature that enables users to easily modify a structure’s current schema to accommodate information that is evolving over time.” This emphasizes the significance of schema evolution in adjusting to dynamic information environments, which is further improved by RPA’s capability to automate manual workflows, thus enhancing operational efficiency.

-

Handling Complex Joins: When managing intricate relationships between source and target tables, using subqueries or Common Table Expressions (CTEs) can significantly simplify your integration logic. This method enhances readability and improves maintainability, making it easier to adapt to future changes in structure. Leveraging Business Intelligence tools can provide insights into these relationships, aiding in more informed decision-making.

-

Batch Processing: For large datasets, breaking down MERGE tasks into smaller batches can enhance performance and mitigate the risk of timeouts or memory issues. This technique enables more manageable transactions and can result in enhanced overall efficiency in processing, aligning with the objectives of RPA to streamline operations.

-

Case Study Reference: Delta Lake conducts schema validation when writing information to a dataset, as demonstrated in the case study titled “How Schema Enforcement Works.” This case study illustrates how Delta Lake verifies compatibility with the target table’s schema, rejecting any writes that contain additional columns, differing formats, or case-sensitive column names. If a write action is incompatible, Delta Lake cancels the transaction and raises an exception, providing feedback on the mismatch to the user.

-

Critical Perspective: It is advised to avoid enabling Delta Lake schema evolution if schema enforcement is desired, as it disables schema enforcement checks and can potentially disrupt downstream processes. This caution is essential for ensuring that your information management practices remain robust and reliable, ultimately supporting your operational efficiency goals.

By applying these advanced strategies, you can navigate the intricacies of schema evolution and ensure that your merging tasks remain strong and flexible, fostering growth and innovation in your information management practices while utilizing RPA and Business Intelligence for improved operational efficiency.

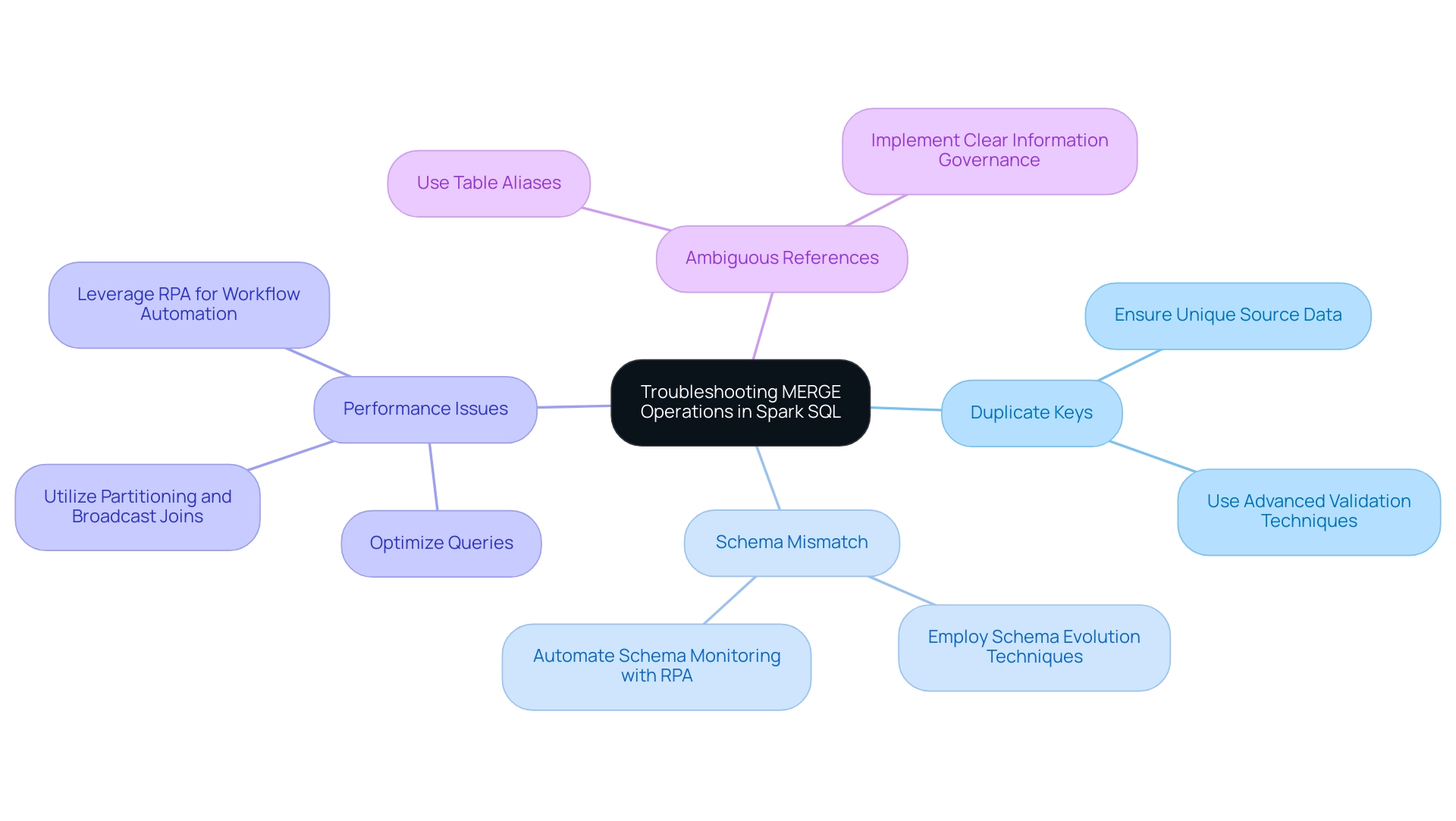

Troubleshooting MERGE Operations: Common Challenges and Solutions

When executing MERGE operations in Spark SQL, several common challenges can arise that may hinder the process:

-

Duplicate Keys: One of the most frequent issues is the presence of duplicate keys in the source data. This can lead to failures in the MERGE operation. To mitigate this, it is crucial to ensure that the source information is unique based on the key specified in the ON clause. Statistics indicate that up to 30% of source datasets may contain duplicate keys, underscoring the importance of cleansing information prior to execution. Additionally, information gets persisted from memory to storage volume whenever a save point is triggered or a delta merge is performed, highlighting the need for effective management during these operations. Creatum GmbH’s customized AI solutions can help in identifying and resolving duplicate keys through advanced information validation techniques.

-

Schema Mismatch: Errors can also occur if there is a mismatch between the schemas of the source and target tables. To address this, employing schema evolution techniques can be beneficial. These techniques enable modifications in the source schema without disrupting the overall information integration process, ensuring compatibility and reducing errors. RPA can automate the monitoring of schema changes, ensuring that any discrepancies are flagged and resolved promptly.

-

Performance Issues: Managing large datasets frequently results in performance bottlenecks during MERGE processes. To enhance performance, consider optimizing your queries, partitioning your information effectively, or utilizing broadcast joins. These strategies can significantly improve execution times and resource utilization, facilitating smoother operations. Leveraging Robotic Process Automation (RPA) can also automate these manual workflows, further enhancing operational efficiency in a rapidly evolving AI landscape. Creatum GmbH’s RPA solutions can streamline processing tasks, reducing the time spent on manual interventions.

-

Ambiguous References: Clarity in column references is essential, particularly when using joins. Ambiguous references can lead to confusion and mistakes in information processing. To avoid this, it is advisable to use table aliases, which help clarify which columns belong to which tables, thereby streamlining the process to merge into Spark SQL. Creatum GmbH emphasizes the importance of clear information governance practices to prevent such issues.

By understanding these challenges and implementing the suggested solutions, you can troubleshoot operations that merge into Spark SQL more effectively, ensuring information integrity and operational efficiency. Real-world examples emphasize that organizations that proactively tackle these issues can improve their synchronization efforts, ultimately supporting business agility and informed decision-making. For instance, in the case study titled ‘Synchronizing Information Across Systems,’ strategies were developed to track updates, inserts, or deletions in real-time or near-real-time, ensuring synchronization across systems.

As Daniel aptly stated, “You can’t ignore the fundamentals or the new features will be of no use to you,” highlighting the significance of grasping these foundational concepts in the context of combining elements. Moreover, effective cultural integration strategies, such as establishing clear communication channels and providing training, can also play a crucial role in overcoming challenges during information integration processes, leveraging Business Intelligence to transform raw information into actionable insights.

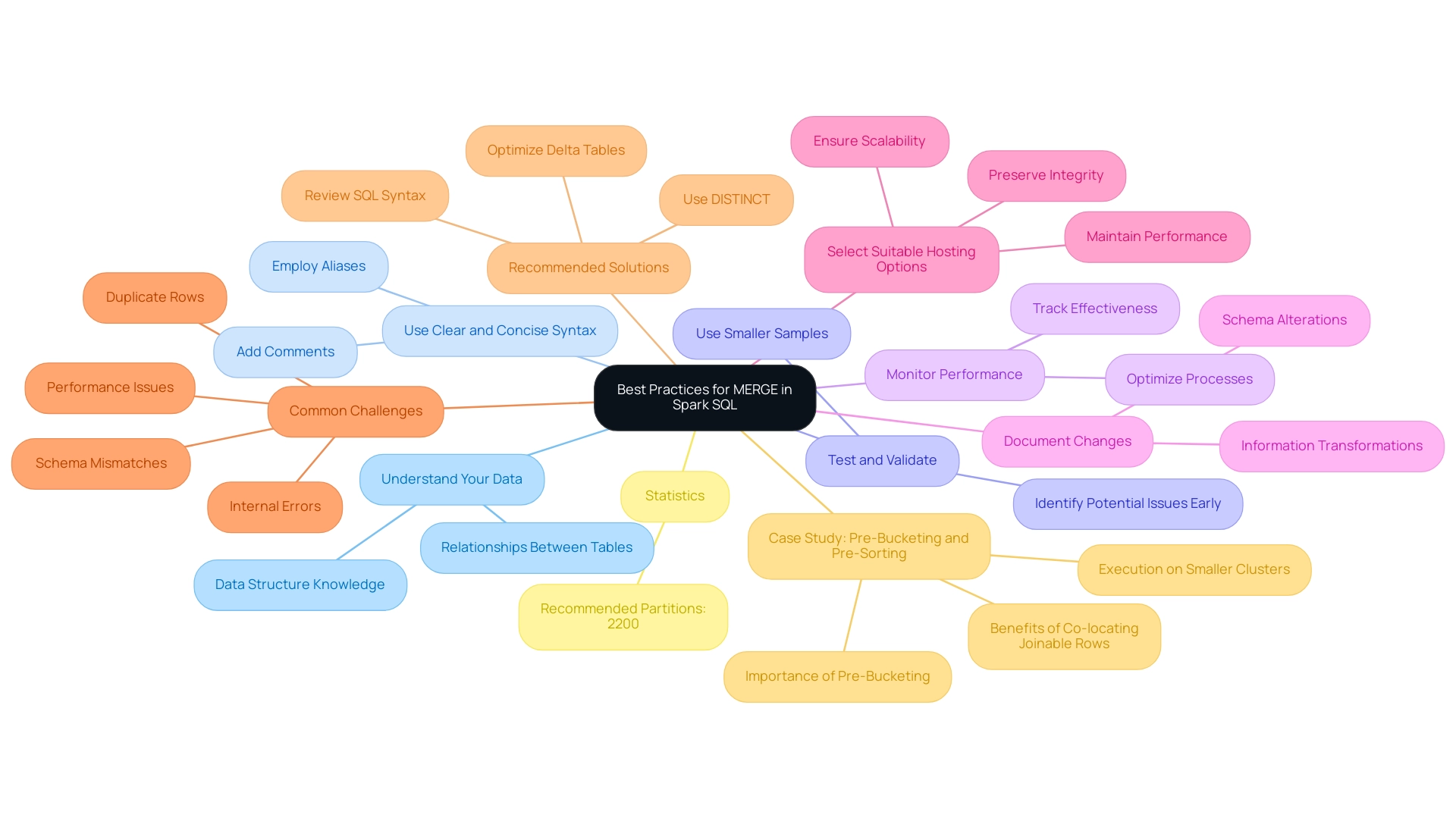

Best Practices for Using MERGE in Spark SQL

To maximize the effectiveness of your MERGE operations in Spark SQL, adopting several best practices is essential, especially when enhanced through Robotic Process Automation (RPA).

-

Understand Your Data: Gain a thorough understanding of the structure and relationships of your source and target tables prior to executing a MERGE. This foundational knowledge is crucial for successful data integration and manipulation.

-

Use Clear and Concise Syntax: Craft your integration statements with clarity in mind. Employing aliases and comments not only improves readability but also aids in maintaining the code over time.

-

Test and Validate: Before executing combining processes on larger datasets, perform tests on smaller samples. This practice ensures that the processes perform as expected and helps identify potential issues early on.

-

Monitor Performance: Regularly track the effectiveness of your combining processes, particularly when handling extensive datasets. Performance monitoring enables prompt optimizations, ensuring efficient information processing.

-

Document Changes: Maintain thorough documentation of your combination activities, including any schema alterations or information transformations. This practice fosters clarity and reproducibility, which are vital for ongoing data management.

-

Select Suitable Hosting Options: Choosing the right hosting options for your combined information is essential for maintaining performance, scalability, and integrity during integration activities.

Integrating these best practices not only boosts the reliability of your combining tasks but also greatly enhances efficiency in Spark SQL. For instance, a case study on pre-bucketing and pre-sorting in Spark highlights how these techniques can enhance join processes, allowing for the execution of large joins on smaller clusters, thus saving time and resources. Understanding the recommended number of partitions—around 2200—is vital for streamlining your processes and ensuring optimal performance.

As you implement these strategies, remember that common challenges such as duplicate rows, schema mismatches, and performance issues can arise. Addressing these proactively through techniques like using DISTINCT and optimizing Delta tables will contribute to smoother processes when you merge into Spark SQL. Furthermore, consider how RPA can automate repetitive tasks related to these procedures, such as information validation and error management, further improving operational efficiency.

Ultimately, a solid understanding of information structures and adherence to best practices will empower you to leverage Spark SQL’s capabilities effectively. As noted by Deloitte, “MarkWide Research is a trusted partner that provides us with the market insights we need to make informed decisions,” emphasizing the importance of informed decision-making in data operations. Explore RPA solutions to streamline your workflows and focus on strategic initiatives.

Conclusion

The MERGE statement in Spark SQL signifies a major leap in data management, facilitating the efficient execution of insert, update, and delete operations within a single command. This powerful functionality not only simplifies workflows but also meets the increasing demand for automation in data processing, especially through the integration of Robotic Process Automation (RPA). By mastering the MERGE statement, data professionals can significantly enhance operational efficiency, reduce processing times, and convert raw data into actionable insights.

Understanding the nuances of join types and best practices is vital for optimizing MERGE operations. Selecting the right join conditions and employing techniques like schema evolution can greatly enhance data handling and minimize errors. Moreover, addressing common challenges—such as duplicate keys and schema mismatches—ensures that data integrity is preserved throughout the process.

As organizations pursue agility in their data operations, leveraging the MERGE statement becomes essential. It empowers teams to streamline their data workflows, ultimately driving informed decision-making and fostering business growth. By embracing these advanced data management techniques alongside RPA, organizations position themselves to thrive in a rapidly evolving landscape where data-driven insights are crucial.

Frequently Asked Questions

What is the MERGE statement in Spark SQL?

The MERGE statement in Spark SQL allows users to perform multiple operations—insert, update, and delete—on a target dataset based on the results of a join with a source dataset.

What is the basic syntax of the MERGE statement?

The basic syntax is as follows:\nsql\nMERGE INTO target_table AS T\nUSING source_table AS S\nON T.id = S.id\nWHEN MATCHED THEN UPDATE SET T.col1 = S.col1\nWHEN NOT MATCHED THEN INSERT (id, col1) VALUES (S.id, S.col1);\n

How does the MERGE statement improve operational efficiency?

The MERGE statement streamlines information management by reducing the need for multiple queries, which enhances operational efficiency, especially in environments where manual workflows can hinder productivity.

What are the reported benefits of using the MERGE statement in organizations?

Organizations have reported up to a 30% decrease in processing time for updates and inserts when applying the MERGE statement in Spark SQL.

Can you provide an example of how the MERGE statement is used?

An example includes a case where the information list for the fourth load features PersonId values 1001, 1005, and 1006, demonstrating successful application of the MERGE statement.

What is the significance of execution statistics in the MERGE process?

Examining execution statistics after merging helps evaluate the impact on the target Delta Table, including the number of rows deleted, inserted, and updated, which is crucial for resolving any inconsistencies.

How do join types affect the MERGE statement in Spark SQL?

The choice of join type impacts how data from source and target datasets is integrated. For instance, an inner join refreshes records present in both collections, while a left outer join updates all records from the target dataset.

What types of joins are available in Spark SQL?

The available join types in Spark SQL include:\n- Inner Join\n- Left Outer Join\n- Right Outer Join\n- Full Outer Join\n- Cross Join

Why is it important to understand the structure of information when working with joins?

Understanding the structure, including fact tables and dimension tables, is essential for effectively organizing information and optimizing join operations for better processing efficiency.

What strategies can enhance the performance of join operations in Spark SQL?

Implementing cost-based optimization strategies, such as projection and filtering, can enhance the performance of join operations by refining queries for better efficiency.